Page 1

Red Hat GFS 5.2.1

Administrator’s Guide

Page 2

Red Hat GFS 5.2.1: Administrator’s Guide

Copyright © 2004 by Red Hat, Inc.

Red Hat, Inc.

1801 Varsity Drive

Raleigh NC 27606-2072 USA

Phone: +1 919 754 3700

Phone: 888 733 4281

Fax: +1 919 754 3701

PO Box 13588

Research Triangle Park NC 27709 USA

Red Hat GFS Administrator’s Guide(EN)-5.2.1-Print-RHI (2004-01-15T12:12-0400)

Copyright © 2004 by Red Hat, Inc. This material may be distributed only subject to the terms and conditions set forth in the

Open Publication License, V1.0 or later (the latest version is presentlyavailableat http://www.opencontent.org/openpub/).

Distribution of substantively modified versions of this document is prohibited without the explicit permission of the copyright

holder.

Distribution of the work or derivative of the work in any standard (paper) book form for commercial purposes is prohibited

unless prior permission is obtained from the copyright holder.

Red Hat, Red Hat Network, the Red Hat "Shadow Man" logo, RPM, Maximum RPM, the RPM logo, Linux Library,

PowerTools, Linux Undercover, RHmember, RHmember More, Rough Cuts, Rawhide and all Red Hat-based trademarks and

logos are trademarks or registered trademarks of Red Hat, Inc. in the United States and other countries.

Linux is a registered trademark of Linus Torvalds.

Motif and UNIX are registeredtrademarks of The Open Group.

XFree86 is a trademark of The XFree86 Project, Inc, and is pending registration.

Intel and Pentium are registered trademarks of Intel Corporation. Itanium and Celeron are trademarks of Intel Corporation.

AMD, Opteron, Athlon, Duron, and K6 are registered trademarks of Advanced Micro Devices, Inc.

Netscape is a registered trademark of Netscape Communications Corporation in the United States and other countries.

Java and Swing are trademarks or registered trademarks of Sun Microsystems, Inc. in the U.S. or other countries.

Oracle is a registered trademark, and Oracle8i, Oracle9i, and interMedia are trademarks or registered trademarksof Oracle

Corporation.

Microsoft and Windows are either registered trademarks or trademarks of Microsoft Corporation in the United States and/or

other countries.

SSH and Secure Shell are trademarks of SSH Communications Security, Inc.

FireWire is a trademark of Apple Computer Corporation.

IBM, AS/400, OS/400, RS/6000, S/390, and zSeries are registered trademarks of International Business Machines

Corporation. eServer, iSeries, and pSeries are trademarks of InternationalBusiness Machines Corporation.

All other trademarks and copyrights referred to are the property of their respective owners.

The GPG fingerprint of the security@redhat.com key is:

CA 20 86 86 2B D6 9D FC 65 F6 EC C4 21 91 80 CD DB 42 A6 0E

Page 3

Table of Contents

Introduction..........................................................................................................................................i

1. Audience ................................................................................................................................i

2. Document Conventions..........................................................................................................i

3. More to Come ......................................................................................................................iii

3.1. Send in Your Feedback ......................................................................................... iv

4. Sign Up for Support ............................................................................................................. iv

5. Recommended References................................................................................................... iv

1. GFS Overview ................................................................................................................................. 1

1.1. New and Changed Features................................................................................................ 1

1.2. Performance, Scalability, and Economy ............................................................................ 1

1.2.1. Superior Performance and Scalability ................................................................ 2

1.2.2. Performance, Scalability, Moderate Price........................................................... 3

1.2.3. Economy and Performance ................................................................................. 3

1.3. GFS Functions ................................................................................................................... 4

1.3.1. Cluster Volume Management.............................................................................. 4

1.3.2. Lock Management .............................................................................................. 5

1.3.3. Cluster Management, Fencing, and Recovery .................................................... 5

1.3.4. Cluster Configuration Management.................................................................... 5

1.4. GFS Software Subsystems.................................................................................................6

1.5. Before Configuring GFS .................................................................................................... 8

2. System Requirements ...................................................................................................................11

2.1. Platform Requirements .................................................................................................... 11

2.2. TCP/IP Network...............................................................................................................11

2.3. Fibre Channel Storage Network....................................................................................... 11

2.4. Fibre Channel Storage Devices........................................................................................12

2.5. Network Power Switches................................................................................................. 12

2.6. Console Access ................................................................................................................ 12

2.7. I/O Fencing ...................................................................................................................... 12

3. Installing System Software...........................................................................................................13

3.1. Prerequisite Tasks ............................................................................................................ 13

3.1.1. Net::Telnet Perl Module .......................................................................... 13

3.1.2. Clock Synchronization Software ...................................................................... 13

3.1.3. Stunnel Utility ................................................................................................ 14

3.2. Installation Tasks ............................................................................................................. 14

3.2.1. Installing a Linux Kernel .................................................................................. 14

3.2.2. Installing A GFS RPM...................................................................................... 15

3.2.3. Loading the GFS Kernel Modules ....................................................................16

4. Initial Configuration ..................................................................................................................... 19

4.1. Prerequisite Tasks ............................................................................................................ 19

4.2. Initial Configuration Tasks...............................................................................................19

4.2.1. Setting Up Logical Devices .............................................................................. 19

4.2.2. Setting Up and Starting the Cluster Configuration System .............................. 20

4.2.3. Starting Clustering and Locking Systems......................................................... 20

4.2.4. Setting Up and Mounting File Systems ............................................................ 21

Page 4

5. Using the Pool Volume Manager .................................................................................................23

5.1. Overview of GFS Pool Volume Manager ........................................................................ 23

5.2. Synopsis of Pool Management Commands ..................................................................... 24

5.2.1. pool_tool ....................................................................................................... 24

5.2.2. pool_assemble ..............................................................................................25

5.2.3. pool_info ....................................................................................................... 25

5.2.4. pool_mp ........................................................................................................... 26

5.3. Scanning Block Devices ..................................................................................................27

5.3.1. Usage................................................................................................................. 27

5.3.2. Example ............................................................................................................ 27

5.4. Creating a Configuration File for a New Volume ............................................................ 27

5.4.1. Examples........................................................................................................... 29

5.5. Creating a Pool Volume ................................................................................................... 29

5.5.1. Usage................................................................................................................. 29

5.5.2. Example ............................................................................................................ 30

5.5.3. Comments .........................................................................................................30

5.6. Activating/Deactivating a Pool Volume ........................................................................... 30

5.6.1. Usage................................................................................................................. 30

5.6.2. Examples........................................................................................................... 31

5.6.3. Comments .........................................................................................................31

5.7. Displaying Pool Configuration Information .................................................................... 31

5.7.1. Usage................................................................................................................. 31

5.7.2. Example ............................................................................................................ 31

5.8. Growing a Pool Volume ...................................................................................................32

5.8.1. Usage................................................................................................................. 32

5.8.2. Example procedure ........................................................................................... 32

5.9. Erasing a Pool Volume .....................................................................................................33

5.9.1. Usage................................................................................................................. 33

5.9.2. Example ............................................................................................................ 33

5.9.3. Comments .........................................................................................................33

5.10. Renaming a Pool Volume............................................................................................... 33

5.10.1. Usage...............................................................................................................34

5.10.2. Example .......................................................................................................... 34

5.11. Changing a Pool Volume Minor Number ...................................................................... 34

5.11.1. Usage...............................................................................................................34

5.11.2. Example .......................................................................................................... 35

5.11.3. Comments ....................................................................................................... 35

5.12. Displaying Pool Volume Information ............................................................................ 35

5.12.1. Usage...............................................................................................................35

5.12.2. Examples......................................................................................................... 36

5.13. Using Pool Volume Statistics ......................................................................................... 36

5.13.1. Usage...............................................................................................................36

5.13.2. Examples......................................................................................................... 36

5.14. Adjusting Pool Volume Multipathing ............................................................................ 37

5.14.1. Usage...............................................................................................................37

5.14.2. Examples......................................................................................................... 37

6. Creating the Cluster Configuration System Files ...................................................................... 39

6.1. Prerequisite Tasks ............................................................................................................ 39

6.2. CCS File Creation Tasks.................................................................................................. 39

6.3. Dual Power and Multipath FC Fencing Considerations .................................................. 40

6.4. GNBD Multipath Considerations ....................................................................................40

6.5. Adding the license.ccs File........................................................................................ 41

6.6. Creating the cluster.ccs File...................................................................................... 42

6.7. Creating the fence.ccs File .......................................................................................... 43

6.8. Creating the nodes.ccs File .......................................................................................... 52

Page 5

7. Using the Cluster Configuration System.....................................................................................71

7.1. Creating a CCS Archive................................................................................................... 71

7.1.1. Usage................................................................................................................. 71

7.1.2. Example ............................................................................................................ 71

7.1.3. Comments .........................................................................................................72

7.2. Starting CCS in the Cluster .............................................................................................. 72

7.2.1. Usage................................................................................................................. 72

7.2.2. Example ............................................................................................................ 72

7.2.3. Comments .........................................................................................................72

7.3. Using Other CCS Administrative Options....................................................................... 73

7.3.1. Extracting Files from a CCS Archive ............................................................... 73

7.3.2. Listing Files in a CCS Archive .........................................................................73

7.3.3. Comparing CCS Configuration Files to a CCS Archive................................... 74

7.4. Changing CCS Configuration Files ................................................................................. 74

7.4.1. Example Procedure ...........................................................................................74

7.5. Alternative Methods to Using a CCA Device.................................................................. 75

7.5.1. CCA File and Server .........................................................................................75

7.5.2. Local CCA Files ............................................................................................... 77

7.6. Combining CCS Methods ................................................................................................ 78

8. Using Clustering and Locking Systems ......................................................................................79

8.1. Locking System Overview............................................................................................... 79

8.2. LOCK_GULM................................................................................................................. 79

8.2.1. Selection of LOCK_GULM Servers................................................................. 79

8.2.2. Number of LOCK_GULM Servers .................................................................. 79

8.2.3. Starting LOCK_GULM Servers ....................................................................... 80

8.2.4. Fencing and LOCK_GULM .............................................................................80

8.2.5. Shutting Down a LOCK_GULM Server .......................................................... 80

8.3. LOCK_NOLOCK............................................................................................................ 81

9. Managing GFS .............................................................................................................................. 83

9.1. Making a File System ...................................................................................................... 83

9.1.1. Usage................................................................................................................. 83

9.1.2. Examples........................................................................................................... 84

9.1.3. Complete Options .............................................................................................84

9.2. Mounting a File System ................................................................................................... 85

9.2.1. Usage................................................................................................................. 85

9.2.2. Example ............................................................................................................ 86

9.2.3. Complete Usage ................................................................................................ 86

9.3. Unmounting a File System ..............................................................................................87

9.3.1. Usage................................................................................................................. 87

9.4. GFS Quota Management.................................................................................................. 87

9.4.1. Setting Quotas ...................................................................................................87

9.4.2. Displaying Quota Limits and Usage ................................................................. 88

9.4.3. Synchronizing Quotas ....................................................................................... 90

9.4.4. Disabling/Enabling Quota Enforcement ...........................................................91

9.4.5. Disabling/Enabling Quota Accounting ............................................................. 92

9.5. Growing a File System..................................................................................................... 93

9.5.1. Usage................................................................................................................. 93

9.5.2. Comments .........................................................................................................93

9.5.3. Examples........................................................................................................... 93

9.5.4. Complete Usage ................................................................................................ 94

9.6. Adding Journals to a File System .................................................................................... 94

9.6.1. Usage................................................................................................................. 94

9.6.2. Comments .........................................................................................................95

9.6.3. Examples........................................................................................................... 95

9.6.4. Complete Usage ................................................................................................ 95

Page 6

9.7. Direct I/O .........................................................................................................................96

9.7.1. O_DIRECT .........................................................................................................96

9.7.2. GFS File Attribute.............................................................................................97

9.7.3. GFS Directory Attribute ...................................................................................97

9.8. Data Journaling ................................................................................................................98

9.8.1. Usage................................................................................................................. 98

9.8.2. Examples........................................................................................................... 99

9.9. Configuring atime Updates ............................................................................................ 99

9.9.1. Mount with noatime........................................................................................ 99

9.9.2. Tune GFS atime Quantum ............................................................................ 100

9.10. Suspending Activity on a File System .........................................................................101

9.10.1. Usage.............................................................................................................101

9.10.2. Examples....................................................................................................... 101

9.11. Displaying Extended GFS Information and Statistics ................................................. 101

9.11.1. Usage.............................................................................................................102

9.11.2. Examples....................................................................................................... 102

9.12. Repairing a File System............................................................................................... 102

9.12.1. Usage.............................................................................................................102

9.12.2. Example ........................................................................................................ 103

9.13. Context-Dependent Path Names ..................................................................................103

9.13.1. Usage.............................................................................................................103

9.13.2. Example ........................................................................................................ 104

9.14. Shutting Down a GFS Cluster......................................................................................105

9.15. Restarting a GFS Cluster .............................................................................................105

10. Using the Fencing System......................................................................................................... 107

10.1. How the Fencing System Works.................................................................................. 107

10.2. Fencing Methods.......................................................................................................... 107

10.2.1. APC MasterSwitch........................................................................................ 108

10.2.2. WTI Network Power Switch......................................................................... 108

10.2.3. Brocade FC Switch ....................................................................................... 109

10.2.4. Vixel FC Switch ............................................................................................109

10.2.5. HP RILOE Card............................................................................................109

10.2.6. GNBD ...........................................................................................................110

10.2.7. Fence Notify GNBD ..................................................................................... 110

10.2.8. Manual .......................................................................................................... 110

11. Using GNBD ..............................................................................................................................113

11.1. Considerations for Using GNBD Multipath ................................................................ 113

11.1.1. Linux Page Caching ...................................................................................... 113

11.1.2. Lock Server Startup ......................................................................................113

11.1.3. CCS File Location ........................................................................................ 113

11.1.4. Fencing GNBD Server Nodes.......................................................................114

11.2. GNBD Driver and Command Usage ...........................................................................114

11.2.1. Exporting a GNBD from a Server ................................................................ 115

11.2.2. Importing a GNBD on a Client ..................................................................... 116

12. Software License .......................................................................................................................119

12.1. Overview...................................................................................................................... 119

12.2. Installing a License ...................................................................................................... 119

12.3. Upgrading and Replacing a License ............................................................................ 120

12.3.1. Replacing a License File: Not Changing the Lock Manager ........................ 120

12.3.2. Replacing a License File: Changing the Lock Manager ............................... 120

12.4. License FAQ................................................................................................................. 121

12.5. Solving License Problems............................................................................................ 121

Page 7

A. Upgrading GFS ..........................................................................................................................123

A.1. Overview of Differences between GFS 5.1.x and GFS 5.2.x ....................................... 123

A.1.1. Configuration Information .............................................................................123

A.1.2. GFS License................................................................................................... 124

A.1.3. GFS LockProto ..............................................................................................124

A.1.4. GFS LockTable .............................................................................................. 124

A.1.5. GFS Mount Options....................................................................................... 124

A.1.6. Pool ................................................................................................................ 125

A.1.7. Fencing Agents .............................................................................................. 125

A.2. Upgrade Procedure........................................................................................................125

B. Basic GFS Examples ..................................................................................................................131

B.1. LOCK_GULM, RLM Embedded .................................................................................131

B.1.1. Key Characteristics......................................................................................... 131

B.1.2. Prerequisites ...................................................................................................132

B.1.3. Setup Process.................................................................................................. 133

B.2. LOCK_GULM, RLM External..................................................................................... 137

B.2.1. Key Characteristics......................................................................................... 137

B.2.2. Prerequisites ...................................................................................................139

B.2.3. Setup Process.................................................................................................. 140

B.3. LOCK_GULM, SLM Embedded.................................................................................. 145

B.3.1. Key Characteristics......................................................................................... 145

B.3.2. Prerequisites ...................................................................................................146

B.3.3. Setup Process.................................................................................................. 147

B.4. LOCK_GULM, SLM External ..................................................................................... 151

B.4.1. Key Characteristics......................................................................................... 151

B.4.2. Prerequisites ...................................................................................................152

B.4.3. Setup Process.................................................................................................. 153

B.5. LOCK_GULM, SLM External, and GNBD ................................................................. 157

B.5.1. Key Characteristics......................................................................................... 157

B.5.2. Prerequisites ...................................................................................................159

B.5.3. Setup Process.................................................................................................. 160

B.6. LOCK_NOLOCK ......................................................................................................... 165

B.6.1. Key Characteristics......................................................................................... 165

B.6.2. Prerequisites ...................................................................................................166

B.6.3. Setup Process.................................................................................................. 167

Index................................................................................................................................................. 171

Colophon..........................................................................................................................................177

Page 8

Page 9

Introduction

Welcome to the Red Hat GFS Administrator’s Guide. This book provides information about installing,

configuring, and maintaining GFS (Global File System). The document contains procedures for commonly performed tasks, reference information, and examples of complex operations and tested GFS

configurations.

HTML and PDF versions of all the official Red Hat Enterprise Linux manuals and release notes are

available online at http://www.redhat.com/docs/.

1. Audience

This book is intended primarily for Linux system administrators who are familiar with the following

activities:

• Linux system administration procedures, including kernel configuration

• Installation and configuration of shared storage networks, such as Fibre Channel SANs

2. Document Conventions

When you read this manual, certain words are represented in different fonts, typefaces, sizes, and

weights. This highlighting is systematic; different words are represented in the same style to indicate

their inclusion in a specific category. The types of words that are represented this way include the

following:

command

Linux commands (and other operating system commands, when used) are represented this way.

This style should indicate to you that you can type the word or phrase on the command line

and press [Enter] to invoke a command. Sometimes a command contains words that would be

displayed in a different style on their own (such as file names). In these cases, they are considered

to be part of the command, so the entire phrase is displayed as a command. For example:

Use the cat testfile command to view the contents of a file, named testfile, in the current

working directory.

file name

File names, directory names, paths, and RPM package names are represented this way. This style

should indicate that a particular file or directory exists by that name on your system. Examples:

The .bashrc file in your home directory contains bash shell definitions and aliases for your own

use.

The /etc/fstab file contains information about different system devices and file systems.

Install the webalizer RPM if you want to use a Web server log file analysis program.

application

This style indicates that the program is an end-user application (as opposed to system software).

For example:

Use Mozilla to browse the Web.

Page 10

ii Introduction

[key]

A key on the keyboard is shown in this style. For example:

To use [Tab] completion, type in a character and then press the [Tab] key. Your terminal displays

the list of files in the directory that start with that letter.

[key]-[combination]

A combination of keystrokes is represented in this way. For example:

The [Ctrl]-[Alt]-[Backspace] key combination exits your graphical session and return you to the

graphical login screen or the console.

text found on a GUI interface

A title, word, or phrase found on a GUI interface screen or window is shown in this style. Text

shown in this style is being used to identify a particular GUI screen or an element on a GUI

screen (such as text associated with a checkbox or field). Example:

Select the Require Password checkbox if you would like your screensaver to require a password

before stopping.

top level of a menu on a GUI screen or window

A word in this style indicates that the word is the top level of a pulldown menu. If you click on

the word on the GUI screen, the rest of the menu should appear. For example:

Under File on a GNOME terminal, the New Tab option allows you to open multiple shell

prompts in the same window.

If you need to type in a sequence of commands from a GUI menu, they are shown like the

following example:

Go to Main Menu Button (on the Panel) => Programming => Emacs to start the Emacs text

editor.

button on a GUI screen or window

This style indicates that the text can be found on a clickable button on a GUI screen. For example:

Click on the Back button to return to the webpage you last viewed.

computer output

Text in this style indicates text displayed to a shell prompt such as error messages and responses

to commands. For example:

The ls command displays the contents of a directory. For example:

Desktop about.html logs paulwesterberg.png

Mail backupfiles mail reports

The output returned in response to the command (in this case, the contents of the directory) is

shown in this style.

prompt

A prompt, which is a computer’s way of signifying that it is ready for you to input something, is

shown in this style. Examples:

$

#

[stephen@maturin stephen]$

leopard login:

Page 11

Introduction iii

user input

Text that the user has to type, either on the command line, or into a text box on a GUI screen, is

displayed in this style. In the following example, text is displayed in this style:

To boot your system into the text based installation program, you must type in the text command at the boot: prompt.

replaceable

Text used for examples which is meant to be replaced with data provided by the user is displayed

in this style. In the following example,

version-numberis displayed in this style:

The directory for the kernel source is /usr/src/

version-number/, where

version-numberis the version of the kernel installed on this system.

Additionally, we use several different strategies to draw your attention to certain pieces of information. In order of how critical the information is to your system, these items are marked as note, tip,

important, caution, or a warning. For example:

Note

Remember that Linux is case sensitive. In other words, a rose is not a ROSE is not a rOsE.

Tip

The directory /usr/share/doc/ contains additional documentation for packages installed on your

system.

Important

If you modify the DHCP configuration file, the changes will not take effect until you restart the DHCP

daemon.

Caution

Do not perform routine tasks as root — use a regular user account unless you need to use the root

account for system administration tasks.

Warning

Be careful to remove only the necessary partitions. Removing other partitions could result in data

loss or a corrupted system environment.

Page 12

iv Introduction

3. More to Come

The Red Hat GFS Administrator’s Guide is part of Red Hat’s growing commitment to provide useful

and timely support to Red Hat Enterprise Linux users.

3.1. Send in Your Feedback

If you spot a typo in the Red Hat GFS Administrator’s Guide, or if you have thought of a way

to make this manual better, we would love to hear from you! Please submit a report in Bugzilla

(http://www.redhat.com/bugzilla) against the component rh-gfsg.

Be sure to mention the manual’s identifier:

Red Hat GFS Administrator’s Guide(EN)-5.2.1-Print-RHI (2004-01-15T12:12-0400)

If you mention this manual’s identifier, we will know exactly which version of the guide you have.

If you have a suggestion for improving the documentation, try to be as specific as possible. If you

have found an error, please include the section number and some of the surrounding text so we can

find it easily.

4. Sign Up for Support

If you have a variant of Red Hat GFS 5.2.1, please remember to sign up for the benefits you are

entitled to as a Red Hat customer.

Registration enables access to the Red Hat Services you have purchased, such as technical support

and Red Hat Network. To register your product, go to:

http://www.redhat.com/apps/activate/

Note

You must activate your product before attempting to connect to Red Hat Network. If your product

has not been activated, Red Hat Network rejects registration to channels to which the system is not

entitled.

Good luck, and thank you for choosing Red Hat GFS!

The Red Hat Documentation Team

5. Recommended References

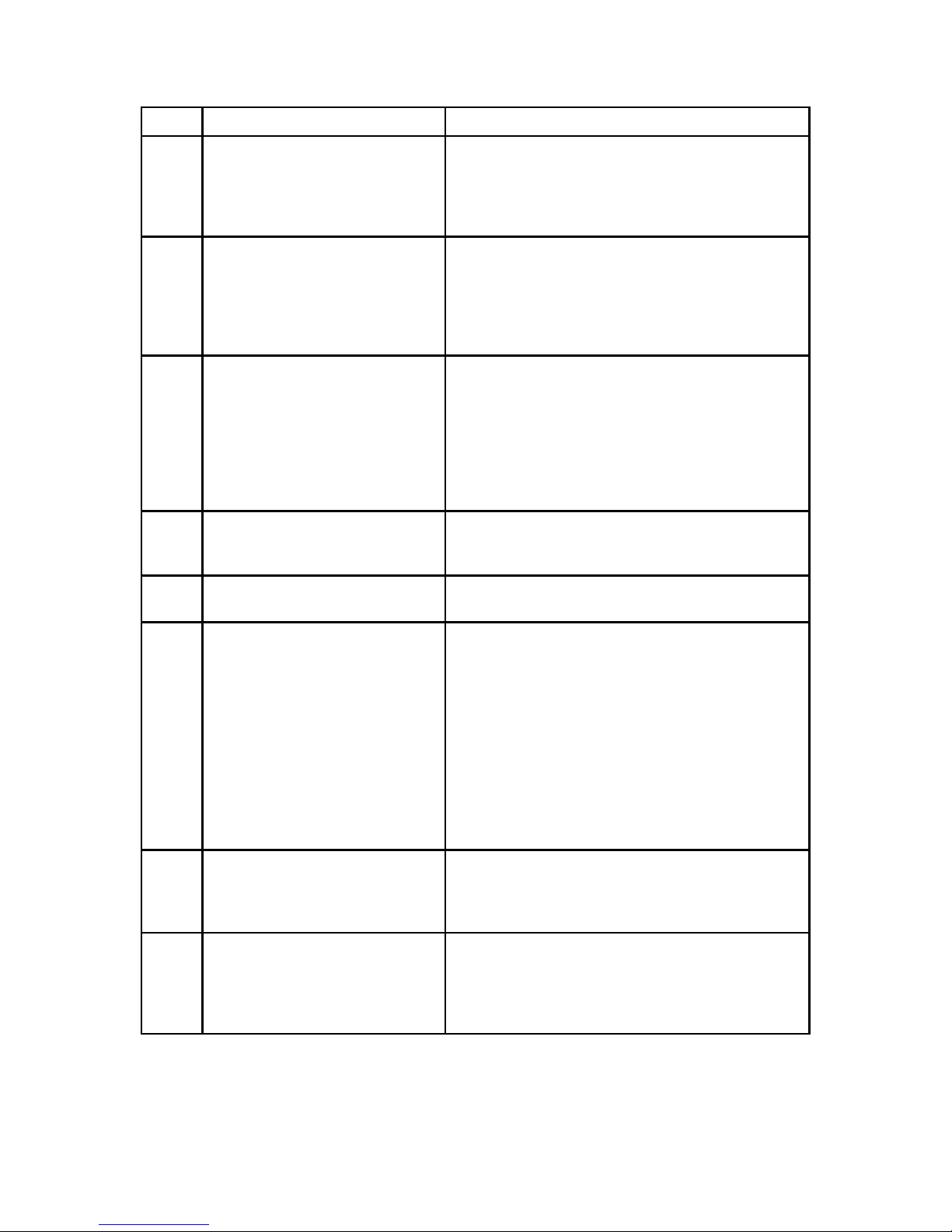

For additional references about related topics, refer to the following table:

Page 13

Introduction v

Topic Reference Comment

Shared Data Clustering and

File Systems

Shared Data Clusters by Dilip

M. Ranade. Wiley, 2002.

Provides detailed technical

information on cluster file

system and cluster volume

manager design.

Storage Area Networks (SANs) Designing Storage Area

Networks: A Practical

Reference for Implementing

Fibre Channel and IP SANs,

Second Edition by Tom Clark.

Addison-Wesley, 2003.

Provides a concise summary of

Fibre Channel and IP SAN

Technology.

Building SANs with Brocade

Fabric Switches by C.

Beauchamp, J. Judd, and B.

Keo. Syngress, 2001.

Best practices for building

Fibre Channel SANs based on

the Brocade family of switches,

including core-edge topology

for large SAN fabrics.

Building Storage Networks,

Second Edition by Marc Farley.

Osborne/McGraw-Hill, 2001.

Provides a comprehensive

overview reference on storage

networking technologies.

Applications and High

Availability

Blueprints for High

Availability: Designing

Resilient Distributed Systems

by E. Marcus and H. Stern.

Wiley, 2000.

Provides a summary of best

practices in high availability.

Table 1. Recommended References Table

Page 14

vi Introduction

Page 15

Chapter 1.

GFS Overview

GFS is a cluster file system that provides data sharing among Linux-based computers. GFS provides

a single, consistent view of the file system name space across all nodes in a cluster. It allows applications to install and run without much knowledge of the underlying storage infrastructure. GFS is

fully compliant with the IEEE POSIX interface, allowing applications to perform file operations as if

they were running on a local file system. Also, GFS provides features that are typically required in

enterprise environments, such as quotas, multiple journals, and multipath support.

GFS provides a versatile method of networking your storage according to the performance, scalability,

and economic needs of your storage environment.

This chapter provides some very basic, abbreviated information as background to help you understand

GFS. It contains the following sections:

• Section 1.1 New and Changed Features

• Section 1.2 Performance, Scalability, and Economy

• Section 1.3 GFS Functions

• Section 1.4 GFS Software Subsystems

• Section 1.5 Before Configuring GFS

1.1. New and Changed Features

New for this release is GNBD (Global Network Block Device) multipath. GNBD multipath configuration of multiple GNBD server nodes (nodes that export GNBDs to GFS nodes) with redundant

paths between the GNBD server nodes and storage devices. The GNBD servers, in turn, present multiple storage paths to GFS nodes via redundant GNBDs. With GNBD multipath, if a GNBD server

node becomes unavailable, another GNBD server node can provide GFS nodes with access to storage

devices.

With GNBD multipath, you need to take into account additional factors — one being that Linux page

caching needs to be disabled on GNBD servers in a GNBD multipath configuration. For information

about CCS files with GNBD multipath, refer to Section 6.4 GNBD Multipath Considerations. For

information about using GNBD with GNBD multipath, refer to Section 11.1 Considerations for Using

GNBD Multipath.

For upgrade instructions, refer to Appendix A Upgrading GFS.

1.2. Performance, Scalability, and Economy

You can deploy GFS in a variety of configurations to suit your needs for performance, scalability, and

economy. For superior performance and scalability, you can deploy GFS in a cluster that is connected

directly to a SAN. For more economical needs, you can deploy GFS in a cluster that is connected to a

LAN with servers that use the GFS VersaPlex architecture. The VersaPlex architecture allows a GFS

cluster to connect to servers that present block-level storage via an Ethernet LAN. The VersaPlex

architecture is implemented with GNBD (Global Network Block Device), a method of presenting

block-level storage over an Ethernet LAN. GNBD is a software layer that can be run on network

nodes connected to direct-attached storage or storage in a SAN. GNBD exports a block interface from

those nodes to a GFS cluster.

Page 16

2 Chapter 1. GFS Overview

You can configure GNBD servers for GNBD multipath. GNBD multipath allows you to configure

multiple GNBD server nodes with redundant paths between the GNBD server nodes and storage devices. The GNBD servers, in turn, present multiple storage paths to GFS nodes via redundant GNBDs.

With GNBD multipath, if a GNBD server node becomes unavailable, another GNBD server node can

provide GFS nodes with access to storage devices.

The following sections provide examples of how GFS can be deployed to suit your needs for performance, scalability, and economy:

• Section 1.2.1 Superior Performance and Scalability

• Section 1.2.2 Performance, Scalability, Moderate Price

• Section 1.2.3 Economy and Performance

Note

The deployment examples in this chapter reflect basic configurations; your needs might require a

combination of configurations shown in the examples. Also, the examples show GNBD (Global Network Block Device) as the method of implementing the VersaPlex architecture.

1.2.1. Superior Performance and Scalability

You can obtain the highest shared-file performance when applications access storage directly. The

GFS SAN configuration in Figure 1-1 provides superior file performance for shared files and file

systems. Linux applications run directly on GFS clustered application nodes. Without file protocols

or storage servers to slow data access, performance is similar to individual Linux servers with directconnect storage; yet, each GFS application node has equal access to all data files. GFS supports over

300 GFS application nodes.

SAN

Fabric

FC or iSCSI

SAN

GFS

Applications

Shared Files

Figure 1-1. GFS with a SAN

Page 17

Chapter 1. GFS Overview 3

1.2.2. Performance, Scalability, Moderate Price

Multiple Linux client applications on a LAN can share the same SAN-based data as shown in Figure

1-2. SAN block storage is presented to network clients as block storage devices by GNBD servers.

From the perspective of a client application, storage is accessed as if it were directly attached to the

server in which the application is running. Stored data is actually on the SAN. Storage devices and data

can be equally shared by network client applications. File locking and sharing functions are handled

by GFS for each network client.

Note

Clients implementing ext2 and ext3 file systems can be configured to access their own dedicated

slice of SAN storage.

GFS with VersaPlex (implemented with GNBD, as shown in Figure 1-2) and a SAN provide fully

automatic application and device failover with failover software and redundant devices.

LAN

Clients

GNBD

servers

SAN

Fabric

GFS

Applications

Shared Files

Figure 1-2. GFS and GNBD with a SAN

Page 18

4 Chapter 1. GFS Overview

1.2.3. Economy and Performance

Figure 1-3 shows how Linux client applications can take advantage of an existing Ethernet topology

to gain shared access to all block storage devices. Client data files and file systems can be shared with

GFS on each client. Application and device failover can be fully automated with mirroring, failover

software, and redundant devices.

LAN

Clients

GNBD

servers

Disk

A

Mirrored disk pairs for data availability

GFS

Applications

1

Disk

C

Disk

B

1

Disk

A

Disk

C

1

Disk

B

Shared Files

Figure 1-3. GFS and GNBD with Direct-Attached Storage

1.3. GFS Functions

GFS is a native file system that interfaces directly with the VFS layer of the Linux kernel file-system

interface. GFS is a cluster file system that employs distributed metadata and multiple journals for

optimal operation in a cluster.

GFS provides the following main functions:

• Cluster volume management

• Lock management

• Cluster management, fencing, and recovery

• Cluster configuration management

Page 19

Chapter 1. GFS Overview 5

1.3.1. Cluster Volume Management

Cluster volume management provides simplified management of volumes and the ability to dynamically extend file system capacity without interrupting file-system access. With cluster volume management, you can aggregate multiple physical volumes into a single, logical device across all nodes in

a cluster.

Cluster volume management provides a logical view of the storage to GFS, which provides flexibility

for the administrator in how the physical storage is managed. Also, cluster volume management provides increased availability because it allows increasing the storage capacity without shutting down

the cluster. Refer to Chapter 5 Using the Pool Volume Manager for more information about cluster

volume management.

1.3.2. Lock Management

A lock management mechanism is a key component of any cluster file system. The GFS OmniLock

architecture provides the following lock managers:

• Single Lock Manager (SLM) — A simple centralized lock manager that can be configured to run

either on a file system node or on a separate dedicated lock manager node.

• Redundant Lock Manager (RLM) — A high-availability lock manager. It allows the configuration

of a master and multiple hot-standby failover lock manager nodes. The failover nodes provide

failover in case the master lock manager node fails.

The lock managers also provide cluster management functions that control node recovery. Refer to

Chapter 8 Using Clustering and Locking Systems for a description of the GFS lock protocols.

1.3.3. Cluster Management, Fencing, and Recovery

Cluster management functions in GFS monitor node status through heartbeat signals to determine

cluster membership. Also, cluster management keeps track of which nodes are using each GFS file

system, and initiates and coordinates the recovery process when nodes fail. This process involves

recovery coordination from the fencing system, the lock manager, and the file system. The cluster

management functions are embedded in each of the lock management modules described earlier in

Lock Management. Refer to Chapter 8 Using Clustering and Locking Systems for more information

on cluster management.

Fencing is the ability to isolate or "fence off" a cluster node when that node loses its heartbeat notification with the rest of the cluster nodes. Fencing ensures that data integrity is maintained during

the recovery of a failed cluster node. GFS supports a variety of automated fencing methods and one

manual method. In addition, GFS provides the ability to configure each cluster node for cascaded

fencing with the automated fencing methods. Refer to Chapter 10 Using the Fencing System for more

information about the GFS fencing capability.

Recovery is the process of controlling reentry of a node into a cluster after the node has been fenced.

Recovery ensures that storage data integrity is maintained in the cluster while the previously fenced

node is reentering the cluster. As stated earlier, recovery involves coordination from fencing, lock

management, and the file system.

1.3.4. Cluster Configuration Management

Cluster configuration management provides a centralized mechanism for the configuration and

maintenance of configuration files throughout the cluster. It provides high-availability access to

configuration-state information for all nodes in the cluster.

Page 20

6 Chapter 1. GFS Overview

For information about cluster configuration management refer to Chapter 6 Creating the Cluster Configuration System Files and Chapter 7 Using the Cluster Configuration System.

1.4. GFS Software Subsystems

GFS consists of the following subsystems:

• Cluster Configuration System (CCS)

• Fence

• Pool

• LOCK_GULM

• LOCK_NOLOCK

Table 1-1 summarizes the GFS Software subsystems and their components.

Software Subsystem Components Description

Cluster Configuration

System (CCS)

ccs_tool Command used to create CCS archives.

ccs_read Diagnostic and testing command that is

used to retrieve information from

configuration files through ccsd.

ccsd CCS daemon that runs on all cluster nodes

and provides configuration file data to

cluster software.

ccs_servd CCS server daemon that distributes CCS

data from a single server to ccsd daemons

when a shared device is not used for storing

CCS data.

Fence fence_node Command used by lock_gulmd when a

fence operation is required. This command

takes the name of a node and fences it

based on the node’s fencing configuration.

fence_apc Fence agent for APC power switch.

fence_wti Fence agent for WTI power switch.

fence_brocade Fence agent for Brocade Fibre Channel

switch.

fence_vixel Fence agent for Vixel Fibre Channel switch.

fence_rib Fence agent for RIB card.

fence_gnbd Fence agent used with GNBD storage.

fence_notify_gnbd Fence agent for notifying GFS nodes about

fenced GNBD server nodes.

fence_manual Fence agent for manual interaction.

Page 21

Chapter 1. GFS Overview 7

Software Subsystem Components Description

fence_ack_manual User interface for fence_manual agent.

Pool pool.o Kernel module implementing the pool

block-device driver.

pool_assemble Command that activates and deactivates

pool volumes.

pool_tool Command that configures pool volumes

from individual storage devices.

pool_info Command that reports information about

system pools.

pool_grow Command that expands a pool volume.

pool_mp Command that manages pool multipathing.

LOCK_GULM lock_gulm.o Kernel module that is installed on GFS

nodes using the LOCK_GULM lock

module.

lock_gulmd Server/daemon that runs on one or more

nodes and communicates with all the nodes

using LOCK_GULM GFS file systems.

gulm_tool Command that configures and debugs the

lock_gulmd server.

LOCL_NOLOCK lock_nolock.o Kernel module installed on a node using

GFS as a local file system.

GFS gfs.o Kernel module that implements the GFS file

system and is loaded on GFS cluster nodes.

lock_harness.o Kernel module that implements the GFS

lock harness into which GFS lock modules

can plug.

gfs_mkfs Command that creates a GFS file system on

a storage device.

gfs_tool Command that configures or tunes a GFS

file system. This command can also gather

a variety of information about the file

system.

gfs_quota Command that manages quotas on a

mounted GFS file system.

gfs_grow Command that grows a mounted GFS file

system.

gfs_jadd Command that adds journals to a mounted

GFS file system.

gfs_fsck Command that repairs an unmounted GFS

file system.

gfs_mount Command that can be optionally used to

mount a GFS file system.

Page 22

8 Chapter 1. GFS Overview

Software Subsystem Components Description

License license_tool Command that is used to check a license

file.

GNBD gnbd.o Kernel module that implements the GNBD

blade-device driver on clients.

gnbd_serv.o Kernel module that implements the GNBD

server. It allows a node to export local

storage over the network.

gnbd_export Command to create, export and manage

GNBDs on a GNBD server.

gnbd_import Command to import and manage GNBDs

on a GNBD client.

Upgrade gfs_conf Command that retrieves from a cidev

configuration information from earlier

versions of GFS.

Table 1-1. GFS Software Subsystem Components

1.5. Before Configuring GFS

Before you install and configure GFS, note the following key characteristics of your GFS configuration:

Cluster name

Determine a cluster name for your GFS cluster. The cluster name is required in the form of a parameter variable, ClusterName, later in this book.The cluster name can be 1 to 16 characters long.

For example, this book uses a cluster name alpha in some example configuration procedures.

Number of file systems

Determine how many GFS file systems to create initially. (More file systems can be added later.)

File system name

Determine a unique name for each file system. Each file system name is required in the form of

a parameter variable, FSName, later in this book. For example, this book uses file system names

gfs1 and gfs2 in some example configuration procedures.

Number of nodes

Determine how many nodes will mount the file systems. The hostnames and IP addresses of all

nodes will be used.

LOCK_GULM server daemons

Determine the number of LOCK_GULM server daemons to use and on which GFS nodes the

server daemons will run. Multiple LOCK_GULM server daemons (only available with RLM)

provide redundancy. RLM requires a minimum of three nodes, but no more than five nodes. Information about LOCK_GULM server daemons is required for a CCS (Cluster Configuration

System) file, cluster.ccs. Refer to Section 6.6 Creating the cluster.ccs File for information about the cluster.ccs file.

Page 23

Chapter 1. GFS Overview 9

GNBD server nodes

If you are using GNBD, determine how many nodes are needed. The hostname and IP address

of all GNBD server nodes are used.

Fencing method

Determine the fencing method for each GFS node. If you are using GNBD multipath, determine

the fencing method for each GNBD server node (node that exports GNBDs to GFS nodes).

Information about fencing methods is required later in this book for the CCS files, fence.ccs

and nodes.ccs.(Refer to Section 6.7 Creating the fence.ccs File and Section 6.8 Creating the

nodes.ccs File for more information.) To help determine the type of fencing methods available

with GFS, refer to Chapter 10 Using the Fencing System. When using RLM, you must use a

fencing method that shuts down and reboots the node being fenced.

Storage devices and partitions

Determine the storage devices and partitions to be used for creating pool volumes in the file systems. Make sure to account for space on one or more partitions for storing cluster configuration

information as follows: 2 KB per GFS node or 2 MB total, whichever is larger.

Page 24

10 Chapter 1. GFS Overview

Page 25

Chapter 2.

System Requirements

This chapter describes the system requirements for Red Hat GFS 5.2.1 and consists of the following

sections:

• Section 2.1 Platform Requirements

• Section 2.2 TCP/IP Network

• Section 2.3 Fibre Channel Storage Network

• Section 2.4 Fibre Channel Storage Devices

• Section 2.5 Network Power Switches

• Section 2.6 Console Access

• Section 2.7 I/O Fencing

2.1. Platform Requirements

Table 2-1 shows the platform requirements for GFS.

Operating System Hardware Architecture RAM

RHEL3 AS, ES, WS ia64, x86-64, x86

SMP supported

256 MB, minimum

RHEL2.1 AS x86, ia64

SMP supported

256 MB, minimum

SLES8 x86

SMP supported

256 MB, minimum

SuSE9 x86-64

SMP supported

256 MB, minimum

Table 2-1. Platform Requirements

2.2. TCP/IP Network

All GFS nodes must be connected to a TCP/IP network. Network communication is critical to the

operation of the GFS cluster, specifically to the clustering and locking subsystems. For optimal performance and security, it is recommended that a private, dedicated, switched network be used for GFS.

GFS subsystems do not use dual-network interfaces for failover purposes.

2.3. Fibre Channel Storage Network

Table 2-2 shows requirements for GFS nodes that are to be connected to a Fibre Channel SAN.

Page 26

12 Chapter 2. System Requirements

Requirement Description

HBA (Host Bus Adapter) One HBA minimum per GFS node

Connection method Fibre Channel switch

Note: If an FC switch is used for I/O fencing nodes, you

may want to consider using Brocade and Vixel FC switches,

for which GFS fencing agents exist.

Note: When a small number of nodes is used, it may be

possible to connect the nodes directly to ports on the

storage device.

Note: FC drivers may not work reliably with FC hubs.

Table 2-2. Fibre Channel Network Requirements

2.4. Fibre Channel Storage Devices

Table 2-3 shows requirements for Fibre Channel devices that are to be connected to a GFS cluster.

Requirement Description

Device Type FC RAID array or JBOD

Note: Make sure that the devices can operate reliably when

heavily accessed simultaneously from multiple initiators.

Note: Make sure that your GFS configuration does not

exceed the number of nodes an array or JBOD supports.

Size 2 TB maximum, for total of all storage connected to a GFS

cluster. Linux 2.4 kernels do not support devices larger than

2 TB; therefore, the total size of storage available to GFS

cannot exceed 2 TB.

Table 2-3. Fibre Channel Storage Device Requirements

2.5. Network Power Switches

GFS provides fencing agents for APC and WTI network power switches.

2.6. Console Access

Make sure that you have console access to each GFS node. Console access to each node ensures that

you can monitor the cluster and troubleshoot kernel problems.

2.7. I/O Fencing

You need to configure each node in your GFS cluster for at least one form of I/O fencing. For more

information about fencing options, refer to Section 10.2 Fencing Methods.

Page 27

Chapter 3.

Installing System Software

Installing system software consists of installing a Linux kernel and the corresponding GFS software

into each node of your GFS cluster. This chapter explains how to install system software and includes

the following sections:

• Section 3.1 Prerequisite Tasks

• Section 3.2 Installation Tasks

• Section 3.2.1 Installing a Linux Kernel

• Section 3.2.2 Installing A GFS RPM

• Section 3.2.3 Loading the GFS Kernel Modules

3.1. Prerequisite Tasks

Before installing GFS software, make sure that you have noted the key characteristics of your GFS

configuration (refer to Section 1.5 Before Configuring GFS). Make sure that you have installed the

following software:

• Net::Telnet Perl module

• Clock synchronization software

• Stunnel utility (optional)

3.1.1. Net::Telnet Perl Module

The Net::Telnet Perl module is used by several fencing agents and should be installed on all

GFS nodes. The Net::Telnet Perl module should be installed before installing GFS; otherwise,

GFS will not install.

You can download the Net::Telnet Perl module and installation instructions for the module from

the Comprehensive Perl Archive Network (CPAN) website at http://www.cpan.org.

3.1.2. Clock Synchronization Software

Make sure that each GFS node is running clock synchronization software. The system clocks in GFS

nodes need to be within a few minutes of each other for the following reasons:

• Avoiding unnecessary inode time-stamp updates — If the node clocks are not synchronized, the

inode time stamps will be updated unnecessarily, severely impacting cluster performance. Refer to

Section 9.9 Configuring atime Updates for additional information.

• Maintaining license validity — If system times are incorrect, the license may be considered invalid.

Page 28

14 Chapter 3. Installing System Software

Note

One example of time synchronization software is the Network Time Protocol (NTP) software. You can

find more information about NTP at http://www.ntp.org.

3.1.3. Stunnel Utility

The Stunnel utility needs to be installed only on nodes that use the HP RILOE PCI card for I/O fencing. (For more information about fencing with the HP RILOE card, refer to HP RILOE Card on page

147.) Verify that the utility is installed on each of those nodes by looking for /usr/sbin/stunnel.

If you need the Stunnel utility, download it from the Stunnel website at http://www.stunnel.org.

3.2. Installation Tasks

To install system software, perform the following steps:

1. Install a Linux kernel.

Note

This step is required only if you are using Red Hat Enterprise Linux Version 3, Update 1 or earlier,

or if you are using Red Hat Linux Version 2.1.

2. Install the GFS RPM.

3. Load the GFS kernel modules.

3.2.1. Installing a Linux Kernel

Note

This step is required only if you are using Red Hat Enterprise Linux Version 3, Update 1 or earlier, or

if you are using Red Hat Linux Version 2.1.

Installing a Linux kernel consists of downloading and installing a binary kernel built with GFS patches

applied and, optionally, compiling third-party drivers not included with the kernel (for example, HBA

drivers) against that kernel. The GFS kernels have been built by applying GFS patches against vendor

kernels, fully enabling all GFS features.

To install a Linux kernel, follow these steps:

Page 29

Chapter 3. Installing System Software 15

Step Action Comment

1. Acquire the appropriate binary

GFS kernel. Copy or download the

appropriate RPM file for each

GFS node.

For example:

kernel-gfs-smp-2.4.21-9.0.1.EL.i686.rpm

Make sure that the file is appropriate for the

hardware and libraries of the computer on which the

kernel will be installed.

2. At each node, install the new

kernel by issuing the following

rpm command and variable:

rpm -ivh GFSKernelRPM

GFSKernelRPM = GFS kernel RPM file.

3. At each node, issue an rpm

command to check the kernel

level.

For example:

rpm -q kernel-gfs

Verify that the correct kernel has been installed.

4. At each node, make sure that the

boot loader is configured to load

the new kernel.

For example, check the GRUB menu.lst file.

5. Reboot each node into the new

kernel.

6. At each node, verify that the

node is running the the

appropriate kernel as follows:

uname -r

If you need to compile third-party

drivers against the kernel, proceed to the next step. If not, proceed to Section 3.2.2 Installing A

GFS RPM.

Displays the current release level of the kernel

running.

7. Acquire the tar file from RHN

containing the GFS kernel

patches:

GFS_kpatches-5.2.1.tgz

The GFS_kpatches-5.2.1.tgz file contains GFS

kernel patches and patch instructions for supported

kernels.

8. Extract a patch-instructions file

from

GFS_kpatches-5.2.1.tgz

For example:

rh_kernel_patch.txt

Follow the patch instructions and check which kernel

versions are supported.

Table 3-1. Installing a Linux Kernel

Page 30

16 Chapter 3. Installing System Software

3.2.2. Installing A GFS RPM

Installing a GFS RPM consists of acquiring the appropriate GFS software and installing it.

Note

Only one instance of a GFS RPM can be installed on a node. If a GFS RPM is present on a node,

that RPM must be removed before installing another GFS RPM. To remove a GFS RPM, you can use

the rpm command with its erase option (-e).

Tip

GNBD modules are included with the GFS RPM.

To install a GFS RPM, follow these steps:

Step Action Comment

1. Acquire the appropriate GFS software and

copy or download it to each GFS node.

Make sure to select the GFS software that

matches the kernel selected in Section 3.2.1

Installing a Linux Kernel.

2. At each node, issue the following rpm

command:

rpm -ivh GFSRPM

Installs the GFS software. GFSRPM = GFS

RPM file.

3. At each node, issue the following rpm

command to check the GFS version:

rpm -qa | grep GFS

Verify that the GFS software has been

installed. This lists the GFS software

installed in the previous step.

Table 3-2. Installing a GFS RPM

3.2.3. Loading the GFS Kernel Modules

Once the GFS software has been installed on the GFS nodes, the following GFS kernel modules need

to be loaded into the running kernel before GFS can be set up and used:

• ccs.o

• pool.o

• lock_harness.o

• locl_gulm.o

• gfs.o

Page 31

Chapter 3. Installing System Software 17

Note

The GFS kernel modules need to be loaded every time a GFS node is started. It is recommended

that you use a startup script to automate loading the GFS kernel modules.

Note

The procedures in this section are for a GFS configuration that uses LOCK_GULM. If you are using

LOCK_NOLOCK, refer to Appendix B Basic GFS Examples for information about which GFS kernel

modules you should load.

Note

When loading the GFS kernel modules you may see warning messages indicating missing or nonGPL licenses. The messages are not related to the GFS license and can be ignored.

To load the GFS kernel modules, follow the steps in Table 3-3. The steps in Table 3-3 use modprobe

commands to load the GFS kernel modules; all dependent modules will be loaded automatically.

Alternatively, you can load the GFS kernel modules by following the steps in Table 3-4 . The steps in

Table 3-4 use insmod commands to load the GFS kernel modules.

Step Command Description

1. depmod -a Run this only once after RPMs are installed.

2. modprobe pool Loads pool.o and dependent files.

3. modprobe lock_gulm Loads lock_gulm.o and dependent files.

4. modprobe gfs Loads gfs.o and dependent files.

5. lsmod Verifies that all GFS kernel modules are loaded. This

shows a listing of currently loaded modules. It

should display all the modules loaded in the previous

steps and other system kernel modules.

Table 3-3. Loading GFS Kernel Modules

Step Command Description

1. insmod ccs Loads the ccs.o module.

2. insmod pool Loads the pool.o module.

3. insmod lock_harness Loads the lock_harness.o module.

Page 32

18 Chapter 3. Installing System Software

Step Command Description

4. insmod lock_gulm Loads the lock_gulm.o module.

5. insmod gfs Loads the gfs.o module.

6. lsmod Verifies that all GFS kernel modules are loaded. This

shows a listing of currently loaded modules. It

should display all the modules loaded in the previous

steps and other system kernel modules.

Table 3-4. (Alternate Method) Loading Kernel Modules

Page 33

Chapter 4.

Initial Configuration

This chapter describes procedures for initial configuration of GFS and contains the following sections:

• Section 4.1 Prerequisite Tasks

• Section 4.2 Initial Configuration Tasks

• Section 4.2.1 Setting Up Logical Devices

• Section 4.2.2 Setting Up and Starting the Cluster Configuration System

• Section 4.2.3 Starting Clustering and Locking Systems

• Section 4.2.4 Setting Up and Mounting File Systems

4.1. Prerequisite Tasks

Before setting up the GFS software, make sure that you have noted the key characteristics of your GFS

configuration (refer to Section 1.5 Before Configuring GFS) and that you have installed the kernel and

the GFS software into each GFS node (refer to Chapter 3 Installing System Software).

4.2. Initial Configuration Tasks

Initial configuration consists of the following tasks:

1. Setting up logical devices (pools).

2. Setting up and starting the Cluster Configuration System (CCS).

3. Starting clustering and locking systems.

4. Setting up and mounting file systems.

Note

GFS kernel modules must be loaded prior to performing initial configuration tasks. Refer to Section

3.2.3 Loading the GFS Kernel Modules.

Note

For examples of GFS configurations, refer to Appendix B Basic GFS Examples.

The following sections describe the initial configuration tasks.

Page 34

20 Chapter 4. Initial Configuration

4.2.1. Setting Up Logical Devices

To set up logical devices (pools) follow these steps:

1. Create file system pools.

a. Create pool configuration files. Refer to Section 5.4 Creating a Configuration File for a

New Volume.

b. Create a pool for each file system. Refer to Section 5.5 Creating a Pool Volume.

Command usage:

pool_tool -c ConfigFile

2. Create a CCS pool.

a. Create pool configuration file. Refer to Section 5.4 Creating a Configuration File for a

New Volume.

b. Create a pool to be the Cluster Configuration Archive (CCA) device. Refer to Section 5.5

Creating a Pool Volume.

Command usage:

pool_tool -c ConfigFile

3. At each node, activate pools. Refer to Section 5.6 Activating/Deactivating a Pool Volume.

Command usage:

pool_assemble

4.2.2. Setting Up and Starting the Cluster Configuration System

To set up and start the Cluster Configuration System, follow these steps:

1. Create CCS configuration files and place them into a temporary directory. Refer to Chapter 6

Creating the Cluster Configuration System Files.

2. Create a CCS archive on the CCA device. (The CCA device is the pool created in Step 2 of

Section 4.2.1 Setting Up Logical Devices.) Put the CCS files (created in Step 1) into the CCS

archive. Refer to Section 7.1 Creating a CCS Archive.

Command usage:

ccs_tool create Directory CCADevice.

3. At each node, start the CCS daemon, specifying the CCA device at the command line. Refer to

Section 7.2 Starting CCS in the Cluster.

Command usage:

ccsd -d CCADevice

Page 35

Chapter 4. Initial Configuration 21

4.2.3. Starting Clustering and Locking Systems

To start clustering and locking systems, follow these steps:

1. Check the cluster.ccs file to identify/verify which nodes are designated as lock server nodes.

2. Start the LOCK_GULM servers. At each lock-server node, start lock_gulmd. Refer to Section

8.2.3 Starting LOCK_GULM Servers.

Command usage:

lock_gulmd

4.2.4. Setting Up and Mounting File Systems

To set up and mount file systems, follow these steps:

1. Create GFS file systems on pools created in Step 1 of Section 4.2.2 Setting Up and Starting the

Cluster Configuration System. Choose a unique name for each file system. Refer to Section 9.1

Making a File System.

Command usage:

gfs_mkfs -p lock_gulm -t ClusterName:FSName -j NumberJournals

BlockDevice

2. At each node, mount the GFS file systems. Refer to Section 9.2 Mounting a File System.

Command usage:

mount -t gfs BlockDevice MountPoint

Page 36

22 Chapter 4. Initial Configuration

Page 37

Chapter 5.

Using the Pool Volume Manager

This chapter describes the GFS volume manager — named Pool — and its commands. The chapter

consists of the following sections: