Page 1

Red Hat Enterprise Linux 4

Cluster Administration

Configuring and Managing a Red Hat Cluster

Page 2

Cluster Administration

Red Hat Enterprise Linux 4 Cluster Administration

Configuring and Managing a Red Hat Cluster

Edition 1.0

Copyright © 2009 Red Hat, Inc. This material may only be distributed subject to the terms and

conditions set forth in the Open Publication License, V1.0 or later (the latest version of the OPL is

presently available at http://www.opencontent.org/openpub/).

Red Hat and the Red Hat "Shadow Man" logo are registered trademarks of Red Hat, Inc. in the United

States and other countries.

All other trademarks referenced herein are the property of their respective owners.

1801 Varsity Drive

Raleigh, NC 27606-2072 USA

Phone: +1 919 754 3700

Phone: 888 733 4281

Fax: +1 919 754 3701

PO Box 13588 Research Triangle Park, NC 27709 USA

Configuring and Managing a Red Hat Cluster describes the configuration and management of Red Hat

cluster systems for Red Hat Enterprise Linux 4. It does not include information about Red Hat Linux

Virtual Servers (LVS). Information about installing and configuring LVS is in a separate document.

Page 3

iii

Introduction v

1. Document Conventions ................................................................................................... vi

1.1. Typographic Conventions ..................................................................................... vi

1.2. Pull-quote Conventions ....................................................................................... viii

1.3. Notes and Warnings ........................................................................................... viii

2. Feedback ....................................................................................................................... ix

1. Red Hat Cluster Configuration and Management Overview 1

1.1. Configuration Basics ..................................................................................................... 1

1.1.1. Setting Up Hardware ......................................................................................... 1

1.1.2. Installing Red Hat Cluster software ..................................................................... 2

1.1.3. Configuring Red Hat Cluster Software ................................................................ 2

1.2. Conga ......................................................................................................................... 4

1.3. system-config-cluster Cluster Administration GUI ........................................................... 6

1.3.1. Cluster Configuration Tool .................................................................................. 7

1.3.2. Cluster Status Tool ............................................................................................ 8

1.4. Command Line Administration Tools ............................................................................. 9

2. Before Configuring a Red Hat Cluster 11

2.1. Compatible Hardware ................................................................................................. 11

2.2. Enabling IP Ports ....................................................................................................... 11

2.2.1. Enabling IP Ports on Cluster Nodes .................................................................. 11

2.2.2. Enabling IP Ports on Computers That Run luci .................................................. 12

2.2.3. Examples of iptables Rules .............................................................................. 13

2.3. Configuring ACPI For Use with Integrated Fence Devices ............................................. 14

2.3.1. Disabling ACPI Soft-Off with chkconfig Management .......................................... 15

2.3.2. Disabling ACPI Soft-Off with the BIOS .............................................................. 16

2.3.3. Disabling ACPI Completely in the grub.conf File ................................................ 18

2.4. Configuring max_luns ................................................................................................. 19

2.5. Considerations for Using Quorum Disk ........................................................................ 20

2.6. Red Hat Cluster Suite and SELinux ............................................................................. 21

2.7. Considerations for Using Conga .................................................................................. 21

2.8. General Configuration Considerations .......................................................................... 22

3. Configuring Red Hat Cluster With Conga 23

3.1. Configuration Tasks .................................................................................................... 23

3.2. Starting luci and ricci .................................................................................................. 23

3.3. Creating A Cluster ...................................................................................................... 25

3.4. Global Cluster Properties ............................................................................................ 26

3.5. Configuring Fence Devices ......................................................................................... 28

3.5.1. Creating a Shared Fence Device ...................................................................... 29

3.5.2. Modifying or Deleting a Fence Device ............................................................... 31

3.6. Configuring Cluster Members ...................................................................................... 31

3.6.1. Initially Configuring Members ............................................................................ 31

3.6.2. Adding a Member to a Running Cluster ............................................................ 32

3.6.3. Deleting a Member from a Cluster .................................................................... 33

3.7. Configuring a Failover Domain .................................................................................... 34

3.7.1. Adding a Failover Domain ................................................................................ 35

3.7.2. Modifying a Failover Domain ............................................................................ 36

3.8. Adding Cluster Resources .......................................................................................... 37

3.9. Adding a Cluster Service to the Cluster ....................................................................... 39

3.10. Configuring Cluster Storage ...................................................................................... 40

Page 4

Cluster Administration

iv

4. Managing Red Hat Cluster With Conga 43

4.1. Starting, Stopping, and Deleting Clusters ..................................................................... 43

4.2. Managing Cluster Nodes ............................................................................................ 44

4.3. Managing High-Availability Services ............................................................................ 45

4.4. Diagnosing and Correcting Problems in a Cluster ......................................................... 46

5. Configuring Red Hat Cluster With system-config-cluster 47

5.1. Configuration Tasks .................................................................................................... 47

5.2. Starting the Cluster Configuration Tool ........................................................................ 48

5.3. Configuring Cluster Properties ..................................................................................... 52

5.4. Configuring Fence Devices ......................................................................................... 53

5.5. Adding and Deleting Members .................................................................................... 54

5.5.1. Adding a Member to a New Cluster .................................................................. 54

5.5.2. Adding a Member to a Running DLM Cluster .................................................... 56

5.5.3. Deleting a Member from a DLM Cluster ............................................................ 58

5.5.4. Adding a GULM Client-only Member ................................................................. 60

5.5.5. Deleting a GULM Client-only Member ............................................................... 60

5.5.6. Adding or Deleting a GULM Lock Server Member .............................................. 62

5.6. Configuring a Failover Domain .................................................................................... 64

5.6.1. Adding a Failover Domain ................................................................................ 65

5.6.2. Removing a Failover Domain ........................................................................... 67

5.6.3. Removing a Member from a Failover Domain .................................................... 68

5.7. Adding Cluster Resources .......................................................................................... 68

5.8. Adding a Cluster Service to the Cluster ....................................................................... 71

5.9. Propagating The Configuration File: New Cluster ......................................................... 74

5.10. Starting the Cluster Software ..................................................................................... 74

6. Managing Red Hat Cluster With system-config-cluster 77

6.1. Starting and Stopping the Cluster Software .................................................................. 77

6.2. Managing High-Availability Services ............................................................................ 77

6.3. Modifying the Cluster Configuration ............................................................................. 79

6.4. Backing Up and Restoring the Cluster Database .......................................................... 80

6.5. Disabling the Cluster Software .................................................................................... 81

6.6. Diagnosing and Correcting Problems in a Cluster ......................................................... 82

A. Example of Setting Up Apache HTTP Server 83

A.1. Apache HTTP Server Setup Overview ........................................................................ 83

A.2. Configuring Shared Storage ........................................................................................ 83

A.3. Installing and Configuring the Apache HTTP Server ..................................................... 84

B. Fence Device Parameters 87

C. Revision History 93

Index 95

Page 5

v

Introduction

This document provides information about installing, configuring and managing Red Hat Cluster

components. Red Hat Cluster components are part of Red Hat Cluster Suite and allow you to connect

a group of computers (called nodes or members) to work together as a cluster. This document

does not include information about installing, configuring, and managing Linux Virtual Server (LVS)

software. Information about that is in a separate document.

The audience of this document should have advanced working knowledge of Red Hat Enterprise Linux

and understand the concepts of clusters, storage, and server computing.

This document is organized as follows:

• Chapter 1, Red Hat Cluster Configuration and Management Overview

• Chapter 2, Before Configuring a Red Hat Cluster

• Chapter 3, Configuring Red Hat Cluster With Conga

• Chapter 4, Managing Red Hat Cluster With Conga

• Chapter 5, Configuring Red Hat Cluster With system-config-cluster

• Chapter 6, Managing Red Hat Cluster With system-config-cluster

• Appendix A, Example of Setting Up Apache HTTP Server

• Appendix B, Fence Device Parameters

• Appendix C, Revision History

For more information about Red Hat Enterprise Linux 4, refer to the following resources:

• Red Hat Enterprise Linux Installation Guide — Provides information regarding installation.

• Red Hat Enterprise Linux Introduction to System Administration — Provides introductory information

for new Red Hat Enterprise Linux system administrators.

• Red Hat Enterprise Linux System Administration Guide — Provides more detailed information about

configuring Red Hat Enterprise Linux to suit your particular needs as a user.

• Red Hat Enterprise Linux Reference Guide — Provides detailed information suited for more

experienced users to reference when needed, as opposed to step-by-step instructions.

• Red Hat Enterprise Linux Security Guide — Details the planning and the tools involved in creating a

secured computing environment for the data center, workplace, and home.

For more information about Red Hat Cluster Suite for Red Hat Enterprise Linux 4 and related

products, refer to the following resources:

• Red Hat Cluster Suite Overview — Provides a high level overview of the Red Hat Cluster Suite.

• LVM Administrator's Guide: Configuration and Administration — Provides a description of the

Logical Volume Manager (LVM), including information on running LVM in a clustered environment.

Page 6

Introduction

vi

• Global File System: Configuration and Administration — Provides information about installing,

configuring, and maintaining Red Hat GFS (Red Hat Global File System).

• Using Device-Mapper Multipath — Provides information about using the Device-Mapper Multipath

feature of Red Hat Enterprise Linux 4.7.

• Using GNBD with Global File System — Provides an overview on using Global Network Block

Device (GNBD) with Red Hat GFS.

• Linux Virtual Server Administration — Provides information on configuring high-performance

systems and services with the Linux Virtual Server (LVS).

• Red Hat Cluster Suite Release Notes — Provides information about the current release of Red Hat

Cluster Suite.

Red Hat Cluster Suite documentation and other Red Hat documents are available in HTML,

PDF, and RPM versions on the Red Hat Enterprise Linux Documentation CD and online at http://

www.redhat.com/docs/.

1. Document Conventions

This manual uses several conventions to highlight certain words and phrases and draw attention to

specific pieces of information.

In PDF and paper editions, this manual uses typefaces drawn from the Liberation Fonts1 set. The

Liberation Fonts set is also used in HTML editions if the set is installed on your system. If not,

alternative but equivalent typefaces are displayed. Note: Red Hat Enterprise Linux 5 and later includes

the Liberation Fonts set by default.

1.1. Typographic Conventions

Four typographic conventions are used to call attention to specific words and phrases. These

conventions, and the circumstances they apply to, are as follows.

Mono-spaced Bold

Used to highlight system input, including shell commands, file names and paths. Also used to highlight

key caps and key-combinations. For example:

To see the contents of the file my_next_bestselling_novel in your current

working directory, enter the cat my_next_bestselling_novel command at the

shell prompt and press Enter to execute the command.

The above includes a file name, a shell command and a key cap, all presented in Mono-spaced Bold

and all distinguishable thanks to context.

Key-combinations can be distinguished from key caps by the hyphen connecting each part of a keycombination. For example:

Press Enter to execute the command.

Press Ctrl+Alt+F1 to switch to the first virtual terminal. Press Ctrl+Alt+F7 to

return to your X-Windows session.

1

https://fedorahosted.org/liberation-fonts/

Page 7

Typographic Conventions

vii

The first sentence highlights the particular key cap to press. The second highlights two sets of three

key caps, each set pressed simultaneously.

If source code is discussed, class names, methods, functions, variable names and returned values

mentioned within a paragraph will be presented as above, in Mono-spaced Bold. For example:

File-related classes include filesystem for file systems, file for files, and dir for

directories. Each class has its own associated set of permissions.

Proportional Bold

This denotes words or phrases encountered on a system, including application names; dialogue

box text; labelled buttons; check-box and radio button labels; menu titles and sub-menu titles. For

example:

Choose System > Preferences > Mouse from the main menu bar to launch Mouse

Preferences. In the Buttons tab, click the Left-handed mouse check box and click

Close to switch the primary mouse button from the left to the right (making the mouse

suitable for use in the left hand).

To insert a special character into a gedit file, choose Applications > Accessories

> Character Map from the main menu bar. Next, choose Search > Find… from the

Character Map menu bar, type the name of the character in the Search field and

click Next. The character you sought will be highlighted in the Character Table.

Double-click this highlighted character to place it in the Text to copy field and then

click the Copy button. Now switch back to your document and choose Edit > Paste

from the gedit menu bar.

The above text includes application names; system-wide menu names and items; application-specific

menu names; and buttons and text found within a GUI interface, all presented in Proportional Bold and

all distinguishable by context.

Note the > shorthand used to indicate traversal through a menu and its sub-menus. This is to avoid

the difficult-to-follow 'Select Mouse from the Preferences sub-menu in the System menu of the main

menu bar' approach.

Mono-spaced Bold Italic or Proportional Bold Italic

Whether Mono-spaced Bold or Proportional Bold, the addition of Italics indicates replaceable or

variable text. Italics denotes text you do not input literally or displayed text that changes depending on

circumstance. For example:

To connect to a remote machine using ssh, type ssh username@domain.name at

a shell prompt. If the remote machine is example.com and your username on that

machine is john, type ssh john@example.com.

The mount -o remount file-system command remounts the named file

system. For example, to remount the /home file system, the command is mount -o

remount /home.

To see the version of a currently installed package, use the rpm -q package

command. It will return a result as follows: package-version-release.

Note the words in bold italics above — username, domain.name, file-system, package, version and

release. Each word is a placeholder, either for text you enter when issuing a command or for text

displayed by the system.

Page 8

Introduction

viii

Aside from standard usage for presenting the title of a work, italics denotes the first use of a new and

important term. For example:

When the Apache HTTP Server accepts requests, it dispatches child processes

or threads to handle them. This group of child processes or threads is known as

a server-pool. Under Apache HTTP Server 2.0, the responsibility for creating and

maintaining these server-pools has been abstracted to a group of modules called

Multi-Processing Modules (MPMs). Unlike other modules, only one module from the

MPM group can be loaded by the Apache HTTP Server.

1.2. Pull-quote Conventions

Two, commonly multi-line, data types are set off visually from the surrounding text.

Output sent to a terminal is set in Mono-spaced Roman and presented thus:

books Desktop documentation drafts mss photos stuff svn

books_tests Desktop1 downloads images notes scripts svgs

Source-code listings are also set in Mono-spaced Roman but are presented and highlighted as

follows:

package org.jboss.book.jca.ex1;

import javax.naming.InitialContext;

public class ExClient

{

public static void main(String args[])

throws Exception

{

InitialContext iniCtx = new InitialContext();

Object ref = iniCtx.lookup("EchoBean");

EchoHome home = (EchoHome) ref;

Echo echo = home.create();

System.out.println("Created Echo");

System.out.println("Echo.echo('Hello') = " + echo.echo("Hello"));

}

}

1.3. Notes and Warnings

Finally, we use three visual styles to draw attention to information that might otherwise be overlooked.

Page 9

Feedback

ix

Note

A note is a tip or shortcut or alternative approach to the task at hand. Ignoring a note

should have no negative consequences, but you might miss out on a trick that makes your

life easier.

Important

Important boxes detail things that are easily missed: configuration changes that only

apply to the current session, or services that need restarting before an update will apply.

Ignoring Important boxes won't cause data loss but may cause irritation and frustration.

Warning

A Warning should not be ignored. Ignoring warnings will most likely cause data loss.

2. Feedback

If you spot a typo, or if you have thought of a way to make this manual better, we would love to

hear from you. Please submit a report in Bugzilla (http://bugzilla.redhat.com/bugzilla/) against the

component rh-cs.

Be sure to mention the manual's identifier:

Cluster_Administration(EN)-4.8 (2009-5-13T12:45)

By mentioning this manual's identifier, we know exactly which version of the guide you have.

If you have a suggestion for improving the documentation, try to be as specific as possible. If you have

found an error, please include the section number and some of the surrounding text so we can find it

easily.

Page 10

x

Page 11

Chapter 1.

1

Red Hat Cluster Configuration and

Management Overview

Red Hat Cluster allows you to connect a group of computers (called nodes or members) to work

together as a cluster. You can use Red Hat Cluster to suit your clustering needs (for example, setting

up a cluster for sharing files on a GFS file system or setting up service failover).

1.1. Configuration Basics

To set up a cluster, you must connect the nodes to certain cluster hardware and configure the

nodes into the cluster environment. This chapter provides an overview of cluster configuration and

management, and tools available for configuring and managing a Red Hat Cluster.

Configuring and managing a Red Hat Cluster consists of the following basic steps:

1. Setting up hardware. Refer to Section 1.1.1, “Setting Up Hardware”.

2. Installing Red Hat Cluster software. Refer to Section 1.1.2, “Installing Red Hat Cluster software”.

3. Configuring Red Hat Cluster Software. Refer to Section 1.1.3, “Configuring Red Hat Cluster

Software”.

1.1.1. Setting Up Hardware

Setting up hardware consists of connecting cluster nodes to other hardware required to run a Red

Hat Cluster. The amount and type of hardware varies according to the purpose and availability

requirements of the cluster. Typically, an enterprise-level cluster requires the following type of

hardware (refer to Figure 1.1, “Red Hat Cluster Hardware Overview”).

• Cluster nodes — Computers that are capable of running Red Hat Enterprise Linux 4 software, with

at least 1GB of RAM.

• Ethernet switch or hub for public network — This is required for client access to the cluster.

• Ethernet switch or hub for private network — This is required for communication among the cluster

nodes and other cluster hardware such as network power switches and Fibre Channel switches.

• Network power switch — A network power switch is recommended to perform fencing in an

enterprise-level cluster.

• Fibre Channel switch — A Fibre Channel switch provides access to Fibre Channel storage. Other

options are available for storage according to the type of storage interface; for example, iSCSI or

GNBD. A Fibre Channel switch can be configured to perform fencing.

• Storage — Some type of storage is required for a cluster. The type required depends on the

purpose of the cluster.

For considerations about hardware and other cluster configuration concerns, refer to Chapter 2,

Before Configuring a Red Hat Cluster or check with an authorized Red Hat representative.

Page 12

Chapter 1. Red Hat Cluster Configuration and Management Overview

2

Figure 1.1. Red Hat Cluster Hardware Overview

1.1.2. Installing Red Hat Cluster software

To install Red Hat Cluster software, you must have entitlements for the software. If you are using

the Conga configuration GUI, you can let it install the cluster software. If you are using other tools

to configure the cluster, secure and install the software as you would with Red Hat Enterprise Linux

software.

1.1.3. Configuring Red Hat Cluster Software

Configuring Red Hat Cluster software consists of using configuration tools to specify the relationship

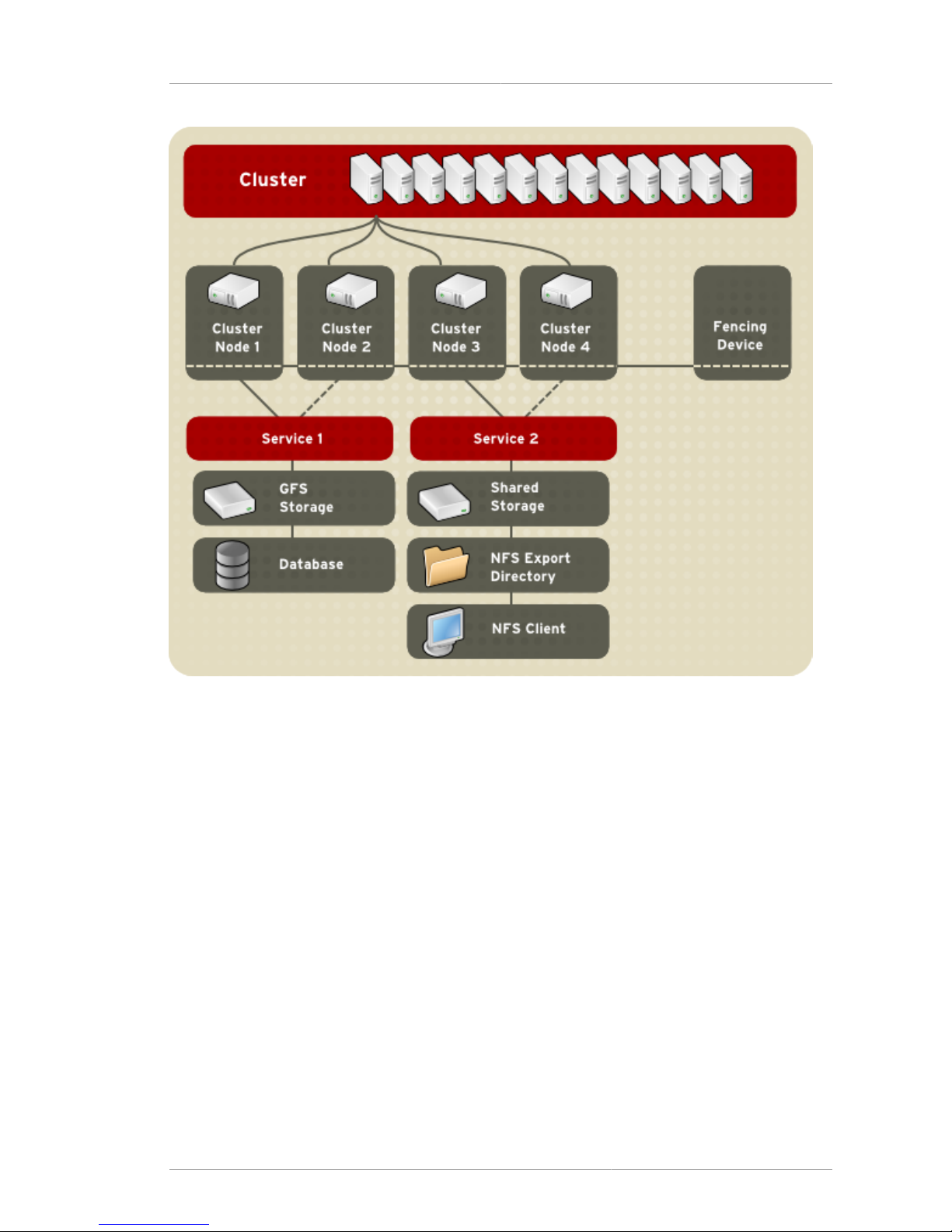

among the cluster components. Figure 1.2, “Cluster Configuration Structure” shows an example of the

hierarchical relationship among cluster nodes, high-availability services, and resources. The cluster

nodes are connected to one or more fencing devices. Nodes can be grouped into a failover domain for

a cluster service. The services comprise resources such as NFS exports, IP addresses, and shared

GFS partitions.

Page 13

Configuring Red Hat Cluster Software

3

Figure 1.2. Cluster Configuration Structure

The following cluster configuration tools are available with Red Hat Cluster:

• Conga — This is a comprehensive user interface for installing, configuring, and managing Red Hat

clusters, computers, and storage attached to clusters and computers.

• system-config-cluster — This is a user interface for configuring and managing a Red Hat

cluster.

• Command line tools — This is a set of command line tools for configuring and managing a Red Hat

cluster.

A brief overview of each configuration tool is provided in the following sections:

• Section 1.2, “Conga”

• Section 1.3, “system-config-cluster Cluster Administration GUI”

• Section 1.4, “Command Line Administration Tools”

In addition, information about using Conga and system-config-cluster is provided in

subsequent chapters of this document. Information about the command line tools is available in the

man pages for the tools.

Page 14

Chapter 1. Red Hat Cluster Configuration and Management Overview

4

1.2. Conga

Conga is an integrated set of software components that provides centralized configuration and

management of Red Hat clusters and storage. Conga provides the following major features:

• One Web interface for managing cluster and storage

• Automated Deployment of Cluster Data and Supporting Packages

• Easy Integration with Existing Clusters

• No Need to Re-Authenticate

• Integration of Cluster Status and Logs

• Fine-Grained Control over User Permissions

The primary components in Conga are luci and ricci, which are separately installable. luci is a server

that runs on one computer and communicates with multiple clusters and computers via ricci. ricci is

an agent that runs on each computer (either a cluster member or a standalone computer) managed by

Conga.

luci is accessible through a Web browser and provides three major functions that are accessible

through the following tabs:

• homebase — Provides tools for adding and deleting computers, adding and deleting users, and

configuring user privileges. Only a system administrator is allowed to access this tab.

• cluster — Provides tools for creating and configuring clusters. Each instance of luci lists clusters

that have been set up with that luci. A system administrator can administer all clusters listed on this

tab. Other users can administer only clusters that the user has permission to manage (granted by an

administrator).

• storage — Provides tools for remote administration of storage. With the tools on this tab, you can

manage storage on computers whether they belong to a cluster or not.

To administer a cluster or storage, an administrator adds (or registers) a cluster or a computer to a

luci server. When a cluster or a computer is registered with luci, the FQDN hostname or IP address of

each computer is stored in a luci database.

You can populate the database of one luci instance from another luciinstance. That capability

provides a means of replicating a luci server instance and provides an efficient upgrade and testing

path. When you install an instance of luci, its database is empty. However, you can import part or all

of a luci database from an existing luci server when deploying a new luci server.

Each luci instance has one user at initial installation — admin. Only the admin user may add systems

to a luci server. Also, the admin user can create additional user accounts and determine which users

are allowed to access clusters and computers registered in the luci database. It is possible to import

users as a batch operation in a new luci server, just as it is possible to import clusters and computers.

When a computer is added to a luci server to be administered, authentication is done once. No

authentication is necessary from then on (unless the certificate used is revoked by a CA). After that,

you can remotely configure and manage clusters and storage through the luci user interface. luci and

ricci communicate with each other via XML.

Page 15

Conga

5

The following figures show sample displays of the three major luci tabs: homebase, cluster, and

storage.

For more information about Conga, refer to Chapter 3, Configuring Red Hat Cluster With Conga,

Chapter 4, Managing Red Hat Cluster With Conga, and the online help available with the luci server.

Figure 1.3. luci homebase Tab

Figure 1.4. luci cluster Tab

Page 16

Chapter 1. Red Hat Cluster Configuration and Management Overview

6

Figure 1.5. luci storage Tab

1.3. system-config-cluster Cluster Administration GUI

This section provides an overview of the cluster administration graphical user interface (GUI) available

with Red Hat Cluster Suite — system-config-cluster. It is for use with the cluster infrastructure

and the high-availability service management components. system-config-cluster consists

of two major functions: the Cluster Configuration Tool and the Cluster Status Tool. The Cluster

Configuration Tool provides the capability to create, edit, and propagate the cluster configuration file

(/etc/cluster/cluster.conf). The Cluster Status Tool provides the capability to manage highavailability services. The following sections summarize those functions.

Note

While system-config-cluster provides several convenient tools for configuring and

managing a Red Hat Cluster, the newer, more comprehensive tool, Conga, provides more

convenience and flexibility than system-config-cluster.

Page 17

Cluster Configuration Tool

7

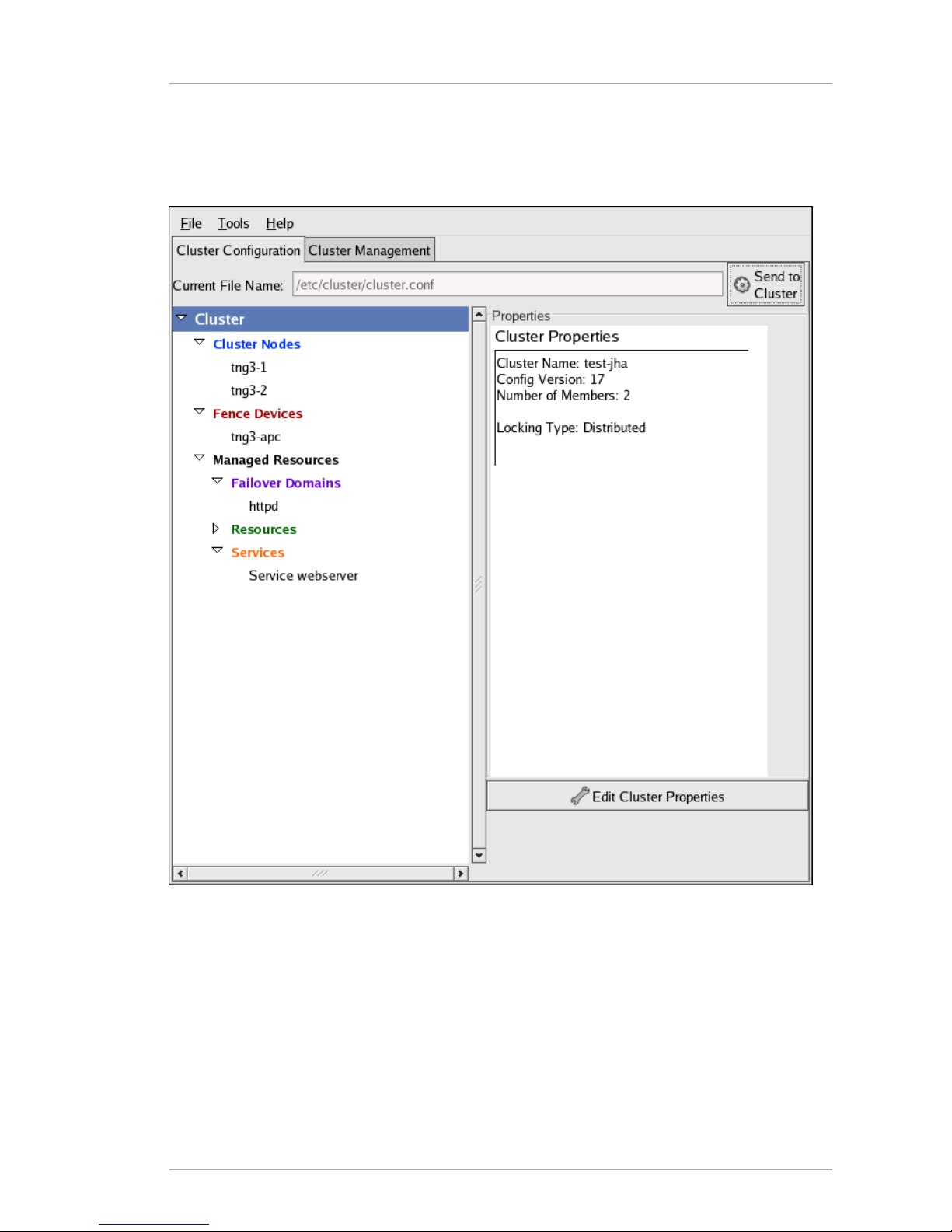

1.3.1. Cluster Configuration Tool

You can access the Cluster Configuration Tool (Figure 1.6, “Cluster Configuration Tool”) through the

Cluster Configuration tab in the Cluster Administration GUI.

Figure 1.6. Cluster Configuration Tool

The Cluster Configuration Tool represents cluster configuration components in the configuration file

(/etc/cluster/cluster.conf) with a hierarchical graphical display in the left panel. A triangle

icon to the left of a component name indicates that the component has one or more subordinate

components assigned to it. Clicking the triangle icon expands and collapses the portion of the tree

below a component. The components displayed in the GUI are summarized as follows:

• Cluster Nodes — Displays cluster nodes. Nodes are represented by name as subordinate

elements under Cluster Nodes. Using configuration buttons at the bottom of the right frame (below

Properties), you can add nodes, delete nodes, edit node properties, and configure fencing methods

for each node.

Page 18

Chapter 1. Red Hat Cluster Configuration and Management Overview

8

• Fence Devices — Displays fence devices. Fence devices are represented as subordinate

elements under Fence Devices. Using configuration buttons at the bottom of the right frame (below

Properties), you can add fence devices, delete fence devices, and edit fence-device properties.

Fence devices must be defined before you can configure fencing (with the Manage Fencing For

This Node button) for each node.

• Managed Resources — Displays failover domains, resources, and services.

• Failover Domains — For configuring one or more subsets of cluster nodes used to run a high-

availability service in the event of a node failure. Failover domains are represented as subordinate

elements under Failover Domains. Using configuration buttons at the bottom of the right frame

(below Properties), you can create failover domains (when Failover Domains is selected) or edit

failover domain properties (when a failover domain is selected).

• Resources — For configuring shared resources to be used by high-availability services. Shared

resources consist of file systems, IP addresses, NFS mounts and exports, and user-created

scripts that are available to any high-availability service in the cluster. Resources are represented

as subordinate elements under Resources. Using configuration buttons at the bottom of the

right frame (below Properties), you can create resources (when Resources is selected) or edit

resource properties (when a resource is selected).

Note

The Cluster Configuration Tool provides the capability to configure private

resources, also. A private resource is a resource that is configured for use with only

one service. You can configure a private resource within a Service component in the

GUI.

• Services — For creating and configuring high-availability services. A service is configured by

assigning resources (shared or private), assigning a failover domain, and defining a recovery

policy for the service. Services are represented as subordinate elements under Services. Using

configuration buttons at the bottom of the right frame (below Properties), you can create services

(when Services is selected) or edit service properties (when a service is selected).

1.3.2. Cluster Status Tool

You can access the Cluster Status Tool (Figure 1.7, “Cluster Status Tool”) through the Cluster

Management tab in Cluster Administration GUI.

Page 19

Command Line Administration Tools

9

Figure 1.7. Cluster Status Tool

The nodes and services displayed in the Cluster Status Tool are determined by the cluster

configuration file (/etc/cluster/cluster.conf). You can use the Cluster Status Tool to enable,

disable, restart, or relocate a high-availability service.

1.4. Command Line Administration Tools

In addition to Conga and the system-config-cluster Cluster Administration GUI, command

line tools are available for administering the cluster infrastructure and the high-availability service

management components. The command line tools are used by the Cluster Administration GUI and

init scripts supplied by Red Hat. Table 1.1, “Command Line Tools” summarizes the command line

tools.

Page 20

Chapter 1. Red Hat Cluster Configuration and Management Overview

10

Command Line

Tool

Used With Purpose

ccs_tool

— Cluster

Configuration

System Tool

Cluster

Infrastructure

ccs_tool is a program for making online updates to the

cluster configuration file. It provides the capability to create

and modify cluster infrastructure components (for example,

creating a cluster, adding and removing a node). For more

information about this tool, refer to the ccs_tool(8) man

page.

cman_tool

— Cluster

Management Tool

Cluster

Infrastructure

cman_tool is a program that manages the CMAN cluster

manager. It provides the capability to join a cluster, leave a

cluster, kill a node, or change the expected quorum votes

of a node in a cluster. cman_tool is available with DLM

clusters only. For more information about this tool, refer to

the cman_tool(8) man page.

gulm_tool

— Cluster

Management Tool

Cluster

Infrastructure

gulm_tool is a program used to manage GULM. It

provides an interface to lock_gulmd, the GULM lock

manager. gulm_tool is available with GULM clusters

only. For more information about this tool, refer to the

gulm_tool(8) man page.

fence_tool —

Fence Tool

Cluster

Infrastructure

fence_tool is a program used to join or leave the default

fence domain. Specifically, it starts the fence daemon

(fenced) to join the domain and kills fenced to leave

the domain. fence_tool is available with DLM clusters

only. For more information about this tool, refer to the

fence_tool(8) man page.

clustat —

Cluster Status

Utility

High-availability

Service

Management

Components

The clustat command displays the status of the cluster.

It shows membership information, quorum view, and the

state of all configured user services. For more information

about this tool, refer to the clustat(8) man page.

clusvcadm

— Cluster

User Service

Administration

Utility

High-availability

Service

Management

Components

The clusvcadm command allows you to enable, disable,

relocate, and restart high-availability services in a

cluster. For more information about this tool, refer to the

clusvcadm(8) man page.

Table 1.1. Command Line Tools

Page 21

Chapter 2.

11

Before Configuring a Red Hat Cluster

This chapter describes tasks to perform and considerations to make before installing and configuring a

Red Hat Cluster, and consists of the following sections:

• Section 2.1, “Compatible Hardware”

• Section 2.2, “Enabling IP Ports”

• Section 2.3, “Configuring ACPI For Use with Integrated Fence Devices”

• Section 2.4, “Configuring max_luns”

• Section 2.5, “Considerations for Using Quorum Disk”

• Section 2.7, “Considerations for Using Conga”

• Section 2.8, “General Configuration Considerations”

2.1. Compatible Hardware

Before configuring Red Hat Cluster software, make sure that your cluster uses appropriate hardware

(for example, supported fence devices, storage devices, and Fibre Channel switches). Refer to the

hardware configuration guidelines at http://www.redhat.com/cluster_suite/hardware/ for the most

current hardware compatibility information.

2.2. Enabling IP Ports

Before deploying a Red Hat Cluster, you must enable certain IP ports on the cluster nodes and on

computers that run luci (the Conga user interface server). The following sections specify the IP ports

to be enabled and provide examples of iptables rules for enabling the ports:

• Section 2.2.1, “Enabling IP Ports on Cluster Nodes”

• Section 2.2.2, “Enabling IP Ports on Computers That Run luci”

• Section 2.2.3, “Examples of iptables Rules”

2.2.1. Enabling IP Ports on Cluster Nodes

To allow Red Hat Cluster nodes to communicate with each other, you must enable the IP ports

assigned to certain Red Hat Cluster components. Table 2.1, “Enabled IP Ports on Red Hat Cluster

Nodes” lists the IP port numbers, their respective protocols, the components to which the port

numbers are assigned, and references to iptables rule examples. At each cluster node, enable

IP ports according to Table 2.1, “Enabled IP Ports on Red Hat Cluster Nodes”. (All examples are in

Section 2.2.3, “Examples of iptables Rules”.)

IP Port

Number

Protocol Component Reference to Example of

iptables Rules

6809 UDP cman (Cluster Manager), for use

in clusters with Distributed Lock

Manager (DLM) selected

Example 2.1, “Port 6809: cman”

Page 22

Chapter 2. Before Configuring a Red Hat Cluster

12

IP Port

Number

Protocol Component Reference to Example of

iptables Rules

11111 TCP ricci (part of Conga remote

agent)

Example 2.3, “Port 11111: ricci

(Cluster Node and Computer

Running luci)”

14567 TCP gnbd (Global Network Block

Device)

Example 2.4, “Port 14567: gnbd”

16851 TCP modclusterd (part of Conga

remote agent)

Example 2.5, “Port 16851:

modclusterd”

21064 TCP dlm (Distributed Lock Manager), for

use in clusters with Distributed Lock

Manager (DLM) selected

Example 2.6, “Port 21064: dlm”

40040,

40042,

41040

TCP lock_gulmd (GULM daemon), for

use in clusters with Grand Unified

Lock Manager (GULM) selected

Example 2.7, “Ports 40040, 40042,

41040: lock_gulmd”

41966,

41967,

41968,

41969

TCP rgmanager (high-availability

service management)

Example 2.8, “Ports 41966, 41967,

41968, 41969: rgmanager”

50006,

50008,

50009

TCP ccsd (Cluster Configuration

System daemon)

Example 2.9, “Ports 50006, 50008,

50009: ccsd (TCP)”

50007 UDP ccsd (Cluster Configuration

System daemon)

Example 2.10, “Port 50007: ccsd

(UDP)”

Table 2.1. Enabled IP Ports on Red Hat Cluster Nodes

2.2.2. Enabling IP Ports on Computers That Run luci

To allow client computers to communicate with a computer that runs luci (the Conga user interface

server), and to allow a computer that runs luci to communicate with ricci in the cluster nodes, you

must enable the IP ports assigned to luci and ricci. Table 2.2, “Enabled IP Ports on a Computer

That Runs luci” lists the IP port numbers, their respective protocols, the components to which the port

numbers are assigned, and references to iptables rule examples. At each computer that runs luci,

enable IP ports according to Table 2.1, “Enabled IP Ports on Red Hat Cluster Nodes”. (All examples

are in Section 2.2.3, “Examples of iptables Rules”.)

Note

If a cluster node is running luci, port 11111 should already have been enabled.

IP Port

Number

Protocol Component Reference to Example of

iptables Rules

8084 TCP luci (Conga user interface server) Example 2.2, “Port 8084: luci

(Cluster Node or Computer Running

luci)”

Page 23

Examples of iptables Rules

13

IP Port

Number

Protocol Component Reference to Example of

iptables Rules

11111 TCP ricci (Conga remote agent) Example 2.3, “Port 11111: ricci

(Cluster Node and Computer

Running luci)”

Table 2.2. Enabled IP Ports on a Computer That Runs luci

2.2.3. Examples of iptables Rules

This section provides iptables rule examples for enabling IP ports on Red Hat Cluster nodes

and computers that run luci. The examples enable IP ports for a computer having an IP address of

10.10.10.200, using a subnet mask of 10.10.10.0/24.

Note

Examples are for cluster nodes unless otherwise noted in the example titles.

-A INPUT -i 10.10.10.200 -m state --state NEW -p udp -s 10.10.10.0/24 -d

10.10.10.0/24 --dport 6809 -j ACCEPT

Example 2.1. Port 6809: cman

-A INPUT -i 10.10.10.200 -m state --state NEW -m multiport -p tcp -s

10.10.10.0/24 -d 10.10.10.0/24 --dports 8084 -j ACCEPT

Example 2.2. Port 8084: luci (Cluster Node or Computer Running luci)

-A INPUT -i 10.10.10.200 -m state --state NEW -m multiport -p tcp -s

10.10.10.0/24 -d 10.10.10.0/24 --dports 11111 -j ACCEPT

Example 2.3. Port 11111: ricci (Cluster Node and Computer Running luci)

-A INPUT -i 10.10.10.200 -m state --state NEW -m multiport -p tcp -s

10.10.10.0/24 -d 10.10.10.0/24 --dports 14567 -j ACCEPT

Example 2.4. Port 14567: gnbd

-A INPUT -i 10.10.10.200 -m state --state NEW -m multiport -p tcp -s

10.10.10.0/24 -d 10.10.10.0/24 --dports 16851 -j ACCEPT

Example 2.5. Port 16851: modclusterd

-A INPUT -i 10.10.10.200 -m state --state NEW -m multiport -p tcp -s

10.10.10.0/24 -d 10.10.10.0/24 --dports 21064 -j ACCEPT

Example 2.6. Port 21064: dlm

Page 24

Chapter 2. Before Configuring a Red Hat Cluster

14

-A INPUT -i 10.10.10.200 -m state --state NEW -m multiport -p tcp -s

10.10.10.0/24 -d 10.10.10.0/24 --dports 40040,40042,41040 -j ACCEPT

Example 2.7. Ports 40040, 40042, 41040: lock_gulmd

-A INPUT -i 10.10.10.200 -m state --state NEW -m multiport -p tcp -s

10.10.10.0/24 -d 10.10.10.0/24 --dports 41966,41967,41968,41969 -j ACCEPT

Example 2.8. Ports 41966, 41967, 41968, 41969: rgmanager

-A INPUT -i 10.10.10.200 -m state --state NEW -m multiport -p tcp -s

10.10.10.0/24 -d 10.10.10.0/24 --dports 50006,50008,50009 -j ACCEPT

Example 2.9. Ports 50006, 50008, 50009: ccsd (TCP)

-A INPUT -i 10.10.10.200 -m state --state NEW -m multiport -p udp -s

10.10.10.0/24 -d 10.10.10.0/24 --dports 50007 -j ACCEPT

Example 2.10. Port 50007: ccsd (UDP)

2.3. Configuring ACPI For Use with Integrated Fence

Devices

If your cluster uses integrated fence devices, you must configure ACPI (Advanced Configuration and

Power Interface) to ensure immediate and complete fencing.

Note

For the most current information about integrated fence devices supported by Red Hat

Cluster Suite, refer to http://www.redhat.com/cluster_suite/hardware/.

If a cluster node is configured to be fenced by an integrated fence device, disable ACPI Soft-Off for

that node. Disabling ACPI Soft-Off allows an integrated fence device to turn off a node immediately

and completely rather than attempting a clean shutdown (for example, shutdown -h now).

Otherwise, if ACPI Soft-Off is enabled, an integrated fence device can take four or more seconds to

turn off a node (refer to note that follows). In addition, if ACPI Soft-Off is enabled and a node panics

or freezes during shutdown, an integrated fence device may not be able to turn off the node. Under

those circumstances, fencing is delayed or unsuccessful. Consequently, when a node is fenced

with an integrated fence device and ACPI Soft-Off is enabled, a cluster recovers slowly or requires

administrative intervention to recover.

Note

The amount of time required to fence a node depends on the integrated fence device

used. Some integrated fence devices perform the equivalent of pressing and holding the

power button; therefore, the fence device turns off the node in four to five seconds. Other

integrated fence devices perform the equivalent of pressing the power button momentarily,

Page 25

Disabling ACPI Soft-Off with chkconfig Management

15

relying on the operating system to turn off the node; therefore, the fence device turns off

the node in a time span much longer than four to five seconds.

To disable ACPI Soft-Off, use chkconfig management and verify that the node turns off immediately

when fenced. The preferred way to disable ACPI Soft-Off is with chkconfig management: however,

if that method is not satisfactory for your cluster, you can disable ACPI Soft-Off with one of the

following alternate methods:

• Changing the BIOS setting to "instant-off" or an equivalent setting that turns off the node without

delay

Note

Disabling ACPI Soft-Off with the BIOS may not be possible with some computers.

• Appending acpi=off to the kernel boot command line of the /boot/grub/grub.conf file

Important

This method completely disables ACPI; some computers do not boot correctly if ACPI is

completely disabled. Use this method only if the other methods are not effective for your

cluster.

The following sections provide procedures for the preferred method and alternate methods of disabling

ACPI Soft-Off:

• Section 2.3.1, “Disabling ACPI Soft-Off with chkconfig Management” — Preferred method

• Section 2.3.2, “Disabling ACPI Soft-Off with the BIOS” — First alternate method

• Section 2.3.3, “Disabling ACPI Completely in the grub.conf File” — Second alternate method

2.3.1. Disabling ACPI Soft-Off with chkconfig Management

You can use chkconfig management to disable ACPI Soft-Off either by removing the ACPI daemon

(acpid) from chkconfig management or by turning off acpid.

Note

This is the preferred method of disabling ACPI Soft-Off.

Disable ACPI Soft-Off with chkconfig management at each cluster node as follows:

1. Run either of the following commands:

• chkconfig --del acpid — This command removes acpid from chkconfig management.

— OR —

Page 26

Chapter 2. Before Configuring a Red Hat Cluster

16

• chkconfig --level 2345 acpid off — This command turns off acpid.

2. Reboot the node.

3. When the cluster is configured and running, verify that the node turns off immediately when

fenced.

Note

You can fence the node with the fence_node command or Conga.

2.3.2. Disabling ACPI Soft-Off with the BIOS

The preferred method of disabling ACPI Soft-Off is with chkconfig management (Section 2.3.1,

“Disabling ACPI Soft-Off with chkconfig Management”). However, if the preferred method is not

effective for your cluster, follow the procedure in this section.

Note

Disabling ACPI Soft-Off with the BIOS may not be possible with some computers.

You can disable ACPI Soft-Off by configuring the BIOS of each cluster node as follows:

1. Reboot the node and start the BIOS CMOS Setup Utility program.

2. Navigate to the Power menu (or equivalent power management menu).

3. At the Power menu, set the Soft-Off by PWR-BTTN function (or equivalent) to Instant-Off (or the

equivalent setting that turns off the node via the power button without delay). Example 2.11, “BIOS

CMOS Setup Utility: Soft-Off by PWR-BTTN set to Instant-Off” shows a Power menu with ACPI

Function set to Enabled and Soft-Off by PWR-BTTN set to Instant-Off.

Note

The equivalents to ACPI Function, Soft-Off by PWR-BTTN, and Instant-Off may

vary among computers. However, the objective of this procedure is to configure the

BIOS so that the computer is turned off via the power button without delay.

4. Exit the BIOS CMOS Setup Utility program, saving the BIOS configuration.

5. When the cluster is configured and running, verify that the node turns off immediately when

fenced.

Note

You can fence the node with the fence_node command or Conga.

Page 27

Disabling ACPI Soft-Off with the BIOS

17

+-------------------------------------------------|-----------------------+

| ACPI Function [Enabled] | Item Help

|

| ACPI Suspend Type [S1(POS)]

|------------------------|

| x Run VGABIOS if S3 Resume Auto | Menu Level *

|

| Suspend Mode [Disabled] |

|

| HDD Power Down [Disabled] |

|

| Soft-Off by PWR-BTTN [Instant-Off] |

|

| CPU THRM-Throttling [50.0%] |

|

| Wake-Up by PCI card [Enabled] |

|

| Power On by Ring [Enabled] |

|

| Wake Up On LAN [Enabled] |

|

| x USB KB Wake-Up From S3 Disabled |

|

| Resume by Alarm [Disabled] |

|

| x Date(of Month) Alarm 0 |

|

| x Time(hh:mm:ss) Alarm 0 : 0 : 0 |

|

| POWER ON Function [BUTTON ONLY] |

|

| x KB Power ON Password Enter |

|

| x Hot Key Power ON Ctrl-F1 |

|

| |

|

| |

|

+-------------------------------------------------|-----------------------+

This example shows ACPI Function set to Enabled, and Soft-Off by PWR-BTTN set to Instant-Off.

Example 2.11. BIOS CMOS Setup Utility: Soft-Off by PWR-BTTN set to Instant-Off

Page 28

Chapter 2. Before Configuring a Red Hat Cluster

18

2.3.3. Disabling ACPI Completely in the grub.conf File

The preferred method of disabling ACPI Soft-Off is with chkconfig management (Section 2.3.1,

“Disabling ACPI Soft-Off with chkconfig Management”). If the preferred method is not effective for your

cluster, you can disable ACPI Soft-Off with the BIOS power management (Section 2.3.2, “Disabling

ACPI Soft-Off with the BIOS”). If neither of those methods is effective for your cluster, you can disable

ACPI completely by appending acpi=off to the kernel boot command line in the grub.conf file.

Important

This method completely disables ACPI; some computers do not boot correctly if ACPI is

completely disabled. Use this method only if the other methods are not effective for your

cluster.

You can disable ACPI completely by editing the grub.conf file of each cluster node as follows:

1. Open /boot/grub/grub.conf with a text editor.

2. Append acpi=off to the kernel boot command line in /boot/grub/grub.conf (refer to

Example 2.12, “Kernel Boot Command Line with acpi=off Appended to It”).

3. Reboot the node.

4. When the cluster is configured and running, verify that the node turns off immediately when

fenced.

Note

You can fence the node with the fence_node command or Conga.

Page 29

Configuring max_luns

19

# grub.conf generated by anaconda

#

# Note that you do not have to rerun grub after making changes to this file

# NOTICE: You have a /boot partition. This means that

# all kernel and initrd paths are relative to /boot/, eg.

# root (hd0,0)

# kernel /vmlinuz-version ro root=/dev/VolGroup00/LogVol00

# initrd /initrd-version.img

#boot=/dev/hda

default=0

timeout=5

serial --unit=0 --speed=115200

terminal --timeout=5 serial console

title Red Hat Enterprise Linux Server (2.6.18-36.el5)

root (hd0,0)

kernel /vmlinuz-2.6.18-36.el5 ro root=/dev/VolGroup00/LogVol00

console=ttyS0,115200n8 acpi=off

initrd /initrd-2.6.18-36.el5.img

In this example, acpi=off has been appended to the kernel boot command line — the line starting

with "kernel /vmlinuz-2.6.18-36.el5".

Example 2.12. Kernel Boot Command Line with acpi=off Appended to It

2.4. Configuring max_luns

If RAID storage in your cluster presents multiple LUNs (Logical Unit Numbers), each cluster node must

be able to access those LUNs. To enable access to all LUNs presented, configure max_luns in the /

etc/modprobe.conf file of each node as follows:

1. Open /etc/modprobe.conf with a text editor.

2. Append the following line to /etc/modprobe.conf. Set N to the highest numbered LUN that is

presented by RAID storage.

options scsi_mod max_luns=N

For example, with the following line appended to the /etc/modprobe.conf file, a node can

access LUNs numbered as high as 255:

options scsi_mod max_luns=255

3. Save /etc/modprobe.conf.

4. Run mkinitrd to rebuild initrd for the currently running kernel as follows. Set the kernel

variable to the currently running kernel:

Page 30

Chapter 2. Before Configuring a Red Hat Cluster

20

# cd /boot

# mkinitrd -f -v initrd-kernel.img kernel

For example, the currently running kernel in the following mkinitrd command is 2.6.9-34.0.2.EL:

# mkinitrd -f -v initrd-2.6.9-34.0.2.EL.img 2.6.9-34.0.2.EL

Note

You can determine the currently running kernel by running uname -r.

5. Restart the node.

2.5. Considerations for Using Quorum Disk

Quorum Disk is a disk-based quorum daemon, qdiskd, that provides supplemental heuristics to

determine node fitness. With heuristics you can determine factors that are important to the operation

of the node in the event of a network partition. For example, in a four-node cluster with a 3:1 split,

ordinarily, the three nodes automatically "win" because of the three-to-one majority. Under those

circumstances, the one node is fenced. With qdiskd however, you can set up heuristics that allow the

one node to win based on access to a critical resource (for example, a critical network path). If your

cluster requires additional methods of determining node health, then you should configure qdiskd to

meet those needs.

Note

Configuring qdiskd is not required unless you have special requirements for node health.

An example of a special requirement is an "all-but-one" configuration. In an all-but-one

configuration, qdiskd is configured to provide enough quorum votes to maintain quorum

even though only one node is working.

Important

Overall, heuristics and other qdiskd parameters for your Red Hat Cluster depend on the

site environment and special requirements needed. To understand the use of heuristics

and other qdiskd parameters, refer to the qdisk(5) man page. If you require assistance

understanding and using qdiskd for your site, contact an authorized Red Hat support

representative.

If you need to use qdiskd, you should take into account the following considerations:

Cluster node votes

Each cluster node should have the same number of votes.

CMAN membership timeout value

The CMAN membership timeout value (the time a node needs to be unresponsive before CMAN

considers that node to be dead, and not a member) should be at least two times that of the

Page 31

Red Hat Cluster Suite and SELinux

21

qdiskd membership timeout value. The reason is because the quorum daemon must detect

failed nodes on its own, and can take much longer to do so than CMAN. The default value

for CMAN membership timeout is 10 seconds. Other site-specific conditions may affect the

relationship between the membership timeout values of CMAN and qdiskd. For assistance

with adjusting the CMAN membership timeout value, contact an authorized Red Hat support

representative.

Fencing

To ensure reliable fencing when using qdiskd, use power fencing. While other types of fencing

(such as watchdog timers and software-based solutions to reboot a node internally) can be reliable

for clusters not configured with qdiskd, they are not reliable for a cluster configured with qdiskd.

Maximum nodes

A cluster configured with qdiskd supports a maximum of 16 nodes. The reason for the limit

is because of scalability; increasing the node count increases the amount of synchronous I/O

contention on the shared quorum disk device.

Quorum disk device

A quorum disk device should be a shared block device with concurrent read/write access by

all nodes in a cluster. The minimum size of the block device is 10 Megabytes. Examples of

shared block devices that can be used by qdiskd are a multi-port SCSI RAID array, a Fibre

Channel RAID SAN, or a RAID-configured iSCSI target. You can create a quorum disk device

with mkqdisk, the Cluster Quorum Disk Utility. For information about using the utility refer to the

mkqdisk(8) man page.

Note

Using JBOD as a quorum disk is not recommended. A JBOD cannot provide

dependable performance and therefore may not allow a node to write to it quickly

enough. If a node is unable to write to a quorum disk device quickly enough, the node

is falsely evicted from a cluster.

2.6. Red Hat Cluster Suite and SELinux

Red Hat Cluster Suite for Red Hat Enterprise Linux 4 requires that SELinux be disabled. Before

configuring a Red Hat cluster, make sure to disable SELinux. For example, you can disable SELinux

upon installation of Red Hat Enterprise Linux 4 or you can specify SELINUX=disabled in the /etc/

selinux/config file.

2.7. Considerations for Using Conga

When using Conga to configure and manage your Red Hat Cluster, make sure that each computer

running luci (the Conga user interface server) is running on the same network that the cluster is using

for cluster communication. Otherwise, luci cannot configure the nodes to communicate on the right

network. If the computer running luci is on another network (for example, a public network rather

than a private network that the cluster is communicating on), contact an authorized Red Hat support

representative to make sure that the appropriate host name is configured for each cluster node.

Page 32

Chapter 2. Before Configuring a Red Hat Cluster

22

2.8. General Configuration Considerations

You can configure a Red Hat Cluster in a variety of ways to suit your needs. Take into account the

following considerations when you plan, configure, and implement your Red Hat Cluster.

No-single-point-of-failure hardware configuration

Clusters can include a dual-controller RAID array, multiple bonded network channels, multiple

paths between cluster members and storage, and redundant un-interruptible power supply (UPS)

systems to ensure that no single failure results in application down time or loss of data.

Alternatively, a low-cost cluster can be set up to provide less availability than a no-single-point-offailure cluster. For example, you can set up a cluster with a single-controller RAID array and only a

single Ethernet channel.

Certain low-cost alternatives, such as host RAID controllers, software RAID without cluster

support, and multi-initiator parallel SCSI configurations are not compatible or appropriate for use

as shared cluster storage.

Data integrity assurance

To ensure data integrity, only one node can run a cluster service and access cluster-service

data at a time. The use of power switches in the cluster hardware configuration enables a node

to power-cycle another node before restarting that node's cluster services during a failover

process. This prevents two nodes from simultaneously accessing the same data and corrupting

it. It is strongly recommended that fence devices (hardware or software solutions that remotely

power, shutdown, and reboot cluster nodes) are used to guarantee data integrity under all failure

conditions. Watchdog timers provide an alternative way to to ensure correct operation of cluster

service failover.

Ethernet channel bonding

Cluster quorum and node health is determined by communication of messages among cluster

nodes via Ethernet. In addition, cluster nodes use Ethernet for a variety of other critical cluster

functions (for example, fencing). With Ethernet channel bonding, multiple Ethernet interfaces are

configured to behave as one, reducing the risk of a single-point-of-failure in the typical switched

Ethernet connection among cluster nodes and other cluster hardware.

Page 33

Chapter 3.

23

Configuring Red Hat Cluster With

Conga

This chapter describes how to configure Red Hat Cluster software using Conga, and consists of the

following sections:

• Section 3.1, “Configuration Tasks”

• Section 3.2, “Starting luci and ricci”.

• Section 3.3, “Creating A Cluster”

• Section 3.4, “Global Cluster Properties”

• Section 3.5, “Configuring Fence Devices”

• Section 3.6, “Configuring Cluster Members”

• Section 3.7, “Configuring a Failover Domain”

• Section 3.8, “Adding Cluster Resources”

• Section 3.9, “Adding a Cluster Service to the Cluster”

• Section 3.10, “Configuring Cluster Storage”

3.1. Configuration Tasks

Configuring Red Hat Cluster software with Conga consists of the following steps:

1. Configuring and running the Conga configuration user interface — the luci server. Refer to

Section 3.2, “Starting luci and ricci”.

2. Creating a cluster. Refer to Section 3.3, “Creating A Cluster”.

3. Configuring global cluster properties. Refer to Section 3.4, “Global Cluster Properties”.

4. Configuring fence devices. Refer to Section 3.5, “Configuring Fence Devices”.

5. Configuring cluster members. Refer to Section 3.6, “Configuring Cluster Members”.

6. Creating failover domains. Refer to Section 3.7, “Configuring a Failover Domain”.

7. Creating resources. Refer to Section 3.8, “Adding Cluster Resources”.

8. Creating cluster services. Refer to Section 3.9, “Adding a Cluster Service to the Cluster”.

9. Configuring storage. Refer to Section 3.10, “Configuring Cluster Storage”.

3.2. Starting luci and ricci

To administer Red Hat Clusters with Conga, install and run luci and ricci as follows:

1. At each node to be administered by Conga, install the ricci agent. For example:

Page 34

Chapter 3. Configuring Red Hat Cluster With Conga

24

# up2date -i ricci

2. At each node to be administered by Conga, start ricci. For example:

# service ricci start

Starting ricci: [ OK ]

3. Select a computer to host luci and install the luci software on that computer. For example:

# up2date -i luci

Note

Typically, a computer in a server cage or a data center hosts luci; however, a cluster

computer can host luci.

4. At the computer running luci, initialize the luci server using the luci_admin init command.

For example:

# luci_admin init

Initializing the Luci server

Creating the 'admin' user

Enter password: <Type password and press ENTER.>

Confirm password: <Re-type password and press ENTER.>

Please wait...

The admin password has been successfully set.

Generating SSL certificates...

Luci server has been successfully initialized

Restart the Luci server for changes to take effect

eg. service luci restart

5. Start luci using service luci restart. For example:

# service luci restart

Shutting down luci: [ OK ]

Starting luci: generating https SSL certificates... done

Page 35

Creating A Cluster

25

[ OK ]

Please, point your web browser to https://nano-01:8084 to access luci

6. At a Web browser, place the URL of the luci server into the URL address box and click Go (or the

equivalent). The URL syntax for the luci server is https://luci_server_hostname:8084.

The first time you access luci, two SSL certificate dialog boxes are displayed. Upon

acknowledging the dialog boxes, your Web browser displays the luci login page.

3.3. Creating A Cluster

Creating a cluster with luci consists of selecting cluster nodes, entering their passwords, and

submitting the request to create a cluster. If the node information and passwords are correct, Conga

automatically installs software into the cluster nodes and starts the cluster. Create a cluster as follows:

1. As administrator of luci, select the cluster tab.

2. Click Create a New Cluster.

3. At the Cluster Name text box, enter a cluster name. The cluster name cannot exceed 15

characters. Add the node name and password for each cluster node. Enter the node name for

each node in the Node Hostname column; enter the root password for each node in the in the

Root Password column. Check the Enable Shared Storage Support checkbox if clustered

storage is required.

4. Click Submit. Clicking Submit causes the the Create a new cluster page to be displayed again,

showing the parameters entered in the preceding step, and Lock Manager parameters. The Lock

Manager parameters consist of the lock manager option buttons, DLM (preferred) and GULM,

and Lock Server text boxes in the GULM lock server properties group box. Configure Lock

Manager parameters for either DLM or GULM as follows:

• For DLM — Click DLM (preferred) or confirm that it is set.

• For GULM — Click GULM or confirm that it is set. At the GULM lock server properties group

box, enter the FQDN or the IP address of each lock server in a Lock Server text box.

Note

You must enter the FQDN or the IP address of one, three, or five GULM lock

servers.

5. Re-enter enter the root password for each node in the in the Root Password column.

6. Click Submit. Clicking Submit causes the following actions:

a. Cluster software packages to be downloaded onto each cluster node.

b. Cluster software to be installed onto each cluster node.

c. Cluster configuration file to be created and propagated to each node in the cluster.

d. Starting the cluster.

Page 36

Chapter 3. Configuring Red Hat Cluster With Conga

26

A progress page shows the progress of those actions for each node in the cluster.

When the process of creating a new cluster is complete, a page is displayed providing a

configuration interface for the newly created cluster.

3.4. Global Cluster Properties

When a cluster is created, or if you select a cluster to configure, a cluster-specific page is displayed.

The page provides an interface for configuring cluster-wide properties and detailed properties.

You can configure cluster-wide properties with the tabbed interface below the cluster name. The

interface provides the following tabs: General, GULM (GULM clusters only), Fence (DLM clusters

only), Multicast (DLM clusters only), and Quorum Partition (DLM clusters only). To configure the

parameters in those tabs, follow the steps in this section. If you do not need to configure parameters in

a tab, skip the step for that tab.

1. General tab — This tab displays cluster name and provides an interface for configuring the

configuration version and advanced cluster properties. The parameters are summarized as

follows:

• The Cluster Name text box displays the cluster name; it does not accept a cluster name

change. You cannot change the cluster name. The only way to change the name of a Red Hat

cluster is to create a new cluster configuration with the new name.

• The Configuration Version value is set to 1 by default and is automatically incremented each

time you modify your cluster configuration. However, if you need to set it to another value, you

can specify it at the Configuration Version text box.

• You can enter advanced cluster properties by clicking Show advanced cluster properties.

Clicking Show advanced cluster properties reveals a list of advanced properties. You can

click any advanced property for online help about the property.

Enter the values required and click Apply for changes to take effect.

2. Fence tab (DLM clusters only) — This tab provides an interface for configuring these Fence

Daemon Properties parameters: Post-Fail Delay and Post-Join Delay. The parameters are

summarized as follows:

• The Post-Fail Delay parameter is the number of seconds the fence daemon (fenced) waits

before fencing a node (a member of the fence domain) after the node has failed. The Post-Fail

Delay default value is 0. Its value may be varied to suit cluster and network performance.

• The Post-Join Delay parameter is the number of seconds the fence daemon (fenced) waits

before fencing a node after the node joins the fence domain. The Post-Join Delay default

value is 3. A typical setting for Post-Join Delay is between 20 and 30 seconds, but can vary

according to cluster and network performance.

Enter values required and Click Apply for changes to take effect.

Note

For more information about Post-Join Delay and Post-Fail Delay, refer to the

fenced(8) man page.

Page 37

Global Cluster Properties

27

3. GULM tab (GULM clusters only) — This tab provides an interface for configuring GULM lock

servers. The tab indicates each node in a cluster that is configured as a GULM lock server and

provides the capability to change lock servers. Follow the rules provided at the tab for configuring

GULM lock servers and click Apply for changes to take effect.

Important

The number of nodes that can be configured as GULM lock servers is limited to either

one, three, or five.

4. Multicast tab (DLM clusters only) — This tab provides an interface for configuring these Multicast

Configuration parameters: Do not use multicast and Use multicast. Multicast Configuration

specifies whether a multicast address is used for cluster management communication among

cluster nodes. Do not use multicast is the default setting. To use a multicast address for cluster

management communication among cluster nodes, click Use multicast. When Use multicast

is selected, the Multicast address and Multicast network interface text boxes are enabled. If

Use multicast is selected, enter the multicast address into the Multicast address text box and

the multicast network interface into the Multicast network interface text box. Click Apply for

changes to take effect.

5. Quorum Partition tab (DLM clusters only) — This tab provides an interface for configuring these

Quorum Partition Configuration parameters: Do not use a Quorum Partition, Use a Quorum

Partition, Interval, Votes, TKO, Minimum Score, Device, Label, and Heuristics. The Do not

use a Quorum Partition parameter is enabled by default. Table 3.1, “Quorum-Disk Parameters”

describes the parameters. If you need to use a quorum disk, click Use a Quorum Partition, enter

quorum disk parameters, click Apply, and restart the cluster for the changes to take effect.

Important

Quorum-disk parameters and heuristics depend on the site environment and the

special requirements needed. To understand the use of quorum-disk parameters and

heuristics, refer to the qdisk(5) man page. If you require assistance understanding and

using quorum disk, contact an authorized Red Hat support representative.

Note

Clicking Apply on the Quorum Partition tab propagates changes to the cluster

configuration file (/etc/cluster/cluster.conf) in each cluster node. However,

for the quorum disk to operate, you must restart the cluster (refer to Section 4.1,

“Starting, Stopping, and Deleting Clusters”).

Parameter Description

Do not use a Quorum

Partition

Disables quorum partition. Disables quorum-disk parameters in the

Quorum Partition tab.

Use a Quorum

Partition

Enables quorum partition. Enables quorum-disk parameters in the

Quorum Partition tab.

Interval The frequency of read/write cycles, in seconds.

Page 38

Chapter 3. Configuring Red Hat Cluster With Conga

28

Parameter Description

Votes The number of votes the quorum daemon advertises to CMAN when it has

a high enough score.

TKO The number of cycles a node must miss to be declared dead.

Minimum Score The minimum score for a node to be considered "alive". If omitted or set

to 0, the default function, floor((n+1)/2), is used, where n is the sum

of the heuristics scores. The Minimum Score value must never exceed

the sum of the heuristic scores; otherwise, the quorum disk cannot be

available.

Device The storage device the quorum daemon uses. The device must be the

same on all nodes.

Label Specifies the quorum disk label created by the mkqdisk utility. If this field

contains an entry, the label overrides the Device field. If this field is used,

the quorum daemon reads /proc/partitions and checks for qdisk

signatures on every block device found, comparing the label against the

specified label. This is useful in configurations where the quorum device

name differs among nodes.

Heuristics Path to Program — The program used to determine if this heuristic is

alive. This can be anything that can be executed by /bin/sh -c. A return

value of 0 indicates success; anything else indicates failure. This field is

required.

Interval — The frequency (in seconds) at which the heuristic is polled. The

default interval for every heuristic is 2 seconds.

Score — The weight of this heuristic. Be careful when determining scores

for heuristics. The default score for each heuristic is 1.

Apply Propagates the changes to the cluster configuration file (/etc/cluster/

cluster.conf) in each cluster node.

Table 3.1. Quorum-Disk Parameters

3.5. Configuring Fence Devices

Configuring fence devices consists of creating, modifying, and deleting fence devices. Creating a