DESIGN GUIDE

1.9.0 | September 2016 | 3725-33186-002A

Polycom® SoundStructure® C16, C12, C8,

and SR12

Copyright© 2016, Polycom, Inc. All rights reserved. No part of this document may be reproduced, translated into another

language or format, or transmitted in any form or by any means, electronic or mechanical, for any purpose, without the

express written permission of Polycom, Inc.

6001 America Center Drive

San Jose, CA 95002

USA

Trademarks Polycom

trademarks and/or service marks of Polycom, Inc., and are registered and/or common law marks in the United States

and various other countries.

All other trademarks are property of their respective owners. No portion hereof may be reproduced or transmitted in any

form or by any means, for any purpose other than the recipient's personal use, without the express written permission

of Polycom.

®

, the Polycom logo and the names and marks associated with Polycom products are

Disclaimer While Polycom uses reasonable efforts to include accurate and up-to-date information in this document,

Polycom makes no warranties or representations as to its accuracy. Polycom assumes no liability or responsibility for

any typographical or other errors or omissions in the content of this document.

Limitation of Liability Polycom and/or its respective suppliers make no representations about the suitability of the

information contained in this document for any purpose. Information is provided "as is" without warranty of any kind and

is subject to change without notice. The entire risk arising out of its use remains with the recipient. In no event shall

Polycom and/or its respective suppliers be liable for any direct, consequential, incidental, special, punitive or other

damages whatsoever (including without limitation, damages for loss of business profits, business interruption, or loss of

business information), even if Polycom has been advised of the possibility of such damages.

End User License Agreement By installing, copying, or otherwise using this product, you acknowledge that you

have read, understand and agree to be bound by the terms and conditions of the End User License Agreement for this

product. The EULA for this product is available on the Polycom Support page for the product.

Patent Information The accompanying product may be protected by one or more U.S. and foreign patents and/or

pending patent applications held by Polycom, Inc.

Open Source Software Used in this Product This product may contain open source software. You may receive

the open source software from Polycom up to three (3) years after the distribution date of the applicable product or

software at a charge not greater than the cost to Polycom of shipping or distributing the software to you. To receive

software information, as well as the open source software code used in this product, contact Polycom by email at

OpenSourceVideo@polycom.com.

Customer Feedback We are striving to improve our documentation quality and we appreciate your feedback. Email

your opinions and comments to DocumentationFeedback@polycom.com.

Polycom Support Visit the Polycom Support Center for End User License Agreements, software downloads,

product documents, product licenses, troubleshooting tips, service requests, and more.

2

Contents

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

Introducing the Polycom SoundStructure Product Family . . . . . . . . . . . . . . . . . 17

Defining SoundStructure Architectural Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

Understanding Polycom OBAM™ - One Big Audio Matrix . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

Understanding SoundStructure C-Series Products . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

Understanding C-Series Input Processing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

Creating C-Series Matrix Crosspoints . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

Understanding C-Series Output processing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

Processing C-Series Submixes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

Understanding C-Series Acoustic Echo Canceller References . . . . . . . . . . . . . . . . . . . . 31

Understanding SoundStructure SR-Series Products . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

Understanding SR-Series Input Processing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

Creating SR-Series Matrix Crosspoints . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

Understanding SR-Series Output Processing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

Processing SR-Series Submix . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

Understanding Telephony Processing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

Introducing SoundStructure Design Concepts . . . . . . . . . . . . . . . . . . . . . . . . . . 47

Understanding Device Inputs and Outputs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

Understanding Physical Channels . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

Numbering Physical Channel On A Single SoundStructure Device . . . . . . . . . . . . . . . . . 48

Numbering Physical Channel With Multiple SoundStructure Devices . . . . . . . . . . . . . . . 49

Physical Channel Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

Understanding Virtual Channels . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

Virtual Channel Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

Understanding Virtual Channel Groups . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

Virtual Channel Group Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

Understanding Telephone Virtual Channels . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

Defining Logic Pins . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

Labeling Physical Logic Pins . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

Controlling Virtual Channels . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

Polycom, Inc. 3

Design Guide for Polycom C16, C12, C8, and SR12 SoundStructure Studio 1.9.0

Controlling Array Virtual Channels . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

Understanding IR Receiver Virtual Channel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

Creating Designs with SoundStructure Studio . . . . . . . . . . . . . . . . . . . . . . . . . . 70

Understanding SoundStructure Studio . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

Understanding System Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

Viewing Recommended Operating System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

Installing SoundStructure Studio . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

Operating in Online and Offline Mode . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

Customizing SoundStructure Designs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

Using the Wiring Page . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

Editing Devices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

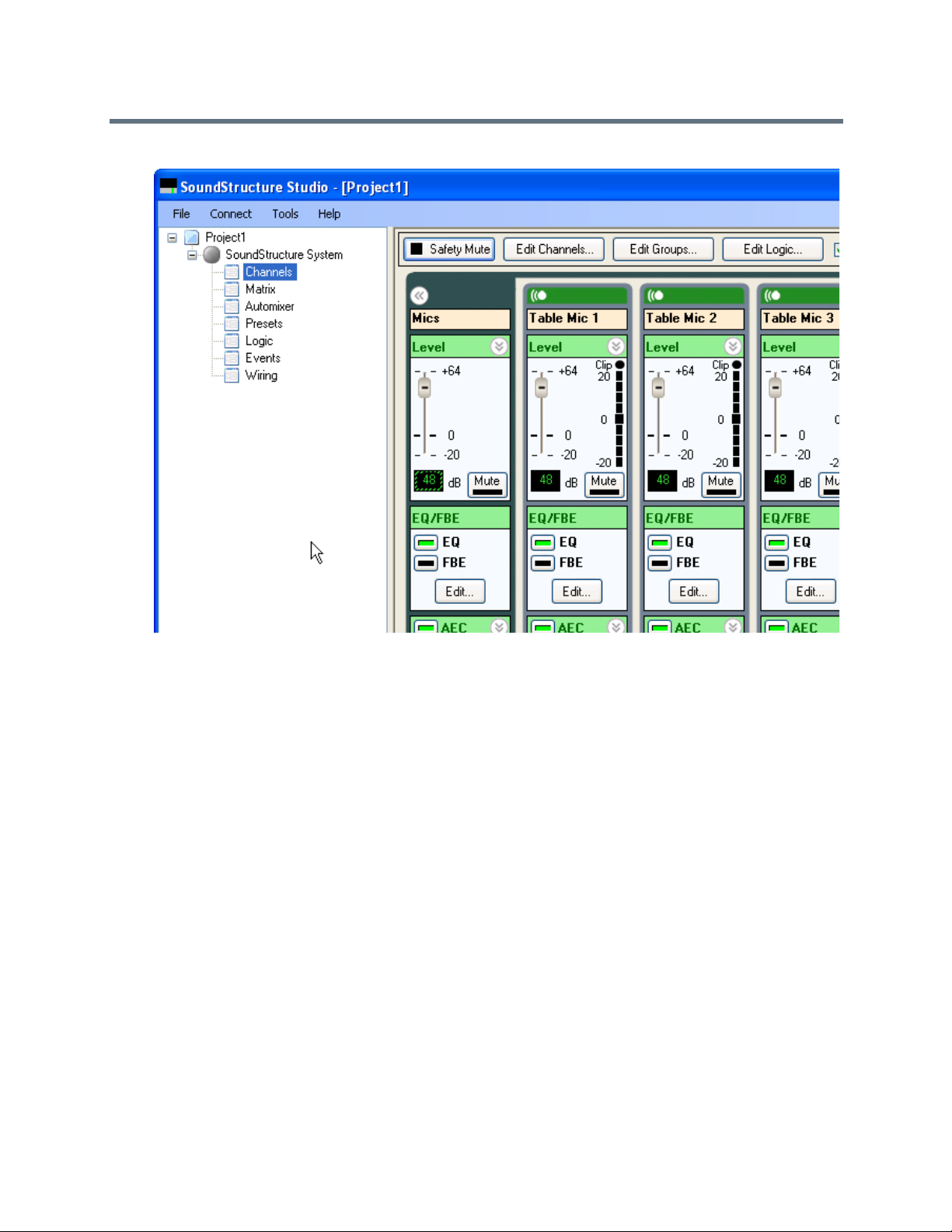

Using the Channels Page . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

Editing Virtual Channels . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

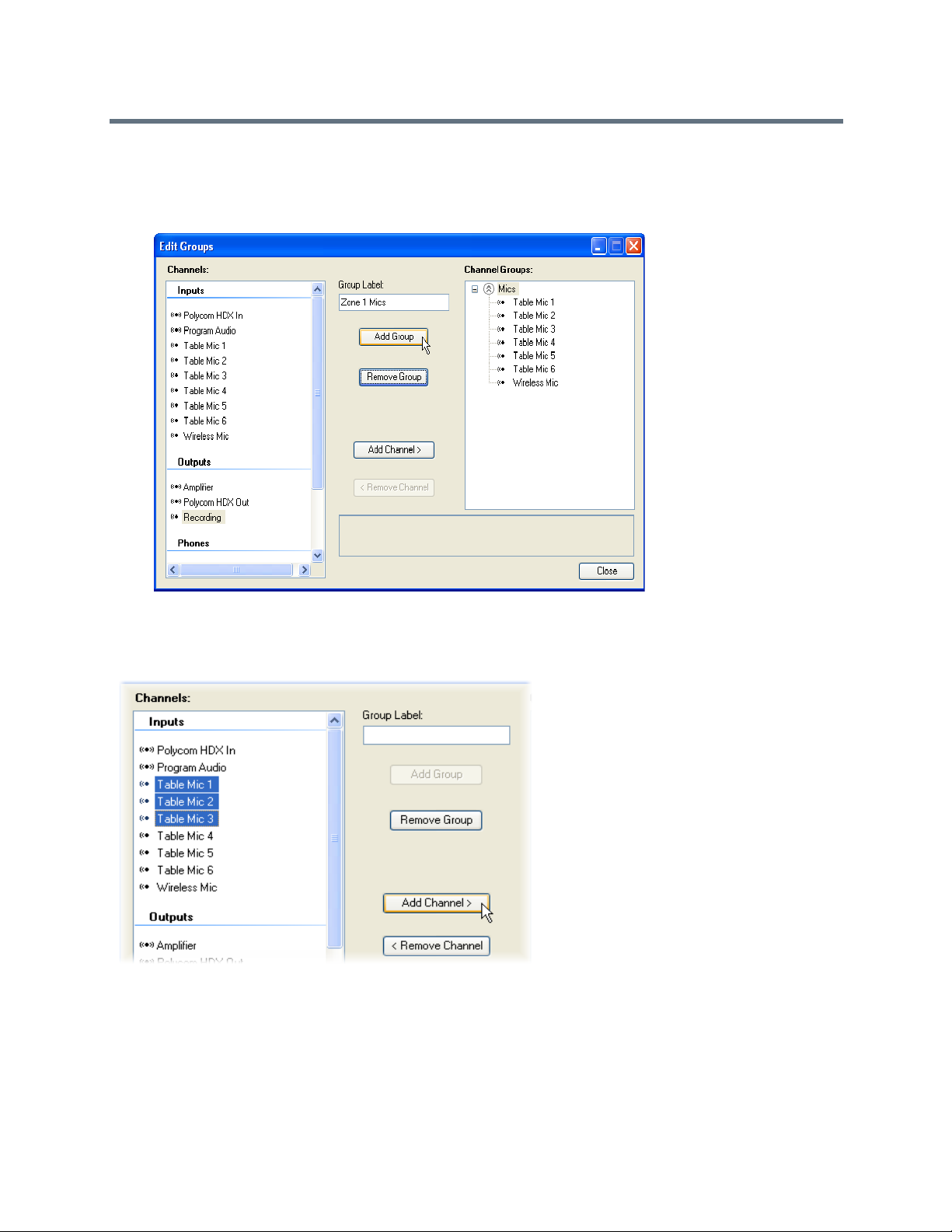

Creating Virtual Channel Groups . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 88

Setting Input Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

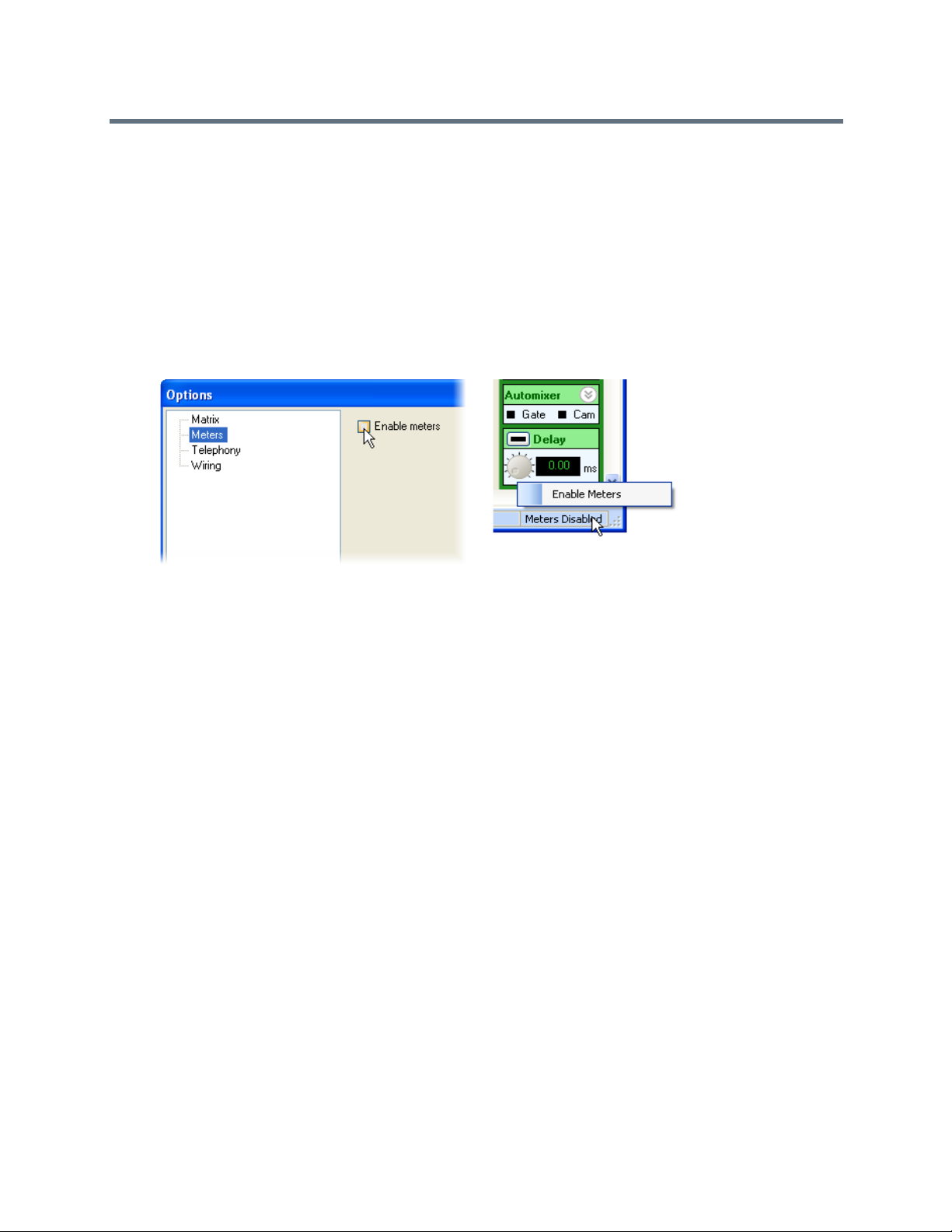

Enabling Input Signal Meters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

Using Input Channel Controls . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

Operating Analog Signal Gain . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98

Changing the Mute Status . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

Enabling Phantom Power . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

Using the Ungated Type . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 100

Using Delay Type . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

Using Delay Compensation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 106

Using Trim . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 107

Processing Equalization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 108

Eliminating Feedback . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

Enabling Acoustic Echo Cancellation (AEC) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 112

Processing Noise Cancellation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

Using Automatic Gain Control (AGC) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

Using Dynamics Processors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

Using Automatic Microphone Mixing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

Processing Delay . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 125

Controlling Fader . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 125

Defining a Signal Generator . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 126

Setting Output Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 128

Processing Output Dynamics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

Processing Output Equalization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

Processing Delay . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

Polycom, Inc. 4

Design Guide for Polycom C16, C12, C8, and SR12 SoundStructure Studio 1.9.0

Processing Submix Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

Processing Output Dynamics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 132

Processing Output Equalization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 132

Processing Delay . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134

Controlling Fader . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134

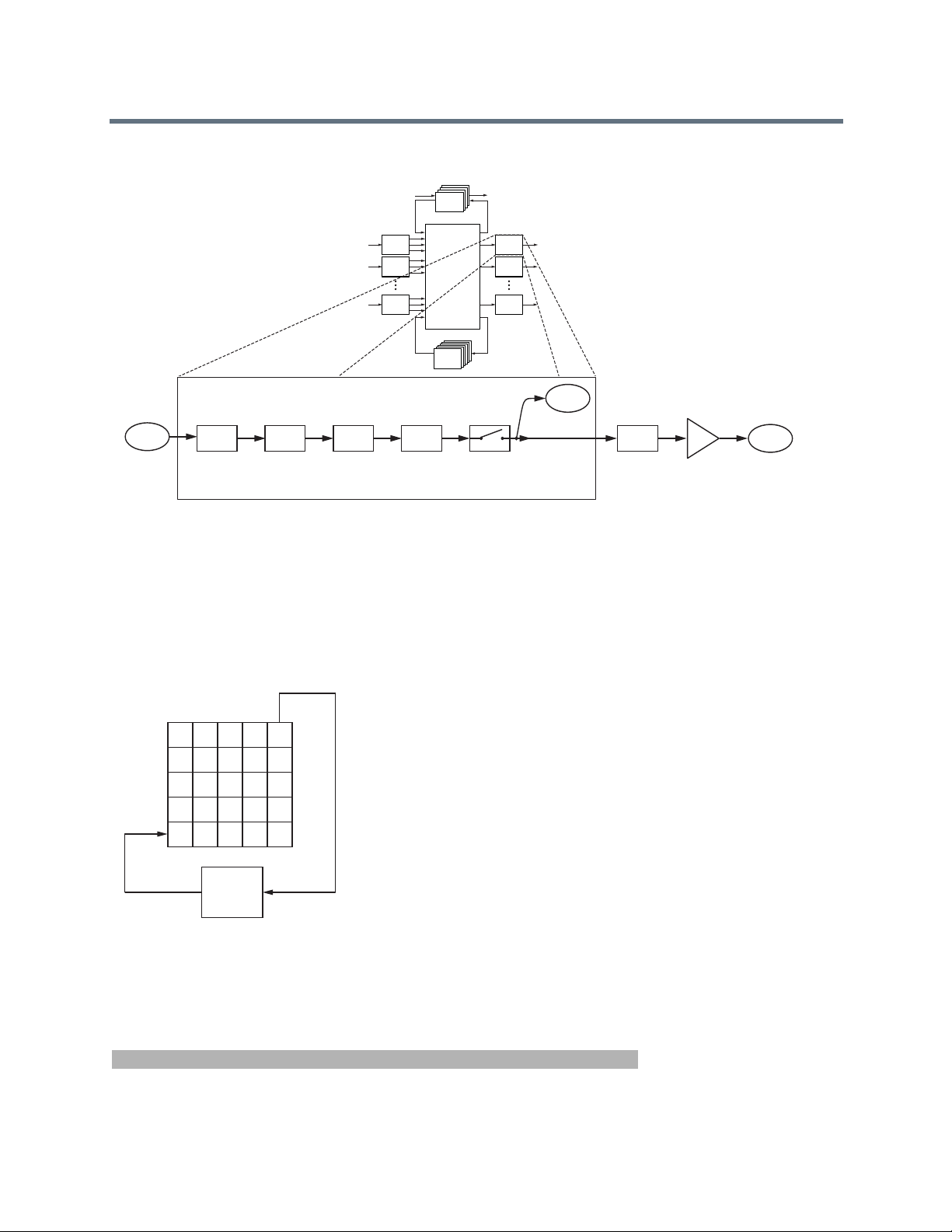

Using the Matrix Page . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134

Adjusting Crosspoints . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

Matrix summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

Using the Telephony Channels . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 142

Adjusting Input Gain . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 143

Processing Noise Cancellation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 144

Using Automatic Gain Control (AGC) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 144

Processing Output Dynamics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 145

Processing Equalization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 145

Controlling Fader . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 146

Processing Delay . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 146

Using Telephone Controls . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 147

Connecting Over Conference Link2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 150

Connecting SoundStructure Conference Link2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 150

Integrating Polycom Video Codec . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 151

Designing with The Polycom Video Codec . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 153

Editing The Polycom Video Codec Input Channels . . . . . . . . . . . . . . . . . . . . . . . . . . . . 153

Processing The Polycom Video Codec SoundStructure Signals . . . . . . . . . . . . . . . . . . 156

Understanding The Polycom Video Codec Output Channels . . . . . . . . . . . . . . . . . . . . . 157

Routing The Polycom Video Codec Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 158

Using the Mute Controls . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 159

Using the Volume Controls . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 161

Designing With Polycom Digital Microphone Arrays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 163

Understanding Digital Microphone Cabling Requirements . . . . . . . . . . . . . . . . . . . . . . . 164

Updating Digital Microphone Firmware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 164

Detecting CLink2 Devices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 166

Viewing Digital Microphone Array Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 166

Assigning Digital Microphone Array Channels To Physical Inputs . . . . . . . . . . . . . . . . . 169

Numbering Digital Microphone Array . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 172

Understanding Installation Options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 174

Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 177

Linking Multiple SoundStructure Devices with One Big Audio Matrix . . . . . . 179

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 179

Polycom, Inc. 5

Design Guide for Polycom C16, C12, C8, and SR12 SoundStructure Studio 1.9.0

Preparing Units for Linking with OBAM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 179

Updating SoundStructure Device Firmwa re . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 179

Linking SoundStructure Devices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 180

Creating a Multi-Device Configuration File . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 184

Expanding or Contracting an Existing Project . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 185

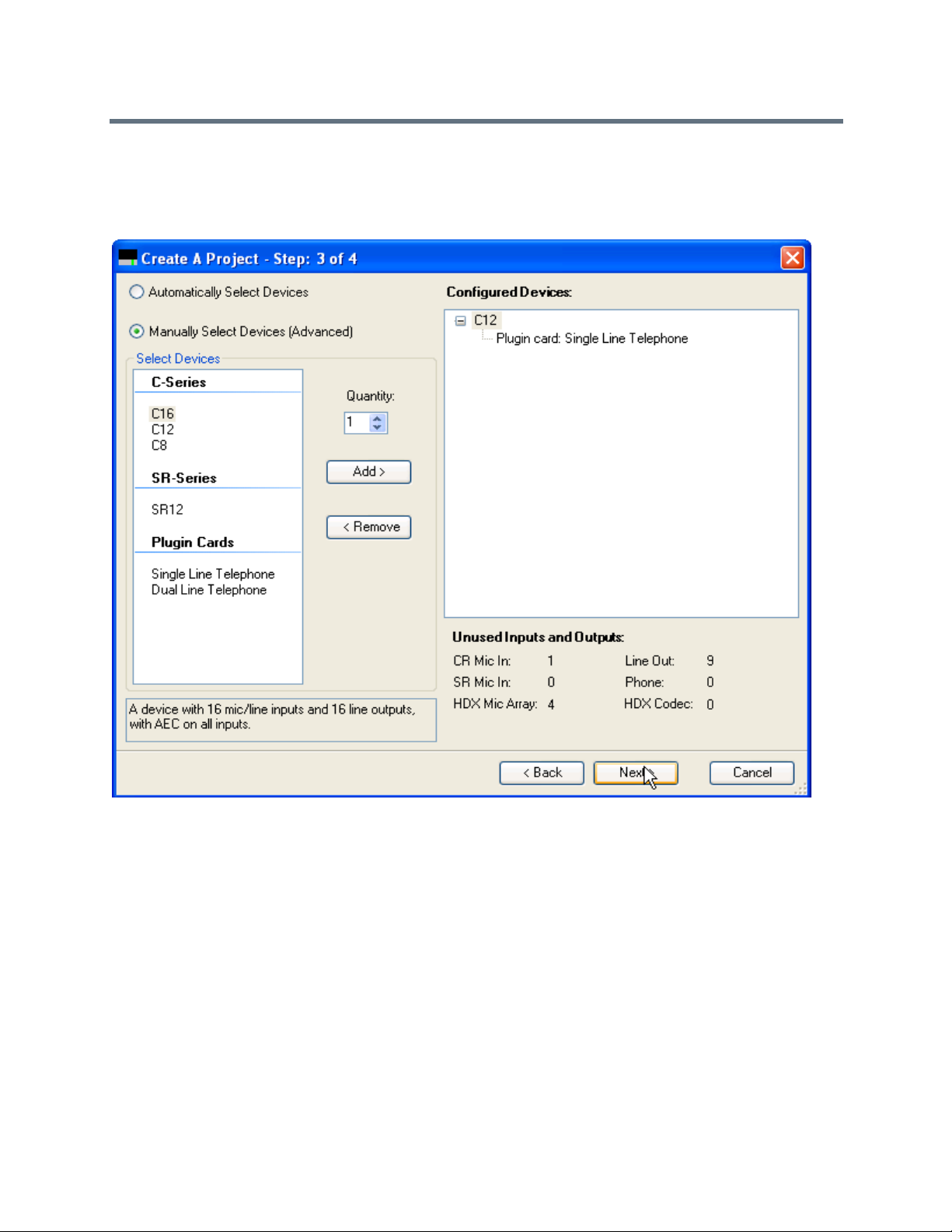

Creating a New Project . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 187

Uploading Configuration Files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 190

Controlling the SoundStructure System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 193

Accessing SoundStructure Logs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 194

Connecting Polycom Microphones . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 195

Connecting Multiple Polycom Video Codec Conferencing Systems . . . . . . . . . . . . . . . . 198

Installing SoundStructure Devices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 201

Configuration Files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 201

Wiring The Devices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 201

Uploading A Configuration File . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 205

Downloading A Configuration File . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 209

Updating Firmware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 209

Configuring The Signal Gains . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 211

Input Signal Level Adjustment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 212

Signal Meters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 212

Room Gain . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 214

Telephony Signal Levels . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 216

Output Signal Levels . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 218

Presets . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 223

Preset Operation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 224

Saving Presets . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 225

Creating Partial Presets . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 227

Running Presets . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 231

Removing Presets . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 232

Using Events, Logic, and IR . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 233

Understanding Events . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 233

Sources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 233

Triggers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 234

Actions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 234

Creating Events With SoundStructure Studio . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 236

Adding New Events . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 236

Enable And Disable Events . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 236

Event Entries In The Logs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 237

Polycom, Inc. 6

Design Guide for Polycom C16, C12, C8, and SR12 SoundStructure Studio 1.9.0

Removing Events With Studio . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 238

SoundStructure Studio Automatically Creates Events . . . . . . . . . . . . . . . . . . . . . . . . . . 238

Polycom IR Remote . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 242

Polycom IR Remote Channel ID . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 246

IR Receiver Connector . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 246

Logic Ports . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 247

Digital Logic Inputs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 248

Analog Logic Inputs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 250

Logic Outputs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 250

Logic Arrays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 251

Viewing Event Examples . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 253

Splitting and Combining Presets Triggered from a Logic Input . . . . . . . . . . . . . . . . . . . . 253

Viewing Push To Talk Microphones with LEDs Example . . . . . . . . . . . . . . . . . . . . . . . . 256

Viewing Push and Hold to Temporarily Mute A Microphone . . . . . . . . . . . . . . . . . . . . . . 260

Viewing the Phone Off Hook Drives A Relay Example . . . . . . . . . . . . . . . . . . . . . . . . . . 261

Viewing the Volume Knob Adjusts “Amplifier” Fader Example . . . . . . . . . . . . . . . . . . . . 263

Viewing the Gating Information Sent To A Control System Example . . . . . . . . . . . . . . . 265

Positioning A Polycom Video Codec Camera Example . . . . . . . . . . . . . . . . . . . . . . . . . 267

Creating SoundStructure Events Best Practices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 268

Managing SoundStructure Systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 270

Connecting To The Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 270

LAN Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 270

Dynamic IP Addresses . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 271

Link-Local IP Addresses . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 273

Static IP Addresses . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 274

Setting The Time Server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 276

Control And Command Sessions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 276

SoundStructure Device Discovery . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 278

AMX Beacon . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 278

RS-232 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 279

Configuring And Accessing The Logs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 279

Using the Polycom® RealPresence Touch™ with a SoundStructure System . 281

Setting Up and Enabling the RealPresence Touch . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 281

Pairing the RealPresence Touch Device with a SoundStructure System . . . . . . . . . . . . . . . 281

Placing Calls on the RealPresence Touch . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 282

Use the RealPresence Touch to Generate Touch Tones in a SoundStructure Call . . . . . . . 282

Use the RealPresence Touch to Generate a Flash Hook Command . . . . . . . . . . . . . . . . . . 283

Polycom, Inc. 7

Design Guide for Polycom C16, C12, C8, and SR12 SoundStructure Studio 1.9.0

Integrating The Polycom® Touch Control with SoundStructure Systems . . . 284

Polycom Touch Control and SoundStructure Systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 284

SoundStructure System Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 284

Using a Polycom Touch Control with Video Codec Systems Versus SoundStructure Systems

285

Pairing the Polycom Touch Control with SoundStructure . . . . . . . . . . . . . . . . . . . . . . . . . . . 286

Polycom Touch Control Administrative Settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 290

Configuring the Polycom Touch Control LAN Properties . . . . . . . . . . . . . . . . . . . . . . . . 291

Configuring Polycom Touch Control Regional Settings . . . . . . . . . . . . . . . . . . . . . . . . . 292

Configuring Security Options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 293

Setting up Polycom Touch Control log management . . . . . . . . . . . . . . . . . . . . . . . . . . . 293

Updating Polycom Touch Control Software . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 293

Using the Polycom Touch Control with SoundStructure . . . . . . . . . . . . . . . . . . . . . . . . . . . . 295

Designing a SoundStructure Project with the Polycom Touch Control . . . . . . . . . . . . . . 295

Using Multiple SoundStructure Telephony Interfaces . . . . . . . . . . . . . . . . . . . . . . . . . . . 299

Using Multiple Polycom Touch Controls with SoundStructure . . . . . . . . . . . . . . . . . . . . 300

Validating Polycom Touch Control and SoundStructure integration . . . . . . . . . . . . . . . . 301

Integrating SoundStructure with SoundStructure VoIP Interface . . . . . . . . . . 305

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 305

How to Read This Chapter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 306

SoundStructure VoIP Interface Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 306

SoundStructure System Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 308

Upgrading a Project to the SoundStructure VoIP Interface . . . . . . . . . . . . . . . . . . . . . . . . . . 308

Upgrading an Existing TEL1/TEL2 Project to the SoundStructure VoIP Interface . . . . . 309

Creating a New Project with the SoundStructure VoIP interface . . . . . . . . . . . . . . . . . . 316

Upgrading the Firmware in the SoundStructu re Syste m . . . . . . . . . . . . . . . . . . . . . . . . . 322

Installing the New Plugin Cards . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 324

Uploading the Configuration File . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 326

Configuring the SoundStructure VoIP Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 327

Setting the IP address of the SoundStructure VoIP Interface . . . . . . . . . . . . . . . . . . . . . 327

Setting the Provisioning Server settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 333

Registering Lines with the SoundStructure VoIP Interface . . . . . . . . . . . . . . . . . . . . . . . 339

Using the SoundStructure VoIP Interface with SoundStructure Studio . . . . . . . . . . . . . . . . . 344

Using the Phone Settings Control . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 344

Customizing SoundStructure Telephony Settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 347

SoundStructure VoIP Interface Settings on the Wiring Page . . . . . . . . . . . . . . . . . . . . . 348

Setting an IP address with SoundStructure Studio . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 349

Using the SoundStructure Studio Console . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 352

Updating Software on the SoundStructure VoIP Interface . . . . . . . . . . . . . . . . . . . . . . . . . . 354

Polycom, Inc. 8

Design Guide for Polycom C16, C12, C8, and SR12 SoundStructure Studio 1.9.0

Upgrading Software with a Local FTP Server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 354

Upgrading Software with an Existing Provisioning Server . . . . . . . . . . . . . . . . . . . . . . . 355

Upgrading Software with the Web Configuration Utility . . . . . . . . . . . . . . . . . . . . . . . . . 357

Validating a SoundStructure VoIP Interface Installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . 364

VoIP Interface Logs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 372

Back up and Restore the VoIP Specific Settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 374

Importing and Exporting VoIP Parameter Settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 376

SoundStructure Log Information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 377

Information Required for Support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 379

Understanding SoundStructure VoIP Interface API Commands . . . . . . . . . . . . . . . . . . . . . . 379

Using the SoundStructure API . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 382

Dialing a Call . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 382

Hanging up a Call . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 383

Putting a Call on Hold and Resuming the Call . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 383

Forwarding an Incoming Call . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 384

Transferring a Call . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 384

Blind Transfer of a Call . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 385

Dialing Two Calls on the Same Line . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 386

Dialing Two Calls on Different Lines . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 387

SoundStructure API Behavior Changes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 388

Adding Authentication to SoundStructure Systems . . . . . . . . . . . . . . . . . . . . . 390

SoundStructure Authentication Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 390

SoundStructure System Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 390

Enabling Authentication on a SoundStructure System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 391

Discovering a System with Authentication . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 392

Removing Authentication from a SoundStructure System . . . . . . . . . . . . . . . . . . . . . . . . . . 394

Viewing SoundStructure Command Logs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 395

Understanding SoundStructure System Compatibility Considerations . . . . . . . . . . . . . . . . . 395

SoundStructure Authentication API Command Summary . . . . . . . . . . . . . . . . . . . . . . . . 398

Creating Advanced Applications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 400

Creating a One Microphone And Mono Video Conferencing System . . . . . . . . . . . . . . . . . . 400

SoundStructure Studio Steps . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 401

Using the Channels Page . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 404

Using the Matrix Page . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 405

Understanding Wiring Information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 406

Controlling The System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 407

Creating Four Digital Array Microphones and A SoundStation VTX1000 Conferencing System .

408

SoundStructure Studio Steps . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 410

Polycom, Inc. 9

Design Guide for Polycom C16, C12, C8, and SR12 SoundStructure Studio 1.9.0

Editing Matrix Settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 413

Editing Channels Settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 415

Understanding Wiring Information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 418

Controlling The System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 419

Creating an Eight Microphones, Video, and Telephony Application Conferencing System . 420

Creating a Project in SoundStructure Studio . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 421

Matrix Settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 425

Channels Settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 427

Wiring Information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 428

Controlling The System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 429

Creating a Two PSTN Line Positional “Receive” Audio Conferencing System . . . . . . . . . . . 430

SoundStructure Studio Steps . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 432

Matrix Settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 436

Channels Settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 439

Wiring Information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 440

Controlling The System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 441

Creating an Eight Microphones and Stereo Video Conferencing System . . . . . . . . . . . . . . . 443

Channels Settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 448

Wiring Information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 449

Controlling The System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 450

Creating an Eight Microphones with The Polycom Video Codec Conferencing System . . . . 451

SoundStructure Studio Steps . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 451

Matrix Settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 455

Channels Settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 456

Wiring Information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 457

Controlling The System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 458

Creating an Eight Microphones with Wireless and Lectern Microphones Reinforcement Confer-

encing System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 459

SoundStructure Studio Steps . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 461

Matrix Settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 462

Channels Settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 465

Wiring Information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 473

Controlling The System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 474

Creating a Sixteen Microphones with Six-Zone Sound Reinforcement Conferencing System . . .

475

SoundStructure Studio Steps . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 476

Matrix Settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 481

Channels Settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 484

Wiring Information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 486

Controlling The System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 488

Polycom, Inc. 10

Design Guide for Polycom C16, C12, C8, and SR12 SoundStructure Studio 1.9.0

Creating a Room Combining Application Conferencing System . . . . . . . . . . . . . . . . . . . . . . 488

SoundStructure Studio Steps . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 491

Combined Room Settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 495

Split Room Settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 499

Wiring Information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 503

Controlling The System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 504

Troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 506

Audio Troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 506

Echo Troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 508

API Troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 512

RS-232 Troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 515

Polycom Video Codec Integration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 517

Telco Troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 518

Ethernet . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 518

Hardware Troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 519

OBAM Troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 520

Troubleshooting The IR Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 521

Contacting Technical Support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 521

Specifications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 522

Technical Specifications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 522

Pin Out Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 524

PSTN Cable . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 525

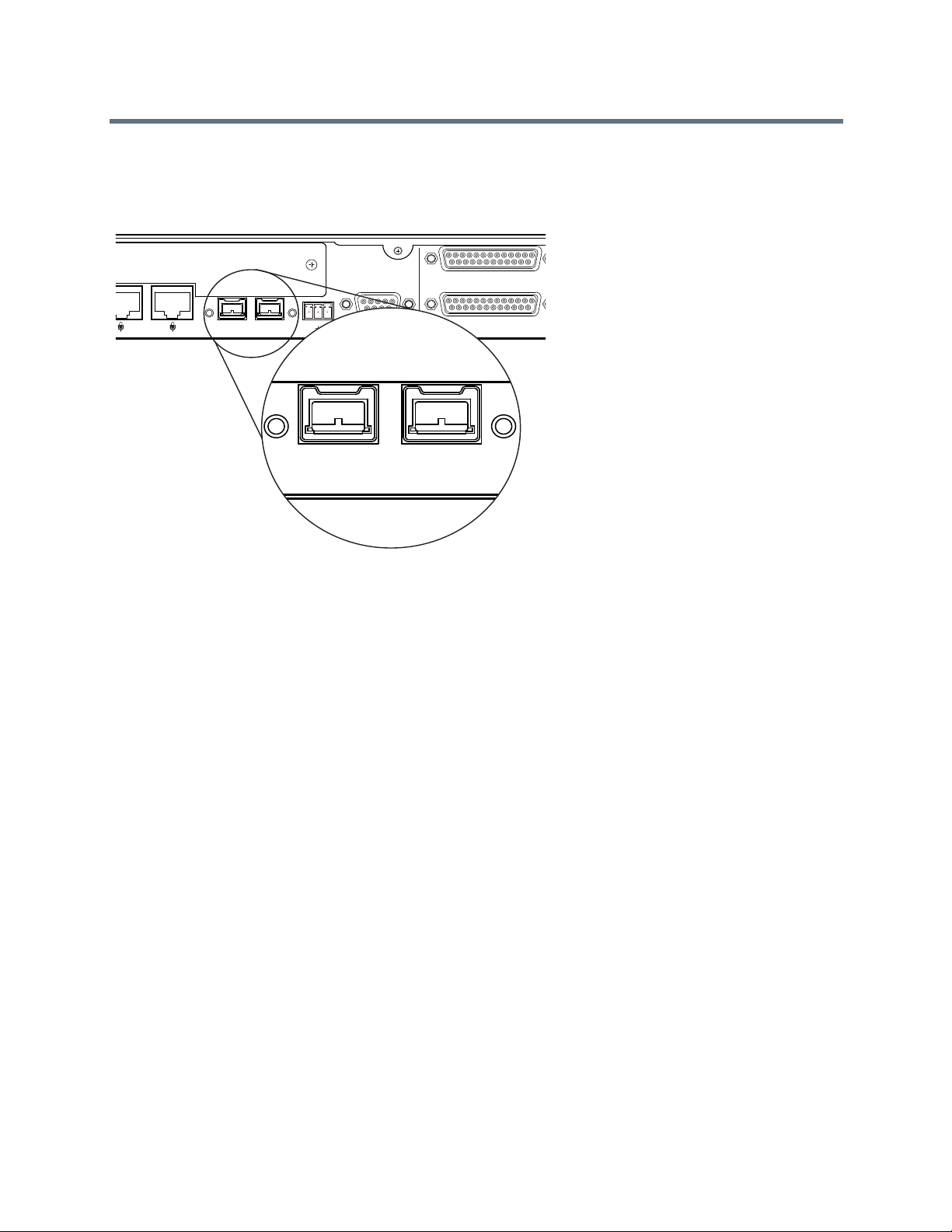

Conference Link2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 525

OBAM Link . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 526

IR Receiver . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 527

RS-232 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 527

Logic Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 528

Audio Connections . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 529

Using SoundStructure Studio Controls . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 531

Adjusting Knobs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 531

Adjusting Matrix Crosspoints . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 531

Appendix A: Command Protocol Reference Guide . . . . . . . . . . . . . . . . . . . . . . 533

Using SoundStructure Command Protocols . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 533

Understanding SoundStructure Control Interfaces . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 533

Understanding RS-232 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 533

Connecting with the Ethernet Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 534

Using Virtual Channels . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 535

Polycom, Inc. 11

Design Guide for Polycom C16, C12, C8, and SR12 SoundStructure Studio 1.9.0

Understanding Virtual Channel Types . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 536

Understanding Virtual Channel Groups . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 537

Understanding SoundStructure Command Syntax . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 538

Controlling SoundStructure Parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 538

Understanding the Command Format . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 540

Understanding the Control Commands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 541

Understanding Virtual Channel Definition Commands . . . . . . . . . . . . . . . . . . . . . . . . . . 542

Virtual Channel Group Definition Commands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 546

Adjusting Parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 550

Command List . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 554

Command Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 555

SoundStructure Parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 557

Gain and Mute Parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 557

Matrix Parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 564

Telephony Parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 571

Equalizer Parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 615

Dynamics Processing Parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 634

Algorithm Parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 652

Input Path Parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 670

Automixer Parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 672

GPIO Control Parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 682

Control Port Parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 685

System Parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 695

Appendix B: Address Book . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 709

Using the Address Book . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 709

Address Book SoundStructure System Entries . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 710

Address Book Folders . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 713

Removing Entries from the Address Book . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 715

Changing the Location of the Address Book . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 715

Appendix C: Designing Audio Conferencing Systems . . . . . . . . . . . . . . . . . . . 716

Large Room Environments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 717

Microphone Selection And Placement . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 717

Microphone Fundamentals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 717

Microphones For Conferencing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 720

Automatic Microphone Mixers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 722

Noise Cancellation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 722

Acoustic Echo Cancellation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 723

AEC Reference . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 725

Polycom, Inc. 12

Design Guide for Polycom C16, C12, C8, and SR12 SoundStructure Studio 1.9.0

Tail Time . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 725

Transmission Delay . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 726

Echo Return Loss . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 727

Multi Channel vs. Single Channel AEC . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 728

Muting Microphones . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 728

Volume Control . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 729

AEC Troubleshooting Guidelines . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 729

Telephone Hybrid . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 730

Amplifiers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 731

Loudspeakers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 731

Speaker Zoning And Placement . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 733

Loudspeakers - How Much Power Is Required . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 735

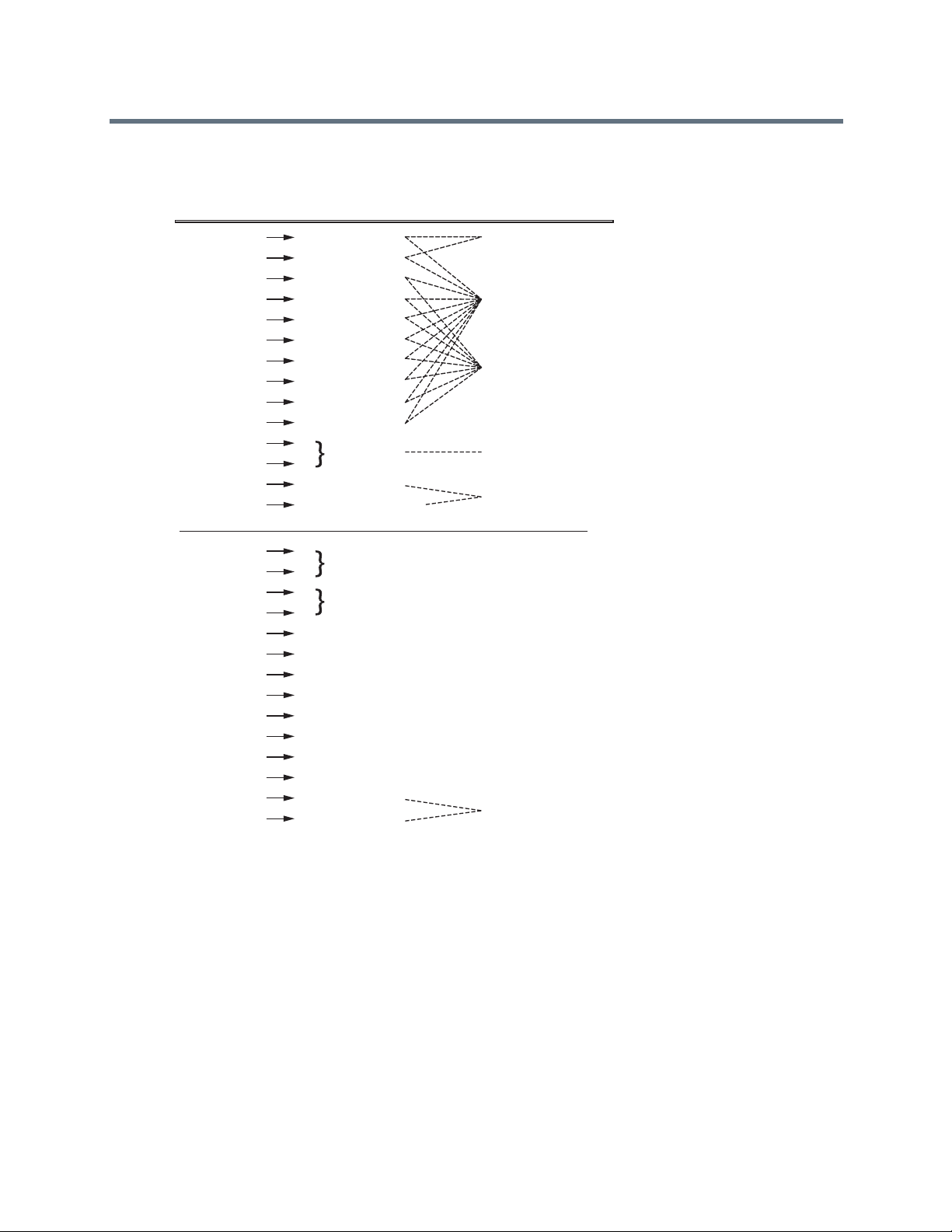

Spatial Directionality . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 735

Microphone And Loudspeaker Placement Considerations . . . . . . . . . . . . . . . . . . . . . . . 735

In-Room Reinforcement . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 736

Polycom, Inc. 13

Introduction

The Polycom® SoundStructure® products are professional, rack-mountable, and audio processing devices

that set a new standard for audio performance and conferencing in any style of r oom. With both monaural

and stereo acoustic echo cancellation capabilities, the SoundStructure conferencing products provide an

immersive conferencing experience that is unparalleled. The SoundStructure products are designed to

integrate seamlessly with supported Polycom Video Codec conferencing systems and Polycom touch

devices for the ultimate experience with HD voice, video, content, and ease of use.

Note: Recent Product Name Changes Not Shown in Graphics

With the release of SoundStructure Firmware 1.7.0 and SoundStructure Studio

1.9.0, the product names for the Polycom video and microphone conferencing

products have changed to reflect added support for Polycom

Group Series. However, the product name changes are not reflected in the graphics

and screenshots shown in this guide. For example, although Polycom HDX is now

Polycom Video Codec, some of the graphics in this guide still display the Polycom

Video Codec as HDX.

Additionally, RealPresence Group Series is compatible with older versions of

SoundStructure Studio and Firmware, and any concepts that refer to HDX apply for

Group Series as well.

®

RealPresence®

The Polycom SoundStructure C16, C12, and C8 audio conferencing devices are single rack unit devices

that have 16 inputs and 16 outputs, 12 inputs and 12 outputs, or 8 inputs and 8 outputs respectively. The

SoundStructure SR12 is an audio device for commercial sound applications that do not require acoustic

echo cancellation capabilities and has 12 inputs and 12 outputs. Any combination of SoundStructure

devices can be used together to build systems up to a total of eig ht SoundStr ucture de vice s and up to 1 28

inputs and 128 outputs. SoundStructure products can be used with any style of analog microphone or

line-level input and output sources and are also compatible with th e Polycom table and ceiling microphones.

The SoundStructure products are used in similar applications as Polycom’s V ortex

®

installed voice products

but have additional capabilities including:

● Stereo acoustic echo cancellation on all inputs

● Direct digital integration with Polycom Video Codec or RealPresence

®

Group Series systems

● Feedback elimination on all inputs

● More equalization options available on all inputs, outputs, and submixes

● Dynamics processing on all inputs, outputs, and submixes

● Modular telephony options that can be used with any SoundStructure device

● Submix processing and as many submixes as inputs

● Ethernet port for configuration and device management

Polycom, Inc. 14

● Event engine for using internal state information such as muting, logic input and logic output ports,

and an IR remote for controlling SoundStructure

SoundStructure devices are configured with Polycom's SoundStructure Studio software, a Windows

®

-based

comprehensive design tool used to create audio configurations either online—connected to a

SoundStructure system—or offline—not connected to a SoundStructure system. SoundStructure Studio is

used to upload and retrieve configuration files to and from SoundStructure systems.

For detailed information on how to install a device, terminate cables, and connect other devices to the

SoundStructure devices, refer to the SoundStructure Hardware Installation Guide. For information on the

SoundStructure API command syntax used to configure SoundStructure devices and control the devices

with third party controllers, refer to Appendix A: Command Pr otocol Reference Guide . The SoundStructure

Command Protocol Reference Guide can also be found by pointing a browser to the SoundStructure

device’s IP address.

This guide is designed for the technical user and A/V designer who needs to us e SoundStructure pro ducts,

create audio designs, customize audio designs, and verify the performance of Soun dStructure designs. This

guide is organized as follows:

● Introducing the Polycom SoundStructure Product Family is an introduction to the SoundStructure

products including the OBAM

™

architecture and details of the signal processing available for inputs,

outputs, telephony, and submix processing.

● Introducing SoundStructure Design Concepts presents the SoundStructure design concepts of

physical channels, virtual channels, and virtual channel groups. These concepts are integral to

making SoundStructure products easy to use and enable control system application code to be

reused and portable across multiple installations.

● Creating Designs with SoundStructure Studio describes how to use the SoundStructure Studio

windows software to create a design. Start with this section if you want to get up and running quickly

using SoundStructure Studio.

● Customizing SoundStructure Designs provides detailed information on customizing the design

created with SoundStructure Studio including all the controls presented as part of the user interface.

Start with this chapter if you have a design and would like to customize it for your application.

● Connecting Over Conference Link2 provides information on the Conference Link2 interf ace and how

SoundStructure devices integrate with the Polycom Video Codec conferencing system.

● Linking Multiple SoundStructure Devices with One Big Audio Matrix provides information on how to

link multiple SoundStructure devices with the OBAM™ interface.

● Installing SoundStructure Devices provides information on how to install, set signal levels, and

validate the performance of the SoundStructure devices. Start here if you have a system already up

and running and would like to adjust the system in real-time.

● Using Events, Logic, and IR provides information on how to use SoundStructure ‘events’ with logic

input and output pins, an IR remote, and for options for how to send commands from

SoundStructure’s RS-232 interface to other devices including a Polycom Video Codec.

● Managing SoundStructure Systems provides information for the network administrator including how

to set IP addresses and how to view the internal SoundStructure logs, and more.

● Using the Polycom

®

RealPresence Touch™ with a SoundStructure System provides the steps for

pairing and using the Polycom RealPresence Touch device with a SoundStructure syst em.

● Integrating the Polycom

®

Touch Control with SoundStructure Systems provides the steps for using

the Polycom Touch Control with a SoundStructure system. See the SoundStructure and the Po lycom

Touch Control Users Guide for instructions on how to use the Polycom Touch Control with

SoundStructure.

Polycom, Inc. 15

● Integrating SoundStructure with SoundStructure V oIP Interface provides the steps for designing with,

and configuring, the SoundStructure VoIP interface.

● Adding Authentication to SoundStructure Systems introduces authentication and how to enable

password protection on SoundStructure systems.

● Creating Advanced Applications provides example applications with SoundStructure products

including stereo audio conferencing applications, room co mbining, and more.

● Troubleshooting provides troublesho oting information and steps incl uding details on the status LEDs

on SoundStructure.

● Specifications lists the Specifications for the SoundStructure devices including audio performance,

power requirements, and more.

● Using SoundStructure Studio Controls provides information on how to use the different UI elements

in the SoundStructure Studio software including knobs and matrix crosspoints.

● Appendix A: Command Protocol Reference Guide provides detailed information on the

SoundStructure command protocol and the full command set.

● Appendix B: Address Book provides detailed information on how to use SoundStructure Studio’s

address book functionality to manage and connect to SoundStructure systems across an enterprise’s

network.

● Appendix C: Designing Audio Conferencing Systemsis an audio conferencing design guide. Refer to

this section if new to audio conferencing or would like to better understand audio conferencing

concepts.

If you are new to the SoundStructure products, re ad this guid e sta rt ing with Introducing the Polycom

SoundStructure Product Family for an overview, Customizing SoundStructure Designs to begin using

SoundStructure Studio, and the remaining chapters as necessary to learn more about using SoundStructure

products.

Polycom, Inc. 16

Introducing the Polycom SoundStructure Product Family

There are two product lines in the SoundStructure product family: the Sound Structure Conferencing ser ies

devices (C-series) designed for audio conferencing applications and the SoundStructure Sound

Reinforcement series devices (SR-series) designed for commercial sound applications.

While the C-series and SR-series product families share a common design philosophy, both have audio

processing capabilities that are designed for their respective applications. As described in detail below, the

C-series products include acoustic echo cancellation on all inputs and are designed for audio and video

conferencing applications. The SR-series products do not include acoustic echo cancellation and are

designed for dedicated sound reinforcement, live sound, broadcast, and other commercial sound

applications that do not require acoustic echo cancellation processing.

Defining SoundStructure Architectural Features

This section defines the common architectural features of the SoundStructure products an d deta ils th e

specific processing for both the C-series and SR-series products. Details on how to configure the devices

are provided in Introducing SoundStructure Design Concepts, Creating Designs with SoundStructure

Studio, and Customizing SoundStructure Designs.

All SoundStructure products are designed with the flexibility of an open architecture and the ease of design

and installation of a fixed architecture system. The resulting solution is tremendous flexibility in how signals

are processed while simultaneously making it easy to achieve exceptional system performance.

The SoundStructure processing includes input processing available on all inputs, output processing

available on all outputs, submix processing available on all submix signals, telephony processing available

on all optional telephony interfaces, and an audio matrix that connects this processing together. The

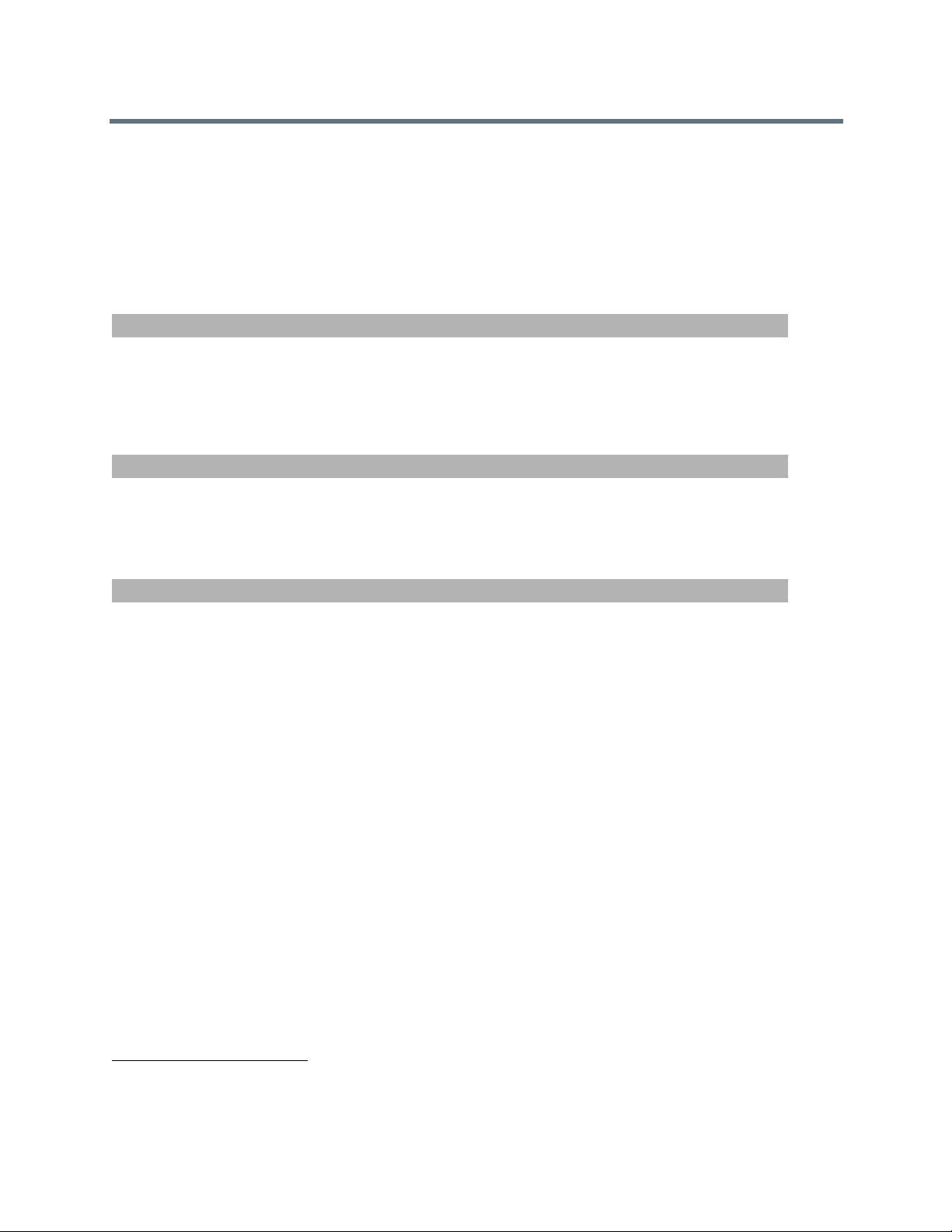

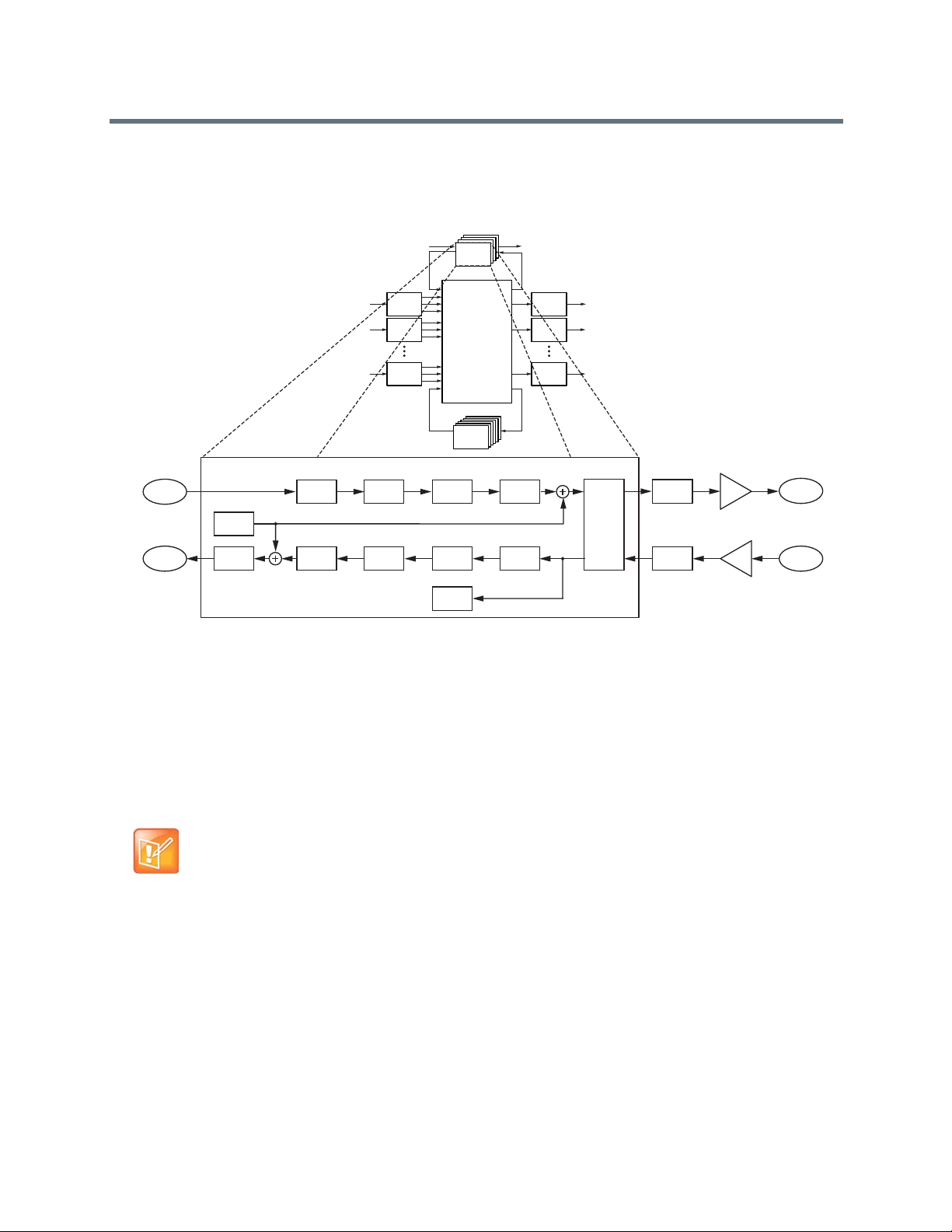

high-level architecture is shown in the following figure for a SoundStructure device that has N inputs and N

Polycom, Inc. 17

1

2

N

1

2

N

Telco

Processing

Telco

Processing

Telco

Processing

Telco

Processing

Matrix

Processing

SubMix

Submix

Processsing

Output

Processing

Output

Processing

Output

Processing

Input

Processing

Input

Processing

Input

Processing

outputs. The specific input and output processing depends on the product family (C-series or SR-series)

and is described later in this chapter.

SoundStructure High-Level Architecture

The following table summarizes the number of inputs, outputs, and submixes supp orted within each type of

device. As shown in this table, each SoundStructure device has as many submixes as there are inputs to

the device.

Supported SoundStructure Inputs, Outputs, and Submixes

SoundStructure

C16 C12 C8 SR12

Inputs 16 12 8 12

Outputs 16 12 8 12

Submixes 16 12 8 12

A summary of the different types of processing in the C-series and SR-series products is shown in the

following table. As can be seen in this table, the difference between the products is that the C-series

products include acoustic echo cancellation while the SR-series products do not include acoustic echo

cancellation. The processing capabilities are described in the following sections.

Types of C-series and SR-series Product Processing

Product Processing C-Series SR-Series

Input Processing

Up to 8th order highpass and lowpass 4 4

1st or 2nd order high shelf and low shelf 4 4

10-band parametric equalization 4 4

Acoustic echo cancellation, 20-22kHz 200 msec tail-time, monaural or stereo 4

Automatic gain control: +15 to -15dB 4 4

Polycom, Inc. 18

Types of C-series and SR-series Product Processing

Dynamics processing: gate, expander, compressor, limiter, peak limiter 4 4

Feedback Eliminator: 10 adaptive filters 4 4

Noise cancellation: 0-20dB noise reduction 4 4

Automixer: gain sharing or gated mixer 4 4

Signal fader gain: +20 to -100 dB 4 4

Signal delay to 1000 msec 4 4

Output Processing

1st or 2nd order high shelf and low shelf filters 4 4

10-bands of parametric or 31-band graphic equalizer 4 4

Dynamics processing: gate, expander, compressor, limiter, peak limiter 4 4

Signal fader gain: +20 to -100 dB 4 4

Cross over equalization up to 8th order highpass and lowpass filters, 1st order 4 4

Crossover delay: up to 100 msec 4 4

Signal delay: up to 1000 msec 4 4

Submix Processing

Up to 8th order highpass and lowpass filters 4 4

1st or 2nd order high shelf and low shelf filters 4 4

10-bands of parametric equalization 4 4

Dynamics processing: gate, expander, compressor, limiter, peak limiter 4 4

Signal fader gain: +20 to -100 dB 4 4

Signal delay: up to 1000 msec 4 4

Telco Processing

Line echo cancellation, 80-3300Hz, 32msec tail-time 4 4

Dynamics processing: gate, expander, compressor, limiter, peak limiter on telco

transmit and receive

Up to 8th order highpass and lowpass filters 4 4

1st or 2nd order high shelf and low shelf filters 4 4

10-bands of parametric equalization on telco transmit and receive 4 4

Call progress detection 4 4

Signal fader gain: +20 to -100 dB 4 4

Automatic gain control: +15 to -15dB on telco receive 4 4

Signal delay on telco transmit and receive: up to 1000 msec 4 4

Noise cancellation: 0-20dB noise reduction on telco receive 4 4

44

Understanding Polycom OBAM™ - One Big Audio Matrix

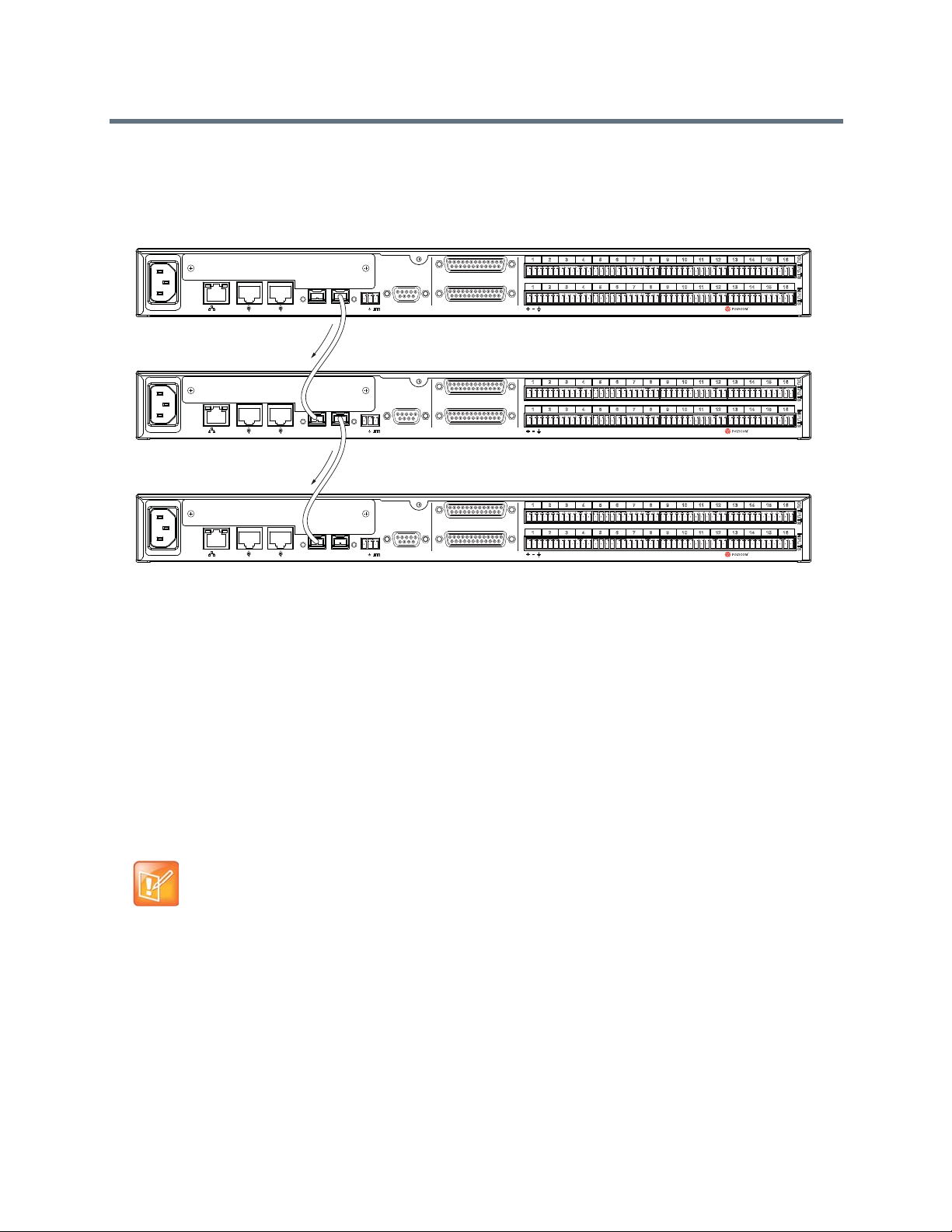

One of the significant advancements in the SoundStructure products is the ability to link together multiple

devices and configure and operate those devices as one large system rather than as multiple individual

devices

required such as complicated sound reinforcement applications.

OBAM's 'one large system' approach provides many benefits including:

Polycom, Inc. 19

1

. This feature dramatically simplifies any installation where audio from more than one device is

● Input signals that feed into the single matrix and outputs that are fed from the single matrix.

1. Requires SoundStructure firmware release 1.2 or higher.

OBAM

OUTIN

OUTIN

OUTIN

OBAM

16x16

16x16 12x12

8x8

8x8

12x12

36x36

● No limitations on how signals from multiple devices are used together, which is beneficial for A/V

designers.

● A transparent device linking scheme for all input signals that you share with all devices, which

simplifies the setup, configuration, and maintenance of large systems.

● Inputs and outputs you can view on one screen, which eliminates the need to configure multiple

devices by viewing multiple pages.

This one big system design approach is the result of the SoundStructure architectural design and the OBAM

high-speed bi-directional link interface between devices. With OBAM, you can link up to eight devices

together. If there are plug-in cards installed in multiple linked SoundStructure devices, the plug-in card

resources are available for routing to any output across the system. See the Hardware Installation Guide or

Introducing SoundStructure Design Concepts for more information on how to link multiple devices together.

The one large system design philosophy means that the audio matrix of a system of SoundStru cture devices

is the size of the total number of inputs and outputs of all the component devices that are linked together.

Since one SoundStructure C16 device has a 16x16 matr ix, two C16 devices linked together create a 32x32

matrix and so forth.

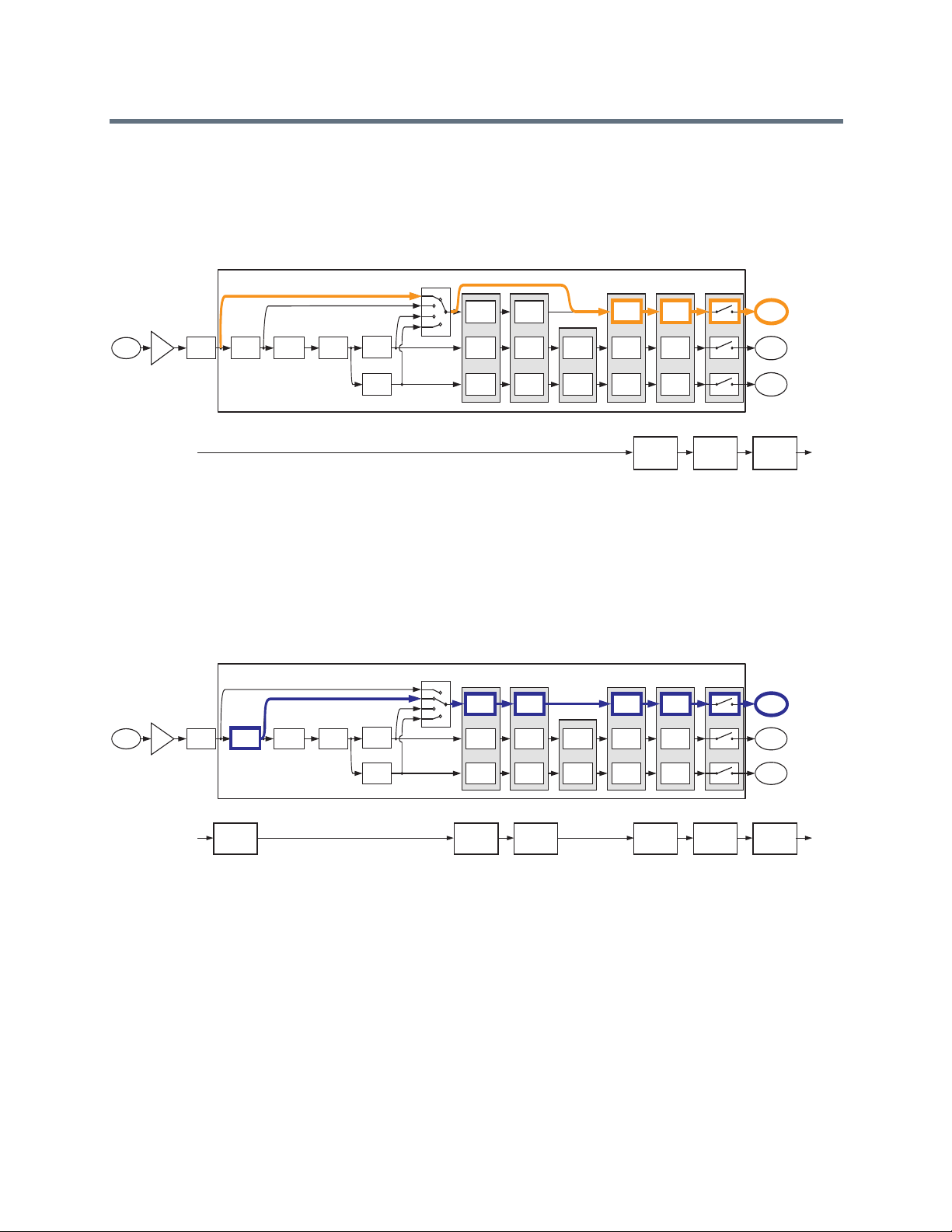

The OBAM architecture is shown in the following figure where a C16 device is linked to a C12 device which

is linked to a C8 device. The resulting system has 36 x36 inputs a nd 36 outputs ( 16+12+8 = 36) . In addition

to all the inputs and outputs, the submixes of each device also feed the matrix a llowing the designer to have

36 submix signals (not shown in the following figure), one for each input that can be used in the system.

OBAM Architecture with Linked SoundStructure Devices

The OBAM design architecture helps A/V designers to no longe r be concerned with d evice linking because

multiple SoundStructure devices behave as, and are configured as, one large system.

Polycom, Inc. 20

Understanding SoundStructure C-Series Products

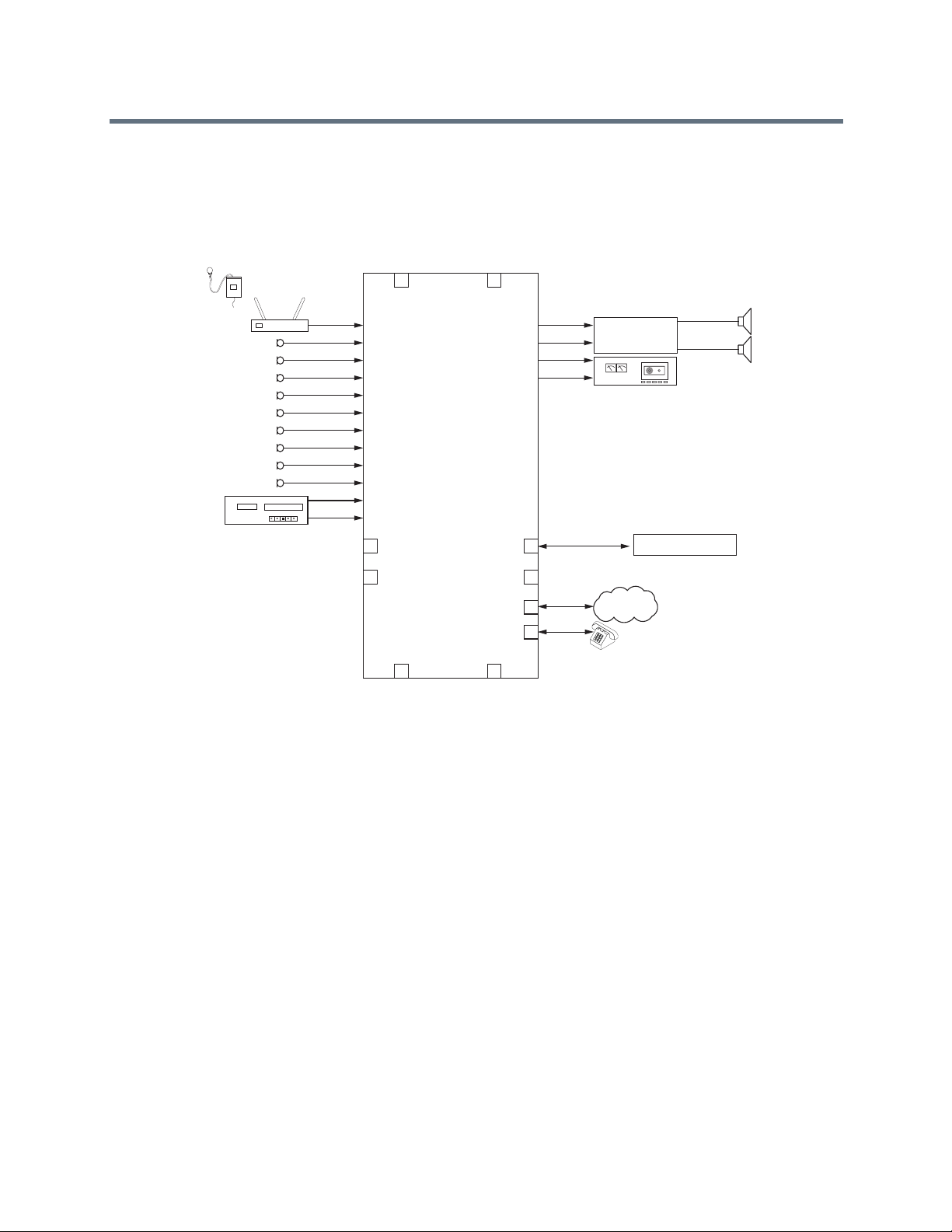

The SoundStructure C16, C12, and C8 devices are designed for audio conferencing applications where

groups of people want to communicate to other individuals or groups such as in a typical room shown in the

following figure.

A Conference Room Used with SoundStructure C-series Products

The SoundStructure C-series products feature both monaural a nd stereo acoustic echo cancellation, noise

cancellation, equalization, dynamics processing, feedback elimination, and automatic microphone mixing.

Note: Processing Capability for Audio Inputs and Outputs

All audio inputs have the same processing capability and you can use audio inputs

with either microphone-level or line-level inputs. Phantom power is available on all

inputs.

All outputs have the same processing capability.

A single SoundStru cture C16, C12, or C8 device supports 16, 12, or 8 microphone or line inputs and 16, 12,

or 8 line outputs, respectively. You can link up to eight SoundStructure device s to geth er inclu din g any

combination of SoundStructure C-series or SR-series products may be used together to build audio

processing systems that support up to 128 analog inputs and outputs.

You can use each SoundStructure C-series device with traditional analog microphones or with Polycom's

table and ceiling microphones

1

. For detailed information on using the Polycom table and ceiling

microphones, see Connecting Over Conference Link2.

1. Requires SoundStructure firmware release 1.1 or later.

Polycom, Inc. 21

Telco

Video Codec

Amplifier

SoundStructure

C16

Microphones

Telephony

Playback/Record

Network

PSTN

Network

Favorite Content

SoundStructure Installation

Audio and video conferencing are typical applications of the SoundStructur e C-series conferencing products

where two or more remote locations are conferen ce d together. The typical connections in a conference

room are shown in the following figure.

Typical SoundStructure Video and Audio Connections in a Conference Room

Before designing with SoundStructure products, the details of the SoundStructure signal processing

capabilities are presented.

Understanding C-Series Input Processing

The input processing on the SoundStructure C-series devices is de signed to help you cr eate confere ncing

solutions with or without sound reinforcement. The audio input processing on a SoundStructure C-series

device is shown in the following table.

SoundStructure Input Processing

Input Processing

Up to 8th order highpass and lowpass

1st or 2nd order high shelf and low shelf

10-band parametric equalization

Acoustic echo cancellation, 20-22kHz 200 msec tail-time, monaural or stereo

Automatic gain control: +15 to -15dB

Dynamics processing: gate, expander, compressor, limiter, peak limiter

Feedback Eliminator: 10 adaptive filters

Noise cancellation: 0-20dB noise reduction

Automixer: gain sharing or gated mixer

Signal fader gain: +20 to -100 dB

Signal delay to 1000 msec

Polycom, Inc. 22

Mic or Line

Input

Input to

Matrix

Input to

Matrix

Parametric

Equalization

A/D

Converter

Analog

Gain

Acoustic Echo

Cancellation

Noise

Cancellation

Automatic

Gain Control

Automixer

AGC Dynamics Fader Delay

Fader

Automixer

Automixer

Delay

Automatic

Gain Control

Non Linear

Processing

Feedback

Cancellation

Dynamics

Processor

Dynamics

Processor

Fader

Input to

Matrix

Automatic

Gain Control

Fader Delay

Dynamics

Processor

Delay

Mute

Recording/

Ungated

Conferencing

Sound

Reinforcement

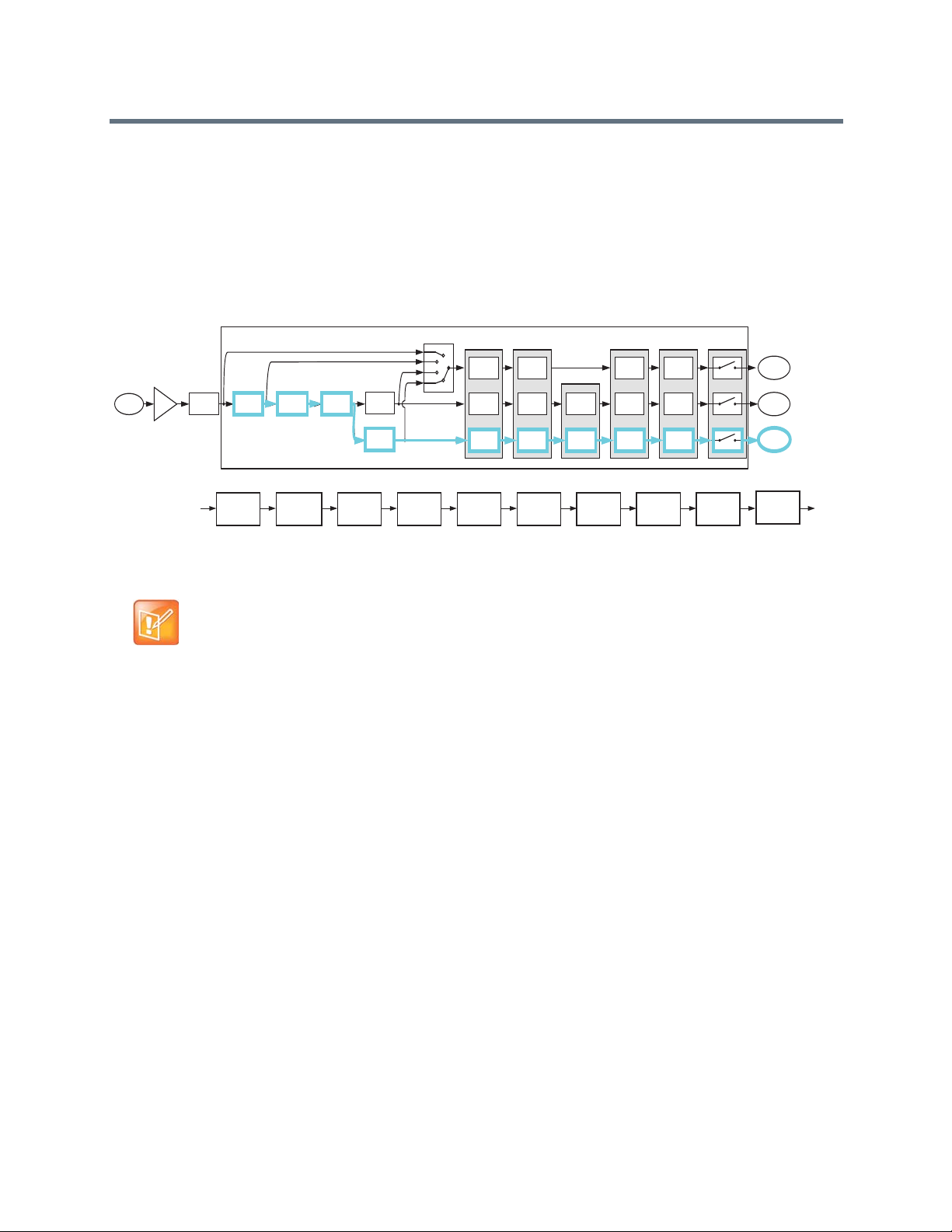

The signal processing follows the signal flow, as shown in the following figure.

SoundStructure C-Series Signal Processing and Signal Flow

Telco

Telco

Telco

Processing

Telco

Processing

Processing

Processing

Input

1

Processing

Input

2

Processing

Input

N

Processing

Matrix

SubMix

Submix

Processing

Processsing

Output

Processing

Output

Processing

Output

Processing

1

2

N

Each analog input signal has an analog gain stage that is used to adjust the gain of the input signal to the

SoundStructure's nominal signal level of 0 dBu. The an alog gain stage can provide from -20 to 64 dB o f gain

in 0.5 dB steps. There is also an option to enable 48 V phantom power on each input. Finally the analog

input signal is digitized and available for processing. The digital signal is processed by five different DSP

algorithms: parametric equalization, acoustic echo cancellation, noise cancellation, feedback reduction, and

echo suppression (non linear processing).

Polycom, Inc. 23

Mic or Line

Input

Parametric

Equalization

A/D

Converter

Analog

Gain

Acoustic Echo

Cancellation

Noise

Cancellation

Non Linear

Processing

Feedback

Cancellation

Route

C-Series Input Processing

Input to

Matrix

Input to

Matrix

Automatic

Gain Control

Automixer

AGC Dynamics Fader Delay

Fader

Automixer

Automixer

Delay

Automatic

Gain Control

Dynamics

Processor

Dynamics

Processor

Fader

Input to

Matrix

Automatic

Gain Control

Fader Delay

Dynamics

Processor

Delay

Mute

Recording/

Ungated

Conferencing

Sound

Reinforcement

SoundStructure C-Series Signal Input Processing

Mic or Line

C-Series Input Processing

A/D

Parametric

Analog

Input

Converter

Gain

Equalization

Acoustic Echo

Cancellation

Cancellation

Non Linear

Noise

Processing

Feedback

Cancellation

AGC Dynamics Fader Delay

Automatic

Dynamics

Gain Control

Automatic

Gain Control

Automatic

Gain Control

Processor

Dynamics

Processor

Dynamics

Processor

Router

Automixer

Automixer

Automixer

Fader Delay

Fader

Fader

Mute

Delay

Delay

Recording/

Input to

Matrix

Ungated

Input to

Conferencing

Matrix

Sound

Input to

Matrix

Reinforcement

Continuing through the signal path, as shown in the next figure, the input signal continues through the

automatic gain control (AGC), dynamics processing, an automixer, an audio fader, and finally through the

input delay.

SoundStructure C-Series Input Signal Path

AGC Dynamics Fader Delay

Automatic

Dynamics

Gain Control

Processor

Acoustic Echo

Cancellation

Cancellation

Non Linear

Noise

Processing

Feedback

Cancellation

A/D

Mic or Line

Input

Parametric

Analog

Converter

Equalization

Gain

Automatic

Gain Control

Automatic

Gain Control

Automixer

Dynamics

Automixer

Processor

Dynamics

Automixer

Processor

Fader Delay

Fader

Fader

Mute

Input to

Recording/

Matrix

Ungated

Input to

Delay

Delay

Conferencing

Matrix

Sound

Input to

Matrix

Reinforcement

Each analog input signal is processed to generate th ree different versions of the processed input signal that

can be used simultaneously in the matrix:

● Conferencing version

● Sound reinforcement version

● Recording/ungated version

Polycom, Inc. 24

Mic or Line

Input

Input to

Matrix

Input to

Matrix

Parametric

Equalization

A/D

Converter

Analog

Gain