PMC-Sierra,Inc.

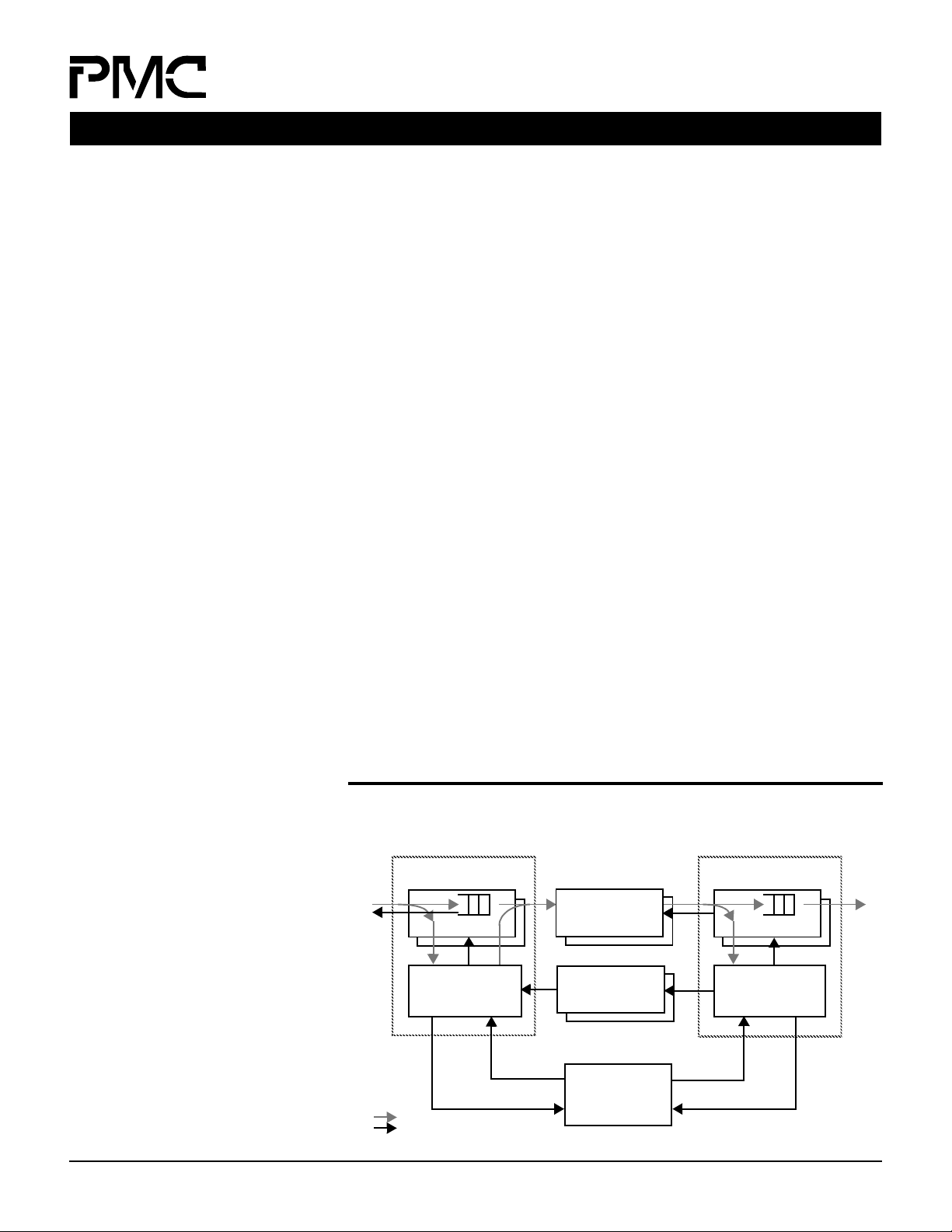

Scheduler

Enhanced Port Processor

Dataslice

data cell flow

control flow

Dataslice

PM9311-UC

PM9315-HC

PM9313-HC

PM9313-HC

Enhanced Port Processor

PM9315-HC

1 of 32 ETT1 Ports

1 of 32 ETT1 Ports

Ingress

Flow from

Linecard

Egress

Flow to

Linecard

LCS

Protocol

Interface

LCS

Protocol

Interface

Crossbar

PM9312-UC

Crossbar

PM9312-UC

Flow Control

Enhanced TT1TM Switch Fabric

Preliminary

PM9311/2/3/5

ETT1™ Chip Set

FEATURES

The ETT1TM Chip Set provides a full 32port crossbar-based switch fabric.

Shelves of physically separated

linecards can be attached to the switch

fabric by fiber optic links running the

Linecard-to-Switch (LCS

CONFIGURATIONS

• 320 Gbit/s aggregate full-duplex

bandwidth with a typical configuration

of 32 ports of OC-192c or 10 Gbit/s

Ethernet.

• Each port operates at a fixed rat e of 25

million fixed-length cells per second.

The user payload in each cell can be

either 64 or 76 bytes. A 64 byte cell

payload is equivalent to a 12.8 Gbit/s

line rate.

• Each port can be configured as:

a) 1 channel of 25 million cells per

second, appropriate for a single OC192c stream or 10 Gbit/s Ethernet, or

as

b) 4 channels of 6.25 million cells per

second, appropriate f or a quad OC-48c

linecard.

SERVICES

• All configurations support four strict

priorities of best-effort traffic for both

unicast and multicast data traffic.

• TDM service provides guaranteed

bandwidth and zero delay variation

with 10Mbit/s per channel resolution.

Can provide Add-Drop type

functionality or ATM CBR service.

• Highly efficient cell scheduling

algorithm together with the use of

Virtual Output Queues and Virtual

Input Queues provides near-outputqueued performance in conjunction

with the fabric’s internal speedup.

• Efficient support for multicast with cell

replicati on performe d within the switch

core. Up to 4096 multicast groups per

port.

TM

) protocol.

MANAGEMENT

• In-band management and control via

Control Packets. These Control

Packets are exchanged between the

linecard and the switch core, and

enable the linecards to communicate

directly with the CPU that controls the

switch fabric.

• Out-of-band (OOB) management and

control via a dedicated CPU interface.

Every ETT1 device has an OOB

interface that provides a CPU-based

control and diagnostics interface. A

single CPU can control every ETT1

device in a full fabric.

FAULT TOLERANCE

• Optional redundancy of all shared

components for fault tolerance. A fully

redundant fabric is capable of

sustaining single errors within any

replicated

device without losing or re-

ordering any cells.

• Hot-swap support for live insertion/

removal of boards from an active

system.

ADDITIONAL SPECIFICATIONS

• Provides a standard five signal

P1149.1 JTAG test port for boundary

scan test purposes.

• 2.5V and 1.5V rails. CMOS I/Os are

3.3V tolerant (except JTAG I/Os)

TM

ETT1

DEVICE CONFIGURATION

• PM9311-UC and PM9312-UC are in a

1088 ceramic column grid array

(CCGA) package. PM9313-HC is a

474 ceramic ball grid array (CBGA).

PM9315-HC is a 624 cerami c ball g rid

array (CBGA).

DEVICE CONFIGURATION

The following illustration shows how the

ETT1 devices are configured to create a

complete fabric. M any i ns tanc es of each

device may be needed in a fabric.

Shown are two fabric ports; each port

would be connected to its own

linecard(s). While every fabric port is fullduplex, the illustration only shows the

ingress stream for the left port and the

egress stream for the port on the right.

The linecard sends cells to the ingress

queues within the Dataslice device s. The

EPP observes their arrival and issues

requests to the Scheduler device. At

some later time, the Scheduler will issu e

a grant back to the EPP. On receipt of

the grant, the EPP instructs the

Dataslice to send the cell at the head of

the relevant ingress queue to the

Crossbar. The cell passes through the

Crossbar and is st ored in the app ropriate

queue at the egress Dataslices. The

egress EPP then inst ructs the Datas lices

to send the cell to the destination

linecard.

LINECARD SUPPORT

• Linecard-to-Switch (LCS) protocol

supports a physical separation

between switch core and linecards of

up to 200 feet/70 m.

• LCS provides a simple credit-based

flow mechanism to avoid cell loss due

to buffer overrun.

PMC-2000411

PROPRIETARY AND CONFIDENTIAL TO PMC-SIERRA, INC., AND FOR ITS CUSTOMERS’ INTERNAL USE

© Copyright PMC-Sierra, Inc. 2000

Preliminary

Enhanced TT1TM Switch Fabric

PM9311/2/3/5 ETT1™ Chip Set

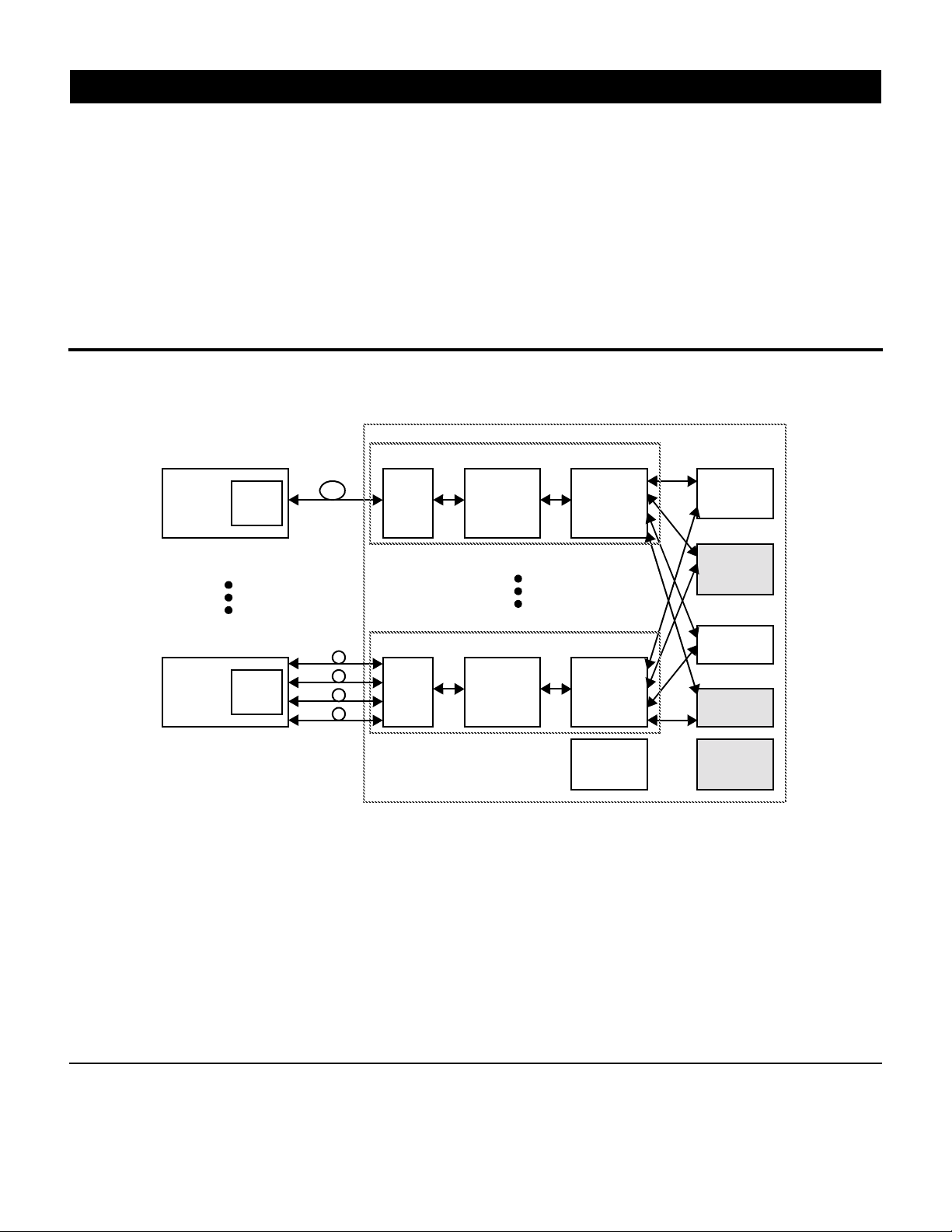

SYSTEM CONFIGURATION

The following illustration shows a

complete switch, with 32 linecards

connecting to the 32 port fabric. As

shown, fiber links are used to interconnect the distant (up to 200 feet)

linecards to the fabric.

Alternatively, linecard silicon can

interface directly to the ETT1 devices, if

sufficient board space, power, thermal

regulation, etc., is available.

SYSTEM CONFIGURATION

OC-192 E/O

Linecard

and O/E

APPLICATIONS

• ATM switches with 32 ports of OC192c, or up to 128 ports of OC-48c, or

combinations thereof.

• SONET Add/Drop Muxes.

• IP switches with 32 ports of 10Gbit/s.

• Any combination of the above.

Fabric Port 1

Fiber

E/O

and O/E

SerDes

(12 or 14)

ETT1 Fabric

EPP plus

Dataslices

6 or 7

Crossbar

(14 or 16)

Redundant

Crossbar

(14 or 16)

Quad OC-48 E/O

Head Office:

PMC-Sierra, Inc.

#105 - 8555 Baxter Place

Burnaby, B.C. V5A 4V7

Canada

Tel: 604.415.6000

Fax: 604.415.6200

Fiber

E/O

Linecard

and O/E

To order documentation,

send email to:

document@pmc-sierra.com

or contact the head office,

Attn: Document Coordinator

PROPRIETARY AND CONFIDENTIAL TO PMC-SIERRA, INC., AND FOR ITS CUSTOMERS’ INTERNAL USE

and O/E

Fabric Port 32

SerDes

(12 or 14)

All product documentation is available on

our web site at:

http://www.pmc-sierra.com

For corporate information,

send email to:

info@pmc-sierra.com

EPP plus

6 or 7

Dataslices

CPU

Scheduler

Redundant

Scheduler

Redundant

CPU

PMC-2000411

© Copyright PMC-Sierra, Inc.

2000. All rights reserved.

March 2000

ETT1, Enhanced TT1, and

LCS are all trademarks of

PMC-Sierra, Inc.

Loading...

Loading...