Released

Datasheet

PMC-Sierra, Inc.

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

PM73488 QSE

PM73488

QSE

5 Gbit/s ATM Switch Fabric Element

DATASHEET

Released

Issue 3: June 1999

Long Form Data Sheet

Released

Datasheet

PMC-Sierra, Inc.

PM73488 QSE

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

AAL1gator, AAL1gator2, Evil Twin Switching, QRT, QSE, and SATURN are trademarks of PMC-Sierra, Inc.

AMCC is a registered trademar k of Applied MicroCircuits Corporation.

i960 is a registered trademark of Intel Corporation.

National Semiconducto r is a registered trademark of National Semiconductor Corporat ion.

Vitesse is a trademark of Vitesse S emiconductor Corporation.

All other brand or product names are trademarks

of their res p ec t iv e com pan ies or org an iz at ions.

U.S. Patents 5,557,607, 5,57 0,348, and 5,583 ,861

Copyright © 1999 PMC-Sierra, Inc.

All Rights Reserved

Released

Datasheet

PMC-Sierra, Inc.

PM73488 QSE

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

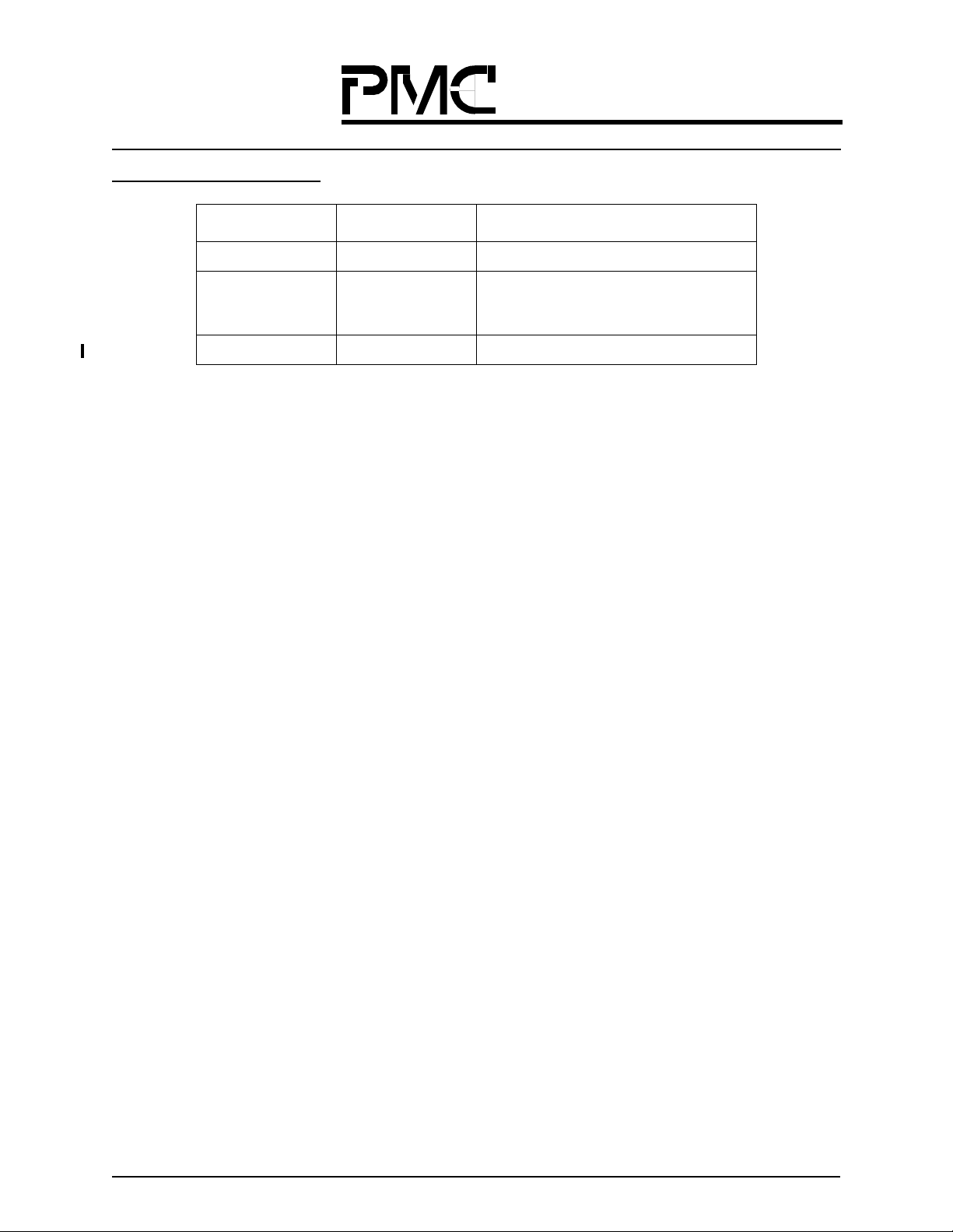

Public Revision History

Issue Number Issue Date Details of Change

Issue 1 March 1998 Creati on of Document

Issue 2 October 1998 Fixed all known typos/errors (e.g. wrong pin-

out:

RAM_ADD(16) and RAM_PARITY swapped).

Issue 3 June 1999 Production Release Version

© 1999

PMC-Sierra, Inc.

105-8555 Baxter Place

Burnaby BC Canada V5A 4V7

Phone: 604.415.6000 FAX: 604.415.6200

Released

Datasheet

PMC-Sierra, Inc.

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

PM73488 QSE

Table of Contents

Description . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

Features. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

Switching Algorithm . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

Multicast Support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

Diagnostic/Robustness Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

I/O Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

Physical Characteristics. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

1 How the QSE Fits into Your System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

1.1 QSE System Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

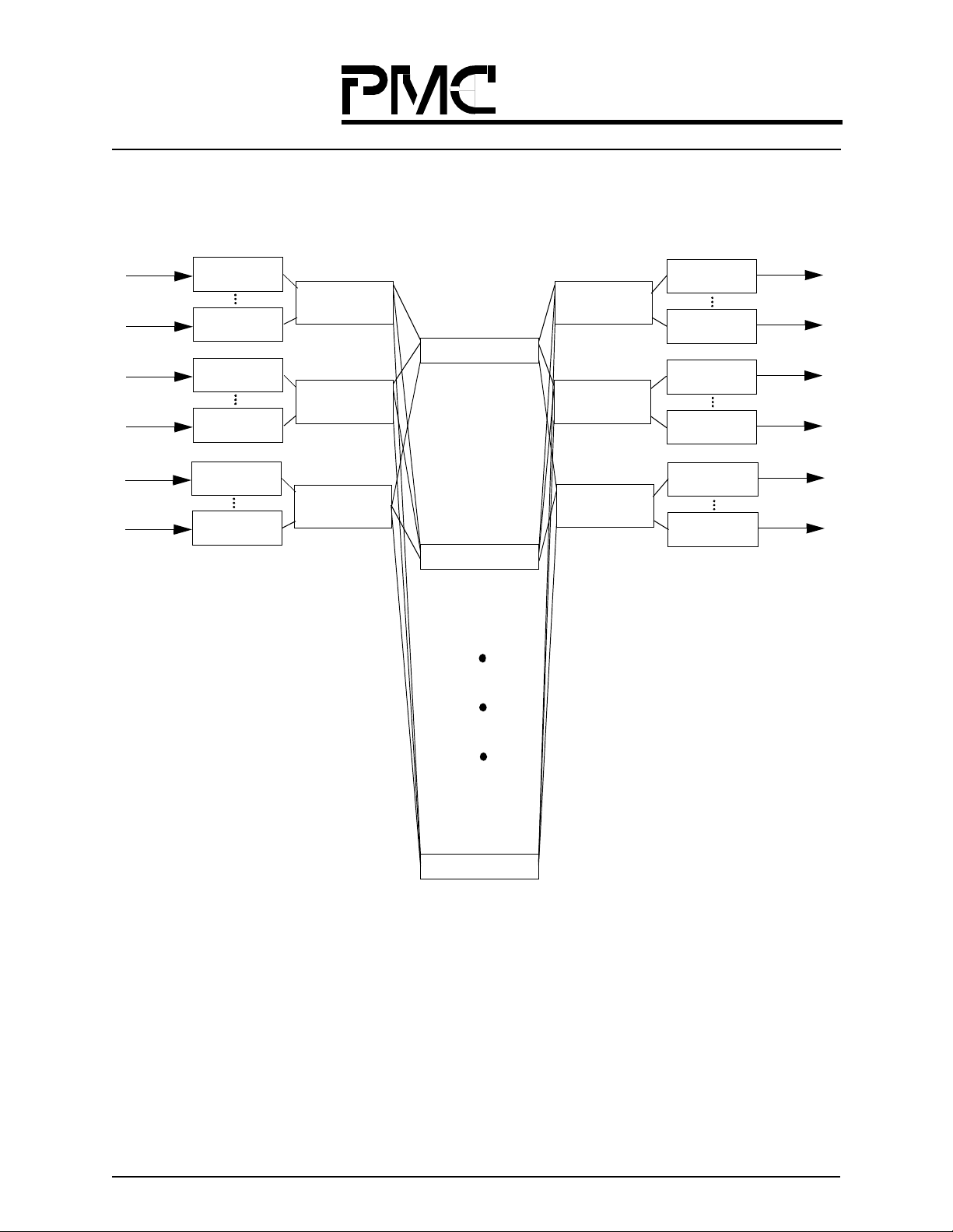

1.2 32 x 32 Switch Application (5 Gbps) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

1.3 64 x 64 Switch Application (10 Gbps) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

1.4 5 Gbps to 20 Gbps Application Example - Seamless Growth. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

1.5 5 Gbps to 160 Gbps Application Example – LAN-to-WAN. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

2 Theory of Operation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

2.1 Phase Aligners . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

2.2 Data Drivers. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

2.3 Unicast Routing and Distribution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

2.4 Multicast Cell Flow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

2.4.1 Multicast Queue Engine . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

2.4.2 Multicast Dequeue Engine. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

2.5 Arbiter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

2.6 BP_ACK Drivers. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

2.7 Interdevic e Interconnectability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

2.8 Network Topologies and the Speedup Factor (SF) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

2.8.1 Network Philosophy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

2.8.2 Network Definition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

2.8.3 Speedup Factor (SF) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

3 External Port Descriptions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

3.1 Switch Fabric Port and Interface Description . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

3.1.1 SE_SOC Encodings. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

3.1.2 Data Cell Format . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

3.1.3 BP_ACK Encodings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

3.2 Data Acknowledge. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

3.3 Microprocessor Interface. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

3.4 Multicast SRAM Interface. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

3.5 Clocks and Timing Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

3.6 CTRL_IN. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

3.7 STAT_OUT . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

Long Form Data Sheet

3.8 Fabric Switch-Over . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

Released

Datasheet

PMC-Sierra, Inc.

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

3.9 Cell Timing/Latency . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

4 QSE Feature Descriptions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

4.1 Distribution Algorithm . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

4.2 Cell Start Offset Logic. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

4.2.1 Relation Between External CELL_START and Local CELL_START . . . . . . . . . . . . . . . . . . . . . . 47

4.2.2 Relation Between Local CELL_START and Data Out of the QSE . . . . . . . . . . . . . . . . . . . . . . . . . 48

4.3 General Description of Phase Aligners . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

4.4 Multicast Backpressure Control. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

4.5 Multilevel Reset. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

5 Fault Specification . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

5. 1 Purpose . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

5. 2 Basic Data and BP/ACK Flow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

5. 3 Fault Detection Mechanisms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

5. 4 Interface Behavior. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

5. 5 IRT-to-Switch Fabric Interface. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

5. 6 QSE Interface, Receive Data Direction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

5. 7 QSE Interface, Transmit Data Direction. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

5. 8 Switch Fabric-to-ORT Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

5. 9 Types of Failures and Their Manifestation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

6 Signal Descriptions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

6.1 Package Diagram . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

6.2 Signal Locations (Signal Name to Ball) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

6.3 Signal Locations (Ball to Signal Name) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

6.4 Pin Descriptions. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

6.4.1 Processor Interface Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72

6.4.2 Multicast RAM Interface Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72

6.4.3 QSE Interface Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

6.4.4 Boundary Scan Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

6.4.5 Miscellaneous Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

6.4.6 Total Pin Count . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

7 Physical Characteristics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

8 Timing Diagrams . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

8.1 Microprocessor Timing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

8.2 RAM Timing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

8.3 QSE Interface Timing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 88

8.4 Miscellaneous Timing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

9 Microprocessor Ports . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

9.1 Microprocessor Ports Summary. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

9.2 Note on Error Detection and Reporting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

9.3 Microprocessor Ports Bit Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

9.3.1 REVISION. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 95

Long Form Data Sheet

9.3.2 CHIP_MODE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 95

PM73488 QSE

Released

Datasheet

PMC-Sierra, Inc.

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

9.3.3 MULTICAST_GROUP_INDEX_REGISTER . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 96

9.3.4 MULTICAST_GROUP_VECTOR_REGISTER . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 96

9.3.5 MULTICAST_GROUP_OP . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

9.3.6 UC/MC_FAIRNESS_REGISTER . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

9.3.7 EXTENDED_CHIP_MODE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

9.3.8 MULTICAST_GROUP_INDEX_MSB . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98

9.3.9 INPUT_PORT_ENABLE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98

9.3.10 OUTPUT_PORT_ENABLE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

9.3.11 INPUT_MARKE D_CE LL S _COUNT . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

9.3.12 OUTPUT_MARKED_CELLS_COUNT . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

9.3.13 PARITY_ERROR_PRESENT . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 100

9.3.14 PARITY_ERROR_LATCH . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 100

9.3.15 PARITY_ERROR_INT_MASK . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 100

9.3.16 SE_INPUT_PORT_FAIL_PRESENT . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

9.3.17 SE_INPUT_PORT_FAIL_LATCH . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

9.3.18 BP_ACK_FAIL_PRESENT . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

9.3.19 BP_ACK_FAIL_LATCH . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

9.3.20 BP_REMOTE_FAIL_PRESENT . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

9.3.21 BP_REMOTE_FAIL_LATCH . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

9.3.22 CONTROL_REGISTER . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

9.3.23 INTERRUPT_STATUS_REGISTER. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

9.3.24 MULTICAST_AGGREGATE_OUTPUT_AND_INPUT_MODES . . . . . . . . . . . . . . . . . . . . . . 105

9.3.25 UNICAST_AGGREGATE_OUTPUT_MODE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 106

9.3.26 SWITCH_FABRIC_ROW . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 107

9.3.27 SWITCH_FABRIC_COLUMN . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 108

9.3.28 CELL_START_OFFSET . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

9.3.29 BP_CONTROL_REGISTER . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

9.3.30 ACK_PAYLOAD . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

9.3.31 GANG_DEAD_ACK_PAYLOAD. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

9.3.32 EXTENDED_SWITCH_MODE. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

10 JTAG . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

10.1 JTAG Support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

10.2 TAP Controller . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 112

10.2.1 Test-Logic-Reset: . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

10.2.2 Run-Test-Idle: . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

10.2.3 Capture-DR:. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

10.2.4 Shift-DR: . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

10.2.5 Update-DR: . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

10.2.6 Capture-IR: . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

10.2.7 Shift-IR: . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

10.2.8 Update-IR: . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

Long Form Data Sheet

10.3 Boundary Scan Instructions. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

PM73488 QSE

Released

Datasheet

PMC-Sierra, Inc.

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

10.3.1 BYPASS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

10.3.2 EXTEST . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

10.3.3 SAMPLE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

10.3.4 IDCODE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

10.3.5 STCTEST . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

10.4 Boundary Scan Pin Order . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

PM73488 QSE

Long Form Data Sheet

Released

Datasheet

PMC-Sierra, Inc.

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

PM73488 QSE

List of Figures

Figure 1. QSE In te rface Block D ia gr am . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

Figure 2. QSE S yst em O v er v iew . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

Figure 3. 32 x 32 S w it ch A p pl ica ti on ( 5 G bps ) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

Figure 4. 64 x 64 S w it ch A p pl ica ti on ( 10 G b p s ) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

Figure 5. 5 Gbps ATM S w itc h U s in g 8 QR Ts, an d 1 QSE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

Figure 6. 10 Gbp s A T M Sw i tch U s i ng 1 6 QRTs , an d 2 Q SEs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

Figure 7. 20 Gbp s A T M Sw i tch U s i ng 3 2 QRTs , an d 4 Q SEs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

Figure 8. 5 Gbps to 160 G b ps S wi tc he s M od el ed Using O nl y Tw o Ca rd s . . . . . . . . . . . . . . . . . . . . . . . . 17

Figure 9. 5 Gbps ATM S w itc h . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

Figure 10 . 10 Gbp s A T M Sw it ch . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

Figure 11 . 15 Gbp s A T M Sw it ch . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

Figure 12 . 20 Gbp s A T M Sw it ch . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

Figure 13 . Basic Q S E F l ow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

Figure 14 . Routing B it s Ro ta ti on f o r Un icast Traf fi c, G an g M o d e o f F our . . . . . . . . . . . . . . . . . . . . . . . 24

Figure 15. Examp le of Mult ic as t Cell Hand l in g i n the QSE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

Figure 16 . Ideal Di s trib u ted N et w or k . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

Figure 17 . More Realistic D ist r ib ut ed N etw o r k . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

Figure 18. “Large” Distri bu t ed N etw o r k (Wi ll n ot W o rk W ell with B an y an Alone ) . . . . . . . . . . . . . . . . 30

Figure 19 . High-Le ve l Q R T/QSE Sy s tem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

Figure 20 . (3) x 1 - 5 Gbp s Sy s te m . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

Figure 21 . (5) x 4 - 20 G b p s Sy s tem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

Figure 22 . (1,3) x 1 - 10 G b ps System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

Figure 23 . Random iz er ( w ith Evil Twi n S witching A l gor it hm) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

Figure 24 . Networ k Ne ed s to be Ru n F aster than th e Lin e Rate . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

Figure 25 . Definit io n of t he S pe ed up Factor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

Figure 26 . How to U se t he SF to Select Fa vo r ab le N etw o r ks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3 4

Figure 27 . SE_SOC Encodin g s . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

Figure 28 . Expanded SE_SO C En codings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

Figure 29 . BP_AC K En co di ng s . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

Figure 30 . QSE Cel l- Lev el Timing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

Figure 31 . QSE Sw it ch La ten cy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

Figure 32 . Basic F or war d an d Backwar d Da ta P ath . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

Figure 33. Basic Dat a Path (SE_D_ OUT/IN and SE_SOC_OUT /IN in Forward Path, B P_ACK_OUT /IN in

Backwar d Pa th ) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

Figure 34 . 596-Bal l E nh an ced Plast ic BG A P h y sical Dimen s io n s D iagram (T op v ie w ) . . . . . . . . . . . . . 55

Figure 35 . 596-Bal l E nh an ced Plast ic BG A P h y sical Dimen s io n s D iagram (B o tt om vi ew ) . . . . . . . . . . . 56

Figure 36 . QSE Pi no u t B lo ck D ia gram . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

Long Form Data Sheet

Figure 37 . Micropr o cess o r Ti min g . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

Released

Datasheet

PMC-Sierra, Inc.

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

Figure 38 . RAM In ter f ac e . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

Figure 39 . QSE Bit -L ev e l Timing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

Figure 40 . Reset Timing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

Figure 41 . JTAG Ti min g . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

Figure 42 . Boundar y S ca n A rch itecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 108

Figure 43 . TAP Con t ro ll er Fin it e S t at e M achine . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

PM73488 QSE

Long Form Data Sheet

Released

Datasheet

PMC-Sierra, Inc.

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

PM73488 QSE

List of Tables

Table 1. BP_CO NT R O L_ RE G ISTER; Thr esh ol d Co n trol B its for Each Set of 3 2 B uff er s . . . . . . . . . . . 25

Table 2. Spee dup F ac to r (1 - Stag e Netwo rks ) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

Table 3. Spee dup F ac to r (3 - Stag e Netwo rks ) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

Table 5. Regu lar C el l Fo r m at . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

Table 6. PM73 48 8 M od e I d le Cell Form a t . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

Table 7. Infor mat io n Bit Enc odin g . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

Table 8. Data Lat en c ie s . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

Table 9. Fail ure C o nd it io n s , I RT -t o S witch Fab ri c In ter f ace . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

Table 10. Failur e C on d iti o ns, QSE R ece iv e I n ter f ace . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

Table 11. Failur e C on d iti o ns, QSE Tran s mi t I n te rf ac e . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

Table 12. Failur e C on d iti o ns, Switc h Fabric-to- OR T In te rface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

Table 13. Faults . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

Table 14. Signal Lo cations (Signal N am e t o B al l) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

Table 15. Signal Lo cations (B al l t o S ig na l N a m e) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

Table 16. Process o r Inte rf a ce S i gn al s ( 21 Signal Pin s ) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

Table 17. Multicas t R A M Inter face S i gn al s (3 9 S i gn al Pin s ) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

Table 18. QSE In te rf ac e S i gnals (364 S ignal Pins ) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

Table 19. Bounda ry Scan Sig na ls ( 8 Sig n al Pins) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

Table 20. Miscell an eo us S ignals (8 S ignal Pin s) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

Table 21. Pin Al lo cat io ns . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

Table 22. Absolut e M aximum R ati ng s . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

Table 23. Recommended O p er at in g Co nd i tio n s . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

Table 24. DC Ope ra ti ng C on d iti o ns . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

Table 25. Capaci tan ce . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 82

Table 26. Estima ted Package Th er m a l C h ar act er is t ics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 82

Table 27. Microp ro ce s sor T im i ng . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

Table 28. RAM In te rf ac e Timing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

Table 29. CTRL_IN, STAT_ O UT , T E ST_ M OD E an d D EBUG Tim in g . . . . . . . . . . . . . . . . . . . . . . . . . 86

Table 30. Valid W i nd o w Timing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

Table 31. Microp ro ce s sor Po r ts S u m mary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

Table 32. Bounda ry Scan Pin o rder . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 112

Table 33. Standar d A b brev iations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

Table 34. Orderi ng I n fo r mat io n . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 132

Long Form Data Sheet

Released

Datasheet

PMC-Sierra, Inc.

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

WAC- 488-B

PM73488 QSE

Product Overview

DESCRIPTION

The PM73488 (QSE) is an advanced communications device that enables the implementation of high performance

switching syst ems. The QSE is a 32 × 32 cell based switch element, with a total sustainable bandwidth of 5 Gb/s .

(The peak, or raw, bandwidth is much more than that: about 8 Gb/s). The QSE is designed to be used with other

QSE’s as part of a larger switch fabric. Various QSE combinations allow fabrics with theoretical peak capacities

ranging from 5 Gb/s (one QSE) to 160 Gb/s. The QSE is not ATM specific; however, should the QSE be used for

switching ATM cells, the QSE cell size is large enough to allow efficient direct mapping between QSE Cells and

ATM cells.

Multistage QSE Fabrics (Delta-Reverse Delt a c onfiguration) have rich connectivity with multi ple paths betwee n each

source/dest ination pair. A QSE fabric performs cut-through unicast swi tching and uses Randomization and Evil-Twin

algorithms to fully utilize these multiple paths and avoid the build up of internal hot spots. Randomiza tion, in combination with multiple routing paths allows graceful degradation of QSE Fabric performance if internal links fail. To

detect failed links, the QSE maintains and checks liveness patterns on input and output ports in hardware, and

automatically routes around ports if they die.

QSE data ports are 6 bits wide including a 4-bit wide 66 MHz data path, a one-bit wide start-of-cell indication, and a

one-bit wide acknowledgment signal. Each port contains "Phase Aligners" to recover the clock for that port, thus

removing the need to synchronize all data to a singl e global clock.

When switching unicast traffic in a multistage fabric (one to three stages), the first nibble of the cell will come out of

the last QSE st age before the last nibble o f that cell enters the first stage. The cell thereby traverses the en tire fabric in

one cell time. If the cell sucessfully makes it to its destination, the ("egress") device accepting the cell from the last

stage QSE has the opportunity to send a four bit "Ack Information Packet" back to source indicating what it did with

this cell ; at its simplest, the egress device can send back one pattern to indicate that the cell was accepted and another

to indicate that the cell was dropped due to, say, buffer overflow.

It is also possible that the cell was dropped inside the QSE fabric due to say a collision with another cell. The QSE

classifies lost cells as due to one of three causes (collision, all possible outputs dead, or parity errors) and will

generate an "Ack Information Packet" back to the source to communicate this event. In each QSE, the 4 bit pattern in

the information packet can be independently software configured for each of the three cases. Note that since each

QSE can be separately programmed, the patterns can even be setup so that the source knows where the cell was

dropped.

The information provided by the "Ack Information Packets" can be used by the device injecting cells into the first

QSE stage to decide how to handle the cells; at its simplest, the device can resend cells that did not get through (a

more sophisticated algorithm might also take into account where the cell was lost and the behavior of the evil twin

algorithm to decide when to resend the cell; for example if the cell was dropped due to output congestion it might

make sense to back off on cells to that output).

For unicast traffic, part of switch bandwidth will be used to resend cells that did not make it through the first time

around. This implies that sustained throughput is less than peak switching capacity. The amount of bandwidth

required for resending cells and the effect of resending on latency and "Cell Delay Variation (CDV)" has been extensively studied with analytical models of the fabric. These results have then been cross checked with results from

simulating software models of the fabric. This data is crucial for designing fabrics that can efficiently support

Long Form Data Sheet

13

Released

Datasheet

PMC-Sierra, Inc.

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

guaranteed "Quality of Service (QOS)" requirements. The recommended QSE fabric configurations for high quality

switching takes these results into account; for example the 3 stage 160 Gb/s sustained throughput fabric has a peak

capacity of 256 Gb/s (60% mar gin).

The QSE fabric is store-and-forward for multicast traffic. Cell replication is performed in an optimal tree based

manner where replication is done as far downstream as possible and each QSE contains cell buffers to buffer

multicast cells. A multipriority backpres sure feedback is us ed to control the flow of multicast cells through the fabric.

PM73488 QSE

FEATURES

Switching A l go rithm

• Supports blocking resolution in the swi tch fabric.

• Guarantees a lower bound on switch performance using a patented randomization algorithm called Evil

Twin Switching.

• Determines routes using specified bits in the header (self-routing switch fabric) for unicast traffic.

• Determines out put groupings using a lookup tabl e for m ulticast traffic.

• Allows output ports to be combined in groups of 1, 2, 4, 8, 16, or 32 for unicast traffic.

• Allows output ports to be combined in groups of 1, 2, or 4 for multicast traffic.

Multicast Support

• Supports opt imal tree-based multicast replication in the switch fabric.

• Supports 512 internal multicast groups, expandable to 256K with external SRAM.

• Provides 64 internal cell buffers for multicast cells.

Diagnostic/Robustness Features

• Checks the header p arity.

• Counts tagge d cells.

• Checks for connectivity and stuck-at faults on all switch fabric interconnects.

I/O Features

• Provides 32 switch fabric interfaces with integrated phase aligner clock recovery ci rcuitry.

• Provides a Start-Of-Cell (SOC) output per four switch element interfaces.

• Provides an external 8-bit Synchronous SRAM (SSRAM) interface for multicast grou p expansion.

• Provides a demultiplexed address/data CPU interface.

• Provides an IEEE 1149.1 (JTAG) boundary scan test bus.

Physical Characteristics

• 3.3 V supply volta ge.

• 5 V tolerant inpu ts.

• 596-p i n En h an c ed P la s ti c B al l G r id Arr a y (EP B G A ) pa ck age.

• Operates from a single 66 MHz clock.

Long Form Data Sheet

14

Released

Datasheet

PMC-Sierra, Inc.

PM73488 QSE

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

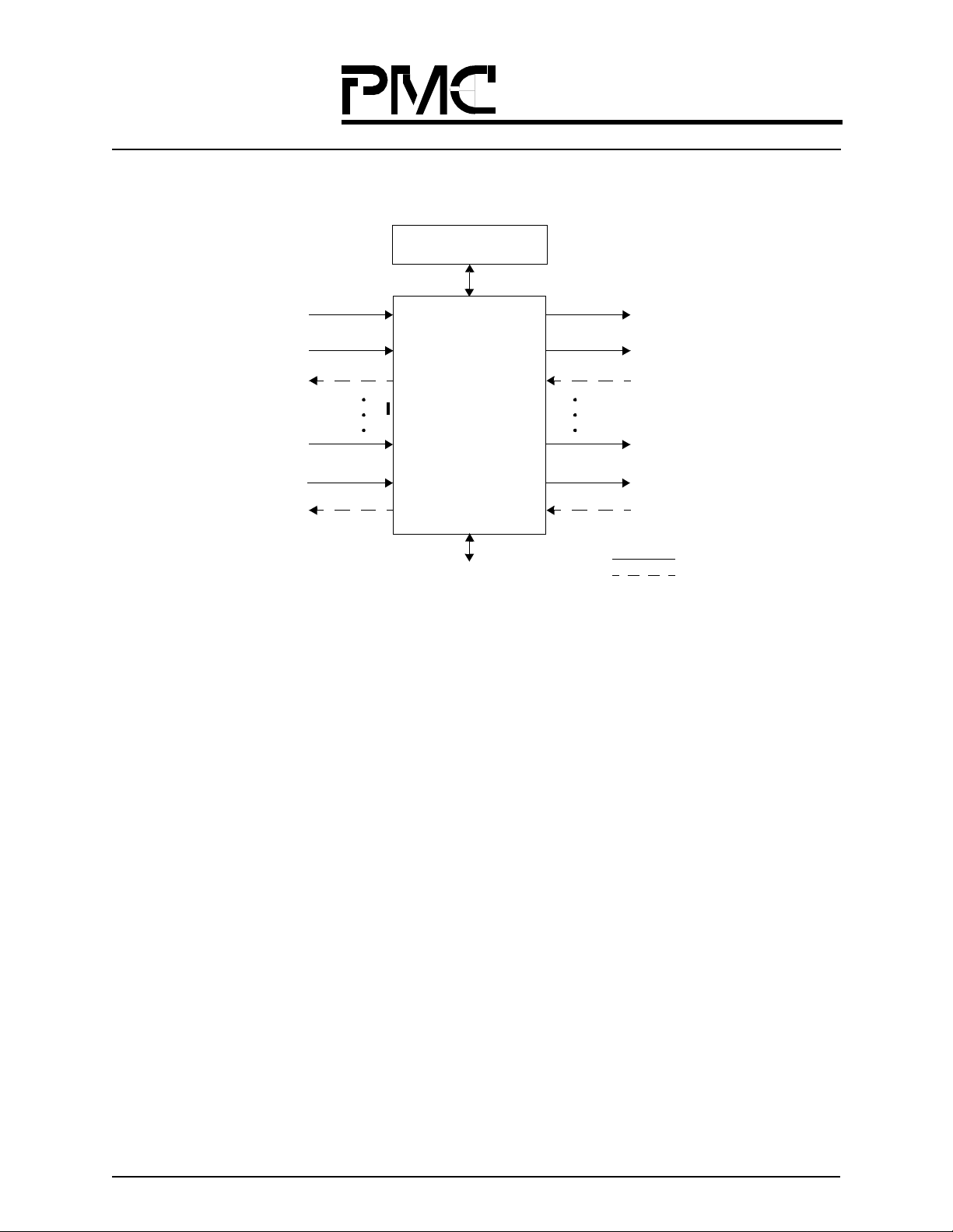

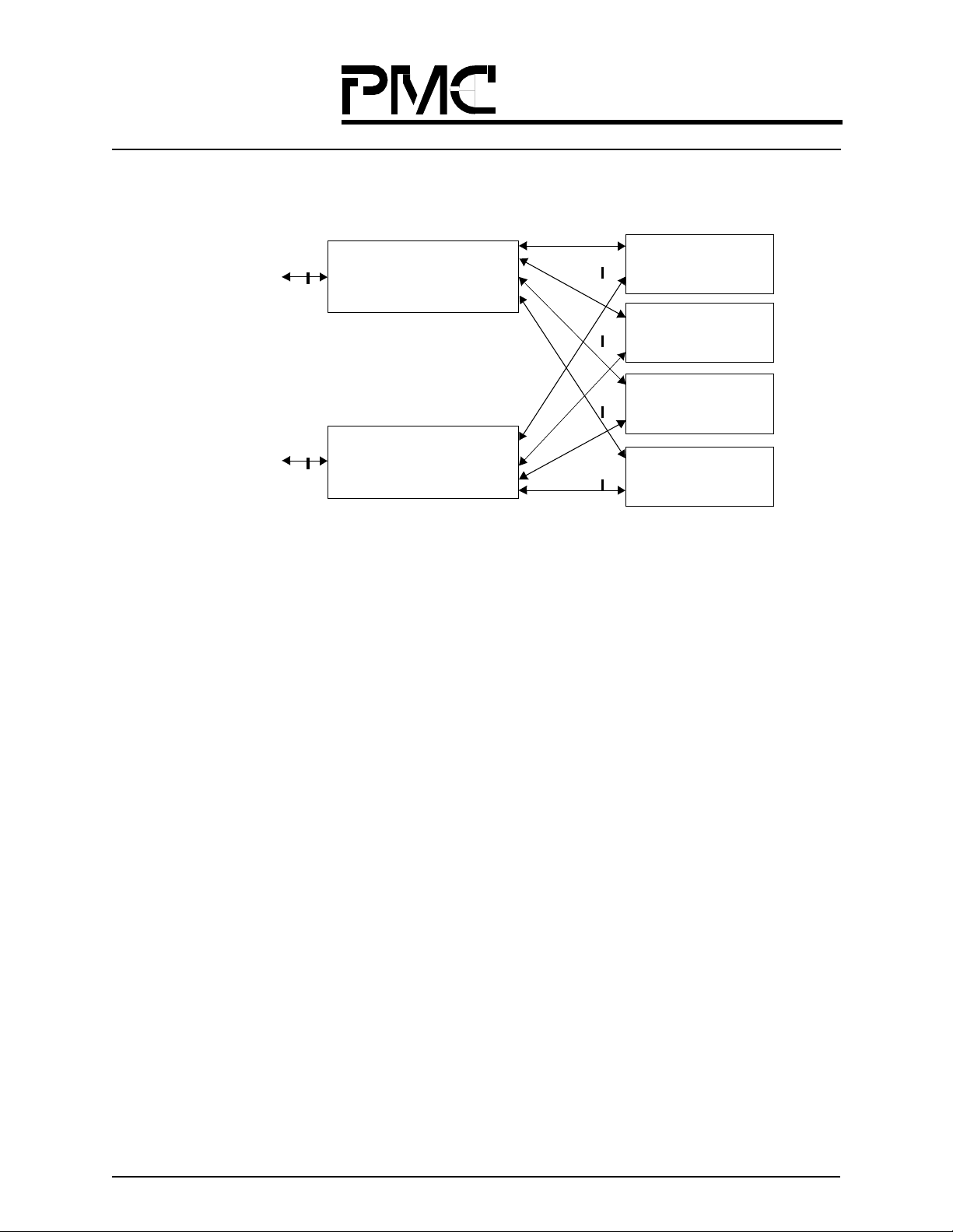

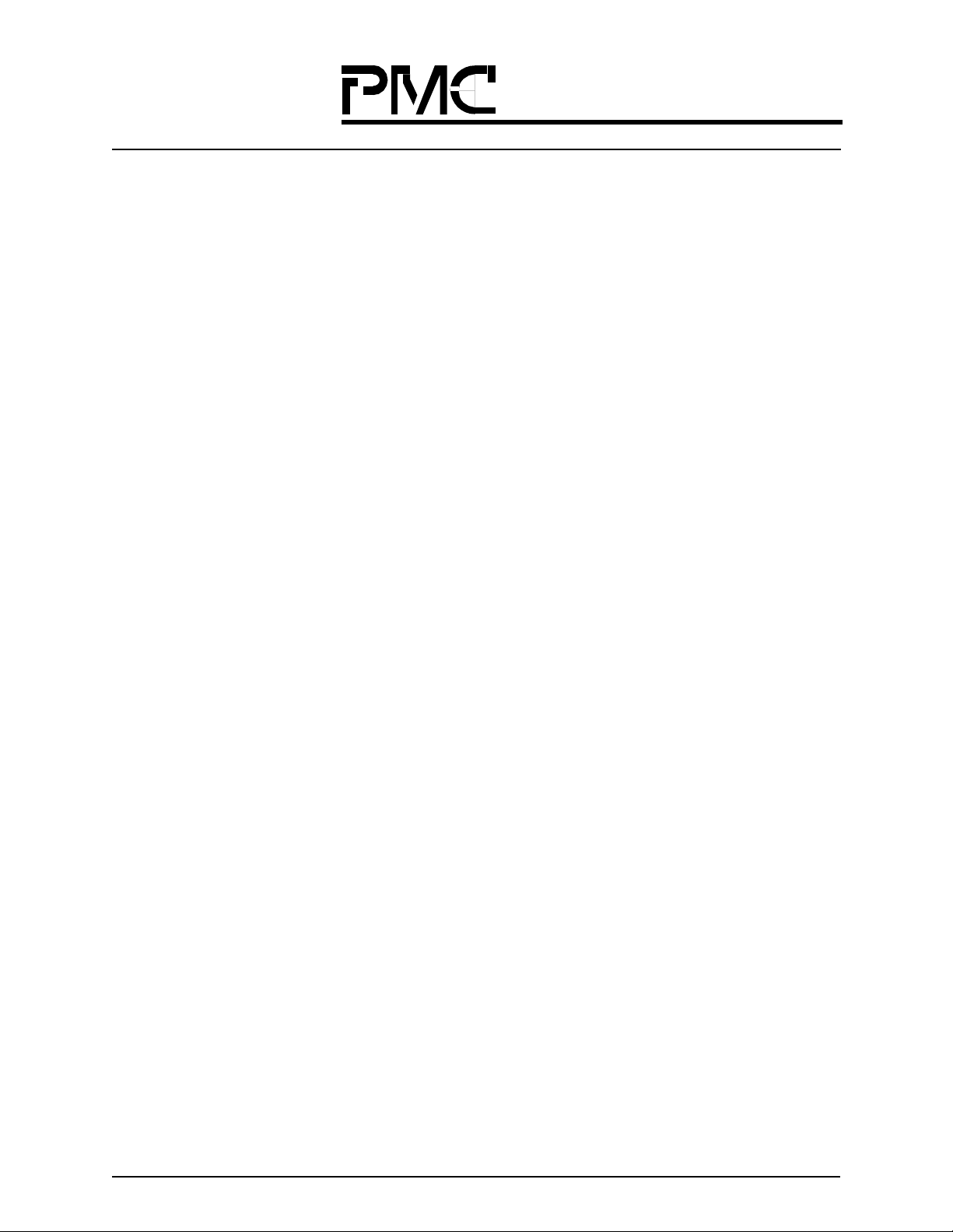

Figure 1 shows a QSE system block diagram.

Multicast SSRAM

(Optional)

SE_SOC_IN(0)

Data from QRTs or QSEs

Input Ports 0

NACK to QRTs or QSEs

SE_SOC_IN(31)

Data from QRTs or QSEs

Input Ports 31

NACK to QRTs or QSEs

QSE

PM73488

Host Interface

Figure 1. QSE Interface Block Di a gram

SE_SOC_OUT(0)

Data to QRTs or QSEs

Output Ports 0:3

NACK from QRTs or QSEs

SE_SOC_OUT(7)

Data to QRTs or QSEs

Output Ports 28:31

NACK from QRTs or QSEs

Key:

Control or Data Signals

Acknowledgment Signal

Long Form Data Sheet

15

Released

Datasheet

PMC-Sierra, Inc.

PM73488 QSE

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

1 HOW THE QSE FITS INTO YOUR SYSTEM

The QSE, together with the QRT, supports a wide range of high-performance ATM switching systems. These systems range in size from 5 Gbps to 160 Gbps. The systems can be developed to provide scalability with linear cost.

Another key feature of the QSE/QRT architecture is that it is exceptionally fault-tolerant, both in the switch fabric

and the UTOPIA interface.

This section contains a quick overvi ew of the QSE and several example applications:

• a 5 Gbps switch using PM73487s and a PM73488,

• a 10 Gbps switch using PM73487s and PM73488s,

• a switch architecture using PM73487s and PM73488s that scales from 5 Gbps to 20 Gbps,

• a switch architecture using PM73487s and PM73488s that scales from 5 Gpbs to 160 Gbps

1.1 QSE System Overview

The QSE is switch element, combinations of which allows switch fabric implementations that span from 5 Gbps to

160 Gbps. The bandwidth of a singl e QSE is 5Gbps of sustainable bandwidth; the raw, or peak, bandwidth is 8Gbps.

(Thus the QSE has an in-buil t sp ee d-up factor of 8/5 = 1.6.)

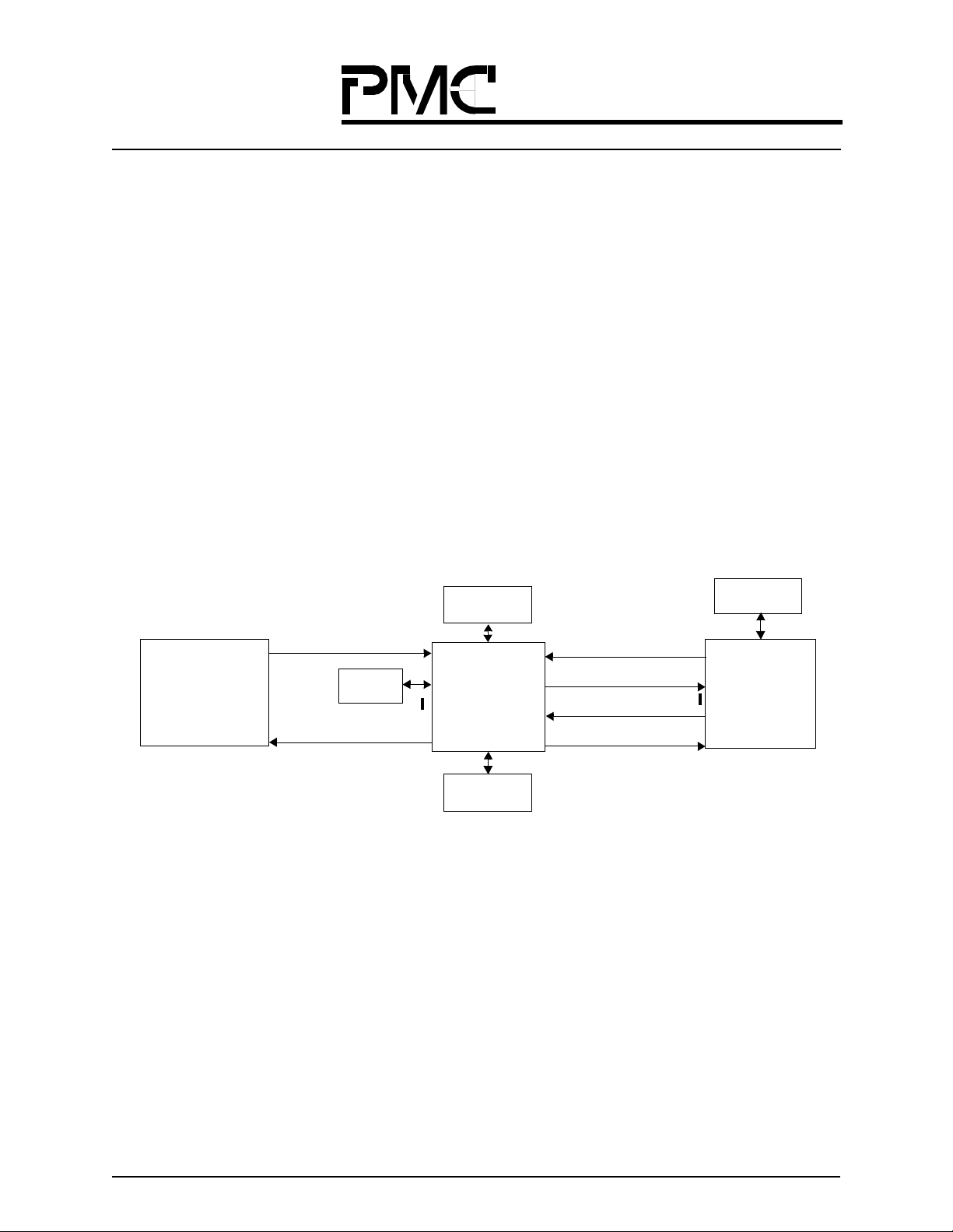

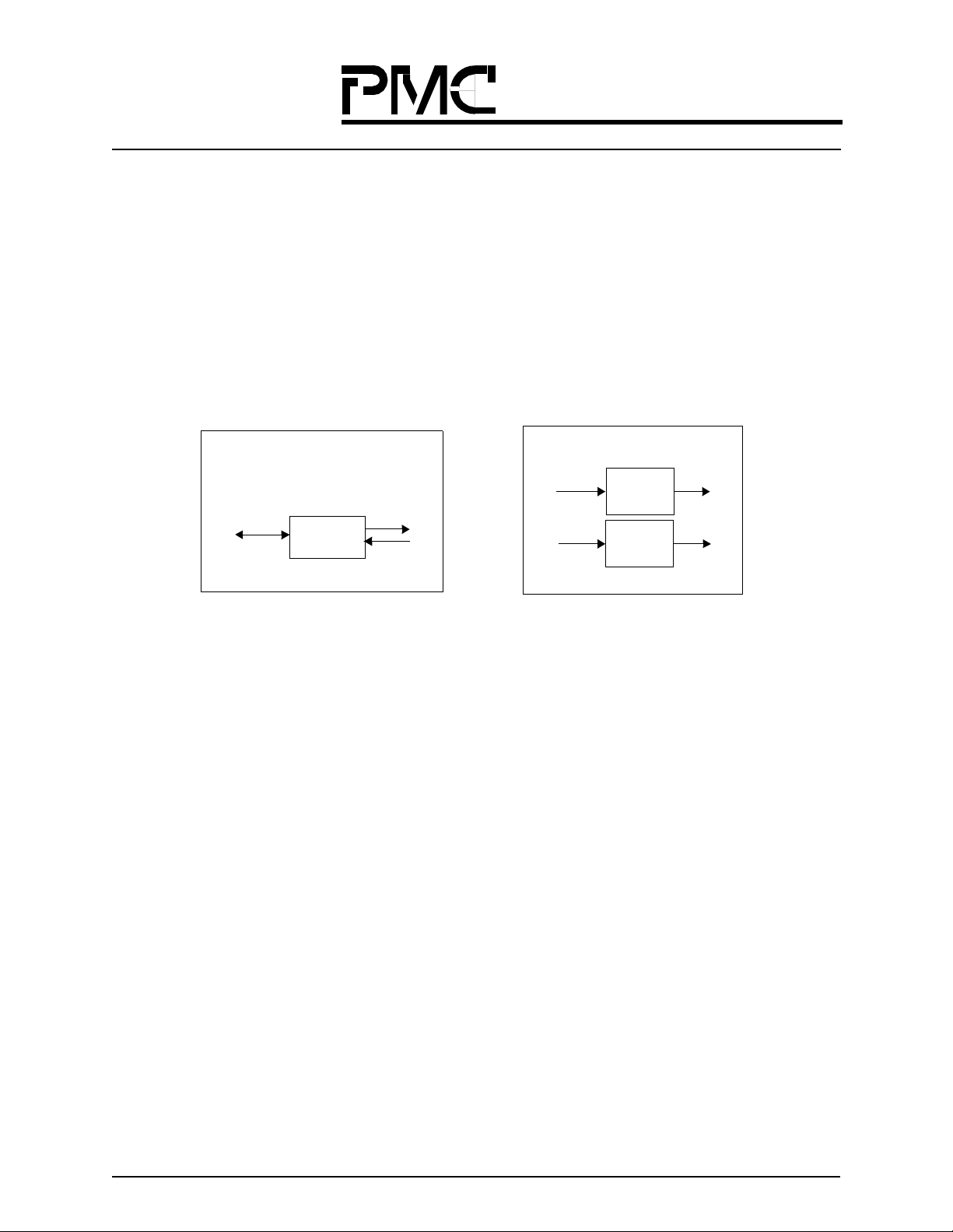

The QSE has 32 input ports and 32 output ports. Each port is a 66 MHz 6-bit interface, out of which 4 are data and 2

are control. Each port can be connected to another QSE or QRT. Figure 2 shows a QSE connected to a QRT.

Physical and/or

Adaptation Layers

Receive UTOPI A Level 2

Interface

Control

SSRAM

Transmit UTOPIA Level 2

Interface

Figure 2. QSE System Overview

Input Cell

SDRAM

QRT

(PM73487)

Output Cell

SDRAM

Receive Feedback

Receive Nibble Data

Transmit Nibble Data

Transmit Feedback

Multicast

SRAM

QSE

(PM73488)

Long Form Data Sheet

16

Released

Datasheet

PMC-Sierra, Inc.

PM73488 QSE

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

1.2 32 x 32 Switch Application (5 Gbps)

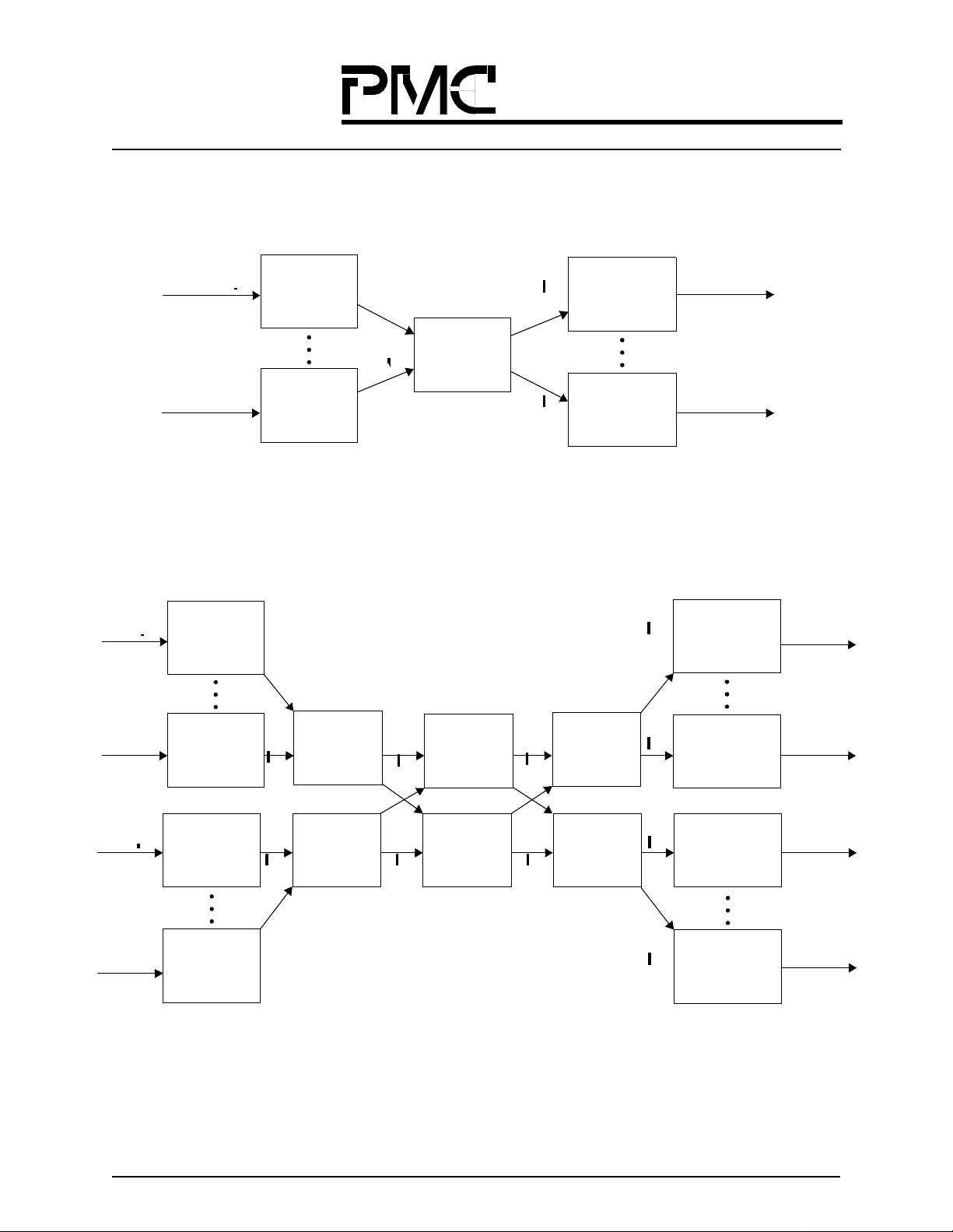

Figure 3 shows a basic 32 × 32 switch application (5 Gbps) using one QSE and eight QRTs.

622 Mbps

Aggregate

Receive UTOPIA

Level 2

622 Mbps

Aggregate

QRT #1

(PM73487)

Receive Input

QRT #8

(PM73487)

Receive Input

QRT #1

(PM73487)

×

4

QSE

4

×

(PM73488)

4

Transmit Output

×

×

4

QRT #8

(PM73487)

Transmit Output

622 Mbps

Aggregate

Transmit UTOPIA

Level 2

622 Mbps

Aggregate

Figure 3. 32 x 32 Switch Ap p lic ati on (5 G bps )

1.3 64 x 64 Switch Application (10 Gbps)

Figure 4 shows a 64 × 64 switch application (10 Gbps) using 6 QSEs and 16 QRTs. This application uses QSEs in a

3-stage fabric. This sized system can be implemented in a single 19 inch rack.

622 Mbps

Aggregate

Receive

UTOPIA

Level 2

622 Mbps

Aggregate

QRT #1

(PM73487)

Receive Input

QRT #8

(PM73487)

Receive Input

×

4

QSE

(PM73488)

× 16

QSE

(PM73488)

× 16

QSE

(PM73488)

4

×

QRT #1

(PM73487)

Transmit Output

QRT #8

(PM73487)

Transmit Output

622 Mbps

Aggregate

Transmit

UTOPIA

Level 2

622 Mbps

Aggregate

622 Mbps

Aggregate

Receive

UTOPIA

Level 2

622 Mbps

Aggregate

QRT #9

(PM73487)

Receive Input

QRT #16

(PM73487)

Receive Input

(PM73488)

4

×

QSE

Figure 4. 6 4 x 6 4 S witc h A pp lic at ion (1 0 G bp s)

Long Form Data Sheet

× 16

QSE

(PM73488)

× 16

QSE

(PM73488)

×

4

QRT #9

(PM73487)

Transmit Output

QRT #16

(PM73487)

Transmit Output

622 Mbps

Aggregate

Transmit

UTOPIA

Level 2

622 Mbps

Aggregate

17

Released

Datasheet

PMC-Sierra, Inc.

PM73488 QSE

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

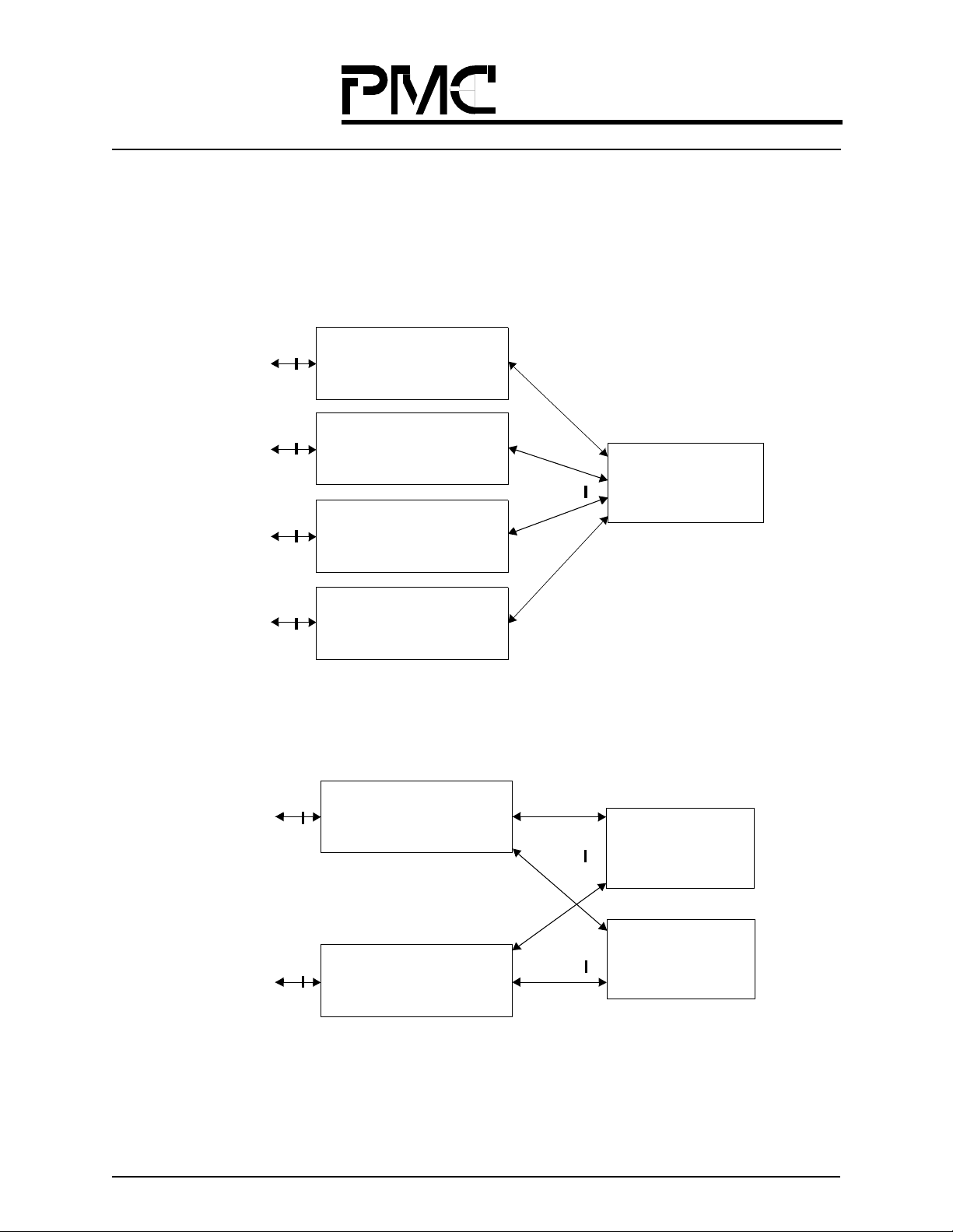

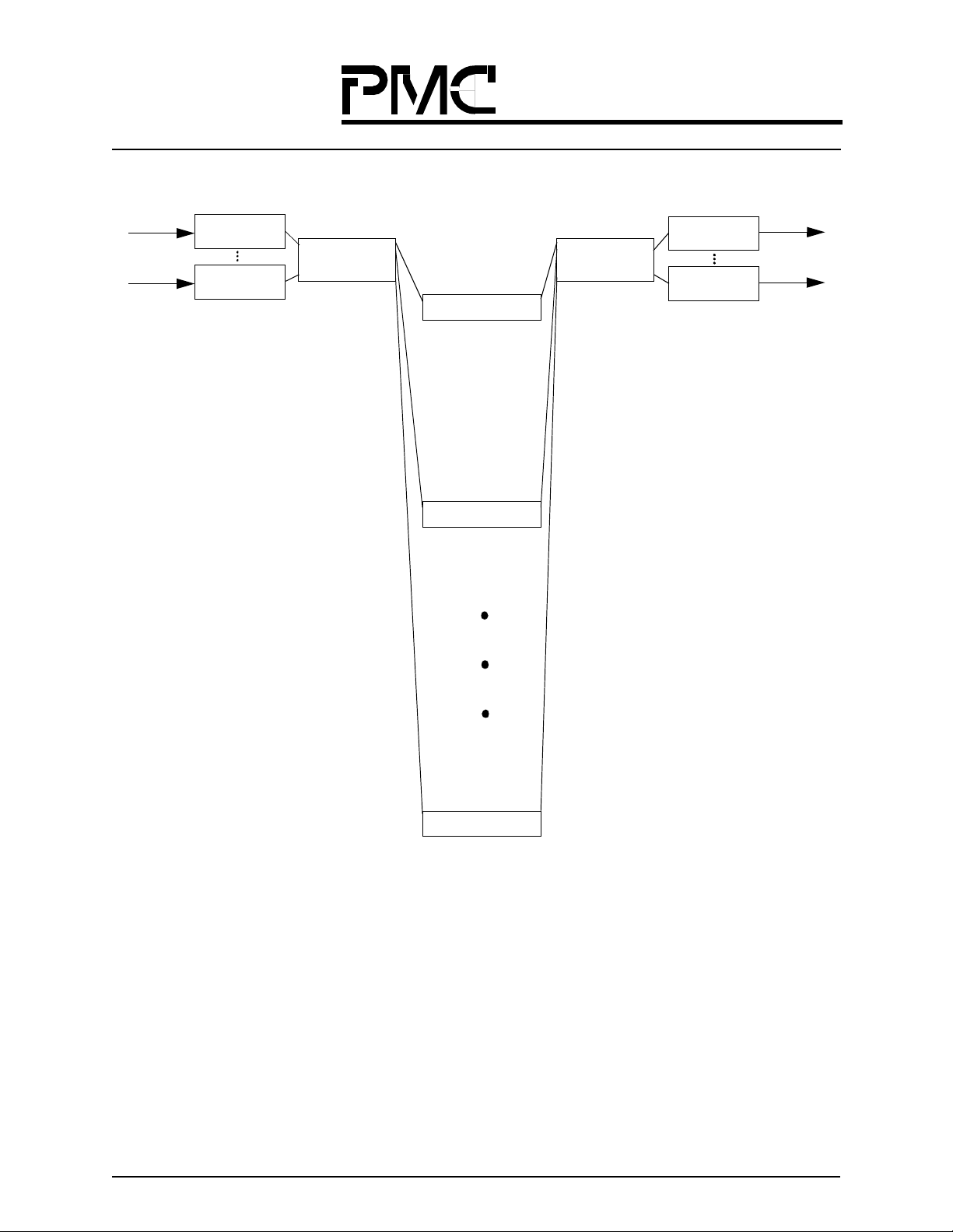

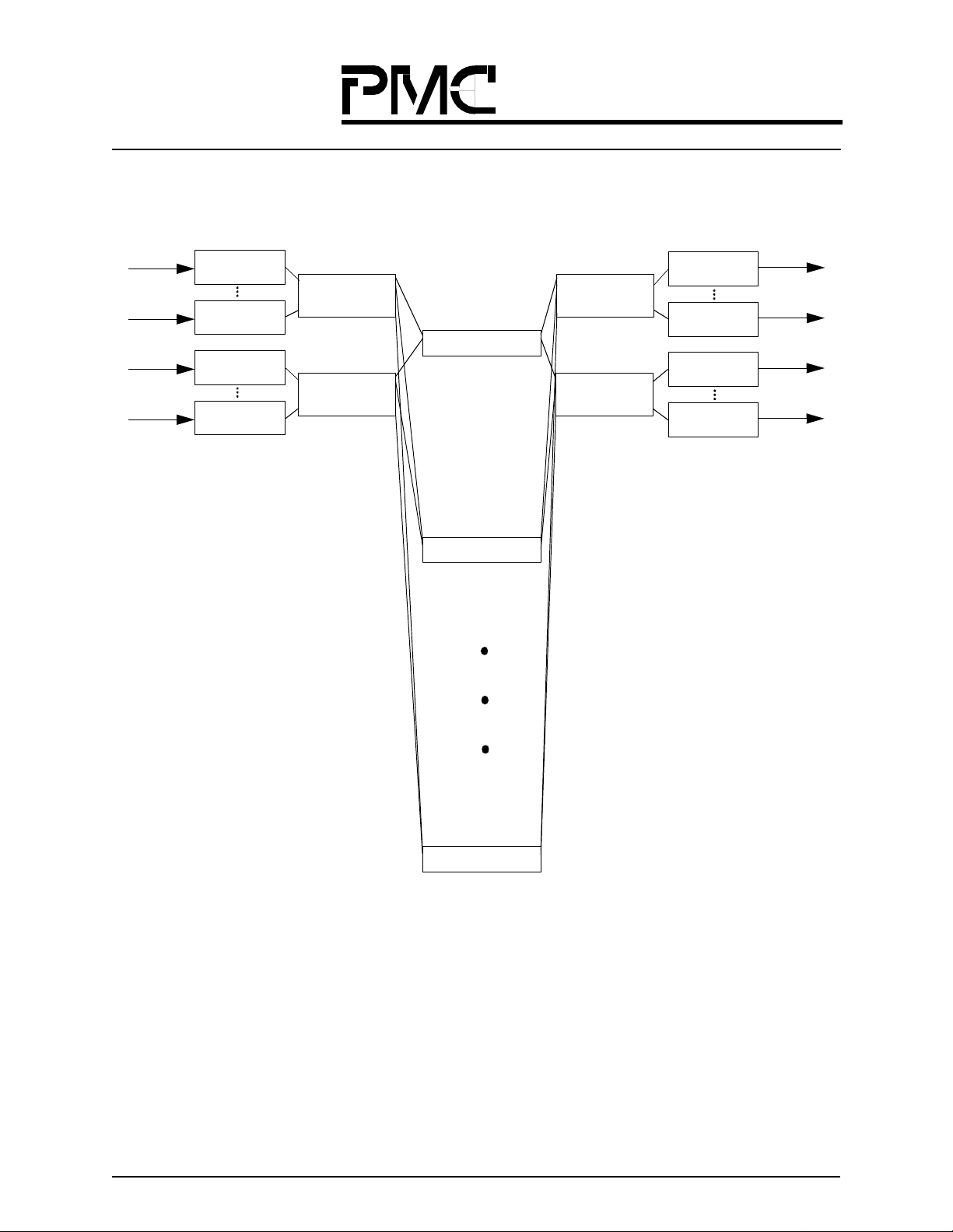

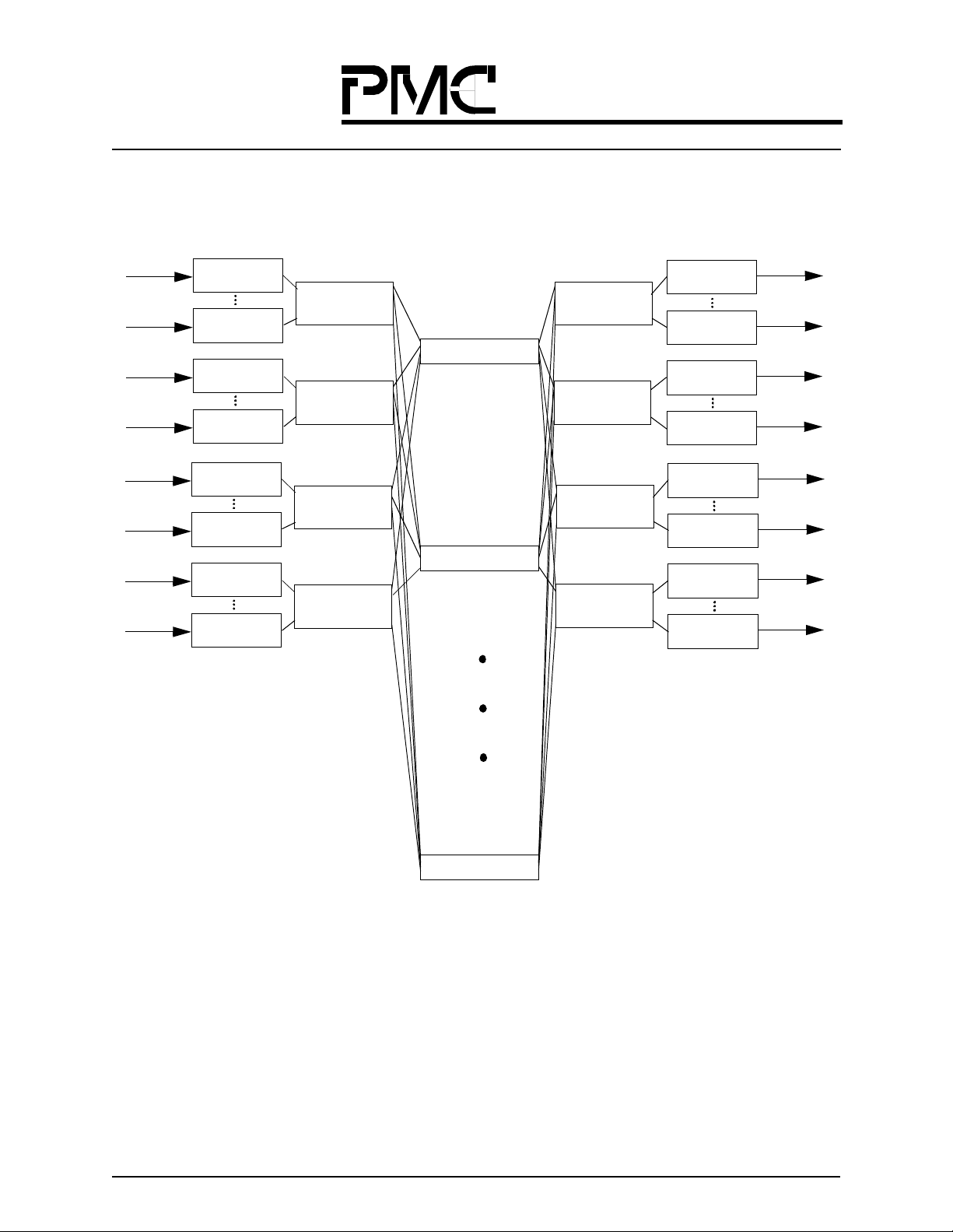

1.4 5 Gbps to 20 Gbps Application Example - Seamless Growth

Figure 5 illustrates the modularity of the QSE and QRT architecture. A 5 Gbps system can immediately be created (as

shown in Figure 5), then be upgraded to 10 Gbps (as shown in Figure 6), or 20 Gbps (as shown in Figure 7 on page

19) with the QSE and the QRT. Since systems composed of the QSEs and QRTs are based on a single-stage switch

fabric, the per-port cost for each system will remain the same.

Eight

155 Mbps

Interfaces

Eight

155 Mbps

Interfaces

Eight

155 Mbps

Interfaces

Eight

155 Mbps

Interfaces

Port Card

• Two QRTs (PM73487s)

Port Card

• Two QRTs (PM73487s)

Switch Card

• One QSE (PM73488s)

Port Card

• Two QRTs (PM73487s)

Port Card

• Two QRTs (PM73487s)

Figure 5 . 5 Gbp s A TM Sw itc h Us in g 8 Q RTs, and 1 Q S E

Eight

155 Mbps

Interfaces

Eight

155 Mbps

Interfaces

Long Form Data Sheet

Figure 6. 10 Gb ps A TM Switch U sing 16 Q R Ts, a n d 2 Q S Es

• Two QRTs (PM73487s)

• Two QRTs (PM73487s)

Port Card 1

Port Card 8

Switch Card

•

• One QSE (PM73488)

•

•

Switch Card

• One QSE (PM73488)

18

Released

Datasheet

PMC-Sierra, Inc.

PM73488 QSE

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

Eight

155 Mbp s

Interface s

Eight

155 Mbp s

Interface s

Port Card 1

• Two QRTs (PM73487s)

•

Switch Card

• One QSE (PM73488)

Switch Card

• One QSE (PM73488)

•

•

Port Card 16

• Two QRTs (PM73487s)

Figure 7. 20 Gb ps A TM Switch U sing 32 Q R Ts, a n d 4 Q S Es

Switch Card

• One QSE (PM73488)

Switch Card

• One QSE (PM73488)

Long Form Data Sheet

19

Released

Datasheet

PMC-Sierra, Inc.

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

1.5 5 Gbps to 160 Gbps Application Example – LAN-to-WAN

A powerful application of the QRT and QSE devices is the creation of modules that can be used in a range of

switches with only the interconnection changing between different sizes. ATM switches from 5 Gbps to 160 Gbps

can be realized with only two unique cards. A port card has one QRT, and a switch card has two QSEs. The switch

fabri c consist s of thr ee stages, each wi th 32 QSEs (o r 1 6switch cards). To plan fo r future scalability, the middle stage

must be built-in upfront. This is a one- time cost. Then, in order to scale in 5 Gbps increments , one switch card and its

accompanying eight port cards should be added. Finer bandwidth scaling is possible by populating the additional

switch card with port cards as needed (in increments of 622 Mbps). With this switch fabric topology, scaling is possible up to 160 Gbps. Once the initial middle stage cost has been incurred, the per-port cost for 5 Gbps through

160 Gbps systems remains cons tant

PM73488 QSE

Port Card - One QRT

One UT O P I A

Level 2 Interfac e

QRT

(PM73487)

Figure 8 . 5 Gbp s to 16 0 Gb p s Sw itc h es M odeled Using O n ly Two C ar ds

Switch Card - Two QSEs

x32

x32

QSE

(PM73488)

QSE

(PM73488)

x32

x32

Long Form Data Sheet

20

Released

Datasheet

PMC-Sierra, Inc.

PM73488 QSE

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

622 Mbps

622 Mbps

Port Card #1

Rx Input

Port Card #8

Rx Input

Switch Card #17

Stage 1 Q SE

x2

Switch Card #1

Switch Card #2

Switch Card #17

Stage 3 QSE

x2

Port Card #1

Tx Output

Port Card #8

Tx Output

622 Mbps

622 Mbps

Switch Card #16

Figure 9 . 5 Gbp s A TM Sw itc h

Figure 9 shows a 5 Gbps ATM switch using 8 port cards (8 QRTs) and 17 switch cards (34 QSEs). The middle stage

is composed of 16 switch c ards. The 5 Gbps bandwith is achie ved by adding switch card #17 (whi ch is depicted using

two boxes: one stage 1 QSE and one stage 3 QSE), and eight port cards (each of which is depicted using two boxes:

one for the Rx input side, and one for the Tx output side). Lines between stage 1 and stage 2, and stage 2 and stage 3

switch cards represent two set s of w ires, one to each of the QSEs in the middle s t age switch ca rds.

Long Form Data Sheet

21

Released

Datasheet

PMC-Sierra, Inc.

PM73488 QSE

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

Figure 10 shows a 10 Gbps ATM s witch using 16 port cards (16 QRTs) and 18 switch cards (36 QSEs). Here, another

switch card and eig ht port cards have been added to the 5 Gbps switch depicted in Figure 9.

622 Mbps

622 Mbps

622 Mbps

622 Mbps

Port Card #1

Rx Input

Port Card #8

Rx Input

Port Card #9

Rx Input

Port Card #16

Rx Input

Switch Card #17

Stage 1 QSE

x2

Switch Card #18

Stage 1 QSE

x2

Switch Card #1

Switch Card #2

Switch Card #17

Stage 3 QSE

x2

Switch Card #18

Stage 3 Q SE

x2

Port Card #1

Tx Output

Port Card #8

Tx Output

Port Card #9

Tx Output

Port Card #16

Tx Output

622 Mbps

622 Mbps

622 Mbps

622 Mbps

Figure 10. 10 Gbps ATM Switch

Long Form Data Sheet

Switch Card #16

22

Released

Datasheet

PMC-Sierra, Inc.

PM73488 QSE

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

Figure 11 shows a 15 Gbps ATM switch using 24 port cards (24 QRTs) and 19 switch cards (38 QSEs).Here, once

again, another s witch card and eight port cards hav e bee n adde d

622 Mbp s

622 Mbp s

622 Mbp s

622 Mbp s

622 Mbps

622 Mbps

Port Card #1

Rx Input

Port Card #8

Rx Input

Port Card #9

Rx Input

Port Card #16

Rx Input

Port Card #17

Rx Input

Port Card #24

Rx Input

Switch Card #17

Stage 1 Q SE

x2

Switch Card #18

Stage 1 Q SE

Switch Card #19

Stage 1 QSE

x2

x2

Switch Card #1

Switch Card #2

Switch Card #17

Stage 3 Q SE

x2

Switch Card #18

Stage 3 QSE

x2

Switch Card #19

Stage 3 Q SE

x2

Port Card #1

Tx Output

Port Card #8

Tx Output

Port Card #9

Tx Output

Port Card #16

Tx Output

Port Card #17

Tx Output

Port Card #24

Tx Output

622 Mbps

622 Mbps

622 Mbps

622 Mbps

622 Mbps

622 Mbps

Figure 11. 15 Gbps ATM Switch

Long Form Data Sheet

Switch Card #16

23

Released

Datasheet

PMC-Sierra, Inc.

PM73488 QSE

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

Figure 12 shows a 20 Gbps ATM switch composed of 32 port cards (32 QRTs) and 20 switch cards (40 QSEs). By

adding additional sets of a switch card and eight port cards in the same manner, this system c an scale up to 160 Gbps.

622 Mbps

622 Mbps

622 Mbps

622 Mbps

622 Mbps

622 Mbps

622 Mbps

622 Mbps

Port Card #1

Rx Input

Port Card #8

Rx Input

Port Card #9

Rx Input

Port Card #16

Rx Input

Port Card #17

Rx Input

Port Card #24

Rx Input

Port Card #25

Rx Input

Port Card #32

Rx Input

Switch Card #17

Stage 1 QSE

x2

Switch Card #18

Stage 1 QSE

x2

Switch Card #19

Stage 1 Q SE

x2

Switch Card #20

Stage 1 Q SE

x2

Switch Card #1

Switch Card #2

Switch Card #17

Stage 3 QSE

x2

Switch Card #18

Stage 3 Q SE

x2

Switch Card #19

Stage 3 QSE

x2

Switch Ca rd #20

Stage 3 Q SE

x2

Port Card #1

Tx Output

Port Card #8

Tx Output

Port Card #9

Tx Output

Port Card #16

Tx Output

Port Card #17

Tx Output

Port Card #24

Tx Output

Port Card #25

Tx Output

Port Card #32

Tx Output

622 Mbps

622 Mbps

622 Mbps

622 Mbps

622 Mbps

622 Mbp s

622 Mbps

622 Mbps

Figure 12. 20 Gbps ATM Switch

Long Form Data Sheet

Switch Card #16

24

Released

Datasheet

PMC-Sierra, Inc.

PM73488 QSE

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

2 THEORY OF OPERATION

Multiple PM73488 QSEs can be combined to build a scalable switch fabric. The QSE switches data in the form of

118 nibble cells. The QSE has 32-input ports and 32 output ports, each containing a nibble-wide data interface, an

SOC signal, and a backp r es s ure/data-acknowledge signal.

Groups of 1, 2, 4, 8, 16, or 32 ports can be internally configured to act as a singl e a ggregate port (also called gang) for

unicast traffic. For multicast traffic, inputs and outputs can be grouped together in groups of 1, 2, or 4 ports. The input

multicast grouping mode, output multicast grouping mode, and the unicast grouping modes do not need to be the

same. Also, the QSE can be configured as a single 32 input × 32 output switch

The cell flow through the QSE has two separate data paths; one path for unicast cells and another path for multicast

cells. Unicast cells are routed from one end of the switch fabric to the other end in a single cell time. In other words,

no unicast cells are ever stored in the switch fabric. Unicast cells are stored only at the ingress and egress of the

switch fabric. Multicast cells are routed in a store-and-forward manner. Each QSE can store up to 64 multicast cells.

The QRT used as an interface to a switch fabric constructed with QSEs allows the construction of an ATM switc h up

to 160 Gbps.

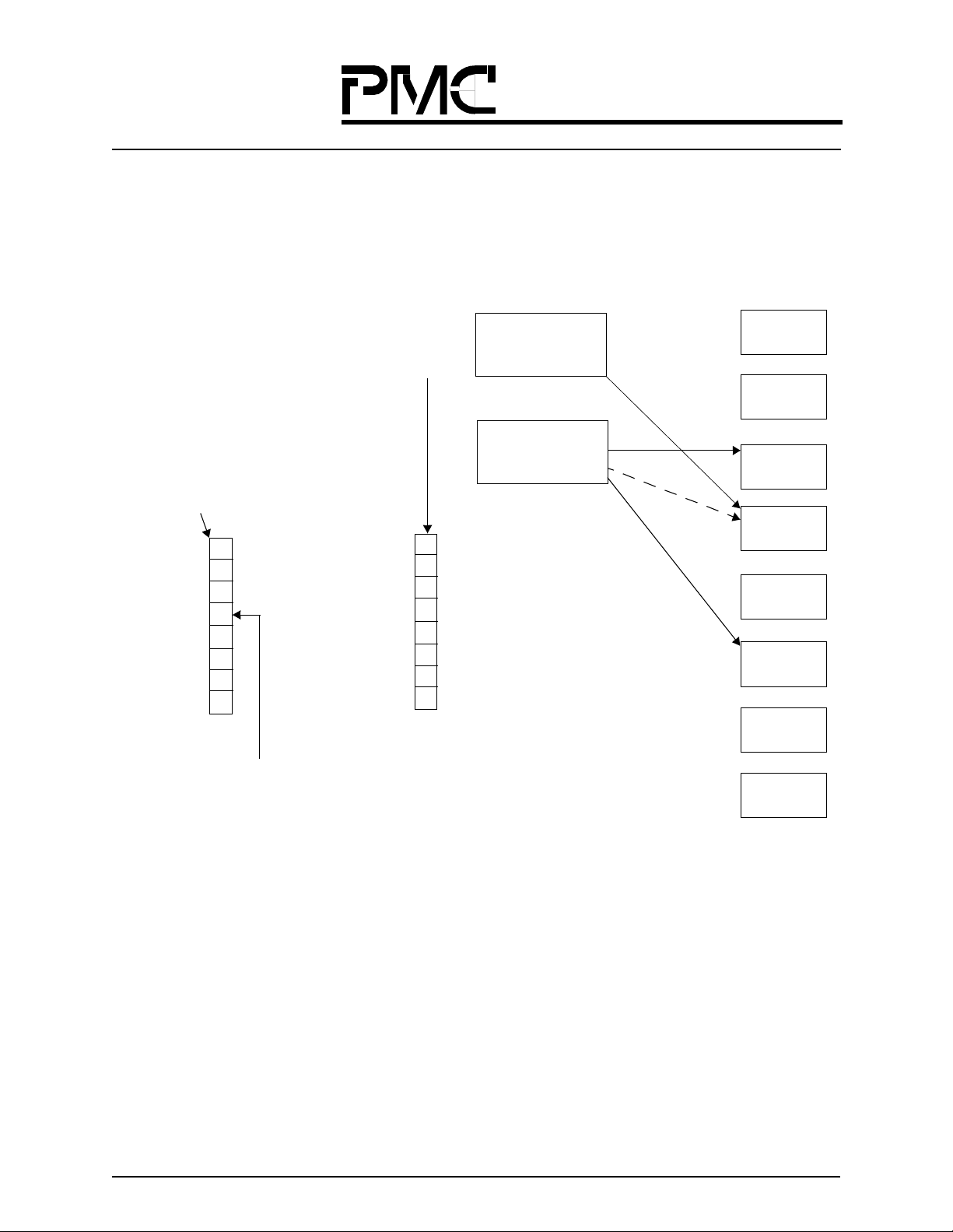

A diagram of the QSE cell flow is shown in Figure 13. The unicast cell flow contains a routing stage that uses routing

information from the cell header to determine the output group. The multicast cell flow contains an interface to an

external SSRAM that contains the Multicast Port Vector (MPV) information for routing cells to multiple output

groups.

Forward Cell Flow

Backpressu r e / Ac k F lo w

Miscel laneous Signals

Phase Aligners

and

Receive SE_D_IN

and SE_SOC_IN

BP_ACK

Drivers

External SSRAM

Multicast

Path

Data

Driver s

Arbiter

Phase Aligners

and Rece iv e

BP_ACK_IN

Unicast Routing and

Distribution Path

Figure 13. Basic QSE Flow

Long Form Data Sheet

25

Released

Datasheet

PMC-Sierra, Inc.

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

2.1 Phase Aligners

Phase aligners aid in constructing large systems. Clock information is recovered from the data sent to each QSE

switch fabric port. Phase aligners are used on the BP_ACK_IN(31:0), SE_SOC_IN(31:0), and SE_D_IN(31:0, 3:0)

signal lines. Since there is no setup or hold time requirements on these signals, the overall clock distribution scheme

within the system can be simplified. Howev er, overall system jitter and skew between signals on the s ame switch fabric data port must still be managed.

2.2 Data Drivers

Another aid to constructing large systems is an elastic store at each QSE input data port. The data elastic store allows

data arriving from different ports to be offset by up to a maxi mu m of e ight clock cycles. The internally generated and

software programmable local CELL_START signal marks the end of an 8-clock-period window within which the

SOC marker on each of the SE_SOC_IN(31:0) lines must arrive.

2.3 Unicast Routing and Distribution

Each of the 32 nibble-wide inputs is connected to an output by a crossbar. This crossbar is transparently controlled by

the cell’s routing tag, which specifies the input-to-output connection. In the event of a conflict for an output port,

higher priority cells are given preference over lower priority cells. There are three unicast cell priorities: high,

medium, and low.

PM73488 QSE

The gang of 32, also known as distribution mode, is a special unicast routing mode in which incoming unicast cells

are routed to outputs us ing PMC’s patented congestion-minimization (Evil Twi n Swi tching) algorithm. In this mode,

no routing information is used from the cell’s routing tag.

Depending on the gang mode, the QSE will need a number of routing bits to determine the output gang of a unicast

cell. For ex am ple, in gang mode of four, there are eigh t output gangs, thus three routing bits are required for selecting

the QSE. However, in distribution mode no routing bits are needed. The routing bits are taken from the head of the

routing tag and are then shifted back in at the tail (which preserves header parity). This allows the next set of routing

bits to be al ways accessible at the sam e spot in the tag, nam ely the head. The cell routing tag is broken int o eight nibbles, namely TAG_0 through TAG_7.

Figure 14 on page 27 shows the tag rotation for gang mode of four (three routing bits are used by the QSE from

TAG_0 and then shifted back in at the tail of TAG_7). TAG_0 is broken up and part of it ended up at the end of

TAG_7 (shown by the white area in Figure 14 on page 27). As a result, all the other tags (TAG_1 through TAG_7)

also get broken up and shifted (as shown by the light and dark gra y areas of Figure 14 on page 27).

Long Form Data Sheet

26

Released

Datasheet

PMC-Sierra, Inc.

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

PM73488 QSE

32

10

TAG_0

TAG_1

TAG_2

TAG_3

TAG_4

TAG_5

TAG_6

TAG_7

Figure 14. Routing Bits Rotation for Unicast Traffic, Gang Mode of Four

2.4 Multicast Cell Flow

There are 64 internal cell buffers for multicast traffic. These buffers are shared among three multicast priorities: high,

medium, and low. These 64 buffers are grouped into two sets of 32-cell buffers each. One set is dedicated to ports 0

to 15, the other set to ports 16 to 32.

Bit Mapping

32

TAG_0

TAG_1

TAG_2

TAG_3

TAG_4

TAG_5

TAG_6

TAG_7

10

A multicast queue engine dynamically allocates the cell buffers to incoming multicast cells. Each cell is buffered

until it can be sent out on all output ports to which it should be routed. These output ports are designated by a Multicast Group Vect or (MGV) that is associated with a Multicast Group Index (MGI) carried by each multicast cell. Each

QSE holds multicast MGVs in an MGV RAM. The QSE has internal RAM to support up to 128 MGVs. This support

can be extended up to 256K MGVs by using an external MGV RAM.

Each multicast cell cont ains the RAM address of the MGV it is supposed to use. When a multicast cell is receive d , its

MGV is fetched from RAM and copied to the MULTICAST_QUEUE_COMPLETION register. The

MULTICAST_QUEUE_COMPLETION register tracks to which QSE ports the cell needs to be sent before its cell

buffer can be cleared. In a multistage QSE fabric, each multicast cell will look up MGVs at each QSE. The MGV’s

sequence determines which output ports will finally receive the cell . T he MGV str ucture allows software to create an

optimal distribution tree for each multicast cell.

Multicast operation can be bes t understood by considering the QSE multicast path as two separate engine s; the multicast queue engine and the multicast dequeue engine. The multicast queue engine queues cells into the multicast cell

buffers (of which there are 64), and issues backpressure on the BP_ACK_OUT(31:0) lines. The multicast dequeue

engine selects and dequeues cells from the buffers for output ports as guided by the backpressure received on the

BP_ACK_IN(31:0) lines.

Long Form Data Sheet

27

Released

Datasheet

PMC-Sierra, Inc.

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

2.4.1 Multicast Queue Engine

The multicast queue engine associates input ports with cell buffers, computes backpressure for the input ports, and

stores incoming cells into the buffers. In doing so, it guarantees:

• No input port will have more than three cells pending in the QSE — this can be changed to allow four

pending cell s by setting the “Allow 4 Bits Per Port” bi t (bit 1) in the BP CONTROL register.

• No input port will have more than two high-priority cells pending.

• The sum of low- and medium-priority cells pending from any single input port will be less than 2.

In addition, the queue engine allows buffers to be reserved for high-priority cells or high/medium-priority cells. This

is controlled by bits 2 and 3 of the BP_CONTROL_REGISTER (refer to section 9.3.29

“BP_CONTROL_REGISTER” on page109). The four possible combinations for these two bits are listed in Table 1.

The multicast queue engine will compute backpressur e fo r the preceding QSE/QRT to ensure the constraints listed in

Table 1 are sati sfied. The same reservation policy applies to both sets of 32 buffers.

T able 1. BP_CONTROL_REGISTER; Threshold Control Bits for Each Set of 32 Buffers

Bit 3 Bit 2 Description

PM73488 QSE

00 • Four buffers are res erv ed for high-priority cells.

• Four buffers are reserved for high- or me diu m -priority c ell s.

• All other buffers can be used by any cell.

0 1 • Four buffers are res erv ed for high-priority cells.

• All other buffers can be used by any cell.

1 0 • Eight buffers are re served for high- or m edium-prior ity cells.

• All other buffers can be used by any cell.

1 1 • All buffers can be used by any cell.

After t he MGV ad dress f or th e cell e n ters t he Q S E , th e MGV a s so ciate d w it h t hat ce ll i s f e tched a nd lo aded in to the

QUEUE_COMPLETION_REGISTER (an internal register) as soon as possible.

2.4.2 Multicast Dequeue Engine

In each cell time, the multicast dequeue engine selects one multicast cell for each of the 32 output ports. In effect, all

multicast cells wanting to go to a particular output port arbitrate among themselves to select the most appropriate

port. Arbitration occurs independently for all 32 ports. The cells winning the internal multicast arbitration then compete w i th th e inc oming u nicast cells f o r a ccess to th e out p ut p or t s . Mu ltica s t ar b it r ation w inne rs ar e chos en to s a tisfy

the following cond itions in this sequence:

• Obey backpress ure from the down stream QSE or QRT. Only cells with the allowed priori ties will take part

in arbitration.

• Higher prio r ity cells win over lower priority cells.

• Cells th at came in ea r lier win ov e r ce lls that came in la te r (if th e y h av e th e sa me prior i ty ) .

• If multiple cells have the same priority and came in s imultaneously, cells from a random input gang group

will be selected.

• If multiple cells have the same priority, came in simultaneous ly, and belong to the same input gang group,

the cell with the lowest port number will be selected.

• Ties are broken randomly.

Long Form Data Sheet

28

Released

Datasheet

PMC-Sierra, Inc.

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

This arbitration occurs among all cells in the cell buffers and occurs for all 32 ports. In effect, arbitration occurs for

output ports in sequence, starting with cells arbitrating for port 0, then for port 1, and continuing on until port 31

(even though the actual implementation uses a parallel algorithm).

Multic ast cells t hat h ave w on t his a rbitra tion then comp ete w ith un icas t cell s fo r acces s to t he ou tput ports . In this

contention, the cell with the highest priority wins and ties are broken randomly according to the programmable ratio

set in the UC/MC_FAI RNESS_REGISTER (refer to section 9.3.6 “UC/MC_FAIRNESS_REGISTER” on page 97).

All these operations are optimized so that, in the absence of congestion, it is possible for a multicast cell to leave the

QSE in the cell time immediately after it arrived.

As mentioned before, the queue completion register (32-bit vector) indicates the outputs to which each multicast cell

needs to go. As a cell goes out on its desired outputs, the appropriate bi ts in the queue completion regis ter are cleared.

When all bits in the queue completion register have been cleared, the cell is deleted from the internal buffers and the

buffer is reused for new incoming traffic.

PM73488 QSE

Long Form Data Sheet

29

Released

Datasheet

PMC-Sierra, Inc.

PM73488 QSE

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

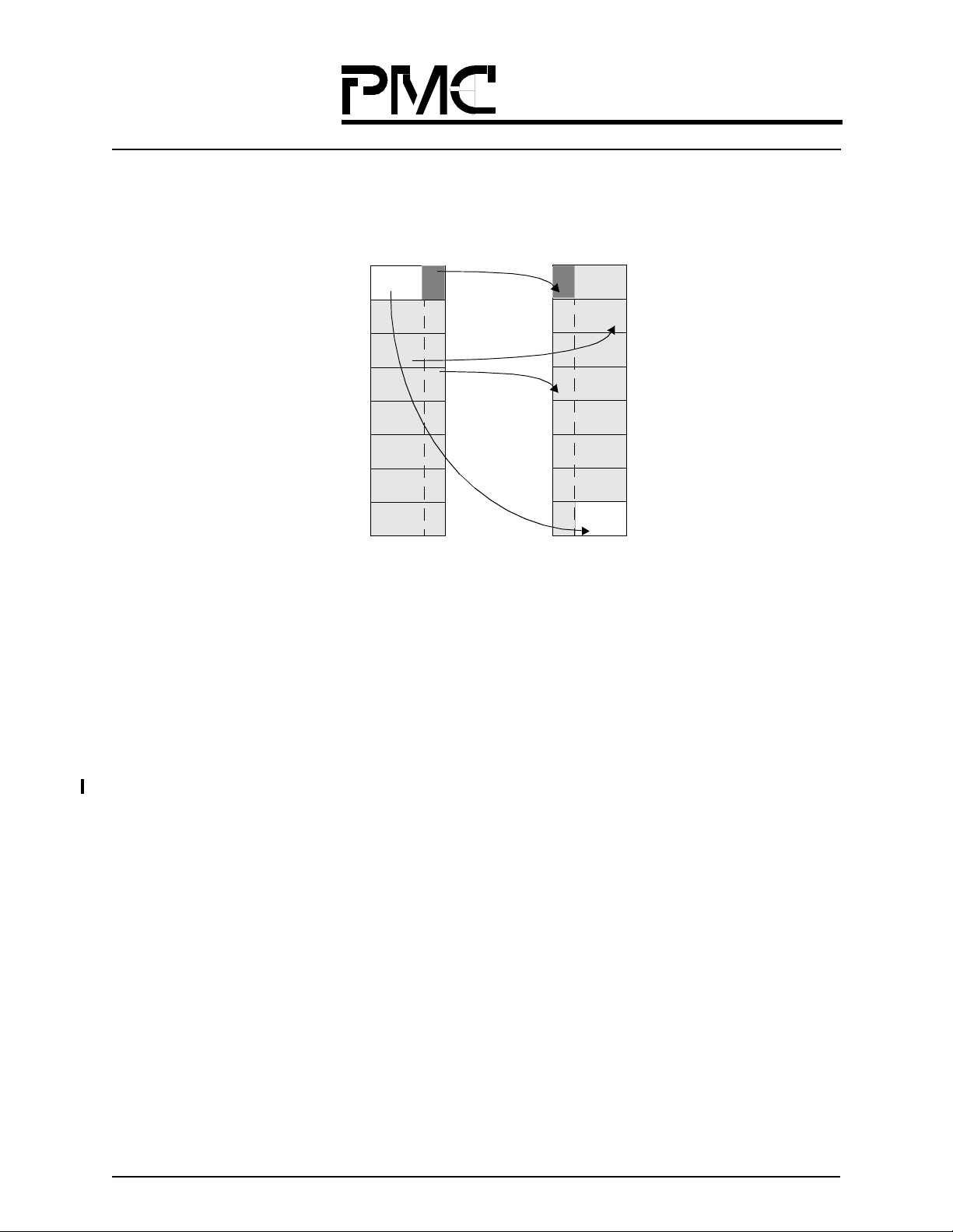

Figure 15 shows an example of a high-priority cell preempting a cell in the multicast queue, and the resulting bit settings in the MULTICAST_QUEUE_COMPLETION_REGISTER (an internal register). (For the sake of simplicity,

only 8 of the 32 outputs, and eight bits of the MGV_REGISTER (refer to section 9.3.4

“MULTICAST_GROUP_VECTOR_REGISTER” on page 96) and

MULTICAST_GROUP_COMPLETION_REGISTER (an internal register) are shown.)

Multicast Group Vector (MGV)

(Specifies where cells should be sent.)

MULTICAST_QUEUE_COMPLETION_REGISTER

(Recor ds if the c ells arrived at the destinations

indicated in the

MULTICAST_GROUP_VECTOR_REGISTER.)

0

0

0

1

0

0

1

0

0

7

This bi t is not set since a hig her priority

cell was output on Output(3 ), preempting

the cell in the multicast qu eue.

High-Priority

Cell

CELL_H

Multicast Queue

CELL_M

0

0

0

1

1

0

1

0

7

0

Output(0)

Output(1)

Output(2)

CELL_M

Output(3)

CELL_H

Output(4)

Output(5)

CELL_M

Output(6)

Output(7)

Figure 15. E x amp le of M ultica s t C ell H an dl ing in th e Q SE

2.5 Arbiter

The arbiter selects between unicast cells and multicast ce lls contending for the same output port. Higher priority ce lls

are given preference over lower priority cells. If a multicast cell and unicast cell have the same priority, one cell is

randomly chosen. The random choice can be biased in favor of either unicast cells or multicast cells at different

points in the switch fabric by using the UC/MC_FAIRNESS_REGISTER (refer to section 9.3.6 “UC/

MC_FAIRNESS_REGISTER” on page 97). In general, unicast cells should be favored at later stages in the switch

fabric. Favoring unicast cells is necessary in multiple-stage switch fabrics since unicast cells are routed in a cutthrough fashion and multicast cells are routed in a store-and-forward fashion. As such, a unicast cell becomes more

“valuable” as it proceeds further in the switc h fabric, since it did so at the expense of other cells.

Long Form Data Sheet

30

Released

Datasheet

PMC-Sierra, Inc.

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

For example, co nsider a congested 3-stage switch fabric w h ere unic ast cells and multicast cells of equal priorities collide at each stage in the fabric, without any biasing. A unicast ce ll must make it from ingress to egress in one cell time

and the chances of doing so would be a little more than (1/2)

50% chance of advancing to the next stage in the switch fabric.

2.6 BP_ACK Drivers

The BP_ACK_OUT(31:0) lines are used to send information from a QSE to upstream QSEs or QRTs. Thes e lines are

used to send two types of information:

• Backpressure information (for unicast cells).

• Transmit acknowledge information (for multicast cells).

Backpressure information is sent for multicast cells. This information indicates to an upstream QRT or QSE if the

QSE c an acc ep t a not her mu ltic ast ce ll i n t he nex t ce ll time . Ba ckpr ess ure in form atio n als o i ndica te s w hat mul tic ast

cell priorities the QSE can accept.

Cell transmit acknowledge information is sent for unicast cells. This information signals whether or not the unicast

cell transmitted in the current cell time made it to its destination QRT. If the cell makes it to the destination QRT, an

Acknowledgment (ACK) is se nt. If the cell has been dropped in the swi tch fabric, informati on is se nt back indicating

if the cell was dropped internally Mid Switch Negative Acknowledgment (MNACK) or at the output of the switch

fabric Output Negative Acknowledgment (ONACK). The MNACK and ONACK is used by the QRT to determine

when to retry sending the given cell.

3

= 12.5%. However, each multicast cell would have a

PM73488 QSE

2.7 Interdevice Interconnectability

All input and output ports can be c onfigured in groups of four to directly connect to either QRT devices or other QSE

devices. This allows considerable flexibility in the switch fabric types and sizes that can be constructed using the

entire PMC chip set.

2.8 Network Topologies and the Speed up Fa ctor (SF)

For many switch fabric architectures using the QSE, a single metric called the Speedup Factor (SF) allows comparison of different network topologies, which is independen t of tr affic load and type. The SF also al lows for predictions

about the network performance.

Before describing the SF metric, we will briefly discuss the network philosophy and the different network topolo gies.

Long Form Data Sheet

31

Released

Datasheet

PMC-Sierra, Inc.

PM73488 QSE

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

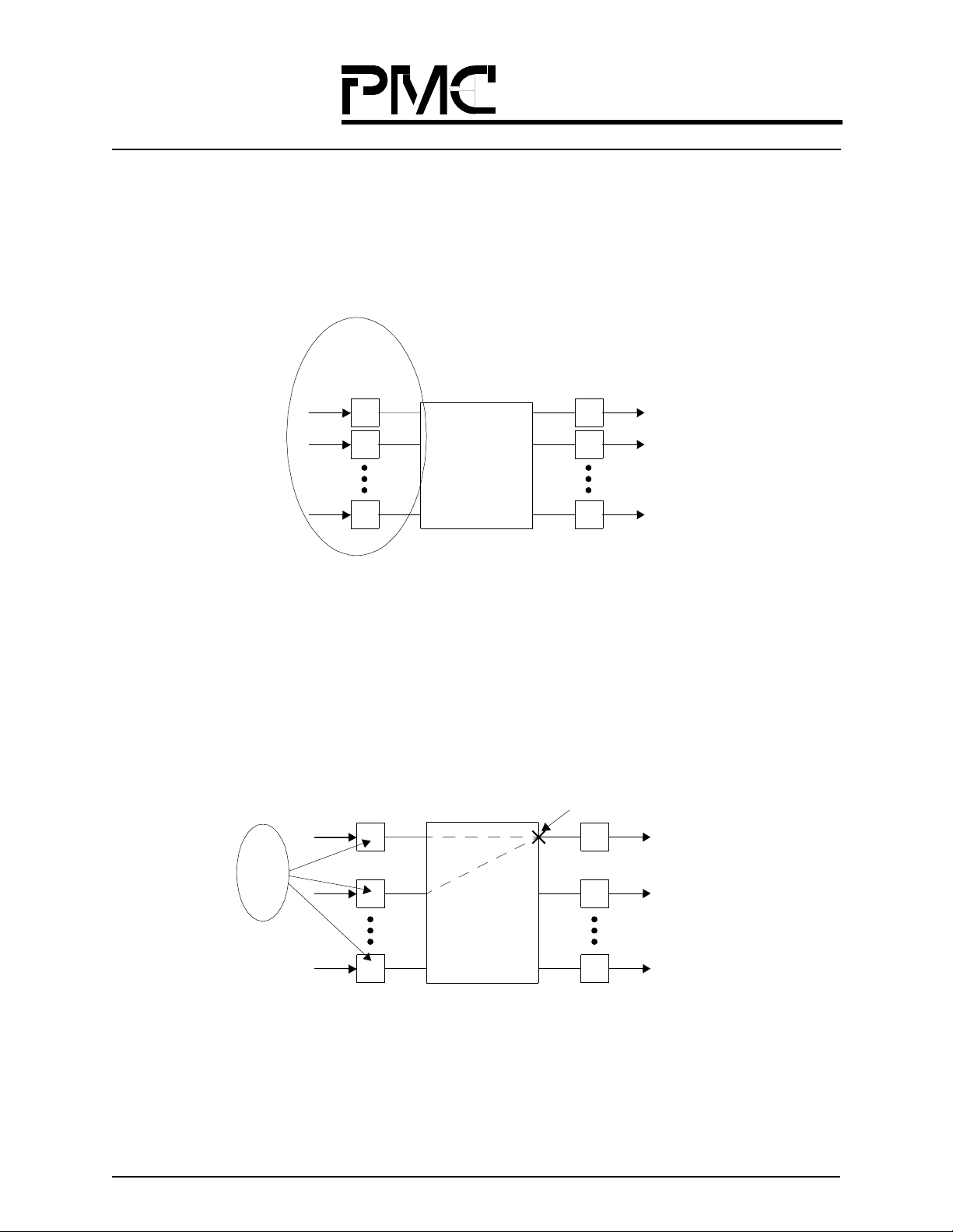

2.8.1 Network Philosophy

Given current technology, to scale through 160 Gbps, a network must be distributed and use buffers at the network

inputs and outputs. In an id eal world, crossbar s of any arbitrary size could be built to provide connectivity for the net-

work inputs and outputs. Additionally, there would be a central “brain”, or global arbiter, to control the input buffers

and schedule cells optimally for routing in the network, as shown in Figure 16.

Global

Arbiter

“Perfect

Crossbar”

Input

Buffers

Figure 16 . Id ea l D is trib u te d Ne tw or k

Output

Buffers

Unfortunately, given real con st r aints, it is not possible to have a global arbiter wired to each input that has knowledge

of all cells in the system, and can quickly make optimal decisions about routing. Thus, each input must make decisions using knowledge local to its buffers. This results in the possibility of collisions at the network outputs, even

though it is a “perfect” crossbar, as shown in Figur e 17.

Output

Collision

Local

Arbiters

“Perfect

Crossb ar”

Input

Buffers

Long Form Data Sheet

Figure 17. More Realistic Distributed Network

Output

Buffers

32

Released

Datasheet

PMC-Sierra, Inc.

PM73488 QSE

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

Replacing the idealized crossbar with a buildable, traditional Banyan network increase s the possibil ity of internal network collisions, as shown in Figure 18. Given a particular Banyan network, one can always find a large class of traffic patterns that will cause many internal collisions. For large Banyan networks, the collision problem is greatly

increased.

Internal

Collisions

Local

Arbiters

Banyan

Input

Buffers

Figure 18. “Large” Distributed Network (Will not Work Well with Banyan Alone)

Output

Collision

Output

Buffers

To reduce internal collisions in the traffic-dependent Banyan networks, the QRT/QSE network adds a distribution/

randomizing network (shown in Figure 19) that uses a patented intelligent configuration algorithm, known as Evil

Twin Sw itch in g. The algor ith m (des cri bed in section 2.8.3 “Speedup Factor (SF)” on page 36) allows lower-bound-

ing the network performance, independent of traffic patterns.

Internal

Collisions

Local

Arbiters

Input

Buffers

Figure 19. High-Level QRT/QSE System

Long Form Data Sheet

Intelligent

Configuration

Algorithm

Banyan

and

Randomizer

Output

Buffers

33

Released

Datasheet

PMC-Sierra, Inc.

PM73488 QSE

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

To overcome the inefficiencies caused by collision in the network, the fabric must be run at a rate greater than line

rate. The speedup factor is the minimum rate necessary to guarantee that the network is no longer the system bottleneck. Note that in this case, the network efficiently moves data from the input to the output buffers, and the switch

performs similar to a purely output buffered switch.

2.8.2 Network Definition

A large range of switch fabrics can be described as follows: with the following notation: “p” refers to the number of

fabric planes, and “x,” “y,” and “z” refer to the routing tag size necessary to make routing decisions in the Banyan

section of the net wo rk to route cells to the correct out put port. This is summarized as follows:

(z)xp — 1-stage network

(y,z)xp — 3-stage network

Hence, the (3) × 1 network shown in Figure 20 refers to a single switch stage, and three routing bits are required to

select from one of the eight output port groupings. (Recall that the QSE has 32 output ports that can be configured in

groups of 1, 2, 4, 8, 16, or 32. In Figure 20, they are configured in groups of four. The input and output buffers provided by the QRT have four input ports and four output ports to the switch fabric, and are logically broken into the

input half of the QRT (IRT) and output half of the QRT (ORT) for convenience.

x4

x4

ORT

622 Mbp s

UTOPIA

622 Mbps

UTOPIA

IRT

x 4

x 4

Figure 20. (3) x 1 - 5 G bps Sys te m

QSE

The (5) × 4 network sh own in Figure 21 is an example of a network with four parall el planes. It demonstrates t he flexibility allowed because the QRT has four input and output ports. In this case, randomization is performed in the IRT .

622 Mbps

UTOPIA

IRT

Randomizer

Figure 21. (5) x 4 - 20 Gbps System

x 4

x 1

QSE

x 1

x 4

ORT

622 Mbps

UTOPIA

Long Form Data Sheet

34

Released

Datasheet

PMC-Sierra, Inc.

PM73488 QSE

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

In Figure 22, the first stage of QSEs is configured to provide the required randomization, and the next two switch

stages route the cells to the final port destination. The second QSE stage needs only t o make an “up” or “down” deci-

sion requirin g a singl e routing bi t, while the third QSE sta ge needs to s elec t between ei ght QRTs , requiri ng three rou ting bits.

622 Mbps

UTOPIA

IRT

x 4

x 4

Randomizer

x16 x 16

QSE

QSE

x 16 x 16

Figure 22. (1,3) x 1 - 10 Gbps System

QSE

QSE

QSE

QSE

x 4

x 4

ORT

622 Mbps

UTOPIA

Long Form Data Sheet

35

Released

Datasheet

PMC-Sierra, Inc.

PM73488 QSE

PMC-980616 Issue 3 5 Gbit/s ATM Switch Fabric Element

2.8.3 Speedup Factor (SF)

If the traffic pattern presented to a particular Banyan network results in many internal collision, a shuffling pattern

exists that has been proven to result in few internal collisions. Although a purely random reshuffling results in good