Released

Datasheet

PMC-Sierra, Inc.

PMC-980618 Issue 3 622 Mbps ATM Traffic Management Device

PM73487 QRT

PM73487

QRT

622 Mbps ATM Traffic Management

Device

DATASHEET

Released

Issue 3: JUN 1999

Long Form Data Sheet

Released

Datasheet

PMC-Sierra, Inc.

PM73487 QRT

PMC-980618 Issue 3 622 Mbps ATM Traffic Management Device

AAL1gator, AAL1gator2, Evil Twin Switching, QRT, QSE, and SATURN are trademarks of PMC-Sierra, Inc.

AMCC is a registered trademark of Applied MicroCircuits Corporation.

i960 is a registered trademark of Intel Corporation.

National Semiconductor is a registered trademark of National Semiconductor Corporation.

is a trademark of PMC-Sierra, Inc.

Vitesse is a trademark of Vitesse Semiconductor Corporation.

All other brand or product names are trademarks

of their respective companies or organizations.

U.S. Patents 5,557,607, 5,570,348, and 5,583,861

Copyright © 1998 PMC-Sierra, Inc.

All Rights Reserved

Released

Datasheet

PMC-Sierra, Inc.

PM73487 QRT

PMC-980618 Issue 3 622 Mbps ATM Traffic Management Device

Public Revision History

Issue Number Issue Date Details of Change

Issue 1 March 1998 Creation of Document

Issue 2 October 1998 This data sheet includes: regi sters added in B

version of devi ce:

RX_QUEUE_ENGINE_TEST - bits 26:16

TX_QUEUE_ENGINE_TEST - bits 22:15

QUEUE_ENGINE_CONDITION_PRES_BIT

S

QUEUE_ENGINE_CONDITION_LATCH_B

ITS

QUEUE_ENGINE_INT_MASK

RX_LOWER16_SCG_CONFIG

RX_LOWER16_SCG_STATE

RX_LOWER32_SCG_CONFIG

RX_LOWER32_SCG_STATE

RX_LOWER48_SCG_CONFIG

RX_LOWER48_SCG_STATE

TX_LOWER4_SCG_CONFIG

TX_LOWER4_SCG_STATE

TX_LOWER8_SCG_CONFIG

TX_LOWER8_SCG_STATE

TX_LOWER12_SCG_CONFIG

TX_LOWER12_SCG_STATE

Updated RX_SERV IC E_ T AB LE

Issue 3 June 1999 Production Release Version

© 1999

PMC-Sierra, Inc.

105-8555 Baxter Place

Burnaby BC Canada V5A 4V7

Phone: 604.415.6000 FAX: 604.415.6200

In any event, no part of this document may be reproduced in any form without the express written consent of PMC-Sierra, Inc.

Released

Datasheet

PMC-Sierra, Inc.

PMC-980618 Issue 3 622 Mbps ATM Traffic Management Device

PM73487 QRT

Long Form Data Sheet

Released

Datasheet

PMC-Sierra, Inc.

PM73487 QRT

PMC-980618 Issue 3 622 Mbps ATM Traffic Management Device

Table of Contents

1 System Applications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.1 QRT System Overview. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.2 622 Mbps Switch Configuration. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

1.3 32 x 32 Switch Application (5 Gbps). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

1.4 64 x 64 Switch Application (10 Gbps). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

1.5 5 Gbps to 20 Gbps Application Example - Seamless Growth . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

1.6 5 Gbps to 160 Gbps Application Example – LAN-to-WAN . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

2 Theory of Operations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

2.1 Overview. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

2.2 Interface Descriptions. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

2.2.1 Switch Fabric Interface. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

2.2.2 Phase Aligners . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

2.2.3 UTOPIA Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

2.2.4 Cell Buffer SDRAM Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

2.2.5 Channel RAM (CH_RAM) Interface. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

2.2.6 Address Lookup RAM (AL_RAM) Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

2.2.7 AB_RAM Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

2.2.8 Host Processor Interface. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

2.2.9 SE_SOC Encodings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

2.2.10 BP_ACK Encodings. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

2.2.11 Relation Between External CELL_START and Local CELL_START. . . . . . . . . . . . . . . . . . . . . . 17

2.3 Cell Flow Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

2.4 UTOPIA Operation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

2.4.1 General . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

2.4.2 UTOPIA Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

2.5 Receiver Operation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

2.5.1 Receive Channel Lookup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

2.5.2 Receive VC (Channel) Queuing. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

2.5.3 Receive Channel Ring . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

2.5.4 Receive Congestion Management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

2.5.5 Receive Queue Service Algorithm. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

2.5.6 Receive Sequencing Algorithm . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

2.6 Transmitter Operation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

2.6.1 Transmit Queuing. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

2.6.2 Transmit Congestion Management. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

2.6.3 Transmit Queue Service Algorithm . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

2.6.4 Transmit Resequencing Algorithm. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

2.6.5 Transmit Recovery Algorithm . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

2.6.6 Transmit Multicast Cell Background Process . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

2.6.7 Transmit Multicast Congestion Management. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

2.7 System Diagram of Internal QRT Blocks and External RAM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

3 Fault Tolerance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

viii

Released

Datasheet

PMC-Sierra, Inc.

PM73487 QRT

PMC-980618 Issue 3 622 Mbps ATM Traffic Management Device

3.1 The Data Path . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

3.1.1 UTOPIA Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

3.1.2 Switch Fabric Interface. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

3.2 Fault Detection and Isolation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

3.2.1 Memory Parity Checking . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

3.2.2 UTOPIA Interface Fault Detection and Recovery Mechanisms . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

3.2.3 Switch Fabric Fault Detection and Recovery Mechanisms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

3.2.4 Tables of Switch Fabric Interface Failure Behaviors. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

4 Pin Descriptions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

4.1 Package Diagram . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

4.2 Signal Locations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

4.3 Signal Descriptions (372 Signal Pins) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

4.3.1 Processor Interface Signals. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

4.3.2 Statistics Interface Signal . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

4.3.3 Switch Element Interface Signals. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

4.3.4 CH_RAM Interface Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

4.3.5 AL_RAM Interface Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

4.3.6 ABR_RAM Interface Signals. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

4.3.7 Receive Cell Buffer DRAMs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

4.3.8 Transmit Cell Buffer DRAMs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

4.3.9 UTOPIA ATM Layer Interface Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72

4.3.10 Boundary Scan Signals. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

4.3.11 Miscellaneous Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

5 Physical Characteristics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

6 Timing Diagrams . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

6.1 UTOPIA Timing. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

6.2 DRAM External Memory Timing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

6.3 SRAM Timings. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

6.4 QRT-QSE Interface Timing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

6.4.1 Switch Fabric Cell Formats . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

6.5 Processor Interface Timing. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

6.6 Miscellaneous Timing. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 96

7 Microprocessor Ports . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98

7.1 Microprocessor Ports Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98

7.2 Microprocessor Ports Bit Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

7.2.1 REVISION . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

7.2.2 RESET . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

7.2.3 TEST_CONFIG . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

7.2.4 SRAM_CONFIG . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

7.2.5 SWITCH_CONFIG . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 106

7.2.6 RAM BIST RESULT . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 108

7.2.7 MARKED_CELLS_COUNT. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1 1 0

7.2.8 CONDITION_PRES_BITS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

7.2.9 CONDITION_LATCH_BITS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

ix

Released

Datasheet

PMC-Sierra, Inc.

PM73487 QRT

PMC-980618 Issue 3 622 Mbps ATM Traffic Management Device

7.2.10 INTR_MASK . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

7.2.11 UTOPIA_CONFIG. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

7.2.12 UT_PRIORITY. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

7.2.13 UT_ENABLE. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

7.2.14 TX_UT_STAT . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

7.2.15 TX_UT_WD_ALIVE. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

7.2.16 RX_CELL_START_ALIGN (Internal Structure) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 122

7.2.17 RX_QUEUE_ENGINE_TEST. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

7.2.18 TX_QUEUE_ENGINE_TEST. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 128

7. 2. 19 QUEUE ENGINE CONDITION_PRES_BITS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 132

7. 2. 20 QUEUE_ENGINE_CONDITION_LATCH_BITS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 135

7. 2. 21 QUEUE_ENGINE_INT_MASK . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 138

7.2.22 RX_DIR_CONFIG. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 140

7.2.23 RX_DIR_STATE (Internal Structure) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 140

7.2.24 TX_DIR_CONFIG. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

7.2.25 TX_DIR_STATE (Internal Structure) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

7.2.26 RX_SENT_CELLS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 142

7.2.27 RX_DROPPED_CELLS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 142

7.2.28 TX_SENT_CELLS. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 142

7.2.29 TX_DROPPED_CELLS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 143

7. 2. 30 RX_LOWER16_SCG_CONFIG . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 144

7. 2. 31 RX_LOWER16_SCG_STATE (Internal State). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 144

7. 2. 32 RX_LOWER32_SCG_CONFIG . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 145

7. 2. 33 RX_LOWER32_SCG_STATE (Internal State). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 145

7. 2. 34 RX_LOWER48_SCG_CONFIG . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 146

7. 2. 35 RX_LOWER48_SCG_STATE (Internal State). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 146

7. 2. 36 TX_LOWER4_SCG_CONFIG . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 147

7. 2. 37 TX_LOWER4_SCG_STATE (Internal State). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 147

7. 2. 38 TX_LOWER8_SCG_CONFIG . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 148

7. 2. 39 TX_LOWER8_SCG_STATE (Internal State). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 148

7. 2. 40 TX_LOWER12_SCG_CONFIG . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 149

7. 2. 41 TX_LOWER12_SCG_STATE (Internal State). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 149

8 Internal RAM Memory Map. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 150

8.1 Internal RAM Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 150

8.2 Transmit Service Class RAM (TSC_RAM) Summary. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 151

8.2.1 Transmit Service Class Queue (TX SCQ) Control Block . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 152

8.3 Receive Service Class RAM (RSC_RAM) Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 155

8.3.1 Receive Service Class (RX SC) Control Block . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 156

8.4 Virtual Output Control RAM (VO_RAM) Summary. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 159

8.4.1 Transmit VO Control Block. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 160

8.4.2 Transmit SC Control Block . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 163

8.4.3 Transmit Multicast SC Control Block Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 164

8.5 Receive Switch Fabric Control RAM (RSF_CONTROL) Summary. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 167

8.5.1 RX_RSF_CONFIG (Internal Structure). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1 6 7

x

Released

Datasheet

PMC-Sierra, Inc.

PM73487 QRT

PMC-980618 Issue 3 622 Mbps ATM Traffic Management Device

8.5.2 RX_RSF_TAG (Internal Structure) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 168

8.5.3 RX_RSF_NEW_VPI_VCI (Internal Structure). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 168

8.5.4 RX_RSF_SN_CHAN (Internal Structure) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 168

8.5.5 RX_RSF_ER_CELL_PTR (Internal Structure). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 169

8.6 Transmit Switch Fabric Control RAM (TSF_CONTROL_RAM) Summary . . . . . . . . . . . . . . . . . . . . . . 169

8.6.1 TX_TSF_SN_CHAN (Internal Structure) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 169

8.7 Test Access to the Receive UTOPIA RAM (RU_RAM). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 170

8.8 Test Access to the Transmit UTOPIA RAM (TU_RAM) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 171

8.9 Test Access to Receive Switch Element RAM (RS_RAM). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 172

8.10 Test Access to Transmit Swith Element RAM (TS_RAM) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 173

9 External RAM Memory Map . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 173

9.1 External RAM Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 173

9.2 Address Lookup RAM (AL_RAM) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 174

9.2.1 VI_VPI_TABLE. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 175

9.2.2 VCI_TABLE. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 176

9.2.3 Multicast Cell Instance Control Block (Internal Structure) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 177

9.2.4 RX_NEXT_CELL (Internal Structure) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 179

9.2.5 Transmit Cell Buffer Control Block (Internal Structure). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 180

9.2.6 Service Order Control Block (Internal Structure) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 181

9.3 Channel RAM (CH_RAM). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 183

9.3.1 Channel Control Block. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 183

9.3.2 Multicast Control Block . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 194

9.4 ABR_RAM. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 196

9.4.1 Receive Channel Queue Block. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 196

9.4.2 Receive Channel Statistics Block. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 197

9.5 SDRAM/SGRAM Interface Description . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 197

9.5.1 RX_DRAM_REGISTER . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 199

9.5.2 TX_DRAM_REGISTER . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 201

9.5.3 Receive Cell Buffer SDRAM/SGRAM Summary (Internal Structure) . . . . . . . . . . . . . . . . . . . . . . 202

9.5.4 Transmit Cell Buffer SDRAM/SGRAM Summary (Internal Structure) . . . . . . . . . . . . . . . . . . . . . 203

10 JTAG. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 204

11 Application Notes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 226

11.1 Connecting QRTs to QSEs Using Gigabit Ethernet Transceivers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 226

11.2 Connecting to Standard Serializer/Deserializer Chipsets. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 227

11.3 Connecting the QRT to the S/UNI-ATLAS PM7324. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 229

11.4 Relationships Among Various Clock Domains . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 229

11.4.1 Relationship Among the SYSCLK, SE_CLK, and the Switch Speed-Up Factor . . . . . . . . . . . . . 230

11.4.2 The Phase Aligner SE_CLK Frequency Constraint. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 230

11.4.3 The SYSCLK DRAM Refresh Constraint . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 230

11.4.4 Relationship Between ATM_CLK and SYSCLK . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 231

11.4.5 Relationship Between the PCLK and the SYSCLK. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 231

xi

Released

Datasheet

PMC-Sierra, Inc.

PM73487 QRT

PMC-980618 Issue 3 622 Mbps ATM Traffic Management Device

List of Figures

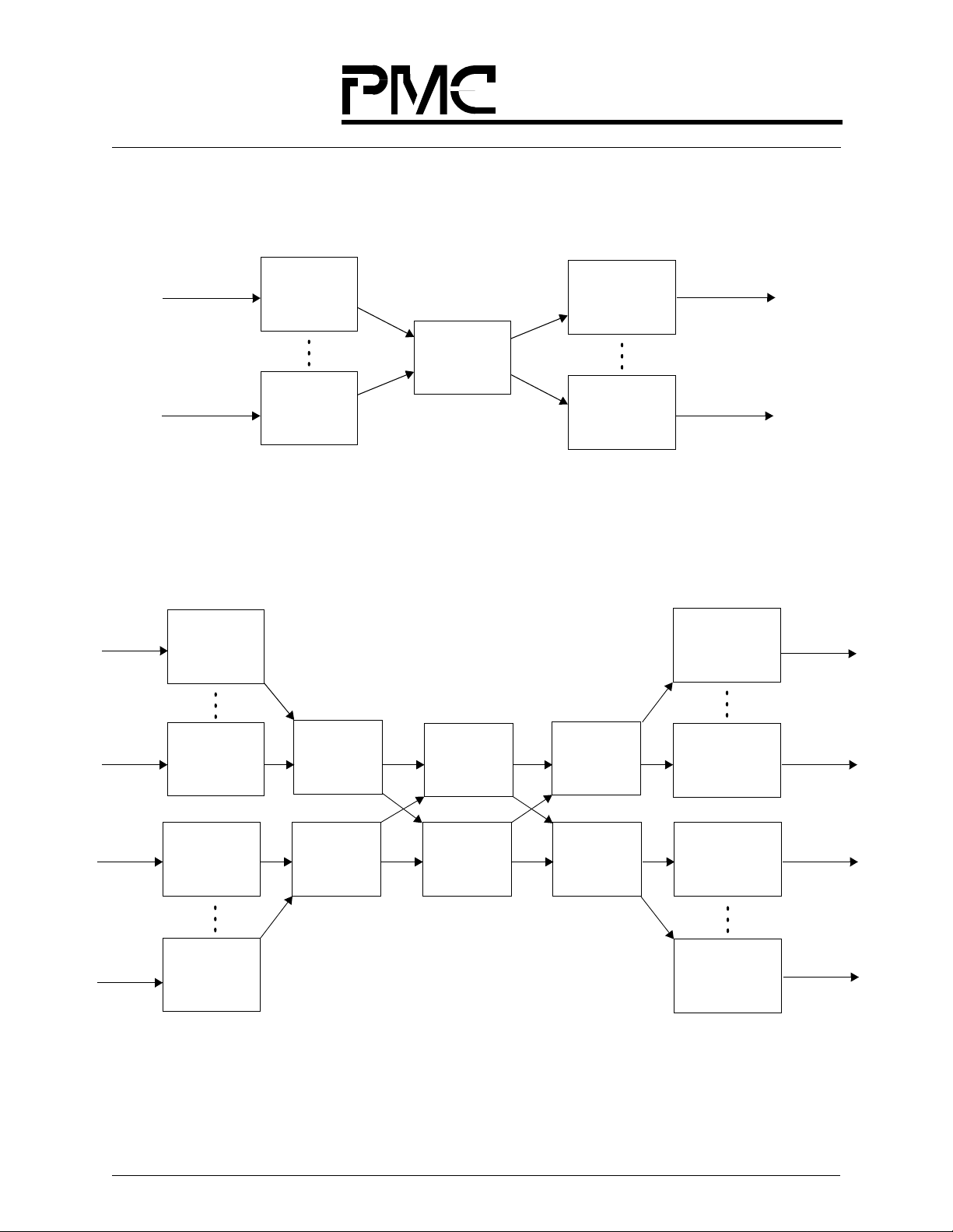

Figure 1. QRT System Block Diagram . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

Figure 2. QRT System Overview. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

Figure 3. 622 Mbps Switch Configuration. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

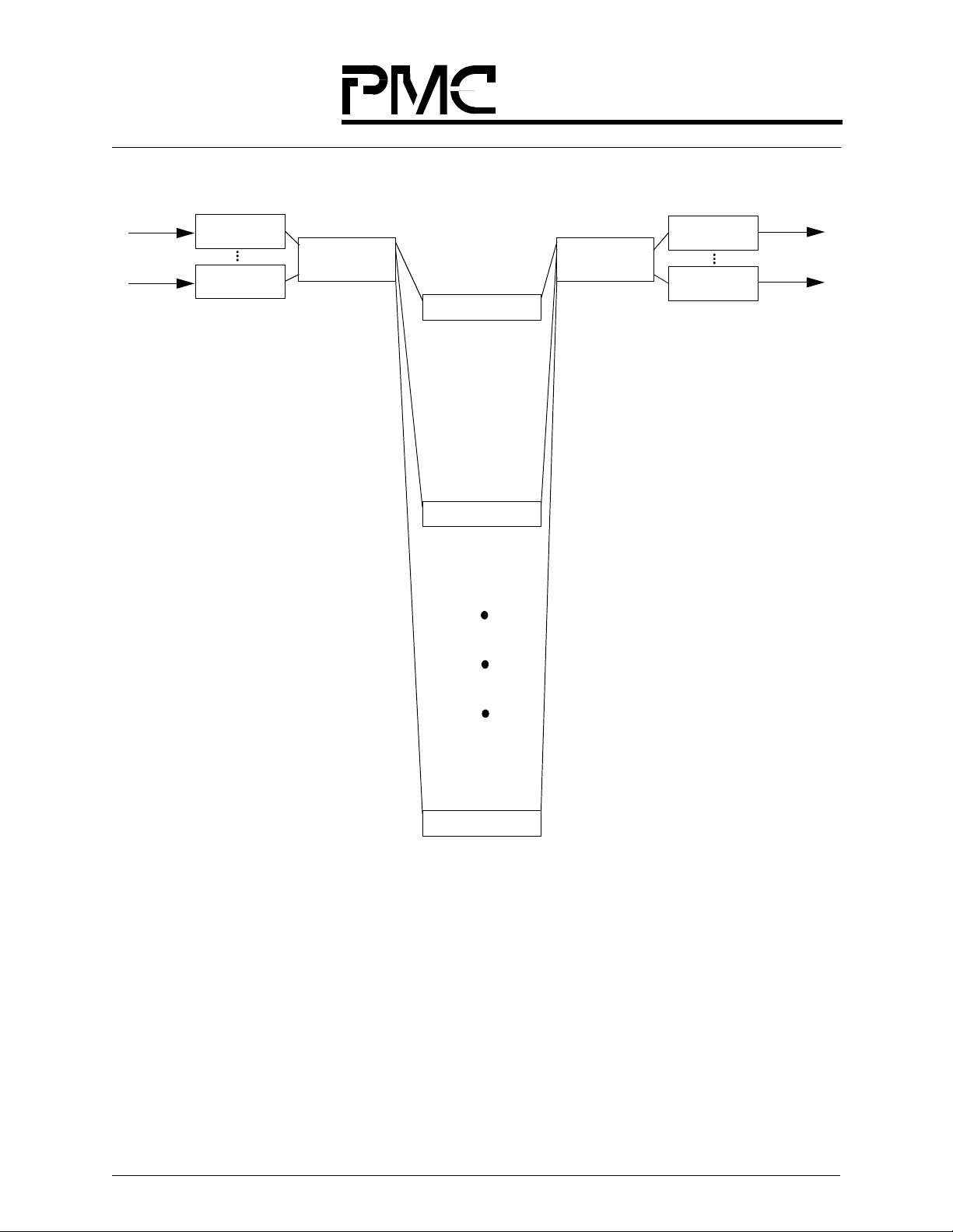

Figure 4. 32 x 32 Switch Application (5 Gbps). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

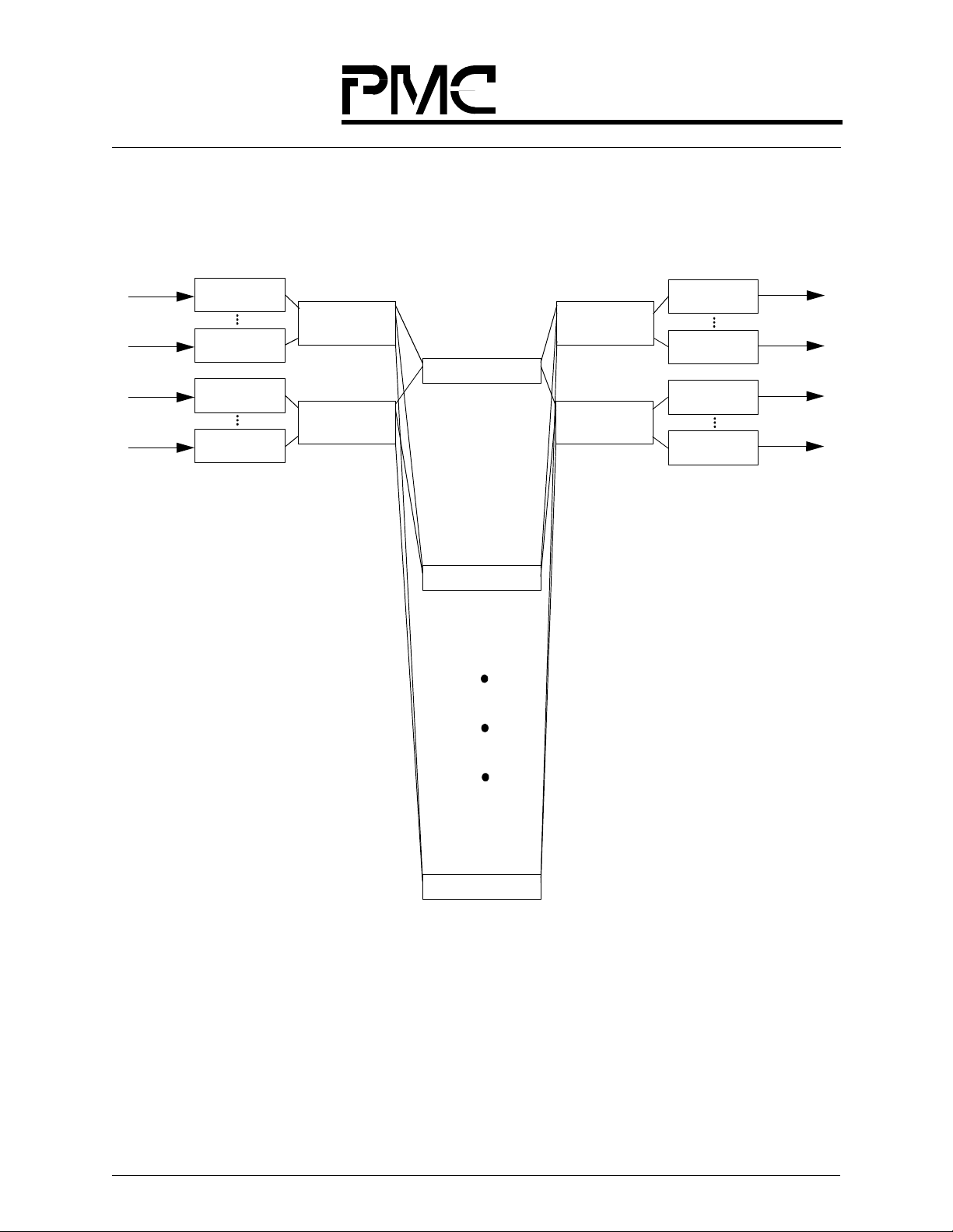

Figure 5. 64 x 64 Switch Application (10 Gbps). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

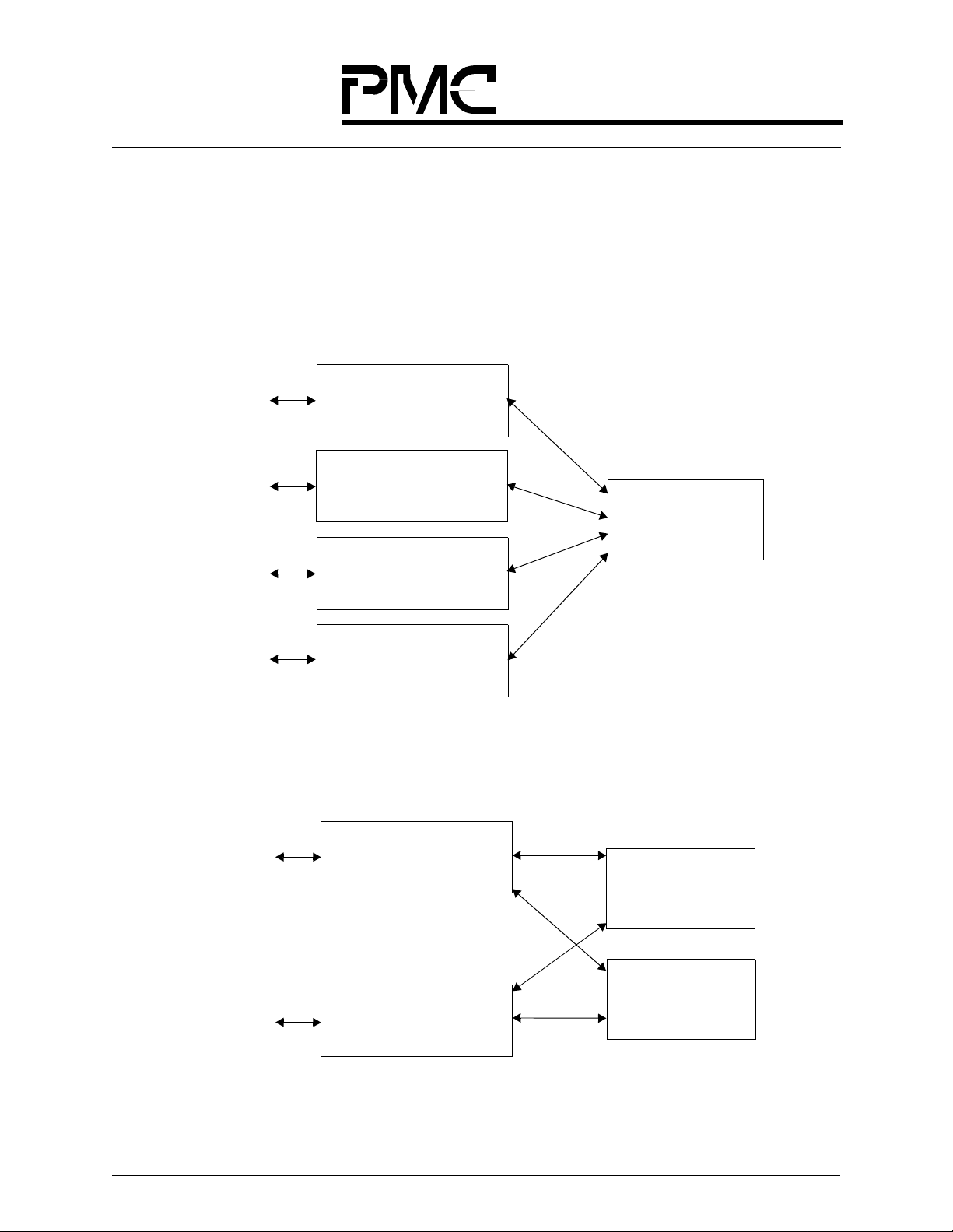

Figure 6. 5 Gbps ATM Switch Using 16 Dual S/UNIs, 8 QRTs, and 1 QSE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

Figure 7. 10 Gbps ATM Switch Using 32 Dual S/UNIs, 16 QRTs, and 2 QSEs . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

Figure 8. 20 Gbps ATM Switch Using 64 Dual S/UNIs, 32 QRTs, and 4 QSEs . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

Figure 9. 5 Gbps to 160 Gbps Switches Modeled Using Only Two Cards . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

Figure 10. 5 Gbps ATM Switch. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

Figure 11. 1 0 Gbps ATM Switch. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

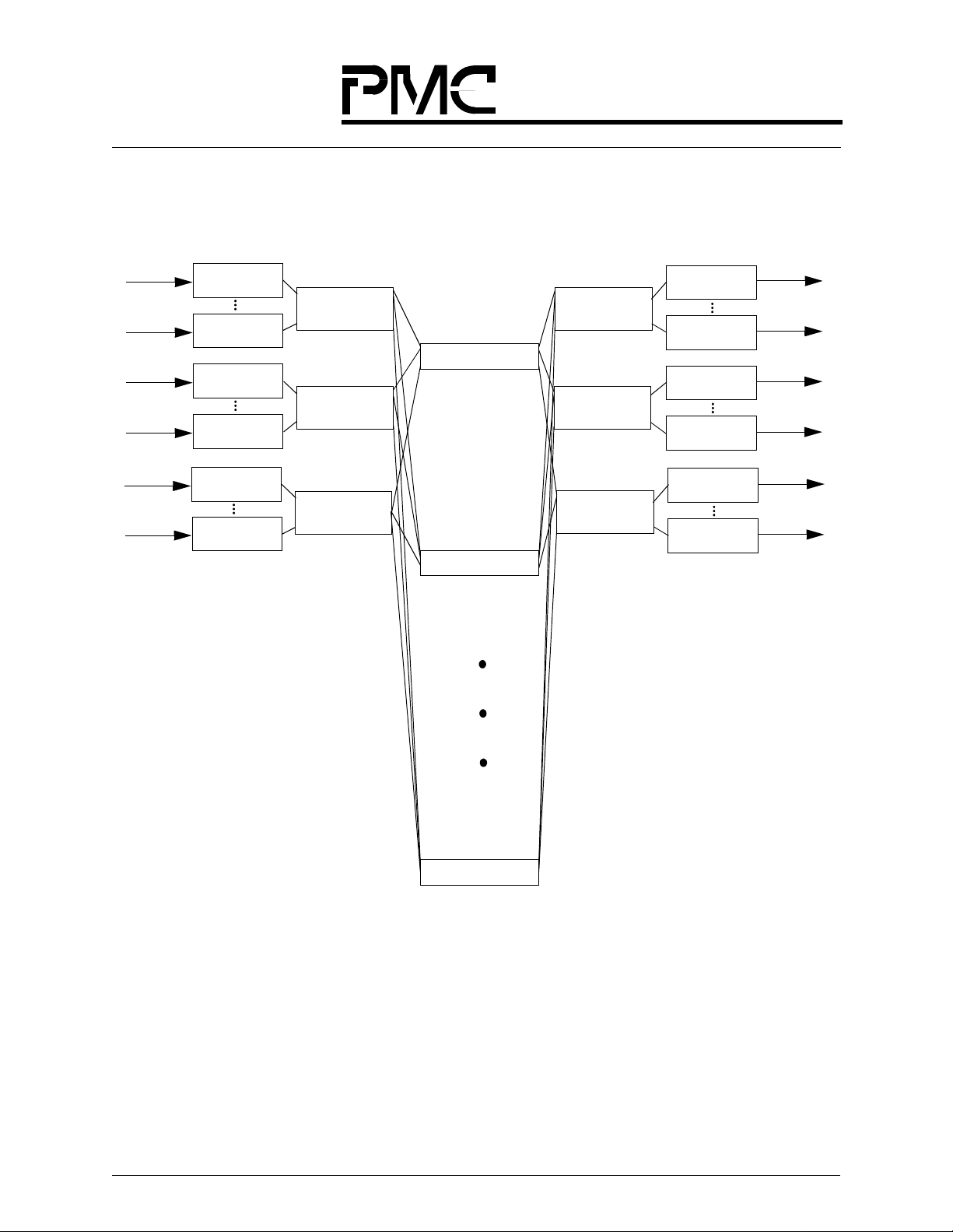

Figure 12. 1 5 Gbps ATM Switch. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

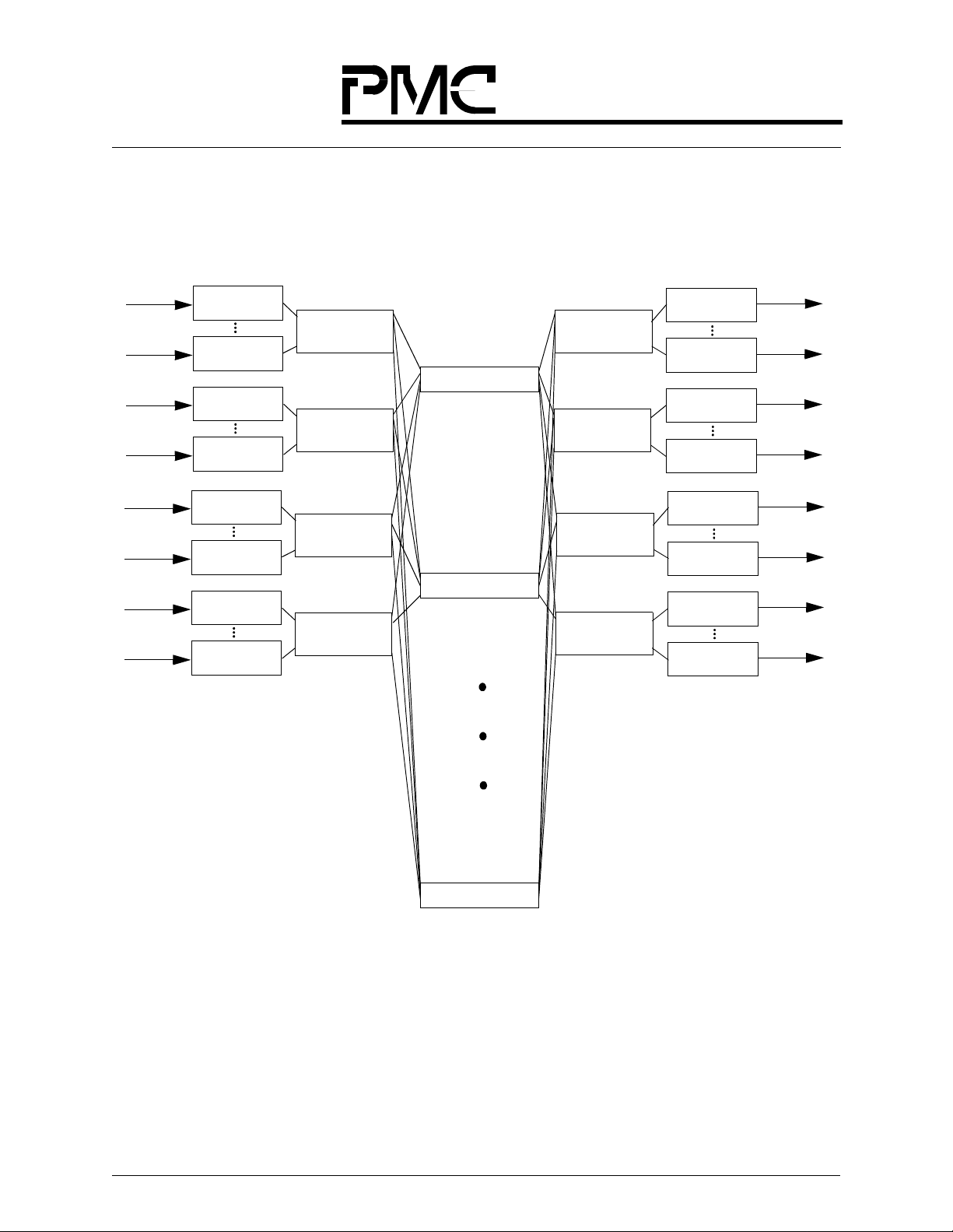

Figure 13. 2 0 Gbps ATM Switch. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

Figure 14. QRT System Overview. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

Figure 15. SE_SOC Encodings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

Figure 16. BP_ACK Encodings. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

Figure 17. QRT Cell-Level Timing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

Figure 18. QRT Data Flow Diagram . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

Figure 19. Receive UTOPIA Operation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

Figure 20. Transmit UTOPIA Operation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

Figure 21. Receive Standard Single Cell Available Polling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

Figure 22. Transmit Standard Single Cell Available Polling. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

Figure 23. Receive UTOPIA Multiplexed Status Polling (MSP), Including Cell Transfer. . . . . . . . . . . . . . . . . . . . 22

Figure 24. Transmit UTOPIA 50 MHz Multiplexed Status Polling (MSP), Including Cell Transfer . . . . . . . . . . . . 22

Figure 25. VCC Channel Lookup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

Figure 26. VPC Channel Lookup. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

Figure 27. Channel Linked List . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

Figure 28. Channel Linked List – a New Cell Arrives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

Figure 29. Channel Linked List – a Cell Is Sent to the Fabric. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

Figure 30. Receive Channel Ring . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

Figure 31. Receive Channel Ring after Channel_A Becomes Run-Limited. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

Figure 32. Receive Channel Ring after Channel_B is Served But It is Not Run-Limited. . . . . . . . . . . . . . . . . . . . . 29

Figure 33. Receive Channel Ring After Channel_A Gets Cell Through Fabric and is Added to Ring. . . . . . . . . . . 29

Figure 34. Receive Congestion Limits. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

Figure 35. EPD/PTD Operation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

Figure 36. EPD/PTD with CLP Operation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

Figure 37. EFCI Operation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

Figure 38. Steps to Send a Cell to the Fabric. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

Figure 39. Receive Service Class (SC) Map. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

Figure 40. Transmit Per-SCQ Linked List. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

Figure 41. Transmit Maximum and Congested Threshold Checks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

Figure 42. Transmit Service Class (SC) Map (Per VO) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

xii

Released

Datasheet

PMC-Sierra, Inc.

PM73487 QRT

PMC-980618 Issue 3 622 Mbps ATM Traffic Management Device

Figure 43. Cell Playout Steps. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

Figure 44. Transmit Resequencing Operation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

Figure 45. Multicast Background Process. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

Figure 46. Multicast Pointer FIFO Operation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

Figure 47. System Diagram of Internal QRT Blocks and External RAM. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

Figure 48. Basic Data Path Through the Switch . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

Figure 49. 5 03-Pin EPBGA Top and Side Views (Part 1 of 2) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

Figure 49. 5 03-Pin EPBGA Bottom View (Part 2 of 2) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

Figure 50. Receive UTOPIA 50 MHz Timing. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

Figure 51. Transmit UTOPIA 50 MHz Timing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

Figure 52. Receive DRAM External Memory 100 MHz Read Timing. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

Figure 53. Receive DRAM External Memory 100 MHz Write Timing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

Figure 54. Transmit DRAM External Memory 100 MHz Read Timing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

Figure 55. Transmit DRAM External Memory 100 MHz Write Timing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 82

Figure 56. Address Lookup RAM Read Timing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

Figure 57. Address Lookup RAM Write Timing. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

Figure 58. Channel RAM Read Timing. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

Figure 59. Channel RAM Write Timing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

Figure 60. AB RAM Read Timing. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

Figure 61. AB RAM Write Timing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 88

Figure 62. QRT Bit-Level Timing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

Figure 63. Microprocessor Read Timing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

Figure 65. Reset Timing. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 96

Figure 66. JTAG Timing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

Figure 67. Transmit Service Class RAM (TSC_RAM) Memory Map . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 151

Figure 68. Receive Service Class RAM (RSC_RAM) Memory Map. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 155

Figure 69. Virtual Output Control RAM (VO_RAM) Memory Map . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 159

Figure 70. Boundary Scan Architecture. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 204

Figure 71. TAP Controller Finite State Machine. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 206

Figure 72. Connecting the QRT to Gigabit Ethernet Transceivers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 227

Figure 73. Connecting the QRT to the RCMP-800 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 229

xiii

Released

Datasheet

PMC-Sierra, Inc.

PM73487 QRT

PMC-980618 Issue 3 622 Mbps ATM Traffic Management Device

List of Tables

Table 1. Backpressure and Acknowledgment Encodings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

Table 2. Failure Conditions, IRT-to-Switch Fabric Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

Table 3. Failure Conditions, QSE Receive Interface. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

Table 4. Failure Conditions, QSE Transmit Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

Table 5. Failure Conditions, Switch Fabric-to-ORT Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

Table 6. Faults and Effects on the Network . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

Table 7. Signal Locations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

Table 8. Processor Interface Signals (38 Pins) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

Table 9. Statistics Interface Signal (1 Pin) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

Table 10. Switch Element Interface Signals (47 Pins) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

Table 11. CH_ RAM Interface Signals (58 Pins) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

Table 12. Address Lookup RAM Interface Signals (42 Pins) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

Table 13. ABR_R AM Interface Signals (22 Pins) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

Table 14. Receive Cell Buffer RAM Interface Signals (49 Pins) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

Table 15. Transmit Cell Buffers RAM Interface Signals (48 Pins) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

Table 16. Transmit UTOPIA ATM Layer Interface Signals (29 Pins) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72

Table 17. Receive UTOPIA ATM Layer Interface Signals (27 Pins) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72

Table 18. Test Signals (8 Signal Pins). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

Table 19. Mis cellaneous Signals (3 Signal Pins) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

Table 20. Estimated Package Thermal Characteristics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

Table 21. QRT-QSE Interface Cell Format. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

Table 22. QRT-QSE Interface Idle Cell Format . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

Table 23. Microprocessor Ports Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98

Table 24. Various ways to configure UTOPIA interface. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

Table 25. Internal RAM Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 150

Table 26. Transmit Service Class Queue (TX SCQ) Control Block Summary. . . . . . . . . . . . . . . . . . . . . . . . . . . . 152

Table 27. Receive Service Class (RX SC) Control Block Summary. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15 6

Table 28. Transmit VO Control Block Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 160

Table 29. Transmit SC Control Block Summary. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 163

Table 30. Transmit Multicast SC Control Block Summary. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 164

Table 31. Receive Switch Fabric Control RAM (RSF_CONTROL) Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . 167

Table 32. Receive UTOPIA Cell Buffers Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 170

Table 33. Transmit UTOPIA Cell Buffers Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 171

Table 34. Address Lookup RAM (AL_RAM) Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 174

Table 35. Determining the NUM_VPI Value . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 175

Table 36. VI_VPI_TABLE Entry if VPC_ENTRY = 1 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 175

Table 37. VI_VPI_TABLE Entry if VPC_ENTRY = 0 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 175

Table 38. Service Order Control Block Summary. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 181

Table 39. Channel RAM (CH_RAM) Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 183

Table 40. Channel Control Block Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 183

Table 41. Multicas t Control Block Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 194

Table 42. AB_RAM Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 196

xiv

Released

Datasheet

PMC-Sierra, Inc.

PM73487 QRT

PMC-980618 Issue 3 622 Mbps ATM Traffic Management Device

Table 43. Receive Channel Queue Block Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 196

Table 44. Receive Channel Queue Block Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 197

Table 45. Receive Cell Buffers SDRAM/SGRAM Summary. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 202

Table 46. Transmit Cell Buffers SDRAM/SGRAM Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 203

Table 47. Boundary Scan Pin Order . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 208

Table 48. Prefixes and Associated Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 232

Table 49. QRT RAM Selection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 237

Table 50. Ordering Information. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 238

xv

Released

Datasheet

PMC-Sierra, Inc.

PMC-980618 Issue 3 622 Mbps ATM Traffic Management Device

PM73487 QRT

Product Overview

The PM73487 622 Mbps ATM Traffic Management Device (QRT) is an advanced communications device capable of supporting very large, high-performance ATM switching systems. The

rich feature set of the QRT enables systems to offer many sophisticated network services. The

QRT provides 622 Mbps UTOPIA (Level 1 or Level 2) access to switch fabrics composed of

PM73488 5 Gbps ATM Switch Fabric Elements (QSEs). Together, these device s can be used to

build architectures with capacities from 622 Mbps to 160 Gbps. The QRT can also act as a standalone 622 Mbps switch.

The QRT/QSE architecture virtually eliminates hea d-of-line blocking by m eans of the QRT’s perVirtual Channel (VC) receive queues and congestion feedback from the QSE switch fabric. The

distributed architecture acts as an output-buffered switch by incorporating Evil Twin Switching

(a congestion-reducing routing algorithm in the switch fabric) and a speed-up factor in the switch

fabric (running the fabric faster than the line rate).

The QRT uses per-VC receive queues, 64 receive Service Classes (SCs), and 16 transmit SCs per

each of the 31 Virtual Outputs (VOs) to enable flexible multi-priority scheduling algorithms. The

scheduler can be used to ensure Quality-of-Service (QoS) guarantees for Constant Bit Rate

(CBR), Variable Bit Rate (VBR), and Unspecified Bit Rate (UBR) VCs. The QRT also provides

five separate congestion thresholds, each with hysteresis, that selectively control AAL5 Early

Packet Discard (EPD) and/or Cell Loss Priority (CLP)-based cell dropping for UBR support.

Additional highlights of the QRT include full Virtual Path Indicator (VPI)/Virtual Channel Indicator (VCI) header translation, separate input and output ce ll buffers (up to 64K each), Virtual

Path (VP)/VC switching, and support for up to 16K VCs on both the receive and transmit sides.

PMC-Sierra also offers the QRT Device Control Package, which is a software package that harnesses the QRT’s rich feature set and shortens system development times.

FEATURES

QUEUING ALGO R ITHMS

Receive

• Maintains 64 weighted, bandwidth-controlled SCs with per-VC queues.

• Provides round-robin servicing of queues within each SC.

• Provides per-channel (VP or VC), per-SC, and per-direction congested and maximum

queue depth limits.

• Provides up to 64K cell buffers.

Transmit

• Provides 31 VOs.

• Maintains 16 SCs for each VO with per-VC accounting.

1

Released

Datasheet

PMC-Sierra, Inc.

PMC-980618 Issue 3 622 Mbps ATM Traffic Management Device

PM73487 QRT

• Provides per-channel (VP or VC), per-SC Queue (SCQ), per-SC, per-VO, and perdirection congested and maximum queue depth limits.

• Provides up to 64K cell buffers.

CONGESTION MANAGEMENT ALGORITHMS

• Supports EPD and Partial Packet Discard (PPD) for UBR traffic, and as a backup for ABR

traffic.

• Supports CLP-based cell discard and Explicit Forward Congestion Indicator (EFCI) cell

marking.

• Supports three congestion limits (as well as EPD, CLP, and EFCI, and/or backpressure)

for logical multicast on the transmit side.

SWITCHING

• Supports VC and VP switching.

• Supports up to 16K VCs.

ADDRESS MAPPING

• Supports all 12 VP and 16 VC bits through use of a double, indirect lookup table.

• Performs header translation at both the input (receive) and output (transmit) directions.

Input header translation is used to pass the output queue channel number through the

switch.

MULTICAST

• Supports logical multicast with a superior queue-clearing algorithm.

DIAGNOSTIC/ROBUSTNESS FEATURES

• Checks the header parity.

• Counts tagged cells.

• Runs error checks continually on all fabric lines.

• Checks liveness of control signal lines at both switch fabric and UTOPIA interfaces,

working around partial fabric failures.

• Checks Static Random Access Memory (SRAM) and Dynamic Random Access Memory

(DRAM) parity.

STATISTICS FEATURES

• In the receive direction, counts cells transmitted and dropped.

• In the transmit direction, counts cells transmitted and dropped on a per-VC basis.

I/O FEATURES

• Provides four switch element interfaces with phase aligners. The phase aligners allow for

external serialization of the data stream enabling systems to be built that support device

separation of up to 10 meters.

• Provides a UTOPIA Level 2 Multi-PHY (MPHY) 16-bit, 50 MHz interface.

• Provides a 2-level priority servicing algorithm for high and low bandwidth UTOPIA PHY

layer devices.

• Provides a multiplexed address/data CPU interface.

2

Released

Datasheet

PMC-Sierra, Inc.

PM73487 QRT

PMC-980618 Issue 3 622 Mbps ATM Traffic Management Device

• Provides two 100 MHz, 32-bit, synchronous DRAM cell buffer interfaces.

• Provides three 100 MHz, synchronous SRAM control interfaces.

• Provides a JTAG boundary scan interface.

COMPATIBILITY FEATURES

• Compatible with the ATM Forum 3.0, 3.1, and 4.0 specifications.

• Compatible with the ATM Forum UTOPIA Level 1 and Level 2 specifications.

• Compatible with the PM73488 ATM QSE.

PHYSICAL CHARACTERISTICS

• 3.3 V supply voltage.

• 5 V tolerant inputs on the microprocessor and UTOPIA interfaces.

• Available in a 503-pin Enhanced Plastic Ball Grid Array (EPBGA) package.

BLOCK DIAGRAM

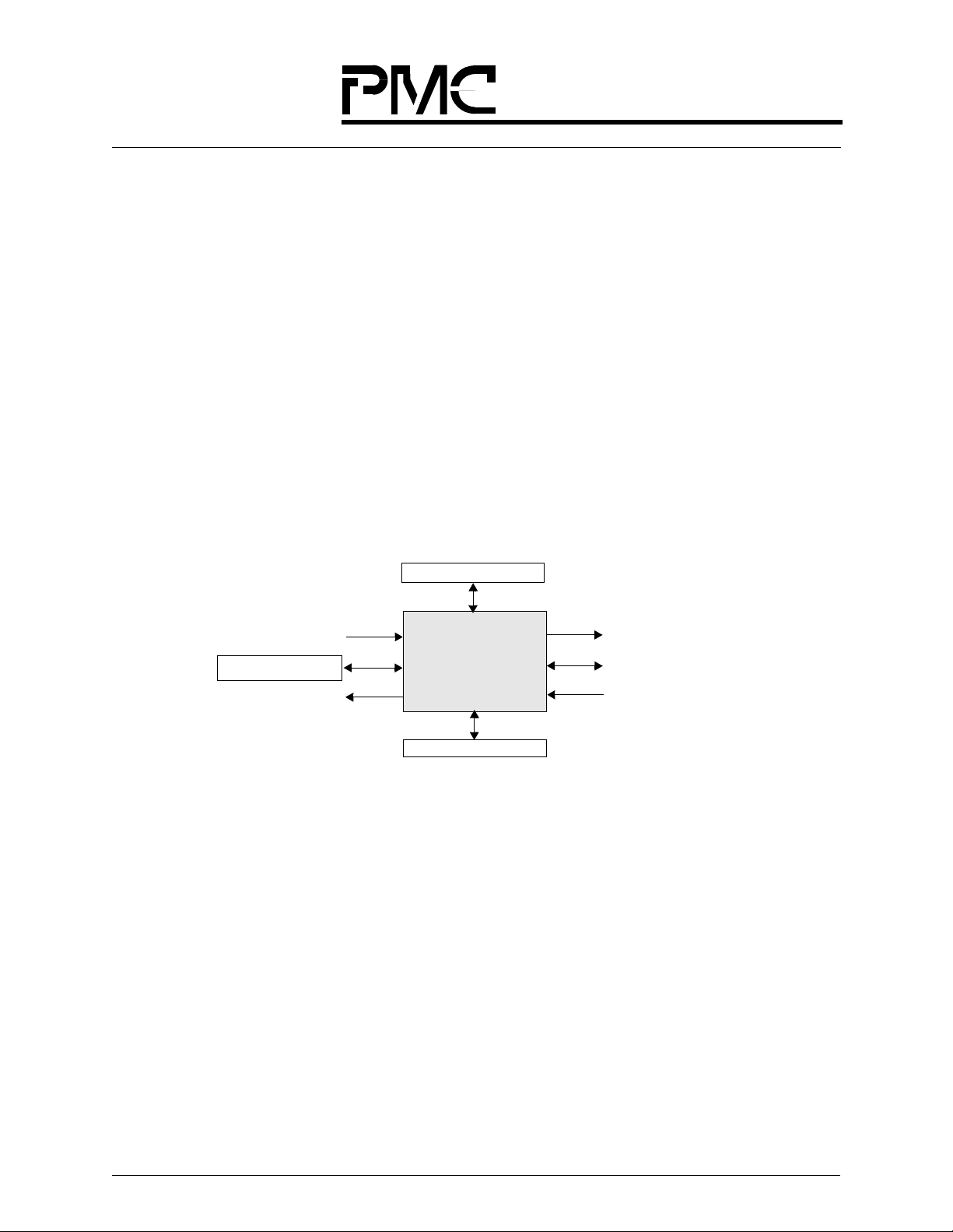

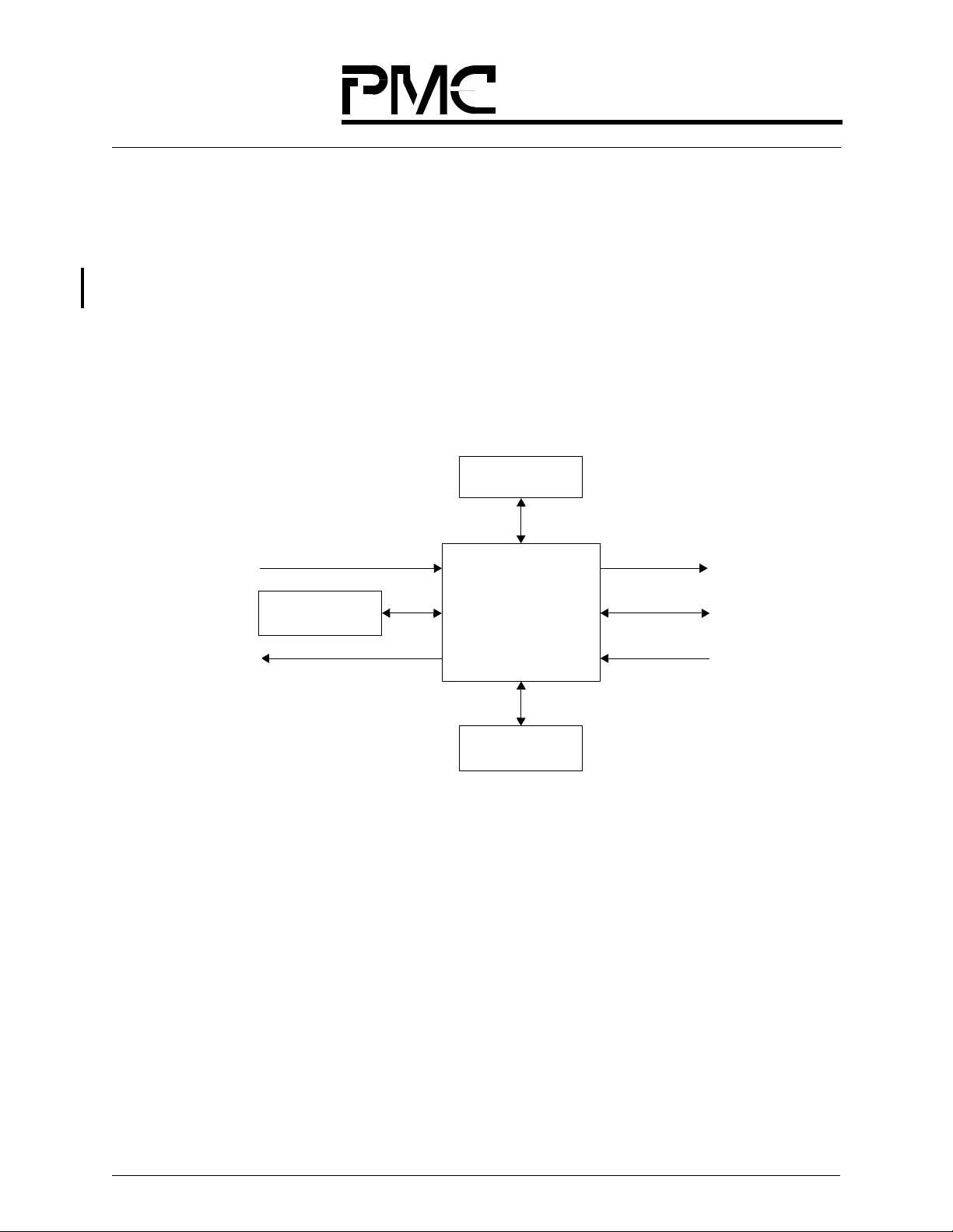

Figure 1 shows a QRT system block diagram.

Receive Cell SDRAM

Receive UTOPIA

Control SSRAM

Transmit UTOPIA

Figure 1. QRT Sys tem Block Diagram

622 Mbps ATM

Traffic Mgt Device

Transmit Cell SDRAM

(QRT)

PM73487

To Switch Fabr ic

Host Interface

From Switch Fabric

3

Released

Datasheet

PMC-Sierra, Inc.

PMC-980618 Issue 3 622 Mbps ATM Traffic Management Device

PM73487 QRT

1 SYSTEM APPLICATIONS

The QRT, together with the QSE, support a wide range of high-performance ATM switching systems. These systems range in size from 622 Mbps to 160 Gbps. The systems can be developed

such that this scalability is provided with linear cost. Another key feature of the QRT/QSE architecture is that it is exceptionally fault-tolerant, both in the switch fabric and the UTOPIA interface.

This section contains a quick overview of the QRT and several example applications:

• a stand-alone 622 Mbps switch using a single QRT,

• a 5 Gbps switch using QRTs and a QSE,

• a 10 Gbps switch using QRTs and QSEs,

• a switch architecture using QRTs and QSEs that scales from 5 Gbps to 20 Gbps,

• a switch architecture using QRTs and QSEs that scales from 5Gbps to 160 Gbps

1.1 QRT System Overview

The QRT provides 622 Mbps of input and output buffered a ccess to switch fabrics composed of

QSEs (32 x 32 PM73488s). In addition, the QRT supports a stand-alone, purely output-buffered

622 Mbps switch mode. Head-of-line blocking, commonly associated with input buffers, is virtually eliminated via per-VC receive queues, three types of per-cell switch fabric feedback, and perVC cell selection algorithms. The QRT also provides eight separate congestion thresholds, each

with hysteresis, that selectively control AAL5 Early Packet Discard (EPD)/Packet Tail Discard

(PTD), CLP-based cell dropping, and/or EFCI marking. Eight separate maximum thresholds are

also supported. Additional highlights of the QRT include full VPI/VCI header translation, separate input and output cell buffers (up to 64K each), Virtual Path Connection (VPC)/Virtual Channel Connection (VCC) connections, and up to 16K VCs. The QRT provides a bidirectional

connection between a UTOPIA Level 2 interface and 4-nibble wide, 66 MHz switch fabric interfaces, as shown in Figure 2 on page 5. A significant switch speed-up factor, up to 1.6 times the

line rate, is used to support full throughput for many switch fabric configurations.

4

Released

Datasheet

PMC-Sierra, Inc.

PM73487 QRT

PMC-980618 Issue 3 622 Mbps ATM Traffic Management Device

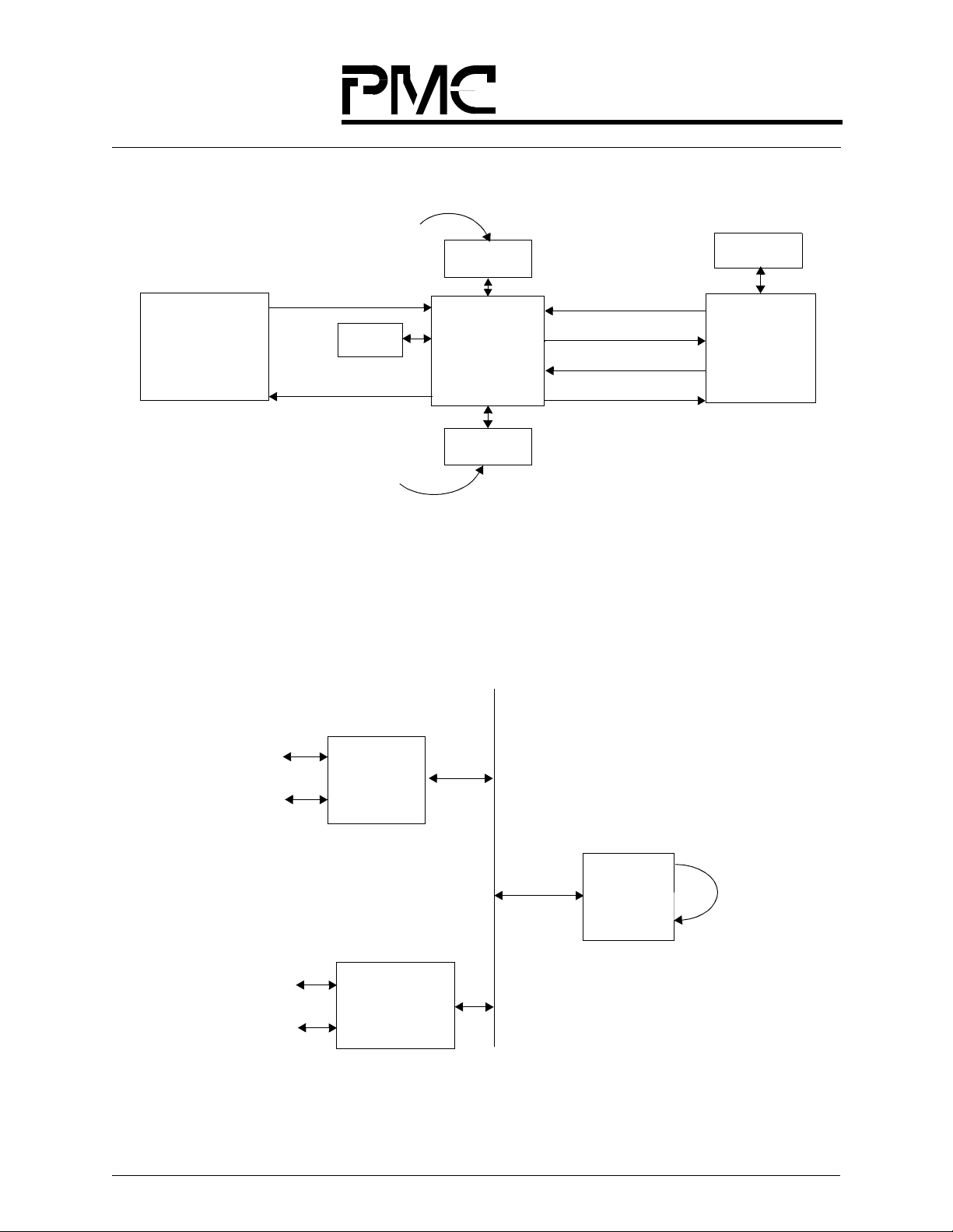

Cells are stored in pe r-VC que ues on

the input and respond to per-cell

Receive UTOPIA Level 2

Physical and/or

ATM Layers

Transmit UTOPIA Level

Cells are stored in per-

priority queues on the output

with per-VC accountin g

Interface

Control

SSRAM

2 Interface

Input Cell

SDRAM

QRT

(PM73487)

Output Cell

SDRAM

Receive

Receive Nibble Data

Transmit Nibble Data

Transmit Feedback

Multicast

SRAM

QSE

(PM73488)

Figure 2. QR T Syste m Over view

1.2 622 Mbps Switch Configuration

The QRT can be used in a stand-alone application that supports ATM switching up to 622 Mbps,

as shown in Figure 3. The four switch fabric interfaces are looped back to the QRT, allowing the

UTOPIA interface to be fully used. In this application, the QRT operates as an output buffered

switch..

2M

2M

155M

155M

UTOPIA Level 2 Multi-PHY Interface

1

AAL1 SAR

Processor

• • •

8

(PM73121)

1

S/UNI-QUAD

(PM5349)

4

Figure 3. 622 Mbps Switch Configurat ion

QRT

(PM73487)

5

Released

Datasheet

PMC-Sierra, Inc.

PM73487 QRT

PMC-980618 Issue 3 622 Mbps ATM Traffic Management Device

1.3 32 x 32 Switch Application (5 Gbps)

Figure 4 shows a basic 32 × 32 switch application (5 Gbps) using eight QRTs and one QSE.

622 Mbps

Aggregate

Receive UTOPIA

Level 2

622 Mbps

Aggregate

QRT #1

(PM73487)

Receive Input

QRT #8

(PM73487)

Receive Input

QRT #1

(PM73487)

×

4

QSE

4

×

(PM73488)

4

Transmit Output

×

×

4

QRT #8

(PM73487)

Transmit Output

622 Mbps

Aggregate

Transmit UTOPIA

Level 2

622 Mbps

Aggregate

Figure 4. 32 x 32 Swit ch App lic atio n (5 Gbps )

1.4 64 x 64 Switch Application (10 Gbps)

Figure 5 shows a 64 × 64 switch application (10 Gbps) using 16 QRTs and 6 QSEs. This applica-

tion uses QSEs in a 3-stage fabric. This sized system can be implemented in a single 19-inch rack.

622 Mbps

Aggregate

Receive

UTOPIA

Level 2

622 Mbps

Aggregate

QRT #1

(PM73487)

Receive Input

QRT #8

(PM73487)

Receive Input

×

4

QSE

(PM73488)

× 16

QSE

(PM73488)

× 16

QSE

(PM73488)

4

×

QRT #1

(PM73487)

Transmit Output

QRT #8

(PM73487)

Transmit Output

622 Mbps

Aggregate

Transmit

UTOPIA

Level 2

622 Mbps

Aggregate

622 Mbps

Aggregate

Receive

UTOPIA

Level 2

622 Mbps

Aggregate

QRT #9

(PM73487)

Receive Input

QRT #16

(PM73487)

Receive Input

(PM73488)

4

×

QSE

× 16

QSE

(PM73488)

× 16

Figure 5. 64 x 64 Switch Application (10 Gbps)

6

QSE

(PM73488)

×

4

QRT #9

(PM73487)

Transmit Output

QRT #16

(PM73487)

Transmit Output

622 Mbps

Aggregate

Transmit

UTOPIA

Level 2

622 Mbps

Aggregate

Released

Datasheet

PMC-Sierra, Inc.

PM73487 QRT

PMC-980618 Issue 3 622 Mbps ATM Traffic Management Device

1.5 5 Gbps to 20 Gbps Application Example - Seamless Growth

This section illustrates the modularity of the QRT (PM73487) and QSE (PM73488) architecture.

A 5 Gbps system can immediately be created (as shown in Figure 6 on page 7), and then be

upgraded to 10 Gbps (as shown in Figure 7 on page 7), or 20 Gbps (as shown in Figure 8 on page

8). Since all these systems are based on a single-stage switch fabric, the per-port cost for each sys-

tem will remain the same.

Eight

155 Mbps

Interfaces

Eight

155 Mbps

Interfaces

Eight

155 Mbps

Interfaces

Eight

155 Mbps

Interfaces

• Two QRTs (PM73487s)

• Two QRTs (PM73487s)

• Two QRTs (PM73487s)

• Two QRTs (PM73487s)

Port Card

Port Card

Switch Card

• One QSE (PM73488)

Port Card

Port Card

Figure 6. 5 Gbps ATM Switch Using 16 Dual S/UN Is, 8 QR Ts, and 1 QSE

Eight

155 Mbps

Interfaces

• Two QRTs (PM73487s)

Port Card 1

•

Switch Card

• One QSE (PM73488)

•

•

Eight

155 Mbps

Interfaces

• Two QRTs (PM73487s)

Figure 7. 10 Gbps ATM Switch Using 32 Dual S /UNIs , 16 QRTs, an d 2 QSEs

Port Card 8

7

Switch Card

• One QSE (PM73488)

Released

Datasheet

PMC-Sierra, Inc.

PM73487 QRT

PMC-980618 Issue 3 622 Mbps ATM Traffic Management Device

Eight

155 Mbps

Interfaces

Port Card 1

• Two QRTs (PM73487s)

•

Switch Card

• One QSE (PM73488)

Switch Card

• One QSE (PM73488)

•

•

Eight

155 Mbps

Interfaces

• Two QRTs (PM73487s)

Figure 8. 20 Gbps ATM Switch Using 64 Dual S /UNIs , 32 QRTs, an d 4 QSEs

Port Card 16

Switch Card

• One QSE (PM73488)

Switch Card

• One QSE (PM73488)

8

Released

Datasheet

PMC-Sierra, Inc.

PM73487 QRT

PMC-980618 Issue 3 622 Mbps ATM Traffic Management Device

1.6 5 Gbps to 160 Gbps Application Example – LAN-to-WAN

A powerful application of the QRT and the QSE devices is the creation of modules that can be

used in a range of switches with only the interconnection changing between different sizes. ATM

switches from 5 Gbps to 160 Gbps can be realized with only two unique cards. A port card has

one QRT, and a switch card has two QSEs. The switch fabric consists of three stages, each with

32 QSEs (or 16 switch cards). To plan for future scalability, the middle stage must be built-in

upfront. This is a one-time cost. Then, in order to scale in 5 Gbps increments, one switch card and

its accompanying eight port cards should be added. Finer bandwidth scaling is possible by populating the additional switch card with port cards as needed (in increments of 622 Mbps). With this

switch fabric topology, scaling is possible up to 160 Gbps. Once the initial middle stage cost has

been incurred, the per-port cost for 5 Gbps through 160 Gbps systems remains almost constant

Port Card - One QRT

One UTOPIA

Level 2 Interface

QRT

(PM73487

Figure 9. 5 Gbps to 160 Gbp s Swi tches Mod eled Using On ly Two Cards

Switch Card - Two QSEs

x32

x32

QSE

(PM73488

QSE

(PM73488

x32

x32

9

Released

Datasheet

PMC-Sierra, Inc.

PM73487 QRT

PMC-980618 Issue 3 622 Mbps ATM Traffic Management Device

622 Mbps

622 Mbps

Port Card #1

Rx Input

Port Card #8

Rx Input

Switch Card #17

Stage 1 QSE

x2

Switch Card #1

Switch Card #2

Switch Card #17

Stage 3 QSE

x2

Port Card #1

Tx Output

Port Card #8

Tx Output

622 Mbps

622 Mbps

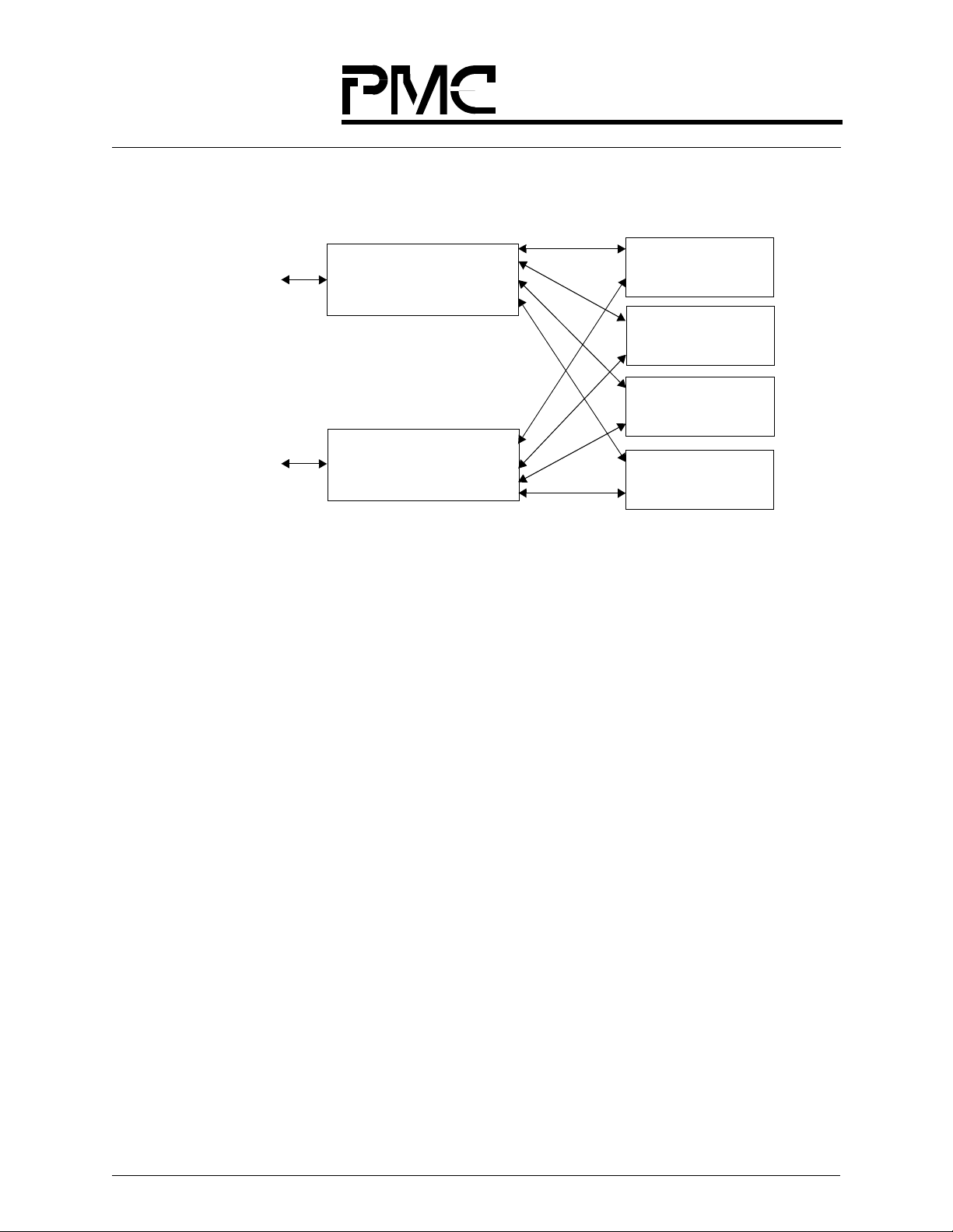

Switch Card #16

Figure 10. 5 Gbps ATM Switch

Figure 10 shows a 5 Gbps ATM switch using 8 port cards (8 QRTs) and 17 switch cards (34

QSEs). The middle stage is composed of 16 switch cards. The 5 Gbps bandwith is achieved by

adding switch card #17 (which is depicted using two boxes: one stage 1 Q SE and one stage 3

QSE), and eight port cards (each of which is depicted using two boxes: one for the Rx input side,

and one for the Tx output side). Lines between stage 1 and stage 2, and stage 2 and stage 3 switch

cards represent two sets of wires, one to each of the QSEs in the middle stage switch cards.

10

Released

Datasheet

PMC-Sierra, Inc.

PM73487 QRT

PMC-980618 Issue 3 622 Mbps ATM Traffic Management Device

.Figure 11 shows a 10 Gbps ATM switch using 16 port cards (16 QRTs) and 18 switch cards (36

QSEs). Here, another switch card and eight port cards have been added to the 5 Gbps switch

depicted in Figure 10.

622 Mbps

622 Mbps

622 Mbps

622 Mbps

Port Card #1

Rx Input

Port Card #8

Rx Input

Port Card #9

Rx Input

Port Card #16

Rx Input

Switch Card #17

Stage 1 QSE

x2

Switch Card #18

Stage 1 QSE

x2

Switch Card #1

Switch Card #2

Switch Card #17

Stage 3 QSE

x2

Switch Card #18

Stage 3 QSE

x2

Port Card #1

Tx Output

Port Card #8

Tx Output

Port Card #9

Tx Output

Port Card #16

Tx Output

622 Mbps

622 Mbps

622 Mbps

622 Mbps

Switch Card #16

Figure 11. 10 Gbps ATM Switch

11

Released

Datasheet

PMC-Sierra, Inc.

PM73487 QRT

PMC-980618 Issue 3 622 Mbps ATM Traffic Management Device

Figure 12 shows a 15 Gbps ATM switch using 24 port cards (24 QRTs) and 19 switch cards (38

QSEs).Here, once again, another switch card and eight port cards have been added

622 Mbps

622 Mbps

622 Mbps

622 Mbps

622 Mbps

622 Mbps

Port Card #1

Rx Input

Port Card #8

Rx Input

Port Card #9

Rx Input

Port Card #16

Rx Input

Port Card #17

Rx Input

Port Card #24

Rx Input

Switch Card #17

Stage 1 QSE

x2

Switch Card #18

Stage 1 QSE

Switch Card #19

Stage 1 QSE

x2

x2

Switch Card #1

Switch Card #2

Switch Card #17

Stage 3 QSE

x2

Switch Card #18

Stage 3 QSE

x2

Switch Card #19

Stage 3 QSE

x2

Port Card #1

Tx Output

Port Card #8

Tx Output

Port Card #9

Tx Output

Port Card #16

Tx Output

Port Card #17

Tx Output

Port Card #24

Tx Output

622 Mbps

622 Mbps

622 Mbps

622 Mbps

622 Mbps

622 Mbps

Switch Card #16

Figure 12. 15 Gbps ATM Switch

12

Released

Datasheet

PMC-Sierra, Inc.

PM73487 QRT

PMC-980618 Issue 3 622 Mbps ATM Traffic Management Device

Figure 13 shows a 20 Gbps ATM switch composed of 32 port cards (32 QRTs) and 20 switch

cards (40 QSEs). By adding additional sets of a switch card and eight port cards in the same manner, this system can scale up to 160 Gbps. .

622 Mbps

622 Mbps

622 Mbps

622 Mbps

622 Mbps

622 Mbps

622 Mbps

622 Mbps

Port Card #1

Rx Input

Port Card #8

Rx Input

Port Card #9

Rx Input

Port Card #16

Rx Input

Port Card #17

Rx Input

Port Card #24

Rx Input

Port Card #25

Rx Input

Port Card #32

Rx Input

Switch Card #17

Stage 1 QSE

x2

Switch Card #18

Stage 1 QSE

x2

Switch Card #19

Stage 1 QSE

x2

Switch Card #20

Stage 1 QSE

x2

Switch Card #1

Switch Card #2

Switch Card #17

Stage 3 QSE

x2

Switch Card #18

Stage 3 QSE

x2

Switch Card #19

Stage 3 QSE

x2

Switch Card #20

Stage 3 QSE

x2

Port Card #1

Tx Output

Port Card #8

Tx Output

Port Card #9

Tx Output

Port Card #16

Tx Output

Port Card #17

Tx Output

Port Card #24

Tx Output

Port Card #25

Tx Output

Port Card #32

Tx Output

622 Mbps

622 Mbps

622 Mbps

622 Mbps

622 Mbps

622 Mbps

622 Mbps

622 Mbps

Switch Card #16

Figure 13. 20 Gbps ATM Switch

.

13

Released

Datasheet

PMC-Sierra, Inc.

PM73487 QRT

PMC-980618 Issue 3 622 Mbps ATM Traffic Management Device

2 THEORY OF OPERATIONS

2.1 Overview

The QRT is a 622 Mbps, full duplex, intelligent routing table which, when used with a switch fabric composed of either SE or QSE devices, can implement ATM switches from 622 Mbps to 160

Gbps. The QRT supports a 16-bit UTOPIA Level 2 interface for ease of connection to PHY or

AAL layer devices. Four nibble-wide data interfaces connect the QRT to the switch interface.

External DRAM memory devices provide receive and transmit cell buffering, and external SRAM

devices provide control data for the QRT. This section explains the algorithms for the data flow.

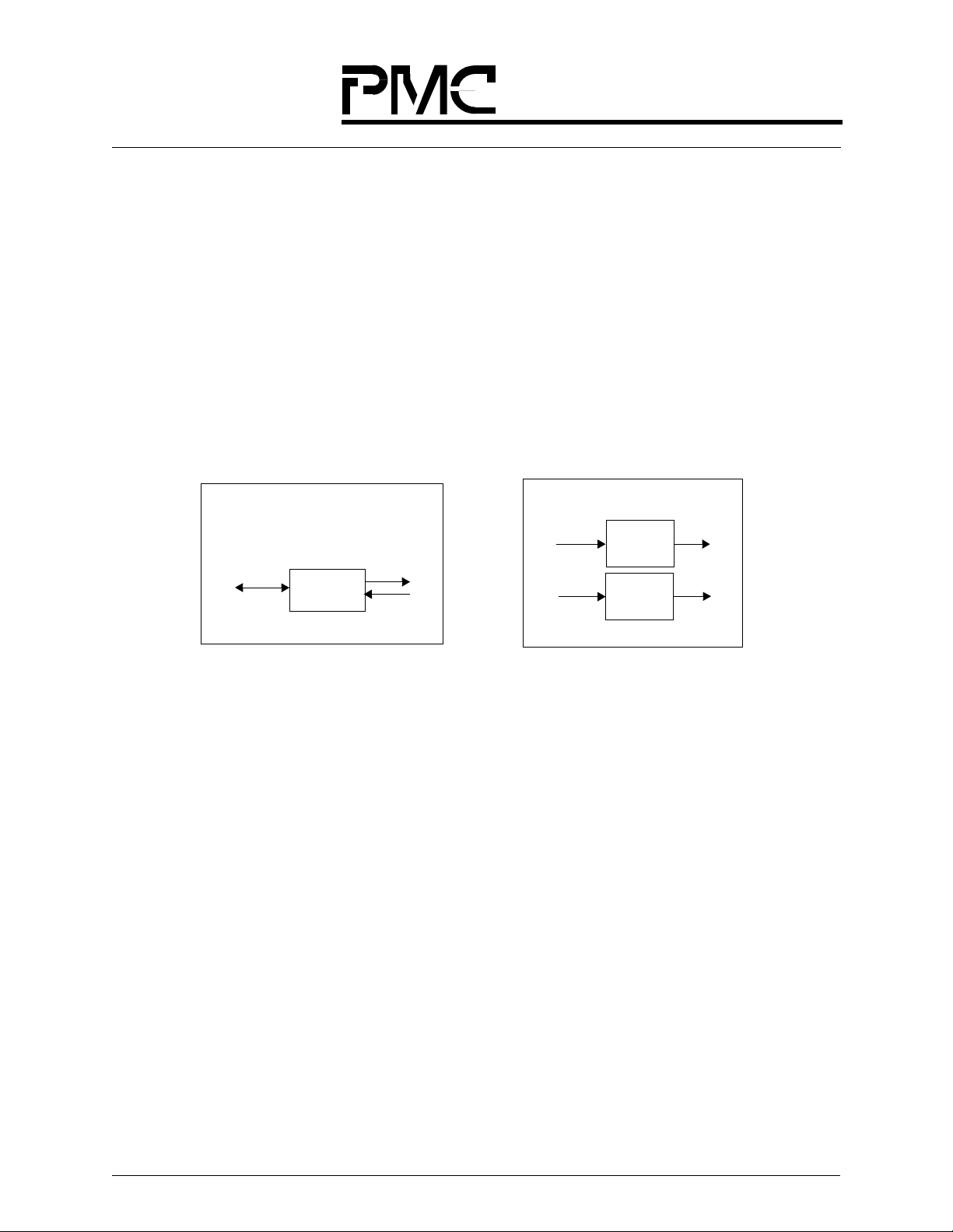

Figure 14 shows an overview of the QRT system.

Receive Cell

SDRAM

Receive UTOPIA

Level 2 Interface

Transmit UTOPIA

Level 2 Interface

Control SSRAM

QRT

(PM73487)

Transmit Cell

SDRAM

Figure 14. QRT Sys tem Overview

To QSE

Host Interface

From QSE

2.2 Interface Descriptions

2.2.1 Switch Fabric Interface

The QRT switch fabric interface consists of four groups of signals from both the ingress (receive

side) and the egress (transmit side). Each group consists of a Start-Of-Cell (SE_SOC_OUT) signal, a nibble-wide data bus, and a backpressure acknowledgment (BP_ACK_IN) signal. The

Start-Of-Cell (SE_SOC_OUT) signal is transmitted at the ingress at the same time as the beginning of a cell. SE_SOC_OUT on the ingress is common to all four groups. The BP_ACK_OUT

signal flows from the egress through the switch fabric, in the direction opposite the data, and indicates whether a cell has successfully passed through the switch fabric. Other signals associated

with the switch fabric interface are the switch element clock (SE_CLK) and RX_CELL_START.

To support the highest possible throughput for various switch fabric configurations, a clock

speed-up factor of 1.6 is used. That is, the switch fabric is run at a rate that is effectively 1.6 times

faster than the line rate.

14

Released

Datasheet

PMC-Sierra, Inc.

PMC-980618 Issue 3 622 Mbps ATM Traffic Management Device

2.2.2 Phase Aligners

PM73487 QRT

Phase aligners are used to allow for extended device separation. The technique used is a clock

recovery mechanism that requires only the switch fabric to be frequency synchronous. A master

clock is distributed to all devices associated with the switch fabric, and the phase of the clock at

each interface is dynamically adjusted to account for skew introduced to the signals. The phase

aligner circuitry for each interface responds to the cell start and feedback signals, which contain a

high number of transitions to ensure accurate phase adjustment of the clock for data and signal

sampling.

2.2.3 UT OPIA Interface

The QRT’s UTOPIA interface implements the ATM Forum standardized 16-bit, Level 2 configuration, which supports up to 31 Virtual Outputs (VOs) via five address bits. Up to 31 PHY or

AAL layer devices with 16-bit UTOPIA Level 2 functionality can be connected to this interface,

providing full duplex throughputs of 675 Mbps.

2.2.4 Cell Buffer SDRAM Interface

The QRT supports two Synchronous DRAM (SDRAM or SGRAM) interfaces providing up to

64K of cell buffering in both the receive and transmit directions. Each interface consists of a 32bit data bus, a 9-bit address bus, two chip select signals, and associated control signals. The frequency of these interfaces is 100 MHz. Both Synchronous Graphic RAM (SGRAM) and SDRAM

devices are supported. Clocking for these two interfaces is provided through the device.

2.2.5 Channel RAM (CH_RAM) Interface

The QRT supports up to 16K channels through a Synchronous SRAM (SSRAM) interfac e. The

interface consists of a 32-bit data bus, a 16-bit address bus, and associated control signals. The

frequency of this interface is 100 MHz. Clocking for this interface is provided through the device.

2.2.6 Address Lookup RAM (AL_RAM) Interface

The QRT has data structures in the AL_RAM, including VPI/VCI address translation. The interface consists of a 6-bit data bus, a 17-bit address bus, and associated control signals. The frequency of this interface is 100 MHz. Clocking for this interface is provided through the device.

2.2.7 AB_RAM Interface

The QRT stores the per VC head / tail pointers and sent / dropped counters for the receive direction in the AB_RAM. Each interface consists of a 17-bit multiplexed address/data bus and associated control signals. The frequency of this interface is 100 MHz.

2.2.8 Host Processor Interface

The QRT host processor interface allows connection of a microprocessor through a multiplexed

32-bit address/data bus. The suggested microprocessor for this interface is the Intel i960®. The

microprocessor has direct access to all of the QRT control registers.

15

Released

Datasheet

PMC-Sierra, Inc.

PM73487 QRT

PMC-980618 Issue 3 622 Mbps ATM Traffic Management Device

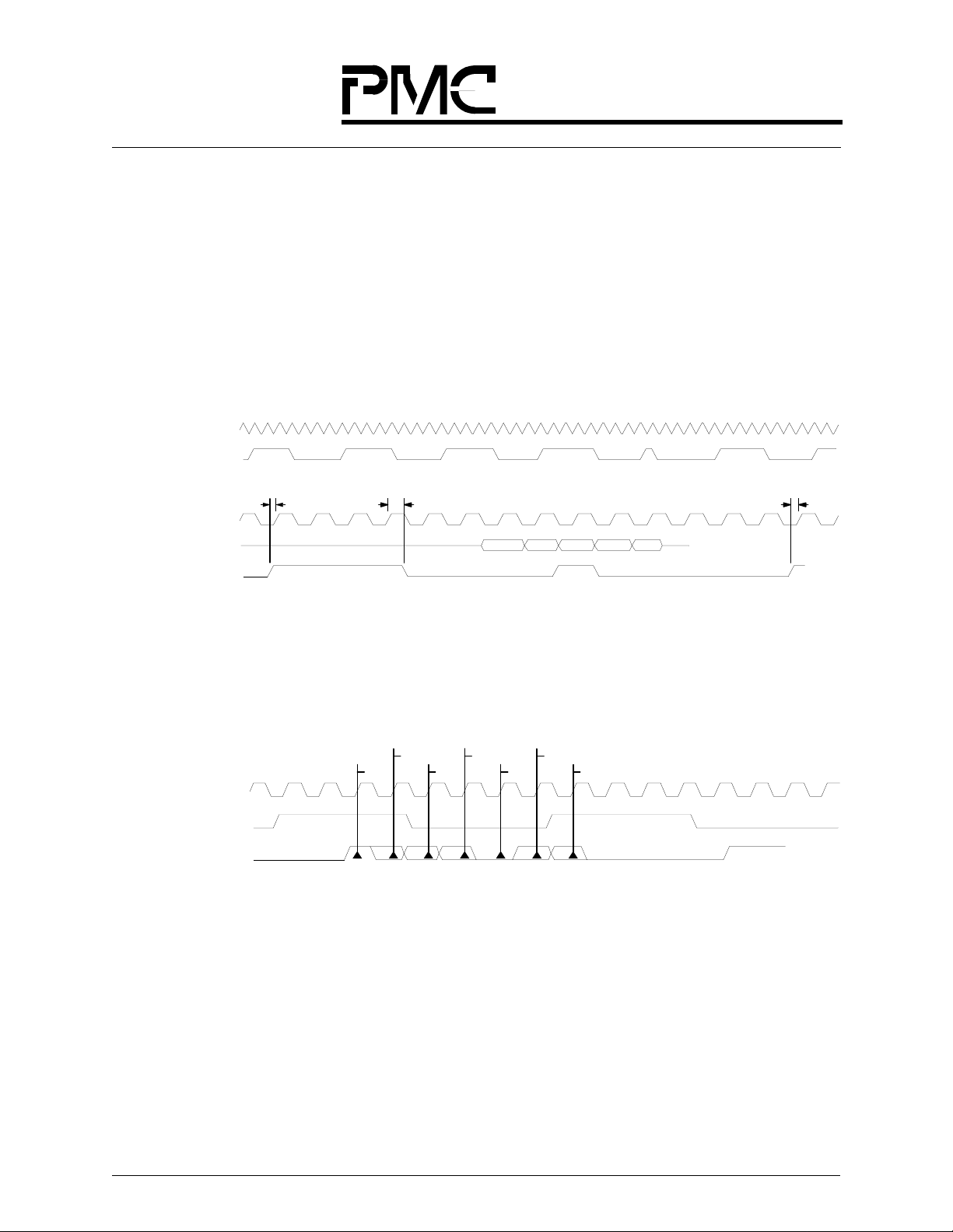

2.2.9 SE_SOC Encodings

The SE_SOC and BP_ACK signals have guaranteed transitions and special encodings, whic h ar e

defined in this section and in “BP_ACK Encodings” which follows. The SE_SOC_IN and

SE_SOC_OUT signals have guaranteed transitions and SOC encodings as shown in Figure 15.

The SE_SOC signals carry a repeating pattern of four zeros and four ones to guarantee transitions

required by the phase aligner. The “Start-Of-Cell” on the data line s associated with an SE_SOC

line is indicated by a change in this pattern. For a valid SE_SOC, the change in pattern is followed

by reset of the background pattern such that it is followed by four zeros and four ones. The first

nibble (PRES) of the header is coincident with SE_SOC (change in pattern).

SE_CLK

SE_SOC_OUT

Magnified CLK

Magnified Data

Magnified SE_SOC_OUT

4 ones. 4 zeros 4 ones 4 zeros 4 ones 4 zeros. 4 ones 4 zeros. 5 zeros 4 ones 4 zeros.

Tsesu TsesuTseho

PRES.

4 ones 4 zeros 1 one. 5 zeros

Figure 15. SE_SOC Encodings

2.2.10 BP_ACK Encodings

Figure 16 shows the BP_ACK encodings.

Mode

Inversion1

clk

BP_ACK Base Pattern

BP_ACK Signaling

Figure 16. BP_ACK Encodings

Data2

Data1

Data0

Inversion2

Code Ext 0

4 ones 4 zeros

4 zeros 4 ones

The BP_ACK_IN and BP_ACK_OUT signals have guaranteed transitions, and BP and ACK

encodings. The BP_ACK signal is used to signal backpressure/cell acknowledgment to the fabric

(QSE) at the egress and receive backpressure/cell acknowledgment at the ingress from the fabric

(QSE).

To ensure the transitions required by the phase aligner, the BP_ACK signal carries a repeating

four zeros, four ones pattern. The actual information is transferred through encoded 7-bit packets

that start with a change in this background pattern. The change (an inversion) on the line is followed by a mode bit, followed by two bits of coded message, and a second inversion (inverse of

the first inversion). If it is an acknowledgment packet, this is followed by two bits of code exten-

16

Released

Datasheet

PMC-Sierra, Inc.

PM73487 QRT

PMC-980618 Issue 3 622 Mbps ATM Traffic Management Device

sion (these bits are for future use and currently are required to be “00”). In the case of a backpressure packet, the next bit is the backpressure bit on for low priority multicast cells, followed by one

code extension bit.

The background is reset to four zeros and four ones after transmission of each packet.

The QRT and QSE allow back-to-back acknowledgment and backpressure packets. In the case of

back-to-back acknowledgment and backpressure packets, the receiving device may see an

inverted bit (a “1”) followed by the rest of the packet instead of a reset background pattern.

One backpressure packet and either one or none acknowledgment packet are expected to be

received during a cell time. The receipt of multiple acknowledgment or backpressure packet s is a

failure condition.

Table 1 describes the backpressure and acknowledgment encodings.

Ta ble 1. Backpressure and Acknowledgment Encodings

Mode Data 2 Data 1 Data 0

0 1 = Backpressure

on high priority

multicast cell.

10000Signals no response. Treated as acknowl-

10100Signals Mid-switch Negative ACKnowl-

11000Signals Output Negative ACKnowledg-

11100Signals ACKnowledgment (ACK).

1 = Backpressure

on medium priority multicast cell.

1 = Backpressure

on low priority

multicast cell.

Code

Ext 0

0 Backpressure information.

This signal is present each cell time,

regardless of whether a cell was transmitted or not (on that link).

This signal is withheld if any problem is

detected on the input port.

edgment.

edgment (MNACK).

ment (ONACK).

Description

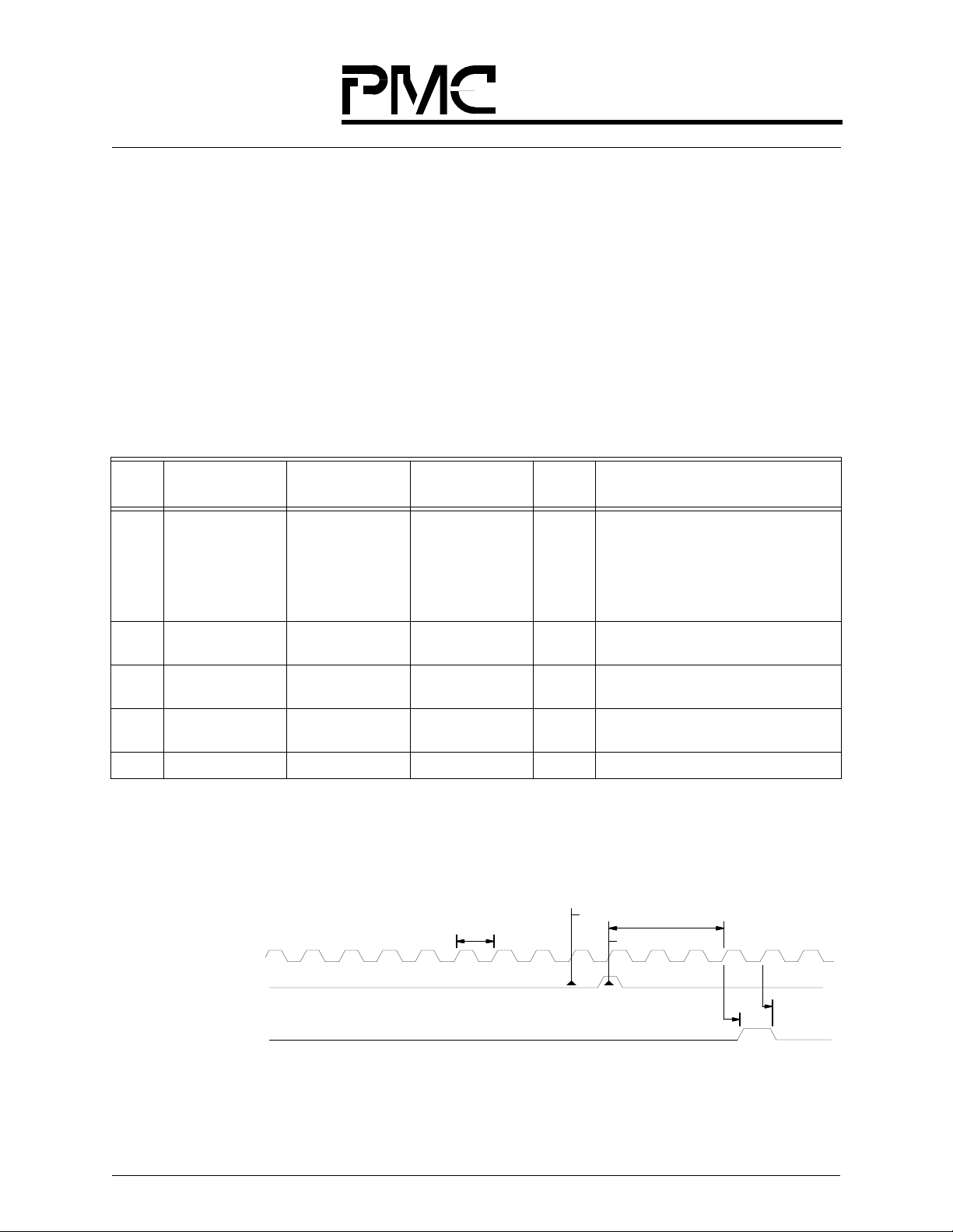

2.2.11 Relation Between External CELL_START and Local CELL_START

Figure 17 shows the relationship between external RX_CELL_START and local CELL_START

signals.

SE_CLK

External RX_CELL_START

Local CELL_START

Clock CycleClock Cycle

RX_CELL_START Low

RX_CELL_START High

CELL_START DelayCELL_START Delay

Delta

Delta

Figure 17. QRT Cell -Level Timin g

17

Released

Datasheet

PMC-Sierra, Inc.

PMC-980618 Issue 3 622 Mbps ATM Traffic Management Device

PM73487 QRT

Delay between the external RX_CELL_START and local CELL_START is programmable

through the RX_CELL_START_ALIGN register (refer to “RX_CELL_START_ALIGN (Inter-

nal Structure)” on page 122).

The local CELL_START impacts the start of cell transmission to the fabric. It also determines the

period within a cell time during which the BP_ACK_IN(3:0) at ingress is valid. As such, the programmable CELL_START delay allows the flexibility to synchronize the QRTs and QSEs in a

system.

2.3 Cell Flow Overview

The QRT functions as a 622 Mbps port for an ATM switch fabric composed of either the SE or