Page 1

Interplay® Central Services

Version 1.8 Installation & Configuration Guide

ICS Version: 1.8 Document Version: 1.0.1

Important Infor mati on

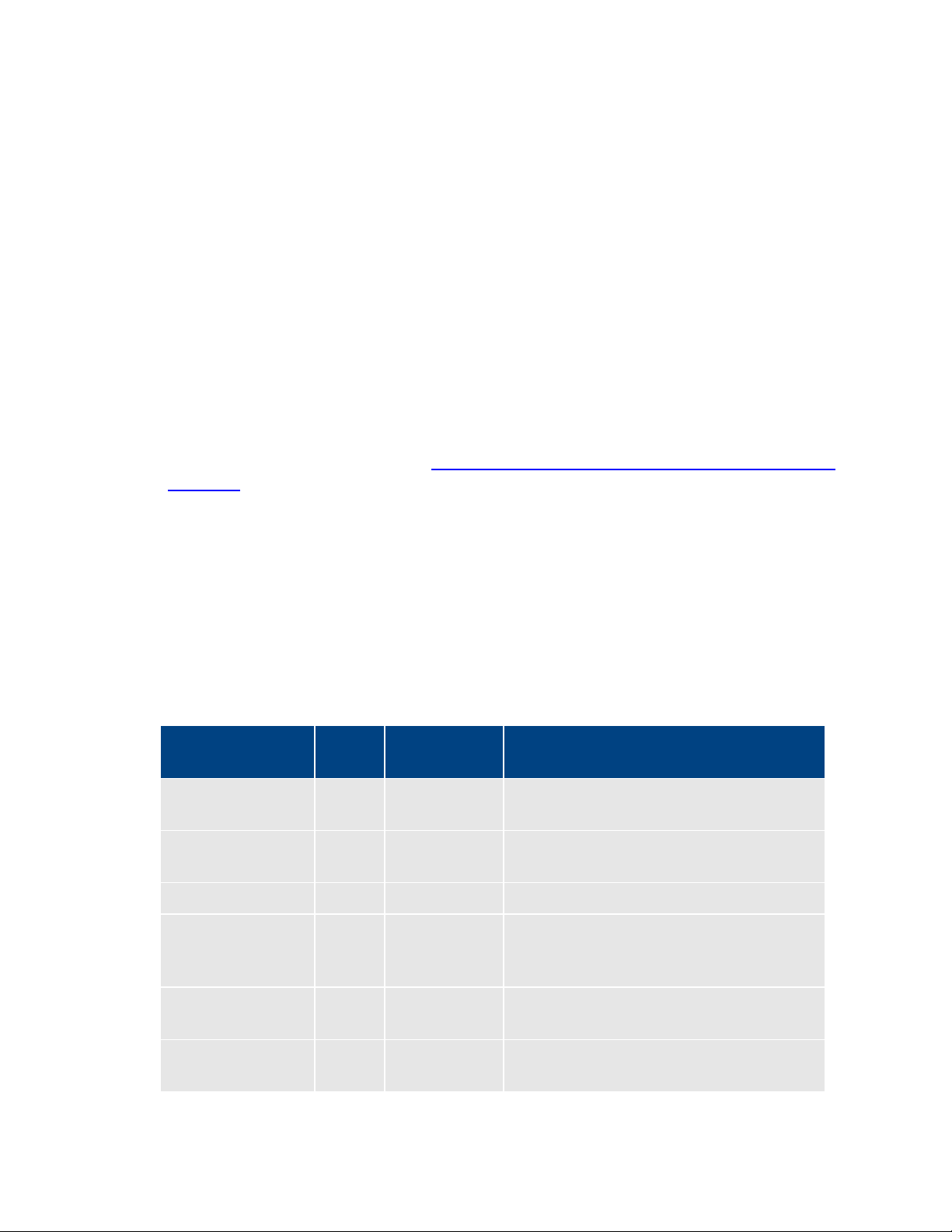

Revision Histor y

Date Revised

Version

Changes Made

July 30, 2014

1.0.1

New section “Verifying the hosts file Contents”.

This document provides in str uctions to install and configure Avid Interplay Central Services (ICS)

version 1.8 for use with Interplay Central 1.8, Sphere (latest plug-in for Media Composer 6.5.x

and 7.0.x and correspondi ng NewsCutter versions), and Interplay MAM 4.3.x.

For the latest information on the Interplay Central Services, see the documentation available

from the Interplay Central Services page of the Avid Knowledge Base. Updates are occasionally

issued after initial release.

http://avid.force.com/pkb/articles/en_US/readme/Avid-Interplay-Central-Services-Version-1-8Documentation

Important: Search the Avid Knowledge Base ICS 1.8 web page for the most up-to-date

ICS 1.8 Installation and Co nfiguration Guide , which contains the latest information that

might have become available after this document was published.

Note: For information on upgrading to ICS 1.8 from an earlier release, see the ICS 1.8

Upgrading Guide, available from the Avid Knowledge Base

March 24, 2014 1.0 First publication

ICS 1.8 web page.

Updated “Adding Host Names and IP Addresses to the hosts File”.

Removed redundant editing of rc.local file from “Mounting the

GlusterFS Volumes in Linux”.

Page 2

ICS 1.8 Installation & Co nfiguration Guide

About ICS 1.8

Please see the Interplay Central Services 1.8 ReadMe and any ReadMe documents pertaining to

the solution(s) by which ICS is used.

2

Page 3

ICS 1.8 Installation & Co nfiguration Guide

Contents

Important Information ....................................................................................................................... 1

Revision History .................................................................................................................................. 1

ART I: INTRODUCTION & OVERVIEW ........................................................................................................... 10

P

Welcome .................................................................................................................................................. 11

About this Guide ...................................................................................................................................... 12

Licensing and Additional Installation Information ................................................................................... 12

Front End License Configuration .......................................................................................................... 12

Delivery of Licenses on Back-End Systems ........................................................................................... 13

Installing the iPhone and iPad Apps ..................................................................................................... 13

Intended Audiences and Prere q uisites .................................................................................................... 13

Basic Installation Skills .......................................................................................................................... 14

Clustering Skills ..................................................................................................................................... 14

Interplay MAM Skills ............................................................................................................................ 14

Deployment Options ................................................................................................................................ 15

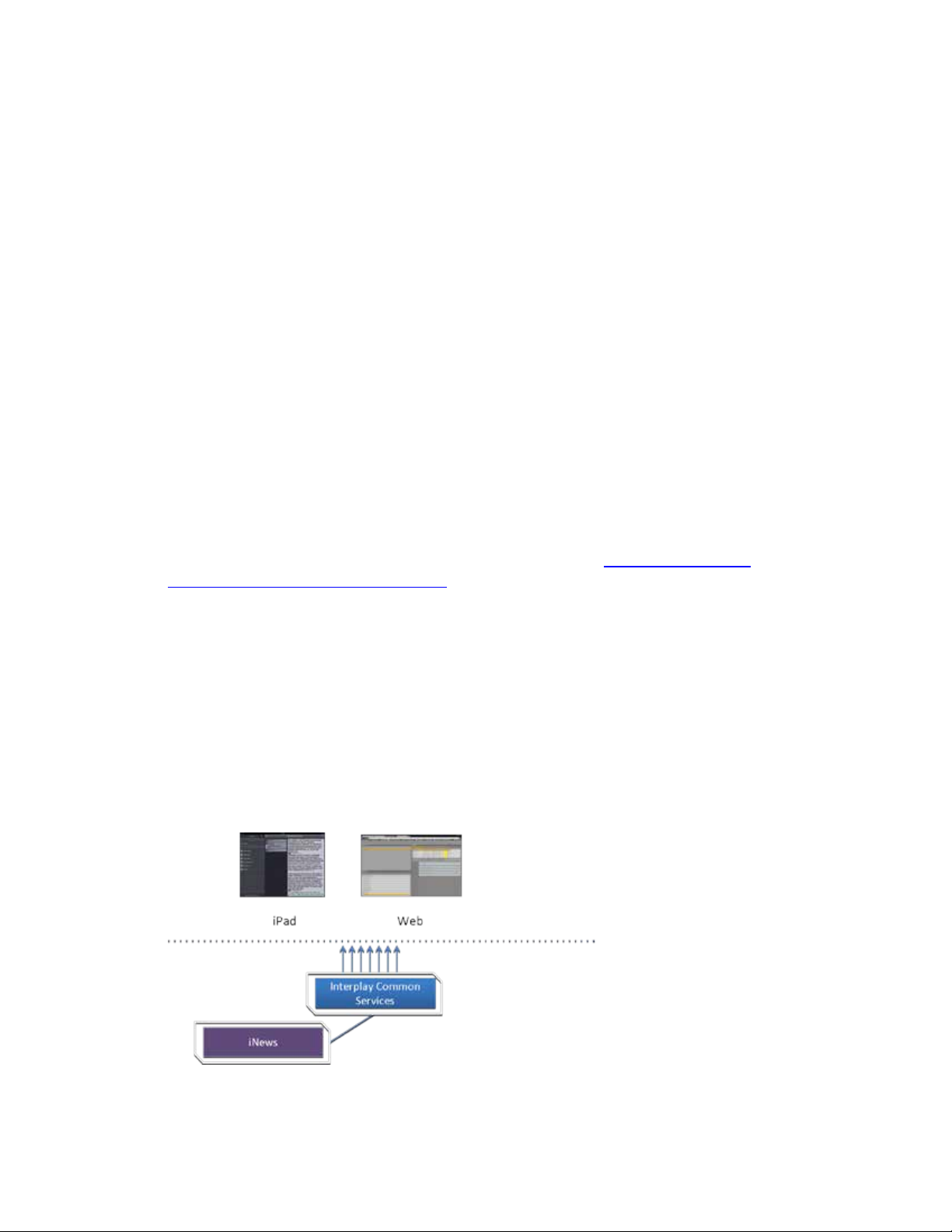

Interplay Central – iNEWS Only ............................................................................................................ 15

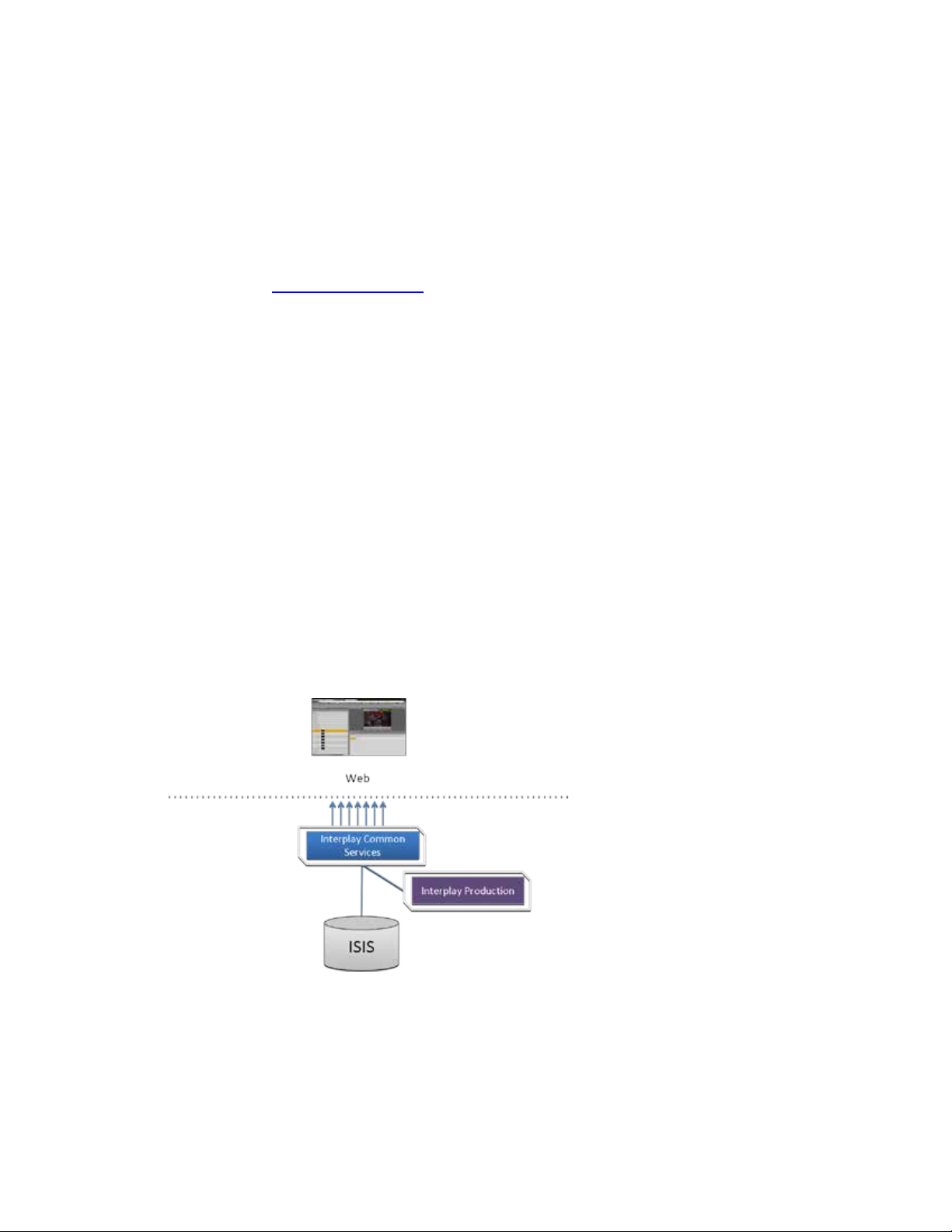

Interplay Central – Interplay Production Only ..................................................................................... 16

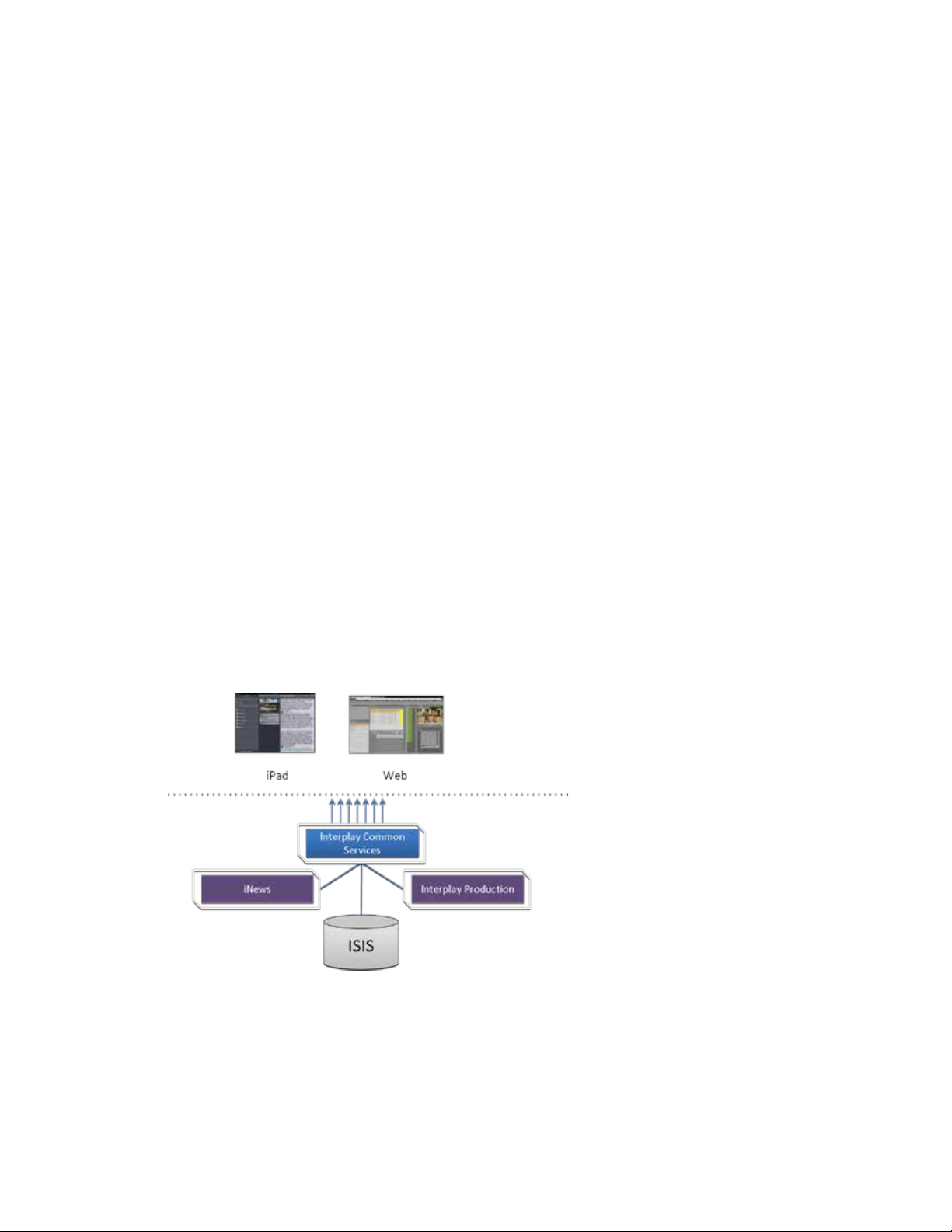

Interplay Central – iNEWS and Interplay Production ........................................................................... 17

Interplay Sphere Only ........................................................................................................................... 18

Both Interplay Central and Interplay Sphere (Shared ICS) ................................................................... 19

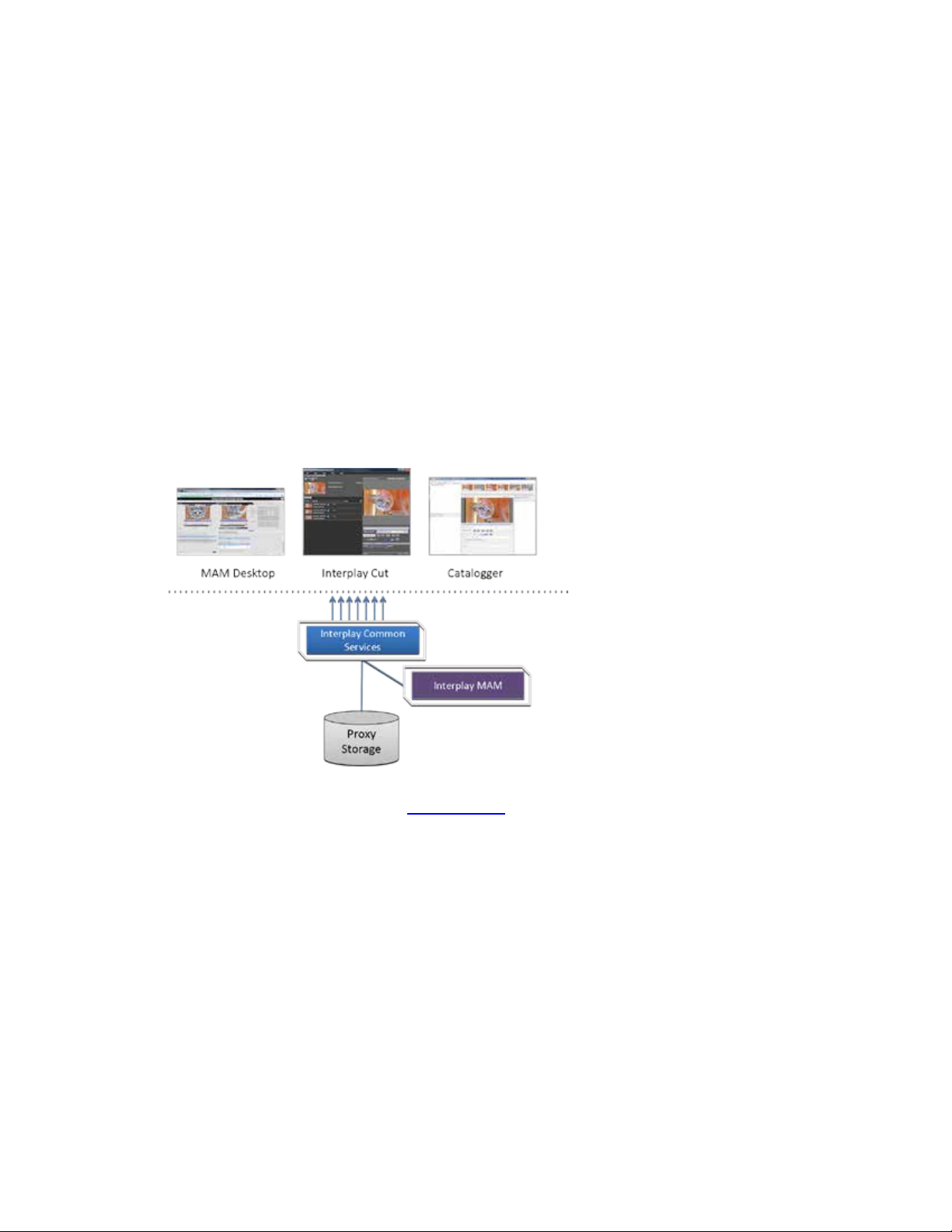

Interplay MAM ..................................................................................................................................... 20

Port Bonding in Interplay MAM ........................................................................................................ 21

Port Requirements ................................................................................................................................... 21

Caching in ICS ........................................................................................................................................... 22

The Dedicated Caching Volume ........................................................................................................... 22

Caching for Interplay MAM .................................................................................................................. 23

Caching for iOS Devices in Interplay Central ........................................................................................ 23

Caching for Sphere ............................................................................................................................... 23

Working with Linux .................................................................................................................................. 24

Installing Linux ...................................................................................................................................... 24

Linux Concepts ..................................................................................................................................... 24

Key Linux Directories ............................................................................................................................ 25

Linux Command Line ............................................................................................................................ 25

3

Page 4

ICS 1.8 Installation & Co nfiguration Guide

Linux Text Editor (vi) ............................................................................................................................. 27

Linux Usage Tips ................................................................................................................................... 28

Volumes in Linux .................................................................................................................................. 29

Clock Synchronization i n Linux ............................................................................................................. 29

Time Zones in RHEL .............................................................................................................................. 30

RAIDs in ICS .............................................................................................................................................. 30

Introduction to Clusteri n g ........................................................................................................................ 31

Single Server Deployment .................................................................................................................... 32

Cluster Deployment .............................................................................................................................. 33

Multicast vs Unicast ............................................................................................................................. 33

Working with Gluster ........................................................................................................................... 34

ART II: INSTALLING & CONFIGURING ........................................................................................................... 35

P

Installation Workflow............................................................................................................................... 36

Before You Begin ...................................................................................................................................... 39

Make Sure the Host Solutions Are I nstalled and Running ................................................................... 39

Make Sure You Have the Following Items ............................................................................................ 39

Make Sure You Can Answer the Following Questions ......................................................................... 40

Make Sure You Have All the Information You Need ............................................................................ 42

Make Sure You Change the Default Passwords ................................................................................... 42

Obtaining the Software ............................................................................................................................ 43

Obtaining the ICS Installation Package ................................................................................................. 43

Obtaining Red Hat Enterprise Linux ..................................................................................................... 44

Obtaining Gluster ................................................................................................................................. 45

Obtaining Additional Packages ............................................................................................................. 45

Preparing the ICS Installation USB Key .................................................................................................... 46

Transferring ICS and Linux to the USB Key ........................................................................................... 46

Copying Gluster to the U SB Key ........................................................................................................... 48

Installing the Network Interface Cards .................................................................................................... 49

Connecting to ISIS Proxy Storage ......................................................................................................... 49

Connecting to non-ISIS Proxy Storage .................................................................................................. 50

Setting the System Clock and Disabling HP Power Saving Mode ............................................................ 51

Setting Up the RAID Level 1 Mirrored System Drives .............................................................................. 52

Setting Up the RAID Level 5 Cache Drives ............................................................................................... 54

4

Page 5

ICS 1.8 Installation & Co nfiguration Guide

Installing RHEL and the ICS Software ....................................................................................................... 56

Booting RHEL for the First Time ............................................................................................................... 58

Booting from the System Drive ............................................................................................................ 59

Changing the root Password ................................................................................................................ 60

Verifying the Date and Time................................................................................................................. 60

Setting the Time Zone .......................................................................................................................... 61

Editing the Network Connections ............................................................................................................ 62

Identifying NIC Interfaces by Sight ....................................................................................................... 62

Verifying the NIC Interface Name ........................................................................................................ 63

Swapping NIC Interface Names ............................................................................................................ 64

Removing the MAC Address Hardware References ............................................................................. 65

Configuring the Hostname and St a tic Network Route ......................................................................... 66

Verifying the hosts file Contents .......................................................................................................... 68

Verifying Network and DNS Connectivity ............................................................................................. 69

Synching the System Clock ....................................................................................................................... 70

Creating the File Cache on the RAID ........................................................................................................ 72

Partitioning the RAID ............................................................................................................................ 72

Creating the Logical Volume and Mounting the Cache ........................................................................ 73

Installing the Interplay Central Distribution Service ................................................................................ 76

Determining Where to Install ICDS ...................................................................................................... 76

Before You Begin .................................................................................................................................. 77

Configuring ICS for Interplay MAM .......................................................................................................... 78

Configuring ICS for Interplay Central and/or Interplay Sphere ............................................................... 80

Configuring Workflow .......................................................................................................................... 80

Before You Begin .................................................................................................................................. 82

Configuring the Interplay Central UI .................................................................................................... 83

Logging into Interplay Central .............................................................................................................. 84

Changing the Administrator Password ................................................................................................. 88

Configuring iNEWS Settings .................................................................................................................. 88

Configuring Interplay Production Settings ........................................................................................... 89

Configuring ICPS for Interplay .............................................................................................................. 90

Configuring the ICPS Player .................................................................................................................. 92

Configuring the ICPS Player for Interplay Sphere ................................................................................. 92

5

Page 6

ICS 1.8 Installation & Co nfiguration Guide

Configuring the ISIS Connecti on(s) ....................................................................................................... 93

Mounting the ISIS System(s) ................................................................................................................ 94

Verifying the ISIS Mount ....................................................................................................................... 95

Verifying Video Playback ...................................................................................................................... 96

Configuring Wi-Fi Only Encoding for Facility-Based iOS Devices ......................................................... 97

ART III: CLUSTERING .................................................................................................................................. 98

P

Setting up the Server Cluster ................................................................................................................... 99

Clustering Workflow .............................................................................................................................. 101

Before You Begin ................................................................................................................................ 102

Configuring the Hosts File and Name Services File ................................................................................ 103

Adding Host Names and IP Addresses to the hosts File ..................................................................... 103

Optimizing the Lookup Servic e Order: Editing the Name Service Switch File .................................... 104

Setting Up DRBD .................................................................................................................................... 105

Starting the Cluster Services .................................................................................................................. 108

Joining the Cluster .................................................................................................................................. 111

Replicating the Cluster File Caches ........................................................................................................ 112

Before You Begin ................................................................................................................................ 112

Mounting the USB Key ....................................................................................................................... 113

Installing Gluster................................................................................................................................. 114

Unmounting and Removing the USB Key ........................................................................................... 115

Creating the Trusted Storage Pool ..................................................................................................... 115

Configuring the GlusterFS Volumes ................................................................................................... 117

Making Cache Directories and Changing Ownership ......................................................................... 119

Mounting the GlusterFS Volumes in Linux ......................................................................................... 121

Testing the Cache ............................................................................................................................... 122

Ensuring Gluster is On at Boot ........................................................................................................... 122

Reconfiguring the ICPS Player for Interplay Central in a Cluster........................................................ 123

ART IV: POST-INSTALLATION .................................................................................................................... 124

P

Post-Installation Steps ........................................................................................................................... 125

Determining the Installed ICS Version................................................................................................ 125

Verifying Cache Directory Permissions .............................................................................................. 125

Securing the System ........................................................................................................................... 126

Enabling and Securing the Player Demonstration Web Page ............................................................ 126

6

Page 7

ICS 1.8 Installation & Co nfiguration Guide

Backing up the ICS System Settings and the ICS Database ................................................................ 127

Monitoring Services and Resources ................................................................................................... 130

Monitoring the AAF Generator Service .............................................................................................. 133

Monitoring ICS High-Availability......................................................................................................... 135

Monitoring Load Balancing ................................................................................................................ 136

Observing Failover in the Cluster ....................................................................................................... 137

Testing the Cluster Email Service ....................................................................................................... 140

Changing the Cluster Administrator Email Address ........................................................................... 141

Reconfiguring Interplay Central Settings in a Cluster......................................................................... 142

Taking a Cluster Node Off-Line Temporarily ...................................................................................... 142

Permanently Removing a Node from a Cluster .................................................................................. 142

Adding a New Node to a Cluster ........................................................................................................ 142

Retrieving ICS Logs ............................................................................................................................. 145

Log Cycling .......................................................................................................................................... 146

Using SNMP Monitoring on the ICPS Server ...................................................................................... 146

Migrating the ICP Database from Windows to Linux ......................................................................... 146

Backing up and Restoring the ICS Data base ....................................................................................... 146

Appendix A: Installing IC S on Non-HP Hardware ................................................................................... 148

Non-HP Installation Notes .................................................................................................................. 148

Appendix B: Table of Deployment Options and Requirements ............................................................. 150

Appendix C: Configuring Port Bonding for Interplay MAM (Optional) .................................................. 152

Verifying the Ethernet Ports ............................................................................................................... 152

Configuring the Port Bonding ............................................................................................................. 153

Appendix D: Handling SSL Certificates ................................................................................................... 155

Built-In Browser Functionality ........................................................................................................ 155

SAN Certificates .............................................................................................................................. 156

Understanding the “Certificate Not Trusted” Warning ...................................................................... 156

Eliminating the Certificate not Trusted and Name Mismatch Warnings ........................................... 157

Generating a Self-Signed Certificate for a Single Server .................................................................... 158

Generating a Self-Signed Certificate for a Server Cluster .................................................................. 160

Before You Begin ............................................................................................................................ 161

Obtaining a Trusted CA-signed Certificate ......................................................................................... 168

Adding a CA-Signed Certificate to a Single Server .............................................................................. 171

7

Page 8

ICS 1.8 Installation & Co nfiguration Guide

Adding a CA-Signed Certificate to a Server Cluster ............................................................................ 176

Configuring Google Chrome (Windows) ............................................................................................ 178

Configuring Internet Explorer (Windows) .......................................................................................... 182

Configuring Safari (Mac OS) ............................................................................................................... 186

Launching the Windows Import SSL Certificate Directly .................................................................... 187

The Interplay Central Application Properties File .............................................................................. 188

Appendix E: Migrating the UMS Database with the User Management Utilities Tool .......................... 189

Appendix F: Installing t he Chrome Extension for Interplay Central MOS Plug-Ins ................................ 192

Setting Up Your Browser .................................................................................................................... 192

Enabling MOS ..................................................................................................................................... 192

Installing Plug-Ins ............................................................................................................................... 192

Uninstalling the Chrome Extension .................................................................................................... 193

Appendix G: Enabling Interplay Central MOS Plug-Ins in IE9................................................................. 194

Sample ActiveX Object in the Preferences File .................................................................................. 195

Appendix H: Unicast Support in Clustering ............................................................................................ 197

Appendix I: Installing t h e Inter play Production License for Interplay Central ....................................... 200

Appendix J: Configuring iNEWS f or Integration with Interplay Central ................................................. 201

Verifying Interplay Central Licenses on iNEWS .................................................................................. 201

Editing SYSTEM.CLIENT.VERSIONS ..................................................................................................... 202

Editing SYSTEM.CLIENT.WINDOWS .................................................................................................... 203

Appendix K: Installing and Configuring the Avid Central Mobile Application for the iPad or iPhone ... 205

Before You Begin ................................................................................................................................ 205

iNEWS Configuration for iPad and iPhone Integration ...................................................................... 205

Editing SYSTEM.CLIENT.VERSIONS ..................................................................................................... 206

Adding iPad and iPhone Devices to the iNEWS Configuration File .................................................... 207

Installing Avid Central on the iPad or iPhone ..................................................................................... 208

Appendix L: Installation Pre-Flight Checklist .......................................................................................... 210

Default Password Information ........................................................................................................... 210

Contact Information ........................................................................................................................... 210

Hardware ............................................................................................................................................ 211

Software ............................................................................................................................................. 211

Network Settings ................................................................................................................................ 211

NTP Time Server ................................................................................................................................. 212

8

Page 9

ICS 1.8 Installation & Co nfiguration Guide

ICS Server Information ....................................................................................................................... 212

Cluster Information ............................................................................................................................ 213

iNEWS Information ............................................................................................................................. 214

Interplay Central and Interplay Sphere Information .......................................................................... 214

Interplay Production Information ...................................................................................................... 215

ISIS Information .................................................................................................................................. 216

Interplay MAM Information ............................................................................................................... 217

Copyright and Disclaimer ....................................................................................................................... 218

9

Page 10

ICS 1.8 Installation & Co nfiguration Guide

PART I: INTRODUCTION & OVERVIEW

10

Page 11

ICS 1.8 Installation & Co nfiguration Guide

Welcome

Welcome to the ICS Installation and Co nfi guration Guide . This document will guide you through

the installation and set up of the Interplay Central Services (ICS) software components. It

provides step by step instructions to visually verify the hardware setup, install Linux and the ICS

software, and configure the software systems that will make use of ICS. It also provides detailed

steps for optional activities, for example: setting up a cluster of ICS servers, or configuring for an

iPad-only deployment.

Note: Beginning with version 1.6, the term “Interplay Central Services” replaces

“Interplay Common Services.” In addition, t he term “I nterplay Central Playback Service”

replaces “Interplay Common Playback Service.”

ICS is a set of software services running under t h e Linux operating system. ICS serves layouts for

applications, provides user authentication, manages system configuration settings, and provides

proxy-based playback of video assets over the network to web-based and mobile clients.

ICS supports several different Avid Integrated Media Enterprise (IME) solutions, including

Interplay Central, and Interplay Sphere, and Interplay MAM. ICS installs on its own set of

servers, distinct from the IME solution it is supporting. Multiple ICS servers can be clustered

together to obtain one or more of high-availability, load balancing and scalability.

Note: Refer to the “How to Buy Hardware for Interplay Central Services” guide for

detailed information on hardware specifications and deployment options. The guide is

available on the Avid Knowledge Base

The installation and configuration steps vary depending on the deployment model, target

hardware, and optional steps. For example, installations on qualified HP servers can use an

express process involving a USB key and the supplied Red Hat Enterprise Linux kickstart (ks.cfg)

file. Kickstart files are commonly used in Linux installs to automatically answer questions for

hardware known in advance. On non-HP servers you must install Red Hat Enterprise Linux

manually.

Note: All decisions pertaining to hardware, deplo yment mode l, optional activities (such

as setting up a cluster), network connections (GigE vs 10GigE), must be made before

beginning the installation. If these decisions have not been taken, or, to verify a non-HP

server, please consult an Avid representative.

Red Hat Enterprise Linux — sometimes just called Red H at, but ref err ed to in this guide as RHEL

— is a commercially supported, open source version of the popular Linux operating system. No

matter what the deployment model and target hardware, the installation of RHEL is mandatory.

Note: ICS requires RHEL 6.3. Do not i nstal l any OS updat es, patches. Do not upgrade to

RHEL 6.4 or higher. Do not run the Linux yum update command.

ICS 1.8 web page.

For more information on Red Hat see “Wor king with Linux ” on page 24

support options are covered in the “How to Buy Hardware for Interplay Central Services” guide,

available on the

11

Avid Knowledge Base ICS 1.8 web page.

. RHEL licensing and

Page 12

ICS 1.8 Installation & Co nfiguration Guide

About this Guide

Licensing and Additional Installation Information

Front End License Configuration

Note: Clock setting and synchronization play an important role in some ICS deployments.

For a discussion of the issues associated with clock synchronization and using a time

server to set the system clock, see “Clock Synchronization in Linux” on page 29

.

This guide provides all the inst ructions you need to set up ICS 1.8. The installation and

configuration is complex and can be difficult, particularly if you are unfamiliar with Linux.

The following tips will ensure a smooth installation:

• Read the whole guide, thoroughly and all the way through, before beginning the

installation process.

• Gather all the information required to perform the install before you start. Waiting until

the information is called for by an installation step will result in considerable delays.

• For a list of required information, see “Appendix L: Installation Pre-Flight Checklist

page 210.

• Complete all the relevant sections in the pre-flight checklist for your deployment.

Licenses must be installed on an iNEWS server, an Interplay Production server, or both. No

licenses are installed on the Interplay Central Services server.

For Interplay Production, the license types are J (Interplay Production Base license) an d G

(Advance license).

• Base license: Ca n connect to only one system type: iNEWS or Interpl ay Production.

Access is limited to specific panes.

• Advance licens e: Can connect to both system types: iNEWS and Interplay Production,

with access to all panes.

Note: Please refer to the “Interplay Central Administration Guide” for licensing details,

such as the panes and features made available by each license t y pe. The guide is

available with other Interplay Central v1.8 documentation on the Avid Knowledge Base:

http://avid.force.com/pkb/articles/en_US/readme/Avid-Interplay-Central-Version-1-81-8-Documentation

” on

You specify the type of license for each Interplay Central role in the Details tab of the Users

layout. For more information, see "Interplay Central Client Licensing" in the Avid Interplay

Central Administration Guide.

12

Page 13

ICS 1.8 Installation & Co nfiguration Guide

Delivery of Licenses on Back-End Systems

Installing the iPhone and iPad Apps

Intended Audiences and Prerequisites

An iNEWS client license or an Interplay Central mobile license for a specified number of clients is

sent to the customer through email along with specific installation instructions. However, to

ensure proper licensed integration between Interplay Central and iNEWS, additional

modification to system files in the iNEWS database is also required.

For more information see “

Central” on page 201.

An Interplay Production license for a specified number of clients is supplied to the customer on

a USB flash drive as a file with the extension nxn.

For more information, see “

Central” on page 200.

Appendix J: Conf i g ur ing iNEWS for Inte gration with Inte r p l ay

Appendix I: Installing the Interplay Production License for Interplay

The Avid Central mobile application is a native user interface designed to run on the Apple iPad

touch-screen tablet and the Appl e iPhone touch-screen phone, and enable direct, secure access

to your station’s iNEWS newsroom computer system.

For installation information, see “

Mobile Application for the iPad or iPhone” on page 205.

Appendix K: Installing and Configuring the Avid Central

This guide is aimed at the person responsible for performing a fresh install of ICS, or upgrading

or maintaining an existing ICS installation. It can also be used by someone creating a cluster of

ICS nodes out of a non-clustered setup. In particular, the following audiences have be en

identified:

• Avid Professional Services: Avid personnel whose responsibilities include installing and

upgrading the ICS system, on-site at a customer’ facility.

• Avid Channel Partners and Resellers: Selected organizations qualified by Avid to educate,

market, sell, install, integrate and provide support for the Avid product line, including ICS.

• In-House Installers: Clients with a sophisticated in-house IT department that has expertise

in systems integration and Linux (including networking, port-bonding, etc.). This kind of

person might be called on to add a new server to an already established cluster of ICS

servers, for example.

13

Page 14

ICS 1.8 Installation & Co nfiguration Guide

Basic Installation Skills

Clustering Skills

Interplay MAM Skills

The following skills are needed to perform the basic installation:

• Windows: Format a USB key, unzip files, etc.

• Server: Access to the physical server, booting/rebooting, interrupting startup screens to

enter BIOS and other utilities, navigating and altering BIOS, setting up RAIDs.

• Network Interface Cards (NI Cs ): Identify a NIC, knowledge of which NIC interface is

being used.

• Linux (install): Previous experience installing Linux is preferred but not essential,

knowledge of manually installing RPM files will be helpful.

• Linux (general): Work with Linux directories (cd, mkdir, ls), create volumes,

mount/unmount directori es, volumes and devices (e.g. USB key) , verify the status of a

Linux service.

• Linux (file editing): Use the Linux text editor (vi) to open/create files, add/delete text,

save/close files, etc.

• Networking: An understanding of network topologies and Ethernet protocols (TCP/IP),

using ping command, verify/change a NIC card Ethernet interface (i.e. eth0).

• System Clocks: Setting the system clock in BIOS and in Linux. For a discussion of system

clock options, see “Clock Synchronizat i on” on page 29

.

The following skills are desirable for setting up a cluster of ICS nodes:

• Gluster: Familiarity with Gluster, as it is used to create a shared pool of storage,

including starting/stopping Gluster services, creating shared storage pools, creating

GlusterFS volumes, etc.

• Networking: A basic understanding of unicast or multicast and IP ne tworking. An

advanced understanding of networking in Linux would be helpful, but is not essential,

since all instructions are provided.

The following skills are desirable or setting up ICS for Interplay MAM (port bonding optional):

• Port Bonding (general): Knowledge of theory and practice of port bonding (also called

link aggregation).

• Port Bonding (Linux): Understanding contents and purpose of Linux network-scripts

directory, editing interface configuration (ifcfg-ethN) files, restarting network services.

Note: Port bonding is an option that is exclusive to Int erpl ay MAM installations. Do not

perform port bonding when performing any other kind of install.

• Interplay MAM configuration: Ability to work as administrator in Interplay MAM.

14

Page 15

ICS 1.8 Installation & Co nfiguration Guide

Deployment Options

Interplay Central – iNEWS Only

ICS is a collection of software services designed to support a number of Avid enterprise solutions

and deployment options. S ince each deployment scenario has different hardware and software

configuration requirements (and playback characteristics), it will be helpful to have a high-level

overview of the deployment of interest before proceeding.

As noted, the installation follows one of these basic deployment models:

• ICS for Interplay Central

o iNEWS only

o Interplay Production only

o iNEWS and Interplay Production

• ICS for Inter play Sphere

• ICS for Interplay Central and Interplay Sphere (Shared ICS)

• ICS for Interplay MAM

This section provides an overview of each of these deploymen ts.

For a detailed technical summary of deployment options, see “

Deployment Opt ions and Requirements” on page 150.

Appendix B: Table of

One of the most straightforward deployments is ICS for Interplay Central in an iNEWS-only

environment; that is, with connections to iNEWS but no connection to Interplay Production. In

this deployment ICS provides the ability to browse and edit iNEWS content (queues, stories)

from a remote web client. The ability to browse, play and edit associated video requires

Interplay Production a n d is not p ro vided by the iNEWS-only deployment.

Interplay Central for iNEWS:

15

Page 16

ICS 1.8 Installation & Co nfiguration Guide

Interplay Central – Interplay Production Only

The iNEWS-only deployment typically requires a RAID 1 (mirrored RAID) for the Linux operating

system. Since ICS is not providing playback of any video assets, there is no need for caching, so

the media cache volume referred to in this guide is not required. Typically, a single ICS server is

sufficient. Two ICS servers configured as a cluster provide high-availability.

Note: The iNEWS-only deployment can be

on smaller, less expensive server hardware.

Refer to the “How to Buy Hardware for Interplay Central Services” gui de f o r detailed

information on hardware specifications and deployment opt i ons. The guide is available

on the Avid Knowledge Base

ICS 1.8 web page.

Deployment Summary:

• Browse and edit iNEWS content

• RAID 1 required

• Media cache volume not required

• Clustering yields high-availability

ICS for Interplay Central with Interplay Production has connections to Interplay Production only.

In this deployment ICS serves layouts for applications, provides user authentication, manages

system configuration settings, and provides proxy-based playback o f video assets over the

network to web-based and mobile clients. ICS decodes the source format and streams images

and sound to the remote web-based Interp

Interplay Central for Interplay Production:

l

ay Centr al client.

This deployment typically requires two HDs configured as a RAID 1 (mirrored RAID) for the Linux

operating system. No iOS devices implies no special caching requirements; however, Multicam

requires a media drive. You can configure two or more ICS servers as a cluster to obtain highavailability and load balancing.

16

Page 17

ICS 1.8 Installation & Co nfiguration Guide

Interplay Central – iNEWS and Interplay Production

Deployment Summary:

• Browse and play video assets

• RAID 1 required

• Media cache volume required

o RAID 5, or

o RAID 1, or

o Single HD

• Clustering yields high-availability and load-balancing

ICS for Interplay Central with iNEWS and Interplay Production has both iNEWS connectivity and

Interplay Production connectivity. Similarly to the iNEWS-only deployment, this provides the

ability to browse and edit iNEWS content (queues, stories) from a remote web client. Interplay

Production connectivity provides the ability to browse, play and edit associated video.

In this deployment ICS serves layouts for applications, provides user authentication, manages

system configuration settings, and provides proxy-based playback of video assets over the

network to web-based and mobile clients. ICS decodes ISIS source formats and streams images

and sound to the remote web-based Interplay Central client.

Interplay Central with iNEWS and Interplay Pr oduction:

This deployment typically requires two HDs configured as a RAID 1 (mirrored RAID) for the Linux

operating system. In a configuration where the iOS application is used, the ICS server should also

have a media cache volume. Multicam also requires a media cache volume. You can configure

two or more ICS servers as a cluster to obtain high-availability and load balancing.

17

Page 18

ICS 1.8 Installation & Co nfiguration Guide

Interplay Sphere Only

Deployment Summary:

• Browse and edit iNEWS content

• Browse and play the associated video assets

• RAID 1 required

• Media cache volume required

o RAID 5, or

o RAID 1, or

o Single HD

• Clustering yields high-availability and load-balancing

ICS for Interplay Sphere provides playback of different format video assets registered by

Interplay Production and residing on an ISIS

images and sound to the remote Interp

.

ICS decodes the source format and streams

l

ay Sphere enabled Media Composer or NewsCutter.

Interplay Sphere:

This deployment ty pically req uires two HDs configured as a RAID 1 (mirrored RAID) for the

Linux operating system. A media cache is also required. In its most basic form, the Interplay

Sphere deploym e nt is a si ngle ICS serve r. You can configure two or more ICS servers as a cluster

to obtain high-availability and load balancing.

Deployment Summary:

• Browse and play th e v ideo assets for Sphere enabled Media Composer and/or

NewsCutter

• RAID 1 required

• Media cache volume required

18

Page 19

ICS 1.8 Installation & Co nfiguration Guide

Both Interplay Central and Interplay Sphere (Shared ICS)

o RAID 5, or

o RAID 1, or

o Single HD

• Clustering yields high-availability and load-balancing

Interplay Central and Interplay Sphere can easily share the same ICS server(s). In this

deployment, ICS serves layouts for applications, provides user authentication, and manages

system configuration settings. ICS also provides proxy-base playback over the network of

different format v

decodes the source format and streams images and sound to the remote web-based Interplay

Central and/or Interp

This is the most sophisticated deployment model, since other elements can also be present,

such as iNEWS with corresponding iOS device applications.

Interplay Central and Interplay Sphere (Shared ICS):

i

deo assets registered by Interplay Production and residing on an ISIS. ICS

l

ay Sphere clients

.

This deployment typically requires a RAID 1 (mirrored RAID) for the Linux operating system. In a

configuration with iO S devices (as with iNEWS), the ICS server should also have a media cache

volume. If iOS devices are not deployed, it has no media cache volume requirements; however,

multicam requires a media cache volume. You can configure two or more ICS servers as a cluster

to obtain high-availability and load balancing.

Deployment Summary:

• Browse and play video assets

• Browse and play video assets

for Sphere enabled Media Composer and/or NewsCutter

• RAID 1 required

19

Page 20

ICS 1.8 Installation & Co nfiguration Guide

Interplay MAM

• Media cache volume required

o RAID 5, or

o RAID 1, or

o Single HD

• Clustering yields high-availability and load-balancing

In an Interplay MAM deployment, ICS provides playback of video assets registered as a browse

proxies by Interplay MAM. The registered browse proxies can reside on standard filesystem

storage, or proprietary storage that provides a standard system gateway. The Interplay MAM

deployment presents two main options – setting up a media cache volume, and port bonding to

improve throughput.

Interplay MAM:

This deployment typically requires a RAID 1 (mirrored RAID) for the Linux operating system.

Under some circumstances – see “Caching in IC S ” on page 22

– the ICS server should also have a

media cache volume. You can configure two or more ICS servers as a cluster to obtain highavailability and load balancing.

Deployment Summary:

• Browse and play video assets

• RAID 1 required

• Media cache volume might be required

o RAID 5, or

o RAID 1, or

o Single HD

• Clustering yields high-availability and load-balancing

20

Page 21

ICS 1.8 Installation & Co nfiguration Guide

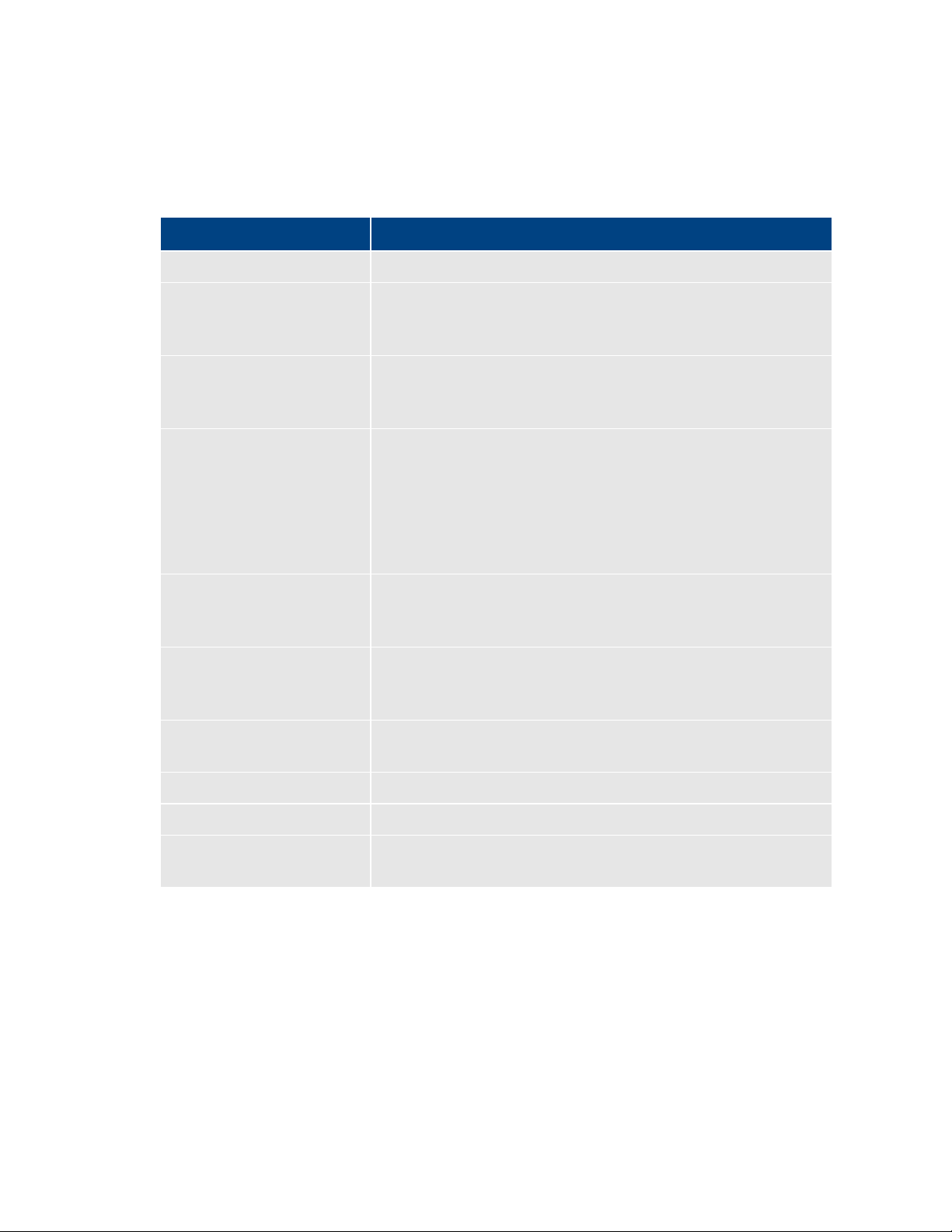

Port Bonding in In terp lay MAM

Port Requirements

Interplay Central

80

TCP inbound

Interplay Central Playback Service (ICPS)

443

Secure TCP

IPC HTTPS calls

843

TCP Inbound

Serving Flash Player socket policy files

5000

TCP Inbound

Playback service (loading assets, serving

Interplay Central

80

TCP Inbound

ICPS HTTP calls

443

Secure TCP

ICPS HTTPS calls

Port bonding (also called link aggregation) is an OS-level technique for combining multiple

Ethernet ports into a group, making them appear and behave as a single port. Ethernet ports

correspond to the physical con nectors in a NIC card where network cables are plugged in.

Bonded ports retain their individual cable connections to the network router or switch.

However, they are seen by the network as a single port.

Port bonding must be configured in “round-robin” mode. In this mode, Ethernet packets are

automatically sent, in tu r n , to each of the bonded ports, reducing bottlenecks and increasing the

available bandwidth. For example, bonding two ports together in round-robin increases

bandwidth by approximately 50% (some efficiency is lost due to overhead).

In MAM deployments of ICS, port bonding improves playback performance when multiple

clients are making requests of the ICS server simultaneously. With port bonding, more

concurrent playback requests can be sustained by a single server, especially for file-based

playback. File-based playback is a playback method for which a single port-bonded ICS server

can support thousands of requests.

For instructions on port bonding see “

(Optional)“ on page 152.

The following table lists the ICS port requirements for the client-side applications (the browserbased Interplay Central application and mobile applications). Ports 80 and 443 are required for

the HTTP(S) traffic. In addition, the Adobe Flash Player (running inside the browser) requires

ports 843 and 5000.

For more information see the ICS Security Archi tect ure and Analysis document.

Component Port Protocol and

Web application

Appendix C: Configuring Port Bonding for Interplay MAM

Usage

Direction

HTTP calls

Inbound

mobile applications

21

JPEG images, and audio, etc.). Ou tput flow

to client serving inbound request.

Inbound

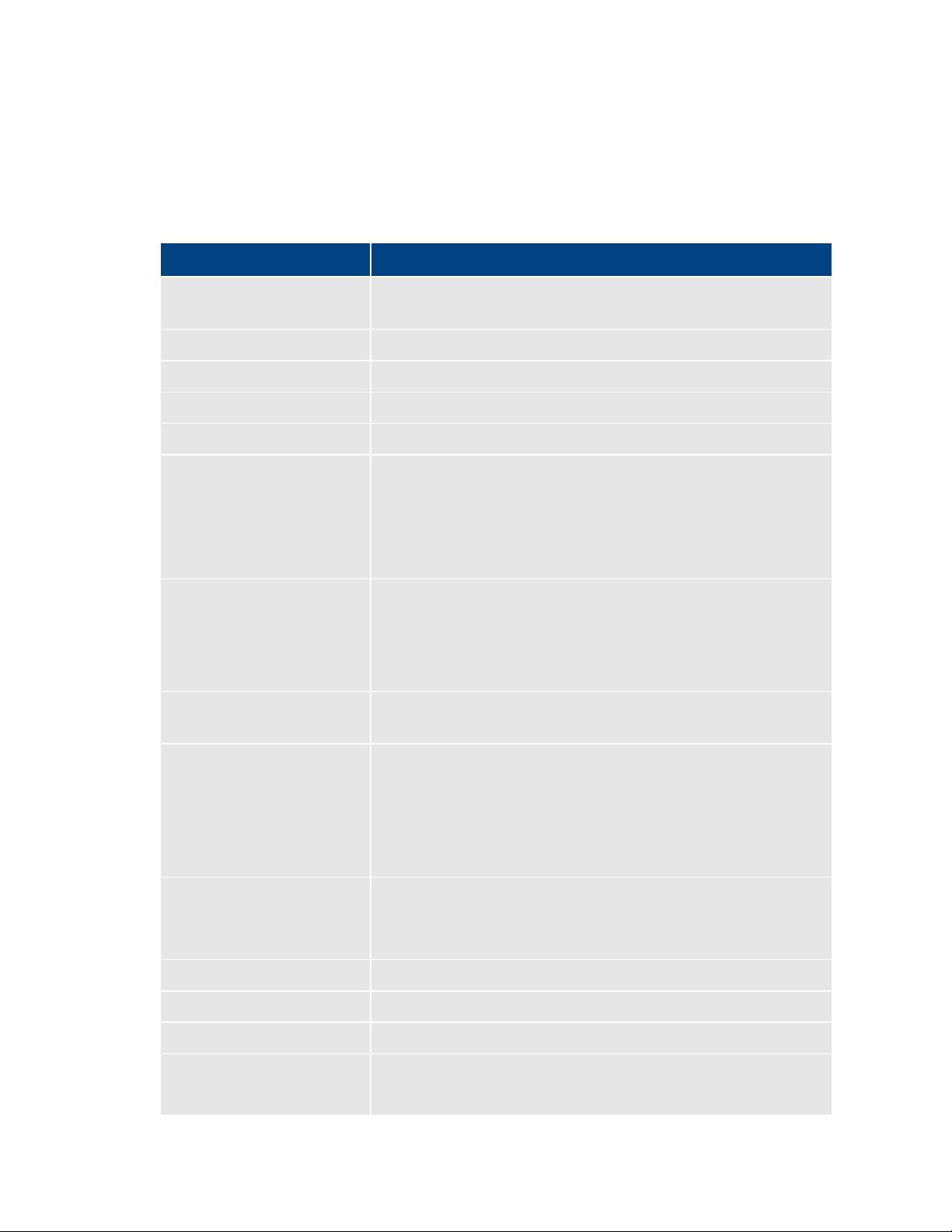

Page 22

ICS 1.8 Installation & Co nfiguration Guide

Interplay Central

80, 443

ICPS

843 (Flash), 80, 5000, 26000

ICS

8000 (optional Admin UI), 8183 (bus cluster info)

ISIS

5000 – 5399 (UPD and TCP)

RabbitMQ

5672 (AMQP), 15672 (Management UI/API)

MongoDB

27017

PostgreSQL

53087

System

22, ICMP, 111, 2400 7, 24008, 24009-(24009 + number of bricks

Caching in ICS

The Dedicated Caching Volume

The following table lists the server-side port requirements. For more information see the ICS

Security Architecture and Analysis document.

Service Name Port

across all volumes for gluster). If you will be using NFS, open

additional ports 38465-(38465 + number of Gluster servers). Some

MAM configuration might require additional NFS ports (111, 2049

tcp & udp) or CIFS (137,138 udp and 137 ,139 tcp). Other filesystems

will have to be checked individually (Isilon, Harmonic Omneon,

etc.).

In its work to provide proxy-based playback of video assets over a network, ICS generates

temporary files in certain workflows. For example, ICS deployed for Interplay MAM typically

generates a multitude of temporary files as it converts proxies from their native MAM formats

into formats compatible with the player. The ICS multicam feature introduced in ICS 1.5

produces numerous temporary files. By default, ICS caches temporary files on the system drive.

Better performance is achieved by allocating a dedicated media cache volume (separate from

the system drive) for the temporary files. In a cluster setup, an open-source software solution

called Gluster is also used.

Note: All

for media caching. Gluster is also required, for file replication between clustered caches.

Note: This document provides instructions f or creat i ng a media cache volume as a RAID

5 using multiple disks in the server enclosure. However, other configurations are

possible, including two drives in a RAID 1 configuration, or a single drive. For details, see

the “How to Buy Hardware for Interplay Central Services” guide.

Interplay Central deployments making use of multicam require a dedicated volume

22

All ICS servers require a RAID 1 that mirrors the operating system across two HD drives. Some

deployments also require a media cache volume consisting of the remaining disks in the

Page 23

ICS 1.8 Installation & Co nfiguration Guide

Caching for Interplay MAM

Caching for iOS Devices in Interplay Central

Caching for Sphere

enclosure, used exclusively for ICS file caching. In a RAID 5 volume (recommended), the disk

controller automatically distributes (stripes) data across all the disks in the RAID 5, yielding

increased performance and redundancy.

In an ICS server cluster the media cache volume is taken one step further. An open source

software solution called Gluster is used to replicate the contents of the media cache volumes

across each server in the cluster. In this way, each ICS server in the cluster can make use of file

data already transcoded and cached by the others.

Note: All

cache volume for caching. Gluster is also required, for file replication between clustered caches.

Interplay Central deployments making use of multicam require a dedicated media

For caching, it is important to understand how MAM browse proxies get from proxy storage to

the MAM desktop. For each playback request, ICS does one of the following:

• File-based playback (native): When MAM proxies are in a format that an Adobe Flash-

based player can play natively, ICS serves the proxy file as-is to the r emot e web -based

client. Adobe Flash-based players natively play MP4-wrapped h.264/aac or FLV. This is

the least CPU-intensive playback mode.

• File-based playback (alternate): When file-based playback requests are made of proxy

formats that cannot be played natively by an Adobe Flash-based player, ICS transcodes

the proxy into FLV, which is stored in the ICS file cache on the media cache volume. This

is then served to the remote web-based client. ICS regularly scans the media cache, and,

when necessary, the least-requested files are purged.

The above playback method has a one-time CPU hit on initial playback request for each

asset, but is subsequently very light because the same cached file is served.

• Frame-based play back: This playback mode is the same one used by Interplay Central,

and is required in MAM for “growing file” workflows and variable-speed playback. In

this case ICS decodes the proxy and streams images and audio to the remote web-based

client frame-by-frame. This is the most CPU-intensive playback mode.

ICS for Interplay MAM requires a dedicated media cache volume when registered browse

proxies include formats that cannot be natively loaded in the Adobe Flash player. For example, if

MAM registered browse proxies are MPEG-1, Sony XD C A M, MXF or WMV, a media cache

volume are needed in ICS. This guide includes inst ructions for setting up a RAID level 5 cache.

In an Interplay Central deployment where an iOS application is used, the I CS server should have

a dedicated media cache volume.

Interplay Sphere caches the video and audio it receives locally on the editor (M edia Com poser

and/or NewsCutter). With the introduction of multic am support for Sp here (i n ICS 1.5) there

23

Page 24

ICS 1.8 Installation & Co nfiguration Guide

Working with Linux

Installing Linux

Linux Concepts

is also a dedicated media cache volum e requirement for Sphere. This is a resu l t of se rver-side

caching of the multicam “grid” of proxy im ages. Sphere conti nues t o cac he v id eo and a ud io

locally.

As noted, RHEL is a commercially supported, open source version of the Linux operating system.

If you have run DOS commands in Windows or have used the Mac terminal window, the Linux

environment will be familiar to you. While many aspects of the ICS installation are automated,

much of it requires entering commands and editing files using the Linux command-line.

Note: RHEL is not free, and Avid does not redistribute it or i ncl ude it as part of the ICS

installation. RHEL licensing and support opti ons are covered in the “How to Buy

Hardware for Inte rplay Central Services” guide.

Installations on qualified HP servers can use an express process involving a USB key and the

supplied RHEL kickstart (ks.cfg) file. Kickstart files are commonly used in Linux installs to

automate the OS installation. A kickstart file automatically answers questions posed by the Linux

installer, for hardware known in advance.

Since RHEL is a licensable product, redistribution by Avid is not possible. However, the ICS

installation package includes a Windows executable (ISO2USB) for creating a bootable USB drive

from a RHEL installation DVD or image (.iso) file. We use ISO2USB to prepare the USB drive to

install the ICS components too.

Note: The USB key and kickstart file shortcuts apply only to ICS installations performed

on qualified HP hardware. For non-HP hardware, see “

HP Hard ware

” on page 148.

Appendix A: Installing ICS on Non-

Once RHEL is installed you can begin the work of setting up the server for ICS. This involves

simple actions such as verifying the system time. It also involves more complex actions, such as

verifying and modifying hardware settings related to networking, and editing files. Depending

on the deployment, you may also b e required to create logical volumes, configure port bonding,

and perform other advanced actions.

Advance knowledge of the following Linux concepts will be helpful:

• root user: The root user (sometimes called the “super” user) is the Linux user with

highest privileges. All steps in the installation are performed as root.

• mounting: Li nu x does not recognize HDs or removable devices such as USB keys unless

they are formally mounted.

• files and direct ories: In Linux, everything is a file or a directory.

24

Page 25

ICS 1.8 Installation & Co nfiguration Guide

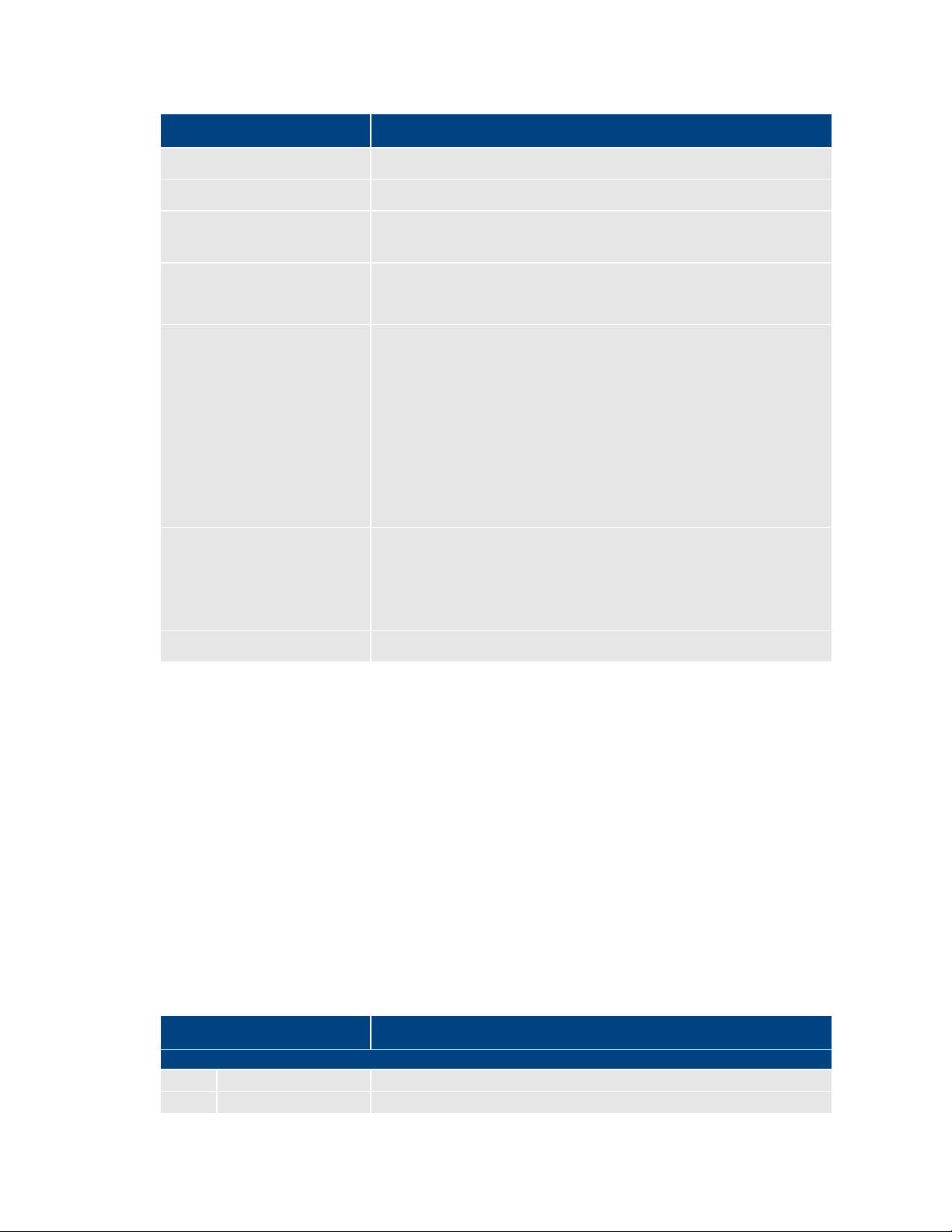

Key Linux Directories

/

The root of the filesystem.

/dev

Contains device files, including those identifying HD partitions,

/etc

Contains Linux system configuration files, including the

/etc/udev/rules.d

Contains rules used by the Linux device manager, including

/etc/sysconfig/network-

Contains, amongst other things, files providing Linux with boot-

/media

Contains the mount points for detachable storage, such as USB

/opt

Contains add-on application packages that are not a native part

/usr

Contains user binaries, including some ICS components.

/tmp

The directory for temporary files.

/var

Contains data files that change in size (variable data), including

Linux Command Line

/bin

/boot

/dev

/etc

/lib

/media

/mnt

/opt

/sbin

/srv

/tmp

/usr

Like other file systems, the Linux filesystem is represented as a hierarchical tree. In Linux

directories are reserved for particular purposes. The following table presents some of the key

Linux directories encountered during the ICS installation and configuration:

Directory Description

USB and CD drives, and so on. For example, sda1 represents the

first partition (1) of the first hard disk (a).

filesystem table, fstab, which tells the operating system what

volumes to mount at mount at boot-time.

network script files where persistent names are assigned to

network interfaces.

In Linux, every network interface has a unique name. If a NIC

card has four connection “po rts”, for example, they might be

named eth0 through eth3.

scripts

time network configuration information, including which NIC

interfaces to bring up.

keys. In Linux, volumes and removable storage must be

mounted before they can be accessed.

of Linux, including the ICS components.

the ICS server log files.

The Linux command line is a powerful tool that lets you perform simple and powerful actions

alike with equal speed and ease. For example, entering the Linux list command, ls, at the root

directory produces results similar to the following.

# ls

25

Page 26

ICS 1.8 Installation & Co nfiguration Guide

/var

ls

Lists directory contents. Use the –l option (hyphen lower-case

cd

Changes directories.

cat

Outputs the contents of the named file to the screen.

clear

Clears screen.

cp

Copies files and directories.

<tab>

Auto-completes the command based on contents of the

|

“Pipes” the output from one command to the input of another.

dmesg

Displays messages from the Linux kernel buffer. Useful to see if

find

Searches for files.

grep

Searches for the named regular expression. Often used in

lvdisplay

Displays information about logical volumes.

man

Presents help (the “manual page”) for the named command.

mkdir

Creates a new directory.

mount

Mounts and unmounts an external device to a directory. A

In the above command, the pound sign (#) indicates the presence of the Linux command

prompt. You do not type a dollar sign. Linux commands, paths, and file names are case-sensitive.

The following table presents a few of the more commonly used Linux commands.

Command Description

L) for a detailed listing.

command line and directory co ntents.

| more

For example, typing cd and the begin ning of a directory name,

then pressing the tab key fills in the remaining letters in the

name.

For example, to view the output of a command one screen at a

time, pipe into the more command, as i n :

ls | more

a device (such as USB key) mounted correctly.

For example, the following use of the find command searches

for <filename> on all local filesystems (avoiding network

mounts):

find / -mount -name <filename>

conjunction with the pipe command, as in:

ps | grep avid

umount

26

device must be mounted before its contents can be accessed.

Page 27

ICS 1.8 Installation & Co nfiguration Guide

ps

Lists the running processes.

passwd

Changes the password for the logged-in user.

scp

Securely copies files between machines (across an ssh

service

Runs an initialization script.

tail

Shows you the last 10 (or n) lines in a file.

udevadm

Requests device events from the Linux kernel. Can be used to

vi

Starts a vi editing session.

Linux Text Editor (vi)

Command Mode

: Prefix to commands in command mode

:wq

Write file and quit vi (in command mode)

Command Description

connection).

e.g. service avid-all

e.g.

tail <filename>

tail -50 <filename>

tail –f <filename>

The “-f” option keeps the tail command outputting appended

data as the file grows. Useful for monitoring log files.

replay device events and create/u pd a te the

70-persistent-net.rules file.

e.g. udevadm trigger --action=add

Linux features a powerful text editor called vi. To invoke vi, type the vi command followed by

the target file at the command prompt.

$ vi <filename>

Vi operates in one of two modes, insert mode and command mode. Insert mode lets you

perform text edits – insertion, deletion, etc. Command mode acts upon the file as a whole – for

example, to save it or to quit without saving.

• Press the “i” (as in Indigo) key to switch to insert mode.

• Press the colon (“:”) k e y to sw it ch to c o m ma n d mode.

The following table presents a few of the more useful vi commands.

27

Key Press Description

Page 28

ICS 1.8 Installation & Co nfiguration Guide

:q!

Quit without writing (in command mode)

Insert Mode

i Insert text before the cursor, until you press <Esc>

I Insert text at beginning of current line

a Insert text after the cursor

A Insert text at end of current line

<Esc>

Turn off Insert mode and switch to command mode.

w Next word

b Previous word

Shift-g

Move cursor to last line of the file

D Delete remainder of line

x Delete character under the cursor

dd

Delete current line

yy

“Yank” (copy) a whole line in command mode.

p Paste the yanked line in command mode.

Linux Usag e Tips

Getting Help

For help with Linux commands, the Linux System Manual (“man” pages)

Searching within

To search for a string within a Linux man page, type the forward slash (“/”)

“command not

A common experience for users new to the Linux command line is to

Key Press Description

For a series of short and helpful vi tutorials, see:

The following table presents tips that will make it easier to work in RHEL.

Tip Description

are easily available by typing the man command followed by the item of

interest.

For example, for help with the ls command, type:

man ls

a man page

followed by the string of interest. This can be helpful for finding a

parameter of interest in a long man entry.

found” error

receive a “command not found” after invoking a command or script that is

definitely in the current directory.

Linux has a PATH variable, but for reasons of security, the current directory

28

— “.” in Linux — is not included in it by default.

Page 29

ICS 1.8 Installation & Co nfiguration Guide

Thus, to execute a command or scrip t in a directory that is unknown to the

current directory.

cat

Prints the contents of a file to the command line.

| more

Piping (“|”) the output of a comma nd through the more command brea ks

less

Similar to the cat command, but automatically breaks up th e output in to

less <filename>

Volumes in Linux

Clock Synchronization in Linux

Tip Description

PATH variable you must enter the full path to the script from the root

directory (“/”) or from the directory containing the script using dot-slash

(“./”) notation, which tells Linux the command you are looki n g fo r is in the

up the output into screen-sized chunks.

For example to view the contents of a large directory one screen at a time,

type the following:

ls | more

screen-sized chunks, with navigation. Useful for navigating large amounts

of text on screen at a time.

For example:

For those more familiar with Windows, the steps to creating usable volume in Linux are similar

to preparing a new HD for use in Windows.

In Windows, you initialize the disk, create a partition, and assign it a drive letter. You must then

format the disk, specify its file system, its allocation unit size, and assign it a volume label.

In Linux, you must also initialize the disk (this takes place during RHEL installation) and create a

partition. You also format the disk and specify its file system and sector size. Volume labels do

not apply, but have a parallel i n the Linux device names (for example /dev/hda or /dev/hdb in

the case of HDs).

Linux builds up to a usable volume in a series of “layers”, each building upon the previous. From

lowest to highest they are physical volumes, volume groups, logical volumes. The filesystem is

built on top of the logical volume.

The basic mechanism for clock synchronization under Linux is the Network Time Protocol (NTP)

daemon, ntpd, which can be used to automatically maintain synchronization of the system clock

with a specified time server. The time server might be a master clock within a firewall, or one of

the numerous time-servers based on an atomic clock and available via the internet. For reasons

of security, it ought to be a Linux NTP server (or compatible solution) within the corporate

firewall.

29

Page 30

ICS 1.8 Installation & Co nfiguration Guide

Time Zones in RHEL

RAIDs in ICS

It is particularly important when setting up a cluster of ICS nodes that each node should have

precisely the same time.

Clock synchronization is covered in “Synching the System Clock” on page 70

Like most operating systems, RHEL needs to know the time zone in which it is operating. In RHEL

this is set by assigning geographic information and/or a specific time zone. For example the

following are all valid time zone specifications in RHEL:

• America/EST

• America/Los_Angeles

• Australia/Sydney

• Brazil/East

• Europe/Amsterdam

The installation script automatically sets the time zone to Eastern Standard Time. You will have

the opportunity to set the time zone to something more appropriate when you boot RHEL for

the first time.

.

RAID stands for redundant array of inexpensive (or independent) disks. RAIDs are used in ICS to

provide data redundancy and for efficiency in caching large amounts of data across multiple

disks. On supported HP serve rs, you implement these RAIDs at the level of the HP disk

controller, using the HP RAID configuration BIOS utility.

ICS makes use of the following RAID types:

• RAID 1: All ICS implementations require a RAID 1 (mirror) for the system (OS) drive. This

RAID provides redundancy in the ev ent of HD failure.

• RAID 5: Certain deployments also require additional disks configured as a RAID 5 (data

striping with parity blocks) for caching file data. This RAID provides redundancy and

increased performance.

Note: This document provides instructions f or creat i ng a media cache volume as a

RAID

5 using multiple disks in the server enclosure. However, other configurations are

possible, including two drives in a RAID 1 configuration, or a single drive. For details, see

the “How to Buy Hardware for Interplay Central Services” guide.

The following deployments typically benefit from the configuration of a media cache volume:

• Interplay MAM: Interplay MAM deployments require a media cache volume when

registered browse proxies include formats that cannot be natively loaded by the Adobe

Flash-based player. That is, for non MP4 h.264 browse proxies (such MPEG-1, Sony

XDCAM, MXF, and WMV), media on proxy storage is transcoded to FLV and sto red.

30

Page 31

ICS 1.8 Installation & Co nfiguration Guide

Introduction to Clustering

• Interplay Centr al: Interplay Central installations deploying the iNEWS iOS (Apple mobile

operating system) app require a media cache volume. In this case, media on the ISIS are

transcoded to MPEG-TS (MPEG-2 transport stream), and stored.

With regards to particular servers:

• HP DL360: The HP DL360 may have up to 8 drives present. Configure two as RAID 1 for

the system drive. The additional drives (up to 6), if present, can be configured as a

RAID 5 volume for caching per deployment requirements.

• Other Servers: Other servers will have different hard drive capacities. Configure two

drives as RAID 1 for the system drive and the remaining drives as a RAID 5 volume for

caching.

Redundancy and scale for ICS is obtained by setting up a cluster of two or more servers. Within

the cluster, requests for media are automatically distributed to the available servers. An ICS

server cluster provides the following:

• Redundancy/High-availability. If any node in the cluster fails, connections to that node

will automatically be redirected to another node.

• Scale/L oad balancing. All incoming playback connections are routed to a cluster IP

address, and are subsequently d i stributed evenly to the nodes in the cluster.

• Replicated Cache: The media transcoded by one node in the cluster is automatically

replicated in the other nodes. If another node receives the same playback request, the

media is immediately available without the need to re-transcode.

• Cluster mon itoring. You can monitor the status of the cluster by entering a command. If

a node fails (or if any other serious problem is detected by the cluster monitoring

service), an e-mail is automatically sent to one or more e-mail addresses.

Generally speaking, clusters consist of nodes with identical hardware profiles. However, this is

not required. You can use differe nt hardware profiles for the servers in a cluster.

Note: For detailed information on how ICS servers operate in a cluster, see the “ICS 1.8

Service and Server Clustering Overview” guide.

31

Page 32

ICS 1.8 Installation & Co nfiguration Guide

Single Server Deployment

In a single server deployment, all ICS services and the ICPS playback service run on the same

server. This server also holds the ICS database and the dedicated media cache volume.

The following diagram illustrates a typical single-server deployment.

32

Page 33

ICS 1.8 Installation & Co nfiguration Guide

Cluster Deployment

Multicast vs Unicast

In a cluster deployment, there is one master-slave pair of nodes (providing high-availability and

failover), and additional nodes supporting transcoding (for scale and load-balancing). In a

cluster, all ICS traffic is routed to the master node. Player requests, handled by the ICPS playback