Page 1

Interplay® Central Services

Service and Server Clustering Overview

Version 1.8

Page 2

Legal Notices

Product specifications are subject to change without notice and do not represent a commitment on the part of Avid Technology, Inc.

This product is subject to the terms and conditions of a software license agreement provided with the software. The product may

only be used in accordance with the license agreement.

This product may be protected by one or more U.S. and non-U.S patents. Details are available at www.avid.com/patents

This document is protected under copyright law. An authorized licensee of Interplay Central may reproduce this publication for the

licensee’s own use in learning how to use the software. This document may not be reproduced or distributed, in whole or in part, for

commercial purposes, such as selling copies of this document or providing support or educational services to others. This document

is supplied as a guide for Interplay Central. Reasonable care has been taken in preparing the information it contains. However, this

document may contain omissions, technical inaccuracies, or typographical errors. Avid Technology, Inc. does not accept

responsibility of any kind for customers’ losses due to the use of this document. Product specifications are subject to change without

notice.

Copyright © 2014 Avid Technology, Inc. and its licensors. All rights reserved.

The following disclaimer is required by Apple Computer, Inc.:

APPLE COMPUTER, INC. MAKES NO WARRANTIES WHATSOEVER, EITHER EXPRESS OR IMPLIED, REGARDING THIS

PRODUCT, INCLUDING WARRANTIES WITH RESPECT TO ITS MERCHANTABILITY OR ITS FITNESS FOR ANY PARTICULAR

PURPOSE. THE EXCLUSION OF IMPLIED WARRANTIES IS NOT PERMITTED BY SOME STATES. THE ABOVE EXCLUSION

MAY NOT APPLY TO YOU. THIS WARRANTY PROVIDES YOU WITH SPECIFIC LEGAL RIGHTS. THERE MAY BE OTHER

RIGHTS THAT YOU MAY HAVE WHICH VARY FROM STATE TO STATE.

The following disclaimer is required by Sam Leffler and Silicon Graphics, Inc. for the use of their TIFF library:

Copyright © 1988–1997 Sam Leffler

Copyright © 1991–1997 Silicon Graphics, Inc.

Permission to use, copy, modify, distribute, and sell this software [i.e., the TIFF library] and its documentation for any purpose is

hereby granted without fee, provided that (i) the above copyright notices and this permission notice appear in all copies of the

software and related documentation, and (ii) the names of Sam Leffler and Silicon Graphics may not be used in any advertising or

publicity relating to the software without the specific, prior written permission of Sam Leffler and Silicon Graphics.

THE SOFTWARE IS PROVIDED “AS-IS” AND WITHOUT WARRANTY OF ANY KIND, EXPRESS, IMPLIED OR OTHERWISE,

INCLUDING WITHOUT LIMITATION, ANY WARRANTY OF MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE.

IN NO EVENT SHALL SAM LEFFLER OR SILICON GRAPHICS BE LIABLE FOR ANY SPECIAL, INCIDENTAL, INDIRECT OR

CONSEQUENTIAL DAMAGES OF ANY KIND, OR ANY DAMAGES WHATSOEVER RESULTING FROM LOSS OF USE, DATA OR

PROFITS, WHETHER OR NOT ADVISED OF THE POSSIBILITY OF DAMAGE, AND ON ANY THEORY OF LIABILITY, ARISING

OUT OF OR IN CONNECTION WITH THE USE OR PERFORMANCE OF THIS SOFTWARE.

The following disclaimer is required by the Independent JPEG Group:

This software is based in part on the work of the Independent JPEG Group.

This Software may contain components licensed under the following conditions:

Copyright (c) 1989 The Regents of the University of California. All rights reserved.

Redistribution and use in source and binary forms are permitted provided that the above copyright notice and this paragraph are

duplicated in all such forms and that any documentation, advertising materials, and other materials related to such distribution and

use acknowledge that the software was developed by the University of California, Berkeley. The name of the University may not be

used to endorse or promote products derived from this software without specific prior written permission. THIS SOFTWARE IS

PROVIDED ``AS IS'' AND WITHOUT ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE

IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE.

Copyright (C) 1989, 1991 by Jef Poskanzer.

Permission to use, copy, modify, and distribute this software and its documentation for any purpose and without fee is hereby

granted, provided that the above copyright notice appear in all copies and that both that copyright notice and this permission notice

appear in supporting documentation. This software is provided "as is" without express or implied warranty.

Copyright 1995, Trinity College Computing Center. Written by David Chappell.

.

2

Page 3

Permission to use, copy, modify, and distribute this software and its documentation for any purpose and without fee is hereby

granted, provided that the above copyright notice appear in all copies and that both that copyright notice and this permission notice

appear in supporting documentation. This software is provided "as is" without express or implied warranty.

Copyright 1996 Daniel Dardailler.

Permission to use, copy, modify, distribute, and sell this software for any purpose is hereby granted without fee, provided that the

above copyright notice appear in all copies and that both that copyright notice and this permission notice appear in supporting

documentation, and that the name of Daniel Dardailler not be used in advertising or publicity pertaining to distribution of the software

without specific, written prior permission. Daniel Dardailler makes no representations about the suitability of this software for any

purpose. It is provided "as is" without express or implied warranty.

Modifications Copyright 1999 Matt Koss, under the same license as above.

Copyright (c) 1991 by AT&T.

Permission to use, copy, modify, and distribute this software for any purpose without fee is hereby granted, provided that this entire

notice is included in all copies of any software which is or includes a copy or modification of this software and in all copies of the

supporting documentation for such software.

THIS SOFTWARE IS BEING PROVIDED "AS IS", WITHOUT ANY EXPRESS OR IMPLIED WARRANTY. IN PARTICULAR,

NEITHER THE AUTHOR NOR AT&T MAKES ANY REPRESENTATION OR WARRANTY OF ANY KIND CONCERNING THE

MERCHANTABILITY OF THIS SOFTWARE OR ITS FITNESS FOR ANY PARTICULAR PURPOSE.

This product includes software developed by the University of California, Berkeley and its contributors.

The following disclaimer is required by Paradigm Matrix:

Portions of this software licensed from Paradigm Matrix.

The following disclaimer is required by Ray Sauers Associates, Inc.:

“Install-It” is licensed from Ray Sauers Associates, Inc. End-User is prohibited from taking any action to derive a source code

equivalent of “Install-It,” including by reverse assembly or reverse compilation, Ray Sauers Associates, Inc. shall in no event be liable

for any damages resulting from reseller’s failure to perform reseller’s obligation; or any damages arising from use or operation of

reseller’s products or the software; or any other damages, including but not limited to, incidental, direct, indirect, special or

consequential Damages including lost profits, or damages resulting from loss of use or inability to use reseller’s products or the

software for any reason including copyright or patent infringement, or lost data, even if Ray Sauers Associates has been advised,

knew or should have known of the possibility of such damages.

The following disclaimer is required by Videomedia, Inc.:

“Videomedia, Inc. makes no warranties whatsoever, either express or implied, regarding this product, including warranties with

respect to its merchantability or its fitness for any particular purpose.”

“This software contains V-LAN ver. 3.0 Command Protocols which communicate with V-LAN ver. 3.0 products developed by

Videomedia, Inc. and V-LAN ver. 3.0 compatible products developed by third parties under license from Videomedia, Inc. Use of this

software will allow “frame accurate” editing control of applicable videotape recorder decks, videodisc recorders/players and the like.”

The following disclaimer is required by Altura Software, Inc. for the use of its Mac2Win software and Sample Source

Code:

©1993–1998 Altura Software, Inc.

The following disclaimer is required by 3Prong.com Inc.:

Certain waveform and vector monitoring capabilities are provided under a license from 3Prong.com Inc.

The following disclaimer is required by Interplay Entertainment Corp.:

The “Interplay” name is used with the permission of Interplay Entertainment Corp., which bears no responsibility for Avid products.

This product includes portions of the Alloy Look & Feel software from Incors GmbH.

This product includes software developed by the Apache Software Foundation (http://www.apache.org/

© DevelopMentor

).

This product may include the JCifs library, for which the following notice applies:

JCifs © Copyright 2004, The JCIFS Project, is licensed under LGPL (http://jcifs.samba.org/). See the LGPL.txt file in the Third Party

Software directory on the installation CD.

3

Page 4

Avid Interplay contains components licensed from LavanTech. These components may only be used as part of and in connection

with Avid Interplay.

This product includes FFmpeg, which is covered by the GNU Lesser General Public License.

This product includes software that is based in part of the work of the FreeType Team.

This software is based in part on the work of the Independent JPEG Group.

This product includes libjpeg-turbo, which is covered by the wxWindows Library License, Version 3.1.

Portions copyright 1994, 1995, 1996, 1997, 1998, 1999, 2000, 2001, 2002 by Cold Spring Harbor Laboratory. Funded under Grant

P41-RR02188 by the National Institutes of Health.

Portions copyright 1996, 1997, 1998, 1999, 2000, 2001, 2002 by Boutell.Com, Inc.

Portions relating to GD2 format copyright 1999, 2000, 2001, 2002 Philip Warner.

Portions relating to PNG copyright 1999, 2000, 2001, 2002 Greg Roelofs.

Portions relating to gdttf.c copyright 1999, 2000, 2001, 2002 John Ellson (ellson@lucent.com).

Portions relating to gdft.c copyright 2001, 2002 John Ellson (ellson@lucent.com).

Portions relating to JPEG and to color quantization copyright 2000, 2001, 2002, Doug Becker and copyright (C) 1994, 1995, 1996,

1997, 1998, 1999, 2000, 2001, 2002, Thomas G. Lane. This software is based in part on the work of the Independent JPEG Group.

See the file README-JPEG.TXT for more information. Portions relating to WBMP copyright 2000, 2001, 2002 Maurice Szmurlo and

Johan Van den Brande.

Permission has been granted to copy, distribute and modify gd in any context without fee, including a commercial application,

provided that this notice is present in user-accessible supporting documentation.

This does not affect your ownership of the derived work itself, and the intent is to assure proper credit for the authors of gd, not to

interfere with your productive use of gd. If you have questions, ask. "Derived works" includes all programs that utilize the library.

Credit must be given in user-accessible documentation.

This software is provided "AS IS." The copyright holders disclaim all warranties, either express or implied, including but not limited to

implied warranties of merchantability and fitness for a particular purpose, with respect to this code and accompanying

documentation.

Although their code does not appear in gd, the authors wish to thank David Koblas, David Rowley, and Hutchison Avenue Software

Corporation for their prior contributions.

This product includes software developed by the OpenSSL Project for use in the OpenSSL Toolkit (http://www.openssl.org/)

Interplay Central may use OpenLDAP. Copyright 1999-2003 The OpenLDAP Foundation, Redwood City, California, USA. All Rights

Reserved. OpenLDAP is a registered trademark of the OpenLDAP Foundation.

Avid Interplay Pulse enables its users to access certain YouTube functionality, as a result of Avid's licensed use of YouTube's API.

The charges levied by Avid for use of Avid Interplay Pulse are imposed by Avid, not YouTube. YouTube does not charge users for

accessing YouTube site functionality through the YouTube APIs.

Avid Interplay Pulse uses the bitly API, but is neither developed nor endorsed by bitly.

Attn. Government User(s). Restricted Rights Legend

U.S. GOVERNMENT RESTRICTED RIGHTS. This Software and its documentation are “commercial computer software” or

“commercial computer software documentation.” In the event that such Software or documentation is acquired by or on behalf of a

unit or agency of the U.S. Government, all rights with respect to this Software and documentation are subject to the terms of the

License Agreement, pursuant to FAR §12.212(a) and/or DFARS §227.7202-1(a), as applicable.

4

Page 5

Trademarks

003, 192 Digital I/O, 192 I/O, 96 I/O, 96i I/O, Adrenaline, AirSpeed, ALEX, Alienbrain, AME, AniMatte, Archive, Archive II, Assistant

Station, AudioPages, AudioStation, AutoLoop, AutoSync, Avid, Avid Active, Avid Advanced Response, Avid DNA, Avid DNxcel, Avid

DNxHD, Avid DS Assist Station, Avid Ignite, Avid Liquid, Avid Media Engine, Avid Media Processor, Avid MEDIArray, Avid Mojo, Avid

Remote Response, Avid Unity, Avid Unity ISIS, Avid VideoRAID, AvidRAID, AvidShare, AVIDstripe, AVX, Beat Detective, Beauty

Without The Bandwidth, Beyond Reality, BF Essentials, Bomb Factory, Bruno, C|24, CaptureManager, ChromaCurve,

ChromaWheel, Cineractive Engine, Cineractive Player, Cineractive Viewer, Color Conductor, Command|24, Command|8,

Control|24, Cosmonaut Voice, CountDown, d2, d3, DAE, D-Command, D-Control, Deko, DekoCast, D-Fi, D-fx, Digi 002, Digi 003,

DigiBase, Digidesign, Digidesign Audio Engine, Digidesign Development Partners, Digidesign Intelligent Noise Reduction,

Digidesign TDM Bus, DigiLink, DigiMeter, DigiPanner, DigiProNet, DigiRack, DigiSerial, DigiSnake, DigiSystem, Digital

Choreography, Digital Nonlinear Accelerator, DigiTest, DigiTranslator, DigiWear, DINR, DNxchange, Do More, DPP-1, D-Show, DSP

Manager, DS-StorageCalc, DV Toolkit, DVD Complete, D-Verb, Eleven, EM, Euphonix, EUCON, EveryPhase, Expander,

ExpertRender, Fader Pack, Fairchild, FastBreak, Fast Track, Film Cutter, FilmScribe, Flexevent, FluidMotion, Frame Chase, FXDeko,

HD Core, HD Process, HDpack, Home-to-Hollywood, HYBRID, HyperSPACE, HyperSPACE HDCAM, iKnowledge, Image

Independence, Impact, Improv, iNEWS, iNEWS Assign, iNEWS ControlAir, InGame, Instantwrite, Instinct, Intelligent Content

Management, Intelligent Digital Actor Technology, IntelliRender, Intelli-Sat, Intelli-sat Broadcasting Recording Manager, InterFX,

Interplay, inTONE, Intraframe, iS Expander, iS9, iS18, iS23, iS36, ISIS, IsoSync, LaunchPad, LeaderPlus, LFX, Lightning, Link &

Sync, ListSync, LKT-200, Lo-Fi, MachineControl, Magic Mask, Make Anything Hollywood, make manage move | media, Marquee,

MassivePack, Massive Pack Pro, Maxim, Mbox, Media Composer, MediaFlow, MediaLog, MediaMix, Media Reader, Media

Recorder, MEDIArray, MediaServer, MediaShare, MetaFuze, MetaSync, MIDI I/O, Mix Rack, Moviestar, MultiShell, NaturalMatch,

NewsCutter, NewsView, NewsVision, Nitris, NL3D, NLP, NSDOS, NSWIN, OMF, OMF Interchange, OMM, OnDVD, Open Media

Framework, Open Media Management, Painterly Effects, Palladium, Personal Q, PET, Podcast Factory, PowerSwap, PRE,

ProControl, ProEncode, Profiler, Pro Tools, Pro Tools|HD, Pro Tools LE, Pro Tools M-Powered, Pro Transfer, QuickPunch,

QuietDrive, Realtime Motion Synthesis, Recti-Fi, Reel Tape Delay, Reel Tape Flanger, Reel Tape Saturation, Reprise, Res Rocket

Surfer, Reso, RetroLoop, Reverb One, ReVibe, Revolution, rS9, rS18, RTAS, Salesview, Sci-Fi, Scorch, ScriptSync,

SecureProductionEnvironment, Serv|GT, Serv|LT, Shape-to-Shape, ShuttleCase, Sibelius, SimulPlay, SimulRecord, Slightly Rude

Compressor, Smack!, Soft SampleCell, Soft-Clip Limiter, SoundReplacer, SPACE, SPACEShift, SpectraGraph, SpectraMatte,

SteadyGlide, Streamfactory, Streamgenie, StreamRAID, SubCap, Sundance, Sundance Digital, SurroundScope, Symphony, SYNC

HD, SYNC I/O, Synchronic, SynchroScope, Syntax, TDM FlexCable, TechFlix, Tel-Ray, Thunder, TimeLiner, Titansync, Titan, TL

Aggro, TL AutoPan, TL Drum Rehab, TL Everyphase, TL Fauxlder, TL In Tune, TL MasterMeter, TL Metro, TL Space, TL Utilities,

tools for storytellers, Transit, TransJammer, Trillium Lane Labs, TruTouch, UnityRAID, Vari-Fi, Video the Web Way, VideoRAID,

VideoSPACE, VTEM, Work-N-Play, Xdeck, X-Form, Xmon and XPAND! are either registered trademarks or trademarks of Avid

Technology, Inc. in the United States and/or other countries.

Adobe and Photoshop are either registered trademarks or trademarks of Adobe Systems Incorporated in the United States and/or

other countries. Apple and Macintosh are trademarks of Apple Computer, Inc., registered in the U.S. and other countries. Windows

is either a registered trademark or trademark of Microsoft Corporation in the United States and/or other countries. All other

trademarks contained herein are the property of their respective owners.

Avid Interplay Central Services — Service and Server Clustering Overview • XXXX-XXXXXX-XX Rev A • May 2014

• Created 5/27/14 • This document is distributed by Avid in online (electronic) form only, and is not available for

purchase in printed form.

5

Page 6

Contents

Using This Guide. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

Chapter 1 Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

Single Server Deployment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

Cluster Deployment. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

How a Failover Works . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

How Load Balancing Works . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

Chapter 2 System Architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

Disk and File System Layout. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

ICS Services and Databases in a Cluster . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

Clustering Infrastructure Services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

DRBD and Database Replication . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

GlusterFS and Cache Replication. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

Clustering and RabbitMQ . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

Chapter 3 Services, Resources and Logs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

Services vs Resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

Tables of Services, Resources and Utilities . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

Interacting with Services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

Interacting with Resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

Services Start Order and Dependencies. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

Working with Cluster Logs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

Understanding Log Rotation and Compression . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

Viewing the Content of Log Files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

Retrieving Log Files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

Important Log Files at a Glance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

RHEL Logs in /var/log . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

RHEL Subdirectories in /var/log . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

ICS Logs in /var/log . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

Page 7

ICS Subdirectories in /var/log. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

Chapter 4 Validating the Cluster . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

Verifying Cluster Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

Verifying the Contents of the Hosts File . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

Verifying the Lookup Service Order . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

Verifying the Cluster IP Addresses and NIC Names. . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

Verifying Node Connectivity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

Checking DRBD Status. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

Identifying the Master, Slave, and Load-Balancing Nodes . . . . . . . . . . . . . . . . . . . . . . . 58

Forcing a Failover . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

Resetting Fail Counts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

Chapter 5 Cluster Maintenance and Administrator Best Practices . . . . . . . . . . . . . . . 64

Checking Cluster Status . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

Understanding the Cluster Resource Monitor Output . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

Verifying Clock Synchronization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

Verifying the AAF Generator Service . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

Shutting Down / Rebooting a Single Node . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

Shutting Down / Rebooting an Entire Cluster . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

Performing a Rolling Shutdown / Reboot . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72

Responding to Automated Cluster Email . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

Chapter 6 Cluster Troubleshooting. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

Common Troubleshooting Commands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

Troubleshooting DRBD . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

Manually Connecting the DRBD Slave to the Master . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

Correcting a DRBD Split Brain . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 82

Chapter 7 Frequently Asked Questions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

7

Page 8

Using This Guide

This guide is intended for the person responsible for installing, maintaining or administering a

cluster of Avid Interplay Common Services (ICS) servers. It provides background and technical

information on clustering in ICS. It provides an inventory of ICS services along with instructions

on how to interact with them for maintenance purposes. Additionally, it explains the specifics of

an ICS cluster, how each service operates in a cluster, and provides guidance on best practices

for cluster administration. Its aim is to provide a level of technical proficiency to the person

charged with installing, maintaining, or troubleshooting an ICS cluster.

For a general introduction to Interplay Central Services, including ICS installation and clustering

steps, see the Avid Interplay Central Services Installation and Configuration Guide. For

administrative information for Interplay Central, see the Avid Interplay Central Administration

Guide.

Page 9

1 Overview

Interplay Central Services (ICS) is a collection of software services running on a server,

supplying interfaces, video playback and other services to Avid Solutions including Interplay

Central, Interplay Sphere, Interplay MAM and mobile applications. A cluster is a collection of

servers that have ICS and additional clustering infrastructure installed. The cluster is configured

to appear to the outside world as a single server. The primary advantages of a cluster are

high-availability, and additional playback capacity.

High availability is obtained through automatic failover of services from one node to another.

This can be achieved with a cluster of just two servers, a primary (master) and secondary (slave).

All ICS services run on the primary. Key ICS services also run on the secondary node. In the

event that a service fails on the primary node, the secondary node automatically takes over,

without the need for human intervention.

When additional capacity is the aim, multiple additional servers can be added to the master-slave

cluster. In this case, playback requests (as always, fielded by the master) are automatically

distributed to the available servers, which perform the tasks of transcoding and serving the

transcoded media. This is referred to as load-balancing. A load-balanced cluster provides better

performance for a deployment supporting multiple, simultaneous users or connections.

An additional benefit of a load-balanced cluster is cache replication, in which media transcoded

by one server is immediately distributed to all the other nodes in the cluster. If another node

receives the same playback request, the material is immediately available without the need for

further effort.

In summary, an ICS server cluster provides the following:

• Redundancy/High-availability. If any node in the cluster fails, connections to that node are

automatically redirected to another node.

• Scale/Load balancing. All incoming playback connections are routed to a single cluster IP

address, and are subsequently distributed evenly to the nodes in the cluster.

• Replicated Cache. The media transcoded by one node in the cluster is automatically

replicated on the other nodes. If another node receives the same playback request, the media

is immediately available without the need to re-transcode.

• Cluster monitoring. A cluster resource monitor lets you actively monitor the status of the

cluster. In addition, If a node fails (or if any other serious problem is detected, e-mail is

automatically sent to one or more e-mail addresses.

Page 10

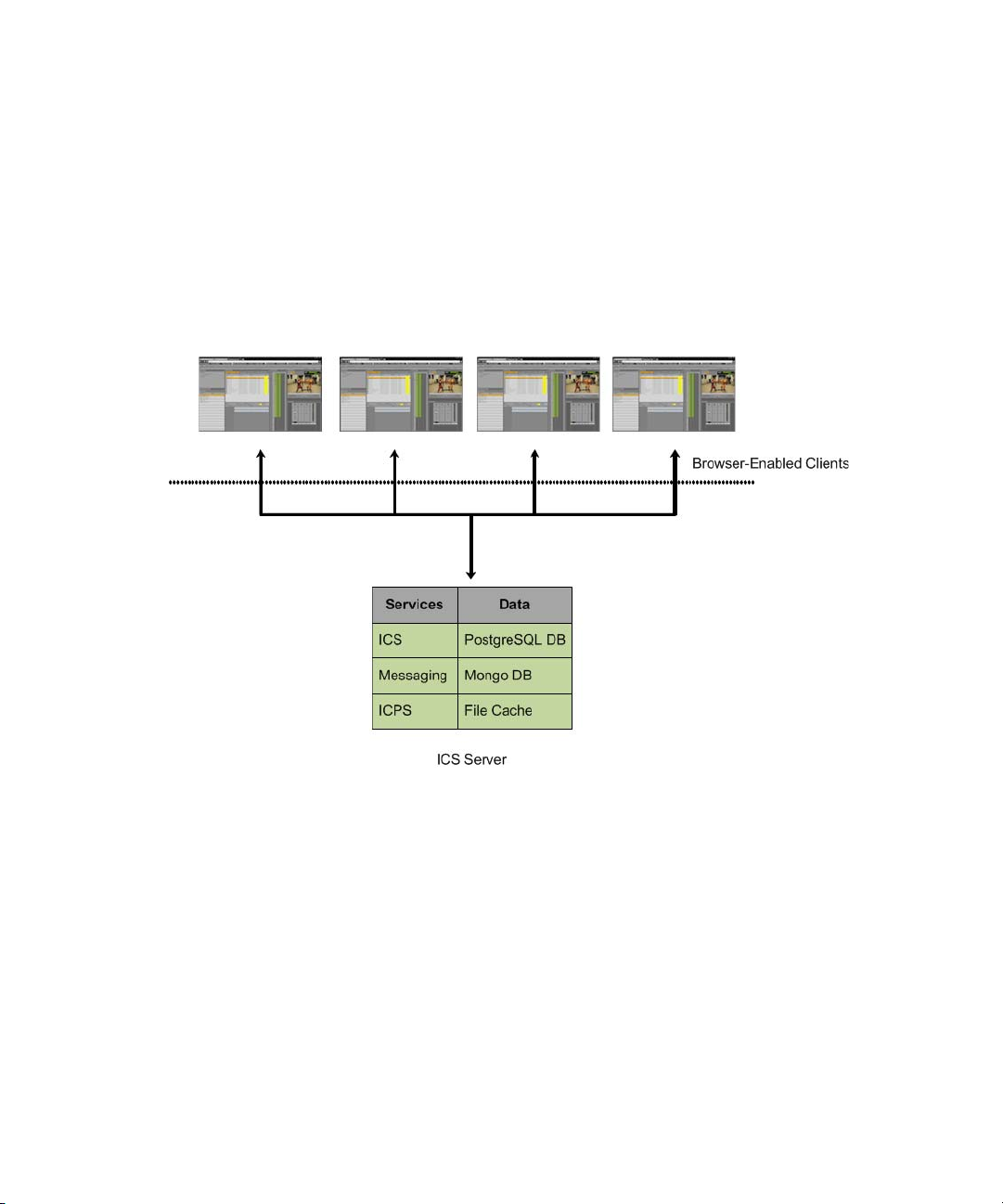

Single Server Deployment

In a single server deployment, all ICS services (including the playback service) run on the same

server. This server also holds the ICS database and the RAID 5 file cache. Since there is only one

server, all tasks, including transcoding, are performed by the same machine. The single server

has a host name and IP address. This is used, for example, by Interplay Central users to connect

directly to it using a web browser.

The following diagram illustrates a typical single-server deployment.

Single Server Deployment

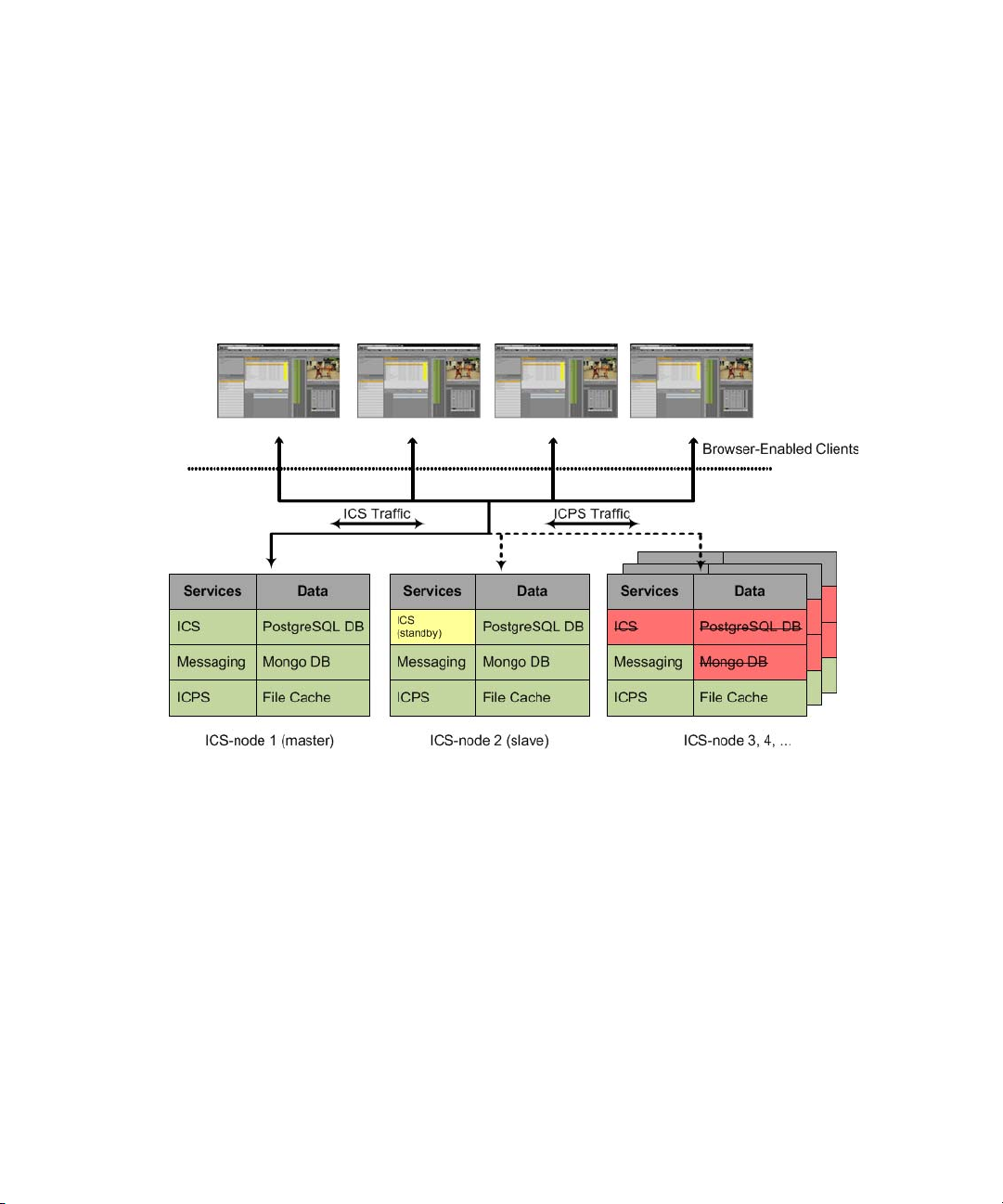

Cluster Deployment

In a basic deployment, a cluster consists of one master-slave pair of nodes configured for

high-availability. Typically, other nodes are also present, in support of load-balanced transcoding

and playback. As in a single node deployment, all ICS traffic is routed through a single node —

the master, in this case, which is running all ICS services. Key ICS services and databases are

replicated on the slave node — some are actively running, some are in "standby" mode — which

is ready to assume the role of master at any time.

Playback requests, handled by the ICPS playback service, are distributed by the master to all

available nodes. The load-balancing nodes perform transcoding, but do not participate in

failovers; that is, without human intervention, they can never take on the role of master or slave.

10

Page 11

How a Failover Works

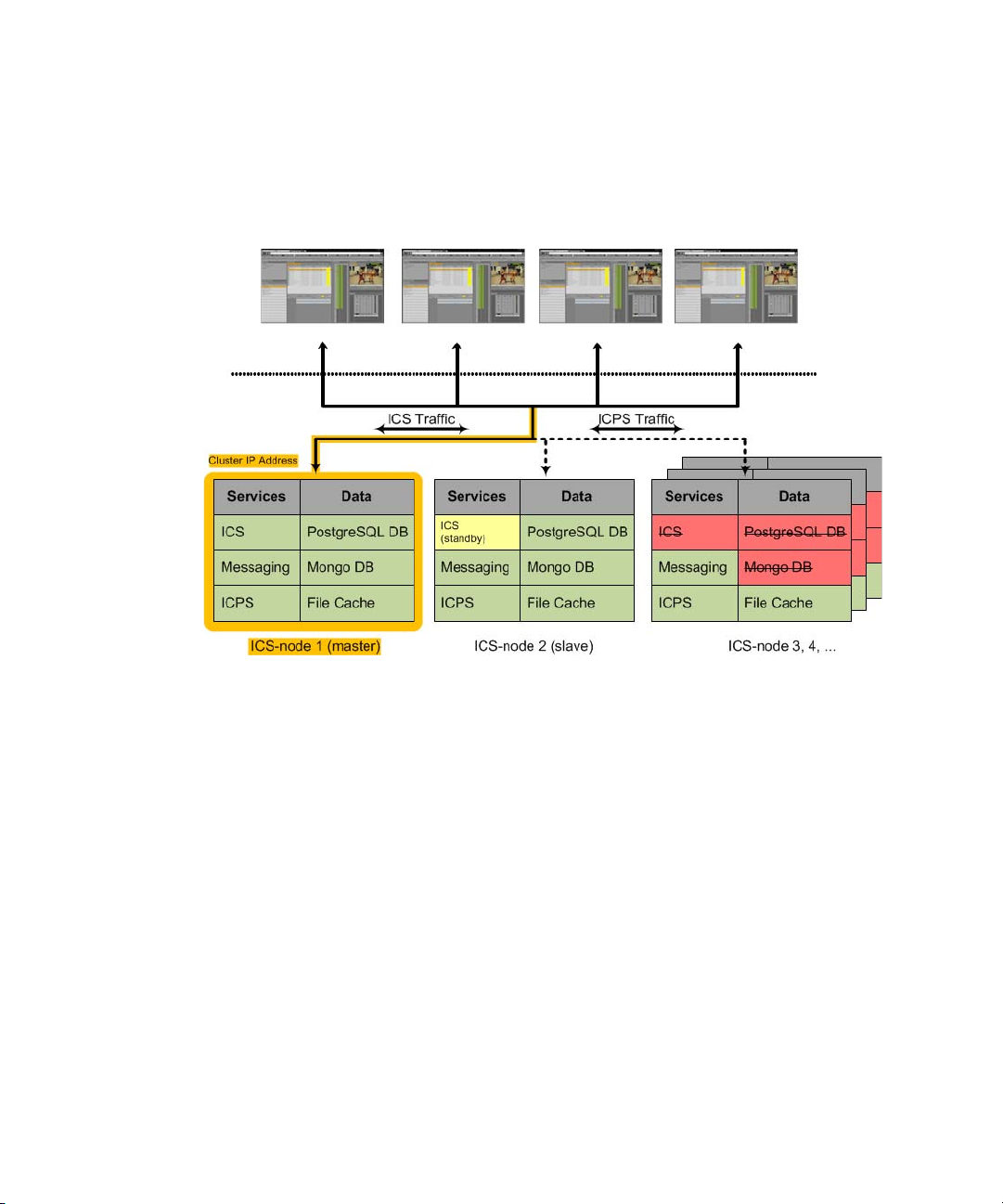

An interesting difference in a cluster deployment is at the level of IP address. In a single server

deployment, each server owns its host name and IP address, which is used, for example, by

Interplay Central users to connect using a web browser. In contrast, a cluster has a virtual IP

address (and a corresponding host name) defined at the DNS level. Interplay Central users enter

the cluster IP address or host name in the browser's address bar, not the name of an individual

server. The cluster redirects the connection request to one of the servers in the cluster, which

remains hidden from view.

The following diagram illustrates a typical cluster deployment.

How a Failover Works

Failovers in ICS operate at two distinct levels: service, and node. A cluster monitor oversees both

levels. A service that fails is swiftly restarted by the cluster monitor, which also tracks the

service's fail count. If the service fails too often (or cannot be restarted), the cluster monitor gives

responsibility for the service to another node in the cluster, in a process referred to as a failover.

A service restart in itself is not enough to trigger a failover. A failover occurs when the fail count

for the service reaches the threshold value.

The node on which the service failed remains in the cluster, but no longer performs the duties

that have failed. Until you manually reset the fail count, the failed service will not be restarted.

11

Page 12

How a Failover Works

In order to achieve this state of high-availability, one node in the cluster is assigned the role of

master node. It runs all the key ICS services. The master node also owns the cluster IP address.

Thus all incoming requests come directly to this node and are serviced by it. This is shown in the

following illustration:

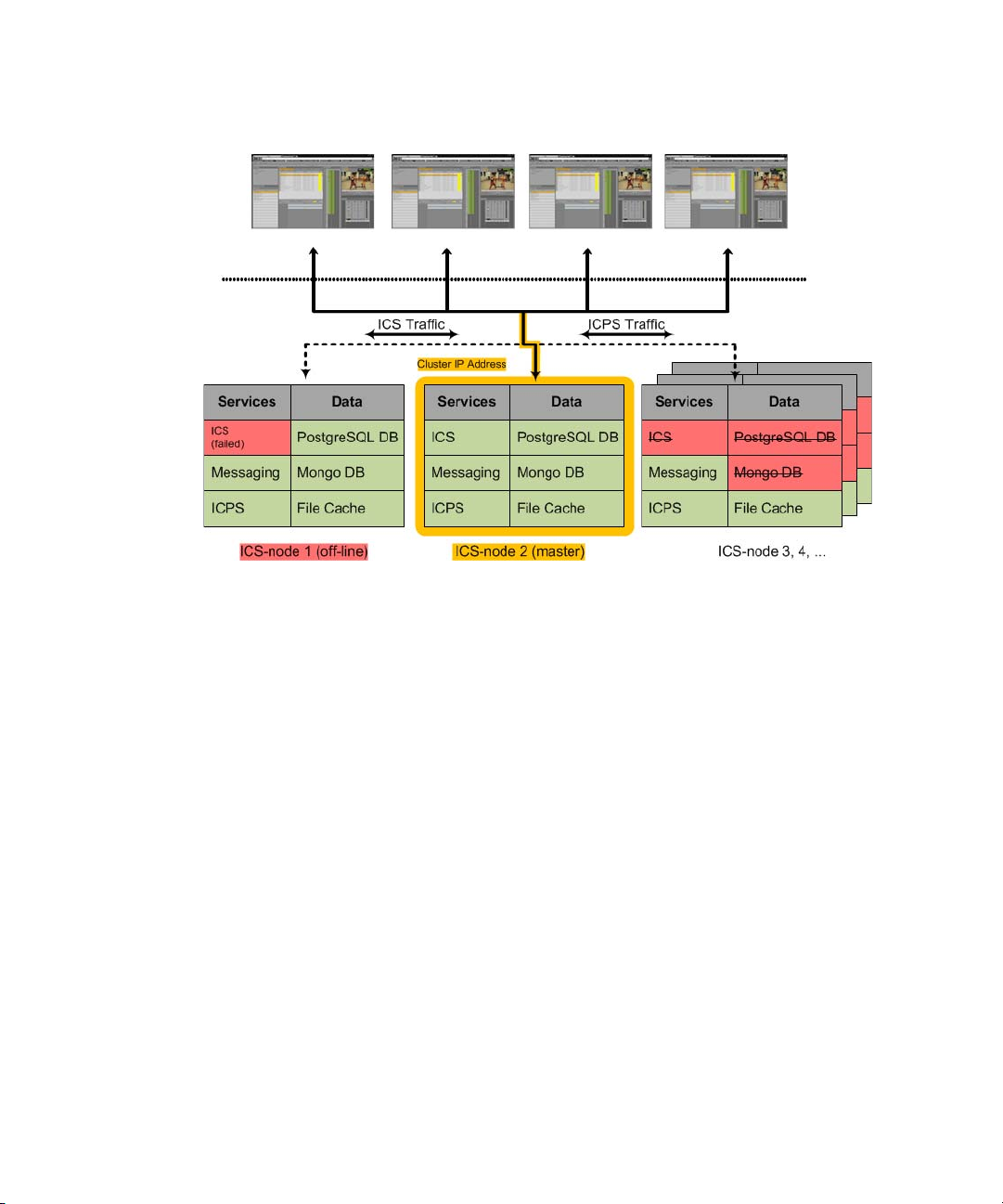

Should any of the key ICS services running on the master node fail without recovery (or reach

the failure threshold) the node is automatically taken out of the cluster and another node takes on

the role of master node. The node that takes over inherits the cluster IP address, and its own ICS

services (that were previously in standby) become fully active. From this point, the new master

receives all incoming requests. Manual intervention must be undertaken to determine the cause

of the fault on the failed node and to restore it to service promptly.

In a correctly sized cluster, a single node can fail and the cluster will properly service its users.

n

However, if two nodes fail, the resulting cluster is likely under-provisioned for expected use and

will be oversubscribed.

This failover from master to slave is shown in the following illustration.

12

Page 13

How Load Balancing Works

How Load Balancing Works

In ICS the video playback service is load-balanced, meaning incoming video playback requests

are distributed evenly across all nodes in the cluster. This can be done because the Interplay

Central Playback Service (ICPS) is actively running on all nodes in the cluster concurrently. A

load-balancing algorithm controlled by the master node monitors the clustered nodes, and

determines which node gets the job.

The exception is the master node, which is treated differently. A portion of its CPU capacity is

preserved for the duties performed by the master node alone, which include serving the UI,

handling logins and user session information, and so on. When the system is under heavy usage,

the master node will not take on additional playback jobs.

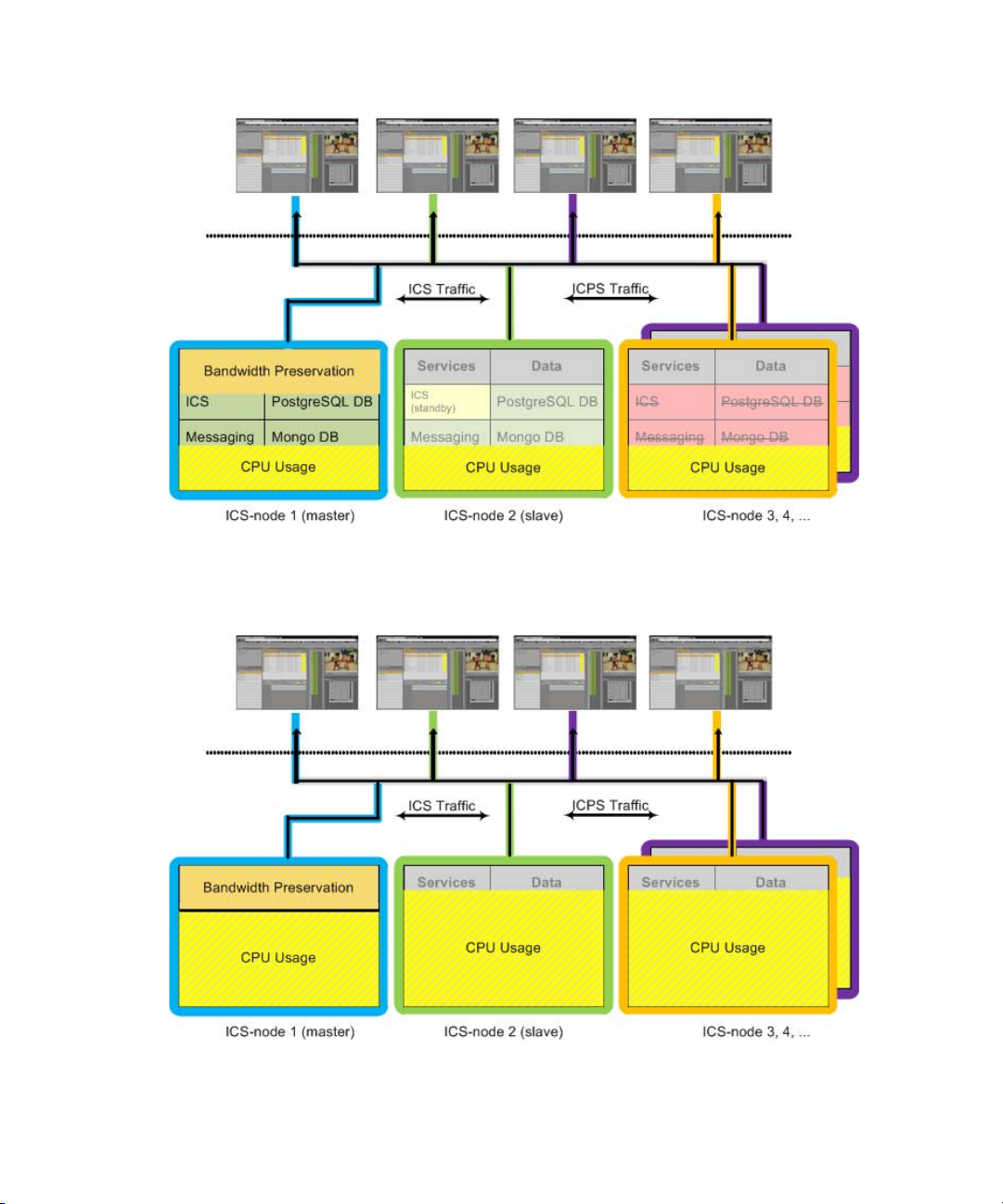

The following illustration shows a typical load-balanced cluster. The colored lines indicate that

playback jobs are sent to different nodes in the cluster. They are not meant to indicate a particular

client is bound to a particular node for its entire session, which may not be the case. Notice the

master node’s bandwidth preservation.

13

Page 14

How Load Balancing Works

The next illustration shows a cluster under heavy usage. As illustrated, CPU usage on the master

node will not exceed a certain amount, even when the other nodes approach saturation.

14

Page 15

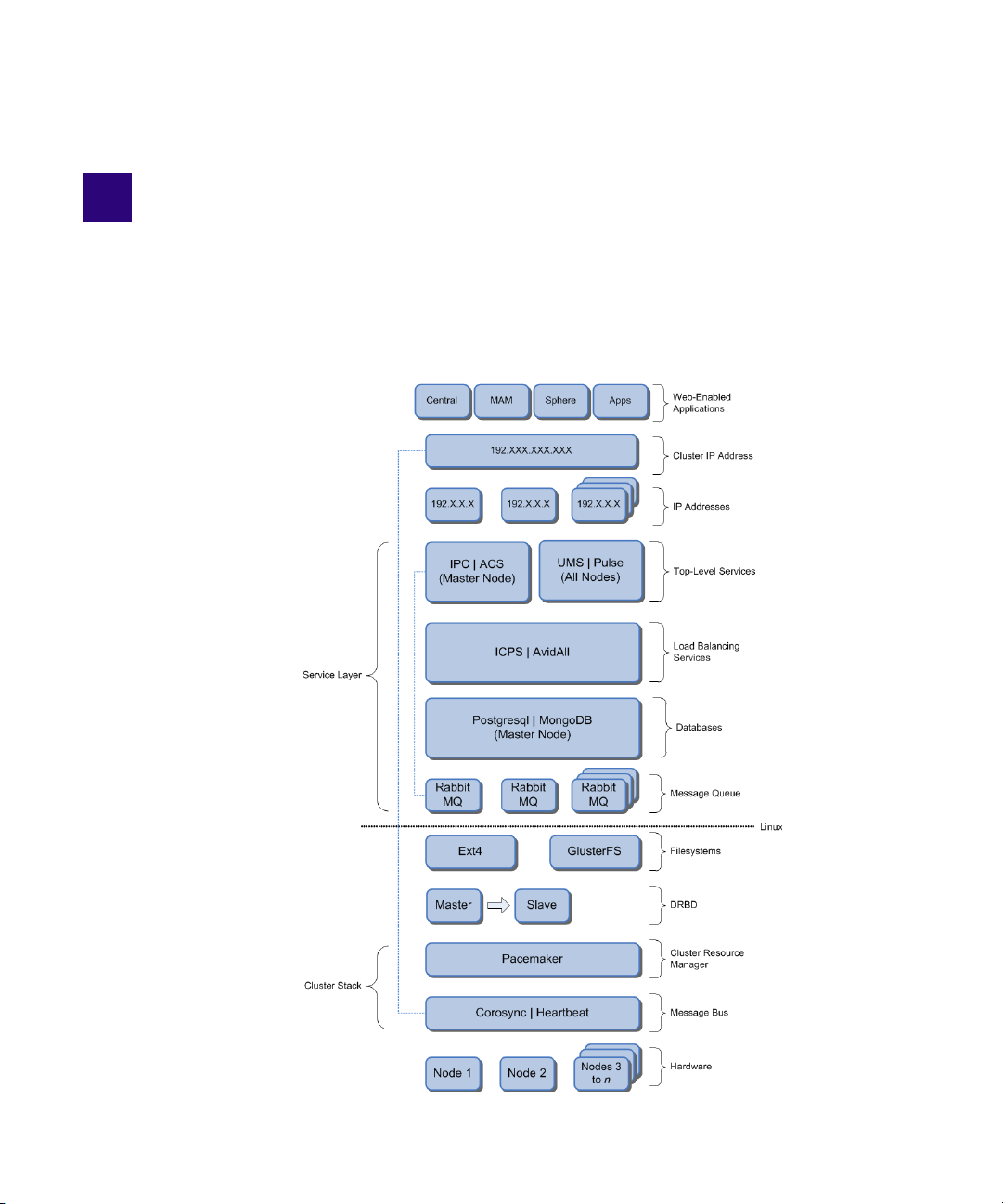

2 System Architecture

ICS features messaging systems, cluster management infrastructure, user management services,

and so on. Many are interdependent, but they are nevertheless best initially understood as

operating at logically distinct layers of the architecture, as shown in the following illustration.

Page 16

The following table explains the role of each layer:

System Architecture Layer Description

Web-Enabled Applications A the top of the food chain are the web-enabled client

applications that take advantage of the ICS cluster. These include

Interplay Central, Interplay MAM, Sphere and the iOS apps.

Cluster IP Address All client applications gain access to ICS via the cluster IP

address. This is a virtual address, established at the network level

in DNS and owned by the node that is currently master. In the

event of a failover, ownership of the cluster IP address is

transferred to the slave node.

The dotted line in the illustration indicates it is Corosync that

manages ownership of the cluster IP address. For example, during

a failover, it is Corosync that transfers ownership of the cluster IP

address from the master node to the slave node.

Node IP Addresses While the cluster is seen from the outside as a single machine

with one IP address and host name, it is important to note that all

the nodes within the cluster retain individual host names and IP

addresses. Network level firewalls and switches must allow the

nodes to communicate with one another.

Top-Level Services At the top level of the service layer are the ICS services running

on the master node only. These include:

• IPC - Interplay Central core services (aka "middleware")

• ACS - Avid Common Service bus (aka "the bus")

(configuration & messaging uses RabbitMQ.

• UMS - User Management Services

• Pulse - Interplay Pulse services (aka "multi-platform

distribution")

The dotted line in the illustration indicates the top level services

communicate with one another via ACS, which, in turn, uses

RabbitMQ.

Load Balancing Services The mid-level service layer includes the ICS services that are

load-balanced. These services run on all nodes in the cluster.

• ICPS - Interplay Central Playback Services: Transcodes and

serves transcoded media.

• AvidAll - Encapsulates all other ICPS back-end services.

16

Page 17

System Architecture Layer Description

Databases The mid-level service layer also includes two databases:

• PostgreSQL: Stores data for several ICS services (UMS,

ACS, ICPS, Pulse).

• MongoDB: Stores data related to ICS messaging.

Both these databases are synchronized from master to slave for

failover readiness.

RabbitMQ Message Queue RabbitMQ is the message broker ("task queue") used by the ICS

top level services.

RabbitMQ maintains its own independent clustering system. That

is, RabbitMQ is not managed by Pacemaker. This allows

RabbitMQ to continue delivering service requests to underlying

services in the event of a failure.

Filesystem The standard Linux filesystem.

This layer also conceptually includes GlusterFS, the Gluster

"network filesystem" used for cache replication. GlusterFS

performs its replication at the file level.

Unlike the Linux filesystem, GlusterFS operates in the "user

space" - the advantage being any GlusterFS malfunction does not

bring down the system.

DRBD Distributed Replicated Block Device (DRBD) is responsible for

volume mirroring.

DRBD replicates and synchronizes the system disk's logical

volume containing the PostgreSQL and MongoDB databases

across the master and slave, for failover readiness. DRBD carries

out replication at the block level.

Pacemaker The cluster resource manager. Resources are collections of

services grouped together for oversight by Pacemaker.

Pacemakers sees and manages resources, not individual services.

Corosync and Heartbeat Corosync and Heartbeat are the clustering infrastructure.

Corosync uses a multicast address to communicate with the other

nodes in the cluster. Heartbeat contains Open Cluster Framework

(OCF) compliant scripts used by Corosync for communication

within the cluster.

17

Page 18

System Architecture Layer Description

Hardware At the lowest layer is the server hardware.

It is at the hardware level that the system disk is established in a

RAID1 (mirror) configuration.

Note that this is distinct from the replication of a particular

volume by DRBD. The RAID 1 mirror protects against disk

failure. The DRBD mirror protects against node failure.

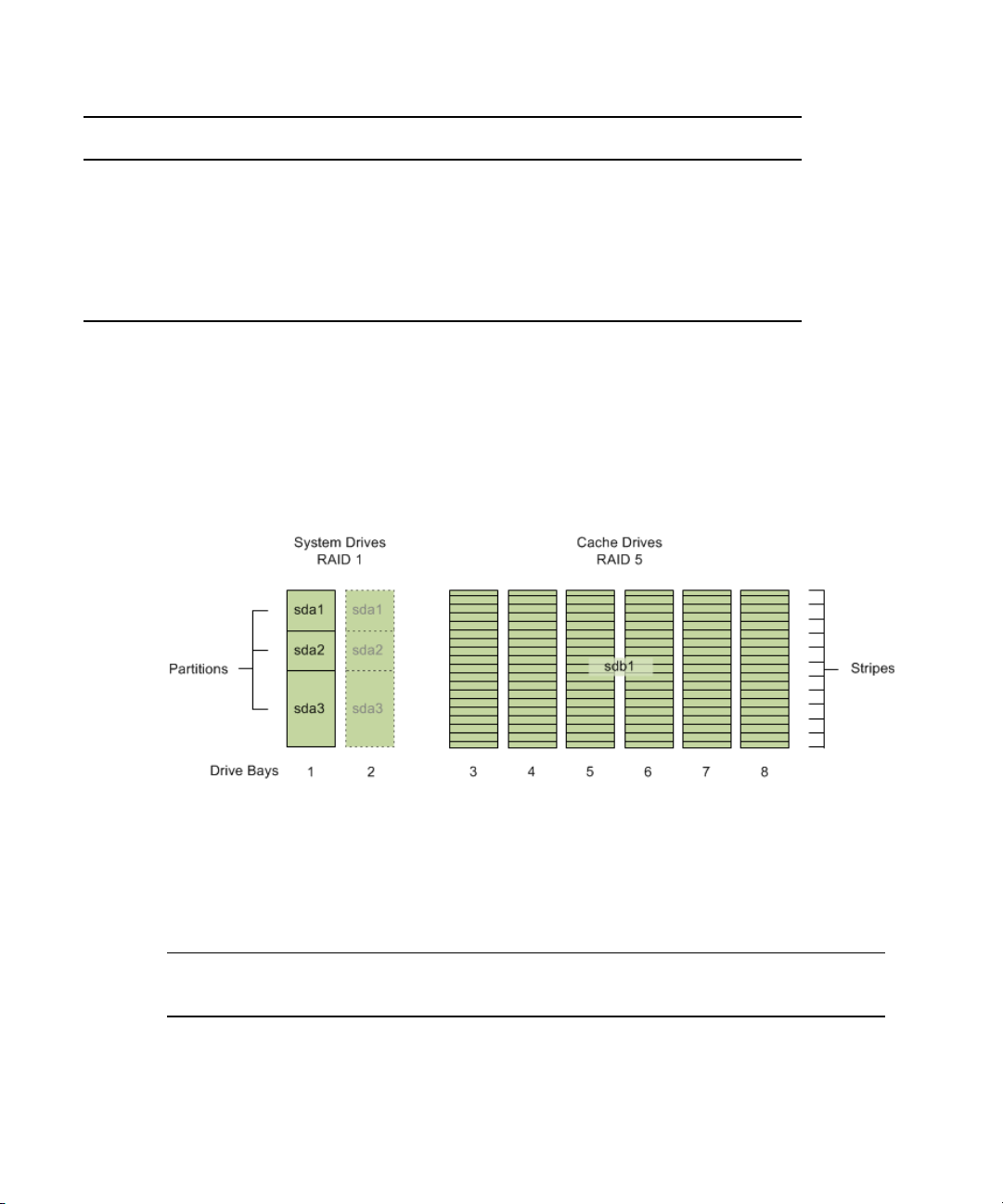

Disk and File System Layout

It is helpful to have an understanding of a node's disk and filesystem layout. The following

illustration represents the layout of a typical node:

Disk and File System Layout

In ICS 1.5 a RAID 5 cache was required for multi-cam, iOS, and MAM non-h264 systems only.

n

As of ICS 1.6 a separate cache is required, but it does not always need to be RAID 5.

The following table presents contents of each volume:

Physical

Volumes (pv)

sda1 /boot RHEL boot

sda2 /dev/drbd1 ICS databases

Volum e

Groups (vg)

Logical

Volumes (lv) Directory Content

partition

18

Page 19

ICS Services and Databases in a Cluster

Physical

Volumes (pv)

sda3 icps swap

sdb1 ics cache /cache ICS file cache

Volum e

Groups (vg)

Logical

Volumes (lv) Directory Content

/dev/dm-0

root

/

Note the following:

• sda1 is a standard Linux partition created by RHEL during installation of the operating

system

• sda2 is a dedicated volume created for the PostgreSQL (UMS, ACS, ICS, Pulse) and

MongoDB (ICS messaging) databases. The sda2 partition is replicated and synchronized

between master and slave by DRBD.

• sda3 contains the system swap disk and the root partition.

• sdb1 is the RAID 5 cache volume used to store transcoded media and various other

temporary files.

ICS Services and Databases in a Cluster

swap space

RHEL system

partition

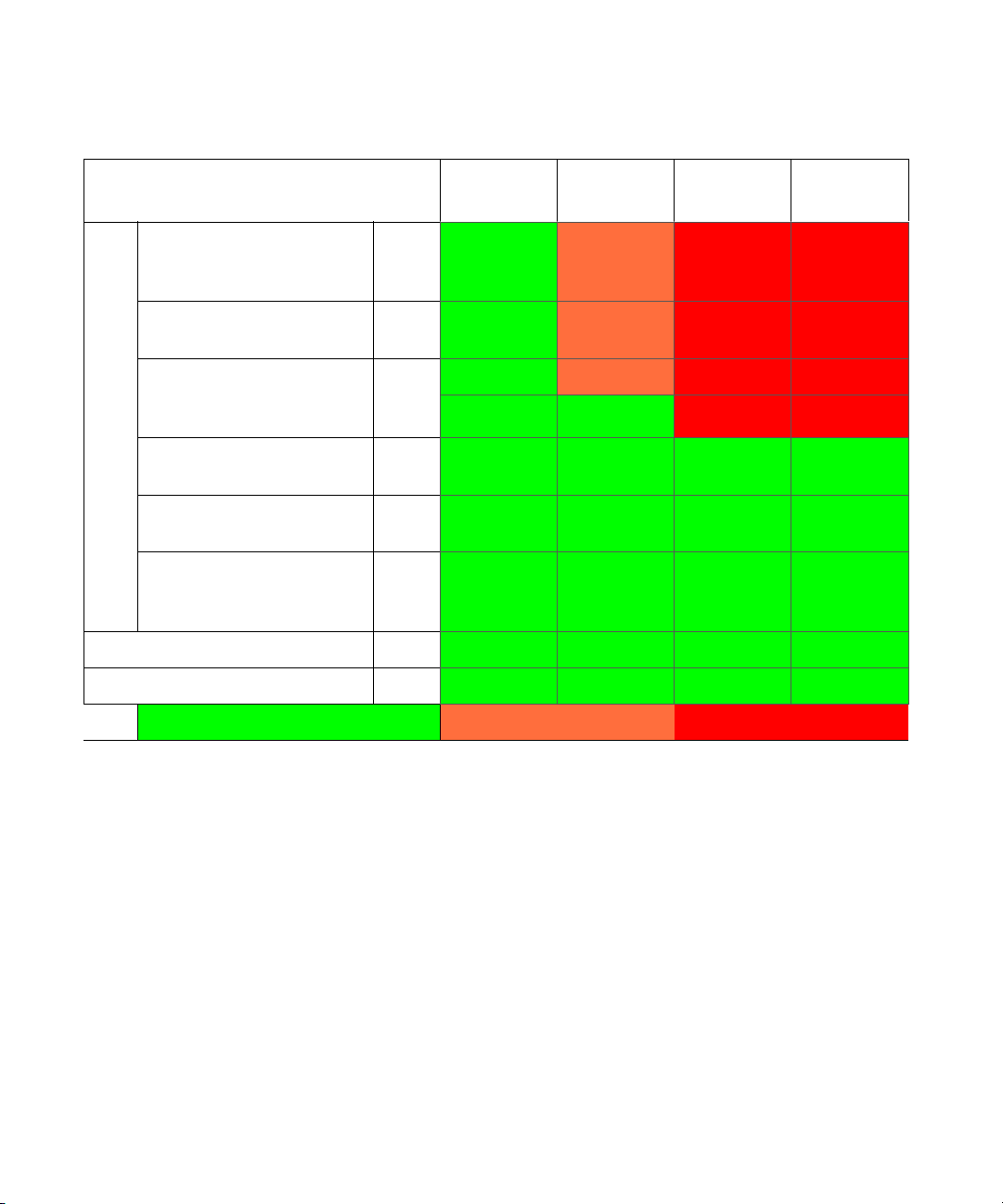

The following table lists the most important ICS services that take advantage of clustering, and

where they run:

19

Page 20

ICS Services and Databases in a Cluster

Services

ICS IPC Core Services

(“the middleware”)

(avid-interplay-central)

User Management Service

(avid-um)

Avid Common Services bus

(“the bus”)

(acs-ctrl-core)

AAF Generator

(avid-aaf-gen)

ICS Messaging

(acs-ctrl-messenger)

Playback Services

(“the back-end”)

(avid-all)

Interplay Pulse (avid-mpd) MPD

Load Balancing (avid-icps-manager) XLB

IPC ON OFF OFF OFF

UMS

ACS

AAF

ICPS

ics-node 1

(Master)

ON OFF OFF OFF

ON OFF OFF OFF

ON ON OFF OFF

ON ON ON ON

ON ON ON ON

ON ON ON ON

ON ON ON ON

ON ON ON ON

ics-node 2

(Slave) ics-node 3 ics-node n

= ON (RUNNING) = OFF (STANDBY) = OFF (DOES NOT RUN)

Note the following:

• All ICS services run on the Master node in the cluster.

• Most ICS services are off on the Slave node but start automatically during a failover.

• On all other nodes, the ICS services never run.

• Some services spawned by the Avid Common Service bus run on the master node only (in

standby on the slave node); others are always running on both nodes.

• The Playback service (ICPS) runs on all nodes for Performance Scalability (load balancing

supports many concurrent clients and/or large media requests) and High Availability (service

is always available).

The following table lists the ICS databases, and where they run:

20

Page 21

Clustering Infrastructure Services

ICS Databases ICS-node 1

(Master)

ICS Database PostgreSQL ON OFF OFF OFF

Service Bus

Messaging

Database

= ON (RUNNING) = OFF (STANDBY) = OFF (DOES NOT RUN)

MongoDB

ON OFF OFF OFF

ICS-node 2

(Slave) ICS-node 3 ICS-node n

Clustering Infrastructure Services

The ICS services and databases presented in the previous section depend upon the correct

functioning a clustering infrastructure. The infrastructure is supplied by a small number of

open-source software designed specifically (or very well suited) for clustering. For example,

Pacemaker and Corosync work in tandem to restart failed services, maintain a fail count, and

failover from the master node to the slave node, when failover criteria are met.

The following table presents the services pertaining to the infrastructure of the cluster:

Software Function

RabbitMQ Cluster Message

Broker/Queue

GlusterFS File Cache Mirroring

DRBD Database Volume

Mirroring

Pacemaker Cluster Management &

Service Failover

Corosync Cluster Engine Data Bus

Heartbeat Cluster Message Queue

= ON (RUNNING) = OFF (STANDBY) = OFF

Node 1

(Master)

ON ON ON ON

ON ON ON ON

ON ON OFF OFF

ON ON ON ON

ON ON ON ON

ON ON ON ON

21

Node 2

(Slave) Node 3 Node n

(DOES NOT RUN)

Page 22

Note the following:

• RabbitMQ, the message broker/queue used by ACS, maintains its own clustering system. It

is not managed by Pacemaker.

• GlusterFS mirrors media cached on an individual RAID 5 drive to all other RAID 5 drives in

the cluster.

• DRBD mirrors the ICS databases across the two servers that are in a master-slave

configuration.

• Pacemaker: The cluster resource manager. Resources are collections of services

participating in high-availability and failover.

• Corosync and Heartbeat: The fundamental clustering infrastructure.

• Corosync and Pacemaker work in tandem to detect server and application failures, and

allocate resources for failover scenarios.

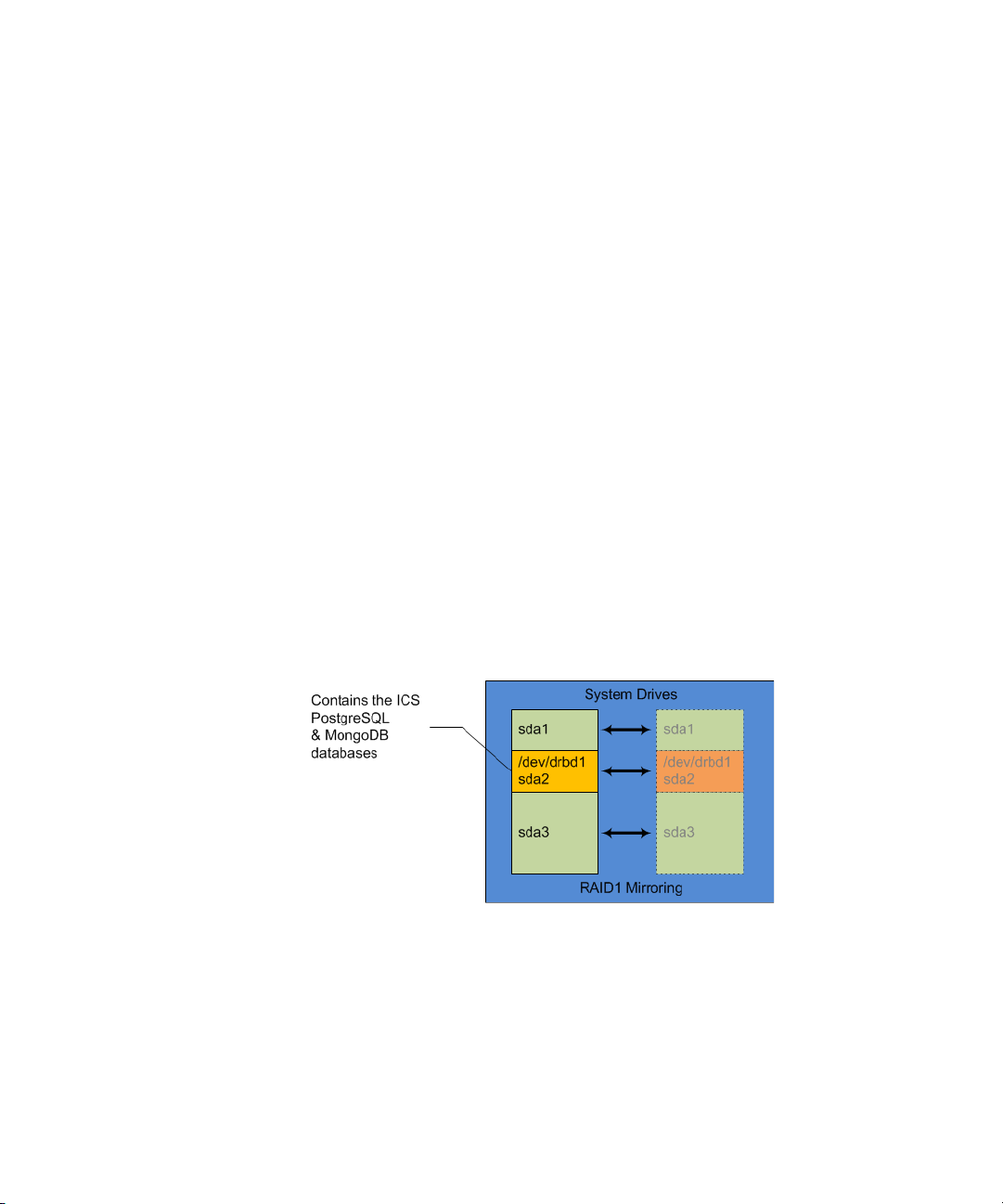

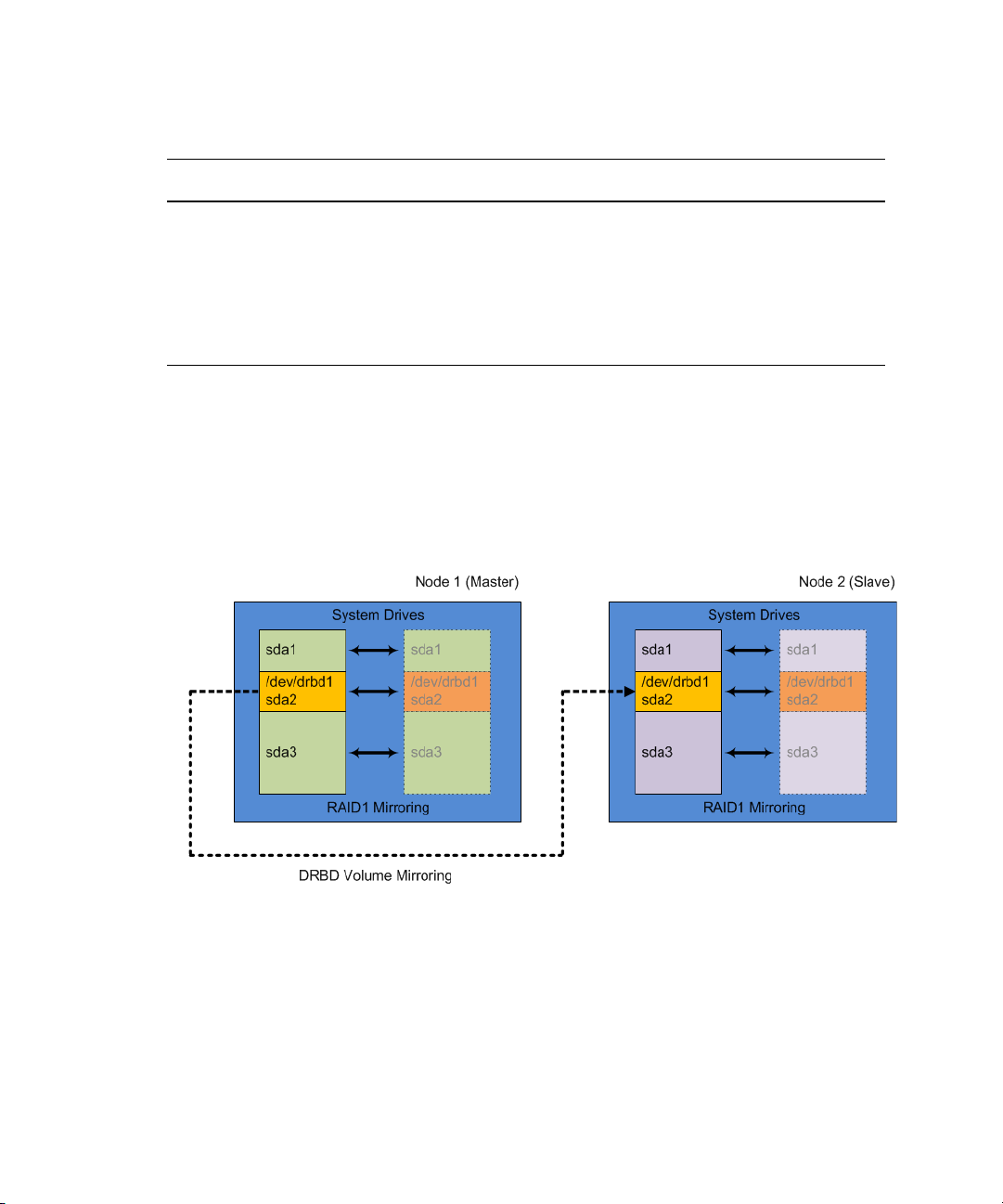

DRBD and Database Replication

Recall the filesystem layout of a typical node. The system drive (in RAID1) consists of three

partitions: sda, sda2 and sda3. As noted earlier, sda2 is the partition used for storing the ICS

databases, stored as PostgreSQL databases.

DRBD and Database Replication

The following table details the contents of the databases stored on the sda2 partition:

22

Page 23

GlusterFS and Cache Replication

Database Directory Contents

PostgreSQL /mnt/drbd/postgres_data UMS - User Management Services

ACS - Avid Common Service bus

ICPS - Interplay Central Playback Services.

MPD - Multi-platform distribution (Pulse)

MongoDB /mnt/drbd/mongo_data ICS Messaging

In a clustered configuration, ICS uses the open source Distributed Replicated Block Device

(DRBD) storage system software to replicate the sda2 partition across the Master-Slave cluster

node pair. DRBD runs on the master node and slave node only, even in a cluster with more than

two nodes. PostgreSQL maintains the databases on sda2. DRBD mirrors them.

The following illustration shows DRBD volume mirroring of the sda2 partition across the master

and slave.

GlusterFS and Cache Replication

Recall that the ICS server transcodes media from the format in which it is stored on the ISIS (or

standard filesystem storage) into an alternate delivery format, such as an FLV, MPEG-2

Transport Stream, or JPEG image files. In a deployment with a single ICS server, the ICS server

maintains a cache where it keeps recently-transcoded media. In the event that the same media is

requested again, the ICS server can deliver the cached media, without the need to re-transcode it.

23

Page 24

Clustering and RabbitMQ

In an ICS cluster, caching is taken one step farther. In a cluster, the contents of the RAID 5

volumes are replicated across all the nodes, giving each server access to all the transcoded

media. The result is that each ICS server sees and has access to all the media transcoded by the

others. When one ICS server transcodes media, the other ICS servers can also make use of it,

without re-transcoding.

The replication process is set up and maintained by GlusterFS, an open source software solution

for creating shared filesystems. In ICS, Gluster manages data replication using its own highly

efficient network protocol. In this respect, it can be helpful to think of Gluster as a "network

filesystem" or even a "network RAID" system.

GlusterFS operates independently of other clustering services. You do not have to worry about

starting or stopping GlusterFS when interacting with ICS services or cluster management

utilities. For example, if you remove a node from the cluster, GlusterFS itself continues to run

and continues to replicate its cache against other nodes in the Gluster group. If you power down

the node for maintenance reasons, it will re-synchronize and 'catch up' with cache replication

when it is rebooted.

The correct functioning of the cluster cache requires that the clocks on each server in the cluster

n

are set to the same time. See “Verifying Clock Synchronization” on page 67.

Clustering and RabbitMQ

RabbitMQ is the message broker ("task queue") used by the ICS top level services. ICS makes

use of RabbitMQ in an active/active configuration, with all queues mirrored to exactly two

nodes, and partition handling set to ignore. The RabbitMQ cluster operates independently of the

ICS master/slave failover cluster, but is often co-located on the same two nodes. The ICS

installation scripts create the RabbbitMQ cluster without the need for human intervention.

Note the following:

• All RabbitMQ servers in the cluster are active and can accept connections

• Any client can connect to any RabbitMQ server in the cluster and access all data

• Each queue and its data exists on two nodes in the cluster (for failover & redundancy)

• In the event of a failover, clients should automatically reconnect to another node

• If a network partition / split brain occurs (very rare), manual intervention will be required

The RabbitMQ Cookie

A notable aspect of the RabbitMQ cluster is the special cookie it requires, which allows

RabbitMQ on the different nodes to communicate with each other. The RabbitMQ cookie must

be identical on each machine, and is set, by default, to a predetermined hardcoded string.

24

Page 25

Clustering and RabbitMQ

Powering Down and Rebooting

With regards to RabbitMQ and powering down and rebooting nodes:

• If you take down the entire cluster, the last node down must always be the first node up. For

example, if "ics-serv3" is the last node you stop, it must be the first node you start.

• Because of the guideline above, it is not advised to power down all nodes at exactly the same

time. There must always be one node that was clearly powered down last.

• If you don't take the whole cluster down at once then the order of stopping/starting servers

doesn't matter.

For details, see

Handling Network Disruptions

“Shutting Down / Rebooting an Entire Cluster” on page 70.

• RabbitMQ does not handle network partitions well. If the network is disrupted on only some

of the machines and then it is restored, you should shutdown the machines that lost the

network and then power them back on. This ensures they re-join the cluster correctly. This

happens rarely, and mainly if the cluster is split between two different switches and only one

of them fails.

• On the other hand, If the network is disrupted to all nodes in the cluster simultaneously (as

in a single-switch setup), no special handling should be required.

Suggestions for Further Reading

•Clustering: http://www.rabbitmq.com/clustering.html

• Mirrored queues: http://www.rabbitmq.com/ha.html

• Network Partitions:

http://www.rabbitmq.com/partitions.html

25

Page 26

3 Services, Resources and Logs

Services and resources are key to the correct operation and health of a cluster. As noted in

“System Architecture” on page 15, services are responsible for all aspects of ICS activity, from

the ACS bus, to end-user management and transcoding. Additional services supply the clustering

infrastructure. Some ICS services are managed by Pacemaker, for the purposes of

high-availability and failover readiness. Services overseen by Pacemaker are called resources.

All services produce logs that are stored in the standard Linux log directories (under /var/log), as

detailed later in this chapter.

Services vs Resources

A typical cluster features both Linux services and Pacemaker cluster resources. Thus, it is

important to understand the difference between the two. In the context of clustering, resources

are simply one or more Linux services under management by Pacemaker. Managing services in

this way allows Pacemaker to monitor the services, automatically restart them when they fail,

and shut them down on one node and start them on another when they fail too many times.

It can be helpful to regard a cluster resource as Linux service inside a Pacemaker “wrapper”. The

wrapper includes the actions defined for it (start, stop, restart, etc.), timeout values, failover

conditions and instructions, and so on. In short, Pacemaker sees and manages resources, not

services.

For example, the Interplay Central (avid-interplay-central) service is the core Interplay Central

service. Since the platform cannot function without it, this service is overseen and managed by

Pacemaker as the AvidI PC resource.

As is known, the status of a Linux service can be verified by entering a command of the

following form at the command line:

service <servicename> status

In contrast, the state of a cluster resource is verified via the Pacemaker Cluster Resource

Manager, crm, as follows:

crm status <resource>

For details see:

Page 27

Tables of Services, Resources and Utilities

• “Interacting with Services” on page 32

• “Interacting with Resources” on page 33

Tables of Services, Resources and Utilities

The tables in this section provide lists of essential services that need to be running in a clustered

configuration. It includes three tables:

• All Nodes: The services that must be running on all nodes.

• Master Node: The services that must be running on the master node only. These services do

not need to be, and should not be running on the any other node.

• Pacemaker Resources: The are the services under management by Pacemaker. They run on

the master node, but can fail over to the slave node.

The lists are not exhaustive. They are lists of essential services that need to be running in a

clustered configuration.

All Nodes

The following table presents the services that must be running on all nodes.

All Nodes

Service Description

avid-all Encapsulates all ICPS back-end services:

• avid-config

• avid-isis

• avid-fps

• avid-jips

• avid-spooler

• avid-edit

pacemaker Cluster Management and Service Failover

Management

corosync Cluster Engine Data Bus

glusterd GlusterFS daemon responsible for cache

replication.

27

Page 28

Tables of Services, Resources and Utilities

All Nodes

Service Description

rabbitmq-server Messaging broker/queue for the ACS bus.

Maintains its own cluster functionality to

deliver high-availability.

avid-aaf-gen AAF Generator service, the service responsible

for saving sequences.

To reduce bottlenecks when the system is under

heavy load, five instances of this service run

concurrently, by default.

Installed on all nodes but only used on the

master or slave node, depending on where the

IPC Core service (avid-interplay-central) is

running.

This service is not managed by Pacemaker,

therefore you should check its status regularly,

and restart it if any instance has failed. See

“Verifying the AAF Generator Service” on

.

page 68

acs-ctrl-messenger The services related to the IPC end-user

messaging feature:

• "messenger" service (handles delivery of

user messages)

• "mail" service (handles mail-forwarding

feature)

This service registers itself on the ACS bus. All

instances are available for handling requests,

which are received by way of the bus via a

round-robin-type distribution system.

This service operates independently, and is not

managed by Pacemaker.

avid-mpd Interplay Pulse services.

Operates similarly to the acs-ctrl-messenger

service described above.

This service is only available when Interplay

Pulse (separate installer) is installed on the

system.

28

Page 29

Tables of Services, Resources and Utilities

All Nodes

Service Description

avid-ics A utility script (not a service) that can be used

to verify the status of all the major ICS services.

Verifies the status of the following services:

- avid-all

- avid-interplay-central

- acs-ctrl-messenger

- acs-ctrl-core

- avid-ums

The utility script enables you to stop, start and

view the status of all the services it

encapsulates at once:

avid-ics status

avid-ics stop

avid-ics start

Note that the utility script cannot be invoked

like a true service. The form "service avid-ics

status" will not work. Use the following form

instead:

avid-ics <command>

Master Node Only

The following table presents the services that must be running on the master node.

Master Node

Service Description

avid-interplay-central IPC Core services (“the middleware”)

29

Page 30

Tables of Services, Resources and Utilities

Master Node

Service Description

acs-ctrl-core Avid Common Service bus (“the bus”)

Essential bus services needed for the overall

platform to work:

• "boot" service (provides registry services to

bus services)

• "attributes" services (provides system

configuration of IPC)

• "federation" service (initializes multi-zone

configurations)

The acs-ctrl-core service is a key service. The

following services will not start or function

correctly if as acs-ctrl-core is not running.

• avid-icps-manager

• avid-ums

• avid-interplay-central

• avid-all

• acs-ctrl-messenger

• avid-mpd

avid-ums User Management Service

avid-icps-manager Manages the ICPS connection and load

balancing services.

postgresql-9.1 PostgreSQL database for user management and

attributes data

mongod MongoDB database for data from the following

services:

• ICS Messaging (acc-ctrl-messenger) data

• ACS bus (acs-ctrl-core) registry

30

Page 31

Tables of Services, Resources and Utilities

Master Node

Service Description

drbd DRBD (Distributed Replicated Block Device)

is used to mirror the system disk partition

containing the two databases from master to

slave, for failover readiness:

• PostGreSQL

• MongoDB

DRBD is fully functional on both master and

slave. It is included in this table for

convenience.

Pacemaker Resources

The following table presents the cluster resources overseen and managed by

Pacemaker/Corosync. The underlying resources must be running on the master node, but will fail

over to the slave node.

Managed by Pacemaker/Corosync

Resource Description

drbd_postgres Encapsulates:

•drbd

• postgresql-9.1

AvidIPC Encapsulates:

• avid-interplay-central

AvidUMS Encapsulates:

• avid-ums

AvidACS Encapsulates:

• acs-ctrl-core

MongoDB Encapsulates:

• mongod

31

Page 32

Managed by Pacemaker/Corosync

Resource Description

AvidAll Encapsulates:

AvidICPS Encapsulates:

Pacemaker and Corosync manage numerous other cluster resources. The table lists the most

n

important ones. For a complete list, query the Cluster Resource Manager using the following

command at the command-line:

crm configure show

In the output that appears, “primitive” is the token that defines a cluster resource.

Interacting with Services

Interacting with Services

• avid-all

• avid-icps-manager

ICS services are standard Linux applications and/or daemons, and you interact with them

following the standard Linux protocols.

The command line for interacting with services follows the standard Linux format:

service <servicename> <action>

Standard actions include the following (some services may permit other actions):

status returns the current status of the service

stop tops the service

start starts the service

restart stops then restarts the service

The command line for interacting with services follows the standard Linux format:

For example:

service avid-ums restart

32

Page 33

Interacting with Resources

Cluster resources are Linux services that are under management by Pacemaker. These should not

normally be touched using typical Linux tools. You must interact with cluster resources using the

Pacemaker Cluster Resource Manager, crm. If you try to stop the underlying service directly —

that is, without going through the Cluster Resource Manager — Pacemaker will do its job and

restart it immediately.

Under special circumstances (such as during troubleshooting), you can shut down Pacemaker

n

and Corosync, then directly stop, start and re-start the underlying services managed by

Pacemaker. The simplest way to gain direct access to a node’s managed services is by taking the

node offline. See “Recommended Approach: Remove Node from Cluster” on page 34.

The command line for interacting with resources uses a format particular to the Cluster Resource

Manager:

crm resource <action> <resourcename>

For example:

crm resource status AvidIPC

Interacting with Resources

Returns information similar to the following:

resource AvidIPC is running on: icps-mam-large

Issuing the above command without specifying a resource returns the status of all cluster

resources.

crm resource status

Returns information similar to the following:

AvidConnectivityMonEverywhere [AvidConnectivityMon]

Started: [ icps-mam-large icps-mam-med icps-mam-small ]

AvidClusterMon (lsb:avid-monitor) Started

MongoDB (lsb:mongod) Started

Resource Group: postgres

postgres_fs (ocf::heartbeat:Filesystem) Started

AvidClusterIP (ocf::heartbeat:IPaddr2) Started

pgsqlDB (ocf::avid:pgsql_Avid) Started

Master/Slave Set: ms_drbd_postgres [drbd_postgres]

Masters: [ icps-mam-large ]

Slaves: [ icps-mam-med ]

Clone Set: AvidAllEverywhere [AvidAll]

Started: [ icps-mam-small icps-mam-med icps-mam-large ]

33

Page 34

Interacting with Resources

AvidIPC (lsb:avid-interplay-central) Started

AvidUMS (lsb:avid-ums) Started

AvidACS (lsb:acs-ctrl-core) Started

Clone Set: AvidICPSEverywhere [AvidICPS]

Started: [ icps-mam-small icps-mam-med icps-mam-large ]

For more information see the discussion of the Cluster Resource Monitor tool, crm, in “Verifying

Cluster Configuration” on page 46

Stopping the Underlying Services Directly: Two Approaches

If you stop a resource's underlying service directly — that is, without going through the cluster

resource manager — Pacemaker will attempt to restart it immediately. This not only restarts the

service, it also increases the failover count of the corresponding resource, and can result in an

unexpected failover. Always use the cluster resource manager utility.

The exception to this rule is during cluster installation, upgrading, or troubleshooting. For

example, if you incorrectly complete an installation and configuration procedure, you might

need to back up a few steps, and redo the procedure. In order for the new settings to take hold,

you might need to restart the corresponding service or services directly. Similar arguments can

be made for upgrading and troubleshooting. In these cases, there are two main approaches.

Recommended Approach: Remove Node from Cluster

The recommended approach is to temporarily remove the node from the cluster using the cluster

resource manager:

crm node standby <node>

Putting a node into standby shuts down Pacemaker and Corosync, freeing all services from

management as resources.

To bring a node back online issue the following command (which restarts Pacemaker and puts its

services back under management):

crm node online <node>

Alternative Approach: Stop Pacemaker and Corosync

An alternative approach is to shut down Pacemaker and Corosync directly:

service pacemaker stop

service corosync stop

Restart them in the reverse order.

34

Page 35

Services Start Order and Dependencies

Services Start Order and Dependencies

When direct intervention with a service is required, take special care with regards to stopping,

starting, or restarting. The services on a node operate within a framework of dependencies.

Services must be stopped and started in a specific order. This order is particularly important

when you have to restart an individual service (in comparison to rebooting the entire server).

Before doing anything, identify and shut down the services that depend on the target service.

If Pacemaker and Corosync are running on the node 1) stop Pacemaker 2) stop Corosync (in that

n

order). Otherwise, Pacemaker will automatically restart the service. If the node is actively part

of a cluster, putting it into standby using the Cluster Resource Manager utility (crm) will stop

Pacemaker and Corosync for you. See “Interacting with Services” on page 32.

The start order and dependencies relationships of the main cluster services are summarized in the

following illustration.

35

Page 36

Services Start Order and Dependencies

The following table summarizes the order in which services can be safely started.

Start

Order Service Name Process Name Notes

1DRBD drbd

2 PostgreSQL postgresql-9.1

3 MongoDB mongod

4 RabbitMQ rabbitmq-server

5 Avid Common Service bus

(ACS: “the bus”)

6 Node.js avid-icps-manager

7 User Management Services

(UMS)

8 AAF Generator avid-aaf-gen Five instances of this service

9 IPC Core Services avid-interplay-central

10 ICPS Backend Services avid-all

11 ICS Messaging acs-ctrl-messenger

12 Pulse avid-mpd

acs-ctrl-core

avid-ums

should always be running. See

“Verifying the AAF Generator

Service” on page 68

.

Example: Restarting the User Management Services

A simple example will demystify the illustration and table. Suppose you need to restart the User

Management Services (avid-ums).

1. Identify its position in the dependency table (#7).

2. Identify all the services that are directly or indirectly dependent on it (service #8, #9 & #12).

3. Since the avid-ums and avid-interplay-central are managed by Pacemaker, stop Pacemaker

and Corosync by putting the node into standby mode.

4. Stop the dependent services first in order from most dependencies to least dependencies.

5. That is, stop, service #12 first, then #9, #8, and #7.

36

Page 37

Working with Cluster Logs

6. Restart UMS (#7).

7. Restart services #8 through #12, in that order.

For a closer look at the start orders assigned to Linux services, see the content of the /etc/rc3.d

directory. The files in this directory are prefixed Sxx or Kxx (e.g. S24, S26, K02). The prefix Sxx

indicates the start order. Kxx indicates the shutdown order.

The content of a typical /etc/rc3.d directory is shown below:

The Linux start order as reflected in the /etc/rc3.d and the other run-level (“/etc/rcX.d”)

n

directories reflect the boot order and shut-down order for the server. They do not always reflect

dependencies within ICS itself.

rr

Working with Cluster Logs

ICS and its associated open-source services — such as Pacemaker, Corosync, and RabbitMQ —

produce numerous logs. These are stored in the standard RHEL directory and subdirectories:

/var/log

Typically, log files have a name of the following form:

<process>.log

37

Page 38

Working with Cluster Logs

For example:

spooler.log

spooler.log-201310.25.gz

spooler.log.old20131024_141055

Note the following:

• *.log are current log files, for the active process.

• *.gz are "rotated out" log files, compressed and with a date appended.

• *.old are backlogs.

Log files are rotated (replaced), compressed and eventually deleted automatically by the Linux

logrotate log management utility. In addition, most ICS logs have the following characteristics,

determined by the logrotate configuration file (/etc/logrotate.conf):

• Fresh logs are begun with each reboot

• New log files are uncompressed text files (some are binaries)

• Older logs are rotated (replaced) weekly

• Older logs are stored in the gzip format

• Four weeks worth of backlogs are kept

• A new empty log file is created after rotating out the old one

• Date is appended as suffix on the rotated file

Specific processes can override the logrotate configuration file settings by supplying their own

n

configuration file in the /etc/logrotate.d directory. If a log file is not behaving as expected, check

there.

Understanding Log Rotation and Compression

The Linux logrotate utility runs and compresses the old logs daily. Although it is invoked by the

Linux cron daemon, the exact runtime for logrotate cannot be stated with accuracy. It varies, for

example, depending on when the system was most recently rebooted, but it does not run at a

fixed time after the reboot. This is by design, in order to vary and minimize the impact on other

system resources. By default, rotated logs files are store as gzip (.gz) compressed files.

The production of logs is controlled by the following files:

• /etc/cron.daily/logrotate specifies the job to be run and the file containing configuration

parameters

• /usr/sbin/logrotate is the job that is run

38

Page 39

• /etc/logrotate.conf is the file containing configuration parameters

• /etc/logrotate.d is a directory containing additional configuration information that might

override the default instructions

Further details on the log rotation configuration files are beyond the scope of this document. For

more information, see the Linux man page for logrotate by typing the following at the Linux

command line:

man logrotate

Viewing the Content of Log Files

You can search and examine the contents of logs from the Linux command line using the usual

Linux tools and commands:

• vi - Opens the log file for editing.

• tail - Displays the last few lines of a log file, in real-time. An excellent tool for monitoring

"growing" files (such as log files.)

To view the content of multiple log files in real time, use the "-f" option:

tail -f <file1> -f <file2>

Working with Cluster Logs

For example the following command displays the last few lines of both the edit.log and

isis.log files in the same shell:

tail -f /var/log/avid/edit.log /var/log/avid/isis.log

• more - Outputs the content of a file one screen at time.

• less - Like more, but permitting forwards and backwards movement through the file.

• grep - Use the grep command to search for regular expressions within a log file from the

command line.

For example the following command searches all log files in the current directory for the

term "fail-count":

grep fail-count *.log

The following more general form of the grep command searches all log files in the current

directory and all subdirectories:

grep -r <searchterm> *.log

• gzip - Use the gzip command to unzip rotated log files for viewing. Rotated log files are

stored as compressed gzip files by default.

The general form of the gzip command for uncompressing .gz files is as follows:

grep -d <logfile>.log.gz

39

Page 40

Retrieving Log Files

In addition to viewing logs directly on the Linux server as outlined above, it can be convenient to

copy logs of interest to a desktop machine. To do so, you need access via the network to the

server of interest, and log in credentials. You also need a secure shell (SSH) file transfer protocol

(FTP) client — commonly abbreviated SFTP — installed on the desktop machine.

WinSCP is the recommended free, open-source Windows client for securely copying files from a

Linux server to a desktop machine (Windows only). It can be downloaded at the following

location:

To copy files using WinSCP:

1. Connect to the server of interest using WinSCP as the root user.

WinSCP uses the standard TCP port 22 for its SSH connection. If you can establish an SSH

n

connection to the server outside of WinSCP, you can use WinSCP.

2. If a Warning dialog that appears, click Ye s /U p da t e as appropriate to accept the key.

3. WinSCP opens an interface providing a view of the Windows filesystem on the left and the

http://winscp.net

The root user has the necessary permission levels to establish the connection.

RHEL filesystem on the right.

Important Log Files at a Glance

WinSCP automatically opens in the home directory of the logged in user. Since you logged in as

n

the root user, this is /root on the RHEL machine. This should not be confused with the Linux root

directory itself (/).

4. Navigate to the directory on the Windows machine where you want to put log files.

5. Navigate to the directory on the Linux server containing the logs of interest (for example,

/var/log/avid).

6. Select the log files of interest. Shift-click to select multiple files.

7. Drag and drop the files to the Windows side of the WinSCP interface. Alternately, press the

Copy button for more options.

WinSCP copies the files from the Linux server to the Windows machine.

Important Log Files at a Glance

The following table presents the most important logs for clustering:

40

Page 41

Important Log Files at a Glance

Log File Description

/var/log/cluster Corosync log files.

/var/log/mongo MongoDB log files.

/var/log/rabbitmq RabbitMQ log files.

/var/log/avid avid-db.log - Log file of the avid-db database management

tool.

ICPS (playback service / "back-end") logs:

• config.log IPC configuration information, as found in the

System Settings panels. Produced by avid-config service.

• edit.log Traffic between the back-end and the editors

(NewsCutter/MediaComposer), including host and log-in

information, timeline warnings, and so on. Produced by

avid-edit service.

• fps.log Flash Player Security (FPS) information, relating to

the player appearing in the IPC UI. Produced by avid-fps

service.

• isis.log Information pertaining to ISIS mounting and

connections. Produced by avid-isis service.

jips.log Java Interplay Production service. Contains

information pertaining to low-level connections between

the ICS back-end services and the Interplay Production

services used to obtain AAF metadata. Produced by

avid-jips service.

• reconfigure.log Activity associated with running "service

avid-all reconfigure", which runs during setup.

• spooler.log Information relating to playback. Produced by

avid-spooler service.

All the above bulleted logs are produced by the named

services, which in turn are overseen by the avid-all service.

/var/log/avid/acs • acs-app-server.log Log file for the ACS Administrative

web pages, to be used for troubleshooting only (not on by

default)

• acs-ctrl-boot.log Log file for the ACS Boot Service (which

serves as a registry of services)

• acs-ctrl-generic.log Log file for most other ACS services,

but in the IPC 1.3 case, this is only Attributes Service

41

Page 42

Important Log Files at a Glance

Log File Description

/var/log/avid/avid-aaf-gen AAF Generators logs. This is the service responsible for saving

sequences.

/var/log/avid/avid-interplay-central Contains the Interplay Central Java middleware logs (including

httpd information).

· interplay_central_x.log Interplay Central server log

· YYYY_MM_DD.request.log -- Per day request logs,

currently empty

· health-check Internal health-check logs directory

· interplay Internal production connection logs directory

· performance Interplay performance logs directory, disabled

by default.

/var/log/avid/avid-register-ics-zone Reserved for future use.

/var/log/avid/avid-register-workgroup Reserved for future use.

/var/log/avid/avid-ums service.log - this information is being prepared.

session.log - log-in requests and session information.

/var/log/avid/qm Quality Manager (relink) logs.

RHEL Logs in /var/log

The following table presents the standard RHEL logs found in the /var/log directory:

Log File Description

/var/log/anaconda.log Linux installation messages.

/var/log/boot.log Information pertaining to boot time.

/var/log/btmp.log Failed login attempts.

/var/log/cron Information logged by the Linux cron daemon.

/var/log/dmesg Information about hardware detected by the kernel at boot

time. The Linux dmesg command shows the contents of this

log.

42

Page 43

Important Log Files at a Glance

Log File Description

/var/log/dracut.log Log file of the Linux initramfs image creation process.

/var/log/lastlog Most recent log-in for all system users. Use Linux lastlog

command to view the contents of this log.

/var/log/maillog Mail server log.

/var/log/mcelog The machine check events (memory and CPU error) log.

/var/log/messages Global system messages, including startup messages, logins,

packet logging.

/var/log/secure Authentication and authorization messages.

/var/log/spooler Usenet and uucp log.

/var/log/tallylog Failed login attempts.

/var/log/wtmp Current login records. Use the Linux who command to display

the contents.

/var/log/yum.log Information about packages installed using Linux yum utility.

RHEL Subdirectories in /var/log

The following table presents the standard RHEL subdirectories found in the /var/log directory: