Page 1

Administrator's Guide

Release 5.0.5

Published April 2010

Page 2

ParaStation5 Administrator's Guide

ParaStation5 Administrator's Guide

Release 5.0.5

Copyright © 2002-2010 ParTec Cluster Competence Center GmbH

April 2010

Printed 7 April 2010, 14:11

Reproduction in any manner whatsoever without the written permission of ParTec Cluster Competence Center GmbH is strictly

forbidden.

All rights reserved. ParTec and ParaStation are registered trademarks of ParTec Cluster Competence Center GmbH. The ParTec

logo and the ParaStation logo are trademarks of ParTec Cluster Competence Center GmbH. Linux is a registered trademark of

Linus Torvalds. All other marks and names mentioned herein may be trademarks or registered trademarks of their respective

owners.

ParTec Cluster Competence Center GmbH

Possartstr. 20

D-81679 München

Phone +49-89-99809-0

Fax +49-89-99809-555

http://www.par-tec.com

<info@par-tec.com>

Please note that you will always find the most up-to-date version of this technical documentation on our

Web site at http://www.par-tec.com/support.php.

Share your knowledge with others. It's a way to achieve immortality.

—Dalai Lama

Page 3

Table of Contents

1. Introduction ................................................................................................................................. 1

1.1. What is ParaStation ......................................................................................................... 1

1.2. The history of ParaStation ................................................................................................ 1

1.3. About this document ........................................................................................................ 2

2. Technical overview ..................................................................................................................... 3

2.1. Runtime daemon .............................................................................................................. 3

2.2. Libraries ........................................................................................................................... 3

2.3. Kernel modules ................................................................................................................ 3

2.4. License ............................................................................................................................ 4

3. Installation .................................................................................................................................. 5

3.1. Prerequisites .................................................................................................................... 5

3.2. Directory structure ............................................................................................................ 6

3.3. Installation via RPM packages .......................................................................................... 7

3.4. Installing the documentation ............................................................................................. 9

3.5. Installing MPI ................................................................................................................. 10

3.6. Further steps .................................................................................................................. 10

3.7. Uninstalling ParaStation5 ................................................................................................ 11

4. Configuration ............................................................................................................................ 13

4.1. Configuration of the ParaStation system .......................................................................... 13

4.2. Enable optimized network drivers .................................................................................... 14

4.3. Testing the installation .................................................................................................... 15

5. Insight ParaStation5 .................................................................................................................. 17

5.1. ParaStation5 pscom communication library ...................................................................... 17

5.2. ParaStation5 protocol p4sock .......................................................................................... 17

5.2.1. Directory /proc/sys/ps4/state .................................................................... 18

5.2.2. Directory /proc/sys/ps4/ether ....................................................................... 18

5.2.3. Directory /proc/sys/ps4/local ....................................................................... 19

5.2.4. p4stat ............................................................................................................... 19

5.3. Controlling process placement ........................................................................................ 19

5.4. Using the ParaStation5 queuing facility ............................................................................ 20

5.5. Exporting environment variables for a task ....................................................................... 20

5.6. Using non-ParaStation applications ................................................................................. 20

5.7. ParaStation5 TCP bypass ............................................................................................... 21

5.8. Controlling ParaStation5 communication paths ................................................................. 21

5.9. Authentication within ParaStation5 ................................................................................... 22

5.10. Homogeneous user ID space ....................................................................................... 23

5.11. Single system view ....................................................................................................... 23

5.12. Parallel shell tool .......................................................................................................... 23

5.13. Nodes and CPUs ......................................................................................................... 23

5.14. Integration with AFS ..................................................................................................... 24

5.15. Integrating external queuing systems ............................................................................. 24

5.15.1. Integration with PBS PRO .................................................................................. 25

5.15.2. Integration with OpenPBS .................................................................................. 25

5.15.3. Integration with Torque ...................................................................................... 25

5.15.4. Integration with LSF ........................................................................................... 25

5.15.5. Integration with LoadLeveler ............................................................................... 25

5.16. Multicasts ..................................................................................................................... 25

5.17. Copying files in parallel ................................................................................................. 26

5.18. Using ParaStation accounting ....................................................................................... 26

5.19. Using ParaStation process pinning ................................................................................ 27

5.20. Using memory binding .................................................................................................. 27

5.21. Spawning processes belonging to all groups .................................................................. 27

5.22. Changing the default ports for psid(8) ............................................................................ 27

6. Troubleshooting ........................................................................................................................ 29

6.1. Problem: psiadmin returns error .................................................................................... 29

ParaStation5 Administrator's Guide iii

Page 4

ParaStation5 Administrator's Guide

6.2. Problem: node shown as "down" .................................................................................... 29

6.3. Problem: cannot start parallel task ................................................................................. 30

6.4. Problem: bad performance ............................................................................................ 30

6.5. Problem: different groups of nodes are seen as up or down ............................................. 30

6.6. Problem: cannot start process on front end ..................................................................... 30

6.7. Warning issued on task startup ...................................................................................... 31

6.8. Problem: pssh fails ........................................................................................................ 31

6.9. Problem: psid does not startup, reports port in use ......................................................... 31

6.10. Problem: processes cannot access files on remote nodes .............................................. 32

I. Reference Pages ....................................................................................................................... 33

parastation.conf ..................................................................................................................... 35

psiadmin ............................................................................................................................... 47

psid ...................................................................................................................................... 63

test_config ............................................................................................................................ 65

test_nodes ............................................................................................................................ 67

test_pse ................................................................................................................................ 69

p4stat ................................................................................................................................... 71

p4tcp .................................................................................................................................... 73

psaccounter .......................................................................................................................... 75

psaccview ............................................................................................................................. 77

mlisten .................................................................................................................................. 81

A. Quick Installation Guide ............................................................................................................ 83

B. ParaStation license ................................................................................................................... 85

C. Upgrading ParaStation4 to ParaStation5 .................................................................................... 89

C.1. Building and installing ParaStation5 packages ................................................................. 89

C.2. Changes to the runtime environment .............................................................................. 89

Glossary ....................................................................................................................................... 91

iv ParaStation5 Administrator's Guide

Page 5

Chapter 1. Introduction

1.1. What is ParaStation

ParaStation is an integrated cluster management and communication solution. It combines unique features

only found in ParaStation with common techniques, widely used in high performance computing, to deliver

an integrated, easy to use and reliable compute cluster environment.

The version 5 of ParaStation supports various communication technologies as interconnect network. It

comes with an optimized communication protocol for Ethernet that enables Gigabit Ethernet to play a new

role in the market of high throughput, low latency communication. Beside Infiniband and Myrinet, it also

supports the upcoming 10G Ethernet networks.

Like previous versions, ParaStation5 includes an integrated cluster administration and management

environment. Using communicating daemon processes on each cluster node, an effective resource

management and single point of administration is implemented. This results in a single system image view

of the cluster.

From the user's point of view this cluster management leads to an easier and more effective usage of

the cluster. Important features like load balancing, job control and input/output management, common in

classical supercomputers, but rarely found in compute clusters, are implemented by ParaStation thus being

now also available on clusters.

1.2. The history of ParaStation

The fundamentals of the ParaStation software were laid in 1995, when the ParaStation communication

hardware and software system was presented. It was developed at the chair of Professor Tichy at computer

science department of Karlsruhe University.

When in 1998 ParaStation2 was presented, it was a pure software project. The communication platform

used then was Myrinet, a Gigabit interconnect developed by Myricom. The development of ParaStation2

still took place at the University of Karlsruhe.

ParaStation became commercial in 1999 when ParTec AG was founded. This spin-off from the University

of Karlsruhe now owns all rights and patents connected with the ParaStation software. ParTec promotes

the further development and improvement of the software. This includes the support of a broader basis of

supported processor types, communication interconnect and operating systems.

Version 3 of the ParaStation software for Myrinet is a rewrite from scratch now fully in the responsibility

of ParTec. All the know-how and experiences achieved from the former versions of the software were

incorporated into this version. It was presented in 2001 and was a major breakthrough with respect to

throughput, latency and stability of the software. Nevertheless it is enhanced constantly with regard to

performance, stability and usability.

In 2002 the ParaStation FE software was presented opening the ParaStation software environment towards

Ethernet communication hardware. This first step in the direction of independence from the underlying

communication hardware brought the convenient ParaStation management facility to Beowulf clusters for

the first time. Furthermore the suboptimal communication performance for large packets gained from the

MPIch/P4 implementation of the MPI message passing interface, the de facto standard on Beowulf clusters,

was improved to the limits that may to be expected from the physical circumstances.

With ParaStation4 presented in 2003 the software became really communication platform independent.

With this version of the software even Gigabit Ethernet became a serious alternative as a cluster

interconnect due to the throughput and latency that could be achieved.

ParaStation5 Administrator's Guide 1

Page 6

About this document

In the middle of 2004, all rights on ParaStation where transferred from ParTec AG to the ParTec Cluster

Competence Center GmbH. This new company takes a much more service-oriented approach to the

customer. The main goal is to deliver integrated and complete software stacks for LINUX-based compute

clusters by selecting state-of-the-art software components and driving software development efforts in

areas where real added value can be provided. The ParTec Cluster Competence Center GmbH continues

to develop and support the ParaStation product as an important part of it's portfolio.

At the end of 2007, ParaStation5 was released supporting MPI2 and even more interconnects and

especially protocols, like DAPL. ParaStation5 is backward compatible to the previous ParaStation4 version.

1.3. About this document

This manual discusses installation, configuration and administration of ParaStation5. Furthermore, all the

system management utilities are described.

For a detailed discussion of the user utilities and the programming interfaces included in the standard

distribution take a look at the ParaStation5 User's Guide and the API reference, respectively.

This document describes version 5.0 of the ParaStation software. Previous versions of ParaStation are

no longer covered by this document. Information about these outdated versions can be found in previous

versions of this document.

2 ParaStation5 Administrator's Guide

Page 7

Chapter 2. Technical overview

Within this section, a brief technical overview of ParaStation5 will be given. The various software modules

constituting ParaStation5 are explained.

2.1. Runtime daemon

In order to enable ParaStation5 on a cluster, the ParaStation daemon psid(8) has to be installed on each

cluster node. This daemon process implements various functions:

• Install and configure local communication devices and protocols, e.g. load the p4sock kernel module and

set up proper routing information, if not already done at system startup.

• Queue parallel and serial tasks until requested resources are available.

• Distribute processes onto the available cluster nodes.

• Startup and monitor processes on cluster nodes. Also terminate and cleanup processes upon request.

• Monitor availability of other cluster nodes, send “I'm alive” messages.

• Handle input/output and signal forwarding.

• Service management commands from the administration tools.

The daemon processes periodically send information containing application processes, system load and

others to all other nodes within the cluster. So each daemon is able to monitor each other node, and in case

of absent alive messages, it will initiate proper actions, e.g. terminate a parallel task or mark this node as "no

longer available". Also, if a previously unavailable node is now responding, it will be marked as "available"

and will be used for upcoming parallel task. No intervention of the system administrator is required.

2.2. Libraries

In addition, a couple of libraries providing communication and management functionality, must be installed.

All libraries are provided as static versions, which will be linked to the application at compile time, or as

shared (dynamic) versions, which are pre-linked at compile time and folded in at runtime. There is also a

set of management and test tools installed on the cluster.

ParaStation5 comes with it's own version of MPI, based on MPIch2. The MPI library provides standard

MPIch2 compatible MPI functions. For communication purposes, it supports a couple of communication

paths in parallel, e.g. local communication using Shared memory, TCP or p4sock, Ethernet using p4sock

and TCP, Infiniband using verbs, Myrinet using GM or 10G Ethernet using DAPL. Thus, ParaStation5 is

able to spawn parallel tasks across nodes connected by different communication networks. ParaStation

will also make use of redundant interconnects, if a failure is encountered during startup of a parallel task.

There are different versions of the ParaStation MPI library available, depending on the hardware

architecture and compiler in use. For IA32, versions for GNU, Intel and Portland Group compilers are

available. For x86_64, versions for the GCC, Intel, Portland Group and Pathscale EKO compiler suite are

available. The versions support all available languages and language options for the selected compiler,

e.g. Fortran, Fortran90, C or C++. The different versions of the MPI library can be installed in parallel, thus

it is possible to compile and run applications using different compilers at the same node.

2.3. Kernel modules

Beside libraries enabling efficient communication and task management, ParaStation5 also provides a set

of kernel modules:

ParaStation5 Administrator's Guide 3

Page 8

License

• p4sock.o: this module implements the kernel based ParaStation5 communication protocol.

• e1000_glue.o, bcm5700_glue.o: these modules enable even more efficient communication to the

network drivers coming with ParaStation5 (see below).

• p4tcp.o: this module provides a feature called "TCP bypass". Thus, applications using standard TCP

communication channels on top of Ethernet are able to use the optimized ParaStation5 protocol and

therefore achieve improved performance.

No modifications of the application, even no relinking is necessary to use this feature. To gain best

performance, relinking with the MPI library provided by ParaStation is recommended.

To enable the maximum performance on Gigabit Ethernet, ParaStation5 comes with its own set of network

drivers. These drivers are based on standard device drivers for the corresponding NICs and especially

tuned for best performance within a cluster environment. They will also support all standard communication

and protocols. To enable best performance within an Ethernet-based cluster, these drivers should replace

their counterparts currently configured within the kernel.

ParaStation currently comes with drivers for Intel (e1000) and Broadcom (bcm5700) network interface

controllers. Dedicated helper modules (glue modules) for these drivers decrease the latency even more.

ParaStation is also able to use all standard Ethernet network drivers configured into the Linux kernel.

However, to get the best performance, the use of the provided drivers is recommended, if applicable.

2.4. License

This version of ParaStation does not require a dedicated license key to run. But usage of the software

implies the acceptance of the license!

For licensing details, refer to Appendix B, ParaStation license.

4 ParaStation5 Administrator's Guide

Page 9

Chapter 3. Installation

This chapter describes the installation of ParaStation5. At first, the prerequisites to use ParaStation5 are

discussed. Next, the directory structure of all installed components is explained. Finally, the installation

using RPM packages is described in detail.

Of course, the less automated the chosen way of installation is, the more possibilities of customization

within the installation process occur. On the other hand even the most automated way of installation, the

installation via RPM, will give a suitable result in most cases.

For a quick installation guide refer to Appendix A, Quick Installation Guide.

3.1. Prerequisites

In order to prepare a bunch of nodes for the installation of the ParaStation5 communication system, a few

prerequisites have to be met.

Hardware

The cluster must have a homogeneous processor architecture, i.e. Intel IA32 and AMD IA32 can be used

together, but not Intel IA32 and IA64 1. The supported processor architectures up to now are:

• i586: Intel IA32 (including AMD Athlon)

• ia64: Intel IA64

• x86_64: Intel EM64T and AMD64

• ppc: IBM Power4 and Power5

Multi-core CPUs are supported, as well as single and multi-CPU (SMP) nodes.

Furthermore the nodes need to be interconnected. In principle, ParaStation5 uses two different kinds of

interconnects:

• At first a so called administration network which is used to handle all the administrative tasks that have

to be dealt with within a cluster. Besides commonly used services like sharing of NFS partitions or NIS

tables, on a ParaStation cluster, this also includes the inter-daemon communication used to implement

the effective cluster administration and parallel task handling mechanisms. This administration network

is usually implemented using a Fast or Gigabit Ethernet network.

• Secondly a high speed interconnect is required in order to do high bandwidth, low latency communication

within parallel applications. While historically this kind of communication is usually done using specialized

highspeed networks like Myrinet, nowadays Gigabit Ethernet is a much cheaper and only slightly

slower alternative. ParaStation5 currently supports Ethernet (Fast, Gigabit and 10G Ethernet), Myrinet,

InfiniBand, QsNetII and Shared Memory.

If IP connections over the high speed interconnect are available, it is not required to really have two distinct

networks. Instead it is possible to use one physical network for both tasks. IP connections are usually

configured by default in the case of Ethernet. For other networks, particular measures have to be taken in

order to enable IP over these interconnects.

1

It is possible to spawn a ParaStation cluster across multiple processor architectures. The daemons will communicate with each

other, but this is currently not a supported configuration. For more details, please contact <support@par-tec.com>.

ParaStation5 Administrator's Guide 5

Page 10

Software

Software

ParaStation requires a RPM-based Linux installation, as the ParaStation software is based on installable

RPM packages.

All current distributions from Novell and Red Hat are supported, like

• SuSE Linux Enterprise Server (SLES) 9 and 10

• SuSE Professional 9.1, 9.2, 9.3 and 10.0, OpenSuSE 10.1, 10.2, 10.3

• Red Hat Enterprise Linux (RHEL) 3, 4 and 5

• Fedora Core, up to version 7

For other distributions and non-RPM based installations, please contact <support@par-tec.com>.

In order to use highspeed networks, additional libraries and kernel modules may be required. These

packages are typically provided by the hardware vendors.

Kernel version

Using only TCP as a high speed interconnect protocol, no dedicated kernel modules are required. This is

the ParaStation default communication path and is always enabled.

Using other interconnects and protocols, additional kernel modules are required. Especially using the

optimized ParaStation p4sock protocol, a couple of additional modules are loaded. Refer to the section

called “Installing the RPMs” for details. The ParaStation modules can be compiled for all major kernel

versions within the 2.4 and 2.6 kernel streams.

Using InfiniBand and Myrinet requires additional modules and may restrict the supported kernels.

3.2. Directory structure

The default location to install ParaStation5 is /opt/parastation. Underneath this directory, several

subdirectories are created containing the actual ParaStation5 installation:

bin

contains all executables and scripts forming the ParaStation system. This directory could be included

into the PATH environment variable for easy access to the ParaStation administration tools.

config

contains the example configuration file parastation.conf.tmpl.

Depending on the communication part of the ParaStation system installed, more scripts and

configuration files may be found within this directory.

doc

contains the ParaStation documentation after installing the corresponding RPM file. The necessary

steps are described in Section 3.4, “Installing the documentation”.

include

contains the header files needed in order to build ParaStation applications. These files are primarily

needed for building applications using the low level PSPort or PSE libraries.

These header files are not needed, if only MPI applications should be build or precompiled third party

applications are used.

lib and lib64

contains various libraries needed in order to build and/or run applications using ParaStation and the

ParaStation MPI libraries.

6 ParaStation5 Administrator's Guide

Page 11

Installation via RPM packages

man

contains the manual pages describing the ParaStation daemons, utilities and configuration files after

installing the documentation package. The necessary steps are described in Section 3.4, “Installing

the documentation”.

In order to enable the users to access these pages using the man(1) command, please consult the

corresponding documentation 2.

mpi2, mpi2-intel, mpi2-pgi, mpi2-psc

contains an adapted version of MPIch2 after installing one of the various psmpi2 RPM files. The

necessary steps are described in Section 3.5, “Installing MPI”.

Especially the sub-directories mpich/bin, mpich-intel/bin, etc, contain all the commands to run

(mpirun) and compile (mpicc, mpif90, ...) parallel tasks.

All ParaStation5 specific kernel modules are located within the directory /lib/modules/

kernel release/kernel/drivers/net/ps4.

3.3. Installation via RPM packages

The recommended way to install ParaStation5 is the installation of packages using the rpm command. This

is the preferred method on all SuSE or Red Hat based systems.

Getting the ParaStation5 RPM packages

Packages containing the different parts of the ParaStation5 system can be obtained from the download

section of the ParaStation homepage.

At least two packages are needed, one containing the management part, the other one providing the

communication part of the ParaStation5 system. Beside this core system, packages supplying MPIch

for GNU, Intel, Portland Group and Pathscale compilers are available. A documentation package is also

obtainable.

The full names of the RPM files follow a simple structure:

name-x.y.z-n.arch.rpm

where name denotes the name and thus the content of the packet, x.y.z describes the version number,

n the build number and arch is the architecture, i.e. one of i586, ia64, x86_64, ppc or noarch. The

latter is used e.g. for the documentation packet.

The package called psmgmt holds the management part of ParaStation. This package is required for any

installation of the ParaStation5 system, independent of the underlying communication platform.

The communication libraries and modules for ParaStation5 come with the pscom package. As explained,

all filenames are followed by an individual version number, the build date and the architecture.

The versions available on the ParaStation homepage at a time are tested to work properly together.

It's recommended to install always the corresponding package versions. If only a part of the installation

should be updated (i.e. only the management part while keeping the communication part untouched)

the corresponding release notes should be consulted in order to verify that the intended combination is

supported.

The release notes of the different packages will either be found within the installation directory /opt/

parastation or on the download section of the ParaStation homepage.

2

Usually this is either done by modifying the MANPATH environment variable or by editing the manpath(1) configuration file, which

is /etc/manpath.config by default.

ParaStation5 Administrator's Guide 7

Page 12

Compiling the ParaStation5 packages from source

Please note that the individual version numbers of the distinct packages building the ParaStation5 system

do not necessarily have to match.

Compiling the ParaStation5 packages from source

To build proper RPM packages suitable for a particular setup, the source code for the ParaStation packages

can be downloaded from www.parastation.com/download 3 .

Typically, it is not necessary to recompile the ParaStation packages, as the provided

precompiled packages will install on all major distributions.

Only the kernel modules should be compiled to provide modules suitable for the

current Linux kernel, see below.

To build the psmgmt package, use

# rpmbuild --rebuild psmgmt.5.0.0-0.src.rpm

After installing the psmgmt package, the pscom package can be built using

# rpm -Uv psmgmt.5.0.0-0.i586.rpm

# rpmbuild --rebuild pscom.5.0.0-0.src.rpm

This will build the packages pscom-5.0.0-0.i586.rpm and pscom-modules-5.0.0-0.i586.rpm.

The architecture will of course vary depending on the system the packages are built on.

While compiling the package, support for Infiniband will be included, if one of the following files where found:

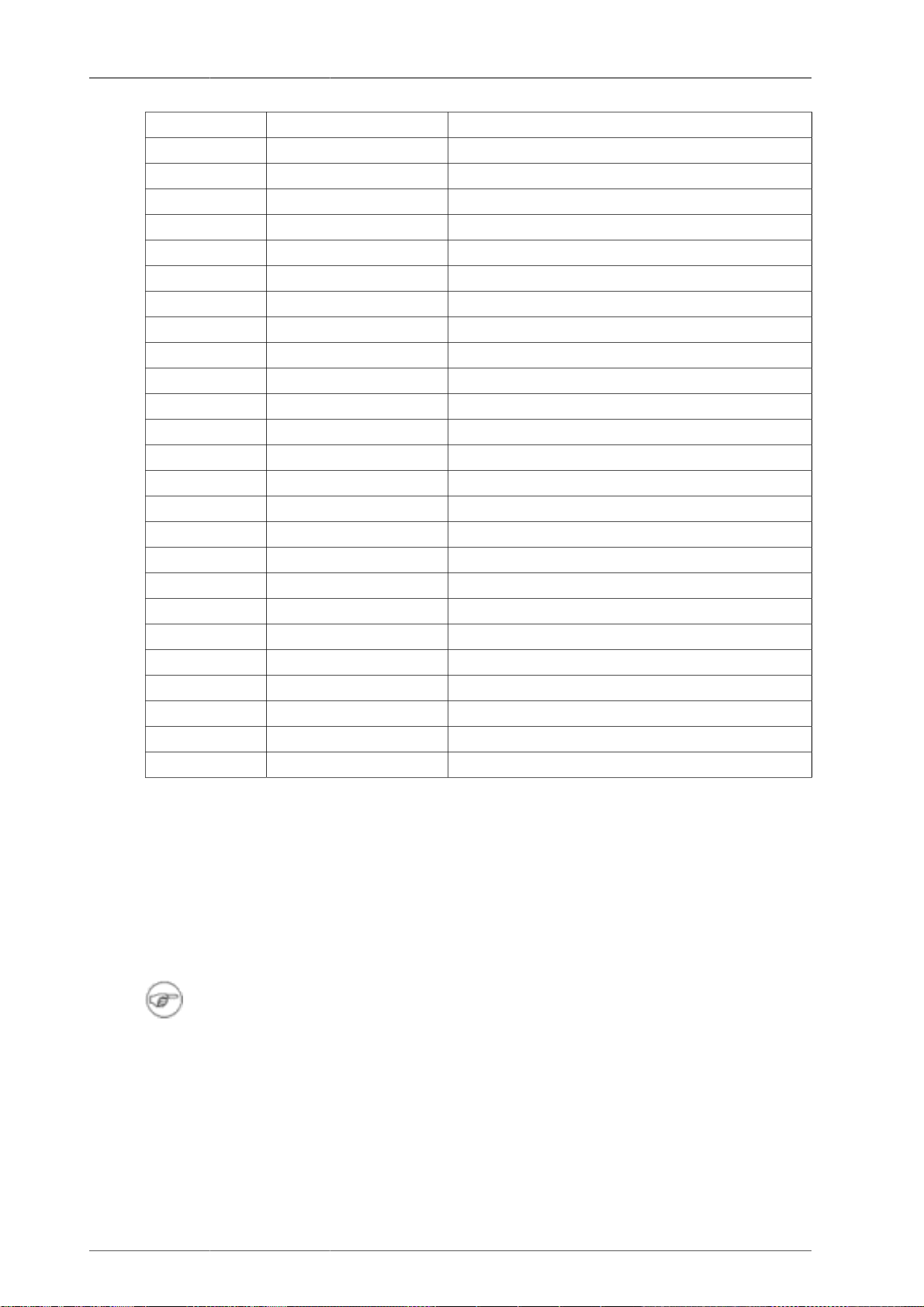

File Version

/usr/mellanox/include/vapi/evapi.h Mellanox

/usr/include/infiniband/verbs.h OpenFabrics

/usr/local/ofed/include/verbs.h OpenFabrics (Voltaire)

Table 3.1. Supported Infiniband implementations

To enable Myrinet GM, the environment variable GM_HOME must be set.

To generate the pscom-modules package, holding the ParaStation5 protocol-specific kernel modules and

patched device drivers only, use the command

# rpmbuild --rebuild --with modules pscom.5.0.0-0.src.rpm

After installing the pscom package, the MPIch2 package can be built using

# rpm -Uv pscom.5.0.0-0.i586.rpm

# rpmbuild --rebuild psmpi2-5.0.0.src.rpm

This will create an installable MPIch RPM package, based on gcc. Support for other compilers can be

enabled using the --with compiler options. Compiler could be intel for Intel icc, pgi for Portland

Group pgi or psc for Pathscale pathcc. The option g77_ will use gcc, rendering symbol names with a single

underscore prefixed.

Installing the RPMs

The installation on the cluster nodes has to be performed with administrator privileges. The packages are

installed using the rpm -U command:

3

Source code for the documentation is currently not available.

8 ParaStation5 Administrator's Guide

Page 13

Installing the documentation

# rpm -Uv psmgmt.5.0.0-0.i586.rpm pscom.5.0.0-0.i586.rpm \

pscom-modules.5.0.0-0.i586.rpm

This will copy all the necessary files to /opt/parastation and the kernel modules to /lib/modules/

kernelversion/kernel/drivers/net/ps4.

On a frontend node or file server, the pscom-modules package is only required, if this node should run

processes of a parallel task. If the frontend or fileserver node is not configured to run compute processes of

parallel tasks, the installation of the pscom-modules package may be skipped. For details how to configure

frontend nodes, refer to Section 4.1, “Configuration of the ParaStation system”.

To enable the ParaStation version of the e1000 or bcm5700 network drivers, rename (or delete) the

original version of this driver in use which is typically located in the system directory /lib/modules/

kernelversion/kernel/kernel/drivers/net/e1000 or bcm, respectively. See modinfo e1000

for details. The module dependency database must be rebuild using the command depmod. See

Section 4.2, “Enable optimized network drivers” for details.

It is not required to use the ParaStation version of the e1000 or bcm5700 driver,

as the p4sock protocol of ParaStation is able to use every network driver within the

Linux kernel. However, to increase performance and to minimize latency, it's highly

recommended.

Using the provided drivers does not influence other network communication.

While installing the ParaStation management RPM, the file /etc/xinetd/psidstarter is installed. This

enables remote startup of ParaStation daemons using the xinetd(8).

The xinetd daemon will be triggered to read this file by executing:

/etc/init.d/xinetd reload

Refer to Section 5.22, “Changing the default ports for psid(8)” on how to change the default network port

used by the psid(8).

In case the system still uses the older xinet(8) server to startup network services, please add the following

lines to /etc/services:

#

# ParaStation daemon

#

psid stream tcp nowait root /opt/parastation/bin/psid psid

Add the next lines to /etc/inetd.conf:

# ParaStation entries

psid 888/tcp # ParaStation Daemon Start Port

# end of ParaStation entries

3.4. Installing the documentation

The ParaStation5 documentation is delivered in three formats: As PDF files, a browseable HTML

documentation and manual pages for all ParaStation5 commands and configuration files.

In order to install the documentation files an up to date version of the documentation package psdoc has to

be retrieved. It can be found in the download section of the ParaStation homepage. The package is called

psdoc and the architecture is noarch, since this part is platform independent. The name of this package

follows the conventions of all other packages building the ParaStation5 distribution.

To install the package, simply execute

ParaStation5 Administrator's Guide 9

Page 14

Installing MPI

# rpm -Uv psdoc-5.0.0-1.noarch.rpm

All the PDF and HTML files will be installed within the directory /opt/parastation/doc, the manual

pages will reside in /opt/parastation/man.

The intended starting point to browse the HTML version of the documentation is file:///opt/

parastation/doc/html/index.html.

The documentation is available in two PDF files called adminguide.pdf for the ParaStation5

Administrator's Guide and userguide.pdf for the ParaStation5 User's Guide. Both can be found in the

directory /opt/parastation/doc/pdf.

In order to enable the manual pages to the users please consult the documentation of the man(1) command

and the remark in Section 3.2, “Directory structure”, on how to do this.

3.5. Installing MPI

The standard for the implementation of parallel applications on distributed memory machines like clusters

is MPI, the Message Passing Interface. In order to enable a ParaStation5 cluster for the development and

execution of MPI programs, the installation of an adapted version of MPIch2 is necessary. A corresponding

RPM packet can be found within the download section of the ParaStation homepage.

The corresponding package psmpi2 follows the common naming conventions of all ParaStation5 packets.

Beside the plain MPI package (psmpi2), which is compiled using the GNU gcc compiler, other versions

of the MPI packet are available, which are built using different compilers like the PGI or Intel compilers on

the Intel IA32 platform, the Intel compiler on the IA64 platform and the PGI, Intel and Pathscale compiler

on X86_64 platform. These packets of course will depend on the corresponding compilers to be installed.

Keep in mind that the use of this compilers might require further licenses.

After downloading the correct MPI package make sure to be root. In order to install MPIch for ParaStation5

from the rpm file, the command

# rpm -Uv psmpi2.5.0.0-1.i586.rpm

must be executed.

The command will extract the ParaStation5 MPI package to the directory /opt/parastation/mpi2.

In order to enable the MPI commands to the users make sure that the directory /opt/parastation/

mpi2/bin is included into the system wide PATH environment variable. Furthermore the administrator

might want to enable the MPI manual pages for all users. These pages reside in /opt/parastation/

mpi2/man. Please consult the documentation of the man(1) command and the remark in Section 3.2,

“Directory structure” on how to do this.

In general, all versions of ParaStation5 MPI supporting different compilers can be installed in parallel. The

files will be copied to the directories /opt/parastation/mpi2 (for GNU gcc), /opt/parastation/

mpi2-intel (for Intel compiler) or /opt/parastation/mpi2-pgi (for Portland Group compiler),

depending on the compiler version supported by the MPI package.

3.6. Further steps

The previous chapter described the basic installation of ParaStation5. There are still some steps to do,

especially:

• configuration of the ParaStation5 system

10 ParaStation5 Administrator's Guide

Page 15

Uninstalling ParaStation5

• testing

These steps will be discussed in Chapter 4, Configuration.

3.7. Uninstalling ParaStation5

After stoping the ParaStation daemons, the corresponding packets can be removed using

# /etc/init.d/parastation stop

# rpm -e psmgmt pscom psdoc psmpi2

on all nodes of the cluster.

ParaStation5 Administrator's Guide 11

Page 16

12 ParaStation5 Administrator's Guide

Page 17

Chapter 4. Configuration

After installing the ParaStation software successfully, only few modifications to the configuration file

parastation.conf(5) have to be made in order to enable ParaStation on the local cluster.

4.1. Configuration of the ParaStation system

Within this section the basic configuration procedure to enable ParaStation will be described. It covers the

configuration of ParaStation5 using TCP/IP (Ethernet) and the optimized ParaStation5 protocol p4sock.

The primarily configuration work is reduced to editing the central configuration file parastation.conf,

which is located in /etc.

A template file can be found in /opt/parastation/config/parastation.conf.tmpl. Copy this file

to /etc/parastation.conf and edit it as appropriate.

This section describes all parameters of /etc/parastation.conf necessary to customize ParaStation

for a basic cluster environment. A detailed description of all possible configuration parameters in the

configuration file can be found within the parastation.conf(5) manual page.

The following steps have to be executed on the frontend node to configure the ParaStation daemon psid(8):

1. Copy template

Copy the file /opt/parastation/config/parastation.conf.tmpl to /etc/

parastation.conf.

The template file contains all possible parameters known by the ParaStation daemon psid(8). Most

of these parameters are set to their default value within lines marked as comments. Only those that

have to be modified in order to adapt ParaStation to the local environment are enabled. Additionally all

parameters are exemplified using comments. A more detailed description of all the parameters can be

found in the parastation.conf(5) manual page.

The template file is a good starting point to create a working configuration of ParaStation for your

cluster. Beside basic information about the cluster, this template file defines all hardware components

ParaStation is able to handle. Since these definitions require a deeper knowledge of ParaStation, it is

easier to copy the template file anyway.

2. Define Number of nodes

The parameter NrOfNodes has to be set to the actual number of nodes within the cluster. Front end

nodes have to be considered as part of the cluster. E.g. if the cluster contains 8 nodes with a fast

interconnect plus a front end node then NrOfNodes has to be set to 9 in order to allow the start of parallel

tasks from this machine.

3. HWType

In order to tell ParaStation which general kind of communication hardware should be used, the HWType

parameter has to be set. This could be changed on a per node basis within the nodes section (see below).

For clusters running ParaStation5 utilizing the optimized ParaStation communication stack on Ethernet

hardware of any flavor this parameter has to be set to:

HWType { p4sock ethernet }

This will use the optimized ParaStation protocol, if available. Otherwise, TCP/IP will be used.

ParaStation5 Administrator's Guide 13

Page 18

Enable optimized network drivers

The values that might be assigned to the HWType parameter have to be defined within the

parastation.conf configuration file. Have a brief look at the various Hardware sections of this file

in order to find out which hardware types are actually defined.

Other possible types are: mvapi, openib, gm, ipath, elan, dapl.

To enable shared memory communication used within SMP nodes, no dedicated

hardware entry is required. Shared memory support is always enabled by default.

As there are no options for shared memory, no dedicated hardware section for this

kind of interconnect is provided.

4. Define Nodes

Furthermore ParaStation has to be told which nodes should be part of the cluster. The usual way of

using the Nodes parameter is the environment mode, that is already enabled in the template file.

The general syntax of the Nodes environment is one entry per line. Each entry has the form

hostname id [HWType] [runJob] [starter] [accounter]

This will register the node hostname to the ParaStation system with the ParaStation ID id. The

ParaStation ID has to be an integer number between 0 and NrOfNodes-1.

For each cluster node defined within the Nodes environment at least the hostname of the node and

the ParaStation ID of this node have to be given. The optional parameters HWType, runJobs, starter

and accounter may be ignored for now. For a detailed description of these parameters refer to the

parastation.conf(5) manual page.

Usually the nodes will be enlisted ordered by increasing ParaStation IDs, beginning with 0 for the first

node. If a front end node exists and furthermore should be integrated into the ParaStation system, it

usually should be configured with ID 0.

Within an Ethernet cluster the mapping between hostnames and ParaStation ID is completely

unrestricted.

5. More options

More configuration options may be set as described in the configuration file parastation.conf. For

details refer to the parastation.conf(5) manual page.

If using vapi (HwType ib) or DAPL (HwType dapl) layers for communication, e.g.

for Infiniband or 10G Ethernet, the amount of lockable memory must be increased.

To do so, use the option rlimit memlock within the configuration file.

6. Copy configuration file to all other nodes

The modified configuration file must be copied to all other nodes of the cluster. E.g., use psh to do so.

Restart all ParaStation daemons.

In order to verify the configuration, the command

# /opt/parastation/bin/test_config

could be run. This command will analyze the configuration file and report any configuration failures. After

finishing these steps, the configuration of ParaStation is done.

4.2. Enable optimized network drivers

As explained in the previous chapter, ParaStation5 comes with its own versions of adapted network drivers

for Intel (e1000) and Broadcom (bcm5700) NICs. If the optimized ParaStation protocol p4sock is used to

14 ParaStation5 Administrator's Guide

Page 19

Testing the installation

transfer application data across Ethernet, this adapted drivers should be used, too. To enable these drivers,

the simplest way is to rename the original modules and recreate the module dependencies:

# cd /lib/modules/$(uname -r)/kernel/drivers/net

# mv e1000/e1000.o e1000/e1000-orig.o

# mv bcm/bcm5700.o bcm/bcm5700-orig.o

# depmod -a

If your system uses the e1000 driver, a subsequent modinfo command for kernel version 2.4 should report

that the new ParaStation version of the driver will be used:

# modinfo e1000

filename: /lib/modules/2.4.24/kernel/drivers/net/ps4/e1000.o

description: "Intel(R) PRO/1000 Network Driver"

author: "Intel Corporation, <linux.nics@intel.com>"

...

The "filename" entry reports that the ParaStation version of the driver will be used. The same should apply

for the bcm5700 network driver.

For kernel version 2.6, use the modprobe command:

# modprobe -l e1000

/lib/modules/2.6.5-7.97/kernel/drivers/net/ps4/e1000.ko

To reload the new version of the network drivers, it is necessary to reboot the system.

4.3. Testing the installation

After installing and configuring ParaStation on each node of the cluster, the ParaStation daemons can be

started up. These daemons will setup all necessary communication relations and thus will form the virtual

cluster consisting of the available nodes.

The ParaStation daemons are started using the psiadmin command. This command will establish a

connection to the local psid. If this daemon is not already up and running, the inetd will start up the daemon

automatically.

If the daemon is not configured to be automatically started by xinetd, it must be started

using /etc/init.d/parastation start.

# /opt/parastation/bin/psiadmin

After connecting to the local psid daemon, this command will issue a prompt

psiadmin>

To start up the ParaStation daemons on all other nodes, use the add command:

psiadmin> add

The following status enquiry command

psiadmin> list

should list all nodes as "up". To verify that all nodes have installed the proper kernel modules, type

psiadmin> list hw

The command should report for all nodes all hardware types configured, e.g. p4sock, ethernet.

ParaStation5 Administrator's Guide 15

Page 20

Testing the installation

Alternatively, it is possible to use the single command form of the psiadmin command:

# /opt/parastation/bin/psiadmin -s -c "list"

The command should be repeated until all nodes are up. The ParaStation administration tool is described

in detail in the corresponding manual page psiadmin(1).

If some nodes are still marked as "down", the logfile /var/log/messages for this node should be

inspected. Entries like “psid: ....” at the end of the file may report problems or errors.

After bringing up all nodes, the communication can be tested using

# /opt/parastation/bin/test_nodes -np nodes

where nodes has to be replaced by the actual number of nodes within the cluster. After a while a result like

---------------------------------------

Master node 0

Process 0-31 to 0-31 ( node 0-31 to 0-31 ) OK

All connections ok

PSIlogger: done

should be reported. Of course the number '31' will be replaced by a the actual number of nodes given on

the command line, i.e. nodes-1.

in case of failure, test_nodes may give continuously results like

---------------------------------------

Master node 0

Process 0-2,4-6 to 0-7 ( node 0-2,4-6 to 0-7 ) OK

Process 3 to 0-6 ( node 3 to 0-6 ) OK

Process 7 to 0-2,4-7 ( node 7 to 0-2,4-7 ) OK

A detailed description of test_nodes can be found within the corresponding manual page test_nodes(1).

16 ParaStation5 Administrator's Guide

Page 21

Chapter 5. Insight ParaStation5

This chapter provides more technical details and background information about ParaStation5.

5.1. ParaStation5 pscom communication library

The ParaStation communication library libpscom offers secure and reliable end-to-end connectivity. It

hides the actual transport and communication characteristics from the application and higher level libraries.

The libpscom library supports a wide range of interconnects and protocols for data transfers. Using a

generic plug-in system, this library may open connections using the following networks and protocols:

• TCP: uses standard TCP/IP sockets to transfer data. This protocol may use any interconnect. Support

for this protocol is built-in to the libpscom.

• P4sock: uses an optimized network protocol for Ethernet (see Section 5.2, “ParaStation5 protocol

p4sock”, below). Support for this protocol is built-in to the libpscom.

• InfiniBand: based on a vapi kernel layer and a libvapi library, typically provided by the hardware

vendor, the libpscom may use InfiniBand to actually transfer data. The corresponding plug-in library

is called libpscom4vapi.

• Myrinet: using the GM library and kernel level module, the libpscom library is able to use Myrinet for

data transfer. The particular plug-in library is called libpscom4gm.

• Shared Memory: for communication within a SMP node, the libpscom library uses shared memory.

Support for this protocol is built-in to the libpscom.

• DAPL: The libpscom supports a DAPL transport layer. Using the libpscom4dapl plug-in, it may

transfer data across various networks like Infiniband or 10G Ethernet using a vendor-provided libdapl.

• QsNet: The libpscom supports the QsNetII transport layer. Using the libpscom4elan plug-in, it may

transfer data using the libelan.

The interconnect and protocol used between two distinct processes is chosen while opening the connection

between those processes. Depending on available hardware, configuration (see Section 4.1, “Configuration

of the ParaStation system”) and current environment variables (see Section 5.8, “Controlling ParaStation5

communication paths”), the library automatically selects the fastest available communication path.

The library routines for sending and receiving data handle arbitrary large buffers. If necessary, the buffers

will be fragmented and reassembled to meet the underlying transport requirements.

The application is dynamically linked with the libpscom.so library. At runtime, this library loads plugins for various interconnects, see above. For more information on controlling ParaStation communication

pathes, refer to Section 5.8, “Controlling ParaStation5 communication paths”.

5.2. ParaStation5 protocol p4sock

ParaStation5 provides its own communication protocol for Ethernet, called p4sock. This protocol is

designed for extremely fast and reliable communication within a closed and homogeneous compute cluster

environment.

The protocol implements a reliable, connection-oriented communication layer, especially designed for very

low overhead. As a result, it delivers very low latencies.

The p4sock protocol is encapsulated within the kernel module p4sock.ko. This module is loaded on system

startup or whenever the ParaStation5 daemon psid(8) starts up and the p4sock protocol is enabled within

the configuration file parastation.conf(5).

ParaStation5 Administrator's Guide 17

Page 22

Directory /proc/sys/ps4/state

The p4sock.ko module inserts a number of entries within the /proc filesystem. All ParaStation5 entries are

located within the subdirectory /proc/sys/ps4. Three different subdirectories, listed below, are available.

To read a value, e.g. just type

# cat /proc/sys/ps4/state/connections

to get the number of currently open connections. To modify a value, for e.g. type

# echo 10 > /proc/sys/ps4/state/ResendTimeout

to set the new value for ResendTimeout.

5.2.1. Directory /proc/sys/ps4/state

Within this state directory, various entries showing protocol counters. All these entries, except polling,

are read only!

• HZ: reads the number of timer interrupts per second for this kernel ("jiffies").

A jiffy is the base unit for system timers, used by the Linux kernel. So all timeouts

within the kernel are based on this timer resolution. On kernels with version 2.4, this

it typically 100Hz (= 10ms). But there are kernel versions available, e.g. for newer

SuSE Linux versions, which include patches to change this to a much higher value!

• connections: reads the current number of open connections.

• polling: returns the current value for the polling flag: 0 = never poll, 1 = poll if otherwise idle (number

of runable processes < number of CPUs), 2 = always poll. Writing this value will immediately change

the polling strategy.

• recv_net_ack: number of received ACKs.

• recv_net_ctrl: number of received control packets (ACK, NACK, SYN, SYNACK, ...).

• recv_net_data: number of received data packets.

• recv_net_nack: number of received NACKs.

• recv_user: number of packets delivered to application buffers.

• send_net_ack: number of sent ACKs.

• send_net_ctrl: number of sent control packets.

• send_net_data: number of sent data packets.

• send_net_nack: number of sent NACKs.

• send_user: number of packets sent by the application.

• sockets: number of open sockets connecting to the ParaStation5 protocol module.

• timer_ack: number of expired delayed ACK timers.

• timer_resend: number of expired resend timers.

5.2.2. Directory /proc/sys/ps4/ether

Within this directory, all Ethernet related parameters for the ParaStation5 p4sock protocol are grouped. All

these entries can be read and written, newly written values will be used immediately.

• AckDelay: maximum delay in "jiffies" for ACK messages. If no message is sent within this time frame,

where an ACK for already received packets can be "hooked up", a single ACK message will generated.

Must be less then ResendTimeout.

18 ParaStation5 Administrator's Guide

Page 23

Directory /proc/sys/ps4/local

• MaxAcksPending: maximum number of pending ACK messages until an "urgent" ACK messages will

be sent.

• MaxDevSendQSize: maximum number of entries of the (protocol internal) send queue to the network

device.

• MaxMTU: maximum packet size used for network packets. For sending packets, the minimum of MaxMTU

and service specific MTU will be used.

• MaxRecvQSize: size of the protocol internal receive queue.

• MaxResend: Number of retries until a connection is declared as dead.

• MaxSendQSize: size of the protocol internal send queue.

• ResendTimeout: delay in "jiffies" for resending packets not acknowledged up to now. Must be greater

then AckDelay.

5.2.3. Directory /proc/sys/ps4/local

Currently, there are no entries defined for this directory.

5.2.4. p4stat

The command p4stat can be used to list open sockets and network connections of the p4sock protocol.

$ /opt/parastation/bin/p4stat -s

Socket #0 : Addr: <00><00><00><00><00><'........' last_idx 0 refs 2

Socket #1 : Addr: <70><6f><72><74><33><'port384.' last_idx 0 refs 10

Socket #2 : Addr: <70><6f><72><74><31><'port144.' last_idx 0 refs 10

$ /opt/parastation/bin/p4stat -n

net_idx SSeqNo SWindow RSeqNo RWindow lusridx lnetidx rnetidx snq rnq refs

84 30107 30467 30109 30468 84 84 230 0 0 2

85 30106 30466 30106 30465 85 85 231 0 0 2

86 30107 30467 30109 30468 86 86 84 0 0 2

87 30106 30466 30106 30465 87 87 85 0 0 2

88 30107 30467 30109 30468 88 88 217 0 0 2

89 30106 30466 30106 30465 89 89 218 0 0 2

90 30106 30466 30106 30465 90 90 220 0 0 2

91 30106 30466 30106 30465 91 91 221 0 0 2

92 30001 30361 30003 30362 92 92 232 0 0 2

93 30001 30361 30003 30362 93 93 219 0 0 2

94 30000 30000 30001 30360 94 94 233 0 0 2

95 30000 30000 30001 30360 95 95 222 0 0 2

96 30000 30000 30001 30360 96 96 222 0 0 2

This command shows some protocol internal parameters, like open connections, sequence numbers,

reference counters, etc. For more information, see p4stat(8).

5.3. Controlling process placement

ParaStation includes sophisticated functions to control the process placement for newly created parallel

and serial tasks. These processes typically require a dedicated CPU (core). Upon task startup, the

environment variables PSI_NODES, PSI_HOSTS and PSI_HOSTFILE are looked up (in this order) to get

ParaStation5 Administrator's Guide 19

Page 24

Using the ParaStation5 queuing facility

a predefined node list. If not defined, all currently known nodes are taken into account. Also, the variables

PSI_NODES_SORT, PSI_LOOP_NODES_FIRST, PSI_EXCLUSIVE and PSI_OVERBOOK are observed.

Based on these variables and the list of currently active processes, a sorted list of nodes is constructed,

defining the final node list for this new task.

Beside this environment variables, node reservations for users and groups are also observed. See

psiadmin(1).

In addition, only available nodes will be used to start up processes. Currently not available nodes will be

ignored.

Obeying all these restrictions, the processes constructing a parallel task will be spawned on the nodes

listed within the final node list. For SMP systems, all available CPUs (cores) on this node may be used for

consecutive ranks, depending on the environment variable PSI_LOOP_NODES_FIRST.

For administrative tasks not requiring a dedicated CPU (core), e.g. processes

spawned using pssh, other strategies take place. As this type of processes are

intended to run on dedicated nodes, predefined by the user, the described procedure

will be circumvented and the processes will be run on the user-defined nodes.

For a detailed discussion of placing processes within ParaStation5, please refer to process placement(7),

ps_environment(5), pssh(8) and mpiexec(8).

5.4. Using the ParaStation5 queuing facility

ParaStation is able to queue task start requests if required resources are currently in use. This queuing

facility is disabled by default. It can be enabled by each user independently. All requests of all users are

held within one queue and managed on a first-come-first-serve strategy.

For details, refer to ParaStation5 User's Guide.

5.5. Exporting environment variables for a task

ParaStation by default exports only a limited set of environment variables to newly spawned processes,

like HOME, USER, SHELL or TERM.

Additional variables can be exported using PSI_EXPORTS.

For a complete list of environment variables known to and exported automatically by ParaStation, refer to

ps_environment(5) .

For more details, refer to ParaStation5 User's Guide.

5.6. Using non-ParaStation applications

It is possible to run programs linked with 3rd party MPI libraries within the ParaStation environment.

Currently supported MPI1 compatible libraries are:

• MPIch using ch_p4 (mpirun_chp4)

• MPIch using GM (mpirun_chgm)

• InfiniPath (mpirun-ipath-ps)

• MVAPIch (mpirun_openib)

• QsNet MPI (mpirun_elan)

20 ParaStation5 Administrator's Guide

Page 25

ParaStation5 TCP bypass

In order to run applications linked with one of those MPI libraries, ParaStation5 provides dedicated

mpirun commands. The processes for those type of parallel tasks are spawned obeying all restrictions

described in Section 5.3, “Controlling process placement”. Of course, the data transfer will be based

on the communication channels supported by the particular MPI library. For MPIch using ch_p4 (TCP),

ParaStation5 provides an alternative, see Section 5.7, “ParaStation5 TCP bypass”.

The command mpirun-ipath-ps running programs linked with InfiniPath™ MPI is

part of the psipath package. For details how to obtain this package, please contact

<support@par-tec.com>.

For more information refer to mpirun_chp4(8), mpirun_chgm(8), mpirun-ipath-ps(8), mpirun_openib(8) and

mpirun_elan(8).

Using the ParaStation5 command mpiexec, any parallel application supporting the PMI protocol, which is

part of the MPI2 standard, may be run using the ParaStation process environment. Therefore, many other

MPI2 compatible MPI libraries are now supported by ParaStation5.

It is also possible to run serial applications, thus applications not parallelized with MPI, within

ParaStation. ParaStation distinguishes between serial tasks allocating a dedicated CPU within the resource

management system and administrative tasks not allocating a CPU. To execute a serial program, run

mpiexec -n 1 To run an administrative task, use pssh or mpiexec -A -n 1.

For more details on how to start-up serial and parallel jobs refer to mpiexec(8), pssh(8) and the ParaStation5

User's Guide.

5.7. ParaStation5 TCP bypass

ParaStation5 offers a feature called "TCP bypass", enabling applications based on TCP to use the efficient

p4sock protocol. The data will be redirected within the kernel to the p4sock protocol. No modifications to

the application are necessary!

To automatically configure the TCP bypass during ParaStation startup, insert a line like

Env PS_TCP FirstAddress-LastAddress

in the p4sock-section of the configuration file parastation.conf, were FirstAddress and

LastAddress are the first and last IP addresses for which the bypass should be configured.

To enable the bypass for a pair of processes, the library libp4tcp.so, located in the directory /opt/

parastation/lib64 must be pre-loaded by both processes using:

export LD_PRELOAD=/opt/parastation/lib64/libp4tcp.so

For parallel and serial tasks launched by ParaStation, this environment variable is exported to all processes

by default. Please refer to ps_environment(5).

It's not recommended to insert libp4tcp.so in the global preload configuration file

/etc/ld.so.preload, as this may hang connections to daemon processes started

up before the bypass was configured.

See also p4tcp(8).

5.8. Controlling ParaStation5 communication paths

ParaStation uses different communication paths, see Section 5.1, “ParaStation5 pscom communication

library” for details. In order to restrict the paths to use, a number of environment variables are recognized

by ParaStation.

ParaStation5 Administrator's Guide 21

Page 26

Authentication within ParaStation5

PSP_SHM or PSP_SHAREDMEM

Don't use shared memory for communication within the same node.

PSP_P4S or PSP_P4SOCK

Don't use ParaStation p4sock protocol for communication.

PSP_MVAPI

Don't use Mellanox InfiniBand vapi for communication.

PSP_OPENIB

Don't use OpenIB InfiniBand vapi for communication.

PSP_GM

Don't use GM (Myrinet) for communication.

PSP_DAPL

Don't use DAPL for communication.

To disable the particular transport, the corresponding variable must be set to 0, to enable a transport, the

variable must be set to 1 or the variable must not be defined.

It is not possible to dynamically disable TCP as a communication path. TCP, if configured, is always used

as a last resort for communication.

Using the environment variable PSP_LIB, it is possible to define the communication library to use,

independent of the variables mentioned above. This library must match the currently available interconnect

and protocol, otherwise an error will occur.

The library name must be specified using the full path and filename, e.g.

export PSP_LIB=/opt/parastation/lib64/libpscomopenib.so

This variable is automatically exported to all processes started by ParaStation. Refer to Section 5.1,

“ParaStation5 pscom communication library” for a full list of available library variants.

If more than one path for a particular interconnect exist, e.g. if the nodes are connected by two Gigabit

Ethernet networks in parallel, it is desirable to pretend the interface and therefore the network to be used

for application data. To do so, the environment variable PSP_NETWORK has to be defined.

Assuming the network 192.168.1.0 is dedicated to management data and the network 192.168.2.0

is intended for application data, the following configuration within parastation.conf would re-direct the

application data to the network 192.168.2.0:

Env PSP_NETWORK 192.168.2.0

Nodes {

node0 0 # 192.168.1.1

node1 1 # 192.168.1.2

...

}

Refer to ps_environment(5) for details.

5.9. Authentication within ParaStation5

Whenever a process of a parallel task is spawned within the cluster, ParaStation does not authenticate the

user. Only the user and group ID is copied to the remote node and used for starting up processes.

Thus, it is not necessary for the user to be known by the compute node, e.g. having an entry in /etc/

passwd. On the contrary, the administrator may disallow logins for users by removing the entries from /

22 ParaStation5 Administrator's Guide

Page 27

Homogeneous user ID space

etc/passwd. Usage of common authentication schemes like NIS is not required and therefore limits user

management to the frontend nodes.

Authentication of users is restricted to login or frontend nodes and is outside of the scope of ParaStation.

5.10. Homogeneous user ID space

As explained in the previous section, ParaStation uses only user and group IDs for starting up remote

processes. Therefore, all processes will have identical user and group IDs on all nodes.

A homogeneous user ID space is stretched across the entire cluster.

5.11. Single system view

The ParaStation administration tool collects and displays information from all or a selected subset of nodes

in the cluster. Actions can be initiated on each node and will be automatically and transparently forwarded

to the destination node(s), if necessary. From a management perspective, all the nodes are seen as a

homogeneous system. Thus, the administrator will have a single system view of the cluster.

5.12. Parallel shell tool

ParaStation provides a parallel shell tool called psh, which allows to run commands on all or selected nodes

of the cluster in parallel. The output of the individual commands is presented in a sophisticated manner,

showing common parts and differences.

psh may also be used to copy files to all nodes of the cluster in parallel.

This command is not intended to run interactive commands in parallel, but to run a single task in parallel

on all or a bunch of nodes and prepare the output to be easily read by the user.

5.13. Nodes and CPUs

Though ParaStation by default tries to use a dedicated CPU per compute process, there is currently no

way to bind a process to a particular CPU. Therefore, there is no guarantee, that each process will use its

own CPU. But due to the nature of parallel tasks, the operating system scheduler will typically distribute

each process to its own CPU.

Care must be taken if the hardware is able to simulate virtual CPUs, e.g. Intel Xeon CPUs using

Hyperthreading. The ParaStation daemon detects virtual CPUs and uses all the virtual CPUs found for

placing processes. Detecting virtual CPUs requires that the kernel module cpuid is loaded prior to starting

the ParaStation daemon. Use

# psiadmin -c "s hw"

Node CPUs Available Hardware

0 4/ 2 ethernet p4sock

1 4/ 2 ethernet p4sock

to show the number of virtual and physical CPUs per node.

It's possible to spawn more processes than physical or virtual CPUs are available on a node ("overbooking").

See ParaStation5 User's Guide for details.

ParaStation5 Administrator's Guide 23

Page 28

Integration with AFS

5.14. Integration with AFS

To run parallel tasks spawned by ParaStation on clusters using AFS, ParaStation provides the scripts

env2tok and tok2env.

On the frontend side, calling

. tok2env

will create an environment variable AFS_TOKEN containing an encoded access token for AFS. This variable

must be added to the list of exported variables

PSI_EXPORTS="AFS_TOKEN,$PSI_EXPORTS"

In addition, the variable

PSI_RARG_PRE_0=/some/path/env2tok

must be set. This will call the script env2tok before running the actual program on each node. Env2tok

itself will decode the token and will setup the AFS environment.

The commands SetToken and GetToken, which are part of the AFS package, must

be available on each node. Also, the commands uuencode and uudecode must be

installed.

Script tok2env:

#!/bin/bash

tmp=$IFS

IFS=" "

export AFS_TOKEN=`GetToken | uuencode /dev/stdout`

IFS=$tmp

Script env2tok:

#!/bin/bash

IFS=" "

echo $AFS_TOKEN | uudecode | SetToken

exec $*

5.15. Integrating external queuing systems

ParaStation can be easily integrated with batch queuing and scheduling systems. In this case, the queuing

system will decide, where (and when) to run a parallel task. ParaStation will then start, monitor and terminate

the task. In case of higher prioritized jobs, the batch system may also suspend a task using the ParaStation

signal forwarding.

Integration is done by setting up ParaStation environment variables, like PSI_HOSTFILE. ParaStation itself

need not be modified in any way. It is not necessary to use a remote shell (rsh) to start mpirun on the first

node of the selected partition. The batch system should only run the command on the same node where

the batch system is running, ParaStation will start all necessary processes on the remote nodes. For details

about spawning processes refer to ParaStation5 User's Guide.

24 ParaStation5 Administrator's Guide

Page 29

Integration with PBS PRO

If an external queuing system is used, the environment variable PSI_NODES_SORT

should be set to "none", thus no sorting of any predefined node list will be done by

ParaStation.

ParaStation includes its own queuing facility. For more details, refer to Section 5.4, “Using the ParaStation5

queuing facility” and ParaStation5 User's Guide.

5.15.1. Integration with PBS PRO

Parallel jobs started by PBS PRO using the ParaStation mpirun command will be automatically recognized.

Due to the environment variable PBS_NODEFILE, defined by PBS PRO, ParaStation will automatically

setup the PSI_HOSTFILE to PBS_NODEFILE. The environment variable PSI_NODES_SORT is set to

"none", thus no sorting of the predefined node list will occur. The tasks will be spawned in the given order

on the predefined list of nodes.

Therefore, ParaStation will use the (unsorted) hostfile supplied by PBS PRO to startup the parallel task.

5.15.2. Integration with OpenPBS

Refer to previous Section 5.15.1, “Integration with PBS PRO”.

5.15.3. Integration with Torque

Refer to previous Section 5.15.1, “Integration with PBS PRO”.

5.15.4. Integration with LSF

Similar to Section 5.15.1, “Integration with PBS PRO”, ParaStation will also recognize the variable

LSB_HOSTS, provided by LSF. This variable holds a list of nodes for the parallel task. It is copied to the

ParaStation variable PSI_HOSTS, consequently it will be used for starting up the task. The environment

variable PSI_NODES_SORT is set to "none", thus no sorting of the predefined node list will occur. The tasks

will be spawned in the given order on the predefined list of nodes.

5.15.5. Integration with LoadLeveler

ParaStation recognizes the variable LOADL_PROCESSOR_LIST, provided by IBM LoadLeveler. This

variable holds a list of nodes for the parallel task. It is copied to the ParaStation variable PSI_HOSTS,

consequently it will be used for starting up the task. The environment variable PSI_NODES_SORT is set to

"none", thus no sorting of the predefined node list will occur. The tasks will be spawned in the given order

on the predefined list of nodes.

5.16. Multicasts

This version of ParaStation uses the ParaStation RDP protocol to exchange status information between the

psid(8) daemons. Therefore, multicast functionality is no longer required. It is still possible to use multicasts,

if requested.

To enable Multicast message exchange, edit parastation.conf and uncomment the

ParaStation5 Administrator's Guide 25

Page 30

Copying files in parallel

# UseMCast

statement.

If Multicast is enabled, the ParaStation daemons exchange status information using multicast messages.

Thus, a Linux kernel supporting multicast on all nodes of the cluster is required. This is usually no problem,

since all standard kernels from all common distribution are compiled with multicast support. If a customized

kernel is used, multicast support must be enabled within the kernel configuration! In order to learn more

about multicast take a look at the Multicast over TCP/IP HOWTO.

In addition, the hardware also has to support multicast packets. Since all modern Ethernet switches support

multicast and the nodes of a cluster typically live in a private subnet, this should be not a problem. If the

cluster nodes are connected by a gateway, it has to be configured appropriately to allow multicast packets

to reach all nodes of the cluster from all nodes.

Using a gateway in order to link parts of a cluster is not a recommended configuration.

On nodes with more than one Ethernet interface, typically frontend or head nodes, or systems where the

default route does not point to the private cluster subnet, a proper route for the multicast traffic must be

setup. This is done by the command

route add -net 224.0.0.0 netmask 240.0.0.0 dev ethX

where ethX should be replaced by the actual name of the interface connecting to all other nodes. In order