Page 1

GraniteRack 3U Chassis

28080AB

Digital Storage System

User Manual

model no.

OE-GRANITE3U

Please carefully read these instructions before using this product.

Save this manual for future use.

Page 2

Copyright

No part of this publication may be reproduced, stored in a retrieval system, or

transmitted in any form or by any means, electronic, mechanical, photocopying,

recording or otherwise, without the prior written consent.

Trademarks

All products and trade names used in this document are trademarks or registered trademarks of their respective holders.

Changes

The material in this documents is for information only and is subject to change

without notice.

Page 3

FCC Compliance Statement

This equipment has been tested and found to comply with the limits for a

Class B digital device, pursuant to Part 15 of the FCC rules. These limits are

designed to provide reasonable protection against harmful interference in

residential installations. This equipment generates, uses, and can radiate radio frequency energy, and if not installed and used in accordance with the

instructions, may cause harmful interference to radio communications.

However, there is not guarantee that interference will not occur in a particular

installation. If this equipment does cause interference to radio or television

equipment reception, which can be determined by turning the equipment off

and on, the user is encouraged to try to correct the interference by one or

more of the following measures:

1. Reorient or relocate the receiving antenna

2. Move the equipment away from the receiver

3. Plug the equipment into an outlet on a circuit different from that to

which the receiver is powered.

4. Consult the dealer or an experienced radio/television technician for

help

All external connections should be made using shielded cables.

Page 4

About This Manual

Welcome to your Redundant Array of Independent Disks System User’s Guide.

This manual covers everything you need to know in learning how to install or

configure your RAID system. This manual also assumes that you know the basic

concepts of RAID technology.

Chapter 1 Introduction

Introduces you to Disk Array’s features and general technology concepts.

Chapter 2 Getting Started

Helps user to identify parts of the Disk Array and prepare the hardware for configuration.

Chapter 3 Configuring

Quick Setup

Provides a simple way to setup your Disk Array.

Customizing Setup

Provides step-by-step instructions to help you to do setup or re-configure your Disk Array.

Chapter 4 Array Maintenance

Adding Cache Memory

Provides a detailed procedure to increase cache memory from the default amount of 128MB to

higher.

Updating Firmware

Provides step-by-step instructions to help you to update the firmware to the latest version.

Hot Swap Components

Describes all hot swap modules on Disk Array and provides the detailed procedure to replace

them.

It includes the following information :

Page 5

Table of Contents

Chapter 1 Introduction

1.1 Key Features..........................................................................................................

1.2 RAID Concepts......................................................................................................

1.3 SCSI Concepts......................................................................................................

1.3.1 Multiple SCSI Format Support..................................................................

1.3.2 Host SCSI ID Selection..............................................................................

1.3.3 Terminators..................................................................................................

1.4 Array Definition.......................................................................................................

1.4.1 RAID set........................................................................................................

1.4.2 Volume Set...................................................................................................

1.4.3 Easy of Use features..................................................................................

1.4.4 High Availability............................................................................................

Chapter 2 Getting Started

2. 1 Unpacking the subsystem.........................................................................................

2.2 Identifying Parts of the subsystem.....................................................................

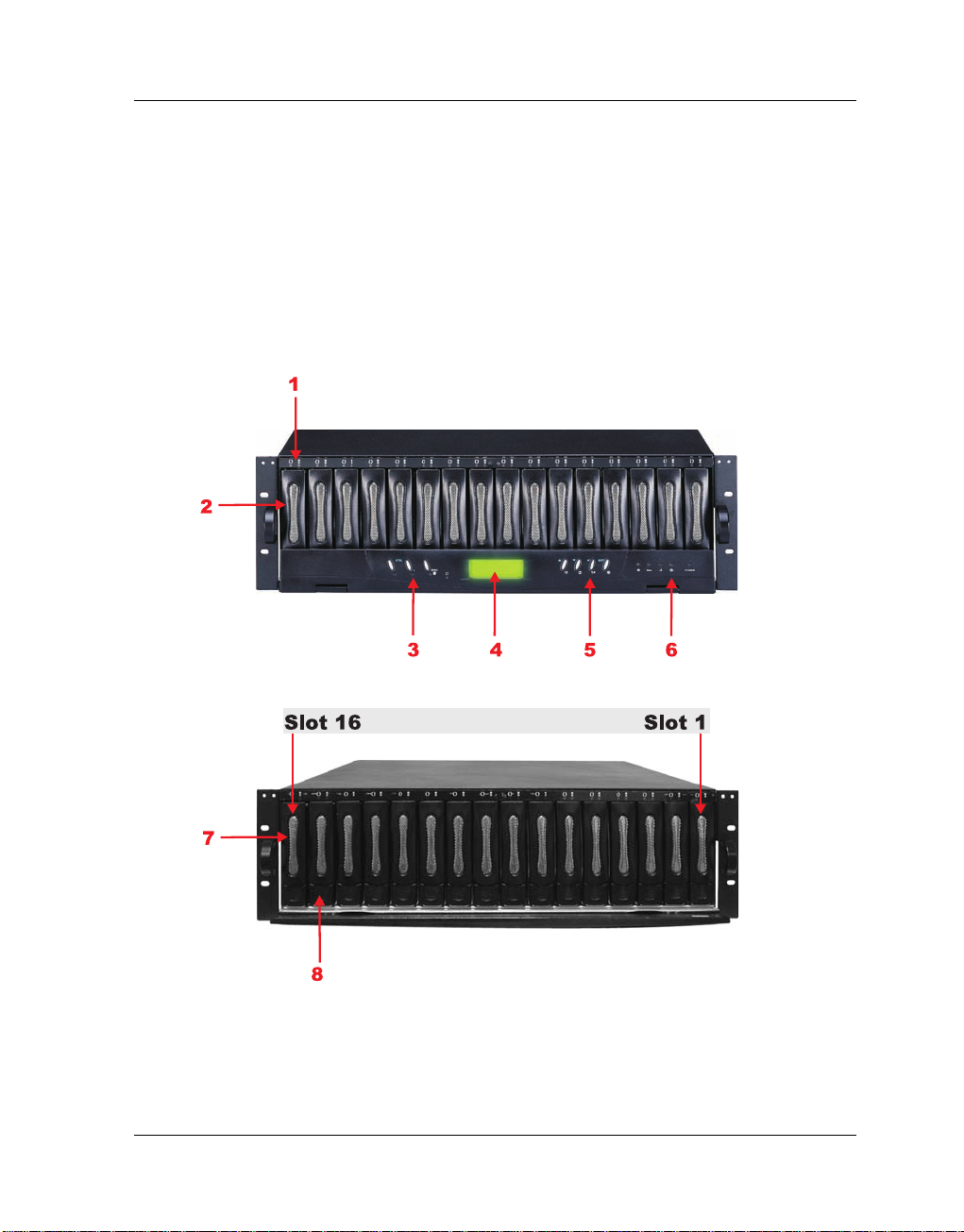

2.2.1 Front View......................................................................................................

2.2.2 Rear View.....................................................................................................

2.3 Connecting to Host...............................................................................................

2.4 SCSI Termination..................................................................................................

2.5 Powering-on the subsystem..............................................................................

2.6 Install Hard Drives................................................................................................

2.7 Connecting UPS...................................................................................................

2.8 Connecting to PC or Terminal............................................................................

1-2

1-3

1-10

1-10

1-10

1-11

1-12

1-12

1-12

1-13

1-15

2-1

2-3

2-3

2-6

2-9

2-10

2-12

2-13

2-15

2-16

Chapter 3 Configuring

3.1 Configuring through a Terminal..............................................................................

3.2 Configuring the Subsystem Using the LCD Panel.........................................

3.3 Menu Diagram.......................................................................................................

3.4 Web browser-based Remote RAID management via R-Link ethernet.......

3.5 Quick Create..........................................................................................................

3.6 Raid Set Functions...............................................................................................

3.6.1 Create Raid Set..........................................................................................

3.6.2 Delete Raid Set............................................................................................

3.6.3 Expand Raid Set...........................................................................................

3.6.4 Activate Incomplete Raid Set...................................................................

3-1

3-9

3-10

3-15

3-17

3-19

3-19

3-20

3-21

3-23

Page 6

3.6.5 Create Hot Spare........................................................................................

3.6.6 Delete Hot Spare.........................................................................................

3.6.7 Rescue Raid Set..........................................................................................

3. 7 Volume Set Function.................................................................................................

3.7.1 Create Volume Set......................................................................................

3.7.2 Delete Volume Set......................................................................................

3.7.3 Modify Volume Set........................................................................................

3.7.3.1 Volume Expansion.......................................................................

3.7.4 Volume Set Migration..................................................................................

3.7.5 Check Volume Set........................................................................................

3.7.6 Stop Volume Set Check..............................................................................

3.8 Physical Drive..........................................................................................................

3.8.1 Create Pass-Through Disk........................................................................

3.8.2 Modify Pass-Through Disk.........................................................................

3.8.3 Delete Pass-Through Disk........................................................................

3.8.4 Identify Selected Drive.................................................................................

3.9 System Configuration...........................................................................................

3.9.1 System Configuration.................................................................................

3.9.2 U320 SCSI Target Configuration...............................................................

3.9.3 Ethernet Config................................................................................................

3.9.4 Alert By Mail Config......................................................................................

3.9.5 SNMP Configuration.........................................................................................

3.9.6 View Events.....................................................................................................

3.9.7 Generate Test Events.................................................................................

3.9.8 Clear Events Buffer......................................................................................

3.9.9 Modify Password..........................................................................................

3.9.10 Upgrade Firmware.........................................................................................

3.10 Information Menu....................................................................................................

3.10.1 RaidSet Hierarchy.....................................................................................

3.10.2 System Information..................................................................................

3.10.3 Hardware Monitor......................................................................................

3.11 Creating a new RAID or Reconfiguring an Existing RAID..............................

3-25

3-25

3-26

3-27

3-27

3-30

3-31

3-31

3-33

3-34

3-34

3-35

3-35

3-36

3-37

3-37

3-38

3-38

3-40

3-41

3-42

3-43

3-44

3-45

3-46

3-46

3-47

3-48

3-48

3-48

3-49

3-50

Chapter 4 Array Maintenance

4.1 Memory Upgrades................................................................................................

4.1.1 Installing Memory Module.........................................................................

4.2 Upgrading the Firmware.....................................................................................

4.3 Hot Swap components........................................................................................

4.3.1 Replacing a disk.........................................................................................

4.3.2 Replacing a Power Supply........................................................................

4.3.3 Replacing a Fan..........................................................................................

Appendix A T echnical Specification...................................................

4-1

4-2

4-3

4-10

4-10

4-11

4-12

A-1

Page 7

Chapter 1

Introduction

The RAID subsystem is a Ultra 320 LVD SCSI-to-Serial ATA II RAID (Redundant

Arrays of Independent Disks) disk array subsystem. It consists of a RAID disk

array controller and sixteen (16) disk trays.

The subsystem is a “Host Independent” RAID subsystem supporting RAID

levels 0, 1, 3, 5, 6 0+1 and JBOD. Regardless of the RAID level the subsystem is configured for, each RAID array consists of a set of disks which to

the user appears to be a single large disk capacity.

One unique feature of these RAID levels is that data is spread across separate disks as a result of the redundant manner in which data is stored in a

RAID array. If a disk in the RAID array fails, the subsystem continues to function without any risk of data loss. This is because redundant information is

stored separately from the data. This redundant information will then be used

to reconstruct any data that was stored on a failed disk. In other words, the

subsystem can tolerate the failure of a drive without losing data while operating independently of each other.

The subsystem is also equipped with an environment controller which is capable of accurately monitoring the internal environment of the subsystem

such as its power supplies, fans, temperatures and voltages. The disk trays

allow you to install any type of 3.5-inch hard drive. Its modular design allows

hot-swapping of hard drives without interrupting the subsystem’s operation.

Introduction

1-1

Page 8

1.1 Key Features

Subsystem Features:

Features an Intel 80321 64 bit RISC I/O processor

Build-in 128MB cache memory, expandable up to 1024MB

Ultra 320 LVD host port

Smart-function LCD panel

Supports up to sixteen (16) 1" hot-swappable Serial ATA II hard drives

Redundant load sharing hot-swappable power supplies

High quality advanced cooling fans

Local audible event notification alarm

Supports password protection and UPS connection

Built-in R-Link LAN port interface for remote management & event notifica-

tion

Dual host channels support clustering technology

The RAID subsystem is made by aluminum. Aluminum is an excellent ther-

mal conductor and Aluminum offers a unique combination of light weight

and high strength.

Real time drive activity and status indicators

RAID Function Features:

Supports RAID levels 0, 1, 0+1, 3, 5, 6 and JBOD

Supports hot spare and automatic hot rebuild

Allows online capacity expansion within the enclosure

Tagged command queuing for 256 commands, allows for overlapping

data streams

Transparent data protection for all popular operating systems

Bad block auto-remapping

Supports multiple array enclosures per host connection

Multiple RAID selection

Array roaming

Online RAID level migration

1-2

Introduction

Page 9

1.2 RAID Concepts

RAID Fundamentals

The basic idea of RAID (Redundant Array of Independent Disks) is to combine

multiple inexpensive disk drives into an array of disk drives to obtain performance,

capacity and reliability that exceeds that of a single large drive. The array of

drives appears to the host computer as a single logical drive.

Six types of array architectures, RAID 1 through RAID 6, were originally defined,

each provides disk fault-tolerance with different compromises in features and

performance. In addition to these five redundant array architectures, it has become

popular to refer to a non-redundant array of disk drives as a RAID 0 array.

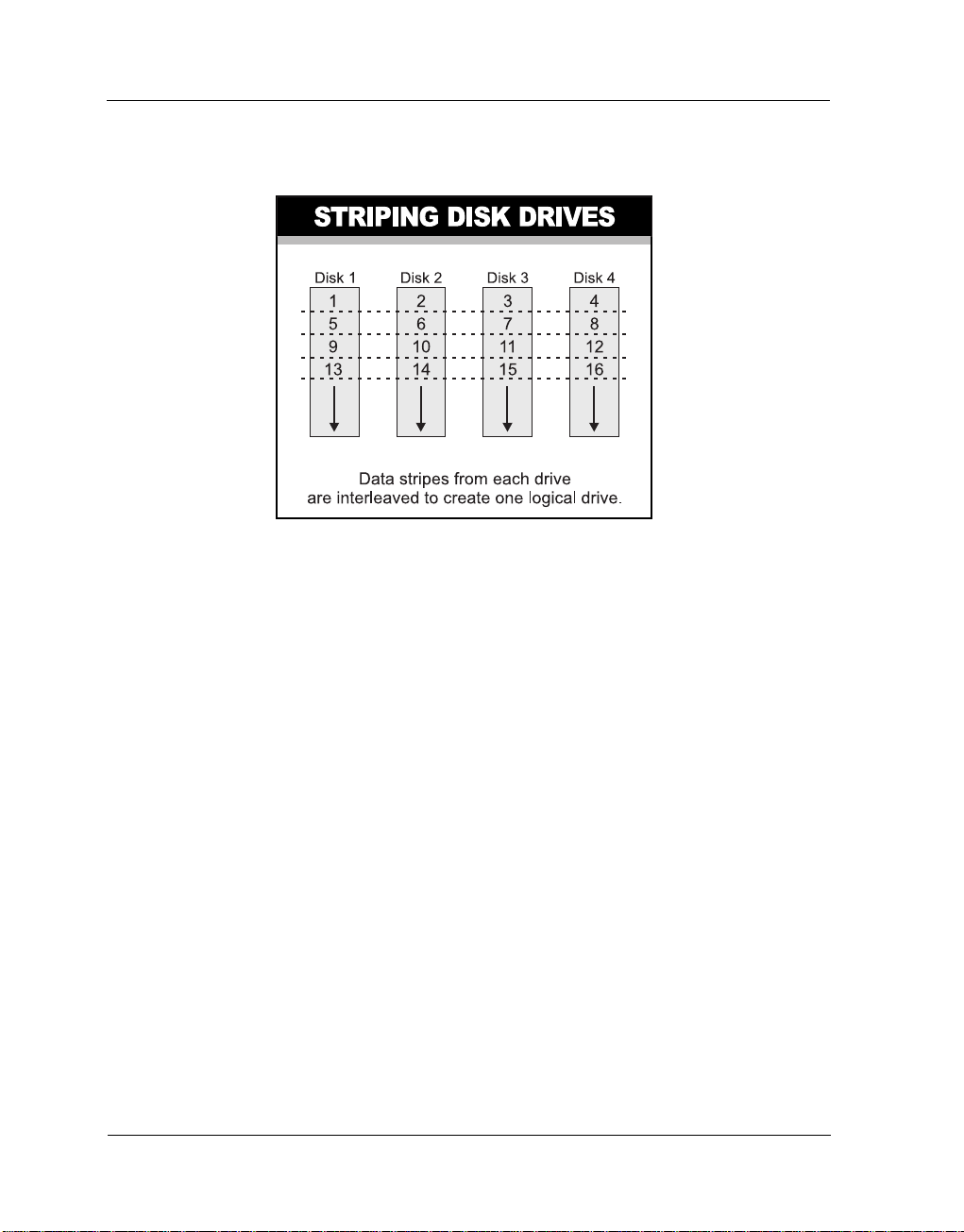

Disk Striping

Fundamental to RAID technology is striping. This is a method of combining

multiple drives into one logical storage unit. Striping partitions the storage

space of each drive into stripes, which can be as small as one sector (512

bytes) or as large as several megabytes. These stripes are then interleaved

in a rotating sequence, so that the combined space is composed alternately

of stripes from each drive. The specific type of operating environment determines whether large or small stripes should be used.

Most operating systems today support concurrent disk I/O operations across

multiple drives. However, in order to maximize throughput for the disk subsystem,

the I/O load must be balanced across all the drives so that each drive can be

kept busy as much as possible. In a multiple drive system without striping, the

disk I/O load is never perfectly balanced. Some drives will contain data files that

are frequently accessed and some drives will rarely be accessed.

Introduction

1-3

Page 10

By striping the drives in the array with stripes large enough so that each record

falls entirely within one stripe, most records can be evenly distributed across all

drives. This keeps all drives in the array busy during heavy load situations. This

situation allows all drives to work concurrently on different I/O operations, and

thus maximize the number of simultaneous I/O operations that can be performed

by the array.

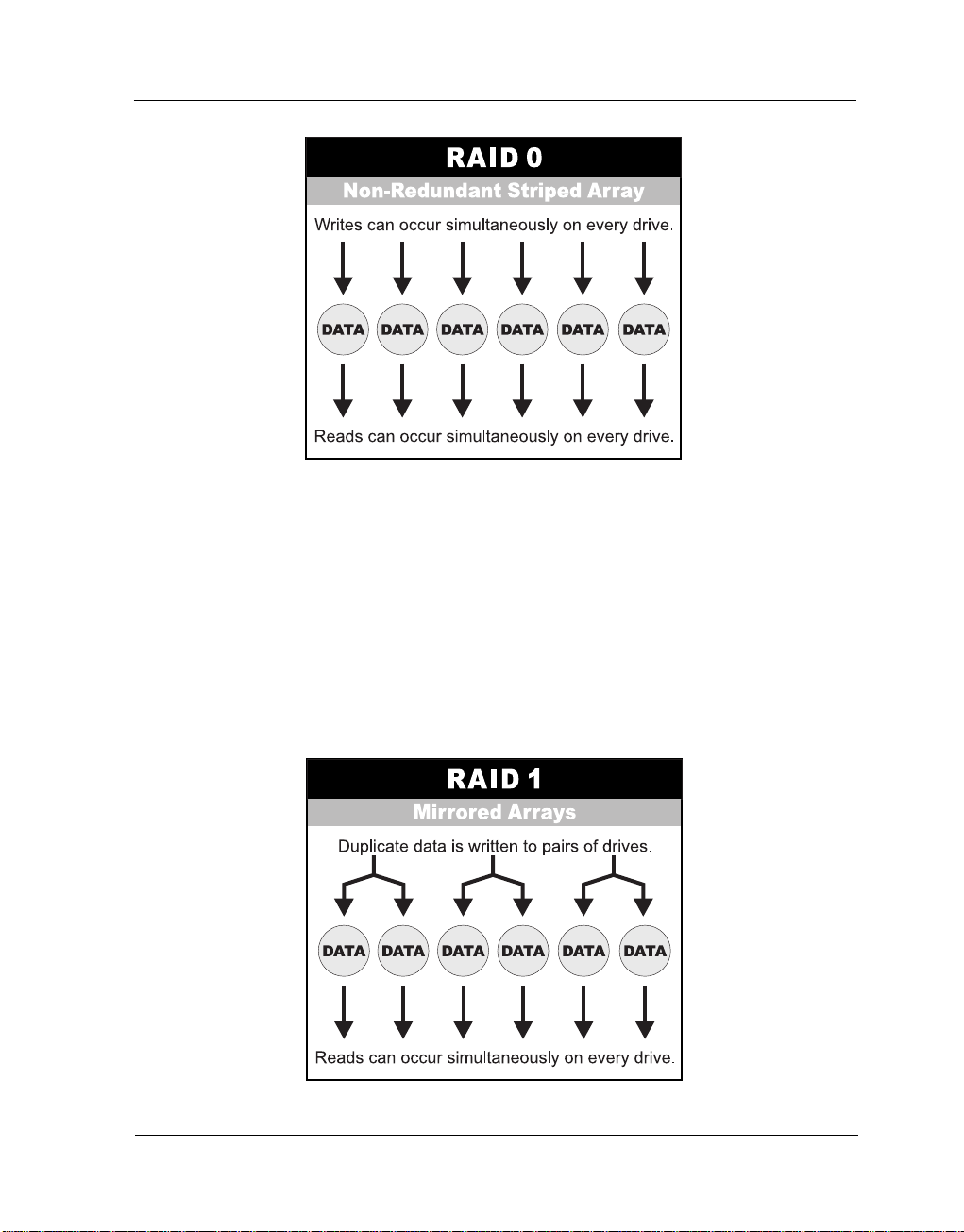

Definition of RAID Levels

RAID 0 is typically defined as a group of striped disk drives without parity or data

redundancy. RAID 0 arrays can be configured with large stripes for multi-user

environments or small stripes for single-user systems that access long sequential

records. RAID 0 arrays deliver the best data storage efficiency and performance

of any array type. The disadvantage is that if one drive in a RAID 0 array fails, the

entire array fails.

1-4

Introduction

Page 11

RAID 1, also known as disk mirroring, is simply a pair of disk drives that store

duplicate data but appear to the computer as a single drive. Although striping is

not used within a single mirrored drive pair, multiple RAID 1 arrays can be striped

together to create a single large array consisting of pairs of mirrored drives. All

writes must go to both drives of a mirrored pair so that the information on the

drives is kept identical. However, each individual drive can perform simultaneous,

independent read operations. Mirroring thus doubles the read performance of a

single non-mirrored drive and while the write performance is unchanged. RAID 1

delivers the best performance of any redundant array type. In addition, there is

less performance degradation during drive failure than in RAID 5 arrays.

Introduction

1-5

Page 12

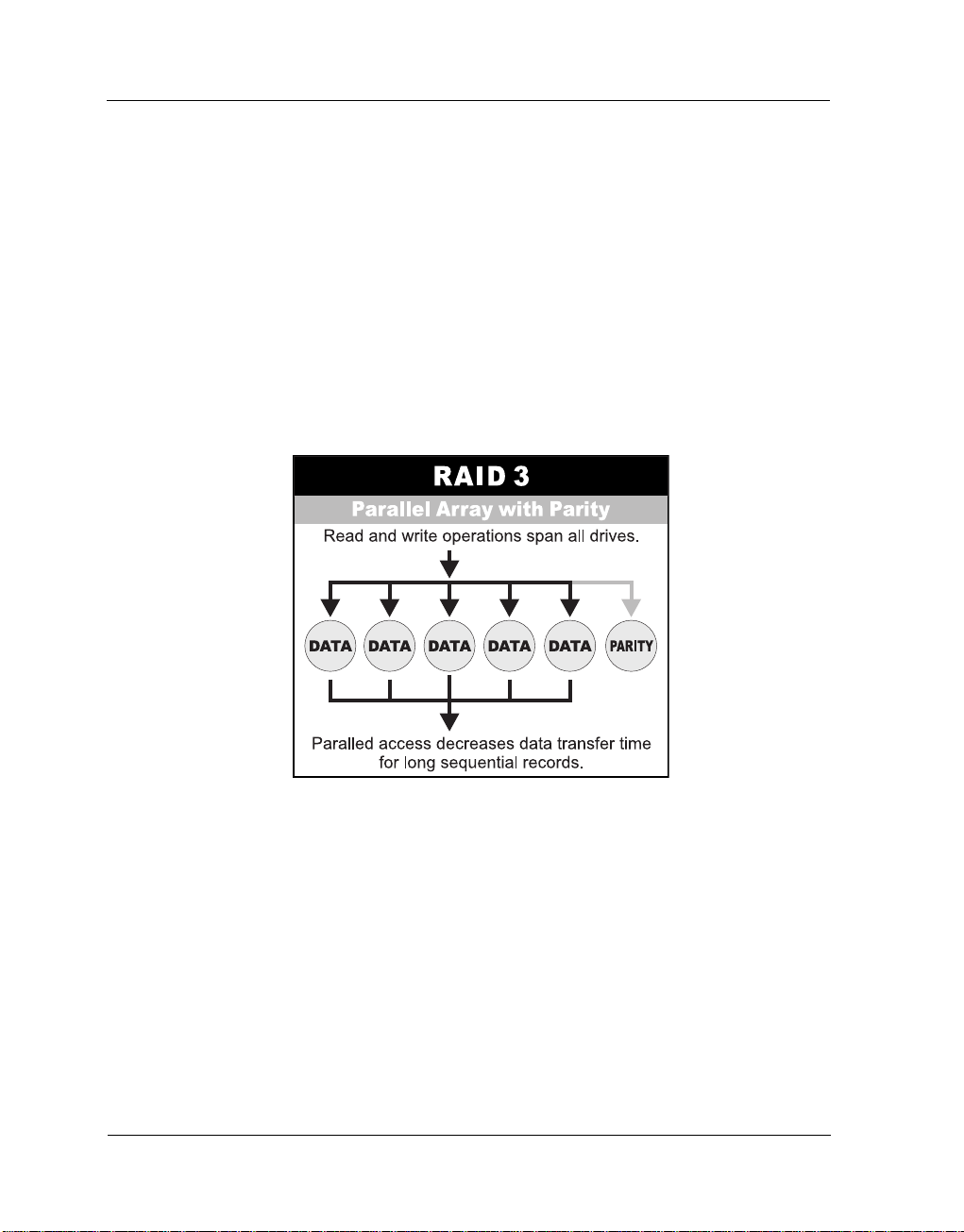

RAID 3 sector-stripes data across groups of drives, but one drive in the group is

dedicated to storing parity information. RAID 3 relies on the embedded ECC in

each sector for error detection. In the case of drive failure, data recovery is

accomplished by calculating the exclusive OR (XOR) of the information recorded

on the remaining drives. Records typically span all drives, which optimizes the

disk transfer rate. Because each I/O request accesses every drive in the array,

RAID 3 arrays can satisfy only one I/O request at a time. RAID 3 delivers the

best performance for single-user, single-tasking environments with long records.

Synchronized-spindle drives are required for RAID 3 arrays in order to avoid

performance degradation with short records. RAID 5 arrays with small stripes

can yield similar performance to RAID 3 arrays.

Under

is no dedicated parity drive, all drives contain data and read operations can be

overlapped on every drive in the array. Write operations will typically access one

data drive and one parity drive. However, because different records store their

parity on different drives, write operations can usually be overlapped.

1-6

RAID 5 parity information is distributed across all the drives. Since there

Introduction

Page 13

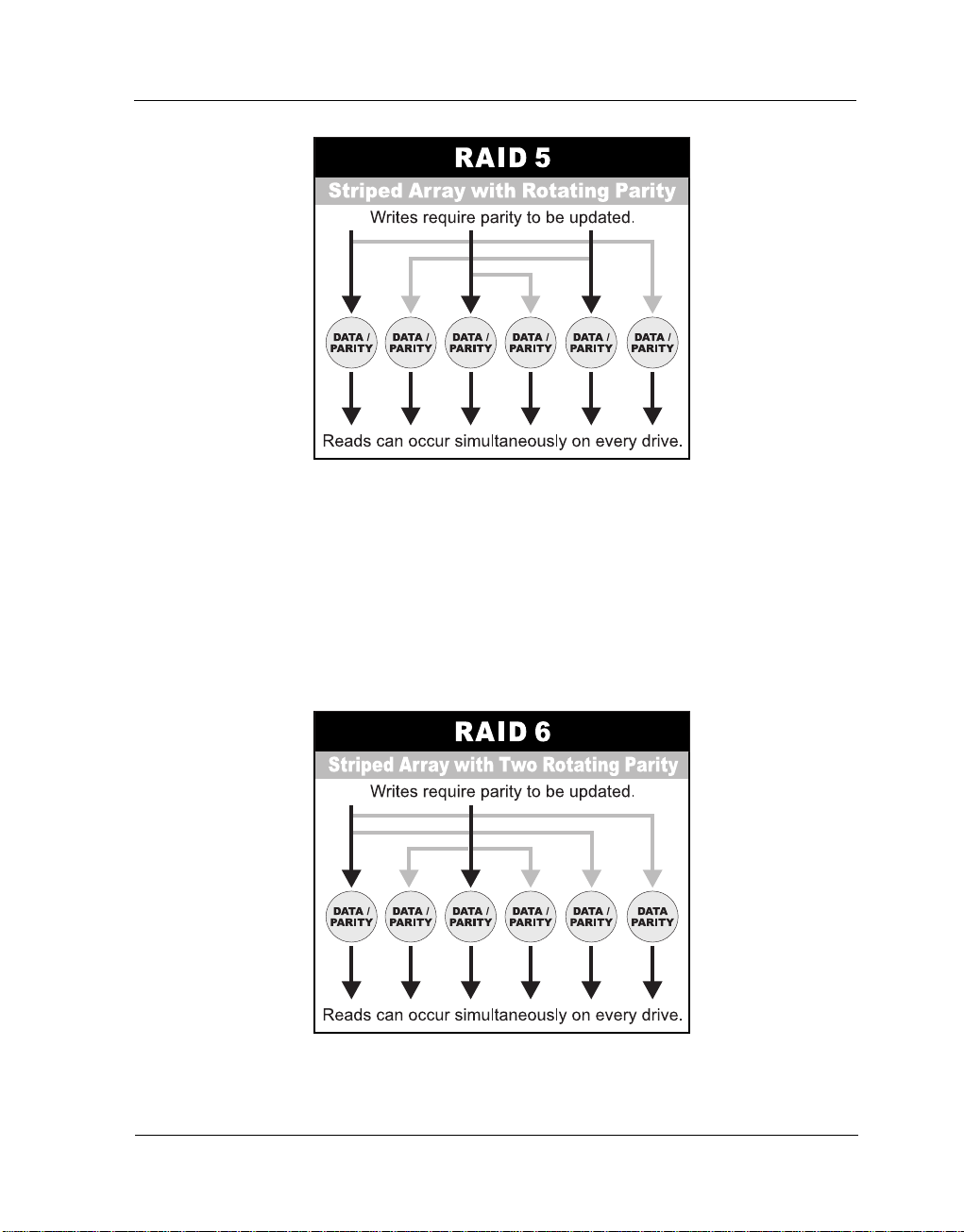

RAID 6 is similar to RAID 5 in that data protection is achieved by writing parity

information to the physical drives in the array. With RAID 6, however,

parity data are used. These two sets are different, and each set occupies a

capacity equivalent to that of one of the constituent drives. The main advantages

of RAID 6 is High data availability – any two drives can fail without loss of critical

data.

two sets of

Introduction

1-7

Page 14

Dual-level RAID achieves a balance between the increased data availability

inherent in RAID 1 and RAID 5 and the increased read performance inherent in

disk striping (RAID 0). These arrays are sometimes referred to as

RAID 10 and RAID 0+5 or RAID 50.

In summary:

RAID 0+1 or

RAID 0 is the fastest and most efficient array type but offers no fault-

tolerance. RAID 0 requires a minimum of two drives.

RAID 1 is the best choice for performance-critical, fault-tolerant

environments. RAID 1 is the only choice for fault-tolerance if no more than

two drives are used.

RAID 3 can be used to speed up data transfer and provide fault-tolerance

in single-user environments that access long sequential records. However,

RAID 3 does not allow overlapping of multiple I/O operations and requires

synchronized-spindle drives to avoid performance degradation with short

records. RAID 5 with a small stripe size offers similar performance.

RAID 5 combines efficient, fault-tolerant data storage with good

performance characteristics. However, write performance and performance

during drive failure is slower than with RAID 1. Rebuild operations also

require more time than with RAID 1 because parity information is also

reconstructed. At least three drives are required for RAID 5 arrays.

RAID 6 is essentially an extension of RAID level 5 which allows for

additional fault tolerance by using a second independent distributed parity scheme (two-dimensional parity). Data is striped on a block level

across a set of drives, just like in RAID 5, and a second set of parity is

calculated and written across all the drives; RAID 6 provides for an extremely high data fault tolerance and can sustain multiple simultaneous

drive failures. Perfect solution for mission critical applications.

1-8

Introduction

Page 15

RAID Management

The subsystem can implement several different levels of RAID technology.

RAID levels supported by the subsystem are shown below.

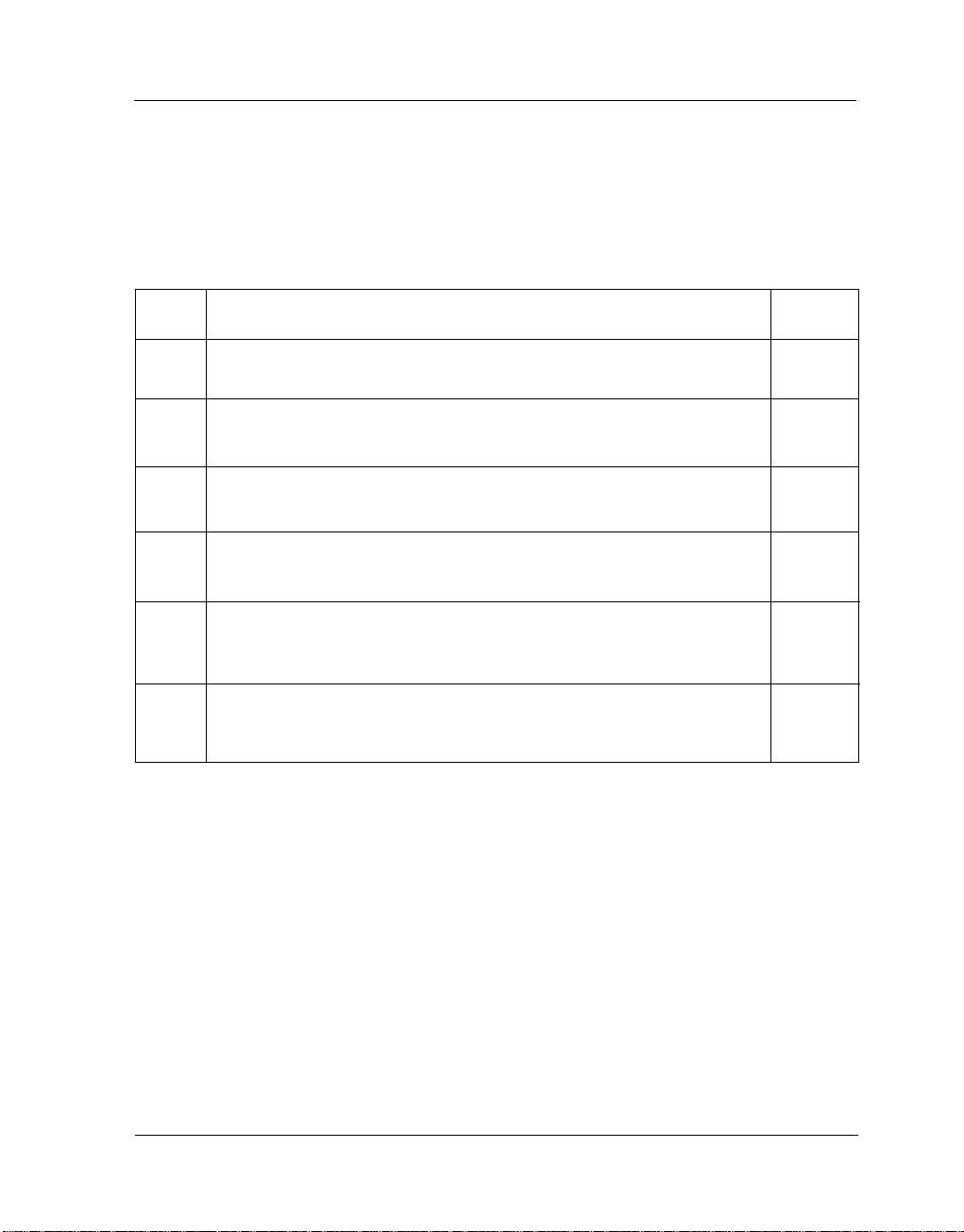

RAID

Level

0

1

3

5

6

0 + 1

Description

Block striping is provide, which yields higher performance than with

individual drives. There is no redundancy.

Drives are paired and mirrored. All data is 100% duplicated on an

equivalent drive. Fully redundant.

Data is striped across several physical drives. Parity protection is

used for data redundancy.

Data is striped across several physical drives. Parity protection is

used for data redundancy.

Data is striped across several physical drives. Parity protection is

used for data redundancy. Requires N+2 drives to implement

because of two-dimensional parity scheme

Combination of RAID levels 0 and 1. This level provides striping

and redundancy through mirroring.

Min

Drives

1

2

3

3

4

4

Introduction

1-9

Page 16

1.3 SCSI Concepts

Before configuring the subsystem, you must first understand some basic

SCSI concepts so that the subsystem and SCSI devices will function

properly.

1.3.1 Multiple SCSI Format Support

The subsystem support the SCSI interface standards listed below. Note that

the data bit and cable length restrictions must be followed.

SCSI T ype

SCSI-1

Fast SCSI

Fast Wide SCSI

Ultra SCSI

Ultra Wide SCSI

Ultra 2 SCSI

Ultra 2 Wide SCSI

Ultra 160 Wide LVD

Ultra 320 LVD

Data Bit

8 Bits

8 Bits

16 Bits

8 Bits

16 Bits

8 Bits

16 Bits

16 Bits

16 Bits

Data Rate

5 MB/Sec

10 MB/Sec

20 MB/Sec

20 MB/Sec

40 MB/Sec

40 MB/Sec

80 MB/Sec

160MB/Sec

320MB/Sec

Cable Length

6 m

3 m

3 m

1.5 m

1.5 m

12 m

12 m

12 m

12 m

1.3.2 Host SCSI ID Selection

A SCSI ID is an identifier assigned to SCSI devices which enables them to

communicate with a computer when they are attached to a host adapter via

the SCSI bus. Each SCSI device, and the host adapter itself, must have a

SCSI ID number (Ultra 320 Wide SCSI = 0 to 15). The ID defines each SCSI

device on the SCSI bus. If there are more than one SCSI adapter in the Host

subsystem, each adapter forms a separate SCSI bus. SCSI IDs can be reused as long as the ID is assigned to a device on a separate SCSI bus.

Refer to the documentation that came with your peripheral device to determine the ID and how to change it. The subsystem must be assigned a

unique SCSI ID ranging from 0 to 15 for the Ultra 320 LVD SCSI host system.

The default value is ID 0.

1-10

Introduction

Page 17

1.3.3 Terminators

Based on SCSI specifications, the SCSI bus must be terminated at both

ends, meaning the devices that are connected to the ends of the SCSI bus

must have their bus terminators enabled. Devices connected in the middle of

the SCSI bus must have their terminators disabled. Proper termination allows

data and SCSI commands to be transmitted reliably on the SCSI bus. The

host adapter and the SCSI devices attached to it must be properly

terminated, or they will not work reliably.

Termination means that terminators are installed in the devices at each end

of the bus. Some SCSI devices require you to manually insert or remove the

terminators. Other devices have built-in terminators that are enabled or disabled via switches or software commands. Refer to the device’s documentation on how to enable or disable termination.

If your RAID subsystem is the last device on the SCSI bus, attach

the terminator included in the package to the Host Channel A & B

Out port before using the subsystem.

Introduction

1-11

Page 18

1.4 Array Definition

1.4.1 RAID Set

A RAID Set is a group of disks containing one or more volume sets. It has

the following features in the RAID subsystem controller:

1. Up to sixteen RAID Sets are supported per RAID subsystem controller.

2. From one to sixteen drives can be included in an individual RAID Set.

3. It is impossible to have multiple RAID Sets on the same disks.

A Volume Set must be created either on an existing RAID set or on a group

of available individual disks (disks that are not yet a part of an raid set). If

there are pre-existing raid sets with available capacity and enough disks for

specified RAID level desired, then the volume set will be created in the existing raid set of the user’s choice. If physical disks of different capacity are

grouped together in a raid set, then the capacity of the smallest disk will

become the effective capacity of all the disks in the raid set.

1.4.2 Volume Set

A Volume Set is seen by the host system as a single logical device. It is

organized in a RAID level with one or more physical disks. RAID level refers

to the level of data performance and protection of a Volume Set. A Volume

Set capacity can consume all or a portion of the disk capacity available in a

RAID Set. Multiple Volume Sets can exist on a group of disks in a RAID Set.

Additional Volume Sets created in a specified RAID Set will reside on all the

physical disks in the RAID Set. Thus each Volume Set on the RAID Set will

have its data spread evenly across all the disks in the RAID Set.

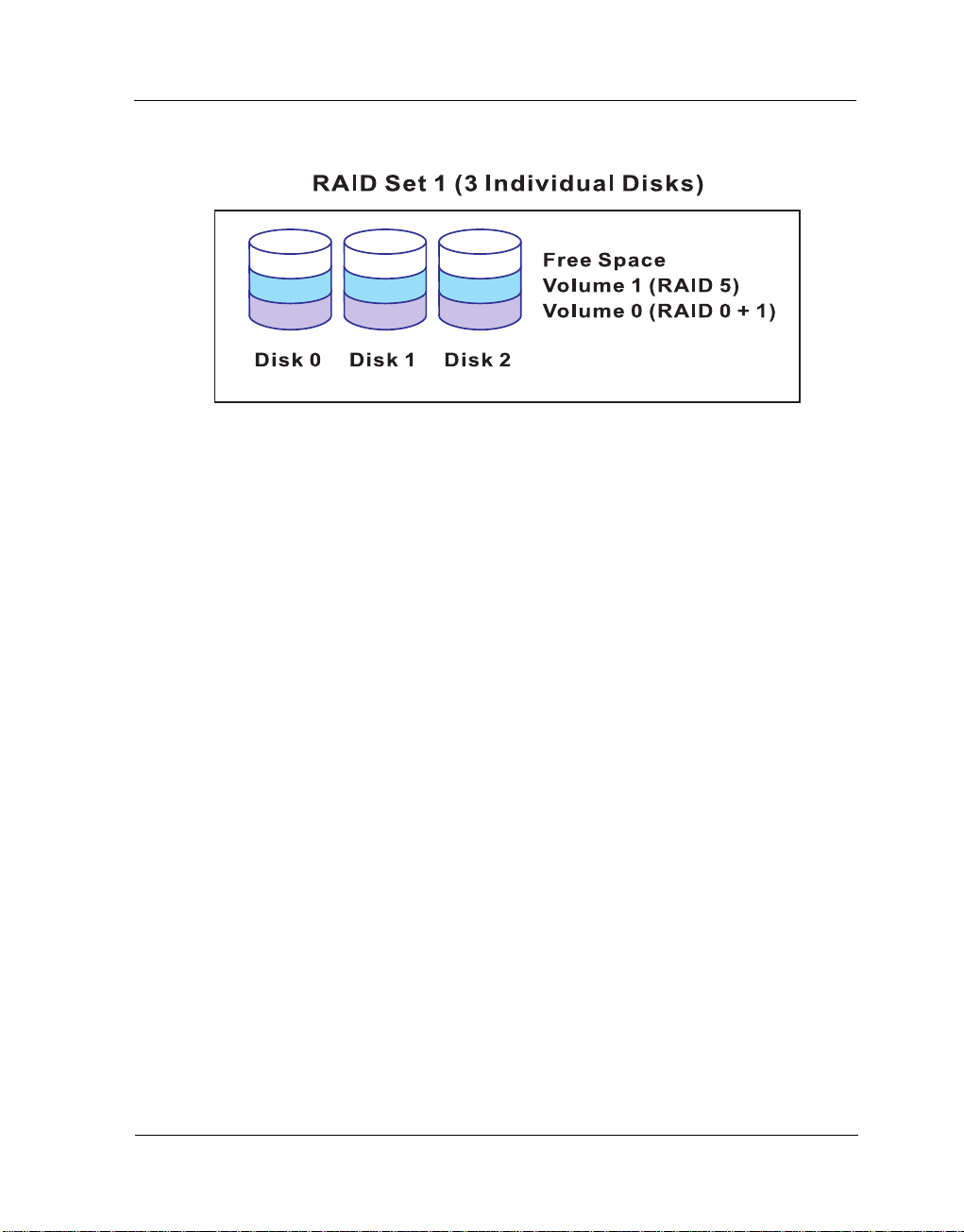

1. Volume Sets of different RAID levels may coexist on the same RAID Set.

In the illustration below, Volume 1 can be assigned a RAID 5 level of operation while Volume 0 might be assigned a RAID 0+1 level of operation.

1-12

Introduction

Page 19

1.4.3 Easy of Use features

1.4.3.1 Instant Availability/Background Initialization

RAID 0 and RAID 1 volume set can be used immediately after the creation.

But the RAID 3, 5 and 6 volume sets must be initialized to generate the

parity. In the Normal Initialization, the initialization proceeds as a background

task, the volume set is fully accessible for system reads and writes. The

operating system can instantly access to the newly created arrays without

requiring a reboot and waiting the initialization complete. Furthermore, the

RAID volume set is also protected against a single disk failure while initialing.

In Fast Initialization, the initialization proceeds must be completed before the

volume set ready for system accesses.

1.4.3.2 Array Roaming

The RAID subsystem stores configuration information both in NVRAM and on

the disk drives It can protect the configuration settings in the case of a disk

drive or controller failure. Array roaming allows the administrators the ability to

move a completely raid set to another system without losing RAID configuration and data on that raid set. If a server fails to work, the raid set disk drives

can be moved to another server and inserted in any order.

Introduction

1-13

Page 20

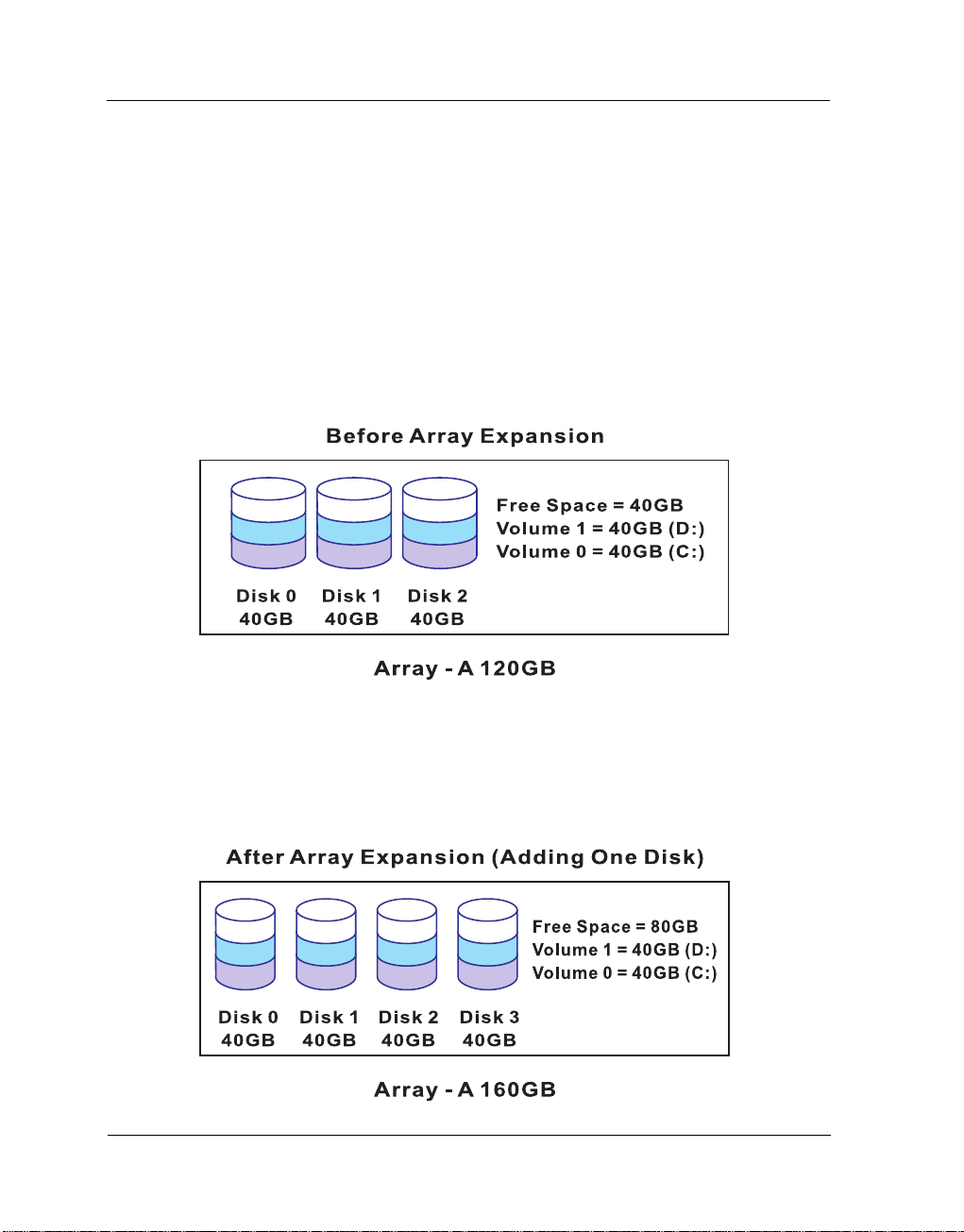

1.4.3.3 Online Capacity Expansion

Online Capacity Expansion makes it possible to add one or more physical

drive to a volume set, while the server is in operation, eliminating the need to

store and restore after reconfiguring the raid set. When disks are added to a

raid set, unused capacity is added to the end of the raid set. Data on the

existing volume sets residing on that raid set is redistributed evenly across all

the disks. A contiguous block of unused capacity is made available on the

raid set. The unused capacity can create additional volume set. The expansion process is illustrated as following figure.

The RAID subsystem controller redistributes the original volume set over the

original and newly added disks, using the same fault-tolerance configuration.

The unused capacity on the expand raid set can then be used to create an

additional volume sets, with a different fault tolerance setting if user need to

change.

1-14

Introduction

Page 21

1.4.3.4 Online RAID Level and Stripe Size Migration

User can migrate both the RAID level and stripe size of an existing volume

set, while the server is online and the volume set is in use. Online RAID level/

stripe size migration can prove helpful during performance tuning activities as

well as in the event that additional physical disks are added to the RAID

subsystem. For example, in a system using two drives in RAID level 1, you

could add capacity and retain fault tolerance by adding one drive. With the

addition of third disk, you have the option of adding this disk to your existing

RAID logical drive and migrating from RAID level 1 to 5. The result would be

parity fault tolerance and double the available capacity without taking the system off.

1.4.4 High availability

1.4.4.1 Creating Hot Spares

A hot spare drive is an unused online available drive, which is ready for replacing the failure disk drive. In a RAID level 1, 0+1, 3, 5 or 6 raid set, any

unused online available drive installed but not belonging to a raid set can

define as a hot spare drive. Hot spares permit you to replace failed drives

without powering down the system. When RAID subsystem detects a UDMA

drive failure, the system will automatic and transparent rebuilds using hot

spare drives. The raid set will be reconfigured and rebuilt in the background,

while the RAID subsystem continues to handle system request. During the

automatic rebuild process, system activity will continue as normal, however,

the system performance and fault tolerance will be affected.

Important:

!

The hot spare must have at least the same or more capacity as the

drive it replaces.

Introduction

1-15

Page 22

1.4.4.2 Hot-Swap Disk Drive Support

The RAID subsystem has built the protection circuit to support the replacement of UDMA hard disk drives without having to shut down or reboot the

system. The removable hard drive tray can deliver “hot swappable,” faulttolerant RAID solutions at prices much less than the cost of conventional

SCSI hard disk RAID subsystems. We provide this feature for subsystems to

provide the advanced fault tolerant RAID protection and “online” drive

replacement.

1.4.4.3 Hot-Swap Disk Rebuild

A Hot-Swap function can be used to rebuild disk drives in arrays with data

redundancy such as RAID level 1(0+1), 3, 5 and 6. If a hot spare is not

available, the failed disk drive must be replaced with a new disk drive so that

the data on the failed drive can be rebuilt. If a hot spare is available, the

rebuild starts automatically when a drive fails. The RAID subsystem automatically and transparently rebuilds failed drives in the background with userdefinable rebuild rates. The RAID subsystem will automatically restart the

system and the rebuild if the system is shut down or powered off abnormally

during a reconstruction procedure condition. When a disk is Hot Swap, al-

though the system is functionally operational, the system may no longer be

fault tolerant. Fault tolerance will be lost until the removed drive is replaced

and the rebuild operation is completed.

1-16

Introduction

Page 23

Chapter 2

Getting Started

Getting started with the subsystem consists of the following steps:

Unpack the storage subsystem.

Identifying Parts of the subsystem.

Connect the SCSI Cables.

SCSI Termination.

Power on the subsystem.

Install Hard Drives.

2.1 Unpacking the Subsystem

Before continuing, first unpack the subsystem and verify that the contents of

the shipping carton are all there and in good condition. Before removing the

subsystem from the shipping carton, visually inspect the physical condition of

the shipping carton. Exterior damage to the shipping carton may indicate that

the contents of the carton are damaged. If any damage is found, do not remove the components; contact the dealer where the subsystem was purchased for further instructions.

The package contains the following items:

Getting Started

2-1

Page 24

• RAID subsystem unit

• Three power cords

• Two external SCSI cables

• One external null modem cable

• One external UPS cable

• One RJ-45 ethernet cable

• Two Active LVD/SE terminators

• Installation Reference Guide

• Spare screws, etc.

If any of these items are missing or damaged, please contact your dealer or

sales representative for assistance.

2-2

Getting Started

Page 25

2.2 Identifying Parts of the subsystem

The illustrations below identify the various features of the subsystem. Get

yourself familiar with these terms as it will help you when you read further in

the following sections.

2.2.1 Front View

Getting Started

2-3

Page 26

1. HDD status Indicator

Access LED Status LED

Parts

HDD Status LEDs

HDD access LEDs

Green LED indicates power is on and hard drive status is good

for this slot. If there is no hard drive, the LED is red. If hard drive

defected in this slot or the hard drive is failure, the LED is

orange.

These LED will blink blue when the hard drive is being accessed.

Function

2. HDD trays 1 ~ 16 (From right to left)

3. Smart Function Panel - Function Keys

Parts

Activity LED

Blue blinking LED indicates controller is activity.

Function

4. LCD display panel

5. Smart Function Panel - Function Keys for RAID configuration

The smart LCD panel is where you will configure the RAID subsystem. If you

are configuring the subsystem using the LCD panel, please press the controller button to configure your RAID subsystem.

Parts

Up and Down

arrow buttons

Select button

Exit button

2-4

Function

Use the Up or Down arrow keys to go through the information

on the LCD screen. This is also used to move between each

menu when you configure the subsystem.

This is used to enter the option you have selected.

Press this button to return to the previous menu.

Getting Started

Page 27

6. Environment status

Parts

Voltage warning

LED

Over temp LED

Fan fail LED

Power fail LED

Power LED

7. Tray Lever

8. Tray Latch

Function

An alarm will sound warning of a voltage abnormality and this

LED will turn red.

If temperature irregularity in these systems occurs (HDD slot temperature over 55oC), this LED will turn red and an alarm will

sound.

When a fan’s rotation speed is lower than 2600rpm, this LED will

turn red and an alarm will sound.

If a redundant power supply fails, this LED will turn red and an

alarm will sound.

Green LED indicates power is on.

Getting Started

2-5

Page 28

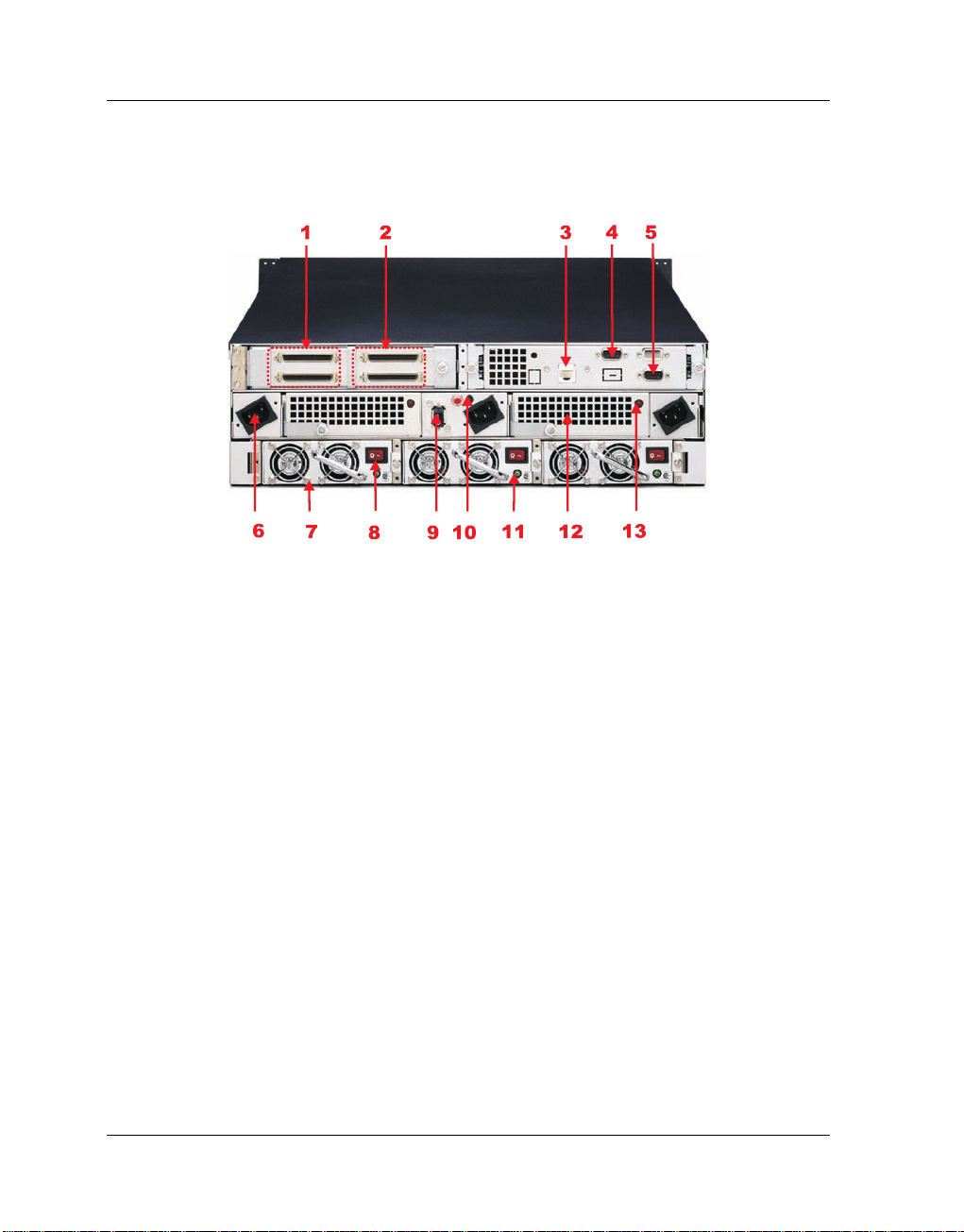

2.2.2 Rear View

1. Host Channel A

The subsystem is equipped with 2 host channels (Host channel A and Host

channel B). The host channel with two 68-pin SCSI connectors at the rear of

the subsystem for SCSI in and out.

2. Host Channel B

Connect to Host’s SCSI adapter or other devices.

3. R-Link Port : Remote Link through RJ-45 ethernet for remote management

The subsystem is equipped with one 10/100 Ethernet RJ45 LAN port. You

use web-based browser to management RAID subsystem through Ethernet

for remote configuration and monitoring.

2-6

Getting Started

Page 29

Link LED: Green LED indicates ethernet is linking.

Access LED: The LED will blink orange when the 100Mbps ethernet is being

accessed.

4. Uninterrupted Power Supply (UPS) Port

The subsystem may come with an optional UPS port allowing you to connect

a UPS device. Connect the cable from the UPS device to the UPS port located at the rear of the subsystem. This will automatically allow the subsystem to use the functions and features of the UPS.

5. Monitor Port

The subsystem is equipped with a serial monitor port allowing you to connect

a PC or terminal.

6. AC power input socket 1 ~ 3 (From left to right)

7. Power Supply Unit 1 ~ 3 (From left to right)

Three power supplies (power supply 1, power supply 2 and power supply 3)

are located at the rear of the subsystem. Turn on the power of these power

supplies to power-on the subsystem. The “power” LED at the front panel will

turn green.

If a power supply fails to function or a power supply was not turned on, the

” Power fail LED will turn red and an alarm will sound. An error message

“

will also appear on the LCD screen warning of power failure.

8. Power Supply Unit on / off switch

9. System power on / off switch

10. Power Supply Fail indicator

If a power supply fails, this LED will turn red.

Getting Started

2-7

Page 30

11. Power Supply Power On Indicator

Green LED indicates power is on.

12. Cooling Fan module 1 ~ 2 (From left to right)

Two blower fans are located at the rear of the subsystem. They provide sufficient airflow and heat dispersion inside the chassis. In case a fan fails to

function, the “

” Fan fail LED will turn red and an alarm will sound. You will

also see an error message appear in the LCD screen warning you of fan

failure.

14. Fan Fail indicator

If a fan fails, this LED will turn red.

2-8

Getting Started

Page 31

2.3 Connecting to Host

The subsystem supports the Ultra 320 SCSI LVD interface which provides

fast 320MB/S data transfer rates using a 16-bit SCSI bus. Installation of the

disk array is very similar to the installation of a standard SCSI drive. The

SCSI connector accepts the standard 68-pin LVD SCSI connector used on

most LVD SCSI devices. Refer to your system and/or SCSI host adapter

manual for additional installation procedures that may apply to your system or

host adapter.

1. The package comes with two external SCSI cables. For every pair of

host channel SCSI connector at the rear of the subsystem, attach one

end of the external SCSI cable to one of the SCSI connectors and the

other end to the host adapter’s external SCSI connector. (The host

adapter is installed in your Host subsystem.)

2. Connect the other host system using the other external SCSI cable if

you want to configure subsystem into multi-host attachment.

Note:

!

1. When one or more SCSI devices are connected, the total length

of all cables (internal or external) must not exceed 3 meters (9.8

ft.) to ensure reliable operation.

2. For safety reasons, make sure the Disk Array and Host Computer are turned off when you plug-in the SCSI cable.

Getting Started

2-9

Page 32

2.4 SCSI Termination

Two 68-pin wide SCSI connectors are provided on the back of the enclosure

for connecting the array to the system. These connectors are used in one of

two ways:

If the disk array is the only external SCSI device, or is the last external

device in a daisy-chained configuration, connect the incoming cable (the

one which is attached to the SCSI adapter) to the Host A & B in connector and install the external SCSI Terminator on the Host A & B out

connector.

If the array is to be placed in the middle of a daisy-chained configuration,

connect the incoming cable (the one which is attached to the SCSI

adapter) to the Host A & B in connector and connect the outgoing cable

(the one which continues on to other devices) to the Host A & B out

connector. In this case, no terminator is required at the disk array but

the last device in the daisy chain must have a terminator.

Correct SCSI termination procedures require that the last devices on the

SCSI bus be terminated. If the last device is not terminated, or if devices

other than the last are terminated, erratic SCSI bus performance may occur.

Typically, the system or host adapter (SCSI card inside the PC) is the first

device and is already terminated. When installing the disk array on a SCSI

2-10

Getting Started

Page 33

bus with other devices, be sure to observe the above rules with all devices

on the SCSI bus. Consult your system and/or host adapter manual for additional information on correct termination procedure.

Note:

If you are connecting another external device after the subsystem,

!

make sure to disable the terminator at the subsystem’s host channel A & B out connector. The default host channel’s terminator is

disabled.

Getting Started

2-11

Page 34

2.5 Powering-on the Subsystem

When you connect the Disk Array to the Host computer, you should press

the ON/OFF Power Supply Switch on both of the power supply units and the

Main Switch. It will turn the Disk Array on and the Self-Test will be started

automatically.

1. Plug in all the power cords or power connectors located at the rear of

the subsystem.

Note:

The subsystem is equipped with N+1 redundant PFC (power factor

!

correction), Full Range power supplies. The subsystem will automatically selector voltage.

2. Turn on the power. Before turning the system power switch on, make

sure all the power switches of power units 1 ~ 3 are on.

3. The “Power” LED on the front panel will turn green. After a few moments

the LCD should display the following message:

{Model Name}

xxx.xxx.xxx.xxx

2-12

Getting Started

Page 35

2.6 Install Hard Drives

This section describes the physical locations of the hard drives supported by

the subsystem and gives instructions on installing a hard drive. The subsystem supports hot-swapping allowing you to install or replace a hard drive

while the subsystem is running.

1. Pull out an empty disk tray. (You can install in any available slot.)

2. Take off the bracket before installing hard drive.

3. Place the hard drive in the disk tray.

4. Install the mounting screws on each side to secure the drive in the mobile rack.

Note:

Insert screws through the front sides of the mounting holes.

Getting Started

2-13

Page 36

5. Slide the tray into a slot until it clicks into place. The HDD status LED will

turn green on front panel.

6. Press the lever in until you hear the latch click into place.

7. If the HDD power LED did not turn green, check the hard drive is in good

condition.

8. If the hard drive is not being accessed, the HDD access LED will not

illuminate. The LED blinks only when being accessed.

2-14

Getting Started

Page 37

2.7 Connecting an Uninterrupted Power Supply (UPS)

The subsystem is equipped with a UPS port located at the rear of the system unit. It allows you to connect a UPS fail signal.

Pin

1

2

3

4

5

6

7

8

9

Description

Not used

UPS Line Fail

Not used

UPS Common

Not used

Not used

Not used

Not used

Not used

Note:

UPS connection compliant with NetWare UPS management, smart

!

mode UPS not support.

Getting Started

2-15

Page 38

2.8 Connecting to a PC or Terminal

The subsystem is equipped with a serial monitor port located at the rear of

the system unit. This serves as an alternative display when accessing the

setup utility.

2-16

Pin

1

2

3

4

5

6

7

8

9

Description

Data Carrier Detect (DCD)

Receive Data (RD)

Transmit Data (TD)

Data Teminal Ready (DTR)

Signal Ground (SG)

Data Set Ready (DSR)

Ready To Send (RTS)

Clear To Send (CTS)

Ring Indicator (RI)

Note:

Refer to Chapter 3 for instructions on accessing the setup utility

through a PC or terminal, as well as instructions on setting the baud

rate, stop bit, data bit and parity of your monitor or terminal. The

default setting of the monitor port is 115200 baud rate, non-parity, 8

data bit and no flow control.

Getting Started

Page 39

Chapter 3

Configuring

The subsystem has a setup configuration utility built in containing important

information about the configuration as well as settings for various optional

functions in the subsystem. This chapter explains how to use and make

changes to the setup utility.

Configuration Methods

There are three methods of configuring the subsystem. You may configure

through the following methods:

• VT100 terminal connected through the controller’s serial port

• Front panel touch-control keypad

• Web browser-based Remote RAID management via the R-Link ethernet

port

Important:

The subsystem allows you to access the utility using only one method

!

at a time. You cannot use both methods at the same time.

3.1 Configuring through a Terminal

Configuring through a terminal will allow you to use the same configuration

options and functions that are available from the LCD panel. To start-up:

1. Connect a VT100 compatible terminal or a PC operating in an equiva-

Configuring

3-1

Page 40

lent terminal emulation mode to the monitor port located at the rear of

the subsystem.

Note:

You may connect a terminal while the subsystem’s power is on.

2. Power-on the terminal.

3. Run the VT100 program or an equivalent terminal program.

3-2

Configuring

Page 41

4. The default setting of the monitor port is 115200 baud rate, 8 data bit,

non-parity, 1 stop bit and no flow control.

Configuring

3-3

Page 42

5. Click disconnect button.

6. Open the File menu, and then open Properties.

3-4

Configuring

Page 43

7. Open the Settings Tab.

8. Open the Settings Tab. Function, arrow and ctrl keys act as: Terminal

Keys, Backspace key sends: Crtl+H, Emulation: VT100, Telnet terminal:

VT100, Back scroll buffer lines: 500. Click OK.

Configuring

3-5

Page 44

9. Now, the VT100 is ready to use. After you have finished the VT100 Terminal setup, you may press “ X “ key (in your Terminal) to link the RAID

subsystem and Terminal together. Press “X’ key to display the disk array

Monitor Utility screen on your VT100 Terminal.

10. The Main Menu will appear.

Keyboard Function Key Definitions

“ A “ key - to move to the line above

“ Z “ key - to move to the next line

“ Enter “ key - Submit selection function

“ ESC “ key - Return to previous screen

“ L ” key - Line draw

“ X ” key - Redraw

3-6

Configuring

Page 45

Main Menu

The main menu shows all function that enables the customer to execute actions by clicking on the appropriate link.

Note:

The password option allows user to set or clear the raid subsystem’s

password protection feature. Once the password has been set, the

user can only monitor and configure the raid subsystem by providing

the correct password. The password is used to protect the internal

RAID subsystem from unauthorized entry. The controller will check the

password only when entering the Main menu from the initial screen.

The RAID subsystem will automatically go back to the initial screen

when it does not receive any command in twenty seconds. The RAID

subsystem password is default setting at

manufacture.

00000000 by the

Configuring

3-7

Page 46

VT100 terminal configuration Utility Main Menu Options

Select an option and the related information or submenu items display beneath it. The submenus for each item are explained on the section 3.3. The

configuration utility main menu options are:

Option

Quick Volume And Raid Set

Setup

Raid Set Functions

Volume Set Functions

Physical Drive Functions

Raid System Functions

Ethernet Configuration

Views System Events

Clear Event Buffer

Hardware Monitor

System Information

Description

Create a RAID configurations which is

consist of the number of physical disk

installed

Create a customized raid set

Create a customized volume set

View individual disk information

Setting the raid system configurations

Setting the Ethernet configurations

Record all system events in the buffer

Clear all event buffer information

Show all system environment status

View the controller information

3-8

Configuring

Page 47

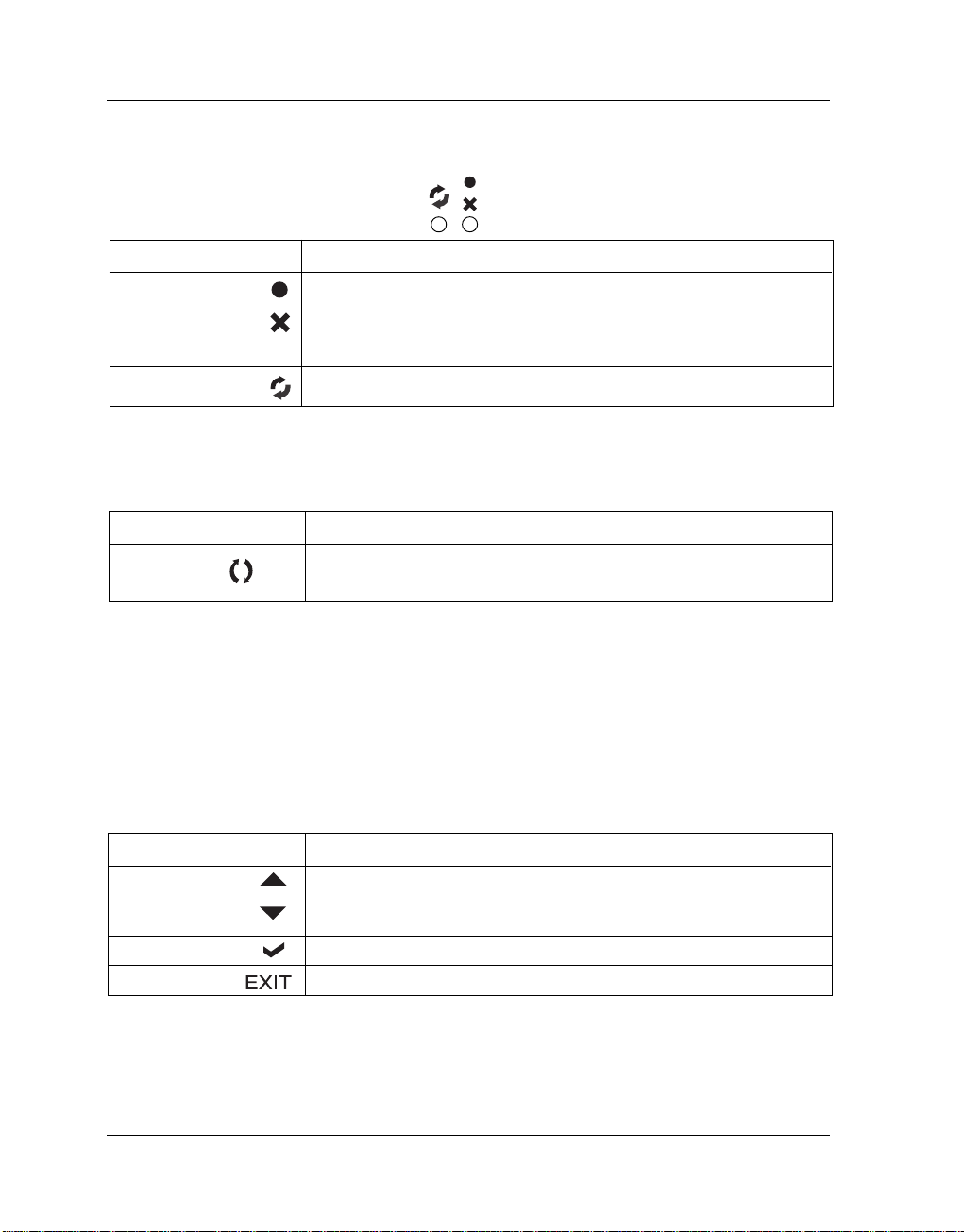

3.2 Configuring the Subsystem Using the LCD Panel

The LCD Display front panel function keys are the primary user interface for

the Disk Array. Except for the “Firmware update” ,all configuration can be

performed through this interface.The LCD provides a system of screens with

areas for information, status indication, or menus. The LCD screen displays

up to two lines at a time of menu items or other information. The RAID subsystem password is default setting at

Function Key Definitions

The four function keys at the top of the front panel perform the following functions :

00000000 by the manufacture.

Up button

Down button

Select button

Exit button

Parts

Up or Down

arrow buttons

Select button

Exit button

Function

Use the Up or Down arrow keys to go through the information

on the LCD screen. This is also used to move between each

menu when you configure the subsystem.

This is used to enter the option you have selected.

Press this button to return to the previous menu.

Configuring

3-9

Page 48

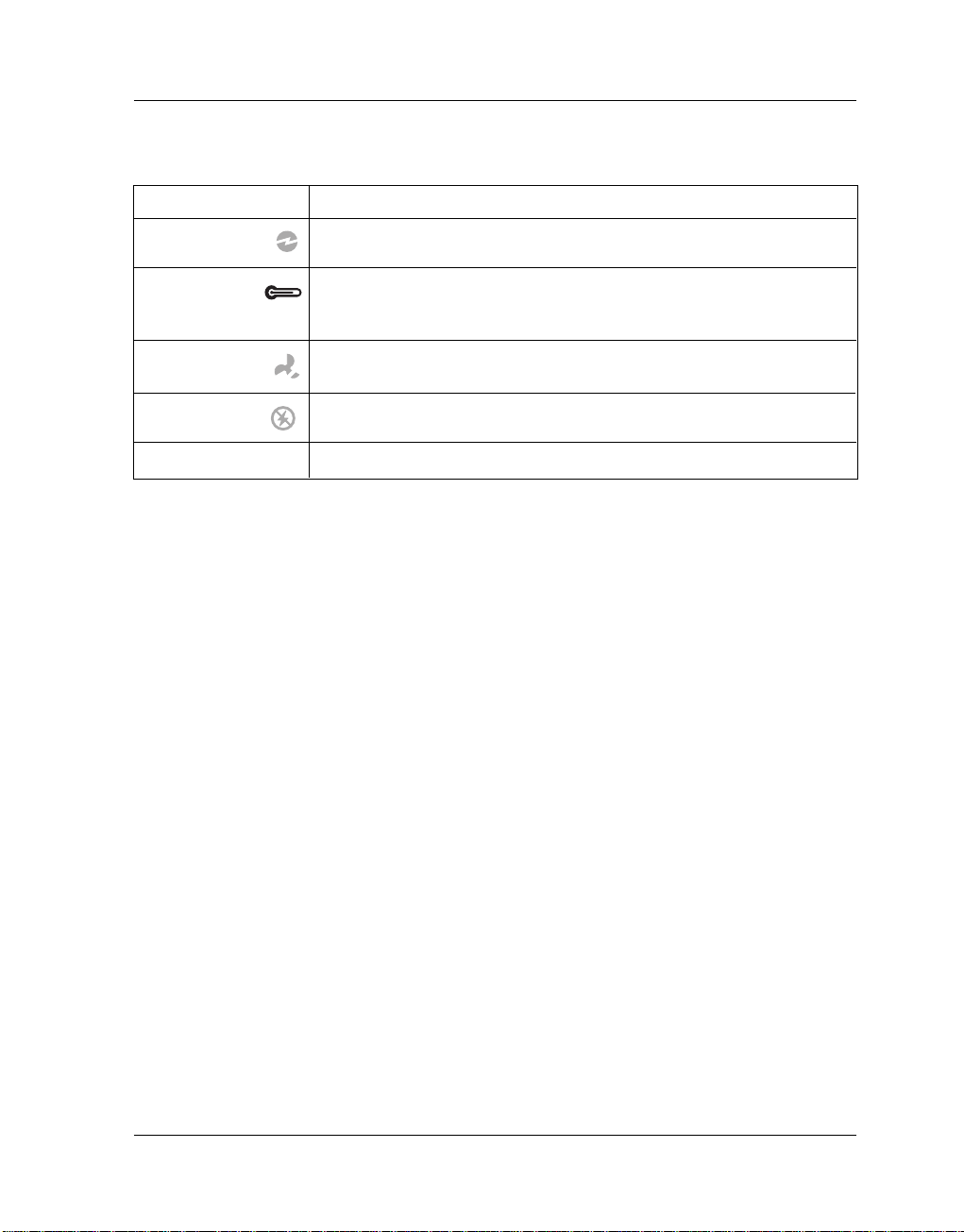

3.3 Menu Diagram

The following tree diagram is a summary of the various configuration and

setting functions that can be accessed through the LCD panel menus or the

terminal monitor.

3-10

Configuring

Page 49

Configuring

3-11

Page 50

3-12

Configuring

Page 51

Physical Drives

ViewDrive Information

SelectThe Drives

Create Pass ThroughDisk

SelectThe Drives

Modify Pass ThroughDisk

SelectThe Drives

Delete Pass ThroughDisk

SelectThe Drives

Delete Pass Through

Are you sure?

Identify Selected Drive

SelectThe Drives

SCSI Channel, SCSI ID,

SCSI LUN, Cache Mode,

Tag Queuing, Max Sync Rate

SCSI Channel, SCSI ID,

SCSI LUN, Cache Mode,

Tag Queuing, Max Sync Rate

Yes, No

Yes, No

Raid System Function

MuteThe Alert Beeper

Alert Beeper Setting

SaveThe Settings

Change Password

Enter New Password

Re-Enter Password

JBOD / RAID Function

RAID, JBOD

ConfiguredAS JBOD?

Raid Rebuild Priority

SaveThe Settings

Terminal PortConfig

Baud Rate

Stop Bits

Update Firmware

Restart Controller

Are you sure?

Yes, No

Disabled, Enabled

Yes, No

SaveThe Password

Yes, No

Are you sure?

UltraLow(5%), Low(20%),

Medium(50%),High(80%)

Yes, No

1200,2400,4800,9600,

19200,38400,57600,115200

1 bit, 2 bits

Yes, No

Yes, No

Yes, No

Yes, No

Configuring

3-13

Page 52

3-14

Configuring

Page 53

3.4 Web browser-based Remote RAID management via

R-Link ethernet port

Configuration of the internal RAID subsystem with remote RAID management is

a web browser-based application, which utilizes the browser installed on your

operating system. Web browser-based remote RAID management can be used

to manage all the raid function.

To configure internal RAID subsystem on a remote machine, you need to know

its IP Address. Launch your web browser by entering http://[IP Address] in the

remote web browser.

Important:

!

The Ethernet default IP is “192.168.001.100”. DHCP function is

“enable”. You can configure correct IP Address through the LCD panel

or the terminal “Ethernet Configuration” menu.

Note that you must be logged in as administrator with local admin rights on the

remote machine to remotely configure it. The RAID subsystem controller default

User Name is “admin” and the Password is “00000000”.

Configuring

3-15

Page 54

Main Menu

The main menu shows all function that enables the customer to execute actions by clicking on the appropriate link.

Individual Category

Quick Create

Raid Set Functions

Volume Set Functions

Physical Drive

System Control

Information

Description

Create a RAID configuration, which is consist of

the number of physical disk installed; it can

modify the volume set Capacity, Raid Level, and

Stripe Size.

Create a customized raid set.

Create customized volume sets and modify the

existed volume sets parameter.

Create pass through disks and modify the existed pass through drives parameter. It also provides the function to identify the respect disk

drive.

Setting the raid system configurations

View the controller and hardware monitor

information. The Raid Set Hierarchy can also

view through the RaidSet Hierarchy item.

3-16

Configuring

Page 55

Configuration Procedures

Below are a few practical examples of concrete configuration procedures.

3.5 Quick Create

The number of physical drives in the raid subsystem determines the RAID

levels that can be implemented with the raid set. You can create a raid set

associated with exactly one volume set. The user can change the raid level,

capacity, Volume Initialization Mode and stripe size . A hot spare option is also

created depending upon the existing configuration.

If volume size over 2TB, it will be provided one option “Creater TwoTB Volume

Support” Automatically as above menu. There are three model for option “No” ,

“64bit LBA” , “For Windows”.

Configuring

3-17

Page 56

Greater Two TB Volume Support:

No: still keep the volume size with max. 2TB limitation.

64bit LBA: the max. size 512TB, for Unix or Linux.

Due to LSI53C1030T does not support 16byte CDB for 64bit LBA, vendor

specific 12byte CDB is used for 64bit LBA support. The system may detect up

to two tera bytes only. A patch driver is needed to enable the system to detect

over two tera bytes. Please contact your vendor for supporting.

For Windows: the max. size 16TB , just use with “ basic disk manager “ under

OS Window 2000, 2003 or XP. Noted that can’t be used by with dynamic disk

manager.

Tick on the Confirm The Operation and click on the Submit button in the

Quick Create screen, the raid set and volume set will start to initialize.

Note: In Quick Create your volume set is automatically configured based on the

number of disks in your system. Use the Raid Set Function and Volume Set Function if you prefer to customize your system.

3-18

Configuring

Page 57

3.6 Raid Set Functions

Use the Raid Set Function and Volume Set Function if you prefer to customize

your system. User manual configuration can full control of the raid set setting,

but it will take longer to complete than the Quick Volume/Raid Setup

configuration. Select the Raid Set Function to manually configure the raid set

for the first time or deletes existing raid set and reconfigures the raid set. A

raid set is a group of disks containing one or more volume sets.

3.6.1 Create Raid Set

To create a raid set, click on the Create Raid Set link. A “Select The IDE

Drive For RAID Set” screen is displayed showing the IDE drive connected to

the current controller. Click on the selected physical drives with the current

raid set. Enter 1 to 15 alphanumeric characters to define a unique identifier

for a raid set. The default raid set name will always appear as Raid Set. #.

Tick on the Confirm The Operation and click on the Submit button in the

screen, the raid set will start to initialize.

Configuring

3-19

Page 58

3.6.2 Delete Raid Set

To delete a raid set, click on the Delete Raid Set link. A “Select The RAID SET

T o Delete” screen is displayed showing all raid set existing in the current controller.

Click the raid set number you which to delete in the select column to delete

screen.

Tick on the Confirm The Operation and click on the Submit button in the

screen to delete it.

3-20

Configuring

Page 59

3.6.3 Expand Raid Set

Use this option to expand a raid set, when a disk is added to your system.

This function is active when at least one drive is available.

To expand a raid set, click on the Expand Raid Set link. Select the target raid

set, which you want to expand it.

Tick on the available disk and Confirm The Operation, and then click on the

Submit button in the screen to add disks to the raid set.

Note:

1. Once the Expand Raid Set process has started, user cannot

stop it. The process must be completed.

2. If a disk drive fails during raid set expansion and a hot spare is

available, an auto rebuild operation will occur after the raid set expansion completes.

Configuring

3-21

Page 60

Migrating occurs when a disk is added to a raid set. Migration status is displayed in the raid status area of the Raid Set information when a disk is

added to a raid set. Migrating status is also displayed in the associated volume status area of the volume set Information when a disk is added to a raid

set.

3-22

Configuring

Page 61

3.6.4 Activate Incomplete Raid Set

When one of the disk drive is removed in power off state, the raid set state

will change to Incomplete State. If user wants to continue to work, when the

RAID subsystem is power on. User can use the Activate Raid Set option to

active the raid set. After user complete the function, the Raid State will

change to Degraded Mode.

To activate the incomplete the raid set, click on the Activate Raid Set link. A

“Select The RAID SET To Activate” screen is displayed showing all raid set ex-

isting in the current controller. Click the raid set number you which to activate in

the select column.

Configuring

3-23

Page 62

Click on the Submit button in the screen to activate the raid set that has

removed one of disk drive in the power off state. The RAID subsystem will

continue to work in degraded mode.

3-24

Configuring

Page 63

3.6.5 Create Hot Spare

When you choose the Create Hot Spare option in the Raid Set Function, all

unused physical devices connected to the current controller appear: Select

the target disk by clicking on the appropriate check box. Tick on the Confirm

The Operation, and click on the Submit button in the screen to create the

hot spares.

The create Hot Spare option gives you the ability to define a global hot spare.

3.6.6 Delete Hot Spare

Select the target Hot Spare disk to delete by clicking on the appropriate

check box.

Tick on the Confirm The Operation, and click on the Submit button in the

screen to delete the hot spares.

Configuring

3-25

Page 64

3.6.7 Rescue Raid Set

If you try to Rescue Missing RAID Set, please contact our engineer for

assistance.

3-26

Configuring

Page 65

3.7 Volume Set Function

A volume set is seen by the host system as a single logical device. It is organized in a RAID level with one or more physical disks. RAID level refers to the

level of data performance and protection of a volume set. A volume set capacity can consume all or a portion of the disk capacity available in a raid set.

Multiple volume sets can exist on a group of disks in a raid set. Additional

volume sets created in a specified raid set will reside on all the physical disks

in the raid set. Thus each volume set on the raid set will have its data spread

evenly across all the disks in the raid set.

3.7.1 Create Volume Set

The following is the volume set features:

1.Volume sets of different RAID levels may coexist on the same raid set.

2.Up to 16 volume sets in a raid set can be created by the RAID subsystem

controller.

To create volume set from raid set system, move the cursor bar to the main

menu and click on the Create Volume Set link. The Select The Raid Set

To Create On It screen will show all raid set number. Tick on a raid set

number that you want to create and then click on the Submit button.

The new create volume set allows user to select the Volume name, capacity,

RAID level, strip size, SCSI ID/LUN, Cache mode, tag queuing and Max Sync

Rate.

Configuring

3-27

Page 66

V olume Name:

The default volume name will always appear as Volume Set. #. You can rename the volume set name providing it does not exceed the 15 characters limit.

Raid Level:

Set the RAID level for the Volume Set. Highlight Raid Level and press Enter.

The available RAID levels for the current Volume Set are displayed. Select a

RAID level and press Enter to confirm.

Capacity:

The maximum volume size is default in the first setting. Enter the appropriate

volume size to fit your application.

Greater Two TB Volume Support: If volume size over 2TB, it will be provided one option “Creater TwoTB Volume Support” Automatically.

No: still keep the volume size with max. 2TB limitation.

3-28

Configuring

Page 67

64bit LBA: the max. size 512TB, for Unix or Linux.

Due to LSI53C1030T does not support 16byte CDB for 64bit LBA, vendor

specific 12byte CDB is used for 64bit LBA support. The system may detect up

to two tera bytes only. A patch driver is needed to enable the system to detect

over two tera bytes. Please contact your vendor for supporting.

For Windows: the max. size 16TB , just use with “ basic disk manager “ under

OS Window 2000, 2003 or XP. Noted that can’t be used by with dynamic disk

manager.

Initialization Mode:

Set the Initialization Mode for the Volume Set. Foreground mode is faster

completion and background is instant available.

Strip Size:

This parameter sets the size of the stripe written to each disk in a RAID 0, 1,

0+1, or 5 logical drive. You can set the stripe size to 4 KB, 8 KB, 16 KB, 32

KB, 64 KB, or 128 KB.

A larger stripe size produces better-read performance, especially if your computer does mostly sequential reads. However, if you are sure that your computer does random reads more often, select a small stripe size

Note: RAID level 3 can’t modify strip size.

Cache Mode:

The RAID subsystem supports Write-Through Cache and Write-Back Cache.

Tag Queuing:

The Enabled option is useful for enhancing overall system performance under

multi-tasking operating systems. The Command Tag (Drive Channel) function controls the SCSI command tag queuing support for each drive channel. This function

should normally remain enabled. Disable this function only when using older SCSI

drives that do not support command tag queuing

Max SCSI Speed:

The RAID subsystem supports 320 MB/sec as the highest data transfer rate.

SCSI Channel/SCSI ID/SCSI Lun:

SCSI Channel: The RAID subsystem supports one SCSI Channel or Dual

SCSI Channel.

Configuring

3-29

Page 68

SCSI ID: Each SCSI device attached to the SCSI card, as well as the card

itself, must be assigned a unique SCSI ID number. A Wide SCSI channel can

connect up to 15 devices. The RAID subsystem is as a large SCSI device.

We should assign an ID from a list of SCSI IDs.

SCSI LUN: Each SCSI ID can support up to 8 LUNs. Most SCSI host adapter

treats each LUN like a SCSI disk.

3.7.2 Delete Volume Set

To delete Volume from raid set system function, move the cursor bar to the

main menu and click on the Delete Volume Set link. The Select The Vol-

ume Set To Delete screen will show all raid set number. Tick on a raid set

number and the Confirm The Operation and then click on the Submit button

to show all volume set item in the selected raid set. Tick on a volume set

number and the Confirm The Operation and then click on the Submit button

to delete the volume set.

3-30

Configuring

Page 69

3.7.3 Modify V olume Set

To modify a volume set from a raid set:

(1). Click on the Modify Volume Set link.

(2). Tick on the volume set from the list that you wish to modify. Click on the

Submit button.

The following screen appears.

Use this option to modify volume set configuration. To modify volume set attribute

values from raid set system function, move the cursor bar to the volume set

attribute menu and click on it. The modify value screen appears. Move the cursor

bar to an attribute item, and then click on the attribute to modify the value. After

you complete the modification, tick on the Confirm The Operation and click on

the Submit button to complete the action. User can modify all values except the

capacity.

3.7.3.1 Volume Expansion

Volume Capacity (Logical Volume Concatenation Plus Re-stripe)

Use this raid set expands to expand a raid set, when a disk is added to your

system. (refer to section 3.6.3)

The expand capacity can use to enlarge the volume set size or create another

volume set. The modify volume set function can support the volume set expansion function. To expand volume set capacity value from raid set system

function, move the cursor bar to the volume set Volume capacity item and

entry the capacity size.

Tick on the Confirm The Operation and click on the Submit button to com-

plete the action. The volume set start to expand.

Configuring

3-31

Page 70

3-32

Configuring

Page 71

3.7.4 Volume Set Migration

Migrating occurs when a volume set is migrating from one RAID level to

another, a volume set strip size changes, or when a disk is added to a raid

set. Migration status is displayed in the volume status area of the RaidSet

Hierarchy screen when one RAID level to another, a Volume set strip size

changes or when a disk is added to a raid set.

Configuring

3-33

Page 72

3.7.5 Check V olume Set

To check a volume set from a raid set:

(1). Click on the Check Volume Set link.

(2). Tick on the volume set from the list that you wish to check. Tick on

Confirm The Operation and click on the Submit button.

Use this option to verify the correctness pf the redundant data in a volume

set. For example, in a system with dedicated parity, volume set check

means computing the parity of the data disk drives and comparing the results

to the contents of the dedicated parity disk drive. The checking percentage

can also be viewed by clicking on RaidSet Hierarchy in the main menu.

3.7.6 Stop V olumeSet Check

Use this option to stop the Check Volume Set function.

3-34

Configuring

Page 73

3.8 Physical Drive

Choose this option from the Main Menu to select a physical disk and to perform the operations listed below.

3.8.1 Create Pass-Through Disk

To create pass-through disk, move the mouse cursor to the main menu and

click on the Create Pass-Through link. The relative setting function screen

appears.

Disk is no controlled by the internal RAID subsystem firmware and thus cannot be a part of a volume set. The disk is available to the operating system

as an individual disk. It is typically used on a system where the operating

system is on a disk not controlled by the RAID firmware. User can also select the cache mode, Tagged Command Queuing, Max SCSI speed and

SCSI channel/SCSI_ID/SCSI_LUN for this volume.

Configuring

3-35

Page 74

3.8.2 Modify Pass-Through Disk

Use this option to modify the Pass-Through Disk Attribute. User can modify

the cache mode, Tagged Command Queuing, Max SCSI speed and SCSI

channel/ID/LUN on an existed pass through disk.

To modify the pass-through drive attribute from the pass-through drive pool,

move the mouse cursor bar to click on Modify Pass-Through link. The Select The Pass Through Disk For Modification screen appears tick on the

Pass-Through Disk from the pass-through drive pool and click on the Submit

button to select drive.

The Enter Pass-Through Disk Attribute screen appears, modify the drive attribute values, as you want.

3-36

Configuring

Page 75

3.8.3 Delete Pass-Through Disk

To delete pass-through drive from the pass-through drive pool, move the

mouse cursor bar to the main menus and click on Delete Pass Through

link. After you complete the selection, tick on the Confirm The Operation

and click on the Submit button to complete the delete action.

3.8.4 Identify Selected Drive

To prevent removing the wrong drive, the selected disk LED will light for

physically locating the selected disk when the Identify Selected Drive is

selected.

To identify the selected drive from the drives pool, move the mouse cursor bar to

click on Identify Selected Drive link. The Select The IDE Device For identification screen appears tick on the IDE device from the drives pool and Flash method.

After completing the selection, click on the Submit button to identify selected

drive.

Configuring

3-37

Page 76

3.9 System Configuration

3.9.1 System Configuration

To set the raid system function, move the cursor bar to the main menu and

click on he Raid System Function link. The Raid System Function menu will

show all items. Select the desired function.

System Beeper Setting:

The Alert Beeper function item is used to Disabled or Enable the RAID subsystem controller alarm tone generator.

RAID Rebuild Priority:

The Raid Rebuild Priority is a relative indication of how much time the controller devotes to a rebuild operation. The RAID subsystem allows user to

choose the rebuild priority (ultraLow, Low, Medium, High) to balance volume

set access and rebuild tasks appropriately. For high array performance,

specify a Low value.

3-38

Configuring

Page 77

Terminal Port Configuration:

Speed setting values are 1200, 2400, 4800, 9600, 19200,38400, 57600, and

115200.

Stop Bits values are 1 bit and 2 bits.

Note: Parity value is fixed at None.

Data Bits value is fixed at 8 bits.

JBOD/RAID Configuration

The RAID subsystem supports JBOD and RAID configuration.

Maximum SATA Mode Supported:

The 16 SATA drive channel can support up to SATA ll, which runs up to 300MB/s.

NCQ is a command protocol in Serial ATA that can only be implemented on native

Serial ATA hard drives. It allows multiple commands to be outstanding within a

drive at the same time. Drives that support NCQ have an internal queue where

outstanding commands can be dynamically rescheduled or re-ordered, along with

the necessary tracking mechanisms for outstanding and completed portions of

the workload. RAID subsystem allows user to choose the SATA Mode: SATA150,

SAT150+NCQ, SAT300, SATA300+NCQ.

Disk Write Cache Mode:

The RAID subsystem supports auto, enabled and disabled. When the RAID subsystem with BBM (battery backup module) the auto option will Enable disk write

cache. Contrariwise, the auto option will Disable disk write cache.

Disk Capacity Truncation Mode:

This RAID subsystem use drive truncation so that drives from differing vendors

are more likely to be able to be used as spares for each other. Drive truncation slightly decreases the usable capacity of a drive that is used in redundant

units.

Multiples Of 10G: If you have 120 GB drives from different vendors; chances

are that the capacity varies slightly. For example, one drive might be 123.5

GB, and the other 120 GB. This drive Truncation mode Multiples Of 10G

uses the same capacity for both of these drives so that one could replace the

other.

Multiples Of 1G: If you have 123 GB drives from different vendors; chances

are that the capacity varies slightly. For example, one drive might be 123.5

GB, and the other 123.4 GB. This drive Truncation mode Multiples Of 1G

uses the same capacity for both of these drives so that one could replace the

other.

No Truncation: It does not truncate the capacity.

Configuring

3-39

Page 78

3.9.2 U320 SCSI Target Config

To set the U320 SCSI Target function, move the cursor bar to the main menu

and click on the U320 SCSI Target Config. The Enabled option supports

Quick Arbitration and Selection (QAS). QAS reduces the overhead of control

release on the SCSI bus from one device to another. This improvement reduces command overhead and maximizes bus utilization. Select the desired

function.

3-40

Configuring

Page 79

3.9.3 EtherNet Config

To set the EtherNet function, move the cursor bar to the main menu and click

on he EtherNet Config. The Raid System EtherNet Function menu will

show all items. Select the desired function.

Configuring

3-41

Page 80

3.9.4 Alert By Mail Config

To set the Event Notification function, move the cursor bar to the main menu and

click on the Alert By Mail Config. The Raid System Event Notification Function

menu will show all items. Select the desired function. When an abnormal condi-

tion occurs, an error message will be email to administrator that a problem has

occurred. Events are classified to 4 levels (urgent, serious, warning, message).

3-42