Page 1

eIQ MACHINE LEARNING

SOFTWARE DEVELOPMENT

ENVIRONMENT

eIQ Machine Learning (ML) software

development environment leverages

inference engines, neural network compilers,

optimized libraries, deep learning toolkits

and open-source technologies for easier,

more secure system-level application

development and ML algorithm enablement,

and auto-quality ML enablement.

FACT SHEET

eIQ™ ML SOFTWARE

OVERVIEW

The NXP

environment provides the key ingredients to do inference

with neural network (NN) models on embedded systems

and deploy ML algorithms on NXP microprocessors and

microcontrollers for edge nodes. It includes inference

engines, NN compilers, libraries, and hardware abstraction

layers that support Google TensorFlow Lite, Glow, Arm

NN, Arm CMSIS-NN, and OpenCV.

With NXP’s i.MX applications processors and i.MX RT

crossover processors based on Arm Cortex

respectively, embedded designs can now support deep

learning applications that require high-performance data

analytics and fast inferencing.

eIQ software includes a variety of application examples that

demonstrate how to integrate neural networks into voice,

vision and sensor applications. The developer can choose

whether to deploy their ML applications on Arm Cortex A,

Cortex M, and GPUs, or for high-end acceleration on the

neural processing unit of the i.MX 8M Plus.

APPLICATIONS

eIQ ML software helps enable a variety of vision and sensor

applications working in conjunction with a collection of

device drivers and functions for cameras, microphones and

a wide range of environmental sensor types.

• Object detection and recognition

®

eIQ (“edge intelligence”) ML software

®

-A and M cores,

®

• Voice command and keyword recognition

• Anomaly detection

• Image and video processing

• Other AI and ML applications include:

– Smart wearables

– Intelligent factories and smart buildings

– Healthcare and diagnostics

– Augmented reality

– Logistics

– Public safety

FEATURES

• Open-source inference engines

• Neural network compilers

• Optimized libraries

• Application samples

• Included in NXP’s Yocto Linux

software releases

®

BSP and MCUXpresso SDK

Page 2

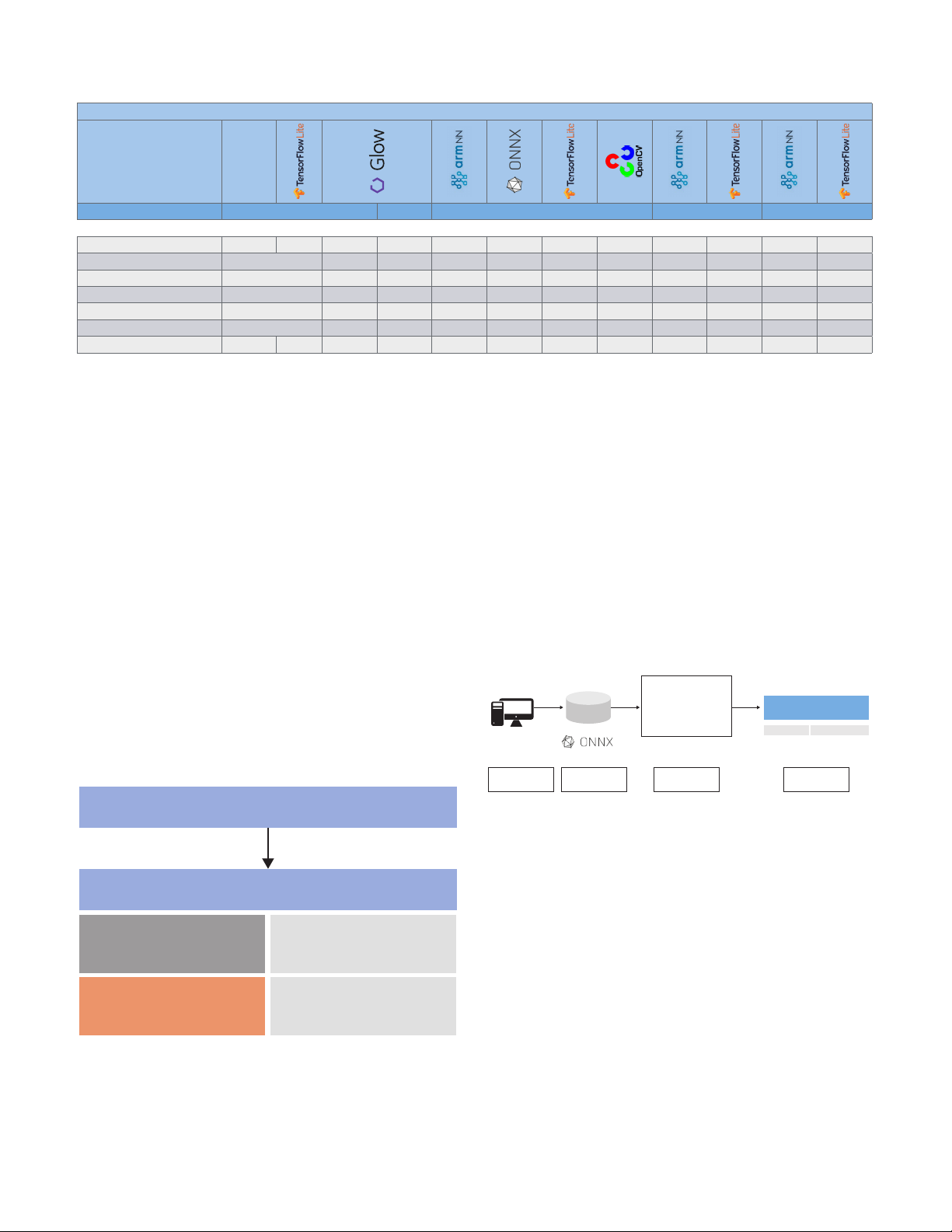

NXP eIQ MACHINE LEARNING SOFTWARE - INFERENCE ENGINES BY CORE

®

NEURAL NETWORK

eIQ™ Inference Engine Deployment (Public version; Subject to Change; 7/6/20)

NXP eIQ Inference

Engines and Libraries

CMSIS-NN

Compute Engines Cortex-M DSP Cortex-A GPU NPU

i.MX 8M Plus --- --- --- --- √ √ √ √ --- --- √ √

i.MX 8QM --- --- --- √ √ √ √ √ √ NA NA

i.MX 8QXP --- --- --- √ √ √ √ √ √ NA NA

i.MX 8M Quad/Nano --- --- --- √ √ √ √ √ √ NA NA

i.MX 8M Mini --- --- --- √ √ √ √ NA NA NA NA

i.MX RT600 --- --- √ NA NA NA NA NA NA NA NA

i.MX RT1050/1060 √ √ √ NA NA NA NA NA NA NA NA NA

NA = Not Applicable

--- = Not Supported

OPEN-SOURCE INFERENCE ENGINES

The following inference engines are included as part of the

eIQ ML software development kit and serve as options for

deploying trained NN models.

Arm NN INFERENCE ENGINE

eIQ ML software supports Arm NN SDK on the i.MX 8 series

applications processor family and is available through the

NXP Yocto Linux-based releases.

Arm NN SDK is open-source, inference engine software that

allows embedded processors to run trained deep learning

models. This tool utilizes the Arm Compute Library to

optimize neural network operations running on Cortex-A

cores (using Neon acceleration). NXP has also integrated

Arm NN with proprietary drivers to support the i.MX GPUs

and i.MX 8M Plus NPU.

eIQ SOFTWARE FOR Arm NN

Neural Network (NN) Frameworks

GLOW

eIQ ML software supports Glow neural network compiler

on the i.MX RT crossover MCU family and is available in the

MCUXpresso SDK.

Glow is a machine learning compiler that enables aheadof-time compilation for increased performance and smaller

memory footprint as compared to a traditional runtime

inference engine. NXP offers optimizations for its i.MX RT

crossover MCUs based on Cortex-M cores and Cadence

Tensilica

®

HiFi 4 DSP.

®

eIQ FOR GLOW NEURAL NETWORK COMPILER

Host machine Target machine

Neural Network

Model

Model Design and

Training, PC or Cloud

Pre-Trained Model

Standard Formats

GLOW AOT NN Compiler

• If available, generates ‘external

function calls’ to CMSIS-NN

kernels or NN Library

• Otherwise it compiles code

from its own native library

Model Optimization

Model Compression

Model Compilation

Deploy

executable

code

Arm® Cortex®-M Tensilica® HiFi 4 DSP

i.MX RT

Inference

Arm® NN

Cortex-A CPU

www.nxp.com/eiqwww.nxp.com/eiq 2

Arm Compute Library

Hardware Abstraction

Layer and Drivers

Verisilicon GPU and

Neural Processing unit

Page 3

Arm CMSIS-NN

®

CMSIS-NN

IMPROVE SECURITY OF ML SYSTEMS

eIQ ML software supports Arm CMSIS-NN on the i.MX

RT crossover processor family and is fully integrated and

available in the MCUXpresso SDK.

Arm CMSIS-NN is a collection of efficient neural network

kernels used to maximize the performance and minimize

the memory footprint of neural networks on Arm

Cortex-M processor cores. Although it involves more

manual intervention than TensorFlow Lite, it yields faster

performance and a smaller memory footprint.

eIQ SOFTWARE WITH Arm CMSIS-NN

PC Tools to Generate Model

a) Parse; b) Quantize network; c) Flatten NN model to C array source file

MCU Firmware: Inference Engine + Application

CMSIS-NN

Convolution Pooling

Fully Connected

NN Functions

Silicon

Trained Models: TensorFlow, Caffe

Data Buffer and Processor

Input Data (e.g., video, audio)

Neural Network Application Code

Activations

NXP i.MX RT Series (Cortex-M)

Data Type Conversion

Activation Tables

NN Support Functions

OPENCV NEURAL NETWORK AND ML ALGORITHM

SUPPORT

eIQ ML software supports the Open-Source Computer

Vision Library (OpenCV) on the i.MX 8 series applications

processor family and is available through the NXP YoctoLinux-based releases.

OpenCV consists of more than 2,500 optimized algorithms

for processing neural networks and machine learning

algorithms for image processing, video encoding/decoding,

video analysis, object detection, and processing of neural

networks. This solution utilizes Arm Neon™ for acceleration.

eIQ SOFTWARE FOR OPENCV NEURAL NETWORK AND

MACHINE LEARNING ALGORITHMS

®

Linux

Bindings: Python, Java

®

Demos, Apps

eIQ™ OpenCV Deep Learning Neural Network

and Machine Learning Models

OpenCV Hardware Abstraction Layer (e.g., Neon™)

TENSORFLOW LITE

eIQ ML software supports TensorFlow Lite on the i.MX 8

applications processor and i.MX RT crossover processor

families, and is available through Yocto and MCUXpresso

environments, respectively.

TensorFlow Lite is a set of tools that allows users to convert

and deploy TensorFlow models to perform faster inferences.

It requires less memory than TensorFlow, making it a good

match for resource-constrained, low-power devices.

eIQ SOFTWARE FOR TENSORFLOW LITE

PC

Pre-trained

TensorFlow

Model

Optimizations

(Quantization,

Pruning)

Optional

TF to TF Lite

Conversion

Convert to

Runtime

Parameters

Camera/Microphone/Sensor

Input

NXP® Device

Inference

Engine

Prediction

i.MX 8 Series

SECURITY SOFTWARE FOR MACHINE LEARNING

In addition to system-level security, NXP’s eIQ Machine

Learning (ML) embedded software development

environment supports security measures to protect machine

learning applications, including protection against model

cloning, model private data extraction invasion, and

adversarial attacks. This software is available through NXP

Yocto Linux-based BSP releases.

IMPROVE SECURITY OF ML SYSTEMS

eIQ™ Security Software

for ML Models

Adversarial

Examples

Model Cloning

Model Inversion

and Privacy

Watermarking

A

3www.nxp.com/eiq

Page 4

SOFTWARE AVAILABILITY

eIQ ML software currently supports NXP i.MX and i.MX RT

processors, with additional MCU/MPU support planned in

the future.

• eIQ ML software for i.MX applications processors is

supported on the current Yocto Linux release

• eIQ ML software for i.MX RT crossover processors is fully

integrated into the MCUXpresso SDK release

GET STARTED:

Learn more: www.nxp.com/ai and www.nxp.com/eiq

Join the eIQ Community: https://community.nxp.com/

community/eiq and www.nxp.com/eiqauto

www.nxp.com/eIQ

NXP, the NXP logo and eIQ are trademarks of NXP B.V. All other product or service names are the property of their respective owners. TensorFlow, the TensorFlow logo and any related marks are

trademarks of Google Inc. Oracle and Java are registered trademarks of Oracle and/or its affiliates. Arm, Cortex and Neon are trademarks or registered trademarks of Arm Limited (or its subsidiaries) in

the US and/or elsewhere. The related technology may be protected by any or all of patents, copyrights, designs and trade secrets. All rights reserved. © 2020 NXP B.V.

Document Number: EIQFS REV 2

Loading...

Loading...