Page 1

SUSE Linux Enterprise

www.novell.com11

February18,2010 High Availability Guide

High Availability

Extension

Page 2

High Availability Guide

Copyright © 2006- 2010 Novell, Inc.

Permission is granted to copy, distribute and/or modify this document under the terms of the GNU

Free Documentation License, Version 1.2 or (at your option) version 1.3; with the Invariant Section

being this copyright notice and license. A copy of the license version 1.2 is included in the section

entitled “GNU Free Documentation License”.

SUSE®, openSUSE®,the openSUSE® logo, Novell®, the Novell® logo, the N® logo, are registered

trademarks of Novell, Inc. in the United States and other countries. Linux* is a registered trademark

of Linus Torvalds. All other third party trademarks are the property of their respective owners. A

trademark symbol (® , ™, etc.) denotes a Novell trademark; an asterisk (*) denotes a third-party

trademark.

All information found in this book has been compiled with utmost attention to detail. However, this

does not guarantee complete accuracy.Neither Novell, Inc., SUSE LINUX Products GmbH, the authors,

nor the translators shall be held liable for possible errors or the consequences thereof.

Page 3

Contents

About This Guide vii

Part I Installation and Setup 1

1 Conceptual Overview 3

1.1 Product Features . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.2 Product Benets . . . . . . . . . . . . . . . . . . . . . . . . . . 5

1.3 Cluster Congurations . . . . . . . . . . . . . . . . . . . . . . . 8

1.4 Architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

1.5 What's New? . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

2 Getting Started 19

2.1 Hardware Requirements . . . . . . . . . . . . . . . . . . . . . . 19

2.2 Software Requirements . . . . . . . . . . . . . . . . . . . . . . 20

2.3 Shared Disk System Requirements . . . . . . . . . . . . . . . . . . 20

2.4 Preparations . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

2.5 Overview: Installing and Setting Up a Cluster . . . . . . . . . . . . . 21

3 Installation and Basic Setup with YaST 23

3.1 Installing the High Availability Extension . . . . . . . . . . . . . . . 23

3.2 Initial Cluster Setup . . . . . . . . . . . . . . . . . . . . . . . . 24

3.3 Bringing the Cluster Online . . . . . . . . . . . . . . . . . . . . . 27

Page 4

Part II Conguration and Administration 29

4 Conguring Cluster Resources with the GUI 31

4.1 Linux HA Management Client . . . . . . . . . . . . . . . . . . . . 32

4.2 Creating Cluster Resources . . . . . . . . . . . . . . . . . . . . . 33

4.3 Creating STONITH Resources . . . . . . . . . . . . . . . . . . . . 37

4.4 Conguring Resource Constraints . . . . . . . . . . . . . . . . . . 38

4.5 Specifying Resource Failover Nodes . . . . . . . . . . . . . . . . . 43

4.6 Specifying Resource Failback Nodes (Resource Stickiness) . . . . . . . . 45

4.7 Conguring Resource Monitoring . . . . . . . . . . . . . . . . . . 46

4.8 Starting a New Cluster Resource . . . . . . . . . . . . . . . . . . . 48

4.9 Removing a Cluster Resource . . . . . . . . . . . . . . . . . . . . 49

4.10 Conguring a Cluster Resource Group . . . . . . . . . . . . . . . . 49

4.11 Conguring a Clone Resource . . . . . . . . . . . . . . . . . . . . 54

4.12 Migrating a Cluster Resource . . . . . . . . . . . . . . . . . . . . 55

4.13 For More Information . . . . . . . . . . . . . . . . . . . . . . . 57

5 Conguring Cluster Resources From Command Line 59

5.1 Command Line Tools . . . . . . . . . . . . . . . . . . . . . . . 59

5.2 Debugging Your Conguration Changes . . . . . . . . . . . . . . . 60

5.3 Creating Cluster Resources . . . . . . . . . . . . . . . . . . . . . 60

5.4 Creating a STONITH Resource . . . . . . . . . . . . . . . . . . . . 65

5.5 Conguring Resource Constraints . . . . . . . . . . . . . . . . . . 66

5.6 Specifying Resource Failover Nodes . . . . . . . . . . . . . . . . . 68

5.7 Specifying Resource Failback Nodes (Resource Stickiness) . . . . . . . . 69

5.8 Conguring Resource Monitoring . . . . . . . . . . . . . . . . . . 69

5.9 Starting a New Cluster Resource . . . . . . . . . . . . . . . . . . . 69

5.10 Removing a Cluster Resource . . . . . . . . . . . . . . . . . . . . 70

5.11 Conguring a Cluster Resource Group . . . . . . . . . . . . . . . . 70

5.12 Conguring a Clone Resource . . . . . . . . . . . . . . . . . . . . 71

5.13 Migrating a Cluster Resource . . . . . . . . . . . . . . . . . . . . 72

5.14 Testing with Shadow Conguration . . . . . . . . . . . . . . . . . 73

5.15 For More Information . . . . . . . . . . . . . . . . . . . . . . . 74

6 Setting Up a Simple Testing Resource 75

6.1 Conguring a Resource with the GUI . . . . . . . . . . . . . . . . . 75

6.2 Manual Conguration of a Resource . . . . . . . . . . . . . . . . . 77

7 Adding or Modifying Resource Agents 79

7.1 STONITH Agents . . . . . . . . . . . . . . . . . . . . . . . . . 79

7.2 Writing OCF Resource Agents . . . . . . . . . . . . . . . . . . . . 80

Page 5

8 Fencing and STONITH 81

8.1 Classes of Fencing . . . . . . . . . . . . . . . . . . . . . . . . . 81

8.2 Node Level Fencing . . . . . . . . . . . . . . . . . . . . . . . . 82

8.3 STONITH Conguration . . . . . . . . . . . . . . . . . . . . . . 84

8.4 Monitoring Fencing Devices . . . . . . . . . . . . . . . . . . . . 88

8.5 Special Fencing Devices . . . . . . . . . . . . . . . . . . . . . . 89

8.6 For More Information . . . . . . . . . . . . . . . . . . . . . . . 90

9 Load Balancing with Linux Virtual Server 91

9.1 Conceptual Overview . . . . . . . . . . . . . . . . . . . . . . . 91

9.2 High Availability . . . . . . . . . . . . . . . . . . . . . . . . . 93

9.3 For More Information . . . . . . . . . . . . . . . . . . . . . . . 94

10 Network Device Bonding 95

11 Updating Your Cluster to SUSE Linux Enterprise 11 99

11.1 Preparation and Backup . . . . . . . . . . . . . . . . . . . . . . 100

11.2 Update/Installation . . . . . . . . . . . . . . . . . . . . . . . . 101

11.3 Data Conversion . . . . . . . . . . . . . . . . . . . . . . . . . 101

11.4 For More Information . . . . . . . . . . . . . . . . . . . . . . 103

Part III Storage and Data Replication 105

12 Oracle Cluster File System 2 107

12.1 Features and Benets . . . . . . . . . . . . . . . . . . . . . . . 107

12.2 Management Utilities and Commands . . . . . . . . . . . . . . . . 108

12.3 OCFS2 Packages . . . . . . . . . . . . . . . . . . . . . . . . . 109

12.4 Creating an OCFS2 Volume . . . . . . . . . . . . . . . . . . . . 110

12.5 Mounting an OCFS2 Volume . . . . . . . . . . . . . . . . . . . . 113

12.6 Additional Information . . . . . . . . . . . . . . . . . . . . . . 115

13 Cluster LVM 117

13.1 Conguration of cLVM . . . . . . . . . . . . . . . . . . . . . . 117

13.2 Conguring Eligible LVM2 Devices Explicitly . . . . . . . . . . . . . 119

13.3 For More Information . . . . . . . . . . . . . . . . . . . . . . 120

14 Distributed Replicated Block Device (DRBD) 121

14.1 Installing DRBD Services . . . . . . . . . . . . . . . . . . . . . . 122

Page 6

14.2 Conguring the DRBD Service . . . . . . . . . . . . . . . . . . . 122

14.3 Testing the DRBD Service . . . . . . . . . . . . . . . . . . . . . 124

14.4 Troubleshooting DRBD . . . . . . . . . . . . . . . . . . . . . . 126

14.5 Additional Information . . . . . . . . . . . . . . . . . . . . . . 128

Part IV Troubleshooting and Reference 131

15 Troubleshooting 133

15.1 Installation Problems . . . . . . . . . . . . . . . . . . . . . . . 133

15.2 “Debugging” a HA Cluster . . . . . . . . . . . . . . . . . . . . . 134

15.3 FAQs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

15.4 Fore More Information . . . . . . . . . . . . . . . . . . . . . . 137

16 Cluster Management Tools 139

17 Cluster Resources 193

17.1 Supported Resource Agent Classes . . . . . . . . . . . . . . . . . 193

17.2 OCF Return Codes . . . . . . . . . . . . . . . . . . . . . . . . 194

17.3 Resource Options . . . . . . . . . . . . . . . . . . . . . . . . 197

17.4 Resource Operations . . . . . . . . . . . . . . . . . . . . . . . 198

17.5 Instance Attributes . . . . . . . . . . . . . . . . . . . . . . . . 199

18 HA OCF Agents 201

Part V Appendix 275

A GNU Licenses 277

A.1 GNU General Public License . . . . . . . . . . . . . . . . . . . . 277

A.2 GNU Free Documentation License . . . . . . . . . . . . . . . . . 280

Terminology 285

Page 7

About This Guide

SUSE® Linux Enterprise High Availability Extension is an integrated suite of open

source clustering technologies that enables you to implement highly available physical

and virtual Linux clusters. For quick and efcient conguration and administration,

the High Availability Extension includes both a graphical user interface (GUI) and a

command line interface (CLI).

This guide is intended for administrators who need to set up, congure, and maintain

High Availability (HA) clusters. Both approaches (GUI and CLI) are covered in detail

to help the administrators choose the appropriate tool that matches their needs for performing the key tasks.

The guide is divided into the following parts:

Installation and Setup

Before starting to install and congure your cluster, make yourself familiar with

cluster fundamentals and architecture, get an overview of the key features and

benets, as well as modications since the last release. Learn which hardware and

software requirements must be met and what preparations to take before executing

the next steps. Perform the installation and basic setup of your HA cluster using

YaST.

Conguration and Administration

Add, congure and manage resources, using either the GUI or the crm command

line interface. Learn how to make use of load balancing and fencing. In case you

consider writing your own resource agents or modifying existing ones, get some

background information on how to create different types of resource agents.

Storage and Data Replication

SUSE Linux Enterprise High Availability Extension ships with a cluster-aware le

system (Oracle Cluster File System, OCFS2) and volume manager (clustered

Logical Volume Manager, cLVM). For replication of your data, the High Availability Extension also delivers DRBD (Distributed Replicated Block Device) which

you can use to mirror the data of a high availabilitly service from the active node

of a cluster to its standby node.

Page 8

Troubleshooting and Reference

Managing your own cluster requires you to perform a certain amount of troubleshooting. Learn about the most common problems and how to x them. Find a comprehensive reference of the command line tools the High Availability Extension offers

for administering your own cluster. Also, nd a list of the most important facts and

gures about cluster resources and resource agents.

Many chapters in this manual contain links to additional documentation resources.

These include additional documentation that is available on the system as well as documentation available on the Internet.

For an overview of the documentation available for your product and the latest documentation updates, refer to http://www.novell.com/documentation.

1 Feedback

Several feedback channels are available:

• To report bugs for a product component or to submit enhancement requests, please

use https://bugzilla.novell.com/. If you are new to Bugzilla, you

might nd the Bug Writing FAQs helpful, available from the Novell Bugzilla home

page.

• We want to hear your comments and suggestions about this manual and the other

documentation included with this product. Please use the User Comments feature

at the bottom of each page of the online documentation and enter your comments

there.

2 Documentation Conventions

The following typographical conventions are used in this manual:

•

/etc/passwd: directory names and lenames

•

placeholder: replace placeholder with the actual value

•

PATH: the environment variable PATH

viii High Availability Guide

Page 9

•

ls, --help: commands, options, and parameters

•

user: users or groups

•

Alt, Alt + F1: a key to press or a key combination; keys are shown in uppercase as

on a keyboard

•

File, File > Save As: menu items, buttons

• This paragraph is only relevant for the specied architectures. The arrows mark

the beginning and the end of the text block.

This paragraph is only relevant for the specied architectures. The arrows mark

the beginning and the end of the text block.

•

Dancing Penguins (Chapter Penguins, ↑Another Manual): This is a reference to a

chapter in another manual.

About This Guide ix

Page 10

Page 11

Part I. Installation and Setup

Page 12

Page 13

Conceptual Overview

SUSE® Linux Enterprise High Availability Extension is an integrated suite of open

source clustering technologies that enables you to implement highly available physical

and virtual Linux clusters, and to eliminate single points of failure. It ensures the high

availability and manageability of critical network resources including data, applications,

and services. Thus, it helps you maintain business continuity, protect data integrity,

and reduce unplanned downtime for your mission-critical Linux workloads.

It ships with essential monitoring, messaging, and cluster resource management functionality (supporting failover, failback, and migration (load balancing) of individually

managed cluster resources). The High Availability Extension is available as add-on to

SUSE Linux Enterprise Server 11.

1.1 Product Features

SUSE® Linux Enterprise High Availability Extension helps you ensure and manage

the availability of your network resources. The following list highlights some of the

key features:

Support for a Wide Range of Clustering Scenarios

Including active/active and active/passive (N+1, N+M, N to 1, N to M) scenarios,

as well as hybrid physical and virtual clusters (allowing virtual servers to be clustered with physical servers to improve services availability and resource utilization).

1

Conceptual Overview 3

Page 14

Multi-node active cluster, containing up to 16 Linux servers. Any server in the

cluster can restart resources (applications, services, IP addresses, and le systems)

from a failed server in the cluster.

Flexible Solution

The High Availability Extension ships with OpenAIS messaging and membership

layer and Pacemaker Cluster Resource Manager. Using Pacemaker, administrators

can continually monitor the health and status of their resources, manage dependencies, and automatically stop and start services based on highly congurable rules

and policies. The High Availability Extension allows you to tailor a cluster to the

specic applications and hardware infrastructure that t your organization. Timedependent conguration enables services to automatically migrate back to repaired

nodes at specied times.

Storage and Data Replication

With the High Availability Extension you can dynamically assign and reassign

server storage as needed. It supports Fibre Channel or iSCSI storage area networks

(SANs). Shared disk systems are also supported, but they are not a requirement.

SUSE Linux Enterprise High Availability Extension also comes with a clusteraware le system (Oracle Cluster File System, OCFS2) and volume manager

(clustered Logical Volume Manager, cLVM). For replication of your data, the High

Availability Extension also delivers DRBD (Distributed Replicated Block Device)

which you can use to mirror the data of a high availably service from the active

node of a cluster to its standby node.

Support for Virtualized Environments

SUSE Linux Enterprise High Availability Extension supports the mixed clustering

of both physical and virtual Linux servers. SUSE Linux Enterprise Server 11 ships

with Xen, an open source virtualization hypervisor. The cluster resource manager

in the High Availability Extension is able to recognize, monitor and manage services

running within virtual servers created with Xen, as well as services running in

physical servers. Guest systems can be managed as services by the cluster.

Resource Agents

SUSE Linux Enterprise High Availability Extension includes a huge number of

resource agents to manage resources such as Apache, IPv4, IPv6 and many more.

It also ships with resource agents for popular third party applications such as IBM

WebSphere Application Server. For a list of Open Cluster Framework (OCF) resource agents included with your product, refer to Chapter 18, HA OCF Agents

(page 201).

4 High Availability Guide

Page 15

User-friendly Administration

For easy conguration and administration, the High Availability Extension ships

with both a graphical user interface (like YaST and the Linux HA Management

Client) and a powerful unied command line interface. Both approaches provide

a single point of administration for effectively monitoring and administrating your

cluster. Learn how to do so in the following chapters.

1.2 Product Benets

The High Availability Extension allows you to congure up to 16 Linux servers into

a high-availability cluster (HA cluster), where resources can be dynamically switched

or moved to any server in the cluster. Resources can be congured to automatically

migrate in the event of a server failure, or they can be moved manually to troubleshoot

hardware or balance the workload.

The High Availability Extension provides high availability from commodity components.

Lower costs are obtained through the consolidation of applications and operations onto

a cluster. The High Availability Extension also allows you to centrally manage the

complete cluster and to adjust resources to meet changing workload requirements (thus,

manually “load balance” the cluster). Allowing clusters of more than two nodes also

provides savings by allowing several nodes to share a “hot spare”.

An equally important benet is the potential reduction of unplanned service outages as

well as planned outages for software and hardware maintenance and upgrades.

Reasons that you would want to implement a cluster include:

• Increased availability

• Improved performance

• Low cost of operation

• Scalability

• Disaster recovery

• Data protection

• Server consolidation

Conceptual Overview 5

Page 16

• Storage consolidation

Shared disk fault tolerance can be obtained by implementing RAID on the shared disk

subsystem.

The following scenario illustrates some of the benets the High Availability Extension

can provide.

Example Cluster Scenario

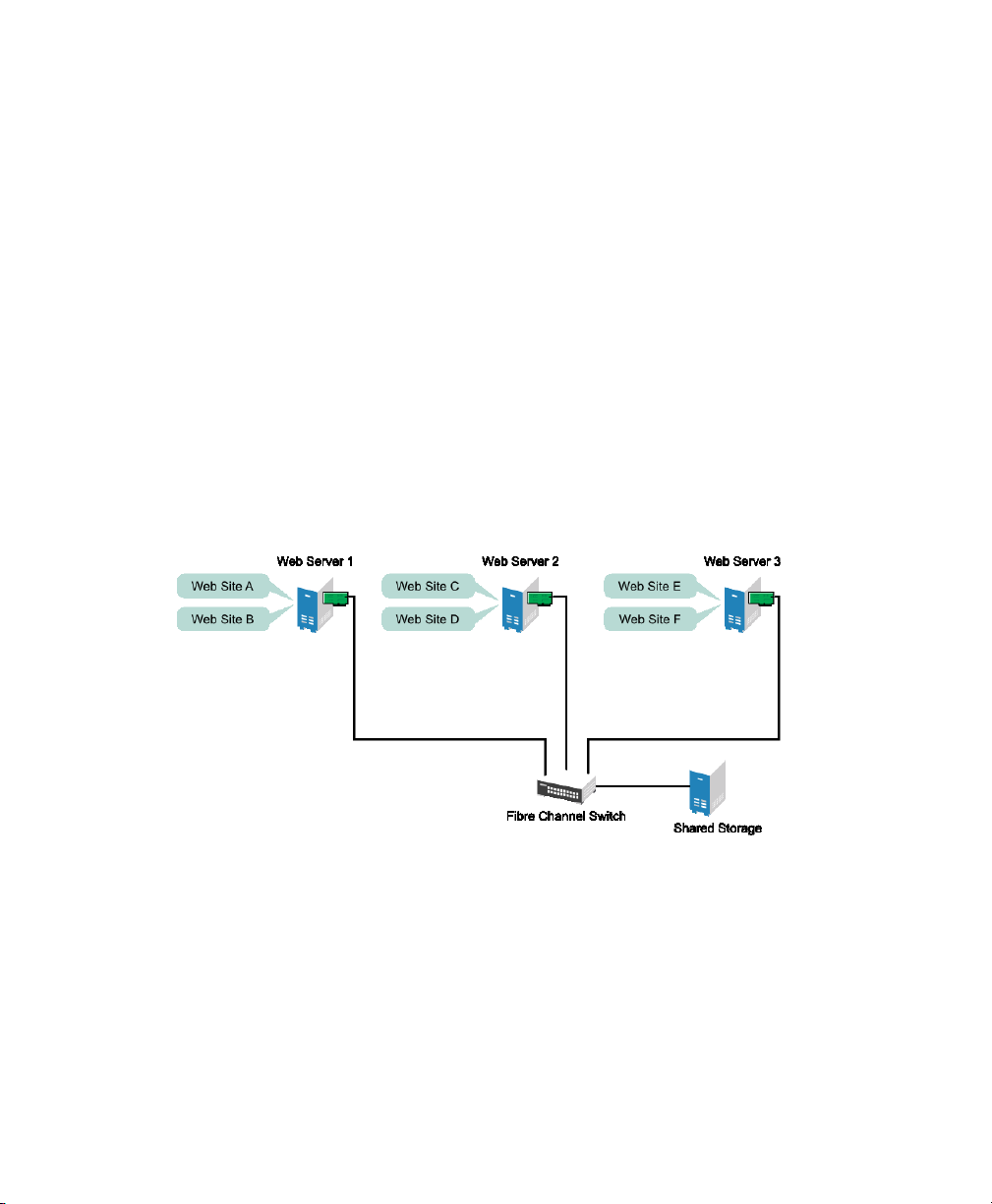

Suppose you have congured a three-server cluster, with a Web server installed on

each of the three servers in the cluster. Each of the servers in the cluster hosts two Web

sites. All the data, graphics, and Web page content for each Web site are stored on a

shared disk subsystem connected to each of the servers in the cluster. The following

gure depicts how this setup might look.

Figure 1.1

During normal cluster operation, each server is in constant communication with the

other servers in the cluster and performs periodic polling of all registered resources to

detect failure.

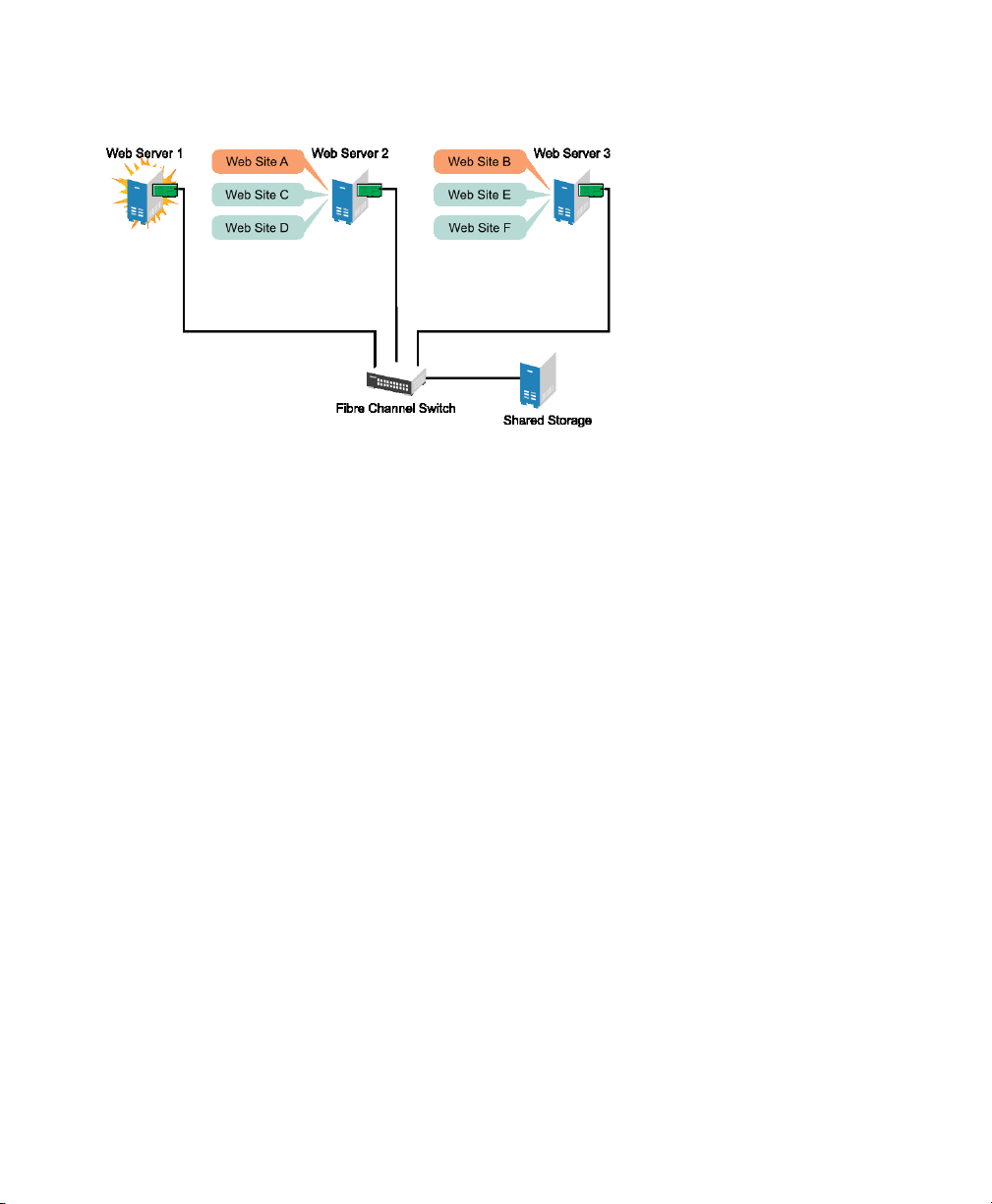

Suppose Web Server 1 experiences hardware or software problems and the users depending on Web Server 1 for Internet access, e-mail, and information lose their connections. The following gure shows how resources are moved when Web Server 1 fails.

Three-Server Cluster

6 High Availability Guide

Page 17

Figure 1.2

Web Site A moves to Web Server 2 and Web Site B moves to Web Server 3. IP addresses

and certicates also move to Web Server 2 and Web Server 3.

When you congured the cluster, you decided where the Web sites hosted on each Web

server would go should a failure occur. In the previous example, you congured Web

Site A to move to Web Server 2 and Web Site B to move to Web Server 3. This way,

the workload once handled by Web Server 1 continues to be available and is evenly

distributed between any surviving cluster members.

Three-Server Cluster after One Server Fails

When Web Server 1 failed, the High Availability Extension software

• Detected a failure and veried with STONITH that Web Server 1 was really dead

• Remounted the shared data directories that were formerly mounted on Web server

1 on Web Server 2 and Web Server 3.

• Restarted applications that were running on Web Server 1 on Web Server 2 and

Web Server 3

• Transferred IP addresses to Web Server 2 and Web Server 3

In this example, the failover process happened quickly and users regained access to

Web site information within seconds, and in most cases, without needing to log in again.

Now suppose the problems with Web Server 1 are resolved, and Web Server 1 is returned

to a normal operating state. Web Site A and Web Site B can either automatically fail

Conceptual Overview 7

Page 18

back (move back) to Web Server 1, or they can stay where they are. This is dependent

on how you congured the resources for them. Migrating the services back to Web

Server 1 will incur some down-time, so the High Availability Extension also allows

you to defer the migration until a period when it will cause little or no service interruption. There are advantages and disadvantages to both alternatives.

The High Availability Extension also provides resource migration capabilities. You

can move applications, Web sites, etc. to other servers in your cluster as required for

system management.

For example, you could have manually moved Web Site A or Web Site B from Web

Server 1 to either of the other servers in the cluster. You might want to do this to upgrade

or perform scheduled maintenance on Web Server 1, or just to increase performance

or accessibility of the Web sites.

1.3 Cluster Congurations

Cluster congurations with the High Availability Extension might or might not include

a shared disk subsystem. The shared disk subsystem can be connected via high-speed

Fibre Channel cards, cables, and switches, or it can be congured to use iSCSI. If a

server fails, another designated server in the cluster automatically mounts the shared

disk directories that were previously mounted on the failed server. This gives network

users continuous access to the directories on the shared disk subsystem.

IMPORTANT: Shared Disk Subsystem with cLVM

When using a shared disk subsystem with cLVM, that subsystem must be connected to all servers in the cluster from which it needs to be accessed.

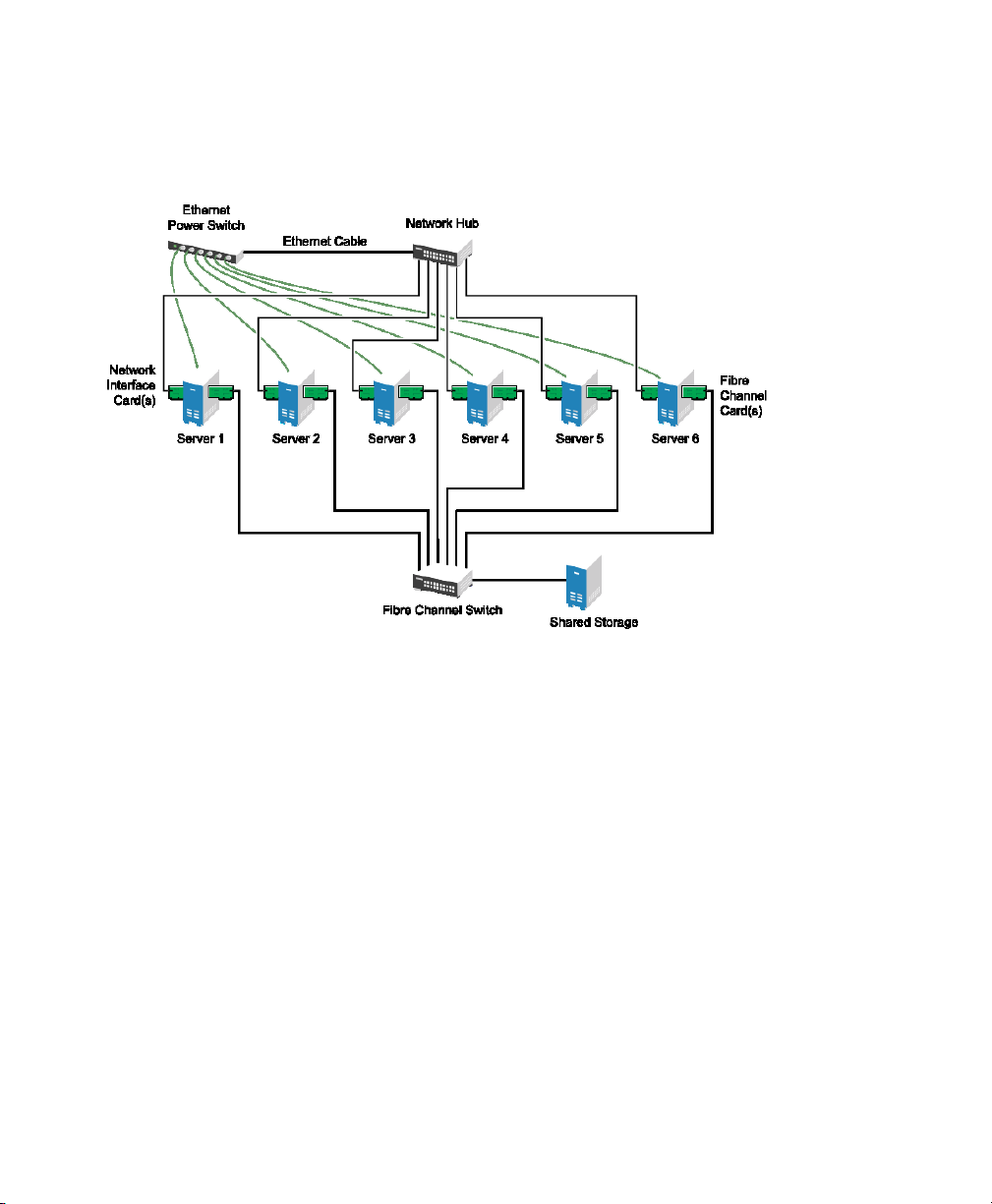

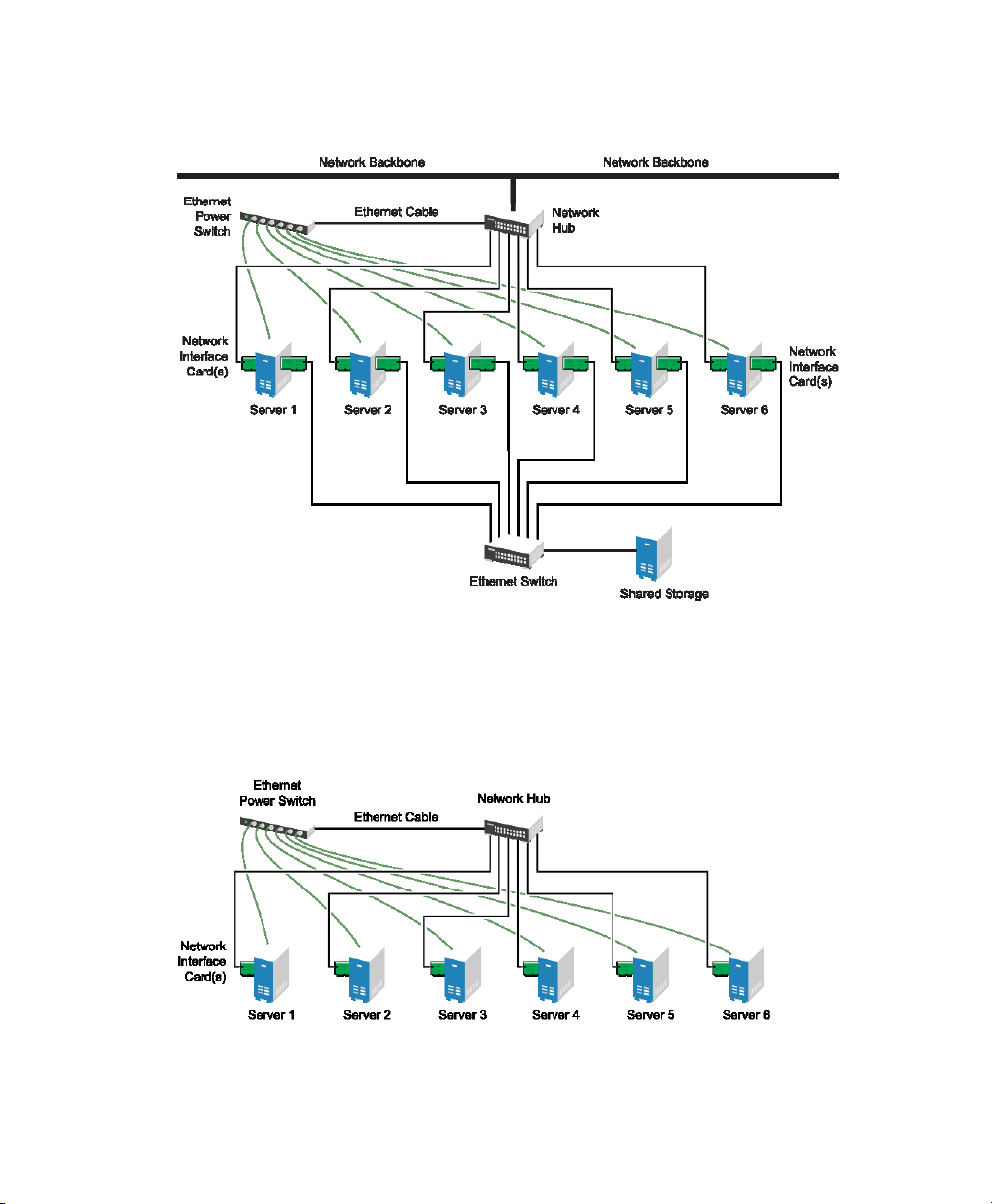

Typical resources might include data, applications, and services. The following gure

shows how a typical Fibre Channel cluster conguration might look.

8 High Availability Guide

Page 19

Figure 1.3

Typical Fibre Channel Cluster Conguration

Although Fibre Channel provides the best performance, you can also congure your

cluster to use iSCSI. iSCSI is an alternative to Fibre Channel that can be used to create

a low-cost Storage Area Network (SAN). The following gure shows how a typical

iSCSI cluster conguration might look.

Conceptual Overview 9

Page 20

Figure 1.4

Although most clusters include a shared disk subsystem, it is also possible to create a

cluster without a share disk subsystem. The following gure shows how a cluster

without a shared disk subsystem might look.

Typical iSCSI Cluster Conguration

Figure 1.5

10 High Availability Guide

Typical Cluster Conguration Without Shared Storage

Page 21

1.4 Architecture

This section provides a brief overview of the High Availability Extension architecture.

It identies and provides information on the architectural components, and describes

how those components interoperate.

1.4.1 Architecture Layers

The High Availability Extension has a layered architecture. Figure 1.6, “Architecture”

(page 11) illustrates the different layers and their associated components.

Figure 1.6

Architecture

Conceptual Overview 11

Page 22

Messaging and Infrastructure Layer

The primary or rst layer is the messaging/infrastructure layer, also known as the

OpenAIS layer. This layer contains components that send out the messages containing

“I'm alive” signals, as well as other information. The program of the High Availability

Extension resides in the messaging/infrastructure layer.

Resource Allocation Layer

The next layer is the resource allocation layer. This layer is the most complex, and

consists of the following components:

Cluster Resource Manager (CRM)

Every action taken in the resource allocation layer passes through the Cluster Resource Manager. If other components of the resource allocation layer (or components

which are in a higher layer) need to communicate, they do so through the local

CRM.

On every node, the CRM maintains the Cluster Information Base (CIB) (page 12),

containing denitions of all cluster options, nodes, resources their relationship and

current status. One CRM in the cluster is elected as the Designated Coordinator

(DC), meaning that it has the master CIB. All other CIBs in the cluster are a replicas

of the master CIB. Normal read and write operations on the CIB are serialized

through the master CIB. The DC is the only entity in the cluster that can decide

that a cluster-wide change needs to be performed, such as fencing a node or moving

resources around.

Cluster Information Base (CIB)

The Cluster Information Base is an in-memory XML representation of the entire

cluster conguration and current status. It contains denitions of all cluster options,

nodes, resources, constraints and the relationship to each other. The CIB also synchronizes updates to all cluster nodes. There is one master CIB in the cluster,

maintained by the DC. All other nodes contain a CIB replica.

Policy Engine (PE)

Whenever the Designated Coordinator needs to make a cluster-wide change (react

to a new CIB), the Policy Engine calculates the next state of the cluster based on

the current state and conguration. The PE also produces a transition graph con-

12 High Availability Guide

Page 23

taining a list of (resource) actions and dependencies to achieve the next cluster

state. The PE runs on every node to speed up DC failover.

Local Resource Manager (LRM)

The LRM calls the local Resource Agents (see Section “Resource Layer” (page 13))

on behalf of the CRM. It can thus perform start / stop / monitor operations and report

the result to the CRM. It also hides the difference between the supported script

standards for Resource Agents (OCF, LSB, Heartbeat Version 1). The LRM is the

authoritative source for all resource-related information on its local node.

Resource Layer

The highest layer is the Resource Layer. The Resource Layer includes one or more

Resource Agents (RA). Resource Agents are programs (usually shell scripts) that have

been written to start, stop, and monitor a certain kind of service (a resource). Resource

Agents are called only by the LRM. Third parties can include their own agents in a

dened location in the le system and thus provide out-of-the-box cluster integration

for their own software.

1.4.2 Process Flow

SUSE Linux Enterprise High Availability Extension uses Pacemaker as CRM. The

CRM is implemented as daemon (crmd) that has an instance on each cluster node.

Pacemaker centralizes all cluster decision-making by electing one of the crmd instances

to act as a master. Should the elected crmd process (or the node it is on) fail, a new one

is established.

A CIB, reecting the cluster’s conguration and current state of all resources in the

cluster is kept on each node. The contents of the CIB are automatically kept in sync

across the entire cluster.

Many actions performed in the cluster will cause a cluster-wide change. These actions

can include things like adding or removing a cluster resource or changing resource

constraints. It is important to understand what happens in the cluster when you perform

such an action.

For example, suppose you want to add a cluster IP address resource. To do this, you

can use one of the command line tools or the GUI to modify the CIB. It is not required

to perform the actions on the DC, you can use either tool on any node in the cluster and

Conceptual Overview 13

Page 24

they will be relayed to the DC. The DC will then replicate the CIB change to all cluster

nodes.

Based on the information in the CIB, the PE then computes the ideal state of the cluster

and how it should be achieved and feeds a list of instructions to the DC. The DC sends

commands via the messaging/infrastructure layer which are received by the crmd peers

on other nodes. Each crmd uses it LRM (implemented as lrmd) to perform resource

modications. The lrmd is non-cluster aware and interacts directly with resource agents

(scripts).

The peer nodes all report the results of their operations back to the DC. Once the DC

concludes that all necessary operations are successfully performed in the cluster, the

cluster will go back to the idle state and wait for further events. If any operation was

not carried out as planned, the PE is invoked again with the new information recorded

in the CIB.

In some cases, it may be necessary to power off nodes in order to protect shared data

or complete resource recovery. For this Pacemaker comes with a fencing subsystem,

stonithd. STONITH is an acronym for “Shoot The Other Node In The Head” and is

usually implemented with a remote power switch. In Pacemaker, STONITH devices

are modeled as resources (and congured in the CIB) to enable them to be easily

monitored for failure. However, stonithd takes care of understanding the STONITH

topology such that its clients simply request a node be fenced and it does the rest.

1.5 What's New?

With SUSE Linux Enterprise Server 11, the cluster stack has changed from Heartbeat

to OpenAIS. OpenAIS implements an industry standard API, the Application Interface

Specication (AIS), published by the Service Availability Forum. The cluster resource

manager from SUSE Linux Enterprise Server 10 has been retained but has been significantly enhanced, ported to OpenAIS and is now known as Pacemaker.

For more details what changed in the High Availability components from SUSE®

Linux Enterprise Server 10 SP2 to SUSE Linux Enterprise High Availability Extension

11, refer to the following sections.

14 High Availability Guide

Page 25

1.5.1 New Features and Functions Added

Migration Threshold and Failure Timeouts

The High Availability Extension now comes with the concept of a migration

threshold and failure timeout. You can dene a number of failures for resources,

after which they will migrate to a new node. By default, the node will no longer

be allowed to run the failed resource until the administrator manually resets the

resource’s failcount. However it is also possible to expire them by setting the re-

source’s failure-timeout option.

Resource and Operation Defaults

You can now set global defaults for resource options and operations.

Support for Ofine Conguration Changes

Often it is desirable to preview the effects of a series of changes before updating

the conguration atomically. You can now create a “shadow” copy of the

conguration that can be edited with the command line interface, before committing

it and thus changing the active cluster conguration atomically.

Reusing Rules, Options and Sets of Operations

Rules, instance_attributes, meta_attributes and sets of operations can be dened

once and referenced in multiple places.

Using XPath Expressions for Certain Operations in the CIB

The CIB now accepts XPath-based create, modify, delete operations. For

more information, refer to the cibadmin help text.

Multi-dimensional Collocation and Ordering Constraints

For creating a set of collocated resources, previously you could either dene a resource group (which could not always accurately express the design) or you could

dene each relationship as an individual constraint—causing a constraint explosion

as the number of resources and combinations grew. Now you can also use an alter-

nate form of collocation constraints by dening resource_sets.

Connection to the CIB From Non-cluster Machines

Provided Pacemaker is installed on a machine, it is possible to connect to the

cluster even if the machine itself is not a part of it.

Conceptual Overview 15

Page 26

Triggering Recurring Actions at Known Times

By default, recurring actions are scheduled relative to when the resource started,

but this is not always desirable. To specify a date/time that the operation should

be relative to, set the operation’s interval-origin. The cluster uses this point to calculate the correct start-delay such that the operation will occur at origin + (interval

* N).

1.5.2 Changed Features and Functions

Naming Conventions for Resource and Custer Options

All resource and cluster options now use dashes (-) instead of underscores (_). For

example, the master_max meta option has been renamed to master-max.

Renaming of master_slave Resource

The master_slave resource has been renamed to master. Master resources

are a special type of clone that can operate in one of two modes.

Container Tag for Attributes

The attributes container tag has been removed.

Operation Field for Prerequisites

The pre-req operation eld has been renamed requires.

Interval for Operations

All operations must have an interval. For start/stop actions the interval must be set

to 0 (zero).

Attributes for Collocation and Ordering Constraints

The attributes of collocation and ordering constraints were renamed for clarity.

Cluster Options for Migration Due to Failure

The resource-failure-stickiness cluster option has been replaced by

the migration-threshold cluster option. See also Migration Threshold and

Failure Timeouts (page 15).

Arguments for Command Line Tools

The arguments for command-line tools have been made consistent. See also Naming

Conventions for Resource and Custer Options (page 16).

16 High Availability Guide

Page 27

Validating and Parsing XML

The cluster conguration is written in XML. Instead of a Document Type Denition

(DTD), now a more powerful RELAX NG schema is used to dene the pattern for

the structure and content. libxml2 is used as parser.

id Fields

id elds are now XML IDs which have the following limitations:

• IDs cannot contain colons.

• IDs cannot begin with a number.

• IDs must be globally unique (not just unique for that tag).

References to Other Objects

Some elds (such as those in constraints that refer to resources) are IDREFs. This

means that they must reference existing resources or objects in order for the

conguration to be valid. Removing an object which is referenced elsewhere will

therefor fail.

1.5.3 Removed Features and Functions

Setting Resource Meta Options

It is no longer possible to set resource meta-options as top-level attributes. Use

meta attributes instead.

Setting Global Defaults

Resource and operation defaults are no longer read from crm_cong.

Conceptual Overview 17

Page 28

Page 29

Getting Started

In the following, learn about the system requirements and which preparations

to take before installing the High Availability Extension. Find a short overview of the

basic steps to install and set up a cluster.

2.1 Hardware Requirements

The following list species hardware requirements for a cluster based on SUSE® Linux

Enterprise High Availability Extension. These requirements represent the minimum

hardware conguration. Additional hardware might be necessary, depending on how

you intend to use your cluster.

• 1 to 16 Linux servers with software as specied in Section 2.2, “Software Requirements” (page 20). The servers do not require identical hardware (memory, disk

space, etc.).

• At least two TCP/IP communication media. Cluster nodes use multicast for communication so the network equipment must support multicasting. The communication

media should support a data rate of 100 Mbit/s or higher. Preferably, the Ethernet

channels should be bonded.

2

• Optional: A shared disk subsystem connected to all servers in the cluster from

where it needs to be accessed.

• A STONITH mechanism. STONITH is an acronym for “Shoot the other node in

the head”. A STONITH device is a power switch which the cluster uses to reset

Getting Started 19

Page 30

nodes that are thought to be dead or behaving in a strange manner. Resetting nonheartbeating nodes is the only reliable way to ensure that no data corruption is

performed by nodes that hang and only appear to be dead.

For more information, refer to Chapter 8, Fencing and STONITH (page 81).

2.2 Software Requirements

Ensure that the following software requirements are met:

• SUSE® Linux Enterprise Server 11 with all available online updates installed on

all nodes that will be part of the cluster.

• SUSE Linux Enterprise High Availability Extension 11 including all available online

updates installed on all nodes that will be part of the cluster.

2.3 Shared Disk System Requirements

A shared disk system (Storage Area Network, or SAN) is recommended for your cluster

if you want data to be highly available. If a shared disk subsystem is used, ensure the

following:

• The shared disk system is properly set up and functional according to the manufacturer’s instructions.

• The disks contained in the shared disk system should be congured to use mirroring

or RAID to add fault tolerance to the shared disk system. Hardware-based RAID

is recommended. Host-based software RAID is not supported for all congurations.

• If you are using iSCSI for shared disk system access, ensure that you have properly

congured iSCSI initiators and targets.

• When using DRBD to implement a mirroring RAID system that distributes data

across two machines, make sure to only access the replicated device. Use the same

(bonded) NICs that the rest of the cluster uses to leverage the redundancy provided

there.

20 High Availability Guide

Page 31

2.4 Preparations

Prior to installation, execute the following preparatory steps:

• Congure hostname resolution and use static host information by by editing the

/etc/hosts le on each server in the cluster. For more information, refer to

SUSE Linux Enterprise Server Administration Guide, chapter Basic Networking ,

section Conguring Hostname and DNS , available at http://www.novell

.com/documentation.

It is essential that members of the cluster are able to nd each other by name. If

the names are not available, internal cluster communication will fail.

• Congure time synchronization by making cluster nodes synchronize to a time

server outside the cluster . For more information, refer to SUSE Linux Enterprise

Server Administration Guide, chapter Time Synchronization with NTP , available

at http://www.novell.com/documentation.

The cluster nodes will use the time server as their time synchronization source.

2.5 Overview: Installing and Setting Up a Cluster

After the preparations are done, the following steps are necessary to install and set up

a cluster with SUSE® Linux Enterprise High Availability Extension:

1. Installing SUSE® Linux Enterprise Server 11 and SUSE® Linux Enterprise High

Availability Extension 11 as add-on on top of SUSE Linux Enterprise Server. For

detailed information, see Section 3.1, “Installing the High Availability Extension”

(page 23).

2. Conguring OpenAIS. For detailed information, see Section 3.2, “Initial Cluster

Setup” (page 24).

3. Starting OpenAIS and monitoring the cluster status. For detailed information, see

Section 3.3, “Bringing the Cluster Online” (page 27).

Getting Started 21

Page 32

4. Adding and conguring cluster resources, either with a graphical user interface

(GUI) or from command line. For detailed information, see Chapter 4, Conguring

Cluster Resources with the GUI (page 31) or Chapter 5, Conguring Cluster Resources From Command Line (page 59).

To protect your data from possible corruption by means of fencing and STONITH,

make sure to congure STONITH devices as resources. For detailed information,

see Chapter 8, Fencing and STONITH (page 81).

You might also need to create le systems on a shared disk (Storage Area Network,

SAN) if they do not already exist and, if necessary, congure those le systems as

cluster resources.

Both cluster-aware (OCFS 2) and non-cluster-aware le systems can be congured

with the High Availability Extension. If needed, you can also make use of data

replication with DRBD. For detailed information, see Part III, “Storage and Data

Replication” (page 105).

22 High Availability Guide

Page 33

Installation and Basic Setup with YaST

There are several ways to install the software needed for High Availability clusters:

either from a command line, using zypper, or with YaST which provides a graphical

user interface. After installing the software on all nodes that will be part of your cluster,

the next step is to initially congure the cluster so that the nodes can communicate with

each other. This can either be done manually (by editing a conguration le) or with

the YaST cluster module.

NOTE: Installing the Software Packages

The software packages needed for High Availability clusters are not automatically copied to the cluster nodes. Install SUSE® Linux Enterprise Server 11 and

SUSE® Linux Enterprise High Availability Extension 11 on all nodes that will be

part of your cluster.

3.1 Installing the High Availability Extension

The packages needed for conguring and managing a cluster with the High Availability

Extension are included in the High Availability installation pattern. This patterns

is only available after SUSE® Linux Enterprise High Availability Extension has been

installed as add-on. For information on how to install add-on products, refer to the

SUSE Linux Enterprise 11 Deployment Guide, chapter Installing Add-On Products .

3

Installation and Basic Setup with YaST 23

Page 34

Start YaST and select Software > Software Management to open the YaST

1

package manager.

From the Filter list, select Patterns and activate the High Availability pattern in

2

the pattern list.

Click Accept to start the installation of the packages.

3

3.2 Initial Cluster Setup

After having installed the HA packages, you can congure the initial cluster setup with

YaST. This includes the communication channels between the nodes, security aspects

(like using encrypted communication) and starting OpenAIS as service.

For the communication channels, you need to dene a bind network address

(bindnetaddr), a multicast address (mcastaddr) and a multicast port

(mcastport). The bindnetaddr is the network address to bind to. To ease sharing

conguration les across the cluster, OpenAIS uses network interface netmask to mask

only the address bits that are used for routing the network. The mcastaddr can be a

IPv4 or IPv6 multicast address. The mcastport is the UDP port specied for

mcastaddr.

The nodes in the cluster will know each other from using the same multicast address

and the same port number. For different clusters, use a different multicast address.

Procedure 3.1

1

Start YaST and select Miscellaneous > Cluster or run yast2 cluster on a

command line to start the initial cluster conguration dialog.

In the Communication Channel category, congure the channels used for com-

2

munication between the cluster nodes. This information is written to the /etc/

ais/openais.conf conguration le.

24 High Availability Guide

Conguring the Cluster

Page 35

Dene the Bind Network Address, the Multicast Address and the Multicast Port

to use for all cluster nodes.

Specify a unique Node ID for every cluster node. It is recommended to start at

3

1.

In the Security category, dene the authentication settings for the cluster. If Enable

4

Security Authentication is activated, HMAC/SHA1 authentication is used for

communication between the cluster nodes.

This authentication method requires a shared secret, which is used to protect and

authenticate messages. The authentication key (password) you specify will be

used on all nodes in the cluster. For a newly created cluster, click Generate Auth

Key File to create an authentication key that is written to /etc/ais/authkey.

Installation and Basic Setup with YaST 25

Page 36

In the Service category, choose whether you want to start OpenAIS on this cluster

5

server each time it is booted.

If you select Off, you must start OpenAIS manually each time this cluster server

is booted. To start OpenAIS manually, use the rcopenais start command.

To start OpenAIS immediately, click Start OpenAIS Now.

If all options are set according to your wishes, click Finish. YaST then automat-

6

ically also adjusts the rewall settings and opens the UDP port used for multicast.

26 High Availability Guide

Page 37

After the initial conguration is done, you need to transfer the conguration to

7

the other nodes in the cluster. The easiest way to do so is to copy the /etc/

ais/openais.conf le to the other nodes in the cluster. As each node needs

to have a unique node ID, make sure to adjust the node ID accordingly after

copying the le.

8

If you want to use encrypted communication, also copy the /etc/ais/

authkey to the other nodes in the cluster.

3.3 Bringing the Cluster Online

After the basic conguration, you can bring the stack online and check the status.

Run the following command on each of the cluster nodes to start OpenAIS:

1

rcopenais start

On one of the nodes, check the cluster status with the following command:

2

crm_mon

If all nodes are online, the output should be similar to the following:

============

Last updated: Thu Feb 5 18:30:33 2009

Current DC: d42 (d42)

Version: 1.0.1-node: b7ffe2729e3003ac8ff740bebc003cf237dfa854

3 Nodes configured.

0 Resources configured.

============

Node: d230 (d230): online

Node: d42 (d42): online

Node: e246 (e246): online

After the basic conguration is done and the nodes are online, you can now start to

congure cluster resources, either with the crm command line tool or with a graphical

user interface. For more information, refer to Chapter 4, Conguring Cluster Resources

with the GUI (page 31) or Chapter 5, Conguring Cluster Resources From Command

Line (page 59).

Installation and Basic Setup with YaST 27

Page 38

Page 39

Part II. Conguration and

Administration

Page 40

Page 41

Conguring Cluster Resources

with the GUI

The main purpose of an HA cluster is to manage user services. Typical examples of

user services are an Apache web server or a database. From the user's point of view,

the services do something specic when ordered to do so. To the cluster, however, they

are just resources which may be started or stopped—the nature of the service is irrelevant

to the cluster.

As a cluster administrator, you need to create cluster resources for every resource or

application you run on servers in your cluster. Cluster resources can include Web sites,

e-mail servers, databases, le systems, virtual machines, and any other server-based

applications or services you want to make available to users at all times.

To create cluster resources, either use the graphical user interface (the Linux HA

Management Client) or the crm command line utility. For the command line approach,

refer to Chapter 5, Conguring Cluster Resources From Command Line (page 59).

This chapter introduces the Linux HA Management Client and then covers several

topics you need when conguring a cluster: creating resources, conguring constraints,

specifying failover nodes and failback nodes, conguring resource monitoring, starting

or removing resources, conguring resource groups or clone resources, and migrating

resources manually.

The graphical user interface for conguring cluster resources is included in the

pacemaker-pygui package.

4

Conguring Cluster Resources with the GUI 31

Page 42

4.1 Linux HA Management Client

When starting the Linux HA Management Client you need to connect to a cluster.

NOTE: Password for the hacluster User

The installation creates a linux user named hacluster. Prior to using the

Linux HA Management Client, you must set the password for the hacluster

user. To do this, become root, enter passwd hacluster at the command

line and enter a password for the hacluster user.

Do this on every node you will connect to with the Linux HA Management

Client.

To start the Linux HA Management Client, enter crm_gui at the command line. To

connect to the cluster, select Connection > Login. By default, the Server eld shows

the localhost's IP address and hacluster as User Name. Enter the user's password

to continue.

Figure 4.1

If you are running the Linux HA Management Client remotely, enter the IP address of

a cluster node as Server. As User Name, you can also use any other user belonging to

the haclient group to connect ot the cluster.

32 High Availability Guide

Connecting to the Cluster

Page 43

After being connected, the main window opens:

Figure 4.2

The Linux HA Management Client lets you add and modify resources, constraints,

congurations etc. It also provides functionalities for managing cluster components

like starting, stopping or migrating resources, cleaning up resources, or setting nodes

to standby. Additionally, you can easily view, edit, import and export the XML

structures of the CIB by selecting any of the Conguration subitems and selecting Show

> XML Mode.

Linux HA Management Client - Main Window

In the following, nd some examples how to create and manage cluster resources with

the Linux HA Management Client.

4.2 Creating Cluster Resources

You can create the following types of resources:

Primitive

A primitive resource, the most basic type of a resource.

Conguring Cluster Resources with the GUI 33

Page 44

Group

Groups contain a set of resources that need to be located together, start sequentially

and stop in the reverse order. For more information, refer to Section 4.10, “Conguring a Cluster Resource Group” (page 49).

Clone

Clones are resources that can be active on multiple hosts. Any resource can be

cloned, provided the respective resource agent supports it. For more information,

refer to Section 4.11, “Conguring a Clone Resource” (page 54).

Master

Masters are a special type of a clone resources, masters can have multiple modes.

Masters must contain exactly one group or one regular resource.

Procedure 4.1

Start the Linux HA Management Client and log in to the cluster as described in

1

Section 4.1, “Linux HA Management Client” (page 32).

In the left pane, select Resources and click Add > Primitive.

2

In the next dialog, set the following parameters for the resource:

3

3a

Enter a unique ID for the resource.

From the Class list, select the resource agent class you want to use for that

3b

resource: heartbeat, lsb, ocf or stonith. For more information, see Section 17.1, “Supported Resource Agent Classes” (page 193).

If you selected ocf as class, specify also the Provider of your OCF resource

3c

agent. The OCF specication allows multiple vendors to supply the same

resource agent.

From the Type list, select the resource agent you want to use (for example,

3d

IPaddr or Filesystem). A short description for this resource agent is displayed

below.

The selection you get in the Type list depends on the Class (and for OCF

resources also on the Provider) you have chosen.

Adding Primitive Resources

Below Options, set the Initial state of resource.

3e

34 High Availability Guide

Page 45

Activate Add monitor operation if you want the cluster to monitor if the re-

3f

source is still healthy.

Click Forward. The next window shows a summary of the parameters that you

4

have already dened for that resource. All required Instance Attributes for that

resource are listed. You need to edit them in order to set them to appropriate

values. You may also need to add more attributes, depending on your deployment

and settings. For details how to do so, refer to Procedure 4.2, “Adding or Modifying Meta and Instance Attributes” (page 36).

If all parameters are set according to your wishes, click Apply to nish the con-

5

guration of that resource. The conguration dialog is closed and the main window

shows the newly added resource.

You can add or modify the following parameters for primitive resources at any time:

Meta Attributes

Meta attributes are options you can add for a resource. They tell the CRM how to

treat a specic resource. For an overview of the available meta attributes, their

values and defaults, refer to Section 17.3, “Resource Options” (page 197).

Conguring Cluster Resources with the GUI 35

Page 46

Instance Attributes

Instance attributes are parameters for certain resource classes that determine how

they behave and which instance of a service they control. For more information,

refer to Section 17.5, “Instance Attributes” (page 199).

Operations

The monitor operations added for a resource. These instruct the cluster to make

sure that the resource is still healthy. Monitor operations can be added for all

classes of resource agents. You can also set particular parameters, such as Timeout

for start or stop operations. For more information, refer to Section 4.7, “Con-

guring Resource Monitoring” (page 46).

Procedure 4.2

In the Linux HA Management Client main window, click Resources in the left

1

pane to see the resources already congured for the cluster.

In the right pane, select the resource to modify and click Edit (or double-click

2

the resource). The next window shows the basic resource parameters and the

Meta Attributes, Instance Attributes or Operations already dened for that resource.

Adding or Modifying Meta and Instance Attributes

36 High Availability Guide

Page 47

To add a new meta attribute or instance attribute, select the respective tab and

3

click Add.

Select the Name of the attribute you want to add. A short Description is displayed.

4

If needed, specify an attribute Value. Otherwise the default value of that attribute

5

will be used.

Click OK to conrm your changes. The newly added or modied attribute appears

6

on the tab.

If all parameters are set according to your wishes, click OK to nish the congu-

7

ration of that resource. The conguration dialog is closed and the main window

shows the modied resource.

TIP: XML Source Code

The Linux HA Management Client allows you to view the XML that is generated

from the parameters that you have dened for a specic resource or for all

the resources. Select Show > XML Mode in the top right corner of the resource

conguration dialog or in the Resources view of the main window.

The editor displaying the XML code allows you to Import or Export the XML

elements or to manually edit the XML code.

4.3 Creating STONITH Resources

To congure fencing, you need to congure one or more STONITH resources.

Procedure 4.3

Start the Linux HA Management Client and log in to the cluster as described in

1

Section 4.1, “Linux HA Management Client” (page 32).

In the left pane, select Resources and click Add > Primitive.

2

In the next dialog, set the following parameters for the resource:

3

3a

Enter a unique ID for the resource.

Adding a STONITH Resource

Conguring Cluster Resources with the GUI 37

Page 48

From the Class list, select the resource agent class stonith.

3b

From the Type list, select the STONITH plug-in for controlling your

3c

STONITH device. A short description for this plug-in is displayed below.

Below Options, set the Initial state of resource.

3d

Activate Add monitor operation if you want the cluster to monitor the fencing

3e

device. For more information, refer to Section 8.4, “Monitoring Fencing

Devices” (page 88).

Click Forward. The next window shows a summary of the parameters that you

4

have already dened for that resource. All required Instance Attributes for the

selected STONITH plug-in are listed. You need to edit them in order to set them

to appropriate values. You may also need to add more attributes or monitor operations, depending on your deployment and settings. For details how to do so,

refer to Procedure 4.2, “Adding or Modifying Meta and Instance Attributes”

(page 36) and Section 4.7, “Conguring Resource Monitoring” (page 46).

If all parameters are set according to your wishes, click Apply to nish the con-

5

guration of that resource. The conguration dialog is closed and the main window

shows the newly added resource.

To complete your fencing conguration add constraints, or use clones or both. For more

details, refer to Chapter 8, Fencing and STONITH (page 81).

4.4 Conguring Resource Constraints

Having all the resources congured is only part of the job. Even if the cluster knows

all needed resources, it might still not be able to handle them correctly. Resource constraints let you specify which cluster nodes resources can run on, what order resources

will load, and what other resources a specic resource is dependent on.

There are three different kinds of constraints available:

Resource Location

Locational constraints that dene on which nodes a resource may be run, may not

be run or is preferred to be run.

38 High Availability Guide

Page 49

Resource Collocation

Collocational constraints that tell the cluster which resources may or may not run

together on a node.

Resource Order

Ordering constraints to dene the sequence of actions.

When dening constraints, you also need to deal with scores. Scores of all kinds are

integral to how the cluster works. Practically everything from migrating a resource to

deciding which resource to stop in a degraded cluster is achieved by manipulating scores

in some way. Scores are calculated on a per-resource basis and any node with a negative

score for a resource cannot run that resource. After calculating the scores for a resource,

the cluster then chooses the node with the highest score. INFINITY is currently dened

as 1,000,000. Additions or subtractions with it follows the following 3 basic rules:

• Any value + INFINITY = INFINITY

• Any value - INFINITY = -INFINITY

• INFINITY - INFINITY = -INFINITY

When dening resource constraints, you also specify a score for each constraint. The

score indicates the value you are assigning to this resource constraint. Constraints with

higher scores are applied before those with lower scores. By creating additional location

constraints with different scores for a given resource, you can specify an order for the

nodes that a resource will fail over to.

Procedure 4.4

Start the Linux HA Management Client and log in to the cluster as described

1

in Section 4.1, “Linux HA Management Client” (page 32).

In the Linux HA Management Client main window, click Constraints in the

2

left pane to see the constraints already congured for the cluster.

In the left pane, select Constraints and click Add.

3

Select Resource Location and click OK.

4

Enter a unique ID for the constraint. When modifying existing constraints,

5

the ID is already dened and is displayed in the conguration dialog.

Adding or Modifying Locational Constraints

Conguring Cluster Resources with the GUI 39

Page 50

Select the Resource for which to dene the constraint. The list shows the IDs

6

of all resources that have been congured for the cluster.

Set the Score for the constraint. Positive values indicate the resource can run

7

on the Node you specify below. Negative values indicate the resource can not

run on this node. Values of +/- INFINITY change “can” to must.

Select the Node for the constraint.

8

If you leave the Node and the Score eld empty, you can also add rules by

9

clicking Add > Rule. To add a lifetime, just click Add > Lifetime.

If all parameters are set according to your wishes, click OK to nish the con-

10

guration of the constraint. The conguration dialog is closed and the main

window shows the newly added or modied constraint.

Procedure 4.5

Start the Linux HA Management Client and log in to the cluster as described

1

in Section 4.1, “Linux HA Management Client” (page 32).

In the Linux HA Management Client main window, click Constraints in the

2

left pane to see the constraints already congured for the cluster.

In the left pane, select Constraints and click Add.

3

Select Resource Collocation and click OK.

4

40 High Availability Guide

Adding or Modifying Collocational Constraints

Page 51

Enter a unique ID for the constraint. When modifying existing constraints,

5

the ID is already dened and is displayed in the conguration dialog.

Select the Resource which is the collocation source. The list shows the IDs

6

of all resources that have been congured for the cluster.

If the constraint cannot be satised, the cluster may decide not to allow the

resource to run at all.

If you leave both the Resource and the With Resource eld empty, you can

7

also add a resource set by clicking Add > Resource Set. To add a lifetime, just

click Add > Lifetime.

In With Resource, dene the collocation target. The cluster will decide where

8

to put this resource rst and then decide where to put the resource in the Resource eld.

Dene a Score to determine the location relationship between both resources.

9

Positive values indicate the resources should run on the same node. Negative

values indicate the resources should not run on the same node. Values of +/-

INFINITY change should to must. The score will be combined with other

factors to decide where to put the node.

If needed, specify further parameters, like Resource Role.

10

Depending on the parameters and options you choose, a short Description

explains the effect of the collocational constraint you are conguring.

If all parameters are set according to your wishes, click OK to nish the con-

11

guration of the constraint. The conguration dialog is closed and the main

window shows the newly added or modied constraint.

Procedure 4.6

Start the Linux HA Management Client and log in to the cluster as described in

1

Section 4.1, “Linux HA Management Client” (page 32).

In the Linux HA Management Client main window, click Constraints in the left

2

pane to see the constraints already congured for the cluster.

In the left pane, select Constraints and click Add.

3

Adding or Modifying Ordering Constraints

Conguring Cluster Resources with the GUI 41

Page 52

Select Resource Order and click OK.

4

Enter a unique ID for the constraint. When modifying existing constraints, the

5

ID is already dened and is displayed in the conguration dialog.

With First, dene the resource that must be started before the Then resource is

6

allowed to.

With Then dene the resource that will start after the First resource.

7

If needed, dene further parameters, for example Score (if greater than zero, the

8

constraint is mandatory; otherwise it is only a suggestion) or Symmetrical (if

true, stop the resources in the reverse order).

Depending on the parameters and options you choose, a short Description explains

the effect of the ordering constraint you are conguring.

If all parameters are set according to your wishes, click OK to nish the congu-

9

ration of the constraint. The conguration dialog is closed and the main window

shows the newly added or modied constraint.

You can access and modify all constraints that you have congured in the Constraints

view of the Linux HA Management Client.

Figure 4.3

42 High Availability Guide

Linux HA Management Client - Constraints

Page 53

For more information on conguring constraints and detailed background information

about the basic concepts of ordering and collocation, refer to the following documents

available at http://clusterlabs.org/wiki/Documentation:

•

Conguration 1.0 Explained , chapter Resource Constraints

•

Collocation Explained

•

Ordering Explained

4.5 Specifying Resource Failover Nodes

A resource will be automatically restarted if it fails. If that cannot be achieved on the

current node, or it fails N times on the current node, it will try to fail over to another

node. You can dene a number of failures for resources (a migration-threshold),

after which they will migrate to a new node. If you have more than two nodes in your

cluster, the node a particular resource fails over to is chosen by the High Availability

software.

If you want to choose which node a resource will fail over to, you must do the following:

Congure a location constraint for that resource as described in Procedure 4.4,

1

“Adding or Modifying Locational Constraints” (page 39).

2

Add the migration-threshold meta attribute to that resource as described

in Procedure 4.2, “Adding or Modifying Meta and Instance Attributes” (page 36)

and enter a Value for the migration-threshold. The value should be positive and

less that INFINITY.

If you want to automatically expire the failcount for a resource, add the

3

failure-timeout meta attribute to that resource as described in Procedure 4.2, “Adding or Modifying Meta and Instance Attributes” (page 36) and

enter a Value for the failure-timeout.

If you want to specify additional failover nodes with preferences for a resource,

4

create additional location constraints.

Conguring Cluster Resources with the GUI 43

Page 54

For example, let us assume you have congured a location constraint for resource r1

to preferably run on node1. If it fails there, migration-threshold is checked

and compared to the failcount. If failcount >= migration-threshold then the resource is

migrated to the node with the next best preference.

By default, once the threshold has been reached, the node will no longer be allowed to

run the failed resource until the administrator manually resets the resource’s failcount

(after xing the failure cause).

However, it is possible to expire the failcounts by setting the resource’s failure-timeout

option. So a setting of migration-threshold=2 and failure-timeout=60s

would cause the resource to migrate to a new node after two failures and potentially

allow it to move back (depending on the stickiness and constraint scores) after one

minute.

There are two exceptions to the migration threshold concept, occurring when a resource

either fails to start or fails to stop: Start failures set the failcount to INFINITY and thus

always cause an immediate migration. Stop failures cause fencing (when

stonith-enabled is set to true which is the default). In case there is no STONITH

resource dened (or stonith-enabled is set to false), the resource will not mi-

grate at all.

To clean up the failcount for a resource with the Linux HA Management Client, select

Management in the left pane, select the respective resource in the right pane and click

Cleanup Resource in the toolbar. This executes the commands crm_resource -C

and crm_failcount -D for the specied resource on the specied node. For more

information, see also crm_resource(8) (page 166) and crm_failcount(8) (page 157).

44 High Availability Guide

Page 55

4.6 Specifying Resource Failback Nodes (Resource Stickiness)

A resource might fail back to its original node when that node is back online and in the

cluster. If you want to prevent a resource from failing back to the node it was running

on prior to failover, or if you want to specify a different node for the resource to fail

back to, you must change its resource stickiness value. You can either specify resource

stickiness when you are creating a resource, or afterwards.

Consider the following when specifying a resource stickiness value:

Value is 0:

This is the default. The resource will be placed optimally in the system. This may

mean that it is moved when a “better” or less loaded node becomes available. This

option is almost equivalent to automatic failback, except that the resource may be

moved to a node that is not the one it was previously active on.

Value is greater than 0:

The resource will prefer to remain in its current location, but may be moved if a

more suitable node is available. Higher values indicate a stronger preference for a

resource to stay where it is.

Value is less than 0:

The resource prefers to move away from its current location. Higher absolute values

indicate a stronger preference for a resource to be moved.

Value is INFINITY:

The resource will always remain in its current location unless forced off because

the node is no longer eligible to run the resource (node shutdown, node standby,

reaching the migration-threshold, or conguration change). This option

is almost equivalent to completely disabling automatic failback .

Value is -INFINITY:

The resource will always move away from its current location.

Conguring Cluster Resources with the GUI 45

Page 56

Procedure 4.7

1

Add the resource-stickiness meta attribute to the resource as described

in Procedure 4.2, “Adding or Modifying Meta and Instance Attributes” (page 36).

2

As Value for the resource-stickiness, specify a value between -INFINITY and

INFINITY.

Specifying Resource Stickiness

4.7 Conguring Resource Monitoring

Although the High Availability Extension can detect a node failure, it also has the

ability to detect when an individual resource on a node has failed. If you want to ensure

that a resource is running, you must congure resource monitoring for it. Resource

monitoring consists of specifying a timeout and/or start delay value, and an interval.

The interval tells the CRM how often it should check the resource status.

Procedure 4.8

Start the Linux HA Management Client and log in to the cluster as described

1

in Section 4.1, “Linux HA Management Client” (page 32).

In the Linux HA Management Client main window, click Resources in the

2

left pane to see the resources already congured for the cluster.

In the right pane, select the resource to modify and click Edit. The next window

3

shows the basic resource parameters and the meta attributes, instance attributes

and operations already dened for that resource.

46 High Availability Guide

Adding or Modifying Monitor Operations

Page 57

To add a new monitor operation, select the respective tab and click Add.

4

To modify an existing operation, select the respective entry and click Edit.

Enter a unique ID for the monitor operation. When modifying existing monitor

5

operations, the ID is already dened and is displayed in the conguration dialog.

6

In Name, select the action to perform, for example monitor, start, or

stop.

In the Interval eld, enter a value in seconds.

7

In the Timeout eld, enter a value in seconds. After the specied timeout pe-

8

riod, the operation will be treated as failed. The PE will decide what to do

or execute what you specied in the On Fail eld of the monitor operation.

If needed, set optional parameters, like On Fail (what do if this action ever

9

fails?) or Requires (what conditions need to be satised before this action

occurs?).

If all parameters are set according to your wishes, click OK to nish the con-

10

guration of that resource. The conguration dialog is closed and the main

window shows the modied resource.

Conguring Cluster Resources with the GUI 47

Page 58

If you do not congure resource monitoring, resource failures after a successful start

will not be communicated, and the cluster will always show the resource as healthy.

If the resource monitor detects a failure, the following takes place:

• Log le messages are generated, according to the conguration specied in the

logging section of /etc/ais/openais.conf (by default, written to syslog,

usually /var/log/messages).

•

The failure is reected in the Linux HA Management Client, the crm_mon tools,

and in the CIB status section. To view them in the Linux HA Management Client,

click Management in the left pane, then in the right pane, select the resource whose

details you want to see.

• The cluster initiates noticeable recovery actions which may include stopping the

resource to repair the failed state and restarting the resource locally or on another

node. The resource also may not be restarted at all, depending on the conguration

and state of the cluster.

4.8 Starting a New Cluster Resource

NOTE: Starting Resources

When conguring a resource with the High Availability Extension, the same

resource should not be started or stopped manually (outside of the cluster).

The High Availability Extension software is responsible for all service start or

stop actions.

If a resource's initial state was set to stopped when being created (target-role

meta attribute has the value stopped), it does not start automatically after being cre-

ated. To start a new cluster resource with the Linux HA Management Client, select

Management in the left pane. In the right pane, right click the resource and select Start

(or start it from the toolbar).

48 High Availability Guide

Page 59

4.9 Removing a Cluster Resource

To remove a cluster resource with the Linux HA Management Client, switch to the

Resources view in the left pane, then select the respective resource and click Remove.

NOTE: Removing Referenced Resources

Cluster resources cannot be removed if their ID is referenced by any constraint.

If you cannot delete a resource, check where the resource ID is referenced and