Page 1

Novell®

www.novell.com

High Availability Configuration Guide

PlateSpin® Orchestrate

novdocx (en) 13 May 2009

AUTHORIZED DOCUMENTATION

2.0.2

June 17, 2009

PlateSpin Orchestrate 2.0 High Availability Configuration Guide

Page 2

Legal Notices

Novell, Inc. makes no representations or warranties with respect to the contents or use of this documentation, and

specifically disclaims any express or implied warranties of merchantability or fitness for any particular purpose.

Further, Novell, Inc. reserves the right to revise this publication and to make changes to its content, at any time,

without obligation to notify any person or entity of such revisions or changes.

Further, Novell, Inc. makes no representations or warranties with respect to any software, and specifically disclaims

any express or implied warranties of merchantability or fitness for any particular purpose. Further, Novell, Inc.

reserves the right to make changes to any and all parts of Novell software, at any time, without any obligation to

notify any person or entity of such changes.

Any products or technical information provided under this Agreement may be subject to U.S. export controls and the

trade laws of other countries. You agree to comply with all export control regulations and to obtain any required

licenses or classification to export, re-export or import deliverables. You agree not to export or re-export to entities on

the current U.S. export exclusion lists or to any embargoed or terrorist countries as specified in the U.S. export laws.

You agree to not use deliverables for prohibited nuclear, missile, or chemical biological weaponry end uses. See the

Novell International Trade Services Web page (http://www.novell.com/info/exports/) for more information on

exporting Novell software. Novell assumes no responsibility for your failure to obtain any necessary export

approvals.

novdocx (en) 13 May 2009

Copyright © 2008-2009 Novell, Inc. All rights reserved. No part of this publication may be reproduced, photocopied,

stored on a retrieval system, or transmitted without the express written consent of the publisher.

Novell, Inc. has intellectual property rights relating to technology embodied in the product that is described in this

document. In particular, and without limitation, these intellectual property rights may include one or more of the U.S.

patents listed on the Novell Legal Patents Web page (http://www.novell.com/company/legal/patents/) and one or

more additional patents or pending patent applications in the U.S. and in other countries.

Novell, Inc.

404 Wyman Street, Suite 500

Waltham, MA 02451

U.S.A.

www.novell.com

Online Documentation: To access the latest online documentation for this and other Novell products, see

the Novell Documentation Web page (http://www.novell.com/documentation).

Page 3

Novell Trademarks

For Novell trademarks, see the Novell Trademark and Service Mark list (http://www.novell.com/company/legal/

trademarks/tmlist.html).

Third-Party Materials

All third-party trademarks are the property of their respective owners.

novdocx (en) 13 May 2009

Page 4

novdocx (en) 13 May 2009

4 PlateSpin Orchestrate 2.0 High Availability Configuration Guide

Page 5

Contents

About This Guide 7

1 Preparing PlateSpin Orchestrate for High Availability Support 9

1.1 Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

1.2 Installing PlateSpin Orchestrate to a High Availability Environment . . . . . . . . . . . . . . . . . . . . 10

1.2.1 Meeting the Prerequisites . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

1.2.2 Installing the High Availability Pattern for SLES 10 . . . . . . . . . . . . . . . . . . . . . . . . . . 12

1.2.3 Configuring Nodes with Time Synchronization and Installing Heartbeat 2 to Each

Node . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

1.2.4 Setting Up OCFS2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

1.2.5 Installing and Configuring PlateSpin Orchestrate on the First Clustered Node . . . . . 15

1.2.6 Running the High Availability Configuration Script . . . . . . . . . . . . . . . . . . . . . . . . . . 28

1.2.7 Installing and Configuring Orchestrate Server Packages on Other Nodes in the Cluster

for High Availability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

1.2.8 Creating the Cluster Resource Group . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

1.2.9 Testing the Failover of the PlateSpin Orchestrate Server in a Cluster . . . . . . . . . . . 31

1.2.10 Installing and Configuring other PlateSpin Orchestrate Components to the High

Availability Grid . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

novdocx (en) 13 May 2009

2 PlateSpin Orchestrate Failover Behaviors in a High Availability Environment 33

2.1 Use Case 1: Orchestrate Server Failover . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

2.2 Use Case 2: Agent Behavior at Orchestrate Server Failover and Failback . . . . . . . . . . . . . . . 33

2.3 Use Case 3: VM Builder Behavior at Orchestrate Server Failover and Failback . . . . . . . . . . . 33

2.4 Use Case 4: Monitoring Behavior at Orchestrate Server Failover and Failback . . . . . . . . . . . 34

3 High Availability Best Practices 35

3.1 Jobs Using scheduleSweep() Might Need a Start Constraint . . . . . . . . . . . . . . . . . . . . . . . . . 35

Contents 5

Page 6

novdocx (en) 13 May 2009

6 PlateSpin Orchestrate 2.0 High Availability Configuration Guide

Page 7

About This Guide

novdocx (en) 13 May 2009

This High Availability Configuration Guide provides the information for installing and configuring

PlateSpin

information about the components and configuration steps necessary for preparing this environment,

including instructions for configuring the PlateSpin Orchestrate Server in a cluster. The guide also

provides some information regarding the behaviors you can expect from PlateSpin Orchestrate in

various failover scenarios. The guide is organized as follows:

Chapter 1, “Preparing PlateSpin Orchestrate for High Availability Support,” on page 9

Chapter 2, “PlateSpin Orchestrate Failover Behaviors in a High Availability Environment,” on

Chapter 3, “High Availability Best Practices,” on page 35

Audience

The contents of this guide are of interest to the following individuals:

VM Administrator: A PlateSpin Orchestrate virtual machine (VM) administrator manages the life

cycle of the VMs in the enterprise, including creating, starting, stopping, migrating, and deleting

VMs. For more information about the tasks and tools used by the VM administrator, see the

PlateSpin Orchestrate 2.0 VM Client Guide and Reference.

Orchestrate Administrator: A PlateSpin Orchestrate Administrator deploys jobs, manages users,

and monitors distributed computing resources. Administrators can also create and set policies for

automating the usage of these computing resources. For more information about the tasks and tools

used by the Orchestrate Administrator, see the PlateSpin Orchestrate 2.0 Administrator Reference.

®

Orchestrate 2.0 from Novell® in a high availability environment. The guide provides

page 33

User: The end user of PlateSpin Orchestrate, also called a “Job Manager,” runs and manages jobs

that have been created by a Job Developer and deployed by the administrator. It is also possible that

the end user could be a developer who has created applications to run on distributed computing

resources. For more information about the tasks and tools used by the Job Manager, see the

PlateSpin Orchestrate 2.0 Server Portal Reference.

Job Developer: The developer controsl a self-contained development system where he or she

creates jobs and policies and tests them in a laboratory environment. When the jobs are tested and

proven to function as intended, the developer delivers them to the PlateSpin Orchestrate

administrator. For more information about the tasks and tools used by the job developer, see the

PlateSpin Orchestrate 2.0 Developer Guide and Reference.

Prerequisite Skills

As data center managers or IT or operations administrators, it is assumed that users of the product

have the following background:

General understanding of network operating environments and systems architecture.

Knowledge of basic Linux* shell commands and text editors.

About This Guide 7

Page 8

Feedback

We want to hear your comments and suggestions about this manual and the other documentation

included with this product. Please use the User Comments feature at the bottom of each page of the

online documentation, or go to www.novell.com/documentation/feedback.html (http://

www.novell.com/documentation/feedback.html) and enter your comments there.

Documentation Updates

For the most recent updates of this High Availability Guide, visit the PlateSpin Orchestrate 2. Web

site (http://www.novell.com/documentation/pso_orchestrate20/).

Documentation Conventions

In Novell documentation, a greater-than symbol (>) is used to separate actions within a step and

items in a cross-reference path.

®

A trademark symbol (

, TM, etc.) denotes a Novell trademark. An asterisk (*) denotes a third-party

trademark.

novdocx (en) 13 May 2009

When a single pathname can be written with a backslash for some platforms or a forward slash for

other platforms, the pathname is presented with a backslash. Users of platforms that require a

forward slash, such as Linux or UNIX*, should use forward slashes as required by your software.

8 PlateSpin Orchestrate 2.0 High Availability Configuration Guide

Page 9

1

Preparing PlateSpin Orchestrate

novdocx (en) 13 May 2009

for High Availability Support

Ensuring maximum service-level availability and data protection is paramount to enterprise IT

infrastructure. Automated failure detection and recovery prevents downtime, and reduces the

financial and operational impact of outages to the business. Highly available infrastructure is a key

requirement for IT decision makers.

®

The PlateSpin

infrastructure. It continuously monitors and manages physical servers and virtual machines (VMs),

and provides high availability for virtual machines by automatically restarting them on alternate

physical servers if the server they are running on becomes unavailable because of a planned or

unplanned outage. Therefore, the Orchestrate Server itself must be highly available.

In PlateSpin Orchestrate, high availability services are provided by a specialized set of applications

that run on SUSE

software that provides multinode failover and service-level health monitoring for Linux-based

services. Heartbeat 2 monitors both physical servers and the services running on them, and

automatically restarts services if they fail.

This guide describes how to configure PlateSpin Orchestrate in a Heartbeat 2 cluster and how to

provide both service-level restart for the Orchestrate Server and failover among the physical servers

of a Linux cluster to ensure that PlateSpin Orchestrate remains available and responsive to the

infrastructure that it manages.

Orchestrate Server from Novell® is a critical component of your enterprise

®

Linux Enterprise Server (SLES10). Heartbeat 2 is high availability clustering

1

This section includes the following information:

Section 1.1, “Overview,” on page 9

Section 1.2, “Installing PlateSpin Orchestrate to a High Availability Environment,” on page 10

1.1 Overview

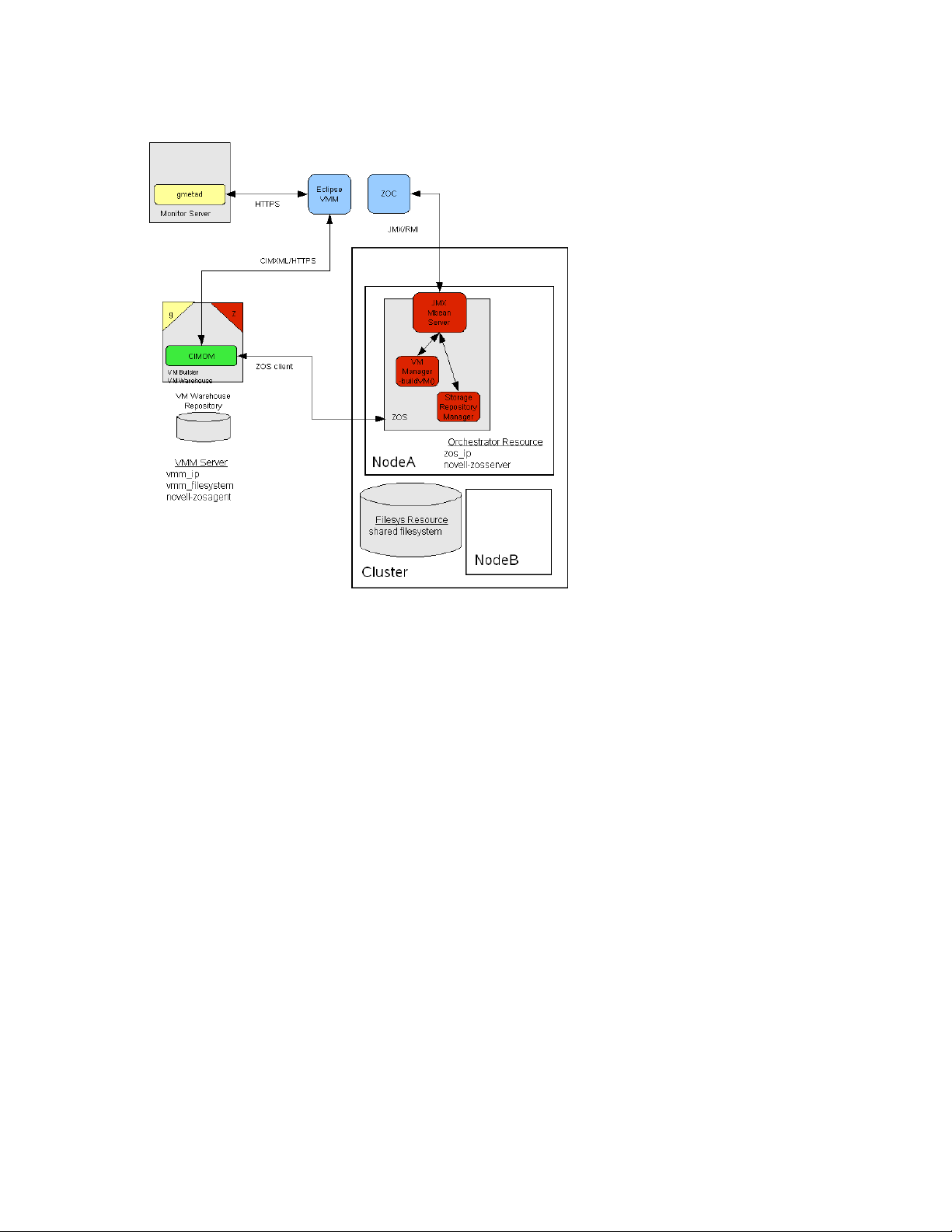

The following figure illustrates how PlateSpin Orchestrate is configured for use in a high

availability environment.

Preparing PlateSpin Orchestrate for High Availability Support

9

Page 10

Figure 1-1 The Oirchestrate Server in a Clustered, High Availability Environment

novdocx (en) 13 May 2009

1.2 Installing PlateSpin Orchestrate to a High Availability Environment

This section includes information to help you install PlateSpin Orchestrate Server components in a

high availability environment. The sequence below is the supported method for configuring this

environment.

1. Section 1.2.1, “Meeting the Prerequisites,” on page 11

2. Section 1.2.2, “Installing the High Availability Pattern for SLES 10,” on page 12

3. Section 1.2.3, “Configuring Nodes with Time Synchronization and Installing Heartbeat 2 to

Each Node,” on page 13

4. Section 1.2.4, “Setting Up OCFS2,” on page 14

5. Section 1.2.5, “Installing and Configuring PlateSpin Orchestrate on the First Clustered Node,”

on page 15

6. Section 1.2.6, “Running the High Availability Configuration Script,” on page 28

7. Section 1.2.8, “Creating the Cluster Resource Group,” on page 29

8. Section 1.2.7, “Installing and Configuring Orchestrate Server Packages on Other Nodes in the

Cluster for High Availability,” on page 28

9. Section 1.2.9, “Testing the Failover of the PlateSpin Orchestrate Server in a Cluster,” on

page 31

10. Section 1.2.10, “Installing and Configuring other PlateSpin Orchestrate Components to the

High Availability Grid,” on page 31

10 PlateSpin Orchestrate 2.0 High Availability Configuration Guide

Page 11

NOTE: Upgrading from earlier versions of PlateSpin Orchestrate (including an earlier installation

of version 1.3) to a high availability environment is supported. For more information, see

“Upgrading a ZENworks Orchestrator 1.3 High Availability Configuration” in the PlateSpin

Orchestrate 2.0 Upgrade Guide.

If you plan to use the PlateSpin Orchestrate VM Client in a high availability environment, see

Section 1.2.10, “Installing and Configuring other PlateSpin Orchestrate Components to the High

Availability Grid,” on page 31.

1.2.1 Meeting the Prerequisites

The environment where PlateSpin Orchestrate Server is installed must meet the hardware and

software requirements for high availability. This section includes the following information to help

you understand those requirements.

“Hardware Requirements for Creating a High Availability Environment” on page 11

“Software Requirements for Creating a High Availability Environment” on page 11

novdocx (en) 13 May 2009

Hardware Requirements for Creating a High Availability Environment

The following hardware components are required for creating a high availability environment for

PlateSpin Orchestrate:

A minimum of two SLES 10 SP2 (or greater) physical servers, each having dual network

interface cards (NICs). These servers are the nodes of the cluster where the PlateSpin

Orchestrate Server is installed and are a key part of the high availability infrastructure.

A Fibre Channel or ISCSI Storage Area Network (SAN)

A STONITH device, to provide node fencing. A STONITH device is a power switch that the

cluster uses to reset nodes that are considered dead. Resetting non-heartbeating nodes is the

only reliable way to ensure that no data corruption is performed by nodes that hang and only

appear to be dead. For more information about setting up STONITH, see, the “Configuring

Stonith” (http://www.novell.com/documentation/sles10/heartbeat/data/b8nrkl5.html) section of

the SLES 10 Heartbeat Guide (http://www.novell.com/documentation/sles10/heartbeat/data/

heartbeat.html).

Software Requirements for Creating a High Availability Environment

The following software components are required for creating a high availability environment for

PlateSpin Orchestrate:

The high availability pattern on the SLES 10 SP2 RPM install source, which includes

Heartbeat 2 software package, which is a high-availability resource manager that supports

multinode failover. This should include all available online updates installed to all nodes

that will be part of the Heartbeat 2 cluster.

Oracle Cluster File System 2 (OCFS2), a parallel cluster file system that offers concurrent

access to a shared file system. See Section 1.2.4, “Setting Up OCFS2,” on page 14 for

more information.

SLES 10 SP2 integrates these open source storage technologies (Heartbeat 2 and OCFS)

in a high availability installation pattern, which, when installed and configured, is known

as the Novell High Availability Storage Infrastructure. This combined technology

Preparing PlateSpin Orchestrate for High Availability Support 11

Page 12

automatically shares cluster configuration and coordinates cluster-wide activities to ensure

deterministic and predictable administration of storage resources for shared-disk-based

clusters.

DNS installed on the nodes of the cluster for resolving the cluster hostname to the cluster IP.

PlateSpin Orchestrate Server installed on all nodes of the cluster (a two-node or three-node

configuration is recommended).

(Optional) VM Builder installed on a non-clustered server (for more information, see

Section 1.2.10, “Installing and Configuring other PlateSpin Orchestrate Components to the

High Availability Grid,” on page 31).

(Optional) Orchestrate Monitoring Server installed on a non-clustered server (for more

information, see Section 1.2.10, “Installing and Configuring other PlateSpin Orchestrate

Components to the High Availability Grid,” on page 31).

1.2.2 Installing the High Availability Pattern for SLES 10

The high availability install pattern is included in the distribution of SLES 10 SP2. Use YaST2 (or

the command line, if you prefer) to install the packages that are associated with the high availability

pattern to each physical node that is to participate in the PlateSpin Orchestrate cluster.

novdocx (en) 13 May 2009

NOTE: The high availability pattern is included on the SLES 10 SP2 install source, not the

PlateSpin Orchestrate install source.

The packages associated with high availability include:

drbd (Distributed Replicated Block Device)

EVMS high availability utilities

The Heartbeat 2 subsystem for high availability on SLES

Heartbeat 2 CIM provider

A monitoring daemon for maintaining high availability resources that can be used by Heartbeat

2

A plug-in and interface loading library used by Heartbeat 2

An interface for the STONITH device

OCFS2 GUI tools

OCFS2 Core tools

For more information, see “Installing and Removing Software” (http://www.novell.com/

documentation/sles10/sles_admin/data/sec_yast2_sw.html) in the SLES 10 Installation and

Administration Guide.

12 PlateSpin Orchestrate 2.0 High Availability Configuration Guide

Page 13

1.2.3 Configuring Nodes with Time Synchronization and Installing Heartbeat 2 to Each Node

When you have installed the high availability packages to each node of the cluster, you need to

configure the Network Timing Protocol (NTP) and Heartbeat 2 clustering environment on each

physical machine that participates in the cluster.

“Configuring Time Synchronization” on page 13

“Configuring Heartbeat 2” on page 13

Configuring Time Synchronization

To configure time synchronization, you need to configure the nodes in the cluster to synchronize to a

time server outside the cluster. The cluster nodes use the time server as their time synchronization

source.

NTP is included as a network service in SLES 10 SP2. Use the time synchronization instructions

(http://www.novell.com/documentation/sles10/heartbeat/data/b5ez1wc.html) in the SLES 10

Heartbeat Guide to help you configure each cluster node with NTP.

novdocx (en) 13 May 2009

Configuring Heartbeat 2

Heartbeat 2 is an open source server clustering system that ensures high availability and

manageability of critical network resources including data, applications, and services. It is a

multinode clustering product for Linux that supports failover, failback, and migration (load

balancing) of individually managed cluster resources.

Heartbeat 2 packages are installed with the high availability pattern on the SLES 10 SP2 install

source. For detailed information about configuring Heartbeat 2, see the installation and setup

instructions (http://www.novell.com/documentation/sles10/heartbeat/index.html?page=/

documentation/sles10/heartbeat/data/b3ih73g.html) in the SLES 10 Heartbeat Guide (http://

www.novell.com/documentation/sles10/heartbeat/data/heartbeat.html).

An important value you need to specify in order for Heartbeat 2 to be enabled for high availability is

configured in the Default Action Timeout field on the settings page of the Heartbeat 2 console

(hb_gui).

Preparing PlateSpin Orchestrate for High Availability Support 13

Page 14

Figure 1-2 The Main Settings Page in the Heartbeat 2 Graphical Interface

novdocx (en) 13 May 2009

The value in this field controls how long Heartbeat 2 waits for services to start. The default value is

20 seconds. The PlateSpin Orchestrate Server requires more time than this to start. We recommend

120s

that you specify the value in this field at

. More time might be required if your PlateSpin

Orchestrate grid is very large.

1.2.4 Setting Up OCFS2

OCFS2 is a general-purpose journaling file system that is fully integrated in the Linux 2.6 and later

kernel that ships with SLES 10 SP2. OCFS2 allows you to store application binary files, data files,

and databases on devices in a SAN. All nodes in a cluster have concurrent read and write access to

the file system. A distributed lock manager helps prevent file access conflicts. OCFS2 supports up to

32,000 subdirectories and millions of files in each directory. The O2CB cluster service (a driver)

runs on each node to manage the cluster.

To set up the high availability environment for PlateSpin Orchestrate, you need to first install the

High Availability pattern in YaST (this includes the

ocfs2-tools

packages) and configure the Heartbeat 2 cluster management system on each physical machine that

participates in the cluster, and then provide a SAN in OCFS2 where the PlateSpin Orchestrate files

can be stored. For information on setting up and configuring OCFS2, see the “Oracle Cluster File

System 2” (http://www.novell.com/documentation/sles10/sles_admin/data/ocfs2.html) section of

the SLES 10 Administration Guide.

Shared Storage Requirements for Creating a High Availability Environment

and

ocfs2console

software

If you want data to be highly available, we recommend that you set up a Fibre Channel Storage Area

Network (SAN) to be used by your PlateSpin Orchestrate cluster.

14 PlateSpin Orchestrate 2.0 High Availability Configuration Guide

Page 15

SAN configuration is beyond the scope of this document. For information about setting up a SAN,

see the Oracle Cluster File System 2 (http://www.novell.com/documentation/sles10/sles_admin/

data/ocfs2.html) documentation in the SLES 10 Administration Guide.

IMPORTANT: PlateSpin Orchestrate requires a specific mount point for file storage on the SAN.

Use

/zos

(in the root directory) for this mount point.

1.2.5 Installing and Configuring PlateSpin Orchestrate on the First Clustered Node

This section includes information about installing the Orchestrate Server components, configuring

those components using two possible methods, and then checking the configuration:

“Installing the Orchestrate Server YaST Patterns on the Node” on page 15

“Running the PlateSpin Orchestrate Configuration Script” on page 16

“Running the PlateSpin Orchestrate Configuration Wizard” on page 19

“PlateSpin Orchestrate Configuration Information” on page 24

novdocx (en) 13 May 2009

“Checking the Configuration” on page 27

Installing the Orchestrate Server YaST Patterns on the Node

NOTE: As you prepare to install PlateSpin Orchestrate 2.0 and use it in a high availability

environment, make sure that the requirements to do so are met. For more information, see “Planning

the Orchestrate Server Installation” in the PlateSpin Orchestrate 2.0 Installation and Configuration

Guide.

The PlateSpin Orchestrate Server (Orchestrate Server) is supported on SUSE Linux Enterprise

Server 10 Service Pack 2 (SLES 10 SP2) only.

To install the PlateSpin Orchestrate Server packages on the first node of the cluster,

1 Download the appropriate PlateSpin Orchestrate Server ISO (32-bit or 64-bit) to an accessible

network location.

2 (Optional) Create a DVD ISO (32-bit or 64-bit) that you can take with you to the machine

where you want to install it or use a network install source.

3 Install PlateSpin Orchestrate software:

root

3a Log in to the target SLES 10 SP2 server as

3b In the YaST Control Center, click Software, then click Add-on Product to display the Add-

on Product Media dialog box.

, then open YaST2.

3c In the Add-on Product Media dialog box, select the ISO media (Local Directory or DVD)

to install.

(Conditional) If you are using a DVD, select DVD, click Next, insert the DVD, then

click Continue.

(Conditional) If you are using a directory, select Local Directory, click Next, select

the ISO Image check box, browse to ISO on the file system, then click OK.

3d Read and accept the license agreement, then click Next to display YaST2.

Preparing PlateSpin Orchestrate for High Availability Support 15

Page 16

3e In the YaST2 Filter drop-down menu, select Patterns to display the install patterns

available on the PlateSpin Orchestrate ISO.

3f Select the PlateSpin Orchestrate Server installation pattern for installation on the first

node. When you do so, the Monitoring Server installation pattern and the Monitoring

Agent pattern are also selected. These patterns are the gateway between enterprise

applications and resource servers. The Orchestrate Server manages computing nodes

(resources) and the jobs that are submitted from applications to run on these resources.

novdocx (en) 13 May 2009

TIP: If they are not already selected by default, you need to select the packages that are in

the PlateSpin Orchestrate Server pattern, the Monitoring Server pattern, and the

Monitoring Client pattern.

3g Click Accept to install the packages.

4 Configure the PlateSpin Orchestrate Server components that you have installed. You can use

one of two methods to perform the configuration:

The PlateSpin Orchestrate product (text-based) configuration script. If you use this

method, continue with “Running the PlateSpin Orchestrate Configuration Script” on

page 16.

The PlateSpin Orchestrate GUI Configuration Wizard, which might be more user-friendly.

If you use this method, skip to “Running the PlateSpin Orchestrate Configuration Wizard”

on page 19.

TIP: Although the text-based configuration process detects which RPM patterns are installed,

the GUI Configuration Wizard requires that you specify which components are to be

configured.

You can use the content in “PlateSpin Orchestrate Configuration Information” on page 24 to

help you understand what is needed during the configuration.

5 Finish the configuration by following the instructions in “Checking the Configuration” on

page 27.

Running the PlateSpin Orchestrate Configuration Script

Use the following procedure to finish the initial installation and configuration script for the first

node in the cluster.

16 PlateSpin Orchestrate 2.0 High Availability Configuration Guide

Page 17

TIP: You can use the content included in “PlateSpin Orchestrate Configuration Information” on

page 24 to help you complete the configuration.

novdocx (en) 13 May 2009

1 Make sure you are logged in as

root

to run the configuration script.

2 Run the script, as follows:

/opt/novell/zenworks/orch/bin/config

When the script runs, the following information is initially displayed:

Welcome to Novell PlateSpin Orchestrate.

This program will configure Novell PlateSpin Orchestrate 2.0

Select whether this is a new install or an upgrade

i) install

u) upgrade

- - - - - -

Selection [install]:

3 Press Enter (or enter i) to accept a new installation and to display the next part of the script.

Select products to configure

# selected Item

1) yes PlateSpin Orchestrate Monitoring Service

2) yes PlateSpin Orchestrate Server

3) no PlateSpin Orchestrate Agent (not installed)

4) no PlateSpin Orchestrate VM Builder (not installed)

Select from the following:

1 - 4) toggle selection status

a) all

n) none

f) finished making selections

q) quit -- exit the program

Selection [finish]:

Because you installed only the Platespin Orchestrate Server and the PlateSpin Orchestrate

Monitoring Service, no other products need to be selected.

4 Press Enter (or enter

Gathering information for PlateSpin Orchestrate Monitoring Service

configuration. . .

You can configure this host to be the Monitoring Server or a monitored node

Configure this host as the Monitoring Server? (y/n) [y]:

f

) to finish the default selection and to display the next part of the script.

5 Press Enter (or enter y) to configure this host as a Monitoring Server. This step of the

configuration also configures the Monitoring Agent you installed previously.

6 Specify a name (or accept the default computer name) that describes this monitored computer’s

location.

The next part of the configuration script is displayed:

Preparing PlateSpin Orchestrate for High Availability Support 17

Page 18

Gathering information for PlateSpin Orchestrate Server configuration. . .

Select whether this is a standard or high-availability server

configuration

s) standard

h) ha

- - - - - -

Selection [standard]:

7 Enter h to specify that this is a high availability server configuration and to display the next part

of the script.

8 Specify the fully qualified cluster hostname or the IP address that is used for configuring the

Orchestrate Server instance in a high availability cluster.

The configuration script binds the IP address of the cluster to this server.

9 Specify a name for the PlateSpin Orchestrate grid.

This grid is an administrative domain container that contains all of the objects in your network

or data center that PlateSpin Orchestrate monitors and manages, including users, resources, and

jobs. The grid name is displayed at the root of the tree in the Explorer Panel of the Orchestrate

Development Client.

10 Specify a name for the PlateSpin Orchestrate Administrator user.

novdocx (en) 13 May 2009

This name is used to log in as the administrator of the PlateSpin Orchestrate Server and the

objects it manages.

11 Specify a password for the PlateSpin Orchestrate Administrator user, then retype the password

to validate it.

y

12 Choose whether to enable an audit database by entering either

or n.

PlateSpin Orchestrate can send audit information to a relational database (RDBMS). If you

enable auditing, you need access to an RDBMS. If you use a PostgreSQL* database, you can

configure it for use with PlateSpin Orchestrate auditing at this time. If you use a different

RDBMS, you must configure it separately for use with PlateSpin Orchestrate.

13 Specify the full path to file containing the license key you received from Novell.

Example:

opt/novell/zenworks/zos/server/license/key.txt

14 Specify the port you want the Orchestrate Server to use for the Server Portal interface so users

(also known as Job Managers) can access the PlateSpin Orchestrate Server to manage jobs.

NOTE: If you plan to use PlateSpin Orchestrate Monitoring outside your cluster, we

recommend that you do not use the default port, 80.

15 Specify a port that you want to designate for the Administrator Information page.

This page includes links to product documentation, agent and client installers, and product

tools to help you understand and use the product. The default port is 8001.

16 Specify a port to be used for communication between the Orchestrate Server and the

Orchestrate Agent. The default port is 8100.

yes

17 Specify (

or no) whether you want the Orchestrate Server to generate a PEM-encoded TLS

certificate for secure communication between the server and the agent. If you choose not to

generate a certificate, you need to provide the location of an existing certificate and key.

18 Specify the password to be used for the VNC on Xen* hosts, then verify that password.

18 PlateSpin Orchestrate 2.0 High Availability Configuration Guide

Page 19

novdocx (en) 13 May 2009

19 Specify whether to view (

yes

or no) or change (

yes

or no) the information you have supplied

in the configuration script.

If you choose to not change the script, the configuration process launches.

If you decide to change the information, the following choices are presented in the script:

Select the component that you want to change

1) PlateSpin Orchestrate Server

- - - - - - - - - - - - - - - d) Display Summary

f) Finish and Install

Specify

Specify

Specify

1

if you want to reconfigure the server.

d

if you want to review the configuration summary again.

f

if you are satisfied with the configuration and want to install using the

specifications as they are.

20 Continue with “Checking the Configuration” on page 27.

Running the PlateSpin Orchestrate Configuration Wizard

Use the following steps to run the Platespin Orchestrate Configuration Wizard.

1 Run the script for the PlateSpin Orchestrate Configuration Wizard as follows:

/opt/novell/zenworks/orch/bin/guiconfig

The Configuration Wizard launches.

IMPORTANT: If you only have a keyboard to navigate through the pages of the GUI

Configuration Wizard, use the Tab key to shift the focus to a control you want to use (for

example, a Next button), then press the Spacebar to activate that control.

2 Click Next to display the license agreement.

Preparing PlateSpin Orchestrate for High Availability Support 19

Page 20

3 Accept the agreement, then click Next to display the installation type page.

novdocx (en) 13 May 2009

4 Select New Installation, then click Next to display the PlateSpin Orchestrate components page.

The components page lists the PlateSpin Orchestrate components that are available for

configuration. By default, only the installed components (the PlateSpin Orchestrate Server, in

this case) are selected for configuration.

20 PlateSpin Orchestrate 2.0 High Availability Configuration Guide

Page 21

If other PlateSpin Orchestrate patterns were installed by mistake, make sure that you deselect

them now. As long as these components are not configured for use, there should be no problem

with the errant installation.

5 Click Next to display the Monitoring Services configuration page, then select the options you

want.

novdocx (en) 13 May 2009

On this page of the wizard, you can change the default node name for this monitored node, and

you can also configure this computer to be the Monitoring Server. Make sure that the option to

configure as a Monitoring Server is selected.

6 Click Next to display the high availability configuration page.

7 Select Install to a High Availability clustered environment to configure the server for high

availability, enter the hostname of IP address of the cluster in the Cluster hostname or IP

address field, then click Next to display the configuration settings page.

Preparing PlateSpin Orchestrate for High Availability Support 21

Page 22

Refer to the information in Table 1-1, “PlateSpin Orchestrate Configuration Information,” on

page 25 for details about the configuration data that you need to provide. The GUI

Configuration Wizard uses this information to build a response file that is consumed by the

setup program inside the GUI Configuration Wizard.

novdocx (en) 13 May 2009

TIP: Select Configure advanced settings to display a page where you can specify various port

settings and certificate files. Details for this page are provided in Table 1-1, “PlateSpin

Orchestrate Configuration Information,” on page 25.

8 Click Next to display the Xen VNC password page.

9 Enter the VNC password you intend to use for VNC on Xen virtualization hosts, confirm the

password, then click Next to display the PlateSpin Orchestrate Configuration Summary page.

22 PlateSpin Orchestrate 2.0 High Availability Configuration Guide

Page 23

IMPORTANT: Although this page of the wizard lets you navigate using the Tab key and

spacebar, you need to use the Shift+Tab combination to navigate past the summary list. If you

accidentally enter the summary list, click Back to re-enter the page to navigate to the control

buttons.

novdocx (en) 13 May 2009

By default, the Configure now check box on this page is selected. If you accept the default, the

wizard starts PlateSpin Orchestrate and applies the configuration settings.

If you deselect the check box, the wizard writes out the configuration file to

novell/novell_zenworks_orch_install.conf

applying the configuration settings. You can use this saved

without starting PlateSpin Orchestrate or

.conf

file to start the Orchestrate

/etc/opt/

Server and apply the settings. Do this either by running the configuration script manually or by

using an installation script. Use the following command to run the configuration script:

/opt/novell/zenworks/orch/bin/config -rs <path_to_config_file

>

10 Click Next to display the following wizard page:

11 Click Next to launch the configuration script. When the configuration is finished, the following

page is displayed:

Preparing PlateSpin Orchestrate for High Availability Support 23

Page 24

12 Click Finish to close the configuration wizard.

13 Continue with “Checking the Configuration” on page 27.

PlateSpin Orchestrate Configuration Information

novdocx (en) 13 May 2009

The following table includes the information required by the PlateSpin Orchestrate configuration

(

config

) and the configuration wizard (

guiconfig

) when configuring the Orchestrate Server

component for high availability. The information is organized in this way to make it readily

available. The information is listed in the order that it is requested by the configuration script or

wizard.

24 PlateSpin Orchestrate 2.0 High Availability Configuration Guide

Page 25

Table 1-1 PlateSpin Orchestrate Configuration Information

novdocx (en) 13 May 2009

Configuration

Information

Orchestrate

Server

Explanation

Because the PlateSpin Orchestrate Server must always be installed for a full

PlateSpin Orchestrate system, the following questions are always asked when you

have installed server patterns prior to the configuration process:

Administrator usename:

Create an Administrator user for PlateSpin Orchestrate.

Default =

zosadmin

The name you create here is required when you access the PlateSpin

Orchestrate Console or the zosadmin command line interface.

You should remember this username for future use.

Administrator password:

Specify the password for <Administrator user>

Default = none

This password you create here is required when you access the PlateSpin

Orchestrate Console or the zosadmin command line interface.

You should remember this password for future use.

Grid name:

Select a name for the PlateSpin Orchestrate grid.

Default =

hostname_grid

A grid is an administrative domain container holding all of the objects in

your network or data center. PlateSpin Orchestrate monitors and manages

these objects, including users, resources, and jobs.

The grid name you create here is displayed as the name for the container

placed at the root of the tree in the Explorer panel of the Orchestrate

Development Client.

License file:

Specify the full path to the license file.

Default = none

A license key is required to use this product. You should have received this

key from Novell, then you should have subsequently copied it to the

network location that you specify here. Be sure to include the name of the

license file in the path.

Audit Database:

Choose whether to configure the audit database.

Default =

this server.

no

. We recommend that you do not install the audit database on

Preparing PlateSpin Orchestrate for High Availability Support 25

Page 26

novdocx (en) 13 May 2009

Configuration

Information

Orchestrate

Server

(continued)

Explanation

Agent Port

Specify the Agent port.

Default =

1

:

8100

Port 8100 is used for communication between the Orchestrate Server and

the Orchestrate Agent. Specify another port number if 8100 is reserved for

another use.

Server Portal

Specify the Server Portal port.

Default =

1

:

8080

(if PlateSpin Orchestrate Monitoring is installed) or 80 (if

Orchestrate Monitoring is not installed).

Because Apache* uses port 80 for PlateSpin Orchestrate Monitoring, it

forwards non-monitoring requests to the Orchestrate Server on the port

you specify here.

Administrator Information port

Specify the Administrator Information page port.

Default =

8001

1

:

Port 8001 on the Orchestrate Server provides access to an Administrator

Information page that includes links to product documentation, agent and

client installers, and product tools to help you understand and use the

product. Specify another port number if 8001 is reserved for another use on

this server.

TLS Certificate and Key

Choose whether to generate a TLS certificate and key.

Default =

for authentication)

yes

1

:

(the Orchestrate Server must generate a certificate and key

A PEM-encoded TLS certificate and key is needed for secure

communication between the Orchestrate Server and Orchestrate Agent.

If you respond with

certificate and key.

TLS Server Certificate

Specify the full path to the TLS server certificate.

Default =

/etc/ssl/servercerts/servercert.pem

no

, you need to provide the location of an existing

2

:

Specify the path to the existing TLS certificate.

TLS Server Key

Specify the full path to the TLS server private key.

Default =

2

:

/etc/ssl/servercerts/serverkey.pem

Specify the path to the existing TLS private key.

Xen VNC password:

Set the password that will be used for VNC on

Xen virtualization hosts. You will need this password when

logging into virtual machines using VNC.

26 PlateSpin Orchestrate 2.0 High Availability Configuration Guide

Page 27

novdocx (en) 13 May 2009

Configuration

Information

Configuration

Summary

Explanation

When you have completed the configuration process, you have the option of viewing

a summary of the configuration information.

View summary:

Specify whether you want to view a summary of the configuration parameters.

Default =

Answering

Orchestrate components you have configured and the information with

which they will be configured.

Answering

Configuration information change:

Default =

Answering

you can make changes to the configuration information.

Answering

1

This configuration parameter is considered an advanced setting for the Orchestrate Server in the

yes

yes

to this question displays a list of all the PlateSpin

no

to this question starts the configuration program.

Do you want to make changes?

no

yes

to this question restarts the configuration process so that

no

to this question starts the configuration program.

PlateSpin Orchestrate Configuration Wizard. If you select the Configure Advanced Settings check

box in the wizard, the setting is configured with normal defaults. Leaving the check box deselected

lets you have the option of changing the default value.

2

This configuration parameter is considered an advanced setting for the Orchestrate Server in the

PlateSpin Orchestrate Configuration Wizard. If you select the Configure Advanced Settings check

box in the wizard, this parameter is listed, but default values are provided only if the previous value

is manually set to no.

Checking the Configuration

When the configuration is completed (using either “Running the PlateSpin Orchestrate

Configuration Script” on page 16 or “Running the PlateSpin Orchestrate Configuration Wizard” on

page 19), the first node of the Orchestrate Server cluster is set up. You then need to check the

configuration.

1 Open the configuration log file (

/var/opt/novell/novell_zenworks_orch_install.log

to make sure that the components were correctly configured.

You can change the configuration if you change your mind about some of the parameters you

provided in the configuration process. To do so, rerun the configuration and change your

responses.

The configuration script performs the following functions in sequence on the PlateSpin

Orchestrate:

1. Binds the cluster IP on this server by issuing the following command internally:

IPaddr2 start <IP_address_you_provided

>

IMPORTANT: Make sure you configure DNS to resolve the cluster hostname to the

cluster IP.

2. Configures the Orchestrate Server.

)

Preparing PlateSpin Orchestrate for High Availability Support 27

Page 28

3. Shuts down the Orchestrate Server because you specified that this is a High-Availability

configuration

4. Unbinds the cluster IP on this server by issuing the following command internally:

IPaddr2 stop <IP_address_you_provided

>

1.2.6 Running the High Availability Configuration Script

Before you run the high availability configuration script, make sure that you have installed the

PlateSpin Orchestrate Server to a single node of your high availability cluster. For more information,

see Section 1.2.5, “Installing and Configuring PlateSpin Orchestrate on the First Clustered Node,”

on page 15.

IMPORTANT: The high availability configuration script asks for the mount point on the Fibre

Channel SAN. Make sure that you have that information (

/zos

) before you run the script.

novdocx (en) 13 May 2009

The high availability script,

zenworks/orch/bin

of the cluster (that is, the node where you installed PlateSpin Orchestrate Server) as the next step in

setting up PlateSpin Orchestrate to work in a high availability environment.

The script performs the following functions:

Verifies that the Orchestrate Server is not running

Copies Apache files to shared storage

Copies gmond and gmetad files to shared storage

Moves the PlateSpin Orchestrate files to shared storage (first node of the cluster)

Creates symbolic links pointing to the location of shared storage (all nodes of the cluster)

The high availability configuration script must be run on all nodes of the cluster. Make sure that you

follow the prompts in the script exactly; do not misidentify a secondary node in the cluster as the

primary node.

zos_server_ha_post_config

with the other configuration tools. You need to run this script on the first node

, is located in

/opt/novell/

1.2.7 Installing and Configuring Orchestrate Server Packages on Other Nodes in the Cluster for High Availability

After you have followed the steps to set up the primary node in your planned cluster, you need to set

up the other nodes that you intend to use for failover in that cluster. Use the following sequence as

you set up other cluster nodes (the sequence is nearly identical to setting up the primary node):

1. Make sure that the SLES 10 SP2 nodes have the high availability pattern. For information, see

Section 1.2.2, “Installing the High Availability Pattern for SLES 10,” on page 12.

2. Make sure that the SLES 10 SP2 nodes have been configured with time synchronization. For

information, see Section 1.2.3, “Configuring Nodes with Time Synchronization and Installing

Heartbeat 2 to Each Node,” on page 13.

3. Set up OCFS2 on each node so that the nodes can communicate with the SAN, making sure to

/zos

designate

OCFS2,” on page 14.

28 PlateSpin Orchestrate 2.0 High Availability Configuration Guide

as the shared mount point. For more information, see Section 1.2.4, “Setting Up

Page 29

4. Install PlateSpin Orchestrate Server packages on this node. Use the steps as described in

“Installing the Orchestrate Server YaST Patterns on the Node” on page 15.

novdocx (en) 13 May 2009

NOTE: Do not run the initial configuration script (

config

or

guiconfig

) on any other node

than the primary node.

5. Copy the license file (

server/license/

key.txt

) from the first node to the

directory on this node.

/opt/novell/zenworks/zos/

6. Run the high availability configuration script on this node, as described in “Running the High

Availability Configuration Script” on page 28. This creates the symbolic link to the file paths of

the SAN.

1.2.8 Creating the Cluster Resource Group

The resource group creation script,

novell/zenworks/orch/bin

node of the cluster to set up the cluster resource group. If you want to set up the resource group

using Heartbeat 2 (GUI console or command line tool), running the script is optional.

The script performs the following functions:

Obtains the DNS name from the PlateSpin Orchestrate configuration file.

Creates the cluster resource group.

Configures resource stickiness to avoid unnecessary failbacks.

The

zos_server_ha_resource_group

Server cluster. The script then adds this address to a Heartbeat 2 Cluster Information Base (CIB)

XML template called

cluster_zos_server.xml

cluster resource group:

zos_server_ha_resource_group

, is located in

/opt/

with the other configuration tools. You can run this script on the first

script prompts you for the IP address of the Orchestrate

and uses the following command to create the

/usr/sbin/cibadmin -o resources -C -x $XMLFILE

The CIB XML template is located at

cluster_zos_server.xml

<group id="ZOS_Server">

<primitive id="ZOS_Server_Cluster_IP" class="ocf" type="IPaddr2"

provider="heartbeat">

<instance_attributes>

<attributes>

<nvpair name="ip" value="$CONFIG_ZOS_SERVER_CLUSTER_IP"/>

</attributes>

</instance_attributes>

</primitive>

<primitive id="ZOS_Server_Instance" class="lsb" type="novell-zosserver"

provider="heartbeat">

<instance_attributes id="zos_server_instance_attrs">

<attributes>

<nvpair id="zos_server_target_role" name="target_role"

value="started"/>

</attributes>

</instance_attributes>

<operations>

<op id="ZOS_Server_Status" name="status" description="Monitor the

status of the ZOS service" interval="60" timeout="15" start_delay="15"

. An unaltered template sample is shown below:

/opt/novell/zenworks/orch/bin/ha/

Preparing PlateSpin Orchestrate for High Availability Support 29

Page 30

role="Started" on_fail="restart"/>

</operations>

</primitive>

<primitive id="Apache2" class="lsb" type="apache2" provider="heartbeat">

<instance_attributes id="apache_attr">

<attributes>

<nvpair id="apache2_target_role" name="target_role"

value="started"/>

</attributes>

</instance_attributes>

<operations>

<op id="Apache2_Status" name="status" description="Monitor the

status of Apache2" interval="120" timeout="15" start_delay="15" role="Started"

on_fail="restart"/>

</operations>

</primitive>

<primitive id="Gmetad" class="lsb" type="novell-gmetad"

provider="heartbeat">

<instance_attributes id="gmetad_attr">

<attributes>

<nvpair id="gmetad_target_role" name="target_role"

value="started"/>

</attributes>

</instance_attributes>

<operations>

<op id="Gmetad_Status" name="status" description="Monitor the

status of Gmetad" interval="300" timeout="15" start_delay="15" role="Started"

on_fail="restart"/>

</operations>

</primitive>

<primitive id="Gmond" class="lsb" type="novell-gmond"

provider="heartbeat">

<instance_attributes id="gmond_attr">

<attributes>

<nvpair id="gmond_target_role" name="target_role"

value="started"/>

</attributes>

</instance_attributes>

<operations>

<op id="Gmond_Status" name="status" description="Monitor the status

of Gmetad" interval="300" timeout="15" start_delay="15" role="Started"

on_fail="restart"/>

</operations>

</primitive>

</group>

novdocx (en) 13 May 2009

The template shows that a cluster resource group comprises these components:

The PlateSpin Orchestrate Server

The PlateSpin Orchestrate Server cluster IP address

A dependency on the cluster file system resource group that you already created

Resource stickiness to avoid unnecessary failbacks

When you have installed and configured the nodes in the cluster and created a cluster resource

group, use the Heartbeat 2 tools to start the cluster resource group. You are then ready to test the

failover of the PlateSpin Orchestrate Server in the high-availability cluster (see Section 1.2.9,

“Testing the Failover of the PlateSpin Orchestrate Server in a Cluster,” on page 31).

30 PlateSpin Orchestrate 2.0 High Availability Configuration Guide

Page 31

1.2.9 Testing the Failover of the PlateSpin Orchestrate Server in a Cluster

You can optionally simulate a failure of the Orchestrate Server by powering off or performing a

shutdown of the server. After approximately 30 seconds, the clustering software detects that the

primary node is no longer functioning, binds the IP address to the failover server, then starts the

failover server in the cluster.

Access the PlateSpin Orchestrate Administrator Information Page to verify that the Orchestrate

Server is installed and running (stopped or started). Use the following URL to open the page in a

Web browser:

http://DNS_name_or_IP_address_of_cluster:8001

The Administrator Information page includes links to separate installation programs (installers) for

the PlateSpin Orchestrate Agent and the PlateSpin Orchestrate Clients. The installers are used for

various operating systems.

1.2.10 Installing and Configuring other PlateSpin Orchestrate

novdocx (en) 13 May 2009

Components to the High Availability Grid

To install and configure other PlateSpin Orchestrate components (including the Orchestrate Agent,

the Monitoring Agent, the Monitoring Server, or the VM Builder) on servers that authenticate to the

cluster, you need to do the following:

Determine which components you want to install, remembering these dependencies:

All non-agent PlateSpin Orchestrate components must be installed to a SLES 10 SP2

server, a RHEL 4 server, or a RHEL 5 server.

The PlateSpin Orchestrate Agent must be installed to a SLES 10 SP1 server, a RHEL 4

server, a RHEL 5 server, or a Windows* (NT, 2000, XP) server.

A VM Warehouse must be installed on the same server as a VM Builder. A VM Builder

can be installed independent of the VM Warehouse on its own server.

Use YaST2 to install the PlateSpin Orchestrate packages of your choice to the network server

resources of your choice. For more information, see “Installing and Configuring All PlateSpin

Orchestrate Components Together” or “Installing the Orchestrate VM Client” in the PlateSpin

Orchestrate 2.0 Installation and Configuration Guide.

If you want to, you can download the Orchestrate Agent or clients from the Administrator

Information page and install them to a network resource as directed in “Installing the

Orchestrate Agent Only” in the PlateSpin Orchestrate 2.0 Getting Started Reference.

Run the text-based configuration script or the GUI Configuration Wizard to configure the

PlateSpin Orchestrate components you have installed (including any type of installation of the

agent). As you do this, you need to remember the hostname of the Orchestrate Server (that is,

the primary Orchestrate Server node), and the administrator name and password of this server.

For more information, see “Installing and Configuring All PlateSpin Orchestrate Components

Together” or “Installing the Orchestrate VM Client” in the PlateSpin Orchestrate 2.0 Getting

Started Reference.

Preparing PlateSpin Orchestrate for High Availability Support 31

Page 32

It is important to understand that virtual machines under the management of PlateSpin Orchestrate

are also highly available—the loss of a host causes PlateSpin Orchestrate to re-provision it

elsewhere. This is true as long as the constraints in PlateSpin Orchestrate allow it to re-provision

(for example, if the virtual machine image is on shared storage).

novdocx (en) 13 May 2009

32 PlateSpin Orchestrate 2.0 High Availability Configuration Guide

Page 33

2

PlateSpin Orchestrate Failover

novdocx (en) 13 May 2009

Behaviors in a High Availability

Environment

This section includes information to help you understand the failover behavior of the PlateSpin®

Orchestrate Server from Novell

Section 2.1, “Use Case 1: Orchestrate Server Failover,” on page 33

Section 2.2, “Use Case 2: Agent Behavior at Orchestrate Server Failover and Failback,” on

page 33

Section 2.3, “Use Case 3: VM Builder Behavior at Orchestrate Server Failover and Failback,”

on page 33

Section 2.4, “Use Case 4: Monitoring Behavior at Orchestrate Server Failover and Failback,”

on page 34

2.1 Use Case 1: Orchestrate Server Failover

If the primary node in the PlateSpin Orchestrate cluster fails, you should see a job restart on another

Orchestrate Server in the cluster. The job must have been flagged as restartable. For more

information, see Section 1.2.9, “Testing the Failover of the PlateSpin Orchestrate Server in a

Cluster,” on page 31.

®

in a high availability environment.

2

2.2 Use Case 2: Agent Behavior at Orchestrate Server Failover and Failback

If the primary node in the PlateSpin Orchestrate cluster fails, the Orchestrate Agent sees this server

go down and then reauthenticates with a secondary PlateSpin Orchestrate node as it takes over

responsibilities of the cluster.

2.3 Use Case 3: VM Builder Behavior at Orchestrate Server Failover and Failback

If the Orchestrate Server fails, any VM builds in progress are canceled and potentially incomplete

residual artifacts of the build are cleaned up. When the Orchestrate Server restarts or when it fails

over in the cluster (the server operates identically in these scenarios), the job controlling the VM

build restarts and runs briefly. The purpose of this brief run is to clean up residual objects in the

PlateSpin Orchestrate model.

PlateSpin Orchestrate Failover Behaviors in a High Availability Environment

33

Page 34

2.4 Use Case 4: Monitoring Behavior at Orchestrate Server Failover and Failback

The PlateSpin Orchestrate Monitoring Server and the Monitoring Agent are installed on the same

server as the PlateSpin Orchestrate Server in the high availability PlateSpin Orchestrate cluster. The

Monitoring Agent reports data to the PlateSpin Orchestrate Monitoring Server, and the Orchestrate

VM Client provides access to the charts created from the monitoring data.

The Monitoring Server and the Monitoring Agent services are made highly available along with the

Orchestrate Server and move between clustered machines as the Orchestrate Server does. If a

monitoring agent is installed on an Orchestrate Server and if that server goes down, the server is

displayed as “Down” in the Orchestrate VM Client (Monitoring view).

novdocx (en) 13 May 2009

34 PlateSpin Orchestrate 2.0 High Availability Configuration Guide

Page 35

3

High Availability Best Practices

This section includes information that might be useful to users of PlateSpin® Orchestrate from

®

Novell

as the product is adopted and deployed. We encourage your contributions to this document. All

comments are tested and approved by product engineers before they appear in this section. Please

use the User Comments feature at the bottom of each page of the online documentation, or go to

www.novell.com/documentation/feedback.html (http://www.novell.com/documentation/

feedback.html) and enter your comments there.

in high availability environments. We anticipate that the contents of the section will expand

Section 3.1, “Jobs Using scheduleSweep() Might Need a Start Constraint,” on page 35

3.1 Jobs Using scheduleSweep() Might Need a Start Constraint

novdocx (en) 13 May 2009

3

If you write a custom job that uses the

are either 1) marked as restartable in a high availability failover situation or 2) scheduled through the

Job Scheduler to run at server startup, the job might fail to schedule any joblets and is easily

noticeable with a 0 second run time. This is because

only for online nodes.

If the Job runs during failover, resources might not be immediately available, so the job ends

immediately.

To keep the Job from running until a resource is online, you can use a start constraint. For example,

you could add the following to the job policy:

<constraint type="start" >

<gt fact="jobinstance.matchingresources" value="0" />

</constraint>

If you implement this constraint, the Job is queued (not started) until at least one resource matches

the policy resource constraint.

As alternatives to using the constraint approach, you can:

Code in a waiting interval for the required Agents in your Job

Using the

Choose an alternative set of resources to consider for the

information, see the “ScheduleSpec”API for more details.

schedule()

API for creating Joblets instead of the

scheduleSweep()

scheduleSweep()

JDL function to schedule joblets and that

, by default, creates joblets

scheduleSweep()

scheduleSweep()

function.

. For more

For more information about using constraints, see “Working with Facts and Constraints” in the

PlateSpin Orchestrate 2.0 Developer Guide and Reference.

High Availability Best Practices

35

Page 36

novdocx (en) 13 May 2009

36 PlateSpin Orchestrate 2.0 High Availability Configuration Guide

Loading...

Loading...