Page 1

Novell®

www.novell.com

AUTHORIZED DOCUMENTATION

Novell Cluster ServicesTM 1.8.7 For Linux Administration Guide

Open Enterprise Server

novdocx (en) 7 January 2010

2 SP2

March 15, 2010

OES 2 SP2: Novell Cluster Services 1.8.7 for Linux Administration Guide

Page 2

Legal Notices

Novell, Inc., makes no representations or warranties with respect to the contents or use of this documentation, and

specifically disclaims any express or implied warranties of merchantability or fitness for any particular purpose.

Further, Novell, Inc., reserves the right to revise this publication and to make changes to its content, at any time,

without obligation to notify any person or entity of such revisions or changes.

Further, Novell, Inc., makes no representations or warranties with respect to any software, and specifically disclaims

any express or implied warranties of merchantability or fitness for any particular purpose. Further, Novell, Inc.,

reserves the right to make changes to any and all parts of Novell software, at any time, without any obligation to

notify any person or entity of such changes.

Any products or technical information provided under this Agreement may be subject to U.S. export controls and the

trade laws of other countries. You agree to comply with all export control regulations and to obtain any required

licenses or classification to export, re-export or import deliverables. You agree not to export or re-export to entities on

the current U.S. export exclusion lists or to any embargoed or terrorist countries as specified in the U.S. export laws.

You agree to not use deliverables for prohibited nuclear, missile, or chemical biological weaponry end uses. See the

Novell International Trade Services Web page (http://www.novell.com/info/exports/) for more information on

exporting Novell software. Novell assumes no responsibility for your failure to obtain any necessary export

approvals.

novdocx (en) 7 January 2010

Copyright © 2007–2010 Novell, Inc. All rights reserved. No part of this publication may be reproduced,

photocopied, stored on a retrieval system, or transmitted without the express written consent of the publisher.

Novell, Inc.

404 Wyman Street, Suite 500

Waltham, MA 02451

U.S.A.

www.novell.com

Online Documentation: To access the latest online documentation for this and other Novell products, see

the Novell Documentation Web page (http://www.novell.com/documentation).

Page 3

Novell Trademarks

For Novell trademarks, see the Novell Trademark and Service Mark list (http://www.novell.com/company/legal/

trademarks/tmlist.html).

Third-Party Materials

All third-party trademarks are the property of their respective owners.

novdocx (en) 7 January 2010

Page 4

novdocx (en) 7 January 2010

4 OES 2 SP2: Novell Cluster Services 1.8.7 for Linux Administration Guide

Page 5

Contents

About This Guide 13

1 Overview of Novell Cluster Services 15

1.1 Why Should I Use Clusters? . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

1.2 Benefits of Novell Cluster Services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

1.3 Product Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

1.4 Clustering for High-Availability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

1.5 Shared Disk Scenarios. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

1.5.1 Using Fibre Channel Storage Systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

1.5.2 Using iSCSI Storage Systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

1.5.3 Using Shared SCSI Storage Systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

2What’s New 23

novdocx (en) 7 January 2010

2.1 What’s New (January 2010). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

2.2 What’s New (OES 2 SP2) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

2.2.1 Improved Error Reporting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

2.2.2 Improved Time Calculations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

2.2.3 Specifying the Size of the SBD Partition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

2.2.4 Customizing Translation Syntax . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

2.2.5 Migration Tool Support for Cluster Configurations . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

2.2.6 New iFolder Resource Template . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

2.2.7 Removed MPK Calls from the Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

2.2.8 Cluster Restart Is No Longer Required in a Rolling Cluster Upgrade . . . . . . . . . . . . 24

2.3 What’s New (OES 2 SP1) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

2.3.1 Schema Extension. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

2.3.2 Installation by Container Administrator . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

2.3.3 Behavior Change for Adding a Node . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

2.3.4 Attribute NCS: GIPC Config Is No Longer Maintained . . . . . . . . . . . . . . . . . . . . . . . 26

2.3.5 Support for Novell AFP for Linux . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

2.3.6 Support for Novell CIFS for Linux . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

2.3.7 Support for Domain Services for Windows . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

2.4 What’s New (OES 2) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

3 Installing and Configuring Novell Cluster Services on OES 2 Linux 27

3.1 System Requirements for Novell Cluster Services. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

3.1.1 Hardware Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

3.1.2 Software Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

3.1.3 Configuration Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

3.1.4 Shared Disk System Requirements. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

3.1.5 SAN Rules for LUN Masking . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

3.1.6 Using Device Mapper Multipath with Novell Cluster Services . . . . . . . . . . . . . . . . . . 38

3.2 Novell Cluster Services Licensing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

3.3 Extending the eDirectory Schema to Add Cluster Objects. . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

3.3.1 Prerequisites for Extending the Schema . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

3.3.2 Extending the Schema. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

3.4 Assigning Install Rights for Container Administrators . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

3.5 Installing Novell Cluster Services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

Contents 5

Page 6

3.5.1 Installing Novell Cluster Services during a OES 2 Linux Installation . . . . . . . . . . . . . 42

3.5.2 Installing Novell Cluster Services on an Existing OES 2 Linux Server . . . . . . . . . . . 44

3.6 Configuring Novell Cluster Services. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

3.6.1 Opening the Novell Open Enterprise Server Configuration Page . . . . . . . . . . . . . . . 46

3.6.2 Using Different LDAP Credentials for the Cluster Configuration . . . . . . . . . . . . . . . . 47

3.6.3 Enabling the Novell Cluster Services Configuration Page in YaST . . . . . . . . . . . . . . 48

3.6.4 Configuring a New Cluster. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

3.6.5 Adding a Node to an Existing Cluster . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

3.7 Configuring Additional Administrators . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

3.8 What’s Next . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

4 Upgrading OES 2 Linux Clusters 53

4.1 Requirements for Upgrading Clusters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

4.2 Upgrading OES 2 Clusters (Rolling Cluster Upgrade) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

4.3 Upgrade Issues for OES 2 SP2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

4.3.1 Updating the iFolder Resource Template . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

5 Upgrading OES 1 Linux Clusters to OES 2 Linux 55

5.1 Requirements and Guidelines for Upgrading Clusters from OES 1 Linux and OES 2 Linux . . 55

5.2 Upgrading Existing OES 1 Linux Cluster Nodes to OES 2 (Rolling Cluster Upgrade) . . . . . . . 56

5.3 Adding New OES 2 Linux Cluster Nodes to Your OES 1 Linux Cluster. . . . . . . . . . . . . . . . . . 57

5.4 Modifying Cluster Resource Scripts for Mixed OES 1 Linux and OES 2 Linux Clusters . . . . . 57

5.5 Finalizing the Cluster Upgrade. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

novdocx (en) 7 January 2010

6 Converting NetWare 6.5 Clusters to OES 2 Linux 59

6.1 Guidelines for Converting Clusters from NetWare to OES 2 Linux . . . . . . . . . . . . . . . . . . . . . 59

6.1.1 Supported Mixed-Node Clusters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

6.1.2 SBD Devices Must Be Marked as Shareable for Clustering . . . . . . . . . . . . . . . . . . . 60

6.1.3 Syntax Translation Issues for Load and Unload Scripts . . . . . . . . . . . . . . . . . . . . . . 60

6.1.4 Case Sensitivity Issues . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

6.1.5 Adding New NetWare Nodes to a Mixed-Node Cluster . . . . . . . . . . . . . . . . . . . . . . . 61

6.1.6 Converting Multiple NetWare Cluster Nodes to OES 2 Linux . . . . . . . . . . . . . . . . . . 61

6.1.7 Converting Nodes that Contain the eDirectory Master Replica . . . . . . . . . . . . . . . . . 62

6.1.8 Failing Over Cluster Resources on Mixed-Node Clusters . . . . . . . . . . . . . . . . . . . . . 62

6.1.9 Managing File Systems in Mixed-Node Clusters . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

6.1.10 Using Novell iManager in Mixed-Node Clusters . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

6.1.11 Using Novell Remote Manager Is Not Supported in Mixed-Node Clusters . . . . . . . . 63

6.1.12 Using ConsoleOne Is Not Supported for Mixed-Node Clusters . . . . . . . . . . . . . . . . . 63

6.1.13 Using the Monitor Function in Mixed-Node Clusters Is Not Supported . . . . . . . . . . . 63

6.2 Guidelines for Converting NSS Pool Resources from NetWare to Linux. . . . . . . . . . . . . . . . . 63

6.2.1 NSS Pool Cluster Migration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

6.2.2 NSS File System Migration to NCP Volumes or Linux POSIX File Systems. . . . . . . 64

6.2.3 Estimated Time Taken to Build the Trustee File on Linux . . . . . . . . . . . . . . . . . . . . . 64

6.3 Guidelines for Converting Service Cluster Resources from NetWare to Linux . . . . . . . . . . . . 64

6.3.1 Overview of All NetWare 6.5 SP8 Services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

6.3.2 Apache Web Server. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

6.3.3 Apple Filing Protocol (AFP) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

6.3.4 Archive and Version Services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

6.3.5 CIFS. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

6.3.6 DFS VLDB . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

6.3.7 DHCP Server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

6.3.8 DNS Server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

6.3.9 eDirectory Server Certificates . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72

6 OES 2 SP2: Novell Cluster Services 1.8.7 for Linux Administration Guide

Page 7

6.3.10 iPrint. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

6.3.11 QuickFinder Server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

6.4 Converting NetWare Cluster Nodes to OES 2 Linux (Rolling Cluster Conversion) . . . . . . . . . 78

6.5 Adding New OES 2 Linux Nodes to Your NetWare Cluster . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

6.6 Translation of Cluster Resource Scripts for Mixed NetWare and Linux Clusters . . . . . . . . . . . 82

6.6.1 Comparing Script Commands for NetWare and Linux. . . . . . . . . . . . . . . . . . . . . . . . 82

6.6.2 Comparing Master IP Address Scripts. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

6.6.3 Comparing NSS Pool Resource Scripts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

6.6.4 Comparing File Access Protocol Resource Script Commands . . . . . . . . . . . . . . . . . 85

6.7 Customizing the Translation Syntax for Converting Load and Unload Scripts. . . . . . . . . . . . . 87

6.8 Finalizing the Cluster Conversion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 88

7 Configuring Cluster Policies and Priorities 91

7.1 Understanding Cluster Settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

7.1.1 Cluster Policies . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

7.1.2 Cluster Protocols Properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

7.2 Configuring Quorum Membership and Timeout Properties . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

7.2.1 Quorum Triggers (Number of Nodes) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

7.2.2 Quorum Triggers (Timeout) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

7.3 Configuring Cluster Protocol Properties. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

7.3.1 Heartbeat . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

7.3.2 Tolerance. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

7.3.3 Master Watchdog. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

7.3.4 Slave Watchdog. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

7.3.5 Maximum Retransmits . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

7.4 Configuring Cluster Event E-Mail Notification . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 95

7.5 Viewing the Cluster Node Properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 95

7.6 Modifying the Cluster IP Address and Port Properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 96

7.7 What’s Next . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 96

novdocx (en) 7 January 2010

8 Managing Clusters 97

8.1 Starting and Stopping Novell Cluster Services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

8.1.1 Starting Novell Cluster Services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98

8.1.2 Stopping Novell Cluster Services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98

8.1.3 Enabling and Disabling the Automatic Start of Novell Cluster Services . . . . . . . . . . 98

8.2 Monitoring Cluster and Resource States . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

8.3 Generating a Cluster Configuration Report . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

8.4 Cluster Migrating Resources to Different Nodes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

8.5 Onlining and Offlining (Loading and Unloading) Cluster Resources from a Cluster Node. . . 102

8.6 Removing (Leaving) a Node from the Cluster . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

8.7 Joining a Node to the Cluster. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

8.8 Configuring the EVMS Remote Request Timeout . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

8.9 Shutting Down Linux Cluster Servers When Servicing Shared Storage . . . . . . . . . . . . . . . . 104

8.10 Enabling or Disabling Cluster Maintenance Mode . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

8.11 Preventing a Cluster Node Reboot after a Node Shutdown. . . . . . . . . . . . . . . . . . . . . . . . . . 104

8.12 Renaming a Pool for a Pool Cluster Resource . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

8.13 Moving a Cluster, or Changing IP Addresses, LDAP Server, or Administrator Credentials for a

Cluster . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

8.13.1 Changing the Administrator Credentials or LDAP Server IP Addresses for a

Cluster . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

8.13.2 Moving a Cluster or Changing IP Addresses of Cluster Nodes and Resources . . . 107

8.14 Adding a Node That Was Previously in the Cluster . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

Contents 7

Page 8

8.15 Deleting a Cluster Node from a Cluster, or Reconfiguring a Cluster Node . . . . . . . . . . . . . . 109

8.16 Creating or Deleting Cluster SBD Partitions. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

8.16.1 Prerequisites for Creating an SBD Partition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

8.16.2 Before Creating an Cluster SBD Partition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

8.16.3 Creating a Non-Mirrored Cluster SBD Partition . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

8.16.4 Creating a Mirrored Cluster SBD Partition. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 112

8.16.5 Deleting a Non-Mirrored Cluster SBD Partition . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

8.16.6 Deleting a Mirrored Cluster SBD Partition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

8.16.7 Removing a Segment from a Mirrored Cluster SBD Partition . . . . . . . . . . . . . . . . . 114

8.17 Customizing Cluster Services Management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

9 Configuring and Managing Cluster Resources 117

9.1 Planning Cluster Maintenance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

9.2 Creating Cluster Resource Templates . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

9.2.1 Default Resource Templates . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

9.2.2 Creating a Resource Template . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

9.3 Creating Cluster Resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

9.4 Configuring a Load Script for a Cluster Resource . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

9.5 Configuring an Unload Script for a Cluster Resource . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 122

9.6 Enabling Monitoring and Configuring the Monitor Script . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

9.6.1 Configuring Resource Monitoring . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

9.6.2 Example Monitoring Scripts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 124

9.6.3 Monitoring Services Critical to Clustering . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 125

9.7 Setting Start, Failover, and Failback Modes for Cluster Resources. . . . . . . . . . . . . . . . . . . . 125

9.7.1 Understanding Cluster Resource Modes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 125

9.7.2 Viewing or Modifying the Start, Failover, and Failback Modes for a Resource . . . . 126

9.8 Assigning Nodes to a Resource. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

9.9 Configuring Resource Priorities for Load Order . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

9.10 Changing the IP Address of a Cluster Resource . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 128

9.11 Deleting Cluster Resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 128

9.11.1 Deleting a Cluster Resource on a Master Node . . . . . . . . . . . . . . . . . . . . . . . . . . . 128

9.11.2 Deleting a Cluster Resource on a Non-Master Node . . . . . . . . . . . . . . . . . . . . . . . 129

9.12 Additional Information for Creating Cluster Resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 130

9.12.1 Creating Storage Cluster Resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 130

9.12.2 Creating Service Cluster Resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 130

9.12.3 Creating Virtual Machine Cluster Resources. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

novdocx (en) 7 January 2010

10 Configuring Cluster Resources for Shared NSS Pools and Volumes 133

10.1 Planning for Shared NSS Pools and Volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 133

10.1.1 Shared Storage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134

10.1.2 Novell Cluster Services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134

10.1.3 Novell Storage Services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134

10.1.4 IP Address . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 135

10.1.5 NCP Server for Linux. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 135

10.1.6 Novell CIFS for Linux. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 135

10.1.7 Novell AFP for Linux . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

10.2 Considerations for Working with Shared NSS Pools and Volumes in the Cluster . . . . . . . . . 136

10.3 Creating NSS Shared Disk Partitions and Pools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 137

10.3.1 Initializing Shared Devices. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 137

10.3.2 Enabling Sharing on a Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 137

10.3.3 Creating Shared NSS Pools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 138

10.4 Creating NSS Volumes on a Shared Pool . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 142

10.4.1 Using iManager to Create NSS Volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 142

10.4.2 Using NSSMU to Create NSS Volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 143

8 OES 2 SP2: Novell Cluster Services 1.8.7 for Linux Administration Guide

Page 9

10.5 Cluster-Enabling an Existing NSS Pool and Its Volumes. . . . . . . . . . . . . . . . . . . . . . . . . . . . 144

10.6 Adding Advertising Protocols . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 147

10.7 Configuring a Load Script for the Shared NSS Pool . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 149

10.8 Configuring an Unload Script for the Shared NSS Pool . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 150

10.9 Configuring a Monitor Script for the Shared NSS Pool . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 150

10.10 Mirroring and Cluster-Enabling Shared NSS Pools and Volumes . . . . . . . . . . . . . . . . . . . . . 150

10.10.1 Understanding NSS Mirroring . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 151

10.10.2 Requirements for NSS Mirroring . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 152

10.10.3 Creating and Mirroring NSS Pools on Shared Storage . . . . . . . . . . . . . . . . . . . . . . 152

10.10.4 Verifying the NSS Mirror Status in the Cluster. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 154

10.11 Deleting NSS Pool Cluster Resources. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 154

10.12 Changing the Volume ID . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 155

10.13 What’s Next . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 155

11 Configuring Cluster Resources for Shared Linux POSIX Volumes 157

11.1 Requirements for Shared Linux POSIX Volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 157

11.2 Creating Linux POSIX Volumes on Shared Disks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 158

11.2.1 Removing Existing Formatting and Segment Managers . . . . . . . . . . . . . . . . . . . . . 158

11.2.2 Creating a Cluster Segment Manager Container. . . . . . . . . . . . . . . . . . . . . . . . . . . 159

11.2.3 Adding a Non-CSM Segment Manager Container. . . . . . . . . . . . . . . . . . . . . . . . . . 160

11.2.4 Creating an EVMS Volume . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 161

11.2.5 Making a File System on the EVMS Volume . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 161

11.3 Cluster-Enabling a Linux POSIX Volume on a Shared Disk . . . . . . . . . . . . . . . . . . . . . . . . . 162

11.3.1 Logging in to iManager . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 163

11.3.2 Creating a Cluster Resource for a Linux POSIX Volume . . . . . . . . . . . . . . . . . . . . 163

11.3.3 Configuring a Load Script for a Linux POSIX Volume Cluster Resource. . . . . . . . . 164

11.3.4 Configuring an Unload Script for a Linux POSIX Volume Cluster Resource . . . . . . 165

11.3.5 Enabling Monitoring and Configuring a Monitor Script for a Linux POSIX Volume Cluster

Resource . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 166

11.3.6 Configuring Policies for a Linux POSIX Volume Cluster Resource . . . . . . . . . . . . . 167

11.4 Creating a Virtual Server Name for the Cluster Resource . . . . . . . . . . . . . . . . . . . . . . . . . . . 169

11.4.1 Using ncs_ncpserv.py to Create an NCS:NCP Server Object. . . . . . . . . . . . . . . . . 169

11.4.2 Using iManager to Create an NCS:NCP Server Object. . . . . . . . . . . . . . . . . . . . . . 171

11.5 Sample Scripts for a Linux POSIX Volume Cluster Resource . . . . . . . . . . . . . . . . . . . . . . . . 172

11.5.1 Sample Load Script for the Linux POSIX Volume Cluster Resource. . . . . . . . . . . . 174

11.5.2 Sample Unload Script for the Linux POSIX Volume Cluster Resource . . . . . . . . . . 175

11.5.3 Sample Monitor Script for a Linux POSIX Volume Cluster Resource . . . . . . . . . . . 176

11.6 Expanding EVMS Volumes on Shared Disks. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 176

11.6.1 Expanding a Volume to a Separate Disk . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 176

11.6.2 Moving a Volume to a Larger Disk. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 177

11.7 Deleting Shared Storage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 178

11.8 Known Issues for Working with Cluster Resources for Linux POSIX Volumes . . . . . . . . . . . 178

11.8.1 Dismount Volumes before Onlining a Comatose Resource . . . . . . . . . . . . . . . . . . 178

11.8.2 Cluster Services Must Be Running When Using EVMS . . . . . . . . . . . . . . . . . . . . . 178

11.8.3 Close EVMS Utilities When They Are Not In Use . . . . . . . . . . . . . . . . . . . . . . . . . . 178

11.8.4 Do Not Migrate Resources When EVMS Tools Are Running . . . . . . . . . . . . . . . . . 179

11.9 What’s Next . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 179

novdocx (en) 7 January 2010

12 Configuring Novell Cluster Services in a Xen Virtualization Environment 181

12.1 Prerequisites for Xen Host Server Environments. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 181

12.2 Virtual Machines as Cluster Resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 182

12.2.1 Creating a Xen Virtual Machine Cluster Resource . . . . . . . . . . . . . . . . . . . . . . . . . 183

12.2.2 Configuring Virtual Machine Load, Unload, and Monitor Scripts . . . . . . . . . . . . . . . 184

Contents 9

Page 10

12.2.3 Setting Up Live Migration. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 189

12.3 Virtual Machines as Cluster Nodes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 190

12.4 Virtual Cluster Nodes in Separate Clusters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 191

12.5 Mixed Physical and Virtual Node Clusters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 191

12.6 Additional Information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 193

13 Troubleshooting Novell Cluster Services 195

13.1 File Location Information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 195

13.2 Diagnosing Cluster Problems. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 196

13.3 Cluster Search Times Out (Bad XML Error). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 197

13.4 A Device Name Is Required to Create a Cluster Partition . . . . . . . . . . . . . . . . . . . . . . . . . . . 197

13.5 Cluster Resource Goes Comatose Immediately After Migration or Failover . . . . . . . . . . . . . 197

13.6 smdr.novell Is Not Registered with SLP for a New Cluster Resource . . . . . . . . . . . . . . . . . . 197

13.7 Cluster View Displays the Wrong Cluster Node Name . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 198

13.8 NSS Takes Up to 10 Minutes to Load When the Server Is Rebooted (Linux) . . . . . . . . . . . . 198

13.9 Could Not Delete This Resource Data_Server (Error 499) . . . . . . . . . . . . . . . . . . . . . . . . . . 198

13.10 Problem Authenticating to Remote Servers during Cluster Configuration . . . . . . . . . . . . . . . 199

13.11 Problem Connecting to an iSCSI Target . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 199

13.12 Problem Deleting a Cluster Resource or Clustered Pool . . . . . . . . . . . . . . . . . . . . . . . . . . . 199

13.13 Version Issues . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 199

13.14 Can’t Find the Prevent Cascading Failover Option . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 199

13.15 Is there a way to uninstall Novell Cluster Services from a server? . . . . . . . . . . . . . . . . . . . . 200

13.16 Cluster Resource Is Stuck in "NDS Sync" or "eDirectory Sync" State . . . . . . . . . . . . . . . . . . 200

novdocx (en) 7 January 2010

14 Security Considerations 201

14.1 Cluster Administration Rights . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 201

14.2 Ports . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 201

14.3 E-Mail Alerts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 201

14.4 Log Files. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 202

A Console Commands for Novell Cluster Services 203

A.1 Cluster Management Commands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 203

A.2 Business Continuity Clustering Commands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 206

A.3 extend_schema Command . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 206

A.4 SBD Utility . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 207

B Files for Novell Cluster Services 209

C Comparing Novell Cluster Services for Linux and NetWare 213

D Comparing Clustering Support for OES 2 Services on Linux and NetWare 219

E Documentation Updates 225

E.1 March 15, 2010 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 225

E.1.1 Configuring and Managing Cluster Resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . 226

E.1.2 Configuring Cluster Resources for Shared Linux POSIX Volumes . . . . . . . . . . . . . 226

E.1.3 Configuring Novell Cluster Services in a Xen Virtualization Environment . . . . . . . . 227

E.1.4 Troubleshooting Novell Cluster Services. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 227

10 OES 2 SP2: Novell Cluster Services 1.8.7 for Linux Administration Guide

Page 11

E.2 February 19, 2010 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 227

E.2.1 Installing and Configuring Novell Cluster Services on OES 2 Linux . . . . . . . . . . . . 227

E.2.2 Managing Clusters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 227

E.3 February 10, 2010 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 228

E.3.1 Configuring Cluster Resources for Linux POSIX Volumes . . . . . . . . . . . . . . . . . . . 228

E.4 January 29, 2010 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 228

E.4.1 Converting NetWare 6.5 Clusters to OES 2 Linux. . . . . . . . . . . . . . . . . . . . . . . . . . 228

E.4.2 Installing and Configuring Novell Cluster Services on OES 2 Linux . . . . . . . . . . . . 229

E.4.3 Managing Clusters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 229

E.4.4 Troubleshooting Novell Cluster Services. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 229

E.4.5 What’s New . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 229

E.5 January 20, 2010 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 230

E.5.1 Converting NetWare 6.5 Clusters to OES 2 Linux. . . . . . . . . . . . . . . . . . . . . . . . . . 230

E.5.2 What’s New . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 230

E.6 January 4, 2010 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 230

E.6.1 Configuring Cluster Resources for Shared NSS Pools and Volumes . . . . . . . . . . . 230

E.6.2 Converting NetWare 6.5 Clusters to OES 2 Linux. . . . . . . . . . . . . . . . . . . . . . . . . . 231

E.7 December 15, 2009 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 231

E.7.1 Installing and Configuring Novell Cluster Services on OES 2 Linux . . . . . . . . . . . . 231

E.8 December 10, 2009 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 231

E.8.1 Converting NetWare 6.5 Clusters to OES 2 Linux. . . . . . . . . . . . . . . . . . . . . . . . . . 232

E.8.2 Installing and Configuring Novell Cluster Services on OES 2 Linux . . . . . . . . . . . . 232

E.8.3 Managing Clusters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 232

E.8.4 Troubleshooting Novell Cluster Services. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 232

E.9 November 2009 (OES 2 SP2) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 232

E.9.1 Configuring Novell Cluster Services in a Xen Host Environment. . . . . . . . . . . . . . . 233

E.9.2 Converting NetWare 6.5 Clusters to OES 2 Linux. . . . . . . . . . . . . . . . . . . . . . . . . . 233

E.9.3 Console Commands for Novell Cluster Services. . . . . . . . . . . . . . . . . . . . . . . . . . . 233

E.9.4 Installing Novell Cluster Services on OES 2 Linux . . . . . . . . . . . . . . . . . . . . . . . . . 234

E.9.5 Managing Clusters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 234

E.9.6 Troubleshooting Novell Cluster Services. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 234

E.9.7 Upgrading OES 2 Linux Clusters. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 234

E.9.8 What’s New . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 235

E.10 July 30, 2009 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 235

E.10.1 Configuring Cluster Resources for Shared NSS Pools and Volumes . . . . . . . . . . . 235

E.10.2 Console Commands for Novell Cluster Services. . . . . . . . . . . . . . . . . . . . . . . . . . . 235

E.10.3 Converting NetWare 6.5 Clusters to OES 2 Linux. . . . . . . . . . . . . . . . . . . . . . . . . . 236

E.10.4 Installing Novell Cluster Services on OES 2 Linux . . . . . . . . . . . . . . . . . . . . . . . . . 236

E.10.5 Managing Clusters. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 236

E.10.6 Security Considerations. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 236

E.10.7 Troubleshooting Novell Cluster Services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 237

E.10.8 What’s New . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 237

E.11 June 22, 2009 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 237

E.11.1 Converting NetWare 6.5 Clusters to OES 2 Linux. . . . . . . . . . . . . . . . . . . . . . . . . . 237

E.11.2 Managing Clusters. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 237

E.12 June 5, 2009 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 238

E.12.1 Configuring Cluster Policies and Priorities. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 238

E.12.2 Configuring Cluster Resources for Shared NSS Pools and Volumes . . . . . . . . . . . 238

E.12.3 Console Commands for Novell Cluster Services. . . . . . . . . . . . . . . . . . . . . . . . . . . 238

E.12.4 Converting NetWare 6.5 Clusters to OES 2 Linux. . . . . . . . . . . . . . . . . . . . . . . . . . 239

E.12.5 Installing Novell Cluster Services on OES 2 Linux . . . . . . . . . . . . . . . . . . . . . . . . . 239

E.12.6 Managing Clusters. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 239

E.13 May 6, 2009 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 240

E.13.1 Configuring Cluster Resources for Shared NSS Pools and Volumes . . . . . . . . . . . 240

E.13.2 Configuring and Managing Cluster Resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . 240

E.13.3 Converting NetWare 6.5 Clusters to OES 2 SP1 Linux. . . . . . . . . . . . . . . . . . . . . . 241

E.13.4 Installing Novell Cluster Services on OES 2 Linux . . . . . . . . . . . . . . . . . . . . . . . . . 241

novdocx (en) 7 January 2010

Contents 11

Page 12

E.13.5 Troubleshooting Novell Cluster Services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 241

E.13.6 Upgrading OES 2 Linux Servers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 242

E.14 March 3, 2009 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 242

E.14.1 Configuring and Managing Cluster Resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . 242

E.14.2 Configuring Cluster Resources for Shared NSS Pools and Volumes . . . . . . . . . . . 242

E.14.3 Converting NetWare 6.5 Clusters to OES 2 Linux. . . . . . . . . . . . . . . . . . . . . . . . . . 243

E.14.4 Managing Clusters. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 243

E.14.5 What’s Next . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 243

E.15 February 13, 2009 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 243

E.15.1 Converting NetWare 6.5 Clusters to OES 2 Linux. . . . . . . . . . . . . . . . . . . . . . . . . . 244

E.16 February 3, 2009 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 244

E.16.1 Configuring and Managing Cluster Resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . 244

E.16.2 Configuring Cluster Resources for Shared NSS Pools and Volumes . . . . . . . . . . . 244

E.16.3 Converting NetWare 6.5 Clusters to OES 2 Linux. . . . . . . . . . . . . . . . . . . . . . . . . . 245

E.16.4 Installing Novell Cluster Services on OES 2 Linux . . . . . . . . . . . . . . . . . . . . . . . . . 245

E.17 January 13, 2009 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 245

E.18 December 2008 (OES 2 SP1) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 245

E.18.1 Comparison of Clustering OES 2 Services for Linux and NetWare . . . . . . . . . . . . . 246

E.18.2 Comparison of Novell Cluster Services for Linux and NetWare . . . . . . . . . . . . . . . 246

E.18.3 Configuring and Managing Cluster Resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . 246

E.18.4 Configuring Cluster Resources for Shared Linux POSIX Volumes . . . . . . . . . . . . . 246

E.18.5 Configuring Cluster Resources for Shared NSS Pools and Volumes . . . . . . . . . . . 246

E.18.6 Configuring Novell Cluster Services in a Virtualization Environment . . . . . . . . . . . . 247

E.18.7 Console Commands for Novell Cluster Services. . . . . . . . . . . . . . . . . . . . . . . . . . . 247

E.18.8 Converting NetWare 6.5 Cluster to OES 2 Linux. . . . . . . . . . . . . . . . . . . . . . . . . . . 248

E.18.9 Installing Novell Cluster Services on OES 2 Linux . . . . . . . . . . . . . . . . . . . . . . . . . 248

E.18.10 Managing Clusters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 249

E.18.11 Overview of Novell Cluster Services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 249

E.18.12 Troubleshooting Novell Cluster Services. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 249

E.18.13 Upgrading OES 2 Clusters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 249

E.18.14 What’s New . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 250

E.19 June 4, 2008 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 250

E.19.1 Configuring Cluster Resources for Shared NSS Pools and Volumes . . . . . . . . . . . 250

E.19.2 Configuring Cluster Resources for Shared Linux POSIX Volumes . . . . . . . . . . . . . 251

E.19.3 Installation and Setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 251

E.19.4 Managing Novell Cluster Services. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 252

E.20 May 2, 2008 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 253

E.20.1 Installation and Setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 253

E.20.2 Managing Novell Cluster Services. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 253

novdocx (en) 7 January 2010

12 OES 2 SP2: Novell Cluster Services 1.8.7 for Linux Administration Guide

Page 13

About This Guide

This guide describes how to install, upgrade, configure, and manage Novell® Cluster ServicesTM. It

is intended for cluster administrators and is divided into the following sections:

Chapter 1, “Overview of Novell Cluster Services,” on page 15

Chapter 2, “What’s New,” on page 23

Chapter 3, “Installing and Configuring Novell Cluster Services on OES 2 Linux,” on page 27

Chapter 4, “Upgrading OES 2 Linux Clusters,” on page 53

Chapter 5, “Upgrading OES 1 Linux Clusters to OES 2 Linux,” on page 55

Chapter 6, “Converting NetWare 6.5 Clusters to OES 2 Linux,” on page 59

Chapter 7, “Configuring Cluster Policies and Priorities,” on page 91

Chapter 8, “Managing Clusters,” on page 97

Chapter 9, “Configuring and Managing Cluster Resources,” on page 117

Chapter 10, “Configuring Cluster Resources for Shared NSS Pools and Volumes,” on page 133

novdocx (en) 7 January 2010

Chapter 11, “Configuring Cluster Resources for Shared Linux POSIX Volumes,” on page 157

Chapter 12, “Configuring Novell Cluster Services in a Xen Virtualization Environment,” on

page 181

Chapter 13, “Troubleshooting Novell Cluster Services,” on page 195

Appendix A, “Console Commands for Novell Cluster Services,” on page 203

Appendix B, “Files for Novell Cluster Services,” on page 209

Appendix C, “Comparing Novell Cluster Services for Linux and NetWare,” on page 213

Appendix D, “Comparing Clustering Support for OES 2 Services on Linux and NetWare,” on

page 219

Audience

This guide is intended for intended for anyone involved in installing, configuring, and managing

Novell Cluster Services.

Feedback

We want to hear your comments and suggestions about this manual and the other documentation

included with this product. Please use the User Comments feature at the bottom of each page of the

online documentation, or go to www.novell.com/documentation/feedback.html and enter your

comments there.

Documentation Updates

The latest version of this Novell Cluster Services for Linux Administration Guide is available on the

OES 2 documentation Web site (http://www.novell.com/documentation/oes2/cluster-services.html).

About This Guide 13

Page 14

Additional Documentation

For information about creating cluster resources for various Linux services on your OES 2 Linux

server, refer to the clustering sections in the individual guides. See the “Clustering Linux Services”

list on the Clustering (High Availability) Documentation Web site (http://www.novell.com/

documentation/oes2/cluster-services.html#clust-config-resources).

®

For information about Novell Cluster Services 1.8.5 for NetWare

, see the “Clustering NetWare

Services” list on the NetWare 6.5 SP8 Clustering (High Availability) Documentation Web site (http:/

/www.novell.com/documentation/nw65/cluster-services.html#clust-config-resources).

Documentation Conventions

In Novell documentation, a greater-than symbol (>) is used to separate actions within a step and

items in a cross-reference path.

®

A trademark symbol (

, TM, etc.) denotes a Novell trademark. An asterisk (*) denotes a third-party

trademark.

novdocx (en) 7 January 2010

14 OES 2 SP2: Novell Cluster Services 1.8.7 for Linux Administration Guide

Page 15

1

Overview of Novell Cluster

novdocx (en) 7 January 2010

Services

Novell® Cluster ServicesTM is a server clustering system that ensures high availability and

manageability of critical network resources including data, applications, and services. It is a multinode clustering product for Linux* that is enabled for Novell eDirectory

failback, and migration (load balancing) of individually managed cluster resources.

Section 1.1, “Why Should I Use Clusters?,” on page 15

Section 1.2, “Benefits of Novell Cluster Services,” on page 15

Section 1.3, “Product Features,” on page 16

Section 1.4, “Clustering for High-Availability,” on page 16

Section 1.5, “Shared Disk Scenarios,” on page 18

1.1 Why Should I Use Clusters?

A server cluster is a group of redundantly configured servers that work together to provide highly

available access for clients to important applications, services, and data while reducing unscheduled

outages. The applications, services, and data are configured as cluster resources that can be failed

over or cluster migrated between servers in the cluster. For example, when a failure occurs on one

node of the cluster, the clustering software gracefully relocates its resources and current sessions to

another server in the cluster. Clients connect to the cluster instead of an individual server, so users

are not aware of which server is actively providing the service or data. In most cases, users are able

to continue their sessions without interruption.

TM

and supports failover,

1

Each server in the cluster runs the same operating system and applications that are needed to provide

the application, service, or data resources to clients. Shared devices are connected to and mounted

on only one server at a time. Clustering software monitors the health of each of the member servers

by listening for its heartbeat, a simple message that lets the others know it is alive.

The cluster’s virtual server provides a single point for accessing, configuring, and managing the

cluster servers and resources. The virtual identity is bound to the cluster’s master node and remains

with the master node regardless of which member server acts the master node. The master server

also keeps information about each of the member servers and the resources they are running. If the

master server fails, the control duties are passed to another server in the cluster.

1.2 Benefits of Novell Cluster Services

Novell Cluster Services provides high availability from commodity components. You can configure

up to 32 OES 2 Linux servers in a high-availability cluster, where resources can be dynamically

relocated to any server in the cluster. Resources can be configured to automatically fail over to one

or multiple different preferred servers in the event of a server failure. In addition, costs are lowered

through the consolidation of applications and operations onto a cluster.

Novell Cluster Services allows you to manage a cluster from a single point of control and to adjust

resources to meet changing workload requirements (thus, manually “load balance” the cluster).

Resources can also be cluster migrated manually to allow you to troubleshoot hardware. For

Overview of Novell Cluster Services

15

Page 16

example, you can move applications, Web sites, and so on to other servers in your cluster without

waiting for a server to fail. This helps you to reduce unplanned service outages and planned outages

for software and hardware maintenance and upgrades.

Novell Cluster Services clusters provide the following benefits over standalone servers:

Increased availability of applications, services, and data

Improved performance

Lower cost of operation

Scalability

Disaster recovery

Data protection

Server consolidation

Storage consolidation

1.3 Product Features

novdocx (en) 7 January 2010

Novell Cluster Services includes several important features to help you ensure and manage the

availability of your network resources:

Support for shared SCSI, iSCSI, or Fibre Channel storage subsystems. Shared disk fault

tolerance can be obtained by implementing RAID on the shared disk subsystem.

Multi-node all-active cluster (up to 32 nodes). Any server in the cluster can restart resources

(applications, services, IP addresses, and file systems) from a failed server in the cluster.

A single point of administration through the browser-based Novell iManager cluster

configuration and monitoring GUI. iManager also lets you remotely manage your cluster.

The ability to tailor a cluster to the specific applications and hardware infrastructure that fit

your organization.

Dynamic assignment and reassignment of server storage as needed.

The ability to use e-mail to automatically notify administrators of cluster events and cluster

state changes.

1.4 Clustering for High-Availability

A Novell Cluster Services for Linux cluster consists of the following components:

2 to 32 OES 2 Linux servers, each containing at least one local disk device.

Novell Cluster Services software running on each Linux server in the cluster.

A shared disk subsystem connected to all servers in the cluster (optional, but recommended for

most configurations).

Equipment to connect servers to the shared disk subsystem, such as one of the following:

High-speed Fibre Channel cards, cables, and switches for a Fibre Channel SAN

Ethernet cards, cables, and switches for an iSCSI SAN

SCSI cards and cables for external SCSI storage arrays

16 OES 2 SP2: Novell Cluster Services 1.8.7 for Linux Administration Guide

Page 17

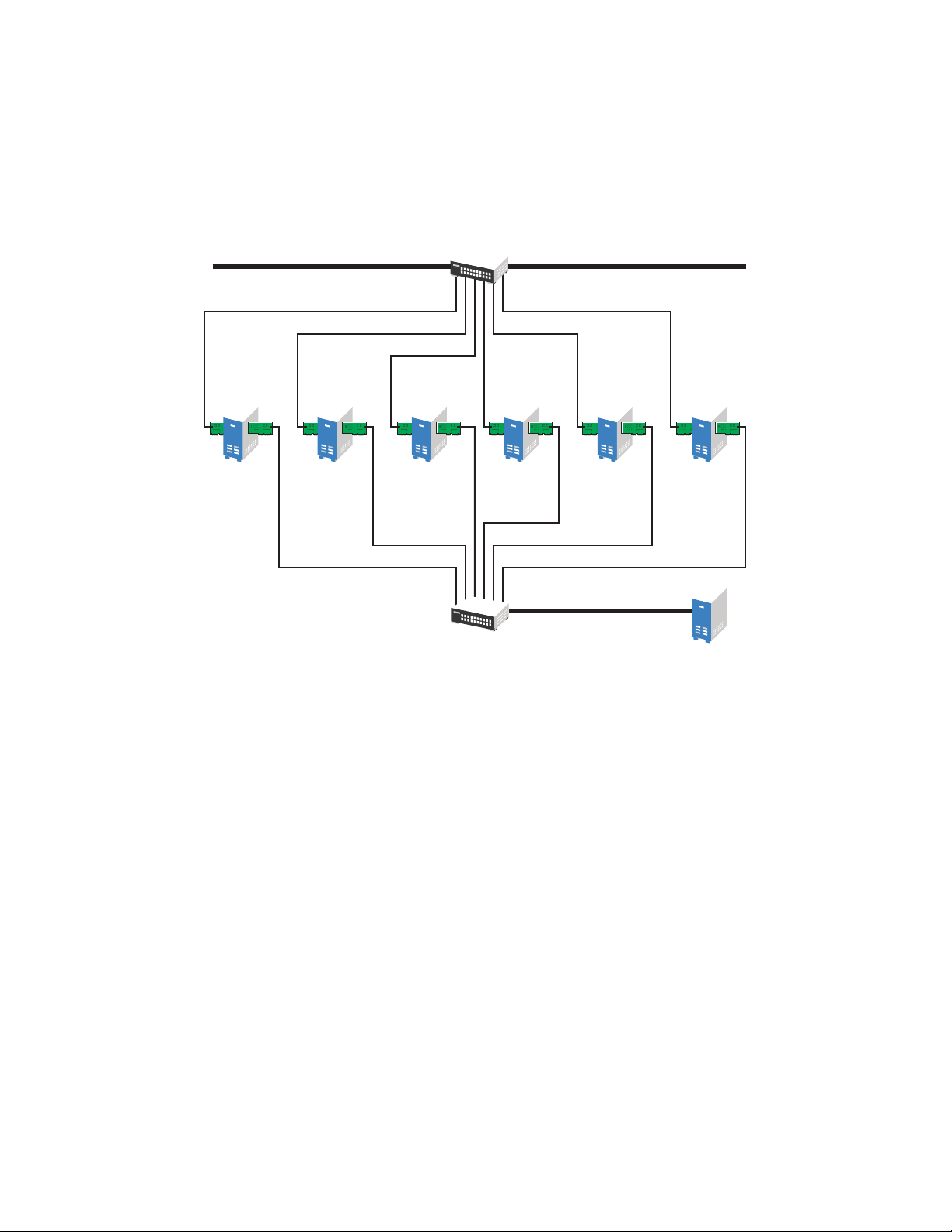

The benefits that Novell Cluster Services provides can be better understood through the following

Fibre Channel Switch

Web Server 1 Web Server 2 Web Server 3

Shared Disk

System

Web Site E

Web Site F

Web Site C

Web Site D

Web Site A

Web Site B

Fibre Channel Switch

Web Server 1 Web Server 2 Web Server 3

Shared Disk

System

Web Site B

Web Site E

Web Site F

Web Site A

Web Site C

Web Site D

scenario.

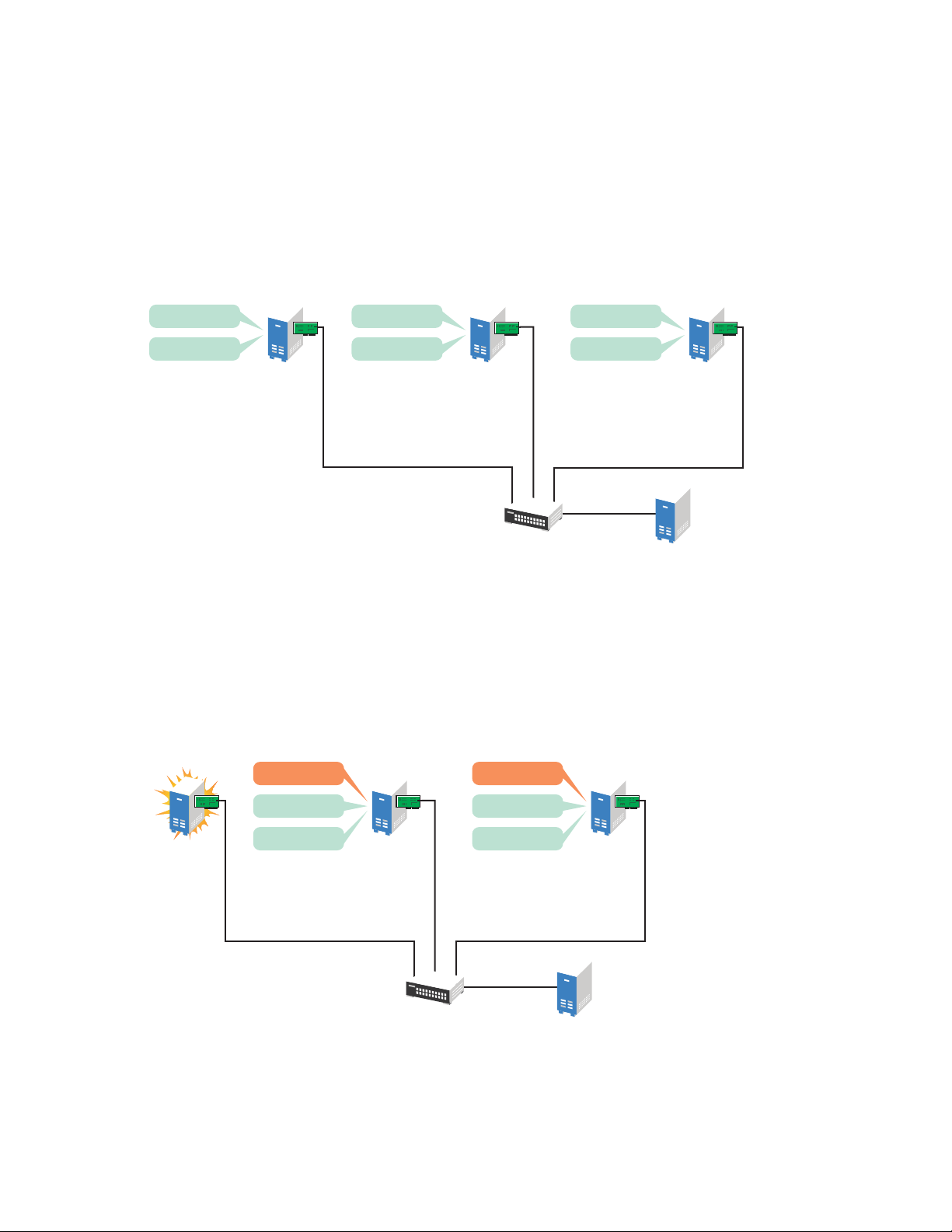

Suppose you have configured a three-server cluster, with a Web server installed on each of the three

servers in the cluster. Each of the servers in the cluster hosts two Web sites. All the data, graphics,

and Web page content for each Web site is stored on a shared disk system connected to each of the

servers in the cluster. Figure 1-1 depicts how this setup might look.

Figure 1-1 Three-Server Cluster

novdocx (en) 7 January 2010

During normal cluster operation, each server is in constant communication with the other servers in

the cluster and performs periodic polling of all registered resources to detect failure.

Suppose Web Server 1 experiences hardware or software problems and the users who depend on

Web Server 1 for Internet access, e-mail, and information lose their connections. Figure 1-2 shows

how resources are moved when Web Server 1 fails.

Figure 1-2 Three-Server Cluster after One Server Fails

Web Site A moves to Web Server 2 and Web Site B moves to Web Server 3. IP addresses and

certificates also move to Web Server 2 and Web Server 3.

Overview of Novell Cluster Services 17

Page 18

When you configured the cluster, you decided where the Web sites hosted on each Web server would

go if a failure occurred. You configured Web Site A to move to Web Server 2 and Web Site B to

move to Web Server 3. This way, the workload once handled by Web Server 1 is evenly distributed.

When Web Server 1 failed, Novell Cluster Services software did the following:

Detected a failure.

Remounted the shared data directories (that were formerly mounted on Web server 1) on Web

Server 2 and Web Server 3 as specified.

Restarted applications (that were running on Web Server 1) on Web Server 2 and Web Server 3

as specified.

Transferred IP addresses to Web Server 2 and Web Server 3 as specified.

In this example, the failover process happened quickly and users regained access to Web site

information within seconds, and in most cases, without logging in again.

Now suppose the problems with Web Server 1 are resolved, and Web Server 1 is returned to a

normal operating state. Web Site A and Web Site B will automatically fail back, or be moved back to

Web Server 1, and Web Server operation will return to the way it was before Web Server 1 failed.

novdocx (en) 7 January 2010

Novell Cluster Services also provides resource migration capabilities. You can move applications,

Web sites, etc. to other servers in your cluster without waiting for a server to fail.

For example, you could have manually moved Web Site A or Web Site B from Web Server 1 to

either of the other servers in the cluster. You might want to do this to upgrade or perform scheduled

maintenance on Web Server 1, or just to increase performance or accessibility of the Web sites.

1.5 Shared Disk Scenarios

Typical cluster configurations normally include a shared disk subsystem connected to all servers in

the cluster. The shared disk subsystem can be connected via high-speed Fibre Channel cards, cables,

and switches, or it can be configured to use shared SCSI or iSCSI. If a server fails, another

designated server in the cluster automatically mounts the shared disk directories previously mounted

on the failed server. This gives network users continuous access to the directories on the shared disk

subsystem.

Section 1.5.1, “Using Fibre Channel Storage Systems,” on page 19

Section 1.5.2, “Using iSCSI Storage Systems,” on page 20

Section 1.5.3, “Using Shared SCSI Storage Systems,” on page 21

18 OES 2 SP2: Novell Cluster Services 1.8.7 for Linux Administration Guide

Page 19

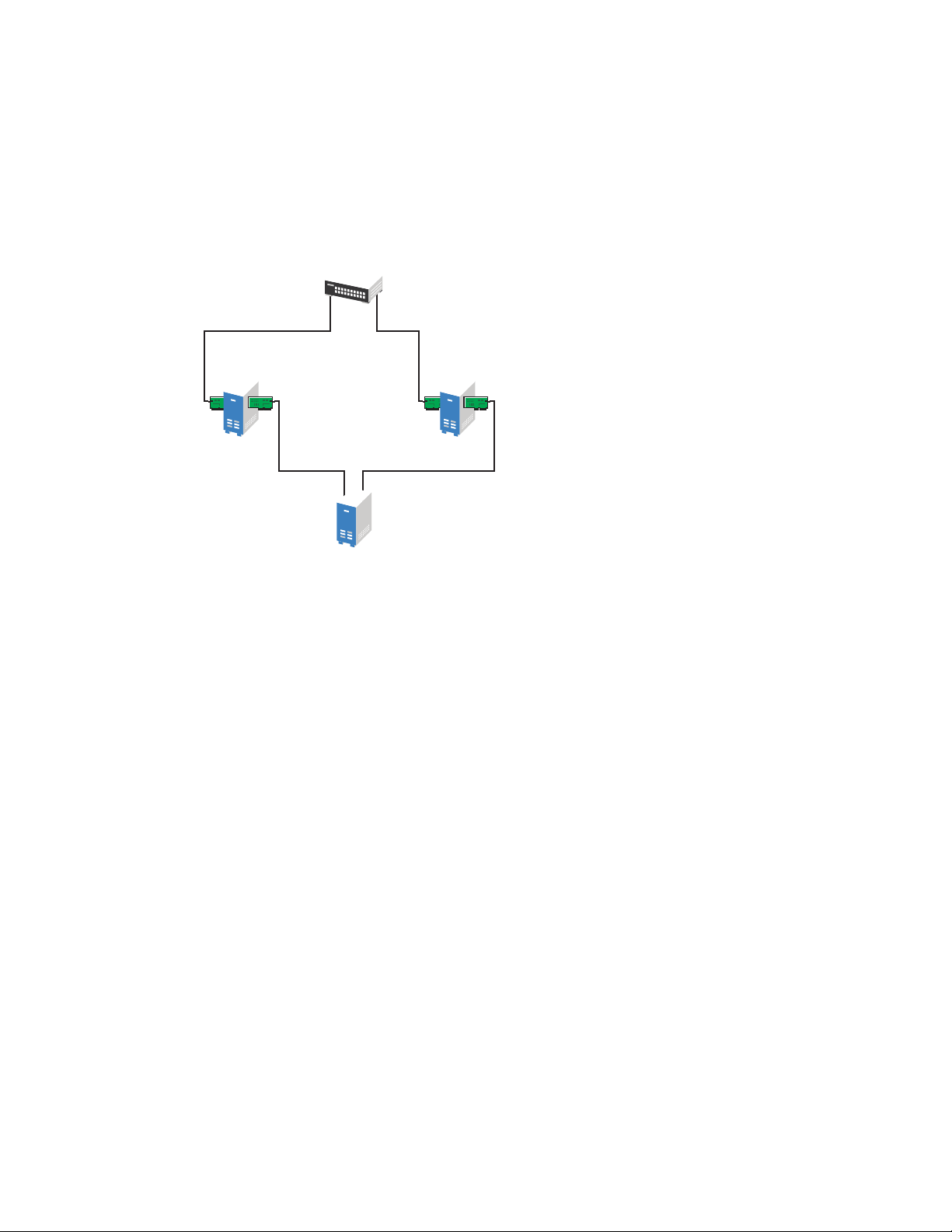

1.5.1 Using Fibre Channel Storage Systems

Network

Interface

Card(s)

Fibre Channel Switch

Network Hub

Fibre

Channel

Card(s)

Server 1 Server 2 Server 3 Server 4 Server 5 Server 6

Shared Disk

System

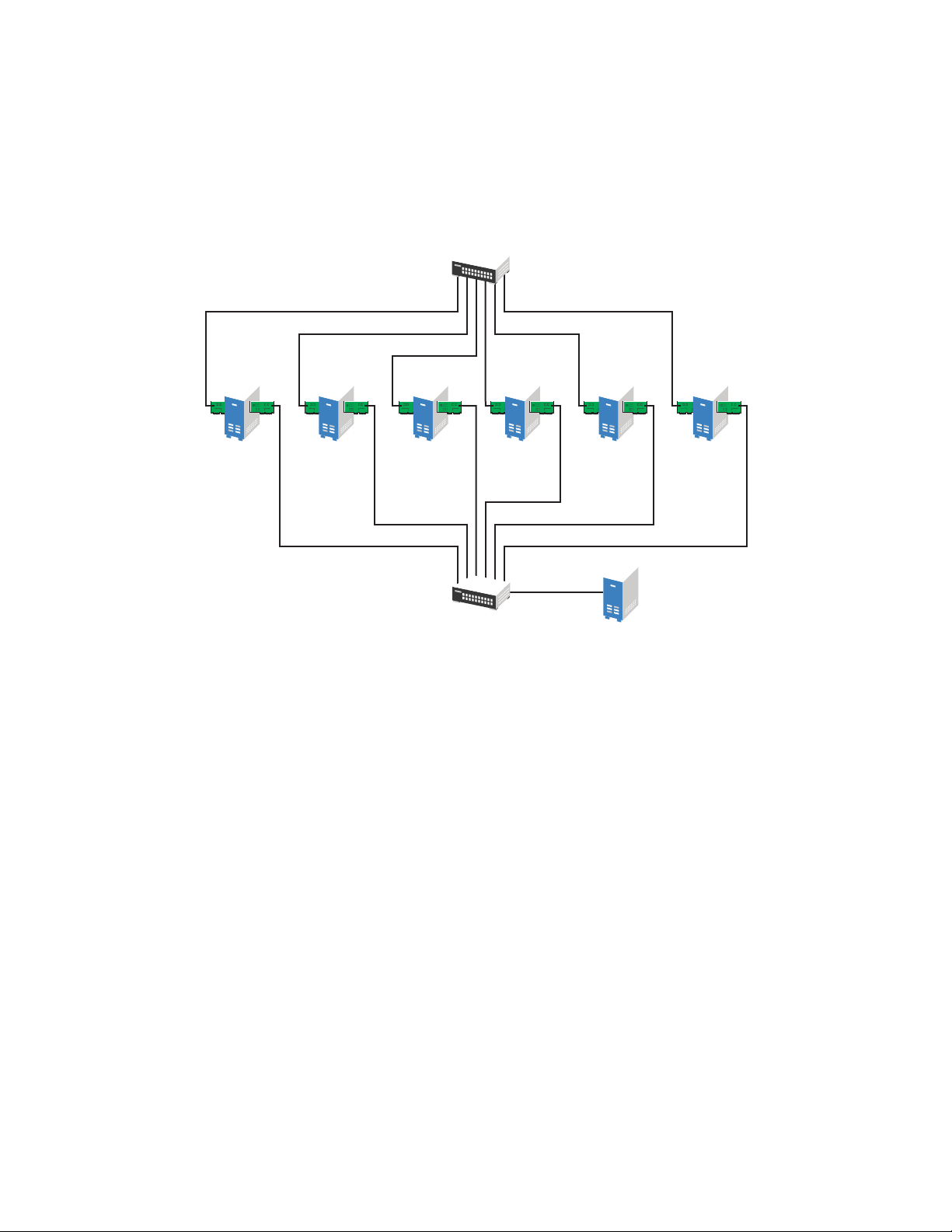

Fibre Channel provides the best performance for your storage area network (SAN). Figure 1-3

shows how a typical Fibre Channel cluster configuration might look.

Figure 1-3 Typical Fibre Channel Cluster Configuration

novdocx (en) 7 January 2010

Overview of Novell Cluster Services 19

Page 20

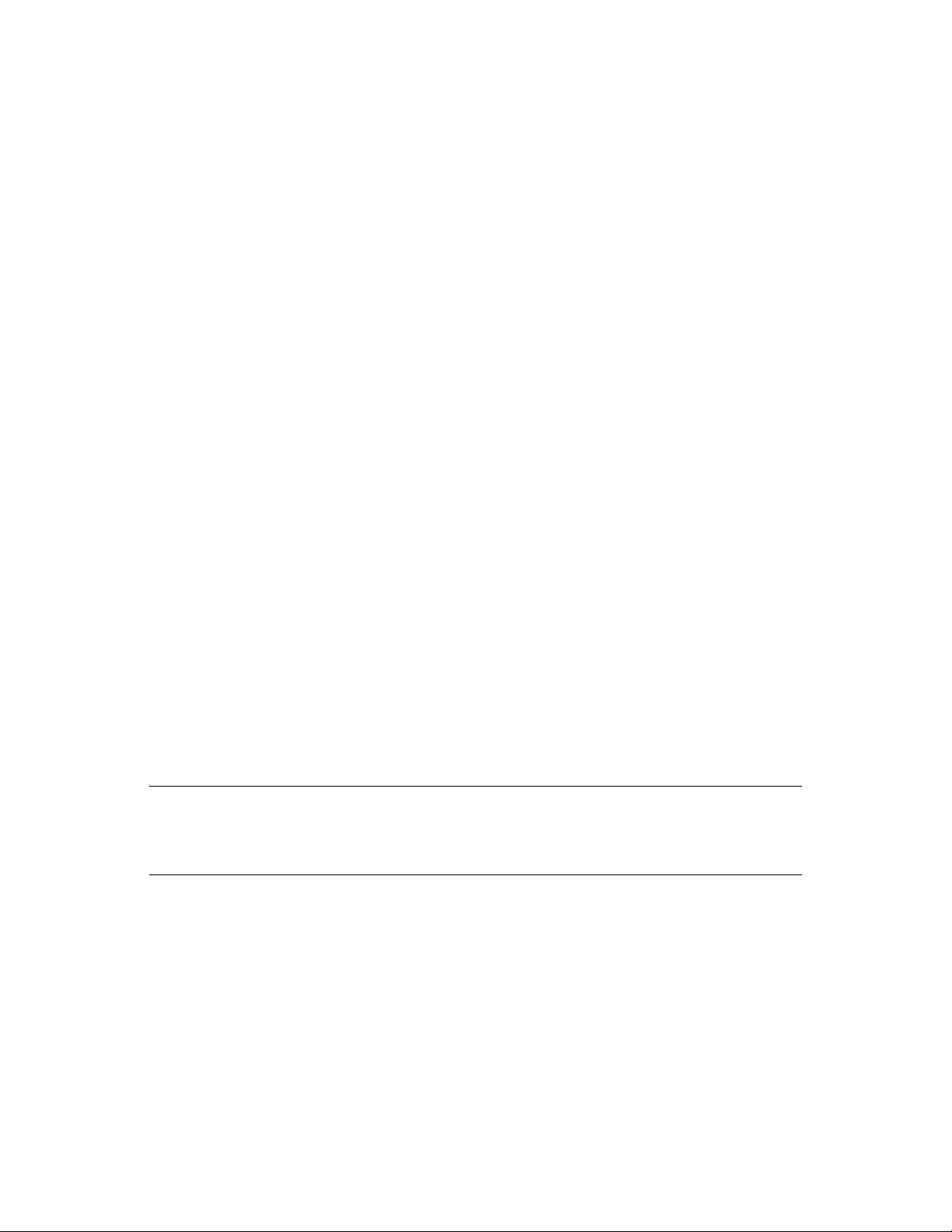

1.5.2 Using iSCSI Storage Systems

Ethernet

Card(s)

Ethernet Switch

Ethernet Switch

Ethernet

Card(s)

Server 1 Server 2 Server 3 Server 4 Server 5 Server 6

Storage

System

Network Backbone

iSCSI

Initiator

iSCSI

Initiator

iSCSI

Initiator

iSCSI

Initiator

iSCSI

Initiator

iSCSI

Initiator

Network Backbone

Ethernet

iSCSI is an alternative to Fibre Channel that can be used to create a lower-cost SAN with Ethernet

equipment. Figure 1-4 shows how a typical iSCSI cluster configuration might look.

Figure 1-4 Typical iSCSI Cluster Configuration

novdocx (en) 7 January 2010

20 OES 2 SP2: Novell Cluster Services 1.8.7 for Linux Administration Guide

Page 21

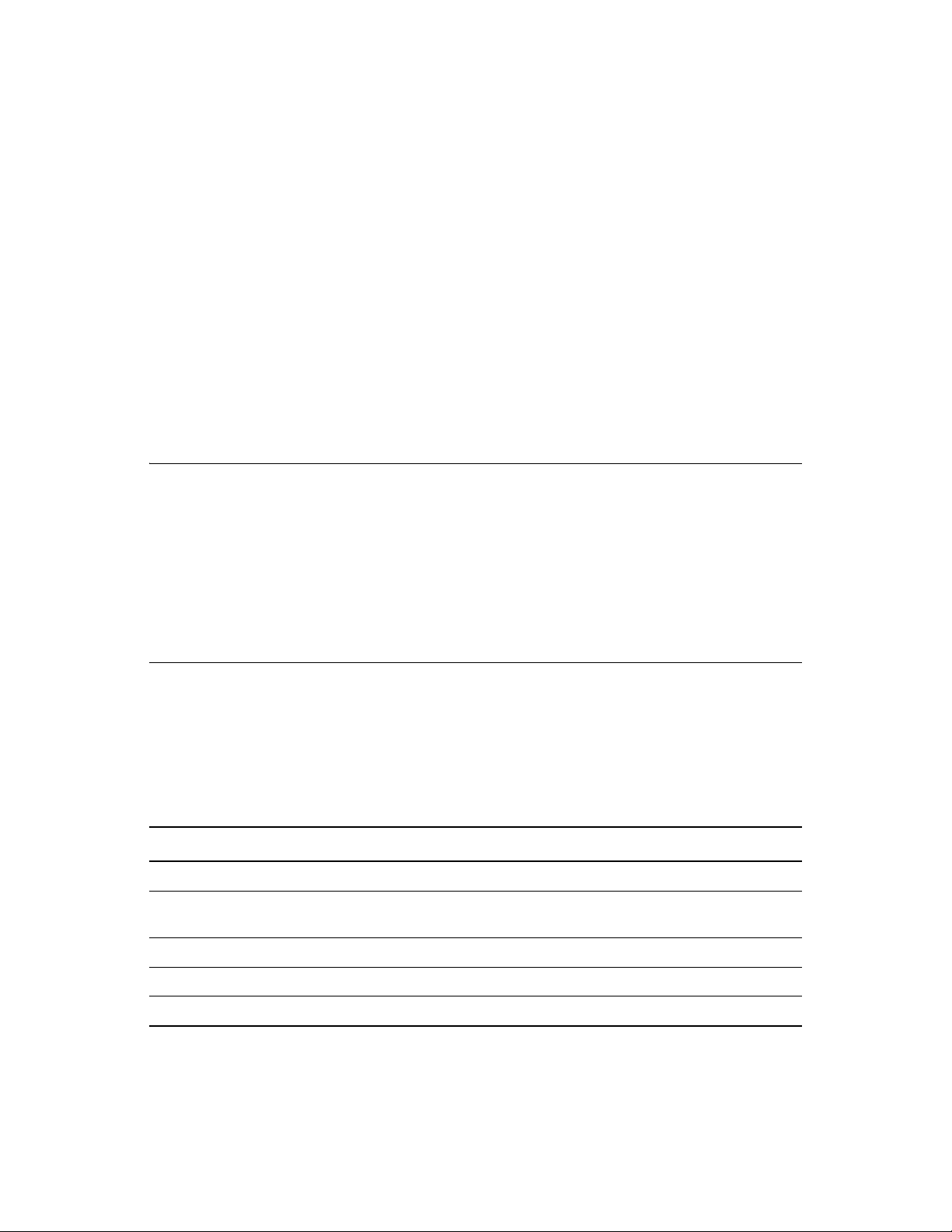

1.5.3 Using Shared SCSI Storage Systems

Network

Interface

Card

Network

Interface

Card

Network Hub

SCSI

Adapter

SCSI

Adapter

Server 1 Server 2

Shared Disk

System

You can configure your cluster to use shared SCSI storage systems. This configuration is also a

lower-cost alternative to using Fibre Channel storage systems. Figure 1-5 shows how a typical

shared SCSI cluster configuration might look.

Figure 1-5 Typical Shared SCSI Cluster Configuration

novdocx (en) 7 January 2010

Overview of Novell Cluster Services 21

Page 22

novdocx (en) 7 January 2010

22 OES 2 SP2: Novell Cluster Services 1.8.7 for Linux Administration Guide

Page 23

2

What’s New

This section describes changes and enhancements that were made to Novell® Cluster ServicesTM for

Linux since the initial release of Novell Open Enterprise Server (OES) 2 Linux.

Section 2.1, “What’s New (January 2010),” on page 23

Section 2.2, “What’s New (OES 2 SP2),” on page 23

Section 2.3, “What’s New (OES 2 SP1),” on page 25

Section 2.4, “What’s New (OES 2),” on page 26

2.1 What’s New (January 2010)

The January 2010 patch for OES 2 SP1 Linux contains bug fixes for Novell Cluster Services and

Novell Business Continuity Clustering 1.2 for OES 2 SP1 Linux.

The January 2010 patch for OES 2 SP2 Linux contains bug fixes for Novell Cluster Services and

adds support for Novell Business Continuity Clustering 1.2.1 for OES 2 SP2 Linux.

novdocx (en) 7 January 2010

2

2.2 What’s New (OES 2 SP2)

In addition to bug fixes, the following changes and enhancements were made for Novell Cluster

Services for Linux in OES 2 SP2.

Section 2.2.1, “Improved Error Reporting,” on page 23

Section 2.2.2, “Improved Time Calculations,” on page 23

Section 2.2.3, “Specifying the Size of the SBD Partition,” on page 24

Section 2.2.4, “Customizing Translation Syntax,” on page 24

Section 2.2.5, “Migration Tool Support for Cluster Configurations,” on page 24

Section 2.2.6, “New iFolder Resource Template,” on page 24

Section 2.2.7, “Removed MPK Calls from the Code,” on page 24

Section 2.2.8, “Cluster Restart Is No Longer Required in a Rolling Cluster Upgrade,” on

page 24

2.2.1 Improved Error Reporting

This release provides improved error reporting for file protocol errors.

2.2.2 Improved Time Calculations

This release improves the way time is calculated so that the inter-packet gap between two heartbeat

packets is reduced.

This means that you will observe an increase in the instances of packets incrementing the 0x (less

than one second) counter, and a decrease in the instances of packets incrementing the 1x (between

one second and two seconds) counter.

What’s New

23

Page 24

For example:

cluster stats display

Report taken: startTime= Thu Jul 23 13:16:33 2009,

endTime= Mon Jul 27 08:44:36 2009

node=5, name=Cluster_06, heartbeat=1, tolerance=8

0x=645550, 1x=6, 2x=1, 3x=2, 5x=2

2.2.3 Specifying the Size of the SBD Partition

When you configure the SBD partition during the cluster configuration of a new cluster (as

described in “Configuring a New Cluster” on page 48), you can now specify the size of the partition.

2.2.4 Customizing Translation Syntax

Beginning in OES 2 SP2, Novell Cluster Services allows you to customize the translation syntax

that is used for load and unload scripts in mixed-platform situations by defining new syntax

translations in the

create. The

processes them in addition to the normal translations in the Cluster Translation Library. For

information, see Section 6.7, “Customizing the Translation Syntax for Converting Load and Unload

Scripts,” on page 87.

/var/opt/novell/ncs/customized_translation_syntax

clstrlib.py

script reads the additional translation syntax from the syntax file, and

file that you

novdocx (en) 7 January 2010

2.2.5 Migration Tool Support for Cluster Configurations

Support was added for migrating services and data in cluster configurations by using the OES 2 SP2

Migration Tool. For instructions on using the Migration Tool to migrate services and data, see the

OES 2 SP2: Migration Tool Administration Guide.

2.2.6 New iFolder Resource Template

The Novell iFolder 3.x resource template has been modified for OES 2 SP2. For information about

using the new template, see Section 4.3.1, “Updating the iFolder Resource Template,” on page 54.

2.2.7 Removed MPK Calls from the Code

MPK calls were removed from the Novell Cluster Services code. The MPK calls were replaced with

POSIX and Linux functions. These changes were made in support of the MPK calls being removed

from the Novell Storage Services

enhancements for the NSS file system.

TM

(NSS) file system software to achieve performance

2.2.8 Cluster Restart Is No Longer Required in a Rolling Cluster Upgrade

In the OES 2 SP1 release, a cluster restart was required at the end of a rolling cluster upgrade in

order to properly update the names of the nodes, as described in Section 2.3.3, “Behavior Change for

Adding a Node,” on page 25. This issue was resolved in OES 2 SP2. The rolling cluster upgrade

process no long requires a cluster restart.

24 OES 2 SP2: Novell Cluster Services 1.8.7 for Linux Administration Guide

Page 25

2.3 What’s New (OES 2 SP1)

In addition to bug fixes, the following changes and enhancements were made for Novell Cluster

Services for Linux in OES 2 SP1.

Section 2.3.1, “Schema Extension,” on page 25

Section 2.3.2, “Installation by Container Administrator,” on page 25

Section 2.3.3, “Behavior Change for Adding a Node,” on page 25

Section 2.3.4, “Attribute NCS: GIPC Config Is No Longer Maintained,” on page 26

Section 2.3.5, “Support for Novell AFP for Linux,” on page 26

Section 2.3.6, “Support for Novell CIFS for Linux,” on page 26

Section 2.3.7, “Support for Domain Services for Windows,” on page 26

2.3.1 Schema Extension

The administrator of a Novell eDirectoryTM tree can now extend the schema for cluster objects

before clusters are installed in the tree. This allows container administrators to install Novell Cluster

Services without needing tree-level administrator rights. See Section 3.3, “Extending the eDirectory

Schema to Add Cluster Objects,” on page 40.

novdocx (en) 7 January 2010

2.3.2 Installation by Container Administrator

Container administrators can install Novell Cluster Services without needing tree-level

administrator rights. Make sure you have the rights needed for the install. See Section 3.4,

“Assigning Install Rights for Container Administrators,” on page 41.

2.3.3 Behavior Change for Adding a Node

In this release, a behavior change was made to address a deadlock defect. After adding a new node

to the cluster, the new node cannot be displayed in the Clusters plug-in to iManager until the

configd.py -init

IMPORTANT: A Novell Cluster Services patch is available in the patch channel and on the Novell

Downloads Web site (http://www.novell.com/downloads) that allows you to add a new node

seamlessly again on a Linux server. For cluster conversions, a cluster restart is still necessary after

all NetWare nodes have been removed from the cluster.

Run one of the following commands in order to make

correctly. It is okay to run

/opt/novell/ncs/bin/ncs-configd.py -init

or

script is run, or until the cluster is restarted.

cluster view

ncs-configd.py

on an active cluster.

display the new node’s name

ncs-

rcnovell-ncs restart

If you are converting a cluster from NetWare to Linux, you must restart the cluster instead so that

clstrlib.ko is reloaded.

What’s New 25

Page 26

novdocx (en) 7 January 2010

For example, if you install a server named

oes2_sales_cluster

node is generically displayed as

Cluster OES2_SALES_CLUSTER

This node SALES_02 [ epoch 4 master node SALES_02 ]

Cluster nodes [ SALES_01, SALES_02, Node_03 ]

After running the cluster configuration daemon or restarting Novell Cluster Services, the new node’s

name is properly displayed as

Cluster OES2_SALES_CLUSTER

This node SALES_02 [ epoch 4 master node SALES_02 ]

Cluster nodes [ SALES_01, SALES_02, SALES_03 ]

with two existing member nodes named

Node_03

SALES_03

sales_03

when you enter the

, and the node is visible in iManager.

in an existing cluster named

sales_01

cluster view

and

sales_02

command:

, the new

2.3.4 Attribute NCS: GIPC Config Is No Longer Maintained

Beginning in OES 2 SP1 Linux, the attribute NCS:GIPC Config in the Cluster object is no longer

maintained. This applies to Linux clusters and mixed NetWare and Linux clusters.

2.3.5 Support for Novell AFP for Linux

This release supports Novell AFP (Apple* Filing Protocol) for Linux in combination with Novell

Storage Services

TM

(NSS) volumes on OES 2 SP1 Linux. See “Novell AFP for Linux” on page 32.

2.3.6 Support for Novell CIFS for Linux

This release supports Novell CIFS for Linux in combination with NSS volumes on OES 2 SP1

Linux. See “Novell CIFS for Linux” on page 32.

2.3.7 Support for Domain Services for Windows

This release supports using clusters in Domain Services for Windows contexts for OES 2 SP1

Linux. See “Novell Domain Services for Windows” on page 32.

2.4 What’s New (OES 2)

The following changes and enhancements were added to Novell Cluster Services for Linux in OES

2.

Resource Monitoring: See Section 9.6, “Enabling Monitoring and Configuring the Monitor

Script,” on page 123.

Support for Xen* Virtualization: See Chapter 12, “Configuring Novell Cluster Services in a

Xen Virtualization Environment,” on page 181.

26 OES 2 SP2: Novell Cluster Services 1.8.7 for Linux Administration Guide

Page 27

3

Installing and Configuring Novell

novdocx (en) 7 January 2010

Cluster Services on OES 2 Linux

Novell® Cluster ServicesTM can be installed during the Novell Open Enterprise Server (OES) 2

Linux installation or afterwards on an existing OES 2 Linux server.

For information about upgrading a cluster server from OES 1 SP2 Linux to OES 2 Linux, see

Chapter 5, “Upgrading OES 1 Linux Clusters to OES 2 Linux,” on page 55.

®

For information about converting a cluster server from NetWare

see Chapter 6, “Converting NetWare 6.5 Clusters to OES 2 Linux,” on page 59.

Section 3.1, “System Requirements for Novell Cluster Services,” on page 27

Section 3.2, “Novell Cluster Services Licensing,” on page 39

Section 3.3, “Extending the eDirectory Schema to Add Cluster Objects,” on page 40

Section 3.4, “Assigning Install Rights for Container Administrators,” on page 41

Section 3.5, “Installing Novell Cluster Services,” on page 42

Section 3.6, “Configuring Novell Cluster Services,” on page 46

Section 3.7, “Configuring Additional Administrators,” on page 52

Section 3.8, “What’s Next,” on page 52

6.5 SP7 or later to OES 2 Linux,

3

3.1 System Requirements for Novell Cluster Services

Section 3.1.1, “Hardware Requirements,” on page 27

Section 3.1.2, “Software Requirements,” on page 28

Section 3.1.3, “Configuration Requirements,” on page 34

Section 3.1.4, “Shared Disk System Requirements,” on page 36

Section 3.1.5, “SAN Rules for LUN Masking,” on page 38

Section 3.1.6, “Using Device Mapper Multipath with Novell Cluster Services,” on page 38

3.1.1 Hardware Requirements

The following hardware requirements for installing Novell Cluster Services represent the minimum

hardware configuration. Additional hardware might be necessary depending on how you intend to

use Novell Cluster Services.

A minimum of two Linux servers, and not more than 32 servers in a cluster

At least 512 MB of memory on each server in the cluster

One non-shared device on each server to be used for the operating system

At least one network card per server in the same IP subnet

Installing and Configuring Novell Cluster Services on OES 2 Linux

27

Page 28

In addition, each server must meet the requirements for Novell Open Enterprise Server 2 Linux. For

information, see “Meeting All Server Software and Hardware Requirements” in the OES 2 SP2:

Installation Guide.

NOTE: Although identical hardware for each cluster server is not required, having servers with the

same or similar processors and memory can reduce differences in performance between cluster

nodes and make it easier to manage your cluster. There are fewer variables to consider when

designing your cluster and failover rules if each cluster node has the same processor and amount of

memory.

If you have a Fibre Channel SAN, the host bus adapters (HBAs) for each cluster node should be

identical.

3.1.2 Software Requirements

Ensure that your system meets the following software requirements for installing and managing

Novell Cluster Services:

“Novell Open Enterprise Server 2 Linux” on page 28

novdocx (en) 7 January 2010

“Novell eDirectory 8.8” on page 29

“Novell iManager 2.7.3” on page 29

“EVMS” on page 30

“Linux POSIX File Systems” on page 30

“NSS File System on Linux” on page 31

“Dynamic Storage Technology Shadow Volume Pairs” on page 31

“NCP Server for Linux” on page 31

“Novell AFP for Linux” on page 32

“Novell CIFS for Linux” on page 32

“Novell Domain Services for Windows” on page 32