Page 1

Administration Guide for NetWare® 6.5 SP8

Novell®

Business Continuity Clustering

novdocx (en) 13 May 2009

AUTHORIZED DOCUMENTATION

1.1 SP2

August 14, 2009

www.novell.com

BCC 1.1 SP2: Administration Guide for NetWare 6.5 SP8

Page 2

Legal Notices

Novell, Inc., makes no representations or warranties with respect to the contents or use of this documentation, and

specifically disclaims any express or implied warranties of merchantability or fitness for any particular purpose.

Further, Novell, Inc., reserves the right to revise this publication and to make changes to its content, at any time,

without obligation to notify any person or entity of such revisions or changes.

Further, Novell, Inc., makes no representations or warranties with respect to any software, and specifically disclaims

any express or implied warranties of merchantability or fitness for any particular purpose. Further, Novell, Inc.,

reserves the right to make changes to any and all parts of Novell software, at any time, without any obligation to

notify any person or entity of such changes.

Any products or technical information provided under this Agreement may be subject to U.S. export controls and the

trade laws of other countries. You agree to comply with all export control regulations and to obtain any required

licenses or classification to export, re-export or import deliverables. You agree not to export or re-export to entities on

the current U.S. export exclusion lists or to any embargoed or terrorist countries as specified in the U.S. export laws.

You agree to not use deliverables for prohibited nuclear, missile, or chemical biological weaponry end uses. See the

Novell International Trade Services Web page (http://www.novell.com/info/exports/) for more information on

exporting Novell software. Novell assumes no responsibility for your failure to obtain any necessary export

approvals.

novdocx (en) 13 May 2009

Copyright © 2007–2009 Novell, Inc. All rights reserved. No part of this publication may be reproduced,

photocopied, stored on a retrieval system, or transmitted without the express written consent of the publisher.

Novell, Inc., has intellectual property rights relating to technology embodied in the product that is described in this

document. In particular, and without limitation, these intellectual property rights may include one or more of the U.S.

patents listed on the Novell Legal Patents Web page (http://www.novell.com/company/legal/patents/) and one or

more additional patents or pending patent applications in the U.S. and in other countries.

Novell, Inc.

404 Wyman Street, Suite 500

Waltham, MA 02451

U.S.A.

www.novell.com

Online Documentation: To access the latest online documentation for this and other Novell products, see

the Novell Documentation Web page (http://www.novell.com/documentation).

Page 3

Novell Trademarks

For Novell Trademarks, see the Novell Trademark and Service Mark list (http://www.novell.com/company/legal/

trademarks/tmlist.html).

Third-Party Materials

All third-party trademarks are the property of their respective owners.

novdocx (en) 13 May 2009

Page 4

novdocx (en) 13 May 2009

4 BCC 1.1 SP2: Administration Guide for NetWare 6.5 SP8

Page 5

Contents

About This Guide 11

1Overview 13

1.1 Disaster Recovery Implications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

1.2 Disaster Recovery Implementations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

1.2.1 LAN-Based versus Internet-Based Applications . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

1.2.2 Host-Based versus Storage-Based Data Mirroring . . . . . . . . . . . . . . . . . . . . . . . . . . 14

1.2.3 Stretch Clusters vs. Cluster of Clusters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

1.3 Novell Business Continuity Clusters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

1.4 BCC Deployment Scenarios . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

1.4.1 Two-Site Business Continuity Cluster Solution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

1.4.2 Multiple-Site Business Continuity Cluster Solution . . . . . . . . . . . . . . . . . . . . . . . . . . 23

1.4.3 Low-Cost Business Continuity Cluster Solution. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

1.5 Key Concepts. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

1.5.1 Business Continuity Clusters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

1.5.2 Cluster Resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

1.5.3 Landing Zone. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

1.5.4 BCC Drivers for Identity Manager . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

novdocx (en) 13 May 2009

2 What’s New for BCC 1.1 for NetWare 27

2.1 What’s New for BCC 1.1 SP2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

2.2 What’s New for BCC 1.1 SP1 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

2.3 What’s New for BCC 1.1 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

3 Planning a Business Continuity Cluster 29

3.1 Determining Design Criteria . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

3.2 Best Practices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

3.3 LAN Connectivity Guidelines . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

3.3.1 VLAN . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

3.3.2 NIC Teaming . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

3.3.3 IP Addresses . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

3.3.4 Name Resolution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

3.3.5 IP Addresses for BCC-Enabled Cluster Resources. . . . . . . . . . . . . . . . . . . . . . . . . . 31

3.4 SAN Connectivity Guidelines . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

3.5 Storage Design Guidelines . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

3.6 eDirectory Design Guidelines. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

3.6.1 Object Location . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

3.6.2 Cluster Context . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

3.6.3 Partitioning and Replication . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

3.6.4 Objects Created by the BCC Drivers for Identity Manager . . . . . . . . . . . . . . . . . . . . 33

3.6.5 Landing Zone. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

3.6.6 Naming Conventions for BCC-Enabled Resources . . . . . . . . . . . . . . . . . . . . . . . . . . 34

3.7 Cluster Design Guidelines . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

4 Installing Business Continuity Clustering 37

4.1 Requirements for BCC 1.1 SP2 for NetWare. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

Contents 5

Page 6

4.1.1 Business Continuity Clustering Licensing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

4.1.2 Business Continuity Cluster Component Locations. . . . . . . . . . . . . . . . . . . . . . . . . . 38

4.1.3 NetWare 6.5 SP8 (OES 2 SP1 NetWare) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

4.1.4 Novell Cluster Services 1.8.5 for NetWare . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

4.1.5 Novell eDirectory 8.8 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

4.1.6 SLP . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

4.1.7 Identity Manager 3.5.1 Bundle Edition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

4.1.8 Novell iManager 2.7.2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

4.1.9 Storage-Related Plug-Ins for iManager 2.7.2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

4.1.10 Windows Workstation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

4.1.11 OpenWBEM. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

4.1.12 Shared Disk Systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

4.1.13 Mirroring Shared Disk Systems Between Peer Clusters . . . . . . . . . . . . . . . . . . . . . . 45

4.1.14 LUN Masking for Shared Devices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

4.1.15 Link Speeds . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

4.1.16 Ports . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

4.1.17 Web Browser . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

4.1.18 BASH . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

4.1.19 LIBC. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

4.1.20 autoexec.ncf File . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

4.2 Downloading the Business Continuity Clustering Software . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

4.3 Configuring a BCC Administrator User. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

4.3.1 Creating the BCC Administrator User . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

4.3.2 Assigning Trustee Rights for the BCC Administrator User to the Cluster Objects. . . 48

4.3.3 Assigning Trustee Rights for the BCC Administrator User to the _ADMIN Volume. . 48

4.3.4 Assigning Trustee Rights for the BCC Administrator User to the sys:\tmp Directory. 49

4.4 Installing and Configuring the Novell Business Continuity Clustering Software. . . . . . . . . . . . 50

4.4.1 Installing the BCC Engine . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

4.4.2 Installing the Identity Manager Templates . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

4.5 What’s Next . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

novdocx (en) 13 May 2009

5 Upgrading Business Continuity Clustering for NetWare 55

5.1 Guidelines for Upgrading . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

5.1.1 Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

5.1.2 Performing a Rolling Cluster Upgrade . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

5.2 Disabling BCC 1.0, Upgrading Servers to NetWare 6.5 SP8, then Enabling BCC 1.1 SP2. . . 56

5.3 Upgrading Clusters from BCC 1.0 to BCC 1.1 SP2 for NetWare. . . . . . . . . . . . . . . . . . . . . . . 58

5.3.1 Upgrading the BCC Cluster from 1.0 to 1.1 SP1 . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

5.3.2 Upgrading the BCC Cluster from 1.1 SP1 to 1.1 SP2 . . . . . . . . . . . . . . . . . . . . . . . . 60

5.4 Upgrading Clusters from BCC 1.1 SP1 to SP2 for NetWare . . . . . . . . . . . . . . . . . . . . . . . . . . 63

5.4.1 Upgrading NetWare and BCC on the Clusters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

5.4.2 Authorizing the BCC Administrator User . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

5.4.3 Upgrading Identity Manager . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

5.4.4 Deleting and Re-Creating the BCC Driver Sets and Drivers . . . . . . . . . . . . . . . . . . . 65

5.4.5 Verifying the BCC Upgrade . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

6 Configuring Business Continuity Clustering Software 67

6.1 Configuring Identity Manager Drivers for the Business Continuity Cluster. . . . . . . . . . . . . . . . 67

6.1.1 Configuring the Identity Manager Drivers and Templates . . . . . . . . . . . . . . . . . . . . . 68

6.1.2 Creating SSL Certificates . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

6.1.3 Synchronizing Identity Manager Drivers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

6.1.4 Preventing Identity Manager Synchronization Loops . . . . . . . . . . . . . . . . . . . . . . . . 71

6.2 Configuring Clusters for Business Continuity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

6.2.1 Enabling Clusters for Business Continuity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

6.2.2 Adding Cluster Peer Credentials . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

6 BCC 1.1 SP2: Administration Guide for NetWare 6.5 SP8

Page 7

6.2.3 Adding Search-and-Replace Values to the Resource Replacement Script . . . . . . . . 74

6.2.4 Adding SAN Management Configuration Information . . . . . . . . . . . . . . . . . . . . . . . . 75

6.2.5 Verifying BCC Administrator User Trustee Rights and Credentials . . . . . . . . . . . . . . 78

6.3 BCC-Enabling Cluster Resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

6.3.1 Enabling a Cluster Resource for Business Continuity . . . . . . . . . . . . . . . . . . . . . . . . 78

6.3.2 Adding Resource Script Search-and-Replace Values . . . . . . . . . . . . . . . . . . . . . . . . 79

6.3.3 Selecting Peer Clusters for the Resource . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

6.3.4 Adding SAN Array Mapping Information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

7 Managing a Business Contiuity Cluster 83

7.1 Migrating a Cluster Resource to a Peer Cluster. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

7.1.1 Understanding BCC Resource Migration. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

7.1.2 Migrating Cluster Resources between Clusters. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

7.2 Changing Cluster Peer Credentials . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

7.3 Viewing the Current Status of a Business Continuity Cluster. . . . . . . . . . . . . . . . . . . . . . . . . . 85

7.3.1 Using iManager to View the Cluster Status . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

7.3.2 Using Console Commands to View the Cluster Status . . . . . . . . . . . . . . . . . . . . . . . 86

7.4 Generating a Cluster Report . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

7.5 Disabling Business Continuity Cluster Resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

7.6 Resolving Business Continuity Cluster Failures. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

7.6.1 SAN-Based Mirroring Failure Types and Responses . . . . . . . . . . . . . . . . . . . . . . . . 88

7.6.2 Host-Based Mirroring Failure Types and Responses . . . . . . . . . . . . . . . . . . . . . . . . 89

novdocx (en) 13 May 2009

8 Virtual IP Addresses 93

8.1 Virtual IP Address Definitions and Characteristics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

8.1.1 Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

8.1.2 Characteristics. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

8.2 Virtual IP Address Benefits . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

8.2.1 High Availability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

8.2.2 Unlimited Mobility. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

8.2.3 Support for Host Mask . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

8.2.4 Source Address Selection for Outbound Connections . . . . . . . . . . . . . . . . . . . . . . . 97

8.3 Reducing the Consumption of Additional IP Addresses. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98

8.4 Configuring Virtual IP Addresses . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

8.4.1 Displaying Bound Virtual IP Addresses . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 100

9 Troubleshooting Business Continuity Clustering 1.1 101

9.1 Cluster Connection States . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

9.2 Driver Ports . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

9.3 Excluded Users . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

9.4 Security Equivalent User . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

9.5 Certificates . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

9.6 Clusters Cannot Communicate . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

9.7 BCC Startup Flags . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 106

9.8 Problems with Installing BCC on NetWare. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 106

9.9 Identity Manager Drivers for Cluster Synchronization Do Not Start . . . . . . . . . . . . . . . . . . . . 106

9.10 Identity Manager Drivers Do Not Synchronize Objects from One Cluster to Another . . . . . . 107

9.11 Tracing Identity Manager Communications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 108

9.12 Peer Cluster Communication Not Working. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 108

9.13 Administration of Peer Clusters Not Functional . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

9.14 A Resource Does Not Migrate to a Peer Cluster . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

9.15 A Resource Cannot Be Brought Online . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

Contents 7

Page 8

9.16 Dumping Syslog on NetWare . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

9.17 Slow Failovers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

9.18 Resource Script Search-and-Replace Functions Do Not Work . . . . . . . . . . . . . . . . . . . . . . . 110

9.19 Virtual NCP Server IP Addresses Won’t Change. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

9.20 The IP Address, Virtual Server DN, or Pool Name Does Not Appear on the iManager Cluster

Configuration Page . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

9.21 Blank Error String iManager Error Appears While Bringing a Resource Online. . . . . . . . . . . 111

9.22 Mapping Drives in Login Scripts. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 112

9.23 Mapping Drives to Home Directories by Using the %HOME_DIRECTORY Variable . . . . . . 112

9.24 BCC Error Codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

10 Security Considerations 115

10.1 Security Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

10.2 Security Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

10.2.1 BCC Configuration Settings. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

10.2.2 Changing the NCS: BCC Setings Attribute in the BCC XML Configuration. . . . . . . 117

10.2.3 Disabling SSL for Inter-Cluster Communication . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

10.3 General Security Guidelines . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

10.4 Security Information for Dependent Products . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

novdocx (en) 13 May 2009

A Console Commands for BCC 123

B Implementing a Multiple-Tree BCC 127

B.1 Planning a Multiple-Tree Solution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

B.1.1 Cluster Synchronization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

B.1.2 User Synchronization. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

B.1.3 SSL Certificates for Drivers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 128

B.2 Using Identity Manager to Copy User Objects to Another eDirectory Tree . . . . . . . . . . . . . . 128

B.3 Configuring User Object Synchronization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 128

B.4 Creating SSL Certificates. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

B.5 Synchronizing the BCC-Specific Identity Manager Drivers . . . . . . . . . . . . . . . . . . . . . . . . . . 130

B.6 Preventing Identity Manager Synchronization Loops. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

B.7 Migrating Resources to Another Cluster . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 132

C Setting Up Auto-Failover 133

C.1 Enabling Auto-Failover. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 133

C.2 Creating an Auto-Failover Policy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134

C.3 Refining the Auto-Failover Policy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134

C.4 Adding or Editing Monitor Configurations. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

D Configuring Host-Based File System Mirroring for NSS Pools 137

D.1 Creating and Mirroring NSS Partitions on Shared Storage . . . . . . . . . . . . . . . . . . . . . . . . . . 138

D.2 Creating NSS Volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 139

D.3 Novell Cluster Services Configuration and Setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 140

D.4 Checking NSS Volume Mirror Status . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 140

E Documentation Updates 141

E.1 August 14, 2009 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

8 BCC 1.1 SP2: Administration Guide for NetWare 6.5 SP8

Page 9

E.1.1 Console Commands for BCC . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

E.1.2 Installing Business Continuity Clustering. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

E.1.3 Upgrading Business Continuity Clustering for NetWare . . . . . . . . . . . . . . . . . . . . . 142

E.2 April 28, 2009 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 142

E.2.1 Converting BCC Clusters from NetWare to Linux . . . . . . . . . . . . . . . . . . . . . . . . . . 142

novdocx (en) 13 May 2009

Contents 9

Page 10

novdocx (en) 13 May 2009

10 BCC 1.1 SP2: Administration Guide for NetWare 6.5 SP8

Page 11

About This Guide

novdocx (en) 13 May 2009

This guide describes how to install, configure, and manage Novell® Business Continuity Clustering

®

1.1 Support Pack 2 (SP2) for NetWare

NetWare) in combination with Novell Cluster Services

Chapter 1, “Overview,” on page 13

Chapter 2, “What’s New for BCC 1.1 for NetWare,” on page 27

Chapter 3, “Planning a Business Continuity Cluster,” on page 29

Chapter 4, “Installing Business Continuity Clustering,” on page 37

Chapter 5, “Upgrading Business Continuity Clustering for NetWare,” on page 55

Chapter 6, “Configuring Business Continuity Clustering Software,” on page 67

Chapter 7, “Managing a Business Contiuity Cluster,” on page 83

Chapter 8, “Virtual IP Addresses,” on page 93

Chapter 9, “Troubleshooting Business Continuity Clustering 1.1,” on page 101

Chapter 10, “Security Considerations,” on page 115

Appendix A, “Console Commands for BCC,” on page 123

Appendix B, “Implementing a Multiple-Tree BCC,” on page 127

Appendix C, “Setting Up Auto-Failover,” on page 133

Appendix D, “Configuring Host-Based File System Mirroring for NSS Pools,” on page 137

6.5 SP8 (same as Novell Open Enterprise Server 2 SP1 for

TM

1.8.5 for NetWare clusters.

Audience

This guide is intended for intended for anyone involved in installing, configuring, and managing

Novell Cluster ServicesTM for NetWare in combination with Novell Business Continuity Clustering

for NetWare.

Feedback

We want to hear your comments and suggestions about this manual and the other documentation

included with this product. Please use the User Comments feature at the bottom of each page of the

online documentation, or go to www.novell.com/documentation/feedback.html (http://

www.novell.com/documentation/feedback.html) and enter your comments there.

Documentation Updates

The latest version of this Novell Business Continuity Clustering 1.1 SP2 Administration Guide for

NetWare 6.5 SP8 (same as OES 2 SP1 NetWare) is available on the Business Continuity Clustering

Documentation Web site (http://www.novell.com/documentation/bcc/index.html) under BCC for

OES 2 SP1.

Additional Documentation

For information about using Novell Business Continuity Clustering 1.2 for Linux, see the BCC 1.2:

Administration Guide for Linux.

About This Guide 11

Page 12

For information about Novell Cluster Services for NetWare, see the OES 2 SP2: Novell Cluster

Services 1.8.5 for NetWare Administration Guide.

For the latest information about Novell Identity Manager 3.5.1, see the Identity Management

Documentation Web site (http://www.novell.com/documentation/idm35/index.html).

For the latest information about OES 2 SP1 for Linux and NetWare, see the OES 2 SP1

Documentation Web site (http://www.novell.com/documentation/oes2/index.html).

Documentation Conventions

In Novell documentation, a greater-than symbol (>) is used to separate actions within a step and

items in a cross-reference path.

®

A trademark symbol (

, TM, etc.) denotes a Novell trademark. An asterisk (*) denotes a third-party

trademark.

novdocx (en) 13 May 2009

12 BCC 1.1 SP2: Administration Guide for NetWare 6.5 SP8

Page 13

1

Overview

novdocx (en) 13 May 2009

1

As corporations become more international, fueled in part by the reach of the Internet, the

requirement for service availability has increased. Novell

offers corporations the ability to maintain mission-critical (24x7x365) data and application services

to their users while still being able to perform maintenance and upgrades on their systems.

In the past few years, natural disasters (ice storms, earthquakes, hurricanes, tornadoes, and fires)

have caused unplanned outages of entire data centers. In addition, U.S. federal agencies have

realized the disastrous effects that terrorist attacks could have on the U.S. economy when

corporations lose their data and the ability to perform critical business practices. This has resulted in

initial recommendations for corporations to build mirrored or replicated data centers that are

geographically separated by 300 kilometers (km) or more. (The minimum acceptable distance is 200

km.)

Many companies have built and deployed geographically mirrored data centers. The problem is that

setting up and maintaining the multiple centers is a manual process that takes a great deal of

planning and synchronizing. Even configuration changes must be carefully planned and replicated.

One mistake and the redundant site is no longer able to effectively take over in the event of a

disaster.

This section identifies the implications for disaster recovery, provides an overview of some of the

network implementations today that attempt to address disaster recovery, and describes how

Business Continuity Clustering can improve your disaster recovery solution by providing

specialized software that automates cluster configuration, maintenance, and synchronization across

two to four geographically separate sites.

®

Business Continuity Clustering (BCC)

Section 1.1, “Disaster Recovery Implications,” on page 13

Section 1.2, “Disaster Recovery Implementations,” on page 14

Section 1.3, “Novell Business Continuity Clusters,” on page 21

Section 1.4, “BCC Deployment Scenarios,” on page 22

Section 1.5, “Key Concepts,” on page 25

1.1 Disaster Recovery Implications

The implications of disaster recovery are directly tied to your data. Is your data mission critical? In

many instances, critical systems and data drive the business. If these services stop, the business

stops. When calculating the cost of downtime, some things to consider are

File transfers and file storage

E-mail, calendaring, and collaboration

Web hosting

Critical databases

Productivity

Reputation

Overview

13

Page 14

Continuous availability of critical business systems is no longer a luxury, it is a competitive business

requirement.The Gartner Group estimates that 40% of enterprises that experience a disaster will go

out of business in five years, and only 15% of enterprises have a full-fledged business continuity

plan that goes beyond core technology and infrastructure.

The cost to the business for each one hour of service outage includes the following:

Income loss measured as the income-generating ability of the service, data, or impacted group

Productivity loss measured as the hourly cost of impacted employees

Recovery cost measured as the hourly cost of IT personnel to get services back online

Future lost revenue because of customer and partner perception

1.2 Disaster Recovery Implementations

Stretch clusters and cluster of clusters are two approaches for making shared resources available

across geographically distributed sites so that a second site can be called into action after one site

fails. To use these approaches, you must first understand how the applications you use and the

storage subsystems in your network deployment can determine whether a stretch cluster or cluster of

clusters solution is possible for your environment.

novdocx (en) 13 May 2009

Section 1.2.1, “LAN-Based versus Internet-Based Applications,” on page 14

Section 1.2.2, “Host-Based versus Storage-Based Data Mirroring,” on page 14

Section 1.2.3, “Stretch Clusters vs. Cluster of Clusters,” on page 15

1.2.1 LAN-Based versus Internet-Based Applications

Traditional LAN applications require a LAN infrastructure that must be replicated at each site, and

might require relocation of employees to allow the business to continue. Internet-based applications

allow employees to work from any place that offers an Internet connection, including homes and

hotels. Moving applications and services to the Internet frees corporations from the restrictions of

traditional LAN-based applications.

®

By using Novell exteNd Director portal services, iChain

applications, and data can be rendered through the Internet, allowing for loss of service at one site

but still providing full access to the services and data by virtue of the ubiquity of the Internet. Data

and services continue to be available from the other mirrored sites.

, and ZENworks®, all services,

1.2.2 Host-Based versus Storage-Based Data Mirroring

For clustering implementations that are deployed in data centers in different geographic locations,

the data must be replicated between the storage subsystems at each data center. Data-block

replication can be done by host-based mirroring for synchronous replication over short distances up

to 10 km. Typically, replication of data blocks between storage systems in the data centers is

performed by SAN hardware that allows synchronous mirrors over a greater distance.

For stretch clusters, host-based mirroring is required to provide synchronous mirroring of the SBD

(split-brain detector) partition between sites. This means that stretch-cluster solutions are limited to

distances of 10 km.

14 BCC 1.1 SP2: Administration Guide for NetWare 6.5 SP8

Page 15

Table 1-1 compares the benefits and limitations of host-based and storage-based mirroring.

Table 1-1 Comparison of Host-Based and Storage-Based Data Mirroring

Capability Host-Based Mirroring Storage-Based Mirroring

novdocx (en) 13 May 2009

Geographic distance between

sites

Mirroring the SBD partition An SBD can be mirrored between

Synchronous data-block

replication of data between sites

Failover support No additional configuration of the

Failure of the site interconnect LUNs can become primary at

SMI-S compliance If the storage subsystems are not

Up to 10 km Can be up to and over 300 km.

two sites.

Yes Yes, requires a Fibre Channel

hardware is required.

both locations (split brain

problem).

SMI-S compliant, the storage

subsystems must be controllable

by scripts running on the nodes of

the cluster.

The actual distance is limited only

by the SAN hardware and media

interconnects for your

deployment.

Yes, if mirroring is supported by

the SAN hardware and media

interconnects for your

deployment.

SAN or iSCSI SAN.

Requires additional configuration

of the SAN hardware.

Clusters continue to function

independently. Minimizes the

chance of LUNs at both locations

becoming primary (split brain

problem).

If the storage subsystems are not

SMI-S compliant, the storage

subsystems must be controllable

by scripts running on the nodes of

the cluster.

1.2.3 Stretch Clusters vs. Cluster of Clusters

A stretch cluster and a cluster of clusters are two clustering implementations that you can use with

Novell Cluster Services

each deployment type, then compares the capabilities of each.

Novell Business Continuity Clustering automates some of the configuration and processes used in a

cluster of clusters. For information, see Section 1.3, “Novell Business Continuity Clusters,” on

page 21.

“Stretch Clusters” on page 16

“Cluster of Clusters” on page 16

“Comparison of Stretch Clusters and Cluster of Clusters” on page 18

“Evaluating Disaster Recovery Implementations for Clusters” on page 20

TM

to achieve your desired level of disaster recovery. This section describes

Overview 15

Page 16

Stretch Clusters

novdocx (en) 13 May 2009

A stretch cluster consists of a single cluster where the nodes are located in two geographically

TM

separate data centers. All nodes in the cluster must be in the same Novell eDirectory

tree, which

requires the eDirectory replica ring to span data centers. The IP addresses for nodes and cluster

resources in the cluster must share a common IP subnet.

At least one storage system must reside in each data center. The data is replicated between locations

by using host-based mirroring or storage-based mirroring. For information about using mirroring

solutions for data replication, see Section 1.2.2, “Host-Based versus Storage-Based Data Mirroring,”

on page 14. Link latency can occur between nodes at different sites, so the heartbeat tolerance

between nodes of the cluster must be increased to allow for the delay.

The split-brain detector (SBD) is mirrored between the sites. Failure of the site interconnect can

result in LUNs becoming primary at both locations (split brain problem) if host-based mirroring is

used.

In the stretch-cluster architecture shown in Figure 1-1, the data is mirrored between two data centers

that are geographically separated. The server nodes in both data centers are part of one cluster, so

that if a disaster occurs in one data center, the nodes in the other data center automatically take over.

Figure 1-1 Stretch Cluster

8-node cluster stretched

between two sites

Building A Building B

Ethernet Switch Ethernet Switch

Server 2 Server 3Server 1 Server 4

Fibre Channel

Switch

Fibre Channel

Disk Array

WAN

Cluster

Heartbeat

SAN

Disk blocks

Server 6 Server 7Server 5 Server 8

Fibre Channel

Switch

Fibre Channel

Disk Array

Site 2Site 1

Cluster of Clusters

A cluster of clusters consists of multiple clusters in which each cluster is located in a geographically

separate data center. Each cluster can be in different Organizational Unit (OU) containers in the

same eDirectory tree, or in different eDirectory trees. Each cluster can be in a different IP subnet.

16 BCC 1.1 SP2: Administration Guide for NetWare 6.5 SP8

Page 17

A cluster of clusters provides the ability to fail over selected cluster resources or all cluster resources

from one cluster to another cluster. For example, the cluster resources in one cluster can fail over to

separate clusters by using a multiple-site fan-out failover approach. A given service can be provided

by multiple clusters. Resource configurations are replicated to each peer cluster and synchronized

manually. Failover between clusters requires manual management of the storage systems and the

cluster.

Nodes in each cluster access only the storage systems co-located in the same data center. Typically,

data is replicated by using storage-based mirroring. Each cluster has its own SBD partition. The

SBD partition is not mirrored across the sites, which minimizes the chance for a split-brain problem

occurring when using host-based mirroring. For information about using mirroring solutions for data

replication, see Section 1.2.2, “Host-Based versus Storage-Based Data Mirroring,” on page 14.

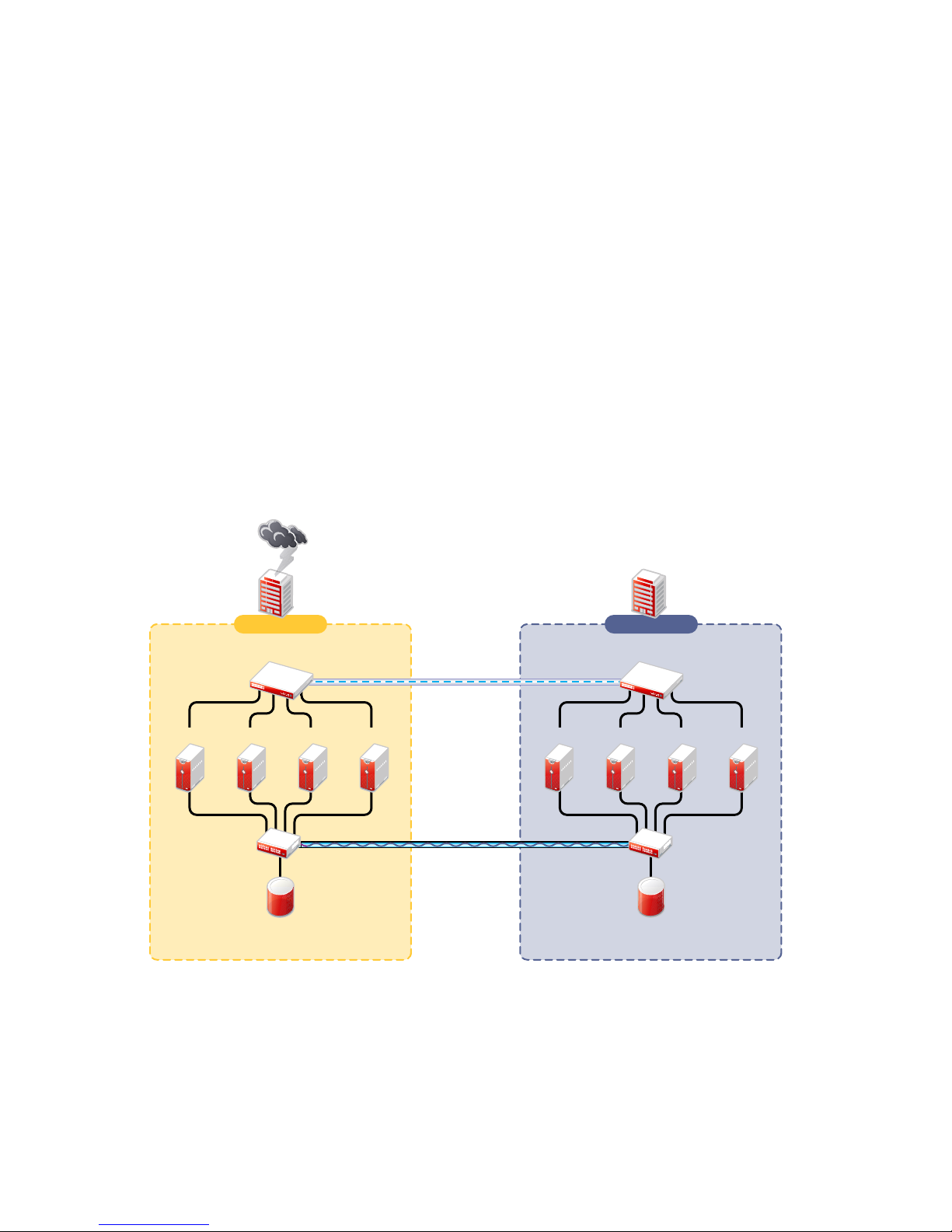

In the cluster-of-clusters architecture shown in Figure 1-2, the data is synchronized by the SAN

hardware between two data centers that are geographically separated. If a disaster occurs in one data

center, the cluster in the other data center takes over.

Figure 1-2 Cluster of Clusters

novdocx (en) 13 May 2009

Server

1A

Fibre Channel

Server2AServer

Switch

Two independent clusters at

geographically separate sites

Building A Building B

Ethernet Switch

3A

Fibre Channel

Disk Arrays

Server

4A

WAN

eDirectory

IDM

Server

1B

SAN

Disk blocks

Ethernet Switch

Server2BServer

Fibre Channel

Disk Arrays

Cluster Site 2Cluster Site 1

3B

Server

Fibre Channel

Switch

4B

Overview 17

Page 18

Comparison of Stretch Clusters and Cluster of Clusters

Table 1-2 compares the capabilities of a stretch cluster and a cluster of clusters.

Table 1-2 Comparison of Stretch Cluster and Cluster of Clusters

Capability Stretch Cluster Cluster of Clusters

Number of clusters One Two or more

novdocx (en) 13 May 2009

Number of geographically

separated data centers

eDirectory trees Single tree only; requires the

eDirectory Organizational Units

(OUs)

IP subnet IP addresses for nodes and

SBD partition A single SBD is mirrored between

Two Two or more

replica ring to span data centers.

Single OU container for all nodes.

As a best practice, place the

cluster container in an OU

separate from the rest of the tree.

cluster resources must be in a

single IP subnet.

Because the subnet spans

multiple locations, you must

ensure that your switches handle

gratuitous ARP (Address

Resolution Protocol).

two sites by using host-based

mirroring, which limits the

distance between data centers to

10 km.

One or multiple trees

Each cluster can be in a different

OU. Each cluster is in a single

OU container.

As a best practice, place each

cluster container in an OU

separate from the rest of the tree.

IP addresses in a given cluster

are in a single IP subnet. Each

cluster can use the same or

different IP subnet.

If you use the same subnet for all

clusters in the cluster of clusters,

you must ensure that your

switches handle gratuitous ARP.

Each cluster has its own SBD.

Each cluster can have an on-site

mirror of its SBD for high

availability.

If the cluster of clusters uses

host-based mirroring, the SBD is

not mirrored between sites, which

minimizes the chance of LUNs at

both locations becoming primary.

Failure of the site interconnect if

using host-based mirroring

Storage subsystem Each cluster accesses only the

18 BCC 1.1 SP2: Administration Guide for NetWare 6.5 SP8

LUNs might become primary at

both locations (split brain

problem).

storage subsystem on its own

site.

Clusters continue to function

independently.

Each cluster accesses only the

storage subsystem on its own

site.

Page 19

Capability Stretch Cluster Cluster of Clusters

novdocx (en) 13 May 2009

Data-block replication between

sites

For information about data

Yes; typically uses storage-based

mirroring, but host-based

mirroring is possible for distances

up to 10 km.

Yes; typically uses storage-based

mirroring, but host-based

mirroring is possible for distances

up to 10 km.

replication solutions, see

Section 1.2.2, “Host-Based

versus Storage-Based Data

Mirroring,” on page 14.

Clustered services A single service instance runs in

the cluster.

Cluster resource failover Automatic failover to preferred

nodes at the other site.

Each cluster can run an instance

of the service.

Manual failover to preferred

nodes on one or multiple clusters

(multiple-site fan-out failover).

Failover requires additional

configuration.

Cluster resource configurations Configured for a single cluster Configured for the primary cluster

that hosts the resource, then the

configuration is manually

replicated to the peer clusters.

Cluster resource configuration

synchronization

Failover of cluster resources

between clusters

Controlled by the master node Manual process that can be

tedious and error-prone.

Not applicable Manual management of the

storage systems and the cluster.

Link latency between sites Can cause false failovers.

The cluster heartbeat tolerance

between master and slave must

be increased to as high as 30

seconds. Monitor cluster

heartbeat statistics, then tune

down as needed.

Each cluster functions

independently in its own

geographical site.

Overview 19

Page 20

Evaluating Disaster Recovery Implementations for Clusters

Table 1-3 illustrates why a cluster of cluster solution is less problematic to deploy than a stretch

cluster solution. Manual configuration is not a problem when using Novell Business Continuity

Clustering for your cluster of clusters.

Table 1-3 Advantages and Disadvantages of Stretch Clusters versus Cluster of Clusters

Stretch Cluster Cluster of Clusters

novdocx (en) 13 May 2009

Advantages It automatically fails over when

configured with host-based

mirroring.

It is easier to manage than separate

clusters.

Cluster resources can fail over to

nodes in any site.

Disadvantages

The eDirectory partition must span

the sites.

Failure of site interconnect can

result in LUNs becoming primary at

both locations (split brain problem) if

host-based mirroring is used.

An SBD partition must be mirrored

between sites.

It accommodates only two sites.

All IP addresses must reside in the

same subnet.

eDirectory partitions don’t need to

span the cluster.

Each cluster can be in different OUs

in the same eDirectory tree, or in

different eDirectory trees.

IP addresses for each cluster can be

on different IP subnets.

Cluster resources can fail over to

separate clusters (multiple-site fanout failover support).

Each cluster has its own SBD.

Each cluster can have an on-site

mirror of its SBD for high availability.

If the cluster of clusters uses hostbased mirroring, the SBD is not

mirrored between sites, which

minimizes the chance of LUNs at

both locations becoming primary.

Resource configurations must be

manually synchronized.

Storage-based mirroring requires

additional configuration steps.

20 BCC 1.1 SP2: Administration Guide for NetWare 6.5 SP8

Page 21

Stretch Cluster Cluster of Clusters

novdocx (en) 13 May 2009

Other

Considerations

Host-based mirroring is required to

mirror the SBD partition between

sites.

Link variations can cause false

failovers.

You could consider partitioning the

eDirectory tree to place the cluster

container in a partition separate from

the rest of the tree.

The cluster heartbeat tolerance

between master and slave must be

increased to accommodate link

latency between sites.

You can set this as high as 30

seconds, monitor cluster heartbeat

statistics, and then tune down as

needed.

Because all IP addresses in the

cluster must be on the same subnet,

you must ensure that your switches

handle ARP.

Contact your switch vendor or

consult your switch documentation

for more information.

Depending on the platform used,

storage arrays must be controllable

by scripts that run on NetWare

the SANs are not SMI-S compliant.

®

if

1.3 Novell Business Continuity Clusters

A Novell Business Continuity Clustering cluster is an automated cluster of Novell Cluster Services

clusters. It is similar to what is described in “Cluster of Clusters” on page 16, except that the cluster

configuration, maintenance, and synchronization have been automated by adding specialized

software.

BCC supports up to four peer clusters. The sites are geographically separated mirrored data centers,

with a high availability cluster located at each site. Configuration is automatically synchronized

between the sites. Data is replicated between sites. All cluster nodes and their cluster resources are

monitored at each site. If one site goes down, business continues through the mirrored sites.

The business continuity cluster configuration information is stored in eDirectory. eDirectory schema

extensions provide the additional attributes required to maintain the configuration and status

information of BCC enabled cluster resources. This includes information about the peer clusters, the

cluster resources and their states, and storage control commands.

BCC is an integrated set of tools to automate the setup and maintenance of a business continuity

infrastructure. Unlike competitive solutions that attempt to build stretch clusters, BCC uses a cluster

of clusters. Each site has its own independent clusters, and the clusters in each of the geographically

separate sites are each treated as “nodes” in a larger cluster, allowing a whole site to do fan-out

failover to other multiple sites. Although this can currently be done manually with a cluster of

clusters, BCC automates the system by using eDirectory and policy-based management of the

resources and storage systems.

Overview 21

Page 22

Novell Business Continuity Clustering software provides the following advantages over typical

cluster-of-clusters solutions:

Supports up to four clusters with up to 32 nodes each.

Integrates with shard storage hardware devices to automate the failover process through

standards-based mechanisms such as SMI-S.

Uses Novell Identity Manager technology to automatically synchronize and transfer cluster-

related eDirectory objects from one cluster to another, and between trees.

Provides the capability to fail over as few as one cluster resource, or as many as all cluster

resources.

Includes intelligent failover that allows you to perform site failover testing as a standard

practice.

Provides scripting capability that allows enhanced storage management control and

customization of migration and fail over between clusters.

Provides simplified business continuity cluster configuration and management by using the

browser-based Novell iManager management tool. iManager is used for the configuration and

monitoring of the overall system and for the individual resources.

novdocx (en) 13 May 2009

1.4 BCC Deployment Scenarios

There are several Business Continuity Clustering deployment scenarios that can be used to achieve

the desired level of disaster recovery. Three possible scenarios include:

Section 1.4.1, “Two-Site Business Continuity Cluster Solution,” on page 22

Section 1.4.2, “Multiple-Site Business Continuity Cluster Solution,” on page 23

Section 1.4.3, “Low-Cost Business Continuity Cluster Solution,” on page 24

1.4.1 Two-Site Business Continuity Cluster Solution

The two-site business continuity cluster deploys two independent clusters at geographically separate

sites. Each cluster can support up to 32 nodes. The clusters can be designed in one of two ways:

Active Site/Active Site: Two active sites where each cluster supports different applications

and services. Either site can take over for the other site at any time.

Active Site/Passive Site: A primary site in which all services are normally active, and a

secondary site which is effectively idle. The data is mirrored to the secondary site, and the

applications and services are ready to load if needed.

The active/active deployment option is typically used in a company that has more than one large site

of operations. The active/passive deployment option is typically used when the purpose of the

secondary site is primarily testing by the IT department. Replication of data blocks is typically done

by SAN hardware, but it can be done by host-based mirroring for synchronous replication over short

distances up to 10 km.

22 BCC 1.1 SP2: Administration Guide for NetWare 6.5 SP8

Page 23

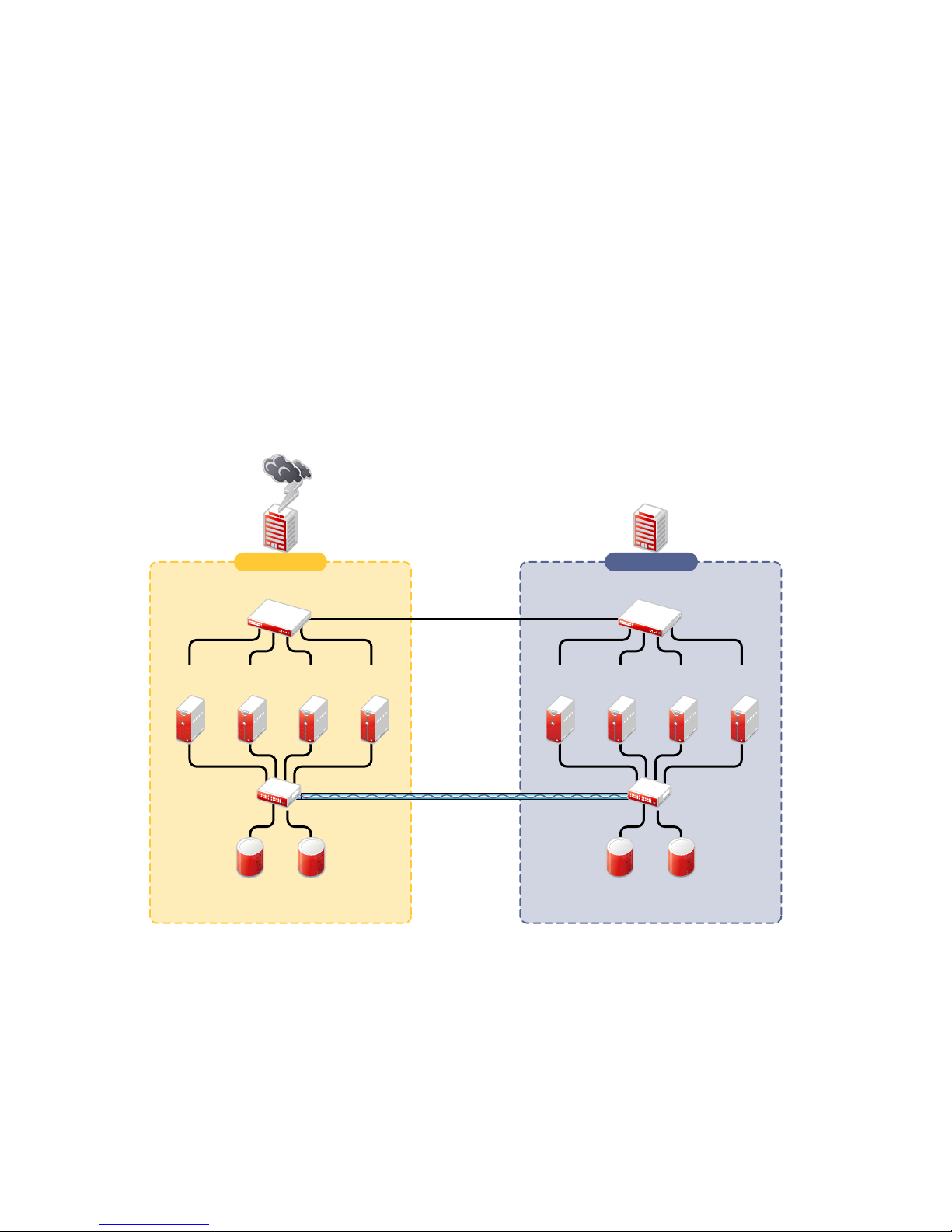

Figure 1-3 shows a two-site business continuity cluster that uses storage-based data replication

between the sites. BCC uses eDirectory and Identity Manager to synchronize cluster information

between the two clusters.

Figure 1-3 Two-Site Business Continuity Cluster

Two independent clusters at

geographically separate sites

Building A Building B

novdocx (en) 13 May 2009

Server

1A

Fibre Channel

Server2AServer

Switch

Ethernet Switch

3A

Fibre Channel

Disk Arrays

Server

4A

WAN

eDirectory

IDM

Server

1B

SAN

Disk blocks

Ethernet Switch

Server2BServer

3B

Fibre Channel

Switch

Fibre Channel

Disk Arrays

Cluster Site 2Cluster Site 1

Server

4B

1.4.2 Multiple-Site Business Continuity Cluster Solution

The multiple-site business continuity cluster is a large solution capable of supporting up to four

sites. Each cluster can support up to 32 nodes. Services and applications can do fan-out failover

between sites. Replication of data blocks is typically done by SAN hardware, but it can be done by

host-based mirroring for synchronous replication over short distances up to 10 km.

Overview 23

Page 24

Figure 1-4 depicts a four-site business continuity cluster that uses storage-based data replication

between the sites. BCC uses eDirectory and Identity Manager to synchronize cluster information

between the two clusters.

Figure 1-4 Multiple-Site Business Continuity Cluster

Four independent clusters in

geographically separate sites

Building D

Building C

novdocx (en) 13 May 2009

Server

1A

Fibre Channel

Switch

Building A

Ethernet Switch

Server

2A

Fibre Channel

Cluster Site 1

Server

Disk Arrays

3A

Server

4A

WAN

eDirectory

IDM

Server

1B

SAN

Disk blocks

Building B

Ethernet Switch

Server2BServer

3B

Fibre Channel

Switch

Fibre Channel

Disk Arrays

Cluster Sites 2, 3, and 4

Server

4D

Server

4C

Server

4B

Channel

Channel

Using additional products, all services, applications, and data can be rendered through the Internet,

allowing for loss of service at one site but still providing full access to the services and data by virtue

of the ubiquity of the Internet. Data and services continue to be available from the other mirrored

sites. Moving applications and services to the Internet frees corporations from the restrictions of

traditional LAN-based applications. Traditional LAN applications require a LAN infrastructure that

must be replicated at each site, and might require relocation of employees to allow the business to

continue. Internet-based applications allow employees to work from any place that offers an Internet

connection, including homes and hotels.

1.4.3 Low-Cost Business Continuity Cluster Solution

The low-cost business continuity cluster solution is similar to the previous two solutions, but

replaces Fibre Channel storage arrays with iSCSI storage arrays. Data block mirroring can be

accomplished either with iSCSI-based block replication, or host-based mirroring. In either case,

snapshot technology can allow for asynchronous replication over long distances. However, the lowcost solution does not necessarily have the performance associated with higher-end Fibre Channel

storage arrays.

24 BCC 1.1 SP2: Administration Guide for NetWare 6.5 SP8

Page 25

1.5 Key Concepts

The key concepts in this section can help you understand how Business Continuity Clustering

manages your business continuity cluster.

Section 1.5.1, “Business Continuity Clusters,” on page 25

Section 1.5.2, “Cluster Resources,” on page 25

Section 1.5.3, “Landing Zone,” on page 25

Section 1.5.4, “BCC Drivers for Identity Manager,” on page 25

1.5.1 Business Continuity Clusters

A cluster of two to four Novell Cluster Services clusters that are managed together by Business

Continuity Clustering software. All nodes in every peer cluster are running the same operating

system.

1.5.2 Cluster Resources

novdocx (en) 13 May 2009

A cluster resource is a cluster-enabled shared disk that is configured for Novell Cluster Services. It

is also BCC-enabled so that it can be migrated and failed over between nodes in different peer

clusters.

1.5.3 Landing Zone

The landing zone is an eDirectory context in which the objects for the Virtual Server, the Cluster

Pool, and the Cluster Volume are placed when they are created for the peer clusters. You specify the

landing zone context when you configure the Identity Manager drivers for the business continuity

cluster.

1.5.4 BCC Drivers for Identity Manager

Business Continuity Clustering requires a special Identity Manager driver that uses an Identity Vault

to synchronize the cluster resource configuration information between the peer clusters. If the peer

clusters are in different eDirectory trees, an additional BCC driver helps synchronize user

information between the trees. For information, see Section 6.1.1, “Configuring the Identity

Manager Drivers and Templates,” on page 68.

Overview 25

Page 26

novdocx (en) 13 May 2009

26 BCC 1.1 SP2: Administration Guide for NetWare 6.5 SP8

Page 27

2

What’s New for BCC 1.1 for

novdocx (en) 13 May 2009

NetWare

This section describes the enhancements made to Novell® Business Continuity Clustering 1.1 for

NetWare

2.1 What’s New for BCC 1.1 SP2

Business Continuity Clustering 1.1 SP2 for NetWare SP8 provides the following enhancements and

changes:

®

.

Section 2.1, “What’s New for BCC 1.1 SP2,” on page 27

Section 2.2, “What’s New for BCC 1.1 SP1,” on page 27

Section 2.3, “What’s New for BCC 1.1,” on page 27

Support for NetWare 6.5 SP8 (same as Novell Open Enterprise Server (OES) 2 SP1 NetWare)

TM

TM

1.8.5 for NetWare

8.8

Support for Novell Cluster Services

Support for Identity Manager 3.5.1

Support for 64-bit architectures

Support for Novell eDirectory

Support for Novell iManager 2.7.2

2

2.2 What’s New for BCC 1.1 SP1

Business Continuity Clustering 1.1 SP1 for NetWare 6.5 SP6 provides the following enhancements

and changes:

Support for NetWare 6.5 SP6 (same as OES 1 SP2 NetWare update)

Support for Identity Manager 3.x

2.3 What’s New for BCC 1.1

Business Continuity Clustering 1.1 for NetWare 6.5 SP5 provides the following enhancements and

changes as compared to BCC 1.0 for NetWare:

Support for NetWare 6.5 SP5 (same as OES 1 SP2 NetWare)

Support for Identity Manager 2.x

Changed inter-cluster communication from the NCP

the CIM ports 5988 and 5989

Storage Management Initiative (SMI-S) CIM support

Standards-based management of the SAN for automatic LUN failover

Support for most SANs (such as Xiotech*, EMC*, HP*, IBM*, and so on)

TM

(NetWare Control Protocl) port 524 to

What’s New for BCC 1.1 for NetWare

27

Page 28

Automatic failover

No need for administrator intervention

Based on a configurable minimum number of nodes or a percentage of nodes

Extensible monitoring framework

Disabled by default

novdocx (en) 13 May 2009

28 BCC 1.1 SP2: Administration Guide for NetWare 6.5 SP8

Page 29

3

Planning a Business Continuity

novdocx (en) 13 May 2009

Cluster

Use the guidelines in this section to design your Novell® Business Continuity Clustering solution.

The success of your business continuity cluster depends on the stability and robustness of the

individual peer clusters. BCC cannot overcome weaknesses in a poorly designed cluster

environment.

Section 3.1, “Determining Design Criteria,” on page 29

Section 3.2, “Best Practices,” on page 29

Section 3.3, “LAN Connectivity Guidelines,” on page 30

Section 3.4, “SAN Connectivity Guidelines,” on page 31

Section 3.5, “Storage Design Guidelines,” on page 32

Section 3.6, “eDirectory Design Guidelines,” on page 32

Section 3.7, “Cluster Design Guidelines,” on page 34

3.1 Determining Design Criteria

The design goal for your business continuity cluster is to ensure that your critical data and services

can continue in the event of a disaster. Design the infrastructure based on your business needs.

3

Determine your design criteria by asking and answering the following questions:

What are the key services that drive your business?

Where are your major business sites, and how many are there?

What services are essential for business continuance?

What is the cost of down time for the essential services?

Based on their mission-critical nature and cost of down time, what services are the highest

priority for business continuance?

Where are the highest-priority services currently located?

Where should the highest-priority services be located for business continuance?

What data must be replicated to support the highest-priority services?

How much data is involved, and how important is it?

3.2 Best Practices

The following practices help you avoid potential problems with your BCC:

IP address changes should always be made on the Protocols page of the iManager cluster plug-

in, not in load and unload scripts.

TM

This is the only way to change the IP address on the virtual NCP

eDirectory

TM

.

server object in

Planning a Business Continuity Cluster

29

Page 30

Ensure that eDirectory and your clusters are stable before implementing BCC.

Engage Novell Consulting.

Engage a consulting group from your SAN vendor.

The cluster node that hosts the Identity Manager driver should have a full read/write

eDirectory

Driver set container

Cluster container

(Parent) container where the servers reside

Landing zone container

User object container

Ensure that you have full read/write replicas of the entire tree at each data center.

TM

replica with the following containers in the replica:

3.3 LAN Connectivity Guidelines

The primary objective of LAN connectivity in a cluster is to provide uninterrupted heartbeat

communications. Use the guidelines in this section to design the LAN connectivity for each of the

peer clusters in the business continuity cluster:

novdocx (en) 13 May 2009

Section 3.3.1, “VLAN,” on page 30

Section 3.3.2, “NIC Teaming,” on page 30

Section 3.3.3, “IP Addresses,” on page 31

Section 3.3.4, “Name Resolution,” on page 31

Section 3.3.5, “IP Addresses for BCC-Enabled Cluster Resources,” on page 31

3.3.1 VLAN

Use a dedicated VLAN (virtual local area network) for each cluster.

The cluster protocol is non-routable, so you cannot direct communications to specific IP addresses.

Using a VLAN for the cluster nodes provides a protected environment for the heartbeat process and

ensures that heartbeat packets are exchanged only between the nodes of a given cluster.

When using a VLAN, no foreign host can interfere with the heartbeat. For example, it avoids

broadcast storms that slow traffic and result in false split-brain abends.

3.3.2 NIC Teaming

Use NIC teaming for adapters for LAN fault tolerance. NIC teaming combines Ethernet interfaces

on a host computer for redundancy or increased throughput. It helps increase the availability of an

individual cluster node, which helps avoid or reduce the occurrences of failover caused by slow

LAN traffic.

When configuring Spanning Tree Protocol (STP), ensure that Portfast is enabled, or consider Rapid

Spanning Tree. The default settings for STP inhibit the heartbeat for over 30 seconds whenever there

is a change in link status. Test your STP configuration with Novell Cluster Services

make sure that a node is not cast out of the cluster when a broken link is restored.

30 BCC 1.1 SP2: Administration Guide for NetWare 6.5 SP8

TM

running to

Page 31

Consider connecting cluster nodes to access switches for fault tolerance.

3.3.3 IP Addresses

Use a dedicated IP address range for each cluster. You need a unique static IP address for each of the

following components of each peer cluster:

Cluster (master IP address)

Cluster nodes

Cluster resources that are not BCC-enabled (file system resources and service resources such as

DHCP, DNS, SLP, FTP, and so on)

Cluster resources that are BCC-enabled (file system resources and service resources such as

DHCP, DNS, SLP, FTP, and so on)

Plan your IP address assignment so that it is consistently applied across all peer clusters. Provide an

IP address range with sufficient addresses for each cluster.

3.3.4 Name Resolution

novdocx (en) 13 May 2009

In BCC 1.1 and later, the master IP addresses are stored in the NCS:BCC Peers attribute. Ensure that

SLP is properly configured for name resolution.

3.3.5 IP Addresses for BCC-Enabled Cluster Resources

Use dedicated IP address ranges for BCC-enabled cluster resources. With careful planning, the IP

address and the name of the virtual server for the cluster resource never need to change.

The IP address of an inbound cluster resource is transformed to use an IP address in the same subnet

of the peer cluster where it is being cluster migrated. You define the transformation rules to

accomplish this by using the Identity Manager driver’s search and replace functionality. The

transformation rules are easier to define and remember when you use strict IP address assignment,

such as using the third octet to identify the subnet of the peer cluster.

3.4 SAN Connectivity Guidelines

The primary objective of SAN (storage area network) connectivity in a cluster is to provide solid

and stable connectivity between cluster nodes and the storage system. Before installing Novell

Cluster Services and Novell Business Continuity Clustering, make sure the SAN configuration is

established and verified.

Use the guidelines in this section to design the SAN connectivity for each of the peer clusters in the

business continuity cluster:

Use host-based multipath I/O management.

Use redundant SAN connections to provide fault-tolerant connectivity between the cluster

nodes and the shared storage devices.

Connect each node via two fabrics to the storage environment.

Planning a Business Continuity Cluster 31

Page 32

Use a minimum of two mirror connections between storage environments over different fabrics

and wide area networks.

Make sure the distance between storage subsystems is within the limitations of the fabric used

given the amount of data, how the data is mirrored, and how long applications can wait for

acknowledgement. Also make sure to consider support for asynchronous versus synchronous

connections.

3.5 Storage Design Guidelines

Use the guidelines in this section to design the shared storage solution for each of the peer clusters in

the business continuity cluster.

Use a LUN device as the failover unit for each BCC-enabled cluster resource. Multiple pools

per LUN are possible, but are not recommended. A LUN cannot be concurrently accessed by

servers belonging to different clusters. This means that all resources on a given LUN can be

active in a given cluster at any given time. For maximum flexibility, we recommend that you

create only one cluster resource per LUN.

If you use multiple LUNs for a given shared NSS pool, all LUNs must fail over together. We

recommend that you use only one LUN per pool, and only one pool per LUN.

Data must be mirrored between data centers by using host-based mirroring or storage-based

mirroring. Storage-based mirroring is recommended.

novdocx (en) 13 May 2009

When using host-based mirroring, make sure that the mirrored partitions are accessible for the

nodes of only one of the BCC peer clusters at any given time. If you use multiple LUNs for a

given pool, each segment must be mirrored individually. In large environments, it might be

difficult to determine the mirror state of all mirrored partitions at one time. You must also make

sure that all segments of the resource fail over together.

3.6 eDirectory Design Guidelines

Your Novell eDirectory solution for each of the peer clusters in the business continuity cluster must

consider the following configuration elements. Make sure your approach is consistent across all peer

clusters.

Section 3.6.1, “Object Location,” on page 32

Section 3.6.2, “Cluster Context,” on page 33

Section 3.6.3, “Partitioning and Replication,” on page 33

Section 3.6.4, “Objects Created by the BCC Drivers for Identity Manager,” on page 33

Section 3.6.5, “Landing Zone,” on page 33

Section 3.6.6, “Naming Conventions for BCC-Enabled Resources,” on page 34

3.6.1 Object Location

Cluster nodes and Cluster objects can exist anywhere in the eDirectory tree. The virtual server

object, cluster pool object, and cluster volume object are automatically created in the eDirectory

context of the server where the cluster resource is created and cluster-enabled. You should create

cluster resources on the master node of the cluster.

32 BCC 1.1 SP2: Administration Guide for NetWare 6.5 SP8

Page 33

3.6.2 Cluster Context

Place each cluster in a separate Organizational Unit (OU). All server objects and cluster objects for a

given cluster should be in the same OU.

Figure 3-1 Cluster Resources in Separate OUs

novdocx (en) 13 May 2009

3.6.3 Partitioning and Replication

Partition the cluster OU and replicate it to dedicated eDirectory servers holding a replica of the

parent partition and to all cluster nodes. This helps prevent resources from being stuck in an NDS

Sync state when a cluster resource’s configuration is modified.

®

3.6.4 Objects Created by the BCC Drivers for Identity Manager

When a resource is BCC-enabled, its configuration is automatically synchronized with every peer

cluster in the business continuity cluster by using customized Identity Manager drivers. The

following eDirectory objects are created in each peer cluster:

Cluster Resource object

Virtual Server object

Cluster Pool object

Cluster Volume object

The Cluster Resource object is placed in the Cluster object of the peer clusters where the resource

did not exist initially. The Virtual Server, Cluster Pool, and Cluster Volume objects are stored in the

landing zone. Search-and-replace transform rules define cluster-specific modifications such as the

IP address.

3.6.5 Landing Zone

Any OU can be defined as the BCC landing zone. Use a separate OU for the landing zone than you

use for a cluster OU. The cluster OU for one peer cluster can be the landing zone OU for a different

peer cluster.

Planning a Business Continuity Cluster 33

Page 34

3.6.6 Naming Conventions for BCC-Enabled Resources

Develop a cluster-independent naming convention for BCC-enabled cluster resources. It can

become confusing if the cluster resource name refers to one cluster and is failed over to a peer

cluster.

You can use a naming convention for resources in your BCC as you create those resources.

Changing existing names of cluster resources is less straightforward and can be error prone.

For example, when cluster-enabling NSS pools the default naming conventions used by NSS are:

novdocx (en) 13 May 2009

Cluster Resource:

Cluster-Enabled Pool:

Cluster-Enabled Volume:

Virtual Server:

poolname_SERVER

clustername_poolname_POOL

clustername_volumename

clustername_poolname_SERVER

Instead, use names that are independent of the clusters and that are unique across all peer clusters.

For example, replace the clustername with something static such as BCC.

Cluster Resource:

Cluster-Enabled Pool:

Cluster-Enabled Volume:

Virtual Server:

poolname_SERVER

BCC_poolname_POOL

BCC_volumename

BCC_poolname_SERVER

Resources have an identity in each peer cluster, and the names are the same in each peer cluster. For

example, Figure 3-2 shows the cluster resource identity in each of two peer clusters.

Figure 3-2 Cluster Resource Identity in Two Clusters

3.7 Cluster Design Guidelines

Your Novell Cluster Services solution for each of the peer clusters in the business continuity cluster

must consider the following configuration guidelines. Make sure your approach is consistent across

all peer clusters.

IP address assignments should be consistently applied within each peer cluster and for all

cluster resources.

Ensure that IP addresses are unique across all BCC peer clusters.

34 BCC 1.1 SP2: Administration Guide for NetWare 6.5 SP8

Page 35

Volume IDs must be unique across all peer clusters. Each cluster node automatically assigns

SYS

volume ID 0 to volume

and volume ID 1 to volume

_ADMIN

. Cluster-enabled volumes use

high volume IDs, starting from 254 in decending order. Novell Client uses the volume ID to

access a volume.

When existing clusters are configured and enabled within the same business continuity cluster,

the volume IDs for the existing shared volumes might also share the same volume IDs. To

resolve this conflict, manually edit the load script for each volume that has been enabled for

business continuity and change the volume IDs to unique values for each volume in the

business continuity cluster.

BCC configuration should consider the configuration requirements for each of the services

supported across all peer clusters.

Create failover matrixes for each cluster resource so that you know what service is supported

and which nodes are the preferred nodes for failover within the same cluster and among the

peer clusters.

novdocx (en) 13 May 2009

Planning a Business Continuity Cluster 35

Page 36

novdocx (en) 13 May 2009

36 BCC 1.1 SP2: Administration Guide for NetWare 6.5 SP8

Page 37

4

Installing Business Continuity

novdocx (en) 13 May 2009

Clustering

This section describes how to install, set up, and configure Novell® Business Continuity Clustering

1.1 SP2 for NetWare

your specific needs:

Section 4.1, “Requirements for BCC 1.1 SP2 for NetWare,” on page 37