Page 1

TM

MATRIXx

XmathTM Xµ Manual

MATRIXx Xmath Basics

The MATRIXx products and related items have been purchased from Wind

River Systems, Inc. (formerly Integrated Systems, Inc.). These reformatted user

materials may contain references to those entities. Any trademark or copyright

notices to those entities are no longer valid and any references to those entities

as the licensor to the MATRIXx products and related items should now be

considered as referring to National Instruments Corporation.

National Instruments did not acquire RealSim hardware (AC-1000, AC-104,

PCI Pro) and does not plan to further develop or support RealSim software.

NI is directing users who wish to continue to use RealSim software and hardware

to third parties. The list of NI Alliance Members (third parties) that can provide

RealSim support and the parts list for RealSim hardware are available in our

online KnowledgeBase. You can access the KnowledgeBase at

www.ni.com/support.

NI plans to make it easy for customers to target NI software and hardware,

including LabVIEW real-time and PXI, with MATRIXx in the future.

For information regarding NI real-time products, please visit

www.ni.com/realtime or contact us at matrixx@ni.com.

April 2004 Edition

Part Number 370760B-01

Page 2

Support

Worldwide Technical Support and Product Information

ni.com

National Instruments Corporate Headquarters

11500 North Mopac Expressway Austin, Texas 78759-3504 USA Tel: 512 683 0100

Worldwide Offices

Australia 1800 300 800, Austria 43 0 662 45 79 90 0, Belgium 32 0 2 757 00 20, Brazil 55 11 3262 3599,

Canada (Calgary) 403 274 9391, Canada (Ottawa) 613 233 5949, Canada (Québec) 450 510 3055,

Canada (Toronto) 905 785 0085, Canada (Vancouver) 514 685 7530, China 86 21 6555 7838,

Czech Republic 420 224 235 774, Denmark 45 45 76 26 00, Finland 385 0 9 725 725 11, France 33 0 1 48 14 24 24,

Germany 49 0 89 741 31 30, Greece 30 2 10 42 96 427, India 91 80 51190000, Israel 972 0 3 6393737,

Italy 39 02 413091, Japan 81 3 5472 2970, Korea 82 02 3451 3400, Malaysia 603 9131 0918, Mexico 001 800 010 0793,

Netherlands 31 0 348 433 466, New Zealand 0800 553 322, Norway 47 0 66 90 76 60, Poland 48 22 3390150,

Portugal 351 210 311 210, Russia 7 095 783 68 51, Singapore 65 6226 5886, Slovenia 386 3 425 4200,

South Africa 27 0 11 805 8197, Spain 34 91 640 0085, Sweden 46 0 8 587 895 00, Switzerland 41 56 200 51 51,

Taiwan 886 2 2528 7227, Thailand 662 992 7519, United Kingdom 44 0 1635 523545

For further support information, refer to the Technical Support Resources and Professional Services appendix. To comment

on the documentation, send email to techpubs@ni.com.

© 2000–2003 National Instruments Corporation. All rights reserved.

Page 3

Important Information

Warranty

The media on which you receive National Instruments software are warranted not to fail to execute programming instructions, due to defects

in materials and workmanship, for a period of 90 days from date of shipment, as evidenced by receipts or other documentation. National

Instruments will, at its option, repair or replace software media that do not execute programming instructions if National Instruments receives

notice of such defects during the warranty period. National Instruments does not warrant that the operation of the software shall be

uninterrupted or error free.

A Return Material Authorization (RMA) number must be obtained from the factory and clearly marked on the outside of the package before

any equipment will be accepted for warranty work. National Instruments will pay the shipping costs of returning to the owner parts which are

covered by warranty.

National Instruments believes that the information in this document is accurate. The document has been carefully reviewed for technical

accuracy. In the event that technical or typographical errors exist, National Instruments reserves the right to make changes to subsequent

editions of this document without prior notice to holders of this edition. The reader should consult National Instruments if errors are suspected.

In no event shall National Instruments be liable for any damages arising out of or related to this document or the information contained in it.

E

XCEPT AS SPECIFIED HEREIN, NATIONAL INSTRUMENTS MAKES NO WARRANTIES, EXPRESS OR IMPLIED, AND SPECIFICALLY DISCLAIMS ANY WARRANTY OF

MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE . CUSTOMER’S RIGHT TO RECOVER DAMAGES CAUSED BY FAULT OR NEGLIGENCE ON THE PART OF

N

ATIONAL INSTRUMENTS SHALL BE LIMITED TO THE AMOUNT THERETOFORE PAID BY THE CUSTOMER. NATIONAL INSTRUMENTS WILL NOT BE LIABLE FOR

DAMAGES RESULTING FROM LOSS OF DATA, PROFITS, USE OF PRODUCTS, OR INCIDENTAL OR CONSEQUENTIAL DAMAGES, EVEN IF ADVISED OF THE PO SSIBILITY

THEREOF. This limitation of the liability of National Instruments will apply regardless of the form of action, whether in contract or tort, including

negligence. Any action against National Instruments must be brought within one year after the cause of action accrues. National Instruments

shall not be liable for any delay in performance due to causes beyond its reasonable control. The warranty provided herein does not cover

damages, defects, malfunctions, or service failures caused by owner’s failure to follow the National Instruments installation, operation, or

maintenance instructions; owner’s modification of the product; owner’s abuse, misuse, or negligent acts; and power failure or surges, fire,

flood, accident, actions of third parties, or other events outside reasonable control.

Copyright

Under the copyright laws, this publication may not be reproduced or transmitted in any form, electronic or mechanical, including photocopying,

recording, storing in an information retrieval system, or translating, in whole or in part, without the prior written consent of National

Instruments Corporation.

Trademarks

LabVIEW™, MATRIXx™, National Instruments™, NI™, ni.com™, SystemBuild™, and Xmath™ are trademarks of National Instruments

Corporation.

Product and company names mentioned herein are trademarks or trade names of their respective companies.

Patents

For patents covering National Instruments products, refer to the appropriate location: Help»Patents in your software, the patents.txt file

on your CD, or

ni.com/patents.

WARNING REGARDING USE OF NATIONAL INSTRUMENTS PRODUCTS

(1) NATIONAL INSTRUMENTS PRODUCTS ARE NOT DESIGNED WITH COMPONENTS AND TESTING FOR A LEVEL OF

RELIABILITY SUITABLE FOR USE IN OR IN CONNECTION WITH SURGICAL IMPLANTS OR AS CRITICAL COMPONENTS IN

ANY LIFE SUPPORT SYSTEMS WHOSE FAILURE TO PERFORM CAN REASONABLY BE EXPECTED TO CAUSE SIGNIFICANT

INJURY TO A HUMAN.

(2) IN ANY APPLICATION, INCLUDING THE ABOVE, RELIABILITY OF OPERATION OF THE SOFTWARE PRODUCTS CAN BE

IMPAIRED BY ADVERSE FACTORS, INCLUDING BUT NOT LIMITED TO FLUCTUATIONS IN ELECTRICAL POWER SUPPLY,

COMPUTER HARDWARE MALFUNCTIONS, COMPUTER OPERATING SYSTEM SOFTWARE FITNESS, FITNESS OF COMPILERS

AND DEVELOPMENT SOFTWARE USED TO DEVELOP AN APPLICATION, INSTALLATION ERRORS, SOFTWARE AND

HARDWARE COMPATIBILITY PROBLEMS, MALFUNCTIONS OR FAILURES OF ELECTRONIC MONITORING OR CONTROL

DEVICES, TRANSIENT FAILURES OF ELECTRONIC SYSTEMS (HARDWARE AND/OR SOFTWARE), UNANTICIPATED USES OR

MISUSES, OR ERRORS ON THE PART OF THE USER OR APPLICATIONS DESIGNER (ADVERSE FACTORS SUCH AS THESE ARE

HEREAFTER COLLECTIVELY TERMED “SYSTEM FAILURES”). ANY APPLICATION WHERE A SYSTEM FAILURE WOULD

CREATE A RISK OF HARM TO PROPERTY OR PERSONS (INCLUDING THE RISK OF BODILY INJURY AND DEATH) SHOULD

NOT BE RELIANT SOLELY UPON ONE FORM OF ELECTRONIC SYSTEM DUE TO THE RISK OF SYSTEM FAILURE. TO AVOID

DAMAGE, INJURY, OR DEATH, THE USER OR APPLICATION DESIGNER MUST TAKE REASONABLY PRUDENT STEPS TO

PROTECT AGAINST SYSTEM FAILURES, INCLUDING BUT NOT LIMITED TO BACK-UP OR SHUT DOWN MECHANISMS.

BECAUSE EACH END-USER SYSTEM IS CUSTOMIZED AND DIFFERS FROM NATIONAL INSTRUMENTS' TESTING

PLATFORMS AND BECAUSE A USER OR APPLICATION DESIGNER MAY USE NATIONAL INSTRUMENTS PRODUCTS IN

COMBINATION WITH OTHER PRODUCTS IN A MANNER NOT EVALUATED OR CONTEMPLATED BY NATIONAL

INSTRUMENTS, THE USER OR APPLICATION DESIGNER IS ULTIMATELY RESPONSIBLE FOR VERIFYING AND VALIDATING

THE SUITABILITY OF NATIONAL INSTRUMENTS PRODUCTS WHENEVER NATIONAL INSTRUMENTS PRODUCTS ARE

INCORPORATED IN A SYSTEM OR APPLICATION, INCLUDING, WITHOUT LIMITATION, THE APPROPRIATE DESIGN,

PROCESS AND SAFETY LEVEL OF SUCH SYSTEM OR APPLICATION.

Page 4

Contents

1 Introduction 1

1.1 Notation..................................... 1

1.2 Manual Outline . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.3 How to avoid really reading this Manual . . . . . . . . . . . . . . . . . . . 3

2 Overview of the Underlying Theory 5

2.1 Introduction................................... 5

2.1.1 Notation................................. 6

2.1.2 AnIntroductiontoNorms....................... 8

2.2 Modeling Uncertain Systems . . . . . . . . . . . . . . . . . . . . . . . . . 13

2.2.1 Perturbation Models for Robust Control . . . . . . . . . . . . . . . 13

2.2.2 Linear Fractional Transformations . . . . . . . . . . . . . . . . . . 17

2.2.3 Assumptions on P ,∆,andtheunknownsignals .......... 22

2.2.4 Additional Perturbation Structures . . . . . . . . . . . . . . . . . . 23

iii

Page 5

iv CONTENTS

2.2.5 Obtaining Robust Control Models for Physical Systems . . . . . . 28

2.3 H

and H2DesignMethodologies ...................... 29

∞

2.3.1 H

2.3.2 Assumptions for the H

DesignOverview ......................... 31

∞

DesignProblem .............. 32

∞

2.3.3 A Brief Review of the Algebraic Riccati Equation . . . . . . . . . . 33

2.3.4 Solving the H

2.3.5 Further Notes on the H

2.3.6 H

DesignOverview.......................... 40

2

2.3.7 Details of the H

Design Problem for a Special Case . . . . . . . . . 36

∞

Design Algorithm . . . . . . . . . . . . . 38

∞

DesignProcedure ................. 40

2

2.4 µ Analysis.................................... 42

2.4.1 MeasuresofPerformance ....................... 42

2.4.2 Robust Stability and µ ......................... 44

2.4.3 RobustPerformance .......................... 46

2.4.4 Properties of µ ............................. 47

2.4.5 TheMainLoopTheorem ....................... 49

2.4.6 State-spaceRobustnessAnalysisTests................ 51

2.4.7 Analysis with both Real and Complex Perturbations . . . . . . . . 58

2.5 µ Synthesis and D-K Iteration ........................ 58

2.5.1 µ-Synthesis ............................... 58

2.5.2 The D-K Iteration Algorithm . . . . . . . . . . . . . . . . . . . . . 60

Page 6

CONTENTS v

2.6 ModelReduction................................ 64

2.6.1 Truncation and Residualization . . . . . . . . . . . . . . . . . . . . 65

2.6.2 BalancedTruncation.......................... 65

2.6.3 HankelNormApproximation ..................... 68

3 Functional Description of Xµ 71

3.1 Introduction................................... 71

3.2 DataObjects .................................. 71

3.2.1 Dynamic Systems .......................... 72

3.2.2 pdms................................... 74

3.2.3 Subblocks: selecting input & outputs . . . . . . . . . . . . . . . . . 77

3.2.4 BasicFunctions............................. 78

3.2.5 Continuous to Discrete Transformations . . . . . . . . . . . . . . . 81

3.3 MatrixInformation,DisplayandPlotting .................. 81

3.3.1 Information Functions for Data Objects . . . . . . . . . . . . . . . 81

3.3.2 FormattedDisplayFunctions ..................... 82

3.3.3 PlottingFunctions ........................... 82

3.4 SystemResponseFunctions .......................... 85

3.4.1 CreatingTimeDomainSignals .................... 85

3.4.2 Dynamic System TimeResponses ................. 85

3.4.3 FrequencyResponses.......................... 88

Page 7

vi CONTENTS

3.5 SystemInterconnection ............................ 91

3.6 H

and H∞AnalysisandSynthesis...................... 95

2

3.6.1 ControllerSynthesis .......................... 95

3.6.2 SystemNormCalculations ...................... 105

3.7 Structured Singular Value (µ) Analysis and Synthesis . . . . . . . . . . . 107

3.7.1 Calculation of µ ............................ 107

3.7.2 The D-K Iteration........................... 110

3.7.3 Fitting D Scales ............................ 112

3.7.4 Constructing Rational Perturbations . . . . . . . . . . . . . . . . . 120

3.7.5 BlockStructuredNormCalculations................. 121

3.8 ModelReduction................................ 121

3.8.1 Truncation and Residualization . . . . . . . . . . . . . . . . . . . . 122

3.8.2 BalancedRealizations ......................... 123

3.8.3 Hankel Singular Value Approximation . . . . . . . . . . . . . . . . 125

4 Demonstration Examples 127

4.1 TheHimatExample .............................. 127

4.1.1 ProblemDescription.......................... 127

4.1.2 State-spaceModelofHimat...................... 128

4.1.3 Creating a Weighted Interconnection Structure for Design . . . . . 131

4.1.4 H

Design ............................... 133

∞

Page 8

CONTENTS vii

4.1.5 µ Analysis of the H

Controller ................... 138

∞

4.1.6 Fitting D-scales for the D-K Iteration................ 140

4.1.7 DesignIteration#2 .......................... 143

4.1.8 Simulation Comparison with a Loopshaping Controller . . . . . . . 146

4.2 A Simple Flexible Structure Example . . . . . . . . . . . . . . . . . . . . 153

4.2.1 TheControlDesignProblem ..................... 153

4.2.2 Creating the Weighted Design Interconnection Structure . . . . . . 155

4.2.3 Design of an H

Controller...................... 162

∞

4.2.4 RobustnessAnalysis .......................... 165

4.2.5 D-K Iteration ............................. 168

4.2.6 ASimulationStudy .......................... 173

5 Bibliography 192

6 Function Reference 201

6.1 Xµ Functions .................................. 201

6.2 Xµ Subroutines and Utilities . . . . . . . . . . . . . . . . . . . . . . . . . 377

Appendices 391

A Translation Between Matlab µ-Tools and Xµ ............... 391

A.1 DataObjects .............................. 392

A.2 Matrix Information, Display and Plotting . . . . . . . . . . . . . . 397

Page 9

viii CONTENTS

A.3 SystemResponseFunctions...................... 398

A.4 SystemInterconnection ........................ 399

A.5 ModelReduction............................ 399

A.6 H

and H∞AnalysisandSynthesis ................. 399

2

A.7 Structured Singular Value (µ) Analysis and Synthesis . . . . . . . 400

Page 10

Chapter 1

Introduction

Xµ is a suite of Xmath functions for the modeling, analysis and synthesis of linear

robust control systems. Robust control theory has developed rapidly during the last

decade to the point where a useful set of computational tools can be used to solve a wide

range of control problems. This theory has already been applied to a wide range of

practical problems.

This manual describes the Xµ functions and presents a demonstration of their

application. The underlying theory is outlined here and further theoretical details can

be found in the many references provided.

It is assumed that the reader is familiar with the use of Xmath; the Xmath Basics

manual and the on-line demos are a good way of getting started with Xmath. A good

knowledge of control theory and application is also assumed. The more that is known

about robust control theory the better as the details are not all covered here.

1.1 Notation

Several font types or capitalization styles are used to distinguish between data objects.

The following table lists the various meanings.

1

Page 11

2 CHAPTER 1. INTRODUCTION

Notation Meaning

pdm Xmath parameter dependent matrix data object

Dynamic System Xmath dynamic system data object

Code examples and function names are set in typewriter font to distinguish them from

narrative text.

1.2 Manual Outline

Chapter 2 outlines the applicable robust control theory. Perturbation models and linear

fractional transformations form the basis of the modeling framework. The discussion is

aimed at an introductory level and not all of the subtleties are covered. The theory

continues with an overview of the H

elsewhere for detail of the theory. The robust control methodology covered here is based

on the analysis of systems with perturbations. This is covered in some detail as such an

understanding is required for effective use of this software. Repeated analysis can be

used to improve upon the synthesis; this takes us from the standard H

to the more sophisticated µ-synthesis techniques.

design technique. Again the reader is referred

∞

design method

∞

The translation between the theoretical concepts and the use of the software is made in

Chapter 3. The means of performing typical robust control calculations are discussed in

some detail. This chapter also serves to introduce the Xµ functions. The discussion is

extended to include some of the relevant Xmath functions. A prior reading of Chapter 2

is helpful for putting this material in context.

The best means of getting an idea of the use of the software is to study completed design

examples, given in Chapter 4. These currently includes a design study for an aerospace

application. Typical modeling, analysis, synthesis, and simulation studies are illustrated.

These studies can be used as initial templates for the user’s application.

Chapter 6 is a function reference guide containing a formal description of each function.

This is similar to that given via the on-line help capability. Functions are listed in

relevant groupings at the start of the chapter. This gives an overview of some of the

software capabilities.

Page 12

1.3. HOW TO AVOID REALLY READING THIS MANUAL 3

1.3 How to avoid really reading this Manual

The layout of the manual proceeds from introduction to background to syntax detail to

application descriptions. This may be tediously theoretical for some. If you are one of

those that considers reading the manual as the option of last resort

the applications (Chapter 4). If you have no prior Xmath experience then skimming

through Chapter 3 is essential. After running the demos and getting a feel for what the

software can do look briefly through the theory section.

1

then go directly to

1

And it seems that you are now exercising that option

Page 13

Chapter 2

Overview of the Underlying

Theory

2.1 Introduction

The material covered here is taken from a variety of sources. The basic approach is

described by Doyle [1, 2], and further elaborated upon by Packard [3]. Summaries have

also appeared in work by Smith [4] and others.

Motivating background can be found in the early paper by Doyle and Stein [5]. An

overview of the robust control approach, particularly for process control systems, is

given by Morari and Zafiriou [6]. The reader can also find a description of the H

synthesis robust control approach in [7].

/µ

∞

There are a number of descriptions of this approach to practical problems. In the last

few years a significant number of these have been described in the proceedings of the

American Control Conference (ACC) and the IEEE Control and Decision Conference

(CDC). Only some of the early illustrative examples are cited here.

Application of µ synthesis to a shuttle control subsystem is given by Doyle et al. [8].

Examples of flexible structure control are described by Balas and

coworkers [9, 10, 11, 12] and Smith, Fanson and Chu [13, 14]. There have also been

5

Page 14

6 CHAPTER 2. OVERVIEW OF THE UNDERLYING THEORY

several studies involving process control applications, particularly high purity distillation

columns. These are detailed by Skogestad and Morari in [15, 16, 17, 18]

Section 2.2 introduces robust control perturbation models and linear fractional

transformations. Weighted H

design is covered in Section 2.3. The analysis of closed

∞

loop systems with the structured singular value (µ) is overviewed in Section 2.4.

Section 2.5 discusses µ synthesis and the D-K iteration. Model reduction is often used

to reduce the controller order prior to implementation and this is covered in Section 2.6.

2.1.1 Notation

We will use some fairly standard notation and this is given here for reference.

R set of real numbers

C set of complex numbers

n

R

n

C

n×m

R

n×m

C

I

n

0 matrix (or vector or scalar) of zeros of appropriate dimension

set of real valued vectors of dimension n × 1

set of complex valued vectors of dimension n × 1

set of real valued matrices of dimension n × m

set of complex valued matrices of dimension n × m

identity matrix of dimension n × n

The following apply to a matrix, M ∈C

M

M

T

∗

transpose of M

complex conjugate transpose of M

n×m

.

|M| absolute value of each element of M (also applies if M is a vector or scalar)

Re{M} real part of M

Im{M} imaginary part of M

dim(M) dimensions of M

σ

(M) maximum singular value of M

max

(M) minimum singular value of M

σ

min

M

ij

element of M in row i, column j. (also used for the i,j partition of a previously defined

partition of M)

(M) an eigenvalue of M

λ

i

ρ(M) spectral radius (max

i|λi

(M)|)

kMk norm of M (see section 2.1.2 for more details)

Page 15

2.1. INTRODUCTION 7

-

z v

y

P

P

11

21

P

P

12

22

u

Figure 2.1: The generic robust control model structure

P

n

Trace(M) trace of M (

i=1

Mii)

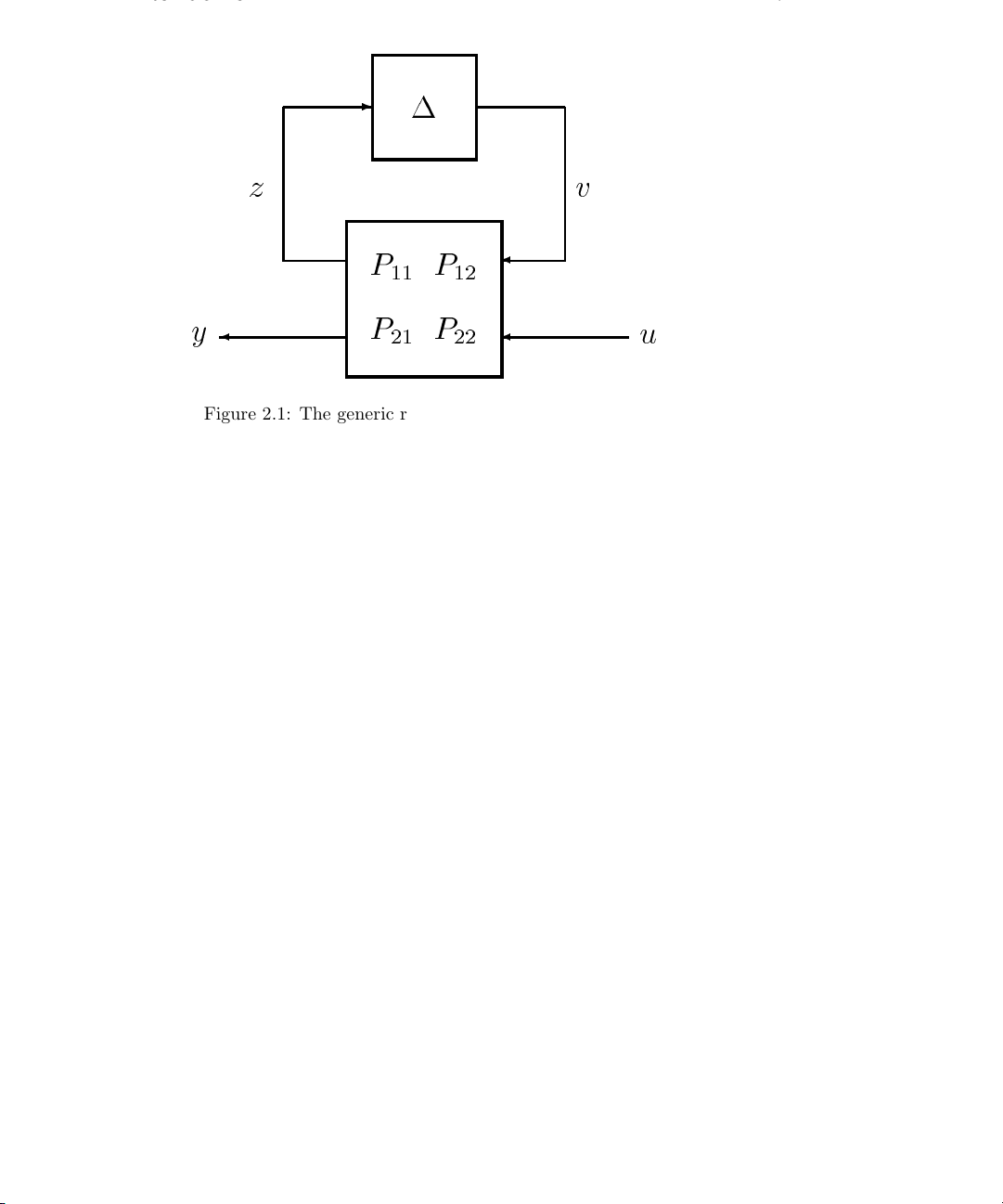

Block diagrams will be used to represent interconnections of systems. Consider the

example feedback interconnection shown in Fig. 2.1. Notice that P has been partitioned

into four parts. This diagram represents the equations,

z = P

y = P

v + P12u

11

v + P22u

21

v =∆z.

This type of diagram (and the associated equations) will be used whenever the objects

P , z, y, etc., are well defined and compatible. For example P could be a matrix and z,

y, etc., would be vectors. If P represented a dynamic system then z, y, etc., would be

signals and

y = P

v + P22u,

21

Page 16

8 CHAPTER 2. OVERVIEW OF THE UNDERLYING THEORY

is interpreted to mean that the signal y is the sum of the response of system P

input signal v and system P

to input signal u. In general, we will not be specific about

22

to

21

the representation of the system P . If we do need to be more specific about P ,then

P(s) is the Laplace representation and p(t) is the impulse response.

Note that Figure 2.1 is drawn from right to left. We use this form of diagram because it

more closely represents the order in which the systems are written in the corresponding

mathematical equations. We will later see that the particular block diagram shown in

Figure 2.1 is used as a generic description of a robust control system.

In the case where we are considering a state-space representation, the following notation

is also used. Given P(s), with state-space representation,

sx(s)=Ax(s)+Bu(s)

y(s)=Cx(s)+Du(s),

we associate this description with the notation,

P (s)=

A

B

C D

.

The motivation for this notation comes from the example presented in Section 2.2.4. We

will also use this notation to for state-space representation of discrete time systems

(where s in the above is replaced by z). The usage will be clear from the context of the

discussion.

2.1.2 An Introduction to Norms

A norm is simply a measure of the size of a vector, matrix, signal, or system. We will

define and concentrate on particular norms for each of these entities. This gives us a

formal way of assessing whether or not the size of a signal is large or small enough. It

allows us to quantify the performance of a system in terms of the size of the input and

output signals.

Unless stated otherwise, when talking of the size of a vector, we will be using the

Page 17

2.1. INTRODUCTION 9

Euclidean norm. Given,

x

1

.

x =

.

,

.

x

n

the Euclidean (or 2-norm) of x, denoted by kxk, is defined by,

!

|xi|

1/2

.

n

kxk =

X

i=1

Many other norms are also options; more detail on the easily calculated norms can be

found in the on-line help for the norm function. The term spatial-norm is often applied

when we are looking at norms over the components of a vector.

Now consider a vector valued signal,

x(t)=

x

x

1

n

(t)

.

.

.

(t)

.

As well as the issue of the spatial norm, we now have the issue of a time norm. In the

theory given here, we concentrate on the 2-norm in the time domain. In otherwords,

kx

(t)k =

i

Z

∞

−∞

|xi(t)|2dt

1/2

.

This is simply the energy of the signal. This norm is sometimes denoted by a subscript

of two, i.e. kx

(t)k2. Parseval’s relationship means that we can also express this norm in

i

the Laplace domain as follows,

kx

(s)k =

i

Z

∞

1

2π

|xi(ω)|2dω

−∞

1/2

.

Page 18

10 CHAPTER 2. OVERVIEW OF THE UNDERLYING THEORY

For persistent signals, where the above norm is unbounded, we can define a power norm,

!

1/2

. (2.1)

(t)k = lim

kx

i

T →∞

2T

Z

T

1

|xi(t)|2dt

−T

The above norms have been defined in terms of a single component, x

(t), of a vector

i

valued signal, x(t). The choice of spatial norm determines how we combine these

components to calculate kx(t)k. We can mix and match the spatial and time parts of the

norm of a signal. In practice it usually turns out that the choice of the time norm is

more important in terms of system analysis. Unless stated otherwise, kx(t)k implies the

Euclidean norm spatially and the 2-norm in the time direction.

Certain signal spaces can be defined in terms of their norms. For example, the set of

signals x(t), with kx(t)k

L

=x(t)

2

kx(t)k < ∞.

finite is denoted by L2. The formal definition is,

2

A similar approach can be taken in the discrete-time domain. Consider a sequence,

∞

{x(k)}

, with 2-norm given by,

k=0

∞

X

kx(k)k

=

2

|x(k)|

k=0

!

1/2

2

.

A lower case notation is used to indicate the discrete-time domain. All signals with finite

2-norm are therefore,

l

=x(k),k =0,...,∞

2

We can essentially split the space L

elements of L

which are analytic in the right-half plane. This can be thought of as

2

kx(k)k2<∞.

into two pieces, H2and H

2

⊥

. H2is the set of

2

those which have their poles strictly in the left half plane; i.e. all stable signals.

⊥

Similarly, H

are all signal with their poles in the left half plane; all strictly unstable

2

Page 19

2.1. INTRODUCTION 11

signals. Strictly speaking, signals in H

2

or H

are not defined on the ω axis. However

2

⊥

we usually consider them to be by taking a limit as we approach the axis.

A slightly more specialized set is RL

strictly proper functions with no poles on the imaginary axis. Similarly we can consider

as strictly proper stable functions and RH

RH

2

poles in Re(s)< 0. The distinction between RL

, the set of real rational functions in L2. These are

2

⊥

as strictly proper functions with no

2

and L2is of little consequence for the

2

sorts of analysis we will do here.

The concept of a unit ball will also come up in the following sections. This is simply the

set of all signals (or vectors, matrices or systems) with norm less than or equal to one.

The unit ball of L

=x(t)

BL

2

, denoted by BL2, is therefore defined as,

2

kx(t)k2< 1.

Now let’s move onto norms of matrices and systems. As expected the norm of a matrix

gives a measure of its size. We will again emphasize only the norms which we will

consider in the following sections. Consider defining a norm in terms of the maximum

gain of a matrix or system. This is what is known as an induced norm. Consider a

matrix, M , and vectors, u and y,where

y=Mu.

Define, kM k,by

kyk

kMk=max

u,kuk<∞

kuk

.

Because M is obviously linear this is equivalent to,

kMk =max

u,kuk=1

kyk.

The properties of kMk will depend on how we define the norms for the vectors u and y.

If we choose our usual default of the Euclidean norm then kMk is given by,

kMk = σ

max

(M),

Page 20

12 CHAPTER 2. OVERVIEW OF THE UNDERLYING THEORY

where σ

denotes the maximum singular value. Not all matrix norms are induced

max

from vector norms. The Froebenius norm (square root of the sum of the squares of all

matrix elements) is one such example.

Now consider the case where P(s) is a dynamic system and we define an induced norm

from L

to L2as follows. In this case, y(s) is the output of P (s)u(s)and

2

ky(s)k

kP(s)k=max

u(s)∈L

2

ku(s)k

2

.

2

Again, for a linear system, this is equivalent to,

kP (s)k =max

u(s)∈BL

This norm is called the ∞-norm, usually denoted by kP (s)k

ky(s)k2.

2

. In the single-input,

∞

single-output case, this is equivalent to,

kP (s)k

= ess supω|P (ω)|.

∞

This formal definition uses the term ess sup, meaning essential supremum. The

“essential” part means that we drop all isolated points from consideration. We will

always be considering continuous systems so this technical point makes no difference to

us here. The “supremum” is conceptually the same as a maximum. The difference is

that the supremum also includes the case where we need to use a limiting series to

approach the value of interest. The same is true of the terms “infimum” (abbreviated to

“inf”) and “minimum.” For practical purposes, the reader can think instead in terms of

maximum and minimum.

Actually we could restrict u(s) ∈H

in the above and the answer would be the same. In

2

other words, we can look over all stable input signals u(s) and measure the 2-norm of

the output signal, y(s). The subscript, ∞, comes from the fact that we are looking for

the supremum of the function on the ω axis. Mathematicians sometimes refer to this

norm as the “induced 2-norm.” Beware of the possible confusion when reading some of

the mathematical literature on this topic.

If we were using the power norm above (Equation 2.1) for the input and output norms,

the induced norm is still kP (s)k

.

∞

Page 21

2.2. MODELING UNCERTAIN SYSTEMS 13

The set of all systems with bounded ∞-norm is denoted by L

into stable and unstable parts. H

finite for all Re(s)> 0. This is where the name “H

often call this norm the H

functions, so RL

Similary, RH

is the set of proper transfer functions with no poles on the ω axis.

∞

is the set of proper, stable transfer functions.

∞

-norm. Again we can restrict ourselves to real rational

∞

denotes the stable part; those systems with |P (s)|

∞

control theory” originates, and we

∞

. We can again split this

∞

Again, we are free to choose a spatial norm for the input and output signals u(s)and

y(s). In keeping with our above choices we will choose the Euclidean norm. So if P (s)is

a MIMO system, then,

kP (s)k

=supωσ

∞

max

[P(ω)].

There is another choice of system norm that will arise in the following sections. This is

the H

-norm for systems, defined as,

2

kP (s)k

where P (ω)

Z

∞

1

=

2

2π

∗

denotes the conjugate transpose of P (ω) and the trace of a matrix is the

Trace[P(ω)∗P (ω)]dω

−∞

1/2

,

sum of its diagonal elements. This norm will come up when we are considering linear

quadratic Gaussian (LQG) problems.

2.2 Modeling Uncertain Systems

2.2.1 Perturbation Models for Robust Control

A simple example will be used to illustrate the idea of a perturbation model. We are

interested in describing a system by a set of models, rather than just a nominal model.

Our uncertainty about the physical system will be represented in an unknown

component of the model. This unknown component is a perturbation, ∆, about which

we make as few assumptions as possible; maximum size, linearity, time-invariance, etc..

Every different perturbation, ∆, gives a slightly different system model. The complete

Page 22

14 CHAPTER 2. OVERVIEW OF THE UNDERLYING THEORY

robust control model is therefore a set description and we hope that some members of

this set capture some of the uncertain or unmodeled aspects of our physical system.

For example, consider the “uncertain” model illustrated in Figure 2.2. This picture is

equivalent to the input-output relationship,

y =[(I+∆W

y

m)Pnom

] u. (2.2)

W

m

s

P

nom

u

+

?

m

Figure 2.2: Generic output multiplicative perturbation model

In this figure, ∆, W

m

and P

theory can be stated with these blocks as elements of H

are dynamic systems. The most general form for the

nom

. For the purposes of

∞

calculation we will be dealing with Xmath Dynamic Systems, and in keeping with this

we will tend to restrict the theoretical discussion to RH

, stable, proper real rational

∞

transfer function matrices.

The only thing that we know about the perturbation, ∆, is that k∆k

with k∆k

≤ 1 gives a different transfer function between u and y. The set of all

∞

≤ 1. Each ∆,

∞

possible transfer functions, generated in this manner, is called P. More formally,

k∆k

P =(I +∆W

m)Pnom

≤ 1. (2.3)

∞

Now we are looking at a set of possible transfer functions,

y(s)=P(s)u(s),

where P (s) ∈P.

Equation 2.2 represents what is known as a multiplicative output perturbation

structure. This is perhaps one of the easiest to look at initially as W (s) can be viewed

Page 23

2.2. MODELING UNCERTAIN SYSTEMS 15

as specifying a maximum percentage error between P

The system P

(s) is the element of P that comes from ∆ = 0 and is called the

nom

and every other element of P.

nom

nominal system. In otherwords, for ∆ = 0, the input-output relationship is

y(s)=P

nominal system is multiplied by (I +∆W

(s) u(s). As ∆ deviates from zero (but remains bounded in size), the

nom

(s)). Wm(s) is a frequency weighting function

m

which allows us the specify the maximum effect of the perturbation for each frequency.

Including W

normalization of ∆ is simply included in W

(s) allows us to model P with ∆ being bounded by one. Any

m

(s).

m

We often assume that ∆ is also linear and time-invariant. This means that ∆(ω)is

simply an unknown, complex valued matrix at each frequency, ω.Ifk∆k

each frequency, σ

(∆(ω)) ≤ 1. Section 2.2.3 gives a further discussion on the pros

max

≤1, then, at

∞

and cons of considering ∆ to be linear, time-invariant.

Now consider an example of this approach from a Nyquist point of view. A simple first

order SISO system with multiplicative output uncertainty is modeled as

y(s)=(I+W

(s)∆)P

m

nom

(s)u(s),

where

P

nom

(s)=

1+0.05s

1+s

and W

m

(s)=

0.1+0.2s

1+0.05s

.

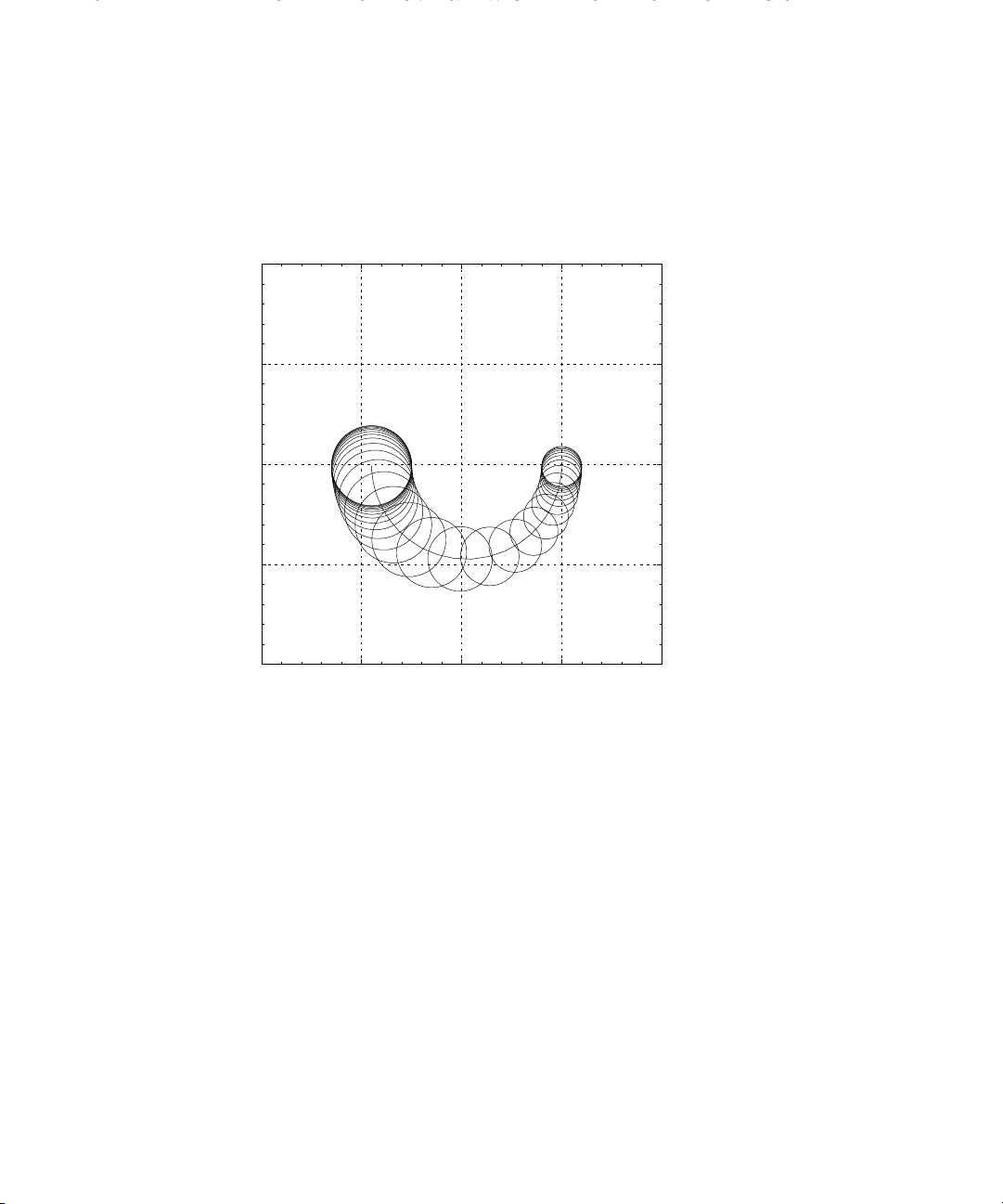

Figure 2.3 illustrates the set of systems generated by a linear time-invariant ∆,

k∆k

≤ 1.

∞

At each frequency, ω, the transfer function of every element of P, lies within a circle,

centered at P

(ω), of radius |P

nom

(ω)Wm(ω)|. Note that for certain frequencies the

nom

disks enclose the origin. This allows us to consider perturbed systems that are

non-minimum phase even though the nominal system is not.

It is worth pointing out that P is still a model; in this case a set of regions in the

Nyquist plane. This is model set is now able to describe a larger set of system behaviors

than a single nominal model. There is still an inevitable mismatch between any model

(robust control model set or otherwise) and the behaviors of a physical system.

Page 24

16 CHAPTER 2. OVERVIEW OF THE UNDERLYING THEORY

1

0.5

0

Imaginary

-0.5

-1

0 0.5 1-0.5 1.5

Real

Figure 2.3: Nyquist diagram of the set of systems, P

Page 25

2.2. MODELING UNCERTAIN SYSTEMS 17

W

a

y

?

j

u u j

+

P

0

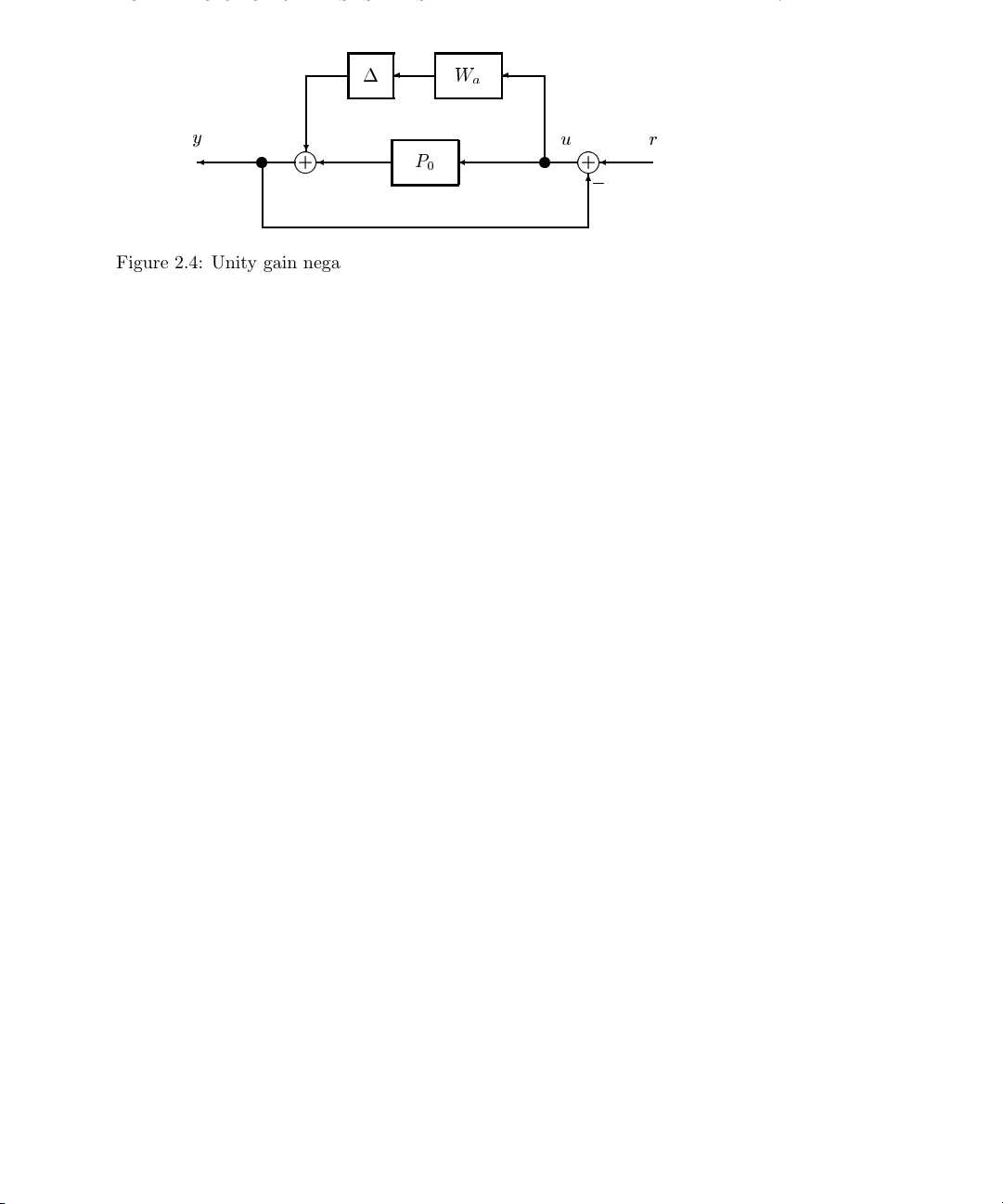

Figure 2.4: Unity gain negative feedback for the example system, P0+∆W

u r

+

,

6

a

2.2.2 Linear Fractional Transformations

A model is considered to be an interconnection of lumped components and perturbation

blocks. In this discussion we will denote the input to the model by u, which can be a

vector valued signal representing input signals such as control inputs, disturbances, and

noise. The outputs signal, denoted in this discussion by y, are also vector valued and can

represent system outputs and other variables of interest.

In order to treat large systems of interconnected components, it is necessary to use a

model formulation that is general enough to handle interconnections of systems. To

illustrate this point consider an affine model description:

y =(P

where u is the input and y is the output. ∆ again represents an unknown but bounded

perturbation. This form of perturbed model is known as an additive perturbation

description. While such a description could be applied to a large class of linear systems,

it is not general enough to describe the interconnection of models. More specifically, an

interconnection of affine models is not necessarily affine. To see this, consider unity gain

positive feedback around the above system. This is illustrated in Figure 2.4.

+∆Wa)u, k∆k∞≤ 1, (2.4)

0

The new input-output transfer function is

y =(P

+∆Wa)[I +(P0+∆Wa)]−1r. (2.5)

0

It is not possible to represent the new system with an affine model. Note that stability

questions arise from the consideration of the invertibility of [I +(P

+∆Wa)].

0

Page 26

18 CHAPTER 2. OVERVIEW OF THE UNDERLYING THEORY

1

-

z v

.

.

.

m

P

11

y

P

21

P

P

12

22

u

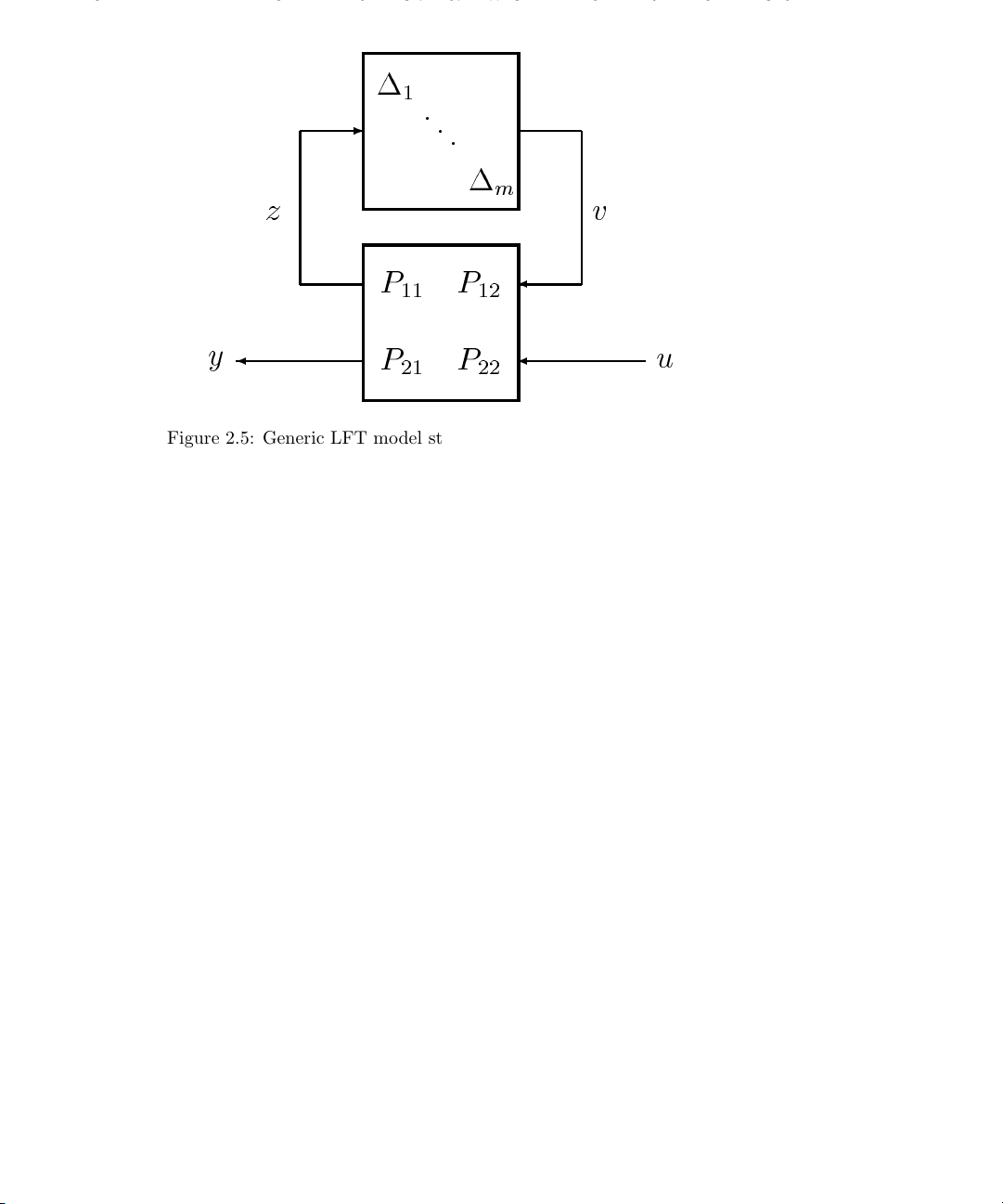

Figure 2.5: Generic LFT model structure including perturbations,∆

A generic model structure, referred to as a linear fractional transformation (LFT),

overcomes the difficulties outlined above. The LFT model is equivalent to the

relationship,

y =P

∆(I − P11∆)−1P12+ P

21

u, (2.6)

22

where the ∆ is the norm bounded perturbation. Figure 2.5 shows a block diagram

equivalent to the system described by Equation 2.6. Because this form of interconnection

is widely used, we will give it a specific notation. Equation 2.6 is abbreviated to,

y = F

(P, ∆)u.

u

The subscript, u, indicates that the ∆ is closed in the upper loop. We will also use

(., .) when the lower loop is closed.

F

l

In this figure, the signals, u, y, z and v can all be vector valued, meaning that the

partitioned parts of P ,(P

, etc.) can themselves be matrices of transfer functions.

11

To make this clear we will look at the perturbed system example, given in Equation 2.4,

Page 27

2.2. MODELING UNCERTAIN SYSTEMS 19

in an LFT format. The open-loop system is described by,

u(Polp

, ∆)u,

y = F

where

0 W

IP

0

a

.

=

P

olp

The unity gain, negative feedback configuration, illustrated in Figure 2.4 (and given in

Equation 2.5) can be described by,

u(Gclp

, ∆)r,

y = F

where

(I + P0)−1Wa(I + P0)

−W

=

G

clp

a

(I + P0)

−1

P0(I + P0)

−1

−1

Figure 2.5 also shows the perturbation, ∆ as block structured. In otherwords,

∆ = diag(∆

,...,∆m). (2.7)

1

This allows us to consider different perturbation blocks in a complex interconnected

system. If we interconnect two systems, each with a ∆ perturbation, then the result can

always be expressed as an LFT with a single, structured perturbation. This is a very

general formulation as we can always rearrange the inputs and outputs of P to make ∆

block diagonal.

The distinction between perturbations and noise in the model can be seen from both

Equation 2.6 and Figure 2.5. Additive noise will enter the model as a component of u.

The ∆ block represents the unknown but bounded perturbations. It is possible that for

some ∆, (I − P

∆) is not invertible. This type of model can describe nominally stable

11

systems which can be destabilized by perturbations. Attributing unmodeled effects

purely to additive noise will not have this characteristic.

Page 28

20 CHAPTER 2. OVERVIEW OF THE UNDERLYING THEORY

The issue of the invertibility of (I − P

∆) is fundamental to the study of the stability of

11

a system under perturbations. We will return to this question in much more detail in

Section 2.4. It forms the basis of the µ analysis approach.

Note that Equation 2.7 indicates that we have m blocks, ∆

, in our model. For

i

notational purposes we will assume that each of these blocks is square. This is actually

without loss of generality as in all of the analysis we will do here we can square up P by

adding rows or columns of zeros. This squaring up will not affect any of the analysis

results. The software actually deals with the non-square ∆ case; we must

specify the input and output dimensions of each block.

The block structure is a m-tuple of integers, (k

block. It is convenient to define a set, denoted here by ∆, with the appropriate block

∆

i

,...,km), giving the dimensions of each

1

structure representing all possible ∆ blocks, consistent with that described above. By

this it is meant that each member of the set of ∆ be of the appropriate type (complex

matrices, real matrices, or operators, for example) and have the appropriate dimensions.

In Figure 2.5 the elements P

. For consistency the sum of the column dimensions of the ∆imust equal the row

∆

i

dimension of P

∆ =ndiag (∆

. Now define ∆ as

11

,...,∆m)

1

It is assumed that each ∆

norm bound is one. If the input to ∆

and P12are not shown partitioned with respect to the

11

dim(∆i)=ki×k

is norm bounded. Scaling P allows the assumption that the

i

is ziand the output is vi,then

i

o

.

i

k = k∆izik≤kzik.

kv

i

It will be convenient to denote the unit ball of ∆, the subset of ∆ norm bounded by

one, by B∆. More formally

B∆ =n∆ ∈ ∆

k∆k≤1o.

Putting all of this together gives the following abbreviated representation of the

perturbed model,

y = F

(P,∆)u, ∆ ∈ B∆. (2.8)

u

Page 29

2.2. MODELING UNCERTAIN SYSTEMS 21

w

?

W

n

W

u

y

+

?

j

?

j

+

u

P

nom

u

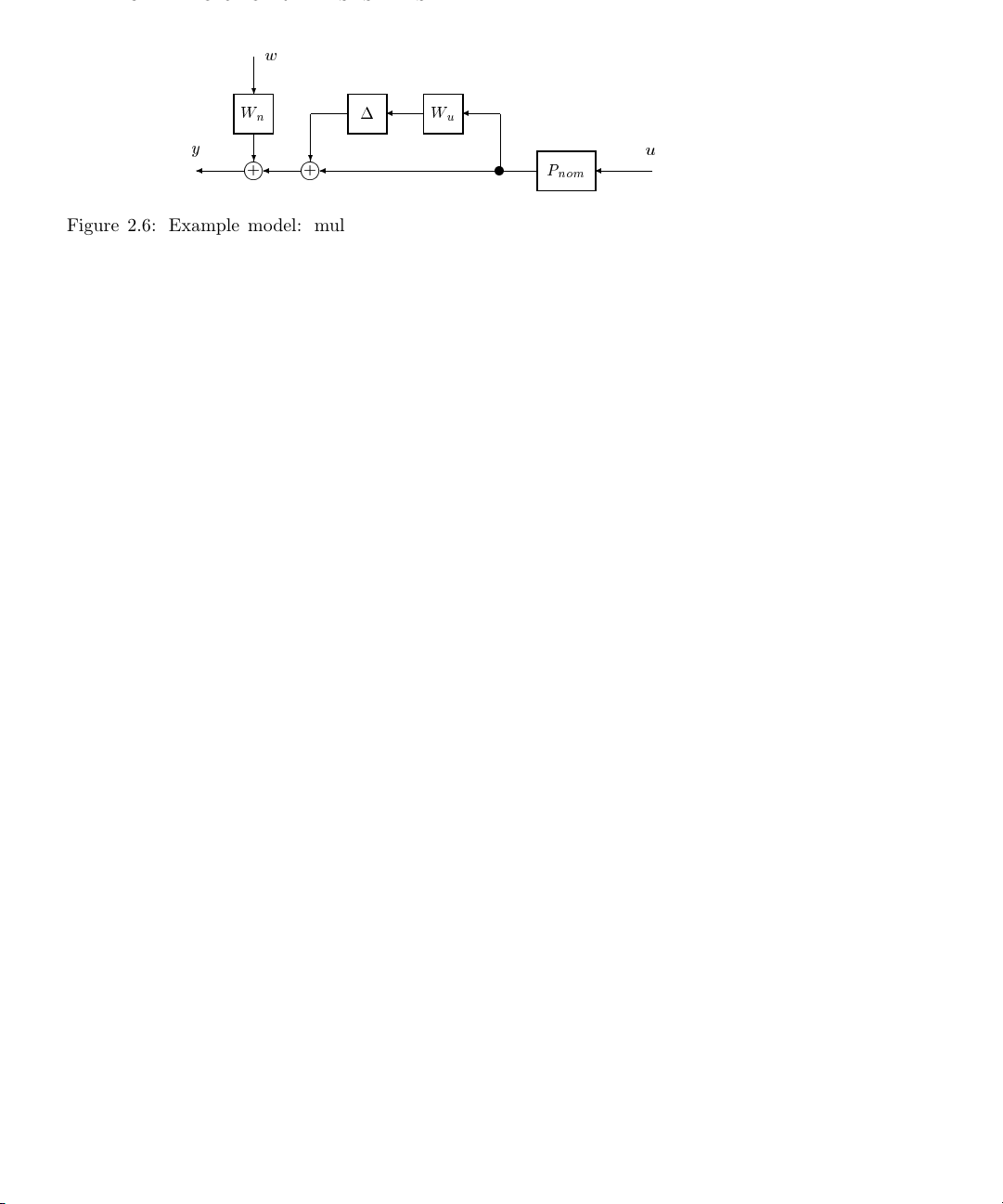

Figure 2.6: Example model: multiplicative output perturbation with weighted output

noise

References to a robust control model will imply a description of the form given in

Equation 2.8.

As a example, consider one of the most common perturbation model descriptions,

illustrated in Figure 2.6. This model represents a perturbed system with bounded noise

at the output.

The example model is given by,

y = W

n

The system W

w +(I+∆Wu)P

is a frequency dependent weight on the noise signal, w. This allows us

n

nom

u.

to use a normalized representation for w. In other words the model includes the

assumption that kwk

≤ 1. Similarly, we assume that k∆k∞≤ 1andWuis a frequency

∞

dependent weight which specifies the contribution of the perturbation at each frequency.

In a typical model W

nominal output) and W

will be small (assuming that the noise is small compared to the

n

will increase at high frequencies (to capture the likely case that

u

we know less about the model at higher frequencies). The LFT representation of this

model is,

where

y = F

P =

h

i

(P, ∆)

u

w

,

u

"

00W

IW

n

P

P

u

nom

nom

#

Page 30

22 CHAPTER 2. OVERVIEW OF THE UNDERLYING THEORY

Robust control models are therefore set descriptions. In the analysis of such models it is

also assumed that the unknown inputs belong to some bounded set. Several choices of

set for the unknown signals can be made, leading to different mathematical problems for

the analysis. Unfortunately not all of them are tractable. The following section discusses

the assumptions typically applied to the robust control models.

2.2.3 Assumptions on P , ∆, and the unknown signals

It will be assumed that the elements of P are either real-rational transfer function

matrices or complex valued matrices. The second case arises in the frequency by

frequency analysis of systems.

In modeling a system, P

defines the nominal model. Input/output effects not

22

described by the nominal model can be attributed to either unknown signals which are

components of the model input (w in the previous example), or the perturbation ∆.

Unmodeled effects which can destabilize a system should be accounted for in ∆. The ∆

can loosely be considered as accounting for the following. This list is by no means

definitive and is only included to illustrate some of the physical effects better suited to

description with ∆.

• Unmodeled dynamics. Certain dynamics may be difficult to identify and there

comes a point when further identification does not yield significant design

performance improvement.

• Known dynamics which have been bounded and included in ∆ to simplify the

model. As the controller complexity depends on the order of the nominal model a

designer may not wish to explicitly include all of the known dynamics.

• Parameter variations in a differential equation model. For example linearization

constants which can vary over operating ranges.

• Nonlinear or inconsistent effects. At some point a linear model will no longer

account for the residual differences between the behaviors of the model and the

physical system.

Several assumptions on ∆ are possible. In the most general case ∆ is a bounded

operator. Alternatively ∆ can be considered as a linear time varying multiplier. This

assumption can be used to capture nonlinear effects which shift energy between

frequencies. Analysis and synthesis are possible with this assumption; Doyle and

Page 31

2.2. MODELING UNCERTAIN SYSTEMS 23

Packard [19] discuss the implications of this assumption on robust control theory and we

briefly touch upon this in Section 2.4.6. The most common assumption is that ∆ is an

unknown, norm-bounded, linear time-invariant system.

Systems often do not fall neatly into one of the usual choices of ∆ discussed above.

Consider a nonlinear system linearized about an operating point. If a range of operation

is desired then the linearization constants can be considered to lie within an interval.

The model will have a ∆ block representing the variation in the linearization constants.

If this is considered to be a fixed function of frequency then the model can be considered

to be applicable for small changes about any operating point in the range. The precise

meaning of small will depend on the effect of the other ∆ blocks in the problem.

If the ∆ block is assumed to be time-varying then arbitrary variation is allowed in the

operating point. However this variation is now arbitrarily fast, and the model set now

contains elements which will not realistically correspond to any observed behavior in the

physical system.

The robust control synthesis theory gives controllers designed to minimize the maximum

error over all possible elements in the model set. Including non-physically motivated

signals or conditions can lead to a conservative design as it may be these signals or

conditions that determine the worst case error and consequently the controller.

Therefore the designer wants a model which describes all physical behaviors of the

system but does not include any extraneous elements.

The designer must select the assumptions on P and ∆. An inevitable tradeoff arises

between the ideal assumptions given the physical considerations of the system, and those

for which good synthesis techniques exist.

The most commonly used assumption is that ∆ is a linear time invariant system. This

allows us to consider the interconnection, F

(P, ∆), from a frequency domain point of

u

view. At each frequency ∆ can be taken as an unknown complex valued matrix of norm

less than or equal to one. This leads to analyses (covered in Section 2.4) involving the

complex structured singular value. The following section discusses more complicated

block structures and their use in modeling uncertain systems.

2.2.4 Additional Perturbation Structures

Equation 2.7 introduced a perturbation structure, ∆ containing m perturbation blocks,

. This form of perturbation is applicable to a wide range of models for uncertain

∆

i

Page 32

24 CHAPTER 2. OVERVIEW OF THE UNDERLYING THEORY

systems. We will now look at other possible perturbation structures. For more detail on

these structures (in the complex case) refer to Packard and Doyle [20].

Consider a blocks which are of the form scalar × identity, where the scalar is unknown.

In the following we will include q of these blocks in ∆. The definition of ∆ is therefore

modified to be,

∆ =ndiag(δ

,...,δqIq,∆1,...,∆m)

1I1

dim(Ij)=lj×lj,dim(∆i)=ki×k

i

o

.(2.9)

The block structure now contains the dimension of the q scalar × identity blocks and the

m full blocks. The block structure is therefore, (l

,...,lq,k1,...,km). If

1

dim(∆) = n × n, then these dimensions must be consistent. In otherwords,

q

X

j=1

lj+

m

X

i=1

ki= n.

Note that this block structure collapses to the previously defined structure

(Equation 2.7) when q =0.

The most obvious application of a repeated scalar block structure occurs when we know

that perturbations occurring in several places in a system are identical (or perhaps just

correlated). For example, dynamic models of aircraft often have the altitude (or

dynamic pressure) occuring in several places in the model. Naturally the same value

should be used in each place and if we model the altitude as an LFT parameter then the

repeated scalar × identity approach is the most appropriate.

This structure also allows us to express uncertain state-space models as LFTs. To

illustrate this consider the following discrete time system.

x(k +1) = Ax(k)+Bu(k)

y(k)=Cx(k)+Du(k).

This digital system has transfer function,

P (z)=C(zI − A)

−1

B + D

Page 33

2.2. MODELING UNCERTAIN SYSTEMS 25

−1

= Cz

= F

(I − z−1A)−1B + D

,z−1I),

u(Pss

where P

and the scalar × identity, z

is the real valued matrix,

ss

=

P

ss

AB

CD

,

−1

I, has dimension equal to the state dimension of P (z).

This is now in the form of an LFT model with a single scalar × identity element in the

upper loop.

One possible use of this is suggested by the following. Define,

∆ =δI

nx

δ ∈C,

where nx is the state dimension. The set of models,

F

, ∆), ∆ ∈ B∆,

u(Pss

is equivalent to P (z), |z|≥1. This hints at using this formulation for a stability analysis

of P(z). This is investigated further in Section 2.4.6.

In the analyses discussed in Section 2.4 we will concentrate on the assumption that ∆ is

complex valued at each frequency. For some models we may wish to restrict ∆ further.

The most obvious restriction is that some (or all) of the ∆ blocks are real valued. This is

applicable to the modeling of systems with uncertain, real-valued, parameters. Such

models can arise from mathematical system models with unknown parameters.

Consider, for example a very simplified model of the combustion characteristics of an

automotive engine. This is a simplified version of the model given by Hamburg and

Shulman [21]. The system input to be considered is the air/fuel ratio at the carburettor.

The output is equivalent to the air/fuel ratio after combustion. This is measured by an

oxygen sensor in the exhaust. Naturally, this model is a strong function of the engine

speed, v (rpm). We model the relationship as,

y =e

−Tds

0.9

1+Tcs

+

0.1

1+s

u,

Page 34

26 CHAPTER 2. OVERVIEW OF THE UNDERLYING THEORY

where the transport delay, T

252

v

and T

T

=

d

, and the combustion lag, Tc, are approximately,

d

202

.

=

c

v

For the purposes of our example we want to design an air/fuel ratio controller that

works for all engine speeds in the range 2,000 to 6,000 rpm. We will use a first order

Pad´e approximation for the delay and express the T

form with a normalized speed deviation, δ

.

v

The dominant combustion lag can be expressed as an LFT on 1/T

0.9

1+Tcs

= F

u(Ptc

,T

−1

c

),

and Tcrelationships in an LFT

d

as follows,

c

where

P

=

tc

Note that T

−1

0.9

−1

is easily modeled in terms of δv,

c

1

s

.

0

s

4000 + 2000δ

1

=

T

c

202

v

,δ

∈R, |δv|≤1.

v

The Pad´e approximation (with delay time, T

−Tds

e

≈F

P

u

delay

,T

d

−1

,

where,

P

delay

−2

s

=

4

s

1

−1

.

)isgivenby

d

Page 35

2.2. MODELING UNCERTAIN SYSTEMS 27

Putting all the pieces together gives an engine model in the following fractional form.

P (s)=F

u(Pmod

, ∆),

where

P

mod

=

−15.87

s

0

−27.75

s

7.14(s − 19.8)

2

s

−9.9

s

−0.9(s − 15.8)(s − 19.8)

3

s

141.5(1 + 1.006s)

s(s +1)

9.9

−17.82(s − 15.8)(1 + 1.006s)

s(s +1)

and ∆ ∈ B∆, with the structure defined as,

∆ =δ

vI2

δ

∈R.

v

To capture the effects of unmodeled high frequency dynamics we will also include an

output multiplicative perturbation. If the output multiplicative weight is W

(s)then

m

the complete open-loop model is,

P (s)=F

u(Pmod

, ∆), (2.10)

,

where,

P

mod

−15.87

s

0

=

−27.75

s

−27.75

s

7.14(s − 19.8)

2

s

−9.9

s

−0.9(s − 15.8)(s − 19.8)

3

s

−0.9(s − 15.8)(s − 19.8)

3

s

0

09.9

0

Wm(s)

141.5(1 + 1.006s)

s(s +1)

−17.82(s − 15.8)(1 + 1.006s)

s(s +1)

−17.82(s − 15.8)(1 + 1.006s)

s(s +1)

Page 36

28 CHAPTER 2. OVERVIEW OF THE UNDERLYING THEORY

and ∆ ∈ B∆, with the structure defined as,

, ∆1)

∆ =diag(δ

vI2

δv∈R,∆1∈C.

Note that this is an LFT with a repeated real-valued parameter, δ

complex perturbation, ∆

Note that as R⊂C, assuming that ∆ ∈C

∆

(or δj) are more appropriately modeled as real-valued. However this may be

i

potentially conservative as the ∆ ∈C

(|∆1|≤1).

1

n×n

, always covers the case where some of the

n×n

, allows many more systems in the model set.

In this case it would be somewhat better to consider combining the effects of δ

(|δv|≤1), and a

v

v

and ∆

into a single complex valued ∆ with an appropriate weight.

In principle, if we have additional information about the system (some δ

∈R,for

j

example) then we should use this information. Performing analyses with real valued

perturbations is currently at the forefront of the structured singular value research. We

will return to this issue in more detail when we cover the analysis methods (Section 2.4).

2.2.5 Obtaining Robust Control Models for Physical Systems

Obtaining a model of the above form is where the real engineering comes in. A designer

must model and identify the physical system to arrive at such a model. This is usually

an iterative process whereby designs are performed and then tested on the system. In

this way the designer often obtains a feeling for the adequacy of the model.

The best way of studying the modeling problem is to look at the documented

experiences of others applying these approaches; particularly in similar applications. The

citations given in the Section 2.1 will be useful in this regard. There are also approaches

addressing the problem of obtaining LFT models from descriptions with variable

state-space matrix coefficients [22, 23]. In the area of SISO process control, Laughlin et

al. [24] describe the relationship between uncertainties in time constant, delay, and gain,

and a suitable ∆ weighting. Models of this form are often applicable to process control.

1

There is little formal theory addressing the robust control modeling problem although

this is an area of increasing interest. A recent workshop proceedings volume on the

subject is an excellent reference for those interested in this area [25]. Other references

can be found in the review article by Gevers [26].

Page 37

2.3. H∞AND H2DESIGN METHODOLOGIES 29

An area of work, known as identification in H

techniques which minimize the worst case H

, looks at experimental identification

∞

error between the physical system and

∞

the model. The following works address this issue: [27, 28, 29, 30, 31, 32, 33, 34, 35].

Applying the more standard, probabilistically based, identification techniques to

uncertain systems is also receiving attention. Relevant work in this area is described

in: [36, 37, 38, 39]

Model validation is the experimental testing of a given robust control model. This can

be useful is assessing model quality. This work is covered in the

following: [4, 40, 41, 42, 43, 44, 45, 46]. An experimental example is described by

Smith [47].

The problems of identifying model parameters in an uncertain model is discussed further

in [48, 49, 50]. A nonlinear ad-hoc approach for obtaining suitable multiplicative

perturbation models for certain classes of systems is given in [51].

Several researchers are also formalizing the interplay between identification and design

in iterative approaches. In practical situations the designer usually ends up with ad-hoc

identification/design iterations. The work in this area is described in [52, 53, 54, 55, 56].

On reading the above works, one will get the impression that this area is the most

poorly developed of the current robust control theory. In obtaining these models

engineering judgement is of paramount importance. The users of this software are

encouraged to document their experiences and bring this work to the authors’ attention.

2.3 H∞and H2Design Methodologies

The generic synthesis configuration is illustrated in LFT form in Figure 2.7. Here P (s)

is referred to as the interconnection structure. The objective is to design K(s) such that

the closed loop interconnection is stable and the resulting transfer function from w to e

(denoted by G(s)),

e = F

satisfies a norm objective.

[P (s),K(s)]w,

l

= G(s)w,

Page 38

30 CHAPTER 2. OVERVIEW OF THE UNDERLYING THEORY

e w

P(s

)

y

-

K(s

)

u

Figure 2.7: LFT configuration for controller synthesis, G(s)=Fl[P(s),K(s)]

Note that the interconnection structure, P (s), given here, differs from that discussed in

the previous section. Here we set up P (s) so that the input, w, is the unknown signals

entering our system. Typical examples would be sensor noise, plant disturbances or

tracking commands. The output, e, represent signals that we would like to make small.

In an engineering application these could include actuator signals and tracking errors.

The signal y is the measurement available to the controller, K(s). In any realistic

problem, some weighted component of w would be added to y to model sensor noise.

The output of the controller, u, is our actuation input to the system. Again, a

reasonable engineering problem would include a weighted u signal as a component of the

penalty output, e.

The interconnection structure, P(s), also contains any frequency weightings on the

signals e and w. Weightings on components of e are used to determine the relative

importance of the various error signals. Weight functions on w indicate the relative

expected size of the unknown inputs.

Xµ provides functions to calculate the controllers minimizing either the H

or H∞norm

2

of G(s). We will cover both of these approaches in the context of the design problem

illustrated in Figure 2.7.

Note that neither of these design approaches takes advantage of any information about

structured perturbations occuring within the model. The following discussion can be

considered as applying to a nominal design problem. Section 2.5 uses D-K iteration to

Page 39

2.3. H∞AND H2DESIGN METHODOLOGIES 31

extend these approaches to the case where P (s) is replaced by F

(P (s), ∆), ∆ ∈ B∆.

u

2.3.1 H∞Design Overview

Again, recall from Section 2.1.2, the H∞is norm of G(s)is,

=supωσ

∞

norm is the induced L2to L2norm. Therefore minimizing the H∞norm of

The H

kG(s)k

∞

G(s) will have the effect of minimizing the worst-case energy of e over all bounded

energy inputs at w.

Consider γ(K ) to be the closed loop H

other words,

γ(K)=kF

(P, K)k∞.

l

There is a choice of controller, K, which minimizes γ(K ). This is often referred to as the

optimal value of γ and is denoted by γ

which satisfies,

max

[G(ω)].

norm achieved for a particular controller K.In

∞

. Furthermore, there is no stabilizing controller

opt

kG(s)k

∞<γopt

In a particular design problem, γ

calculating the H

to γ

.

opt

.

is not known a priori. Therefore the functions

opt

controller use some form of optimization to obtain a value of γ close

∞

The first approaches to the solution of this problem were described by Doyle [1]. The

book by Francis [57] gives a good overview of the early version of this theory. A

significant breakthrough was achieved with the development of state-space calculation

techniques for the problem. These are discussed in the paper colloquially known as

DGKF [58]. The algorithmic details are actually given by Glover and Doyle [59].

Page 40

32 CHAPTER 2. OVERVIEW OF THE UNDERLYING THEORY

2.3.2 Assumptions for the H∞Design Problem

There are several assumptions required in order to achieve a well-posed design problem.

The DGKF paper gives a state-space solution to the H

a similar notation here.

Consider the open loop state-space representation of P (s), partitioned according to the

signals shown in Figure 2.7,

design problem and we will use

∞

P (s)=

A

C

C

1

2

B1B

D11D

D21D

2

. (2.11)

12

22

We will assume that P (s) is a minimal representation. The following assumptions are

required for a well-posed problem.

(i)(A, B

(ii) D

) is stabilizable and (C2,A) is detectable;

2

and D21are full rank;

12

(iii) The matrix,

D

2

,

12

A − ωI B

C

1

has full column rank for all ω ∈R;

(iv ) The matrix,

D

1

,

21

A − ωI B

C

2

has full row rank for all ω ∈R.

Item (i) is required so that input-output stability is equivalent to internal stability. If it

is not satisfied then there are unstable modes which cannot be stabilized by any K(s).

Items (ii)and(iii ) mean that, at every frequency, there is no component of the output

signal, e, that cannot be influenced by the controller. Similarly, items (ii)and(iv)mean

Page 41

2.3. H∞AND H2DESIGN METHODOLOGIES 33

that the effect of all disturbances, w, at every frequency, can be measured by the

controller. If either of these conditions are not met then the problem could be ill-posed.

It is possible to violate these conditions by using pure integrators as design weights.

While this could still give a meaningful design problem, solution via the state-space H

∞

approach requires that an approximation be used for the integrator weight. If item (iii)

or (iv) is violated at ω = 0, then the integrator should be replaced with very low

frequency pole.

2.3.3 A Brief Review of the Algebraic Riccati Equation

Solution of the H∞design problem requires the solution of coupled Algebraic Riccati

Equations (AREs). This is illustrated in more detail in the next section. Here we give a

very brief review of the Riccati equation and the most common solution techniques.

Some knowledge of this area is helpful because the design software displays variables

related to the Riccati solutions and the user has the option of adjusting several software

tolerances relating to these solutions. The notation used here comes from DGKF [58].

The matrix equation,

T

A

X + XA +XRX − Q =0,

is an ARE. Given A, R and Q (with R and Q symmetric), we are interested in finding a

T

symmetric positive definite solution, X . In other words, X = X

≥ 0. With this ARE

we associate a Hamiltonian matrix, denoted by H,

H =

AR

Q−A

T

.

If dim(A)=n×n, then dim(H)=2n×2n. Assume that H has no ω axis eigenvalues.

The structure of H meansthatithasnstable (Re{s} < 0) and n unstable (Re{s} > 0)

eigenvalues.

Now consider finding a basis for the stable eigenvalues. Stacking the basis vectors

together will give a 2n × n matrix,

i

h

X

1

.

X

2

Page 42

34 CHAPTER 2. OVERVIEW OF THE UNDERLYING THEORY

We have partitioned the matrix into two n × n blocks, X

and X2.IfX1is invertible,

1

then

−1

X

X = X

,

2

1

is the unique, stabilizing solution to the ARE. The ability to form X doesn’t depend on

the particular choice of X

and X2.

1

Given a Hamiltonian, H,wesaythatH∈dom(Ric) if H has no ω axis eigenvalues and

the associated X

matrix is invertible. Therefore, if H ∈ dom(Ric), we can obtain a

1

unique stabilizing solution, X. This mapping, from H to X , is often written as the

function, X = Ric(H).

To give an idea of the application of the ARE consider the following lemma (taken from

DGKF).

Lemma 1 Suppose H ∈ dom(Ric) and X = Ric(H). Then:

a) X is symmetric;

b) X satisfies the ARE,

T

A

X + XA +XRX − Q =0;

c) A + RX is stable.

This is of course the well know result relating AREs to the solution of stabilizing state

feedback controllers.

AREs can also be used in calculating the H

-norm of a state-space system. The

∞

approach outlined here is actually that used in the software for the calculation of

kP (s)k

. Consider a stable system,

∞

P (s)=

B

A

C 0

.

Page 43

2.3. H∞AND H2DESIGN METHODOLOGIES 35

Choose γ>0 and form the following Hamiltonian matrix,

.

T

<γ.Aproofof

∞

H =

−CTC −A

−2BBT

Aγ

The following lemma gives a means of checking whether or not kP (s)k

this lemma is given in DGKF although it is based on the work of Anderson [60],

Willems [61] and Boyd et al.[62].

Lemma 2 The following conditions are equivalent:

a) kP (s)k

∞

<γ;

b) H has no eigenvalues on the ω axis;

c) H ∈ dom(Ric);

d) H ∈ dom(Ric) and Ric(H ) ≥ 0 (if (C,A) is observable then Ric(H) > 0).

As the above illustrates, AREs play a role in both stabilization and H

calculations for state-space systems. Before giving more detail on the H

-norm

∞

∞

design

problem (Section 2.3.4), we will discuss some of the issues that arise in the practical

calculation of ARE solutions.

We can summarize an ARE solution method as follows:

(i) Form the Hamiltonian, H.

(ii)CheckthatHhas no ω axis eigenvalues.

(iii) Find a basis for the stable subspace of H.

(iv)CheckthatX

(v) Form X = X

is invertible.

1

−1

X

.

2

1

The first issue to note is that it is difficult to numerically determine whether or not H

has ω axis eigenvalues. A numerical calculation of the eigenvalues is unlikely to give

Page 44

36 CHAPTER 2. OVERVIEW OF THE UNDERLYING THEORY

any with a zero real part. In practice we must use a tolerance to determine what is

considered as a zero real part.

Finding a basis for the stable subspace of H involves either an eigenvalue or Schur

decomposition. Numerical errors will be introduced at this stage. In most cases using an

eigenvalue decomposition is faster and less accurate than using a Schur decomposition.

Similarly, forming X = X

−1

X

will also introduce numerical errors. The Schur solution

2

1

approach, developed by Laub et al. [63, 64, 65], is currently the best numerical approach

to solving the ARE and is used in the software as the default method. An overview of

invariant subspace methods for ARE solution is given by Laub [66]. Accurate solution of

the ARE is still very much an active area of research.

2.3.4 Solving the H∞Design Problem for a Special Case

We will now look at the H∞design problem for a simplifying set of assumptions. The

general problem (with assumptions given in Section 2.3.2) can be transformed into the

simplified one given here via scalings and other transformations. The simplified problem

illustrates the nature of the solution procedure and is actually the problem studied in

DGKF. The formulae for the general problem are given in Glover and Doyle [59]. The

software solves the general problem.

Consider the following assumptions, with reference to the system in Equation 2.11:

(i)(A,B

(ii)(A,B

(iii) D

(iv)

(v) D

) stabilizable and (C1,A) detectable;

1

) stabilizable and (C2,A) detectable;

2

T

[ C1D12]=[0 I];

12

D21D

= D22=0.

11

h

B

1

T

21

i

0

=

;

I

Assumption (i) is included in DGKF for technical reasons. The formulae are still correct

if it is violated. Note that, with these assumptions,

e = C

x + D12u,

1

Page 45

2.3. H∞AND H2DESIGN METHODOLOGIES 37

and the components, C

x and D12u are orthogonal. D12is also assumed to be

1

normalized. This essentially means that there is no cross-weighting between the state

and input penalties. Assumption (iv) is the dual of this; the input and unknown input

(disturbance and noise) affect the measurement, y, orthogonally, with the weight on the

unknown input being unity.

To solve the H

=

H

∞

design problem we define two Hamiltonian matrices,

∞

−C

T

C

−2

1

Aγ

1

T

B1B

−B2B

1

T

−A

T

2

,

and

=

A

−B1B

T

1

J

∞

T

γ−2C

T

C1− C

1

−A

T

C

2

2

.

The following theorem gives the solution to the problem.

Theorem 3 There exists a stabilizing controller satisfying kG(s)k

<γ if and only if

∞

the following three conditions are satisfied:

a) H

b) J

c) ρ(X

∈ dom(Ric) and X∞= Ric(H∞) ≥ 0.

∞

∈ dom(Ric) and Y∞= Ric(J∞) ≥ 0.

∞

) <γ2.

∞Y∞

When these conditions are satisfied, one such controller is,

,

0

K

(s)=

∞

ˆ

A

∞−Z∞L∞

F

∞

where,

F

∞

= −B

T

X

∞

2

Page 46

38 CHAPTER 2. OVERVIEW OF THE UNDERLYING THEORY

= −Y∞C

L

∞

Z∞=(I−γ−2Y∞X∞)

ˆ

=A+γ−2B1B

A

∞

T

2

−1

T

X∞+B2F∞+Z∞L∞C2.

1

Actually, the above formulation can be used to parametrize all stabilizing controllers

which satisfy, kG(s)k

<γ. This can be expressed as an LFT. All such controllers are

∞

given by,

= Fl(M∞,Q),

K

∞

where,

ˆ

A

∞

M

=

∞

F

−C

∞

2

and Q satisfies: Q ∈RH

−Z∞L∞Z∞B

0 I

I 0

,kQk∞<γ.NotethatifQ= 0 we get back the controller

∞

2

,

given in Theorem 3. This controller is referred to as the central controller and it is the

controller calculated by the software.