Page 1

TM

MATRIXx

XmathTM Model Reduction Module

Xmath Model Reduction Module

April 2004 Edition

Part Number 370755B-01

Page 2

Support

Worldwide Technical Support and Product Information

ni.com

National Instruments Corporate Headquarters

11500 North Mopac Expressway Austin, Texas 78759-3504 USA Tel: 512 683 0100

Worldwide Offices

Australia1800300800, Austria4306624579900, Belgium32027570020, Brazil551132623599,

Canada (Calgary) 403 274 9391, Canada (Ottawa) 613 233 5949, Canada (Québec) 450 510 3055,

Canada (Toronto) 905 785 0085, Canada (Vancouver) 514 685 7530, China 86 21 6555 7838,

Czech Republic 420 224 235 774, Denmark 45 45 76 26 00, Finland 3850972572511,

France330148142424, Germany490897413130, Greece302104296427, India918051190000,

Israel972036393737, Italy3902413091, Japan81354722970, Korea820234513400,

Malaysia 603 9131 0918, Mexico 001 800 010 0793, Netherlands 31 0 348 433 466,

New Zealand 0800 553 322, Norway 47 0 66 90 76 60, Poland 48 22 3390150, Portugal 351 210 311 210,

Russia 7 095 783 68 51, Singapore 65 6226 5886, Slovenia 386 3 425 4200, South Africa 27 0 11 805 8197,

Spain34916400085, Sweden460858789500, Switzerland41562005151, Taiwan886225287227,

Thailand 662 992 7519, United Kingdom 44 0 1635 523545

For further support information, refer to the Technical Support and Professional Services appendix. To comment

on the documentation, send email to techpubs@ni.com.

© 2000–2004 National Instruments Corporation. All rights reserved.

Page 3

Important Information

Warranty

The media on which you receive National Instruments software are warranted not to fail to execute programming instructions, due to defects

in materials and workmanship, for a period of 90 days from date of shipment, as evidenced by receipts or other documentation. National

Instruments will, at its option, repair or replace software media that do not execute programming instructions if National Instruments receives

notice of such defects during the warranty period. National Instruments does not warrant that the operation of the software shall be

uninterrupted or error free.

A Return Material Authorization (RMA) number must be obtained from the factory and clearly marked on the outside of the package before

any equipment will be accepted for warranty work. National Instruments will pay the shipping costs of returning to the owner parts which are

covered by warranty.

National Instruments believes that the information in this document is accurate. The document has been carefully reviewed for technical

accuracy. In the event that technical or typographical errors exist, National Instruments reserves the right to make changes to subsequent

editions of this document without prior notice to holders of this edition. The reader should consult National Instruments if errors are suspected.

In no event shall National Instruments be liable for any damages arising out of or related to this document or the information contained in it.

E

XCEPT AS SPECIFIED HEREIN, NATIONAL INSTRUMENTS MAKES NO WARRANTIES, EXPRESS OR IMPLIED, AND SPECIFICALLY DISCLAIMS ANY WARRANTY OF

MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. CUSTOMER’S RIGHT TO RECOVER DAMAGES CAUSED BY FAULT OR NEGLIGENCE ON THE PART OF

N

ATIONAL INSTRUMENTS SHALL BE LIMITED TO THE AMOUNT THERETOFORE PAID BY THE CUSTO MER. NATIONAL INSTRUMENTS WILL NOT BE LIA BLE FOR

DAMAGES RESULTIN G FROM LOSS OF DATA, PROFITS, USE OF PRODUCTS, OR INCIDENTAL OR CONSEQUENTIAL DAMAGES, EVEN IF ADVI SED OF THE POSSIB ILITY

THEREOF. This limitation of the liability of National Instruments will apply regardless of the form of action, whether in contract or tort, including

negligence. Any action against National Instruments must be brought within one year after the cause of action accrues. National Instruments

shall not be liable for any delay in performance due to causes beyond its reasonable control. The warranty provided herein does not cover

damages, defects, malfunctions, or service failures caused by owner’s failure to follow the National Instruments installation, operation, or

maintenance instructions; owner’s modification of the product; owner’s abuse, misuse, or negligent acts; and power failure or surges, fire,

flood, accident, actions of third parties, or other events outside reasonable control.

Copyright

Under the copyright laws, this publication may not be reproduced or transmitted in any form, electronic or mechanical, including photocopying,

recording, storing in an information retrieval system, or translating, in whole or in part, without the prior written consent of National

Instruments Corporation.

Trademarks

MATRIXx™, National Instruments™, NI™, ni.com™, and Xmath™ are trademarks of National Instruments Corporation.

Product and company names mentioned herein are trademarks or trade names of their respective companies.

Patents

For patents covering National Instruments products, refer to the appropriate location: Help»Patents in your software, the patents.txt file

on your CD, or ni.com/patents.

WARNING REGARDING USE OF NATIONAL INSTRUMENTS PRODUCTS

(1) NATIONAL INSTRUMENTS PRODUCTS ARE NOT DESIGNED WITH COMPONENTS AND TESTING FOR A LEVEL OF

RELIABILITY SUITABLE FOR USE IN OR IN CONNECTION WITH SURGICAL IMPLANTS OR AS CRITICAL COMPONENTS IN

ANY LIFE SUPPORT SYSTEMS WHOSE FAILURE TO PERFORM CAN REASONABLY BE EXPECTED TO CAUSE SIGNIFICANT

INJURY TO A HUMAN.

(2) IN ANY APPLICATION, INCLUDING THE ABOVE, RELIABILITY OF OPERATION OF THE SOFTWARE PRODUCTS CAN BE

IMPAIRED BY ADVERSE FACTORS, INCLUDING BUT NOT LIMITED TO FLUCTUATIONS IN ELECTRICAL POWER SUPPLY,

COMPUTER HARDWARE MALFUNCTIONS, COMPUTER OPERATING SYSTEM SOFTWARE FITNESS, FITNESS OF COMPILERS

AND DEVELOPMENT SOFTWARE USED TO DEVELOP AN APPLICATION, INSTALLATION ERRORS, SOFTWARE AND

HARDWARE COMPATIBILITY PROBLEMS, MALFUNCTIONS OR FAILURES OF ELECTRONIC MONITORING OR CONTROL

DEVICES, TRANSIENT FAILURES OF ELECTRONIC SYSTEMS (HARDWARE AND/OR SOFTWARE), UNANTICIPATED USES OR

MISUSES, OR ERRORS ON THE PART OF THE USER OR APPLICATIONS DESIGNER (ADVERSE FACTORS SUCH AS THESE ARE

HEREAFTER COLLECTIVELY TERMED “SYSTEM FAILURES”). ANY APPLICATION WHERE A SYSTEM FAILURE WOULD

CREATE A RISK OF HARM TO PROPERTY OR PERSONS (INCLUDING THE RISK OF BODILY INJURY AND DEATH) SHOULD

NOT BE RELIANT SOLELY UPON ONE FORM OF ELECTRONIC SYSTEM DUE TO THE RISK OF SYSTEM FAILURE. TO AVOID

DAMAGE, INJURY, OR DEATH, THE USER OR APPLICATION DESIGNER MUST TAKE REASONABLY PRUDENT STEPS TO

PROTECT AGAINST SYSTEM FAILURES, INCLUDING BUT NOT LIMITED TO BACK-UP OR SHUT DOWN MECHANISMS.

BECAUSE EACH END-USER SYSTEM IS CUSTOMIZED AND DIFFERS FROM NATIONAL INSTRUMENTS' TESTING

PLATFORMS AND BECAUSE A USER OR APPLICATION DESIGNER MAY USE NATIONAL INSTRUMENTS PRODUCTS IN

COMBINATION WITH OTHER PRODUCTS IN A MANNER NOT EVALUATED OR CONTEMPLATED BY NATIONAL

INSTRUMENTS, THE USER OR APPLICATION DESIGNER IS ULTIMATELY RESPONSIBLE FOR VERIFYING AND VALIDATING

THE SUITABILITY OF NATIONAL INSTRUMENTS PRODUCTS WHENEVER NATIONAL INSTRUMENTS PRODUCTS ARE

INCORPORATED IN A SYSTEM OR APPLICATION, INCLUDING, WITHOUT LIMITATION, THE APPROPRIATE DESIGN,

PROCESS AND SAFETY LEVEL OF SUCH SYSTEM OR APPLICATION.

Page 4

Conventions

The following conventions are used in this manual:

[ ] Square brackets enclose optional items—for example, [

brackets also cite bibliographic references.

» The » symbol leads you through nested menu items and dialog box options

to a final action. The sequence File»Page Setup»Options directs you to

pull down the File menu, select the Page Setup item, and select Options

from the last dialog box.

This icon denotes a note, which alerts you to important information.

bold Bold text denotes items that you must select or click in the software, such

as menu items and dialog box options. Bold text also denotes parameter

names.

italic Italic text denotes variables, emphasis, a cross reference, or an introduction

to a key concept. This font also denotes text that is a placeholder for a word

or value that you must supply.

monospace Text in this font denotes text or characters that you should enter from the

keyboard, sections of code, programming examples, and syntax examples.

This font is also used for the proper names of disk drives, paths, directories,

programs, subprograms, subroutines, device names, functions, operations,

variables, filenames, and extensions.

monospace bold Bold text in this font denotes the messages and responses that the computer

automatically prints to the screen. This font also emphasizes lines of code

that are different from the other examples.

response]. Square

monospace italic Italic text in this font denotes text that is a placeholder for a word or value

that you must supply.

Page 5

Contents

Chapter 1

Introduction

Using This Manual.........................................................................................................1-1

Document Organization...................................................................................1-1

Bibliographic References ................................................................................1-2

Commonly Used Nomenclature ......................................................................1-2

Conventions.....................................................................................................1-2

Related Publications ........................................................................................1-3

MATRIXx Help...............................................................................................1-3

Overview........................................................................................................................1-3

Functions .........................................................................................................1-4

Nomenclature .................................................................................................1-6

Commonly Used Concepts ............................................................................................1-7

Controllability and Observability Grammians ................................................1-7

Hankel Singular Values...................................................................................1-8

Internally Balanced Realizations.....................................................................1-10

Singular Perturbation.......................................................................................1-11

Spectral Factorization......................................................................................1-13

Low Order Controller Design Through Order Reduction .............................................1-15

Chapter 2

Additive Error Reduction

Introduction....................................................................................................................2-1

Truncation of Balanced Realizations.............................................................................2-2

Reduction Through Balanced Realization Truncation...................................................2-4

Singular Perturbation of Balanced Realization..............................................................2-5

Hankel Norm Approximation ........................................................................................2-6

balmoore( ).....................................................................................................................2-8

Related Functions ............................................................................................2-11

truncate( ).......................................................................................................................2-11

Related Functions ............................................................................................2-11

redschur( )......................................................................................................................2-12

Algorithm ........................................................................................................2-12

Related Functions ............................................................................................2-14

ophank( ) ........................................................................................................................2-14

Restriction........................................................................................................2-14

Algorithm ........................................................................................................2-15

Behaviors.........................................................................................................2-15

© National Instruments Corporation v Xmath Model Reduction Module

Page 6

Contents

Onepass Algorithm ......................................................................................... 2-18

Multipass Algorithm ...................................................................................... 2-20

Discrete-Time Systems ................................................................................... 2-21

Impulse Response Error.................................................................................. 2-22

Unstable System Approximation .................................................................... 2-23

Related Functions............................................................................................ 2-23

Chapter 3

Multiplicative Error Reduction

Selecting Multiplicative Error Reduction......................................................................3-1

Multiplicative Robustness Result.................................................................... 3-2

bst( )............................................................................................................................... 3-3

Restrictions...................................................................................................... 3-4

Algorithm ........................................................................................................ 3-4

Algorithm with the Keywords right and left................................................... 3-5

Securing Zero Error at DC.............................................................................. 3-8

Hankel Singular Values of Phase Matrix of G

Further Error Bounds ...................................................................................... 3-9

Reduction of Minimum Phase, Unstable G .................................................... 3-9

Imaginary Axis Zeros (Including Zeros at ∞)................................................. 3-10

Related Functions............................................................................................ 3-14

mulhank( ) ..................................................................................................................... 3-14

Restrictions...................................................................................................... 3-14

Algorithm ........................................................................................................ 3-14

right and left .................................................................................................... 3-15

Consequences of Step 5 and Justification of Step 6........................................ 3-18

Error Bounds................................................................................................... 3-20

Imaginary Axis Zeros (Including Zeros at ∞)................................................. 3-21

Related Functions............................................................................................ 3-24

............................................... 3-9

r

Chapter 4

Frequency-Weighted Error Reduction

Introduction ................................................................................................................... 4-1

Controller Reduction....................................................................................... 4-2

Controller Robustness Result.......................................................................... 4-2

Fractional Representations.............................................................................. 4-5

wtbalance( ) ................................................................................................................... 4-10

Algorithm ........................................................................................................ 4-12

Related Functions............................................................................................ 4-15

fracred( ) ........................................................................................................................ 4-15

Restrictions...................................................................................................... 4-15

Defining and Reducing a Controller............................................................... 4-16

Xmath Model Reduction Module vi ni.com

Page 7

Chapter 5

Utilities

hankelsv( )......................................................................................................................5-1

stable( ) ..........................................................................................................................5-2

compare( ) ......................................................................................................................5-4

Chapter 6

Tutorial

Plant and Full-Order Controller.....................................................................................6-1

Controller Reduction......................................................................................................6-5

Contents

Algorithm ........................................................................................................4-18

Additional Background ...................................................................................4-20

Related Functions ............................................................................................4-21

Related Functions ............................................................................................5-2

Algorithm ........................................................................................................5-2

Related Functions ............................................................................................5-4

ophank( )..........................................................................................................6-9

wtbalance.........................................................................................................6-12

fracred..............................................................................................................6-20

Appendix A

Bibliography

Appendix B

Technical Support and Professional Services

Index

© National Instruments Corporation vii Xmath Model Reduction Module

Page 8

Introduction

This chapter starts with an outline of the manual and some useful notes. It

also provides an overview of the Model Reduction Module, describes the

functions in this module, and introduces nomenclature and concepts used

throughout this manual.

Using This Manual

This manual describes the Model Reduction Module (MRM), which

provides a collection of tools for reducing the order of systems.

Readers who are not familiar with Parameter Dependent Matrices (PDMs)

should consult the Xmath User Guide before using MRM functions and

tools. Although several MRM functions accept both PDMs and matrices as

input parameters, PDMs are preferable because they can include additional

information that is useful for simulation, plotting, and signal labeling.

Document Organization

This manual includes the following chapters:

• Chapter 1, Introduction, starts with an outline of the manual and some

useful notes. It also provides an overview of the Model Reduction

Module, describes the functions in this module, and introduces

nomenclature and concepts used throughout this manual.

• Chapter 2, Additive Error Reduction, describes additive error

reduction including discussions of truncation of, reduction by,

and perturbation of balanced realizations.

• Chapter 3, Multiplicative Error Reduction, describes multiplicative

error reduction presenting considerations for using multiplicative

rather than additive error reduction.

• Chapter 4, Frequency-Weighted Error Reduction, describes

frequency-weighted error reduction problems, including controller

reduction and fractional representations.

1

© National Instruments Corporation 1-1 Xmath Model Reduction Module

Page 9

Chapter 1 Introduction

• Chapter 5, Utilities, describes three utility functions: hankelsv( ),

stable( ), and compare( ).

• Chapter 6, Tutorial, illustrates a number of the MRM functions and

their underlying ideas.

Bibliographic References

Throughout this document, bibliographic references are cited with

bracketed entries. For example, a reference to [VODM1] corresponds

to a paper published by Van Overschee and De Moor. For a table of

bibliographic references, refer to Appendix A, Bibliography.

Commonly Used Nomenclature

This manual uses the following general nomenclature:

• Matrix variables are generally denoted with capital letters; vectors are

represented in lowercase.

• G(s) is used to denote a transfer function of a system where s is the

Laplace variable. G(q) is used when both continuous and discrete

systems are allowed.

• H(s) is used to denote the frequency response, over some range of

frequencies of a system where s is the Laplace variable. H(q) is used

to indicate that the system can be continuous or discrete.

• A single apostrophe following a matrix variable, for example, x’,

denotes the transpose of that variable. An asterisk following a matrix

variable, for example, A*, indicates the complex conjugate, or

Hermitian, transpose of that variable.

Conventions

This publication makes use of the following types of conventions: font,

format, symbol, mouse, and note. These conventions are detailed in

Chapter 2, MATRIXx Publications, Online Help, and Customer Support,

of the MATRIXx Getting Started Guide.

Xmath Model Reduction Module 1-2 ni.com

Page 10

Related Publications

For a complete list of MATRIXx publications, refer to Chapter 2,

MATRIXx Publications, Online Help, and Customer Support, of the

MATRIXx Getting Started Guide. The following documents are particularly

useful for topics covered in this manual:

• MATRIXx Getting Started Guide

• Xmath User Guide

• Control Design Module

• Interactive Control Design Module

• Interactive System Identification Module, Part 1

• Interactive System Identification Module, Part 2

• Model Reduction Module

• Optimization Module

• Robust Control Module

•X

MATRIXx Help

Model Reduction Module function reference information is available in

the MATRIXx Help. The MATRIXx Help includes all Model Reduction

functions. Each topic explains a function’s inputs, outputs, and keywords

in detail. Refer to Chapter 2, MATRIXx Publications, Online Help, and

Customer Support, of the MATRIXx Getting Started Guide for complete

instructions on using the help feature.

µ

Module

Chapter 1 Introduction

Overview

The Xmath Model Reduction Module (MRM) provides a collection of tools

for reducing the order of systems. Many of the functions are based on

state-of-the-art algorithms in conjunction with researchers at the Australian

National University, who were responsible for the original development of

some of the algorithms. A common theme throughout the module is the use

of Hankel singular values and balanced realizations, although

considerations of numerical accuracy often dictates whether these tools are

used implicitly rather than explicitly. The tools are particularly suitable

when, as generally here, quality of approximation is measured by closeness

of frequency domain behavior.

© National Instruments Corporation 1-3 Xmath Model Reduction Module

Page 11

Chapter 1 Introduction

Functions

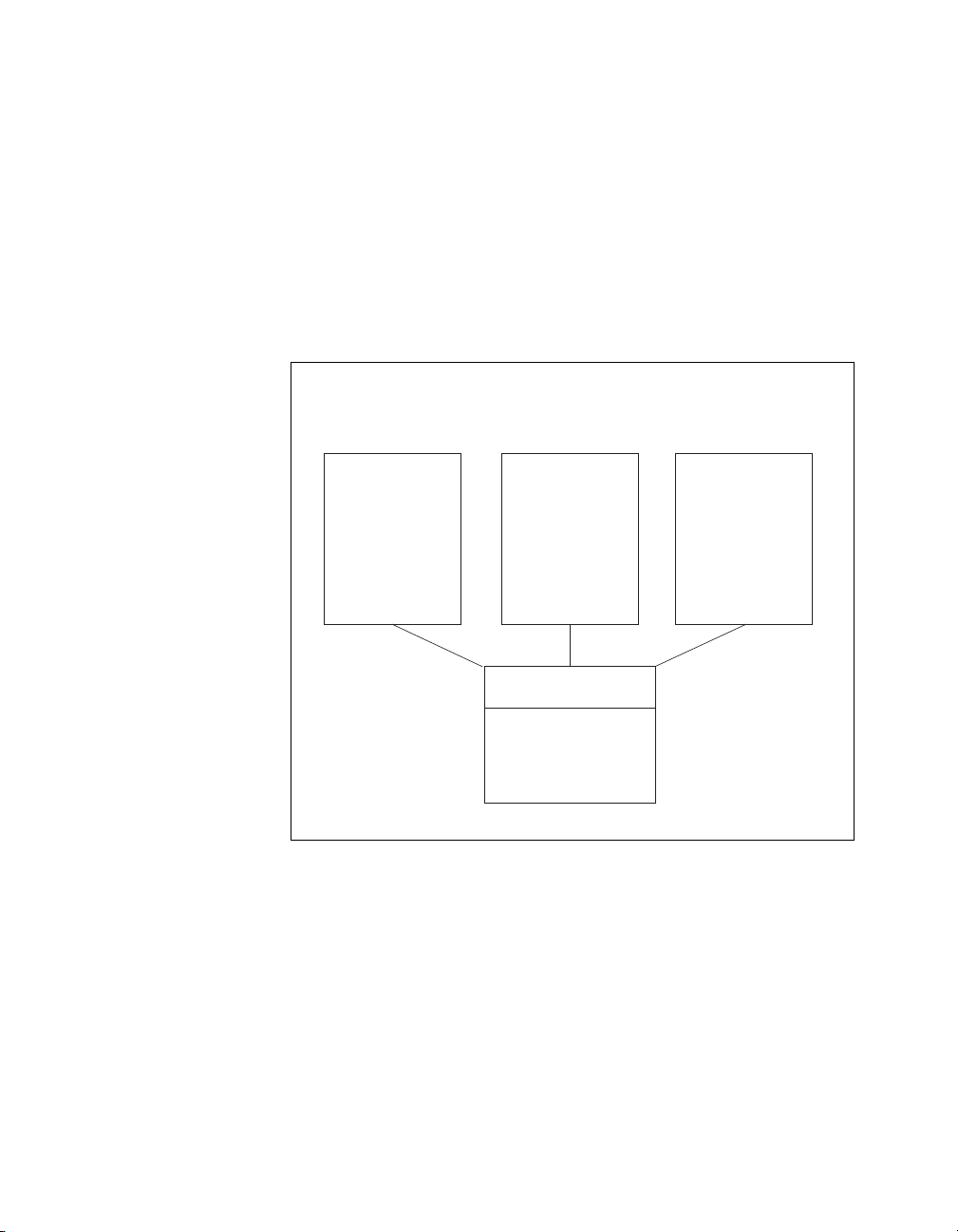

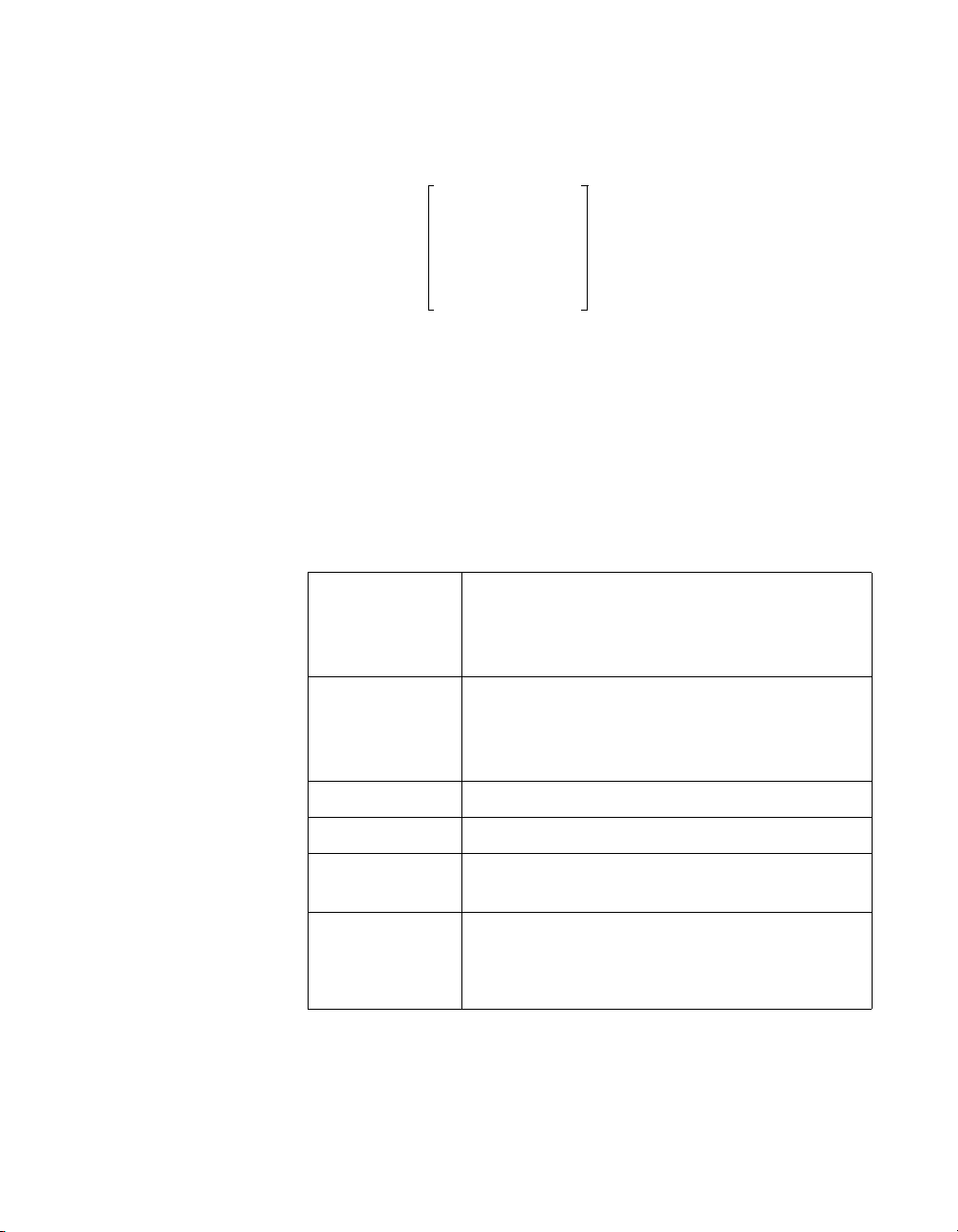

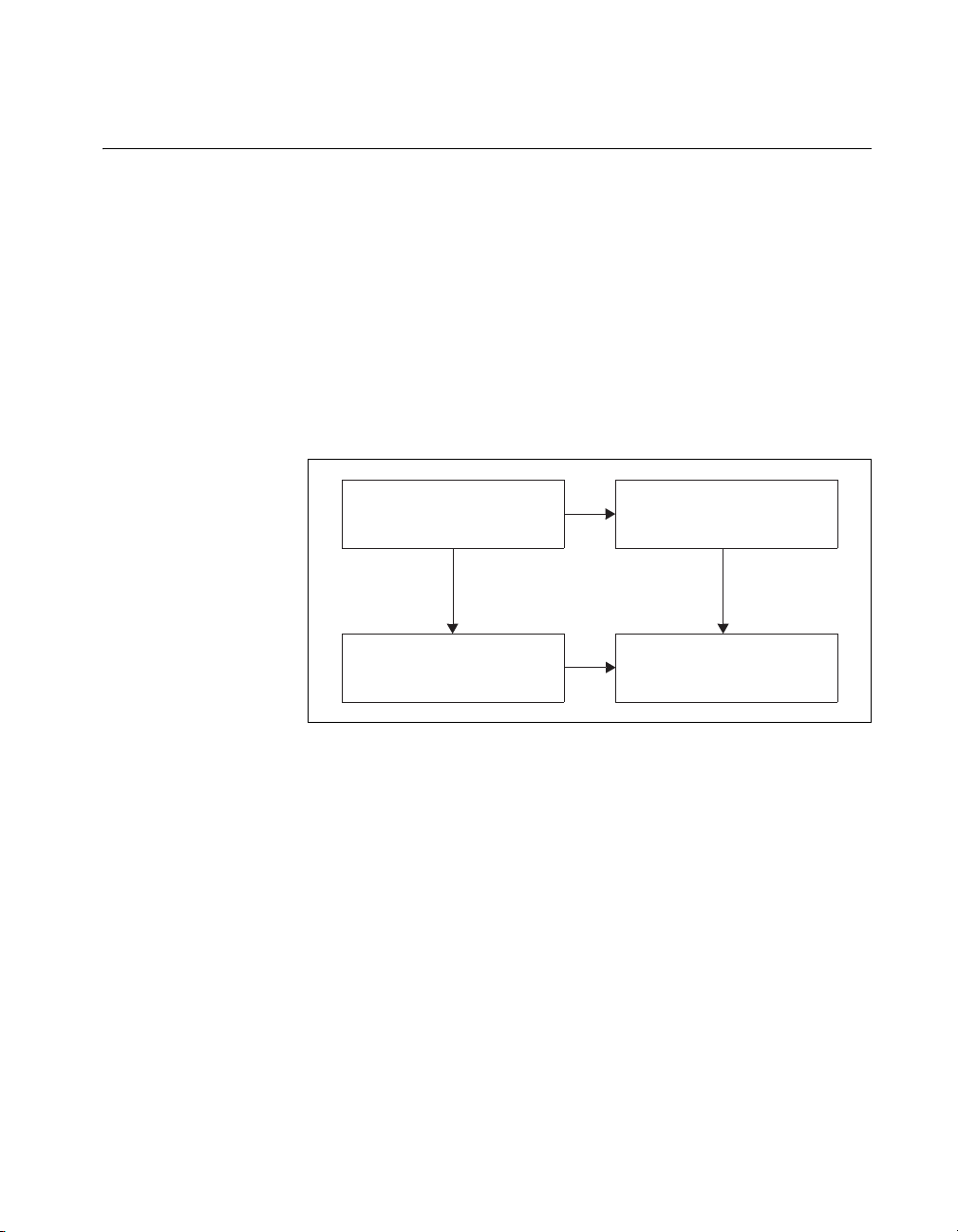

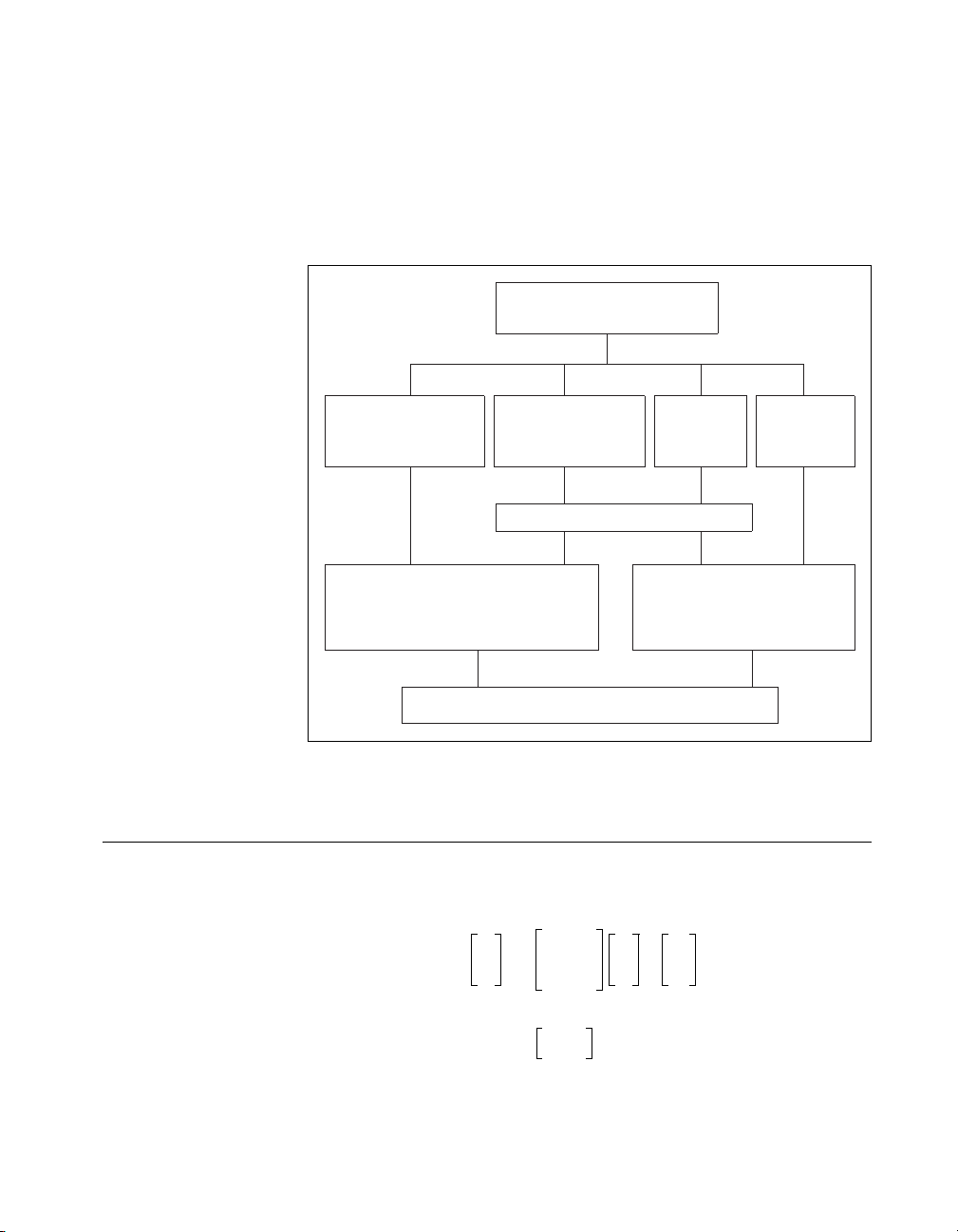

As shown in Figure 1-1, functions are provided to handle four broad tasks:

• Model reduction with additive errors

• Model reduction with multiplicative errors

• Model reduction with frequency weighting of an additive error,

including controller reduction

• Utility functions

Additive Error

Model Reduction

balmoore

redschur

ophank

truncate

balance

mreduce

Multiplicative

Model Reduction

bst

mulhank

Utility Functions

hankelsv

stable

compare

Figure 1-1. MRM Function

Frequency Weighted

Model Reduction

wtbalance

fracred

Xmath Model Reduction Module 1-4 ni.com

Page 12

Chapter 1 Introduction

Certain restrictions regarding minimality and stability are required of the

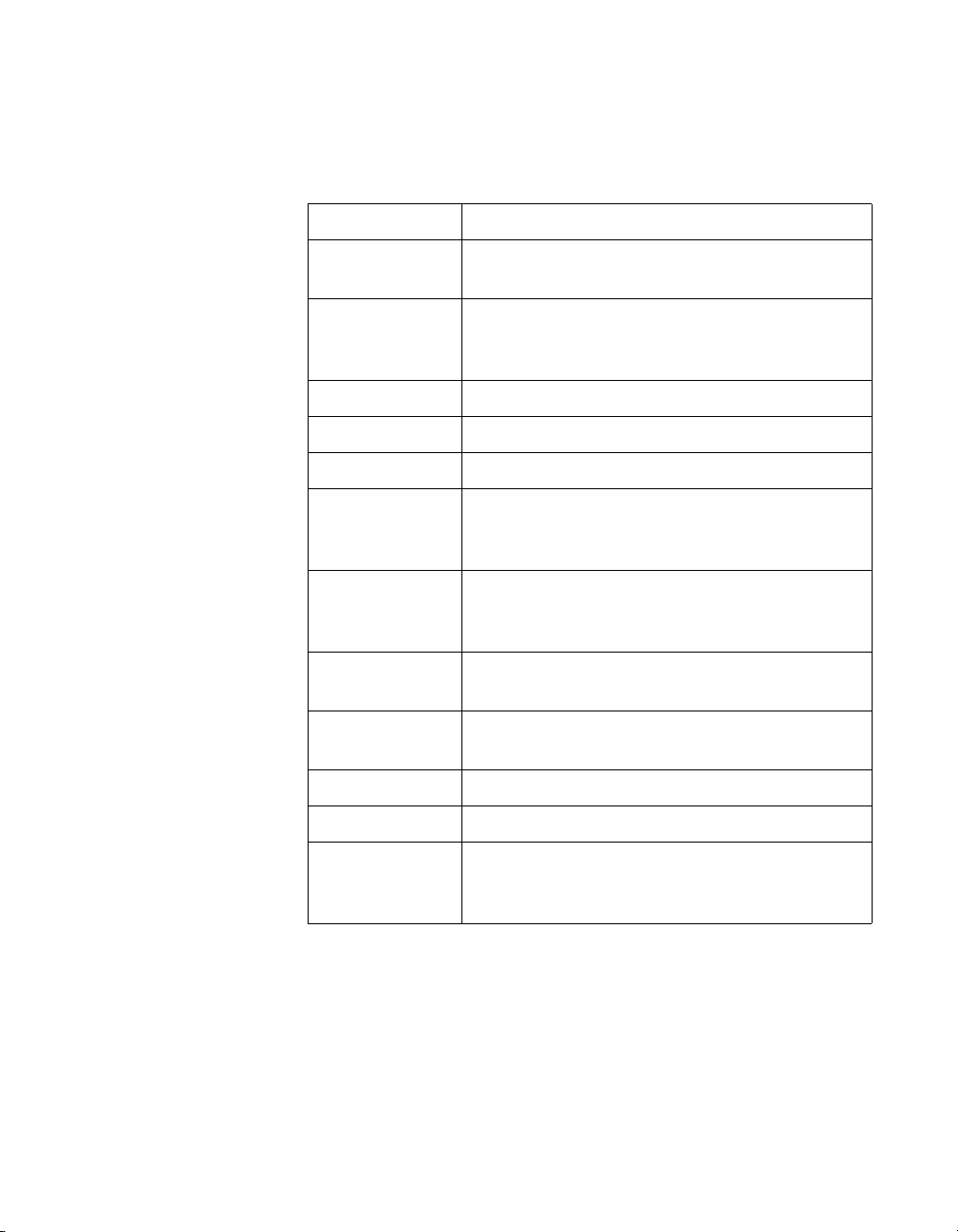

input data, and are summarized in Table 1-1.

Table 1-1. MRM Restrictions

balance( )

balmoore ( ) A state-space system must be stable and minimal,

A stable, minimal system

having at least one input, output, and state

bst( ) A state-space system must be linear,

continuous-time, and stable, with full rank along

the jω-axis, including infinity

compare( ) Must be a state-space system

fracred( )

hankelsv( ) A system must be linear and stable

mreduce( ) A submatrix of a matrix must be nonsingular

A state-space system must be linear and continuous

for continuous systems, and variant for discrete

systems

mulhank( )

A state-space system must be linear,

continuous-time, stable and square, with full

rank along the jω-axis, including infinity

ophank( )

A state-space system must be linear,

continuous-time and stable, but can be nonminimal

redschur( ) A state-space system must be stable and linear,

but can be nonminimal

stable ( ) No restriction

truncate( )

wtbalance( )

Any full-order state-space system

A state-space system must be linear and

continuous. Interconnection of controller and plant

must be stable, and/or weight must be stable.

Documentation of the individual functions sometimes indicates how the

restrictions can be circumvented. There are a number of model reduction

methods not covered here. These include:

• Padé Approximation

• Methods based on interpolating, or matching at discrete frequencies

© National Instruments Corporation 1-5 Xmath Model Reduction Module

Page 13

Chapter 1 Introduction

Nomenclature

• L2 approximation, in which the L2 norm of impulse response error (or,

by Parseval’s theorem, the L

norm of the transfer-function error along

2

the imaginary axis) serves as the error measure

• Markov parameter or impulse response matching, moment matching,

covariance matching, and combinations of these, for example,

q-COVER approximation

• Controller reduction using canonical interactions, balanced Riccati

equations, and certain balanced controller reduction algorithms

This manual uses standard nomenclature. The user should be familiar with

the following:

• sup denotes supremum, the least upper bound.

• The acute accent (´) denotes matrix transposition.

• A superscripted asterisk (*) denotes matrix transposition and complex

conjugation.

• λ

(A) for a square matrix A denotes the maximum eigenvalue,

max

presuming there are no complex eigenvalues.

•Reλ

(A) and |λi(A)| for a square matrix A denote an arbitrary real part

i

and an arbitrary magnitude of an eigenvalue of A.

Xjω()

• for a transfer function X(·) denotes:

∞

sup

∞– ω∞<<

λ

X*jω()Xjω()[][]

max

12/

• An all-pass transfer-function W(s) is one where for all ω;

Xjω() 1=

to each pole, there corresponds a zero which is the reflection through

the jω-axis of the pole, and there are no jω-axis poles.

• An all-pass transfer-function matrix W(s) is a square matrix where

W′ jω–()Wjω() I=

P >0 and P ≥ 0 for a symmetric or hermitian matrix denote positive

and nonnegative definiteness.

> P2 and P1≥ P2 for symmetric or hermitian P1 and P2 denote

• P

1

P

– P2 is positive definite and nonnegative definite.

1

•A superscripted number sign (#) for a square matrix A denotes the

Moore-Penrose pseudo-inverse of A.

Xmath Model Reduction Module 1-6 ni.com

Page 14

• An inequality or bound is tight if it can be met in practice, for example

1 xx– 0≤log+

is tight because the inequality becomes an equality for x =1. Again,

if F(jω) denotes the Fourier transform of some , the

Heisenberg inequality states,

ft()

-------------------------------------------------------------------------------------------

2

t

ft()2dt

∫

and the bound is tight since it is attained for f(t) = exp + (–kt

∫

12⁄

∫

Commonly Used Concepts

This section outlines some frequently used standard concepts.

Controllability and Observability Grammians

Suppose that G(s)=D + C(sI–A)–1B is a transfer-function matrix with

Reλ

(A)<0. Then there exist symmetric matrices P, Q defined by:

i

2

dt

2

ω

Fjω()2dω

Chapter 1 Introduction

ft() L

∈

2

4π≤

12⁄

2

).

PA′ +AP = –BB′

QA + A′Q = –C′C

These are termed the controllability and observability grammians of the

realization defined by {A,B,C,D}. (Sometimes in the code, WC is used for

P and WO for Q.) They have a number of properties:

• P ≥ 0, with P > 0 if and only if [A,B] is controllable, Q ≥ 0 with Q >0

if and only if [A,C] is observable.

∞

• and

PeAtBB′e

= Qe

∫

• With vec P denoting the column vector formed by stacking column 1

• The controllability grammian can be thought of as measuring the

© National Instruments Corporation 1-7 Xmath Model Reduction Module

0

of P on column 2 on column 3, and so on, and ⊗ denoting Kronecker

product

difficulty of controlling a system. More specifically, if the system is in

the zero state initially, the minimum energy (as measured by the L

norm of u) required to bring it to the state x

eigenvalues of P correspond to systems that are difficult to control,

while zero eigenvalues correspond to uncontrollable systems.

A′ t

dt

IAAI⊗+⊗[]vecP vec(– BB′)=

∞

A′ t

∫

0

C′CeAtdt

0

is x0P –1x0; so small

2

=

Page 15

Chapter 1 Introduction

• The controllability grammian is also E[x(t)x′(t)] when the system

x·Ax Bw+=

noise with .

has been excited from time –∞ by zero mean white

Ewt()w′ s()[]Iδ ts–()=

• The observability grammian can be thought of as measuring the

information contained in the output concerning an initial state.

x·Ax= y, Cx=

If with x(0) = x

∞

y′ t()yt()dt

∫

0

then:

0

=

x′0Qx

0

Systems that are easy to observe correspond to Q with large

eigenvalues, and thus large output energy (when unforced).

lyapunov(A,B*B') produces P and lyapunov(A',C'*C)

•

produces Q.

For a discrete-time G(z)=D + C(zI-A)

–1

B with |λi(A)|<1, P and Q are:

P – APA′ = BB′

Q – A′QA = C′C

The first dot point above remains valid. Also,

∞

k

• and

= QA

PA

∑

k 0=

BB′A ′

k

∞

=

k

∑

k 0=

C′CA′

k

with the sums being finite in case A is nilpotent (which is the case if

the transfer-function matrix has a finite impulse response).

•[I–A⊗ A] vec P = vec (BB′)

lyapunov( ) can be used to evaluate P and Q.

Hankel Singular Values

If P, Q are the controllability and observability grammians of a

transfer-function matrix (in continuous or discrete time), the Hankel

Singular Values are the quantities λ

• All eigenvalues of PQ are nonnegative, and so are the Hankel singular

values.

• The Hankel singular values are independent of the realization used to

calculate them: when A,B,C,D are replaced by TAT

then P and Q are replaced by TPT′ and (T

by TPQT

–1

and the eigenvalues are unaltered.

• The number of nonzero Hankel singular values is the order or

McMillan degree of the transfer-function matrix, or the state

dimension in a minimal realization.

Xmath Model Reduction Module 1-8 ni.com

1/2

(PQ). Notice the following:

i

–1

–1

)′QT–1; then PQ is replaced

, TB, CT

–1

and D,

Page 16

Chapter 1 Introduction

• Suppose the transfer-function matrix corresponds to a discrete-time

system, with state variable dimension n. Then the infinite Hankel

matrix,

2

B

2

B

H

CB CAB CA

CAB CA

=

2

CA

B

has for its singular values the n nonzero Hankel singular values,

together with an infinite number of zero singular values.

The Hankel singular values of a (stable) all pass system (or all pass matrix)

are all 1.

Slightly different procedures are used for calculating the Hankel singular

values (and so-called weighted Hankel singular values) in the various

functions. These procedures are summarized in Table 1-2.

Table 1-2. Calculating Hankel Singular Values

(balance( ))

balmoore( )

hankelsv( )

ophank( )

redschur( )

bst( )

mulhank( )

wtbalance( )

fracred( )

For a discussion of the balancing algorithm, refer to

the Internally Balanced Realizations section; the

Hankel singular values are given by

1/2

diag(R

)=HSV

For a discussion of the balancing algorithm, refer to

the Internally Balanced Realizations section; the

matrix S

diag(SH)

real(sqrt(eig(p*q)))

yields the Hankel singular values through

H

Calls hankelsv( )

Computes a Schur decomposition of P*Q and then

takes the square roots of the diagonal entries

Same as redschur( ) except either P or Q can be

a weighted grammian

© National Instruments Corporation 1-9 Xmath Model Reduction Module

Page 17

Chapter 1 Introduction

Internally Balanced Realizations

Suppose that a realization of a transfer-function matrix has the

controllability and observability grammian property that P = Q = Σ for

some diagonal Σ. Then the realization is termed internally balanced. Notice

that the diagonal entries σ

that is, they are the Hankel singular values. Often the entries of Σ are

assumed ordered with σ

As noted in the discussion of grammians, systems with small (eigenvalues

of) P are hard to control and those with small (eigenvalues of) Q are hard

to observe. Now a state transformation T = α I will cause P = Q to be

replaced by α

expense of difficulty of observation, and conversely. Balanced realizations

are those when ease of control has been balanced against ease of

observation.

Given an arbitrary realization, there are a number of ways of finding a

state-variable coordinate transformation bringing it to balanced form.

A good survey of the available algorithms for balancing is in [LHPW87].

One of these is implemented in the Xmath function

2

P, α–2Q, implying that ease of control can be obtained at the

of Σ are square roots of the eigenvalues of PQ,

i

≥ σ

.

i

i+1

balance( ).

The one implemented in

balmoore( ) as part of this module is more

sophisticated, but more time consuming. It proceeds as follows:

1. Singular value decompositions of P and Q are defined. Because P and

Q are symmetric, this is equivalent to diagonalizing P and Q by

orthogonal transformations.

P=U

Q=UoSo U′

cSc

U′

c

o

2. The matrix,

12⁄

=

HS

0

UHSHV

12⁄

H

is constructed, and from it, a singular value decomposition is obtained:

=

HU

′

HSHVH

3. The balancing transformation is given by:

=

TU

The balanced realization is T

Xmath Model Reduction Module 1-10 ni.com

12⁄–

0S0

–1

AT, T–1B, CT.

UHS

12⁄

H

Page 18

Chapter 1 Introduction

This is almost the algorithm set out in Section II of [LHPW87]. The one

difference (and it is minor) is that in [LHPW87], lower triangular Cholesky

factors of P and Q are used, in place of U

cSc

1/2

and UOS

1/2

in forming H

O

in step 2. The grammians themselves need never be formed, as these

Cholesky factors can be computed directly from A, B, and C in both

continuous and discrete time; this, however, is not done in

balmoore.

The algorithm has the property that:

Thus the diagonal entries of S

The algorithm implemented in

A lower triangular Cholesky factor L

Then the symmetric matrix L

value decomposition), thus L′

the coordinate basis transformation is given by T = L

T′QT = T

Singular Perturbation

A common procedure for approximating systems is the technique of

Singular Perturbation. The underlying heuristic reasoning is as follows.

Suppose there is a system with the property that the unforced responses of

the system contain some modes which decay to zero extremely fast. Then

an approximation to the system behavior may be attained by setting state

variable derivatives associated with these modes to zero, even in the forced

case. The phrase “associated with the modes” is loose: exactly what occurs

is shown below. The phrase “even in the forced case” captures a logical

flaw in the argument: smallness in the unforced case associated with initial

conditions is not identical with smallness in the forced case.

Suppose the system is defined by:

–1

P(T–1)′ = R

T′ QT′ T

1/2

.

1–

PT1–()′ S

==

are the Hankel singular values.

H

balance( ) is older, refer to [Lau80].

of P is found, so that LcLc′ = P.

c

′QLc is diagonalized (through a singular

c

= VRU′, with actually V = U. Finally,

cQLc

H

VR

c

–1/4

, resulting in

·

x

·

x

© National Instruments Corporation 1-11 Xmath Model Reduction Module

A11A

1

A21A

2

y

C

1C2

x

B

1

12

x

22

x

1

x

2

1

u+=

B

2

2

Du+=

(1-1)

Page 19

Chapter 1 Introduction

and also:

Reλ

)<0 and .

i(A22

Reλ

iA11A12A22

–()0<

1–

A

21

Usually, we expect that,

()ReλiA11A12A

Reλ

iA22

–()«

1–

A

22

21

in the sense that the intuitive argument hinges on this, but it is not necessary.

·

Then a singular perturbation is obtained by replacing by zero; this

x

2

means that:

A21x1A22x2B2u++ 0=

or

x

2

1–

A–

A21x1A

22

1–

B2u–=

22

Accordingly,

·

x

A11A12A

1

yC

–()x1DC2A

1C2A22

1–

=()x1B1A12A

A

22

21

1–

A

21

–()u+=

–()u+=

1–

B

22

2

1–

B

22

2

(1-2)

Equation 1-2 may be an approximation for Equation 1-1. This means that:

• The transfer-function matrices may be similar.

• If Equation 1-2 is excited by some u(·), with initial condition x

1(to

), and

if Equation 1-1 is excited by the same u(·) with initial condition given

by,

•x

1(to

then x

) and x2(to) = –A

(·) and y(·) computed from Equation 1-1 and from Equation 1-2

1

–1

A21x1(to)–A

22

–1

22

B2u(to),

should be similar.

• If Equation 1-1 and Equation 1-2 are excited with the same u(·), have

the same x

) and Equation 1-1 has arbitrary x2, then x1(·) and y(·)

1(to

computed from Equation 1-1 and Equation 1-2 should be similar after

a possible initial transient.

As far as the transfer-function matrices are concerned, it can be verified that

they are actually equal at DC.

Xmath Model Reduction Module 1-12 ni.com

Page 20

Chapter 1 Introduction

Similar considerations govern the discrete-time problem, where,

can be approximated by:

mreduce( ) can carry out singular perturbation. For further discussion,

refer to Chapter 2, Additive Error Reduction. If Equation 1-1 is balanced,

singular perturbation is provably attractive.

Spectral Factorization

Let W(s) be a stable transfer-function matrix, and suppose a system S with

transfer-function matrix W(s) is excited by zero mean unit intensity white

noise. Then the output of S is a stationary process with a spectrum Φ(s)

related to W(s) by:

x

k 1+()

1

k 1+()

x

2

A

11A12

A21A

22

x1k()

x

2

x1k()

yk()

x1k 1+()A11A12IA

y

k

C

1C2

x

k()

2

+[]x1k()+=

B

+[]uk()

1A12

C1C2IA

+[]x1k()+=

DC

+[]uk()

–()

IA

–()

–()

22

IA

–()

2

22

22

22

k()

1–

1–

B

1

uk()+=

B

2

Du k()+=

1–

A

21

1–

B

2

A

21

B

2

Φ s() Ws()W′ s–()=

(1-3)

Evidently,

Φ jω() Wjω()W

*

jω()=

so that Φ( jω) is nonnegative hermitian for all ω; when W( jω) is a scalar, so

is Φ( jω) with Φ( jω)=|W( jω)|

2

.

In the matrix case, Φ is singular for some ω only if W does not have full

rank there, and in the scalar case only if W has a zero there.

Spectral factorization, as shown in Example 1-1, seeks a W(jω), given

Φ(jω). In the rational case, a W(jω) exists if and only if Φ(jω) is

© National Instruments Corporation 1-13 Xmath Model Reduction Module

Page 21

Chapter 1 Introduction

nonnegative hermitian for all ω. If Φ is scalar, then Φ(jω)≥0 for all ω.

Normally one restricts attention to Φ(·) with lim

Φ(jω)<∞. A key result

ω→∞

is that, given a rational, nonnegative hermitian Φ(jω) with

lim

Φ(jω)<∞, there exists a rational W(s) where,

ω→∞

• W(∞)<∞.

• W(s) is stable.

• W(s) is minimum phase, that is, the rank of W(s) is constant in Re[s]>0.

In the scalar case, all zeros of W(s) lie in Re[s]≤0, or in Re[s]<0 if Φ(jω)>0

for all ω.

In the matrix case, and if Φ(jω) is nonsingular for some ω, it means that

W(s) is square and W

is nonsingular for all ω.

Moreover, the particular W(s) previously defined is unique, to within right

multiplication by a constant orthogonal matrix. In the scalar case, this

means that W(s) is determined to within a ±1 multiplier.

Example 1-1 Example of Spectral Factorization

Suppose:

Then Equation 1-3 is satisfied by , which is stable and

minimum phase.

Also, Equation 1-3 is satisfied by and , and , and

so forth, but none of these is minimum phase.

bst( ) and mulhank( ) both require execution within the program of

a spectral factorization; the actual algorithm for achieving the spectral

factorization depends on a Riccati equation. The concepts of a spectrum

and spectral factor also underpin aspects of

–1

(s) has all its poles in Re[s]≤ 0, or in Re[s]<0 if Φ(jω)

2

1+

Ws()

s 1–

----------s 2+

ω

---------------=

2

ω

4+

s 1+

-----------

±=

s 2+

s 3–

----------s 2+

wtbalance( ).

s 1–

-----------

s 2+

s 1+

sT–

-----------

e

s 2+

Φ jω()

Xmath Model Reduction Module 1-14 ni.com

Page 22

Chapter 1 Introduction

Low Order Controller Design Through Order Reduction

The Model Reduction Module is particularly suitable for achieving low

order controller design for a high order plant. This section explains some of

the broad issues involved.

Most modern controller design methods, for example, LQG and H

∞, yield

controllers of order roughly comparable with that of the plant. It follows

that, to obtain a low order controller using such methods, one must either

follow a high order controller design by a controller reduction step,

or reduce an initially given high order plant model, and then design a

controller using the resulting low order plant, with the understanding that

the controller will actually be used on the high order plant. Refer to

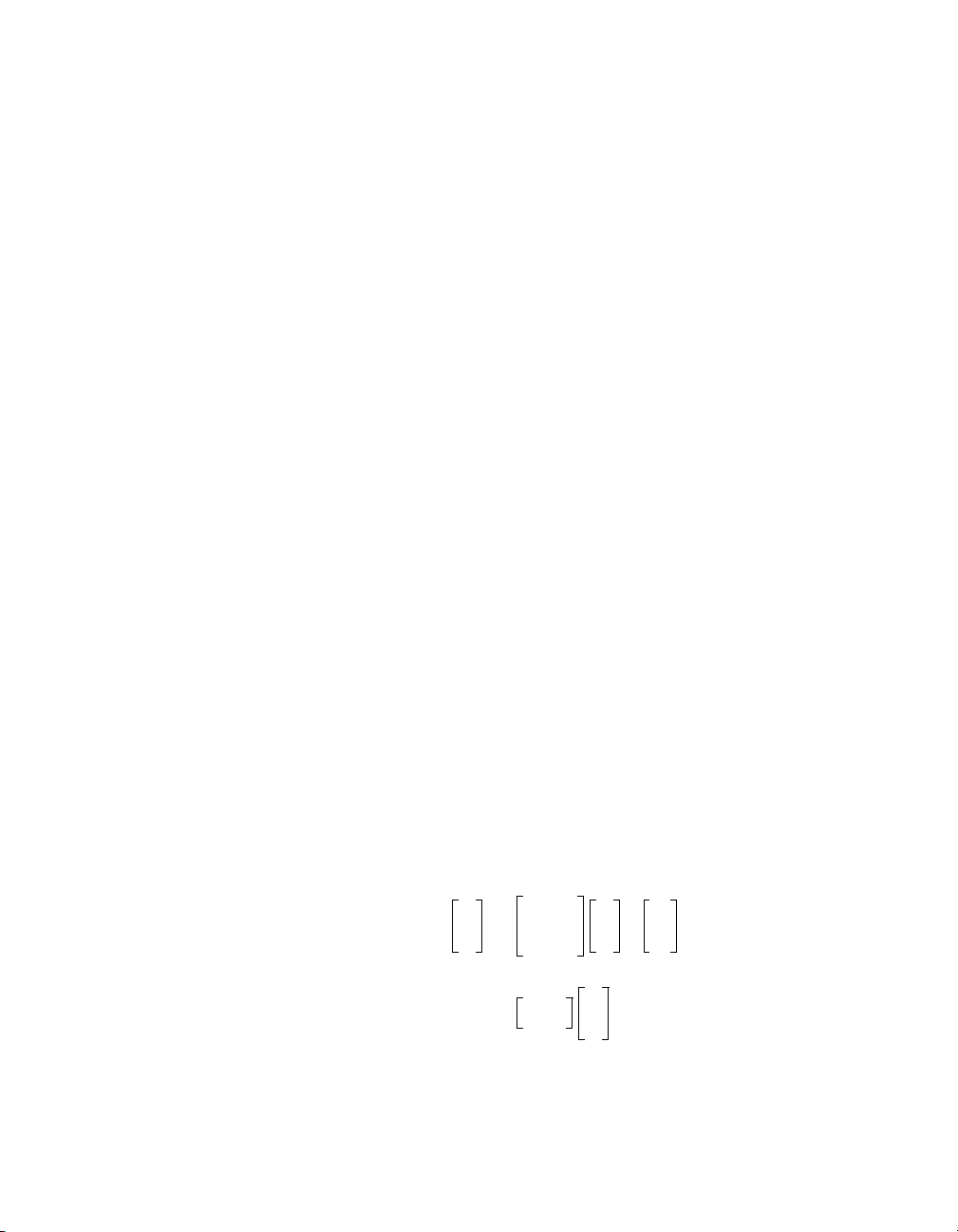

Figure 1-2.

High Order Plant

Plant

Reduction Reduction

Low Order Plant

Figure 1-2. Low Order Controller Design for a High Order Plant

High Order Controller

Controller

Low Order Controller

Generally speaking, in any design procedure, it is better to postpone

approximation to a late step of the procedure: if approximation is done

early, the subsequent steps of the design procedure may have unpredictable

effects on the approximation errors. Hence, the scheme based on high order

controller design followed by reduction is generally to be preferred.

Controller reduction should aim to preserve closed-loop properties as far

as possible. Hence the controller reduction procedures advocated in this

module reflect the plant in some way. This leads to the frequency weighted

reduction schemes of

wtbalance( ) and fracred( ), as described in

Chapter 4, Frequency-Weighted Error Reduction. Plant reduction logically

should also seek to preserve closed-loop properties, and thus should involve

the controller. With the controller unknown however, this is impossible.

Nevertheless, it can be argued, on the basis of the high loop gain property

within the closed-loop bandwidth that is typical of many systems, that

© National Instruments Corporation 1-15 Xmath Model Reduction Module

Page 23

Chapter 1 Introduction

multiplicative reduction, as described in Chapter 4, Frequency-Weighted

Error Reduction, is a sound approach. Chapter 3, Multiplicative Error

Reduction, and Chapter 4, Frequency-Weighted Error Reduction, develop

these arguments more fully.

Xmath Model Reduction Module 1-16 ni.com

Page 24

Additive Error Reduction

This chapter describes additive error reduction including discussions of

truncation of, reduction by, and perturbation of balanced realizations.

Introduction

Additive error reduction focuses on errors of the form,

2

Gjω()G

where G is the originally given transfer function, or model, and G

reduced one. Of course, in discrete-time, one works instead with:

Gejω()Grejω()–

As is argued in later chapters, if one is reducing a plant that will sit inside

a closed loop, or if one is reducing a controller, that again is sitting in a

closed loop, focus on additive error model reduction may not be

appropriate. It is, however, extremely appropriate in considering reducing

the transfer function of a filter. One pertinent application comes specifically

from digital filtering: a great many design algorithms lead to a finite

impulse response (FIR) filter which can have a very large number of

coefficients when poles are close to the unit circle. Model reduction

provides a means to replace an FIR design by a much lower order infinite

impulse response (IIR) design, with close matching of the transfer function

at all frequencies.

jω()–

r

∞

is the

r

∞

© National Instruments Corporation 2-1 Xmath Model Reduction Module

Page 25

Chapter 2 Additive Error Reduction

Truncation of Balanced Realizations

A group of functions can be used to achieve a reduction through truncation

of a balanced realization. This means that if the original system is

·

x

·

x

A11A

1

2

A21A

12

22

x

B

1

1

x

2

u+=

B

2

y

C

1C2

xD

+=

u

(2-1)

and the realization is internally balanced, then a truncation is provided by

·

x

A11x1B1u+=

1

1x1

Du+=

yC

The functions in question are:

• balmoore( )

• balance( ) (refer to the Xmath Help)

• truncate( )

• redschur( )

One only can speak of internally balanced realizations for systems which

are stable; if the aim is to reduce a transfer function matrix G(s) which

contains unstable poles, one must additively decompose it into a stable part

and unstable part, reduce the stable part, and then add the unstable part back

in. The function

stable( ), described in Chapter 5, Utilities, can be used

to decompose G(s). Thus:

G(s) = G

(s) + Gu(s)(Gs(s) stable, Gu(s) unstable)

s

Gsr(s) = found by algorithm (reduction of Gs(s))

(s) = Gsr(s) + Gu(s) (reduction of G(s))

G

r

Xmath Model Reduction Module 2-2 ni.com

Page 26

Chapter 2 Additive Error Reduction

A very attractive feature of the truncation procedure is the availability

of an error bound. More precisely, suppose that the controllability and

observability grammians for [Enn84] are

Σ

0

PQΣ

===

1

0 Σ

2

(2-2)

with the diagonal entries of Σ in decreasing order, that is, σ

≥ σ2 ≥ ···. Then

1

the key result is,

with G, G

Gjω()Grjω()–

the transfer function matrices of Equation 2-1 and Equation 2-2,

r

2trΣ

≤

∞

2

respectively. This formula shows that small singular values can, without

great cost, be thrown away. It also is valid in discrete time, and can be

improved upon if there are repeated Hankel singular values. Provided that

the smallest diagonal entry of Σ

of Σ

, the reduced order system is guaranteed to be stable.

2

strictly exceeds the largest diagonal entry

1

Several other points concerning the error can be made:

• The error G( jω)–G

( jω) as a function of frequency is not flat; it is zero

r

at ω = ∞, and may take its largest value at ω = 0, so that there is in

general no matching of DC gains of the original and reduced system.

• The actual error may be considerably less than the error bound at all

frequencies, so that the error bound formula can be no more than an

advance guide. However, the bound is tight when the dimension

reduction is 1 and the reduction is of a continuous-time

transfer-function matrix.

• With g(·) and g

responses of G and G

(·) denoting the impulse responses for impulse

r

and with Gr of degree k, the following L1 bound

r

holds [GCP88]

gg

–

This bound also will apply for the L

≤

r

1

42k 1+()trΣ

error on the step response.

∞

2

It is helpful to note one situation where reduction is likely to be difficult (so

that Σ will contain few diagonal entries which are, relatively, very small).

Suppose G(s), strictly proper, has degree n and has (n – 1) unstable zeros.

Then as ω runs from zero to infinity, the phase of G(s) will change by

(2n – 1)π/2. Much of this change may occur in the passband. Suppose G

r

(s)

has degree n–1; it can have no more than (n – 2) zeros, since it is strictly

© National Instruments Corporation 2-3 Xmath Model Reduction Module

Page 27

Chapter 2 Additive Error Reduction

proper. So, even if all zeros are unstable, the maximum phase shift when ω

moves from 0 to ∞ is (2n – 3)π/2. It follows that if G(jω) remains large in

magnitude at frequencies when the phase shift has moved past (2n – 3)π/2,

approximation of G by G

approximation may depend somehow on removing roughly cancelling

pole-zeros pairs; when there are no left half plane zeros, there can be no

rough cancellation, and so approximation is unsatisfactory.

As a working rule of thumb, if there are p right half plane zeros in the

passband of a strictly proper G(s), reduction to a G

p + 1 is likely to involve substantial errors. For non-strictly proper G(s),

having p right half plane zeros means that reduction to a G

than p is likely to involve substantial errors.

An all-pass function exemplifies the problem: there are n stable poles and

n unstable zeros. Since all singular values are 1, the error bound formula

indicates for a reduction to order n – 1 (when it is not just a bound, but

exact) a maximum error of 2.

Another situation where poor approximation can arise is when a highly

oscillatory system is to be replaced by a system with a real pole.

will necessarily be poor. Put another way, good

r

(s) of order less than

r

(s) of order less

r

Reduction Through Balanced Realization Truncation

This section briefly describes functions that reduce( ), balance( ),

and

truncate( ) to achieve reduction.

balmoore( )—Computes an internally balanced realization of a

•

system and optionally truncates the realization to form an

approximation.

•

balance( )—Computes an internally balanced realization of a

system.

•

truncate( )—This function truncates a system. It allows

examination of a sequence of different reduced order models formed

from the one balanced realization.

redschur( )—These functions in theory function almost the same

•

as the two features of

state-variable realization of a reduced order model, such that the

transfer function matrix of the model could have resulted by truncating

a balanced realization of the original full order transfer function

matrix. However, the initially given realization of the original transfer

function matrix is never actually balanced, which can be a numerically

hazardous step. Moreover, the state-variable realization of the reduced

Xmath Model Reduction Module 2-4 ni.com

balmoore( ). That is, they produce a

Page 28

Chapter 2 Additive Error Reduction

order model is not one in general obtainable by truncation of an

internally-balanced realization of the full order model.

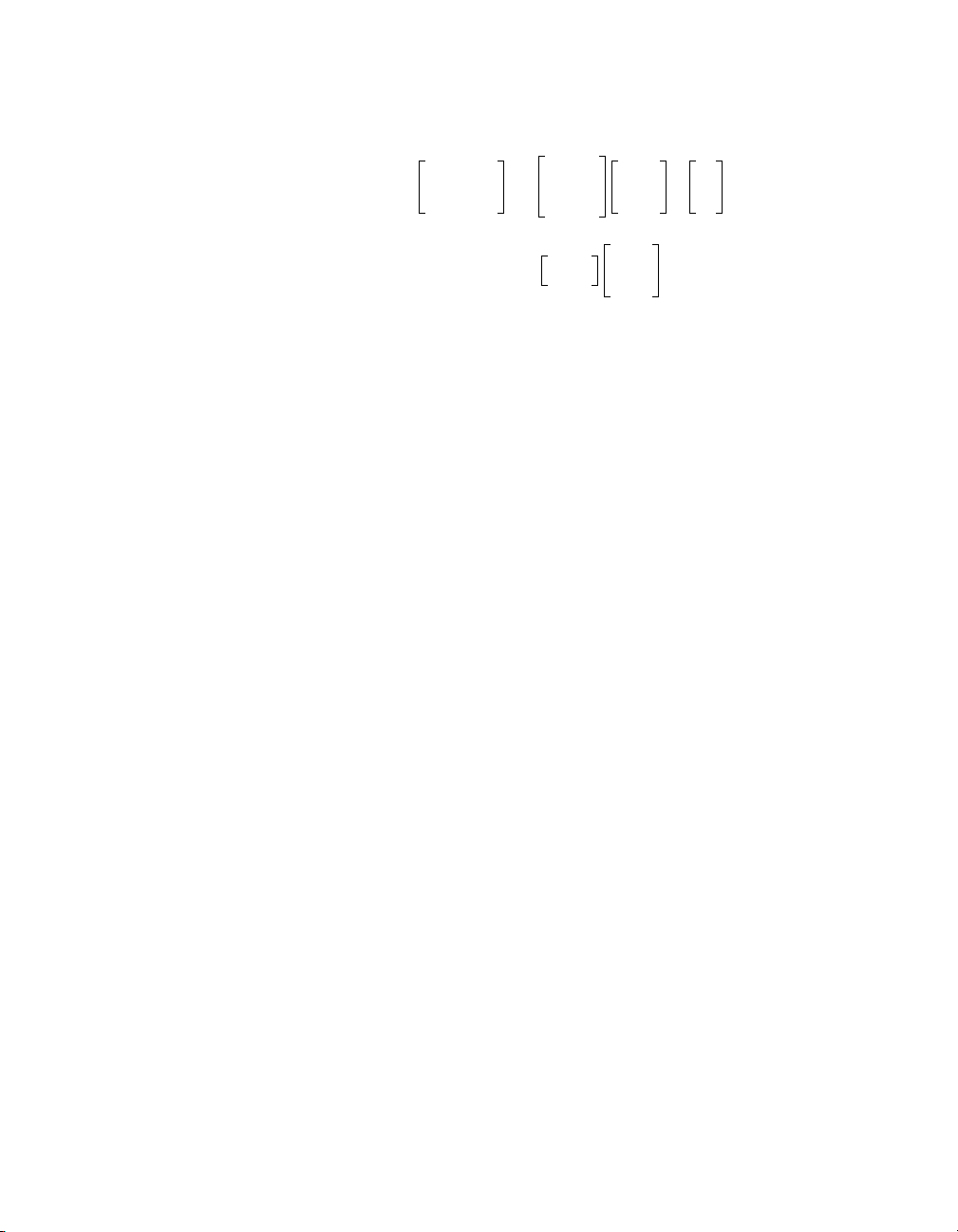

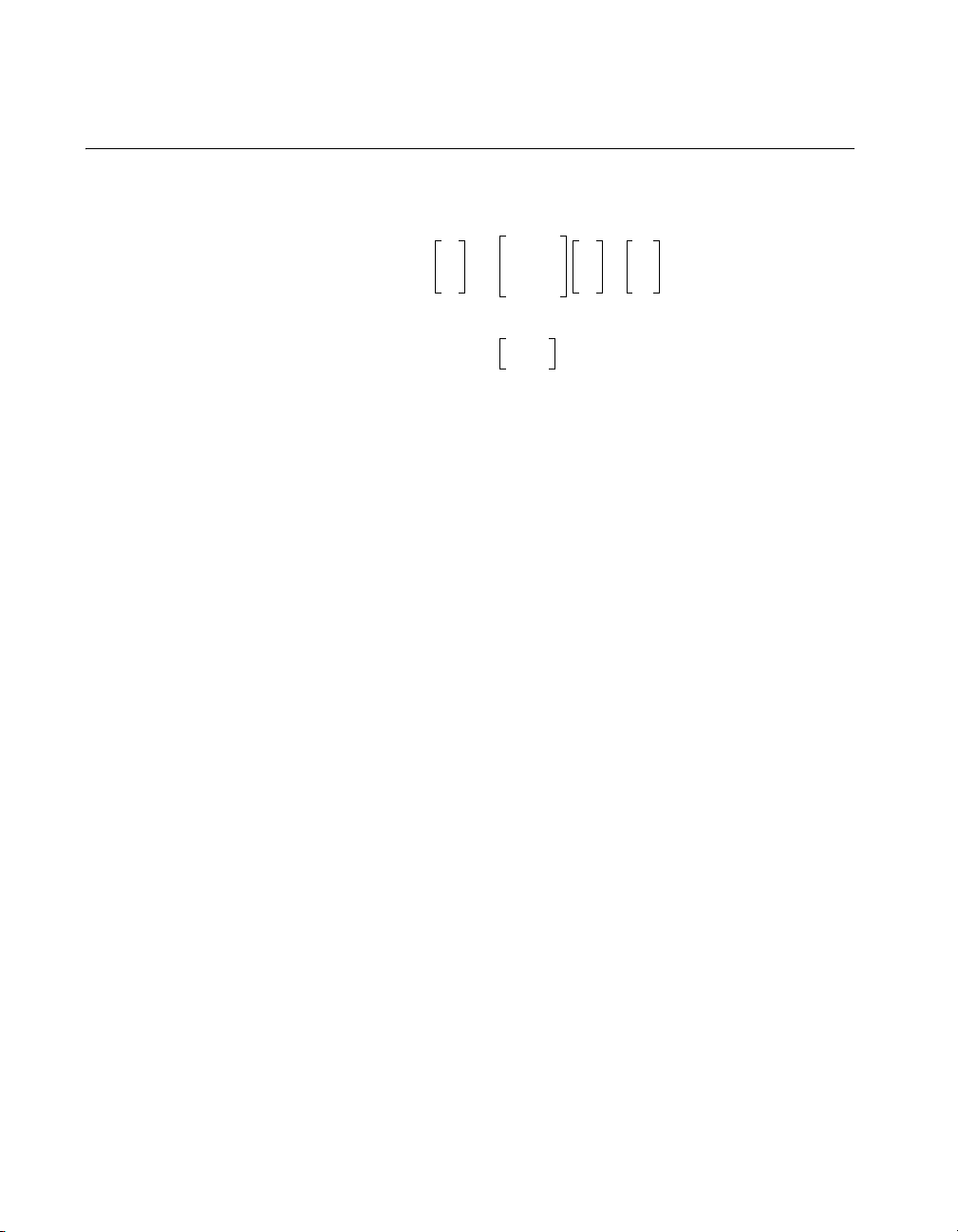

Figure 2-1 sets out several routes to a reduced-order realization. In

continuous time, a truncation of a balanced realization is again balanced.

This is not the case for discrete time, but otherwise it looks the same.

Full Order Realization

balmoore

(with both steps)

Balanced Realization of

Reduced Order Model

(in continuous time)

Reduced Order Model Transfer Function

balmoore

(with first step)

truncate

balance redschur

Nonbalanced

Realization of

Reduced Order Model

Figure 2-1. Different Approaches for Obtaining the Same Reduced Order Model

Singular Perturbation of Balanced Realization

Singular perturbation of a balanced realization is an attractive way to

produce a reduced order model. Suppose G(s) is defined by,

·

x

·

x

A11A

1

A21A

2

x

B

1

12

x

22

1

u+=

B

2

2

y

© National Instruments Corporation 2-5 Xmath Model Reduction Module

C

1C2

xDu+=

Page 29

Chapter 2 Additive Error Reduction

with controllability and observability grammians given by,

in which the diagonal entries of Σ are in decreasing order, that is,

σ

1≥σ2

the first diagonal entry of Σ

Reλ

defined by:

Σ

0

PQΣ

===

≥ ···, and such that the last diagonal entry of Σ1 exceeds

. It turns out that Reλi( )<0 and

(A11–A

i

1–

A21)< 0, and a reduced order model Gr(s) can be

A

12

22

2

1

0 Σ

2

1–

A

22

x·A11A12A

yC

1C2A22

The attractive feature [LiA89] is that the same error bound holds as for

balanced truncation. For example,

Although the error bounds are the same, the actual frequency pattern of

the errors, and the actual maximum modulus, need not be the same for

reduction to the same order. One crucial difference is that balanced

truncation provides exact matching at ω = ∞, but does not match at DC,

while singular perturbation is exactly the other way round. Perfect

matching at DC can be a substantial advantage, especially if input signals

are known to be band-limited.

Singular perturbation can be achieved with

the two alternative approaches. For both continuous-time and discrete-time

reductions, the end result is a balanced realization.

Hankel Norm Approximation

1–

–()xB1A12– A

–()xDC2A

Gjω()G

A

22

21

1–

A

21

jω()–

r

+()u+=

1–

–()u+=

22

2trΣ

≤

∞

mreduce( ). Figure 2-1 shows

2

1–

B

22

2

B

2

In Hankel norm approximation, one relies on the fact that if one chooses an

approximation to exactly minimize one norm (the Hankel norm) then the

infinity norm will be approximately minimized. The Hankel norm is

defined in the following way. Let G(s) be a (rational) stable transfer

Xmath Model Reduction Module 2-6 ni.com

Page 30

Chapter 2 Additive Error Reduction

ˆ

ˆ

function matrix. Consider the way the associated impulse response maps

inputs defined over (–∞,0] in L

into outputs, and focus on the output over

2

[0,∞). Define the input as u(t) for t <0, and set v(t)=u(–t). Define the

output as y(t) for t > 0. Then the mapping is

∞

yt() CexpAt r+()Bv r()dr

=

∫

0

–1

if G(s)=C(sI-A)

G

norm of G. A key result is that if σ

H

values of G(s), then .

B. The norm of the associated operator is the Hankel

≥ ···, are the Hankel singular

G

σ1=

H

1≥σ2

To avoid minor confusion, suppose that all Hankel singular values of G are

distinct. Then consider approximating G by some stable of prescribed

degree k much that is minimized. It turns out that

ˆ

GG

–

H

G

inf

Gˆof degree k

and there is an algorithm available for obtaining . Further, the

optimum which is minimizing does a reasonable job

of minimizing , because it can be shown that

ˆ

G

ˆ

GG

–

∞

GG

–

where n =deg G, with this bound subject to the proviso that G and are

GG

–

GG

–

ˆ

≤

∞

ˆ

σ

k 1+

G()=

H

G

ˆ

H

σ

j

∑

jk1+=

ˆ

G

allowed to be nonzero and different at s = ∞.

The bound on is one half that applying for balanced truncation.

ˆ

GG

–

However,

• It is actual error that is important in practice (not bounds).

• The Hankel norm approximation does not give zero error at ω = ∞

or at ω = 0. Balanced realization truncation gives zero error at ω = ∞,

and singular perturbation of a balanced realization gives zero error

at ω =0.

There is one further connection between optimum Hankel norm

approximation and L

ˆ

with stable and of degree k and with F unstable, then:

G

error. If one seeks to approximate G by a sum + F,

∞

ˆ

G

ˆ

GG

inf

Gˆof degree k and F unstable

© National Instruments Corporation 2-7 Xmath Model Reduction Module

– F–

σ

k 1+

G()=

∞

Page 31

Chapter 2 Additive Error Reduction

Further, the which is optimal for Hankel norm approximation also is

optimal for this second type of approximation.

ˆ

G

balmoore( )

In Xmath Hankel norm approximation is achieved with

ophank( ).

The most comprehensive reference is [Glo84].

[SysR,HSV,T] = balmoore(Sys,{nsr,bound})

The balmoore( ) function computes an internally-balanced realization of

a continuous system and then optionally truncates it to provide a balance

reduced order system using B.C. Moore’s algorithm.

balmoore( ) is being used to reduce a system, its objective mirrors

When

that of

redschur( ), therefore, if the same Sys and nsr are used for both

algorithms, the reduced order system should have the same transfer

function (though in general the state-variable realizations will be different).

When

balmoore( ) is being used to balance a system, its objective, like

that of balance, is to generate an internally-balanced state-variable

realization. The implementations are not identical.

balmoore( ) only can be applied on systems that have a stable A matrix,

and are controllable and observable, (that is, minimal). Checks, which are

rather time-consuming, are included. The computation is intrinsically not

well-conditioned if

balmoore( ) serves to find a transformation matrix T such that the

Sys is nearly nonminimal. The first part of

controllability and observability grammians after transformation are equal,

and diagonal, with decreasing entries down the diagonal, that is, the system

representation is internally balanced. (The condition number of T is a

measure of the ill-conditioning of the algorithm. If there is a problem with

ill-conditioning,

redschur( ) can be used as an alternative.) If this

common grammian is Σ, then after transformation:

Σ A′

(continuous)

(discrete)

Σ diag σ

1σ2σ3

Singular Values of

Xmath Model Reduction Module 2-8 ni.com

... σns,,,[]= σiσ

Sys. In the second part of balmoore( ), a truncation

+ A Σ = –BB

Σ

– A Σ A

with with the the Hankel

= –BB

i 1+

′Σ

A + A′Σ = –C′C

′Σ

- A

′ Σ

A = –C′C

0>≤σ

i

Page 32

Chapter 2 Additive Error Reduction

s

of the balanced system occurs, (assuming nsr is less than the number of

states). Thus, if the state-space representation of the balanced system is

A

11A12

= B

A21A

22

=

with A

possessing dimension nsr × nsr, B1 possessing nsr rows and C1

11

possessing

A

nsr columns, the reduced order system SysR is:

(continuous) (discrete)

·

x

A11x1B1u+=

1

yC

xDu+=

1

x1k 1+()A11x1k() B1uk()+=

The following error formula is relevant:

(continuous)

CjωIA–()

[]C1jωIA

1–

(discrete)

CejωIA–()

B

1

B

2

yk() C

2 σ

1–

BD+[]C1ejωIA

nsr 1+

C

=

C

1C2

k() Du k()+=

1x1

1–

–()

1

σ

+++[]≤

nsr 2+

B1D+()[]–

–()

... σ

1

∞

ns

1–

B1D+[]–

∞

2 σ

nsr 1+

σ

+++[]≤

nsr 2+

... σ

ns

It is this error bound which is the basis of the determination of the order

of the reduced system when the keyword

bound sought is smaller than , then no reduction is possible which is

2σ

n

consistent with the error bound. If it is larger than , then the constant

bound is specified. If the error

2trΣ

transfer function matrix D achieves the bound.

For continuous systems, the actual approximation error depends on

frequency, but is always zero at ω = ∞. In practice it is often greatest at

ω = 0; if the reduction of state dimension is 1, the error bound is exact, with

the maximum error occurring at DC. The bound also is exact in the special

case of a single-input, single-output transfer function which has poles and

zero alternating along the negative real axis. It is far from exact when the

poles and zeros approximately alternate along the imaginary axis (with the

poles stable).

© National Instruments Corporation 2-9 Xmath Model Reduction Module

Page 33

Chapter 2 Additive Error Reduction

The actual approximation error for discrete systems also depends on

frequency, and can be large at ω = 0. The error bound is almost never tight,

that is, the actual error magnitude as a function of ω almost never attains

the error bound, so that the bound can only be a guide to the selection of the

reduced system dimension.

In principle, the error bound formula for both continuous and discrete

systems can be improved (that is, made tighter or less likely to overestimate

the actual maximum error magnitude) when singular values occur with

multiplicity greater than one. However, because of errors arising in

calculation, it is safer to proceed conservatively (that is, work with the error

bound above) when using the error bound to select

actual error achieved. If this is smaller than required, a smaller dimension

for the reduced order system can be selected.

mreduce( ) provides an alternative reduction procedure for a balanced

realization which achieves the same error bound, but which has zero error

at ω = 0. For continuous systems there is generally some error at ω = ∞,

because the D matrix is normally changed. (This means that normally the

approximation of a strictly proper system through

strictly proper, in contrast to the situation with

systems the D matrix is also normally changed so that, for example, a

system which was strictly causal, or guaranteed to contain a delay (that is,

D = 0), will be approximated by a system

nsr, and examine the

mreduce( ) will not be

balmoore( ).) For discrete

SysR without this property.

The presentation of the Hankel singular values may suggest a logical

σ

dimension for the reduced order system; thus if , it may be

»

kσk 1+

sensible to choose nsr = k.

With

mreduce( ) and a continuous system, the reduced order system

SysR is internally balanced, with the grammian , so

diag σ

...,σ

,,[]

1σ2

nsr

that its Hankel Singular Values are a subset of those of the original system

Sys. Provided , SysR also is controllable, observable, and

stable. This is not guaranteed if , so it is highly advisable to

σ

>

nsrσnsr 1+

σ

nsr

σ

=

nsr 1+

avoid this situation. Refer to the balmoore( ) section for more on the

balmoore( ) algorithm.

With

mreduce( ) and discrete systems, the reduced order system SysR is

not in general balanced (in contrast to

singular values are not in general a subset of those of

>

σ

nsrσsrn 1+

, the reduced order system

observable and stable. This is not guaranteed if , so it is

balmoore( )), and its Hankel

Sys. Provided

SysR also is controllable,

σ

nsr

σ

=

nsr 1+

highly advisable to avoid this situation. For additional information about

the

balmoore( ) function, refer to the Xmath Help.

Xmath Model Reduction Module 2-10 ni.com

Page 34

Related Functions

truncate( )

Chapter 2 Additive Error Reduction

balance(), truncate(), redschur(), mreduce()

SysR = truncate(Sys,nsr,{VD,VA})

The truncate( ) function reduces a system Sys by retaining the first

nsr states and throwing away the rest to form a system SysR.

If for

Sys one has,

Related Functions

A

A

11A12

= B

A21A

22

B

1

=

B

2

C

=

C

1C2

the reduced order system (in both continuous-time and discrete-time cases)

is defined by A

approximation of

well be used after an initial application of

, B1, C1, and D. If Sys is balanced, then SysR is an

11

Sys achieving a certain error bound. truncate( ) may

balmoore( ) to further reduce

a system should a larger approximation error be tolerable. Alternatively, it

may be used after an initial application of

If

Sys was calculated from redschur( ) and VA,VD were posed as

arguments, then

SysR is calculated as in redschur( ) (refer to the

balance( ) or redschur( ).

redschur( ) section).

truncate( ) should be contrasted with mreduce( ), which achieves a

reduction through a singular perturbation calculation. If

Sys is balanced,

the same error bound formulas apply (though not necessarily the same

errors),

truncate( ) always ensures exact matching at s = ∞ (in the

continuous-time case), or exacting matching of the first impulse response

coefficient D (in the discrete-time case), while

matching of DC gains for

Sys and SysR in both the continuous-time and

discrete-time case. For a additional information about the

mreduce( ) ensures

truncate( )

function, refer to the Xmath Help.

balance(), balmoore(), redschur(), mreduce()

© National Instruments Corporation 2-11 Xmath Model Reduction Module

Page 35

Chapter 2 Additive Error Reduction

redschur( )

[SysR,HSV,slbig,srbig,VD,VA] = redschur(Sys,{nsr,bound})

The redschur( ) function uses a Schur method (from Safonov and

Chiang) to calculate a reduced version of a continuous or discrete system

without balancing.

Algorithm

The objective of redschur( ) is the same as that of balmoore( ) when

the latter is being used to reduce a system; this means that if the same

and

the same transfer function matrix. However, in contrast to

redschur( ) do not initially transform Sys to an internally balanced

realization and then truncate it; nor is

there is no balancing offers numerical advantages, especially if

nearly nonminimal.

Sys should be stable, and this is checked by the algorithm. In contrast to

balmoore( ), minimality of Sys (that is, controllability and

observability) is not required.

Sys

nsr are used for both algorithms, the reduced order system should have

balmoore( ),

SysR in balanced form. The fact that

Sys is

If the Hankel singular values of

then those of

SysR in the continuous-time case are .

A restriction of the algorithm is that is required for both

Sys are ordered as ,

σ

>

nsrσnsr 1+

continuous-time and discrete-time cases. Under this restriction,

σ

σ

1σ2

1σ2

... σns0≥≥≥ ≥

... σ

nsr

SysR is

0>≥≥≥

guaranteed to be stable and minimal.

The algorithms depend on the same algorithm, apart from the calculation

of the controllability and observability grammians W

original system. These are obtained as follows:

(continuous)

(discrete)

WcA′ AW

+ BB′–= WoAA′W

c

W

AWcA′– BB′= WoA′WoA– C′C=

c

and Wo of the

c

+ C′C=

o

The maximum order permitted is the number of nonzero eigenvalues of

that are larger than ε.

W

cWo

Xmath Model Reduction Module 2-12 ni.com

Page 36

Chapter 2 Additive Error Reduction

Next, Schur decompositions of WcWo are formed with the eigenvalues of

W

in ascending and descending order. These eigenvalues are the square

cWo

of the Hankel singular values of

Sys, and if Sys is nonminimal, some can

be zero.

The matrices V

V′

AWcWoVA

V′

DWcWoVD

, VD are orthogonal and S

A

S

=

asc

S

=

des

, S

are upper triangular. Next,

asc

des

submatrices are obtained as follows:

V

= V

V

lbig

A

0

I

nsr

rbig

I

=

nsr

V

D

0

and then a singular value decomposition is found:

U

ebigSebigVebig

=

V′

lbigVrbig

From these quantities, the transformation matrices used for calculating

SysR are defined:

S

S

lbig

rbig

V

=

lbigUebigSebig

V

=

rbigVebigSebig

12⁄

12⁄

and the reduced order system is:

′

S

AS

=

A

R

lbig

rbig

B

′

S

lbig

B=

R

A

CS

=

R

rbig

D

An error bound is available. In the continuous-time case it is as follows. Let

G( jω) and G

( jω) be the transfer function matrices of Sys and SysR,

R

respectively.

For the continuous case:

Gjω()G

© National Instruments Corporation 2-13 Xmath Model Reduction Module

jω()–

R

2 σ

∞

nsr 1+

σ

+++()≤

nsr 2+

... σ

ns

Page 37

Chapter 2 Additive Error Reduction

For the discrete-time case:

Related Functions

ophank( )

Gejω()GRejω()–

{bound} is specified, the error bound just enunciated is used to

When

choose the number of states in

is as small as possible. If the desired error bound is smaller than 2σ

2 σ

∞

nsr 1+

SysR so that the bound is satisfied and nsr

σ

+++()≤

nsr 2+

... σ

ns

,

ns

no reduction is made.

In the continuous-time case, the error depends on frequency, but is always

zero at ω = ∞. If the reduction in dimension is 1, or the system

Sys is

single-input, single-output, with alternating poles and zeros on the real

axis, the bound is tight. It is far from tight when the poles and zeros

approximately alternate along the jω-axis. It is not normally tight in the

discrete-time case, and for both continuous-time and discrete-time cases,

it is not tight if there are repeated singular values.

The presentation of the Hankel singular values may suggest a logical

dimension for the reduced order system; thus if , it may be

σkσ

»

k 1+

sensible to choose nsr = k.

ophank(), balmoore()

[SysR,SysU,HSV] = ophank(Sys,{nsr,onepass})

The ophank( ) function calculates an optimal Hankel norm reduction

of

Sys.

Restriction

This function has the following restriction:

• Only continuous systems are accepted; for discrete systems use

makecontinuous( ) before calling bst( ), then discretize the

result.

Sys=ophank(makecontinuous(SysD));

SysD=discretize(Sys);

Xmath Model Reduction Module 2-14 ni.com

Page 38

Algorithm

Chapter 2 Additive Error Reduction

The algorithm does the following. The system Sys and the reduced order

system