Page 1

Mellanox ConnectX®-5 VPI Adapter Cards for Open

Compute Project User Manual

P/N:

MCX545A-ECAN, MCX545B-ECAN, MCX545M-ECAN

Rev 1.5

www.mellanox.com

Mellanox Technologies

Page 2

Mellanox Technologies

350 Oakmead Parkway Suite 100

Sunnyvale, CA 94085

U.S.A.

www.mellanox.com

Tel: (408) 970-3400

Fax: (408) 970-3403

© Copyright 2018. Mellanox Technologies Ltd. All Rights Reserved.

Mellanox®, Mellanox logo, Accelio®, BridgeX®, CloudX logo, CompustorX®, Connect-IB®, ConnectX®,

CoolBox®, CORE-Direct®, EZchip®, EZchip logo, EZappliance®, EZdesign®, EZdriver®, EZsystem®,

GPUDirect®, InfiniHost®, InfiniBridge®, InfiniScale®, LinkX®, Kotura®, Kotura logo, Mellanox CloudRack®,

Mellanox CloudXMellanox®, Mellanox Federal Systems®, Mellanox HostDirect®, Mellanox Multi-Host®, Mellanox

Open Ethernet®, Mellanox OpenCloud®, Mellanox OpenCloud Logo®, Mellanox PeerDirect®, Mellanox

ScalableHPC®, Mellanox StorageX®, Mellanox TuneX®, Mellanox Connect Accelerate Outperform logo, Mellanox

Virtual Modular Switch®, MetroDX®, MetroX®, MLNX-OS®, NP-1c®, NP-2®, NP-3®, NPS®, Open Ethernet logo,

PhyX®, Pla tformX®, PSIPHY®, SiPhy®, StoreX®, SwitchX®, Tilera®, Tilera logo, TestX®, TuneX®, The

Generation of Open Ethernet logo, UFM®, Unbreakable Link®, Virtual Protocol Interconnect®, Voltaire® and

Voltaire logo are registered trademarks of Mellanox Technologies, Ltd.

All other trademarks are property of their respective owners.

For the most updated list of Mellanox trademarks, visit http://www.mellanox.com/page/trademarks

NOTE:

THIS HARDWARE , SOFTWARE OR TEST SUITE PRODUCT ( PRODUCT (S) ) AND ITS RELATED

DOCUMENTATION ARE PROVIDED BY MELLANOX TECHNOLOGIES AS-ISﺴ WITH ALL FAULTS OF ANY

KIND AND SOLELY FOR THE PURPOSE OF AIDING THE CUSTOMER IN TESTING APPLICATIONS THAT

USE THE PRODUCTS IN DESIGNATED SOLUTIONS . THE CUSTOMER 'S MANUFACTURING TEST

ENVIRONMENT HAS NOT MET THE STANDARDS SET BY MELLANOX TECHNOLOGIES TO FULLY

QUALIFY THE PRODUCT (S) AND/OR THE SYSTEM USING IT . THEREFORE , MELLANOX TECHNOLOGIES

CANNOT AND DOES NOT GUARANTEE OR WARRANT THAT THE PRODUCTS WILL OPERATE WITH THE

HIGHEST QUALITY . ANY EXPRESS OR IMPLIED WARRANTIES , INCLUDING , BUT NOT LIMITED TO , THE

IMPLIED WARRANTIES OF MERCHANTABILITY , FITNESS FOR A PARTICULAR PURPOSE AND

NONINFRINGEMENT ARE DISCLAIMED . IN NO EVENT SHALL MEL LANOX BE LIABLE TO CUSTOMER OR

ANY THIRD PARTIES FOR ANY DIRECT , INDIRECT , SPECIAL , EXEMPLARY , OR CONSEQUENTIAL

DAMAGES OF ANY KIND (INCLUDING, BUT NOT LIMITED TO , PAYMENT FOR PROCUREMENT OF

SUBSTITUTE GOODS OR SERVICES ; LOSS OF USE , DATA , OR PROFITS ; OR BUSINESS INTERRUPTION )

HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY , WHETHER IN CONTRACT , STRICT LIABILITY ,

OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE ) ARISING IN ANY WAY FROM THE USE OF THE

PRODUCT (S) AND RELATED DOCUMENTATION EVEN IF ADVISED OF THE POSSIBILITY OF SUCH

DAMAGE .

Doc #: MLNX-15-5136 2Mellanox Technologies

Page 3

Table of Contents

Table of Contents . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

List of Tables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

List of Figures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

Revision History . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

About This Manual . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

Chapter 1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

1.1 Product Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

1.2 OCP Spec 2.0 Type Stacking Heights . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

1.2.1 OCP Spec 2.0 Type 2 Stacking Height- Single-port Card. . . . . . . . . . . . . . . 12

1.2.2 OCP Spec 2.0 Type 1 Stacking Height - Single-port Card . . . . . . . . . . . . . . 12

1.3 Features and Benefits. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

1.4 Multi-Host Technology. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

1.5 Operating Systems/Distributions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

1.6 Connectivity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

Chapter 2 Interfaces . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

2.1 InfiniBand Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

2.2 Ethernet QSFP28 Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

2.3 PCI Express Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

2.4 LED Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

2.5 FRU EEPROM. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

Chapter 3 Hardware Installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

3.1 System Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

3.1.1 Hardware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

3.1.2 Operating Systems/Distributions. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

3.1.3 Software Stacks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

3.2 Safety Precautions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

3.3 Pre-Installation Checklist . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

3.4 Card Installation Instructions. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

3.5 Cables and Modules . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

3.5.1 Cable Installation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

3.6 Adapter Card Disassembly Instructions . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

3.6.1 Safety Precautions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

3.6.2 Un-Installing the Cards . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

3.7 Identify the Card in Your System. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

Rev 1.5 3Mellanox Technologies

Page 4

3.7.1 On Windows . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

3.7.2 On Linux . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

Chapter 4 Driver Installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

4.1 Linux. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

4.1.1 Hardware and Software Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

4.1.2 Downloading Mellanox OFED. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

4.1.3 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Installing Mellanox OFED 30

4.1.3.1 Installation Script . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

4.1.3.2 Installation Procedure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

4.1.3.3 Installation Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

4.1.3.4 Installation Logging . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

4.1.3.5 openibd Script . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

4.1.3.6 Driver Load Upon System Boot. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

4.1.3.7 mlnxofedinstall Return Codes. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

4.1.4 Uninstalling Mellanox OFED . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

4.1.5 Installing MLNX_OFED Using YUM. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

4.1.5.1 Setting up MLNX_OFED YUM Repository . . . . . . . . . . . . . . . . . . . . . . . . . 36

4.1.5.2 Installing MLNX_OFED Using the YUM Tool . . . . . . . . . . . . . . . . . . . . . . . 37

4.1.5.3 Uninstalling Mellanox OFED Using the YUM Tool . . . . . . . . . . . . . . . . . . . 38

4.1.5.4 Installing MLNX_OFED Using apt-get Tool. . . . . . . . . . . . . . . . . . . . . . . . . 39

4.1.5.5 Setting up MLNX_OFED apt-get Repository . . . . . . . . . . . . . . . . . . . . . . . 39

4.1.5.6 Installing MLNX_OFED Using the apt-get Tool . . . . . . . . . . . . . . . . . . . . . 39

4.1.5.7 Uninstalling Mellanox OFED Using the apt-get Tool . . . . . . . . . . . . . . . . . 40

4.1.6 Updating Firmware After Installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

4.1.6.1 Updating the Device Online . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

4.1.6.2 Updating the Device Manually . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

4.1.6.3 Updating the Device Firmware Automatically upon System Boot . . . . . 41

4.1.7 UEFI Secure Boot . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

4.1.7.1 Enrolling Mellanox's x.509 Public Key On your Systems . . . . . . . . . . . . . 42

4.1.7.2 Removing Signature from kernel Modules . . . . . . . . . . . . . . . . . . . . . . . . 42

4.1.8 Performance Tuning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

4.2 Windows Driver . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

4.2.1 Hardware and Software Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

4.2.2 Downloading Mellanox WinOF-2 Driver . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

4.2.3 Installing Mellanox WinOF-2 Driver. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

4.2.3.1 Attended Installation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

4.2.3.2 Unattended Installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

4.2.4 Installation Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

4.2.5 Extracting Files Without Running Installation. . . . . . . . . . . . . . . . . . . . . . . 52

4.2.6 Uninstalling Mellanox WinOF-2 Driver . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

4.2.6.1 Attended Uninstallation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

4.2.6.2 Unattended Uninstallation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

Rev 1.5 4Mellanox Technologies

Page 5

4.2.7 Firmware Upgrade . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

4.2.8 Deploying the Driver on a Nano Server . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

4.2.8.1 Offline Installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

4.2.8.2 Online Update . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

Chapter 5 Updating Adapter Card Firmware. . . . . . . . . . . . . . . . . . . . . . . . . . 57

5.1 Firmware Update Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

Chapter 6 Troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

6.1 General . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

6.2 Linux. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

6.3 Windows . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

Chapter 7 Specifications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

7.1 MCX545A-ECAN/MCX545B-ECAN Specifications. . . . . . . . . . . . . . . . . . . . 61

7.2 MCX545M-ECAN Specifications. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

7.3 Adapter Card LED Operations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

7.4 Board Mechanical Drawing and Dimensions . . . . . . . . . . . . . . . . . . . . . . . 65

Appendix A Finding the GUID/MAC and Serial Number on the Adapter Card 67

Appendix B Safety Warnings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

Appendix C Avertissements de sécurité d’installation (Warnings in French) 71

Appendix D Sicherheitshinweise (Warnings in German) . . . . . . . . . . . . . . . . 73

Appendix E Advertencias de seguridad para la instalación (Warnings in Spanish) 75

Rev 1.5 5Mellanox Technologies

Page 6

List of Tables

Table 1: Revision History Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

Table 2: Documents List. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

Table 3: Single-Port VPI Adapter Card . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

Table 4: Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

Table 5: Hardware and Software Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

Table 6: Installation Results. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

Table 7: mlnxofedinstall Return Codes. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

Table 8: Hardware and Software Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

Table 9: General Troubleshooting. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

Table 10: Linux Troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

Table 11: Windows Troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

Table 12: MCX545A-ECAN/MCX545B-ECAN Specification Table . . . . . . . . . . . . . . . . . . . . . . 61

Table 13: MCX545M-ECAN Specification Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

Table 14: Physical and Logical Link Indications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

Rev 1.5 6Mellanox Technologies

Page 7

List of Figures

Figure 1: Type 2 Vertical Stack Front View - Single-port Card . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

Figure 2: Type 1 Vertical Stack Front View - Single Port Cards . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

Figure 3: Multi-Host Technology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

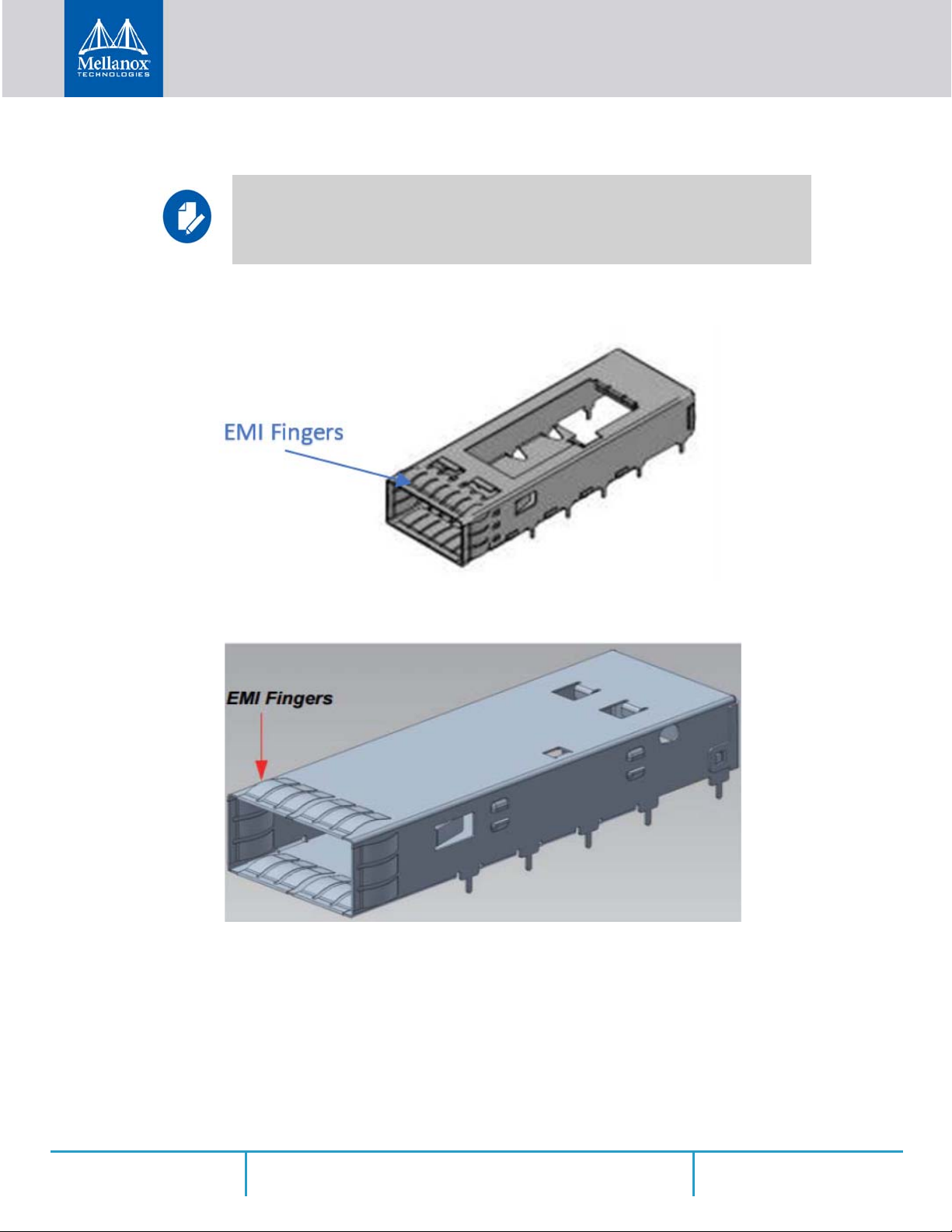

Figure 4: EMI fingers on QSFP28 Cage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

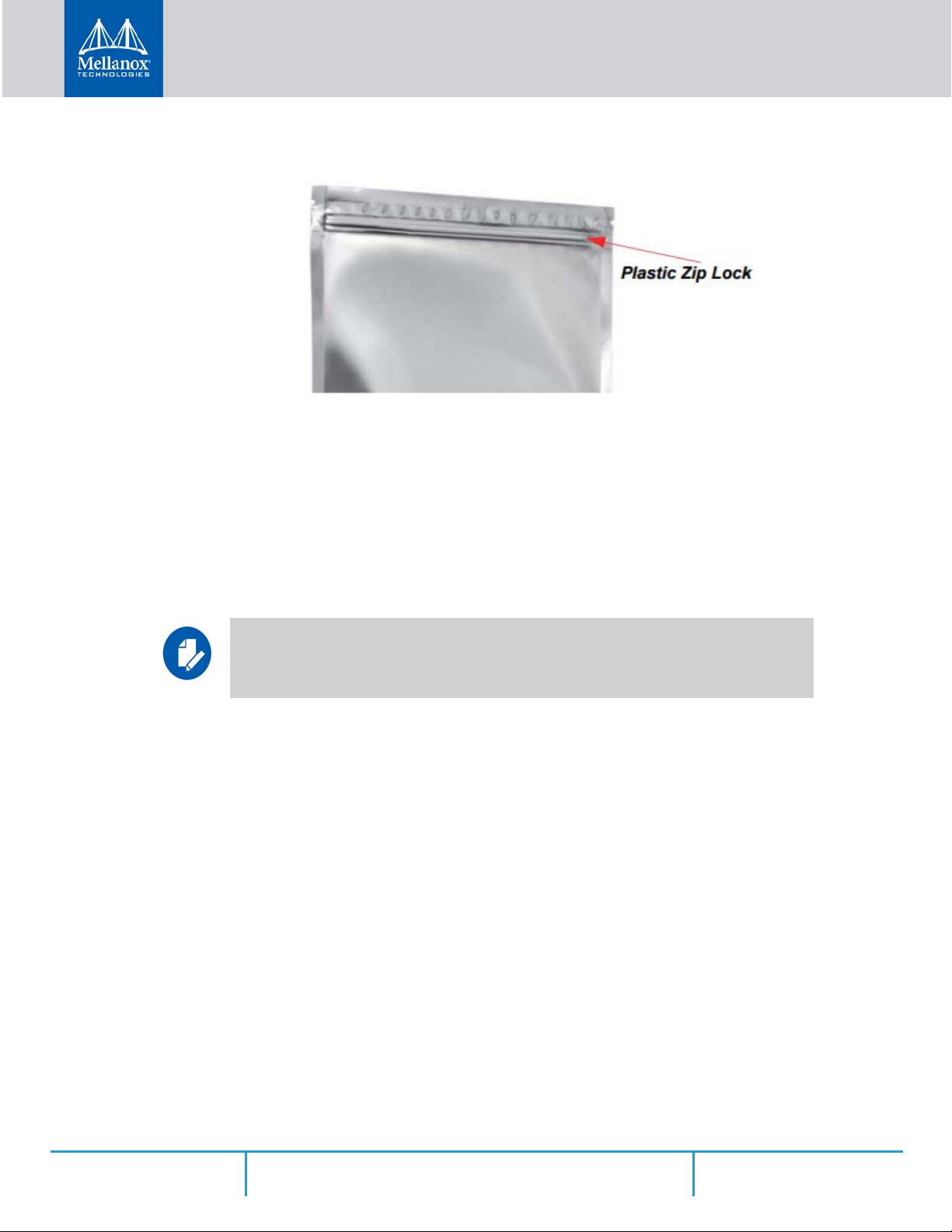

Figure 5: EMI Fingers on QSFP Connector . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

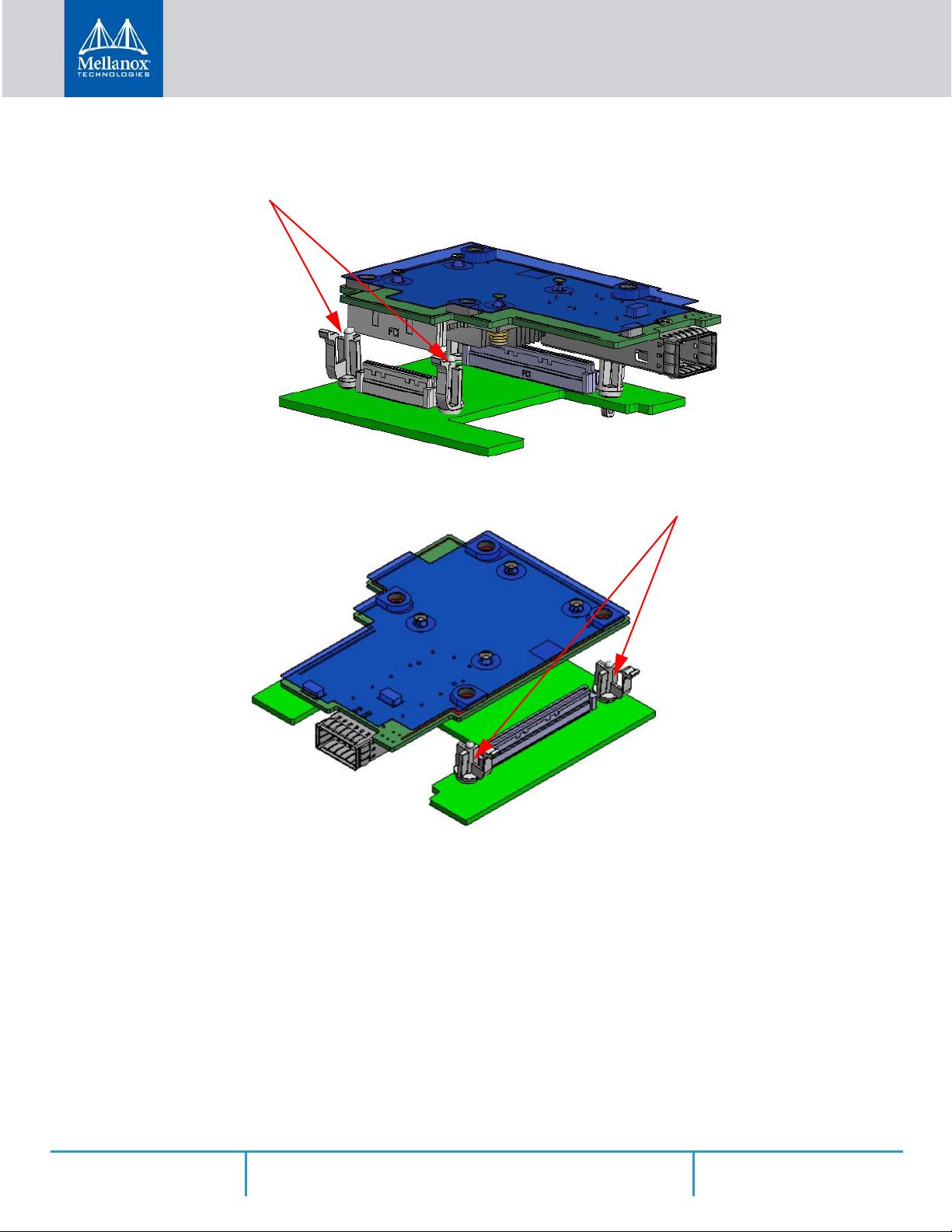

Figure 6: Plastic Ziplock . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

Figure 7: PCI Device (Example) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

Figure 8: Installation Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

Figure 9: Mechanical Drawing of MCX545A-ECAN and MCX545M-ECAN . . . . . . . . . . . . . . . . . . . . 65

Figure 10: Mechanical Drawing of MCX545B-ECAN . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

Figure 11: MCX545A-ECAN Board Labels (Example) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

Figure 12: MCX545B-ECAN Board Labels (Example) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

Figure 13: MCX545M-ECAN Board Labels (Example) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

Rev 1.5 7Mellanox Technologies

Page 8

Revision History

This document was printed on October 23, 2018.

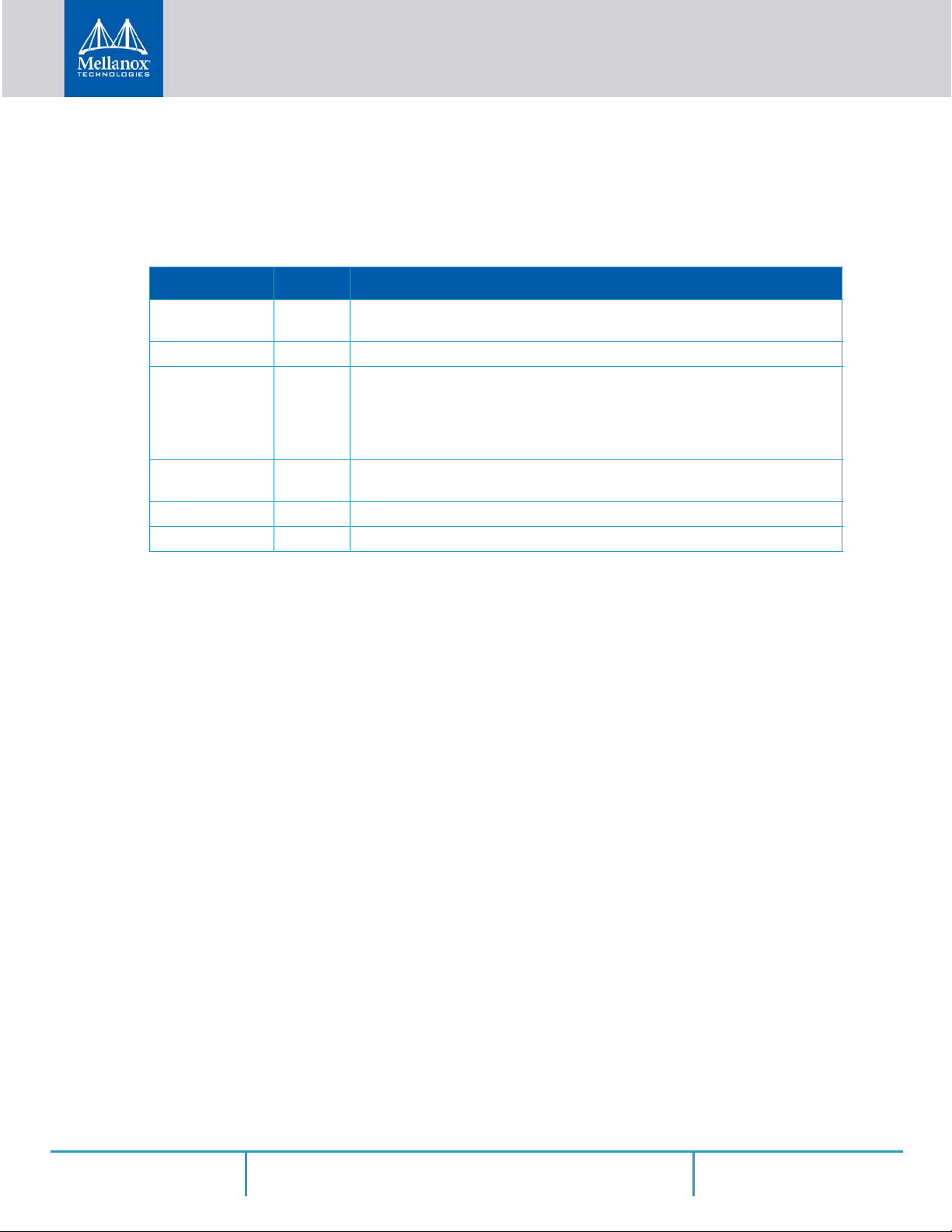

Table 1 - Revision History Table

Date Rev Comments/Changes

October 20148 1.5 • Added MCX545B-ECAN support across document

• Updated MCX545M-ECAN Specification Table on page 62

April 2018 1.4 • Updated MCX545A-ECAN/MCX545B-ECAN Specification Table on page 61

July 2017 1.3 • Updated Product Overview on page 11

• Updated Features and Benefits on page 13

• Added FRU EEPROM on page 18

• Updated MCX545A-ECAN Specifications on page 69

• Added Airflow Specifications on page 70

June 2017 1.2 • Removed MCX545M-ECAN support across document

• Updated MCX545A-ECAN Specifications on page 69

May 2017 1.1 Updated “OCP Spec 2.0 Type Stacking Heights” on page 12

March 2017 1.0 First release

Rev 1.5 8Mellanox Technologies

Page 9

About This Manual

This User Manual describes Mellanox Technologies ConnectX®-5 Single QSFP28 port PCI

Express x16 adapter cards for Open Compute Project, Spec 2.0. It provides details as to the inter

faces of the board, specifications, required software and firmware for operating the board, and

relevant documentation.

Intended Audience

This manual is intended for the installer and user of these cards.

The manual assumes basic familiarity with InfiniBand and Ethernet network and architecture

specifications.

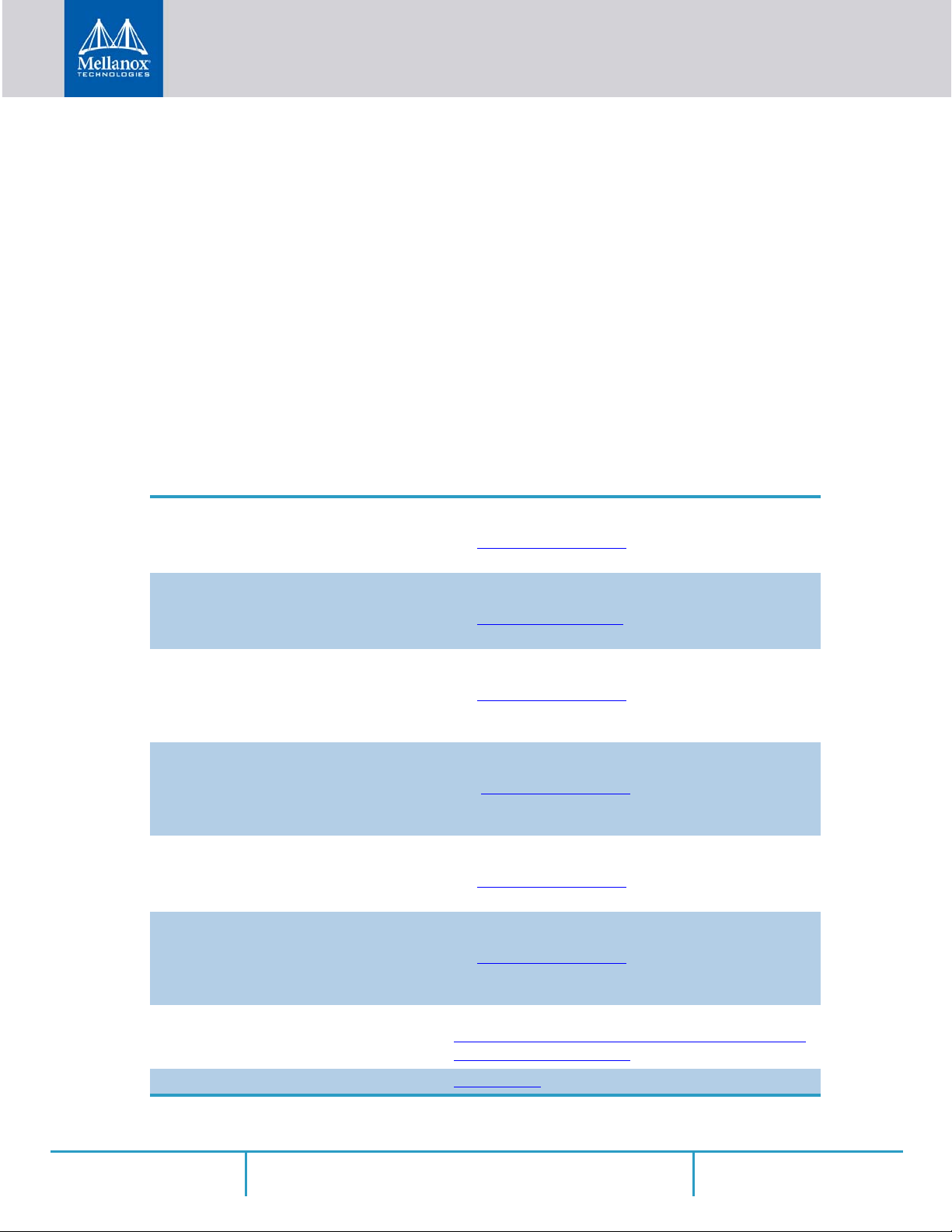

Related Documentation

Table 2 - Documents List

-

Mellanox Firmware Tools (MFT) User Manual

Document no. 2204UG

Mellanox Firmware Utility (mlxup) User Manual

and Release Notes

Mellanox OFED for Linux

User Manual

Document no. 2877

Mellanox OFED for Linux Release Notes

Document no. 2877

WinOF-2 for Windows

User Manual

Document no. MLX-15-3280

Mellanox OFED for Windows Driver

Release Notes

User Manual describing the set of MFT firmware management

tools for a single node.

See http://www.mellanox.com => Products => Software =>

Firmware Tools

Mellanox firmware update and query utility used to update the

firmware.

See http://www.mellanox.com => Products => Software =>

Firmware Tools => mlxup Firmware Utility

User Manual describing OFED features, performance, Band

diagnostic, tools content and configuration.

See http://www.mellanox.com => Products => Software =>

InfiniBand/VPI Drivers=> Mellanox OpenFabrics Enterprise

Distribution for Linux (MLNX_OFED)

Release Notes for Mellanox OFED for Linux driver kit for Mellanox adapter cards:

See: http://www.mellanox.com =>Products => Software =>

InfiniBand/VPI Drivers => Linux SW/Drivers => Release

Notes

User Manual describing WinOF-2 features, performance, Ethernet diagnostic, tools content and configuration.

See http://www.mellanox.com => Products => Software =>

Windows SW/Drivers

Release notes for Mellanox Technologies' MLNX_EN for Linux

driver kit for Mellanox adapter cards:

See http://www.mellanox.com => Products => Software =>

Ethernet Drivers => Mellanox OFED for Windows => WinOF2 Release Notes

IBTA Specification Release 1.3 InfiniBand Architecture Specification:

http://www.infinibandta.org/content/pages.php?pg=technology_public_specification

Open Compute Project 2.0 Specification OCP Spec 2.0

Rev 1.5 9Mellanox Technologies

Page 10

Document Conventions

When discussing memory sizes, MB and MBytes are used in this document to mean size in mega

Bytes. The use of Mb or Mbits (small b) indicates size in mega bits. B is used in this document to

mean InfiniBand. In this document PCIe is used to mean PCI Express.

Technical Support

Customers who purchased Mellanox products directly from Mellanox are invited to contact us

through the following methods.

•URL: http://www.mellanox.com => Support

• E-mail: support@mellanox.com

• Tel: +1.408.916.0055

Customers who purchased Mellanox M-1 Global Support Services, please see your contract for

details regarding Technical Support.

Customers who purchased Mellanox products through a Mellanox approved reseller should first seek assistance through their reseller.

Firmware Updates

The Mellanox support downloader contains software, firmware and knowledge database information for Mellanox products. Access the database from the Mellanox Support web page,

http://www.mellanox.com => Support

Or use the following link to go directly to the Mellanox Support Download Assistant page,

http://www.mellanox.com/supportdownloader/.

Rev 1.5 10Mellanox Technologies

Page 11

1 Introduction

This is the User Guide for Mellanox Technologies VPI adapter cards based on the ConnectX®-5

integrated circuit device for Open Compute Project. These adapters connectivity provide the

highest performing low latency and most flexible interconnect solution for PCI Express Gen 3.0

servers used in Enterprise Data Centers and High-Performance Computing environments.

This chapter covers the following topics:

• Section 1.1, “Product Overview”, on page 11

• Section 1.3, “Features and Benefits”, on page 13

• Section 1.5, “Operating Systems/Distributions”, on page 15

• Section 1.6, “Connectivity”, on page 16

1.1 Product Overview

The following section provides the ordering part number, port speed, number of ports, and PCI

Express speed.

Table 3 - Single-Port VPI Adapter Card

Introduction

Single-host Cards with host management:

• MCX545A-ECAN- OCP Spec 2.0 Type 2

Ordering Part Number (OPN)

Data Transmission Rate

Network Connector Types

PCI Express (PCIe) SerDes Speed

RoHS

Adapter IC Part Number

Device ID (decimal)

a. See “OCP Spec 2.0 Type 2 Stacking Height- Single-port Card”

b. See “OCP Spec 2.0 Type 1 Stacking Height - Single-port Card”

• MCX545B-ECAN- OCP Spec 2.0 Type 2

Multi-host Cards with host management:

• MCX545M-ECAN - OCP Spec 2.0 Type 2

InfiniBand: SDR/DDR/QDR/FDR/EDR

Ethernet: 1/10/25/40/50/100Gb/s

Single-port QSFP28

PCIe 3.0 x16 8GT/s (through two x8 B2B FCI connectors)

RoHS Compliant

MT27808A0-FCCF-EV

4119 for Physical Function (PF)

4120 for Virtual Function (VF)

a

b

a

Rev 1.5

11Mellanox Technologies

Page 12

1.2 OCP Spec 2.0 Type Stacking Heights

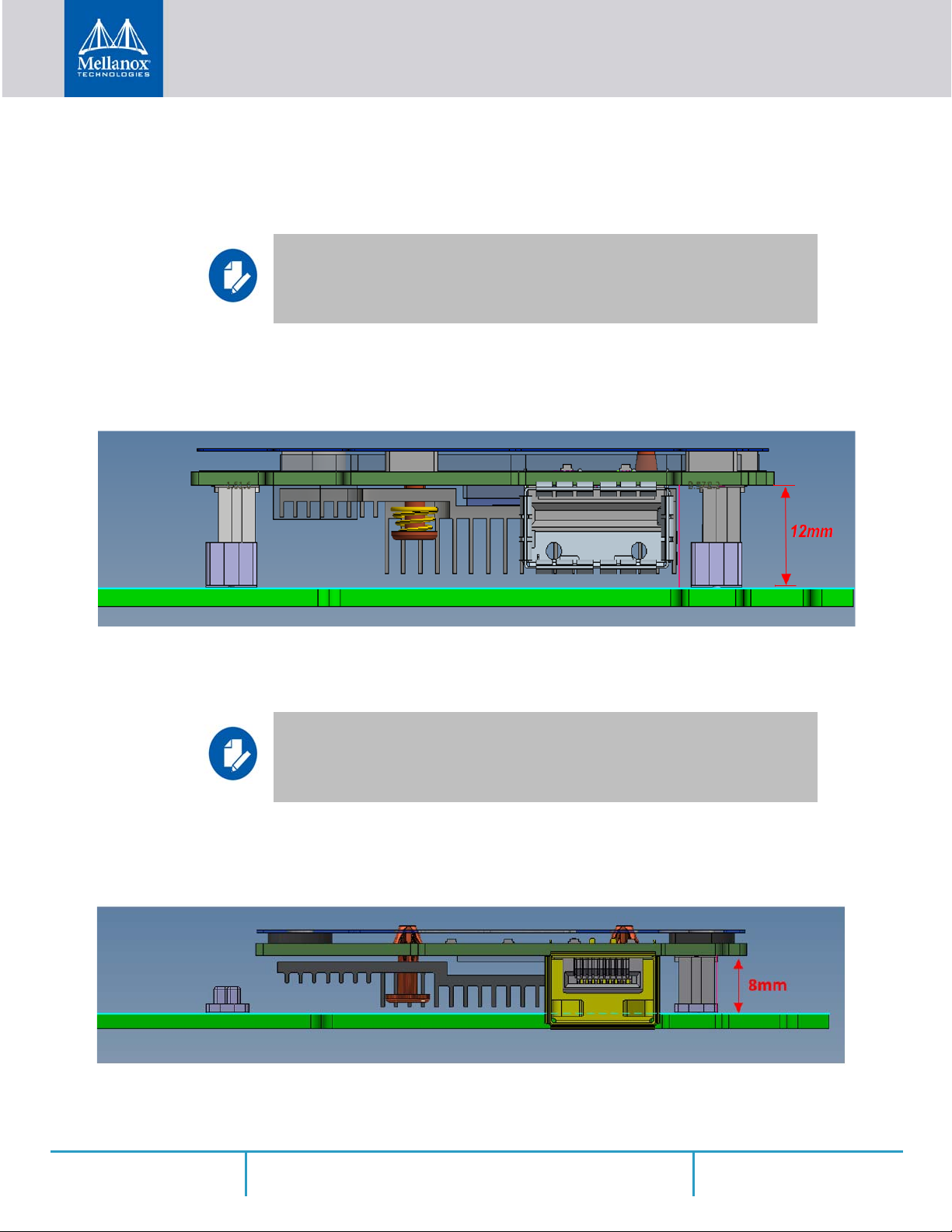

1.2.1 OCP Spec 2.0 Type 2 Stacking Height- Single-port Card

Applies to MCX545A-ECAN, MCX545M-ECAN.

The single-port adapter card follows OCP Spec 2.0 Type 2 with 12mm stacking height. See

Figure 2.

Figure 1: Type 2 Vertical Stack Front View - Single-port Card

Introduction

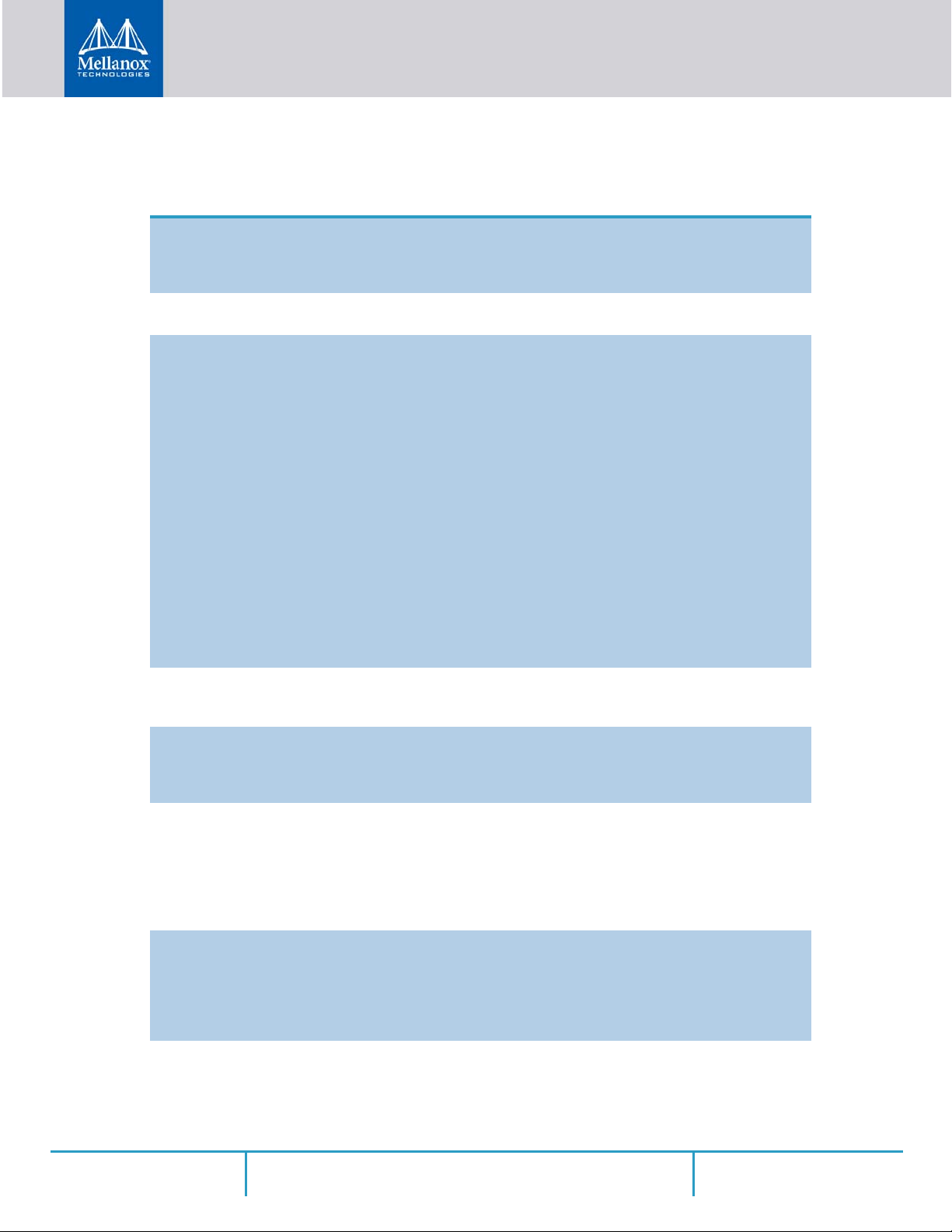

1.2.2 OCP Spec 2.0 Type 1 Stacking Height - Single-port Card

Applies to MCX545B-ECAN only.

The Single port adapter card comply with OCP 2.0 Type 1 with 8mm stacking height. See

Figure 1 for the single port front view.

Figure 2: Type 1 Vertical Stack Front View - Single Port Cards

Rev 1.5

12Mellanox Technologies

Page 13

1.3 Features and Benefits

Table 4 - Features

100Gb/s Virtual Protocol

Interconnect (VPI) Adapter

PCI Express (PCIe)

Up to 100 Gigabit Ethernet

InfiniBand EDR

Memory

Overlay Networks

RDMA and RDMA over

Converged Ethernet (RoCE)

a

ConnectX-5 offers the highest throughput VPI adapter, supporting EDR 100Gb/

s InfiniBand and 100Gb/s Ethernet and enabling any standard networking, clus

tering, or storage to operate seamlessly over any converged network leveraging

a consolidated software stack.

Uses Gen 3.0 (8GT/s) through x16 edge connector (two B2B FCI x8 connectors)

Mellanox adapters comply with the following IEEE 802.3 standards:

– 100GbE/ 50GbE / 40GbE / 25GbE / 10GbE / 1GbE

– IEEE 802.3bj, 802.3bm 100 Gigabit Ethernet

– IEEE 802.3by, Ethernet Consortium25, 50 Gigabit Ethernet,

supporting all FEC modes

– IEEE 802.3ba 40 Gigabit Ethernet

– IEEE 802.3by 25 Gigabit Ethernet

– IEEE 802.3ae 10 Gigabit Ethernet

– IEEE 802.3ap based auto-negotiation and KR startup

– Proprietary Ethernet protocols (20/40GBASE-R2, 50GBASE-R4)

– IEEE 802.3ad, 802.1AX Link Aggregation

– IEEE 802.1Q, 802.1P VLAN tags and priority

– IEEE 802.1Qau (QCN)

– Congestion Notification

– IEEE 802.1Qaz (ETS)

– IEEE 802.1Qbb (PFC)

– IEEE 802.1Qbg

– IEEE 1588v2

– Jumbo frame support (9.6KB)

A standard InfiniBand data rate, where each lane of a 4X port runs a bit rate of

25.78125Gb/s with a 64b/66b encoding, resulting in an effective bandwidth of

100Gb/s.

PCI Express - stores and accesses InfiniBand and/or Ethernet fabric connection

information and packet data.

SPI - includes 128Mb SPI Flash device (W25Q128FVSIG by WINBONDNUVOTON).FRU EEPROM capacity is 2Kb.

In order to better scale their networks, data center operators often create overlay

networks that carry traffic from individual virtual machines over logical tunnels

in encapsulated formats such as NVGRE and VXLAN. While this solves net

work scalability issues, it hides the TCP packet from the hardware offloading

engines, placing higher loads on the host CPU. ConnectX-5 effectively

addresses this by providing advanced NVGRE and VXLAN hardware offload

ing engines that encapsulate and de-capsulate the overlay protocol.

ConnectX-5, utilizing IBTA RDMA (Remote Data Memory Access) and RoCE

(RDMA over Converged Ethernet) technology, delivers low-latency and highperformance over Band and Ethernet networks. Leveraging data center bridging

(DCB) capabilities as well as ConnectX-5 advanced congestion control hard

ware mechanisms, RoCE provides efficient low-latency RDMA services over

Layer 2 and Layer 3 networks.

Introduction

-

-

-

-

Rev 1.5

13Mellanox Technologies

Page 14

Introduction

Table 4 - Features

Mellanox PeerDirect™

CPU Offload

Quality of Service (QoS)

Hardware-based I/O

Virtualization

Storage Acceleration

SR-IOV

NC-SI

High-Performance

Accelerations

a

PeerDirect™ communication provides high efficiency RDMA access by eliminating unnecessary internal data copies between components on the PCIe bus

(for example, from GPU to CPU), and therefore significantly reduces application run time. ConnectX-5 advanced acceleration technology enables higher

cluster efficiency and scalability to tens of thousands of nodes.

Adapter functionality enabling reduced CPU overhead allowing more available

CPU for computation tasks.

Open VSwitch (OVS) offload using ASAP

• Flexible match-action flow tables

• Tunneling encapsulation / decapsulation

Support for port-based Quality of Service enabling various application requirements for latency and SLA.

ConnectX-5 provides dedicated adapter resources and guaranteed isolation and

protection for virtual machines within the server.

A consolidated compute and storage network achieves significant cost-performance advantages over multi-fabric networks. Standard block and file access

protocols can leverage InfiniBand RDMA for high-performance storage access.

• NVMe over Fabric offloads for target machine

• Erasure Coding

• T10-DIF Signature Handover

ConnectX-5 SR-IOV technology provides dedicated adapter resources and

guaranteed isolation and protection for virtual machines (VM) within the

server.

The adapter supports a Network Controller Sideband Interface (NC-SI), MCTP

over SMBus and MCTP over PCIe - Baseboard Management Controller interface.

• Tag Matching and Rendezvous Offloads

• Adaptive Routing on Reliable Transport

• Burst Buffer Offloads for Background Checkpointing

2(TM)

Rev 1.5

NC-SI

Wake-on-LAN (WoL) Supported

Reset-on-Lan (RoL) Supported

a. This section describes hardware features and capabilities. Please refer to the driver release notes for feature availabil-

ity. See “Related Documentation” on page 9.

The adapter supports a slave Network Controller Sideband Interface (NC-SI)

that can be connected to a BMC.

.

14Mellanox Technologies

Page 15

1.4 Multi-Host Technology

ConnectX®-5 adapter card specifically designed for supported servers (as described in Section

3.1) implements Multi-Host technology to deliver direct and independent PCIe connections to

each of the four CPUs in the server.

The ConnectX-5 PCIe x16 interface is separated into four independent PCIe x4 interfaces. Each

interface is connected to a separate host with no performance degradation.

Connecting server CPUs directly to the network delivers performance gain as each CPU can send

and receive network traffic independently without the need to send network data to other CPUs

using QPI bus.

Figure 3: Multi-Host Technology

Introduction

1.5 Operating Systems/Distributions

• RHEL/CentOS

•Windows

• FreeBSD

•VMware

• OpenFabrics Enterprise Distribution (OFED)

• OpenFabrics Windows Distribution (WinOF-2)

Rev 1.5

15Mellanox Technologies

Page 16

1.6 Connectivity

• Interoperable with 1/10/25/40/50/100 Gb/s Ethernet switches

• Passive copper cable with ESD protection

• Powered connectors for optical and active cable support

Introduction

Rev 1.5

16Mellanox Technologies

Page 17

2 Interfaces

The adapter card includes special circuits to protect from ESD shocks to the card/server when

plugging copper cables.

Each adapter card includes the following interfaces:

• “InfiniBand Interface”

• “Ethernet QSFP28 Interface”

• “PCI Express Interface”

• “LED Interface”

2.1 InfiniBand Interface

The network ports of the ConnectX®-5 adapter cards are compliant with the InfiniBand Architecture Specification, Release 1.3. InfiniBand traffic is transmitted through the cards' QSFP28 con-

nectors.

2.2 Ethernet QSFP28 Interface

Interfaces

The network ports of the ConnectX®-5 adapter card are compliant with the IEEE 802.3 Ethernet

standards listed in

Table 4. Ethernet traffic is transmitted through the cards' QSFP28 connectors.

2.3 PCI Express Interface

The ConnectX®-5 adapter card supports PCI Express Gen 3.0 (1.1 and 2.0 compatible) through

two x8 FCI B2B connectors: connector A and connector B. The device can be either a master ini

tiating the PCI Express bus operations, or a slave responding to PCI bus operations.

The following lists PCIe interface features:

• PCIe Gen3.0 compliant, 2.0 and 1.1 compatible

• 2.5, 5.0, 8.0GT/slink rate x16

• Auto-negotiates to x16, x8, x4, x2, or x1

• Support for MSI/MSI-X mechanisms

2.4 LED Interface

There are two I/O LEDs per port located on the adapter card. For LED specifications, please refer

Section 7.3, “Adapter Card LED Operations”, on page 64.

to

-

Rev 1.5

17Mellanox Technologies

Page 18

2.5 FRU EEPROM

FRU EEPROM allows the baseboard to identify different types of Mezzanine cards. MEZZ FRU

EEPROM is accessible through MEZZ_SMCLK and MEZZ_SMDATA (Connector A18 and

A19). MEZZ FRU EEPROM address is 0xA2 and its capacity is 2Kb.

Interfaces

Rev 1.5

18Mellanox Technologies

Page 19

3 Hardware Installation

3.1 System Requirements

3.1.1 Hardware

Unless otherwise specified, Mellanox products are designed to work in an environmentally

controlled data center with low levels of gaseous and dust (particulate) contamination.

The operation environment should meet severity level G1 as per ISA 71.04 for gaseous

contamination and ISO 14644-1 class 8 for cleanliness level.

A system with PCI Express x16 slot (two FCI B2B x8 connectors) is required for installing the

card.

3.1.2 Operating Systems/Distributions

Please refer to Section 1.5, “Operating Systems/Distributions”, on page 15.

Hardware Installation

3.1.3 Software Stacks

Mellanox OpenFabric software package MLNX_OFED for Linux and WinOF-2 for Windows

See

Chapter 4, “Driver Installation”.

3.2 Safety Precautions

The adapter is being installed in a system that operates with voltages that can be lethal.

Before opening the case of the system, observe the following precautions to avoid injury and

prevent damage to system components.

1. Remove any metallic objects from your hands and wrists.

2. Make sure to use only insulated tools.

3. Verify that the system is powered off and is unplugged.

4. It is strongly recommended to use an ESD strap or other antistatic devices.

3.3 Pre-Installation Checklist

1. Verify that your system meets the hardware and software requirements stated above.

2. Shut down your system if active.

3. After shutting down the system, turn off the power and unplug the cord.

Rev 1.5

19Mellanox Technologies

Page 20

4. Remove the card from its package.

Please note that if the card is removed hastily from the antistatic bag, the plastic ziplock may

harm the EMI fingers on the QSFP connector. Carefully remove the card from the antistatic

bag to avoid damaging the EMI fingers. See

Figure 4: EMI fingers on QSFP28 Cage

Hardware Installation

Figure 4 and Figure 6.

Figure 5: EMI Fingers on QSFP Connector

Rev 1.5

20Mellanox Technologies

Page 21

Hardware Installation

Figure 6: Plastic Ziplock

5. Please note that the card must be placed on an antistatic surface.

6. Check the card for visible signs of damage. Do not attempt to install the card if damaged.

.

3.4 Card Installation Instructions

Please note that the following figures are for illustration purposes only.

1. Before installing the card, make sure that the system is off and the power cord is not connected to the server. Please follow proper electrical grounding procedures.

2. Open the system case.

3. Make sure the adapter clips or screws are open.

Rev 1.5

21Mellanox Technologies

Page 22

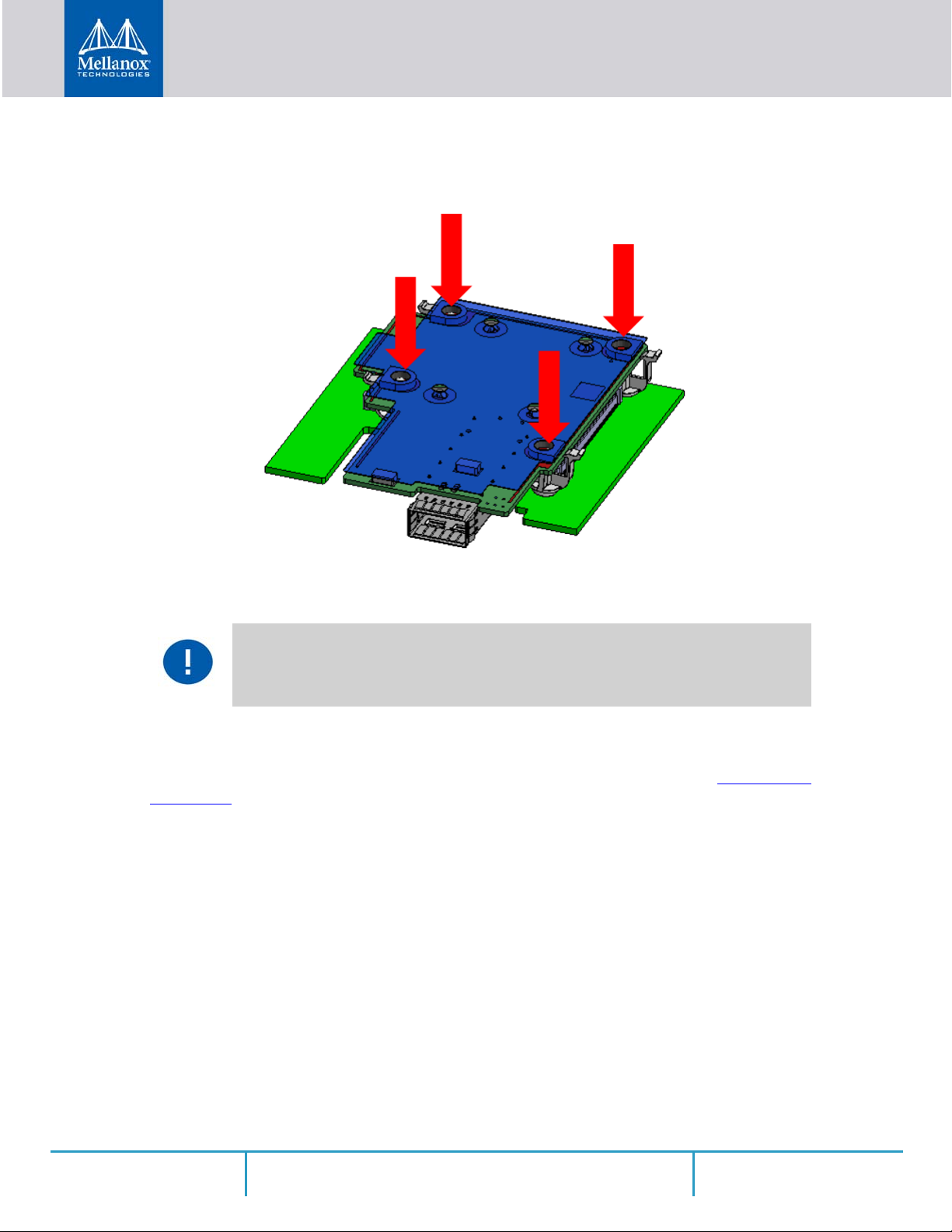

4. Place the adapter card on the clips without applying any pressure.

Open Clips

Open Clips

Hardware Installation

Rev 1.5

22Mellanox Technologies

Page 23

Hardware Installation

5. Applying even pressure on four corners of the card (as shown in the below picture), insert the

adapter card into the PCI Express slot until firmly seated.

6. Secure the adapter with the adapter clip or screw.

7. Close the system case.

Do not use excessive force when seating the card, as this may damage the system or the

adapter.

3.5 Cables and Modules

To obtain the list of supported Mellanox cables for your adapter, please refer to the Cables Refer-

ence Table.

3.5.1 Cable Installation

1. All cables can be inserted or removed with the unit powered on.

2. To insert a cable, press the connector into the port receptacle until the connector is firmly

seated.

a. Support the weight of the cable before connecting the cable to the adapter card. Do this by using a cable

holder or tying the cable to the rack.

b. Determine the correct orientation of the connector to the card before inserting the connector. Do not try and

insert the connector upside down. This may damage the adapter card.

c. Insert the connector into the adapter card. Be careful to insert the connector straight into the cage. Do not

apply any torque, up or down, to the connector cage in the adapter card.

Rev 1.5

23Mellanox Technologies

Page 24

Hardware Installation

d. Make sure that the connector locks in place.

When installing cables make sure that the latches engage.

Always install and remove cables by pushing or pulling the cable and connector in a

straight line with the card.

3. After inserting a cable into a port, the Yellow or Green LED0 indicator will light when the

physical connection is established (that is, when the unit is powered on and a cable is plugged

into the port with the other end of the connector plugged into a functioning port). See

Section 7.3, “Adapter Card LED Operations”, on page 64.

4. After plugging in a cable, lock the connector using the latching mechanism particular to the

cable vendor. When a logical connection is made, Green LED1 will light. When data is being

transferred, Green LED1 will blink. See

page 64.

5. Care should be taken as not to impede the air exhaust flow through the ventilation holes. Use

cable lengths which allow for routing horizontally around to the side of the chassis before

bending upward or downward in the rack.

6. To remove a cable, disengage the locks and slowly pull the connector away from the port

receptacle. LED indicator will turn off when the cable is unseated.

Section 7.3, “Adapter Card LED Operations”, on

3.6 Adapter Card Disassembly Instructions

3.6.1 Safety Precautions

The adapter card and auxiliary PCIe connection cards are installed in a system that

operates with voltages that can be lethal. Before un-installing the cards, please observe

the following precautions to avoid injury and prevent damage to system components.

1. Remove any metallic objects from your hands and wrists.

2. It is strongly recommended to use an ESD strap or other antistatic devices.

3. Turn off the system and disconnect the power cord from the server.

3.6.2 Un-Installing the Cards

1. Verify that the system is powered off and unplugged.

2. Wait 30 seconds.

Rev 1.5

24Mellanox Technologies

Page 25

3. To remove the card, disengage clip 1 and 2 on connector Aside.

Connector A

Clip 2

Clip 1

Connector A

Hardware Installation

4. To disconnect connector A, gently pull the adapter card upwards

Do not use excessive force when disconnecting the adapter card, as this may damage

the system or the adapter.

Rev 1.5

25Mellanox Technologies

Page 26

5. Disengage clip 3 and clip 4 on the adapter card on Connector B side.

Clip 4

Clip 3

Connector B

6. To remove the card, gently pull the adapter card upwards

Hardware Installation

Rev 1.5

Do not use excessive force when disconnecting the adapter card, as this may damage

the system or the adapter.

3.7 Identify the Card in Your System

3.7.1 On Windows

1. Open Device Manager on the server. Click Start => Run, and then enter “devmgmt.msc”.

26Mellanox Technologies

Page 27

Hardware Installation

2. Expand System Devices and locate your Mellanox ConnectX-5 adapter card.

3. Right click the mouse on your adapter's row and select Properties to display the adapter card

properties window.

4. Click the Details tab and select Hardware Ids (Windows 2012/R2/2016) from the Properties

pull-down menu.

Figure 7: PCI Device (Example)

Rev 1.5

5. In the Value display box, check the fields VEN and DEV (fields are separated by ‘&’). In the

display example above, notice the sub-string “PCI\VEN_15B3&DEV_1003”: VEN is equal

to 0x15B3 – this is the Vendor ID of Mellanox Technologies; and DEV is equal to 1018 (for

ConnectX-5) – this is a valid Mellanox Technologies PCI Device ID.

If the PCI device does not have a Mellanox adapter ID, return to Step 2 to check

another device.

The list of Mellanox Technologies PCI Device IDs can be found in the PCI ID repository at http://pci-ids.ucw.cz/read/PC/15b3.

27Mellanox Technologies

Page 28

3.7.2 On Linux

Get the device location on the PCI bus by running lspci and locating lines with the string

“Mellanox Technologies”:

lspci |grep -i Mellanox

Network controller: Mellanox Technologies MT28800 Family [ConnectX-5]

Hardware Installation

Rev 1.5

28Mellanox Technologies

Page 29

4 Driver Installation

4.1 Linux

For Linux, download and install the latest OpenFabrics Enterprise Distribution (OFED) software

package available via the Mellanox web site at: http://www.mellanox.com => Products => Soft

ware => InfiniBand/VPI Drivers => Linux SW/Drivers => Download. This chapter describes

how to install and test the Mellanox OFED for Linux package on a single host machine with Mel

lanox ConnectX-5 adapter hardware installed.

4.1.1 Hardware and Software Requirements

Table 5 - Hardware and Software Requirements

Requirements Description

Platforms A server platform with an adapter card based on one of the following Mel-

lanox Technologies’ InfiniBand/VPI HCA devices:

• MT4119 ConnectX®-5 (VPI, IB, EN) (firmware: fw-ConnectX5)

Driver Installation

-

-

Required Disk

Space for Installa

tion

Device ID For the latest list of device IDs, please visit Mellanox website.

Operating System Linux operating system.

Installer

Privileges

1GB

-

For the list of supported operating system distributions and kernels, please

refer to the Mellanox OFED Release Notes file.

The installation requires administrator (root) privileges on the target

machine.

4.1.2 Downloading Mellanox OFED

Step 1. Download the ISO image to your host.

The image’s name has the format MLNX_OFED_LINUX-<ver>-<OS label><CPU

arch>.iso

--> Drivers --> Mellanox OFED Linux (MLNX_OFED).

Step a. Scroll down to the Download wizard, and click the Download tab.

Step b. Choose your relevant package depending on your host operating system.

Step c. Click the desired ISO/tgz package.

Step d. To obtain the download link, accept the End User License Agreement (EULA).

Step 2. Use the md5sum utility to confirm the file integrity of your ISO image. Run the following

command and compare the result to the value provided on the download page.

md5sum MLNX_OFED_LINUX-<ver>-<OS label>.iso

. You can download it from http://www.mellanox.com --> Products --> Software

Rev 1.5

29Mellanox Technologies

Page 30

4.1.3 Installing Mellanox OFED

4.1.3.1 Installation Script

The installation script, mlnxofedinstall, performs the following:

• Discovers the currently installed kernel

• Uninstalls any software stacks that are part of the standard operating system distribution

or another vendor's commercial stack

• Installs the MLNX_OFED_LINUX binary RPMs (if they are available for the current

kernel)

• Identifies the currently installed InfiniBand and Ethernet network adapters and automat-

1

ically

upgrades the firmware

Note: If you wish to perform a firmware upgrade using customized FW binaries, you can

provide a path to the folder that contains the FW binary files, by running

. Using this option, the FW version embedded in the MLNX_OFED package will be

dir

ignored.

Example:

./mlnxofedinstall --fw-image-dir /tmp/my_fw_bin_files

Driver Installation

--fw-image-

Usage

./mnt/mlnxofedinstall [OPTIONS]

The installation script removes all previously installed Mellanox OFED packages and re-installs

from scratch. You will be prompted to acknowledge the deletion of the old packages.

Pre-existing configuration files will be saved with the extension “.conf.rpmsave”.

• If you need to install Mellanox OFED on an entire (homogeneous) cluster, a common

strategy is to mount the ISO image on one of the cluster nodes and then copy it to a

shared file system such as NFS. To install on all the cluster nodes, use cluster-aware

tools (such as pdsh).

• If your kernel version does not match with any of the offered pre-built RPMs, you can

add your kernel version by using the

“mlnx_add_kernel_support.sh” script located

inside the MLNX_OFED package.

On Redhat and SLES distributions with errata kernel installed there is no need to use the

mlnx_add_kernel_support.sh script. The regular installation can be performed and weakupdates mechanism will create symbolic links to the MLNX_OFED kernel modules.

Rev 1.5

1. The firmware will not be updated if you run the install script with the ‘--without-fw-update’ option.

30Mellanox Technologies

Page 31

Driver Installation

The “mlnx_add_kernel_support.sh” script can be executed directly from the mlnxofedinstall

script. For further information, please see

On Ubuntu and Debian distributions drivers installation use Dynamic Kernel Module

Support (DKMS) framework. Thus, the drivers' compilation will take place on the host

during MLNX_OFED installation.

Therefore, using "mlnx_add_kernel_support.sh" is irrelevant on Ubuntu and

Debian distributions.

'--add-kernel-support' option below.

Example

The following command will create a MLNX_OFED_LINUX ISO image for RedHat 6.3 under

the /tmp directory.

# ./MLNX_OFED_LINUX-x.x-x-rhel6.3-x86_64/mlnx_add_kernel_support.sh -m /tmp/MLNX_OFED_LINUX-x.x-x-rhel6.3-x86_64/ --make-tgz

Note: This program will create MLNX_OFED_LINUX TGZ for rhel6.3 under /tmp directory.

All Mellanox, OEM, OFED, or Distribution IB packages will be removed.

Do you want to continue?[y/N]:y

See log file /tmp/mlnx_ofed_iso.21642.log

Building OFED RPMs. Please wait...

Removing OFED RPMs...

Created /tmp/MLNX_OFED_LINUX-x.x-x-rhel6.3-x86_64-ext.tgz

• The script adds the following lines to /etc/security/limits.conf for the userspace

components such as MPI:

• * soft memlock unlimited

• * hard memlock unlimited

• These settings set the amount of memory that can be pinned by a user space application to unlimited.

If desired, tune the value unlimited to a specific amount of RAM.

For your machine to be part of the InfiniBand/VPI fabric, a Subnet Manager must be running on

one of the fabric nodes. At this point, Mellanox OFED for Linux has already installed the

OpenSM Subnet Manager on your machine.

For the list of installation options, run: ./mlnxofedinstall --h

The DKMS (on Debian based OS) and the weak-modules (RedHat OS) mechanisms rebuild

the initrd/initramfs for the respective kernel in order to add the MLNX_OFED drivers.

When installing MLNX_OFED without DKMS support on Debian based OS, or without

KMP support on RedHat or any other distribution, the initramfs will not be changed. There

fore, the inbox drivers may be loaded on boot. In this case, openibd service script will automatically unload them and load the new drivers that come with MLNX_OFED.

4.1.3.2 Installation Procedure

-

Rev 1.5

Step 1. Login to the installation machine as root.

Step 2. Mount the ISO image on your machine.

# mount -o ro,loop MLNX_OFED_LINUX-<ver>-<OS label>-<CPU arch>.iso /mnt

31Mellanox Technologies

Page 32

Step 3. Run the installation script.

/mnt/mlnxofedinstall

Logs dir: /tmp/MLNX_OFED_LINUX-x.x-x.logs

This program will install the MLNX_OFED_LINUX package on your machine.

Note that all other Mellanox, OEM, OFED, RDMA or Distribution IB packages will be removed.

Those packages are removed due to conflicts with MLNX_OFED_LINUX, do not reinstall them.

Starting MLNX_OFED_LINUX-x.x.x installation ...

........

........

Installation finished successfully.

Attempting to perform Firmware update...

Querying Mellanox devices firmware ...

For unattended installation, use the --force installation option while running the MLNX_OFED

installation script:

/mnt/mlnxofedinstall --force

MLNX_OFED for Ubuntu should be installed with the following flags in chroot environment:

./mlnxofedinstall --without-dkms --add-kernel-support --kernel

<kernel version in chroot> --without-fw-update --force

For example:

./mlnxofedinstall --without-dkms --add-kernel-support --kernel

3.13.0-85-generic --without-fw-update --force

Note that the path to kernel sources (--kernel-sources) should be added if the sources are not in

their default location.

Driver Installation

In case that your machine has the latest firmware, no firmware update will occur and the installation script will print at the end of installation a message similar to the following:

Device #1:

----------

Device Type: ConnectX-5

Part Number: MCX545E-ECAN

Description: ConnectX®-5 VPI network interface card for

OCP , with host management, EDR IB (100Gb/s) and 100GbE, singleport QSFP28, PCIe3.0 x16, no bracket

PSID: MT_2190110032

PCI Device Name: 0b:00.0

Base MAC: 0000e41d2d5cf810

Versions: Current Available

FW 16.22.0228 16.22.0228

Status: Up to date

Rev 1.5

32Mellanox Technologies

Page 33

Driver Installation

In case that your machine has an unsupported network adapter device, no firmware update will

occur and one of the following error messages below will be printed. Please contact your hard

ware vendor for help on firmware updates.

Error message 1:

Device #1:

--------- Device Type: ConnectX-5

Part Number: MCX545E-ECAN

Description: ConnectX®-5 VPI network interface card for

OCP , with host management, EDR IB (100Gb/s) and 100GbE, singleport QSFP28, PCIe3.0 x16, no bracket

PSID: MT_2190110032

PCI Device Name: 0b:00.0

Base MAC: 0000e41d2d5cf810

Versions: Current Available

FW 16.22.0228 N/A

Status: No matching image found

Error message 2:

The firmware for this device is not distributed inside Mellanox

driver: 0000:01:00.0 (PSID: IBM2150110033)

To obtain firmware for this device, please contact your HW vendor.

-

Step 4. If the installation script has performed a firmware update on your network adapter, com-

plete the step relevant to your adapter card type to load the firmware:

Otherwise, restart the driver by running: "/etc/init.d/openibd restart"

Step 5. (InfiniBand only) Run the hca_self_test.ofed utility to verify whether or not the

InfiniBand link is up. The utility also checks for and displays additional information such

as:

• HCA firmware version

• Kernel architecture

• Driver version

• Number of active HCA ports along with their states

•Node GUID

For more details on hca_self_test.ofed, see the file docs/readme_and_user_manual/

hca_self_test.readme.

After installation completion, information about the Mellanox OFED installation, such as prefix,

kernel version, and installation parameters can be retrieved by running the command

infiniband/info

.

/etc/

Most of the Mellanox OFED components can be configured or reconfigured after the installation,

by modifying the relevant configuration files. See the relevant chapters in this manual for details.

The list of the modules that will be loaded automatically upon boot can be found in the /etc/

infiniband/openib.conf

file.

Rev 1.5

33Mellanox Technologies

Page 34

4.1.3.3 Installation Results

Table 6 - Installation Results

Software • Most of MLNX_OFED packages are installed under the “/usr” directory except for the follow-

ing packages which are installed under the “/opt” directory:

• fca and ibutils

• The kernel modules are installed under

• /lib/modules/`uname -r`/updates on SLES and Fedora Distributions

• /lib/modules/`uname -r`/extra/mlnx-ofa_kernel on RHEL and other RedHat like Distribu-

Firmware • The firmware of existing network adapter devices will be updated if the following two condi-

tions are fulfilled:

• The installation script is run in default mode; that is, without the option ‘--without-fw-

• The firmware version of the adapter device is older than the firmware version included with

• In case that your machine has an unsupported network adapter device, no firmware update will

occur and the error message below will be printed.

The firmware for this device is not distributed inside Mellanox

driver: 0000:01:00.0 (PSID: IBM2150110033)

To obtain firmware for this device, please contact your HW vendor.

Driver Installation

tions

update’

the Mellanox OFED ISO image

Note: If an adapter’s Flash was originally programmed with an Expansion ROM image, the

automatic firmware update will also burn an Expansion ROM image.

4.1.3.4 Installation Logging

While installing MLNX_OFED, the install log for each selected package will be saved in a separate log file.

The path to the directory containing the log files will be displayed after running the installation

script in the following format:

Example:

Logs dir: /tmp/MLNX_OFED_LINUX-x.x-x.logs

4.1.3.5 openibd Script

As of MLNX_OFED v2.2-1.0.0 the openibd script supports pre/post start/stop scripts:

This can be controlled by setting the variables below in the /etc/infiniband/openibd.conf

file.

OPENIBD_PRE_START

OPENIBD_POST_START

OPENIBD_PRE_STOP

OPENIBD_POST_STOP

Example:

OPENIBD_POST_START=/sbin/openibd_post_start.sh

"Logs dir: /tmp/MLNX_OFED_LINUX-<version>.<PID>.logs".

Rev 1.5

34Mellanox Technologies

Page 35

An example of OPENIBD_POST_START script for activating all interfaces is provided in

the MLNX_OFED package under the docs/scripts/openibd-post-start-configure-inter

faces/ folder.

4.1.3.6 Driver Load Upon System Boot

Upon system boot, the Mellanox drivers will be loaded automatically.

To prevent automatic load of the Mellanox drivers upon system boot:

Step 1. Add the following lines to the "/etc/modprobe.d/mlnx.conf" file.

blacklist mlx4_core

blacklist mlx4_en

blacklist mlx5_core

blacklist mlx5_ib

Step 2. Set “ONBOOT=no” in the "/etc/infiniband/openib.conf" file.

Step 3. If the modules exist in the initramfs file, they can automatically be loaded by the kernel.

To prevent this behavior, update the initramfs using the operating systems’ standard tools.

Note: The process of updating the initramfs will add the blacklists from step 1, and will prevent the kernel from loading the modules automatically.

Driver Installation

-

4.1.3.7 mlnxofedinstall Return Codes

The table below lists the mlnxofedinstall script return codes and their meanings.

Table 7 - mlnxofedinstall Return Codes

Return Code Meaning

0 The Installation ended successfully

1 The installation failed

2 No firmware was found for the adapter device

22 Invalid parameter

28 Not enough free space

171 Not applicable to this system configuration. This can occur when the

required hardware is not present on the system.

172 Prerequisites are not met. For example, missing the required software

installed or the hardware is not configured correctly.

173 Failed to start the mst driver

4.1.4 Uninstalling Mellanox OFED

Use the script /usr/sbin/ofed_uninstall.sh to uninstall the Mellanox OFED package.

The script is part of the

ofed-scripts RPM.

Rev 1.5

35Mellanox Technologies

Page 36

4.1.5 Installing MLNX_OFED Using YUM

This type of installation is applicable to RedHat/OL, Fedora, XenServer Operating Systems.

4.1.5.1 Setting up MLNX_OFED YUM Repository

Step 1. Log into the installation machine as root.

Step 2. Mount the ISO image on your machine and copy its content to a shared location in your net-

work.

# mount -o ro,loop MLNX_OFED_LINUX-<ver>-<OS label>-<CPU arch>.iso /mnt

Step 3. Download and install Mellanox Technologies GPG-KEY:

The key can be downloaded via the following link:

http://www.mellanox.com/downloads/ofed/RPM-GPG-KEY-Mellanox

# wget http://www.mellanox.com/downloads/ofed/RPM-GPG-KEY-Mellanox

--2014-04-20 13:52:30-- http://www.mellanox.com/downloads/ofed/RPM-GPG-KEY-Mellanox

Resolving www.mellanox.com... 72.3.194.0

Connecting to www.mellanox.com|72.3.194.0|:80... connected.

HTTP request sent, awaiting response... 200 OK

Length: 1354 (1.3K) [text/plain]

Saving to: ?RPM-GPG-KEY-Mellanox?

Driver Installation

100%[=================================================>] 1,354 --.-K/s in 0s

2014-04-20 13:52:30 (247 MB/s) - ?RPM-GPG-KEY-Mellanox? saved [1354/1354]

Step 4. Install the key.

# sudo rpm --import RPM-GPG-KEY-Mellanox

warning: rpmts_HdrFromFdno: Header V3 DSA/SHA1 Signature, key ID 6224c050: NOKEY

Retrieving key from file:///repos/MLNX_OFED/<MLNX_OFED file>/RPM-GPG-KEY-Mellanox

Importing GPG key 0x6224C050:

Userid: "Mellanox Technologies (Mellanox Technologies - Signing Key v2) <support@mellanox.com>"

From : /repos/MLNX_OFED/<MLNX_OFED file>/RPM-GPG-KEY-Mellanox

Is this ok [y/N]:

Step 5. Check that the key was successfully imported.

# rpm -q gpg-pubkey --qf '%{NAME}-%{VERSION}-%{RELEASE}\t%{SUMMARY}\n' | grep Mellanox

gpg-pubkey-a9e4b643-520791ba gpg(Mellanox Technologies <support@mellanox.com>)

Step 6. Create a yum repository configuration file called "/etc/yum.repos.d/mlnx_ofed.repo"

with the following content:.

[mlnx_ofed]

name=MLNX_OFED Repository

baseurl=file:///<path to extracted MLNX_OFED package>/RPMS

enabled=1

gpgkey=file:///<path to the downloaded key RPM-GPG-KEY-Mellanox>

gpgcheck=1

Rev 1.5

36Mellanox Technologies

Page 37

Step 7. Check that the repository was successfully added.

# yum repolist

Loaded plugins: product-id, security, subscription-manager

This system is not registered to Red Hat Subscription Management. You can use subscription-manager to register.

repo id repo name status

mlnx_ofed MLNX_OFED Repository 108

rpmforge RHEL 6Server - RPMforge.net - dag 4,597

repolist: 8,351

4.1.5.2 Installing MLNX_OFED Using the YUM Tool

After setting up the YUM repository for MLNX_OFED package, perform the following:

Step 1. View the available package groups by invoking:

# yum search mlnx-ofedmlnx-ofed-all.noarch : MLNX_OFED all installer package (with KMP support)

mlnx-ofed-basic.noarch : MLNX_OFED basic installer package (with KMP support)

mlnx-ofed-guest.noarch : MLNX_OFED guest installer package (with KMP support)

mlnx-ofed-hpc.noarch : MLNX_OFED hpc installer package (with KMP support)

mlnx-ofed-hypervisor.noarch : MLNX_OFED hypervisor installer package (with KMP support)

mlnx-ofed-vma.noarch : MLNX_OFED vma installer package (with KMP support)

mlnx-ofed-vma-eth.noarch : MLNX_OFED vma-eth installer package (with KMP support)

mlnx-ofed-vma-vpi.noarch : MLNX_OFED vma-vpi installer package (with KMP support)

Driver Installation

Where:

mlnx-ofed-all Installs all available packages in MLNX_OFED.

mlnx-ofed-basic Installs basic packages required for running Mellanox cards.

mlnx-ofed-guest Installs packages required by guest OS.

mlnx-ofed-hpc Installs packages required for HPC.

mlnx-ofed-hypervisor Installs packages required by hypervisor OS.

mlnx-ofed-vma Installs packages required by VMA.

mlnx-ofed-vma-eth Installs packages required by VMA to work over Ethernet.

mlnx-ofed-vma-vpi Installs packages required by VMA to support VPI.

Note: MLNX_OFED provides kernel module RPM packages with KMP support for

RHEL and SLES. For other operating systems, kernel module RPM packages are provided

only for the operating systems' default kernel. In this case, the group RPM packages have

the supported kernel version in their package's name.

Example:

mlnx-ofed-all-3.17.4-301.fc21.x86_64.noarch : MLNX_OFED all installer package for kernel

3.17.4-301.fc21.x86_64 (without KMP support)

mlnx-ofed-basic-3.17.4-301.fc21.x86_64.noarch : MLNX_OFED basic installer package for

kernel 3.17.4-301.fc21.x86_64 (without KMP support)

mlnx-ofed-guest-3.17.4-301.fc21.x86_64.noarch : MLNX_OFED guest installer package for

kernel 3.17.4-301.fc21.x86_64 (without KMP support)

mlnx-ofed-hpc-3.17.4-301.fc21.x86_64.noarch : MLNX_OFED hpc installer package for kernel

3.17.4-301.fc21.x86_64 (without KMP support)

Rev 1.5

37Mellanox Technologies

Page 38

mlnx-ofed-hypervisor-3.17.4-301.fc21.x86_64.noarch : MLNX_OFED hypervisor installer

package for kernel 3.17.4-301.fc21.x86_64 (without KMP support)

mlnx-ofed-vma-3.17.4-301.fc21.x86_64.noarch : MLNX_OFED vma installer package for kernel

3.17.4-301.fc21.x86_64 (without KMP support)

mlnx-ofed-vma-eth-3.17.4-301.fc21.x86_64.noarch : MLNX_OFED vma-eth installer package

for kernel 3.17.4-301.fc21.x86_64 (without KMP support)

mlnx-ofed-vma-vpi-3.17.4-301.fc21.x86_64.noarch : MLNX_OFED vma-vpi installer package

for kernel 3.17.4-301.fc21.x86_64 (without KMP support)

If you have an operating system different than RHEL or SLES, or you have installed a kernel that is not supported by default in MLNX_OFED, you can use the mlnx_add_ker-

nel_support.sh script to build MLNX_OFED for your kernel.

The script will automatically build the matching group RPM packages for your kernel so

that you can still install MLNX_OFED via yum.

Please note that the resulting MLNX_OFED repository will contain unsigned RPMs,

therefore, you should set

Step 2. Install the desired group.

# yum install mlnx-ofed-all

Loaded plugins: langpacks, product-id, subscription-manager

Resolving Dependencies

--> Running transaction check

---> Package mlnx-ofed-all.noarch 0:3.1-0.1.2 will be installed

--> Processing Dependency: kmod-isert = 1.0-OFED.3.1.0.1.2.1.g832a737.rhel7u1 for pack-

age: mlnx-ofed-all-3.1-0.1.2.noarch

..................

..................

qperf.x86_64 0:0.4.9-9

rds-devel.x86_64 0:2.0.7-1.12

rds-tools.x86_64 0:2.0.7-1.12

sdpnetstat.x86_64 0:1.60-26

srptools.x86_64 0:1.0.2-12

Driver Installation

'gpgcheck=0' in the repository configuration file.

Complete!

Installing MLNX_OFED using the “apt-get” tool does not automatically update the

firmware.

To update the firmware to the version included in MLNX_OFED package, run:

# apt-get install mlnx-fw-updater

OR:

Update the firmware to the latest version available on Mellanox Technologies’ Web site as

described in

4.1.5.3 Uninstalling Mellanox OFED Using the YUM Tool

Use the script /usr/sbin/ofed_uninstall.sh to uninstall the Mellanox OFED package. The

script is part of the ofed-scripts RPM.

Rev 1.5

38Mellanox Technologies

Page 39

4.1.5.4 Installing MLNX_OFED Using apt-get Tool

This type of installation is applicable to Debian and Ubuntu operating systems.

4.1.5.5 Setting up MLNX_OFED apt-get Repository

Step 1. Log into the installation machine as root.

Step 2. Extract the MLNX_OFED pacakge on a shared location in your network.

You can download it from http://www.mellanox.com > Products > Software> Ethernet

Drivers.

Step 3. Create an apt-get repository configuration file called

"/etc/apt/sources.list.d/mlnx_ofed.list" with the following content:

# deb file:/<path to extracted MLNX_OFED package>/DEBS ./

Step 4. Download and install Mellanox Technologies GPG-KEY.

# wget -qO - http://www.mellanox.com/downloads/ofed/RPM-GPG-KEY-Mellanox | sudo apt-key

add -

Step 5. Check that the key was successfully imported.

# apt-key list

pub 1024D/A9E4B643 2013-08-11

uid Mellanox Technologies <support@mellanox.com>

sub 1024g/09FCC269 2013-08-11

Driver Installation

Step 6. Update the apt-get cache.

# sudo apt-get update

4.1.5.6 Installing MLNX_OFED Using the apt-get Tool

After setting up the apt-get repository for MLNX_OFED package, perform the following:

Step 1. View the available package groups by invoking:

# apt-cache search mlnx-ofedmlnx-ofed-vma-eth - MLNX_OFED vma-eth installer package (with DKMS support)

mlnx-ofed-hpc - MLNX_OFED hpc installer package (with DKMS support)

mlnx-ofed-vma-vpi - MLNX_OFED vma-vpi installer package (with DKMS support)

mlnx-ofed-basic - MLNX_OFED basic installer package (with DKMS support)

mlnx-ofed-vma - MLNX_OFED vma installer package (with DKMS support)

mlnx-ofed-all - MLNX_OFED all installer package (with DKMS support)

Where:

mlnx-ofed-all MLNX_OFED all installer package.

mlnx-ofed-basic MLNX_OFED basic installer package.

mlnx-ofed-vma MLNX_OFED vma installer package.

mlnx-ofed-hpc MLNX_OFED HPC installer package.

mlnx-ofed-vma-eth MLNX_OFED vma-eth installer package.

mlnx-ofed-vma-vpi MLNX_OFED vma-vpi installer package.

Rev 1.5

Step 2. Install the desired group.

#

apt-get install '<group name>'

39Mellanox Technologies

Page 40

Example:

#

apt-get install mlnx-ofed-all

Installing MLNX_OFED using the “apt-get” tool does not automatically update the firmware.

To update the firmware to the version included in MLNX_OFED package, run:

# apt-get install mlnx-fw-updater

OR:

Update the firmware to the latest version available on Mellanox Technologies’ Web site as

described in

4.1.5.7 Uninstalling Mellanox OFED Using the apt-get Tool

Use the script /usr/sbin/ofed_uninstall.sh to uninstall the Mellanox OFED package.

The script is part of the

ofed-scripts package.

4.1.6 Updating Firmware After Installation

The firmware can be updated either manually or automatically (upon system boot), as described

in the sections below.

Driver Installation

4.1.6.1 Updating the Device Online

To update the device online on the machine from Mellanox site, use the following command line:

mlxfwmanager --online -u -d <device>

Example:

mlxfwmanager --online -u -d 0000:09:00.0

Querying Mellanox devices firmware ...

Device #1:

----------

Device Type: ConnectX-5

Part Number: MCX545A-ECAN

Description: ConnectX®-5 VPI network interface card for OCP , with

host management, EDR IB (100Gb/s) and 100GbE, single-port QSFP28, PCIe3.0

x16, no bracket

PSID: MT_1020120019

PCI Device Name: 0000:09:00.0

Port1 GUID: 0002c9000100d051

Port2 MAC: 0002c9000002

Versions: Current Available

FW 2.32.5000 2.33.5000

Status: Update required

--------Found 1 device(s) requiring firmware update. Please use -u flag to perform the update.

Rev 1.5

40Mellanox Technologies

Page 41

4.1.6.2 Updating the Device Manually

To update the device manually, please refer to the OEM Firmware Download page.

In case that you ran the mlnxofedinstall script with the ‘--without-fw-update’ option or

you are using an OEM card and now you wish to (manually) update firmware on your adapter

card(s), you need to perform the steps below. The following steps are also appropriate in case that

you wish to burn newer firmware that you have downloaded from Mellanox Technologies’ Web

site (http://www.mellanox.com > Support > Firmware Download).

Step 1. Get the device’s PSID.

mlxfwmanager_pci | grep PSID

PSID: MT_1210110019

Step 2. Download the firmware BIN file from the Mellanox website or the OEM website.

Step 3. Burn the firmware.

mlxfwmanager_pci -i <fw_file.bin>

Step 4. Reboot your machine after the firmware burning is completed.

4.1.6.3 Updating the Device Firmware Automatically upon System Boot

As of MLNX_OFED v3.1-x.x.x, firmware can be automatically updated upon system boot.

The firmware update package (mlnx-fw-updater) is installed in the “/opt/mellanox/mlnx-fw-

updater”

folder, and openibd service script can invoke the firmware update process if requested

on boot.

If the firmware is updated, the following message is printed to the system’s standard logging file:

fw_updater: Firmware was updated. Please reboot your system for the changes to take

effect.

Driver Installation

Otherwise, the following message is printed:

fw_updater: Didn't detect new devices with old firmware.

Please note, this feature is disabled by default. To enable the automatic firmware update upon

system boot, set the following parameter to

openibd service configuration file

You can opt to exclude a list of devices from the automatic firmware update procedure. To do so,

edit the configurations file

provide a comma separated list of PCI devices to exclude from the firmware update.

Example:

MLNX_EXCLUDE_DEVICES="00:05.0,00:07.0"

4.1.7 UEFI Secure Boot

All kernel modules included in MLNX_OFED for RHEL7 and SLES12 are signed with x.509

key to support loading the modules when Secure Boot is enabled.

Rev 1.5

“yes” “RUN_FW_UPDATER_ONBOOT=yes” in the

“/etc/infiniband/openib.conf”.

“/opt/mellanox/mlnx-fw-updater/mlnx-fw-updater.conf” and

41Mellanox Technologies

Page 42

4.1.7.1 Enrolling Mellanox's x.509 Public Key On your Systems

In order to support loading MLNX_OFED drivers when an OS supporting Secure Boot boots on

a UEFI-based system with Secure Boot enabled, the Mellanox x.509 public key should be added

to the UEFI Secure Boot key database and loaded onto the system key ring by the kernel.

Follow these steps below to add the Mellanox's x.509 public key to your system:

Prior to adding the Mellanox's x.509 public key to your system, please make sure:

• The 'mokutil' package is installed on your system

• The system is booted in UEFI mode

Step 1. Download the x.509 public key.

# wget http://www.mellanox.com/downloads/ofed/mlnx_signing_key_pub.der

Step 2. Add the public key to the MOK list using the mokutil utility.

You will be asked to enter and confirm a password for this MOK enrollment request.

# mokutil --import mlnx_signing_key_pub.der

Step 3. Reboot the system.

The pending MOK key enrollment request will be noticed by shim.efi and it will launch Mok-

Manager.efi to allow you to complete the enrollment from the UEFI console. You will need to

enter the password you previously associated with this request and confirm the enrollment. Once

done, the public key is added to the MOK list, which is persistent. Once a key is in the MOK list,

it will be automatically propagated to the system key ring and subsequent will be booted when

the UEFI Secure Boot is enabled.

Driver Installation

To see what keys have been added to the system key ring on the current boot, install the 'keyutils'

package and run:

#keyctl list %:.system_keyring

4.1.7.2 Removing Signature from kernel Modules

The signature can be removed from a signed kernel module using the 'strip' utility which is provided by the 'binutils' package.

# strip -g my_module.ko

The strip utility will change the given file without saving a backup. The operation can be undo

only by resigning the kernel module. Hence, we recommend backing up a copy prior to removing

the signature.

To remove the signature from the MLNX_OFED kernel modules:

Step 1. Remove the signature.

# rpm -qa | grep -E "kernel-ib|mlnx-ofa_kernel|iser|srp|knem|mlnx-rds|mlnx-nfsrdma|mlnx-nvme|mlnx-rdma-rxe" | xargs rpm -ql | grep "\.ko$" | xargs strip -g

After the signature has been removed, a massage as the below will no longer be presented

upon module loading:

"Request for unknown module key 'Mellanox Technologies signing key:

61feb074fc7292f958419386ffdd9d5ca999e403' err -11"

Rev 1.5

42Mellanox Technologies

Page 43

However, please note that a similar message as the following will still be presented:

"my_module: module verification failed: signature and/or required key missing - tainting kernel"

This message is presented once, only for each boot for the first module that either has no

signature or whose key is not in the kernel key ring. So it's much easier to miss this mes

sage. You won't see it on repeated tests where you unload and reload a kernel module until

you reboot. There is no way to eliminate this message.

Step 2. Update the initramfs on RHEL systems with the stripped modules.

mkinitrd /boot/initramfs-$(uname -r).img $(uname -r) --force

4.1.8 Performance Tuning

Depending on the application of the user's system, it may be necessary to modify the default configuration of network adapters based on the ConnectX® adapters. In case that tuning is required,

please refer to the

Performance Tuning Guide for Mellanox Network Adapters.

Driver Installation

-

Rev 1.5

43Mellanox Technologies

Page 44

4.2 Windows Driver

The snapshots in the following sections are presented for illustration purposes only. The installation interface may slightly vary, depending on the used operating system

For Windows, download and install the latest Mellanox WinOF-2 for Windows software package

available via the Mellanox web site at: http://www.mellanox.com => Products => Software =>

InfiniBand/VPI Drivers => Download. Follow the installation instructions included in the down

load package (also available from the download page).

4.2.1 Hardware and Software Requirements

Table 8 - H ar dwa re an d Software Requirements

Driver Installation

-

Description

a

Windows Server 2012 R2 MLNX_WinOF2-1_10_All_x64.exe

Windows Server 2012 MLNX_WinOF2-1_10_All_x64.exe

Windows Server 2016 MLNX_WinOF2-1_10_All_x64.exe

Windows 8.1 Client (64 bit only) MLNX_WinOF2-1_10_All_x64.exe

Windows 10 Client (64 bit only) MLNX_WinOF2-1_10_All_x64.exe

a. The Operating System listed above must run with administrator privileges.

4.2.2 Downloading Mellanox WinOF-2 Driver

To download the .exe according to your Operating System, please follow the steps below:

Step 1. Obtain the machine architecture.

1. To go to the Start menu, position your mouse in the bottom-right corner of the

Remote Desktop of your screen.

2. Open a CMD console (Click Task Manager-->File --> Run new task, and enter

CMD).

3. Enter the following command.

echo %PROCESSOR_ARCHITECTURE%

On an x64 (64-bit) machine, the output will be “AMD64”.

Step 2. Go to the Mellanox WinOF-2 web page at:

http://www.mellanox.com => Products =>InfiniBand/VPIDrivers => Windows SW/Drivers.

Package

Rev 1.5

Step 3. Download the.exe image according to the architecture of your machine (see <Xref>Step 1).

The name of the .exe is in the following format

MLNX_WinOF2-<version>_x.exe.

44Mellanox Technologies

Page 45

Installing the incorrect .exe file is prohibited. If you do so, an error message will be displayed. For

example, if you try to install a 64-bit .exe on a 32-bit machine, the wizard will display the follow

ing (or a similar) error message:

“The installation package is not supported by this processor type. Contact your vendor”

4.2.3 Installing Mellanox WinOF-2 Driver

WinOF-2 supports adapter cards based on the Mellanox ConnectX®-4 and above family of

adapter IC devices only. If you have ConnectX-3 and ConnectX-3 Pro on your server, you will

need to install WinOF driver.