Page 1

Optimization Tool

User’s Guide

box™ 5

Page 2

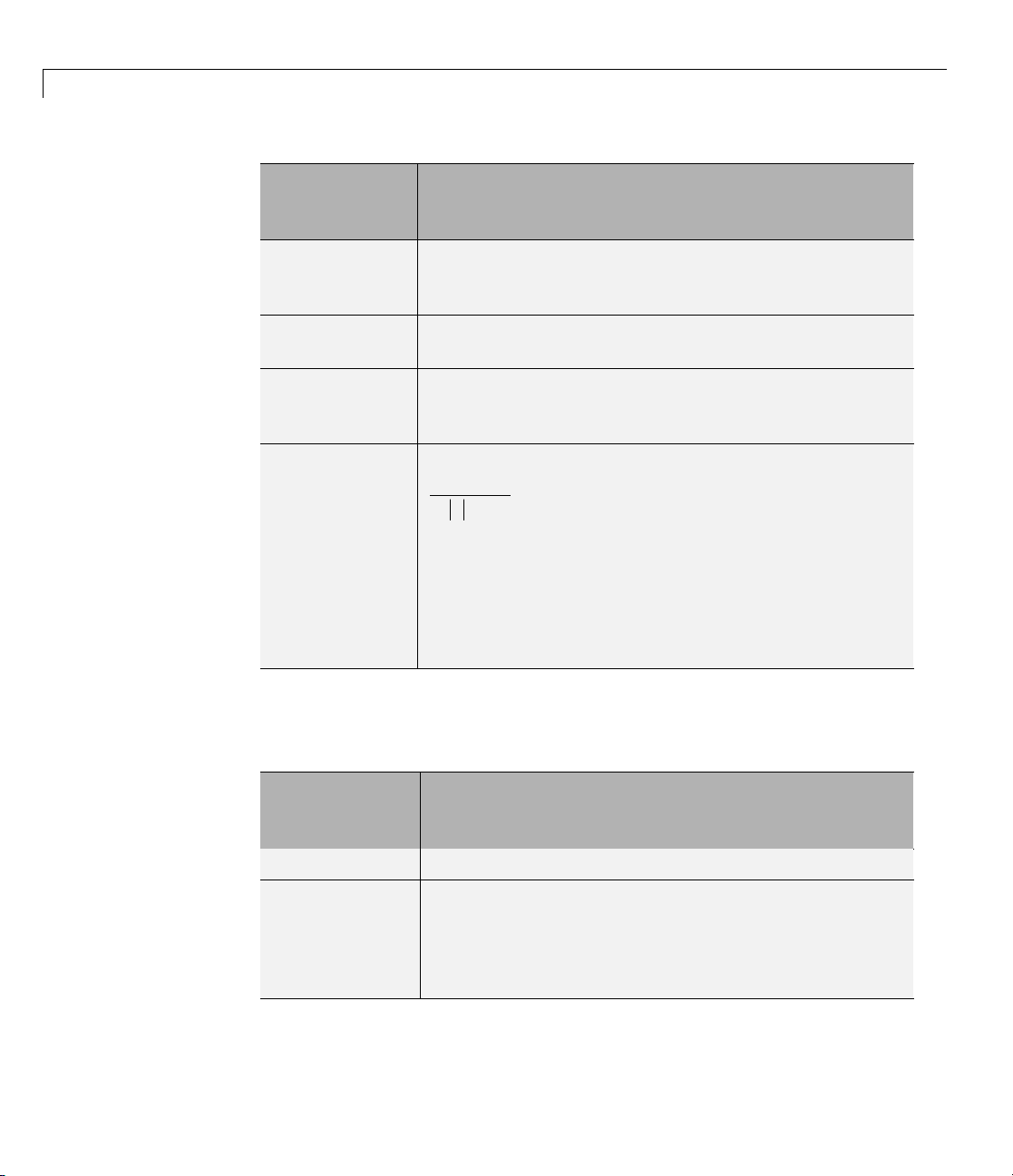

How to Contact The MathWorks

www.mathworks.

comp.soft-sys.matlab Newsgroup

www.mathworks.com/contact_TS.html T echnical Support

suggest@mathworks.com Product enhancement suggestions

bugs@mathwo

doc@mathworks.com Documentation error reports

service@mathworks.com Order status, license renewals, passcodes

info@mathwo

com

rks.com

rks.com

Web

Bug reports

Sales, prici

ng, and general information

508-647-7000 (Phone)

508-647-7001 (Fax)

The MathWorks, Inc.

3 Apple Hill Drive

Natick, MA 01760-2098

For contact information about worldwide offices, see the MathWorks Web site.

Optimization Toolbox™ User’s Guide

© COPYRIGHT 1990–20 10 by The MathWorks, Inc.

The software described in this document is furnished under a license agreement. The software may be used

or copied only under the terms of the license agreement. No part of this manual may be photocopied or

reproduced in any form without prior written consent from The MathW orks, Inc.

FEDERAL ACQUISITION: This provision applies to all acquisitions of the Program and Documentation

by, for, or through the federal government of the United States. By accepting delivery of the Program

or Documentation, the government hereby agrees that this software or documentation qualifies as

commercial computer software or commercial computer software documentation as such terms are used

or defined in FAR 12.212, DFARS Part 227.72, and DFARS 252.227-7014. Accordingly, the terms and

conditions of this Agreement and only those rights specified in this Agreement, shall pertain to and govern

theuse,modification,reproduction,release,performance,display,anddisclosureoftheProgramand

Documentation by the federal government (or other entity acquiring for or through the federal government)

and shall supersede any conflicting contractual terms or conditions. If this License fails to meet the

government’s needs or is inconsistent in any respect with federal procurement law, the government agrees

to return the Program and Docu mentation, unused, to The MathWorks, Inc.

Trademarks

MATLAB and Simulink are registered trademarks of The MathWorks, Inc. See

www.mathworks.com/trademarks for a list of additional trademarks. Other product or brand

names may be trademarks or registered trademarks of their respective holders.

Patents

The MathWorks products are protected by one or more U.S. patents. Please see

www.mathworks.com/patents for more information.

Page 3

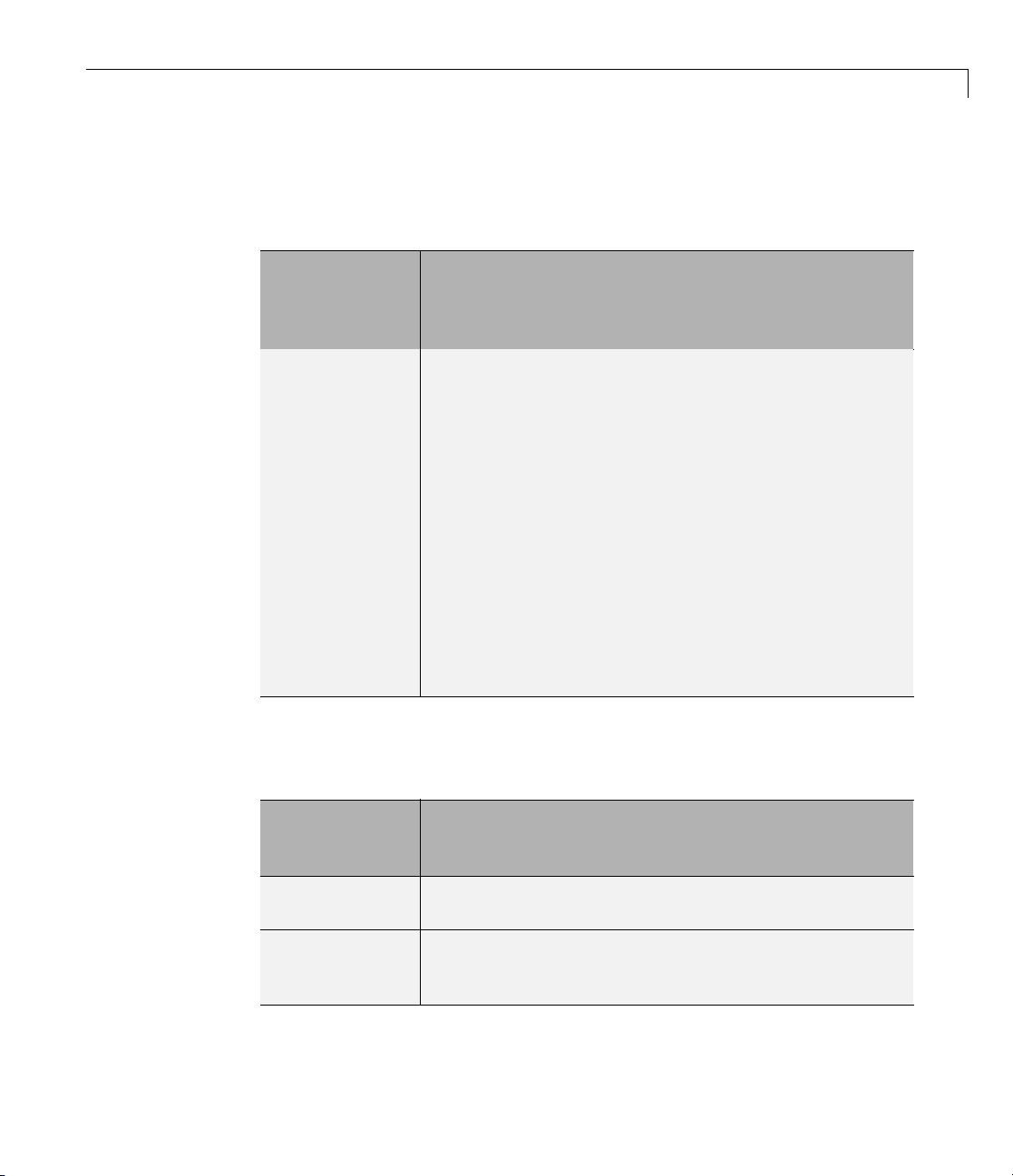

Revision History

November 1990 First printing

December 1996 Second printing For MATLAB

®

5

January 1999 Third printing For Version 2 (Release 11)

September 2000 Fourth printing For Version 2.1 (Release 12)

June 2001 Online only Revised for Version 2.1.1 (Release 12.1)

September 2003 Online only Revised for Version 2.3 (Release 13SP1)

June 2004 Fifth printing Revised for Version 3.0 (Release 14)

October 2004 Online only Revised for Version 3.0.1 (Release 14SP1)

March 2005 Online only Revised for Version 3.0.2 (Release 14SP2)

September 2005 Online only Revised for Version 3.0.3 (Release 14SP3)

March 2006 Online only Revised for Version 3.0.4 (Release 2006a)

September 2006 Sixth printing Revised for Version 3.1 (Release 2006b)

March 2007 Seventh printing Revised for Version 3.1.1 (Release 2007a)

September 2007 Eighth printing Revised for Version 3.1.2 (Release 2007b)

March 2008 Online only Revised for Version 4.0 (Release 2008a)

October 2008 Online only Revised for Version 4.1 (Release 2008b)

March 2009 Online only Revised for Version 4.2 (Release 2009a)

September 2009 Online only Revised for Version 4.3 (Release 2009b)

March 2010 Online only Revised for Version 5.0 (Release 2010a)

Page 4

Page 5

Acknowledgments

The MathWorks™ would like to acknowledge the following contributors to

Optimization Toolbox™ algorithms.

Thomas F. Coleman researched and contributed algorithms for constrained

and unconstrained minimization, nonlinear least squares and curve fitting,

constrained linear least squares, quadratic programming, and nonlinear

equations.

Dr. Coleman is Dean of Faculty of Mathematics and Professor of

Combinatorics and Optimization at University of Waterlo o.

Dr. Coleman has published 4 books and over 70 technical papers in the

areas of continuous optimization and computational methods and tools for

large-scale problems.

Yin Zhang researched and contributed the large-scale linear programming

algorithm.

Acknowledgments

Dr. Zhang is Professor of Computational and Applied Mathematics on the

faculty of the Keck Center for Interdisciplinary Bioscience Training at Rice

University.

Dr. Zhang has published over 50 technical papers in the areas of interior-point

methods for linear programming and computational mathematical

programming.

Page 6

Acknowledgments

Page 7

Getting Started

1

Product Overview ................................. 1-2

Introduction

Optimization Functions

Optimization T ool GUI

...................................... 1-2

............................ 1-2

............................. 1-3

Contents

Example: Nonlinear Constrained Minimization

Problem Formulation: Rosenbrock’s Function

Defining the Problem in Toolbox Syntax

Running the Optimization

Interpreting the Result

.......................... 1-7

............................. 1-12

............... 1-5

...... 1-4

.......... 1-4

Setting Up an Optimization

2

Introduction to Optimization Toolbox Solvers ........ 2-2

Choosing a Solver

Optimization Decision Table

Problems Handled by Optimization Toolbox Functions

Writing Objective Functions

Writing Objective Functions

Jacobians of Vector and Matrix Objective Functions

Anonymous Function Objectives

Maximizing an Objective

................................. 2-4

........................ 2-4

........................ 2-10

......................... 2-10

..................... 2-15

........................... 2-16

... 2-7

..... 2-12

Writing Constraints

Types of Constraints

Iterations Can Violate Constraints

Bound Constraints

................................ 2-17

............................... 2-17

................................ 2-19

................... 2-18

vii

Page 8

Linear Inequality Constraints ....................... 2-20

Linear Equality Constraints

Nonlinear Constraints

An Exam ple Using All Types of Constraints

......................... 2-20

............................. 2-21

............ 2-23

Passing Extra Parameters

Anonymous Functions

Nested Functions

Global Variables

Setting Options

Default Options

Changing the Default Settings

Tolerances and Stopping Criteria

Checking Validity of Gradients or Jacobians

Choosing the Algorithm

................................. 2-28

.................................. 2-28

.................................... 2-30

................................... 2-30

.......................... 2-25

.............................. 2-25

....................... 2-30

.................... 2-36

........... 2-37

............................ 2-45

Examining Results

3

Current Point and Function Value .................. 3-2

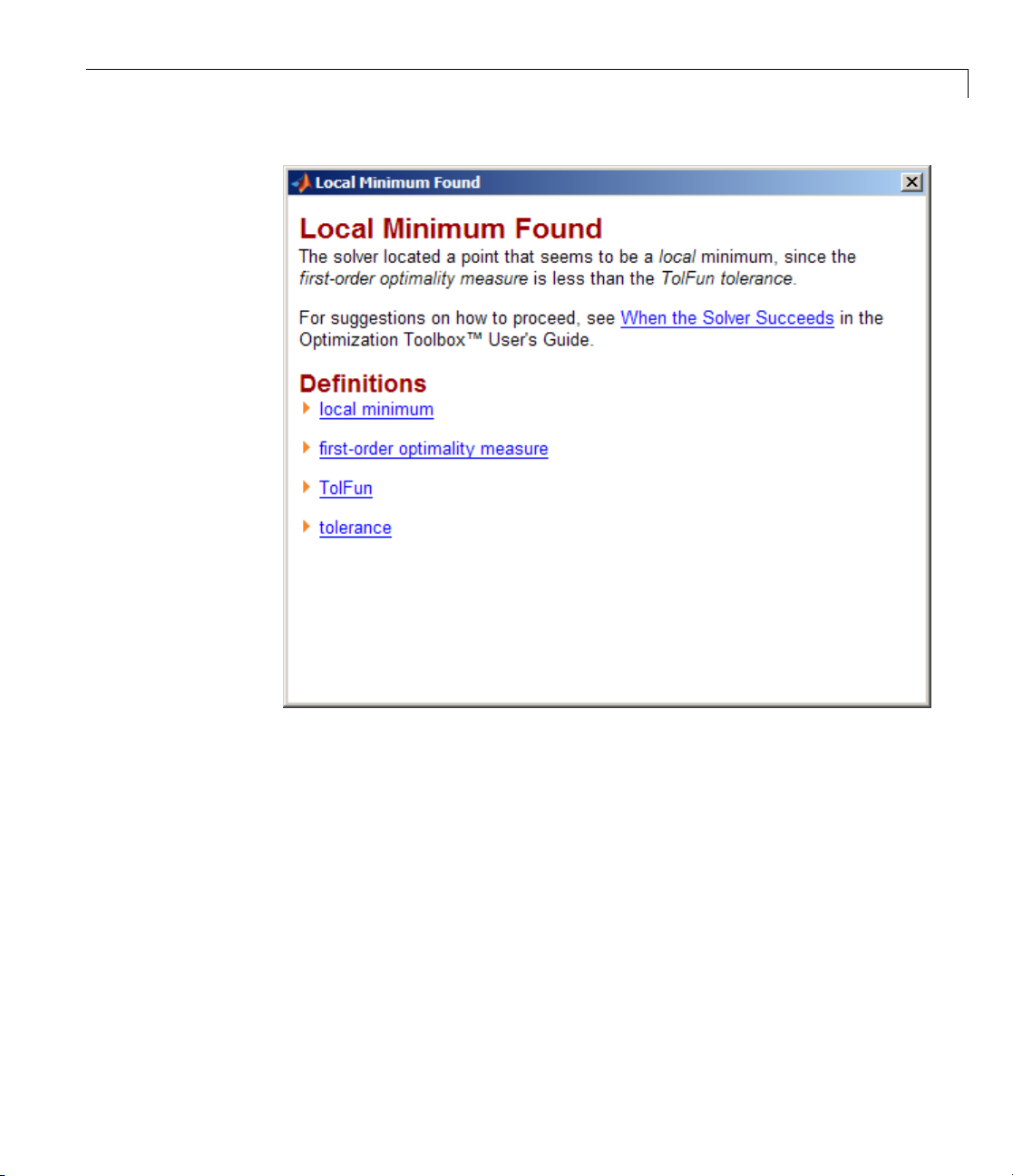

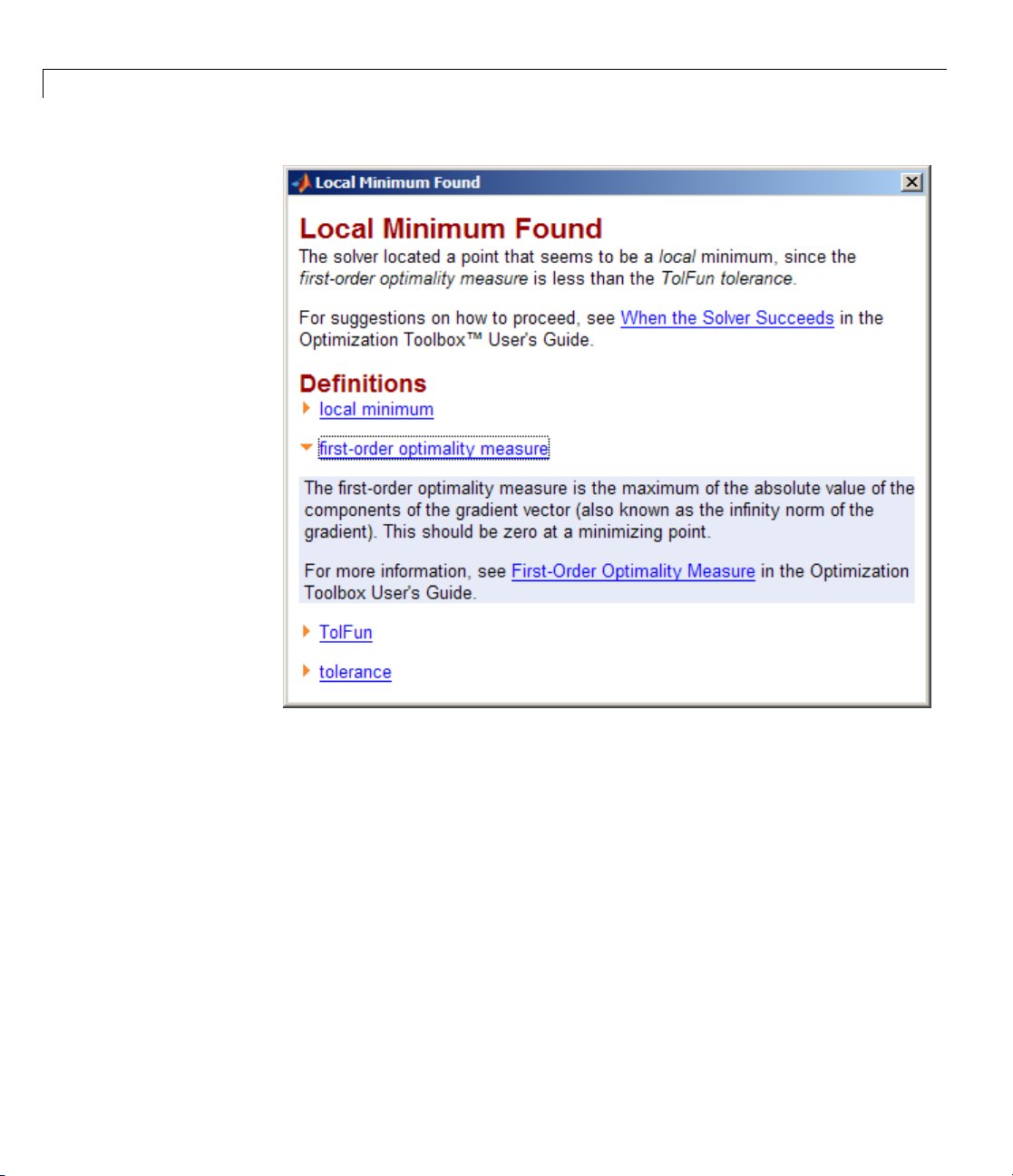

Exit Flags and Exit Messages

Exit Flags

Exit Messages

Enhanced Exit Messages

Exit Message Options

........................................ 3-3

.................................... 3-5

.............................. 3-8

....................... 3-3

........................... 3-5

viii Contents

Iterations and Function C ounts

First-Order Optimality Measure

Unconstrained Optimality

Constrained Optimality—Theory

Constrained Optimality in Solver Form

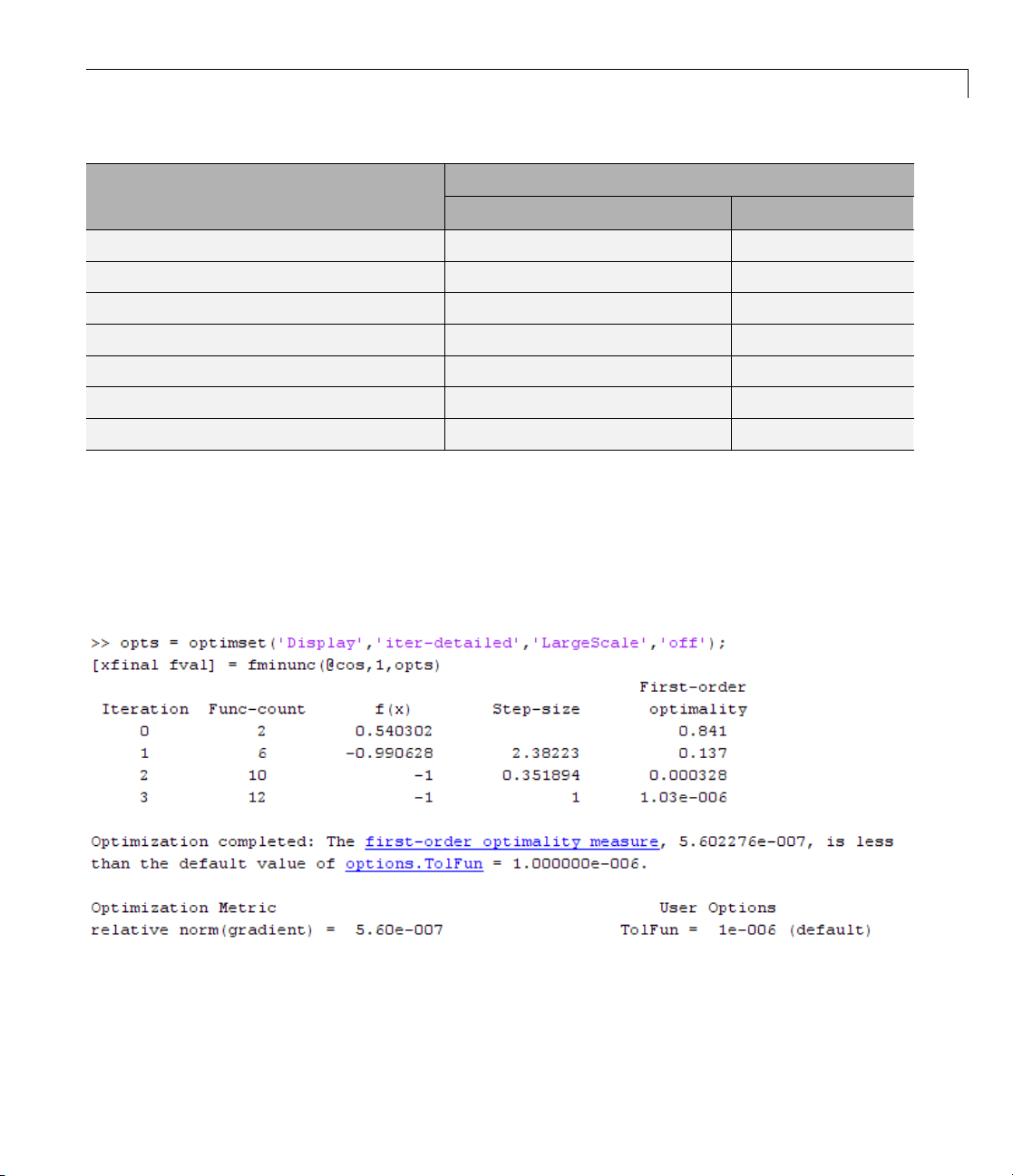

Displaying Iterative Output

Introduction

Most Common Output Headings

...................................... 3-14

.......................... 3-11

........................ 3-14

..................... 3-10

.................... 3-11

..................... 3-11

............... 3-13

..................... 3-14

Page 9

Function-Specific Output Headings ................... 3-15

Output Structures

Lagrange Multiplier Structures

Hessian

fminunc Hessian

fmincon Hessian

Plot F unctions

Example: Using a Plot Function

Output Functions

Introduction

Example: Using Output Functions

........................................... 3-23

................................. 3-21

..................... 3-22

.................................. 3-23

.................................. 3-24

.................................... 3-26

..................... 3-26

.................................. 3-32

...................................... 3-32

................... 3-32

Steps to Take A fter Running a Solver

4

Overview of Next Steps ............................ 4-2

When the Solver Fails

Too Many Iterations or Function Evaluations

No Feasible Point

Problem Unbounded

fsolve Could Not Solve Equation

Solver T akes Too Long

When the Solver Might Have Succeeded

Final Point Equals Initial Point

Local Minimum Possible

When the Solver Succeeds

What Can Be Wrong If The Solver Succeeds?

1. Change the Initial Point

2. Check N earby Points

.............................. 4-3

................................. 4-8

............................... 4-9

..................... 4-10

............................. 4-10

...................... 4-14

............................ 4-14

.......................... 4-22

.......................... 4-23

............................ 4-24

.......... 4-3

............. 4-14

........... 4-22

ix

Page 10

3. Check your Objective and Constraint Functions ...... 4-25

Local vs. Global Optima

............................ 4-26

Optimization Tool

5

Getting Started with the Optimization Tool .......... 5-2

Introduction

Opening the Optimization Tool

Steps for Using the Optimization Tool

...................................... 5-2

...................... 5-2

................. 5-5

Running a Problem in the Optimization Tool

Introduction

Pausing and Stopping the Algorithm

Viewing Results

Final Point

Starting a New Problem

Closing the Optimization Tool

Specifying Certain Options

Plot Functions

Output function

Display to Command Window

Getting Help in the Optimization Tool

Quick Reference

Additional Help

Importing and Exporting Your Work

Exporting to the MATLAB Workspace

Importing Your Work

Generating a File

Optimization Tool Examples

About Optimization Tool Examples

Optimization T ool with the fmincon Solver

Optimization T ool with the lsqlin Solver

...................................... 5-6

.................. 5-7

................................... 5-7

....................................... 5-11

............................ 5-11

....................... 5-12

......................... 5-13

.................................... 5-13

................................... 5-14

....................... 5-14

............... 5-16

.................................. 5-16

................................... 5-16

................ 5-17

................ 5-17

.............................. 5-19

................................. 5-19

........................ 5-21

................... 5-21

............. 5-21

............... 5-25

......... 5-6

x Contents

Page 11

Optimization Algorithms and Examples

6

Optimization Th eory Overview ..................... 6-2

Unconstrained Nonlinear Optimization

Definition

Large Scale fminunc Algorithm

Medium Scale fminunc Algorithm

fminsearch Algorithm

Unconstrained Nonlinear Optimization Examples

Example: fminunc Unconstrained Minimization

Example: Nonlinear Minimization with Gradient and

Hessian

Example: Nonlinear Minimization with Gradient and

Hessian Sparsity Pattern

Constrained Nonlinear Optimization

Definition

fmincon Trust Region Reflective Algorithm

fmincon Active Set Algorithm

fmincon SQP Algorithm

fmincon Interior Point Algorithm

fminbnd Algorithm

fseminf Problem Formulation and Algorithm

Constrained Nonlinear Optimization Examples

Example: Nonlinear Inequality Constraints

Example: Bound Constraints

Example: Constraints With Gradients

Example: Constrained Minimization Using fmincon’s

Interior-Point Algorithm With Analytic Hessian

Example: Equality and Inequality Constraints

Example: Nonlinear Minimization with Bound Constraints

and Banded Preconditioner

Example: Nonlinear Minimization with E quality

Constraints

Example: Nonl inear Minimization with a Dense but

Structured Hessian and Equality Constraints

........................................ 6-3

...................... 6-3

.................... 6-6

.............................. 6-11

....................................... 6-16

......................... 6-17

........................................ 6-20

........................ 6-26

............................ 6-36

..................... 6-37

................................ 6-41

........................ 6-47

....................... 6-59

.................................... 6-63

.............. 6-3

........ 6-14

................ 6-20

............ 6-20

........... 6-41

............ 6-45

................ 6-48

......... 6-58

........ 6-65

.... 6-14

...... 6-45

...... 6-51

xi

Page 12

Example: Using Symbolic Math Toolbox Functions to

Calculate Gradients and Hessians

Example: One-Dim e n sional Sem i-In f inite Constraints

Example: Two-Dimensional Semi-Infinite Constraint

.................. 6-69

... 6-84

.... 6-87

Linear Programming

Definition

Large Scale Linear Programming

Active-Set Medium-Scale linprog Algorithm

Medium-Scale linprogSimplexAlgorithm

Linear Programming Examples

Example: Linear Programming with Equalities and

Inequalities

Example: Linear Programming with Dense Columns in the

Equalities

Quadratic Programming

Definition

Large-Scale quadprog Algorithm

Medium-Scale quadprog Algorithm

Quadratic Programming Examples

Example: Quadratic Minimization with Bound

Constraints

Example: Quadratic Minimization with a Dense b ut

Structured Hessian

........................................ 6-91

..................................... 6-105

........................................ 6-108

............................... 6-91

.................... 6-91

............ 6-95

.............. 6-99

..................... 6-104

.................................... 6-104

........................... 6-108

..................... 6-108

................... 6-113

.................. 6-118

.................................... 6-118

.............................. 6-120

xii Contents

Binary Integer Programming

Definition

bintprog Algorithm

Binary Integer Programming Example

Example: Investments with Constraints

Least Squares (Model Fitting)

Definition

Large-Scale Least Squares

Levenberg-Marquardt Method

Gauss-Newton Method

........................................ 6-126

................................ 6-126

........................................ 6-134

............................. 6-140

....................... 6-126

...................... 6-134

.......................... 6-135

....................... 6-139

.............. 6-129

............... 6-129

Page 13

Least Squares (Model Fitting) Examples ............. 6-144

Example: Using lsqnonlin With a Simulink Model

Example: Nonlinear Least-Squares with Full Jacobian

Sparsity Pattern

Example: Linear Least-Squares with Bound

Constraints

Example: Jacobian Multiply Function with Linear Least

Squares

Example: Nonlinear Curve Fitting with lsqcurvefit

....................................... 6-153

................................ 6-150

.................................... 6-151

....... 6-144

...... 6-157

Multiobjective Optimization

Definition

Algorithms

Multiobjective Optimization Examples

Example: Using fminimax with a Simu link Model

Example: Signal Processing Using fgoalattain

Equation Solving

Definition

Trust-Region Dogleg Method

Trust-Region Reflective fsolve Algorithm

Levenberg-Marquardt Method

Gauss-Newton Method

\ Algorithm

fzero Algorithm

Equation Solving Examples

Example: Nonlinear Equations with Analytic Jacobian

Example: Nonlinear Equations with Finite-Difference

Jacobian

Example: Nonlinear Equations with Jacobian

Example: Nonlinear Equations with Jacobian Sparsity

Pattern

........................................ 6-160

....................................... 6-161

.................................. 6-173

........................................ 6-173

...................................... 6-180

................................... 6-180

....................................... 6-184

........................................ 6-188

........................ 6-160

.............. 6-166

.......... 6-169

........................ 6-174

.............. 6-176

....................... 6-179

............................. 6-179

......................... 6-181

.......... 6-185

....... 6-166

... 6-181

Selected Bibliography

.............................. 6-192

xiii

Page 14

Parallel Computing for Optimization

7

Parallel Computing in Optimization Toolbox

Functions

Parallel Optimization Functionality

Parallel Estimation of Gradients

Nested Parallel Functions

Using Parallel Computing with fmincon, fgoalattain,

and fminimax

Using Parallel Computing with Multicore Processors

Using Parallel Com puting with a Multiprocessor

Network

Testing Parallel Computations

....................................... 7-2

.................. 7-2

..................... 7-2

.......................... 7-3

................................... 7-5

....................................... 7-6

....................... 7-7

.... 7-5

Improving Performance with Parallel Computing

Factors That Affect Speed

Factors That Affect Results

Searching for Global Optima

.......................... 7-8

......................... 7-9

........................ 7-9

External Interface

8

ktrlink: An Interface to KNITRO Libraries ........... 8-2

What Is ktrlink?

Installation and Configuration

Example Using ktrlink

Setting Options

Sparse Matrix Considerations

.................................. 8-2

....................... 8-2

............................. 8-4

................................... 8-8

....................... 8-9

Argument and Options Reference

9

.... 7-8

xiv Contents

Function Arguments ............................... 9-2

Page 15

Input Arguments .................................. 9-2

Output Arguments

................................ 9-5

10

Optimization Options

Options Structure

Output Function

Plot Functions

.................................... 9-27

.............................. 9-7

................................. 9-7

.................................. 9-18

Function Reference

Minimization ...................................... 10-2

Equation Solving

Least Squares (Curve Fitting)

GUI

Utilities

.............................................. 10-3

........................................... 10-4

.................................. 10-2

...................... 10-3

11

A

Functions — Alphabetical List

Examples

Constrained Nonlinear Examples ................... A-2

Least Squares Examples

............................ A-2

xv

Page 16

Unconstrained Nonlinear Examples ................. A-3

Linear Programming Examples

Quadratic Programming Examples

Binary Integer Programming Examples

Multiobjective Examples

Equation Solving Examples

........................... A-3

..................... A-3

.................. A-3

......................... A-4

............. A-3

Index

xvi Contents

Page 17

Getting Started

• “Product Overview” on page 1-2

• “Example: Nonline ar Constrained Minimization” on page 1-4

1

Page 18

1 Getting Started

Product Overview

Introduction

Optimization Toolbox provides widely us ed a lg orithms for standard

and large-scale optimization. These algorithms solve constrained and

unconstrained continuous and discrete problems. The toolbox includes

functions for linear programming, quadratic programming, binary integer

programming, nonlinear optimization, nonlinear least squares, systems of

nonlinear equations, and multiobjective optimization. You can use them to

find optimal solutions, perform tradeoff analyses, balance multiple design

alternatives, and incorporate optimization methods into algorithms and

models.

In this section...

“Introduction” on page 1-2

“Optimization Functions” on page 1 -2

“Optimization Tool GUI” on page 1-3

1-2

Key features:

• Interactive tools for defining and solving optimization problems and

monitoring solution progress

• Solvers for nonlinear and multiobjective o ptimizatio n

• Solvers for nonlinear least squares, data fitting, and nonlinear equations

• Methods for solving quadratic and linear programming problems

• Methods for solving binary integer programming problems

• Parallel computing support in selected con strai ned n on li near solvers

Optimization Functions

Most toolbox functions are MATLAB®function files, made up of MATLAB

statements that implement specialized optimization algorithms. You can view

the code for these functions using the statement

type function_name

Page 19

Product Overview

You can extend the capabilities of Optimization Toolbox software by writing

your own functions, or by using the software in combination with other

toolboxes, or with the MATLAB or Simulink

®

environments.

Optimization Tool GUI

Optimization Tool (optimtool) is a graphical user interface (GUI) for selecting

a toolbox function, specifying optimization options, and running optimizations.

It provides a convenie nt interface for all optimization routines, including those

from Gl obal Optimization Toolbox software, which is licensed separately.

Optimization Tool makes it easy to

• Define and modify problems quickly

• Use the correct syntax for optimization functions

• Import and export from the MATLAB workspace

• Generate code containing your configuration for a solver and options

• Change parameters of an optimization during the execution of certain

Global Optimization Toolbox functions

1-3

Page 20

1 Getting Started

Example: Nonlinear Constrained Minimization

In this section...

“Problem Formulation: Rosenbrock’s Function” on page 1-4

“Defining the Problem in Toolbox Syntax” on page 1-5

“Running the Optimization” on page 1-7

“Interpreting the Result” on page 1-12

Problem Formulation: Rosenbrock’s Function

Consider the problem of minimizing Rosenbrock’s function

2

fx x x x() ( ),=−

over the unit disk, i.e., the disk of radius 1 centered at the origin. In other

words, find x that minimizes the function f(x)overtheset

problem is a minimization of a nonlinear function with a nonlinear constraint.

()

2

21

+−100 1

2

1

2

xx

122

1+≤

.This

1-4

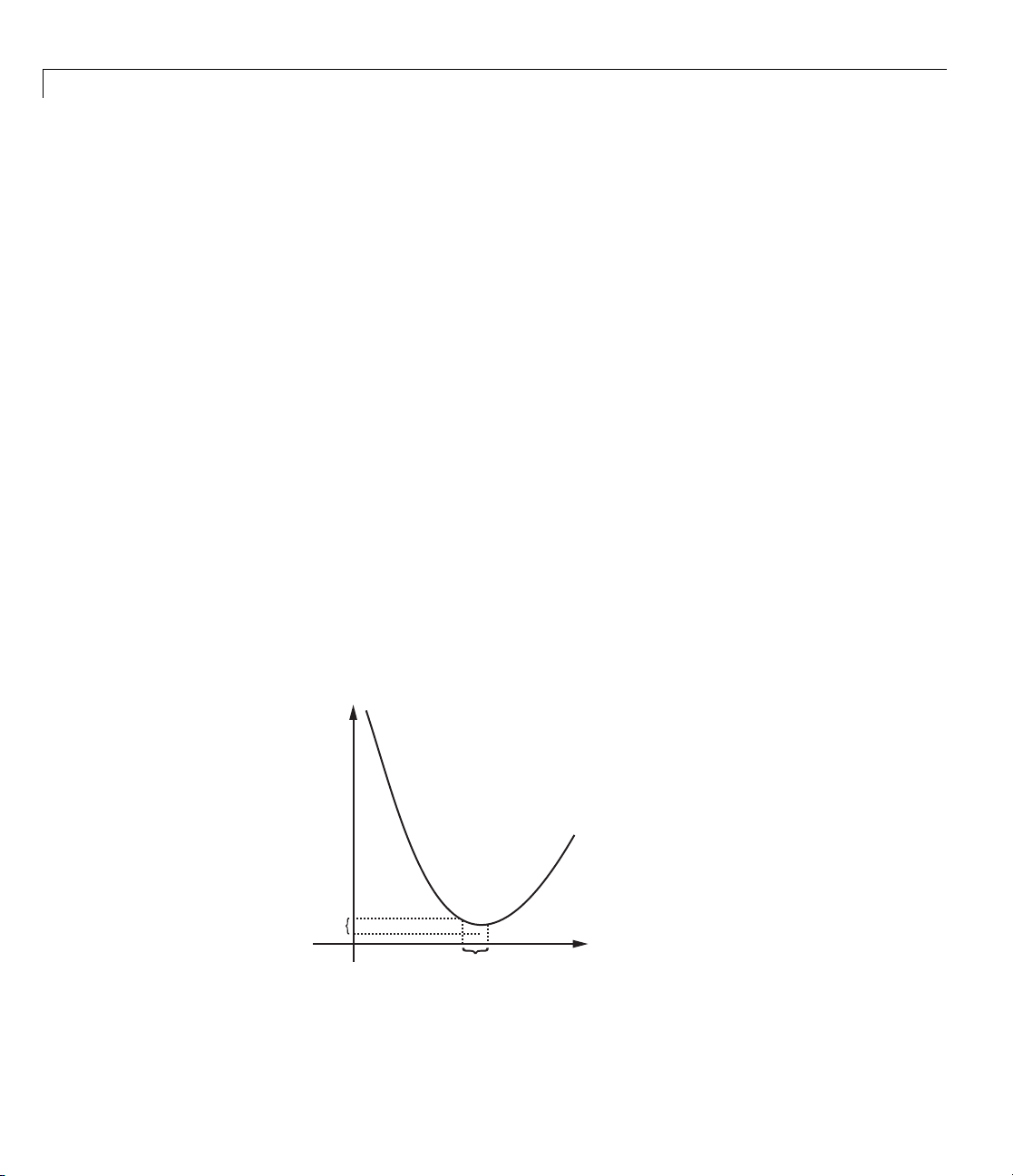

Note Rosenbrock’s function is a standard test function in optimization. It

has a unique minimum value of 0 attained at the point (1,1). Finding the

minimum is a challenge for some algorithms since it has a shallow minimum

inside a deeply curved valley.

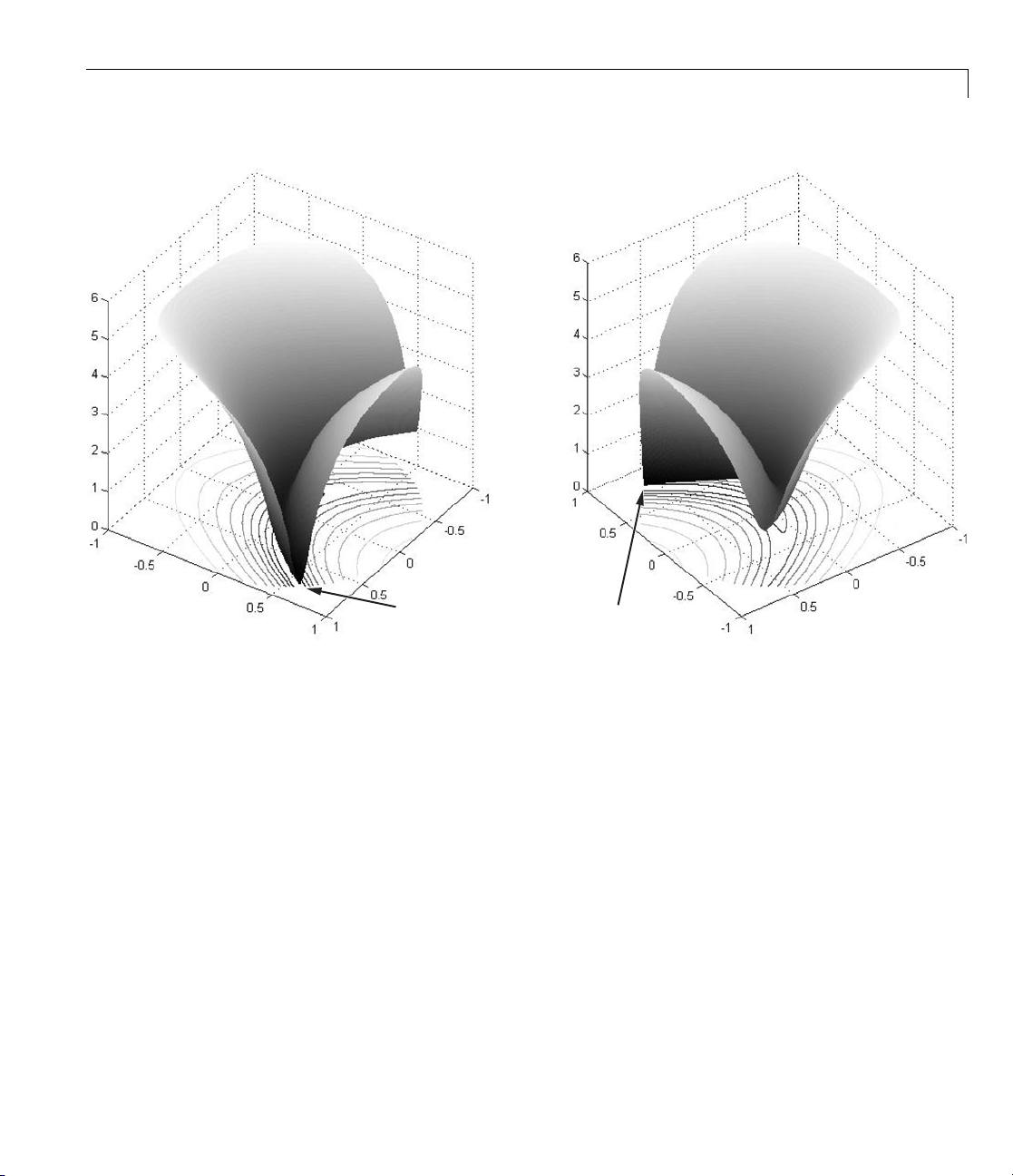

Here are two views of Ro senbrock’s function in the unit disk. The vertical

axis is log-scaled; in other words, the plot shows log(1 + f(x)). Contour lines

lie beneath the surface plot.

Page 21

Example: Nonlinear Constrained Minimization

Minimum at (.7864, .6177)

Rosenbrock’s function, log-scaled: two views .

The function f(x)iscalledtheobjective function. This is the function you wish

to minimize. The inequality

limitthesetofx over which you may search for a minimum. You can have

any num ber of constraints, which are inequalities or equations.

All Optimization Toolbox optimization functions minimize an objective

function. To maximize a function f, apply an optimization routine to minimize

–f. For more details about maximizing, see “Maximizing an Objective” on

page 2-16.

Defining the Problem in Toolbox Syntax

To use Optimization Toolbox software, you need to

2

xx

122

is called a constraint. Constraints

1+≤

1-5

Page 22

1 Getting Started

1 Define your objective function in the MATLAB language, as a function file

or anonymous function. This example will use a function file.

2 Define your constraint(s) as a separate file or anonymous function.

Function File for Objective Function

A function file is a text file containing MATLAB commands with the extension

.m. Create a new function file in any te xt editor, or use the buil t-in MATLAB

Editor as follows:

1 At the command line enter:

edit rosenbrock

The MATLAB Editor opens.

2 In the editor enter:

function f = rosenbrock(x)

f = 100*(x(2) - x(1)^2)^2 + (1 - x(1))^2;

1-6

3 SavethefilebyselectingFile > Save.

File for Constraint Function

Constraint functions must be formulated so that they are in the form

2

c(x) ≤ 0orceq(x)=0. Theconstraint

2

xx

122

10+−≤

in order to have the correct syntax.

xx

122

Furthermore, toolbox functions that accept nonlinear constraints need to

have both equality and inequality constraints defined. In this example there

is only an inequali ty constraint, so you must pass an empty array

the equality constraint function ceq.

With these considerations in mind, write a function file for the nonlinear

constraint:

1 Create a file named unitdisk.m containing the following code:

needs to be reformulated as

1+≤

[]as

Page 23

Example: Nonlinear Constrained Minimization

function [c, ceq] = unitdisk(x)

c = x(1)^2 + x(2)^2 - 1;

ceq = [ ];

2 Save the file unitdisk.m.

Running the Optimization

Therearetwowaystoruntheoptimization:

• Using the “Optimization Tool” on page 1-7 Graphical User Interface (GUI)

• Using command line functions; see “Minimizing at the Command Line”

on page 1-11.

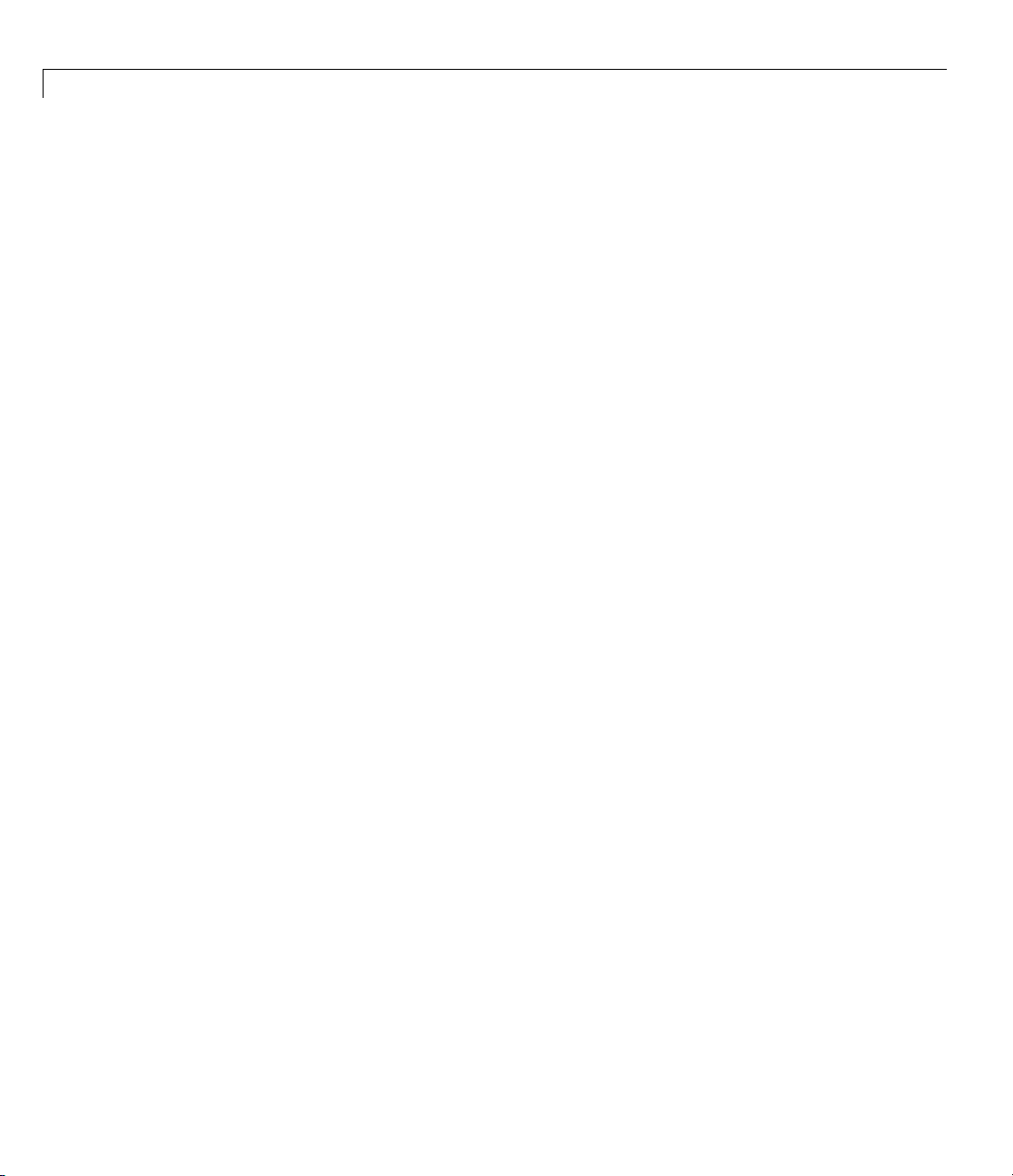

Optimization Tool

1 Start the Optimization Tool by typing optimtool at the command line.

The following GUI opens.

1-7

Page 24

1 Getting Started

1-8

ore information about this tool, see Chapter 5, “Optimization Tool”.

For m

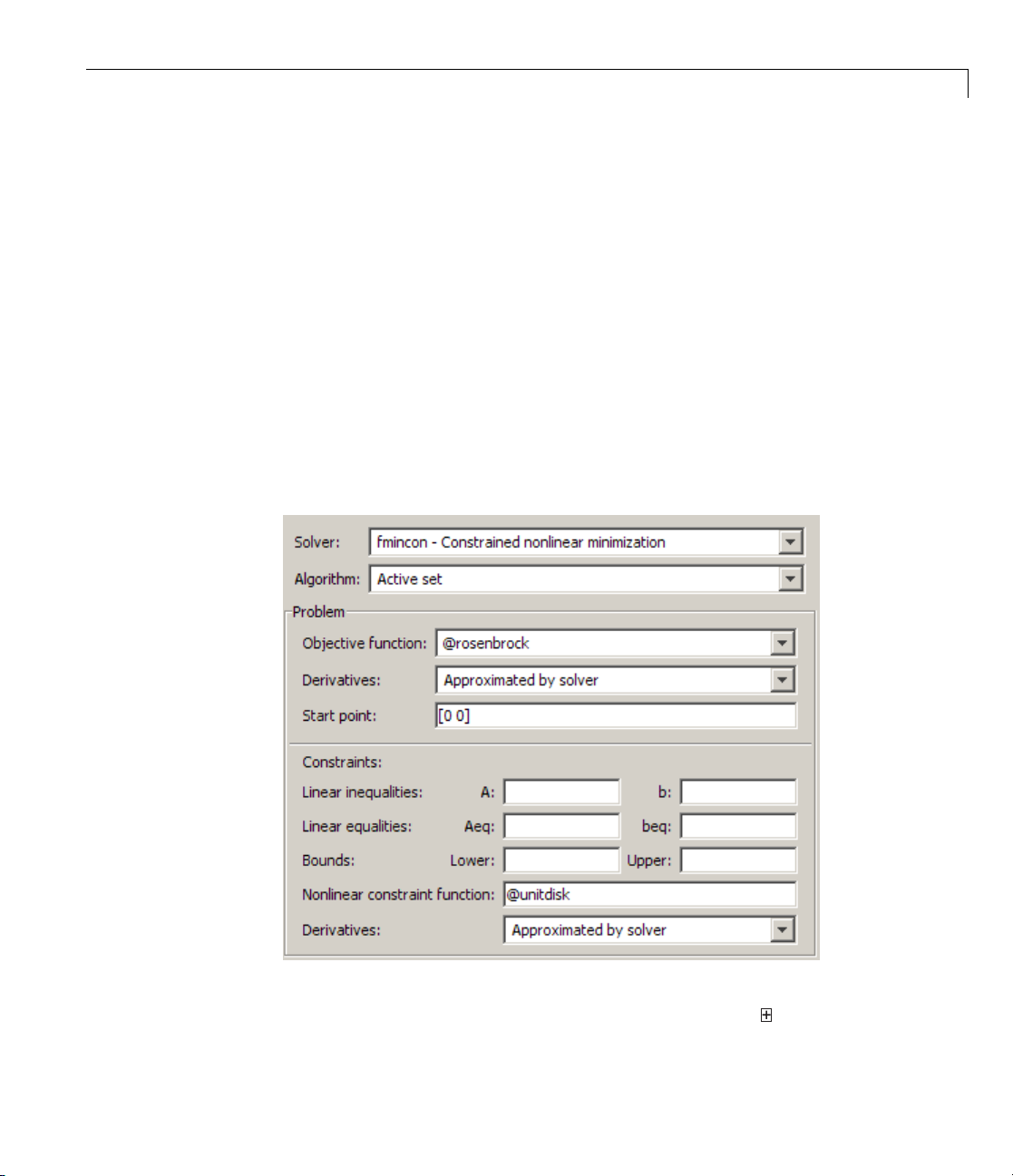

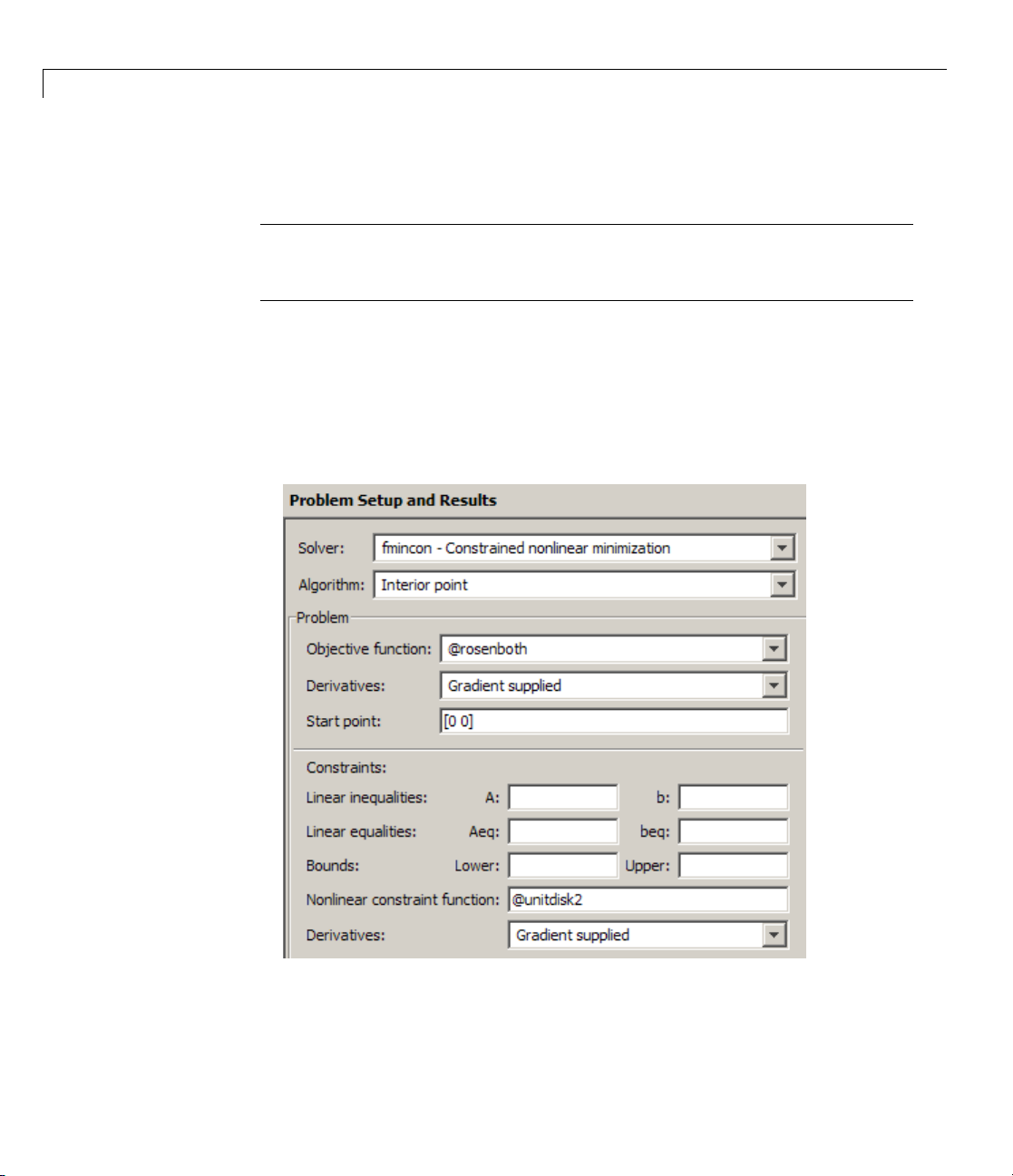

2 The default Solver fmincon - Constrained nonlinear minimization

isselected. Thissolverisappropriatefor this problem, since Rosenbrock’s

function is nonlinear, and the problem has a constraint. For more

Page 25

Example: Nonlinear Constrained Minimization

information a bout how to choose a solver, see “Choosing a Solver” on page

2-4.

3 In the Algorithm pop-up menu choose Active set—the default Trust

region reflective

4 For Objective function enter @rosenbrock. The @ character indicates

that this is a function handle of the file

5 For Start point enter [0 0]. This is the initial point where fmincon

algorithm doesn’t handle nonlinear constraints.

rosenbrock.m.

begins its search for a minimum.

6 For Nonlinear constraint function enter @unitdisk, the function

handle of

unitdisk.m.

Your Problem Setup and Results pane should match this figure.

7 In the Options pane (center bottom), select iterative in the Level of

display pop-up menu. (If you don’t see the option, click

Display to

1-9

Page 26

1 Getting Started

command window.) This shows the progress of fmincon in th e command

window.

8 Click Start un

der Run solver and view results.

The following message appears in the box below the Start button:

Optimization running.

Objective function value: 0.045674808692966654

Local minimum possible. Constraints satisfied.

fmincon stopped because the predicted change in the objective function

is less than the default value of the function tolerance and constraints

were satisfied to within the default value of the constraint tolerance.

Your objective function value may differ slightly, depending on your computer

system and version of O p timization Toolbox software.

The message tells y ou that:

• The search for a constrained optimum ended because the derivative of the

objective function is nearly 0 in directions allowed by the constraint.

1-10

• The constraint is very nearly satisfied.

“Exit Flags a n d Exit Messages” on p a ge 3-3 discusses exit messages such

as these.

The minimizer

x appears under Final point.

Page 27

Example: Nonlinear Constrained Minimization

Minimizing at the Command Line

You can run the same optimization from the command line, as follows.

1 Create an options structure to choose iterative display and the active-set

algorithm:

options = optimset('Display','iter','Algorithm','active-set');

2 Run the fmincon solver with the options structure, reporting both the

location

function:

x of the minimizer, and value fval attained by the objective

[x,fval] = fmincon(@rosenbrock,[0 0],...

[],[],[],[],[],[],@unitdisk,options)

The six sets of empty brackets represent optional constraints that are not

being used in this example. See the

fmincon function reference pages for

the syntax.

MATLAB outputs a table of iterations, and the results of the optimization:

Local minimum possible. Constraints satisfied.

fmincon stopped because the predicted change in the objective function

is less than the default value of the function tolerance and constraints

were satisfied to within the default value of the constraint tolerance.

<stopping criteria details>

Active inequalities (to within options.TolCon = 1e-006):

lower upper ineqlin ineqnonlin

1

x=

0.7864 0.6177

1-11

Page 28

1 Getting Started

fval =

0.0457

The message tells you that the search for a constrained optimum ended

because the derivative of the objective function is nearly 0 in directions

allowed by the constraint, and that the constraint is very nearly satisfied.

Several phrases in the message contain links that give you more information

about the terms used in the message. For more details about these links, see

“Enhanced Exit Mes sages” on page 3-5.

Interpreting the Result

The iteration table in the command window shows how MATLAB searched for

the minimum value of Rosenbrock’s function in the unit disk. This table is

the same whether you use Optimization Tool or the command line. MATLAB

reports the minim ization as follows:

Max Line search Directional First-order

Iter F-count f(x) constraint steplength derivative optimality Procedure

03 1 -1

1 9 0.953127 -0.9375 0.125 -2 12.5

2 16 0.808446 -0.8601 0.0625 -2.41 12.4

3 21 0.462347 -0.836 0.25 -12.5 5.15

4 24 0.340677 -0.7969 1 -4.07 0.811

5 27 0.300877 -0.7193 1 -0.912 3.72

6 30 0.261949 -0.6783 1 -1.07 3.02

7 33 0.164971 -0.4972 1 -0.908 2.29

8 36 0.110766 -0.3427 1 -0.833 2

9 40 0.0750939 -0.1592 0.5 -0.5 2.41

10 43 0.0580974 -0.007618 1 -0.284 3.19

11 47 0.048247 -0.003788 0.5 -2.96 1.41

12 51 0.0464333 -0.00189 0.5 -1.23 0.725

13 55 0.0459218 -0.0009443 0.5 -0.679 0.362

14 59 0.0457652 -0.0004719 0.5 -0.4 0.181

15 63 0.0457117 -0.0002359 0.5 -0.261 0.0905 Hessian modified

16 67 0.0456912 -0.0001179 0.5 -0.191 0.0453 Hessian modified

17 71 0.0456825 -5.897e-005 0.5 -0.156 0.0226 Hessian modified

18 75 0.0456785 -2.948e-005 0.5 -0.139 0.0113 Hessian modified

19 79 0.0456766 -1.474e-005 0.5 -0.13 0.00566 Hessian modified

1-12

Page 29

Example: Nonlinear Constrained Minimization

This table might differ from yours depending on to olbo x ve rsio n and computing

platform. The following description applies to the table as displayed.

• The first column, labeled

fmincon took 19 iterations to converge.

• The second column, labeled

Iter, is the iteration number from 0 to 19.

F-count, reports the cumulative number

of times Rosenbrock’s function was evaluated. The final row shows an

F-count of 79, indicating that fmincon evaluated Rosenbrock’s function

79 times in the process of finding a minimum.

• The third column, labeled

f(x), displays the value of the objective function.

The final value, 0.0456766, is the minimum that is reported in the

Optimization Tool Run solver and view results box, and at the end of

the exit message in the com ma nd window.

• The fourth column,

Max constraint, goes from a value of –1 at the initial

value, to very nearly 0, –1.474e–005, at the final iteration. This column

shows the value of the constraint function

the value of

unitdisk was nearly 0 at the final iteration,

unitdisk at each iteration. Since

2

xx

122

1+≈

there.

The other columns of the iteration table are described in “Displaying Iterative

Output” on page 3-14.

1-13

Page 30

1 Getting Started

1-14

Page 31

2

Setting Up an Optimization

• “Introduction to Optimization Toolbox Solvers” on page 2-2

• “Choosing a Solver” on page 2-4

• “Writing Objective Functions” on page 2-10

• “Writing Constraints” on page 2-17

• “Passing Extra Parameters” on page 2-25

• “Setting Options” on page 2-30

Page 32

2 Setting Up an Optimization

Introduction to Optimization Toolbox Solvers

There are four general categories of Optimization Toolbox solvers:

• Minimizers

This group of solvers attempts to find a local minimum of the objective

function near a starting point

optimization, linear programming, quadratic programming, and general

nonlinear programming.

• Multiobjectiv e minimizers

This group of solvers attempts to ei ther minimize the maximum value of

a set of functions (

functions is below some prespecified values (

• Equation solvers

This group of solvers attempts to find a solution to a scalar- or vector-valued

nonlinear equation f(x)=0nearastartingpoint

be considered a form of optimization because it is equivalent to finding

the minimum norm of f(x)near

fminimax), or to find a location where a collection of

x0. They address problems of unconstrained

x0.

fgoalattain).

x0. Equation-solving can

2-2

• Least-Squares (curve-fitting) solvers

This group of solvers attempts to minimize a sum of squares. This type of

problem frequently arises in fitting a model to data. The s olv ers address

problems of finding nonnegative solutions, bounded or linearly constrained

solutions, and fitting parameterized nonlinear models to data.

For more information see “Problems Handled by Optimization Toolbox

Functions” on page 2-7. See “Optimization Decis ion Table” on page 2-4 for

aid in choosing among solvers for minimization.

Minimizers formulate optimization problems in the form

min ( ),xfx

possibly subject to constraints. f(x)iscalledanobjective function. In general,

f(x) is a scalar function of type

double. However, multiobjective optimization, equation solving, and some

sum-of-squares minimizers, can have vector or matrix objective functions F(x)

double,andx isavectororscalaroftype

Page 33

Introduction to Optimization Toolbox™ Solvers

of type double. To use Optimization Toolbox solvers for maximization instead

of minimization, see “Maximizing an Objective” on page 2-16.

Write the objective function for a solver in the form of a function file or

anonymous function handle. You can s upply a gradient

and you can supply a Hessian for several solvers. See “Writing Objective

Functions” on page 2-10. Constraints have a special form, as described in

“Writing Constraints” on page 2-17.

∇

f(x) for many solvers,

2-3

Page 34

2 Setting Up an Optimization

Choosing a Solver

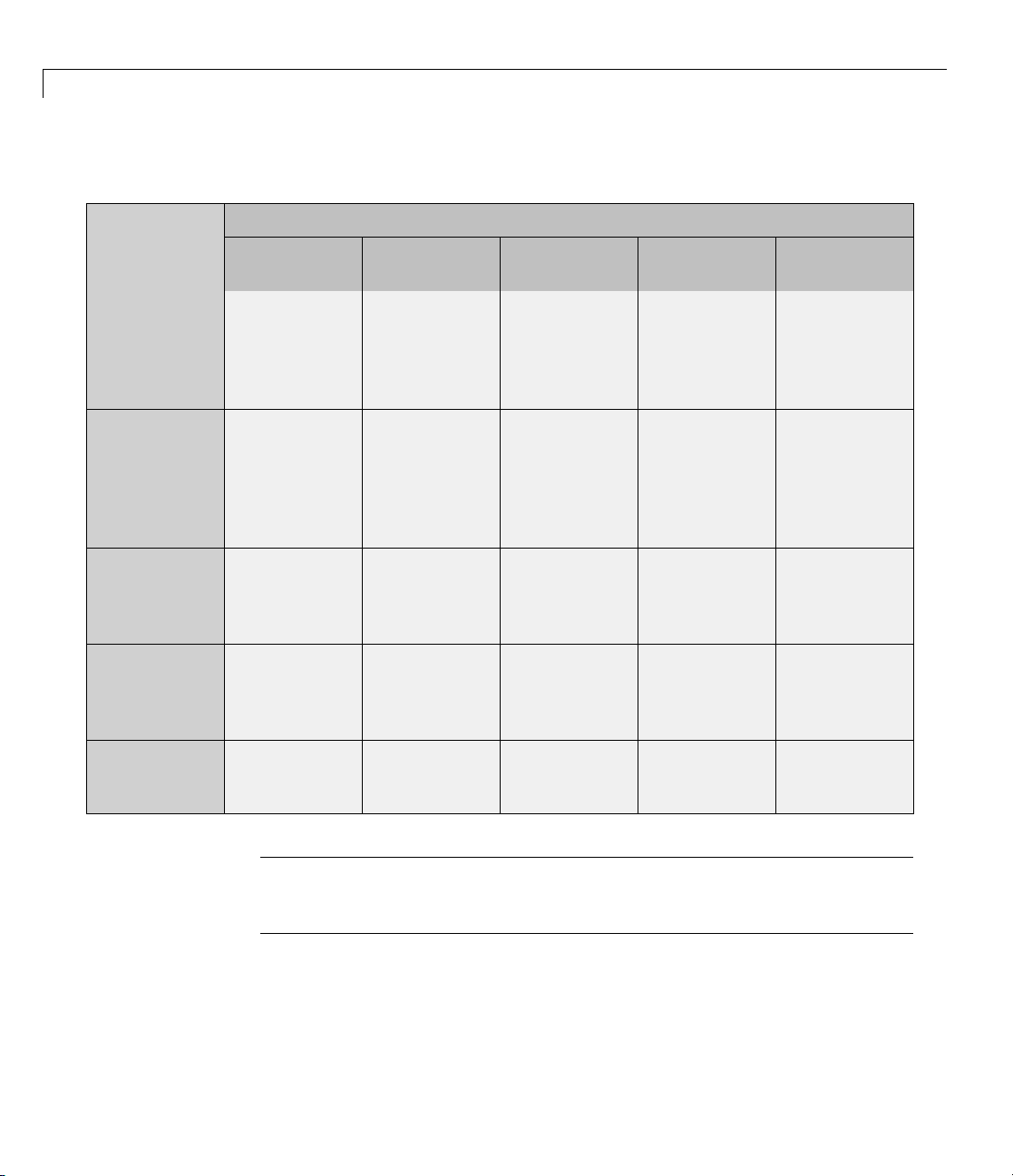

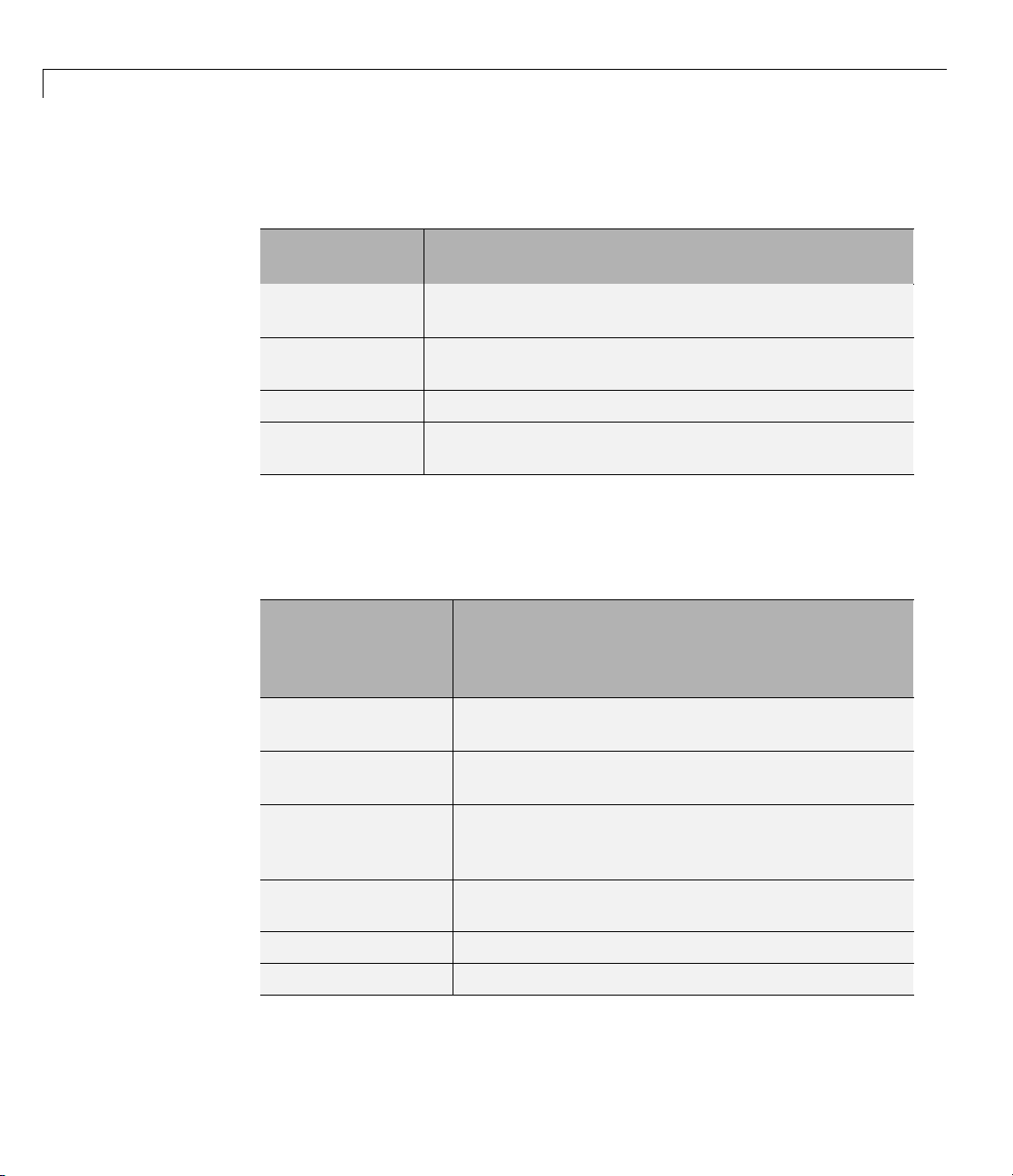

Optimization Decision Table

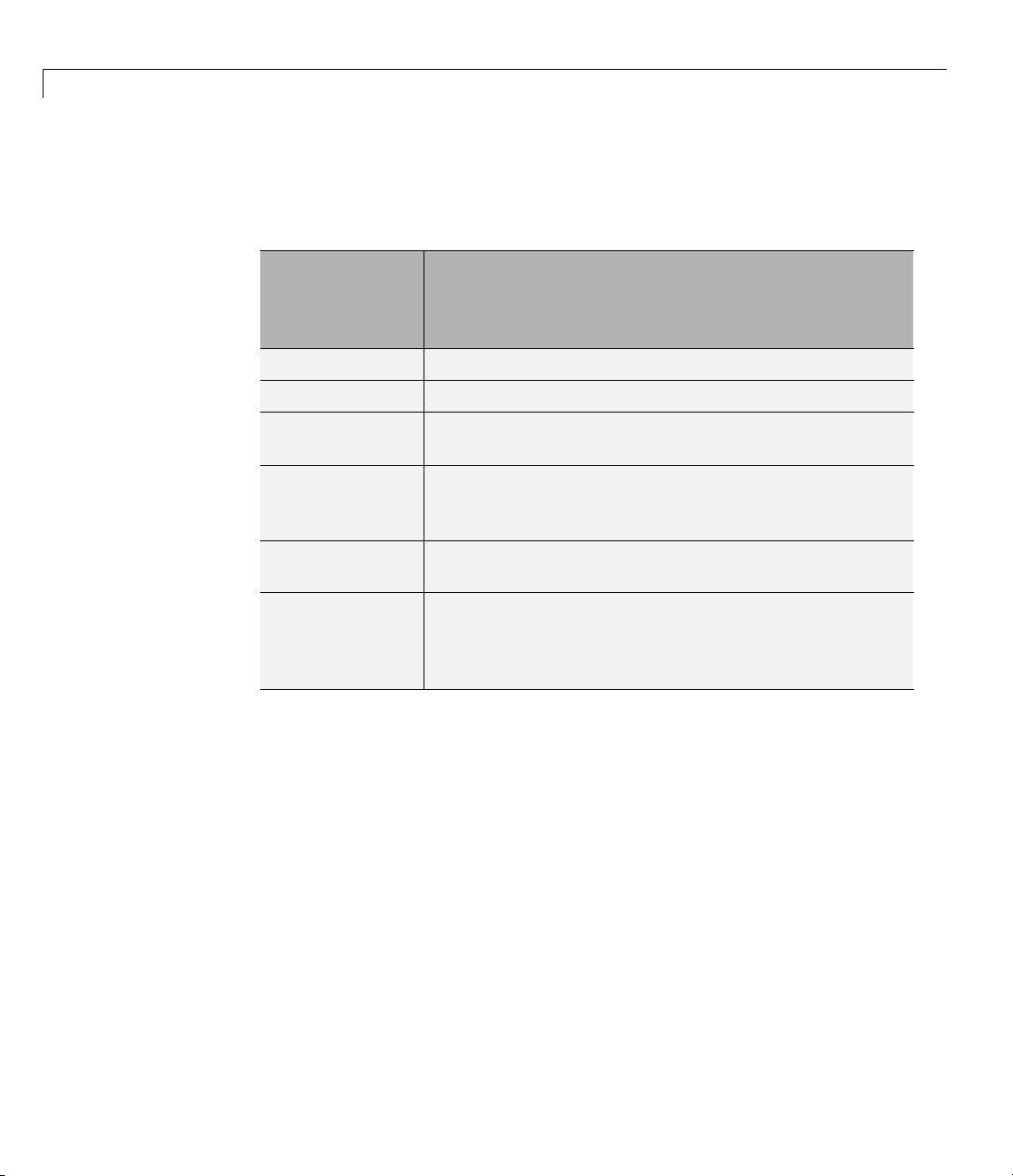

The following table is designed to help you choose a solver. It does not address

multiobjective optimization or equation so lv in g. There are more details on

all the solvers in “Problems Handled by Optimization Toolbox Functions”

on page 2-7.

Usethetableasfollows:

1 Identifyyourobjectivefunctionasoneoffivetypes:

In this section...

“Optimization D ecision Table” on page 2-4

“Problems Handled by Optimization Toolbox Functions” on page 2-7

• Linear

2-4

• Quadratic

• Sum-of-squares (Least squares)

• Smooth nonlinear

• Nonsmooth

2 Identify your constraints as one of five types:

• None (unconstrained)

• Bound

• Linear (including bound)

• General smooth

• Discrete (integer)

3 Use

In

thetabletoidentifyarelevantsolver.

this table:

Page 35

Choosing a Solver

• Blank entries means there is no Optimization Toolbox solver specifically

designed for this type of problem.

• * means relevant solvers are found in Global Optimization Toolbox

functions (licensed separately from Optimization Toolbo x solvers).

•

fmincon applies to most smooth objective functions with smooth

constraints. It is not listed as a preferred solver for least squares or linear

or quadratic programming because the listed solvers are usually more

efficient.

• The table has suggested functions, but it is not meant to unduly restrict

your choices. For example,

fmincon isknowntobeeffectiveonsome

nonsmooth problems.

• The Global Optimization Toolbox

ga function can be programmed to

address discrete problems. It is not listed in the table because additional

programming is needed to solve discrete problems.

2-5

Page 36

2 Setting Up an Optimization

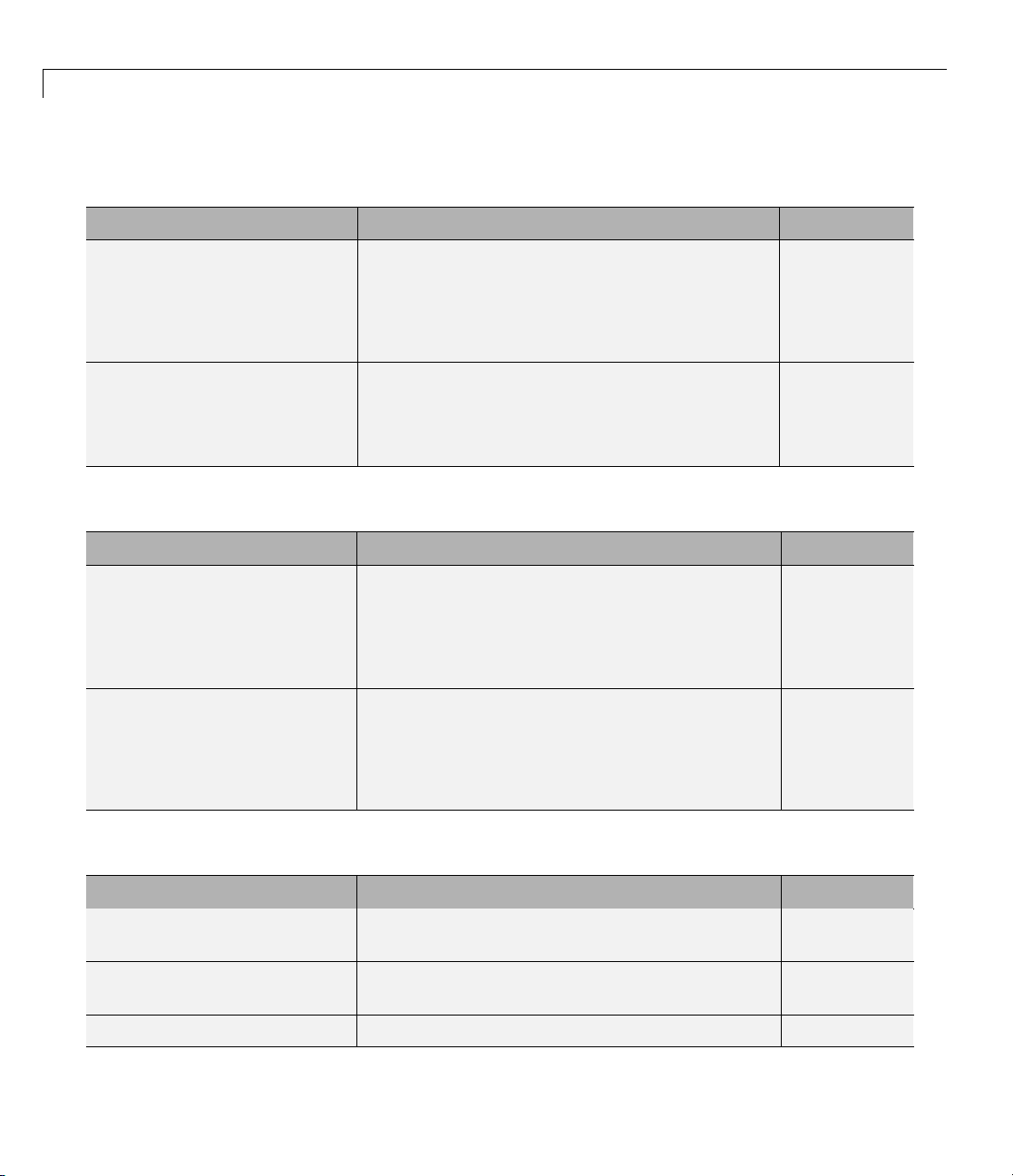

Solvers by Objective and Constraint

Constraint

Type

None

Bound

Linear

General

smooth

Discrete

Linear

n/a (f =const,

or min =

linprog,

−∞

Theory,

Examples

linprog,

Theory,

Examples

fmincon,

Theory,

Examples

bintprog,

Theory,

Example

Quadratic

quadprog,

Theory,

)

Examples

quadprog,

Theory,

Examples

quadprog,

Theory,

Examples

fmincon,

Theory,

Examples

Objective Type

Least

Squares

\,

lsqcurvefit,

lsqnonlin,

Theory,

Examples

lsqcurvefit,

lsqlin,

lsqnonlin,

lsqnonneg,

Theory,

Examples

lsqlin,

Theory,

Examples

fmincon,

Theory,

Examples

Smooth

nonlinear

fminsearch,

fminunc,

Theory,

Examples

fminbnd,

fmincon,

fseminf,

Theory,

Examples

fmincon,

fseminf,

Theory,

Examples

fmincon,

fseminf,

Theory,

Examples

Nonsmooth

fminsearch,*

*

*

*

2-6

Note This table does not list multiobjective solvers nor equation solvers. See

“Problems Handled by Optimization Toolbox Functions” on page 2-7 for a

complete list of problems addressed by O p tim i z a tion Toolbox functions.

Page 37

Note Some solvers have several algorithms. For help cho osing, see “Choosing

the Algorithm” on page 2- 4 5.

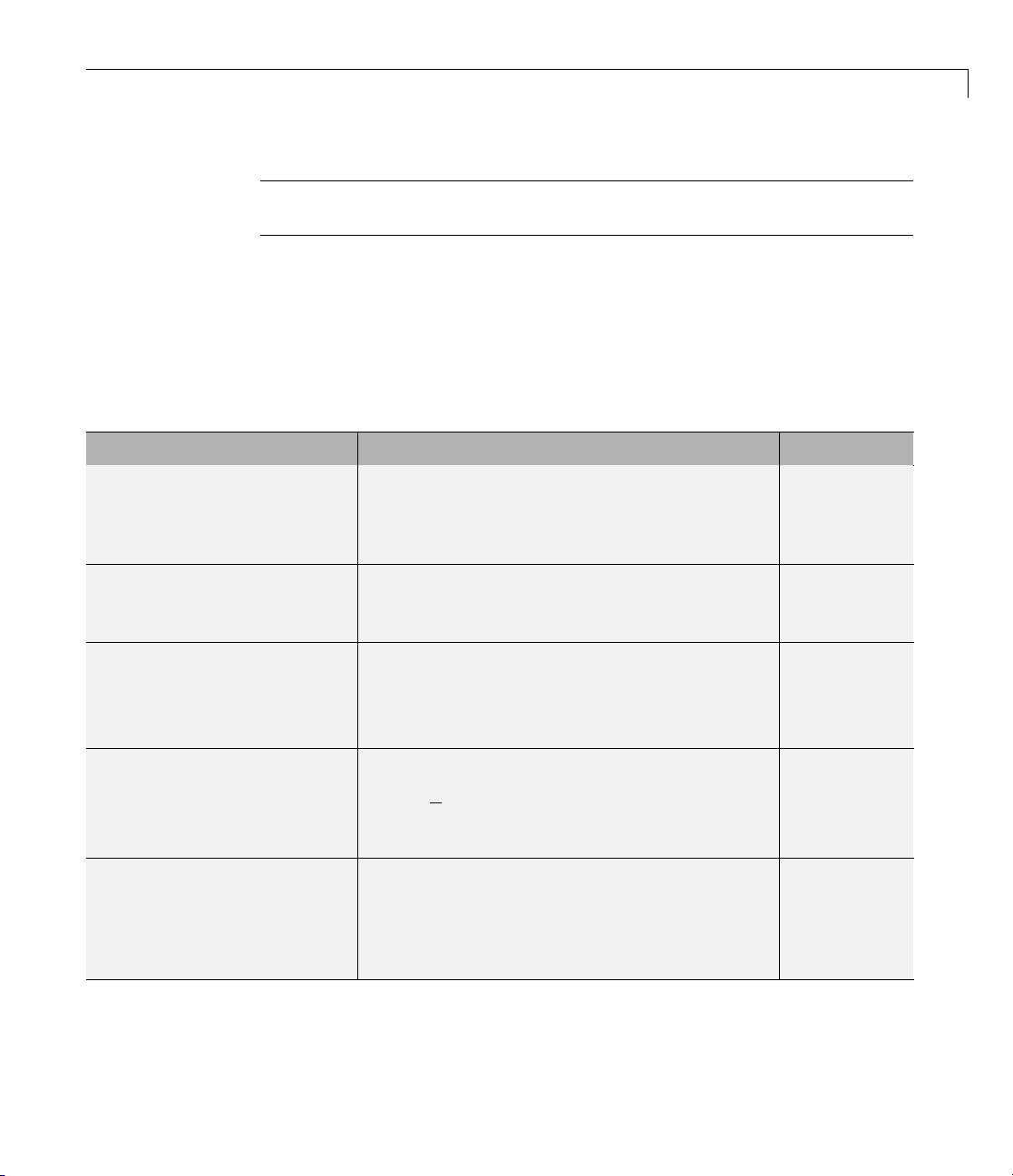

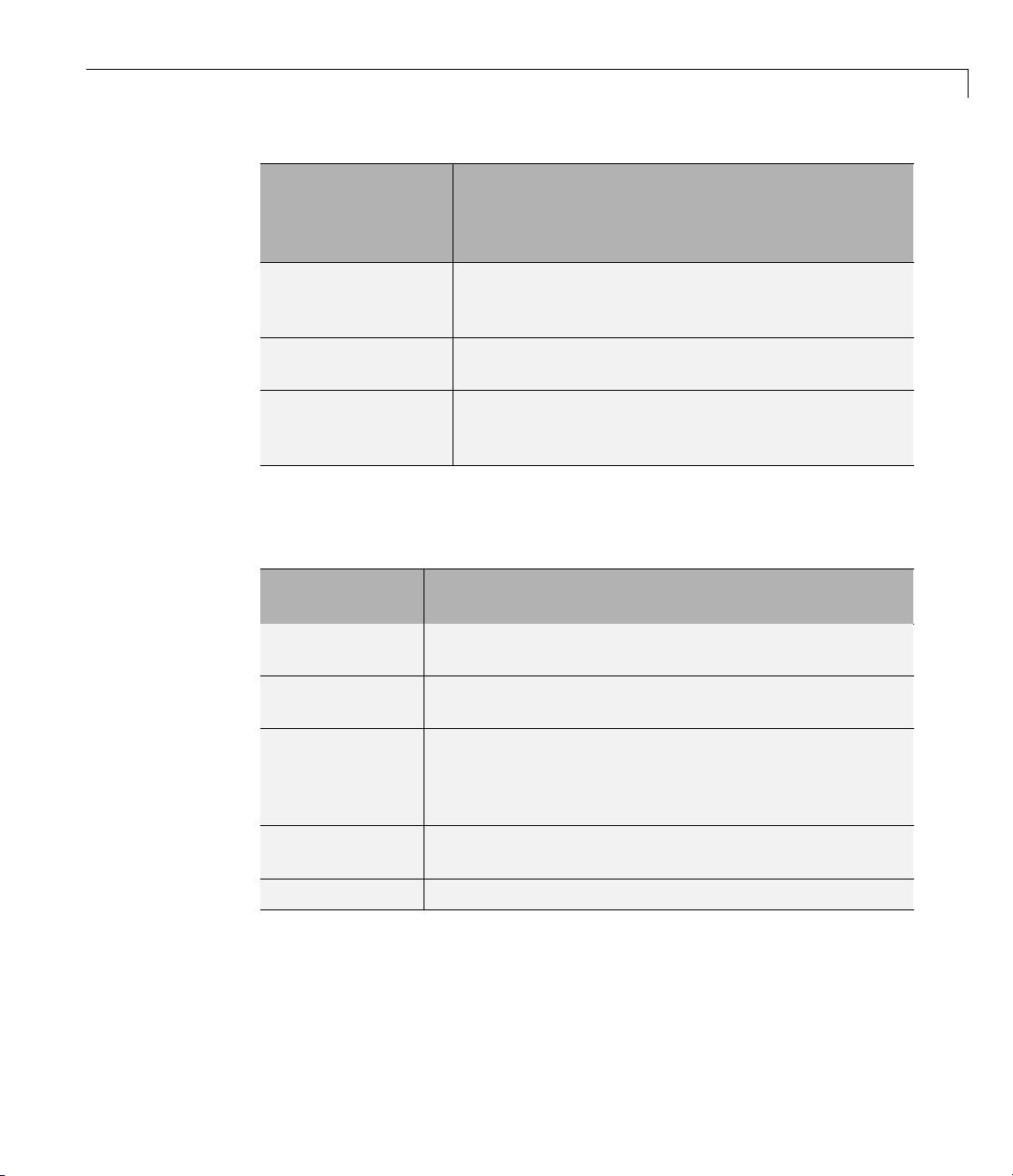

Problems Handled by Optimization Toolbox Functions

The following tables show the functions available for minimization, equation

solving, multiobjective optimization, and solving least-squares or data-fitting

problems.

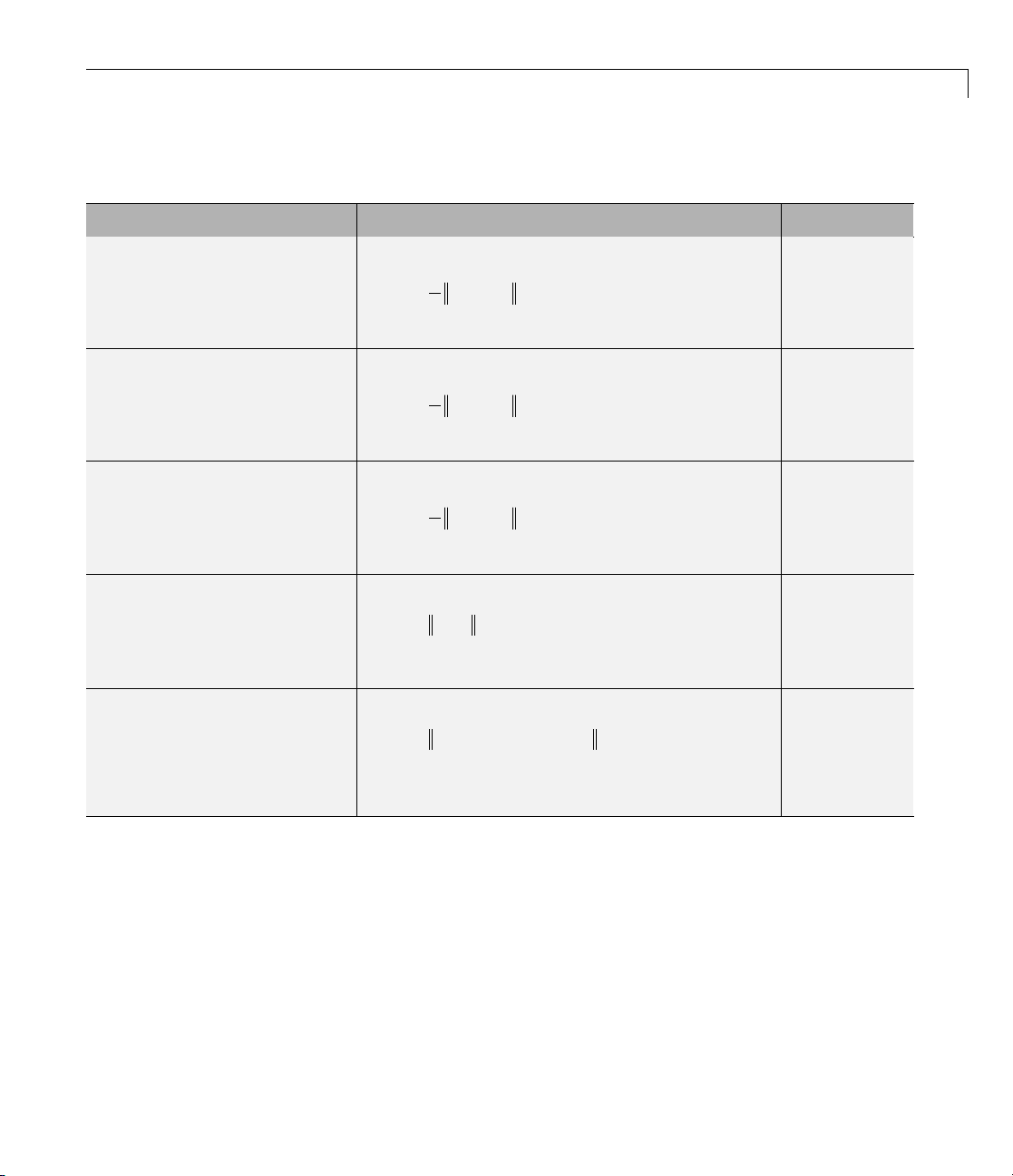

Minimization Problems

Type Formulation Solver

Scalar minimization

min ( )xfx

such that l < x < u (x is scalar)

Unconstrained minimization

min ( )xfx

fminbnd

fminunc,

fminsearch

Choosing a Solver

Linear programming

Quadratic programming

Constrained minimization

T

min

fx

x

such that A·x ≤ b, Aeq·x = beq, l ≤ x ≤ u

1

min

TT

xHx cx

2

x

+

such that A·x ≤ b, Aeq·x = beq, l ≤ x ≤ u

min ( )xfx

such that c(x) ≤ 0, ceq(x)=0, A·x ≤ b,

Aeq·x = beq, l ≤ x ≤ u

linprog

quadprog

fmincon

2-7

Page 38

2 Setting Up an Optimization

Minimization Problems (Continued)

Type Formulation Solver

Semi-infinite minimization

min ( )xfx

such that K(x,w) ≤ 0forallw, c(x) ≤ 0,

ceq(x)=0, A·x ≤ b, Aeq·x = beq, l ≤ x ≤ u

Binary integer programming

T

min

fx

x

such that A·x ≤ b, Aeq·x = beq, x binary

Multiobjective Problems

Type F ormul ation Solve r

Goal attainment

min

γ

,x γ

such that F(x)–w·γ ≤ goal, c(x) ≤ 0, ceq(x)=0,

A·x ≤ b, Aeq·x = beq, l ≤ x ≤ u

Minimax

min max ( )

x

such that c(x) ≤ 0, ceq(x)=0, A·x ≤ b,

Aeq·x = beq, l ≤ x ≤ u

i

Fx

i

fseminf

bintprog

fgoalattain

fminimax

Equation Solving Problems

Type F ormul ation Solve r

Linear equations

Nonlinear equation of one

C·x = d, n equations, n variables

f(x)=0

variable

Nonlinear equations

F(x)=0,n equations, n variables

2-8

\ (matrix left

division)

fzero

fsolve

Page 39

Least-Squares (Model-Fitting) Problems

Type F ormul ation Solve r

Choosing a Solver

Linear least-squares

Nonnegative

linear-least-squares

Constrained

linear-least-squares

Nonlinear least-square s

Nonlinear curve fitting

1

minxCx d

2

⋅−

2

2

m equations, n variables

1

minxCx d

2

⋅−

2

2

such that x ≥ 0

1

minxCx d

2

⋅−

2

2

such that A·x ≤ b, Aeq·x = beq, lb ≤ x ≤ ub

min ( ) min ( )

xx

2

Fx F x

=

2

∑

2

i

i

such that lb ≤ x ≤ ub

min ( , )

Fxxdata ydata−

x

2

2

such that lb ≤ x ≤ ub

\ (matrix left

division)

lsqnonneg

lsqlin

lsqnonlin

lsqcurvefit

2-9

Page 40

2 Setting Up an Optimization

Writing Objective Functions

In this section...

“Writing Objective Functions” on page 2-10

“Jacobians of Vector and Matrix Objective Functions” on page 2-12

“Anonymous Function Objectives” on page 2-15

“Maximizing an Objective” on page 2-16

Writing Objective Functions

This section relates to scalar-value d objective functions. A few solvers

require vector-valued objective functions:

lsqcurvefit,andlsqnonlin. For vector-valued or matrix-valued objective

functions, see “Jacobians o f Vector and Matrix Objective Functions” on page

2-12. For information on how to include extra parameters, see “Passing Extra

Parameters” on p age 2-25.

fgoalattain, fminimax, fsolve,

2-10

An objective function file can return one, two, or three outputs. It can return:

• A single double-precision number, representing the value of f(x)

• Both f(x)anditsgradient

∇

• All three of f(x),

You are not required to provide a gradient for some solvers, and you are

never required to provide a Hessian, but providing one or both can lead to

faster execution and more reliable answers. If you do not provide a gradient

or Hessian, solvers may attempt to estimate them using finite difference

approximations or other numerical schemes.

For constrained problems, providing a gradient has another advantage. A

solver can reach a point

x always lead to an infeasible point. In this case, a solver can fail or halt

prematurely. Providing a gradient allows a solver to proceed.

Some solvers do not use gradient or Hessian information. You shoul d

“conditionalize” a function so that it returns just what is needed:

f(x), and the Hessian matrix H(x)=∂2f/∂xi∂x

∇

f(x)

j

x such that x is feasible, but finite differences around

Page 41

Writing Objective Functions

• f(x)alone

∇

• Both f(x)and

• All three of f(x),

Forexample,considerRosenbrock’sfunction

fx x x x() ( ),=−

which is described and plotted in “Example: Nonlinear Constrained

Minimization” on page 1-4. The gradient of f(x)is

f(x)

∇

f(x), and H(x)

2

2

()

21

+−100 1

2

1

⎡

−−

xxx x

400 2 1

()

∇fx

and the Hessian H(x)is

Hx

Function rosenboth returns the value of Rosenbrock’s function in f,the

gradient in

function [f g H] = rosenboth(x)

% Calculate objective f

f = 100*(x(2) - x(1)^2)^2 + (1-x(1))^2;

if nargout > 1 % gradient required

⎢

⎢

⎣

⎡

1200 400 2 400

() .=

⎢

⎢

⎣

g, and the Hessian in H if required:

g = [-400*(x(2)-x(1)^2)*x(1)-2*(1-x(1));

if nargout > 2 % Hessian required

end

⎢

() ,=

21211

200

2

xx x

−+−

1

−

400 200

200*(x(2)-x(1)^2)];

H = [1200*x(1)^2-400*x(2)+2, -400*x(1);

-400*x(1), 200];

−−

()

2

xx

−

()

21

21

x

1

⎤

⎥

⎥

⎥

⎦

⎤

⎥

⎥

⎦

end

2-11

Page 42

2 Setting Up an Optimization

nargout checks the number of arguments that a calling function specifies; see

“Checking the Number of Input Arguments” in the MATLAB Programming

Fundamentals documentation.

The

to minimize Rosenbrock’s function. Tell

Hessian by setting

Run fminunc starting at [-1;2]:

fminunc solver, designed for unconstrained optimization, allows you

fminunc to use the gradient and

options:

options = optimset('GradObj','on','Hessian','on');

[x fval] = fminunc(@rosenboth,[-1;2],options)

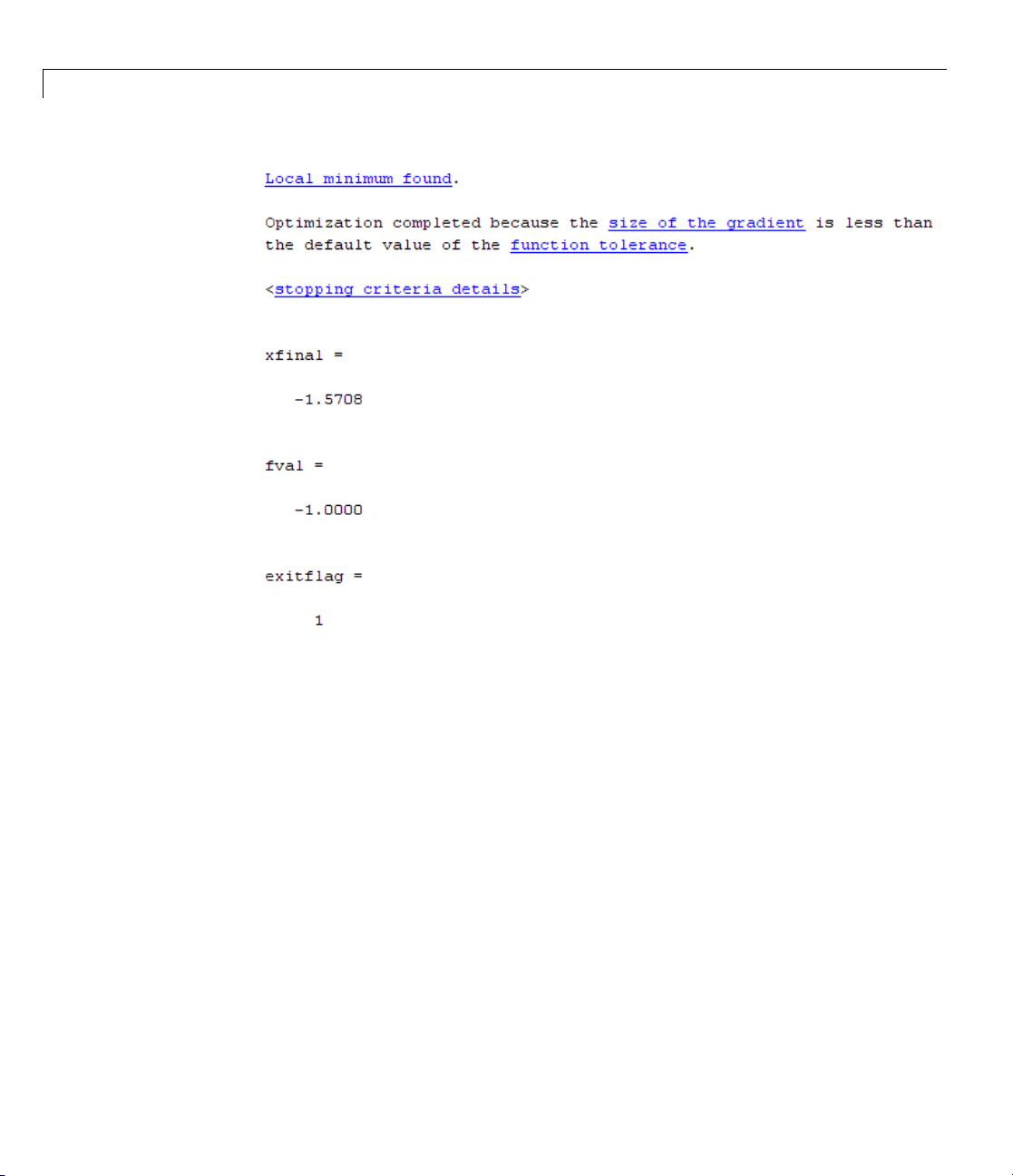

Local minimum found.

Optimization completed because the size of the gradient

is less than the default value of the function tolerance.

x=

1.0000

1.0000

2-12

fval =

1.9310e-017

If you have a Symbolic Math Toolbox™ license, you can calculate gradients

and Hessians automatically, as described in “Example: Using Symbolic Math

Toolbox Functions to Calculate Gradients and Hessians” on page 6-69.

Jacobians of Vector and Matrix Objective Functions

Some solvers, s uch as fsolve and lsqcurvefit, have objective functions

that are vectors or matrices. T he only difference in usage between these

types of objective functions and scalar objective functions is the way to write

their derivatives. The first-order partial derivatives of a vector-valued or

matrix-valued function is called a Jacobian; the first-order partial derivatives

of a scalar function is called a gradient.

Page 43

Writing Objective Functions

Jacobians of Vector Functions

If x represents a vector of independent variables, and F(x)isthevector

function, the Jacobian J(x) is defined as

Fx

()

∂

Jx

()

ij

If F has m components, and x has k components, J is a m-by-k matrix.

For example, if

i

.=

x

∂

j

Fx

()

⎡

xxx

+

1223

⎢

⎢

⎣

sin

23

xxx

+−

()

123

⎤

,=

⎥

⎥

⎦

then J(x)is

2

Jx

()

⎡

=

⎢

cos cos cos

⎣

xx x

13 2

23 2 23 3 23

xxx xxx xxx

+−

()

123 123 123

+−

()

−+−

(()

Jacobians of Matrix Functions

The Jacobian of a matrix F(x) is defined by changing the matrix to a vector,

column by column. For example, the matrix

FF

⎡

11 12

⎢

F

FF

=

21 22

⎢

⎢

FF

31 32

⎣

is rewritten as a vector f:

F

⎡

11

⎢

F

21

⎢

⎢

F

31

f

=

⎢

F

12

⎢

⎢

F

22

⎢

F

⎢

32

⎣

The Jacobian of F is defined as the Jacobian of f,

⎤

⎥

⎥

⎥

⎦

⎤

⎥

⎥

⎥

.

⎥

⎥

⎥

⎥

⎥

⎦

⎤

.

⎥

⎦

2-13

Page 44

2 Setting Up an Optimization

If F is an m-by-n matrix, and x is a k-vector, the Jacobian is a mn-by-k matrix.

For example, if

the Jacobian of F is

J

ij

Fx

() / ,=

Jx

()

f

∂

i

=

.

x

∂

j

⎡

xx x x

12 132

⎢

⎢

xx xx

−

5

21421

⎢

⎢

⎣

⎡

⎢

⎢

⎢

⎢

=

⎢

⎢

⎢

⎢

⎢

⎣

2

xxx

−−

4

2

xx

21

3

x

−

45

1

02

2

xx

36

1

xx x

//

−

2121

2

xx

34

1

−

1

−

+

3

132

⎤⎤

⎥

⎥

⎥

x

2

⎥

.

⎥

2

⎥

⎥

⎥

3

⎥

2

⎦

2

⎤

⎥

⎥

⎥

4

⎥

⎦

2-14

Jacobians with Matrix-Valued Independent Variables

If x is a matrix, the Ja c ob ian of F(x) is defined by changing the matrix x to a

vector, column by column. For example, if

xx

⎡

11 12

X

=

⎢

xx

⎣

21 22

then the gradient is defined in terms of the vector

x

⎡

11

⎢

x

21

⎢

x

=

⎢

x

12

⎢

x

⎢

⎣

22

With

⎤

,

⎥

⎦

⎤

⎥

⎥

.

⎥

⎥

⎥

⎦

Page 45

Writing Objective Functions

FF

⎡

⎢

F

=

⎢

⎢

⎣

11 12

FF

21 22

FF

31 32

⎤

⎥

,

⎥

⎥

⎦

and f the vector form of F as above, the Jacobian of F(X) is defined to be the

Jacobian of f(x):

f

∂

J

i

=

ij

.

x

∂

j

So, for example,

J

(,)

32

()

3

()

2

∂

31

=

X

∂

21

J

,(,)

and ..

54

f

()

∂

=

x

()

∂

f

∂

=

x

∂

F

∂

5

4

22

=

X

∂

22

F

If F is an m-by-n matrix, and x is a j-by-k matrix, the Jacobian is a mn-by-jk

matrix.

Anonymous Function Objectives

Anonymous functions are useful for writing simple objective functions.

For more information about anonymous functions, see “Constructing an

Anonymous Function”. Rosenbrock’s function is simple enough to write as an

anonymous function:

anonrosen = @(x)(100*(x(2) - x(1)^2)^2 + (1-x(1))^2);

Check that this evaluates correctly at (–1,2):

>> anonrosen([-1 2])

ans =

104

Using anonrosen in fminunc yields the following results:

options = optimset('LargeScale','off');

[x fval] = fminunc(anonrosen,[-1;2],options)

Local minimum found.

2-15

Page 46

2 Setting Up an Optimization

Maximizing an Objective

All solvers are designed to minimize an objective function. If you have a

maximization problem, that is, a problem of the form

then define g(x)=–f(x), and minimize g.

For example, to find the maximum of tan(cos(x)) near x =5,evaluate:

Optimization completed because the size of the gradient

is less than the default value of the function tolerance.

x=

1.0000

1.0000

fval =

1.2262e-010

max ( ),

fx

x

2-16

[x fval] = fminunc(@(x)-tan(cos(x)),5)

Warning: Gradient must be provided for trust-region method;

using line-search method instead.

> In fminunc at 356

Local minimum found.

Optimization completed because the size of the gradient is less than

the default value of the function tolerance.

x=

6.2832

fval =

-1.5574

The maximum is 1.5574 (the negative of the reported fval), and occurs at

x = 6.2832. This is correct since, to 5 digits, the maximum is tan(1) = 1.5574,

which occurs at x =2π = 6.2832.

Page 47

Writing Co nstraints

In this section...

“Types of Constraints” on page 2-17

“Iterations Can Violate Constraints” on page 2-18

“Bound Constraints” on page 2-19

“Linear Inequality Constraints” on page 2-20

“Linear Equality Constraints” on page 2-20

“Nonlinear Constraints” on p a ge 2-21

“An Example Using All Types of Constraints” on page 2-23

Types of Constraints

Optimization Toolbox solvers have special forms for constraints. Constraints

are separated into the following types:

Writing Constraints

• “Bound Constraints” on page 2-19 — Lower and upper bounds on individual

components: x ≥ l and x ≤ u.

• “Linear Inequality Constraints” on page 2-20 — A·x ≤ b. A is an m-by-n

matrix, w hich represents m constraints for an n-dimensional vector x. b is

m-dimensional.

• “Linear Equality Constraints” on page 2-20 — Aeq·x = beq. Thisisasystem

of equations.

• “Nonlinear Constraints” on page 2-21 — c(x) ≤ 0andceq (x)=0. Bothc and

ceq are scalars or vectors representing several constraints.

Optimization Toolbox functions assume that inequality constraints are of the

form c

constraints by multiplying them by –1. For example, a constraint of the form

c

A·x ≥ b is equivalent to the constraint –A·x ≤ –b. For more information, see

“Linear Inequality Constraints” on page 2-20 and “Nonlinear Constraints”

on page 2-21.

(x) ≤ 0orAx≤ b. Greater-than constraints are expressed as less-than

i

(x) ≥ 0 is equivalent to the constraint –ci(x) ≤ 0. A constraint of the form

i

2-17

Page 48

2 Setting Up an Optimization

You can sometimes write constraints in a variety of ways. To make the best

use of the solvers, use the lowest numbered constraints possible:

1 Bounds

2 Linear equalities

3 Linear inequalities

4 Nonlinear equalities

5 Nonlinear inequalities

For example, with a constraint 5 x ≤ 20, use a bound x ≤ 4insteadofalinear

inequality or nonlinear inequality.

For information on how to pass extra parameters to constraint functions, see

“Passing Extra Parameters” on page 2-25.

2-18

Iterations Can Violate Constraints

Be careful when writing your objective and constraint functions. Intermediate

iterations can lead to points that are infeasible (do not satisfy constraints).

If you write objective or constraint functions that assume feasibility, these

functions can error or give unexpected results.

For example, if you take a square root or logarithm of x,andx <0,theresult

is not real. You can try to avoid this error by setting

Nevertheless, an intermediate iteration can violate this bound.

Algorithms that Satisfy Bound Constraints

Some solvers and algorithms honor bound constraints at every iteration.

These include:

•

fmincon interior-point, sqp,andtrust-region-reflective algorithms

lsqcurvefit trust-region-reflective algorithm

•

lsqnonlin trust-region-reflective algorithm

•

fminbnd

•

0 as a lower bound on x.

Page 49

Writing Constraints

Algorithms that can Violate Bound Constraints

The following solvers and algorithms can violate bound constraints at

intermediate iterations:

•

fmincon active-set algorithm

fgoalattain

•

• fminimax

• fseminf

Bound Constraints

Lower and upper bounds on the components of the vector x. You need not give

gradients for this type of constraint; solvers calculate them automatically.

Bounds do not affect Hessians.

If you know bounds o n the location of your optimum, then you may obtain

faster and more reliable solutions by explicitly including these bounds in your

problem formulation.

Bounds are given as vectors, with the same length as x.

• If a particular component is not bounded below, use –

similarly, use

Inf if a component is not bounded above.

Inf as the bound;

• If you have only bounds of one type (upper or lower), you do not need

to write the other type. For example, if you have no upper bounds, you

do not need to supply a vector of

Infs. Also, if only the first m out of n

components are bounded, then you need only supply a vector of length

m containing bounds.

For example, suppose your bounds are:

• x

≥ 8

3

≤ 3

• x

2

Write the constraint vectors as

• l = [–Inf; –Inf; 8]

2-19

Page 50

2 Setting Up an Optimization

• u =[Inf;3]oru = [Inf; 3; Inf]

Linear Inequality Constraints

Linear inequality constraints are of the form A·x ≤ b.WhenA is m-by-n,this

represents m constraints on a variable x with n components. You supply the

m-by-n matrix A and the m-component vector b. You do not need to give

gradients for this type of constraint; solvers calculate them automatically.

Linear inequalities do not affect Hessians.

For example, suppose that you have the following linear inequalities as

constraints:

Here m =3andn =4.

Write these using the following matrix A and vector b:

x

+ x3≤ 4,

1

2x

– x3≥ –2,

2

x

– x2+ x3– x4≥ 9.

1

2-20

1010

⎡

⎢

Ab=−

0210

⎢

⎢

−−

11 11

⎣

4

⎡

⎤

⎢

⎥

=

2

.

⎢

⎥

⎢

⎥

−

9

⎣

⎦

Notice that the “greater than” inequalities were first multiplied by –1 in order

to get them into “less than” inequality form.

⎤

⎥

,

⎥

⎥

⎦

Linear Equality Constraints

Linear equalities are of the form Aeq·x = beq.Thisrepresentsm equations

with n-component vector x. You supply the m-by-n matrix Aeq and the

m-component vector beq.Youdonotneedtogiveagradientforthistypeof

constraint; solvers calculate them automatically. Linear equalities do not

affect Hessians. The form of this type of constraint is exactly the same as

for “Linear Inequality Constraints” on page 2-20. Equalities rather than

Page 51

Writing Constraints

inequalities are implied by the position in the input argument list of the

various solvers.

Nonlinear Constraints

Nonlinear inequality constraints are of the form c(x) ≤ 0, where c is a vector of

constraints, one component for each constraint. Similarly, nonlinear equality

constraints are of the form ceq( x)=0.

Nonlinear constraint functions must return both inequality and equality

constraints, even if they do not both exist. Return empty

constraint.

If you provide gradients for c and ceq, your solver may run faster and give

more reliable results.

[] fo r a n onexistent

Providing a gradient has another advantage. A solver can reach a point

x

such that x is feasible, but finite differences around x always lead to an

infeasible point. In this case, a solver can fail or halt prematurely. Providing

a gradient allow s a solver to proceed.

For e xample, suppose that you have the following inequalities as constraints:

2

xx

122

+≤

94

1

2

xx

≥−,.

21

1

Write these constraints in a function file as follows:

function [c,ceq]=ellipseparabola(x)

% Inside the ellipse bounded by (-3<x<3),(-2<y<2)

% Above the line y=x^2-1

c(1) = (x(1)^2)/9 + (x(2)^2)/4 - 1;

c(2) = x(1)^2 - x(2) - 1;

ceq = [];

end

ellipseparabola

returns empty [] as the nonlinear equality function. Also,

both inequalities were put into ≤ 0form.

2-21

Page 52

2 Setting Up an Optimization

To include gradient information, write a conditionalized function as follows:

See “Writing Objective Functions” on page 2-10 for information on

conditionalized gradients. The gradient matrix is of the form

function [c,ceq,gradc,gradceq]=ellipseparabola(x)

% Inside the ellipse bounded by (-3<x<3),(-2<y<2)

% Above the line y=x^2-1

c(1) = x(1)^2/9 + x(2)^2/4 - 1;

c(2) = x(1)^2 - x(2) - 1;

ceq = [];

if nargout > 2

gradc = [2*x(1)/9, 2*x(1);...

x(2)/2, -1];

gradceq = [];

end

gradc

The first column of the gradient matrix is associated with

column is associated with

=[∂c(j)/∂xi].

i, j

c(1),andthesecond

c(2). This is the transpose of the form of Jacobians.

To have a solver use gradients of nonlinear constraints, indicate that they

exist by using

options=optimset('GradConstr','on');

optimset:

Make sure to pass the options structure to your solver:

[x,fval] = fmincon(@myobj,x0,A,b,Aeq,beq,lb,ub,...

@ellipseparabola,options)

If you have a license for Symbolic Math Toolbox software, you can calculate

gradients and Hessians automatically, as described in “Example: Using

Symbolic M ath Toolbox Functions to Calculate Gradients and Hessians” on

page 6-69.

2-22

Page 53

Writing Constraints

An Example Using All Types of Constraints

This section contains an example of a nonlinear minimization problem with

all possible types of constraints. The objective function is in the subfunction

myobj(x). The nonlinear constraints are in the subfunction myconstr(x).

This example does not use gradients.

function fullexample

x0 = [1; 4; 5; 2; 5];

lb = [-Inf; -Inf; 0; -Inf; 1];

ub = [ Inf; Inf; 20];

Aeq = [1 -0.3 0 0 0];

beq = 0;

A = [0 0 0 -1 0.1

0 0 0 1 -0.5

0 0 -1 0 0.9];

b = [0; 0; 0];

[x,fval,exitflag]=fmincon(@myobj,x0,A,b,Aeq,beq,lb,ub,...

@myconstr)

%--------------------------------------------------------function f = myobj(x)

f = 6*x(2)*x(5) + 7*x(1)*x(3) + 3*x(2)^2;

%--------------------------------------------------------function [c, ceq] = myconstr(x)

c = [x(1) - 0.2*x(2)*x(5) - 71

0.9*x(3) - x(4)^2 - 67];

ceq = 3*x(2)^2*x(5) + 3*x(1)^2*x(3) - 20.875;

Calling fullexample produces the following display in the command window:

fullexample

Warning: Trust-region-reflective algorithm does not solve this type of problem, using active-set

algorithm. You could also try the interior-point or sqp algorithms: set the Algorithm option to

'interior-point' or 'sqp' and rerun. For more help, see Choosing the Algorithm in the documentation.

> In fmincon at 472

In fullexample at 12

2-23

Page 54

2 Setting Up an Optimization

Local minimum found that satisfies the constraints.

Optimization completed because the objective function is non-decreasing in

feasible directions, to within the default value of the function tolerance,

and constraints were satisfied to within the default value of the constraint tolerance.

Active inequalities (to within options.TolCon = 1e-006):

lower upper ineqlin ineqnonlin

3

x=

0.6114

2.0380

1.3948

0.3587

1.5498

fval =

37.3806

2-24

exitflag =

1

Page 55

Passing Extra Parameters

Sometimes objective or constraint functions hav e parameters in addition

to the independent variable. There are three methods of including these

parameters:

• “Anonymous Functions” on page 2-25

• “Nested Functions” on page 2-28

• “Global Variables” on page 2-28

Global variables are troublesome because they do not allow names to be

reused among functions. It is better to use one of the other two methods.

For example, suppose you want to minimize the function

fx a bx x x xx c cx x() ,

=− +

()

121

4312

/

++−+

12 222

Passing Extra Parameters

()

2

(2-1)

for diffe

depend o

how to pr

paramet

Anonym

To pass

1 Write

2 Ass

rent values of a, b,andc. Solvers accept objective functions that

nly on a single variable (x in th is case). The following sections show

ovide the additional parameters a, b,andc.Thesolutionsarefor

er values a =4,b =2.1,andc =4nearx

=[0.50.5]usingfminunc.

0

ous Functions

parameters using anonymous functions:

a file contain ing the follo wing code:

function y = parameterfun(x,a,b,c)

y = (a - b*x(1)^2 + x(1)^4/3)*x(1)^2 + x(1)*x(2) + ...

(-c + c*x(2)^2)*x(2)^2;

ign values to the parameters and define a function handle

nymous function by entering the following commands at the MATLAB

ano

mpt:

pro

a = 4; b = 2.1; c = 4; % Assign parameter values

x0 = [0.5,0.5];

f to an

2-25

Page 56

2 Setting Up an Optimization

3 Call the solver fminunc with the anonymous function:

f = @(x)parameterfun(x,a,b,c)

[x,fval] = fminunc(f,x0)

The following output is displayed in the command window:

Warning: Gradient must be provided for trust-region algorithm;

using line-search algorithm instead.

> In fminunc at 347

Local minimum found.

Optimization completed because the size of the gradient is less than

the default value of the function tolerance.

x=

-0.0898 0.7127

2-26

fval =

-1.0316

Page 57

Passing Extra Parameters

Note The parameters passed in the anonymous function are those that exist

at the time the anonymous function is created. Consider the example

a=4;b=2.1;c=4;

f = @(x)parameterfun(x,a,b,c)

Suppose you subsequently change, a to 3 and run

[x,fval] = fminunc(f,x0)

You get the same answer as before, since parameterfun uses a=4,thevalue

when

parameterfun was created.

To change the parameters that are passed to the function, renew the

anonymous function by reentering it:

a=3;

f = @(x)parameterfun(x,a,b,c)

You can create anonymous functions of more than one argument. For

example, to use

arguments,

fh = @(x,xdata)(sin(x).*xdata +(x.^2).*cos(xdata));

x = pi; xdata = pi*[4;2;3];

fh(x, xdata)

ans =

9.8696

9.8696

-9.8696

lsqcurvefit, first create a function that takes two input

x and xdata:

Now call lsqcurvefit:

% Assume ydata exists

x = lsqcurvefit(fh,x,xdata,ydata)

2-27

Page 58

2 Setting Up an Optimization

Nested Function

To pass the param

file that

• Accepts

• Contains the o

• Calls

Here is the co

function [x,fval] = runnested(a,b,c,x0)

[x,fval] = fminunc(@nestedfun,x0);

% Nested function that computes the objective function

end

The obje

to the va

To run th

a, b, c,

fminunc

de for the function file for this example:

function y = nestedfun(x)

y = (a - b*x(1)^2 + x(1)^4/3)*x(1)^2 + x(1)*x(2) +...

end

ctivefunctionisthenestedfunction

riables

e optimization, enter:

a, b,andc.

s

eters for Equation 2-1 via a nested function, write a single

and

x0 as inputs

bjective function as a nested function

(-c + c*x(2)^2)*x(2)^2;

nestedfun, which has access

2-28

a = 4; b = 2.1; c = 4;% Assign parameter values

x0 = [0.5,0.5];

[x,fval] = runnested(a,b,c,x0)

The ou

Glob

Glob

glob

the

tput is the same as in “Anonymous Functions” on page 2-25.

al Variables

al va riab le s can be troublesome, it is better to avoid u sin g them. To u se

al variables, declare the variables to be global in the workspace and in

functions that use the variables.

te a function file:

1 Wri

function y = globalfun(x)

Page 59

Passing Extra Parameters

global a b c

y = (a - b*x(1)^2 + x(1)^4/3)*x(1)^2 + x(1)*x(2) + ...

(-c + c*x(2)^2)*x(2)^2;

2 In your MATLAB workspace, define the variables and run fminunc:

global a b c;

a = 4; b = 2.1; c = 4; % Assign parameter values

x0 = [0.5,0.5];

[x,fval] = fminunc(@globalfun,x0)

The output is the same as in “Anonymous Functions” on page 2-25.

2-29

Page 60

2 Setting Up an Optimization

Setting Options

Default Options

The options structure contains options used in the optimization routines.

If, on the first call to an optimization routine, the

provided, or is empty, a set of default options is generated. Some of the default

options values are calculated using factors based on problem size, such as

MaxFunEvals. Some options are dependent on the specific solver algorithms,

and a re documented on those solver f unction reference pages.

In this section...

“Default Options” on page 2-30

“Changing the Default Settings” on page 2-30

“Tolerances and Stopping Criteria” on page 2-36

“Checking Validity of Gradients or Jacobians” on page 2-37

“Choosing the Algorithm” on page 2-45

options structure is not

2-30

“Optimization Options” on page 9-7 provides an overview of all the options in

the

options structure.

Changing the Default Settings

The optimset function creates or updates an options structure to pass

to the various optimiz ation functions. The arguments to the

function are option name and option value pairs, such as TolX and 1e-4.Any

unspecified properties have default values. You need to type only enough

leading characters to define the option name uniquely. Case is ignored for

option names. For option values that are strings, however, case and the exact

string are necessary.

help optimset provides information that defines the different options and

describes how to use them.

Herearesomeexamplesoftheuseof

optimset.

optimset

Page 61

Setting Options

Returning All Options

optimset returns all the options that can be set with typical values and

default values.

Determining Options Used by a Function

The options structure defines the options passed to solvers. Because

functions do not use all the options, it can be useful to find which options

are used by a particular function. There are several ways of determining

the available options:

• Consult the combined “Optimization Options” on page 9-7 table.

• To find detailed descriptions of the options and their default values,

consult the Options table in the function reference pages. For example, the

available options for

• To find available options at the command line, pass the name of the

function (in this example,

optimset('fmincon')

fmincon appear in this table.

fmincon)tooptimset:

or

optimset fmincon

or

optimset(@fmincon)

This statement returns a structure. Generally, fields not used by the

function have empty values (

[]); fields used by the function are set to their

default values for the given function. However, some solvers have different

default values depending on the algorithm used. For example,

adefault

active-set algorithms, but a default value of 1000 for the interior-point

MaxIter value of 400 for the trust-region-reflective, sqp,and

fmincon has

algorithm. optimset fmincon returns [] for the MaxIter field.

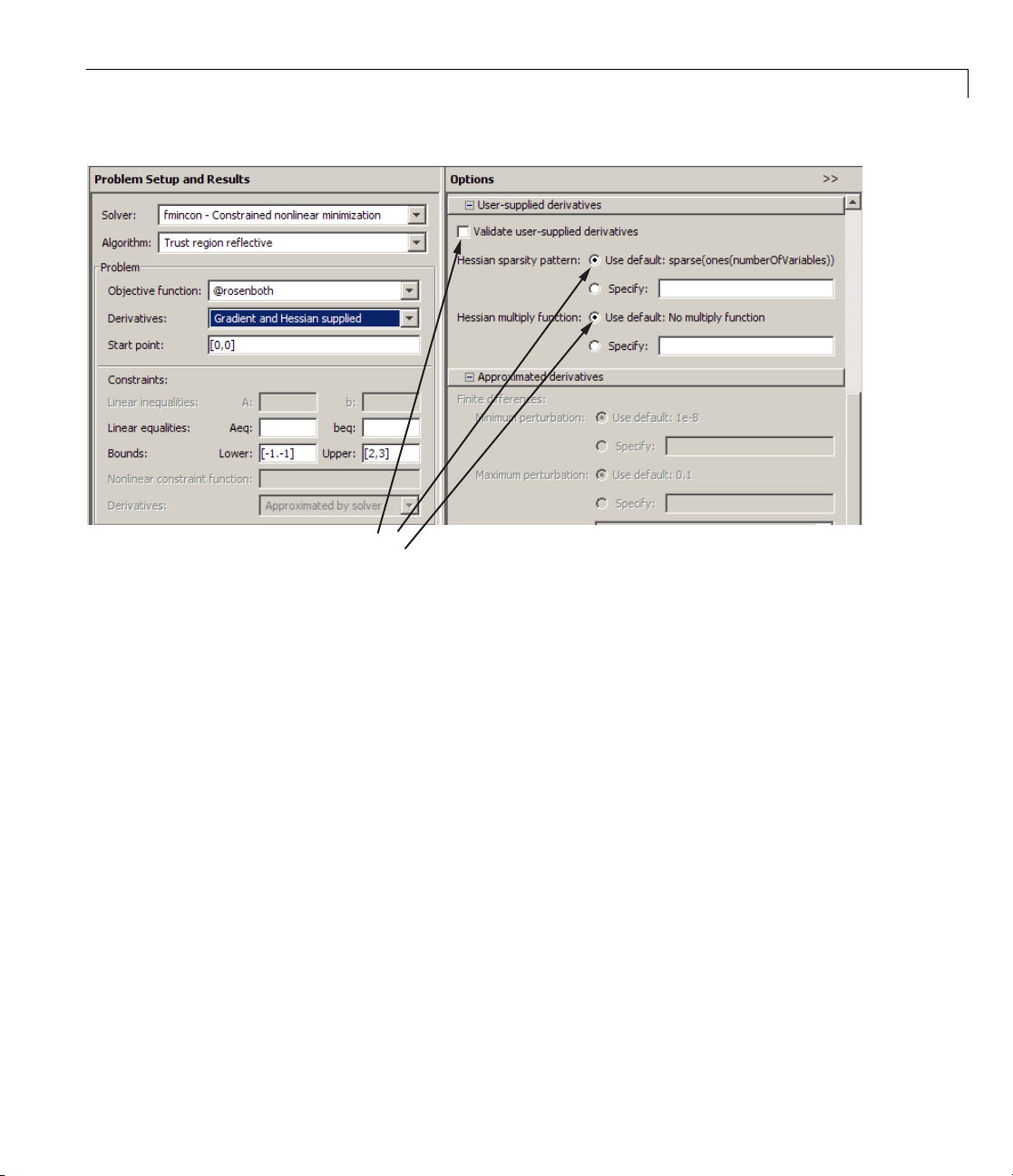

• You can also check the available options and their defaults in the

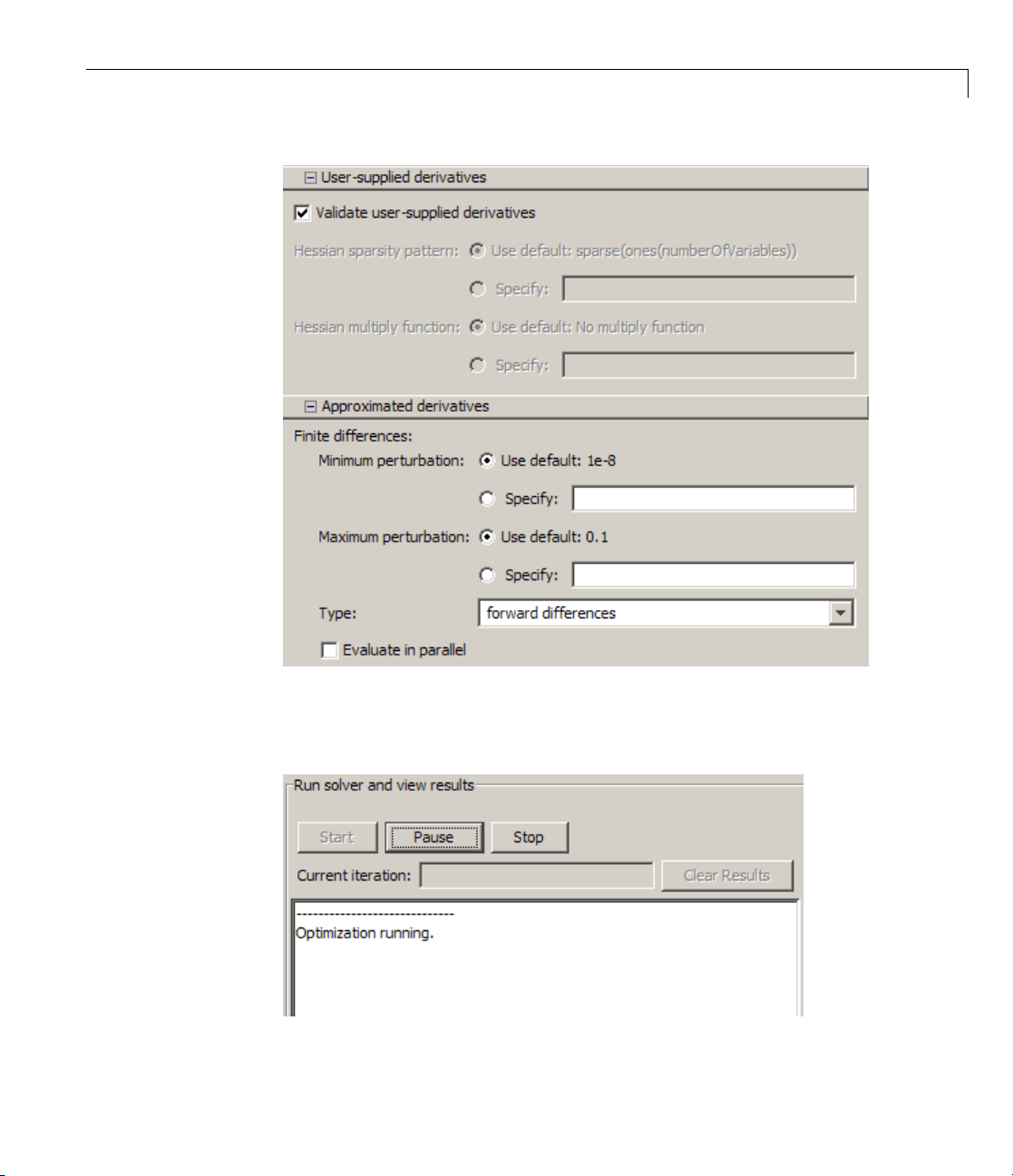

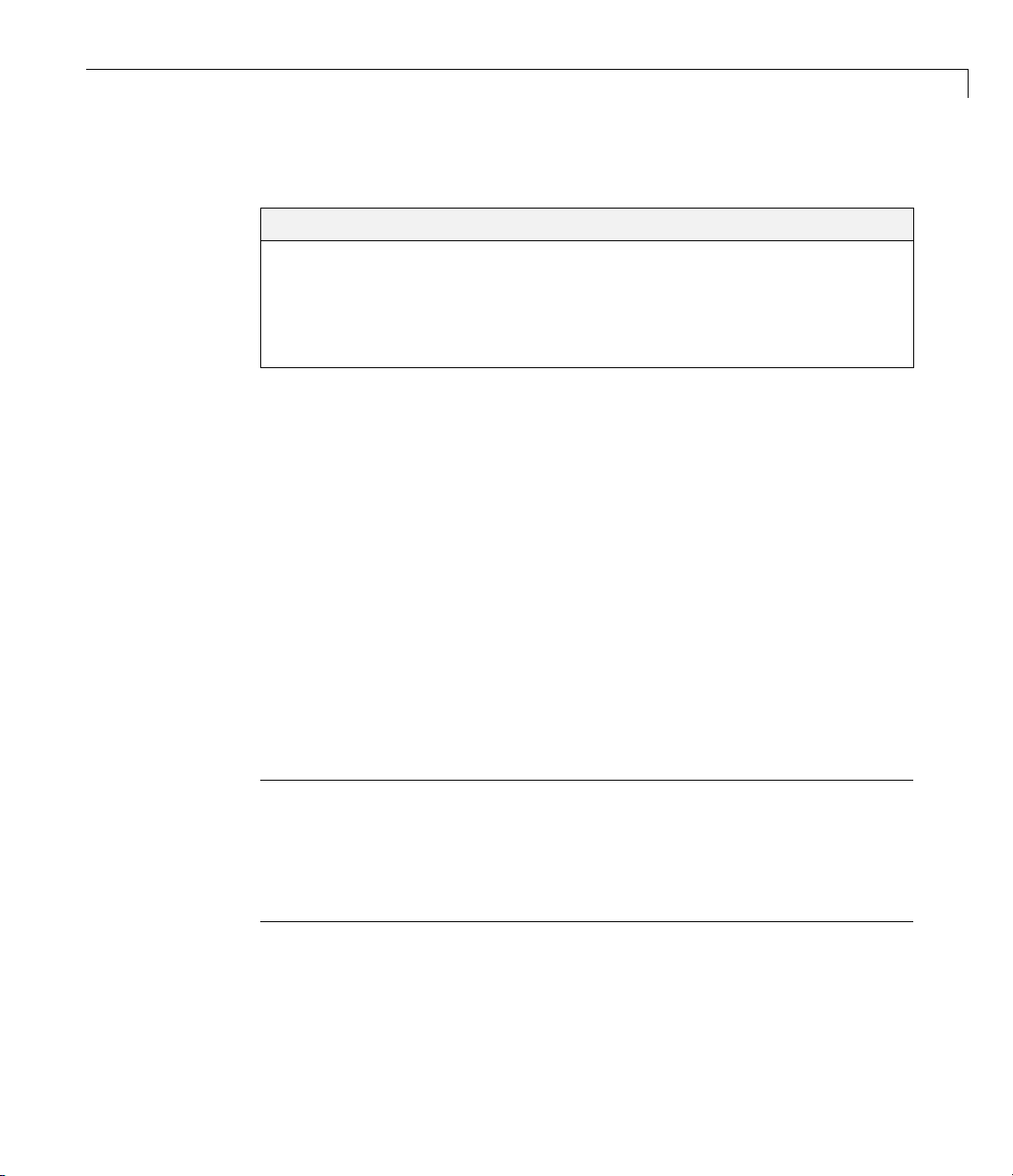

Optimization Tool. These can change depending on problem and algorithm

settings. These three pictures show how the available options for

derivatives change as the type of supplied derivatives change:

2-31

Page 62

2 Setting Up an Optimization

Settable

options

2-32

Settable

options

Page 63

Settable

options

Setting Options

Setting More Than One Option

You can specify multiple options with one call to optimset. For example, to

reset the

options = optimset('Display','iter','TolX',1e-6);

Display option and the tolerance on x,enter

Updating an options Structure

To update an existing options structure, call optimset and pass the existing

structure name as the first argument:

options = optimset(options,'Display','iter','TolX',1e-6);

Retrieving Option Values

Use the optimget function to get option values from an options structure.

Forexample,togetthecurrentdisplay option, enter the following:

verbosity = optimget(options,'Display');

2-33

Page 64

2 Setting Up an Optimization

Displaying Output

To display output at each iteration, enter

This command sets the value of the Display option to 'iter', which causes

the solver to display output at each iter ation. You can also turn off any output

display (

output only if the problem fails to converge (

Choosing an Algorithm

Some solvers explicitly use an option called LargeScale for choosing which

algorithm to use:

the