Page 1

MegaRAID® Express 500

Hardware Guide

MAN-475

6/13/2001

Page 2

© Copyright 2001 LSI Logic Corporation

All rights reserved.

LSI Logic Corporation

6145-D Northbelt Parkway

Norcross, GA 30071

This publication contains proprietary information which is protected by copyright. No part of this publication can be reproduced,

transcribed, stored in a retrieval system, translated into any language or computer language, or transmitted in any form whatsoever

without the prior written consent of the publisher, LSI Logic Corporation. LSI Logic Corporation acknowledges the following

trademarks:

Intel is a registered trademark of Intel Corporation

Sytos 300 is a registered trademark of Sytron Corporation.

MS-DOS, and Microsoft are registered trademarks of Microsoft Corporation. Windows 95, Microsoft Windows and Windows NT are

trademarks of Microsoft Corporation.

SCO, UnixWare, and Unix are registered trademarks of the Santa Cruz Operation. Inc.

Novell NetWare is a registered trademark of Novell Corporation.

IBM, AT, VGA, PS/2, and OS/2 are registered trademarks and XT and CGA are trademarks of International Business Machines

Corporation.

NEC is a registered trademark of Nippon Electric Corporation.

Sony is a registered trademark of Sony Corporation.

Toshiba is a registered trademark of Toshiba America Corporation.

Archive and Python are registered trademarks of Archive Corporation.

Quantum is a registered trademark of Quantum Corporation.

Seagate is a registered trademark of Seagate Corporation.

SyQuest is a trademark of SyQuest Corporation.

Panasonic is a registered trademark of Panasonic Corporation.

Hewlett-Packard is a registered trademark of Hewlett-Packard Corporation.

Amphenol is a trademark of Amphenol Corporation.

Siemens is a registered trademark of Siemens Corporation.

AMP is a trademark of AMP Corporation.

.

Revision History

4/14/00 Initial release.

4/11/01 Corrected RAID 0 graphic, and Array Configuration Planner table.

6/13/01 Make corrections, such as cache size (16 MB is smallest option), and the number of physical disk drives

supported at various RAID levels.

MegaRAID Express500 Hardware Guide

ii

Page 3

Table of Contents

1 Overview .................................................. 1

Single Ended and Differential SCSI Buses....................... 2

Maximum Cable Length for SCSI Standards....................2

Documentation..................................................................3

MegaRAID Express 500 Block Diagram..........................4

2 Introduction to RAID................................ 5

RAID Benefits.................................................................. 5

In This Chapter................................................................. 6

MegaRAID Express 500 – Host-Based RAID Solution ... 7

RAID Overview................................................................ 8

Fault Tolerance................................................................. 8

Consistency Check............................................................ 8

Disk Rebuild..................................................................... 9

Hot Spares ......................................................................10

Hot Swap ........................................................................ 10

Parity ..............................................................................11

Disk Striping................................................................... 12

Disk Mirroring................................................................ 13

Disk Spanning.................................................................14

Logical Drive.................................................................. 15

Logical Drive States .......................................................15

SCSI Drive States ........................................................... 15

Disk Array Types............................................................16

Enclosure Management................................................... 16

3 RAID Levels............................................ 17

Selecting a RAID Level.................................................. 18

RAID 0 ........................................................................... 19

RAID 1 ........................................................................... 20

RAID 3 ........................................................................... 21

RAID 5 ........................................................................... 23

RAID 10 ......................................................................... 24

RAID 30 ......................................................................... 25

RAID 50 ......................................................................... 26

Preface

iii

Page 4

Table of Contents,

Continued

4 Features ................................................. 27

Hardware Requirements .................................................28

Configuration Features ................................................... 28

Hardware Architecture Features..................................... 29

Array Performance Features ........................................... 29

RAID Management Features ..........................................30

Fault Tolerance Features.................................................30

Software Utilities............................................................ 31

Operating System Software Drivers................................31

MegaRAID Express 500 Specifications .........................32

PCI Bridge/CPU ............................................................. 32

Cache Memory................................................................33

MegaRAID BIOS ...........................................................33

Onboard Speaker ............................................................ 33

Serial Port ....................................................................... 33

SCSI Bus ........................................................................34

SCSI Connectors.............................................................34

SCSI Termination........................................................... 34

SCSI Firmware ...............................................................34

RAID Management......................................................... 35

Fault-Tolerance Features ................................................ 35

Compatibility.................................................................. 36

Summary.........................................................................36

5 Configuring MegaRAID Express 500 ... 37

Configuring SCSI Physical Drives..................................37

Current Configuration..................................................... 38

Logical Drive Configuration........................................... 38

Physical Device Layout ..................................................40

Configuring Arrays......................................................... 42

Configuration Strategies ................................................. 43

Assigning RAID Levels.................................................. 45

Configuring Logical Drives ............................................45

Optimizing Data Storage ................................................ 46

Planning the Array Configuration................................... 46

Array Configuration Planner........................................... 47

MegaRAID Express500 Hardware Guide

iv

Page 5

Table of Contents,

Continued

6 Hardware Installation ............................ 49

Checklist......................................................................... 49

Installation Steps.............................................................50

Step 1 Unpack.................................................................51

Step 2 Power Down ........................................................ 51

Step 3 Configure Motherboard....................................... 51

Step 4 Install Cache Memory..........................................52

Step 5 Set Jumpers.......................................................... 53

MegaRAID Express 500 Card Layout............................ 53

Step 6 Set Termination ................................................... 56

SCSI Termination........................................................... 57

Step 7 Install MegaRAID Express 500........................... 60

Step 8 Connect SCSI Cables...........................................61

Step 9 Set Target IDs...................................................... 62

Device Identification on MegaRAID Express 500 .........63

Step 10 Power Up........................................................... 64

Step 11 Run MegaRAID BIOS Setup.............................64

Step 12 Install the Operating System Driver................... 65

Summary.........................................................................66

7 Cluster Installation and Configuration 67

Software Requirements................................................... 67

Hardware Requirements .................................................68

Installation and Configuration ........................................69

Driver Installation Instructions under Microsoft

Windows 2000 Advanced Server ...................................70

Network Requirements ................................................... 75

Shared Disk Requirements.............................................. 75

Cluster Installation.......................................................... 76

Installing the Windows 2000 Operating System............. 77

Setting Up Networks.......................................................77

Configuring the Cluster Node Network Adapter ............ 79

Configuring the Public Network Adapter ....................... 80

Verifying Connectivity and Name Resolution................ 80

Verifying Domain Membership...................................... 81

Setting Up a Cluster User Account................................. 82

Setting Up Shared Disks................................................. 83

Configuring Shared Disks............................................... 84

Assigning Drive Letters .................................................. 84

Verifying Disk Access and Functionality .......................85

Cluster Service Software Installation.............................. 86

Configuring Cluster Disks ..............................................89

Validating the Cluster Installation ..................................95

Configuring the Second Node......................................... 95

Verify Installation........................................................... 96

SCSI Drive Installations .................................................97

Configuring the SCSI Devices........................................ 97

Terminating the Shared SCSI Bus.................................. 97

Preface

v

Page 6

Table of Contents,

Continued

8 Troubleshooting .................................... 99

BIOS Boot Error Messages .......................................... 101

Other BIOS Error Messages......................................... 103

DOS ASPI Driver Error Messages ............................... 104

Other Potential Problems.............................................. 105

A SCSI Cables and Connectors ............. 107

SCSI Connectors...........................................................107

68-Pin High Density SCSI Internal Connector ............. 107

High-Density 68-Pin SCSI Connector Pinout............... 113

68-Pin SCSI Connector Pinout, Continued................... 114

68-Pin Connector Pinout for LVD SCSI ...................... 115

B Audible Warnings ................................ 117

C Cluster Configuration with a Crossover Cable...119

Solution.........................................................................120

Glossary.......................................................... 121

Index................................................................ 131

MegaRAID Express500 Hardware Guide

vi

Page 7

Preface

The MegaRAID Express 500 PCI RAID Controller supports all single ended and low-voltage

differential (LVD) SCSI devices on a 160M Ultra and Wide SCSI channel with data transfer rates

up to 160 MB/s (Megabytes per second). This manual describes MegaRAID Express 500.

Limited Warranty

Limitations of Liability

The buyer agrees if this product proves to be defective, that LSI Logic is obligated only to repair or

replace this product at LSI Logic’s discretion according to the terms and conditions of the warranty

registration card that accompanies this product. LSI Logic shall not be liable in tort or contract for

any loss or damage, direct, incidental or consequential resulting from the use of this product. Please

see the Warranty Registration Card shipped with this product for full warranty details.

any kind whatsoever, whether direct, indirect, incidental, or consequential (whether arising from

the design or use of this product or the support materials provided with the product). No action or

proceeding against LSI Logic Corporation may be commenced more than two years after the

delivery of product to Licensee of Licensed Software.

Licensee agrees to defend and indemnify LSI Logic Corporation from any and all claims, suits, and

liabilities (including attorney’s fees) arising out of or resulting from any actual or alleged act or

omission on the part of Licensee, its authorized third parties, employees, or agents, in connection

with the distribution of Licensed Software to end-users, including, without limitation, claims, suits,

and liability for bodily or other injuries to end-users resulting from use of Licensee’s product not

caused solely by faults in Licensed Software as provided by LSI Logic to Licensee.

LSI Logic Corporation shall in no event be held liable for any loss, expenses, or damages of

Cont’d

Preface

vii

Page 8

Preface,

Continued

Package Contents

Technical Support

Web Site

You should have received:

• a MegaRAID Express 500 PCI RAID Controller

• a CD with drivers, utilities, and documentation

• a MegaRAID Express 500 Hardware Guide (on CD)

• a MegaRAID Configuration Software Guide (on CD)

• a MegaRAID Operating System Drivers Guide (on CD)

• software license agreement (on CD)

• a warranty registration card (on CD)

If you need help installing, configuring, or running the MegaRAID Express 500 PCI

RAID Controller, call your LSI Logic OEM Technical Support representative at 678-728-

1250. Before you call, please complete the MegaRAID Problem Report form on the next

page.

We invite you to access the LSI Logic world wide web site at:

http://www.lsil.com.

MegaRAID Express500 Hardware Guide

viii

Page 9

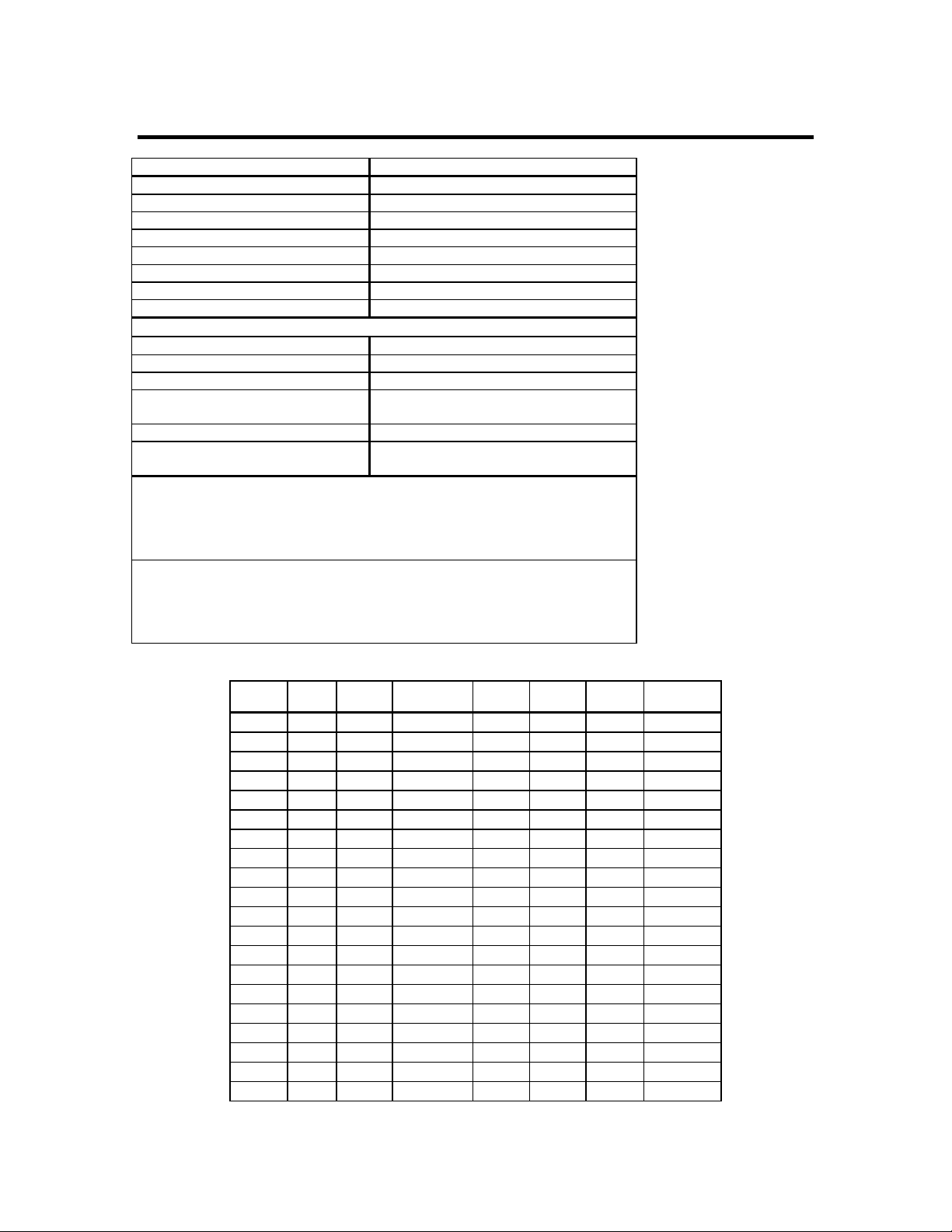

MegaRAID Problem Report Form

Customer Information MegaRAID Information

Name Today’s Date

Company Date of Purchase

Address Invoice Number

City/State Serial Number

Country

email address Cache Memory

Phone Firmware Version

Fax BIOS Version

System Information

Motherboard: BIOS manufacturer:

Operating System: BIOS Date:

Op. Sys. Ver.: Video Adapter:

MegaRAID

Driver Ver.:

Network Card: System Memory:

Other disk controllers

installed:

Description of problem:

Steps necessary to re-create problem:

1.

2.

3.

4.

CPU Type/Speed:

Other adapter cards

installed:

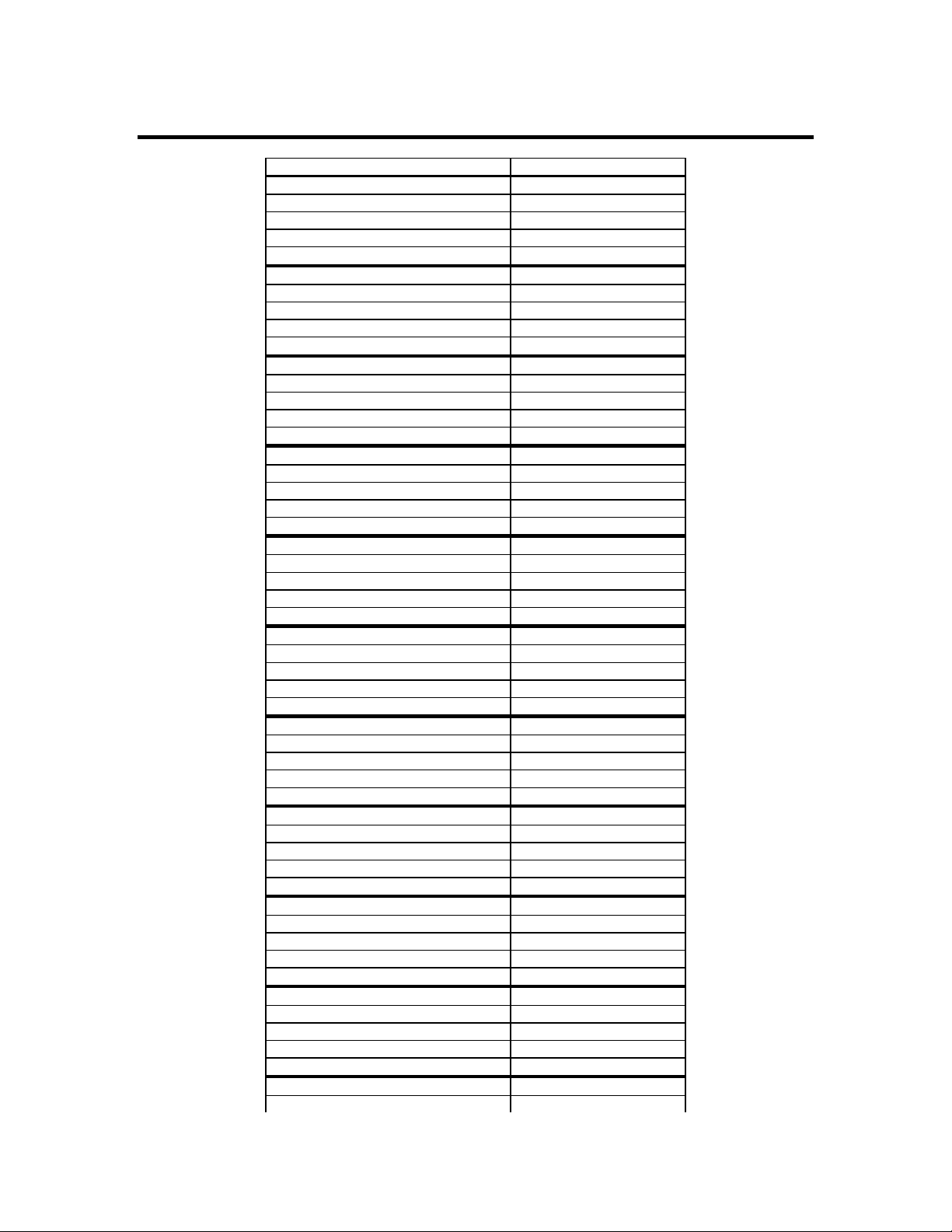

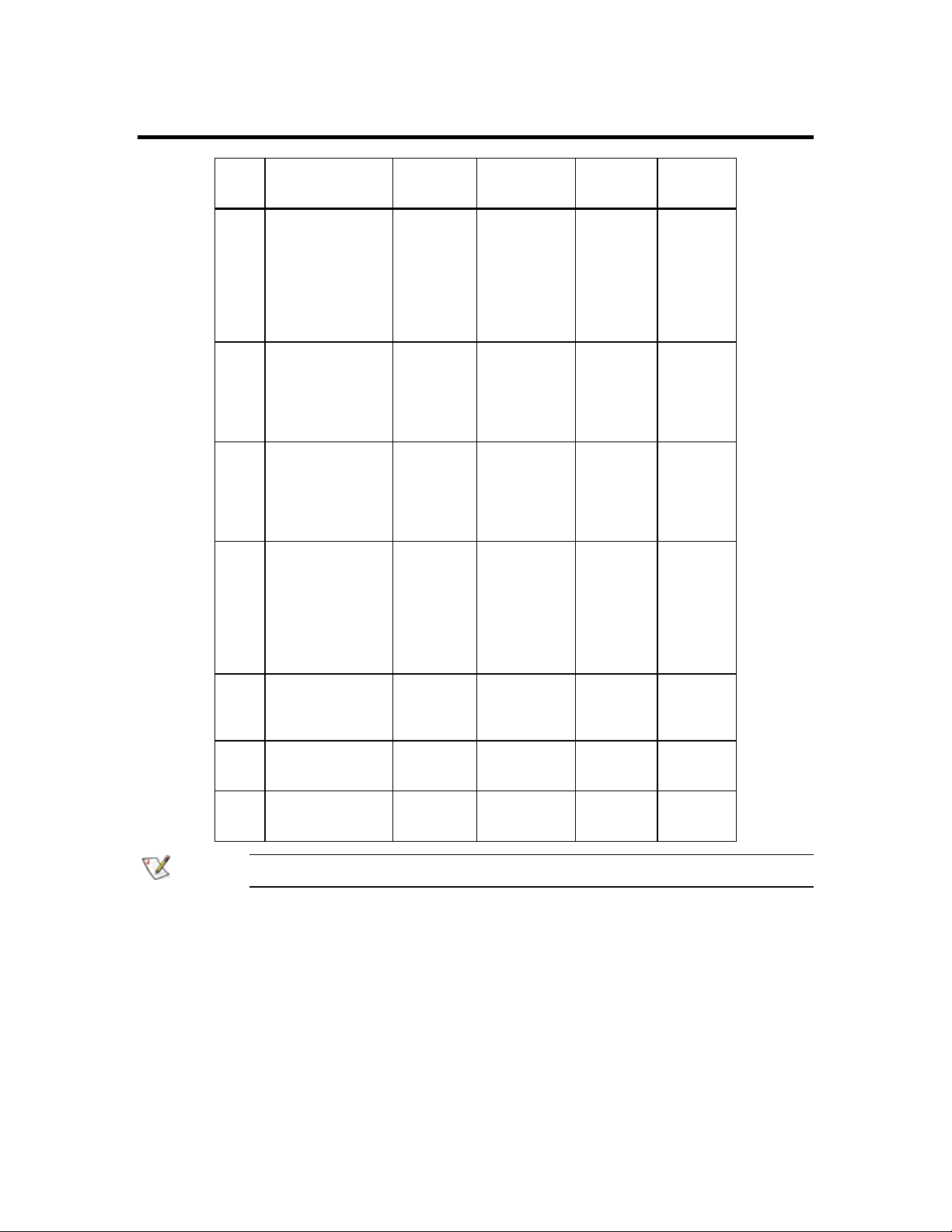

Logical Drive Configuration

Logical

Drive

RAID

Level

LD0

LD1

LD2

LD3

LD4

LD5

LD6

LD7

LD8

LD9

LD10

LD11

LD12

LD13

LD14

LD15

LD16

LD17

LD18

LD19

Stripe

Size

Logical Drive

Size

Cache

Policy

Read

Policy

Write

Policy

# of Physical

Drives

Preface

ix

Page 10

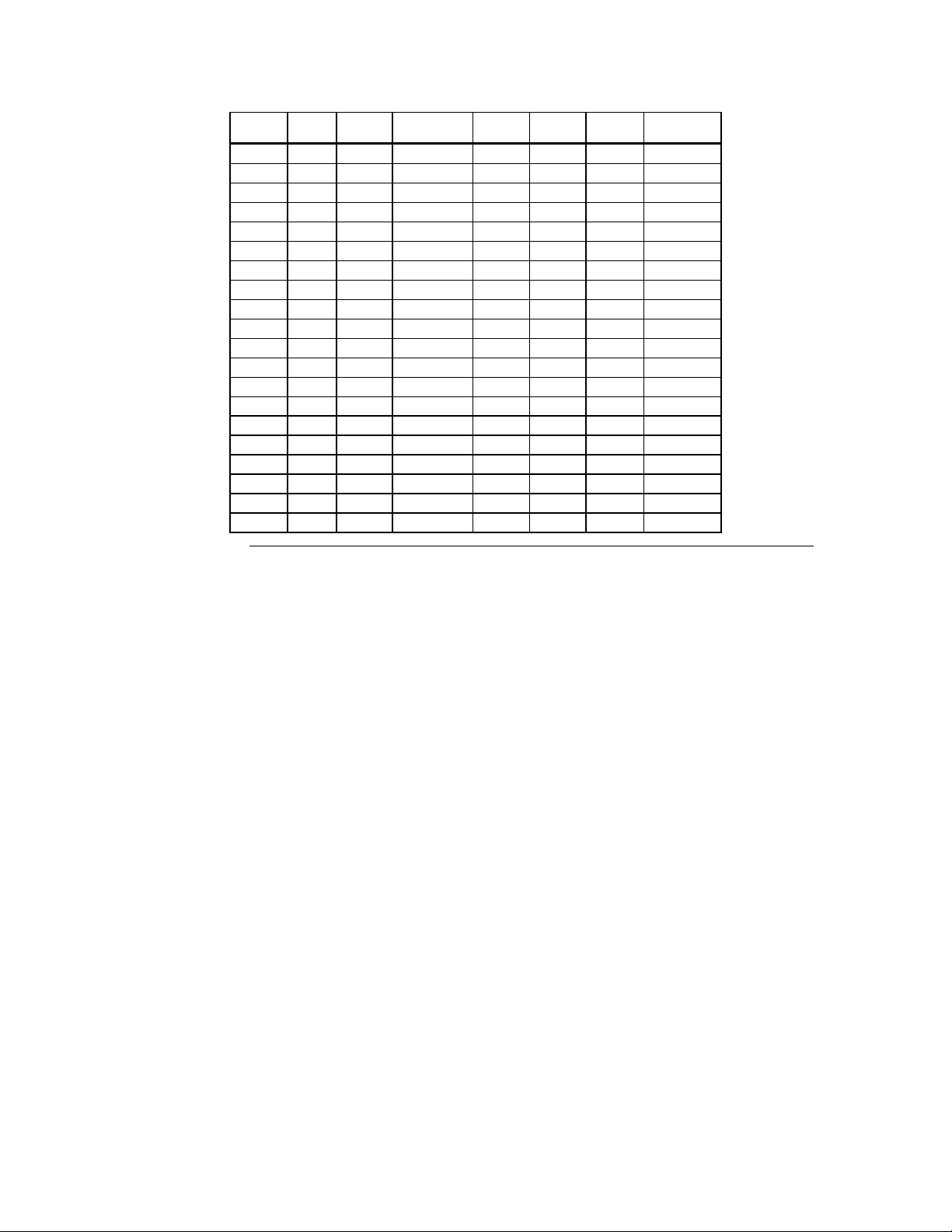

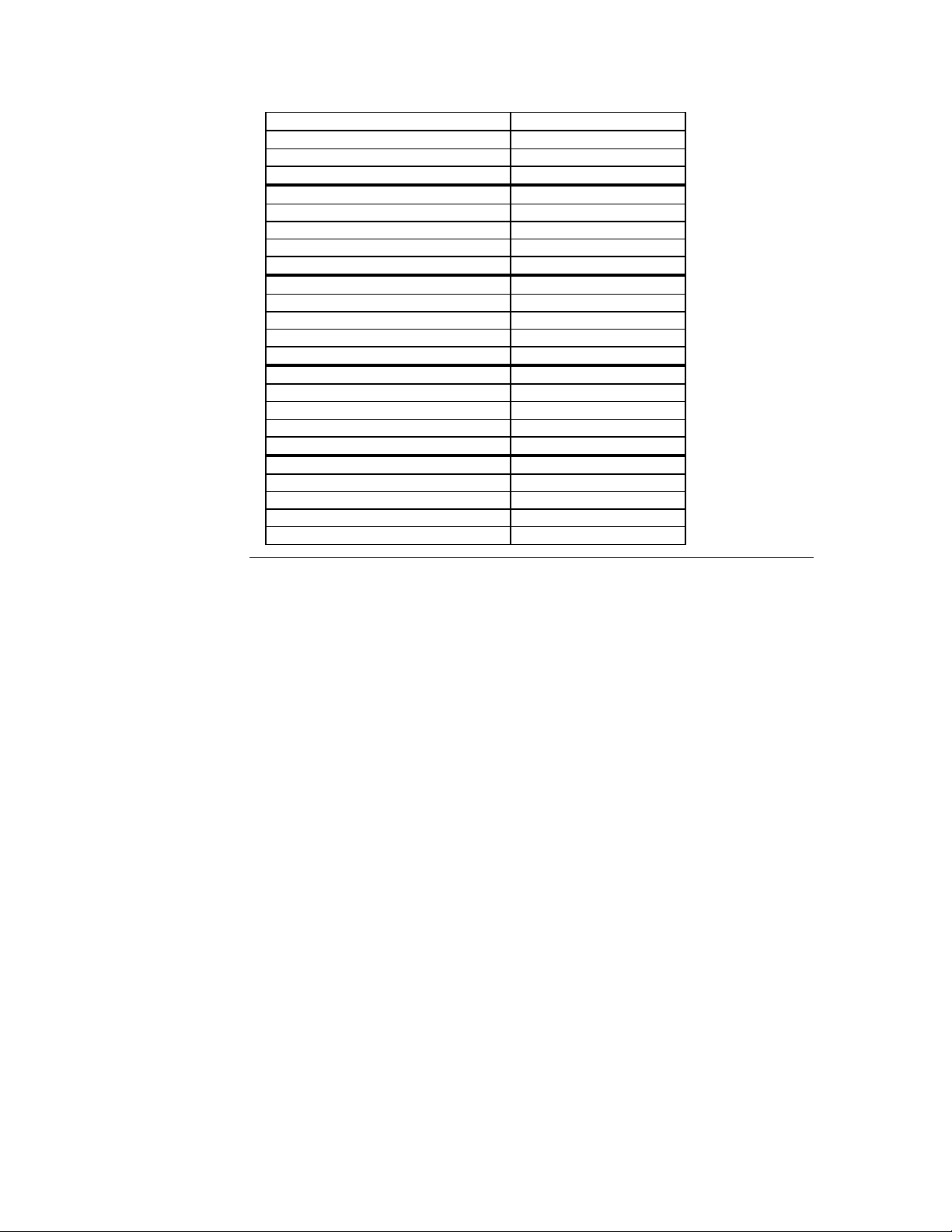

Logical

Drive

LD20

LD21

LD22

LD23

LD24

LD25

LD26

LD27

LD28

LD29

LD30

LD31

LD32

LD33

LD34

LD35

LD36

LD37

LD38

LD39

RAID

Level

Stripe

Size

Logical Drive

Size

Cache

Policy

Read

Policy

Write

Policy

# of Physical

Drives

MegaRAID Express500 Hardware Guide

x

Page 11

Physical Device Layout

Target ID

Device Type

Logical Drive Number/ Drive Number

Manufacturer/Model Number

Firmware level

Target ID

Device Type

Logical Drive Number/ Drive Number

Manufacturer/Model Number

Firmware level

Target ID

Device Type

Logical Drive Number/ Drive Number

Manufacturer/Model Number

Firmware level

Target ID

Device Type

Logical Drive Number/ Drive Number

Manufacturer/Model Number

Firmware level

Target ID

Device Type

Logical Drive Number/ Drive Number

Manufacturer/Model Number

Firmware level

Target ID

Device Type

Logical Drive Number/ Drive Number

Manufacturer/Model Number

Firmware level

Target ID

Device Type

Logical Drive Number/ Drive Number

Manufacturer/Model Number

Firmware level

Target ID

Device Type

Logical Drive Number/ Drive Number

Manufacturer/Model Number

Firmware level

Target ID

Device Type

Logical Drive Number/ Drive Number

Manufacturer/Model Number

Firmware level

Target ID

Device Type

Logical Drive Number/ Drive Number

Manufacturer/Model Number

Firmware level

Target ID

Device Type

Channel 1

Preface

xi

Page 12

Logical Drive Number/ Drive Number

Manufacturer/Model Number

Firmware level

Target ID

Device Type

Logical Drive Number/ Drive Number

Manufacturer/Model Number

Firmware level

Target ID

Device Type

Logical Drive Number/ Drive Number

Manufacturer/Model Number

Firmware level

Target ID

Device Type

Logical Drive Number/ Drive Number

Manufacturer/Model Number

Firmware level

Target ID

Device Type

Logical Drive Number/ Drive Number

Manufacturer/Model Number

Firmware level

Channel 1

MegaRAID Express500 Hardware Guide

xii

Page 13

Preface,

Continued

Disclaimer

This manual describes the operation of the LSI Logic MegaRAID Express 500 Disk Array

Controller. Although efforts have been made to assure the accuracy of the information contained

here, LSI Logic expressly disclaims liability for any error in this information, and for damages,

whether direct, indirect, special, exemplary, consequential or otherwise, that may result from such

error, including but not limited to the loss of profits resulting from the use or misuse of the manual

or information contained therein (even if LSI Logic has been advised of the possibility of such

damages). Any questions or comments regarding this document or its contents should be addressed

to LSI Logic at the address shown on the cover.

LSI Logic Corporation provides this publication “as is” without warranty of any kind, either

expressed or implied, including, but not limited to, the implied warranties of merchantability or

fitness for a specific purpose.

Some states do not allow disclaimer of express or implied warranties or the limitation or exclusion

of liability for indirect, special, exemplary, incidental or consequential damages in certain

transactions; therefore, this statement may not apply to you. Also, you may have other rights which

vary from jurisdiction to jurisdiction.

This publication could include technical inaccuracies or typographical errors. Changes are

periodically made to the information herein; these changes will be incorporated in new editions of

the publication. LSI Logic may make improvements and/or revisions in the product(s) and/or the

program(s) described in this publication at any time.

Requests for technical information about LSI Logic products should be made to your LSI Logic

authorized reseller or marketing representative.

Preface

xiii

Page 14

FCC Regulatory Statement

This device complies with Part 15 of the FCC Rules. Operation is subject to the following two conditions: (1) this device may not

cause harmful interference, and (2) this device must accept any interference received, including interference that may cause undesired

operation.

Warning

:

void the user's authority to operate the equipment.

Note:

the FCC Rules. These limits are designed to provide reasonable protection against harmful interference in a residential installation.

This equipment generates, uses and can radiate radio frequency energy and, if not installed and used in accordance with the

instructions, may cause harmful interference to radio communications. However, there is no guarantee that interference will not occur

in a specific installation. If this equipment does cause harmful interference to radio or television reception, which can be determined

by turning the equipment off and on, try to correct the interference by one or more of the following measures:

1) Reorient or relocate the receiving antenna.

2) Increase the separation between the equipment and

3)

4) Consult the dealer or an experienced radio/TV technician

Shielded interface cables must be used with this product to ensure compliance with the Class B FCC

limits.

Changes or modifications to this unit not expressly approved by the party responsible for compliance could

This equipment has been tested and found to comply with the limits for a Class B digital device, pursuant to Part 15 of

the receiver.

Connect the equipment into an outlet on a circuit different from

that to which the receiver is connected.

for help.

LSI Logic MegaRAID Express 500 PCI RAID Controller

Model Number:

FCC ID Number:

Series 475

IUESER475

Disclaimer

LSI Logic certifies only that this product will work correctly when this

product is used with the same jumper settings, the same system

configuration, the same memory module parts, and the same

peripherals that were tested by LSI Logic with this product. The

complete list of tested jumper settings, system configurations,

peripheral devices, and memory modules are documented in the LSI

Logic Compatibility Report for this product. Call your LSI Logic sales

representative for a copy of the Compatibility Report for this product.

MegaRAID Express500 Hardware Guide

xiv

Page 15

1Overview

The MegaRAID® Express 500 PCI RAID controller is a high performance intelligent

PCI-to-SCSI host adapter with RAID control capabilities. The MegaRAID Express 500

provides reliability, high performance, and fault-tolerant disk subsystem management.

The MegaRAID Express 500 is part of the LSI Logic Intel i960RM/RS-based MegaRAID

controller family. The MegaRAID Express 500 is an entry level-to mid-range RAID

controller solution. MegaRAID Express 500 offers a cost-effective way to implement

RAID in a server.

The MegaRAID Express 500 has a 160M Ultra and Wide SCSI channel supporting data

transfer rates up to 160 megabytes per second (MB/s) per channel. The SCSI channel

supports up to fifteen non-Ultra SCSI devices. MegaRAID Express 500 includes

MegaRAID features and performance.

Features

SCSI Channel

MegaRAID Express 500:

provides a high performance I/O migration path while preserving existing PCI-SCSI software

•

performs SCSI data transfers up to 160 MB/s

•

performs synchronous operation on a wide LVD SCSI bus

•

allows up to 15 LVD SCSI devices on the wide bus

•

includes an Intel® i960RM that performs RAID calculations and routing

•

supports 8, 16, 32, 64, or 128 MB of SDRAM cache memory in a DIMM socket used for read

•

and write-back caching and RAID 5 parity generation

The MegaRAID Express 500 upgrade card includes one Ultra3 SCSI channel. The

channel is powered by a Q-Logic ISP10160A 160M SCSI processor.

NVRAM and Flash ROM

MegaRAID Express 500 firmware is stored in flash ROM for easy upgrade.

SCSI Connectors

MegaRAID Express 500 has one ultra high density 68-pin external connector for

external storage subsystem and one high density 68-pin internal connector.

A 32 KB x 8 NVRAM stores RAID system configuration information. The

Chapter 1 Overview

1

Page 16

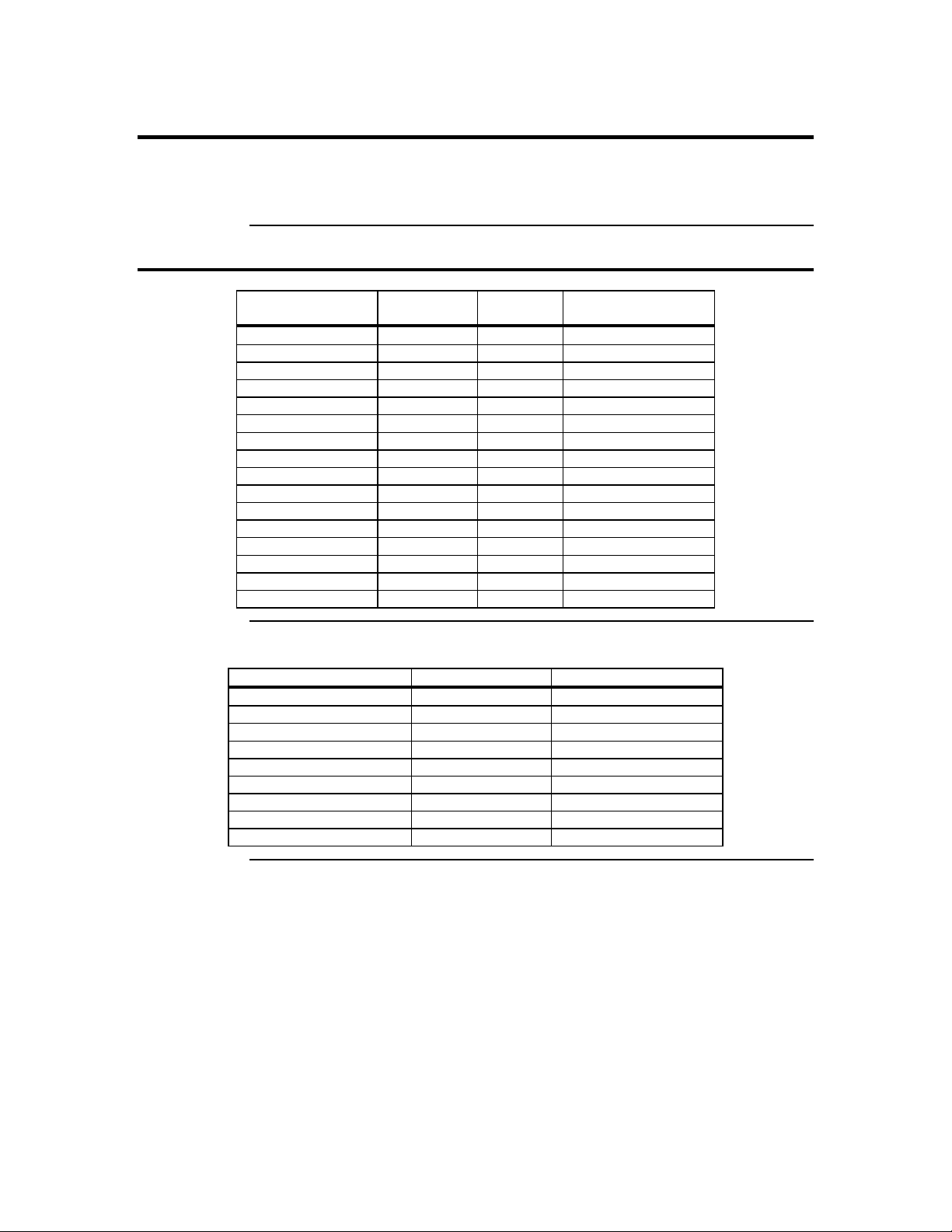

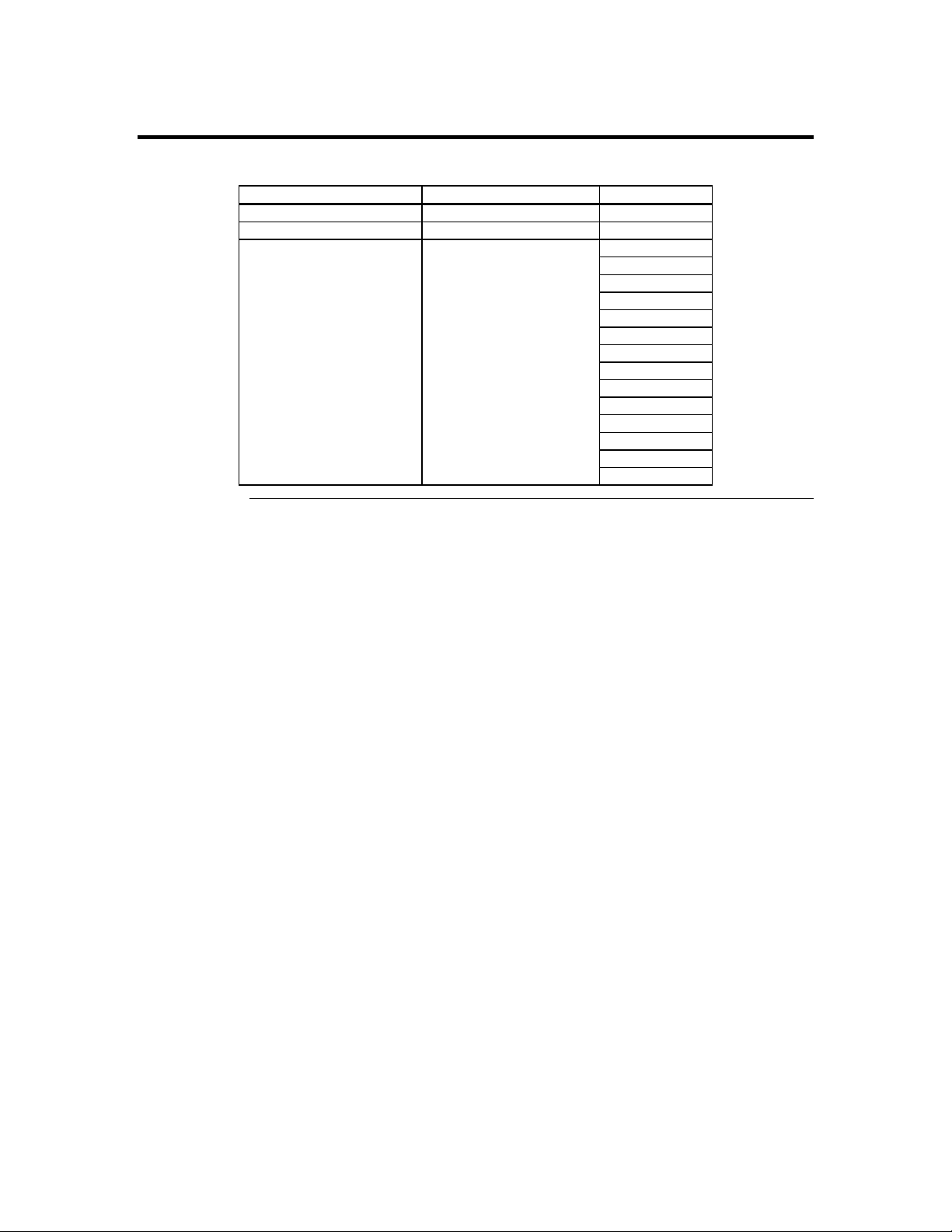

Single Ended and Differential SCSI Buses

The SCSI standard defines two electrical buses:

• a single ended bus

• low-voltage differential bus

Maximum Cable Length for SCSI Standards

Standard Single ended LVD Maximum Number of

SCSI I 6 m 12 m 7

Fast SCSI 6 m 12 m 7

Fast Wide SCSI 6 m 12 m 15

Ultra SCSI 1.5 m 12 m 7

Ultra SCSI 3 m 12 m 3

Wide Ultra SCSI 12 m 15

Wide Ultra SCSI 1.5 m 12 m 7

Wide Ultra SCSI 3 m 12 m 3

Ultra 2 SCSI 25 m 1

Ultra 2 SCSI 12 m 7

Wide Ultra 2 SCSI 25 m 1

Wide Ultra 2 SCSI 12 m 15

Ultra3 SCSI 25m 1

Ultra3 SCSI 12m 7

Wide Ultra3 SCSI 25m 1

Wide Ultra3 SCSI 12m 15

Drives

SCSI Bus Widths and Maximum Throughput

SCSI Standard SCSI Bus Width SCSI Throughput

SCSI I 8 bits 5 MB/s

Fast SCSI 8 bits 10 MB/s

Fast Wide SCSI 16 bits 20 MB/s

Ultra SCSI 8 bits 20 MB/s

Wide Ultra SCSI 16 bits 40 MB/s

Ultra 2 SCSI 8 bits 40 MB/s

Wide Ultra 2 SCSI 16 bits 80 MB/s

Ultra3 SCSI 8 bits 80 MB/s

Wide Ultra3 SCSI 16 bits 160 MB/s

MegaRAID Express 500 Hardware Guide

2

Page 17

Documentation

The MegaRAID Express 500 technical documentation set includes:

• the MegaRAID Elite 1600 Hardware Guide

• the MegaRAID Configuration Software Guide

• the MegaRAID Operating System Drivers Guide

MegaRAID Configuration Hardware Guide This manual contains the RAID overview, RAID planning,

and RAID system configuration information you will need first. Read the MegaRAID

Express 500 Hardware Guide first.

MegaRAID Configuration Software Guide This manual describes the software configuration utilities that

configure and modify RAID systems.

MegaRAID Operating System Drivers Guide This manual provides detailed information about installing

the MegaRAID Express 500 operating system drivers.

Chapter 1 Overview

3

Page 18

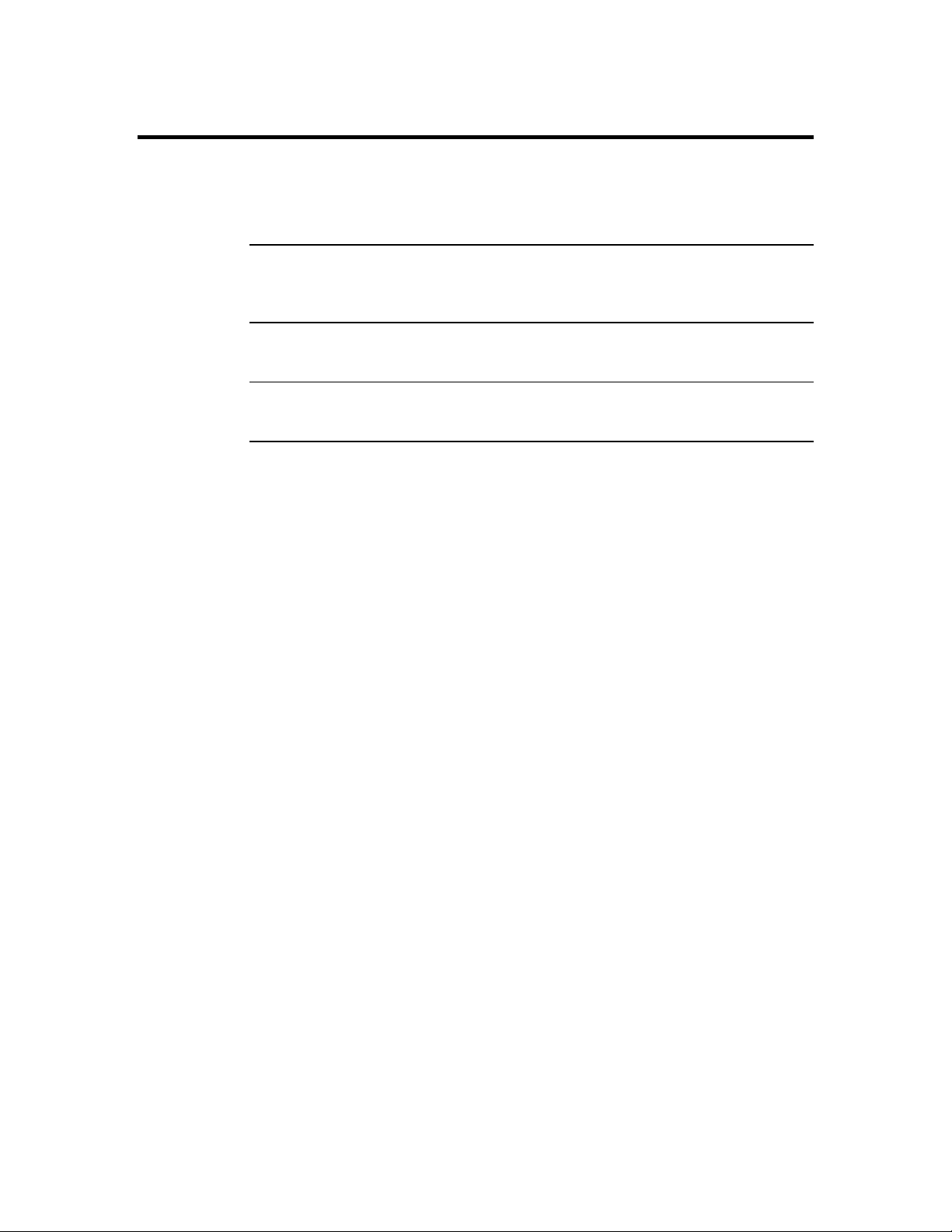

MegaRAID Express 500 Block Diagram

MegaRAID Express 500 Hardware Guide

4

Page 19

2 Introduction to RAID

RAID (Redundant Array of Independent Disks) is an array of multiple independent hard

disk drives that provide high performance and fault tolerance. A RAID disk subsystem

improves I/O performance over a computer using only a single drive. The RAID array

appears to the host computer as a single storage unit or as multiple logical units. I/O is

expedited because several disks can be accessed simultaneously. RAID systems improve

data storage reliability and fault tolerance compared to single-drive computers. Data loss

because of a disk drive failure can be recovered by reconstructing missing data from the

remaining data and parity drives.

RAID Benefits

RAID has gained popularity because it improves I/O performance and increases storage

subsystem reliability. RAID provides data security through fault tolerance and redundant

data storage. The MegaRAID Express 500 management software configures and monitors

RAID disk arrays.

Improved I/O

Increased Reliability

Although disk drive capabilities have improved drastically, actual performance has been

improved only three to four times in the last decade. Computing performance has been

improved over 50 times during the same time period.

more power, and generate more noise and vibration than electronic devices. These factors

reduce the reliability of data stored on disks.

The electromechanical components of a disk subsystem operate more slowly, require

Chapter 2 Introduction to RAID

5

Page 20

In This Chapter

The following topics are discussed:

Host-based RAID solution page 7

RAID overview page 8

Major Topic Subtopic turn to

Consistency check page 8

Fault tolerance page 8

Disk rebuild page 9

Hot spares Page 10

Hot swaps page 10

Parity page 11

Disk striping page 12

Disk mirroring page 13

Disk spanning page 14

Logical drive page 15

Logical drive states page 15

SCSI drive states page 15

Disk array types page 16

Enclosure management page 16

MegaRAID Express 500 Hardware Guide

6

Page 21

MegaRAID Express 500 – Host-Based RAID Solution

RAID products are either:

• host-based or

• SCSI-to-SCSI

The MegaRAID Express 500 controller is a host-based RAID solution. MegaRAID

Express 500 is a PCI adapter card that is installed in any available PCI expansion slot in a

host system.

Host-Based

SCSI-to-SCSI

A host-based RAID product puts all of the RAID intelligence on an adapter card that is

installed in a network server. A host-based RAID product provides the best performance.

MegaRAID Express 500 is part of the file server, so it can transmit data directly across

the computer’s buses at data transfer speeds up to 132 MB/s.

The available sequential data transfer rate is determined by the following factors:

• the sustained data transfer rate on the motherboard PCI bus

• the sustained data transfer rate on the i960RM PCI to PCI bridge

• the sustained data transfer rate of the SCSI controller

• the sustained data transfer rate of the SCSI devices

• the number of SCSI channels

• the number of SCSI disk drives

Host-based solutions must provide operating system-specific drivers.

A SCSI-to-SCSI RAID product puts the RAID intelligence inside the RAID chassis and

uses a plain SCSI Host Adapter installed in the network server. The data transfer rate is

limited to the bandwidth of the SCSI channel. A SCSI-to-SCSI RAID product that has

two wide SCSI channels operating at speeds up to 160 MB/s must squeeze the data into a

single wide SCSI (160 MB/s) channel back to the host computer.

In SCSI-to-SCSI RAID products, the hard drive subsystem uses only a single SCSI ID,

which allows you to connect multiple drive subsystems to a single SCSI controller.

Chapter 2 Introduction to RAID

7

Page 22

RAID Overview

RAID (Redundant Array of Independent Disks) is a collection of specifications that

describe a system for ensuring the reliability and stability of data stored on large disk

subsystems. A RAID system can be implemented in a number of different versions (or

RAID Levels). The standard RAID levels are 0, 1, 3, and 5. MegaRAID Express 500

supports all standard RAID levels and RAID levels 10, 30, and 50, special RAID versions

supported by MegaRAID Express 500.

Fault Tolerance

Fault tolerance is achieved through cooling fans, power supplies, and the ability to hot

swap drives. MegaRAID Express 500 provides hot swapping through the hot spare

feature. A hot spare drive is an unused online available drive that MegaRAID Express

500 instantly plugs into the system when an active drive fails.

After the hot spare is automatically moved into the RAID subsystem, the failed drive is

automatically rebuilt. The RAID disk array continues to handle request while the rebuild

occurs.

Consistency Check

In RAID, check consistency verifies the correctness of redundant data in an array. For

example, in a system with dedicated parity, checking consistency means computing the

parity of the data drives and comparing the results to the contents of the dedicated parity

drive.

MegaRAID Express 500 Hardware Guide

8

Page 23

Disk Rebuild

You rebuild a disk drive by recreating the data that had been stored on the drive before

the drive failed.

Rebuilding can be done only in arrays with data redundancy such as RAID level 1, 3, 5,

10, 30, and 50.

Standby (warm spare) rebuild is employed in a mirrored (RAID 1) system. If a disk drive

fails, an identical drive is immediately available. The primary data source disk drive is the

original disk drive.

A hot spare can be used to rebuild disk drives in RAID 1, 3, 5, 10, 30, or 50 systems. If a

hot spare is not available, the failed disk drive must be replaced with a new disk drive so

that the data on the failed drive can be rebuilt.

The MegaRAID Express 500 controller automatically and transparently rebuilds failed

drives with user-definable rebuild rates. If a hot spare is available, the rebuild starts

automatically when a drive fails. MegaRAID Express 500 automatically restarts the

system and the rebuild if the system goes down during a rebuild.

Rebuild Rate

Physical Array

The rebuild rate is the fraction of the compute cycles dedicated to rebuilding failed drives.

A rebuild rate of 100 percent means the system is totally dedicated to rebuilding the failed

drive.

The MegaRAID Express 500 rebuild rate can be configured between 0% and 100%. At

0%, the rebuild is only done if the system is not doing anything else. At 100%, the rebuild

has a higher priority than any other system activity.

A RAID array is a collection of physical disk drives governed by the RAID management

software. A RAID array appears to the host computer as one or more logical drives.

Chapter 2 Introduction to RAID

9

Page 24

Hot Spares

Hot Swap

A hot spare is an extra, unused disk drive that is part of the disk subsystem. It is usually in

standby mode, ready for service if a drive fails. Hot spares permit you to replace failed

drives without system shutdown or user intervention.

MegaRAID Express 500 implements automatic and transparent rebuilds using hot spare

drives, providing a high degree of fault tolerance and zero downtime. The MegaRAID

Express 500 RAID Management software allows you to specify physical drives as hot

spares. When a hot spare is needed, the MegaRAID Express 500 controller assigns the

hot spare that has a capacity closest to and at least as great as that of the failed drive to

take the place of the failed drive.

Important

Hot spares are employed only in arrays with redundancy, for

example, RAID levels 1, 3, 5, 10, 30, and 50.

A hot spare connected to a specific MegaRAID Express 500

controller can be used only to rebuild a drive that is

connected to the same controller.

A hot swap is the manual replacement of a defective physical disk unit while the computer

is still running. When a new drive has been installed, you must issue a command to

rebuild the drive.

MegaRAID Express 500 Hardware Guide

10

Page 25

Parity

Parity generates a set of redundancy data from two or more parent data sets. The

redundancy data can be used to reconstruct one of the parent data sets. Parity data does

not fully duplicate the parent data sets. In RAID, this method is applied to entire drives or

stripes across all disk drives in an array. The types of parity are:

Type Description

Dedicated Parity The parity of the data on two or more disk drives is

stored on an additional disk.

Distributed

Parity

The parity data is distributed across all drives in the

system.

If a single disk drive fails, it can be rebuilt from the parity and the data on the remaining

drives.

RAID level 3 combines dedicated parity with disk striping. The parity disk in RAID 3 is

the last logical drive in a RAID set.

RAID level 5 combines distributed parity with disk striping. Parity provides redundancy

for one drive failure without duplicating the contents of entire disk drives, but parity

generation can slow the write process. A dedicated parity scheme during normal

read/write operations is shown below:

Chapter 2 Introduction to RAID

11

Page 26

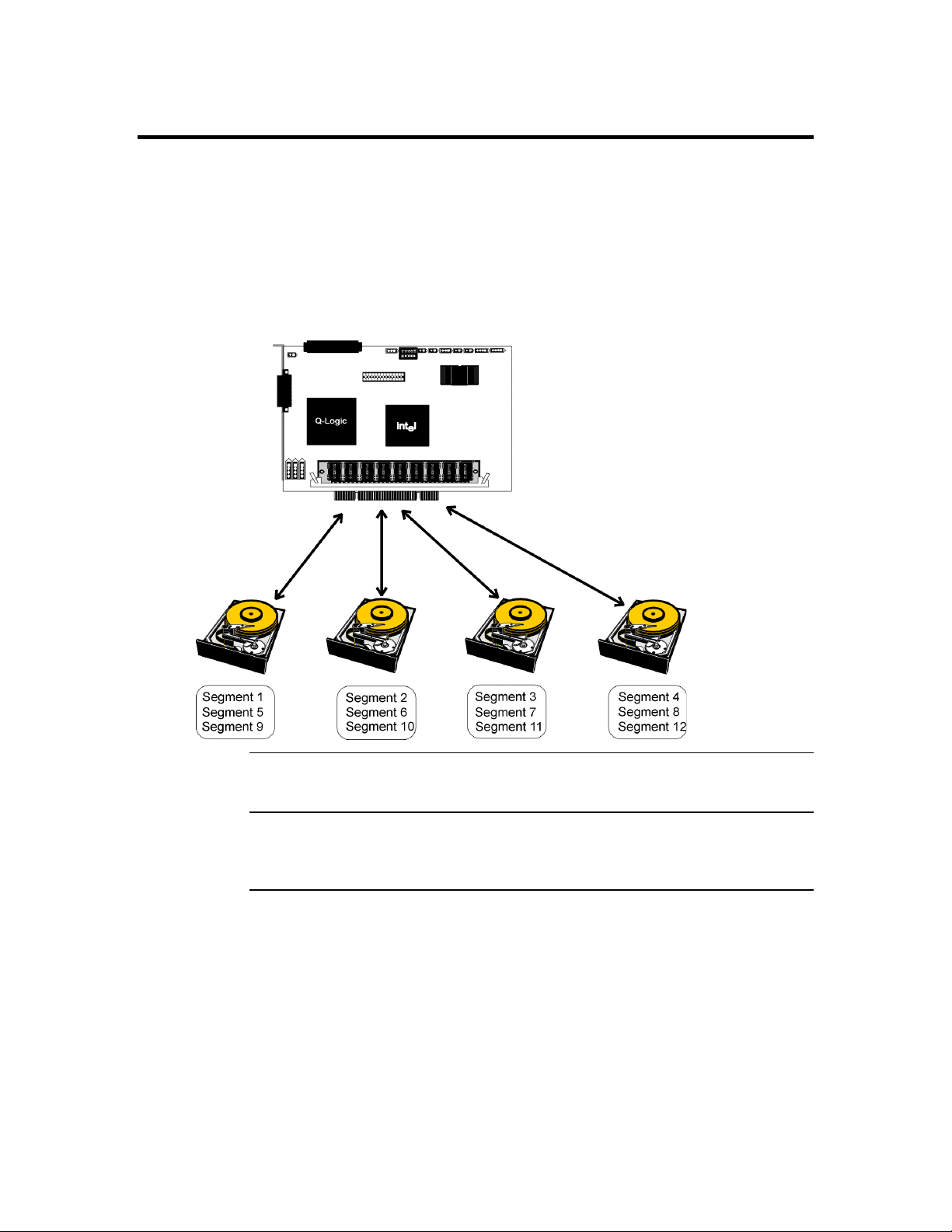

Disk Striping

Disk striping writes data across multiple disk drives instead of just one disk drive. Disk

striping involves partitioning each drive storage space into stripes that can vary in size

from 2 KB to 128 KB. These stripes are interleaved in a repeated sequential manner. The

combined storage space is composed of stripes from each drive. MegaRAID Express 500

supports stripe sizes of 2 KB, 4 KB, 8 KB, 16 KB, 32 KB, 64 KB, or 128 KB.

For example, in a four-disk system using only disk striping (as in RAID level 0), segment

1 is written to disk 1, segment 2 is written to disk 2, and so on. Disk striping enhances

performance because multiple drives are accessed simultaneously; but disk striping does

not provide data redundancy.

Stripe Width

Stripe width is a measure of the number of disks involved in an array where striping is

implemented. For example, a four-disk array with disk striping has a stripe width of four.

Stripe Size

The stripe size is the length of the interleaved data segments that MegaRAID Express 500

writes across multiple drives. MegaRAID Express 500 supports stripe sizes of 2 KB, 4

KB, 8 KB, 16 KB, 32 KB, 64 KB, or 128 KB.

MegaRAID Express 500 Hardware Guide

12

Page 27

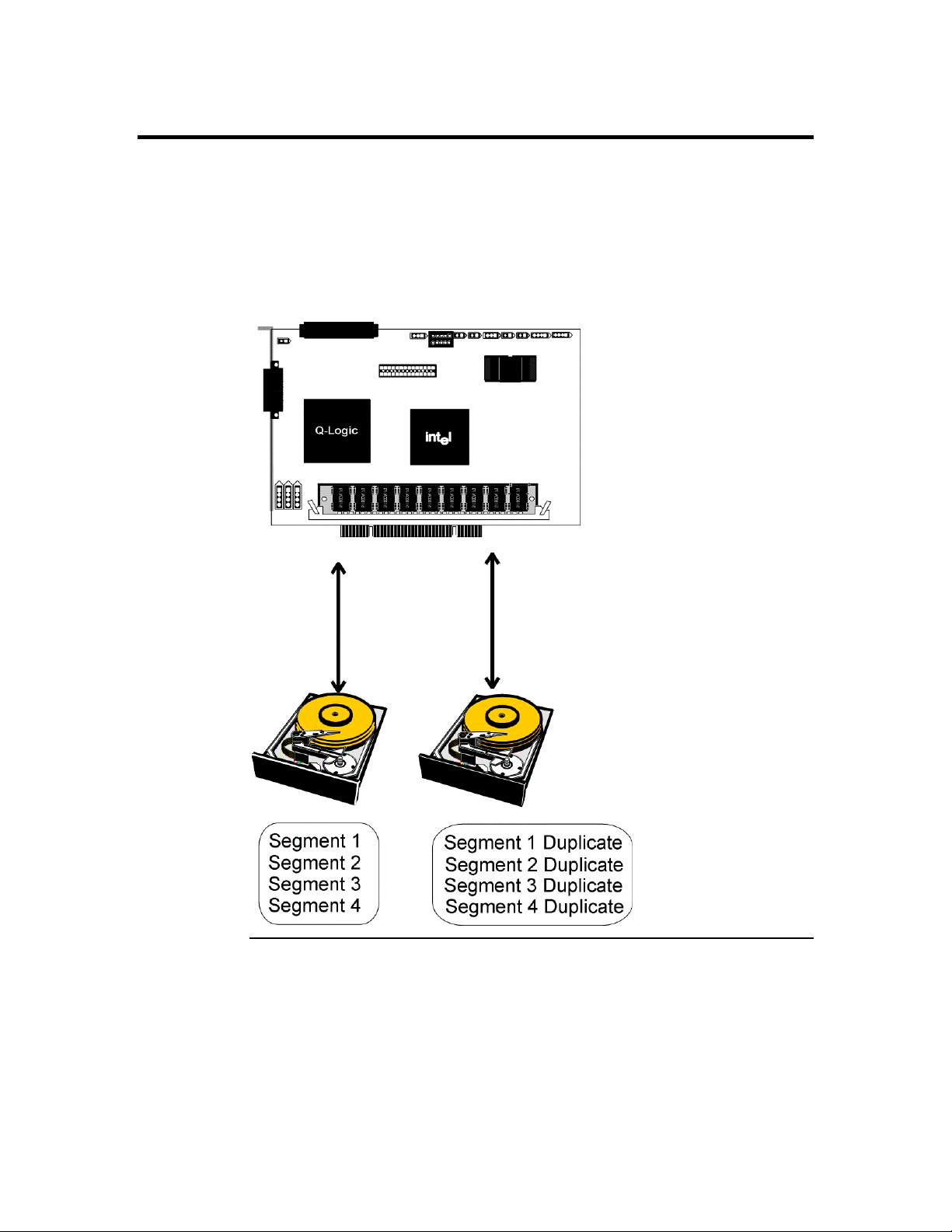

Disk Mirroring

With mirroring (used in RAID 1), data written to one disk drive is simultaneously written

to another disk drive. If one disk drive fails, the contents of the other disk drive can be

used to run the system and reconstruct the failed drive. The primary advantage of disk

mirroring is that it provides 100% data redundancy. Since the contents of the disk drive

are completely written to a second drive, it does not matter if one of the drives fails. Both

drives contain the same data at all times. Either drive can act as the operational drive.

Disk mirroring provides 100% redundancy, but is expensive because each drive in the

system must be duplicated.

Chapter 2 Introduction to RAID

13

Page 28

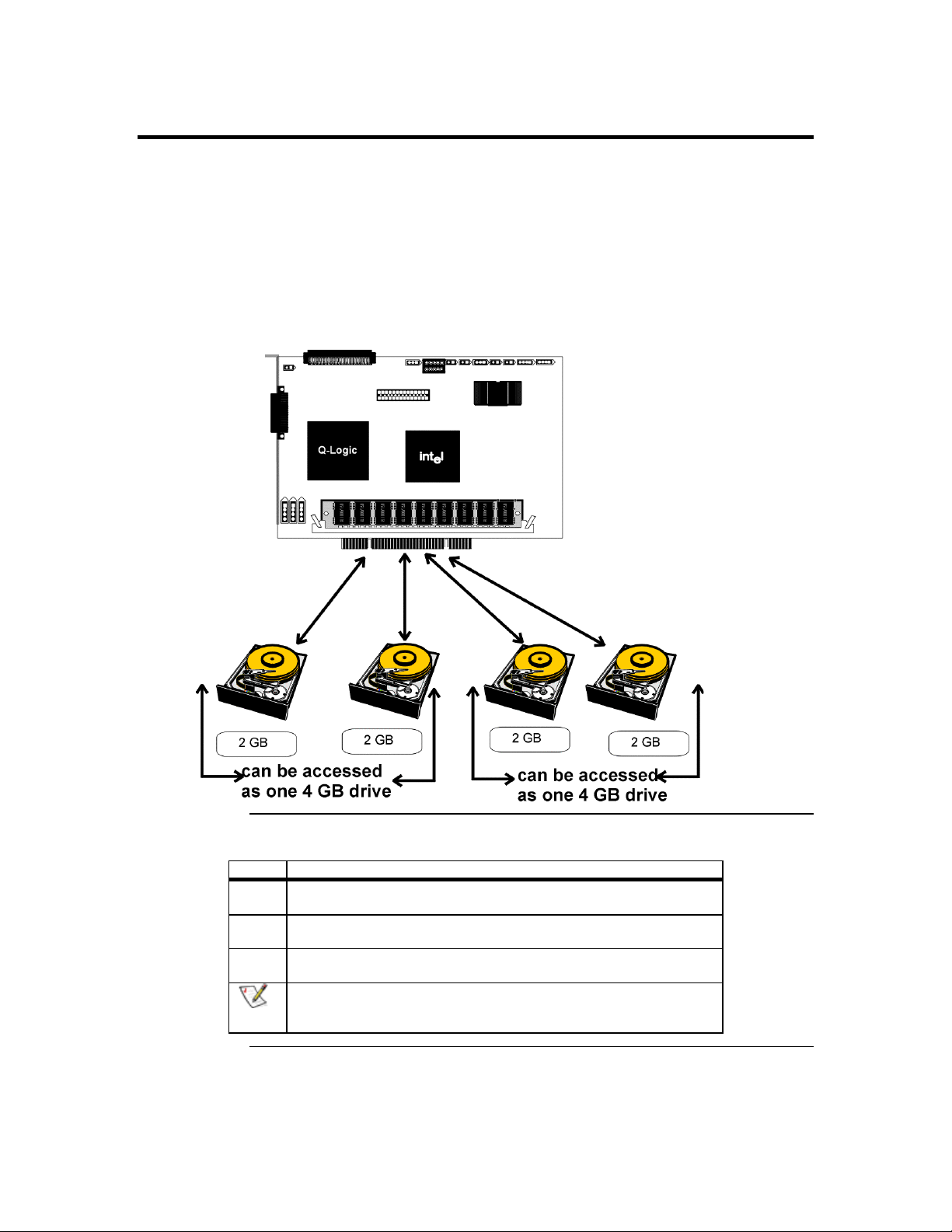

Disk Spanning

Disk spanning allows multiple disk drives to function like one big drive. Spanning

overcomes lack of disk space and simplifies storage management by combining existing

resources or adding relatively inexpensive resources. For example, four 400 MB disk

drives can be combined to appear to the operating system as one single 1600 MB drive.

Spanning alone does not provide reliability or performance enhancements. Spanned

logical drives must have the same stripe size and must be contiguous. In the following

graphic, RAID 1 array is turned into a RAID 10 array.

This controller supports a span depth of eight. That means that eight RAID 1, 3 or 5

arrays can be spanned to create one logical drive.

Spanning for RAID 10, RAID 30, or RAID 50

Level Description

10 Configure RAID 10 by spanning two contiguous RAID 1 logical drives.

The RAID 1 logical drives must have the same stripe size.

30 Configure RAID 30 by spanning two contiguous RAID 3 logical drives.

The RAID 3 logical drives must have the same stripe size.

50 Configure RAID 50 by spanning two contiguous RAID 5 logical drives.

The RAID 5 logical drives must have the same stripe size.

Spanning two contiguous RAID 0 logical drives does not produce a new

Note:

MegaRAID Express 500 Hardware Guide

14

RAID level or add fault tolerance. It does increase the size of the logical

volume and improves performance by doubling the number of spindles.

Page 29

Logical Drive

A logical drive is a partition in a physical array of disks that is made up of contiguous

data segments on the physical disks. A logical drive can consist of:

• an entire physical array

• more than one entire physical array

• a part of an array

•

parts of more than one array, or

•

a combination of any two of the above conditions

Logical Drive States

State Description

Optimal The drive operating condition is good. All configured drives are

Degraded The drive operating condition is not optimal. One of the configured

Failed The drive has failed.

Offline The drive is not available to MegaRAID Express 500.

SCSI Drive States

online

drives has failed or is offline.

A SCSI disk drive can be in one of these states:

State Description

Online

(ONLIN)

Ready

(READY)

Hot Spare

(HOTSP)

Fail

(FAIL)

Rebuild

(REB)

The drive is functioning normally and is a part of a configured

logical drive.

The drive is functioning normally but is not part of a configured

logical drive and is not designated as a hot spare.

The drive is powered up and ready for use as a spare in case an

online drive fails.

A fault has occurred in the drive placing it out of service.

The drive is being rebuilt with data from a failed drive.

Chapter 2 Introduction to RAID

15

Page 30

Disk Array Types

The RAID disk array types are listed in the following table:

Type Description

Software-

Based

SCSI to SCSI The array controller resides outside of the host computer and

Bus-Based The array controller resides on the bus (for example, a PCI or

The array is managed by software running in a host computer using

the host CPU bandwidth. The disadvantages associated with this

method are the load on the host CPU and the need for different

software for each operating system.

communicates with the host through a SCSI adapter in the host.

The array management software runs in the controller. It is

transparent to the host and independent of the host operating

system. The disadvantage is the limited data transfer rate of the

SCSI channel between the SCSI adapter and the array controller.

EISA bus) in the host computer and has its own CPU to generate

the parity and handle other RAID functions. A bus-based controller

can transfer data at the speed of the host bus (PCI, ISA, EISA, VLBus) but is limited to the bus it is designed for. MegaRAID

Express 500 resides on a PCI bus, which can handle data transfer

at up to 132 MB/s. With MegaRAID Express 500, the channel can

handle data transfer rates up to 160 MB/s per SCSI channel.

Enclosure Management

Enclosure management is the intelligent monitoring of the disk subsystem by software

and/or hardware.

The disk subsystem can be part of the host computer or separate from it. Enclosure

management helps you stay informed of events in the disk subsystem, such as a drive or

power supply failure. Enclosure management increases the fault tolerance of the disk

subsystem.

MegaRAID Express 500 Hardware Guide

16

Page 31

3 RAID Levels

There are six official RAID levels (RAID 0 through RAID 5). MegaRAID Express 500

supports RAID levels 0, 1, 3, and 5. LSI Logic has designed three additional RAID levels

(10, 30, and 50) that provide additional benefits. The RAID levels that MegaRAID

Express 500 supports are:

RAID Level Type turn to

0 Standard page 19

1 Standard page 20

3 Standard page 21

5 Standard page 23

10 MegaRAID Express 500 only page 24

30 MegaRAID Express 500 only page 25

50 MegaRAID Express 500 only page 26

Select RAID Level

To ensure the best performance, you should select the optimal RAID level when you

create a system drive. The optimal RAID level for your disk array depends on a number

of factors:

• the number of drives in the disk array

• the capacity of the drives in the array

• the need for data redundancy

• the disk performance requirements

Selecting a RAID Level

next page.

The factors you need to consider when selecting a RAID level are listed on the

Chapter 3 RAID Levels

17

Page 32

Selecting a RAID Level

Level Description and

Use

0 Data divided in

blocks and

distributed

sequentially (pure

striping). Use for

non-critical data

that requires high

performance.

1 Data duplicated on

another disk

(mirroring). Use

for read-intensive

fault-tolerant

systems.

3 Disk striping with a

dedicated parity

drive. Use for noninteractive apps

that process large

files sequentially.

5 Disk striping and

parity data across

all drives. Use for

high read volume

but low write

volume, such as

transaction

processing.

10 Data striping and

mirrored drives.

30 Disk striping with a

dedicated parity

drive.

50 Disk striping and

parity data across

all drives.

Pros Cons Maximum

Physical

High data

throughput

for large

files

100% data

redundancy

Achieves

data

redundancy

at low cost

Achieves

data

redundancy

at low cost

High data

transfers,

complete

redundancy

High data

transfers,

redundancy

High data

transfers,

redundancy

No fault

tolerance. All

data lost if

any drive

fails.

Doubles disk

space.

Reduced

performance

during

rebuilds.

Performance

not as good as

RAID 1

Performance

not as good as

RAID 1

More

complicated

More

complicated

More

complicated

One to 15 No

Three to 15 Yes

Three to 15 Yes

Four to 14

(must be a

multiple of

Six to15 Yes

Six to 15 Yes

Fault

Tolerant

Drives

2Yes

Yes

two)

Note:

MegaRAID Express 500 Hardware Guide

18

The maximum number of physical drives supported by the Express 500 controller is 15.

Page 33

RAID 0

Uses

Strong Points

Weak Points

Drives

RAID 0 provides disk striping across all drives in the RAID subsystem. RAID 0 does not

provide any data redundancy, but does offer the best performance of any RAID level.

RAID 0 breaks up data into smaller blocks and then writes a block to each drive in the

array. The size of each block is determined by the stripe size parameter, set during the

creation of the RAID set. RAID 0 offers high bandwidth. By breaking up a large file into

smaller blocks, MegaRAID Express 500 can use several drives to read or write the file

faster. RAID 0 involves no parity calculations to complicate the write operation. This

makes RAID 0 ideal for applications that require high bandwidth but do not require fault

tolerance.

RAID 0 provides high data throughput, especially for large

files. Any environment that does not require fault tolerance.

Provides increased data throughput for large files. No capacity

loss penalty for parity.

Does not provide fault tolerance. All data lost if any drive

fails.

One to 15

The initiator takes one ID per channel. This leaves 15 IDs

available for one channel.

Chapter 3 RAID Levels

19

Page 34

RAID 1

Uses

Strong Points

Weak Points

Drives

In RAID 1, MegaRAID Express 500 duplicates all data from one drive to a second drive.

RAID 1 provides complete data redundancy, but at the cost of doubling the required data

storage capacity.

Use RAID 1 for small databases or any other environment

that requires fault tolerance but small capacity.

RAID 1 provides complete data redundancy. RAID 1 is

ideal for any application that requires fault tolerance and

minimal capacity.

RAID 1 requires twice as many disk drives. Performance is

impaired during drive rebuilds.

Two

MegaRAID Express 500 Hardware Guide

20

Page 35

RAID 3

Uses

Strong Points

Weak Points

Drives

RAID 3 provides disk striping and complete data redundancy though a dedicated parity

drive. The stripe size must be 64 KB if RAID 3 is used. RAID 3 handles data at the block

level, not the byte level, so it is ideal for networks that often handle very large files, such

as graphic images. RAID 3 breaks up data into smaller blocks, calculates parity by

performing an exclusive-or on the blocks, and then writes the blocks to all but one drive

in the array. The parity data created during the exclusive-or is then written to the last

drive in the array. The size of each block is determined by the stripe size parameter,

which is set during the creation of the RAID set.

If a single drive fails, a RAID 3 array continues to operate in degraded mode. If the failed

drive is a data drive, writes will continue as normal, except no data is written to the failed

drive. Reads reconstruct the data on the failed drive by performing an exclusive-or

operation on the remaining data in the stripe and the parity for that stripe. If the failed

drive is a parity drive, writes will occur as normal, except no parity is written. Reads

retrieve data from the disks.

Best suited for applications such as graphics, imaging, or

video that call for reading and writing huge, sequential

blocks of data.

Provides data redundancy and high data transfer rates.

The dedicated parity disk is a bottleneck with random I/O.

Three to 15

Chapter 3 RAID Levels

Cont’d

21

Page 36

RAID 3,

Continued

RAID 5 vs RAID 3

You may find that RAID 5 is preferable to RAID 3, even for applications characterized

by sequential reads and writes, because MegaRAID Express 500 has very robust caching

algorithms.

The benefits of RAID 3 disappear if there are many small I/O operations scattered

randomly and widely across the disks in the logical drive. The RAID 3 fixed parity disk

becomes a bottleneck in such applications. For example: The host attempts to make two

small writes and the writes are widely scattered, involving two different stripes and

different disk drives. Ideally both writes should take place at the same time. But this is not

possible in RAID 3, since the writes must take turns accessing the fixed parity drive. For

this reason, RAID 5 is the clear choice in this scenario.

MegaRAID Express 500 Hardware Guide

22

Page 37

RAID 5

Uses

Strong Points

Weak Points

Drives

RAID 5 includes disk striping at the byte level and parity. In RAID 5, the parity

information is written to several drives. RAID 5 is best suited for networks that perform a

lot of small I/O transactions simultaneously.

RAID 5 addresses the bottleneck issue for random I/O operations. Since each drive

contains both data and parity numerous writes can take place concurrently. In addition,

robust caching algorithms and hardware based exclusive-or assist make RAID 5

performance exceptional in many different environments.

RAID 5 provides high data throughput, especially for large

files. Use RAID 5 for transaction processing applications

because each drive can read and write independently. If a

drive fails, MegaRAID Express 500 uses the parity drive to

recreate all missing information. Use also for office

automation and online customer service that requires fault

tolerance. Use for any application that has high read request

rates but low write request rates.

Provides data redundancy and good performance in most

environments

Disk drive performance will be reduced if a drive is being

rebuilt. Environments with few processes do not perform as

well because the RAID overhead is not offset by the

performance gains in handling simultaneous processes.

Three to 15

Chapter 3 RAID Levels

23

Page 38

RAID 10

Uses

Strong Points

Weak Points

Drives

RAID 10 is a combination of RAID 0 and RAID 1. RAID 10 has mirrored drives. RAID

10 breaks up data into smaller blocks, and then stripes the blocks of data to each RAID 1

raid set. Each RAID 1 raid set then duplicates its data to its other drive. The size of each

block is determined by the stripe size parameter, which is set during the creation of the

RAID set. RAID 10 can sustain one to four drive failures while maintaining data integrity

if each failed disk is in a different RAID 1 array.

RAID 10 works best for data storage that must have 100%

redundancy of mirrored arrays and that also needs the

enhanced I/O performance of RAID 0 (striped arrays).

RAID 10 works well for medium-sized databases or any

environment that requires a higher degree of fault tolerance

and moderate to medium capacity.

RAID 10 provides both high data transfer rates and

complete data redundancy.

RAID 10 requires twice as many drives as all other RAID

levels except RAID 1.

Four to 14 (must be a multiple of two)

MegaRAID Express 500 Hardware Guide

24

Page 39

RAID 30

Uses

Strong Points

Weak Points

Drives

RAID 30 is a combination of RAID 0 and RAID 3. RAID 30 provides high data transfer

speeds and high data reliability. RAID 30 is best implemented on two RAID 3 disk arrays

with data striped across both disk arrays. RAID 30 breaks up data into smaller blocks, and

then stripes the blocks of data to each RAID 3 raid set. RAID 3 breaks up data into

smaller blocks, calculates parity by performing an exclusive-or on the blocks, and then

writes the blocks to all but one drive in the array. The parity data created during the

exclusive-or is then written to the last drive in each RAID 3 array. The size of each block

is determined by the stripe size parameter, which is set during the creation of the RAID

set.

RAID 30 can sustain one to four drive failures while maintaining data integrity if each

failed disk is in a different RAID 3 array.

Use RAID 30 for sequentially written and read data, prepress and video on demand that requires a higher degree of

fault tolerance and medium to large capacity.

Provides data reliability and high data transfer rates.

Requires 2 – 4 times as many parity drives as RAID 3.

Six to 15

The initiator takes one ID per channel. This leaves 15 IDs

available for one channel.

Chapter 3 RAID Levels

25

Page 40

RAID 50

Uses

Strong Points

Weak Points

Drives

RAID 50 provides the features of both RAID 0 and RAID 5. RAID 50 includes both

parity and disk striping across multiple drives. RAID 50 is best implemented on two

RAID 5 disk arrays with data striped across both disk arrays. RAID 50 breaks up data

into smaller blocks, and then stripes the blocks of data to each RAID 5 raid set. RAID 5

breaks up data into smaller blocks, calculates parity by performing an exclusive-or on the

blocks, and then writes the blocks of data and parity to each drive in the array. The size of

each block is determined by the stripe size parameter, which is set during the creation of

the RAID set.

RAID 50 can sustain one to four drive failures while maintaining data integrity if each

failed disk is in a different RAID 5 array.

RAID 50 works best when used with data that requires high

reliability, high request rates, and high data transfer and

medium to large capacity

RAID 50 provides high data throughput, data redundancy,

and very good performance.

Requires 2 to 4 times as many parity drives as RAID 5.

Six to 15

The initiator takes one ID per channel. This leaves 15 IDs

available for one channel.

.

MegaRAID Express 500 Hardware Guide

26

Page 41

4 Features

MegaRAID is a family of high performance intelligent PCI-to-SCSI host adapters with

RAID control capabilities. MegaRAID Express 500 has a SCSI channel that supports

160M Ultra and Wide SCSI at data transfer rates up to 160 MB/s. The SCSI channel

supports up to 15 Wide devices and up to seven non-Wide devices.

In This Chapter

SMART Technology

Topics described in this chapter include:

new features

•

configuration features

•

hardware architecture features

•

array performance features

•

RAID management features

•

fault tolerance features

•

utility programs

•

software drivers

•

The MegaRAID Express 500 Self Monitoring Analysis and Reporting Technology

(SMART) detects up to 70% of all predictable drive failures. SMART monitors the

internal performance of all motors, heads, and drive electronics.

Configuration on Disk

NVRAM on MegaRAID Express 500 and on the disk drives connected to MegaRAID

Express 500. If MegaRAID Express 500 is replaced, the new MegaRAID Express 500

controller can detect the actual RAID configuration, maintaining the integrity of the data

on each drive, even if the drives have changed channel and/or target ID.

Configuration on Disk (drive roaming) saves configuration information both in

Chapter 4 Features

27

Page 42

Hardware Requirements

MegaRAID Express 500 can be installed in an IBM AT®-compatible or EISA computer

with a motherboard that has 5 volt/3.3 volt PCI expansion slots. The computer must

support PCI version 2.1 or later. The computer should have an Intel Pentium, Pentium

Pro, or more powerful CPU, a floppy drive, a color monitor and VGA adapter card, a

mouse, and a keyboard.

Configuration Features

Specification Feature

RAID Levels 0, 1, 3, 5, 10, 30, and 50.

SCSI Channels One

Maximum number of drives per channel 15

Array interface to host PCI 2.1

Drive interface Fast and Wide, Ultra 160M SE and

Upgradable cache size 16 MB, 32 MB, 64 MB, or 128 MB

Cache Function Write-through, write-back, ARA,

Multiple logical drives/arrays per

controller

Maximum number of MegaRAID Express

500 controller per system

Online capacity expansion Yes

Dedicated and pool hot spare Yes

Flashable firmware Yes

Hot swap devices supported Yes

Non-disk devices supported Yes

Mixed capacity hard disk drives Yes

Number of 16-bit internal connectors One

Number of 16-bit external connectors One

Support for hard disk drives with

capacities of more than 8 GB.

Clustering support (Failover control) No

Online RAID level migration Yes

RAID remapping Yes

No reboot necessary after expansion Yes

More than 200 Qtags per physical drive Yes

Hardware clustering support on the board Yes

User-specified rebuild rate Yes

LVD

NRA, RA

Up to 40 logical drives per controller

12

Yes

MegaRAID Express 500 Hardware Guide

28

Page 43

Hardware Architecture Features

The hardware architecture features include:

Specification Feature

Processor Intel i960RM 100

SCSI Controller Q Logic ISP10160A

Size of Flash ROM 1 MB

Amount of NVRAM 32 KB

Hardware XOR assistance Yes

Direct I/O Yes

Removable cache memory module Yes

SCSI bus termination Active, single-ended or LVD

Double-sided DIMMs Yes

Auxiliary TermPWR source No

Direct I/O bandwidth 132 MB/s

Array Performance Features

The array performance features include:

Specification Feature

Host data transfer rate 132 MB/s

Drive data transfer rate 160 MB/s

Maximum Scatter/Gathers 26 elements

Maximum size of I/O requests 6.4 MB in 64 KB stripes

Maximum Queue Tags per drive 211

Stripe Sizes 2 KB, 4 KB, 8 KB, 16 KB, 32 KB, 64

Maximum number of concurrent

commands

KB, or 128 KB

255

Chapter 4 Features

29

Page 44

RAID Management Features

The RAID management features include:

Specification Feature

Support for SNMP Yes

Performance Monitor provided Yes

Remote control and monitoring Yes

Event broadcast and event alert Yes

Hardware connector RS232C

Drive roaming Yes

Support for concurrent multiple stripe

sizes

Web-based management tools Not released yet

Windows NT and NetWare server

support via GUI client utility

SCO Unix, OS/2, and UnixWare

server support via GUI client utility

DMI support Yes

Management through an industry-

standard browser

Fault Tolerance Features

The fault tolerance features include:

Yes

Yes

Yes

Not released yet

Specification Feature

Support for SMART Yes

Enclosure management SAF-TE compliant

Drive failure detection Automatic

Drive rebuild using hot spares Automatic

Parity Generation and checking Software

MegaRAID Express 500 Hardware Guide

30

Page 45

Software Utilities

The software utility features include:

Specification Feature

Graphical user interface Yes

Management utility Yes

Bootup configuration via MegaRAID Manager Yes

Online Read, Write, and cache policy switching Yes

Internet and intranet support through TCP/IP Yes

Operating System Software Drivers

Operating System Drivers

drivers for:

• Windows NT V4.0

• Novell NetWare 4.x

• OS/2

• SCO UnixWare 2.1x

• SCO Open Server R5.0x

The DOS drivers for MegaRAID Express 500 are contained in the firmware on

MegaRAID Express 500 except the DOS ASPI and CD-ROM drivers. Call your LSI

Logic OEM support representative for information about drivers for other operating

systems.

MegaRAID Express 500 includes a DOS software configuration utility and

Chapter 4 Features

31

Page 46

MegaRAID Express 500 Specifications

Parameter Specification

Card Size 5.875" x 4.2" (half length PCI)

Processor Intel i960RM™ 32-bit RISC processor @ 100 MHz

Bus Type PCI 2.1

PCI Controller Intel i960RM

Bus Data Transfer Rate Up to 132 MB/s

BIOS AMIBIOS MegaRAID BIOS

Cache Configuration 16, 32, 64, or 128 MB ECC through a 66MHz 72-

bit unbuffered 3.3V SDRAM.

Firmware 1 MB × 8 flash ROM

Nonvolatile RAM 32 KB × 8 for storing RAID configuration

Operating Voltage 5.00 V ± 0.25 V

SCSI Controller One SCSI controller for 160 M Ultra and Wide

support.

SCSI Data Transfer

Rate

SCSI Bus LVD or single-ended

SCSI Termination Active

Termination Disable Automatic through cable and device detection

Devices per SCSI

Channel

SCSI Device Types

Supported

RAID Levels Supported 0, 1, 3, 5,10, 30, and 50

SCSI Connectors One 68-pin internal high-density connector for 16-

Serial Port 3-pin RS232C-compatible berg

Up to 160 MB/s

Up to 15 wide or seven non-wide SCSI devices. Up

to 6 non-disk SCSI drives per MegaRAID Express

500 controller.

Synchronous or Asynchronous. Disk and non-disk.

bit SCSI devices. One ultra-high density 68-pin

external connector for Ultra and Wide SCSI.

PCI Bridge/CPU

MegaRAID Express 500 uses the Intel i960RM PCI bridge with an embedded 80960JX

RISC processor running at 100 MHz. The RM bridge handles data transfers between the

primary (host) PCI bus, the secondary PCI bus, cache memory, and the SCSI bus. The

DMA controller supports chaining and unaligned data transfers. The embedded 80960JX

CPU directs all controller functions, including command processing, SCSI bus transfers,

RAID processing, drive rebuilding, cache management, and error recovery.

MegaRAID Express 500 Hardware Guide

32

Page 47

Cache Memory

MegaRAID Express 500 cache memory resides in a memory bank that uses 2 M x 72 (16

MB), 4 M x 72 (32 MB), 8 M x 72 (64 MB) or 16 M x 72 (128 MB) unbuffered 3.3V

SDRAM . Possible configurations are 16, 32, 64, or 128 MB. The maximum achievable

memory bandwidth is 528 MB/s.

MegaRAID supports write-through or write-back caching, which can be selected for each

logical drive. To improve performance in sequential disk accesses, MegaRAID does not

use read-ahead caching for the current logical drive. The default setting for the read

policy is Normal, meaning no read-ahead caching. You can disable read-ahead caching.

Warning!

Write caching is not recommended for the physical drives. When write cache is enabled, loss

of data can occur when power is interrupted.

MegaRAID BIOS

The BIOS resides on a 1 MB × 8 flash ROM for easy upgrade. The MegaRAID BIOS

supports INT 13h calls to boot DOS without special software or device drivers. The

MegaRAID BIOS provides an extensive setup utility that can be accessed by pressing

<Ctrl> <M> at BIOS initialization. MegaRAID BIOS Setup is described in the

MegaRAID Configuration Software Guide.

Onboard Speaker

The MegaRAID Express 500 controller has an onboard tone generator for audible

warnings when system errors occur. Audible warnings can be generated through this

speaker. The audible warnings are listed on page 117.

Serial Port

MegaRAID Express 500 includes a 3-pin RS232C-compatible serial port berg connector,

which can connect to communications devices.

Chapter 4 Features

33

Page 48

SCSI Bus

MegaRAID Express 500 has a Fast and Wide Ultra 160M SCSI channel that supports

both LVD and single-ended devices with active termination. Synchronous and

asynchronous devices are supported. MegaRAID Express 500 provides automatic

termination disable via cable detection. The SCSI channel supports up to 15 wide or

seven non-wide SCSI devices at speeds up to

160 MB/s. MegaRAID Express 500 supports up to

SCSI Connectors

MegaRAID Express 500 has two types of SCSI connectors:

• a 68-pin high density internal connector

• a 68-pin external ultra-high-density connector

Both connector types can be used for the SCSI channel.

SCSI Termination

MegaRAID Express 500 uses active termination on the SCSI bus conforming to

Alternative 2 of the SCSI-2 specifications. Termination enable/disable is automatic

through cable detection.

six non-disk devices per controller.

SCSI Firmware

The MegaRAID Express 500 firmware handles all RAID and SCSI command processing

and also supports:

Feature Description

Disconnect/

Reconnect

Tagged Command

Queuing

Scatter/Gather Multiple address/count pairs

Multi-threading Up to 255 simultaneous commands with elevator sorting and

Stripe Size Variable for all logical drives: 2 KB, 4 KB, 8 KB, 16 KB, 32

Rebuild Multiple rebuilds and consistency checks with user-

Optimizes SCSI Bus seek.

Multiple tags to improve random access

concatenation of requests per SCSI channel

KB, 64 KB, or 128 KB.

definable priority.

MegaRAID Express 500 Hardware Guide

34

Page 49

RAID Management

RAID management is provided by software utilities that manage and configure the RAID

system and MegaRAID Express 500, create and manage multiple disk arrays, control and

monitor multiple RAID servers, provide error statistics logging, and provide online

maintenance. They include:

MegaRAID BIOS Setup

•

Power Console 500

•

MegaRAID Manager

•

• General Alert Module

MegaRAID BIOS Setup

BIOS Setup configures and maintains RAID arrays, formats disk drives, and

manages the RAID system. It is independent of any operating system. See the MegaRAID

Configuration Software Guide for additional information.

Power Console 500

Power Console 500 runs in Windows NT. It configures, monitors, and maintains

multiple RAID servers from any network node or a remote location. See the MegaRAID

Configuration Software Guide for additional information.

MegaRAID Manager

This is a character-based utility that works in DOS, SCI Unix SVR3.2 R4.2, SCO

UnixWare, OS/2 2.x, OS/2 Warp, Linux Red Hat 6.x, and Novell NetWare 3.x and 4.x.

See the MegaRAID Configuration Software Guide for additional information.

Fault-Tolerance Features

The MegaRAID Express 500 fault-tolerance features are:

• automatic failed drive detection

• automatic failed drive rebuild with no user intervention required

• hot swap manual replacement without bringing the system down

• SAF-TE compliant enclosure management

Detect Failed Drive

The MegaRAID Express 500 firmware automatically detects and rebuilds failed

drives. This can be done transparently with hot spares.

Hot Swap

MegaRAID Express 500 supports the manual replacement of a disk unit in the RAID

subsystem without system shutdown.

Chapter 4 Features

35

Page 50

Compatibility

MegaRAID Express 500 compatibility issues include:

• server management

• SCSI device compatibility

• software compatibility

Server Management

SCSI Device Compatibility

Software

As an SNMP agent, MegaRAID Express 500 supports all SNMP managers and

RedAlert from Storage Dimensions.

drives, optical drives, DAT drives and other SCSI peripheral devices.

All SCSI backup and utility software should work with MegaRAID Express 500.

Software that has been tested and approved for use with MegaRAID Express 500 includes

Cheyenne®, CorelSCSI®, Arcserve®, and Novaback®. This software is not provided

with MegaRAID Express 500.

Summary

MegaRAID Express 500 Features were discussed in this chapter.

Configuring MegaRAID Express 500 is discussed in Chapter 5.

MegaRAID Express 500 supports SCSI hard disk drives, CD-ROMs, tape

MegaRAID Express 500 Hardware Guide

36

Page 51

5 Configuring MegaRAID Express 500

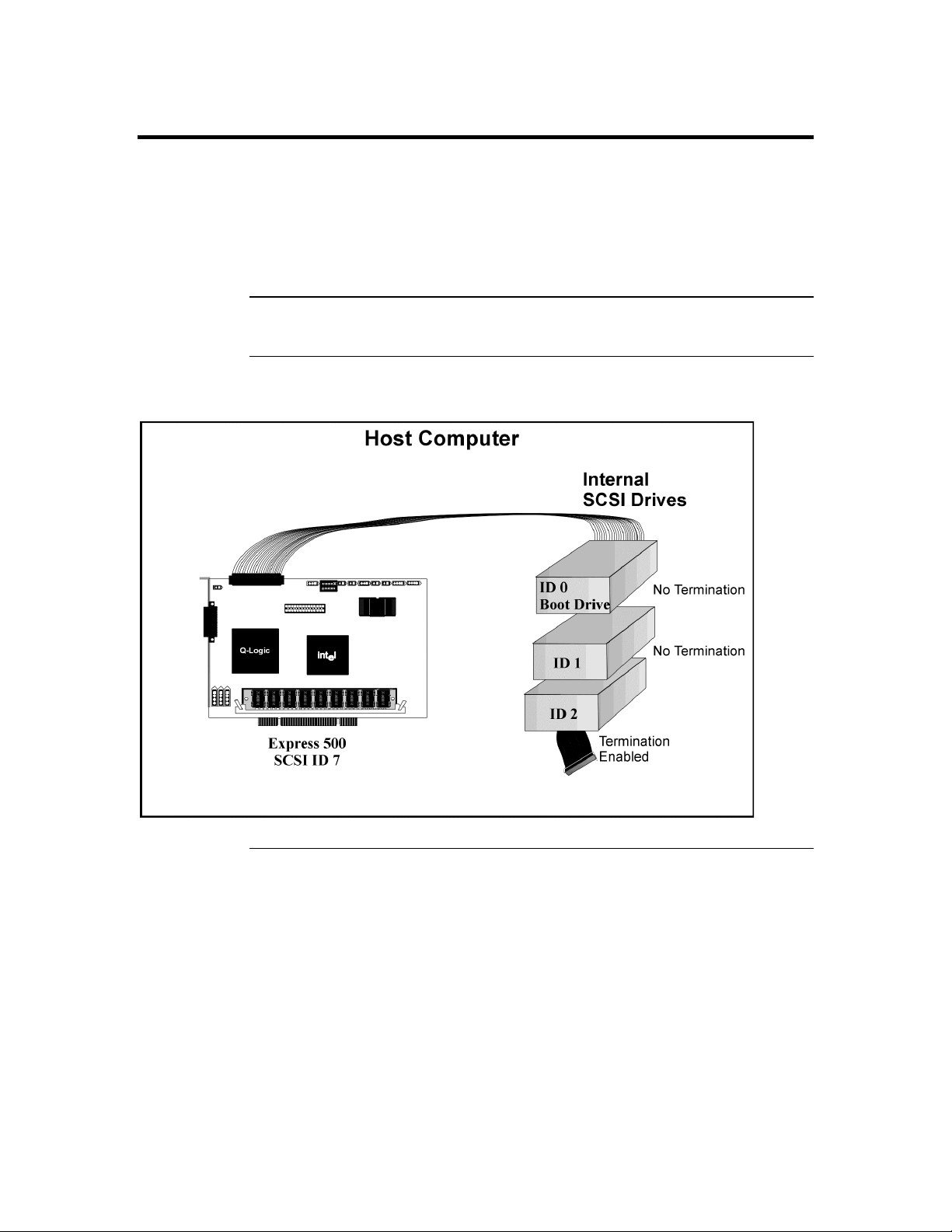

Configuring SCSI Physical Drives

SCSI Channel

Basic Configuration Rules

Physical SCSI drives must be organized into logical drives. The arrays and logical drives

that you construct must be able to support the RAID level that you select.

Your MegaRAID Express 500 adapter has one SCSI channel.

SCSI devices in a RAID array:

• attach non-disk SCSI devices to a single SCSI channel that does not have any disk

drives

• you can place up to 15 physical disk drives in an array, depending on the RAID level

• include all drives that have the same capacity to the same array

• make sure any hot spare has a capacity that is at least as large as the largest drive that

may be replaced by the hot spare

• when replacing a failed drive, make sure that the replacement drive has a capacity

that is at least as large as the drive being replaced

You should observe the following guidelines when connecting and configuring

Chapter 5 Configuring MegaRAID Express 500

37

Page 52

Current Configuration

SCSI ID Device Description Termination?

0

1

2

3

4

5

6

8

9

10

11

12

13

14

15

Logical Drive Configuration

SCSI Channel 1

Logical

Drive

LD0

LD1

LD2

LD3

LD4

LD5

LD6

LD7

LD8

LD9

LD10

LD11

LD12

LD13

LD14

LD15

LD16

LD17

LD18

LD19

LD20

LD21

LD22

LD23

LD24

LD25

LD26

LD27

LD28

RAID

Level

Stripe

Size

Logical Drive

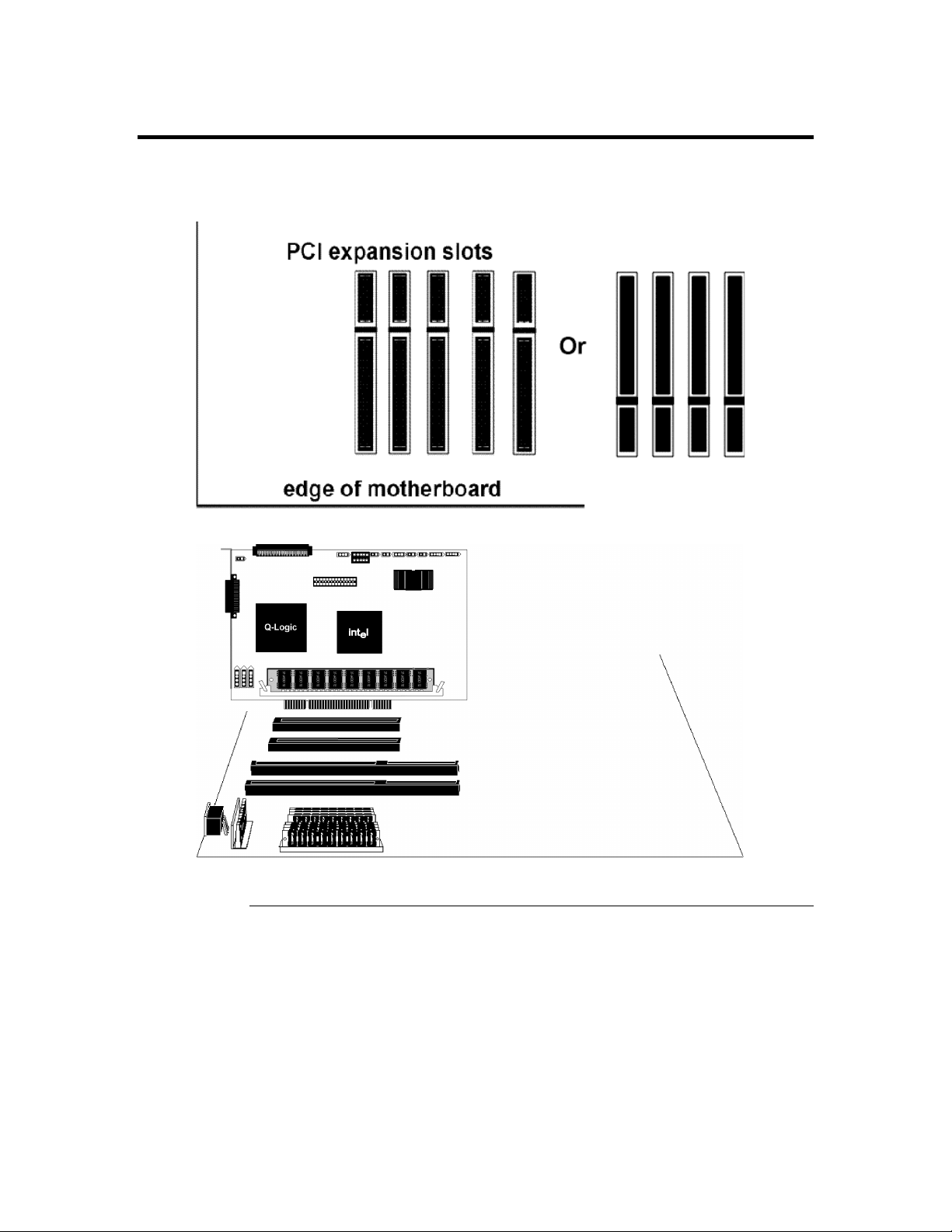

Size

Cache

Policy

Read

Policy

Write

Policy

# of Physical

Drives

MegaRAID Express 500 Hardware Guide

38

Page 53

Logical

Drive

LD29

LD30

LD31

LD32

LD33

LD34

LD35

LD36

LD37

LD38

LD39

RAID

Level

Stripe

Size

Logical Drive

Size

Cache

Policy

Read

Policy

Write

Policy

# of Physical

Drives

Cont’d

Chapter 5 Configuring MegaRAID Express 500

39

Page 54

Physical Device Layout

Target ID

Device Type

Logical Drive Number/ Drive Number

Manufacturer/Model Number

Firmware level

Target ID

Device Type

Logical Drive Number/ Drive Number

Manufacturer/Model Number

Firmware level

Target ID

Device Type

Logical Drive Number/ Drive Number

Manufacturer/Model Number

Firmware level

Target ID

Device Type

Logical Drive Number/ Drive Number

Manufacturer/Model Number

Firmware level

Target ID

Device Type

Logical Drive Number/ Drive Number

Manufacturer/Model Number

Firmware level

Target ID

Device Type

Logical Drive Number/ Drive Number

Manufacturer/Model Number

Firmware level

Target ID

Device Type

Logical Drive Number/ Drive Number

Manufacturer/Model Number

Firmware level

Target ID

Device Type

Logical Drive Number/ Drive Number

Manufacturer/Model Number

Firmware level

Target ID

Device Type

Logical Drive Number/ Drive Number

Manufacturer/Model Number

Firmware level

Target ID

Device Type

Logical Drive Number/ Drive Number

Manufacturer/Model Number

Firmware level

Target ID

Device Type

Channel 1

MegaRAID Express 500 Hardware Guide

40

Page 55

Logical Drive Number/ Drive Number

Manufacturer/Model Number

Firmware level

Target ID

Device Type

Logical Drive Number/ Drive Number

Manufacturer/Model Number

Firmware level

Target ID

Device Type

Logical Drive Number/ Drive Number

Manufacturer/Model Number

Firmware level

Target ID

Device Type

Logical Drive Number/ Drive Number

Manufacturer/Model Number

Firmware level

Target ID

Device Type

Logical Drive Number/ Drive Number

Manufacturer/Model Number

Firmware level

Channel 1

Chapter 5 Configuring MegaRAID Express 500

41

Page 56

Configuring Arrays

Organize the physical disk drives in arrays after the drives are connected to MegaRAID

Express 500, formatted, and initialized. An array can consist of up to 15 physical disk

drives, depending on the RAID level.

MegaRAID Express 500 supports up to eight arrays. The number of drives in an array

determines the RAID levels that can be supported.

Arranging Arrays

You must arrange the arrays to provide additional organization for the drive array. You

must arrange arrays so that you can create system drives that can function as boot devices.

You can sequentially arrange arrays with an identical number of drives so that the drives

in the group are spanned. Spanned drives can be treated as one large drive. Data can be

striped across multiple arrays as one logical drive.

You can create spanned drives by using the MegaRAID BIOS Setup utility or the

MegaRAID Manager.

Creating Hot Spares