Page 1

Model 2001 Multimeter

Calibration Manual

A GREATER MEASURE OF CONFIDENCE

Page 2

Model 2001 Multimeter

Calibration Manual

©1992, Keithley Instruments, Inc.

All rights reserved.

Cleveland, Ohio, U.S.A.

Seventh Printing May 2004

Document Number: 2001-905-01 Rev. G

Page 3

WARRANTY

Keithley Instruments, Inc. warrants this product to be free from defects in material and workmanship for a period of 3 years from

date of shipment.

Keithley Instruments, Inc. warrants the following items for 90 days from the date of shipment: probes, cables, rechargeable batteries,

diskettes, and documentation.

During the warranty period, we will, at our option, either repair or replace any product that proves to be defective.

To exercise this warranty, write or call your local Keithley representative, or contact Keithley headquarters in Cleveland, Ohio. You

will be given prompt assistance and return instructions. Send the product, transportation prepaid, to the indicated service facility.

Repairs will be made and the product returned, transportation prepaid. Repaired or replaced products are warranted for the balance

of the original warranty period, or at least 90 days.

LIMITATION OF WARRANTY

This warranty does not apply to defects resulting from product modification without Keithley’s express written consent, or misuse

of any product or part. This warranty also does not apply to fuses, software, non-rechargeable batteries, damage from battery leakage, or problems arising from normal wear or failure to follow instructions.

THIS WARRANTY IS IN LIEU OF ALL OTHER WARRANTIES, EXPRESSED OR IMPLIED, INCLUDING ANY IMPLIED

WARRANTY OF MERCHANTABILITY OR FITNESS FOR A PARTICULAR USE. THE REMEDIES PROVIDED HEREIN

ARE BUYER’S SOLE AND EXCLUSIVE REMEDIES.

NEITHER KEITHLEY INSTRUMENTS, INC. NOR ANY OF ITS EMPLOYEES SHALL BE LIABLE FOR ANY DIRECT,

INDIRECT, SPECIAL, INCIDENTAL OR CONSEQUENTIAL DAMAGES ARISING OUT OF THE USE OF ITS INSTRUMENTS AND SOFTWARE EVEN IF KEITHLEY INSTRUMENTS, INC., HAS BEEN ADVISED IN ADVANCE OF THE

POSSIBILITY OF SUCH DAMAGES. SUCH EXCLUDED DAMAGES SHALL INCLUDE, BUT ARE NOT LIMITED TO:

COSTS OF REMOVAL AND INSTALLATION, LOSSES SUSTAINED AS THE RESULT OF INJURY TO ANY PERSON, OR

DAMAGE TO PROPERTY.

A G R E A T E R M E A S U R E O F C O N F I D E N C E

Keithley Instruments, Inc.

Corporate Headquarters • 28775 Aurora Road • Cleveland, Ohio 44139 • 440-248-0400 • Fax: 440-248-6168 • 1-888-KEITHLEY (534-8453) • www.keithley.com

Belgium: Sint-Pieters-Leeuw • 02-363 00 40 • Fax: 02-363 00 64 • www.keithley.nl Italy: Milano • 02-48 39 16 01 • Fax: 02- 48 39 16 28 • www.keithley.it

China: Beijing • 8610-82251886 • Fax: 8610-82251892 • www.keithley.com.cn Japan: Tokyo • 81-3-5733-7555 • Fax: 81-3-5733-7556 • www.keithley.jp

Finland: Helsinki • 09-5306-6560 • Fax: 09-5306-6565 • www.keithley.com Korea: Seoul • 82-2-574-7778 • Fax: 82-2-574-7838 • www.keithley.com

France: Saint-Aubin • 01-64 53 20 20 • Fax: 01-60 11 77 26 • www.keithley.fr Netherlands: Gorinchem • 0183-635333 • Fax: 0183-630821 • www.keithley.nl

Germany: Germering • 089/84 93 07-40 • Fax: 089/84 93 07-34 • www.keithley.de Singapore: Singapore • 65-6747-9077 • Fax: 65-6747-2991 • www.keithley.com

Great Britain: Theale • 0118 929 7500 • Fax: 0118 929 7519 • www.keithley.co.uk Sweden: Solna • 08-509 04 600 • Fax: 08-655 26 10 • www.keithley.com

India: Bangalore • 91-80 2212 8027 • Fax: 91-80 2212 8005 • www.keithley.com Tai wa n : Hsinchu • 886-3-572-9077 • Fax: 886-3-572-9031 • www.keithley.com.tw

3/04

Page 4

Manual Print History

The print history shown below lists the printing dates of all Revisions and Addenda created for this manual. The Revision

Level letter increases alphabetically as the manual undergoes subsequent updates. Addenda, which are released between Revisions, contain important change information that the user should incorporate immediately into the manual. Addenda are numbered sequentially. When a new Revision is created, all Addenda associated with the previous Revision of the manual are

incorporated into the new Revision of the manual. Each new Revision includes a revised copy of this print history page.

Revision A (Document Number 2001-905-01) ....................................................................................... April 1992

Revision B (Document Number 2001-905-01) ........................................................................................ June 1992

Revision C (Document Number 2001-905-01) ........................................................................................ May 1993

Addendum C (Document Number 2001-905-02)..................................................................................... June 1993

Addendum C (Document Number 2001-905-03)............................................................................November 1993

Addendum C (Document Number 2001-905-04)................................................................................ January 1995

Revision D (Document Number 2001-905-01) .................................................................................... August 1995

Revision E (Document Number 2001-905-01) ........................................................................................ April 1996

Revision F (Document Number 2001-905-01)................................................................................November 2003

Revision G (Document Number 2001-905-01) ........................................................................................ May 2004

All Keithley product names are trademarks or registered trademarks of Keithley Instruments, Inc.

Other brand and product names are trademarks or registered trademarks of their respective holders.

Page 5

Safety Precautions

The following safety precautions should be observed before using

this product and any associated instrumentation. Although some instruments and accessories would normally be used with non-hazardous voltages, there are situations where hazardous conditions

may be present.

This product is intended for use by qualified personnel who recognize shock hazards and are familiar with the safety precautions required to avoid possible injury. Read and follow all installation,

operation, and maintenance information carefully before using the

product. Refer to the manual for complete product specifications.

If the product is used in a manner not specified, the protection provided by the product may be impaired.

The types of product users are:

Responsible body is the individual or group responsible for the use

and maintenance of equipment, for ensuring that the equipment is

operated within its specifications and operating limits, and for ensuring that operators are adequately trained.

Operators use the product for its intended function. They must be

trained in electrical safety procedures and proper use of the instrument. They must be protected from electric shock and contact with

hazardous live circuits.

Maintenance personnel perform routine procedures on the product

to keep it operating properly, for example, setting the line voltage

or replacing consumable materials. Maintenance procedures are described in the manual. The procedures explicitly state if the operator

may perform them. Otherwise, they should be performed only by

service personnel.

Service personnel are trained to work on live circuits, and perform

safe installations and repairs of products. Only properly trained service personnel may perform installation and service procedures.

Keithley products are designed for use with electrical signals that

are rated Measurement Category I and Measurement Category II, as

described in the International Electrotechnical Commission (IEC)

Standard IEC 60664. Most measurement, control, and data I/O signals are Measurement Category I and must not be directly connected to mains voltage or to voltage sources with high transient overvoltages. Measurement Category II connections require protection

for high transient over-voltages often associated with local AC

mains connections. Assume all measurement, control, and data I/O

connections are for connection to Category I sources unless otherwise marked or described in the Manual.

Exercise extreme caution when a shock hazard is present. Lethal

voltage may be present on cable connector jacks or test fixtures.

The American National Standards Institute (ANSI) states that a

shock hazard exists when voltage levels greater than 30V RMS,

42.4V peak, or 60VDC are present. A good safety practice is to ex-

pect that hazardous voltage is present in any unknown circuit

before measuring.

Operators of this product must be protected from electric shock at

all times. The responsible body must ensure that operators are prevented access and/or insulated from every connection point. In

some cases, connections must be exposed to potential human contact. Product operators in these circumstances must be trained to

protect themselves from the risk of electric shock. If the circuit is

capable of operating at or above 1000 volts, no conductive part of

the circuit may be exposed.

Do not connect switching cards directly to unlimited power circuits.

They are intended to be used with impedance limited sources.

NEVER connect switching cards directly to AC mains. When connecting sources to switching cards, install protective devices to limit

fault current and voltage to the card.

Before operating an instrument, make sure the line cord is connected to a properly grounded power receptacle. Inspect the connecting

cables, test leads, and jumpers for possible wear, cracks, or breaks

before each use.

When installing equipment where access to the main power cord is

restricted, such as rack mounting, a separate main input power disconnect device must be provided, in close proximity to the equipment and within easy reach of the operator.

For maximum safety, do not touch the product, test cables, or any

other instruments while power is applied to the circuit under test.

ALWAYS remove power from the entire test system and discharge

any capacitors before: connecting or disconnecting cables or jumpers, installing or removing switching cards, or making internal

changes, such as installing or removing jumpers.

Do not touch any object that could provide a current path to the common side of the circuit under test or power line (earth) ground. Always

make measurements with dry hands while standing on a dry, insulated

surface capable of withstanding the voltage being measured.

The instrument and accessories must be used in accordance with its

specifications and operating instructions or the safety of the equipment may be impaired.

Do not exceed the maximum signal levels of the instruments and accessories, as defined in the specifications and operating information, and as shown on the instrument or test fixture panels, or

switching card.

When fuses are used in a product, replace with same type and rating

for continued protection against fire hazard.

Chassis connections must only be used as shield connections for

measuring circuits, NOT as safety earth ground connections.

If you are using a test fixture, keep the lid closed while power is applied to the device under test. Safe operation requires the use of a

lid interlock.

5/03

Page 6

If a screw is present, connect it to safety earth ground using the

wire recommended in the user documentation.

!

The symbol on an instrument indicates that the user should refer to the operating instructions located in the manual.

The symbol on an instrument shows that it can source or measure 1000 volts or more, including the combined effect of normal

and common mode voltages. Use standard safety precautions to

avoid personal contact with these voltages.

The symbol indicates a connection terminal to the equipment

frame.

The WA RN ING heading in a manual explains dangers that might

result in personal injury or death. Always read the associated information very carefully before performing the indicated procedure.

The CAUTION heading in a manual explains hazards that could

damage the instrument. Such damage may invalidate the warranty.

Instrumentation and accessories shall not be connected to humans.

Before performing any maintenance, disconnect the line cord and

all test cables.

To maintain protection from electric shock and fire, replacement

components in mains circuits, including the power transformer, test

leads, and input jacks, must be purchased from Keithley Instruments. Standard fuses, with applicable national safety approvals,

may be used if the rating and type are the same. Other components

that are not safety related may be purchased from other suppliers as

long as they are equivalent to the original component. (Note that selected parts should be purchased only through Keithley Instruments

to maintain accuracy and functionality of the product.) If you are

unsure about the applicability of a replacement component, call a

Keithley Instruments office for information.

To clean an instrument, use a damp cloth or mild, water based

cleaner. Clean the exterior of the instrument only. Do not apply

cleaner directly to the instrument or allow liquids to enter or spill on

the instrument. Products that consist of a circuit board with no case

or chassis (e.g., data acquisition board for installation into a computer) should never require cleaning if handled according to instructions. If the board becomes contaminated and operation is affected,

the board should be returned to the factory for proper cleaning/servicing.

Page 7

Table of Contents

1 Performance Verification

1.1 Introduction..........................................................................................................................................................1-1

1.2 Environmental conditions .................................................................................................................................... 1-1

1.3 Warm-up period ...................................................................................................................................................1-1

1.4 Line power ...........................................................................................................................................................1-1

1.5 Recommended test equipment .............................................................................................................................1-2

1.6 Verification limits ................................................................................................................................................1-2

1.7 Restoring default conditions ................................................................................................................................1-2

1.8 Verification procedures........................................................................................................................................1-4

1.8.1 DC volts verification.................................................................................................................................... 1-4

1.8.2 AC volts verification.................................................................................................................................... 1-5

1.8.3 DC current verification ..............................................................................................................................1-10

1.8.4 AC current verification ..............................................................................................................................1-11

1.8.5 Resistance verification ...............................................................................................................................1-13

1.8.6 Frequency accuracy verification................................................................................................................1-16

1.8.7 Temperature reading checks ...................................................................................................................... 1-17

2Calibration

2.1 Introduction..........................................................................................................................................................2-1

2.2 Environmental conditions .................................................................................................................................... 2-1

2.3 Warm-up period ...................................................................................................................................................2-2

2.4 Line power ...........................................................................................................................................................2-2

2.5 Calibration lock.................................................................................................................................................... 2-2

2.5.1 Comprehensive calibration lock...................................................................................................................2-2

2.5.2 Low-level calibration lock ...........................................................................................................................2-2

2.5.3 IEEE-488 bus calibration lock status ........................................................................................................... 2-2

2.6 IEEE-488 bus calibration commands and program .............................................................................................2-2

2.6.1 Calibration commands .................................................................................................................................2-2

2.6.2 Required order of command execution........................................................................................................2-4

2.6.3 Example calibration command program ......................................................................................................2-4

2.7 Calibration errors ................................................................................................................................................. 2-5

i

Page 8

2.7.1 Front panel error message summary.............................................................................................................2-5

2.7.2 IEEE-488 bus error reporting.......................................................................................................................2-6

2.8 Comprehensive calibration...................................................................................................................................2-6

2.8.1 Recommended equipment for comprehensive calibration...........................................................................2-6

2.8.2 Front panel comprehensive calibration ........................................................................................................2-6

2.8.3 IEEE-488 bus comprehensive calibration....................................................................................................2-9

2.9 AC self-calibration .............................................................................................................................................2-12

2.9.1 Front panel AC calibration.........................................................................................................................2-12

2.9.2 IEEE-488 bus AC self-calibration..............................................................................................................2-12

2.10 Low-level calibration..........................................................................................................................................2-12

2.10.1 Recommended equipment for low-level calibration ..................................................................................2-13

2.10.2 Low-level calibration summary..................................................................................................................2-13

2.10.3 Front panel low-level calibration procedure...............................................................................................2-15

2.10.4 IEEE-488 bus low-level calibration procedure ..........................................................................................2-18

3 Calibration Command Reference

3.1 Introduction .........................................................................................................................................................3-1

3.2 Command summary..............................................................................................................................................3-1

3.3 :CALibration:PROTected subsystem...................................................................................................................3-3

3.3.1 :LOCK..........................................................................................................................................................3-3

3.3.2 :SWITch?......................................................................................................................................................3-3

3.3.3 :SAVE...........................................................................................................................................................3-4

3.3.4 :DATA?........................................................................................................................................................3-4

3.3.5 :DATE..........................................................................................................................................................3-5

3.3.6 :DATE?.........................................................................................................................................................3-5

3.3.7 :NDUE..........................................................................................................................................................3-5

3.3.8 :NDUE?........................................................................................................................................................3-6

3.3.9 :LLEVel........................................................................................................................................................3-6

3.3.10 :DC ...............................................................................................................................................................3-9

3.4 :CALibration:UNPRotected Subsystem.............................................................................................................3-12

3.4.1 :ACCompensation ......................................................................................................................................3-12

3.5 Bus error reporting .............................................................................................................................................3-13

3.5.1 Calibration error summary .........................................................................................................................3-13

3.5.2 Detecting calibration errors........................................................................................................................3-13

3.6 Detecting calibration step completion................................................................................................................3-13

3.6.1 Using the *OPC? query..............................................................................................................................3-13

3.6.2 Using the *OPC command.........................................................................................................................3-14

Appendices

A Model 2001 Specifications..................................................................................................................................A-1

B Calibration Programs...........................................................................................................................................B-1

C Calibration Messages...........................................................................................................................................C-1

D Alternate Calibration Sources .............................................................................................................................D-1

ii

Page 9

List of Illustrations

1 Performance Verification

Figure 1-1 Connections for DC volts verification ...................................................................................................... 1-5

Figure 1-2 Connections for AC volts verification (all except 2MHz test).................................................................. 1-6

Figure 1-3 Connections for AC volts verification (2MHz frequency only) ............................................................... 1-7

Figure 1-4 Connections for DC current verification................................................................................................. 1-11

Figure 1-5 Connections for AC current verification................................................................................................. 1-12

Figure 1-6 Connections for resistance verification (20¾-200k¾ ranges)................................................................. 1-14

Figure 1-7 Connections for resistance verification (2M¾ - 200M¾ ranges) ........................................................... 1-15

Figure 1-8 1G¾ resistor test box construction.......................................................................................................... 1-15

Figure 1-9 Connections for frequency accuracy verification.................................................................................... 1-16

2 Calibration

Figure 2-1 Low-thermal short connections................................................................................................................. 2-7

Figure 2-2 Connections for comprehensive calibration.............................................................................................. 2-8

Figure 2-3 Calibration voltage connections.............................................................................................................. 2-16

Figure 2-4 Current calibration connections .............................................................................................................. 2-17

Figure 2-5 Synthesizer connections .......................................................................................................................... 2-18

Appendices

Figure B-1 Low-thermal short connections..................................................................................................................................................... B-3

Figure B-2 Calibration connection for comprehensive calibration............................................................................................................... B-4

Figure B-3 Calibration voltage connections..................................................................................................................................................... B-4

Figure B-4 Calibration current connections..................................................................................................................................................... B-5

Figure B-5 Synthesizer connections.................................................................................................................................................................. B-5

iii

Page 10

List of Tables

1 Performance Verification

Table 1-1 Recommended equipment for performance verification........................................................................... 1-2

Table 1-2 Limits for DC volts verification................................................................................................................ 1-5

Table 1-3 Limits for normal mode AC voltage verification...................................................................................... 1-8

Table 1-4 Limits for low-frequency mode AC voltage verification.......................................................................... 1-9

Table 1-5 Limits for AC peak voltage verification ................................................................................................. 1-10

Table 1-6 Limits for DC current verification .......................................................................................................... 1-11

Table 1-7 Limits for AC current verification .......................................................................................................... 1-12

Table 1-8 Limits for resistance verification (20¾-200M¾ ranges)......................................................................... 1-13

Table 1-9 Limits for resistance verification (1G¾ range) ....................................................................................... 1-15

Table 1-10 Frequency verification limits .................................................................................................................. 1-16

Table 1-11 Thermocouple temperature reading checks............................................................................................. 1-17

Table 1-12 RTD probe temperature reading checks.................................................................................................. 1-18

2 Calibration

Table 2-1 IEEE-488 bus calibration command summary.......................................................................................... 2-3

Table 2-2 Calibration error messages........................................................................................................................ 2-5

Table 2-3 Recommended equipment for comprehensive calibration........................................................................ 2-6

Table 2-4 Front panel comprehensive calibration summary ..................................................................................... 2-6

Table 2-5 IEEE-488 bus comprehensive calibration summary ............................................................................... 2-10

Table 2-6 Recommended equipment for low-level calibration............................................................................... 2-13

Table 2-7 Low-level calibration summary .............................................................................................................. 2-14

3 Calibration Command Reference

Table 3-1 IEEE-488 bus calibration command summary.......................................................................................... 3-2

Table 3-2 Low-level calibration commands.............................................................................................................. 3-6

Table 3-3 Comprehensive calibration commands ..................................................................................................... 3-9

Table 3-4 Calibration error summary ...................................................................................................................... 3-13

v

Page 11

Appendices

Table B-1 Recommended equipment for comprehensive calibration ....................................................................... B-2

Table B-2 Recommended equipment for low-level calibration................................................................................. B-2

Table C-1 Calibration errors...................................................................................................................................... C-2

Table D-1 Alternate calibration sources .................................................................................................................... D-1

vi

Page 12

1

Performance Verification

1.1 Introduction

The procedures in this section are intended to verify that

Model 2001 accuracy is within the limits stated in the instrument one-year specifications. These procedures can be performed when the instrument is first received to ensure that no

damage or misadjustment has occurred during shipment.

Verification may also be performed whenever there is a question of instrument accuracy, or following calibration, if desired.

NOTE

If the instrument is still under warranty,

and its performance is outside specified

limits, contact your Keithley representative or the factory to determine the correct

course of action.

This section includes the following:

1.2 Environmental conditions: Covers the temperature

and humidity limits for verification.

1.3 Warm-up period: Describes the length of time the

Model 2001 should be allowed to warm up before testing.

1.4 Line power: Covers power line voltage ranges during

testing.

1.5 Recommended equipment: Summarizes recommended equipment and pertinent specifications.

1.6 Verification limits: Explains how reading limits were

calculated.

1.7 Restoring factory default conditions: Gives step-bystep procedures for restoring default conditions before

each test procedure.

1.8 Verification procedures: Details procedures to verify

measurement accuracy of all Model 2001 measurement functions.

1.2 Environmental conditions

Verification measurements should be made at an ambient

temperature of 18-28°C (65-82°F), and at a relative humidity

of less than 80% unless otherwise noted.

1.3 Warm-up period

The Model 2001 must be allowed to warm up for at least one

hour before performing the verification procedures. If the instrument has been subjected to temperature extremes (outside the range stated in paragraph 1.2), allow additional time

for internal temperatures to stabilize. Typically, it takes one

additional hour to stabilize a unit that is 10°C (18°F) outside

the specified temperature range.

The calibration equipment should also be allowed to warm

up for the minimum period specified by the manufacturer.

1-1

Page 13

Performance Verification

1.4 Line power

The Model 2001 should be tested while operating from a line

voltage in the range of 90-134V or 180-250V at a frequency

of 50, 60, or 400Hz.

1.5 Recommended test equipment

Table 1-1 lists all test equipment required for verification.

Alternate equipment may be used as long as that equipment

has specifications at least as good as those listed in the table.

See Appendix D for a list of alternate calibration sources.

1.6 Verification limits

The verification limits stated in this section have been calculated using only Model 2001 one year specifications, and

they do not include test equipment tolerance. If a particular

measurement falls slightly outside the allowed range, recalculate new limits based both on Model 2001 specifications

and pertinent calibration equipment specifications.

1.7 Restoring default conditions

Before performing each performance verification procedure,

restore instrument bench default conditions as follows:

1. From the normal display mode, press the MENU key.

The instrument will display the following:

MAIN MENU

SAVESETUP GPIB CALIBRATION

2. Select SAVESETUP, and press ENTER. The following

will be displayed:

SETUP MENU

SAVE RESTORE POWERON RESET

3. Select RESET, and press ENTER. The display will then

appear as follows:

RESET ORIGINAL DFLTS

BENCH GPIB

4. Select BENCH, then press ENTER. The following will

be displayed:

RESETTING INSTRUMENT

ENTER to confirm; EXIT to abort

5. Press ENTER again to confirm instrument reset.

Table 1-1

Recommended equipment for performance verification

Mfg. Model Description Specifications*

Fluke 5700A Calibrator ±5ppm basic uncertainty.

DC voltage:

190mV: ±11ppm

1.9V: ±5ppm

19V: ±5ppm

190V: ±7ppm

1000V: ±9ppm

AC voltage, 10Hz-1MHz (40Hz-20kHz specifications):

190mV: ±150ppm

1.9V: ±78ppm

19V: ±78ppm

190V: ±85ppm

DC current:

190µA: ±102ppm

1.9mA: ±55ppm

19mA: ±55ppm

190mA: ±65ppm

1.9A: ±96ppm

1-2

Page 14

Performance Verification

Table 1-1 (cont.)

Recommended equipment for performance verification

Mfg. Model Description Specifications*

Fluke 5700A Calibrator AC current, 40Hz-10kHz (40Hz-1kHz specifications):

190µA: ±245ppm

1.9mA: ±160ppm

19mA: ±160ppm

190mA: ±170ppm

1.9A: ±670ppm

Resistance:

19¾: ±26ppm

190¾: ±17ppm

1.9k¾: ±12ppm

19k¾: ±11ppm

190k¾: ±13ppm

1.9M¾: ±19ppm

19M¾: ±47ppm

100M¾: ±120ppm

Fluke 5725A Amplifier AC voltage, 1kHz-10kHz: 750V: ±85ppm

Fluke 5700A-03 Wideband AC option 190mV, 1.9V @ 2MHz, ±0.1%

Fluke 5440A-7002 Low thermal cable set

Keithley CA-18-1 Low capacitance cable Low capacitance dual banana to dual banana shielded cable (for

ACV), 1.2m (4 ft.) in length.

Keithley R-289-1G 1G¾ resistor NOTE: Resistor should be characterized to within ±10,000 ppm and

mounted in shielded test box (see procedure).

Metal component box (for

1G¾ resistor)

Insulated banana plugs (2)

(for test box)

Keithley 3940 Multifunction Synthesizer 1Hz-15MHz, ±5ppm

General

Radio

1433-T Precision Decade Resis-

tance Box

10-400¾, ±0.02%

⎯⎯ Megohmmeter 1G¾, ±1%

* 90-day calibrator specifications shown include total uncertainty at specified output. The 1.9V output includes 0.5ppm transfer uncertainty. See Appendix

D for recommendation on alternate calibration sources.

1-3

Page 15

Performance Verification

1.8 Verification procedures

The following paragraphs contain procedures for verifying

instrument accuracy specifications for the following measuring functions:

•DC volts

• AC volts

• DC current

• AC current

• Resistance

•Frequency

• Temperature

If the Model 2001 is out of specifications and not under warranty, refer to the calibration procedures in Section 2.

WARNING

The maximum common-mode voltage

(voltage between INPUT LO and chassis ground) is 500V peak. Exceeding this

value may cause a breakdown in insulation, creating a shock hazard. Some of

the procedures in this section may expose you to dangerous voltages. Use

standard safety precautions when such

dangerous voltages are encountered to

avoid personal injury caused by electric

shock.

NOTE

Do not connect test equipment to the Model 2001 through a scanner.

1.8.1 DC volts verification

DC voltage accuracy is verified by applying accurate DC

voltages from a calibrator to the Model 2001 input and verifying that the displayed readings fall within specified ranges.

Follow the steps below to verify DCV measurement accuracy.

CAUTION

Do not exceed 1100V peak between INPUT HI and INPUT LO, or instrument

damage may occur.

1. Turn on the Model 2001 and the calibrator, and allow a

one-hour warm-up period before making measurements.

NOTE

Use shielded, low-thermal connections

when testing the 200mV range to avoid errors caused by noise or thermal offsets.

Connect the shield to calibrator output

LO. (See Table 1-1.)

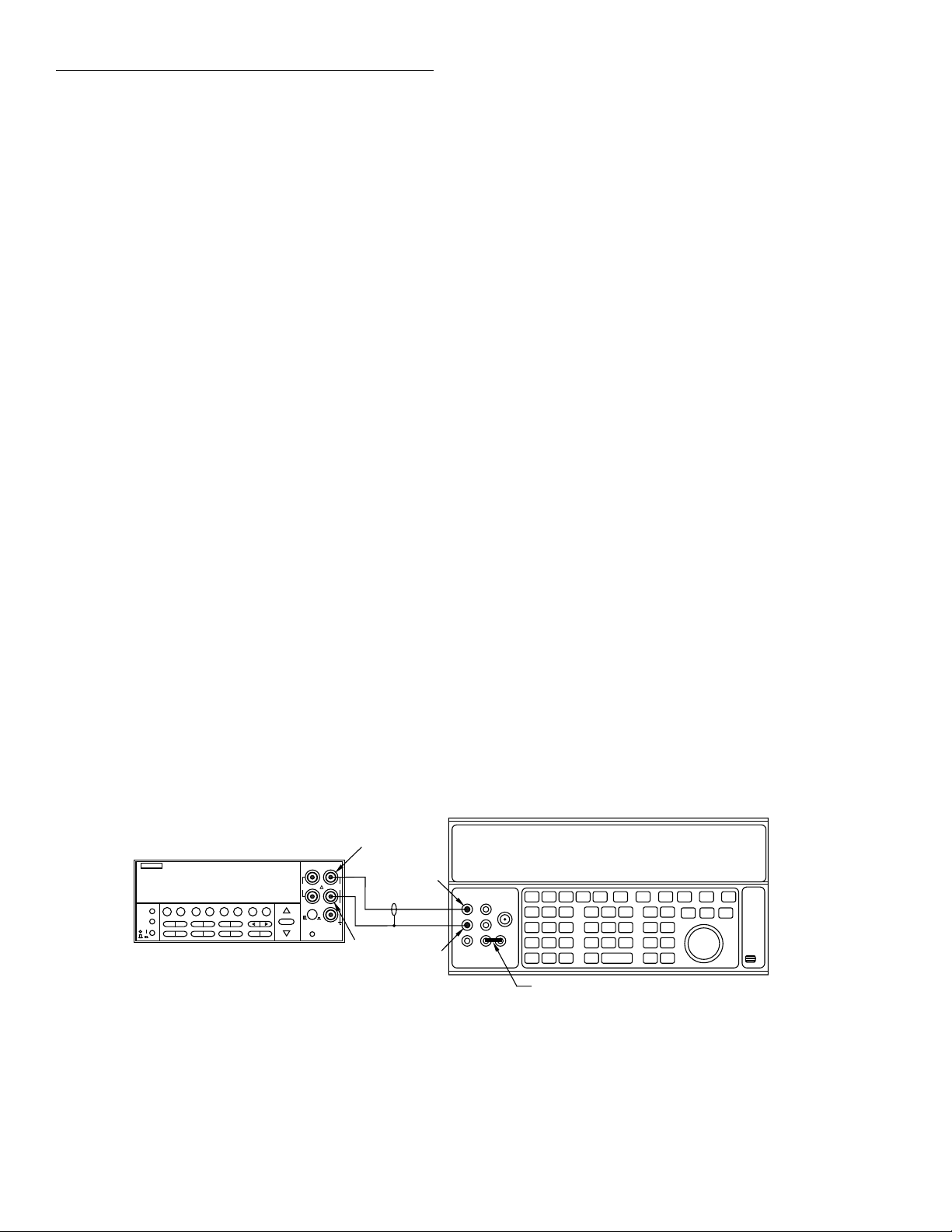

2. Connect the Model 2001 to the calibrator, as shown in

Figure 1-1. Be sure to connect calibrator HI to Model

2001 INPUT HI and calibrator LO to Model 2001 INPUT LO as shown.

3. Restore Model 2001 factory default conditions, as explained in paragraph 1.7.

4. Set digital filter averaging as follows:

A. From normal display, press CONFIG then DCV.

B. Select FILTER, then press ENTER.

C. Select AVERAGING, then press ENTER.

D. Using the cursor and range keys, set the averaging

parameter to 10 readings, then press ENTER.

E. Press EXIT as necessary to return to normal display.

F. If the FILT annunciator is off, press FILTER to en-

able the filter.

5. Select the Model 2001 200mV DC range.

NOTE

Do not use auto-ranging for any of the verification tests because auto-range hysteresis may cause the Model 2001 to be on an

incorrect range.

6. Set the calibrator output to 0.000000mVDC, and allow

the reading to settle.

7. Enable the Model 2001 REL mode. Leave REL enabled

for the remainder of the DC volts verification test.

8. Set the calibrator output to +190.0000mVDC, and allow

the reading to settle.

9. Verify that the Model 2001 reading is within the limits

summarized in Table 1-2.

10. Repeat steps 8 and 9 for the remaining ranges and voltages listed in Table 1-2.

11. Repeat the procedure for each of the ranges with negative voltages of the same magnitude as those listed in Table 1-2.

1-4

Page 16

Performance Verification

5700A Calibrator (Output DC Voltage)

Input HI

INPUT

HI

1100V

PEAK

LO

500V

PEAK

R

2A 250V

AMPS

Input

LO

+1. 900000 VDC

PREV

DCV ACV DCI ACI Ω2 Ω4

DISPLAY

NEXT

REL TRIG STORE

POWER

LOCAL CHAN SCAN CONFIG MENU EXIT ENTER

INFO

Model 2001

2001 MULTIMETER

RECALL

FREQ TEMP

FILTER MATH

SENSE

Ω 4 WIRE

350V

PEAK

INPUTS

F

RANGE

FRONT/REAR

AUTO

RANGE

CAL

Note: Use shielded, low-thermal cables

when testing 200mV range. Use

internal Guard (EX GRD LED is off).

Figure 1-1

Connections for DC volts verification

Table 1-2

Limits for DC volts verification

2001

DCV

range

200mV

200V

1000V

Notes:

1. Repeat procedure for negative voltages.

2. Reading limits shown do not include calibrator uncertainty.

2V

20V

Applied DC

voltage

190.0000mV

1.900000V

19.00000V

190.0000V

1000.000V

Reading limits

(18° to 28°C, 1 year)

189.9918mV to 190.0082mV

1.899949V to 1.900052V

18.99946V to 19.00054V

189.9922V to 190.0078V

999.953V to 1000.047V

Output HI

Output

LO

Ground link installed.

Normal mode

1. Turn on the Model 2001, calibrator, and amplifier, and

allow a one-hour warm-up period before making measurements.

2. Connect the Model 2001 to the calibrator, as shown in

Figure 1-2. Be sure to connect the amplifier HI to Model

2001 INPUT HI, and amplifier LO to Model 2001 INPUT LO as shown. Connect the power amplifier to the

calibrator using the appropriate connector on the rear of

the calibrator.

3. Restore Model 2001 factory default conditions, as explained in paragraph 1.7.

4. Select the ACV function and the 200mV range on the

Model 2001, and make sure that REL is disabled.

1.8.2 AC volts verification

AC voltage accuracy is checked by applying accurate AC

voltages at specific frequencies from an AC calibration

source and then verifying that each Model 2001 AC voltage

reading falls within the specified range. The three ACV verification procedures that follow include:

• Normal mode

• Low-frequency mode

• Peak ACV

CAUTION

Do not exceed 1100V peak or 2 ×

7

V•Hz between INPUT HI and IN-

10

PUT LO, or instrument damage may occur.

NOTE

Do not use REL to null offsets when performing AC volts tests.

5. Set the calibrator output to 190.000mVAC at a frequency of 20Hz, and allow the reading to settle.

6. Verify that the Model 2001 reading is within the limits

summarized in Table 1-3.

7. Repeat steps 5 and 6 for 190mVAC at the remaining frequencies listed in Table 1-3 (except 2MHz).Verify that

instrument readings fall within the required limits listed

in the table.

8. Repeat steps 5 through 7 for the 2V, 20V, 200V, and

750VAC ranges, using the input voltages and limits stated in Table 1-3.

9. Connect the Model 2001 to the wideband calibrator output (Figure 1-3).

1-5

Page 17

Performance Verification

10. Set the calibrator output to 190.0000mV at a frequency

of 2MHz.

11. Verify that the reading is within limits stated in Table 1-

3.

12. Repeat steps 10 and 11 for 1.900V input on the 2V

range.

Low-frequency mode

1. Turn on the Model 2001, calibrator, and amplifier, and

allow a one-hour warm-up period before making measurements.

2. Connect the Model 2001 to the calibrator, as shown in

Figure 1-2. Be sure to connect the amplifier HI to Model

2001 INPUT HI, and amplifier LO to Model 2001 INPUT LO as shown. Connect the power amplifier to the

calibrator using the appropriate connector on the rear of

the calibrator.

3. Restore Model 2001 factory default conditions, as explained in paragraph 1.7.

4. Select the ACV function and the 200mV range on the

Model 2001, and make sure that REL is disabled.

NOTE

Do not use REL to null offsets when performing AC volts tests. Also, do not enable the filter.

5. Select the low-frequency mode as follows:

A. Press CONFIG ACV, select AC-TYPE, then press

ENTER.

B. Select LOW-FREQ-RMS, then press ENTER.

C. Press EXIT as required to return to normal display.

6. Set the calibrator output to 190.000mVAC at a frequency of 10Hz, and allow the reading to settle.

7. Verify that the Model 2001 reading is within the limits

summarized in Table 1-4.

8. Repeat steps 6 and 7 for 190mVAC at the remaining frequencies listed in the table.

9. Repeat steps 6 through 8 for the 2V, 20V, 200V, and

750VAC ranges, using the input voltages and limits stated in Table 1-4.

CAUTION

Do not apply more than 400V at 50kHz,

80V at 250kHz, 40V at 500kHz, or 20V

at 1MHz, or instrument damage may

occur.

Input HI

INPUT

HI

1100V

PEAK

LO

500V

PEAK

R

2A 250V

AMPS

Input

LO

CA-18-1 Lowcapacitance cable

Output HI

Output

LO

1. 900000 VAC RMS

PREV

DCV ACV DCI ACI Ω2 Ω4

DISPLAY

NEXT

REL TRIG STORE RECALL

POWER

INFO LOCAL CHAN SCAN CONFIG MENU EXIT ENTER

Model 2001

2001 MULTIMETER

FREQ TEMP

FILTER MATH

SENSE

Ω 4 WIRE

350V

PEAK

INPUTS

F

RANGE

FRONT/REAR

AUTO

RANGE

CAL

Figure 1-2

Connections for AC volts verification (all except 2MHz test)

5725 Amplifier (Connect to calibrator)

5700A Calibrator (Output AC Voltage)

Ground link installed.

Note: Use internal Guard (EX GRD LED is off).

1-6

Page 18

1. 900000 VAC RMS

PREV

DCV ACV DCI ACI Ω2 Ω4

DISPLAY

NEXT

REL TRIG STORE RECALL

POWER

INFO LOCAL CHAN SCAN CONFIG MENU EXIT ENTER

Model 2001

2001 MULTIMETER

FREQ TEMP

FILTER MATH

RANGE

AUTO

RANGE

BNC to dual

banana

SENSE

INPUT

Ω 4 WIRE

HI

350V

PEAK

LO

INPUTS

F

R

FRONT/REAR

2A 250V

AMPS

CAL

1100V

PEAK

500V

PEAK

Performance Verification

5725 Amplifier (Connect to calibrator)

50Ω

terminator

50Ω Coax

Wideband

output

Note: Use internal Guard (EX GRD LED is off).

Figure 1-3

Connections for AC volts verification (2MHz frequency only)

5700A Calibrator (Output AC Voltage)

Ground link installed.

1-7

Page 19

Performance Verification

180.100mVto199.900mV

1.80100Vto1.99900V

to

186.000mV

1.86000Vto1.94000V

194.000mV

*

18.2000Vto19.8000V

to

189.000mV

189.647mVto190.353mV

1.89000Vto1.91000V

191.000mV

1.89647Vto1.90353V

to

189.856mV

189.875mVto190.125mV

189.875mVto190.125mV

1.89856Vto1.90144V

190.144mV

1.89875Vto1.90125V

1.89875Vto1.90125V

to

189.875mV

1.89875Vto1.90125V

190.125mV

***

18.9000Vto19.1000V

18.9647Vto19.0353V

18.9723Vto19.0277V

18.9742Vto19.0258V

18.9809Vto19.0192V

18.9856Vto19.0144V

189.640Vto190.360V

189.716Vto190.284V

189.735Vto190.265V

189.802Vto190.198V

189.849Vto190.151V

*****

748.12Vto751.88V

748.49Vto751.51V

748.72Vto751.28V

1-8

Table 1-3

to

to

19.1284V

189.678Vto190.322V

to

200V 190V 188.709V

189.685mV

1.89685Vto1.90315V

190.315mV

to

Allowable readings (1 year, 18° to 28°C)

Applied

voltage 20Hz 50Hz 1kHz 5kHz 25kHz 50kHz 100kHz 200kHz 1MHz 2MHz**

191.284mV

2V 1.9V 1.88716V

ACV

200mV 190mV 188.716mV

range

Limits for normal mode AC voltage verification

2001

18.9685Vto19.0315V

to

1.91284V

20V 19V 18.8716V

191.291V

to

⎯ 748.12V

750V 750V

751.88V

7

V•Hz input.

*CAUTION: Do not exceed 2 × 10

**Use wideband option and connections when performing 2MHz tests.

NOTE: Limits shown do not include calibrator uncertainty. Reading limits do include the adder for AC Coupling of the input.

Page 20

Table 1-4

Limits for low-frequency mode AC voltage verification

Allowable readings

2001 ACV

range

Applied

voltage

10Hz 50Hz 100Hz

(1 year, 18° to 28°C)

Performance Verification

200mV

190mV

189.837mV

190.163mV

2V

20V

200V

750V

NOTE: Specifications above 100Hz are the same as normal mode. Limits shown do not include

calibrator uncertainty.

1.9V

19V

190V

750V

AC peak mode

1. Turn on the Model 2001, calibrator, and amplifier, and

allow a one-hour warm-up period before making measurements.

2. Connect the Model 2001 to the calibrator, as shown in

Figure 1-2. Be sure to connect the amplifier HI to Model

2001 INPUT HI, and amplifier LO to Model 2001 INPUT LO as shown. Connect the power amplifier to the

calibrator using the appropriate connector on the rear of

the calibrator.

3. Restore Model 2001 factory default conditions, as explained in paragraph 1.7.

4. Select the ACV function and the 200mV range on the

Model 2001, and make sure that REL is disabled.

NOTE

to

1.89837V

to

1.90163V

18.9818V

to

19.0182V

189.811V

to

190.189V

—

189.875mV

to

190.125mV

1.89875V

to

1.90125V

18.9856V

to

19.0144V

189.849V

to

190.151V

748.72V

to

751.28V

189.875mV

to

190.125mV

1.89875V

to

1.90125V

18.9856V

to

19.0144V

189.849V

to

190.151V

748.72V

to

751.28V

C. Select FILTER, then press ENTER.

D. Select AVERAGING, then press ENTER.

E. Using the cursor and range keys, set the averaging

parameter to 10 readings, then press ENTER.

F. Press EXIT as necessary to return to normal display.

G. If the FLT annunciator is off, press FILTER to en-

able the filter.

6. Set the calibrator output to 100.000mVAC at a frequency of 5kHz, and allow the reading to settle.

7. Verify that the Model 2001 reading is within the limits

summarized in Table 1-5.

8. Repeat steps 6 and 7 for 100mVAC at the remaining frequencies listed in the table.

9. Repeat steps 6 through 8 for the 2V, 20V, 200V, and

750VAC ranges, using the input voltages and limits stated in Table 1-5.

Do not use REL to null offsets when performing AC volts tests. Use AC coupling

for 5kHz-1MHz tests. Use AC+DC coupling for 20Hz tests. (Use CONFIG-ACV

to set coupling.)

5. Select the AC peak and filter modes as follows:

A. Press CONFIG then ACV, select AC-TYPE, then

press ENTER.

B. Select PEAK, then press ENTER.

CAUTION

Do not apply more than 400V at 50kHz,

80V at 250kHz, 40V at 500kHz, or 20V

at 1MHz, or instrument damage may

occur.

10. Set input coupling to AC+DC, then repeat the procedure

for a 20Hz input signal.

1-9

Page 21

Performance Verification

Table 1-5

Limits for AC peak voltage verification

2001

ACV

range

Applied

voltage* 20Hz†

Allowable Readings (1 year, 18° to 28°C)

5kHz 25kHz 50kHz 100kHz 250kHz 500kHz 750kHz 1MHz

200mV 100mV 139.9mV

to

142.9mV

2V 1V 1.407V

to

1.421V

20V 10V 13.99V

to

14.29V

200V 190V 267.8V

to

269.6V

750V 750V

*Calibrator voltage is given as an RMS value. Model 2001 reading limits are peak AC values.

**CAUTION: Do not apply more than 2 × 10

†Use AC+DC input coupling for 20Hz tests only. (Use CONFIG-ACV to set coupling.)

NOTE: Limits shown do not include uncertainty calibrator.

⎯

1.8.3 DC current verification

DC current accuracy is checked by applying accurate DC

currents from a calibrator to the instrument AMPS input and

then verifying that the current readings fall within appropriate limits.

Follow the steps below to verify DCI measurement accuracy.

139.9mV

to

142.9mV

1.407V

to

1.421V

13.98V

to

14.30V

267.8V

to

269.6V

1054V

to

1067V

7

V•Hz

139.9mV

to

142.9mV

1.407V

to

1.421V

13.98V

to

14.30V

267.7V

to

269.7V

1053V

to

1068V

139.8mV

to

143.0mV

1.406V

to

1.422V

13.97V

to

14.31V

267.6V

to

269.8V

** ** ** ** ** **

139.6mV

143.2mV

1.404V

1.424V

13.96V

14.32V

267.4V

270.0V

4. Set digital filter averaging as follows:

A. From normal display, press CONFIG then DCI.

B. Select FILTER, then press ENTER.

C. Select AVERAGING, then press ENTER.

D. Using the cursor and range keys, set the averaging

E. Press EXIT as necessary to return to normal display.

F. If the FILT annunciator is off, press FILTER to en-

CAUTION

Do not apply more than 2A, 250V to the

AMPS input, or the amps protection

fuse will blow.

1. Turn on the Model 2001 and the calibrator, and allow a

one-hour warm-up period before making measurements.

2. Connect the Model 2001 to the calibrator, as shown in

Figure 1-4. Be sure to connect calibrator HI to the

AMPS input, and connect calibrator LO to INPUT LO

as shown.

5. Select the DC current function (DCI) and the 200µA

range on the Model 2001.

6. Set the calibrator output to +190.0000µADC, and allow

the reading to settle.

7. Verify that the Model 2001 reading is within the limits

summarized in Table 1-6.

8. Repeat steps 6 and 7 for the remaining currents listed in

Table 1-6.

9. Repeat the procedure for each of the ranges with negative currents of the same magnitude as those listed in Table 1-6.

3. Restore Model 2001 factory default conditions, as explained in paragraph 1.7.

138.6mV

to

to

to

to

to

144.2mV

1.394V

to

1.434V

13.86V

to

14.42V

** ** ** **

136.5mV

to

146.3mV

1.373V

to

1.455V

13.65V

to

14.63V

132.2mV

to

150.6mV

1.330V

to

1.498V

13.22V

to

15.06V

parameter to 10 readings, then press ENTER.

able the filter.

127.3mV

to

155.5mV

1.281V

to

1.547V

12.73V

to

15.55V

1-10

Page 22

Performance Verification

5700A Calibrator (Output DC Current)

19.00000 mADC

PREV

DCV ACV DCI ACI Ω2 Ω4

DISPLAY

NEXT

REL TRIG STORE RECALL

POWER

INFO LOCAL CHAN SCAN CONFIG MENU EXIT ENTER

Model 2001

2001 MULTIMETER

FREQ TEMP

FILTER MATH

SENSE

Ω 4 WIRE

350V

PEAK

INPUTS

F

RANGE

FRONT/REAR

AUTO

RANGE

CAL

Input

INPUT

LO

HI

1100V

PEAK

LO

500V

PEAK

R

2A 250V

AMPS

Amps

Output HI

Output

LO

Note: Use internal Guard (EX GRD LED is off).

Figure 1-4

Connections for DC current verification

Table 1-6

Limits for DC current verification

2001 DCI

range

200µA

Applied DC

current

190.0000µA

Reading limits

(1 year, 18° to 28°C)

189.9000µA

to

190.1000µA

2mA

1.900000mA

1.899200mA

to

1.900800mA

20mA

19.00000mA

18.99200mA

to

19.00800mA

200mA

190.0000mA

189.9010mA

to

190.0990mA

2A

1.900000A

1.898200A

to

1.901800A

NOTES:

1. Repeat procedure for negative currents.

2. Reading limits shown do not include calibrator uncertainty.

1.8.4 AC current verification

AC current verification is performed by applying accurate

AC currents at specific frequencies and then verifying that

Model 2001 readings fall within specified limits.

Ground link installed.

Follow the steps below to verify ACI measurement accuracy.

CAUTION

Do not apply more than 2A, 250V to the

AMPS input, or the current protection

fuse will blow.

1. Turn on the Model 2001 and the calibrator, and allow a

one-hour warm-up period before making measurements.

2. Connect the Model 2001 to the calibrator, as shown in

Figure 1-5. Be sure to connect calibrator HI to the

AMPS input, and connect calibrator LO to INPUT LO

as shown.

3. Restore Model 2001 factory default conditions, as explained in paragraph 1.7.

4. Select the AC current function and the 200µA range on

the Model 2001.

5. Set the calibrator output to 190.000µA AC at a frequency of 40Hz, and allow the reading to settle.

6. Verify that the Model 2001 reading is within the limits

for the present current and frequency summarized in Table 1-7.

7. Repeat steps 4 and 5 for each frequency listed in Table

1-7.

8. Repeat steps 4 through 7 for the remaining ranges and

frequencies listed in Table 1-7.

1-11

Page 23

Performance Verification

5700A Calibrator (Output AC Current)

190.0000 μAAC RMS

PREV

DCV ACV DCI ACI Ω2 Ω4

DISPLAY

NEXT

REL TRIG STORE RECALL

POWER

INFO LOCAL CHAN SCAN CONFIG MENU EXIT ENTER

Model 2001

2001 MULTIMETER

FREQ TEMP

FILTER MATH

SENSE

Ω 4 WIRE

350V

PEAK

INPUTS

F

RANGE

FRONT/REAR

AUTO

RANGE

Figure 1-5

Connections for AC current verification

Table 1-7

Limits for AC current verification

2001 ACI

range

Applied AC

current

200µA 190.000µA 188.260µA

2mA 1.90000mA 1.88355mA

20mA 19.0000mA 18.8355mA

200mA 190.000mA 188.355mA

2A 1.90000A 1.88250A

Note: Reading limits shown do not include calibrator uncertainty.

Input

INPUT

LO

HI

1100V

PEAK

LO

500V

PEAK

R

2A 250V

AMPS

CAL

Amps

Output HI

Output

LO

Ground link installed.

Note: Use internal Guard (EX GRD LED is off).

Reading limits (1 year, 18° to 28°C)

40Hz 100Hz 1kHz 10kHz

to

191.740µA

to

1.91645mA

to

19.1645mA

to

191.645mA

to

1.91750A

189.560µA

to

190.440µA

1.89657mA

to

1.90344mA

18.9657mA

to

19.0344mA

189.657mA

to

190.344mA

1.89556A

to

1.90444A

189.210µA

to

190.790µA

1.89742mA

to

1.90258mA

18.9742mA

to

19.0258mA

189.742mA

to

190.258mA

1.89390A

to

1.90610A

189.020µA

to

190.980µA

1.89742mA

to

1.90258mA

18.9742mA

to

19.0258mA

189.685mA

to

190.315mA

1.89105A

to

1.90895A

1-12

Page 24

Performance Verification

1.8.5 Resistance verification

Resistance verification is performed by connecting accurate

resistance values to the instrument and verifying that Model

2001 resistance readings are within stated limits.

Follow the steps below to verify resistance measurement accuracy.

CAUTION

Do not apply more than 1100V peak between INPUT HI and LO or more than

350V peak between SENSE HI and LO,

or instrument damage may occur.

20¾ - 200k¾ range verification

1. Turn on the Model 2001 and the calibrator, and allow a

one-hour warm-up period before making measurements.

2. Set the calibrator for 4-wire resistance (external sense

on).

3. Using shielded 4-wire connections, connect the Model

2001 to the calibrator, as shown in Figure 1-6. Be sure

to connect calibrator HI and LO terminals to the Model

2001 HI and LO terminals (including SENSE HI and

LO) as shown.

4. Restore Model 2001 factory default conditions, as explained in paragraph 1.7.

5. Set operating modes as follows:

A. From normal display, press CONFIG then ¾4.

B. Select FILTER, then press ENTER.

C. Select AVERAGING, then press ENTER.

D. Using the cursor and range keys, set the averaging

parameter to 10 readings, then press ENTER.

E. Select OFFSETCOMP, then press ENTER.

F. Select ON, then press ENTER.

G. Press EXIT to return to normal display.

6. Set the calibrator to output 19.000¾, and allow the reading to settle. Verify that the reading is within the limits

stated in Table 1-8.

NOTE

Resistance values available in the Model

5700A calibrator may be slightly different

than the stated nominal resistance values.

Calculated limits stated in Table 1-8

should be recalculated based on actual calibrator resistance values.

7. Set the calibrator output to 190.000¾, and allow the

reading to settle.

8. Verify that the reading is within the limits stated in Table

1-8. (NOTE: Recalculate limits if calibrator resistance is

not exactly as listed.)

9. Repeat steps 11 and 12 for the 2k¾ through 200k¾ ranges using the values listed in Table 1-8. NOTE: Turn offset compensation off when testing the 200k¾ range (see

step 5).

Table 1-8

Limits for resistance verification (20¾-200M¾ ranges)

2001 ¾ range

20¾

Applied

resistance

19.0000¾

Reading limits

(1 year, 18° to 28°C)

18.99849¾

to

19.00151¾

200¾

190.000¾

189.9880¾

to

190.0120¾

2k¾

1.90000k¾

1.899897k¾

to

1.900103k¾

20k¾

19.0000k¾

18.99897k¾

to

19.00103k¾

200k¾

190.000k¾

189.9820k¾

to

190.0180k¾

2M¾

1.90000M¾

1.899687M¾

to

1.900313M¾

20M¾

19.0000M¾

18.98281M¾

to

19.01719M¾

200M¾

100.000M¾

97.9800M¾

to

102.0200M¾

NOTES:

1. Limits shown do not include calibrator uncertainty and are based on

absolute calibration values shown. Recalculate limits using Model

2001 specifications if calibrator resistance values differ from nominal values shown.

2. Use 4-wire connections and function for 20¾-200k¾ ranges. Use 2wire connections and function for 2M¾-200M¾ ranges.

1-13

Page 25

Performance Verification

5700A Calibrator (Output 2-Wire Resistance)

Input HI

INPUT

HI

1100V

PEAK

LO

500V

PEAK

R

2A 250V

AMPS

Input

LO

Output HI

Output

LO

+1. 900000 kΩ

PREV

DCV ACV DCI ACI Ω2 Ω4

DISPLAY

NEXT

REL TRIG STORE RECALL

POWER

INFO LOCAL CHAN SCAN CONFIG MENU EXIT ENTER

Model 2001

2001 MULTIMETER

FREQ TEMP

FILTER MATH

SENSE

Ω 4 WIRE

350V

PEAK

INPUTS

F

RANGE

FRONT/REAR

AUTO

RANGE

CAL

Note: Use shielded cable to minimize noise.

Disable calibrator external sense mode.

Use internal Guard (EX GRD LED is off).

Figure 1-6

Connections for resistance verification (20¾-200k¾ ranges)

2M¾ – 200M¾ range verification

1. Connect the DC calibrator and Model 2001 using the 2wire connections shown in Figure 1-7.

2. Set the calibrator to the 2-wire mode (external sense

off).

3. Set operating modes as follows:

A. From normal display, press CONFIG then ¾2.

B. Select FILTER, then press ENTER.

C. Select AVERAGING, then press ENTER.

D. Using the cursor and range keys, set the averaging

parameter to 10 readings, then press ENTER.

E. Press EXIT to return to normal display.

F. If the FILT annunciator is off, press FILTER to en-

able the filter.

4. Select the Model 2001 ¾2 function, and change to the

2M¾ range.

5. Set the calibrator to output 1.90000M¾, and allow the

reading to settle.

6. Verify that the reading is within the limits for the 2M¾

range stated in Table 1-8. (NOTE: Recalculate limits if

actual calibrator resistance differs from value shown.)

7. Repeat steps 4 through 6 for the 20M¾ (output

19.0000M¾) and 200M¾ (output 100.000M¾) ranges.

1G¾ range verification

Ground link installed.

2. Characterize the 1G¾ resistor to within ±10,000ppm or

better using an accurate megohmmeter (see Table 1-1).

Record the characterized value where indicated in Table

1-9. Also, compute the limits based on the value of R using the formula at the bottom of the table.

NOTE

The value of the 1G¾ resistor should not

exceed 1.05G¾.

3. Set operating modes as follows:

A. From normal display, press CONFIG then ¾2.

B. Select FILTER, then press ENTER.

C. Select AVERAGING, then press ENTER.

D. Using the cursor and range keys, set the averaging

parameter to 10 readings, then press ENTER.

E. Press EXIT to return to normal display.

F. If the FILT annunciator is off, press FILTER to en-

able the filter.

4. Select the 2-wire ohms function (¾2) and the 1G¾

range on the Model 2001.

5. Connect the 1G¾ resistor test box (from steps 1 and 2)

to the INPUT HI and LO terminals of the Model 2001.

Allow the reading to settle.

6. Verify that the Model 2001 reading is within the limits

you calculated and recorded in Table 1-9.

1. Mount the 1G¾ resistor and the banana plugs to the test

box, as shown in Figure 1-8. Be sure to mount the banana plugs with the correct spacing. The resistor should

be completely enclosed in and shielded by the metal test

box. The resistor LO lead should be electrically connected to the test box to provide adequate shielding.

1-14

Page 26

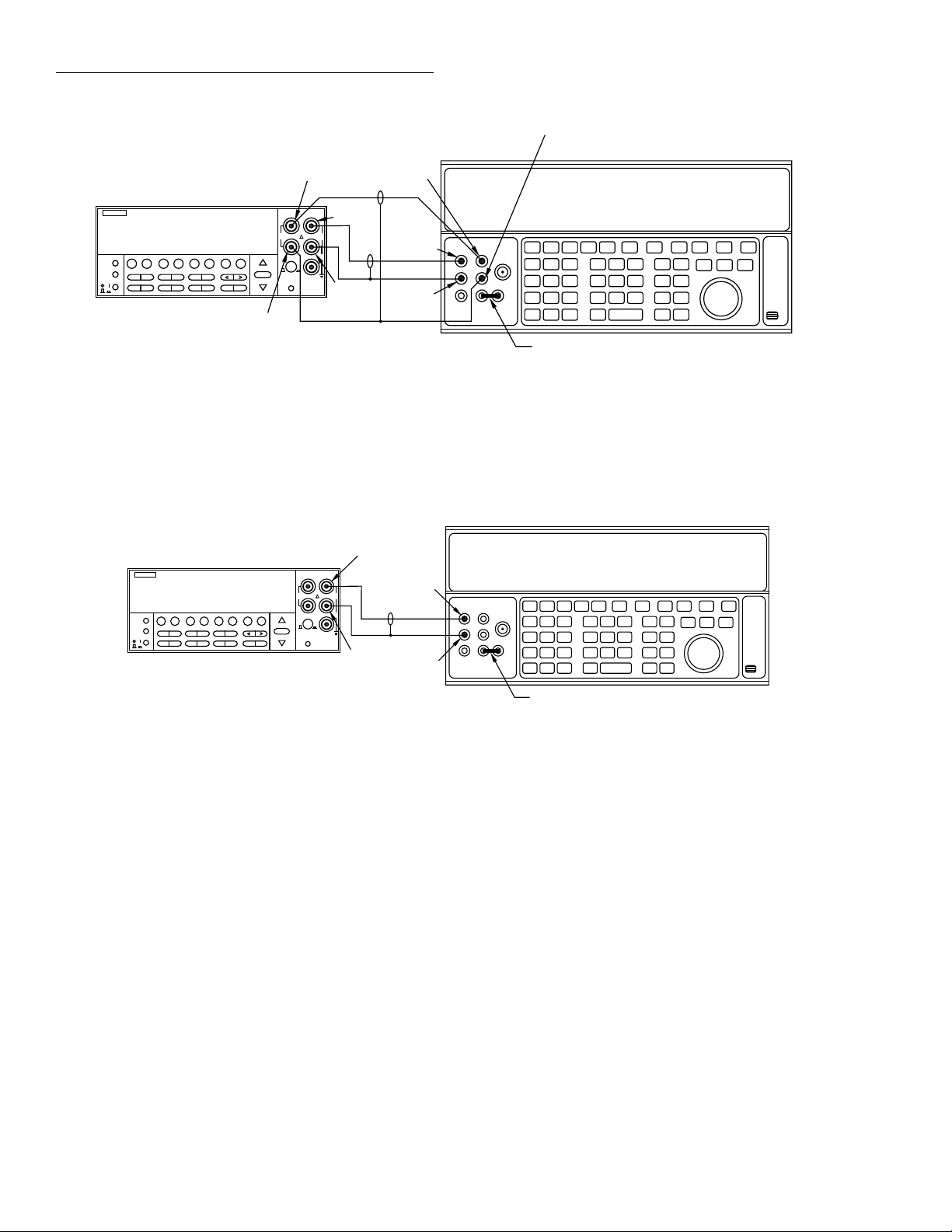

190.0000 kΩ

PREV

DCV ACV DCI ACI Ω2 Ω4

DISPLAY

NEXT

REL TRIG STORE RECALL

POWER

INFO LOCAL CHAN SCAN CONFIG MENU EXIT ENTER

Model 2001

2001 MULTIMETER

FREQ TEMP

FILTER MATH

Sense LO

Performance Verification

Sense LO

Sense HI

Sense HI

SENSE

INPUT

Ω 4 WIRE

HI

350V

PEAK

F

RANGE

FRONT/REAR

AUTO

RANGE

Input HI

1100V

PEAK

LO

500V

PEAK

INPUTS

R

2A 250V

AMPS

CAL

Input

LO

Output HI

Output

LO

5700A Calibrator (Output 4-wire Resistance)

Note: Use shielded cables to minimize noise.

Enable calibrator external sense mode.

Use internal Guard (EX GRD LED is off).

Figure 1-7

Connections for resistance verification (2M¾ - 200M¾ ranges)

Insulated

Plug

HI

0.75"

LO

Banana

Plugs

Non-insulated Plug

Ground link installed.

1GΩ Resistor (Keithley

part # R-289-1G)

Metal

Test Box

Figure 1-8

1G¾ resistor test box construction

Table 1-9

Limits for resistance verification (1G¾ range)

Characterized

resistor (R) Reading limit (1 year, 18° to 28°C)*

____________ G¾ _________G¾ to _________G¾

*1 Year limits = R ± (0.04R + 100,000)

Where R = characterized value of 1G¾ resistor.

Note: Resistor must be accurately characterized

before use (see text).

1-15

Page 27

Performance Verification

1.8.6 Frequency accuracy verification

Frequency accuracy verification is performed by connecting

an accurate frequency source to the Model 2001 inputs, and

then verifying that the frequency readings are within stated

limits.

Use the procedure below to verify the frequency measurement accuracy of the Model 2001.

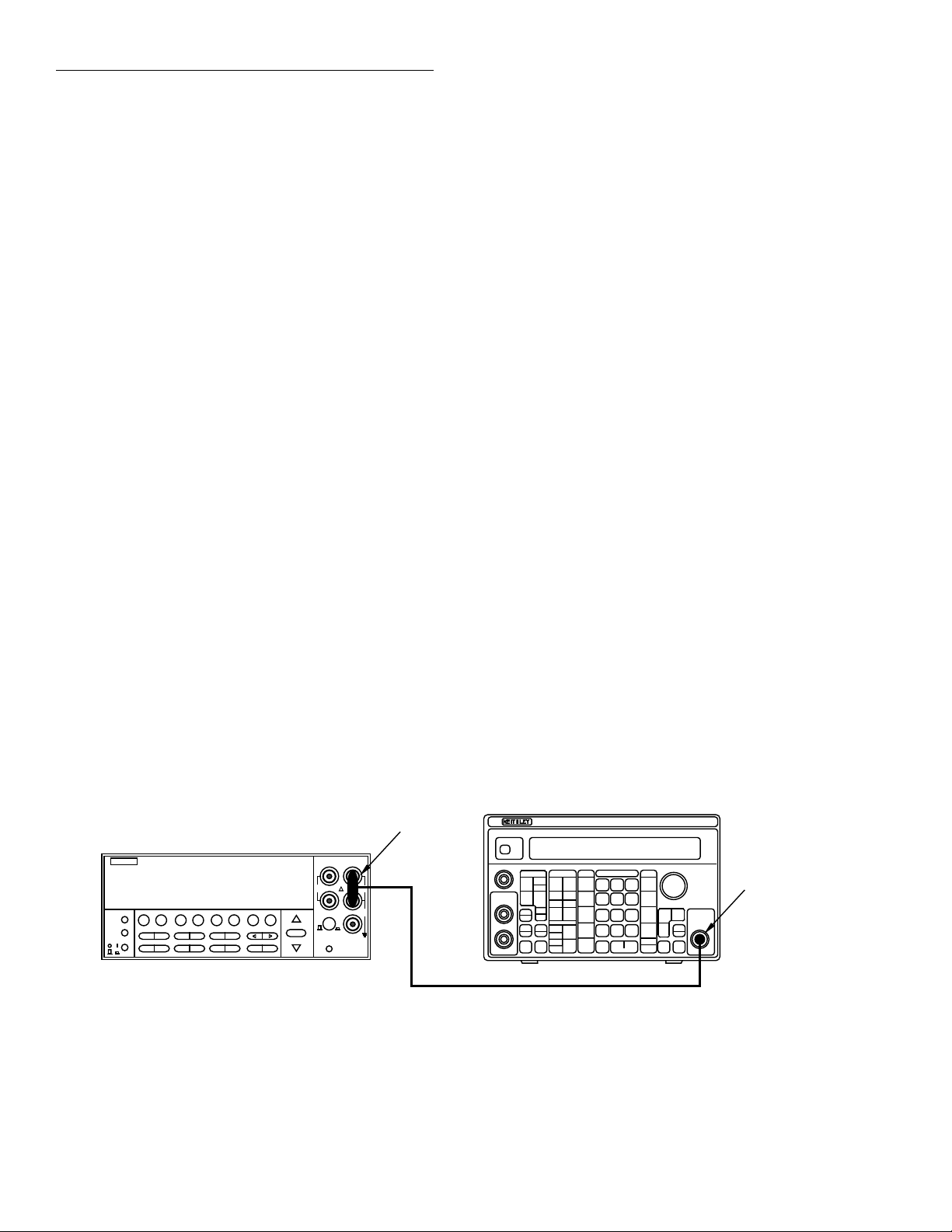

1. Connect the frequency synthesizer to the Model 2001

INPUT terminals, as shown in Figure 1-9.

2. Turn on both instruments, and allow a one-hour warmup period before measurement.

3. Set the synthesizer operating modes as follows:

FREQ: 1Hz

AMPTD: 5V p-p

OFFSET: 0V

MODE: CONT

FCTN: sine wave

4. Restore Model 2001 factory defaults, as explained in

paragraph 1.7.

5. Press FREQ to place the Model 2001 in the frequency

measurement mode.

6. Set maximum signal level to 10V as follows:

A. Press CONFIG then FREQ.

B. Select MAX-SIGNAL-LEVEL, then press ENTER.

C. Select VOLTAGE, then press ENTER.

D. Select 10V, then press ENTER.

E. Press EXIT to return to normal display.

7. Verify that the Model 2001 frequency reading is within

the limits shown in the first line of Table 1-10.

8. Set the synthesizer to each of the frequencies listed in

Table 1-10, and verify that the Model 2001 frequency

reading is within the required limits.

Table 1-10

Frequency verification limits

Synthesizer

frequency

1Hz

10Hz

100Hz

1kHz

10kHz

100kHz

1MHz

10MHz

15MHz

Reading limits

(1 year, 18° to 28°C)

0.9997Hz to 1.0003Hz

9.997Hz to 10.003Hz

99.97Hz to 100.03Hz

0.9997kHz to 1.0003kHz

9.997kHz to 10.003kHz

99.97kHz to 100.03kHz

0.9997MHz to 1.0003MHz

9.997MHz to 10.003MHz

14.995MHz to 15.005MHz

Model 2001

SENSE

Ω 4 WIRE

1.0000 MHz

PREV

DCV ACV DCI ACI Ω2 Ω4 FREQ TEMP

DISPLAY

NEXT

REL TRIG STORE RECALL

POWER

INFO LOCAL CHAN SCAN CONFIG MENU EXIT ENTER

2001 MULTIMETER

FILTER MATH

Figure 1-9

Connections for frequency accuracy verification

350V

PEAK

LO

INPUTS

F

FRONT/REAR

R

CAL

RANGE

AUTO

RANGE

BNC-to-Dual

Banana Plug

Model 3940 Synthesizer

3940 MULTIFUNCTION SYNTHESIZER

Adapter

INPUT

HI

1100V

PEAK

500V

PEAK

2A 250V

AMPS

Main

Function

Output

50Ω BNC Coaxial Cable

1-16

Page 28

Performance Verification

1.8.7 Temperature reading checks

When using thermocouples, the Model 2001 displays temperature by measuring the DC thermocouple voltage, and

then calculating the corresponding temperature. Similarly,

the instrument computes RTD temperature readings by measuring the resistance of the RTD probe and calculating temperature from the resistance value.

Since the instrument computes temperature from DCV and

resistance measurements, verifying the accuracy of those

DCV and resistance measurement functions guarantees the

accuracy of corresponding temperature measurements.

Thus, it is not necessary to perform a comprehensive temperature verification procedure if DCV and resistance verification procedures show the instrument meets its specifications

in those areas. However, those who wish to verify that the

Model 2001 does in fact properly display temperature can

use the following procedure to do so.

Selecting the temperature sensor

Follow the steps below to select the type of temperature sensor:

1. From normal display, press CONFIG then TEMP.

2. Select SENSOR, then press ENTER.

3. Select 4-WIRE RTD or THERMOCOUPLE as desired,

then press ENTER.

4. Select the type of RTD probe or thermocouple you wish

to test, then return to the CONFIG TEMPERATURE

menu.

5. Select UNITS, then press ENTER.

6. Select DEG-C, then press ENTER.

7. Press EXIT as necessary to return to normal display.

8. Press the TEMP key to place the Model 2001 in the temperature display mode. Refer to further information below on how to check thermocouple and RTD probe

readings.

Thermocouple temperature reading checks

To check thermocouple readings, simply apply the appropriate DC voltage listed in Table 1-11 to the Model 2001 INPUT jacks using a precision DC voltage source (such as the

one used to verify DC voltage accuracy in paragraph 1.8.1),

and check the displayed temperature reading. Be sure to use

low-thermal cables for connections between the DC calibrator and the Model 2001 when making these tests.

NOTE

The voltages shown are based on a 0°C

reference junction temperature. Use CONFIG TEMP to set the default reference

junction temperature to 0°C.

Table 1-11

Thermocouple temperature reading checks

Thermo-

couple type

J -4.215mV

K -3.242mV

T -3.089mV

E -4.777mV

R0.054mV

S0.055mV

B0.632mV

*Voltages shown are based on 0°C reference junction temperature. Use

CONFIG-TEMP menu to set default reference junction to 0°C.

Applied DC

voltage*

0mV

1.277mV

5.268mV

42.283mV

0mV

1.000mV

4.095mV

54.125mV

0mV

0.992mV

4.277mV

20.252mV

0mV

1.495mV

6.317mV

75.608mV

0.647mV

4.471mV

20.878mV

0.645mV

4.234mV

18.504mV

1.241mV

4.833mV

13.585mV

Displayed

temperature (°C)

-90.5 to -89.5

-0.5 to +0.5

24.5 to 25.5

99.5 to 100.5

749.5 to 750.5

-90.5 to -89.5

-0.5 to +0.5

24.5 to 25.5

99.5 to 100.5

1349.5 to 1350.5

-90.5 to -89.5

-0.5 to +0.5

24.5 to 25.5

99.5 to 100.5

389.5 to 390.5

-90.6 to -89.4

-0.6 to +0.6

24.4 to 25.6

99.4 to 100.6

989.4 to 990.6

7 to 13

97 to 103

497 to 503

1747 to 1753

7 to 13

97 to 103

497 to 503

1747 to 1753

355 to 365

495 to 505

995 to 1005

1795 to 1805

1-17

Page 29

Performance Verification

RTD Temperature reading checks

Use a precision decade resistance box (see Table 1-1) to simulate probe resistances at various temperatures (Table 1-12).

Be sure to use 4-wire connections between the decade resistance box and the Model 2001.

Table 1-12

RTD probe temperature reading checks

RTD probe

type

PT385

(∝=0.00385055)

PT3916

(∝=0.00392)

Applied

resistance

64.30¾

100¾

109.73¾

138.51¾

313.71¾

63.68¾

100¾

109.90¾

139.16¾

266.94¾

Displayed

temperature (°C)

-90.08 to -89.92

-0.08 to +0.08

24.92 to 25.08

99.92 to 100.08

599.86 to 600.14