Page 1

QFX3000-G QFabric System Deployment Guide

Release

13.1

Modified: 2015-06-30

Copyright © 2015, Juniper Networks, Inc.

Page 2

Juniper Networks, Inc.

1133 Innovation Way

Sunnyvale, California 94089

USA

408-745-2000

www.juniper.net

Juniper Networks, Junos, Steel-Belted Radius, NetScreen, and ScreenOS are registered trademarks of Juniper Networks, Inc. in the United

States and other countries. The Juniper Networks Logo, the Junos logo, and JunosE are trademarks of Juniper Networks, Inc. All other

trademarks, service marks, registered trademarks, or registered service marks are the property of their respective owners.

Juniper Networks assumes no responsibility for any inaccuracies in this document. Juniper Networks reserves the right to change, modify,

transfer, or otherwise revise this publication without notice.

QFX3000-G QFabric System Deployment Guide

13.1

Copyright © 2015, Juniper Networks, Inc.

All rights reserved.

The information in this document is current as of the date on the title page.

YEAR 2000 NOTICE

Juniper Networks hardware and software products are Year 2000 compliant. Junos OS has no known time-related limitations through the

year 2038. However, the NTP application is known to have some difficulty in the year 2036.

END USER LICENSE AGREEMENT

The Juniper Networks product that is the subject of this technical documentation consists of (or is intended for use with) Juniper Networks

software. Use of such software is subject to the terms and conditions of the End User License Agreement (“EULA”) posted at

http://www.juniper.net/support/eula.html. By downloading, installing or using such software, you agree to the terms and conditions of

that EULA.

Copyright © 2015, Juniper Networks, Inc.ii

Page 3

Table of Contents

About the Documentation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xxi

Documentation and Release Notes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xxi

Supported Platforms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xxi

Using the Examples in This Manual . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xxi

Merging a Full Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xxii

Merging a Snippet . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xxii

Documentation Conventions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xxiii

Documentation Feedback . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xxv

Requesting Technical Support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xxv

Self-Help Online Tools and Resources . . . . . . . . . . . . . . . . . . . . . . . . . . xxv

Opening a Case with JTAC . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xxvi

Part 1 Overview

Chapter 1 Before You Begin . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

QFabric System Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

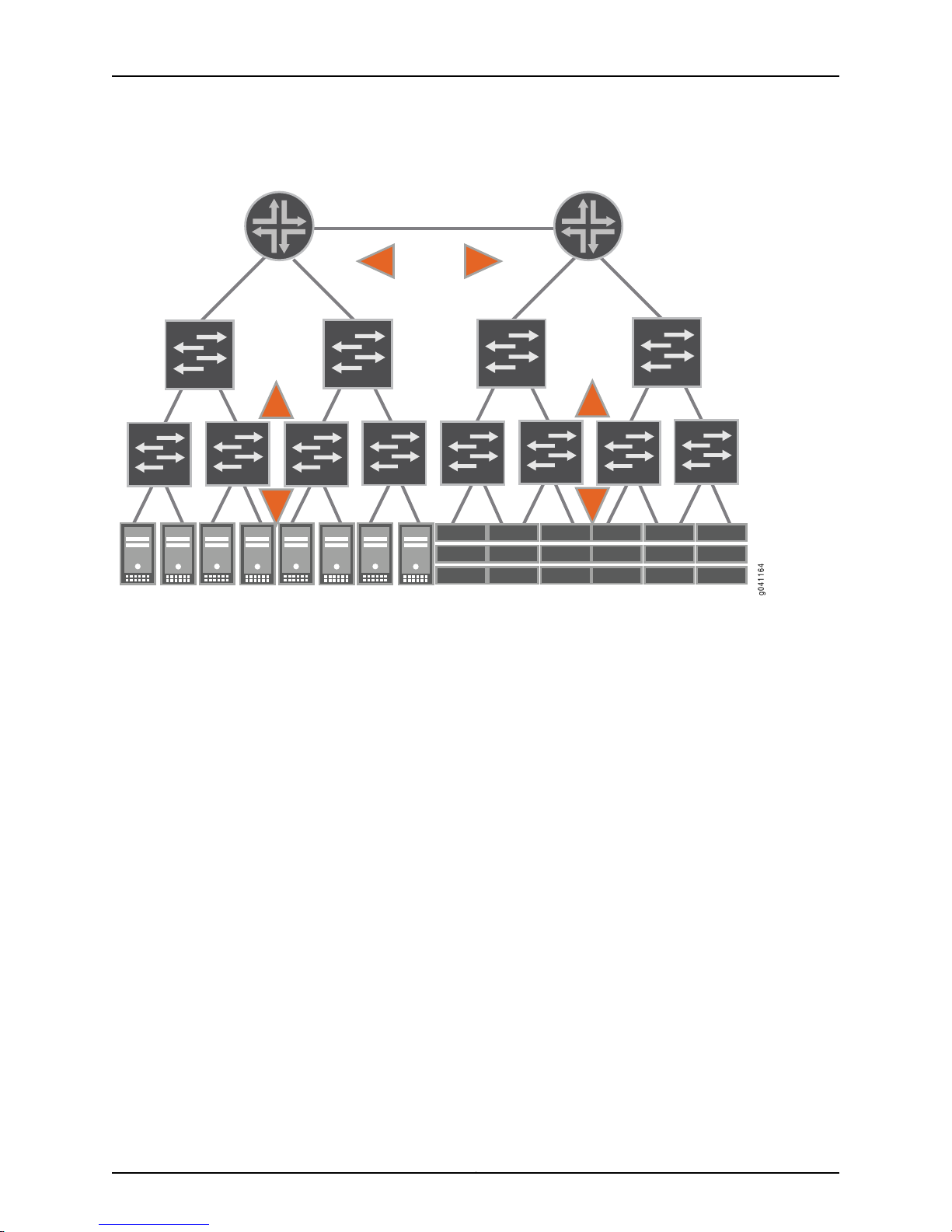

Legacy Data Center Architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

QFX Series QFabric System Architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

Understanding QFabric System Terminology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

Understanding Interfaces on the QFabric System . . . . . . . . . . . . . . . . . . . . . . . . . . 11

Four-Level Interface Naming Convention . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

QSFP+ Interfaces . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

Link Aggregation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

Chapter 2 Hardware Architecture Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

Understanding the QFabric System Hardware Architecture . . . . . . . . . . . . . . . . . . 15

QFabric System Hardware Architecture Overview . . . . . . . . . . . . . . . . . . . . . . 15

QFX3000-G QFabric System Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

QFX3000-M QFabric System Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

Understanding the Director Group . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

Director Group Components . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

Director Group Services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

Understanding Routing Engines in the QFabric System . . . . . . . . . . . . . . . . . . . . . 19

Hardware-Based Routing Engines . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

Software-Based External Routing Engines . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

Understanding Interconnect Devices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

Interconnect Device Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

QFX3008-I Interconnect Devices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

QFX3600-I Interconnect Devices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

iiiCopyright © 2015, Juniper Networks, Inc.

Page 4

QFX3000-G QFabric System Deployment Guide

Understanding Node Devices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

Node Device Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

QFX3500 Node Devices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

QFX3600 Node Devices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

Understanding Node Groups . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

Network Node Groups . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

Server Node Groups . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

Understanding Port Oversubscription on Node Devices . . . . . . . . . . . . . . . . . . . . 29

Chapter 3 Software Architecture Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

Understanding the QFabric System Software Architecture . . . . . . . . . . . . . . . . . . 31

Understanding the Director Software . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

Understanding Partitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

QFabric System Default Partition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

Understanding the QFabric System Control Plane . . . . . . . . . . . . . . . . . . . . . . . . . 35

Control Plane Elements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

Control Plane Services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

Understanding the QFabric System Data Plane . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

Data Plane Components . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

QFabric System Fabric . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

Chapter 4 Software Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

QFX Series Software Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

Understanding Software Upgrade on the QFabric System . . . . . . . . . . . . . . . . . . 42

Operational Software Commands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

Operational Reboot Commands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

Understanding Nonstop Software Upgrade for QFabric Systems . . . . . . . . . . . . . 43

Understanding Statements and Commands on the QFabric System . . . . . . . . . . 47

Chassis Statements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

Chassis Commands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

Understanding NTP on the QFabric System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

Understanding Network Management Implementation on the QFabric

System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

Understanding the Implementation of SNMP on the QFabric System . . . . . . . . . 50

Understanding the Implementation of System Log Messages on the QFabric

System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

Understanding User and Access Management Features on the QFabric

System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

Understanding QFabric System Login Classes . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

Understanding Interfaces on the QFabric System . . . . . . . . . . . . . . . . . . . . . . . . . 56

Four-Level Interface Naming Convention . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

QSFP+ Interfaces . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

Link Aggregation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

Understanding Layer 3 Features on the QFabric System . . . . . . . . . . . . . . . . . . . . 59

Understanding Security Features on the QFabric System . . . . . . . . . . . . . . . . . . . 60

Understanding Port Mirroring on the QFabric System . . . . . . . . . . . . . . . . . . . . . . . 61

Understanding Fibre Channel Fabrics on the QFabric System . . . . . . . . . . . . . . . . 61

Copyright © 2015, Juniper Networks, Inc.iv

Page 5

Table of Contents

Understanding CoS Fabric Forwarding Class Sets . . . . . . . . . . . . . . . . . . . . . . . . . 62

Default Fabric Forwarding Class Sets . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

Fabric Forwarding Class Set Configuration and Implementation . . . . . . . . . . 67

Mapping Forwarding Classes to Fabric Forwarding Class Sets . . . . . . . . 67

Fabric Forwarding Class Set Implementation . . . . . . . . . . . . . . . . . . . . . 68

Fabric Forwarding Class Set Scheduling (CoS) . . . . . . . . . . . . . . . . . . . . . . . 69

Class Groups for Fabric Forwarding Class Sets . . . . . . . . . . . . . . . . . . . . 69

Class Group Scheduling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

QFabric System CoS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

Support for Flow Control and Lossless Transport Across the Fabric . . . . . . . . 71

Viewing Fabric Forwarding Class Set Information . . . . . . . . . . . . . . . . . . . . . . 73

Summary of Fabric Forwarding Class Set andNodeDevice Forwarding Class

Set Differences . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

Chapter 5 Licenses . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

Junos OS Feature Licenses . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

Software Features That Require Licenses on the QFX Series . . . . . . . . . . . . . . . . . 77

Junos OS License Keys . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

Licensable Ports on MX5, MX10, and MX40 Routers . . . . . . . . . . . . . . . . . . . . 79

Part 2 Installation

Chapter 6 Before You Begin . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

QFX3000-G QFabric System Installation Overview . . . . . . . . . . . . . . . . . . . . . . . 83

Understanding QFX3000-G QFabric System Hardware Configurations . . . . . . . 85

Planning a QFX3000-G QFabric System Deployment . . . . . . . . . . . . . . . . . . . . . 86

General Site Guidelines . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

Site Electrical Wiring Guidelines . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

Environmental Requirements and Specifications for a QFX3100 Director

Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

Environmental Requirements and Specifications for a QFX3008-I Interconnect

Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

Environmental Requirements and Specifications for a QFX3500 Device . . . . . . . 94

Environmental Requirements and Specifications for QFX3600 and QFX3600-I

Devices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 95

Chapter 7 Ports and Connectors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

Interface Support for the QFX3600 Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

Interface Support for the QFX3500 Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 100

Interface Specifications for SFP, SFP+, and QSFP+ Transceivers for the QFX

Series . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

Interface Specifications for SFP+ DAC Cables for the QFX Series . . . . . . . . . . . . 114

Interface Specifications for QSFP+ DAC Breakout Cables for the QFX Series . . . 120

Interface Specifications for QSFP+ DAC Cables for the QFX Series . . . . . . . . . . . 123

Cable Specifications for Copper-Based Control Plane Connections for the QFabric

System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 126

Chapter 8 Power . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

AC Power Specifications for a QFX3100 Director Device . . . . . . . . . . . . . . . . . . . 129

AC Power Cord Specifications for a QFX3100 Director Device . . . . . . . . . . . . . . . 130

vCopyright © 2015, Juniper Networks, Inc.

Page 6

QFX3000-G QFabric System Deployment Guide

AC Power Specifications for a QFX3008-I InterconnectDevice with Single-Phase

Wiring Trays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

AC Power Specifications for a QFX3008-I Interconnect Device with Three-Phase

Delta Wiring Trays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 132

AC Power Specifications for a QFX3008-I Interconnect Device with Three-Phase

Wye Wiring Trays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 132

AC Power Cord Specifications for a QFX3008-I Interconnect Device with

Single-Phase Wiring Trays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 133

AC Power Cord Specifications for a QFX3008-I Interconnect Device with

Three-Phase Delta Wiring Trays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 135

AC Power Cord Specifications for a QFX3008-I Interconnect Device with

Three-Phase Wye Wiring Trays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

AC Power Specifications for a QFX3600 or QFX3600-I Device . . . . . . . . . . . . . . 137

AC Power Specifications for a QFX3500 Device . . . . . . . . . . . . . . . . . . . . . . . . . . 138

AC Power Cord Specifications for a QFX3500, QFX3600, or QFX3600-I

Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 139

DC Power Specifications for a QFX3600 or QFX3600-I Device . . . . . . . . . . . . . 140

DC Power Specifications for a QFX3500 Device . . . . . . . . . . . . . . . . . . . . . . . . . . 141

Chapter 9 Installing a QFX3100 Director Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 143

Installing and Connecting a QFX3100 Director Device . . . . . . . . . . . . . . . . . . . . . 143

Unpacking a QFX3100 Director Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 144

Mounting a QFX3100 Director Device on Two Posts in a Rack or Cabinet . . . . . . 146

Mounting a QFX3100 Director Device on Four Posts in a Rack or Cabinet . . . . . . 147

Connecting AC Power to a QFX3100 Director Device . . . . . . . . . . . . . . . . . . . . . . 149

Connecting a QFX Series Device to a Management Console . . . . . . . . . . . . . . . . . 151

Powering On a QFX3100 Director Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 151

Chapter 10 Installing a QFX3008-I Interconnect Device . . . . . . . . . . . . . . . . . . . . . . . . . 155

Installing and Connecting a QFX3008-I Interconnect Device . . . . . . . . . . . . . . . 155

Unpacking a QFX3008-I Interconnect Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . 156

Parts Inventory (Packing List) for a QFX3008-I Interconnect Device . . . . . . . . . . 157

Installing QFX3008-I Interconnect Device Mounting Hardware on Four-Post

Racks or Cabinets . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 159

Installing Four-Post Mounting Shelf and Rear Support Bracket for QFX3008-I

Installing Spacer Bars and Shelves for QFX3008-I Interconnect Device

Interconnect Device Four-Post Rack or Cabinet Mounting . . . . . . . . . . 160

Installing Cage Nuts for the Four-Post Mounting Shelf and Support

Bracket for QFX3008-I Interconnect Device Four-Post Rack or

Cabinet Mounting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 162

Installing the Rear Support Bracket for QFX3008-I Interconnect Device

Four-Post Rack or Cabinet Mounting . . . . . . . . . . . . . . . . . . . . . . . . 162

Installing the Four-Post Mounting Shelf for QFX3008-I Interconnect

Device Four-Post Rack or Cabinet Mounting . . . . . . . . . . . . . . . . . . 162

Four-Post Rack or Cabinet Mounting . . . . . . . . . . . . . . . . . . . . . . . . . . . 163

Installing Cage Nuts for QFX3008-I Interconnect Device Four-Post Rack

or Cabinet Mounting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 165

Installing the Small Mounting Shelf for QFX3008-I InterconnectDevice

Four-Post Rack or Cabinet Mounting . . . . . . . . . . . . . . . . . . . . . . . . 165

Copyright © 2015, Juniper Networks, Inc.vi

Page 7

Table of Contents

Installing the Large Mounting Shelf and Spacer Bars for QFX3008-I

Interconnect Device Four-Post Rack or Cabinet Mounting . . . . . . . 166

Removing the Adjustable Center-Mounting Brackets for QFX3008-I

Interconnect Device Four-Post Rack or Cabinet Mounting . . . . . . . . . . 166

Installing QFX3008-I Interconnect Device Mounting Hardware on Two-Post

Racks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 167

Installing Cage Nuts for QFX3008-I Interconnect Device Two-Post Rack

Mounting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 169

Installing the Small Mounting Shelf for QFX3008-I Interconnect Device

Two-Post Rack Mounting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 169

Installing the Large Mounting Shelf for QFX3008-I Interconnect Device

Two-Post Rack Mounting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 170

Mounting a QFX3008-I Interconnect Device on a Rack or Cabinet Using a

Mechanical Lift . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 170

Connecting Earth Ground to a QFX3008-I Interconnect Device . . . . . . . . . . . . . . 174

Connecting AC Power to a QFX3008-I Interconnect Device with Single-Phase

Wiring Trays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 176

Preparing Delta and Wye Three-Phase Power Cords . . . . . . . . . . . . . . . . . . . . . . 178

Connecting AC Power to a QFX3008-I Interconnect Device with Three-Phase

Delta Wiring Trays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 183

Connecting AC Power to a QFX3008-I Interconnect Device with Three-Phase

Wye Wiring Trays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 187

Connecting a QFX Series Device to a Management Console . . . . . . . . . . . . . . . . 190

Powering On a QFX3008-I Interconnect Device . . . . . . . . . . . . . . . . . . . . . . . . . . 191

Chapter 11 Installing a QFX3600 Node Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 193

Installing and Connecting a QFX3600 or QFX3600-I Device . . . . . . . . . . . . . . . . 193

Unpacking a QFX3600 or QFX3600-I Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . 194

Mounting a QFX3600 or QFX3600-I Device on Two Posts in a Rack or

Cabinet . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 195

Mounting a QFX3600 or QFX3600-I Device on Four Posts in a Rack or

Cabinet . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 198

Connecting Earth Ground to QFX3600 or QFX3600-I Devices . . . . . . . . . . . . . . 201

Connecting AC Power to a QFX3500, QFX3600, or QFX3600-I Device . . . . . . . 203

Connecting DC Power to a QFX3500, QFX3600, or QFX3600-I Device . . . . . . . 205

Connecting a QFX Series Device to a Management Console . . . . . . . . . . . . . . . . 209

Chapter 12 Installing a QFX3500 Node Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 211

Installing and Connecting a QFX3500 Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . 211

Unpacking a QFX3500 Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 212

Mounting a QFX3500 Device in a Rack or Cabinet . . . . . . . . . . . . . . . . . . . . . . . . 213

Connecting Earth Ground to a QFX3500 Device . . . . . . . . . . . . . . . . . . . . . . . . . . 215

Connecting AC Power to a QFX3500, QFX3600, or QFX3600-I Device . . . . . . . 217

Connecting DC Power to a QFX3500, QFX3600, or QFX3600-I Device . . . . . . . 220

Connecting a QFX Series Device to a Management Console . . . . . . . . . . . . . . . . 223

viiCopyright © 2015, Juniper Networks, Inc.

Page 8

QFX3000-G QFabric System Deployment Guide

Chapter 13 Cabling a Copper-Based Control Plane for the QFX3000-G QFabric

System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 225

Interconnecting Two Virtual Chassis for Copper-Based QFX3000-G QFabric

System Control Plane Redundancy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 225

Connecting QFX3100 Director Devices in a Director Group . . . . . . . . . . . . . . . . . 228

Connecting QFX3100 Director Devices to a Copper-Based QFX3000-G QFabric

System Control Plane Network . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 231

Connecting a QFX3100 Director Device to a Network for Out-of-Band

Management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 233

Connecting a QFX3008-I Interconnect Device to a Copper-Based QFX3000-G

QFabric System Control Plane Network . . . . . . . . . . . . . . . . . . . . . . . . . . . . 234

Connecting a QFX3600 Node Device to a Copper-Based QFX3000-G QFabric

System Control Plane Network . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 238

Connecting a QFX3500 Node Device to a Copper-Based QFX3000-G QFabric

System Control Plane Network . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 241

Chapter 14 Cabling a Fiber-Based Control Plane for the QFX3000-G QFabric

System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 245

InterconnectingTwo Virtual Chassis for Fiber-Based QFX3000-G QFabric System

Control Plane Redundancy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 245

Connecting QFX3100 Director Devices in a Director Group . . . . . . . . . . . . . . . . . 248

Connecting QFX3100 Director Devices to a Fiber-Based QFX3000-G QFabric

System Control Plane Network . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 251

Connecting a QFX3100 Director Device to a Network for Out-of-Band

Management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 254

Connecting a QFX3008-I Interconnect Device to a Fiber-Based QFX3000-G

QFabric System Control Plane Network . . . . . . . . . . . . . . . . . . . . . . . . . . . . 255

Connecting a QFX3600 Node Device to a Fiber-Based QFX3000-G QFabric

System Control Plane Network . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 261

Connecting a QFX3500 Node Device to a Fiber-Based QFX3000-G QFabric

System Control Plane Network . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 264

Chapter 15 Cabling the Data Plane for the QFX3000-G QFabric System . . . . . . . . . . 269

Connecting a QFX3600 Node Device to a QFX3008-I Interconnect Device . . . 269

Connecting a QFX3500 Node Device to a QFX3008-I Interconnect Device . . . . 271

Copyright © 2015, Juniper Networks, Inc.viii

Page 9

Table of Contents

Part 3 Configuration

Chapter 16 Initial Setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 275

QFabric System Initial and Default Configuration Information . . . . . . . . . . . . . . . 275

Converting the Device Mode for a QFabric System Component . . . . . . . . . . . . . 277

Example: Configuring the Virtual Chassis for the QFX3000-G QFabric System

Control Plane . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 283

Example: Configuring a Fiber-Based Control Plane for the QFX3000-G QFabric

System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 327

Importing a QFX3000-G QFabric System Control Plane Virtual Chassis

Configuration with a USB Flash Drive . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 359

Generating the MAC Address Range for a QFabric System . . . . . . . . . . . . . . . . . 361

Performing the QFabric System Initial Setup on a QFX3100 Director Group . . . 362

Performing an Initial Setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 363

Restoring a Backup Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 366

Chapter 17 QFabric System Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 369

Understanding QFabric System Administration Tasks and Utilities . . . . . . . . . . 369

Gaining Access to the QFabric System Through the Default Partition . . . . . . . . . 373

Example: Configuring QFabric System Login Classes . . . . . . . . . . . . . . . . . . . . . . 374

Configuring Aliases for the QFabric System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 382

Configuring the Port Type on QFX3600 Node Devices . . . . . . . . . . . . . . . . . . . . 392

Configuring Node Groups for the QFabric System . . . . . . . . . . . . . . . . . . . . . . . . 395

Example: Configuring SNMP . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 399

Configuring Graceful Restart for QFabric Systems . . . . . . . . . . . . . . . . . . . . . . . . 401

Enabling Graceful Restart . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 402

Configuring Graceful Restart Options for BGP . . . . . . . . . . . . . . . . . . . . . . . 403

Configuring Graceful Restart Options for OSPF and OSPFv3 . . . . . . . . . . . 404

Tracking Graceful Restart Events . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 405

Optimizing the Number of Multicast Flows on QFabric Systems . . . . . . . . . . . . 405

Chapter 18 QFabric System Licensing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 407

Generating the License Keys for a QFabric System . . . . . . . . . . . . . . . . . . . . . . . 407

Adding New Licenses (CLI Procedure) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 409

Deleting a License (CLI Procedure) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 410

Saving License Keys . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 411

Verifying Junos OS License Installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 412

Displaying Installed Licenses . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 412

Displaying License Usage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 413

Chapter 19 Configuration Statements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 415

aliases . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 416

archive (QFabric System) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 417

chassis (QFabric System) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 418

director-device (Aliases) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 420

fabric . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 421

file (QFabric System) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 422

graceful-restart (Enabling Globally) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 423

graceful-restart (Protocols BGP) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 425

graceful-restart (Protocols OSPF) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 427

ixCopyright © 2015, Juniper Networks, Inc.

Page 10

QFX3000-G QFabric System Deployment Guide

interconnect-device (Chassis) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 429

interconnect-device (Aliases) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 430

multicast (QFabric Routing Options) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 431

network-domain . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 431

no-make-before-break . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 432

node-device (Aliases) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 433

node-device (Chassis) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 434

node-device (Resources) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 435

node-group (Chassis) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 436

node-group (Resources) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 437

pic (Port) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 438

remote-debug-permission . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 439

resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 440

routing-options (QFabric System) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 440

syslog (QFabric System) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 441

Part 4 Administration

Chapter 20 Software Upgrade and Recovery . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 445

Performing a Nonstop Software Upgrade on the QFabric System . . . . . . . . . . . 445

Backing Up the Current Configuration Files . . . . . . . . . . . . . . . . . . . . . . . . . . 446

Downloading Software Files Using a Browser . . . . . . . . . . . . . . . . . . . . . . . . 447

Retrieving Software Files for Download . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 447

Performing a Nonstop Software Upgrade for Director Devices in a Director

Performinga Nonstop Software Upgrade for InterconnectDevices and Other

(Optional) Creating Upgrade Groups for Node Groups . . . . . . . . . . . . . . . . 448

Performing a Nonstop Software Upgrade on a Node Group . . . . . . . . . . . . 449

Verifying Nonstop Software Upgrade for QFabric Systems . . . . . . . . . . . . . . . . . 450

Verifying a Director Group Nonstop Software Upgrade . . . . . . . . . . . . . . . . . 451

Verifying a Fabric Nonstop Software Upgrade . . . . . . . . . . . . . . . . . . . . . . . 464

Verifying a Redundant Server Node Group Nonstop Software Upgrade . . . 465

Verifying a Network Node Group Nonstop Software Upgrade . . . . . . . . . . . 468

Upgrading Software on a QFabric System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 470

Backing Up the Current Configuration Files . . . . . . . . . . . . . . . . . . . . . . . . . . 471

Downloading Software Files Using a Browser . . . . . . . . . . . . . . . . . . . . . . . . 471

Retrieving Software Files for Download . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 472

Installing the Software Package on the Entire QFabric System . . . . . . . . . . 472

Performing System Backup and Recovery for a QFabric System . . . . . . . . . . . . . 475

Chapter 21 Operational Mode Commands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 477

QFabric System Operational Mode Commands . . . . . . . . . . . . . . . . . . . . . . . . . . 478

Filtering Operational Mode Command Output in a QFabric System . . . . . . . . . 480

request chassis device-mode . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 481

request chassis fabric fpc . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 483

request component login . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 484

request fabric administration director-group change-master . . . . . . . . . . . . . . . 486

request fabric administration remove . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 487

request fabric administration system mac-pool add . . . . . . . . . . . . . . . . . . . . . . 489

Group . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 448

Fabric-Related Components . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 448

Copyright © 2015, Juniper Networks, Inc.x

Page 11

Table of Contents

request fabric administration system mac-pool delete . . . . . . . . . . . . . . . . . . . 490

request system halt . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 491

request system reboot . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 497

request system software format-qfabric-backup . . . . . . . . . . . . . . . . . . . . . . . . 500

request system software nonstop-upgrade . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 501

request system software system-backup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 507

set chassis display message . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 509

show chassis device-mode . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 515

show chassis ethernet-switch interconnect-device cb . . . . . . . . . . . . . . . . . . . . . 518

show chassis ethernet-switch interconnect-device fpc . . . . . . . . . . . . . . . . . . . . 535

show chassis fabric connectivity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 560

show chassis fabric device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 567

show chassis lcd . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 569

show chassis nonstop-upgrade node-group . . . . . . . . . . . . . . . . . . . . . . . . . . . . 583

show fabric administration inventory . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 584

show fabric administration inventory director-group status . . . . . . . . . . . . . . . . 589

show fabric administration inventory infrastructure . . . . . . . . . . . . . . . . . . . . . . 594

show fabric administration inventory interconnect-devices . . . . . . . . . . . . . . . . 597

show fabric administration inventory node-devices . . . . . . . . . . . . . . . . . . . . . . 599

show fabric administration inventory node-groups . . . . . . . . . . . . . . . . . . . . . . . 601

show fabric administration system mac-pool . . . . . . . . . . . . . . . . . . . . . . . . . . . 603

show fabric inventory . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 604

show fabric session-host . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 607

show log . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 608

show system software upgrade status . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 611

Part 5 Troubleshooting

Chapter 22 QFabric System Troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 615

Performing System Backup and Recovery for a QFabric System . . . . . . . . . . . . . 615

Performing a QFabric System Recovery Installation on the Director Group . . . . . 616

(Optional) Creating an Emergency Boot Device Using a Juniper Networks

External Blank USB Flash Drive . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 617

Performing a Recovery Installation Using a Juniper Networks External USB

Flash Drive with Preloaded Software . . . . . . . . . . . . . . . . . . . . . . . . . . . 618

Performing a Recovery Installation on a QFX3008-I, QFX3600-I, QFX3600, or

QFX3500 Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 623

Creating an Emergency Boot Device for a QFX Series Device . . . . . . . . . . . . . . . 625

xiCopyright © 2015, Juniper Networks, Inc.

Page 12

QFX3000-G QFabric System Deployment Guide

Copyright © 2015, Juniper Networks, Inc.xii

Page 13

List of Figures

Part 1 Overview

Chapter 1 Before You Begin . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

Figure 1: Legacy Data Center Architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

Figure 2: QFX Series QFabric System Architecture . . . . . . . . . . . . . . . . . . . . . . . . . . 5

Chapter 2 Hardware Architecture Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

Figure 3: QFabric System Hardware Architecture . . . . . . . . . . . . . . . . . . . . . . . . . . 16

Figure 4: External Routing Engine Types . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

Figure 5: Clos Switching for QFX3008-I Interconnect Devices . . . . . . . . . . . . . . . . 22

Figure 6: QFX3008-I Data Plane and Control Plane Connections . . . . . . . . . . . . . 23

Figure 7: QFX3600-I Data Plane and Control Plane Connections . . . . . . . . . . . . . 24

Figure 8: QFX3500 Data Plane and Control Plane Connections . . . . . . . . . . . . . . 26

Figure 9: QFX3600 Data Plane and Control Plane Connections . . . . . . . . . . . . . . 27

Chapter 3 Software Architecture Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

Figure 10: QFabric System Topology - Default Partition . . . . . . . . . . . . . . . . . . . . . 34

Figure 11: QFabric System Control Plane Network . . . . . . . . . . . . . . . . . . . . . . . . . 36

Figure 12: QFabric System Data Plane Network . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

Figure 13: QFX3008-I Interconnect Device Cross-Connect System . . . . . . . . . . . 40

Part 2 Installation

Chapter 7 Ports and Connectors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

Figure 14: QSFP+ Uplink Port Locations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 100

Chapter 8 Power . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

Figure 15: AC Plug Types . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

Figure 16: AC Plug Types . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 140

Chapter 9 Installing a QFX3100 Director Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 143

Figure 17: Unpacking a QFX3100 Director Device . . . . . . . . . . . . . . . . . . . . . . . . . . 145

Figure 18: Mounting the QFX3100 Director Device on Two Posts in a Rack . . . . . 147

Figure 19: Mounting a QFX3100 Director Device on Four Posts in a Rack or

Cabinet . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 149

Figure 20: Connecting an AC Power Cord to an AC Power Supply in a QFX3100

Director Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 150

Figure 21: Connecting the QFX Series to a Management Console Through a

Console Server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 151

Figure 22: Connecting the QFX Series Directly to a Management Console . . . . . . 151

Chapter 10 Installing a QFX3008-I Interconnect Device . . . . . . . . . . . . . . . . . . . . . . . . . 155

xiiiCopyright © 2015, Juniper Networks, Inc.

Page 14

QFX3000-G QFabric System Deployment Guide

Figure 23: Installing Four-Post Mounting Shelf and Rear Support Bracket for

QFX3008-I Interconnect Device Four-Post Rack or Cabinet Mounting . . . . . 161

Figure 24: Installing Spacer Bar and Shelves for QFX3008-I Interconnect Device

Four-Post Rack or Cabinet Mounting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 164

Figure 25: Installing the Mounting Hardware for a Two-Post Rack . . . . . . . . . . . 168

Figure 26: Installing a QFX3008-I Interconnect Device in a Four-Post Rack . . . . 173

Figure 27: Attaching Rear Support Anchors to the QFX3008-I Chassis in a

Four-Post Rack . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 174

Figure 28: Connecting a Grounding Cable to a QFX3008-I Interconnect

Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 176

Figure 29: Connecting an AC Power Cord to a Single-Phase Wiring Tray . . . . . . . 178

Figure 30: Assembling a Power Cord Using a 90° Connector . . . . . . . . . . . . . . . . 179

Figure 31: Assembling a Power Cord Using a Flat Connector . . . . . . . . . . . . . . . . 179

Figure 32: Wye Wiring Tray with a 90° Connector Installed . . . . . . . . . . . . . . . . . 180

Figure 33: Delta Wiring Tray with a Flat Connector Installed . . . . . . . . . . . . . . . . 180

Figure 34: Installing a Three-Phase Wiring Tray with a Power Cord Installed . . . . 181

Figure 35: Connecting Power to a Three-Phase Delta AC Power Supply . . . . . . . 186

Figure 36: Connecting Power to the Three-Phase Wye Wiring Tray . . . . . . . . . . . 189

Figure 37: Connecting the QFX Series to a Management Console Through a

Console Server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 190

Figure 38: Connecting the QFX Series Directly to a Management Console . . . . . . 191

Chapter 11 Installing a QFX3600 Node Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 193

Figure 39: Attaching the Front or Rear Mounting Brackets to the Side Panel of

the Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 197

Figure 40: Mounting the Device on Two Posts in a Rack . . . . . . . . . . . . . . . . . . . . 197

Figure 41: Attaching the Installation Blades to the Rear of the Rack . . . . . . . . . . 200

Figure 42: Mounting the Device on Four-Posts . . . . . . . . . . . . . . . . . . . . . . . . . . . 201

Figure 43: Connecting a Grounding Cable to a QFX3600 or QFX3600-I

Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 203

Figure 44: Connecting an AC Power Cord to an AC Power Supply in a QFX3500

Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 204

Figure 45: Connecting an AC Power Cord to an AC Power Supply in a QFX3600

or QFX3600-I Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 205

Figure 46: DC Power Supply Faceplate for a QFX3500, QFX3600 or QFX3600-I

Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 208

Figure 47: Securing Ring Lugs to the Terminals on the QFX3500, QFX3600 or

QFX3600-I DC Power Supply . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 209

Figure 48: Connecting the QFX Series to a Management Console Through a

Console Server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 210

Figure 49: Connecting the QFX Series Directly to a Management Console . . . . . 210

Chapter 12 Installing a QFX3500 Node Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 211

Figure 50: Installing an Installation Blade in a Rack . . . . . . . . . . . . . . . . . . . . . . . 214

Figure 51: Mounting the QFX3500 Device on Four Posts in a Rack . . . . . . . . . . . . 215

Figure 52: Connecting a Grounding Cable to a QFX3500 Device . . . . . . . . . . . . . 217

Figure 53: Connecting an AC Power Cord to an AC Power Supply in a QFX3500

Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 219

Figure 54: Connecting an AC Power Cord to an AC Power Supply in a QFX3600

or QFX3600-I Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 219

Copyright © 2015, Juniper Networks, Inc.xiv

Page 15

Figure 55: DC Power Supply Faceplate for a QFX3500, QFX3600 or QFX3600-I

Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 222

Figure 56: Securing Ring Lugs to the Terminals on the QFX3500, QFX3600 or

QFX3600-I DC Power Supply . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 223

Figure 57: Connecting the QFX Series to a Management Console Through a

Console Server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 224

Figure 58: Connecting the QFX Series Directly to a Management Console . . . . . 224

Chapter 13 Cabling a Copper-Based Control Plane for the QFX3000-G QFabric

System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 225

Figure 59: QFX3000-G QFabric System Copper-Based Control

Plane—Inter-Virtual Chassis LAG Connections . . . . . . . . . . . . . . . . . . . . . . . 226

Figure 60: Connecting a Fiber-Optic Cable to an Optical Transceiver Installed

in an EX Series Switch . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 227

Figure 61: QFX3100 Director Group Control Plane Connections for QFX3000-G

QFabric System Using Copper-Based Control Plane . . . . . . . . . . . . . . . . . . 228

Figure 62: QFX3100 Director Group Control Plane Connections for QFX3000-G

QFabric System Using Fiber-Based Control Plane . . . . . . . . . . . . . . . . . . . . 229

Figure 63: QFX3100 Director Group Control Plane Connections for QFX3000-M

QFabric System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 229

Figure 64: QFX3100 Director Group to Virtual Chassis Connections for

QFX3000-G QFabric System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 231

Figure 65: QFX3008-I Interconnect Device Control Plane Connections . . . . . . . 235

Figure 66: QFX3600 Node Device Control Plane Connections . . . . . . . . . . . . . . 239

Figure 67: QFX3500 Node Device Control Plane Connections . . . . . . . . . . . . . . . 241

Chapter 14 Cabling a Fiber-Based Control Plane for the QFX3000-G QFabric

System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 245

Figure 68: QFX3000-G QFabric SystemFiber-Based Control Plane—Inter-Virtual

Chassis LAG Connections . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 246

Figure 69: Connecting a Fiber-Optic Cable to an Optical Transceiver Installed

in an EX Series Switch . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 247

Figure 70: QFX3100 Director Group Control Plane Connections for QFX3000-G

QFabric System Using Copper-Based Control Plane . . . . . . . . . . . . . . . . . . 248

Figure 71: QFX3100 Director Group Control Plane Connections for QFX3000-G

QFabric System Using Fiber-Based Control Plane . . . . . . . . . . . . . . . . . . . . 249

Figure 72: QFX3100 Director Group Control Plane Connections for QFX3000-M

QFabric System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 249

Figure 73: QFX3100 Director Group to Virtual Chassis Connections for

QFX3000-G QFabric System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 252

Figure 74: QFX3000-G QFabric System Fiber-Based Control Plane—Interconnect

Device to Virtual Chassis Connections . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 256

Figure 75: QFX3600 Node Device Fiber-Based Control Plane Connections for

QFX3000-M QFabric System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 261

Figure 76: QFX3500 Node Device Fiber-Based Control Plane Connections for

QFX3000-M QFabric System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 265

List of Figures

Part 3 Configuration

Chapter 16 Initial Setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 275

xvCopyright © 2015, Juniper Networks, Inc.

Page 16

QFX3000-G QFabric System Deployment Guide

Figure 77: QFX3000-G QFabric System Control Plane—Virtual Chassis Port

Ranges . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 285

Figure 78: QFX3000-G QFabric System Control Plane—Director Group to Virtual

Chassis Connections . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 290

Figure 79: QFX3000-G QFabric System Control Plane—Interconnect Device to

Virtual Chassis Connections . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 292

Figure 80: QFX3000-G QFabric System Control Plane—Node Device to Virtual

Chassis Connections . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 293

Figure 81: QFX3000-G QFabric System Control Plane—Inter-Virtual Chassis

LAG Connections . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 294

Figure 82: QFX3000-G QFabric System Fiber-Based Control Plane—Virtual

Chassis Port Ranges . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 329

Figure 83: QFX3000-G QFabric System Fiber-Based Control Plane—Director

Group to Virtual Chassis Connections . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 333

Figure 84: QFX3000-G QFabric System Fiber-BasedControl Plane—Interconnect

Device to Virtual Chassis Connections . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 335

Figure 85: QFX3000-G QFabric System Fiber-Based Control Plane—Node Device

to Virtual Chassis Connections . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 337

Figure 86: QFX3000-G QFabric SystemFiber-Based Control Plane—Inter-Virtual

Chassis LAG Connections . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 338

Copyright © 2015, Juniper Networks, Inc.xvi

Page 17

List of Tables

About the Documentation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xxi

Table 1: Notice Icons . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xxiii

Table 2: Text and Syntax Conventions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xxiii

Part 1 Overview

Chapter 1 Before You Begin . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

Table 3: QFabric System Terms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

Table 4: QFX3600 Node Device Port Mappings . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

Chapter 2 Hardware Architecture Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

Table 5: Supported QFabric System Hardware Configurations . . . . . . . . . . . . . . . . 17

Table 6: Oversubscription Ratio on Node Devices . . . . . . . . . . . . . . . . . . . . . . . . . 29

Chapter 4 Software Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

Table 7: QFX3600 Node Device Port Mappings . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

Table 8: Default Fabric Forwarding Class Sets . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

Table 9: Default Forwarding Class to Fabric Forwarding Class Set Mapping . . . . 65

Table 10: Class Group Scheduling Properties and Membership . . . . . . . . . . . . . . . 70

Table 11: Lossless Priority (Forwarding Class) Support for QFX3500 and

Table 12: show class-of-service forwarding-class-set Command Output

Table 13: Summary of Differences Between Fabric fc-sets and Node Device

Chapter 5 Licenses . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

Table 14: Junos OS Feature Licenses and Model Numbers for QFX Series

Table 15: Upgrade Licenses for Enhancing Port Capacity . . . . . . . . . . . . . . . . . . . 80

QFX3600 Node Devices When Fewer than Six Lossless Priorities Are

Supported . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

Fields . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

fc-sets . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

Devices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

Part 2 Installation

Chapter 6 Before You Begin . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

Table 16: Number of 10-Gigabit Ethernet Access Ports Supported on Node

Table 17: Maximum Number of Node Devices Supported Based on

Table 18: Number of Connections Required Between Node and Interconnect

Table 19: Site Electrical Wiring Guidelines . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

Devices Based on Oversubscription Ratio . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

Oversubscription Ratio and Number of Interconnect Devices . . . . . . . . . . . . 87

Devices Based on Oversubscription Ratio . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

xviiCopyright © 2015, Juniper Networks, Inc.

Page 18

QFX3000-G QFabric System Deployment Guide

Table 20: QFX3100 Director Device Environmental Tolerances . . . . . . . . . . . . . . . 93

Table 21: QFX3008-I Interconnect Device Environmental Tolerances . . . . . . . . . . 94

Table 22: QFX3500 Device Environmental Tolerances . . . . . . . . . . . . . . . . . . . . . 95

Table 23: QFX3600 and QFX3600-I Device Environmental Tolerances . . . . . . . . 96

Chapter 7 Ports and Connectors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

Table 24: Supported Transceivers for the QFX3600 Device . . . . . . . . . . . . . . . . . 98

Table 25: Supported DAC and DAC Breakout Cables for the QFX3600

Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

Table 26: Supported Transceivers for the QFX3500 Device . . . . . . . . . . . . . . . . . 101

Table 27: Supported DAC and DAC Breakout Cables for the QFX3500 Device . . 102

Table 28: Copper Interface Support and Optical Interface Support for

Gigabit Ethernet SFP Transceivers for the QFX Series . . . . . . . . . . . . . . . . . . 105

Table 29: Optical Interface Support for Fibre Channel SFP+ Transceivers for the

QFX Series . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 108

Table 30: Optical Interface Support for 10-Gigabit Ethernet SFP+ Transceivers

for the QFX Series . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

Table 31: Interface Support for 40-Gigabit Ethernet QSFP+ Transceivers for the

QFX Series . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

Table 32: Third-Party SFP+ DAC Cable Support . . . . . . . . . . . . . . . . . . . . . . . . . . 115

Table 33: SFP+ Passive Direct Attach Copper Cable Specifications . . . . . . . . . . . 116

Table 34: SFP+ Active Direct Attach Copper Cable Specifications . . . . . . . . . . . . 118

Table 35: Third-Party QSFP+ DAC Breakout Cable Support . . . . . . . . . . . . . . . . . 121

Table 36: QSFP+ DAC Breakout Cable Specifications . . . . . . . . . . . . . . . . . . . . . . 122

Table 37: QSFP+ Active DAC Breakout Cable Specifications . . . . . . . . . . . . . . . . 123

Table 38: Third-Party QSFP+ DAC Cable Support . . . . . . . . . . . . . . . . . . . . . . . . 124

Table 39: Interface Specifications for QSFP+ DAC Cables . . . . . . . . . . . . . . . . . . 125

Table 40: Cable Specifications for Copper-Based Control Plane Connections

for the QFabric System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 126

Chapter 8 Power . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

Table 41: AC Power Specifications for a QFX3100 Director Device . . . . . . . . . . . . 129

Table 42: AC Power Cord Specifications for a QFX3100 Director Device . . . . . . . 130

Table 43: AC Power Specifications for a QFX3008-I Interconnect Device with

Single-Phase Wiring Trays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

Table 44: AC Power Specifications for a QFX3008-I Interconnect Device with

Three-Phase Delta Wiring Trays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 132

Table 45: AC Power Specifications for a QFX3008-I Interconnect Device with

Three-Phase Wye Wiring Trays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 133

Table 46: AC Power Cord Specifications for a Single-Phase Wiring Tray . . . . . . . 134

Table 47: Three-Phase Delta AC Power Cord Specifications . . . . . . . . . . . . . . . . 136

Table 48: Three-Phase Delta AC Power Cord Specifications . . . . . . . . . . . . . . . . 137

Table 49: AC Power Specifications for a QFX3600 or QFX3600-I Device . . . . . . 138

Table 50: AC Power Specifications for a QFX3500 Device . . . . . . . . . . . . . . . . . . 138

Table 51: AC Power Cord Specifications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 139

Table 52: DC Power Specifications for a QFX3600 or QFX3600-I Device . . . . . . 140

Table 53: DC Power Specifications for a QFX3500 Device . . . . . . . . . . . . . . . . . . 141

Chapter 9 Installing a QFX3100 Director Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 143

Copyright © 2015, Juniper Networks, Inc.xviii

Page 19

List of Tables

Table 54: Inventory of Components Provided with a QFX3100 Director

Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 145

Chapter 10 Installing a QFX3008-I Interconnect Device . . . . . . . . . . . . . . . . . . . . . . . . . 155

Table 55: Parts List for QFX3008-I Interconnect Device Configurations . . . . . . . 158

Table 56: QFX3008-I Interconnect Device Accessory Kit Contents . . . . . . . . . . . 158

Table 57: QFX3008-I Interconnect Device Rack Install Accessory Kit

Contents . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 159

Table 58: QFX3008-I Interconnect Device Wiring Tray Accessory Kit Part

Contents . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 159

Table 59: Four-Post Mounting Shelf and Rear Support Bracket Hole

Locations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 161

Table 60: Four-Post Rack or Cabinet Mounting Hole Locations . . . . . . . . . . . . . . 164

Table 61: Two-Post Rack Mounting Hole Locations . . . . . . . . . . . . . . . . . . . . . . . 168

Chapter 11 Installing a QFX3600 Node Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 193

Table 62: Accessory Kit Part Contents . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 195

Chapter 12 Installing a QFX3500 Node Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 211

Table 63: Inventory of Components Supplied with a QFX3500 Device . . . . . . . . 212

Chapter 13 Cabling a Copper-Based Control Plane for the QFX3000-G QFabric

System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 225

Table 64: Virtual Chassis-to-Virtual Chassis Copper-Based Control Plane Port

Assignments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 227

Table 65: QFX3100 Director Device-to-Virtual Chassis Control Plane Port

Assignments for DG0 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 233

Table 66: Second QFX3100 Director Device-to-Virtual Chassis Control Plane

Port Assignments for DG1 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 233

Table 67: Interconnect Device Port Mappings . . . . . . . . . . . . . . . . . . . . . . . . . . . . 236

Table 68: QFX3600 Node Device-to-Virtual Chassis Control Plane Port

Assignments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 240

Table 69: QFX3500 Node Device-to-Virtual Chassis Copper-Based Control

Plane Port Assignments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 242

Chapter 14 Cabling a Fiber-Based Control Plane for the QFX3000-G QFabric

System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 245

Table 70: Virtual Chassis-to-Virtual Chassis Fiber-Based Control Plane Port

Assignments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 247

Table 71: QFX3100 Director Device-to-VIrtual Chassis Control Plane Port

Assignments for DG0 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 254

Table 72: Second QFX3100 Director Device-to-Virtual Chassis Control Plane

Port Assignments for DG1 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 254

Table 73: Interconnect Device Port Mappings . . . . . . . . . . . . . . . . . . . . . . . . . . . . 257

Table 74: QFX3600 Node Device-to-Virtual Chassis Fiber-Based Control Plane

Port Assignments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 263

Table 75: QFX3500 Node Device-to-Virtual Chassis Fiber-Based Control Plane

Port Assignments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 266

Part 3 Configuration

Chapter 16 Initial Setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 275

xixCopyright © 2015, Juniper Networks, Inc.

Page 20

QFX3000-G QFabric System Deployment Guide

Table 76: Support for device mode options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 278

Table 77: QFX3000-G QFabric System Virtual Chassis Control Plane Port

Assignments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 285

Table 78: Director Group Port Mappings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 290

Table 79: Hardware to Software Port Mappings for Director Device Network

Modules . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 291

Table 80: Interconnect Device Port Mappings . . . . . . . . . . . . . . . . . . . . . . . . . . . 292

Table 81: Interconnect Device Port Mappings for Two Additional Devices . . . . . 293

Table 82: Node Device Port Mappings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 294

Table 83: Virtual Chassis LAG Port Mappings . . . . . . . . . . . . . . . . . . . . . . . . . . . . 295

Table 84: QFX3000-G QFabric System Virtual Chassis Fiber-Based Control

Plane Port Assignments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 330

Table 85: Director Group Port Mappings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 334

Table 86: Hardware to Software Port Mappings for Director Device Network

Modules . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 334

Table 87: Interconnect Device Port Mappings . . . . . . . . . . . . . . . . . . . . . . . . . . . . 335

Table 88: Interconnect Device Port Mappings for Two Additional Devices . . . . . 336

Table 89: Node Device Port Mappings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 337

Table 90: Virtual Chassis LAG Port Mappings . . . . . . . . . . . . . . . . . . . . . . . . . . . 338

Part 4 Administration

Chapter 21 Operational Mode Commands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 477

Table 91: QFabric System Operational Mode Commands . . . . . . . . . . . . . . . . . . 478

Table 92: show chassis device-mode Output Fields . . . . . . . . . . . . . . . . . . . . . . . 516

Table 93: show chassis ethernet-switch interconnect-device fpc Output

Fields . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 518

Table 94: show chassis ethernet-switch interconnect-device fpc Output

Fields . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 535

Table 95: show chassis fabric connectivity Output Fields . . . . . . . . . . . . . . . . . . 560

Table 96: show chassis fabric device Output Fields . . . . . . . . . . . . . . . . . . . . . . . 567

Table 97: show chassis lcd Output Fields . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 571

Table 98: show chassis nonstop-upgrade node-group Output Fields . . . . . . . . 583

Table 99: show fabric administration inventory Output Fields . . . . . . . . . . . . . . 585

Table 100: show fabric administration inventory director-group status Output

Fields . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 589

Table 101: show fabric administration inventory infrastructure Output Fields . . 594

Table 102: show fabric administration inventory interconnect-devices Output

Fields . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 597

Table 103: show fabric administration inventory node-devices Output Fields . . 599

Table 104: show fabric administration inventory node-groups Output Fields . . . 601

Table 105: show fabric administration system mac-pool Output Fields . . . . . . . 603

Table 106: show fabric inventory Output Fields . . . . . . . . . . . . . . . . . . . . . . . . . . 605

Table 107: show fabric session-host Output Fields . . . . . . . . . . . . . . . . . . . . . . . 607

Table 108: show system software upgrade status Output Fields . . . . . . . . . . . . . 611

Copyright © 2015, Juniper Networks, Inc.xx

Page 21

About the Documentation

•

Documentation and Release Notes on page xxi

•

Supported Platforms on page xxi

•

Using the Examples in This Manual on page xxi

•

Documentation Conventions on page xxiii

•

Documentation Feedback on page xxv

•

Requesting Technical Support on page xxv

Documentation and Release Notes

To obtain the most current version of all Juniper Networks®technical documentation,

see the product documentation page on the Juniper Networks website at

http://www.juniper.net/techpubs/.

If the information in the latest release notes differs from the information in the

documentation, follow the product Release Notes.

Juniper Networks Books publishes books by Juniper Networks engineers and subject

matter experts. These books go beyond the technical documentation to explore the

nuances of network architecture, deployment, and administration. The current list can

be viewed at http://www.juniper.net/books.

Supported Platforms

For the features described in this document, the following platforms are supported:

•

QFabric System

•

QFX3000-G

Using the Examples in This Manual

If you want to use the examples in this manual, you can use the load merge or the load

merge relative command. These commands cause the software to merge the incoming

configuration into the current candidate configuration. The example does not become

active until you commit the candidate configuration.

If the example configuration contains the top level of the hierarchy (or multiple

hierarchies), the example is a full example. In this case, use the load merge command.

xxiCopyright © 2015, Juniper Networks, Inc.

Page 22

QFX3000-G QFabric System Deployment Guide

If the example configuration does not start at the top level of the hierarchy, the example

is a snippet. In this case, use the load merge relative command. These procedures are

described in the following sections.

Merging a Full Example

To merge a full example, follow these steps:

1. From the HTML or PDF version of the manual, copy a configuration example into a

text file, save the file with a name, and copy the file to a directory on your routing

platform.

For example, copy the followingconfigurationto a file and name the file ex-script.conf.

Copy the ex-script.conf file to the /var/tmp directory on your routing platform.

system {

scripts {

commit {

}

}

}

interfaces {

fxp0 {

disable;

unit 0 {

}

}

}

file ex-script.xsl;

family inet {

address 10.0.0.1/24;

}

Merging a Snippet

2. Merge the contents of the file into your routing platform configuration by issuing the

load merge configuration mode command:

[edit]

user@host# load merge /var/tmp/ex-script.conf

load complete

To merge a snippet, follow these steps:

1. From the HTML or PDF version of the manual, copy a configuration snippet into a text

file, save the file with a name, and copy the file to a directory on your routing platform.

For example, copy the following snippet to a file and name the file

ex-script-snippet.conf. Copy the ex-script-snippet.conf file to the /var/tmp directory

on your routing platform.

commit {

file ex-script-snippet.xsl; }

2. Move to the hierarchy level that is relevant for this snippet by issuing the following

configuration mode command:

Copyright © 2015, Juniper Networks, Inc.xxii

Page 23

[edit]

user@host# edit system scripts

[edit system scripts]

3. Merge the contents of the file into your routing platform configuration by issuing the

load merge relative configuration mode command:

[edit system scripts]

user@host# load merge relative /var/tmp/ex-script-snippet.conf

load complete

For more information about the load command, see the CLI User Guide.

Documentation Conventions

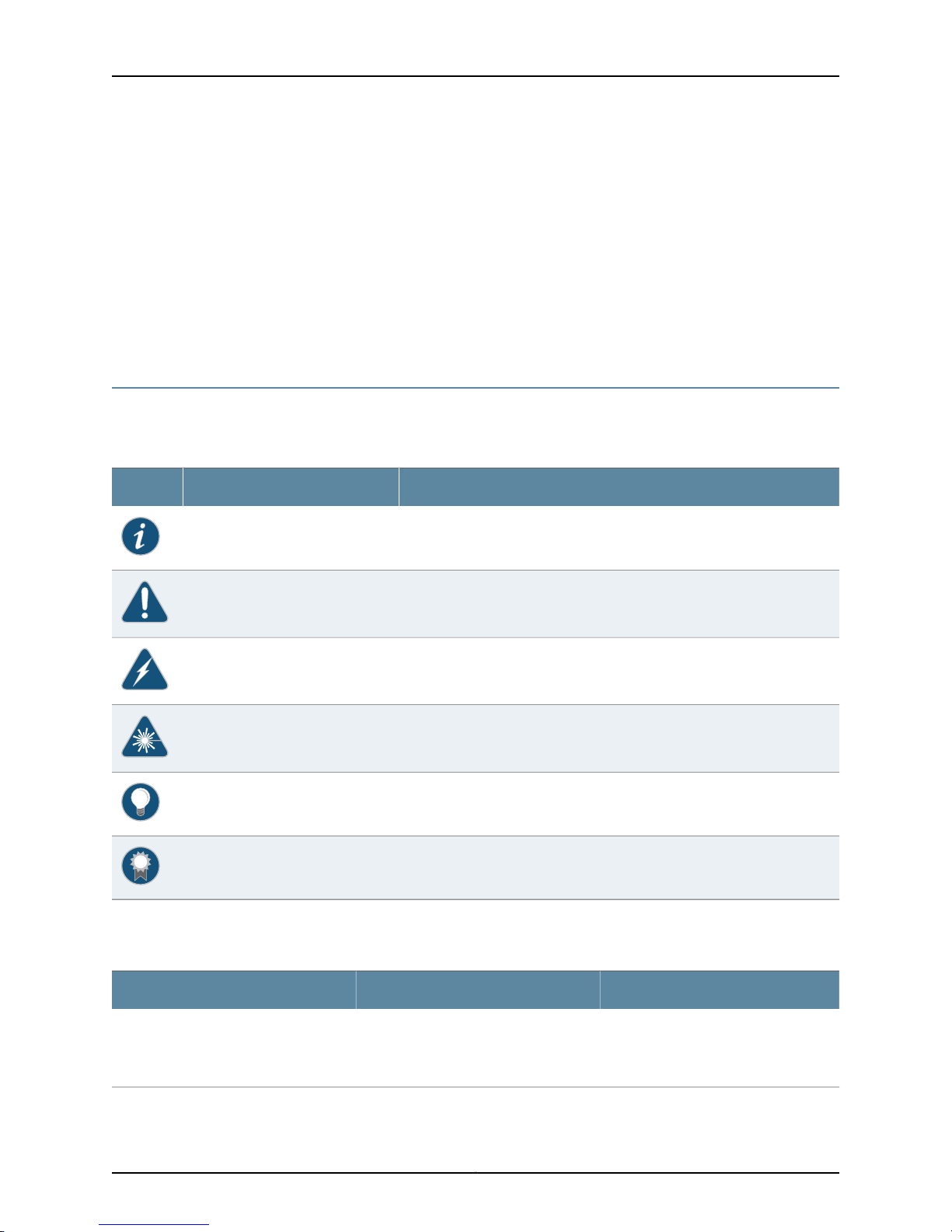

Table 1 on page xxiii defines notice icons used in this guide.

Table 1: Notice Icons

About the Documentation

DescriptionMeaningIcon

Table 2 on page xxiii defines the text and syntax conventions used in this guide.

Table 2: Text and Syntax Conventions

Indicates important features or instructions.Informational note

Indicates a situation that might result in loss of data or hardware damage.Caution

Alerts you to the risk of personal injury or death.Warning

Alerts you to the risk of personal injury from a laser.Laser warning

Indicates helpful information.Tip

Alerts you to a recommended use or implementation.Best practice

ExamplesDescriptionConvention

Represents text that you type.Bold text like this

To enter configuration mode, type the

configure command:

user@host> configure

xxiiiCopyright © 2015, Juniper Networks, Inc.

Page 24

QFX3000-G QFabric System Deployment Guide

Table 2: Text and Syntax Conventions (continued)

ExamplesDescriptionConvention

Fixed-width text like this

Italic text like this

Italic text like this

Text like this

| (pipe symbol)

Represents output that appears on the

terminal screen.

•

Introduces or emphasizes important