Page 1

Intel® Xeon® Processor 7500 Series

Uncore Programming Guide

Reference Number: 323535-001

March 2010

Page 2

INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY

ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN

INTEL’S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER, AND INTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR

WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER INTELLECTUAL PROPERTY RIGHT. INTEL PRODUCTS ARE NOT INTENDED FOR USE IN MEDICAL, LIFE SAVING, OR

LIFE SUSTAINING APPLICATIONS.

Intel may make changes to specifications and product descriptions at any time, without notice.

Developers must not rely on the absence or characteristics of any features or instructions marked “reserved” or “undefined.” Im-

proper use of reserved or undefined featu res or instructions may cause unpr edictable behavior or failure in developer 's software

code when running on an Intel processor. Intel reserves these features or instr uctions for fut ure definition and s hall hav e no responsibility whatsoever for conflicts or incompatibilities arising from their unauthorized use.

The Intel

able on request.

Hyper-Threading Technology requires a computer system with an Intel

HT T echnology enabled chipset, BIO S and oper ating sy stem. P erformance wi ll vary dependin g on the specific ha rdware and softw are

you use. For more information, see http://www.intel.com/technology/hyperthread/index.htm; including details on which processors support

HT Technology.

Intel

and for some uses, certain platform software enabled for it. Functionality, performance or other benefits will

ware and software c onfigurations. Intel

ment.

64-bit computing on Intel architecture requires a computer system with a processor, chipset, BIOS, operating system, device drivers

and applications enabled for Intel

architecture-enabled BIOS. P erforma nce will v ary depend ing on yo ur hardwar e and soft ware conf igurat ions. Consul t with your system vendor for more information.

®

64 architecture processors may contain design defects or errors known as err at a. Curren t char ac terize d err ata ar e av ail -

®

processor supporting Hyper-Threading Technology and an

®

Virtualization T echnolo gy requires a computer system with an enabled Intel® processor , BIOS, virtual machine monitor (VMM)

®

Virtualization Technology-enabled BIOS and VMM applications are currently in develop-

®

64 architecture. Processors will not op erate (including 32-bit operation) witho ut an Intel® 64

vary depending on hard-

Intel and Intel Xeon are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United States and other

countries.

*Other names and brands may be claimed as the property of others.

Contact your local Intel sales office or your distributor to obtain the latest specifications and before placing your product order.

Copies of documents which have an ordering nu mber and are r eferenced in t his document, or other Intel liter ature, may be obtained

from:

Intel Corporation

P.O. Box 5937

Denver, CO 80217-9808

or call 1-800-548-4725

or visit Intel’s website at http://www.intel.com

Copyright © 2010 Intel Corporation

Page 3

INTEL® XEON® PROCESSOR 7500 SERIES UNCORE PROGRAMMING GUIDE CONTENTS

CONTENTS

CHAPTER 1

INTRODUCTION

1.1 INTRODUCTION . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-1

1.2 U

1.3 U

1.4 R

CHAPTER 2

UNCORE PERFORMANCE MONITORING

2.1 GLOBAL PERFORMANCE MONITORING CONTROL . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-1

2.1.1 Counter Overflow. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2-1

2.1.1.1 Freezing on Counter Overflow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2-1

2.1.1.2 PMI on Counter Overflow. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2-1

2.1.2 Setting up a Monitoring Session . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2-1

2.1.3 Reading the Sample Interval . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2-2

2.1.4 Enabling a New Sample Interval from Frozen Counters. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2-2

2.1.5 Global Performance Monitors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2-3

2.1.5.1 Global PMON Global Control/Status Registers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2-3

2.2 U-B

2.2.1 U-Box PMON Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2-5

2.2.1.1 U-Box Box Level PMON State . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2-5

2.2.1.2 U-Box PMON state - Counter/Control Pairs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2-5

2.2.2 U-Box Performance Monitoring Events. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2-6

2.2.3 U-Box Events Ordered By Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2-6

2.2.4 U-Box Performance Monitor Event List. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2-7

2.3 C-B

2.3.1 Overview of the C-Box . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2-9

2.3.2 C-Box Performance Monitoring Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2-9

2.3.2.1 C-Box PMU - Overflow, Freeze and Unfreeze . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2-9

2.3.3 C-BOX Performance Monitors. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-10

2.3.3.1 C-Box Box Level PMON state . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-13

2.3.3.2 C-Box PMON state - Counter/Control Pairs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-14

2.3.4 C-BOX Performance Monitoring Events . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-16

2.3.4.1 An Overview: . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-16

2.3.4.2 Acronyms frequently used in C-Box Events: . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-16

2.3.4.3 The Queues: . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-17

2.3.4.4 Detecting Performance Problems in the C-Box Pipeline: . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-17

2.3.5 C-Box Events Ordered By Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-17

2.3.6 C-Box Performance Monitor Event List . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-18

2.4 B-B

2.4.1 Overview of the B-Box . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-29

2.4.2 B-Box Performance Monitoring Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-29

2.4.2.1 B-Box PMU - On Overflow and the Consequences (PMI/Freeze). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-29

2.4.3 B-BOX Performance Monitors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-30

2.4.3.1 B-Box Box Level PMON state . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-30

2.4.3.2 B-Box PMON state - Counter/Control Pairs + Filters. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-31

2.4.4 B-Box Performance Monitoring Events . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-33

2.4.4.1 On the ARBQ:. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-33

2.4.4.2 On the Major B-Box Structures: . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-34

2.4.4.3 On InvItoE Transactions: . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-34

2.4.5 B-Box Events Ordered By Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-34

2.4.6 B-Box Performance Monitor Event List. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-36

2.5 S-B

2.5.1 Overview of the S-Box . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-45

2.5.2 S-Box Performance Monitoring Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-45

2.5.2.1 S-Box PMU - Overflow, Freeze and Unfreeze . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-45

2.5.3 S-BOX Performance Monitors. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-45

2.5.3.1 S-Box PMON for Global State . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-46

2.5.3.2 S-Box Box Level PMON state . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-47

2.5.3.3 S-Box PMON state - Counter/Control Pairs + Filters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-48

2.5.3.4 S-Box Registers for Mask/Match Facility . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-49

2.5.4 S-BOX Performance Monitoring Events . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-51

2.5.4.1 An Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-51

NCORE PMU OVERVIEW . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-1

NCORE PMU SUMMARY TABLES . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-2

EFERENCES. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-3

OX PERFORMANCE MONITORING . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-5

OX PERFORMANCE MONITORING. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-9

OX PERFORMANCE MONITORING . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-29

OX PERFORMANCE MONITORING. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-45

Page 4

INTEL® XEON® PROCESSOR 7500 SERIES UNCORE PROGRAMMING GUIDE CONTENTS

2.5.4.2 On Queue Occupancy Usage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-51

2.5.4.3 On Packet Transmission Events . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-52

2.5.5 S-Box Events Ordered By Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-53

2.5.6 S-Box Performance Monitor Event List. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-55

2.6 R-BOX PERFORMANCE MONITORING . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-72

2.6.1 Overview of the R-Box . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-72

2.6.1.1 R-Box Input Port. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-72

2.6.1.2 R-Box Arbitration Control . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-72

2.6.1.3 R-Box Output Port . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-73

2.6.1.4 R-Box Link Layer Resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-73

2.6.2 R-Box Performance Monitoring Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-73

2.6.2.1 Choosing An Event To Monitor - Example. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-73

2.6.2.2 R-Box PMU - Overflow, Freeze and Unfreeze . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-74

2.6.3 R-BOX Performance Monitors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-74

2.6.3.1 R-Box Performance Monitors To Port Mapping . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-77

2.6.3.2 R-Box Box Level PMON state . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-78

2.6.3.3 R-Box PMON state - Counter/Control Pairs + Filters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-78

2.6.3.4 R-Box IPERF Performance Monitoring Control Registers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-82

2.6.3.5 R-Box QLX Performance Monitoring Control Registers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-83

2.6.3.6 R-Box Registers for Mask/Match Facility . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-84

2.6.4 R-BOX Performance Monitoring Events . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-87

2.6.4.1 An Overview: . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-87

2.6.5 R-Box Events Ordered By Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-87

2.6.6 R-Box Performance Monitor Event List. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-88

2.7 M-B

OX PERFORMANCE MONITORING . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-95

2.7.1 Overview of the M-Box . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-95

2.7.2 Functional Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-95

2.7.2.1 Intel ® 7500 Scalable Memory Buffer. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-96

2.7.3 M-Box Performance Monitoring Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-96

2.7.3.1 Choosing An Event To Monitor - Example using subcontrol registers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-96

2.7.3.2 M-Box PMU - Overflow, Freeze and Unfreeze . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-97

2.7.4 M-BOX Performance Monitors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-98

2.7.4.1 M-Box Box Level PMON state . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-99

2.7.4.2 M-Box PMON state - Counter/Control Pairs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-100

2.7.4.3 M-Box PMU Filter Registers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-102

2.7.4.4 M-Box PMU Subcontrol Registers - Subunit descriptions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-102

2.7.5 M-Box Performance Monitoring Events . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-109

2.7.5.1 An Overview: . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-109

2.7.6 M-Box Events Ordered By Code. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-110

2.7.7 M-Box Performance Monitor Event List . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-111

2.8 W-B

OX PERFORMANCE MONITORING . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-124

2.8.1 Overview of the W-Box . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-124

2.8.2 W-Box Performance Monitoring Overview. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-124

2.8.2.1 W-Box PMU - Overflow, Freeze and Unfreeze . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-124

2.8.3 W-BOX Performance Monitors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-125

2.8.3.1 W-Box Box Level PMON state . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-125

2.8.3.2 W-Box PMON state - Counter/Control Pairs. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-126

2.8.4 W-BOX Performance Monitoring Events . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-128

2.8.4.1 An Overview: . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-128

2.8.5 W-Box Events Ordered By Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-128

2.8.6 W-Box Performance Monitor Event List . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-128

2.9 P

ACKET MATCHING REFERENCE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-130

Page 5

INTEL® XEON® PROCESSOR 7500 SERIES UNCORE PROGRAMMING GUIDE FIGURES

FIGURES

Figure 1-1. Intel Xeon Processor 7500 Series Block Diagram . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .1-1

Figure 2-1. R-Box Block Diagram . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-72

Figure 2-2. Memory Controller Block Diagram. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-95

Page 6

INTEL® XEON® PROCESSOR 7500 SERIES UNCORE PROGRAMMING GUIDE FIGURES

This page intentionally left blank

Page 7

INTEL® XEON® PROCESSOR 7500 SERIES UNCORE PROGRAMMING GUIDE TABLES

TABLES

Table 1-1. Per-Box Performance Monitoring Capabilities . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-2

Table 1-2. Uncore Performance Monitoring MSRs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-2

Table 2-1. Global Performance Monitoring Control MSRs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-3

Table 2-2. U_MSR_PMON_GLOBAL_CTL Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-4

Table 2-3. U_MSR_PMON_GLOBAL_STATUS Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-4

Table 2-4. U_MSR_PMON_GLOBAL_OVF_CTL Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-4

Table 2-5. U-Box Performance Monitoring MSRs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2-5

Table 2-6. U_MSR_PMON_EVT_SEL Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-5

Table 2-7. U_MSR_PMON_CTR Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-6

Table 2-8. Performance Monitor Events for U-Box Events . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2-6

Table 2-9. C-Box Performance Monitoring MSRs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-10

Table 2-10. C_MSR_PMON_GLOBAL_CTL Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-14

Table 2-11. C_MSR_PMON_GLOBAL_STATUS Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-14

Table 2-12. C_MSR_PMON_GLOBAL_OVF_CTL Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-14

Table 2-13. C_MSR_PMON_EVT_SEL{5-0} Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-15

Table 2-14. C_MSR_PMON_CTR{5-0} Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-15

Table 2-15. Performance Monitor Events for C-Box Events . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-17

Table 2-16. B-Box Performance Monitoring MSRs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-30

Table 2-17. B_MSR_PMON_GLOBAL_CTL Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-31

Table 2-18. B_MSR_PMON_GLOBAL_STATUS Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-31

Table 2-19. B_MSR_PMON_GLOBAL_OVF_CTL Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-31

Table 2-20. B_MSR_PMON_EVT_SEL{3-0} Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-32

Table 2-21. B_MSR_PMON_CNT{3-0} Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-32

Table 2-22. B_MSR_MATCH_REG Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-33

Table 2-23. B_MSR_MASK_REG Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-33

Table 2-24. Performance Monitor Events for B-Box Events . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-35

Table 2-25. S-Box Performance Monitoring MSRs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-45

Table 2-26. S_MSR_PMON_SUMMARY Register Fields . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-47

Table 2-27. S_CSR_PMON_GLOBAL_CTL Register Fields . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-47

Table 2-28. S_MSR_PMON_GLOBAL_STATUS Register Fields . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-47

Table 2-29. S_MSR_PMON_OVF_CTRL Register Fields . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-48

Table 2-30. S_CSR_PMON_CTL{3-0} Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-48

Table 2-31. S_CSR_PMON_CTR{3-0} Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-49

Table 2-32. S_MSR_MM_CFG Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-49

Table 2-33. S_MSR_MATCH Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-50

Table 2-34. S_MSR_MATCH.opc - Opcode Match by Message Class . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-50

Table 2-35. S_MSR_MASK Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-51

Table 2-36. S-Box Data Structure Occupancy Events . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-52

Table 2-37. Performance Monitor Events for S-Box Events . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-53

Table 2-38. Input Buffering Per Port . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-73

Table 2-39. R-Box Performance Monitoring MSRs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-74

Table 2-40. R-Box Port Map . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-77

Table 2-41. R_MSR_PMON_GLOBAL_CTL_{15_8, 7_0} Register Fields . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-78

Table 2-42. R_MSR_PMON_GLOBAL_STATUS_{15_8, 7_0} Register Fields . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-78

Table 2-43. R_MSR_PMON_OVF_CTL_{15_8, 7_0} Register Fields . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-78

Table 2-44. R_MSR_PMON_CTL{15-0} Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-79

Table 2-45. R_MSR_PMON_CTL{15-8} Event Select . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-80

Table 2-46. R_MSR_PMON_CTL{7-0} Event Select . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-81

Table 2-47. R_MSR_PMON_CTR{15-0} Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-81

Table 2-48. R_MSR_PORT{7-0}_IPERF_CFG{1-0} Registers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Table 2-49. R_MSR_PORT{7-0}_QLX_CFG Register Fields . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-83

Table 2-50. R_MSR_PORT{7-0}_XBR_SET{2-1}_MM_CFG Registers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-85

Table 2-51. R_MSR_PORT{7-0}_XBR_SET{2-1}_MATCH Registers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-85

Table 2-52. R_MSR_PORT{7-0}_XBR_SET{2-1}_MASK Registers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-86

Table 2-53. Message Events Derived from the Match/Mask filters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-87

Table 2-54. Performance Monitor Events for R-Box Events . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-88

Table 2-55. Unit Masks for ALLOC_TO_ARB . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-89

Table 2-56. Unit Masks for EOT_DEPTH_ACC . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-89

Table 2-57. Unit Masks for EOT_ROLL_DEPTH_ACC . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-90

Table 2-58. Unit Masks for GLOBAL_ARB_BID_FAIL . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-91

Table 2-59. Unit Masks for NEW_PACKETS_RECV . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-92

Table 2-60. Unit Masks for QUE_ARB_BID . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-93

. . . . . . . . . . . 2-82

Page 8

INTEL® XEON® PROCESSOR 7500 SERIES UNCORE PROGRAMMING GUIDE TABLES

Table 2-61. Unit Masks for QUE_ARB_BID_FAIL . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-93

Table 2-62. Unit Masks for TARGET_AVAILABLE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-94

Table 2-63. M-Box Performance Monitoring MSRs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-98

Table 2-64. M_MSR_PERF_GLOBAL_CTL Register Fields . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-100

Table 2-65. M_MSR_PERF_GLOBAL_STATUS Register Fields . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-100

Table 2-66. M_MSR_PERF_GLOBAL_OVF_CTL Register Fields . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-100

Table 2-67. M_MSR_PMU_CNT_CTL{5-0} Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-101

Table 2-68. M_MSR_PMU_CNT_{5-0} Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-101

Table 2-69. M_MSR_PMU_TIMESTAMP_UNIT Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-102

Table 2-70. M_MSR_PMU_MM_CFG Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-102

Table 2-71. M_MSR_PMU_ADDR_MATCH Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-102

Table 2-72. M_MSR_PMU_ADDR_MASK Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-102

Table 2-73. M_MSR_PMU_DSP Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-104

Table 2-74. M_CSR_ISS_PMU Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-104

Table 2-75. M_MSR_PMU_MAP Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-105

Table 2-76. M_CSR_PMU_MA_MSC_THR Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-105

Table 2-77. TRP_PT_{DN,UP}_CND Encodings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-106

Table 2-78. M_MSR_PMU_PGT Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-106

Table 2-79. M_MSR_PMU_PLD Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-107

Table 2-80. M_MSR_PMU_ZDP_CTL_FVC Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-108

Table 2-81. M_MSR_PMU_ZDP_CTL_FVC.evnt{4-1} Encodings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-108

Table 2-82. M_MSR_PMU_ZDP_CTL_FVC.RESP Encodings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-109

Table 2-83. M_MSR_PMU_ZDP_CTL_FVC.BCMD Encodings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-109

Table 2-84. Performance Monitor Events for M-Box Events . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-110

Table 2-85. Unit Masks for CYCLES_DSP_FILL . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-111

Table 2-86. Unit Masks for CYCLES_PGT_STATE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-112

Table 2-87. Unit Masks for CYCLES_SCHED_MODE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-112

Table 2-88. Unit Masks for DSP_FILL . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-114

Table 2-89. Unit Masks for FVC_EV0 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-115

Table 2-90. Unit Masks for FVC_EV1 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-115

Table 2-91. Unit Masks for FVC_EV2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-116

Table 2-92. Unit Masks for FVC_EV3 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-117

Table 2-93. Unit Masks for BCMD_SCHEDQ_OCCUPANCY . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-119

Table 2-94. W-Box Performance Monitoring MSRs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-125

Table 2-95. W_MSR_PMON_GLOBAL_CTL Register Fields . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-125

Table 2-96. W_MSR_PMON_GLOBAL_STATUS Register Fields . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-126

Table 2-97. W_MSR_PMON_GLOBAL_OVF_CTRL Register Fields . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-126

Table 2-98. W_MSR_PMON_EVT_SEL_{3-0} Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-127

Table 2-99. W_MSR_PMON_FIXED_CTR_CTL Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-127

Table 2-100. W_MSR_PMON_CTR_{3-0} Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-128

Table 2-101. W_MSR_PMON_FIXED_CTR Register – Field Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-128

Table 2-102. Performance Monitor Events for W-Box Events . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-128

Table 2-103. Intel® QuickPath Interconnect Packet Message Classes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-130

Table 2-104. Opcode Match by Message Class . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-131

Table 2-105. Opcodes (Alphabetical Listing) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-132

Page 9

INTEL® XEON® PROCESSOR 7500 SERIES UNCORE PROGRAMMING GUIDE INTRODUCTION

CHAPTER 1

INTRODUCTION

1.1 INTRODUCTION

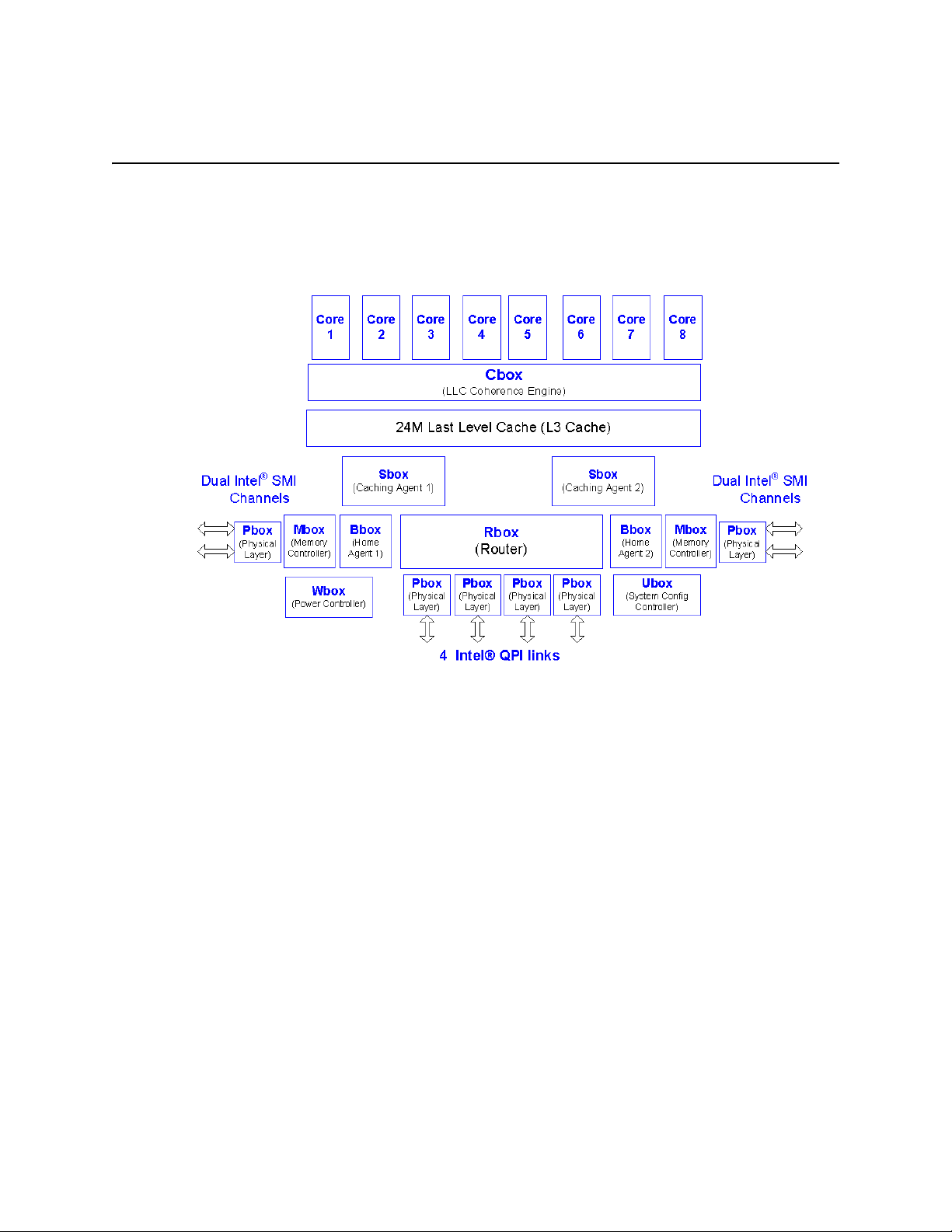

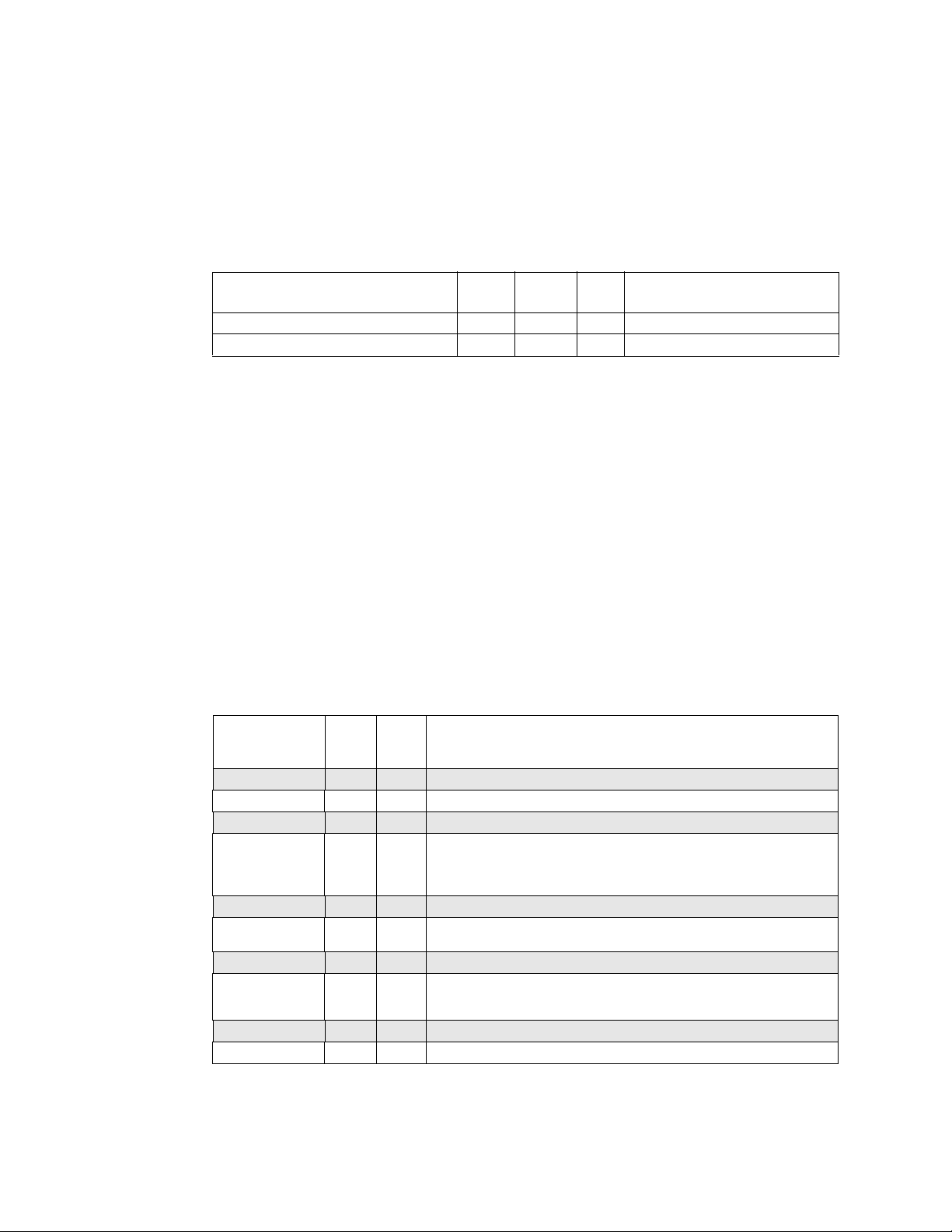

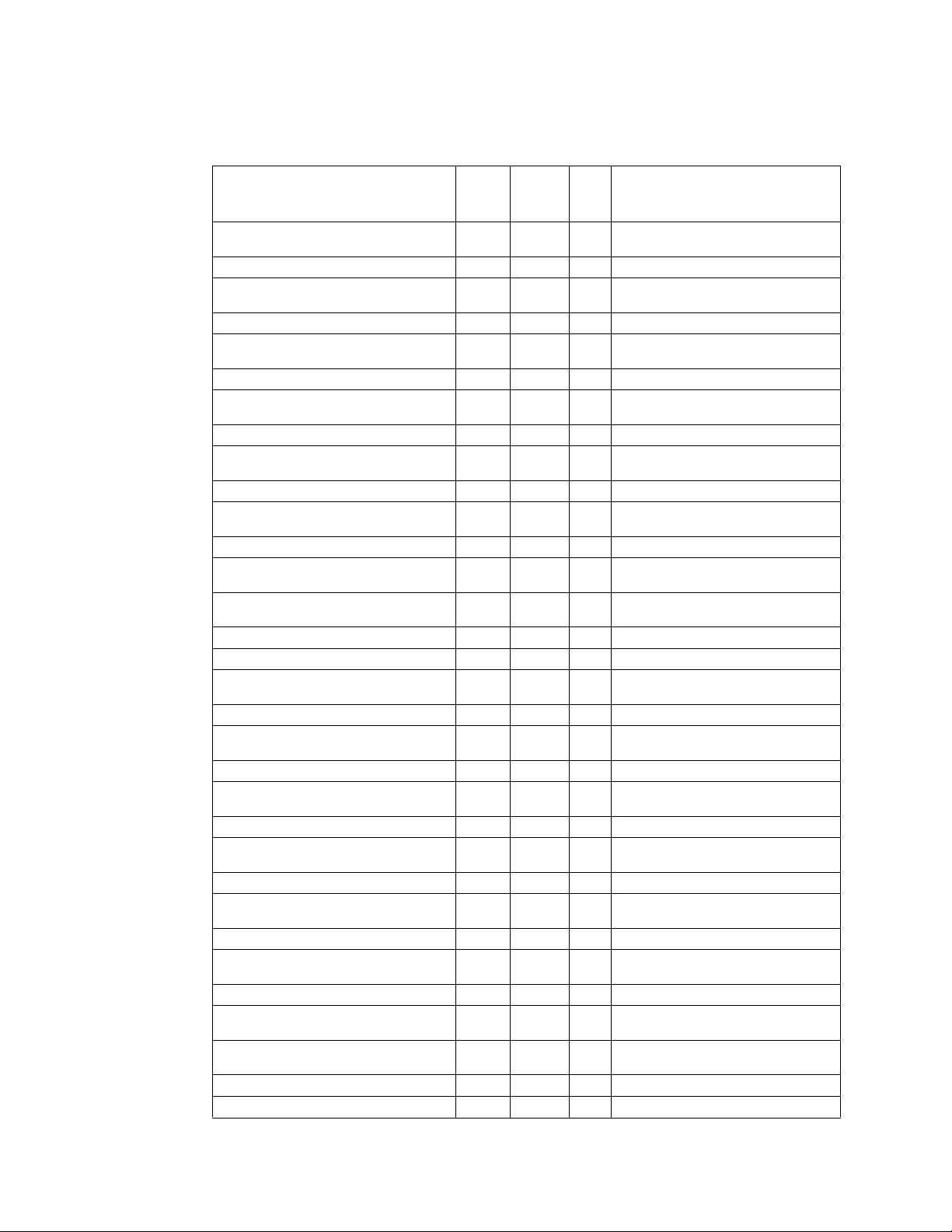

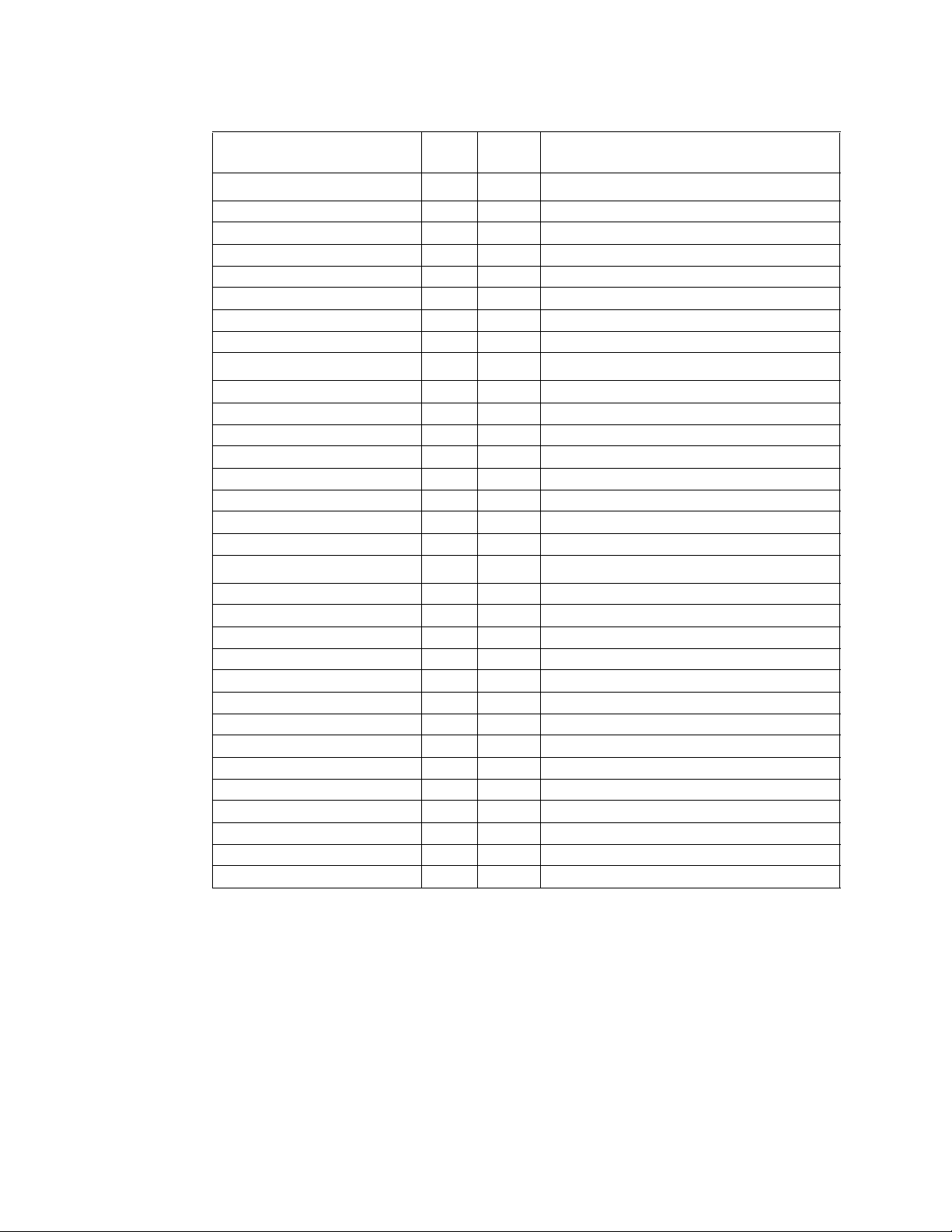

Figure 1-1 provides an Intel® Xeon® Processor 7500 Series block diagram.

Figure 1-1. Intel Xeon Processor 7500 Series Block Diagram

1.2 Uncore PMU Overview

The processor uncore performance monitoring is supported by PMUs local to each of the C, S, B, M, R, U,

and W-Boxes. Each of these boxes communicates with the U-Box which contains registers to control all

uncore PMU activity (as outlined in Section 2.1, “Global Performance Monitoring Control”).

All processor uncore performance monitoring features can be accessed through RDMSR/WRMSR instructions executed at ring 0.

Since the uncore performance monitors represent socket-wide resources that are not context switched

by the OS, it is highly recommended that only one piece of software (per-socket) attempt to program and

extract information from the monitors. T o keep things simple, it is also recommended that the monitoring

software communicate with the OS such that it can be executed on coreId = 0, threadId = 0. Although

recommended, this step is not necessary . Software may be notified of an overflowing uncore counter on

any core.

1-1

Page 10

INTEL® XEON® PROCESSOR 7500 SERIES UNCORE PROGRAMMING GUIDE INTRODUCTION

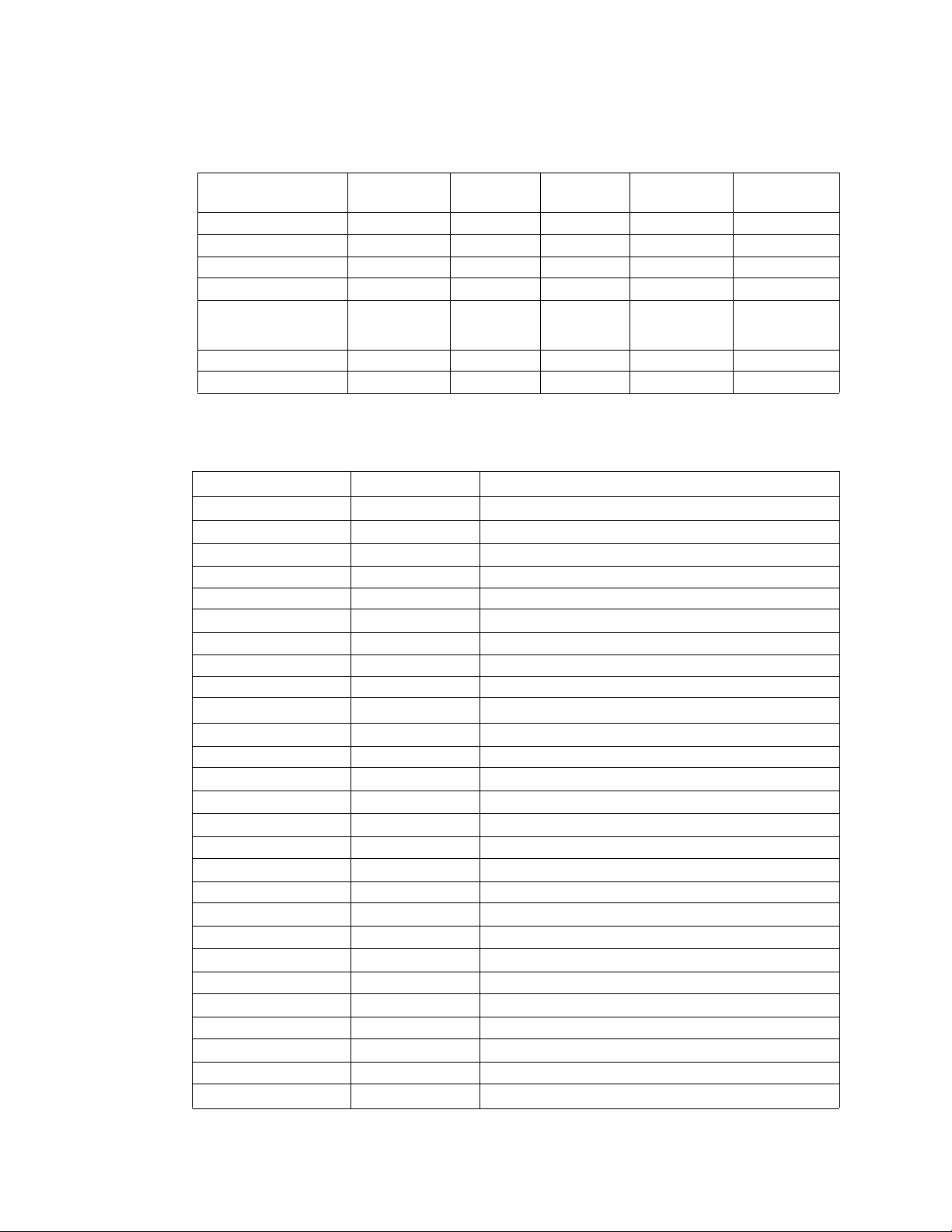

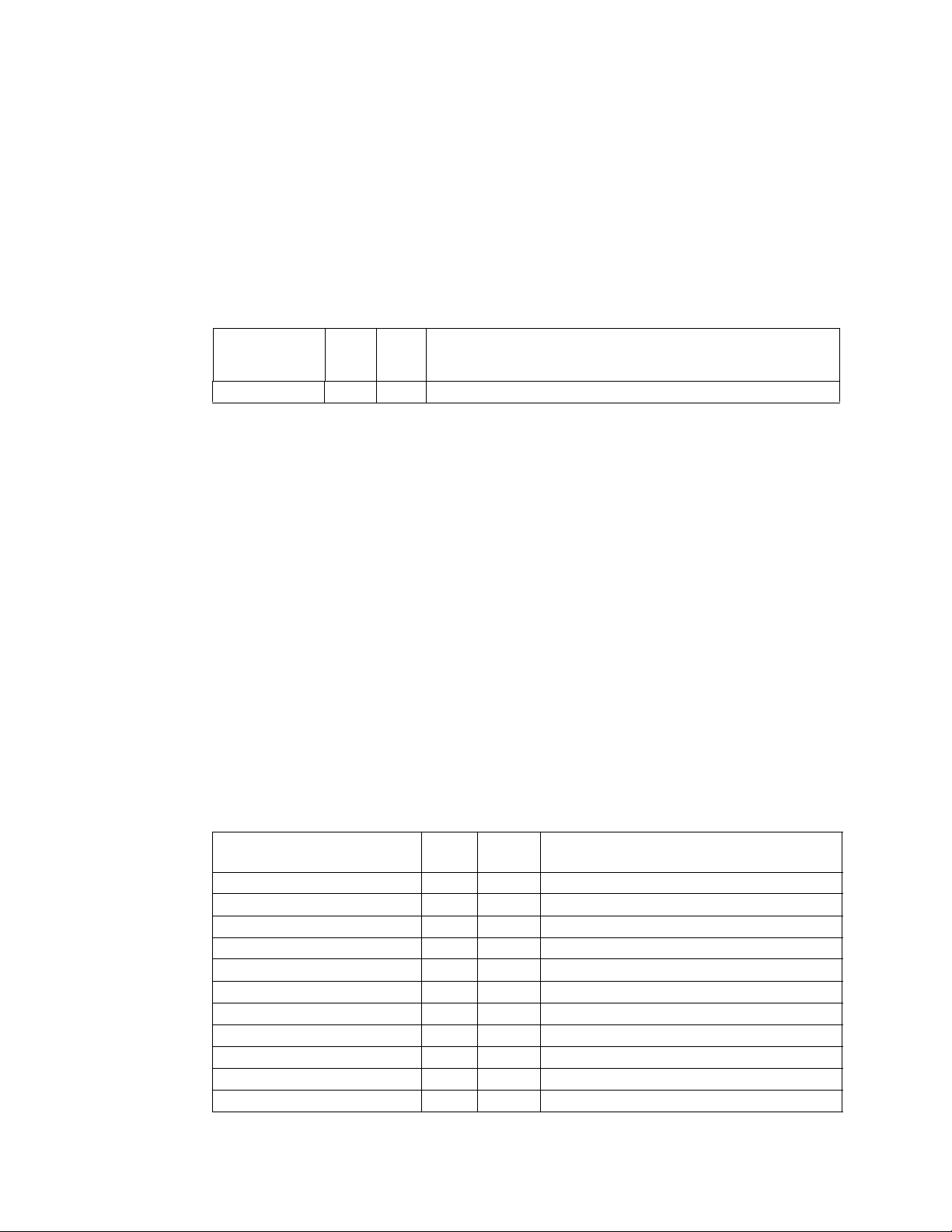

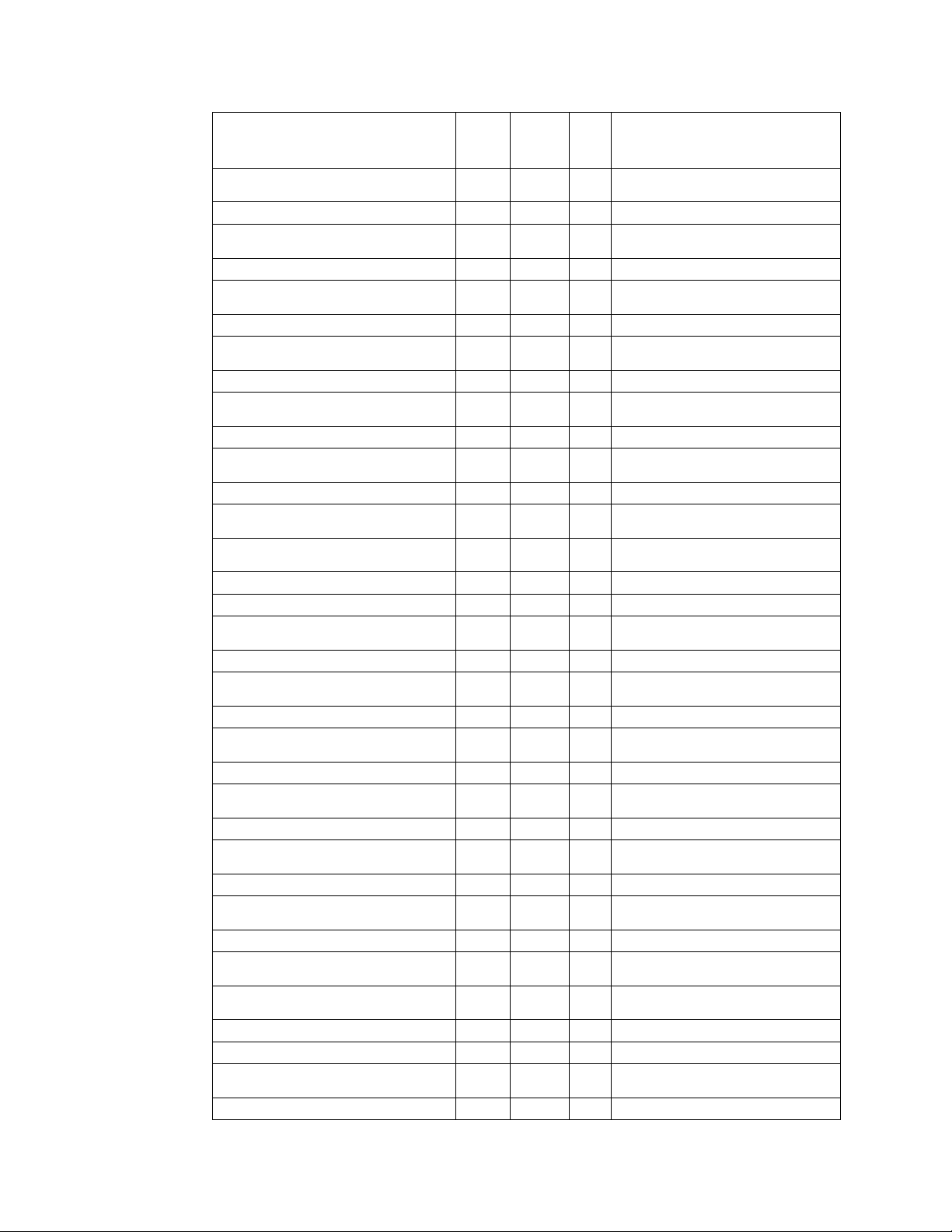

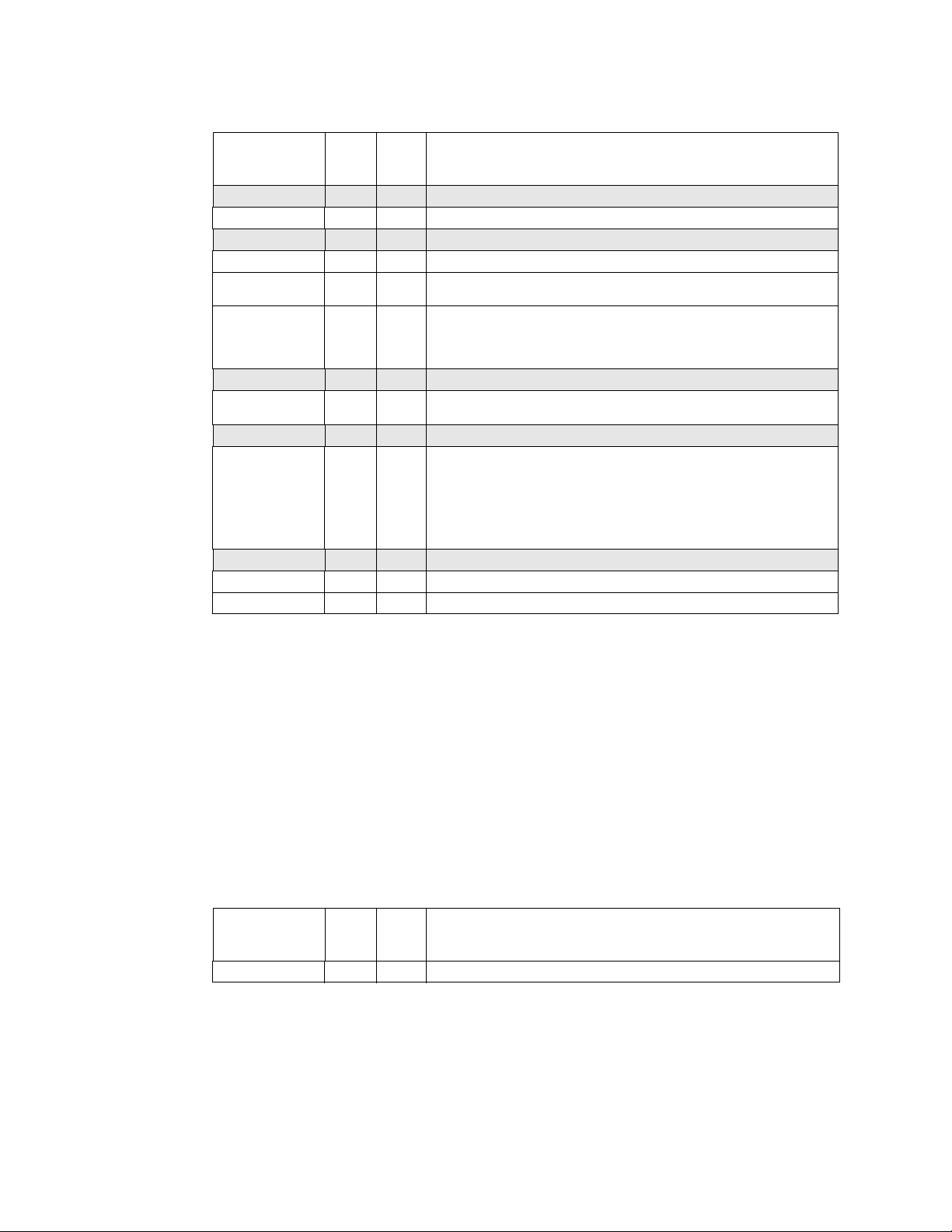

The general performance monitoring capabilities in each box are outlined in the following table.

Table 1-1. Per-Box Performance Monitoring Capabilities

Box # Boxes

C-Box 8 6 Y N 48

S-Box 2 4 Y Y 48

B-Box 2 4 N Y 48

M-Box 2 6 N N 48

R-Box 1 (L/R sides) 16

U-Box 1 1 Y N 48

W-Box 1 4 Y N 48

# Counters/

Box

(2 per port, 8

per side)

Generic

Counters?

Packet Match/

Mask Filters?

NY48

Bit Width

1.3 Uncore PMU Summary Tables

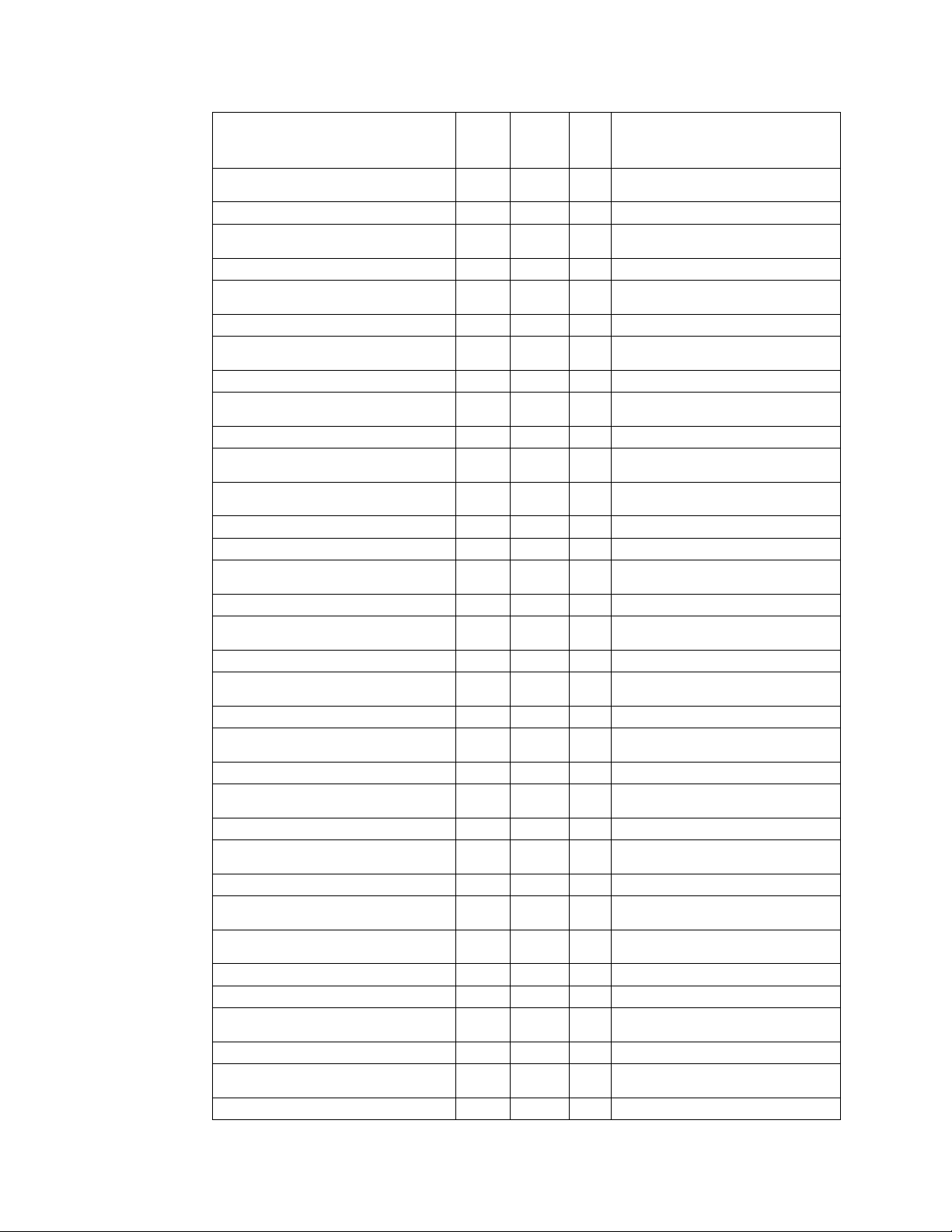

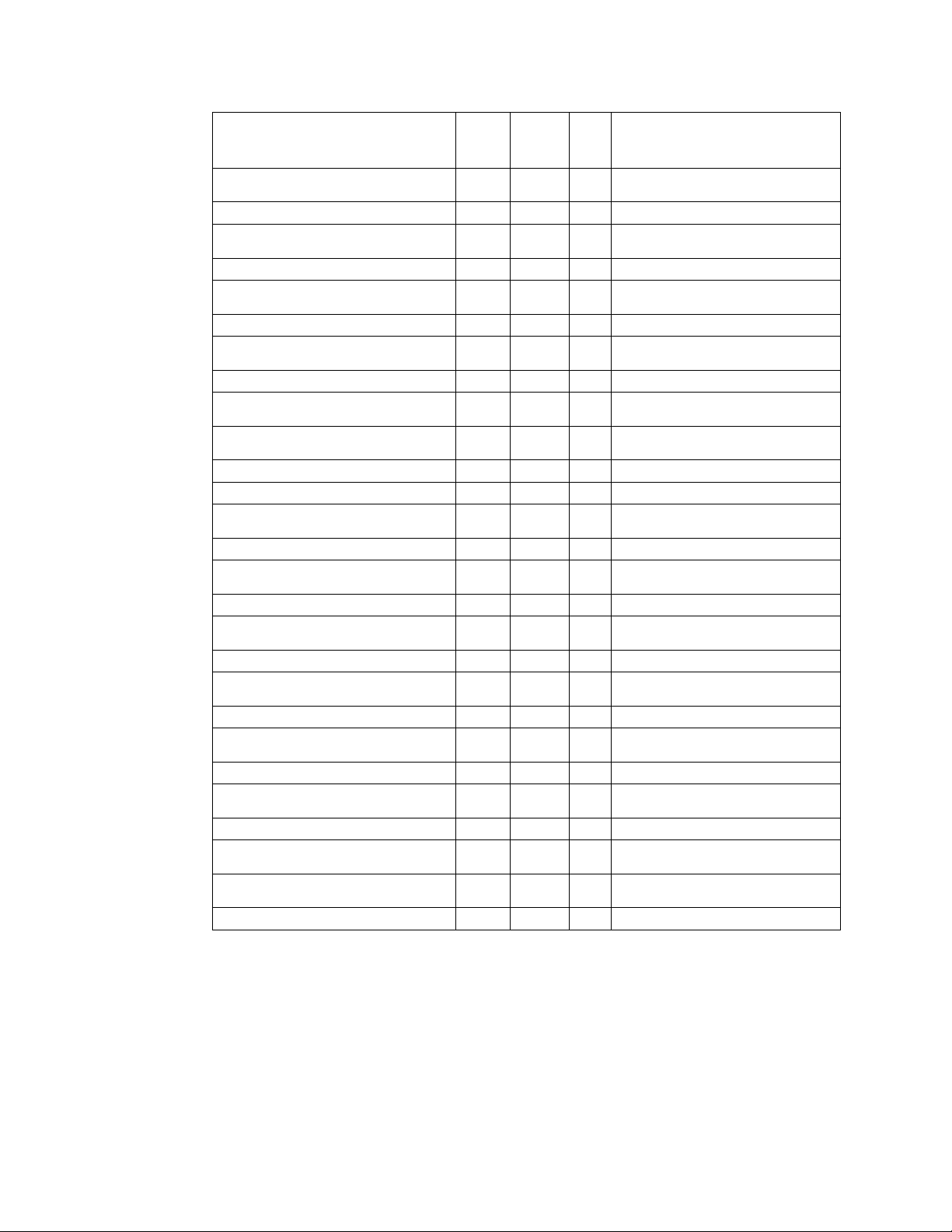

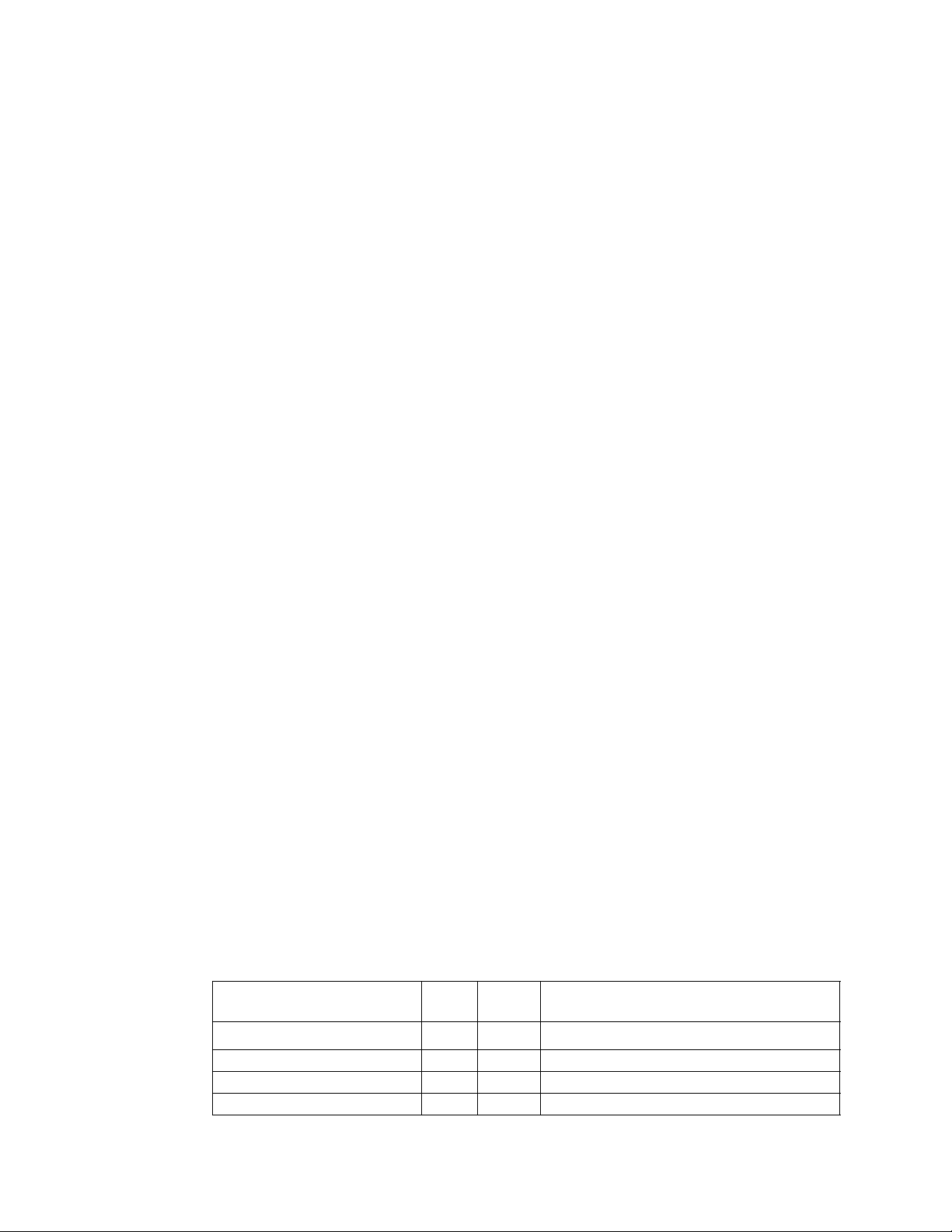

Table 1-2. Uncore Performance Monitoring MSRs

Box MSR Addresses Description

R-Box Counters

R-Box R

R-Box L

C-Box Counters

C-Box 7

C-Box 3

C-Box 5

C-Box 1

C-Box 6

C-Box 2

C-Box 4

C-Box 0

M-Box Counters

0xE3F-0xE30 Counter/Config Registers(15-8)

0xE2F-0xE2C QLX SubConfig Registers for Ports 7-4

0xE2B-0xE24 IPERF 1 SubConfig Registers

0xE22-0xE20 Global (Control/Status/Ovf Control)

0xE1F-0xE10 Counter/Config Registers(7-0)

0xE0F-0xE0C QLX SubConfig Registers for Ports 3-0

0xE0B-0xE04 IPERF 0 SubConfig Registers

0xE02-0xE00 Global (Control/Status/Ovf Control)

0xDFB-0xDF0 Counter/Config Registers

0xDE2-0xDE0 Global (Control/Status/Ovf Control)

0xDDB-0xDD0 Counter/Config Registers

0xDC2-0xDC0 Global (Control/Status/Ovf Control)

0xDBB-0xDB0 Counter/Config Registers

0xDA2-0xDA0 Global (Control/Status/Ovf Control)

0xD9B-0xD90 Counter/Config Registers

0xD82-0xDE0 Global (Control/Status/Ovf Control)

0xD7B-0xD70 Counter/Config Registers

0xD62-0xD60 Global (Control/Status/Ovf Control)

0xD5B-0xD50 Counter/Config Registers

0xD42-0xD40 Global (Control/Status/Ovf Control)

0xD3B-0xD30 Counter/Config Registers

0xD22-0xD20 Global (Control/Status/Ovf Control)

0xD1B-0xD10 Counter/Config Registers

0xD02-0xD00 Global (Control/Status/Ovf Control)

1-2

Page 11

INTEL® XEON® PROCESSOR 7500 SERIES UNCORE PROGRAMMING GUIDE INTRODUCTION

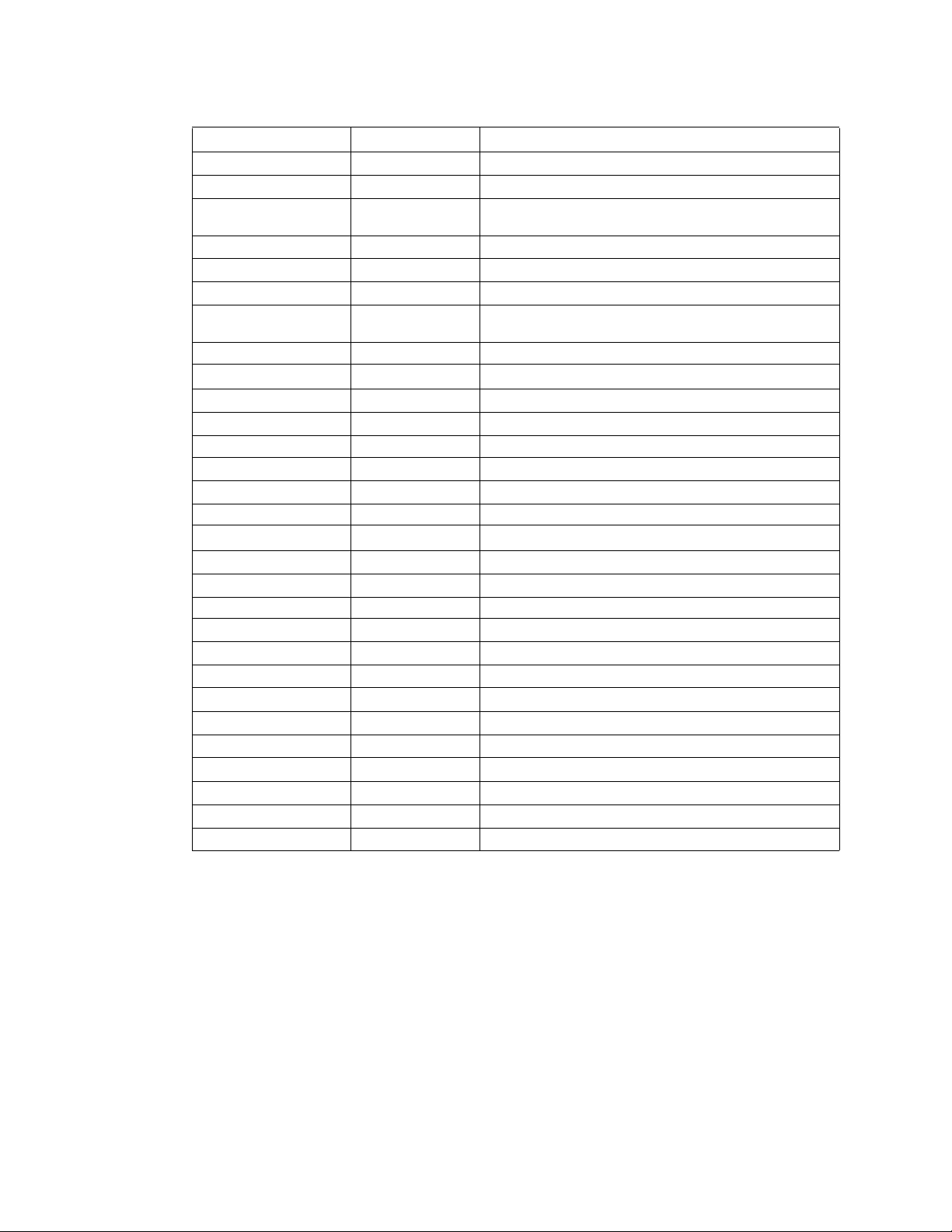

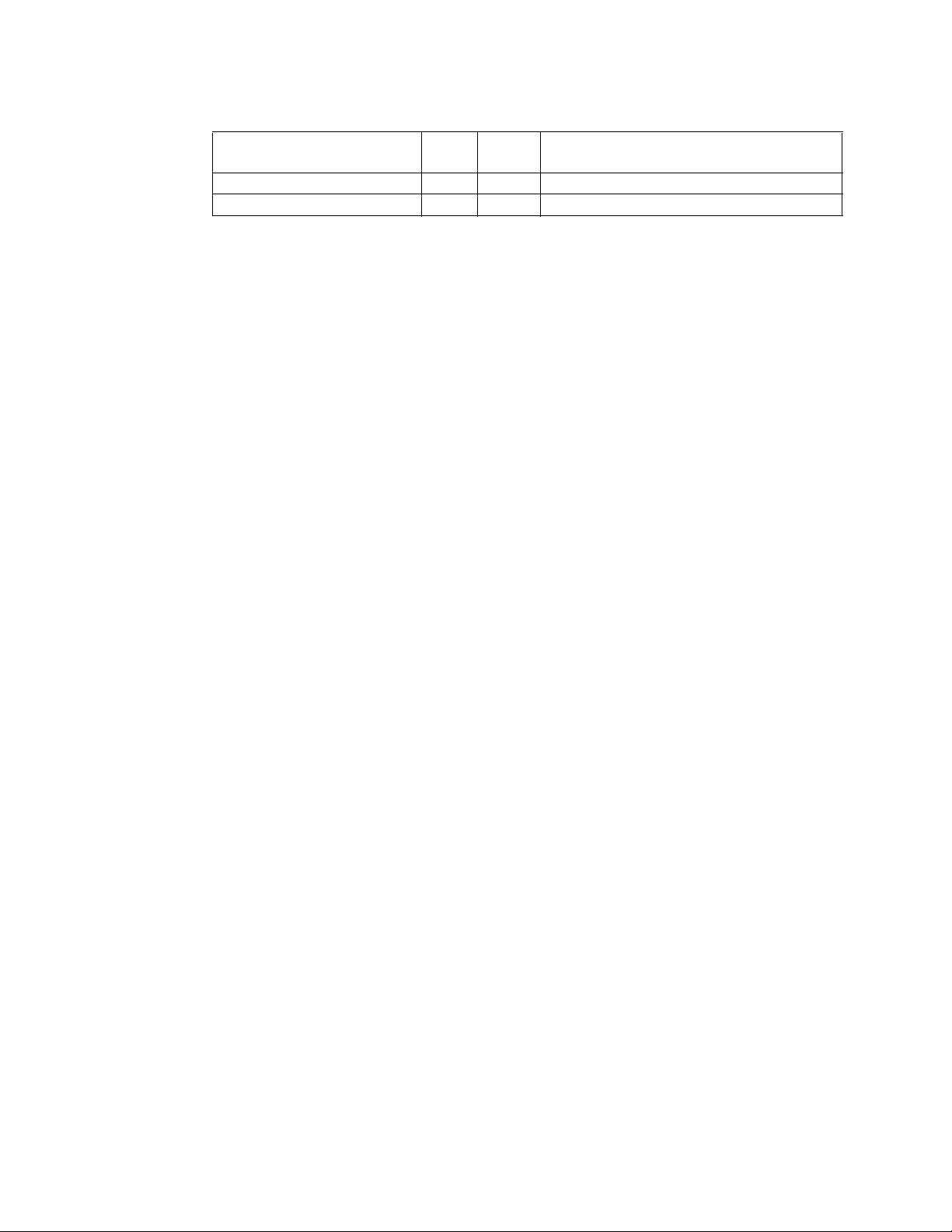

Table 1-2. Uncore Performance Monitoring MSRs

Box MSR Addresses Description

M-Box 1

M-Box 0

S-Box Counters

S-Box 1

S-Box 0

B-Box Counters

B-Box 1

B-Box 0

U-Box Counters

U-Box

W-Box Counters

W-Box

0xE5E-0xE5C Match/Mask Registers

0xCFB-0xCF0 Counter/Config Registers

0xCEB-0xCE4 Subconfig Registers (FVC,PLD,PGT,THR,MAP,ISS,DSP)

+ Timestamp Register

0xCE2-0xCE0 Global (Control/Status/Ovf Control)

0xE56-0xE54 Match/Mask Registers

0xCBB-0xCB0 Counter/Config Registers

0xCAB-0xCA4 Subconfig Registers (FVC,PLD,PGT,THR,MAP,ISS,DSP)

+ Timestamp Register

0xCA2-0xCA0 Global (Control/Status/Ovf Control)

0xE5A-0xE58 Match/Mask Registers

0xCD7-0xCD0 Counter/Config Registers

0xCC3-0xCC0 Global (Control/Status/Ovf Control)

0xE4A-0xE48 Match/Mask Registers

0xC57-0xC50 Counter/Config Registers

0xC43-0xC40 Global (Control/Status/Ovf Control)

0xE4E-0xE4D Match/Mask Registers

0xC77-0xC70 Counter/Config Registers

0xC62-0xC60 Global (Control/Status/Ovf Control)

0xE46-0xE45 Match/Mask Registers

0xC37-0xC30 Counter/Config Registers

0xC22-0xC20 Global (Control/Status/Ovf Control)

0xC11-0xC10 Counter/Config Registers

0xC02-0xC00 Global (Control/Status/Ovf Control)

0x395-0x394 Fixed Counter/Config Registers

0xC97-0xC90 Counter/Config Registers

0xC82-0xC80 Global (Control/Status/Ovf Control)

1.4 References

The following sections provide a breakdown of the performance monitoring capabilities of each box.

• Section 2.1, “Global Performance Monitoring Control”

• Section 2.2, “U-Box Performance Monitoring”

• Section 2.3, “C-Box Performance Monitoring”

• Section 2.4, “B-Box Performance Monitoring”

• Section 2.5, “S-Box Performance Monitoring”

• Section 2.6, “R-Box Performance Monitoring”

• Section 2.7, “M-Box Performance Monitoring”

1-3

Page 12

INTEL® XEON® PROCESSOR 7500 SERIES UNCORE PROGRAMMING GUIDE INTRODUCTION

• Section 2.8, “W-Box Performance Monitoring”

• Section 2.9, “Packet Matching Reference”

1-4

Page 13

INTEL® XEON® PROCESSOR 7500 SERIES UNCORE PROGRAMMING GUIDE UNCORE PERFORMANCE MONITORING

CHAPTER 2

UNCORE PERFORMANCE MONITORING

2.1 Global Performance Monitoring Control

2.1.1 Counter Overflow

If a counter overflows, it will send the overflow signal towards the U-Box. This signal will be

accumulated along the way in summary registers contained in each S-Box and a final summary register

in the U-Box.

®

The Intel

this overflow with two basic actions:

2.1.1.1 Freezing on Counter Overflow

Each uncore performance counter may be configured to, upon detection of overflow, disable (or

‘freeze’) all other counters in the uncore. To do so, the .pmi_en in the individual counter’s control

register must be set to 1. If the U_MSR_PMON_GLOBAL_CTL.frz_all is also set to 1, once the U-Box

receives the PMI from the uncore box, it will set U_MSR_PMON_GLOBAL_CTL.en_all to 0 which will

disable all counting.

Xeon® Processor 7500 Series uncore performance monitors may be configured to respond to

2.1.1.2 PMI on Counter Overflow

The uncore may also be configured to, upon detection of a performance counter overflow, send a PMI

signal to the core executing the monitoring software. To do so, the .pmi_en in the individual counter’s

control register must be set to 1 and U_MSR_PMON_GLOBAL_CTL.pmi_core_sel must be set to point to

the core the monitoring software is executing on.

Note: PMI is decoupled from freeze, so if software also wants the counters frozen, it must set

U_MSR_PMON_GLOBAL_CTL.frz_all to 1.

2.1.2 Setting up a Monitoring Session

On HW reset, all the counters should be disabled. Enabling is hierarchical. So the following steps must

be taken to set up a new monitoring session:

a) Reset counters to ensure no stale values have been acquired from previous sessions:

- set U_MSR_PMON_GLOBAL_CTL.rst_all to 1.

b) Select event to monitor:

Determine what events should be captured and program the control registers to capture them (i.e.

typically selected by programming the .ev_sel bits although other bit fields may be involved).

i.e. Set B_MSR_PMON_EVT_SEL3.ev _s e l to 0x03 to capture SNP_MERGE.

c) Enable counting locally:

i.e. Set B_MSR_PMON_EVT_SEL3.en to 1.

2-1

Page 14

INTEL® XEON® PROCESSOR 7500 SERIES UNCORE PROGRAMMING GUIDE UNCORE PERFORMANCE MONITORING

d) Enable counting at the box-level:

Enable counters within that box via it’s ‘GLOBAL_CTL’ register

i.e. set B_MSR_PMON_GLOBAL_CTL[3] to 1.

e) Select how to gather data. If polling, skip to 4. If sampling:

To set up a sample interval, software can pre-program the data register with a value of [2^48 -

sample interval length]. Doing so allows software, through use of the pmi mechanism, to be notified

when the number of events in the sample have been captured. Capturing a performance monitoring

sample every ‘X cycles’ (the fixed counter in the W-Box counts uncore clock cycles) is a common use of

this mechanism.

i.e. To stop counting and receive notification when the 1,000th SNP_MERGE has been detected,

- set B_MSR_PMON_CNT to (2^48- 1000)

- set B_MSR_PMON_EVT_SEL.pmi_en to 1

- set U_MSR_PMON_GLOBAL_CTL.frz_all to 1

- set U_MSR_PMON_GLOBAL_CTL.pmi_core_sel to which core the monitoring thread is executing on.

f) Enable counting at the global level by setting the U_MSR_PMON_GLOBAL_CTL.en_all bit to 1. Set the

.rst_all field to 0 with the same write.

And with that, counting will begin.

2.1.3 Reading the Sample Interval

Software can either poll the counters whenever it chooses, or wait to be notified that a counter has

overflowed (by receiving a PMI).

a) Polling - before reading, it is recommended that software freeze and disable the counters (by

clearing U_MSR_PMON_GLOBAL_CTL.en_all).

b) Frozen counters - If software set up the counters to freeze on overflow and send notification when it

happens, the next question is: Who caused the freeze?

Overflow bits are stored hierarchically within the Intel Xeon Processor 7500 Series uncore. First,

software should read the U_MSR_PMON_GLOBAL_STA T US.ov_* bits to determine whether a U or W box

counter caused the overflow or whether it was a counter in a box attached to the S0 or S1 Box.

The S-Boxes aggregate overflow bits from the M/B/C/R boxes they are attached to. So the next step is

to read the S{0,1}_MSR_PMON_SUMMARY.ov_* bits. Once the box(es) that contains the overflowing

counter is identified, the last step is to read that box’s *_MSR_PMON_GLOBAL_STATUS.ov field to find

the overflowing counter.

Note: More than one counter may overflow at any given time.

2.1.4 Enabling a New Sample Interval from Frozen Counters

Note: Software can determine if the counters have been frozen due to a PMI by examining two

bits: U_MSR_PMON_GLOBAL_SUMMARY.pmi should be 1 and

U_MSR_PMON_GLOBAL_CTL.en_all should be 0. If not, set

U_MSR_PMON_GLOBAL_CTL.en_all to 0 to disable counting.

2-2

Page 15

INTEL® XEON® PROCESSOR 7500 SERIES UNCORE PROGRAMMING GUIDE UNCORE PERFORMANCE MONITORING

a) Clear all uncore counters: Set U_MSR_PMON_GLOBAL_CTL.rst_all to 1.

b) Clear all overflow bits. When an overflow bit is cleared, all bits that summarize that overflow (above

in the hierarchy) will also be cleared. Therefore it is only necessary to clear the overflow bits

corresponding to the actual counter.

i.e. If counter 3 in B-Box 1 overflowed, to clear the overflow bit software should set

B_MSR_PMON_GLOBAL_OVF_CTL.clr_ov[3] to 1 in B-Box 1. This action will also clear

S_MSR_PMON_SUMMARY.ov_mb in S-Box 1 and U_MSR_PMON_GLOBAL_STATUS.ov_s1.c

c) Create the next sample: Reinitialize the sample by setting the monitoring data register to (2^48 sample_interval). Or set up a new sample interv al as outlined in Sect ion 2.1.2, “Setting up a Monitoring

Session”.

d) Re-enable counting: Set U_MSR_PMON_GLOBAL_CTL.en_all to 1. Set the .rst_all field back to 0 with

the same write.

2.1.5 Global Performance Monitors

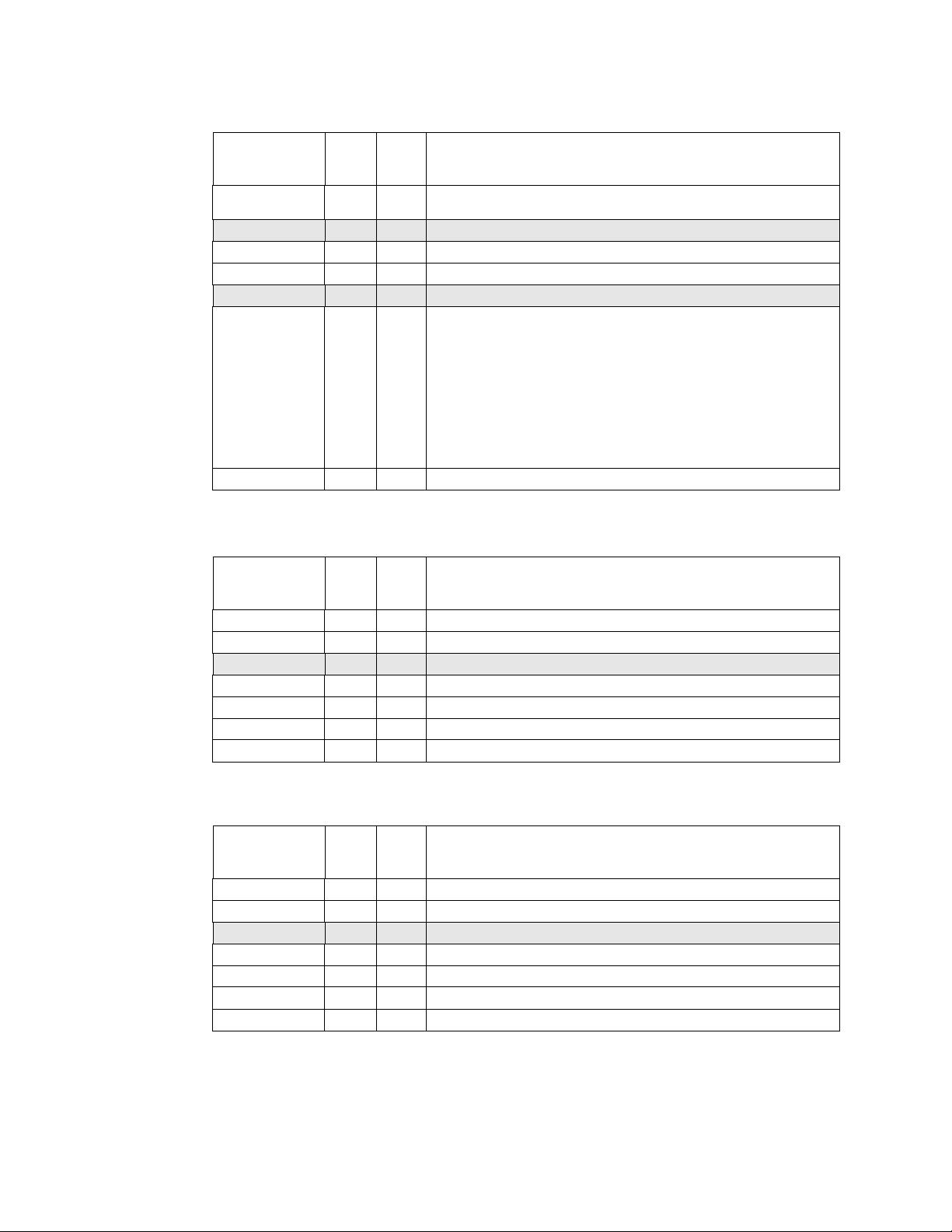

Table 2-1. Global Performance Monitoring Control MSRs

MSR Name Access

U_MSR_PMON_GLOBAL_OVF_CTL RW_RW 0x0C02 32 U-Box PMON Global Overflow Control

U_MSR_PMON_GLOBAL_STATUS RW_RO 0x0C01 32 U-Box PMON Global Status

U_MSR_PMON_GLOBAL_CTL RW_RO 0x0C00 32 U-Box PMON Global Control

MSR

Address

Size

(bits)

Description

2.1.5.1 Global PMON Global Control/Status Registers

The following registers represent state governing all PMUs in the uncore, both to exert global control

and collect box-level information.

U_MSR_PMON_GLOBAL_CTL contains bits that can reset (.rst_all) and freeze/enable (.en_all) all the

uncore counters. The .en_all bit must be set to 1 before any uncore counters will collect events.

Note: The register also contains the enable for the U-Box counters.

If an overflow is detected in any of the uncore’s PMON registers, it will be summarized in

U_MSR_PMON_GLOBAL_STATUS. This register accumulates overflows sent to it from the U-Box, W-Box

and S-Boxes and indicates if a disable was received from one of the boxes. To reset the summary

overflow bits, a user must set the corresponding bits in the U_MSR_PMON_GLOBAL_OVF_CTL register.

2-3

Page 16

INTEL® XEON® PROCESSOR 7500 SERIES UNCORE PROGRAMMING GUIDE UNCORE PERFORMANCE MONITORING

Table 2-2. U_MSR_PMON_GLOBAL_CTL Register – Field Definitions

Field Bits

frz_all 31 0 Disable uncore counting (by clearing .en_all) if PMI is received from box

ig 30 0 Read zero; writes ignored. (?)

rst_all 29 0 Reset All Uncore PMON Counters

en_all 28 0 Enable All Uncore PMON Counters

ig 27:9 0 Read zero; writes ignored. (?)

pmi_core_sel 8:1 0 PMI Core Select

en 0 0 Enable U-Box PMON counters

HW

Reset

Val

Description

with overflowing counter.

Ex:

If counter pmi is sent to U-Box for Box with overflowing counter...

00000000 - No PMI sent

00000001 - Send PMI to core 0

10000000 - Send PMI to core 7

11000100 - Send PMI to core 2, 6 & 7

etc.

Table 2-3. U_MSR_PMON_GLOBAL_STATUS Register – Field Definitions

Field Bits

cond 31 0 Condition Change

pmi 30 0 PMI Received from box with overflowing counter.

ig 31:4 0 Read zero; writes ignored. (?)

ov_s0 3 0 Set if overflow is detected from a S-Box 0 PMON register.

ov_s1 2 0 Set if overflow is detected from a S-Box 1 PMON register.

ov_w 1 0 Set if overflow is detected from a W-Box PMON register.

ov_u 0 0 Set if overflow is detected from a U-Box PMON register.

HW

Reset

Val

Description

Table 2-4. U_MSR_PMON_GLOBAL_OVF_CTL Register – Field Definitions

Field Bits

clr_cond 31 0 Clear Condition Change

clr_pmi 30 0 Clear PMI Received bit.

ig 29:4 0 Read zero; writes ignored. (?)

clr_ov_s0 3 0 Clear S-Box 0 Overflow

clr_ov_s1 2 0 Clear S-Box 1 Overflow

clr_ov_w 1 0 Clear W-Box Overflow

clr_ov_u 0 0 Clear U-Box Overflow

HW

Reset

Val

Description

2-4

Page 17

INTEL® XEON® PROCESSOR 7500 SERIES UNCORE PROGRAMMING GUIDE UNCORE PERFORMANCE MONITORING

2.2 U-Box Performance Monitoring

The U-Box serves as the system configuration controller for the Intel Xeon Processor 7500 Series.

It contains one counter which can be configured to capture a small set of events.

2.2.1 U-Box PMON Summary

Table 2-5. U-Box Performance Monitoring MSRs

MSR Name Access

U_MSR_PMON_CTR RW_RW 0x0C11 64 U-Box PMON Counter

U_MSR_PMON_EV_SEL RW_RO 0x0C10 32 U-Box PMON Event Select

MSR

Address

Size

(bits)

Description

2.2.1.1 U-Box Box Level PMON State

U-Box global state bits are stored in the uncore global state registers. Refer to Section 2.1, “Global

Performance Monitoring Control” for more information.

2.2.1.2 U-Box PMON state - Counter/Control Pairs

The following table defines the layout of the U-Box performance monitor control register. The main task

of this configuration register is to select the event to be monitored by its respective data counter.

Setting the .ev_sel field performs the event selection. The .en bit must be set to 1 to enable counting.

Additional control bits include:

- .pmi_en which governs what to do if an overflow is detected.

- .edge_detect - Rather than accumulating the raw count each cycle, the register can capture

transitions from no event to an event incoming.

Table 2-6. U_MSR_PMON_EVT_SEL Register – Field Definitions

Field Bits

ig 63 0 Read zero; writes ignored. (?)

rsv 62 0 Reserved; Must write to 0 else behavior is undefined.

ig 61:23 0 Read zero; writes ignored. (?)

en 22 0 Local Counter Enable. When set, the associated counter is locally

ig 21 0 Read zero; writes ignored. (?)

pmi_en 20 0 When this bit is asserted and the corresponding counter overflows, a PMI

ig 19 0 Read zero; writes ignored. (?)

edge_detect 18 0 When asserted, the 0 to 1 transition edge of a 1 bit event input will cause

ig 17:8 0 Read zero; writes ignored. (?)

ev_sel 7:0 0 Select event to be counted.

HW

Reset

Val

Description

enabled.

NOTE: It must also be enabled in C_MSR_PMON_GLOBAL_CTL and the

U-Box to be fully enabled.

exception is sent to the U-Box.

the corresponding counter to increment. When 0, the counter will

increment for however long the event is asserted .

2-5

Page 18

INTEL® XEON® PROCESSOR 7500 SERIES UNCORE PROGRAMMING GUIDE UNCORE PERFORMANCE MONITORING

The U-Box performance monitor data register is 48b wide. A counter overflow occurs when a carry out

bit from bit 47 is detected. Software can force all uncore counting to freeze after N events by preloading

48

a monitor with a count value of 2

- N and setting the control register to send a PMI to the U-Box. Upon

receipt of the PMI, the U-Box will disable counting ( Section 2.1.1.1, “Freezing on Counter Overflow”).

During the interval of time between overflow and global disable, the counter value will wrap and

continue to collect events.

In this way, software can capture the precise number of events that occurred between the time uncore

counting was enabled and when it was disabled (or ‘frozen’) with minimal skew.

If accessible, software can continuously read the data registers without disabling event collection.

Table 2-7. U_MSR_PMON_CTR Register – Field Definitions

Field Bits

event_count 47:0 0 48-bit performance event counter

HW

Reset

Val

Description

2.2.2 U-Box Performance Monitoring Events

The set of events that can be monitored in the U-Box are summarized in the following section.

- Tracks NcMsgS packets generated by the U-Box, as they arbitrate to be broadcast. They are

prioritized as follows: Special Cycle->StopReq1/StartReq2->Lock/Unlock->Remote Interrupts->Local

Interrupts.

- Errors detected and distinguished between recoverable, corrected, uncorrected and fatal.

- Number of times cores were sent IPIs or were Woken up.

- Requests to the Ring or a B-Box.

etc.

2.2.3 U-Box Events Ordered By Code

Table 2-8 summarizes the directly-measured U-Box events.

Table 2-8. Performance Monitor Events for U-Box Events

Symbol Name

BUF_VALID_LOCAL_INT 0x000 1 Local IPI Buffer is valid

BUF_VALID_REMOTE_INT 0x001 1 Remote IPI Buffer is valid

BUF_VALID_LOCK 0x002 1 Lock Buffer is valid

BUF_VALID_STST 0x003 1 Start/Stop Req Buffer is valid

BUF_VALID_SPC_CYCLES 0x004 1 SpcCyc Buffer is valid

U2R_REQUESTS 0x050 1 Number U-Box to Ring Requests

U2B_REQUEST_CYCLES 0x051 1 U to B-Box Active Request Cycles

WOKEN 0x0F8 1 Number of core woken up

IPIS_SENT 0x0F9 1 Number of core IPIs sent

RECOV 0x1DF 1 Recoverable

CORRECTED_ERR 0x1E4 1 Corrected Error

Event

Code

Max

Inc/Cyc

Description

2-6

Page 19

INTEL® XEON® PROCESSOR 7500 SERIES UNCORE PROGRAMMING GUIDE UNCORE PERFORMANCE MONITORING

Table 2-8. Performance Monitor Events for U-Box Events

Symbol Name

UNCORRECTED_ERR 0x1E5 1 Uncorrected Error

FATAL_ERR 0x1E6 1 Fatal Error

Event

Code

Max

Inc/Cyc

Description

2.2.4 U-Box Performance Monitor Event List

This section enumerates Intel Xeon Processor 7500 Series uncore performance monitoring events for

the U-Box.

BUF_VALID_LOCAL_INT

• Title: Local IPI Buffer Valid

• Category: U-Box Events

• Event Code: 0x000, Max. Inc/Cyc: 1,

• Definition: Number of cycles the Local Interrupt packet buffer contained a valid entry.

BUF_VALID_LOCK

• Title: Lock Buffer Valid

• Category: U-Box Events

• Event Code: 0x002, Max. Inc/Cyc: 1,

• Definition: Number of cycles the Lock packet buffer contained a valid entry.

BUF_VALID_REMOTE_INT

• Title: Remote IPI Buffer Valid

• Category: U-Box Events

• Event Code: 0x001, Max. Inc/Cyc: 1,

• Definition: Number of cycles the Remote IPI packet buffer contained a valid entry.

BUF_VALID_SPC_CYCLES

• Title: SpcCyc Buffer Valid

• Category: U-Box Events

• Event Code: 0x004, Max. Inc/Cyc: 1,

• Definition: Number of uncore cycles the Special Cycle packet buffer contains a valid entry. ‘Special

Cycles’ are NcMsgS packets generated by the U-Box and broadcast to internal cores to cover such

things as Shutdown, Invd_Ack and WbInvd_Ack conditions.

BUF_VALID_STST

• Title: Start/Stop Req Buffer Valid

• Category: U-Box Events

• Event Code: 0x003, Max. Inc/Cyc: 1,

• Definition: Number of uncore cycles the Start/Stop Request packet buffer contained a valid entry.

CORRECTED_ERR

• Title: Corrected Errors

• Category: U-Box Events

• Event Code: 0x1E4, Max. Inc/Cyc: 1,

• Definition: Number of corrected errors.

2-7

Page 20

INTEL® XEON® PROCESSOR 7500 SERIES UNCORE PROGRAMMING GUIDE UNCORE PERFORMANCE MONITORING

FATAL_ERR

• Title: Fatal Errors

• Category: U-Box Events

• Event Code: 0x1E6, Max. Inc/Cyc: 1,

• Definition: Number of fatal errors.

IPIS_SENT

• Title: Number Core IPIs Sent

• Category: U-Box Events

• Event Code: 0x0F9, Max. Inc/Cyc: 1,

• Definition: Number of core IPIs sent.

RECOV

• Title: Recoverable

• Category: U-Box Events

• Event Code: 0x1DF, Max. Inc/Cyc: 1,

• Definition: Number of recoverable errors.

U2R_REQUESTS

• Title: Number U2R Requests

• Category: U-Box Events

• Event Code: 0x050, Max. Inc/Cyc: 1,

• Definition: Number U-Box to Ring Requests.

U2B_REQUEST_CYCLES

• Title: U2B Active Request Cycles

• Category: U-Box Events

• Event Code: 0x051, Max. Inc/Cyc: 1,

• Definition: Number U to B-Box Active Request Cycles.

UNCORRECTED_ERR

• Title: Uncorrected Error

• Category: U-Box Events

• Event Code: 0x1E5, Max. Inc/Cyc: 1,

• Definition: Number of uncorrected errors.

WOKEN

• Title: Number Cores Woken Up

• Category: U-Box Events

• Event Code: 0x0F8, Max. Inc/Cyc: 1,

• Definition: Number of cores woken up.

2-8

Page 21

INTEL® XEON® PROCESSOR 7500 SERIES UNCORE PROGRAMMING GUIDE UNCORE PERFORMANCE MONITORING

2.3 C-Box Performance Monitoring

2.3.1 Overview of the C-Box

For the Intel Xeon Processor 7500 Series, the LLC coherence engine (C-Box) manages the interface

between the core and the last level cache (LLC). All core transactions that access the LLC are directed

from the core to a C-Box via the ring interconnect. The C-Box is responsible for managing data delivery

from the LLC to the requesting core. It is also responsible for maintaining coherence between the cores

within the socket that share the LLC; generating snoops and collecting snoop responses to the local

cores when the MESI protocol requires it.

®

The C-Box is also the gate keeper for all Intel

originate in the core and is responsible for ensuring that all Intel QuickPath Interconnect messages that

pass through the socket’s LLC remain coherent.

The Intel Xeon Processor 7500 Series contains eight instances of the C-Box, each assigned to manage a

distinct 3MB, 24-way set associative slice of the processor’s total LLC capacity. For processors with

fewer than 8 3MB LLC slices, the C-Boxes for missing slices will still be active and track ring traffic

caused by their co-located core even if they have no LLC related traffic to track (i.e. hits/misses/

snoops).

Every physical memory address in the system is uniquely associated with a single C-Box instance via a

proprietary hashing algorithm that is designed to keep the distribution of traffic across the C-Box

instances relatively uniform for a wide range of possible address patterns. This enables the individual CBox instances to operate independently, each managing its slice of the physical address space without

any C-Box in a given socket ever needing to communicate with the other C-Boxes in that same socket.

QuickPath Interconnect (Intel® QPI) messages that

Each C-Box is uniquely associated with a single S-Box. All messages which a given C-Box sends out to

the system memory or Intel QPI pass through the S-Box that is physically closest to that C-Box.

2.3.2 C-Box Performance Monitoring Overview

Each of the C-Boxes in the Intel Xeon Processor 7500 Series supports event monitoring through six 48bit wide counters (CBx_CR_C_MSR_PMON_CTR{5:0}). Each of these six counters can be programmed

to count any C-Box event. The C-Box counters can increment by a maximum of 5b per cycle.

For information on how to setup a monitoring session, refer to Section 2.1, “Global Performance

Monitoring Control”

2.3.2.1 C-Box PMU - Overflow, Freeze and Unfreeze

If an overflow is detected from a C-Box performance counter, the overflow bit is set at the box level

(C_MSR_PMON_GLOBAL_STATUS.ov), and forwarded up the chain towards the U-Box. If a C-Box0

counter overflows, a notification is sent and stored in S-Box0 (S_MSR_PMON_SUMMARY.ov_c_l) which,

in turn, sends the overflow notification up to the U-Box (U_MSR_PMON_GLOBAL_ST ATUS.ov_s0). Refer

to Table 2-26, “S_MSR_PMON_SUMMARY Register Fields” to determine how each C-Box’ s overflow bit is

accumulated in the attached S-Box.

HW can be also configured (by setting the corresponding .pmi_en to 1) to send a PMI to the U-Box

when an overflow is detected. The U-Box may be configured to freeze all uncore counting and/or send a

PMI to selected cores when it receives this signal.

Once a freeze has occurred, in order to see a new freeze, the overflow field responsible for the freeze,

must be cleared by setting the corresponding bit in C_MSR_PMON_GLOBAL_OVF_CTL.clr_ov. Assuming

all the counters have been locally enabled (.en bit in data registers meant to monitor events) and the

overflow bit(s) has been cleared, the C-Box is prepared for a new sample interval. Once the global

controls have been re-enabled (Section 2.1.4, “Enabling a New Sample Interval from Frozen

Counters”), counting will resume.

.

2-9

Page 22

INTEL® XEON® PROCESSOR 7500 SERIES UNCORE PROGRAMMING GUIDE UNCORE PERFORMANCE MONITORING

2.3.3 C-BOX Performance Monitors

Table 2-9. C-Box Performance Monitoring MSRs

MSR Name

CB7_CR_C_MSR_PMON_CTR_5 RW_RW0xDFB 64 C-Box 7 PMON Counter 5

CB7_CR_C_MSR_PMON_EVT_SEL_5 RW_RO 0xDFA 64 C-Box 7 PMON Event Select 5

CB7_CR_C_MSR_PMON_CTR_4 RW_RW0xDF964C-Box 7 PMON Counter 4

CB7_CR_C_MSR_PMON_EVT_SEL_4 RW_RO 0xDF8 64 C-Box 7 PMON Event Select 4

CB7_CR_C_MSR_PMON_CTR_3 RW_RW0xDF764C-Box 7 PMON Counter 3

CB7_CR_C_MSR_PMON_EVT_SEL_3 RW_RO 0xDF6 64 C-Box 7 PMON Event Select 3

CB7_CR_C_MSR_PMON_CTR_2 RW_RW0xDF564C-Box 7 PMON Counter 2

CB7_CR_C_MSR_PMON_EVT_SEL_2 RW_RO 0xDF4 64 C-Box 7 PMON Event Select 2

CB7_CR_C_MSR_PMON_CTR_1 RW_RW0xDF364C-Box 7 PMON Counter 1

CB7_CR_C_MSR_PMON_EVT_SEL_1 RW_RO 0xDF2 64 C-Box 7 PMON Event Select 1

CB7_CR_C_MSR_PMON_CTR_0 RW_RW0xDF164C-Box 7 PMON Counter 0

CB7_CR_C_MSR_PMON_EVT_SEL_0 RW_RO 0xDF0 64 C-Box 7 PMON Event Select 0

CB7_CR_C_MSR_PMON_GLOBAL_OVF_CTLWO_RO0xDE232C-Box 7 PMON Global Overflow Control

CB7_CR_C_MSR_PMON_GLOBAL_STATUS RW_RW0xDE132C-Box 7 PMON Global Status

Acces

MSR

Addres

s

Size

(bits

s

)

Description

CB7_CR_C_MSR_PMON_GLOBAL_CTL RW_RO 0xDE0 32 C-Box 7 PMON Global Control

CB3_CR_C_MSR_PMON_CTR_5 RW_RW0xDDB 64 C-Box 3 PMON Counter 5

CB3_CR_C_MSR_PMON_EVT_SEL_5 RW_RO 0xDDA 64 C-Box 3 PMON Event Select 5

CB3_CR_C_MSR_PMON_CTR_4 RW_RW0xDD9 64 C-Box 3 PMON Counter 4

CB3_CR_C_MSR_PMON_EVT_SEL_4 RW_RO 0xDD8 64 C-Box 3 PMON Event Select 4

CB3_CR_C_MSR_PMON_CTR_3 RW_RW0xDD7 64 C-Box 3 PMON Counter 3

CB3_CR_C_MSR_PMON_EVT_SEL_3 RW_RO 0xDD6 64 C-Box 3 PMON Event Select 3

CB3_CR_C_MSR_PMON_CTR_2 RW_RW0xDD5 64 C-Box 3 PMON Counter 2

CB3_CR_C_MSR_PMON_EVT_SEL_2 RW_RO 0xDD4 64 C-Box 3 PMON Event Select 2

CB3_CR_C_MSR_PMON_CTR_1 RW_RW0xDD3 64 C-Box 3 PMON Counter 1

CB3_CR_C_MSR_PMON_EVT_SEL_1 RW_RO 0xDD2 64 C-Box 3 PMON Event Select 1

CB3_CR_C_MSR_PMON_CTR_0 RW_RW0xDD1 64 C-Box 3 PMON Counter 0

CB3_CR_C_MSR_PMON_EVT_SEL_0 RW_RO 0xDD0 64 C-Box 3 PMON Event Select 0

CB3_CR_C_MSR_PMON_GLOBAL_OVF_CTLWO_RO0xDC232C-Box 3 PMON Global Overflow Control

CB3_CR_C_MSR_PMON_GLOBAL_STATUS RW_RW0xDC132C-Box 3 PMON Global Status

CB3_CR_C_MSR_PMON_GLOBAL_CTL RW_RO 0xDC0 32 C-Box 3 PMON Global Control

2-10

Page 23

INTEL® XEON® PROCESSOR 7500 SERIES UNCORE PROGRAMMING GUIDE UNCORE PERFORMANCE MONITORING

MSR Name

CB5_CR_C_MSR_PMON_CTR_5 RW_RW0xDBB 64 C-Box 5 PMON Counter 5

CB5_CR_C_MSR_PMON_EVT_SEL_5 RW_RO 0xDBA 64 C-Box 5 PMON Event Select 5

CB5_CR_C_MSR_PMON_CTR_4 RW_RW0xDB964C-Box 5 PMON Counter 4

CB5_CR_C_MSR_PMON_EVT_SEL_4 RW_RO 0xDB8 64 C-Box 5 PMON Event Select 4

CB5_CR_C_MSR_PMON_CTR_3 RW_RW0xDB764C-Box 5 PMON Counter 3

CB5_CR_C_MSR_PMON_EVT_SEL_3 RW_RO 0xDB6 64 C-Box 5 PMON Event Select 3

CB5_CR_C_MSR_PMON_CTR_2 RW_RW0xDB564C-Box 5 PMON Counter 2

CB5_CR_C_MSR_PMON_EVT_SEL_2 RW_RO 0xDB4 64 C-Box 5 PMON Event Select 2

CB5_CR_C_MSR_PMON_CTR_1 RW_RW0xDB364C-Box 5 PMON Counter 1

CB5_CR_C_MSR_PMON_EVT_SEL_1 RW_RO 0xDB2 64 C-Box 5 PMON Event Select 1

CB5_CR_C_MSR_PMON_CTR_0 RW_RW0xDB164C-Box 5 PMON Counter 0

CB5_CR_C_MSR_PMON_EVT_SEL_0 RW_RO 0xDB0 64 C-Box 5 PMON Event Select 0

CB5_CR_C_MSR_PMON_GLOBAL_OVF_CTLWO_RO0xDA2 32 C-Box 5 PMON Global Overflow Control

CB5_CR_C_MSR_PMON_GLOBAL_STATUS RW_RW0xDA1 32 C-Box 5 PMON Global Status

Acces

MSR

Addres

s

Size

(bits

s

)

Description

CB5_CR_C_MSR_PMON_GLOBAL_CTL RW_RO 0xDA0 32 C-Box 5 PMON Global Control

CB1_CR_C_MSR_PMON_CTR_5 RW_RW0xD9B 64 C-Box 1 PMON Counter 5

CB1_CR_C_MSR_PMON_EVT_SEL_5 RW_RO 0xD9A 64 C-Box 1 PMON Event Select 5

CB1_CR_C_MSR_PMON_CTR_4 RW_RW0xD9964C-Box 1 PMON Counter 4

CB1_CR_C_MSR_PMON_EVT_SEL_4 RW_RO 0xD98 64 C-Box 1 PMON Event Select 4

CB1_CR_C_MSR_PMON_CTR_3 RW_RW0xD9764C-Box 1 PMON Counter 3

CB1_CR_C_MSR_PMON_EVT_SEL_3 RW_RO 0xD96 64 C-Box 1 PMON Event Select 3

CB1_CR_C_MSR_PMON_CTR_2 RW_RW0xD9564C-Box 1 PMON Counter 2

CB1_CR_C_MSR_PMON_EVT_SEL_2 RW_RO 0xD94 64 C-Box 1 PMON Event Select 2

CB1_CR_C_MSR_PMON_CTR_1 RW_RW0xD9364C-Box 1 PMON Counter 1

CB1_CR_C_MSR_PMON_EVT_SEL_1 RW_RO 0xD92 64 C-Box 1 PMON Event Select 1

CB1_CR_C_MSR_PMON_CTR_0 RW_RW0xD9164C-Box 1 PMON Counter 0

CB1_CR_C_MSR_PMON_EVT_SEL_0 RW_RO 0xD90 64 C-Box 1 PMON Event Select 0

CB1_CR_C_MSR_PMON_GLOBAL_OVF_CTLWO_RO0xD8232C-Box 1 PMON Global Overflow Control

CB1_CR_C_MSR_PMON_GLOBAL_STATUS RW_RW0xD8132C-Box 1 PMON Global Status

CB1_CR_C_MSR_PMON_GLOBAL_CTL RW_RO 0xD80 32 C-Box 1 PMON Global Control

CB6_CR_C_MSR_PMON_CTR_5 RW_RW0xD7B 64 C-Box 6 PMON Counter 5

CB6_CR_C_MSR_PMON_EVT_SEL_5 RW_RO 0xD7A 64 C-Box 6 PMON Event Select 5

2-11

Page 24

INTEL® XEON® PROCESSOR 7500 SERIES UNCORE PROGRAMMING GUIDE UNCORE PERFORMANCE MONITORING

MSR Name

CB6_CR_C_MSR_PMON_CTR_4 RW_RW0xD7964C-Box 6 PMON Counter 4

CB6_CR_C_MSR_PMON_EVT_SEL_4 RW_RO 0xD78 64 C-Box 6 PMON Event Select 4

CB6_CR_C_MSR_PMON_CTR_3 RW_RW0xD7764C-Box 6 PMON Counter 3

CB6_CR_C_MSR_PMON_EVT_SEL_3 RW_RO 0xD76 64 C-Box 6 PMON Event Select 3

CB6_CR_C_MSR_PMON_CTR_2 RW_RW0xD7564C-Box 6 PMON Counter 2

CB6_CR_C_MSR_PMON_EVT_SEL_2 RW_RO 0xD74 64 C-Box 6 PMON Event Select 2

CB6_CR_C_MSR_PMON_CTR_1 RW_RW0xD7364C-Box 6 PMON Counter 1

CB6_CR_C_MSR_PMON_EVT_SEL_1 RW_RO 0xD72 64 C-Box 6 PMON Event Select 1

CB6_CR_C_MSR_PMON_CTR_0 RW_RW0xD7164C-Box 6 PMON Counter 0

CB6_CR_C_MSR_PMON_EVT_SEL_0 RW_RO 0xD70 64 C-Box 6 PMON Event Select 0

CB6_CR_C_MSR_PMON_GLOBAL_OVF_CTLWO_RO0xD6232C-Box 6 PMON Global Overflow Control

CB6_CR_C_MSR_PMON_GLOBAL_STATUS RW_RW0xD6132C-Box 6 PMON Global Status

CB6_CR_C_MSR_PMON_GLOBAL_CTL RW_RO 0xD60 32 C-Box 6 PMON Global Control

CB2_CR_C_MSR_PMON_CTR_5 RW_RW0xD5B 64 C-Box 2 PMON Counter 5

Acces

MSR

Addres

s

Size

(bits

s

)

Description

CB2_CR_C_MSR_PMON_EVT_SEL_5 RW_RO 0xD5A 64 C-Box 2 PMON Event Select 5

CB2_CR_C_MSR_PMON_CTR_4 RW_RW0xD5964C-Box 2 PMON Counter 4

CB2_CR_C_MSR_PMON_EVT_SEL_4 RW_RO 0xD58 64 C-Box 2 PMON Event Select 4

CB2_CR_C_MSR_PMON_CTR_3 RW_RW0xD5764C-Box 2 PMON Counter 3

CB2_CR_C_MSR_PMON_EVT_SEL_3 RW_RO 0xD56 64 C-Box 2 PMON Event Select 3

CB2_CR_C_MSR_PMON_CTR_2 RW_RW0xD5564C-Box 2 PMON Counter 2

CB2_CR_C_MSR_PMON_EVT_SEL_2 RW_RO 0xD54 64 C-Box 2 PMON Event Select 2

CB2_CR_C_MSR_PMON_CTR_1 RW_RW0xD5364C-Box 2 PMON Counter 1

CB2_CR_C_MSR_PMON_EVT_SEL_1 RW_RO 0xD52 64 C-Box 2 PMON Event Select 1

CB2_CR_C_MSR_PMON_CTR_0 RW_RW0xD5164C-Box 2 PMON Counter 0

CB2_CR_C_MSR_PMON_EVT_SEL_0 RW_RO 0xD50 64 C-Box 2 PMON Event Select 0

CB2_CR_C_MSR_PMON_GLOBAL_OVF_CTLWO_RO0xD4232C-Box 2 PMON Global Overflow Control

CB2_CR_C_MSR_PMON_GLOBAL_STATUS RW_RW0xD4132C-Box 2 PMON Global Status

CB2_CR_C_MSR_PMON_GLOBAL_CTL RW_RO 0xD40 32 C-Box 2 PMON Global Control

CB4_CR_C_MSR_PMON_CTR_5 RW_RW0xD3B 64 C-Box 4 PMON Counter 5

CB4_CR_C_MSR_PMON_EVT_SEL_5 RW_RO 0xD3A 64 C-Box 4 PMON Event Select 5

CB4_CR_C_MSR_PMON_CTR_4 RW_RW0xD3964C-Box 4 PMON Counter 4

CB4_CR_C_MSR_PMON_EVT_SEL_4 RW_RO 0xD38 64 C-Box 4 PMON Event Select 4

2-12

Page 25

INTEL® XEON® PROCESSOR 7500 SERIES UNCORE PROGRAMMING GUIDE UNCORE PERFORMANCE MONITORING

MSR Name

CB4_CR_C_MSR_PMON_CTR_3 RW_RW0xD3764C-Box 4 PMON Counter 3

CB4_CR_C_MSR_PMON_EVT_SEL_3 RW_RO 0xD36 64 C-Box 4 PMON Event Select 3

CB4_CR_C_MSR_PMON_CTR_2 RW_RW0xD3564C-Box 4 PMON Counter 2

CB4_CR_C_MSR_PMON_EVT_SEL_2 RW_RO 0xD34 64 C-Box 4 PMON Event Select 2

CB4_CR_C_MSR_PMON_CTR_1 RW_RW0xD3364C-Box 4 PMON Counter 1

CB4_CR_C_MSR_PMON_EVT_SEL_1 RW_RO 0xD32 64 C-Box 4 PMON Event Select 1

CB4_CR_C_MSR_PMON_CTR_0 RW_RW0xD3164C-Box 4 PMON Counter 0

CB4_CR_C_MSR_PMON_EVT_SEL_0 RW_RO 0xD30 64 C-Box 4 PMON Event Select 0

CB4_CR_C_MSR_PMON_GLOBAL_OVF_CTLWO_RO0xD2232C-Box 4 PMON Global Overflow Control

CB4_CR_C_MSR_PMON_GLOBAL_STATUS RW_RW0xD2132C-Box 4 PMON Global Status

CB4_CR_C_MSR_PMON_GLOBAL_CTL RW_RO 0xD20 32 C-Box 4 PMON Global Control

CB0_CR_C_MSR_PMON_CTR_5 RW_RW0xD1B 64 C-Box 0 PMON Counter 5

CB0_CR_C_MSR_PMON_EVT_SEL_5 RW_RO 0xD1A 64 C-Box 0 PMON Event Select 5

CB0_CR_C_MSR_PMON_CTR_4 RW_RW0xD1964C-Box 0 PMON Counter 4

Acces

MSR

Addres

s

Size

(bits

s

)

Description

CB0_CR_C_MSR_PMON_EVT_SEL_4 RW_RO 0xD18 64 C-Box 0 PMON Event Select 4

CB0_CR_C_MSR_PMON_CTR_3 RW_RW0xD1764C-Box 0 PMON Counter 3

CB0_CR_C_MSR_PMON_EVT_SEL_3 RW_RO 0xD16 64 C-Box 0 PMON Event Select 3

CB0_CR_C_MSR_PMON_CTR_2 RW_RW0xD1564C-Box 0 PMON Counter 2

CB0_CR_C_MSR_PMON_EVT_SEL_2 RW_RO 0xD14 64 C-Box 0 PMON Event Select 2

CB0_CR_C_MSR_PMON_CTR_1 RW_RW0xD1364C-Box 0 PMON Counter 1

CB0_CR_C_MSR_PMON_EVT_SEL_1 RW_RO 0xD12 64 C-Box 0 PMON Event Select 1

CB0_CR_C_MSR_PMON_CTR_0 RW_RW0xD1164C-Box 0 PMON Counter 0

CB0_CR_C_MSR_PMON_EVT_SEL_0 RW_RO 0xD10 64 C-Box 0 PMON Event Select 0

CB0_CR_C_MSR_PMON_GLOBAL_OVF_CTLWO_RO0xD0232C-Box 0 PMON Global Overflow Control

CB0_CR_C_MSR_PMON_GLOBAL_STATUS RW_RW0xD0132C-Box 0 PMON Global Status

CB0_CR_C_MSR_PMON_GLOBAL_CTL RW_RO 0xD00 32 C-Box 0 PMON Global Control

2.3.3.1 C-Box Box Level PMON state

The following registers represent the state governing all box-level PMUs in the C-Box.

The _GLOBAL_CTL register contains the bits used to enable monitoring. It is necessary to set the

.ctr_en bit to 1 before the corresponding data register can collect events.

2-13