Page 1

Intel® Server Boards S5520HC,

S5500HCV, and S5520HCT

Technical Product Specification

Intel order number E39529-013

Revision 1.8

May 2010

Enterprise Platforms and Services Division

Page 2

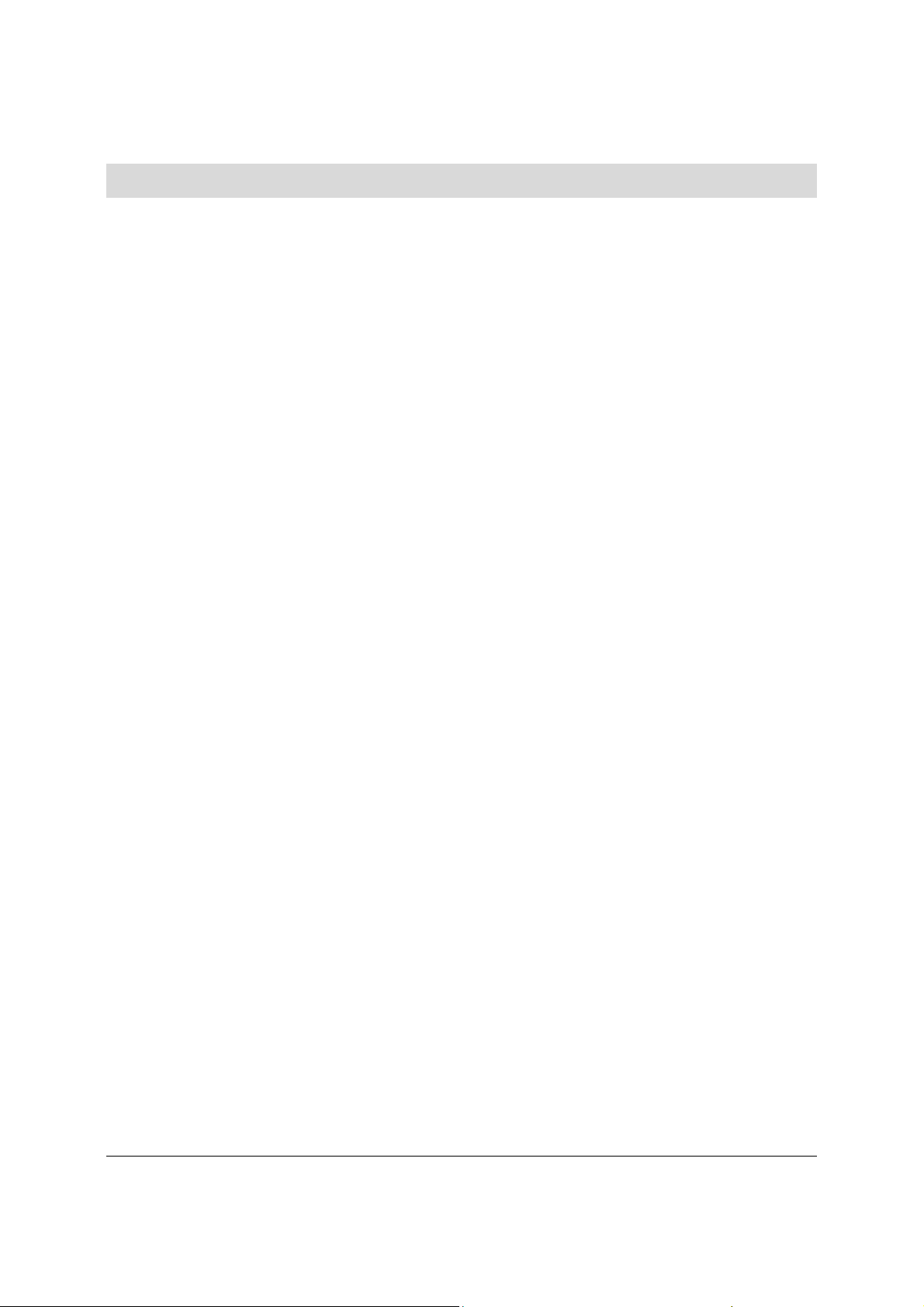

Revision History Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS

Revision History

Date Revision

Modifications

Number

February 2008 0.1 Preliminary Draft

March 2008 0.3 Content Update

March 2008 0.5 Updated sections 2.1 and 3.2

April 2008 0.55 Updated product code and processor support related information.

August 2008 0.6 Updated product code and memory support related information; S5500HCV

DIMM slot population change; and Chassis Intrusion header location change

September 2008 0.65 Jumper block location change.

February 2009 1.0 Updated Block Diagram; Updated Functional Architecture Section; added BIOS

Setup Utility Section; and updated Appendix.

March 2009 1.1 – Updated Section 3.3.4.1 Memory Reservation for Memory-mapped Functions

– Updated Section 3.4.1.2 onboard SATA Storage Mode Matrix table

– Added Fan Domain Table in Section 4.3.2.2.1

– Updated Section 9.2 MTBF

– Added Appendix G Installation Guidelines

– Added Processor Stepping Mismatching on Table 2

– Updated Boot Option BIOS Setup Menu (Table 34 and Figure 36)

– Updated Table 4 Memory Running Frequency

– Updated Table 12 Intel

®

SAS Entry RAID Module AXX4SASMOD Storage

Mode

– Updated Table 47 and Table 48, CPU 1 and CPU2 power connectors pin-out

– Updated Table 84 POST Codes and Messages

– Updated Table 87 BMC Beep Codes

– Updated Figure 21 SMBUS Block Diagram, revised components code name

– Updated Figure 53 Power Distribution Block Diagram, revised components

code name

July 2009 1.2 – Updated Section 2.1, the feature set table

– Updated Section 3.3.2 supported memory

– Updated Section 3.3.3

– Added Section 3.15

– Updated Appendix A: adding PCI device SEL event decoding tips

– Updated Appendix G

– Updated Section 4.2.2 Keyboard, Video, and Mouse (KVM) Redirection

– Updated Table 2, Table 8, Table 9, Table 25, Figure 13, and Figure 14

August 2009 1.3 Updated Section 3.15 and 7.3

November 2009 1.4 – Updated Section 3.3.3

– Updated Section 3.3.9 supported memory population

January 2010 1.5 – Updated Section 2.1, added security feature for S5520HCT

– Added Section 3.13 Trusted Platform Module

March 2010 1.6 – Updated Section 2.1, added Intel® Xeon® Processor 5600 series support

– Updated Section 3.2, added Intel

®

Xeon® Processor 5600 series support

April 2010 1.7 – Removed CCC related notices

May 2010 1.8 - Added Section 3.13.3 Intel® Trusted Execution Technology(Intel® TXT)

- Update section 3.3.2 memory capacity

ii

Revision 1.8

Intel order number E39529-013

Page 3

Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS Disclaimers

Disclaimers

Information in this document is provided in connection with Intel® products. No license, express or implied, by

estoppel or otherwise, to any intellectual property rights is granted by this document. Except as provided in Intel's

Terms and Conditions of Sale for such products, Intel assumes no liability whatsoever, and Intel disclaims any

express or implied warranty, relating to sale and/or use of Intel products including liability or warranties relating to

fitness for a particular purpose, merchantability, or infringement of any patent, copyright or other intellectual property

right. Intel products are not intended for use in medical, life saving, or life sustaining applications. Intel may make

changes to specifications and product descriptions at any time, without notice.

Designers must not rely on the absence or characteristics of any features or instructions marked "reserved" or

"undefined." Intel reserves these for future definition and shall have no responsibility whatsoever for conflicts or

incompatibilities arising from future changes to them.

The Intel

errata which may cause the product to deviate from published specifications. Refer to the Intel

S5520HC, S5500HCV and S5520HCT Specification Update for published errata.

Intel Corporation server baseboards contain a number of high-density VLSI and power delivery components that

need adequate airflow to cool. Intel’s own chassis are designed and tested to meet the intended thermal

requirements of these components when the fully integrated system is used together. It is the responsibility of the

system integrator that chooses not to use Intel developed server building blocks to consult vendor datasheets and

operating parameters to determine the amount of air flow required for their specific application and environmental

conditions. Intel Corporation can not be held responsible if components fail or the server board does not operate

correctly when used outside any of their published operating or non-operating limits.

Intel, Pentium, Itanium, and Xeon are trademarks or registered trademarks of Intel Corporation.

*Other brands and names may be claimed as the property of others.

Copyright © Intel Corporation 2009-2010.

®

Server Boards S5520HC, S5500HCV and S5520HCT may contain design defects or errors known as

®

Server Boards

Revision 1.8

iii

Intel order number E39529-013

Page 4

Table of Contents Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS

Table of Contents

1. Introduction ........................................ ............. ............. ................ .............. ............. ..... .......... 1

1.1 Chapter Outline ......................................................................................................... 1

1.2 Server Board Use Disclaimer .................................................................................... 1

2. Overview ..................................... ..... ... ..... ... .. ...... .. ...... .. ...... .. ... ..... ... ..... ... ... ..... ... ..... ... ............ 2

2.1 Intel® Server Boards S5520HC, S5500HCV and S5520HCT Feature Set .............. 2

2.1.1 Server Board Connector and Component Layout .................................................... 5

2.1.2 Server Board Mechanical Drawings ......................................................................... 8

2.1.3 Server Board Rear I/O Layout ................................................................................ 16

3. Functional Architecture .......................................... ............................................. ................ 17

3.1 Intel® 5520 and 5500 I/O Hub (IOH) ....................................................................... 20

3.1.1 Intel® QuickPath Interconnect ................................................................................. 20

3.1.2 PCI Express* Ports .................................................................................................. 20

3.1.3 Enterprise South Bridge Interface (ESI) ................................................................. 21

3.1.4 Manageability Engine (ME) ..................................................................................... 21

3.1.5 Controller Link (CL) ................................................................................................. 21

3.2 Processor Support ................................................................................................... 22

3.2.1 Processor Population Rules .................................................................................... 22

3.2.2 Mixed Processor Configurations. ............................................................................ 22

3.2.3 Intel

®

Hyper-Threading Technology (Intel

3.2.4 Enhanced Intel SpeedStep® Technology (EIST) .................................................... 24

3.2.5 Intel® Turbo Boost Technology ............................................................................... 24

3.2.6 Execute Disable Bit Feature ................................................................................... 24

3.2.7 Core Multi-Processing ............................................................................................. 25

3.2.8 Direct Cache Access (DCA) .................................................................................... 25

3.2.9 Unified Retention System Support .......................................................................... 25

3.3 Memory Subsystem................................................................................................. 27

3.3.1 Memory Subsystem Nomenclature ......................................................................... 27

3.3.2 Supported Memory .................................................................................................. 29

3.3.3 Processor Cores, QPI Links and DDR3 Channels Frequency Configuration ........ 30

3.3.4 Publishing System Memory .................................................................................... 32

3.3.5 Memory Interleaving ................................................................................................ 32

3.3.6 Memory Test............................................................................................................ 33

3.3.7 Memory Scrub Engine ............................................................................................. 33

3.3.8 Memory RAS ........................................................................................................... 33

3.3.9 Memory Population and Upgrade Rules ................................................................. 34

3.3.10 Supported Memory Configuration ........................................................................... 36

3.3.11 Memory Error Handling ........................................................................................... 38

3.4 ICH10R .................................................................................................................... 39

3.4.1 Serial ATA Support .................................................................................................. 39

®

HT) ...................................................... 24

iv

Revision 1.8

Intel order number E39529-013

Page 5

Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS Table of Contents

3.4.2 USB 2.0 Support ..................................................................................................... 41

3.5 PCI Subsystem ........................................................................................................ 42

3.5.1 PCI Express* Riser Slot (S5520HC – Slot 6) ......................................................... 43

3.6 Intel® SAS Entry RAID Module AXX4SASMOD (Optional Accessory) .................. 44

3.6.1 SAS RAID Support .................................................................................................. 45

3.7 Baseboard Management Controller ........................................................................ 47

3.7.1 BMC Embedded LAN Channel ............................................................................... 48

3.8 Serial Ports .............................................................................................................. 49

3.9 Floppy Disk Controller ............................................................................................. 49

3.10 Keyboard and Mouse Support ................................................................................ 49

3.11 Video Support .......................................................................................................... 49

3.11.1 Video Modes............................................................................................................ 49

3.11.2 Dual Video ............................................................................................................... 50

3.12 Network Interface Controller (NIC) ......................................................................... 51

3.12.1 MAC Address Definition .......................................................................................... 51

3.13 *Trusted Platform Module (TPM) – Supported only on S5520HCT ....................... 52

3.13.1 Overvi ew .................................................................................................................. 52

3.13.2 TPM security BIOS .................................................................................................. 52

3.13.3 Intel

®

Trusted Execution Technology (Intel

®

TXT) .................................................. 55

3.14 ACPI Support .......................................................................................................... 59

3.15 Intel® Virtualization Technology .............................................................................. 60

3.15.1 Intel® Virtualization Technology for Directed IO (VT-d) .......................................... 60

4. Platform Management .................................................................................... ..................... 61

4.1 Feature Support ...................................................................................................... 61

4.1.1 IPMI 2.0 Features .................................................................................................... 61

4.1.2 Non-IPMI Features .................................................................................................. 61

4.2 Optional Advanced Management Feature Support ................................................ 63

4.2.1 Enabling Advanced Management Features ........................................................... 63

4.2.2 Keyboard, Video, and Mouse (KVM) Redirection ................................................... 63

4.2.3 Media Redirection ................................................................................................... 64

4.2.4 Web Services for Management (WS-MAN) ............................................................ 65

4.2.5 Embedded Web server ........................................................................................... 66

4.2.6 Local Directory Authentication Protocol (LDAP) ..................................................... 66

4.3 Platform Control ...................................................................................................... 67

4.3.1 Memory Open and Closed Loop Thermal Throttling .............................................. 68

4.3.2 Fan Speed Control .................................................................................................. 68

4.4 Intel® Intelligent Power Node Manager ................................................................... 70

4.4.1 Manageability Engine (ME) ..................................................................................... 70

5. BIOS Setup Utility ................................................................................................................ 72

5.1 Logo/Diagnostic Screen .......................................................................................... 72

5.2 BIOS Boot Popup Menu .......................................................................................... 72

Revision 1.8

Intel order number E39529-013

v

Page 6

Table of Contents Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS

5.3 BIOS Setup Utility .................................................................................................... 72

5.3.1 Operation ................................................................................................................. 72

5.3.2 Server Platform Setup Utility Screens .................................................................... 75

6. Connector/Header Locations and Pin-outs .................................... ................................ 108

6.1 Board Connector Information ................................................................................ 108

6.2 Power Connectors ................................................................................................. 109

6.3 System Management Headers ............................................................................. 110

6.3.1 Intel® Remote Management Module 3 Connector ................................................ 110

6.3.2 LCP/IPMB Header ................................................................................................. 111

6.3.3 HSBP Header ........................................................................................................ 111

6.3.4 SGPIO Header ...................................................................................................... 111

6.4 Front Panel Connector .......................................................................................... 111

6.5 I/O Connectors ...................................................................................................... 112

6.5.1 VGA Connector ..................................................................................................... 112

6.5.2 NIC Connectors ..................................................................................................... 113

6.5.3 SATA Connectors .................................................................................................. 113

6.5.4 SAS Module Slot ................................................................................................... 113

6.5.5 Serial Port Connectors .......................................................................................... 114

6.5.6 USB Connector ..................................................................................................... 115

6.6 Fan Headers .......................................................................................................... 116

7. Jumper Blocks.............................................. ... ..... ... ..... ... ..... ... ... ..... ... ..... ... ..... ... .. ...... .. ...... 118

7.1 CMOS Clear and Password Reset Usage Procedure .......................................... 119

7.1.1 Clearing the CMOS ............................................................................................... 119

7.1.2 Clearing the Password .......................................................................................... 119

7.2 Force BMC Update Procedure .............................................................................. 120

7.3 BIOS Recovery Jumper ........................................................................................ 120

8. Intel® Light Guided Diagnostics ......................... .............................................................. 122

8.1 5-volt Stand-by LED .............................................................................................. 122

8.2 Fan Fault LED’s ..................................................................................................... 123

8.3 System ID LED and System Status LED .............................................................. 124

8.4 DIMM Fault LEDs .................................................................................................. 126

8.5 Post Code Diagnostic LEDs .................................................................................. 127

9. Design and Environmental Specifications ........................................ .............................. 128

9.1 Intel® Server Boards S5520HC, S5500HCV, and S5520HCT Design Specifications128

9.2 MTBF ..................................................................................................................... 128

9.3 Server Board Power Requirements ...................................................................... 130

9.3.1 Processor Power Support ..................................................................................... 131

9.4 Power Supply Output Requirements .................................................................... 131

9.4.1 Grounding .............................................................................................................. 131

9.4.2 Stand-by Outputs .................................................................................................. 131

9.4.3 Remote Sense ....................................................................................................... 132

vi

Revision 1.8

Intel order number E39529-013

Page 7

Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS Table of Contents

9.4.4 Voltage Regulation ................................................................................................ 132

9.4.5 Dynamic Loading ................................................................................................... 132

9.4.6 Capacitive Loading ................................................................................................ 133

9.4.7 Ripple/Noise .......................................................................................................... 133

9.4.8 Timing Requirements ............................................................................................ 133

9.4.9 Residual Voltage Immunity in Stand-by Mode ...................................................... 136

10. Regulatory and Certification Information .. ................ ................... ................ ................ ... 137

10.1 Product Regulatory Compliance ........................................................................... 137

10.1.1 Product Safety Compliance .................................................................................. 137

10.1.2 Product EMC Compliance – Class A Compliance ................................................ 137

10.1.3 Certific ations/Registrations/Declarations .............................................................. 138

10.2 Product Regulatory Compliance Markings ........................................................... 138

10.3 Electromagnetic Compatibility Notices ................................................................. 139

FCC (USA) ........................................................................................................................... 139

ICES-003 (Canada) .............................................................................................................. 140

Europe (CE Declaration of Conformity) ............................................................................... 140

VCCI (Japan) ........................................................................................................................ 140

BSMI (Taiwan) ...................................................................................................................... 140

RRL KCC (Korea) ................................................................................................................ 141

10.4 Product Ecology Change (EU RoHS) ................................................................... 141

10.5 Product Ecology Change (CRoHS) ...................................................................... 141

10.6 China Packaging Recycle Marks (or GB18455-2001).......................................... 144

10.7 CA Perchlorate Warning ....................................................................................... 144

10.8 End-of-Life/Product Recycling .............................................................................. 144

Appendix A: Integration and Usage Tips ................................................. .............................. 145

Appendix B: Compatible Intel® Server Chassis .................................................................... 147

Appendix C: BMC Sensor Tables ........................................................................................... 150

Appendix D: Platform Specific BMC Appendix ............................... ...................................... 160

Appendix E: POST Code Diagnostic LED Decoder ... ..... ................ ................... ................ ... 161

Appendix F: POST Error Messages and Handling .................................. ................... ........... 165

Appendix G: Installation Guidelines .................... ................... ................ ................ ................ 170

Glossary ................................ ........... ............. ........... ............. ........... ............. ........... ........... .. ...... 172

Reference Documents ................................................................................................................ 176

Revision 1.8

Intel order number E39529-013

vii

Page 8

List of Figures Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS

List of Figures

Figure 1. Intel

Figure 2. Intel

®

Server Board S5520HC ......................................................................................... 5

®

Server Board S5500HCV ...................................................................................... 5

Figure 3. Major Board Components ............................................................................................... 7

Figure 4. Mounting Hole Locations ................................................................................................. 8

Figure 5. Major Connector Pin-1 Locations (1 of 2) ....................................................................... 9

Figure 6. Major Connector Pin-1 Locations (2 of 2) ..................................................................... 10

Figure 7. Primary Side Keep-out Zone (1 of 2) ............................................................................ 11

Figure 8. Primary Side Keep-out Zone (2 of 2) ............................................................................ 12

Figure 9. Primary Side Air Duct Keep-out Zone ........................................................................... 13

Figure 10. Primary Side Card-Side Keep-out Zone ..................................................................... 14

Figure 11. Second Side Keep-out Zone ....................................................................................... 15

Figure 12. Rear I/O Layout ........................................................................................................... 16

Figure 13. Intel

Figure 14. Intel

®

Server Board S5520HC Functional Block Diagram .......................................... 18

®

Server Board S5500HCV Functional Block Diagram ........................................ 19

Figure 15. Unified Retention System and Unified Back Plate Assembly ..................................... 26

Figure 16. Intel

Figure 17. Intel

Figure 18. Intel

Figure 19. Intel

®

Server Board S5520HC DIMM Slots Arrangement ........................................... 28

®

Server Board S5500HCV DIMM Slots Arrangement ........................................ 29

®

SAS Entry RAID Module AXX4SASMOD Component and Connector Layout 44

®

SAS Entry RAID Module AXX4SASMOD Functional Block Diagram............... 45

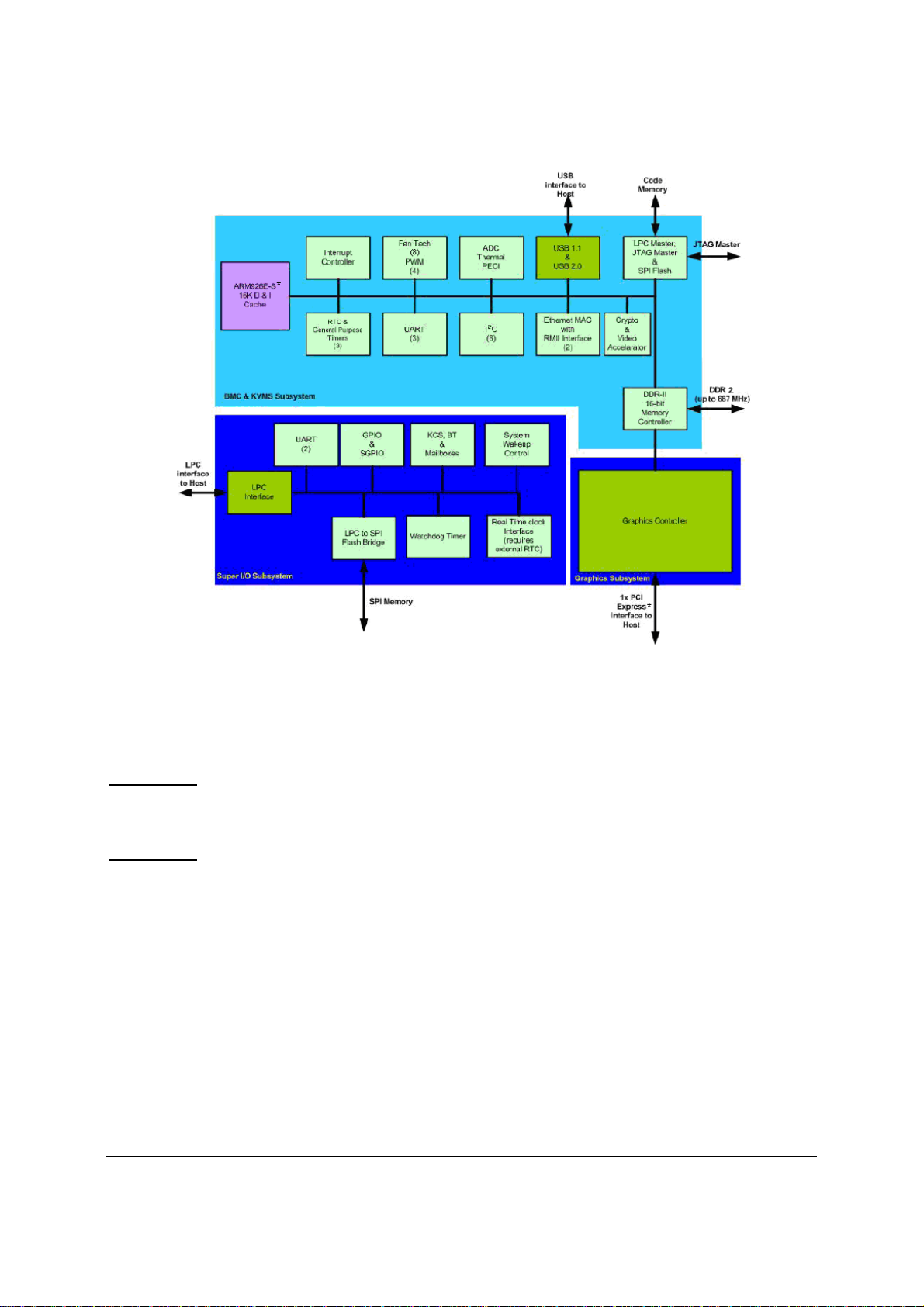

Figure 20. Integrated BMC Hardware .......................................................................................... 48

Figure 21. Setup Utility – TPM Configuration Screen .................................................................. 54

Figure 22. Setting Administrator password in BIOS ..................................................................... 56

Figure 23. Activating TPM ............................................................................................................ 57

Figure 24. TPM activated .............................................................................................................. 58

Figure 25. BIOS setting for TXT ................................................................................................... 59

Figure 26. Platform Control .......................................................................................................... 67

Figure 27. SMBUS Block Diagram ............................................................................................... 71

Figure 28. Setup Utility — Main Screen Display .......................................................................... 76

Figure 29. Setup Utility — Advanced Screen Display .................................................................. 78

Figure 30. Setup Utility — Processor Configuration Screen Display ........................................... 79

Figure 31. Setup Utility — Memory Configuration Screen Display .............................................. 82

Figure 32. Setup Utility — Configure RAS and Performance Screen Display ............................ 84

Figure 33. Setup Utility — Mass Storage Controller Configuration Screen Display .................... 85

Figure 34. Setup Utility — Serial Port Configuration Screen Display .......................................... 87

Figure 35. Setup Utility — USB Controller Configuration Screen Display ................................... 88

Figure 36. Setup Utility — PCI Configuration Screen Display ..................................................... 90

Figure 37. Setup Utility — System Acoustic and Performance Configuration Screen Display ... 91

Figure 38. Setup Utility — Security Configuration Screen Display .............................................. 92

Figure 39. Setup Utility — Server Management Configuration Screen Display .......................... 95

viii

Revision 1.8

Intel order number E39529-013

Page 9

Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS List of Figures

Figure 40. Setup Utility — Console Redirection Screen Display ................................................. 96

Figure 41. Setup Utility — Server Management System Information Screen Display ................ 98

Figure 42. Setup Utility — Boot Options Screen Display ............................................................. 99

Figure 43. Setup Utility — Add New Boot Option Screen Display ............................................. 101

Figure 44. Setup Utility — Delete Boot Option Screen Display ................................................. 102

Figure 45. Setup Utility — Hard Disk Order Screen Display ...................................................... 102

Figure 46. Setup Utility — CDROM Order Screen Display ........................................................ 103

Figure 47. Setup Utility — Floppy Order Screen Display ........................................................... 103

Figure 48. Setup Utility — Network Device Order Screen Display ............................................ 104

Figure 49. Setup Utility — BEV Device Order Screen Display .................................................. 104

Figure 50. Setup Utility — Boot Manager Screen Display ......................................................... 105

Figure 51. Setup Utility — Error Manager Screen Display ........................................................ 106

Figure 52. Setup Utility — Exit Screen Display .......................................................................... 106

Figure 53. Jumper Blocks (J1E2, J1E4, J1E5, J1E6, J1H1) ..................................................... 118

Figure 54. 5-volt Stand-by Status LED Location ........................................................................ 122

Figure 55. Fan Fault LED’s Location .......................................................................................... 123

Figure 56. System Status LED Location .................................................................................... 124

Figure 57. DIMM Fault LED’s Location ...................................................................................... 126

Figure 58. POST Code Diagnostic LED Locations .................................................................... 127

Figure 59. Power Distribution Block Diagram ............................................................................ 130

Figure 60. Output Voltage Timing............................................................................................... 134

Figure 61. Turn On/Off Timing (Power Supply Signals) ............................................................ 135

Figure 62. Active Processor Heatsink Installation Requireme nt ................................................ 149

Figure 63. Diagnostic LED Placement Diagram ........................................................................ 161

Revision 1.8

Intel order number E39529-013

ix

Page 10

List of Tables Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS

List of Tables

Table 1. IOH High-Level Summary .............................................................................................. 20

Table 2. Mixed Processor Configurations .................................................................................... 23

Table 3. Memory Running Frequency vs. Processor SKU .......................................................... 31

Table 4. Memory Running Frequency vs. Memory Population .................................................... 31

Table 5. Supported DIMM Population under the Dual Processors Configuration ....................... 37

Table 6. Supported DIMM Population under the Single Processor Configuration ...................... 37

Table 7. Onboard SATA Storage Mode Matrix ............................................................................ 40

Table 8. Intel

Table 9. Intel

Table 10. Intel

Table 11. PCI Riser Support ......................................................................................................... 43

Table 12. Intel

Table 13. Serial B Header Pin-out ................................................................................................ 49

Table 14. Video Modes ................................................................................................................. 50

Table 15. Onboard NIC Status LED ............................................................................................. 51

Table 16. TSetup Utility – Security Configuration Screen Fields ................................................. 54

Table 17. Basic and Advanced Management Features ............................................................... 63

Table 18. S5520HC, S5500HCV and S5520HCT Fan Domain Table ........................................ 69

Table 19. BIOS Setup Page Layout ............................................................................................. 73

Table 20. BIOS Setup: Keyboard Command Bar ........................................................................ 74

Table 21. Setup Utility — Main Screen Fields .............................................................................. 76

Table 22. Setup Utility — Advanced Screen Display Fields ........................................................ 78

Table 23. Setup Utility — Processor Configuration Screen Fields .............................................. 80

Table 24. Setup Utility — Memory Configuration Screen Fields ................................................. 83

Table 25. Setup Utility — Configure RAS and Performance Screen Fields ................................ 84

Table 26. Setup Utility — Mass Storage Controller Configuration Screen Fields ....................... 86

Table 27. Setup Utility — Serial Ports Configuration Screen Fields ............................................ 87

Table 28. Setup Utility — USB Controller Configuration Screen Fields ...................................... 89

Table 29. Setup Utility — PCI Configuration Screen Fields ......................................................... 90

Table 30. Setup Utility — System Acoustic and Performance Configuration Screen Fields ...... 92

Table 31. Setup Utility — Security Configuration Screen Fields ................................................. 93

Table 32. Setup Utility — Server Management Configuration Screen Fields ............................. 95

Table 33. Setup Utility — Console Redirection Configuration Fields .......................................... 97

Table 34. Setup Utility — Server Management System Information Fields ................................ 98

Table 35. Setup Utility — Boot Options Screen Fields .............................................................. 100

Table 36. Setup Utility — Add New Boot Option Fields ............................................................. 101

Table 37. Setup Utility — Delete Boot Option Fields ................................................................. 102

Table 38. Setup Utility — Hard Disk Order Fields ...................................................................... 102

®

Server Board S5520HC PCI Bus Segment Characteristics ................................. 42

®

Server Board S5500HCV PCI Bus Segment Characteristics .............................. 43

®

Server Board S5520HC PCI Riser Slot (Slot 6) ................................................. 43

®

SAS Entry RAID Module AXX4SASMOD Storage Mode .................................. 46

x

Revision 1.8

Intel order number E39529-013

Page 11

Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS List of Tables

Table 39. Setup Utility — CDROM Order Fields ........................................................................ 103

Table 40. Setup Utility — Floppy Order Fields ........................................................................... 103

Table 41. Setup Utility — Network Device Order Fields ............................................................ 104

Table 42. Setup Utility — BEV Device Order Fields .................................................................. 105

Table 43. Setup Utility — Boot Manager Screen Fields ............................................................ 105

Table 44. Setup Utility — Error Manager Screen Fields ............................................................ 106

Table 45. Setup Utility — Exit Screen Fields ............................................................................. 107

Table 46. Board Connector Matrix ............................................................................................. 108

Table 47. Main Power Connector Pin-out (J1K3) ...................................................................... 109

Table 48. CPU 1 Power Connector Pin-out (J9A1) .................................................................... 109

Table 49. CPU 2 Power Connector Pin-out (J9K1) .................................................................... 110

Table 50. Power Supply Auxiliary Signal Connector Pin-out (J9K2) ......................................... 110

Table 51. Intel

®

RMM3 Connector Pin-out (J1C1) ..................................................................... 110

Table 52. LCP/IPMB Header Pin-out (J1G6) ............................................................................. 111

Table 53. HSBP Header Pin-out (J1F5, J1G3) .......................................................................... 111

Table 54. SGPIO Header Pin-out (J1G2) ................................................................................... 111

Table 55. Front Panel SSI Standard 24-pin Connector Pin-out (J1B3) ..................................... 112

Table 56. VGA Connector Pin-out (J7A1) .................................................................................. 112

Table 57. RJ-45 10/100/1000 NIC Connector Pin-out (J5A1, J6A1) ......................................... 113

Table 58. SATA/SAS Connector Pin-out (J1E3, J1G1, J1G4, J1G5, J1F1, J1F4) ................... 113

Table 59. SAS Module Slot Pin-out (J2J1) ................................................................................. 113

Table 60. External DB9 Serial A Port Pin-out (J8A1) ................................................................. 114

Table 61. Internal 9-pin Serial B Header Pin-out (J1B1) ........................................................... 114

Table 62. External USB Connector Pin-out (J5A1, J6A1) ......................................................... 115

Table 63. Internal USB Connector Pin-out (J1D1) ..................................................................... 115

Table 64. Internal USB Connector Pin-out (J1D2) ..................................................................... 115

Table 65. Pin-out of Internal Low-Profile USB Connector for Solid State Drive (J2D2) ............ 116

Table 66. Internal Type A USB Port Pin-out (J1H2) .................................................................. 116

Table 67. SSI 4-pin Fan Header Pin-out (J7K1, J9A2, J9A3) ................................................... 117

Table 68. SSI 6-pin Fan Header Pin-out (J1K1, J1K2, J1K4, J1K5) ......................................... 117

Table 69. Server Board Jumpers (J1E6, J1E2, J1E4, J1E5, J1H1) .......................................... 118

Table 70. System Status LED .................................................................................................... 125

Table 71. Server Board Design Specifications........................................................................... 128

Table 72. MTBF Estimate ........................................................................................................... 129

Table 73. Intel® Xeon

®

Processor Dual Processor TDP Guidelines .......................................... 131

Table 74. 670-W Load Ratings ................................................................................................... 131

Table 75. Voltage Regulation Limits ........................................................................................... 132

Table 76. Transient Load Requirements .................................................................................... 133

Table 77. Capacitive Loading Conditions ................................................................................... 133

Table 78. Ripple and Noise ........................................................................................................ 133

Table 79. Output Voltage Timing ................................................................................................ 134

Revision 1.8

Intel order number E39529-013

xi

Page 12

List of Tables Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS

Table 80. Turn On/Off Timing ..................................................................................................... 135

Table 81. Compatible Chassis/Heatsink Matrix ......................................................................... 147

Table 82. Integrated BMC Core Sensors ................................................................................... 152

Table 83. Platform Specific BMC Features ................................................................................ 160

Table 84. POST Progress Code LED Example ......................................................................... 161

Table 85. POST Codes and Messages ...................................................................................... 162

Table 86. POST Error Messages and Handling ......................................................................... 166

Table 87. POST Error Beep Codes ............................................................................................ 169

Table 88. BMC Beep Codes ....................................................................................................... 169

xii

Revision 1.8

Intel order number E39529-013

Page 13

Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS List of Tables

<This page intentionally left blank.>

Revision 1.8

Intel order number E39529-013

xiii

Page 14

Page 15

Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS Introduction

1. Introduction

This Technical Product Specification (TPS) provides board-specific information detailing the

features, functionality, and high-level architecture of the Intel

®

Server Boards S5520HC,

S5500HCV and S5520HCT.

In addition, you can obtain design-level information for a given subsystem by ordering the

External Product Specifications (EPS) for the specific subsystem. EPS documents are not

publicly available and you must order them through your local Intel representative.

1.1 Chapter Outline

This document is divided into the following chapters:

Chapter 1 – Introduction

Chapter 2 – Overview

Chapter 3 – Functional Architecture

Chapter 4 – Platform Management

Chapter 5 – BIOS Setup Utility

Chapter 6 – Connector/Header Locations and Pin-outs

Chapter 7 – Jumper Blocks

Chapter 8 – Intel

Chapter 9 – Design and Environmental Specifications

Chapter 10 – Regulatory and Certification Information

Appendix A – Integration and Usage Tips

Appendix B – Compatible Intel

Appendix C – BMC Sensor Tables

Appendix D – Platform Specific BMC Appendix

Appendix E – POST Code Diagnostic LED Decoder

Appendix F – POST Error Messages and Handling

Appendix G – Installation Guidelines

Glossary

Reference Documents

®

Light Guided Diagnostics

®

Server Chassis

1.2 Server Board Use Disclaimer

Intel® Server Boards contain a number of high-density VLSI (Very-large-scale integration) and

power delivery components that require adequate airflow for cooling. Intel ensures through its

own chassis development and testing that when Intel

the fully integrated system meets the intended thermal requirements of these components. It is

the responsibility of the system integrator who chooses not to use Intel developed server

building blocks to consult vendor datasheets and operating parameters to determine the amount

of airflow required for their specific application and environmental conditions. Intel Corporation

cannot be held responsible if components fail or the server board does not operate correctly

when used outside any of the published operating or non-operating limits.

Revision 1.8

Intel order number E39529-013

®

server building blocks are used together,

1

Page 16

Overview Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS

®

®

2. Overview

The Intel® Server Boards S5520HC, S5500HCV and S5520HCT are monolithic printed circuit

boards (PCBs) with features designed to support the pedestal server markets.

2.1 Intel

®

Server Boards S5520HC, S5500HCV and S5520HCT Feature Set

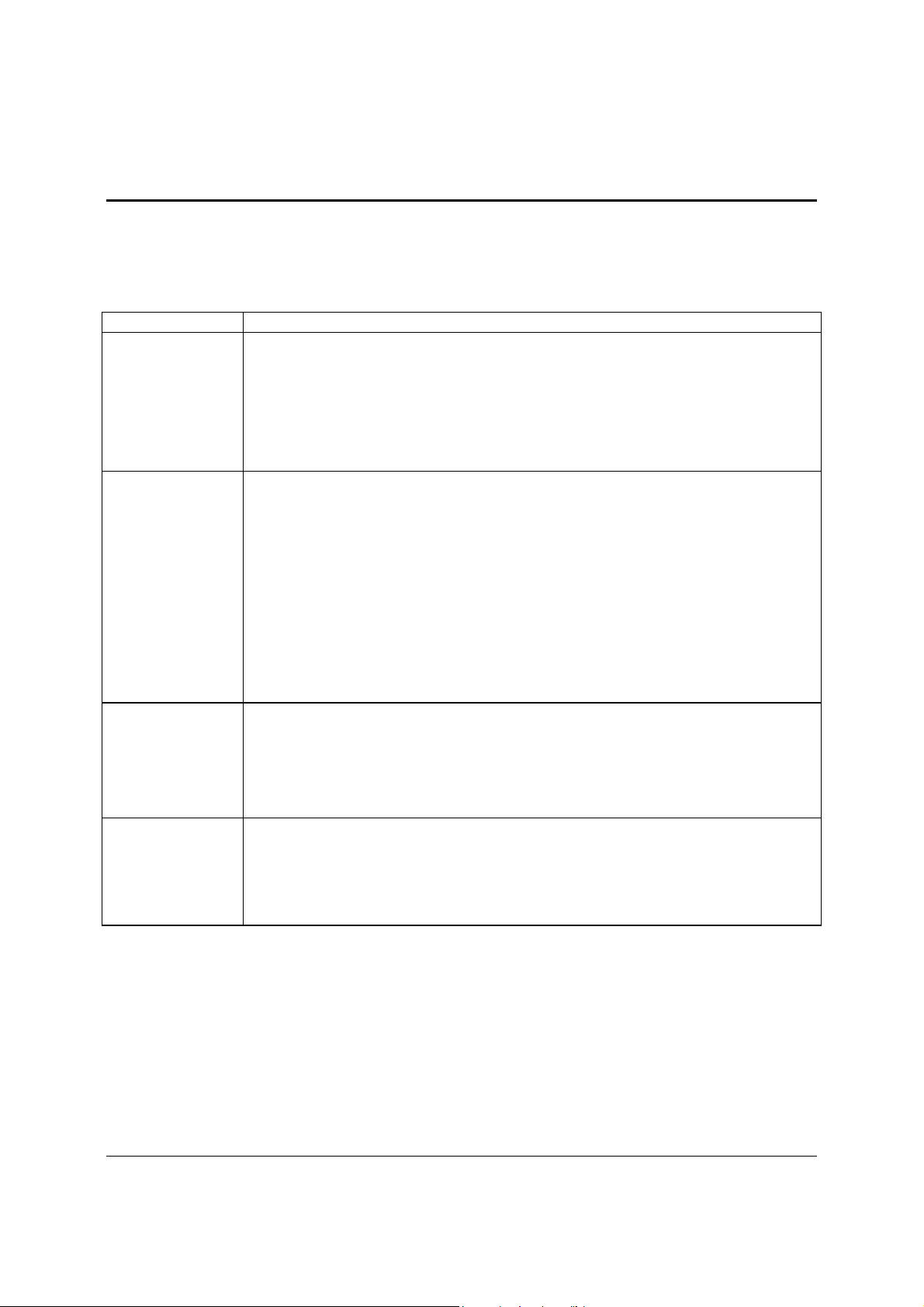

Feature Description

Processors

• Support for one or two Intel

Xeon® Processor(s) 5500 series up to 95W Thermal Design

Power

• Support for one or two Intel

®

Xeon® Processor(s) 5600 series up to 130W Thermal Design

Power

• 4.8 GT/s, 5.86 GT/s, and 6.4 GT/s Intel

• FC-LGA 1366 Socket B

Enterprise Voltage Regulator-Down (EVRD) 11.1

Memory

• Six memory channels (three channels for each processor socket)

Channels A, B, C, D, E, and F

• Support for 800/1066/1333 MT/s ECC Registered DDR3 Memory (RDIMM), ECC

Unbuffered DDR3 memory ((UDIMM)

• No support for mixing of RDIMMs and UDIMMs

®

Server Board S5520HC/S5520HCT:

12 DIMM slots

Two DIMM slots per channel

®

Server Board S5500HCV

Nine DIMM slots

Two DIMM slots on Channels A, B, and C

One DIMM slot on Channels D, E, and F

Server Board S5520HC/S5520HCT:

®

Intel

Intel

Intel

Intel

5520 Chipset

®

82801JIR I/O Controller Hub (ICH10R)

®

Server Board S5500HCV:

®

5500 Chipset

®

82801JIR I/O Controller Hub (ICH10R)

Chipset

• Intel

• Intel

• Intel

• Intel

Cooling Fan Support Support for

• Two processor fans (4-pin headers)

• Four front system fans (6-pin headers)

• One rear system fans (4-pin header)

• 3-pin fans are compatible with all fan headers

®

QuickPath Interconnect (Intel® QPI)

Revision 1.8

2

Intel order number E39529-013

Page 17

Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS Overview

®

®

®

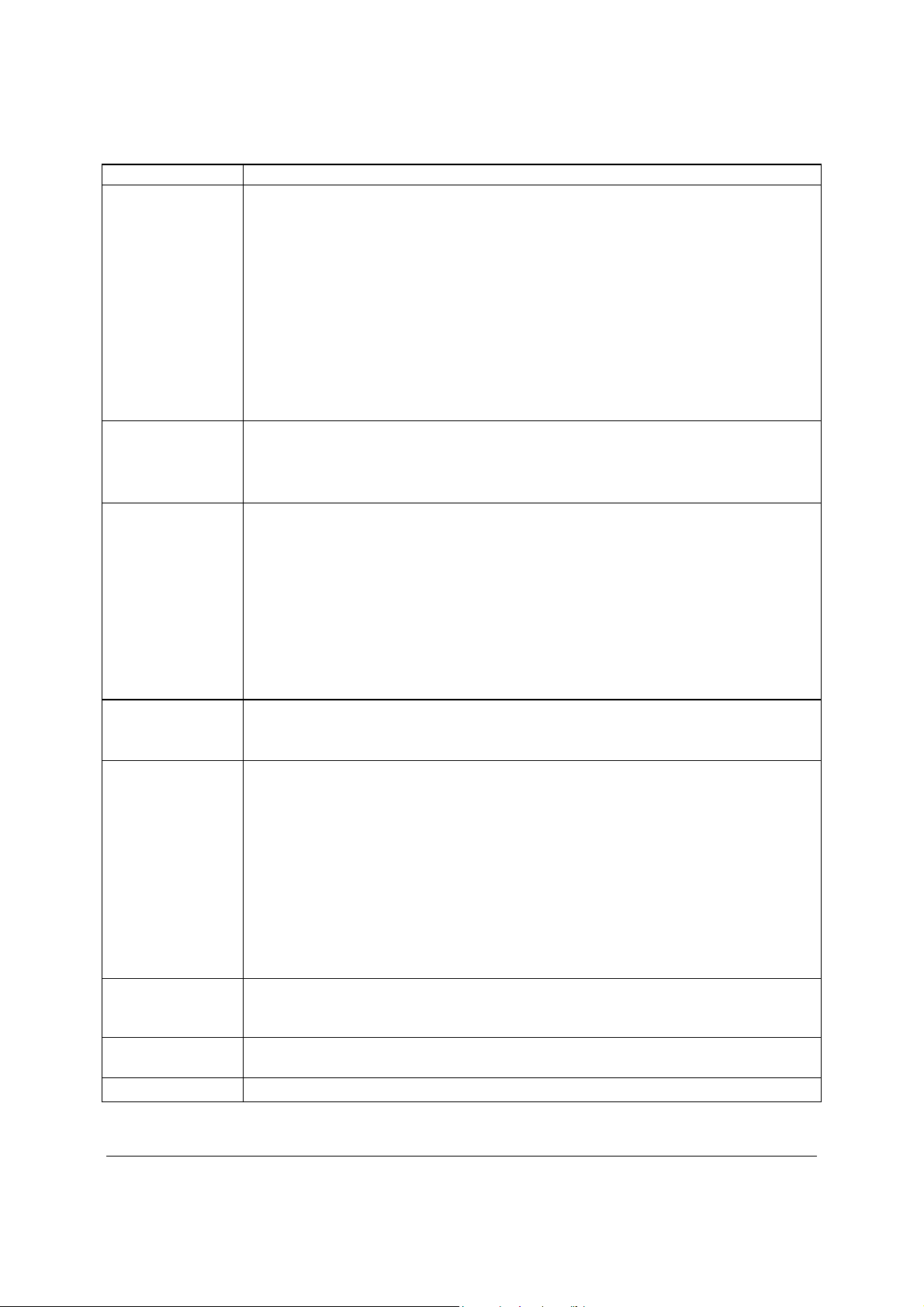

Feature Description

Add-in Card Slots

Hard Drive and

Optical Drive

Support

RAID Support

USB Drive Support

I/O control support

Video Support

LAN

Security**

• Intel

• Intel

• Optical devices are supported

• Six SATA connectors at 1.5 Gbps and 3 Gbps

• Four SAS connectors at 3 Gbps through optional Intel

• Intel

• Intel

• IT/IR RAID through optional Intel

• 4 ports full featured SAS/SATA hardware RAID through optional Intel

• One internal type A USB port with USB 2.0 support that supports a peripheral, such as a

• One internal low-profile USB port for USB Solid State Drive

• External connections:

• Internal connections:

• ServerEngines* LLC Pilot II* with 64 MB DDR2 memory, 8 MB allocated to graphics

• Two Gigabit through Intel

• Trusted Platform Module

Server Board S5520HC/S5520HCT: Six expansion slots

One full-length/full-height PCI Express* Gen2 slot (x16 Mechanically, x8 Electrically)

Three full-length/full-height PCI Express* Gen2 x8 slots

One full-length/full-height PCI Express* Gen1 slot (x8 Mechanically, x4 Electrically)

shared with SAS Module slot*.

One 32-bit/33 MHz PCI slot, keying for 5-V and Universal PCI add-in card

®

Server Board S5500HCV: Five expansion slots

One full-length/full-height PCI Express* Gen2 slot (x16 Mechanically, x4 Electrically)

Two full-length/full-height PCI Express* Express* Gen2 x8 slots

One full-length/full-height PCI Express* Gen1 slot (x8 Mechanically, x4 Electrically)

shared with SAS Module slot*.

One 32-bit/33 MHz PCI slot, keying for 5-volt and Universal PCI add-in card

®

SAS Entry RAID Module

AXX4SASMOD

Embedded Server RAID Technology II through onboard SATA connectors provides

SATA RAID 0, 1, and 10 with optional RAID 5 support provided by the Intel

®

RAID

Activation Key AXXRAKSW5

®

Embedded Server RAID Technology II through optional Intel® SAS Entry RAID

Module AXX4SASMOD provides SAS RAID 0, 1, and 10 with optional RAID 5 support

provided by the Intel

®

RAID Activation Key AXXRAKSW5

®

SAS Entry RAID Module AXX4SASMOD provides entry-

level hardware RAID 0, 1, 10/10E, and native SAS pass through mode

®

Integrated RAID

Module SROMBSASMR (AXXROMBSASMR), provides RAID 0, 1, 5, 6 and striping

capability for spans 10, 50, 60.

floppy drive

DB9 serial port A connection

One DH 10 serial port connector (optional)

Two RJ-45 NIC connectors for 10/100/1000 Mb connections: Dual GbE through the

®

82575EB Network Connection.

Intel

Four USB 2.0 ports at the back of the board

Two 9-pin USB headers, each supports two USB 2.0 ports

One DH10 serial port B header

Six SATA connectors at 1.5 Gbps and 3 Gbps

Four SAS connectors at 3 Gbps (optional)

One SSI-compliant 24-pin front control panel header

Integrated 2D video controller

Dual monitor video mode is supported

82575EB PHYs with Intel® I/O Acceleration Technology 2

support

Revision 1.8

3

Intel order number E39529-013

Page 18

Overview Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS

®

Feature Description

Server Management

BIOS Flash

Form Factor

Compatible Intel®

Server Chassis

* The PCI Express* Gen 1 slot (x8 Mechanically, x4 Electrically) is not available when the SAS module slot is in use

and vice versa.

**The Trusted Platform Module is only availabe in S5520HCT

• Onboard ServerEngines* LLC Pilot II* Controller

Integrated Baseboard Management Controller (Integrated BMC), IPMI 2.0 compliant

Integrated Super I/O on LPC interface

• Support for Intel

®

• Intel

Light-Guided Diagnostics on field replaceable units

• Support for Intel

• Support for Intel

®

Remote Management Module 3

®

System Management Software 3.1 and beyond

®

Intelligent Power Node Manager (Need PMBus-compliant power supply)

• Winbond* W25X64

• SSI EEB (12”x13”)

• Intel

• Intel

• Intel

• Intel

• Intel

Server Chassis SC5650DP

®

Server Chassis SC5650BRP (PMBus-compliant Power Supply)

®

Server Chassis SC5600Base

®

Server Chassis SC5600BRP (PMBus-compliant Power Supply)

®

Server Chassis SC5600LX (PMBus-compliant Power Supply)

Revision 1.8

4

Intel order number E39529-013

Page 19

Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS Overview

Server Board Layout

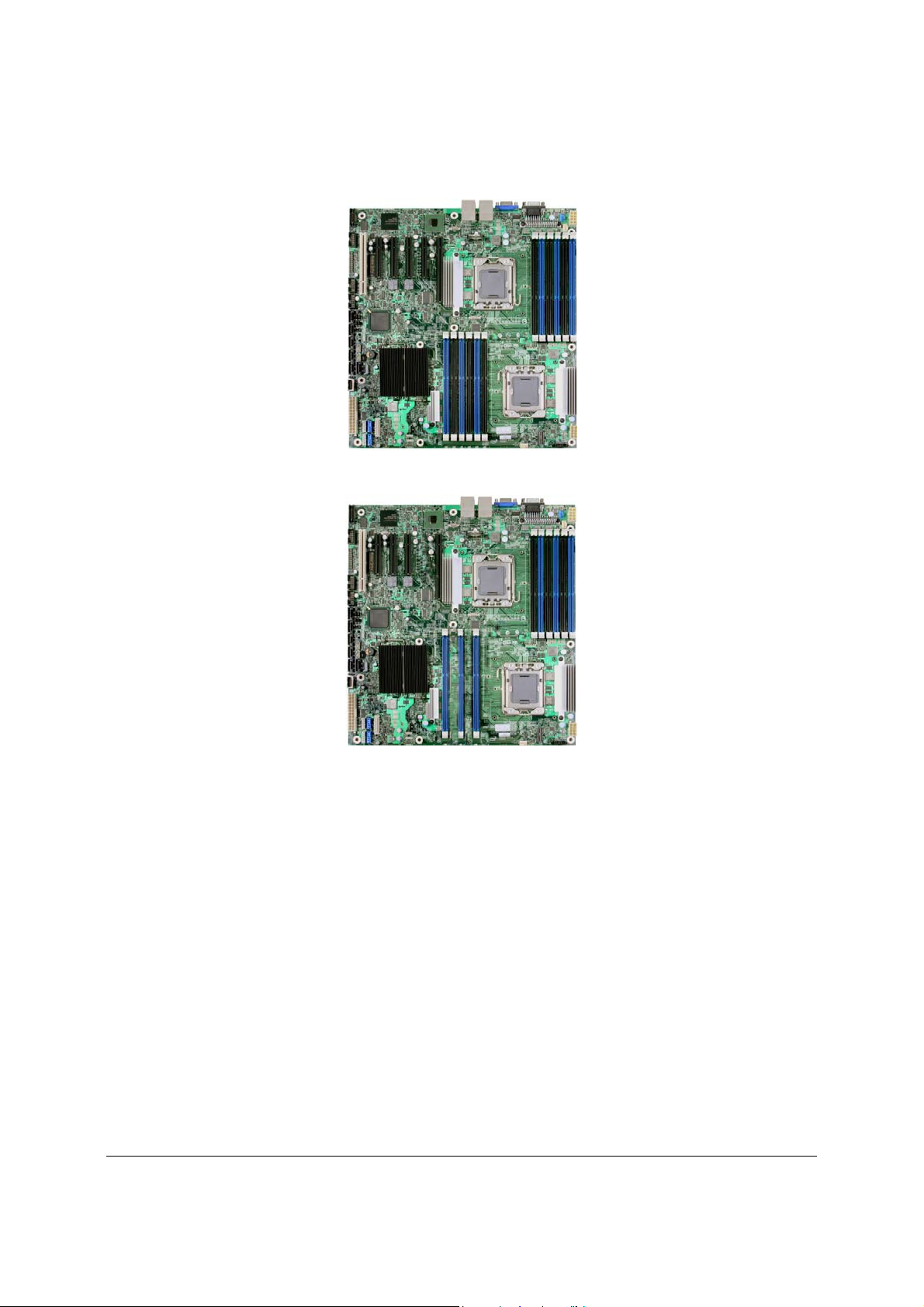

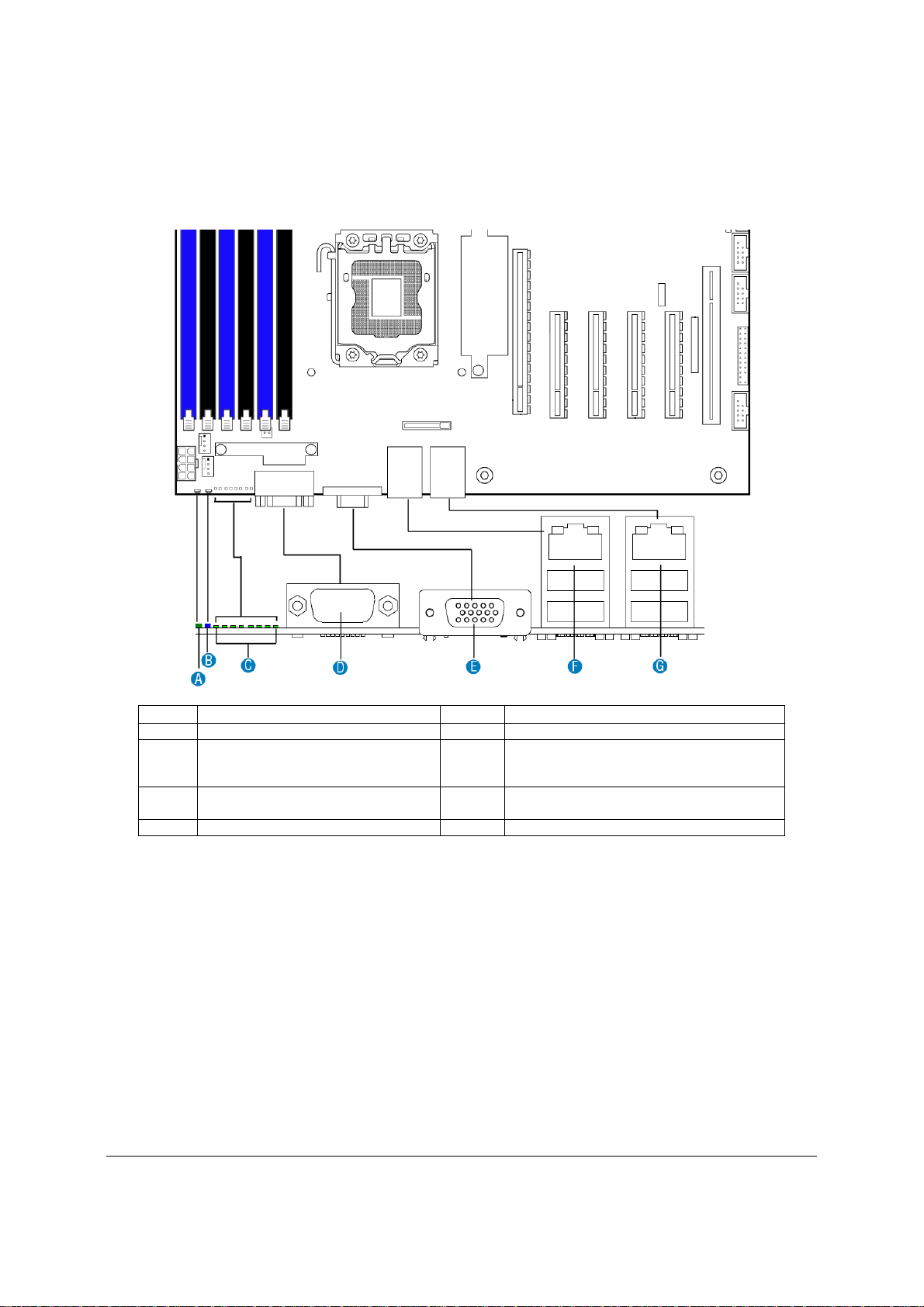

Figure 1. Intel® Server Board S5520HC

Figure 2. Intel® Server Board S5500HCV

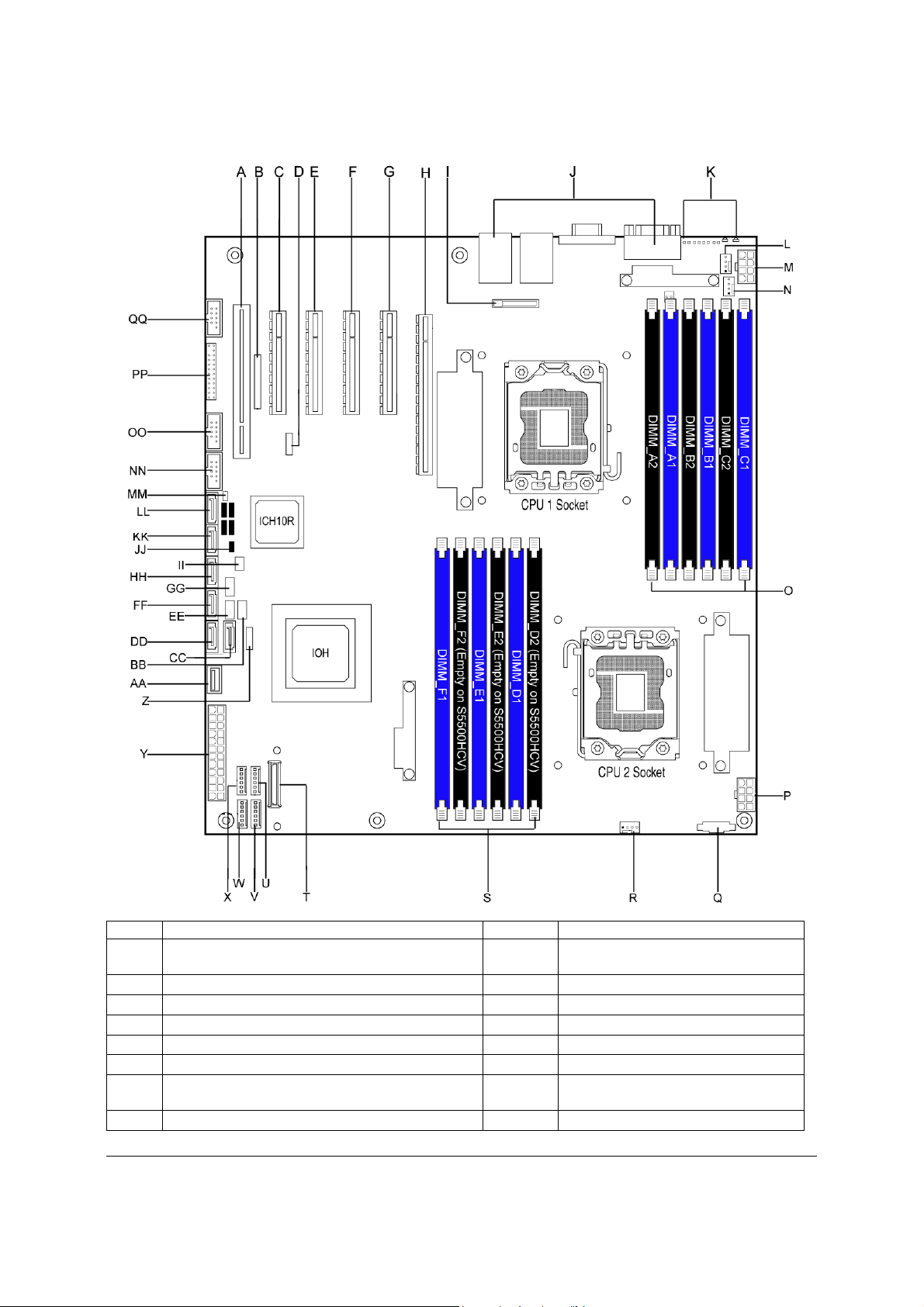

2.1.1 Server Board Connector and Component Layout

The following figure shows the layout of the server board. Each connector and major component

is identified by a number or letter, and a description is given below the figure.

Revision 1.8

Intel order number E39529-013

5

Page 20

Overview Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS

®

Callout Description Callout Description

Slot 1, 32-bit/33 MHz PCI, Keying for 5V and

A

Universal

B Intel® RMM3 Slot X System Fan 1 Header (6-pin)

C Slot 2, PCI Express* x4 (x8 Mechanically) Y Main Power Connector

D Low-profile USB Solid State Drive Header Z LCP/IPMB Header

E Slot 3, PCI Express* Gen2 x8 AA Type A USB Port

F Slot 4, PCI Express* Gen2 x8 BB SATA SGPIO Header

Slot 5, PCI Express* Gen2 x8 (Empty on Intel

G

Server Board S5500HCV)

H

S5520HC: Slot 6, PCI Express* Gen2 x8 (x16

Revision 1.8

6

Intel order number E39529-013

W System Fan 2 Header (6-pin)

CC SATA Port 0

DD SATA Port 1

Page 21

Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS Overview

Callout Description Callout Description

Mechanically)

S5500HCV: Slot 6, PCI Express* Gen2 x4 (x16

Mechanically)

I Battery EE HSBP_B

J Back Panel I/O Ports FF SATA Port 2

K Diagnostic and Identify LED’s GG HSBP_A

L System Fan 5 Header (4-pin) HH SATA Port 3

Power Connector for Processor 1 and Memory

M

attached to Processor 1

N Processor 1 Fan Header (4-pin) JJ Chassis Intrusion Header

O DIMM Sockets of Memory Channel A, B, and C KK SATA Port 4

Power Connector for Processor 2 and Memory

P

attached to Processor 2

Q Auxiliary Power Signal Connector MM

R Processor 2 Fan Header (4-pin) NN

S DIMM Sockets of Memory Channel D, E, and F OO USB Connector (9-pin)

T SAS Module Slot PP Front Control Panel header

U System Fan 3 Header (6-pin) QQ DH-10 Serial B header

V System Fan 4 Header (6-pin)

II SATA Software RAID 5 Key Header

LL SATA Port 5

HDD Activity LED Header (Connect to

Add-in Card HDD Activity LED

Header)

USB Connector (9-pin, for front panel

USB ports)

Figure 3. Major Board Components

Revision 1.8

7

Intel order number E39529-013

Page 22

Overview Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS

2.1.2 Server Board Mechanical Drawings

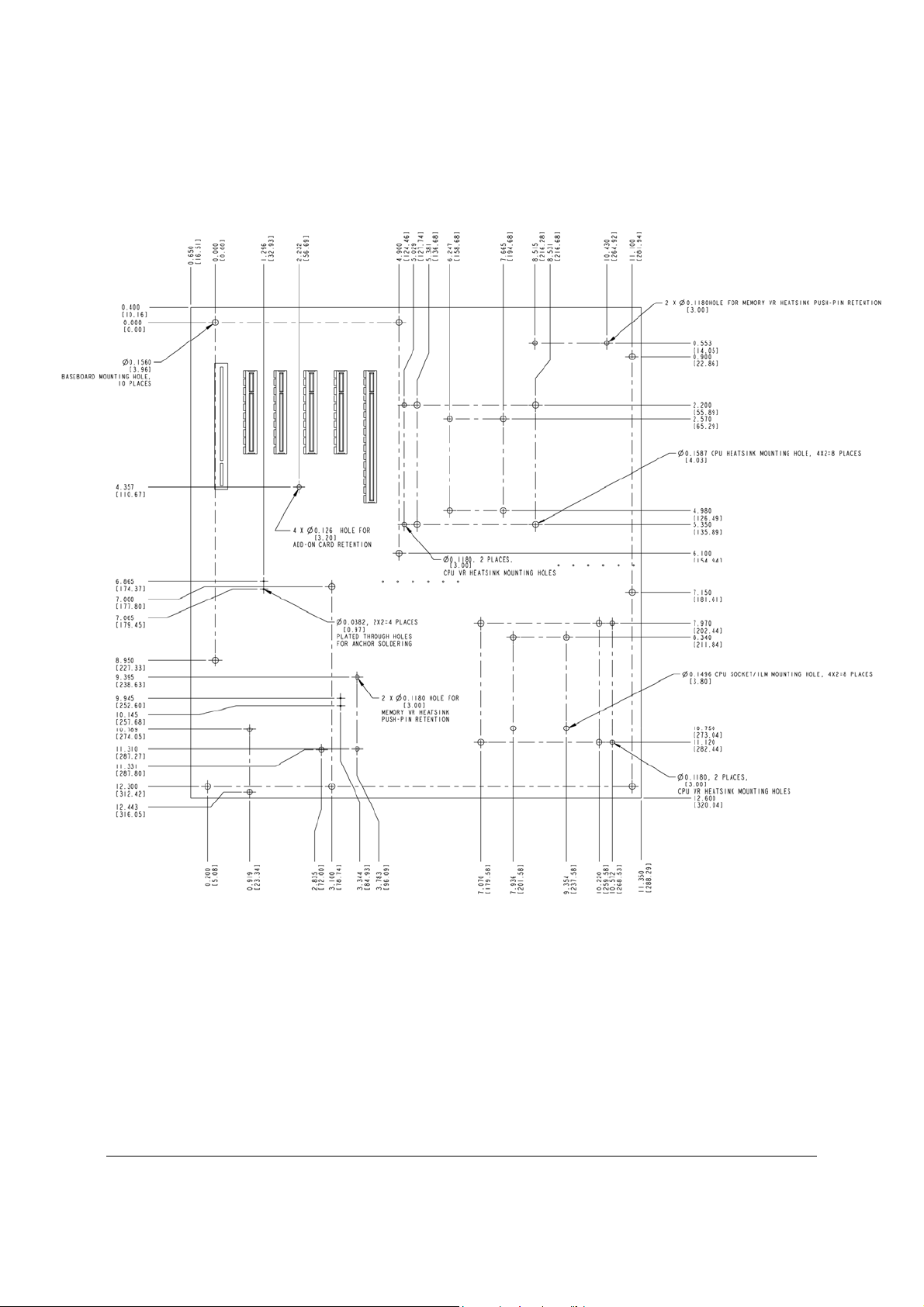

Figure 4. Mounting Hole Locations

Revision 1.8

8

Intel order number E39529-013

Page 23

Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS Overview

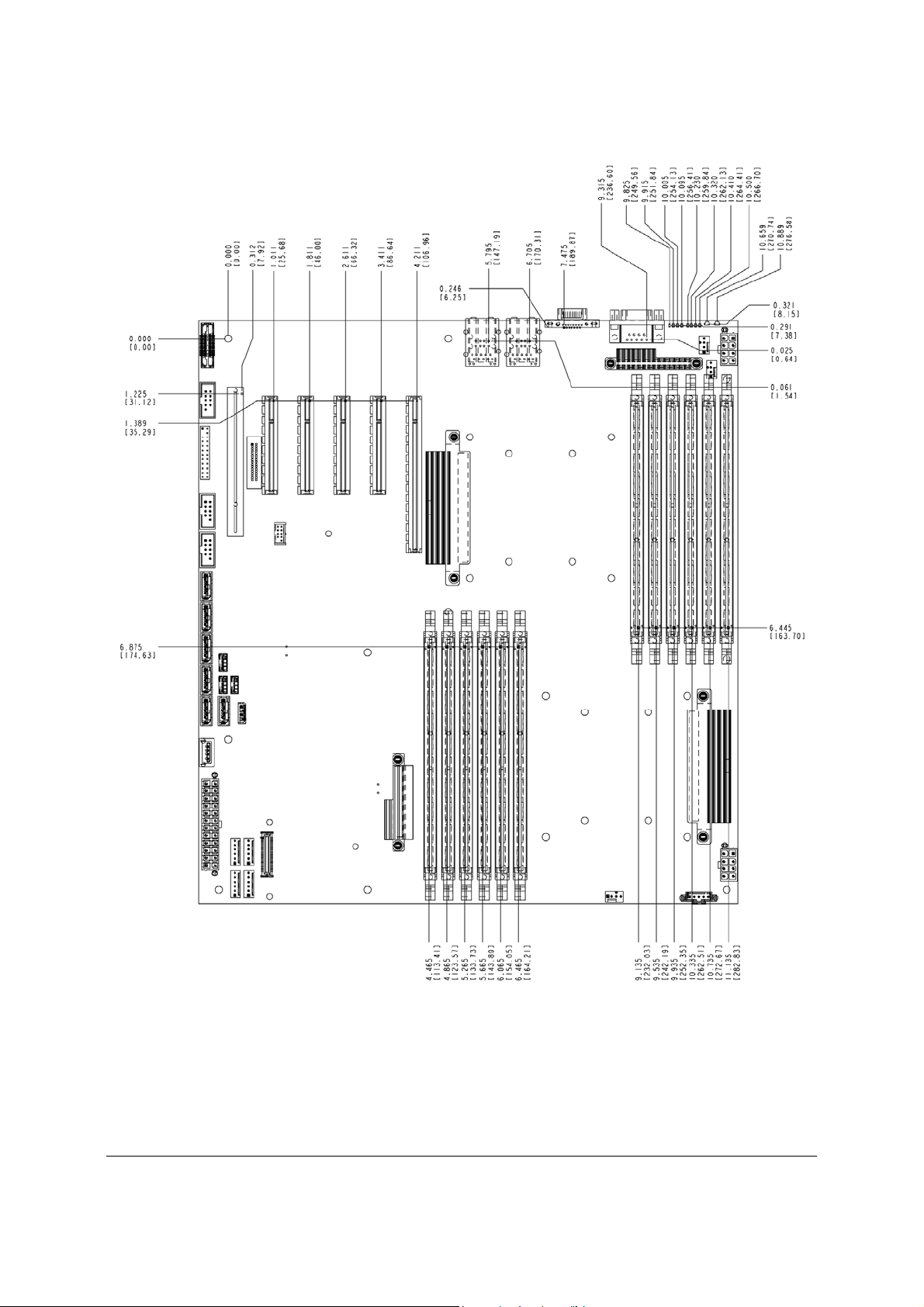

Figure 5. Major Connector Pin-1 Locations (1 of 2)

Revision 1.8

Intel order number E39529-013

9

Page 24

Overview Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS

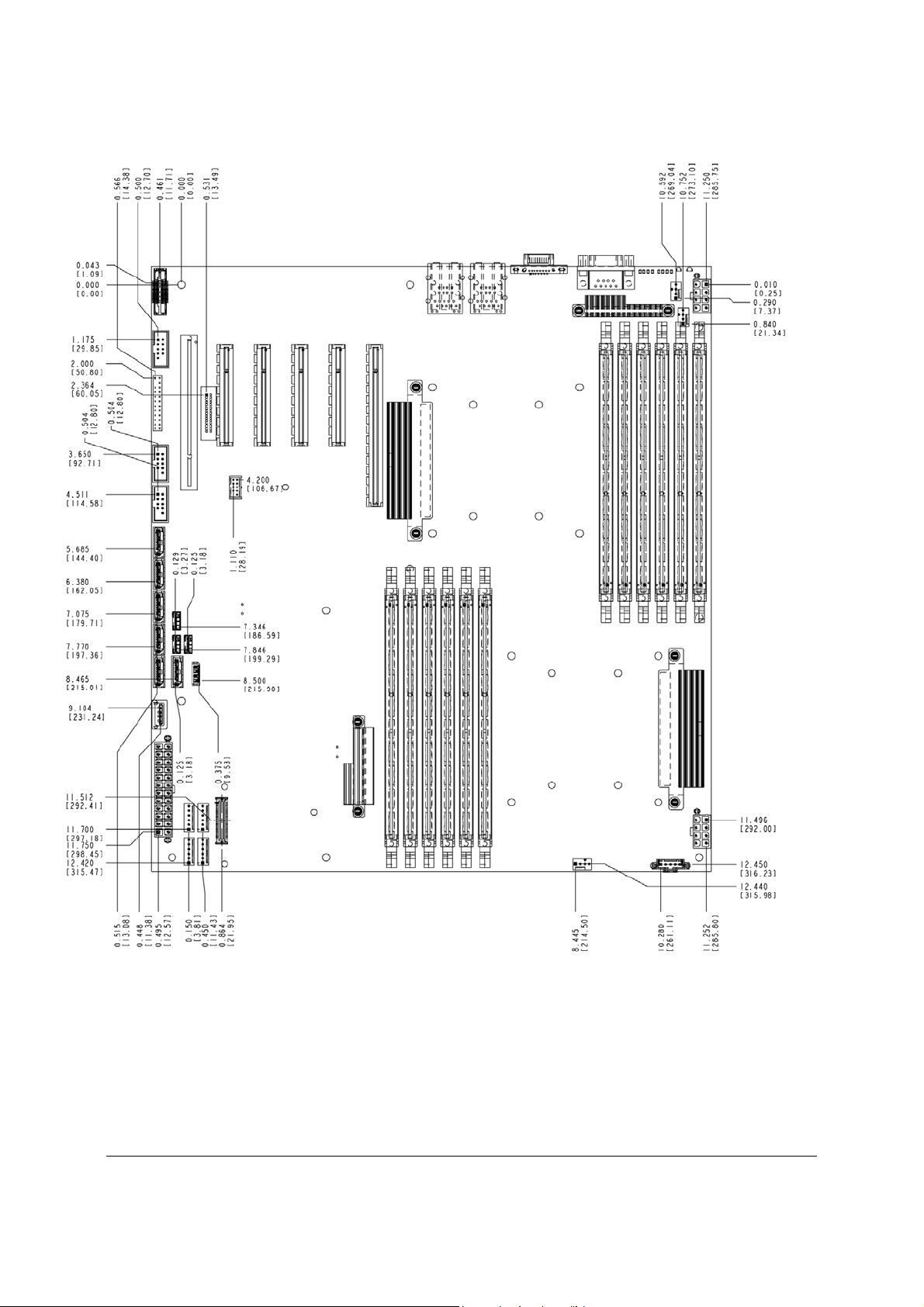

Figure 6. Major Connector Pin-1 Locations (2 of 2)

Revision 1.8

10

Intel order number E39529-013

Page 25

Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS Overview

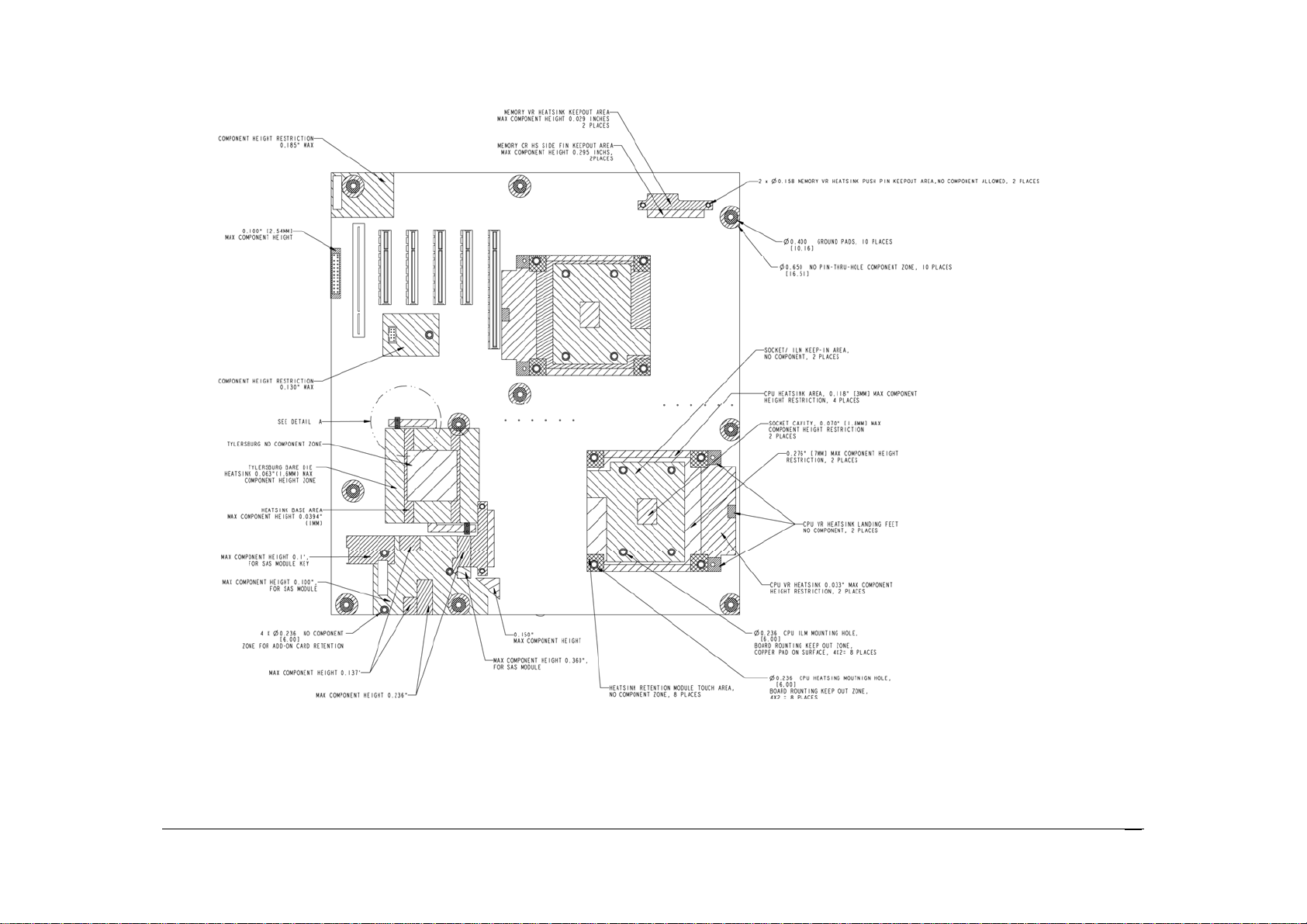

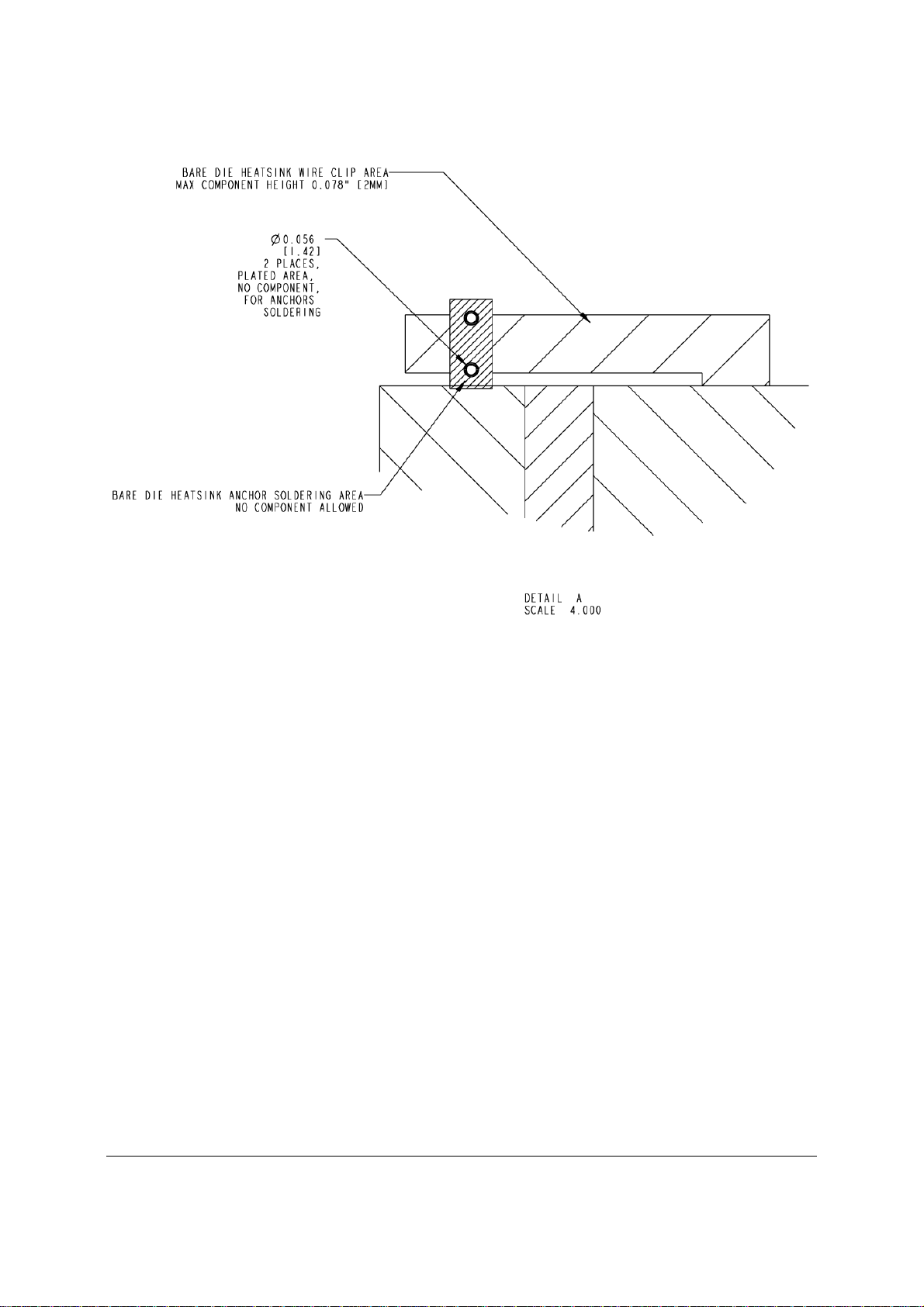

Figure 7. Primary Side Keep-out Zone (1 of 2)

Revision 1.8

11

Intel order number E39529-013

Page 26

Overview Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS

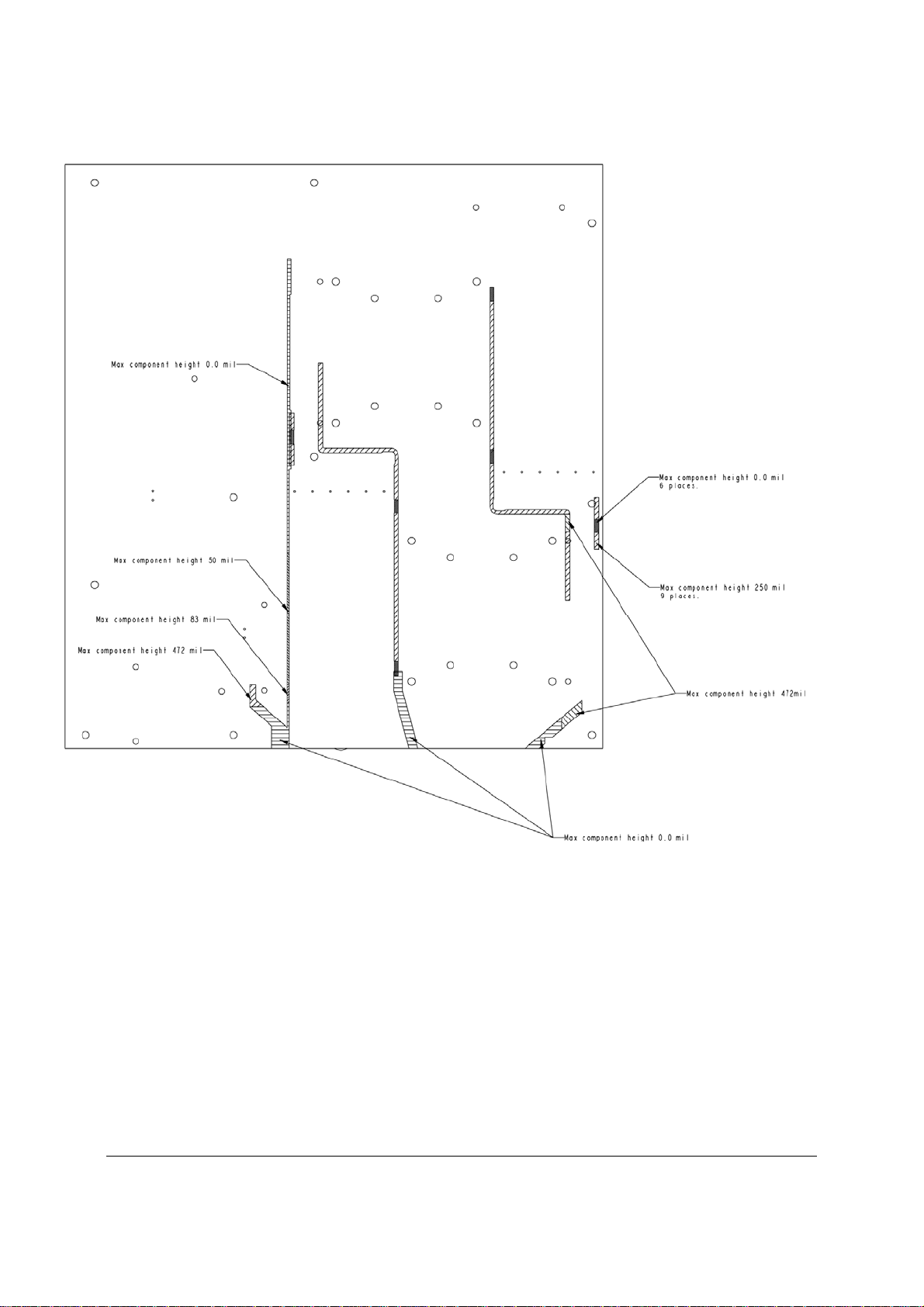

Figure 8. Primary Side Keep-out Zone (2 of 2)

Revision 1.8

12

Intel order number E39529-013

Page 27

Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS Overview

Figure 9. Primary Side Air Duct Keep-out Zone

Revision 1.8

Intel order number E39529-013

13

Page 28

Overview Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS

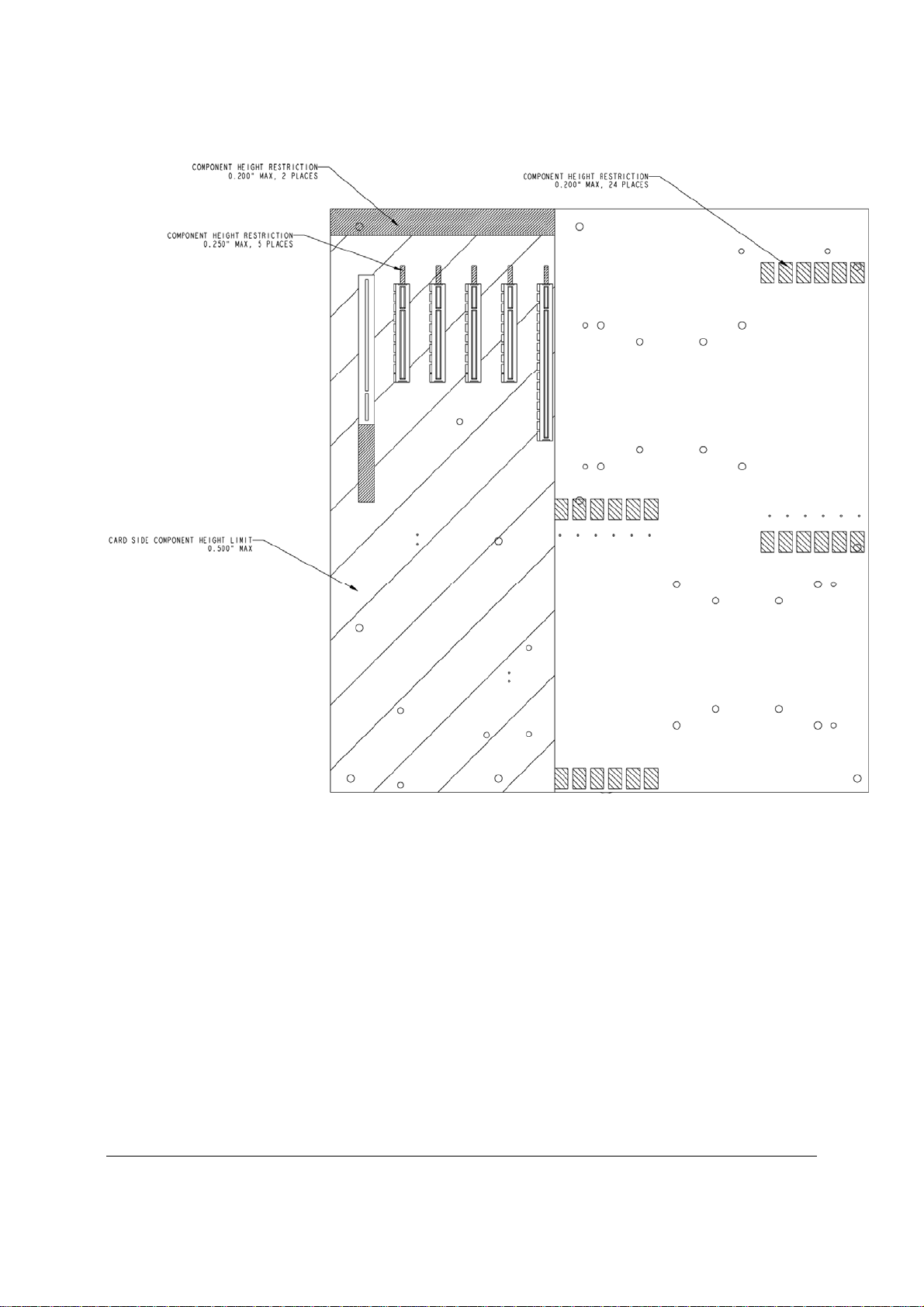

Figure 10. Primary Side Card-Side Keep-out Zone

Revision 1.8

14

Intel order number E39529-013

Page 29

Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS Overview

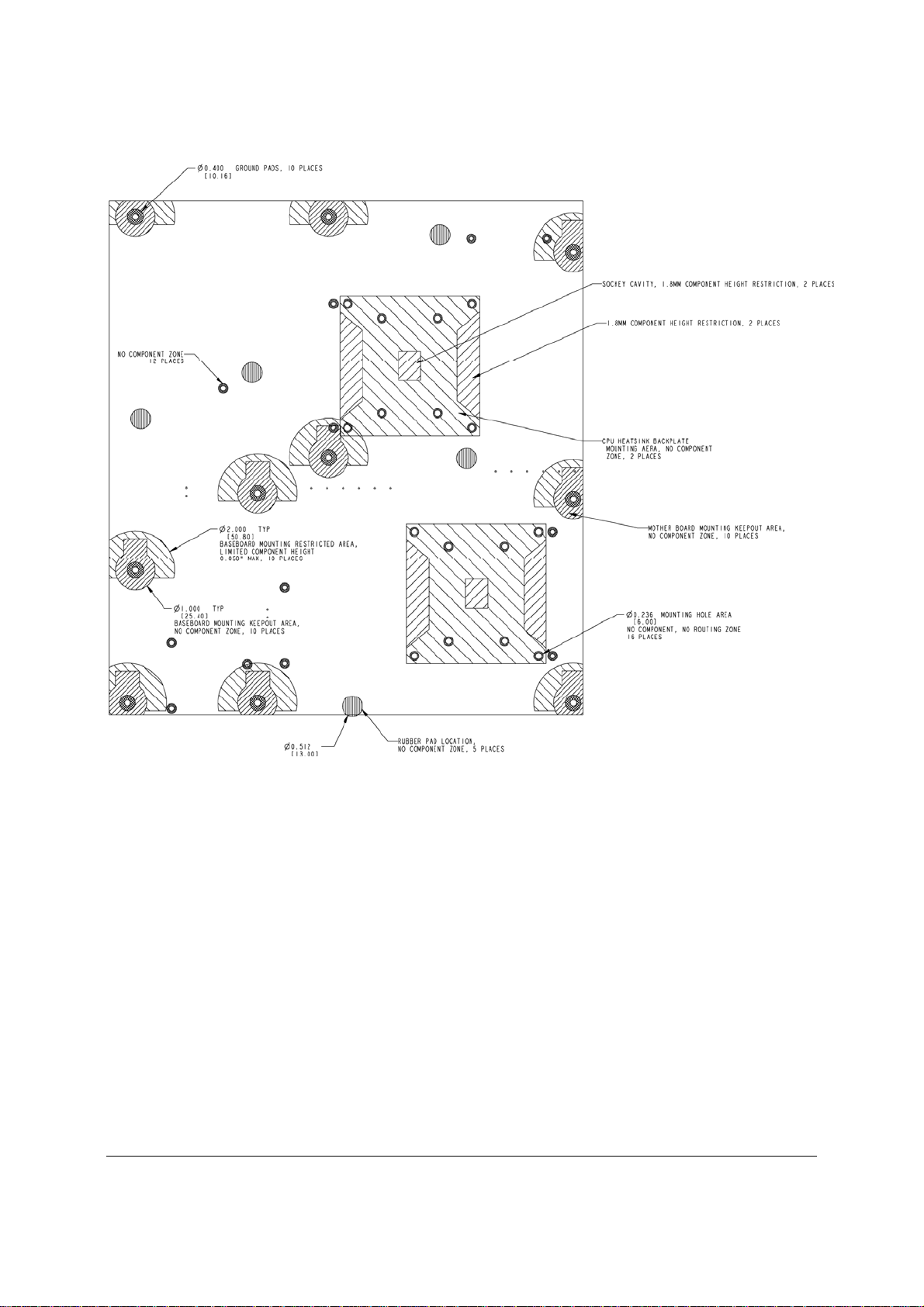

Figure 11. Second Side Keep-out Zone

Revision 1.8

Intel order number E39529-013

15

Page 30

Overview Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS

2.1.3 Server Board Rear I/O Layout

The following drawing shows the layout of the rear I/O components for the server boards.

Callout Description Callout Description

A System Status LED E Video

NIC Port 1 (1 Gb, Default Management

B ID LED F

C Diagnostics LED’s G

D Serial Port A

Port)

USB Port 2 (top), 3 (bottom)

NIC Port 2 (1 Gb)

USB Port 0 (top), 1 (bottom)

Figure 12. Rear I/O Layout

Revision 1.8

16

Intel order number E39529-013

Page 31

Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS Functional Architecture

3. Functional Architecture

The architecture and design of the Intel® Server Boards S5520HC, S5500HCV and

S5520HCTis based on the Intel

systems based on the Intel

package with Intel

®

QuickPath Interconnect (Intel® QPI) speed at 6.40 GT/s, 5.86 GT/s, and

®

5520/5500 and ICH10R chipset. The chipset is designed for

®

Xeon® Processor 5500 Series in an FC-LGA 1366 Socket B

4.80 GT/s.

The chipset contains two main components:

– Intel

®

5520 I/O Hub or 5500 I/O Hub, which provides a connection point between

various I/O components and the Intel

®

QuickPath Interconnect (Intel® QPI) based

processors

– Intel

®

ICH10 RAID (ICH10R) I/O controller hub for the I/O subsystem

This chapter provides a high-level description of the functionality associated with each chipset

component and the architectural blocks that make up the server boards.

Revision 1.8

Intel order number E39529-013

17

Page 32

Functional Architecture Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS

Figure 13. Intel® Server Board S5520HC Functional Block Diagram

Revision 1.8

18

Intel order number E39529-013

Page 33

Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS Functional Architecture

Figure 14. Intel® Server Board S5500HCV Functional Block Diagram

Revision 1.8

Intel order number E39529-013

19

Page 34

Functional Architecture Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS

3.1 Intel

The Intel® 5520 and 5500 I/O Hub (IOH) in the Intel® Server Boards S5520HC, S5500HCV and

S5520HCT provide a connection point between various I/O components and Intel

®

5520 and 5500 I/O Hub (IOH)

®

QPI-based

processors, which includes the following core platform functions:

®

• Intel

QPI link interface for the processor subsystem

• PCI Express* Ports

®

• Enterprise South Bridge Interface (ESI) for connecting Intel

ICH10R

• Manageability Engine (ME)

• Controller Link (CL)

• SMBus Interface

®

• Intel

The following table shows the high-level features of the Intel

Virtualization Technology for Directed I/O (Intel® VT-d)

®

5520 and 5500 IOH:

Table 1. IOH High-Level Summary

IOH SKU Intel® QPI Ports Supported Processor PCI Express*

Lanes

5520 2 Intel® Xeon® Processor 5500 Series 36 Intel® Intelligent Power Node

Manager

5500 2 Intel® Xeon® Processor 5500 Series 24 Intel® Intelligent Power Node

Manager

Manageability

3.1.1 Intel

The Intel® Server Boards S5520HC, S5500HCV and S5520HCT provide two full-width, cachecoherent, link-based Intel

connecting Intel

®

QuickPath Interconnect

®

®

QPI based processors. The two Intel® QPI link interfaces support full-width

QuickPath Interconnect interfaces from Intel® 5520 and 5500 IOH for

communication only and have the following main features:

z Packetized protocol with 18 data/protocol bits and 2 CRC bits per link per direction

Supporting 4.8 GT/s, 5.86 GT/s, and 6.4 GT/s

z Fully-coherent write cache with inbound write combining

z Read Current command support

z Support for 64-byte cache line size

3.1.2 PCI Express* Ports

The Intel® 5520 IOH is capable of interfacing with up to 36 PCI Express* Gen2 lanes, which

support devices with the following link width: x16, x8, x4, x2, and x1.

The Intel

support devices with the following link width: x16, x8, x4, x2, and x1.

All ports support PCI Express* Gen1 and Gen2 transfer rates.

For a detailed PCI Express* Slots definition in the Intel

and S5520HCT, see “3.5 PCI Subsystem.”

®

5500 IOH is capable of interfacing with up to 24 PCI Express* Gen2 lanes, which

®

Server Boards S5520HC, S5500HCV

Revision 1.8

20

Intel order number E39529-013

Page 35

Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS Functional Architecture

3.1.3 Enterprise South Bridge Interface (ESI)

One x4 ESI link interface supporting PCI Express Gen1 (2.5 Gbps) transfer rate for connecting

®

Intel

ICH10R in the Intel® Server Boards S5520HC, S5500HCV and S5520HCT.

3.1.4 Manageability Engine (ME)

An embedded ARC controller is within the IOH providing the Intel® Server Platform Services

(SPS). The controller is also commonly referred to as the Manageability Engine (ME).

3.1.5 Controller Link (CL)

The Controller Link is a private, low-pin count (LPC), low power, communication interface

between the IOH and the ICH10 portions of the Manageability Engine subsystem.

Revision 1.8

Intel order number E39529-013

21

Page 36

Functional Architecture Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS

3.2 Processor Support

The Intel® Server Boards S5520HC, S5500HCV and S5520HCT support the following

processors:

®

• One or two Intel

GT/s Intel

®

• One or two Intel

interface and Thermal Design Power (TDP) up to 130 W.

Xeon® Processor 5500 Series with a 4.8 GT/s, 5.86 GT/s, or 6.4

QPI link interface and Thermal Design Power (TDP) up to 95 W.

®

Xeon® Processor 5600 Series with a 6.4 GT/s Intel® QPI link

The server boards do not support previous generations of the Intel

®

Xeon® Processors.

For a complete updated list of supported processors, see:

http://support.intel.com/support/motherboards/server/S5520HC/

. On the Support tab, look for

“Compatibility” and then “Supported Processor List”.

3.2.1 Processor Population Rules

You must populate processors in sequential order. Therefore, you must populate Processor

socket 1 (CPU 1) before processor socket 2 (CPU 2).

When only one processor is installed, it must be in the socket labeled CPU1, which is located

near the rear edge of the server board. When a single processor is installed, no terminator is

required in the second processor socket.

For optimum performance, when two processors are installed, both must be the identical

revision and have the same core voltage and Intel

®

QPI/core speed.

3.2.2 Mixed Processor Configurations.

The following table describes mixed processor conditions and recommended actions for the

®

Intel

Server Boards S5520HC, S5500HCV and S5520HCT. Errors fall into one of three

categories:

z Halt: If the system can boot, it pauses at a blank screen with the text

“Unrecoverable fatal error found. System will not boot until the error is resolved” and

“Press <F2> to enter setup”, regardless of if the “Post Error Pause” setup option is

enabled or disabled. After entering setup, the error message displays on the Error

Manager screen, and an error is logged to the System Event Log (SEL) with the

error code. The system cannot boot unless the error is resolved. The user needs to

replace the faulty part and restart the system.

z Pause: If the “Post Error Pause” setup option is enabled, the system goes directly to

the Error Manager screen to display the error and log the error code to SEL.

Otherwise, the system continues to boot and no prompt is given for the error,

although the error code is logged to the Error Manager and in a SEL message.

z Minor: The message is displayed on the screen or on the Error Manager screen.

The system continues booting in a degraded state regardless of if the “Post Error

Pause” setup option is enabled or disabled. The user may want to replace the

erroneous unit.

Revision 1.8

22

Intel order number E39529-013

Page 37

Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS Functional Architecture

Table 2. Mixed Processor Configurations

Error Severity System Action

Processor family not

identical

Processor stepping

mismatch

Processor cache not

identical

Processor frequency

(speed) not identical

Halt The BIOS detects the error condition and responds as follows:

– Logs the error into the system event log (SEL).

– Alerts the Integrated BMC about the configuration error.

– Does not disable the processor.

– Displays “0194: Processor 0x family mismatch detected” message in

the Error Manager.

– Halts the system and will not boot until the fault condition is

remedied.

Pause The BIOS detects the stepping difference and responds as follows:

– Checks to see whether the steppings are compatible – typically +/-

one stepping.

– If so, no error is generated (this is not an error condition).

– Continues to boot the system successfully.

Otherwise, this is a stepping mismatch error, and the BIOS responds as

follows:

– Displays “0193: Processor 0x stepping mismatch” message in the

Error Manager and logs it into the SEL.

– Takes Minor Error action and continues to boot the system.

Halt The BIOS detects the error condition and responds as follows:

– Logs the error into the SEL.

– Alerts the Integrated BMC about the configuration error.

– Does not disable the processor.

– Displays “0192: Processor 0x cache size mismatch detected”

message in the Error Manager.

– Halts the system and will not boot until the fault condition is

remedied.

Halt The BIOS detects the error condition and responds as follows:

– Adjusts all processor frequencies to the highest common frequency.

– No error is generated – this is not an error condition.

– Continues to boot the system successfully.

If the frequencies for all processors cannot be adjusted to be the same,

then the BIOS:

– Logs the error into the SEL.

– Displays “0197: Processor 0x family is not supported” message in the

Error Manager.

– Halts the system and will not boot until the fault condition is

remedied.

Revision 1.8

23

Intel order number E39529-013

Page 38

Functional Architecture Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS

Error Severity System Action

Processor Intel®

QuickPath

Interconnect speeds

not identical

Processor microcode

missing

Halt The BIOS detects the error condition and responds as follows:

– Adjusts all processor QPI frequencies to highest common frequency.

– No error is generated – this is not an error condition

– Continues to boot the system successfully.

If the link speeds for all QPI links cannot be adjusted to be the same, then

the BIOS:

– Logs the error into the SEL.

– Displays “0195: Processor 0x Intel

in the Error Manager.

– Halts the system and will not boot until the fault condition is

remedied.

Minor The BIOS detects the error condition and responds as follows:

– Logs the error into the SEL.

– Does not disable the processor.

– Displays “8180: Processor 0x microcode update not found” message

in the Error Manager or on the screen.

– The system continues to boot in a degraded state, regardless of the

setting of POST Error Pause in Setup.

®

QPI speed mismatch” message

3.2.3 Intel

®

Hyper-Threading Technology (Intel® HT)

If the installed processor supports the Intel® Hyper-Threading Technology, the BIOS Setup

provides an option to enable or disable this feature. The default is enabled.

The BIOS creates additional entries in the ACPI MP tables to describe the virtual processors.

The SMBIOS Type 4 structure shows only the installed physical processors. It does not

describe the virtual processors.

Because some operating systems are not able to efficiently use the Intel

®

HT Technology, the

BIOS does not create entries in the Multi-Processor Specification, Version 1.4 tables to describe

the virtual processors.

3.2.4 Enhanced Intel SpeedStep

®

Technology (EIST)

If the installed processor supports the Enhanced Intel SpeedStep® Technology, the BIOS Setup

provides an option to enable or disable this feature. The Default is enabled.

3.2.5 Intel

®

Turbo Boost Technology

Intel® Turbo Boost Technology opportunistically and automatically allows the processor to run

faster than the marked frequency if the part is operating below power, temperature, and current

limits.

If the processor supports this feature, the BIOS setup provides an option to enable or disable

this feature. The default is enabled.

3.2.6 Execute Disable Bit Feature

The Execute Disable Bit feature (XD bit) can prevent data pages from being used by malicious

software to execute code. A processor with the XD bit feature can provide memory protection in

one of the following modes:

z Legacy protected mode if Physical Address Extension (PAE) is enabled.

Revision 1.8

24

Intel order number E39529-013

Page 39

Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS Functional Architecture

z Intel

®

64 mode when 64-bit extension technology is enabled (Entering Intel® 64

mode requires enabling PAE).

You can enable and disable the XD bit in the BIOS Setup. The default behavior is enabled.

3.2.7 Core Multi-Processing

The BIOS setup provides the ability to selectively enable one or more cores. The default

behavior is to enable all cores. You can do this through the BIOS setup option for active core

count.

The BIOS creates entries in the Multi-Processor Specification, Version 1.4 tables to describe

multi-core processors.

3.2.8 Direct Cache Access (DCA)

Direct Cache Access (DCA) is a system-level protocol in a multi-processor system to improve

I/O network performance, thereby providing higher system performance. The basic idea is to

minimize cache misses when a demand read is executed. This is accomplished by placing the

data from the I/O devices directly into the processor cache through hints to the processor to

perform a data pre-fetch and install it in its local caches.

The BIOS setup provides an option to enable or disable this feature. The default behavior is

enabled.

3.2.9 Unified Retention System Support

The server boards comply with Unified Retention System (URS) and Unified Backplate

Assembly. The server boards ship with Unified Backplate Assembly at each processor socket.

The URS retention transfers load to the server boards via the Unified Backplate Assembly. The

URS spring, captive in the heatsink, provides the necessary compressive load for the thermal

interface material (TIM). All components of the URS heatsink solution are captive to the

heatsink and only require a Phillips* screwdriver to attach to the Unified Backplate Assembly.

See the following figure for the stacking order of URS components.

The Unified Backplate Assembly is removable, allowing for the use of non-Intel

retention solutions.

®

heatsink

Revision 1.8

Intel order number E39529-013

25

Page 40

Functional Architecture Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS

Figure 15. Unified Retention System and Unified Back Plate Assembly

Revision 1.8

26

Intel order number E39529-013

Page 41

Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS Functional Architecture

3.3 Memory Subsystem

The Intel® Xeon® Processor 5500 Series on the Intel® Server Boards S5520HC, S5500HCV and

S5520HCT are populated on CPU sockets. Each processor installed on the CPU socket has an

integrated memory controller (IMC), which supports up to three DDR3 channels and groups

DIMMs on the server boards into autonomous memory.

3.3.1 Memory Subsystem Nomenclature

The nomenclature for DIMM sockets implemented in the Intel® Server Boards S5520HC,

S5500HCV and S5520HCT is represented in the following figures.

z DIMMs are organized into physical slots on DDR3 memory channels that belong to

processor sockets.

z The memory channels for CPU 1 socket are identified as Channels A, B, and C. The

memory channels for CPU 2 socket are identified as Channels D, E, and F.

z The DIMM identifiers on the silkscreen on the board provide information about which

channel/CPU Socket they belong to. For example, DIMM_A1 is the first slot on

Channel A of CPU 1 socket. DIMM_D1 is the first slot on Channel D of CPU 2

Socket.

z Processor sockets are self-contained and autonomous. However, all configurations

in the BIOS setup, such as RAS, Error Management, and so forth, are applied

commonly across sockets.

The Intel

processor) with two DIMM slots per channel, thus supporting up to twelve DIMMs in twoprocessor configuration. See Figure 16 for the Intel

arrangement.

®

Server Board S5520HC supports six DDR3 memory channels (three channels per

®

Server Board S5520HC DIMM slots

The Intel

®

Server Board S5500HCV supports six DDR3 memory channels (three channels per

processor) with two DIMM slots per channel at Channels A, B, and C, and one DIMM slot per

channel at Channels D, E, and F, thereby supporting up to nine DIMMs in a two-processor

configuration. See Figure 17 for the Intel

Revision 1.8

®

Server Board S5500HCV DIMM slots arrangement.

Intel order number E39529-013

27

Page 42

Functional Architecture Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS

Server Board CPU Socket DIMM Identifier Channel/Slot

Intel® Server Board S5520HC

Figure 16. Intel® Server Board S5520HC DIMM Slots Arrangement

CPU 1

CPU 2

A1 (Blue) Channel A, Slot 0

A2 (Black) Channel A, Slot 1

B1 (Blue) Channel B, Slot 0

B2 (Black) Channel B, Slot 1

C1 (Blue) Channel C, Slot 0

C2 (Black) Channel C, Slot 1

D1 (Blue) Channel D, Slot 0

D2 (Black) Channel D, Slot 1

E1 (Blue) Channel E, Slot 0

E2 (Black) Channel E, Slot 1

F1 (Blue) Channel F, Slot 0

F2 (Black) Channel F, Slot 1

Revision 1.8

28

Intel order number E39529-013

Page 43

Intel® Server Boards S5520HC, S5500HCV, and S5520HCT TPS Functional Architecture

Server Board

CPU 1

Intel® Server Board

S5500HCV

CPU 2

Figure 17. Intel® Server Board S5500HCV DIMM Slots Arrangement

3.3.2 Supported Memory

z Both Intel

1.5-V DDR3 DIMMs.

Intel

Inte

z Both Intel