Page 1

Front cover

Click here to check for updates

IBM Flex System V7000

Storage Node

Introduction and Implementation Guide

Introduction to IBM Flex System family,

features, and functions

IBM Flex System V7000 Storage

Node hardware overview

Host configuration guide

John Sexton

Tilak Buneti

Eva Ho

Massimo Rosati

ibm.com/redbooks

Page 2

Page 3

International Technical Support Organization

IBM Flex System V7000 Storage Node

Introduction and Implementation Guide

September 2013

SG24-8068-01

Page 4

Note: Before using this information and the product it supports, read the information in “Notices” on

page xi.

Second Edition (September 2013)

This edition applies to IBM Flex System V7000 Storage Node Version 7.1.

© Copyright International Business Machines Corporation 2013. All rights reserved.

Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule

Contract with IBM Corp.

Page 5

Contents

Notices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xi

Trademarks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xii

Preface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xiii

Authors. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xiii

Now you can become a published author, too! . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xv

Comments welcome. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .xv

Stay connected to IBM Redbooks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xv

Summary of changes. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xvii

September 2013, Second Edition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xvii

Chapter 1. Introduction to IBM Flex Systems and IBM PureSystems offerings . . . . . . 1

1.1 IBM PureSystems overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.1.1 Product names . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.1.2 IBM PureFlex System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.1.3 IBM PureApplication System. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

1.2 IBM PureFlex System building blocks. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

1.2.1 Highlights . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

1.2.2 Components . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

1.3 IBM Flex System Enterprise Chassis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

1.3.1 Chassis power supplies . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

1.3.2 Fan modules and cooling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

1.4 Compute nodes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

1.4.1 IBM Flex System x440 Compute Node. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

1.4.2 IBM Flex System x240 Compute Node. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

1.4.3 IBM Flex System x220 Compute Node. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

1.4.4 IBM Flex System p260 and p24L Compute Nodes . . . . . . . . . . . . . . . . . . . . . . . . 20

1.4.5 IBM Flex System p460 Compute Node. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

1.5 I/O modules. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

1.5.1 IBM Flex System Fabric CN4093 10 Gb Converged Scalable Switch . . . . . . . . . 25

1.5.2 IBM Flex System Fabric EN4093 and EN4093R 10 Gb Scalable Switch . . . . . . . 26

1.5.3 IBM Flex System EN4091 10 Gb Ethernet Pass-thru . . . . . . . . . . . . . . . . . . . . . . 27

1.5.4 IBM Flex System EN2092 1 Gb Ethernet Scalable Switch . . . . . . . . . . . . . . . . . . 27

1.5.5 IBM Flex System FC5022 16 Gb SAN Scalable Switch . . . . . . . . . . . . . . . . . . . . 28

1.5.6 IBM Flex System FC3171 8 Gb SAN Switch . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

1.5.7 IBM Flex System FC3171 8 Gb SAN Pass-thru . . . . . . . . . . . . . . . . . . . . . . . . . . 29

1.5.8 IBM Flex System IB6131 InfiniBand Switch . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

1.6 Introduction to IBM Flex System storage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

1.6.1 IBM Storwize V7000 and IBM Flex System V7000 Storage Node . . . . . . . . . . . . 30

1.6.2 Benefits and value proposition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

1.6.3 Data Protection features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

1.7 External storage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

1.7.1 Storage products. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

1.7.2 IBM Storwize V7000 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

Chapter 2. Introduction to IBM Flex System V7000 Storage Node . . . . . . . . . . . . . . . . 37

2.1 IBM Flex System V7000 Storage Node overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

2.2 IBM Flex System V7000 Storage Node terminology . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

© Copyright IBM Corp. 2013. All rights reserved. iii

Page 6

2.3 IBM Flex System V7000 Storage Node . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

2.3.1 IBM Flex System V7000 Storage Node releases . . . . . . . . . . . . . . . . . . . . . . . . . 42

2.3.2 IBM Flex System V7000 Storage Node capabilities . . . . . . . . . . . . . . . . . . . . . . . 42

2.3.3 IBM Flex System V7000 Storage Node functions . . . . . . . . . . . . . . . . . . . . . . . . . 43

2.4 IBM Flex System V7000 Storage Node licensing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

2.4.1 Mandatory licensing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

2.4.2 Optional licensing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

2.5 IBM Flex System V7000 Storage Node hardware . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

2.5.1 Control canister . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

2.5.2 Expansion canister . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

2.5.3 Supported disk drives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

2.5.4 IBM Storwize V7000 expansion enclosure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

2.5.5 SAS cabling requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

2.6 IBM Flex System V7000 Storage Node components . . . . . . . . . . . . . . . . . . . . . . . . . . 59

2.6.1 Hosts. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

2.6.2 Control canisters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

2.6.3 I/O groups . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

2.6.4 Clustered system . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

2.6.5 RAID . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

2.6.6 Managed disks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

2.6.7 Quorum disks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

2.6.8 Storage pools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

2.6.9 Volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

2.6.10 Thin-provisioned volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

2.6.11 Mirrored volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

2.6.12 Easy Tier. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

2.6.13 Real-time Compression. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72

2.6.14 iSCSI. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72

2.6.15 Fibre Channel over Ethernet (FCoE) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

2.7 Advanced copy services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

2.7.1 FlashCopy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

2.7.2 IBM Flex System V7000 Remote Mirroring software . . . . . . . . . . . . . . . . . . . . . . 76

2.7.3 Synchronous / Asynchronous Remote Copy . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

2.7.4 Copy Services configuration limits . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

2.8 Management and support tools. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

2.8.1 IBM Assist On-site and remote service. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

2.8.2 Event notifications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

2.8.3 SNMP traps. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

2.8.4 Syslog messages . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

2.8.5 Call Home email . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

2.9 Useful references from Storwize V7000 websites. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

2.10 IBM virtual storage learning videos on YouTube . . . . . . . . . . . . . . . . . . . . . . . . . . . . 82

Chapter 3. Systems management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

3.1 System Management overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

3.1.1 Integrated platform management tools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

3.1.2 IBM Flex System storage management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

3.1.3 Storage management interfaces. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

3.2 IBM Flex System Chassis Management Module (CMM). . . . . . . . . . . . . . . . . . . . . . . . 90

3.2.1 Overview of IBM Flex System Chassis Management Module . . . . . . . . . . . . . . . 90

3.2.2 Accessing the CMM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

3.2.3 Viewing and configuring IP addresses of chassis components . . . . . . . . . . . . . . 94

3.2.4 Accessing I/O modules using CMM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 96

iv IBM Flex System V7000 Storage Node Introduction and Implementation Guide

Page 7

3.2.5 Managing storage using IBM Flex System Chassis Management Module . . . . . . 99

3.2.6 Data collection using CMM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

3.3 Flex System Manager . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

3.3.1 Overview of IBM Flex System Manager (FSM). . . . . . . . . . . . . . . . . . . . . . . . . . 113

3.3.2 IBM Flex System Manager storage management features. . . . . . . . . . . . . . . . . 115

3.3.3 Logging in to the IBM Flex System Manager Node. . . . . . . . . . . . . . . . . . . . . . . 119

3.3.4 Overview of IBM Flex System Manager and IBM FSM Explorer . . . . . . . . . . . . 120

3.3.5 Accessing I/O modules using FSM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 135

3.3.6 Data collection using FSM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 139

3.3.7 Managing storage using IBM Flex System Manager . . . . . . . . . . . . . . . . . . . . . 143

Chapter 4. IBM Flex System V7000 Storage Node initial configuration . . . . . . . . . . . 157

4.1 Planning overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 158

4.1.1 Hardware planning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 158

4.1.2 SAN configuration planning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 159

4.1.3 LAN configuration planning. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 159

4.1.4 Management IP address considerations. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 160

4.1.5 Service IP address considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 160

4.1.6 Management interface planning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 161

4.2 Initial setup for IBM Flex System V7000 Storage Node . . . . . . . . . . . . . . . . . . . . . . . 162

4.2.1 Using FSM for initial setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 163

4.2.2 Using CMM for initial setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 167

4.3 IBM Flex System V7000 Storage Node Setup Wizard . . . . . . . . . . . . . . . . . . . . . . . . 170

4.4 System management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 181

4.4.1 Graphical User Interface (GUI) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 181

4.4.2 Launching IBM Flex System V7000 Storage Node GUI from CMM . . . . . . . . . . 183

4.5 Service Assistant. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 184

4.5.1 Changing the Service IP address . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 184

4.6 Command-Line interface (CLI) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 186

4.7 Recording system access information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 187

Chapter 5. IBM Flex System V7000 Storage Node GUI interface . . . . . . . . . . . . . . . . 189

5.1 Overview of IBM Flex System V7000 Storage Node management software . . . . . . . 190

5.1.1 Access to the Graphical User Interface. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 190

5.1.2 Graphical User Interface layout. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 191

5.1.3 Navigation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 192

5.1.4 Multiple selections. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 195

5.1.5 Status Indicators menus . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 196

5.2 Home menu. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 198

5.3 Monitoring menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 199

5.3.1 Monitoring System Details menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 199

5.3.2 Monitoring Events menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 204

5.3.3 Monitoring Performance menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 212

5.4 Pools menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 214

5.4.1 Volumes by Pool menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 215

5.4.2 Internal Storage menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 216

5.4.3 External Storage menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 217

5.4.4 System Migration tools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 217

5.4.5 MDisks by Pools menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 218

5.5 Volumes menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 221

5.5.1 The Volumes menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 222

5.5.2 Volumes by Pool menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 224

5.5.3 Volumes by Host menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 226

Contents v

Page 8

5.6 Hosts menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 228

5.6.1 Hosts menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 229

5.6.2 Ports by Host menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 231

5.6.3 Host Mappings menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 233

5.6.4 Volumes by Host menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 235

5.7 Copy Services menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 235

5.7.1 FlashCopy menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 236

5.7.2 FlashCopy Consistency Group menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 238

5.7.3 FlashCopy Mapping menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 239

5.7.4 Remote Copy and the Partnerships menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 239

5.8 Access menu. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 240

5.8.1 Users menu. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 241

5.8.2 Audit Log menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 243

5.9 Settings menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 244

5.9.1 Event Notification menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 245

5.9.2 Directory Services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 246

5.9.3 Network menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 247

5.9.4 Support menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 249

5.9.5 General menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 254

Chapter 6. Basic volume and host configuration. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 255

6.1 Storage provisioning from IBM Flex System V7000 Storage Node. . . . . . . . . . . . . . . 256

6.1.1 Creating a generic volume . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 258

6.1.2 Creating a thin-provisioned volume. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 260

6.1.3 Creating a mirrored volume. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 262

6.1.4 Creating a thin-mirror volume . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 266

6.1.5 IBM Real-time Compression . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 269

6.2 Creating a new host . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 273

6.2.1 Creating a Fibre Channel attached host . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 273

6.2.2 Creating an iSCSI attached host. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 276

6.3 Mapping a volume to the host . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 279

6.3.1 Mapping newly created volumes to the host using the wizard . . . . . . . . . . . . . . 279

6.3.2 Additional features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 281

6.4 Scalability enhancements made in v7.1 compared to v6.4 . . . . . . . . . . . . . . . . . . . . . 282

Chapter 7. Storage Migration Wizard . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 283

7.1 Preparing for data migration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 284

7.2 Migrating data using the Storage Migration Wizard . . . . . . . . . . . . . . . . . . . . . . . . . . 285

7.2.1 Check the Windows 2008 host before the migration. . . . . . . . . . . . . . . . . . . . . . 285

7.2.2 Remapping the disk to IBM Flex System V7000 Storage Node . . . . . . . . . . . . . 286

7.2.3 Storage Migration Wizard on IBM Flex System V7000 Storage Node . . . . . . . . 289

7.2.4 Verifying the disks on the Windows server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 301

7.2.5 Finalizing the migration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 304

7.2.6 Mapping disk to host after the migration has begun . . . . . . . . . . . . . . . . . . . . . . 306

7.2.7 Renaming the volume . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 309

Chapter 8. Storage pools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 313

8.1 Working with internal drives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 314

8.1.1 Actions on internal drives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 318

8.1.2 Configuring internal storage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 321

8.2 Working with MDisks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 335

8.2.1 Adding MDisks to storage pools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 337

8.2.2 Importing MDisks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 342

8.2.3 RAID action for MDisks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 351

vi IBM Flex System V7000 Storage Node Introduction and Implementation Guide

Page 9

8.2.4 Selecting the tier for MDisks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 353

8.2.5 Additional actions on MDisks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 355

8.2.6 Properties for Mdisks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 357

8.3 Working with storage pools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 359

Chapter 9. IBM Flex System V7000 Storage Node Copy Services . . . . . . . . . . . . . . . 363

9.1 Services provided . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 364

9.2 FlashCopy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 364

9.2.1 Business requirements for FlashCopy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 364

9.2.2 FlashCopy functional overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 365

9.2.3 Planning for FlashCopy. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 373

9.2.4 Managing FlashCopy using the GUI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 375

9.2.5 Managing FlashCopy mapping . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 381

9.3 Remote Copy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 402

9.3.1 Remote Copy concepts. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 402

9.3.2 Global Mirror with Change Volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 409

9.3.3 Remote Copy planning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 414

9.4 Troubleshooting Remote Copy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 417

9.4.1 1920 error . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 418

9.4.2 1720 error . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 420

9.5 Managing Remote Copy using the GUI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 420

9.5.1 Managing cluster partnerships . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 420

9.5.2 Deleting a partnership. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 426

9.5.3 Managing a Remote Copy consistency group . . . . . . . . . . . . . . . . . . . . . . . . . . 436

Chapter 10. Volume mirroring and migration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 447

10.1 Volume mirroring and migration options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 448

10.2 Tunable timeout . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 448

10.3 Usage of mirroring for migration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 448

10.4 Managing Volume Mirror and migration with the GUI . . . . . . . . . . . . . . . . . . . . . . . . 450

Chapter 11. SAN connections and configuration. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 457

11.1 Storage Area Network overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 458

11.2 Connection to chassis I/O modules. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 459

11.2.1 I/O module configurations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 459

11.2.2 I/O module connection summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 460

11.3 iSCSI connectivity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 461

11.3.1 Session establishment and management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 462

11.3.2 iSCSI initiators . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 462

11.3.3 iSCSI multisession configuration and support. . . . . . . . . . . . . . . . . . . . . . . . . . 464

11.3.4 iSCSI multipath connectivity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 465

11.3.5 Configuring multiple iSCSI host links . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 466

11.4 FCoE connectivity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 468

11.4.1 FCoE protocol stack . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 469

11.4.2 Converged Network Adapters. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 470

11.4.3 FCoE port types . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 471

11.4.4 Configuring CN4093 for FCoE connectivity . . . . . . . . . . . . . . . . . . . . . . . . . . . 473

11.5 Fibre Channel connectivity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 491

11.5.1 The concept of layers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 492

11.5.2 Fibre Channel topologies . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 494

11.5.3 FC addressing and port types. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 495

11.5.4 Zoning a compute node for storage allocation . . . . . . . . . . . . . . . . . . . . . . . . . 496

11.5.5 Multipathing. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 506

11.5.6 FC Switch Transparent Mode and N_Port ID Virtualization (NPIV) . . . . . . . . . 507

Contents vii

Page 10

11.6 Storage Area Network summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 509

Chapter 12. Host configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 511

12.1 Host configuration overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 512

12.2 Discovering volumes from the host and multipath settings . . . . . . . . . . . . . . . . . . . . 513

12.3 Windows host attachment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 514

12.3.1 Windows 2012 R2 Fibre Channel volume attachment . . . . . . . . . . . . . . . . . . . 514

12.3.2 Windows 2008 R2 iSCSI volume attachment . . . . . . . . . . . . . . . . . . . . . . . . . . 520

12.3.3 Windows 2008 R2 FCoE volume attachment . . . . . . . . . . . . . . . . . . . . . . . . . . 533

12.4 VMware ESX host attachment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 538

12.4.1 VMware ESX Fibre Channel attachment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 538

12.4.2 VMware ESX iSCSI attachment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 544

12.5 AIX host attachment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 552

12.5.1 Configuring the AIX compute node for FC connectivity . . . . . . . . . . . . . . . . . . 552

12.5.2 Operating system versions and maintenance levels. . . . . . . . . . . . . . . . . . . . . 553

12.5.3 Checking connectivity to IBM Flex System V7000 Storage Node. . . . . . . . . . . 554

12.5.4 Installing the 2145 host attachment support package. . . . . . . . . . . . . . . . . . . . 555

12.5.5 Subsystem Device Driver Path Control Module . . . . . . . . . . . . . . . . . . . . . . . . 556

12.6 Linux host attachment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 560

12.6.1 Linux Fibre Channel attachment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 560

12.6.2 Applying device drivers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 561

12.6.3 Creating and preparing the SDD volumes for use . . . . . . . . . . . . . . . . . . . . . . 564

12.6.4 Using the operating system Device Mapper Multipath (DM-MPIO) . . . . . . . . . 566

12.6.5 Creating and preparing DM-MPIO volumes for use . . . . . . . . . . . . . . . . . . . . . 567

Chapter 13. Maintenance and troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 571

13.1 Reliability, availability, and serviceability (RAS) . . . . . . . . . . . . . . . . . . . . . . . . . . . . 572

13.2 Hardware and LED descriptions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 573

13.2.1 Understanding the system state using the control enclosure LEDs . . . . . . . . . 573

13.2.2 Understanding the system state using the expansion enclosure LEDs . . . . . . 579

13.2.3 Power-on self-test . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 582

13.2.4 Powering on using LED indicators . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 583

13.3 Monitoring system status and health. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 584

13.3.1 Using FSM for status and health. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 584

13.3.2 System Status and Health tasks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 586

13.4 Managing storage nodes. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 588

13.4.1 Using FSM Chassis Manager to manage a storage node . . . . . . . . . . . . . . . . 588

13.4.2 Using FSM Storage Management to manage a storage node . . . . . . . . . . . . . 591

13.4.3 Using CMM Chassis Manager to manage a storage node . . . . . . . . . . . . . . . . 592

13.5 Configuration backup and restore process . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 593

13.6 Software upgrade . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 594

13.6.1 Choosing an upgrade method. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 594

13.6.2 Upgrading the system software using IBM Flex System V7000 Storage Node

management GUI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 595

13.7 Drive firmware upgrade . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 601

13.7.1 Multi drive upgrade utility . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 601

13.7.2 Upgrade procedure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 602

13.8 Troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 602

13.8.1 Using the CMM for troubleshooting tasks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 603

13.8.2 Using IBM Flex System V7000 Storage Node management GUI for troubleshooting

tasks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 606

13.8.3 Removing and replacing parts for troubleshooting and resolving problems . . . 608

13.8.4 Event reporting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 608

viii IBM Flex System V7000 Storage Node Introduction and Implementation Guide

Page 11

13.8.5 Viewing the event log . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 609

13.8.6 Error event IDs and error codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 610

13.9 Audit log navigation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 613

13.10 Support data collection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 613

13.10.1 Collecting System Management Server service data using the CMM . . . . . . 615

13.10.2 Collecting support files using FSM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 616

13.11 Using event notifications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 617

13.12 Configuring Call Home . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 618

13.12.1 Configuring Call Home if FSM is not included. . . . . . . . . . . . . . . . . . . . . . . . . 618

13.12.2 Configuring Call Home if FSM is included. . . . . . . . . . . . . . . . . . . . . . . . . . . . 620

13.13 IBM Flex System V7000 Storage Node power on and off. . . . . . . . . . . . . . . . . . . . 625

13.13.1 Powering on the system . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 626

13.13.2 Powering off the system using management GUI. . . . . . . . . . . . . . . . . . . . . . 627

13.13.3 Shutting down using IBM Flex System V7000 Storage Node command-line

interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 628

13.13.4 Powering off a node using the CMM. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 628

Appendix A. CLI setup and configuration. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 629

Command-line interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 630

Basic setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 630

Example commands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 639

Related publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 645

IBM Redbooks publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 645

Other publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 645

Online resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 646

Help from IBM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 646

Contents ix

Page 12

x IBM Flex System V7000 Storage Node Introduction and Implementation Guide

Page 13

Notices

This information was developed for products and services offered in the U.S.A.

IBM may not offer the products, services, or features discussed in this document in other countries. Consult

your local IBM representative for information on the products and services currently available in your area. Any

reference to an IBM product, program, or service is not intended to state or imply that only that IBM product,

program, or service may be used. Any functionally equivalent product, program, or service that does not

infringe any IBM intellectual property right may be used instead. However, it is the user's responsibility to

evaluate and verify the operation of any non-IBM product, program, or service.

IBM may have patents or pending patent applications covering subject matter described in this document. The

furnishing of this document does not grant you any license to these patents. You can send license inquiries, in

writing, to:

IBM Director of Licensing, IBM Corporation, North Castle Drive, Armonk, NY 10504-1785 U.S.A.

The following paragraph does not apply to the United Kingdom or any other country where such

provisions are inconsistent with local law: INTERNATIONAL BUSINESS MACHINES CORPORATION

PROVIDES THIS PUBLICATION "AS IS" WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESS OR

IMPLIED, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF NON-INFRINGEMENT,

MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. Some states do not allow disclaimer of

express or implied warranties in certain transactions, therefore, this statement may not apply to you.

This information could include technical inaccuracies or typographical errors. Changes are periodically made

to the information herein; these changes will be incorporated in new editions of the publication. IBM may make

improvements and/or changes in the product(s) and/or the program(s) described in this publication at any time

without notice.

Any references in this information to non-IBM websites are provided for convenience only and do not in any

manner serve as an endorsement of those websites. The materials at those websites are not part of the

materials for this IBM product and use of those websites is at your own risk.

IBM may use or distribute any of the information you supply in any way it believes appropriate without incurring

any obligation to you.

Any performance data contained herein was determined in a controlled environment. Therefore, the results

obtained in other operating environments may vary significantly. Some measurements may have been made

on development-level systems and there is no guarantee that these measurements will be the same on

generally available systems. Furthermore, some measurements may have been estimated through

extrapolation. Actual results may vary. Users of this document should verify the applicable data for their

specific environment.

Information concerning non-IBM products was obtained from the suppliers of those products, their published

announcements or other publicly available sources. IBM has not tested those products and cannot confirm the

accuracy of performance, compatibility or any other claims related to non-IBM products. Questions on the

capabilities of non-IBM products should be addressed to the suppliers of those products.

This information contains examples of data and reports used in daily business operations. To illustrate them

as completely as possible, the examples include the names of individuals, companies, brands, and products.

All of these names are fictitious and any similarity to the names and addresses used by an actual business

enterprise is entirely coincidental.

COPYRIGHT LICENSE:

This information contains sample application programs in source language, which illustrate programming

techniques on various operating platforms. You may copy, modify, and distribute these sample programs in

any form without payment to IBM, for the purposes of developing, using, marketing or distributing application

programs conforming to the application programming interface for the operating platform for which the sample

programs are written. These examples have not been thoroughly tested under all conditions. IBM, therefore,

cannot guarantee or imply reliability, serviceability, or function of these programs.

© Copyright IBM Corp. 2013. All rights reserved. xi

Page 14

Trademarks

IBM, the IBM logo, and ibm.com are trademarks or registered trademarks of International Business Machines

Corporation in the United States, other countries, or both. These and other IBM trademarked terms are

marked on their first occurrence in this information with the appropriate symbol (® or ™), indicating US

registered or common law trademarks owned by IBM at the time this information was published. Such

trademarks may also be registered or common law trademarks in other countries. A current list of IBM

trademarks is available on the Web at http://www.ibm.com/legal/copytrade.shtml

The following terms are trademarks of the International Business Machines Corporation in the United States,

other countries, or both:

Active Cloud Engine™

Active Memory™

AIX®

BladeCenter®

DS4000®

DS6000™

DS8000®

Easy Tier®

Electronic Service Agent™

EnergyScale™

FlashCopy®

HACMP™

IBM Flex System™

IBM Flex System Manager™

IBM PureData™

IBM®

Power Systems™

POWER7®

PowerVM®

POWER®

PureApplication™

PureData™

PureFlex™

PureSystems™

Real-time Compression™

Redbooks®

Redbooks (logo) ®

ServerProven®

Storwize®

System i®

System p®

System Storage DS®

System Storage®

System x®

Tivoli®

X-Architecture®

XIV®

The following terms are trademarks of other companies:

Intel Xeon, Intel, Pentium, Intel logo, Intel Inside logo, and Intel Centrino logo are trademarks or registered

trademarks of Intel Corporation or its subsidiaries in the United States and other countries.

Linux is a trademark of Linus Torvalds in the United States, other countries, or both.

Microsoft, Windows, and the Windows logo are trademarks of Microsoft Corporation in the United States,

other countries, or both.

Java, and all Java-based trademarks and logos are trademarks or registered trademarks of Oracle and/or its

affiliates.

Other company, product, or service names may be trademarks or service marks of others.

xii IBM Flex System V7000 Storage Node Introduction and Implementation Guide

Page 15

Preface

IBM® Flex System™ products are ideally suited for data center environments that require

flexible, cost-effective, secure, and energy-efficient hardware. IBM Flex System V7000

Storage Node is the latest addition to the IBM Flex Systems product family and is a modular

storage system designed to fit into the IBM Flex System Enterprise chassis.

When purchased in the IBM PureFlex™ configurations, IBM Flex System V7000 Storage

Node is configured from the factory into the hardware solution purchased. If, however, the

configuration wanted is not offered in the predefined offerings, then a “Build to Order”

configuration is designed to meet your needs.

IBM Flex System V7000 Storage Node includes the capability to virtualize its own internal

storage in the same manner as the IBM Storwize® V7000 does. It is designed to be a

scalable internal storage system to support the compute nodes of the IBM Flex System

environment.

This IBM Redbooks® publication introduces the features and functions of IBM Flex System

V7000 Storage Node through several examples. This book is aimed at pre-sales and

post-sales technical support and marketing personnel and storage administrators. It can help

you understand the architecture of IBM Flex System V7000 Storage Node, how to implement

it, and how to take advantage of the industry leading functions and features.

Authors

This book was produced by a team of specialists from around the world working at the

International Technical Support Organization, Raleigh Center.

John Sexton is temporarily assigned at the International Technical Support Organization,

Raleigh Center as team leader for this project. He is a Certified Consulting IT Specialist,

based in Wellington, New Zealand, and has over 25 years of experience working in IT. He has

worked at IBM for the last 17 years. His areas of expertise include IBM System p®, IBM AIX®,

IBM HACMP™, virtualization, storage, cloud, IBM Tivoli® Storage Manager, SAN, SVC, and

business continuity. He provides pre-sales support and technical services for clients

throughout New Zealand, including consulting, solution design and implementation,

troubleshooting, performance monitoring, system migration, and training. Prior to joining IBM

in New Zealand, John worked in the United Kingdom building and maintaining systems in the

UK financial and advertising industries.

Tilak Buneti is an IBM Real-time Compression™ Development Support Engineer based in

North Carolina, USA and has over 15 years of experience working in Storage and IT fields.

He joined IBM directly as a professional and holds a Bachelor degree in Electronics and

Communication Engineering. He has expertise in various technologies used in NAS, SAN,

backup, and storage optimization technologies. He has certifications for CCNA, MCSE,

NACP, and NACA. In his current role, he is responsible for worldwide product support for IBM

Real-time Compression and documentation updates.

Eva Ho is a worldwide Product Engineer support for IBM Flex System V7000 with the IBM

Systems Technology Group. She has 28 years of working experience within IBM, which

includes product development, L2/PFE support, and Product Engineer experience working

with IBM products such as servers, networking products, IBM Network Attached Storage

© Copyright IBM Corp. 2013. All rights reserved. xiii

Page 16

appliances, IBM DS6000™, IBM System Storage® N series, Storwize V7000, Storwize

V7000 Unified, and IBM Flex System V7000. She also worked as technical team lead when

she joined the STG worldwide N series PFE support team in Research Triangle Park, North

Carolina. Eva has a System Storage Certification with IBM. She was a participant in

developing IBM Storage Networking Solutions V1 and V2 Certification test. She holds a

Masters degree in Computer Science.

Massimo Rosati is a Certified Senior Storage IT Specialist in IBM Italy. He has 28 years of

experience in the delivery of Professional Services and SW Support. His areas of expertise

include storage hardware, SANs, storage virtualization, disaster recovery, and business

continuity solutions. He has achieved Brocade and Cisco SAN Design Certifications, and is

supporting critical and complex client engagements in the SAN and storage areas. Massimo

has written extensively about SAN and virtualization products in several IBM Redbooks

publications.

Thanks to the following people for their contributions to this project:

Sangam Racherla

Matt Riddle

Karen Brown

Scott Piper

Roger Bullard

Walter Tita

Andy Sylivant

John Fasano

Bill Wiegand

Andrew Martin

Dan Braden

Marisol Diaz Amador

Royce Espey

Andrew P. Jones

Carlos Fuente

Tayf un Ar li

IBM

International Technical Support Organization, Raleigh Center

Tam ik ia B ar row

Ilya Krutov

David Watts

Thanks also to the authors of the previous editions of this book:

Authors of the first edition, IBM Flex System V7000 Storage Node Introduction and

Implementation Guide, published in March 2013:

– Sangam Racherla

–Eva Ho

– Carsten Larsen

–Kim Serup

– John Sexton

– Mansoor Syed

– Alexander Watson

xiv IBM Flex System V7000 Storage Node Introduction and Implementation Guide

Page 17

Now you can become a published author, too!

Here’s an opportunity to spotlight your skills, grow your career, and become a published

author—all at the same time! Join an ITSO residency project and help write a book in your

area of expertise, while honing your experience using leading-edge technologies. Your efforts

will help to increase product acceptance and customer satisfaction, as you expand your

network of technical contacts and relationships. Residencies run from two to six weeks in

length, and you can participate either in person or as a remote resident working from your

home base.

Find out more about the residency program, browse the residency index, and apply online at:

ibm.com/redbooks/residencies.html

Comments welcome

Your comments are important to us!

We want our books to be as helpful as possible. Send us your comments about this book or

other IBM Redbooks publications in one of the following ways:

Use the online Contact us review Redbooks form found at:

ibm.com/redbooks

Send your comments in an email to:

redbooks@us.ibm.com

Mail your comments to:

IBM Corporation, International Technical Support Organization

Dept. HYTD Mail Station P099

2455 South Road

Poughkeepsie, NY 12601-5400

Stay connected to IBM Redbooks

Find us on Facebook:

http://www.facebook.com/IBMRedbooks

Follow us on Twitter:

http://twitter.com/ibmredbooks

Look for us on LinkedIn:

http://www.linkedin.com/groups?home=&gid=2130806

Explore new Redbooks publications, residencies, and workshops with the IBM Redbooks

weekly newsletter:

https://www.redbooks.ibm.com/Redbooks.nsf/subscribe?OpenForm

Stay current on recent Redbooks publications with RSS Feeds:

http://www.redbooks.ibm.com/rss.html

Preface xv

Page 18

xvi IBM Flex System V7000 Storage Node Introduction and Implementation Guide

Page 19

Summary of changes

This section describes the technical changes made in this edition of the book and in previous

editions. This edition might also include minor corrections and editorial changes that are not

identified.

Summary of Changes

for SG24-8068-01

for IBM Flex System V7000 Storage Node Introduction and Implementation Guide

as created or updated on March 12, 2014.

September 2013, Second Edition

This revision reflects the addition, deletion, or modification of new and changed information

described below.

IBM Storwize Family Software for Storwize V7000 V7.1.x is now available on IBM Flex

System V7000 Storage Node for upgrade to and new purchases.

New hardware

Larger drives increase the maximum internal capacity by up to 33% using 1.2 TB 2.5 inch 10K

RPM SAS drives instead of 900 GB 10K RPM SAS drives. Or, the capacity can be increased

by up to 20% using 1.2 TB 2.5 inch10K RPM SAS drives instead of 1 TB 7.2K RPM NL SAS

drives, which was formerly the maximum size drive available.

Changed information

Scalability enhancements enable the Storwize Software family to handle larger configurations

with more hosts using more volumes with more virtual machines:

Increases the number of hosts per I/O group from 256 to 512.

For a cluster, increases the host limit from 1024 to 2048.

Increases the number of volumes or LUNs per host from 512 to 2048. This increase is

applicable to any host type subject to host type limitations. The increase is applicable to

FC and FCoE host attachment types (subject to host limitations), but not for iSCSI.

Increases the number of host WWPNS per I/O group to 2048 and per cluster to 8192.

This increase applies equally to native FC and FCoE WWPNs.

Ability to use IBM Real-time Compression and EasyTier together enables users to get high

performance and high efficiency at the same time.

Copy services now has a new function that permits to switch from Metro Mirror to Global

Mirror (with or without change volumes) without the need to re-synchronize.

© Copyright IBM Corp. 2013. All rights reserved. xvii

Page 20

xviii IBM Flex System V7000 Storage Node Introduction and Implementation Guide

Page 21

Chapter 1. Introduction to IBM Flex Systems

1

and IBM PureSystems offerings

This chapter provides an overview of the IBM PureSystems offerings and how IBM Flex

System V7000 Storage Node adds to a cloud ready solution within a single IBM Flex System

Enterprise Chassis. Such a solution consists of compute nodes, storage systems, LAN, and

SAN-infrastructure, allowing connectivity.

IBM Flex System products are ideally suited for data center environments that require flexible,

cost-effective, secure, with energy-efficient hardware.

The innovative design features of the IBM Flex System products make it possible for you to

configure totally integrated, customized, secure solutions that meet your data center needs

today and provide flexible expansion capabilities for the future. The scalable hardware

features and the unprecedented cooling capabilities of the IBM Flex System products help

you optimize hardware utilization, minimize cost, and simplify the overall management of your

data center.

The primary focus of this book is to describe features and functions of IBM Flex System

V7000 Storage Node. However, in early versions of the IBM Flex System, the integrated

storage is provided by IBM Storwize V7000. Further developments were made from the time

of initial product GA announcement, and IBM Flex System V7000 Storage Node is now

supported as an integrated storage inside the IBM Flex System chassis. Hence this

introduction covers both storage systems. In the following chapters, IBM Flex System V7000

Storage Node and its functions are described.

For more information about IBM PureSystems, see the following website:

http://www.ibm.com/ibm/puresystems/us/en/index.html

© Copyright IBM Corp. 2013. All rights reserved. 1

Page 22

1.1 IBM PureSystems overview

During the last 100 years, information technology has moved from a specialized tool to a

pervasive influence on nearly every aspect of life. From tabulating machines that simply

counted with mechanical switches or vacuum tubes to the first programmable computers, IBM

has been a part of this growth, while always helping customers to solve problems.

Information Technology (IT) is a constant part of business and of our lives. IBM expertise in

delivering IT solutions has helped the planet become smarter. And as organizational leaders

seek to extract more real value from their data, business processes and other key

investments, IT is moving to the strategic center of business.

To meet those business demands, IBM is introducing a new category of systems, systems

that combine the flexibility of general-purpose systems, the elasticity of cloud computing and

the simplicity of an appliance that is tuned to the workload. Expert integrated systems are

essentially the building blocks of capability. This new category of systems represents the

collective knowledge of thousands of deployments, established best practices, innovative

thinking, IT leadership, and distilled expertise.

The offerings in IBM PureSystems are designed to deliver value in the following ways:

Built-in expertise helps you to address complex business and operational tasks

automatically.

Integration by design helps you to tune systems for optimal performance and efficiency.

Simplified experience, from design to purchase to maintenance, creates efficiencies

quickly.

IBM PureSystems offerings are optimized for performance and virtualized for efficiency.

These systems offer a no-compromise design with system-level upgradeability. IBM

PureSystems is built for cloud, containing “built-in” flexibility and simplicity.

At IBM, expert integrated systems come in two types:

IBM PureFlex System: Infrastructure systems deeply integrate the IT elements and

expertise of your system infrastructure.

IBM PureApplication™ System: Platform systems include middleware and expertise for

deploying and managing your application platforms.

IBM PureSystems are built for cloud with integrated elasticity and virtualization capabilities to

provision new services in minutes and improve business flexibility while reducing cost.

IBM Flex System is a build-to-order offering that is integrated by the client or a partner and

does not deliver against all of the three attributes of expert integrated systems (built-in

expertise, integration by design, simplified experience). IBM Flex System allows clients to

build their own system to meet unique IT requirements with a set of no-compromise

components including compute, storage, networking, and systems management.

IBM PureFlex System and IBM PureApplication System are built on elements of the IBM Flex

System. It has been designed for clients that need pre-integrated hardware infrastructure

comprised of compute, storage and networking nodes as well as a choice of operating

systems and hypervisors.

The new offering, IBM Flex System V7000 Storage Node, is supported with IBM PureFlex

System and other IBM Flex System configurations.

2 IBM Flex System V7000 Storage Node Introduction and Implementation Guide

Page 23

1.1.1 Product names

The primary product names for the IBM PureSystems components are as follows:

IBM PureSystems:

– The overall name for IBMs new family of expert integrated systems

IBM Flex System:

– A build-to-order offering with clients choice of IBM Flex System components

– IBM Flex System that can help you go beyond blades

– An innovative Enterprise Chassis designed for new levels of simplicity, flexibility,

integration, reliability, and upgradability

– A broad range of x86 and IBM POWER® compute nodes

– New IBM Flex System V7000 Storage Node built into the Enterprise Chassis

IBM PureFlex System:

– A solution that combines compute nodes, storage, networking, virtualization and

management into a single infrastructure system. It is expert at sensing and anticipating

resource needs to optimize your infrastructure.

IBM PureApplication System:

– A platform system designed and tuned specifically for transactional web and database

applications. Its workload-aware, flexible platform is designed to be easy to deploy,

customize, safeguard, and manage.

IBM PureData™ System:

– PureData System is the newest member of the IBM PureSystems™ family that is

optimized exclusively for delivering data services to today’s demanding applications

with simplicity, speed, and lower cost.

– Like IBM PureApplication System, it offers built-in expertise, integration by design, and

a simplified experience throughout its life cycle.

IBM Flex System V7000 Storage Node:

– The product name for the IBM Flex System V7000 Storage Node family of controller

and expansion enclosures. The IBM Flex System V7000 Storage Node is an add-on for

the IBM Flex System Enterprise Chassis.

IBM Flex System V7000 Control Enclosure:

– The controller enclosure of the IBM Flex System V7000 Storage Node. The IBM Flex

System V7000 Control Enclosure is an add-on for the IBM Flex System Enterprise

Chassis and mounts internally into it.

– The IBM Flex System V7000 Control Enclosure provides 24 disk drive bays.

– The IBM Flex System V7000 Control Enclosure supports block workloads only.

IBM Flex System V7000 Expansion Enclosure:

– A SAS disk shelf with 24 disk drive bays that connects to the IBM Flex System V7000

Control Enclosure. The IBM Flex System V7000 Expansion Enclosure is an add-on for

the IBM Flex System Enterprise Chassis and mounts internally into it.

IBM Storwize V7000:

– The IBM Storwize V7000 is a disk system with built in IBM SAN Volume Controller

(SVC) functionality. It has the ability to virtualize a wide range of external storage

systems from either IBM or other Storage vendors.

– The IBM Storwize V7000 Control Enclosure provides a choice of 24 x 2.5" Small Form

Factor (SFF) disk drives or 12 x 3.5" Large Form Factor (LFF) disk drive form factors.

– The IBM Storwize V7000 supports block workloads only.

Chapter 1. Introduction to IBM Flex Systems and IBM PureSystems offerings 3

Page 24

IBM Storwize V7000 Unified:

– IBM Storwize V7000 Unified is like the IBM Storwize V7000 a disk system that provides

internal storage and external virtualization. However, the IBM Storwize V7000 Unified

also has file modules that provide NAS functionality like the CIFS and NFS protocols.

– The Storwize V7000 Unified consolidates block and file workloads into a single system.

IBM Storwize V7000 Control Enclosure:

– This component is the controller enclosure of the IBM Storwize V7000 storage system.

– The IBM Storwize V7000 Control Enclosure provides 12 or 24 disk drive bays,

depending on the model.

IBM Storwize V7000 Expansion Enclosure:

– A SAS disk shelf with either 12 or 24 disk drive bays that can connect to either the IBM

Storwize V7000 Control Enclosure or the IBM Flex System V7000 Control Enclosures.

Figure 1-2 shows the different IBM PureSystems and their building blocks.

Figure 1-1 IBM PureSystems

1.1.2 IBM PureFlex System

To meet today’s complex and ever-changing business demands, you need a solid foundation

of server, storage, networking, and software resources that is simple to deploy and can

quickly and automatically adapt to changing conditions. You also need access to, and the

ability to take advantage of, broad expertise and proven best practices in systems

management, applications, hardware maintenance, and more.

IBM PureFlex System is a comprehensive infrastructure system that provides an expert

integrated computing system, combining servers, enterprise storage, networking,

virtualization, and management into a single structure. Its built-in expertise enables

organizations to simply manage and flexibly deploy integrated patterns of virtual and

4 IBM Flex System V7000 Storage Node Introduction and Implementation Guide

Page 25

hardware resources through unified management. These systems are ideally suited for

customers interested in a system that delivers the simplicity of an integrated solution but who

also want control over tuning middleware and the run-time environment.

IBM PureFlex System recommends workload placement based on virtual machine

compatibility and resource availability. Using built-in virtualization across servers, storage,

and networking, the infrastructure system enables automated scaling of resources and true

workload mobility.

IBM PureFlex System undergoes significant testing and experimentation, so it can mitigate IT

complexity without compromising the flexibility to tune systems to the tasks businesses

demand. By providing both flexibility and simplicity, IBM PureFlex System can provide

extraordinary levels of IT control, efficiency, and operating agility that enable businesses to

rapidly deploy IT services at a reduced cost. Moreover, the system is built on decades of

expertise, enabling deep integration and central management of the comprehensive,

open-choice infrastructure system and dramatically cutting down on the skills and training

required for managing and deploying the system.

IBM PureFlex System combine advanced IBM hardware and software along with patterns of

expertise and integrate them into three optimized configurations that are simple to acquire

and deploy so you get fast time to value for your solution.

Figure 1-2 shows the IBM PureFlex System with its three different chassis implementations.

Figure 1-2 IBM PureFlex System

The three PureFlex System configurations are as follows:

IBM PureFlex System Express: Designed for small and medium businesses, it is the most

affordable entry point for the PureFlex System.

IBM PureFlex System Standard: Optimized for application servers with supporting storage

and networking, it is designed to support your key ISV solutions.

IBM PureFlex System Enterprise: Optimized for transactional and database systems, it

has built-in redundancy for highly reliable and resilient operation to support your most

critical workloads.

Note: IBM Flex System allows you to build your own system to meet the unique IT

requirements.

Chapter 1. Introduction to IBM Flex Systems and IBM PureSystems offerings 5

Page 26

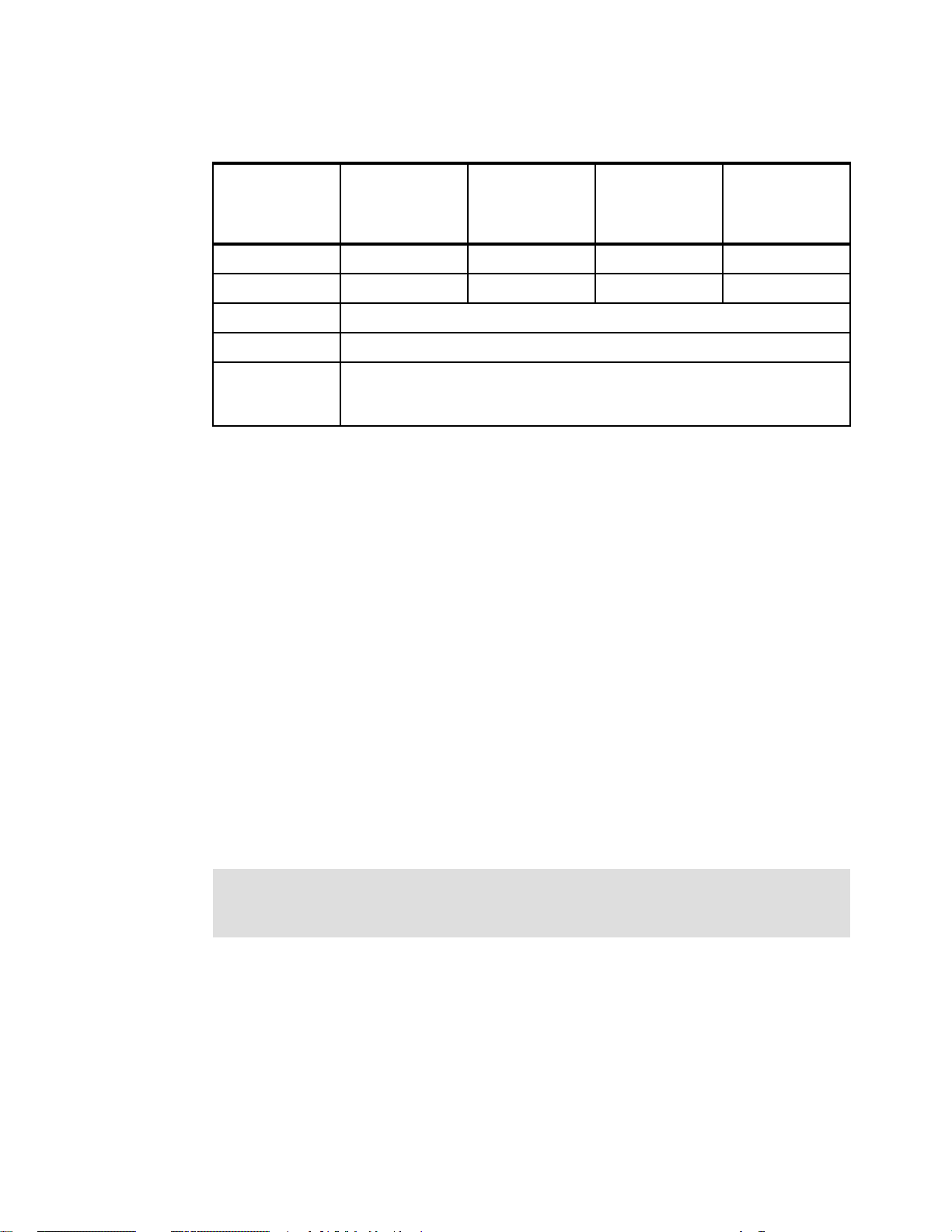

The components of the PureFlex System are summarized in Table 1-1.

Table 1-1 IBM PureFlex System components

Component IBM PureFlex System

Express

IBM PureFlex System

Standard

IBM PureFlex System

Enterprise

IBM PureFlex System 42U

Rack

IBM Flex System Enterprise

Chassis

IBM Flex System Fabric

EN4093 10Gb Scalable Switch

IBM Flex System FC3171 8 Gb

SAN Switch

IBM Flex System Manager

Node

IBM Flex System Manager

software license

IBM Flex System Chassis

Management Module

Chassis power supplies

(std/max)

IBM Flex System Enterprise

Chassis 80 mm Fan Module

Pair (std/max)

IBM Flex System V7000

Storage Node

111

111

1 1 2 with both port-count

upgrades

122

111

IBM Flex System Manager

with 1-year service and

support

222

2 / 6 4 / 6 6 / 6

4 / 8 6 / 8 8 / 8

Yes (redundant controller) Yes (redundant controller) Yes (redundant controller)

IBM Flex System Manager

Advanced with 3-year

service and support

IBM Flex System Manager

Advanced with 3-year

service and support

IBM Flex System V7000 Base

Software

Base with 1-year software

maintenance agreement

The fundamental building blocks of IBM PureFlex System solutions are the IBM Flex System

Enterprise Chassis complete with compute nodes, networking, and storage. See the next

sections for more information about the building blocks of the IBM PureFlex System.

1.2, “IBM PureFlex System building blocks” on page 8

1.3, “IBM Flex System Enterprise Chassis” on page 10

1.4, “Compute nodes” on page 15

1.5, “I/O modules” on page 24

1.1.3 IBM PureApplication System

The IBM PureApplication System is a platform system that pre-integrates a full application

platform set of middleware and expertise in with the IBM PureFlex System with a single

management console. It is a workload-aware, flexible platform that is designed to be easy to

deploy, customize, safeguard, and manage in a traditional or private cloud environment,

ultimately providing superior IT economics.

Availability: IBM Flex System V7000 Storage Node is currently not offered in IBM

PureApplication Systems. Currently the only available storage for IBM PureApplication

System is IBM Storwize V7000.

Base with 3-year software

maintenance agreement

Base with 3-year software

maintenance agreement

6 IBM Flex System V7000 Storage Node Introduction and Implementation Guide

Page 27

With the IBM PureApplication System, you can provision your own patterns of software,

middleware, and virtual system resources. You can provision these patterns within a unique

framework that is shaped by IT best practices and industry standards. Such standards have

been developed from many years of IBM experience with clients and from a deep

understanding of smarter computing. These IT best practices and standards are infused

throughout the system.

With IBM PureApplication System, you enjoy the following benefits:

IBM builds expertise into preintegrated deployment patterns, which can speed the

development and delivery of new services.

By automating key processes such as application deployment, PureApplication System

built-in expertise capabilities can reduce the cost and time required to manage an

infrastructure.

Built-in application optimization expertise reduces the number of unplanned outages

through best practices and automation of the manual processes identified as sources of

those outages.

Administrators can use built-in application elasticity to scale up or to scale down

automatically. Systems can use data replication to increase availability.

Patterns of expertise can automatically balance, manage, and optimize the elements

necessary, from the underlying hardware resources up through the middleware and software.

These patterns of expertise help deliver and manage business processes, services, and

applications by encapsulating best practices and expertise into a repeatable and deployable

form. This best-practice knowledge and expertise has been gained from decades of

optimizing the deployment and management of data centers, software infrastructures, and

applications around the world.

These patterns help you achieve the following types of value:

Agility: As you seek to innovate to bring products and services to market faster, you need

fast time-to-value. Expertise built into a solution can eliminate manual steps, automate

delivery, and support innovation.

Efficiency: To reduce costs and conserve valuable resources, you must get the most out of

your systems with energy efficiency, simple management, and fast, automated response

to problems. With built-in expertise, you can optimize your critical business applications

and get the most out of your investments.

Increased simplicity: You need a less complex environment. Patterns of expertise help you

to easily consolidate diverse servers, storage and applications onto an easier-to-manage,

integrated system.

Control. With optimized patterns of expertise, you can accelerate cloud implementations

to lower risk by improving security and reducing human error.

IBM PureApplication System is available in four configurations. These configuration options

enable you to choose the size and compute power that meets your needs for application

infrastructure. You can upgrade to the next size when your organization requires more

capacity, and in most cases, you can do so without application downtime.

Chapter 1. Introduction to IBM Flex Systems and IBM PureSystems offerings 7

Page 28

Table 1-2 provides a high-level overview of the configurations.

Table 1-2 IBM PureApplication System configurations

IBM

PureApplication

System

W1500-96

Cores 96 192 384 608

RAM 1.5 TB 3.1 TB 6.1 TB 9.7 TB

SSD Storage 6.4 TB

HDD Storage 48.0 TB

Application

Services

Entitlement

IBM

PureApplication

System

W1500-192

Included

For more details about IBM PureApplication System, see the following website:

http://ibm.com/expert

1.2 IBM PureFlex System building blocks

IBM PureFlex System provides an integrated computing system, combining servers,

enterprise storage, networking, virtualization, and management into a single structure. The

built-in expertise lets organizations simply manage and flexibly deploy integrated patterns of

virtual and hardware resources through unified management.

IBM

PureApplication

System

W1500-384

IBM

PureApplication

System

W1500-608

1.2.1 Highlights

Each system consists of IBM System x® nodes, IBM Power Systems™ compute nodes, or a

combination of these two types, which is known as a hybrid configuration. The bundled,

on-site services provide some initial compute node configuration and might differ for IBM

System x nodes and Power Systems compute nodes. A client-specified primary node

(POWER or x86) is pre-configured with a hypervisor (IBM PowerVM®, VMWare, KVM,

HyperV) to allow virtual server configuration by IBM services personnel. Services also include

skills transfer to the client personnel.

Important: Initial IBM PureFlex System configuration is carried out by IBM services and is

included with the purchase. To ensure configuration success, the default shipped

configuration must not be changed until these services are completed by IBM.

8 IBM Flex System V7000 Storage Node Introduction and Implementation Guide

Page 29

1.2.2 Components

The IBM PureFlex System offerings comprise of the following components as illustrated in

Figure 1-3. With these components pre-configured, pre-integrated infrastructure systems with

compute, storage, networking, physical and virtual management, and entry cloud

management with integrated expertise are delivered by the IBM PureFlex System.

Figure 1-3 IBM PureFlex System Building Bocks

Storage components

The storage capabilities of IBM Flex System allows you to gain advanced functionality with