Page 1

TPF

Systems

Technical Newsletter

http://www.s390.ibm.com/products/tpf/tpfhp.html

Volume 7 Number 4Fourth Quarter 2000

In this issue

What Is on Program Update Tape (PUT) 13? ...................3

TCP/IP Congress Ratifies the Balanced Budget

Amendment.......................................................................6

“We are definitely living in the age

of information overload where

TCP/IP Update ..................................................................9

TPF DECB Support ...........................................................12

TPFCS Recoup Changes, but Stays the Same ................18

Another Monumental Home Run for Sammy OSA............ 21

How Do I Find Information about ______? .......................25

The TPFDF Product Gets SET..........................................26

BookManager Hints and Tips: Searching..........................27

The Collection Connection................................................36

TPF Family Libraries Web Page----We’re Growing! ..........42

Letters, We Get Letters . . . Usually C and C++................ 43

Logical Record Caching....................................................47

Recoup - Warp Factor 9 - Engaged .................................. 53

IIOP Connect for TPF---Keeping Current .......................... 58

there is an infinite amount of

information at our disposal every

day. With the onslaught of more

and more information, it has

become crucial that we find the

information we want when we

want it and as quickly as possible.”

How Do I Find Information about ______?

Fay Casatuta, IBM TPF ID Core Team

2001 TPF Holiday Schedule ............................................. 59

Page 2

TPF Systems Technical Newsletter

Vol. 7 No. 4

Managing Editors:

Thomas Brocker

Jennifer Wirsch

Editor:

Frank DiGiandomenico

Web Suppor t:

Al Brajnikoff

The TPF Systems Technical Newsletter is a

publication of the International Business

Machines Corporation. IBM may own patents

and copyrights on the subject matter disclosed

in this newsletter. No license is implied or

intended through the publication and distribution

of this newsletter. IBM is a registered trademark

of the International Business Machines

Corporation.

© Copyright International Business Machines

Corporation 2000. All rights reserved.

Comments, Address Changes

This newsletter is provided free-of-charge

through the IBM TPF Web site. IBM reserves

the right to discontinue this service or change

any of the policies regarding this service at any

time.

To Fax your comments, use the following

number:

• +1-845-432-9788.

To send comments electronically, use the

following Internet E-mail addresses:

• jwirsch@us.ibm.com

• brocker@us.ibm.com

Letters to the Editor and Articles

Policy

Suggestions on topics and comments on articles

are welcome from our readers. Letters or articles

submitted for publication by readers may or may

not be published at the sole discretion of IBM.

Page 3

Fourth Quarter 2000

What Is on Program Update Tape (PUT) 13?

Ellen Smyth, IBM TPF ID Core Team

The following summarizes PUT 13 support:

• Use either Domain Name System (DNS) server support or the enhancements to DNS client support (APAR

PJ27268), or both, to do the following:

- Enable the TPF 4.1 system to process incoming DNS requests, thereby enabling load balancing of the

Transmission Control Protocol/Internet Protocol (TCP/IP) connections in a loosely coupled complex (referred

to as the DNS server portion)

- Customize the load balancing algorithms by using the UDNS user exit

- Enhance DNS client performance of your TPF 4.1 system by providing a cache to store information received

from remote DNS servers (referred to as the client cache portion).

• The following enhancements are included in TPF Support for VisualAge Client (APAR PJ27383):

- Macro breakpoints entered in either the TPF C Debugger for VisualAge Client or the TPF Assembler Debugger

for VisualAge Client are in effect for both C and assembler programs.

- Deferred line breakpoints for the TPF Assembler Debugger for V isualAge Client are saved between debugging

sessions so that you need to set them only once, and they are available during any debugging sessions that

follow.

- Enter/back support for the TPF Performance Trace Execution Analyzer for VisualAge Client means that

when a transaction is run through the performance analyzer, the analyzer will record information for assembler

segments as well as C and C++ programs.

- PRINT NOGEN support addresses the ability to generate assembler debugger ADATA files, which allow

macro expansions to be suppressed.

• FIFO special file support (APAR PJ27214) builds on the infrastructure provided previously with TPF Internet

server support (APARs PJ25589 and PJ25703) and open systems infrastructure (APAR PJ26188).

Enhancements to the infrastructure ease porting efforts and encourage development of new applications for

the TPF system. FIFO special file support provides the following:

- Support for FIFO special files. A FIFO special file is a file that is typically used to send data from one process

to another so that the receiving process reads the data as first-in-first-out (FIFO) format. A FIFO special file

is also known as a named pipe. This support provides a method for independent processes to communicate

with each other by using TPF file system functions such as the read and write functions.

- Enhancements to the select function to allow the use of file descriptors for named pipes.

- A syslog daemon to provide a message logging facility for all application and system processes. Internet

server applications and components use the syslog daemon for logging purposes and can also send trace

information to the syslog daemon. Messages can be logged to file or to tape. Remote syslog daemons can

also log messages to the local syslog daemon through remote sockets.

• File system tools (APAR PJ27277) enhances the file system by making it easier to port or create new applications

and scripting languages on the TPF 4.1 system. File system tools allows you to do the following:

- Activate scripts and TPF segments from the command line using the ZFILE functional message

3

Page 4

TPF Systems Technical Newsletter http://www.s390.ibm.com/products/tpf/tpfhp.html

- Pass data from the standard output (stdout) of one ZFILE functional message to another as standard input

(stdin) by using a vertical bar (|) as a pipe

- Use the expanded family of ZFILE functional messages to support Web hosting and scripting

- Emulate the execl, execp, execv, and execvp Portable Operating System Interface for Computer Environments

(POSIX) functions to process file system files with more than one level of redirection

- Preserve the meaning of special characters by using a quoting mechanism in the ZFILE functional messages.

Many of these enhancements are made possible through updates to the tpf_fork function. This function has

been enhanced as follows to allow you to specify how to pass data:

- Application-defined environment variables can now be passed to the child process.

- Data can be passed as arguments through the argv parameter to the main function of the child process.

• Infrastructure for 32-way loosely coupled processor support (AP AR PJ27387) provides structural changes needed

to support the future growth of application workload beyond the maximum of 8 loosely coupled processors. This

support includes:

- Enhancements to the internal event facility (IEF) so that application processors can track communication

responses from as many as 32 loosely coupled processors

- Changes to the communication control record structure and content so that you can add as many as 32 loosely

coupled processors to future configurations without requiring the reorganization of communication control record

structures

- Enhancements to the processor resource ownership table (PROT) and E-type loaders to accommodate additional

loosely coupled processors.

Note: PUT 13 does not remove the constraint of a maximum of 8 loosely coupled processors. Additional

functions are required to complete 32-way loosely coupled processor support.

• Integrated online pool maintenance and recoup support (APAR PJ27469) enhances the recoup, pool directory

update (PDU), pool generation, pool allocation, and pool deactivation utilities in a TPF 4.1 system environment by

doing the following:

- Eliminating most offline processing

- Eliminating recoup and pool general files

- Increasing performance and data integrity

- Allowing all phases of recoup to be run in NORM state

- Providing multiprocessor and multi-I-stream capability

- Providing online historical data

- Providing recoup and PDU fallback capability.

• The loaders enhancement for the TPF Assembler Debugger for VisualAge Client (APAR PJ27422) offers the

ability to load ADATA files used by the assembler debugger rather than using T rivial File Transfer Protocol (TFTP)

to transfer ADATA files to the online TPF 4.1 system. The loaders enhancement for the TPF Assembler Debugger

provides the following benefits:

- Eliminates the need to remember and specify the path and name of the ADATA file in the hierarchical file

system (HFS). The assembler debugger finds and uses the ADATA file that is loaded by the TPF loader.

- E-type loader (OLDR) support for ADATA files allows the assembler debugger to automatically use the correct

ADATA file for any version of a program.

4

Page 5

Fourth Quarter 2000

- Provides a foundation for changes to the assembler debugger that enable tracing in a multiple database function

(MDBF) environment by loading ADATA files to a specific subsystem.

• Logical record cache and coupling facility (CF) cache support (APAR PJ27083) further exploits CF support and

CF record lock support, which were provided on PUT 9 and PUT 11 respectively. Logical record cache support

provides the ability to maintain data consistency as well as the ability to keep track of data that resides in the local

cache and in permanent storage. The caches can be processor shared or processor unique.

• Open Systems Adapter (OSA)-Express support is now enabled on the TPF 4.1 system. An Open Systems Adapter

is integrated hardware (the OSA-Express card) that combines the functions of an IBM System/390 (S/390) I/O

channel with the functions of a network port to provide direct connectivity between IBM S/390 applications and

remote Transmission Control Protocol/Internet Protocol (TCP/IP) applications on the attached networks. OSAExpress is the third generation of OSA and provides the following enhancements:

- You can dynamically configure an OSA-Express card by using the ZOSAE functional message to manage

OSA-Express connections.

- The queued direct I/O (QDIO) protocol is used to communicate between the TPF 4.1 system and an OSAExpress card by sharing memory and eliminating the need for real I/O operations (channel programs) for data

transfer between them. The load on your I/O processor is reduced, path lengths in the TPF 4.1 system are

reduced, and throughput is increased.

- OSA-Express support enables the TPF 4.1 system to connect to high-bandwidth TCP/IP networks such as the

Gigabit Ethernet (GbE or GENET) network.

- OSA-Express support provides virtual IP address (VIPA) support to eliminate single points of failure in a TCP/

IP network.

- Movable virtual IP address (VIP A) support provides the ability to balance TCP/IP workloads across processors

in the same loosely coupled complex using the ZVIPA functional message.

• Before TPF data event control block (DECB) support (AP AR PJ27393), the TPF 4.1 system restricted the number

of entry control block (ECB) data levels (D0 - DF) that were available for use to 16 (the number of data levels

defined in the ECB). With TPF DECB support, that restriction has been removed. TPF DECB support also provides

the following:

- 8-byte file addressing in 4x4 format, which provides standard 4-byte file addresses (FARF3, F ARF4, or F ARF5)

to be stored in an 8-byte field

- New interfaces to allow TPF programs to access file records with a DECB instead of a data level in an ECB

- New macros for managing DECBs

- The ability to associate symbolic names with each DECB; this allows different components of a program to

easily pass information in core blocks attached to a DECB.

• TPF MQSeries enhancements (APARs PJ27230, PJ27231, PJ27351, and PJ27431) include the following:

- MQSeries TCP/IP support (APAR PJ27230) allows the TPF system to connect to MQSeries server platforms

that do not support the Systems Network Architecture (SNA) protocol.

- MQSeries user exit support (APAR PJ27231) adds the TPF MQSeries start queue manager user exit, CUIA,

which allows you to start your MQSeries applications immediately after the start or restart of the queue manager,

and moves other TPF MQSeries user exits from dynamic link library (DLL) CMQS to DLL CMQU, allowing you

to load CMQS without modifying the DLL.

5

Page 6

TPF Systems Technical Newsletter http://www.s390.ibm.com/products/tpf/tpfhp.html

- MQSeries slow queue sweeper and move support (APARs PJ27351 and PJ27431) creates the slow queue

sweeper mechanism and the ZMQSC MOVEMSGS functional message. The slow queue sweeper mechanism

moves messages on a queue to a TPF collection support (TPFCS) persistent collection if the number of messages

on the queue is increasing. This prevents memory resources from becoming depleted. The ZMQSC MOVEMSGS

functional message allows you to move messages from a processor unique normal local queue when that

processor is deactivated.

• The system initialization program (SIP) enhancement for the TPF Database Facility (TPFDF) (APAR PJ27328)

includes the TPFDF product in the TPF 4.1 system SIP process. This enhancement creates all the JCL that is

required to build the TPFDF product during the TPF SIP process. It is easier to install the TPFDF product because

the required segments are assembled, compiled, and link-edited as part of the TPF SIP process. Before this

enhancement, this occurred after the TPF SIP process, making any installation errors dif ficult to detect and timeconsuming to correct.

TCP/IP Congress Ratifies the Balanced Budget Amendment

Mark Gambino, IBM TPF Development

You have a TCP/IP server application that resides on all TPF processors in a loosely coupled complex, or the

application resides on a single TPF processor that has multiple network interfaces (IP addresses). The problem

becomes how to balance the workload across the different processors and interfaces. To make the situation even

more challenging, the server environment is not static, meaning TPF processors are periodically added or removed

from the complex. Processors in the complex can have different capacity, and the amount of available cycles on a

given processor can change when certain utilities are run. Another way of saying all of this is that the characteristics

of the server environment change throughout the day and the load balancing mechanisms must be able to react to

the changes. TPF provides two TCP/IP load balancing functions on PUT 13: movable virtual IP address (VIPA)

support and Domain Name System (DNS) server support.

Movable VIPA Motor Homes

TPF supports VIP As across OSA-Express connections with PUT 13 APAR PJ27333. Traditionally , VIP As are used to

eliminate single points of failure in the IP network because the physical network through which a VIP A is mapped can

change. TPF has extended the concept of a VIPA where it can be moved from one TPF processor to another in the

loosely coupled complex. This support is included in AP AR PJ27333. During a planned or unplanned outage of a TPF

processor, movable VIPAs currently owned by that processor are moved to another processor. The result is that all

movable VIPAs are always active (except, of course, in the case of a total complex outage). This is an important

concept because a given remote client always connects to the same TPF VIPA. Which TPF processor the client

connection goes to is based on which processor currently owns the VIP A. However, all that the client cares about is

whether or not a connection can be established. Because the movable VIPA is always active somewhere in the

complex, the client is able to connect to TPF.

When you move a VIPA to another TPF processor, connections to that VIPA will fail, causing remote clients to

reconnect. When they reconnect, the new connections will end up on the new TPF processor . Therefore, moving a

VIPA moves a percentage of the workload from one TPF processor to another. The following are situations where

you would want to move VIPAs:

• A TPF processor is taken out of the complex (planned or unplanned).

• A TPF processor is added to the complex.

• The workload in the complex is not balanced.

6

Page 7

Fourth Quarter 2000

A VIPA can be moved manually at any time using the ZVIPA MOVE operator command. In addition, new user exit

UVIP allows you to automatically move VIPAs that are owned by a TPF processor when that TPF processor is

deactivated. Various displays have been included to help you determine and react to unbalanced workloads. For

example, you can use the ZVIPA SUMMARY command to display the distribution of TCP/IP traffic across all the

processors in the complex and to see how busy each processor is. You can then use the ZVIPA DISPLA Y command

to identify which movable VIPAs are on the overloaded processors and use the ZVIPA MOVE command to move

some of those VIPAs to the processors that have available cycles. In the case of a planned outage on processor

TPFA, you can gradually move the VIPAs off TPFA and then deactivate that processor . When new processor TPFB

is added to the complex, you can gradually move work to TPFB by moving VIPAs to TPFB one at a time.

What Is the Matrix?

A TCP/IP client application uses the Domain Name System (DNS) to translate the host name of a server application

to the IP address of the server. The client application issues a gethostbyname() call causing the client node,

which is acting as the DNS client, to send the request to its local DNS server. If the local DNS server cannot resolve

the server host name, the local DNS server communicates with remote DNS servers in the hierarchy until the

request is resolved. The reply sent back to the client node contains the IP address of the server. In the case of a

single server node with a single network interface, there is only one possible server IP address to use. However , in

the case of a server farm, cluster, or complex, the server has multiple IP addresses. Because of this, the reply sent

to the DNS client node can contain multiple server IP addresses. If so, it is implementation-dependent how the client

node selects the IP address to use from the reply . The reply can also indicate that the local DNS server and the client

node can cache the reply information. Caching the information in the client node allows subsequent gethostbyname()

requests issued on that node for the same server host name to be resolved internally without having to go to its local

DNS server . Caching the information in the local DNS server means that the local DNS server can resolve requests

for that server host name if the request is issued by any node using this DNS server.

Assume you have 50 business partners, each with 100 clients, that connect to your server. Each business partner

has its own local DNS server. In the single server node, single network interface environment, there is only one

server IP address; therefore, allowing the 50 local DNS servers and 5000 client nodes to cache the server information

is not a problem. If, instead, you had multiple server nodes and network interfaces, load balancing becomes an

issue. If you allow the client nodes or local DNS servers of the client to select the server IP addresses, you have no

control over the load balancing. The situation becomes even worse if the server environment changes, meaning

server nodes are added and removed throughout the day. For example, assume there is a maximum of 32 server

nodes (each with only one IP address to keep things simple). If the cached information of the remote client DNS

servers includes all 32 server IP addresses, but only 16 of the server nodes are active, there is a 50% chance that

the client DNS server will select an IP address of an inactive server node. If so, depending on the client node’s

implementation, the client application could fail or it has to use trial and error until an IP address is selected that goes

to an active server node, neither of which is a desirable outcome. Rather than pass back all possible server IP

addresses, assume the server node’s DNS server is smart enough to only include active IP addresses in the DNS

reply sent back to your business partners. If the client nodes cache the fact that there are 16 active server IP

addresses and then you activate more server nodes, there is no easy way to get connections to the new server

nodes because none of the client caches know about the IP addresses of the new server nodes.

By now it should be obvious that allowing the client node or client DNS server to select the server IP address cannot

achieve load balancing in a multiple server node environment. To find out the best server IP address to use for a new

remote client connection, you need to ask the question to the server complex. This is why the DNS server function

is now part of the TPF system. PUT 13 APAR PJ27268 provides this function. If a remote client wants to start a

connection with a TPF server application, the DNS request to resolve the TPF host name will now flow into the TPF

system. The TPF DNS server application will look up the host name in the user-created TPF host name file

(/etc/host.txt) to find the IP addresses that are allowed to be used by this server application. Because the DNS

7

Page 8

TPF Systems Technical Newsletter http://www.s390.ibm.com/products/tpf/tpfhp.html

server function is now in the TPF system, it knows all the IP addresses that are active and on which processors in the

complex. The list of IP addresses from the TPF host name file is then trimmed down so that only active IP addresses

remain. One IP address is selected from this list. User exit UDNS allows you to select the IP address or you can let

the TPF DNS server select the IP address. The DNS reply sent back to the remote client contains only one server IP

address; therefore, that is the IP address the client will use to connect to TPF. The other important factor is that the

DNS reply indicates that the information is not allowed to be cached. Not allowing the client nodes or client DNS

servers to cache information forces all DNS requests for TPF host names to flow into TPF. This centralizes the load

balancing in one place (TPF) and allows you to assign new client connections to processors based on current

conditions in the server complex.

As an added bonus, APAR PJ27268 also contains enhancements to TPF DNS client support, which shipped on PUT

8 and enabled TPF client applications to issue gethostbyname() and gethostbyaddr() calls. These requests

were always sent to an external DNS server for processing. APAR PJ27268 adds DNS client cache functionality to

TPF, meaning information learned from external DNS servers in replies is cached in TPF if the reply indicates that

the information is allowed to be cached. Now, when a TPF client application requests a DNS function, TPF first looks

in its local cache to try to resolve the request. If the information is not in the cache, the request is sent to an external

DNS server. If the request cannot be resolved by an external DNS server, TPF will now look in the user-created

external host name file (/etc/hosts) to see if the request can be resolved.

Déjà Vu

If the concepts of these TCP/IP load balancing solutions sound familiar , they should. If you replace the term TCP/IP

with SNA, you would have similar load balancing concepts, just different names. For example, when an SNA LU-LU

session is started, information flows on a control session (CDRM-CDRM or CP-CP) to select the path for the LU-LU

session to use. Putting the DNS server function in TPF emulates the SNA control session concept. The TPF SNA

Advanced Peer-to-Peer Networking (APPN) and TPF TCP/IP DNS server environments are very similar in concept

now. When a remote client wants to connect to TPF, a control message is sent to TPF, TPF selects the path for the

session with a user exit allowing you to customize the path selection algorithm, the reply with the selected path is

sent to the remote client, and then the remote client starts the connection across the selected path. Movable VIPA

support for TCP/IP is similar to how generic LU works for SNA networks. In both cases, a server interface (VIPA or

LU name) can be moved from one server node to another in the complex.

Why the Votes Were Unanimous

Movable VIPA and DNS server support both have attractive features for the TPF environment:

• Solutions are based on proven concepts in other networking environments.

• All the load balancing is centralized in one place (TPF).

• No special hardware or software is required in the network or remote client nodes.

• They use standard protocols (RIP for movable VIPA and standard DNS flows for DNS server).

• Solutions are network-independent, meaning the underlying physical network can change without affecting how

the load balancing is done.

• Solutions are scalable, meaning there are no architectural limits to the number of remote client connections.

High-end TCP/IP connectivity and load balancing solutions for TPF . . . they’re here!

8

Page 9

Fourth Quarter 2000

TCP/IP Update

Dan Yee, IBM TPF Development

Program update tape (PUT) 13 contains a large number of enhancements and fixes to TPF Transmission Control

Protocol/Internet Protocol (TCP/IP) support in various areas. Some of the enhancements are addressed in other

TPF newsletter articles in this issue. This article briefly mentions those enhancements and discusses TCP/IP

enhancements and fixes that are not explained in the other articles.

DNS Server Support on APAR PJ27268

APAR PJ27268 contains Domain Name System (DNS) server support to enable you to load balance TCP/IP traffic

that comes into a TPF loosely coupled complex. The server code will only work with TCP/IP native stack support and

will not work with TCP/IP offload support.

The main features of the server code include the following:

• Support for a User Datagram Protocol (UDP) server that accepts DNS queries on port 53 through the Internet

daemon

• Support for a /etc/host.txt file that enables you to define TPF host names and IP addresses to be associated

with each host name

• Support for a UDNS user exit that enables you to customize the TCP/IP load balancing to suit your particular

loosely coupled environment.

The DNS server code also supports a new flag for the recvfrom() socket API function. The flag is called

MSG_RTN_LOCAL_IP_ADDR and returns the local socket address associated with an incoming message. This

flag is unique to the TPF environment because it enables the TPF DNS server code to run multithreaded without

using routing tables when sending responses to DNS queries; that is, the server code is able to respond to multiple

DNS query requests at the same time by creating a raw socket, binding to the local IP address returned by

recvfrom(), and sending the response on that socket for each DNS query it receives. As a result, the performance

of the TPF DNS server is enhanced because it does not have to process one DNS query at a time. See “TCP/IP

Congress Ratifies the Balanced Budget Amendment” in this issue for more information about the DNS server code.

DNS Client Support on APAR PJ27268

The majority of the code for DNS support on APAR PJ27268 will only work with TCP/IP native stack support.

However, the DNS client cache support in APAR PJ27268 will work with offload support, native stack support, or

both. As a prerequisite, you need to install A PAR PJ27083, which added logical record cache support to the system

on PUT 13. The client cache portion of the APAR applies to the DNS client code in the system that processes the

gethostbyaddr() and gethostbyname() functions. Data that is retrieved from the remote DNS server is saved

in the gethostbyaddr() cache if a gethostbyaddr() function was issued, and is saved in the gethostbyname()

cache if a gethostbyname() function was issued. As a result, host names or IP addresses that you query on a

regular basis from your TCP/IP applications are not retrieved from the remote server each time, and are obtained

from one of the two new client caches. Because TPF does not have to go to the remote server each time, the

performance of your applications is improved.

AP AR PJ27268 also contains support for a /etc/hosts file similar to a file you may use on other TCP/IP platforms,

such as UNIX. The /etc/hosts file, which is optional, must contain a list of IP addresses and host names to be

used by the gethostbyname() function to resolve a host name provided by the application. The gethostbyname()

code only searches this file if the host name cannot be resolved by either the primary or secondary remote DNS

server or if the host name cannot be retrieved from the gethostbyname() cache. Although the format of this file is

similar to /etc/hosts files you may use on other TCP/IP platforms, the host name must precede the IP address in

9

Page 10

TPF Systems Technical Newsletter http://www.s390.ibm.com/products/tpf/tpfhp.html

the file, and any aliases in the file are ignored by TPF. We chose this format so that it would be similar to the format

of the /etc/host.txt file we are now supporting for the new DNS server support contained in APAR PJ27268.

Despite the similarity in format, there are several differences in characteristics between the TPF /etc/hosts and

the /etc/host.txt file:

• Y ou can enter more than one IP address per host name in the /etc/host.txt file. You cannot enter more than

one IP address per host name in the /etc/hosts file.

• The /etc/host.txt file contains host names and IP addresses local to TPF. The /etc/hosts file contains

host names and IP addresses that are remote to TPF; for example, www.ibm.com or www.yahoo.com.

• The /etc/host.txt file is only supported if TCP/IP native stack support has been installed. The /etc/hosts

file is supported for native stack and offload environments.

OSA-Express and VIPA Support (APAR PJ27333)

TCP/IP native stack support (APAR PJ26683) enables the TPF system to directly connect to IP networks through a

channel data link control (CDLC) link with a 374x IP router. With Open Systems Adapter (OSA)-Express support in

APAR PJ27333, TPF can connect to IP networks through a Queued Direct I/O (QDIO) link with an OSA-Express

card. An OSA-Express card is a third generation OSA card that is available on IBM G5 processors and later models.

The OSA-Express card enables TPF to connect to IP networks directly without the need for front-end channelattached routers. The three features of OSA-Express that make it highly desirable in a TPF environment are as

follows:

• It connects to high-bandwidth TCP/IP networks through the Gigabit Ethernet (GbE) adapter. This adapter type is

currently the only one supported by TPF.

• It uses the QDIO link layer to communicate between the host and the OSA-Express card. Older OSA cards use

standard channel programs to exchange data between the host and the card. The QDIO link layer enables the

host and OSA-Express card to share memory , so there is no need for the channel programs to transfer the data.

As a result, throughput and performance are improved.

• The OSA-Express card can be configured by the host. Older OSA cards require the OSA/SF feature to configure

the card.

APAR PJ27333 also contains support for virtual IP addresses (VIPAs), which can be used in an OSA-Express

environment to eliminate single points of failure. In an OSA-Express environment, each OSA-Express connection

has an associated real IP address as well, but it is to your advantage to use VIPAs instead of real IP addresses in

your socket applications. One advantage to using VIPAs instead of real IP addresses is that VIPAs automatically

swing from a primary OSA-Express connection to an alternate primary OSA-Express connection if the primary

connection fails. When that swing occurs, the sockets using those VIPAs remain open. If a real IP address is used

and the associated OSA-Express connection fails, sockets using the real IP address fail as well. Another advantage

to using VIPAs instead of real IP addresses is that the ZVIPA functional message can be used to move them from

one processor to another to balance TCP/IP traffic. For more information about APAR PJ27333, see “Another

Monumental Home Run for Sammy OSA” in this issue.

Offload Support

There are a few APARs on PUT 13 relating to offload support that you need to be aware of. Customers have

experienced CTL-571, -572, or -573 system errors occurring when dump processing and Common Link Access to

Workstation (CLAW) protocol are running at the same time. Program-controlled interruptions (PCIs) from offload

devices may occur during dump processing and cause an I-stream to attempt to lock the CLAW adapter block when

another I-stream is holding the lock. Because the I-stream is still holding the lock as a result of the dump, the various

CTL-571, -572, and -573 dumps will occur in that case. As it turns out, the OSA-Express support code cannot allow

PCIs during dump processing, so the fix to prevent PCIs from being processed during dump processing has been

10

Page 11

Fourth Quarter 2000

included in the OSA-Express support APAR (PJ27333).

A few customers have run into resource problems when writing CLAW trace output to the real-time tape. DASD and

tape queues build because the CLAW trace program retrieves keypoint A from DASD and issues a TOUTC macro

instead of a TOURC macro when writing to tape. The TOUTC macro causes the program to wait before the data is

written to tape, so ECBs, SWBs, and 4-K frames may become depleted as the trace program is reentered numerous

times to write 4-K buffers to tape. APAR PJ27288 solves these problems in the following ways:

• A LODIC macro has been added to check system resources before CLAW trace data is written to tape. If there

are not enough resources, the writing of the CLAW trace data to tape is stopped.

• The TOUTC macro has been replaced by a TOURC macro, which does not require the CLAW trace program to

wait before the data is written to tape.

• Keypoint A is no longer retrieved from DASD.

If the LODIC macro determines that there are not enough resources to write the CLAW trace data to tape, error

message CLAW0082I (ZCLAW TAPE TRACE INTERNALLY INACTIVATED) will now be issued and TPF stops

writing the data to tape. If this condition occurs, the operator needs to enter the ZSYSL functional message to adjust

the shutdown values associated with the IBMHI priority class for ECBs, 4-KB frames, and SWBs. Once the operator

adjusts the shutdown values with the ZSYSL functional message, the operator can reenter ZCLA W TRACE START

TAPE to enable the CLAW trace to be written to tape again.

If you have installed INETD support or your applications contain select() API function calls, you may have noticed

that your CLAW trace output is usually filled with traces of select() API function calls that have timed out. APAR

PJ26995 is designed to reduce the amount of trace output that is produced by timed-out select() API function

calls. If you install this APAR, timed-out select() API function calls are not traced and responses for all other

select() API function calls are traced. As a result, you may notice that your CLA W trace output will be significantly

reduced after you apply this APAR.

Native Stack Support

APAR PJ27204 corrects a problem in which a channel data link control (CDLC) link cannot be activated after a

ZTTCP INACT functional message is entered. What happens is that the 3746 sends an asynchronous interrupt to

TPF after the link is deactivated indicating that it is now ready to start an exchange ID (XID). TPF responds by

sending a prenegotiation write XID that contains control vector X’22' (XID error control vector) with a sense code of

X’08010000' (resource not available). The problem is that this write XID leaves the CDLC link in an inoperable state,

so TPF cannot activate the link with a ZTTCP ACT functional message unless the 3746 is reinitialized. APAR

PJ27204 fixes the problem by ignoring the asynchronous interrupt sent by the 3746 during link deactivation so that

control vector X’22' is not sent to the 3746.

Internet Daemon Support

The ZINET functional message has been enhanced by AP AR PJ27255 to display the socket number in both decimal

and hexadecimal format. The reason for this change is that ZDTCP NETSTAT displays the socket number in

hexadecimal for sockets created by native stack support, and the ZINET functional message previously displayed

the socket number in decimal only. APAR PJ27255 adds consistency to the two messages.

APARs PJ27363 and PJ27474 correct timing problems associated with child processes created by the various

Internet daemon models. APAR PJ27363 eliminates a CTL-4 system error that occurs when the Internet daemon

attempts to address system heap storage that has been released during system cycle-down. AP AR PJ27474 corrects

a CTL-C problem that can occur when the daemon model is run and a loadset is activated or deactivated. If you have

installed APAR PJ26848 on PUT 12, you need to install both of these APARs to correct these problems.

11

Page 12

TPF Systems Technical Newsletter http://www.s390.ibm.com/products/tpf/tpfhp.html

TPF DECB Support

Michele Dalbo, IBM TPF ID Core Team, and Jason Keenaghan and Mike Wang, IBM TPF Development

What Is a DECB?

A data event control block (DECB) can logically be thought of as another data level in an entry control block (ECB).

A DECB can be used in place of an ECB data level for FIND/FILE-type I/O requests by applications. Although a

DECB does not physically reside in an ECB, it contains the same information as standard ECB data levels: a core

block reference word (CBRW), a file address reference word (F ARW), file address extension words (FAXWs), and a

detailed error indicator (SUD).

Before TPF DECB support, the TPF 4.1 system restricted the number of ECB data levels (D0–DF) that were available

for use to 16 (the number of data levels defined in the ECB). DECBs can be acquired dynamically by a single ECB

(through the use of the tpf_decb_create C++ function or the DECBC assembler macro), with no preset limit on

the number of DECBs that a single ECB can create.

How Is a DECB Different from an ECB Data Level?

An ECB data level is simply a series of doubleword reference or control fields (CBRWs, FARWs, and FAXWs) for

data blocks that can be used however the application requirements dictate. A DECB is basically the same except the

file address field in the FARW has been expanded to allow for 8-byte file addresses as compared to 4 bytes in an

ECB data level FARW. Because there is no room for that expansion in an ECB FARW without changing the entire

layout of the ECB, a DECB is the logical place for 8-byte file addresses to be stored.

In addition, using TPF DECB support instead of ECB data levels allows you to associate symbolic names with each

DECB. This feature allows different components of a program to easily pass information in core blocks attached to

a DECB. Each component only needs to know the name of the DECB where the information is located to access it.

What Are the DECB Fields?

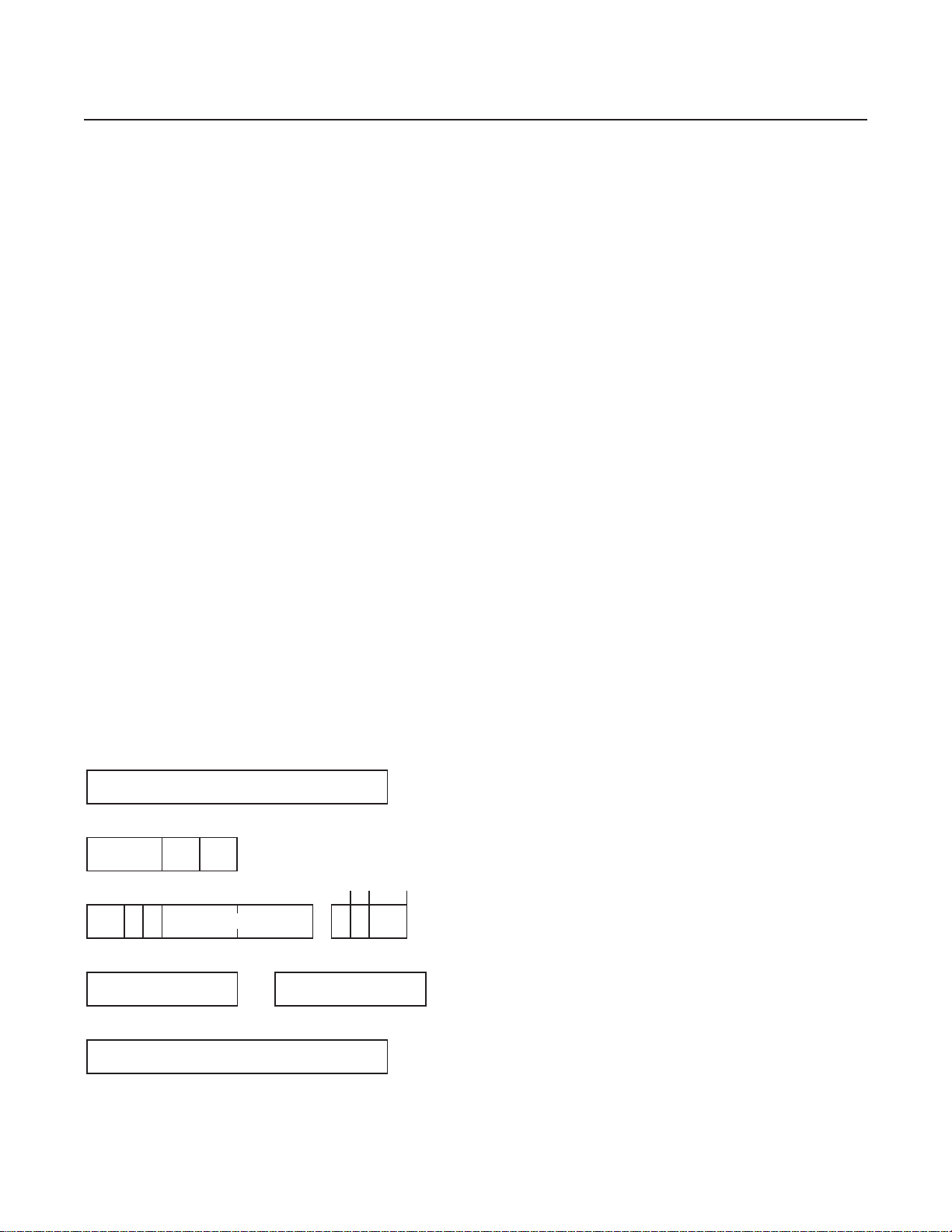

The following figure shows the DECB application area and the fields inside the DECB.

DECB Name (IDECNAM)

CBRW (IDECCRW)

Core Block Type Len

FARW (IDECFRW)

R

C

RID File Address

C

FAXW (IDECFX0) (IDECUSR)

Ext. File Address

Reserved

IDECSUD

Reserved

S

U

D

User Data

IDECDET

12

Page 13

The DECB fields are used as follows:

IDECNAM Contains the name of the DECB.

IDECCRW Corresponds to an ECB core block level.

IDECDAD (Core block)

Contains the address of a core block.

IDECCT0 (Type)

Contains the core block type indicator or X’0001' to indicate there is no core block attached.

IDECDLH (Len)

Contains the data length.

IDECFRW Corresponds to an ECB FARW.

IDECRID (RID)

Contains the record ID for a FIND/FILE request.

IDECRCC (RCC)

Contains the record code check (RCC) value for a FIND/FILE request.

IDECFA (File Address)

Contains the file address for a FIND/FILE request.

Fourth Quarter 2000

IDECSUD When the FIND/FILE request is completed, this field will be set to the SUD error value, or zero if

there is no error.

IDECDET Contains the number of core blocks currently detached from this DECB.

IDECFX0 Contains the FAXW information.

IDECUSR Contains the user data.

What Is 4x4 Format?

The IDECFA field, which contains the file address, is 8 bytes in the DECB. This allows 8-byte file addressing in 4x4

format, which provides for standard 4-byte file addresses (FARF3, FARF4, and FARF5) to be stored in an 8-byte

field. This is done by having the file address reside in the low-order 4 bytes of the field. The high-order 4 bytes of the

field contain an indicator (a fullword of zeros) that classifies it as a valid 4x4 format address. When there is file

activity, the FARW will be used for the record identification (2 bytes), record code check (1 byte), and the symbolic

file address (8 bytes); 1 byte is unused. The following example shows the layout of a 4x4 format file address:

E5 C3 00 00 07 D0 88 4000 00 00 00

IDECFA: 8-byte symbolic file address

not used: 1-byte

IDECRCC: 1-byte record code check, an unsigned character

IDECRID: 2-byte file record identification, an unsigned short integer

How Do I Create and Release DECBs?

TPF programs can dynamically create and release DECBs by using the tpf_decb_create and tpf_decb_release

C++ functions or the DECBC assembler macro. The storage, which will hold the DECB comes from the 1-MB ECB

private area (EPA). Therefore, the number of DECBs that the ECB is restricted to is limited only by the amount of

13

Page 14

TPF Systems Technical Newsletter http://www.s390.ibm.com/products/tpf/tpfhp.html

storage in the private area that is dedicated to DECBs.

When the first DECB creation request is received by the TPF system, a 4-K frame is obtained from the EPA. This

frame, which will act as a DECB frame, is attached to page 2 of the ECB at field CE2DECBPT. The DECB frame

itself contains a header section to help manage the DECBs. The remainder of the frame is then carved into the

individual DECBs themselves; therefore, a single DECB frame contains several individual DECBs (currently a single

frame can hold 31 DECBs). If more DECB creation requests are received than can fit in a single DECB frame,

another 4-K frame is obtained and forward chained to the previous DECB frame.

Individual DECBs are dispensed sequentially using a first-in-first-out (FIFO) approach. The address of the next

DECB to be dispensed is maintained in the header of the DECB frame. When dispensing the DECB, the contents

are first cleared and the DECB is marked as not holding a core block. The DECB is then marked as in-use (that is,

allocated) and the name of the DECB, if one was supplied at creation time, is assigned. Finally, the DECB frame

header is updated to indicate the address of the next DECB that will be dispensed.

When a DECB is released by the application, the DECB is simply marked as not in use. The DECB is not cleared

until it is later redistributed, nor is the DECB frame header updated to indicate the address of the next DECB to be

dispensed. Once released, this DECB will not be redistributed until all other DECBs in this frame have been dispensed

at least one time (FIFO approach). No DECB frames will be detached from the ECB until ECB exit time, even if all

DECBs are currently marked as not in use. This is done to speed processing if another DECB creation request is

received by the TPF system.

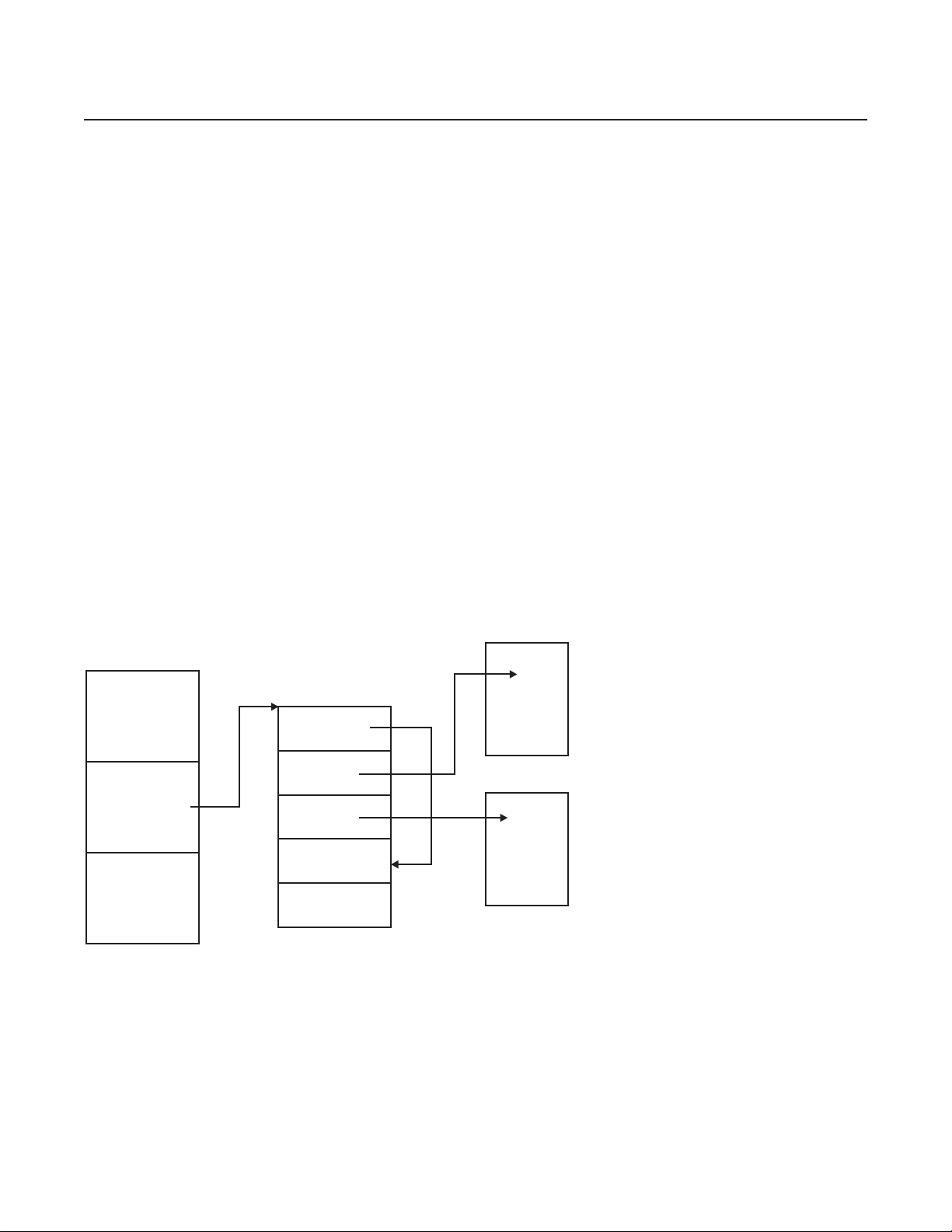

The following figure shows the relationship between DECBs and the ECB.

ECB

Core

Block

Core

Block

CE2DECBPT

DECB Frame

Available

CBRW

CBRW

How Do I Access File Records with a DECB?

All types of applications can use DECBs. Application programming interfaces (APIs) have been added or updated to

allow TPF programs to access file records with a DECB instead of an ECB data level. However , only a subset of the

existing macros and C functions that currently reference ECB data levels accept a DECB in place of an ECB data

level. Macros and C functions that use a file address will first verify that it is a valid address in 4x4 format. If the

address is not valid, a system error will occur.

14

Page 15

Fourth Quarter 2000

How Does a DECB Handle Errors?

With TPF DECB support, each DECB has a detailed error indicator byte (IDECSUD). The existing CE1SUG byte will

include any errors that occur on a DECB-related I/O operation. In addition, the IN1DUD bit in the CE1IN1 byte of the

ECB will be set to indicate that an error occurred on a DECB.

What Other Areas of TPF Will Be Affected by the Introduction of DECBs?

The following areas in the TPF system have been changed to best make use of TPF DECB support:

• The ZFECB functional message has been modified to display DECBs attached to a given ECB.

• The real-time trace (RTT) utility has been modified to save and display information about DECBs for specific

types of macros that are called by a particular ECB.

• ECB macro trace has been modified to handle DECBs as macro parameters instead of ECB data levels as well

as to correctly handle 8-byte file addresses.

• Because of the increased size of each macro trace entry, the number of macro trace entries for each ECB has

been reduced from 66 to 55 when tracing without registers, and from 25 to 23 when tracing with registers.

• System error processing has been updated to gather information about DECBs attached to an ECB when an

error occurs. Additionally, system error processing now searches for any core blocks attached to or detached

from DECBs; this will also be reported in a system dump.

• The interface to the Dump Data user exit (segment CPSU) has been updated to pass along information about

DECBs to the user if an error occurs.

• ECB exit processing has been modified to release any memory resources associated with the DECB before

stopping an ECB.

Why Must I Use the C++ Compiler Instead of the C Compiler When Using

DECBs?

When creating the new C language functions that would support DECBs and, in some cases, 8-byte file addresses

without DECBs, several different approaches were examined. The primary goal was to create functions that could

be quickly learned by experienced TPF programmers. In other words, if a new C function was going to be created to

release a core block on a DECB, it should look very similar to the existing function to release a core block on an ECB

data level (relcc).

Note: In the remainder of this article, relcc is used as an example of the functions updated for TPF DECB support.

The first approach would have been to change the existing function to accept a pointer to a DECB (TPF_DECB *) in

addition to an ECB data level (enum t_lvl). However, this would have forced all existing applications to be recoded

and recompiled to account for the additional parameter that was added. This is obviously an unacceptable solution.

A second approach would have been to create completely new functions with new names and new parameters to

handle similar functions with DECBs instead of ECB data levels. This approach becomes cumbersome to a TPF

application programmer who would have to recognize that when using ECB data levels, the correct function to call

would be relcc; however, when using DECBs, the correct function to call would be something like tpf_relcc_decb

(to follow TPF naming conventions).

The final approach, and the one that has been implemented for TPF DECB support, is to create functions with the

same name (relcc), but have the function take a pointer to a DECB instead of an ECB data level as a parameter.

15

Page 16

TPF Systems Technical Newsletter http://www.s390.ibm.com/products/tpf/tpfhp.html

The benefits of this approach include the following:

• Eliminating the need to recompile any existing applications that call the function

• Using familiar TPF function names so application programmers do not have to remember additional functions.

To enable existing TPF C functions to use DECBs instead of ECB data levels, the functions have been doubly

defined by using the C++ function overload feature. For example, in addition to the existing relcc function prototype:

#ifdef __cplusplus

extern "C"

#endif

void relcc (enum t_lvl level);

an overloaded relcc function that takes a DECB pointer as a parameter has been created:

#ifdef __cplusplus

} /* end extern "C" */

void relcc (TPF_DECB *decb);

#endif

The overloaded function uses C++ function linkage, so programs that use overloaded functions must be compiled

with the C++ compiler.

In TPF, the relcc function (which uses ECB data levels) is actually an assembler program (crelcc.asm) that resides

in the CTAL (TPF Application Library) ISO-C library. This did not change with the introduction of the overloaded

relcc function (which uses DECBs). Any calls made to relcc using ECB data levels will still go to the CT AL library;

it does not matter if the call is made from a C or C++ program.

The definition of the relcc function, which uses DECBs, is part of a C++ segment that contains what can be thought

of as bridge functions (segment ctadov.cpp). This segment has been included as part of the new CTAD (TPF

Application DLL) DLL. The underlying service routine (or stub, as it is sometimes called) is an assembler language

program. However, C++ and assembler programs cannot communicate between each other directly , but C++ programs

can communicate with C programs and C programs can communicate with assembler language programs. Therefore,

the new bridge functions have been created to span the gap between C++ and assembler programs. The following

shows an example from segment ctadov.cpp:

#pragma export(relcc(TPF_DECB *decb)) <--- This makes relcc callable by other

. DLMs and DLLs

.

.

#pragma map(tpf_relcc_decb, "@@RELCCD")

extern "C" tpf_relcc_decb (TPF_DECB *decb); <--- This is internal to this DLL

. and generates C-type linkage

.

.

void relcc (TPF_DECB *decb)

{

tpf_relcc_decb (decb); <--- Call internal C function to process relcc

request

}

The functions shown in the previous example interact in the following way: a pseudo C function called

tpf_relcc_decb has been created. The underlying service routine for this C function can then be written in

16

Page 17

Fourth Quarter 2000

assembler language, much like the service routine for relcc (enum t_lvl level) is in the CTAL library. T o avoid

recompiling or reassembling, a separate segment was added to process the DECB parameter. The segment that

processes tpf_relcc_decb is in an assembler segment (crelcd.asm), which is also a member of the CTAD DLL.

The first few instructions in segment crelcd.asm would look as follows to be correctly resolved at when the DLL is

linked:

BEGIN NAME=CRELCD,IBM=YES,VERSION=40,TPFISOC=YES

...

@@RELCCD TMSPC FRAMESIZE=NO,LWS=R6,PPANAME=tpf_relcc_decb

L R2,0(R1) GET DECB FOR RELEASE

...

...

When a TPF application wants to call the relcc function using DECBs, the TPF application must first be compiled

with the C++ compiler . This does not mean that the application has to be written as an object-oriented C++ application;

it only means that the __cplusplus declaration must be defined (which happens automatically when the segment

is a .cpp file). The second step is to ensure that the definition side-deck for the CT AD DLL is imported correctly when

linking the dynamic load module (DLM) containing the application calling the overloaded function. This enables the

correct function linkage to be generated to the relcc service routine in the CTAD DLL at run time.

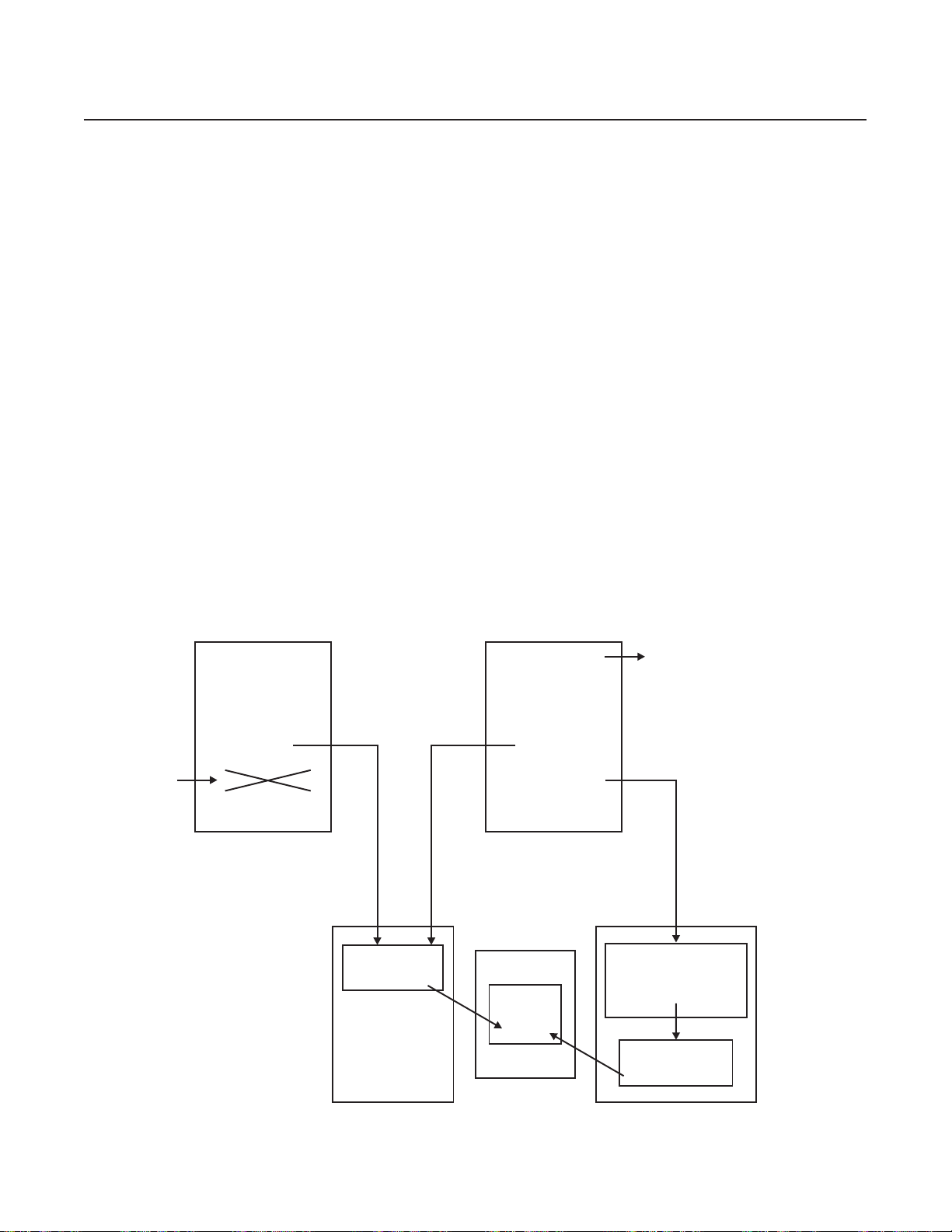

The following shows an example of how all this links together for both C and C++ programs. Notice that both

functions, in the end, call the same SVC service routine in the CCNUCL CSECT of the control program (CP):

Cannot call DECB

from C program

qzz1.c qzz2.cpp

#include <tpfapi.h>

void QZZ1

{

…

relcc (D1);

…

relcc (decb_1);

…

}

CTALLibrary CTAD DLL

crelcc.asm

#include <tpfapi.h>

void QZZ2

{

…

relcc (D2);

…

relcc (decb_2);

…

}

CCNUCL

cicr40.cpy

Includes c$decb.h

ctadov.cpp

void relcc (TPF_DECB *decb)

{

tpf_relcc_decb (decb);

}

crelcd.asm

17

Page 18

TPF Systems Technical Newsletter http://www.s390.ibm.com/products/tpf/tpfhp.html

Where Can I Find More Information about TPF DECB Support?

For more information, see the following books:

• TPF Application Programming

• TPF C/C++ Language Support User’s Guide

• TPF Concepts and Structures

• TPF Database Reference

• TPF General Macros

• TPF Library Guide

• TPF Messages

• TPF Migration Guide

• TPF Operations

• TPF Program Development Support Reference

• TPF System Generation

• TPF System Installation Support Reference

• TPF System Macros

TPFCS Recoup Changes, but Stays the Same

Daniel Jacobs, IBM TPF Development

Y ou have probably been hearing a lot about the improved online recoup deliverable on PUT 13. There were definitely

many changes made to the TPF online recoup package, but there were also some changes made to TPF collection

support (TPFCS) recoup. What are these changes? Do they affect you? How the heck do you even use TPFCS

recoup? What year was the chocolate chip cookie invented? If you’re curious about the answers to any of these

questions, read on!

TPFCS Recoup Overview

For starters, I will describe how TPFCS recoup works on your pre-PUT 13 system. Assuming that the TPFCS

database is initialized in the system, records used by TPFCS are recouped as part of TPF recoup phase 1 before the

traditional TPF recoup chain chase. Because TPFCS has no knowledge of the contents of the data stored in a

collection, the data layout must be provided to TPFCS through another means so that collections that contain

references to standard TPF files or to other collections can be recouped correctly.

So what happens during TPFCS recoup processing? Well, it is a very complicated algorithm, but I will try to explain

it as best as I can. First, TPFCS makes a list of all data stores that reside on the subsystem that recoup is running

on. Second, for each data store, recoup processes each of the TPFCS internal system collections. Third, if any of

those collections contain any embedded references (as described by a recoup index associated with those collections),

those collections are then recursively processed as well. Fourth ... wait, there is no fourth ... that’s it! OK, maybe it

wasn’t so complicated after all (it seemed a lot worse writing the code).

At this point, you might be asking yourself, “What about my user collections? How are they recouped?” Good

questions! You may have cleverly realized that any collections created by user applications will not be recouped

unless they are explicitly made known to TPFCS by establishing one or more recoup indexes. A recoup index

describes the location of PIDs and file addresses embedded within all collections associated with that recoup index.

An anchor collection, usually the data store application dictionary (named DS_USER_DICT) of the data store, refers

to user-created collections, which refer to other collections, and so on. So, in step 3 of the previously described

algorithm, when DS_USER_DICT (an internal system collection) is recouped, embedded references to user collections

will also be processed .

18

Page 19

Fourth Quarter 2000

T o summarize the important points here, (1) every user-created collection must be referred to by an anchor collection

or it will not be recouped, and (2) a recoup index must be created and associated with the anchor collection and any

other collections that contain embedded references.

Recoup Indexes

How do you manage recoup indexes? TPFCS provides APIs that allow you to write applications to create, update, or

delete recoup indexes; or if you have APAR PJ26887 from PUT 12 installed, you can use the ZBROW RECOUP

functional message to do the same thing.

A separate index should be created for each of the different collection formats in a TPFCS data store. After a recoup

index is created, you must add one or more entries to it. Recoup index entries identify the location of file addresses

or PIDs in the collection data. Note that TPFCS recoup supports only traditional TPF chained records. TPFDF

records are not supported.

There are two basic types of recoup indexes. The first type, known as a homogeneous index, is used when each of

the elements in the collections associated with this index has the same format and can be recouped the same way .

The second type, known as a heterogeneous index, is used when the elements in the collections associated with

this index cannot be recouped the same way because they have different formats. When adding entries to a

heterogeneous index, you must specify which elements have embedded file address or PID information (as well as

the displacements in those elements where the embedded references are stored). Any collection type can be

associated with either recoup index type with the exception of binary large objects (BLOBs). Because BLOBs have

a single element, special processing is required and the recoup index type indicator is ignored.

After the recoup index has been created, it can be associated with one or more collections as long as they have the

same format for embedded references. However, a single collection can only be associated with one recoup index

at a time.

TPF Database Reference gives more information about using TPFCS recoup indexes, including:

• A comparison of the APIs to the ZBROW RECOUP functional message parameters

• Details about the format of embedded references

• A sample application that sets up recoup indexes.

PUT 13 TPFCS Recoup Changes

Now that I have explained how TPFCS used to work, let me tell you what has changed with the PUT 13 recoup

enhancements (AP AR PJ27469). Most of the changes made to the TPFCS recoup code were internal and would not

normally be seen by the user. However, there were a few external changes as well.

One big change is that TPF recoup now requires TPFCS to be initialized in your TPF system (this is a one-time only

procedure). With many other TPF functions using TPFCS, such as the POSIX file system, MQSeries, and MATIP,

you probably already have TPFCS initialized. If not, you may want to read about the ZOODB INIT functional message

in TPF Operations.

Another big change was the creation of two structures that could be used by both TPF recoup as well as TPFCS

recoup. The first structure is the IBM Recoup Scheduling Control Table (IRSCT). This table is used to determine the

order that TPF groups and TPFCS data stores are processed. What this means is that TPFCS data stores are no

longer automatically processed first. Data stores are now handled like TPF record IDs, so depending on how your

GROUP macros are defined, TPFCS data stores can be processed before, after, or even while TPF groups are

processed.

19

Page 20

TPF Systems Technical Newsletter http://www.s390.ibm.com/products/tpf/tpfhp.html

The second structure is the IBM Recoup Active Root Table (IRART), which effectively replaces the TPFCS recoup

heap. There is one IRART for each processor defined in your TPF system. The IRART contains information about

each of the root units of work in progress, whether it is a TPF ordinal or a TPFCS persistent identifier (PID). This table

is used to determine timeout conditions and is also used during restart processing.

These structures are represented by TPFCS BLOBs. To initialize these structures, you are now required to enter the

ZRECP SETUP functional message once at CRAS state or above for each subsystem in your TPF system. Once

this is done, recoup can run in any state. However, if the IRSCT needs to expand because you are adding more

items to it, you will need to cycle to CRAS state or above. The ZRECP SETUP functional message also resets all

ZRECP PROFILE options to their default values.

As a result of these changes, many aspects of TPFCS recoup are now obsolete. With TPFCS recoup now using the

IRSCT and IRART, the old TPFCS recoup BLOB (also known as the TPFCS recoup heap) is no longer needed and

has been obsoleted. In fact, it will be automatically removed from your system when you enter the ZRECP SETUP

functional message.

The ZRECP TO2 functional message has also been obsoleted, although the functionality of many of its parameters

are still available:

• The ZRECP SETUP functional message replaces the SETUP parameter.

• The CSERRMAX and CSTIMEOUT parameters on the ZRECP PROFILE functional message replace the MAXERR

and TIMEOUT parameters.

• The ZRECP LEVEL functional message replaces the functionality of the MAXECB parameter.

Furthermore, pre-PUT 13 recoup allowed you to selectively recoup individual collections in test mode or production

mode. The improved online recoup package still allows you to selectively recoup individual collections in production

mode with the PID parameter on the ZRECP SEL functional message. However, the selective processing in test

mode has been changed to now allow you to recoup an individual data store instead of an individual collection by

using the SEL and DS parameters with the ZRECP RECALL functional message. This logically corresponds to being

able to selectively recoup an individual record ID and not a file address in test mode.

Other than what has already been described, very little else has changed! The segments are different and the online

message numbers have changed, but the fundamental TPFCS recoup processing remains the same. (Remember

our three-item algorithm?) Most importantly , TPFCS recoup indexes are still used the same way and existing TPFCS

recoup indexes are still valid and don’t have to be modified at all.

Odds and Ends

If you don’t have any user TPFCS data stores defined, there is very little that you need to do for recoup to protect all

file addresses in your existing TPFCS data stores. There are a few notes worth mentioning though:

• File system users should make sure they have the BKD7 descriptor loaded to their system. Without this descriptor ,

the x'FC2A' fixed file records will not be recouped and your file system will potentially become corrupted if the

recoup results are rolled in.

• MQSeries and file system users should apply A PAR PJ27409 to correct a problem where pool addresses belonging

to deleted collections were being lost.

20

Page 21

Fourth Quarter 2000

• MQSeries users should apply APAR PJ27274 to avoid some online errors while the MQSC data store is being

recouped.

• MATIP users should apply APAR PJ27688 to avoid the potential corruption of their connection definitions.

Finally , the chocolate chip cookie was invented in 1933 by Ruth W akefield. Why was this mentioned here? Because

I got very hungry while writing this article and I was thinking about chocolate chip cookies.

Another Monumental Home Run for Sammy OSA

Mark Gambino, IBM TPF Development

The history of networking technology is similar to the evolution of flight. Networks, like airplanes, keep getting faster

and faster. In the early days there were crop dusters and “puddle jumpers” with a top speed of 100 miles per hour

(provided, of course, there was a strong tailwind), and network line speeds were measured in bytes per second.

Jumping ahead to the end of the 20th century , there are supersonic aircraft capable or traveling at several times the

speed of sound, and network connections are now measured in megabytes (MB) per second. New challenges had

to be overcome as speeds increased, such as creating aircraft out of space-age composite materials that can

withstand the stresses of near warp (or is it warped) speed, and designing scalable TCP/IP stacks that can handle

the ever-increasing volume of traffic (and do so efficiently). In 1999, IBM technicians working in their secret “Area

61” facility (Poughkeepsie, New York) declassified (announced and shipped) two state-of-the-art projects:

• TCP/IP native stack support, enabling the TPF 4.1 operating system to connect to high-volume TCP/IP networks

through channel-attached routers, and doing so with exceptional performance

• OSA-Express support, enabling the S/390 host (running on IBM G5 servers or later models) to directly connect to

the latest generation and preferred high-speed IP backbone network, Gigabit Ethernet (GbE).

“Area 61” is now proud to announce that these technologies have been integrated to produce the first hypersonic

aircraft on the network: OSA-Express support for the TPF system. This support is provided by TPF APAR PJ27333

on PUT 13. You will also need OSA-Express cards at microcode level 4.08 or higher.

Who Is Sammy OSA and What Records Did He Break?

Open Systems Adapter (OSA), not to be confused with origin subarea (OSA), is integrated hardware (a card)

residing in the IBM mainframe that combines the functions of an I/O channel with a network port to provide direct

connectivity between S/390 applications and TCP/IP networks. OSA-Express is the third generation of OSA and

provides the following significant enhancements:

• OSA-Express connects to current high-bandwidth network technology , specifically GbE. Previous generations of

OSA were limited to Fast Ethernet connection speed.

• OSA-Express uses a new link layer, queued direct I/O (QDIO), to exchange information between the host and

OSA-Express card. QDIO uses a shared memory model, eliminating the need for real I/O operations (channel

programs) to transfer data between the host and the card. Previous generations of OSA used channel program

interfaces to exchange data between the host and the card. QDIO reduces the load on your I/O processor (IOP)

and improves the performance of the host.

• OSA-Express can be dynamically configured using TPF functional messages. Previous generations of OSA

required a separate feature, OSA/SF, to configure the OSA card.

These features made OSA-Express a logical choice to couple with TPF TCP/IP native stack support. Data presented

at the October 1999 TPF Users Group (TPFUG) meeting compared high-end native stack support (using IBM 3746

21

Page 22

TPF Systems Technical Newsletter http://www.s390.ibm.com/products/tpf/tpfhp.html

IP routers) to high-end offload support (using Cisco 7500 offload boxes). The results showed that we were comparing

a jet fighter to a propeller-driven plane because TPF was able to send 2.3 to 16 times more data using native stack

and do so much more efficiently using 9.5 to 17.2 times fewer CPU cycles. Each channel-attached router using

native stack support could pump up to about 1500 packets per second and a maximum of about 2.5 MB per second

into a TPF system, depending on message size. To achieve more volume, just add more routers (or channel

connections). Recent testing in lab 416 of “Area 61” compared TPF native stack support using channel-attached

routers to TPF native stack support using OSA-Express. Again, the results showed another giant leap in technology ,

from jet fighter to next-generation hypersonic propulsion aircraft. A TPF system with only one OSA-Express connection

generated over 33 000 packets per second using a mixture of 500- and 1400-byte packets, and 44 MB per second

using large (3500-byte) packets. In other words, a single OSA-Express card can handle the load of a dozen or more

channel-attached connections. This enables you to greatly reduce the cost, size, and complexity of your network at

strategic air command headquarters (where your TPF system is located).

What Do I Do If Sammy OSA Goes on the Disabled List?

A single OSA-Express card can probably handle all the TCP/IP traffic in and out of a TPF processor in your complex

today , but that does not mean you need only one card (per processor). Networks and airplanes have another thing

in common here: single points of failure can be fatal! To avoid single points of failure in the network, OSA-Express

support for TPF (AP AR PJ27333) includes virtual IP address (VIP A) support. An OSA-Express card has one network

port that connects to one GbE network through a GbE switch. TCP/IP is a connectionless architecture, meaning the

failure of any one component in the network should not cause user connections (sockets) to fail provided that

alternate paths exist. Applying this concept to TPF sockets across OSA-Express, you do not want the sockets to fail

if the card, GbE switch, or Ethernet fails. Figure 1 shows the recommended configuration for a processor in a

production TPF system.

VIPA1

TPF

VIPA2

VIPA3

OSA1PRIM OSA1BACK

OSA-Express OSA-Express

GbE Switch GbE Switch

IP Router IPRouter IP Router

Network

Figure 1. Recommended OSA-Express Configuration for TPF

In the figure, TPF is connected to two OSA-Express cards, each of which is connected to a different GbE network

through a different GbE switch. Both GbE switches are connected to a series of IP routers that connect to the

remainder of the network. One or more VIP As in TPF are mapped across one of the OSA-Express cards. TPF uses

Routing Information Protocol (RIP) to broadcast information to all the IP routers. This information, which indicates

the current path to use to reach the VIP As in TPF, is saved in the routing tables of the IP routers. In this example, the

VIP As are mapped across the OSA1PRIM connection. When a packet arrives from the network at an IP router and

22

Page 23

Fourth Quarter 2000

the final destination of the packet is one of the VIPAs in TPF, the IP router will look in its routing table to determine

the GbE switch through which to route the packet to reach the appropriate OSA-Express card, which in turn passes

the packet to TPF.

Figure 2 shows what happens if the OSA1PRIM connection fails for any reason (card, switch, or Ethernet failure).

TPF will automatically swing the VIP As to the alternate connection, which in this example is OSA1BACK. New RIP

messages are broadcast to the IP routers indicating to use a different path (through OSA1BACK) to now reach the

VIPAs. This causes the IP routers to update their routing tables with the new path information and subsequent

packets destined for VIP As in TPF will be routed using the new path. The VIPA swing operation is automatic in this

case and transparent to the end user, meaning that the sockets using the VIP As will remain active despite the failure

of a network component. To use VIPA support on TPF, your IP routers must support and have RIP version 2 enabled

on the interfaces that are used to connect to TPF (meaning the interface on the IP router that connects to the GbE

switch that, in turn, connects to the OSA-Express card).

TPF

OSA1PRIM OSA1BACK

OSA-Express OSA-Express

GbE Switch

IP Router IP Router IP Router

Network

VIPA1

VIPA2

VIPA3

GbE Switch

Figure 2. Network Failure Causes VIPAs to Swing to Alternate Commection

How Do I Use Sammy OSA in Spring Training?

An OSA-Express card can be shared by any combination of logical partitions (LPARs) in the processor (CEC). In

addition, multiple TPF test systems running under VM can each have connections to the same OSA-Express card.

While OSA-Express does not use channel programs for data transfer, it does use channel programs for connection

activation and some error reporting. Each connection between TPF and an OSA-Express card uses three symbolic

device addresses (SDAs). In the test system environment, you need to assign each TPF test ID three SDAs and a

unique set of IP addresses (including VIPAs). For planning purposes, it is important to know how much payload an

aircraft can carry and how many host connections an OSA-Express card can have. The answer of course is, “it

depends.” The formula for the maximum number of host connections to an OSA-Express card is 80 divided by the

number of LPARs that are sharing the OSA-Express card.

For example, if two LPARs are sharing the card, each LPAR can have 40 host connections. If your shop has 150

TPF developers and testers, two OSA-Express cards dedicated to the test system would give TCP/IP connectivity

to every developer and tester. The final important point to mention about test systems is that you need VM/ESA 2.4

or higher to use OSA-Express support on a TPF system that is running under VM.

23

Page 24

TPF Systems Technical Newsletter http://www.s390.ibm.com/products/tpf/tpfhp.html

I Traded to Get Sammy OSA on My Team. Now What?

Once you have decided that you want to use OSA-Express for your TPF system, there are planning steps that you

need to think about well in advance:

• Hardware:

- IBM G5 servers or later models are required

- Determine how many cards are needed and then order OSA-Express cards with GbE adapters at microcode

level 4.08 or higher

- GbE switches to connect to the OSA-Express cards

- IP routers on the other side of the GbE switches to connect to the reaminder of the network. These IP routers

must support RIP version 2 to use VIPA support on TPF.

• Software:

- TPF 4.1 with PUT 13 APAR PJ27333 applied

- VM/ESA 2.4 or later version to use OSA-Express support for TPF test systems running under VM.

• Network Administration:

- Assign IP addresses to the TPF systems, including VIPAs

- Assign IP addresses to the IP routers.

After you have completed the necessary hardware and software upgrades, and the cabling of network devices is

completed, you are ready to install and use OSA-Express support. The procedure is as follows:

1. Define the OSA-Express cards to the processor in the I/O configuration program (IOCP). Each definition includes

which LP ARs will be using (sharing) the card and the range of SDAs that can be used for connections to the card.

2. Update parameter MAXOSA in keypoint 2 (CTK2) with the maximum number of OSA-Express connections for

your TPF system. Load the updated CTK2 to TPF.

3. IPL and cycle your TPF system to NORM state.