Page 1

TM

TPC Benchmark

H Full Disclosure Report

for

IBM

IBM DB2

®

^ xSeries 346

®

Universal Database 8.2

Submitted for Review

using

May 18, 2005

®

Page 2

Second Edition - December 2005

THE INFORMATION CONTAINED IN THIS DOCUMENT IS DISTRI BUTED ON AN AS IS BASIS

WITHOUT ANY WARRANTY EITHER EXPRESSED OR IMPLIED. The use of this information or the

implementation of any of these techniques is the customer’s responsibility and depends on the customer’s

ability to evaluate and integrate them into the customer’s operational environment. While each item has

been reviewed by IBM for accuracy in a specific situation, there is no guarantee that the same or similar

results will be obtained elsewhere. Customers attempting to adapt these techniques to their own

environment do so at their own risk.

In this document, any references made to an IBM licensed program are not intended to state or imply that

only IBM’s licensed program may be used; any functionally equivalent program may be used.

This publication was produced in the United States. IBM may not offer the products, services, or features

discussed in this document in other countries, and the information is subject to change without notice.

Consult your local IBMrepresentative for information on products and services available in your area.

© Copyright International Business Machines Corporation 2004. All rights reserved.

Permission is hereby granted to reproduce this document in whole or in part, provided the copyright notice

as printed above is set forth in full text on the title page of each item reproduced.

U.S. Government Users - Documentation related to restricted rights: Use, duplication, or disclosure is

subject to restrictions set forth in GSA ADP Schedule Contract with IBM Corp.

Trademarks

IBM, the IBM ~ logo, DB2, DB2 Universal Database, and xSeries are trademarks or registered

trademarks of International Business Machines Corporation.

The following terms used in this publication are trademarks of other companies as follows: TPC

Benchmark,TPC-H, QppH QthH and QphH are trademarks of Transaction Processing Performance

Council; Intel and Xeon aretrademarks or registered trademarks of Intel Corporation; Linux is a registered

trademark of Linus Torvalds in the United States, other countries, or both. Other company, product, or

service names, which may be denoted by two asterisks (**), may be trademarks or service marks of others.

Notes

1 GHz only measures microprocessor internal clock speed, not application performance. Many factors affect

application performance.

2 When referring to hard disk capacity, one GB equals one billion bytes. Total user-accessible capacity may

be less.

©Copyright IBM Corporation TPC Benchmark H Full Disclosure Report May 2005 Page: 2

Page 3

®

IBM

®

with IBM DB2

~™ x346

®

UDB 8.2

TPC-H Revision 2.1.0

Report Date:

May 18, 2005

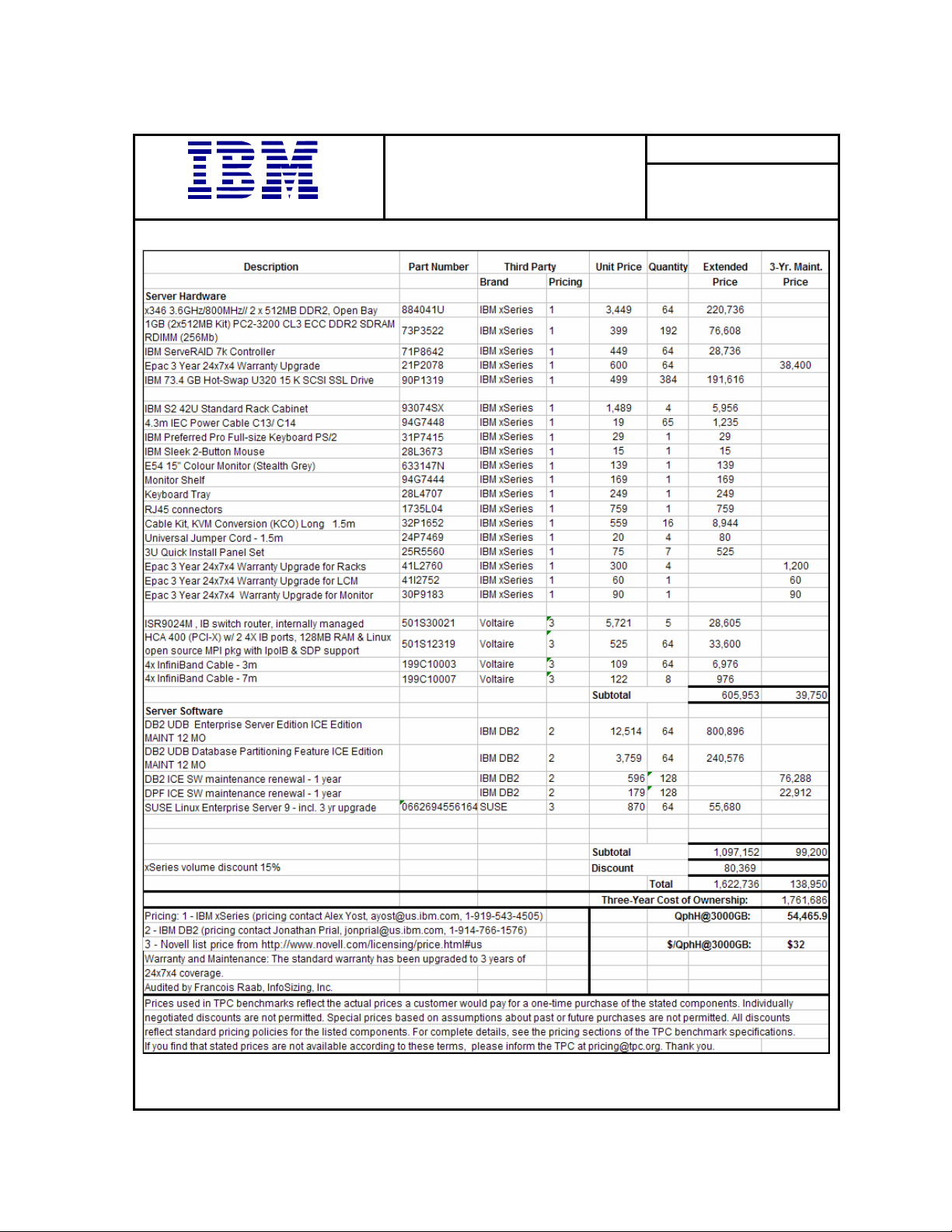

Total System Cost Composite Query-per-hour Metric Price/Performance

$1,761,686

54,465.9

QphH@3000GB

per QphH@3000GB

$ 32

Database Size Database Manager Operating System Availability Date

3000GB IBM DB2 UDB 8.2

SuSE Linux

Enterprise Server 9

Aug 15, 2005

Database Load Time: 2:23:35

RAID (Base Table): Y RAID (Base Tables and

System Configuration:

Load Included Backup: N Total Data Storage /

Database Size: 8.75

RAID (ALL): Y

Auxiliary Data Structures): Y

64 IBM ~ x346 servers, each server with:

Processor: 1 x 3.6GHz Intel Xeon with 2MB cache

on 1 processor, 1 core, 2 threads

Memory: Eight (8) 512MB PC-3200 ECC SDRAM RDIMMs

Cluster Interconnect: One Voltaire HCA400 Dual-Port InfiniBand Host Channel Adapter

Disk Controllers: One ServeRAID-7k Ultra320 SCSI controller

Disk Drives:

Total Disk Storage:

Six (6) 73.4GB 15K Ultra320 SCSI disk drives

26,249 GB

Page 4

y

IBM® ~™ x346

®

with IBM DB2

®

UDB 8.2

TPC-H Revision 2.1.0

Report Date:

18, 2005

Ma

Page 5

IBM®~™ x346

®

with IBM DB2

Measurement Results:

Database Scale Factor 3000

Total Data Storage/Database Size 8.75

Start of Database Load 15:05:38

End of Database Load 17:29:13

Database Load Time 2:23:35

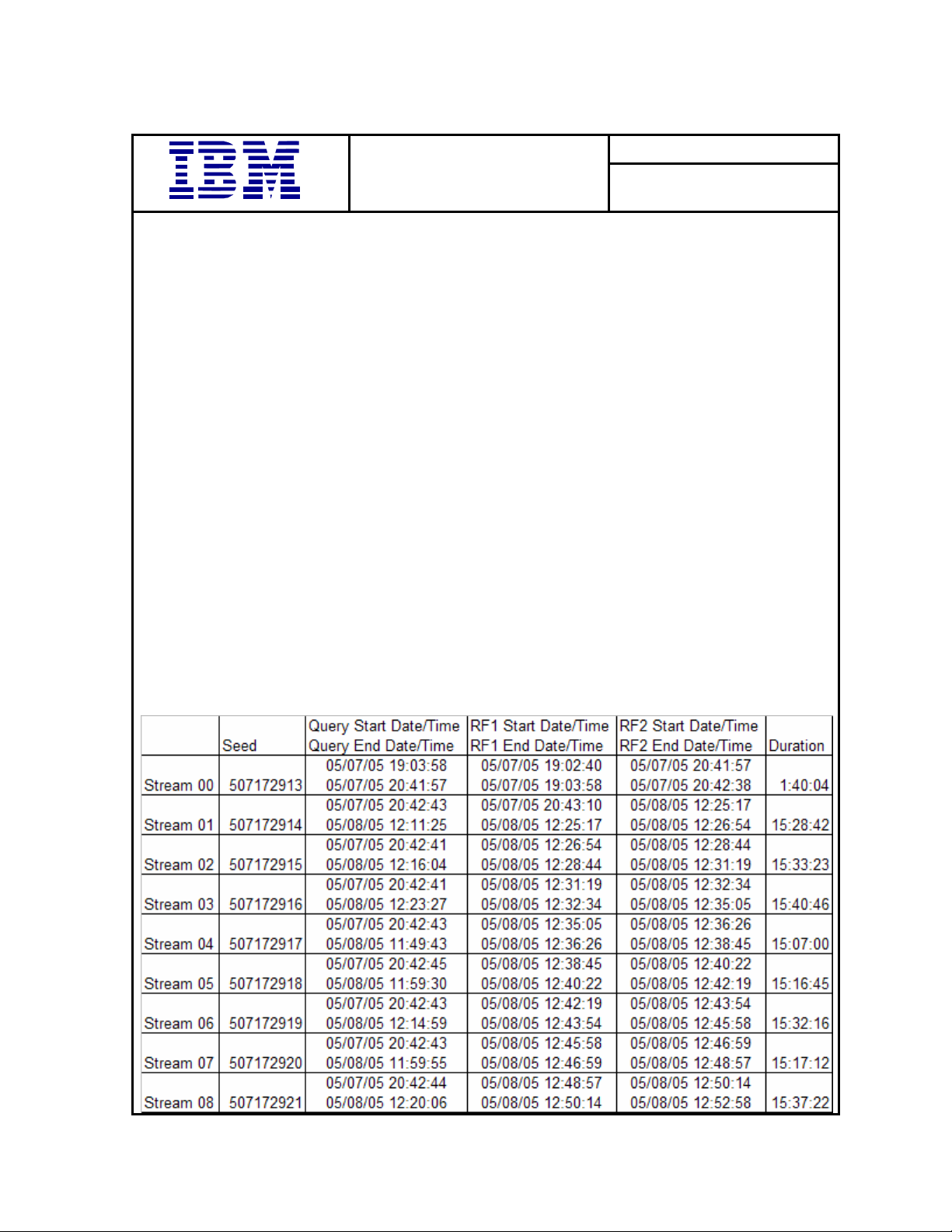

Query Streams for Throughput Test 8

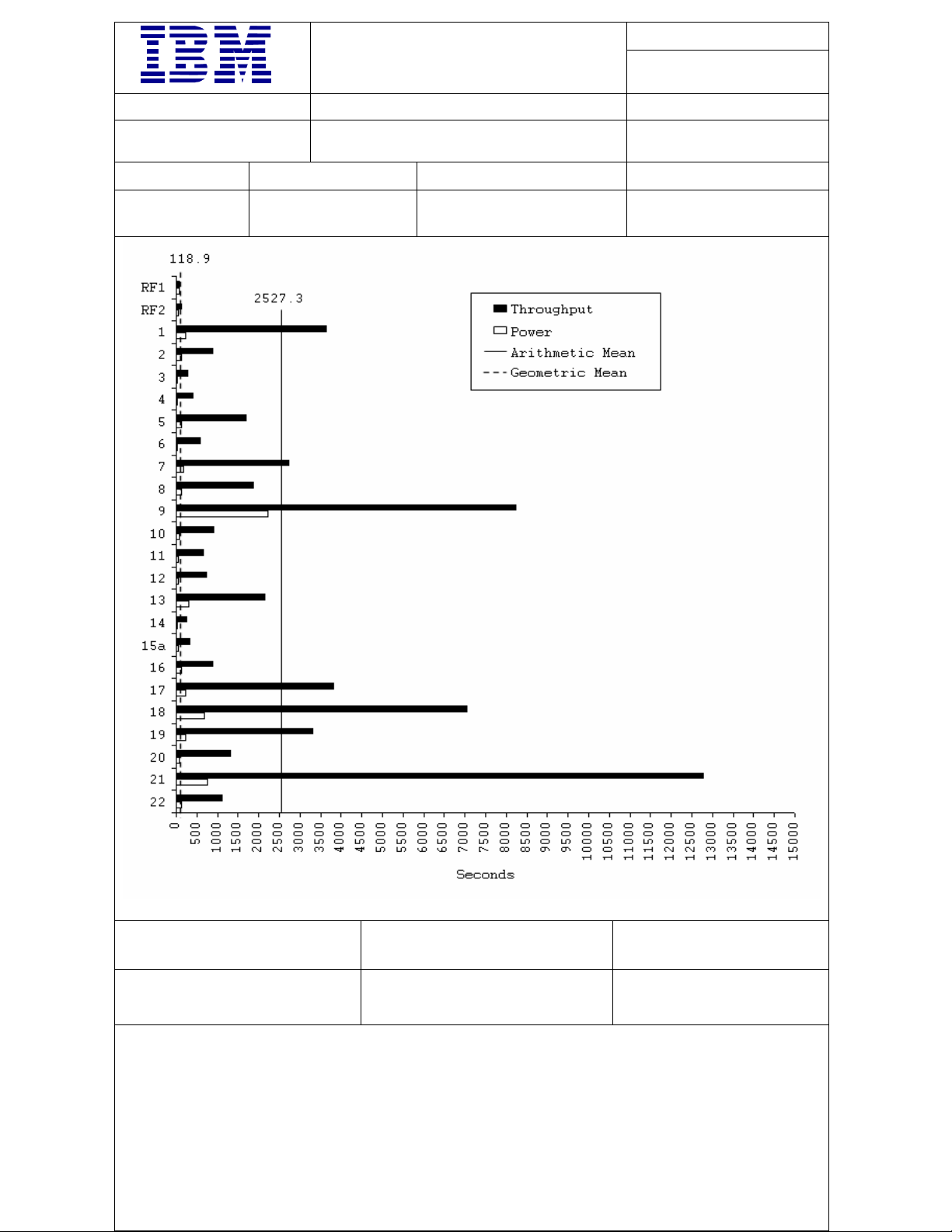

TPC-H Power 90,854.7

TPC-H Throughput 32,651.4

TPC-H Composite Query-per-Hour (QphH@3000GB) 54,465.9

Total System Price over 3 Years $1,761,686

TPC-H Price/Performance Metric ($/QphH@3000GB) $32

Measurement Interval:

Measurement Interval in Throughput Test (Ts) = 58215 seconds

Duration of Stream Execution:

®

UDB 8.2

TPC-H Revision 2.1.0

Report Date: May 18, 2005

Page 6

y

®

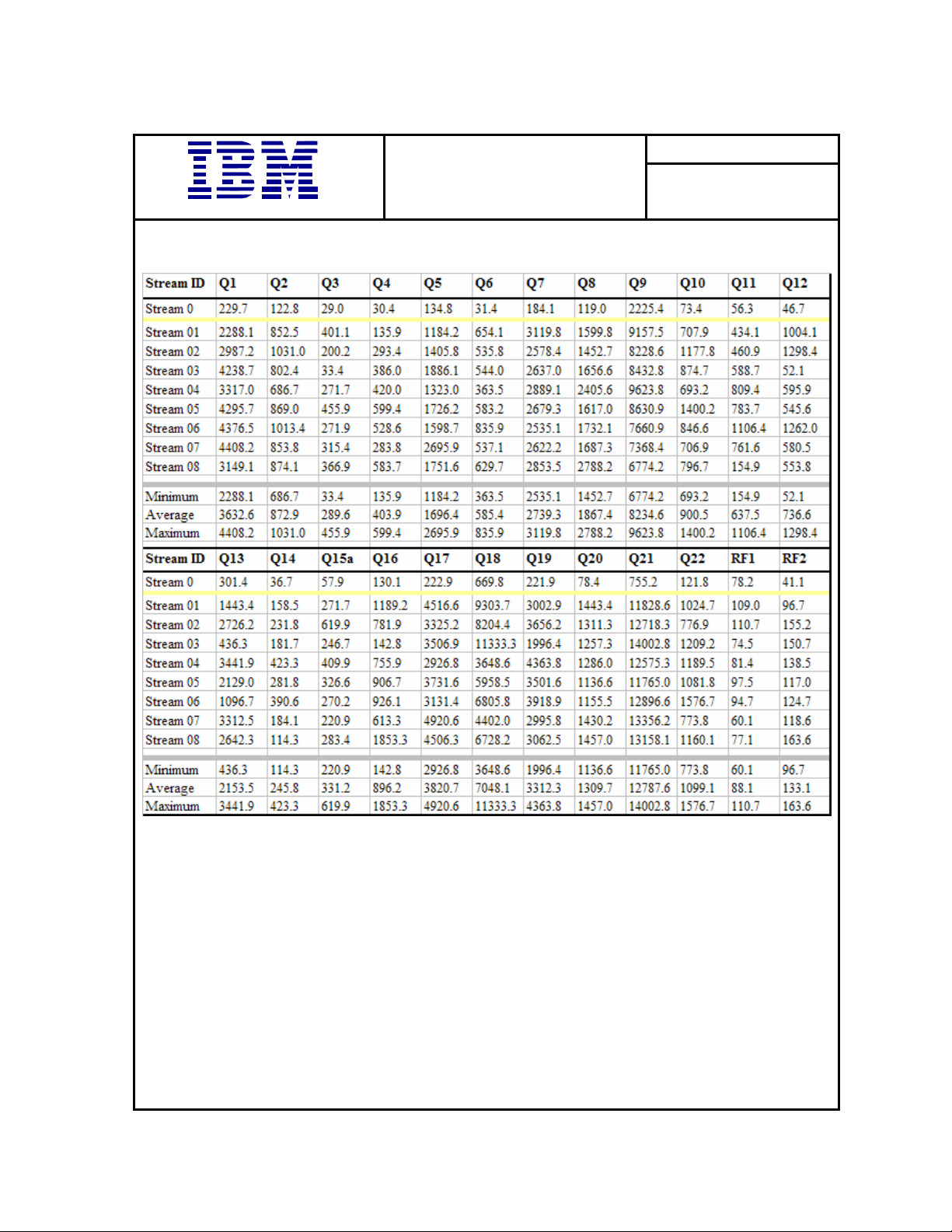

TPC-H Timing Intervals (in seconds):

IBM® ~™ x346

®

with IBM DB2

UDB 8.2

TPC-H Revision 2.1.0

Report Date:

18, 2005

Ma

Page 7

Benchmark Sponsor: Haider Rizvi

Mgr., DB2 Data Warehouse Performance

IBM Canada Ltd;

8200 Warden Avenue

Markham, Ontario L6G 1C7

May 16, 2005

I verified the TPC Benchmark™ H performance of the following configuration:

Platform: IBM

^ eServer xSeries 346, 64-node cluster

Database Manager: IBM DB2 UDB 8.2

Operating System: SuSE Linux Enterprise Server 9

The results were:

CPU (Speed) Memory Disks

QphH@3,000GB

Sixty-four (64) IBM ^ eServer xSeries 346 (each with)

1 x Intel Xeon

(3.6 GHz)

2 MB L2 Cache

4 GB Main

6 x 73.4 GB uSCSI

54,465.9

In my opinion, this performance result was produced in compliance with the TPC’s requirements

for the benchmark. The following verification items were given special attention:

• The database records were defined with the proper layout and size

• The database population was generated using DBGEN

• The database was properly scaled to 3,000GB and populated accordingly

• The compliance of the database auxiliary data structures was verified

• The database load time was correctly measured and reported

• The required ACID properties were verified and met

1373 North Franklin Street • Colorado Springs, CO 80903-2527 • Office: 719/473-7555 • Fax: 719/473-7554

Page 8

• The query input variables were generated by QGEN

• The query text was produced using minor modifications and an approved query variant

• The execution of the queries against the SF1 database produced compliant answers

• A compliant implementation specific layer was used to drive the tests

• The throughput tests involved 8 query streams

• The ratio between the longest and the shortest query was such that no query timing was

adjusted

• The execution times for queries and refresh functions were correctly measured and reported

• The repeatability of the measured results was verified

• The required amount of database log was configured

• The system pricing was verified for major components and maintenance

• The major pages from the FDR were verified for accuracy

Additional Audit Notes:

None.

Respectfully Yours,

François Raab

President

1373 North Franklin Street • Colorado Springs, CO 80903-2527 • Office: 719/473-7555 • Fax: 719/473-7554

Page 9

Table of Contents

Preface

1 General Items

1.1 Benchmark Sponsor 14

1.2 Parameter Settings 14

1.3 Configuration Diagrams 14

1.3.1 Measured and Priced Configurations 15

2 Clause 1: Logical Database Design Related Items

2.1 Database Table Definitions 16

2.2 Database Organization 16

2.3 Horizontal Partitioning 16

2.4 Replication 16

3 Clause 2: Queries and Update Functions Related Items

3.1 Query Language 17

3.2 Random Number Generation 17

3.3 Substitution Parameters Generation 17

3.4 Query Text and Output Data from Database 17

12

14

16

17

3.5 Query Substitution Parameters and Seeds Used 17

3.6 Query Isolation Level 17

3.7 Refresh Function Implementation 18

4 Clause 3: Database System Properties Related Items

4.1 Atomicity Requirement s 19

4.1.1 Atomicity of Completed Transactions 19

4.1.2 Atomicity of Aborted Transactions 19

4.2 Consistency Requirements 19

4.2.1 Consistency Condition 19

4.2.2 Consistency Tests 20

4.3 Isolation Requirements 20

4.3.1 Isolation Test 1 20

4.3.2 Isolation Test 2 20

4.3.3 Isolation Test 3 20

4.3.4 Isolation Test 4 21

4.3.5 Isolation Test 5 21

19

©Copyright IBM Corporation TPC Benchmark H Full Disclosure Report May 2005 Page: 7

Page 10

4.3.6 Isolation Test 6 22

4.4 Durability Requirements 22

4.4.1 Failure of Durable Medium Containing Recovery Log Data, and

Loss of System Power/Memory

22

4.4.2 Loss of Switch Power 23

5 Clause 4: Scaling and Database Populat i on Rel a ted Items

24

5.1 Cardinality of Tables 24

5.2 Distribution of Tables and Logs 24

5.3 Database Partition / Replication Mapping 25

5.4 RAID Implementation 25

5.5 DBGEN Modifications 25

5.6 Database Load Time 25

5.7 Data Storage Ratio 25

5.8 Database Load Mechanism Details and Illustration 25

5.9 Qualification Database Configuration 26

6 Clause 5: Performance Metrics and Execution Rules Related Items

27

6.1 System Activity between Load and Performance Tests 27

6.2 Steps in the Power Test 27

6.3 Timing Intervals for Each Query and Refresh Function 27

6.4 Number of Streams for the Throughput Test 27

6.5 Start and End Date/Times for Each Query Stream 27

6.6 Total Elapsed Time for the Measurement Interval 27

6.7 Refresh Function Start Date/Time and Finish Date/Time 27

6.8 Timing Intervals for Each Query and Each Refresh Function for Each Stream 28

6.9 Performance Metrics 28

6.10 Performance Metric and Numerical Quantities from Both Runs 28

6.11 System Activity between Tests 28

7 Clause 6: SUT and Driver Implementation Related Items

29

7.1 Driver 29

7.2 Implementation-Specific Layer 29

7.3 Profile-Directed Optimization 29

8 Clause 7: Pricing Related Items

30

8.1 Hardware and Software Components 30

8.2 Three-Year Cost of System Configuration 30

8.3 Availability Dates 30

©Copyright IBM Corporation TPC Benchmark H Full Disclosure Report May 2005 Page: 8

Page 11

8.4 Country-Specific Pricing 30

9 Clause 8: Audit Related Items

31

9.1 Auditor’s Report 31

Appendix A: Tunable Parameters and System Configuration

32

DB2 UDB 8.2 Database and Database Manager Configuration 32

DB2 Database Manager Configuration 33

DB2 Registry Variables 35

Linux Parameters 35

Appendix B: Database Build Scripts

35

buildtpcd 35

create_bufferpools 46

create_indexes 47

create_nodegroups 47

create_tables 47

create_ tablespaces 49

createuftbls 50

db2nodes.cfg 50

Load_db2set.ksh 51

run_db2set.ksh 51

runstats.ddl 52

load_tables.ksh 52

load_tb_customer.ddl

load_tb_lineitem.ddl

load_tb_orders.ddl

load_tb_part.ddl

load_tb_partsupp.ddl

load_tb_supplier.ddl

53

53

53

53

53

53

Load_dbcfg.ddl 53

load_dbmcfg.ddl 54

run_dbcfg.ddl 54

run_dbmcfg.ddl 54

setlogpath.ksh 54

tpcd.setup 54

Appendix C: Qualification Query Output

©Copyright IBM Corporation TPC Benchmark H Full Disclosure Report May 2005 Page: 9

58

Page 12

Qualification Queries 58

Query 1 58

Query 2 59

Query 3 60

Query 4 60

Query 5 61

Query 6 61

Query 7 62

Query 8 62

Query 9 63

Query 10 63

Query 11 65

Query 12 65

Query 13 65

Query 14 66

Query 15a 66

Query 16 67

Query 17 67

Query 18 68

Query 19 69

Query 20 70

Query 21 70

Query 22 72

First 10 Rows of the Database 72

Query Substitution Parameters 76

Appendix D: Driver Source Code

80

ploaduf1 80

ploaduf2 80

load_line_uf 80

load_orders_uf 80

runpower 80

runthroughput 84

tpcdbatch.h 88

tpcdbatch.sqc 89

©Copyright IBM Corporation TPC Benchmark H Full Disclosure Report May 2005 Page: 10

Page 13

tpcdUF.sqc 128

Appendix E: ACID Transaction Source Code

acid.h 135

acid.sqc 136

makefile 148

Appendix F: Price Quotations

135

185

©Copyright IBM Corporation TPC Benchmark H Full Disclosure Report May 2005 Page: 11

Page 14

Preface

TPC Benchmark H Standard Specification was developed by the Transaction Processing Performance

Council (TPC). It was released on February 26, 1999, and most recently revised (Revision 2.1.0) August

14, 2003. This is the full disclosure report for benchmark testing of IBM

TPC Benchmark H Standard Specification.

The TPC Benchmark H is a decision support benchmark. It consists of a suite of business-oriented ad hoc

queries and concurrent data modificati ons. The q ueries and the data populating the database have been

chosen to have broad industry-wide relevance while maintaining a sufficient degree of ease of

implementation. This benchmark illustrates decision support systems that:

v Examine large volumes of data;

v Execute queries with a high degree of complexity;

v Give answers to critical business questions.

TPC-H evaluates the performance of various decision support systems by the execution of set of queries

against a standard database under controlled conditions. The TPC-H queries:

v Give answers to real-world business questions;

v Simulate generated ad-hoc queries (e.g., via a point-and-click GUI interface);

v Are far more complex than most OLTP transactions;

v Include a rich breadth of operators and selectivity constraints;

v Generate intensive activity on the part of the database server component of the system under test;

v Are executed against a database complying with specific population and scaling requirements;

v Are implemented with constraints derived from staying closely synchronized with an on-line production

database.

The TPC-H operations are modeled as follows:

v The database is continuously available 24 hours a day, 7 day s a week, for ad- h oc queries from multiple

end users and data modifications against all tables, except possibly during infrequent (e.g., once a

month) maintenance sessions.

v The TPC-H database tracks, possibly with some delay, the state of the OLTP database through ongoing

refresh functions, which batch together a number of modifications impacting some part of the decision

support database.

v Due to the worldwide nature of the business data stored in the TPC-H database, the queries and the

refresh functions may be executed against the database at any time, especially in relation to each other.

In addition, this mix of queries and refresh functions is subject to specific ACIDity requirements, since

queries and refresh functions may execute concurrently.

v To achieve the optimal compromise between performance and operational requirements, the database

administrator can set, once and for all, the locking levels and the concurrent scheduling rules for queries

and refresh functions.

The minimum database required to run the benchmark holds business data from 10,000 suppliers. It

contains almost 10 million rows representing a raw storage capacity of about 1 gigabyte. Compliant

benchmark implementations may also use one of the larger permissible database populations (e.g., 100

gigabytes), as defined in Clause 4.1.3).

The performance metrics reported by TPC-H is called the TPC-H Composite Query-per-Hour Performance

Metric (QphH@Size), and reflects multiple aspects of the capability of the system to process queries. These

aspects include the selected database size against which the queries are executed, the query processing

power when queries are submitted by a single stream , and the query throughput when queries are

submitted by multiple concurrent users. The TPC-H Price/Performance metric is expressed as

$/QphH@Size. To be compliant with the TPC-H standard, all references to TPC-H results for a given

configuration must include all required reporting components (see Clause 5.4.6). The TPC believes that

comparisons of TPC-H results measured against different database sizes are misleading and discourages

such comparisons.

~ x346 according to the

©Copyright IBM Corporation TPC Benchmark H Full Disclosure Report May 2005 Page: 12

Page 15

The TPC-H database must be implemented using a commercially available database management system

(DBMS), and the queries executed via an interface using dynamic SQL. The specification provides for

variants of SQL, as implementers are not required to have implemented a specific SQL standard in full.

Benchmarks results are highly dependent upon workload, specific applicatio n requirements, and systems

design and implementation. Relative system performance will vary as a result of these and other factors.

Therefore, TPC-H should not be used as a substitute for specific customer application benchmarking when

critical capacity planning and/or product evaluation decisions are contemplated.

©Copyright IBM Corporation TPC Benchmark H Full Disclosure Report May 2005 Page: 13

Page 16

1 General Items

1.1 Benchmark Sponsor

A statement identifying the benchmark sponsor(s) and other participating companies must be provided.

IBM Corporation sponsored this TPC-H benchmark.

1.2 Parameter Settings

Settings must be provided for all customer-tunable parameters and options that have been changed from

the defaults found in actual products, including but not limited to:

v

Database tuning options

v

Optimizer/Query execution options

v

Query Processing tool/language configuration parameters

v

Recovery/commit options

v

Consistency/locking options

v

Operating system and configuration parameters

v

Configuration parameters and options for any other software component incorporated into the pricing

structure

v

Compiler optimization options.

Appendix A, “Tunable Parameters,” contains a list of all DB2 parameters and operating system parameters.

Session initialization parameters can be set during or immediately after establishing the connection to the

database within the tpcdbatch program documented in Appendix D, “Driver Source Code.” This result uses

the default session initialization parameters established during preprocessing/binding of the tpcdbatch

program.

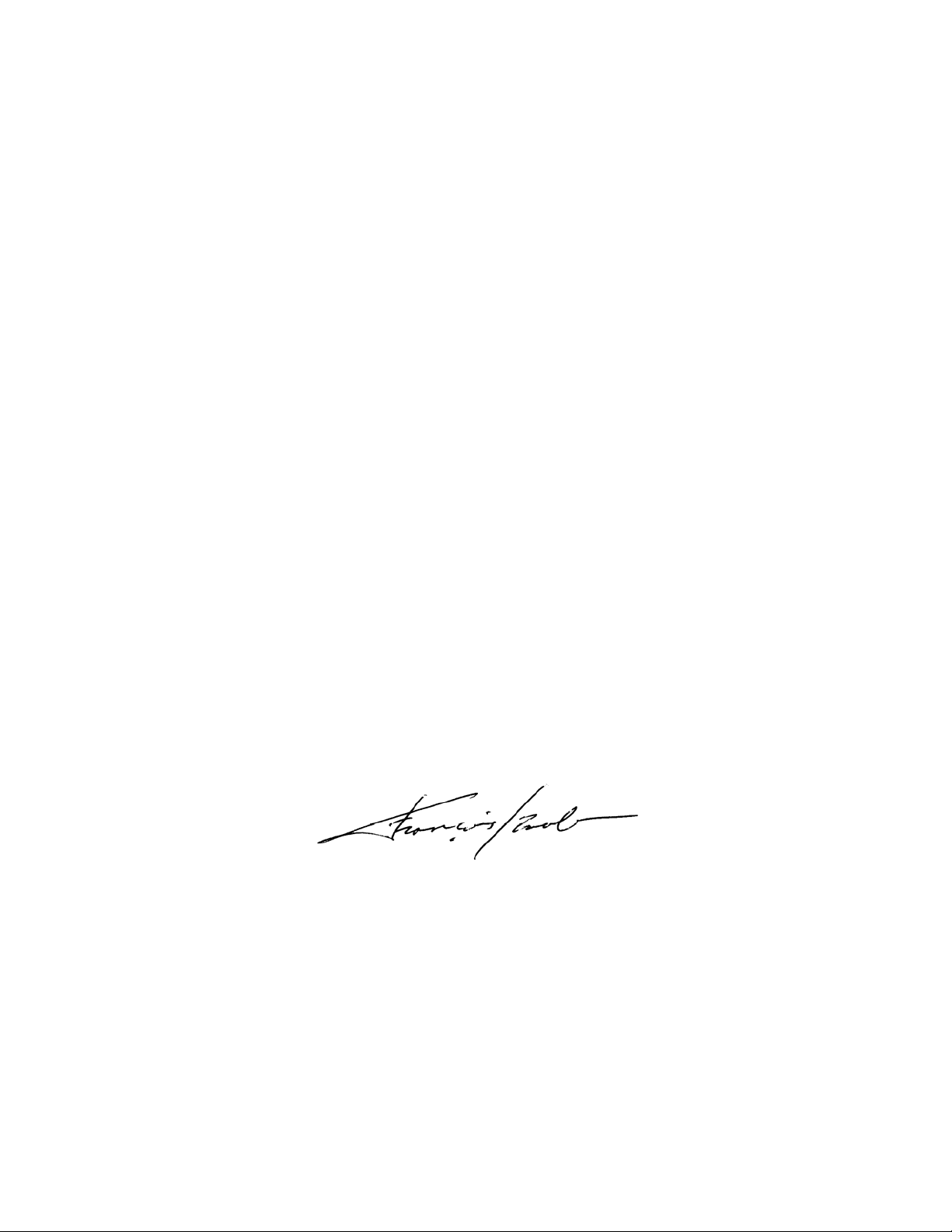

1.3 Configuration Diagrams

Diagrams of both measured and price d con f i gurations must be provided, accompanie d by a descript i o n of

the differences. This includes, but is not limited to:

v

Number and type of processors

v

Size of allocated memory and any specific mapping/partitioning of memory unique to the test and type

of disk units (and controllers, if applicable)

v

Number and type of disk units (and controllers, if applicable)

v

Number of channels or bus connections to disk units, including their protocol type

v

Number of LAN (e.g., Ethernet) connections, including routers, workstations, terminals, etc., that were

physically used in the test or are incorporated into the pricing structure

v

Type and run-time execution location of software components (e.g., DBMS, query processing

tools/languages, middleware components, software drivers, etc.).

The configuration diagram for the tested and priced system is provided on the following page.

©Copyright IBM Corporation TPC Benchmark H Full Disclosure Report May 2005 Page: 14

Page 17

1.3.1 Priced and Measured Configurations

The priced configuration was a cluster of 64 x IBM

• 1 Intel Xeon 3.6GHz processor with 2MB cache

• Eight (8) 512MB PC-3200 ECC SDRAM RDIMMs

• One Voltaire HCA400 Dual-Port InfiniBand Host Channel Adapter

• One ServeRAID-7k Ultra320 SCSI controller

• Six (6) 73.4GB 15K Ultra320 SCSI disk drives

For full details of the priced configuration see the pricing spreadsheet in the Executive Summary.

~ xSeries 346 servers. Each had:

©Copyright IBM Corporation TPC Benchmark H Full Disclosure Report May 2005 Page: 15

Page 18

2 Clause 1: Logical Database Design Related Items

2.1 Database Table Definitions

Listings must be provided for all table definiti on st at ements and all other statements used to set up the test

and qualification databases. (8.1.2.1)

Appendix B contains the scripts that were used to set up the TPC-H test and qualification databases.

2.2 Database Organization

The physical organization of tables and indexes within the test and qualification databases must be

disclosed. If the column ordering of any table is different from that specified in Clause 1.4, it must be noted.

Appendix B contains the scripts that were used to create the indexes on the test and qualification databases.

2.3 Horizontal Partitioning

Horizontal partitioning of tables and rows in the test and qualification databases must be disclosed (see

Clause 1.5.4).

Horizontal partitioning was used for all tables except for the nation and region tables. See Appendix B,

“Database Build Scripts.”

2.4 Replication

Any replication of physical objects must be disclosed and must conform to the requirements of Clause

1.5.6).

No replication was used.

©Copyright IBM Corporation TPC Benchmark H Full Disclosure Report May 2005 Page: 16

Page 19

3 Clause 2: Queries and Update Functions Related Items

3.1 Query Language

The query language used to implement the queries must be identified.

SQL was the query language used.

3.2 Random Number Generation

The method of verification for the random number generation must be described unless the supplied

DBGEN and QGEN were used.

The TPC-supplied DBGEN version 1.3.0 and QGEN version 1.3.0 were used to generate all database

populations.

3.3 Substitution Parameters Generation

The method used to generate values for substitution parameters must be disclosed. If QGEN is not used for

this purpose, then the source code of any non-commercial tool used must be di scl ose d. If QGEN is used, the

version number, release number, modification number and patch level of QGEN must be disclosed.

The supplied QGEN version 1.3.0 was used to generate the substitution parameters.

3.4 Query Text and Output Data from Database

The executable query text used for query validation must be disclosed along with the corresponding output

data generated during the execution of the query text against the qualification database. If minor

modifications (see Clause 2.2.3) have been applied to any functional query definitions or approved variants

in order to obtain executable query text, these modifications must be disclosed and justified. The

justification for a particular minor query modification can apply collectively to all queries for which it has

been used. The output data for the power and throughput tests must be made available electronically upon

request.

Appendix C.1, “Qualification Queries,” contains the output for each of the queries. The functional query

definitions and variants used in this disclosure use the following minor query modifications:

v Table names and view names are fully qualified. For example, the nation table is referred to as

“TPCD.NATION.”

v The standard IBM SQL date syntax is used for date arithmetic. For example, DATE(‘1996-01-01’)+3

MONTHS.

v The semicolon (;) is used as a command delimiter.

3.5 Query Substitution Parameters and Seeds Used

All query substitution parameters used for all performance tests must be disclosed in tabular format, along

with the seeds used to generate these parameters.

Appendix C contains the seed and query substitution parameters used.

3.6 Query Isolation Level

The isolation level used to run the queries must be disclosed. If the isolation level does not map closely to

one of the isolation levels defined in Clause 3.4, additional descriptive detail must be provided.

The isolation level used to run the queries was “repeatable read.”

©Copyright IBM Corporation TPC Benchmark H Full Disclosure Report May 2005 Page: 17

Page 20

3.7 Refresh Function Implementation

The details of how the refresh functions were implemented must be disclosed (including source code of any

non-commercial program used).

The refresh functions are part of the implementation-specific layer/driver code included in Appendix D,

“Driver Source Code.”

©Copyright IBM Corporation TPC Benchmark H Full Disclosure Report May 2005 Page: 18

Page 21

4 Clause 3: Database System Properties Related Items

The results of the ACID tests must be disclosed, along with a description of how the ACID requirements

were met. This includes disclosing the code written to implement the ACID Transaction and Query.

All ACID tests were conducted according to specifications. The Atomicity, Isolation, Consistency and

Durability tests were performed on the IBM

source code.

4.1 Atomicity Requirements

The system under test must guarantee that transactions are atomic; the system will either perform all

individual operations on the data, or will assure that no partially completed operations leave any effects on

the data.

4.1.1 Atomicity of Completed Transactions

Perform the ACID transactions for a randomly selected set of input data and verify that the appropriate

rows have been changed in the ORDER, LINEITEM and HISTORY tables.

The following steps were performed to verify the Atomicity of completed transactions.

1. The total price from the ORDER table and the extended price from the LINEITEM table were

retrieved for a randomly selected order key. The number of records in the HISTORY table was

also retrieved.

2. The ACID Transaction T1 was executed for the order key used in step 1.

3. The total price and extended price were retrieved for the same order key used in step 1 and step 2.

It was verified that:

T1.EXTENDEDPRICE=OLD.EXTENDEDPRICE+((T1.DELTA)*

(OLD.EXTENDEDPRICE/OLD.QUANTITY)),T1.TOTALPRICE=OLD.TOTALPRICE+

((T1.EXTENDEDPRICE-OLD.EXTENDEDPRICE)*(1-DISCOUNT)*(1+TAX)), and that

the number of records in the History table had increased by 1.

4.1.2 Atomiciy of Aborted Transactions

~ x346. Appendix E contains the ACID transaction

Perform the ACID transactions for a randomly selected set of input data, and verify that the appropriate

rows have been changed in the ORDER, LINEITEM and HISTORY tables.

The following steps were performed to verify the Atomicity of the aborted ACID transaction:

1. The ACID application is passed a parameter to execute a rollback of the transaction instead of

performing the commit.

2. The total price from the ORDER table and the extended price from the LINEITEM table were

retrieved for a random order key. The number of records in the HISTORY table was also

retrieved.

3. The ACID transaction was executed for the orderkey used in step 2. The transaction was rolled

back.

4. The total price and the extended price were retrieved for the same orderkey used in step 2 and step

3. It was verified that the extended price and the total price were the same as in step 2.

4.2 Consistency Requirements

Consistency is the property of the application that requires any execution of transactions to take the

database from one consistent state to another.

4.2.1 Consistency Condition

A consistent state for the TPC-H database is defined to exist when:

O_TOTALPRICE=SUM(L_EXTENDEDPRICE*(1-L_DISCOUNT)*(1+L_TAX)

for each ORDER and LINEITEM defined by (O_ORDERKEY=L_ORDERKEY)

©Copyright IBM Corporation TPC Benchmark H Full Disclosure Report May 2005 Page: 19

Page 22

The following queries were executed before and after a measurement to show that the database was always

in a consistent state both initially and after a measurement.

SELECT DECIMAL(SUM(DECIMAL(INTEGER(INTEGER(DECIMAL

(INTEGER(100*DECIMAL(L_EXTENDEDPRICE,20,2)),20,3)*

(1-L_DISCOUNT))*(1+L_TAX)),20,3)/100.0),20,3)

FROM TPCD.LINEITEM WHERE L_ORDEYKEY=okey

SELECT DECIMAL(SUM(O_TOTALPRICE,20,3)) from TPCD.ORDERS WHERE

O_ORDERKEY = okey

4.2.2 Consistency Tests

Verify that the ORDER and LINEITEM tables are initially consistent as defined in Clause 3.3.2.1, based on

a random sample of at least 10 distinct values of O_ORDERKEY.

The queries defined in 4.2.1, “Consistency Condition,” were run after initial database build and pr ior to

executing the ACID transaction. The queries showed that the database was in a consistent condition.

After executing 9 streams of 100 ACID transactions each, the queries defined in 4.2.1, “Consistency

Condition,” were run again. The queries showed that the database was still in a consistent state.

4.3 Isolation Requirements

4.3.1 Isolation Test 1

This test demonstrates isolation for the read-write conflict of a read-write transaction and a read-on ly

transaction when the read-write transaction is committed.

The following steps were performed to satisfy the test of isolation for a read-only and a read-write

committed transaction:

1. First session: Start an ACID transaction with a randomly selected O_KEY,L_KEY and DELTA.

The transaction is delayed for 60 seconds just prior to the Commit.

2. Second session: Start an ACID query for the same O_KEY as in the ACID transaction.

3. Second session: The ACID query attempts to read the file but is locked out by the ACID

transaction waiting to complete.

4. First session: The ACID transaction is released and the Commit is executed releasing the record.

With the LINEITEM record now released, the ACID query can now complete.

5. Second session: Verify that the ACID query delays for approximately 60 seconds and that the

results displayed for the ACID query match the input for the ACID transaction.

4.3.2 Isolation Test 2

This test demonstrates isolation for the read-write conflict of read-write transaction and read-only

transaction when the read-write transaction is rolled back.

The following steps were performed to satisfy the test of isolation for read-only and a rolled back readwrite transaction:

1. First session: Perform the ACID transaction for a random O_KEY, L_KEY and DELTA. The

transaction is delayed for 60 seconds just prior to the Rollback.

2. Second session: Start an ACID query for the same O_KEY as in the ACID transaction. The ACID

query attempts to read the LINEITEM table but is locked out by the ACID transaction.

3. First session: The ACID transaction is released and the Rollback is executed, releasing the read.

4. Second session: With the LINEITEM record now released, the ACID query completes.

4.3.3 Isolation Test 3

This test demonstrates isolation for the write-write conflict of two refresh transactions when the first

transaction is committed.

The following steps were performed to verify isolation of two refresh transactions:

©Copyright IBM Corporation TPC Benchmark H Full Disclosure Report May 2005 Page: 20

Page 23

1. First session: Start an ACID transaction T1 for a randomly selected O_KEY, L_KEY and

DELTA. The transaction is delayed for 60 seconds just prior to the COMMIT.

2. Second session: Start a second ACID transaction T2 for the same O_KEY, L_KEY, and for a

randomly selected DELTA2. This transaction is forced to wait while the 1st session holds a lock

on the LINEITEM record requested by the second session.

3. First session: The ACID transaction T1 is released and the Commit is executed, releasing the

record. With the LINEITEM record now released, the ACID transaction T2 can now complete.

4. Verify that:

T2.L_EXTENDEDPRICE=T1.L_EXTENDEDPRICE+DELTA*

(T1.L_EXTENDEDPRICE)/T1.L_QUANTITY)

4.3.4 Isolation Test 4

This test demonstrates isolation for write-write conflict of two ACID transactions when the first tran saction

is rolled back.

The following steps were performed to verify the isola tion of two ACID transactions after the first one is

rolled back:

1. First session: Start an ACID transaction T1 for a randomly selected O_KEY, L_KEY, and

DELTA. The transaction is delayed for 60 seconds just prior to the rollback.

2. Second session: Start a second ACID transaction T2 for the same O_KEY, L_KEY used by the 1st

session. This transaction is forced to wait while the 1st session holds a lock on the LINEITEM

record requested by the second session.

3. First session: Rollback the ACID transaction T1. With the LINEITEM record now released, the

ACID transaction T2 completes.

4. Verify that T2.L_EXTENDEDPRICE = T1.L_EXTENDEDPRICE

4.3.5 Isolation Test 5

This test demonstrates the ability of read and write transactions affecting different database tables to make

progress concurrently.

1. First session: Start an ACID transaction, T1, for a randomly selected O_KEY, L_KEY and

DELTA. The ACID transaction was suspended prior to COMMIT.

2. First session: Start a second ACID transaction, T2, which selects random values of

PS_PARTKEY and PS_SUPPKEY and returns all columns of the PARTSUPP table for which

PS_PARTKEY and PS_SUPPKEY are equal to the selected values.

3. T2 completed.

4. T1 was allowed to complete.

5. It was verified that the appropriate rows in the ORDERS, LINEITEM and HISTORY tables have

been changed.

©Copyright IBM Corporation TPC Benchmark H Full Disclosure Report May 2005 Page: 21

Page 24

4.3.6 Isolation Test 6

This test demonstrates that the continuous submission of arbitrary (read-only) queries against one or more

tables of the database does not indefinitely delay refresh transactions affecting those tables from making

progress.

1. First session: A transaction T1, which executes modified TPC-H query 1 wi t h DELT A = 0, was

started.

2. Second session: Before T1 completed, an ACID transaction T2, with randomly selected values of

O_KEY, L_KEY and DELTA, was started.

3. Third session: Before T1 completed, a transaction T3, which executes modified TPC-H query 1

with a randomly selected value of DELTA (not equal to 0), was started.

4. T1 completed.

5. T2 completed.

6. T3 completed.

7. It was verified that the appropriate rows in the ORDERS, LINEITEM and HISTORY tables were

changed.

4.4 Durability Requirements

The SUT must guarantee durability: the ability to preserve the effects of committed transactions and ensure

database consistency after recovery from any one of the failures listed in Clause 3.5.3.

4.4.1 Failure of Durable Medium Containing Recovery Log Data, and Loss of System

Power/Memory

Guarantee the database and committed updates are preserved across a permanent irrecoverable failure of

any single durable medium containing TPC-H database tables or recovery log tables.

The database log was stored on RAID-5 protected storage. The tables for the database were stored on

RAID-5 protected storage. The tests were conducted on the qualification database. The steps performed are

shown below.

1. The consistency test described in section 4.2.1 was verified.

2. The current count of the total number of records in the HISTORY table was determined giving

hist1.

3. A test to run 200 ACID transactions on each of 9 execution streams was started such that each

stream executes a different set of transactions.

4. One of the disks containing the DB2 transaction log recovery data and DB2 database tables was

powered off after at least 30 ACID transactions had completed from each of the execution

streams.

5. Because the disks were in RAID 5 configuration the applications continued running the ACID

transactions.

6. The system was shutdown by switching off circuit breakers on the power rail connected to all

system component cabinets, after at least a total of 100 transactions had completed for each

stream.

7. The system was powered back on and rebooted.

8. All volumes were re-established and RAID5 volumes were synchronized.

9. Step 2 was performed giving hist2. It was verified that hist2 - hist1 was greater than or eq ual to the

number of records in the success file.

10. Consistency condition described in 4.2.1 was verified.

©Copyright IBM Corporation TPC Benchmark H Full Disclosure Report May 2005 Page: 22

Page 25

4.4.2. Loss of Switch Power

This test was conducted on the qualification database. The following steps were performed:

1. The consistency test described in section 4.2.1 was verified.

2. The current count of the total number of records in the HISTORY table was determined giving

hist1.

3. A test to run 200 ACID transactions on each of 9 execution streams was started such that each

stream executes a different set of transactions.

4. The Voltaire Infiniband switch was disconnected from the system.

5. Database detected the network loss and terminated processing.

6. Network connections were reestablished and the database was restarted.

7. Step 2 was performed giving hist2. It was verified that hist2 - hist1 was greate r tha n or eq ual to

the number of records in the success file.

8. Consistency condition described in 4.2.1 was verified.

©Copyright IBM Corporation TPC Benchmark H Full Disclosure Report May 2005 Page: 23

Page 26

5 Clause 4: Scaling and Database Population Related Items

5.1 Cardinality of Tables

The cardinality (e.g., the number of rows) of each table of the test database, as it existed at the completion

of the database load (see Clause 4.2.5), must be disclosed.

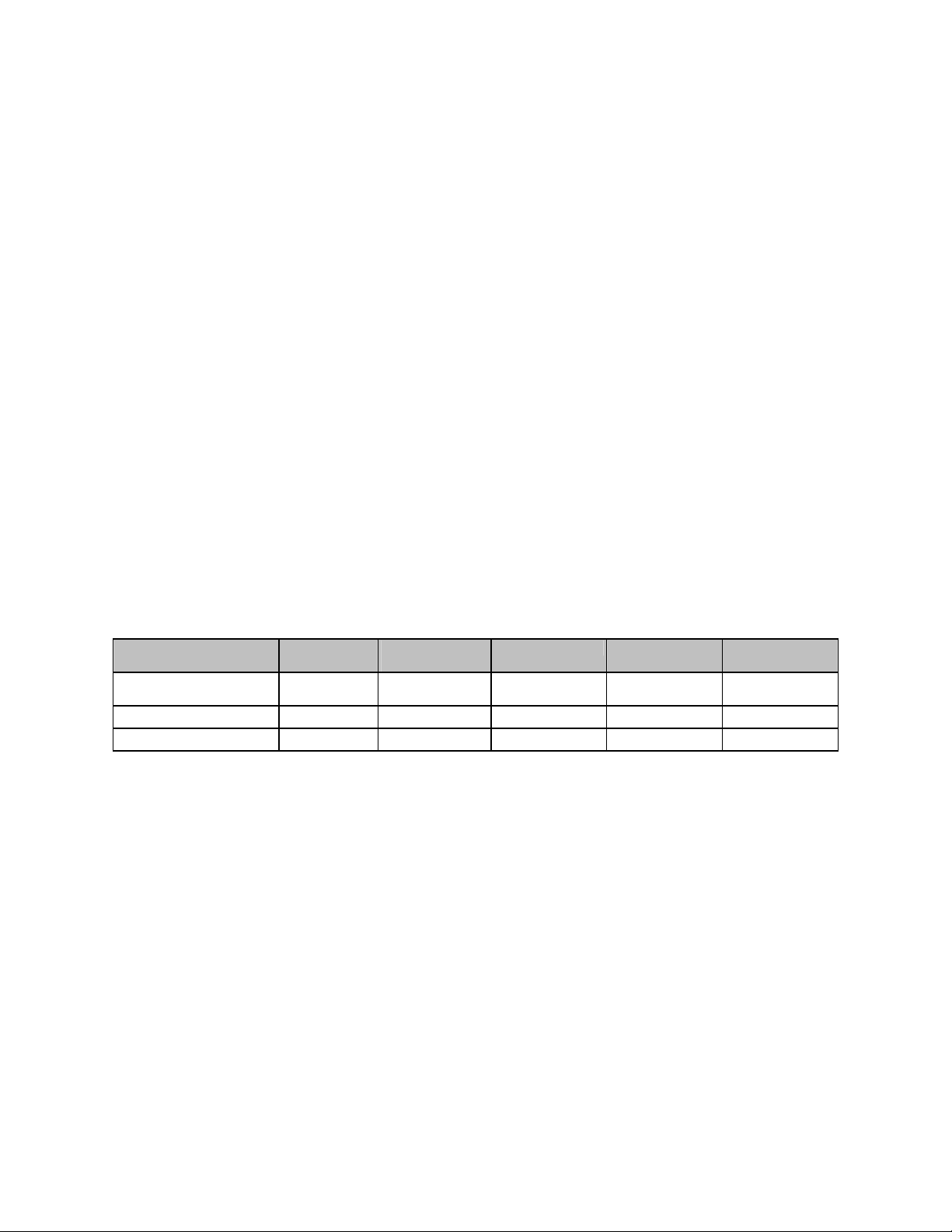

Table Name Rows

Order 4,500,000,000

Lineitem 18,000,048,306

Customer 450,000,000

Part 600,000,000

Supplier 30,000,000

Partsupp 2,400,000,000

Nation 25

Region 5

5.2 Distribution of Tables and Logs

The distribution of tables and logs across all media must be explicitly described.

Controller Drives Logical Partition Size Use

Internal 3 disk-73.4GB /dev/sda1 50GB OS root filesystem

Serve-RAID 7k RAID5 /dev/sda2 8GB Swap

/dev/sda5 18GB Temp Tables

/dev/sda6 40GB DB Data

/dev/sda7 25GB Temp Tables

/dev/sda8 1.5GB Logs

3 disk-73.4GB /dev/sdb1 58GB Filesystem

RAID5 /dev/sdb5 18GB Temp Tables

/dev/sdb6 40GB DB Data

/dev/sdb7 25GB Temp Tables

/dev/sdb8 1.5GB Logs

©Copyright IBM Corporation TPC Benchmark H Full Disclosure Report May 2005 Page: 24

Page 27

5.3 Database Partition / Replication Mapping

The mapping of database partitions/replications must be explicitly described.

The database was not replicated. The database was logically partitioned into 128 logical nodes, 2 nodes on

each physical server.

5.4 RAID Implementation

Implementations may use some form of RAID to ensure high availability. If used for data, auxiliary storage

(e.g., indexes) or temporary space, the level of RAID must be disclosed for each device.

RAID level 5 was used across all database tables, indexes, and recovery logs.

5.5 DBGEN Modifications

Any modifications to the DBGEN (see Clause 4.2.1) source code must be disclosed. In the event that a

program other than DBGEN was used to populate the database, it must be disclosed in its entirety.

The standard distribution DBGEN version 1.3.0 was used for database population. No modi fi cat i ons were

made.

5.6 Database Load Time

The database load time for the test database (see Clause 4.3) must be disclosed.

See the Executive Summary at the beginning of this report.

5.7 Data Storage Ratio

The data storage ratio must be disclosed. It is computed as the ratio between the total amount of priced

disk space and the chosen test database size as defined in Clause 4.1.3.

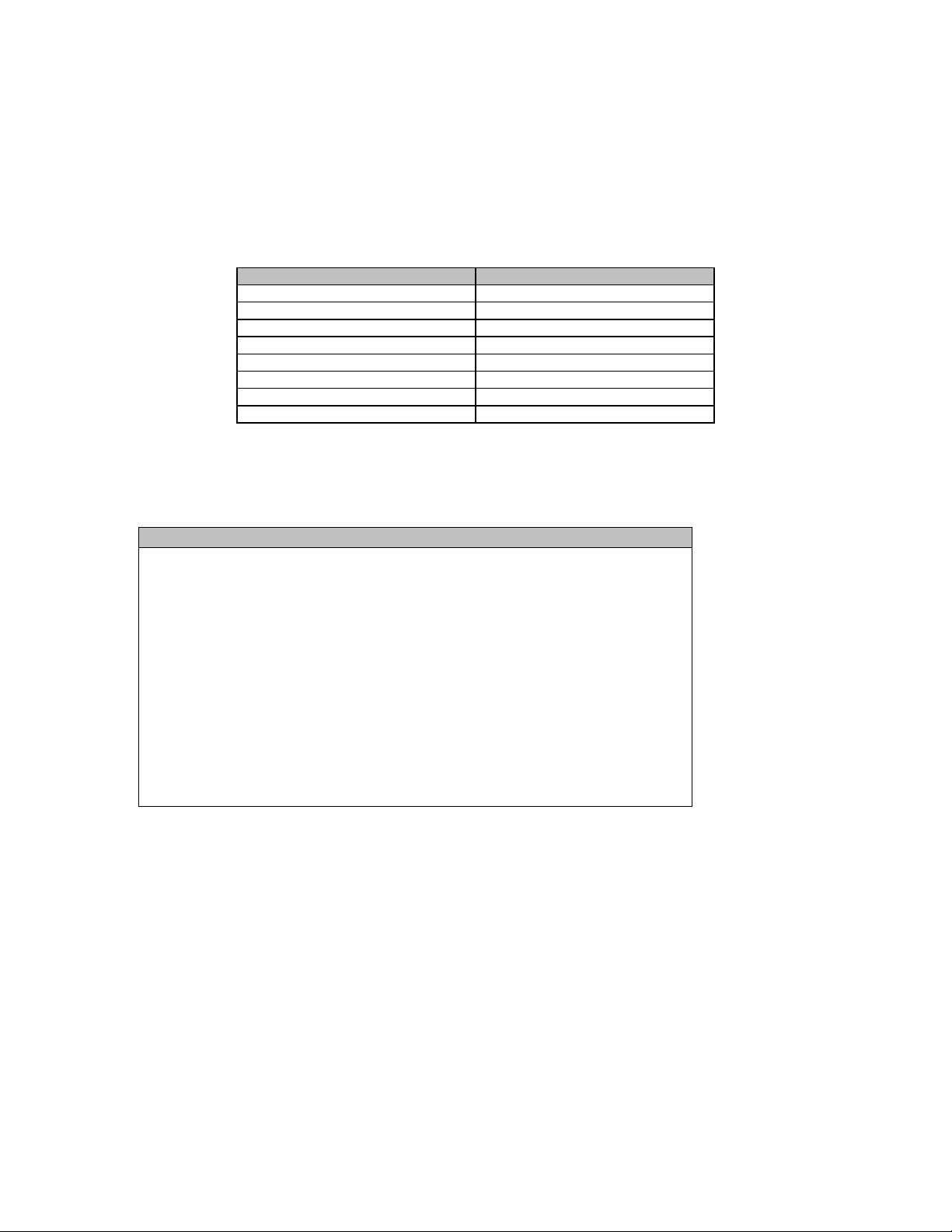

The calculation of the data storage ratio is shown in the following table.

Disk Type Number of

Disks

73.4GB 15K Ultra160 SCSI

Drive

Total

The data storage ratio is 8.75, derived by dividing 26,249.856GB by the database size of 3000GB.

384 68.359 GB 26,249.856 GB

Formatted Space

per Disk

Total Disk Space Scale Factor Storage Ratio

26,249.856 GB 3000 8.75

5.8 Database Load Mechanism Details and Illustration

The details of the database load must be disclosed, including a block diagram illustrating the overall

process. Disclosure of the load procedure includes all steps. scripts, input and configuration files required

to completely reproduce the test and qualification databases.

Flat files for each of the tables were created using DBGEN.

The NATION and REGION tables were created on node 0 and then loaded from dbgen output. The other

tables were loaded on 128 logical nodes.

The tables were loaded as depicted in Figure 4-1.

©Copyright IBM Corporation TPC Benchmark H Full Disclosure Report May 2005 Page: 25

Page 28

g

Period

Database Load Timin

Create Database,

Nodegroups and

tablespaces

Configure for Load

Create Tables

Load Tables

Create Indexes

Update Statistics

Configure for Run

Figure 4-1. Database Load Procedure

5.9 Qualification Database Configuration

Any differences between the configuration of the qualification database and the test database must be

disclosed.

The qualification database used identical scripts to create and load the data with changes to adjust for the

database scale factor.

©Copyright IBM Corporation TPC Benchmark H Full Disclosure Report May 2005 Page: 26

Page 29

6 Clause 5: Performance Metrics and Execution Rules Related Items

6.1 System Activity between Load and Performance Tests

Any system activity on the SUT that takes place between the conclusion of the load test and the beginning of

the performance test must be fully disclosed.

The auditor requested that queries be run against the database to verify the correctness of the database load.

6.2 Steps in the Power Test

The details of the steps followed to implement the power test (e.g., system reboot, database restart) must be

disclosed.

The following steps were used to implement the power test:

1. RF1 Refresh Transaction

2. Stream 00 Execution

3. RF2 Refresh Transaction

6.3 Timing Intervals for Each Query and Refresh Function

The timing intervals for each query of the measured set and for both update functions must be reported for

the power test.

See the Numerical Quantities Summary in the Executive Summary at the beginning of this report.

6.4 Number of Streams for the Throughput Test

The number of execution streams used for the throughput test must be disclosed.

Eight streams were used for the throughput test.

6.5 Start and End Date/Times for Each Query Stream

The start time and finish time for each query execution stream must be reported for the throughput test.

See the Numerical Quantities Summary in the Executive Summary at the beginning of this report.

6.6 Total Elapsed Time for the Measurement Interval

The total elapsed time for the measurement interval must be reported for the throughput test.

See the Numerical Quantities Summary in the Executive Summary at the beginning of this report..

6.7 Refresh Function Start Date/Time and Finish Date/Time

The start time and finish time for each update function in the update stream must be reported for the

throughput test.

See the Numerical Quantities Summary in the Executive Summary at the beginning of this report.

©Copyright IBM Corporation TPC Benchmark H Full Disclosure Report May 2005 Page: 27

Page 30

6.8 Timing Intervals for Each Query and Each Refresh Function for Each Stream

The timing intervals for each query of each stream and for each update function must be reported for the

throughput test.

See the Numerical Quantities Summary in the Executive Summary at the beginning of this report.

6.9 Performance Metrics

The computed performance metrics, related numerical quantities, and the price/performance metric must

be reported.

See the Numerical Quantities Summary in the Executive Summary at the beginning of this report.

6.10 Performance Metric and Numerical Quantities from Both Runs

The performance metric and numerical quantities from both runs must be disclosed.

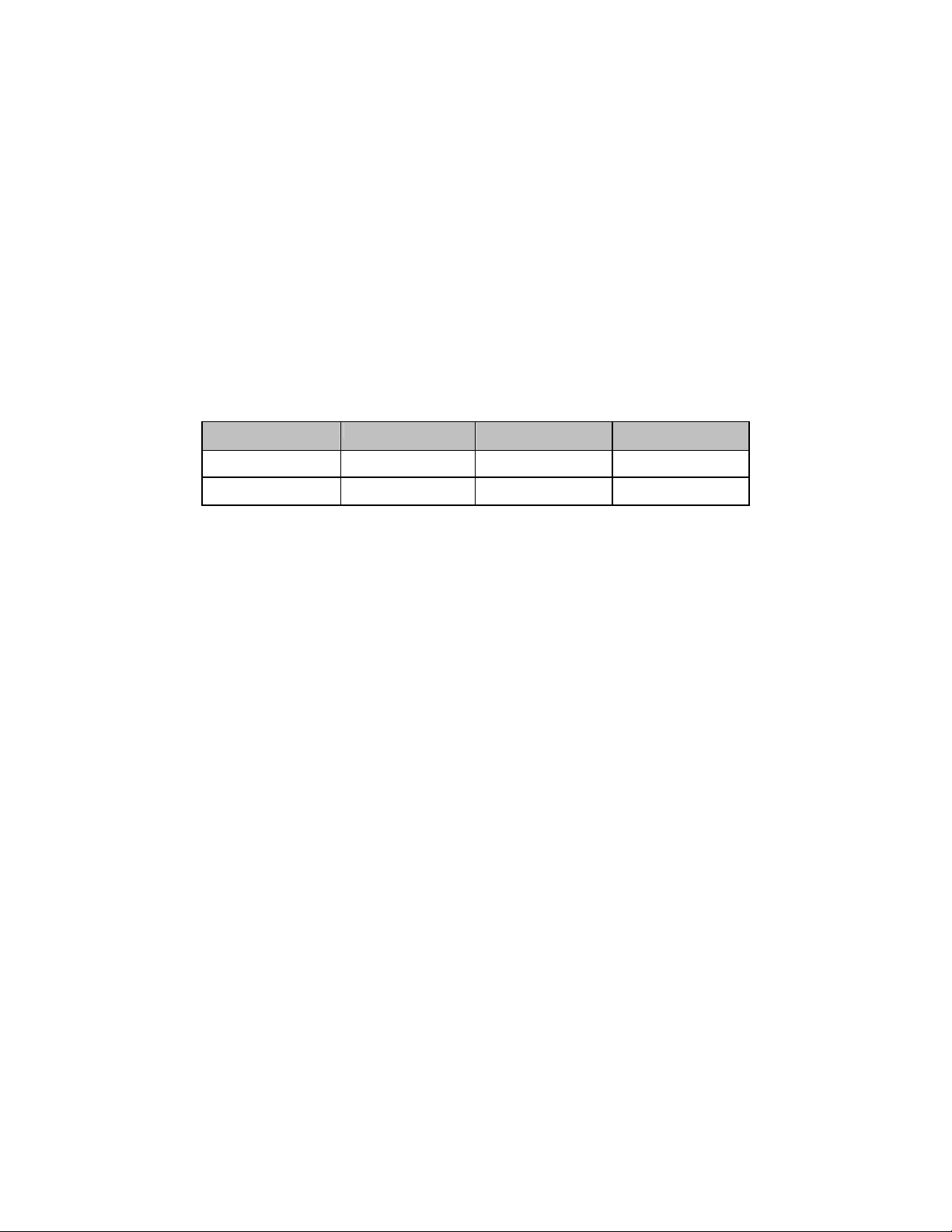

Two consecutive runs of the TPC-H benchmark were performed. The following table contains the results

for both runs.

Run1

Run2

QppH @ 3000GB QthH @ 3000GB QphH @ 3000GB

90,854.7 32,651.4 54,465.9

96,326.7 31,892.1 55,426.2

6.11 System Activity between Tests

Any activity on the SUT that takes place between the conclusion of Run1 and the beginning of Run2 must be

disclosed.

The system was rebooted and DB2 was restarted between runs.

©Copyright IBM Corporation TPC Benchmark H Full Disclosure Report May 2005 Page: 28

Page 31

7 Clause 6: SUT and Driver Implementation Related Items

7.1 Driver

A detailed textual description of how the driver performs its functions, how its various components interact

and any product functionality or environmental setting on which it relies must be provided. All related

source code, scripts and configurations must be disclosed. The information provided should be sufficient

for an independent reconstruction of the driver.

Appendix D, “Driver Source Code,” contains the source code used for the driver and all scripts used in

connection with it.

The Power test is invoked by calling tpcdbatch with the stream number 0 specified, an indication that the

refresh functions must be run, and the SQL file that contains the power stream queries.

The Throughput test is invoked by initiating a call to tpcdbatch for every query stream that will be run.

Tpcdbatch gets the stream number for each of the streams, and the SQL file specific to that stream number

as the queries to execute. The refresh function is initiated as a separate call to tpcdbatch with the SQL script

for the refresh functions and the total number of query streams specified.

7.2 Implementation-Specific Layer

If an implementation-specific layer is used, then a detailed description of how it performs its functions must

be supplied, including any related source code or scripts. This descri pt i o n shoul d al l ow a n independent

reconstruction of the implementation-specific layer.

The implementation specific layer is a single executable SQL application that uses embedded dynamic

SQL to process the EQT generated by QGEN. The application is called tpcdbatch to indicate that it

processes a batch of TPC-H queries, although it is completely capable of processing any arbitrary SQL

statement (both DML and DDL).

A separate instance of tpcdbatch is invoked for each stream. Each instance establishes a distinct connection

to the database server through which the EQT is transmitted to the database and the results are returned

through the implementation specific layer to the driver. When an instance of tpcdbatch is invoked, it is

provided with a context of whether it is running a power test, query stream or refresh stream, as well as an

input file containing the 22 queries and/or refresh functions. tpcdbatch then connects to the database,

performs any session initialization as well as preparing output files required by the auditor. Then it

proceeds to read from the input file and processes each query or refresh function in turn.

For queries, each query is prepared, described, and a cursor is opened and used to fetch the required

number of rows. After the last row has been retrieved a commit is issued. For the refresh functions, during

the database build all data is first split for each node using the db2split utility. For RF1, the data for each

node is further split into n equal portions for both the lineitem and orders tables taking care that the records

for the same orderkey remain in the same set. For RF2, the data for each node is further split into m equal

portions. During the run, when tpcdbatch encounters a call to execute RF1, it first calls a shell script which

loads these n sets of data into n sets of temporary tables (one each for lineitem and orders). Then tpcdbatch

forks off n children to do an insert with subselect into the original lineitem and orders tables. When

tpcdbatch encounters a call to execute RF2, it calls a shell script that loads these data into a single staging

table. Then tpcdbatch forks off p children (where p * x = m) to do x sets of deletes from the orders and

lineitem tables with a subselect from the staging table.

7.3 Profile-Directed Optimization

Profile-directed optimization was not used.

©Copyright IBM Corporation TPC Benchmark H Full Disclosure Report May 2005 Page: 29

Page 32

8 Clause 7: Pricing Related Items

8.1 Hardware and Software Components

A detailed list of the hardware and software used in the priced system must be reported. Each item must

have a vendor part number, description and release/revision level, and either general availability status or

committed delivery date. If package-pricing is used, contents of the package must be disclosed. Pricing

source(s) and effective date(s) must also be reported.

A detailed list of all hardware and software, including the 3-year price, is provided in the Executive

Summary at the front of this report. The price quotations are included in Appendix F.

8.2 Three-Year Cost of System Configuration

The total 3-year price of the entire configuration must be reported, including hardware, software and

maintenance charges. Separate comp onent pricing is recommended. The basis of all disc ou nt s mu st be

disclosed.

A detailed list of all hardware and software, including the 3-year price, is provided in the Executive

Summary at the front of this report. The price quotations are included in Appendix F.

8.3 Availability Dates

The committed delivery date for general availability (availability date) of products used in the price

calculations must be reported. When the priced system includes products with different availability dates,

availability date reported on the Executive Summary must be the date by which all components are

committed to being available. The Full Disclosure Report must report availability dates individually for at

least each of the categories for which a pricing subtotal must be provided (see Clause 7.3.1.3).

The system as priced will be generally available August 15, 2005.

8.4 Country-Specific Pricing

Additional Clause 7 related items may be included in the Full Disclosure Report for each country-specific

priced configuration. Country-specific pricing is subject to Clause 7.1.7.

The configuration is priced for the United States of America.

©Copyright IBM Corporation TPC Benchmark H Full Disclosure Report May 2005 Page: 30

Page 33

9 Clause 8: Audit Related Items

9.1 Auditor’s Report

The auditor’s agency name, address, phone num ber, and Attestation letter with a brief audit summary

report indicating compliance must be included in the Full Disclosure Report. A statement should be

included specifying who to contact in order to obtain further information regarding the audit process.

This implementation of the TPC Benchmark H was audited by Francois Raab of InfoSizing, Inc. Further

information can be downloaded from www.tpc.org.

©Copyright IBM Corporation TPC Benchmark H Full Disclosure Report May 2005 Page: 31

Page 34

Appendix A: Tunable Parameters

and System Configuration

DB2 UDB 8.2 Database and Database

Manager Configuration

get database configuration for TPCD

Database Configuration for Database

TPCD

Database configuration release level

= 0x0a00

Database release level

= 0x0a00

Database territory

= US

Database code page

= 819

Database code set

= ISO8859-1

Database country/region code

= 1

Database collating sequence

= BINARY

Alternate collating sequence

(ALT_COLLATE) =

Dynamic SQL Query management

(DYN_QUERY_MGMT) = DISABLE

Discovery support for this database

(DISCOVER_DB) = ENABLE

Default query optimization class

(DFT_QUERYOPT) = 7

Degree of parallelism

(DFT_DEGREE) = 1

Continue upon arithmetic exceptions

(DFT_SQLMATHWARN) = NO

Default refresh age

(DFT_REFRESH_AGE) = 0

Default maintained table types for opt

(DFT_MTTB_TYPES) = SYSTEM

Number of frequent values retained

(NUM_FREQVALUES) = 0

Number of quantiles retained

(NUM_QUANTILES) = 600

Backup pending

= NO

Database is consistent

= NO

Rollforward pending

= NO

Restore pending

= NO

Multi-page file allocation enabled

= YES

Log retain for recovery status

= NO

User exit for logging status

= NO

Data Links Token Expiry Interval (sec)

(DL_EXPINT) = 60

Data Links Write Token Init Expiry

Intvl(DL_WT_IEXPINT) = 60

Data Links Number of Copies

(DL_NUM_COPIES) = 1

Data Links Time after Drop (days)

(DL_TIME_DROP) = 1

Data Links Token in Uppercase

(DL_UPPER) = NO

Data Links Token Algorithm

(DL_TOKEN) = MAC0

Database heap (4KB)

(DBHEAP) = 10000

Size of database shared memory (4KB)

(DATABASE_MEMORY) = AUTOMATIC

Catalog cache size (4KB)

(CATALOGCACHE_SZ) = (MAXAPPLS*4)

Log buffer size (4KB)

(LOGBUFSZ) = 2048

Utilities heap size (4KB)

(UTIL_HEAP_SZ) = 5000

Buffer pool size (pages)

(BUFFPAGE) = 70000

Extended storage segments size (4KB)

(ESTORE_SEG_SZ) = 16000

Number of extended storage segments

(NUM_ESTORE_SEGS) = 0

Max storage for lock list (4KB)

(LOCKLIST) = 40000

Max size of appl. group mem set (4KB)

(APPGROUP_MEM_SZ) = 2000

Percent of mem for appl. group heap

(GROUPHEAP_RATIO) = 70

Max appl. control heap size (4KB)

(APP_CTL_HEAP_SZ) = 512

Sort heap thres for shared sorts (4KB)

(SHEAPTHRES_SHR) = 250

Sort list heap (4KB)

(SORTHEAP) = 10000

SQL statement heap (4KB)

(STMTHEAP) = 10000

Default application heap (4KB)

(APPLHEAPSZ) = 1024

Package cache size (4KB)

(PCKCACHESZ) = (MAXAPPLS*8)

Statistics heap size (4KB)

(STAT_HEAP_SZ) = 4384

Interval for checking deadlock (ms)

(DLCHKTIME) = 10000

Percent. of lock lists per application

(MAXLOCKS) = 20

Lock timeout (sec)

(LOCKTIMEOUT) = -1

Changed pages threshold

(CHNGPGS_THRESH) = 60

Number of asynchronous page cleaners

(NUM_IOCLEANERS) = 2

Number of I/O servers

(NUM_IOSERVERS) = 4

Index sort flag

(INDEXSORT) = YES

Sequential detect flag

(SEQDETECT) = YES

©Copyright IBM Corporation TPC Benchmark H Full Disclosure Report May 2005 Page: 32

Page 35

Default prefetch size (pages)

(DFT_PREFETCH_SZ) = AUTOMATIC

Track modified pages

(TRACKMOD) = OFF

Default number of containers

= 1

Default tablespace extentsize (pages)

(DFT_EXTENT_SZ) = 32

Max number of active applications

(MAXAPPLS) = AUTOMATIC

Average number of active applications

(AVG_APPLS) = 1

Max DB files open per application

(MAXFILOP) = 1024

Log file size (4KB)

(LOGFILSIZ) = 50000

Number of primary log files

(LOGPRIMARY) = 4

Number of secondary log files

(LOGSECOND) = 1

Changed path to log files

(NEWLOGPATH) =

Path to log files

= /dev/raw/raw1

Overflow log path

(OVERFLOWLOGPATH) =

Mirror log path

(MIRRORLOGPATH) =

First active log file

=

Block log on disk full

(BLK_LOG_DSK_FUL) = NO

Percent of max active log space by

transaction(MAX_LOG) = 0

Num. of active log files for 1 active

UOW(NUM_LOG_SPAN) = 0

Group commit count

(MINCOMMIT) = 1

Percent log file reclaimed before soft

chckpt (SOFTMAX) = 360

Log retain for recovery enabled

(LOGRETAIN) = OFF

User exit for logging enabled

(USEREXIT) = OFF

HADR database role

= STANDARD

HADR local host name

(HADR_LOCAL_HOST) =

HADR local service name

(HADR_LOCAL_SVC) =

HADR remote host name

(HADR_REMOTE_HOST) =

HADR remote service name

(HADR_REMOTE_SVC) =

HADR instance name of remote server

(HADR_REMOTE_INST) =

HADR timeout value

(HADR_TIMEOUT) = 120

HADR log write synchronization mode

(HADR_SYNCMODE) = NEARSYNC

First log archive method

(LOGARCHMETH1) = OFF

Options for logarchmeth1

(LOGARCHOPT1) =

Second log archive method

(LOGARCHMETH2) = OFF

Options for logarchmeth2

(LOGARCHOPT2) =

Failover log archive path

(FAILARCHPATH) =

Number of log archive retries on error

(NUMARCHRETRY) = 5

Log archive retry Delay (secs)

(ARCHRETRYDELAY) = 20

Vendor options

(VENDOROPT) =

Auto restart enabled

(AUTORESTART) = ON

Index re-creation time and redo index build

(INDEXREC) = SYSTEM (RESTART)

Log pages during index build

(LOGINDEXBUILD) = OFF

Default number of loadrec sessions

(DFT_LOADREC_SES) = 1

Number of database backups to retain

(NUM_DB_BACKUPS) = 12

Recovery history retention (days)

(REC_HIS_RETENTN) = 366

TSM management class

(TSM_MGMTCLASS) =

TSM node name

(TSM_NODENAME) =

TSM owner

(TSM_OWNER) =

TSM password

(TSM_PASSWORD) =

Automatic maintenance

(AUTO_MAINT) = OFF

Automatic database backup

(AUTO_DB_BACKUP) = OFF

Automatic table maintenance

(AUTO_TBL_MAINT) = OFF

Automatic runstats

(AUTO_RUNSTATS) = OFF

Automatic statistics profiling

(AUTO_STATS_PROF) = OFF

Automatic profile updates

(AUTO_PROF_UPD) = OFF

Automatic reorganization

(AUTO_REORG) = OFF

DB2 Database Manager Configuration

Database Manager

get database manager configuration

Database Manager Configuration

Node type = Enterprise Server Edition

with local and remote clients

Database manager configuration release

level = 0x0a00

CPU speed (millisec/instruction)

(CPUSPEED) = 1.889377e-06

Communications bandwidth (MB/sec)

(COMM_BANDWIDTH) = 1.000000e+00

©Copyright IBM Corporation TPC Benchmark H Full Disclosure Report May 2005 Page: 33

Page 36

Max number of concurrently active databases

(NUMDB) = 1

Data Links support

(DATALINKS) = NO

Federated Database System Support

(FEDERATED) = NO

Transaction processor monitor name

(TP_MON_NAME) =

Default charge-back account

(DFT_ACCOUNT_STR) =

Java Development Kit installation path

(JDK_PATH) = /opt/IBMJava2-141

Diagnostic error capture level

(DIAGLEVEL) = 0

Notify Level

(NOTIFYLEVEL) = 0

Diagnostic data directory path

(DIAGPATH) =

Default database monitor switches

Buffer pool

(DFT_MON_BUFPOOL) = OFF

Lock

(DFT_MON_LOCK) = OFF

Sort

(DFT_MON_SORT) = OFF

Statement

(DFT_MON_STMT) = OFF

Table

(DFT_MON_TABLE) = OFF

Timestamp

(DFT_MON_TIMESTAMP) = OFF

Unit of work

(DFT_MON_UOW) = OFF

Monitor health of instance and databases

(HEALTH_MON) = OFF

SYSADM group name

(SYSADM_GROUP) =

SYSCTRL group name

(SYSCTRL_GROUP) =

SYSMAINT group name

(SYSMAINT_GROUP) =

SYSMON group name

(SYSMON_GROUP) =

Client Userid-Password Plugin

(CLNT_PW_PLUGIN) =

Client Kerberos Plugin

(CLNT_KRB_PLUGIN) =

Group Plugin

(GROUP_PLUGIN) =

GSS Plugin for Local Authorization

(LOCAL_GSSPLUGIN) =

Server Plugin Mode

(SRV_PLUGIN_MODE) = UNFENCED

Server List of GSS Plugins

(SRVCON_GSSPLUGIN_LIST) =

Server Userid-Password Plugin

(SRVCON_PW_PLUGIN) =

Server Connection Authentication

(SRVCON_AUTH) = NOT_SPECIFIED

Database manager authentication

(AUTHENTICATION) = SERVER

Cataloging allowed without authority

(CATALOG_NOAUTH) = NO

Trust all clients

(TRUST_ALLCLNTS) = YES

Trusted client authentication

(TRUST_CLNTAUTH) = CLIENT

Bypass federated authentication

(FED_NOAUTH) = NO

Default database path

(DFTDBPATH) = /home/tpch

Database monitor heap size (4KB)

(MON_HEAP_SZ) = 90

Java Virtual Machine heap size (4KB)

(JAVA_HEAP_SZ) = 2048

Audit buffer size (4KB)

(AUDIT_BUF_SZ) = 0

Size of instance shared memory (4KB)

(INSTANCE_MEMORY) = AUTOMATIC

Backup buffer default size (4KB)

(BACKBUFSZ) = 1024

Restore buffer default size (4KB)

(RESTBUFSZ) = 1024

Sort heap threshold (4KB)

(SHEAPTHRES) = 100000

Directory cache support

(DIR_CACHE) = YES

Application support layer heap size (4KB)

(ASLHEAPSZ) = 15

Max requester I/O block size (bytes)

(RQRIOBLK) = 32767

Query heap size (4KB)

(QUERY_HEAP_SZ) = 1000

Workload impact by throttled

utilities(UTIL_IMPACT_LIM) = 10

Priority of agents

(AGENTPRI) = SYSTEM

Max number of existing agents

(MAXAGENTS) = 400

Agent pool size

(NUM_POOLAGENTS) = 64

Initial number of agents in pool

(NUM_INITAGENTS) = 4

Max number of coordinating agents

(MAX_COORDAGENTS) = (MAXAGENTS NUM_INITAGENTS)

Max no. of concurrent coordinating agents

(MAXCAGENTS) = MAX_COORDAGENTS

Max number of client connections

(MAX_CONNECTIONS) = MAX_COORDAGENTS

Keep fenced process

(KEEPFENCED) = YES

Number of pooled fenced processes

(FENCED_POOL) = MAX_COORDAGENTS

Initial number of fenced processes

(NUM_INITFENCED) = 0

Index re-creation time and redo index build

(INDEXREC) = RESTART

Transaction manager database name

(TM_DATABASE) = 1ST_CONN

Transaction resync interval (sec)

(RESYNC_INTERVAL) = 180

SPM name

(SPM_NAME) =

©Copyright IBM Corporation TPC Benchmark H Full Disclosure Report May 2005 Page: 34

Page 37

SPM log size

(SPM_LOG_FILE_SZ) = 256

SPM resync agent limit

(SPM_MAX_RESYNC) = 20

SPM log path

(SPM_LOG_PATH) =

TCP/IP Service name

(SVCENAME) = DB2_tpch_SVC

Discovery mode

(DISCOVER) = SEARCH

Discover server instance

(DISCOVER_INST) = ENABLE

Maximum query degree of parallelism

(MAX_QUERYDEGREE) = ANY

Enable intra-partition parallelism

(INTRA_PARALLEL) = NO

No. of int. communication

buffers(4KB)(FCM_NUM_BUFFERS) = 16384

Number of FCM request blocks

(FCM_NUM_RQB) = 8192

Number of FCM connection entries

(FCM_NUM_CONNECT) = AUTOMATIC

Number of FCM message anchors

(FCM_NUM_ANCHORS) = AUTOMATIC

Node connection elapse time (sec)

(CONN_ELAPSE) = 20

Max number of node connection retries

(MAX_CONNRETRIES) = 5

Max time difference between nodes (min)

(MAX_TIME_DIFF) = 1440

db2start/db2stop timeout (min)

(START_STOP_TIME) = 10

DB2 Registry Variables

DB2NOLIOAIO=no

DB2_EXTENDED_OPTIMIZATION=Y

DB2_ANTIJOIN=Y

DB2_LIKE_VARCHAR=Y,Y

DB2BPVARS=/home/custom/bpvar.cfg

DB2RQTIME=30

DB2OPTIONS=-t -v +c

DB2COMM=tcpip

DB2BQTRY=120

DB2_PARALLEL_IO=*

Linux Parameters

kernel.shmmax=268435456

kernel.shmmni=4096

kernel.msgmni=1024

fs.file-max=8129

kernel.sem="250 32000 32 1024"

vm.swappiness=0

Appendix B: Database Build Scripts

buildtpcd

#!/usr/bin/perl

# usage buildtpcd [QUAL]

# ASSUMPTIONS: all ddl files have commits in

them!

($myName = $0) =~ s@.*/@@; $usage="

Usage: buildtpcd [QUAL]

where QUAL is the optional parameter

saying to build the qualification

database (sf = .1 =

100MB)\n";

$qual="";

if (@ARGV == 1){

$qual = $ARGV[0];

}

# get TPC-D specific environment variables

require "getvars";

require "macro.pl";

require "tpcdmacro.pl";

require "version";

$timestamp=`perl gettimestamp "short"`;

# Make output unbuffered.

open(STDOUT, "| tee

buildtpcd.out.${timestamp}");

select(STDOUT);

$| = 1 ;

#-------------------------------------------

--------------------------------#

# verify that necessary environment

variables for building the database #

# are present. Default those that aren't

necessary #

#-------------------------------------------

--------------------------------#

# variables that must be specified for

script to run

@reqVars = ("TPCD_PLATFORM",

"TPCD_PRODUCT",

"TPCD_VERSION",

"TPCD_DBNAME",

"TPCD_MODE",

"TPCD_SF",

"TPCD_DDLPATH",

"TPCD_AUDIT",

"TPCD_AUDIT_DIR",

"TPCD_BUILD_STAGE");

# variables default to 'NULL' if unspecified

@defNullVars = ("TPCD_LOAD_SCRIPT",

"TPCD_LOAD_SCRIPT_QUAL",

"TPCD_INPUT",

"TPCD_QUAL_INPUT",

"TPCD_DBGEN",

"TPCD_LOGPRIMARY",

"TPCD_LOGSECOND",

"TPCD_LOGFILSIZ",

"TPCD_LOG_DIR",

"TPCD_MACHINE",

"TPCD_AGENTPRI",

"TPCD_STAGING_TABLE_DDL",

"TPCD_PRELOAD_STAGING_TABLE_SCRIPT",

"TPCD_DELETE_STAGING_TABLE_SQL",

"TPCD_RUNSTATSHORT",

"TPCD_ADD_RI",

"TPCD_AST",

"TPCD_DBM_CONFIG",

"TPCD_EXPLAIN_DDL",

©Copyright IBM Corporation TPC Benchmark H Full Disclosure Report May 2005 Page: 35

Page 38

"TPCD_NODEGROUP_DEF",

"TPCD_BUFFERPOOL_DEF",

"TPCD_LOAD_DB2SET_SCRIPT",

"TPCD_DB2SET_SCRIPT",

"TPCD_LOG_DIR_SETUP_SCRIPT",

"TPCD_LOAD_CONFIGFILE",

"TPCD_LOAD_DBM_CONFIGFILE",

"TPCD_TEMP");

&setVar(@reqVars, "ERROR");

&setVar(@defNullVars, "NULL");

if ( $qual eq "QUAL" ){

@reqQualVars = ("TPCD_QUAL_DBNAME",

"TPCD_QUAL_DDL",

"TPCD_QUAL_TBSP_DDL",

"TPCD_QUALCONFIGFILE",

"TPCD_DBM_QUALCONFIG",

"TPCD_LOAD_QUALCONFIGFILE",

"TPCD_LOAD_DBM_QUALCONFIGFILE");

&setVar(@reqQualVars, "ERROR");

if ( ($ENV{"TPCD_QUAL_INPUT"}) eq "NULL"

){

if ((($ENV{"TPCD_DBGEN"}) eq "NULL")

||

(($ENV{"TPCD_TEMP"}) eq

"NULL")){

die "TPCD_DBGEN and TPCD_TEMP

must be set if flatfiles are not

provided.\n";

}

}

}

$platform=$ENV{"TPCD_PLATFORM"};

if (length($ENV{"TPCD_DBPATH"}) <= 0){

# if no db pathname specified, build the

db in the home directory

if ( $platform eq "aix" ||

$platform eq "sun" ||

$platform eq "ptx" ||

$platform eq "hp" ||

$platform eq "linux"){

$ENV{"TPCD_DBPATH"} = $ENV{"HOME"};

}

elsif ( $platform eq "nt" ){

$ENV{"TPCD_DBPATH"} =

$ENV{"HOMEDRIVE"};

}

else{

die "platform '$platform' not

supported yet\n";

}

}

if ( ($ENV{"TPCD_INPUT"}) eq "NULL" ){

if ((($ENV{"TPCD_DBGEN"}) eq "NULL") ||

(($ENV{"TPCD_TEMP"}) eq "NULL")){

die "TPCD_DBGEN and TPCD_TEMP must

be set if flatfiles are not provided.\n";

}

}

#-------------------------------------------

--------------------------------#

# ddl script files found under custom

directory #

#-------------------------------------------

--------------------------------#

if (length($ENV{"TPCD_DDL"}) <= 0){

$ENV{"TPCD_DDL"} = "dss.ddl";

}

if (length($ENV{"TPCD_TBSP_DDL"}) <= 0){

$ENV{"TPCD_TBSP_DDL"} = "dss.tbsp.ddl";

}

if (length($ENV{"TPCD_INDEXDDL"}) <= 0){

$ENV{"TPCD_INDEXDDL"} = "dss.index";

}

if (length($ENV{"TPCD_RUNSTATS"}) <= 0){

$ENV{"TPCD_RUNSTATS"} = "dss.runstats";

}

if (length($ENV{"TPCD_CONFIGFILE"}) <= 0){

$ENV{"TPCD_CONFIGFILE"} =

"dss.dbconfig";

}

#-------------------------------------------

--------------------------------#

# other settings

#

#-------------------------------------------

--------------------------------#

if (length($ENV{"TPCD_BACKUP_DIR"}) <= 0){

$ENV{"TPCD_BACKUP_DIR"} =

"${delim}dev${delim}null";

}

if (length($ENV{"TPCD_COPY_DIR"}) <= 0){

$ENV{"TPCD_COPY_DIR"} =

"${delim}dev${delim}null";

}

if (length($ENV{"TPCD_TEMP"}) <= 1){

$ENV{"TPCD_TEMP"} =

"/u/$instance/sqllib/tmp";

}

if (length($ENV{"TPCD_PHYS_NODE"}) <= 0){

$ENV{"TPCD_NODEGROUP_DEF"}="NULL"

}

if (length($ENV{"TPCD_GENERATE_SEED_FILE"})

<= 0){

$ENV{"TPCD_GENERATE_SEED_FILE"} = "no";

}

if (length($ENV{"TPCD_SORTBUF"}) <= 0){

$ENV{"TPCD_SORTBUF"} = 4096;

}

if (length($ENV{"TPCD_LOAD_PARALLELISM"}) <=

0){

$ENV{"TPCD_LOAD_PARALLELISM"} = 0;

}

if (length($ENV{"TPCD_LOADSTATS"}) <= 0){

$ENV{"TPCD_LOADSTATS"} = "no";

}

if (length($ENV{"TPCD_FASTPARSE"}) <= 0){

$ENV{"TPCD_FASTPARSE"} = "no";

}

if (length($ENV{"TPCD_LOG"}) <= 0){

$ENV{"TPCD_LOG"} = "no";

}

if (length($ENV{"TPCD_SMPDEGREE"}) <= 0 ){

©Copyright IBM Corporation TPC Benchmark H Full Disclosure Report May 2005 Page: 36

Page 39

$ENV{"TPCD_SMPDEGREE"} = 1;

}

if (length($ENV{"TPCD_ACTIVATE"}) <= 0){

$ENV{"TPCD_ACTIVATE"} = "no";

}

if (length($ENV{"TPCD_APPEND_ON"}) <= 0){

$ENV{"TPCD_APPEND_ON"}="yes"

}

if (length($ENV{"TPCD_GENERATE_SEED_FILE"})

<= 0){

$ENV{"TPCD_GENERATE_SEED_FILE"}="no";

}

#setup global variables

$tpcdVersion= $ENV{"TPCD_VERSION"};

$buildStage=

$ENV{"TPCD_BUILD_STAGE"};

$mode= $ENV{"TPCD_MODE"};

$delim =

$ENV{"TPCD_PATH_DELIM"};

$sep = $ENV{"COMMAND_SEP"};

$ddlpath= $ENV{"TPCD_DDLPATH"};

$extraindex=

$ENV{"TPCD_EXTRAINDEX"};

$earlyindex=

$ENV{"TPCD_EARLYINDEX"};

$loadstats=

$ENV{"TPCD_LOADSTATS"};

$addRI= $ENV{"TPCD_ADD_RI"};

$astFile= $ENV{"TPCD_AST"};

$genSeed=

$ENV{"TPCD_GENERATE_SEED_FILE"};

$log= $ENV{"TPCD_LOG"};

$activate=

$ENV{"TPCD_ACTIVATE"};

$RealAudit= $ENV{"TPCD_AUDIT"};

$auditDir=

$ENV{"TPCD_AUDIT_DIR"};

$loadsetScript=

$ENV{"TPCD_LOAD_DB2SET_SCRIPT"};

$user= $ENV{"USER"};

$logDirScript=

$ENV{"TPCD_LOG_DIR_SETUP_SCRIPT"};

$logprimary=

$ENV{"TPCD_LOGPRIMARY"};

$logsecond=

$ENV{"TPCD_LOGSECOND"};

$logfilsiz=

$ENV{"TPCD_LOGFILSIZ"};

$dbpath = $ENV{"TPCD_DBPATH"};

$explainDDL=

$ENV{"TPCD_EXPLAIN_DDL"};

$platform=

$ENV{"TPCD_PLATFORM"};

$buffpooldef=

$ENV{"TPCD_BUFFERPOOL_DEF"};

$stagingTbl =

$ENV{"TPCD_STAGING_TABLE_DDL"};

$preloadSampleUF=

$ENV{"TPCD_PRELOAD_STAGING_TABLE_SCR

IPT"};

$deleteSampleUF=

$ENV{"TPCD_DELETE_STAGING_TABLE_SQL"

};

$machine= $ENV{"TPCD_MACHINE"};

$runstatShort =

$ENV{"TPCD_RUNSTATSHORT"};

$runstats =

$ENV{"TPCD_RUNSTATS"};

$smpdegree =

$ENV{"TPCD_SMPDEGREE"};

$agentpri =

$ENV{"TPCD_AGENTPRI"};

$setScript =

$ENV{"TPCD_DB2SET_SCRIPT"};

$backupdir =

$ENV{"TPCD_BACKUP_DIR"};

$nodegroupdef=

$ENV{"TPCD_NODEGROUP_DEF"};

$dbgen= $ENV{"TPCD_DBGEN"};

$appendOn=

$ENV{"TPCD_APPEND_ON"};

$indexddl=

$ENV{"TPCD_INDEXDDL"};

if($qual eq "QUAL"){

$logDir=

$ENV{"TPCD_LOG_QUAL_DIR"};

$dbname=

$ENV{"TPCD_QUAL_DBNAME"};

$input=

$ENV{"TPCD_QUAL_INPUT"};

$sf= $ENV{"TPCD_QUAL_SF"};

$loadconfigfile=$ENV{"TPCD_LOAD_QUAL

CONFIGFILE"};

$loadDBMconfig=

$ENV{"TPCD_LOAD_DBM_QUALCONFIGFILE"}

;

$loadscript =

$ENV{"TPCD_LOAD_SCRIPT_QUAL"};

$configfile =

$ENV{"TPCD_QUALCONFIGFILE"};

$dbmconfig =

$ENV{"TPCD_DBM_QUALCONFIG"};

$ddl=

$ENV{"TPCD_QUAL_DDL"};

$tbspddl=

$ENV{"TPCD_QUAL_TBSP_DDL"};

}else{

$logDir= $ENV{"TPCD_LOG_DIR"};

$dbname= $ENV{"TPCD_DBNAME"};

$input= $ENV{"TPCD_INPUT"};

$sf= $ENV{"TPCD_SF"};

$loadconfigfile=$ENV{"TPCD_LOAD_CONF

IGFILE"};

$loadDBMconfig=

$ENV{"TPCD_LOAD_DBM_CONFIGFILE"};

$loadscript =

$ENV{"TPCD_LOAD_SCRIPT"};

$configfile =

$ENV{"TPCD_CONFIGFILE"};

$dbmconfig =

$ENV{"TPCD_DBM_CONFIG"};

$ddl= $ENV{"TPCD_DDL"};

$tbspddl=

$ENV{"TPCD_TBSP_DDL"};

}

if (( $mode eq "uni" ) || ( $mode eq "smp"

)){

$all_ln="once";

$all_pn="once";

$once="once";

}

else{

$all_ln="all_ln";

$all_pn="all_pn";

$once="once";

}

©Copyright IBM Corporation TPC Benchmark H Full Disclosure Report May 2005 Page: 37

Page 40

#-------------------------------------------

--------------------------------#

# echo parameter settings to acknowledge

what is being built #

# and set db2set options for database load

#

#-------------------------------------------

--------------------------------#

&printSummary;

print "\nSleeping for 15 seconds to give you

a chance to reconsider...\n";

sleep 15;

if ( $platform eq "nt" ){

if (($mode eq "uni") || ($mode eq

"smp")){

#spaces required for NT

$rc=&dodb_noconn("db2set

DB2OPTIONS=\" -t -v +c\";db2set

DB2NTNOCACHE=ON",$all_ln);

}

else{

$rc=&dodb_noconn("db2set

DB2OPTIONS=\\\" -t -v +c\\\";db2set

DB2NTNOCACHE=ON",$all_ln);

}

}

else{

if (($mode eq "uni") || ($mode eq

"smp")){

$rc=&dodb_noconn("db2set

DB2OPTIONS=\"-t -v +c\"",$all_ln);

}

else{

# $rc=&dodb_noconn("db2set

DB2OPTIONS=\\\"-t -v +c\\\"",$all_ln);

}

}

if ( $rc != 0 ){

die "failure setting db2 environment

variable : rc = $rc\n";

}

#-------------------------------------------

--------------------------------#

# set the db2 env vars for loading, from the

TPCD_LOAD_DB2SET_SCRIPT script #

#-------------------------------------------

--------------------------------#

if ( $loadsetScript ne "NULL" )

{

if ( $platform eq "nt" ){

if (( $mode eq "uni" ) || ( $mode eq

"smp" )){

$rc=system("${ddlpath}${delim}$loadsetScript

");

}

else{

$rc=system(" rah \" cd

${ddlpath} & $loadsetScript\" ");

}

}

else{

$rc=system("${ddlpath}${delim}$loadsetScript

");

}

($rc == 0) || die "failure loading

db2set parms from $loadsetScript \n";

}

!&stopStart || die;

#-------------------------------------------

--------------------------------#

# Begin complete build: TPCD_BUILDSTAGE =

ALL #

#-------------------------------------------

--------------------------------#

if($buildStage eq "ALL") {

#create the database

$rc = &createDb;

($rc == 0) || die "ERROR: create

database failed. rc = $rc\n ";

&setLog;

};

$rc = &setLoadConfig;

#-------------------------------------------

--------------------------------#