IBM System x3850 X5, BladeCenter HX5, System x3950 X5, System x3690 X5 Implementation Manual

Page 1

Front cover

Click here to check for updates

IBM eX5

Implementation Guide

Covers the IBM System x3950 X5, x3850 X5,

x3690 X5, and the IBM BladeCenter HX5

Details technical information

about each server and option

Describes how to implement

two-node configurations

David Watts

Aaron Belisle

Duncan Furniss

Scott Haddow

Michael Hurman

Jeneea Jervay

Eric Kern

Cynthia Knight

Miroslav Peic

Tom Sorcic

Evans Tanurdin

ibm.com/redbooks

Page 2

Page 3

International Technical Support Organization

IBM eX5 Implementation Guide

May 2011

SG24-7909-00

Page 4

Note: Before using this information and the product it supports, read the information in “Notices” on

page xi.

First Edition (May 2011)

This edition applies to the following servers:

IBM System x3850 X5, machine type 7145

IBM System x3950 X5, machine type 7145

IBM System x3690 X5, machine type 7148

IBM BladeCenter HX5, machine type 7872

© Copyright International Business Machines Corporation 2011. All rights reserved.

Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule

Contract with IBM Corp.

Page 5

Contents

Notices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xi

Trademarks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xii

Preface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xiii

The team who wrote this book . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xiii

Now you can become a published author, too! . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xvi

Comments welcome. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xvii

Stay connected to IBM Redbooks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xvii

Chapter 1. Introduction. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.1 eX5 systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.2 Model summary. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.2.1 IBM System x3850 X5 models . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.2.2 Workload-optimized x3950 X5 models . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.2.3 x3850 X5 models with MAX5 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.2.4 Base x3690 X5 models . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.2.5 Workload-optimized x3690 X5 models . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

1.2.6 BladeCenter HX5 models . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

1.3 Positioning. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

1.3.1 IBM System x3850 X5 and x3950 X5 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

1.3.2 IBM System x3690 X5 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

1.3.3 IBM BladeCenter HX5. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

1.4 Energy efficiency . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

1.5 Services offerings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

1.6 What this book contains . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

Part 1. Product overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

Chapter 2. IBM eX5 technology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

2.1 eX5 chip set . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

2.2 Intel Xeon 6500 and 7500 family processors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

2.2.1 Intel Virtualization Technology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

2.2.2 Hyper-Threading Technology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

2.2.3 Turbo Boost Technology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

2.2.4 QuickPath Interconnect (QPI) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

2.2.5 Processor performance in a green world . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

2.3 Memory . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

2.3.1 Memory speed . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

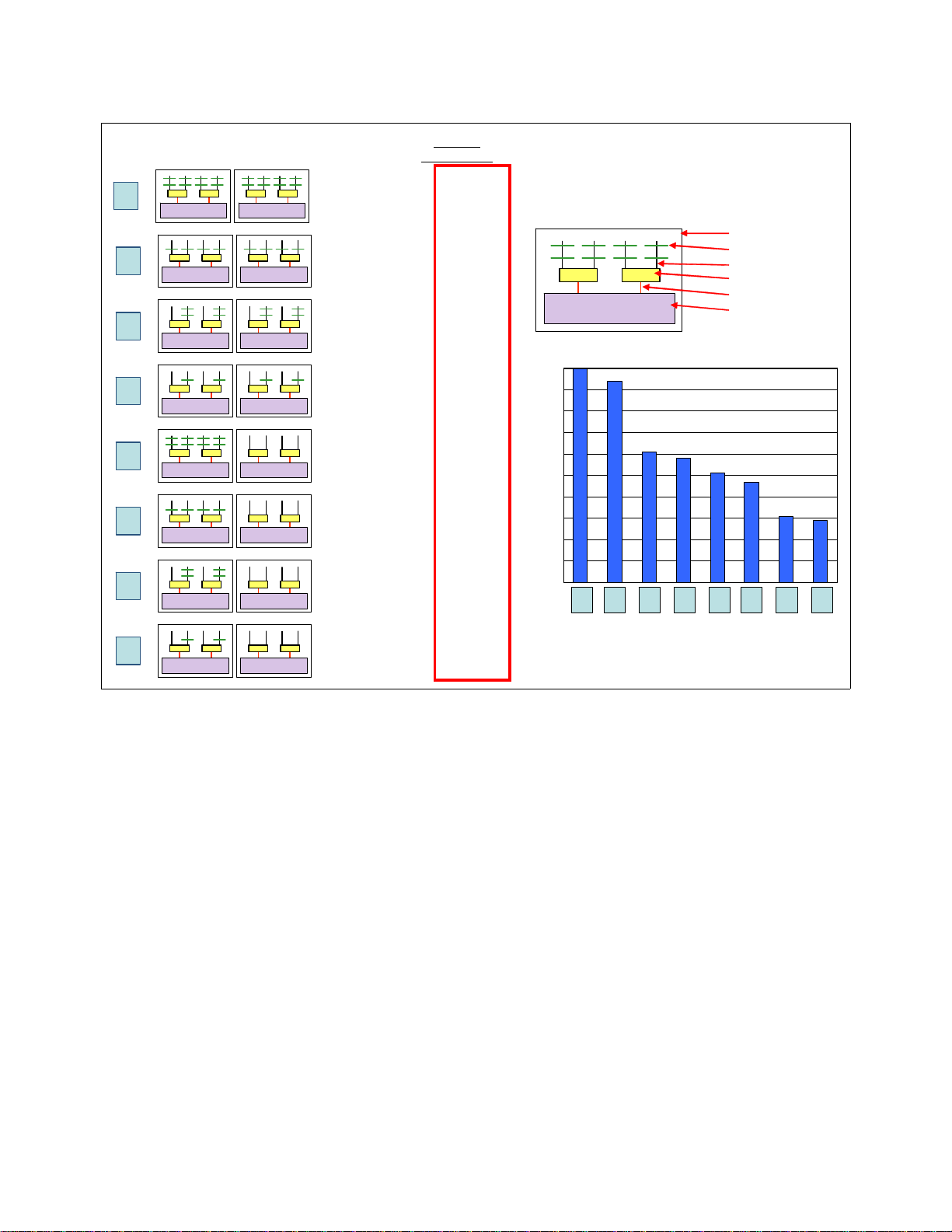

2.3.2 Memory DIMM placement. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

2.3.3 Memory ranking . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

2.3.4 Nonuniform memory architecture (NUMA) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

2.3.5 Hemisphere Mode. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

2.3.6 Reliability, availability, and serviceability (RAS) features . . . . . . . . . . . . . . . . . . . 28

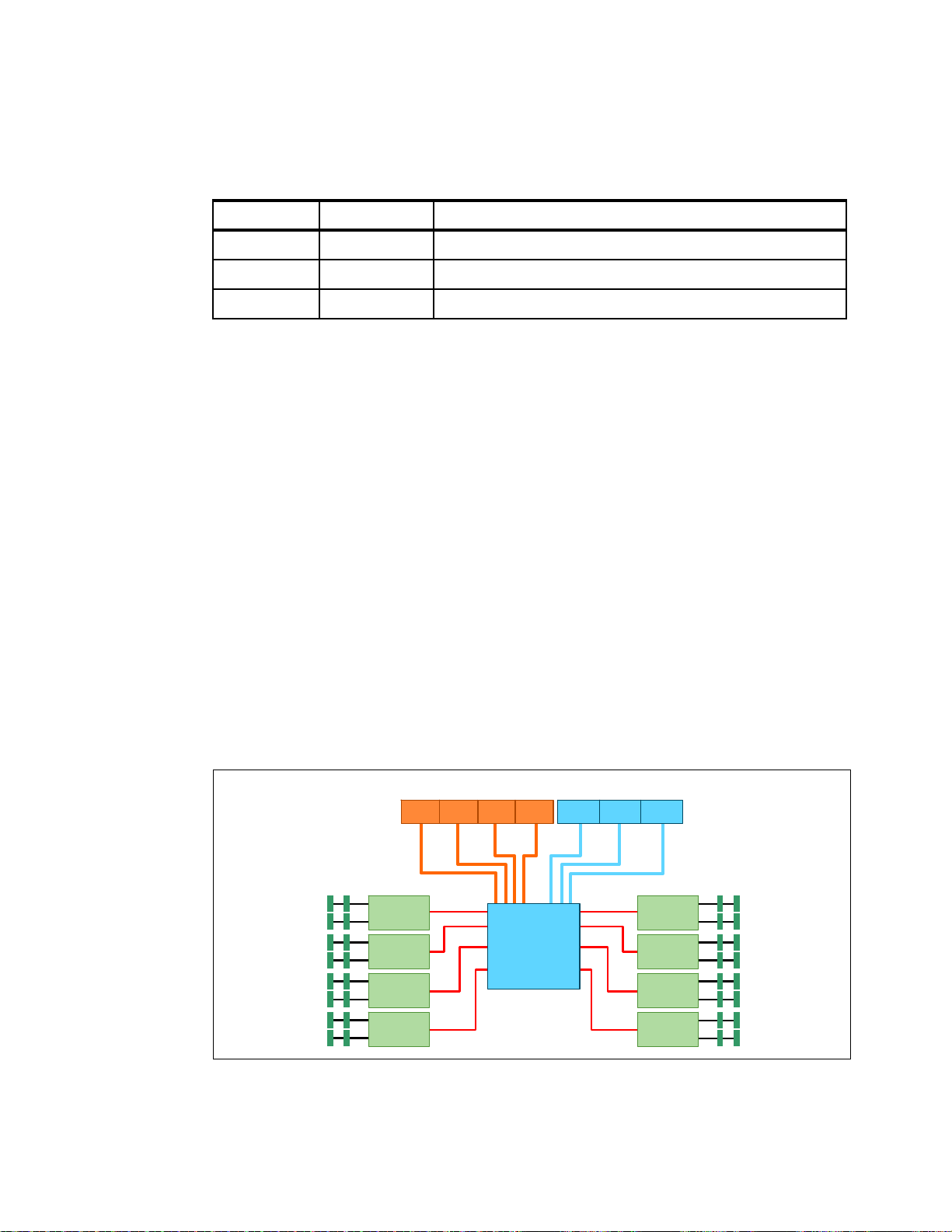

2.3.7 I/O hubs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

2.4 MAX5 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

2.5 Scalability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

2.6 Partitioning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

2.7 UEFI system settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

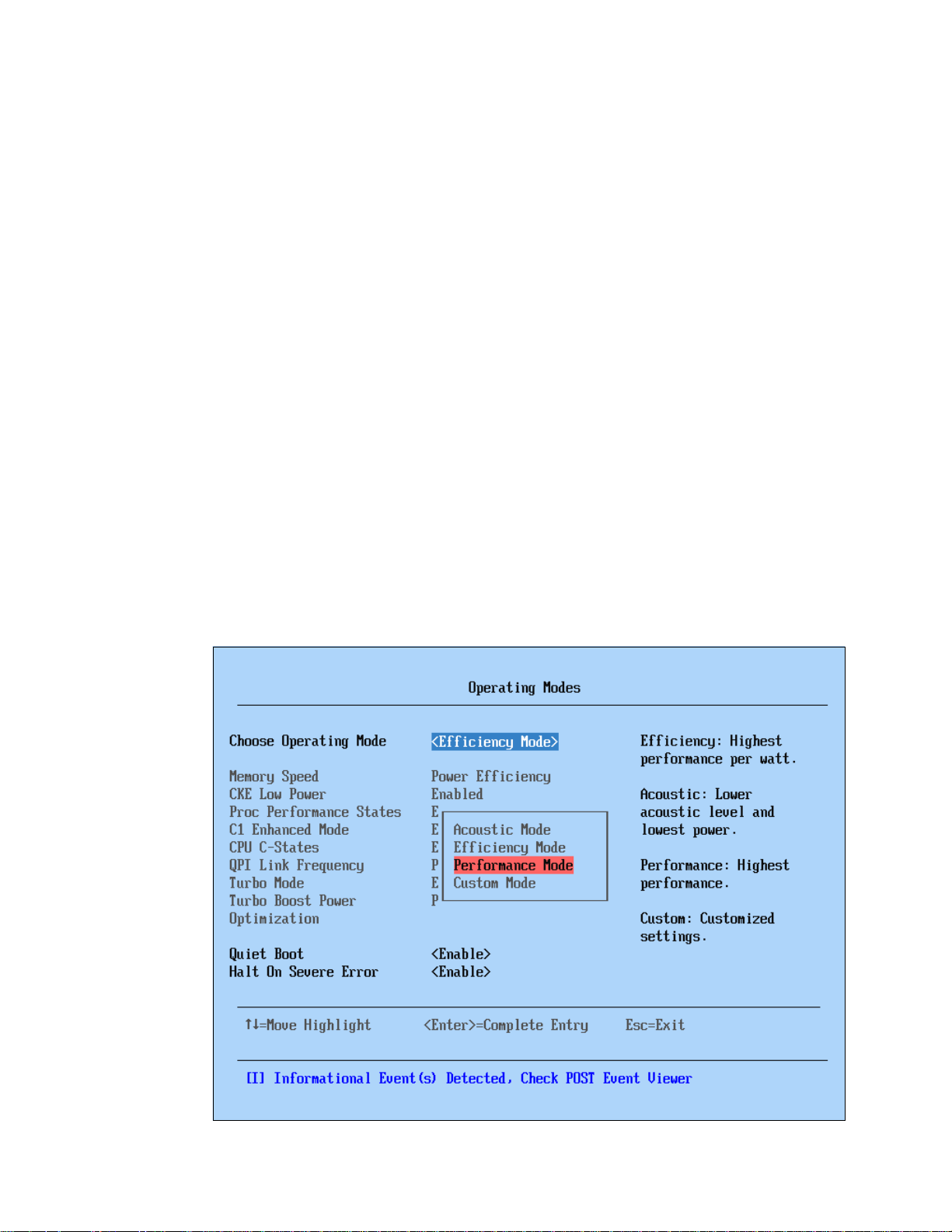

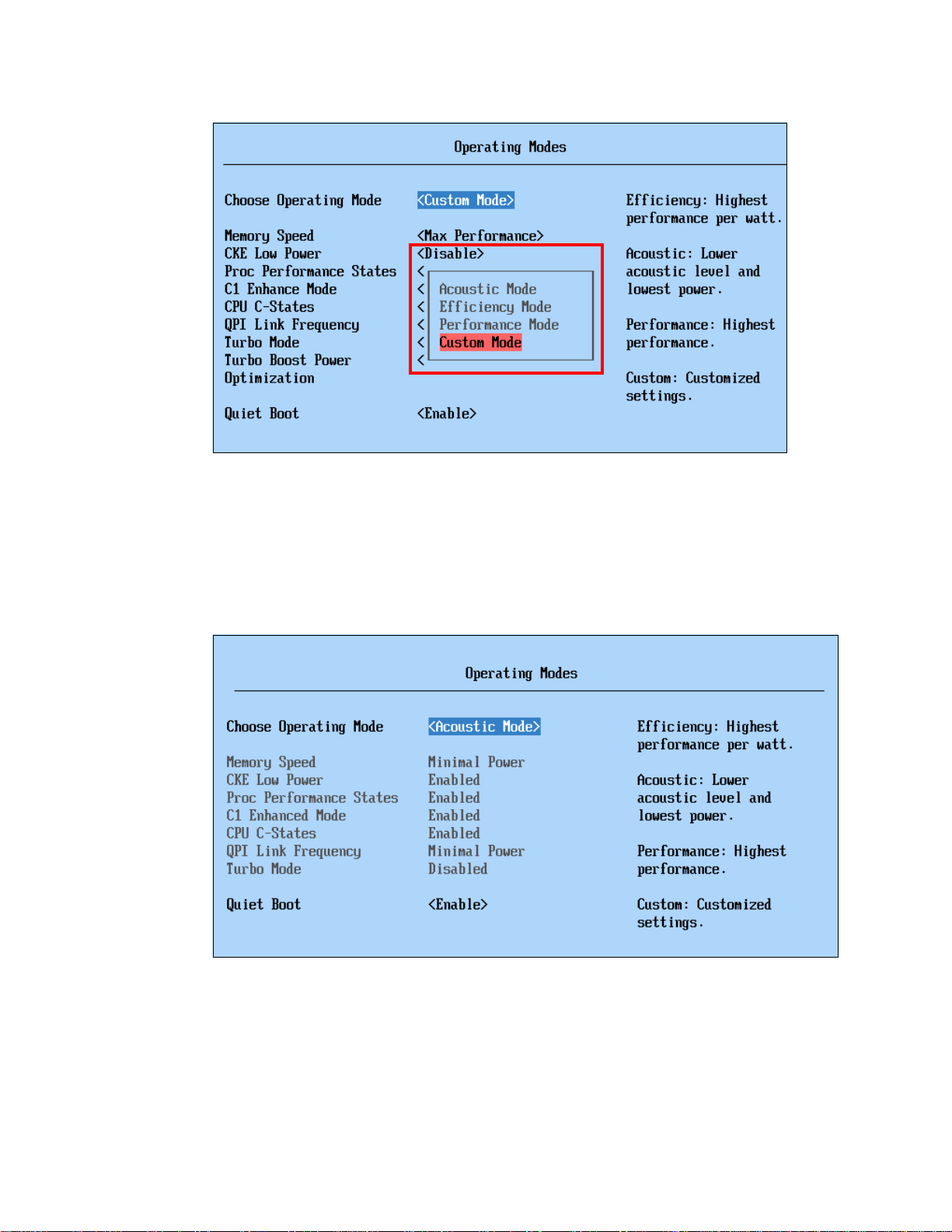

2.7.1 System power operating modes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

© Copyright IBM Corp. 2011. All rights reserved. iii

Page 6

2.7.2 System power settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

2.7.3 Performance-related individual system settings . . . . . . . . . . . . . . . . . . . . . . . . . . 43

2.8 IBM eXFlash . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

2.8.1 IBM eXFlash price-performance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

2.9 Integrated virtualization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

2.9.1 VMware ESXi . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

2.9.2 Red Hat RHEV-H (KVM) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

2.9.3 Windows 2008 R2 Hyper-V. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

2.10 Changes in technology demand changes in implementation . . . . . . . . . . . . . . . . . . . 51

2.10.1 Using swap files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

2.10.2 SSD drives and battery backup cache on RAID controllers . . . . . . . . . . . . . . . . 52

2.10.3 Increased resources for virtualization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

2.10.4 Virtualized Memcached distributed memory caching . . . . . . . . . . . . . . . . . . . . . 52

Chapter 3. IBM System x3850 X5 and x3950 X5. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

3.1 Product features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

3.1.1 IBM System x3850 X5 product features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

3.1.2 IBM System x3950 X5 product features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

3.1.3 IBM MAX5 memory expansion unit. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

3.1.4 Comparing the x3850 X5 to the x3850 M2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

3.2 Target workloads. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

3.3 Models. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

3.4 System architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

3.4.1 System board . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

3.4.2 QPI Wrap Card . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

3.5 MAX5 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

3.6 Scalability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

3.6.1 Memory scalability with MAX5 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

3.6.2 Two-node scalability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

3.7 Processor options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

3.8 Memory . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

3.8.1 Memory cards and DIMMs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

3.8.2 DIMM population sequence . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

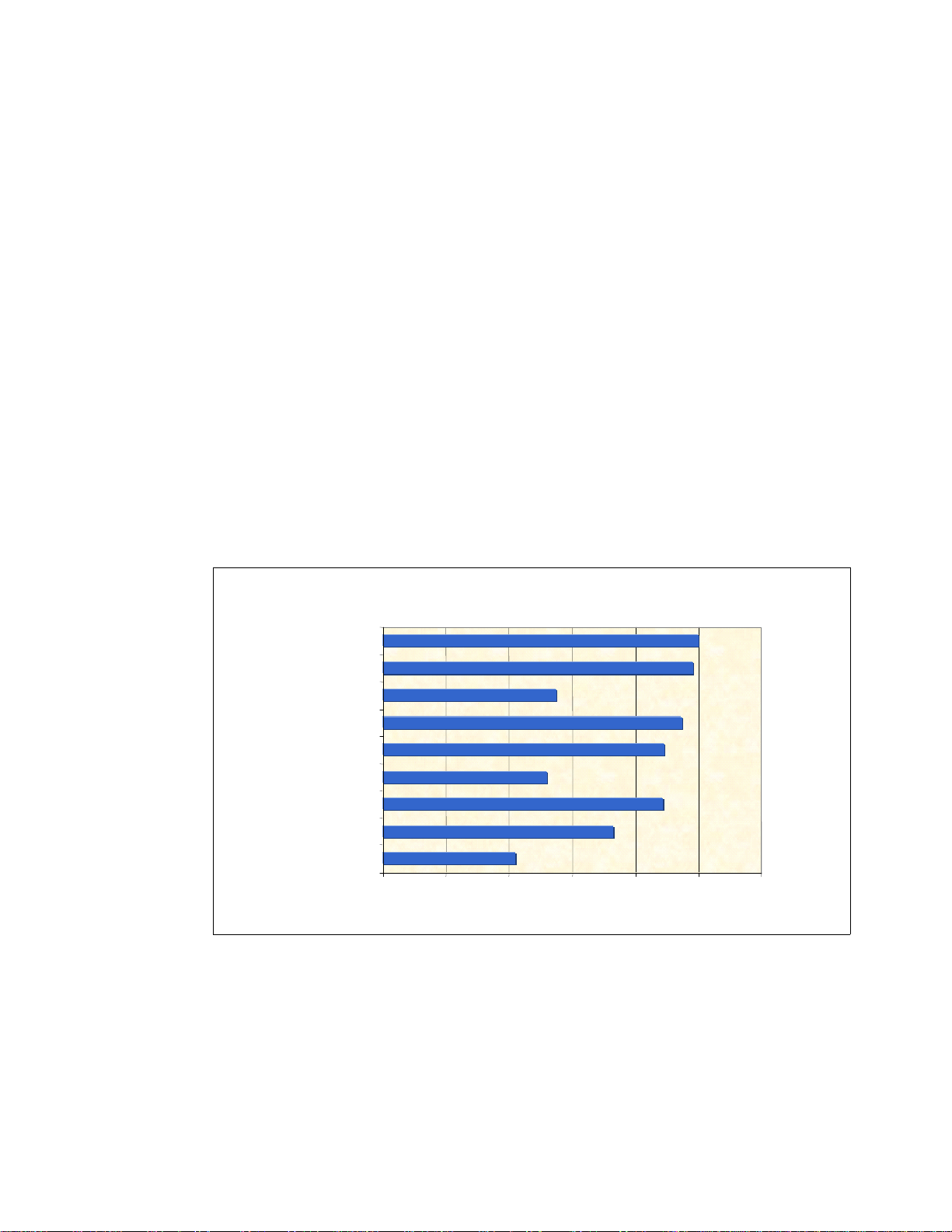

3.8.3 Maximizing memory performance. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

3.8.4 Memory mirroring . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

3.8.5 Memory sparing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

3.8.6 Effect on performance by using mirroring or sparing . . . . . . . . . . . . . . . . . . . . . . 89

3.9 Storage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

3.9.1 Internal disks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

3.9.2 SAS and SSD 2.5-inch disk support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

3.9.3 IBM eXFlash and 1.8-inch SSD support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

3.9.4 SAS and SSD controllers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 96

3.9.5 Dedicated controller slot . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 100

3.9.6 External storage connectivity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

3.10 Optical drives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

3.11 PCIe slots . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

3.12 I/O cards . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

3.12.1 Standard Emulex 10Gb Ethernet Adapter . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

3.12.2 Optional adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 107

3.13 Standard onboard features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

3.13.1 Onboard Ethernet . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

3.13.2 Environmental data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

3.13.3 Integrated Management Module (IMM). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

iv IBM eX5 Implementation Guide

Page 7

3.13.4 UEFI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

3.13.5 Integrated Trusted Platform Module (TPM). . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

3.13.6 Light path diagnostics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

3.14 Power supplies and fans of the x3850 X5 and MAX5 . . . . . . . . . . . . . . . . . . . . . . . . 112

3.14.1 x3850 X5 power supplies and fans . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 112

3.14.2 MAX5 power supplies and fans . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

3.15 Integrated virtualization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

3.16 Operating system support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

3.17 Rack considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

Chapter 4. IBM System x3690 X5. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

4.1 Product features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

4.1.1 System components . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

4.1.2 IBM MAX5 memory expansion unit. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

4.2 Target workloads. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

4.3 Models. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 124

4.4 System architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 126

4.5 MAX5 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

4.6 Scalability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 128

4.7 Processor options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 130

4.8 Memory . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

4.8.1 Memory DIMM options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 133

4.8.2 x3690 X5 memory population order . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 133

4.8.3 MAX5 memory . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

4.8.4 Memory balance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 139

4.8.5 Mixing DIMMs and the performance effect . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 140

4.8.6 Memory mirroring . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

4.8.7 Memory sparing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 143

4.8.8 Effect on performance of using mirroring or sparing. . . . . . . . . . . . . . . . . . . . . . 144

4.9 Storage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 145

4.9.1 2.5-inch SAS drive support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 145

4.9.2 IBM eXFlash and SSD disk support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 149

4.9.3 SAS and SSD controller summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 152

4.9.4 Battery backup placement . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 155

4.9.5 ServeRAID Expansion Adapter. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 157

4.9.6 Drive combinations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 158

4.9.7 External SAS storage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 162

4.9.8 Optical drives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 163

4.10 PCIe slots . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 164

4.10.1 Riser 1. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 165

4.10.2 Riser 2. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 165

4.10.3 Emulex 10Gb Ethernet Adapter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 166

4.10.4 I/O adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 168

4.11 Standard features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 169

4.11.1 Integrated management module . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 169

4.11.2 Ethernet subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 170

4.11.3 USB subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 170

4.11.4 Integrated Trusted Platform Module . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 170

4.11.5 Light path diagnostics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 170

4.11.6 Cooling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 171

4.12 Power supplies . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 173

4.12.1 x3690 X5 power subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 173

4.12.2 MAX5 power subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 174

Contents v

Page 8

4.13 Integrated virtualization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 174

4.14 Supported operating systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 175

4.15 Rack mounting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 176

Chapter 5. IBM BladeCenter HX5. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 177

5.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 178

5.1.1 Comparison to the HS22 and HS22V . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 180

5.2 Target workloads. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 181

5.3 Chassis support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 182

5.4 Models. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 183

5.5 System architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 184

5.6 Speed Burst Card . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 185

5.7 IBM MAX5 for BladeCenter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 186

5.8 Scalability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 188

5.8.1 Single HX5 configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 188

5.8.2 Double-wide HX5 configuration. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 188

5.8.3 HX5 with MAX5 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 190

5.9 Processor options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 192

5.10 Memory . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 194

5.10.1 Memory options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 194

5.10.2 DIMM population order . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 196

5.10.3 Memory balance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 199

5.10.4 Memory mirroring . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 200

5.10.5 Memory sparing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 202

5.11 Storage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 203

5.11.1 Solid-state drives (SSDs) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 204

5.11.2 LSI configuration utility . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 205

5.11.3 Determining which SSD RAID configuration to choose . . . . . . . . . . . . . . . . . . 207

5.11.4 Connecting to external SAS storage devices . . . . . . . . . . . . . . . . . . . . . . . . . . 207

5.12 BladeCenter PCI Express Gen 2 Expansion Blade . . . . . . . . . . . . . . . . . . . . . . . . . 208

5.13 I/O expansion cards . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 209

5.13.1 CIOv . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 209

5.13.2 CFFh . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 210

5.14 Standard onboard features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 212

5.14.1 UEFI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 212

5.14.2 Onboard network adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 212

5.14.3 Integrated Management Module (IMM). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 213

5.14.4 Video controller . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 213

5.14.5 Trusted Platform Module (TPM) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 213

5.15 Integrated virtualization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 214

5.16 Partitioning capabilities . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 214

5.17 Operating system support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 215

Part 2. Implementing scalability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 217

Chapter 6. IBM System x3850 X5 and x3950 X5. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 219

6.1 Before you apply power for the first time after shipping . . . . . . . . . . . . . . . . . . . . . . . 220

6.1.1 Verify that the components are securely installed. . . . . . . . . . . . . . . . . . . . . . . . 220

6.1.2 Clear CMOS memory . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 220

6.1.3 Verify that the server completes POST before adding options . . . . . . . . . . . . . . 221

6.2 Processor considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 221

6.2.1 Minimum processors required. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 222

6.2.2 Processor operating characteristics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 222

6.2.3 Processor installation order. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 224

vi IBM eX5 Implementation Guide

Page 9

6.2.4 Processor installation tool . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 224

6.3 Local memory configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 225

6.3.1 Testing the memory DIMMs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 226

6.3.2 Memory fault tolerance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 229

6.4 Attaching the MAX5 memory expansion unit . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 230

6.4.1 Before you attach the MAX5 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 230

6.4.2 Installing in a rack . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 231

6.4.3 MAX5 cables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 231

6.4.4 Accessing the DIMMs in the MAX5. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 233

6.5 Forming a 2-node x3850 X5 complex . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 235

6.5.1 Firmware requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 235

6.5.2 Processor requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 236

6.5.3 Memory requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 236

6.5.4 Cabling the servers together. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 236

6.6 PCIe adapters and riser card options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 238

6.6.1 Generation 2 and Generation 1 PCIe adapters . . . . . . . . . . . . . . . . . . . . . . . . . 239

6.6.2 PCIe adapters: Slot selection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 244

6.6.3 Cleaning up the boot sequence . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 245

6.7 Power supply considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 249

6.8 Using the Integrated Management Module . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 250

6.8.1 IMM network access . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 251

6.8.2 Configuring the IMM network interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 251

6.8.3 IMM communications troubleshooting. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 253

6.8.4 IMM functions to help you perform problem determination. . . . . . . . . . . . . . . . . 253

6.9 UEFI settings. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 259

6.9.1 Settings needed for 1-node, 2-node, and MAX5 configurations . . . . . . . . . . . . . 261

6.9.2 UEFI performance tuning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 262

6.10 Installing an OS. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 263

6.10.1 Installing without a local optical drive . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 263

6.10.2 Use of embedded VMware ESXi. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 271

6.10.3 Installing the ESX 4.1 or ESXi 4.1 Installable onto x3850 X5 . . . . . . . . . . . . . . 275

6.10.4 OS installation tips and instructions on the web . . . . . . . . . . . . . . . . . . . . . . . . 288

6.10.5 Downloads and fixes for x3850 X5 and MAX5 . . . . . . . . . . . . . . . . . . . . . . . . . 293

6.10.6 SAN storage reference and considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . 294

6.11 Failure detection and recovery . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 297

6.11.1 What happens when a node fails or the MAX5 fails . . . . . . . . . . . . . . . . . . . . . 297

6.11.2 Reinserting the QPI wrap cards for extended outages . . . . . . . . . . . . . . . . . . . 297

6.11.3 Tools to aid hardware troubleshooting for x3850 X5. . . . . . . . . . . . . . . . . . . . . 297

6.11.4 Recovery process . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 299

Chapter 7. IBM System x3690 X5. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 301

7.1 Before you apply power for the first time after shipping . . . . . . . . . . . . . . . . . . . . . . . 302

7.1.1 Verify that the components are securely installed. . . . . . . . . . . . . . . . . . . . . . . . 302

7.1.2 Clear CMOS memory . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 302

7.1.3 Verify that the server will complete POST before adding options . . . . . . . . . . . . 304

7.2 Processor considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 304

7.2.1 Minimum processors required. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 304

7.2.2 Processor operating characteristics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 305

7.3 Memory considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 306

7.3.1 Local memory installation considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 306

7.3.2 Testing the memory DIMMs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 307

7.3.3 Memory fault tolerance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 310

7.4 MAX5 considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 311

Contents vii

Page 10

7.4.1 Before you attach the MAX5 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 311

7.4.2 Installing in a rack . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 312

7.4.3 MAX5 cables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 312

7.4.4 Accessing the DIMMs in the MAX5. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 314

7.5 PCIe adapters and riser card options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 316

7.5.1 Generation 2 and Generation 1 PCIe adapters . . . . . . . . . . . . . . . . . . . . . . . . . 316

7.5.2 PCIe adapters: Slot selection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 321

7.5.3 Cleaning up the boot sequence . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 322

7.6 Power supply considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 326

7.7 Using the Integrated Management Module . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 327

7.7.1 IMM network access . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 328

7.7.2 Configuring the IMM network interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 328

7.7.3 IMM communications troubleshooting. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 330

7.7.4 IMM functions to help you perform problem determination. . . . . . . . . . . . . . . . . 331

7.8 UEFI settings. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 337

7.8.1 Scaled system settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 338

7.8.2 Operating system-specific settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 339

7.8.3 Power and performance system settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 340

7.8.4 Optimizing boot options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 343

7.9 Operating system installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 346

7.9.1 Installation media . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 346

7.9.2 Integrated virtualization hypervisor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 355

7.9.3 Windows Server 2008 R2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 356

7.9.4 Red Hat Enterprise Linux 6 and SUSE Linux Enterprise Server 11 . . . . . . . . . . 358

7.9.5 VMware vSphere ESXi 4.1 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 358

7.9.6 VMware vSphere ESX 4.1 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 362

7.9.7 Downloads and fixes for the x3690 X5 and MAX5 . . . . . . . . . . . . . . . . . . . . . . . 365

7.9.8 SAN storage reference and considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . 367

7.10 Failure detection and recovery . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 369

7.10.1 System alerts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 369

7.10.2 System recovery . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 371

Chapter 8. IBM BladeCenter HX5. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 373

8.1 Before you apply power for the first time after shipping . . . . . . . . . . . . . . . . . . . . . . . 374

8.1.1 Verifying that the components are securely installed . . . . . . . . . . . . . . . . . . . . . 374

8.1.2 Clearing CMOS memory. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 375

8.1.3 Verifying the server boots before adding options . . . . . . . . . . . . . . . . . . . . . . . . 376

8.2 Planning to scale: Prerequisites . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 377

8.2.1 Processors supported and requirements to scale. . . . . . . . . . . . . . . . . . . . . . . . 377

8.2.2 Minimum memory requirement . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 377

8.2.3 Required firmware of each blade and the AMM . . . . . . . . . . . . . . . . . . . . . . . . . 379

8.3 Recommendations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 382

8.3.1 Power sharing cap . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 382

8.3.2 BladeCenter H considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 383

8.4 Local storage considerations and array setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 385

8.4.1 Launching the LSI Setup Utility. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 386

8.4.2 Creating a RAID-1 mirror using the LSI Setup Utility . . . . . . . . . . . . . . . . . . . . . 389

8.4.3 Using IBM ServerGuide to configure the LSI controller . . . . . . . . . . . . . . . . . . . 392

8.4.4 Speed Burst Card reinstallation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 394

8.5 UEFI settings. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 396

8.5.1 UEFI performance tuning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 397

8.5.2 Start-up parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 398

8.5.3 HX5 single-node UEFI settings. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 400

viii IBM eX5 Implementation Guide

Page 11

8.5.4 HX5 2-node UEFI settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 401

8.5.5 HX5 with MAX5 attached . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 401

8.5.6 Operating system-specific settings in UEFI . . . . . . . . . . . . . . . . . . . . . . . . . . . . 402

8.6 Creating an HX5 scalable complex . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 402

8.6.1 Troubleshooting HX5 problems. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 406

8.7 Operating system installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 407

8.7.1 Operating system installation media . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 407

8.7.2 VMware ESXi on a USB key. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 415

8.7.3 Installing ESX 4.1 or ESXi 4.1 Installable onto HX5 . . . . . . . . . . . . . . . . . . . . . . 421

8.7.4 Windows installation tips and settings. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 434

8.7.5 Red Hat Enterprise Linux installation tips and settings . . . . . . . . . . . . . . . . . . . . 436

8.7.6 SUSE Linux Enterprise Server installation tips and settings. . . . . . . . . . . . . . . . 437

8.7.7 Downloads and fixes for HX5 and MAX5 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 438

8.7.8 SAN storage reference and considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . 440

8.8 Failure detection and recovery . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 442

8.8.1 Tools to aid hardware troubleshooting for the HX5. . . . . . . . . . . . . . . . . . . . . . . 443

8.8.2 Reinserting the Speed Burst card for extended outages . . . . . . . . . . . . . . . . . . 444

8.8.3 Effects of power loss on HX5 2-node or MAX5 configurations . . . . . . . . . . . . . . 444

Chapter 9. Management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 447

9.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 448

9.2 Integrated Management Module (IMM). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 449

9.2.1 IMM out-of-band configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 449

9.2.2 IMM in-band configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 453

9.2.3 Updating firmware using the IMM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 454

9.3 Advanced Management Module (AMM) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 454

9.3.1 Accessing the Advanced Management Module . . . . . . . . . . . . . . . . . . . . . . . . . 456

9.3.2 Service Advisor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 458

9.3.3 Updating firmware using the AMM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 461

9.4 Remote control . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 462

9.4.1 Accessing the Remote Control feature on the x3690 X5 and the x3850 X5. . . . 462

9.4.2 Accessing the Remote Control feature for the HX5 . . . . . . . . . . . . . . . . . . . . . . 465

9.5 IBM Systems Director 6.2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 467

9.5.1 Discovering the IMM of a single-node x3690 X5 or x3850 X5 out-of-band via IBM

Systems Director . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 468

9.5.2 Discovering a 2-node x3850 X5 via IBM Systems Director 6.2.x . . . . . . . . . . . . 472

9.5.3 Discovering a single-node HX5 via IBM Systems Director . . . . . . . . . . . . . . . . . 477

9.5.4 Discovering a 2-node HX5 via IBM Systems Director 6.2.x . . . . . . . . . . . . . . . . 478

9.5.5 Service and Support Manager . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 481

9.5.6 Performing tasks against a 2-node system . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 488

9.6 IBM Electronic Services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 493

9.7 Advanced Settings Utility (ASU) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 495

9.7.1 Using ASU to configure settings in IMM-based servers . . . . . . . . . . . . . . . . . . . 495

9.7.2 Common problems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 498

9.7.3 Command examples . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 499

9.8 IBM ServerGuide. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 501

9.9 IBM ServerGuide Scripting Toolkit . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 507

9.10 Firmware update tools and methods. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 509

9.10.1 Configuring UEFI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 509

9.10.2 Requirements for updating scalable systems . . . . . . . . . . . . . . . . . . . . . . . . . . 510

9.10.3 IBM Systems Director . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 511

9.11 UpdateXpress System Pack Installer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 511

9.12 Bootable Media Creator . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 514

Contents ix

Page 12

9.13 MegaRAID Storage Manager . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 521

9.13.1 Installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 521

9.13.2 Drive states . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 522

9.13.3 Virtual drive states . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 523

9.13.4 MegaCLI utility for storage management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 523

9.14 Serial over LAN . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 525

9.14.1 Enabling SoL in UEFI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 526

9.14.2 BladeCenter requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 527

9.14.3 Enabling SoL in the operating system . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 529

9.14.4 How to start a SoL connection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 533

Abbreviations and acronyms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 537

Related publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 541

IBM Redbooks publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 541

Other publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 542

Online resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 543

Help from IBM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 543

Index . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 545

x IBM eX5 Implementation Guide

Page 13

Notices

This information was developed for products and services offered in the U.S.A.

IBM may not offer the products, services, or features discussed in this document in other countries. Consult

your local IBM representative for information on the products and services currently available in your area. Any

reference to an IBM product, program, or service is not intended to state or imply that only that IBM product,

program, or service may be used. Any functionally equivalent product, program, or service that does not

infringe any IBM intellectual property right may be used instead. However, it is the user's responsibility to

evaluate and verify the operation of any non-IBM product, program, or service.

IBM may have patents or pending patent applications covering subject matter described in this document. The

furnishing of this document does not give you any license to these patents. You can send license inquiries, in

writing, to:

IBM Director of Licensing, IBM Corporation, North Castle Drive, Armonk, NY 10504-1785 U.S.A.

The following paragraph does not apply to the United Kingdom or any other country where such

provisions are inconsistent with local law: INTERNATIONAL BUSINESS MACHINES CORPORATION

PROVIDES THIS PUBLICATION "AS IS" WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESS OR

IMPLIED, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF NON-INFRINGEMENT,

MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. Some states do not allow disclaimer of

express or implied warranties in certain transactions, therefore, this statement may not apply to you.

This information could include technical inaccuracies or typographical errors. Changes are periodically made

to the information herein; these changes will be incorporated in new editions of the publication. IBM may make

improvements and/or changes in the product(s) and/or the program(s) described in this publication at any time

without notice.

Any references in this information to non-IBM websites are provided for convenience only and do not in any

manner serve as an endorsement of those websites. The materials at those websites are not part of the

materials for this IBM product and use of those websites is at your own risk.

IBM may use or distribute any of the information you supply in any way it believes appropriate without incurring

any obligation to you.

Information concerning non-IBM products was obtained from the suppliers of those products, their published

announcements or other publicly available sources. IBM has not tested those products and cannot confirm the

accuracy of performance, compatibility or any other claims related to non-IBM products. Questions on the

capabilities of non-IBM products should be addressed to the suppliers of those products.

This information contains examples of data and reports used in daily business operations. To illustrate them

as completely as possible, the examples include the names of individuals, companies, brands, and products.

All of these names are fictitious and any similarity to the names and addresses used by an actual business

enterprise is entirely coincidental.

COPYRIGHT LICENSE:

This information contains sample application programs in source language, which illustrate programming

techniques on various operating platforms. You may copy, modify, and distribute these sample programs in

any form without payment to IBM, for the purposes of developing, using, marketing or distributing application

programs conforming to the application programming interface for the operating platform for which the sample

programs are written. These examples have not been thoroughly tested under all conditions. IBM, therefore,

cannot guarantee or imply reliability, serviceability, or function of these programs.

© Copyright IBM Corp. 2011. All rights reserved. xi

Page 14

Trademarks

IBM, the IBM logo, and ibm.com are trademarks or registered trademarks of International Business Machines

Corporation in the United States, other countries, or both. These and other IBM trademarked terms are

marked on their first occurrence in this information with the appropriate symbol (® or ™), indicating US

registered or common law trademarks owned by IBM at the time this information was published. Such

trademarks may also be registered or common law trademarks in other countries. A current list of IBM

trademarks is available on the Web at http://www.ibm.com/legal/copytrade.shtml

The following terms are trademarks of the International Business Machines Corporation in the United States,

other countries, or both:

AIX®

BladeCenter®

Calibrated Vectored Cooling™

DS4000®

Dynamic Infrastructure®

Electronic Service Agent™

eServer™

IBM Systems Director Active Energy

Manager™

IBM®

iDataPlex™

Netfinity®

PowerPC®

POWER®

Redbooks®

Redpaper™

Redbooks (logo) ®

RETAIN®

ServerProven®

Smarter Planet™

System Storage®

System x®

System z®

Tivoli®

X-Architecture®

XIV®

xSeries®

The following terms are trademarks of other companies:

Java, and all Java-based trademarks are trademarks of Sun Microsystems, Inc. in the United States, other

countries, or both.

Microsoft, Windows, and the Windows logo are trademarks of Microsoft Corporation in the United States,

other countries, or both.

Intel Xeon, Intel, Itanium, Intel logo, Intel Inside logo, and Intel Centrino logo are trademarks or registered

trademarks of Intel Corporation or its subsidiaries in the United States and other countries.

Linux is a trademark of Linus Torvalds in the United States, other countries, or both.

Other company, product, or service names may be trademarks or service marks of others.

xii IBM eX5 Implementation Guide

Page 15

Preface

High-end workloads drive ever-increasing and ever-changing constraints. In addition to

requiring greater memory capacity, these workloads challenge you to do more with less and

to find new ways to simplify deployment and ownership. And although higher system

availability and comprehensive systems management have always been critical, they have

become even more important in recent years.

Difficult challenges, such as these, create new opportunities for innovation. The IBM® eX5

portfolio delivers this innovation. This family of high-end computing introduces the fifth

generation of IBM X-Architecture® technology. The family includes the IBM System x3850

X5, x3690 X5, and the IBM BladeCenter® HX5. These servers are the culmination of more

than a decade of x86 innovation and firsts that have changed the expectations of the industry.

With this latest generation, eX5 is again leading the way as the shift toward virtualization,

platform management, and energy efficiency accelerates.

This book is divided into two parts. In the first part, we provide detailed technical information

about the servers in the eX5 portfolio. This information is most useful in designing,

configuring, and planning to order a server solution. In the second part of the book, we

provide detailed configuration and setup information to get your servers operational. We focus

particularly on setting up MAX5 configurations of all three eX5 servers as well as 2-node

configurations of the x3850 X5 and HX5.

This book is aimed at clients, IBM Business Partners, and IBM employees that want to

understand the features and capabilities of the IBM eX5 portfolio of servers and want to learn

how to install and configure the servers for use in production.

The team who wrote this book

This book was produced by a team of specialists from around the world working at the

International Technical Support Organization, Raleigh Center.

David Watts is a Consulting IT Specialist at the IBM ITSO Center in Raleigh. He manages

residencies and produces IBM Redbooks® publications for hardware and software topics that

are related to IBM System x® and IBM BladeCenter servers, and associated client platforms.

He has authored over 80 books, papers, and web documents. He holds a Bachelor of

Engineering degree from the University of Queensland (Australia) and has worked for IBM

both in the US and Australia since 1989. David is an IBM Certified IT Specialist and a

member of the IT Specialist Certification Review Board.

Aaron Belisle is a BladeCenter and System x Technical Support Specialist for IBM in Atlanta,

Georgia. He has 12 years of experience working with servers and has worked at IBM for

seven years. His areas of expertise include IBM BladeCenter, System x, and BladeCenter

Fibre Channel fabrics.

Duncan Furniss is a Senior IT Specialist for IBM in Canada. He currently provides technical

sales support for System x, BladeCenter, and IBM System Storage® products. He has

co-authored six previous IBM Redbooks publications, the most recent being Implementing an

IBM System x iDataPlex Solution, SG24-7629. He has helped clients design and implement

x86 server solutions from the beginning of the IBM Enterprise X-Architecture initiative. He is

an IBM Regional Designated Specialist for Linux®, High Performance Compute Clusters, and

© Copyright IBM Corp. 2011. All rights reserved. xiii

Page 16

Rack, Power and Cooling. He is an IBM Certified IT Specialist and member of the IT

Specialist Certification Review Board.

Scott Haddow is a Presales Technical Support Specialist for IBM in the UK. He has 12 years

of experience working with servers and storage. He has worked at IBM for six years, his

experience spanning IBM Netfinity®, xSeries®, and now the System x brand. His areas of

expertise include Fibre Channel fabrics.

Michael Hurman is a Senior IT Specialist for IBM STG Lab Services in South Africa. He has

more than 12 years of international experience in IT and has co-authored previous IBM

Redbooks publications including Implementing the IBM BladeCenter S Chassis, SG24-7682.

His areas of expertise include assisting clients to design and implement System x,

BladeCenter, IBM Systems Director, midrange storage and storage area networks

(SAN_-based solutions. He started his career at IBM in 2006.

Jeneea Jervay (JJ) was a Technical Support Management Specialist in Raleigh at the time of

writing this publication. She provided presales technical support to IBM Business Partners,

clients, IBM Advanced Technical Support specialists, and IBM Field Technical Sales Support

Specialists globally for the BladeCenter portfolio. She authored the IBM BladeCenter

Interoperability Guide from 2007 to early 2010. She is a PMI Certified Project Manager and

former System x and BladeCenter Top Gun instructor. She was the lead for the System x and

BladeCenter Demand Acceleration Units (DAU) program. Previously, she was a member of

the Americas System x and BladeCenter Brand team and the Sales Solution Center, which

focused exclusively on IBM Business Partners. She started her career at IBM in 1995.

Eric Kern is a Senior Managing Consultant for IBM STG Lab Services. He currently provides

technical consulting services for System x, BladeCenter, System Storage, and Systems

Software. Since 2007, he has helped clients design and implement x86 server and systems

management software solutions. Prior to joining Lab Services, he developed software for the

BladeCenter’s Advanced Management Module and for the Remote Supervisor Adapter II. He

is a VMware Certified Professional and a Red Hat Certified Technician.

Cynthia Knight is an IBM Hardware Design Engineer in Raleigh and has worked for IBM for

11 years. She is currently a member of the IBM eX5 design team. Previous designs include

the Ethernet add-in cards for the IBM Network Processor Reference Platform and the

Chassis Management Module for BladeCenter T. She was also the lead designer for the IBM

BladeCenter PCI Expansion Units.

Miroslav Peic is a System x Support Specialist in IBM Austria. He has a graduate degree in

applied computer science and has many industry certifications, including the Microsoft®

Certified Systems Administrator 2003. He trains other IBM professionals and provides

technical support to them, as well as to IBM Business Partners and clients. He has 10 years

experience in IT and has worked at IBM since 2008.

Tom Sorcic is an IT specialist and technical trainer for BladeCenter and System x support.

He is part of Global Technology Enterprise Services at the Intel® Smart Center in Atlanta,

Georgia, where he started working for IBM in 2001. He has 37 years of international

experience with IT in banking, manufacturing, and technical support. An author of hundreds

of web pages, he continues his original role as core team member for the Global System x

Skills Exchange (GLOSSE) website, assisting in the site design and providing technical

content on a wide variety of topics since 2008. He is a subject matter expert in all forms of

IBM ServeRAID hardware, Ethernet networking, storage area networks, and Microsoft high

availability clusters.

xiv IBM eX5 Implementation Guide

Page 17

Evans Tanurdin is an IT Specialist at IBM Global Technology Services in Indonesia. He

provides technical support and services on the IBM System x, BladeCenter, and System

Storage product lines. His technology focus areas include the design, operation, and

maintenance services of enterprise x86 server infrastructure. Other significant experiences

include application development, system analysis, and database design. Evans holds a

degree in Nuclear Engineering from Gadjah Mada University (Indonesia), and certifications

from Microsoft, Red Hat, and Juniper.

The authors of this book were divided into two teams, Part 1 of the book is based on the IBM

Redpaper™ IBM eX5 Portfolio Overview: IBM System x3850 X5, x3950 X5, x3690 X5, and

BladeCenter HX5, REDP-4650, and written by one team of subject matter experts.

The team that wrote Part 1 (left to right): David, Duncan, JJ, Scott, Cynthia, and Eric

Part 2 of the book was written by a second team of subject matter experts. This team also

provided updates to the first part of the book.

The team that wrote Part 2 (left to right): David, Evans, Aaron, Miro, Tom, and Mike

Thanks to the following people for their contributions to this project:

From IBM Marketing:

Mark Chapman

Michelle Gottschalk

Harsh Kachhy

Preface xv

Page 18

Richard Mancini

Tim Martin

Kevin Powell

Heather Richardson

David Tareen

From IBM Development:

Justin Bandholz

Ralph Begun

Jon Bitner

Charles Clifton

Candice Coletrane-Pagan

David Drez

Royce Espy

Dustin Fredrickson

Larry Grasso

Dan Kelaher

Randy Kolvick

Chris LeBlanc

Mike Schiskey

Greg Sellman

Mehul Shah

Matthew Trzyna

Matt Weber

From the IBM Redbooks organization:

Mary Comianos

Linda Robinson

Stephen Smith

From other IBM employees throughout the world:

Randall Davis, IBM Australia

John Encizo, IBM U.S.

Shannon Meier, IBM U.S.

Keith Ott, IBM U.S.

Andrew Spurgeon, IBM New Zealand

Xiao Jun Wu, IBM China

Now you can become a published author, too!

Here’s an opportunity to spotlight your skills, grow your career, and become a published

author—all at the same time! Join an ITSO residency project and help write a book in your

area of expertise, while honing your experience using leading-edge technologies. Your efforts

will help to increase product acceptance and client satisfaction, as you expand your network

of technical contacts and relationships. Residencies run from two to six weeks in length, and

you can participate either in person or as a remote resident working from your home base.

Find out more about the residency program, browse the residency index, and apply online at:

ibm.com/redbooks/residencies.html

xvi IBM eX5 Implementation Guide

Page 19

Comments welcome

Your comments are important to us!

We want our books to be as helpful as possible. Send us your comments about this book or

other IBM Redbooks publications in one of the following ways:

Use the online Contact us review Redbooks form found at:

ibm.com/redbooks

Send your comments in an email to:

redbooks@us.ibm.com

Mail your comments to:

IBM Corporation, International Technical Support Organization

Dept. HYTD Mail Station P099

2455 South Road

Poughkeepsie, NY 12601-5400

Stay connected to IBM Redbooks

Find us on Facebook:

http://www.facebook.com/IBMRedbooks

Follow us on Twitter:

http://twitter.com/ibmredbooks

Look for us on LinkedIn:

http://www.linkedin.com/groups?home=&gid=2130806

Explore new Redbooks publications, residencies, and workshops with the IBM Redbooks

weekly newsletter:

https://www.redbooks.ibm.com/Redbooks.nsf/subscribe?OpenForm

Stay current on recent Redbooks publications with RSS Feeds:

http://www.redbooks.ibm.com/rss.html

Preface xvii

Page 20

xviii IBM eX5 Implementation Guide

Page 21

Chapter 1. Introduction

1

The IBM eX5 product portfolio represents the fifth generation of servers built upon Enterprise

X-Architecture. Enterprise X-Architecture is the culmination of bringing generations of IBM

technology and innovation derived from our experience in high-end enterprise servers. Now

with eX5, IBM scalable systems technology for Intel processor-based servers has also been

delivered to blades. These servers can be expanded on demand and configured by using a

building block approach that optimizes system design servers for your workload

requirements.

As a part of the IBM Smarter Planet™ initiative, our Dynamic Infrastructure® charter guides

us to provide servers that improve service, reduce cost, and manage risk. These servers

scale to more CPU cores, memory, and I/O than previous systems, enabling them to handle

greater workloads than the systems they supersede. Power efficiency and machine density

are optimized, making them affordable to own and operate.

The ability to increase the memory capacity independently of the processors means that

these systems can be highly utilized, yielding the best return from your application

investment. These systems allow your enterprise to grow in processing, I/O, and memory

dimensions, so that you can provision what you need now, and expand the system to meet

future requirements. System redundancy and availability technologies are more advanced

than the technologies that were previously available in the x86 systems.

This chapter contains the following topics:

1.1, “eX5 systems” on page 2

1.2, “Model summary” on page 3

1.3, “Positioning” on page 7

1.4, “Energy efficiency” on page 10

1.5, “Services offerings” on page 11

1.6, “What this book contains” on page 11

© Copyright IBM Corp. 2011. All rights reserved. 1

Page 22

1.1 eX5 systems

The four systems in the eX5 family are the x3850 X5, x3950 X5, x3690 X5, and the HX5

blade. The eX5 technology is primarily designed around three major workloads: database

servers, server consolidation using virtualization services, and Enterprise Resource Planning

(application and database) servers. Each system can scale with additional memory by adding

an IBM MAX5 memory expansion unit to the server, and the x3850 X5, x3950 X5, and HX5

can also be scaled by connecting two systems to form a 2-node scale.

Figure 1-1 shows the IBM eX5 family.

Figure 1-1 eX5 family (top to bottom): BladeCenter HX5 (2-node), System x3690 X5, and System

x3850 X5 (the System x3950 X5 looks the same as the x3850 X5)

The IBM System x3850 X5 and x3950 X5 are 4U highly rack-optimized servers. The

x3850 X5 and the workload-optimized x3950 X5 are the new flagship servers of the IBM x86

server family. These systems are designed for maximum utilization, reliability, and

performance for computer-intensive and memory-intensive workloads.

The IBM System x3690 X5 is a new 2U rack-optimized server. This machine brings new

features and performance to the middle tier, as well as a memory scalability option with

MAX5.

The IBM BladeCenter HX5 is a single-wide (30 mm) blade server that follows the same

design as all previous IBM blades. The HX5 brings unprecedented levels of capacity to

high-density environments. The HX5 is expandable to form either a 2-node system with four

processors, or a single-node system with the MAX5 memory expansion blade.

When compared to other machines in the System x portfolio, these systems represent the

upper end of the spectrum, are suited for the most demanding x86 tasks, and can handle jobs

which previously might have been run on other platforms. To assist with selecting the ideal

system for a given workload, we have designed workload-specific models for virtualization

and database needs.

2 IBM eX5 Implementation Guide

Page 23

1.2 Model summary

This section summarizes the models that are available for each of the eX5 systems.

1.2.1 IBM System x3850 X5 models

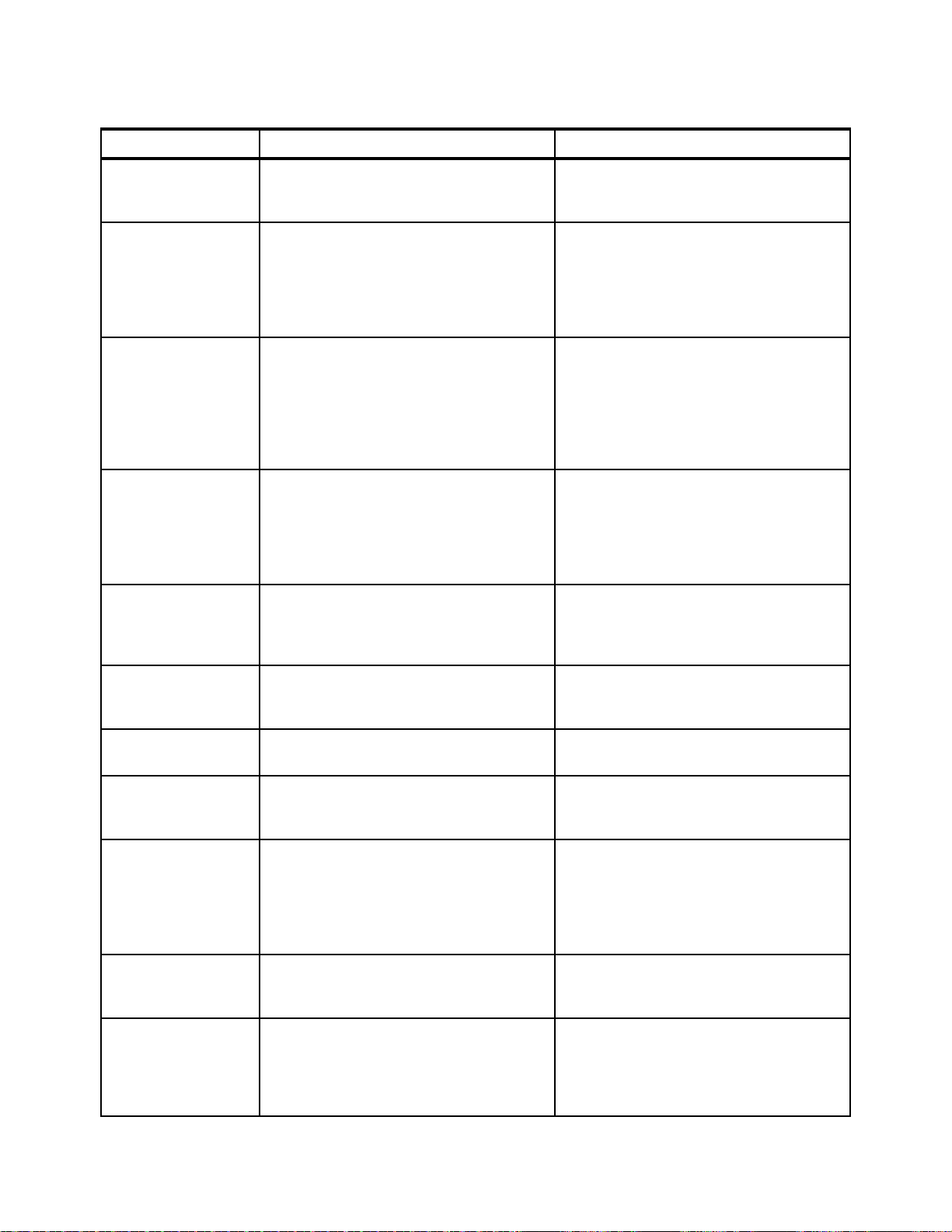

Table 1-1 lists the standard x3850 X5 models.

Table 1-1 Base models of the x3850 X5: Four socket-scalable server

BR10i std

10Gb Ethernet

b

Drive bays

Power supplies

(std/max)

(std/max)

standard

Standard

Memory

Intel Xeon® processors

a

Model

7145-ARx E7520 4C 1.86 GHz, 18 MB L3, 95W

7145-1Rx E7520 4C 1.86 GHz, 18 MB L3, 95W

7145-2Rx E7530 6C 1.86 GHz,12 MB L3, 105W

7145-3Rx E7540 6C 2.0 GHz, 18 MB L3, 105W 1066 MHz 4x 4 GB 2/8

7145-4Rx X7550 8C 2.0 GHz, 18 MB L3, 130W 1066 MHz 4x 4 GB 2/8

7145-5Rx X7560 8C 2.27 GHz, 24 MB L3, 130W 1066 MHz 4x 4 GB 2/8

a. The x character in the seventh position of the machine model denotes the region-specific character.

For example, U indicates US, and G indicates EMEA.

b. Emulex 10Gb Ethernet Adapter is installed in PCIe slot 7.

c. Any model using the E7520 or E7530 CPU cannot scale beyond single-node 4-way, even with the addition of MAX5.

(two standard; maximum of four)

speed

(MHz)

c

800 MHz 2x 2 GB 1/8 No No 1/2 None

c

800 MHz 4x 4 GB 2/8 Ye s Yes 2 / 2 4x 2.5”/8

c

978 MHz 4x 4 GB 2/8 Ye s Yes 2 / 2 4x 2.5”/8

memory

(MAX5 is

optional)

Memory cards

(std/max)

ServeRAID

Ye s Ye s 2 / 2 4x 2.5”/8

Ye s Ye s 2 / 2 4x 2.5”/8

Ye s Ye s 2 / 2 4x 2.5”/8

1.2.2 Workload-optimized x3950 X5 models

Table 1-2 on page 4 lists the workload-optimized models of the x3950 X5 that have been

announced. The MAX5 is optional on these models. (In the table,

maximum.)

std is standard, and max is

Model 5Dx

Model 5Dx is designed for database applications and uses solid-state drives (SSDs) for the

best I/O performance. Backplane connections for eight 1.8-inch SSDs are standard and there

is space for an additional eight SSDs. The SSDs themselves must be ordered separately.

Because no SAS controllers are standard, you can select from the available cards as

described in 3.9, “Storage” on page 90.

Model 4Dx

Model 4Dx is designed for virtualization and is fully populated with 4 GB memory dual inline

memory modules (DIMMs), including in an attached MAX5 memory expansion unit, a total of

384 GB of memory. Backplane connections for four 2.5-inch serial-attached SCSI (SAS) hard

disk drives (HDDs) are standard; however, the SAS HDDs themselves must be ordered

separately. A ServeRAID BR10i SAS controller is standard in this model.

Chapter 1. Introduction 3

Page 24

Table 1-2 Models of the x3950 X5: Workload-optimized models

Intel Xeon

processors

(two standard,

a

Model

Database workload-optimized models

7145-5Dx

Virtualization workload-optimized models

7145-4Dx

a. The x character in the seventh position of the machine model denotes the region-specific character.

For example, U indicates US, and G indicates EMEA.

b. Emulex 10Gb Ethernet Adapter is installed in PCIe slot 7.

c. Includes, as standard, one 8-bay eXFlash SSD backplane; one additional eXFlash backplane is optional.

maximum of four)

X7560 8C 2.27 GHz,

24 MB L3, 130W

4x X7550 8C 2.0 GHz,

18 MB L3, 130W

Memory

speed MAX5

1066

MHz

1066

MHz

Opt Server: 8x 4GB 4/8 No

Std

Standard

memory

Server: 64x 4GB

MAX5: 32x 4GB

Memory cards

(std/max)

ServeRAID

8/8 Ye s Ye s 2/2 4x 2.5”/8

b

BR10i std

10Gb Ethernet

standard

Ye s 2 / 2 8x 1.8”/16

1.2.3 x3850 X5 models with MAX5

Table 1-3 lists the models that are standard with the 1U MAX5 memory expansion unit.

Table 1-3 Models of the x3850 X5 with the MAX5 standard

Drive bays

(std/max)

Power supplies

(std/max)

c

Standard

Memory

Intel Xeon processors

a

Model

7145-2Sx

7145-4Sx

7145-5Sx

a. The x character in the seventh position of the machine model denotes the region-specific character.

For example, U indicates US, and G indicates EMEA.

b. Emulex 10Gb Ethernet Adapter is installed in PCIe slot 7.

c. Any model using the E7520 or E7530 CPU cannot scale beyond single-node 4-way, even with the addition of

MAX5.

(four standard and max)

4x E7530 6C 1.86 GHz,

12 MB L3, 105W

4x X7550 8C 2.0 GHz,

18 MB L3, 130W

4x X7560 8C 2.27 GHz,

24 MB L3, 130W

c

speed

(MHz)

978 MHz

1066 MHz

1066 MHz

memory

(MAX5 is

standard)

Server: 8x 4 GB

MAX5: 2x 4 GB

Server: 8x 4 GB

MAX5: 2x 4 GB

Server: 8x 4 GB

MAX5: 2x 4 GB

Memory cards

4/8

4/8

4/8

(std/max)

ServeRAID

Ye s Ye s 2 / 2 4x 2.5”/8

Ye s Ye s 2 / 2 4x 2.5”/8

Ye s Ye s 2 / 2 4x 2.5”/8

b

BR10i std

10Gb Ethernet

standard

Power supplies

(std/max)

1.2.4 Base x3690 X5 models

Table 1-4 on page 5 provides the standard models of the x3690 X5. The MAX5 memory

expansion unit is standard on specific models as indicated.

Drive bays

(std/max)

4 IBM eX5 Implementation Guide

Page 25

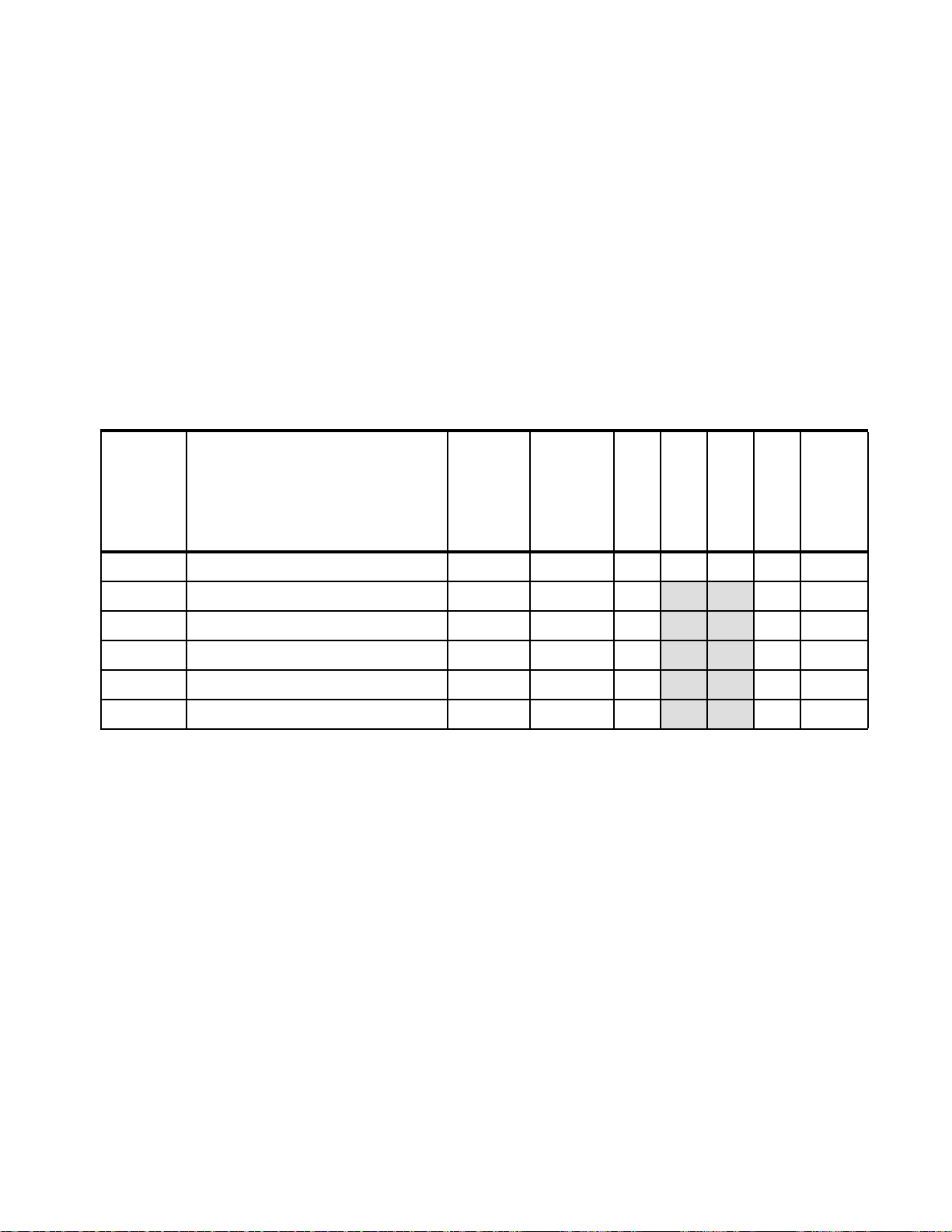

Table 1-4 x3690 X5 models

Intel Xeon

processors

Model

(two maximum)

Memory

speed MAX5

Standard

memory

b

Power

a

Memory tray

ServeRAID

M1015 standard

10Gb Ethernet

supplies

std/max

standard

Drive

bays

std/max

7148-ARx

7148-1Rx

7148-2Rx

7148-3Rx

7148-3Gx

7148-4Rx

7148-3Sx

7148-4Sx

a. Up to 64 DIMM sockets: Each server has 16 DIMM sockets standard or 32 sockets with the addition of the internal

memory tray (mezzanine). With the addition of the MAX5 memory expansion unit, 64 DIMM sockets in total are

available.

b. Emulex 10Gb Ethernet Adapter.

1x E7520 4C,

1.86 GHz, 95W

1x E7520 4C,

1.86 GHz, 95W

1x E6540 6C,

2.00 GHz, 105W

1x X6550 8C,

2.00 GHz, 130W

1x X6550 8C,

2.00 GHz, 130W

1x X7560 8C,

2.26 GHz, 130W

1x X7550 8C,

2.00GHz, 130W

1x X7560 8C,

2.26GHz, 130W

800 MHz Opt Server: 2x 4GB Opt Opt Opt 1/4 None

800 MHz Opt Server: 2x 4GB Opt

1066 MHz Opt Server: 2x 4GB Opt

1066 MHz Opt Server: 2x 4GB Opt

1066 MHz Opt Server: 2x 4GB Opt

1066 MHz Opt Server: 2x 4GB Opt

1066 MHz

1066 MHz

Std

Std

Server: 2x 4GB

MAX5: 2x 4GB

Server: 2x 4GB

MAX5: 2x 4GB

Std Opt 1/4 4x 2.5”/16

Std Opt 1/4 4x 2.5”/16

Std Opt 1/4 4x 2.5”/16

Std Std 1/4 4x 2.5”/16

Std Opt 1/4 4x 2.5”/16

Opt Std Opt

Opt Std Opt

Server: 2/4

MAX5: 1/2

Server: 2/4

MAX5: 1/2

4x 2.5”/16

4x 2.5”/16

1.2.5 Workload-optimized x3690 X5 models

Table 1-5 on page 6 lists the workload-optimized models.

Model 3Dx is designed for database applications and uses SSDs for the best I/O

performance. Backplane connections for sixteen 1.8-inch solid-state drives are standard and

there is space for an additional 16 solid-state drives. You must order the SSDs separately. No

SAS controllers are standard, which lets you select from the available cards, as described in

4.9, “Storage” on page 145. The MAX5 is optional on this model.

Model 2Dx is designed for virtualization applications and includes VMware ESXi 4.1 on an

integrated USB memory key. The server is fully populated with 4 GB memory DIMMs,

including those in an attached MAX5 memory expansion unit, for a total of 256 GB of

memory. Backplane connections for four 2.5-inch SAS drives are standard and there is space

for an additional twelve 2.5-inch disk drives. You must order the drives separately. See 4.9,

“Storage” on page 145.

Chapter 1. Introduction 5

Page 26

Table 1-5 x3690 X5 workload-optimized models

Intel Xeon

Model

processors

(two maximum)

Memory

speed MAX5

Database workload-optimized models

Standard

memory

b

Power

a

Memory tray

ServeRAID

M1015 std

supplies

std/max

10Gb Eth

standard

Drive

bays

std/max

7148-3Dx

2x X6550 8C,

2.00 GHz, 130W

1066 MHz Opt Server: 4x 4 GB

Std Opt Opt Server: 4/4 16x 1.8”/32

Virtualization workload-optimized models

7148-2Dx

2x E6540 6C,

2.00 GHz, 105W

1066 MHz Std

Server: 32x 4GB

MAX5: 32x 4GB

Std Opt Std

Server: 4/4

MAX5: 2/2

4x 2.5”/16