Page 1

Front cover

Implementing the IBM Storwize V5000

Gen2 (including the Storwize V5010,

V5020, and V5030) with IBM Spectrum

Virtualize V8.1

Jon Tate

Dharmesh Kamdar

Hartmut Lonzer

Gustavo Tinelli Martins

Redbooks

Page 2

Page 3

International Technical Support Organization

Implementing the IBM Storwize V5000 Gen2 with IBM

Spectrum Virtualize V8.1

March 2018

SG24-8162-03

Page 4

Note: Before using this information and the product it supports, read the information in “Notices” on

page xiii.

Fourth Edition (March 2018)

This edition applies to the IBM Storwize V5000 Gen2 and software V8.1.0. Note that since this book was

produced, several panels might have changed.

© Copyright International Business Machines Corporation 2018. All rights reserved.

Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule

Contract with IBM Corp.

Page 5

Contents

Notices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xiii

Trademarks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xiv

Preface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xv

Authors. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .xv

Now you can become a published author, too! . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xvii

Comments welcome. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xvii

Stay connected to IBM Redbooks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xvii

Summary of changes. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xix

October 2017, Fourth Edition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xix

Chapter 1. Overview of the IBM Storwize V5000 Gen2 system. . . . . . . . . . . . . . . . . . . . 1

1.1 IBM Storwize V5000 Gen2 overview. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.2 IBM Storwize V5000 Gen2 terminology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.3 IBM Storwize V5000 Gen2 models . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

1.3.1 IBM Storage Utility Offerings. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

1.4 IBM Storwize V5000 Gen1 and Gen2 compatibility . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

1.5 IBM Storwize V5000 Gen2 hardware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

1.5.1 Control enclosure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

1.5.2 Storwize V5010 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

1.5.3 Storwize V5020 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

1.5.4 Storwize V5030 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

1.5.5 Expansion enclosure. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

1.5.6 Host interface cards . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

1.5.7 Disk drive types. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

1.6 IBM Storwize V5000 Gen2 terms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

1.6.1 Hosts. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

1.6.2 Node canister . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

1.6.3 I/O groups . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

1.6.4 Clustered system . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

1.6.5 RAID . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

1.6.6 Managed disks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

1.6.7 Quorum disks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

1.6.8 Storage pools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

1.6.9 Volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

1.6.10 iSCSI. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

1.6.11 Serial-attached SCSI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

1.6.12 Fibre Channel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

1.7 IBM Storwize V5000 Gen2 features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

1.7.1 Mirrored volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

1.7.2 Thin Provisioning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

1.7.3 Real-time Compression. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

1.7.4 Easy Tier . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

1.7.5 Storage Migration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

1.7.6 FlashCopy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

1.7.7 Remote Copy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

1.7.8 IP replication . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

1.7.9 External virtualization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

© Copyright IBM Corp. 2018. All rights reserved. iii

Page 6

1.7.10 Encryption . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

1.8 Problem management and support. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

1.8.1 IBM Support assistance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

1.8.2 Event notifications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

1.8.3 SNMP traps. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

1.8.4 Syslog messages . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

1.8.5 Call Home email . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

1.9 More information resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

1.9.1 Useful IBM Storwize V5000 Gen2 websites . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

Chapter 2. Initial configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

2.1 Hardware installation planning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

2.1.1 Procedure to install the SAS cables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

2.2 SAN configuration planning. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

2.3 FC direct-attach planning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

2.4 SAS direct-attach planning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

2.5 LAN configuration planning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

2.5.1 Management IP address considerations. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

2.5.2 Service IP address considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

2.6 Host configuration planning. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

2.7 Miscellaneous configuration planning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

2.8 System management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

2.8.1 Graphical user interface (GUI) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

2.8.2 Command-line interface (CLI). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

2.9 First-time setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

2.10 Initial configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

2.10.1 Adding enclosures after the initial configuration . . . . . . . . . . . . . . . . . . . . . . . . . 69

2.10.2 Service Assistant Tool . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

Chapter 3. Graphical user interface overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

3.1 Overview of IBM Spectrum Virtualize management software . . . . . . . . . . . . . . . . . . . . 80

3.1.1 Access to the storage management software . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

3.1.2 System pane layout . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

3.1.3 Navigation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

3.1.4 Multiple selection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

3.1.5 Status indicators area . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

3.2 Overview pane . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

3.3 Monitoring menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 88

3.3.1 System overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

3.3.2 System details. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

3.3.3 Events . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

3.3.4 Performance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 95

3.3.5 Background Task . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 96

3.4 Pools menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 96

3.4.1 Pools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98

3.4.2 Child pools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

3.4.3 Volumes by pool . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

3.4.4 Internal storage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

3.4.5 External storage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

3.4.6 MDisks by pools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 107

3.4.7 System migration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 108

3.5 Volumes menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

3.5.1 All volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

iv Implementing the IBM Storwize V5000 Gen2 with IBM Spectrum Virtualize V8.1

Page 7

3.5.2 Volumes by pool . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

3.5.3 Volumes by host . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

3.6 Hosts menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

3.6.1 Hosts. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

3.6.2 Host Clusters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

3.6.3 Ports by host . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

3.6.4 Host mappings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

3.6.5 Volumes by host . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

3.7 Copy services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

3.7.1 FlashCopy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

3.7.2 Consistency group . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 122

3.7.3 FlashCopy mappings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 124

3.7.4 Remote copy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 126

3.7.5 Partnerships . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

3.8 Access menu. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 128

3.8.1 Users. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 128

3.8.2 Audit Log option . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 130

3.9 Settings menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

3.9.1 Notifications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

3.9.2 Network. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 132

3.9.3 Security . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 133

3.9.4 System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

3.9.5 Support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 139

3.9.6 GUI preferences . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

Chapter 4. Storage pools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 143

4.1 Working with internal drives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 144

4.1.1 Internal Storage window . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 144

4.1.2 Actions on internal drives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 146

4.2 Working with storage pools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 156

4.2.1 Creating storage pools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 158

4.2.2 Actions on storage pools. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 160

4.2.3 Child storage pools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 166

4.3 Working with managed disks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 170

4.3.1 Assigning managed disks to storage pools. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 171

4.3.2 RAID configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 178

4.3.3 Distributed RAID . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 179

4.3.4 RAID configuration presets . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 182

4.3.5 Actions on arrays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 184

4.3.6 Actions on external MDisks. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 188

4.3.7 More actions on MDisks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 194

4.4 Working with external storage controllers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 197

Chapter 5. Host configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 199

5.1 Host attachment overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 200

5.2 Planning for direct-attached hosts. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 201

5.2.1 Fibre Channel direct attachment to host systems . . . . . . . . . . . . . . . . . . . . . . . . 201

5.2.2 FC direct attachment between nodes in a Storwize V5000 system . . . . . . . . . . 201

5.3 Preparing the host operating system . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 201

5.3.1 Windows 2008 R2 and 2012 R2: Preparing for Fibre Channel attachment . . . . 202

5.3.2 Windows 2008 R2 and Windows 2012 R2: Preparing for iSCSI attachment . . . 207

5.3.3 Windows 2012 R2: Preparing for SAS attachment . . . . . . . . . . . . . . . . . . . . . . . 214

5.3.4 VMware ESXi: Preparing for Fibre Channel attachment. . . . . . . . . . . . . . . . . . . 215

Contents v

Page 8

5.3.5 VMware ESXi: Preparing for iSCSI attachment . . . . . . . . . . . . . . . . . . . . . . . . . 218

5.3.6 VMware ESXi: Preparing for SAS attachment . . . . . . . . . . . . . . . . . . . . . . . . . . 227

5.4 N-Port Virtualization ID (NPIV) Support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 228

5.4.1 NPIV Prerequisites . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 230

5.4.2 Enabling NPIV on a new system. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 231

5.4.3 Enabling NPIV on an existing system . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 234

5.5 Creating hosts by using the GUI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 236

5.5.1 Creating Fibre Channel hosts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 238

5.5.2 Configuring the IBM Storwize V5000 for FC connectivity . . . . . . . . . . . . . . . . . . 246

5.5.3 Creating iSCSI hosts. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 249

5.5.4 Configuring the IBM Storwize V5000 for iSCSI host connectivity . . . . . . . . . . . . 251

5.5.5 Creating SAS hosts. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 256

5.6 Host Clusters. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 259

5.6.1 Creating a host cluster . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 260

5.6.2 Adding a member to a host cluster . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 262

5.6.3 Listing a host cluster member . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 265

5.6.4 Assigning a volume to a Host Cluster . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 266

5.6.5 Remove volume mapping from a host cluster. . . . . . . . . . . . . . . . . . . . . . . . . . . 270

5.6.6 Removing a host cluster member . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 273

5.6.7 Removing a host cluster . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 277

5.6.8 I/O throttling for hosts and Host Clusters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 279

5.7 Proactive Host Failover . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 285

Chapter 6. Volume configuration. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 287

6.1 Introduction to volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 288

6.1.1 Image mode volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 289

6.1.2 Managed mode volumes. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 290

6.1.3 Cache mode for volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 291

6.1.4 Mirrored volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 292

6.1.5 Thin-provisioned volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 295

6.1.6 Compressed volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 297

6.1.7 Volumes for various topologies. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 297

6.2 Create Volumes menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 298

6.3 Creating volumes using the Volume Creation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 303

6.3.1 Creating Basic volumes using Volume Creation . . . . . . . . . . . . . . . . . . . . . . . . . 303

6.3.2 Creating Mirrored volumes using Volume Creation . . . . . . . . . . . . . . . . . . . . . . 305

6.4 Mapping a volume to the host . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 309

6.5 Creating Custom volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 313

6.5.1 Creating a custom thin-provisioned volume . . . . . . . . . . . . . . . . . . . . . . . . . . . . 314

6.5.2 Creating Custom Compressed volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 317

6.5.3 Custom Mirrored Volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 320

6.6 HyperSwap and the mkvolume command . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 322

6.6.1 Volume manipulation commands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 325

6.7 Mapping Volumes to Host after volume creation . . . . . . . . . . . . . . . . . . . . . . . . . . . . 326

6.7.1 Mapping newly created volumes to the host using the wizard . . . . . . . . . . . . . . 327

6.8 Migrating a volume to another storage pool . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 332

6.9 Migrating volumes using the volume copy feature . . . . . . . . . . . . . . . . . . . . . . . . . . . 336

6.10 I/O throttling. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 341

6.10.1 Define throttle on a volume . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 341

6.10.2 Remove a throttle from a volume . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 343

Chapter 7. Storage migration. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 347

7.1 Storage migration wizard overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 348

vi Implementing the IBM Storwize V5000 Gen2 with IBM Spectrum Virtualize V8.1

Page 9

7.2 Interoperation and compatibility . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 348

7.3 Storage migration wizard . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 349

7.3.1 External virtualization capability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 349

7.3.2 Model and adapter card considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 349

7.3.3 Overview of the storage migration wizard . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 350

7.3.4 Storage migration wizard tasks. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 351

Chapter 8. Advanced host and volume administration . . . . . . . . . . . . . . . . . . . . . . . . 373

8.1 Advanced host administration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 374

8.1.1 Modifying volume mappings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 376

8.1.2 Unmapping volumes from a host . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 379

8.1.3 Renaming a host . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 382

8.1.4 Removing a host . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 385

8.1.5 Host properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 388

8.2 Adding and deleting host ports . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 393

8.2.1 Adding host port . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 393

8.2.2 Deleting a host port . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 398

8.3 Advanced volume administration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 401

8.3.1 Advanced volume functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 402

8.3.2 Mapping a volume to a host . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 405

8.3.3 Unmapping volumes from all hosts. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 405

8.3.4 Viewing which host is mapped to a volume . . . . . . . . . . . . . . . . . . . . . . . . . . . . 406

8.3.5 Renaming a volume . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 407

8.3.6 Shrinking a volume . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 407

8.3.7 Expanding a volume . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 409

8.3.8 Migrating a volume to another storage pool . . . . . . . . . . . . . . . . . . . . . . . . . . . . 409

8.3.9 Exporting to an image mode volume. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 410

8.3.10 Deleting a volume . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 412

8.3.11 Duplicating a volume. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 412

8.3.12 Adding a volume copy. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 414

8.4 Volume properties and volume copy properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 416

8.5 Advanced volume copy functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 420

8.5.1 Volume copy: Make Primary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 420

8.5.2 Splitting into a new volume . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 422

8.5.3 Validate Volume Copies option. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 423

8.5.4 Delete volume copy option . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 426

8.5.5 Migrating volumes by using the volume copy features . . . . . . . . . . . . . . . . . . . . 427

8.6 Volumes by storage pool. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 428

8.7 Volumes by host . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 429

Chapter 9. Advanced features for storage efficiency . . . . . . . . . . . . . . . . . . . . . . . . . 431

9.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 432

9.2 Easy Tier . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 432

9.2.1 Easy Tier overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 433

9.2.2 Tiered storage pools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 434

9.2.3 Easy Tier process . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 436

9.2.4 I/O Monitoring . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 437

9.2.5 Data Placement Advisor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 437

9.2.6 Data Migration Planner . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 437

9.2.7 Data Migrator . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 437

9.2.8 Easy Tier accelerated mode . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 438

9.2.9 Easy Tier operating modes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 439

9.2.10 Easy Tier status . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 439

Contents vii

Page 10

9.2.11 Storage Pool Balancing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 440

9.2.12 Easy Tier rules . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 440

9.2.13 Creating multi-tiered pools: Enabling Easy Tier . . . . . . . . . . . . . . . . . . . . . . . . 442

9.2.14 Downloading Easy Tier I/O measurements. . . . . . . . . . . . . . . . . . . . . . . . . . . . 453

9.2.15 Easy Tier I/O Measurement through the command-line interface. . . . . . . . . . . 455

9.2.16 IBM Storage Tier Advisor Tool . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 459

9.2.17 Processing heat log files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 459

9.3 Thin provisioning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 460

9.3.1 Configuring a thin provisioned volume . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 461

9.3.2 Performance considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 464

9.3.3 Limitations of virtual capacity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 465

9.4 Real-time Compression Software . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 466

9.4.1 Common use cases . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 466

9.4.2 Real-time Compression concepts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 467

9.4.3 Random Access Compression Engine . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 468

9.4.4 Random Access Compression Engine in IBM Spectrum Virtualize stack. . . . . . 473

9.4.5 Data write flow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 474

9.4.6 Data read flow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 474

9.4.7 Compression of existing data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 474

9.4.8 Configuring compressed volumes. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 475

9.4.9 Comprestimator . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 477

Chapter 10. Copy services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 481

10.1 IBM FlashCopy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 482

10.1.1 Business requirements for FlashCopy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 482

10.1.2 Backup improvements with FlashCopy. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 482

10.1.3 Restore with FlashCopy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 483

10.1.4 Moving and migrating data with FlashCopy . . . . . . . . . . . . . . . . . . . . . . . . . . . 483

10.1.5 Application testing with FlashCopy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 484

10.1.6 Host and application considerations to ensure FlashCopy integrity . . . . . . . . . 484

10.1.7 FlashCopy attributes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 484

10.1.8 Reverse FlashCopy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 485

10.1.9 IBM Spectrum Protect Snapshot. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 486

10.2 FlashCopy functional overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 487

10.3 Implementing FlashCopy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 488

10.3.1 FlashCopy mappings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 488

10.3.2 Multiple Target FlashCopy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 489

10.3.3 Consistency Groups . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 490

10.3.4 FlashCopy indirection layer. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 492

10.3.5 Grains and the FlashCopy bitmap. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 493

10.3.6 Interaction and dependency between multiple target FlashCopy mappings. . . 494

10.3.7 Summary of the FlashCopy indirection layer algorithm. . . . . . . . . . . . . . . . . . . 495

10.3.8 Interaction with the cache . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 496

10.3.9 FlashCopy and image mode volumes. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 497

10.3.10 FlashCopy mapping events . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 500

10.3.11 FlashCopy mapping states . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 502

10.3.12 Thin provisioned FlashCopy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 504

10.3.13 Background copy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 504

10.3.14 Serialization of I/O by FlashCopy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 505

10.3.15 Event handling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 506

10.3.16 Asynchronous notifications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 507

10.3.17 Interoperation with Metro Mirror and Global Mirror . . . . . . . . . . . . . . . . . . . . . 507

10.3.18 FlashCopy presets . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 508

viii Implementing the IBM Storwize V5000 Gen2 with IBM Spectrum Virtualize V8.1

Page 11

10.4 Managing FlashCopy by using the GUI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 510

10.4.1 Creating a FlashCopy mapping. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 511

10.4.2 Single-click snapshot . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 523

10.4.3 Single-click clone . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 525

10.4.4 Single-click backup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 526

10.4.5 Creating a FlashCopy Consistency Group . . . . . . . . . . . . . . . . . . . . . . . . . . . . 528

10.4.6 Creating FlashCopy mappings in a Consistency Group . . . . . . . . . . . . . . . . . . 529

10.4.7 Showing related volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 534

10.4.8 Moving a FlashCopy mapping to a Consistency Group . . . . . . . . . . . . . . . . . . 535

10.4.9 Removing a FlashCopy mapping from a Consistency Group . . . . . . . . . . . . . . 536

10.4.10 Modifying a FlashCopy mapping. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 537

10.4.11 Renaming FlashCopy mapping. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 538

10.4.12 Renaming Consistency Group . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 539

10.4.13 Deleting FlashCopy mapping . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 540

10.4.14 Deleting FlashCopy Consistency Group . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 541

10.4.15 Starting FlashCopy process . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 542

10.4.16 Stopping FlashCopy process . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 542

10.5 Volume mirroring and migration options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 543

10.6 Native IP replication . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 545

10.6.1 Native IP replication technology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 545

10.6.2 IBM Storwize System Layers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 546

10.6.3 IP partnership limitations. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 548

10.6.4 VLAN support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 549

10.6.5 IP partnership and terminology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 550

10.6.6 States of IP partnership . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 551

10.6.7 Remote copy groups . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 552

10.7 Remote Copy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 553

10.7.1 Multiple IBM Storwize V5000 system mirroring. . . . . . . . . . . . . . . . . . . . . . . . . 554

10.7.2 Importance of write ordering . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 556

10.7.3 Remote copy intercluster communication . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 558

10.7.4 Metro Mirror overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 559

10.7.5 Synchronous remote copy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 560

10.7.6 Metro Mirror features. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 561

10.7.7 Metro Mirror attributes. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 562

10.7.8 Practical use of Metro Mirror. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 562

10.7.9 Global Mirror Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 563

10.7.10 Asynchronous remote copy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 564

10.7.11 Global Mirror features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 565

10.7.12 Using Change Volumes with Global Mirror . . . . . . . . . . . . . . . . . . . . . . . . . . . 568

10.7.13 Distribution of work among nodes. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 570

10.7.14 Background copy performance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 571

10.7.15 Thin-provisioned background copy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 571

10.7.16 Methods of synchronization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 571

10.7.17 Practical use of Global Mirror . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 572

10.7.18 Valid combinations of FlashCopy, Metro Mirror, and Global Mirror . . . . . . . . 572

10.7.19 Remote Copy configuration limits . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 572

10.7.20 Remote Copy states and events. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 573

10.8 Consistency protection for Remote and Global mirror . . . . . . . . . . . . . . . . . . . . . . . 580

10.9 Remote Copy commands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 582

10.9.1 Remote Copy process . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 582

10.9.2 Listing available system partners . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 583

10.9.3 Changing the system parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 583

10.9.4 System partnership . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 585

Contents ix

Page 12

10.9.5 Creating a Metro Mirror/Global Mirror consistency group . . . . . . . . . . . . . . . . . 586

10.9.6 Creating a Metro Mirror/Global Mirror relationship . . . . . . . . . . . . . . . . . . . . . . 586

10.9.7 Changing Metro Mirror/Global Mirror relationship . . . . . . . . . . . . . . . . . . . . . . . 586

10.9.8 Changing Metro Mirror/Global Mirror consistency group . . . . . . . . . . . . . . . . . 587

10.9.9 Starting Metro Mirror/Global Mirror relationship . . . . . . . . . . . . . . . . . . . . . . . . 587

10.9.10 Stopping Metro Mirror/Global Mirror relationship . . . . . . . . . . . . . . . . . . . . . . 587

10.9.11 Starting Metro Mirror/Global Mirror consistency group . . . . . . . . . . . . . . . . . . 588

10.9.12 Stopping Metro Mirror/Global Mirror consistency group . . . . . . . . . . . . . . . . . 588

10.9.13 Deleting Metro Mirror/Global Mirror relationship . . . . . . . . . . . . . . . . . . . . . . . 588

10.9.14 Deleting Metro Mirror/Global Mirror consistency group. . . . . . . . . . . . . . . . . . 589

10.9.15 Reversing Metro Mirror/Global Mirror relationship . . . . . . . . . . . . . . . . . . . . . 589

10.9.16 Reversing Metro Mirror/Global Mirror consistency group . . . . . . . . . . . . . . . . 589

10.10 Managing Remote Copy using the GUI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 589

10.10.1 Creating Fibre Channel partnership . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 590

10.10.2 Creating stand-alone remote copy relationships. . . . . . . . . . . . . . . . . . . . . . . 592

10.10.3 Creating Consistency Group. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 600

10.10.4 Renaming Consistency Group . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 607

10.10.5 Renaming remote copy relationship . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 608

10.10.6 Moving stand-alone remote copy relationship to Consistency Group. . . . . . . 609

10.10.7 Removing remote copy relationship from Consistency Group . . . . . . . . . . . . 610

10.10.8 Starting remote copy relationship . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 610

10.10.9 Starting remote copy Consistency Group . . . . . . . . . . . . . . . . . . . . . . . . . . . . 611

10.10.10 Switching copy direction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 612

10.10.11 Switching the copy direction for a Consistency Group . . . . . . . . . . . . . . . . . 613

10.10.12 Stopping a remote copy relationship. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 615

10.10.13 Stopping Consistency Group . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 616

10.10.14 Deleting stand-alone remote copy relationships . . . . . . . . . . . . . . . . . . . . . . 617

10.10.15 Deleting Consistency Group . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 618

10.11 Troubleshooting remote copy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 619

10.11.1 1920 error . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 619

10.11.2 1720 error . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 621

10.12 HyperSwap . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 622

10.12.1 Introduction to HyperSwap volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 623

10.12.2 Failure scenarios. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 629

10.12.3 Current HyperSwap limitations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 633

Chapter 11. External storage virtualization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 635

11.1 Planning for external storage virtualization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 636

11.1.1 License for external storage virtualization. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 636

11.1.2 SAN configuration planning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 636

11.1.3 External storage configuration planning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 638

11.1.4 Guidelines for virtualizing external storage . . . . . . . . . . . . . . . . . . . . . . . . . . . . 638

11.2 Working with external storage. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 639

11.2.1 Adding external storage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 639

11.2.2 Importing image mode volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 642

11.2.3 Managing external storage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 647

11.2.4 Removing external storage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 651

Chapter 12. RAS, monitoring, and troubleshooting. . . . . . . . . . . . . . . . . . . . . . . . . . . 653

12.1 Reliability, availability, and serviceability features. . . . . . . . . . . . . . . . . . . . . . . . . . . 654

12.2 System components . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 654

12.2.1 Enclosure midplane . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 655

12.2.2 Node canisters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 655

x Implementing the IBM Storwize V5000 Gen2 with IBM Spectrum Virtualize V8.1

Page 13

12.2.3 Expansion canisters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 664

12.2.4 Disk subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 666

12.2.5 Power supply units . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 670

12.3 Configuration backup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 672

12.3.1 Generating a manual configuration backup by using the CLI . . . . . . . . . . . . . . 672

12.3.2 Downloading a configuration backup by using the GUI . . . . . . . . . . . . . . . . . . 673

12.4 System update . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 676

12.4.1 Updating node canister software . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 676

12.4.2 Updating the drive firmware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 690

12.5 Monitoring . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 693

12.5.1 Email notifications and Call Home . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 694

12.6 Audit log . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 696

12.7 Event log . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 698

12.7.1 Managing the event log. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 698

12.7.2 Alert handling and recommended actions. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 702

12.8 Support Assistance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 705

12.8.1 Configuring support assistance. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 706

12.8.2 Set up Support Assistant . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 706

12.8.3 Disable Support Assistance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 715

12.9 Collecting support information. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 715

12.9.1 Collecting support information by using the GUI. . . . . . . . . . . . . . . . . . . . . . . . 715

12.9.2 Automatic upload of Support Packages . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 715

12.9.3 Manual upload of Support Packages . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 720

12.9.4 Collecting support information by using the SAT . . . . . . . . . . . . . . . . . . . . . . . 724

12.10 Powering off the system and shutting down the infrastructure . . . . . . . . . . . . . . . . 726

12.10.1 Powering off . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 726

12.10.2 Shutting down and starting up the infrastructure. . . . . . . . . . . . . . . . . . . . . . . 730

Chapter 13. Encryption. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 731

13.1 Planning for encryption . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 732

13.2 Defining encryption of data at-rest . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 732

13.2.1 Encryption methods . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 735

13.2.2 Encryption keys. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 736

13.2.3 Encryption licenses . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 737

13.3 Activating encryption . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 737

13.3.1 Obtaining an encryption license . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 737

13.3.2 Start activation process during initial system setup . . . . . . . . . . . . . . . . . . . . . 738

13.3.3 Start activation process on a running system . . . . . . . . . . . . . . . . . . . . . . . . . . 740

13.3.4 Activate the license automatically . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 741

13.3.5 Activate the license manually . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 744

13.4 Enabling encryption. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 745

13.4.1 Starting the Enable Encryption wizard . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 746

13.4.2 Enabling encryption using USB flash drives . . . . . . . . . . . . . . . . . . . . . . . . . . . 748

13.4.3 Enabling encryption using key servers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 752

13.4.4 Enabling encryption using both providers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 758

13.5 Configuring additional providers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 765

13.5.1 Adding SKLM as a second provider . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 765

13.5.2 Adding USB flash drives as a second provider . . . . . . . . . . . . . . . . . . . . . . . . . 767

13.6 Migrating between providers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 769

13.6.1 Migration from USB flash drive provider to encryption key server . . . . . . . . . . 769

13.6.2 Migration from encryption key server to USB flash drive provider . . . . . . . . . . 769

13.7 Recovering from a provider loss . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 770

13.8 Using encryption . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 770

Contents xi

Page 14

13.8.1 Encrypted pools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 771

13.8.2 Encrypted child pools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 772

13.8.3 Encrypted arrays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 773

13.8.4 Encrypted MDisks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 774

13.8.5 Encrypted volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 776

13.8.6 Restrictions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 778

13.9 Rekeying an encryption-enabled system . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 778

13.9.1 Rekeying using a key server. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 779

13.9.2 Rekeying using USB flash drives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 781

13.10 Migrating between key providers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 783

13.11 Disabling encryption . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 784

Appendix A. CLI setup and SAN Boot. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 785

Command-line interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 786

Basic setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 786

SAN Boot . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 798

Enabling SAN Boot for Windows. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 799

Enabling SAN Boot for VMware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 799

Windows SAN Boot migration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 799

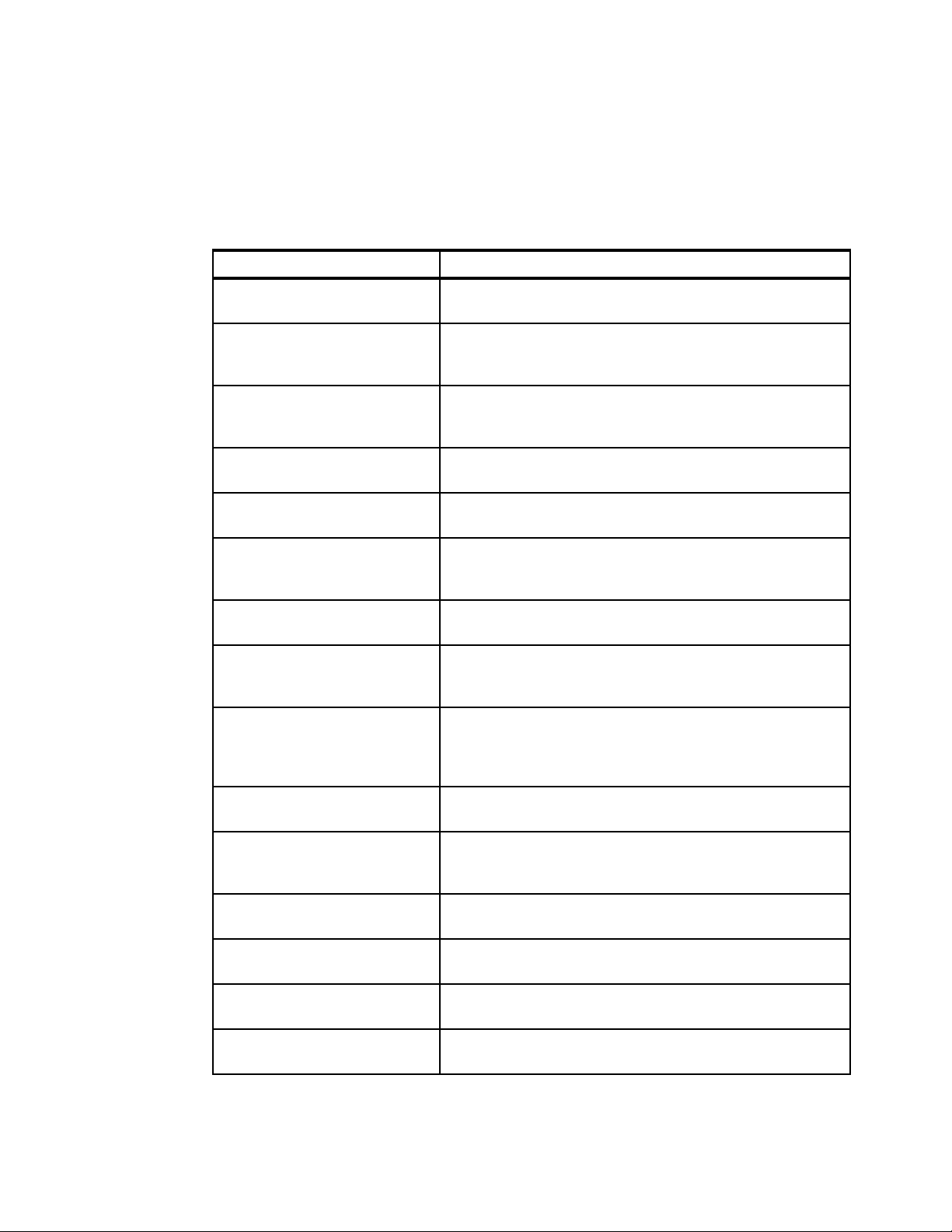

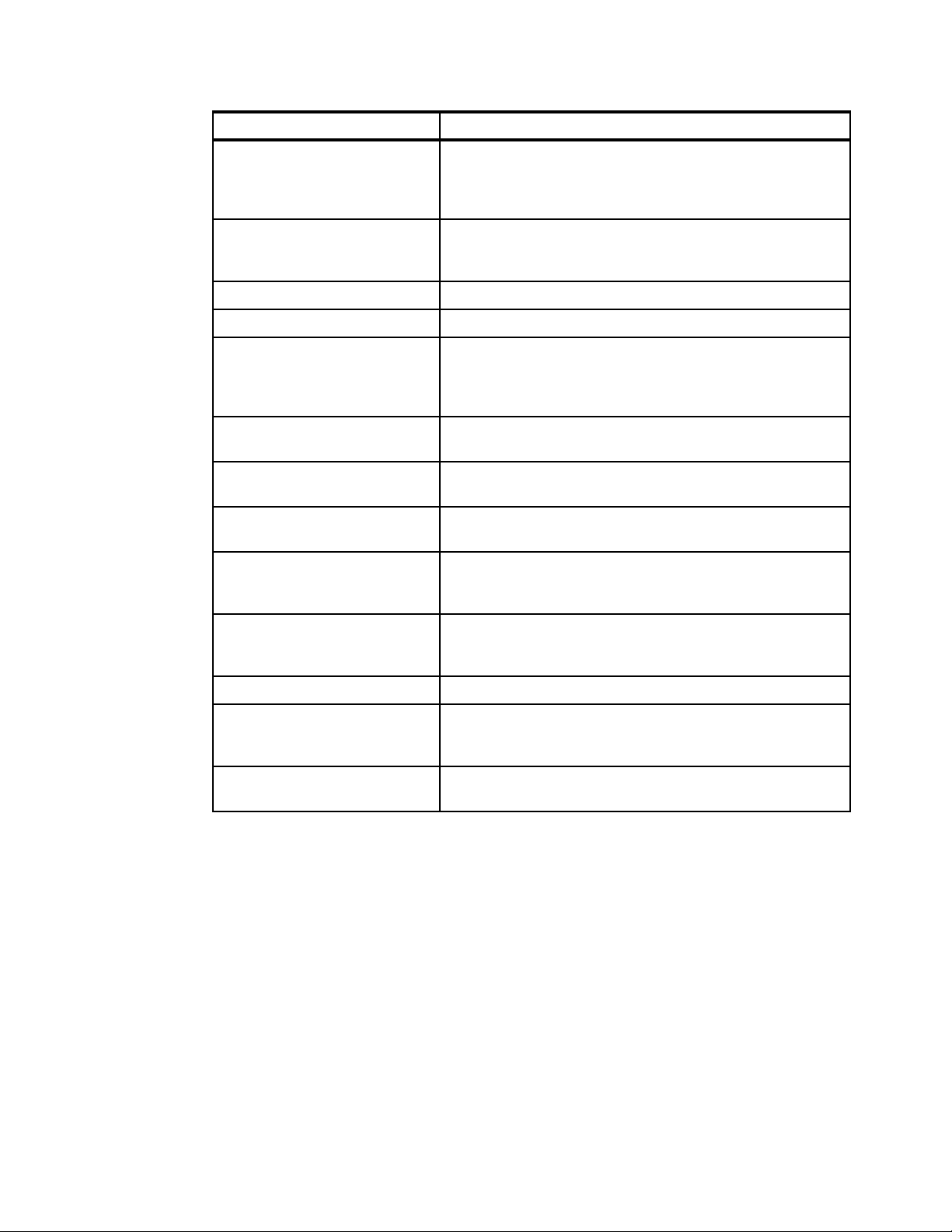

Appendix B. Terminology. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 803

Commonly encountered terms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 804

Related publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 821

IBM Redbooks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 821

IBM Storwize V5000 publications and support. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 821

Help from IBM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 821

xii Implementing the IBM Storwize V5000 Gen2 with IBM Spectrum Virtualize V8.1

Page 15

Notices

This information was developed for products and services offered in the US. This material might be available

from IBM in other languages. However, you may be required to own a copy of the product or product version in

that language in order to access it.

IBM may not offer the products, services, or features discussed in this document in other countries. Consult

your local IBM representative for information on the products and services currently available in your area. Any

reference to an IBM product, program, or service is not intended to state or imply that only that IBM product,

program, or service may be used. Any functionally equivalent product, program, or service that does not

infringe any IBM intellectual property right may be used instead. However, it is the user’s responsibility to

evaluate and verify the operation of any non-IBM product, program, or service.

IBM may have patents or pending patent applications covering subject matter described in this document. The

furnishing of this document does not grant you any license to these patents. You can send license inquiries, in

writing, to:

IBM Director of Licensing, IBM Corporation, North Castle Drive, MD-NC119, Armonk, NY 10504-1785, US

INTERNATIONAL BUSINESS MACHINES CORPORATION PROVIDES THIS PUBLICATION “AS IS”

WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESS OR IMPLIED, INCLUDING, BUT NOT LIMITED

TO, THE IMPLIED WARRANTIES OF NON-INFRINGEMENT, MERCHANTABILITY OR FITNESS FOR A

PARTICULAR PURPOSE. Some jurisdictions do not allow disclaimer of express or implied warranties in

certain transactions, therefore, this statement may not apply to you.

This information could include technical inaccuracies or typographical errors. Changes are periodically made

to the information herein; these changes will be incorporated in new editions of the publication. IBM may make

improvements and/or changes in the product(s) and/or the program(s) described in this publication at any time

without notice.

Any references in this information to non-IBM websites are provided for convenience only and do not in any

manner serve as an endorsement of those websites. The materials at those websites are not part of the

materials for this IBM product and use of those websites is at your own risk.

IBM may use or distribute any of the information you provide in any way it believes appropriate without

incurring any obligation to you.

The performance data and client examples cited are presented for illustrative purposes only. Actual

performance results may vary depending on specific configurations and operating conditions.

Information concerning non-IBM products was obtained from the suppliers of those products, their published

announcements or other publicly available sources. IBM has not tested those products and cannot confirm the

accuracy of performance, compatibility or any other claims related to non-IBM products. Questions on the

capabilities of non-IBM products should be addressed to the suppliers of those products.

Statements regarding IBM’s future direction or intent are subject to change or withdrawal without notice, and

represent goals and objectives only.

This information contains examples of data and reports used in daily business operations. To illustrate them

as completely as possible, the examples include the names of individuals, companies, brands, and products.

All of these names are fictitious and any similarity to actual people or business enterprises is entirely

coincidental.

COPYRIGHT LICENSE:

This information contains sample application programs in source language, which illustrate programming

techniques on various operating platforms. You may copy, modify, and distribute these sample programs in

any form without payment to IBM, for the purposes of developing, using, marketing or distributing application

programs conforming to the application programming interface for the operating platform for which the sample

programs are written. These examples have not been thoroughly tested under all conditions. IBM, therefore,

cannot guarantee or imply reliability, serviceability, or function of these programs. The sample programs are

provided “AS IS”, without warranty of any kind. IBM shall not be liable for any damages arising out of your use

of the sample programs.

© Copyright IBM Corp. 2018. All rights reserved. xiii

Page 16

Trademarks

IBM, the IBM logo, and ibm.com are trademarks or registered trademarks of International Business Machines

Corporation, registered in many jurisdictions worldwide. Other product and service names might be

trademarks of IBM or other companies. A current list of IBM trademarks is available on the web at “Copyright

and trademark information” at http://www.ibm.com/legal/copytrade.shtml

The following terms are trademarks or registered trademarks of International Business Machines Corporation,

and might also be trademarks or registered trademarks in other countries.

AIX®

DB2®

DS8000®

Easy Tier®

FlashCopy®

Global Technology Services®

HyperSwap®

IBM®

IBM FlashSystem®

IBM SmartCloud®

IBM Spectrum™

IBM Spectrum Control™

IBM Spectrum Protect™

IBM Spectrum Scale™

IBM Spectrum Virtualize™

PowerHA®

Real-time Compression™

Redbooks®

Redbooks (logo) ®

Storwize®

System Storage®

Tivoli®

The following terms are trademarks of other companies:

SoftLayer, and The Planet are trademarks or registered trademarks of SoftLayer, Inc., an IBM Company.

Celeron, Intel logo, Intel Inside logo, and Intel Centrino logo are trademarks or registered trademarks of Intel

Corporation or its subsidiaries in the United States and other countries.

Linux is a trademark of Linus Torvalds in the United States, other countries, or both.

Microsoft, Windows, and the Windows logo are trademarks of Microsoft Corporation in the United States,

other countries, or both.

Java, and all Java-based trademarks and logos are trademarks or registered trademarks of Oracle and/or its

affiliates.

UNIX is a registered trademark of The Open Group in the United States and other countries.

Other company, product, or service names may be trademarks or service marks of others.

xiv Implementing the IBM Storwize V5000 Gen2 with IBM Spectrum Virtualize V8.1

Page 17

Preface

Organizations of all sizes face the challenge of managing massive volumes of increasingly

valuable data. But storing this data can be costly, and extracting value from the data is

becoming more difficult. IT organizations have limited resources but must stay responsive to

dynamic environments and act quickly to consolidate, simplify, and optimize their IT

infrastructures. The IBM® Storwize® V5000 Gen2 system provides a smarter solution that is

affordable, easy to use, and self-optimizing, which enables organizations to overcome these

storage challenges.

The Storwize V5000 Gen2 delivers efficient, entry-level configurations that are designed to

meet the needs of small and midsize businesses. Designed to provide organizations with the

ability to consolidate and share data at an affordable price, the Storwize V5000 Gen2 offers

advanced software capabilities that are found in more expensive systems.

This IBM Redbooks® publication is intended for pre-sales and post-sales technical support

professionals and storage administrators.

It applies to the Storwize V5030, V5020, and V5010, and to IBM Spectrum Virtualize™ V8.1.

Authors

This book was produced by a team of specialists from around the world working at the

International Technical Support Organization, San Jose Center.

Jon Tate is a Project Manager for IBM System Storage® SAN

Solutions at the International Technical Support Organization

(ITSO), San Jose Center. Before Jon joined the ITSO in 1999,

he worked in the IBM Technical Support Center, providing

Level 2 support for IBM storage products. Jon has 32 years of

experience in storage software and management, services,

and support. He is both an IBM Certified IT Specialist and an

IBM SAN Certified Specialist. He is also the UK Chairman of

the Storage Networking Industry Association.

Dharmesh Kamdar has been working in IBM Systems group

for over 15 years as a Senior Software Engineer. He works in

the Open Systems Lab (OSL), where he focuses on

interoperability testing of a range of IBM storage products with

various vendor products, including operating systems,

clustering solutions, virtualization platforms, volume managers,

and file systems.

© Copyright IBM Corp. 2018. All rights reserved. xv

Page 18

Hartmut Lonzer is an OEM Alliance Manager for IBM Storage.

Before this position, he was a Client Technical Specialist for

IBM Germany. He works in the IBM Germany headquarters in

Ehningen. His main focus is on the IBM SAN Volume

Controller, IBM Storwize Family, and IBM VersaStack. His

experience with the IBM SAN Volume Controller and Storwize

products goes back to the beginning of these products.

Hartmut has been with IBM in various technical roles for 40

years.

Gustavo Tinelli Martins is a Storage Technical Leader who

works for IBM Global Technology Services® in Brazil. He is

also an IBM Certified IT Specialist, member of IBM’s IT

Specialist Advisory Board, responsible for evaluating

candidates who wish to acquire the title of IBM IT specialist.

Gustavo has eight years of professional experience, of which

two of those years were dedicated to Customer’s Service

Center and the other six years were dedicated to the Storage

Service Line. Gustavo is certified in multiple IBM storage

technologies and also in other vendor storage products.

Thanks to the following people for their contributions to this project:

James Whitaker

Catarina Castro

Martyn Spink

Djihed Afifi

Vanessa Howling

IBM Manchester Lab

Thanks to the following authors of the previous edition of this book:

Catarina Castro

Uwe Dubberke

Justin Heather

Andrew Hickey

Imran Imtiaz

Nancy Kinney

Hartmut Lonzer

Adam Lyon-Jones

Saiprasad Prabhakar Parkar

Edward Seager

Lee Sirett

Chris Tapsell

Paulo Tomiyoshi Takeda

Dieter Utesch

Thomas Vogel

Mikhail Zakharov

xvi Implementing the IBM Storwize V5000 Gen2 with IBM Spectrum Virtualize V8.1

Page 19

Now you can become a published author, too

Here’s an opportunity to spotlight your skills, grow your career, and become a published

author—all at the same time. Join an ITSO residency project and help write a book in your

area of expertise, while honing your experience using leading-edge technologies. Your efforts

will help to increase product acceptance and customer satisfaction, as you expand your

network of technical contacts and relationships. Residencies run from two to six weeks in

length, and you can participate either in person or as a remote resident working from your

home base.

Find out more about the residency program, browse the residency index, and apply online at:

ibm.com/redbooks/residencies.html

Comments welcome

Your comments are important to us.

We want our books to be as helpful as possible. Send us your comments about this book or

other IBM Redbooks publications in one of the following ways:

Use the online Contact us review Redbooks form:

ibm.com/redbooks

Send your comments in an email:

redbooks@us.ibm.com

Mail your comments:

IBM Corporation, International Technical Support Organization

Dept. HYTD Mail Station P099

2455 South Road

Poughkeepsie, NY 12601-5400

Stay connected to IBM Redbooks

Find us on Facebook:

http://www.facebook.com/IBMRedbooks

Follow us on Twitter:

http://twitter.com/ibmredbooks

Look for us on LinkedIn:

http://www.linkedin.com/groups?home=&gid=2130806

Explore new Redbooks publications, residencies, and workshops with the IBM Redbooks

weekly newsletter:

https://www.redbooks.ibm.com/Redbooks.nsf/subscribe?OpenForm

Stay current on recent Redbooks publications with RSS Feeds:

http://www.redbooks.ibm.com/rss.html

Preface xvii

Page 20

xviii Implementing the IBM Storwize V5000 Gen2 with IBM Spectrum Virtualize V8.1

Page 21

Summary of changes

This section describes the technical changes made in this edition of the book and in previous

editions. This edition might also include minor corrections and editorial changes that are not

identified.

Summary of Changes

for SG24-8162-03

for Implementing the IBM Storwize V5000 Gen2 with IBM Spectrum Virtualize V8.1

as created or updated on March 20, 2018.

March 2018, Fourth Edition

This revision includes the following substantial new and changed information.

New information

New GUI

Encryption

Storage migration

Changed information

Screen captures for new GUI

Encryption

© Copyright IBM Corp. 2018. All rights reserved. xix

Page 22

xx Implementing the IBM Storwize V5000 Gen2 with IBM Spectrum Virtualize V8.1

Page 23

Chapter 1. Overview of the IBM Storwize

1

V5000 Gen2 system

This chapter provides an overview of the IBM Storwize V5000 Gen2 architecture and includes

a brief explanation of storage virtualization.

Specifically, this chapter provides information about the following topics:

Overview

Te r mi n ol o gy

Models

IBM Storwize V5000 Gen1 and Gen2 compatibility

Hardware

Te r ms

Features

Problem management and support

More information resources

© Copyright IBM Corp. 2018. All rights reserved. 1

Page 24

1.1 IBM Storwize V5000 Gen2 overview

The IBM Storwize V5000 Gen2 solution is a modular entry-level and midrange storage

solution. The IBM Storwize V5000 Gen2 includes the capability to virtualize its own internal

Redundant Array of Independent Disk (RAID) storage and existing external storage area

network (SAN)-attached storage (the Storwize V5030 only).

The three IBM Storwize V5000 Gen2 models (Storwize V5010, Storwize V5020, and Storwize

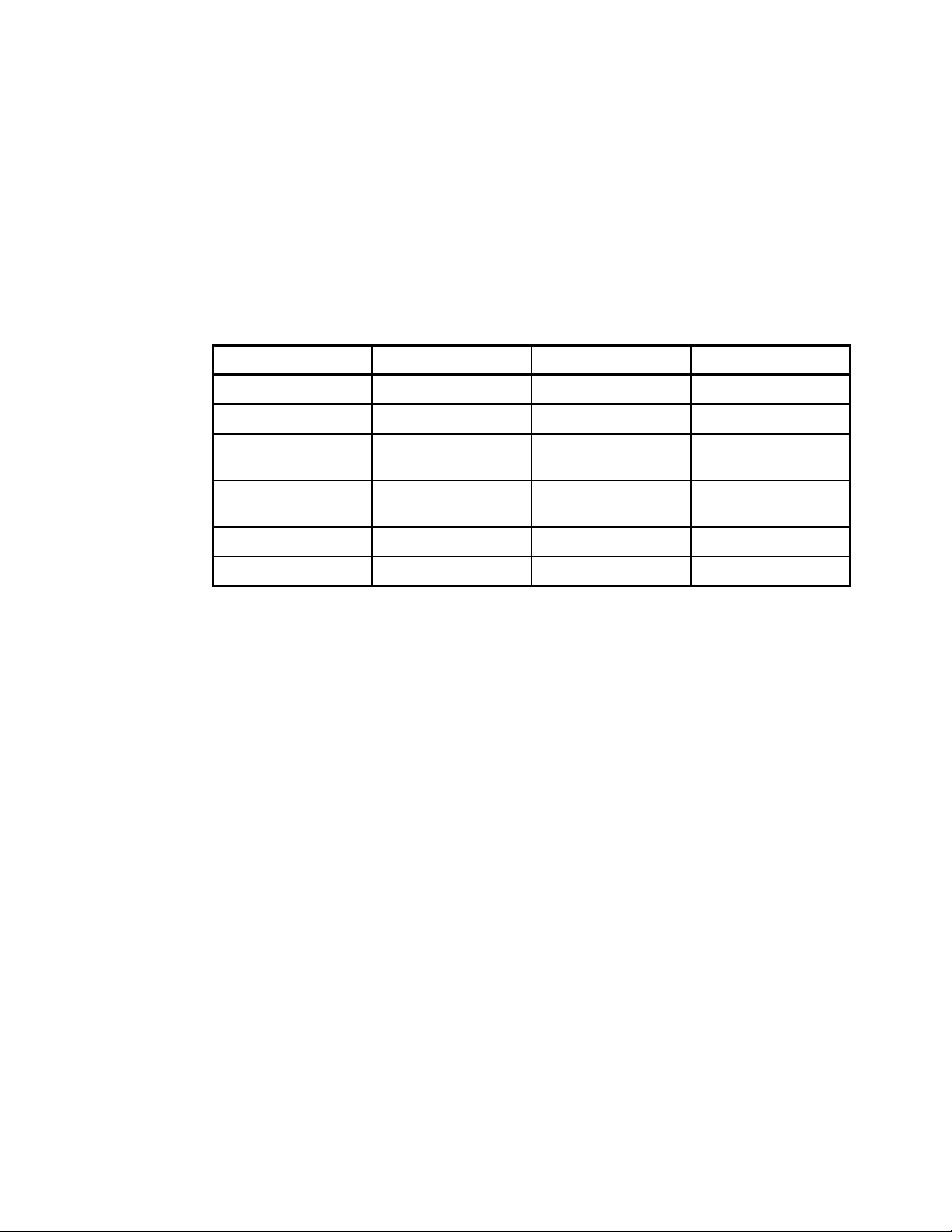

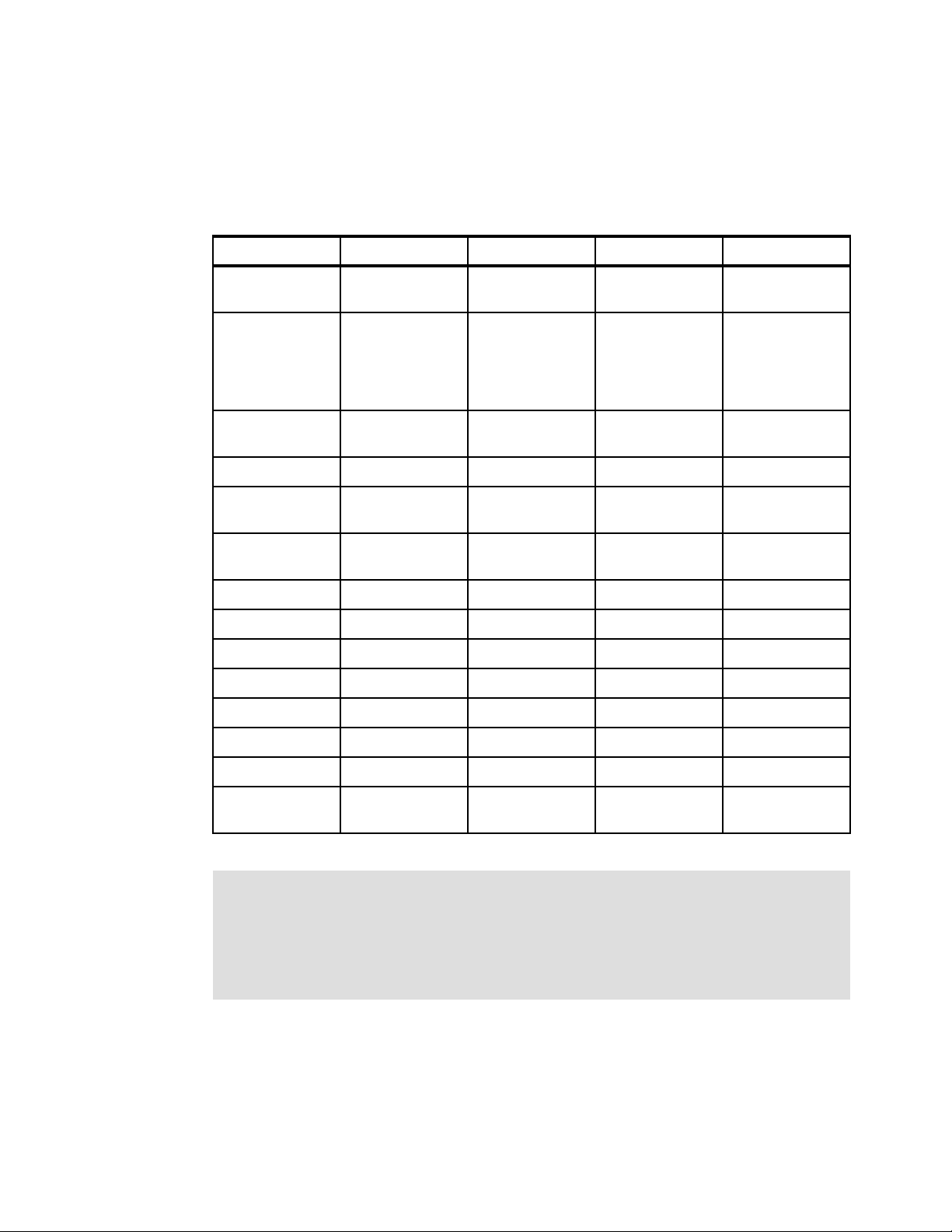

V5030) offer a range of performance scalability and functional capabilities. Table 1-1 shows a

summary of the features of these models.

Table 1-1 IBM Storwize V5000 Gen2 models

Storwize V5010 Storwize V5020 Storwize V5030

CPU cores 2 2 6

Cache 16 GB Up to 32 GB Up to 64 GB

Supported expansion

enclosures

External storage

virtualization

Compression No No Yes

Encryption No Yes Yes

10 10 20

No No Yes

For a more detailed comparison, see Table 1-3 on page 6.

IBM Storwize V5000 Gen2 features the following benefits:

Enterprise technology available to entry and midrange storage

Expert administrators are not required

Easy client setup and service

Simple integration into the server environment

Ability to grow the system incrementally as storage capacity and performance needs

change

The IBM Storwize V5000 Gen2 addresses the block storage requirements of small and

midsize organizations. The IBM Storwize V5000 Gen2 consists of one 2U control enclosure

and, optionally, up to ten 2U expansion enclosures on the Storwize V5010 and Storwize

V5020 systems and up to twenty 2U expansion enclosures on the Storwize V5030 systems.

The Storwize V5030 systems are connected by serial-attached Small Computer Systems

Interface (SCSI) (SAS) cables that make up one system that is called an

I/O group.

With the Storwize V5030 systems, two I/O groups can be connected to form a cluster,

providing a maximum of two control enclosures and 40 expansion enclosures. With the High

Density expansion drawers, you are able to attach up to 16 expansion enclosures to a cluster.

The control and expansion enclosures are available in the following form factors, and they can

be intermixed within an I/O group:

12 x 3.5-inch (8.89-centimeter) drives in a 2U unit

24 x 2.5-inch (6.35-centimeter) drives in a 2U unit

92 x 2.5-inch in carriers or 3.5-inch drives in a 5U unit

2 Implementing the IBM Storwize V5000 Gen2 with IBM Spectrum Virtualize V8.1

Page 25

Two canisters are in each enclosure. Control enclosures contain two node canisters, and

expansion enclosures contain two expansion canisters.

The IBM Storwize V5000 Gen2 supports up to 1,520 x 2.5 inch drives or 3.5 inch drives or a

combination of both drive form factors for the internal storage in a two I/O group Storwize

V5030 cluster.

SAS, Nearline (NL)-SAS, and flash drive types are supported.

The IBM Storwize V5000 Gen2 is designed to accommodate the most common storage

network technologies to enable easy implementation and management. It can be attached to

hosts through a Fibre Channel (FC) SAN fabric, an Internet Small Computer System Interface

(iSCSI) infrastructure, or SAS. Hosts can be attached directly or through a network.

Important: For more information about supported environments, configurations, and

restrictions, see the IBM System Storage Interoperation Center (SSIC):

https://ibm.biz/BdxQhe

For more information, see this website:

http://www.ibm.com/support/knowledgecenter/STHGUJ/

The IBM Storwize V5000 Gen2 is a virtualized storage solution that groups its internal drives

into RAID arrays, which are called

the Storwize V5030 systems by importing logical unit numbers (LUNs) from external FC