Page 1

IBM® SPSS® Amos™ 21

User’s Guide

James L. Arbuckle

Page 2

Note: Before using this information and the product it supports, read the information in the “Notices” section

on page 631.

This edition applies to IBM® SPSS® Amos™ 21 and to all subsequent releases and modifications until

otherwise indicated in new editions.

Microsoft product screenshots reproduced with permission from Microsoft Corporation.

Licensed Materials - Property of IBM

© Copyright IBM Corp. 1983, 2012. U.S. Government Users Restricted Rights - Use, duplication or

disclosure restricted by GSA ADP Schedule Contract with IBM Corp.

© Copyright 2012 Amos Development Corporation. All Rights Reserved.

AMOS is a trademark of Amos Development Corporation.

Page 3

Contents

Part I: Getting Started

1 Introduction 1

Featured Methods . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

About the Tutorial . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

About the Examples . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

About the Documentation . . . . . . . . . . . . . . . . . . . . . . . . . . . .4

Other Sources of Information. . . . . . . . . . . . . . . . . . . . . . . . . . 4

Acknowledgements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

2 Tutorial: Getting Started with

Amos Graphics 7

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .7

About the Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .8

Launching Amos Graphics . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

Creating a New Model. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

Specifying the Data File . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

Specifying the Model and Drawing Variables . . . . . . . . . . . . . . . 11

Naming the Variables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

Drawing Arrows . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

Constraining a Parameter . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

Altering the Appearance of a Path Diagram . . . . . . . . . . . . . . . . 15

To Move an Object . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

To Reshape an Object or Double-Headed Arrow . . . . . . . . . . . 15

To Delete an Object. . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

To Undo an Action . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

To Redo an Action . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

iii

Page 4

Setting Up Optional Output . . . . . . . . . . . . . . . . . . . . . . . . . . 16

Performing the Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

Viewing Output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

To View Text Output . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

To View Graphics Output . . . . . . . . . . . . . . . . . . . . . . . . 19

Printing the Path Diagram. . . . . . . . . . . . . . . . . . . . . . . . . . . 20

Copying the Path Diagram . . . . . . . . . . . . . . . . . . . . . . . . . . 21

Copying Text Output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

Part II: Examples

1 Estimating Variances and Covariances 23

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

About the Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

Bringing In the Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

Analyzing the Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

Specifying the Model. . . . . . . . . . . . . . . . . . . . . . . . . . . 25

Naming the Variables . . . . . . . . . . . . . . . . . . . . . . . . . . 26

Changing the Font . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

Establishing Covariances . . . . . . . . . . . . . . . . . . . . . . . . 27

Performing the Analysis . . . . . . . . . . . . . . . . . . . . . . . . . 28

Viewing Graphics Output . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

Viewing Text Output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

Optional Output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

Calculating Standardized Estimates . . . . . . . . . . . . . . . . . . 33

Rerunning the Analysis . . . . . . . . . . . . . . . . . . . . . . . . . 34

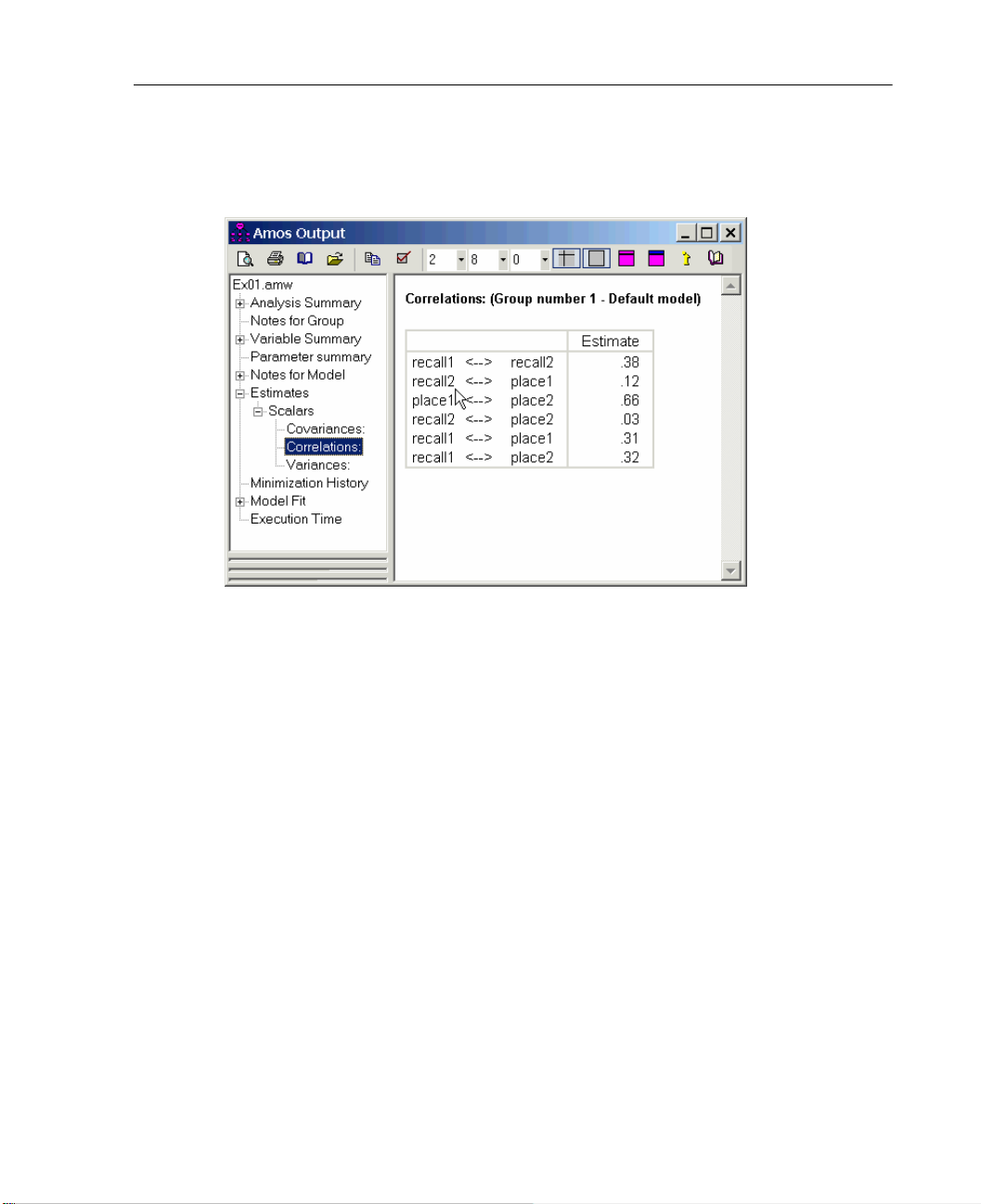

Viewing Correlation Estimates as Text Output . . . . . . . . . . . . 34

Distribution Assumptions for Amos Models . . . . . . . . . . . . . . . . 35

Modeling in VB.NET . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

Generating Additional Output . . . . . . . . . . . . . . . . . . . . . . 39

Modeling in C# . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

Other Program Development Tools . . . . . . . . . . . . . . . . . . . . . 40

iv

Page 5

2 Testing Hypotheses 41

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .41

About the Data. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .41

Parameters Constraints . . . . . . . . . . . . . . . . . . . . . . . . . . . .41

Constraining Variances . . . . . . . . . . . . . . . . . . . . . . . . . .42

Specifying Equal Parameters. . . . . . . . . . . . . . . . . . . . . . .43

Constraining Covariances . . . . . . . . . . . . . . . . . . . . . . . .44

Moving and Formatting Objects . . . . . . . . . . . . . . . . . . . . . . . .45

Data Input . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .46

Performing the Analysis. . . . . . . . . . . . . . . . . . . . . . . . . .47

Viewing Text Output . . . . . . . . . . . . . . . . . . . . . . . . . . . .47

Optional Output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .48

Covariance Matrix Estimates. . . . . . . . . . . . . . . . . . . . . . .49

Displaying Covariance and Variance Estimates

on the Path Diagram. . . . . . . . . . . . . . . . . . . . . . . . . . . .51

Labeling Output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .51

Hypothesis Testing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .52

Displaying Chi-Square Statistics on the Path Diagram . . . . . . . . . . .53

Modeling in VB.NET. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .55

Timing Is Everything . . . . . . . . . . . . . . . . . . . . . . . . . . . .57

3 More Hypothesis Testing 59

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .59

About the Data. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .59

Bringing In the Data. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .59

Testing a Hypothesis That Two Variables Are Uncorrelated . . . . . . .60

Specifying the Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .60

Viewing Text Output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .62

Viewing Graphics Output. . . . . . . . . . . . . . . . . . . . . . . . . . . .63

Modeling in VB.NET. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .65

v

Page 6

4 Conventional Linear Regression 67

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

About the Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

Analysis of the Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

Specifying the Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

Identification . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

Fixing Regression Weights . . . . . . . . . . . . . . . . . . . . . . . . . . 70

Viewing the Text Output . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72

Viewing Graphics Output . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

Viewing Additional Text Output. . . . . . . . . . . . . . . . . . . . . . . . 75

Modeling in VB.NET . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

Assumptions about Correlations among Exogenous Variables . . . 77

Equation Format for the AStructure Method . . . . . . . . . . . . . 78

5 Unobserved Variables 81

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

About the Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

Model A . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

Measurement Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

Structural Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

Identification . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

Specifying the Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

Changing the Orientation of the Drawing Area . . . . . . . . . . . . 86

Creating the Path Diagram . . . . . . . . . . . . . . . . . . . . . . . 87

Rotating Indicators . . . . . . . . . . . . . . . . . . . . . . . . . . . . 88

Duplicating Measurement Models. . . . . . . . . . . . . . . . . . . 88

Entering Variable Names . . . . . . . . . . . . . . . . . . . . . . . . 90

Completing the Structural Model . . . . . . . . . . . . . . . . . . . . 90

Results for Model A . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

Viewing the Graphics Output . . . . . . . . . . . . . . . . . . . . . . 93

vi

Page 7

Model B . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .93

Results for Model B . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .94

Testing Model B against Model A. . . . . . . . . . . . . . . . . . . . . . .96

Modeling in VB.NET. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .98

Model A . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .98

Model B . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .99

6 Exploratory Analysis 101

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

About the Data. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

Model A for the Wheaton Data . . . . . . . . . . . . . . . . . . . . . . . 102

Specifying the Model . . . . . . . . . . . . . . . . . . . . . . . . . . 102

Identification . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

Results of the Analysis . . . . . . . . . . . . . . . . . . . . . . . . . 103

Dealing with Rejection . . . . . . . . . . . . . . . . . . . . . . . . . 104

Modification Indices. . . . . . . . . . . . . . . . . . . . . . . . . . . 105

Model B for the Wheaton Data . . . . . . . . . . . . . . . . . . . . . . . 107

Text Output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 108

Graphics Output for Model B . . . . . . . . . . . . . . . . . . . . . . 109

Misuse of Modification Indices . . . . . . . . . . . . . . . . . . . . 110

Improving a Model by Adding New Constraints . . . . . . . . . . . 110

Model C for the Wheaton Data . . . . . . . . . . . . . . . . . . . . . . . 114

Results for Model C . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

Testing Model C . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

Parameter Estimates for Model C . . . . . . . . . . . . . . . . . . . 115

Multiple Models in a Single Analysis . . . . . . . . . . . . . . . . . . . . 116

Output from Multiple Models . . . . . . . . . . . . . . . . . . . . . . . . 119

Viewing Graphics Output for Individual Models . . . . . . . . . . . 119

Viewing Fit Statistics for All Four Models. . . . . . . . . . . . . . . 119

Obtaining Optional Output . . . . . . . . . . . . . . . . . . . . . . . 121

Obtaining Tables of Indirect, Direct, and Total Effects . . . . . . . 122

vii

Page 8

Modeling in VB.NET . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

Model A . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

Model B . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 124

Model C . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 125

Fitting Multiple Models. . . . . . . . . . . . . . . . . . . . . . . . . 126

7 A Nonrecursive Model 129

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

About the Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

Felson and Bohrnstedt’s Model . . . . . . . . . . . . . . . . . . . . . . 130

Model Identification . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

Results of the Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

Text Output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

Obtaining Standardized Estimates . . . . . . . . . . . . . . . . . . 133

Obtaining Squared Multiple Correlations . . . . . . . . . . . . . . 133

Graphics Output. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134

Stability Index . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 135

Modeling in VB.NET . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

8 Factor Analysis 137

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 137

About the Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 137

A Common Factor Model . . . . . . . . . . . . . . . . . . . . . . . . . . 138

Identification . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 139

Specifying the Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . 140

Drawing the Model . . . . . . . . . . . . . . . . . . . . . . . . . . . 140

Results of the Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

Obtaining Standardized Estimates . . . . . . . . . . . . . . . . . . 142

Viewing Standardized Estimates . . . . . . . . . . . . . . . . . . . 143

Modeling in VB.NET . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 144

viii

Page 9

9 An Alternative to Analysis of Covariance 145

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 145

Analysis of Covariance and Its Alternative . . . . . . . . . . . . . . . . 145

About the Data. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 146

Analysis of Covariance . . . . . . . . . . . . . . . . . . . . . . . . . . . . 147

Model A for the Olsson Data. . . . . . . . . . . . . . . . . . . . . . . . . 147

Identification. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 148

Specifying Model A . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 149

Results for Model A . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 149

Searching for a Better Model . . . . . . . . . . . . . . . . . . . . . . . . 149

Requesting Modification Indices . . . . . . . . . . . . . . . . . . . 149

Model B for the Olsson Data. . . . . . . . . . . . . . . . . . . . . . . . . 150

Results for Model B . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 151

Model C for the Olsson Data . . . . . . . . . . . . . . . . . . . . . . . . . 153

Drawing a Path Diagram for Model C . . . . . . . . . . . . . . . . . 154

Results for Model C . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 154

Fitting All Models At Once . . . . . . . . . . . . . . . . . . . . . . . . . . 154

Modeling in VB.NET. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 155

Model A . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 155

Model B . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 155

Model C . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 156

Fitting Multiple Models . . . . . . . . . . . . . . . . . . . . . . . . . 157

10 Simultaneous Analysis of Several Groups 159

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 159

Analysis of Several Groups . . . . . . . . . . . . . . . . . . . . . . . . . 159

About the Data. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 160

Model A . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 160

Conventions for Specifying Group Differences . . . . . . . . . . . 161

Specifying Model A . . . . . . . . . . . . . . . . . . . . . . . . . . . 161

Text Output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 166

Graphics Output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 167

ix

Page 10

Model B . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 168

Text Output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 170

Graphics Output. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 171

Modeling in VB.NET . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 171

Model A . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 171

Model B . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 172

Multiple Model Input . . . . . . . . . . . . . . . . . . . . . . . . . . 173

11 Felson and Bohrnstedt’s Girls and Boys 175

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 175

Felson and Bohrnstedt’s Model . . . . . . . . . . . . . . . . . . . . . . 175

About the Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 175

Specifying Model A for Girls and Boys . . . . . . . . . . . . . . . . . . 176

Specifying a Figure Caption . . . . . . . . . . . . . . . . . . . . . . 176

Text Output for Model A . . . . . . . . . . . . . . . . . . . . . . . . . . . 179

Graphics Output for Model A . . . . . . . . . . . . . . . . . . . . . . . . 181

Obtaining Critical Ratios for Parameter Differences . . . . . . . . 182

Model B for Girls and Boys . . . . . . . . . . . . . . . . . . . . . . . . . 182

Results for Model B . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 184

Text Output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 184

Graphics Output. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 187

Fitting Models A and B in a Single Analysis . . . . . . . . . . . . . . . 188

Model C for Girls and Boys . . . . . . . . . . . . . . . . . . . . . . . . . 188

Results for Model C . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 191

Modeling in VB.NET . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 192

Model A . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 192

Model B . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 193

Model C . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 193

Fitting Multiple Models. . . . . . . . . . . . . . . . . . . . . . . . . 194

x

Page 11

12 Simultaneous Factor Analysis for

Several Groups 195

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 195

About the Data. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 195

Model A for the Holzinger and Swineford Boys and Girls . . . . . . . . 196

Naming the Groups . . . . . . . . . . . . . . . . . . . . . . . . . . . 196

Specifying the Data . . . . . . . . . . . . . . . . . . . . . . . . . . . 197

Results for Model A . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 198

Text Output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 198

Graphics Output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 199

Model B for the Holzinger and Swineford Boys and Girls . . . . . . . . 200

Results for Model B . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 202

Text Output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 202

Graphics Output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 203

Modeling in VB.NET. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 206

Model A . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 206

Model B . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 207

13 Estimating and Testing Hypotheses

about Means 209

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 209

Means and Intercept Modeling . . . . . . . . . . . . . . . . . . . . . . . 209

About the Data. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 210

Model A for Young and Old Subjects . . . . . . . . . . . . . . . . . . . . 210

Mean Structure Modeling in Amos Graphics . . . . . . . . . . . . . . . 210

Results for Model A . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 212

Text Output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 212

Graphics Output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 214

Model B for Young and Old Subjects . . . . . . . . . . . . . . . . . . . . 214

Results for Model B . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 216

Comparison of Model B with Model A . . . . . . . . . . . . . . . . . . . 216

xi

Page 12

Multiple Model Input. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 216

Mean Structure Modeling in VB.NET . . . . . . . . . . . . . . . . . . . 217

Model A . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 217

Model B . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 218

Fitting Multiple Models. . . . . . . . . . . . . . . . . . . . . . . . . 219

14 Regression with an Explicit Intercept 221

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 221

Assumptions Made by Amos . . . . . . . . . . . . . . . . . . . . . . . . 221

About the Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 222

Specifying the Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . 222

Results of the Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . 223

Text Output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 223

Graphics Output. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 225

Modeling in VB.NET . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 225

15 Factor Analysis with Structured Means 229

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 229

Factor Means . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 229

About the Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 230

Model A for Boys and Girls . . . . . . . . . . . . . . . . . . . . . . . . . 230

Specifying the Model. . . . . . . . . . . . . . . . . . . . . . . . . . 230

Understanding the Cross-Group Constraints . . . . . . . . . . . . . . . 232

Results for Model A . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 233

Text Output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 233

Graphics Output. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 233

Model B for Boys and Girls . . . . . . . . . . . . . . . . . . . . . . . . . 235

Results for Model B . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 237

Comparing Models A and B. . . . . . . . . . . . . . . . . . . . . . . . . 237

xii

Page 13

Modeling in VB.NET. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 238

Model A . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 238

Model B . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 239

Fitting Multiple Models . . . . . . . . . . . . . . . . . . . . . . . . . 240

16 Sörbom’s Alternative to

Analysis of Covariance 241

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 241

Assumptions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 241

About the Data. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 242

Changing the Default Behavior . . . . . . . . . . . . . . . . . . . . . . . 243

Model A . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 243

Specifying the Model . . . . . . . . . . . . . . . . . . . . . . . . . . 243

Results for Model A . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 245

Text Output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 245

Model B . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 247

Results for Model B . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 249

Model C. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 250

Results for Model C . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 251

Model D . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 252

Results for Model D . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 253

Model E. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 255

Results for Model E . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 255

Fitting Models A Through E in a Single Analysis . . . . . . . . . . . . . 255

Comparison of Sörbom’s Method with the Method of Example 9 . . . . 256

Model X. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 256

Modeling in Amos Graphics . . . . . . . . . . . . . . . . . . . . . . . . . 256

Results for Model X . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 257

Model Y. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 257

Results for Model Y . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 259

Model Z. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 260

xiii

Page 14

Results for Model Z . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 261

Modeling in VB.NET . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 262

Model A . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 262

Model B . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 263

Model C . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 264

Model D . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 265

Model E . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 266

Fitting Multiple Models. . . . . . . . . . . . . . . . . . . . . . . . . 267

Models X, Y, and Z . . . . . . . . . . . . . . . . . . . . . . . . . . . 268

17 Missing Data 269

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 269

Incomplete Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 269

About the Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 270

Specifying the Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . 271

Saturated and Independence Models . . . . . . . . . . . . . . . . . . . 272

Results of the Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . 273

Text Output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 273

Graphics Output. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 275

Modeling in VB.NET . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 275

Fitting the Factor Model (Model A) . . . . . . . . . . . . . . . . . . 276

Fitting the Saturated Model (Model B) . . . . . . . . . . . . . . . . 277

Computing the Likelihood Ratio Chi-Square Statistic and P . . . . 281

Performing All Steps with One Program . . . . . . . . . . . . . . . 282

18 More about Missing Data 283

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 283

Missing Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 283

About the Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 284

Model A . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 285

Results for Model A . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 287

xiv

Page 15

Graphics Output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 287

Text Output. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 287

Model B . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 290

Output from Models A and B. . . . . . . . . . . . . . . . . . . . . . . . . 291

Modeling in VB.NET. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 292

Model A . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 292

Model B . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 293

19 Bootstrapping 295

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 295

The Bootstrap Method . . . . . . . . . . . . . . . . . . . . . . . . . . . . 295

About the Data. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 296

A Factor Analysis Model . . . . . . . . . . . . . . . . . . . . . . . . . . . 296

Monitoring the Progress of the Bootstrap . . . . . . . . . . . . . . . . . 297

Results of the Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . 297

Modeling in VB.NET. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 301

20 Bootstrapping for Model Comparison 303

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 303

Bootstrap Approach to Model Comparison . . . . . . . . . . . . . . . . 303

About the Data. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 304

Five Models . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 304

Text Output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 308

Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 310

Modeling in VB.NET. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 310

21 Bootstrapping to Compare

Estimation Methods 311

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 311

Estimation Methods. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 311

xv

Page 16

About the Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 312

About the Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 312

Text Output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 315

Modeling in VB.NET . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 318

22 Specification Search 319

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 319

About the Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 319

About the Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 319

Specification Search with Few Optional Arrows. . . . . . . . . . . . . 320

Specifying the Model. . . . . . . . . . . . . . . . . . . . . . . . . . 320

Selecting Program Options . . . . . . . . . . . . . . . . . . . . . . 322

Performing the Specification Search . . . . . . . . . . . . . . . . 323

Viewing Generated Models . . . . . . . . . . . . . . . . . . . . . . 324

Viewing Parameter Estimates for a Model . . . . . . . . . . . . . 325

Using BCC to Compare Models . . . . . . . . . . . . . . . . . . . . 326

Viewing the Akaike Weights . . . . . . . . . . . . . . . . . . . . . 327

Using BIC to Compare Models . . . . . . . . . . . . . . . . . . . . 328

Using Bayes Factors to Compare Models . . . . . . . . . . . . . . 329

Rescaling the Bayes Factors . . . . . . . . . . . . . . . . . . . . . 331

Examining the Short List of Models. . . . . . . . . . . . . . . . . . 332

Viewing a Scatterplot of Fit and Complexity. . . . . . . . . . . . . 333

Adjusting the Line Representing Constant Fit . . . . . . . . . . . . 335

Viewing the Line Representing Constant C – df. . . . . . . . . . . 336

Adjusting the Line Representing Constant C – df . . . . . . . . . . 337

Viewing Other Lines Representing Constant Fit. . . . . . . . . . . 338

Viewing the Best-Fit Graph for C . . . . . . . . . . . . . . . . . . . 338

Viewing the Best-Fit Graph for Other Fit Measures . . . . . . . . 339

Viewing the Scree Plot for C . . . . . . . . . . . . . . . . . . . . . 340

Viewing the Scree Plot for Other Fit Measures . . . . . . . . . . . 342

Specification Search with Many Optional Arrows . . . . . . . . . . . . 344

Specifying the Model. . . . . . . . . . . . . . . . . . . . . . . . . . 345

Making Some Arrows Optional . . . . . . . . . . . . . . . . . . . . 345

Setting Options to Their Defaults . . . . . . . . . . . . . . . . . . . 345

xvi

Page 17

Performing the Specification Search . . . . . . . . . . . . . . . . . 346

Using BIC to Compare Models . . . . . . . . . . . . . . . . . . . . . 347

Viewing the Scree Plot . . . . . . . . . . . . . . . . . . . . . . . . . 348

Limitations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 348

23 Exploratory Factor Analysis by

Specification Search 349

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 349

About the Data. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 349

About the Model. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 349

Specifying the Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 350

Opening the Specification Search Window . . . . . . . . . . . . . . . . 350

Making All Regression Weights Optional . . . . . . . . . . . . . . . . . 351

Setting Options to Their Defaults . . . . . . . . . . . . . . . . . . . . . . 351

Performing the Specification Search . . . . . . . . . . . . . . . . . . . . 353

Using BCC to Compare Models . . . . . . . . . . . . . . . . . . . . . . . 354

Viewing the Scree Plot . . . . . . . . . . . . . . . . . . . . . . . . . . . . 357

Viewing the Short List of Models . . . . . . . . . . . . . . . . . . . . . . 357

Heuristic Specification Search . . . . . . . . . . . . . . . . . . . . . . . 358

Performing a Stepwise Search . . . . . . . . . . . . . . . . . . . . . . . 359

Viewing the Scree Plot . . . . . . . . . . . . . . . . . . . . . . . . . . . . 360

Limitations of Heuristic Specification Searches . . . . . . . . . . . . . 361

24 Multiple-Group Factor Analysis 363

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 363

About the Data. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 363

Model 24a: Modeling Without Means and Intercepts . . . . . . . . . . 363

Specifying the Model . . . . . . . . . . . . . . . . . . . . . . . . . . 364

Opening the Multiple-Group Analysis Dialog Box . . . . . . . . . . 364

Viewing the Parameter Subsets . . . . . . . . . . . . . . . . . . . . 366

Viewing the Generated Models . . . . . . . . . . . . . . . . . . . . 367

Fitting All the Models and Viewing the Output . . . . . . . . . . . . 368

xvii

Page 18

Customizing the Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . 369

Model 24b: Comparing Factor Means . . . . . . . . . . . . . . . . . . . 370

Specifying the Model. . . . . . . . . . . . . . . . . . . . . . . . . . 370

Removing Constraints . . . . . . . . . . . . . . . . . . . . . . . . . 371

Generating the Cross-Group Constraints . . . . . . . . . . . . . . 372

Fitting the Models. . . . . . . . . . . . . . . . . . . . . . . . . . . . 373

Viewing the Output . . . . . . . . . . . . . . . . . . . . . . . . . . . 374

25 Multiple-Group Analysis 377

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 377

About the Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 377

About the Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 377

Specifying the Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . 378

Constraining the Latent Variable Means and Intercepts . . . . . . . . 378

Generating Cross-Group Constraints . . . . . . . . . . . . . . . . . . . 379

Fitting the Models . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 381

Viewing the Text Output . . . . . . . . . . . . . . . . . . . . . . . . . . . 381

Examining the Modification Indices . . . . . . . . . . . . . . . . . . . . 382

Modifying the Model and Repeating the Analysis . . . . . . . . . 383

26 Bayesian Estimation 385

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 385

Bayesian Estimation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 385

Selecting Priors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 387

Performing Bayesian Estimation Using Amos Graphics . . . . . . 388

Estimating the Covariance. . . . . . . . . . . . . . . . . . . . . . . 388

Results of Maximum Likelihood Analysis . . . . . . . . . . . . . . . . . 389

Bayesian Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 390

Replicating Bayesian Analysis and Data Imputation Results . . . . . . 392

Examining the Current Seed. . . . . . . . . . . . . . . . . . . . . . 392

Changing the Current Seed . . . . . . . . . . . . . . . . . . . . . . 393

Changing the Refresh Options . . . . . . . . . . . . . . . . . . . . 395

xviii

Page 19

Assessing Convergence . . . . . . . . . . . . . . . . . . . . . . . . . . . 396

Diagnostic Plots . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 398

Bivariate Marginal Posterior Plots . . . . . . . . . . . . . . . . . . . . . 404

Credible Intervals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 407

Changing the Confidence Level . . . . . . . . . . . . . . . . . . . . 407

Learning More about Bayesian Estimation . . . . . . . . . . . . . . . . 408

27 Bayesian Estimation Using a

Non-Diffuse Prior Distribution 409

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 409

About the Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 409

More about Bayesian Estimation . . . . . . . . . . . . . . . . . . . . . . 409

Bayesian Analysis and Improper Solutions . . . . . . . . . . . . . . . . 410

About the Data. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 410

Fitting a Model by Maximum Likelihood . . . . . . . . . . . . . . . . . . 411

Bayesian Estimation with a Non-Informative (Diffuse) Prior. . . . . . . 412

Changing the Number of Burn-In Observations . . . . . . . . . . . 412

28 Bayesian Estimation of Values

Other Than Model Parameters 423

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 423

About the Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 423

The Wheaton Data Revisited . . . . . . . . . . . . . . . . . . . . . . . . 423

Indirect Effects . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 424

Estimating Indirect Effects . . . . . . . . . . . . . . . . . . . . . . . 425

Bayesian Analysis of Model C . . . . . . . . . . . . . . . . . . . . . . . . 427

Additional Estimands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 428

Inferences about Indirect Effects . . . . . . . . . . . . . . . . . . . . . . 431

xix

Page 20

29 Estimating a User-Defined Quantity

in Bayesian SEM 437

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 437

About the Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 437

The Stability of Alienation Model . . . . . . . . . . . . . . . . . . . . . 437

Numeric Custom Estimands. . . . . . . . . . . . . . . . . . . . . . . . . 443

Dragging and Dropping . . . . . . . . . . . . . . . . . . . . . . . . 447

Dichotomous Custom Estimands . . . . . . . . . . . . . . . . . . . . . . 457

Defining a Dichotomous Estimand . . . . . . . . . . . . . . . . . . 457

30 Data Imputation 461

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 461

About the Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 461

Multiple Imputation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 462

Model-Based Imputation . . . . . . . . . . . . . . . . . . . . . . . . . . 462

Performing Multiple Data Imputation Using Amos Graphics . . . . . . 462

31 Analyzing Multiply Imputed Datasets 469

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 469

Analyzing the Imputed Data Files Using SPSS Statistics . . . . . . . . 469

Step 2: Ten Separate Analyses . . . . . . . . . . . . . . . . . . . . . . . 470

Step 3: Combining Results of Multiply Imputed Data Files . . . . . . . 471

Further Reading . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 473

32 Censored Data 475

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 475

About the Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 475

Recoding the Data . . . . . . . . . . . . . . . . . . . . . . . . . . . 477

Analyzing the Data . . . . . . . . . . . . . . . . . . . . . . . . . . . 477

Performing a Regression Analysis . . . . . . . . . . . . . . . . . . 478

xx

Page 21

Posterior Predictive Distributions . . . . . . . . . . . . . . . . . . . . . . 481

Imputation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 484

General Inequality Constraints on Data Values . . . . . . . . . . . . . . 488

33 Ordered-Categorical Data 489

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 489

About the Data. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 489

Specifying the Data File . . . . . . . . . . . . . . . . . . . . . . . . . 491

Recoding the Data within Amos . . . . . . . . . . . . . . . . . . . . 492

Specifying the Model . . . . . . . . . . . . . . . . . . . . . . . . . . 500

Fitting the Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 501

MCMC Diagnostics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 504

Posterior Predictive Distributions . . . . . . . . . . . . . . . . . . . . . . 506

Posterior Predictive Distributions for Latent Variables. . . . . . . . . . 511

Imputation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 516

34 Mixture Modeling with Training Data 521

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 521

About the Data. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 521

Performing the Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . 524

Specifying the Data File . . . . . . . . . . . . . . . . . . . . . . . . . . . 526

Specifying the Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 530

Fitting the Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 532

Classifying Individual Cases . . . . . . . . . . . . . . . . . . . . . . . . . 535

Latent Structure Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . 537

35 Mixture Modeling without Training Data 539

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 539

About the Data. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 539

Performing the Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . 540

xxi

Page 22

Specifying the Data File . . . . . . . . . . . . . . . . . . . . . . . . . . . 542

Specifying the Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . 545

Constraining the Parameters . . . . . . . . . . . . . . . . . . . . . 546

Fitting the Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 548

Classifying Individual Cases . . . . . . . . . . . . . . . . . . . . . . . . 551

Latent Structure Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . 553

Label Switching . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 554

36 Mixture Regression Modeling 557

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 557

About the Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 557

First Dataset . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 557

Second Dataset . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 559

The Group Variable in the Dataset . . . . . . . . . . . . . . . . . . 560

Performing the Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . 561

Specifying the Data File . . . . . . . . . . . . . . . . . . . . . . . . . . . 563

Specifying the Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . 566

Fitting the Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 567

Classifying Individual Cases . . . . . . . . . . . . . . . . . . . . . . . . 572

Improving Parameter Estimates . . . . . . . . . . . . . . . . . . . . . . 573

Prior Distribution of Group Proportions . . . . . . . . . . . . . . . . . . 575

Label Switching . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 576

37 Using Amos Graphics

without Drawing a Path Diagram 577

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 577

About the Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 578

A Common Factor Model . . . . . . . . . . . . . . . . . . . . . . . . . . 578

Creating a Plugin to Specify the Model . . . . . . . . . . . . . . . 578

Controlling Undo Capability . . . . . . . . . . . . . . . . . . . . . . 583

Compiling and Saving the Plugin . . . . . . . . . . . . . . . . . . . 585

Using the Plugin. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 586

xxii

Page 23

Other Aspects of the Analysis in Addition to Model Specification . . . 588

Defining Program Variables that Correspond to

Model Variables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 588

Part III: Appendices

A Notation 591

B Discrepancy Functions 593

C Measures of Fit 597

Measures of Parsimony . . . . . . . . . . . . . . . . . . . . . . . . . . . 598

NPAR . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 598

DF . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 598

PRATIO . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 599

Minimum Sample Discrepancy Function . . . . . . . . . . . . . . . . . . 599

CMIN . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 599

P . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 599

CMIN/DF . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 601

FMIN. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 602

Measures Based On the Population Discrepancy . . . . . . . . . . . . 602

NCP . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 602

F0. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 603

RMSEA . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 603

PCLOSE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 605

Information-Theoretic Measures . . . . . . . . . . . . . . . . . . . . . . 605

AIC . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 605

BCC . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 606

BIC . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 606

CAIC . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 607

ECVI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 607

MECVI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 608

xxiii

Page 24

Comparisons to a Baseline Model . . . . . . . . . . . . . . . . . . . . . 608

NFI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 609

RFI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 610

IFI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 611

TLI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 611

CFI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 612

Parsimony Adjusted Measures. . . . . . . . . . . . . . . . . . . . . . . 612

PNFI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 613

PCFI. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 613

GFI and Related Measures . . . . . . . . . . . . . . . . . . . . . . . . . 613

GFI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 613

AGFI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 614

PGFI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 615

Miscellaneous Measures . . . . . . . . . . . . . . . . . . . . . . . . . . 615

HI 90 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 615

HOELTER . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 615

LO 90 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 616

RMR . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 616

Selected List of Fit Measures. . . . . . . . . . . . . . . . . . . . . . . . 617

D Numeric Diagnosis of Non-Identifiability 619

E Using Fit Measures to Rank Models 621

F Baseline Models for

Descriptive Fit Measures 625

G Rescaling of AIC, BCC, and BIC 627

Zero-Based Rescaling . . . . . . . . . . . . . . . . . . . . . . . . . . . . 627

Akaike Weights and Bayes Factors (Sum = 1) . . . . . . . . . . . . . . 628

Akaike Weights and Bayes Factors (Max = 1) . . . . . . . . . . . . . . 629

xxiv

Page 25

Notices 631

Bibliography 635

Index 647

xxv

Page 26

Page 27

spatial

visperc

cubes

lozenges

wordmean

paragraph

sentence

e1

e2

e3

e4

e5

e6

verbal

1

1

1

1

1

1

1

1

Input:

spatial

visperc

cubes

.43

lozenges

.54

wordmean

.71

paragraph

.77

sentence

.68

e1

e2

e3

e4

e5

e6

verbal

.70

.65

.74

.88

.83

.84

.49

Chi-square = 7.853 (8 df)

p = .448

Output:

Introduction

IBM SPSS Amos implements the general approach to data analysis known as

structural equation modeling (SEM), also known as analysis of covariance

structures, or causal modeling. This approach includes, as special cases, many well-

known conventional techniques, including the general linear model and common

factor analysis.

Chapter

1

IBM SPSS Amos (Analysis of Moment Structures) is an easy-to-use program for

visual SEM. With Amos, you can quickly specify, view, and modify your model

graphically using simple drawing tools. Then you can assess your model’s fit, make

any modifications, and print out a publication-quality graphic of your final model.

Simply specify the model graphically (left). Amos quickly performs the

computations and displays the results (right).

1

Page 28

2

Chapter 1

Structural equation modeling (SEM) is sometimes thought of as esoteric and difficult

to learn and use. This is incorrect. Indeed, the growing importance of SEM in data

analysis is largely due to its ease of use. SEM opens the door for nonstatisticians to

solve estimation and hypothesis testing problems that once would have required the

services of a specialist.

IBM SPSS Amos was originally designed as a tool for teaching this powerful and

fundamentally simple method. For this reason, every effort was made to see that it is

easy to use. Amos integrates an easy-to-use graphical interface with an advanced

computing engine for SEM. The publication-quality path diagrams of Amos provide a

clear representation of models for students and fellow researchers. The numeric

methods implemented in Amos are among the most effective and reliable available.

Featured Methods

Amos provides the following methods for estimating structural equation models:

Maximum likelihood

Unweighted least squares

Generalized least squares

Browne’s asymptotically distribution-free criterion

Scale-free least squares

Bayesian estimation

IBM SPSS Amos goes well beyond the usual capabilities found in other structural

equation modeling programs. When confronted with missing data, Amos performs

state-of-the-art estimation by full information maximum likelihood instead of relying

on ad-hoc methods like listwise or pairwise deletion, or mean imputation. The program

can analyze data from several populations at once. It can also estimate means for

exogenous variables and intercepts in regression equations.

The program makes bootstrapped standard errors and confidence intervals available

for all parameter estimates, effect estimates, sample means, variances, covariances,

and correlations. It also implements percentile intervals and bias-corrected percentile

intervals (Stine, 1989), as well as Bollen and Stine’s (1992) bootstrap approach to

model testing.

Multiple models can be fitted in a single analysis. Amos examines every pair of

models in which one model can be obtained by placing restrictions on the parameters

of the other. The program reports several statistics appropriate for comparing such

Page 29

models. It provides a test of univariate normality for each observed variable as well as

a test of multivariate normality and attempts to detect outliers.

IBM SPSS Amos accepts a path diagram as a model specification and displays

parameter estimates graphically on a path diagram. Path diagrams used for model

specification and those that display parameter estimates are of presentation quality.

They can be printed directly or imported into other applications such as word

processors, desktop publishing programs, and general-purpose graphics programs.

About the Tutorial

The tutorial is designed to get you up and running with Amos Graphics. It covers some

of the basic functions and features and guides you through your first Amos analysis.

Once you have worked through the tutorial, you can learn about more advanced

functions using the online Help, or you can continue working through the examples to

get a more extended introduction to structural modeling with IBM SPSS Amos.

3

Introduction

About the Examples

Many people like to learn by doing. Knowing this, we have developed many examples

that quickly demonstrate practical ways to use IBM SPSS Amos. The initial examples

introduce the basic capabilities of Amos as applied to simple problems. You learn

which buttons to click, how to access the several supported data formats, and how to

maneuver through the output. Later examples tackle more advanced modeling

problems and are less concerned with program interface issues.

Examples 1 through 4 show how you can use Amos to do some conventional

analyses—analyses that could be done using a standard statistics package. These

examples show a new approach to some familiar problems while also demonstrating

all of the basic features of Amos. There are sometimes good reasons for using Amos

to do something simple, like estimating a mean or correlation or testing the hypothesis

that two means are equal. For one thing, you might want to take advantage of the ability

of Amos to handle missing data. Or maybe you want to use the bootstrapping capability

of Amos, particularly to obtain confidence intervals.

Examples 5 through 8 illustrate the basic techniques that are commonly used

nowadays in structural modeling.

Page 30

4

Chapter 1

Example 9 and those that follow demonstrate advanced techniques that have so far not

been used as much as they deserve. These techniques include:

Simultaneous analysis of data from several different populations.

Estimation of means and intercepts in regression equations.

Maximum likelihood estimation in the presence of missing data.

Bootstrapping to obtain estimated standard errors and confidence intervals. Amos

makes these techniques especially easy to use, and we hope that they will become

more commonplace.

Specification searches.

Bayesian estimation.

Imputation of missing values.

Analysis of censored data.

Analysis of ordered-categorical data.

Mixture modeling.

Tip: If you have questions about a particular Amos feature, you can always refer to the

extensive online Help provided by the program.

About the Documentation

IBM SPSS Amos 21 comes with extensive documentation, including an online Help

system, this user’s guide, and advanced reference material for Amos Basic and the

Amos API (Application Programming Interface). If you performed a typical

installation, you can find the IBM SPSS Amos 21 Programming Reference Guide in

the following location: C:\Program

Files\IBM\SPSS\Amos\21\Documentation\Programming Reference.pdf.

Other Sources of Information

Although this user’s guide contains a good bit of expository material, it is not by any

means a complete guide to the correct and effective use of structural modeling. Many

excellent SEM textbooks are available.

Page 31

Structural Equation Modeling: A Multidisciplinary Journal contains

methodological articles as well as applications of structural modeling. It is

published by Taylor and Francis (http://www.tandf.co.uk).

Carl Ferguson and Edward Rigdon established an electronic mailing list called

Semnet to provide a forum for discussions related to structural modeling. You can

find information about subscribing to Semnet at

www.gsu.edu/~mkteer/semnet.html.

Acknowledgements

Many users of previous versions of Amos provided valuable feedback, as did many

users who tested the present version. Torsten B. Neilands wrote Examples 26 through

31 in this User’s Guide with contributions by Joseph L. Schafer. Eric Loken reviewed

Examples 32 and 33. He also provided valuable insights into mixture modeling as well

as important suggestions for future developments in Amos.

A last word of warning: While Amos Development Corporation has engaged in

extensive program testing to ensure that Amos operates correctly, all complicated

software, Amos included, is bound to contain some undetected bugs. We are

committed to correcting any program errors. If you believe you have encountered one,

please report it to technical support.

5

Introduction

James L. Arbuckle

Page 32

Page 33

Tutorial: Getting Started with Amos Graphics

Introduction

Remember your first statistics class when you sweated through memorizing formulas

and laboriously calculating answers with pencil and paper? The professor had you do

this so that you would understand some basic statistical concepts. Later, you

discovered that a calculator or software program could do all of these calculations in

a split second.

This tutorial is a little like that early statistics class. There are many shortcuts to

drawing and labeling path diagrams in Amos Graphics that you will discover as you

work through the examples in this user’s guide or as you refer to the online help. The

intent of this tutorial is to simply get you started using Amos Graphics. It will cover

some of the basic functions and features of IBM SPSS Amos and guide you through

your first Amos analysis.

Once you have worked through the tutorial, you can learn about more advanced

functions from the online help, or you can continue to learn incrementally by working

your way through the examples.

If you performed a typical installation, you can find the path diagram constructed

in this tutorial in this location:

C:\Program Files\IBM\SPSS\Amos\21\Tutorial\<language>. The file Startsps.amw

uses a data file in SPSS Statistics format. Getstart.amw is the same path diagram but

uses data from a Microsoft Excel file.

Chapter

2

Tip: IBM SPSS Amos 21 provides more than one way to accomplish most tasks. For

all menu commands except Tools > Macro, there is a toolbar button that performs the

same task. For many tasks, Amos also provides keyboard shortcuts. The user’s guide

7

Page 34

8

Chapter 2

demonstrates the menu path. For information about the toolbar buttons and keyboard

shortcuts, see the online help.

About the Data

Hamilton (1990) provided several measurements on each of 21 states. Three of the

measurements will be used in this tutorial:

Average SAT score

Per capita income expressed in $1,000 units

Median education for residents 25 years of age or older

You can find the data in the Tutorial directory within the Excel 8.0 workbook

Hamilton.xls in the worksheet named Hamilton. The data are as follows:

SAT Income Education

899 14.345 12.7

896 16.37 12.6

897 13.537 12.5

889 12.552 12.5

823 11.441 12.2

857 12.757 12.7

860 11.799 12.4

890 10.683 12.5

889 14.112 12.5

888 14.573 12.6

925 13.144 12.6

869 15.281 12.5

896 14.121 12.5

827 10.758 12.2

908 11.583 12.7

885 12.343 12.4

887 12.729 12.3

790 10.075 12.1

868 12.636 12.4

904 10.689 12.6

888 13.065 12.4

Page 35

Tutorial: Getting Started with Amos Graphics

The following path diagram shows a model for these data:

This is a simple regression model where one observed variable, SAT, is predicted as a

linear combination of the other two observed variables, Education and Income. As with

nearly all empirical data, the prediction will not be perfect. The variable Other

represents variables other than Education and Income that affect SAT.

Each single-headed arrow represents a regression weight. The number

1 in the

figure specifies that Other must have a weight of 1 in the prediction of SAT. Some such

constraint must be imposed in order to make the model identified, and it is one of the

features of the model that must be communicated to Amos.

9

Launching Amos Graphics

You can launch Amos Graphics in any of the following ways:

Click Start on the Windows task bar, and choose All Programs > IBM SPSS

Statistics

Double-click any path diagram (*.amw).

Drag a path diagram (*.amw) file from Windows Explorer to the Amos Graphics

window.

Click Start on the Windows task bar, and choose All Programs > IBM SPSS

Statistics

diagram in the View Path Diagrams window.

From within SPSS Statistics, choose Analyze > IBM SPSS Amos from the menus.

> IBM SPSS Amos 21 > Amos Graphics.

> IBM SPSS Amos 21 > View Path Diagrams. Then double-click a path

Page 36

10

Chapter 2

Creating a New Model

E From the menus, choose File > New.

Your work area appears. The large area on the right is where you draw path diagrams.

The toolbar on the left provides one-click access to the most frequently used buttons.

You can use either the toolbar or menu commands for most operations.

Page 37

Specifying the Data File

The next step is to specify the file that contains the Hamilton data. This tutorial uses a

Microsoft Excel 8.0 (*.xls) file, but Amos supports several common database formats,

including SPSS Statistics *.sav files. If you launch Amos from the Add-ons menu in

SPSS Statistics, Amos automatically uses the file that is open in SPSS Statistics.

E From the menus, choose File > Data Files.

E In the Data Files dialog box, click File Name.

E Browse to the Tutorial folder. If you performed a typical installation, the path is

C:\Program Files\IBM\SPSS\Amos\21\Tutorial\<language>.

E In the Files of type list, select Excel 8.0 (*.xls).

E Select Hamilton.xls, and then click Open.

E In the Data Files dialog box, click OK.

11

Tutorial: Getting Started with Amos Graphics

Specifying the Model and Drawing Variables

The next step is to draw the variables in your model. First, you’ll draw three rectangles

to represent the observed variables, and then you’ll draw an ellipse to represent the

unobserved variable.

E From the menus, choose Diagram > Draw Observed.

E In the drawing area, move your mouse pointer to where you want the Education

rectangle to appear. Click and drag to draw the rectangle. Don’t worry about the exact

size or placement of the rectangle because you can change it later.

E Use the same method to draw two more rectangles for Income and SAT.

E From the menus, choose Diagram > Draw Unobserved.

Page 38

12

Chapter 2

E In the drawing area, move your mouse pointer to the right of the three rectangles and

click and drag to draw the ellipse.

The model in your drawing area should now look similar to the following:

Naming the Variables

E In the drawing area, right-click the top left rectangle and choose Object Properties from

the pop-up menu.

E Click the Text tab.

E In the Variable name text box, type Education.

E Use the same method to name the remaining variables. Then close the Object

Properties dialog box.

Page 39

Your path diagram should now look like this:

Drawing Arrows

Now you will add arrows to the path diagram, using the following model as your guide:

13

Tutorial: Getting Started with Amos Graphics

E From the menus, choose Diagram > Draw Path.

E Click and drag to draw an arrow between Education and SAT.

E Use this method to add each of the remaining single-headed arrows.

E From the menus, choose Diagram > Draw Covariances.

E Click and drag to draw a double-headed arrow between Income and Education. Don’t

worry about the curve of the arrow because you can adjust it later.

Page 40

14

Chapter 2

Constraining a Parameter

To identify the regression model, you must define the scale of the latent variable Other.

You can do this by fixing either the variance of Other or the path coefficient from Other

to SAT at some positive value. The following shows you how to fix the path coefficient

at unity (1).

E In the drawing area, right-click the arrow between Other and SAT and choose Object

Properties

E Click the Parameters tab.

E In the Regression weight text box, type 1.

from the pop-up menu.

E Close the Object Properties dialog box.

There is now a

1 above the arrow between Other and SAT. Your path diagram is now

complete, other than any changes you may wish to make to its appearance. It should

look something like this:

Page 41

Tutorial: Getting Started with Amos Graphics

Altering the Appearance of a Path Diagram

You can change the appearance of your path diagram by moving and resizing objects.

These changes are visual only; they do not affect the model specification.

To Move an Object

E From the menus, choose Edit > Move.

15

E In the drawing area, click and drag the object to its new location.

To Reshape an Object or Double-Headed Arrow

E From the menus, choose Edit > Shape of Object.

E In the drawing area, click and drag the object until you are satisfied with its size and

shape.

To Delete an Object

E From the menus, choose Edit > Erase.

E In the drawing area, click the object you wish to delete.

Page 42

16

Chapter 2

To Undo an Action

E From the menus, choose Edit > Undo.

To Redo an Action

E From the menus, choose Edit > Redo.

Setting Up Optional Output

Some of the output in Amos is optional. In this step, you will choose which portions of

the optional output you want Amos to display after the analysis.

E From the menus, choose View > Analysis Properties.

E Click the Output tab.

E Select the Minimization history, Standardized estimates, and Squared multiple correlations

check boxes.

Page 43

Tutorial: Getting Started with Amos Graphics

17

E

Close the Analysis Properties dialog box.

Page 44

18

Chapter 2

Performing the Analysis

The only thing left to do is perform the calculations for fitting the model. Note that in

order to keep the parameter estimates up to date, you must do this every time you

change the model, the data, or the options in the Analysis Properties dialog box.

E From the menus, click Analyze > Calculate Estimates.

E Because you have not yet saved the file, the Save As dialog box appears. Type a name

for the file and click Save.

Amos calculates the model estimates. The panel to the left of the path diagram displays

a summary of the calculations.

Viewing Output

When Amos has completed the calculations, you have two options for viewing the

output: text and graphics.

To View Text Output

E From the menus, choose View > Text Output.

The tree diagram in the upper left pane of the Amos Output window allows you to

choose a portion of the text output for viewing.

E Click Estimates to view the parameter estimates.

Page 45

Tutorial: Getting Started with Amos Graphics

19

To View Graphics Output

E Click the Show the output path diagram button .

E In the Parameter Formats pane to the left of the drawing area, click Standardized

estimates.

Page 46

20

Chapter 2

Your path diagram now looks like this:

The value 0.49 is the correlation between Education and Income. The values 0.72 and

0.11 are standardized regression weights. The value 0.60 is the squared multiple

correlation of SAT with Education and Income.

E In the Parameter Formats pane to the left of the drawing area, click Unstandardized

estimates

.

Your path diagram should now look like this:

Printing the Path Diagram

E From the menus, choose File > Print.

The Print dialog box appears.

Page 47

E Click Print.

Copying the Path Diagram

21

Tutorial: Getting Started with Amos Graphics

Amos Graphics lets you easily export your path diagram to other applications such as

Microsoft Word.

E From the menus, choose Edit > Copy (to Clipboard).

E Switch to the other application and use the Paste function to insert the path diagram.

Amos Graphics exports only the diagram; it does not export the background.

Copying Text Output

E In the Amos Output window, select the text you want to copy.

E Right-click the selected text, and choose Copy from the pop-up menu.

E Switch to the other application and use the Paste function to insert the text.

Page 48

Page 49

Estimating Variances and Covariances

Introduction

This example shows you how to estimate population variances and covariances. It also

discusses the general format of Amos input and output.

Example

1

About the Data

Attig (1983) showed 40 subjects a booklet containing several pages of advertisements.

Then each subject was given three memory performance tests.

Test Explanation

recall

cued

place

Attig repeated the study with the same 40 subjects after a training exercise intended

to improve memory performance. There were thus three performance measures

before training and three performance measures after training. In addition, she

recorded scores on a vocabulary test, as well as age, sex, and level of education.

Attig’s data files are included in the Examples folder provided by Amos.

The subject was asked to recall as many of the advertisements as possible.

The subject’s score on this test was the number of advertisements recalled

correctly.

The subject was given some cues and asked again to recall as many of the

advertisements as possible. The subject’s score was the number of

advertisements recalled correctly.

The subject was given a list of the advertisements that appeared in the

booklet and was asked to recall the page location of each one. The subject’s

score on this test was the number of advertisements whose location was

recalled correctly.

23

Page 50

24

Example 1

Bringing In the Data

E From the menus, choose File > New.

E From the menus, choose File > Data Files.

E In the Data Files dialog box, click File Name.

E Browse to the Examples folder. If you performed a typical installation, the path is

C:\Program Files\IBM\SPSS\Amos\21\Examples\<language>.

E In the Files of type list, select Excel 8.0 (*.xls), select UserGuide.xls, and then click

Open.

E In the Data Files dialog box, click OK.

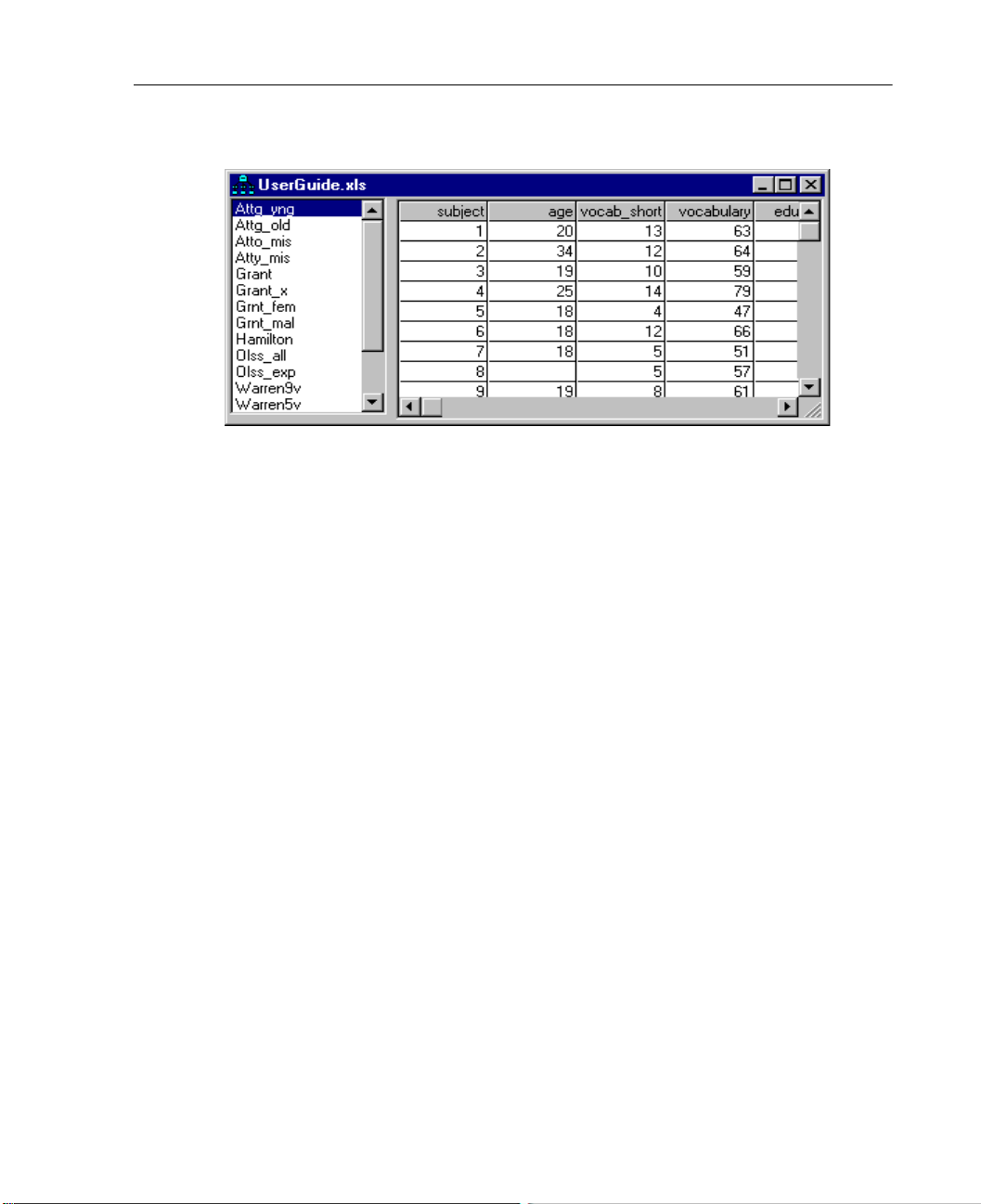

Amos displays a list of worksheets in the UserGuide workbook. The worksheet

Attg_yng contains the data for this example.

E In the Select a Data Table dialog box, select Attg_yng, then click View Data.

The Excel worksheet for the Attg_yng data file opens.

Page 51

Estimating Variances and Covariances

As you scroll across the worksheet, you will see all of the test variables from the Attig

study. This example uses only the following variables: recall1 (recall pretest), recall2

(recall posttest), place1 (place recall pretest), and place2 (place recall posttest).

E After you review the data, close the data window.

E In the Data Files dialog box, click OK.

25

Analyzing the Data

In this example, the analysis consists of estimating the variances and covariances of the

recall and place variables before and after training.

Specifying the Model

E From the menus, choose Diagram > Draw Observed.

E In the drawing area, move your mouse pointer to where you want the first rectangle to

appear. Click and drag to draw the rectangle.

E From the menus, choose Edit > Duplicate.

E Click and drag a duplicate from the first rectangle. Release the mouse button to

position the duplicate.

Page 52

26

Example 1

E Create two more duplicate rectangles until you have four rectangles side by side.

Tip: If you want to reposition a rectangle, choose Edit > Move from the menus and drag

the rectangle to its new position.

Naming the Variables

E From the menus, choose View > Variables in Dataset.

The Variables in Dataset dialog box appears.

E Click and drag the variable recall1 from the list to the first rectangle in the drawing

area.

E Use the same method to name the variables recall2, place1, and place2.

E Close the Variables in Dataset dialog box.

Page 53

Changing the Font

E Right-click a variable and choose Object Properties from the pop-up menu.

The Object Properties dialog box appears.

E Click the Text tab and adjust the font attributes as desired.

27

Estimating Variances and Covariances

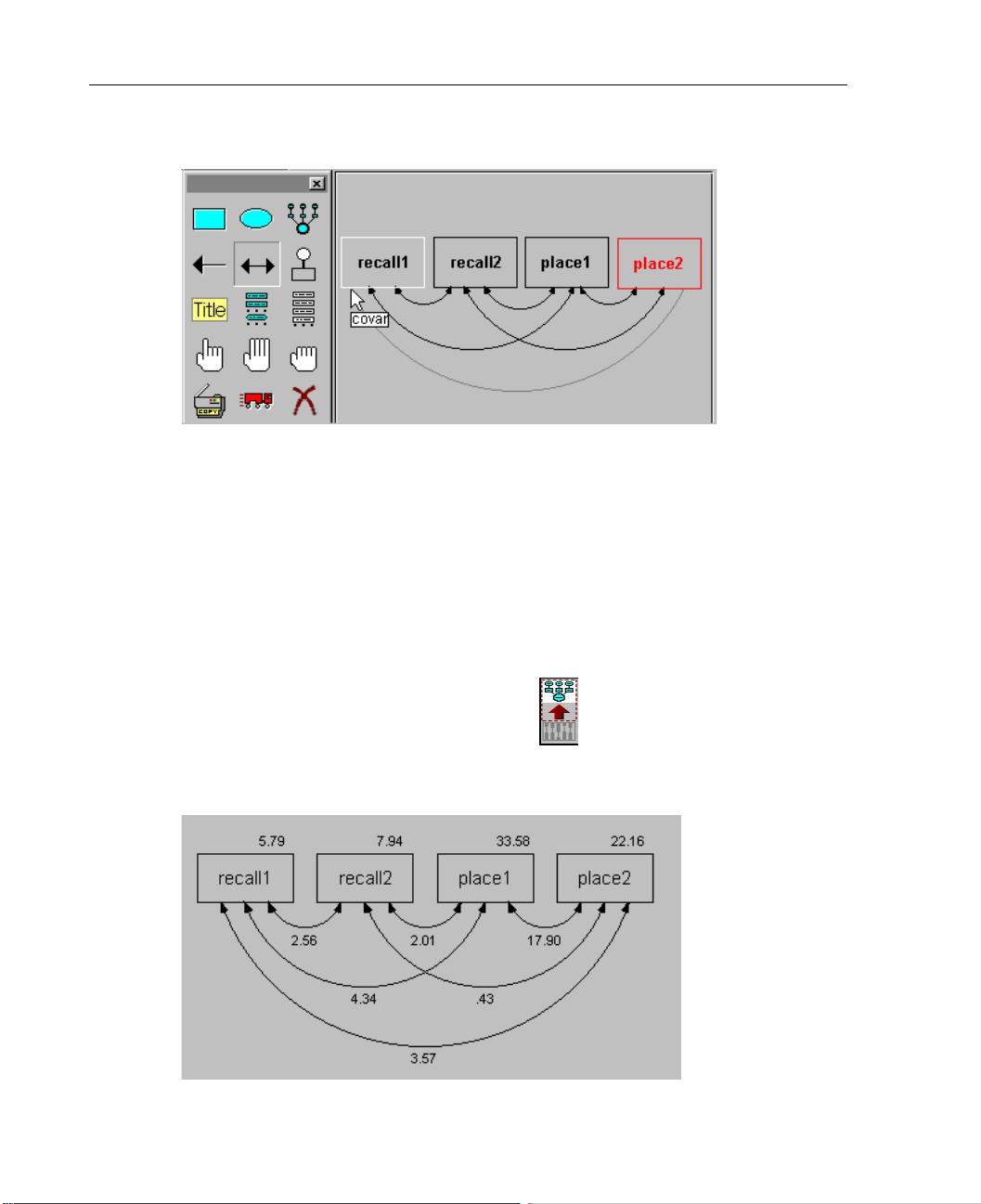

Establishing Covariances

If you leave the path diagram as it is, Amos Graphics will estimate the variances of the

four variables, but it will not estimate the covariances between them. In Amos

Graphics, the rule is to assume a correlation or covariance of 0 for any two variables

that are not connected by arrows. To estimate the covariances between the observed

variables, we must first connect all pairs with double-headed arrows.

E From the menus, choose Diagram > Draw Covariances.

E Click and drag to draw arrows that connect each variable to every other variable.

Your path diagram should have six double-headed arrows.

Page 54

28

Example 1

Performing the Analysis

E From the menus, choose Analyze > Calculate Estimates.

Because you have not yet saved the file, the Save As dialog box appears.

E Enter a name for the file and click Save.

Viewing Graphics Output