Page 1

RAMAC Virtual Array,

Peer-to-Peer Remote Copy,

and IXFP/SnapShot for VSE/ESA

Alison Pate Dionisio Dychioco Guenter Rebmann Bill Worthington

IBML

International Technical Support Organization

http://www.redbooks.ibm.com

This book was printed at 240 dpi (dots per inch). The final production redbook with the RED cover will

be printed at 1200 dpi and will provide superior graphics resolution. Please see “How to Get ITSO

Redbooks” at the back of this book for ordering instructions.

SG24-5360-00

Page 2

Page 3

IBML

International Technical Support Organization

SG24-5360-00

RAMAC Virtual Array,

Peer-to-Peer Remote Copy,

and IXFP/SnapShot for VSE/ESA

January 1999

Page 4

Take Note!

Before using this information and the product it supports, be sure to read the general information in

Appendix E, “Special Notices” on page 65.

First Edition (January 1999)

This edition applies to Version 6 of VSE Central functions, Program Number 5686-066, Version 2, Release 3.1 of

VSE/ESA, Program Number 5590-VSE, and Version 1, Release 16 of Initialize Disk (ICKDSF), Program Number

5747-DS2 for use with the VSE/ESA operating system.

Comments may be addressed to:

IBM Corporation, International Technical Support Organization

Dept. QXXE Building 80-E2

650 Harry Road

San Jose, California 95120-6099

When you send information to IBM, you grant IBM a non-exclusive right to use or distribute the information in any

way it believes appropriate without incurring any obligation to you.

Copyright International Business Machines Corporation 1999. All rights reserved.

Note to U.S. Government Users — Documentation related to restricted rights — Use, duplication or disclosure is

subject to restrictions set forth in GSA ADP Schedule Contract with IBM Corp.

Page 5

Contents

Preface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . vii

The Team That Wrote This Redbook

Comments Welcome

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . viii

......................... vii

Chapter 1. The IBM RAMAC Virtual Array

1.1 What Is an IBM RAMAC Virtual Array?

..................... 1

..................... 1

1.1.1 Overview of RVA and the Virtual Disk Architecture

1.1.2 Log Structured File

1.1.3 Data Compression and Compaction

1.2 VSE/ESA Support for the RVA

1.2.1 What Is IXFP/SnapShot for VSE/ESA?

1.2.2 What Is SnapShot?

1.2.3 What Is IXFP?

1.3 What Is Peer-to-Peer Remote Copy?

Chapter 2. RVA Benefits for VSE/ESA

2.1 RVA Simplifies Your Storage Management

2.1.1 Disk Capacity

2.1.2 Administration

2.1.3 IXFP

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

2.2 Batch Window Improvement

2.2.1 RAMAC Virtual Array

2.2.2 IXFP/SnapShot for VSE/ESA

2.3 Application Development

2.4 RVA Data Availability

2.4.1 Hardware

2.4.2 SnapShot

2.4.3 PPRC

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

.................................... 11

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

.............................. 1

.................... 2

.......................... 3

................... 3

.............................. 3

................................. 4

...................... 5

........................ 7

.................. 7

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

........................... 8

............................. 9

......................... 9

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

............................... 10

........... 1

Chapter 3. VSE/ESA Support for the RVA

3.1 Prerequisites

3.2 Volumes

3.3 Host Connection

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

...................... 13

3.3.1 VSE/ESA Input/Output Configuration Program

3.4 ICKDSF

3.4.1 Volume Minimal Init

3.4.2 Partial Disk Minimal Init

Chapter 4. IXFP/SnapShot for VSE/ESA

4.1 IXFP SNAP

4.1.1 A Full Volume

4.1.2 A Range of Cylinders

4.1.3 A Non-VSAM File

4.2 IXFP DDSR

4.2.1 Expired Files

4.2.2 A Total Volume

4.2.3 A Range of Cylinders

4.2.4 A Specified File

4.3 IXFP REPORT

4.3.1 Device Detail Report

4.3.2 Device Summary Report

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

.............................. 15

........................... 15

....................... 17

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

................................. 21

............................. 23

............................... 24

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

................................ 26

............................. 26

................................ 27

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

............................. 29

........................... 31

.............. 14

Copyright IBM Corp. 1999 iii

Page 6

4.3.3 Subsystem Summary Report ........................ 31

Chapter 5. Peer-to-Peer Remote Copy

5.1 PPRC and VSE/ESA Software Requirements

5.2 PPRC Hardware Requirements

5.3 Invoking peer-to-peer remote copy

5.4 SnapShot Considerations

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

5.4.1 Primary Devices of a PPRC Pair

5.4.2 Secondary Devices of a PPRC Pair

5.5 Examples of PPRCOPY Commands

5.5.1 Setting Up PPRC Paths and Pairs

5.5.2 Recovering from a Primary Site Failure

5.5.3 Recovering from a Secondary Site Failure

5.5.4 Deleting PPRC Pairs and Paths

5.6 Physical Connections to the RVA

........................ 33

................. 34

......................... 35

....................... 35

...................... 36

.................... 36

....................... 36

..................... 37

.................. 37

................ 38

....................... 38

........................ 38

5.6.1 Determining the Logical Control Unit Number for RVA

5.6.2 Determining the Channel Connection Address

Appendix A. RVA Functional Device Configuration

Appendix B. IXFP Command Examples

B.1 SNAP Command

B.1.1 Syntax

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

....................... 43

B.1.2 Using SnapShot to Copy One Volume to Another

.............. 39

................ 41

............ 45

B.1.3 Using SnapShot to Copy a File from One Volume to Another

B.1.4 Using SnapShot to Copy Files with Relocation

B.1.5 Other Uses of SnapShot

B.2 DDSR Command

B.2.1 Syntax

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

........................... 48

B.2.2 Using DDSR to Delete a Single Data Set

B.2.3 Using DDSR to Delete the Contents of a Volume

B.2.4 Using DDSR to Delete the Free Space on an RVA

B.3 Report Command

B.3.1 Syntax

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

B.3.2 Reporting on the Capacity of a Single Volume

B.3.3 Reporting on the Capacity of Multiple Volumes

B.3.4 Reporting on the Capacity of the RVA Subsystem

B.4 IXFP/SnapShot Setup Job Streams

....................... 54

............. 47

................. 50

............ 50

........... 51

............. 53

............. 53

........... 53

......... 39

..... 47

Appendix C. VSE/VSAM Considerations

C.1 Backing up VSAM Volumes

Appendix D. IOCDS Example

Appendix E. Special Notices

Appendix F. Related Publications

F.1 International Technical Support Organization Publications

F.2 Redbooks on CD-ROMs

F.3 Other Publications

How to Get ITSO Redbooks

IBM Redbook Fax Order Form

Index

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

iv RAMAC Virtual Array, Peer-to Peer Remote VSE/ESA

....................... 59

........................... 60

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

. . . . . . . . . . . . . . . . . . . . . . . . . . 67

......... 67

.............................. 67

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

.............................. 69

............................. 70

Page 7

ITSO Redbook Evaluation ................................ 73

Contents v

Page 8

vi RAMAC Virtual Array, Peer-to Peer Remote VSE/ESA

Page 9

Preface

This redbook provides a foundation for understanding VSE/ESA′s support for the

IBM 9393 RAMAC Virtual Array (RVA). It covers existing support and the

recently available IXFP/SnapShot for VSE/ESA and peer-to-peer remote copy

support for the RVA. It also covers using IXFP/SnapShot for VSE/ESA for

VSE/VSAM.

The redbook is intended for use by IBM client representatives, IBM technical

specialists, IBM Business Partners, and IBM customers who are planning to

implement IXFP/SnapShot for VSE/ESA on the RVA.

The Team That Wrote This Redbook

This redbook was produced by a team of specialists from around the world

working at the International Technical Support Organization, San Jose Center.

Alison Pate is a project leader in the International Technical Support

Organization, San Jose Center. She joined IBM in the UK after completing an

MSc in Information Technology. A large-systems specialist since 1990, Alison

has eight years of practical experience in using and implementing IBM DASD

solutions. She has acted as a consultant to some of the largest, leading-edge

customers in the United Kingdom—providing technical support and guidance to

their key business projects.

Guenter Rebmann is a DASD HW Support Specialist in Germany. He joined IBM

in 1983 as a Customer Engineer for large system customers. Since 1993 Guenter

has worked in the DASD-EPSG (EMEA Product Support Group) in Mainz,

Germany. His area of expertise is large system hardware products.

Dionisio Dychioco III is a Technical Support Manager in the Philippines. He has

19 years of experience in data processing, including 17 years in technical

support in the IBM mainframe environment. Dionisio has worked at the Far East

Bank & Trust Co. for 12 years. His areas of expertise include MVS, VSE, and

mainframe-PC connectivity.

Bill Worthington is a Consulting IT Technical Specialist in the United States. He

has 38 years of experience in data processing, including 34 years in technical

sales support within IBM. His areas of expertise include high-end disk storage

products as well as VSE/ESA and VM/ESA. Bill has been in his current position

supporting RAMAC DASD products since 1995.

Thanks to the following people for their invaluable contributions to this project:

Janet Anglin, Storage Systems Division, San Jose

Maria Sueli Almeida, International Technical Support Organization, San Jose

Fred Borchers, International Technical Support Organization, Poughkeepsie

Jack Flynn, Storage Systems Division, San Jose

Bob Haimowitz, International Technical Support Organization, Poughkeepsie

Dan Janda, Jr., Advanced Technical Support, Endicott

Teresa Leamon, Storage Systems Division, San Jose

Axel Pieper, VSE Development, Boeblingen, Germany

Hanns-Joachim Uhl, VSE Development, Boeblingen, Germany

Copyright IBM Corp. 1999 vii

Page 10

Comments Welcome

Your comments are important to us!

We want our redbooks to be as helpful as possible. Please send us your

comments about this or other redbooks in one of the following ways:

•

•

•

Fax the evaluation form found in “ITSO Redbook Evaluation” on page 73 to

the fax number shown on the form.

Use the online evaluation form found at http://www.redbooks.ibm.com/

Send your comments in an Internet note to redbook@us.ibm.com

viii RAMAC Virtual Array, Peer-to Peer Remote VSE/ESA

Page 11

Chapter 1. The IBM RAMAC Virtual Array

In this chapter we describe the RAMAC Virtual Array (RVA) and the support that

VSE/ESA delivers for it.

1.1 What Is an IBM RAMAC Virtual Array?

We explain functions here on a level that is needed to understand how data is

stored and organized on an RVA. If you would like more detailed descriptions of

this interesting virtual disk architecture, see the redbook entitled

Virtual Array

1.1.1 Overview of RVA and the Virtual Disk Architecture

Traditional storage subsystems such as the 3990 and 3390 use the count key

data (CKD) architecture. The CKD architecture defines how and where on the

disk device the data is physically stored. Any updates to the data are written

directly to the same position on the physical disk from which the updated data

was read. This is referred to as

IBM′s RVA provides a high-availability, high-performance storage solution thanks

to its revolutionary

to four traditional 3990 storage controls, with up to 256 3380 or 3390 volumes—64

functional volumes on each 3990. These devices do not physically exist in the

subsystem and are referred to as

contains RAID 6-protected arrays of fixed block architecture (FBA) disk devices.

, SG24-4951.

virtual disk architecture

update in place

. To the host, the RVA appears as up

functional devices

.

IBM RAMAC

. Physically, the subsystem

1.1.2 Log Structured File

The RAID-protected FBA disk arrays that make up the RVA′s physical disk space

are sequentially filled with data. New and updated data is placed at the end of

the file, as it is on a sequential or log file. We call this architecture a

structured file

Updates leave areas in the log file that are no longer needed. A microcode

process called

there is always enough freespace for writing. This process runs as a

background task. You can control the freespace by observing the net capacity

load (NCL) of the RVA and using the IBM Extended Facilities Product (IXFP)

program. See the RVA redbook entitled

information. The RVA′s physical disk space typically should be kept below 75%

NCL. Above that level, the freespace collection process runs with higher priority,

and performance degradation may result. A service information message (SIM)

informs operators when this threshold is reached.

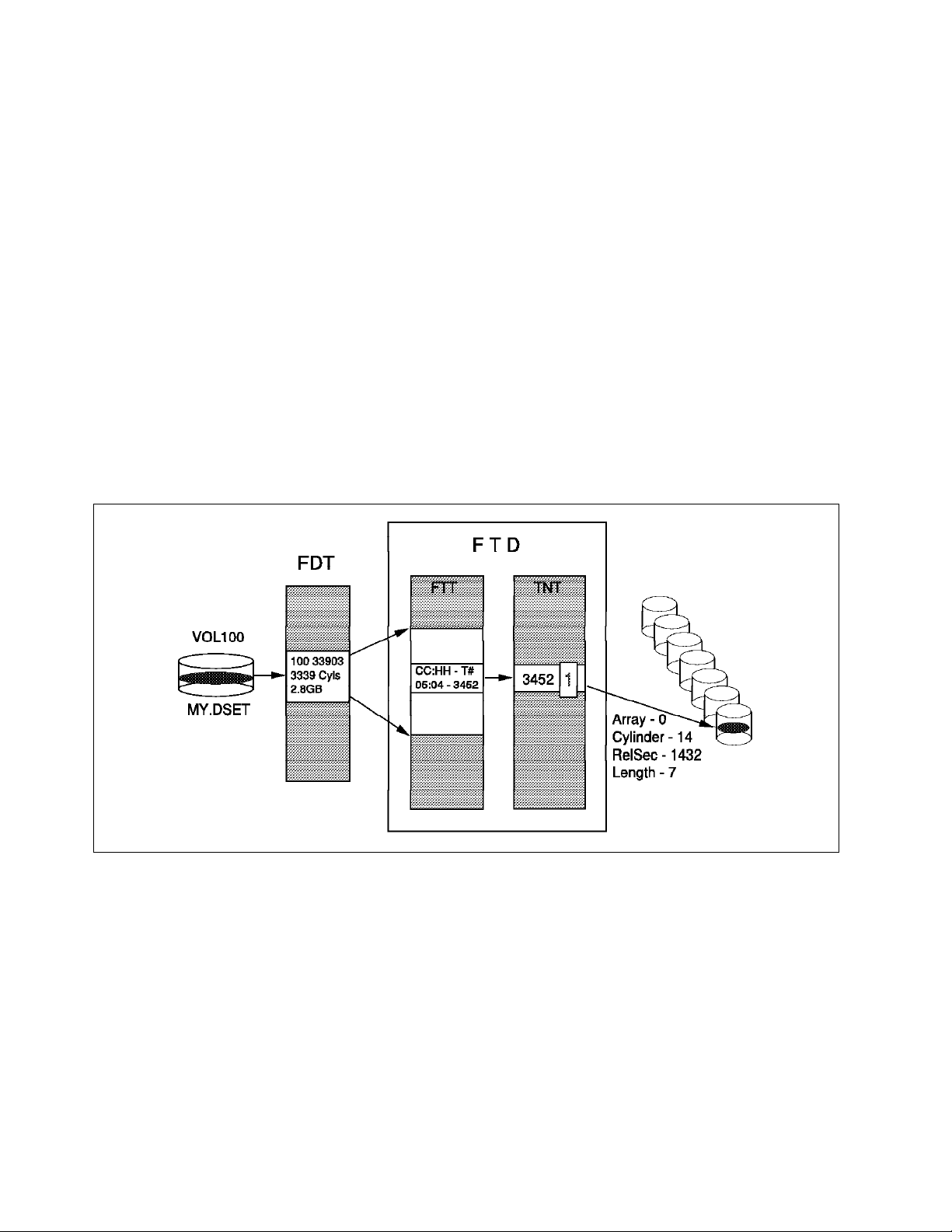

The following tables are used to map the tracks of functional devices to the FBA

blocks related to those tracks:

•

Functional device table

The functional device table (FDT) holds the information about the functional

volumes that have been defined to the RVA.

•

Functional track directory

.

freespace collection

log

ensures that these areas are put back so that

IBM RAMAC Virtual Array

for more

Copyright IBM Corp. 1999 1

Page 12

The functional track directory (FTD) is the collective name for two tables that

together map each functional track to an area in the RVA′s physical storage:

Functional track table

−

The functional track table (FTT) contains the host-related pointers, that is,

the functional-device-related track pointers of the FTD.

Track number table

−

The track number table (TNT) contains the back-end data pointers of the

FTD. A

Although the FTD consists of two tables, we discuss it as one entity (see

Figure 1). In fact, each functional track has an entry in the FDT. If a functional

track contains data, its associated FTD entry has a pointer to the FBA block

where the data starts. The data of a functional track may fit on one or more FBA

blocks.

If the data of a functional track is updated, the RVA puts the new data in a new

place in the log structured file, and the FTD entry points to the new data location.

There is no update in place.

reference counter

is also part of this table.

Figure 1. The RAMAC Virtual Array Tables

1.1.3 Data Compression and Compaction

All data in the RVA is compressed; compression occurs when data enters an

RVA. In addition, the gaps between the records, as they appear on the CKD

devices, are removed before the data is written to disk. Similarly, the unused

space at the end of a track is removed. This is called

compression and compaction ratio depends on the nature of the data.

Experience shows that RVA customers can achieve an overall compression and

compaction ratio of 3.6:1.

Data compression speeds up the transfer from and to the arrays. It increases

the effectiveness of cache memory because when compressed more data can fit

in cache.

2 RAMAC Virtual Array, Peer-to Peer Remote VSE/ESA

compaction

. The

Page 13

1.2 VSE/ESA Support for the RVA

The RVA has been supported by VSE/ESA since its introduction. Because the

RVA presents itself as logical 3380 or 3390 direct access storage devices (DASD)

attached to a logical 3990 Model 3 storage control, releases of VSE/ESA

supporting this logical environment have functioned with the RVA. However,

until now, VSE/ESA has not provided SnapShot, deleted data space release

(DDSR) or capacity reporting natively. It has depended on VM/ESA ′s IXFP and

SnapShot to provide that benefit to VSE/ESA guests.

1.2.1 What Is IXFP/SnapShot for VSE/ESA?

IXFP/SnapShot for VSE/ESA is a feature of VSE Central Functions in conjunction

with VSE/ESA Version 2, Release 3.1. It provides SnapShot, DDSR, and capacity

reporting support for the RVA. OS/390 and VM/ESA provide two distinct

products—IXFP and SnapShot—for supporting the RVA, whereas VSE/ESA has

integrated many of the functions provided by these products into a single feature

that implements the support in an Attention Routine command.

1.2.2 What Is SnapShot?

SnapShot, one of the three functions in IXFP/SnapShot for VSE/ESA, enables you

to produce almost instantaneous copies of non-VSAM data sets, volumes, and

data within CYLINDER ranges.

Note: Although not officially supported, VSAM data sets can be indirectly copied

through techniques we discuss in Appendix C, “VSE/VSAM Considerations” on

page 59.

The speed in copying is attained by exploiting the RVA′s virtual disk architecture.

Snapshot produces copies without data movement. We call making a copy with

snap

SnapShot a

Conventional methods of copying data on DASD consist of making a physical

copy of the data on either DASD or tape. Host processors, channels, tape, and

DASD controllers are involved in these conventional copy processes. Copying

may take a long time, depending on available system resources.

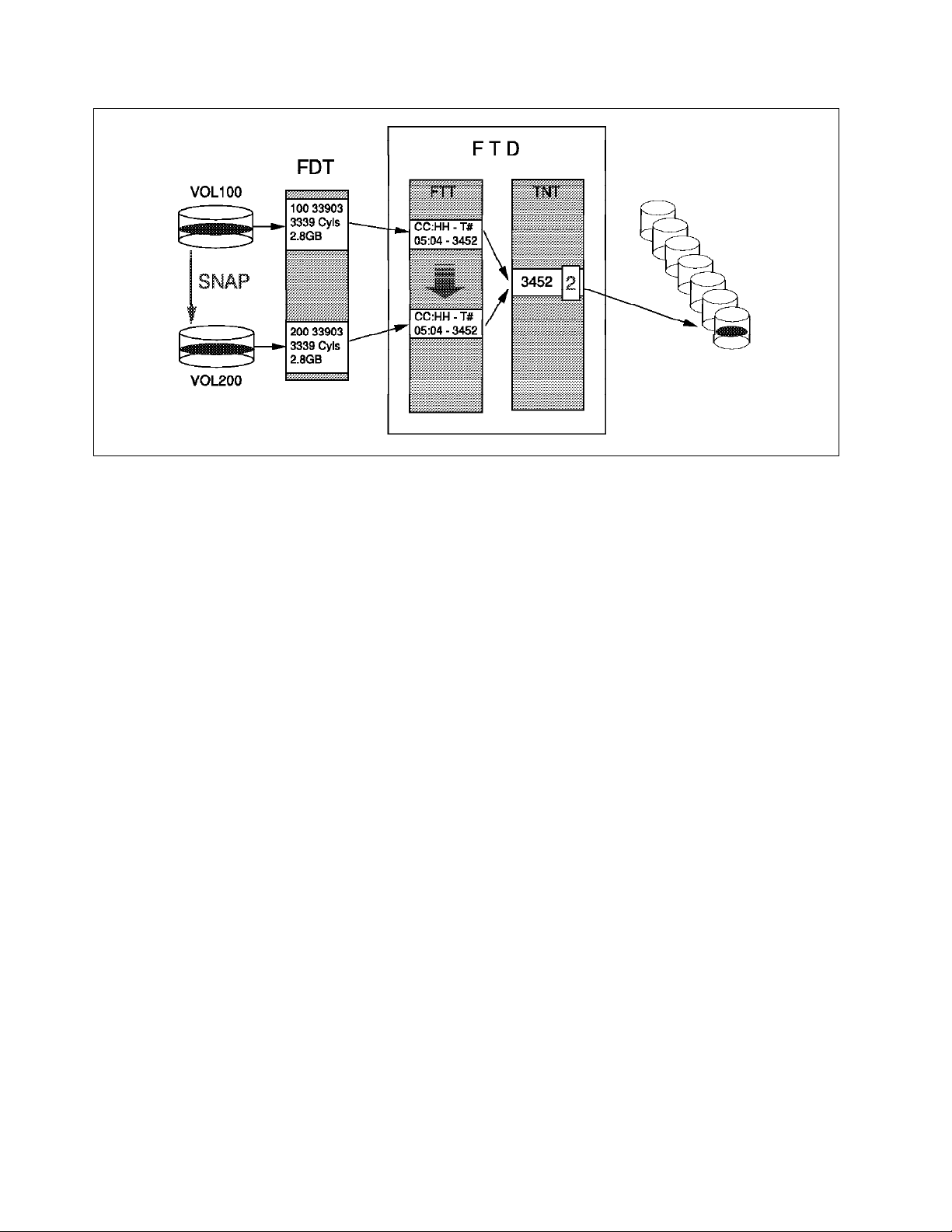

In the RVA′s virtual disk architecture, a functional device is represented by a

certain number of pointers in the FTD. Every used track has a pointer in the FTD

to its back-end data. Y ou

As you can imagine, snapping is a very fast process that takes seconds rather

than minutes or hours. No data movement takes place, and no additional

back-end physical space is used. Both FTD pointers, the original and the copy,

point to the same physical data location (see Figure 2 on page 4). Notice too

that the TNT entry now has a value of 2, which indicates that the data is in use

by two logical volumes.

. The result of a SnapShot is also called a

snap

a copy of the data by copying its FTD pointers.

snap

.

Chapter 1. The IBM RAMAC Virtual Array 3

Page 14

Figure 2. Data Snapping. SnapShot creates a logical copy by copying the FTD pointers.

Only when either the original or the copied track is updated is its associated FTD

pointer changed to point to the new data location. The other FTD pointer

remains unchanged. Additional space is needed in this case. As long as there

is a pointer to a data block in the physical disk storage, the block cannot become

free for the freespace collection process. A reference counter in the TNT

prevents the block from becoming free.

Although the creation of the copy is a logical manipulation of pointers, the data

exists on disk, so in this sense is not a logical copy. As far as the operating

system is concerned, the two copies are completely independent of each other.

snap

On the RVA hardware side, the

is a virtual copy of the data.

1.2.3 What Is IXFP?

The IXFP portion of IXFP/SnapShot for VSE/ESA provides two functions: DDSR

and reporting.

1.2.3.1 Deleted Data Space Release

The RVA manages the freespace in the back-end storage by monitoring space

that is no longer occupied by active data. This may be space formerly occupied

by temporary files, such as DFSORT for VSE work files, or it may be space that

previously held data that has been updated and written back to a different array

track in the back-end storage of the RVA. The difference is that the RVA does

not know that the sort work space is no longer needed without being explicitly

told to release it into the free space pool.

The DDSR option of the IXFP operator command provides the ability to release

this freespace back to the RVA. Using DDSR, allocated space can be returned to

the RVA on a subsystem or volume basis. Individual files can also be deleted

and their space returned. DDSR is done using the new IXFP Attention Routine

command when space reclamation is needed. Either the operator can invoke

the commands, or a job stream can include them.

4 RAMAC Virtual Array, Peer-to Peer Remote VSE/ESA

Page 15

1.2.3.2 Reporting Functions

The IXFP/SnapShot for VSE/ESA reporting functiion displays logical volume

utilization, such as space allocated and data stored on the volume. It can also

display a summary of the entire subsystem and its NCL, freespace, capacity

allocated and used, and the compression and compaction ratio.

For the capacity management functions supported by OS/390 and VM/ESA, such

as reconfiguring the DASD, adding or deleting volumes, and draining an array,

you use the RVA′s local operator panel.

1.3 What Is Peer-to-Peer Remote Copy?

We explain functions here on a level that is needed to understand how

peer-to-peer remote copy (PPRC) functions on an RVA. If you would like more

detailed descriptions of this data availability architecture, see the RVA redbook

entitled

PPRC allows the synchronous copying of data from a primary RVA to one or

more secondary RVAs at the same or a different location while providing

enterprisewide data protection, availability, and ease of system management.

The secondary site may be up to 43 km away from the primary. The

synchronous nature of PPRC offers a full recovery solution while delivering the

highest levels of data integrity and data availability for both operational and

disaster recovery environments.

Implementing PPRC on RVA

, SG24-4951.

The availability of PPRC on the RVA Model T82 now places the RVA at the heart

of mission-critical enterprise storage solutions—including remote site disaster

recovery protection, high availability, and data migration capabilities.

Chapter 1. The IBM RAMAC Virtual Array 5

Page 16

6 RAMAC Virtual Array, Peer-to Peer Remote VSE/ESA

Page 17

Chapter 2. RVA Benefits for VSE/ESA

In this chapter we describe how VSE/ESA′s support for the RVA assists in

managing storage, affects batch window characteristics, improves application

development, and increases the availability of data to the applications running

on the host system.

2.1 RVA Simplifies Your Storage Management

The RVA virtual disk architecture has many benefits to simplify your storage

management. In this section we discuss the improvements in storage

management that the functions and features of the RVA provide.

The space management improvements come from; more effective space use

from compression, minimizing adminstration, and ease of management using

IXFP/SnapShot for VSE/ESA (see Figure 3).

Figure 3. Space Management Improvements

2.1.1 Disk Capacity

The virtual disk architecture and the use of data compression enable you to

store data more effectively in the arrays of the RVA than in a traditional disk

architecture subsystem. The virtual disk architecture reduces the cost per

megabyte in your subsystem and increases the internal transfer rate in the RVA.

There is no allocation of physical disk space for logical volumes, as there is in

traditional subsystems, so unused disk space is not given away. When data is

written to the RVA, the arrays are filled sequentially, independent of the volume

or data set to which the data belongs. The logical capacity that you define is

independent of the physical capacity that you have installed. Therefore you can

define more logical volumes than you would be able to define in a traditional

Copyright IBM Corp. 1999 7

Page 18

disk architecture, for the same physical space. Thus you can spread data over

more volumes to improve performance and data availability of the subsystem.

The hardware design of the RVA allows you to upgrade physical disk space to

726 GB without any subsystem outage time and without any change to the logical

device configuration. Cache upgrades are also concurrent with subsystem

operation. Therefore you have a high level of data availability and low

subsystem outage time for planned configuration changes.

2.1.2 Administration

The subsystem administration activity is reduced for space management. Space

management is simplified with the RVA, because there is no penalty for

overallocation. Data placement activity is also reduced. With the use of the log

structure file, the data from all volumes is spread across all physical disks in the

array. This eliminates the requirement to separate data for availability or

performance reasons, such as preventing high activity data sets, tables, or

indexes from being placed on the same disk volumes.

When the RVA is installed, you can define up to 256 volumes as either 3390 or

3380 devices. This gives you flexibility in your configuration and eases migration

from previous installed hardware. With the virtual disk architecture data

management and tuning activities are minimized. Data is spread across all

disks in the array, automatically balancing the I/O load. When data is updated it

is written to another physical location in the array. As a result uncollected

freespace is created, which the RVA recycles independently in a background

routine to have it available for further use. This automatic freespace collection

keeps the level of the NCL as low as possible without any intervention from

space management, thus reducing the time and cost of storage management.

2.1.3 I XFP

With IXFP it is easy to manage the subsystem and monitor the NCL. With the

use of IXFP SnapShot, the time expended to copy volumes, data sets, or files is

considerably reduced. Backups can be done more often to increase the

consistency and data availability of your system.

The IXFP reporting function provides information about the space utilization of a

single RVA device or range of RVA devices. With the DDSR function of IXFP you

can release expired files residing on all VSE-managed RVA volumes whose

expiration date has been reached. You can process a complete volume, a

cylinder range, or a specified file. Thus the RVA can manage subsystem

freespace more efficiently than before.

2.2 Batch Window Improvement

Reducing the batch processing time increases the availability of online

applications. RVA and IXFP/SnapShot for VSE/ESA help reduce batch processing

time in several areas.

8 RAMAC Virtual Array, Peer-to Peer Remote VSE/ESA

Page 19

2.2.1 RAMAC Virtual Array

The RVA′s virtual disk architecture enables performance improvement in batch

processing. This new architecture, coupled with data compression and

self-tuning capabilities, improves disk capacity utilization. Data from all logical

volumes is written across all the physical disks the array. Automatic load

balancing across the volumes occurs as data is written to the array. Further, the

ability of the RVA to dynamically configure additional volumes of various sizes

eliminates volume contention and thereby reduces I/O bottlenecks.

In the RVA, space that has never been allocated or is allocated but unused takes

up no capacity. Data set size can increase and new files can be created with

minimal need to reorganize existing stored data. Users of traditional storage

systems keep lots of available free space to avoid batch application failures,

wasting valuable space and increasing storage costs. With the RVA it is not

necessary to keep lots of free space to avoid out-of-space conditions.

2.2.2 IXFP/SnapShot for VSE/ESA

Based on the belief that the best I/O is no I/O, IXFP/SnapShot for VSE/ESA

reduces online system outage by reducing the amount of elapsed time backups

and copies need to complete. IXFP/SnapShot for VSE/ESA improves these

aspects of batch processing:

•

Data backup

Data is backed up during batch processing for many different reasons.

Copying data to protect against a hardware, software, or application failure

in the computer center is considered an

operational backup

. The backup

might be a complete copy of all data, or an incremental copy, that is, a

backup of the changes made since the last backup.

Data backups can be taken between processing steps to protect against data

loss if a subsequent job or step fails. These backups are called

backups

. In some data centers, interim backups are done with tapes.

interim

Using SnapShot to replace the backup and/or copy operations speeds up

processing. Tapes are no longer required, and interim backups are done

more quickly. Additional interim backup points are now possible because of

the instantaneous copy capability and reduced batch processing time. The

interim backups are deleted after successful completion of the batch

process.

In many cases the operational backups made are also used for disaster

recovery. Many of the considerations for disaster recovery are also valid for

operational backup. You can minimize the time for disaster backups using

SnapShot, by making a SnapShot copy to disk. You can then restart online

applications before making a tape backup of the SnapShot copy.

•

Data set reorganization

Data set reorganization is very often a time-consuming part of nightly batch

processing. The REORG becomes important especially when it is part of the

critical path of batch processing. Traditional reorganization procedures copy

the affected VSAM key-sequenced data set (KSDS) into a sequential data set

on tape or on another DASD. This activity takes a long time when large data

sets are copied. SnapShot can dramatically reduce the run time when used

in place of IDCAMS REPRO to copy the KSDS into a sequential data set on

another DASD.

Chapter 2. RVA Benefits for VSE/ESA 9

Page 20

•

Report generation

A large part of batch processing is often dedicated to generating output

reports from production data. Often read access only is required by the

applications. SnapShot can be used to decrease the contention of multiple

read jobs accessing the same data set by replicating critical files and

allowing parallel access to multiple copies of the data.

•

Production problem resolution

You can use SnapShot to create a copy of the production database when you

need to simulate and resolve problem conditions in production. This

reduces disruption to the production environment as it is possible to snap

complete copies of the production database in a very short time.

•

Application processing

SnapShot can be used to speed up any data copy steps during batch

processing.

2.3 Application Development

In the area of application development, the RVA and IXFP/SnapShot for VSE/ESA

provide dynamic volume configuration and rapid data duplication.

•

Dynamic volume configuration

Additional volumes can be easily created if and when required. Using the

RVA local operator panel and device address predefinition in the VSE I/O

Configuration Program (IOCP), you can dynamically create or remove

volumes. You can easily add volumes required to simulate the production

environment. Temporary scratch volumes needed as work areas or testing

areas can be easily created. Production volumes can be cloned to re-create

and resolve a problem.

•

Data duplication (including Year 2000)

Several copies of test databases can be easily produced for several testing

units to use at the same time, for example, maintenance, user acceptance

testing, and enhancements. After a test cycle the test database can be reset

by resnapping from the original with SnapShot. Year 2000 testing requires

several iterations, to verify code changes with a new date. You can snap

your existing production database to create a new test database.

2.4 RVA Data Availability

The design and concept of the RVA are predicated on a very high level of data

availability. In this section we discuss the components, functions, and features

that guarantee and improve the data availability of the RVA.

2.4.1 Hardware

The RVA hardware is based on an N+1 concept. All functional areas in the

machine are duplicated. If one of these areas becomes inoperable because of a

hardware problem, other parts of the machine can take over its functions,

without losing data availability. In most cases there is no significant

performance degradation.

The disk arrays have two spare drives. If a drive fails, the data is immediately

reconstructed on one of the spare drives, and the broken drive is fenced. During

this process data availability is maintained without performance degradation.

10 RAMAC Virtual Array, Peer-to Peer Remote VSE/ESA

Page 21

2.4.2 SnapShot

2.4.3 PPRC

All necessary repair actions due to a hardware failure are performed

concurrently with customer operation.

With the RVA, data availability is also maintained during upgrades. Upgrading

disk arrays or cache size can be done concurrently with subsystem operation,

without impact or performance degradation.

With SnapShot you can make a copy of your online data to use for different tests

while the original data is available to the production system. In the same way,

you can use a SnapShot copy to back up volumes to tape or other media while

online applications continue to have access to the original data.

PPRC provides a synchronous copying capability for protection against loss of

access to data in the event of an outage at the primary site. Whenever a

disaster occurs at the primary site and the data there is no longer available, you

can switch to the secondary site. The switch is nearly without impact to or

outage of your operating system, and all data is again available with minimal

effort.

Chapter 2. RVA Benefits for VSE/ESA 11

Page 22

12 RAMAC Virtual Array, Peer-to Peer Remote VSE/ESA

Page 23

Chapter 3. VSE/ESA Support for the RVA

In this chapter we cover several utilities and the VSE/ESA Base Programs that

support the RVA.

3.1 Prerequisites

The RVA has been supported since VSE/ESA Version 1.4 and RVA microcode

level of LIC 03.00.00 or higher. A n equivalent microcode level is required for the

StorageTek Iceberg 9200.

To use IXFP/SnapShot and PPRC for VSE/ESA (see Chapter 4, “IXFP/SnapShot

for VSE/ESA” on page 17 and Chapter 5 , “Peer-to-Peer Remote Copy” on

page 33) the prerequisites are different from the basic RVA support. The

requirements for IXFP/SnapShot for VSE/ESA support are:

•

RAMAC Virtual Array Licensed Internal Code (LIC) 04.03.00 or higher. A n

equivalent microcode level is required for the StorageTek Iceberg 9200.

Note: LIC level 03.02.00 contains support for SnapShot. However, level

04.03.00 introduced nondisruptive code load for the RVA′s microcode and is

the recommended minimum level.

•

VSE/ESA 2.3.0 (5690-VSE) installed with VSE/AF Supervisor with PTF DY44820

or higher.

•

SnapShot requires Feature 6001 for existing 9393 systems or RPQ 8S0421 for

StorageTek Icebergs.

•

If IXFP/SnapShot for VSE/ESA is used in a VM/VSE environment, VM APAR

VM61486 is required for minidisk cache (MDC) support.

For PPRC, the requirements are discussed in 5.1, “PPRC and VSE/ESA Software

Requirements” on page 34 and 5.2, “PPRC Hardware Requirements” on

page 35.

The RVA subsystem provides a set of control and monitoring functions that

extend the set of IBM 3990 control functions. Many of these functions can be

invoked either from the host with the IXFP/SnapShot for VSE/ESA or directly from

the RVA. The Extended Control and Monitoring (ECAM) interface is the protocol

for communications between the host CPU and the RVA. The use of the ECAM

interface is generally transparent to the user.

These functions require that APAR DY44820 be installed and the phase $IJBIXFP

has been loaded to the SVA via the SET SDL interface. Once the phase has

loaded, any of above functions can be invoked through simple operator

commands.

If you want to enable SnapShot on a StorageTek 9200, you must submit an RPQ

to IBM for each serial number.

Before you use IXFP/SnapShot or PPRC for the RVA, we recommend checking

with your local IBM support center to get information about the latest levels of

VSE/ESA, microcode, and required APARs.

Copyright IBM Corp. 1999 13

Page 24

3.2 Volumes

With VSE/ESA and the RVA, the subsystem volumes can be defined in different

emulation modes. This makes the RVA absolutely adaptable to your needs.

The following device type emulations are supported with the RVA:

•

•

3.3 Host Connection

Host connectivity options include parallel and/or ESCON attachment. Extended

connectivity parallel channel configurations of up to 32 channels are available

and supported without bus and tag output connections. The bus and tag outputs

are internally terminated, so the RVA must physically be the last in a

daisy-chained configuration. ESCON configurations include up to 128 logical

links, either as direct links or through an ESCON Director.

The physical channel configuration must match the definitions in the I/O

configuration data set (IOCDS). The physical connections on the CPU and the

RVA must reflect the statements in the IOCDS. Mistakes can go undetected until

later maintenance actions cause unnecessary impact. The channel configuration

should be planned such that the impact of a single component failure is

minimized. For an IOCDS example, refer to Appendix D, “IOCDS Example” on

page 63.

3380 model J, K, and KE (KE is a 3380K compatible device with the same

number of cylinders (1770) as a 3380E).

3390 models 1, 2, and 3

3.3.1 VSE/ESA Input/Output Configuration Program

Support for the stand-alone IOCP is supplied with the hardware system′s service

processor or processor controller. IOCP describes a system ′s I/O configuration

to the CPU.

Before you install VSE/ESA natively (not under VM/ESA or an LPAR), make sure

that you have fully configured your system through IOCP. Starting with VSE/ESA

1.3, the VSE/ESA IOCP is automatically installed during initial installation of

VSE/ESA. You can use the IOCP batch program to create a new IOCDS when

you change the hardware configuration. You can use the IOCP batch program to

define and validate the IOCP macro instructions if you prepare for the installation

of a new processor. Use skeleton SKIOCPCN (available in VSE/ICCF library 59)

as a base for configuration changes.

An IOCDS must be generated for the hardware the first time through the

stand-alone IOCP delivered with the processor. For this program you can use

prepared IOCP macro instructions that have been generated on another

processor. The specified device numbers for VSE/ESA must be within the range

0000 through 0FFF. The devices themselves can be attached to any available

link.

For more information about IOCP, refer to the processor′s IOCP manual and to

IOCP User′s Guide and ESCON Channel-to-Channel Reference

the

, GC38-0401.

14 RAMAC Virtual Array, Peer-to Peer Remote VSE/ESA

Page 25

3.4 ICKDSF

Once the 9393 is installed and the functional devices are defined on the operator

panel (see Appendix A, “RVA Functional Device Configuration” on page 41) the

only initialization needed for the RVA functional devices is a minimal init,

ICKDSF INIT, with the additional parameters needed to define the VOLID and the

volume table of contents (VTOC) size and location. The CHECK parameter is not

supported, because surface checking is not needed on an RVA. Other ICKDSF

functions for surface checking, such as INSTALL or REVALIDATE, also are not

supported for the RVA.

The RVA internally uses FBA disk drives with internal data error recovery, so

surface checking in this way is inappropriate. If a media surface check is

needed, the IBM service representative can do this on the maintenance panel.

ICKDSF Release 16 with applicable PTFs is needed to support the RVA. T o run

the VSE version of ICKDSF in batch mode, submit a job with an EXEC ICKDSF job

control statement.

3.4.1 Volume Minimal Init

In the example in Figure 4 a volume is initialized at minimal level under VSE. A

VSE format VTOC is written at cylinder 32, track 0 for a length of 20 tracks. The

volume is labeled 338001.

// JOB jobname

// ASSGN SYS002,151

// EXEC ICKDSF,SIZE=AUTO

INIT SYSNAME(SYS002) NOVERIFY -

VSEVTOC(X′20′,X′0′,X′14′) VOLID(338001)

/*

/&

Figure 4. ICKDSF INIT Volume at the Minimal Level

3.4.2 Partial Disk Minimal Init

In the example in Figure 5, an emulated partial disk is initialized under VSE. A

VSE format VTOC is written at cylinder 0, track 1 for a length of one track. The

volume is labeled AA3380.

// JOB jobname

// ASSGN SYS000,353

// EXEC ICKDSF,SIZE=AUTO

INIT SYSNAME(SYS000) NVFY VSEVTOC(0,1,1) -

VOLID(AA3380) MIMIC(EMU(20))

/*

/&

Figure 5. ICKDSF INIT Emulated Partial Disk

For more information about and examples of running ICKDSF under VSE, refer to

ICKDSF R16 User′s Guide,

the

GC35-0033.

Chapter 3. VSE/ESA Support for the RVA 15

Page 26

16 RAMAC Virtual Array, Peer-to Peer Remote VSE/ESA

Page 27

Chapter 4. IXFP/SnapShot for VSE/ESA

IXFP/SnapShot for VSE/ESA is a combination of software and RVA microcode

functions. It has three main functions, namely, SnapShot, DDSR, and Report.

SnapShot is the data duplication utility that exploits the RVA′s virtual disk

architecture to achieve instantaneous copy without actually using resources.

DDSR releases space occupied by a volume, a cylinder range, and a specified

data set. The report functions provide information about the space utilization

and subsystem utilization summary of the RVA.

A new attention routine ($IJBIXFP) has been provided in VSE. You invoke

IXFP/SnapShot for VSE/ESA functions through the attention routine in three basic

ways:

1. From a console

You enter the IXFP/SnapShot for VSE/ESA functions on the command line of

the VSE operator console or through the interactive computing and control

facility (ICCF) console operator interface.

Note: Make sure that you are authorized to enter operator commands when

using the ICCF console operator interface.

Figure 6 illustrates invoking IXFP REPORT functions directly from the

operator console.

SYSTEM : VSE/ESA VSE/ESA 2.3 USER :

TIME :

AR 0015 1I40I READY

------------------------------------------------------------------------==> IXFP REPORT<enter>

Figure 6. Sample Operator Console Screen to Invoke IXFP REPORT Functions

Copyright IBM Corp. 1999 17

Page 28

2. From a batch job

You can code the function you want to invoke in the PARM field of the

VSE-provided DTRIATTN module and submit the batch job for execution.

Figure 7 illustrates the report function (IXFP REPORT) being invoked by a

batch job using DTRIATTN.

// JOB jobname

// log

// EXEC DTRIATTN,PARM=′ IXFP REPORT′

/&

Figure 7. Sample Batch Job to Invoke IXFP Report Function

3. From a REXX CLIST

You can code the IXFP function in the SENDCMD of REXX. Use of a REXX

CLIST allows use of logic for automated procedures. Figure 8 shows the

statements required to catalog the REXX procedure. Figure 9 on page 19

illustrates invoking the report function (IXFP REPORT) from a REXX CLIST in

a batch job. The second SENDCMD in the job is a dummy. It is coded to

illustrate the possibility of coding other IXFP/SnapShot for VSE/ESA or VSE

commands, thus providing flexibility for creating automated procedures.

* $$ JOB JNM=IXFPREXC,CLASS=0,DISP=D

// JOB IXFPREXC

// EXEC LIBR

ACC S=PRD2.CONFIG

CAT IXFPREXX.PROC R=Y

/* -=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=- */

/* REXX/VSE procedure to issue console commands */

/* -=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=- */

trace off

arg parm_string

parse var parm_string command1 ′#′ command2

rc = SENDCMD( command1 ) /* Submit first command */

if rc = 0

then do

call sleep 5 /* Wait before submitting next command */

rc = SENDCMD( command2 ) /* Submit second command */

end

exit rc

/+

/*

/&

* $$ EOJ

Figure 8. Cataloging a REXX CLIST Procedure to Invoke an IXFP Function

18 RAMAC Virtual Array, Peer-to Peer Remote VSE/ESA

Page 29

* $$ JOB JNM=IXFPREXX,CLASS=0,DISP=D

// JOB IXFPREXX

// LIBDEF *,SEARCH=(PRD2.CONFIG,PRD1.BASE)

// EXEC REXX=IXFPREXX,PARM=′ IXFP REPORT#<your-console-cmd-2>′

/&

* $$ EOJ

Figure 9. Using a REXX CLIST As a Batch Job Step to Invoke an IXFP Function

Note: More information about the REXX/VSE console automation capability

can be found in Chapter 14 of the

SC33-6642-01.

REXX/VSE V6 R1.2 Reference Manual

,

Chapter 4. IXFP/SnapShot for VSE/ESA 19

Page 30

Figure 10 displays the RVA subsystem status on the operator console.

ixfp report

AR 0015 SUBSYSTEM 1321117

AR 0015 *** DEVICE DETAIL REPORT ***

AR 0015 <---FUNC. CAPACITY (MB)---> <---CAPACITY (%)---> PHYS. COMP.

AR 0015 CUU DEF ALLOC STORED UNUSED ALLOC STORED UNUSED USED(MB) RATIO

AR 0015 80E 2838.0 N/A 369.2 2468.7 N/A 13.01 86.99 213.6 1.72

AR 0015 80F 2838.0 N/A 0.8 2837.1 N/A 0.03 99.97 0.0 9.65

AR 0015

AR 0015 *** DEVICE SUMMARY REPORT

AR 0015 CAPACITY <-----TOTAL-----> <------TOTALS %------> COMP.

AR 0015 DEFINED 5676.032 MB 100.00 RATIO

AR 0015 STORED 370.129 MB 6.52

AR 0015 PHYS.USED 213.688 MB 3.76 1.73

AR 0015 UNUSED 5305.903 MB 93.48

AR 0015

AR 0015 *** SUBSYSTEM SUMMARY REPORT ***

AR 0015 SYSTEM DEFINED-CAPACITY DISK-ARRAY-CAP FREE-DISK-ARRAY-CAP

AR 0015 PROD 726532.208 MB 117880.209 MB 57752.311 MB

AR 0015

AR 0015 NET-CAPACITY-LOAD(%) COLL.-FREE-SPACE(%) UNCOLL-FREE-SPACE(%)

AR 0015 TEST PROD OVERALL TEST PROD OVERALL TEST PROD OVERALL

AR 0015 0.00 51.01 51.01 0.00 47.55 47.55 0.00 1.44 1.44

AR 0015 1I40I READY

Figure 10. Sample Console Output of the IXFP REPORT Function

Figure 11 shows the general syntax of the IXFP command.

┌┐─,───────────────────────────────────────────────────────────────

──IXFP─ ──┬ ┬──SNAP, ──┬ ┬───

│ ││ │└┘──(scyl ──┬ ┬──-scyl )└┘──(tcyl)└ ┘──,VOL1=volid └┘──,NOPROMPT

│ ││ │└┘──.ncyl

│ │└ ┘──source(DSN=′ data-set-name′):target ──┬ ┬──────── ─────────────────────

│ │└┘──(tcyl)

├ ┤──DDSR ──┬ ┬──────────────────────────────── ──┬ ┬─────────── ──────────────────────────────────────

│ │││┌┐────────────────────────────── └ ┘──,NOPROMPT

│ │├┤───

│ │││└┘──(dcyl ──┬ ┬──-dcyl )

│ │││└┘──.ncyl

│ │└┘──,unit(DSN=′ data-set-name′) ────

│ │┌┐─────────

└ ┘──REPORT ───

Figure 11. General Syntax of IXFP Command

┴┬┬───── ────────────────────────────────────────────────────────────────────────────

└┘──,id

See Appendix B, “IXFP Command Examples” on page 43 for an explanation of

the IXFP specifications.

┴source ──┬ ┬─────────────────── :target ──┬ ┬──────── ──┬ ┬───────────── ──┬ ┬─────────── ────────

┴,unit ──┬ ┬───────────────────

20 RAMAC Virtual Array, Peer-to Peer Remote VSE/ESA

Page 31

4.1 IXFP SNAP

SNAP identifies this command as a SNAP function. Figure 12 shows the syntax

of the IXFP SNAP command.

┌┐─,───────────────────────────────────────────────────────────────

──IXFP─ ─── ───SNAP, ──┬ ┬───

Figure 12. Syntax of IXFP SNAP Command

│ │└┘──(scyl ──┬ ┬──-scyl )└┘──(tcyl)└ ┘──,VOL1=volid └┘──,NOPROMPT

│ │└┘──.ncyl

└ ┘──source(DSN=′ data-set-name′):target ──┬ ┬──────── ─────────────────────

Note: SnapShot commands can be set up to be invoked by end users as well as

by database administrators and system programmers.

There are three ways to do a SnapShot. You can take a copy of:

•

A full volume

•

A range of cylinders

•

A non-VSAM file

4.1.1 A Full Volume

You copy a full volume by identifying the device address (cuu) or VOLID label of

the source and target in the IXFP SNAP command. Intermixing of the device

address and VOLID label to specify the source and target in the IXFP command

is allowed. Use the VOL1= parameter to specify the volume label that the

target will assume after the copy has completed. I f the VOL1= parameter is

omitted, the source and target volumes will have the same VOLID label when the

copy is completed.

┴source ──┬ ┬─────────────────── :target ──┬ ┬──────── ──┬ ┬───────────── ──┬ ┬─────────── ────────

└┘──(tcyl)

To illustrate, we use two volumes: PATEV1 and PATEV2. The device addresses

of these volumes are 80E and 80F, respectively. To copy the first volume onto

the second volume, issue any of the following commands:

•

IXFP SNAP,80E:80F,NOPROMPT

•

IXFP SNAP,PATEV1:PATEV2,NOPROMPT

•

IXFP SNAP,PATEV1:80F,NOPROMPT

In this case the source 80E and target 80F will have the same VOLID label

(PATEV1) after the snap completes. A VOL1= parameter can be used to give

the target a specific VOLID label after the snap of the source volume. Continuing

with the example, to give the target volume a VOLID label of PATEV3, we include

a VOL1= parameter indicating VOLID label PATEV3. Use one of the following

commands;

•

IXFP SNAP,80E:80F,VOL1=PATEV3,NOPROMPT

•

IXFP SNAP,PATEV1:80F,VOL1=PATEV3,NOPROMPT

The NOPROMPT parameter is included to prevent decision-type messages from

being issued. Otherwise, decision-type messages are issued for the operator to

verify and confirm (see Figure 13 on page 22).

Chapter 4. IXFP/SnapShot for VSE/ESA 21

Page 32

AR 0015 1I40I READY

ixfp snap,80e:80f,vol1=patev3

AR+0015 IXFP23D SNAP FROM CUU=80E CYL=′0000′ TO CUU=80F CYL=′0000′ NCYL=′0D0B

- REPLY ′ YES′ TO PROCEED

15 yes

AR 0015 IXFP22I SNAP TO CUU= 80F STARTED AT 18:46:51 11/16/1998

AR 0015 IXFP20I SNAP FUNCTION COMPLETED AT 18:46:51 11/16/1998

AR 0015 1I40I READY

Figure 13. Decision-Type Message Issued to Confirm SNAP

VSE/VSAM

VSE/VSAM support is not currently included in IXFP/SnapShot for VSE/ESA.

However, you can still take advantage of IXFP/SnapShot for VSE/ESA with

VSE/VSAM data sets. With volume snap, VSAM data sets can be indirectly

copied when the VSAM catalog, space, and cluster are in the same volume.

In VSE, you can reference the two USER CATALOGs individually through the

use of the ASSGN JCL statement; however, the VSAM share option

restrictions still apply. The ideal scenario is to assign the target volume into

another LPAR so as not to encounter the restrictions and further processing

or copy to tape using IDCAMS REPRO. If the purpose of the volume snap is

for backup using FASTCOPY or DDR (VM), the VSAM share option

restrictions will not be encountered.

See Appendix C, “VSE/VSAM Considerations” on page 59.

Notes:

If the source is identified by its VOLID, it must be either the only volume with

that VOLID or the only VOLUME with that VOLID that is up (DVCUP).

Otherwise an error message will be issued.

The target device must be down (DVCDN) before you initiate the volume

snap.

If the target device is identified by its VOLID, it must be either the only

volume with that VOLID or the only volume with that VOLID which is down

(DVCDN). Otherwise an error message will be issued.

Only when doing full volume snaps can you use the VOL1 parameter.

Volume copying is done unconditionally within the specified or assumed

boundaries. VSE does not perform any VTOC checking on the specified

target device and thus does not provide any warning messages regarding

overlapping extents or secured or unexpired files.

When you duplicate a volume, both the source and the target must be within

the same RVA. (If you are using test partitions, the source and the target

must also be in the same partition.)

22 RAMAC Virtual Array, Peer-to Peer Remote VSE/ESA

Page 33

4.1.2 A Range of Cylinders

You copy a range of cylinders by identifying the device address or VOL1 label of

the source and target in the IXFP SNAP command. In addition, the decimal start

cylinder (scyl) and end cylinder (-scyl) or number of cylinders (,ncyl) are

specified in parentheses and appended to the source specification. Optionally,

the target start cylinder (tcyl) can be included to specify where copying is to start

on the target device.

To illustrate, we use two volumes: PATEV1 and PATEV2. The device addresses

of these volumes are 80E and 80F, respectively. To copy cylinders 0 through 999

in the first volume onto the second volume, issue one of the following

commands:

•

IXFP SNAP,80E(0000-0999):80F,NOPROMPT

•

IXFP SNAP,80E(0000,1000):80F,NOPROMPT

•

IXFP SNAP,PATEV1(0000-0999):PATEV2,NOPROMPT

•

IXFP SNAP,PATEV1(0000,1000):PATEV2,NOPROMPT

You specify the target start-cylinder (tcyl) to relocate the range of cylinders on

the target volume. Indicate target start-cylinder 1000 to relocate the range of

cylinders on the target volume. You can issue one of the following modified

commands:

•

IXFP SNAP,80E(0000-0999):80F(1000),NOPROMPT

•

IXFP SNAP,80E(0000,1000):80F(1000),NOPROMPT

•

IXFP SNAP,PATEV1(0000-0999):PATEV2(1000),NOPROMPT

•

IXFP SNAP,PATEV1(0000,1000):PATEV2(1000),NOPROMPT

You may or may not include the NOPROMPT parameter to prevent or allow

decision-type messages (see Figure 13 on page 22).

Notes:

If the source is identified by its VOLID, it must be either the only volume with

that VOLID or the only VOLUME with that VOLID which is up (DVCUP).

Otherwise an error message will be issued.

The target device must be down (DVCDN) before you initiate the volume

snap, except when the source and the target device are the same device.

If the target device is identified by its VOLID, it must be either the only

volume with that VOLID or the only volume with that VOLID which is down

(DVCDN). Otherwise an error message will be issued.

The highest (end) cylinder number must not exceed 32767 or the maximum

number of cylinders of the devices. The start cylinder number must not be

greater than the end cylinder number.

You cannot use the VOL1 parameter when copying cylinder ranges.

Cylinder range copying is done unconditionally within the specified or

assumed boundaries. VSE does not perform any VTOC checking on the

specified target device and thus does not provide any warning messages

regarding overlapping extents or secured or unexpired files.

Chapter 4. IXFP/SnapShot for VSE/ESA 23

Page 34

The source and the target device must be of the same type and must be

within the same RVA subsystem. (If you are using test partitions, the source

and the target must also be in the same partition.)

4.1.3 A Non-VSAM File

You copy a file by indicating the data set name on the source device, using the

DSN= (data set name) parameter, in addition to identifying the source and

target device address or VOLID labels. The file specified must be non-VSAM.

Optionally, the target start cylinder (tcyl) can be included to specify where

copying is to start on the target device if relocation is required.

To illustrate, we use two volumes: PATEV1 and PATEV2. The device addresses

of these volumes are 80E and 80F, respectively. To copy file ′test.data.1′ in

volume 80E onto volume 80F, issue one of the following commands:

•

IXFP SNAP,80E(DSN=′ test.data.1′):80F,NOPROMPT

•

IXFP SNAP,PATEV1(DSN=′ test.data.1′):80F,NOPROMPT

If you want to copy file ′test.data.1′ to cylinder 1000 on volume 80F, you must

specify the target start cylinder (tcyl). Issue one of the following modified

commands:

•

IXFP SNAP,80E(DSN=′ test.data.1′):80F(1000),NOPROMPT

•

IXFP SNAP,80E(DSN=′ test.data.1′):PATEV2(1000),NOPROMPT

Notes:

If source is identified by its VOLID, it must be either the only volume with

that VOLID or the only VOLUME with that VOLID that is up (DVCUP).

Otherwise an error message will be issued.

The target device must be down (DVCDN) before you initiate the volume

snap, except when the source and the target are the same device.

If the target device is identified by its VOLID, it must be either the only

volume with that VOLID or the only volume with that VOLID that is down

(DVCDN). Otherwise an error message will be issued.

The highest (end) cylinder number must not exceed 32767 or the maximum

number of cylinders of the devices. The start cylinder number must not be

greater than the end cylinder number.

You cannot use the VOL1 parameter when copying a non-VSAM file.

When you snap files, VSE performs VTOC checking on the specified target

device and provides warning messages regarding overlapping extents or

secured or unexpired files.

The source and the target device must be of the same type and must be

within the same RVA subsystem. (If you are using test partitions, the source

and the target must also be in the same partition.)

See B.1, “SNAP Command” on page 43 for additional illustrations.

24 RAMAC Virtual Array, Peer-to Peer Remote VSE/ESA

Page 35

4.2 IXFP DDSR

DDSR identifies this command as a DDSR function. DDSR causes the release of

the physical storage space associated with:

•

Expired files

•

A total volume

•

A range of cylinders

•

A specified file

This function ensures that all space releases done on the VSE system (normally

through deletes) result in space releases in the RVA subsystem. Figure 14

shows the syntax of the IXFP DDSR command.

──IXFP─ ─── ───DDSR ──┬ ┬──────────────────────────────── ──┬ ┬─────────── ──────────────────────────────────────────────

Figure 14. Syntax of IXFP DDSR Command

││┌┐────────────────────────────── └ ┘──,NOPROMPT

├┤───

││└┘──(dcyl ──┬ ┬──-dcyl )

││└┘──.ncyl

└┘──,unit(DSN=′ data-set-name′) ────

┴,unit ──┬ ┬───────────────────

4.2.1 Expired Files

DDSR, when specified without any additional parameters, deletes the VTOC

entries and all physical space for all expired nonsecured files residing on VSE

managed RVA devices. These extents are freed up and become part of free

space.

To illustrate, we use two volumes: PATEV1 and PATEV3. The device addresses

of these volumes are 80E and 80F, respectively. To delete all VSE-recognized

expired files, issue the following command:

IXFP DDSR,NOPROMPT

The NOPROMPT parameter is included to prevent decision-type messages from

being issued. Otherwise, decision-type messages are issued for the operator to

verify and confirm (see Figure 15).

AR 0015 1I40I READY

IXFP DDSR

AR+0015 IXFP26D ′ TEST.DATA.1′ HAS EXPIRED ON CUU=80E - REPLY ′YES′ FOR DELETION

15 YES

AR+0015 IXFP26D ′ TEST.DATA.1′ HAS EXPIRED ON CUU=80F - REPLY ′YES′ FOR DELETION

15 YES

AR 0015 1I40I READY

Figure 15. Decision-Type Message Issued to Confirm DDSR Expired Files

Chapter 4. IXFP/SnapShot for VSE/ESA 25

Page 36

Notes:

When you do DDSR for expired files, VSE performs checking on the online

(up) units.

DDSR checks only those RVA devices managed by the VSE system.

DDSR considers only the files created by VSE.

4.2.2 A Total Volume

It is possible to delete and release space for an entire volume when you include

the device address or VOLID label with no other operands.

To illustrate, we use volume PATEV3 with device address 80F. To delete all data

including the VTOC and VOLID label of PATEV3, issue the following command:

IXFP DDSR,PATEV3

The NOPROMPT parameter is included to prevent decision-type messages from

being issued. Otherwise, decision-type messages are issued for the operator to

verify and confirm (see Figure 16).

AR 0015 1I40I READY

IXFP DDSR,PATEV3

AR+0015 IXFP29D DDSR FOR CUU=80F (WHOLE VOLUME) - REPLY ′ YES′ FOR DELETION

15 YES

AR 0015 1I40I READY

Figure 16. Decision-Type Message Issued to Confirm DDSR Volume

Notes:

The device should belong to the RVA subsystem managed by the VSE

system.

The device should be in the offline (DVCDN) condition.

The device should be reinitialized before you use it as a regular volume

again. However, if you are going to use it as a target for a volume snap, you

can leave it as is.

4.2.3 A Range of Cylinders

This command is similar to the DDSR volume command, except that additional

specifications are added to signify the decimal start cylinder and end cylinder

(dcyl-dcyl) or the decimal start cylinder and the number of cylinders (dcyl,ncyl) to

delete.

To illustrate, we use volume PATEV3 with device address 80F. To delete all data

from cylinders 0 through cylinder 999, issue the following command:

IXFP DDSR,PATEV3(0000-0999),NOPROMPT

26 RAMAC Virtual Array, Peer-to Peer Remote VSE/ESA

Page 37

The NOPROMPT parameter is included to prevent decision-type messages from

being issued. Otherwise, decision-type messages are issued for the operator to

verify and confirm (similar to Figure 16).

Notes:

The device should belong to the RVA subsystem managed by the VSE

system.

The device should be in the offline (DVCDN) condition.

The highest (end) cylinder number must not exceed 32767 or the maximum

number of cylinders of the devices. The start cylinder number must not be

greater than the end cylinder number.

4.2.4 A Specified File

This command is similar to doing DDSR for a specific range of cylinders. The

difference is that a DSN= specification is coded in place of the decimal start

cylinder and decimal end cylinder (dcyl-dcyl).

To illustrate, we use volume PATEV3 with device address 80F. To delete file

′test.data.3′ on volume PATEV3, issue the following command:

IXFP DDSR,PATEV3(DSN=′ test.data.3′),NOPROMPT

The NOPROMPT parameter is included to prevent decision-type messages from

being issued. Otherwise, decision-type messages are issued for the operator to

verify and confirm (similar to Figure 16 on page 26).

4.3 IXFP REPORT

Notes:

The device should belong to the RVA subsystem managed by the VSE

system.

The device should be in the online (DVCUP) condition. Otherwise the

command is rejected, and an error message is displayed.

The file must be non-VSAM.

The file will be deleted unconditionally and the space returned to the RVA

freespace.

When you process multivolume files, repeat DDSR for all volumes containing

the file extents.

See B.2, “DDSR Command” on page 49 for additional illustrations.

The IXFP REPORT command provides information about the space utilization of a

single RVA device or a range of RVA devices. It also provides information about

the space utilization of all devices (that were added during IPL) of an RVA

subsystem as well as important subsystem utilization summary information.

Figure 17 on page 28 shows the syntax of the IXFP REPORT command.

Chapter 4. IXFP/SnapShot for VSE/ESA 27

Page 38

┌┐─────────

──IXFP─ ─── ───REPORT ───

Figure 17. Syntax of IXFP REPORT Command

└┘──,id

┴┬┬───── ────────────────────────────────────────────────────────────────────────────────────

When the command is issued without the id parameter, all information about

every RVA subsystem, including all devices known to the VSE system, is

displayed.

If you need information about a specific device, a specific range of devices, or a

specific RVA subsystem, include the

output.

To illustrate, we use two volumes: PATEV1 and PATEV2. The device addresses

of these volumes are 80E and 80F, respectively. To display space utilization for

one of the devices, issue one of the following commands:

•

IXFP REPORT,80E

•

IXFP REPORT,80F

It is also possible to display the space utilization of the two devices in one

command by including the addresses of the two devices. Issue the following

command:

IXFP REPORT,80E,80F

id

parameter to limit the scope of the report

To get all space information for the entire VSE system, issue the command

without any additional parameter:

IXFP REPORT

Notes:

The device must belong to the RVA subsystem managed by the VSE system.

If used under VM, REPORT only works for full-pack minidisks or dedicated

devices.

NOPROMPT is not a valid parameter for IXFP REPORT.

Issuing IXFP REPORT or IXFP REPORT,80 yields the same result because we

are only using one RVA subsystem and there is only one device address

range (80).

Figure 18 on page 29 shows a sample IXFP REPORT output.

28 RAMAC Virtual Array, Peer-to Peer Remote VSE/ESA

Page 39

ixfp report

AR 0015 SUBSYSTEM 1321117

AR 0015 *** DEVICE DETAIL REPORT ***

AR 0015 <---FUNC. CAPACITY (MB)---> <---CAPACITY (%)---> PHYS. COMP.

AR 0015 CUU DEF ALLOC STORED UNUSED ALLOC STORED UNUSED USED(MB) RATIO

AR 0015 80E 2838.0 N/A 369.2 2468.7 N/A 13.01 86.99 213.6 1.72

AR 0015 80F 2838.0 N/A 0.8 2837.1 N/A 0.03 99.97 0.0 9.65

AR 0015

AR 0015 *** DEVICE SUMMARY REPORT

AR 0015 CAPACITY <-----TOTAL-----> <------TOTALS %------> COMP.

AR 0015 DEFINED 5676.032 MB 100.00 RATIO

AR 0015 STORED 370.129 MB 6.52

AR 0015 PHYS.USED 213.688 MB 3.76 1.73

AR 0015 UNUSED 5305.903 MB 93.48

AR 0015

AR 0015 *** SUBSYSTEM SUMMARY REPORT ***

AR 0015 SYSTEM DEFINED-CAPACITY DISK-ARRAY-CAP FREE-DISK-ARRAY-CAP

AR 0015 PROD 726532.208 MB 117880.209 MB 57752.311 MB

AR 0015

AR 0015 NET-CAPACITY-LOAD(%) COLL.-FREE-SPACE(%) UNCOLL-FREE-SPACE(%)

AR 0015 TEST PROD OVERALL TEST PROD OVERALL TEST PROD OVERALL

AR 0015 0.00 51.01 51.01 0.00 47.55 47.55 0.00 1.44 1.44

AR 0015 1I40I READY

Figure 18. Sample Output of IXFP REPORT Command

See B.3, “Report Command” on page 52 for further illustrations.

In the sections that follow we define the field names and column headings of the

reports that IXFP REPORT provides.

4.3.1 Device Detail Report

cuu This is the device ID of the device to which the data applies.

<---FUNC. CAPACITY (MB)---> This heading covers the fields that provide

<--- CAPACITY (%)---> This heading covers the fields that provide information

information about the functional capacity in megabytes for a certain

device. Functional capacity in this sense is the capacity that would

exist on traditional count key data (CKD) or extended count key data

(ECKD) devices.

DEF This field contains the functional capacity in megabytes

defined to the subsystem for this specific device.

ALLOC This field is the functional space allocated by the VTOC.

This data is not currently available for VSE/ESA.

STORED This field contains the functional capacity in megabytes

stored (occupying disk array storage) for the device or

subsystem.

UNUSED This field contains the part of the functional capacity

defined for the device or subsystem that is not mapped

and thus unused (that is, not occupying disk array

storage).

about the percentage of the defined functional capacity assigned to

the individual groups.

ALLOC This field is the percentage of functional space allocated

by the VTOC. This data is not currently available for

VSE/ESA.

Chapter 4. IXFP/SnapShot for VSE/ESA 29

Page 40

STORED This field contains the percentage of the defined functional

capacity that contains stored data (occupying disk array

storage) for the device or subsystem.

UNUSED This field contains the percentage of the defined functional

capacity for the device or subsystem that does not yet

contain data and is thus unused (that is, not occupying

disk array storage).

PHYS. USED(MB) This heading covers the field containing the real capacity in

megabytes, that occupies disk array storage, as opposed to the

functional capacity. The difference between the stored and the

physical used capacity is the savings due to compression and

compaction performed by the RVA subsystem for this device.

COMP. RATIO This heading covers the field containing the real compaction ratio,

which is the quotient of functional capacity stored/physical used

capacity.

30 RAMAC Virtual Array, Peer-to Peer Remote VSE/ESA

Page 41

4.3.2 Device Summary Report

CAPACITY This column covers the capacity groups that are being differentiated.

<-----TOTAL-----> This column covers the total capacity in megabytes that has

been allocated to the appropriate group in that line. The capacity is

the sum of all the devices that were selected for the report.

<------TOTALS %------> This column shows the percentage of capacity that the

group in the appropriate line is occupying, always compared to the

100% defined functional capacity in the first row. The percentage is

based on all the devices that were selected for the report.

COMP. RATIO This column shows the real compaction ratio, which is the

quotient of the total functional capacity stored/total physical used

capacity and is a measure of the overall compaction for the devices

selected for the report.

4.3.3 Subsystem Summary Report

SYSTEM This column identifies the system to which the capacity outlined in the

appropriate line applies. If a test system has not been configured,

only the PROD system information is provided.

DEFINED-CAPACITY This column contains the functional capacity in megabytes

for the whole subsystem as it has been configured.

DISK-ARRAY-CAP This column contains the total disk array capacity in

megabytes for the whole subsystem that has been installed.

FREE-DISK-ARRAY-CAP This column contains the current free disk array

capacity in megabytes that is still available for allocation by the RVA

subsystem.

NET-CAPACITY-LOAD(%) This column contains the percentage of the disk array

capacity that is currently being occupied by the TEST, PROD, or both

(OVERALL) systems. This is probably the most important value and

has been placed at the beginning of the very last report line to make

it easy to find.

COLL.-FREE-SPACE(%) This column contains the percentage of the array

cylinders in the subsystem that are free array cylinders (that is, the

total space that can be written to).

UNCOLL-FREESPACE(%) This column contains the percentage of the array

capacity originally occupied when a functional track has been

rewritten to a new location in the disk array.

Chapter 4. IXFP/SnapShot for VSE/ESA 31

Page 42

32 RAMAC Virtual Array, Peer-to Peer Remote VSE/ESA

Page 43

Chapter 5. Peer-to-Peer Remote Copy

In this chapter we describe the VSE/ESA support for the RVA and the PPRC.

As part of the continuing effort to meet customer requirements for 24-hour 7-day

availability, the RVA Model T82 provides remote copy for disaster and critical

volume protection. PPRC is supported by OS/390, VSE/ESA, and VM/ESA. PPRC

complements the existing availability functions of the RVA, such as the dual

power systems, nonvolatile storage (NVS), and nondisruptive installation and

repair.

PPRC standardizes and streamlines today′s disaster recovery data backup

capabilities by providing data-type independent remote copy. The

implementation provides a choice based on business requirements such as

performance needs, data synchrony criteria, system resource considerations,

operational control requirements, and recovery distance requirements.

PPRC provides an RVA synchronous data copying capability for protection

against loss of access to data in the event of an outage at the primary site.

Updates are sent from the primary RVA directly to the recovery RVA in a cache

to cache communication through dedicated ESCON links between the two RVAs.

The RVA can be located up to 43 km (26.7 mi) from the host. T he primary and

secondary volumes must be of the same track geometry.

Figure 19 shows two host systems with an RVA attached to each. The two RVAs

are attached on at least one ESCON path. ESCON Directors can be used to

extend the distance between the two hosts up to 43 km. The second host is

optional if PPRC is being used to migrate data from one RVA to a second within

the same data center.

Figure 19. Peer-to-Peer Remote Copy

Both RVAs can treat writes as DASD fast write (DFW) operations. The primary

RVA write is handled normally, and it is processed as a cache hit whenever

possible; the write to the secondary subsystem is always treated as a write hit.

This capability, and the inherent performance capabilities of the RVA, help to