Page 1

International Technical Support Organization

IBM PC Server and Novell NetWare Integration Guide

December 1995

SG24-4576-00

Page 2

Take Note!

Before using this information and the product it supports, be sure to read the general information under

“Special Notices” on page xv.

First Edition (December 1995)

This edition applies to IBM PC Servers, for use with an OEM operating system.

Order publications through your IBM representative or the IBM branch office serving your locality. Publications

are not stocked at the address given below.

An ITSO Technical Bulletin Evaluation Form for reader′s feedback appears facing Chapter 1. If the form has been

removed, comments may be addressed to:

IBM Corporation, International Technical Support Organization

Dept. HZ8 Building 678

P.O. Box 12195

Research Triangle Park, NC 27709-2195

When you send information to IBM, you grant IBM a non-exclusive right to use or distribute the information in any

way it believes appropriate without incurring any obligation to you.

Copyright International Business Machines Corporation 1995. All rights reserved.

Note to U.S. Government Users — Documentation related to restricted rights — Use, duplication or disclosure is

subject to restrictions set forth in GSA ADP Schedule Contract with IBM Corp.

Page 3

Abstract

This document describes the procedures necessary to successfully implement

Novell NetWare on an IBM PC Server platform. It describes the current IBM PC

Server line and discusses the technology inside the machines. It outlines

step-by-step procedures for installing both NetWare V3.12 and V4.1 using both

IBM ServerGuide and the original product media. It has a detailed section on

performance tuning. It covers IBM′s NetFinity systems management tool, which

ships with every IBM PC Server and IBM premium brand PC.

This document is intended for IBM customers, dealers, systems engineers and

consultants who are implementing NetWare on an IBM PC Server platform.

A basic knowledge of PCs, file servers, DOS, and NetWare is assumed.

(212 pages)

Copyright IBM Corp. 1995 iii

Page 4

Contents

Abstract . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . iii

Special Notices

Preface

How This Document is Organized

Related Publications

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xv

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xvii

......................... xvii

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xviii

International Technical Support Organization Publications

ITSO Redbooks on the World Wide Web (WWW)

Acknowledgments

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xix

Chapter 1. IBM PC Server Technologies

1.1 Processors

1.1.1 Clock Rate

1.1.2 External Interfaces

1.1.3 Processor Types

1.2 Multiprocessing

1.3 Memory

1.3.1 Caches

1.3.2 Memory Interleaving

1.3.3 Dual Path Buses

1.3.4 SynchroStream Technology

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

................................ 7

. . . . . . . . . . . . . . . . . . . . . . . . . 8

1.4 Memory Error Detection and Correction

1.4.1 Standard (Parity) Memory

1.4.2 Error Correcting Code (ECC)

.......................... 9

........................ 9

................ xviii

...................... 1

.................... 9

1.4.3 Error Correcting Code-Parity Memory (ECC-P)

1.4.4 ECC on SIMMs (EOS) Memory

1.4.5 Performance Impact

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

1.4.6 Memory Options and Speed

1.5 Bus Architectures

1.5.1 ISA Bus

1.5.2 EISA Bus

1.5.3 Micro Channel Bus

1.5.4 P CI B u s

1.6 Disk Subsystem

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

.............................. 13

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

1.6.1 Hard Disk Interfaces

1.6.2 SCSI Technology

1.6.3 SCSI Adapters

1.6.4 Hard Disk Drives

1.6.5 RAID Technology

1.6.6 RAID Classifications

1.6.7 Recommendations

1.7 LAN Subsystem

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

............................... 21

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

1.7.1 Shared RAM Adapters

1.7.2 Bus Master Adapters

1.7.3 PeerMaster Technology

1.8 Security Features

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

1.8.1 Tamper-Evident Cover

1.8.2 Secure I/O Cables

1.8.3 Passwords

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

............................... 35

1.8.4 Secure Removable Media

............................. 16

............................ 31

............................. 32

. . . . . . . . . . . . . . . . . . . . . . . . . . . 33

. . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

....................... 11

......................... 12

.......................... 36

.......... xviii

.............. 10

Copyright IBM Corp. 1995 v

Page 5

1.8.5 Selectable Drive Startup ........................... 37

1.8.6 Unattended Start Mode

1.9 Systems Management

1.9.1 DMI

1.9.2 SNMP

1.9.3 NetFinity

1.9.4 SystemView

1.10 Fault Tolerance

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

1.10.1 NetWare SFT III

1.11 Uninterruptible Power Supply (UPS)

1.11.1 A PC PowerChute

............................ 37

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

................................ 48

...................... 52

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

Chapter 2. IBM PC Server Family Overview

2.1 IBM PC Server Model Specifications

2.1.1 IBM PC Server 300

2.1.2 IBM PC Server 310

2.1.3 IBM PC Server 320 EISA

2.1.4 IBM PC Server 320 MCA

2.1.5 IBM PC Server 500

2.1.6 IBM PC Server 520 EISA

2.1.7 IBM PC Server 520 MCA

2.1.8 IBM PC Server 720

Chapter 3. Hardware Configuration

3.1 The Setup Program

3.1.1 Main Menu

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

3.1.2 Advanced Menu

3.1.3 Security

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

3.2 EISA Configuration Utility

3.3 SCSI Select Utility Program

3.4 System Programs

.............................. 56

.............................. 57

........................... 58

........................... 59

.............................. 60

........................... 61

........................... 62

.............................. 63

. . . . . . . . . . . . . . . . . . . . . . . . . 65

................................ 67

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

............................. 74

............................ 78

.................................. 82

3.4.1 Starting From the System Partition

3.4.2 Starting From the Reference Diskette

3.4.3 Main Menu Options

.............................. 84

3.4.4 Backup/Restore System Programs Menu

3.4.5 Set Configuration Menu

3.4.6 Set Features Menu

3.4.7 Test the Computer

3.4.8 More Utilities Menu

3.4.9 Advanced Diagnostic Program

3.5 RAID Controller Utility

3.5.1 Drive Information

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

3.5.2 Formatting the Disks

3.5.3 Defining a Hot-Spare Disk

3.5.4 Creating a Disk Array

3.5.5 Defining Logical Drives

3.5.6 Setting the Write Policy

3.5.7 Initializing the Array

........................... 86

.............................. 91

.............................. 97

.............................. 98

....................... 99

.............................. 101

............................ 104

......................... 106

........................... 108

........................... 109

.......................... 112

............................ 112

3.5.8 Backup/Restoring the Configuration

.................... 55

...................... 56

..................... 83

................... 84

................. 86

................... 112

Chapter 4. Novell NetWare Installation

4.1 ServerGuide Overview

4.2 Starting ServerGuide

4.3 Installing NetWare 4.1 with ServerGuide

4.4 Installing NetWare 3.12 with Diskettes

vi NetWare Integration Guide

...................... 115

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

............................... 115

................... 118

.................... 127

Page 6

4.4.1 Hardware Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . 127

4.4.2 Software Requirements

4.4.3 Information Requested at Time of Installation

4.4.4 Installation Files

4.4.5 Installation Procedure

4.5 Installing NetWare 4.1 with the Original CD-ROM

4.5.1 Hardware Requirements

4.5.2 Software Requirements

4.5.3 Installation Procedure

4.6 NetFinity Services for NetWare

4.6.1 System Requirements

4.6.2 Installing NetFinity Services for NetWare

4.7 The RAID Administration for NetWare Utility

4.7.1 Installing the Utility

4.8 Hard Disk Failure Simulation

4.8.1 Simulating with a Hot Spare Drive

4.8.2 Simulating without a Hot Spare Drive

. . . . . . . . . . . . . . . . . . . . . . . . . . 127

............. 127

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

. . . . . . . . . . . . . . . . . . . . . . . . . . . 128

.............. 135

. . . . . . . . . . . . . . . . . . . . . . . . . . 135

. . . . . . . . . . . . . . . . . . . . . . . . . . 135

. . . . . . . . . . . . . . . . . . . . . . . . . . . 135

......................... 144

. . . . . . . . . . . . . . . . . . . . . . . . . . . 144

................ 144

................. 151

............................. 151

.......................... 152

.................... 153

.................. 160

Chapter 5. Performance Tuning

5.1 Hardware Tuning

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 167

5.1.1 General Performance Characteristics

5.2 Performance Analysis Tools

5.2.1 DatagLANce

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 172

5.2.2 NetWare Monitoring Tools

5.3 Tuning NetWare

5.3.1 Disk Subsystem

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 175

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 176

5.3.2 Network Subsystem

5.3.3 System Memory

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 185

5.3.4 Memory Requirements

5.3.5 System Processor

Appendix A. EISA Configuration File

. . . . . . . . . . . . . . . . . . . . . . . . . . 167

.................. 168

.......................... 172

......................... 173

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 182

. . . . . . . . . . . . . . . . . . . . . . . . . . . 187

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 187

....................... 189

Appendix B. Hardware Compatibility, Device Driver, and Software Patch

Information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 199

B.1 Finding Compatibility Information on the World Wide Web

B.2 Finding Device Drivers on the World Wide Web

B.3 Finding Software Patches on the World Wide Web

Appendix C. Configuring DOS CD-ROM Support

C.1 Installing CD-ROM Support for PCI Adapters.

C.2 Installing CD-ROM Support for Adaptec Adapters

.............. 200

............. 201

................ 203

................ 203

............. 203

C.3 Installing CD-ROM Support for Micro-Channel Adapters

........ 199

......... 203

List of Abbreviations

Index

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 207

................................. 205

Contents vii

Page 7

Figures

1. SMP Shared Secondary Cache ......................... 5

2. SMP with Dedicated Secondary Cache

3. Two-Way Interleaved Memory Banks

4. Dual Path Bus Implementation

5. ECC Memory Operation

6. ECC-P Memory Implementation

7. Micro Channel - Basic Data Transfer (20 MBps)

8. Micro Channel - Data Streaming Transfer (40 MBps)

9. Micro Channel - Data Streaming Transfer (80 MBps)

10. SCSI Disk Interface

11. RAID-0 (Block Interleave Data Striping without Parity)

12. RAID-1 (Disk Mirroring)

13. RAID-1 (Disk Duplexing)

14. RAID-1 Enhanced, Data Strip Mirroring

15. RAID-6,10 - Mirroring of RAID 0 Drives

16. RAID-2 (Bit Interleave Data Striping with Hamming Code)

17. RAID-3 (Bit Interleave Data Striping with Parity Disk)

18. RAID-4 (Block Interleave Data Striping with One Parity Disk)

19. RAID-5 (Block Interleave Data Striping with Skewed Parity)

20. NetFinity Services Folder

21. IBM PC Server Family of Products

22. Hardware Configuration Steps

23. PC Server 320 Setup Program - Main Menu

24. PC Server 320 Setup Program - Advanced Menu

25. PC Server 320 Setup Program - Boot Options Menu

26. PC Server 320 Setup Program - Integrated Peripherals Menu

27. PC Server 320 Setup Program - Security Menu

28. EISA Configuration Utility - Main Panel

29. EISA Configuration Utility - Steps

30. EISA Configuration Utility - Step 2

31. EISA Configuration Utility - Move Confirmation Panel

32. EISA Configuration Utility - Step 3

33. EISA Configuration Utility - Step 4

34. IBM PC Server SCSISelect Utility Program - Main Menu

35. IBM PC Server SCSI Select Utility Program - Host Adapter Settings

36. PC Server 320 SCSI Select Utility Program - SCSI Device Configuration 80

37. PC Server 320 SCSISelect Utility Program - Advanced Configuration

38. PC Server 320 SCSISelect Utility Program - DASD Information

39. System Programs - Main Menu

40. System Programs - Backup/Restore System Programs Menu

41. System Programs - Set Configuration Menu

42. System Programs - View Configuration Screen

43. Set Configuration - Memory Map

44. Set Configuration - SCSI Device Configuration

45. Set Features Menu

46. Set Passwords and Security Features

47. Set Startup Sequence Screen

48. Set Power-On Features Screen

49. More Utilities Menu

50. Display Revision Level Screen

51. System Error Log Screen

. . . . . . . . . . . . . . . . . . . . 6

. . . . . . . . . . . . . . . . . . . . . 7

. . . . . . . . . . . . . . . . . . . . . . . . . 8

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

. . . . . . . . . . . . . . . . . . . . . . . . 11

. . . . . . . . . . . . . . 14

. . . . . . . . . . . . 14

. . . . . . . . . . . . 15

................................ 17

........... 24

............................. 25

............................. 25

.................... 26

.................... 26

........ 27

........... 28

....... 29

....... 29

............................ 41

....................... 55

......................... 66

................. 67

.............. 68

............ 69

...... 70

............... 71

.................... 74

....................... 74

....................... 75

........... 75

....................... 76

....................... 77

......... 78

.. 78

.. 81

...... 82

........................ 84

...... 86

................. 87

............... 88

........................ 90

............... 91

................................ 92

..................... 93

.......................... 96

......................... 97

................................ 98

......................... 98

............................ 99

Copyright IBM Corp. 1995 ix

Page 8

52. Advanced Diagnostic Menu .......................... 100

53. Test Selection Menu

54. RAID Configuration Program - Adapter Selection

55. RAID Configuration Program - Main Menu

56. RAID Configuration Program - Drive Information

57. RAID Configuration Program - Advanced Functions Menu

58. RAID Configuration Program - DASD Formatting

59. RAID Configuration Program - Change RAID parameters

60. RAID Configuration Program - Create/Delete Array Menu

61. RAID Configuration Program - Hot-Spare Disk Definition

62. RAID Configuration Program - Disk Array Creation

63. RAID Configuration Program - Logical Drive Definition

.............................. 100

............. 102

................. 102

............. 103

........ 104

............. 105

........ 105

........ 107

........ 107

............ 108

......... 109

64. RAID Configuration Program - Logical Drive Definition - Array

Selection

65. RAID Configuration Program - RAID level Selection

66. RAID Configuration Program - Size Definition

67. RAID Configuration Program - Result

68. RAID Configuration Program - Advanced Functions Menu

69. ServerGuide Language

70. ServerGuide Main Menu

71. Installing NetWare

72. Configuring NetWare

73. Configuring IBM NetFinity

74. Partitioning the Hard Disk

75. Reviewing Configuration

76. Unlocking Programs

77. Installing NetWare Directory Services (NDS)

78. Assigning a Name to a Directory Tree

79. Assigning a Context for the Server

80. Server Context Information.

81. NetWare Installation

82. NetWare V3.12 Installation - Main Menu

83. Create Partition

84. Partition Information

85. Creating a New Volume

86. Volume Status

87. Copy System and Public Files

88. Path for STARTUP.NCF File

89. STARTUP.NCF File

90. AUTOEXEC.NCF File

91. Installation Menu

92. Disk Driver Options

93. Network Driver Options

94. Create Partition

95. Disk Partition Information

96. New Volume Information

97. Optional NetWare Files

98. Install NetWare Directory Services(NDS)

99. Assigning a Name to the Directory Tree

100. Context for the Server

101. Server Context Information

102. Editing STARTUP.NCF File

103. Editing AUTOEXEC.NCF File

104. File Copy Status

105. Other Installation Options

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

........... 110

............... 111

.................... 111

........ 113

. . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

............................ 117

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

........................... 121

........................... 122

. . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 124

................ 125

................... 125

..................... 126

.......................... 126

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

.................. 130

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 130

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

............................ 131

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 132

........................ 132

.......................... 133

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

............................... 137

............................ 137

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 138

........................... 138

............................ 139

............................ 139

.................. 140

.................. 140

............................. 141

.......................... 141

........................... 142

.......................... 142

................................. 143

........................... 143

x NetWare Integration Guide

Page 9

106. NetFinity Network Driver Configuration ................... 145

107. NetFinity Installation

108. NetFinity Services for NetWare

109. NetFinity Installation - Copying Files

110. Network Driver Configuration

111. Configuration Update

112. NetFinity Installation Complete

113. Raid Administration for NetWare - Main Menu

114. RAID Administration Utility - Main Menu

115. Verifying Array Configuration

116. Using RAID Manager to View Array Configuration

117. Detecting the Disk Failure

118. Disk Failure - NetFinity Alert

119. Disk Failure - NetFinity RAID Service

120. View Last Event Message

121. RAID Administration - Recovery Message

122. NetFinity Recovery Alert

123. Changes in Array Configuration

124. RAID Administration - Replace a Defunct Drive

125. RAID Administration - Verifying the Replacement of a Defunct Drive

126. NetFinity New Hot Spare Drive Alert

127. NetFinity RAID Service - New Hot Spare

128. RAID Administration - Array Configuration

129. NetFinity RAID Service - Verifying Configuration

130. Detecting the Disk Failure

131. NetFinity Alert Log

132. NetFinity RAID Service - Disk Failure

133. Last Event Message

134. RAID Administration Utility - Reviewing Disk Status

135. RAID Administration - Replace a Defunct Drive

136. RAID Administration - Rebuild Progress

137. RAID Administration - Verifying the Rebuild Status

138. NetFinity Alert - New Disk Online

139. LAN Server Controlled Subsystems

140. File Server Performance - General Characteristics

141. Differences in LAN Adapters

142. Differences in Disk Subsystems

143. MONITOR Utility

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 174

144. SERVMAN Utility

145. Sample Compatibility Report Showing Ethernet LAN Adapters

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 147

........................ 148

..................... 148

......................... 149

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 150

........................ 150

.............. 152

.................. 153

......................... 154

............ 154

........................... 155

......................... 155

.................... 156

........................... 156

................. 157

............................ 157

....................... 158

.............. 158

. 159

..................... 159

.................. 160

................. 160

............. 161

........................... 161

............................... 162

.................... 162

.............................. 163

........... 163

.............. 164

.................. 164

............ 165

...................... 165

..................... 167

............ 169

......................... 171

....................... 172

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 175

..... 200

Figures xi

Page 10

Tables

1. ECC Memory Performances . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

2. Summary of Memory Implementations

3. SCSI Adapters Summary

4. PCI SCSI Adapters Summary

5. Summary of Disks Performance Characteristics

6. RAID Classifications

7. Summary of RAID Performance Characteristics

8. IBM PC Servers 300 Models

9. IBM PC Servers 310 Models

10. IBM PC Servers 320 EISA Models

11. IBM PC Servers 320 MCA Models

12. IBM PC Server 500 Models

13. IBM PC Servers 520 EISA Models

14. IBM PC Servers 520 MCA Models

15. IBM PC Servers 720 Models

16. Host Adapter SCSI Termination Parameter

17. Volume Block Size and Cache Buffer Size Recommendations

18. Default Block Sizes Based on Volume Size

19. NetWare Memory Pools

. . . . . . . . . . . . . . . . . . . . 12

. . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

. . . . . . . . . . . . . . . . . . . . . . . . . . 21

. . . . . . . . . . . . . . 22

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

. . . . . . . . . . . . . . . 30

. . . . . . . . . . . . . . . . . . . . . . . . . . 56

. . . . . . . . . . . . . . . . . . . . . . . . . . 57

....................... 58

....................... 59

........................... 60

....................... 61

....................... 62

.......................... 63

................. 79

..... 176

................ 177

............................ 185

Copyright IBM Corp. 1995 xiii

Page 11

Special Notices

This document is intended for IBM customers, dealers, systems engineers and

consultants who are implementing Novell NetWare on an IBM PC Server. The

information in this publication is not intended as the specification of any

programming interfaces that are provided by IBM.

References in this publication to IBM products, programs or services do not

imply that IBM intends to make these available in all countries in which IBM

operates. Any reference to an IBM product, program, or service is not intended

to state or imply that only IBM′s product, program, or service may be used. Any

functionally equivalent program that does not infringe any of IBM′s intellectual

property rights may be used instead of the IBM product, program or service.

Information in this book was developed in conjunction with use of the equipment

specified, and is limited in application to those specific hardware and software

products and levels.

IBM may have patents or pending patent applications covering subject matter in

this document. The furnishing of this document does not give you any license to

these patents. You can send license inquiries, in writing, to the IBM Director of

Licensing, IBM Corporation, 500 Columbus Avenue, Thornwood, NY 10594 USA.

The information contained in this document has not been submitted to any

formal IBM test and is distributed AS IS. The information about non-IBM

(VENDOR) products in this manual has been supplied by the vendor and IBM

assumes no responsibility for its accuracy or completeness. The use of this

information or the implementation of any of these techniques is a customer

responsibility and depends on the customer′s ability to evaluate and integrate

them into the customer′s operational environment. While each item may have

been reviewed by IBM for accuracy in a specific situation, there is no guarantee

that the same or similar results will be obtained elsewhere. Customers

attempting to adapt these techniques to their own environments do so at their

own risk.

Any performance data contained in this document was determined in a

controlled environment, and therefore, the results that may be obtained in other

operating environments may vary significantly. Users of this document should

verify the applicable data for their specific environment.

Reference to PTF numbers that have not been released through the normal

distribution process does not imply general availability. The purpose of

including these reference numbers is to alert IBM customers to specific

information relative to the implementation of the PTF when it becomes available

to each customer according to the normal IBM PTF distribution process.

The following terms are trademarks of the International Business Machines

Corporation in the United States and/or other countries:

AIX AIX/6000

AT DB2/2

DataHub DatagLANce

EtherStreamer First Failure Support Technology

IBM LANStreamer

Micro Channel NetFinity

Copyright IBM Corp. 1995 xv

Page 12

NetView OS/2

PS/2 Personal System/2

Power Series 800 Presentation Manager

SystemView Ultimedia

VM/ESA

The following terms are trademarks of other companies:

C-bus is a trademark of Corollary, Inc.

PC Direct is a trademark of Ziff Communications Company and is

used by IBM Corporation under license.

UNIX is a registered trademark in the United States and other

countries licensed exclusively through X/Open Company Limited.

Windows is a trademark of Microsoft Corporation.

386 Intel Corporation

486 Intel Corporation

AHA Adaptec, Incorporated

AppleTalk Apple Computer, Incorporated

Banyan Banyan Systems, Incorporated

CA Computer Associates

DECnet Digital Equipment Corporation

EtherLink 3COM Corporation

HP Hewlett-Packard Company

IPX Novell, Incorporated

Intel Intel Corporation

Lotus 1-2-3 Lotus Development Corporation

Lotus Notes Lotus Development Corporation

MS Microsoft Corporation

Micronics Micronics Electronics, Incorporated

Microsoft Microsoft Corporation

Microsoft Excel Microsoft Corporation

NFS Sun Microsystems Incorporated

NetWare Novell, Incorporated

Novell Novell, Incorporated

OpenView Hewlett-Packard Company

Pentium Intel Corporation

Phoenix Phoenix Technologies, Limited

PowerChute American Power Conversion

SCO The Santa Cruz Operation, Incorporated

SCSI Security Control Systems, Incorporated

SCSISelect Ada ptec, Incorporated

VINES Banyan Systems, Incorporated

Windows NT Microsoft Corporation

X/Open X/Open Company Limited

i386 Intel Corporation

i486 Intel Corporation

i960 Intel Corporation

Other trademarks are trademarks of their respective companies.

xvi NetWare Integration Guide

Page 13

Preface

This document describes the procedures necessary to implement Novell

NetWare on IBM PC Server platforms. It provides detailed information on

installation, configuration, performance tuning, and management of the IBM PC

Server in the NetWare environment. It also discusses the features and

technologies of the IBM PC Server brand and positions the various models in the

brand.

How This Document is Organized

The document is organized as follows:

•

Chapter 1, “IBM PC Server Technologies”

This chapter introduces many of the technologies used in the IBM PC Server

brand and gives examples of system implementations where they are used.

•

Chapter 2, “IBM PC Server Family Overview”

This chapter positions the various models within the IBM PC Server brand

and gives specifications for each model.

•

Chapter 3, “Hardware Configuration”

This chapter provides a roadmap for configuring the various models of the

IBM PC Server line and describes the configuration process in detail.

•

Chapter 4, “Novell NetWare Installation”

This chapter gives a step-by-step process for installing both NetWare V3.12

and V4.1 and the NetFinity Manager using both ServerGuide and the original

product diskettes and CD-ROM. It also contains an overview of the

ServerGuide product. It also covers the RAID administration tools and

details a process for simulating and recovering from a DASD failure.

•

Chapter 5, “Performance Tuning”

This chapter presents an in-depth discussion of tuning NetWare as it relates

to the major hardware subsystems of the file server. It also discusses

performance monitoring tools.

•

Appendix A, “EISA Configuration File”

This appendix contains a sample report printed from the EISA configuration

utility.

•

Appendix B, “Hardware Compatibility, Device Driver, and Software Patch

Information”

This appendix gives information on where to find the latest compatibility

information, device drivers, and code patches in the NetWare environment.

•

Appendix C, “Configuring DOS CD-ROM Support”

This appendix gives information on how to configure your IBM PC Server for

CD-ROM support in the DOS environment.

Copyright IBM Corp. 1995 xvii

Page 14

Related Publications

The publications listed in this section are considered particularly suitable for a

more detailed discussion of the topics covered in this document.

•

IBM PC Server 310 System Library,

•

IBM PC Server 320 System Library for Non-Array Models,

•

IBM PC Server 320 System Library for Array Models,

•

IBM PC Server 320 PCI/Micro Channel System Library,

•

IBM PC Server 520 System Library,

•

The PC Server 720 System Library

S52H-3697

S52H-3695

, S30H-1782

International Technical Support Organization Publications

•

Advanced PS/2 Servers Planning and Selection Guide

•

NetWare 4.0 from IBM: Directory Services Concepts

•

NetWare from IBM: Network Protocols and Standards

S19H-1175

S19H-1196

S30H-1778

, GG24-3927

, GG24-4078

, GG24-3890

A complete list of International Technical Support Organization publications,

known as redbooks, with a brief description of each, may be found in:

International Technical Support Organization Bibliography of Redbooks,

GG24-3070.

To get a catalog of ITSO redbooks, VNET users may type:

TOOLS SENDTO WTSCPOK TOOLS REDBOOKS GET REDBOOKS CATALOG

A listing of all redbooks, sorted by category, may also be found on MKTTOOLS

as ITSOCAT TXT. This package is updated monthly.

How to Order ITSO Redbooks

IBM employees in the USA may order ITSO books and CD-ROMs using

PUBORDER. Customers in the USA may order by calling 1-800-879-2755 or by

faxing 1-800-445-9269. Most major credit cards are accepted. Outside the

USA, customers should contact their local IBM office. Guidance may be

obtained by sending a PROFS note to BOOKSHOP at DKIBMVM1 or E-mail to

bookshop@dk.ibm.com.

Customers may order hardcopy ITSO books individually or in customized

sets, called BOFs, which relate to specific functions of interest. I BM

employees and customers may also order ITSO books in online format on

CD-ROM collections, which contain redbooks on a variety of products.

ITSO Redbooks on the World Wide Web (WWW)

Internet users may find information about redbooks on the ITSO World Wide Web

home page. To access the ITSO Web pages, point your Web browser to the

following URL:

http://www.redbooks.ibm.com/redbooks

xviii NetWare Integration Guide

Page 15

IBM employees may access LIST3820s of redbooks as well. Point your web

browser to the IBM Redbooks home page at the following URL:

http://w3.itsc.pok.ibm.com/redbooks/redbooks.html

Acknowledgments

This project was designed and managed by:

Tim Kearby

International Technical Support Organization, Raleigh Center

The authors of this document are:

Wuilbert Martinez Zamora

IBM Mexico

Jean-Paul Simoen

IBM France

Angelo Rimoldi

IBM Italy

Tim Kearby

International Technical Support Organization, Raleigh Center

This publication is the result of a residency conducted at the International

Technical Support Organization, Raleigh Center.

Thanks to the following people for the invaluable advice and guidance provided

in the production of this document:

Barry Nusbaum, Michael Koerner, Gail Wojton

International Technical Support Organization

Tom Neidhardt, Dave Laubscher, Marc Shelley

IBM PC Server Competency Center, Raleigh

Ted Ross, Ron Abbott

IBM PC Company, Raleigh

Gregg McKnight, Phil Horwitz, Paul Awoseyi

IBM PC Server Performance Laboratory, Raleigh

John Dinwiddie, Alison Farley, Victor Guess, Dottie Gardner-Lamontagne

IBM PC Server Unit, Raleigh

Parts of this document are based on an earlier version of the

Integration Guide,

Center in Basingstoke, U.K.

which was produced by the IBM European Personal Systems

NetWare

Thanks also to the many people, both within and outside IBM, who provided

suggestions and guidance, and who reviewed this document prior to publication.

Preface xix

Page 16

Chapter 1. IBM PC Server Technologies

IBM PC Servers use a variety of technologies. This chapter introduces many of

these technologies and gives examples of system implementations where they

are used.

1.1 Processors

The microprocessor is the central processing unit (CPU) of the server. It is the

place where most of the control and computing functions occur. All operating

system and application program instructions are executed here. Most

information passes through it, whether it is a keyboard stroke, data from a disk

drive, or information from a communication network.

The processor needs data and instructions for each processing operation that it

performs. Data and instructions are loaded from memory into data-storage

locations, known as registers, in the processor. Registers are also used to store

the data that results from each processing operation, until the data is transferred

to memory.

1.1.1 Clock Rate

The microprocessor is packaged as an integrated circuit which contains one or

more arithmetic logic units (ALUs), a floating point unit, on-board cache,

registers for holding instructions and data, and control circuitry.

Note: The ALUs and the floating point unit are often collectively referred to as

execution units.

A fundamental characteristic of all microprocessors is the rate at which they

perform operations. This is called the clock rate and is measured in millions of

cycles per second or Megahertz (MHz). The maximum clock rate of a

microprocessor is determined by how fast the internal logic of the chip can be

switched. As silicon fabrication processes are improved, the integrated devices

on the chip become smaller and can be switched faster. Thus, the clock speed

can be increased.

For example, the Pentium P54C processor in the IBM PC Server 720 operates at

a clock speed of 100 MHz. The P54C is based on a fabrication process where

transistors on the chip have a channel width of .6 microns (a .6 micron BiCMOS

process). The original P5 processor is based on a .8 micron process and could

only be clocked at a maximum of 66 MHz.

The clock rate of the external components can be different from the rate at which

the processor is clocked internally. Clock doubling is a technique used in the

Intel DX2 and DX4 class processors to clock the processor internally faster than

the external logic components. For example, the 486DX2 at 66/33 MHz clocks the

processor internally at 66 MHz, while clocking the external logic components at

33 MHz. This is an efficient systems design technique when faster external logic

components are not available or are prohibitively expensive.

One might think that the faster the clock speed, the faster the performance of the

system. This is not always the case. The speed of the other system

components, such as main memory, can also have a dramatic effect on

Copyright IBM Corp. 1995 1

Page 17

performance. (Please see 1.3, “Memory” on page 3 for a discussion of memory

speeds and system performance.) The point is that you cannot compare system

performance by simply looking at the speed at which the processor is running.

A 90 MHz machine with a set of matched components can out perform a 100

MHz machine which is running with slow memory. IBM PC Servers are always

optimized to incorporate these factors and they always deliver a balanced

design.

1.1.2 External Interfaces

The processor data interface, or data bus, is the data connection between the

processor and external logic components. The Pentium family of processors

utilizes a 64-bit data bus, which means that they are capable of reading in 8

bytes of data in one memory cycle from processor main memory. The Intel 486

has a data bus of only 32-bits, which limits its memory cycles to 4 bytes of data

per cycle.

The width of the processor address interface, or address bus, determines the

amount of physical memory the processor can address. A processor with a

24-bit address bus, such as the i286 class of processors, can address a

maximum of 16 megabytes (MB) of physical memory. Starting with the i386 class

of processors, the address bus was increased to 32 bits, which correlates to 4

gigabyte (GB) of addressability.

1.1.3 Processor Types

IBM currently uses two processors in the PC Server line:

•

80486DX2

The 80486DX2 has a 32-bit address bus and 32-bit data bus. I t utilizes clock

doubling to run at 50/25 MHz or 66/33 MHz. I t is software compatible with all

previous Intel processors. The 80486DX2 has an internal two-way set

associative 8KB cache.

•

Pentium

The Pentium has a 32-bit address bus and 64-bit data bus. It has internal

split data and instruction caches of 8KB each. The instruction cache is a

write-through cache and the data cache is a write-back design. The Pentium

microprocessor is a two-issue superscalar machine. This means that there

are two integer execution units (ALUs) in addition to the on-board floating

point unit. The superscalar architecture is one of the key techniques used to

improve performance over that of the previous generation i486 class

processors. Intel was able to achieve this design while maintaining

compatibility with applications written for the Intel i368/i486 family of

processors.

Note: A superscalar architecture is one where the microprocessor has

multiple execution units, which allow it to perform multiple operations during

the same clock cycle.

2 NetWare Integration Guide

Page 18

1.2 Multiprocessing

Multiprocessing uses two or more processors in a system to increase

throughput. Multiprocessing yields high performance for CPU intensive

applications such as database and client/server applications.

There are two types of multiprocessing:

•

Asymmetric Multiprocessing

•

Symmetric Multiprocessing

1.3 Memory

Asymmetric Multiprocessing:

In asymmetric multiprocessing the program tasks

(or threads) are strictly divided by type between processors and each processor

has its own memory address space. These features make asymmetric

multiprocessing difficult to implement.

Symmetric Multiprocessing (SMP):

Symmetric multiprocessing means that any

processor has access to all system resources including memory and I/O devices.

Threads are divided evenly between processors regardless of type. A process is

never forced to execute on a particular processor.

Symmetric multiprocessing is easier to implement in network operating systems

(NOSs) and is the method used most often in operating systems that support

multiprocessing. It is the technology currently used by OS/2 SMP, Banyan Vines,

SCO UNIX, Windows NT, and UnixWare 2.0.

The IBM PC Server 320, 520, and 720 support SMP. The PC Server 320 and 520

support two-way SMP via an additional Pentium processor in a socket on the

planar board. The 720 supports two-to-six way SMP via additional processor

complexes.

The system design of PC servers (in fact all microprocessor-based systems) is

centered around the basic memory access operation. System designers must

tune

always

this operation to be as fast as possible in order to achieve the

highest possible performance.

Processor architectures always allow a certain number of clock cycles in order

to read or write information to system memory. If the system design allows this

to be completed in the given number of clock cycles, then this is called a zero

wait state design.

If for some reason the operation does not complete in the given number of

clocks, the processor must

These are called

wait states

by inserting extra

and are always an integer multiple of clock cycles.

states

into the basic operation.

wait

The challenge is that as each new generation of processors is clocked faster, it

becomes more expensive to incorporate memory devices that have access times

allowing zero wait designs. For example, state of the art Dynamic Random

Access Memory, or DRAM, has a typical access time of about 60 nanoseconds

(ns). A 60 ns DRAM is not fast enough to permit a zero wait state design with a

Pentium class processor. Static RAM, or SRAM, has an access time of less than

10 ns. A 10 ns SRAM design would allow for zero waits at current processor

speeds but would be prohibitively expensive to implement as main memory. A

basic trade-off that all system designers must face is simply that as the access

time goes down, the price goes up.

Chapter 1. IBM PC Server Technologies 3

Page 19

1.3.1 Caches

The key is to achieve a balanced design where the speed of the processor is

matched to that of the external components. IBM engineers achieve a balanced

design by using several techniques to reduce the

effective

access time of main

system memory:

•

Cache

•

Interleaving

•

Dual path buses

•

SynchroStream technology

Research has shown that when a system uses data, it will be likely to use it

again. As previously discussed, the faster the access to this data occurs, the

faster the overall machine will operate. Caches are memory buffers that act as

temporary storage places for instructions and data obtained from slower, main

memory. They use static RAM and are much faster than the dynamic RAM used

for system memory (typically five to ten times faster). However, SRAM is more

expensive and requires more power, which is why it is not used for all memory.

Caches reduce the number of clock cycles required for a memory access since

they are implemented with fast SRAMs. Whenever the processor must perform

external memory read accesses, the cache controller always pre-fetches extra

bytes and loads them into the cache. When the processor needs the next piece

of data, it is likely that it is already in the cache. If so, processor performance is

enhanced, if not, the penalty is minimal.

Caches are cost-effective because they are relatively small as compared to the

amount of main memory.

There are several levels of cache implemented in IBM PC servers. The cache

incorporated into the main system processor is known as Level 1 (L1) cache.

The Intel 486 incorporates a single 8KB cache. The Intel Pentium family has two

8KB caches, one for instructions and one for data. Access to these on-board

caches are very fast and consume only a fraction of the time required to access

memory locations external to the chip.

The second level of cache, called second-level cache or L2 cache, provides

additional high speed memory to the L1 cache. If the processor cannot find what

it needs in the processor cache (a first-level

cache miss

additional cache memory. If it finds the code or data there (a second-level

) the processor will use it and continue. If the data is in neither of the caches,

hit

), it then looks in the

cache

an access to main memory must occur.

L2 caches are standard in all IBM PC server models.

With all types of caching, more is not always better. Depending on the system,

the optimum size of Level 2 Cache is usually 128KB to 512KB.

L2 Caches can be of two types:

•

4 NetWare Integration Guide

Write-Through Cache

Read operations are issued from the cache but write operations are sent

directly to the standard memory. Performance improvements are obtained

only for read operations.

Page 20

•

Write-Back Cache

Write operations are also performed on the cache. Transfer to standard

memory is done if:

− Memory is needed in the cache for another operation

− Modified data in the cache is needed for another application

The third level of cache or L3 cache is sometimes referred to as a victim cache.

This cache is a highly customized cache used to store recently evicted L2 cache

entries. It is a smaller cache usually less than 256 bytes. An L3 cache is

implemented in the IBM PC Server 720 SMP system.

1.3.1.1 SM P Caching

Within SMP designs, there are two ways in which a cache is handled:

•

Shared cache

•

Dedicated cache

Shared Cache:

expensive SMP design. However, the performance gains associated with a

shared cache are not as great as with a dedicated cache. With the shared

secondary cache design, adding a second processor can provide as much as a

30% performance improvement. Additional processors provide very little

incremental gain. If two many processors are added, the system will even run

slower due to memory bus bottlenecks caused by processor contention for

access to system memory.

The IBM PC server 320 supports SMP with a shared cache.

Figure 1 shows SMP with shared secondary cache.

Sharing a single L2 cache among processors is the least

┌───────────────┐ ┌───────────────┐

│ Pentium │ │ Pentium │

└───────┬───────┘ └───────┬───────┘

││

││

││

└────────────────┬────────────────┘

│

│

│

│

│

┌──────────────────┴────────────────────┐

│ 512KB Secondary (level2) Cache │

└──────────────────┬────────────────────┘

│

│

│

┌────────┴─────────┐

│ Main memory │

└────────┬─────────┘

│

Figure 1. SMP Shared Secondary Cache

Chapter 1. IBM PC Server Technologies 5

Page 21

Dedicated Cache:

processor. This allows more cache hits than a shared L2 cache. Adding a

second processor using a dedicated L2 cache can improve performance as

much as 80%. With current technology, adding even more processors can

further increase performance in an almost linear fashion up to the point where

the addition of more processors does not increase performance and can actually

decrease performance due to excessive overhead.

The IBM PC Server 720 implements SMP with dedicated caches.

Figure 2 shows SMP with dedicated secondary cache.

This SMP design supports a dedicated L2 cache for each

┌───────────────┐ ┌───────────────┐

│ Pentium │ │ Pentium │

└───────┬───────┘ └───────┬───────┘

││

││

││

┌────────────┴─────────────────┐ ┌──────────────┴────────────────┐

│512KB Secondary (level2) Cache│ │512KB Secondary(level2) Cache│ │

└────────────┬─────────────────┘ └──────────────┬────────────────┘

││

││

││

└────────────────────┬──────────────────┘

│

│

┌────────┴─────────┐

│ Main memory │

└────────┬─────────┘

│

Figure 2. SMP with Dedicated Secondary Cache

Dedicated caches are also more complicated to manage. Care needs to be

taken to ensure that a processor needing data always gets the latest copy of that

data. If this data happens to reside in another processor′ s cache, then the two

caches must be brought into sync with one another.

The cache controllers maintain this

another using a special protocol called MESI, which stands for

E

xclusive, Shared, or Invalid. These refer to tags that are maintained for each

line of cache, and indicate the state of each line.

The implementation of MESI in the IBM PC server 720 supports two sets of tags

for each cache line, which allows for faster cache operation than when only one

set of tags is provided.

1.3.2 Memory Interleaving

Another technique used to reduce effective memory access time is interleaving.

This technique greatly increases memory bandwidth when access to memory is

sequential such as in program instruction fetches.

coherency

by communicating with one

M

odified,

6 NetWare Integration Guide

Page 22

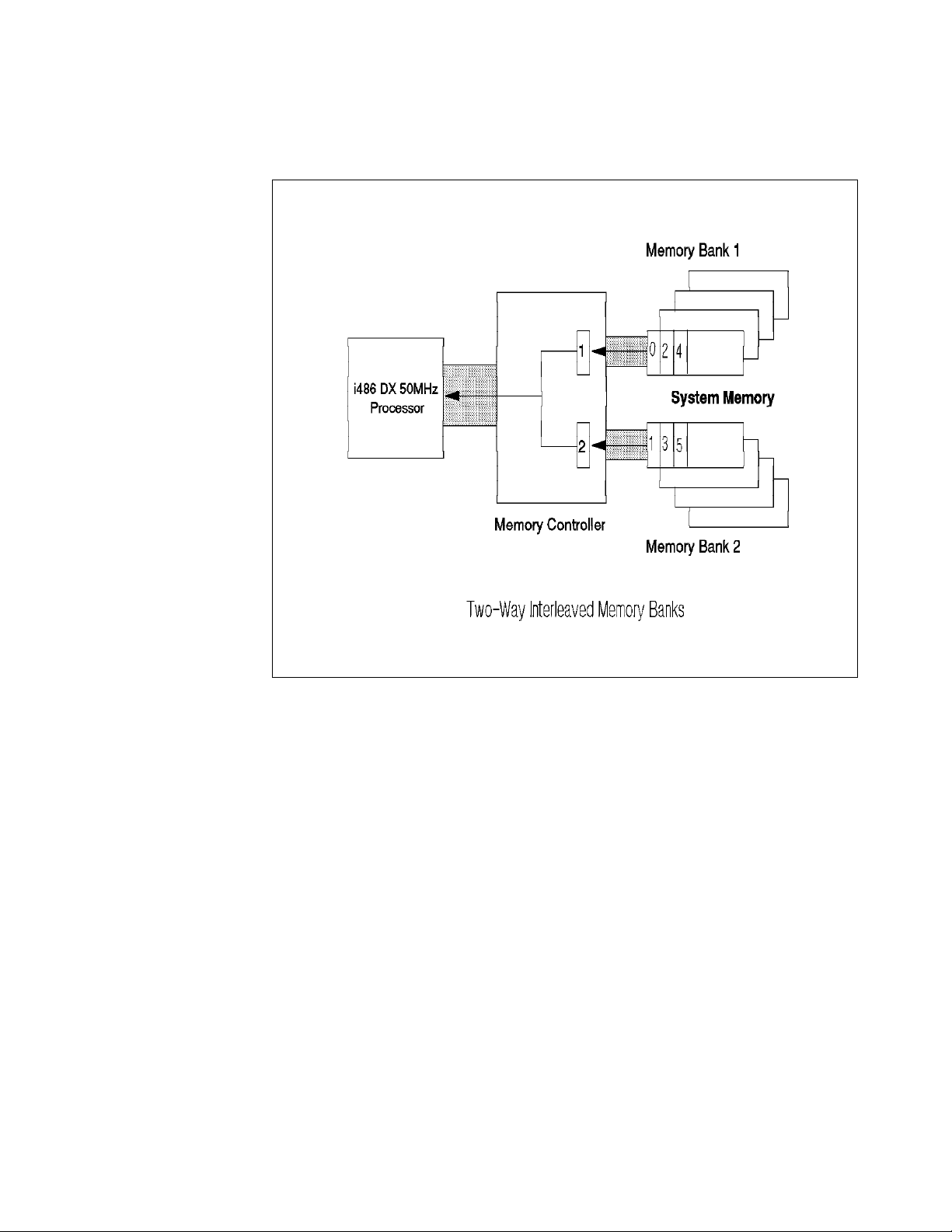

In interleaved systems, memory is currently organized in either two or four

banks. Figure 3 on page 7 shows a two-way interleaved memory

implementation.

Figure 3. Two-Way Interleaved Memory Banks

Memory accesses are overlapped so that as the controller is reading/writing

from bank 1, the address of the next word is presented to bank 2. This gives

bank 2 a head start on the required access time. Similarly, when bank 2 is being

read, bank 1 is fetching/storing the next word.

The PC server 500 uses a two-way interleaved memory. In systems

implementing two-way interleaved memory, additional memory must be added in

pairs of single in-line memory modules (SIMMs) operating at the same speed

(matched SIMMs).

The PC server 720 uses a four-way interleaved memory with a word length of 64

bits. In this system, in order to interleave using 32-bit SIMMs, it is necessary to

add memory in matched sets of eight SIMMs each.

1.3.3 Dual Path Buses

A dual path bus allows both the processor and a bus master to access system

memory simultaneously. Figure 4 on page 8 shows a dual path bus

implementation.

Chapter 1. IBM PC Server Technologies 7

Page 23

┌─────────┐ ┌─────────┐ ┌─────────┐ ┌─────────┐

│ CPU ├───┤ L2 Cache├───┤ Memory ├───┤ Memory │

│ ├───┤ ├───┤ Control.├───┤ │

└─────────┘ └─────────┘ └──┬───┬──┘ └─────────┘

│ │

│ │

┌──┴───┴──┐

│ I/0 │

│ Control.│

└──┬───┬──┘

│ │

│ │

┌──────────────────────────────┴───┴────────────────┐

││

│ BUS ISA/EISA/MCA/VL/PCI │

││

└────┬─┬───┬─┬───────────────────────┬─┬──┬─┬───────┘

││ ││ ││ ││

└─┘ └─┘ └─┘ └─┘

Slots SCSI VGA

Figure 4. Dual Path Bus Implementation

Without a dual path bus, there is often contention for system resources such as

main memory. When contention between the processor and a bus master

occurs, one has to wait for the other to finish its memory cycle before it can

proceed. Thus, fast devices like processors have to wait for much slower I/O

devices, slowing down the performance of the entire system to the speed of the

slowest device. This is very costly to the overall system performance.

1.3.4 SynchroStream Technology

SynchroStream is an extension of the dual bus path technique. The

SynchroStream controller synchronizes the operation of fast and slow devices

and streams data to these devices to ensure that all devices work at their

optimum levels of performance.

It works much like a cache controller in that it pre-fetches extra data on each

access to memory and buffers this data in anticipation of the next request. When

the device requests the data, the IBM SynchroStream controller provides it

quickly from the buffer and the device continues working. It does not have to

wait for a normal memory access cycle.

When devices are writing data into memory, the IBM SynchroStream controller

again buffers the data, and writes it to memory after the bus cycle is complete.

Since devices are not moving data to and from memory directly, but to the

SynchroStream controller, each device has its own logical path to memory. The

devices do not have to wait for other, slower devices.

8 NetWare Integration Guide

Page 24

1.4 Memory Error Detection and Correction

IBM PC servers implement four different memory systems:

•

Standard (parity) memory

•

Error Correcting Code-Parity

•

Error Correcting Code (ECC) memory

•

ECC Memory on SIMMs (EOS) Memory

1.4.1 Standard (Parity) Memory

Parity memory is standard IBM memory with 32 bits of data space and 4 bits of

parity information (one check bit/byte of data). The 4 bits of parity information

are able to tell you an error has occurred but do not have enough information to

locate which bit is in error. In the event of a parity error, the system generates a

non-maskable interrupt (NMI) which halts the system. Double bit errors are

undetected with parity memory.

Standard memory is implemented in the PC Servers 300 and 320 as well as in

the majority of the IBM desktops (for example the IBM PC 300, IBM PC 700, and

PC Power Series 800).

1.4.2 Error Correcting Code (ECC)

The requirements for system memory in PC servers has increased dramatically

over the past few years. Several reasons include the availability of 32 bit

operating systems and the caching of hard disk data on file servers.

As system memory is increased, the possibility for memory errors increase.

Thus, protection against system memory failures becomes increasingly

important. Traditionally, systems which implement only parity memory halt on

single-bit errors, and fail to detect double-bit errors entirely. Clearly, as memory

is increased, better techniques are required.

To combat this problem, the IBM PC servers employ schemes to detect and

correct memory errors. These schemes are called Error Correcting Code (or

sometimes Error Checking and Correcting but more commonly just ECC). ECC

can detect and correct single bit-errors, detect double-bit errors, and detect

some triple-bit errors.

ECC works like parity by generating extra check bits with the data as it is stored

in memory. However, while parity uses only 1 check bit per byte of data, ECC

uses 7 check bits for a 32-bit word and 8 bits for a 64-bit word. These extra

check bits along with a special hardware algorithm allow for single-bit errors to

be detected and corrected in real time as the data is read from memory.

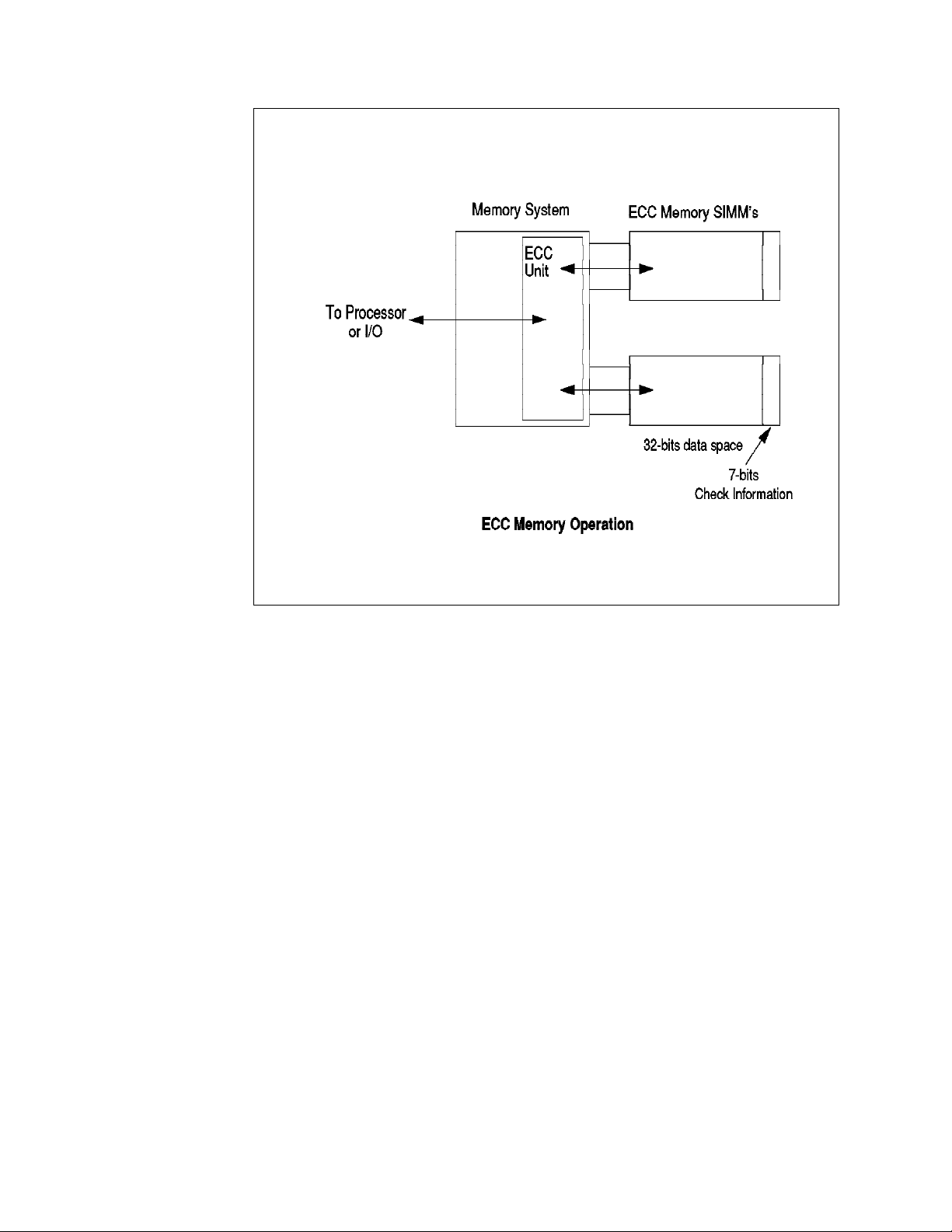

Figure 5 on page 10 shows how the ECC circuits operate. The data is scanned

as it is written to memory. This scan generates a unique 7-bit pattern which

represents the data stored. This pattern is then stored in the 7-bit check space.

Chapter 1. IBM PC Server Technologies 9

Page 25

Figure 5. ECC Memory Operation

As the data is read from memory, the ECC circuit again performs a scan and

compares the resulting pattern to the pattern which was stored in the check bits.

If a single-bit error has occurred (the most common form of error), the scan will

always detect it, automatically correct it and record its occurrence. In this case,

system operation will not be affected.

The scan will also detect all double-bit errors, though they are much less

common. With double-bit errors, the ECC unit will detect the error and record its

occurrence in NVRAM; it will then halt the system to avoid data corruption. The

data in NVRAM can then be used to isolate the defective component.

In order to implement an ECC memory system, you need an ECC memory

controller and ECC SIMMs. ECC SIMMs differ from standard memory SIMMs in

that they have additional storage space to hold the check bits.

The IBM PC Servers 500 and 720 have ECC circuitry and provide support for ECC

memory SIMMs to give protection against memory errors.

1.4.3 Error Correcting Code-Parity Memory (ECC-P)

Previous IBM servers such as the IBM Server 85 were able to use standard

memory to implement what is known as ECC-P. ECC-P takes advantage of the

fact that a 64-bit word needs 8 bits of parity in order to detect single-bit errors

(one bit/byte of data). Since it is also possible to use an ECC algorithm on 64

bits of data with 8 check bits, IBM designed a memory controller which

implements the ECC algorithm using the standard memory SIMMs.

10 NetWare Integration Guide

Page 26

Figure 6 on page 11 shows the implementation of ECC-P. When ECC-P is

enabled via the reference diskette, the controller reads/writes two 32-bit words

and 8 bits of check information to standard parity memory. Since 8 check bits

are available on a 64-bit word, the system is able to correct single-bit errors and

detect double-bit errors just like ECC memory.

┌───────────────────┐ ┌───────────────────┐

││││

││││

├─────────┬┬────────┤ ├─────────┬┬────────┤

│ 32 data ││4 parity│ │ 32 data ││4 parity│

└────┬────┘└───┬────┘ └─────┬───┘└────┬───┘

││ ││

│ └───────────────┬──────────┼─────────┘

│││

└─────────────────────┬───┼──────────┘

64 bits for data │ │

│ │8 bits for ECC

┌──┴───┴─────┐

│ Memory │

│ Controller │

└────────────┘

Figure 6. ECC-P Memory Implementation

While ECC-P uses standard non-expensive memory, it needs a specific memory

controller that is able to read/write the two memory blocks and check and

generate the check bits. Also, the additional logic necessary to implement the

ECC circuitry make it slightly slower than true ECC memory. Since the price

difference between a standard memory SIMM and an ECC SIMM has narrowed,

IBM no longer implements ECC-P.

1.4.4 ECC on SIMMs (EOS) Memory

A server that supports one hundred or more users can justify the additional cost

necessary to implement ECC on the system. It is harder to justify this cost for

smaller configurations. It would be desirable for a customer to be able to

upgrade his system at a reasonable cost to take advantage of ECC memory as

his business grows.

The problem is that the ECC and ECC-P techniques previously described use

special memory controllers imbedded on the planar board which contain the

ECC circuits. I t is impossible to upgrade a system employing parity memory

(with a parity memory controller) to ECC even if we upgrade the parity memory

SIMMs to ECC memory SIMMs.

To answer this problem, IBM has introduced a new type of memory SIMM which

has the ECC logic integrated on the SIMM. These are called ECC on SIMMs or

EOS memory SIMMs. With these SIMMs, the memory error is detected and

corrected directly on the SIMM before the data gets to the memory controller.

This solution allows a standard memory controller to be used on the planar

board and allows the customer to upgrade a server to support error checking

memory.

Chapter 1. IBM PC Server Technologies 11

Page 27

1.4.5 Performance Impact

As previously discussed, systems which employ ECC memory have slightly

longer memory access times depending on where the checking is done. It

should be stressed that this affects only the access time of external system

memory, not L1 or L2 caches. Table 1 shows the performance impacts as a

percentage of system memory access times of the different ECC memory

solutions.

Again, these numbers represent only the impact to accessing external memory.

They do not represent the impact to overall system performance which is harder

to measure but will be substantially less.

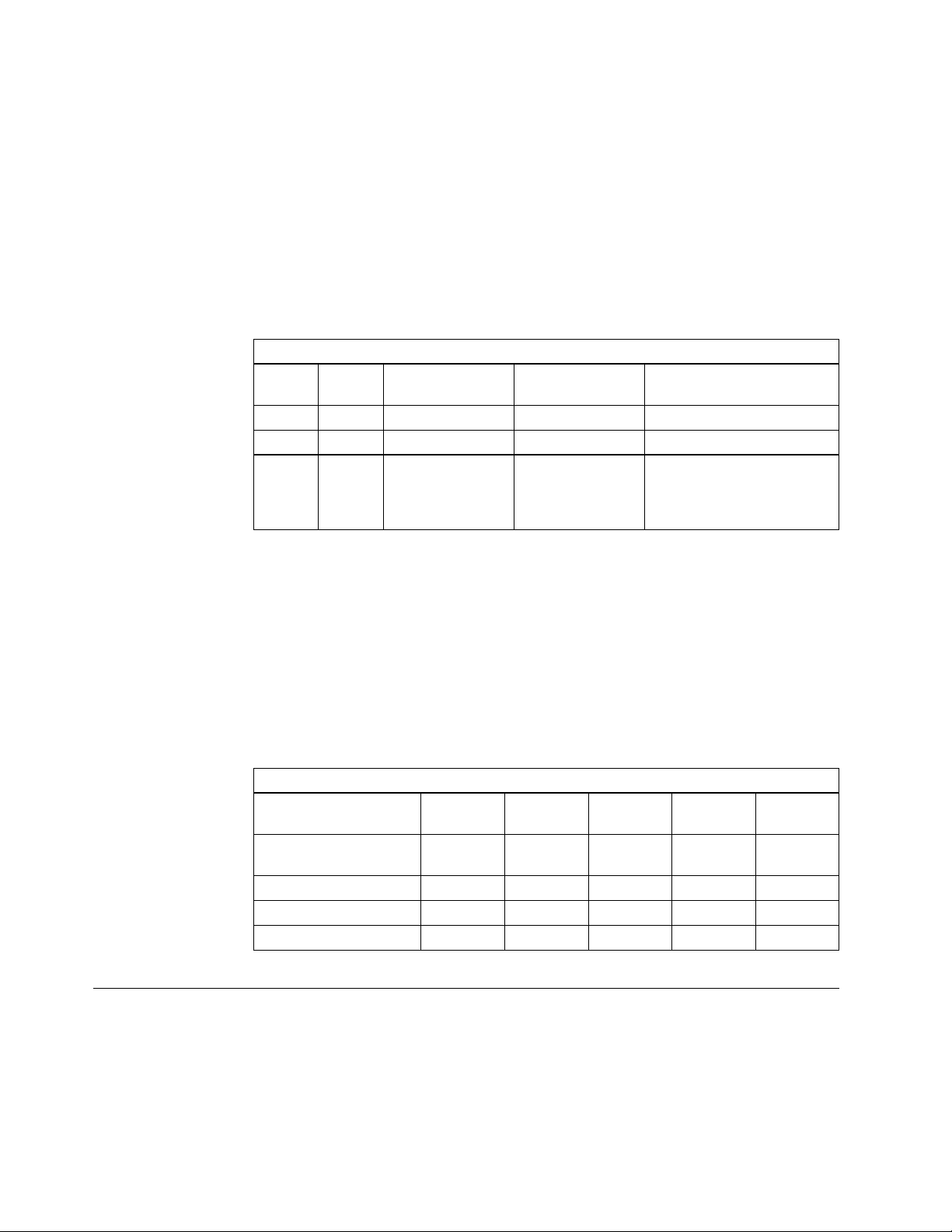

Table 1. ECC Memory Performances

ECC X X 3% PC Servers 500 and 720

ECC-P X 14% No more (Mod 85)

EOS X None Option for PC Servers

SIMM Memory

Controller

Impact to

Access Time

Systems where

implemented

300, 320

Standard for PC Servers

520

1.4.6 Memory Options and Speed

The following memory options are available from IBM:

•

4MB, 8MB, 16MB, 32MB 70 ns Standard (Parity) Memory SIMMs

•

4MB, 8MB, 16MB, 32MB 70 ns ECC Memory SIMMs

•

4MB, 8MB, 16MB, 32MB 60 ns ECC Memory SIMMs

•

4MB, 8MB, 16MB, 32MB 70 ns EOS Memory SIMMs

Table 2 shows the options used by each PC server.

Table 2. Summary of Memory Implementations

PS/2 Model 70 ns

PC Server

300/310/320

PC Server 500 X

PC Server 520 X

PC Server 720 X

1.5 Bus Architectures

70 ns

Standard

X OPT

ECC-P

70 ns

ECC

60 ns

ECC

70 ns

EOS

There are a number of bus architectures implemented in IBM PC servers:

•

•

•

•

12 NetWare Integration Guide

ISA

EISA

MCA

PCI

Page 28

1.5.1 ISA Bus

The Industry Standard Architecture (ISA) is not really an architecture at all but a

defacto standard based on the original IBM PC/AT bus design. The main

characteristics of the ISA bus include a 16-bit data bus and a 24-bit address bus.

The bus speed is limited to 8 MHz and it did not allow for DMA and bus masters

in its original form. It does not support automatically configuring adapters and

resolving resource conflicts among adapters nor does it allow for sharing of

interrupts. Nonetheless, it was an extremely successful design and even with

these disadvantages, it is estimated that the ISA bus is in 70% of the PCs

manufactured today.

1.5.2 EISA Bus

The Extended Industry Standard Bus Architecture (EISA) is a 32-bit superset of

the ISA bus providing improved functionality and greater data rates while

maintaining backward compatibility with the many ISA products already

available.

The main advancements of the EISA bus are 32-bit addressing and 16-bit data

transfer. It supports DMA and bus master devices. It is synchronized by an 8.33

MHz clock and can achieve data transfer of up to 33 MBps. A bus arbitration

scheme is also provided which allows efficient sharing of multiple EISA bus

devices. EISA systems can also automatically configure adapters.

1.5.3 Micro Channel Bus

The Micro Channel Architecture (MCA) was introduced by IBM in 1987. Micro

Channel is an improvement over ISA in all of the areas discussed in the previous

section on EISA. I n addition, it supports data streaming which is an important

performance feature of the MCA architecture.

1.5.3.1 Data Streaming

The data streaming transfer offers considerably improved I/O performance. In

order to understand data streaming transfers we need to see how data is

transferred between Micro Channel bus master adapters and memory.

The standard method of transfer across the Micro Channel is known as basic

data transfer. In order to transfer a block of data in basic data transfer mode, an

address is generated on the address bus to specify where the data should be

stored; then the data is put on the data bus.

This process is repeated until the entire block of data has been transferred.

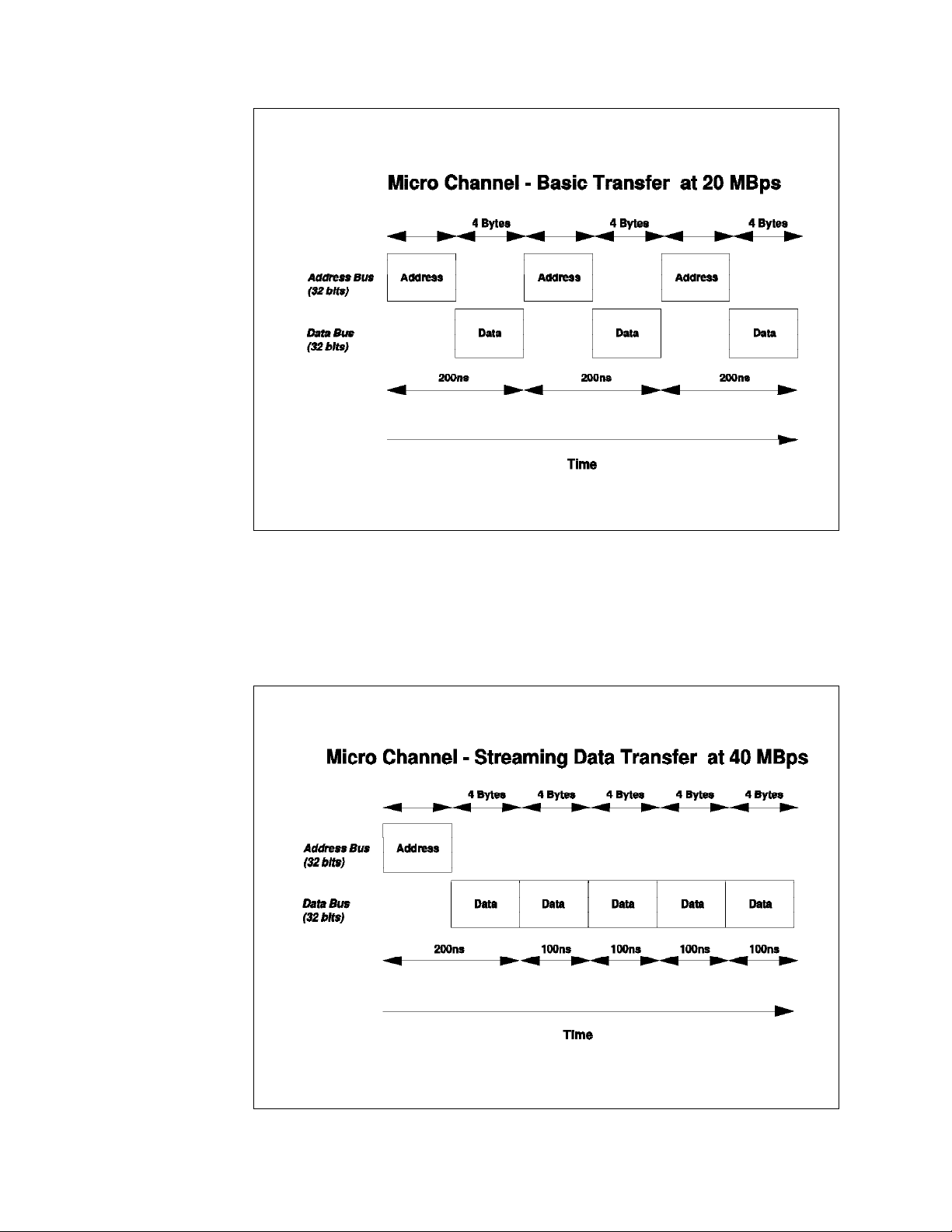

Figure 7 on page 14 shows basic data transfer in operation. Basic data transfer

on the Micro Channel runs at 20 MBps (each cycle takes 200 nanoseconds, and

32 bits or 4 bytes of data are transferred at a time).

Chapter 1. IBM PC Server Technologies 13

Page 29

Figure 7. Micro Channel - Basic Data Transfer (20 MBps)

However, in many cases, blocks transferred to and from memory are stored in

sequential addresses, so repeatedly sending the address for each 4 bytes is

unnecessary. With data streaming transfers, the initial address is sent, and then

the blocks of data are sent; it is then assumed that the data requests are

sequential. Figure 8 shows 40 MBps data streaming in operation.

Figure 8. Micro Channel - Data Streaming Transfer (40 MBps)

14 NetWare Integration Guide

Page 30

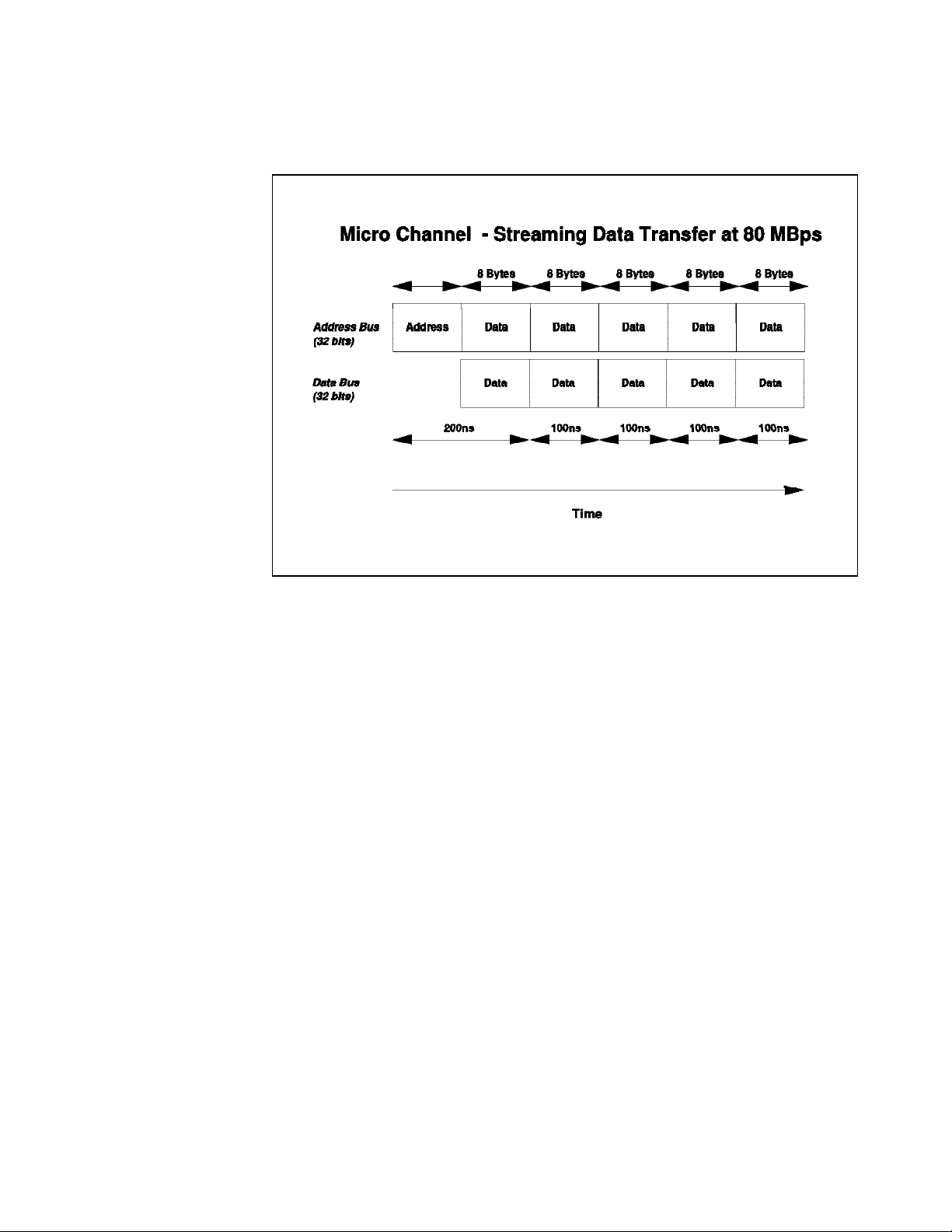

The Micro Channel supports another mode of data streaming whereby the

address bus can also be used to transfer data. This is depicted in Figure 9 on

page 15.

1.5.4 PCI Bus

Figure 9. Micro Channel - Data Streaming Transfer (80 MBps)

As can be seen from this figure, in this mode, after the initial address is

presented during the first bus cycle, the address bus is then multiplexed to carry

an additional 32 bits of data. This results in an effective data transfer rate of 80

MBps.

Data streaming, as well as improving the data transfer rate, also provides a

more efficient use of the Micro Channel. Since MCA operations complete in a

shorter amount of time, the overall throughput of the system is increased.

Data streaming is useful for any adapters that perform block transfers across the

Micro Channel such as the IBM SCSI-2 Fast/Wide Streaming RAID Adapter/A.

MCA is implemented in some models of the IBM PC Server 300 and 500 lines

and in all models of the PC Server 720.

In the later part of 1992, Intel, IBM and a number of other companies worked

together to define a new local component bus which was designed to provide a

number of new features and work with a wide range of new processors. The

result was the Peripheral Component Interconnect (PCI) bus. The PCI bus was

designed to provide the Pentium processor with all the bandwidth it needed and

to provide for more powerful processors in the future. It was also designed for

use in multiprocessing environments.

The PCI bus was designed to work with a number of buses including Micro

Channel, ISA and EISA buses. I t was designed to provide a local bus, more

tightly integrated with the processor, to provide more bandwidth to I/O devices

such as LAN adapters and DISK controllers, which require more bandwidth than

Chapter 1. IBM PC Server Technologies 15

Page 31

is available with previous bus architectures. In order to optimize performance,

the PCI architecture strictly limits the number of loads (hence the number of

adapters) on the bus. It therefore needs an I/O expansion bus to handle the

more routine I/O devices.

The bus has 32 or 64 bits of address and data, is processor independent and is

capable of speeds over 50 MHz. 8-bit and 16-bit devices are not supported. The

64-bit data bus width in combination with clock speeds over 50 MHz can result in

data transfer of several hundred megabytes per second. In addition to memory

space and I/O space, the bus includes a third address space to support

automatic resource allocation and configuration of system and adapter boards.

Unique features of the PCI include parity on all bus lines and control lines. The

parity is not optional as in other architectures, but is required. All PCI bus

masters must support data streaming to memory devices.

1.6 Disk Subsystem

The disk subsystem is a critical element of server design. In this section we

examine the controllers, the devices, and the interfaces between them. We will

specifically address SCSI technology and also examine RAID technology in some

detail.

1.6.1 Hard Disk Interfaces

The disk interface specifies the physical, electrical, and logical connections

between the controller and the Direct Access Storage Devices (DASD). There

have been four main interfaces developed thus far. Each possesses different

characteristics and performance levels. The interfaces are:

1. ST506 - This interface was the original standard for microcomputers. It has a

data transfer rate of 5 million bits per second (Mbps) between the controller

and the DASD Device. It is a serial rather than a parallel interface. This

interface is classified as a device level interface because the device itself

has no logic to interpret commands. Functions such as formatting, head

selection, and error detection are directed by the controller which is housed

in an adapter card. A device level interface requires specific adapters and

device drivers for each different type of device.

2. Enhanced Small Device Interface (ESDI) - This is an enhanced version of the

ST506 interface. I t provides a 10 Mbps data transfer rate (15 Mbps in some

implementations). ESDI devices were the first to use a type of data encoding

called Run Length Limited (RLL) which results in denser storage and faster

data transfer than the older modified frequency modulation (MFM) technique.

However, it is still a device level, serial interface.

3. Integrated Drive Electronics (IDE) - This is a bus level interface meaning that

the device controller is built into the device itself. The IDE interface was

designed for the low cost PC market segment. The interface is flexible and

has been enhanced over time. The latest enhancements include caching at

the adapter level, a CD-ROM interface, and an extension of the maximum

disk storage which was previously limited to 500 MB. However, most IDE

implementations still limit the maximum number of hard disks per interface

to two. This limitation makes IDE more applicable for desktop systems.

16 NetWare Integration Guide

Page 32

4. Small Computer System Interface (SCSI) - The SCSI interface is a high speed

parallel interface that transfers eight bits at a time rather than one bit at a

time for the ST506 and ESDI serial interfaces. Thus data transfer rates for

SCSI are measured in mega

faster than those of the serial interfaces. SCSI is also a bus level interface

which makes it very flexible. Since the commands are interpreted by the

device and not the SCSI host bus adapter, new devices (with new

commands) can be implemented and used with standard SCSI adapters. The

device driver then interacts with the device via the new commands. A n

example of this would be a CD-ROM device sharing the same adapter as a

hard disk drive. Figure 10 shows a SCSI subsystem with a host bus adapter

attached to an integrated controller and hard disk.

bytes

versus mega

bits

and are considerably

Figure 10. SCSI Disk Interface

The SCSI flexibility and high performance make it very suitable for the server

environment. In fact, SCSI is the most widely used disk subsystem

technology in advanced servers today. All the current IBM PC Servers

except for a few at the low end use this technology. For these reasons, we

will take a closer look at this interface.

1.6.2 SCSI Technology

As previously discussed, SCSI is a bus level interface through which computers