Page 1

CICS Transaction Server fo r z/OS

Version 4 Release 1

Recovery and Restart Guide

SC34-7012-01

Page 2

Page 3

CICS Transaction Server fo r z/OS

Version 4 Release 1

Recovery and Restart Guide

SC34-7012-01

Page 4

Note

Before using this information and the product it supports, read the information in “Notices” on page 243.

This edition applies to Version 4 Release 1 of CICS Transaction Server for z/OS (product number 5655-S97) and to

all subsequent releases and modifications until otherwise indicated in new editions.

© Copyright IBM Corporation 1982, 2010.

US Government Users Restricted Rights – Use, duplication or disclosure restricted by GSA ADP Schedule Contract

with IBM Corp.

Page 5

Contents

Preface ..............vii

What this book is about ..........vii

Who should read this book .........vii

What you need to know to understand this book vii

How to use this book ...........vii

Changes in CICS Transaction Server for

z/OS, Version 4 Release 1 .......ix

Part 1. CICS recovery and restart

concepts ..............1

Chapter 1. Recovery and restart facilities 3

Maintaining the integrity of data .......3

Minimizing the effect of failures ........4

The role of CICS .............4

Recoverable resources ...........5

CICS backward recovery (backout) .......5

Dynamic transaction backout ........6

Emergency restart backout .........6

CICS forward recovery ...........7

Forward recovery of CICS data sets......7

Failures that require CICS recovery processing . . . 8

CICS recovery processing following a

communication failure ..........8

CICS recovery processing following a transaction

failure ...............10

CICS recovery processing following a system

failure ...............10

Chapter 2. Resource recovery in CICS 13

Units of work .............13

Shunted units of work ..........13

Locks ...............14

Synchronization points .........15

CICS recovery manager ..........17

Managing the state of each unit of work....18

Coordinating updates to local resources ....19

Coordinating updates in distributed units of

work ...............20

Resynchronization after system or connection

failure ...............21

CICS system log .............21

Information recorded on the system log ....21

System activity keypoints.........22

Forward recovery logs ...........22

User journals and automatic journaling .....22

Chapter 3. Shutdown and restart

recovery ..............25

Normal shutdown processing ........25

First quiesce stage ...........25

Second quiesce stage ..........26

Third quiesce stage ...........26

Warm keypoints ............27

Shunted units of work at shutdown .....27

Flushing journal buffers .........28

Immediate shutdown processing (PERFORM

SHUTDOWN IMMEDIATE) .........28

Shutdown requested by the operating system . . . 29

Uncontrolled termination ..........30

The shutdown assist transaction .......30

Cataloging CICS resources .........31

Global catalog ............31

Local catalog .............32

Shutdown initiated by CICS log manager ....33

Effect of problems with the system log ....33

How the state of the CICS region is reconstructed 34

Overriding the type of start indicator .....35

Warm restart .............35

Emergency restart ...........35

Cold start ..............36

Dynamic RLS restart ..........37

Recovery with VTAM persistent sessions ....38

Running with persistent sessions support . . . 38

Running without persistent sessions support . . 40

||

Part 2. Recovery and restart

processes.............43

Chapter 4. CICS cold start ......45

Starting CICS with the START=COLD parameter . . 45

Files ................46

Temporary storage ...........47

Transient data ............47

Transactions .............48

Journal names and journal models......48

LIBRARY resources...........48

Programs ..............48

Start requests (with and without a terminal) . . 48

Resource definitions dynamically installed . . . 48

Monitoring and statistics .........49

Terminal control resources ........49

Distributed transaction resources ......50

Dump table .............50

Starting CICS with the START=INITIAL parameter 50

Chapter 5. CICS warm restart .....53

Rebuilding the CICS state after a normal shutdown 53

Files ................54

Temporary storage ...........55

Transient data ............55

Transactions .............56

LIBRARY resources...........56

Programs ..............56

Start requests .............57

Monitoring and statistics .........57

© Copyright IBM Corp. 1982, 2010 iii

Page 6

Journal names and journal models......58

Terminal control resources ........58

Distributed transaction resources ......59

URIMAP definitions and virtual hosts ....59

Chapter 6. CICS emergency restart . . 61

Recovering after a CICS failure ........61

Recovering information from the system log . . 61

Driving backout processing for in-flight units of

work ...............61

Concurrent processing of new work and backout 61

Other backout processing.........62

Rebuilding the CICS state after an abnormal

termination ..............62

Files ................62

Temporary storage ...........63

Transient data ............63

Start requests .............64

Terminal control resources ........64

Distributed transaction resources ......65

Chapter 7. Automatic restart

management ............67

CICS ARM processing ...........67

Registering with ARM ..........68

Waiting for predecessor subsystems .....68

De-registering from ARM.........68

Failing to register ...........69

ARM couple data sets ..........69

CICS restart JCL and parameters .......69

Workload policies ............70

Connecting to VTAM ...........70

The COVR transaction..........71

Messages associated with automatic restart . . . 71

Automatic restart of CICS data-sharing servers . . 71

Server ARM processing .........71

Chapter 8. Unit of work recovery and

abend processing ..........73

Unit of work recovery ...........73

Transaction backout ..........74

Backout-failed recovery .........79

Commit-failed recovery .........83

Indoubt failure recovery .........84

Investigating an indoubt failure .......85

Recovery from failures associated with the coupling

facility ................88

Cache failure support ..........88

Lost locks recovery ...........89

Connection failure to a coupling facility cache

structure ..............91

Connection failure to a coupling facility lock

structure ..............91

MVS system recovery and sysplex recovery . . 91

Transaction abend processing ........92

Exit code ..............92

Abnormal termination of a task.......93

Actions taken at transaction failure ......94

Processing operating system abends and program

checks ................94

Chapter 9. Communication error

processing .............97

Terminal error processing..........97

Node error program (DFHZNEP) ......97

Terminal error program (DFHTEP) .....97

Intersystem communication failures ......98

Part 3. Implementing recovery and

restart ..............99

Chapter 10. Planning aspects of

recovery .............101

Application design considerations ......101

Questions relating to recovery requirements . . 101

Validate the recovery requirements statement 102

Designing the end user ’s restart procedure . . 103

End user’s standby procedures ......103

Communications between application and user 103

Security ..............104

System definitions for recovery-related functions 104

Documentation and test plans ........105

Chapter 11. Defining system and

general log streams ........107

Defining log streams to MVS ........108

Defining system log streams ........108

Specifying a JOURNALMODEL resource

definition..............109

Model log streams for CICS system logs . . . 110

Activity keypointing ..........112

Defining forward recovery log streams .....116

Model log streams for CICS general logs . . . 117

Merging data on shared general log streams . . 118

Defining the log of logs ..........118

Log of logs failure ...........119

Reading log streams offline ........119

Effect of daylight saving time changes .....120

Adjusting local time ..........120

Time stamping log and journal records ....120

Chapter 12. Defining recoverability for

CICS-managed resources ......123

Recovery for transactions .........123

Defining transaction recovery attributes . . . 123

Recovery for files ............125

VSAM files .............125

Basic direct access method (BDAM) .....126

Defining files as recoverable resources ....126

File recovery attribute consistency checking

(non-RLS) .............129

Implementing forward recovery with

user-written utilities ..........131

Implementing forward recovery with CICS

VSAM Recovery MVS/ESA .......131

Recovery for intrapartition transient data ....131

Backward recovery ..........131

Forward recovery ...........133

Recovery for extrapartition transient data ....134

iv CICS TS for z/OS 4.1: Recovery and Restart Guide

Page 7

Input extrapartition data sets .......134

Output extrapartition data sets ......135

Using post-initialization (PLTPI) programs . . 135

Recovery for temporary storage .......135

Backward recovery ..........135

Forward recovery ...........136

Recovery for Web services .........136

Configuring CICS to support persistent

messages ..............136

Defining local queues in a service provider . . 137

Persistent message processing .......138

Chapter 13. Programming for recovery 141

Designing applications for recovery ......141

Splitting the application into transactions . . . 141

SAA-compatible applications .......143

Program design ............143

Dividing transactions into units of work . . . 143

Processing dialogs with users .......144

Mechanisms for passing data between

transactions .............145

Designing to avoid transaction deadlocks . . . 146

Implications of interval control START requests 147

Implications of automatic task initiation (TD

trigger level) ............148

Implications of presenting large amounts of data

to the user .............148

Managing transaction and system failures ....149

Transaction failures ..........149

System failures ............151

Handling abends and program level abend exits 151

Processing the IOERR condition ......152

START TRANSID commands .......153

PL/I programs and error handling .....153

Locking (enqueuing on) resources in application

programs...............153

Implicit locking for files .........154

Implicit enqueuing on logically recoverable TD

destinations .............157

Implicit enqueuing on recoverable temporary

storage queues ............157

Implicit enqueuing on DL/I databases with

DBCTL ..............158

Explicit enqueuing (by the application

programmer) ............158

Possibility of transaction deadlock .....159

User exits for transaction backout ......160

Where you can add your own code .....160

XRCINIT exit ............161

XRCINPT exit ............161

XFCBFAIL global user exit ........161

XFCLDEL global user exit ........162

XFCBOVER global user exit .......162

XFCBOUT global user exit ........162

Coding transaction backout exits ......162

Chapter 14. Using a program error

program (PEP) ...........163

The CICS-supplied PEP ..........163

YourownPEP.............164

Omitting the PEP ............165

Chapter 15. Resolving retained locks

on recoverable resources ......167

Quiescing RLS data sets ..........167

The RLS quiesce and unquiesce functions . . . 168

Switching from RLS to non-RLS access mode. . . 172

Exception for read-only operations .....172

What can prevent a switch to non-RLS access

mode?...............173

Resolving retained locks before opening data

sets in non-RLS mode .........174

Resolving retained locks and preserving data

integrity ..............176

Choosing data availability over data integrity 177

The batch-enabling sample programs ....178

CEMT command examples ........178

A special case: lost locks.........180

Overriding retained locks ........180

Coupling facility data table retained locks ....182

Chapter 16. Moving recoverable data

sets that have retained locks ....183

Procedure for moving a data set with retained

locks ................183

Using the REPRO method ........183

Using the EXPORT and IMPORT functions . . 185

Rebuilding alternate indexes .......186

Chapter 17. Forward recovery

procedures ............187

Forward recovery of data sets accessed in RLS

mode ................187

Recovery of data set with volume still available 188

Recovery of data set with loss of volume . . . 189

Forward recovery of data sets accessed in non-RLS

mode ................198

Procedure for failed RLS mode forward recovery

operation ...............198

Procedure for failed non-RLS mode forward

recovery operation ...........201

Chapter 18. Backup-while-open (BWO) 203

BWO and concurrent copy .........203

BWO and backups ..........203

BWO requirements ...........204

Hardware requirements .........205

Which data sets are eligible for BWO .....205

How you request BWO ..........206

Specifying BWO using access method services 206

Specifying BWO on CICS file resource

definitions .............207

Removing BWO attributes .........208

Systems administration ..........208

BWO processing ............209

File opening .............210

File closing (non-RLS mode) .......212

Shutdown and restart .........213

Data set backup and restore .......213

Contents v

Page 8

Forward recovery logging ........215

Forward recovery ...........216

Recovering VSAM spheres with AIXs ....217

An assembler program that calls DFSMS callable

services ...............218

Chapter 19. Disaster recovery ....223

Why have a disaster recovery plan? ......223

Disaster recovery testing .........224

Six tiers of solutions for off-site recovery ....225

Tier 0: no off-site data .........225

Tier 1 - physical removal ........225

Tier 2 - physical removal with hot site ....227

Tier 3 - electronic vaulting ........227

Tier 0–3 solutions ...........228

Tier 4 - active secondary site .......229

Tier 5 - two-site, two-phase commit .....231

Tier 6 - minimal to zero data loss......231

Tier 4–6 solutions ...........233

Disaster recovery and high availability .....234

Peer-to-peer remote copy (PPRC) and extended

remote copy (XRC) ..........234

Remote Recovery Data Facility ......236

Choosing between RRDF and 3990-6 solutions 237

Disaster recovery personnel considerations . . 237

Returning to your primary site ......238

Disaster recovery facilities .........238

MVS system logger recovery support ....238

CICS VSAM Recovery QSAM copy .....239

Remote Recovery Data Facility support....239

CICS VR shadowing ..........239

CICS emergency restart considerations .....239

Indoubt and backout failure support ....239

Remote site recovery for RLS-mode data sets 239

Final summary .............240

Part 4. Appendixes ........241

Notices ..............243

Trademarks ..............244

Bibliography............245

CICS books for CICS Transaction Server for z/OS 245

CICSPlex SM books for CICS Transaction Server

for z/OS ...............246

Other CICS publications ..........246

Accessibility............247

Index ...............249

vi

CICS TS for z/OS 4.1: Recovery and Restart Guide

Page 9

Preface

What this book is about

This book contains guidance about determining your CICS®recovery and restart

needs, deciding which CICS facilities are most appropriate, and implementing your

design in a CICS region.

The information in this book is generally restricted to a single CICS region. For

information about interconnected CICS regions, see the CICS Intercommunication

Guide.

This manual does not describe recovery and restart for the CICS front end

programming interface. For information on this topic, see the CICS Front End

Programming Interface User's Guide.

Who should read this book

This book is for those responsible for restart and recovery planning, design, and

implementation—either for a complete system, or for a particular function or

component.

What you need to know to understand this book

To understand this book, you should have experience of installing CICS and the

products with which it is to work, or of writing CICS application programs, or of

writing exit programs.

You should also understand your application requirements well enough to be able

to make decisions about realistic recovery and restart needs, and the trade-offs

between those needs and the performance overhead they incur.

How to use this book

This book deals with a wide variety of topics, all of which contribute to the

recovery and restart characteristics of your system.

It’s unlikely that any one reader would have to implement all the possible

techniques discussed in this book. By using the table of contents, you can find the

sections relevant to your work. Readers new to recovery and restart should find

the first section helpful, because it introduces the concepts of recovery and restart.

© Copyright IBM Corp. 1982, 2010 vii

Page 10

viii CICS TS for z/OS 4.1: Recovery and Restart Guide

Page 11

Changes in CICS Transaction Server for z/OS, Version 4

Release 1

For information about changes that have been made in this release, please refer to

What's New in the information center, or the following publications:

v CICS Transaction Server for z/OS What's New

v CICS Transaction Server for z/OS Upgrading from CICS TS Version 3.2

v CICS Transaction Server for z/OS Upgrading from CICS TS Version 3.1

v CICS Transaction Server for z/OS Upgrading from CICS TS Version 2.3

Any technical changes that are made to the text after release are indicated by a

vertical bar (|) to the left of each new or changed line of information.

© Copyright IBM Corp. 1982, 2010 ix

Page 12

x CICS TS for z/OS 4.1: Recovery and Restart Guide

Page 13

Part 1. CICS recovery and restart concepts

It is very important that a transaction processing system such as CICS can restart

and recover following a failure. This section describes some of the basic concepts

of the recovery and restart facilities provided by CICS.

© Copyright IBM Corp. 1982, 2010 1

Page 14

2 CICS TS for z/OS 4.1: Recovery and Restart Guide

Page 15

Chapter 1. Recovery and restart facilities

Problems that occur in a data processing system could be failures with

communication protocols, data sets, programs, or hardware. These problems are

potentially more severe in online systems than in batch systems, because the data

is processed in an unpredictable sequence from many different sources.

Online applications therefore require a system with special mechanisms for

recovery and restart that batch systems do not require. These mechanisms ensure

that each resource associated with an interrupted online application returns to a

known state so that processing can restart safely. Together with suitable operating

procedures, these mechanisms should provide automatic recovery from failures

and allow the system to restart with the minimum of disruption.

The two main recovery requirements of an online system are:

v To maintain the integrity and consistency of data

v To minimize the effect of failures

CICS provides a facility to meet these two requirements called the recovery

manager. The CICS recovery manager provides the recovery and restart functions

that are needed in an online system.

Maintaining the integrity of data

Data integrity means that the data is in the form you expect and has not been

corrupted. The objective of recovery operations on files, databases, and similar data

resources is to maintain and restore the integrity of the information.

Recovery must also ensure consistency of related changes, whereby they are made

as a whole or not at all. (The term resources used in this book, unless stated

otherwise, refers to data resources.)

Logging changes

One way of maintaining the integrity of a resource is to keep a record, or log, of all

the changes made to a resource while the system is executing normally. If a failure

occurs, the logged information can help recover the data.

An online system can use the logged information in two ways:

1. It can be used to back out incomplete or invalid changes to one or more

resources. This is called backward recovery, or backout. For backout, it is

necessary to record the contents of a data element before it is changed. These

records are called before-images. In general, backout is applicable to processing

failures that prevent one or more transactions (or a batch program) from

completing.

2. It can be used to reconstruct changes to a resource, starting with a backup copy

of the resource taken earlier. This is called forward recovery. For forward

recovery, it is necessary to record the contents of a data element after it is

changed. These records are called after-images.

© Copyright IBM Corp. 1982, 2010 3

Page 16

In general, forward recovery is applicable to data set failures, or failures in

similar data resources, which cause data to become unusable because it has

been corrupted or because the physical storage medium has been damaged.

Minimizing the effect of failures

An online system should limit the effect of any failure. Where possible, a failure

that affects only one user, one application, or one data set should not halt the

entire system.

Furthermore, if processing for one user is forced to stop prematurely, it should be

possible to back out any changes made to any data sets as if the processing had

not started.

If processing for the entire system stops, there may be many users whose updating

work is interrupted. On a subsequent startup of the system, only those data set

updates in process (in-flight) at the time of failure should be backed out. Backing

out only the in-flight updates makes restart quicker, and reduces the amount of

data to reenter.

Ideally, it should be possible to restore the data to a consistent, known state

following any type of failure, with minimal loss of valid updating activity.

The role of CICS

The CICS recovery manager and the log manager perform the logging functions

necessary to support automatic backout. Automatic backout is provided for most

CICS resources, such as databases, files, and auxiliary temporary storage queues,

either following a transaction failure or during an emergency restart of CICS.

If the backout of a VSAM file fails, CICS backout failure processing ensures that all

locks on the backout-failed records are retained, and the backout-failed parts of the

unit of work (UOW) are shunted to await retry. The VSAM file remains open for

use. For an explanation of shunted units of work and retained locks, see “Shunted

units of work” on page 13.

If the cause of the backout failure is a physically damaged data set, and provided

the damage affects only a localized section of the data set, you can choose a time

when it is convenient to take the data set offline for recovery. You can then use the

forward recovery log with a forward recovery utility, such as CICS VSAM

Recovery, to restore the data set and re-enable it for CICS use.

Note: In many cases, a data set failure also causes a processing failure. In this

event, forward recovery must be followed by backward recovery.

You don't need to shut CICS down to perform these recovery operations. For data

sets accessed by CICS in VSAM record-level sharing (RLS) mode, you can quiesce

the data set to allow you to perform the forward recovery offline. On completion

of forward recovery, setting the data set to unquiesced causes CICS to perform the

backward recovery automatically.

For files accessed in non-RLS mode, you can issue a SET DSNAME RETRY command

after the forward recovery, which causes CICS to perform the backward recovery

online.

4 CICS TS for z/OS 4.1: Recovery and Restart Guide

Page 17

Another way is to shut down CICS with an immediate shutdown and perform the

forward recovery, after which a CICS emergency restart performs the backward

recovery.

Recoverable resources

In CICS, a recoverable resource is any resource with recorded recovery information

that can be recovered by backout.

The following resources can be made recoverable:

v CICS files that relate to:

– VSAM data sets

– BDAM data sets

v Data tables (but user-maintained data tables are not recovered after a CICS

failure, only after a transaction failure)

v Coupling facility data tables

v The CICS system definition (CSD) file

v Intrapartition transient data destinations

v Auxiliary temporary storage queues

v Resource definitions dynamically installed using resource definition online

(RDO)

In some environments, a VSAM file managed by CICS file control might need to

remain online and open for update for extended periods. You can use a backup

manager, such as DFSMSdss, in a separate job under MVS

file at regular intervals while it is open for update by CICS applications. This

operation is known as backup-while-open (BWO). Even changes made to the

VSAM file while the backup is in progress are recorded.

DFSMSdss is a functional component of DFSMS/MVS, and is the primary data

mover. When used with supporting hardware, DFSMSdss also provides a

concurrent copy capability. This capability enables you to copy or back up data

while that data is being used.

If a data set failure occurs, you can use a backup of the data set and a forward

recovery utility, such as CICS VSAM Recovery (CICSVR), to recover the VSAM file.

CICS backward recovery (backout)

Backward recovery, or backout, is a way of undoing changes made to resources

such as files or databases.

Backout is one of the fundamental recovery mechanisms of CICS. It relies on

recovery information recorded while CICS and its transactions are running

normally.

Before a change is made to a resource, the recovery information for backout, in the

form of a before-image, is recorded on the CICS system log. A before-image is a

record of what the resource was like before the change. These before-images are

used by CICS to perform backout in two situations:

v In the event of failure of an individual in-flight transaction, which CICS backs

out dynamically at the time of failure (dynamic transaction backout)

™

, to back up a VSAM

Chapter 1. Recovery and restart facilities 5

Page 18

v In the event of an emergency restart, when CICS backs out all those transactions

that were in-flight at the time of the CICS failure (emergency restart backout).

Although these occur in different situations, CICS uses the same backout process in

each case. CICS does not distinguish between dynamic backout and emergency

restart backout. See Chapter 6, “CICS emergency restart,” on page 61 for an

explanation of how CICS reattaches failed in-flight units of work in order to

perform transaction backout following an emergency restart.

Each CICS region has only one system log, which cannot be shared with any other

CICS region. The system log is written to a unique MVS system logger log stream.

The CICS system log is intended for use only for recovery purposes, for example

during dynamic transaction backout, or during emergency restart. It is not meant

to be used for any other purpose.

CICS supports two physical log streams - a primary and a secondary log stream.

CICS uses the secondary log stream for storing log records of failed units of work,

and also some long running tasks that have not caused any data to be written to

the log for two complete activity key points. Failed units of work are moved from

the primary to the secondary log stream at the next activity keypoint. Logically,

both the primary and secondary log stream form one log, and as a general rule are

referred to as the system log.

Dynamic transaction backout

In the event of a transaction failure, or if an application explicitly requests a

syncpoint rollback, the CICS recovery manager uses the system log data to drive

the resource managers to back out any updates made by the current unit of work.

This process, known as dynamic transaction backout, takes place while the rest of

CICS continues normally.

For example, when any updates made to a recoverable data set are to be backed

out, file control uses the system log records to reverse the updates. When all the

updates made in the unit of work have been backed out, the unit of work is

completed. The locks held on the updated records are freed if the backout is

successful.

For data sets open in RLS mode, CICS requests VSAM RLS to release the locks; for

data sets open in non-RLS mode, the CICS enqueue domain releases the locks

automatically.

See “Units of work” on page 13 for a description of units of work.

Emergency restart backout

If a CICS region fails, you restart CICS with an emergency restart to back out any

transactions that were in-flight at the time of failure.

During emergency restart, the recovery manager uses the system log data to drive

backout processing for any units of work that were in-flight at the time of the

failure. The backout of units of work during emergency restart is the same as a

dynamic backout; there is no distinction between the backout that takes place at

emergency restart and that which takes place at any other time. At this point,

while recovery processing continues, CICS is ready to accept new work for normal

processing.

6 CICS TS for z/OS 4.1: Recovery and Restart Guide

Page 19

The recovery manager also drives:

v The backout processing for any units of work that were in a backout-failed state

at the time of the CICS failure

v The commit processing for any units of work that had not finished commit

processing at the time of failure (for example, for resource definitions that were

being installed when CICS failed)

v The commit processing for any units of work that were in a commit-failed state

at the time of the CICS failure

See “Unit of work recovery” on page 73 for an explanation of the terms

commit-failed and backout-failed.

The recovery manager drives these backout and commit processes because the

condition that caused them to fail might be resolved by the time CICS restarts. If

the condition that caused a failure has not been resolved, the unit of work remains

in backout- or commit-failed state. See “Backout-failed recovery” on page 79 and

“Commit-failed recovery” on page 83 for more information.

CICS forward recovery

Some types of data set failure cannot be corrected by backward recovery; for

example, failures that cause physical damage to a database or data set.

Recovery from failures of this type is usually based on the following actions:

1. Take a backup copy of the data set at regular intervals.

2. Record an after-image of every change to the data set on the forward recovery

log (a general log stream managed by the MVS system logger).

3. After the failure, restore the most recent backup copy of the failed data set, and

use the information recorded on the forward recovery log to update the data

set with all the changes that have occurred since the backup copy was taken.

These operations are known as forward recovery. On completion of the forward

recovery, as a fourth step, CICS also performs backout of units of work that failed

in-flight as a result of the data set failure.

Forward recovery of CICS data sets

CICS supports forward recovery of VSAM data sets updated by CICS file control

(that is, by files or CICS-maintained data tables defined by a CICS file definition).

CICS writes the after-images of changes made to a data set to a forward recovery

log, which is a general log stream managed by the MVS system logger.

CICS obtains the log stream name of a VSAM forward recovery log in one of two

ways:

1. For files opened in VSAM record level sharing (RLS) mode, the explicit log

stream name is obtained directly from the VSAM ICF catalog entry for the data

set.

2. For files in non-RLS mode, the log stream name is derived from:

v The VSAM ICF catalog entry for the data set if it is defined there, and if

RLS=YES is specified as a system initialization parameter. In this case, CICS

file control manages writes to the log stream directly.

v A journal model definition referenced by a forward recovery journal name

specified in the file resource definition.

Chapter 1. Recovery and restart facilities 7

Page 20

Forward recovery journal names are of the form DFHJnn where nn is a

number in the range 1–99 and is obtained from the forward recovery log id

(FWDRECOVLOG) in the FILE resource definition.

In this case, CICS creates a journal entry for the forward recovery log, which

can be mapped by a JOURNALMODEL resource definition. Although this

method enables user application programs to reference the log, and write

user journal records to it, you are recommended not to do so. You should

ensure that forward recovery log streams are reserved for forward recovery

data only.

Note: You cannot use a CICS system log stream as a forward recovery log.

The VSAM recovery options or the CICS file control recovery options that you

require to implement forward recovery are explained further in “Defining files as

recoverable resources” on page 126.

For details of procedures for performing forward recovery, see Chapter 17,

“Forward recovery procedures,” on page 187.

Forward recovery for non-VSAM resources

CICS does not provide forward recovery logging for non-VSAM resources, such as

BDAM files. However, you can provide this support yourself by ensuring that the

necessary information is logged to a suitable log stream. In the case of BDAM files,

you can use the CICS autojournaling facility to write the necessary after-images to

a log stream.

Failures that require CICS recovery processing

The following section briefly describes CICS recovery processing after a

communication failure, transaction failure, and system failure.

Whenever possible, CICS attempts to contain the effects of a failure, typically by

terminating only the offending task while all other tasks continue normally. The

updates performed by a prematurely terminated task can be backed out

automatically.

CICS recovery processing following a communication failure

Causes of communication failure include:

v Terminal failure

v Printer terminal running out of paper

v Power failure at a terminal

v Invalid SNA status

v Network path failure

v Loss of an MVS image that is a member of a sysplex

There are two aspects to processing following a communications failure:

1. If the failure occurs during a conversation that is not engaged in syncpoint

protocol, CICS must terminate the conversation and allow customized handling

of the error, if required. An example of when such customization is helpful is

for 3270 device types. This is described below.

8 CICS TS for z/OS 4.1: Recovery and Restart Guide

Page 21

2. If the failure occurs during the execution of a CICS syncpoint, where the

conversation is with another resource manager (perhaps in another CICS

region), CICS handles the resynchronization. This is described in the CICS

Intercommunication Guide.

If the link fails and is later reestablished, CICS and its partners use the SNA

set-and-test-sequence-numbers (STSN) command to find out what they were doing

(backout or commit) at the time of link failure. For more information on link

failure, see the CICS Intercommunication Guide.

When communication fails, the communication system access method either retries

the transmission or notifies CICS. If a retry is successful, CICS is not informed.

Information about the error can be recorded by the operating system. If the retries

are not successful, CICS is notified.

When CICS detects a communication failure, it gives control to one of two

programs:

®

v The node error program (NEP) for VTAM

logical units

v The terminal error program (TEP) for non-VTAM terminals

Both dummy and sample versions of these programs are provided by CICS. The

dummy versions do nothing; they allow the default actions selected by CICS to

proceed. The sample versions show how to write your own NEP or TEP to change

the default actions.

The types of processing that might be in a user-written NEP or TEP are:

v Logging additional error information. CICS provides some error information

when an error occurs.

v Retrying the transmission. This is not recommended because the access method

will already have made several attempts.

v Leaving the terminal out of service. This means that it is unavailable to the

terminal operator until the problem is fixed and the terminal is put back into

service by means of a master terminal transaction.

v Abending the task if it is still active (see “CICS recovery processing following a

transaction failure” on page 10).

v Reducing the amount of error information printed.

For more information about NEPs and TEPs, see Chapter 9, “Communication error

processing,” on page 97.

XCF/MRO partner failures

Loss of communication between CICS regions can be caused by the loss of an MVS

image in which CICS regions are running.

If the regions are communicating over XCF/MRO links, the loss of connectivity

may not be immediately apparent because XCF waits for a reply to a message it

issues.

The loss of an MVS image in a sysplex is detected by XCF in another MVS, and

XCF issues message IXC402D. If the failed MVS is running CICS regions connected

through XCF/MRO to CICS regions in another MVS, tasks running in the active

regions are initially suspended in an IRLINK WAIT state.

XCF/MRO-connected regions do not detect the loss of an MVS image and its

resident CICS regions until an operator replies to the XCF IXC402D message.

Chapter 1. Recovery and restart facilities 9

Page 22

When the operator replies to IXC402D, the CICS interregion communication

program, DFHIRP, is notified and the suspended tasks are abended, and MRO

connections closed. Until the reply is issued to IXC402D, an INQUIRE

CONNECTION command continues to show connections to regions in the failed

MVS as in service and normal.

When the failed MVS image and its CICS regions are restarted, the interregion

communication links are reopened automatically.

CICS recovery processing following a transaction failure

Transactions can fail for a variety of reasons, including a program check in an

application program, an invalid request from an application that causes an abend,

a task issuing an ABEND request, or I/O errors on a data set that is being accessed

by a transaction.

During normal execution of a transaction working with recoverable resources,

CICS stores recovery information in the system log. If the transaction fails, CICS

uses the information from the system log to back out the changes made by the

interrupted unit of work. Recoverable resources are thus not left in a partially

updated or inconsistent state. Backing out an individual transaction is called

dynamic transaction backout.

After dynamic transaction backout has completed, the transaction can restart

automatically without the operator being aware of it happening. This function is

especially useful in those cases where the cause of transaction failure is temporary

and an attempt to rerun the transaction is likely to succeed (for example, DL/I

program isolation deadlock). The conditions when a transaction can be

automatically restarted are described under “Abnormal termination of a task” on

page 93.

If dynamic transaction backout fails, perhaps because of an I/O error on a VSAM

data set, CICS backout failure processing shunts the unit of work and converts the

locks that are held on the backout-failed records into retained locks. The data set

remains open for use, allowing the shunted unit of work to be retried. If backout

keeps failing because the data set is damaged, you can create a new data set using

a backup copy and then perform forward recovery, using a utility such as CICSVR.

When the data set is recovered, retry the shunted unit of work to complete the

failed backout and release the locks.

Chapter 8, “Unit of work recovery and abend processing,” on page 73 gives more

details about CICS processing of a transaction failure.

CICS recovery processing following a system failure

Causes of a system failure include a processor failure, the loss of a electrical power

supply, an operating system failure, or a CICS failure.

During normal execution, CICS stores recovery information on its system log

stream, which is managed by the MVS system logger. If you specify

START=AUTO, CICS automatically performs an emergency restart when it restarts

after a system failure.

During an emergency restart, the CICS log manager reads the system log backward

and passes information to the CICS recovery manager.

10 CICS TS for z/OS 4.1: Recovery and Restart Guide

Page 23

The CICS recovery manager then uses the information retrieved from the system

log to:

v Back out recoverable resources.

v Recover changes to terminal resource definitions. (All resource definitions

installed at the time of the CICS failure are initially restored from the CICS

global catalog.)

A special case of CICS processing following a system failure is covered in

Chapter 6, “CICS emergency restart,” on page 61.

Chapter 1. Recovery and restart facilities 11

Page 24

12 CICS TS for z/OS 4.1: Recovery and Restart Guide

Page 25

Chapter 2. Resource recovery in CICS

Before you begin to plan and implement resource recovery in CICS, you should

understand the concepts involved, including units of work, logging and journaling.

Units of work

When resources are being changed, there comes a point when the changes are

complete and do not need backout if a failure occurs later. The period between the

start of a particular set of changes and the point at which they are complete is

called a unit of work (UOW). The unit of work is a fundamental concept of all CICS

backout mechanisms.

From the application designer's point of view, a UOW is a sequence of actions that

needs to be complete before any of the individual actions can be regarded as

complete. To ensure data integrity, a unit of work must be atomic, consistent,

isolated, and durable.

The CICS recovery manager operates with units of work. If a transaction that

consists of multiple UOWs fails, or the CICS region fails, committed UOWs are not

backed out.

A unit of work can be in one of the following states:

v Active (in-flight)

v Shunted following a failure of some kind

v Indoubt pending the decision of the unit of work coordinator.

v Completed and no longer of interest to the recovery manager

Shunted units of work

A shunted unit of work is one awaiting resolution of an indoubt failure, a commit

failure, or a backout failure. The CICS recovery manager attempts to complete a

shunted unit of work when the failure that caused it to be shunted has been

resolved.

A unit of work can be unshunted and then shunted again (in theory, any number

of times). For example, a unit of work could go through the following stages:

1. A unit of work fails indoubt and is shunted.

2. After resynchronization, CICS finds that the decision is to back out the indoubt

unit of work.

3. Recovery manager unshunts the unit of work to perform backout.

4. If backout fails, it is shunted again.

5. Recovery manager unshunts the unit of work to retry the backout.

6. Steps 4 and 5 can occur several times until the backout succeeds.

These situations can persist for some time, depending on how long it takes to

resolve the cause of the failure. Because it is undesirable for transaction resources

to be held up for too long, CICS attempts to release as many resources as possible

while a unit of work is shunted. This is generally achieved by abending the user

task to which the unit of work belongs, resulting in the release of the following:

v Terminals

v User programs

© Copyright IBM Corp. 1982, 2010 13

Page 26

v Working storage

v Any LU6.2 sessions

v Any LU6.1 links

v Any MRO links

The resources CICS retains include:

v Locks on recoverable data. If the unit of work is shunted indoubt, all locks are

retained. If it is shunted because of a commit- or backout-failure, only the locks

on the failed resources are retained.

v System log records, which include:

– Records written by the resource managers, which they need to perform

recovery in the event of transaction or CICS failures. Generally, these records

are used to support transaction backout, but the RDO resource manager also

writes records for rebuilding the CICS state in the event of a CICS failure.

– CICS recovery manager records, which include identifiers relating to the

original transaction such as:

- The transaction ID

- The task ID

- The CICS terminal ID

- The VTAM LUNAME

- The user ID

- The operator ID.

Locks

For files opened in RLS mode, VSAM maintains a single central lock structure

using the lock-assist mechanism of the MVS coupling facility. This central lock

structure provides sysplex-wide locking at a record level. Control interval (CI)

locking is not used.

The locks for files accessed in non-RLS mode, the scope of which is limited to a

single CICS region, are file-control managed locks. Initially, when CICS processes a

read-for-update request, CICS obtains a CI lock. File control then issues an ENQ

request to the enqueue domain to acquire a CICS lock on the specific record. This

enables file control to notify VSAM to release the CI lock before returning control

to the application program. Releasing the CI lock minimizes the potential for

deadlocks to occur.

For coupling facility data tables updated under the locking model, the coupling

facility data table server stores the lock with its record in the CFDT. As in the case

of RLS locks, storing the lock with its record in the coupling facility list structure

that holds the coupling facility data table ensures sysplex-wide locking at record

level.

For both RLS and non-RLS recoverable files, CICS releases all locks on completion

of a unit of work. For recoverable coupling facility data tables, the locks are

released on completion of a unit of work by the CFDT server.

Active and retained states for locks

CICS supports active and retained states for locks.

14 CICS TS for z/OS 4.1: Recovery and Restart Guide

Page 27

When a lock is first acquired, it is an active lock. It remains an active lock until

successful completion of the unit of work, when it is released, or is converted into

a retained lock if the unit of work fails, or for a CICS or SMSVSAM failure:

v If a unit of work fails, RLS VSAM or the CICS enqueue domain continues to

hold the record locks that were owned by the failed unit of work for recoverable

data sets, but converted into retained locks. Retaining locks ensures that data

integrity for those records is maintained until the unit of work is completed.

v If a CICS region fails, locks are converted into retained locks to ensure that data

integrity is maintained while CICS is being restarted.

v If an SMSVSAM server fails, locks are converted into retained locks (with the

conversion being carried out by the other servers in the sysplex, or by the first

server to restart if all servers have failed). This means that a UOW that held

active RLS locks will hold retained RLS locks following the failure of an

SMSVSAM server.

Converting active locks into retained locks not only protects data integrity. It also

ensures that new requests for locks owned by the failed unit of work do not wait,

but instead are rejected with the LOCKED response.

Synchronization points

The end of a UOW is indicated to CICS by a synchronization point, usually

abbreviated to syncpoint.

A syncpoint arises in the following ways:

v Implicitly at the end of a transaction as a result of an EXEC CICS RETURN

command at the highest logical level. This means that a UOW cannot span tasks.

v Explicitly by EXEC CICS SYNCPOINT commands issued by an application program

at appropriate points in the transaction.

v Implicitly through a DL/I program specification block (PSB) termination (TERM)

call or command. This means that only one DL/I PSB can be scheduled within a

UOW.

Note that an explicit EXEC CICS SYNCPOINT command, or an implicit syncpoint at

the end of a task, implies a DL/I PSB termination call.

v Implicitly through one of the following CICS commands:

– EXEC CICS CREATE TERMINAL

– EXEC CICS CREATE CONNECTION COMPLETE

– EXEC CICS DISCARD CONNECTION

– EXEC CICS DISCARD TERMINAL

v Implicitly by a program called by a distributed program link (DPL) command if

the SYNCONRETURN option is specified. When the DPL program terminates

with an EXEC CICS RETURN command, the CICS mirror transaction takes a

syncpoint.

It follows from this that a unit of work starts:

v At the beginning of a transaction

v Whenever an explicit syncpoint is issued and the transaction does not end

v Whenever a DL/I PSB termination call causes an implicit syncpoint and the

transaction does not end

v Whenever one of the following CICS commands causes an implicit syncpoint

and the transaction does not end:

– EXEC CICS CREATE TERMINAL

Chapter 2. Resource recovery in CICS 15

Page 28

– EXEC CICS CREATE CONNECTION COMPLETE

– EXEC CICS DISCARD CONNECTION

– EXEC CICS DISCARD TERMINAL

A UOW that does not change a recoverable resource has no meaningful effect for

the CICS recovery mechanisms. Nonrecoverable resources are never backed out.

A unit of work can also be ended by backout, which causes a syncpoint in one of

the following ways:

v Implicitly when a transaction terminates abnormally, and CICS performs

dynamic transaction backout

v Explicitly by EXEC CICS SYNCPOINT ROLLBACK commands issued by an application

program to backout changes made by the UOW.

Examples of synchronization points

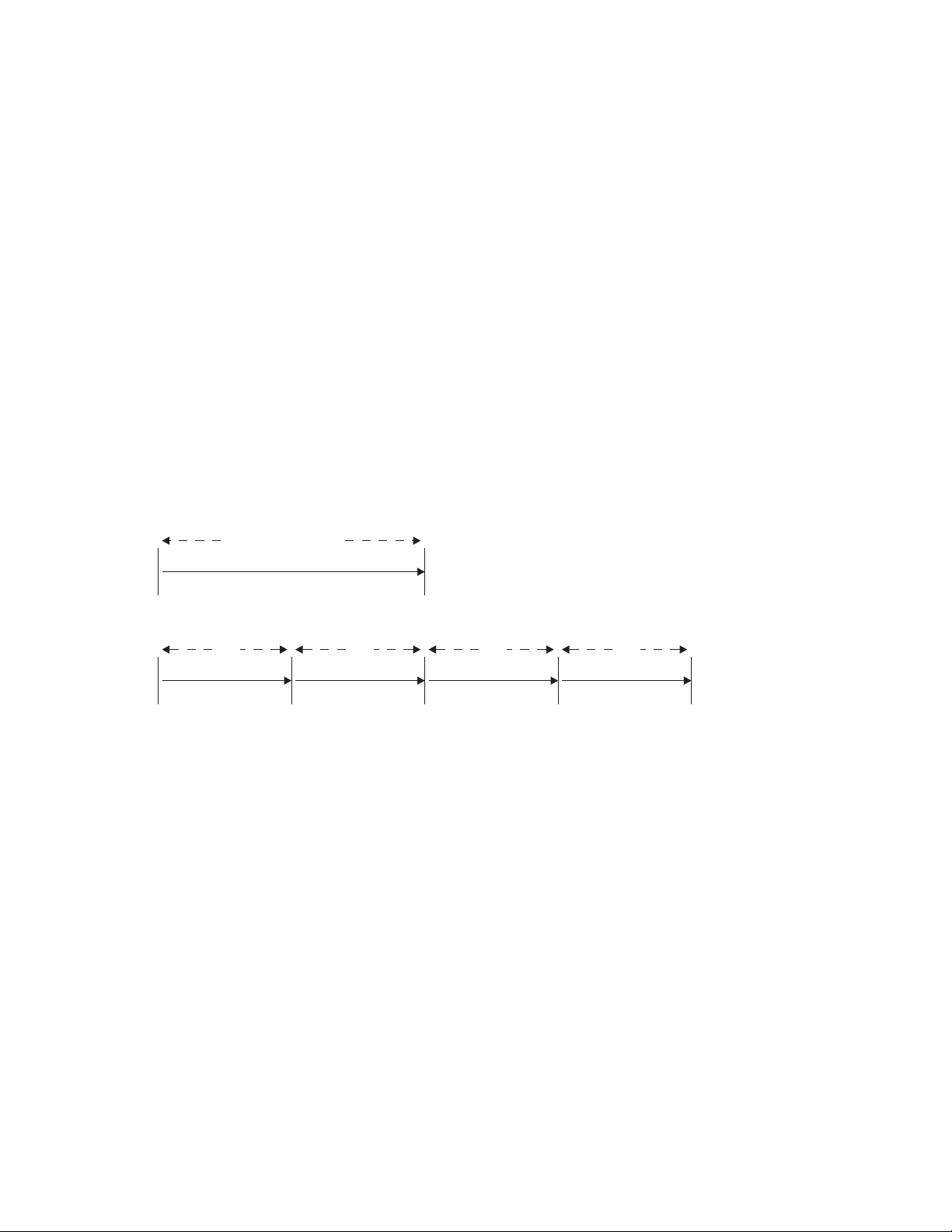

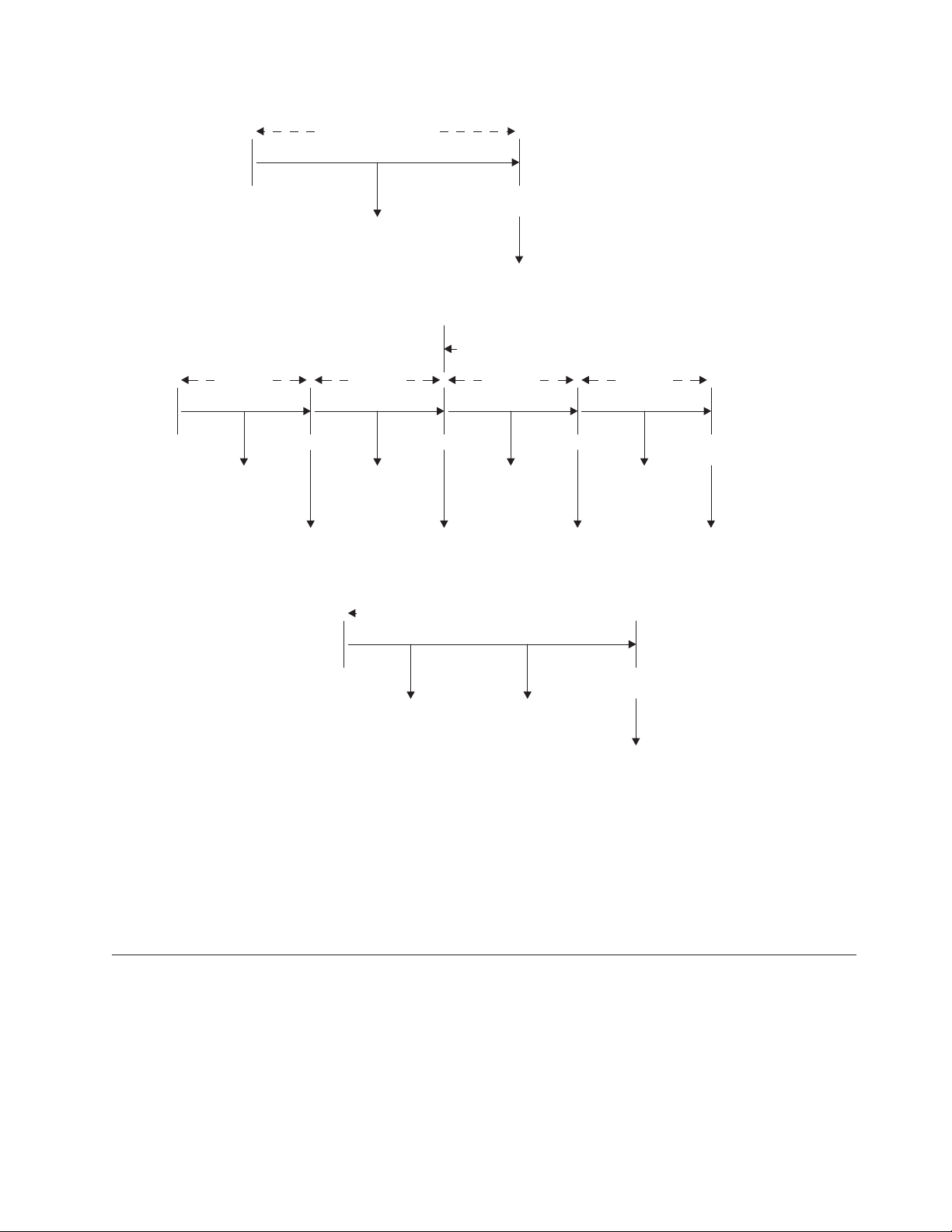

In Figure 1, task A is a nonconversational (or pseudoconversational) task with one

UOW, and task B is a multiple UOW task (typically a conversational task in which

each UOW accepts new data from the user). The figure shows how UOWs end at

syncpoints. During the task, the application program can issue syncpoints

explicitly, and, at the end, CICS issues a syncpoint.

Unit of work

Task A

SOT EOT

UOW UOW UOW UOW

Task B

SOT SP SP SP EOT

Abbreviations:

EOT: End of task SOT: Start of task

UOW: Unit of work SP: Syncpoint

Figure 1. Units of work and syncpoints

Figure 2 on page 17 shows that database changes made by a task are not

committed until a syncpoint is executed. If task processing is interrupted because

of a failure of any kind, changes made within the abending UOW are

automatically backed out.

If there is a system failure at time X:

v The change(s) made in task A have been committed and are therefore not backed

out.

v In task B, the changes shown as Mod 1 and Mod 2 have been committed, but

the change shown as Mod 3 is not committed and is backed out.

v All the changes made in task C are backed out.

(SP)

(SP)

16 CICS TS for z/OS 4.1: Recovery and Restart Guide

Page 29

Task B

X

Unit of work .

.

Task A .

.

SOT EOT .

(SP).

Mod .

.

.

Commit.

Mod .

.

.

Backout .

===========.

.

UOW 1 UOW 2 UOW 3 . UOW 4

.

.

SOT SP SP . SP EOT

. (SP)

Mod Mod Mod . Mod

123.4

.

.

Commit Commit .Commit Commit

Mod 1 Mod 2 .Mod 3 Mod 4

.

.

Backout .

=======================.

.

Task C

.

SOT . EOT

. (SP)

Mod Mod .

.

.

. Commit

Abbreviations: . Mods

EOT: End of task .

UOW: Unit of work X

Mod: Modification to database

SOT: Start of task

SP: Syncpoint

X: Moment of system failure

Figure 2. Backout of units of work

CICS recovery manager

The recovery manager ensures the integrity and consistency of resources (such as

files and databases) both within a single CICS region and distributed over

interconnected systems in a network.

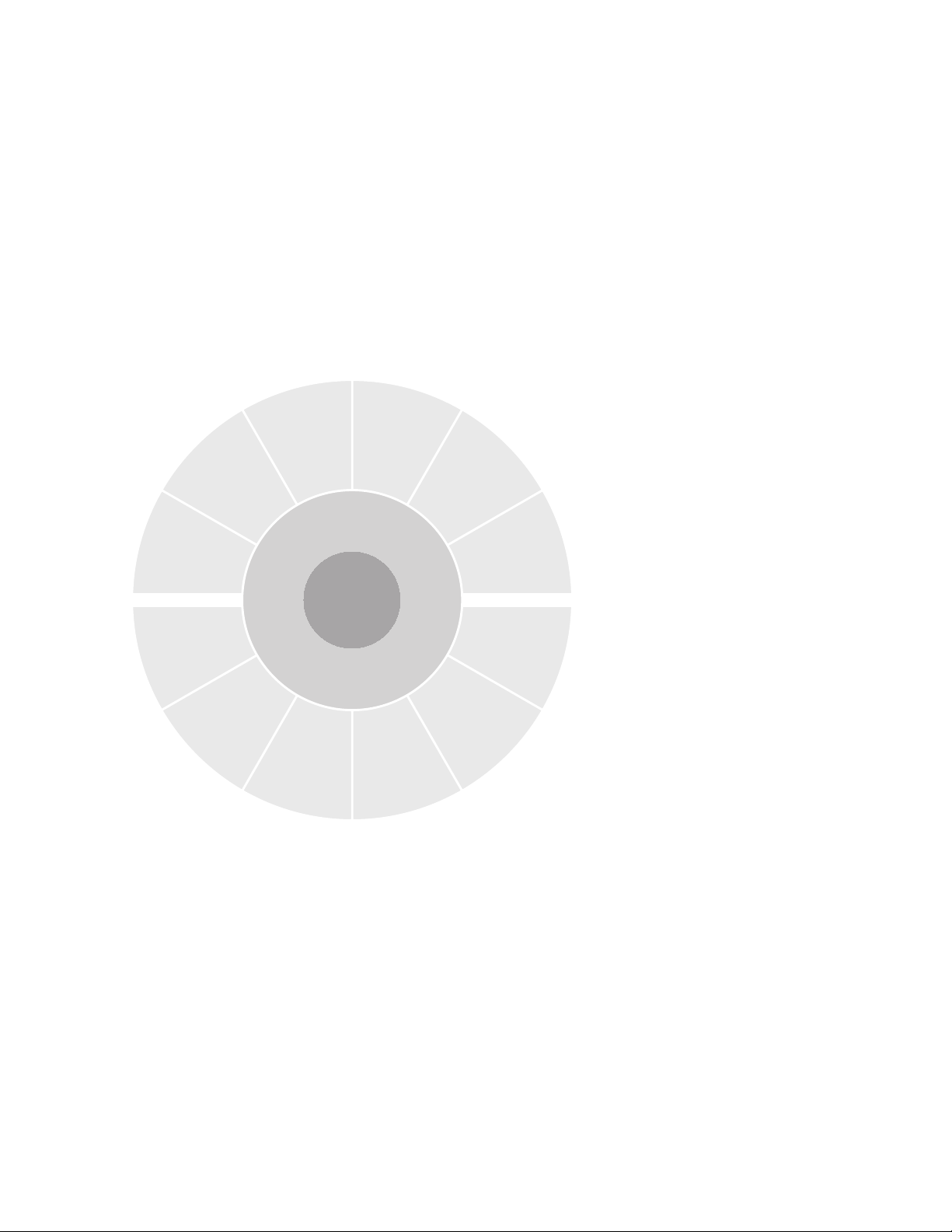

Figure 3 on page 18 shows the resource managers and their resources with which

the CICS recovery manager works.

The main functions of the CICS recovery manager are:

Chapter 2. Resource recovery in CICS 17

Page 30

c

o

L

RDO

v Managing the state, and controlling the execution, of each UOW

v Coordinating UOW-related changes during syncpoint processing for recoverable

resources

v Coordinating UOW-related changes during restart processing for recoverable

resources

v Coordinating recoverable conversations to remote nodes

v Temporarily suspending completion (shunting), and later resuming completion

(unshunting), of UOWs that cannot immediately complete commit or backout

processing because the required resources are unavailable, because of system,

communication, or media failure

r

c

e

u

a

o

s

e

R

l

TS

M

a

n

a

g

e

r

s

TD

FC

Recovery

LOG

C

o

m

Figure 3. CICS recovery manager and resources it works with

m

u

LU6.2

n

i

c

a

Manager

DBCTL

t

LU6.1

i

o

n

s

M

a

n

a

g

Managing the state of each unit of work

The CICS recovery manager maintains, for each UOW in a CICS region, a record of

the changes of state that occur during its lifetime.

Typical events that cause state changes include:

v Creation of the UOW, with a unique identifier

v Premature termination of the UOW because of transaction failure

v Receipt of a syncpoint request

v Entry into the indoubt period during two-phase commit processing (see the

MRO

e

r

s

CICS Transaction Server for z/OS Glossary for a definition of two-phase commit)

MQM

R

M

o

f

I

FC/RLS

DB2

t

o

m

e

R

r

e

R

e

e

c

r

u

o

s

18 CICS TS for z/OS 4.1: Recovery and Restart Guide

Page 31

v Notification that the resource is not available, requiring temporary suspension

(shunting) of the UOW

v Notification that the resource is available, enabling retry of shunted UOWs

v Notification that a connection is reestablished, and can deliver a commit or

rollback (backout) decision

v Syncpoint rollback

v Normal termination of the UOW

The identity of a UOW and its state are owned by the CICS recovery manager, and

are recorded in storage and on the system log. The system log records are used by

the CICS recovery manager during emergency restart to reconstruct the state of the

UOWs in progress at the time of the earlier system failure.

The execution of a UOW can be distributed over more than one CICS system in a

network of communicating systems.

The CICS recovery manager supports SPI commands that provide information

about UOWs.

Coordinating updates to local resources

The recoverable local resources managed by a CICS region are files, temporary

storage, and transient data, plus resource definitions for terminals, typeterms,

connections, and sessions.

Each local resource manager can write UOW-related log records to the local system

log, which the CICS recovery manager may subsequently be required to re-present

to the resource manager during recovery from failure.

To enable the CICS recovery manager to deliver log records to each resource

manager as required, the CICS recovery manager adds additional information

when the log records are created. Therefore, all logging by resource managers to

the system log is performed through the CICS recovery manager.

During syncpoint processing, the CICS recovery manager invokes each local

resource manager that has updated recoverable resources within the UOW. The

local resource managers then perform the required action. This provides the means

of coordinating the actions performed by individual resource managers.

If the commit or backout of a file resource fails (for example, because of an I/O

error or the inability of a resource manager to free a lock), the CICS recovery

manager takes appropriate action with regard to the failed resource:

v If the failure occurs during commit processing, the UOW is marked as

commit-failed and is shunted awaiting resolution of whatever caused the

commit failure.

v If the failure occurs during backout processing, the UOW is marked as

backout-failed, and is shunted awaiting resolution of whatever caused the

backout to fail.

Note that a commit failure can occur during the commit phase of a completed

UOW, or the commit phase that takes place after successfully completing backout.

(These two phases (or ‘directions’) of commit processing—commit after normal

completion and commit after backout—are sometimes referred to as ‘forward

commit’ and ‘backward commit’ respectively.) Note also that a UOW can be

backout-failed with respect to some resources and commit-failed with respect to

Chapter 2. Resource recovery in CICS 19

Page 32

others. This can happen, for example, if two data sets are updated and the UOW

has to be backed out, and the following happens:

v One resource backs out successfully

v While committing this successful backout, the commit fails

v The other resource fails to back out

These events leave one data set commit-failed, and the other backout-failed. In this

situation, the overall status of the UOW is logged as backout-failed.

During emergency restart following a CICS failure, each UOW and its state is

reconstructed from the system log. If any UOW is in the backout-failed or

commit-failed state, CICS automatically retries the UOW to complete the backout

or commit.

Coordinating updates in distributed units of work

If the execution of a UOW is distributed across more than one system, the CICS

recovery manager (or their non-CICS equivalents) in each pair of connected

systems ensure that the effects of the distributed UOW are atomic.

Each CICS recovery manager (or its non-CICS equivalent) issues the requests

necessary to effect two-phase syncpoint processing to each of the connected

systems with which a UOW may be in conversation.

Note: In this context, the non-CICS equivalent of a CICS recovery manager could

be the recovery component of a database manager, such as DBCTL or DB2

equivalent function where one of a pair of connected systems is not CICS.

|

|

|

|

|

In each connected system in a network, the CICS recovery manager uses interfaces

to its local recovery manager connectors (RMCs) to communicate with partner

recovery managers. The RMCs are the communication resource managers (IPIC,

LU6.2, LU6.1, MRO, and RMI) which have the function of understanding the

transport protocols and constructing the flows between the connected systems.

As remote resources are accessed during UOW execution, the CICS recovery

manager keeps track of data describing the status of its end of the conversation

with that RMC. The CICS recovery manager also assumes responsibility for the

coordination of two-phase syncpoint processing for the RMC.

®

,orany

Managing indoubt units of work

During the syncpoint phases, for each RMC, the CICS recovery manager records

the changes in the status of the conversation, and also writes, on behalf of the

RMC, equivalent information to the system log.

If a session fails at any time during the running of a UOW, it is the RMC

responsibility to notify the CICS recovery manager, which takes appropriate action

with regard to the unit of work as a whole. If the failure occurs during syncpoint

processing, the CICS recovery manager may be in doubt and unable to determine

immediately how to complete the UOW. In this case, the CICS recovery manager

causes the UOW to be shunted awaiting UOW resolution, which follows

notification from its RMC of successful resynchronization on the failed session.

During emergency restart following a CICS failure, each UOW and its state is

reconstructed from the system log. If any UOW is in the indoubt state, it remains

shunted awaiting resolution.

20 CICS TS for z/OS 4.1: Recovery and Restart Guide

Page 33

Resynchronization after system or connection failure

Units of work that fail while in an indoubt state remain shunted until the indoubt

state can be resolved following successful resynchronization with the coordinator.

Resynchronization takes place automatically when communications are next

established between subordinate and coordinator. Any decisions held by the

coordinator are passed to the subordinate, and indoubt units of work complete

normally. If a subordinate has meanwhile taken a unilateral decision following the

loss of communication, this decision is compared with that taken by the

coordinator, and messages report any discrepancy.

For an explanation and illustration of the roles played by subordinate and

coordinator CICS regions, and for information about recovery and

resynchronization of distributed units of work generally, see the CICS

Intercommunication Guide.

CICS system log

CICS system log data is written to two MVS system logger log streams, the

primary log stream and secondary log stream, which together form a single logical

log stream.

The system log is the only place where CICS records information for use when

backing out transactions, either dynamically or during emergency restart

processing. CICS automatically connects to its system log stream during

initialization, unless you have specified a journal model definition that defines the

system log as DUMMY (in which case CICS can perform only an initial start).

The integrity of the system log is critical in enabling CICS to perform recovery. If

any of the components involved with the system log—the CICS recovery manager,

the CICS log manager, or the MVS system logger—experience problems with the

system log, it might be impossible for CICS to perform successfully recovery

processing. For more information about errors affecting the system log, see “Effect

of problems with the system log” on page 33.

The CICS System Definition Guide tells you more about CICS system log streams,

and how you can use journal model definitions to map the CICS journal names for

the primary system log stream (DFHLOG) and the secondary system log stream

(DFHSHUNT) to specific log stream names. If you don't specify journal model

definitions, CICS uses default log stream names.

Information recorded on the system log

The information recorded on the system log is sufficient to allow backout of

changes made to recoverable resources by transactions that were running at the

time of failure, and to restore the recoverable part of CICS system tables.

Typically, this includes before-images of database records and after-images of

recoverable parts of CICS tables—for example, transient data cursors or TCTTE

sequence numbers. You cannot use the system log for forward recovery

information, or for terminal control or file control autojournaling.

Your application programs can write user-defined recovery records to the system

log using EXEC CICS WRITE JOURNALNAME commands. Any user-written log

records to support your own recovery processes are made available to global user

exit programs enabled at the XRCINPT exit point.

Chapter 2. Resource recovery in CICS 21

Page 34

CICS also writes “backout-failed” records to the system log if a failure occurs in

backout processing of a VSAM data set during dynamic backout or emergency

restart backout.

Records on the system log are used for cold, warm, and emergency restarts of a

CICS region. The only type of start for which the system log records are not used is

an initial start.

System activity keypoints

The recovery manager controls the recording of keypoint information, and the

delivery of the information to the various resource managers at emergency restart.

The recovery manager provides the support that enables activity keypoint

information to be recorded at frequent intervals on the system log. You specify the

activity keypoint frequency on the AKPFREQ system initialization parameter. See the

CICS System Definition Guide for details. Activity keypoint information is of two

types:

1. A list of all the UOWs currently active in the system

2. Image-copy type information allowing the current contents of a particular

resource to be rebuilt

During an initial phase of CICS restart, recovery manager uses this information,

together with UOW-related log records, to restore the CICS system to its state at

the time of the previous shutdown. This is done on a single backward scan of the

system log.

Frequency of taking activity keypoints: You are strongly recommended to specify a

nonzero activity keypoint frequency. Choose an activity keypoint frequency that is

suitable for the size of your system log stream. Note that writing activity keypoints

at short intervals improves restart times, but at the expense of extra processing

during normal running.

The following additional actions are taken for files accessed in non-RLS mode that

use backup while open (BWO):

v Tie-up records are recorded on the forward recovery log stream. A tie-up record

associates a CICS file name with a VSAM data set name.

v Recovery points are recorded in the integrated catalog facility (ICF) catalog.

These define the starting time for forward recovery. Data recorded on the

forward recovery log before that time does not need to be used.

Forward recovery logs

CICS writes VSAM forward recovery logs to a general log stream defined to the

MVS system logger. You can merge forward recovery log data for more than one

VSAM data set to the same log stream, or you can dedicate a forward recovery log

stream to a single data set.

See “Defining forward recovery log streams” on page 116 for information about the

use of forward recovery log streams.

User journals and automatic journaling

User journals and autojournals are written to a general log stream defined to the

MVS system logger.

22 CICS TS for z/OS 4.1: Recovery and Restart Guide

Page 35

v User journaling is entirely under your application programs’ control. You write

records for your own purpose using EXEC CICS WRITE JOURNALNAME

commands. See “Flushing journal buffers” on page 28 for information about

CICS shutdown considerations.

v Automatic journaling means that CICS automatically writes records to a log

stream, referenced by the journal name specified in a journal model definition,

as a result of:

– Records read from or written to files. These records represent data that has

been read, or data that has been read for update, or data that has been

written, or records to indicate the completion of a write, and so on,

depending on what types of request you selected for autojournaling.

You specify that you want autojournaling for VSAM files using the

autojournaling options on the file resource definition in the CSD. For BDAM

files, you specify the options on a file entry in the file control table.

– Input or output messages from terminals accessed through VTAM.

You specify that you want terminal control autojournaling on the JOURNAL

option of the profile resource definition referenced by your transaction

definitions. These messages could be used to create audit trails.

Automatic journaling is used for user-defined purposes; for example, for an

audit trail. Automatic journaling is not used for CICS recovery purposes.

Chapter 2. Resource recovery in CICS 23

Page 36

24 CICS TS for z/OS 4.1: Recovery and Restart Guide

Page 37

Chapter 3. Shutdown and restart recovery

CICS can shut down normally or abnormally and this affects the way that CICS

restarts after it shuts down.

CICS can stop executing as a result of:

v A normal (warm) shutdown initiated by a CEMT, or EXEC CICS, PERFORM

SHUT command

v An immediate shutdown initiated by a CEMT, or EXEC CICS, PERFORM SHUT

IMMEDIATE command

v An abnormal shutdown caused by a CICS system module encountering an

irrecoverable error

v An abnormal shutdown initiated by a request from the operating system

(arising, for example, from a program check or system abend)

v A machine check or power failure

Normal shutdown processing

Normal shutdown is initiated by issuing a CEMT PERFORM SHUTDOWN

command, or by an application program issuing an EXEC CICS PERFORM

SHUTDOWN command. It takes place in three quiesce stages, as follows:

First quiesce stage

During the first quiesce stage of shutdown, all terminals are active, all CICS

facilities are available, and the a number of activities are performed concurrently

The following activities are performed:

v CICS invokes the shutdown assist transaction specified on the SDTRAN system

initialization parameter or on the shutdown command.

Because all user tasks must terminate during the first quiesce stage, it is possible

that shutdown could be unacceptably delayed by long-running tasks (such as

conversational transactions). The purpose of the shutdown assist transaction is to

allow as many tasks as possible to commit or back out cleanly, while ensuring

that shutdown completes within a reasonable time.

CICS obtains the name of the shutdown assist transaction as follows:

1. If SDTRAN(tranid) is specified on the PERFORM SHUTDOWN command, or

as a system initialization parameter, CICS invokes that tranid.

2. If NOSDTRAN is specified on the PERFORM SHUTDOWN command, or as

a system initialization parameter, CICS does not start a shutdown

transaction. Without a shutdown assist transaction, all tasks that are already

running are allowed to complete.

3. If the SDTRAN (or NOSDTRAN) options are omitted from the PERFORM

SHUTDOWN command, and omitted from the system initialization

parameters, CICS invokes the default shutdown assist transaction, CESD,

which runs the CICS-supplied program DFHCESD.

The SDTRAN option specified on the PERFORM SHUT command overrides any

SDTRAN option specified as a system initialization parameter.

© Copyright IBM Corp. 1982, 2010 25

Page 38

v The DFHCESD program started by the CICS-supplied transaction, CESD,

attempts to purge and back out long-running tasks using increasingly stronger

methods (see “The shutdown assist transaction” on page 30).

v Tasks that are automatically initiated are run—if they start before the second

quiesce stage.

v Any programs listed in the first part of the shutdown program list table (PLT)

are run sequentially. (The shutdown PLT suffix is specified in the PLTSD system

initialization parameter, which can be overridden by the PLT option of the

CEMT or EXEC CICS PERFORM SHUTDOWN command.)

v A new task started as a result of terminal input is allowed to start only if its

transaction code is listed in the current transaction list table (XLT) or has been

defined as SHUTDOWN(ENABLED) in the transaction resource definition. The

XLT list of transactions restricts the tasks that can be started by terminals and

allows the system to shut down in a controlled manner. The current XLT is the

one specified by the XLT=xx system initialization parameter, which can be

overridden by the XLT option of the CEMT or EXEC CICS PERFORM

SHUTDOWN command.

Certain CICS-supplied transactions are, however, allowed to start whether their

code is listed in the XLT or not. These transactions are CEMT, CESF, CLR1,

CLR2, CLQ2, CLS1, CLS2, CSAC, CSTE, and CSNE.

v Finally, at the end of this stage and before the second stage of shutdown, CICS

unbinds all the VTAM terminals and devices.

The first quiesce stage is complete when the last of the programs listed in the first

part of the shutdown PLT has executed and all user tasks are complete. If the

CICS-supplied shutdown transaction CESD is used, this stage does not wait

indefinitely for all user tasks to complete.

Second quiesce stage

During the second quiesce stage of shutdown:

v Terminals are not active.

v No new tasks are allowed to start.

v Programs listed in the second part of the shutdown PLT (if any) run

sequentially. These programs cannot communicate with terminals, or make any

request that would cause a new task to start.

The second quiesce stage ends when the last of the programs listed in the PLT has

completed executing.

Third quiesce stage

During the third quiesce stage of shutdown:

v CICS closes all files that are defined to CICS file control. However, CICS does

not catalog the files as UNENABLED; they can then be opened implicitly by the

first reference after a subsequent CICS restart.