Page 1

Front cover

IBM Power Systems S822LC

for High Performance Computing

Technical Overview and Introduction

Alexandre Caldeira

Volker Haug

Scott Vetter

Redpaper

Page 2

Page 3

International Technical Support Organization

IBM Power Systems S822LC for High Performance

Computing

September 2016

REDP-5405-00

Page 4

Note: Before using this information and the product it supports, read the information in “Notices” on page v.

First Edition (September 2016)

This edition applies to the IBM Power Systems S822LC model 8335-GTB.

© Copyright International Business Machines Corporation 2016. All rights reserved.

Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule

Contract with IBM Corp.

Page 5

Contents

Notices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .v

Trademarks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . vi

IBM Redbooks promotions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . vii

Preface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ix

Authors. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .x

Now you can become a published author, too! . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .x

Comments welcome. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .x

Stay connected to IBM Redbooks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xi

Chapter 1. Architecture and technical description . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.1 Server features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.1.1 Minimum features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.1.2 System cooling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.2 The NVIDIA Tesla P100 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.3 Operating system support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

1.3.1 Ubuntu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

1.3.2 Additional information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

1.4 Operating environment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

1.5 Physical package . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

1.6 System architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

1.7 The POWER8 processor. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

1.7.1 POWER8 processor overview. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

1.7.2 POWER8 processor core . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

1.7.3 Simultaneous multithreading. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

1.7.4 Memory access . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

1.7.5 On-chip L3 cache innovation and Intelligent Cache . . . . . . . . . . . . . . . . . . . . . . . 13

1.7.6 L4 cache and memory buffer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

1.7.7 Hardware transactional memory . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

1.7.8 Coherent Accelerator Processor Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

1.7.9 NVLink . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

1.8 Memory subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

1.8.1 Memory riser cards . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

1.8.2 Memory placement rules. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

1.8.3 Memory bandwidth . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

1.9 System bus . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

1.10 Internal I/O subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

1.11 Slot configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

1.12 System ports . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

1.13 PCI adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

1.13.1 PCI Express . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

1.13.2 LAN adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

1.13.3 Compute Intensive Accelerator. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

1.13.4 Fibre Channel adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

1.13.5 CAPI enabled Infiniband adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

1.14 Internal storage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

1.14.1 Disk and media features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

1.15 External I/O subsystems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

© Copyright IBM Corp. 2016. All rights reserved. iii

Page 6

1.16 IBM System Storage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

1.17 Java. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

Chapter 2. Management, Reliability, Availability, and Serviceability . . . . . . . . . . . . . . 31

2.1 Main management components overview. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

2.2 Service processor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

2.2.1 Open Power Abstraction Layer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

2.2.2 Intelligent Platform Management Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

2.3 Reliability, availability, and serviceability. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

2.3.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

2.3.2 IBM terminology versus x86 terminology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

2.3.3 Error handling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

2.3.4 Serviceability. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

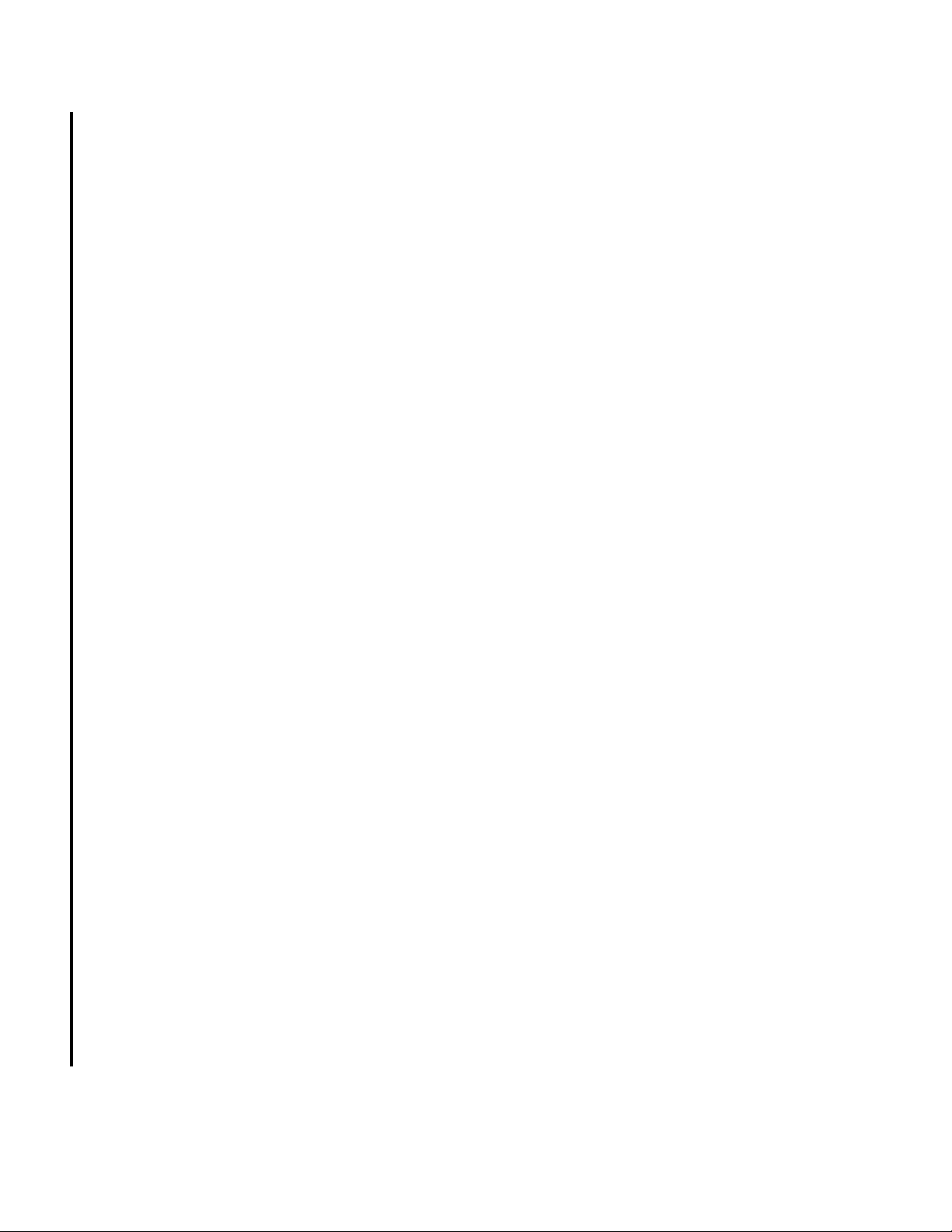

2.3.5 Manageability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

Appendix A. Server racks and energy management . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

IBM server racks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

Water cooling option . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

IBM 7014 Model S25 rack . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

IBM 7014 Model T00 rack . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

IBM 7014 Model T42 rack . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

IBM 42U SlimRack 7965-94Y . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

The AC power distribution unit and rack content . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

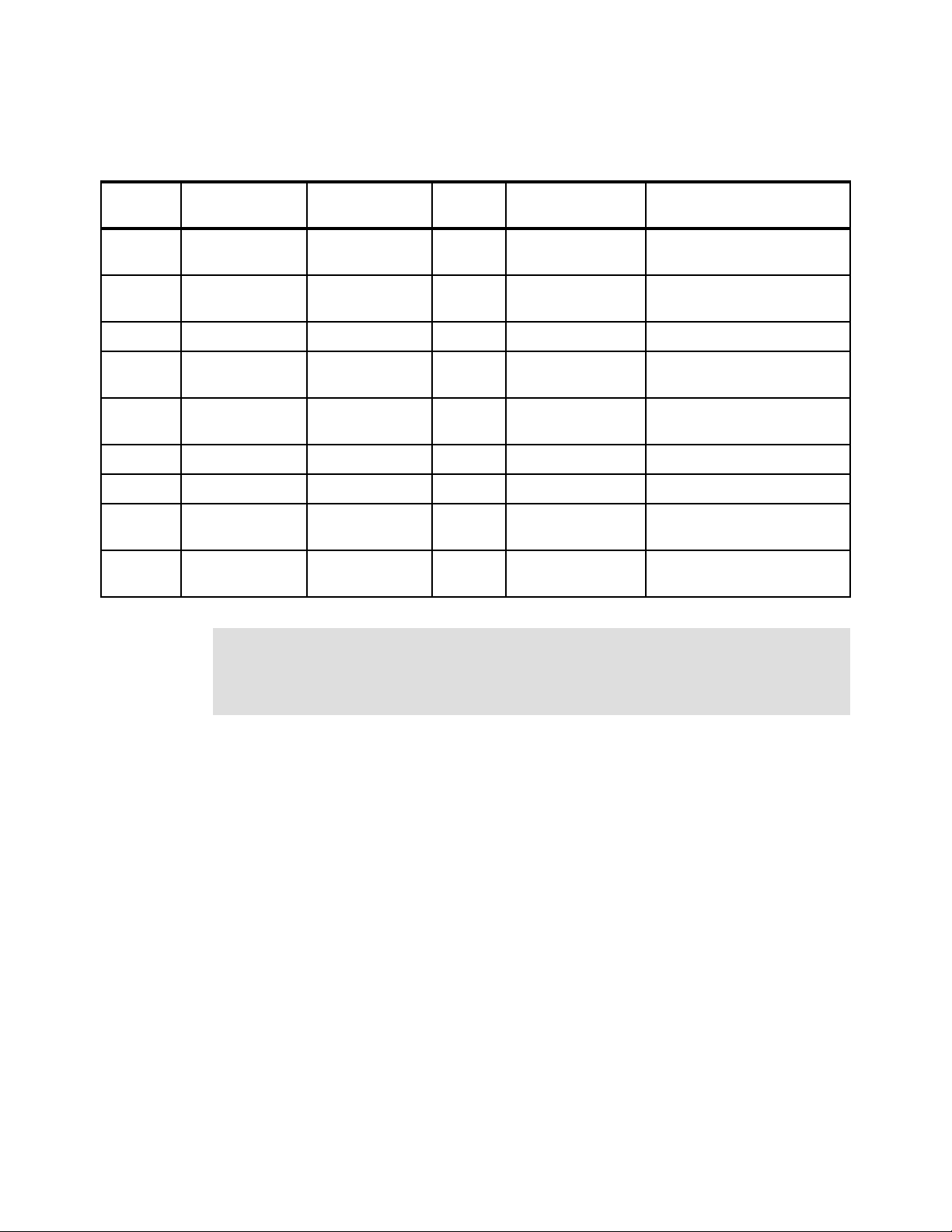

Rack-mounting rules . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

Useful rack additions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

OEM racks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

Energy management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

IBM EnergyScale technology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

On Chip Controller . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

Energy consumption estimation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

Related publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

IBM Redbooks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

Other publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

Online resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

Help from IBM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

iv IBM Power Systems S822LC for High Performance Computing

Page 7

Notices

This information was developed for products and services offered in the U.S.A.

IBM may not offer the products, services, or features discussed in this document in other countries. Consult

your local IBM representative for information on the products and services currently available in your area. Any

reference to an IBM product, program, or service is not intended to state or imply that only that IBM product,

program, or service may be used. Any functionally equivalent product, program, or service that does not

infringe any IBM intellectual property right may be used instead. However, it is the user's responsibility to

evaluate and verify the operation of any non-IBM product, program, or service.

IBM may have patents or pending patent applications covering subject matter described in this document. The

furnishing of this document does not grant you any license to these patents. You can send license inquiries, in

writing, to:

IBM Director of Licensing, IBM Corporation, North Castle Drive, Armonk, NY 10504-1785 U.S.A.

The following paragraph does not apply to the United Kingdom or any other country where such

provisions are inconsistent with local law: INTERNATIONAL BUSINESS MACHINES CORPORATION

PROVIDES THIS PUBLICATION "AS IS" WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESS OR

IMPLIED, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF NON-INFRINGEMENT,

MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. Some states do not allow disclaimer of

express or implied warranties in certain transactions, therefore, this statement may not apply to you.

This information could include technical inaccuracies or typographical errors. Changes are periodically made

to the information herein; these changes will be incorporated in new editions of the publication. IBM may make

improvements and/or changes in the product(s) and/or the program(s) described in this publication at any time

without notice.

Any references in this information to non-IBM websites are provided for convenience only and do not in any

manner serve as an endorsement of those websites. The materials at those websites are not part of the

materials for this IBM product and use of those websites is at your own risk.

IBM may use or distribute any of the information you supply in any way it believes appropriate without incurring

any obligation to you.

Any performance data contained herein was determined in a controlled environment. Therefore, the results

obtained in other operating environments may vary significantly. Some measurements may have been made

on development-level systems and there is no guarantee that these measurements will be the same on

generally available systems. Furthermore, some measurements may have been estimated through

extrapolation. Actual results may vary. Users of this document should verify the applicable data for their

specific environment.

Information concerning non-IBM products was obtained from the suppliers of those products, their published

announcements or other publicly available sources. IBM has not tested those products and cannot confirm the

accuracy of performance, compatibility or any other claims related to non-IBM products. Questions on the

capabilities of non-IBM products should be addressed to the suppliers of those products.

This information contains examples of data and reports used in daily business operations. To illustrate them

as completely as possible, the examples include the names of individuals, companies, brands, and products.

All of these names are fictitious and any similarity to the names and addresses used by an actual business

enterprise is entirely coincidental.

COPYRIGHT LICENSE:

This information contains sample application programs in source language, which illustrate programming

techniques on various operating platforms. You may copy, modify, and distribute these sample programs in

any form without payment to IBM, for the purposes of developing, using, marketing or distributing application

programs conforming to the application programming interface for the operating platform for which the sample

programs are written. These examples have not been thoroughly tested under all conditions. IBM, therefore,

cannot guarantee or imply reliability, serviceability, or function of these programs.

© Copyright IBM Corp. 2016. All rights reserved. v

Page 8

Trademarks

IBM, the IBM logo, and ibm.com are trademarks or registered trademarks of International Business Machines

Corporation in the United States, other countries, or both. These and other IBM trademarked terms are

marked on their first occurrence in this information with the appropriate symbol (® or ™), indicating US

registered or common law trademarks owned by IBM at the time this information was published. Such

trademarks may also be registered or common law trademarks in other countries. A current list of IBM

trademarks is available on the Web at http://www.ibm.com/legal/copytrade.shtml

The following terms are trademarks of the International Business Machines Corporation in the United States,

other countries, or both:

AIX®

DS8000®

Easy Tier®

EnergyScale™

IBM®

IBM FlashSystem®

IBM z™

POWER®

POWER Hypervisor™

Power Systems™

POWER8®

PowerHA®

PowerLinux™

PowerPC®

PowerVM®

Real-time Compression™

Redbooks®

Redpaper™

Redbooks (logo) ®

Storwize®

System Storage®

XIV®

The following terms are trademarks of other companies:

Linux is a trademark of Linus Torvalds in the United States, other countries, or both.

Java, and all Java-based trademarks and logos are trademarks or registered trademarks of Oracle and/or its

affiliates.

UNIX is a registered trademark of The Open Group in the United States and other countries.

Other company, product, or service names may be trademarks or service marks of others.

vi IBM Power Systems S822LC for High Performance Computing

Page 9

IBM REDBOOKS PROMOTIONS

Find and read thousands of

IBM Redbooks publications

Search, bookmark, save and organize favorites

Get up-to-the-minute Redbooks news and announcements

Link to the latest Redbooks blogs and videos

Download

Now

Get the latest version of the Redbooks Mobile App

iOS

Android

Place a Sponsorship Promotion in an IBM

Redbooks publication, featuring your business

or solution with a link to your web site.

Qualified IBM Business Partners may place a full page

promotion in the most popular Redbooks publications.

Imagine the power of being seen by users who download

millions of Redbooks publications each year!

®

®

Promote your business

in an IBM Redbooks

publication

ibm.com/Redbooks

About Redbooks Business Partner Programs

IBM Redbooks promotions

Page 10

THIS PAGE INTENTIONALLY LEFT BLANK

Page 11

Preface

This IBM® Redpaper™ publication is a comprehensive guide that covers the IBM Power

Systems™ S822LC 8335-GTB server designed for impressive High Performance Computing

applications that support the Linux operating system (OS) as well as High Performance Data

Analytics, the enterprise datacenter, and accelerated cloud deployments.

The objective of this paper is to introduce the major innovative Power S822LC features and

their relevant functions:

Powerful POWER8® processors that offer 16 cores at 3.259 GHz or 3.857 GHz turbo

A 19-inch rack-mount 2U configuration

NVIDIA NVLink technology for exceptional processor to accelerator intercommunication

Four SXM2 form factor connectors for the NVIDIA Tesla P100 GPU

This publication is for professionals who want to acquire a better understanding of IBM Power

Systems products. The intended audience includes the following roles:

Clients

Sales and marketing professionals

Technical support professionals

performance or 20 cores at 2.860 GHz or 3.492 GHz turbo

IBM Business Partners

Independent software vendors

This paper expands the set of IBM Power Systems documentation by providing a desktop

reference that offers a detailed technical description of the Power S822LC server.

This paper does not replace the latest marketing materials and configuration tools. It is

intended as an additional source of information that, together with existing sources, can be

used to enhance your knowledge of IBM server solutions.

© Copyright IBM Corp. 2016. All rights reserved. ix

Page 12

Authors

This paper was produced by a team of specialists from around the world working at the

International Technical Support Organization, Austin Center.

Alexandre Caldeira is a Certified IT Specialist and is the Product Manager for Power

Systems Latin America. He holds a degree in Computer Science from the Universidade

Estadual Paulista (UNESP) and an MBA in Marketing. His major areas of focus are

competition, sales, marketing and technical sales support. Alexandre has more than 16 years

of experience working on IBM Systems Solutions and has worked also as an IBM Business

Partner on Power Systems hardware, AIX, and IBM PowerVM® virtualization products.

Volker Haug is an Executive IT Specialist & Open Group Distinguished IT Specialist within

IBM Systems in Germany supporting Power Systems clients and Business Partners. He

holds a Diploma degree in Business Management from the University of Applied Studies in

Stuttgart. His career includes more than 29 years of experience with Power Systems, AIX,

and PowerVM virtualization. He has written several IBM Redbooks® publications about

Power Systems and PowerVM. Volker is an IBM POWER8® Champion and a member of the

German Technical Expert Council, which is an affiliate of the IBM Academy of Technology.

The project that produced this publication was managed by:

Scott Vetter

Executive Project Manager, PMP

Thanks to the following people for their contributions to this project:

George Ahrens, Nick Bofferding, Charlie Burns, Sertac Cakici, Dan Crowell,

Daniel Henderson, Yesenia Jimenez, Ann Lund, Benjamin Mashak, Chris Mann,

Michael J Mueller, Kanisha Patel, Matt Spinler, Jeff Stuecheli, Uma Yadlapati, and

Maury Zipse.

Now you can become a published author, too!

Here’s an opportunity to spotlight your skills, grow your career, and become a published

author—all at the same time! Join an ITSO residency project and help write a book in your

area of expertise, while honing your experience using leading-edge technologies. Your efforts

will help to increase product acceptance and customer satisfaction, as you expand your

network of technical contacts and relationships. Residencies run from two to six weeks in

length, and you can participate either in person or as a remote resident working from your

home base.

Find out more about the residency program, browse the residency index, and apply online at:

ibm.com/redbooks/residencies.html

Comments welcome

Your comments are important to us!

x IBM Power Systems S822LC for High Performance Computing

Page 13

We want our papers to be as helpful as possible. Send us your comments about this paper or

other IBM Redbooks publications in one of the following ways:

Use the online Contact us review Redbooks form found at:

ibm.com/redbooks

Send your comments in an email to:

redbooks@us.ibm.com

Mail your comments to:

IBM Corporation, International Technical Support Organization

Dept. HYTD Mail Station P099

2455 South Road

Poughkeepsie, NY 12601-5400

Stay connected to IBM Redbooks

Find us on Facebook:

http://www.facebook.com/IBMRedbooks

Follow us on Twitter:

http://twitter.com/ibmredbooks

Look for us on LinkedIn:

http://www.linkedin.com/groups?home=&gid=2130806

Explore new Redbooks publications, residencies, and workshops with the IBM Redbooks

weekly newsletter:

https://www.redbooks.ibm.com/Redbooks.nsf/subscribe?OpenForm

Stay current on recent Redbooks publications with RSS Feeds:

http://www.redbooks.ibm.com/rss.html

Preface xi

Page 14

xii IBM Power Systems S822LC for High Performance Computing

Page 15

Chapter 1. Architecture and technical

1

description

The IBM Power System S822LC for High Performance Computing (8335-GTB) server, the

first Power System offering with NVIDIA NVLink Technology, removes GPU computing

bottlenecks by employing the high-bandwith and low-latency NVLink interface from CPU to

GPU and GPU to GPU. This unlocks new performance and new applications for accelerated

computing.

Power System LC servers are a product of a co-design with OpenPOWER Foundation

ecosystem members. Power S822LC for High Performance Computing innovation partners

include IBM, NVIDIA, Mellanox, Canonical, Wistron, and more.

The Power S822LC server offers a modular design to scale from single racks to hundreds,

simplicity of ordering, and a strong innovation roadmap for GPUs.

The IBM Power System S822LC for High Performance Computing (8335-GTB) server, the

first Power System offering with NVIDIA NVLink Technology, removes GPU computing

bottlenecks by employing the high-bandwith and low-latency NVLink interface from CPU to

GPU and GPU to GPU. This unlocks new performance and new applications for accelerated

computing

The IBM Power S822LC for High Performance Computing (8335-GTB) server offers two

processor sockets for a total of 16 cores at 3.259 GHz (3.857 GHz turbo) or 20 cores at 2.860

GHz (3.492 GHz turbo) in a 19-inch rack-mount, 2U (EIA units) drawer configuration. All the

cores are activated.

The server provides eight memory daughter cards with 16 GB (4x4 GB), 32 GB (4x8 GB),

64 GB (4x16 GB), and 128 GB (4x32 GB), allowing for a maximum system memory of

1024 GB.

© Copyright IBM Corp. 2016. All rights reserved. 1

Page 16

Figure 1-1 shows the front view of a Power S822LC server.

POWER8 Processor (2x)

Operator Interface

•1 USB 3.0

• Green, Amber, Blue LED’s

Cooling Fans (x4)

• Coun ter- Rotat ing

• Hot s wap

PCIe slot (1x)

• PCIe Gen3 x8 (CAPI)

PCIe slot (2x)

• PCIe Gen3 x16 (CAPI)

• PCIe Gen3 x8

NIVIDIA P100 GPU (4x)

• PCIe Gen3 (CAPI)

• 300W Capable

• PCIe riser cards

Power Supplies (2x)

• 1,300 W

• Hot Swap

Memory riser cards (8x)

• 1 Buffer Chip per Riser

• 4 DIMMs per Riser

• 32 DIMMs total

Front Bezel removed

Internal Storage

• 2 SFF-4 bays

• HHD or SSD

Figure 1-1 Front view of the Power S822LC server

1.1 Server features

The server chassis of the Power S822LC server contains two processor modules attached

directly to the board. Each POWER8 processor module is either 8-core or 10-core and has a

64-bit architecture, up to 512 KB of L2 cache per core, and up to 8 MB of L3 cache per core.

The clock speed of each processor available varies based on the model of the server that is

used.

The Power S822LC computing server provides eight DIMM memory slots. Memory features

that are supported are 16 GB (#EM55), 32 GB (#EM56), 64 GB (#EM57), and 128 GB

(#EM58), allowing for a maximum of 1024 GB DDR4 system memory.

The physical locations of the main server components are shown in Figure 1-2.

Figure 1-2 Location of server main components

The servers support four SXM2 form factor connectors for NVIDIA Tesla P100 GPU (#EC4C,

#EC4D, or #EC4F) only. Optional water cooling is available.

This summary describes the standard features of the Power S822LC model 8355-GTA

server:

19” rack-mount (2U) chassis

2 IBM Power Systems S822LC for High Performance Computing

Page 17

Two POWER8 processor modules:

– 8-core 3.3259 GHz processor module

– 10-core 2.860 GHz processor module

Up to 1024 GB of 1333 MHz DDR4 ECC memory

Two SFF bays for two HDDs or two SSDs that supports:

– Two 1 TB 7200 RPM NL SATA disk drives (#ELD0)

– Two 2 TB 7200 RPM NL SATA disk drives (#ES6A)

– Two 480 GB SATA SSDs (#ELS5)

– Two 960 GB SATA SSDs (#ELS6)

– Two 1.92 TB SATA SSDs (#ELSZ)

– Two 3.84 TB SATA SSDs (#ELU0)

Integrated SATA controller

Three PCIe Gen 3 slots:

– One PCIe x8 Gen3 Low Profile slot, CAPI enabled

– Two PCIe x16 Gen3 Low Profile slot, CAPI enabled

Four SXM2 form factor connectors for NVIDIA Tesla P100 GPU (#EC4C, #EC4D, or

#EC4F) only

Integrated features:

– EnergyScale™ technology

– Hot-swap and redundant cooling

– One front USB 2.0 port for general usage

– One rear USB 3.0 port for general usage

– One system port with RJ45 connector

Two power supplies

1.1.1 Minimum features

The minimum Power S822LC model 8355-GTB server initial order must include:

Two processor modules with at least 16 CPUs

128 GB of memory (eight 16 GB memory DIMMs)

Two #EC4C Compute Intensive Accelerator - NVIDIA GP100

Two power supplies and power cords

An OS indicator

A rack integration indicator

A Language Group Specify

Linux is the supported OS. The Integrated 1Gb Ethernet port can be used as the base LAN

port.

1.1.2 System cooling

Air or water cooling depends on the GPU that is installed. See 1.13.3, “Compute Intensive

Accelerator” on page 26 for a list of GPUs available.

Feature code #ER2D is the water cooling indicator for the 8335-GTB.

Note: If #ER2D is ordered, you must order #EJTX fixed rail kit. Ordering #ER2D with

#EJTY slide rails is not supported.

Chapter 1. Architecture and technical description 3

Page 18

1.2 The NVIDIA Tesla P100

NVIDIA’s new NVIDIA Tesla P100 accelerator (see Figure 1-3) takes GPU computing to the

next level. This section discusses the Tesla P100 accelerator.

Figure 1-3 NVIDIA Tesla P100 accelerator

With a 15.3 billion transistor GPU, a new high performance interconnect that greatly

accelerates GPU peer-to-peer and GPU-to-CPU communications, new technologies to

simplify GPU programming, and exceptional power efficiency, Tesla P100 is not only the most

powerful, but also the most architecturally complex GPU accelerator architecture ever built.

Key features of Tesla P100 include:

Extreme performance

Powering HPC, Deep Learning, and many more GPU Computing areas

NVLink

NVIDIA’s new high speed, high bandwidth interconnect for maximum application

scalability

HBM2

Fast, high capacity, extremely efficient CoWoS (Chip-on-Wafer-on-Substrate) stacked

memory architecture

Unified Memory, Compute Preemption, and New AI Algorithms

Significantly improved programming model and advanced AI software optimized for the

Pascal architecture;

16nm FinFET

Enables more features, higher performance, and improved power efficiency

4 IBM Power Systems S822LC for High Performance Computing

Page 19

The Tesla P100 was built to deliver exceptional performance for the most demanding

compute applications, delivering:

5.3 TFLOPS of double precision floating point (FP64) performance

10.6 TFLOPS of single precision (FP32) performance

21.2 TFLOPS of half-precision (FP16) performance

In addition to the numerous areas of high performance computing that NVIDIA GPUs have

accelerated for a number of years, most recently Deep Learning has become a very important

area of focus for GPU acceleration. NVIDIA GPUs are now at the forefront of deep neural

networks (DNNs) and artificial intelligence (AI). They are accelerating DNNs in various

applications by a factor of 10x to 20x compared to CPUs, and reducing training times from

weeks to days. In the past three years, NVIDIA GPU-based computing platforms have helped

speed up Deep Learning network training times by a factor of fifty. In the past two years, the

number of companies NVIDIA collaborates with on Deep Learning has jumped nearly 35x to

over 3,400 companies.

New innovations in the Pascal architecture, including native 16-bit floating point (FP)

precision, allow GP100 to deliver great speedups for many Deep Learning algorithms. These

algorithms do not require high levels of floating-point precision, but they gain large benefits

from the additional computational power FP16 affords, and the reduced storage requirements

for 16-bit datatypes.

For more detailed information on the NVIDA Tesla P100, see:

https://devblogs.nvidia.com/parallelforall/inside-pascal/

1.3 Operating system support

The Power S822LC (8335-GTB) server supports Linux, which provides a UNIX like

implementation across many computer architectures.

For more information about the software that is available on Power Systems, see the Linux on

Power Systems website:

http://www.ibm.com/systems/power/software/linux/index.html

The Linux operating system is an open source, cross-platform OS. It is supported on every

Power Systems server IBM sells. Linux on Power Systems is the only Linux infrastructure that

offers both scale-out and scale-up choices.

1.3.1 Ubuntu

Ubuntu Server 16.04, at the time of writing, is the supported OS for the S822LC.

For more information about Ubuntu Server for Ubuntu for POWER8, see the following

website:

http://www.ubuntu.com/download/server/power8

1.3.2 Additional information

For more information about the IBM PowerLinux™ Community, see the following website:

https://www.ibm.com/developerworks/group/tpl

Chapter 1. Architecture and technical description 5

Page 20

For more information about the features and external devices that are supported by Linux,

see the following website:

http://www.ibm.com/systems/power/software/linux/index.html

1.4 Operating environment

Table 1-1 provides the operating environment specifications for the Power S822LC servers.

Table 1-1 Operating environment for Power S822LC servers

Power S822LC server operating environment

Description Operating Non-operating

Temperature Allowable: 5 - 40 degrees Ca

(41 - 104 degrees F)

Recommended: 18 - 27

degrees C

(64 - 80 degrees F)

Relative humidity 8 - 80% 8 - 80%

Maximum dew point 24 degrees C (75 degrees F) 27 degrees C (80 degrees F)

Operating voltage 200 - 240 V AC N/A

Operating frequency 50 - 60 Hz +/- 3 Hz N/A

Power consumption 2550 watts maximum N/A

Power source loading 2.6 kVA maximum N/A

Thermal output 8703 BTU/hr maximum N/A

Maximum altitude 3,050 m

(10,000 ft)

Noise level and sound power 7.6/6.7 bels operating/ idling N/A

a. Heavy workloads might see some performance degradation above 35 degrees C if internal

temperatures trigger a CPU clock reduction.

1 - 60 degrees C

(34 - 140 degrees F)

N/A

Tip: The maximum measured value is expected from a fully populated server under an

intensive workload. The maximum measured value also accounts for component tolerance

and operating conditions that are not ideal. Power consumption and heat load vary greatly

by server configuration and usage. Use the IBM Systems Energy Estimator to obtain a

heat output estimate that is based on a specific configuration, which is available at the

following website:

http://www-912.ibm.com/see/EnergyEstimator

1.5 Physical package

Table 1-2 on page 7 shows the physical dimensions of the Power S822LC chassis. The

servers are available only in a rack-mounted form factor and take 2U (2 EIA units) of rack

space.

6 IBM Power Systems S822LC for High Performance Computing

Page 21

Table 1-2 Physical dimensions for the Power S822LC servers

Dimension Power S822LC (8335-GTB) server

Width 441.5 mm (17.4 in.)

Depth 822 mm (32.4 in.)

Height 86 mm (3.4 in.)

Weight (maximum configuration) 30 kg (65 lbs.)

1.6 System architecture

This section describes the overall system architecture for the Power S822LC computing

servers. The bandwidths that are provided throughout the section are theoretical maximums

that are used for reference.

The speeds that are shown are at an individual component level. Multiple components and

application implementation are key to achieving the preferred performance. Always do the

performance sizing at the application workload environment level and evaluate performance

by using real-world performance measurements and production workloads.

The Power S822LC server is a two single chip module (SCM) system. Each SCM is attached

to four memory riser cards that have buffer chips for the L4 Cache and four memory RDIMM

slots. The server has a maximum capacity of 32 memory DIMMs when all the memory riser

cards are populated, which allows for up to 1024 GB of memory.

The servers have a total of three PCIe Gen3 slots with all of these slots being CAPI-capable.

The system has sockets for four GPUs each 300 W capable.

An integrated SATA controller is fed through a dedicated PCI bug on the main system board

and allows for up to two SATA HDDs or SSDs to be installed. This bus also drives the

integrated Ethernet and USB port.

Chapter 1. Architecture and technical description 7

Page 22

B

u

f

f

e

r

C

a

c

h

e

L

4

R

D

I

M

M

R

D

I

M

M

R

D

I

M

M

R

D

I

M

M

B

u

f

f

e

r

C

a

c

h

e

L

4

R

D

I

M

M

R

D

I

M

M

R

D

I

M

M

R

D

I

M

M

B

u

f

f

e

r

C

a

c

h

e

L

4

R

D

I

M

M

R

D

I

M

M

R

D

I

M

M

R

D

I

M

M

B

u

f

f

e

r

C

a

c

h

e

L

4

R

D

I

M

M

R

D

I

M

M

R

D

I

M

M

R

D

I

M

M

B

u

f

f

e

r

C

a

c

h

e

L

4

R

D

I

M

M

R

D

I

M

M

R

D

I

M

M

R

D

I

M

M

B

u

f

f

e

r

C

a

c

h

e

L

4

R

D

I

M

M

R

D

I

M

M

R

D

I

M

M

R

D

I

M

M

B

u

f

f

e

r

C

a

c

h

e

L

4

R

D

I

M

M

R

D

I

M

M

R

D

I

M

M

R

D

I

M

M

B

u

f

f

e

r

C

a

c

h

e

L

4

R

D

I

M

M

R

D

I

M

M

R

D

I

M

M

R

D

I

M

M

P

H

B

0

P

H

B

1

S

M

P

A

B

u

s

M

e

m

o

r

y

C

o

n

t

r

o

l

l

e

r

P

H

B

0

P

H

B

1

P

C

I

e

G

e

n

3

x

8

C

A

P

I

s

u

p

p

o

r

t

P

C

I

e

G

e

n

3

x

1

6

C

A

P

I

s

u

p

p

o

r

t

P

C

I

e

G

e

n

3

x

1

6

C

A

P

I

s

u

p

p

o

r

t

SATA

Controller

SFF-4

HDD/

SSD

USB 3.0

Front

USB 3.0

Rear

VGA

Management

1 Gbps

Ethernet

System Planar

12.8 GBps

28.8 GBps

x

8

x

1

6

x

8

x

1

6

POWER8

SCM0

POWER8

SCM1

SFF-4

HDD/

SSD

PLX

M

e

m

o

r

y

C

o

n

t

r

o

l

l

e

r

M

e

m

o

r

y

C

o

n

t

r

o

l

l

e

r

M

e

m

o

r

y

C

o

n

t

r

o

l

l

e

r

S

M

P

A

B

u

s

N

V

L

i

n

k

N

V

L

i

n

k

N

V

L

i

n

k

N

V

L

i

n

k

N

V

I

D

I

A

P

1

0

0

S

X

M

2

.

0

N

V

I

D

I

A

P

1

0

0

S

X

M

2

.

0

N

V

I

D

I

A

P

1

0

0

S

X

M

2

.

0

N

V

I

D

I

A

P

1

0

0

S

X

M

2

.

0

S

M

P

A

B

u

s

S

M

P

A

B

u

s

Figure 1-4 shows the logical system diagram for the Power S822LC servers.

Figure 1-4 Power S822LC server logical system diagram

8 IBM Power Systems S822LC for High Performance Computing

Page 23

1.7 The POWER8 processor

This section introduces the latest processor in the Power Systems product family and

describes its main characteristics and features in general.

The POWER8 processor in the S822LC for High Performance Computing is unique to the

8335-GTB. Engineers removed the A-Bus interface along with SMP over PCI support to make

room for the NVLink interface. The resulting chip grows slightly from 649 mm

1.7.1 POWER8 processor overview

2

to 659 mm2.

The POWER8 processor is manufactured by using the IBM 22 nm Silicon-On-Insulator (SOI)

technology. Each chip is 649 mm

the chip contains up to 12 cores, two memory controllers, Peripheral Component Interconnect

Express (PCIe) Gen3 I/O controllers, and an interconnection system that connects all

components within the chip. Each core has 512 KB of L2 cache, and all cores share 96 MB of

L3 embedded DRAM (eDRAM). The interconnect also extends through module and system

board technology to other POWER8 processors in addition to DDR4 memory and various I/O

devices.

POWER8 processor-based systems use memory buffer chips to interface between the

POWER8 processor and DDR4 memory. Each buffer chip also includes an L4 cache to

reduce the latency of local memory accesses.

2

and contains 4.2 billion transistors. As shown in Figure 1-5,

Figure 1-5 The POWER8 processor chip

Chapter 1. Architecture and technical description 9

Page 24

Here are additional features that can augment the performance of the POWER8 processor:

Support for DDR4 memory through memory buffer chips that offload the memory support

from the POWER8 memory controller.

An L4 cache within the memory buffer chip that reduces the memory latency for local

access to memory behind the buffer chip; the operation of the L4 cache is not apparent to

applications running on the POWER8 processor. Up to 128 MB of L4 cache can be

available for each POWER8 processor.

Hardware transactional memory.

On-chip accelerators, including on-chip encryption, compression, and random number

generation accelerators.

CAPI, which allows accelerators that are plugged into a PCIe slot to access the processor

bus by using a low latency, high-speed protocol interface.

Adaptive power management.

Table 1-3 summarizes the technology characteristics of the POWER8 processor.

Table 1-3 Summary of POWER8 processor technology

Technology 8335-GTB POWER8 processor

Die size 659 mm

Fabrication technology 22 nm lithography

Copper interconnect

SOI

eDRAM

2

Maximum processor cores 12

Maximum execution threads core/chip 8/96

Maximum L2 cache core/chip 512 KB/6 MB

Maximum On-chip L3 cache core/chip 8 MB/96 MB

Maximum L4 cache per chip 128 MB

Maximum memory controllers 2

SMP design-point 16 sockets with POWER8 processors

Compatibility Specific to the 8335-GTB

10 IBM Power Systems S822LC for High Performance Computing

Page 25

Figure 1-6 on page 11 shows the areas of the processor that were modified to include the

2 C4 rows

added

x8 I OP

x8 P HB

NVLink support added in extended ESA-bus removed, NVLink added

Chip height:

2

nd

CAPP unit added, X2 r emoved

NVLink and additional CAPI interface.

Figure 1-6 Areas modified on the POWER8 processor core

1.7.2 POWER8 processor core

The POWER8 processor core is a 64-bit implementation of the IBM Power Instruction Set

Architecture (ISA) Version 2.07 and has the following features:

Multi-threaded design, which is capable of up to eight-way simultaneous multithreading

(SMT)

32 KB, eight-way set-associative L1 instruction cache

64 KB, eight-way set-associative L1 data cache

Enhanced prefetch, with instruction speculation awareness and data prefetch depth

awareness

Enhanced branch prediction, which uses both local and global prediction tables with a

selector table to choose the preferred predictor

Improved out-of-order execution

Two symmetric fixed-point execution units

Two symmetric load/store units and two load units, all four of which can also run simple

fixed-point instructions

An integrated, multi-pipeline vector-scalar floating point unit for running both scalar and

SIMD-type instructions, including the Vector Multimedia eXtension (VMX) instruction set

and the improved Vector Scalar eXtension (VSX) instruction set, and capable of up to

eight floating point operations per cycle (four double precision or eight single precision)

In-core Advanced Encryption Standard (AES) encryption capability

Chapter 1. Architecture and technical description 11

Page 26

Hardware data prefetching with 16 independent data streams and software control

Hardware decimal floating point (DFP) capability.

More information about Power ISA Version 2.07 can be found at the following website:

https://www.power.org/wp-content/uploads/2013/05/PowerISA_V2.07_PUBLIC.pdf

Figure 1-7 shows a picture of the POWER8 core, with some of the functional units

highlighted.

Figure 1-7 POWER8 processor core

1.7.3 Simultaneous multithreading

Simultaneous multithreading (SMT) allows a single physical processor core to dispatch

simultaneously instructions from more than one hardware thread context. With SMT, each

POWER8 core can present eight hardware threads. Because there are multiple hardware

threads per physical processor core, additional instructions can run at the same time. SMT is

primarily beneficial in commercial environments where the speed of an individual transaction

is not as critical as the total number of transactions that are performed. SMT typically

increases the throughput of workloads with large or frequently changing working sets, such

as database servers and web servers.

Table 1-4 shows a comparison between the different POWER processors options for a

Power S822LC server and the number of threads that are supported by each SMT mode.

Table 1-4 SMT levels that are supported by a Power S822LC server

Cores per system SMT mode Hardware threads per system

16 Single Thread (ST) 16

16 SMT2 32

16 SMT4 64

16 SMT8 128

20 Single Thread (ST) 20

20 SMT2 40

20 SMT4 80

12 IBM Power Systems S822LC for High Performance Computing

Page 27

20 SMT8 160

POWER8

SCM

Memory

Controller

Memory

Controller

Buffer

Chip

Buffer

Chip

Buffer

Chip

16 MB

L4

Cache

Memory

Riser Card

4 x RDIMMs

28.8 GBps

The architecture of the POWER8 processor, with its larger caches, larger cache bandwidth,

and faster memory, allows threads to have faster access to memory resources, which

translates into a more efficient usage of threads. Therefore, POWER8 allows more threads

per core to run concurrently, increasing the total throughput of the processor and of the

system.

1.7.4 Memory access

On the Power S822LC server, each POWER8 module has two memory controllers, each

connected to two memory channels. Each memory channel operates at 1600 MHz and

connects to a memory riser card. Each memory riser card has a memory buffer that is

responsible for many functions that were previously on the memory controller, such as

scheduling logic and energy management. The memory buffer also has 16 MB of L4 cache.

Also, the memory riser card houses four industry-standard RDIMMs.

Each memory channel can address up to 64 GB. Therefore, the Power S822LC server can

address up to 1 TB (1024 GB) of total memory.

Figure 1-8 shows a POWER8 processor that is connected to four memory riser cards and its

components.

Figure 1-8 Logical diagram of the POWER8 processor connected to four memory riser cards

1.7.5 On-chip L3 cache innovation and Intelligent Cache

The POWER8 processor uses a breakthrough in material engineering and microprocessor

fabrication to implement the L3 cache in eDRAM and place it on the processor die. L3 cache

is critical to a balanced design, as is the ability to provide good signaling between the L3

cache and other elements of the hierarchy, such as the L2 cache or SMP interconnect.

Chapter 1. Architecture and technical description 13

Page 28

The on-chip L3 cache is organized into separate areas with differing latency characteristics.

Each processor core is associated with a fast 8 MB local region of L3 cache (FLR-L3), but

also has access to other L3 cache regions as shared L3 cache. Additionally, each core can

negotiate to use the FLR-L3 cache that is associated with another core, depending on

reference patterns. Data can also be cloned to be stored in more than one core’s FLR-L3

cache, again depending on reference patterns. This

the POWER8 processor to optimize the access to L3 cache lines and minimize overall cache

latencies.

Figure 1-5 on page 9 show the on-chip L3 cache, and highlights the fast 8 MB L3 region that

is closest to a processor core.

The innovation of using eDRAM on the POWER8 processor die is significant for several

reasons:

Latency improvement

A six-to-one latency improvement occurs by moving the L3 cache on-chip compared to L3

accesses on an external (on-ceramic) Application Specific Integrated Circuit (ASIC).

Bandwidth improvement

A 2x bandwidth improvement occurs with on-chip interconnect. Frequency and bus sizes

are increased to and from each core.

No off-chip driver or receivers

Removing drivers or receivers from the L3 access path lowers interface requirements,

conserves energy, and lowers latency.

Intelligent Cache management enables

Small physical footprint

The performance of eDRAM when implemented on-chip is similar to conventional SRAM

but requires far less physical space. IBM on-chip eDRAM uses only a third of the

components that conventional SRAM uses, which has a minimum of six transistors to

implement a 1-bit memory cell.

Low energy consumption

The on-chip eDRAM uses only 20% of the standby power of SRAM.

1.7.6 L4 cache and memory buffer

POWER8 processor-based systems introduce an additional level in memory hierarchy. The

L4 cache is implemented together with the memory buffer in the memory riser cards. Each

memory buffer contains 16 MB of L4 cache. On a Power S822LC server, you can have up to

128 MB of L4 cache by using all the eight memory riser cards.

14 IBM Power Systems S822LC for High Performance Computing

Page 29

Figure 1-9 shows a picture of the memory buffer, where you can see the 16 MB L4 cache and

processor links and memory interfaces.

Figure 1-9 Memory buffer chip

1.7.7 Hardware transactional memory

Transactional memory is an alternative to lock-based synchronization. It attempts to simplify

parallel programming by grouping read and write operations and running them as a single

operation. Transactional memory is like database transactions, where all shared memory

accesses and their effects are either committed all together or discarded as a group. All

threads can enter the critical region simultaneously. If there are conflicts in accessing the

shared memory data, threads try accessing the shared memory data again or are stopped

without updating the shared memory data. Therefore, transactional memory is also called a

lock-free synchronization. Transactional memory can be a competitive alternative to

lock-based synchronization.

Transactional memory provides a programming model that makes parallel programming

easier. A programmer delimits regions of code that access shared data and the hardware

runs these regions atomically and in isolation, buffering the results of individual instructions,

and trying execution again if isolation is violated. Generally, transactional memory allows

programs to use a programming style that is close to coarse-grained locking to achieve

performance that is close to fine-grained locking.

Most implementations of transactional memory are based on software. The POWER8

processor-based systems provide a hardware-based implementation of transactional memory

that is more efficient than the software implementations and requires no interaction with the

processor core, therefore allowing the system to operate in maximum performance.

1.7.8 Coherent Accelerator Processor Interface

Coherent Accelerator Processor Interface (CAPI) defines a coherent accelerator interface

structure for attaching special processing devices to the POWER8 processor bus.

The CAPI can attach accelerators that have coherent shared memory access with the

processors in the server and share full virtual address translation with these processors,

which use a standard PCIe Gen3 bus.

Chapter 1. Architecture and technical description 15

Page 30

Applications can have customized functions in FPGAs and enqueue work requests directly in

Custom

Hardware

Application

CAPP

Coherence Bus

PSL

FPGA or ASIC

POWER8

PCIe Gen3

Transport for encapsulated messages

shared memory queues to the FPGA, and by using the same effective addresses (pointers) it

uses for any of its threads running on a host processor. From a practical perspective, CAPI

allows a specialized hardware accelerator to be seen as an additional processor in the

system, with access to the main system memory, and coherent communication with other

processors in the system.

The benefits of using CAPI include the ability to access shared memory blocks directly from

the accelerator, perform memory transfers directly between the accelerator and processor

cache, and reduce the code path length between the adapter and the processors. This is

possibly because the adapter is not operating as a traditional I/O device, and there is no

device driver layer to perform processing. It also presents a simpler programming model.

Figure 1-10 shows a high-level view of how an accelerator communicates with the POWER8

processor through CAPI. The POWER8 processor provides a Coherent Attached Processor

Proxy (CAPP), which is responsible for extending the coherence in the processor

communications to an external device. The coherency protocol is tunneled over standard

PCIe Gen3, effectively making the accelerator part of the coherency domain.

Figure 1-10 CAPI accelerator that is attached to the POWER8 processor

The accelerator adapter implements the Power Service Layer (PSL), which provides address

translation and system memory cache for the accelerator functions. The custom processors

on the system board, consisting of an FPGA or an ASIC, use this layer to access shared

memory regions, and cache areas as though they were a processor in the system. This ability

enhances the performance of the data access for the device and simplifies the programming

effort to use the device. Instead of treating the hardware accelerator as an I/O device, it is

treated as a processor, which eliminates the requirement of a device driver to perform

communication, and the need for Direct Memory Access that requires system calls to the

operating system (OS) kernel. By removing these layers, the data transfer operation requires

much fewer clock cycles in the processor, improving the I/O performance.

The implementation of CAPI on the POWER8 processor allows hardware companies to

develop solutions for specific application demands and use the performance of the POWER8

processor for general applications and the custom acceleration of specific functions by using

a hardware accelerator, with a simplified programming model and efficient communication

with the processor and memory resources.

16 IBM Power Systems S822LC for High Performance Computing

Page 31

For a list of supported CAPI adapters, see 1.13.5, “CAPI enabled Infiniband adapters” on

page 27.

1.7.9 NVLink

NVLink is NVIDIA’s new high-speed interconnect technology for GPU-accelerated computing.

Supported on SXM2 based Tesla P100 accelerator boards, NVLink significantly increases

performance for both GPU-to-GPU communications, and for GPU access to system memory.

Today, multiple GPUs are common in workstations as well as the nodes of HPC computing

clusters and deep learning training systems. A powerful interconnect is extremely valuable in

multiprocessing systems. Our vision for NVLink was to create an interconnect for GPUs that

would offer much higher bandwidth than PCI Express Gen3 (PCIe), and be compatible with

the GPU ISA to support shared memory multiprocessing workloads.

Support for the GPU ISA means that programs running on NVLink-connected GPUs can

execute directly on data in the memory of another GPU as well as on local memory. GPUs

can also perform atomic memory operations on remote GPU memory addresses, enabling

much tighter data sharing and improved application scaling.

NVLink uses NVIDIA’s new High-Speed Signaling interconnect (NVHS). NVHS transmits data

over a differential pair running at up to 20 Gb/sec. Eight of these differential connections form

a

Link that connects two processors (GPU-to-GPU or GPU-to-CPU). A single Link supports up

to 40 GB/sec of bidirectional bandwidth between the endpoints. Multiple Links can be

combined to form

NVLink implementation in Tesla P100 supports up to four Links, allowing for a gang with an

aggregate maximum theoretical bandwidth of 160 GB/sec bidirectional bandwidth.

Sub-Link that sends data in one direction, and two sub-links - one for each direction - form a

Gangs for even higher-bandwidth connectivity between processors. The

Chapter 1. Architecture and technical description 17

Page 32

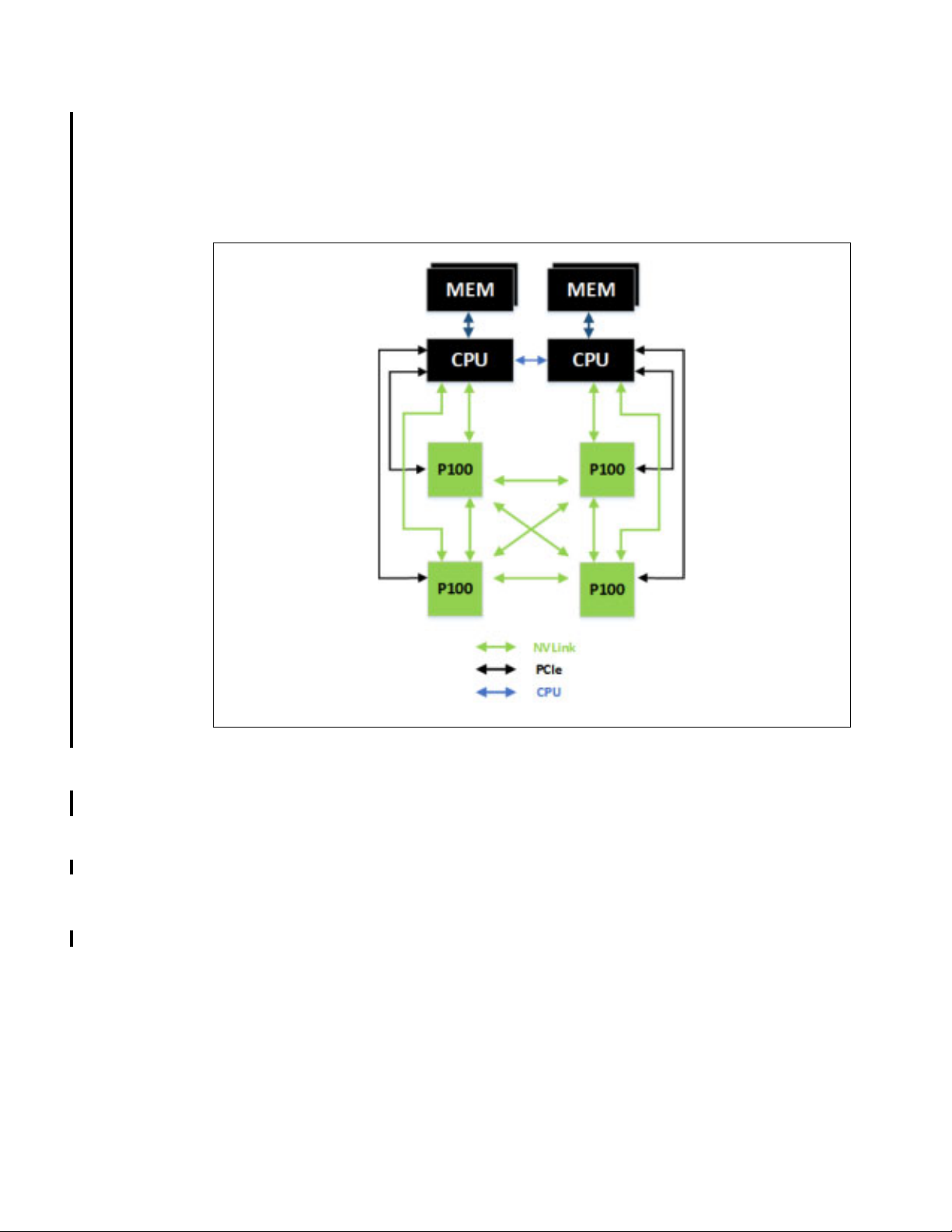

While NVLink primarily focuses on connecting multiple NVIDIA Tesla P100s together it can

also connect Tesla P100 GPUs with IBM Power CPUs with NVLink support. Figure 1-11 on

page 18 highlights an example of a four-GPU system with dual NVLink-capable CPUs

connected with NVLink. In this configuration, each GPU has 120 combined GB/s bidirectional

bandwidth to the other three GPUs in the system, and 40 GB/s bidirectional bandwidth to a

CPU.

Figure 1-11 CPU to GPU and GPU to GPU interconnect using NVLink

1.8 Memory subsystem

The Power S822LC server is a two-socket system that supports two POWER8 SCM

processor modules. The server supports a maximum of 32 DDR4 RDIMMs slots housed in

eight memory riser cards.

Memory features equate to a riser card with four memory DIMMs. Memory feature codes that

are supported are 16 GB, 32 GB, 64 GB, and 128 GB, and run at speeds of 1600 MHz,

allowing for a maximum system memory of 1024 GB.

1.8.1 Memory riser cards

Memory riser cards are designed to house up to four industry-standard DRAM memory

DIMMs and include a set of components that allow for higher bandwidth and lower latency

communications:

Memory Scheduler

Memory Management (RAS Decisions & Energy Management)

18 IBM Power Systems S822LC for High Performance Computing

Page 33

Buffer Cache

Connection to system backplane

DDR3 DIMM (4x)

Memory Buffer

By adopting this architecture, several decisions and processes regarding memory

optimizations are run outside the processor, saving bandwidth and allowing for faster

processor to memory communications. It also allows for more robust reliability, availability,

and serviceability (RAS). For more information about RAS, see 2.3, “Reliability, availability,

and serviceability” on page 33.

A detailed diagram of the memory riser card that is available for the Power S822LC server

and its location on the server are shown in Figure 1-12.

Figure 1-12 Memory riser card components and server location

The buffer cache is a L4 cache and is built on eDRAM technology (same as the L3 cache),

which has lower latency than regular SRAM. Each memory riser card has a buffer chip with

16 MB of L4 cache, and a fully populated Power S822LC server (two processors and eight

memory riser cards) has 128 MB of L4 cache. The L4 cache performs several functions that

have a direct impact on performance and brings a series of benefits for the Power S822LC

server:

Reduces energy consumption by reducing the number of memory requests.

Increases memory write performance by acting as a cache and by grouping several

random writes into larger transactions.

Partial write operations that target the same cache block are “gathered” within the L4

cache before written to memory, becoming a single write operation.

Reduces latency on memory access. Memory access for cached blocks has up to 55%

lower latency than non-cached blocks.

Chapter 1. Architecture and technical description 19

Page 34

1.8.2 Memory placement rules

Each feature code equates to a riser card with four memory DIMMs.

The following memory feature codes are orderable:

16 GB DDR4: A riser card with four 4 GB 1600 MHz DDR4 DRAMs (#EM55)

32 GB DDR4: A riser card with four 8 GB 1600 MHz DDR4 DRAMs (#EM56)

64 GB DDR4: A riser card with four 16 GB 1600 MHz DDR4 DRAMs (#EM57)

128 GB DDR4: A riser card with four 32 GB 1600 MHz DDR4 DRAMs (#EM58)

The supported maximum memory is 1024 GB by installing a quantity of eight #EM58

components.

For the Power S822LC model 8335-GTB server:

It is required that all the memory modules be populated.

Memory features cannot be mixed.

The base memory is 128 GB with eight 16 GB, 1600 MHz DDR3 memory modules

(#EM55).

Memory upgrades are not supported.

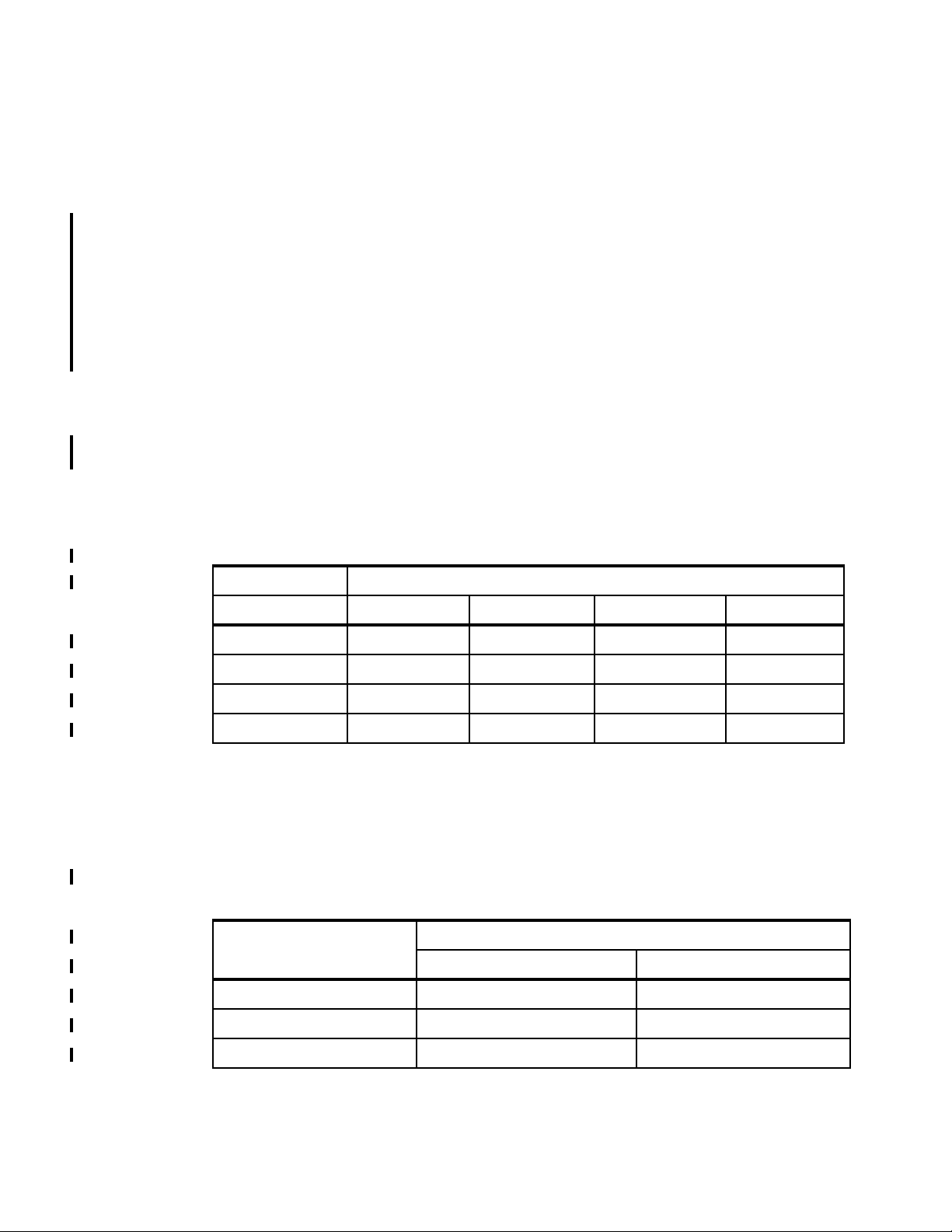

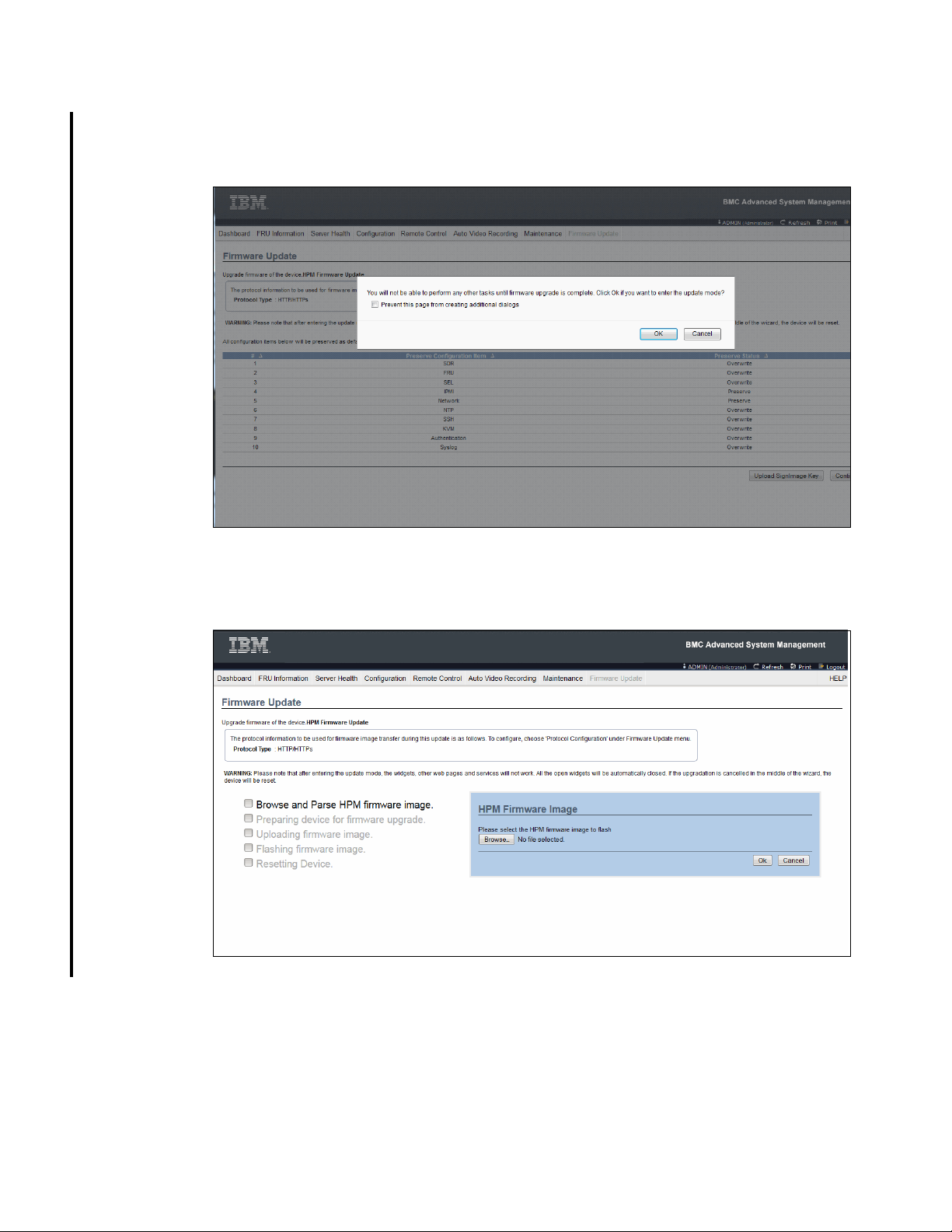

Table 1-5 shows the supported quantities for each memory feature code.

Table 1-5 Supported quantity of feature codes for model 8335-GTB

Memory features 128 GB 256 GB 512 GB 1024 GB

16 GB (#EM55) 8

32 GB (#EM56) 8

64 GB (#EM57) 8

128 GB (#EM58) 8

1.8.3 Memory bandwidth

The POWER8 processor has exceptional cache, memory, and interconnect bandwidths.

Table 1-6 shows the maximum bandwidth estimates for a single core on the Power S822LC

server.

Table 1-6 Power S822LC single-core bandwidth estimates

Single core 8335-GTB

L1 (data) cache 137.28 GBps 156.43 GBps

L2 cache 137.28 GBps 156.43 GBps

Total installed memory

2.860 GHz 3.259 GHz

L3 cache 183.04 GBps 208.57 GBps

20 IBM Power Systems S822LC for High Performance Computing

Page 35

The bandwidth figures for the caches are calculated as follows:

L1 cache: In one clock cycle, two 16-byte load operations and one 16-byte store operation

can be accomplished. The value varies depending on the clock of the core, and the

formulas are as follows:

– 2.860 GHz Core: (2 * 16 B + 1 * 16 B) * 2.860 GHz = 137.28 GBps

– 3.259 GHz Core: (2 * 16 B + 1 * 16 B) * 3.259 GHz = 156.43 GBps

L2 cache: In one clock cycle, one 32-byte load operation and one 16-byte store operation

can be accomplished. The value varies depending on the clock of the core, and the

formula is as follows:

– 2.860 GHz Core: (1 * 32 B + 1 * 16 B) * 2.860 GHz = 137.28 GBps

– 3.259 GHz Core: (1 * 32 B + 1 * 16 B) * 3.259 GHz = 156.43 GBps

L3 cache: One 32-byte load operation and one 32-byte store operation can be

accomplished at half-clock speed, and the formula is as follows:

– 2.860 GHz Core: (1 * 32 B + 1 * 32 B) * 2.860 GHz = 183.04 GBps

– 3.259 GHz Core: (1 * 32 B + 1 * 32 B) * 3.259 GHz = 208.57 GBps

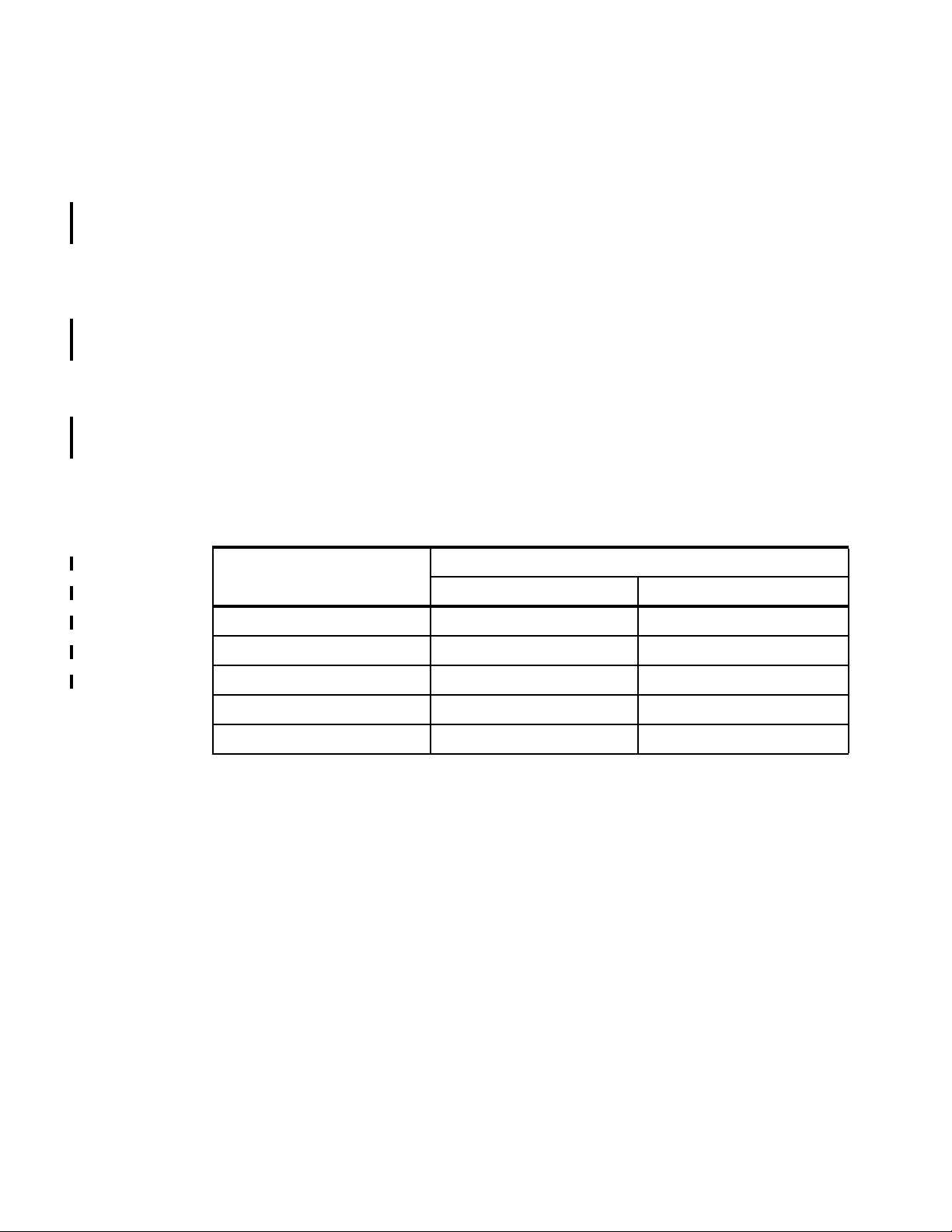

For the entire Power S822LC server populated with the two processor modules, the overall

bandwidths are shown in Table 1-7.

Table 1-7 Power S822LC server - total bandwidth estimates

Total bandwidths 8335-GCB

20 cores @ 2.860 GHz 16 cores @ 3.259 GHz

L1 (data) cache 2,746 GBps 2,503 GBps

L2 cache 2,746 GBps 2,503 GBps

L3 cache 3,661 GBps 3,337 GBps

Total memory 230 GBps 230 GBps

PCIe Interconnect 128 GBps 128 GBps

Where:

Total memory bandwidth: Each POWER8 processor has four memory channels running at

9.6 GBps capable of writing 2 bytes and reading 1 byte at a time. The bandwidth formula is

calculated as follows:

Four channels * 9.6 GBps * 3 bytes = 192 GBps per processor module

SMP interconnect: The POWER8 processor has two 2-byte 3-lane A buses working at

6.4 GHz. Each A bus has three active lanes. The bandwidth formula is calculated as

follows:

3 A buses * 2 bytes * 6.4 GHz = 38.4 GBps

PCIe Interconnect: Each POWER8 processor has 32 PCIe lanes running at 8 Gbps

full-duplex. The bandwidth formula is calculated as follows:

Thirty-two lanes * 2 processors * 8 Gbps * 2 = 128 GBps

Chapter 1. Architecture and technical description 21

Page 36

1.9 System bus

This section provides more information about the internal buses.

The Power S822LC servers have internal I/O connectivity through Peripheral Component

Interconnect Express Gen3 (PCI Express Gen3 or PCIe Gen3) slots.

The internal I/O subsystem on the systems is connected to the PCIe controllers on a

POWER8 processor in the system. Each POWER8 processor has a bus that has 32 PCIe

lanes running at 9.6 Gbps full-duplex and provides 64 GBps of I/O connectivity to the PCIe

slots, SAS internal adapters, and USB ports.

Some PCIe devices are connected directly to the PCIe Gen3 buses on the processors, and

other devices are connected to these buses through PCIe Gen3 Switches. The PCIe Gen3

switches are high-speed devices (512 GBps - 768 GBps each) that allow for the optimal

usage of the processors PCIe Gen3 x16 buses by grouping slower x8 or x4 devices that might

plug into a x8 slot and not use its full bandwidth. For more information about which slots are

connected directly to the processor and which ones are attached to PCIe Gen3 switches

(referred as PLX), see 1.7, “The POWER8 processor” on page 9.

22 IBM Power Systems S822LC for High Performance Computing

Page 37

A diagram showing the Power S822LC server buses and logical architecture is shown in

P

H

B

0

P

H

B

1

S

M

P

A

B

u

s

M

e

m

o

r

y

C

o

n

t

r

o

l

l

e

r

P

H

B

0

P

H

B

1

P

C

I

e

G

e

n

3

x

8

C

A

P

I

s

u

p

p

o

r

t

P

C

I

e

G

e

n

3

x

1

6

C

A

P

I

s

u

p

p

o

r

t

P

C

I

e

G

e

n

3

x

1

6

C

A

P

I

s

u

p

p

o

r

t

SATA

Controller

SFF-4

HDD/

SSD

USB 3.0

Front

USB 3.0

Rear

VGA

Management

1 Gbps

Ethernet

12.8 GBps

x

8

x

1

6

x

8

x

1

6

POWER8

SCM0

POWER8

SCM1

SFF-4

HDD/

SSD

PLX

M

e

m

o

r

y

C

o

n

t

r

o

l

l

e

r

M

e

m

o

r

y

C

o

n

t

r

o

l

l

e

r

M

e

m

o

r

y

C

o

n

t

r

o

l

l

e

r

S

M

P

A

B

u

s

N

V

L

i

n

k

N

V

L

i

n

k

N

V

L

i

n

k

N

V

L

i

n

k

N

V

I

D

I

A

P

1

0

0

S

X

M

2

.

0

N

V

I

D

I

A

P

1

0

0

S

X

M

2

.

0

N

V

I

D

I

A

P

1

0

0

S

X

M

2

.

0

N

V

I

D

I

A

P

1

0

0

S

X

M

2

.

0

S

M

P

A

B

u

s

S

M

P

A

B

u

s

Figure 1-13.

Figure 1-13 Power S822LC server buses and logical architecture

Each processor has 32 PCIs lanes split into three channels: two channels are PCIe Gen3 x8

and one channel is PCIe Gen 3 x16.

The PCIe channels are connected to the PCIe slots, which can support GPUs and other

high-performance adapters, such as InfiniBand.

Table 1-8 lists the total I/O bandwidth of a Power S822LC server.

Table 1-8 I/O bandwidth

I/O I/O bandwidth (maximum theoretical)

Total I/O bandwidth 64 GBps simplex

128 GBps duplex

For the PCIe Interconnect, each POWER8 processor has 32 PCIe lanes running at 9.6 Gbps

full-duplex. The bandwidth formula is calculated as follows:

Thirty-two lanes * 2 processors * 9.6 Gbps * 2 = 128 GBps

Chapter 1. Architecture and technical description 23

Page 38

1.10 Internal I/O subsystem

IBM Confidential

PCIe Slot 1

• Gen 3 x 16 Interfac e

• CAPI enabled

• Full height, full length slot

PCIe Slot 2

• Gen 3 x 8 Interf ace

• Half height, half length slot

• PLX switch base

PCIe Slot 3

• Gen 3 x 16 Interfac e

• Half height, half length slot

• CAPI Enabled

USB 3.0

1Gb EN IPMI VGA

The internal I/O subsystem is on the system board, which supports PCIe slots. PCIe adapters

on the Power S822LC server are not hot-pluggable.

1.11 Slot configuration

The Power S822LC server has three PCIe Gen3 slots.

Figure 1-14 is a rear view diagram of the PCIe slots for the Power S822LC server.

Figure 1-14 Power S822LC server rear view PCIe slots and connectors

Table 1-9 shows the PCIe Gen3 slot configuration for the Power S822LC server.

Table 1-9 Power S822LC server PCIe slot properties

Slot Description Card size CAPI

Slot 1

PCIe Gen3 x16 Half height,

half length

Slot 2 PCIe Gen3 x8 Half height,

half length

Slot 3 PCIe Gen3 x16 Full height,

half length

24 IBM Power Systems S822LC for High Performance Computing

Power limit

capable

Ye s 7 5 W

Ye s 5 0 W

Ye s 7 5 W

Page 39

The two x16 slots that are provided by the internal PCIe riser (Slot 1 and Slot 3) can be

populated with GPU adapters (NVIDIA) or can be used for any high-profile (not LP) adapters.

Mixing of GPU and other high-profile adapters on the internal PCIe riser is supported.

Only LP adapters can be placed in LP slots. An x8 adapter can be placed in an x16 slot, but

an x16 adapter cannot be placed in an x8 slot. One LP slot must be used for a required

Ethernet adapter (#5260, #EL3Z, or #EN0T).

1.12 System ports

The system board has one 1 Gbps Ethernet port, one Intelligent Platform Management

Interface (IPMI) port and a VGA port, as shown in Figure 1-14 on page 24.

The integrated system ports are supported for modem and asynchronous terminal

connections with Linux. Any other application that uses serial ports requires a serial port

adapter to be installed in a PCI slot. The integrated system ports do not support IBM

PowerHA® configurations. The VGA port does not support cable lengths that exceed

3 meters.

1.13 PCI adapters

This section covers the various types and functions of the PCI adapters that are supported by

the Power S822LC servers.

1.13.1 PCI Express

Peripheral Component Interconnect Express (PCIe) uses a serial interface and allows for

point-to-point interconnections between devices (by using a directly wired interface between

these connection points). A single PCIe serial link is a dual-simplex connection that uses two

pairs of wires, one pair for transmit and one pair for receive, and can transmit only one bit per

cycle. These two pairs of wires are called a

these configurations, the connection is labeled as x1, x2, x8, x12, x16, or x32, where the

number is effectively the number of lanes.

The PCIe interfaces that are supported on this server are PCIe Gen3, which are capable of

16 GBps simplex (32 GBps duplex) on a single x16 interface. PCIe Gen3 slots also support

previous generation (Gen2 and Gen1) adapters, which operate at lower speeds, according to

the following rules:

Place x1, x4, x8, and x16 speed adapters in the same size connector slots first, before

mixing adapter speed with connector slot size.

Adapters with lower speeds are allowed in larger sized PCIe connectors, but larger speed

adapters are not compatible in smaller connector sizes (that is, a x16 adapter cannot go in

an x8 PCIe slot connector).

PCIe adapters use a different type of slot than PCI adapters. If you attempt to force an

adapter into the wrong type of slot, you might damage the adapter or the slot.

lane. A PCIe link can consist of multiple lanes. In

POWER8 based servers can support two different form factors of PCIe adapters:

PCIe low profile (LP) cards, which are used with the Power S822L server.

Chapter 1. Architecture and technical description 25

Page 40

PCIe full height and full high cards are designed for the 4 EIA scale-out servers, such as

the Power S824L server.

Before adding or rearranging adapters, use the System Planning Tool to validate the new

adapter configuration. For more information, see the System Planning Tool website:

http://www.ibm.com/systems/support/tools/systemplanningtool/

If you are installing a new feature, ensure that you have the software that is required to

support the new feature and determine whether there are any existing update prerequisites to

install. To obtain this information, use the IBM prerequisite website:

https://www-912.ibm.com/e_dir/eServerPreReq.nsf

The following sections describe the supported adapters and provide tables of orderable

feature code numbers.

1.13.2 LAN adapters

To connect the Power S822LC servers to a local area network (LAN), you can use the LAN

adapters that are supported in the PCIe slots of the system unit. Table 1-10 lists the