Page 1

Front cover

IBM Power 750 and 760

Technical Overview and

Introduction

Features the 8408-E8D and 9109-RMD based

on the latest POWER7+ processor technology

Discusses the dual chip module

architecture

Describes the enhanced I/O

subsystem

James Cruickshank

Sorin Hanganu

Volker Haug

Stephen Lutz

John T Schmidt

Marco Vallone

ibm.com/redbooks

Redpaper

Page 2

Page 3

International Technical Support Organization

IBM Power 750 and 760 Technical Overview and

Introduction

May 2013

REDP-4985-00

Page 4

Note: Before using this information and the product it supports, read the information in “Notices” on

page vii.

First Edition (May 2013)

This edition applies to the IBM Power 750 (8408-E8D) and Power 760 (9109-RMD) Power Systems servers.

© Copyright International Business Machines Corporation 2013. All rights reserved.

Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule

Contract with IBM Corp.

Page 5

Contents

Notices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . vii

Trademarks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . viii

Preface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ix

Authors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .x

Now you can become a published author, too! . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xi

Comments welcome. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xi

Stay connected to IBM Redbooks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xii

Chapter 1. General description . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.1 Systems overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.1.1 IBM Power 750 Express server. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.1.2 IBM Power 760 server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.2 Operating environment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

1.3 IBM Systems Energy Estimator . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

1.4 Physical package . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

1.5 System features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

1.5.1 Power 750 Express system features. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

1.5.2 Power 760 system features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

1.5.3 Minimum features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

1.5.4 Power supply features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

1.5.5 Processor card features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

1.5.6 Memory features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

1.6 Disk and media features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

1.7 I/O drawers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

1.7.1 12X I/O Drawer PCIe . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

1.7.2 EXP30 Ultra SSD I/O drawer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

1.7.3 EXP24S SFF Gen2-bay drawer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

1.7.4 EXP12S SAS drawer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

1.7.5 I/O drawers and usable PCI slots . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

1.8 Comparison between models . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

1.9 Build to order . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

1.10 IBM editions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

1.11 Model upgrades . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

1.12 Server and virtualization management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

1.13 System racks. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

1.13.1 IBM 7014 Model T00 rack . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

1.13.2 IBM 7014 Model T42 rack . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

1.13.3 Feature code 0551 rack . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

1.13.4 Feature code 0553 rack . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

1.13.5 The AC power distribution unit and rack content . . . . . . . . . . . . . . . . . . . . . . . . 32

1.13.6 Useful rack additions. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

1.13.7 OEM rack . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

Chapter 2. Architecture and technical overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

2.1 The IBM POWER7+ processor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

2.1.1 POWER7+ processor overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

2.1.2 POWER7+ processor core . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

2.1.3 Simultaneous multithreading. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

© Copyright IBM Corp. 2013. All rights reserved. iii

Page 6

2.1.4 Memory access . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

2.1.5 On-chip L3 cache innovation and Intelligent Cache . . . . . . . . . . . . . . . . . . . . . . . 49

2.1.6 POWER7+ processor and Intelligent Energy . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

2.1.7 Comparison of the POWER7+, POWER7, and POWER6 processors . . . . . . . . . 51

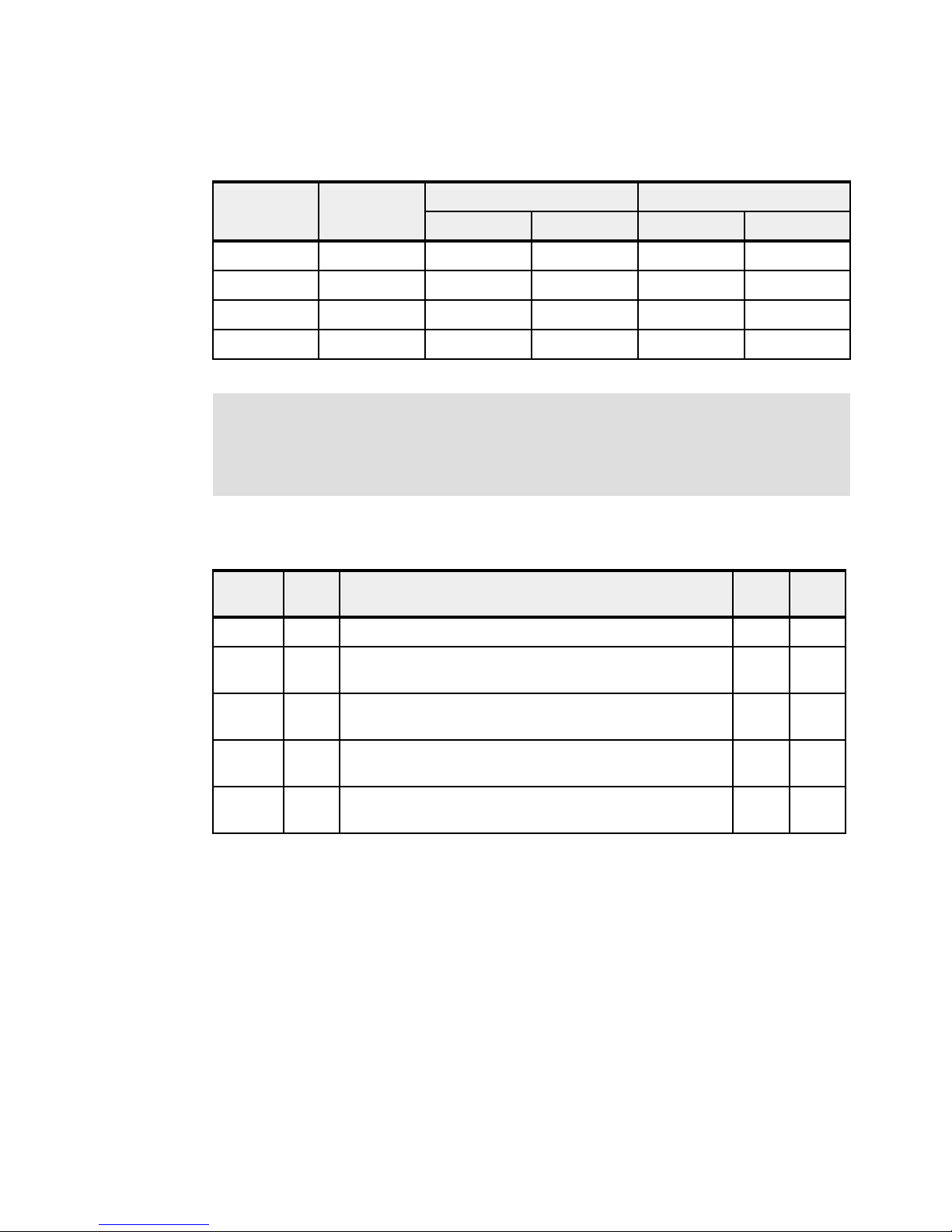

2.2 POWER7+ processor card . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

2.2.1 Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

2.2.2 Processor interconnects . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

2.3 Memory subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

2.3.1 Registered DIMM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

2.3.2 Memory placement rules. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

2.3.3 Memory bandwidth . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

2.4 Capacity on Demand. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

2.4.1 Capacity Upgrade on Demand . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

2.4.2 Capacity Backup offering (applies only to IBM i). . . . . . . . . . . . . . . . . . . . . . . . . . 61

2.4.3 Software licensing and CoD . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

2.5 System bus . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

2.5.1 I/O buses and GX++ card . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

2.6 Internal I/O subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

2.6.1 Blind swap cassettes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

2.6.2 Integrated multifunction card. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

2.7 PCI adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

2.7.1 PCI Express . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

2.7.2 PCI-X adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

2.7.3 IBM i IOP adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

2.7.4 PCIe adapter form factors. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

2.7.5 LAN adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

2.7.6 Graphics accelerator adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

2.7.7 SCSI and SAS adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

2.7.8 iSCSI adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

2.7.9 Fibre Channel adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

2.7.10 Fibre Channel over Ethernet. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

2.7.11 InfiniBand host channel adapter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72

2.7.12 Asynchronous and USB adapters. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

2.7.13 Cryptographic coprocessor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

2.8 Internal Storage. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

2.8.1 Dual split backplane mode . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

2.8.2 Triple split backplane mode . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

2.8.3 Dual storage I/O Adapter (IOA) configurations . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

2.8.4 DVD . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

2.9 External I/O subsystems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

2.9.1 PCI-DDR 12X expansion drawer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

2.9.2 12X I/O Drawer PCIe . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

2.9.3 12X I/O Drawer PCIe configuration and cabling rules. . . . . . . . . . . . . . . . . . . . . . 82

2.10 External disk subsystems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

2.10.1 EXP30 Ultra SSD I/O drawer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

2.10.2 EXP24S SFF Gen2-bay drawer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

2.10.3 EXP12S SAS expansion drawer. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

2.10.4 TotalStorage EXP24 disk drawer and tower . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

2.10.5 IBM 7031 TotalStorage EXP24. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

2.10.6 IBM System Storage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

2.11 Hardware Management Console . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 95

2.11.1 HMC connectivity to the POWER7+ processor-based systems . . . . . . . . . . . . . 97

2.11.2 High availability HMC configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

iv IBM Power 750 and 760 Technical Overview and Introduction

Page 7

2.12 Operating system support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 100

2.12.1 IBM AIX operating system . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

2.12.2 IBM i operating system . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

2.12.3 Linux operating system . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

2.12.4 Virtual I/O Server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

2.12.5 Java versions that are supported . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

2.12.6 Boosting performance and productivity with IBM compilers . . . . . . . . . . . . . . . 103

2.13 Energy management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

2.13.1 IBM EnergyScale technology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

2.13.2 Thermal power management device card. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

2.13.3 Energy consumption estimation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

Chapter 3. Virtualization. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

3.1 POWER Hypervisor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 112

3.2 POWER processor modes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

3.3 Active Memory Expansion. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

3.4 PowerVM. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 122

3.4.1 PowerVM editions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 122

3.4.2 Logical partitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 122

3.4.3 Multiple shared processor pools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 126

3.4.4 Virtual I/O Server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 130

3.4.5 PowerVM Live Partition Mobility . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

3.4.6 Active Memory Sharing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 138

3.4.7 Active Memory Deduplication . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 139

3.4.8 Dynamic Platform Optimizer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 142

3.4.9 Dynamic System Optimizer. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 143

3.4.10 Operating system support for PowerVM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 143

3.4.11 Linux support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 144

3.5 System Planning Tool . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 146

3.6 New PowerVM Version 2.2.2 features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 147

Chapter 4. Continuous availability and manageability . . . . . . . . . . . . . . . . . . . . . . . . 149

4.1 Reliability. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 150

4.1.1 Designed for reliability. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 150

4.1.2 Placement of components . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 151

4.1.3 Redundant components and concurrent repair. . . . . . . . . . . . . . . . . . . . . . . . . . 151

4.2 Availability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 151

4.2.1 Partition availability priority . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 152

4.2.2 General detection and deallocation of failing components . . . . . . . . . . . . . . . . . 152

4.2.3 Memory protection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 154

4.2.4 Cache protection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 156

4.2.5 Special Uncorrectable Error handling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 156

4.2.6 PCI Enhanced Error Handling. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 157

4.3 Serviceability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 158

4.3.1 Detecting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 159

4.3.2 Diagnosing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 164

4.3.3 Reporting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 165

4.3.4 Notifying . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 167

4.3.5 Locating and servicing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 168

4.4 Manageability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 171

4.4.1 Service user interfaces . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 171

4.4.2 IBM Power Systems firmware maintenance . . . . . . . . . . . . . . . . . . . . . . . . . . . . 176

4.4.3 Concurrent firmware update improvements with POWER7+ . . . . . . . . . . . . . . . 178

Contents v

Page 8

4.4.4 Electronic Services and Electronic Service Agent . . . . . . . . . . . . . . . . . . . . . . . 179

4.5 POWER7+ RAS features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 180

4.6 Power-On Reset Engine . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 181

4.7 Operating system support for RAS features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 181

Related publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 185

IBM Redbooks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 185

Other publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 186

Online resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 187

Help from IBM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 187

vi IBM Power 750 and 760 Technical Overview and Introduction

Page 9

Notices

This information was developed for products and services offered in the U.S.A.

IBM may not offer the products, services, or features discussed in this document in other countries. Consult

your local IBM representative for information on the products and services currently available in your area. Any

reference to an IBM product, program, or service is not intended to state or imply that only that IBM product,

program, or service may be used. Any functionally equivalent product, program, or service that does not

infringe any IBM intellectual property right may be used instead. However, it is the user's responsibility to

evaluate and verify the operation of any non-IBM product, program, or service.

IBM may have patents or pending patent applications covering subject matter described in this document. The

furnishing of this document does not grant you any license to these patents. You can send license inquiries, in

writing, to:

IBM Director of Licensing, IBM Corporation, North Castle Drive, Armonk, NY 10504-1785 U.S.A.

The following paragraph does not apply to the United Kingdom or any other country where such

provisions are inconsistent with local law: INTERNATIONAL BUSINESS MACHINES CORPORATION

PROVIDES THIS PUBLICATION "AS IS" WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESS OR

IMPLIED, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF NON-INFRINGEMENT,

MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. Some states do not allow disclaimer of

express or implied warranties in certain transactions, therefore, this statement may not apply to you.

This information could include technical inaccuracies or typographical errors. Changes are periodically made

to the information herein; these changes will be incorporated in new editions of the publication. IBM may make

improvements and/or changes in the product(s) and/or the program(s) described in this publication at any time

without notice.

Any references in this information to non-IBM websites are provided for convenience only and do not in any

manner serve as an endorsement of those websites. The materials at those websites are not part of the

materials for this IBM product and use of those websites is at your own risk.

IBM may use or distribute any of the information you supply in any way it believes appropriate without incurring

any obligation to you.

Any performance data contained herein was determined in a controlled environment. Therefore, the results

obtained in other operating environments may vary significantly. Some measurements may have been made

on development-level systems and there is no guarantee that these measurements will be the same on

generally available systems. Furthermore, some measurements may have been estimated through

extrapolation. Actual results may vary. Users of this document should verify the applicable data for their

specific environment.

Information concerning non-IBM products was obtained from the suppliers of those products, their published

announcements or other publicly available sources. IBM has not tested those products and cannot confirm the

accuracy of performance, compatibility or any other claims related to non-IBM products. Questions on the

capabilities of non-IBM products should be addressed to the suppliers of those products.

This information contains examples of data and reports used in daily business operations. To illustrate them

as completely as possible, the examples include the names of individuals, companies, brands, and products.

All of these names are fictitious and any similarity to the names and addresses used by an actual business

enterprise is entirely coincidental.

COPYRIGHT LICENSE:

This information contains sample application programs in source language, which illustrate programming

techniques on various operating platforms. You may copy, modify, and distribute these sample programs in

any form without payment to IBM, for the purposes of developing, using, marketing or distributing application

programs conforming to the application programming interface for the operating platform for which the sample

programs are written. These examples have not been thoroughly tested under all conditions. IBM, therefore,

cannot guarantee or imply reliability, serviceability, or function of these programs.

© Copyright IBM Corp. 2013. All rights reserved. vii

Page 10

Trademarks

IBM, the IBM logo, and ibm.com are trademarks or registered trademarks of International Business Machines

Corporation in the United States, other countries, or both. These and other IBM trademarked terms are

marked on their first occurrence in this information with the appropriate symbol (® or ™), indicating US

registered or common law trademarks owned by IBM at the time this information was published. Such

trademarks may also be registered or common law trademarks in other countries. A current list of IBM

trademarks is available on the Web at http://www.ibm.com/legal/copytrade.shtml

The following terms are trademarks of the International Business Machines Corporation in the United States,

other countries, or both:

Active Memory™

AIX®

AS/400®

BladeCenter®

DS8000®

Dynamic Infrastructure®

Electronic Service Agent™

EnergyScale™

Focal Point™

IBM®

IBM Flex System™

IBM Systems Director Active Energy

Manager™

Micro-Partitioning®

POWER®

POWER Hypervisor™

Power Systems™

Power Systems Software™

POWER6®

POWER6+™

POWER7®

POWER7+™

PowerHA®

PowerPC®

PowerVM®

pSeries®

PureFlex™

Rational®

Rational Team Concert™

Real-time Compression™

Redbooks®

Redpaper™

Redpapers™

Redbooks (logo) ®

RS/6000®

Storwize®

System p®

System Storage®

System x®

System z®

Tivoli®

XIV®

The following terms are trademarks of other companies:

Intel, Intel Xeon, Intel logo, Intel Inside logo, and Intel Centrino logo are trademarks or registered trademarks

of Intel Corporation or its subsidiaries in the United States and other countries.

ITIL is a registered trademark, and a registered community trademark of The Minister for the Cabinet Office,

and is registered in the U.S. Patent and Trademark Office.

Linux is a trademark of Linus Torvalds in the United States, other countries, or both.

LTO, Ultrium, the LTO Logo and the Ultrium logo are trademarks of HP, IBM Corp. and Quantum in the U.S.

and other countries.

Microsoft, and the Windows logo are trademarks of Microsoft Corporation in the United States, other

countries, or both.

Java, and all Java-based trademarks and logos are trademarks or registered trademarks of Oracle and/or its

affiliates.

UNIX is a registered trademark of The Open Group in the United States and other countries.

Other company, product, or service names may be trademarks or service marks of others.

viii IBM Power 750 and 760 Technical Overview and Introduction

Page 11

Preface

This IBM® Redpaper™ publication is a comprehensive guide covering the IBM Power 750

(8408-E8D) and Power 760 (9109-RMD) servers that support IBM AIX®, IBM i, and Linux

operating systems. The goal of this paper is to introduce the major innovative Power 750 and

Power 760 offerings and their prominent functions:

The IBM POWER7+™ processor, available at frequencies of 3.1 GHz, 3.4 GHz, 3.5 GHz,

The larger IBM POWER7+ Level 3 cache provides greater bandwidth, capacity, and

The newly introduced POWER7+ dual chip module (DCM).

New 10 GBaseT options for the Integrated Multifunction Card that provides two USB ports,

New IBM PowerVM® V2.2.2 features, such as 20 LPARs per core.

The improved IBM Active Memory™ Expansion technology that provides more usable

IBM EnergyScale™ technology that provides features such as power trending,

Improved reliability, serviceability, and availability.

and 4.0 GHz.

reliability.

one serial port, and four Ethernet connectors for a processor enclosure and does not

require a PCI slot.

memory than is physically installed in the system.

power-saving, capping of power, and thermal measurement.

Dynamic Platform Optimizer.

High-performance SSD drawer.

This publication is for professionals who want to acquire a better understanding of IBM Power

Systems™ products. The intended audience includes the following roles:

Clients

Sales and marketing professionals

Technical support professionals

IBM Business Partners

Independent software vendors

This paper expands the current set of IBM Power Systems documentation by providing a

desktop reference that offers a detailed technical description of the Power 750 and Power 760

systems.

This paper does not replace the latest marketing materials and configuration tools. It is

intended as an additional source of information that, together with existing sources, can be

used to enhance your knowledge of IBM server solutions.

© Copyright IBM Corp. 2013. All rights reserved. ix

Page 12

Authors

This paper was produced by a team of specialists from around the world working at the

International Technical Support Organization, Poughkeepsie Center.

James Cruickshank works in the Power Systems Client Technical Specialist team for IBM in

the UK. He holds an Honors degree in Mathematics from the University of Leeds. James has

over 11 years of experience working with IBM pSeries®, IBM System p® and Power Systems

products and is a member of the EMEA Power Champions team. James supports customers

in the financial services sector in the UK.

Sorin Hanganu is an Accredited Product Services professional. He has eight years of

experience working on Power Systems and IBM i products. He is an IBM Certified Solution

Expert for IBM Dynamic Infrastructure® and also a IBM Certified Systems Expert for Power

Systems, AIX, PowerVM virtualization, ITIL and ITSM. Sorin works as a System Services

Representative for IBM in Bucharest, Romania.

Volker Haug is an Open Group Certified IT Specialist within IBM Germany supporting Power

Systems clients and Business Partners as a Client Technical Specialist. He holds a diploma

degree in Business Management from the University of Applied Studies in Stuttgart. His

career includes more than 25 years of experience with Power Systems, AIX, and PowerVM

virtualization; he has written several IBM Redbooks® publications about Power Systems and

PowerVM. Volker is an IBM POWER7® Champion and a member of the German Technical

Expert Council, an affiliate of the IBM Academy of Technology.

Stephen Lutz is a Certified Senior Technical Sales Professional for Power Systems working

for IBM Germany. He holds a degree in Commercial Information Technology from the

University of Applied Science Karlsruhe, Germany. He is POWER7 champion and has 14

years experience in AIX, Linux, virtualization, and Power Systems and its predecessors,

providing pre-sales technical support to clients, Business Partners, and IBM sales

representatives all over Germany. Stephen is also an expert in IBM Systems Director, its

plug-ins, and IBM SmartCloud® Entry with a focus on Power Systems and AIX.

John T Schmidt is an Accredited IT Specialist for IBM and has twelve years experience with

IBM and Power Systems. He has a degree in Electrical Engineering from the University of

Missouri - Rolla and an MBA from Washington University in St. Louis. In addition to

contributing to eight other Power Systems IBM Redpapers™ publications, in 2010, he

completed an assignment with the IBM Corporate Service Corps in Hyderabad, India. He is

currently working in the United States as a pre-sales Field Technical Sales Specialist for

Power Systems in Boston, MA.

Marco Vallone is a certified IT Specialist at IBM Italy. He joined IBM in 1989 starting in the

Power Systems production plant (Santa Palomba) as a product engineer and afterwords he

worked for the ITS AIX support and delivery service center. For the last eight years of his

career, he has worked as IT Solution Architect in the ITS Solution Design Compentence

Center of Excellence in Rome, where he mainly designs infrastructure solutions on distributed

environments with a special focus on Power System solution.

The project that produced this publication was managed by:

Scott Vetter

Executive Project Manager, PMP

x IBM Power 750 and 760 Technical Overview and Introduction

Page 13

Thanks to the following people for their contributions to this project:

Larry L. Amy, Ron Arroyo, Hsien-I Chang, Carlo Costantini, Kirk Dietzman,

Gary Elliott, Michael S. Floyd, James Hermes, Pete Heyrman, John Hilburn,

Roberto Huerta de la Torre, Dan Hurlimann, Roxette Johnson, Sabine Jordan,

Kevin Kehne, Robert Lowden, Jia Lei Ma, Hilary Melville, Hans Mozes,

Thoi Nguyen, Mark Olson, Pat O’Rourke, Jan Palmer, Velma Pavlasek,

Dave Randall, Robb Romans, Todd Rosedahl, Jeff Stuecheli, Madeline Vega

IBM

Udo Sachs

SVA Germany

Louis Bellanger

Bull

Simon Higgins

FIL Investment Management Limited

Tam ikia B arrow

International Technical Support Organization, Poughkeepsie Center

Now you can become a published author, too!

Here’s an opportunity to spotlight your skills, grow your career, and become a published

author—all at the same time! Join an ITSO residency project and help write a book in your

area of expertise, while honing your experience using leading-edge technologies. Your efforts

will help to increase product acceptance and customer satisfaction, as you expand your

network of technical contacts and relationships. Residencies run from two to six weeks in

length, and you can participate either in person or as a remote resident working from your

home base.

Find out more about the residency program, browse the residency index, and apply online at:

ibm.com/redbooks/residencies.html

Comments welcome

Your comments are important to us!

We want our papers to be as helpful as possible. Send us your comments about this paper or

other IBM Redbooks® publications in one of the following ways:

Use the online Contact us review Redbooks form found at:

ibm.com/redbooks

Send your comments in an email to:

redbooks@us.ibm.com

Mail your comments to:

IBM Corporation, International Technical Support Organization

Dept. HYTD Mail Station P099

2455 South Road

Poughkeepsie, NY 12601-5400

Preface xi

Page 14

Stay connected to IBM Redbooks

Find us on Facebook:

http://www.facebook.com/IBMRedbooks

Follow us on Twitter:

http://twitter.com/ibmredbooks

Look for us on LinkedIn:

http://www.linkedin.com/groups?home=&gid=2130806

Explore new Redbooks publications, residencies, and workshops with the IBM Redbooks

weekly newsletter:

https://www.redbooks.ibm.com/Redbooks.nsf/subscribe?OpenForm

Stay current on recent Redbooks publications with RSS Feeds:

http://www.redbooks.ibm.com/rss.html

xii IBM Power 750 and 760 Technical Overview and Introduction

Page 15

Chapter 1. General description

1

The IBM Power 750 Express server (8408-E8D) and IBM Power 760 server (9109-RMD) use

the latest POWER7+ processor technology that is designed to deliver unprecedented

performance, scalability, reliability, and manageability for demanding commercial workloads.

The IBM Power 750 Express server and the Power 760 server deliver the outstanding

performance of the POWER7+ processor. The performance, capacity, energy efficiency, and

virtualization capabilities of the Power 750 or Power 760 make it an ideal consolidation,

database or multi-application server. As a consolidation or highly virtualized multi-application

server, the Power 750 Express and the Power 760 servers offer tremendous configuration

flexibility to meet the most demanding capacity and growth requirements. Use the full

capability of the system by leveraging industrial-strength PowerVM virtualization for AIX,

IBM i, and Linux. PowerVM offers the capability to dynamically adjust system resources

based on workload demands so that each partition gets the resources it needs. Active

Memory Expansion is a technology, introduced with POWER7, that enables the effective

maximum memory capacity to be much larger than the true physical memory. The POWER7+

processor includes built-in accelerators that increase the efficiency of the compression and

decompression process, allowing greater levels of expansion up to 125%. This can enable a

partition to do significantly more work or enable a server to run more partitions with the same

physical amount of memory.

The Power 750 and Power 760 servers are 5U 19-inch rack-based systems. The Power 750

offers configurations of up to 32 POWER7+ cores and 1 TB of memory. The Power 760 offers

configurations of up to 48 POWER7+ cores and 2 TB of memory. Both are able to contain

internal I/O and also connections to additional drawers for external I/O. These systems

contain a single processor planar board with up to four pluggable processor modules. The

processor modules have eight installed cores (8404-E8D) or 12 installed cores (9109-RMD).

The POWER7+ module, built with 32 nm technology, dramatically increases the number of

circuits available, supporting a larger L3 cache (80 MB: 2.5 times greater than its POWER7

predecessor), and new performance acceleration features for Active Memory Expansion and

hardware-based data encryption. Power servers using this new module will be able to achieve

higher frequencies within the same power envelope and improved performance per core

when compared to POWER7 based offerings.

© Copyright IBM Corp. 2013. All rights reserved. 1

Page 16

1.1 Systems overview

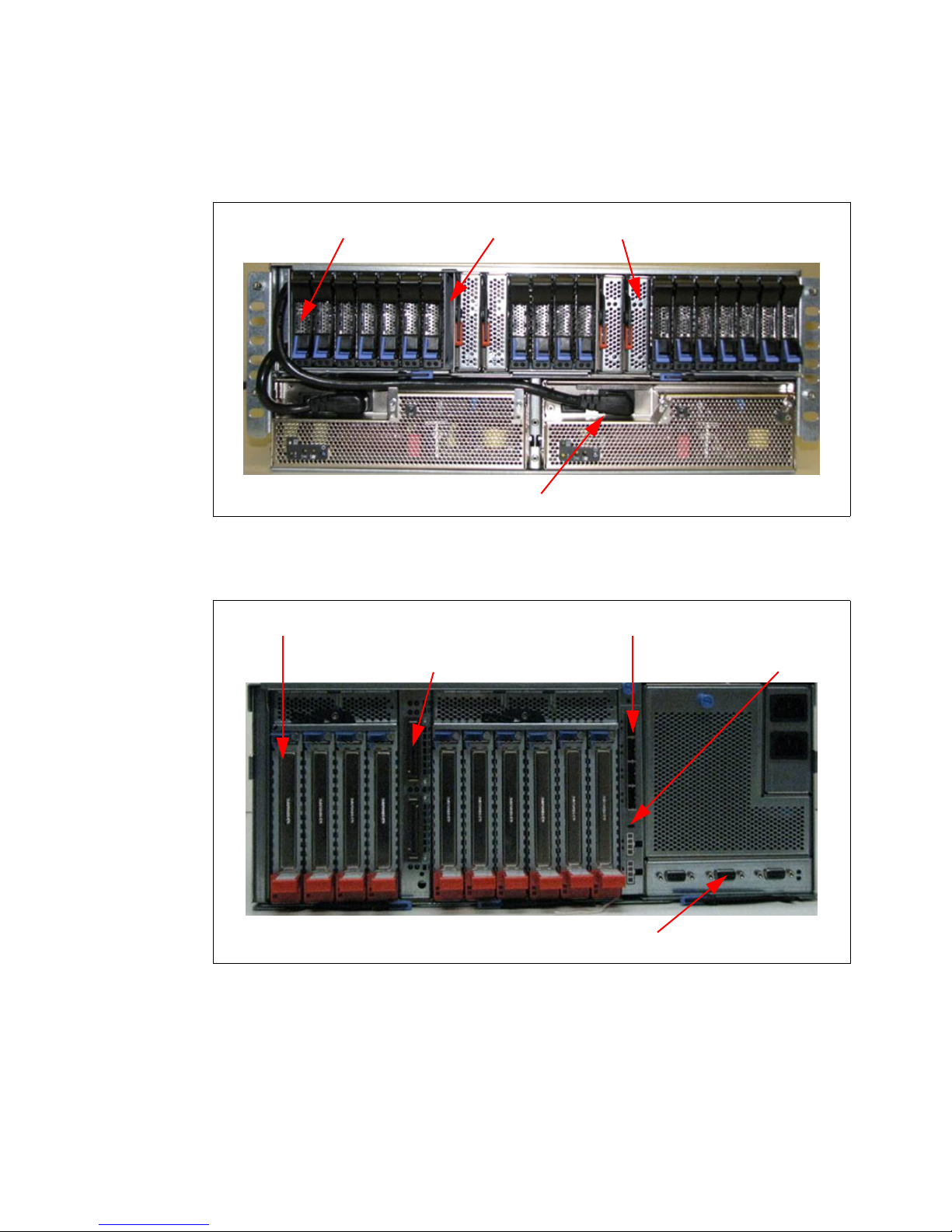

Power 760 (front views)

Power 750 Express (front views)

Detailed information about the Power 750 Express server and Power 760 systems is within

the following sections. Figure 1-1 shows the front view of the Power 750 and Power 760.

Figure 1-1 Front view of the Power 750 Express and Power 760

1.1.1 IBM Power 750 Express server

The Power 750 Express server (8408-E8D) supports up to four POWER7+ processor dual

chip modules (DCMs). Each of the four processor DCMs is an 8-core DCM packaged with

2 x 4-core chips. All 8-core processor DCMs are either 3.5 or 4.0 GHz mounted on a

dedicated card. The Power 750 is in a 19-inch rack-mount, 5U (EIA units) drawer

configuration. Each POWER7+ processor DCM is a 64-bit, 8-core processor packaged on a

dedicated card with a maximum of 16 DDR3 DIMMs, 10 MB of L3 cache per core, and

256 KB of L2 cache per core. A Power 750 Express server can be populated with one, two,

three, or four DCMs providing 8, 16, 24, or 32 cores. All the cores are active.

The Power 750 Express server supports a maximum of 64 DDR3 DIMM slots, 16 per 8-core

DCM. Memory features (two DIMMs per memory feature) supported are 8, 16, and 32 GB

and run at a speed of 1066 MHz. A system with four DCMs installed has a maximum memory

of 1024 GB. The optional Active Memory Expansion feature enables the effective maximum

memory capacity to be much larger than the true physical memory. Innovative compression

and decompression of memory content using a new hardware accelerator can allow memory

expansion up to 125% for AIX partitions. A server with a maximum of 1024 GB can effectively

be expanded in excess of more than 2 TB. This can enhance virtualization and server

consolidation by allowing more partitions or running more work with the same physical

amount of memory.

The Power 750 Express server delivers great I/O expandability. In addition to the six PCIe

Gen2 slots in the system unit, up to four 12X-attached I/O drawers (FC 5802 or FC 5877),

2 IBM Power 750 and 760 Technical Overview and Introduction

Page 17

add up to 40 PCIe Gen1 slots. This set of PCIe slots can provide extensive connectivity to

LANs, switches, SANs, asynchronous devices, SAS storage, tape storage, and more. For

example, more than 64 TB of SAS disk storage is supported.

The Power 750 Express system unit includes six small form factor (SFF) SAS bays. This

offers up to 5.4 TB HDD capacity or up to 3.6 TB SSD capacity. All SAS disks and SSDs are

2.5-inch SFF and hot swappable. The six SAS SFF bays can be split into two sets of three

bays for additional configuration flexibility using just the integrated SAS adapters.

Two new SSD packages offer ordering convenience and price savings for a new server order.

Each 6-pack SSD feature (FC ESR2 or FC ESR4) for the EXP30 Ultra SSD I/O Drawer can

provide up to 140,000 I/O operations per second (IOPS) in just one-fifth of a 1U drawer. The

4-pack SSD features (FC ESRA, FC ESRB, FC ESRC, and FC ESRD) can provide up to

90,000 IOPS. The 6-pack or 4-pack SSD must be ordered with the server, not as a later

miscellaneous equipment specification (MES) order.

Other integrated features include the following items:

Enhanced I/O bandwidth with PCIe Gen2 slots compared to the PCIe Gen1 and PCI-X

slots of the POWER7-based Power 750 (8233-E8B)

Enhanced I/O redundancy and flexibility with two new, integrated POWER7 I/O controllers

One hot-plug, slim-line SATA media bay (optional)

Choice of Integrated Multifunction Card options (maximum one per system):

– Dual 10 Gb Copper + Dual 1 Gb Ethernet (FC 1768)

– Dual 10 Gb Optical + Dual 1 Gb Ethernet (FC 1769)

– Dual 10 Gb Copper + Dual 1/10 Gb (RJ45) Ethernet (FC EN10)

– Dual 10 Gb Optical + Dual 1/10 Gb (RJ45) Ethernet (FC EN11)

One serial port on the Integrated Multifunction Card: two USB ports per each Integrated

Multifunction Card plus another USB port (maximum three usable USB ports per system)

Service processor

EnergyScale technology

Two SPCN ports and two Hardware Management Console (HMC) ports (HMC is optional)

Redundant and hot-swap AC power supplies

Redundant and hot-swap cooling

4-pack and 6-pack SSD features that can be ordered with a new server

1.1.2 IBM Power 760 server

The IBM Power 760 server (9109-RMD) supports up to four POWER7+ processor DCMs and

is in a 5U (EIA units) drawer configuration. Each of the four processor DCMs is a 0/12-core

Capacity Upgrade on Demand (CUoD) DCM packaged with 2 x 6-core chips. All 0/12-core

CUoD processor DCMs are 64-bit, either 3.1 GHz or 3.4 GHz mounted on a dedicated card

with a maximum of 16 DDR3 DIMMs, 10 MB of L3 cache per core, and 256 KB of L2 cache

per core. A fully populated Power 760 server with four DCMs has a minimum of eight cores

activated and up to a maximum of 48 cores with a CUoD granularity of one core.

Note: 0/12-core means 0-core through 12-core. For example, 16 slots per 0 to 12 core

DCM is indicated as 16 per 0/12-core.

Chapter 1. General description 3

Page 18

The Power 760 server supports a maximum of 64 DDR3 DIMM slots, 16 per 0/12-core

processor DCM. Memory features (two memory DIMMs per feature) supported are 8, 16, 32,

and 64 GB and run at a speed of 1066 MHz. A system with four DCMs installed has a

maximum memory of 2048 GB. Also, the optional Active Memory Expansion can enable the

effective maximum memory capacity to be much larger than the true physical memory.

Innovative compression and decompression of memory content using processor cycles can

enable memory expansion up to 125% for AIX partitions. A server with a maximum of

2048 GB can effectively be expanded to greater than 4 TB. This can enhance virtualization

and server consolidation by allowing more partitions or running more work with the same

physical amount of memory.

The Power 760 server offers great I/O expandability. In addition to the six PCIe Gen2 slots in

the system unit, up to four 12X-attached I/O drawers (FC 5802 or FC 5877) add up to 40 PCIe

Gen1 slots. This set of PCI slots can deliver extensive connectivity to LANs, switches, SANs,

asynchronous devices, SAS storage, tape storage, and more. For example, more than 64 TB

of SAS disk storage is supported.

The Power 760 server includes six SFF SAS bays. This offers up to 5.4 TB HDD capacity or

up to 3.6 TB SSD capacity. All SAS disks and SSDs are 2.5-inch SFF and hot swappable. The

six SAS or SSD bays can be split into two sets of three bays for additional configuration

flexibility using just the integrated SAS adapters.

Two new SSD packages offer ordering convenience and price savings for a new server order.

Each 6-pack SSD feature (FC ESR2 or FC ESR4) for the EXP30 Ultra SSD I/O Drawer can

provide up to 140,000 I/O operations per second (IOPS) in just one-fifth of a 1U drawer. The

4-pack SSD feature (FC ESRA, FC ESRB, FC ESRC, and FC ESRD) can provide up to

90,000 IOPS. A 6-pack or 4-pack SSD must be ordered with the server, not as a later MES

order.

Other integrated features include:

Enhanced I/O bandwidth with PCIe Gen2 slots compared to the PCIe Gen1 and PCI-X

slots of the POWER7-based Power 750

Enhanced I/O redundancy and flexibility with two new, integrated POWER7 I/O controllers

One hot-plug, slim-line SATA media bay per enclosure (optional)

Choice of Integrated Multifunction Card options (maximum one per system):

– Dual 10 Gb Copper + Dual 1 Gb Ethernet (FC 1768)

– Dual 10 Gb Optical + Dual 1 Gb Ethernet (FC 1769)

– Dual 10 Gb Copper + Dual 1/10 Gb (RJ45) Ethernet (FC EN10)

– Dual 10 Gb Optical + Dual 1/10 Gb (RJ45) Ethernet (FC EN11)

One serial port on the Integrated Multifunction Card

Two USB ports on the Integrated Multifunction Card plus another USB port on the base

system unit

Service processor

EnergyScale technology

Two SPCN ports and two hardware management console (HMC) ports (HMC is optional)

Redundant and hot-swap AC power supplies

Redundant and hot-swap cooling

4-pack and 6-pack SSD features that can be ordered with a new server

4 IBM Power 750 and 760 Technical Overview and Introduction

Page 19

1.2 Operating environment

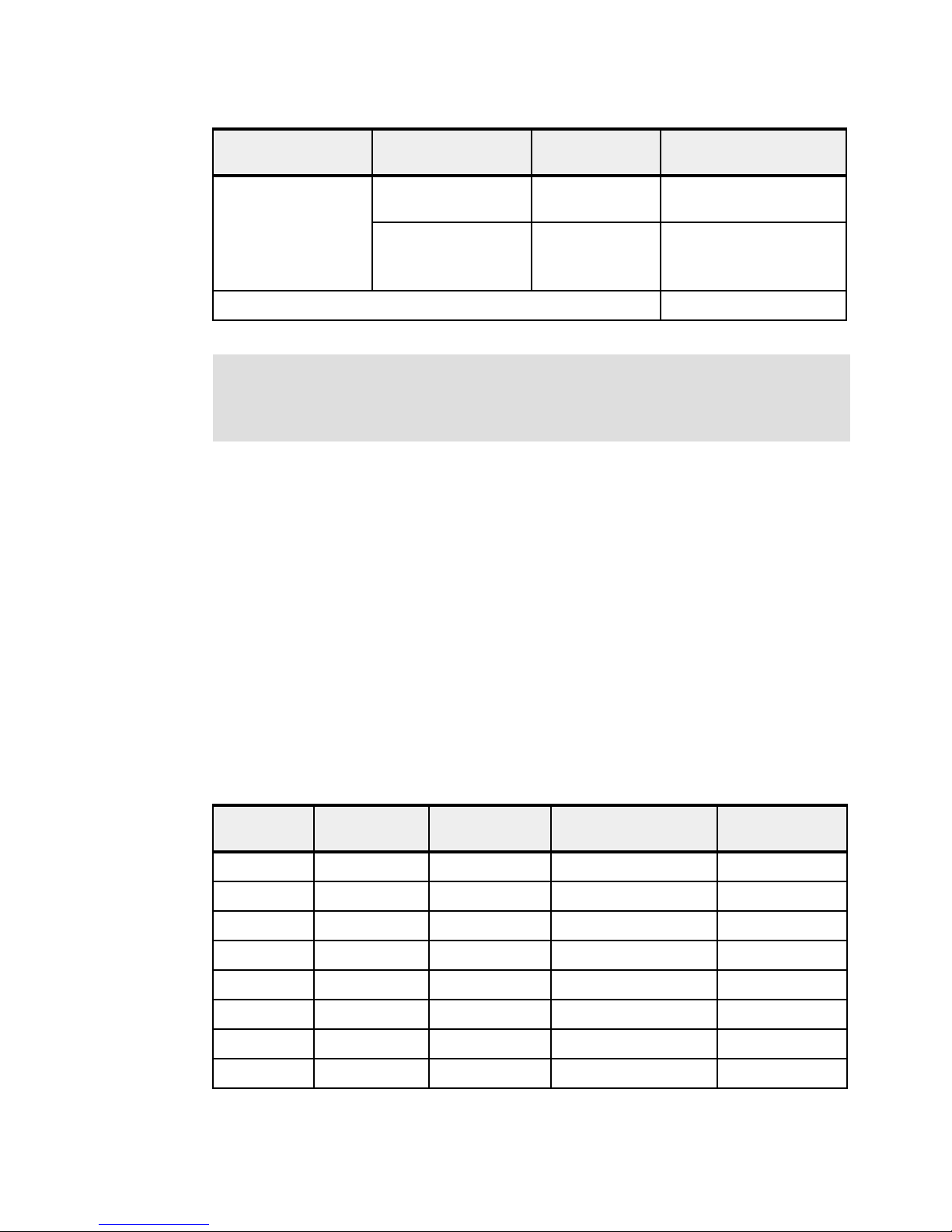

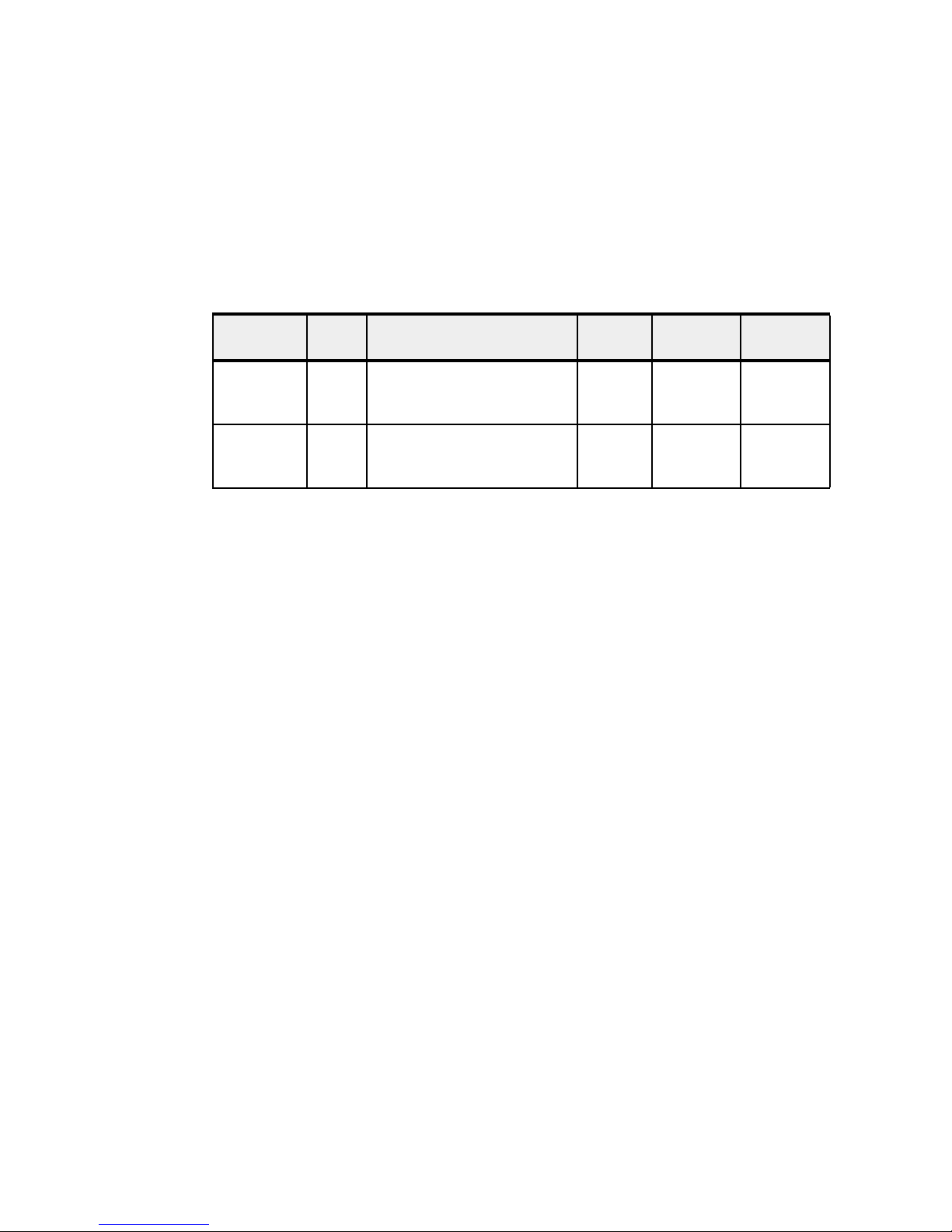

Table 1-1 lists the operating environment specifications for the servers.

Table 1-1 Operating environment for Power 750 Express and Power 760

Description Operating Non-operating

Temperature 5 - 35 degrees C

(41 - 95 degrees F)

Relative humidity 20 - 80% 8 - 80%

Maximum dew point 29 degrees C

(84 degrees F)

Operating voltage 200 - 240 V AC Not applicable

Operating frequency 50 - 60 ± 3 Hz Not applicable

Power consumption Power 750:

2400 watts maximum (system unit

with 32 cores installed)

Power 760:

2400 watts maximum (system unit

with 48 cores active)

Power source loading Power 750:

2.45 kVA maximum (system unit

with 32 cores installed)

Power 760:

2.45 kVA maximum (system unit

with 48 cores active)

Thermal output Power 750:

8,189 BTU/hr maximum (system

unit with 32 cores installed)

Power 760:

8,189 BTU/hr maximum (system

unit with 48 cores active)

5 - 45 degrees C

(41 - 113 degrees F)

28 degrees C

(82 degrees F)

Not applicable

Not applicable

Not applicable

Maximum altitude 3048 m

Noise level for system unit Power 750 (system unit with 32 installed cores):

Not applicable

(10,000 ft)

7.1 bels (operating or idle)

6.6 bels (operating or idle) with acoustic rack doors

Power 760 (system unit with 48 active cores):

7.1 bels (operating or idle)

6.6 bels (operating or idle) with acoustic rack doors

Chapter 1. General description 5

Page 20

1.3 IBM Systems Energy Estimator

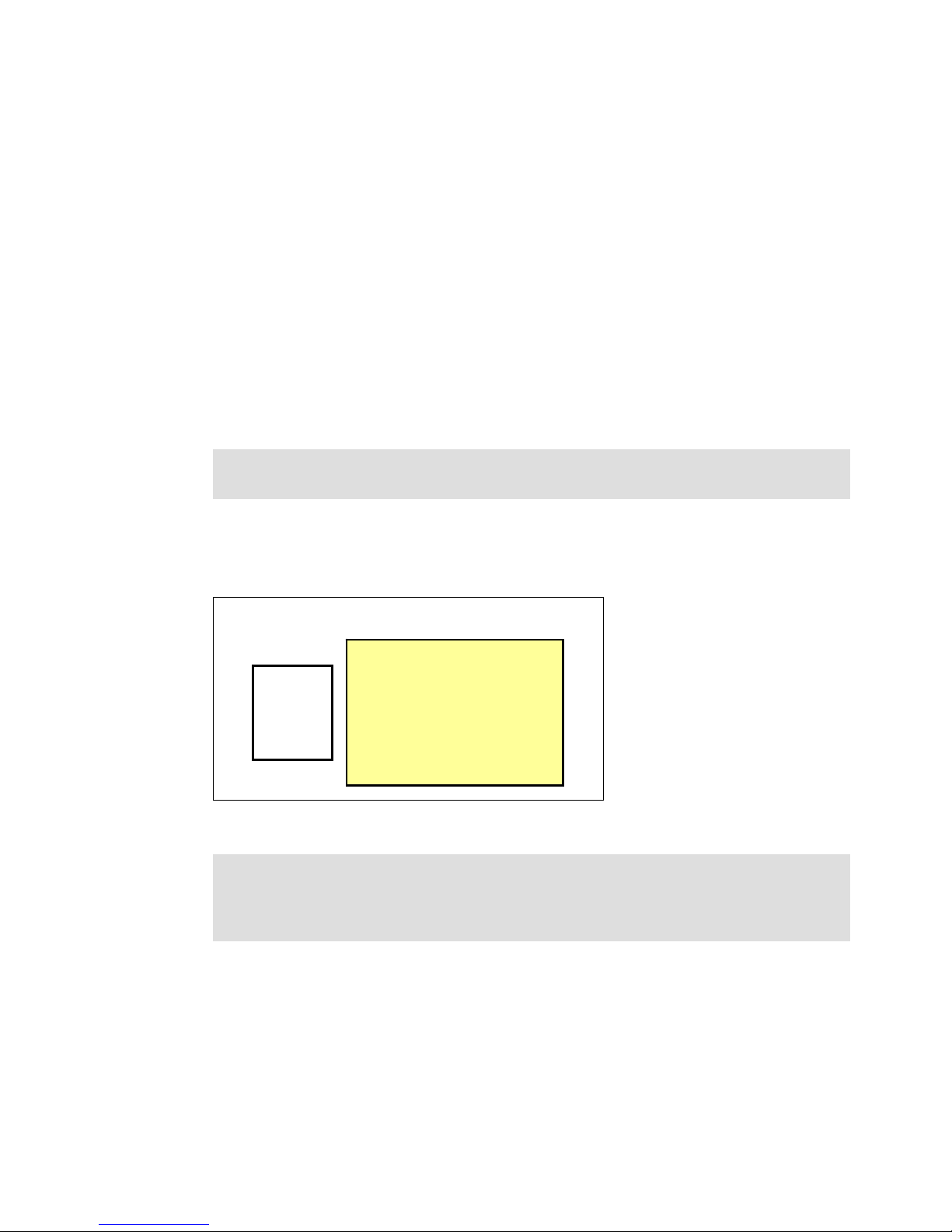

Integrated Multifunction Card

PCIe Gen2

slots

GX++

slots

Power

Supplies

HMC

portsUSB port

SPCN

ports

USB ports

VPD

card

External SAS

port

Ethernet

ports

Serial port

The IBM Systems Energy Estimator is a web-based tool for estimating power requirements

for IBM Power Systems. You can use this tool to estimate typical power requirements (watts)

for a specific system configuration under normal operating conditions:

http://www-912.ibm.com/see/EnergyEstimator/

1.4 Physical package

Table 1-2 lists the physical dimensions of an individual system unit. Both servers are available

only in a rack-mounted form factor and each can take five EIA units (5U) of rack space.

Table 1-2 Physical dimensions of a Power 750 Express and Power 760 server

Dimension Power 750 (Model 8408-E8D) Power 760 (Model 9109-RMD)

Width 447 mm (17.6 in) 447 mm (17.6 in)

Depth 858 mm (33.8 in) 858 mm (33.8 in)

Height 217 mm (8.56 in), 5U (EIA units) 217 mm (8.56 in), 5U (EIA units)

Weight 70.3 kg (155 lb) 70.3 kg (155 lb)

Figure 1-2 shows the rear view of the Power 750 Express and Power 760.

Figure 1-2 Rear view of the Power 750 Express and Power 760

6 IBM Power 750 and 760 Technical Overview and Introduction

Page 21

1.5 System features

This section describes the features available on the Power 750 and Power 760 systems.

1.5.1 Power 750 Express system features

The following features are available on the Power 750 Express:

A 5U 19-inch rack-mount system enclosure

One to four 8-core DCMs:

– 8-core (2 x 4-core) 3.5 GHz processor DCM (FC EPT8)

– 8-core (2 x 4-core) 4.0 GHz processor DCM (FC EPT7)

Additional processor considerations:

Each system must have a minimum of one processor DCM (eight cores).

There is a maximum of four DCMs per system (32 cores).

If you have more than one processor DCM in one server, then all processor DCM

features must be identical: All 3.5 GHz processor DCMs (FC EPT8) or all 4.0 GHz

processor DCMs (FC EPT7).

All the cores must be activated using FC EPTC, FC EPTD, FC EPTE or FC EPTF.

A minimum of 8 GB per core is required to use FC EPTC or FC EPTD zero-priced

1-core activation features.

POWER7+ DDR3 Memory DIMMs (two per feature):

– 8 GB (2 x 4 GB), 1066 MHz (FC EM08)

– 16 GB (2 x 8 GB), 1066 MHz (FC EM4B)

– 32 GB (2 x 16 GB), 1066 MHz (FC EM4C)

Active Memory Expansion with POWER7+ hardware accelerator (FC 4792)

Six hot-swappable, 2.5-inch, small form factor, SAS disks or SSD bays per system

One hot-plug, slim-line, SATA media bay per system

Redundant hot-swap 1,925 Watts AC power supplies

Choice of Integrated Multifunction Card options (maximum one per system):

– Dual 10 Gb Copper and Dual 1 Gb Ethernet (FC 1768)

– Dual 10 Gb Optical and Dual 1 Gb Ethernet (FC 1769)

– Dual 10 Gb Copper and Dual 1/10 Gb (RJ45) Ethernet (FC EN10)

– Dual 10 Gb Optical and Dual 1/10 Gb (RJ45) Ethernet (FC EN11)

One serial port on the Integrated Multifunction Card

Two USB ports on the Integrated Multifunction Card plus another USB port on the base

system unit

DASD and Media backplane with 6 x 2.5-inch HDD or SSD (FC EPTS):

– One to six SFF SAS DASD or SSDs (mixing allowed)

– Two integrated SAS controllers to run SAS bays

– One slim bay for a DVD-RAM (required)

– One integrated SATA controller to run the DVD-RAM

Two HMC ports

Chapter 1. General description 7

Page 22

Eight I/O expansion slots per system

– Six Gen2 PCIe 8x slots plus two GX++ slots

PowerVM (optional)

– IBM Micro-Partitioning®

– Virtual I/O Server (VIOS)

– Automated CPU and memory reconfiguration support for dedicated and shared

processor logical partition groups (dynamic LPAR)

– PowerVM Live Partition Migration (requires PowerVM Enterprise Edition)

12X I/O drawer with PCIe slots for 16-core or larger Power 750 systems:

– Up to four PCIe I/O drawers (FC 5802 or FC 5877)

Disk or SSD-only I/O drawers:

– Up to two EXP30 Ultra SSD I/O drawers (FC EDR1) with integrated, high-performance

SAS controllers

– Up to 51 EXP24S SFF SAS I/O drawers (FC 5887) on SAS PCIe controllers (optionally

one of the 51 drawers can be attached to the external SAS port of the system unit)

– Up to 27 EXP12S 3.5-inch SAS I/O drawers (FC 5886) on SAS PCIe controllers

(supported but not orderable)

1.5.2 Power 760 system features

The following features are available on the Power 760:

A 5U 19-inch rack-mount system enclosure

One to four 0/12-core CUoD processor DCMs:

– 0/12-core (2 x 6-core) 3.1 GHz processor DCM (FC EPT5)

– 0/12-core (2 x 6-core) 3.4 GHz processor DCM (FC EPT6)

Additional processor considerations:

Each system must have a minimum of one processor DCM (12 cores).

There is a maximum of four DCMs per system (48 cores).

If you have more than one processor DCM in one server, then all processor DCM

features must be identical: All 3.1 GHz processor DCMs (FC EPT5) or all 3.4 GHz

processor DCMs (FC EPT6).

Each system must have a minimum of eight processor activations (FC EPTA or

FC EPTB).

All processor DCMs are placed on a mandatory processor and memory backplane

(FC EPT1).

POWER7+ DDR3 Memory DIMMs (two per feature):

– 8 GB (2 x 4 GB), 1066 MHz (FC EM08)

– 16 GB (2 x 8 GB), 1066 MHz (FC EM4B)

– 32 GB (2 x 16 GB), 1066 MHz (FC EM4C)

– 64 GB (2 x 32 GB), 1066 MHz (FC EM4D)

Active Memory Expansion with POWER7+ hardware accelerator (FC 4792)

Six hot-swappable, 2.5-inch, small form-factor SAS disks or SSD bays per system

8 IBM Power 750 and 760 Technical Overview and Introduction

Page 23

One hot-plug, slim-line SATA media bay per system

Redundant hot-swap 1,925 Watts AC power supplies

Choice of Integrated Multifunction Card options (maximum one per system):

– Dual 10 Gb Copper and Dual 1 Gb Ethernet (FC 1768)

– Dual 10 Gb Optical and Dual 1 Gb Ethernet (FC 1769)

– Dual 10 Gb Optical and Dual 1/10 Gb (RJ45) Ethernet (FC EN11)

– Dual 10 Gb Copper and Dual 1/10 Gb (RJ45) Ethernet (FC EN10)

One serial port on the Integrated Multifunction Card

Two USB ports on the Integrated Multifunction Card plus another USB port on the base

system unit

DASD and Media Backplane with 6 x 2.5-inch DASD or SSD (FC EPTS):

– One to six SFF SAS DASD or SSDs (mixing allowed)

– Two integrated SAS controllers to run the SAS bays

– One slim bay for a DVD-RAM (required)

– One integrated SATA controller to run the DVD-RAM

Eight I/O expansion slots per system

– Six Gen2 PCIe 8x slots plus two GX++ slots

Two HMC ports

Permanent Processor CUoD

PowerVM (optional)

– Micro-Partitioning

– Virtual I/O Server (VIOS)

– Automated CPU and memory reconfiguration support for dedicated and shared

processor logical partition (LPAR) groups

– PowerVM Live Partition Mobility (requires PowerVM Enterprise Edition)

12X I/O drawers with PCIe slots for 24-core or larger Power 760:

– Up to four PCIe I/O drawers (FC 5802 or FC 5877)

Disk-only I/O drawers

– Up to two EXP30 Ultra SSD I/O drawers with integrated, high performance, SAS

controllers (FC EDR1)

– Up to 51 EXP24S SFF SAS I/O drawers (FC 5887) on SAS PCIe controller (optionally

one of the 51 drawers can be attached to the external SAS port of the system unit)

– Up to 27 EXP12S 3.5-inch SAS I/O drawers (FC 5886) on SAS PCIe controllers

Chapter 1. General description 9

Page 24

1.5.3 Minimum features

Each system has a minimum feature set to be valid. Table 1-3 shows the minimum system

configuration for a Power 750.

Table 1-3 Minimum features for Power 750 Express system

Power 750 minimum

features

One system enclosure (5U) The base machine includes the bezels for the rack. No feature

Notes

code is required.

One service processor (FC EPTR)

Processor and memory backplane (FC EPT1)

One DASD backplane (FC EPTS)

Two power cords rated at 200-240 V and 10 A

Two AC power supply (FC 5532)

One Integrated Multifunction Card chosen from:

– Quad Ethernet 2 x 1 GB and 2 x 10 GB Optical (FC 1769)

– Quad Ethernet 2 x 1 GB and 2 x 10 GB Copper (FC 1768)

– Dual 10 Gb Copper + Dual 1/10 Gb (RJ45) Ethernet

(FC EN10)

– Dual 10 Gb Optical + Dual 1/10 Gb (RJ45) Ethernet

(FC EN11)

One primary operating

system

One processor card 8-core, 3.5 GHz processor card DCM (FC EPT8)

Eight processor activations For processor card FC EPT7, one of the following items:

32 GB minimum DDR3

memory. A minimum of two

identical features from:

For AIX and Linux:

One disk drive

AIX (FC 2146)

IBM i (FC 2145)

Linux (FC 2147)

8-core, 4.0 GHz processor card DCM (FC EPT7)

8 X (FC EPTE)

4 X (FC EPTC) plus 4 x (FC EPTE)

For processor card FC EPT8, one of the following items:

8 X (FC EPTF)

4 X (FC EPTD) plus 4 x (FC EPTF)

8 GB (4 X 8 GB), 1066 MHz (FC EM08)

16 GB (2 X 8 GB), 1066 MHz (FC EM4B)

32 GB (2 X 16 GB), 1066 MHz (FC EM4C)

900 GB 10K RPM SAS SFF Disk Drive (FC 1751)

900 GB 10K RPM SAS SFF-2 Disk Drive (FC 1752)

600 GB 10K RPM SAS SFF Disk Drive (FC 1790)

600 GB 10K RPM SAS SFF-2 Disk Drive (FC 1964)

300 GB 15K RPM SAS SFF Disk Drive (FC 1880)

300 GB 15K RPM SAS SFF-2 Disk Drive (FC 1953)

300 GB 10K RPM SFF SAS Disk Drive (FC 1885)

300 GB 10K RPM SAS SFF-2 Disk Drive (FC 1925)

146 GB 15K RPM SFF SAS Disk Drive (FC 1886)

146 GB 15K RPM SAS SFF-2 Disk Drive (FC 1917)

If SAN boot (FC 0837) is selected then no disk drive is required

10 IBM Power 750 and 760 Technical Overview and Introduction

Page 25

Power 750 minimum

features

Notes

For IBM i:

Two disk drives

856 GB 10K RPM SAS SFF Disk Drive (FC 1737)

856 GB 10K RPM SAS SFF-2 Disk Drive (FC 1738)

571 GB 10K RPM SAS SFF Disk Drive (FC 1916)

571 GB 10K RPM SAS SFF-2 Disk Drive (FC 1962)

283 GB 15K RPM SAS SFF Disk Drive (FC 1879)

283 GB 15K RPM SAS SFF-2 Disk Drive (FC 1948)

283 GB 10K RPM SFF SAS Disk Drive (FC 1911)

283 GB 10K RPM SAS SFF-2 Disk Drive (FC 1956)

139 GB 15K RPM SFF SAS Disk Drive (FC 1888)

139 GB 15K RPM SAS SFF-2 Disk Drive (FC 1947)

If SAN boot (FC 0837) is selected then no disk drive is required

One Language Group

FC 9300 or FC 97xx Language Group Specify

Specify

One removable media

SATA Slimline DVD-RAM Drive (FC 5771)

device

One HMC HMC is optional

Considerations:

The no-charge processor core activations, FC EPTC and FC EPTD, have a minimum

prerequisite of 8 GB memory per core before they can be ordered. That is, a miniumum of 64 GB

of active memory per DCM is a prerequisite before ordering the no-charge processor core

activations. When either FC EPTC or FC EPTD are ordered, 50% of the DCM processor core

activations can be no-charge FC EPTC or FC EPTD and at 50% must be priced FC EPTE or

FC EPTF.

The Ethernet ports and serial port of the Integrated Multifunction Card is not natively supported

by IBM i and thus cannot be used for IBM i LAN console support. The FC 5899 4-port Ethernet

adapter is usually used with this function or an optional HMC can be used for IBM i console

functions.

If IBM i native support is required, choose an Ethernet card:

– 2-Port 10/100/1000 Base-TX Ethernet PCI Express Adapter (FC 5767)

– 2-Port Gigabit Ethernet-SX PCI Express Adapter (FC 5768)

– 10 Gigabit Ethernet-LR PCI Express Adapter (FC 5772)

– PCIe2 4-Port 1 Gb Ethernet Adapter (FC 5899)

Table 1-4 shows the minimum system configuration for a Power 760 system.

Table 1-4 Minimum features for Power 760 system

Power 760 minimum

features

One system enclosure (5U) The base machine includes the bezels for the rack. No feature

Notes

code is required.

One service processor (FC EPTR)

Processor and Memory Backplane (FC EPT1)

One DASD Backplane (FC EPTS)

Two Power Cords rated at 200-240 V and 10 A

Two AC Power Supply (FC 5532)

One Integrated Multifunction Card chosen from:

– Quad Ethernet 2 x 1 GB and 2 x 10 GB Optical (FC 1769)

– Quad Ethernet 2 x 1 GB and 2 x 10 GB Copper (FC 1768)

– Dual 10 Gb Copper + Dual 1/10 Gb (RJ45) Ethernet

(FC EN10)

– Dual 10 Gb Optical + Dual 1/10 Gb (RJ45) Ethernet

(FC EN11)

Chapter 1. General description 11

Page 26

Power 760 minimum

features

Notes

One primary operating

system

AIX (FC 2146)

IBM i (FC 2145)

Linux (FC 2147)

One processor card 0/12-core, 3.1 GHz POWER7+ processor card (FC EPT5)

0/12-core, 3.4 GHz POWER7+ processor card (FC EPT6)

Eight processor activations 0/12-core, 3.1 GHz POWER7+ processor card (FC EPT5)

requires a minimum of eight FC EPTA

0/12-core, 3.4 GHz POWER7+ processor card (FC EPT6)

requires a minimum of eight FC EPTB

32 GB minimum DDR3

memory. A minimum of two

identical features from:

8 GB (4 x 8 GB), 1066 MHz (FC EM08)

16 GB (2 x 8 GB), 1066 MHz (FC EM4B)

32 GB (2 x 16 GB), 1066 MHz (FC EM4C)

64 GB (2 x 32 GB), 1066 MHz (FC EM4D)

For AIX and Linux:

One disk drive

900 GB 10K RPM SAS SFF Disk Drive (FC 1751)

900 GB 10K RPM SAS SFF-2 Disk Drive (FC 1752)

600 GB 10K RPM SAS SFF Disk Drive (FC 1790)

600 GB 10K RPM SAS SFF-2 Disk Drive (FC 1964)

300 GB 15K RPM SAS SFF Disk Drive (FC 1880)

300 GB 15K RPM SAS SFF-2 Disk Drive (FC 1953)

300 GB 10K RPM SFF SAS Disk Drive (FC 1885)

300 GB 10K RPM SAS SFF-2 Disk Drive (FC 1925)

146 GB 15K RPM SFF SAS Disk Drive (FC 1886)

146 GB 15K RPM SAS SFF-2 Disk Drive (FC 1917)

If SAN boot (FC 0837) is selected then no disk drive is required

For IBM i:

Two disk drives

856 GB 10K RPM SAS SFF Disk Drive (FC 1737)

856 GB 10K RPM SAS SFF-2 Disk Drive (FC 1738)

571 GB 10K RPM SAS SFF Disk Drive (FC 1916)

571 GB 10K RPM SAS SFF-2 Disk Drive (FC 1962)

283 GB 15K RPM SAS SFF Disk Drive (FC 1879)

283 GB 15K RPM SAS SFF-2 Disk Drive (FC 1948)

283 GB 10K RPM SFF SAS Disk Drive (FC 1911)

283 GB 10K RPM SAS SFF-2 Disk Drive (FC 1956)

139 GB 15K RPM SFF SAS Disk Drive (FC 1888)

139 GB 15K RPM SAS SFF-2 Disk Drive (FC 1947)

If SAN boot (FC 0837) is selected then no disk drive is required

One Language Group

FC 9300 or FC 97xx Language Group Specify

Specify

One HMC Required for the Power 760

Considerations:

The Ethernet ports and serial port of the Integrated Multifunction Card is not natively supported

by IBM i and thus cannot be used for IBM i LAN console support. The FC 5899 4-port Ethernet

adapter is usually used with this function or an optional HMC can be used for IBM i console

functions.

If IBM i inative support is required, choose an Ethernet card:

– 2-Port 10/100/1000 Base-TX Ethernet PCI Express Adapter (FC 5767)

– 2-Port Gigabit Ethernet-SX PCI Express Adapter (FC 5768)

– 10 Gigabit Ethernet-LR PCI Express Adapter (FC 5772)

– PCIe2 4-Port 1 Gb Ethernet Adapter (FC 5899)

12 IBM Power 750 and 760 Technical Overview and Introduction

Page 27

1.5.4 Power supply features

Two system AC power supplies (FC 5532) are required for each system enclosure. The

second power supply provides redundant power for enhanced system availability. To provide

full redundancy, the two power supplies must be connected to separate power distribution

units (PDUs).

The system will continue to function with one working power supply. A failed power supply can

be hot-swapped but must remain in the system until the replacement power supply is

available for exchange.

The Power 750 and the Power 760 require 200-240 V AC for all configurations.

1.5.5 Processor card features

The Power 750 and Power 760 systems contain a processor planar board (FC EPT1) that has

the following sockets:

Four processor sockets

Eight memory riser sockets (two per processor module) with eight DIMM sockets per riser

Five power regulator sockets (one regulator socket is preinstalled on the planar)

The processor planar is populated with one, two, three, or four processor modules. The

processor modules can be installed in field but must be installed by an IBM customer

engineer.

The Power 750 has two types of processor cards:

FC EPT8 offering 8-core POWER7+ processor card at 3.5 GHz

FC EPT7 offering 8-core POWER7+ processor card at 4.0 GHz

The Power 760 has two types of processor cards:

FC EPT5 offering 12-core POWER7+ processor card at 3.1 GHz

FC EPT6 offering 12-core POWER7+ processor card at 3.4 GHz

Chapter 1. General description 13

Page 28

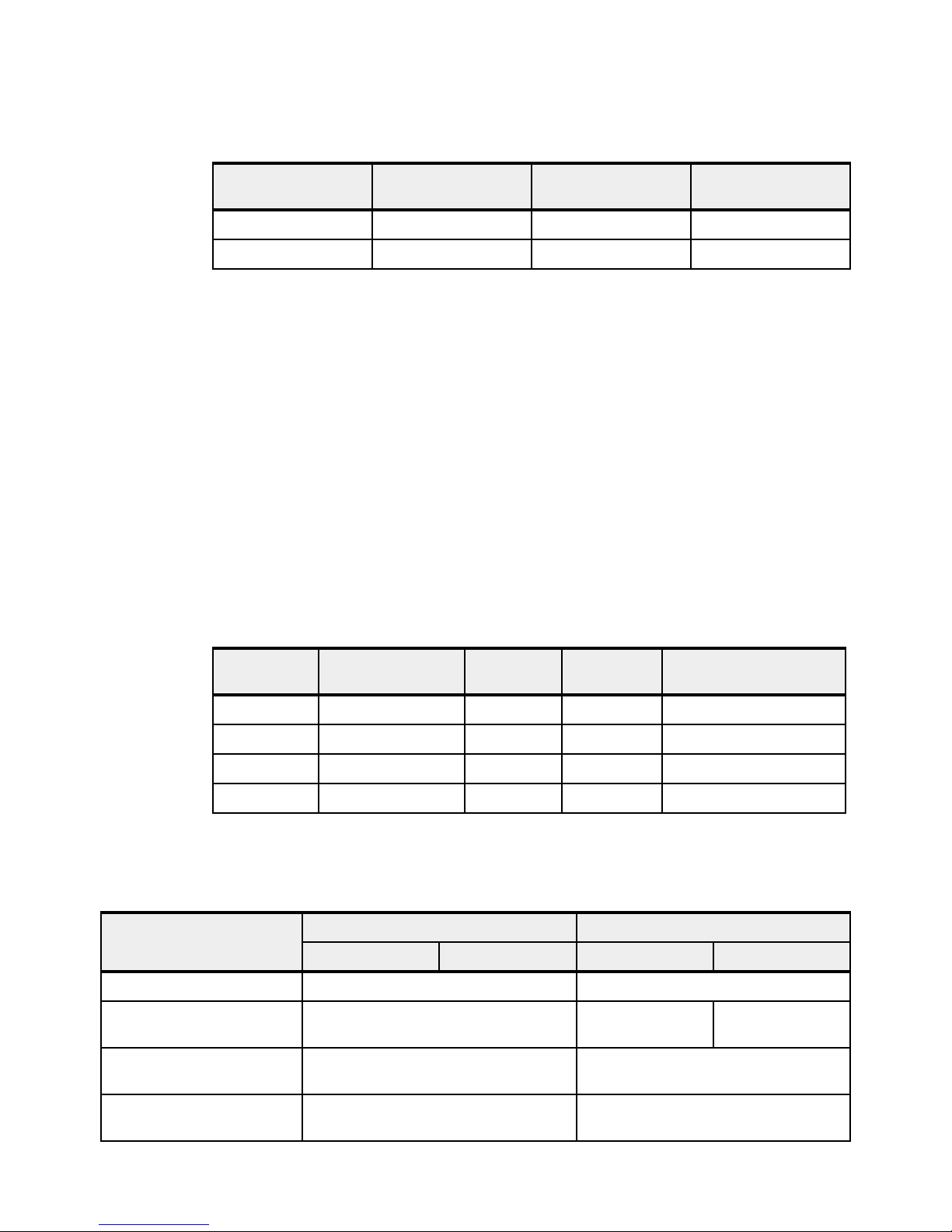

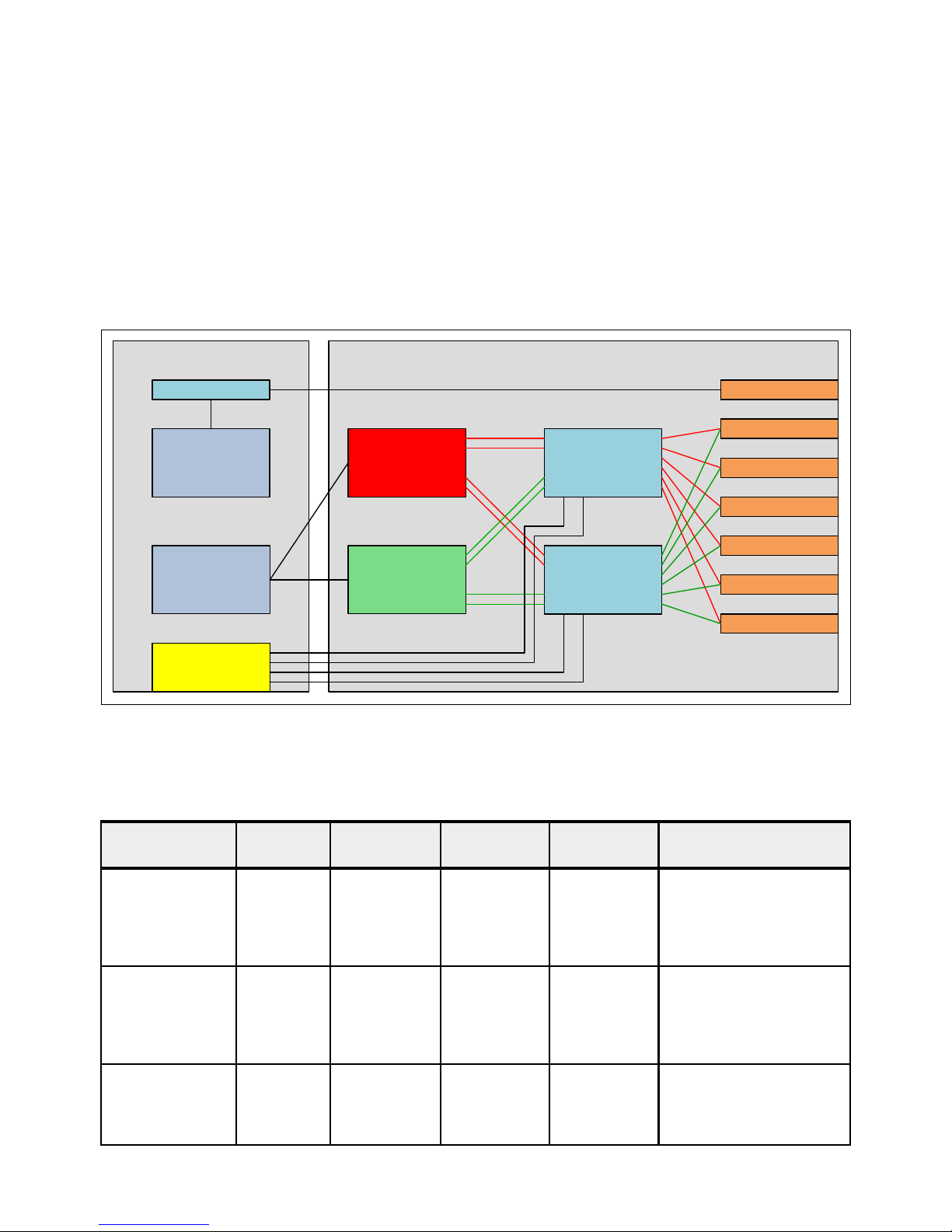

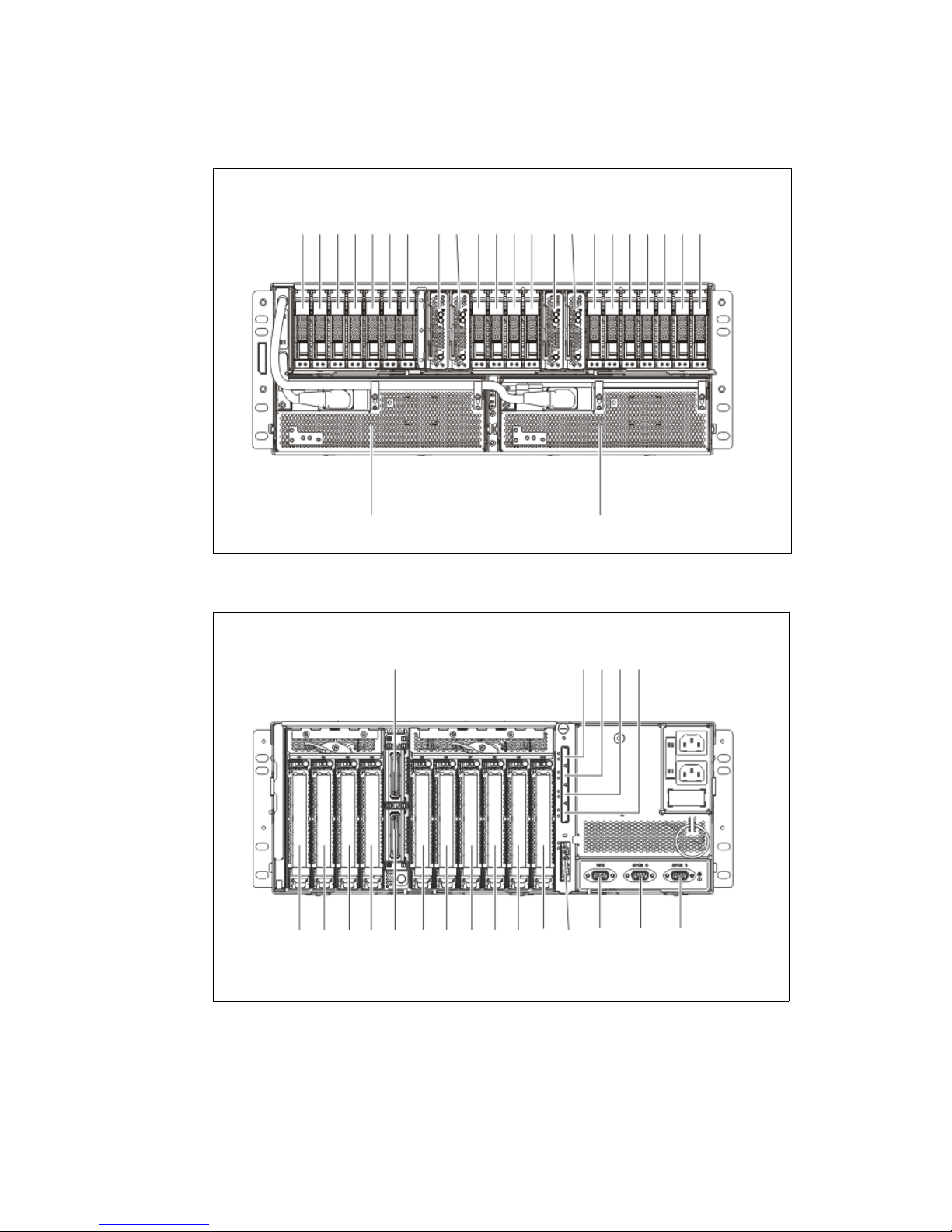

Figure 1-3 shows the top view of the Power 750 and Power 760 system with four DCMs

PCIe slot #1

PCIe slot #3

PCIe slot #2

PCIe slot #4

PCIe slot #5

PCIe slot #6

Memory Riser #1

Memory Riser #2

Memory Riser #3

Memory Riser #4

Memory Riser #5

Memory Riser #6

Memory Riser #7

Memory Riser #8

Regulator #5

TPMD Slot

Regulator #1

Regulator #2

Regulator #3

Regulator #4

DCM1

DCM0

DCM4

DCM3

Fans

installed.

Figure 1-3 View of the Power 750 and Power 760 with four DCMs installed

The Power 750 server does not support Capacity Upgrade on Demand for processors, and

must come fully activated.

The Power 760 supports Capacity Upgrade On Demand for processors only. A minimum of

eight processor activations are required per system. Additional processor activations may be

purchased with the initial configuration or at a later time. More information about Capacity

Upgrade on Demand is in 2.4.1, “Capacity Upgrade on Demand” on page 61.

14 IBM Power 750 and 760 Technical Overview and Introduction

Page 29

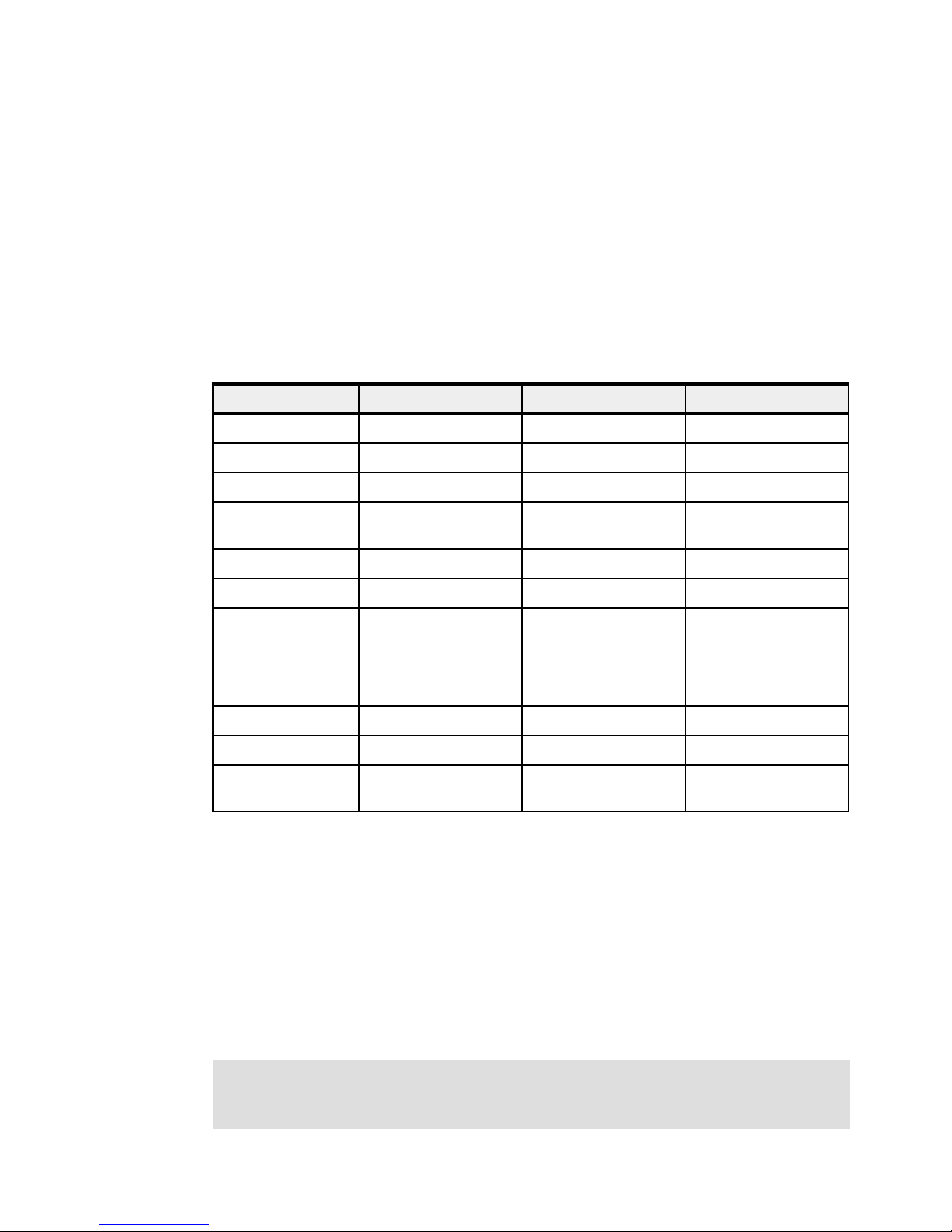

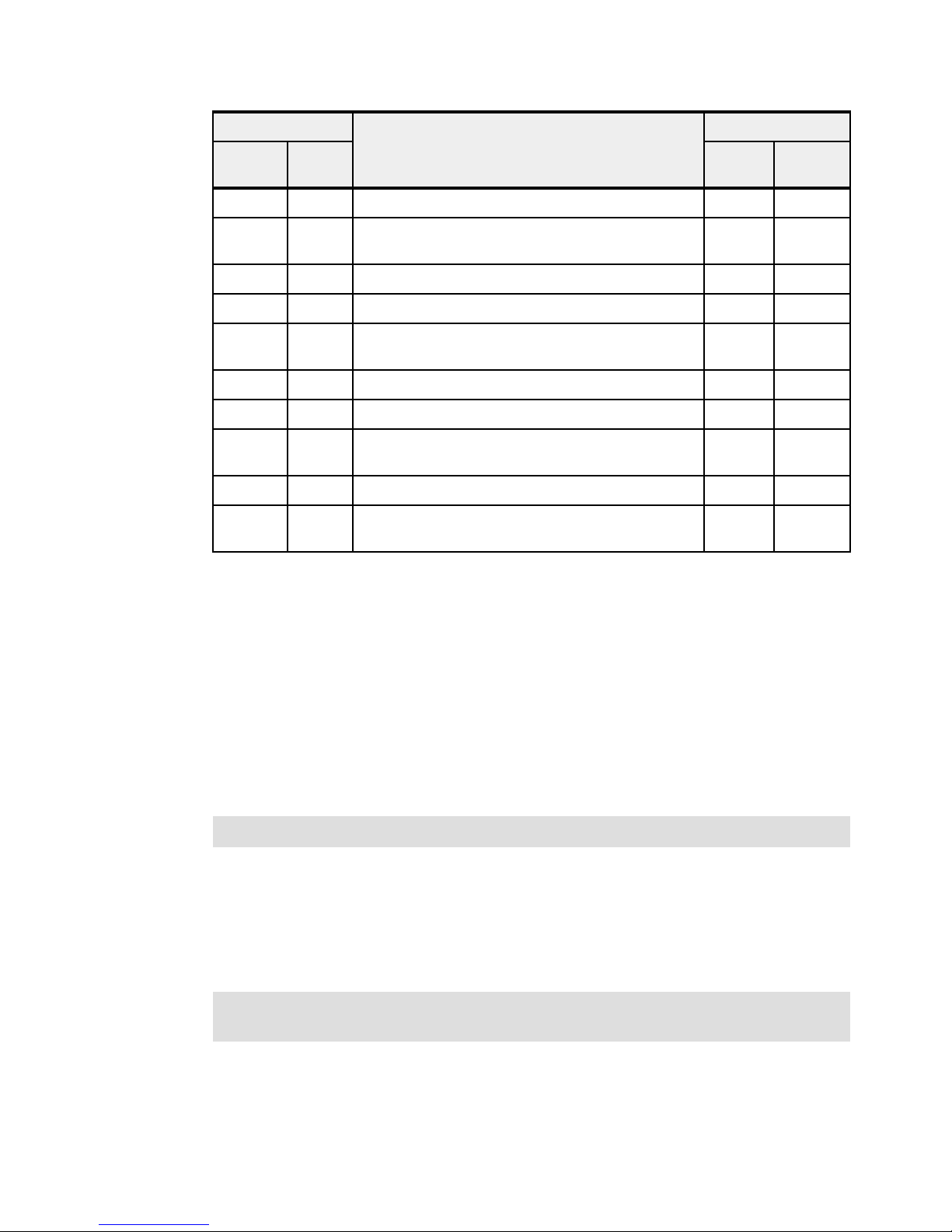

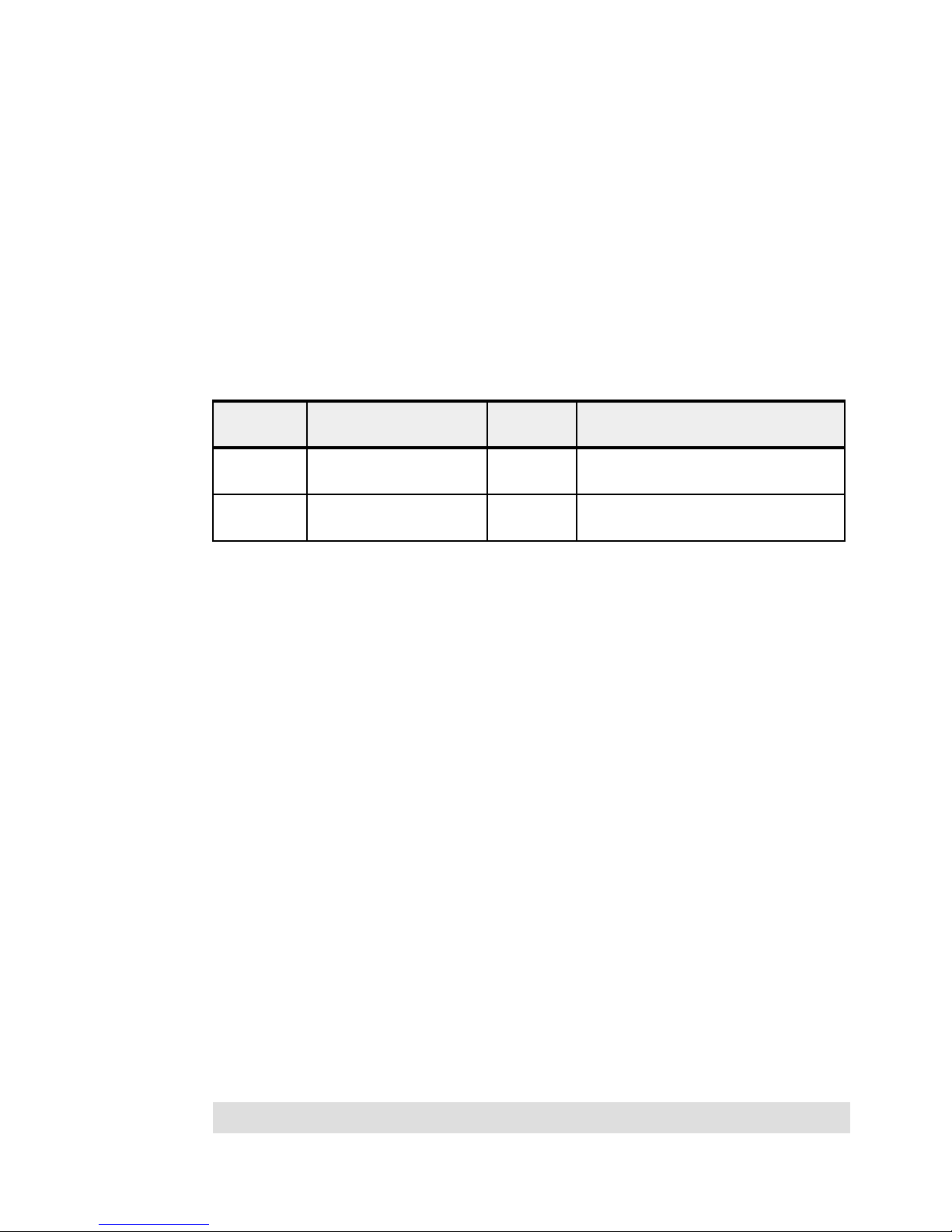

Summary of processor features

Table 1-5 summarizes the processor feature codes for the Power 750 Express server. Cells

marked N/A indicate bulk ordering codes and Custom Card Identification Number (CCIN) are

not applicable. A blank CCIN cell indicates CCIN not available.

Table 1-5 Summary of processor features for the Power 750 Express server

Feature

code

EPT1 2B61 Processor & Memory Backplane + Base Memory VRM + Clock

EPT8 54A1 3.5 GHz, 8-core POWER7+ Processor DCM (2x4-core) AIX, IBM i,

EPT7 4.0 GHz, 8-core POWER7+ Processor DCM (2x4-core) AIX, IBM i,

EPTC N/A 1-core activation of FC EPT7 (No charge) AIX, IBM i,

EPTD N/A 1-core activation of FC EPT8 (No charge) AIX, IBM i,

EPTE N/A 1-core activation of FC EPT7 AIX, IBM i,

EPTF N/A 1-core activation of FC EPT8 AIX, IBM i,

EPTR 2B67 Service processor AIX, IBM i,

CCIN Description OS

support

AIX, IBM i,

Card

Linux

Linux

Linux

Linux

Linux

Linux

Linux

Linux

Table 1-6 summarizes the processor feature codes for the Power 760.

Table 1-6 Summary of processor features for the Power 760

Feature

code

EPT1 2B61 Processor & Memory Backplane + Base Memory VRM + Clock

EPT5 3.1 GHz, Proc DCM, 0/12-core POWER7+ (2x6-core) AIX, IBM i,

EPT6 3.4 GHz, Proc DCM, 0/12-core POWER7+ (2x6-core) AIX, IBM i,

EPTA N/A 1-core activation of FC EPT5 AIX, IBM i,

EPTB N/A 1-core activation of FC EPT6 AIX, IBM i,

EPTR 2B67 Service processor AIX, IBM i,

CCIN Description OS

support

AIX, IBM i,

Card

Linux

Linux

Linux

Linux

Linux

Linux

Chapter 1. General description 15

Page 30

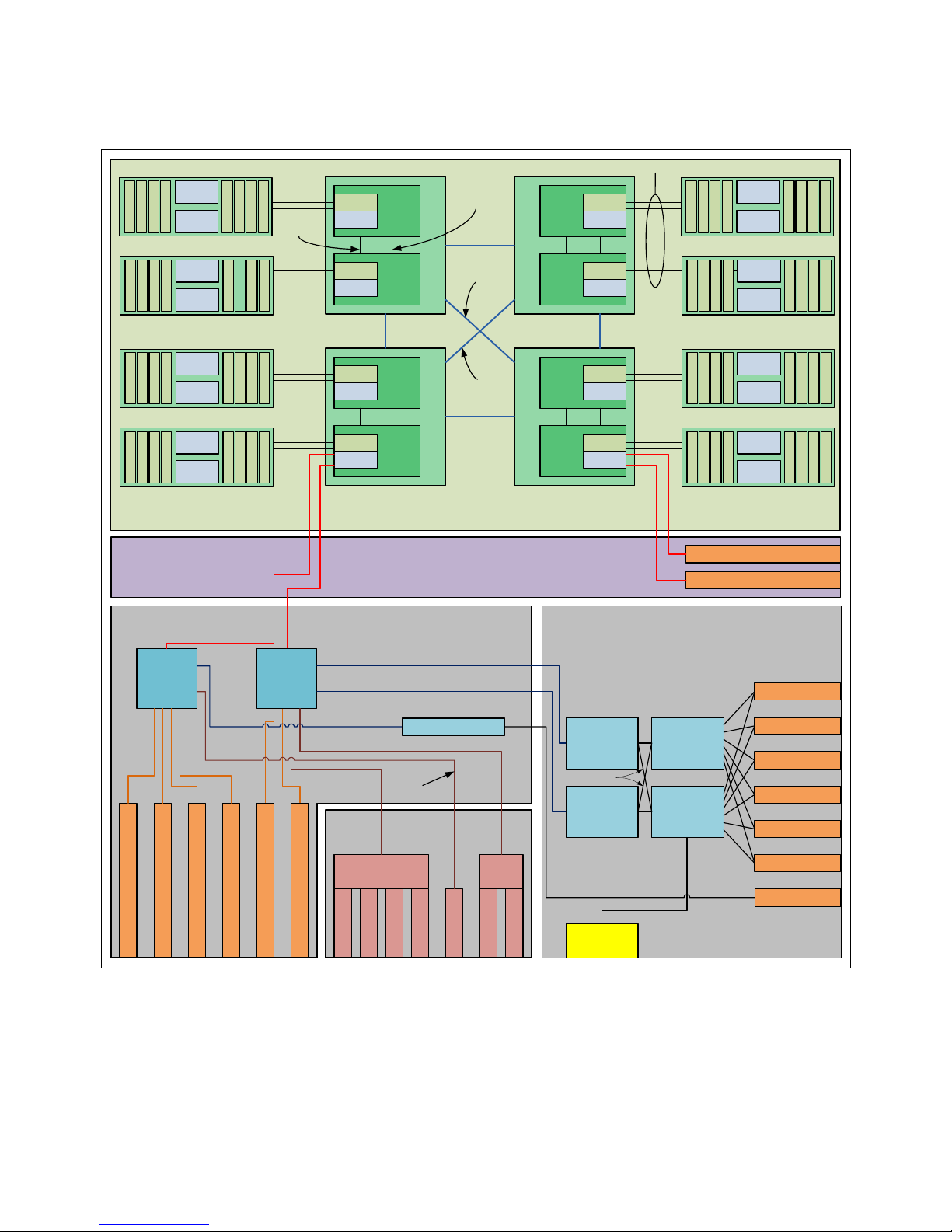

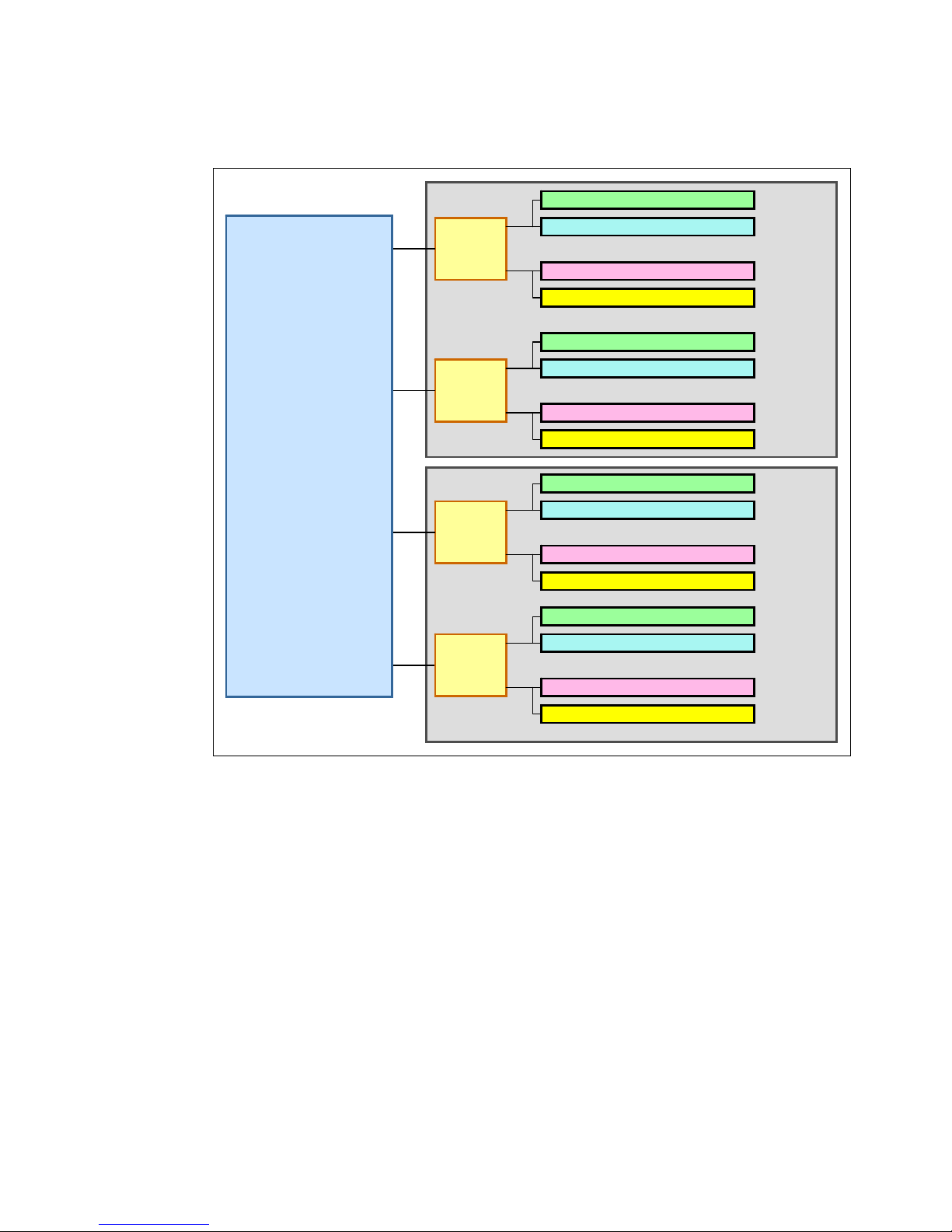

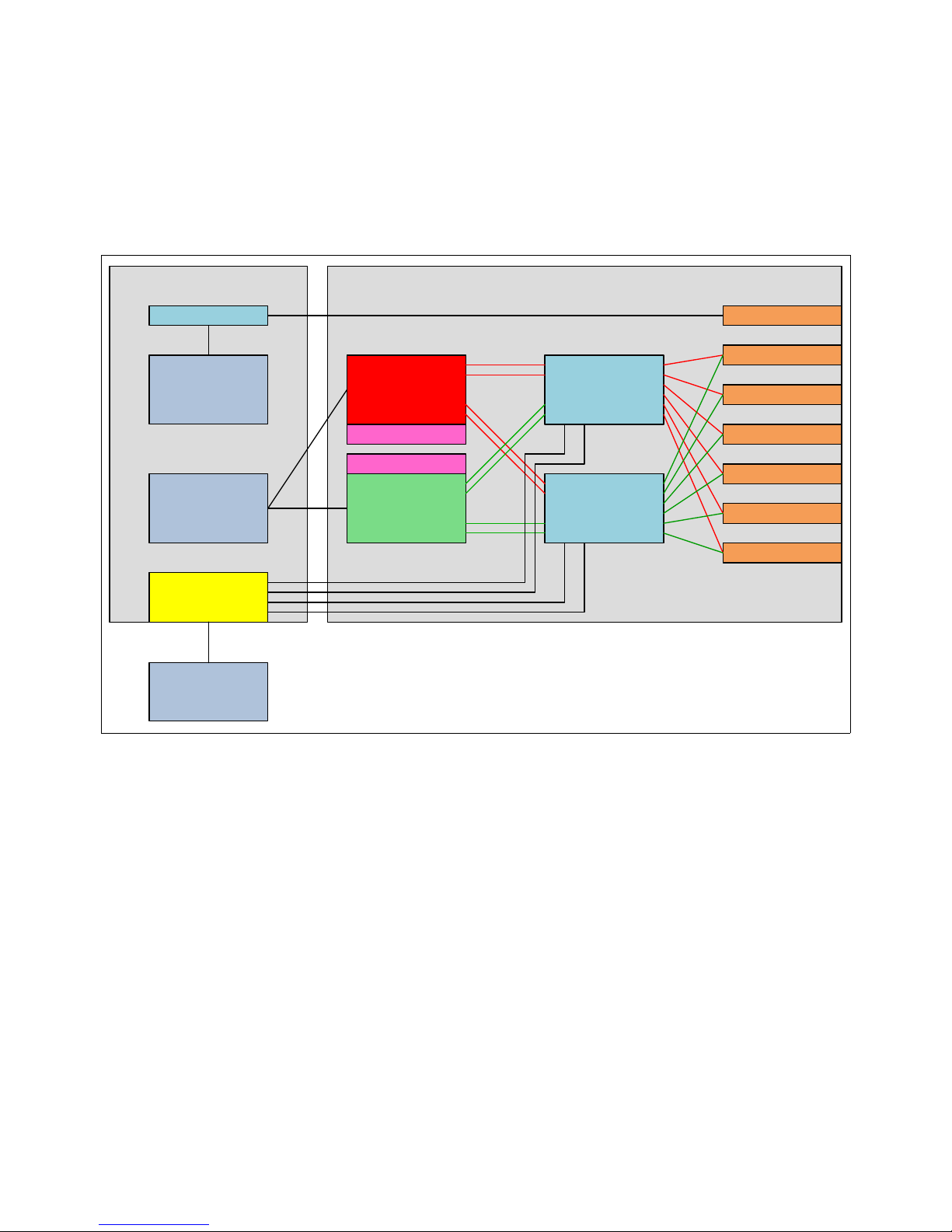

1.5.6 Memory features

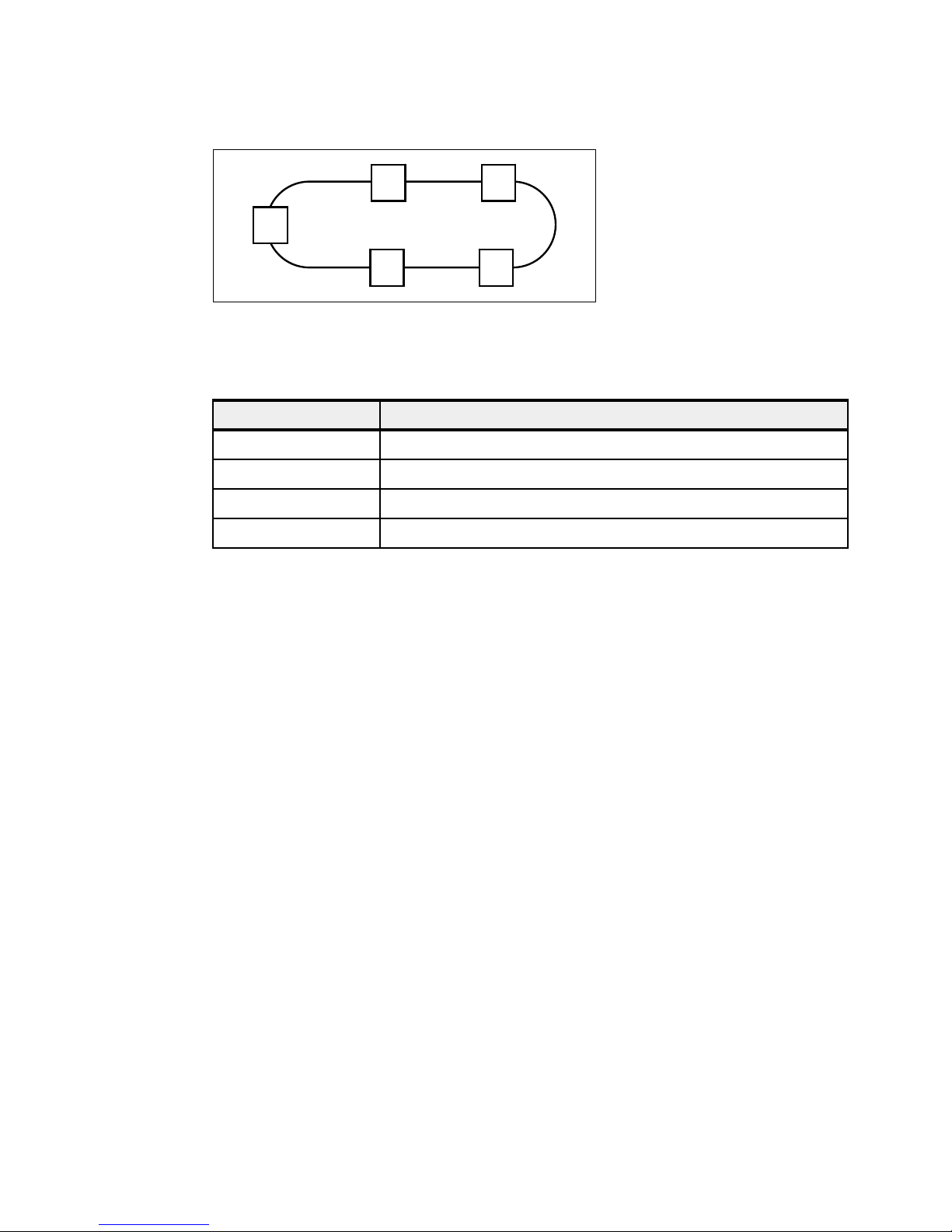

DCM

MC: Memory Controller

BC: Memory Buffer

Memory Riser Card #2

DDR3 RDIMM Slot 2

DDR3 RDIMM Slot 1

DDR3 RDIMM Slot 8

DDR3 RDIMM Slot 7

DDR3 RDIMM Slot 4

DDR3 RDIMM Slot 3

DDR3 RDIMM Slot 6

DDR3 RDIMM Slot 5

BC-B

BC-A

POWER 7+

Chip 1

MC1

Channel D

MC1

Channel C

POWER 7+

Chip 0

MC0

Channel B

MC0

Channel A

Memory Riser Card #1

DDR3 RDIMM Slot 2

DDR3 RDIMM Slot 1

DDR3 RDIMM Slot 8

DDR3 RDIMM Slot 7

DDR3 RDIMM Slot 4

DDR3 RDIMM Slot 3

DDR3 RDIMM Slot 6

DDR3 RDIMM Slot 5

BC-B

BC-A

In POWER7+ systems, DDR3 memory is used throughout. There are four capacity memory

features, each has two DIMMs: 8 GB, 16 GB, 32 GB, or 64 GB. The Power 760 supports all

four memory features. The Power 750 does not support the 64 GB feature.

The POWER7+ DDR3 memory has been redesigned to provide greater bandwidth and

capacity. The 16, 32 and 64 GB DIMMs use 4 GB DRAMs. This enables operating at a higher

data rate for large memory configurations. All memory cards have eight memory DIMM slots

running at speeds of 1066 MHz and must be populated with POWER7+ DDR3 Memory

DIMMs. Each DCM supports two memory riser cards.

The DIMMs are plugged into memory riser cards (FC EM01) located on the processor and

memory backplane (FC EPT1). Each riser card has eight DIMM slots.

Figure 1-4 outlines the general connectivity of a POWER7+ DCM and DDR3 memory DIMMs.

The figure shows the eight memory channels (four per DCM).

Figure 1-4 Outline of POWER7+ processor connectivity to DDR3 DIMMs in Power 750 and Power 760

16 IBM Power 750 and 760 Technical Overview and Introduction

Page 31

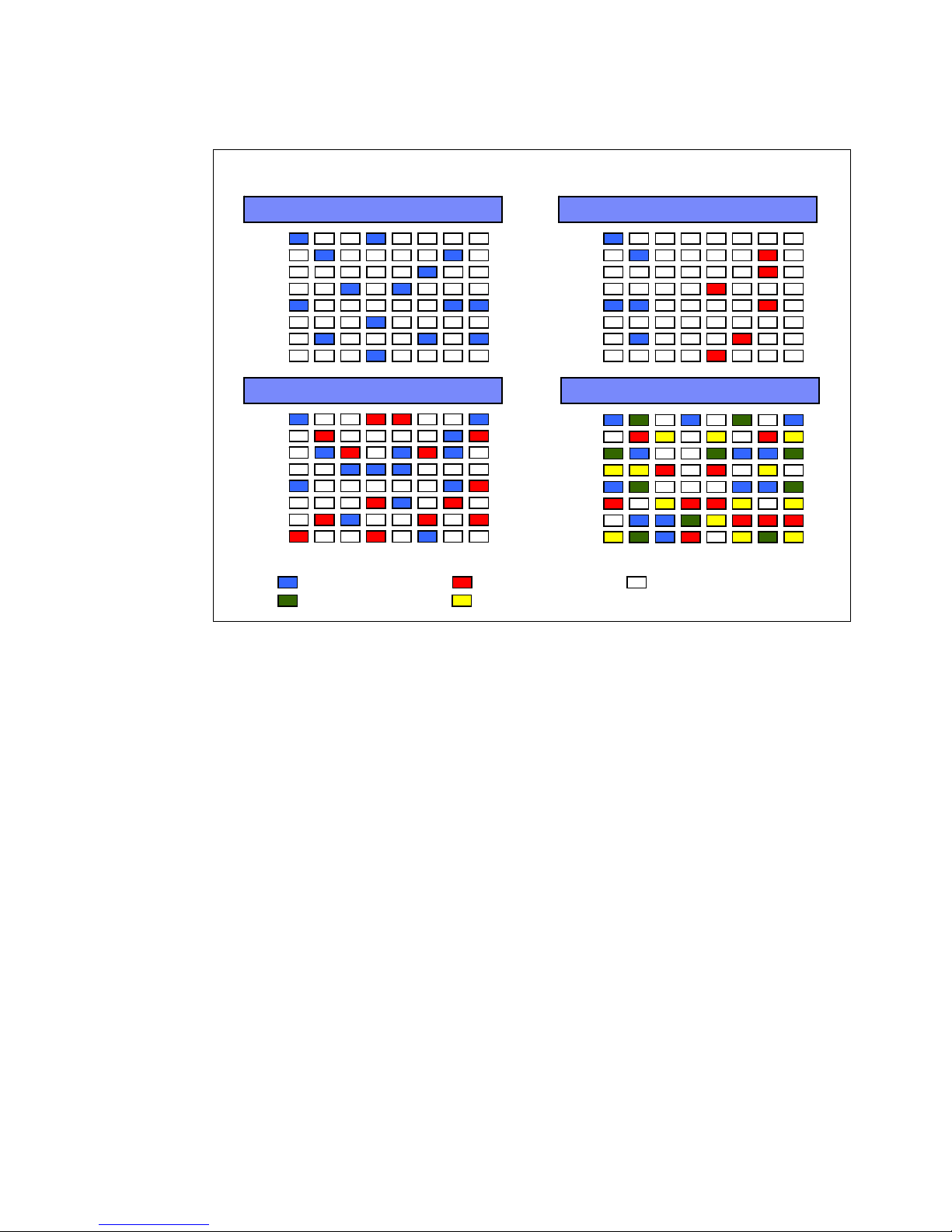

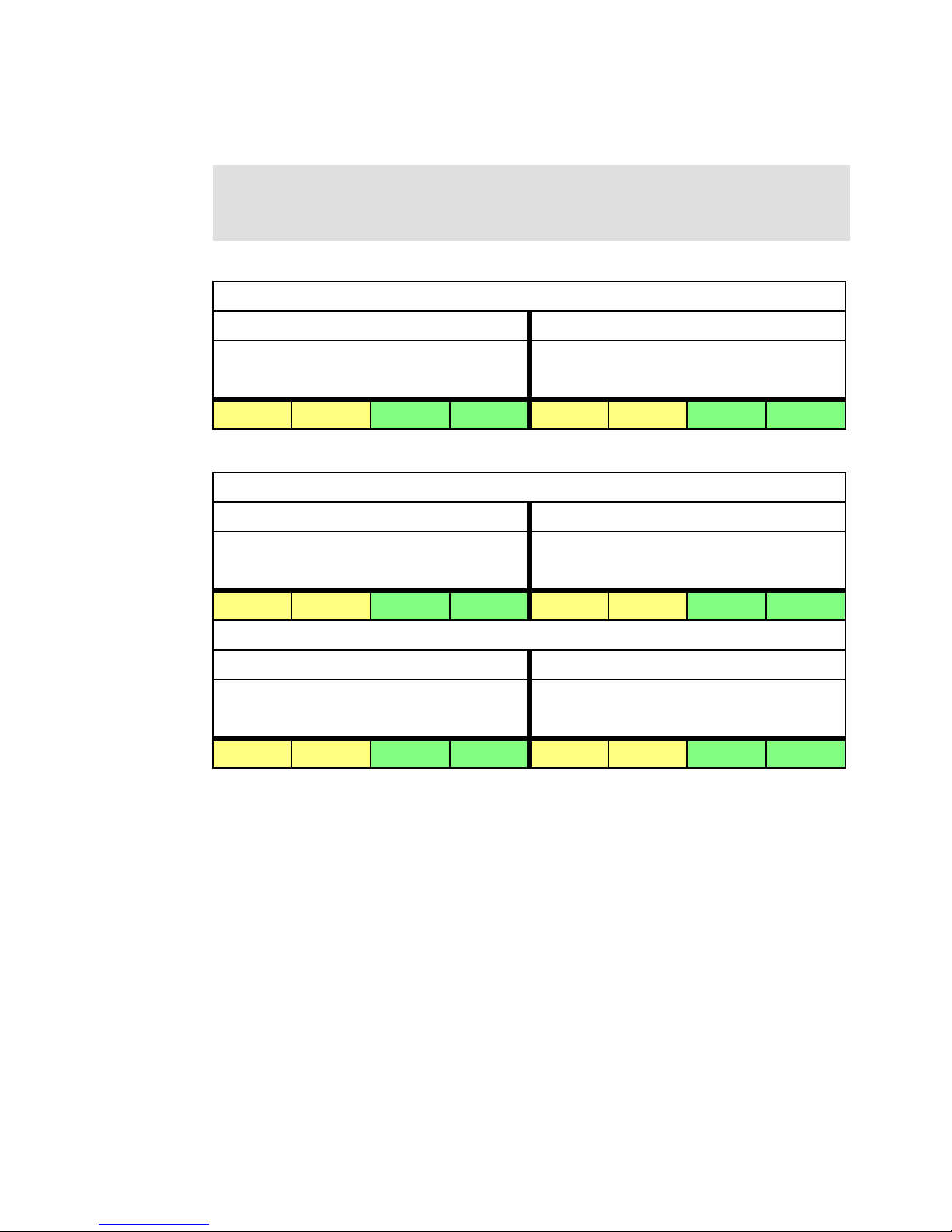

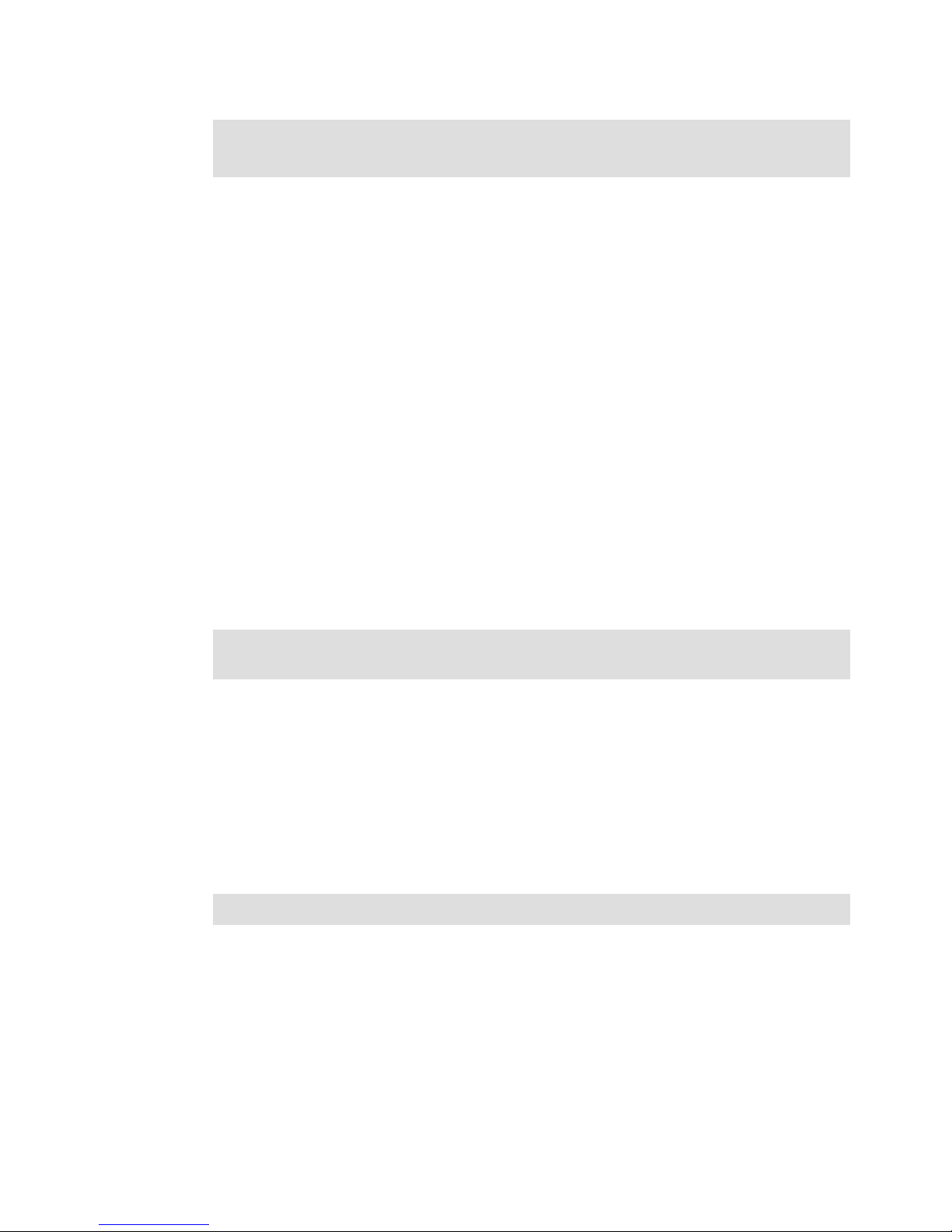

Table 1-7 gives the maximum and minimum memory configurations for Power 750 and

Power 760 with different number of DCMs installed.

Table 1-7 Maximum and minimum memory configurations of the Power 750 and Power 760

DCMs in

system

1 16 32 GB 256 GB 32 GB 512 GB

2 32 32 GB 512 GB 32 GB 1024 GB

3 48 48 GB 768 GB 48 GB 1536 GB

4 64 64GB 1024GB 64GB 2048GB

DIMM slots Power 750 memory Power 760 memory

Minimum Maximum Minimum Maximum

Additional memory configuration rules:

Different system memory feature codes may be mixed on each of the two memory riser

cards servicing each DCM.

Each riser card must have at least one memory feature code (two identical DIMMs).

Table 1-8 details the memory features available in the Power 750 and Power 760.

Table 1-8 Summary of memory features available on Power 750 and Power 760

Feature

code

EM01 2C1C Memory Riser Card 2 8

CCIN Description Min

a

Max

a

EM08 8 GB (2 x 4 GB) Memory DIMMs, 1066 MHz, 2 GB DDR3

DRAM

EM4B 31FA 16 GB (2 x 8 GB) Memory DIMMs, 1066 MHz, 4 GB DDR3

DRAM

EM4C 32 GB (2 x 16 GB) Memory DIMMs, 1066 MHz, 4 GB DDR3

DRAM

EM4D 64 GB (2 x 32 GB) Memory DIMMs, 1066 MHz, 4 GB DDR3

DRAM

a. Minimum and maximum

032

032

032

032

A memory riser card can have two, four, six, or eight DIMMs ordered by one, two, three, or

four memory features. All the memory features can be the same in the riser card, or two

different size memory features can be used in the same riser card. If using two different sized

memory features, valid configurations are as follows:

Four DIMMs total: one memory feature plus one different memory feature

Six DIMMs total: two memory features plus one different memory feature

Eight DIMMs total: two memory features plus two different memory features

Invalid configurations using more than one memory size feature are as follows:

More than two sizes of memory features. For example: 8 GB plus 16 GB plus 32 GB on

one riser.

Three of one memory features plus one of another memory feature. Use two sets of two

features instead if installing eight DIMMs.

Chapter 1. General description 17

Page 32

Different memory feature codes can be mixed on each of the two memory riser cards

associated with each DCM. Likewise, riser cards on multiple processor DCMs can have the

same or different memory features.

For better performance, two guidelines are important:

Be sure that the quantity of DIMMs are evenly distributed across each of the riser cards.

Be sure that the total quantity of GB on each riser card is balanced as evenly as possible.

Where possible, avoid having one riser card with more than twice the gigabytes of another

riser card on the server.

These are general performance guidelines, not mandatory configuration rules. The first

guideline is typically more significant than the second guideline.

The eight DIMM slots in a riser card are labeled C1, C2, C3, C4, C5, C6, C7, and C8. DIMM

placement rules are as follows:

The DIMMs in C1 and C3 must be identical. Similarly the DIMMs in C2 and C4 must be

identical and C5 and C7 must be identical and C6 and C8 must be identical.

The four DIMMs, if present in C1, C2, C3, and C4, must be identical in a riser card. The

four DIMMs, if present in C5, C6, C7, and C8, must be identical in a riser card.

Plans for future memory upgrades should be taken into account when deciding which

memory feature size to use at the time of initial system order.

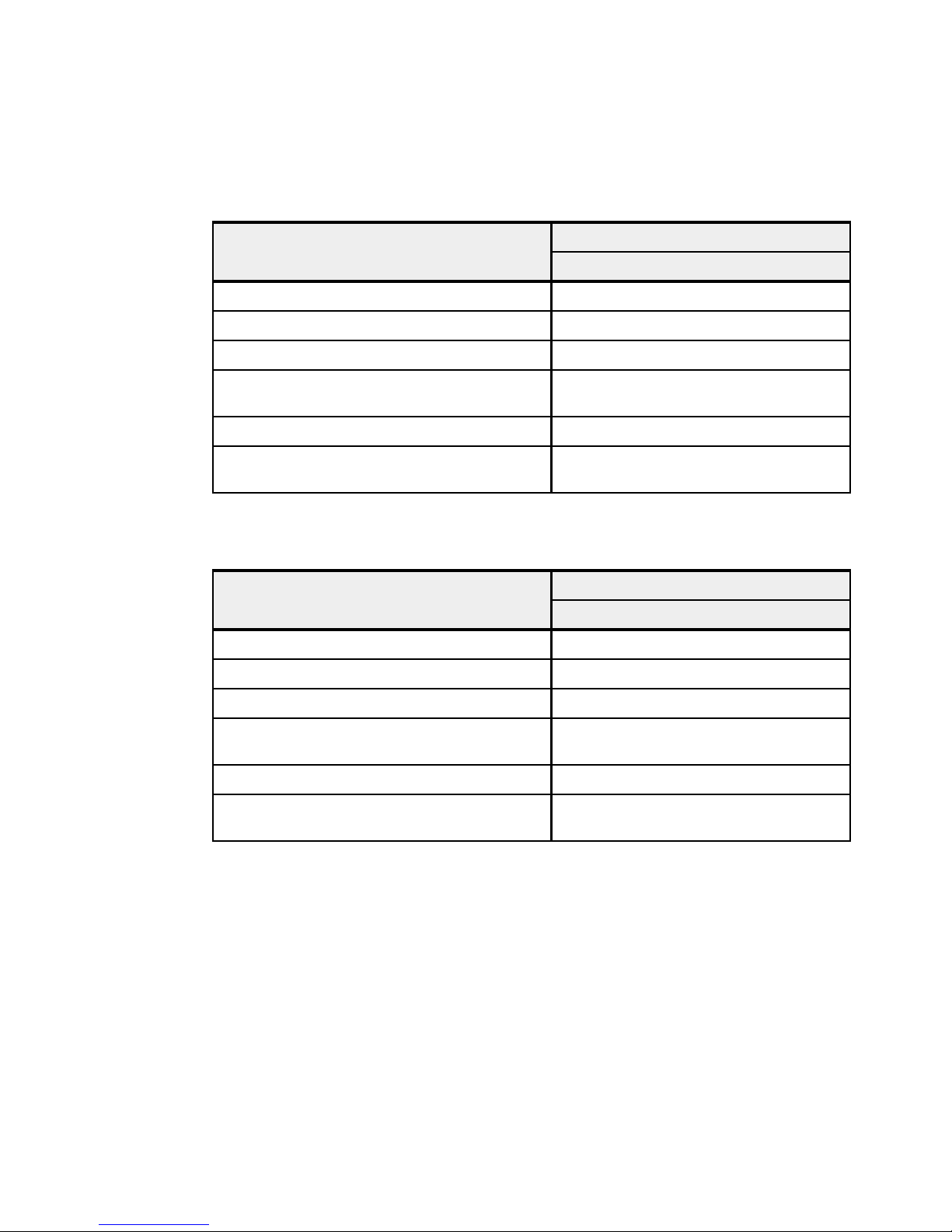

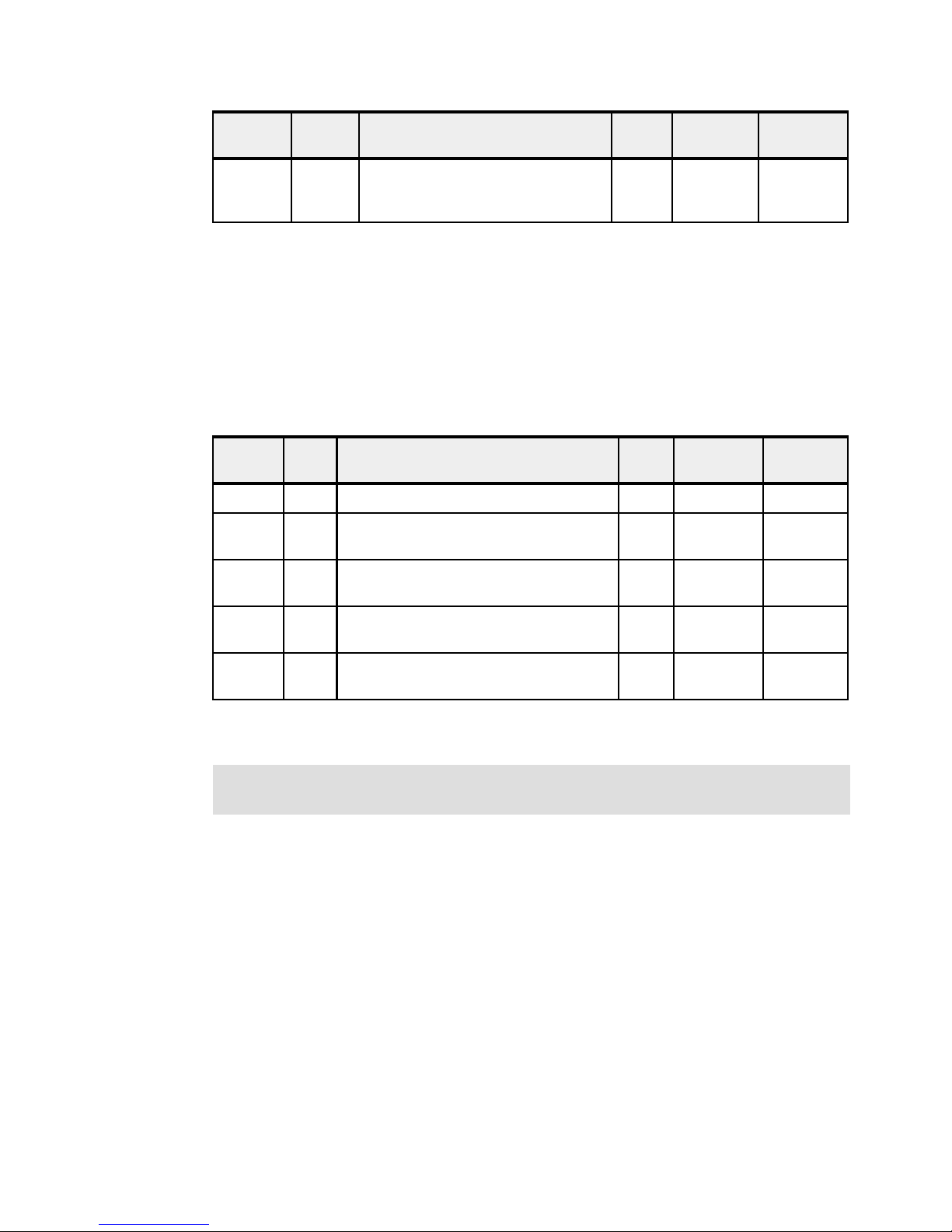

1.6 Disk and media features

The Power 750 and the Power 760 system unit includes six SFF SAS bays. This offers up to

5.4 TB HDD capacity or up to 3.6 TB SSD capacity. All SAS disks and SSD drives are

2.5-inch SFF and hot swappable. The six SAS SFF bays can be split into two sets of three

bays for additional configuration flexibility using just the integrated SAS adapters.

Table 1-9 shows the available disk drive feature codes that each bay can contain.

Table 1-9 Disk drive feature code description

Feature

code

1886 146 GB 15K RPM SFF SAS Disk Drive AIX, Linux

1917 146 GB 15K RPM SAS SFF-2 Disk Drive AIX, Linux

1775 177 GB SFF SSD with eMLC AIX, Linux

1793 177 GB SFF-2 SSD with eMLC AIX, Linux

1995 177 GB SSD Module with eMLC AIX, Linux

1885 300 GB 10K RPM SFF SAS Disk Drive AIX, Linux

1925 300 GB 10K RPM SAS SFF-2 Disk Drive AIX, Linux

1880 169C 300 GB 15K RPM SAS SFF Disk Drive AIX, Linux

CCIN Description OS

support

1953 300 GB 15K RPM SAS SFF-2 Disk Drive AIX, Linux

ES02 387 GB 1.8 inch SAS SSD for AIX and Linux with eMLC AIX, Linux

ES0A 387 GB SFF SSD with eMLC AIX, Linux

18 IBM Power 750 and 760 Technical Overview and Introduction

Page 33

Feature

code

ES0C 387 GB SFF-2 SSD eMLC AIX, Linux

1790 600 GB 10K RPM SAS SFF Disk Drive AIX, Linux

1964 600 GB 10K RPM SAS SFF-2 Disk Drive AIX, Linux

1751 900 GB 10K RPM SAS SFF Disk Drive AIX, Linux

1752 900 GB 10K RPM SAS SFF-2 Disk Drive AIX, Linux

1888 198C 139 GB 15K RPM SFF SAS Disk Drive IBM i

1947 19B0 139 GB 15K RPM SAS SFF-2 Disk Drive IBM i

1787 58B3 177 GB SFF SSD with eMLC IBM i

1794 58B4 177 GB SFF-2 SSD with eMLC IBM i

1996 58B2 177 GB SSD Module with eMLC IBM i

1911 198D 283 GB 10K RPM SFF SAS Disk Drive IBM i

1956 19B7 283 GB 10K RPM SAS SFF-2 Disk Drive IBM i

1879 283 GB 15K RPM SAS SFF Disk Drive IBM i

1948 19B1 283 GB 15K RPM SAS SFF-2 Disk Drive IBM i

CCIN Description OS

support

ES0B 387 GB SFF SSD eMLC IBM i

ES0D 387 GB SFF-2 SSD eMLC IBM i

1916 19A3 571 GB 10K RPM SAS SFF Disk Drive IBM i

1962 19B3 571 GB 10K RPM SAS SFF-2 Disk Drive IBM i

1737 19A4 856 GB 10K RPM SAS SFF Disk Drive IBM i

1738 19B4 856 GB 10K RPM SAS SFF-2 Disk Drive IBM i