Page 1

Sterling B2B Integrator

Performance Management

Version 5.2

IBM

Page 2

Page 3

Sterling B2B Integrator

Performance Management

Version 5.2

IBM

Page 4

Note

Before using this information and the product it supports, read the information in “Notices” on page 247.

Copyright

This edition applies to Version 5 Release 2 of Sterling B2B Integrator and to all subsequent releases and

modifications until otherwise indicated in new editions.

© Copyright IBM Corporation 2000, 2015.

US Government Users Restricted Rights – Use, duplication or disclosure restricted by GSA ADP Schedule Contract

with IBM Corp.

Page 5

Contents

Performance Management ....... 1

Overview ............... 1

Intended Audience ........... 2

System Components ........... 2

Performance Tuning Methodology ...... 4

Performance Recommendations Checklists .... 5

Sterling B2B Integrator: General Recommendations

Checklist .............. 5

IBM Sterling File Gateway: Specific

Recommendations Checklist ........ 9

EBICS Banking Server: Specific

Recommendations ........... 12

Database Management........... 13

Planning .............. 13

Server Sizing ............. 14

Storage and File Systems ......... 14

Database management for Sterling B2B Integrator 16

Oracle Database Configuration and Monitoring 22

IBM DB2 for LUW Configuration and Monitoring 35

Microsoft SQL Server Configuration and

Monitoring ............. 51

Java Virtual Machine ........... 61

Garbage Collection Statistics ........ 62

JVM Verbose Garbage Collection ...... 62

IBM JVM Garbage Collection Example .... 62

Introduction to HotSpot JVM Performance and

Tuning ............... 63

Introduction to the IBM JVM Performance and

Tuning Guidelines ........... 78

Monitoring Operations .......... 82

Managing System Logs ......... 82

Auditing .............. 93

Monitoring a Business Process Thread .... 94

Monitoring Messages .......... 96

Reviewing System Information ....... 97

Monitoring Node Status ......... 106

Monitoring Deprecated Resources ..... 109

Soft Stop of Sterling B2B Integrator ..... 110

Hard Stop of Sterling B2B Integrator .... 120

Performance Tuning ........... 120

Performance Tuning Utility........ 120

Manual Performance Tuning ....... 166

Performance Statistics .......... 203

Turning On and Turning Off Performance

Statistics .............. 213

Reporting Performance Statistics ...... 213

Troubleshooting ............ 214

Database Maintenance Check ....... 214

Full Database Issues and Resolution ..... 218

Database Connection Issues ....... 222

Database Down Check ......... 224

Tracking JDBC Connections ....... 226

Types of Cache Memory......... 227

Symptoms and Causes of Inefficient Cache

Usage ............... 228

Resolving Inefficient Cache Usage ..... 228

Correcting Dropped Data Problems ..... 229

Correcting Out-Of-Memory Errors ..... 230

Understanding Business Process ...... 230

Symptoms and Causes of Poor Business Process

Execution Time ............ 230

Resolving Halted, Halting, Waiting, or

Interrupted Business Processes ...... 232

Slow System: Symptoms, Causes, and

Resolution ............. 234

Performing a Thread Dump ....... 236

Scenario-Based Troubleshooting Tips and

Techniques ............. 239

Performance and Tuning Worksheet ...... 241

workflowLauncher: Running a Business Process

from a Command Line .......... 244

Notices .............. 247

Index ............... 251

© Copyright IBM Corp. 2000, 2015 iii

Page 6

iv Sterling B2B Integrator: Performance Management

Page 7

Performance Management

You can manage the performance of Sterling B2B Integrator according to your

needs.

Overview

Typically, performance in general, and optimal performance in particular, are

associated with the following criteria: latency, throughput, scalability, and load.

v Latency – The amount of time taken to generate a response to a request (speed).

v Throughput – The amount of data transferred during a specified period

(volume).

v Scalability – The ability of the system to adapt to increasing workload

(additional hardware).

v Load – The ability of the system to continue performing at optimal level even

when the load on the system increases.

Sterling B2B Integrator performance can be tuned to meet various processing

requirements, including higher processing speed and ability to sustain high

volumes. The amount of resources given to the interactive and batch components

of a mixed workload determines the trade-off between responsiveness (speed) and

throughput (work completed in a certain amount of time).

When using Sterling B2B Integrator, if you face any performance issues, perform

the applicable task or tasks from the following list:

v Change performance parameters in the properties files or through the

performance tuning utility. For more information about changing performance

parameters, refer to “Performance Tuning Utility” on page 120.

v Add additional hardware.

v Tune your business processes to run more efficiently on Sterling B2B Integrator.

v Monitor and archive the database to free up resources.

v Create Sterling B2B Integrator cluster for load balancing and scaling.

Before You Begin Performance Tuning

Before you carry out performance tuning actions, you must consider capacity

planning issues. The “Performance and Tuning Worksheet” on page 241 provides

information about how to determine your capacity requirements. This worksheet,

and other capacity planning tools, also help you adjust your current workload,

regardless of your future requirements.

Following is a list of some capacity issues that impact performance and tuning:

v Daily volume requirements, including the average size and number of

transactions to be processed.

v Additional processing requirements, for example, translation, and

straight-through processing.

v Types of pay loads, including EDIFACT, XML, and other formats.

v Translation requirements, for example, translation from EDIFACT to XML.

© Copyright IBM Corp. 2000, 2015 1

Page 8

v Enterprise Resource Planning (ERP) integration requirements, for example,

integration with SAP®or PeopleSoft®.

v Number of processors that are available and can be dedicated to Sterling B2B

Integrator.

v Memory available to meet your processing requirements.

v Disk space available.

v Hard disk Redundant Array of Independent Disks (RAID) level. RAID arrays

use two or more drives in combination for fault tolerance and performance. The

recommended RAID level for Sterling B2B Integrator is Level 5.

v Database size requirements.

Note: When conducting a performance tuning activity, keep the information

provided in this topic at hand for easy reference and future planning.

Intended Audience

This document is intended for, but not limited to:

v Technical Architects

v Performance Engineers

v Configuration Engineers

v Application Administrators

v Database Administrators

v System Administrators

System Components

Performance management activities affect all aspects of the system, including

computer nodes, network, disks, database, and so on.

One person or role may be responsible for one, several, or all the components.

Some of the roles include:

v Hardware Engineer

v System Administrator

v Network Engineer - Local Area or Wide Area

v Application Server Administrator

v Database Administrator

v Capacity Planning Engineer

v Performance Analyst

v IBM®Sterling B2B Integrator Administrator

Performance management documentation includes the following information:

v Background information about the different performance and tuning issues

when running Sterling B2B IntegratorSterling B2B Integrator.

v Step-by-step information that helps you:

– Optimize the performance.

– Diagnose and resolve performance issues, if any, to suit your environment.

You can work through most performance and tuning issues using the following

tools:

v Properties file settings, which you can access through the properties directory in

your installation directory.

2 Sterling B2B Integrator: Performance Management

Page 9

v The Sterling B2B Integrator user interface. Use the Operations option in the

Administration menu to access the following tools:

– JDBC Monitor (Operations > JDBC Monitor)

– JVM Monitor (Operations > System > Performance > JVM Monitor)

– Message Monitor (Operations > Message Monitor)

– Performance Statistics Report (Operations > System > Performance >

Statistics)

– Performance Tuning Wizard (Operations > System > Performance > Tuning)

– System Troubleshooting (Operations > System > Troubleshooter)

– Thread Monitor (Operations > Thread Monitor)

Based on the diversity of the roles and the responsibilities associated with them,

the Sterling B2B Integrator Performance Management Guide is divided into the

following sections:

v Overview - Provides performance tuning overview, audience information,

performance concepts overview, performance tuning methodologies, and tuning

issues that may arise when running Sterling B2B Integrator.

v Performance recommendations checklist - Provides general, planning, and

implementation (single node and cluster) checklists. This topic caters to

hardware engineers, system administrators, network engineers, capacity

planners, and Sterling B2B Integrator administrators.

v Database management system - Discusses key recommendations for Oracle®,

IBM DB2®, Microsoft SQL Server™, and MySQL databases. This topic caters to

database administrators.

v Java™Virtual Machines - Explains configuration, recommendations, and so on.

This topic caters to application server administrators.

v Monitoring operations, performance tuning, and performance statistics -

Discusses monitoring operations, performance tuning (utility and manual), and

management of performance statistics.

v Monitoring operations - Provides information about system logs, auditing,

business process threads, messages, system information, and cluster node status.

v Performance tuning - Explains performance tuning using the performance tuning

wizard, and manual performance tuning recommendations such as scheduling

policies, cache contents, persistence levels, property files, and system recovery.

v Performance statistics - Provides information about managing, enabling and

disabling, and reporting performance statistics.

v Troubleshooting - Provides information about resolving full database issues, Java

Database Connectivity (JDBC™) connections, cache issues, memory issues, slow

system issues, and improving business process execution time.

v Performance and tuning worksheet - Helps you to take an inventory of the

different parts of your Sterling B2B Integrator installation that affects

performance.

v workFlow Launcher - Explains how to run business processes from the

command line.

Note: It is recommended that the performance analyst, capacity planner, and

Sterling B2B Integrator administrators read all the topics.

Performance Management 3

Page 10

Performance Tuning Methodology

For effective performance tuning, you must first identify the performance issues

and determine the corrective actions. Following is a methodology that helps you

focus your efforts and reduce redundant tasks.

Use the following methodology to identify and resolve performance issues in

Sterling B2B Integrator:

1. Fill out the Requirements Planning Worksheet to determine the hardware

requirements for a given processing volume and speed.

Note: If you purchased IBM Consulting Services, you would have received a

report containing information about your hardware requirements based on

your business needs.

2. Verify that your hardware and memory specifications match the hardware and

memory recommendations provided during the performance planning phase.

3. Verify that you have installed the requisite patches on your system, which will

help you fix your performance issues from the IBM Customer Center Web site

at https://cn.sterlingcommerce.com/login.jsp.

4. Verify that you have the supported Java Virtual Machine (JVM™) on the

computer running Sterling B2B Integrator, and on the DMZ computer if you

are running perimeter servers. Both the JVM versions must match each other

and your Sterling B2B Integrator version requirements. The build date and

lower release numbers must also match.

5. Verify that you are running the supported version of your operating system.

6. Verify that you are running the supported versions of the JDBC drivers.

7. Verify that you have created your business processes using the most current

adapters and services, well-structured XPath statements, and the lowest

persistence by step and business process.

8. Tune Sterling B2B Integrator using the Performance Tuning Utility and the

information that you provided in the Requirements Planning Worksheet (refer

to the topic Performance and Tuning Worksheet). The Performance Tuning

Utility enables you to tune the cache, memory, and other system components,

but not the business processes.

For more information about this utility, refer to “Performance Tuning Utility”

on page 120.

9. Navigate to Operations > System > Performance > Statistics. In the Statistics

page, select the Enable Performance Statistics option. The Performance

Statistics reports provide information about the time taken to perform

business processes and execute activities, database connections and

persistence, and business process queue performance. You can use this

information to tune your system according to your volume and speed

requirements.

For more information about performance statistics, refer to the topic Manage

Performance Statistics.

10. Review your history of incoming and outgoing documents and enter this

information in charts, showing daily, weekly, and monthly processing trends.

Use these charts to determine your peak volume processing levels based on

your processing pattern history.

11. Conduct initial performance testing by running your business processes with

sample data that is the same size as the data that will be processed in

production. In addition, run your business processes with data that

4 Sterling B2B Integrator: Performance Management

Page 11

approximates your anticipated peak processing volume. This helps you tune

your system as close to your production environment as possible.

12. Review the Performance Statistics Report for processing speeds, volumes, and

database connections.

13. Review the other reports, such as the Database Usage Report and the Cache

Usage Report, for information about the areas that can be tuned.

14. Retune Sterling B2B Integrator using the Performance Tuning Utility, based on

the information you gathered from your initial performance testing.

Continue this process until your processing time and volume requirements are

met.

15. Create a new Performance Statistics Report called Benchmarksdd/mm/yy.

16. Conduct the same test that you conducted in step 11.

17. Review the Benchmarksdd/mm/yy Performance Statistics Report. If the statistics

in this report are not similar to your previous statistics, repeat steps 11 - 14.

18. Compare your monthly or weekly Performance Statistics Reports with this

Benchmark report to verify that your system is processing business processes

efficiently and that your resources are being used efficiently. Using this

methodology as a proactive measure may reduce downtime and processing

issues.

Performance Recommendations Checklists

Performance Recommendations Checklists provide guidelines to plan for the

required elements, and enhance the performance of Sterling B2B Integrator and

related components.

Sterling B2B Integrator: General Recommendations Checklist

The general recommendations checklist provides a list of guidelines to plan for the

required elements, and to enhance the performance of Sterling B2B Integrator.

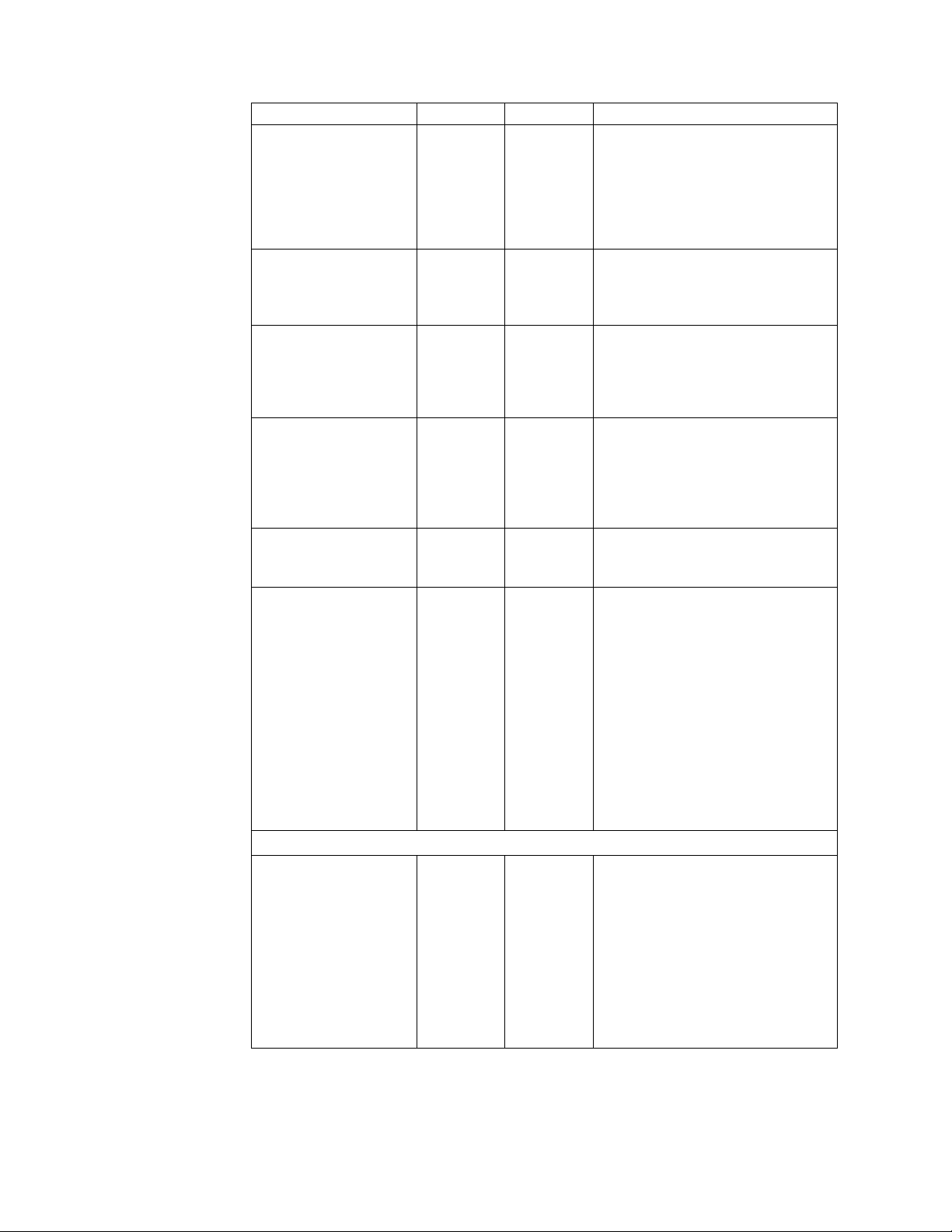

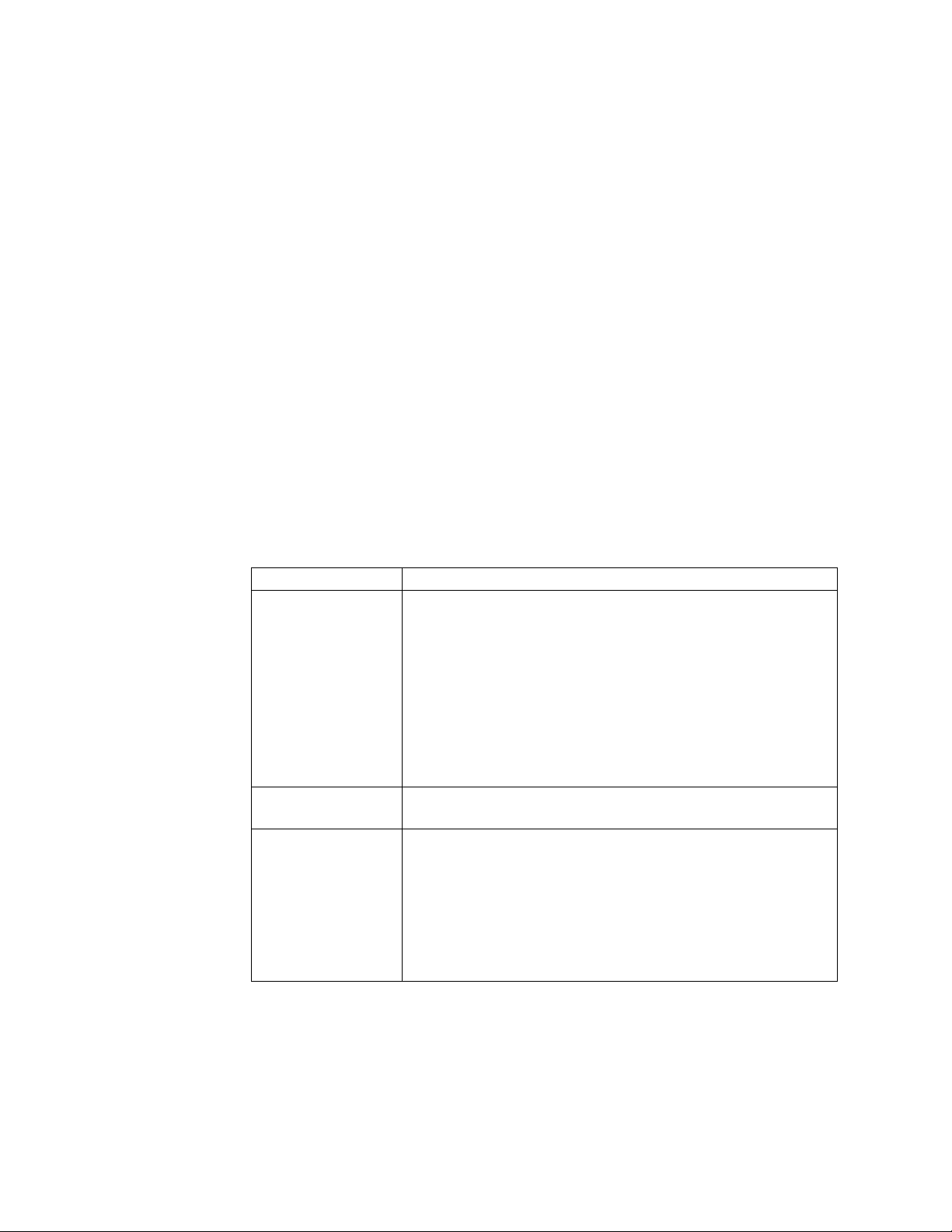

In the following table, the Test and Production columns indicate whether the

recommendations are Recommended (R), Critical (C), or Not Applicable (NA) in

the test and production environments.

Note: It is recommended to setup a Sterling B2B Integrator test environment with

a sample set of data to verify the recommendations provided in this checklist.

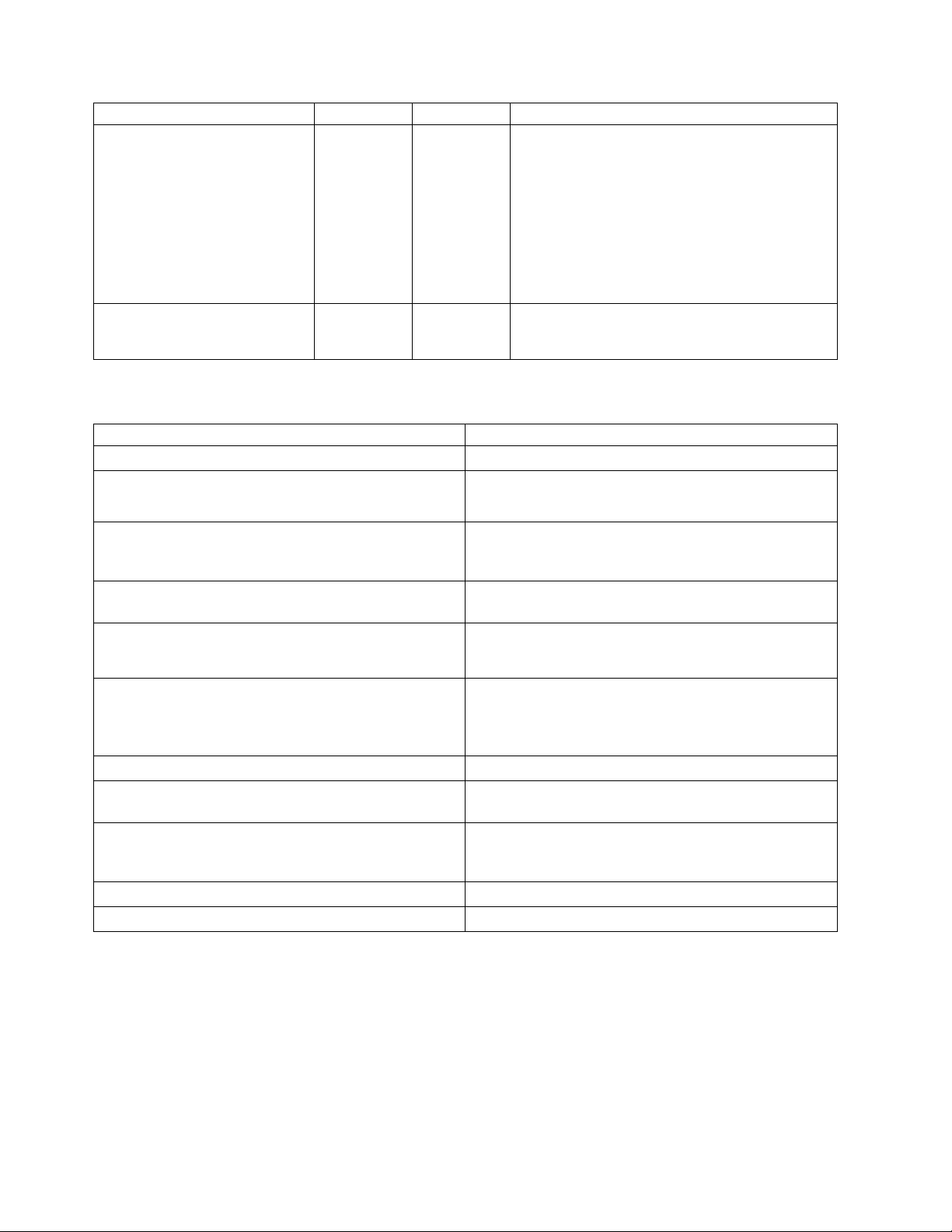

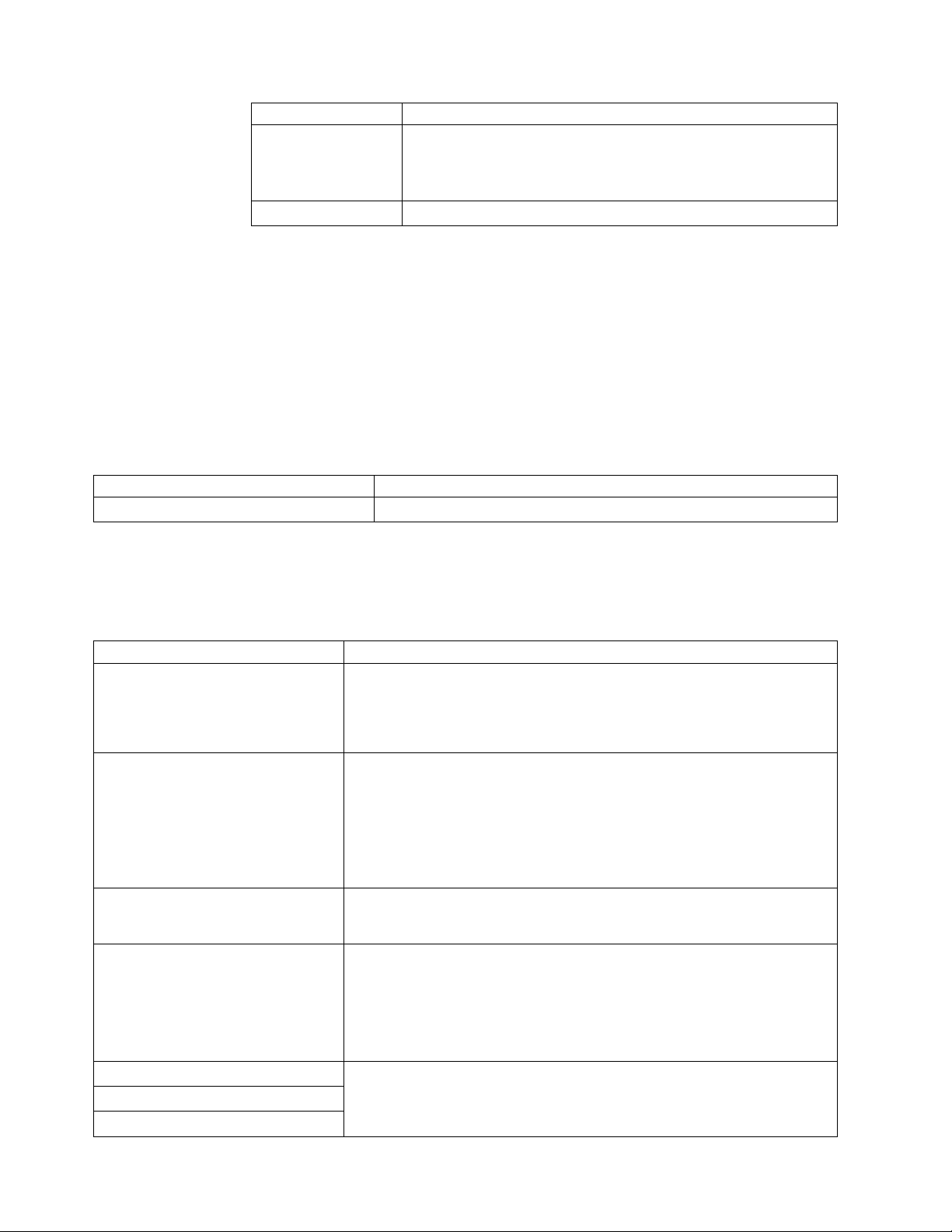

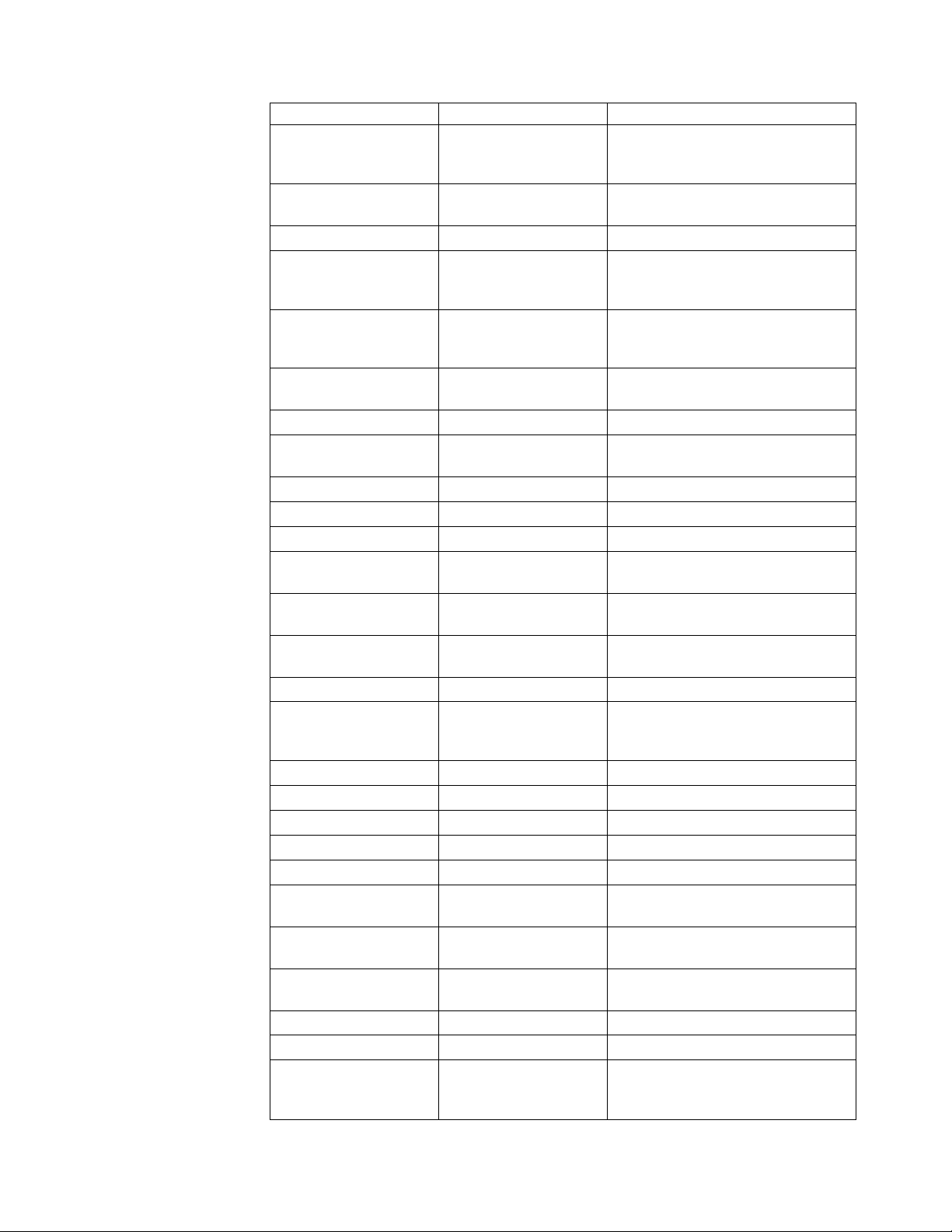

Recommendation Test Production Comments

OS version and OS

kernel parameters

Network speed C C You should ensure that your

C C You should ensure that you install

Sterling B2B Integrator on certified

OS versions and levels.

Refer to the System Requirements

documentation of the corresponding

OS versions.

network cards are operating at the

highest speeds. The network

interface and the network switch can

negotiate to lower speed. When that

happens, performance degrades even

under normal processing periods.

Performance Management 5

Page 12

Recommendation Test Production Comments

AIX page space

allocation

Monitor CPU Utilization NA C You should monitor CPU utilization

Monitor Swap Usage C C If not enough space is left on the

Monitor Paging C C The JVMs and database management

C C The AIX default page space

allocation policy does not reserve

swap space when processes allocate

memory. This can lead to excessive

swap space, which forces AIX to kill

processes when it runs out of swap

space.

You should ensure that you either

have sufficient swap space, or set the

following environment policy

variables:

PSALLOC=EARLY

NODISCLAIM=TRUE

to ensure that there is no CPU

contention.

swap device (or paging file), the

operating system may prevent

another process from starting, or in

some cases, be forced to kill the

running processes.

systems rely on large memory

buffers or heaps, and are sensitive to

paging. Performance can noticeably

degrade if enough memory is not

available to keep the JVM heap in

memory.

Monitor Heap

Garbage Collection

Performance

You can monitor paging levels using

standard operating system or

third-party measurement tools, such

as:

v UNIX/Linux – SAR

v Windows – System Monitor

C C Monitoring heap GC performance is

critical for performance and

availability. For example, if the

amount of heap that is free after a

GC is continually increasing, and

approaching the maximum heap

size, the JVM can experience

OutOfMemory exceptions.

6 Sterling B2B Integrator: Performance Management

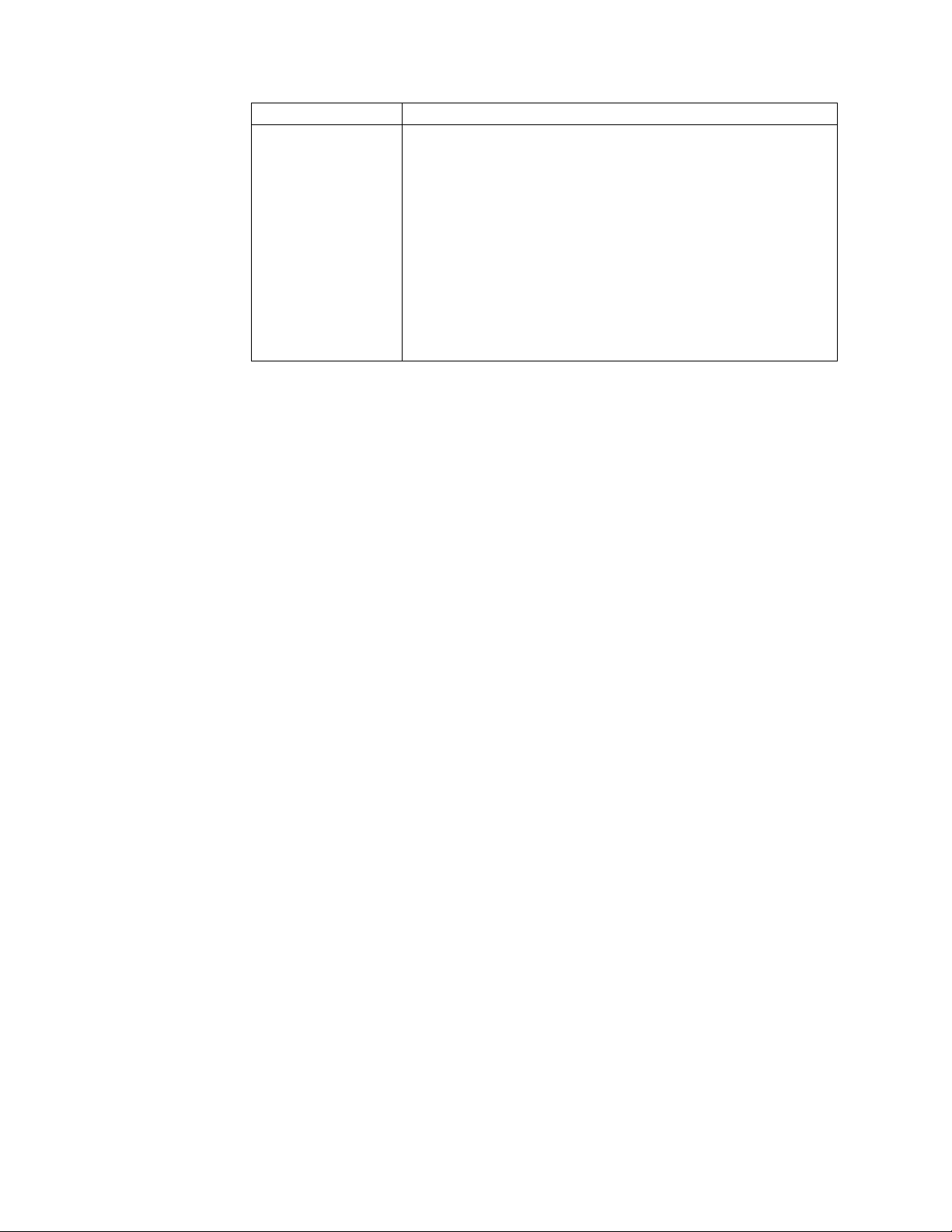

Page 13

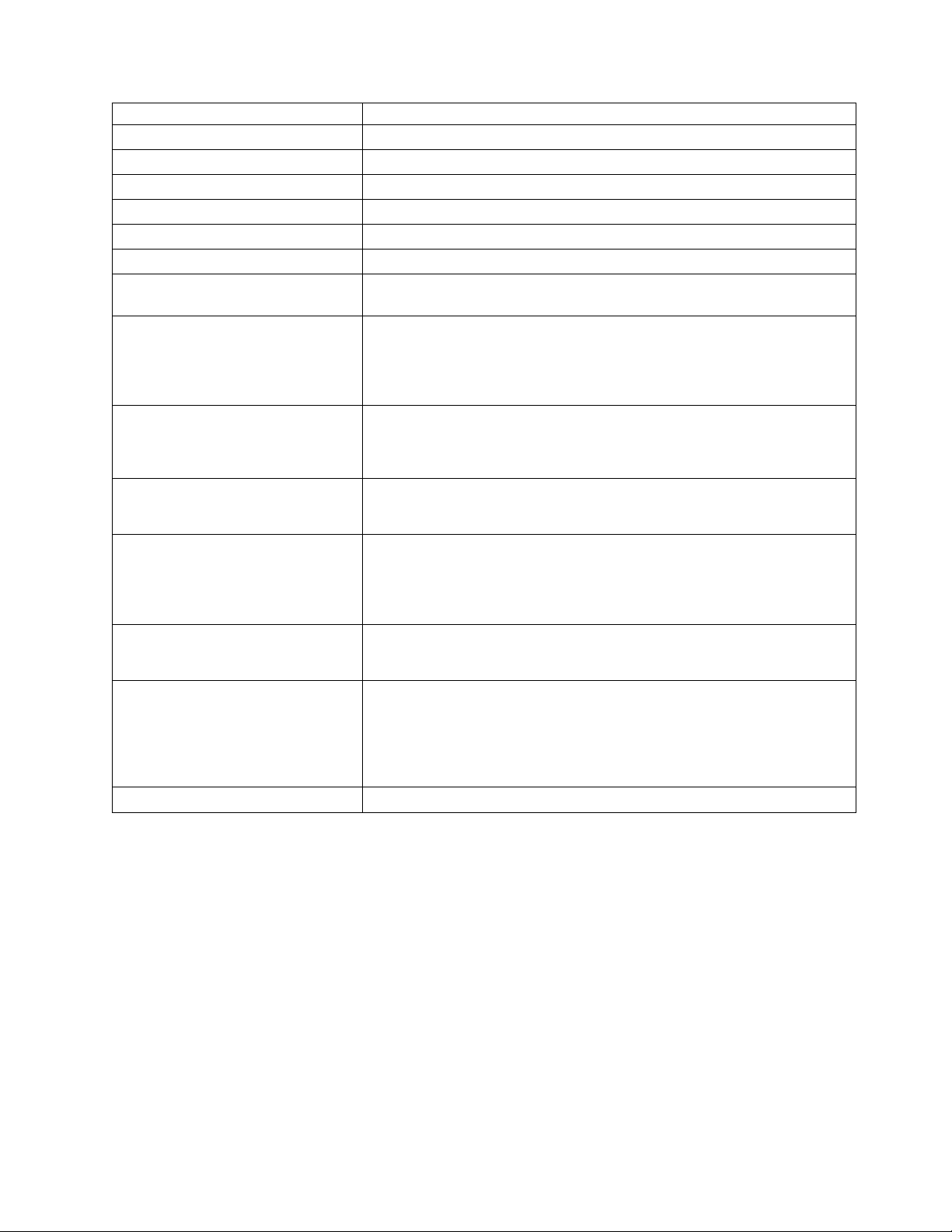

Recommendation Test Production Comments

EDI Encoder Service

R R As of Sterling B2B Integrator 5.2, the

EDI Encoder Service and EDI

EDI Envelope Service

Envelope Service notify the user of

an incorrect value or mismatch in

the Mode parameter by writing

information on how to correct the

problem in the status report of the

business process step. This can cause

a performance degradation in some

very heavy usage scenarios by

adding additional load on the

database. It is recommended that all

business processes that use the EDI

Encoder Service and/or EDI

Envelope Service be reviewed to

make certain they are using the

correct Mode parameter, and that the

values match in the business process.

v EDI Encoder Service should be set

as Mode. For example: <assign

to="Mode">IMMEDIATE</assign>

v EDI Envelope Service should be

set as MODE. For example: <assign

to="MODE">IMMEDIATE</assign>

Planning

Server node sizing NA C You should ensure that you have

sufficient computing capacity to

process peak transaction volumes.

Refer to System Requirements

documentation to get an estimate of

the processor, memory, and other

requirements.

You can also engage IBM

Professional Services to conduct a

capacity plan study of your system.

This study involves measuring your

system, and using the measurements

to forecast resource requirements at

anticipated peak processing periods.

Database disk sizing NA C You should ensure that you have

sufficient disk space for the database

server.

The size of the database disk

subsystem may vary from a few

gigabytes to several terabytes. The

size depends on the processing

volume, its complexity, and the

length of time you want to keep the

data active in the database.

JVM

Performance Management 7

Page 14

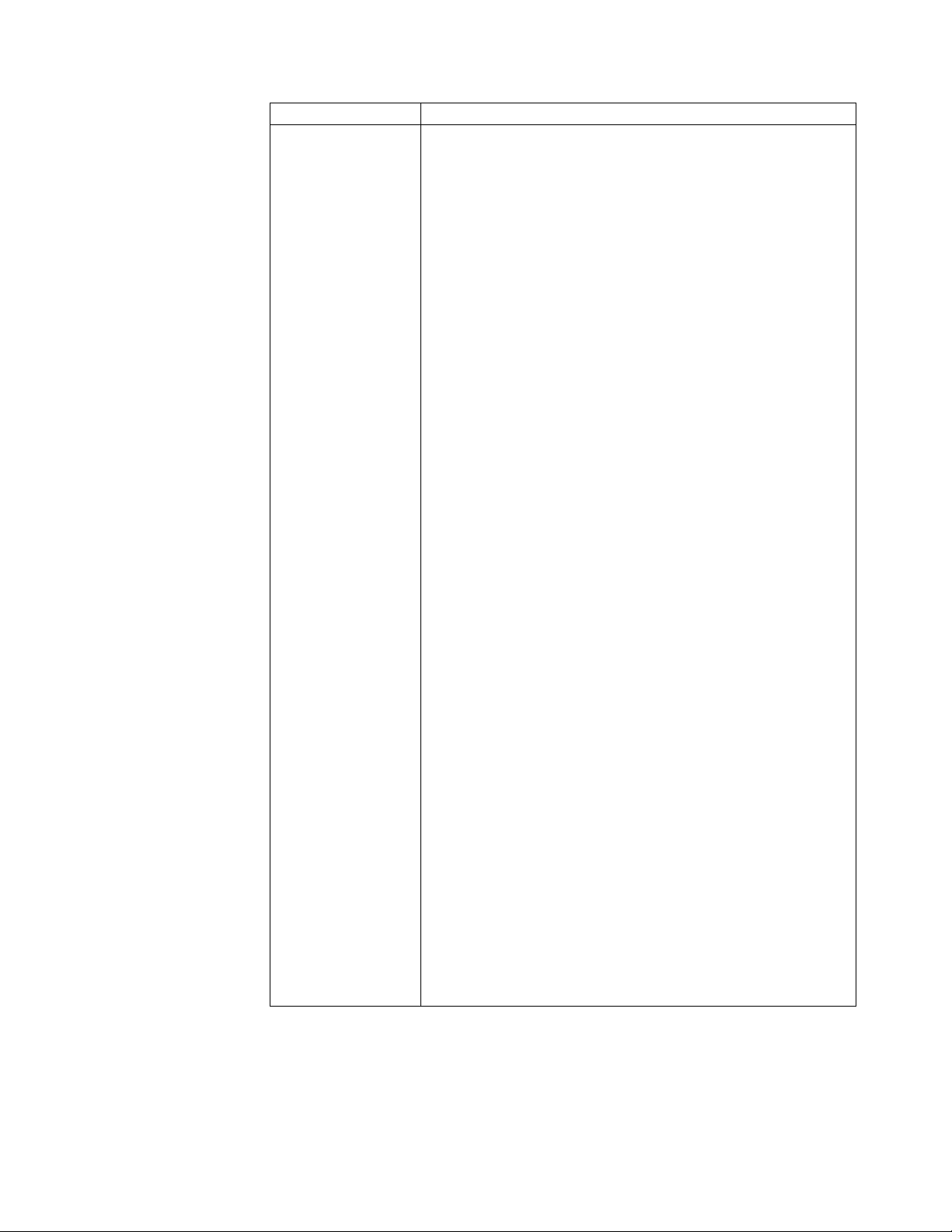

Recommendation Test Production Comments

JVM version C C You must ensure that you install

Sterling B2B Integrator on certified

JVM versions and levels.

Run the –version command in the

command prompt to ensure that you

have installed the correct version.

Verbose GC statistics NA C You can enable verbose GC statistics

collection. Understanding the health

of GCs for each JVM is critical for

performance.

Paging C C The JVM heap must be resident in

the memory. The performance can

noticeably degrade if the operating

system has to page portions of the

heap out to disk.

OutOfMemory

Exceptions

JVM VM mode C C For HotSpot JVM, the server mode is

Heap size C C Correct heap size configuration is

C C OutOfMemory exceptions can cause

unpredictable behavior. You should

ensure that you have allocated

sufficient physical memory to

Sterling B2B Integrator based on

your processing requirements.

applicable for long-running

workloads.

critical for both performance and

availability. If the heap size is too

big, the GC pauses can be long.

Similarly, if the heap size is too

small, it can lead to OutOfMemory

exceptions. You should ensure that

heap size is not set larger than

physical memory to avoid thrashing.

Database

Monitor and regulate

indexes

8 Sterling B2B Integrator: Performance Management

Sterling B2B Integrator supports both

32-bit and 64-bit JVMs. You should

allocate more heap space when you

are running a 64-bit JVM.

C C Sterling B2B Integrator comes with a

default set of indexes. In some cases,

the indexes may not apply to your

operational environment.

You should regularly monitor the

resource cost of frequently used

queries to check if additional indexes

are required. Similarly, you can also

monitor the database to delete

indexes if they are not required.

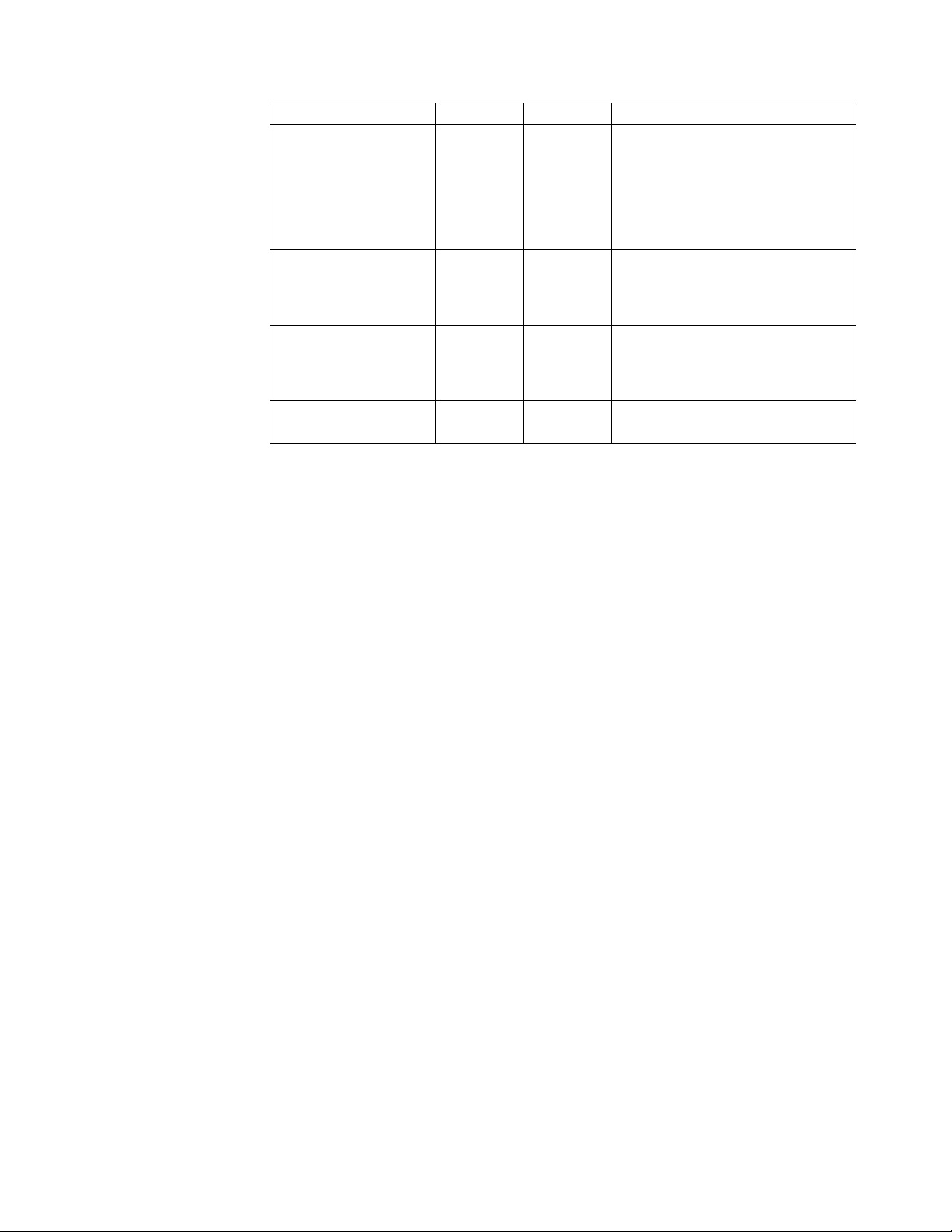

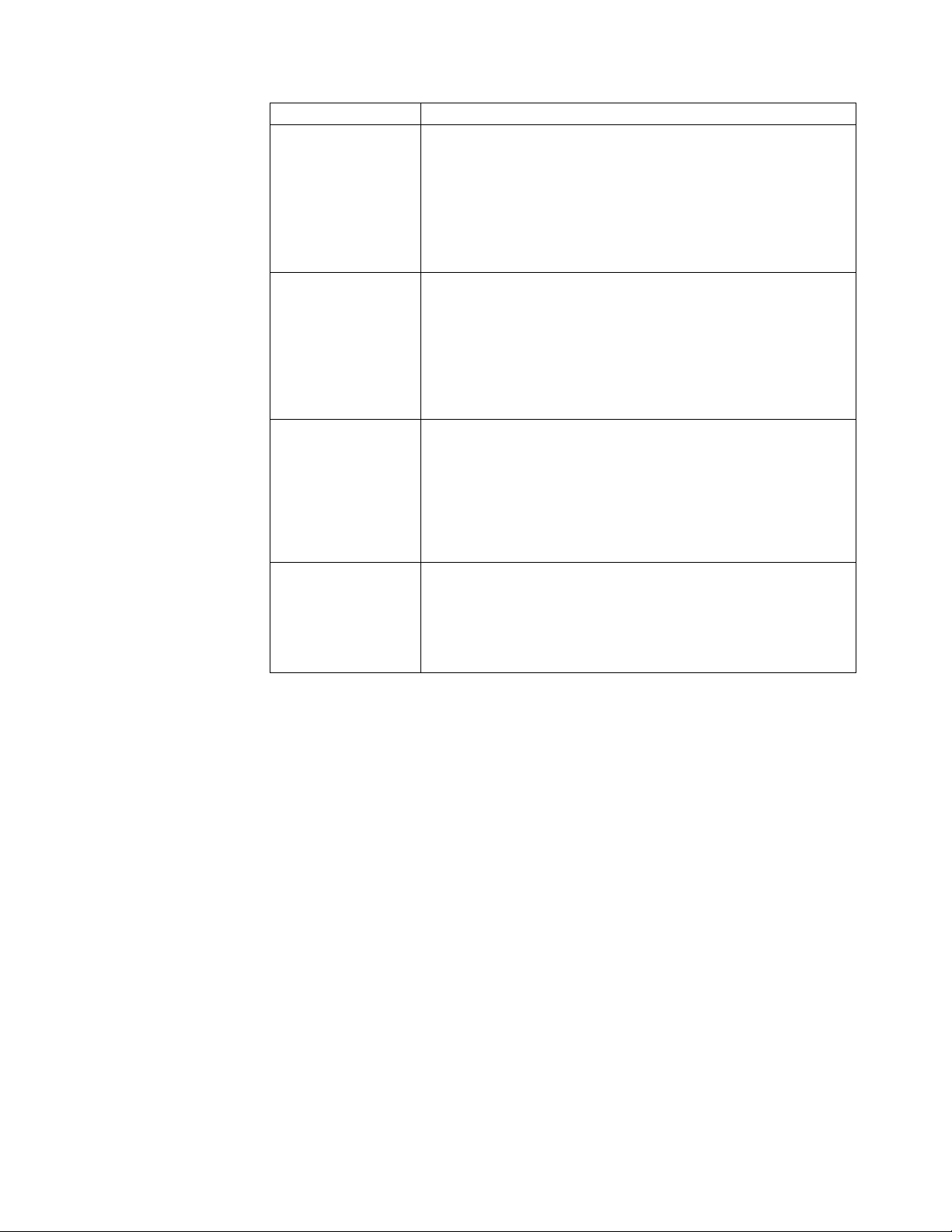

Page 15

Recommendation Test Production Comments

Cursor sharing C C If you are using Oracle database,

cursor sharing enables dynamic SQL

to be reusable, thereby reducing the

contention on the shared pool.

You should ensure that you set

cursor_sharing=EXACT

Parameters governing

DB2 locking strategy

Parameters governing

DB2 memory

Volatile table NA C Mark tables that change significantly,

C C Set DB2_EVALUNCOMMITTED,

DB2_SKIPDELETED, and

DB2_SKIPINSERTED to reduce lock

contention.

C C Set parameters to manage various

memory structures such as

LOCKLIST, SORTHEAP, and so on,

to AUTOMATIC.

as volatile.

IBM Sterling File Gateway: Specific Recommendations Checklist

IBM Sterling File Gateway is installed on an instance of Sterling B2B Integrator,

and shares many of the resources with the latter, including:

v Communication Adapters

v Business Processes

v Security Services

v Perimeter Services

v Encryption

v Decryption

v Account Management

You should, therefore, tune your Sterling B2B Integrator installation first, and then

perform the Sterling File Gateway-specific tuning and troubleshooting tasks. Be

aware that the changes you make to Sterling File Gateway can also affect the

performance of Sterling B2B Integrator.

Note: Do not edit the properties files. Make all the changes in the

customer_overrides.properties file. For example, to change the

pgpCmdline2svcname property, enter the following line in the

customer_overrides.properties file:

filegateway.pgpCmdline2svcname=CUSTOM

In this line, replace CUSTOM with the name of your Command Line 2 adapter. For

more information about the customer_overrides.properties file, refer to the

property files documentation in the online documentation library.

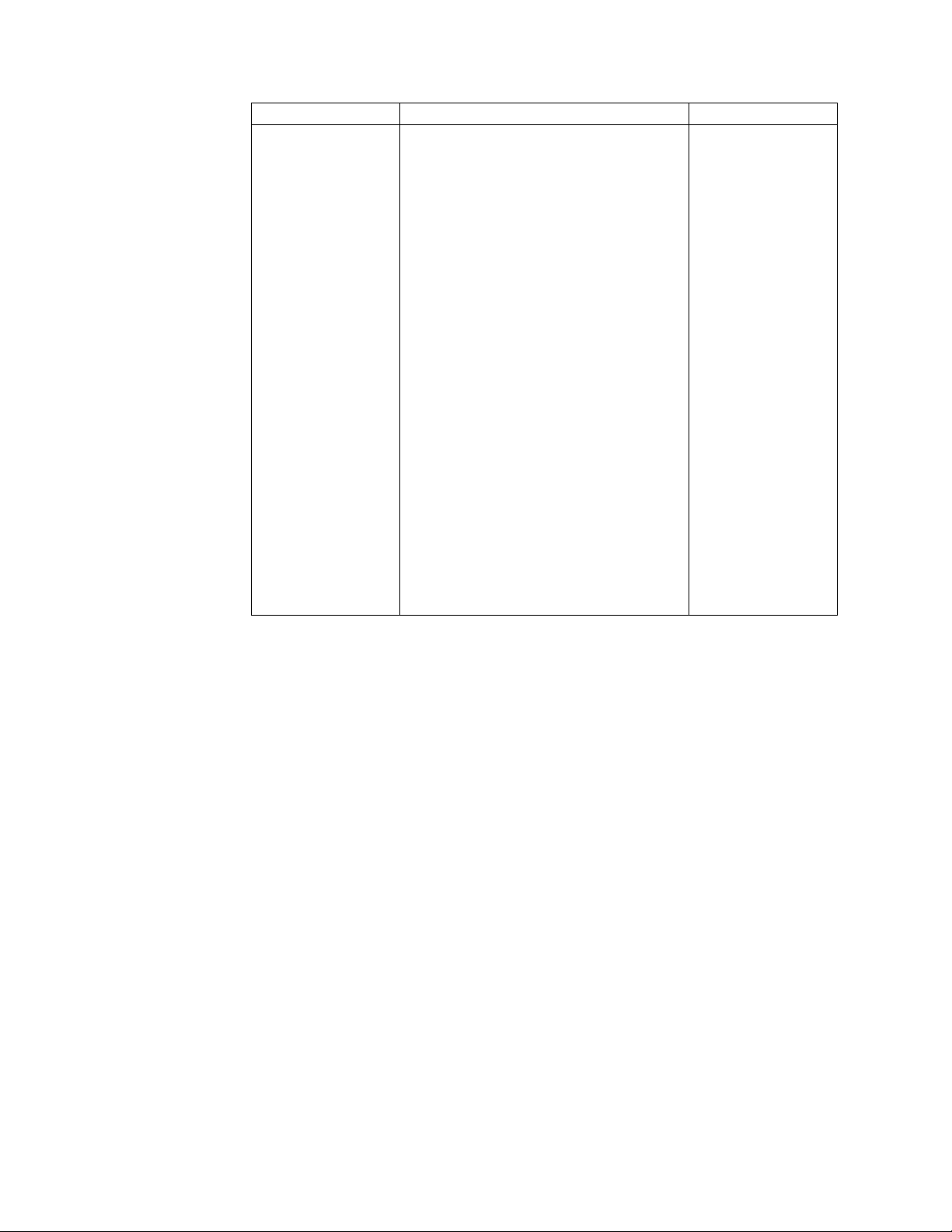

The following table describes some of the key parameters that must be configured

to optimize Sterling File Gateway performance.

In the following table, the Test and Production columns indicate whether the

recommendations are Recommended (R), Critical (C), or Not Applicable (NA) in

the test and production environments.

Performance Management 9

Page 16

Recommendation Test Production Comments

Increase the value of Sterling File

Gateway.

If you are processing very large

files, increase the probe values to

avoid timeout conditions.

R R Number of Sterling File Gateway services that can

be run concurrently. The services are split into

two groups, and each group has this value as the

limit. Therefore, the total number of services that

can run concurrently is equal to the value for this

property multiplied by two. Set this to a value

that is higher than the sum of business process

threads in queues 4 and 6 (where Sterling File

Gateway services run).

Default value: 8 (Maximum: 64)

R R Timeouts and sleep intervals that control the

period for which Sterling File Gateway waits for

each of the sub-business process it invokes. The

timeouts and sleep intervals control the timeouts

when a business process is executed

synchronously during routing. The types of

business processes that run during routing are

consumer identification and PGP processing.

Setting the values for these properties also enables

one set of relatively quick probes, followed by a

second set of slower probes. The first set will be

reactive, but consumes more processor capacity.

The second set will be activated for

longer-running processes and will consume less

processor capacity.

If you have a high volume of

PGP traffic, you can improve

your performance by specifying a

group for the file gateway.

If you have very large files that

will be processed by PGP,

increase the value of the file

gateway.

First, probe 120 times, with 100 milliseconds

between each probe, for a total of 12 seconds.

Default value:

v bpCompletionProbes.1=120

v bpCompletionSleepMsec.1=100

Then, probe 600 times with 2000 milliseconds

between each probe, for a total of 1200 seconds

(20 minutes).

Default value:

v bpCompletionProbes.2=600

v bpCompletionSleepMsec.2=2000

R R The name of the Command Line 2 adapter to be

used for PGP packaging and unpackaging. You

can override this property in the

customer_overrides.properties file if a custom

Command Line 2 adapter is used for PGP

operations. You can also specify an adapter group

name to balance the outbound PGP sessions load

across multiple adapter instances.

Default value: pgpCmdline2svcname=

PGPCmdlineService

R R Timeout value, in milliseconds, for PGP package

and unpackage operations invoked by Sterling

File Gateway.

Default value: 240000 milliseconds (4 minutes)

10 Sterling B2B Integrator: Performance Management

Page 17

Recommendation Test Production Comments

R R The FTP Client Adapter instance or service group

If you have high volumes of FTP

traffic, you can improve your

performance by specifying a

group.

that the FileGatewayDeliverFTP business process

will use. You can override this property in the

customer_overrides.properties file to use a

custom FTP Client Adapter instance to contact

trading partners. You can also specify an adapter

group name to balance the outbound FTP sessions

load across multiple adapter instances.

Default value: ftpClientAdapterName=

FTPClientAdapter

Decrease the value of evaluation

frequency.

R R You can enable either

MailboxEvaluateAllAutomaticRules or

MailboxEvaluateAll

AutomaticRulesSubMin.

MailboxEvaluateAll

AutomaticRulesSubMin verifies the presence of

routable messages once every 10 seconds, and can

be edited for other intervals of less than one

minute by modifying the MailboxEvaluateAll

AutomaticRulesSubMin business process.

Suppress Duplicate Messages R R Prevents duplicate messages from using system

resources.

Increase the number of steps a

business process must complete

prior to returning to the queue.

R R Number of steps involved in the completion of a

business process before the business process

returns to the queue. Higher values will accelerate

individual business process execution, while

lower values will provide smoother multitasking

capabilities. Interactive use favors a lower number

of steps, while batch processing favors a higher

number of steps. The value of

noapp.AE_ExecuteCycle.# can be different for

each queue. .# indicates the queue number.

Increase the time period that a

business process can use a

thread, before releasing it to be

used for another business

process.

When a business process has one service to begin

a protocol session and another service to use the

protocol session, a very low AE_ExecuteCycle

may lead many business processes to be in the

queue, with only the first service running. This

may result in many protocol sessions

accumulating in an open state, and session limits

being met sooner than is necessary.

R R Maximum time period, in milliseconds, for which

a business process can use a thread before

releasing it for use by another business process.

This value will override the value set for

AE_ExecuteCycle. Tuning the value for this

property ensures that a series of unusually slow

steps will not tie up a thread completely. This

value can be different for each queue. .# indicates

the queue number. A value that is too low may

result in the accumulation of more sessions than

are recommended.

Performance Management 11

Page 18

Recommendation Test Production Comments

Increase the number of

concurrent threads.

Set storage type. R R File System is more efficient.

R R Total number of concurrent threads that Sterling

File Gateway is allowed to use. This number may

be verified against the licensed number of

threads. This value is the total number of threads

available to a workflow engine to execute

business process steps. Other, non-workflow

engine threads do not come under the purview of

this limit. For example, the threads set in

fgRouteConcurrentSessionLimit do not come

under the purview of this limit.

Default value: database

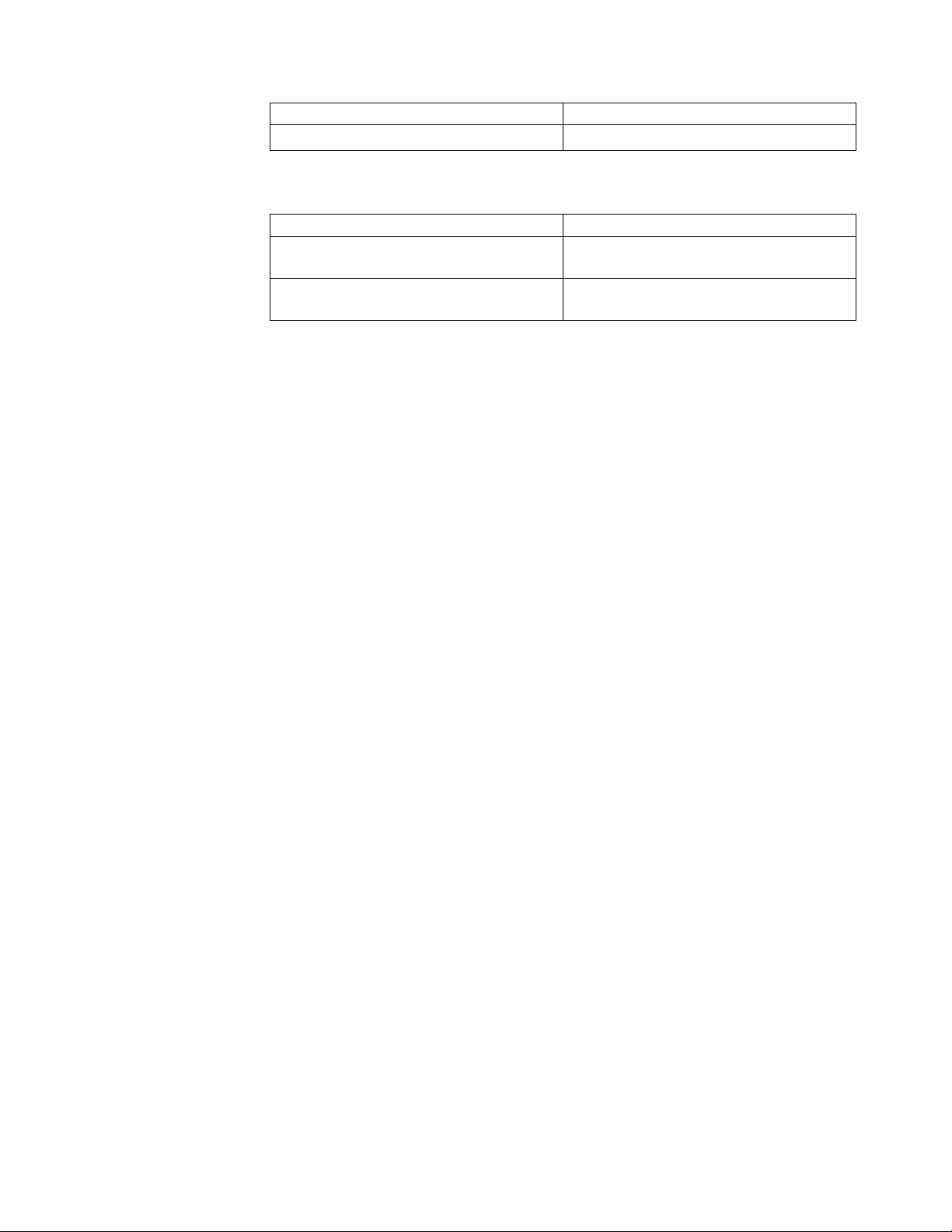

The following table shows the properties that control the above parameters:

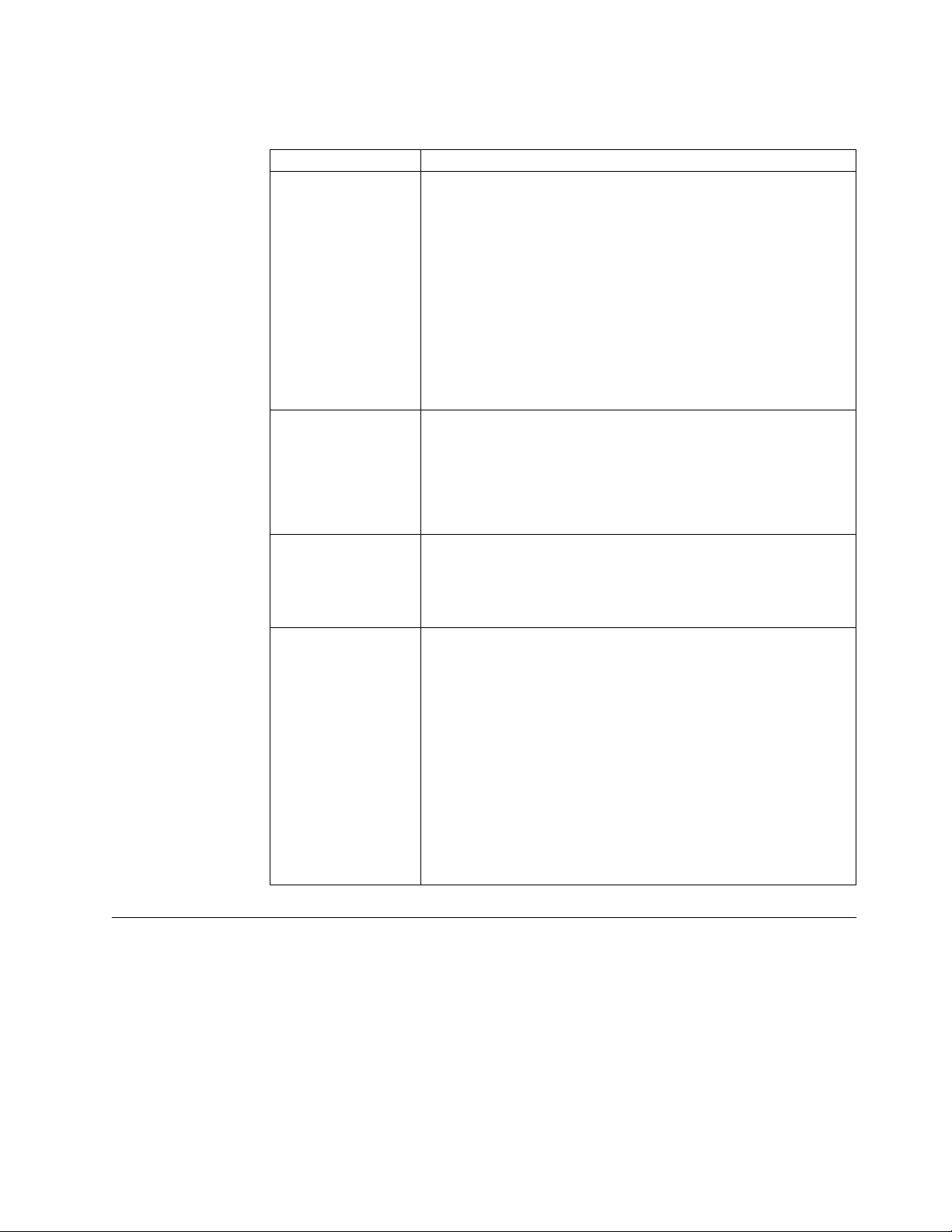

Recommendation Property

Increase the value of Sterling File Gateway. fgRouteConcurrentSessionLimit

If you are processing very large files, increase the probe

values to avoid timeout conditions.

If you have a high volume of PGP traffic, you can

improve your performance by specifying a group for the

file gateway.

If you have very large files that will be processed by

PGP, increase the value of the file gateway.

If you have high volumes of FTP traffic, you can

improve your performance by specifying a group.

Decrease the value of evaluation frequency.

Suppress Duplicate Messages mailbox.disallowDuplicateMessages=true

Increase the number of steps a business process must

complete prior to returning to the queue.

Increase the time period that a business process can use

a thread, before releasing it to be used for another

business process.

Increase the number of concurrent threads. noapp.MaxThreads

Set storage type. N/A

v filegateway.bpCompletionProbes.2

v filegateway.bpCompletionSleepMsec.2

pgpCmdline2svcname

fgRoutePGPCmdLineSocketTimeout

filegateway.ftpClientAdapterName

v MailboxEvaluateAllAutomaticRules

or

v MailboxEvaluateAllAutomaticRulesSubMin

noapp.AE_ExecuteCycle.#

noapp.AE_ExecuteCycleTime.#

EBICS Banking Server: Specific Recommendations

The EBICS Banking Server is installed on an instance of Sterling B2B Integrator,

and shares many of the resources with the latter.

You should, therefore, tune your Sterling B2B Integrator installation first, and then

perform the EBICS Banking Server-specific tuning. Be aware that the changes you

make to the EBICS Banking Server can also affect the performance of Sterling B2B

Integrator.

12 Sterling B2B Integrator: Performance Management

Page 19

The following table describes some of the key parameters that must be configured

to optimize the EBICS Banking Server's performance:

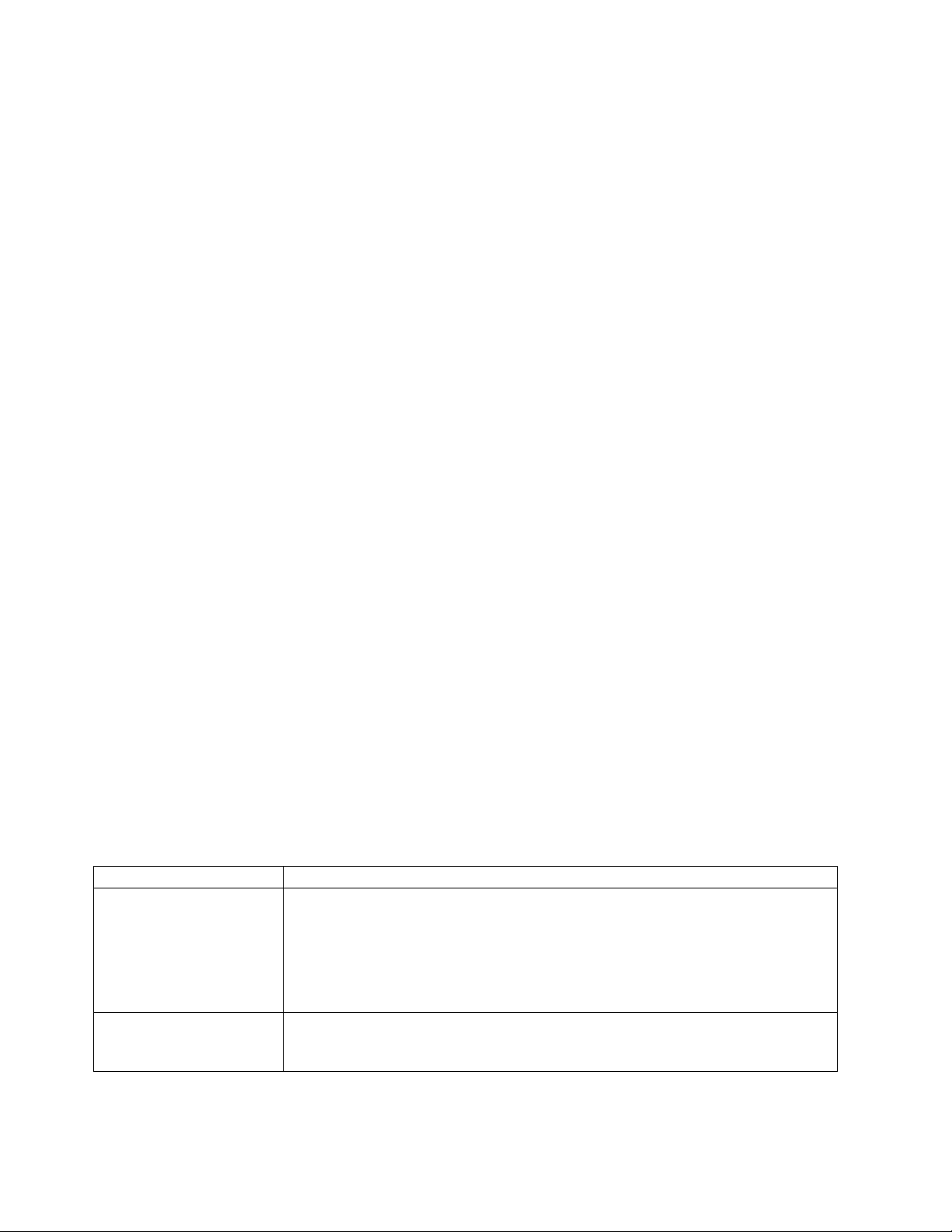

Recommendation Comments

Allocation of

additional threads to

the HTTP Server

Adapter

Reduction of the disk

I/O amount

Storage size The database space required to store the files processed by the

Purge documents The document lifespan in an EBICS transaction is set to ten years.

You can allocate additional threads to the HTTP Server adapter

when the rate of concurrent requests to the EBICS Banking Server

is moderately high. Complete the following steps to allocate

additional threads to the HTTP Server adapter:

1. Modify the numOfmaxThread parameter in the

http.properties.in file by setting numOfmaxThread to four

times the number of processor cores on the system the HTTP

Server Adapter is running on. For more information about

calculating the settings to tune the performance of your system,

refer to the topic “View and Restore Performance Configuration

Settings” on page 121.

2. Run the setupfiles.sh (setupfiles.cmd for Windows) script to

apply the changes.

In order to reduce the amount of disk I/O on the system, change

the persistence of the following EBICS business processes to Error

Only:

v handleEBICSRequest

v EBICSOrderAuthorisationProcessing

v EBICSOrderProcessing

EBICS Banking Server is approximately 2.5 times the size of the

transacted files. For more information about managing databases in

Sterling B2B Integrator, refer to “Database management for Sterling

B2B Integrator” on page 16.

As a result, the business processes associated with the documents

remain in the live system databases, and may occupy a large

memory and slow down the performance of the system.

Database Management

For optimal performance in Sterling B2B Integrator, you must properly plan,

configure and manage your database.

Planning

This section provides information about the tasks elements that have to be

completed prior to the implementation phase. The key planning tasks include, but

are not limited to:

The Index Business Process service scans the live systems, and

flags the records that have reached their purge eligibility date and

time. To reset the document lifespan, schedule the Index Business

Process to run every ten minutes. The Index Business Process

resets the lifespan after you delete the messages from the mailbox.

For more information about implementing and configuring the

Index Business Process service and scheduled purge, refer to Index

Business Process Service and Purge Service documentation

respectively in the online documentation library.

Performance Management 13

Page 20

v Selecting a certified database management server software and version. Refer to

the Sterling B2B Integrator System Requirements for a list of supported database

configurations.

v Determining the size and configuration of the database server node

v Determining the size and configuration of the database disk subsystem

v Determining the disk technology

Monitoring Disk Space

At the operating system level, it is important to monitor disk space utilization, as

this is one of the most common causes of database freeze up. On various

UNIX/Linux-based platforms, run the df command as a line command or in a

shell script. Various third-party tools are also available.

Server Sizing

At appropriate times in the project lifecycle, you can request a Server Sizing study

from your IBM Professional Services Project Manager or a IBM Sales Executive.

This study starts with the Sterling B2B Integrator Server Sizing Questionnaire. IBM

Performance Engineering creates a sizing document that provides information

about the estimated processor, memory, and network requirements for the

standard/baseline Sterling B2B Integrator. On your part, you must consider

additional requirements such as other workloads on the same node (for example,

additional third-party software, customization, performance monitors, and so on).

Storage and File Systems

This section covers the following topics:

v Capacity Requirements

v Use of Native File Systems

v Monitoring Disk Space

Capacity Requirements

Your disk capacity requirement is a very important input to the disk configuration

planning process. This involves many considerations to ensure survivability,

manageability, scalability, and performance.

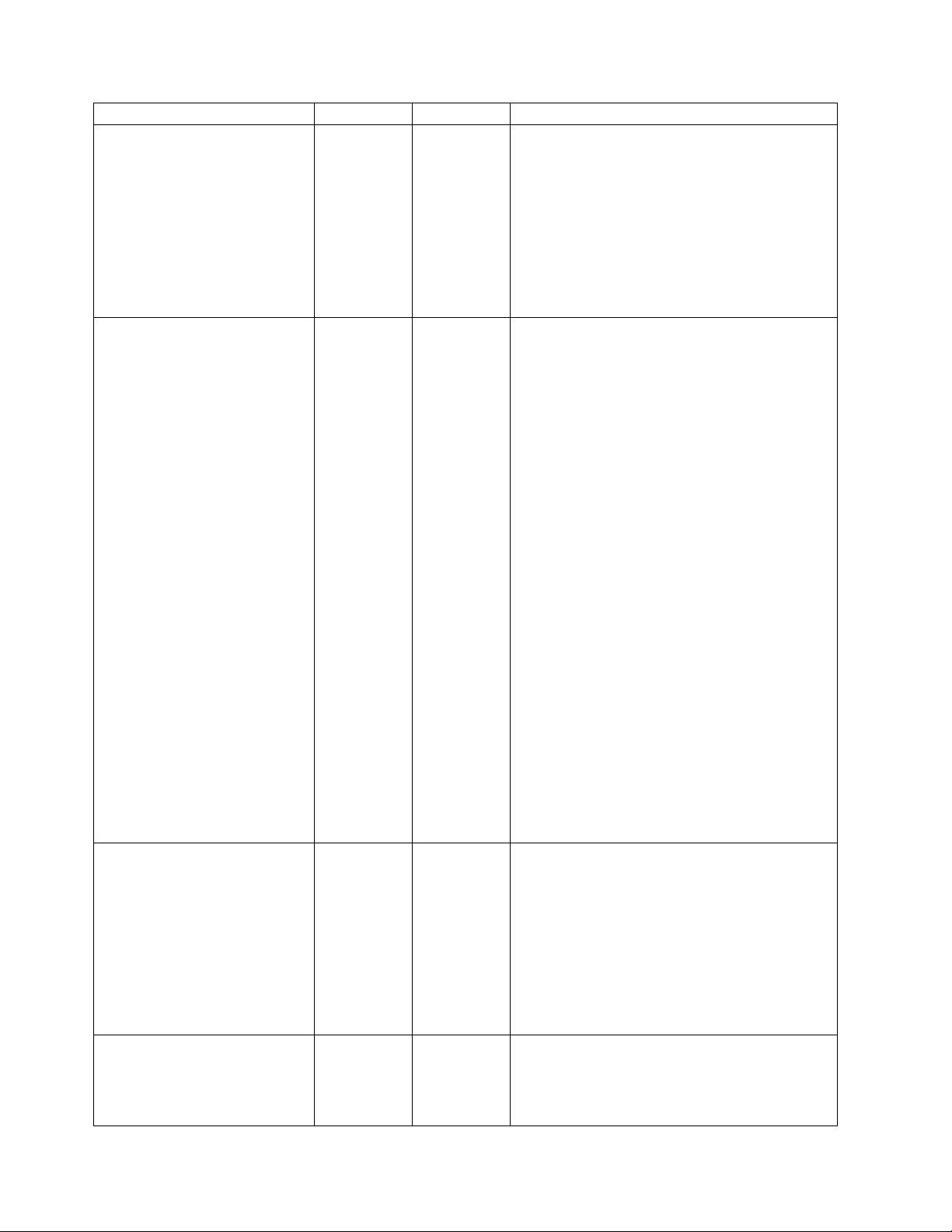

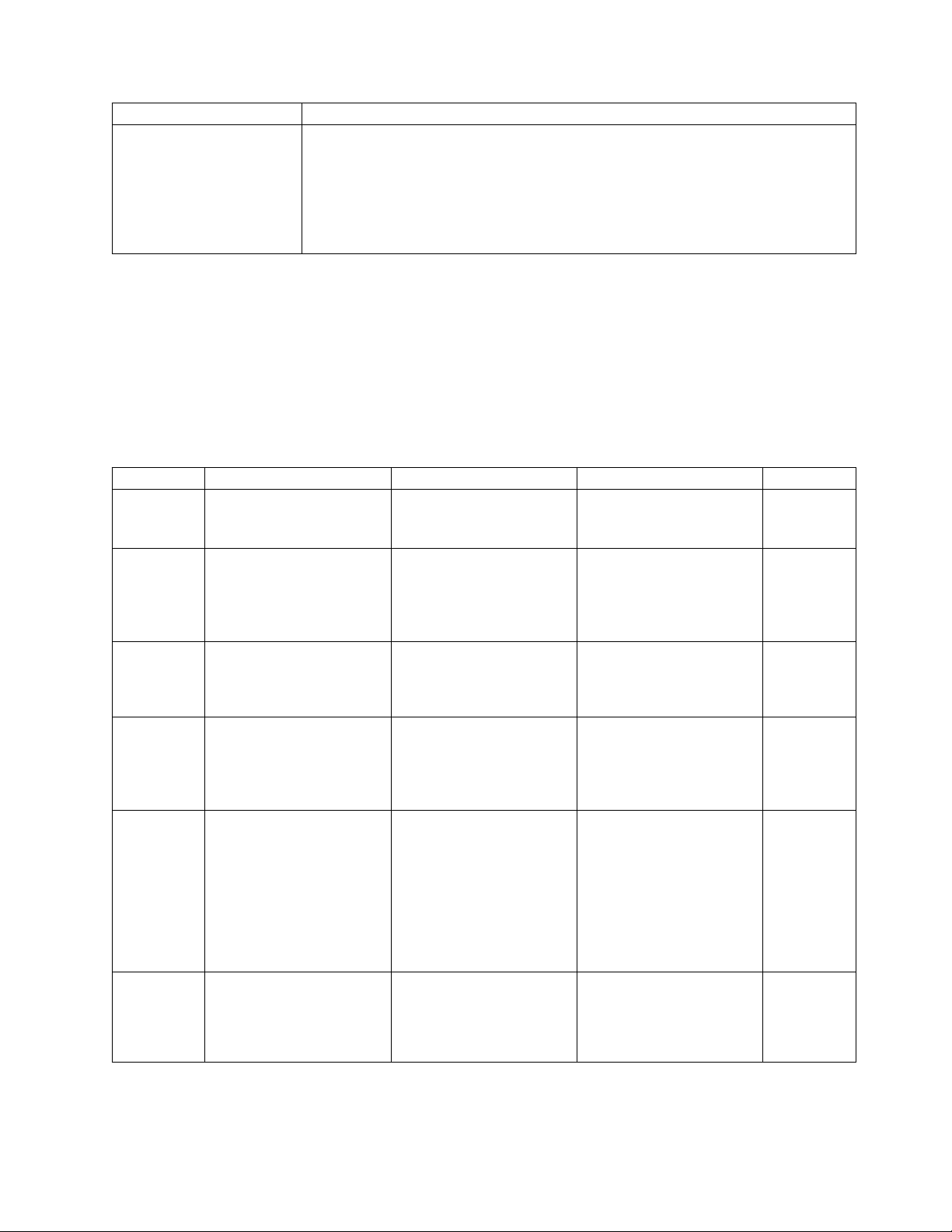

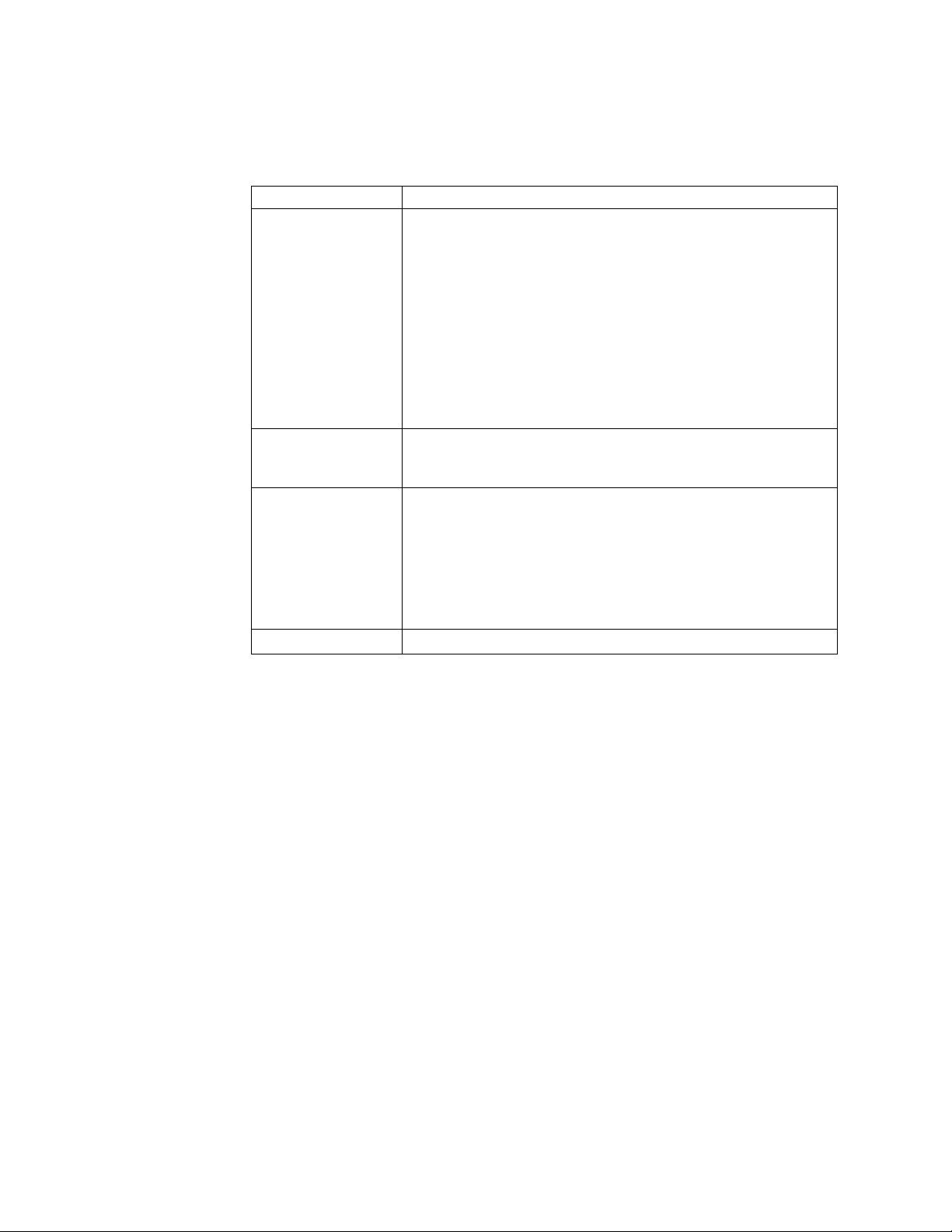

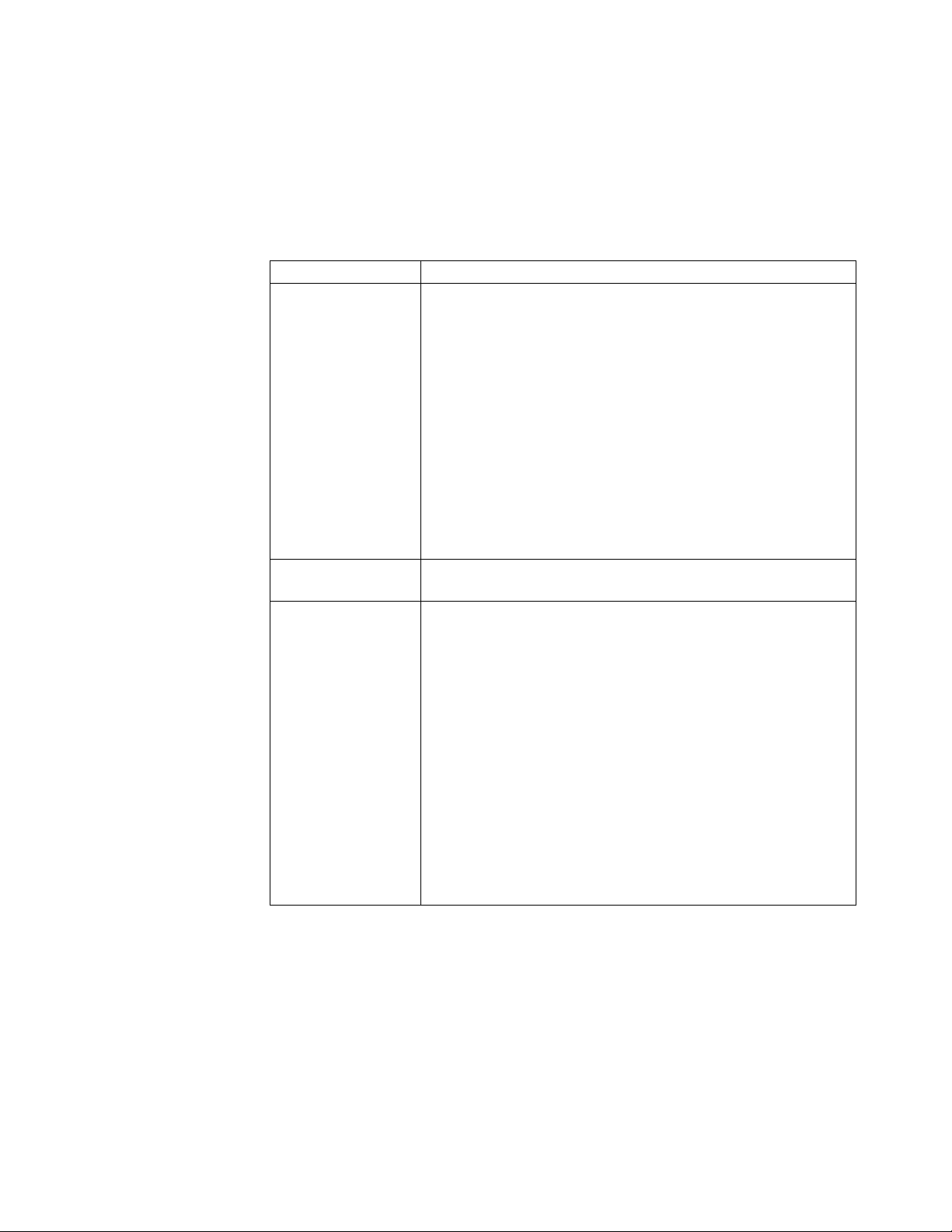

The following table provides information about the tasks that you must perform to

ensure that the required behavior is achieved.

Goal Strategy

Survivability

Manageability If you have limited-time windows to back up the database, select techniques such as

v Configure disks with the ability to survive single or multiple disk failures (for

example, RAID-1, RAID-5, or RAID-10).

v Configure the disk array with multiple I/O paths to the server to survive I/O path

failures.

v Configure disks to be accessible from multiple server nodes to tolerate single-node

failures.

array snapshots or SAN-based (storage area network) mirroring that allow logical

backups.

14 Sterling B2B Integrator: Performance Management

Page 21

Goal Strategy

Scalability and Performance

v Configure the disk array with many small disks instead of a few large disks to

increase the number of I/O paths.

v Configure the disk array with large NVRAM cache to improve read and write

performance.

v Configure the disks with stripping (for example, RAID-0 or RAID-10).

v In some circumstances, you may also want to consider using solid-state disks.

Capacity Requirements: An Example

Let us consider the following example to understand the concept of capacity

requirements better. Assume you require 900 GB of storage and you have disk

arrays or SANs that are made up of 93 GB disks. Let us further assume that the

database is implemented over ninety 10 GB data files.

The following table summarizes the choices for the common disk organizations

and the trade-offs involved.

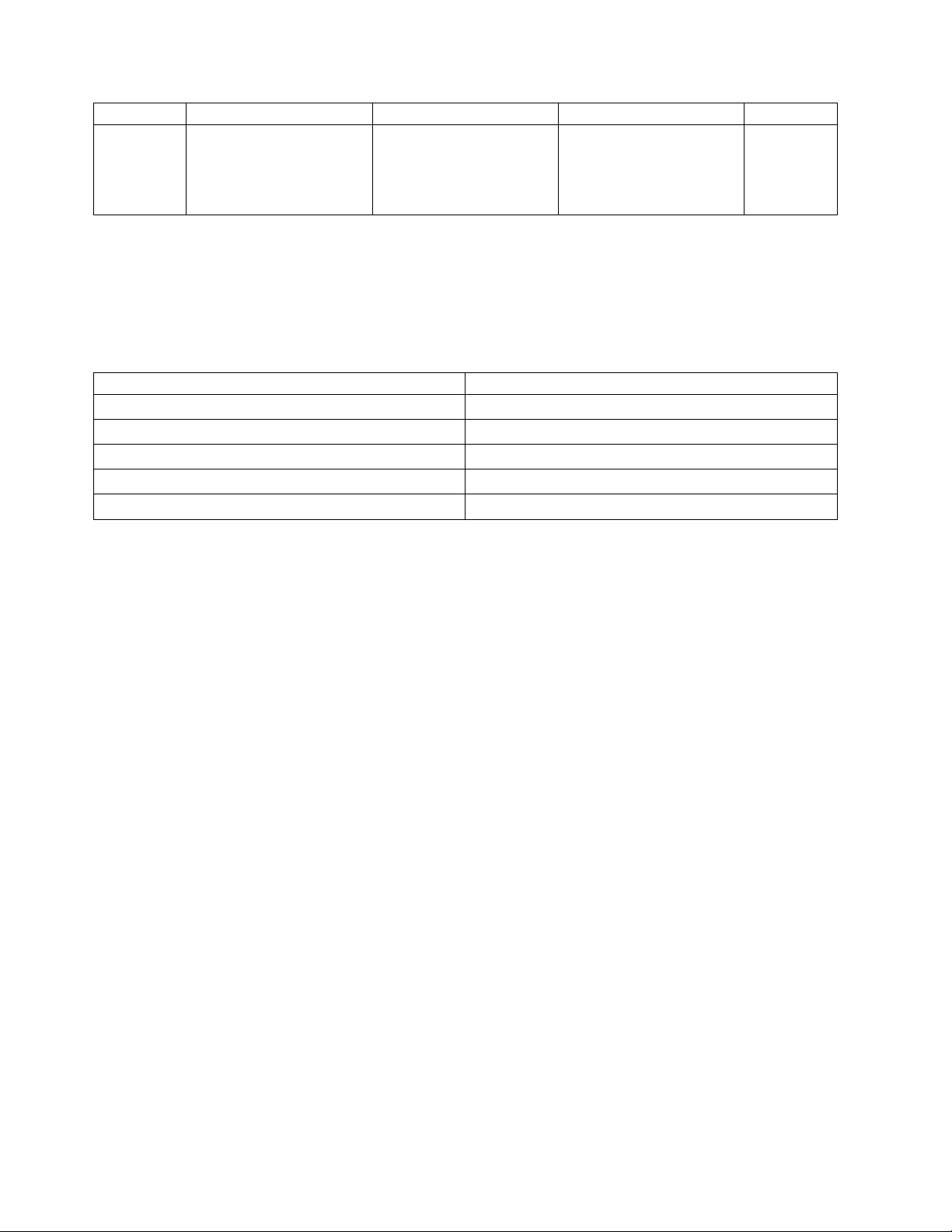

Technology Scalability Survivability Maintainability Num Disks

JBOD Poor. Subject to throughput

of individual disks

RAID-0 Excellent. Striping n disks

provides read/write

throughput at n times a

single disk.

RAID-1 Poor. Similar performance

to that of JBOD.

RAID-5 Excellent for read (similar

to RAID-0). Potentially

poor for write performance.

RAID-6 Excellent for read (similar

to RAID-0). Potentially

poor for write performance

as parity calculations need

to happen. The

performance of RAID-6 and

RAID-5 is about the same

and dependent on the

controllers.

RAID-01 Excellent read/write

performance.

Poor. Single-disk failure

creates outage and requires

database recovery

Poor. Single-disk failure

creates outage and requires

database recovery.

Better. Can survive

multiple disk failures,

assuming that these occur

in different mirrored sets.

Better. Able to survive a

single-disk failure. Multiple

disk failures create an

outage and require

database recovery.

Better. Can survive a

double-disk failure. This

gives it an edge over

RAID-5. A failure of more

than two disks creates an

outage.

Can tolerate up to two disk

failures as long as both

failures are not in the same

mirrored set.

Poor. High disk utilization

skew.

Excellent. Expect

near-uniform disk

utilization within a logical

unit. Potential LUN

utilization skew.

Poor. High disk utilization

skew.

Excellent. Low disk

utilization skew. Possible

LUN utilization skew.

Excellent. Low disk

utilization skew. Possible

LUN utilization skew.

Excellent. Low disk

utilization skew.

Possible LUN utilization

skew.

10

10

20

11

12

20

Performance Management 15

Page 22

Technology Scalability Survivability Maintainability Num Disks

RAID-10 Excellent read/write

performance.

Can tolerate up to n disk

failures as long as two

failures do not occur in the

same mirrored set.

Excellent. Low disk

utilization skew.

Possible LUN utilization

skew.

20

Use of Native File Systems

Using file systems for storage can simplify administration tasks, although

potentially at some loss of some performance over using raw disks. It is

recommended that you consult with your storage administrator and storage

vendor.

Operating System Native File System

Windows NTFS

Linux EXT3

Solaris UFS

®

AIX

HP-UX VxFS

JFS2

Database management for Sterling B2B Integrator

Sterling B2B Integrator uses a database server as a repository for transactional,

reference, and history data that it generates and uses.

Refer to the system requirements for a list of supported database configurations.

This topic covers the following concepts:

v JDBC Connection Pool Overview

v Configuring Parameters for a Database Pool

v Schema Objects

JDBC connection pool overview

Sterling B2B Integrator uses internal connection pooling to minimize delays in the

creation of new connections to the database server. When a connection is

requested, an existing connection is used whenever possible. Internal connection

pooling improves performance by removing the need to go to the driver and

creating and delete a new connection each time one is needed.

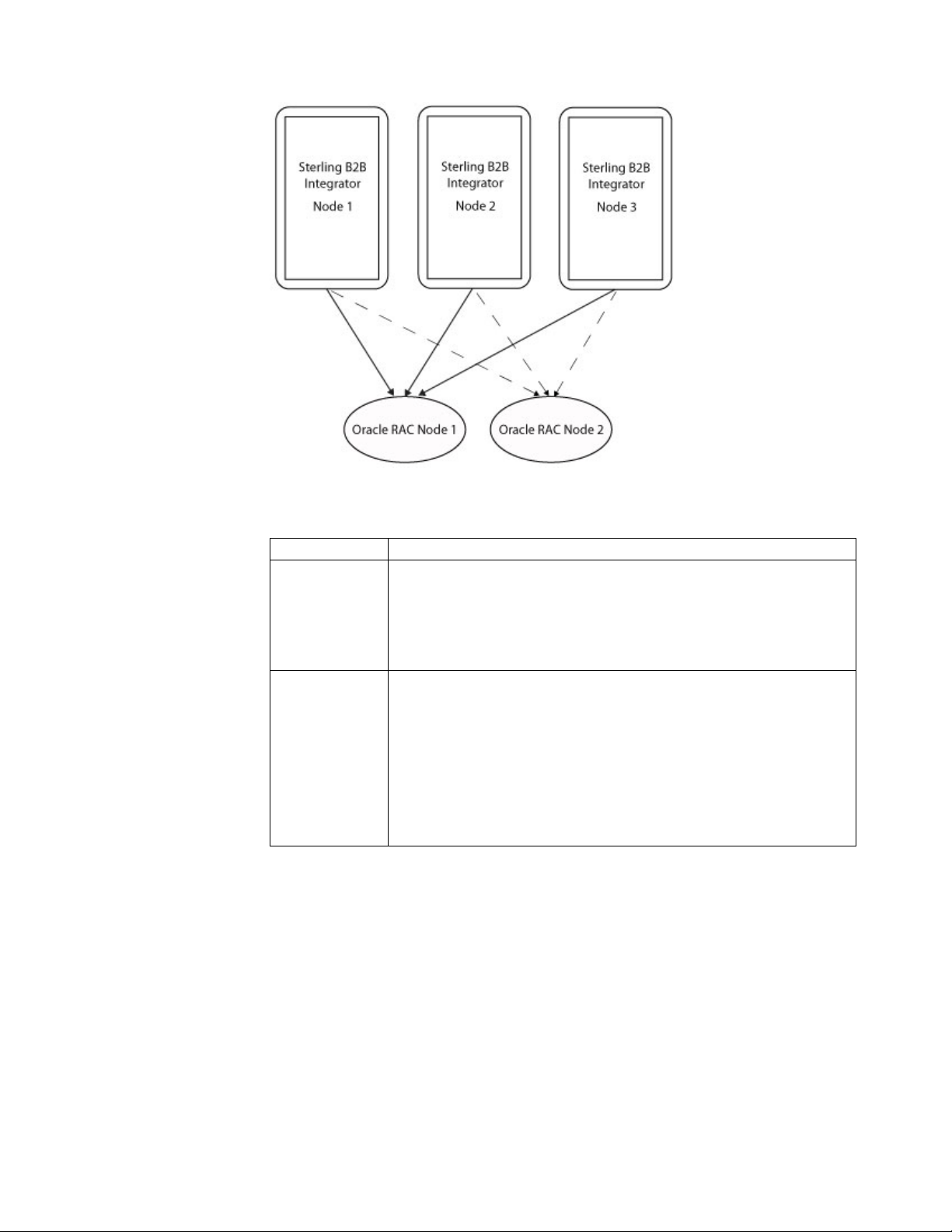

Internal connection pooling mechanism implements a connection pool in every

JVM started for the product. In a cluster with multiple nodes, the number of

connections the database must establish is the total of all connections for all the

connection pools.

For example, if you have four JVM instances and each connection pool averages

around 30 connections, your database server must establish 120 database

connections.

16 Sterling B2B Integrator: Performance Management

Page 23

With this implementation, the database reports any idle connections to the system,

which is the expected behavior. JDBC properties can be tuned in the

jdbc.properties file to delete idle connections and minimize usage.

Note: Override the JDBC properties in the customer_overrides.properties file. Do

not modify the jdbc.properties file directly. For more information about the

jdbc.properties file, see the properties file documentation.

Implementation of connection pooling

Each database pool is created with an initial number of connections, as defined by

the initsize property in the jdbc.properties file. As more connections are

required, Sterling B2B Integrator requests additional connections up to the

maximum number defined by the maxsize property. When Sterling B2B Integrator

finishes with a connection, the connection is returned to the pool of connections for

later use.

If Sterling B2B Integrator requires connections beyond the maximum size limit for

each database pool, and every pool is in use, Sterling B2B Integrator can request

new connections up to the value defined by the buffer_size property. Connections

in this “buffer range” (that is, beyond the number of connections that are defined

by the maxsize property) are created as needed, and deleted when the calling

process is completed. To detect this condition, search for warning messages such as

connections exceeded in the noapp.log file. Performance drops if Sterling B2B

Integrator runs in this range for a long time.

The buffer_max value is a hard maximum. When the number of connections that

are defined by buffer_max (maxsize + buffersize) is reached, additional requests

for connections are denied. An error is written to the logs that indicates that the

pool is exhausted and the attempt to create a new connection failed.

Summary

Each JVM can create connections for each of the pools that are defined in the

jdbc.properties file and configured in the customer_overrides.properties file.

Each pool grabs the initial number of connections (initsize) configured.

Because a pool requires additional connections beyond the initial size, it creates

new connections up to the maxsize limit for that pool. Connections that are created

with fewer than the maxsize are returned to that pool for reuse, thus improving

performance.

If a pool is using all the connections up to the maxsize limit, it creates a new

connection as needed within the buffer size limit. Connections beyond maxsize and

below the buffersize are not reused. Each connection is created when needed, and

deleted when no longer needed by the calling process. This method is expensive

and harms performance if Sterling B2B Integrator runs for continued amounts of

time in this state.

When the number of connections (maxsize + buffersize) is reached, new requests

for connections are refused.

You can now manage JDBC pools dynamically. For more information about

dynamically managing JDBC pools, see “Dynamically Manage JDBC Pools” on

page 20.

Performance Management 17

Page 24

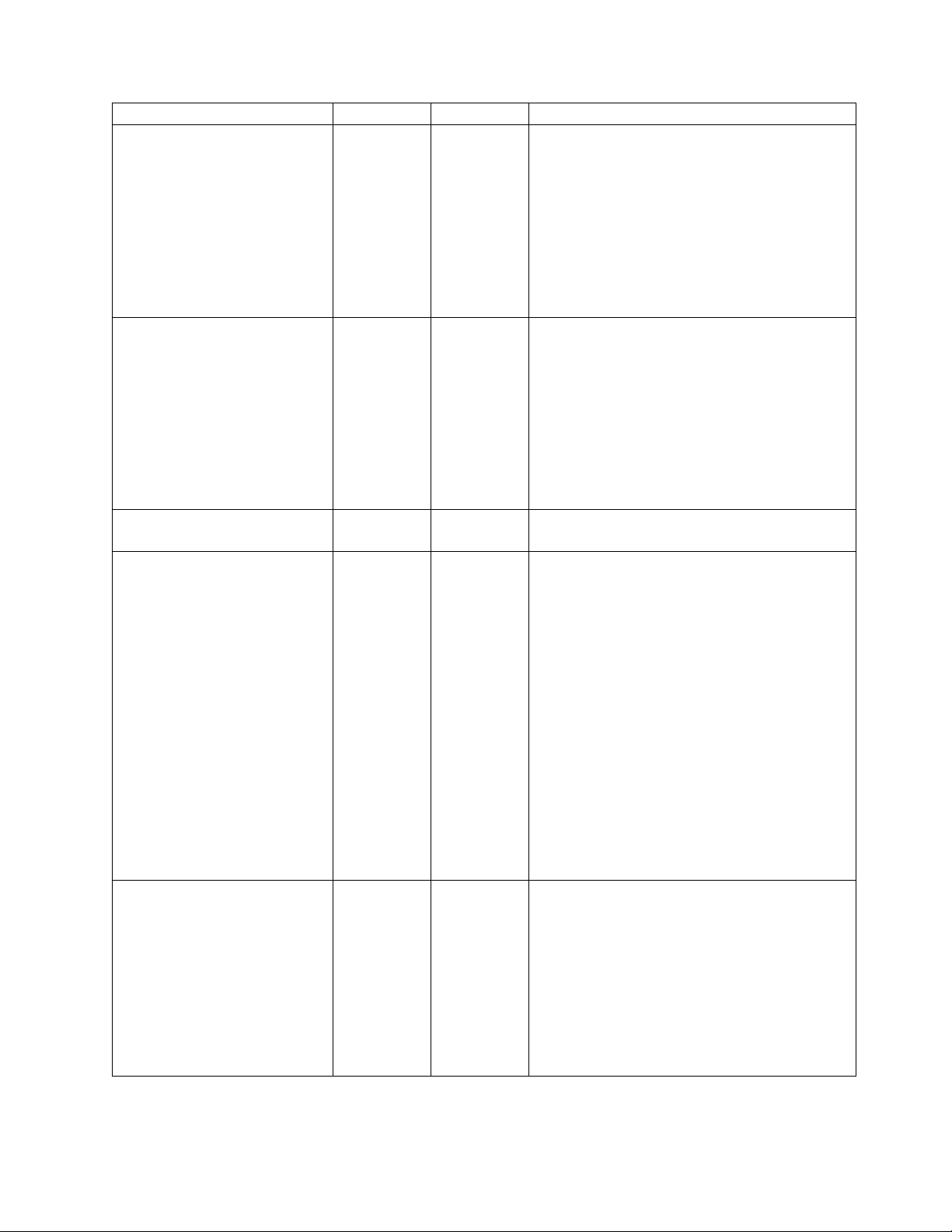

Configuring parameters for a database pool

The following table describes the primary parameters of a database pool and the

recommended value for each parameter:

Parameter Description and recommended value

initsize When a JVM is created and connection pool objects are instantiated

for each of the pools, a call is made to the JDBC driver for each

pool and an initial number of connections are created for each

pool. Connections that are created from the initsize are part of

the connections that exist in the pool for reuse, as needed.

Since various JVMs can load the initial number of connections, but

might not really need them, it is recommended that you do not set

the initsize to a large value.

Default value: 0 or 1

Recommended value: 1

maxsize Maximum size of the connection pool that pertains to a database

pool. After a connection is created up to this value, it is not

deleted. It remains idle until needed.

Buffersize Maximum number of connections that can be created. Connections

that are created beyond the maxsize value are created and deleted

as needed.

After all the connections are used (maxsize + buffersize), and a

new request for a connection comes in, the new request is denied,

and an error is written stating that a connection cannot be created

and that the connection pool is exhausted.

maxConn This parameter is not used in Sterling B2B Integrator.

Frequently asked questions

v Q: How many connections will I need?

A: You can start with the recommended settings provided by the tuning wizard

and then monitor the noapp.log file to ensure that you are not close to the

maximum size of the connection pool (maxsize). Monitor the usage over a

period of time and observe if the usage is increasing. If the usage limits exceed

25% of the settings that are recommended by the tuning wizard and the demand

for connections is increasing, contact the IBM Professional Services onsite

consultant or IBM Customer Support.

v Q: Why are connections shown as being idle?

A: Getting a new connection from the database is expensive. Connections are

held in a pool to be available when the system needs them, which means that

connections are often shown as being idle. This method is a performance

trade-off that enables connections to be available when needed.

v Q: Can I kill idle connections?

A: Configure the pool to keep fewer connections, and release connections after a

specified amount of time.

Schema objects

This topic covers the following concepts:

v Placement of Schema Table Objects

18 Sterling B2B Integrator: Performance Management

Page 25

v Placement of Indexes

v Sterling B2B Integrator Database Tables

Placement of Schema Table Objects

The Sterling B2B Integrator installation guide provides directions about how to

create the database, including the necessary changes to some critical parameters.

The DDL statements allow you to create a simple schema that is suitable for

general use. You need to review, and possibly modify, these statements before

production.

Placement of Indexes

The DDL statements create a minimal set of indexes for general use. You might

need to create more indexes to suit your business needs. Contact IBM Professional

Services or IBM Customer Support to create more indexes. You should also

maintain a list of the indexes added and ensure that they are present after you

upgrade Sterling B2B Integrator and monitor its usage. These indexes may or may

not be added to the Sterling B2B Integrator and is specific to your operating

environment.

You might want to create more table spaces for storing indexes in addition to those

table spaces for the base tables. Table spaces should have multiple data files for

I/O load balancing.

Important: Indexes can also be separated out into different storage areas. This

action should be done in coordination with your database administrator. However,

if you are moving tables to different storage areas, do so only in coordination with

IBM Professional Services to prevent upgrade issue in the future.

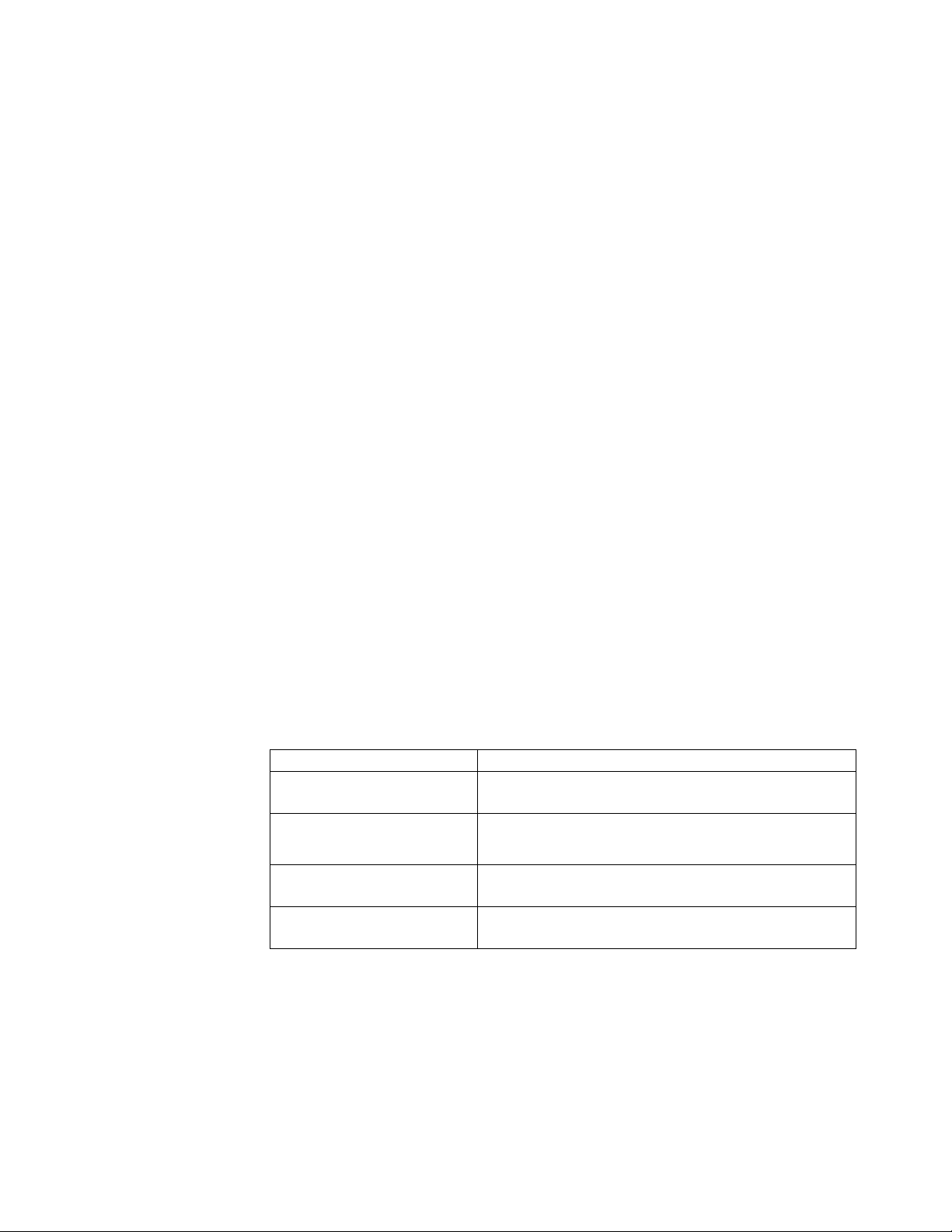

Database tables that can substantially grow

The following table lists some of the tables that are defined in Sterling B2B

Integrator experience substantial input, output, and rapid growth.

Table name Table function

DATA_TABLE These tables hold the binary payload of documents that

are used for processing within Sterling B2B Integrator.

v DOCUMENT

v CORRELATION_SET

WORKFLOW_CONTEXT Contains step status and business process flow

ARCHIVE_INFO Holds lifespan information that pertains to all business

These tables are candidates for moving to a table space or segment that is separate

from the rest of the database.

Contain document metadata that is used for searchability

and enablement of various document processing tasks.

information.

processes and document storage in the system.

Important: Move these tables only in coordination with IBM Professional services.

When patches or upgrades that need to re-create a table are installed, the

installation attempts to create them in the default table space.

Performance Management 19

Page 26

Controlling entries in the CORRELATION_SET table

You can control when correlation entries for different activities of Sterling B2B

Integrator are written to the CORRELATION_SET table. Reducing the number of

records in the CORRELATION_SET table improves the performance of Sterling B2B

Integrator.

The doc_tracking.properties file includes properties that enable or disable

correlation entries for the following activities. The properties are in parentheses.

v Mailbox (makeCorrelationForMailbox)

v FTP (makeCorrelationForFTP)

v SFTP (makeCorrelationForSFTP)

v System tracking extension (makeTrackingExtensions)

The default value for each property is true, which means that correlation entries

are written to the CORRELATION_SET table.

Attention: If makeTrackingExtensions=false, then no mailbox correlation entries

are created, even if makeCorrelationForMailbox=true.

The doc_tracking.properties file is in the install_dir\install\properties

directory.

Dynamically Manage JDBC Pools

Sterling B2B Integrator now has the ability to dynamically add, modify, remove

JDBC Pools and manage JDBC pools with effective dates for passwords.

Adding JDBC Pools:

About this task

This procedure shows how to add JDBC pools using the

customer_overides.properties file.

Procedure

1. In the customer_overrides.properties file, specify the database server name, port

number, database/catalog name, user ID, and password. To encrypt your

database password, use the encrypt_string.sh or encrypt_string.cmd utility in

the bin directory. Then place the encrypted password, prefixed by an

encryption indicator, in the customer_overrides.properties file.

2. To update Sterling B2B Integrator with this new pool information, restart the

application.

Modifying a Database Pool in jdbc.properties:

Procedure

1. In the install_dir/install/properties directory, locate the

customer_overrides.properties file.

2. Open the customer_overrides.properties file in a text editor.

3. Modify the properties you want to change in the customer pools list of

properties.

Note: You can modify any properties for user added pools. For system pools,

you cannot change the database type (for example, Oracle to MSSQL), but you

can change the database type for customer pools.

4. Save the customer_overrides.properties file.

20 Sterling B2B Integrator: Performance Management

Page 27

5. After modifying the pool properties in customer_overrides.properties, go to

JDBC monitor page and click the Refresh JDBC Pools button, or run the

REFRESHJDBC OPS command from the install root directory: ./bin/opscmd.sh

-cREFRESHJDBC -nnode1

Removing Pool from jdbc.properties:

Procedure

1. In the install_dir/install/properties directory, locate the

customer_overrides.properties file.

2. In the customer_overrides.properties file, delete the pool you want to remove.

Note: Verify that all the pool properties are removed for the pool you want to

delete, including, jdbc.properties_*_ext, jdbc_customer.properties,

customer_overrides.properties files and system_overrides.properties.

3. Save the customer_overrides.properties file.

4. After removing the pool properties in customer_overrides.properties, go to

JDBC monitor page and click the Refresh JDBC Pools button, or run the

REFRESHJDBC OPS command from the install root directory: ./bin/opscmd.sh

-cREFRESHJDBC -nnode1

Controlling User and Password Credentials with Effective Dates: You can now

change database passwords on a scheduled basis in Sterling B2B Integrator. When

you add or modify a pool, you now can control user and password credentials

with effective dates. Multiple user and password credentials are associated with a

pool. A date/time entry indicates to Sterling B2B Integrator when to start using

that credential for new connections. This applies primarily to external pools,

although Sterling B2B Integrator database pools will also work.

You can use the following variables for the date format:

v 15:00:00 3/16/09

v 3/16/09 15:00:00

v 3/16/2009 15:00:00

v Sat, 12 Aug 1995 13:30:00 GMT

v Sat, 12 Aug 1995 13:30:00 GMT+0430

Note: Other formats may be used as long as they follow the Internet Engineering

Task Force (IETF) standard date syntax. For additional information, see

http://www.ietf.org/rfc/rfc3339.txt.

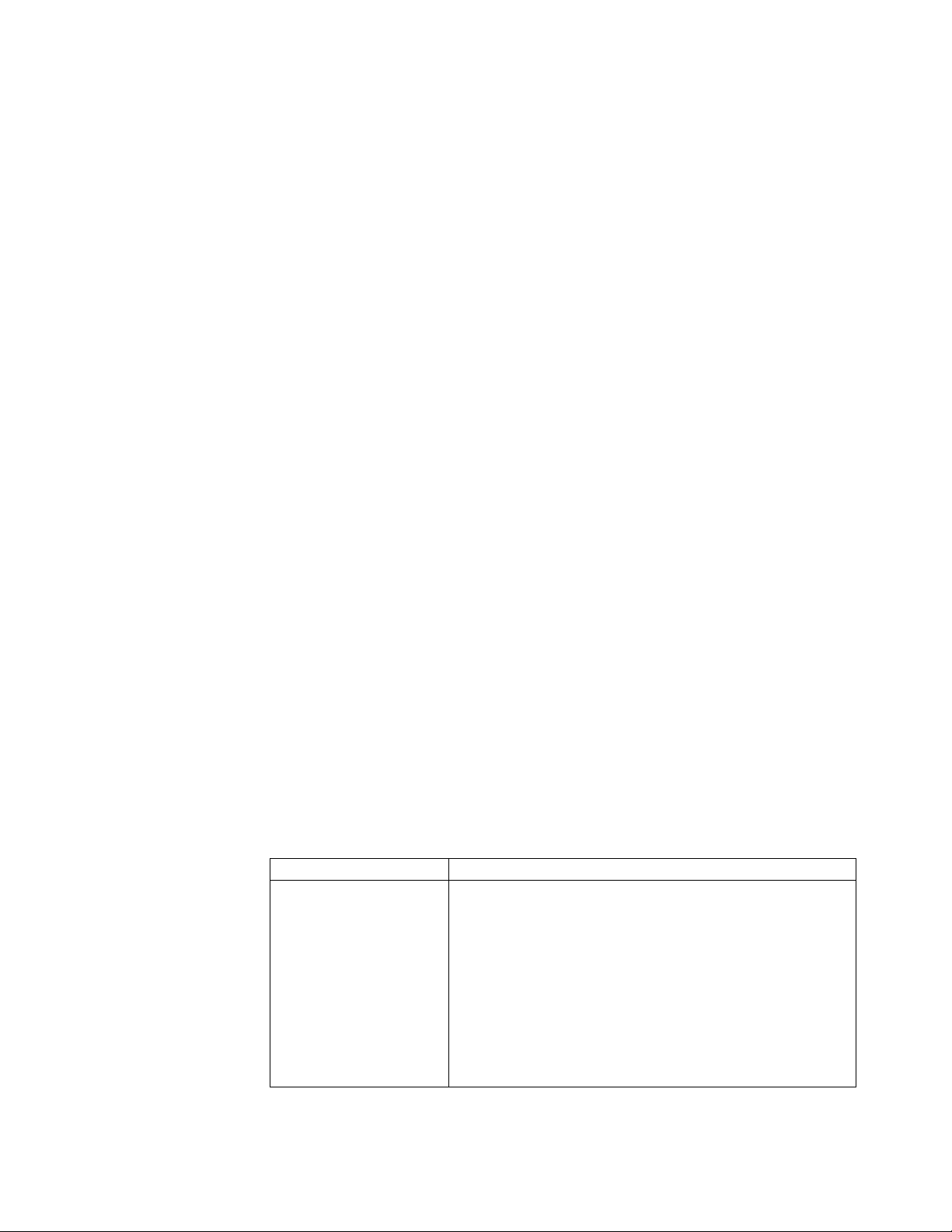

Pool Property Description

newDBPool.password.1 =

<new password>

You can specify alphabets and combination of alphabets and

numbers for the password. You can use numbers for

newDBPool.password.1 or newDBPool.password.2 as well as

following examples:

v newDBPool.password.a=password_a

v newDBPool.effective.a=1/01/2005 09:35:00

v newDBPool.password.b=password_b

v newDBPool.effective.b=02/01/2009 09:35:00

v newDBPool.password.c=password_c

v newDBPool.effective.c=06/18/2009 11:07:00

Performance Management 21

Page 28

newDBPool.effective.1 =

<The date for the new

password starts to take

affect>

You can specify alphabets and combination of alphabets and

numbers for the password. You can use numbers for

newDBPool.password.1 or newDBPool.password.2 as well as

following examples:

v newDBPool.password.a=password_a

v newDBPool.effective.a=1/01/2005 09:35:00

v newDBPool.password.b=password_b

v newDBPool.effective.b=02/01/2009 09:35:00

v newDBPool.password.c=password_c

v newDBPool.effective.c=06/18/2009 11:07:00

System Logs and Error Logs: System Logs

When applicable, the following items are logged in system logs:

v Logging the switch from one credential to the next, as well as the initialization

of the pool dates and user IDs being used (not the passwords).

v Logging if the connection is expired when it returns to the pool.

v Logging if two passwords have the same effective dates. In this case, the system

randomly selects a password and log that two passwords had the same effective

dates. Additional logs on passwords and effective dates may be added.

v Logging when pool properties are changed. If you changed the pool related

property like maxSize, or lifespan the following message appears in the system

log: "for pool name ***** <PROPERTY> is changed".

Error Logs

The following list provides descriptions of the different types of errors that can be

logged:

v Failed to add the pool <pool name>

v Failed to delete the pool <pool name>

v Failed to modify the pool <pool name>

v Failed to create the connections from the pool <pool name>

Oracle Database Configuration and Monitoring

This topic provides information about configuring and monitoring Oracle database.

It also provides information about concepts such as init parameter configuration,

rolling back or undoing changes to the database, database storage, and monitoring

with Automatic Workload Repository (AWR) reports.

Oracle init parameter configuration checklist

The Oracle init parameters have mandatory and recommended settings for Sterling

B2B Integrator performance with an Oracle database.

Mandatory Oracle init parameters:

The Oracle init parameters have mandatory settings for Sterling B2B Integrator

performance with an Oracle database.

Parameter Mandatory value

cursor_sharing Exact

Character set AL32UTF8

22 Sterling B2B Integrator: Performance Management

Page 29

Recommended Oracle init parameters:

The Oracle init parameters have recommended settings for Sterling B2B Integrator

performance with an Oracle database.

Parameter Recommended value

processes Must be greater than the number of connections that are

required by Sterling B2B Integrator (sum of transactional or

local and NoTrans pools in the jdbc.properties file), and

operational management tools.

v sga_max_size

v sga_target

v pga_aggregate_target

timed_statistics True

optimizer_mode All_rows

open_cursors 2000 or higher if prepared statement caching is to be used.

1 GB to n GB, depending on the amount of physical

memory on your database server. If the server is running

only this database, up to 80% of physical memory.

To size SGA (Shared Global Area) pools automatically, see

“SGA pool sizing” on page 25.

Oracle init parameter descriptions:

The Oracle init parameters have mandatory and recommended settings for the

performance of Sterling B2B Integrator with an Oracle database.

Parameter Description

Number of processes Maximum number of processes that the Oracle server can create.

Each inbound connection from a client requires an available

process on the Oracle serverand internal processes that run in the

Oracle server itself.

This setting needs to be set high enough to accommodate the

expected peak connections from Sterling B2B Integrator as well as

additional processes for internal server processes plus the possible

usage of buffer connections from the Sterling B2B Integrator pools.

Note that the expected peak connections from Sterling B2B

Integrator are per node, and this number should be multiplied by

the number of nodes in a cluster.

Important: Exceeding the allocated processes can destabilize the

entire Oracle server and cause unpredictable issues.

Normally, the Sterling B2B Integrator logs a report if this has

occurred, by logging an “ORA-00020 maximum number of

processes exceeded” error.

Performance Management 23

Page 30

Parameter Description

open_cursors Number of cursors that the Oracle server can create. Each process

that is servicing a connection will normally spawn two or more

cursors, plus additional cursors that are needed for internal server

processes.

As with the processes, this needs to be set high enough to

accommodate the expected peak connections, multiplied by two,

plus an allocation for possible buffer connections. This should also

be multiplied by the number of nodes in the cluster.

A simple rule of thumb is to set this to four times the number of

processes, four times the number of nodes of Sterling B2B

Integrator, plus an additional 10%.

If the number of cursors is exceeded, Sterling B2B Integrator logs

“ORA-01000: maximum open cursors exceeded.”

Character Set Controls the storage of character data in the Oracle database. The

UTF8 set most closely matches the default character set for Java

and will prevent any conversion of character data.

AL32UTF8 is the preferred setting for Oracle database.

cursor_sharing Controls whether SQL sent from a client is reparsed each time, or

if the server can reuse (“share”) the complied plan.

Sterling B2B Integrator requires the setting to be “exact” for both

performance and data integrity.

sga_max_size Maximum size of the memory allocated to the System Global Area

(SGA). This controls the maximum memory that can be allocated

to the system area of the Oracle instance. The size of the SGA

should never exceed the size of the actual memory (RAM) installed

on the server.

A rule of thumb is that the SGA maximum size should be allotted

as much memory as possible, but should not exceed 80% of the

total memory of the server.

sga_max_target Target value that Oracle uses for automatic allocation of SGA pool

resources.

Recommended setting is equal to the sga_max_size. The exception

is for extremely large databases, where this may need to be

adjusted manually.

pga_aggregate_target Specifies the target aggregate Program Global Area memory

available to all server processes attached to the Oracle instance.

These are normally internal Oracle processes, and are not used by

clients connecting to the instance.

Recommended setting is a non-zero value. This allows the Oracle

instance to size the SQL working areas automatically as needed.

timed_statistics Controls whether database statistics for particular times are logged

by Oracle. This information may be useful to monitor system or

application performance.

Setting timed_statistics to TRUE may incur a slight overall load

increase on the Oracle instance. However, the information it creates

is valuable for diagnosing issues with performance of the instance.

24 Sterling B2B Integrator: Performance Management

Page 31

Parameter Description

optimizer_mode Controls the mode that the optimizer uses to select the proper

execution path for fulfilling query results, among other functions.

Setting this to an incorrect mode can dramatically affect the overall

performance of the server.

Recommended setting for Sterling B2B Integrator: all_rows

SGA pool sizing:

As an alternative to manually sizing the main SGA pools in an Oracle database,

IBM recommends that you use Automatic Shared Memory Management (ASMM).

This feature controls pool size dynamically at run time, readjusting the sizes of the

main pools (db_cache_size, shared_pool_size, large_pool_size, java_pool_size)

based on existing workloads.

Set the following values to enable Oracle ASMM:

v Use an spfile for the init.ora values

v Set sga_target to a non-zero value

v Set statistics_level to TYPICAL (the default) or ALL

v Set shared_pool_size to a non-zero value

Rolling Back or Undoing Changes in Oracle Database

Oracle database supports AUTO UNDO management. Its use is recommended to

avoid manual monitoring of UNDO segments. Set the

UNDO_MANAGEMENT=AUTO parameter in init<SID>.ora. Your database

administrator needs to determine the UNDO_RETENTION setting.

Ensure that the file system where UNDOTBS1 tablespace is located has enough

space to use the AUTOGROW setting. As a starting point, size the undo tablespace

at 20% of the total database size.

Redo Logs

Redo logs are critical for database and instance recovery. Correct redo log

configuration is also critical for performance. We have seen best performance while

implementing redo logs on ASM. The following is recommended:

v Consider implementing redo logs on dedicated disk devices, preferably RAID 10.

v Consider implementing redo log group log files on alternating disks.

Redo File Size

Your choice of redo file size depends on your trade-off between performance and

availability, specifically the time required to recover the Oracle instance in the

event of a failure. For performance, some installations opt to create large redo logs

in order to reduce the frequency of log switches. However, this means potentially

more transactions in the redo logs that must be replayed during recovery.

The general rule for sizing redo log files is to consider the time it takes to switch

log files. Generally, do not switch logs more than once every 20 to 30 minutes.

Issue the following query to see how often the redo log files are changing:

Performance Management 25

Page 32

select * from v$loghist order by first_time desc

Following is an example of the output:

THREAD# SEQUENCE# FIRST_CHANGE# FIRST_TIME SWITCH_CHANGE#

1 97 7132082 10/20/2008 11:47:53 PM 7155874

1 96 7086715 10/20/2008 11:32:04 PM 7132082

1 95 7043684 10/20/2008 11:15:07 PM 7086715

1 94 6998984 10/20/2008 11:00:57 PM 7043684

1 93 6950799 10/20/2008 10:48:03 PM 6998984

In this example, the logs are switched every 15 minutes.

Rollback Transaction Errors

If Sterling B2B Integrator is running with Oracle and is under load, the snap shot

too old SQL error may be found in the wf.log file. The SQL error can be resolved

with the following:

v Tune the Oracle database by increasing undo_retention and redo table space.

v If the SQL error is from WFReportService (from the stack trace in wf.log),

change the useNewStateAndStatusLogic property in jdbc.properties and

jdbc.properties.in to false. This will prevent the snap shot too old SQL error, but

it might cause the Recover process to run longer.

Database Storage

This topic provides information about database storage.

ASM

It is recommended that you use Oracle Automatic Storage Management (ASM) to

manage database storage. The benefits of using ASM include:

v Improved I/O performance and scalability

v Simplified database administration

v Automatic I/O tuning

v Reduction in number of objects to manage

For more information, consult these sources:

v ASM documents available on the Oracle Web site.

v Storage vendor best practice papers about how to configure ASM for their

storage products.

Raw Disks

For performance-critical systems, importing only raw devices into ASM is

recommended. For high-volume processing environments, ensure that ASM is

configured with disk devices from high-performance disk storage arrays. Some

characteristics that you should look for include large NVRAM caches in order to

buffer the disk reads and writes, and for efficient RAID implementation.

26 Sterling B2B Integrator: Performance Management

Page 33

Configure ASM with External Redundancy to ensure that redundancy is provided

by your storage array.

Raw devices (raw partitions or raw volumes) can be used directly under Oracle on

UNIX-based or Linux-based systems and can help performance. However, raw

disks have the following potential disadvantages:

v Raw devices may not solve problems with file size writing limits.

To display current UNIX file size limits, run the following command:

ulimit -a

v It may not be possible to move files to other disk drives if you are using raw

devices. If a particular disk drive has intense I/O activity, and performance will

benefit from the movement of an Oracle data file to another drive, it is likely

that no acceptably-sized partition or volume exists on a drive with less I/O

activity.

v Raw devices are more difficult to administer than data files stored on a file

system or in an Automatic Storage Management disk group.

When deciding whether to use raw devices, consider the following issues:

v Raw disk partition availability

Use raw partitions for Oracle files only if you have at least as many raw disk

partitions as Oracle data files. If disk space is a consideration, and the raw disk

partitions are already created, match the data file size to the partition size as

closely as possible to avoid wasting space.

You must also consider the performance implications of using all the disk space

on a few disks as opposed to using less space on more disks.

v Logical volume manager

Logical volume managers manage disk space at a logical level and hide some of

the complexity of raw devices. With logical volumes, you can create logical disks

based on raw partition availability.

The logical volume manager controls fixed-disk resources by:

– Mapping data between logical and physical storage

– Enabling data to span multiple disks and to be discontiguous, replicated, and

dynamically expanded

For RAC, you can use logical volumes for drives associated with a single

system, as well as those that can be shared with more than one system of a

cluster. Shared drives enables all the files associated with a RAC database to be

placed on these shared logical volumes.

v Dynamic performance tuning

To optimize disk performance, you can move files from disk drives with high

activity to disk drives with low activity. Most hardware vendors who provide

the logical disk facility also provide a graphical user interface (GUI) that you can

use for tuning.

Refer to your operating system documentation on how to use raw devices with

your OS.

Tablespaces (Single or Multiple)

Prior to production, you should plan the overall storage strategy. The DDLs to

create temporary tablespaces and data tablespaces are left to the discretion of the

customer. General recommendations include:

Performance Management 27

Page 34

v Implement these tablespaces as locally managed tablespaces (LMTs) by

specifying extent management as local when creating the tablespace.

v Implement tablespaces with Automatic Space Management by specifying

segment space management as auto.

v With LMTs, you may want to consider creating tablespaces that store small

reference tables with the autoallocate extent allocation model.

v Consider putting large tables into their own tablespace and using the uniform

extent allocation model. Use the default extent size 1 MB.

v Create your temporary tablespace as a temporary data file (temp files). Temp

files are used to store intermediate results, such as from large sort operation.

Changes to temp files are not recorded in the redo logs.

I/O Sub-System Response Time

Sterling B2B Integrator is an On-Line Transaction Processing (OLTP) application.

As an OLTP application, database response time to the I/O sub-system needs to be

in the single digit range even during the peak periods. The database response time

to the I/O sub-system should be less than:

v 5 ms for logs. 1ms or better is recommended.

v 10 ms or better for data. 5ms is recommended.

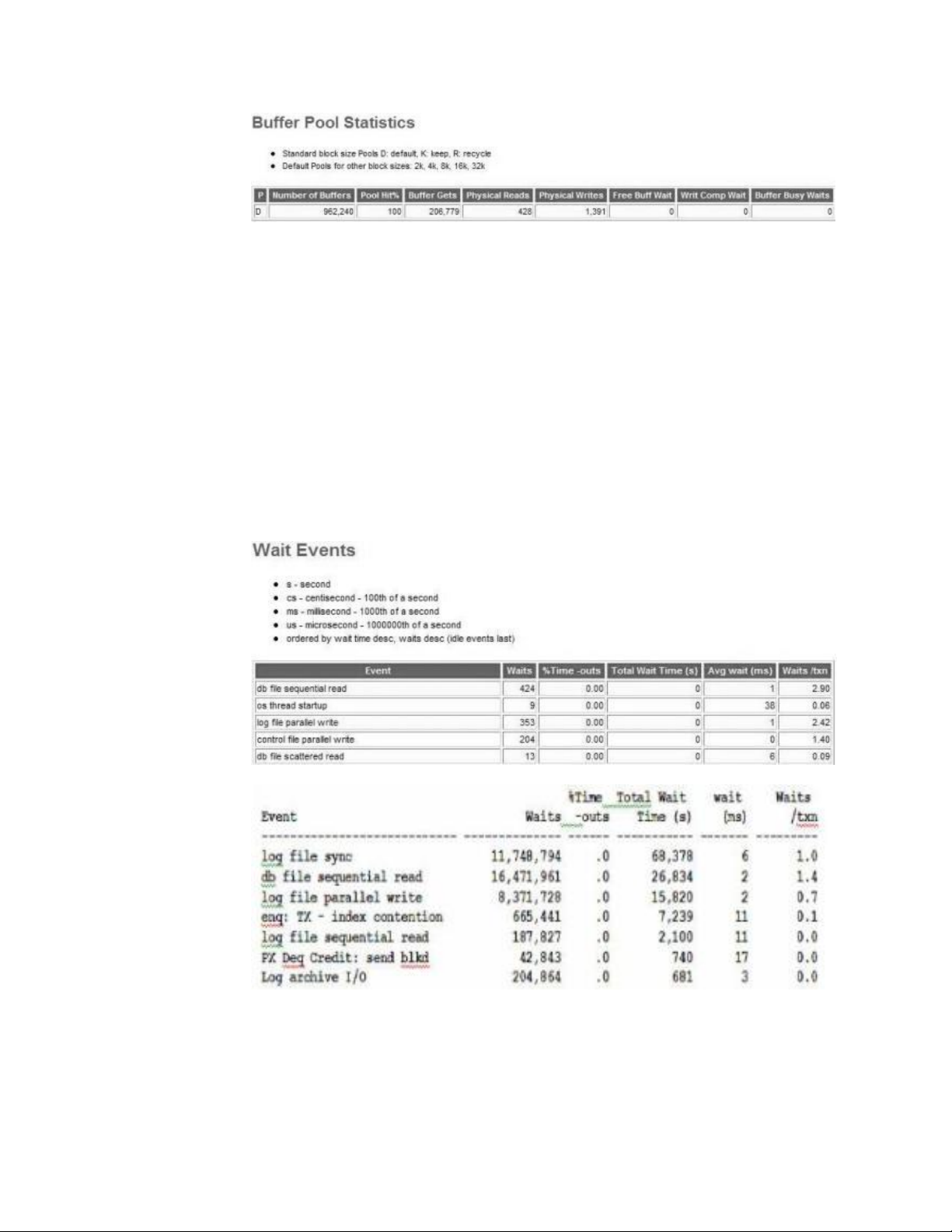

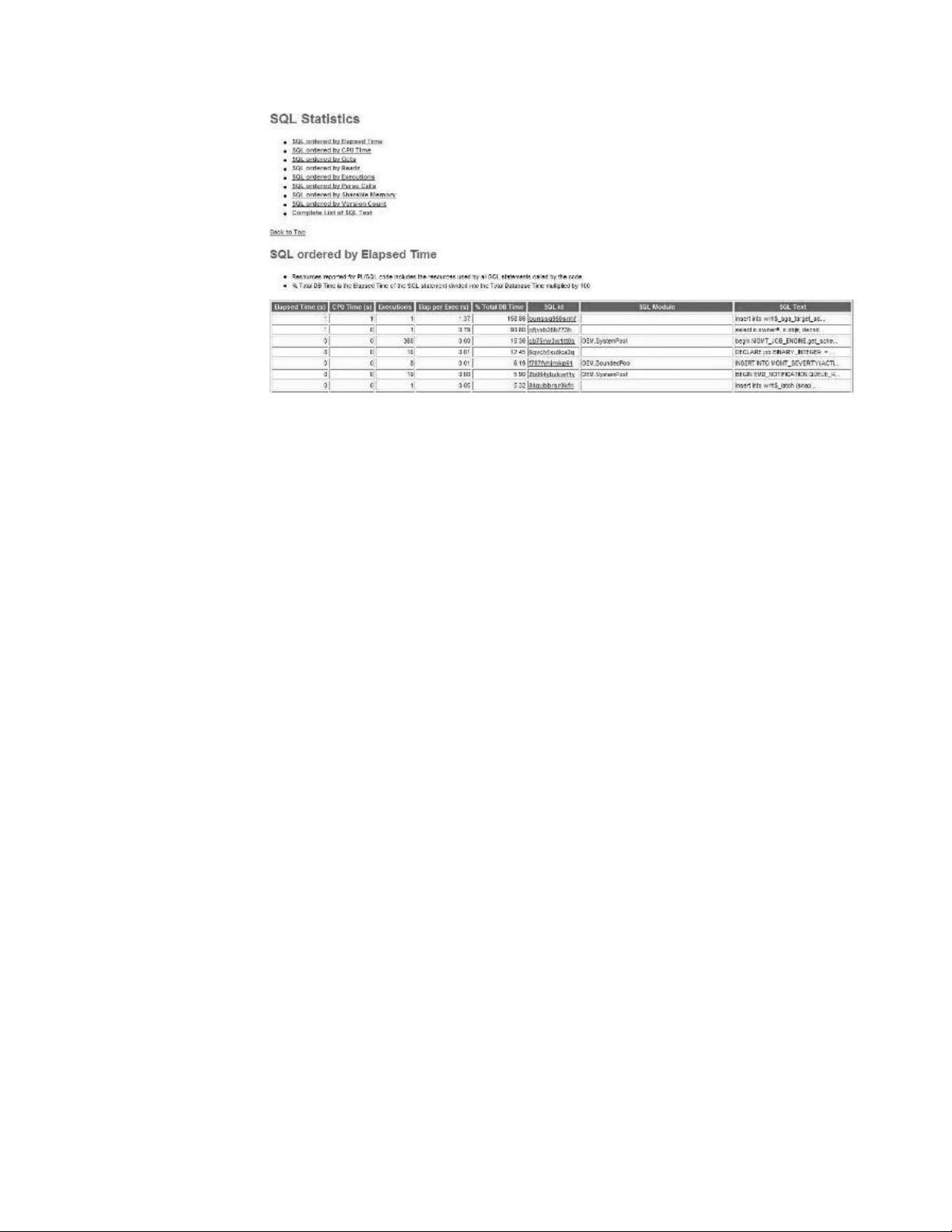

Monitoring with the AWR Report

Important: The information in an Automatic Workload Repository (AWR) report

needs to be considered in relation to Sterling B2B Integrator performance. This

information is not meaningful in isolation. You should monitor AWR over a period

of time to establish your steady state performance baseline. You should monitor

AWR even if Sterling B2B Integrator is performing to your satisfaction; doing so

could uncover issues before they become noticeable.

The AWR is used to collect performance statistics, including:

v Wait events used to identify performance problems

v Time model statistics indicating the amount of DB time associated with a process

from the V$SESS_TIME_MODEL and V$SYS_TIME_MODEL views

v Active Session History (ASH) statistics from the V$ACTIVE_SESSION_HISTORY

view

v Selected system and session statistics from the V$SYSSTAT and V$SESSTAT

views

v Object usage statistics

v Resource-intensive SQL statements

The repository is a source of information for several other Oracle database features,

including:

v Automatic Database Diagnostic Monitor

v SQL Tuning Advisor

v Undo Advisor

v Segment Advisor

By default, AWR snapshots of the relevant data are taken every hour and retained

for seven days. It is recommended to set shorter intervals, for example, 30 minutes,

because at longer intervals, the issues are less noticeable. Default values for these

settings can be altered with statements such as:

28 Sterling B2B Integrator: Performance Management

Page 35

BEGIN

DBMS_WORKLOAD_REPOSITORY.modify_snapshot_settings(

retention => 43200, -- Minutes (= 30 Days).

Current value retained if NULL.

interval => 30); -- Minutes. Current value retained if NULL.

END;

/

A typical Sterling B2B Integrator deployment contains characteristics of both