Page 1

IBM

IMS/ESA V6

Parallel Sysplex Migration Planning Guide

for IMS TM and DBCTL

Bob Gendry, Bill Keene, Rich Lewis, Bill Stillwell, Scott Chen

International Technical Support Organization

http://www.redbooks.ibm.com

SG24-5461-00

Page 2

Page 3

IBM

International Technical Support Organization

SG24-5461-00

IMS/ESA V6

Parallel Sysplex Migration Planning Guide

for IMS TM and DBCTL

June 1999

Page 4

Take Note!

Before using this information and the product it supports, be sure to read the general information in

Appendix F, “Special Notices” on page 239.

First Edition (June 1999)

This edition applies to Version 6, Release Number 1 of IMS/ESA, Program Number 5655-158 for use with the

MVS/ESA or OS/390 operating system.

Comments may be addressed to:

IBM Corporation, International Technical Support Organization

Dept. QXXE Building 80-E2

650 Harry Road

San Jose, California 95120-6099

When you send information to IBM, you grant IBM a non-exclusive right to use or distribute the information in any

way it believes appropriate without incurring any obligation to you.

Copyright International Business Machines Corporation 1999. All rights reserved.

Note to U.S. Government Users — Documentation related to restricted rights — Use, duplication or disclosure is

subject to restrictions set forth in GSA ADP Schedule Contract with IBM Corp.

Page 5

Contents

Figures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xi

Tables

Preface

The Team That Wrote This Redbook

Comments Welcome

Chapter 1. Introduction

1.1 Purpose of This Redbook

1.2 Organization of This Redbook

1.3 Prerequisite Knowledge

1.4 Assumptions

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xiii

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xv

......................... xv

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xvi

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

............................. 1

.......................... 1

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

Part 1. Developing the Plan ........................................ 3

Chapter 2. Plan Development

2.1 Planning for Migration

2.1.1 Planning Phase

2.1.2 Preparation Phase

2.1.3 Implementation Phase

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

............................... 5

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

. . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

Part 2. Planning Considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

Chapter 3. Planning Considerations with IMS/ESA V6 in Mind

3.1 Discussing the Use of Traditional Queuing and Shared Queues

......... 13

...... 17

Chapter 4. Planning Configurations After Failures

4.1 IRLMs

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

................. 19

4.1.1 Restarting Batch (DLI and DBB) Data-Sharing Jobs

4.2 IMS Subsystem Configurations

4.3 IMS Data-Sharing Subsystems

4.4 IMS Subsystems Utilizing Shared Queues

.......................... 22

.......................... 22

................... 23

4.5 IMS Subsystems Utilizing VTAM Generic Resources

4.6 FDBR Action After an IMS Failure

4.7 Restarting BMPs

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

4.8 Degraded Mode Processing

Chapter 5. System Environment Consideration

5.1 Naming Conventions

5.2 IMS Subsystem Data Sets

5.2.1 IMS Data Sets

5.2.2 CQS Data Sets

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

............................. 28

................................. 28

................................. 29

5.2.3 Data Set Characteristics

5.3 Executable Code

5.3.1 IMS System Code

5.3.2 CQS System Code

5.3.3 Exit Code

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

............................... 30

.............................. 31

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

5.3.4 Application Program Code

5.4 Control Blocks

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

........................ 25

........................... 25

.................. 27

........................... 29

.......................... 32

.......... 21

............. 24

Copyright IBM Corp. 1999 iii

Page 6

5.4.1 CQS Access . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

5.4.2 Online Access

5.5 Parameter Libraries

5.6 Dynamic Allocation

5.7 JCL Libraries

5.7.1 Procedures

5.7.2 Jobs

....................................... 34

5.7.3 DBRC JCL Libraries

5.8 Making the Decision

5.8.1 Data Sets That Must Be Unique

5.8.2 Data Sets That Must Be Shared

5.8.3 Data Sets That Are Probably Shared

5.8.4 Data Sets That Are Probably Unique

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

............................. 36

................................ 37

...................... 37

...................... 38

................... 38

................... 40

Chapter 6. Applications and Databases

6.1 Applications

6.2 Databases

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

6.3 Partitioned Applications and Databases

6.4 Cloned Applications and Data Sets

6.5 Handling Databases That Are Not Shared

6.5.1 Routing Transactions

6.5.2 Copying Databases

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

....................... 41

.................... 42

....................... 42

................... 42

Part 3. Planning Considerations for IMS TM ........................... 45

Chapter 7. Introduction to IMS TM Considerations

7.1 Overview

7.2 IMS TM Configuration Considerations

7.2.1 Cloning

7.2.2 Joining

7.2.3 Front-End and Back-End

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

..................... 48

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

........................... 50

Chapter 8. IMS TM Network Considerations

8.1 Overview

8.2 Special Network Considerations

8.2.1 SLUTYPEP

8.2.2 ISC

8.3 APPC (LU 6.2)

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

......................... 54

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

.................................... 58

8.4 ETO Considerations with Shared Queues

8.4.1 Duplicate Terminal Names

8.4.2 Solutions

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

......................... 59

8.4.3 Limiting the Number of End-User Signons

8.4.4 Dead Letter Queue Considerations

8.5 Conversational Transaction Processing

8.6 Application Program Considerations

8.7 Application Affinities

8.8 IMS APPLIDs

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

...................... 62

8.9 VTAM Model Application Program Definitions

8.10 A Model Application Program Definition Example

................ 47

.................... 53

................... 59

................ 61

.................... 61

.................... 62

................. 64

.............. 64

Chapter 9. IMS TM Workload Balancing Considerations

9.1 Overview.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

9.2 Network Workload Balancing

9.2.1 Instructions to the End-Users

iv IMS Parallel Sysplex Migration Planning Guide

............. 65

........................... 66

........................ 67

Page 7

9.2.2 USSTAB Processing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

9.2.3 CLSDST PASS VTAM Application Program

9.2.4 USERVAR Processing

9.2.5 Use of VTAM Generic Resources

. . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

...................... 69

9.2.6 VTAM Generic Resources for APPC/IMS

9.2.7 Printers and Network Workload Balancing

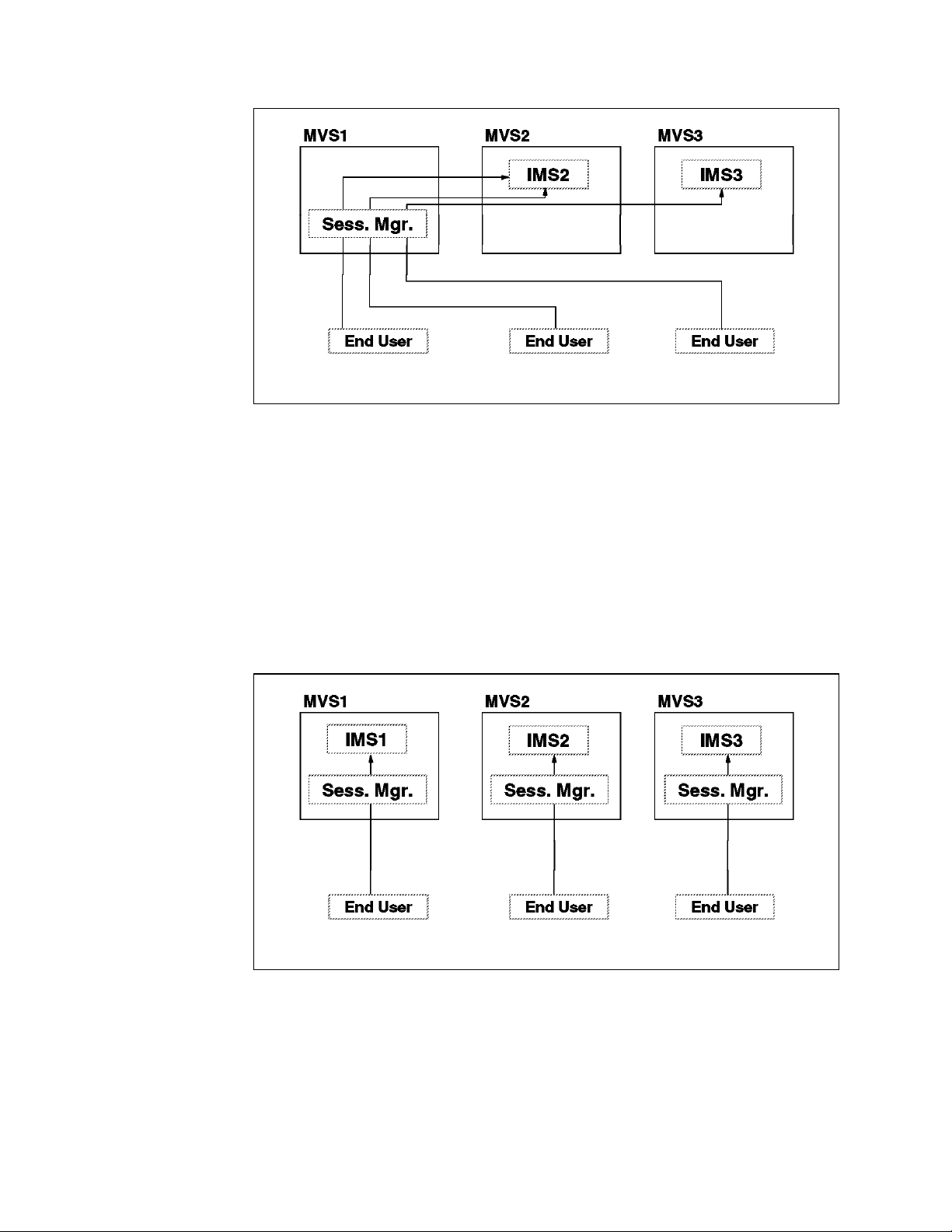

9.2.8 Network Workload Balancing With Session Managers

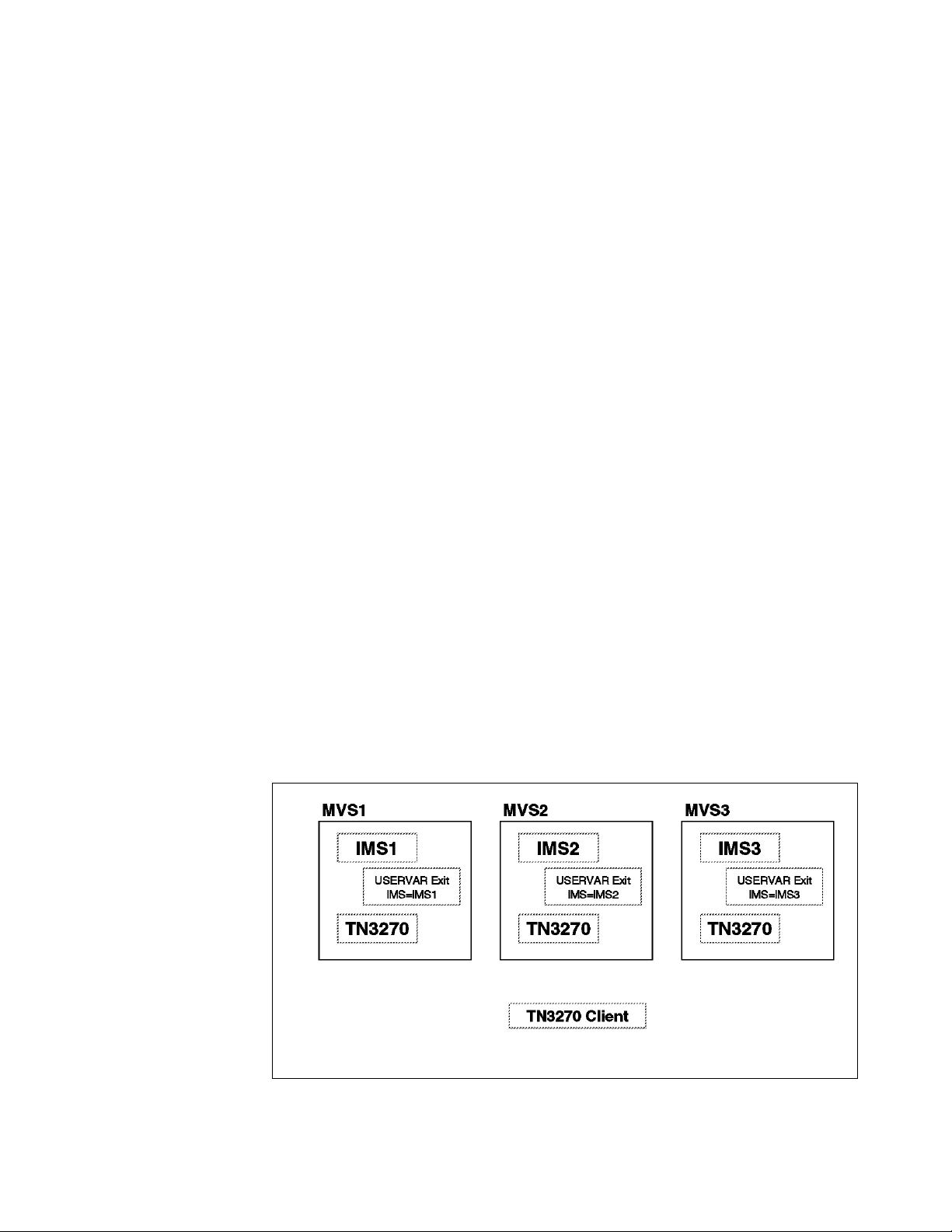

9.2.9 Network Workload Balancing With TCP/IP and TN3270

9.3 Application Workload Balancing

9.3.1 Network Workload Balancing

9.3.2 MSC Balancing

9.3.3 IMS Workload Router

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 82

............................. 82

......................... 81

........................ 81

9.3.4 Shared Queues and Application Workload Balancing

9.4 Transaction Scheduling Considerations

9.5 Summary of Workload Balancing Considerations

................ 67

................. 72

................ 73

......... 76

......... 79

......... 83

.................... 85

............... 85

Chapter 10. IMS TM System Definition Considerations

10.1 Master Terminals With IMS/ESA Version 5

10.2 Master Terminals With IMS/ESA Version 6

10.3 Security

10.4 Resource Definitions and Usage

10.5 MSC Considerations

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

........................ 89

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

10.5.1 Inter-IMSplex MSC Without Shared Queues

10.5.2 Migration to Shared Queues

........................ 91

10.5.3 Example: Migration of Two MSC-Connected Systems

.............. 87

.................. 87

.................. 88

............... 90

........ 95

10.5.4 Example: MSC Link from a Shared Queues Group Member to a

Remote IMS

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

10.5.5 Example: MSC Link Between Members of Two Shared Queues

Groups

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

10.5.6 Example: Multiple MSC Links between Members of Two Shared

Queues Groups

10.5.7 Example: Backup MSC Link Configuration

10.6 Serial Transactions

10.6.1 Serial Transactions With Traditional Queuing

10.6.2 Serial Transactions With Shared Queues

10.7 Undefined Destinations

10.7.1 Destination Determination

10.7.2 Back-End Processing of Input Transactions

10.7.3 Comments and Recommendations

10.8 Online Change

10.9 SPOOL Terminals

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

.............. 100

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

............. 102

............... 102

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

. . . . . . . . . . . . . . . . . . . . . . . . 104

.............. 104

................... 104

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

Part 4. Data-Sharing and Shared Queues Considerations .................. 107

Chapter 11. Data-Sharing Enablement

11.1 IMS System Definition

11.2 IRLM

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

............................. 109

11.2.1 IRLM Subsystem Names

11.2.2 IRLM as Lock Manager

11.2.3 IRLM Scope

11.3 DBRC

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

11.3.1 SHARECTL

11.3.2 SHARELVL

11.3.3 Skeletal JCL

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 112

. . . . . . . . . . . . . . . . . . . . . . . 109

......................... 110

.......................... 110

Contents v

Page 8

11.4 Database Data Set Sharability ........................ 112

11.4.1 VSAM SHAREOPTION(3 3)

11.4.2 DISP=SHR in JCL or DFSMDA

11.5 CFNAMES Statement

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

11.6 DEDB Statements in the DFSVSMxx Member

11.7 Order of Implementation Steps

11.8 IMS Procedures for Data-Sharing

11.9 Data-Sharing Performance Considerations

11.10 Sources of Performance Information

11.11 Data Sharing Coupling Facility Performance Impacts

11.11.1 IRLM CF Access

11.11.2 IMS CF Access

............................. 122

.............................. 123

........................ 112

..................... 112

............... 113

........................ 114

...................... 116

................. 118

.................... 120

.......... 121

Chapter 12. Shared Queues Enablement

12.1 Program Properties Table

12.2 Structure Definitions

12.3 Log Stream Definitions

12.4 System Checkpoint Data Sets

12.5 Structure Recovery Data Sets

12.6 CQS Procedure

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 126

........................... 125

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 125

............................. 125

......................... 125

......................... 126

12.7 BPE Configuration PROCLIB Member

12.8 CQS Initialization Parameters PROCLIB Member (CQSIPxxx)

12.9 CQS Local Structure Definition PROCLIB Member (CQSSLxxx)

12.10 CQS Global Structure Definition PROCLIB Member (CQSSGxxx)

12.11 Security for CQS Structures

12.12 ARM Policy Updates

.............................. 127

......................... 127

12.13 IMS Shared Queues PROCLIB Member (DFSSQxxx)

12.14 QMGR and Shared Queues Traces

12.15 IMS Procedure

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

12.16 IMS Procedures for Shared Queues

..................... 125

.................... 126

...... 126

..... 126

... 126

.......... 127

..................... 127

.................... 127

Part 5. MVS Parallel Sysplex Conderations ............................ 129

Chapter 13. VTAM Generic Resources Enablement

13.1 VTAM Requirements

13.2 IMS Requirements

13.3 APPC/IMS Requirements

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

. . . . . . . . . . . . . . . . . . . . . . . . . . . 131

............... 131

Chapter 14. Automatic Restart Manager (ARM)

14.1 Exceptions to Automated Restarts of IMS

14.2 Restart Conditions

14.3 Restart Groups

14.4 Other ARM Capabilities

14.5 ARM with IRLM

14.5.1 Restarting after IRLM Abends

14.5.2 Restarting after System Failures

14.6 ARM with CQS

14.7 ARM with FDBR

14.8 Information for ARM Policies

Chapter 15. Coupling Facility

15.1 Coupling Facility Planning Guidelines

15.1.1 Structure Placement Rules

15.1.2 Initial Structure Placement

vi IMS Parallel Sysplex Migration Planning Guide

................. 133

.................. 133

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134

............................ 135

................................. 136

...................... 136

.................... 136

.................................. 136

................................. 137

......................... 137

. . . . . . . . . . . . . . . . . . . . . . . . . . . . 139

.................... 139

........................ 139

........................ 140

Page 9

15.1.3 Structure Sizing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

15.1.4 IMS Database Manager

15.1.5 IRLM

15.1.6 Shared Queues

..................................... 146

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 148

15.1.7 Summary of Structure Characteristics

15.2 Changing CF Structure Sizes

15.2.1 Connection and Structure Persistence

15.2.2 IMS Buffer Invalidation Structure Changes

15.2.3 DEDB VSO Cache Structure Changes

15.2.4 IRLM Lock Structure Changes

15.2.5 Automatic Rebuilds

15.2.6 Shared Queues Structure Changes

15.2.7 Alter and Rebuild for Shared Queues Structures

.......................... 141

................. 150

......................... 151

................. 151

.............. 151

................. 152

...................... 152

. . . . . . . . . . . . . . . . . . . . . . . . . . . . 153

................... 154

........... 154

Part 6. Operation Considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 157

Chapter 16. IMS Connections, Security and User Exits

16.1 IMS Connections

16.2 Security

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 160

16.2.1 RACF Security

16.3 IMS SMU Security

16.4 Database Data Set Dispositions

16.5 User Exits

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 162

16.5.1 IMS System Exits

16.5.2 IMS Database Manager Exits

16.5.3 IMS Transaction Manager Exits

16.5.4 Common Queue Server Exit Routines

Chapter 17. IMS and User JCL

17.1 IMS Procedures

17.1.1 Started Procedures

17.1.2 Executed Procedures

17.2 IMS Jobs

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 170

17.3 DBRC Skeletal JCL

17.4 Other Backup and Recovery JCL

17.5 Other Application JCL

17.6 BMP JCL

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 173

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 159

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 160

................................ 161

....................... 161

.............................. 162

...................... 163

..................... 163

................. 164

........................... 167

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 167

. . . . . . . . . . . . . . . . . . . . . . . . . . . . 167

. . . . . . . . . . . . . . . . . . . . . . . . . . . 170

............................... 172

....................... 173

............................. 173

17.6.1 Using the Function Delivered by APAR PQ21039

17.6.2 The IMSGROUP Function

......................... 174

17.6.3 Handling IMSID Without APAR PQ21039 Function

17.6.4 Maintenance Levels of RESLIB

17.6.5 Routing BMP Jobs

............................. 177

..................... 177

............. 159

........... 173

.......... 174

Chapter 18. Operations

18.1 Operational Procedures

18.2 Automated Operations

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 179

. . . . . . . . . . . . . . . . . . . . . . . . . . . . 179

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 179

18.2.1 IMS Time-Control Operations

18.2.2 IMS AO Exits

18.2.3 Other AO Products

................................ 180

............................. 180

18.3 Use of IRLM SCOPE=NODISCON

18.3.1 Use of RDI Regions

18.4 Recovery Procedures

18.5 Support Procedures

18.6 IMS Log Management

............................ 181

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 182

............................... 182

............................. 183

...................... 179

...................... 180

Contents vii

Page 10

18.6.1 IMS Log Archive .............................. 183

18.7 Job Scheduling Procedures

18.8 Online Change Procedures

18.9 IMS Commands from MVS Consoles

18.9.1 GLOBAL Commands

18.9.2 Alternative to GLOBAL Commands

18.9.3 Recommendations for IMS Commands From MVS Consoles

.......................... 183

.......................... 184

.................... 184

. . . . . . . . . . . . . . . . . . . . . . . . . . . 185

................... 187

... 187

Chapter 19. Fast Database Recovery (FDBR)

19.1 FDBR Monitoring

19.1.1 Log Monitoring

19.1.2 XCF Status Monitoring

19.2 Invoking Recovery

19.3 FDBR Failures

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 189

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 189

.......................... 190

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 191

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 192

19.4 Restarting IMS after FDBR Completion

19.5 DBRC Authorizations with FDBR

19.6 ARM and FDBR

................................. 192

19.6.1 ARM Support for IMS With FDBR Active

19.6.2 ARM Support for FDBR

19.7 FDBR, XRF, and ARM

.......................... 193

.............................. 193

19.7.1 FDBR Advantages and Disadvantages

19.7.2 XRF Advantages and Disadvantages

19.7.3 ARM Advantages and Disadvantages

19.8 Recommendations

Chapter 20. Recovery Procedures

20.1 Image Copies

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 194

. . . . . . . . . . . . . . . . . . . . . . . . . 197

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 197

20.1.1 Database Image Copy (DFSUDMP0)

................... 189

................... 192

....................... 192

................ 192

................. 193

.................. 194

................. 194

.................. 197

20.1.2 Concurrent Image Copy (DFSUDMP0 with CIC Option)

20.1.3 Online Database Image Copy (DFSUICP0)

20.1.4 Database Image Copy 2 (DFSUDMT0)

20.1.5 Summary of Image Copies

20.2 Database Recoveries

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 198

........................ 198

20.2.1 Time-Stamp Recovery Considerations

20.3 IMS Batch (DBB and DLI) Job Abends

20.4 IMS Online (TM or DBCTL) Abends

20.5 IRLM Abends

20.6 MVS Failures

20.7 Lock Structure Failures

................................... 200

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 200

............................ 201

20.8 OSAM and VSAM Structure Failures

20.9 DEDB VSO Structure Failures

......................... 202

..................... 200

..................... 202

20.9.1 Procedure for Failure of One of Two Structures

............... 197

................. 198

................. 199

................... 200

........... 203

20.9.2 Procedure for Failure of Only Structure or Both Structures

20.10 CF Connection Failures

20.11 CF Connection Failure to Lock Structure

............................ 203

................. 203

20.12 CF Connection Failure to an OSAM or VSAM Structure

20.13 CF Connection Failure to a DEDB VSO Structure

............ 204

20.13.1 Procedure with Connectivity to a Second Structure

20.13.2 Procedure without Connectivity to a Second Structure

20.14 Disaster Recovery

20.14.1 Image Copy Only Disaster Recovery

20.14.2 Time-Stamp Recovery Disaster Recovery

20.14.3 Latest Archived Log Disaster Recovery

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 205

................. 205

.............. 206

............... 206

20.14.4 Real-Time Electronic Log Vaulting Disaster Recovery

....... 197

.... 203

......... 204

........ 205

...... 205

....... 207

viii IMS Parallel Sysplex Migration Planning Guide

Page 11

Appendix A. Naming Convention Suggestions .................. 209

Appendix B. IMS System Data Sets

B.1 Data Sets That Must Be Unique

B.2 Data Sets That Must Be Shared

B.3 Data Sets That Are Probably Unique

B.4 Data Sets That Are Probably Shared

........................ 215

........................ 215

........................ 216

..................... 216

..................... 216

Appendix C. Sample USERVAR Exit for Network Balancing

Appendix D. Parallel Sysplex Publications

Appendix E. Migration Plan Task List

E.1 Planning Phase

E.2 Preparation Phase

E.3 Implementation Phase

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 234

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 235

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 237

E.3.1 Data Sharing Environment Implementation

E.3.2 Generic Resources Environment Implementation

E.3.3 Shared Queues Environment Implementation

E.3.4 Implementation of Second System (Clone)

Appendix F. Special Notices

Appendix G. Related Publications

. . . . . . . . . . . . . . . . . . . . . . . . . . . . 239

. . . . . . . . . . . . . . . . . . . . . . . . . 241

.................... 231

....................... 233

............... 237

........... 237

............. 238

............... 238

G.1 International Technical Support Organization Publications

G.2 Redbooks on CD-ROMs

G.3 Other Publications

............................. 241

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 241

.......... 219

........ 241

How to Get ITSO Redbooks

IBM Redbook Fax Order Form

Glossary

List of Abbreviations

Index

ITSO Redbook Evaluation

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 245

................................. 249

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 251

............................... 253

............................. 243

............................ 244

Contents ix

Page 12

x IMS Parallel Sysplex Migration Planning Guide

Page 13

Figures

1. Using Unique IRLM Names and Moving IRLMs . . . . . . . . . . . . . . . 20

2. Using Unique IRLM Names and Changing IRLMs Used by IMSs

3. Using ″IRLM″ for all IRLM Names

. . . . . . . . . . . . . . . . . . . . . . . 21

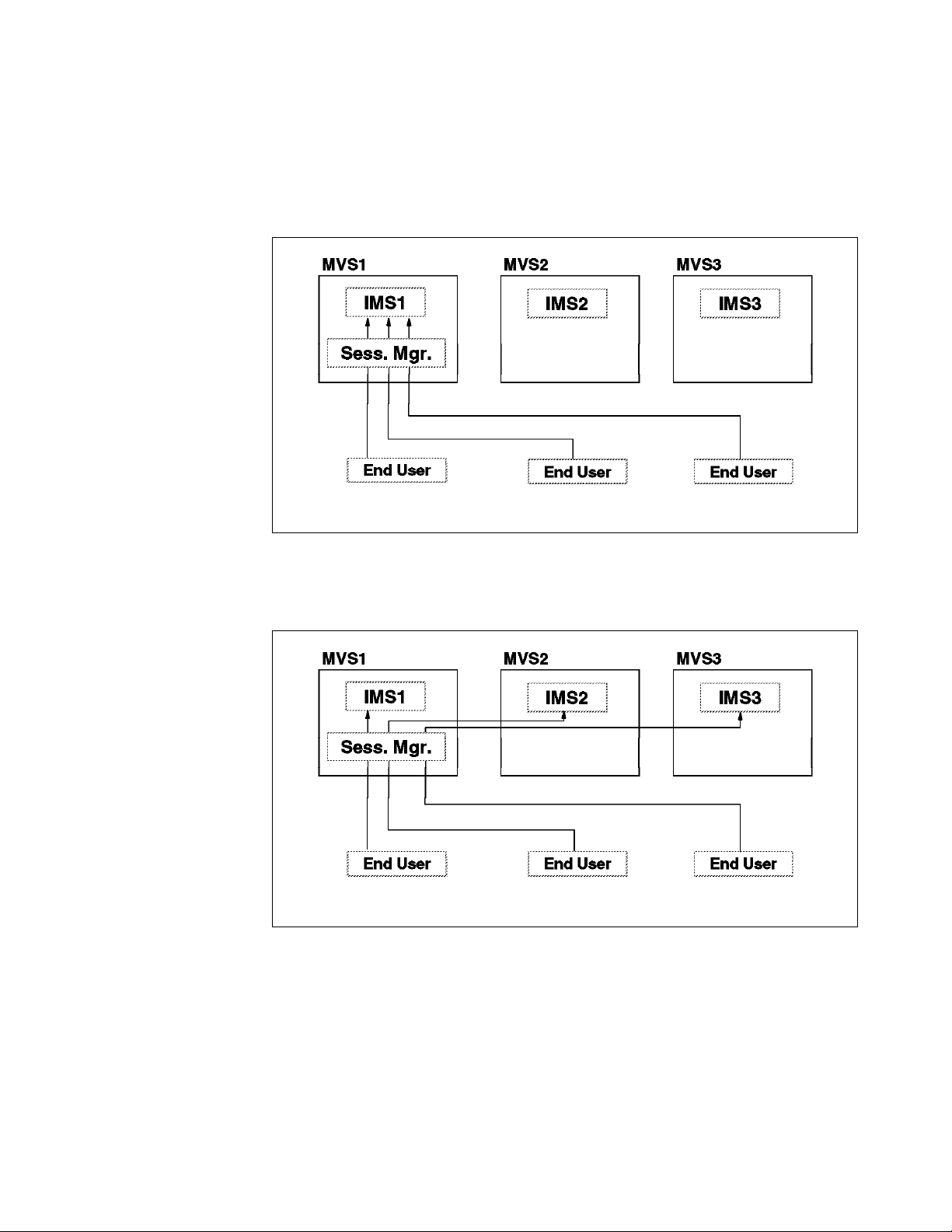

4. Using One Instance of a Session Manager Without ISTEXCGR

5. Using One Instance of a Session Manager With ISTEXCGR

6. Placing the Session Manager on an MVS Without IMS

7. Session Managers With Generic Resource Support

8. Using DNS With TN3270 and VTAM GR

9. Using DNS With TN3270 and VTAM GR

. . . . . . . . . . . . . . . . . . . . 79

. . . . . . . . . . . . . . . . . . . . 80

. . . . . . . . . . . 78

. . . . . . . . . . . . 78

10. Using DNS With TN3270 and VTAM GR After a Failure of IMS1

11. Two IMS systems With an MSC connection

12. Migration of IMSB to a Shared Queues Group

................. 95

................ 96

13. Migration of IMSA and IMSB to a shared queues group is completed

14. MSC link from shared queues group member to remote IMS

15. MSC links between members of two shared queues groups

. . . . . 21

. . . . . . 77

........ 77

...... 81

. 96

....... 98

....... 99

16. MSC connections from shared queues group members to remote IMS

systems

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 100

17. Backing up logical links from a shared queues group to a remote IMS 101

18. Starting a backup link to a shared queues group

............. 101

Copyright IBM Corp. 1999 xi

Page 14

xii IMS Parallel Sysplex Migration Planning Guide

Page 15

Tables

1. IMS Online Region Library . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

2. IMS Procedures Summary

3. IMS Shared Queues Procedure Parameters

4. ARM Element Terms

5. Structure Characteristics

6. Summary of IMS Connectors

7. IMSID Usage Before Cloning

8. IMSID Usage After Cloning

9. Image Copy With Concurrent Updates

10. Task List

11. Task List

12. Task List

13. Task List

14. Task List

15. Task List

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 234

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 235

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 237

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 237

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 238

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 238

. . . . . . . . . . . . . . . . . . . . . . . . . . . 116

. . . . . . . . . . . . . . . . 128

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 137

. . . . . . . . . . . . . . . . . . . . . . . . . . . 150

. . . . . . . . . . . . . . . . . . . . . . . . . 159

. . . . . . . . . . . . . . . . . . . . . . . . . 174

. . . . . . . . . . . . . . . . . . . . . . . . . . 174

. . . . . . . . . . . . . . . . . . . 198

Copyright IBM Corp. 1999 xiii

Page 16

xiv IMS Parallel Sysplex Migration Planning Guide

Page 17

Preface

This redbook provides information for those planning for migrating IMS/ESA 6.1

system to a Parallel Sysplex environment using data sharing and shared queues.

The redbook lists all important factors to be consider during the planning stage.

It also lists important features and describes the relationships between IMS/ESA

V6 and OS/390. Readers will, therefore, be able to understand what benefits can

be derived even before the complete implementation to the Parallel Sysplex

environment.

The target audience is customer IT architects, system designers, IT managers

and IBM representatives who are helping customers on migration projects.

The Team That Wrote This Redbook

This redbook was written by a team of IMS specialists from the IMS Advanced

Technical Support Department at the IBM Dallas Systems Center.

Bob Gendry has been a member of the IMS Advanced Technical Support

Department at the IBM Dallas Systems Center since 1978 and has over 25 years

of experience in providing technical support for IMS. He has given multiple

presentations on IMS-related topics to user groups and at the IMS Technical

Conferences in the United States and Europe. He has provided assistance to

multiple IMS users in planning and implementating IMS in a Parallel Sysplex

environment for the past several years. In addition, he has prepared and taught

educational materials directly related to the understanding, implementation, and

use of IMS in a Parallel Sysplex environment.

Bill Keene was a member of the IMS Advanced Technical Support team at the

IBM Dallas Systems Center. He has over 25 years of experience in providing

technical support for IMS. He is a frequent speaker at GUIDE, SHARE, and IMS

Technical Conferences on IMS-related topics. He has provided assistance to

multiple IMS users in planning for and implementing the use of IMS in a Parallel

Sysplex environment for the past several years. In addition, he has prepared

and taught educational materials directly related to the understanding,

implementation, and use of IMS in a Parallel Sysplex environment.

After contributing to this redbook, Bill Keene retired from IBM.

Rich Lewis has been a member of the IMS Advanced Technical Support

Department at the IBM Dallas Systems Center since 1979. He has over 25 years

of experience in providing technical support for IMS. Since the introduction of

Parallel Sysplex, he has been assisting users in implementing IMS data-sharing.

He has written technical documents, created presentations, and developed an

IMS Parallel Sysplex data sharing course. He has provided planning services to

many customers for the introduction of IMS into their Parallel Sysplex

environments. Rich regularly presents Parallel Sysplex topics at IMS Technical

Conferences and user group meetings in the United States and Europe.

Bill Stillwell has been providing technical support and consulting services to IMS

customers as a member of the Dallas Systems Center for 17 years. During that

time, he developed expertise in application and database design, IMS

Copyright IBM Corp. 1999 xv

Page 18

performance, fast path, data sharing, planning for IMS Parallel Sysplex

exploitation and migration, DBRC, and database control (DBCTL).

He also develops and teaches IBM Education and Training courses, including

IMS/ESA Version 6 Product Enhancements, IMS Shared Queues, and IMS Fast

Path Implementation, and is a regular speaker at the annual IMS Technical

Conferences in the United States and Europe.

Scott Chen is a member of International Technical Support Organization, San

Jose Center. Scott has installing, configuring, debugging, tuning, consulting,

application designing, programming and studying MVS and OS/390 internal,

database and transaction management system (includes IMS) and digital library

softwares for over 25 years.

Thanks to the following people for their invaluable contributions to this project:

Dick Hannan

IBM Santa Teresa Laboratory

IMS Development

Comments Welcome

Your comments are important to us!

We want our redbooks to be as helpful as possible. Please send us your

comments about this or other redbooks in one of the following ways:

•

Fax the evaluation form found in “ITSO Redbook Evaluation” on page 253 to

the fax number shown on the form.

•

Use the online evaluation form found at http://www.redbooks.ibm.com/

•

Send your comments in an Internet note to redbook@us.ibm.com

xvi IMS Parallel Sysplex Migration Planning Guide

Page 19

Chapter 1. Introduction

1.1 Purpose of This Redbook

This redbook provides information for those creating a plan for migrating an

IMS/ESA 6.1 system to a Parallel Sysplex environment using data sharing and

shared queues. The reader is assumed to be familiar with the requirements for

data sharing and shared queues implementation. This information may be

obtained from the IBM Education and Training classes for IMS/ESA Block Level

Data Sharing, Shared Queues, and IMS/ESA Version 6 and from IMS/ESA Data

Sharing in a Parallel Sysplex, SG24-4303.

The redbook applies to both IMS/ESA TM and Database Control (DBCTL) users.

Some Parallel Sysplex functions and facilities, such as Shared queues, apply

only to IMS Transaction Manager (TM) users. Sections of this redbook which

apply only to IMS TM users or only to DBCTL users are identified.

1.2 Organization of This Redbook

The main body of this redbook is divided into six sections plus several

appendices.

1. Developing the Plan

This section addresses the plan itself, including the purpose of the plan, its

content, and the migration tasks that should be identified within the plan.

2. Planning Considerations

This section addresses some of the general technical issues that must be

considered when developing and implementing the plan and is intended to

help make decisions.

3. Planning Considerations for IMS TM

This section focuses on technical issues related to the IMS TM environment.

4. Data Sharing and Shared Queues Considerations

This section reviews some technical issues related to IMS data sharing and

shared queues.

5. MVS Parallel Sysplex Considerations

Here, we look at some technical topics related to IMS interact with MVS

Parallel Sysplex.

6. Operation Considerations

Finally, we review technical topics related to IMS operation and recovery

procedures.

7. Appendixes

The appendixes provide additional technical information that might be useful

in performing some of the tasks. A sample list of tasks is included at the

end of this redbook in Appendix E, “Migration Plan Task List” on page 233.

This list includes references to the parts of this redbook that apply to each

task in the list.

Copyright IBM Corp. 1999 1

Page 20

A list of useful Parallel Sysplex publications, other than the standard

IMS/ESA V6.1 product publications, is provided in Appendix D, “Parallel

Sysplex Publications” on page 231.

1.3 Prerequisite Knowledge

This redbook is written for those who are familiar with the following:

•

IMS block-level data sharing definition requirements for IMS, IRLM, and the

Coupling Facility

•

IMS shared queues definition requirements for IMS (including the Common

Queue Server) and the Coupling Facility

•

Recovery procedures for failures in the IMS block-level data sharing

environment

•

Recovery procedures for failures in the shared queues environment

•

Roles of IRLM and the Coupling Facility in supporting block-level data

sharing

•

Roles of the Common Queue Server and the Coupling Facility in supporting

shared queues

1.4 Assumptions

The assumptions about the installation for which the plan is being developed

are:

•

The installation is in production with IMS/ESA V6.1 prior to the

implementation of this plan.

•

A Parallel Sysplex environment has been implemented prior to the

implementation of this plan.

•

The application (system) to be migrated has been identified.

•

The existing IMS environment and its applications are to be cloned.

There is only one current IMS system to be cloned. Its workload will be split

across multiple IMS systems which are as identical in function as possible.

They can have different workload capacities.

2 IMS Parallel Sysplex Migration Planning Guide

Page 21

Part 1. Developing the Plan

Copyright IBM Corp. 1999 3

Page 22

4 IMS Parallel Sysplex Migration Planning Guide

Page 23

Chapter 2. Plan Development

This section addresses the development of the migration plan and identifies

some of the steps and considerations you might encounter when developing the

plan. The result of this exercise is not to ″perform″ any of the implementation

tasks but to identify those tasks which must be done and to create a plan for

accomplishing them. For example, the plan can identify as a task the

establishment of a naming convention for system data sets. The naming

convention itself is not a part of the plan, but is a result of implementing the

plan.

2.1 Planning for Migration

The process of migrating to an IMS data sharing and/or shared queues Parallel

Sysplex environment should be accomplished in three phases: a planning phase,

a preparation phase, and an implementation phase.

2.1.1 Planning Phase

The purpose of this phase is to identify/document where you are coming from,

where you are going, what you will do if there are failures, and to determine how

the plan will be created, who has responsibility, what will be its content and

format, what planning or project management tools will be used, and finally to

develop the plan itself.

Below, we have identified four major steps in the planning phase. You might

recognize fewer or more, but each step below has a purpose, and that purpose,

must be satisfied in any planning process.

2.1.1.1 Understand the Existing Environment

The first step in the planning phase is to understand the existing environment.

This includes knowing, for each application, the resource requirements (such as,

CPU, I/O), service level requirements, schedules and workloads, connections to

other subsystems, and the reasons for migrating (for instance, reduced costs or

improved performance, capacity, or availability). You should also identify any

″inhibitors″ to migration, such as non-sharable resources.

The assumption here is that the target environment is replacing an existing

environment for one or more reasons (for example, capacity, performance,

availability, flexibility,...). However, the target environment must continue to

provide the equivalent function with performance and availability at least as

good as the existing environment. So, in order to define a target environment

which will do this, it is first necessary to understand the existing environment.

The following describes the characteristics of the existing environment that

should be known before defining the target.

•

Why are you migrating to this environment?

A major part of developing a migration plan is to choose the configuration to

which the migration will be done. This configuration is affected by the

reasons for making the migration. The migration to IMS data sharing and

shared queues with Parallel Sysplex can be used to provide multiple

benefits. These include, but are not limited to:

− Increased availability

Copyright IBM Corp. 1999 5

Page 24

− Increased capacity

− Incremental growth capability

− Operational flexibility

•

What is the current workload?

This should be documented in terms that will facilitate the distribution of that

workload over two or more (perhaps) processors and should include

transaction volumes as well as batch/BMP and support jobs such as image

copies, reorganizations, and so forth.

•

Who are the heavy resource users?

Which of the above transactions or batch processes require the greatest

number of, CPU or I/O resources, and which transactions are the highest

volumes. It might be necessary to make special provisions for them in the

target environment.

•

What are the service level commitments?

What agreements exist for transaction response times, batch elapsed times

and availability? Are users billed according to the resources they use?

•

To what systems is the existing IMS connected?

This should include other IMSs, DB2s, CICSs, and any other ″intelligent″

systems or devices that might be sensitive to the identity of the IMS system

to which they are connected.

•

What are the online and batch schedules?

What are the hours of availability for online access and what is the ″batch

window″ (if there is one)?

•

Are there any batch or online dependencies?

Are there ″sequences″ of processes that must be maintained in the target

environment. For example, transactions defined as SERIAL, implying a FIFO

processing sequence, should be identified.

•

Are any user exits sensitive to the identity of the IMS system on which they

execute?

Look particularly at transaction manager exits and system exits as there will

be multiple transaction managers with different IDs, connected, perhaps, to

different subsystems (for instance, different CICSs or different DB2s) and with

only part of the original network.

•

Do any user-written programs process the IMS logs?

The logs will be quite different, with each log containing only part of the

information that was on the original single-image log.

•

What are the business-critical applications?

If one component of the target environment fails (for instance, one of two IMS

systems) and can′t be immediately restarted, it might be necessary to

quiesce relatively unimportant work on the surviving system in order to shift

the entire critical workload to that system. It might also be necessary to shift

part (or all) of the network to the surviving system.

•

Are there any non-sharable resources?

6 IMS Parallel Sysplex Migration Planning Guide

Page 25

An installation can choose not to share some databases. (See 6.5, “Handling

Databases That Are Not Shared” on page 42). These must be identified and

plans made for their migration to the target system.

2.1.1.2 Define Target Environment

The next step in the migration planning phase is to define the configuration of

the target environment. This includes the number of IMS subsystems in the data

sharing group, shared queues group, VTAM generic resource group, the MVS

system on which each IMS will run, other subsystems outside of the Parallel

Sysplex environment to which the target IMS will connect ( for example, other

IMSs, CICS, DB2), the coupling facilities to be used, and which systems will be

used for various purposes. For example, one must know on which systems IMS

BMPs will be run, or if applications are to be partitioned, on which systems they

will run. Be sure the target environment satisfies the reasons for migration.

The elements of the target configuration include the following:

•

MVS Systems

The MVS systems in the target configuration should be identified by the

processors and LPARs on which they run and the types of work they will

support. The types of work include the IMS subsystems and support

processes they will handle.

•

IMS Subsystems and Processes

These are IMS online systems (either IMS Transaction Manager, DCCTL, or

DBCTL), IMS batch jobs, and IMS support processes. Support processes

include database image copies, database reorganizations, log archiving, and

definition jobs. The MVS systems on which they will run should be identified.

The use of each IMS online system should be identified.

− IMS TM

For IMS TM this includes the terminal networks that will use them, the

ISC and MSC connections to other systems, APPC connections, the

associated DB2 subsystems, the application programs, and transactions

that will run on each system. Application programs include BMPs.

For IMS TM and DCCTL subsystems, special planning considerations will

be required if shared queues, VTAM generic resources, and/or other

Parallel Sysplex enhancements delivered with IMS/ESA V6.1 are to be

used.

− DBCTL

For DBCTL this includes the CICS systems that will attach to them, the

DB2 systems used by BMPs, and the application programs that will run

on each system. Application programs include BMPs.

For cloned systems, it is assumed that all online transactions and programs

will be run on each system. For performance, operational, or affinity

reasons, there may be exceptions. These should be understood, and the

target configuration must account for these considerations. Typically, BMPs

and IMS batch jobs will be directed to particular IMS or MVS systems. Many

installations will want to run them on only one MVS system.

•

Coupling Facilities

Chapter 2. Plan Development 7

Page 26

The coupling facilities and coupling facility structures to support IMS should

be identified. This includes structure sizes and the placement of these

structures in support of:

− Data sharing structures

- IRLM (lock structure)

- VSAM buffer invalidation structure (directory-only cache structure)

- OSAM buffer invalidation/cache structure (store-through cache

structure)

- Shared DEDB VSO structure(s) (store-in cache structure)

− Shared Queues

- Message queue and EMH queue primary and overflow structures (list

structures)

- MVS logger structures (list structures)

− VTAM Generic Resource structure (list structure)

2.1.1.3 Define Degraded Mode Environment

Next you must decide what you will do if something fails. Since a Parallel

Sysplex consists of multiple MVS and IMS systems, an installation should plan

what it will do if one or more components fail. For example, there may be

certain applications or systems that are more critical to the business and

therefore should have preference to be available when there is an outage of part

of the system. This is called degraded mode processing.

During this phase, you should determine both the processing and business

impact of the failure of any component of the target environment. Identify those

applications which must be given priority in a degraded processing environment.

You must also consider what users who are connected to a failed component

should do (such as, log on to another IMS?).

Some tasks which should be included in this phase are:

•

Perform a ″component failure impact analysis (CFIA)″ for critical components

•

Prioritize applications for degraded mode processing

•

Identify applications to run in degraded mode

•

Identify network terminals and connections to be reconnected to another

system if one system fails

2.1.1.4 Develop the Plan

The plan should recognize the following two phases of the migration process:

preparation and implementation. Although this document does not prescribe a

format for the migration plan, the following elements should be included:

•

Tasks - What must be done?

•

Responsibility - Who is responsible to see that it gets done?

•

Dependencies - Is any task a prerequisite to another task?

•

Duration - How long should each task take?

•

Schedule - When must it be done - start/complete/drop-dead dates?

•

Status - A mechanism for monitoring progress?

8 IMS Parallel Sysplex Migration Planning Guide

Page 27

Appendix E, “Migration Plan Task List” on page 233 is a list of tasks that have

been identified which should be a part of the migration plan.

2.1.2 Preparation Phase

Most of the tasks identified in the migration plan are implemented during the

preparation phase. The plan may say, for example, that a naming convention

must be established for system data sets. During this phase, that naming

convention will be developed. Or the plan may say that operational procedures

must be updated. During this phase, those procedures are updated.

Some of the tasks in this phase will be ″decision″ type tasks (for instance, how

many copies of RESLIB do I want?). Others will be ″implementing″ some of

these decisions (such as, making two copies of RESLIB). At the conclusion of

this phase, you are ready to migrate your existing system to production.

2.1.3 Implementation Phase

The final phase in the migration process is the actual implementation. That is,

the existing environment will be converted to an operational IMS Parallel

Sysplex environment. The actual ″implementation plan″ will probably be

produced as part of the preparation phase as it is unlikely that enough

information will be available during planning to generate this plan.

Chapter 2. Plan Development 9

Page 28

10 IMS Parallel Sysplex Migration Planning Guide

Page 29

Part 2. Planning Considerations

Copyright IBM Corp. 1999 11

Page 30

12 IMS Parallel Sysplex Migration Planning Guide

Page 31

Chapter 3. Planning Considerations with IMS/ESA V6 in Mind

IMS/ESA V5.1 was the first release of IMS to exploit Parallel Sysplex facilities. It

supported data sharing for full function and Fast Path databases. IMS/ESA V6.1

contains many enhancements for the use of IMS in a Parallel Sysplex. These

enhancements and others are listed below with brief comments about their use

or impact on IMS systems. Wherever appropriate, planning and migration

considerations for these enhancements are explained in the following sections of

this publication.

•

Shared Queues is a replacement for the manner in which input transactions

and output messages are physically managed and queued for processing.

With Shared Queues, the use of the message queue data sets has been

eliminated and replaced by queuing messages to list structures in one or

more coupling facilities.

Since a Coupling Facility can be accessed by multiple IMS subsystems, the

messages queued in a Coupling Facility can be accessed and processed by

any IMS that is interested in processing a particular resource name. For

example, all IMSs with access to the queue structures and with TRANA

defined are ′interested′ in processing TRANA. A given instance of TRANA,

therefore, can be processed by any IMS with access to the shared queues on

the Coupling Facility regardless of which IMS queued TRANA to the shared

queues as a result of terminal input or application output. This example of

′sharing′ the processing load among multiple IMS subsystems is one of the

key benefits of shared queues: That is, shared queues enable:

− Workload balancing. Multiple IMS subsystems can balance dependent

region application workloads among themselves automatically.

− Improved availability. If one IMS fails, the surviving IMSs can continue

processing.

− Incremental growth. IMS subsystems can be added to the Parallel

Sysplex environment without disruption.

•

VTAM Generic Resource support allows terminals to log on using a generic

name rather than a specific IMS APPLID when requesting a session with one

of the IMSs in a Generic Resource Group. VTAM Generic Resource support

selects one of the IMS subsystems in the generic resource group to which

the session request will be routed. VTAM′s session selection algorithm

enables the session requests to be evenly distributed across the IMS

subsystems to achieve network workload balancing while simplifying the

terminal end-user interface to the IMSs in the Parallel Sysplex.

•

Data sharing for DEDBs with SDEPs and for DEDBs with the VSO option is

enabled with IMS/ESA V6.1. The lack of data sharing support for these

particular types of DEDBs were considerations when migrating to data

sharing in a Parallel Sysplex environment with IMS/ESA V5.1.

•

OSAM Coupling Facility Caching allows OSAM blocks to be cached in a

coupling facility structure.

One of the costs of data sharing among IMS subsystems is known as ′buffer

invalidation.′ Whenever a data sharing IMS updates a block in its local buffer

pool, if the block is also in another system′s buffer, that buffer must be

invalidated. A future reference by that IMS to the block in the invalidated

buffer requires it to reread the block from the database data set on DASD.

Copyright IBM Corp. 1999 13

Page 32

With OSAM Caching capability, IMS users now have the option of storing

updated blocks in a coupling facility structure such that references to

invalidated blocks can be satisfied from the coupling facility, thus avoiding a

reread from a data set on DASD.

The OSAM Caching capability has three options: Store all selected database

data set blocks in the coupling facility or, store only blocks that have been

changed in the coupling facility, or, do not store any OSAM blocks in the

coupling facility.

•

Fast Database Recovery (FDBR) is a facility or function that addresses a

data-sharing problem known as the ″retained locks problem.″

At the time a data sharing subsystem fails, it might hold non-sharable locks

on database resources that cannot be released until the retained locks are

released. Retained locks are usually released by dynamic backout during

emergency restart of the failed IMS. The execution of a successful

emergency restart typically takes a few minutes, but can take much longer

depending upon the type of failure and nature of the emergency restart.

Given that a data-sharing IMS has failed, the other data sharing partners are

still executing. If application programs executing on the the remaining IMS

subsystems try to access a database resource that is protected by a retained

lock, the program is abended with a U3303 abend code, and the input

transaction is placed on the suspend queue. If access attempts to retained

locks result in many U3303 abends, then the impact on the application

programs executing on the surviving IMSs can be severe (that is, application

programs are unable to get their work done, and terminal operators might be

hung in response mode).

Application program failures because of retained locks can be avoided or

minimized in two ways. Application programs can be changed to issue an

INIT STATUS GROUPA call and to check for BA and BB status codes for calls

to databases. These application changes eliminate the U3303 abend when a

database call attempts to access a resource protected by a retained lock.

This method of avoiding application abends because of access to retained

locks has been available with IMS for many years. Unfortunately, not many

IMS users have modified their application programs to take advantage of

checking for the BA and BB status codes.

FDBR provides a second way to address the retained lock problem by

dynamically backing out in-flight units of work whenever an IMS subsystem

in a data-sharing group fails while holding retained locks. FDBR minimizes

the impact of the problem by reducing the amount of time that retained locks

are held.

•

Sysplex communications for and among the IMSs in a Parallel Sysplex

environment has been enhanced by:

− Allowing commands to be submitted to any IMS TM, DCCTL, or DBCTL

subsystem in a Parallel Sysplex environment from a single MCS or

E-MCS console in the Sysplex.

− Routing synchronous and asynchronous responses to a command to the

MCS or E-MCS console which entered the command.

− Providing RACF (or equivalent product) and/or Command Authorization

Exit (DFSCCMD0) security for commands entered from MCS or E-MCS

consoles.

14 IMS Parallel Sysplex Migration Planning Guide

Page 33

These improvements in Sysplex communications capability allow users to

control multiple IMSs within a Parallel Sysplex from a single MVS console

rather than from multiple MVS consoles or IMS Master Terminals.

•

MSC dynamic MSNAMEs and shared local SYSIDs are functions designed

explicitly to assist in the maintenance of IMS systems in a shared queues

group when one or more of the IMSs in the group has external MSC links to

other IMS systems outside the group or when such MSC links are added to

one or more of the IMSs in the group. As the label implies, ′dynamic

MSNAMEs′ creates MSNAMEs dynamically whenever an IMS joins the group

and does not have the MSNAMEs defined to the existing members of the

group defined to it or the existing members of the group do not have the new

shared queues group when member′s MSC definitions defined to them.

Dynamic MSNAMEs, as implemented, remove the requirement for each IMS

in a shared queues group to SYSGEN the definitions of the MSC network. In

some situations, an IMS remote to the shared queues group might be added

to the MSC network with minimal disruption to the IMSs in the shared

queues group.

Shared local SYSIDs among the members of a shared queues group allow all

members of the group to properly handle MSC-related messages that are

received or sent by members of the group from/to IMS systems connected

through IMS to one or more members of the group.

•

Primary Master/Secondary Master Terminal definitions and use:

One of the advantages of a Parallel Sysplex environment is the capability to

clone the IMS system definitions for all of the IMSs, thus making the

definition process less error prone. With IMS/ESA V5.1, the Master and

Secondary Master Terminal Operator physical terminal definitions could be

cloned if these physical terminals did not actually exist. Some automation

products have the capability of providing the Master Terminal function. Also,

the Master and Secondary Master LTERM definitions can be cloned because

the LTERMs are directly associated with the IMS system that had output

queued to them.

Shared queues with IMS/ESA V6.1 introduces the possibility of duplicate

LTERM names. With shared queues, duplicate LTERM names associated

with active sessions are to be avoided because the delivery of output

messages then becomes similar to a lottery for the delivery of those output

messages. The results of the lottery (competition to deliver Master Terminal

output) would sometimes deliver an output message to the correct Master

Terminal and sometimes not. ′Sometimes not′ is unacceptable, and without

a change to IMS, would require the system definitions of all of the IMSs in a

shared queues group to define unique Master and Secondary Master

Terminal LTERM names.

IMS/ESA V6.1 allows the physical terminal and LTERM name definitions for

the Primary and Secondary Master Terminals defined in an IMS system

definition to be overridden at execution time through a new, unique proclib

member associated with each IMS subsystem. This new proclib member

eliminates the problem previously described.

IMS/ESA V6.1 also includes new, or enhancements to existing, functions that

enhance the use of IMS in a Parallel Sysplex environment. These enhancements

are also available to IMS subsystems outside the Parallel Sysplex arena. A list

of these enhancements follows:

Chapter 3. Planning Considerations with IMS/ESA V6 in Mind 15

Page 34

•

The /STOP REGION ABDUMP command has been enhanced to detect whether the

region which is to be stopped is waiting on a lock request within the IRLM. If

it is waiting on a lock, when the command is issued, the waiting dependent

region task is resumed and forced to return to its dependent region address

space, where it is abended (U0474).

In prior releases, the execution of the command was waited upon if the

dependent region to be aborted was waiting for an IRLM lock. If the wait for

the command to execute is excessive, the operator wanting to terminate the

dependent region can issue another form of the command,

/STOP REGION

CANCEL, to force the region to terminate. This form of the command causes

the entire IMS online system to abnormally terminate if the dependent region

were waiting on a lock.

•

CI reclaim is not turned off in data-sharing or XRF environments with

IMS/ESA V6.1. It was turned off in prior releases, which had the potential for

causing performance problems when using primary and secondary indexes.

•

Improved security is accomplished with IMS/ESA V6.1 by propagating the

security environment to a back-end IMS when it is processing a transaction

that requires signon security. This is an important enhancement in either a

shared queues or MSC environment.

•

UCB VSCR/10,000 DD-names provide virtual storage constraint relief for

private address space storage below the 16 MB line and the ability to

allocate as many as 10,000 data sets to an address space. Note, there is a

limit of 8,189 full-function data base data sets that can be opened

concurrently. For DEDBs, which are allocated to the control region, 10,000

area data sets can be opened as well as allocated. These enhancements

allow the number of data sets that can be allocated and opened in the

Control Region and DL/I SAS address spaces to be greatly increased.

•

DBRC performance has been enhanced in several ways. These performance

enhancements are an integral part of IMS/ESA V6.1. Nothing has to be done

by the IMS user to receive the benefit.

•

Increased number of MSC links and SYSIDs gives the ability to define more

MSC resources to an MSC network. These changes remove the concern of

the addition of multiple IMS TM systems to an MSC network in a Parallel

Sysplex environment.

•

Generic /START region capability allows a single proclib member to be used

to start a dependent region under any IMS TM system. This is useful when

starting Message Processing Regions (MPRs) and Interactive Fast Path

Regions (IFPs) and can have some use when starting Batch Message

Processing (BMP) Regions.

•

Online change for DEDBs allows changes to be made without bringing down

IMS TM.

•

SMS Concurrent Copy is a new form of image copy that produces an image

copy with high performance with no or minimal data base data set outage.

The fuzzy image copy allows the data base data set to be copied to remain

fully accessible by the online system(s). A clean image copy does require a

short outage (a few seconds). SMS Concurrent Copy supports all types of

database data set organizations (KSDS, ESDS, and OSAM) used by IMS.

•

Conversational SPA Truncated Data Option supports a variable length SPA

size when conversational processing involves multiple program-to-program

16 IMS Parallel Sysplex Migration Planning Guide

Page 35

switches. This support is useful when the processing IMS is not the owner of

the conversation, such as in a shared queues environment.

•

DBRC NOPFA Option prevents the setting of the Prohibit Further

Authorization flag when the GLOBAL form of a database command is issued,

such as

issuing the command, for example,

/DBR, /STOP, or /DBD with the GLOBAL keyword option. If the intent of

/DBR DB GLOBAL, was to deallocate a

database from multiple data sharing IMS TM systems so that stand-alone

batch processing could be started against the database, the use of the

keyword option with the /DBR command eliminates the need to turn off the

′Prohibit Further Authorization′ flag in DBRC before starting the stand-alone

batch processing.

•

DBRC DST/Year 2000: DBRC DST support eliminates the need to shut down

IMS twice a year when the switch to or from daylight savings time (DST)

takes place. The elimination of the requirement to shut down twice a year is

only true when all of the IMS systems sharing the RECON data sets are at

the IMS/ESA 6.1 level.

DBRC Year 2000 support provides support for a four-digit year not only

internally within IMS but also externally for IMS users, such as, application

programs executing in a dependent region.

•

BMP Scheduling Flexibility: APAR PQ21039 for IMS/ESA V6 simplifies the

specification of the IMSID for users whose BMPs can execute under multiple

control regions in a Parallel Sysplex. Without this enhancement, moving a

BMP from one control region to another typically requires a change in the

BMP IMSID parameter. With this enhancement, no changes to BMP JCL are

required even when a BMP is executed under different control regions. A

detailed description of this enhancement is found in 17.6.1, “Using the

Function Delivered by APAR PQ21039” on page 173.

NOPFA

3.1 Discussing the Use of Traditional Queuing and Shared Queues

The use of shared queues is optional. With IMS/ESA V6.1, one can choose to

use the new shared queues function or to use traditional queuing (for example,

continue to use the message queue data sets). Depending upon which form of

queuing is to be used, the Parallel Sysplex implementation considerations can

be quite different. For this reason, the discussions that follow differentiate

between the implementation of IMS in a Parallel Sysplex environment using

traditional queuing versus shared queues.

Chapter 3. Planning Considerations with IMS/ESA V6 in Mind 17

Page 36

18 IMS Parallel Sysplex Migration Planning Guide

Page 37

Chapter 4. Planning Configurations After Failures

An installation should have a plan for what it will do when any component of the

Parallel Sysplex fails. For example, if a processor is unavailable, the installation

should have a plan for what subsystems, such as IMSs or IRLMs, will be moved

to another processor. Decisions about the configurations that will be used after

failures will determine what will be included in recovery procedures. These

decisions can also affect what names are chosen for subsystems. This means

that these decisions must be made early in the planning process.

Components that might fail include a processor, an MVS system, an IRLM, an

IMS subsystem, a CQS address space, an FDBR address space, a component of

the MVS logger, one or more Coupling Facility links, a Coupling Facility

structure, or a Coupling Facility. Some failures can be addressed by moving a

component. This, in turn, might affect the execution of other components. For

example, the movement of an IMS subsystem to a different MVS might affect

where BMPs are run.

The following are considerations that should be understood in making decisions

about configurations after failures.

4.1 IRLMs

The following rules apply to the use of IRLMs in a data-sharing group.

•

Each IMS subsystem must specify the name of the IRLM that it uses. This is

IRLMNM parameter.

the

•

An IMS can be restarted using any IRLM in the data-sharing group. This

applies to both normal and emergency restarts.

•

IRLM names are subsystem names and all subsystems on an MVS must

have unique names.

•

IRLM names do not have to be unique in a data-sharing group. IRLMs

running on different MVS systems can use the same IRLM name.

•

IRLM IDs must be unique in a data-sharing group. IRLMs must have

different IDs even when they run on different MVS systems.

•

IRLM names of all IRLMs that will be run on an MVS must be known to that

MVS.

An installation can choose to have either a unique name for each IRLM in the

data-sharing group or a common name shared by all of the IRLMs. If a common

name is used, an IMS can be restarted on any MVS without changing the

IRLMNM

parameter for the IMS. Of course, only one IRLM per MVS need be used for the

data-sharing group. If unique names are used, an installation has two options

for restarting an IMS on a different MVS. It can either change the IRLM name

used by the IMS or start the second IRLM on the MVS before restarting IMS.

An IMS batch (DLI or DBB) data-sharing job specifies the IRLM name. If

movement of work requires that the batch job use an

IRLM with a different name, its JCL must be changed. If an installation wants to

be able to run an IMS batch job on any MVS system without changing its JCL, all

IRLMs must have the same name.

Copyright IBM Corp. 1999 19

Page 38

The IRLM name is made known to MVS by specifying it in the IEFSSNxx member

of the MVS PARMLIB. MVS is preconditioned with the names of IRLM and

JRLM; so these names do not have to be included in IEFSSNxx. All other IRLM

names that might be used on an MVS must be specified in its IEFSSNxx member.

The following figures illustrate three cases for handling the failure of an MVS

system.

•

Figure 1 shows the use of unique IRLM names an moving an IRLM and its

associated IMS when their MVS fails.

•

Figure 2 on page 21 shows the use of unique names for all of the IRLMs in

the data sharing group and moving only the IMS when an MVS fails. In this

case, the IRLMNM parameter for the IMS must be changed to match the

IRLM on the MVS to which it is moved.

•

Figure 3 on page 21 shows the use of a common name by all IRLMs. When

an MVS fails, only its IMS is moved to another MVS. The IRLM does not

have to be moved and the IRLMNM specified by the moved, IMS does not

have to change.

All figures show the movement of IMSA from MVSA to MVSB after MVSA

becomes unavailable.

MVSA MVSB MVSC

┌─────────┐ ┌─────────┐ ┌─────────┐

Before │ IRLA │ │ IRLB │ │ IRLC │

MVSA │ IMSA │ │ IMSB │ │ IMSC │

Failure └─────────┘ └─────────┘ └─────────┘

────────────────────────────────────────────────────────

MVSA MVSB MVSC

┌─────────┐ ┌─────────────────┐ ┌─────────┐

After │ │ │ IRLB │ │ IRLC │

MVSA │ │ │ IMSB using IRLB │ │ IMSC │

Failure └─────────┘ │ IRLA │ └─────────┘

│ IMSA using IRLA │

└─────────────────┘

Figure 1. Using Unique IRLM Names and Moving IRLMs

20 IMS Parallel Sysplex Migration Planning Guide

Page 39

MVSA MVSB MVSC

┌─────────┐ ┌─────────┐ ┌─────────┐

Before │ IRLA │ │ IRLB │ │ IRLC │

MVSA │ IMSA │ │ IMSB │ │ IMSC │

Failure └─────────┘ └─────────┘ └─────────┘

────────────────────────────────────────────────────────

MVSA MVSB MVSC

┌─────────┐ ┌─────────────────┐ ┌─────────┐

After │ │ │ IRLB │ │ IRLC │

MVSA │ │ │ IMSB using IRLB │ │ IMSC │

Failure └─────────┘ │ IMSA using IRLA │ └─────────┘

└─────────────────┘

Figure 2. Using Unique IRLM Names and Changing IRLMs Used by IMSs

MVSA MVSB MVSC

┌─────────┐ ┌─────────┐ ┌─────────┐

Before │ IRLM │ │ IRLM │ │ IRLM │

MVSA │ IMSA │ │ IMSB │ │ IMSC │

Failure └─────────┘ └─────────┘ └─────────┘

────────────────────────────────────────────────────────

MVSA MVSB MVSC

┌─────────┐ ┌─────────┐ ┌─────────┐

After │ │ │ IRLM │ │ IRLM │

MVSA │ │ │ IMSB │ │ IMSC │

Failure └─────────┘ │ IMSA │ └─────────┘

└─────────┘

Figure 3. Using ″IRLM″ for all IRLM Names

4.1.1 Restarting Batch (DLI and DBB) Data-Sharing Jobs

When a data-sharing batch (DLI or DBB) job fails, dynamic backout generally is

not invoked. The only exception is for IMS pseudo abends of jobs using a DASD

log and specifying

In a data-sharing environment, this backout can be run using any IRLM in the

data-sharing group. Of course, the batch log must be available to the backout.

It is usually important to run this backout as quickly as possible since any modify

locks held by the failed batch job are retained and cause requestors of the locks

to receive a ″lock reject″ condition. Lock rejects usually result in U3303 abends.

After a batch job is backed out, it will normally need to be restarted. The restart

of the batch job can be done using any IRLM in the data-sharing group. If the

restarted batch job reads a checkpoint record from its log, this log must be

available on the MVS system used for restarting the batch job.

BKO=Y. In general, batch backout must be run for an updater.

Chapter 4. Planning Configurations After Failures 21

Page 40

4.2 IMS Subsystem Configurations

The exploitation of a Parallel Sysplex environment by IMS began with IMS/ESA

V5.1 and the changes introduced in that release to enhance data-sharing in a

Parallel Sysplex environment. IMS subsystems in a Parallel Sysplex