Page 1

Power Systems

IBM Flex System p260 and p460

Compute Nodes Installation and

Service Guide

IBM

Page 2

Page 3

Power Systems

IBM Flex System p260 and p460

Compute Nodes Installation and

Service Guide

IBM

Page 4

Note

Before using this information and the product it supports, read the information in “Safety notices” on page v, “Notices,” on

page 501, the IBM Systems Safety Notices manual, G229-9054, and the IBM Environmental Notices and User Guide, Z125–5823.

This edition applies to IBM Power Systems servers that contain the POWER7 processor and to all associated

models.

© Copyright IBM Corporation 2012, 2015.

US Government Users Restricted Rights – Use, duplication or disclosure restricted by GSA ADP Schedule Contract

with IBM Corp.

Page 5

Contents

Safety notices ............ v

Chapter 1. Introduction ........ 1

Product registration ............ 1

Related documentation ........... 3

IBM documentation CD .......... 4

Hardware and software requirements ..... 4

Using the documentation browser ...... 4

Notices and statements ........... 5

Features and specifications.......... 5

Features and specifications of the IBM Flex System

p260 Compute Node........... 5

Features and specifications of the IBM Flex System

p460 Compute Node........... 7

What your compute node offers ........ 9

Chapter 2. Power, controls, indicators,

and connectors ........... 11

Compute node control panel button and LEDs... 11

Turning on the compute node ........ 12

Turning off the compute node ........ 13

System-board layouts ........... 13

System-board connectors ......... 14

System-board LEDs........... 17

Input/output connectors and devices..... 19

Chapter 3. Configuring the compute

node................ 21

Updating the firmware .......... 22

Starting the TEMP image ......... 24

Verifying the system firmware levels ..... 24

Using the SMS utility ........... 24

Starting the SMS utility ......... 25

SMS utility menu choices......... 25

Creating a CE login............ 25

Configuring processor cores ......... 26

MAC addresses for integrated Ethernet controllers 27

Configuring a RAID array ......... 28

Removing the compute node from an IBM Flex

System Enterprise Chassis ......... 37

Reseating the compute node in a chassis..... 38

Removing and replacing tier 1 CRUs ...... 39

Removing the compute node cover ..... 39

Installing and closing the compute node cover.. 41

Removing the bezel assembly ....... 43

Installing the bezel assembly ....... 45

Removing a SAS hard disk drive ...... 46

Installing a SAS hard disk drive ...... 48

Removing a solid-state drive carrier ..... 49

Installing a solid-state drive carrier ..... 51

Removing a SATA solid-state drive ..... 53

Installing a SATA solid-state drive ...... 54

Removing a DIMM ........... 55

Installing a DIMM ........... 58

Supported DIMMs ........... 59

Removing a network adapter ....... 61

Installing a network adapter........ 63

Removing the battery .......... 64

Installing the battery .......... 65

Replacing the thermal sensor on an IBM Flex

System p460 Compute Node........ 67

Removing and replacing tier 2 CRUs ...... 68

Removing a DIMM ........... 68

Installing a DIMM ........... 71

Removing the management card ...... 73

Installing the management card ...... 75

Obtaining a PowerVM Virtualization Engine

system technologies activation code ..... 77

Removing the light path diagnostics panel ... 81

Installing the light path diagnostics panel ... 82

Removing and replacing FRUs (trained service

technician only) ............. 83

Replacing the system-board and chassis assembly 83

Completing the installation ......... 90

Installing and closing the compute node cover.. 91

Installing the compute node in an IBM Flex

System Enterprise Chassis ........ 93

Chapter 4. Installing the operating

system............... 29

Locating the installation instructions ...... 29

Installing service and productivity tools for Linux 31

Chapter 5. Accessing the service

processor ............. 33

Chapter 6. Installing and removing

components ............ 35

Returning a device or component ....... 35

Installation guidelines ........... 35

System reliability guidelines ........ 36

Handling static-sensitive devices ...... 36

© Copyright IBM Corp. 2012, 2015 iii

Chapter 7. Parts listing for IBM Flex

System p260 and p460 Compute Nodes 97

Chapter 8. Troubleshooting ..... 105

Introduction to problem solving ....... 105

Solving problems ............ 105

Diagnostics .............. 107

Diagnostic tools ........... 107

Collecting dump data ......... 109

Location codes ............ 109

Reference codes ........... 115

System reference codes (SRCs)...... 116

1xxxyyyy SRCs .......... 117

6xxxyyyy SRCs.......... 128

A1xxyyyy service processor SRCs ... 134

Page 6

A2xxyyyy logical partition SRCs .... 134

A6xxyyyy licensed internal code or

hardware event SRCs........ 135

A7xxyyyy licensed internal code SRCs .. 138

AAxxyyyy partition firmware attention

codes ............. 140

B1xxyyyy service processor SRCs.... 143

B2xxyyyy logical partition SRCs .... 146

B6xxyyyy licensed internal code or

hardware event SRCs........ 166

B7xxyyyy licensed internal code SRCs .. 169

BAxxyyyy partition firmware SRCs ... 189

POST progress codes (checkpoints) .... 233

C1xxyyyy service processor checkpoints 234

C2xxyyyy virtual service processor

checkpoints ........... 250

IPL status progress codes ...... 261

C7xxyyyy compute node firmware IPL

status checkpoints ......... 261

CAxxyyyy partition firmware checkpoints 262

D1xx1yyy service processor dump status

codes ............. 289

D1xx3yzz service processor dump codes 297

D1xx9yyy to D1xxCyyy service processor

power-off checkpoints ....... 300

Service request numbers (SRNs) ..... 301

Using the SRN tables........ 301

101-711 through FFC-725 SRNs .... 302

A00-FF0 through A24-xxx SRNs .... 438

SCSD devices SRNs (ssss-102 to ssss-640) 438

Failing function codes ....... 446

Controller maintenance analysis

procedures ........... 448

Error logs ............. 450

Checkout procedure .......... 451

About the checkout procedure...... 451

Performing the check-out procedure.... 451

Verifying the partition configuration..... 453

Running the diagnostics program ..... 453

Starting AIX concurrent diagnostics .... 453

Starting stand-alone diagnostics ..... 453

Starting stand-alone diagnostics from a NIM

server .............. 454

Using the diagnostics program ..... 455

Boot problem resolution ......... 456

Troubleshooting by symptom ....... 457

Intermittent problems ........ 457

Connectivity problems ........ 459

PCI expansion card (PIOCARD) problem

isolation procedure ......... 462

Hypervisor problems......... 463

Service processor problems ....... 467

Software problems.......... 485

Light path diagnostics ......... 485

Viewing the light path diagnostic LEDs .. 486

Light path diagnostics LEDs ...... 488

Isolating firmware problems ....... 492

Save vfchost map data ......... 492

Restore vfchost map data ........ 493

Recovering the system firmware ...... 494

Starting the PERM image ....... 494

Starting the TEMP image ....... 494

Recovering the TEMP image from the PERM

image .............. 495

Verifying the system firmware levels ... 495

Committing the TEMP system firmware

image .............. 496

Solving shared IBM Flex System Enterprise

Chassis resource problems ........ 496

Solving shared network connection problems 497

Solving shared power problems ..... 498

Solving undetermined problems ...... 498

Appendix. Notices ......... 501

Trademarks .............. 502

Electronic emission notices ......... 503

Class A Notices............ 503

Class B Notices ............ 507

Terms and conditions........... 510

iv Power Systems: IBM Flex System p260 and p460 Compute Nodes Installation and Service Guide

Page 7

Safety notices

Safety notices may be printed throughout this guide.

v DANGER notices call attention to a situation that is potentially lethal or extremely hazardous to

people.

v CAUTION notices call attention to a situation that is potentially hazardous to people because of some

existing condition.

v Attention notices call attention to the possibility of damage to a program, device, system, or data.

World Trade safety information

Several countries require the safety information contained in product publications to be presented in their

national languages. If this requirement applies to your country, safety information documentation is

included in the publications package (such as in printed documentation, on DVD, or as part of the

product) shipped with the product. The documentation contains the safety information in your national

language with references to the U.S. English source. Before using a U.S. English publication to install,

operate, or service this product, you must first become familiar with the related safety information

documentation. You should also refer to the safety information documentation any time you do not

clearly understand any safety information in the U.S. English publications.

Replacement or additional copies of safety information documentation can be obtained by calling the IBM

Hotline at 1-800-300-8751.

German safety information

Das Produkt ist nicht für den Einsatz an Bildschirmarbeitsplätzen im Sinne § 2 der

Bildschirmarbeitsverordnung geeignet.

Laser safety information

The servers can use I/O cards or features that are fiber-optic based and that utilize lasers or LEDs.

Laser compliance

The servers may be installed inside or outside of an IT equipment rack.

© Copyright IBM Corp. 2012, 2015 v

Page 8

DANGER

When working on or around the system, observe the following precautions:

Electrical voltage and current from power, telephone, and communication cables are hazardous. To

avoid a shock hazard:

v Connect power to this unit only with the IBM provided power cord. Do not use the IBM

provided power cord for any other product.

v Do not open or service any power supply assembly.

v Do not connect or disconnect any cables or perform installation, maintenance, or reconfiguration

of this product during an electrical storm.

v The product might be equipped with multiple power cords. To remove all hazardous voltages,

disconnect all power cords.

v Connect all power cords to a properly wired and grounded electrical outlet. Ensure that the outlet

supplies proper voltage and phase rotation according to the system rating plate.

v Connect any equipment that will be attached to this product to properly wired outlets.

v When possible, use one hand only to connect or disconnect signal cables.

v Never turn on any equipment when there is evidence of fire, water, or structural damage.

v Disconnect the attached power cords, telecommunications systems, networks, and modems before

you open the device covers, unless instructed otherwise in the installation and configuration

procedures.

v Connect and disconnect cables as described in the following procedures when installing, moving,

or opening covers on this product or attached devices.

To Disconnect:

1. Turn off everything (unless instructed otherwise).

2. Remove the power cords from the outlets.

3. Remove the signal cables from the connectors.

4. Remove all cables from the devices.

To Connect:

1. Turn off everything (unless instructed otherwise).

2. Attach all cables to the devices.

3. Attach the signal cables to the connectors.

4. Attach the power cords to the outlets.

5. Turn on the devices.

(D005)

DANGER

vi Power Systems: IBM Flex System p260 and p460 Compute Nodes Installation and Service Guide

Page 9

Observe the following precautions when working on or around your IT rack system:

v Heavy equipment–personal injury or equipment damage might result if mishandled.

v Always lower the leveling pads on the rack cabinet.

v Always install stabilizer brackets on the rack cabinet.

v To avoid hazardous conditions due to uneven mechanical loading, always install the heaviest

devices in the bottom of the rack cabinet. Always install servers and optional devices starting

from the bottom of the rack cabinet.

v Rack-mounted devices are not to be used as shelves or work spaces. Do not place objects on top

of rack-mounted devices.

v Each rack cabinet might have more than one power cord. Be sure to disconnect all power cords in

the rack cabinet when directed to disconnect power during servicing.

v Connect all devices installed in a rack cabinet to power devices installed in the same rack

cabinet. Do not plug a power cord from a device installed in one rack cabinet into a power

device installed in a different rack cabinet.

v An electrical outlet that is not correctly wired could place hazardous voltage on the metal parts of

the system or the devices that attach to the system. It is the responsibility of the customer to

ensure that the outlet is correctly wired and grounded to prevent an electrical shock.

CAUTION

v Do not install a unit in a rack where the internal rack ambient temperatures will exceed the

manufacturer's recommended ambient temperature for all your rack-mounted devices.

v Do not install a unit in a rack where the air flow is compromised. Ensure that air flow is not

blocked or reduced on any side, front, or back of a unit used for air flow through the unit.

v Consideration should be given to the connection of the equipment to the supply circuit so that

overloading of the circuits does not compromise the supply wiring or overcurrent protection. To

provide the correct power connection to a rack, refer to the rating labels located on the

equipment in the rack to determine the total power requirement of the supply circuit.

v (For sliding drawers.) Do not pull out or install any drawer or feature if the rack stabilizer brackets

are not attached to the rack. Do not pull out more than one drawer at a time. The rack might

become unstable if you pull out more than one drawer at a time.

v (For fixed drawers.) This drawer is a fixed drawer and must not be moved for servicing unless

specified by the manufacturer. Attempting to move the drawer partially or completely out of the

rack might cause the rack to become unstable or cause the drawer to fall out of the rack.

(R001)

Safety notices vii

Page 10

CAUTION:

Removing components from the upper positions in the rack cabinet improves rack stability during

relocation. Follow these general guidelines whenever you relocate a populated rack cabinet within a

room or building:

v Reduce the weight of the rack cabinet by removing equipment starting at the top of the rack

cabinet. When possible, restore the rack cabinet to the configuration of the rack cabinet as you

received it. If this configuration is not known, you must observe the following precautions:

– Remove all devices in the 32U position and above.

– Ensure that the heaviest devices are installed in the bottom of the rack cabinet.

– Ensure that there are no empty U-levels between devices installed in the rack cabinet below the

32U level.

v If the rack cabinet you are relocating is part of a suite of rack cabinets, detach the rack cabinet from

the suite.

v Inspect the route that you plan to take to eliminate potential hazards.

v Verify that the route that you choose can support the weight of the loaded rack cabinet. Refer to the

documentation that comes with your rack cabinet for the weight of a loaded rack cabinet.

v Verify that all door openings are at least 760 x 230 mm (30 x 80 in.).

v Ensure that all devices, shelves, drawers, doors, and cables are secure.

v Ensure that the four leveling pads are raised to their highest position.

v Ensure that there is no stabilizer bracket installed on the rack cabinet during movement.

v Do not use a ramp inclined at more than 10 degrees.

v When the rack cabinet is in the new location, complete the following steps:

– Lower the four leveling pads.

– Install stabilizer brackets on the rack cabinet.

– If you removed any devices from the rack cabinet, repopulate the rack cabinet from the lowest

position to the highest position.

v If a long-distance relocation is required, restore the rack cabinet to the configuration of the rack

cabinet as you received it. Pack the rack cabinet in the original packaging material, or equivalent.

Also lower the leveling pads to raise the casters off of the pallet and bolt the rack cabinet to the

pallet.

(R002)

(L001)

(L002)

viii Power Systems: IBM Flex System p260 and p460 Compute Nodes Installation and Service Guide

Page 11

(L003)

or

All lasers are certified in the U.S. to conform to the requirements of DHHS 21 CFR Subchapter J for class

1 laser products. Outside the U.S., they are certified to be in compliance with IEC 60825 as a class 1 laser

product. Consult the label on each part for laser certification numbers and approval information.

CAUTION:

This product might contain one or more of the following devices: CD-ROM drive, DVD-ROM drive,

DVD-RAM drive, or laser module, which are Class 1 laser products. Note the following information:

v Do not remove the covers. Removing the covers of the laser product could result in exposure to

hazardous laser radiation. There are no serviceable parts inside the device.

v Use of the controls or adjustments or performance of procedures other than those specified herein

might result in hazardous radiation exposure.

(C026)

Safety notices ix

Page 12

CAUTION:

Data processing environments can contain equipment transmitting on system links with laser modules

that operate at greater than Class 1 power levels. For this reason, never look into the end of an optical

fiber cable or open receptacle. (C027)

CAUTION:

This product contains a Class 1M laser. Do not view directly with optical instruments. (C028)

CAUTION:

Some laser products contain an embedded Class 3A or Class 3B laser diode. Note the following

information: laser radiation when open. Do not stare into the beam, do not view directly with optical

instruments, and avoid direct exposure to the beam. (C030)

CAUTION:

The battery contains lithium. To avoid possible explosion, do not burn or charge the battery.

Do Not:

v ___ Throw or immerse into water

v ___ Heat to more than 100°C (212°F)

v ___ Repair or disassemble

Exchange only with the IBM-approved part. Recycle or discard the battery as instructed by local

regulations. In the United States, IBM has a process for the collection of this battery. For information,

call 1-800-426-4333. Have the IBM part number for the battery unit available when you call. (C003)

Power and cabling information for NEBS (Network Equipment-Building System)

GR-1089-CORE

The following comments apply to the servers that have been designated as conforming to NEBS

(Network Equipment-Building System) GR-1089-CORE:

The equipment is suitable for installation in the following:

v Network telecommunications facilities

v Locations where the NEC (National Electrical Code) applies

The intrabuilding ports of this equipment are suitable for connection to intrabuilding or unexposed

wiring or cabling only. The intrabuilding ports of this equipment must not be metallically connected to the

interfaces that connect to the OSP (outside plant) or its wiring. These interfaces are designed for use as

intrabuilding interfaces only (Type 2 or Type 4 ports as described in GR-1089-CORE) and require isolation

from the exposed OSP cabling. The addition of primary protectors is not sufficient protection to connect

these interfaces metallically to OSP wiring.

Note: All Ethernet cables must be shielded and grounded at both ends.

The ac-powered system does not require the use of an external surge protection device (SPD).

The dc-powered system employs an isolated DC return (DC-I) design. The DC battery return terminal

shall not be connected to the chassis or frame ground.

x Power Systems: IBM Flex System p260 and p460 Compute Nodes Installation and Service Guide

Page 13

Chapter 1. Introduction

The IBM®Flex System p260 Compute Node or IBM Flex System p460 Compute Node is based on IBM

POWER®technologies. These compute nodes run in IBM Flex System Enterprise Chassis units to provide

a high-density, high-performance compute-node environment with advanced processing technology.

The Installation and User's Guide includes the compute node information on the IBM Flex System Enterprise

Chassis Documentation CD. All of the following information is in the document and also in the information

center:

v Setting up the compute node

v Starting and configuring the compute node

v Installing optional hardware devices

v A reference to more information about installing supported operating systems

v Performing basic troubleshooting of the compute node

Packaged with the printed Installation and User's Guide are software CDs that help you to configure

hardware, install device drivers, and install the operating system.

The compute node comes with a limited warranty. For information about the terms of the warranty and

getting service and assistance, see the information center or the Warranty and Support Information

document on the IBM Flex System Enterprise Chassis Documentation CD.

The compute node might have features that are not described in the documentation that comes with the

compute node. Occasionally, the documentation might be updated to include information about those

features. Technical updates might also become available to provide additional information that is not

included in the original compute node documentation. The most recent version of all IBM Flex System

Enterprise Chassis documentation is in the IBM Flex System Information Center.

The online information for the IBM Flex System Enterprise Chassis is available in the

http://publib.boulder.ibm.com/infocenter/flexsys/information/index.jsp.

Related information:

http://www14.software.ibm.com/webapp/set2/sas/f/lopdiags/home.html

http://publib.boulder.ibm.com/infocenter/flexsys/information/index.jsp

Product registration

Record vital data about your compute node.

© Copyright IBM Corp. 2012, 2015 1

Page 14

Vital product data

Print Table 1 and use it to record information about your compute node.

You will need this information when you register the compute node with IBM. You can register the

compute node at http://www.ibm.com/support/mynotifications.

To determine the values for your compute node, use the management module and the lsvpd command. If

you are running the Linux operating system, download and install the service and productivity tools for

the Linux operating system to install the lsvpd command.

The model number and serial number are on the ID label that is behind the control panel door on the

front of the compute node, and on a label on the side of the compute node that is visible when the

compute node is not in the IBM Flex System Enterprise Chassis.

A set of blank labels comes with the compute node. When you install the compute node in the IBM Flex

System Enterprise Chassis, write identifying information on a label and place the label on the bezel. See

the documentation for your IBM Flex System Enterprise Chassis for the location of the label placement.

Important: Do not place the label where it blocks any ventilation holes on the compute node or the IBM

Flex System Enterprise Chassis.

Table 1. Vital product data

Vital product data field Vital product data How to find this data

Product name

IBM Flex System p260 Compute Node and

IBM Flex System p460 Compute Node

Type model number

IBM Flex System p260 Compute Node:

7895-22X, 7895-23A, 7895-23X

IBM Flex System p460 Compute Node:

7895-42X, 7895-43X

v For FSM:

– Chassis Manager in the

management software web

interface of the IBM Flex System

Manager

v For Hardware Management Console

(HMC):

1. In the navigation area, click

Systems Management > Servers.

2. In the content pane, select the

server you want to work with.

3. Click Tasks > Properties.

v For Integrated Virtualization Manager

(IVM), see IVM lssyscfg command

(http://pic.dhe.ibm.com/infocenter/

powersys/v3r1m5/topic/p7hcg/

lssyscfg.htm).

2 Power Systems: IBM Flex System p260 and p460 Compute Nodes Installation and Service Guide

Page 15

Table 1. Vital product data (continued)

Vital product data field Vital product data How to find this data

v For FSM:

– Chassis Manager in the

management software web

interface of the IBM Flex System

Manager

Serial number

________________________ (7 characters)

System unique ID

Worldwide port number

Brand B0 (B followed by zero) lsvpd | grep BR command

_________________________________ (12

characters)

_________________________________ (12

characters)

v For HMC:

1. In the navigation area, click

Systems Management > Servers.

2. In the content pane, select the

server you want to work with.

3. Click Tasks > Properties.

v For IVM, see IVM lssyscfg command.

lsvpd | grep SU command

lsvpd | grep WN command

Related documentation

Documentation for the IBM Flex System p260 Compute Node or IBM Flex System p460 Compute Node

includes PDF files on the IBM Flex System Enterprise Chassis Documentation CD and in the information

center.

The most recent version of all IBM Flex System Enterprise Chassis documentation is in the IBM Flex

System Information Center.

PDF versions of the following documents are on the IBM Flex System Enterprise Chassis Documentation CD

and in the information center:

v Problem Determination and Service Guide

This document contains information to help you solve problems, and it contains information for service

technicians.

v Safety Information

This document contains translated caution and danger statements. Each caution and danger statement

that appears in the documentation has a number that you can use to locate the corresponding

statement in your language in the Safety Information document.

v Warranty and Support Information

This document contains information about the terms of the warranty and about getting service and

assistance.

Chapter 1. Introduction 3

Page 16

The compute node might have features that are not described in the documentation that comes with the

compute node. Occasional updates to the documentation might include information about those features,

or technical updates might be available to provide additional information that is not included in the

documentation that comes with the compute node.

Review the IBM Flex System Information Center or the Planning Guide and the Installation Guide for your

IBM Flex System Enterprise Chassis. The information can help you prepare for system installation and

configuration. The most current version of each document is available in the IBM Flex System

Information Center.

Related information:

IBM Flex System Information Center

IBM documentation CD

You can run the IBM Flex System Enterprise Chassis Documentation CD on any personal computer that

meets the hardware and software requirements.

The CD contains documentation for your compute node in a PDF file and includes the IBM

documentation browser to help you find information quickly.

Hardware and software requirements

The IBM Documentation CD requires the following minimum hardware and software levels.

v Microsoft Windows XP Professional, Windows 2000, or Red Hat Enterprise Linux

v 100 MHz Microprocessor

v 32 MB of RAM

v Adobe Acrobat Reader 3.0 (or later) or xpdf viewer, which comes with Linux operating systems

Using the documentation browser

Use the documentation browser to browse the contents of the CD, to read brief descriptions of the

documents, and to view documents by using Adobe Acrobat Reader or xpdf viewer.

About this task

The documentation browser automatically detects the regional settings in your system and displays the

documents in the language for that region (if available). If a document is not available in the language for

that region, the English-language version is displayed.

To start the documentation browser, use one of the following procedures:

v If Autostart is enabled, insert the CD into the CD or DVD drive. The documentation browser starts

automatically.

v If Autostart is disabled or is not enabled for all users, use one of the following procedures:

– If you are using a Windows operating system, insert the CD into the CD or DVD drive and click

Start > Run. In the Open field, type the following string, where e is the drive letter of the CD or

DVD drive, and click OK:

e:\win32.bat

– If you are using Red Hat Enterprise Linux, insert the CD into the CD or DVD drive, and then run

the following command from the /mnt/cdrom directory:

sh runlinux.sh

4 Power Systems: IBM Flex System p260 and p460 Compute Nodes Installation and Service Guide

Page 17

Select the compute node from the Product menu. The Available Topics list displays all the documents for

the compute node. Some documents might be in folders. A plus sign (+) indicates each folder or

document that has additional documents under it. Click the plus sign to display the additional

documents.

When you select a document, a description of the document is displayed under Topic Description. To

select more than one document, press and hold the Ctrl key while you select the documents. Click View

Book to view the selected documents in Acrobat Reader or xpdf viewer.

To search all the documents, type a word or text string in the Search field and click Search. The

documents in which the word or text string occurs are listed in order of the most occurrences. Click a

document to view it, and press Ctrl+F to use the Acrobat Reader search function, or press Alt+F to use

the xpdf viewer search function within the document.

Notices and statements

The CAUTION and DANGER statements in this document are also in the multilingual Safety Information.

Each statement is numbered for reference to the corresponding statement in your language in the Safety

Information document.

The following notices and statements are used in this document:

v Note: These notices provide important tips, guidance, or advice.

v Important: These notices provide information or advice that might help you avoid inconvenient or

problem situations.

v Attention: These notices indicate potential damage to programs, devices, or data. An attention notice is

placed just before the instruction or situation in which damage might occur.

v CAUTION: These statements indicate situations that can be potentially hazardous to you. A CAUTION

statement is placed just before the description of a potentially hazardous procedural step or situation.

v DANGER: These statements indicate situations that can be potentially lethal or extremely hazardous to

you. A DANGER statement is placed just before the description of a potentially lethal or extremely

hazardous procedural step or situation.

Features and specifications

Features and specifications of the IBM Flex System p260 Compute Node and IBM Flex System p460

Compute Node are summarized in these topics.

Features and specifications of the IBM Flex System p260 Compute Node

Features and specifications of the IBM Flex System p260 Compute Node are summarized in this

overview.

Chapter 1. Introduction 5

Page 18

The IBM Flex System p260 Compute Node is a one-bay compute node and is used in an IBM Flex System

Enterprise Chassis.

Notes:

v Power, cooling, removable-media drives, external ports, and Advanced System Management (ASM) are

provided by the IBM Flex System Enterprise Chassis.

v The operating system in the compute node must provide support for the Universal Serial Bus (USB) to

enable the compute node to recognize and communicate internally with the removable-media drives

and front-panel USB ports.

Core electronics:

64-bit 2 x POWER7®processors

IBM Flex System p260 Compute Node

1-bay:

v Model 7895-22X 16-way SMP 1-bay:

2 socket, 4-core or 8-core at 3.2, 3.3,

or 3.5 GHz

v Model 7895-23A 4-way SMP 1-bay:

2 socket, 2-core at 4.0 GHz

v Model 7895-23X 16-way SMP 1-bay:

2 socket, 4-core at 4.0 GHz or

8-core at 3.6 or 4.1 GHz

v 16 DIMM DDR3 slots. Maximum

capacity is 512 GB. With hard disk

drives (HDDs) or solid-state drives

(SSDs) installed, supports 4 GB and

8 GB very low profile (VLP)

DIMMs. With SSDs installed or in

diskless configurations, also

supports 2 GB, 16 GB, and 32 GB

low profile (LP) DIMMs.

POWER7 IOC I/O hub x 2 for the

IBM Flex System p260 Compute

Node

On-board, integrated features:

v Service processor: IPMI, serial over

LAN (SOL)

v SAS controller

v USB 2.0

Local storage:

v Zero, one, or two SAS 2.5 in. 300

GB, 600 GB, or 900 GB HDDs

v Zero, one, or two SATA 1.8 in. 177

GB SSDs with SAS-to-SATA

conversion

v Hardware mirroring supported

Network and storage adapter card

I/O options:

For a mapping of location codes, see

“System-board connectors” on page

14.

v 1 Gb Ethernet 4-port or 10 Gb

Ethernet KR 4-port card or 8-port

10 Gb converged network adapter

card in the I/O expansion card

slots (P1-C18, P1-C19) of the IBM

Flex System p260 Compute Node

v 8 Gb 2-port, 16 Gb 2-port, and 16

Gb 4-port Fibre Channel cards in

I/O expansion card slot P1-C19 of

the IBM Flex System p260

Compute Node

v 2-port 4X InfiniBand QDR

network adapter form factor

expansion card in the P1-C19 slot

of the IBM Flex System p260

Compute Node

v 2-port 10 Gb RoCE card form

factor expansion card in the

P1-C19 slot of the IBM Flex

System p260 Compute Node

Integrated functions:

v Two 1 Gb Ethernet ports for

communication with the

management module

v Automatic compute node restart

v SOL over the management

network

v Single USB 2.0 on base system

board for communication with

removable-media drives

v Optical media available by shared

chassis feature

Environment: These compute nodes

comply with ASHRAE class A3

specifications. For details, see the

Environment specifications at

http://publib.boulder.ibm.com/

infocenter/flexsys/information/topic/

com.ibm.acc.8721.doc/

features_and_specifications.html.

Size:

v Height: 55 mm (2.2 in.)

v Depth: 492 mm (19.4 in.)

v Width: 215 mm (8.5 in.)

Systems management:

v Supported by IBM Flex System

Enterprise Chassis management

module (CMM)

v Front panel LEDs

v Management console: IBM Flex

System Manager, Hardware

Management Console (HMC), or

Integrated Virtualization Manager

(IVM)

Note: The compute node can be

managed by only one management

console at a time.

v Energy scale thermal management

for power management, power

oversubscription (throttling), and

environmental sensing

v Field core override to disable cores

and save on licensing costs

v Concurrent code update by using

IBM Flex System Manager Update

Manager, Inventory Collection,

multiple VIOS, and PowerVM

Enterprise

®

6 Power Systems: IBM Flex System p260 and p460 Compute Nodes Installation and Service Guide

Page 19

Reliability and service features:

v Dual alternating current power

supply

v IBM Flex System Enterprise Chassis:

chassis redundant and hot-plug

power and cooling modules

v Boot-time processor deallocation

v Compute node hot plug

v Customer setup and expansion

v Automatic reboot on power loss

v Internal and chassis-external

temperature monitors

v ECC, chipkill memory

v System management alerts

v Light path diagnostics

v Electronic Service Agent™call-home

capability

Electrical input: 12 V dc

Security: Fully compliant with NIST

800-131A. The security cryptography

mode set by the managing device

(CMM or FSM node) determines the

security mode in which the compute

node operates.

See the ServerProven website for information about supported operating-system versions and all compute

node optional devices.

Features and specifications of the IBM Flex System p460 Compute Node

Features and specifications of the IBM Flex System p460 Compute Node are summarized in this

overview.

The IBM Flex System p460 Compute Node is the two-bay symmetric multiprocessing (SMP) unit and is

used in an IBM Flex System Enterprise Chassis.

Notes:

v Power, cooling, removable-media drives, external ports, and Advanced System Management (ASM) are

provided by the IBM Flex System Enterprise Chassis.

v The operating system in the compute node must provide support for the Universal Serial Bus (USB) to

enable the compute node to recognize and communicate internally with the removable-media drives

and front-panel USB ports.

Chapter 1. Introduction 7

Page 20

Core electronics:

64-bit 2 x POWER7 processors

IBM Flex System p460 Compute Node

2-bay:

v Model 7895-42X 32-way SMP 2-bay:

4 socket, 4-core or 8-core at 3.2, 3.3,

or 3.5 GHz

v Model 7895-43X 32-way SMP 2-bay:

4 socket, 4-core at 4.0 GHz or

8-core at 3.6 or 4.1 GHz

v 32 DIMM DDR3 slots. Maximum

capacity is 1024 GB. With hard disk

drives (HDDs) or solid-state drives

(SSDs) installed, supports 4 GB and

8 GB very low profile (VLP)

DIMMs. With SSDs installed or in

diskless configurations, also

supports 2 GB (7895-42X only), 16

GB, and 32 GB low profile (LP)

DIMMs.

POWER7 IOC I/O hub x 4 for the

IBM Flex System p460 Compute

Node

On-board, integrated features:

v Service processor: IPMI, serial over

LAN (SOL)

v SAS controller

v USB 2.0

Local storage:

v Zero, one, or two SAS 2.5 in. 300

GB, 600 GB, or 900 GB HDDs

v Zero, one, or two SATA 1.8 in. 177

GB SSDs with SAS-to-SATA

conversion

v Hardware mirroring supported

Network and storage adapter card

I/O options:

For a mapping of location codes, see

“System-board connectors” on page

14.

v 1 Gb Ethernet 4-port or 10 Gb

Ethernet KR 4-port card or 8-port

10 Gb converged network adapter

card in the I/O expansion card

slots (P1-C34 through P1-C37) of

the IBM Flex System p460

Compute Node

v 8 Gb 2-port, 16 Gb 2-port, and 16

Gb 4-port Fibre Channel cards in

the M2 and M4 slots (P1-C35,

P1-C37) of the IBM Flex System

p460 Compute Node

v 2-port 4X InfiniBand QDR

network adapter form factor

expansion card in the M2 and M4

slots (P1-C35, P1-C37) of the IBM

Flex System p460 Compute Node

v 2-port 10 Gb RoCE card form

factor expansion card in the M2,

M3, and M4 slots (P1-C35 through

P1-C37) of the IBM Flex System

p460 Compute Node

Integrated functions:

v Two 1 Gb Ethernet ports for

communication with the

management module

v Automatic compute node restart

v SOL over the management

network

v Single USB 2.0 on base system

board for communication with

removable-media drives

v Optical media available by shared

chassis feature

Environment: These compute nodes

comply with ASHRAE class A3

specifications. For details, see the

Environment specifications at

http://publib.boulder.ibm.com/

infocenter/flexsys/information/topic/

com.ibm.acc.8721.doc/

features_and_specifications.html.

Size:

v Height: 55 mm (2.2 in.)

v Depth: 492 mm (19.4 in.)

v Width: 437 mm (17.2 in.)

Systems management:

v Supported by IBM Flex System

Enterprise Chassis management

module (CMM)

v Front panel LEDs

v Management console: IBM Flex

System Manager, Hardware

Management Console (HMC), or

Integrated Virtualization Manager

(IVM)

Note: The compute node can be

managed by only one management

console at a time.

v Energy scale thermal management

for power management, power

oversubscription (throttling), and

environmental sensing

v Field core override to disable cores

and save on licensing costs

v Concurrent code update by using

IBM Flex System Manager Update

Manager, Inventory Collection,

multiple VIOS, and PowerVM

Enterprise

8 Power Systems: IBM Flex System p260 and p460 Compute Nodes Installation and Service Guide

Page 21

Reliability and service features:

v Dual alternating current power

supply

v IBM Flex System Enterprise Chassis:

chassis redundant and hot-plug

power and cooling modules

v Boot-time processor deallocation

v Compute node hot plug

v Customer setup and expansion

v Automatic reboot on power loss

v Internal and chassis-external

temperature monitors

v ECC, chipkill memory

v System management alerts

v Light path diagnostics

v Electronic Service Agent call-home

capability

Electrical input: 12 V dc

Security: Fully compliant with NIST

800-131A. The security cryptography

mode set by the managing device

(CMM or FSM node) determines the

security mode in which the compute

node operates.

See the ServerProven website for information about supported operating-system versions and all compute

node optional devices.

What your compute node offers

The design of the compute node takes advantage of advancements in chip technology, memory

management, and data storage.

The compute node uses the following features and technologies:

v Service processor

The service processor for the IBM Flex System p260 Compute Node or IBM Flex System p460 Compute

Node provides support for the following functions:

– Intelligent Platform Management Interface (IPMI)

– The operating system

– Power control and advanced power management

– Reliability, availability, and serviceability (RAS) features

– Serial over LAN (SOL)

– Continuous health monitoring and control

– Configurable notification and alerts

– Event logs that are time stamped and saved in nonvolatile memory and that can be attached to

email alerts

– Point-to-Point Protocol (PPP) support

– Remote power control

– Remote firmware update and access to critical compute node settings

v Disk drive support

Chapter 1. Introduction 9

Page 22

The compute node supports either Serial Advanced Technology Attachment (SATA) solid-state drives

(SSDs) or serial-attached SCSI (SAS) hard disk drives (HDDs) in one of the following configurations:

– Up to two 1.8 in. SATA SSDs

– Up to two 2.5 in. SAS HDDs

v Impressive performance using the latest microprocessor technology

The compute node comes with two POWER7 microprocessors for the IBM Flex System p260 Compute

Node and four POWER7 microprocessors for the IBM Flex System p460 Compute Node.

v I/O expansion

The compute node has connectors on the system board for optional PCI Express (PCIe) network

adapter cards for adding more network communication capabilities to the compute node.

v Large system memory capacity

The memory bus in the IBM Flex System p260 Compute Node supports up to 512 GB of system

memory, and the IBM Flex System p460 Compute Node supports up to 1024 GB of system memory.

For the official list of supported dual-inline memory modules (DIMMs), see http://www.ibm.com/

systems/info/x86servers/serverproven/compat/us/ (http://www.ibm.com/systems/info/x86servers/

serverproven/compat/us/).

v Light path diagnostics

Light path diagnostics provides light-emitting diodes (LEDs) to help diagnose problems. An LED on

the compute node control panel is lit if an unusual condition or a problem occurs. If this happens, you

can look at the LEDs on the system board to locate the source of the problem.

v Power throttling

If your IBM Flex System Enterprise Chassis supports power management, the power consumption of

the compute node can be dynamically managed through the management module. For more

information, see http://publib.boulder.ibm.com/infocenter/flexsys/information/topic/

com.ibm.acc.cmm.doc/cmm_product_page.html or the IBM support site at http://www.ibm.com/

support/entry/portal/Overview.

10 Power Systems: IBM Flex System p260 and p460 Compute Nodes Installation and Service Guide

Page 23

Chapter 2. Power, controls, indicators, and connectors

You can use the control panel to turn the compute nodes on or off and to view some controls and

indicators. Other indicators are on the system board. The system board also has connectors for various

components.

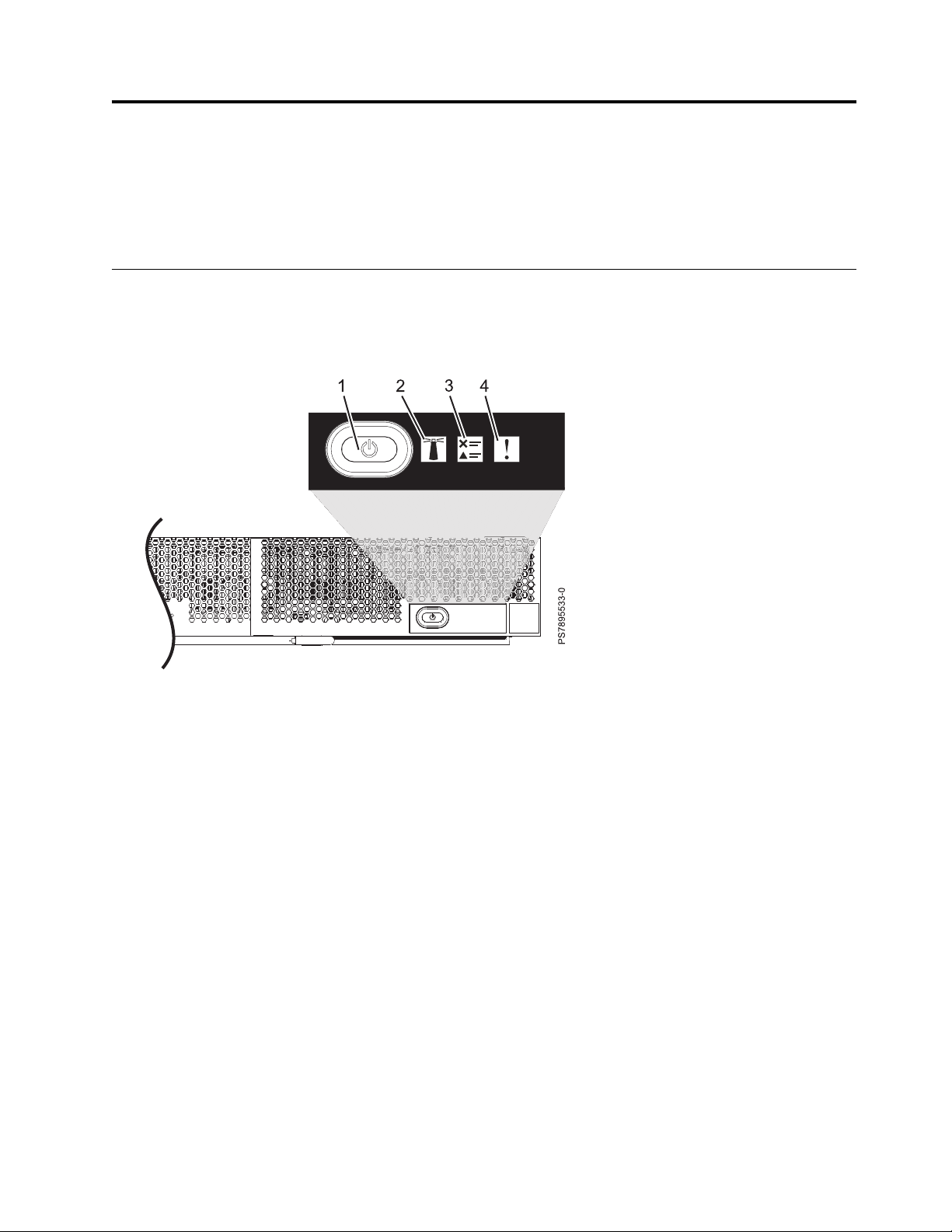

Compute node control panel button and LEDs

Compute node control panel button and LEDs provide operational controls and status indicators.

Figure 1. Compute node control panel button and LEDs

1. Power-control button and light path LED: Press this button to turn on or turn off the compute node

or to view light path diagnostic LEDs.

The power-control button has an effect only if local power control is enabled for the compute node.

Local power control is enabled and disabled through the web interface of the management module.

Press the power-control button for 5 seconds to begin powering down the compute node.

The green light path LED indicates the power status of the compute node in the following manner:

v Flashing rapidly: The service processor is initializing the compute node.

v Flashing slowly: The compute node has completed initialization and is waiting for a power-on

command.

v Lit continuously: The compute node has power and is turned on.

Note: The enhanced service processor can take as long as 3 minutes to initialize after you install the

compute node, at which point the LED begins to flash slowly.

2. Location LED: When this blue LED is lit, it has been turned on by the system administrator to aid in

visually locating the compute node. The location LED can be turned off through the management

console.

3. Check log LED: When this amber LED is lit, it indicates that an error for the compute node has been

detected that must be addressed by the user. See the error log repository to further investigate this

serviceable event. The LED can be turned off through the management console.

© Copyright IBM Corp. 2012, 2015 11

Page 24

4. Enclosure fault LED: When this amber LED is lit, it indicates that a system error has occurred in the

compute node. The compute-node error LED will turn off after one of the following events:

v Correcting the error

v Reseating the compute node in the IBM Flex System Enterprise Chassis

v Cycling the IBM Flex System Enterprise Chassis power

Related tasks:

“Viewing the light path diagnostic LEDs” on page 486

After reading the required safety information, look at the control panel to determine whether the LEDs

indicate a suboptimal condition or an error.

Turning on the compute node

After you connect the compute node to power through the IBM Flex System Enterprise Chassis, you can

start the compute node after the discovery and initialization process is complete.

About this task

To start the compute node, use one of the following methods:

Procedure

v Start the compute node by pressing the power-control button on the front of the compute node.

After you push the power-control button, the power-on LED continues to flash slowly for about 15

seconds, and then is lit solidly when the power-on process is complete.

Wait until the power-on LED on the compute node flashes slowly before you press the compute node

power-control button. If the power-on LED is flashing rapidly, the service processor is initializing the

compute node. The power-control button does not respond during initialization.

Note: The enhanced service processor can take as long as 3 minutes to initialize after you install the

compute node, at which point the LED begins to flash slowly.

v Start the compute node automatically when power is restored after a power failure.

If a power failure occurs, the IBM Flex System Enterprise Chassis and then the compute node can start

automatically when power is restored. You must configure the compute node to restart through the

management module.

v Start the compute node remotely using the management module.

After you initiate the power-on process, the power-on LED flashes slowly for about 15 seconds, and

then is lit solidly when the power-on process is complete.

12 Power Systems: IBM Flex System p260 and p460 Compute Nodes Installation and Service Guide

Page 25

Turning off the compute node

When you turn off the compute node, it is still connected to power through the IBM Flex System

Enterprise Chassis. The compute node can respond to requests from the service processor, such as a

remote request to turn on the compute node. To remove all power from the compute node, you must

remove it from the IBM Flex System Enterprise Chassis.

Before you begin

Shut down the operating system before you turn off the compute node. See the operating-system

documentation for information about shutting down the operating system.

About this task

To turn off the compute node, use one of the following methods:

Procedure

v Turn off the compute node by pressing the power-control button for at least 5 seconds.

Note: The power-control LED can remain on solidly for up to 1 minute after you push the

power-control button. After you turn off the compute node, wait until the power-control LED is

flashing slowly before you press the power-control button to turn on the compute node again.

If the operating system stops functioning, press and hold the power-control button for more than 5

seconds to force the compute node to turn off.

v Use the management module to turn off the compute node.

The power-control LED can remain on solidly for up to 1 minute after you initiate the power-off

process. After you turn off the compute node, wait until the power-control LED is flashing slowly

before you initiate the power-on process from the Chassis Management Module (CMM) to turn on the

compute node again.

Use the management-module Web interface to configure the management module to turn off the

compute node if the system is not operating correctly.

For additional information, see http://publib.boulder.ibm.com/infocenter/flexsys/information/topic/

com.ibm.acc.cmm.doc/cmm_product_page.html.

System-board layouts

Illustrations show the connectors and LEDs on the system board. The illustrations might differ slightly

from your hardware.

Chapter 2. Power, controls, indicators, and connectors 13

Page 26

System-board connectors

Compute node components attach to the connectors on the system board.

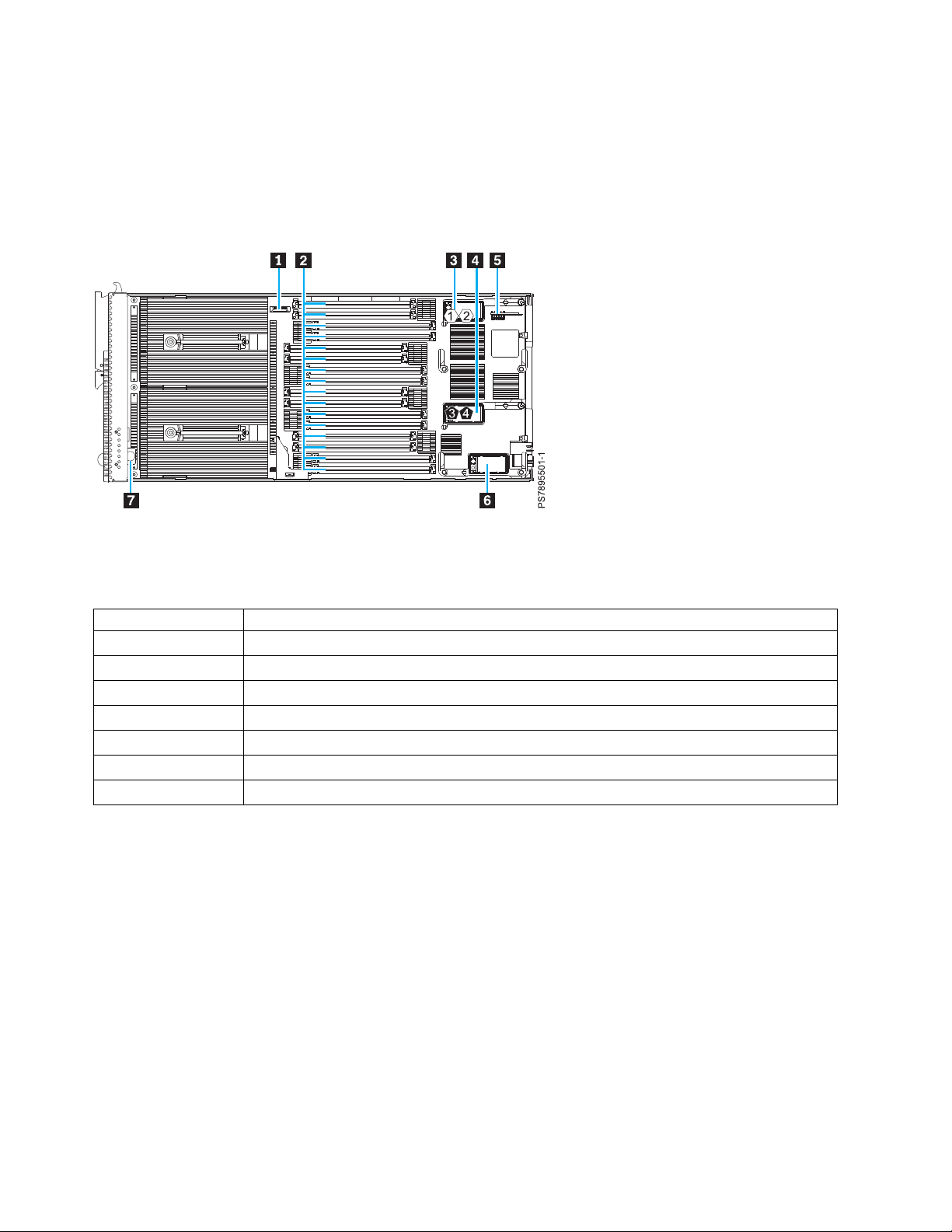

The following figure shows the connectors on the base-unit system board in the IBM Flex System p260

Compute Node.

Figure 2. System-board connectors for the IBM Flex System p260 Compute Node

The following table identifies and describes the connectors for the IBM Flex System p260 Compute Node.

Table 2. Connectors for the IBM Flex System p260 Compute Node

Callout IBM Flex System p260 Compute Node connectors

▌1▐ 3 V lithium battery connector (P1-E1)

▌2▐ DIMM connectors (See Figure 4 on page 16 for individual connectors.)

▌3▐ I/O expansion card top connector for chassis bays 1 and 2 (P1-C18)

▌4▐ I/O expansion card bottom connector for chassis bays 3 and 4 (P1-C19)

▌5▐ Management card connector (P1-C21)

▌6▐ Everything-to-Everywhere (ETE) connector (P1-C20)

▌7▐ Light path card

The following figure shows the connectors on the base-unit system board in the IBM Flex System p460

Compute Node.

14 Power Systems: IBM Flex System p260 and p460 Compute Nodes Installation and Service Guide

Page 27

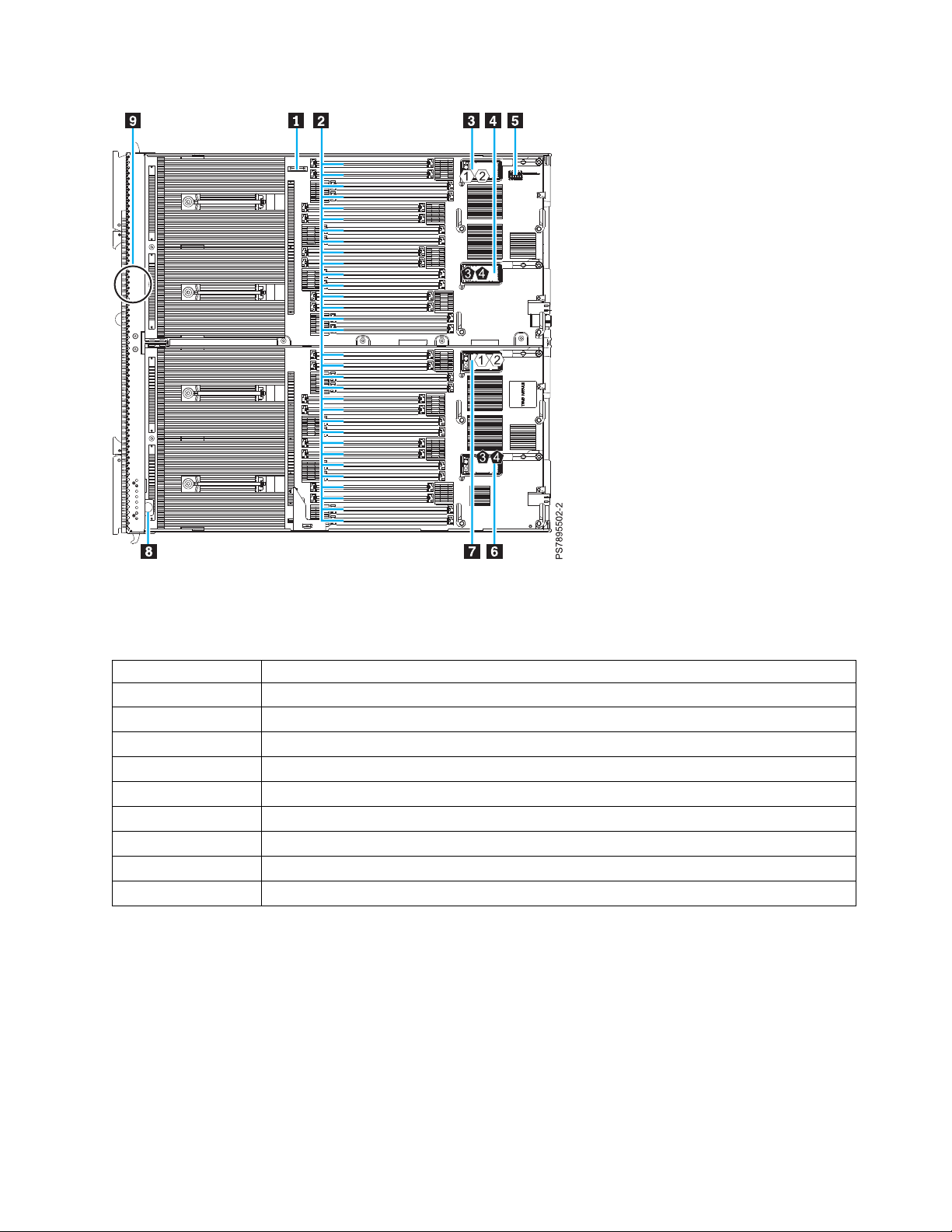

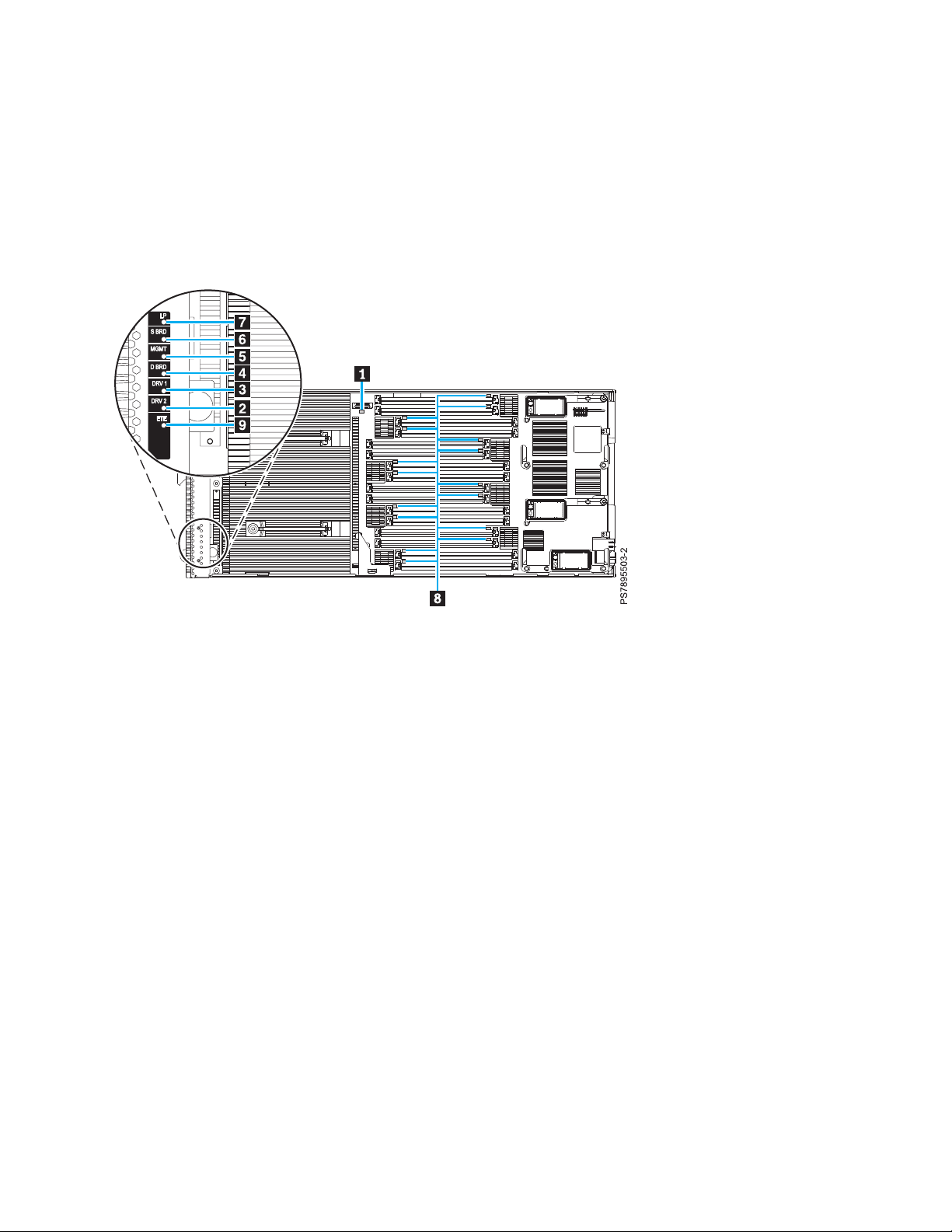

Figure 3. Base-unit connectors for the IBM Flex System p460 Compute Node

The following table identifies and describes the connectors for the IBM Flex System p460 Compute Node.

Table 3. Connectors for the IBM Flex System p460 Compute Node

Callout IBM Flex System p460 Compute Node connectors

▌1▐ 3 V lithium battery connector (P1-E1)

▌2▐ DIMM connectors (See Figure 5 on page 16 for individual connectors.)

▌3▐ I/O expansion card M1 connector for chassis bays 1 and 2 (P1-C34)

▌4▐ I/O expansion card M2 connector for chassis bays 3 and 4 (P1-C35)

▌5▐ Management card connector (P1-C38)

▌6▐ I/O expansion card M4 connector for chassis bays 3 and 4 (P1-C37)

▌7▐ I/O expansion card M3 connector for chassis bays 1 and 2 (P1-C36)

▌8▐ Light path card

▌9▐ Thermal sensor

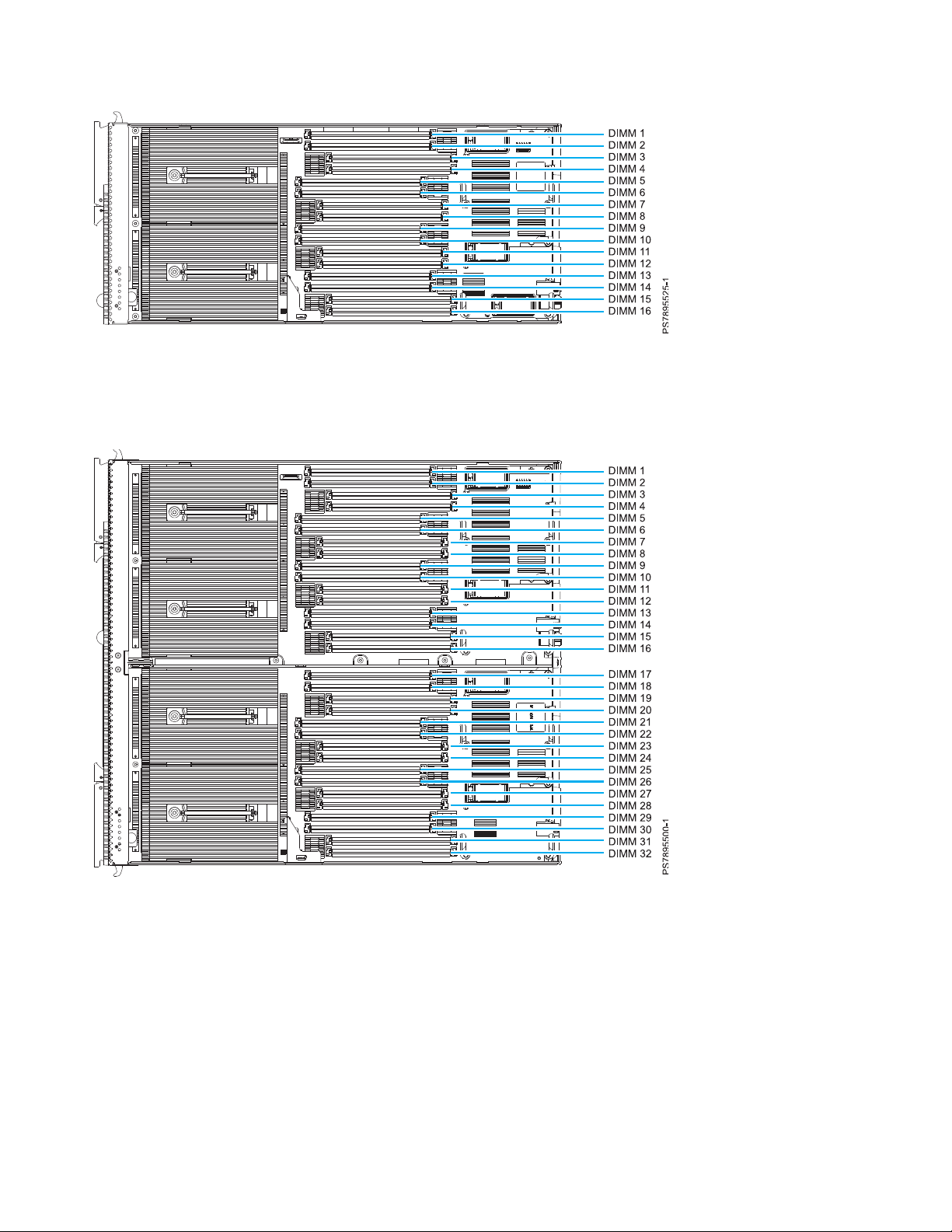

The following figure shows individual DIMM connectors for the IBM Flex System p260 Compute Node

system board.

Chapter 2. Power, controls, indicators, and connectors 15

Page 28

Figure 4. DIMM connectors for the IBM Flex System p260 Compute Node

The following figure shows individual DIMM connectors for the IBM Flex System p460 Compute Node

system board.

Figure 5. DIMM connectors for the IBM Flex System p460 Compute Node

16 Power Systems: IBM Flex System p260 and p460 Compute Nodes Installation and Service Guide

Page 29

System-board LEDs

Use the illustration of the LEDs on the system board to identify a light emitting diode (LED).

Press and hold the front power-control button to see any light path diagnostic LEDs that were turned on

during error processing. Use the following figure to identify the failing component.

The following figure shows LEDs on the IBM Flex System p260 Compute Node. The following figures

and table shows the system-board LEDs.

Figure 6. LED locations on the system board of the IBM Flex System p260 Compute Node

The following figure shows LEDs on the system board of the IBM Flex System p460 Compute Node.

Chapter 2. Power, controls, indicators, and connectors 17

Page 30

Figure 7. LED locations on the system board of the IBM Flex System p460 Compute Node

The following table identifies the light path diagnostic LEDs.

Table 4. IBM Flex System p260 Compute Node and IBM Flex System p460 Compute Node LEDs

Callout Unit LEDs

▌1▐ 3 V lithium battery LED

▌2▐ DRV2 LED (HDD or SSD)

▌3▐ DRV1 LED (HDD or SSD)

▌4▐ Drive board LED (solid-state drive interposer, which is integrated in the cover)

▌5▐ Management card LED

▌6▐ System board LED

▌7▐ Light path power LED

▌8▐ DIMM LEDs

▌9▐ ETE connector LED

18 Power Systems: IBM Flex System p260 and p460 Compute Nodes Installation and Service Guide

Page 31

Input/output connectors and devices

The input/output connectors that are available to the compute node are supplied by the IBM Flex System

Enterprise Chassis.

See the documentation that comes with the IBM Flex System Enterprise Chassis for information about the

input/output connectors.

The Ethernet controllers on the compute node communicate with the network through the

Ethernet-compatible I/O modules on the IBM Flex System Enterprise Chassis.

Chapter 2. Power, controls, indicators, and connectors 19

Page 32

20 Power Systems: IBM Flex System p260 and p460 Compute Nodes Installation and Service Guide

Page 33

Chapter 3. Configuring the compute node

While the firmware is running Power on System Test (POST) and before the operating system starts, a

POST menu with POST indicators is displayed. The POST indicators are the words Memory, Keyboard,

Network, SCSI, and Speaker that are displayed as each component is tested. You can then select

configuration utilities from the POST menu.

About this task

The following configuration utilities are available from the POST menu:

v System management services (SMS)

Use the system management services (SMS) utility to view information about your system or partition

and to perform tasks such as setting up remote IPL, changing self-configuring SCSI device (SCSD)

settings, and selecting boot options. The SMS utility can be used for AIX®or Linux partitions.

v Default boot list

Use this utility to initiate a system boot in service mode through the default service mode boot list.

This mode attempts to boot from the first device of each type that is found in the list.

Note: This is the preferred method of starting the stand-alone AIX diagnostics from CD.

v Stored boot list

Use this utility to initiate a system boot in service mode by using the customized service-mode boot

list that was set up by the AIX operating system when the operating system was first booted, or

manually by using the AIX service aids.

v Open firmware prompt

This utility is for advanced users of the IEEE 1275 specifications only.

v Management module

Use the management module to change the boot list to determine which firmware image to boot, and

to perform other configuration tasks.

Related tasks:

“Using the SMS utility” on page 24

Use the System Management Services (SMS) utility to configure the IBM Flex System p260 Compute

Node or IBM Flex System p460 Compute Node.

© Copyright IBM Corp. 2012, 2015 21

Page 34

Updating the firmware

IBM periodically makes firmware updates available for you to install on the compute node, on the

management module, or on expansion cards in the compute node.

Before you begin

Attention: Installing the wrong firmware update might cause the compute node to malfunction. Before

you install a firmware update, read any readme and change history files that are provided with the

downloaded update. These files contain important information about the update and the procedure for

installing the update, including any special procedure for updating from an early firmware version to the

latest version.

Important:

v To avoid problems and to maintain proper system performance, always verify that the compute node

BIOS, service processor, and diagnostic firmware levels are consistent for all compute nodes within the

IBM Flex System Enterprise Chassis.

v For a detailed summary of update procedures for all IBM Flex System components, see the

http://www.ibm.com/support/entry/portal/docdisplay?lndocid=MIGR-5091991.

To update the firmware of the compute node, use one of the following methods.

v The IBM Flex System Manager. See http://publib.boulder.ibm.com/infocenter/flexsys/information/

topic/com.ibm.acc.8731.doc/updating_firmware_and_software.html.

v The Hardware Management Console (HMC). See Managed system updates.

v The Integrated Virtualization Manager (IVM), see Updating the Integrated Virtualization Manager.

v In-band operating system capabilities. These include the update_flash command for the Linux

operating system and the AIX operating system or the ldfware command for Virtual I/O Server.

v The firmware update function of AIX diagnostics.

v The firmware update function of the stand-alone diagnostics boot image.

Attention: Before the installation of the new firmware to the temporary side begins, the contents of the

temporary side are copied into the permanent side. After the firmware installation begins, the previous

level of firmware on the permanent side is no longer available.

Notes:

v You must use the default USERID account and password in the management software to access a

Chassis Management Module (CMM) that is managing a chassis that contains Power Systems

compute nodes.

v Before you update the firmware for one or more Power Systems compute nodes, make sure that the

password for the default USERID account will not expire before the update is complete. If the

password expires during a code update, then the Power Systems compute nodes might not reconnect

to the management software, and each Power Systems compute node might have to be updated with

the new password.

v Firmware updates can take some time to load. To expedite the initial setup process, you can begin to

install your operating system while you wait for the firmware updates.

™

22 Power Systems: IBM Flex System p260 and p460 Compute Nodes Installation and Service Guide

Page 35

About this task

To install compute node firmware using an in-band method, complete the following steps:

Procedure

1. Download the IBM Flex System p260 Compute Node one-bay firmware or the IBM Flex System p460

Compute Node two-bay firmware.

a. Go to http://www.ibm.com/software/brandcatalog/puresystems/centre/update.

b. Select the update group that matches the IBM Flex System version to which you want to update.

For example, select the Flex System 1.2.1 tab.

c. Select the updates for the applicable compute node.

d. Download the compute node firmware and any firmware required for installed devices, such as

adapters or drives.

Note: Ensure that you download all files in the firmware update, including .rpm, .xml, dd.xml,

and pd.sdd files as well as the readme.txt file.

e. Use FTP to copy the update to a directory on the compute node (such as /tmp/fwrpms).

2. Log on to the AIX or Linux system as root, or log on to the Virtual I/O Server (VIOS) as padmin.

3. If you are logging on to VIOS, run the following command to obtain root access:

run oem_setup_env

4. Unpack the .rpm file.

For example, if you are installing the FW773 service pack 01AF773_051_033:

rpm -Uvh -ignoreos 01AF773_051_033.rpm

The output from the command should be similar to:

Preparing... #################################### [100%]

1:01AF773_051_033 #################################### [100%]

The resulting .img file is now in the /tmp/fwupdate subdirectory.

5. Install the firmware update with one of the following methods:

v Install the firmware with the AIX update_flash command:

cd /tmp/fwupdate

/usr/lpp/diagnostics/bin/update_flash -f 01AFxxx_yyy_zzz.img

v Install the firmware with the Linux update_flash command:

cd /tmp/fwupdate

/usr/sbin/update_flash -f 01AFxxx_yyy_zzz.img

v Return to VIOS and install the firmware with the ldfware command on VIOS:

#exit

cd /tmp/fwupdate

ldfware -file 01AFxxx_yyy_zzz.img

Where 01AFxxx_yyy_zzz.img is the name of the firmware image.

Note: You can also use the firmware update function of AIX diagnostics or the firmware update

function of the stand-alone diagnostics boot image. For more information, see http://

publib.boulder.ibm.com/infocenter/powersys/v3r1m5/topic/p7ha5/fix_aix_diags.htm.

Chapter 3. Configuring the compute node 23

Page 36

6. Restart the compute node to apply the firmware update.

7. Run the following command in AIX or Linux to verify if the firmware update was successful:

lsmcode -A

Run the following command in VIOS to verify if the firmware update was successful:

lsfware -all

Starting the TEMP image

The system firmware is contained in separate temporary and permanent images in the flash memory of

the compute node. These images are referred to as TEMP and PERM, respectively. The compute node

normally starts from the TEMP image. Start the TEMP image before you update the firmware.

About this task

To start the TEMP image, see http://publib.boulder.ibm.com/infocenter/flexsys/information/topic/

com.ibm.acc.psm.hosts.doc/dpsm_managing_hosts_power_firmware.html.

Verifying the system firmware levels

The diagnostics program displays the current system firmware levels for the temporary (TEMP) and

permanent (PERM) images. This function also displays which image the compute node used to start.

Procedure

1. Start the diagnostics program.

2. From the Function Selection menu, select Task Selection and press Enter.

3. From the Tasks Selection List menu, select Update and Manage System Flash and press Enter.

The top of the Update and Manage System Flash menu displays the system firmware level for the

PERM and the TEMP images and the image that the compute node used to start.

Note: If the TEMP image level is more current than the PERM image, commit the TEMP image.

4. When you have verified the firmware levels, press F3 until the Diagnostic Operating Instructions

window is displayed, and then press F3 again to exit the diagnostic program.

Using the SMS utility

Use the System Management Services (SMS) utility to configure the IBM Flex System p260 Compute

Node or IBM Flex System p460 Compute Node.

24 Power Systems: IBM Flex System p260 and p460 Compute Nodes Installation and Service Guide

Page 37

Starting the SMS utility

Start the SMS utility to configure the compute node.

Procedure

1. Turn on or restart the compute node, and establish an SOL session with it.

See the IBM Chassis Management Module Command-Line Interface Reference Guide for more information.

2. When the POST menu and indicators are displayed, and after the word Keyboard is displayed and

before the word Speaker is displayed, press 1.

3. Follow the instructions in the window.

SMS utility menu choices

Select SMS tasks from the SMS utility main menu. Choices on the SMS utility main menu depend on the

version of the firmware in the compute node.

Some menu choices might differ slightly from these descriptions:

v Select Language

Changes the language that is used to display the SMS menus.

v Setup Remote IPL (Initial Program Load)

Enables and sets up the remote startup capability of the compute node or partition.

v Change SCSI Settings

Changes the addresses of the self-configuring SCSI device (SCSD) controllers that are attached to the

compute node.

v Select Console

Selects the console on which the SMS menus are displayed.

v Select Boot Options

Sets various options regarding the installation devices and boot devices.

Note: If a device that you are trying to select is not displayed in the Select Device Type menu, select

List all Devices and select the device from that menu.

v Firmware Boot Side Options

Controls the booting of firmware from the permanent or temporary side.

Creating a CE login

If the compute node is running the AIX operating system, you can create a customer engineer (CE) login.

The CE login is used to perform operating system commands that are required to service the system

without being logged in as a root user.

Chapter 3. Configuring the compute node 25

Page 38

About this task

The CE login must have a role of Run Diagnostics and must be in a primary group of System. This

setting enables the CE login to perform the following tasks:

v Run the diagnostics, including the service aids, certification, and formatting.

v Run all the operating-system commands that are run by system group users.

v Configure and unconfigure devices that are not in use.

In addition, this login can enable the Shutdown Group so that use of the Update System Microcode

service aid and the shutdown and reboot operations are available.

The preferred CE login user name is qserv.

Configuring processor cores

Learn how to increase or decrease the number of active processor cores in the compute node.

You can order your IBM Flex System p260 Compute Node or IBM Flex System p460 Compute Node with

a feature that instructs the factory to reduce the number of active processor cores in the compute node to

reduce software licensing costs. The factory uses the field core override option to reduce the number of

processor cores when feature code 2319: Factory deconfiguration of one core is ordered with a new system.

This option, available on the Advanced System Management Interface (ASMI), reduces the number of

processor cores by one.

The field core override option indicates the number of functional cores that are active in the compute

node. The field core override option provides the capability to increase or decrease the number of active

processor cores in the compute node. The compute node firmware sets the number of active processor

cores to the entered value. The value takes effect when the compute node is rebooted. The field core

override value can be changed only when the compute node is powered off.

You must use this option to increase the number of active processor cores due to increased workload on

the compute node.

To change the number of functional override cores in the compute node, you must access ASMI. See

http://publib.boulder.ibm.com/infocenter/flexsys/information/topic/com.ibm.acc.psm.hosts.doc/

dpsm_managing_hosts_launch_asm.html.

For detailed information about the field core override feature, see http://publib.boulder.ibm.com/

infocenter/powersys/v3r1m5/topic/p7hby/fieldcore.htm.

Related information:

http://publib.boulder.ibm.com/infocenter/powersys/v3r1m5/topic/p7hby/viewprocconfig.htm

26 Power Systems: IBM Flex System p260 and p460 Compute Nodes Installation and Service Guide

Page 39

MAC addresses for integrated Ethernet controllers

Two integrated Ethernet ports are used by the service processor on the IBM Flex System p260 Compute

Node or IBM Flex System p460 Compute Node. Additional Ethernet ports are provided by the feature

cards plugged into the two expansion cards slots. These expansion card Ethernet ports, when used with a

Virtual I/O Server (VIOS), provide virtual logical Ethernet to client logical partitions (LPARs). The VIOS

software uses the logical Ethernet as if they were actual physical ports.

About this task

The Media Access Control (MAC) addresses of the integrated Ethernet ports are listed on a label on the

compute node. The compute node label lists two MAC addresses. The MAC addresses of the integrated

Ethernet ports are displayed in the Chassis Manager in the management software web interface of the

IBM Flex System Manager and in the Hardware Management Console (HMC), and in the Integrated

Virtualization Manager (IVM). The MAC addresses of the logical ports are generated by VIOS.

To view the MAC addresses of the Ethernet ports by using HMC, click HMC Management > Change

Network Settings > LAN Adapters.

To view the MAC addresses of the Ethernet ports by using IVM, click View/Modify TCP/IP Settings >

Properties > Connected Partitions.

Table 5 shows the relative addressing scheme.

Table 5. MAC addressing scheme for physical and logical integrated Ethernet controllers

Relationship to the MAC

Node

Service processor built-in

Enet0

Service processor built-in

Enet1

Logical Ethernet ports Generated by VIOS

Name in management

module

that is listed on the IBM

Flex System p260 Compute

Node or IBM Flex System

p460 Compute Node label

Same as first MAC address 00:1A:64:44:0e:c4

MAC + 1 00:1A:64:44:0e:c5

Example

1. The Integrated Virtualization Manager (IVM), see Updating the Integrated Virtualization Manager.

For more information about planning, deploying, and managing the use of integrated Ethernet

controllers, see the Configuring section of the PowerVM Information Roadmap.

Chapter 3. Configuring the compute node 27

Page 40

Configuring a RAID array

Use this information to configure a RAID array.

About this task

Configuring a RAID array applies to a compute node in which disk drives or solid-state drives are

installed.

Note: When configuring a RAID array, the hard disk drives must use the same type of interface and

must have identical capacity and speed.

Disk drives and solid-state drives in the IBM Flex System p260 Compute Node or IBM Flex System p460

Compute Node can be used to implement and manage various types of RAID arrays in operating

systems that are on the ServerProven list. For the compute node, you must configure the RAID array

through the smit sasdam utility, which is the SAS RAID Disk Array Manager for the AIX operating

system. The AIX Disk Array Manager is packaged with the Diagnostics utilities on the diagnostics CD.

Use the smit sasdam utility to configure the disk drives for use with the SAS controller. For more

information, see http://publib.boulder.ibm.com/infocenter/systems/scope/hw/index.jsp?topic=/p7ebj/

sasusingthesasdiskarraymanager.htm.

Important: Depending on your RAID configuration, you might have to create the array before you install

the operating system in the compute node.

Before you can create a RAID array, you must reformat the drives so that the sector size of the drives

changes from 512 bytes to 528 bytes. If you later decide to remove the drives, delete the RAID array

before you remove the drives. If you decide to delete the RAID array and reuse the drives, you must

reformat the drives so that the sector size of the drives changes from 528 bytes to 512 bytes.

Related information:

http://www.ibm.com/systems/info/x86servers/serverproven/compat/us/

http://publib.boulder.ibm.com/infocenter/systems/scope/hw/index.jsp?topic=/p7ebj/

sasusingthesasdiskarraymanager.htm

28 Power Systems: IBM Flex System p260 and p460 Compute Nodes Installation and Service Guide

Page 41

Chapter 4. Installing the operating system

Before you install the operating system on the compute node, verify that the compute node is installed in

the IBM Flex System Enterprise Chassis, that the management-module firmware is at the latest available

level, and that the compute node is turned on.

About this task

If you are not using an unattended network-installation method to install your operating system, you

must first provide a serial over LAN (SOL) connection to the compute node to install your operating

system. For information about starting an SOL session, see http://publib.boulder.ibm.com/infocenter/

flexsys/information/topic/com.ibm.acc.cmm.doc/dw1kt_cmm_cli_book.pdf.

Important:

v After you install the operating system on the compute node, you must install any service packs or

update packages that come with the operating system. For additional information, see the instructions

that come with your operating-system documentation and the service packs or update packages.

v If you plan to install an Ethernet I/O expansion card, first install the operating system so that the

onboard ports can be recognized and configured before the ports on the I/O expansion card. If you