Page 1

ibm.com/redbooks

IBM Eserver xSeries

455 Planning and Planning and

Installation Guideuide

David Watts

Aubrey Applewhaite

Yonni Meza

Describes the technical details of the

new 64-bit server

Covers supported Windows and

Linux 64-bit operating systems

Helps you prepare for and

perform an installation

Front cover

Page 2

Page 3

IBM Eserver xSeries 455 Planning and Installation

Guide

February 2004

International Technical Support Organization

SG24-7056-00

Page 4

© Copyright International Business Machines Corporation 2004. All rights reserved.

Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP

Schedule Contract with IBM Corp.

First Edition (February 2004)

This edition applies to the IBM Eserver xSeries 455, machine type 8855.

Note: Before using this information and the product it supports, read the information in

“Notices” on page vii.

Page 5

© Copyright IBM Corp. 2004. All rights reserved. iii

Contents

Notices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . vii

Trademarks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . viii

Preface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .ix

The team that wrote this redbook. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ix

Become a published author . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xi

Comments welcome. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xii

Chapter 1. Technical description . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.1 Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.1.1 Comparing the x455 with the x450 . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.1.2 Features not supported . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.2 The x455 base models . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.2.1 Front and rear views . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.3 System assembly . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

1.4 IBM XA-64 chipset. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

1.4.1 The processor-board assembly. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

1.4.2 The memory-board assembly . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

1.4.3 PCI-X board assembly . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

1.5 Remote Supervisor Adapter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

1.6 RXE-100 Expansion Enclosure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

1.7 Multinode scalable partitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

1.7.1 RXE-100 connectivity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

1.7.2 Multinode configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

1.7.3 Integrated I/O function support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

1.7.4 Error recovery . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

1.8 Redundancy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

1.9 Light path diagnostics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

1.10 Extensible Firmware Interface. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

1.10.1 GUID Partition Table disk . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

1.10.2 EFI System Partition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

1.10.3 EFI and the reduced-legacy concept . . . . . . . . . . . . . . . . . . . . . . . 34

1.11 Operating system support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

1.12 Enterprise X-Architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

1.12.1 NUMA architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

Chapter 2. Positioning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

2.1 Migrating to a 64-bit platform. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

2.2 Scalable system partitioning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

Page 6

iv IBM Eserver xSeries 455 Planning and Installation Guide

2.2.1 RXE-100 Expansion Enclosure. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

2.3 Operating system support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

2.4 Server consolidation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

2.5 ServerProven® . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

2.6 IBM Datacenter Solution Program. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

2.7 Application solutions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

2.7.1 Database applications. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

2.7.2 Business logic . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

2.7.3 e-Business and security transactions . . . . . . . . . . . . . . . . . . . . . . . . 50

2.7.4 In-house developed compute-intensive applications . . . . . . . . . . . . 51

2.7.5 Science and technology industries . . . . . . . . . . . . . . . . . . . . . . . . . . 51

2.8 Why choose x455 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

Chapter 3. Planning. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

3.1 System hardware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

3.1.1 Processors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

3.1.2 Memory . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

3.1.3 PCI-X slot configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

3.1.4 Broadcom Gigabit Ethernet controller . . . . . . . . . . . . . . . . . . . . . . . . 64

3.2 Cabling and connectivity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

3.2.1 SMP Expansion connectivity. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

3.2.2 Remote Expansion Enclosure connectivity . . . . . . . . . . . . . . . . . . . . 70

3.2.3 Remote Supervisor Adapter connectivity . . . . . . . . . . . . . . . . . . . . . 76

3.2.4 Serial connectivity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

3.3 Storage considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

3.3.1 xSeries storage solutions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

3.3.2 Tape backup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

3.4 Rack installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

3.5 Power considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

3.6 Operating system support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

3.6.1 Clustering . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 88

3.7 IBM Director support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

3.8 Solution Assurance Review. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

3.8.1 Trigger Tool. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

3.8.2 Electronic Solution Assurance Review (eSAR). . . . . . . . . . . . . . . . . 90

Chapter 4. Installation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

4.1 Using The Extensible Firmware Interface . . . . . . . . . . . . . . . . . . . . . . . . . 92

4.1.1 EFI Firmware Boot Manager . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

4.1.2 The EFI shell . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 95

4.1.3 Driver Setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

4.1.4 Flash update . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 112

4.1.5 Configuration/Setup utility . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

Page 7

Contents v

4.1.6 Diagnostic utility . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

4.1.7 Boot Option Maintenance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 122

4.2 Configuring scalable partitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

4.2.1 Creating a scalable partition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

4.2.2 Booting a scalable partition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134

4.2.3 Multiple Monitors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 135

4.2.4 Deleting a scalable partition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

4.3 Installing Windows Server 2003 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

4.3.1 Important information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

4.3.2 Preparing to install . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 138

4.3.3 Installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 139

4.3.4 Post-setup phase . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 144

4.4 Installing Linux. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 151

4.4.1 Linux IA-64 kernel overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 152

4.4.2 Choosing a Linux distribution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 153

4.4.3 Installing SUSE LINUX Enterprise Server 8.0. . . . . . . . . . . . . . . . . 156

4.4.4 Installing Red Hat Enterprise Linux AS . . . . . . . . . . . . . . . . . . . . . . 160

4.4.5 Linux boot process . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 162

4.4.6 Information about the installed system . . . . . . . . . . . . . . . . . . . . . . 163

4.4.7 Using the serial port for the Linux console . . . . . . . . . . . . . . . . . . . 171

4.4.8 RXE-100 Expansion Enclosure. . . . . . . . . . . . . . . . . . . . . . . . . . . . 172

4.4.9 Upgrading drivers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 173

Chapter 5. Management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 175

5.1 IBM Director . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 176

5.1.1 Scalable Systems Manager . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 177

5.2 The Remote Supervisor Adapter. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 177

5.2.1 Connecting via a Web browser . . . . . . . . . . . . . . . . . . . . . . . . . . . . 179

5.2.2 Connecting via the ASM interconnect . . . . . . . . . . . . . . . . . . . . . . . 184

5.2.3 Installing the device driver. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 185

5.2.4 Configuring the remote control password . . . . . . . . . . . . . . . . . . . . 186

5.3 Management using the Remote Supervisor Adapter . . . . . . . . . . . . . . . 187

5.3.1 Configuring which alerts to monitor . . . . . . . . . . . . . . . . . . . . . . . . . 188

5.3.2 Configuring SNMP . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 190

5.3.3 Sending alerts directly to IBM Director . . . . . . . . . . . . . . . . . . . . . . 191

5.3.4 Creating a test event action plan in IBM Director . . . . . . . . . . . . . . 193

5.4 Windows System Resource Manager . . . . . . . . . . . . . . . . . . . . . . . . . . . 196

5.4.1 WSRM description . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 197

5.4.2 WSRM features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 198

5.4.3 WSRM in the x455 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 199

Abbreviations and acronyms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 201

Related publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 203

Page 8

vi IBM Eserver xSeries 455 Planning and Installation Guide

IBM Redbooks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 203

Other publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 203

Online resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 204

How to get IBM Redbooks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 208

Help from IBM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 208

Index . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 209

Page 9

© Copyright IBM Corp. 2004. All rights reserved. vii

Notices

This information was developed for products and services offered in the U.S.A.

IBM may not offer the products, services, or features discussed in this document in other countries. Consult

your local IBM representative for information on the products and services currently available in your area.

Any reference to an IBM product, program, or service is not intended to state or imply that only that IBM

product, program, or service may be used. Any functionally equivalent product, program, or service that

does not infringe any IBM intellectual property right may be used instead. However, it is the user's

responsibility to evaluate and verify the operation of any non-IBM product, program, or service.

IBM may have patents or pending patent applications covering subject matter described in this document.

The furnishing of this document does not give you any license to these patents. You can send license

inquiries, in writing, to:

IBM Director of Licensing, IBM Corporation, North Castle Drive Armonk, NY 10504-1785 U.S.A.

The following paragraph does not apply to the United Kingdom or any other country where such provisions

are inconsistent with local law: INTERNATIONAL BUSINESS MACHINES CORPORATION PROVIDES

THIS PUBLICATION "AS IS" WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESS OR IMPLIED,

INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF NON-INFRINGEMENT,

MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. Some states do not allow disclaimer

of express or implied warranties in certain transactions, therefore, this statement may not apply to you.

This information could include technical inaccuracies or typographical errors. Changes are periodically made

to the information herein; these changes will be incorporated in new editions of the publication. IBM may

make improvements and/or changes in the product(s) and/or the program(s) described in this publication at

any time without notice.

Any references in this information to non-IBM Web sites are provided for convenience only and do not in any

manner serve as an endorsement of those Web sites. The materials at those Web sites are not part of the

materials for this IBM product and use of those Web sites is at your own risk.

IBM may use or distribute any of the information you supply in any way it believes appropriate without

incurring any obligation to you.

Information concerning non-IBM products was obtained from the suppliers of those products, their published

announcements or other publicly available sources. IBM has not tested those products and cannot confirm

the accuracy of performance, compatibility or any other claims related to non-IBM products. Questions on

the capabilities of non-IBM products should be addressed to the suppliers of those products.

This information contains examples of data and reports used in daily business operations. To illustrate them

as completely as possible, the examples include the names of individuals, companies, brands, and products.

All of these names are fictitious and any similarity to the names and addresses used by an actual business

enterprise is entirely coincidental.

COPYRIGHT LICENSE:

This information contains sample application programs in source language, which illustrates programming

techniques on various operating platforms. You may copy, modify, and distribute these sample programs in

any form without payment to IBM, for the purposes of developing, using, marketing or distributing application

programs conforming to the application programming interface for the operating platform for which the

sample programs are written. These examples have not been thoroughly tested under all conditions. IBM,

therefore, cannot guarantee or imply reliability, serviceability, or function of these programs. You may copy,

modify, and distribute these sample programs in any form without payment to IBM for the purposes of

developing, using, marketing, or distributing application programs conforming to IBM's application

programming interfaces.

Page 10

viii IBM Eserver xSeries 455 Planning and Installation Guide

Trademarks

The following terms are trademarks of the International Business Machines Corporation in the United States,

other countries, or both:

Chipkill™

DB2 Connect™

DB2 Universal Database™

DB2®

DRDA®

Enterprise Storage Server®

ESCON®

Eserver™

Eserver™

eServer™

FlashCopy®

FICON™

IBM®

ibm.com®

iSeries™

LANClient Control Manager™

Notes®

OnForever™

Predictive Failure Analysis®

PS/2®

pSeries®

Redbooks™

Redbooks (logo) ™

RETAIN®

ServerGuide™

ServerProven®

ServeRAID™

ThinkPad®

Tivoli®

TotalStorage®

Wake on LAN®

X-Architecture™

xSeries®

zSeries®

The following terms are trademarks of International Business Machines Corporation and Rational Software

Corporation, in the United States, other countries or both.

Rational®

The following terms are trademarks of other companies:

Intel, Intel Inside (logos), MMX, and Pentium are trademarks of Intel Corporation in the United States, other

countries, or both.

Microsoft, Windows, Windows NT, and the Windows logo are trademarks of Microsoft Corporation in the

United States, other countries, or both.

Java and all Java-based trademarks and logos are trademarks or registered trademarks of Sun

Microsystems, Inc. in the United States, other countries, or both.

UNIX is a registered trademark of The Open Group in the United States and other countries.

SET, SET Secure Electronic Transaction, and the SET Logo are trademarks owned by SET Secure

Electronic Transaction LLC.

Other company, product, and service names may be trademarks or service marks of others.

Page 11

© Copyright IBM Corp. 2004. All rights reserved. ix

Preface

The IBM Eserver xSeries® 455 is the second generation Enterprise

X-Architecture™ server using the 64-bit IBM® XA-64 chipset and the Intel®

Itanium 2 processor. Unlike the x450, its predecessor, the x455 supports the

merging of four server chassis to form a single 16-way image, providing even

greater expandability and investment protection.

This IBM Redbook is a comprehensive resource on the technical aspects of the

server, and is divided into five key subject areas:

Chapter 1, “Technical description” on page 1, introduces the server and its

subsystems and describes the key features and how they work. This includes

the Extensible Firmware Interface, which provides a powerful replacement to

the BIOS facility found on the IA-32 platform.

Chapter 2, “Positioning” on page 39, examines the types of applications that

would be used on a server such as the x455.

Chapter 3, “Planning” on page 55, describes the considerations when

planning to purchase and planning to install the x455. It covers such topics as

configuration, operating system specifics, scalability, and physical site

planning.

Chapter 4, “Installation” on page 91, covers the process of installing

Windows® Server 2003, SUSE LINUX Enterprise Server, and Red Hat

Enterprise Linux AS on the x455.

Chapter 5, “Management” on page 175, describes how to use the Remote

Supervisor Adapter to send alerts to an IBM Director management

environment.

The team that wrote this redbook

This redbook was produced by a team of specialists from around the world

working at the International Technical Support Organization, Raleigh Center.

David Watts is a Consulting IT Specialist at the International Technical Support

Organization in Raleigh. He manages residencies and produces IBM

Redbooks™ on hardware and software topics related to IBM xSeries systems

and associated client platforms. He has authored more than 30 IBM

Redbooks and redpapers; his most recent books include Implementing

Systems Management Solutions Using IBM Director, SG24-6188. He has a

Page 12

x IBM Eserver xSeries 455 Planning and Installation Guide

Bachelor of Engineering degree from the University of Queensland (Australia)

and has worked for IBM for more than 14 years. He is an IBM Eserver™

Certified Specialist for xSeries and an IBM Certified IT Specialist.

Aubrey Applewhaite is a Senior IT Specialist working for the IBM Systems

Group in the United Kingdom. He is a member of the Server Implementation

Team and specializes in xSeries hardware, Microsoft® Windows, clustering and

VMware. He has worked in the IT industry for over 16 years and been at IBM for

eight years. He currently works in a customer-acing role providing consultancy

and practical assistance to help IBM customers implement new technology with

particular emphasis on xSeries hardware. He holds a Bachelor of Science

Degree in Sociology and Politics from Aston University and is an MCSE for both

Windows NT® and Windows 2000, IBM eServer™ Certified Systems Expert,

Cisco CNA, and Compaq Proliant ASE.

Yonni Meza is an xSeries Specialist in Peru who also works as the country’s PCI

Instructor, teaching courses on xSeries and Personal Computer Division (PCD)

products. Additionally, he supports the PCD team with pre- and post-sales

technical support, conducting demos and presentations on a regular basis. He

has four years of experience in personal computing systems as well as Intel

servers. Furthermore, he has implemented several xSeries solutions such as

clustering in Windows and Linux with SCSI, SAN and ESS. Yonni studied

Systems Engineering at the University of Lima.

The redbook team (l-r): David, Aubrey, Yonni

Page 13

Preface xi

Thanks to the following people for their contributions to this project:

Henry Artner, Service Education Curriculum Manager, Raleigh

Pat Byers, Program Director, Linux xSeries Alliances & Marketing

Alex Candelaria, IBM Center for Microsoft Technologies, Seattle

Greg Clarke, IBM Advanced Technical Support, Dallas

Rufus Credle, International Technical Support Organization, Raleigh

Gary Hade, IBM Linux Technology Center, Beaverton

Jim Hanna, xSeries development, Austin

Cecil Lockett, Senior Engineer, Engineering Software, Raleigh

Gerry McGettigan, Advanced Technical Support, EMEA

Michael L Nelson, IBM Eserver Solutions Engineering, Raleigh

Lubos Nikolini, Systems Engineer, HT Computers

Charles Perkins, Course Developer, Service and Support Education, Raleigh

Steve Powell, Service and Support Education Team, Raleigh

Ken Rauch, Delivery Project Manager, Markham

Jose Rodriquez Ruibal, Advanced Technical Support, EMEA

Steve Russell, EMEA ATS xSeries Product Introduction Center, Hursley

Bob Zuber, x455 World Wide Product Manager, Raleigh

Julie Czubik, Technical Editor, ITSO, Poughkeepsie

Become a published author

Join us for a two- to six-week residency program! Help write an IBM Redbook

dealing with specific products or solutions, while getting hands-on experience

with leading-edge technologies. You'll team with IBM technical professionals,

Business Partners and/or customers.

Your efforts will help increase product acceptance and customer satisfaction. As

a bonus, you'll develop a network of contacts in IBM development labs, and

increase your productivity and marketability.

Find out more about the residency program, browse the residency index, and

apply online at:

ibm.com/redbooks/residencies.html

Page 14

xii IBM Eserver xSeries 455 Planning and Installation Guide

Comments welcome

Your comments are important to us!

We want our Redbooks to be as helpful as possible. Send us your comments

about this or other Redbooks in one of the following ways:

Use the online Contact us review redbook form found at:

ibm.com/redbooks

Send your comments in an Internet note to:

redbook@us.ibm.com

Mail your comments to:

IBM Corporation, International Technical Support Organization

Dept. HZ8 Building 662

P.O. Box 12195

Research Triangle Park, NC 27709-2195

Page 15

© Copyright IBM Corp. 2004. All rights reserved. 1

Chapter 1. Technical description

The IBM ^ xSeries 455 is the latest IBM top-of-the-line server and is the

second implementation of the 64-bit IBM XA-64 chipset, code named “Summit”,

which forms part of the Enterprise X-Architecture strategy. The x455 completes

the xSeries product family, leveraging the proven Enterprise X-Architecture to

deliver robust and reliable 64-bit systems.

“Features” on page 2

“The x455 base models” on page 4

“System assembly” on page 6

“IBM XA-64 chipset” on page 7

“Remote Supervisor Adapter” on page 19

“RXE-100 Expansion Enclosure” on page 21

“Multinode scalable partitions” on page 22

“Redundancy” on page 27

“Light path diagnostics” on page 27

“Extensible Firmware Interface” on page 29

“Operating system support” on page 35

“Enterprise X-Architecture” on page 35

1

Page 16

2 IBM Eserver xSeries 455 Planning and Installation Guide

1.1 Features

The following are the key features of the x455:

One-way or two-way Intel Itanium 2 models, upgradable to 4-way in a single

node and 16-way in a 4-node partition.

64 MB XceL4 Server Accelerator Cache providing an extra level of cache,

upgradeable to 256 MB in a 4-node partition.

1 GB or 2 GB RAM standard, upgradeable to 56 GB in a single node and 224

GB in a 4-node partition. Available options are 512 MB, 1 GB, and 2 GB ECC

DDR SDRAM RDIMMs.

Memory enhancement such as memory mirroring, Chipkill™, Memory

ProteXion, and hot swap.

Dual channel Ultra320 SCSI/RAID controller.

Six 64-bit Active PCI-X slots: Two 133 MHz, two 100 MHz, and two 66 MHz,

upgradeable to 24 x 64-bit PCI-X slots in a four-node partition.

Scalable system partitioning in two-node and four-node configurations via

three scalability ports.

Connectivity to an RXE-100 external enclosure for an additional 12 PCI-X

slots, upgradeable to 24 additional PCI-X slots in a 4-node partition.

Two hot-swap 1-inch drive bays, upgradeable to eight in a four-node partition.

Support for major storage subsystems, including SCSI and Fibre Channel.

Light path diagnostics for troubleshooting.

Remote Supervisor Adapter (RSA) for systems management and remote

diagnostics.

Integrated dual 10/100/1000 Mbps Ethernet controller.

Integrated ATI Rage XL with 8 MB video RAM.

Three USB ports and one serial port.

Two 1050 W hot swap power supply.

24x combination DVD/CD-RW drive.

4U x 26” rack drawer design.

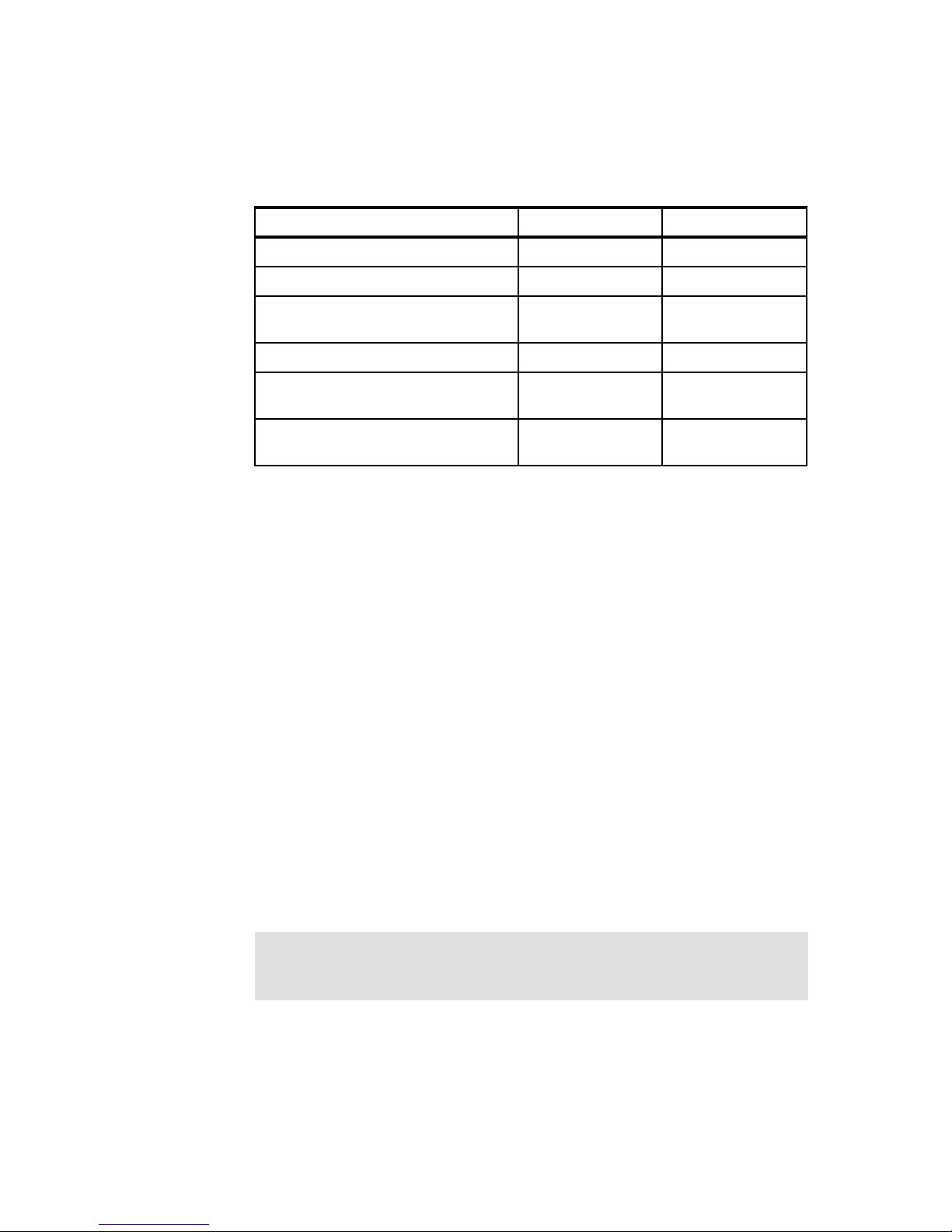

1.1.1 Comparing the x455 with the x450

The x455 builds on the proven and popular x450 and brings a number of

enhancements. Table 1-1 on page 3 summarizes the differences and

enhancements between the two servers.

Page 17

Chapter 1. Technical description 3

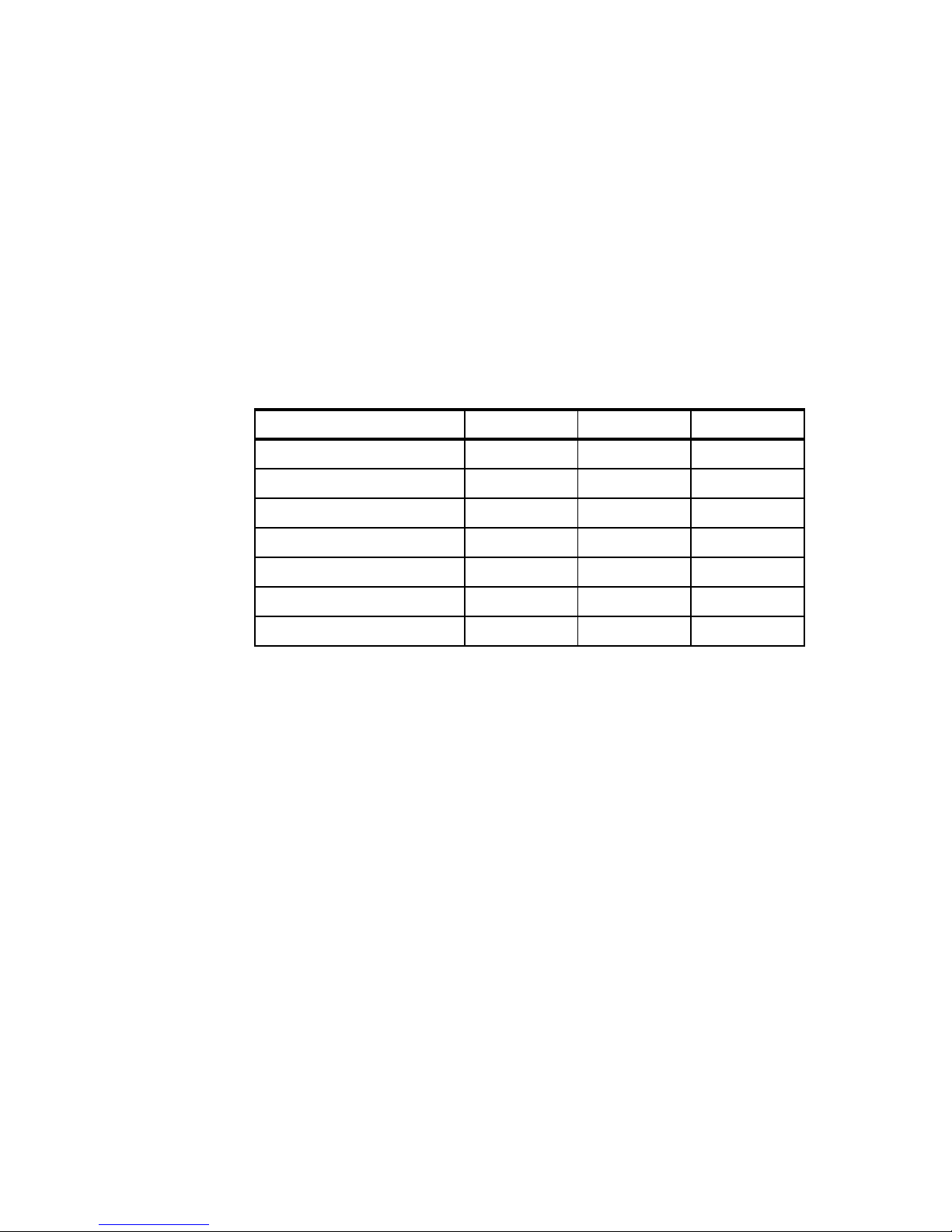

Table 1-1 Comparing the differences between the x455 and x450

1.1.2 Features not supported

Due to its 64-bit architecture many existing 32-bit applications are no longer

supported. These include:

32-bit and 16-bit operating systems

ServerGuide™

Remote Deployment Manager (RDM)

LANClient Control Manager™ (LCCM)

UpdateExpress

Access Support

64-bit versions of some of these tool will be made available in the future.

The following functions are also not supported:

More than one RXE-100 connected to a single node

Hot add/remove of an RXE-100

Physical partitioning within a single node

Partial mirroring of memory

Hot add/swap of the CD-ROM or diskette drive (if installed)

Inter-Process Communications (IPC) over scalability ports

Hot adding memory (hot swap is supported)

PS/2® keyboard and mouse

Parallel port

Component x450 x455

Maximum memory (GB) 40 56

Active memory with hot-swap support No Yes

Multi chassis support No Yes (one, two or four

chassis)

Shared RXE-100 between 2 machines No Yes

Redundant cabling to RXE-100 (only

from a single independent machine)

No Yes

Enterprise X-Architecture First generation

chipset

Second generation

chipset

Important: The x455 does not have PS/2 ports for a keyboard and mouse.

Either a USB keyboard and mouse are required or the appropriate cables to

connect to a KVM switch.

Page 18

4 IBM Eserver xSeries 455 Planning and Installation Guide

1.2 The x455 base models

Powered by XA-64 Enterprise X-Architecture and the 64-bit Itanium 2 “Madison”

processors, the x455 server brings the future of 64-bit processing and

production-level reliability to your data centers today. Featuring

mainframe-inspired advanced mission-critical functions, you can depend on

these 16-way-capable enterprise servers to run your complex business

applications around the clock.

The initial models of the x455 are listed in Table 1-2.

Table 1-2 Initial x455 base models

The base models can also be connected together to form two-node (eight CPUs)

and four-node (16 CPUs) configurations. See “Multinode scalable partitions” on

page 22 for details.

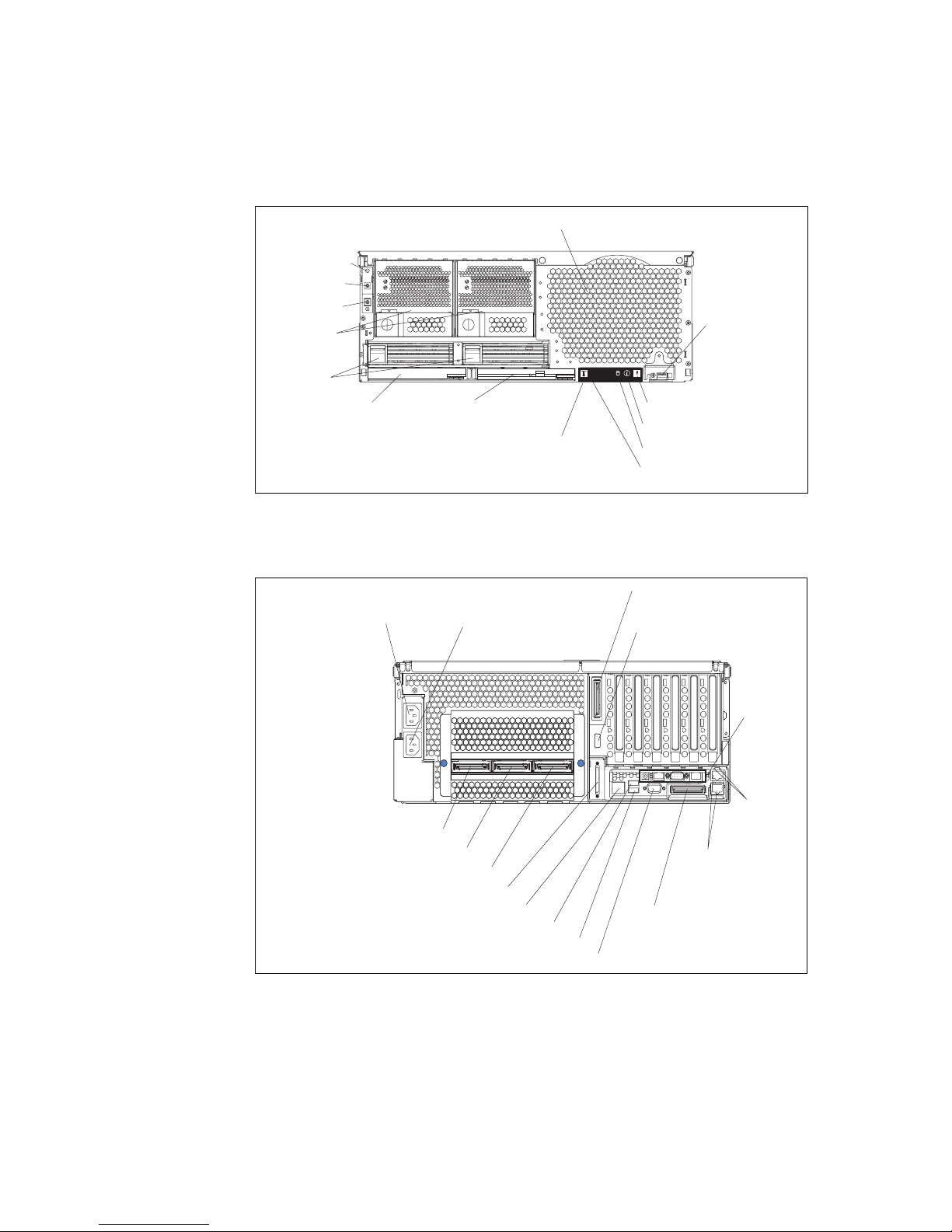

1.2.1 Front and rear views

Figure 1-1 on page 5 shows the front view of the x455 showing the system

components.

Base model 8855-1RX 8855-2RX 8855-3RX

Itanium 2 processors 1 x 1.3 GHz 2 x 1.4 GHz 2 x 1.5 GHz

Max SMP 4-way 4-way 4-way

Memory 1 GB 2 GB 2 GB

L1 cache 32 KB 32 KB 32 KB

L2 cache 256 KB 256 KB 256 KB

L3 cache 3 MB 4 MB 6 MB

XceL4 Accelerator Cache 64 MB 64 MB 64 MB

Page 19

Chapter 1. Technical description 5

Figure 1-1 Front panel of the xSeries 455

Figure 1-2 shows the rear view of the x455 showing the system connectors.

Figure 1-2 Rear view of the x455

Power button

Reset button

Power-on light

Hot-swap fans

USB port

System-error light (amber)

Information light (amber)

SCSI activity light (green)

Locator light (blue)

DVD/CD-RW drive

Hot swap

power supplies

Blank media

bay

Light Path Diagnostics

panel (pulls out)

Hot swap

drive bays

System power

connector (1)

System power

connector (2)

RXE Expansion Port (B)

connector

Remote

Supervisor

Adapter

connectors

and LEDs

Ethernet

LEDs

Gigabit Ethernet

connectors

RXE Expansion

Port (A) connector

Video connector

USB 2 connector

USB 1 connector

RXE Management Port connector

SCSI connector

Serial connector

SMP Expansion Port 1 connector

SMP Expansion Port 2 connector

SMP Expansion Port 3 connector

Page 20

6 IBM Eserver xSeries 455 Planning and Installation Guide

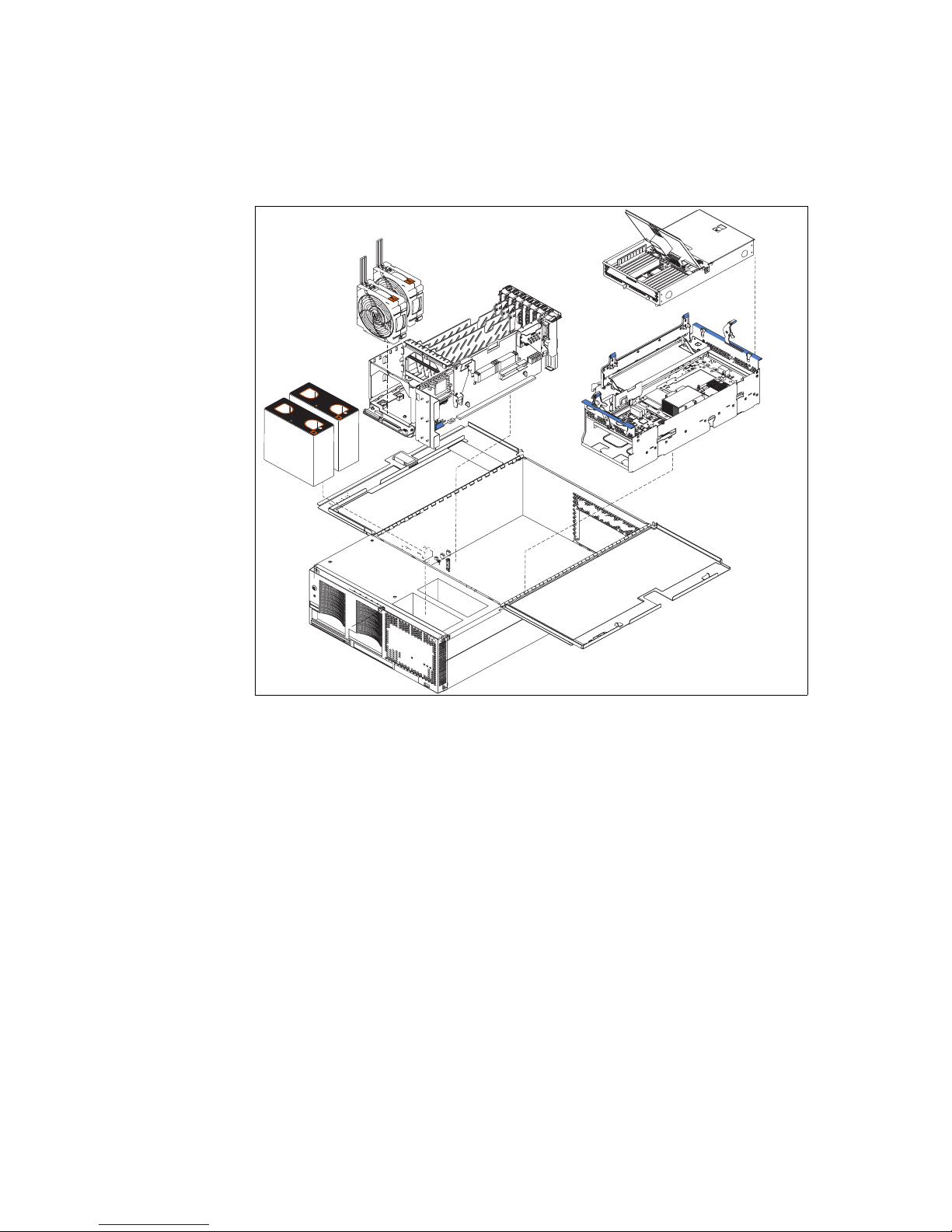

1.3 System assembly

The x455 has a similar internal design to the x450. The midplane board (viewed

from the front) interfaces with three major assemblies:

The processor-board assembly

This is located to the right of the midplane board and under the

memory-board assembly. It houses the Itanium 2 processors, Cache and

Scalability Controller, and the 64 MB of Xcel4 cache.

The memory-board assembly

This is located to the right of the midplane board and above the

processor-board assembly. It houses the memory and memory controller.

The PCI-X board assembly

This is located to the left of the midplane board. It houses all the PCI-X slots

and all other I/O components.

Page 21

Chapter 1. Technical description 7

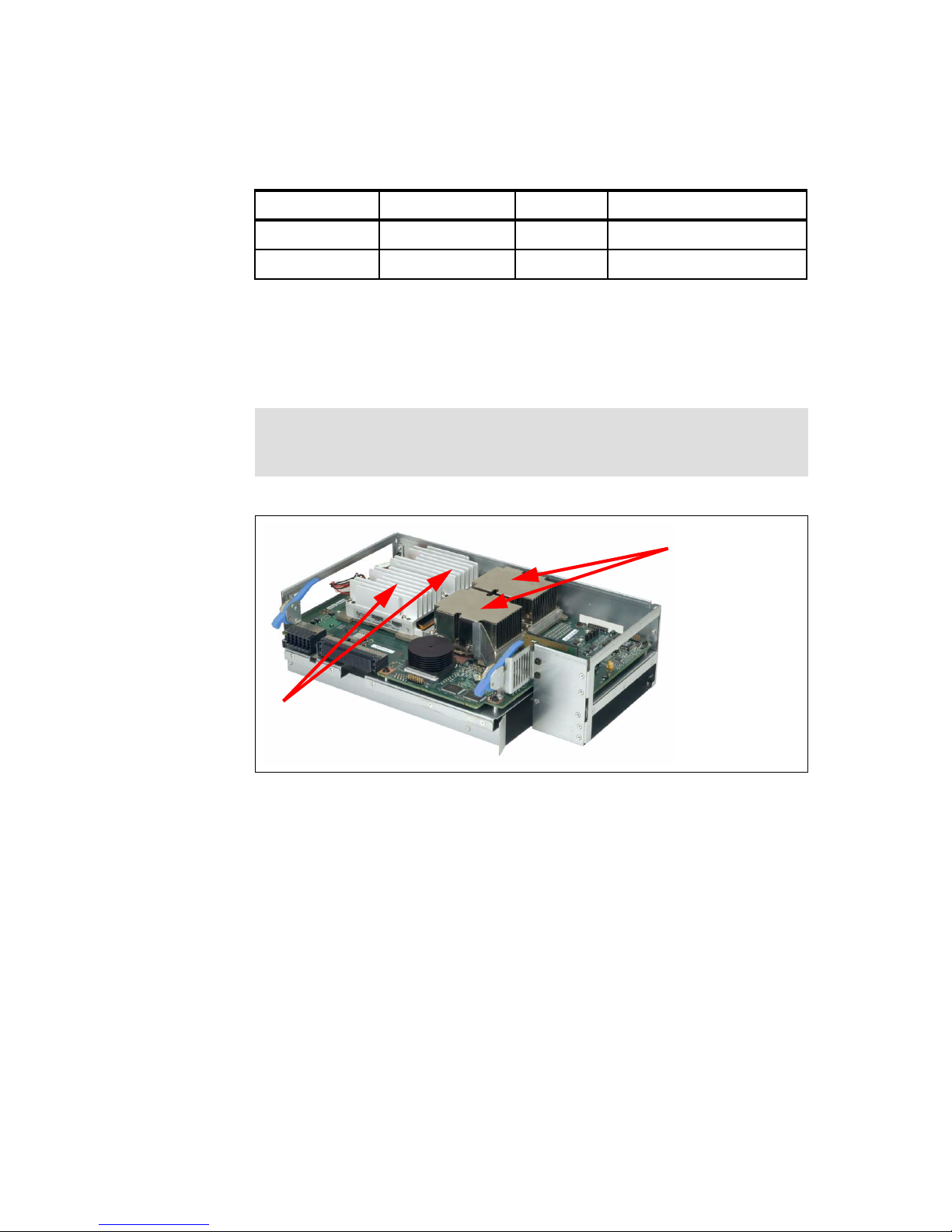

Figure 1-3 Memory-board and processor-board assembly locations

1.4 IBM XA-64 chipset

The IBM XA-64 chipset is the product name describing the chipset developed

under the code name “Summit” and implemented on the IA-64 platform. A

product of the IBM Microelectronics Division, the XA-64 chipset leverages the

proven Enterprise X-Architecture chipset used initially in the x440 and applies

the same technologies to the IA-64 architecture. The XA-64 chipset comprises

the following components:

Itanium 2 processor(s)

Cache and Scalability Controller

A single controller, code named “Tornado”, located within the processor-board

assembly.

N

O

T

E

:

F

O

R

P

R

O

P

E

R

A

I

R

F

L

O

W,

R

E

P

L

A

C

E

F

A

N

W

I

T

H

I

N

2

M

I

N

U

T

E

S

F

R

O

N

T

O

F

B

O

X

N

O

T

E

:

F

O

R

P

R

O

P

E

R

A

I

R

F

L

O

W,

R

E

P

L

A

C

E

F

A

N

W

I

T

H

I

N

2

M

I

N

U

T

E

S

F

R

O

N

T

O

F

B

O

X

Processor-board

assembly

Memory-board

assembly

PCI-X

slots

Page 22

8 IBM Eserver xSeries 455 Planning and Installation Guide

Memory controller

A single memory controller, code named “Cyclone”, located within the

memory-board assembly.

Two PCI-X bridges

Two PCI-X bridges, code named “Winnipeg”, one located on the PCI-X board

and the other on the I/O board. These control both the PCI-X and Remote I/O.

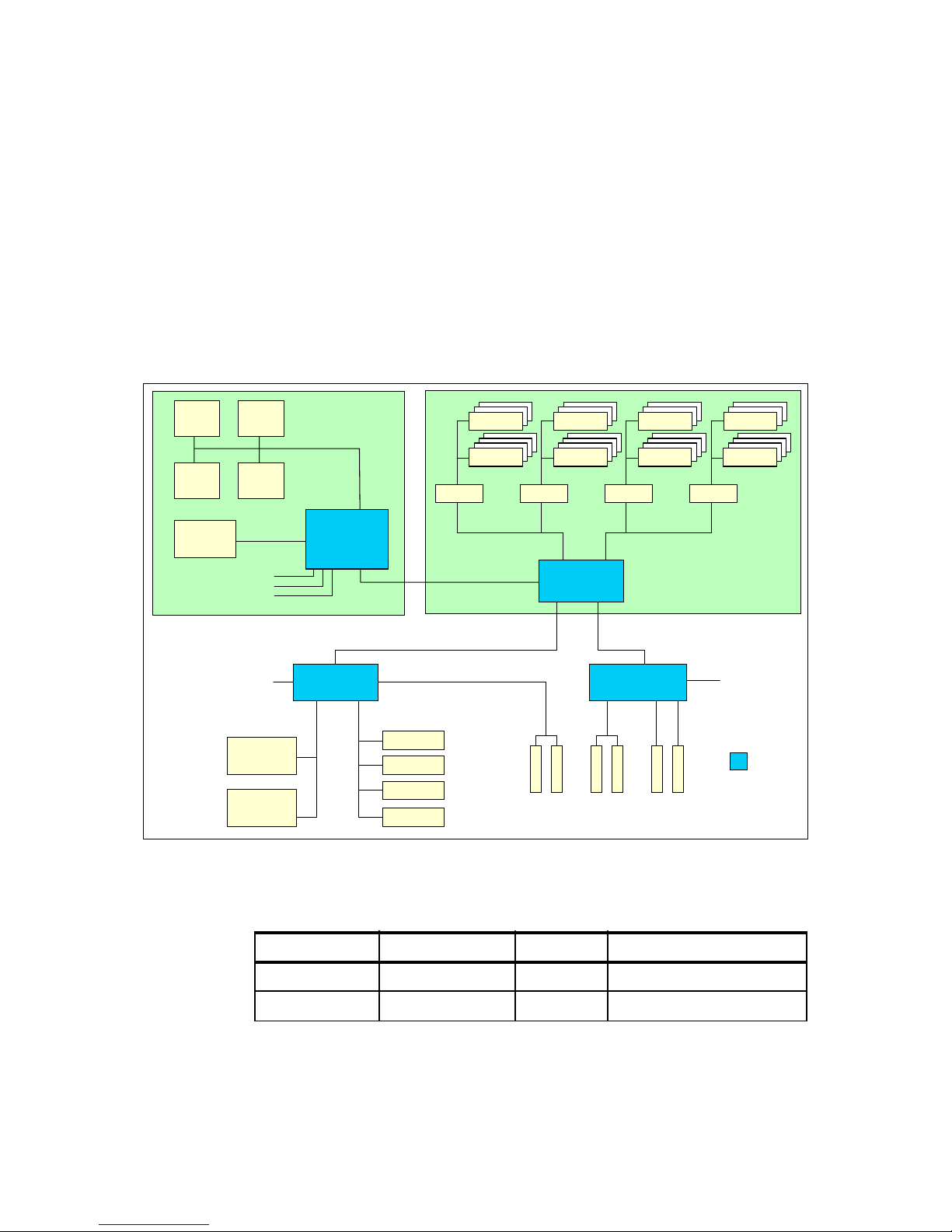

Figure 1-4 shows the various IBM XA-64 components in an x455 configuration.

Figure 1-4 xSeries 455 system block diagram

Table 1-3 shows how the bandwidths in Figure 1-4 are calculated.

Table 1-3 Bus speeds

Ultra320

SCSI

Gigabit

Ethernet

Video

3x USB

Serial

RSA

33 MHz66 MHz

64-bit

66 MHz

64-bit

100 MHz

64-bit

133 MHz

Bus A66 MHz

RXE

Expansion

Port A

(1 GBps)

B-100

D-133C-133

IBM XA-64

("Summit")

core chipset

6.4 GBps

64 MB

L4 cache

400 MHz

3.2 GBps

CPU 1 CPU 2

CPU 3 CPU 4

PCI-X bridge PCI-X bridge

RXE

Expansion

Port B

(1 GBps)

1 GBps1 GBps

200 MHz

2-way or 4-way

interleaved DDR

Port 2

3.2 GBps

Port 1

3.2 GBps

DDR

DDR

DDR

DDR

DDR

DDR

SMI-E

DDR

DDR

Memory

controller

SMI-E SMI-E SMI-E

6.4 GBps

Processor-board assembly Memory-board assembly

Cache and

scalability

controller

SMP scalability

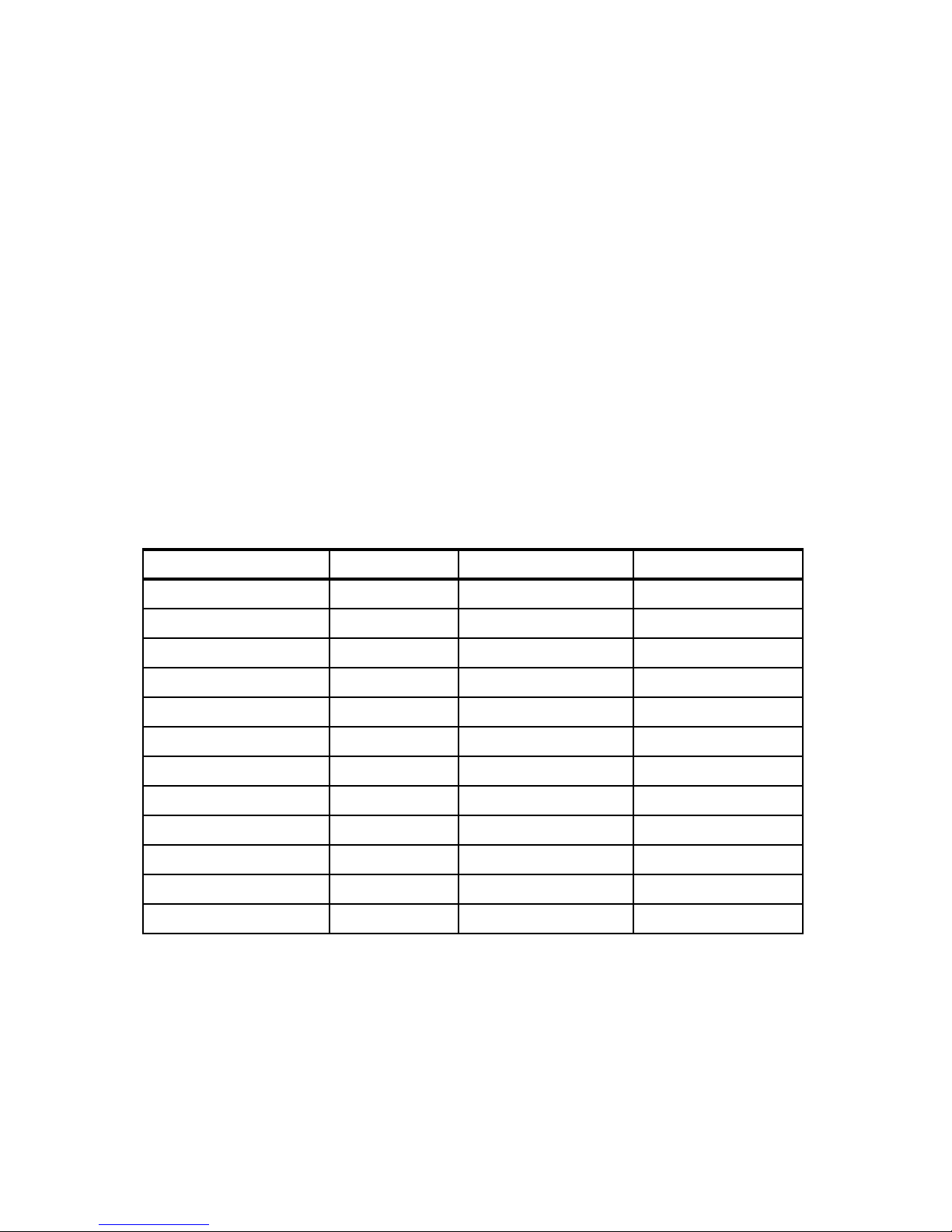

ports

From To Bandwidth Calculation

CPUs Cache controller 6.4 GBps 400 MHz x 128-bit data path

L4 Cache Cache controller 6.4 GBps 400 MHz x 128-bit data path

Page 23

Chapter 1. Technical description 9

1.4.1 The processor-board assembly

The processor-board assembly is located below the memory board. It is held in

place by retaining levers, an EMC shield and a retention bracket. For instructions

to remove or install please refer to the Option Installation Guide.

Figure 1-5 The processor-board assembly

The power modules shown in Figure 1-5 supply power to the processors and are

equivalent to VRMs in other systems.

Processors should be installed in the order 1, 2, 3, 4. The bootstrap processor

(BSP) may not necessarily be the processor located in processor socket 1. The

Intel Itanium Architecture processors are initialized and tested in parallel. The

first processor to complete initialization becomes the BSP.

The CPUs are connected together with a 200 MHz frontside bus, but supply data

at an effective rate of 400 MHz using the “dual-pump” design of the Intel

Itanium 2 architecture is described in “Intel Itanium 2 processors” on page 10.

SDRAM Memory controller 3.2 GBps 400 MHz x 64-bit data path

Cache controller Memory controller 3.2 GBps 400 MHz x 64-bit data path

From To Bandwidth Calculation

Warning: Be careful when removing or installing the processor-board

assembly or the memory-board assembly. It is possible to damage the

midplane if not done correctly.

Processors 1 & 3

(processors 2 &

4 are on the

underside of the

circuit board)

Power modules for

each processor

Page 24

10 IBM Eserver xSeries 455 Planning and Installation Guide

The processor-board assembly is also equipped with LEDs for light path

diagnostics for the following components:

Each processor

Each power module (“pod”)

In addition, a “remind” button is located on the upper side of the processor-board

assembly. Pressing this button while the processor-board assembly is not

attached to AC power will illuminate any light path LEDs for 10 seconds that had

been lit while the system was under power.

Intel Itanium 2 processors

The Itanium 2 processor used in the x455 is code named “Madison”. It uses a

ZIF socket design and its small form factor is what allows up to four processors in

a 4U dense machine.

Table 1-4 outlines some of the differences between the Itanium and Itanium 2

processors (both the “Madison” and the earlier “McKinley” processor).

Table 1-4 Itanium v Itanium 2 processors

The Itanium 2 processor has three levels of cache, all of which are on the

processor die:

Level 1 cache, 32 KB

Feature Itanium Itanium 2 “McKinley” Itanium 2 “Madison”

Processor core speed 733 or 800 MHz 900 MHz or 1.0 GHz 1.3, 1.4 or 1.5 GHz

L3 Cache 2 or 4 MB 1.5 or 3 MB 3, 4 or 6 MB

Frontside bus 266 MHz 400 MHz, 128 bit 400 MHz, 128 bit

Frontside bus bandwidth 2.1 GBps 6.4 GBps 6.4 GBps

Pipeline stages 10 8 8

Issue ports 9 11 11

on-board registers 328 328 328

Integer units 3 6 6

Branch units 3 3 3

Floating point units 2 2 2

SIMD units 2 1 1

Load and store units 2 (total) 2 load and 2 store 2 load and 2 store

Page 25

Chapter 1. Technical description 11

This is new and the “closest” to the processor, and is used to store

micro-operations. These are decoded executable machine instructions. It

serves these to the processor at rated speed. This additional level of cache

saves decode time on cache hits.

Level 2 cache, 256 KB

This is equivalent to L1 cache on the Pentium® III Xeon.

Level 3 cache 3–6 MB

This is equivalent to L2 cache on the Pentium III Xeon or the L3 cache on the

Pentium Xeon MP processor. Unlike the design of the original Itanium

processor, this L3 cache is now on the processor die, greatly improving

performance, up to 2 times greater than that of the original Itanium.

The x455 also implements a Level 4 cache as described in “IBM XceL4

Accelerator Cache” on page 12.

Intel has also introduced a number of features associated with its Itanium

micro-architecture. These are available in the x455, including:

400 MHz frontside bus

The Pentium III Xeon processor had a 100 MHz frontside bus that equated to

a burst throughput of 800 MBps. With protocols such as TCP/IP, this had been

shown to be a bottleneck in high-throughput situations. The Itanium 2

processor improves on this by using a single 200 MHz clock but using both

edges of each clock cycle to transmit data. This is shown in Figure 1-6.

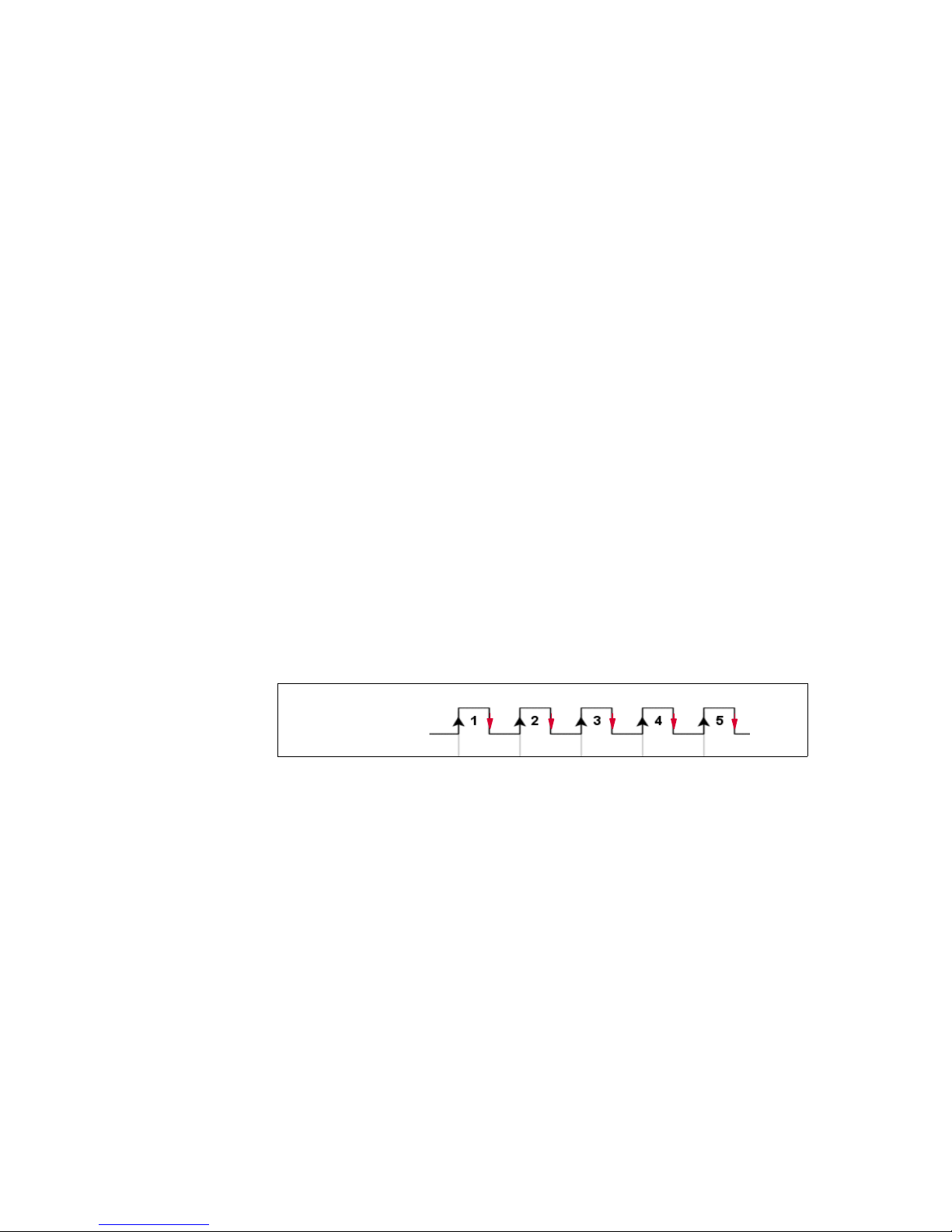

Figure 1-6 Dual-pumped frontside bus

This increases the performance of the frontside bus. The end result is an

effective burst throughput of 6.4 GBps (128-bit wide data path running at 400

MHz), which can have a substantial impact, especially on TCP/IP-based LAN

traffic. This is opposed to the Itanium processor, which had a burst throughput

of only 2.1 GBps (64-bit wide data path running at 266 MHz).

Explicitly Parallel Instruction Computing (EPIC)

EPIC technology, developed by Intel and HP, leads to more efficient, faster

processors because it eliminates numerous processing inefficiencies in

current processors and attacks the perennial data bottleneck problems by

increasing parallelism, rather than simply boosting the raw “clock” speed of

the processor.

200 MHz clock

Page 26

12 IBM Eserver xSeries 455 Planning and Installation Guide

Specifically, in today's 32-bit processors, much of the instruction scheduling

(the order in which computing instructions are executed) is done on the chip

itself, leading to a great deal of overhead and slowing down overall processor

performance. Moreover, today's processors are plagued by instruction flow

problems since the processor often has to stop what it is doing and

reconstruct the instruction flow due to inherent inefficiencies in instruction

handling.

EPIC makes the instruction scheduling more intelligent and handles much of

the scheduling off-chip, in the compiler program, before feeding “parallelized”

instructions to the Itanium 2 processor for execution. The parallelized

instructions allow the chip to process a number of instructions simultaneously,

increasing performance.

The Itanium 2 architecture is based on EPIC technology and has the following

features:

– Provides faster online transaction processing

– Has the capability to execute multiple instructions simultaneously

– Enables faster calculations and data analysis

– Allows for faster storage and movement of large models (CAD, CAE)

– Speeds up simulation and rendering times

For more information about the features of the Itanium 2 processor, go to:

http://www.intel.com/design/itanium2

IBM XceL4 Accelerator Cache

Integrated into the processor-board assembly is 64 MB of Level 4 cache, which is

shown in Figure 1-4 on page 8. This XceL4 Server Accelerator Cache provides

the necessary extra level of cache to maximize CPU throughput by reducing the

need for main memory access under demanding workloads, resulting in an

overall enhancement to system performance.

Cache memory is two-way interleaved 200 MHz DDR memory and is faster than

the main memory because it is directly connected to the Cache and Scalability

Controller and does not have additional latency associated with the large fan-out

necessary to support the 28 DIMM slots. Since the data interface to the controller

is 400 MHz, peak bandwidth for the XceL4 cache is 6.4 GBps.

The XceL4 Accelerator Cache has been designed with commercial workloads in

mind that tend to have high cache hit rates. This effectively boosts performance

and compensates in part for the 3.2 GBps bandwidth between the Cache and

Scalability Controller and Memory Controller.

Page 27

Chapter 1. Technical description 13

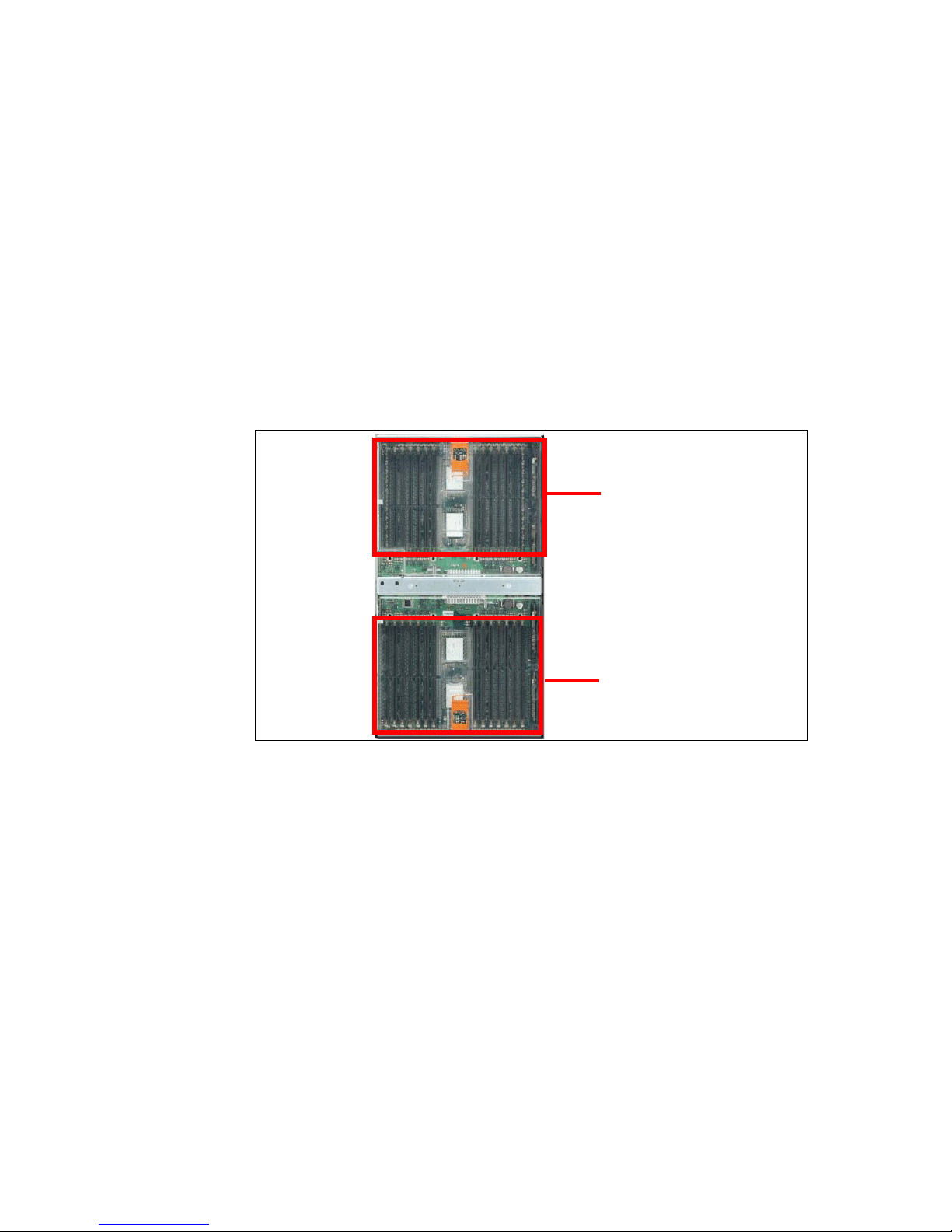

1.4.2 The memory-board assembly

The x455 memory-board assembly is installed in the top of the server and

mounts to the side of the midplane using two retaining levers on the top. This

location allows for easy access to all memory DIMMs without having to remove

any components from the system.

The memory-board assembly houses 28 DIMM slots. All DIMM slots can be used

with 512 MB, 1 GB or 2GB RDIMMs for a maximum of 56 GB. Memory can be

hot swapped but not hot added. This function, however, is as much a part of the

operating system as the hardware. Check with your operating system Help for

details.

Figure 1-7 The memory-board assembly, showing the two memory ports

The memory-board assembly is also equipped with LEDs for light path

diagnostics for each DIMM. In addition, the assembly is equipped with LEDs for

the following:

Power to memory port 1

Power to memory port 2

Hot-plug memory enabled

Memory used in the x455 is standard PC2100 ECC DDR SDRAM RDIMMs. The

memory is 2-way interleaved; however, 4-way interleaving is also supported

when both ports are engaged. Interleaving requires DIMMs to be installed in

matched pairs and in specific DIMM sockets (see “Memory” on page 57).

There are 14 DIMM slots in each

of the two ports, for a total of 28

DIMMs.

Memory Port 1

Memory Port 2

Page 28

14 IBM Eserver xSeries 455 Planning and Installation Guide

System memory

DIMMs must be installed in matched pairs, since the DIMMs are two-way

interleaved. However, if memory is installed in matched fours (a matched pair in

each port), the system automatically detects this and will enable 4-way

interleaving. With this, memory access is performed simultaneously from both

ports (two separate paths into the memory controller as shown in Figure 1-4 on

page 8), leading to improved memory performance.

Figure 1-8 Memory DIMMs are divided into two ports

There are a number of advanced features implemented in the x455 memory

subsystem, collectively known as

Active Memory:

Memory ProteXion

Memory ProteXion, also known as “redundant bit steering”, is the technology

behind using redundant bits in a data packet to provide backup in the event of

a DIMM failure.

Port 1 Por t 2

Front of server

Page 29

Chapter 1. Technical description 15

Currently, other industry-standard servers use 8 bits of the 72-bit data packets

for ECC functions and the remaining 64 bits for data. However, the x455 (and

several other xSeries servers) use an advanced ECC algorithm that is based

not on bits but on memory symbols. Symbols are groups of multiple bits, and

in the case of the x455, each symbol is 4 bits wide. With two-way interleaved

memory, the algorithm needs only three symbols to perform the same ECC

functions, thus leaving one symbol free (2 bits on each DIMM).

In the event that a chip failure on the DIMM is detected by memory scrubbing,

the memory controller can re-route data around that failed chip through the

spare symbol (similar to the hot-spare drive of a RAID array). It can do this

automatically without issuing a Predictive Failure Analysis® (PFA) or light

path diagnostics alert to the administrator. After the second DIMM failure, PFA

and light path diagnostics alerts would occur on that DIMM as normal.

Memory scrubbing

Memory scrubbing is an automatic daily test of all the system memory that

corrects soft errors and reports recoverable errors. An excessive rate of

recoverable errors reported triggers Memory ProteXion to replace the failing

locations.

Memory mirroring

Memory mirroring is equivalent to RAID-1 in disk arrays, in that memory is

divided in two ports and one port is mirrored to the other (see Figure 1-8 on

page 14). If 8 GB is installed, then the operating system sees 4 GB once

memory mirroring is enabled (it is disabled by default). All mirroring activities

are handled by the hardware without any additional support required from the

operating system.

When memory mirroring is enabled the data that is written to memory is

stored in two locations. One copy is kept in the port 1 DIMMs, while a second

copy is kept in the port 2 DIMMs.

During the execution of the read command, the data is read simultaneously

from both ports, and error-free data from either port is forwarded. This

provides an extra level of error recovery capability.

When an unrecoverable memory error from one of the memory ports is

encountered, good data from the non-failing memory port is forwarded to the

system. The failing DIMM is reported and indicated with light path. The failing

memory port is then disabled.

Certain restrictions exist with respect to placement and size of memory

DIMMs when memory mirroring is enabled. These are discussed in “Memory

mirroring” on page 58.

Page 30

16 IBM Eserver xSeries 455 Planning and Installation Guide

Chipkill memory

Chipkill is integrated into the XA-64 chipset and does not require special

Chipkill DIMMs. Chipkill corrects multiple single-bit errors to keep a DIMM

from failing. When combining Chipkill with Memory ProteXion and Active

Memory, the x455 provides very high reliability in the memory subsystem.

Chipkill memory is approximately 100 times more effective than ECC

technology, providing correction for up to 4 bits per DIMM, whether on a single

chip or multiple chips.

If a memory chip error does occur, Chipkill is designed to automatically take

the inoperative memory chip offline while the server keeps running. The

memory controller provides memory protection similar in concept to disk array

striping with parity, writing the memory bits across multiple memory chips on

the DIMM. The controller is able to reconstruct the “missing” bit from the failed

chip and continue working as usual.

Chipkill support is provided in the memory controller and implemented using

standard RDIMMs, so it is transparent to the operating system.

In addition, to maintain the highest levels of system availability, if a memory error

is detected during POST or memory configuration, the server can automatically

disable the failing DIMM and continue operating with reduced memory capacity.

You can manually re-enable the memory bank after the problem is corrected via

the Setup/Configuration option in the EFI Firmware Boot Manager menu. EFI is

Extensible Firmware Interface, the replacement to BIOS as described in

“Extensible Firmware Interface” on page 29.

Memory ProteXion, memory mirroring, and Chipkill provide multiple levels of

redundancy to the memory subsystem. Combining Memory ProteXion with

Chipkill enables up to two memory chip failures per memory port (14 DIMMs).

Both memory ports could sustain up to four memory chip failures. Memory

mirroring provides additional protection with the ability to continue operations

with memory module failures.

1. The first failure detected by the Chipkill algorithm on each port does not

generate a light path diagnostics error, since Memory ProteXion recovers

from the problem automatically.

2. Each memory port could then sustain a second chip failure without shutting

down.

3. Provided that memory mirroring is enabled, the third chip failure on that port

would send the alert and take the DIMM offline, but keep the system running

out of the redundant memory bank.

The combination of these technologies provides the most reliable memory

subsystem available.

Page 31

Chapter 1. Technical description 17

1.4.3 PCI-X board assembly

Strictly speaking the PCI-X board assembly does not truly exist in the same way

that the processor board and memory board assemblies do. The term is used

here to loosely describe the PCI-X board and I/O subsystem that together

comprise the other components of the machine.

The two PCI-X bridges in the XA-64 chipset provide support for 33, 66, 100, and

133 MHz devices using 4 PCI-X buses (labelled A–D in Figure 1-4 on page 8).

The PCI-X bridges also have two 1 GBps bi-directional Remote Expansion I/O

(RXE) ports for connectivity to the RXE-100 Expansion Enclosure. The RXE-100

provides up to an additional 12 PCI-X slots and can be connected by a single

cable to port A or to both ports A and B to provide redundancy.

The rear panel of the x455, which indicates the location of the RXE Expansion

Ports, is shown in Figure 1-2 on page 5.

PCI-X subsystem

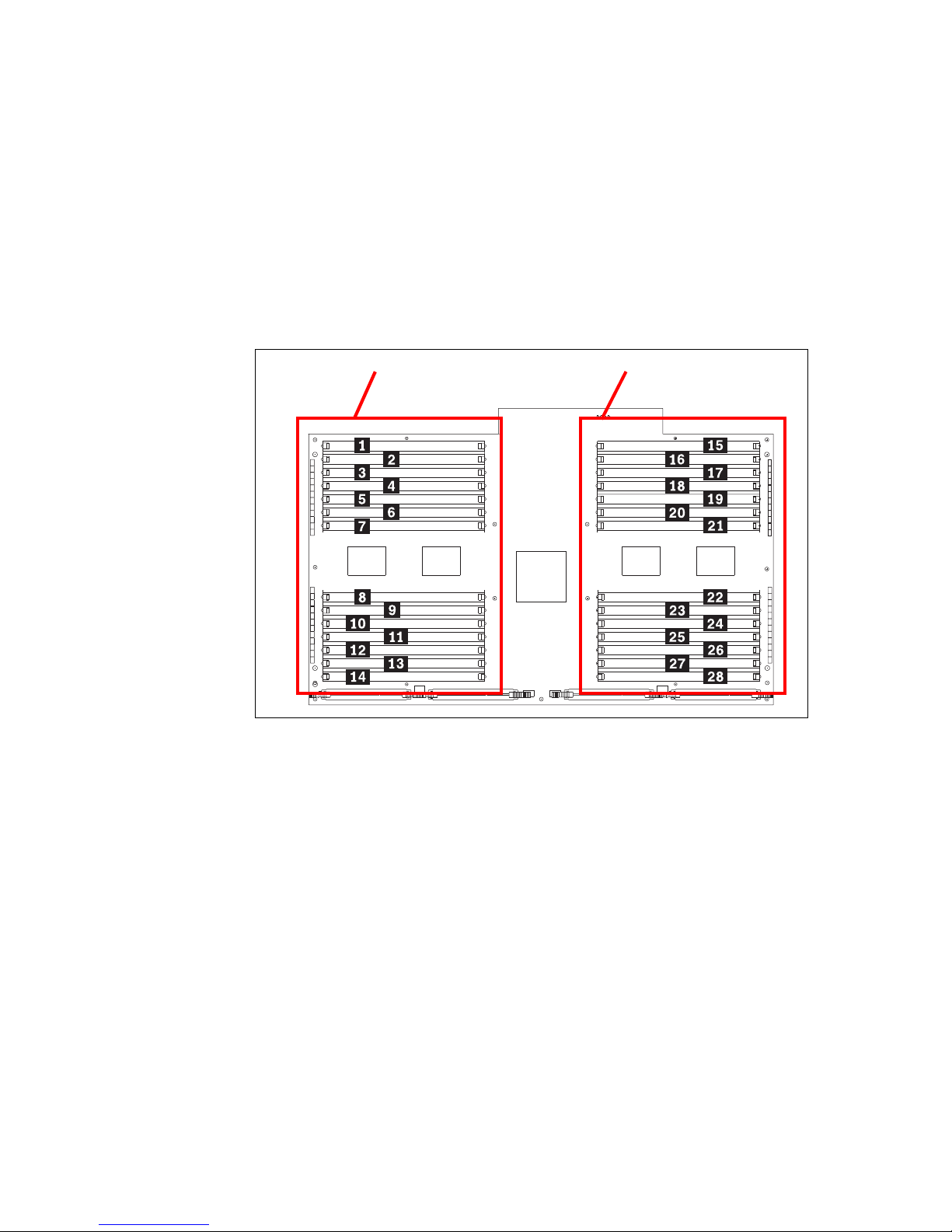

There are six available PCI-X slots in four buses. These are shown in Figure 1-9.

Figure 1-9 PCI-X slot information

Only 3.3V cards are supported, but all slots will accept the following:

32-bit or 64-bit cards

33MHz, 66MHz, 100MHz or 133MHz cards

PCI-X slot 1

(66 )MHz

PCI-X slot 2

(66 )MHz

PCI-X slot 3

(100 )MHz

PCI-X slot 4

(100 )MHz

PCI-X slot 5

(133 )MHz

Back of server

Bus: D B AC

PCI-X slot 6

(133 MHz)

Page 32

18 IBM Eserver xSeries 455 Planning and Installation Guide

You should bear in mind that the overall throughput will be the lowest common

denominator. As such it is important that you carefully consider the placement of

cards to get the best throughput.

The following guidelines will help you gain the best performance from the PCI-X

buses:

Put cards in a slot that will give the best performance but not in a slot rated

higher than the card.

If a 133 MHz card is in bus B then avoid using the other slot in the bus if

possible so the card will operate at its rated speed.

Use buses B, C and D in preference to A. Bus A is connected to the PCI-X

south bridge with legacy devices and competes with these “slower” devices

when sending and receiving data to and from the memory controller.

See “PCI-X slot configuration” on page 61 for details on what adapters are

supported and in what combinations.

The PCI-X subsystem also supplies these I/O devices:

Dual channel Ultra320 SCSI/RAID controller with one internal port and one

external port using the LSI 53C1030 chipset

The internal port supports both single disks and RAID-1 mirrored pairs of

disks. The external port will support additional RAID configurations but this

has a number of limitations and is not recommended.

Dual Gigabit 10/100/1000 Ethernet ports using the Broadcom 5704 chipset.

The BCM5704 supports full- and half-duplex performance at all speeds

(10/100/1000 Mbps, auto-negotiated) and includes integrated on-chip

memory for buffering data transmissions to ensure the highest network

performance. It has dual onboard RISC processors for advanced packet

parsing and backwards compatibility with today's 10/100 networks. It includes

Important: The rating of bus B at 100 MHz is for a fully populated bus. If a

single 133 MHz card is installed the bus increases to 133 MHz. This is part of

the PCI-X specification.

Note: The LSI 53C1030 is a PCI-X to Dual Channel Ultra320 SCSI/RAID

Multifunction Controller. Internally, however, each SCSI channel is

managed separately and controlled by two separate PCI-X configuration

spaces. As a result it may be viewed by software such as LSI configuration,

ServeRAID™, and the operating system as two separate controllers, each

with a single channel.

Page 33

Chapter 1. Technical description 19

software support for failover, layer-3 load balancing, and comprehensive

diagnostics.

Category 5 or better Ethernet cabling is required with RJ-45 connectors. If

you plan to implement a Gigabit Ethernet connection, ensure that your

network infrastructure is capable of the necessary throughput to match the

server’s I/O capacity.

SVGA with 8 MB video memory (ATI RageXL chipset)

Three USB ports: One on the front panel and two on the rear

All ports are USB 2.0 compliant.

One RS-232 serial port, located on the rear of the machine

Remote Supervisor Adapter with:

– 10/100 Ethernet port management port

– RS-485 ASM interconnect bus

–Serial port

– External power port

There are no PS/2 keyboard or mouse ports on the x455. USB keyboard and

mice are supported. If you require KVM support, the 1.5 M USB Conversion

Option (UCO) (part number 73P5832) enables the x455 to be attached to one of

the Advanced Connectivity Technology (ACT) switches for common management

within the rack. This smart cable is plugged into the USB and video ports on the

server. It converts KVM signals to CAT5 signals for transmission over a CAT5

cable to either a Remote Console Manager (RCM) or Local Console Manager

(LCM). USB servers can be managed on the same set of switches as legacy

PS/2-based or C2T-based KVM servers.

1.5 Remote Supervisor Adapter

The x454 includes a Remote Supervisor Adapter (RSA), which is positioned

horizontally in a dedicated PCI-X slot beneath the PCI-X adapter area of the

system.

Page 34

20 IBM Eserver xSeries 455 Planning and Installation Guide

Figure 1-10 Remote Supervisor Adapter connectors

The Remote Supervisor Adapter allows you to provide remote management both

out-of-band and in-band. Out-of-band refers to managing the server without the

use of the operating system. This would be done via the Ethernet or serial port

via a Web or telnet session. In-band refers to managing the server through the

operating system. This would typically be via IBM Director and/or SNMP.

The features of the Remote Supervisor Adapter include:

In-band and out-of-band remote server access and alerting through IBM

Director

Full Web browser support with no other software required

Enhanced security features

Graphics/text console redirection for remote control

Dedicated 10/100 Ethernet access port

ASM interconnect bus for connection to other service processors

Serial dial in/out

E-mail, pager and SNMP alerting

Event log

Predictive Failure Analysis on memory, power, hard drives, and CPUs

Temperature and voltage monitoring with setable threshold

Light path diagnostics

Automatic Server Restart (ASR) for operating system and POST

Remote firmware update

LAN access

Alert forwarding

Ability to manage and monitor an RXE-100

See the IBM Redbook Implementing Systems Management Solutions using IBM

Director, SG24-6188, for more information on the Remote Supervisor Adapter.

External power

supply

Error LED

(amber)

Power LED

(green)

ASM interconnect

(RS-485) port

10/100

Ethernet port

Management

COM port

Rear of x455

Page 35

Chapter 1. Technical description 21

1.6 RXE-100 Expansion Enclosure

The attachment of an RXE-100 Remote Expansion Enclosure is also supported.

These are supported in a number of configurations with some configurations

offering redundancy. The RXE-100 is connected to the x455 via one or two

remote I/O cables and has a throughput of 1 GBps. It is available as standard

with six PCI-X slots available but is upgradable to 12 PCI-X slots, giving you up to

a total of 12 PCI-X or 18 PCI-X slots, respectively. In a multinode configuration an

RXE-100 can be shared by two machines. This allows a total of one RXE-100 in

a 2-node partition and two RXE-100s in a 4-node partition.

For systems configured for RXE failover, one of the RXE Expansion Port

connections may fail at either runtime or boot time and the system will continue to

operate properly. Failover capability requires the RXE-100 to be configured with

12 slots, be configured in unified (that is, non-shared) mode, and have both RXE

Expansion Ports connected. Runtime failures will be logged in the System Error

Log. The broken connection is not re-established at runtime. A Reboot is

required to recover failover capability.

An RXE-100 connection also requires the connection of the RXE management

port(s). There is no failover capability for RXE management port connections.

The following connectivity options are supported:

Connecting one 6-slot RXE to one x455

Connecting one 12-slot RXE to one x455 using one or (recommended) two

data cables

Sharing one 12-slot RXE between two independent x455s

Connecting one RXE to a two-node x455, configured so as to share the slots

between the nodes

Connecting one RXE to a 4-node x455 (one RXE connected to two of the

nodes, either nodes 1 and 2, or nodes 3 and 4)

Connecting two RXEs to a 4-node x455 (one RXE connected to two of the

nodes), configured so as to share the slots between nodes 1 and 2, and

between nodes 3 and 4

See “Remote Expansion Enclosure connectivity” on page 70 for details.

Important: Although other combinations of attaching RXE-100s in a

multinode partition will work, they are not supported configurations.

Page 36

22 IBM Eserver xSeries 455 Planning and Installation Guide

1.7 Multinode scalable partitions

The x455 can be configured as part of a multinode partition. The partition can

comprise either two nodes or four nodes. The partition is a hardware partition,

which is invisible to the operating system. From an operating system point of view

it sees all the hardware as a single machine.

All nodes in a scalable partition must have the following:

Same base model

Processors that have the same core speed and cache

Memory mirroring that is configured the same on all nodes, that is, either all

enabled or all disabled

When you have a multi-node configuration, all nodes must be fully populated with

processors. In other words, only eight-way and 16-way CPU configurations are

supported.

Conceptually two-node and four-node partitions are depicted in Figure 1-11 and

Figure 1-12 on page 23. These also show the cable requirements.

Figure 1-11 Two-node partition

x455

Node A

x455

Node B

1

3 2

2

3

1

1

2

3

SMP

Scalability

ports

Page 37

Chapter 1. Technical description 23

Figure 1-12 Four-node partition

The partition is powered on by turning on the primary node (Node 1). This will

power on the other nodes. It is also powered off by turning off the primary node,

which will power off the other nodes.

The three scalability ports on the back of the machine are used to interconnect

the machines, and the Configuration/Setup utility is used to define the partition

and members of the partition. The scalability cables can be up to 3.5 meters

long. This restriction normally means that all members of a partition will be in the

same rack.

For properly configured two-node systems, one of the scalability port

connections may fail at either runtime or boot time and the system will continue to

operate properly. Runtime failures will be logged in the System Error Log.

For properly configured four-node systems, one of the scalability port

connections may fail at runtime and the system will continue to operate properly.

Failures will be logged in the System Error Log. Boot time scalability connection

failure results in each node booting to the EFI shell independently.

Below are the maximum supported configurations for one-, two- and four-node

partitions.

Table 1-5 Comparing one-node, two-node and four-node configurations

Note: Powering on and off the other nodes will have the same effect, but this

should always be done from the primary node.

Component 1 node 2 nodes 4 nodes

Itanium 2 processors 4 8 16

x455

Node A

x455

Node C

x455

Node B

x455

Node D

1

3

2

3

2

1

23 2

3

1

1

1

2

3

SMP

Scalability

ports

Page 38

24 IBM Eserver xSeries 455 Planning and Installation Guide

1.7.1 RXE-100 connectivity

RXE-100s may be attached to x455 systems. They can be connected using

either optional 3.5m Scalability Port Cables or optional 8m RXE Cables. The

RXE cable length allows placement of RXE-100s in a rack adjacent to the rack

containing the x455 system.

Conceptually, two-node and four-node configurations with RXE-100s are

depicted in Figure 1-13 on page 25 and Figure 1-14 on page 25. These also

show the cable requirements.

Memory 56 GB 112 GB 224 GB

XceL4 Cache 64 MB 128 MB 256 MB

Internal hard disks 2 4 8

Active 64-bit PCI-X slots 2 x 66 MHz

2 x 100 MHz

2 x 133 MHz

4 x 66 MHz

4 x 100 MHz

4 x 133 MHz

8 x 66 MHz

8 x 100 MHz

8 x 133 MHz

RXE-100 support 1 1 2

Active 64-bit PCI-X slots with

RXE

2 x 66 MHz

2 x 100 MHz

14 x 133 MHz

4 x 66 MHz

4 x 100 MHz

16 x 133 MHz

8 x 66 MHz

8 x 100 MHz

32 x 133 MHz

Dual channel integrated

Ultra320 SCSI support

124

Dual port integrated Ethernet

controller

124

Keyboard and mouse 1 2 4

USB ports 3 6 12

Video ports 1 2

1

4

1

Wake on LAN® Ethernet cards 2 2 2

1. This is supported for Windows Server 2003 Enterprise Edition only

Component 1 node 2 nodes 4 nodes

Page 39

Chapter 1. Technical description 25

Figure 1-13 Two-node configuration with one RXE-100

Figure 1-14 Four-node configuration with two RXE-100s

1.7.2 Multinode configuration

Multinode system configurations are defined to each x455 using the EFI

Configuration/Setup menus. These options are fairly self explanatory and allow

you to configure the following:

– Partition size (two-node or four-node)

x455

Node A

x455

Node B

1

3 2

23

1

RXE-100

A

A

RXE-100

B

B

A

B

1

2

3

SMP

Scalability

ports

A

B

RXE-100

Expansion

ports

Data cables

Management

cables

x455

Node A

x455

Node C

x455

Node B

x455

Node D

1

3

2

3

2

1

23 2

3

1

1

1

2

3

SMP

Scalability

ports

A

B

RXE-100

Expansion

ports

RXE-100

A

A

A

B

B

A

RXE-100 RXE-100

B

B

A

B

A

B

Data cables

Managem ent

cables

Page 40

26 IBM Eserver xSeries 455 Planning and Installation Guide

– Partition ID

– IP address of member nodes (IP address of the RSA)

– Additional shared resources (video and/or CD-ROM)

All system node participants in a multinode system must be connected to the

network via the Service Processor Ethernet port.

1.7.3 Integrated I/O function support

The following integrated I/O functions are available in multinode x455 systems.

Table 1-6 Integrated I/O function support

1.7.4 Error recovery

With a multinode configuration, in the event there is a major problem with one of

the nodes, the remaining nodes will boot to EFI as single node partitions. This is

done to facilitate diagnostics and reconfiguration.

Function Primary node Secondary nodes

USB ports Yes Yes

1

Serial port Yes No

Disk drive bays (SCSI) Yes Yes

SCSI port Yes Yes

Ethernet ports Yes Yes

Video ports Yes Yes

2/3

Media bays (IDE) Yes Yes

Wake on LAN Yes No

1. Keyboard or mouse can be used on any machine.

2. Windows Server 2003 Enterprise Edition only.

3. Windows Server 2003 Datacenter Edition does not support multiple monitors as

there currently is no certified driver for the ATI RageXL chipset.

Page 41

Chapter 1. Technical description 27

1.8 Redundancy

The x455 has the following redundancy features to maintain high availability:

Four hot-swap multi-speed fans.

With four hot-swap redundant fans, the x455 has adequate cooling for each of

its major component areas. There are 2 fans located at the front of the server

that direct air through the memory-board assembly and processor-board

assembly. These fans are accessible from the top of the server without having

to open the system panels. In the event of a fan failure, the other fan will

speed up to continue to provide adequate cooling until the fan can be

hot-swapped.

The other two fans are located just behind the power supplies and provide

cooling for the I/O devices. Similar to the SMP Expansion Module fans, these

fans will speed up in the event that one should fail and will compensate for the

reduction in air flow. In general, failed fans should be replaced within 24 hours

following failure.

Due to airflow requirements, fans should not be removed for longer than two

minutes. The fan compartments need to be fully populated even if the fan is

defective. Therefore, remove a defective fan only when a new fan is available

for immediate replacement

Two hot-swap power supplies with separate power cords.

For large configurations, redundancy is achieved only when connected to a

220 V power supply. See 3.5, “Power considerations” on page 87 for details.

To ensure adequate power, a UPS with a rating of RMB 5000 or more is

recommended.

Two hot-swap hard disk drive bays. Using either the onboard LSI chipset or a

ServeRAID adapter, these drives can be configured to form a RAID-1 disk

array for the operating system.

The memory subsystem has a number of redundancy features, including

memory mirroring, as described in “System memory” on page 14.

The layout of the front panel of the x455, showing the location of the four fans,

two drive bays, and two power supplies is shown in Figure 1-1 on page 5.

1.9 Light path diagnostics

To limit the need to slide the server out of the rack to diagnose problems, a light

path diagnostics panel has been incorporated in the front of the x455, as shown

in Figure 1-15. This panel can be ejected from the server to view all light path

Page 42

28 IBM Eserver xSeries 455 Planning and Installation Guide

diagnostics monitored server subsystems. In the event that maintenance is

required the customer can slide the server out from the rack and, using the LEDs,

find the failed or failing component.

As illustrated in Figure 1-15, light path diagnostics are able to monitor and report

on the health of CPUs, main memory, hard disk drives, PCI-X slots, fans, power

supplies, power modules and the internal system temperature.

Figure 1-15 Light path diagnostics panel on the x455

The light path diagnostics on the x455 has four levels:

The first level is the front panel fault LED.

Level 2 is the pop-out panel, as shown in Figure 1-15.

For further investigation, there are light path diagnostics LEDs visible through

the top of the server. This requires the server to be slid out of the rack.

For the fourth level of diagnostics, LEDs on major system components

indicate the component causing the error.

As the processor-board assembly is not visible during normal operation, a light

path diagnostics button has been incorporated into it to assist with diagnosing

errors. You can light up the LEDs for a maximum of two minutes. After that time,

the circuit that powers the lights is exhausted.

Important: If a light path diagnostics LED has been illuminated and system

power is removed, there is no way to redisplay the LEDs on the system tray

without re-applying AC power. If the fault has not been rectified when power is

restored, the LED will re-light.

C

P

U

V

R

M

M

E

M

O

R

Y

DA

S

D

N

M

I

B

O

A

R

D

E

V

E

N

T

L

O

G

FA

N

P

O

W

E

R

S

U

P

P

L

Y

P

C

I

B

U

S

2

1

N

O

N

R

E

D

OV

E

R

S

P

E

C

T

E

M

P

R

E

M

IN

D

i

!

CPU

VRM

MEMORY

DASD

NMI

BOARD

EVENT LOG

FAN

POWER

SUPPLY

PCI-X BUS

2

1

NON REDUND

OVER SPEC

TEMP

REMIND

Light Path

Diagnostics™

Page 43

Chapter 1. Technical description 29

The pop-out panel (Figure 1-15 on page 28) also has a remind button. This

places the front panel system-error LED into remind mode, which means it

flashes briefly every two seconds. By pressing the button, you acknowledge the

failure but indicate that you will not take immediate action. If a new failure occurs,

the system-error LED will turn on again and no longer blink. The system-error

LED remains in the remind mode until one of the following situations occurs:

All known problems are resolved.

The system is restarted.

A new problem occurs, at which time it then is illuminated continuously.

1.10 Extensible Firmware Interface

The Extensible Firmware Interface (EFI) specification describes an interface

between the operating system and platform firmware as shown in Figure 1-16 on

page 30. The interface offers platform-related information to the operating

system as well as boot and runtime service calls that are available to the

operating system and OS loader. Together, this makes a well-defined

environment for booting the operating system and running pre-boot applications,

such as diagnostics, system setup, and driver setup utilities.

In comparison to a BIOS-based, legacy system, the EFI is an additional layer

between the operating system and the firmware. In a legacy system, the OS

loader calls BIOS functions directly. Consequently, to provide a stable boot

environment, changes in the OS loader and the platform firmware must go

hand-in-hand. Figure 1-16 on page 30 shows a conceptual view of the EFI.

Tip: The remind button on the pop-out LPD panel does not function when AC

power has been removed from the system. The button is just used to

acknowledge a system error as described above.

Page 44

30 IBM Eserver xSeries 455 Planning and Installation Guide