Page 1

ibm.com/redbooks

Planning, Installing, and

Managing the

IBM System x3950 M2

David Watts

Jens Reizel

Paul Tan

Kevin Galloway

Understand the IBM System x3950 M2

and IBM x3850 M2

Learn the technical details of

these high-performance servers

See how to configure, install,

manage multinode complexes

Front cover

Page 2

Page 3

Planning, Installing, and Managing the IBM System

x3950 M2

November 2008

International Technical Support Organization

SG24-7630-00

Page 4

© Copyright International Business Machines Corporation 2008. All rights reserved.

Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP

Schedule Contract with IBM Corp.

First Edition (November 2008)

This edition applies to the following systems:

IBM System x3950 M2, machine types 7141 and 7233

IBM System x3850 M2, machine types 7141 and 7233

Note: Before using this information and the product it supports, read the information in

“Notices” on page ix.

Page 5

© Copyright IBM Corp. 2008. All rights reserved. iii

Contents

Notices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ix

Trademarks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . x

Preface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xiii

The team that wrote this book . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xiii

Become a published author . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xv

Comments welcome . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xvi

Chapter 1. Technical overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.1 IBM eX4-based servers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.1.1 Features of the x3950 M2 and x3850 M2 servers. . . . . . . . . . . . . . . . 2

1.1.2 x3950 M2: scalable hardware components. . . . . . . . . . . . . . . . . . . . . 7

1.2 Model numbers and scalable upgrade options . . . . . . . . . . . . . . . . . . . . . . 9

1.2.1 Finding country-specific model information . . . . . . . . . . . . . . . . . . . . 10

1.2.2 x3850 M2 model information. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

1.2.3 x3950 M2 model information. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

1.2.4 Scalable upgrade option for x3850 M2 . . . . . . . . . . . . . . . . . . . . . . . 11

1.3 Multinode capabilities . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

1.4 x3950 M2 Windows Datacenter models . . . . . . . . . . . . . . . . . . . . . . . . . . 15

1.4.1 IBM Datacenter Unlimited Virtualization offering. . . . . . . . . . . . . . . . 16

1.4.2 IBM Datacenter Unlimited Virtualization with High Availability . . . . . 16

1.4.3 Upgrading to Datacenter Edition . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

1.4.4 Datacenter multinode configurations. . . . . . . . . . . . . . . . . . . . . . . . . 19

1.4.5 Datacenter cluster configurations . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

1.5 Integrated virtualization: VMware ESXi . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

1.5.1 Key features of VMware ESXi. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

1.5.2 VMware ESXi on x3850 M2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

1.5.3 Comparing ESXi to other VI3 editions. . . . . . . . . . . . . . . . . . . . . . . . 22

1.5.4 VMware ESXi V3.5 licensing. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

1.5.5 Support for applications running on VMware ESX and ESXi . . . . . . 26

1.6 IBM fourth generation XA-64e chipset . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

1.6.1 Hurricane 4 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

1.6.2 XceL4v dynamic server cache . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

1.6.3 PCI Express I/O bridge chip . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

1.6.4 High-speed memory buffer chips . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

1.6.5 Ranks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

1.6.6 Comparing IBM eX4 to X3 technologies . . . . . . . . . . . . . . . . . . . . . . 31

1.7 Processors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

Page 6

iv Planning, Installing, and Managing the IBM System x3950 M2

1.8 Memory subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

1.9 SAS controller and ports . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

1.10 PCI Express subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

1.11 Networking . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

1.11.1 Main features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

1.11.2 Redundancy features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

1.12 Systems management features. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

1.12.1 Light path diagnostics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

1.12.2 BMC service processor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

1.12.3 Remote Supervisor Adapter II. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

1.12.4 Active Energy Manager . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

1.13 Trusted Platform Module and where used . . . . . . . . . . . . . . . . . . . . . . . 51

Chapter 2. Product positioning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

2.1 Focus market segments and target applications . . . . . . . . . . . . . . . . . . . . 54

2.2 Positioning the IBM x3950 M2 and x3850 M2 . . . . . . . . . . . . . . . . . . . . . . 56

2.2.1 Overview of scale-up, scale-out . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

2.2.2 IBM BladeCenter and iDataPlex . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

2.3 Comparing x3850 M2 to x3850 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

2.4 Comparing x3950 M2 to x3950 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

2.5 System scalability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

2.6 Operating system scalability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

2.6.1 Scaling VMware ESX . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

2.6.2 Scaling Microsoft Windows Server 2003. . . . . . . . . . . . . . . . . . . . . . 73

2.6.3 Scaling Microsoft Windows Server 2008 and Hyper-V . . . . . . . . . . . 75

2.6.4 Scaling Linux server operating systems . . . . . . . . . . . . . . . . . . . . . . 76

2.7 Application scalability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

2.7.1 Microsoft SQL Server 2005. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

2.7.2 Microsoft SQL Server 2008. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

2.8 Scale-up or scale-out . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

2.8.1 Scale-up . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

2.8.2 Scale-out . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

Chapter 3. Hardware configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

3.1 Processor subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

3.1.1 Processor options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

3.1.2 Installation of processor options . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

3.1.3 Processor (CPU) configuration options . . . . . . . . . . . . . . . . . . . . . . . 99

3.2 Memory subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

3.2.1 Memory options. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

3.2.2 Memory card . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

3.2.3 Memory mirroring . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

3.2.4 Hot-swap memory . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

Page 7

Contents v

3.2.5 Hot-add memory . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

3.2.6 Memory configuration in BIOS . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

3.3 Internal drive options and RAID controllers . . . . . . . . . . . . . . . . . . . . . . . 124

3.3.1 LSI 1078 SAS onboard controller . . . . . . . . . . . . . . . . . . . . . . . . . . 124

3.3.2 SAS disk drive options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

3.3.3 ServeRAID-MR10k RAID controller . . . . . . . . . . . . . . . . . . . . . . . . 128

3.3.4 ServeRAID-MR10M SAS/SATA II controller . . . . . . . . . . . . . . . . . . 135

3.3.5 SAS expansion enclosure (unit) . . . . . . . . . . . . . . . . . . . . . . . . . . . 142

3.3.6 Updating the SAS storage controllers . . . . . . . . . . . . . . . . . . . . . . . 148

3.4 Configuring RAID volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 154

3.4.1 Starting the LSI1078 controller BIOS . . . . . . . . . . . . . . . . . . . . . . . 154

3.4.2 Starting the ServeRAID-MR10k controller WebBIOS . . . . . . . . . . . 158

3.4.3 Working with LSI MegaRAID controller WebBIOS . . . . . . . . . . . . . 159

3.5 PCI Express options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 188

3.5.1 PCI and I/O devices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 188

3.5.2 PCI device scan order . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 188

3.5.3 PCI adapter installation order . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 189

3.5.4 PCI Express device-related information in the BIOS . . . . . . . . . . . 190

3.5.5 Supported PCI Express adapter options . . . . . . . . . . . . . . . . . . . . . 194

Chapter 4. Multinode hardware configurations . . . . . . . . . . . . . . . . . . . . 195

4.1 Introduction and terminology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 196

4.2 Multinode capabilities . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 198

4.3 Understanding scalability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 199

4.3.1 Complex Descriptor. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 199

4.3.2 Complex Descriptor contents . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 200

4.4 Prerequisites to create a multinode complex . . . . . . . . . . . . . . . . . . . . . 201

4.5 Upgrading an x3850 M2 to an x3950 M2 . . . . . . . . . . . . . . . . . . . . . . . . 204

4.5.1 Installing the ScaleXpander key (chip) . . . . . . . . . . . . . . . . . . . . . . 204

4.5.2 Configuring for a LAN connection . . . . . . . . . . . . . . . . . . . . . . . . . . 206

4.5.3 Updating the code levels, firmware . . . . . . . . . . . . . . . . . . . . . . . . . 208

4.6 Cabling of multinode configurations . . . . . . . . . . . . . . . . . . . . . . . . . . . . 209

4.6.1 Two-node configuration. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 212

4.6.2 Three-node configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 214

4.6.3 Four-node configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 216

4.7 Configuring partitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 220

4.7.1 Understanding the Scalable Partitioning menu . . . . . . . . . . . . . . . . 220

4.7.2 First steps in configuring the partition . . . . . . . . . . . . . . . . . . . . . . . 225

4.7.3 Creating partitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 226

4.8 Working with partitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 228

4.8.1 Managing partitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 228

4.8.2 Behaviors of scalability configurations . . . . . . . . . . . . . . . . . . . . . . 232

4.9 Observations with scalability configurations . . . . . . . . . . . . . . . . . . . . . . 237

Page 8

vi Planning, Installing, and Managing the IBM System x3950 M2

4.9.1 Problem with merging if prerequisites were met . . . . . . . . . . . . . . . 237

4.9.2 Problems with merging if prerequisites were not met . . . . . . . . . . . 239

4.9.3 Known problems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 240

Chapter 5. Installation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 245

5.1 Updating firmware and BIOS. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 246

5.1.1 Prerequisite checklist . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 246

5.1.2 Downloading the firmware. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 247

5.1.3 Performing the updates. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 247

5.2 Confirming BIOS settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 254

5.3 Supported operating systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 257

5.3.1 VMware ESX operating systems. . . . . . . . . . . . . . . . . . . . . . . . . . . 259

5.3.2 Windows Server 2003 and 2008 operating systems . . . . . . . . . . . . 259

5.3.3 Red Hat Enterprise Linux operating systems . . . . . . . . . . . . . . . . . 261

5.3.4 SUSE Linux Enterprise Server operating systems . . . . . . . . . . . . . 262

5.3.5 Solaris operating systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 263

5.4 Installing the operating system . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 264

5.4.1 Installing (configuring) VMware ESXi 3.5 embedded . . . . . . . . . . . 264

5.4.2 Installing VMware ESXi 3.5 Installable . . . . . . . . . . . . . . . . . . . . . . 279

5.4.3 Installing VMware ESX 3.5 Update 1 . . . . . . . . . . . . . . . . . . . . . . . 281

5.4.4 Installing Windows Server 2003 . . . . . . . . . . . . . . . . . . . . . . . . . . . 285

5.4.5 Installing Windows Server 2008 . . . . . . . . . . . . . . . . . . . . . . . . . . . 289

5.4.6 Installing Red Hat Enterprise Linux 5 Update 1 . . . . . . . . . . . . . . . 293

5.4.7 Installing SUSE Linux Enterprise Server 10 SP1 . . . . . . . . . . . . . . 295

Chapter 6. Management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 299

6.1 BMC configuration options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 300

6.1.1 BMC connectivity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 302

6.1.2 BMC LAN configuration in BIOS . . . . . . . . . . . . . . . . . . . . . . . . . . . 302

6.1.3 Event Log . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 304

6.1.4 User Account Settings menus. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 305

6.1.5 Remote control using SMBridge . . . . . . . . . . . . . . . . . . . . . . . . . . . 305

6.1.6 BMC monitoring features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 306

6.1.7 BMC firmware update . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 307

6.1.8 Installing the BMC device drivers . . . . . . . . . . . . . . . . . . . . . . . . . . 308

6.1.9 Ports used by the BMC . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 316

6.2 Remote Supervisor Adapter II . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 316

6.2.1 RSA II connectivity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 319

6.2.2 RSA LAN configuration in BIOS . . . . . . . . . . . . . . . . . . . . . . . . . . . 319

6.2.3 Web interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 321

6.2.4 Remote console and media . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 324

6.2.5 Updating firmware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 327

6.2.6 Implementing the RSA II in the operating system . . . . . . . . . . . . . . 329

Page 9

Contents vii

6.2.7 TCP/UDP ports used by the RSA II . . . . . . . . . . . . . . . . . . . . . . . . 332

6.2.8 MIB files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 333

6.2.9 Error logs. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 333

6.3 Use of IBM Director with VMware ESX . . . . . . . . . . . . . . . . . . . . . . . . . . 334

6.4 Active Energy Manager . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 334

6.4.1 Active Energy Manager terminology . . . . . . . . . . . . . . . . . . . . . . . . 335

6.4.2 Active Energy Manager components . . . . . . . . . . . . . . . . . . . . . . . 336

6.4.3 Active Energy Manager tasks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 338

6.4.4 Active Energy Manager 3.1 functions . . . . . . . . . . . . . . . . . . . . . . . 340

6.5 IBM Director: Implementation of servers . . . . . . . . . . . . . . . . . . . . . . . . . 346

6.5.1 Integrating x3850 M2 and x3950 M2 into IBM Director . . . . . . . . . . 347

6.5.2 Level 0: Implementation by service processors . . . . . . . . . . . . . . . 348

6.5.3 Level 1: Implementation by the IBM Director Core Services . . . . . . 349

6.5.4 LSI MegaRAID Provider . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 352

6.5.5 Level 2: Implementation by the IBM Director agent . . . . . . . . . . . . 353

6.6 System management with VMware ESXi 3.5 . . . . . . . . . . . . . . . . . . . . . 355

6.6.1 Hypervisor systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 355

6.6.2 Implementation of x3850 M2 Hypervisor systems . . . . . . . . . . . . . 355

6.7 Power management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 356

6.7.1 Processor features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 356

6.7.2 Power consumption measurement and capping . . . . . . . . . . . . . . . 357

6.7.3 Virtualization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 357

6.8 Power Distribution Units (PDU) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 357

6.8.1 Key features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 358

6.8.2 Availability and flexibility of Enterprise PDUs . . . . . . . . . . . . . . . . . 359

6.8.3 Comparing PDU and intelligent PDU . . . . . . . . . . . . . . . . . . . . . . . 360

6.8.4 Assembling of intelligent PDU . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 361

6.8.5 Intelligent PDU power management Web interface . . . . . . . . . . . . 364

6.9 DSA Preboot . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 366

6.9.1 Updating DSA Preboot . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 369

6.9.2 Working with the command line interface . . . . . . . . . . . . . . . . . . . . 372

6.9.3 Working with the graphical user interface (GUI) . . . . . . . . . . . . . . . 376

6.9.4 Scalability partition management . . . . . . . . . . . . . . . . . . . . . . . . . . 379

Abbreviations and acronyms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 381

Related publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 385

IBM Redbooks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 385

Product publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 385

Online resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 386

How to get Redbooks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 394

Help from IBM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 394

Index . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 395

Page 10

viii Planning, Installing, and Managing the IBM System x3950 M2

Page 11

© Copyright IBM Corp. 2008. All rights reserved. ix

Notices

This information was developed for products and services offered in the U.S.A.

IBM may not offer the products, services, or features discussed in this document in other countries. Consult

your local IBM representative for information on the products and services currently available in your area.

Any reference to an IBM product, program, or service is not intended to state or imply that only that IBM

product, program, or service may be used. Any functionally equivalent product, program, or service that

does not infringe any IBM intellectual property right may be used instead. However, it is the user's

responsibility to evaluate and verify the operation of any non-IBM product, program, or service.

IBM may have patents or pending patent applications covering subject matter described in this document.

The furnishing of this document does not give you any license to these patents. You can send license

inquiries, in writing, to:

IBM Director of Licensing, IBM Corporation, North Castle Drive, Armonk, NY 10504-1785 U.S.A.

The following paragraph does not apply to the United Kingdom or any other country where such

provisions are inconsistent with local law: INTERNATIONAL BUSINESS MACHINES CORPORATION

PROVIDES THIS PUBLICATION "AS IS" WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESS OR

IMPLIED, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF NON-INFRINGEMENT,

MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. Some states do not allow disclaimer

of express or implied warranties in certain transactions, therefore, this statement may not apply to you.

This information could include technical inaccuracies or typographical errors. Changes are periodically made

to the information herein; these changes will be incorporated in new editions of the publication. IBM may

make improvements and/or changes in the product(s) and/or the program(s) described in this publication at

any time without notice.

Any references in this information to non-IBM Web sites are provided for convenience only and do not in any

manner serve as an endorsement of those Web sites. The materials at those Web sites are not part of the

materials for this IBM product and use of those Web sites is at your own risk.

IBM may use or distribute any of the information you supply in any way it believes appropriate without

incurring any obligation to you.

Information concerning non-IBM products was obtained from the suppliers of those products, their published

announcements or other publicly available sources. IBM has not tested those products and cannot confirm

the accuracy of performance, compatibility or any other claims related to non-IBM products. Questions on

the capabilities of non-IBM products should be addressed to the suppliers of those products.

This information contains examples of data and reports used in daily business operations. To illustrate them

as completely as possible, the examples include the names of individuals, companies, brands, and products.

All of these names are fictitious and any similarity to the names and addresses used by an actual business

enterprise is entirely coincidental.

COPYRIGHT LICENSE:

This information contains sample application programs in source language, which illustrate programming

techniques on various operating platforms. You may copy, modify, and distribute these sample programs in

any form without payment to IBM, for the purposes of developing, using, marketing or distributing application

programs conforming to the application programming interface for the operating platform for which the

sample programs are written. These examples have not been thoroughly tested under all conditions. IBM,

therefore, cannot guarantee or imply reliability, serviceability, or function of these programs.

Page 12

x Planning, Installing, and Managing the IBM System x3950 M2

Trademarks

IBM, the IBM logo, and ibm.com are trademarks or registered trademarks of International Business

Machines Corporation in the United States, other countries, or both. These and other IBM trademarked

terms are marked on their first occurrence in this information with the appropriate symbol (® or ™),

indicating US registered or common law trademarks owned by IBM at the time this information was

published. Such trademarks may also be registered or common law trademarks in other countries. A current

list of IBM trademarks is available on the Web at http://www.ibm.com/legal/copytrade.shtml

The following terms are trademarks of the International Business Machines Corporation in the United States,

other countries, or both:

Active Memory™

BladeCenter®

Chipkill™

Cool Blue™

DB2®

DPI®

IBM Systems Director Active

Energy Manager™

IBM®

iDataPlex™

Lotus®

PowerExecutive™

PowerPC®

Predictive Failure Analysis®

Redbooks®

Redbooks (logo) ®

RETAIN®

ServeRAID™

ServerGuide™

ServerProven®

ServicePac®

System x™

Tivoli®

Wake on LAN®

WebSphere®

X-Architecture®

Xcelerated Memory

Technology™

xSeries®

The following terms are trademarks of other companies:

Advanced Micro Devices, AMD, AMD-V, ATI, ES1000, Radeon, the AMD Arrow logo, and combinations

thereof, are trademarks of Advanced Micro Devices, Inc.

Cognos, and the Cognos logo are trademarks or registered trademarks of Cognos Incorporated, an IBM

Company, in the United States and/or other countries.

Snapshot, and the NetApp logo are trademarks or registered trademarks of NetApp, Inc. in the U.S. and

other countries.

Novell, SUSE, the Novell logo, and the N logo are registered trademarks of Novell, Inc. in the United States

and other countries.

Oracle, JD Edwards, PeopleSoft, Siebel, and TopLink are registered trademarks of Oracle Corporation

and/or its affiliates.

SAP, and SAP logos are trademarks or registered trademarks of SAP AG in Germany and in several other

countries.

Virtual SMP, VMotion, VMware, the VMware "boxes" logo and design are registered trademarks or

trademarks of VMware, Inc. in the United States and/or other jurisdictions.

Java, OpenSolaris, Solaris, Ultra, and all Java-based trademarks are trademarks of Sun Microsystems, Inc.

in the United States, other countries, or both.

BitLocker, Hyper-V, Microsoft, SQL Server, Windows NT, Windows Server, Windows, and the Windows logo

are trademarks of Microsoft Corporation in the United States, other countries, or both.

Intel Core, Intel SpeedStep, Intel Xeon, Intel, Itanium-based, Itanium, Intel logo, Intel Inside logo, and Intel

Centrino logo are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United

States, other countries, or both.

Page 13

Notices xi

UNIX is a registered trademark of The Open Group in the United States and other countries.

Linux is a trademark of Linus Torvalds in the United States, other countries, or both.

Other company, product, or service names may be trademarks or service marks of others.

Page 14

xii Planning, Installing, and Managing the IBM System x3950 M2

Page 15

© Copyright IBM Corp. 2008. All rights reserved. xiii

Preface

The x3950 M2 server and x3850 M2 are the System x™ flagship servers and

implement the fourth generation of the IBM® X-Architecture®. They delivers

innovation with enhanced reliability and availability features to enable optimal

performance for databases, enterprise applications, and virtualized

environments.

The x3950 M2 four-socket system is designed for extremely complex,

compute-intensive applications that require four sockets, plus processing power

and large memory support.

The x3950 M2 and x3850 M2 features make the servers ideal for handling

complex, business-critical On Demand Business applications such as database

serving, business intelligence, transaction processing, enterprise resource

planning, collaboration applications, and server consolidation.

Up to four x3950 M2 servers can be connected to form a single-system image

comprising of up to 16 six-core processors, up to 1 TB of high speed memory,

and support for up to 28 PCI Express adapters. The capacity gives you the

ultimate in processing power, ideally suited for very large relational databases.

The x3850 M2 is the equivalent of the x3950 M2 however it can only be used as

a single four-processor node

This IBM Redbooks® publication describes the technical details of the x3950 M2

scalable server and the x3850 M2 server. We explain what the configuration

options are, how 2-node, 3-node, and 4-node complexes are cabled and

implemented, how to install key server operating systems, and what

management tools are available to systems administrators.

The team that wrote this book

This book was produced by a team of specialists from around the world working

at the International Technical Support Organization, Raleigh Center.

David Watts is a Consulting IT Specialist at the IBM ITSO Center in Raleigh. He

manages residencies and produces IBM Redbooks publications on hardware

and software topics related to IBM System x and BladeCenter® servers, and

associated client platforms. He has authored over 80 books, papers, and

technotes. He holds a Bachelor of Engineering degree from the University of

Page 16

xiv Planning, Installing, and Managing the IBM System x3950 M2

Queensland (Australia) and has worked for IBM both in the United States and

Australia since 1989. He is an IBM Certified IT Specialist.

Jens Reizel is a Support Specialist at IBM Germany and is responsible for the

post-sales technical support teams in the EMEA region. He has been working in

this function and with IBM for nine years. His areas of expertise include IBM

System x high end systems, management hardware, and Windows®, Linux®,

and VMware® operating systems.

Paul Tan works as a presales System x, BladeCenter and Storage Technical

Specialist at IBM Systems and Technology Group in Melbourne, Australia. He

regularly leads customer presentations and solution workshops based around

key leading IBM technologies with a particular focus on x86-based virtualization

products such as VMware. He has been working in this role for more than two

years and prior to that for five years as an IBM Infrastructure Consultant,

specializing in Microsoft® and Linux systems. He holds a Bachelor of Science

(Computer Science) and Bachelor of Engineering (Computer Engineering) from

the University of Melbourne, Australia. He also holds industry certifications such

as Microsoft Certified Systems Engineer and Red Hat Certified Technician.

Kevin Galloway is a graduate student at the University of Alaska, Fairbanks. He

is currently working toward a Master of Science degree in Computer Science,

with a focus on computer security and software development. He joined the ITSO

as an IBM Redbooks intern.

The team (left to right): David, Kevin, Jens, and Paul

Page 17

Preface xv

Thanks to the following people for their contributions to this project:

From the International Technical Support Organization:

Jeanne Alderson

Tam ik ia Ba rr ow

Emma Jacobs

Linda Robinson

Diane Sherman

Erica Wazewski

From IBM Marketing

Beth McElroy

Heather Richardson

Kevin Powell

Don Roy

Scott Tease

Bob Zuber

From IBM Development

Paul Anderson

Chia-Yu Chu

Richard French

Joe Jakubowski

Mark Kapoor

Don Keener

Dan Kelaher

Randy Kolvick

Josh Miller

Thanh Ngo

Chuck Stephan

From IBM Service and Support

Khalid Ansari

Brandon Church

Become a published author

Join us for a two- to six-week residency program! Help write a book dealing with

specific products or solutions, while getting hands-on experience with

leading-edge technologies. You will have the opportunity to team with IBM

technical professionals, Business Partners, and Clients.

Page 18

xvi Planning, Installing, and Managing the IBM System x3950 M2

Your efforts will help increase product acceptance and customer satisfaction. As

a bonus, you will develop a network of contacts in IBM development labs, and

increase your productivity and marketability.

Find out more about the residency program, browse the residency index, and

apply online at:

ibm.com/redbooks/residencies.html

Comments welcome

Your comments are important to us!

We want our books to be as helpful as possible. Send us your comments about

this book or other IBM Redbooks in one of the following ways:

Use the online Contact us review Redbooks form found at:

ibm.com/redbooks

Send your comments in an e-mail to:

redbooks@us.ibm.com

Mail your comments to:

IBM Corporation, International Technical Support Organization

Dept. HYTD Mail Station P099

2455 South Road

Poughkeepsie, NY 12601-5400

Page 19

© Copyright IBM Corp. 2008. All rights reserved. 1

Chapter 1. Technical overview

The IBM System x3950 M2 and IBM System x3850 M2 are the IBM System x

flagship systems. They are based on eX4 technology, which is the fourth

generation of IBM X-Architecture. This technology leverages the extensive

research and development by IBM in XA-64e chipset based on the scalable

Intel® Xeon MP system.

This chapters discusses the following topics:

1.1, “IBM eX4-based servers” on page 2

1.2, “Model numbers and scalable upgrade options” on page 9

1.3, “Multinode capabilities” on page 14

1.4, “x3950 M2 Windows Datacenter models” on page 15

1.5, “Integrated virtualization: VMware ESXi” on page 19

1.6, “IBM fourth generation XA-64e chipset” on page 27

1.7, “Processors” on page 33

1.8, “Memory subsystem” on page 39

1.9, “SAS controller and ports” on page 42

1.10, “PCI Express subsystem” on page 43

1.11, “Networking” on page 44

1.12, “Systems management features” on page 47

1.13, “Trusted Platform Module and where used” on page 51

1

Page 20

2 Planning, Installing, and Managing the IBM System x3950 M2

1.1 IBM eX4-based servers

IBM eX4 technology offers a balanced system design with unique scalability,

reliability, availability, and performance capabilities to take full advantage of Intel’s

latest multi-core processors. By connecting four servers together, the

single-system image can have up to 16 processor sockets (96 cores), up to 128

DIMM sockets and 1 TB of RAM, 28 PCI Express slots, and 34.1 GBps of

memory bandwidth for each 256 GB RAM server. This results in a high-capacity

system with significant processing and I/O performance, and greater power

efficiency.

The two servers based on IBM eX4 technology are:

IBM System x3850 M2

IBM System x3950 M2

Although they have the same technical specifications and features, the x3850 M2

cannot be used to form a multinode unless you upgrade it to an IBM System

x3950 M2 by adding the ScaleXpander Option Kit, as described in section 1.2,

“Model numbers and scalable upgrade options” on page 9.

1.1.1 Features of the x3950 M2 and x3850 M2 servers

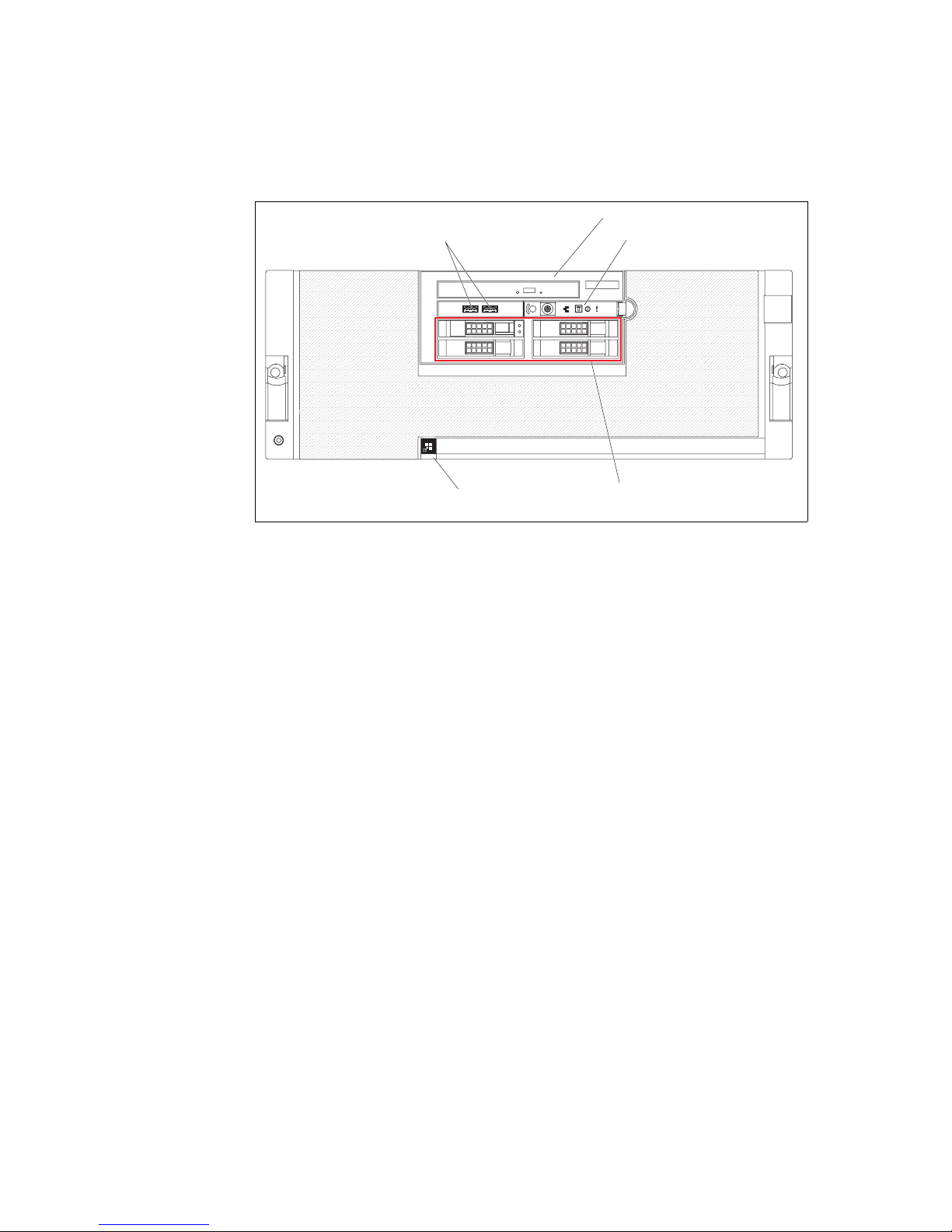

The x3950 M2 and x3850 M2 look very similar, as shown in Figure 1-1.

Figure 1-1 IBM System x3950 M2 and IBM System x3850 M2

Front and rear panels

The components and connectors on the front and rear of the system are shown

in Figure 1-2 on page 3 and Figure 1-3 on page 4.

Page 21

Chapter 1. Technical overview 3

Figure 1-2 Front panel of x3850 M2 and x3950 M2

The front panel of the x3850 M2 and the x3950 M2, as shown in Figure 1-2,

provides easy access to a maximum of four hot-swap 2.5-inch SAS drives,

DVD-ROM, two USB 2.0 ports, an operator information panel with power on/off

button, and LEDs indicating information such as scalability, network activity, and

system errors and warnings.

The scalability LED on an x3950 M2 indicates whether the node (building block in

a scalable system) is participating in a multinode x3950 M2 complex. After each

node has successfully merged with the primary node in a partition, the scalability

LED is lit on all nodes in a partition of a multinode complex.

12

3

4

Operator information panel

USB connectors

DVD-R

O

M drive

Four hot-swap

disk drive bays

Scalability LED

Page 22

4 Planning, Installing, and Managing the IBM System x3950 M2

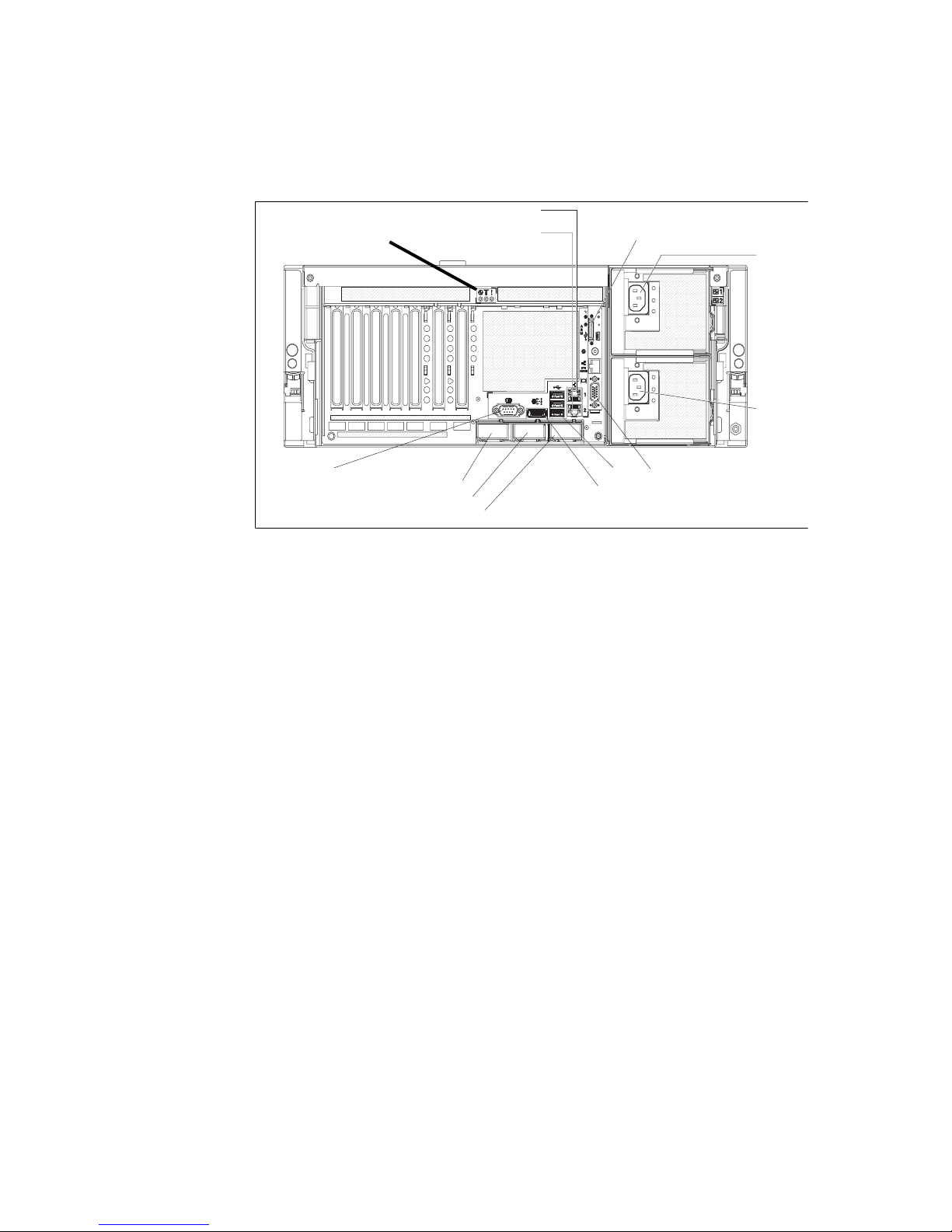

Figure 1-3 Rear panel of x3850 M2 and x3950 M2

The rear panel of the x3850 M2 and x3950 M2, as shown in Figure 1-3, has:

PCI Express (PCIe) slots 1 to 7 (from left to right on the panel)

System serial port

Three scalability SMP expansion ports used for multinode x3950 M2

complexes

External SAS port

Three USB 2.0 ports

Integrated dual-port Broadcom Gigabit Ethernet RJ45 ports

Remote Supervisor Adapter II panel, which contains the servers video

connector port, 10/100 Mbps RJ45 out-of-band remote management port

(there is also a mini-USB port and a power adapter socket that is not used for

the x3850 M2/x3950 M2)

Two hot-swap redundant power supplies

Hypervisor models of the x3850 M2

Inside the server is an additional USB socket used exclusively for the embedded

virtualization feature. This device, shown in Figure 1-4 on page 5, is standard on

hypervisor models of the x3850 M2.

Gigabit Ethernet 2

Gigabit Ethernet 1

SMP Expansion Port 1

SMP Expansion Port 2

SMP Expansion Port 3

USB

SAS

System serial

Powe r

supply 1

Powe r

supply 2

Remote Supervisor Adapter II

Video connector

Power-on, Locator and

System Error LEDs

Page 23

Chapter 1. Technical overview 5

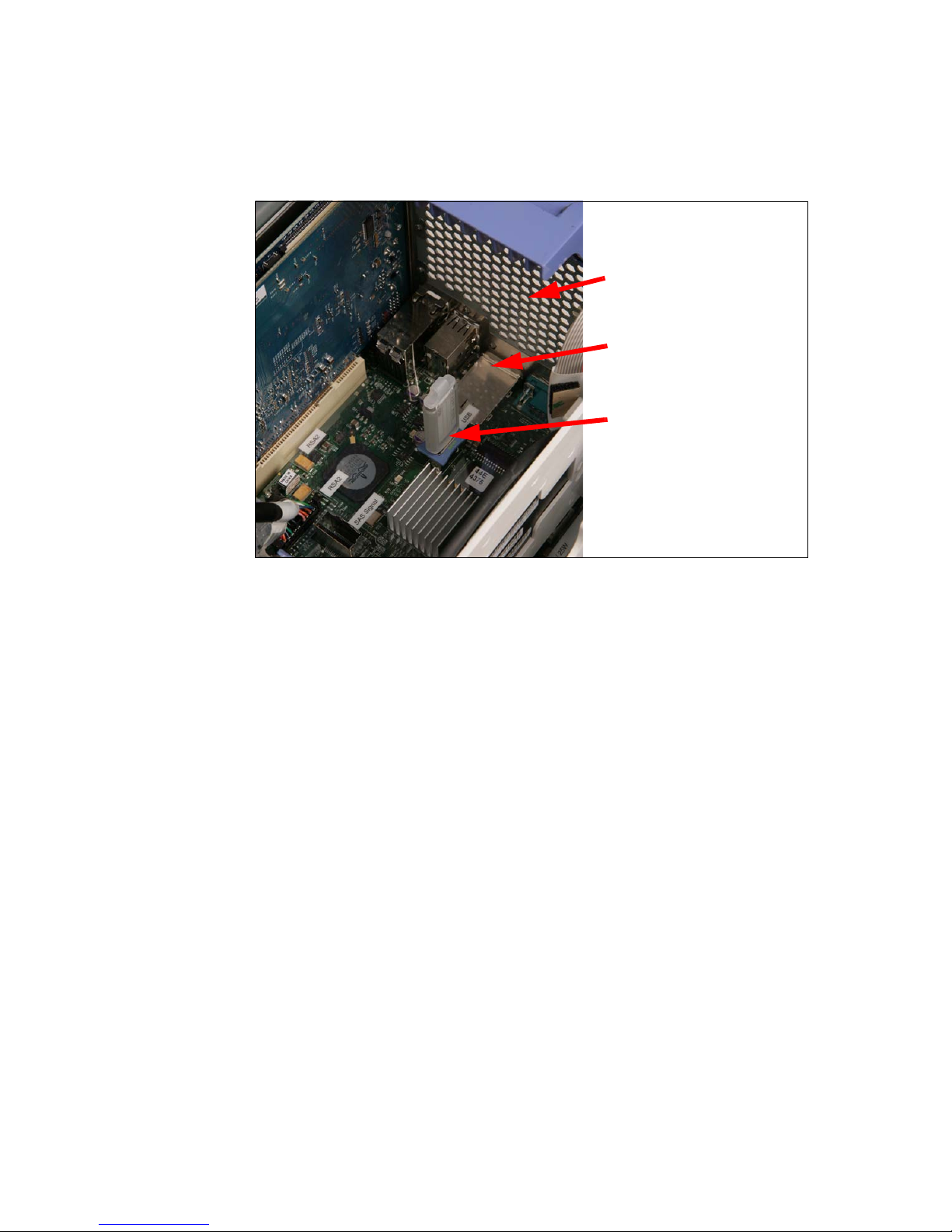

Figure 1-4 On the hypervisor models of x3850 M2, a USB flash drive is pre-installed in

the internal USB socket and contains VMware ESXi 3.5 pre-loaded

Standard features for both systems

The x3950 M2 and x3850 M2 have the following standard features. We discuss

these in greater detail in sections later in this chapter.

Processors

Processor features include:

One 4U Rack-optimized sever with one of the following Intel processors:

– Xeon 7200 series (Tigerton) dual-core processors

– Xeon 7300 series (Tigerton) quad-core processors

– Xeon 7400 series (Dunnington) quad-core processors

– Xeon 7400 series (Dunnington) 6-core processors

Two processors standard, with support for up to four processors

One IBM eX4 “Hurricane 4” chipset with four 1066 MHz front-side buses

Support for Intel Virtualization Technology (Intel VT), Intel 64 technology

(EM64T), and Execute Disable Bit feature

Rear Air Ventilation Panel

as view from inside the

server

External SAS connector

as viewed from inside the

server

IBM 4 GB USB Flash Disk

pre-loaded with integrated

virtualization hypervisor

Page 24

6 Planning, Installing, and Managing the IBM System x3950 M2

Memory subsystem

Memory subsystem features include:

4 GB or 8 GB memory standard expandable to 256 GB

Support for 1, 2, 4, and 8 GB DDR2 registered DIMMs

Maximum of 32 DIMM slots by installing four memory card (each card has

eight DIMMs sockets)

Active Memory™ with Memory ProteXion, hot-swap memory with memory

mirroring, hot-add memory with supported operating systems, and Chipkill™.

I/O slots and integrated NICs

I/O subsystem features include:

Seven 64-bit PCIe x8 full height (half-length) slots; two of these seven slots

are hot-swap

Integrated dual-port Broadcom NeXtreme II 5709C PCI Express Gigabit

Ethernet controller with Jumbo Frame support

SAS RAID controller and HDD slots

Disk subsystem features include:

Integrated LSI 1078 SAS controller with support for RAID-0 and RAID-1

External JBOD SAS storage through external SAS x4 port (if IBM

ServeRAID™ MR10k SAS/SATA Controller is installed)

The SAS SFF-8088 connector is located above SMP Expansion Port 2 in

Figure 1-3 on page 4.

Up to four hot-swap 2.5-inch SAS hard drives (up to a maximum of 584 GB of

internal storage)

Systems management and security

Management and security features include:

Onboard BMC shares integrated Broadcom Gigabit Ethernet 1 interface

Remote Supervisor Adapter II with dedicated 10/100 Mbps Ethernet

management interface

The RSA Adapter II’s 10/100 Mbps Ethernet port is located above the video

connector in Figure 1-3 on page 4.

Operator information panel (see Figure 1-2 on page 3), which provides light

path diagnostics information

Note: TCP Offload Engine (TOE) support is planned.

Page 25

Chapter 1. Technical overview 7

Windows Hardware Error Architecture (WHEA) support in the BIOS

Trusted Platform Module support. The module is a highly secure start-up

process from power-on through to the startup of the operating system boot

loader. Advanced Configuration and Power Interface (ACPI) support is

provided to allow ACPI-enabled operating systems to access the security

features of this module.

System ports and media access

Ports and media access features include:

Six USB 2.0 ports, two on the front panel, three on the rear panel, and one

internal for USB Flash Disk

The Hypervisor model of x3850 M2 includes an integrated hypervisor for

virtualization on a 4 GB USB Flash Disk with VMware ESXi pre-loaded. See

Figure 1-4 on page 5.

An ATI™ Radeon™ ES1000™ SVGA video controller (DB-15 video

connector on RSA II card as shown in Figure 1-3 on page 4) on the Remote

Supervisor Adapter II

Optical drive:

– On machine type 7141: One standard 24x/8x IDE CD-RW/DVD-ROM

combo drive

– One machine type 7233: SATA CD-RW/DVD-ROM combo drive

USB keyboard and mouse

System serial port

Three SMP expansion ports for use in scalable multinode complex.

Power

Two hot-swap redundant 1440 W power supplies are standard. At 220 V, one

power supply is redundant. At 110 V, the power supplies are non-redundant.

1.1.2 x3950 M2: scalable hardware components

The x3950 M2 includes the following additional scalable hardware components

as standard compared to the x3850 M2. The additional components enable the

x3950 M2 to scale up to a multinode complex comprising of up to a maximum

four x3950 M2s.

ScaleXpander chip (see Figure 1-5 on page 8)

One 3.08 m scalability cable (see Figure 1-6 on page 9)

Page 26

8 Planning, Installing, and Managing the IBM System x3950 M2

Larger cable management arm to accommodate use of scalability cables

connecting to SMP expansion ports (see Figure 1-7 on page 12 and

Figure 1-8 on page 13)

All necessary hardware components are provided for forming a three-node

x3950 M2 complex with the order of three x3950 M2 servers. However, to form a

four-node x3950 M2 complex, you must have four x3950 M2

and a Scalability

Upgrade Option 2, which contains one 3.08m and one 3.26m Scalability cable

(see Table 4-1 on page 202 for details of part numbers). Refer to Chapter 4,

“Multinode hardware configurations” on page 195 for more details about scaling

the x3950 M2 to complexes of two, three, and four nodes.

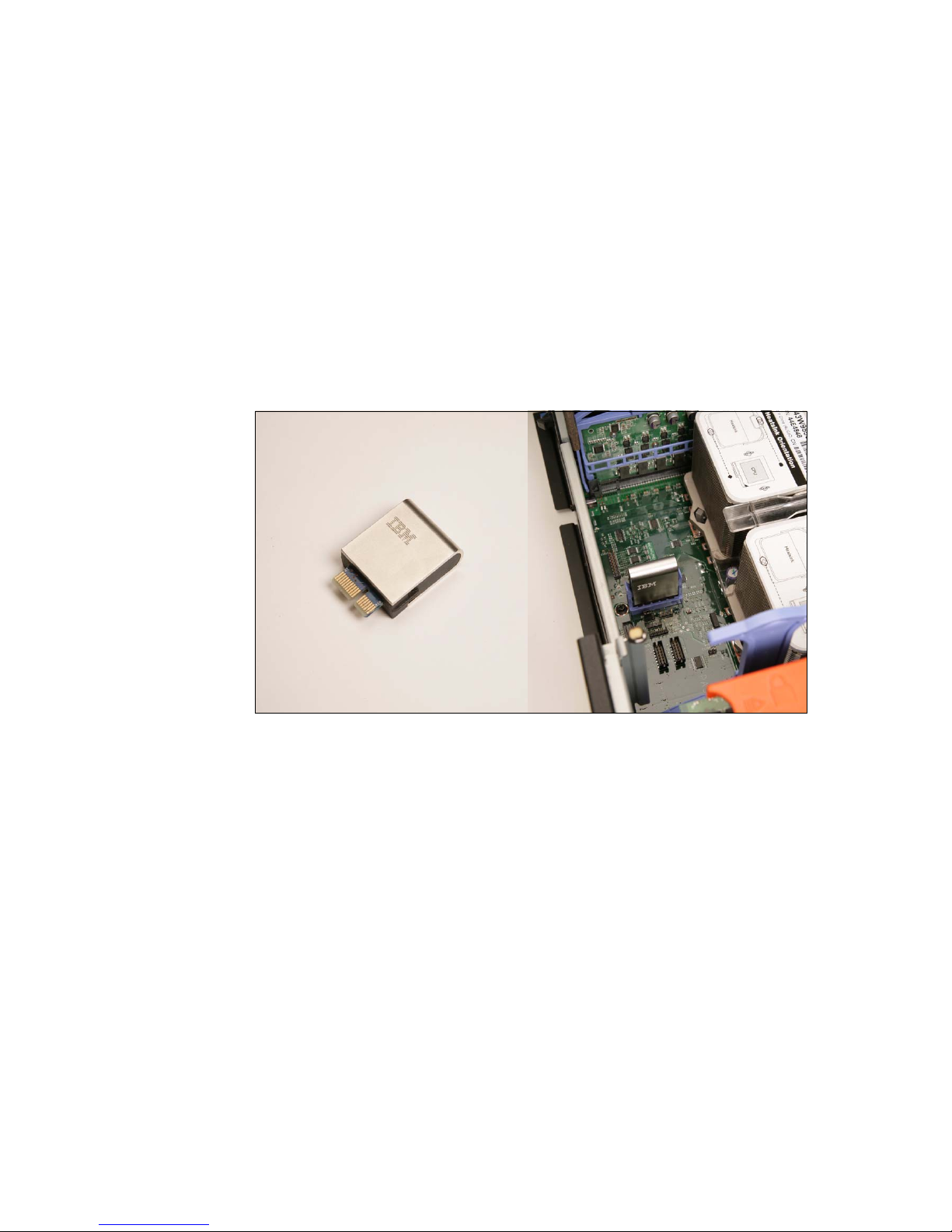

Figure 1-5 ScaleXpander chip (left); ScaleXpander chip installed on processor board

near the front panel of the x3950 M2 (right)

Page 27

Chapter 1. Technical overview 9

Figure 1-6 Scalability cable (top); cable installed in SMP Expansion Port 1 (bottom)

1.2 Model numbers and scalable upgrade options

As discussed previously, the x3850 M2 and x3950 M2 servers are based on

IBM eX4 technology. This section lists the available models for each server and

where to find more information about models available in your country.

The tables in this section use the following nomenclature:

n Indicates variations between server models relating to the processor

type and the number of memory cards and memory DIMMs installed.

c Indicates the country in which the model is available: U is for

countries in North America and South America. G is for EMEA (for

example, 1RG). For Asia-Pacific countries, the letter varies from

country to country.

Page 28

10 Planning, Installing, and Managing the IBM System x3950 M2

1.2.1 Finding country-specific model information

For the specific models available in your country, consult one of the following

sources of information:

Announcement letters; search for the machine type (such as 7141):

http://www.ibm.com/common/ssi/

Configuration and Options Guide (COG) for System x:

http://www.ibm.com/support/docview.wss?uid=psg1SCOD-3ZVQ5W

Direct link to COG page for the System x3850/3950 M2 servers:

http://www.ibm.com/systems/xbc/cog/x3850m2/x3850m2aag.html

IBM BladeCenter and System x Reference Sheets (xREF):

http://www.redbooks.ibm.com/xref

1.2.2 x3850 M2 model information

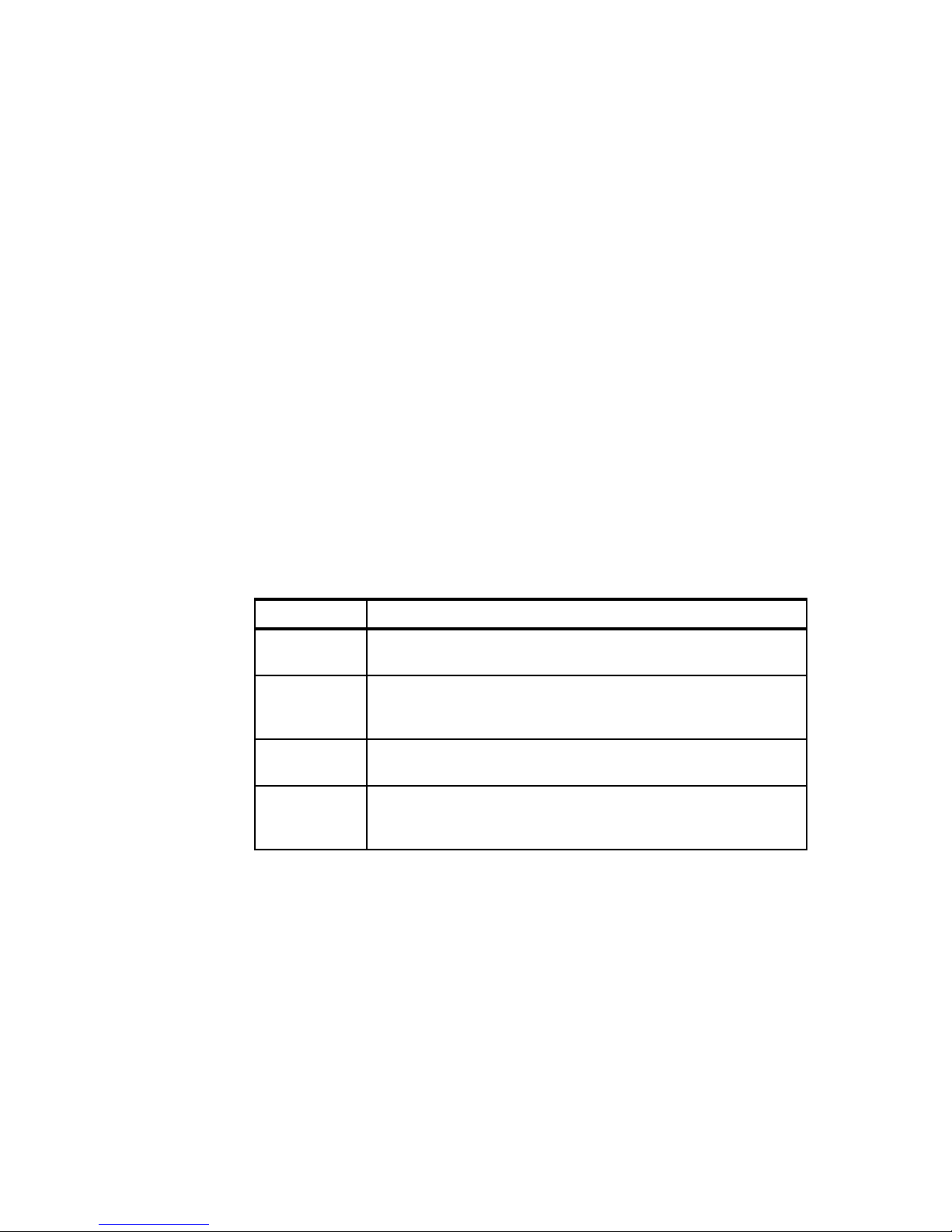

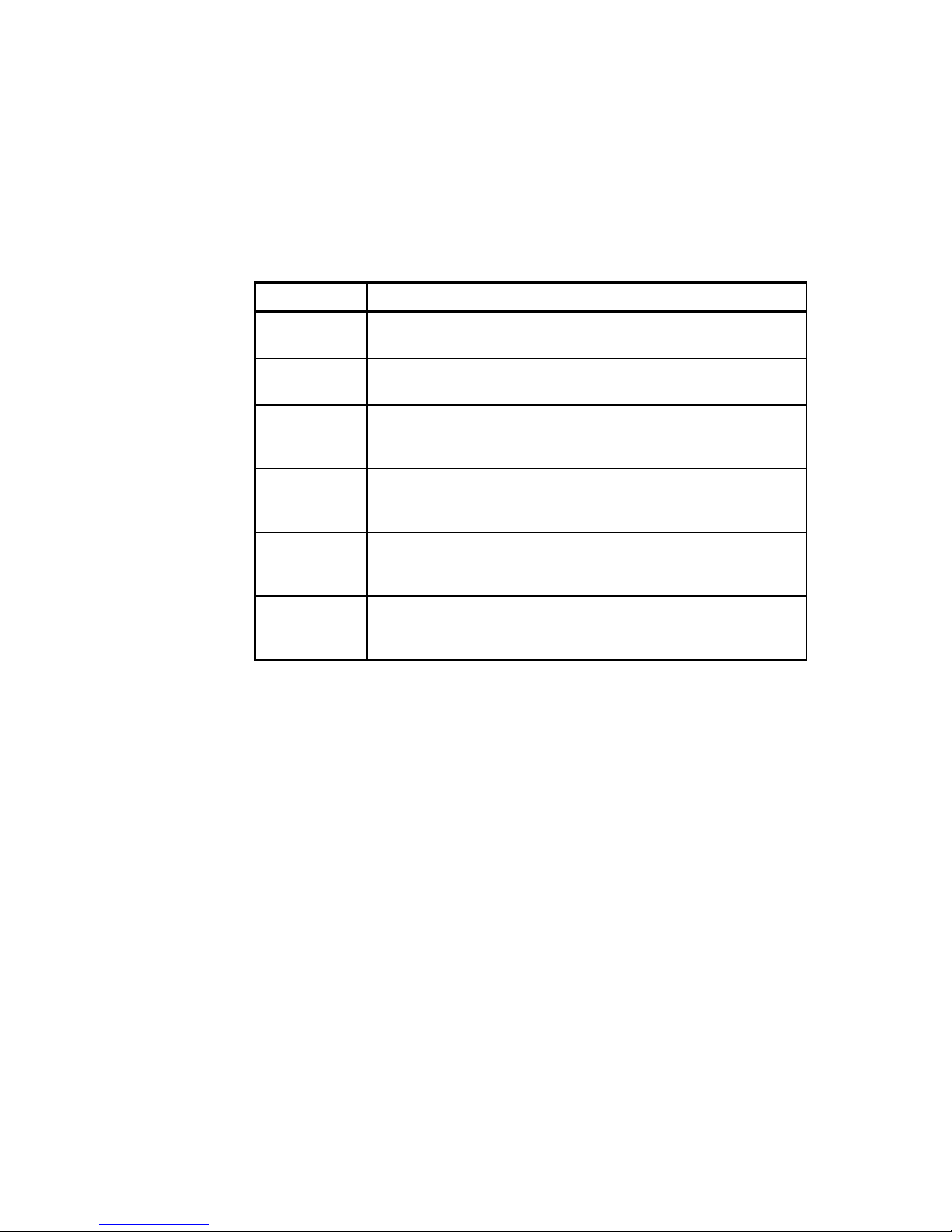

The model numbers of the x3850 M2 are listed in Table 1-1.

Table 1-1 Models of x3850 M2

Models Description

7141-nRc Standard models of x3850 M2 with dual-core or quad-core Xeon

7200 and Xeon 7300 (Tigerton) processors

7141-3Hc Integrated hypervisor models of x3850 M2 with Xeon E7330

(Tigerton) processors. See 1.5, “Integrated virtualization: VMware

ESXi” on page 19.

7233-nRc Standard models of x3850 M2 with quad-core and six-core Xeon

7400 (Dunnington) processors

7233-4Hc Integrated hypervisor models of x3850 M2 with quad-core Xeon

E7440 (Dunnington) processors. See 1.5, “Integrated virtualization:

VMware ESXi” on page 19.

Page 29

Chapter 1. Technical overview 11

1.2.3 x3950 M2 model information

The model numbers of the x3950 M2 are listed in Table 1-2.

Table 1-2 Models of x3950 M2

1.2.4 Scalable upgrade option for x3850 M2

Unlike the x3850 server (based on X3 technology), the x3850 M2 can be

converted to an x3950 M2 through the use of the IBM ScaleXpander Option Kit,

part number 44E4249. After this kit is installed, the x3850 M2 functionally

becomes an x3950 M2, and is therefore able to form part of a multinode complex

comprising of up to four x3950 M2s.

The IBM ScaleXpander Option Kit contains the following items:

Scalability cable 3.08m (See Figure 1-6 on page 9.)

Larger cable management arm, which replaces the existing arm to allow the

easy installation of the scalability cables. See Figure 1-7 on page 12 and

Figure 1-8 on page 13.

Models Description

7141-nSc Standard models of the x3950 M2 with dual-core or quad-core Xeon

7200 or Xeon 7300 (Tigerton) processors

7233-nSc Standard Models of x3950 M2 with quad-core and six-core Xeon

7400 (Dunnington) processors

a

a. Dunnington quad-core and six-core processors include L2 and L3 shared cache

unlike Tigerton processors with only L2 shared cache. See 1.7, “Processors” on

page 33 for more details.

7141-nAc Datacenter Unlimited Virtualization with High Availability models

certified for 32-bit Windows 2003 Datacenter Edition. See 1.4.2, “IBM

Datacenter Unlimited Virtualization with High Availability” on page 16.

7141-nBc Datacenter Unlimited Virtualization with High Availability models

certified for 64-bit Windows 2003 Datacenter Edition. See 1.4.2, “IBM

Datacenter Unlimited Virtualization with High Availability” on page 16.

7141-nDc Datacenter Unlimited Virtualization models certified for 32-bit

Windows 2003 Datacenter Edition. See 1.4.1, “IBM Datacenter

Unlimited Virtualization offering” on page 16.

7141-nEc Datacenter Unlimited Virtualization models certified for 64-bit

Windows 2003 Datacenter Edition. See 1.4.1, “IBM Datacenter

Unlimited Virtualization offering” on page 16.

Page 30

12 Planning, Installing, and Managing the IBM System x3950 M2

ScaleXpander chip required to convert the x3850 M2 to an x3950 M2. See

Figure 1-5 on page 8.

x3950 M2 bezel, which replaces the existing bezel and shows the x3850 M2

has the kit installed and is now functionally equal to an x3950 M2. See

Figure 1-9 on page 13.

Figure 1-7 x3950 M2 enterprise cable management arm

Page 31

Chapter 1. Technical overview 13

Figure 1-8 x3950 M2 cable management arm mounted on server rails

Figure 1-9 x3950 M2 bezel

Scalability cable brackets to guide the scalability

cables from the scalability expansion ports on one

node to another node

Route power, Ethernet, fibre

cables, video, mouse and

keyboard through here

Scalability expansion ports 1,

2, and 3 from left to right

Page 32

14 Planning, Installing, and Managing the IBM System x3950 M2

1.3 Multinode capabilities

The x3950 M2 is the base building block, or node, for a scalable system. At their

most basic, these nodes are comprised of four-way SMP-capable systems with

processors, memory, and I/O devices. The x3950 M2 is the building block that

allows supported 8-way, 12-way, and 16-way configurations by adding more

x3950 M2s as required.

Unlike with the System x3950 and xSeries® 460, the x3950 M2 does not require

a special modular expansion enclosure. The multinode configuration is simply

formed by using another x3950 M2 or an x3850 M2 that has the ScaleXpander

Option Kit installed as described previously in 1.2.4, “Scalable upgrade option for

x3850 M2” on page 11

Multinode configurations

The x3950 M2 can form a multinode configuration by adding one or more

x3950 M2 servers. A number of configurations are possible as shown in

Figure 1-10.

Figure 1-10 Possible multinode configurations

Note: When we refer to an x3950 M2, we mean either an x3950 M2 or an

x3850 M2 that has the ScaleXpander Option Kit installed.

Four nodes

8-way or 16-way

(Each node is

2-way or 4-way)

Up to 1 TB RAM

x3950 M2

x3950 M2

x3950 M2

x3950 M2

One node

2-way or 4-way

Up to 256 GB RAM

x3950 M2*

Two nodes

4-way or 8-way

(Each node is

2-way or 4-way)

Up to 512 GB RAM

x3950 M2

x3950 M2

Three nodes

6-way or 12-way

(Each node is

2-way or 4-way)

Up to 768 GB RAM

x3950 M2

x3950 M2

x3950 M2

Each node can be either an x3950 M2 or an x3850 M2

with the ScaleXpander Option Kit installed. All CPUs in

every node must be identical.

*

Page 33

Chapter 1. Technical overview 15

The possible configurations are:

A one-node system is a one x3950 M2 server or one x3850 M2 server, with

one, two, three, or four processors and up to 256 GB of RAM.

A two-node complex is comprised of two x3950 M2 servers, with up to eight

processors, and up to 512 GB RAM installed.

A three-node complex is comprised of three x3950 M2 servers, up to 12

processors, and up to 768 GB RAM installed.

A four-node complex is comprised of four x3950 M2 servers, up to 16

processors, and up to 1 TB RAM installed.

Partitioning

Partitioning is the concept of logically splitting a multinode complex into separate

systems. You can then install an operating system on a partition and have it run

independently from all other partitions. The advantage of partitioning is that you

can create and delete partitions without having to recable the complex. The only

requirement is that partitions be formed on node boundaries.

The interface where you set up and maintain partitions is an extension of the

Remote Supervisor Adapter II Web interface. It is used to create, delete, control,

and view scalable partitions.

Multinode complexes support partitioning on node boundaries. This means, for

example, you can logically partition your 2-node 8-way system as two 4-way

systems, while still leaving the complex cabled as 2 nodes. This increases

flexibility. You can reconfigure the complex by using the Web interface without

changing the systems or cabling.

For more information about multinode complexes and partitioning, see

Chapter 4, “Multinode hardware configurations” on page 195.

1.4 x3950 M2 Windows Datacenter models

IBM offers Windows 2003 Datacenter Edition as part of the following two IBM

offerings, which are described in this section:

IBM Datacenter Unlimited Virtualization

IBM Datacenter Unlimited Virtualization with High Availability

Note: At the time of writing, only Windows 2003 Enterprise and Datacenter

64-bit editions, RHEL 5 64-bit, and SLES 10 64-bit support this amount of

memory. See 2.6, “Operating system scalability” on page 66 for details.

Page 34

16 Planning, Installing, and Managing the IBM System x3950 M2

1.4.1 IBM Datacenter Unlimited Virtualization offering

The IBM Datacenter Unlimited Virtualization offering is ideal for customers who

already have a well-managed IT infrastructure and want only a Windows

operating system that scales from 4-way to 32-way and offers maximum

performance and scalability in a nonclustered environment.

IBM Datacenter Unlimited Virtualization solution is tested and certified on

specific System x servers and with standard ServerProven® options:

Datacenter-specific, certified configurations are no longer required

Supported on all ServerProven configurations for designated System x

Datacenter servers

Installation can be performed by IBM, the Business Partner, or the customer.

Optional System x Lab Services onsite and IBM Global Services - Remote

Support Services can be provided.

Table 1-2 on page 11 shows the system models for both 32-bit and 64-bit

versions of the operating system.

With the IBM Datacenter Unlimited Virtualization option, the x3950 M2 models

come with two processors, 8 GB of memory (eight 1 GB DIMMs), four memory

cards, and no disks. The system is shipped with the Datacenter installation CD,

OS documentation, recovery CD, and a 4-socket Certificate of Authenticity (COA)

to license the system. Windows Server® 2003 R2 Datacenter Edition is not

preloaded. This offering is available in both English and Japanese languages.

1.4.2 IBM Datacenter Unlimited Virtualization with High Availability

The IBM Datacenter Unlimited Virtualization with High Availability (UVHA)

Program offering delivers a fully certified solution on 4-way through 32-way

server configurations that support up to 8-node Microsoft cluster certified

solutions for a tightly controlled, end-to-end supported environment for maximum

availability.

This end-to-end offering provides a fully configured and certified solution for

customers who want to maintain a tightly controlled environment for maximum

Note: IBM no longer offers a Software Update Subscription for this offering.

Customers should purchase a Microsoft Software Assurance contract for

operating system maintenance and upgrades. For information see:

http://www.microsoft.com/licensing/sa

Page 35

Chapter 1. Technical overview 17

availability. To maintain this high availability, the solution must be maintained as a

certified configuration.

IBM Datacenter UVHA solution offerings are tested and certified on specific

System x servers and with standard ServerProven options, storage systems, and

applications. All components must be both ServerProven and Microsoft

cluster-logo certified.

The operating system is not preloaded. Installation must be performed by IBM

System x Lab Services or IBM Partners certified under the EXAct program.

Standard IBM Stage 2 manufacturing integration services can be used. IBM

Global Services - Remote Support Services are mandatory.

Table 1-2 on page 11 shows the models for both 32-bit and 64-bit versions of the

operating system.

With this option, the x3950 M2 models come with two processors, 8 GB memory

(eight 1 GB DIMMs), four memory cards, and no disks. Unlike previous

high-availability offerings, Windows Server 2003 R2 Datacenter Edition is not

preloaded. Also shipped with the system are a recovery CD, OS documentation,

and a 4-socket Certificate of Authenticity (COA) to license the system. This

offering is available in both English and Japanese languages.

1.4.3 Upgrading to Datacenter Edition

If you are using another Windows operating system on your x3950 M2, such as

Windows Server 2003 Enterprise Edition, and want to upgrade to Datacenter

Edition, you can order the appropriate upgrade as described in this section.

IBM Datacenter preload upgrades can be ordered only after receiving approval

from the IBM world-wide System x marketing team. IBM Sales Representatives

should notify their geography Marketing Product Manager and Sales Managers

of these opportunities, and the Product and Sales Managers should, in turn,

notify the World Wide Marketing Product Manager of the sales opportunity.

Business Partners should notify their IBM Sales Representative, who should

engage with geography Product Marketing and Sales Managers.

IBM validates the customer's current x3950 M2 hardware configuration as a

certified Datacenter configuration. Orders are allowed only after a Solutions

Note: IBM no longer offers a Software Update Subscription for this offering.

Customers should purchase a Microsoft Software Assurance contract for

operating system maintenance and upgrades. For information see:

http://www.microsoft.com/licensing/sa

Page 36

18 Planning, Installing, and Managing the IBM System x3950 M2

Assurance Review is completed. For the IBM Datacenter Unlimited Virtualization

with High Availability offering, the appropriate service and support contracts must

also be in place.

Upgrading to IBM Datacenter Unlimited Virtualization

To upgrade to the IBM Datacenter Unlimited Virtualization offering, order one or

more of the part numbers listed in Table 1-3. You must have one 4-CPU license

for each x3950 M2 in your configuration. Licenses are cumulative.

Table 1-3 Upgrade options for the IBM Datacenter Unlimited Virtualization offering

Upgrading to IBM Datacenter Unlimited Virtualization with

High Availability

To upgrade to the IBM Datacenter Unlimited Virtualization with High Availability

(UVHA) offering, order one or more of the part numbers listed in Table 1-4. You

must have one 4-CPU license for each x3950 M2 in your configuration. Licenses

are cumulative.

Table 1-4 Upgrade options for IBM Datacenter UVHA

Upgrade kits Order number

Windows Server 2003 Datacenter Edition R2, 32-bit, 1-4 CPUs 4818-NCU

Windows Server 2003 Datacenter Edition R2 x64, 1-4 CPUs 4818-PCU

Windows Server 2003 Datacenter Edition R2, 32-bit, 1-4 CPUs

(Japanese)

4818-NCJ

Windows Server 2003 Datacenter Edition R2 x64, 1-4 CPUs

(Japanese)

4818-PCJ

Note: These upgrade order numbers can only be ordered from the IBM World

Wide System x Brand and might not appear in IBM standard configuration

tools.

Upgrade kits Order number

Windows Server 2003 Datacenter Edition R2, 32-bit, 1-4 CPUs 4816-NCU

Windows Server 2003 Datacenter Edition R2 x64, 1-4 CPUs 4816-PCU

Page 37

Chapter 1. Technical overview 19

1.4.4 Datacenter multinode configurations

Configurations greater than 4-way on the x3950 M2 are comprised of an

x3950 M2 primary node with a number of x3950 M2 systems, up to a maximum

of four nodes to make a 16-way Datacenter system. Forming these scalable

systems requires additional scalability cables, as explained in 4.4, “Prerequisites

to create a multinode complex” on page 201.

1.4.5 Datacenter cluster configurations

Microsoft Cluster Server (MSCS) is supported only under the UVHA offering.

Check for updates to the Microsoft Hardware Compatibility List (HCL) at:

http://www.microsoft.com/whdc/hcl/default.mspx

1.5 Integrated virtualization: VMware ESXi

VMware ESXi is the next-generation hypervisor that is integrated into IBM

servers such as the x3850 M2. It provides a cost-effective, high-capacity virtual

machine platform with advanced resource management capabilities. This

innovative architecture operates independently from any general-purpose

operating system, offering improved security, increased reliability, and simplified

management. The compact architecture is designed for integration directly into

virtualization-optimized server hardware like the IBM x3850 M2, enabling rapid

installation, configuration, and deployment.

Windows Server 2003 Datacenter Edition R2, 32-bit, 1-4 CPUs

(Japanese)

4816-NCJ

Windows Server 2003 Datacenter Edition R2 x64, 1-4 CPUs

(Japanese)

4816-PCJ

Note: These upgrade order numbers can only be ordered from the IBM World

Wide System x Brand and might not appear in IBM standard configuration

tools.

Upgrade kits Order number

Page 38

20 Planning, Installing, and Managing the IBM System x3950 M2

1.5.1 Key features of VMware ESXi

As discussed in the white paper The Architecture of VMware ESX Server 3i1,

VMware ESXi has equivalent functions to ESX Server 3.5. However, the ESXi

hypervisor footprint is less than 32 MB of memory because the Linux-based

service console has been removed. The function of the service console is

replaced by new remote command line interfaces in conjunction with adherence

to system management standards.

Like the ESX Server, VMware ESXi supports the entire VMware Infrastructure 3

suite of products, including VMFS, Virtual SMP®, VirtualCenter, VMotion®,

VMware Distributed Resource Scheduler, VMware High Availability, VMware

Update Manager, and VMware Consolidated Backup.

The VMware ESXi architecture comprises the underlying operating system,

called VMkernel, and processes that run on it. VMkernel provides the means for

running all processes on the system, including management applications and

agents as well as virtual machines. VMkernel also manages all hardware devices

on the server, and manages resources for the applications.

The main processes that run on top of VMkernel are:

Direct Console User Interface (DCUI), which is the low-level configuration and

management interface, accessible through the console of the server, and

used primarily for initial basic configuration.

The virtual machine monitor, which is the process that provides the execution

environment for a virtual machine, as well as a helper process known as

VMX. Each running virtual machine has its own VMM and VMX process.

Various agents are used to enable high-level VMware Infrastructure

management from remote applications.

The Common Information Model (CIM) system, which is the interface that

enables hardware-level management from remote applications through a set

of standard APIs.

Figure 1-11 on page 21 shows a components diagram of the overall ESXi 3.5

architecture.

For detailed examination of each of these components, refer to the previously

mentioned white paper, The Architecture of VMware ESX Server 3i, at:

http://www.vmware.com/files/pdf/ESXServer3i_architecture.pdf

1

Available from http://www.vmware.com/files/pdf/ESXServer3i_architecture.pdf. This section

contains material from VMware. Used with permission.

Page 39

Chapter 1. Technical overview 21

Figure 1-11 The architecture of VMware ESXi eliminates the need for a service console

1.5.2 VMware ESXi on x3850 M2

Although VMware ESXi can be booted from flash memory or installed on a hard

disk, ESXi is currently available from IBM only on specific systems, including

specific models of the x3850 M2. These models have an integrated bootable

USB flash drive that is securely installed to an internal USB port.

With an IBM eX4 server running VMware ESXi (or VMware ESX), applications

and services can be deployed in highly reliable and secure virtual machines.

Virtual machines can be provisioned, consolidated, and managed centrally

without having to install an operating system, thus simplifying the IT

infrastructure and driving down total cost of ownership for businesses with

constrained IT budgets and resources.

One important recommended consideration when selecting a server to run

VMware ESX is to ensure you have sufficient headroom in capacity. The IBM

x3850 M2 is optimized for VMware ESXi because of its vertical scalability in the

key areas such as processor, memory, and I/O subsystems. VMware discusses

the benefits of CPU dense ESX server hosts by saying

2

:

The chance that the scheduler can find room for a particular workload without

much reshuffling of virtual machines will always be better when the scheduler

has more CPUs across which it can search for idle time. For this reason, it will

generally be better to purchase two four-way ESX Server licenses than to

purchase four two-way machines.

VM kernel

VMMOSVMMOSVMM

OS

Virtual Ethernet

adapter and switch

Network stack

Distributed

VM file system

Storage stack

Device drivers

Resource

scheduling

User world API

CIM broker

Third-party

CIM plug-ins

vpxa SNMP

hostd DCUI syslog VMX VMX VMX

2

See Tips and Techniques for Implementing Infrastructure Services on ESX Server, available at:

http://www.vmware.com/vmtn/resources/409. Reproduced by permission.

Page 40

22 Planning, Installing, and Managing the IBM System x3950 M2

Similarly, two eight-way servers will provide more scheduling flexibility than four

4-way servers. Refer to the white paper, Tips and Techniques for Implementing

Infrastructure Services on ESX Server available from:

http://www.vmware.com/vmtn/resources/409

Table 1-5 shows that scheduling opportunities scale exponentially rather than

linearly when more cores are available.

Table 1-5 Scheduling opportunities scale exponentially when there are more cores

1.5.3 Comparing ESXi to other VI3 editions

VMware ESXi is one of the new VMware VI Editions being offered from VMware

and provides the same functionality as ESX 3.5. VMware ESXi can be upgraded

to VI Foundation, Standard, and Enterprise Editions to provide additional

management features as detailed in Table 1-6.

Table 1-6 Feature comparison

ESX Host Server Number of Cores Scheduling

opportunities

(VM = 2 vCPUs)

4-way Dual Core 8 28

8-way Dual Core 16 120

8-way Quad Core 32 496

Feature VMware ESXi VI Foundation VI Standard VI Enterprise

VMFS Virtual SMP

Ye s Ye s Ye s Ye s

VC Agent - Central

management

No

Ye s Ye s Ye s

Update manager No

Ye s Ye s Ye s

Consolidated backup No

Ye s Ye s Ye s

High availability No No

Ye s Ye s

DRS - Resource management No No No

Ye s

DPM - Power management No No No

Ye s

VMotion - Live VM migration No No No

Ye s

Storage VMotion - Live VM disk

file migration

No No No

Ye s

Page 41

Chapter 1. Technical overview 23

VMware is available in several editions, including:

VMware Infrastructure Enterprise Edition

This edition contains the entire array of virtual infrastructure capabilities for

resource management, workload mobility, and high availability. It includes:

– VMware ESX Server

– VMware ESXi

– VMware Consolidated Backup

– VMware Update Manager

– VMware VMotion

– VMware Storage VMotion

– VMware DRS with Distributed Power Management (DPM)

– VMware HA

VMware Infrastructure Standard Edition

This edition is designed to bring higher levels of resiliency to IT environments

at greater value. It includes:

– VMware HA

– VMware ESX Server

– VMware ESXi

– VMware Consolidated Backup

– VMware Update Manager

VMware Infrastructure Foundation Edition

Unlike the previous VMware Infrastructure 3 Starter Edition, VMware

Infrastructure Foundation Edition has no restrictions on shared storage

connectivity, memory utilization, or number of CPUs of the physical server.

It includes:

– VMware ESX Server

– VMware ESXi

– VMware Consolidated Backup

– VMware Update Manager

New features such as VMware High Availability (VMware HA), Distributed

Resource Scheduler (DRS), and Consolidated Backup provide higher

availability, guaranteed service level agreements, and quicker recovery from

failures than was previously possible, and comes close to the availability you

get from more expensive and complicated alternatives such as physically

clustered servers.

Page 42

24 Planning, Installing, and Managing the IBM System x3950 M2

VMware Infrastructure ESXi Edition

This edition has no restrictions on shared storage connectivity, memory

utilization, or number of CPUs of the physical server. However, if you

purchase IBM x3850 M2 with VMware ESXi integrated hypervisor and

subsequently require additional functionality, you can upgrade ESXi to the VI

Enterprise, Standard, or Foundation Editions. See “License upgrades from

ESXi to VI3 Editions” on page 25 for details about upgrade options.

The System x3850 M2 and x3950 M2 servers are designed for balanced system

performance, and are therefore uniquely positioned to take advantage of the

larger workloads now available to be virtualized.

Table 1-7 shows the limitations of each VMware distribution that is supported on

the x3850 M2 and x3950 M2 (single node).

Table 1-7 Features of the VMware ESX family

Note: For all VMware VI3 Infrastructure editions (Enterprise, Standard, and

Foundation), two Socket licenses must be purchased with a corresponding

subscription and support for the VI3 Edition purchased. The licenses are also

valid for use with ESXi Installable Edition. VMware ESXi is now available free

of cost, with no subscription required, however additional VI3 features are

licensed separately.

Feature ESX Server 3.0.2

update 1

VMware ESXi

VMware ESX V3.5

Maximum logical CPUs

a

a. Each core is equal to a logical CPU.

32 32 (64 logical CPUs are

supported experimentally

by VMware)

Maximum memory 64 GB 256 GB

Size of RAM per virtual

machine

16,384 MB 65,532 MB

Note: The values in the table are correct at the time of writing and may change

as testing completes. The values do not reflect the theoretical values but set

the upper limit of support for either distribution.

Page 43

Chapter 1. Technical overview 25

For more information about the configuration maximums of ESX Server, see:

ESX Server 3.0.2 configuration maximums:

http://www.vmware.com/pdf/vi3_301_201_config_max.pdf

VMware ESX V3.5 and VMware ESXi V3.5 configuration maximums:

http://www.vmware.com/pdf/vi3_35/esx_3/r35/vi3_35_25_config_max.pdf

1.5.4 VMware ESXi V3.5 licensing

As described in 1.2.2, “x3850 M2 model information” on page 10, specific

hypervisor models of the x3850 M2 includes VMware ESXi V3.5, the embedded

virtualization engine on an IBM customized USB Flash Disk. These models

include a license for VMware ESXi V3.5 for up to four processor sockets.

Subscriptions for updates

In addition, subscriptions for updates to VMware ESXi V3.5 are recommended,

but not mandatory, to be purchased for each ESXi V3.5 (four-socket) license

using product number 5773-VMW:

Subscription for two processor sockets: Feature code 0997

Subscription for four processor sockets: Feature code 0998

For more details, see the IBM Announcement Letter 208-071:

http://www.ibm.com/isource/cgi-bin/goto?it=usa_annred&on=208-071

License upgrades from ESXi to VI3 Editions

VMware ESXi can be upgrade to provide the additional features available in the

VMware Infrastructure Enterprise, Standard or Foundation Editions, with a

purchase of licenses from IBM as shown in Table 1-8 on page 26.

Note: VMware ESXi is available only in a dedicated model of the x3850 M2

(as described in 1.2.2, “x3850 M2 model information” on page 10) or in

configure-to-order (CTO) models pre-loaded as a Factory Install (product

number 5773-VMW).

Page 44

26 Planning, Installing, and Managing the IBM System x3950 M2

Table 1-8 VMware license, subscription, support options for ESX 3.5 and ESXi

For details of part numbers, refer to VMware Offerings in the IBM System x

Configuration and Options Guide:

http://www.ibm.com/systems/xbc/cog/vmwareesx.html

For example, to upgrade an ESXi 4-socket license for x3850 M2 hypervisor

model (with 4 x processor sockets populated), purchase the following items:

Two VMware ESX Server 3i to Enterprise Upgrade, 2-socket license only

Two Subscription Only VMware ESX Server 3i to Enterprise Upgrade,

2-socket, 3-year support

Two VMware Infrastructure 3, Enterprise, 2-socket, 3-year support

The exact description of the parts above might differ slightly from country to

country, or by the length (in years) of subscription and support. License

upgrades, subscription upgrades, and support must be purchased as a complete

set to upgrade ESXi Edition to Virtual Infrastructure Foundation, Standard, and

Enterprise Editions.

1.5.5 Support for applications running on VMware ESX and ESXi

Ensure that the applications you plan to use on the x3850 M2 and x3950 M2

running VMware ESX Server are supported by the application vendor:

Microsoft

See the following Microsoft support Web site for details about its support of

applications and operating systems running on ESX Server:

http://support.microsoft.com/kb/897615/

Description Quantity for

[x3850 M2 / x3950

M2] (single node)

with 2 sockets

Quantity for

[x3850 M2 / x3950

M2] (single node)

with 4 sockets

VMware ESX 3i to [Enterprise,

Standard, or Foundation] upgrade,

2-socket license only

12

Subscription only VMware ESX 3i to

[Enterprise, Standard, or Foundation]