Technical white paper

HP Virtual Connect FlexFabric Cookbook

– With HP Virtual Connect Flex-10/10D

(Version 3.70 through 4.01 Firmware Enhancements)

Purpose 4

Documentation feedback 4

Introduction to Virtual Connect Flex-10 and FlexFabric 5

New Features: 5

The Virtual Connect Cookbook Series: 8

Virtual Connect Ethernet Modules 9

Connecting VC Flex-10/10D or VC FlexFabric to the CORE 21

Choosing VC Flex-10/10D or VC FlexFabric 22

Choosing an Adapter for VC Flex-10/10D or VC

FlexFabric 23

Determining Network Traffic Patterns and Virtual

Connect network design (Active/Standby vs.

Active/Active) 23

VMware ESXi 5.0/5.1 26

Single Domain/Enclosure Scenarios 28

Overview 28

Requirements 28

Scenario 1 – Simple vNet with Active/Standby Uplinks –

Ethernet and FCoE – Windows 2008 R2 30

Overview 30

Requirements 30

Installation and configuration 32

Review 40

Results – Windows 2008 R2 Networking Examples 40

Results – Windows 2008 R2 SAN Connectivity 45

Summary 46

Scenario 2 –Shared Uplink Sets with Active/Active

uplinks and 802.3ad (LACP) - Ethernet and FCoE –

Windows 2008 R2 47

Overview 47

Requirements 47

Installation and configuration 49

Review 58

Results – Windows 2008 R2 Networking Examples 59

Results – Windows 2008 R2 SAN Connectivity 63

Summary 64

1

Scenario 3 – Shared Uplink Set with Active/Active

Uplinks and 802.3ad (LACP) - Ethernet and FCoE Boot

from SAN – Windows 2008 R2 65

Overview 65

Requirements 65

Installation and configuration 67

Review 77

Results – Windows 2008 R2 Networking Examples 77

Results – Windows 2008 R2 SAN Connectivity 82

Summary 83

Scenario 4 – Shared Uplink Set with Active/Active

Uplinks and 802.3ad (LACP) – Ethernet, FCoE SAN Windows 2008 R2 Hyper-V 84

Overview 84

Requirements 84

Installation and configuration 86

Review 97

Results – Windows 2008 R2 Networking Examples 98

Results – Windows 2008 R2 SAN Connectivity 108

Summary 109

Scenario 5 – Shared Uplink Set with Active/Standby

Uplinks and 802.3ad (LACP) - Ethernet and FCoE SAN vSphere 110

Overview 110

Requirements 110

Installation and configuration 112

Review 123

Results – vSphere Networking Examples 125

Results – vSphere SAN Connectivity 130

Summary 131

Scenario 6 – Shared Uplink Set with Active/Active

Uplinks, 802.3ad (LACP) - Ethernet and FCoE SAN –

vSphere 132

Overview 132

Requirements 132

Installation and configuration 134

Review 144

Results – vSphere Networking Examples 146

Results – vSphere SAN Connectivity 151

Summary 152

Scenario 7 – Tunneled VLANs and Shared Uplink Set with

Active/Active Uplinks and 802.3ad (LACP) - Ethernet and

FCoE SAN - vSphere 153

Overview 153

Requirements 153

Installation and configuration 155

Review 167

Results – vSphere Networking Examples 168

Results – vSphere SAN Connectivity 173

Summary 174

Scenario 8 – Network Access Groups in a Shared Uplink

Set - Ethernet and FCoE SAN – Windows 2008R2 175

Overview 175

Requirements 175

Installation and configuration 178

Review 187

Results – Windows 2008 R2 Networking Examples 187

Summary 192

Scenario 9 – Shared Uplink Set with Active/Active

Uplinks, 802.3ad (LACP) – Flex-10 and VC-Fibre Channel

SAN – vSphere 193

Overview 193

Requirements 193

Installation and configuration 195

Review 205

Results – vSphere Networking Examples 207

Results – vSphere SAN Connectivity 212

Summary 213

Appendix A1 – Scenario-based CISCO IOS Command Line

Reference 214

Appendix A2 – Scenario-based CISCO NX-OS Command

Line Reference 219

Appendix B1 – Scenario-based ProCurve Command Line

Reference 224

Appendix B2 – Scenario-based Comware Command Line

Reference 228

Appendix C – Acronyms and abbreviations 234

Appendix D – Useful VC CLI Command sets 235

VC Domain Configuration 235

FlexFabric Scripting Additions 238

Release 3.30 Scripting Additions 238

Appendix E – Configuring QoS 240

3

Purpose

The purpose of this Virtual Connect Cookbook is to provide users of Virtual Connect with a better

understanding of the concepts and steps required when integrating HP BladeSystem and Virtual

Connect Flex-10 or FlexFabric components into an existing network.

The scenarios in this Cookbook vary from simplistic to more complex while covering a range of

typical building blocks to use when designing Virtual Connect Flex-10 or FlexFabric solutions.

Although these scenarios are shown individually, some scenarios could be combined to create a

more complex and versatile Virtual Connect environment, such as the combined use of Shares

Uplink Sets (SUS) and vNet Tunnels. Or Active/Active networks for North/South traffic flows, such

as iSCSI or VDI, while also having the primary network traffic configured in a separate Shared Uplink

Set with Active/Standby uplinks.

Existing users of Virtual Connect will quickly realize that as of VC firmware release 3.30 that the

selection between “Mapped” and “Tunneled” modes are no longer of concern. The capabilities

provided in those modes are now available in the default installation of VC firmware 3.30 and

beyond. These capabilities and changes will be discussed in further detail later in this paper.

In addition to the features added in release 3.30, 4.01 is a major release containing several new

features, including QoS and Min/Max downlink speed settings among others. This Cookbook will

highlight and discuss some of these added features.

The scenarios as written are meant to be self-contained configurations and do not build on earlier

scenarios, with this you may find some repetition or duplication of configuration across scenarios.

This paper is not meant to be a complete or detailed guide to Virtual Connect Flex-10 or FlexFabric,

but is intended to provide the reader with some valid examples of how Virtual Connect Flex-10 or

FlexFabric could be deployed within their environments. Many additional configurations or

scenarios could also be implemented. Please refer to the following section for additional reference

material on Virtual Connect, Flex-10 and FlexFabric.

Documentation feedback

HP welcomes your feedback. To make comments and suggestions about product documentation,

send a message to docsfeedback@hp.com. Include the document title and manufacturing part

number. All submissions become the property of HP.

Purpose 4

Introduction to Virtual Connect Flex-10 and

FlexFabric

Virtual Connect is an industry standards-based implementation of server-edge virtualization. It

puts an abstraction layer between the servers and the external networks so the LAN and SAN see a

pool of servers rather than individual servers. Once the LAN and SAN connections are physically

made to the pool of servers, the server administrator uses Virtual Connect management tools

(Virtual Connect Manager (VCM) or Virtual Connect Enterprise Manager (VCEM)) to create a profile

for each server.

Virtual Connect FlexFabric is an extension to Virtual Connect Flex-10 which leverages Fibre Channel

over Ethernet (FCoE) protocols. By leveraging FCoE for connectivity to existing Fibre Channel SAN

networks, we can reduce the number of switch modules and HBAs required within the server blade

and enclosure. This in turn further reduces cost, complexity, power and administrative overhead.

This paper will discuss the differences between Flex-10 and FlexFabric and provide information and

suggestions to assist the reader in determining the best option for their implementation of

BladeSystem and Virtual Connect. For additional information on Virtual Connect, Flex-10 and/or

FlexFabric, please review the documents below.

New Features:

Version 3.70 of Virtual Connect contains support for the following enhancements:

The user guide contains information about the following changes in VC 3.70:

Discontinued support for old hardware:

o HP 1/10Gb Virtual Connect Ethernet Module

o HP 1/10Gb-F Virtual Connect Ethernet Module

Support for new hardware:

o HP Virtual Connect Flex-10/10D Module

o HP ProLiant BL660c Gen8 Server series

o HP ProLiant WS460c Gen8 Workstation series

o HP Integrity BL860c i4 Server Blades

o HP Integrity BL870c i4 Server Blades

o HP Integrity BL890c i4 Server Blades

o HP 7m C-series Active Copper SFP+ cables (QK701A)

o HP 10m C-series Active Copper SFP+ cables (QK702A)

o Cisco 7m copper active Twinax cables (SFP-H10GB-ACU7M)

o Cisco 10m copper active Twinax cables (SFP-H10GB-ACU10M)

Virtual Connect Direct-Attach Fibre Channel for HP 3PAR Storage Systems

Manageability enhancements:

o VCM GUI access to telemetry information

o Advanced telemetry and statistics for Link Aggregation Groups and FlexNICs

o GUI access to the FC Port Statistics for HP FlexFabric 10Gb/24-port Modules

o Improvements to the Statistics Throughout display and data collection

o Display of factory default MACs and WWNs in server profiles

o Added an FC/FCoE “Connect To” field to help identify how server ports are

connected to the uplink ports

o LLDP enhancements to more easily identify VC Ethernet modules on the network

o Improvements to the display of the MAC Address table to show the network name

and VLAN ID where the MAC address was learned, as well as display of the LAG

membership table

VCM GUI/CLI task progress activity indicator for predefined VCM operations

Security enhancements:

o Support for 2048 bit SSL certificates and configurable SSL-CSR

Introduction to Virtual Connect Flex-10 and FlexFabric 5

o Activity logging improvements for TACACS+ accounting

o Option to disable local account access when LDAP, RADIUS, or TACACS+

authentication is enabled

o Increased the default VCM local user account minimum required password length

o SNMP access security to prevent access from unauthorized management stations

SmartLink failover improvements

IGMP “NoFlood” option when IGMP snooping is enabled

Browser support:

o Internet Explorer 8 and 9

o Firefox 10 and 11

Firmware upgrade rollback from a previous firmware upgrade without domain deletion

Please refer to the VC 3.70 User Guide for additional VCEM feature enhancements

Please refer to the VC 3.70 Release notes and User Guides for further information

3.70 Release Notes

http://bizsupport2.austin.hp.com/bc/docs/support/SupportManual/c03478436/c03478436.pdf

3.70 CLI User Guide

http://bizsupport2.austin.hp.com/bc/docs/support/SupportManual/c03478433/c03478433.pdf

3.70 User Guide

http://h20628.www2.hp.com/km-ext/kmcsdirect/emr_na-c03478464-3.pdf

Virtual Connect Firmware 4.01 includes the following new features:

Version 4.01 of Virtual Connect contains support for the following enhancements:

Manageability enhancements:

Extended support for FCoE protocol on Flex-10/10D and FlexFabric modules, which

includes FIP snooping support but is limited to dual-hop configurations. FlexFabric

module dual-hop FCoE support is restricted to uplink ports X1-X4

IMPORTANT: For more information about the installation and limitations for Virtual

Connect dual-hop FCoE support, see the HP Virtual Connect Dual-Hop FCoE Cookbook,

which can be found on the Installing tab of the HP BladeSystem Technical Resources

website (http://www.hp.com/go/bladesystem/documentation)

Prioritization of critical application traffic with QoS

Minimum and maximum bandwidth optimization for efficient allocation of bandwidth

in virtualized environments with Flex-10 and FlexFabric adapters. Flex-10 and

FlexFabric adapter firmware and drivers must be updated to SPP version 2013.02.00,

or the latest hotfix thereafter, to take advantage of this enhancement

Note: This feature excludes support for the following adapters:

o HP NC551i Dual Port FlexFabric 10Gb Converged Network Adapter

o HP NC551m Dual Port FlexFabric 10Gb Converged Network Adapter

o HP NC550m 10Gb 2-port PCIe x8 Flex-10 Ethernet Adapter

VC SNMP MIB enhancements for improved troubleshooting and failure analysis Virtual

Connect SNMP Domain MIB (vc-domain-mib.mib) traps now contain detailed

information

with the root cause of each event. Update SNMP management stations with the HP MIB

Kit version 9.30 prior to installing Virtual Connect version 4.01 to take advantage of

this enhancement. Download the update from the HP website

(http://h18006.www1.hp.com/products/servers/management/hpsim/mibkit.html).

Enhanced support for LLDP MIB, Bridge MIB, Interface MIB, and Link aggregation MIB

The domain status alerts screen includes cause and root cause for each alert

Customization of VC user roles and privileges

The VCM GUI now allows searching for Network Access Groups, modules, interconnect

bays, and device bay items from the left navigation tree

Configurable long or short LACP timer

VCM CLI TAB key auto-completion

The Network, SUS, and hardware pages now display the remote system name instead

Introduction to Virtual Connect Flex-10 and FlexFabric 6

of the MAC address.

Security enhancements:

o IGMP Snooping enhancements with multicast group host membership filtering

o Ability to set session timeout for idle VCM CLI or VCM GUI management sessions

o Protection of VC Ethernet modules from buffer exhaustion due to flooding of

Pause packets from servers

VCEM compatibility:

If you are running VCEM 6.3.1 or later to manage a VC 4.01 domain, the 4.01 domain

can be in a VCDG in 3.30 firmware mode or later. To enable new features in VC 4.01,

you must upgrade to VCEM 7.2 or later. VCEM 7.2 does not support VC versions prior to

3.30

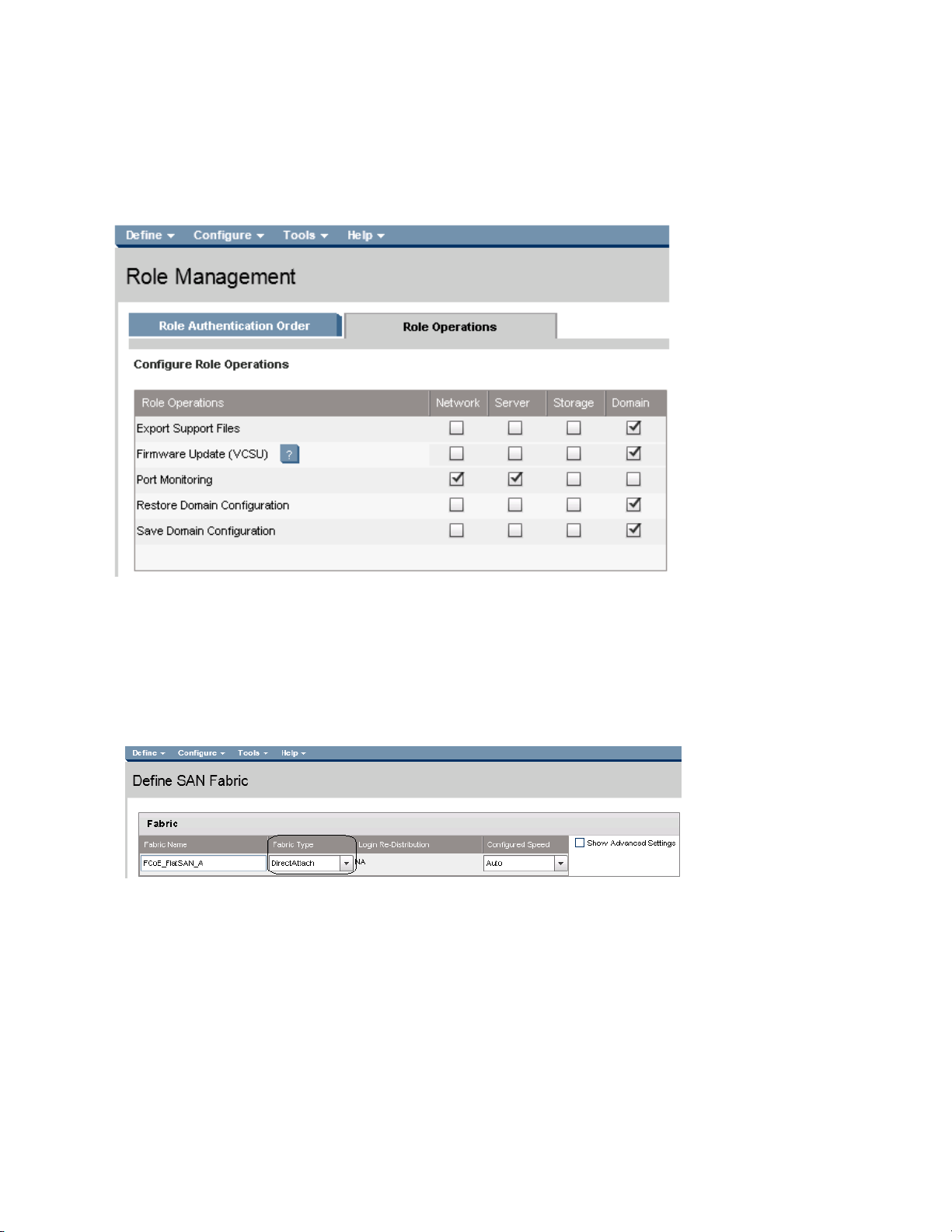

Configurable role operations must be delegated to one of the following roles if they

are to be performed while the domain is in Maintenance Mode: Network, Storage or

Domain. Administrators logging into VCM with a Server role account while the domain

is in Maintenance mode will be denied access to perform delegated operations such as

exporting support files, updating firmware, configuring port monitoring or saving or

restoring domain configuration

In VC 4.01, the telemetry port throughput is Enabled by default. You must do the

following to add a fresh VC 4.01 installation to your existing VCDG:

3.30-3.70 VCDG with statistics throughput disabled—Clear the Enable

Throughput Statistics checkbox on the Ethernet Settings (Advanced Settings)

screen, or run the following VCM CLI command:

set statistics-throughput Enabled=false

3.30-3.70 VCDG with statistics throughput enabled—Add the domain as is. No

change is required

In VC 4.01, the VLAN Capacity is set to Expanded by default. You must do the following

to add a fresh VC 4.01 installation to your existing VCDG:

3.30-3.70 with Legacy VLAN VCDG—You cannot add the domain. Select a different

VCDG

3.30-3.70 with Enhanced VLAN VCDG—Add the domain as is. No change is

required

Please refer to the VC 4.01 Release notes for further information

4.01 Release Notes

http://bizsupport2.austin.hp.com/bc/docs/support/SupportManual/c03801912/c03801912.pdf

4.01 CLI User Guide

http://bizsupport2.austin.hp.com/bc/docs/support/SupportManual/c03790895/c03790895.pdf

4.01 User Guide

http://bizsupport2.austin.hp.com/bc/docs/support/SupportManual/c03791917/c03791917.pdf

Additional Virtual Connect Reference Material

Links to HP Virtual Connect technology site, provides a great deal of reference information on

HP Virtual Connect Flex-10 and FlexFabric.

http://h18000.www1.hp.com/products/blades/virtualconnect/

Overview of HP Virtual Connect Technologies

http://h20000.www2.hp.com/bc/docs/support/SupportManual/c00814156/c00814156.pdf

HP Virtual Connect Traffic Flow

http://h20000.www2.hp.com/bc/docs/support/SupportManual/c03154250/c03154250.pdf

HP Virtual Connect for c-Class BladeSystem Setup and Installation Guide

http://bizsupport1.austin.hp.com/bc/docs/support/SupportManual/c01732252/c01732252.pdf

Efficiently managing Virtual Connect environments

http://h20000.www2.hp.com/bc/docs/support/SupportManual/c03028646/c03028646.pdf

HP Virtual Connect Direct-Attach Fibre Channel for HP 3PAR (FlatSAN) Solution brief

http://h20195.www2.hp.com/V2/GetPDF.aspx/4AA4-1557ENW.pdf

Introduction to Virtual Connect Flex-10 and FlexFabric 7

HP BladeSystem Network Reference Architecture - FlexFabric and VMware vSphere 5

http://h20000.www2.hp.com/bc/docs/support/SupportManual/c03278211/c03278211.pdf

Virtual Connect User, Setup and CLI Guides

http://h20000.www2.hp.com/bizsupport/TechSupport/DocumentIndex.jsp?contentType=SupportManual&lan

g=en&cc=us&docIndexId=64180&taskId=101&prodTypeId=3709945&prodSeriesId=3794423

HP Virtual Connect FlexFabric Solutions Recipe

http://vibsdepot.hp.com/hpq/recipes/

Virtual Connect Multi-Enclosure Stacking Reference Guide

http://h20000.www2.hp.com/bc/docs/support/SupportManual/c02102153/c02102153.pdf

Virtual Connect for the CISCO Administrator

http://h20000.www2.hp.com/bc/docs/support/SupportManual/c01386629/c01386629.pdf

(www.hp.com/go/blades)

The Virtual Connect Cookbook Series:

Virtual Connect 1Gb Ethernet Cookbook

Virtual Connect can be used to support both Ethernet and Fibre Channel connections. The Virtual

Connect 1Gb Ethernet Cookbook is provided with basic Virtual Connect configurations in a 1Gb

environment. Earlier releases of the Virtual Connect Ethernet Cookbook cover both 1Gb and 10Gb

solutions; however, the most recent release of the Virtual Connect 1Gb Cookbook cover only 1Gb

Ethernet Solutions up to Virtual Connect firmware release 3.6x.

http://h20000.www2.hp.com/bc/docs/support/SupportManual/c01990371/c01990371.pdf

(www.hp.com/go/blades)

Virtual Connect Dual-Hop FCoE Cookbook

Virtual Connect 4.01 now provides the ability to pass FCoE (Dual Hop) to an external FCoE capable

network switch. This guide is focused on both the Virtual Connect and Network switch

configurations needed to support this connectivity.

For Dual Hop FCoE connectivity, please refer to the Dual-Hop FCoE with HP Virtual Connect modules

Cookbook

http://bizsupport1.austin.hp.com/bc/docs/support/SupportManual/c03808925/c03808925.pdf

(www.hp.com/go/blades)

Virtual Connect Fibre Channel Cookbook

Virtual Connect can be used to support both Ethernet and Fibre Channel connections; however, this

guide is focused completely on the Ethernet configuration.

For Fibre Channel connectivity, please refer to the Virtual Connect Fibre Channel Cookbook

http://bizsupport1.austin.hp.com/bc/docs/support/SupportManual/c01702940/c01702940.pdf

(www.hp.com/go/blades)

Virtual Connect iSCSI Cookbook

Virtual Connect can be used to support iSCSI accelerated connections, including iSCSI boot,

however, this guide is focused completely on the Ethernet and iSCSI configuration.

For iSCSI connectivity, please refer to the Virtual Connect iSCSI Cookbook

http://h20000.www2.hp.com/bc/docs/support/SupportManual/c02533991/c02533991.pdf

(www.hp.com/go/blades)

Introduction to Virtual Connect Flex-10 and FlexFabric 8

Virtual Connect Ethernet Modules

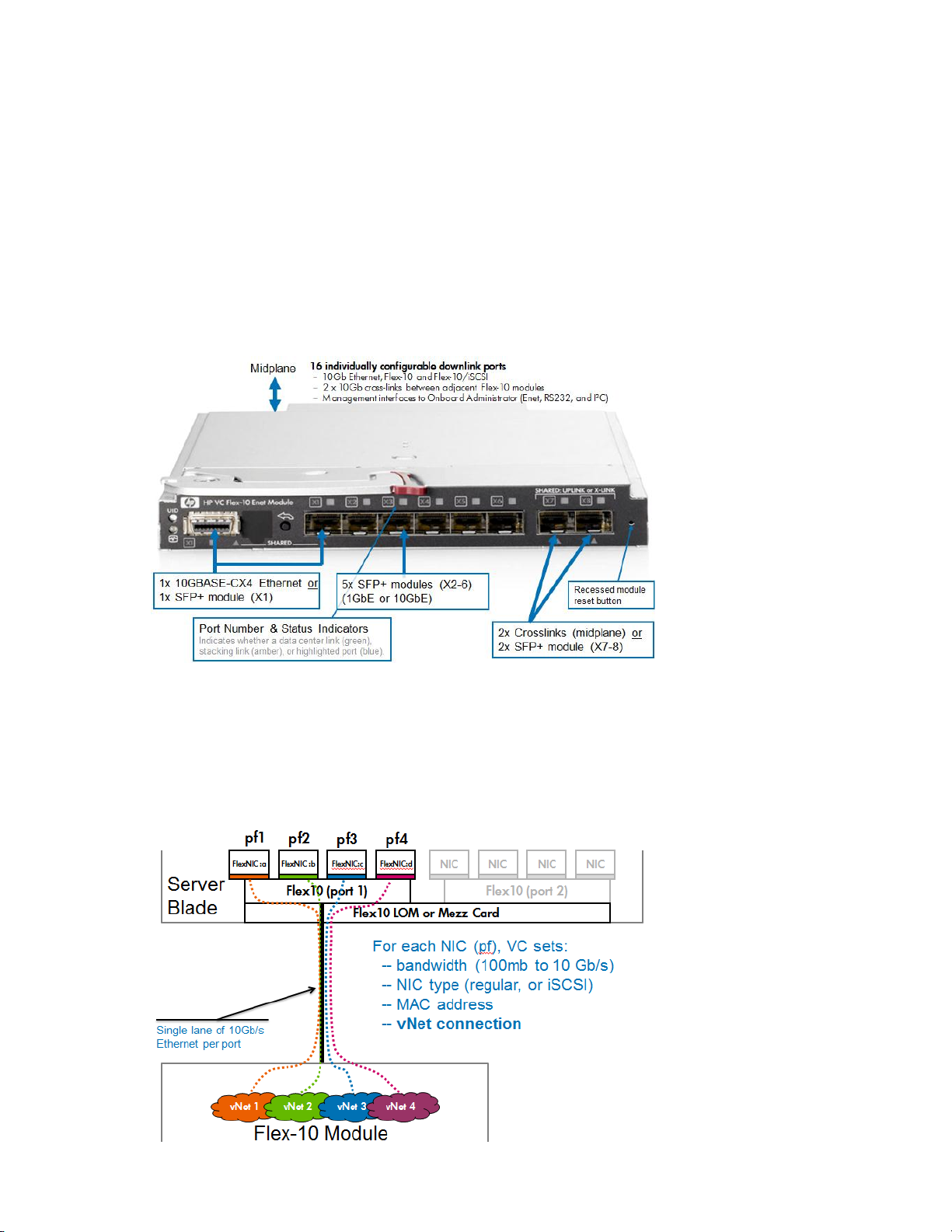

Virtual Connect Flex-10 Module Uplink Port Mappings

It is important to note how the external uplink ports on the Flex-10 module are configured. The

graphic below outlines the type and speed each port can be configured as.

Ports X1 – X8; Can be configured as 1Gb or 10Gb Ethernet

Ports X7 – X8; Are also shared as internal cross connect and should not be used for

external connections, at the very least one horizontal stacking link is required.

Uplink Ports X1-X8 support 0.5–7m length DAC as stacking or uplink

The CX-4 port is shared with port X1, only one of these connections can be used at a time.

Figure 1 – Virtual Connect Flex-10 Module port configuration, speeds and types

Note: The Virtual Connect Flex-10 module shown above was introduced in in Late 2008 and is

replaced by the Flex-10/10D module, shown next and was released in August of 2012. The Flex-10

module above will go end of sales life in late 2013.

Figure 2 - FlexNIC Connections – It is important to note that Physical Function two (pf2) can be

configured as Ethernet or iSCSI (iSCSI is supported with Flex-10 and G7 and Gen 8 blades using the

Emulex based BE2 and BE3 chipsets). Physical Functions 1, 3 and 4 would be assigned as Ethernet

only connections

Introduction to Virtual Connect Flex-10 and FlexFabric 9

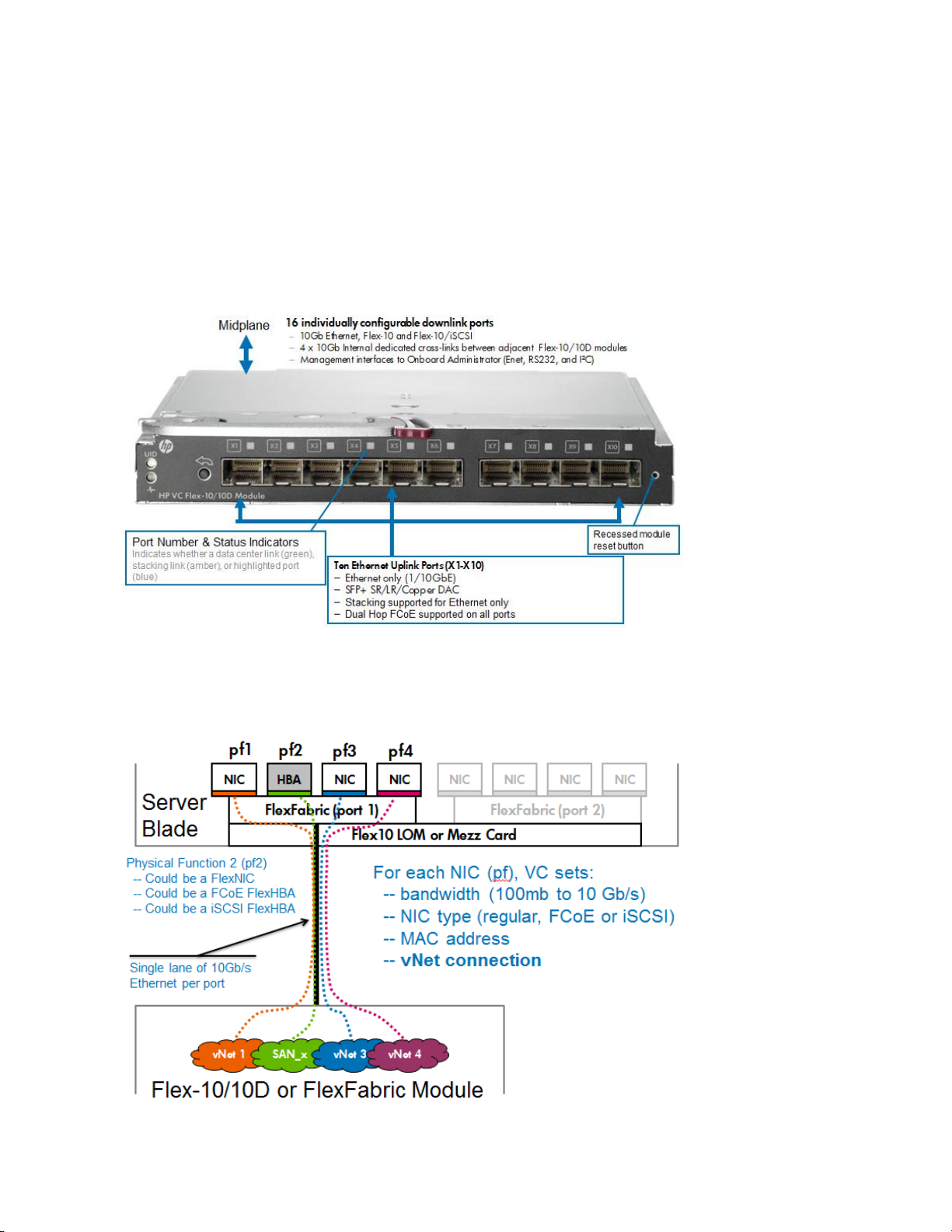

Virtual Connect Flex-10/10D Module Uplink Port Mappings

It is important to note how the external uplink ports on the Flex-10 module are configured. The

graphic below outlines the type and speed each port can be configured as.

Ports X1 – X10; Can be configured as 1Gb or 10Gb Ethernet or FCoE (ALL external ports can

be used, no sharing of these ports with internal stacking, as with previous modules)

Ports X11-X14; Internal cross connections for horizontal stacking and are NOT shared with

any external connections

Uplink Ports X1-X10 support 0.5–15m length DAC as stacking or uplink. If greater lengths

are required, fibre optic cables would be required

Figure 3 – Virtual Connect Flex-10/10D Module port configuration, speeds and types

Figure 4 - FlexNIC Connections – It is important to note that Physical Function two (pf2) can be

configured as Ethernet, iSCSI (iSCSI and Dual Hop FCoE are supported with Flex-10/10D and G7

blades using the Emulex based BE2 and BE3 chipsets). Physical Functions 1, 3 and 4 would be

assigned as Ethernet only connections. Dual Hop FCoE connections are supported on all external

uplink ports

Introduction to Virtual Connect Flex-10 and FlexFabric 10

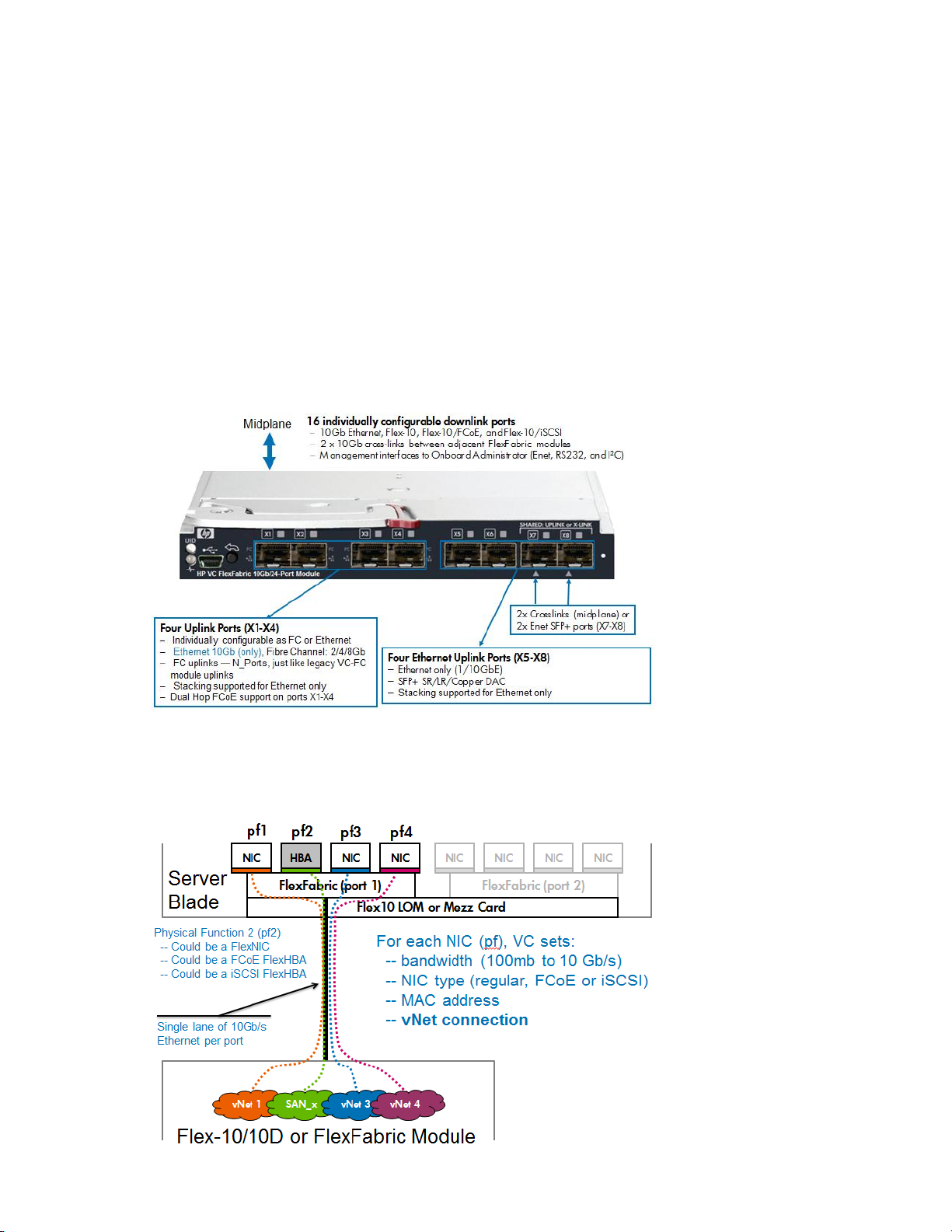

Virtual Connect FlexFabric Module Uplink Port Mappings

It is important to note how the external uplink ports on the FlexFabric module are configured. The

graphic below outlines the type and speed each port can be configured as.

Ports X1 – X4; Can be configured as 10Gb Ethernet or Fibre Channel, FC speeds supported =

2Gb, 4Gb or 8Gb using 4Gb or 8Gb FC SFP modules, please refer to the FlexFabric Quick

Spec for a list of supported SFP modules

Ports X5 – X8: Can be configured as 1Gb or 10Gb Ethernet

Ports X7 – X8; Are also shared as internal stacking links and should not be used for

external connections, at the very least one horizontal stacking link is required, if modules

are in adjacent bays. Note: Within FlexFabric Stacking only applies to Ethernet traffic.

Uplink ports X1-X4 support 0.5–5m length DAC as stacking or uplink

Uplink Ports X5-X8 support 0.5–7m length DAC as stacking or uplink

Note: 5m DAC cables are supported on all ports with FlexFabric, in addition, 7-15m DAC cables are also

supported on ports X5 through X8. Flex-10 supports 15m DAC cables on ALL ports.

Figure 5 – Virtual Connect FlexFabric Module port configuration, speeds and types

Figure 6 - FlexNIC Connections – It is important to note that Physical Function two (pf2) can be

configured as Ethernet, iSCSI or FCoE (iSCSI and FCoE are supported with VC FlexFabric and G7

blades using the Emulex based BE2 and BE3 chipsets). Physical Functions 1, 3 and 4 would be

assigned as Ethernet only connections. Dual Hop FCoE connections are supported on external ports

X1 through X4

Introduction to Virtual Connect Flex-10 and FlexFabric 11

Virtual Connect 8Gb 20-Port Fibre Channel Module Uplink Port Mappings

It is important to note how the external uplink ports on the VC-FC module are configured. The

graphic below outlines the type and speed each port can be configured as.

Ports 1 - 4; Can be operate at Fibre Channel speeds of 2Gb, 4Gb or 8Gb using 4Gb or 8Gb FC

SFP modules,

The VC 8Gb 2o Port module ships with NO SFP modules

Refer to the VC 8Gb 20 Port module Quick Spec for a list of supported SFP modules

Figure 7 - Virtual Connect 8Gb 20 Port Module port configuration and speed types

Virtual Connect 8Gb 24-Port Fibre Channel Module Uplink Port Mappings

It is important to note how the external uplink ports on the VC-FC module are configured. The

graphic below outlines the type and speed each port can be configured as.

Ports 1 - 8; Can be operate at Fibre Channel speeds of 2Gb, 4Gb or 8Gb using 4Gb or 8Gb FC

SFP modules

The VC 8Gb 24 Port module ships with TWO 8Gb FC SFP modules installed

Refer to the VC 8Gb 20 Port module Quick Spec for a list of supported SFP modules

Figure 8 - Virtual Connect 8Gb 20 Port Module port configuration and speed types

Introduction to Virtual Connect Flex-10 and FlexFabric 12

Mode

Link Init/Fill Word

Mode 0

IDLE/IDLE

Mode 1

ARBF/ARBF

Mode 2

IDLE/ARBF

Mode 3

If ARBF/ARBF fails use IDLE/ARBF

Connecting to Brocade Fibre Channel Fabric at 8Gb

NOTE: When VC 8Gb 20-port FC or VC FlexFabric 10Gb/24-port module Fibre Channel uplink ports

are configured to operate at 8Gb speed and connecting to HP B-series (Brocade) Fibre Channel SAN

switches, the minimum supported version of the Brocade Fabric OS (FOS) is v6.3.1 and v6.4.x. In

addition, a fill word on those switch ports must be configured with option “Mode 3” to prevent

connectivity issues at 8Gb speed.

On HP B-series (Brocade) FC switches use the command;

portCfgFillWord (portCfgFillWord <Port#> <Mode>) to configure this setting:

Although this setting only affects devices logged in at 8G, changing the mode is disruptive

regardless of the speed the port is operating at. The setting is retained and applied any time an 8G

device logs in. Upgrades to FOS v6.3.1 or v6.4 from prior releases supporting only modes 0 and 1

will not change the existing setting, but a switch or port reset to factory defaults with FOS v6.3.1 or

v6.4 will be configured to Mode 0 by default. The default setting on new units may vary by vendor.

Please use portcfgshow CLI to view the current portcfgfillword status for that port.

Modes 2 and 3 are compliant with FC-FS-3 specifications (standards specify the IDLE/ARBF behavior

of Mode 2 which is used by Mode 3 if ARBF/ARBF fails after 3 attempts). For most environments,

Brocade recommends using Mode 3, as it provides more flexibility and compatibility with a wide

range of devices. In the event that the default setting or Mode 3 does not work with a particular

device, contact your switch vendor for further assistance. When connecting to Brocade SAN

Switches at 8Gb, “portCfgFillWord” must be set to Mode 3 – If ARBF/ARBF fails use IDLE/ARBF. In

order to use Mode 3, FOS v6.3.1 or v6.4.x or better is required.

Tunneled VLAN and Mapped VLANS

Readers that are familiar with earlier releases of Virtual Connect firmware features will realize that

Virtual Connect 3.30 firmware removed the need to configure Virtual Connect in Mapped vs.

Tunneled mode. As of Virtual Connect 3.30 firmware release, Virtual Connect now provides the

ability to simultaneously take advantage of the features and capabilities that were provided in

either mapped or tunneled modes, there is no need to choose the domain’s mode of operation. The

key feature gained here is the ability to now use Mapped VLANs (multiple networks) and Tunneled

networks within the same profile.

Virtual Connect VLAN Support – Shared Uplink Set

Shared Uplink Sets provide administrators with the ability to distribute VLANs into discrete and

defined Ethernet Networks (vNet.) These vNets can then be mapped logically to a Server Profile

Network Connection allowing only the required VLANs to be associated with the specific server NIC

port. This also allows the flexibility to have various network connections for different physical

Operating System instances (i.e. VMware ESX host and physical Windows host.)

Introduction to Virtual Connect Flex-10 and FlexFabric 13

Legacy VLAN Capacity

Legacy VLAN capacity mode allows up to 320 VLANs per Ethernet module, 128 VLANs per Shared

Uplink Set and, up to 28 VLANs are allowed per FlexNIC port. Care must be taken not to exceed the

limit per physical server port.

The following Shared Uplink Set rules apply to legacy capacity mode:

320 VLANs per Virtual Connect Ethernet Module

128 VLANs per Shared Uplink Set (single uplink port)

28 unique server mapped VLANs per server profile network connection

The above configuration rules apply only to a Shared Uplink set. If support for a larger numbers of

VLANs is required, a VLAN Tunnel can be configured to support a large number of VLANs. Please see

the Virtual Connect Release Notes for future details.

Expanded VLAN Capacity – Added in Virtual Connect 3.30 Release

This mode allows up to 1000 VLANs per domain when implementing a Share Uplink Set (SUS). The

number of VLANs per shared uplink set is restricted to 1000. In addition, up to 162 VLANs are

allowed per physical server port, with no restriction on how those VLANs are distributed among the

server connections mapped to the same physical server port. Care must be taken not to exceed the

limit per physical server port. For example, if you configure 150 VLAN mappings for a server

connection (FlexNIC:a) of a FlexFabric physical server port, then you can only map 12 VLANs to the

remaining three server connections (FlexNIC:b, FlexNIC:c, and FlexNIC:d) of the same physical server

port. If you exceed the 162 VLAN limit, the physical server port is disabled and the four server

connections are marked as Failed. Also, keep in mind that the FCoE SAN or iSCSI connection is also

counted as a network mapping. In the event that greater numbers of VLANs are needed a vNet

Tunnel can be used simultaneously with VLAN mapping.

The following Shared Uplink Set rules apply:

1000 VLANs per Virtual Connect Ethernet domain,

162 VLANs per Ethernet server port

The above configuration rules apply only to a Shared Uplink set. If support for a greater

numbers of VLANs is required, a VLAN Tunnel can be configured to support a large number

of VLANs. Please see the Virtual Connect Release Notes for further details.

When creating the Virtual Connect Domain, the default configuration in 3.30 is Legacy VLAN

Capacity Mode (in Virtual Connect 4.01, the default mode is now Expanded VLAN Capacity),

however, Multiple Networks and Tunnel mode can be used simultaneously. After Expanded VLAN

Capacity mode is configured, in order to revert back to Legacy VLAN capacity mode, you must delete

and recreate the Virtual Connect Domain.

Note: Expanded VLAN Capacity mode is not supported on the following 1Gb based Virtual Connect

Ethernet modules, such as:

HP 1/10Gb VC Ethernet Module

HP 1/10Gb-F VC Ethernet Module

If these modules are inserted into an enclosure that is in Expanded VLAN Capacity mode, they are

marked as incompatible. If these modules are installed in an enclosure, converting to Expanded

VLAN Capacity mode will not be permitted.

Introduction to Virtual Connect Flex-10 and FlexFabric 14

Figure 9 - Configuring Expanded VLAN Capacity support

Bulk VLAN Creation

In addition to providing support for a greater number of VLANs, Virtual Connect now provides the

ability to create several VLANs, within a Shared Uplink Set (SUS), in a single operation. Using the

Bulk VLAN creation feature in the GUI or the add network-range command in the CLI many VLANs

can be added to a SUS. In addition, copying an existing SUS is also now possible. When creating an

Active/Active SUS configuration, you can create the first SUS, and then copy it.

Figure 10 - Example of adding multiple VLANs to a SUS through the GUI

Here is an example of creating a shared Uplink Set using the CLI command “add network-range” to

create the more than 400 VLANs shown above.

Introduction to Virtual Connect Flex-10 and FlexFabric 15

add uplinkset VLAN-Trunk-1

add uplinkport enc0:1:X5 Uplinkset=VLAN-Trunk-1 speed=auto

add uplinkport enc0:1:X6 Uplinkset=VLAN-Trunk-1 speed=auto

add network-range -quiet UplinkSet=VLAN-Trunk-1 NamePrefix=VLAN- NameSuffix=-1

VLANIds=101-105,2100-2400 State=enabled PrefSpeedType=auto SmartLink=enabled

Note: Earlier release of Virtual Connect firmware supported only 320 VLANs, in addition, to create

each VLAN with SmartLink enabled required two lines of script. In the example above, over 300

VLANs are created in a single statement.

Copying a Shared Uplink Sets

Virtual Connect provides the ability to copy a Shared Uplink Set. This can be very handy when

defining an Active/Active Shared Uplink Set design. You simply create the first SUS, and then copy

it.

For example, after creating Shared Uplink Set VLAN-Trunk-1 you can copy it to VLAN-Trunk-2. You

will then need to add uplinks to the new SUS and ensure all networks have SmartLink enabled. This

can be accomplished as follows;

copy uplinkset VLAN-Trunk-1 VLAN-Trunk-2 fromVlanStr=1 toVlanStr=2 replace=last

add uplinkport enc0:2:X5 Uplinkset=VLAN-Trunk-2 speed=auto

add uplinkport enc0:2:X6 Uplinkset=VLAN-Trunk-2 speed=auto

set network-range -quiet UplinkSet=VLAN-Trunk-1 VLANIds=101-105,2100-2400

SmartLink=enabled

vNets, Tunnels and Shared Uplink Sets

There are two types of vNets. The first is a simple vNet that will pass only untagged frames. The

second is a vNet tunnel which will pass tagged frames for one or many VLANs.

vNet

The vNet is a simple network connection between one or many server NICs to one or many uplink

ports.

A vNet could be used to connect a single VLAN, without tagging, to one or many server NICs. If this

network is configured as a VLAN, by configuring the upstream switch port as an access or untagged

port, by extension, any server connected to this vNet would reside in that VLAN, but would not need

to be configured to interpret the VLAN tags.

Benefits of a vNet

A vNet can be utilized in one of two ways, a simple vNet, used to pass untagged frames and a

tunneled vNet. A tunneled vNet can be used to pass many VLANs without modifying the VLAN tags,

functioning as a transparent VLAN Pass-Thru module.

vNet Tunnel

A tunneled vNet will pass VLAN tagged frames, without the need to interpret or forward those

frames based on the VLAN tag. Within a tunneled vNet the VLAN tag is completely ignored by

Virtual Connect and the frame is forwarded to the appropriate connection (server NIC[s] or uplinks)

depending on frame direction flow. In this case, the end server would need to be configured to

interpret the VLAN tags. This could be a server with a local operating system, in which the network

stack would need to be configured to understand which VLAN the server was in, or a virtualization

host with a vSwitch supporting multiple VLANs.

The tunneled vNet can support up to 4096 VLANs.

Benefits of a vNet Tunnel

A vNet Tunnel can present one or many VLANs to a server NIC. When additional VLANs are added to

the upstream switch port, they are made available to server with no changes required within Virtual

Connect. All presented VLANs are pass through the tunnel, unchanged.

Introduction to Virtual Connect Flex-10 and FlexFabric 16

Shared Uplink Set (SUS)

The SUS provides the ability to support VLAN tagging and forward frames based on the VLAN tags

of those frames. The SUS connects one or many server NICs to one or many uplink ports. A SUS

would be configured for the specific VLANs it will support. If support for additional VLANs is

required, those VLANs need to be configured within the SUS.

When connecting a server NIC to a network within a SUS, there are two choices provided. The key

difference between these two options is the state in which the frame is passed to the server NIC.

When configuring a server NIC for network connection;

1. Selecting a single network – which would be mapped to a specific VLAN.

If a single network is selected, the frames will be presented to the server NIC WITHOUT a

VLAN tag. In this case the host operating system does not need to understand which VLAN it

resides in. When the server transmits frames back to Virtual Connect, those frames will not

be tagged, however; Virtual Connect will add the VLAN tag and forward the frame onto the

correct VLAN.

2. Selecting multiple networks – which would provide connectivity to several VLANs.

The Map VLAN Tags feature provides the ability to use a Shared Uplink Set to present

multiple networks to a single NIC. If you select Multiple Networks when assigning a

Network to a server NIC, you will have the ability to configure multiple Networks (VLANS) on

that server NIC. At this point Virtual Connect tags ALL the packets presented to the NIC —

unless the Native check box is selected for one of the networks, in which case packets from

this network (VLAN) will be untagged, and any untagged packets leaving the server will be

placed on this Network (VLAN).

With Mapped VLAN Tags, you can create a Shared Uplink Set that contains ALL the VLANs

you want to present to your servers, then present only ONE network (the one associated

with the VLAN we want the server NIC in) to the Windows, LINUX or the ESX Console NIC,

then select Multiple Networks for the NIC connected to the ESX vSwitch and select ALL the

networks that we want presented to the ESX host vSwitch. The vSwitch will then break out

the VLANs into port groups and present them to the guests. Using Mapped VLAN Tags

minimizes the number of uplinks required.

Benefits of a SUS

A Shared Uplink Set can be configure to support both tagged and un-tagged network traffic to a

server NIC, which simplifies the overall configuration and minimizes the number of uplink cables

required to support the network connections.

MAC Cache Failover

When a Virtual Connect Ethernet uplink that was previously in standby mode becomes active, it can

take several minutes for external Ethernet switches to recognize that the c-Class server blades can

now be reached on this newly-active connection. Enabling Fast MAC Cache Failover causes Virtual

Connect to transmit Ethernet packets on newly-active links, which enables the external Ethernet

switches to identify the new connection more quickly (and update their MAC caches appropriately).

This transmission sequence repeats a few times at the MAC refresh interval (5 seconds

recommended) and completes in about 1 minute.

When implementing Virtual Connect in an Active/Standby configuration, where some of the links

connected to a Virtual connect Network (whether a SUS or vNet) are in standby, MAC Cache Fail-over

would be employed to notify the switch as a link transitions from Standby to Active within Virtual

Connect.

Note: Be sure to set switches to allow MAC addresses to move from one port to another without

waiting for an expiration period or causing a lock out.

Introduction to Virtual Connect Flex-10 and FlexFabric 17

Role Management

New to Virtual Connect 4.01 is the ability to provide a more granular control of each of the

operational user roles provided. In prior releases, each role had a set level of access.

Figure 11 – Role Operations provides the ability to set the level of access a specific operational role

is provided

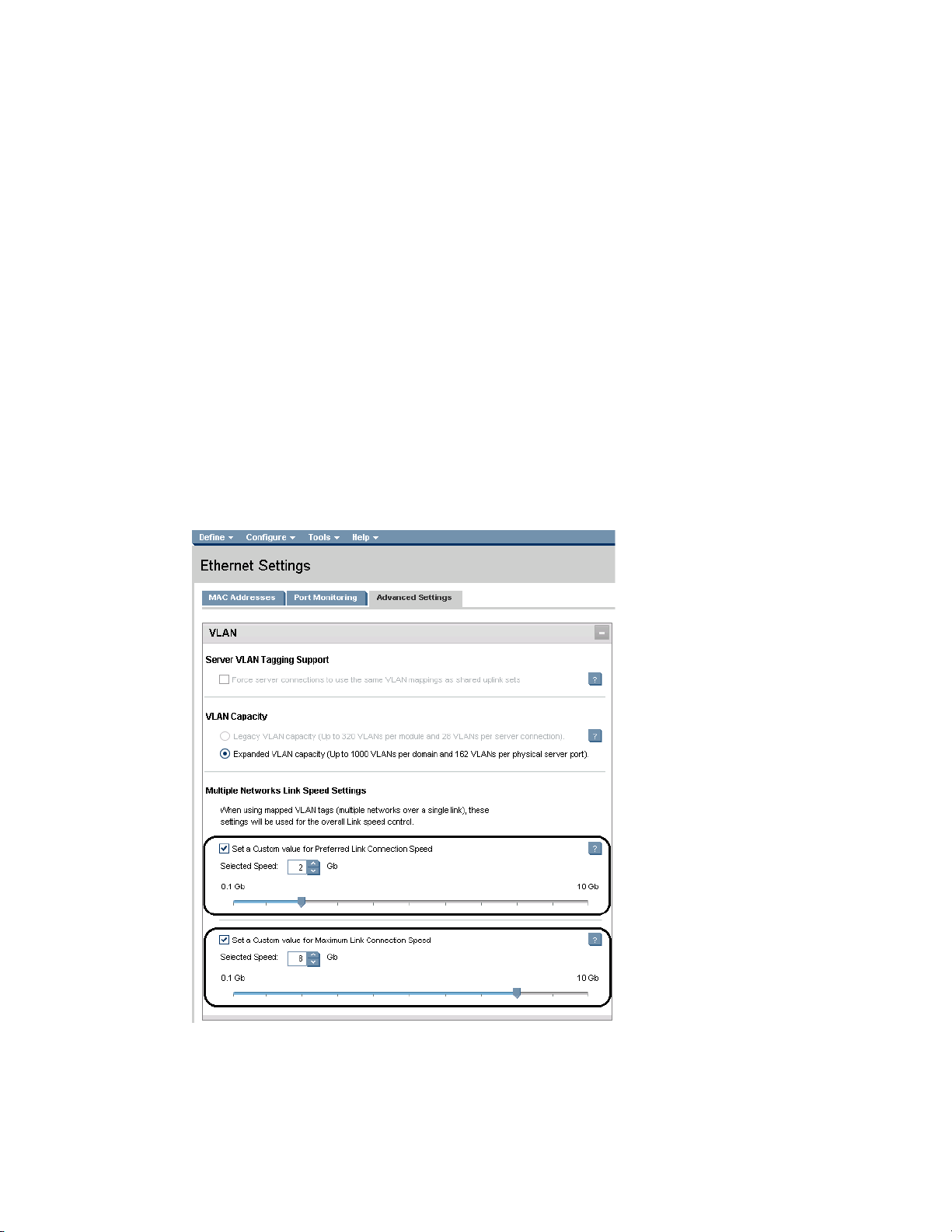

Virtual Connect DirectAttach Virtual Connect SAN fabrics (FlatSAN with 3PAR)

Virtual Connect Direct Attached SAN fabrics, provides the ability to directly connect HP FlexFabric to

an HP 3PAR storage array and completely eliminate the need for a traditional SAN fabric and the

administrative overhead associated with maintaining the fabric. FlatSAN is supported on FlexFabric

modules through Ports X1-X4, simply connect the FlexFabric modules to available ports on the

3PAR array and configure the Virtual Connect fabrics for “DirectAttach”.

Figure 12 - When configuring FlatSAN, chose the Fabric Type of “DirectAttach”

Note: See Scenario 6 in the Virtual Connect Fibre Channel Cookbook for a details on implementation

of FlatSAN.

http://bizsupport1.austin.hp.com/bc/docs/support/SupportManual/c01702940/c01702940.pdf

Virtual Connect QoS

QoS is used to provide different priorities for designated networking traffic flows and guarantee a

certain level of performance through resource reservation. QoS is important for reasons such as:

Providing Service Level Agreements for network traffic and to optimize network utilization

Different traffic types such as management, back up, and voice having different

requirements for throughput, jitter, delays and packet loss

IP-TV, VOIP and expansion of internet is creating additional traffic and latency

requirements

Introduction to Virtual Connect Flex-10 and FlexFabric 18

In some cases, capacity cannot be increased. Even when possible, increasing capacity may

still encounter issues if traffic needs to be re-routed due to a failure

Traffic must be categorized and then classified. Once classified, traffic is given priorities and

scheduled for transmission. For end to end QoS, all hops along the way must be configured with

similar QoS policies of classification and traffic management. Virtual Connect manages and

guarantees its own QoS settings as one of the hops within the networking infrastructure.

Network Access Groups (NAG)

Before Virtual connect 3.30, any server profile could be assigned any set of networks. If policy

dictated that some networks should not be accessed by a system that accessed other networks (for

example, the Intranet and the Extranet or DMZ networks) there was no way to enforce that policy

automatically.

With Virtual Connect 3.30 and later, network access groups are defined by the network

administrator and associated with a set of networks that can be shared by a single server. Each

server profile is associated with one network access group. A network cannot be assigned to the

server profile unless the profile is a member of the network access group associated with that

network. A network access group can contain multiple networks. A network can reside in more than

one network access group, such as a management or VMotion VLAN.

Up to 128 network access groups are supported in the domain. Ethernet networks and server

profiles that are not assigned to a specific network access group are added to the domain Default

network access group automatically. The Default network access group is predefined by VCM and

cannot be removed or renamed.

If you are updating to Virtual Connect 3.30, all current networks are added to the Default network

access group and all server profiles are set to use the Default network access group. Network

communication within the network access group behaves similarly to earlier versions of Virtual

Connect firmware, because all profiles can reach all networks.

If you create a new network access group, NetGroup1, and copy or move existing networks from the

Default network access group to NetGroup1, then a profile that uses NetGroup1 cannot use

networks included in the Default network access group. Similarly, if you create a new network and

assign it to NetGroup1 but not to the Default network access group, then a profile that uses the

Default network access group cannot use the new network. Therefore, an administrator cannot

inadvertently, or intentionally, place a server on networks that reside in different Network Access

Groups.

Virtual Connect LACP Timers

Virtual Connect provides two options for configuring uplink redundancy (Auto and Failover). When

the connection mode is set to "Auto", Virtual Connect uses Link Aggregation Control Protocol to

aggregate uplink ports from a Network or Shared Uplink Set into Link Aggregation Groups. As part

of the LACP negotiation to form a LAG, the remote switch sends a request for the frequency of the

control packets (LACPDU). This frequency can be "short" or "long." Short is every 1 second with a 3

second timeout. Long is every 30 seconds with a 90 second timeout.

Prior to Virtual Connect 4.01 this setting defaulted to short. Starting with Virtual Connect 4.01 this

setting can be set to short or long. The domain-wide setting can be changed on the Ethernet

Settings (Advanced Settings) screen. Additionally, each Network or Shared Uplink Set also has a

LACP timer setting. There are three possible values: Domain-Default, Short, or Long. The domain

default option sets the LACP timer to the domain-wide default value that is specified on the

Advanced Ethernet Settings screen.

This setting specifies the domain-wide default LACP timer. VCM uses this value to set the duration

of the LACP timeout and to request the rate at which LACP control packets are to be received on

LACP-supported interfaces. Changes to the domain-wide setting are immediately applied to all

existing networks and shared uplink sets.

Using the "long" setting can help prevent loss of LAGs while performing in-service upgrades on

upstream switch firmware.

Introduction to Virtual Connect Flex-10 and FlexFabric 19

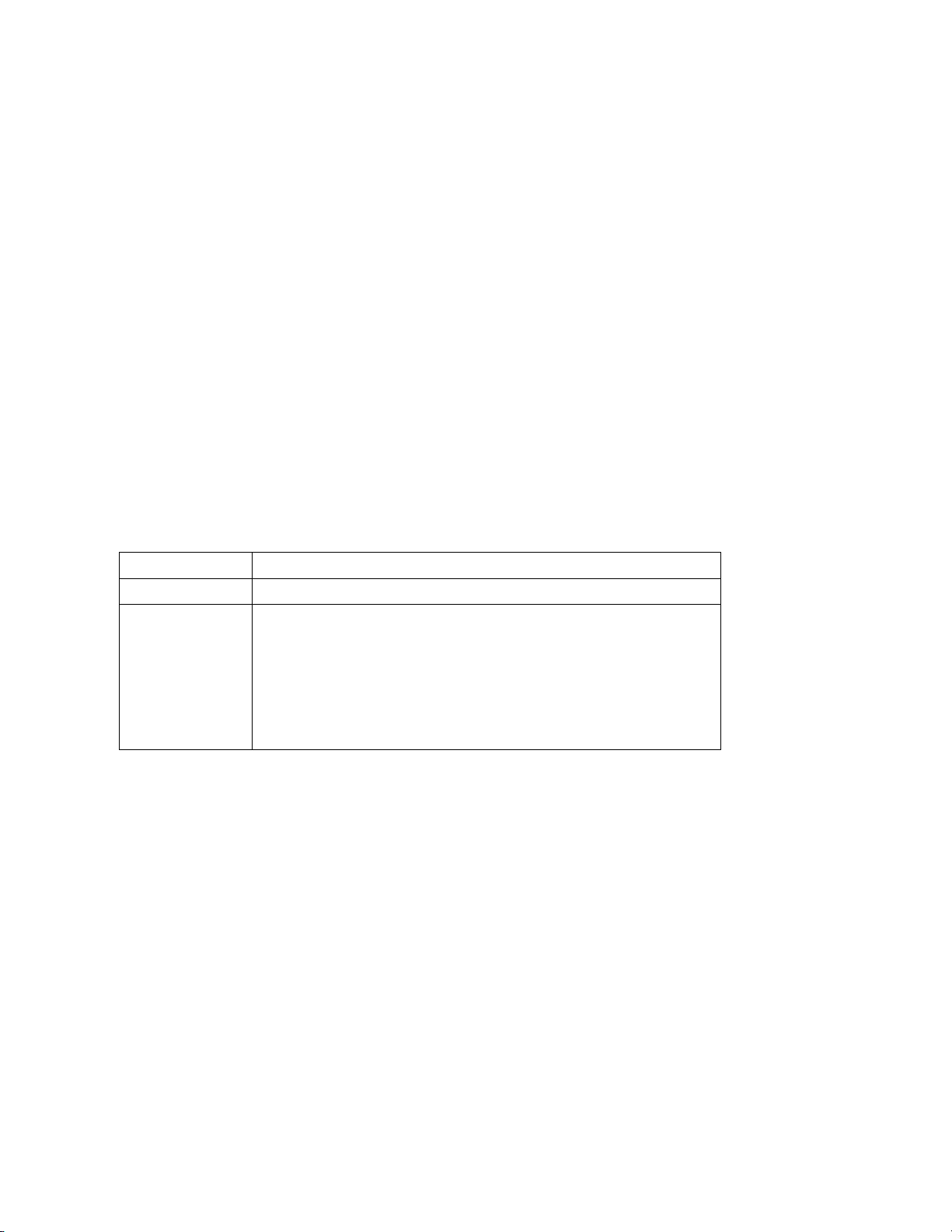

Multiple Networks Link Speed Settings (Min/Max Bandwidth Control)

A new feature to Virtual Connect 4.01 provides the ability to configure a minimum and maximum

preferred NIC link speed for server downlinks. This setting can be configured as a global default for

NICs configured with multiple networks, but can also be fine-tuned at the individual NIC level. The

default global Preferred Speed is set to 10Gb. The new “Maximum Link Connection Speed” setting

can be configured to enable a NIC to transmit at a speed greater that it’s configured speed. The

default Maximum speed is set to 10Gb. If these settings are remain as default, each NIC, although

configured for a set speed (minimum guaranteed speed), will be able to transmit at a rate as high as

10Gb. This feature is also known as “Min/Max”.

Configuring Multiple Networks Link Speed Settings (Min/Max)

Configure the global default setting for Preferred Link Speed to 2Gb and the Maximum Speed to

8Gb. This global setting applies to connections configured for Multiple Networks only.

On the Virtual Connect Manager screen, Left pane, click Ethernet Settings, Advanced

Settings

Select Set a Customer value for Preferred Link Connection Speed

o Set for 2Gb

Select Set a Customer value for Maximum Link Connection Speed

o Set for 8Gb

Select Apply

Figure 13 - Set Custom Link Speeds

The following command can be copied and pasted into an SSH based CLI session with Virtual

Connect;

# Set Preferred and Maximum Connection Speeds

set enet-vlan PrefSpeedType=Custom PrefSpeed=2000

set enet-vlan MaxSpeedType=Custom MaxSpeed=8000

Introduction to Virtual Connect Flex-10 and FlexFabric 20

Task

Action

Enable/disable

Select (enable) or clear (disable) the Enable Throughput Statistics checkbox

Change sampling

rate

Select a sampling rate from the Configuration list. Supported sampling rates

include:

Sample rate of 1 minute, collecting up to 5 hours of samples.

Sample rate of 2 minutes, collecting up to 10 hours of samples.

Sample rate of 3 minutes, collecting up to 15 hours of samples.

Sample rate of 4 minutes, collecting up to 20 hours of samples.

Sample rate of 5 minutes, collecting up to 25 hours of samples.

Sample rate of 1 hour, collecting up to 12.5 days of samples.

Configuring Throughput Statistics

Telemetry support for network devices caters to seamless operations and interoperability by

providing visibility into what is happening on the network at any given time. It offers extensive and

useful detection capabilities which can be coupled with upstream systems for analysis and trending

of observed activity.

The Throughput Statistics configuration determines how often the Throughput Statistics are

collected and the supported time frame for sample collection before overwriting existing samples.

When the time frame for sample collection is reached, the oldest sample is removed to allocate

room for the new sample. Configuration changes can be made without having to enable

Throughput Statistics. Applying configuration changes when Throughput statistics is enabled

clears all existing samples.

Some conditions can clear existing Throughput Statistics:

Disabling the collection of Throughput Statistics clears all existing samples.

Changing the sampling rate clears all existing samples.

Power cycling a Virtual connect Ethernet module clears all Throughput Statistics samples

for that module.

Collected samples are available for analysis on the Throughput Statistics screen (on page 226 of

the Virtual Connect 4.01 User Guide), accessible by selecting Throughput Statistics from the Tools

pull-down menu.

The following table describes the available actions for changing Throughput Statistics settings.

Connecting VC Flex-10/10D or VC FlexFabric to

the CORE

The baseline Virtual Connect technology adds a virtualization layer between the edge of the server

and the edge of the existing LAN and SAN. As a result, the external networks connect to a shared

resource pool of MAC addresses and WWNs rather than to MACs/WWNs of individual servers.

LAN-Safe

From the external networking view, Virtual Connect FlexFabric, Flex-10, or Ethernet uplinks appear

to be multiple NICs on a large server. Virtual Connect ports at the enclosure edge look like server

connections. This is analogous to a VMware environment that provides multiple MAC addresses to

the network through a single NIC port on a server.

Virtual Connect works seamlessly with your external network:

Does not participate in Spanning Tree Protocol (STP) on the network uplinks to the data

Introduction to Virtual Connect Flex-10 and FlexFabric 21

center. This avoids potential STP configuration errors that can negatively affect switches in

the network and the servers connected to those switches

Uses an internal loop prevention algorithm to automatically detect and prevent loops

inside a Virtual Connect domain. Virtual Connect ensures that there is only one active

uplink for any single network at one time

Allows aggregation of uplinks to data center networks (using LACP and fail-over)

Supports VLAN tagging on egress or pass-thru of VLAN tags in tunneled mode

Supports Link Layer Discovery Protocol (LLDP) and Jumbo Frames

Virtual Connect was designed to connect to the network as an endpoint device, as such, it is capable

of connecting to any network switch, at any layer, including directly to the core switch, providing

the ability to flatten the network as required.

Choosing VC Flex-10/10D or VC FlexFabric

When choosing between Flex-10/10D and FlexFabric, the first question to ask is whether a direct

connection to a Fibre Channel SAN fabric will be required, today or in the future. The key difference

between Flex-10 and FlexFabric is that FlexFabric modules leverage the built in Converged Network

Adapter (CNA) provided in the G7 and Gen 8 BladeSystem servers to provide FCoE (Fibre Channel)

connectivity. FCoE connectivity is provided through the integrated Converged Network Adapter

(CNA) and the FlexFabric modules, the FlexFabric modules connect directly to the existing Fibre

Channel switch fabrics, no additional components would be required, such as a traditional HBA.

With the release of Virtual connect firmware 4.01, the Flex-10/10D and FlexFabric modules can also

be utilized to provide dual hop FCoE connectivity to a switch that supports FCoE connections, in

which case the FCoE traffic would traverse the Ethernet uplinks and connect to the SAN through the

ToR or Core switch.

Virtual Connect 3.70 provided a new capability when connecting to HP’s 3PAR storage arrays using

Fibre Channel, allowing the 3PAR array to be directly connected to the FlexFabric modules. This

feature is call “FlatSAN” and provides the ability to completely eliminate the need for a fibre

channel SAN fabric, further reducing the cost of implementation and management of a blade server

environment.

If direct connection to a Fibre Channel SAN fabric is not required, then all the capabilities of the CNA

in the G7 and Gen 8 Blade and Virtual Connect can be obtained through the use of the Flex-10/10D

modules, the only feature not available would be direct connection to a fibre channel SAN fabric.

Fibre Channel connectivity could be later added through the use of traditional Virtual Connect Fibre

Channel modules, and FC HBAs. iSCSI support is provided through either FlexFabric or Flex-10

modules.

If Fibre Channel is not used, then the second Physical Function (pf) on each port would be used for

Ethernet. If Flex-10 modules are used with Virtual connect Fibre Channel modules, ensure an HBA

is installed in the appropriate MEZZ slot in the blade and simply configure a “FC HBA” within the

server profile and map it to the appropriate FC SAN Fabrics. In this case, FCoE SAN Fabrics and FCoE

CNAs would not be utilized. An example of this configuration is provided in Scenario 9.

The Scenarios provided in this document could be implemented on either; Flex-10, Flex-10/10D

(with VC-FC Modules for FC connections) or FlexFabric modules, with the exception of the dual hop

FCoE, which would not be supported on Flex-10 modules.

FlexFabric also provides the ability to support “Direct Attached” SAN fabrics to an HP 3PAR SAN,

which provides the ability to eliminate the SAN fabric.

Note: Dual hop FCoE connectivity is provided through Flex-10/10D and FlexFabric modules only.

The original Flex-10 module does not support dual hop FCoE.

Introduction to Virtual Connect Flex-10 and FlexFabric 22

Choosing an Adapter for VC Flex-10/10D or VC

FlexFabric

The following adapters are supported with Virtual Connect Flex-10, Flex-10/10D and FlexFabric;

Gen 8 Blades – FlexFabric FCoE/iSCSI support

HP FlexFabric 10Gb 2-port 554FLB Adapter

HP FlexFabric 10Gb 2-port 554M Adapter

Gen 8 Blades – Flex-10 Ethernet only

HP Flex-10 10Gb 2-port 530FLB Adapter

HP Flex-10 10Gb 2-port 530M Adapter

HP Flex-10 10Gb 2-port 552M Adapter

Gen 7 and older Blades – FlexFabric FCoE/iSCSI support

HP NC553i 10Gb FlexFabric adapter

HP NC553m 10Gb 2-port FlexFabric Adapter

Gen 7 and older Blades – Flex-10 Ethernet Only

HP NC552m 10Gb Dual Port Flex-10 Ethernet Adapter

HP NC532m 10Gb Dual Port Flex-10 Ethernet Adapter

HP NC542m 10Gb Dual Port Flex-10 Ethernet Adapter

HP NC550m 10Gb Dual Port Flex-10 Ethernet Adapter

The Min/Max bandwidth optimization feature released in Virtual Connect 4.01 excludes support for

the following adapters:

HP NC551i Dual Port FlexFabric 10Gb Converged Network Adapter

HP NC551m Dual Port FlexFabric 10Gb Converged Network Adapter

HP NC550m 10Gb 2-port PCIe x8 Flex-10 Ethernet Adapter

The following adapters are NOT supported with Flex-10, Flex-10/10D or FlexFabric:

HP Ethernet 10Gb 2-port 560FLB FIO Adapter

HP Ethernet 10Gb 2-port 560M Adapter

Note: All 1Gb Blade LAN adapters will function with any of the Virtual Connect 10Gb Ethernet

modules, however, will operate at 1Gb.

Determining Network Traffic Patterns and

Virtual Connect network design

(Active/Standby vs. Active/Active)

When choosing which Virtual Connect network design to use (Active/Active (A/A) vs. Active/Standby

(A/S) uplinks), consider the type of network traffic this enclosure will need to support. For example,

will there be much server to server traffic needed within the enclosure, or is the traffic flow mainly

in/out bound of the enclosure.

Network traffic patterns, North/South (N/S) vs. East/West (E/W), should be considered when

designing a Virtual Connect solution as network connectivity can be implemented in a way to

maximize the connected bandwidth and/or minimize the need for server to server traffic to leave

the enclosure when communicating on the same VLAN with other servers within the same

enclosure.

Introduction to Virtual Connect Flex-10 and FlexFabric 23

For example; if the solution being implemented will have a high level of in/out or North/South

traffic flow, an A/A network design would likely be the better solution as it would enable all

connected uplinks. However, if a greater level of network traffic is between systems within the

same enclosure/VLAN, such as a multi-tiered application, then a better design may be A/S, as this

would minimize or eliminate any server to server communications from leaving the enclosure.

Determining whether network connectivity is A/A vs. A/S is not a domain configuration issue or

concern. Networks are independent of one another and both A/A and A/S networks could be

implemented in the same Virtual Connect domains. As an example, an iSCSI connection could be

configured as A/A to support a high rate of N/S traffic between targets and initiators. Whereas the

LAN connectivity for the users and applications could be more E/W where an A/S network design

could be implemented.

In an active/standby network design, all servers would have both NICs connected to the same

Virtual Connect network. All communications between servers within the Virtual Connect Domain

would occur through this network, no matter which network adapter is active. In the example

below, if Windows Host 1 is active on NIC 1 and Windows Host 2 is active on NIC 2, the

communications between servers will cross the internal stacking links. For external

communications, all servers in the enclosure will use the Active uplink (currently) connected to Bay

1, no matter which NIC they are active on.

Figure 14 - This is an example of an Active/Standby network configuration. One uplink is active,

while the other is in standby, and available in the event of a network or module failure

In an A/A network design, all servers would have their NICs connected to opposite Virtual Connect

networks. Communications between servers within the Virtual Connect Domain would depend on

which NIC each server was active on. In the following example, if Windows Host 1 is active on NIC 1

and Windows Host 2 is active on NIC 2, the communications between servers will NOT cross the

internal stacking links and would need to leave the enclosure and re-enter via the opposite module;

however, if a higher rate of external communications is require, vs. peer to peer, then an

active/active configuration may be preferred as both uplinks would be actively forwarding traffic.

Also, if both servers were active on the same NIC, then communications between servers would

remain within the module/enclosure.

Introduction to Virtual Connect Flex-10 and FlexFabric 24

Figure 15 - This is an example of an Active/Active network configuration. Both uplinks are actively

forwarding traffic.

Figure 16 - Both A/A (iSCSI_x) and A/S (vNet_PROD) networks are used in this example.

Note: Alternatively, if Fibre Channel will not be required, the iSCSI networks could be connected as

iSCSI hardware accelerated and would be connected to the FlexHBA.

Introduction to Virtual Connect Flex-10 and FlexFabric 25

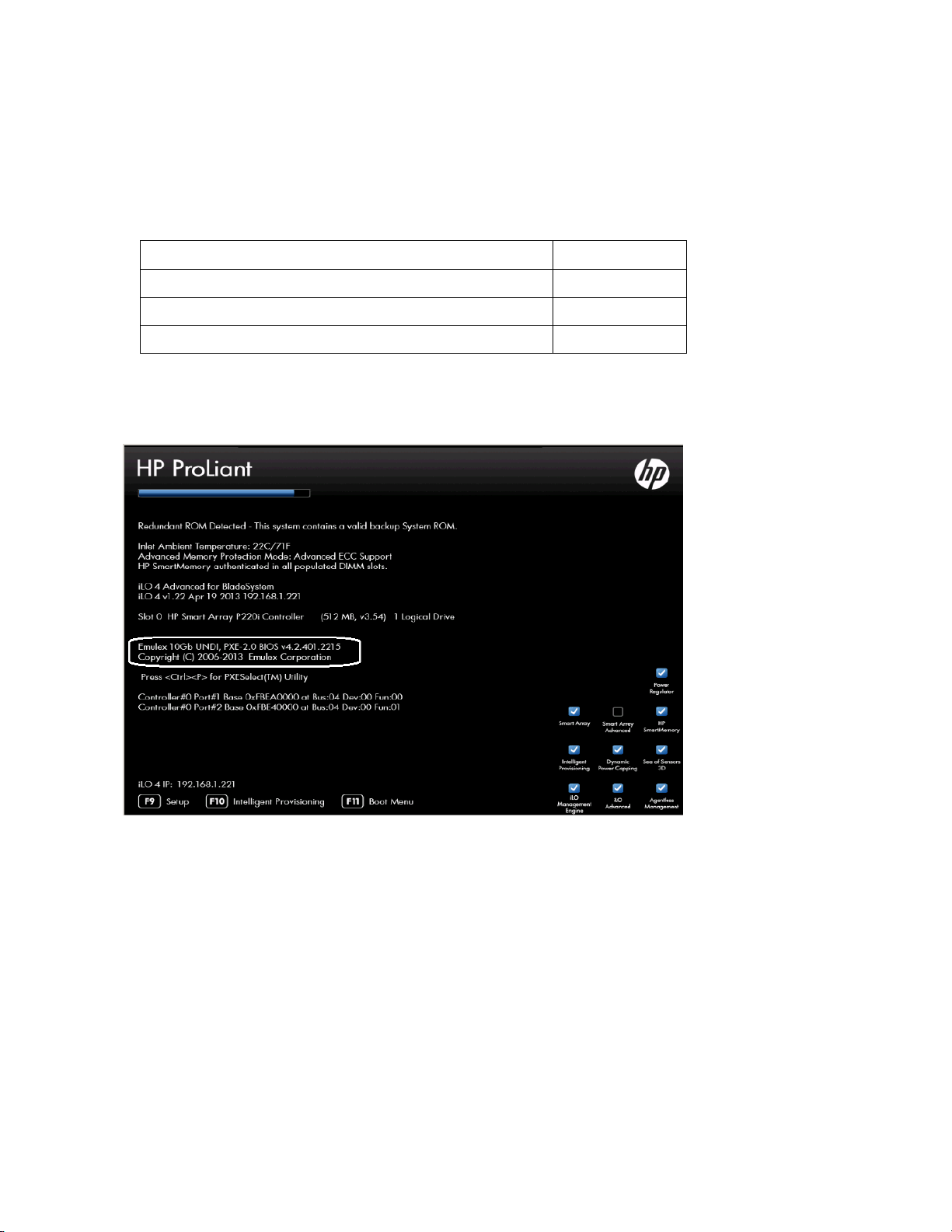

Emulex NC55x CNA Firmware

4.2.401.2155

VMware ESXi 5.0/5.1 Driver CD for Emulex be2net

4.2.327.0

VMware ESXi50 Driver for Emulex iSCSI Driver

4.2.324.12

VMware ESX/ESXi Driver CD for Emulex FCoE/FC adapters

8.2.4.141.55

VMware ESXi 5.0/5.1

VMware ESX 5.0 is fully supported with BladeSystem and Virtual Connect. However, it is important

to ensure that the proper Network Adapter and HBA drivers and firmware are properly installed. As

of this writing, the following drivers and firmware should be used.

CNA driver and Firmware recommendations:

Note: As noted in the “February 2013 VMware FW and Software Recipe”

http://vibsdepot.hp.com/hpq/recipes/February2013VMwareRecipe5.0.pdf.

Note: For the most up to date recipe document please visit “vibsdepot” at http://vibsdepot.hp.com

Figure 17 - Note the Emulex BIOS version as 4.2.401.2155

Introduction to Virtual Connect Flex-10 and FlexFabric 26

Figure 18 - Note the be2net driver and firmware level as displayed in vCenter, under the Hardware

Status tab

Introduction to Virtual Connect Flex-10 and FlexFabric 27

Single Domain/Enclosure Scenarios

Overview

This Cookbook will provide several configuration scenarios of Virtual Connect Flex-10/10D and

FlexFabric, using an HP BladeSystem c7000 enclosure. Virtual Connect also supports MultiEnclosure stacking, for up to 4 enclosures, which provides a single point of management and can

further reduce cable connectivity requirements. For Virtual connect stacked configurations, see the

Virtual Connect Multi-Enclosure Stacking Reference Guide. Each scenario will provide an overview

of the configuration, show how to complete that configuration and include both GUI and CLI

(scripted) methods. Where possible, examples for Windows and/or VMware vSphere will also be

provided.

Requirements

This Cookbook will utilize a single HP BladeSystem c7000 enclosure with TWO Virtual Connect

FlexFabric or Flex-10/10D modules installed in I/O Bays 1 and 2 and a BL460c Gen 8 half height

BladeSystem Servers in server Bay 1. Some of the scenarios will provide Ethernet only connections,

in which case Flex-10/10D modules may be used. In the scenarios where Fibre Channel connectivity

is required, FlexFabric modules will be used, with the exception of Scenario 9 which uses Flex10/10D and Virtual Connect Fibre Channel modules.

The server’s integrated converged network adapters (CNA) will connect to Bays 1 and 2, with two

10Gb FlexFabric adapter ports. Each FlexFabric Adapter port supports Ethernet and iSCSI or Fibre

Channel over Ethernet (FCoE) when connected to FlexFabric modules. Port 1 will connect to the

FlexFabric module in Bay 1 and Port 2 will connect to the FlexFabric module in Bay 2.

The Flex-10/10D modules are connected to a pair of 10Gb Ethernet switches for standard LAN

connectivity.

The FlexFabric modules and VC-FC modules are linked to a pair of 8Gb Brocade fibre channel

switches for SAN connectivity.

In each scenario, it’s assumed that a Virtual Connect Domain has been created either through the

GUI or a CLI script and no Virtual Connect Networks, uplink sets or Server Profiles have been

created. Virtual Connect scripting examples are provided within each scenario as well as additional

examples in Appendix C.

Figure 19- c7000 enclosure front view with Half Height Gen 8 BladeSystem servers installed

Single Domain/Enclosure Scenarios 28

Figure 20 - c7000 enclosure rear view with Virtual Connect FlexFabric Modules installed in

Interconnect bays 1& 2

Figure 21 - c7000 enclosure rear view with Virtual Connect Flex-10/10D modules in Bays 1 & 2 and

Virtual Connect 20 Port 8Gb Fibre Channel Modules installed in Interconnect bays 3 & 4. If Fibre

Channel connectivity is not required, the Fibre Channel modules would not be required

Single Domain/Enclosure Scenarios 29

Scenario 1 – Simple vNet with

Active/Standby Uplinks – Ethernet and

FCoE – Windows 2008 R2

Overview

This simple configuration uses the Virtual Connect vNet along with FCoE for SAN connectivity.

When VLAN mapping is not required, the vNet is the simplest way to connect Virtual Connect to a

network and server. In this scenario, the upstream network switch connects a network to a single

port on each FlexFabric module. In addition, Fibre Channel uplinks will also be connected to the

FlexFabric modules to connect to the existing Fibre Channel infrastructure.

No special upstream switch configuration is required as the switch is in the factory default

configuration, typically configured as an Access or untagged port on either the default VLAN or a

specific VLAN. In this scenario, Virtual Connect does not receive VLAN tags.

When configuring Virtual Connect, we can provide several ways to implement network fail-over or

redundancy. One option would be to connect TWO uplinks to a single vNet; those two uplinks would

connect from different Virtual Connect modules within the enclosure and could then connect to the

same or two different upstream switches, depending on your redundancy needs. An alternative

would be to configure TWO separate vNets, each with a single or multiple uplinks configured. Each

option has its advantages and disadvantages. For example; an Active/Standby configuration places

the redundancy at the VC level, where Active/Active places it at the OS NIC teaming or bonding level.

We will review the first option in this scenario.

In addition, several vNets can be configured to support the required networks to the servers within

the BladeSystem enclosure. These networks could be used to separate the various network traffic

types, such as iSCSI, backup and VMotion from production network traffic.

This scenario will also leverage the Fibre Channel over Ethernet (FCoE) capabilities of the FlexFabric

modules. Each Fibre channel fabric will have one uplink connected to each of the FlexFabric

modules.

Requirements

This scenario will support both Ethernet and fibre channel connectivity. In order to implement this

scenario, an HP BladeSystem c7000 enclosure with one or more server blades and TWO Virtual

Connect FlexFabric modules, installed in I/O Bays 1& 2 are required. In addition, we will require ONE

or TWO external Network switches. As Virtual Connect does not appear to the network as a switch

and is transparent to the network, any standard managed switch will work with Virtual Connect.

The Fibre Channel uplinks will connect to the existing FC SAN fabrics. The SAN switch ports will

need to be configured to support NPIV logins. One uplink from each FlexFabric module will be

connected the existing SAN fabrics.

Scenario 1 – Simple vNet with Active/Standby Uplinks – Ethernet and FCoE – Windows 2008 R2 30

Figure 22 - Physical View; Shows one Ethernet uplink from Ports X5 on Module 1 and 2 to Port 1 on

each network switch. The SAN fabrics are also connected redundantly, with TWO uplinks per fabric,

from ports X1 and X2 on module 1 to Fabric A and ports X1 and X2 to Fabric B.

Figure 23 - Logical View; Shows a single Ethernet uplink from Port X5 on Module 1 on the first

network switch and a single uplink from Port X5 on Module 2 to the second network switch. Both

Ethernet uplinks are connected to the same vNet, vNet-PROD. In addition, SAN Fabric FCoE_A

connects to the existing SAN Fabric A through port X1 on Module 1 (Bay 1) and FCoE_B connects to

the existing SAN Fabric B through port X1 on Module 2 (Bay 2)

Scenario 1 – Simple vNet with Active/Standby Uplinks – Ethernet and FCoE – Windows 2008 R2 31

Installation and configuration

Switch configuration

As the Virtual Connect module acts as an edge switch, Virtual Connect can connect to the network at

either the distribution level or directly to the core switch.

The appendices provide a summary of the cli commands required to configure various switches for

connection to Virtual Connect. The configuration information provided in the appendices for this

scenario assumes the following information:

The switch ports are configured as ACCESS or untagged ports, either presenting the

Default VLAN or a specific VLAN and will be forwarding untagged frames

As an alternative, if the switch ports were configured as TRUNK ports and forwarding

multiple VLANS, Virtual Connect would forward those tagged frames to the host NICs

configured for this network, however; the Virtual Connect network would need to be

configured for VLAN Tunneling. The connected host would then need to be configured to

interpret those VLAN tags.

The network switch port should be configured for Spanning Tree Edge as Virtual Connect appears to

the switch as an access device and not another switch. By configuring the port as Spanning Tree

Edge, it allows the switch to place the port into a forwarding state much quicker than otherwise,

this allows a newly connected port to come online and begin forwarding much quicker.

The SAN switch ports connecting to the FlexFabric module must be configured to accept NPIV

logins.

Configuring the VC module

Physically connect Port 1 of network switch 1 to Port X15 of the VC module in Bay 1

Physically connect Port 1 of network switch 2 to Port X5 of the VC module in Bay 2

Note: if you have only one network switch, connect VC port X5 (Bay 2) to an alternate port on the

same switch. This will NOT create a network loop and Spanning Tree is not required.

Physically connect Port X1 on the FlexFabric in module Bay 1 to a switch port in SAN Fabric A

Physically connect Port X1 on the FlexFabric in module Bay 2 to a switch port in SAN Fabric B

VC CLI commands

In addition to the GUI many of the configuration settings within VC can also be accomplished via a

CLI command set. In order to connect to VC via a CLI, open an SSH connection to the IP address of

the active VCM. Once logged in, VC provides a CLI with help menus. The Virtual Connect CLI guide

also provides many useful examples. Throughout this scenario the CLI commands to configure VC

for each setting are provided.

Configuring Expanded VLAN Capacity via GUI

Virtual Connect release 3.30 provided an expanded VLAN capacity mode when using Shared Uplink

Sets, this mode can be enabled through the Ethernet Settings tab or the VC CLI. The default

configuration for a new Domain install is “Expanded VLAN Capacity” mode, Legacy mode is no

longer available and the Domain cannot be downgraded.

Scenario 1 – Simple vNet with Active/Standby Uplinks – Ethernet and FCoE – Windows 2008 R2 32

To verify the VLAN Capacity mode

On the Virtual Connect Manager screen, Left pane, click Ethernet Settings, Advanced

Settings

Select Expanded VLAN capacity

Verify Expanded VLAN Capacity is configured and Legacy VLAN Capacity is greyed out.

Note: Legacy VLAN mode will only be presented if 1Gb Virtual Connect Modules are present, in

which case the domain would be limited to Firmware version 3.6x.

Configuring Expanded VLAN Capacity via CLI

The following command can be copied and pasted into an SSH based CLI session with Virtual

Connect;

# Set Expanded VLAN Capacity

set enet-vlan -quiet VlanCapacity=Expanded

Figure 24 - Enabling Expanded VLAN Capacity

Note: If a 1Gb VC Ethernet module is present in the Domain, Expanded VLAN capacity will be greyed

out, this is only supported with 10Gb based VC modules. Also, once Expanded VLAN capacity is

selected, moving back to Legacy VLAN capacity mode will require a domain deletion and rebuild.

Configuring Fast MAC Cache Failover

When an uplink on a VC Ethernet Module that was previously in standby mode becomes active, it

can take several minutes for external Ethernet switches to recognize that the c-Class server blades

must now be reached on this newly active connection.

Enabling Fast MAC Cache Failover forces Virtual Connect to transmit Ethernet packets on newly

active links, which enables the external Ethernet switches to identify the new connection more

quickly (and update their MAC caches appropriately). This transmission sequence repeats a few

times at the MAC refresh interval (five seconds is the recommended interval) and completes in

about one minute.

Configuring the VC Module for Fast Mac Cache Fail-over via GUI (Ethernet settings)

Set Fast MAC Cache Fail-over to 5 Seconds

On the Virtual Connect Manager screen, Left pane, click Ethernet Settings, Advanced

Settings

Click the “Other” tab

Select Fast MAC Cache Fail-over with a refresh of 5

Select Apply

Scenario 1 – Simple vNet with Active/Standby Uplinks – Ethernet and FCoE – Windows 2008 R2 33

Configuring the VC Module for Fast Mac Cache Fail-over via CLI (Ethernet settings)

The following command can be copied and pasted into an SSH based CLI session with Virtual

Connect;

# Set Advanced Ethernet Settings to Enable Fast MAC cache fail-over

set mac-cache Enabled=True Refresh=5

Figure 25 - Set Fast MAC Cache, under Ethernet Settings “Advanced Settings”)

Scenario 1 – Simple vNet with Active/Standby Uplinks – Ethernet and FCoE – Windows 2008 R2 34

Defining a new vNet via GUI

Create a vNet and name it “vNet-PROD”

Login to Virtual Connect, if a Domain has not been created, create it now, but cancel out of

the configuration wizards after the domain has been created.

On the Virtual Connect Manager screen, click Define, Ethernet Network to create a vNet

Enter the Network Name of “vNet-PROD”

o Note; Do NOT select the options (ie; SmartLink, Private Networks or Enable VLAN

Tunnel)

Select Add Port, then add the following ports;

o Enclosure 1 (enc0), Bay 1, Port X5

o Enclosure 1 (enc0), Bay 2, Port X5

Leave Connection Mode as Auto

Optionally, Select Advanced Network Settings and set the Preferred speed to 4Gb and the

Maximum speed to 6Gb.

Select Apply

Note: By connecting TWO Uplinks from this vNet we have provided a redundant path to the

network. As each uplink originates from a different VC module, one uplink will be Active and the

second will be in Standby. This configuration provides the ability to lose an uplink cable, network

switch or depending on how the NICs are configured at the server (teamed or un-teamed), even a VC

module. An Active/Standby configuration also provides better East/West connectivity.

Note: SmartLink – In this configuration SmartLink should NOT be enabled. SmartLink is used to turn

off downlink ports within Virtual Connect, if ALL available uplinks to a vNet are down. We will use

SmartLink in a later scenario.

Figure 26 - Define Ethernet Network (vNet-PROD). Note: The Port Status and Connected to

information. If the connected switch has LLDP enabled, the connected to information should be

displayed as below

Scenario 1 – Simple vNet with Active/Standby Uplinks – Ethernet and FCoE – Windows 2008 R2 35

Figure 27 - Configuring the Advanced network setting for Min/Max Network Speed. We will see how

this configuration is utilized when we create the server profile

Defining a new vNet via CLI

The following command(s) can be copied and pasted into an SSH based CLI session with Virtual

Connect

# Create the vNet "vNet-PROD" and configure uplinks as discussed above

add Network vNet-PROD

add uplinkport enc0:1:X5 Network=vNet-PROD speed=auto

add uplinkport enc0:2:X5 Network=vNet-PROD speed=auto

set network vNet-PROD SmartLink=Disabled

Note: Optionally, if you wish to utilize the new Min/Max NIC speed setting provided within Virtual

Connect, you can set this Network to a “Preferred” Speed and a “Maximum Speed”. This provides

the ability to quickly create server profiles, using the NIC speed setting of “Preferred”, then allowing

Virtual Connect to configure the NIC speeds for both the minimum speed as well as the maximum

speed. Use the setting below to configure the Min. Max. NIC speeds for this network. It is also

important to note, that this does NOT affect the network uplink speed, which will remain at 10Gb

(or 1Gb if connected to a 1Gb switch port).

set network vNet-PROD SmartLink=Disabled PrefSpeedType=Custom PrefSpeed=4000

MaxSpeedType=Custom MaxSpeed=6000

Defining a new (FCoE) SAN Fabric via GUI