Page 1

HP

P9000 RAID Manager User Guide

Abstract

This guide provides information on using HP StorageWorks P9000 RAID Manager Software on HP StorgeWorks P9000 disk

arrays. Included is information on: command usage, configuration file examples, and information on High Availability failover

and failback, Fibre Channel addressing, and Standard input (STDIN) file formats.

HP Part Number: T1610-96043

Published: April 2012

Edition: Eighth

Page 2

© Copyright 2010, 2012 Hewlett-Packard Development Company, L.P.

Confidential computer software. Valid license from HP required for possession, use or copying. Consistent with FAR 12.211 and 12.212, Commercial

Computer Software, Computer Software Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government under

vendor's standard commercial license.

The information contained herein is subject to change without notice. The only warranties for HP products and services are set forth in the express

warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. HP shall

not be liable for technical or editorial errors or omissions contained herein.

Acknowledgements

Microsoft®, Windows®, Windows® XP, and Windows NT® are U.S. registered trademarks of Microsoft Corporation.

Java and Oracle are registered trademarks of Oracle and/or its affiliates.

UNIX® is a registered trademark of The Open Group.

Export Requirements

You may not export or re-export this document or any copy or adaptation in violation of export laws or regulations.

Without limiting the foregoing, this document may not be exported, re-exported, transferred or downloaded to or within (or to a national resident

of) countries under U.S. economic embargo, including Cuba, Iran, North Korea, Sudan, and Syria. This list is subject to change.

This document may not be exported, re-exported, transferred, or downloaded to persons or entities listed on the U.S. Department of Commerce

Denied Persons List, Entity List of proliferation concern or on any U.S. Treasury Department Designated Nationals exclusion list, or to parties directly

or indirectly involved in the development or production of nuclear, chemical, biological weapons, or in missile technology programs as specified

in the U.S. Export Administration Regulations (15 CFR 744).

Revision History

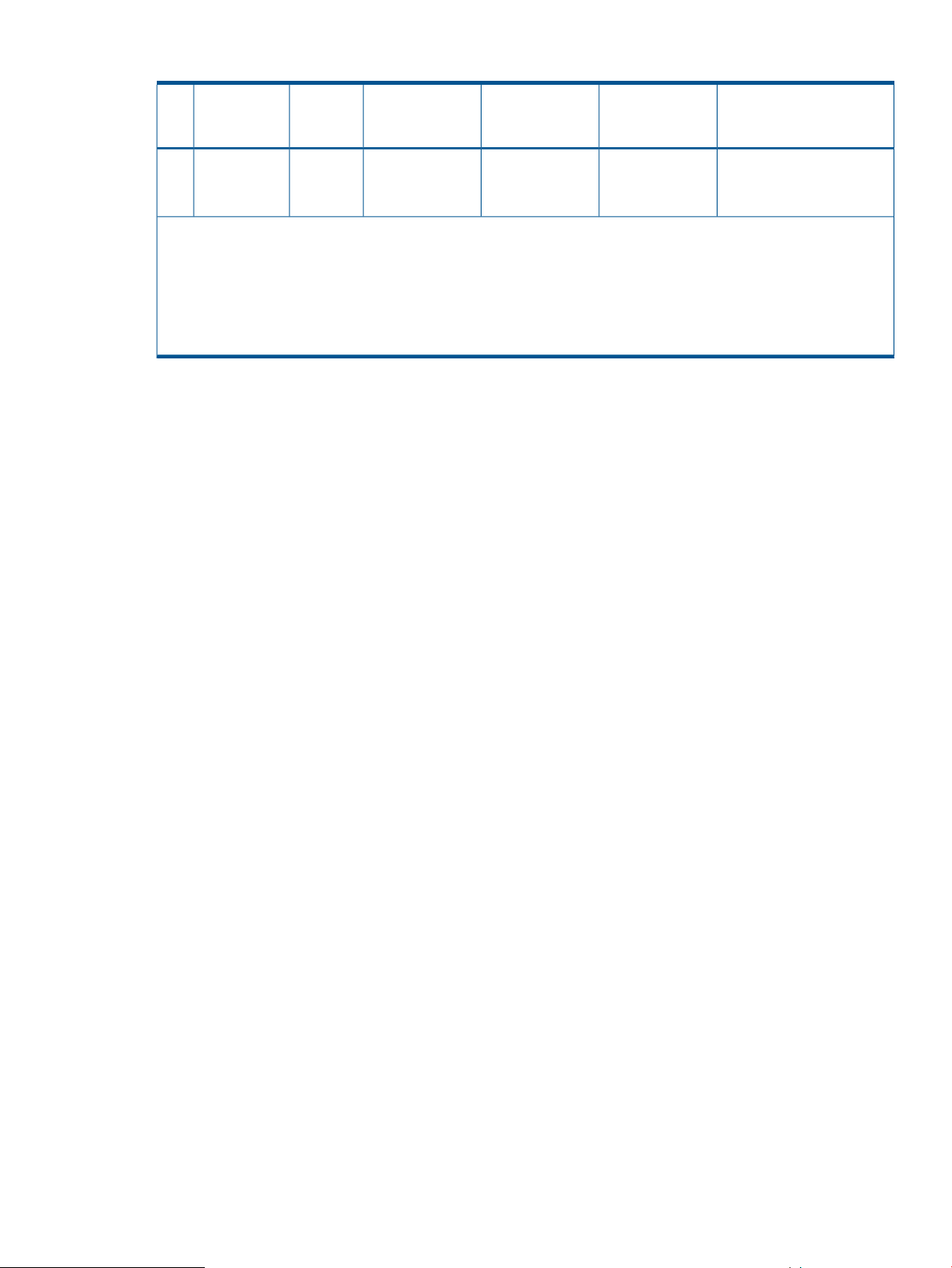

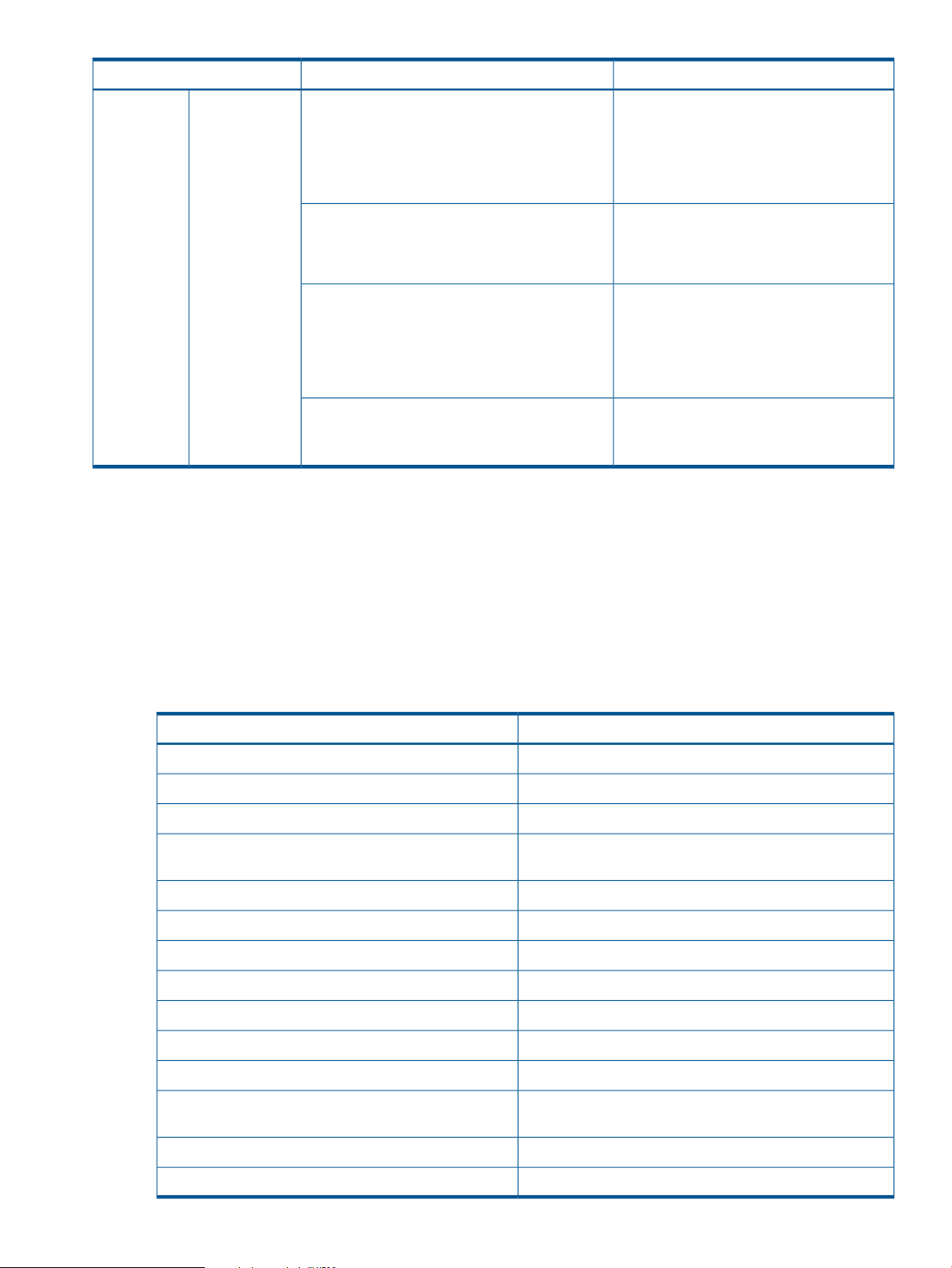

DescriptionDateEdition

Applies to version 01.24.13 or later.October 2010First

Applies to version 01.24.13 or later.November 2010Second

Applies to version 01.24.16 or later.January 2011Third

Applies to version 01.25.03 or later.May 2011Fourth

Applies to version 01-25-03/06 or later.August 2011Fifth

Applies to version 01-25-03/06 or later.September 2011Sixth

Applies to version 01-26-03 or later.November 2011Seventh

Applies to version 01-27-03/xx or laterApril 2012Eighth

Page 3

Contents

1 Overview..................................................................................................8

About RAID Manager...............................................................................................................8

RAID Manager functions available on the P9500 storage system....................................................8

Provisioning function.............................................................................................................9

Asynchronous command processing.......................................................................................9

Command execution modes................................................................................................10

Precheck function...............................................................................................................10

Command execution by the out-of-band method.....................................................................11

User authentication............................................................................................................12

LDEV nickname function......................................................................................................12

LDEV grouping function......................................................................................................13

Resource group function......................................................................................................13

Resource group locking function...........................................................................................13

RAID Manager functions available on all RAID storage systems....................................................14

In-system replication...........................................................................................................14

Remote replication.............................................................................................................14

Data protection..................................................................................................................14

2 RAID Manager software environment..........................................................15

Overview of the RAID Manager software environment.................................................................15

RAID Manager components on the RAID storage system..............................................................15

Command device...............................................................................................................15

Command device guarding............................................................................................16

Alternate command device function.................................................................................17

Define the remote command device.................................................................................18

RAID Manager and the SCSI command interface...................................................................18

Command competition...................................................................................................19

Command flow.............................................................................................................19

Issuing commands for LDEVs within a LUSE device.............................................................20

RAID Manager instance components on the host server...............................................................20

HORCM operational environment........................................................................................20

RAID Manager instance configurations.................................................................................21

Host machines that can be paired........................................................................................23

Configuration definition file.................................................................................................24

Overview.....................................................................................................................24

Configuration definition file settings.................................................................................26

Configuration definition for cascading volume pairs...............................................................33

Configuration file and mirror descriptors...........................................................................33

Cascading connection and configuration files...................................................................34

Business Copy..............................................................................................................35

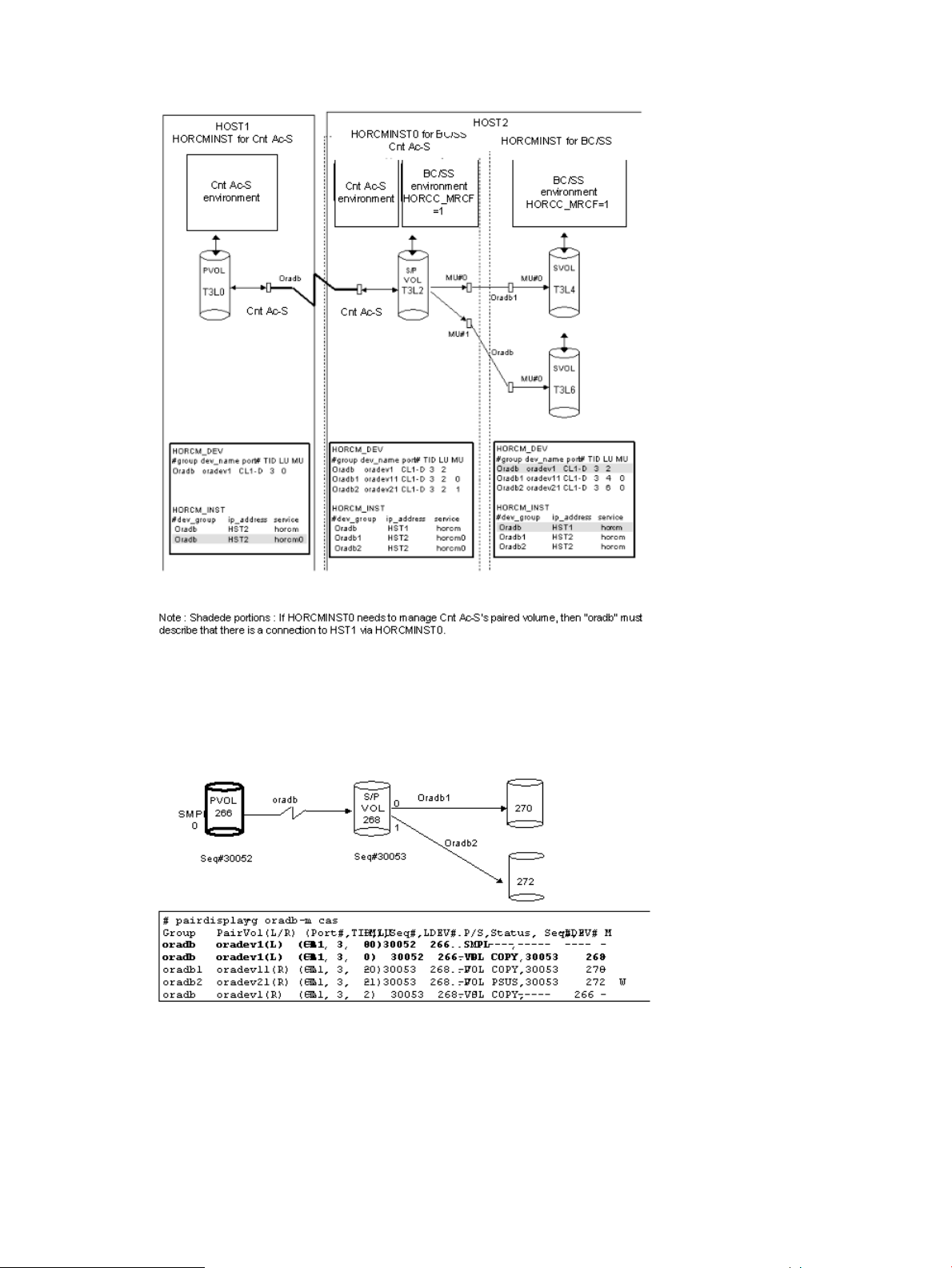

Cascading connections for Continuous Access Synchronous and Business Copy....................36

RAID Manager software files....................................................................................................39

RAID Manager files supplied with the software......................................................................39

RAID Manager files for UNIX-based systems.....................................................................39

RAID Manager files for Windows-based systems...............................................................40

RAID Manager files for OpenVMS-based systems..............................................................41

RAID Manager log and trace files.............................................................................................42

RAID Manager log files......................................................................................................42

RAID Manager trace files....................................................................................................44

RAID Manager trace control command.................................................................................44

Command error logging for audit........................................................................................45

User-created files....................................................................................................................46

Contents 3

Page 4

3 RAID Manager functions on P9500............................................................48

Command execution using in-band and out-of-band methods.......................................................48

User authentication.................................................................................................................49

Command operation authority and user authentication................................................................49

Controlling User Role..........................................................................................................49

Controlling user resources...................................................................................................50

The commands that are executed depending on the operation authorities managed by Remote

Web Console and the SVP..................................................................................................51

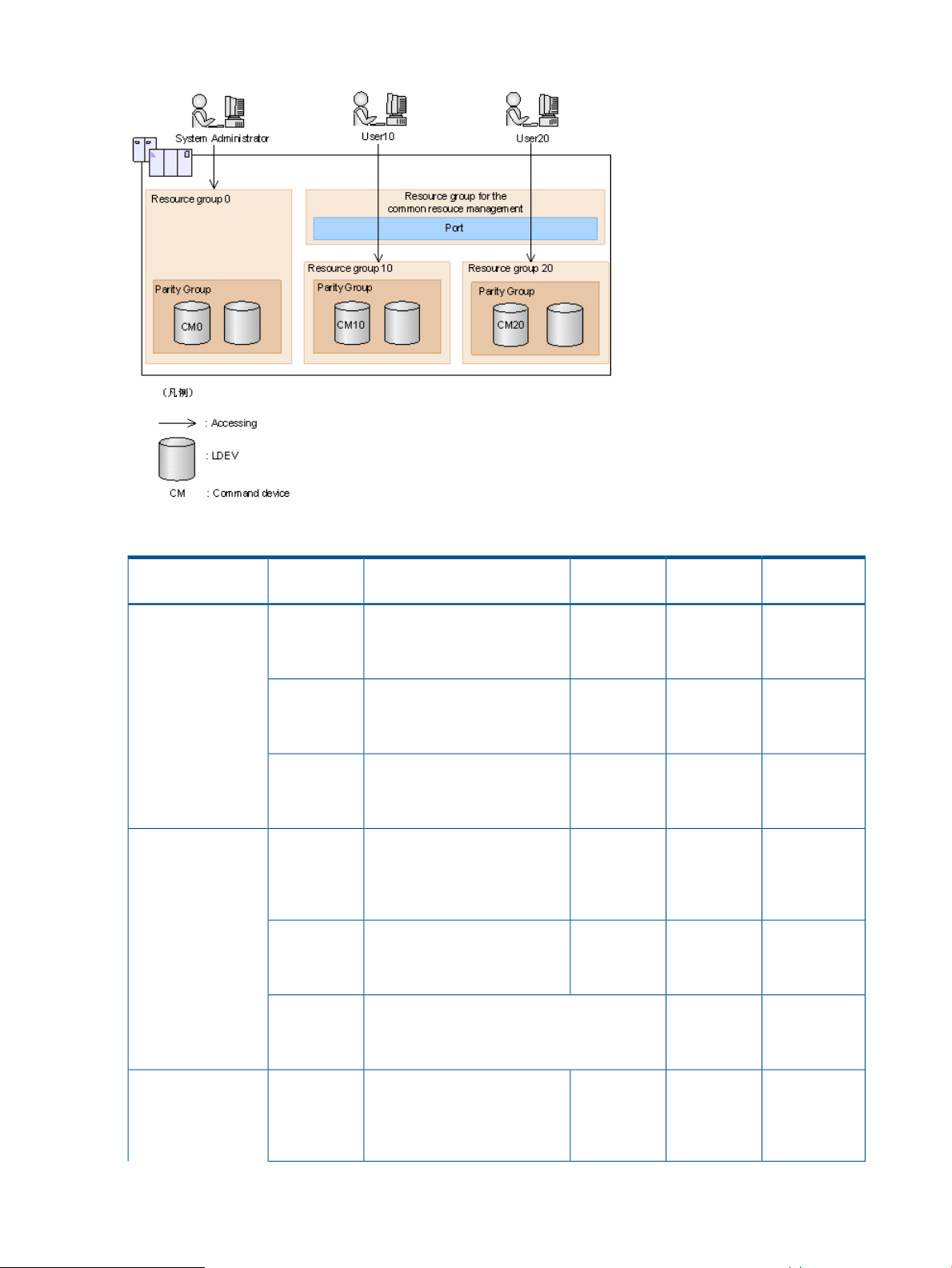

The relationship between resource groups and command operations.............................................53

Resource lock function.............................................................................................................55

Command execution modes.....................................................................................................56

Overview..........................................................................................................................56

Context check....................................................................................................................57

How to check...............................................................................................................57

Details of check contents................................................................................................58

Configuration check...........................................................................................................63

LDEV group function................................................................................................................63

Overview..........................................................................................................................63

Device group definition methods..........................................................................................65

Read operations and command device settings......................................................................66

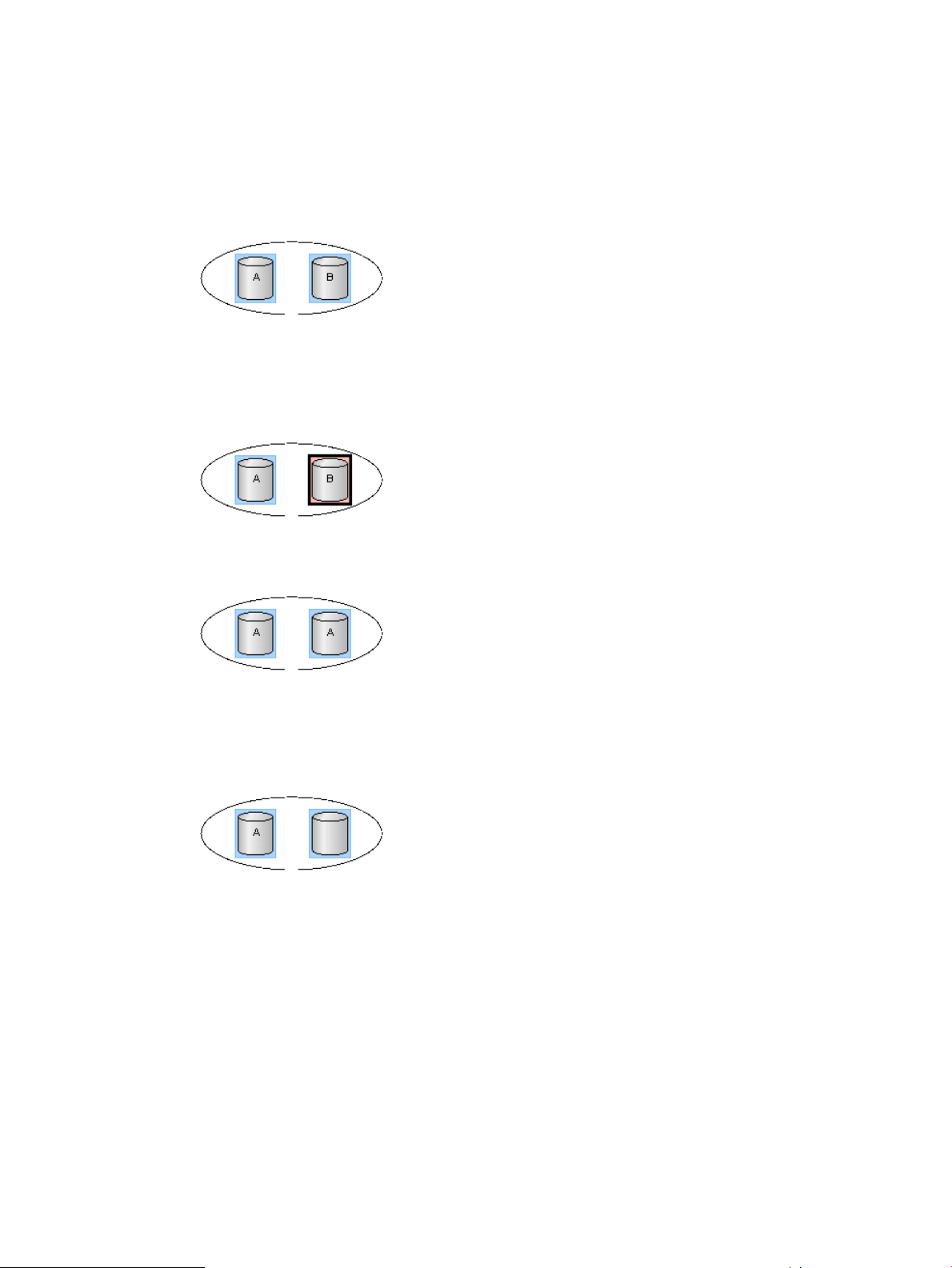

Device group function.........................................................................................................66

Device group creation....................................................................................................68

LDEV addition to device group........................................................................................68

LDEV deletion from device group.....................................................................................69

Device group deletion....................................................................................................70

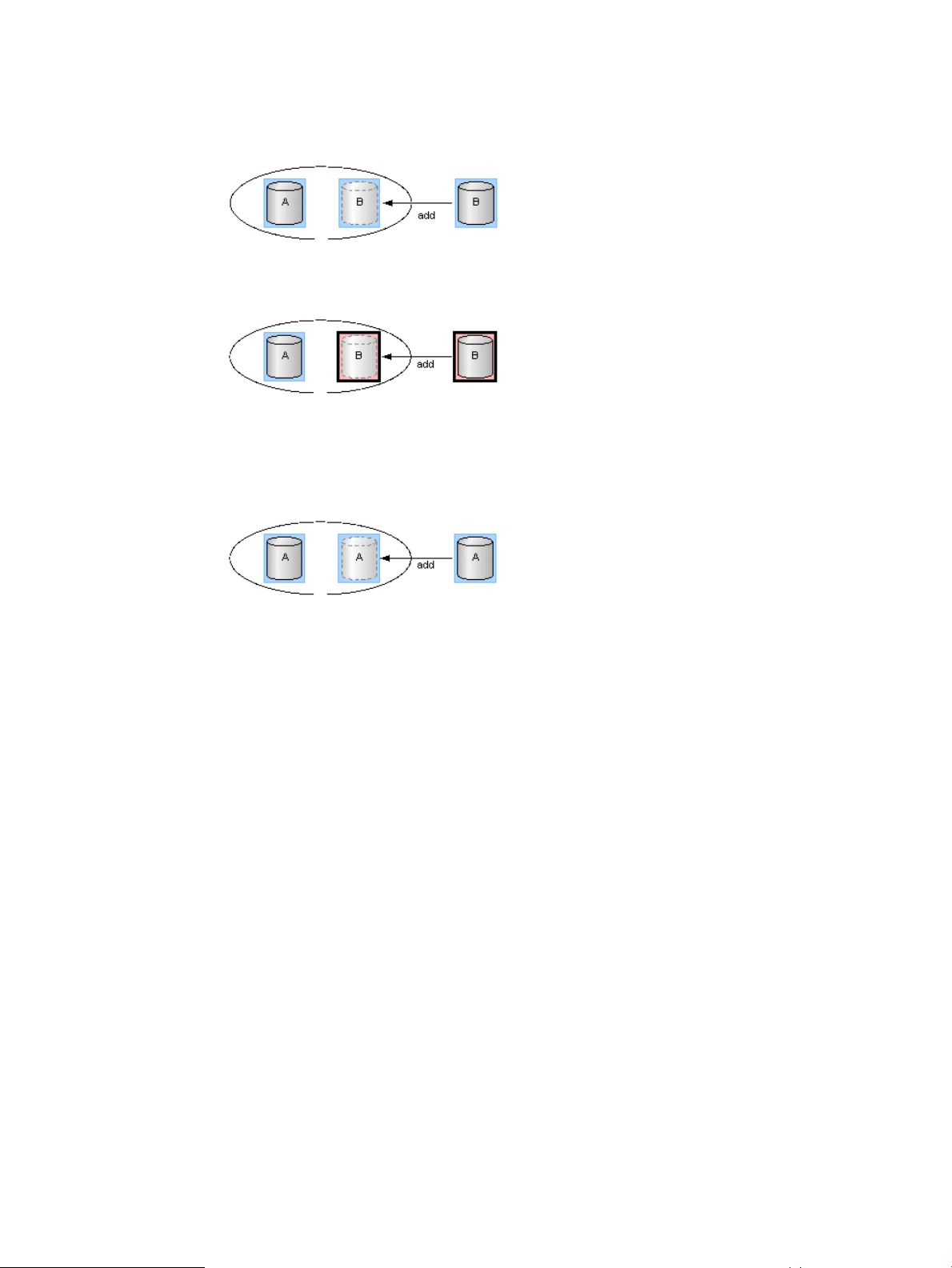

Copy group function...........................................................................................................71

Copy group creation.....................................................................................................73

LDEV addition to a copy group.......................................................................................73

LDEV deletion from copy group.......................................................................................74

Copy group deletion......................................................................................................75

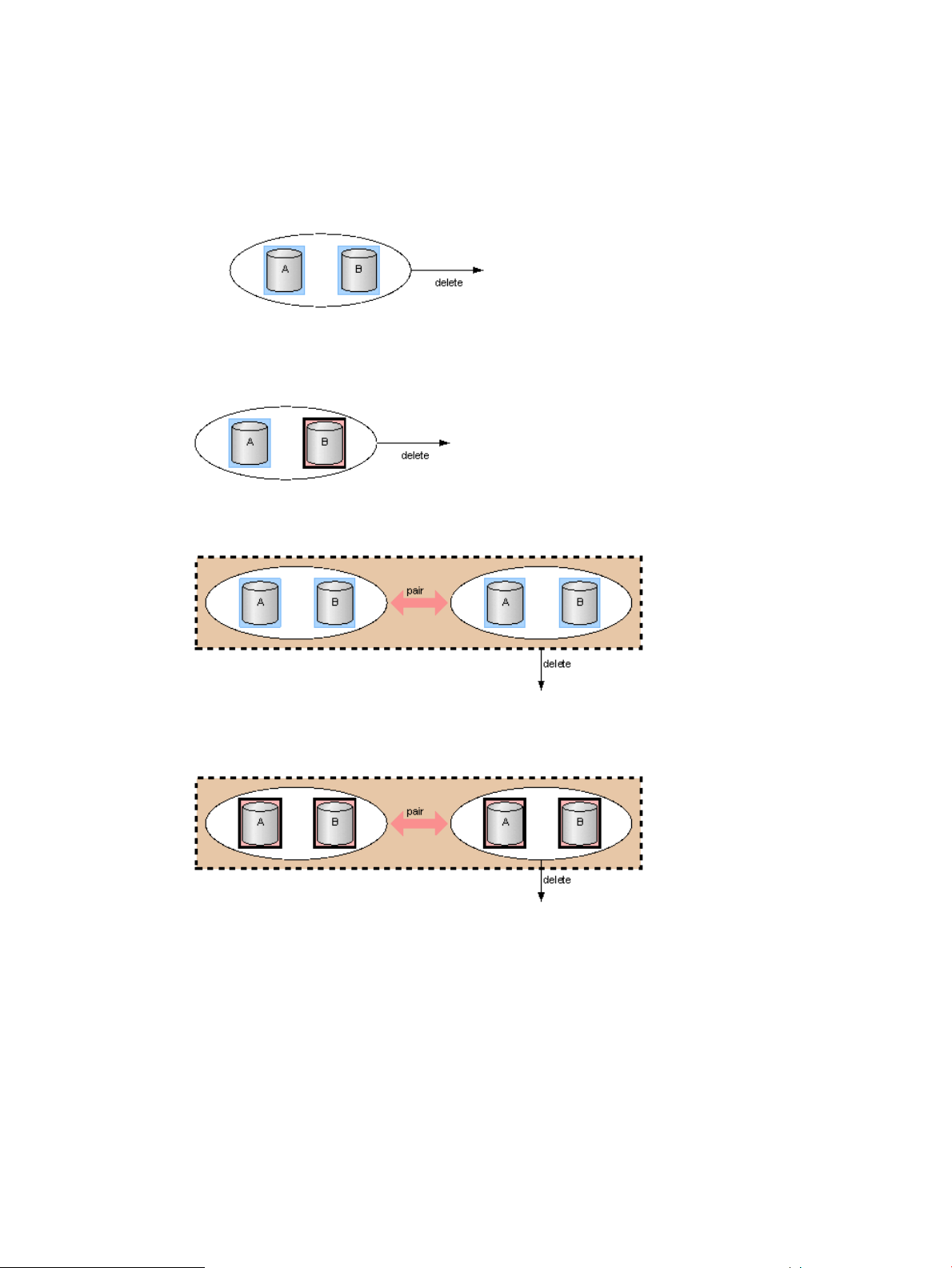

Pair operation by specifying a copy group.......................................................................76

Pair operations with volumes for mainframe................................................................................79

Using dummy LU................................................................................................................79

Displayed pair statuses.......................................................................................................80

Multi-platform volumes........................................................................................................81

Differences in replication commands....................................................................................82

4 Starting up RAID Manager........................................................................84

Starting up on UNIX systems....................................................................................................84

Starting up on Windows systems..............................................................................................85

Starting up on OpenVMS systems.............................................................................................86

Starting RAID Manager as a service (Windows systems)..............................................................87

5 Provisioning operations with RAID Manager................................................89

About provisioning operations..................................................................................................89

Overview of the configuration setting command.....................................................................89

Synchronous command processing..................................................................................89

Asynchronous command processing................................................................................89

Asynchronous commands...............................................................................................90

Help on configuration setting commands...............................................................................91

LDEV nickname function......................................................................................................91

Available provisioning operations.............................................................................................91

Available provisioning operation (specifying device group)..........................................................97

Summary..........................................................................................................................97

Operation method.............................................................................................................98

Common operations when executing provisioning operations.......................................................99

4 Contents

Page 5

Resource group operations.....................................................................................................100

Creating resource groups..................................................................................................100

Deleting resource groups..................................................................................................100

Allocating resources that are allocated to resource groups to the other resource groups.............100

Execution example...........................................................................................................101

Internal volume operations.....................................................................................................101

Creating internal volumes (open volume).............................................................................101

Script examples...............................................................................................................102

Creating internal volumes (mainframe volume)..........................................................................104

Script examples....................................................................................................................105

Virtual volume (Thin Provisioning) operations............................................................................106

Creating virtual volumes (Thin Provisioning).........................................................................106

Script examples...............................................................................................................107

Virtual volume (Thin Provisioning Z) operations.........................................................................109

Creating virtual volumes (Thin Provisioning Z)......................................................................109

Script examples...............................................................................................................109

Virtual volume (Smart Tiers) operations....................................................................................110

Operational flow.............................................................................................................110

Creating virtual volumes (Smart Tiers).................................................................................112

Script examples...............................................................................................................113

External volume operations....................................................................................................116

Creating external volumes.................................................................................................116

Script Examples...............................................................................................................117

6 Data replication operations with RAID Manager.........................................120

About data replication operations...........................................................................................120

Features of paired volumes....................................................................................................120

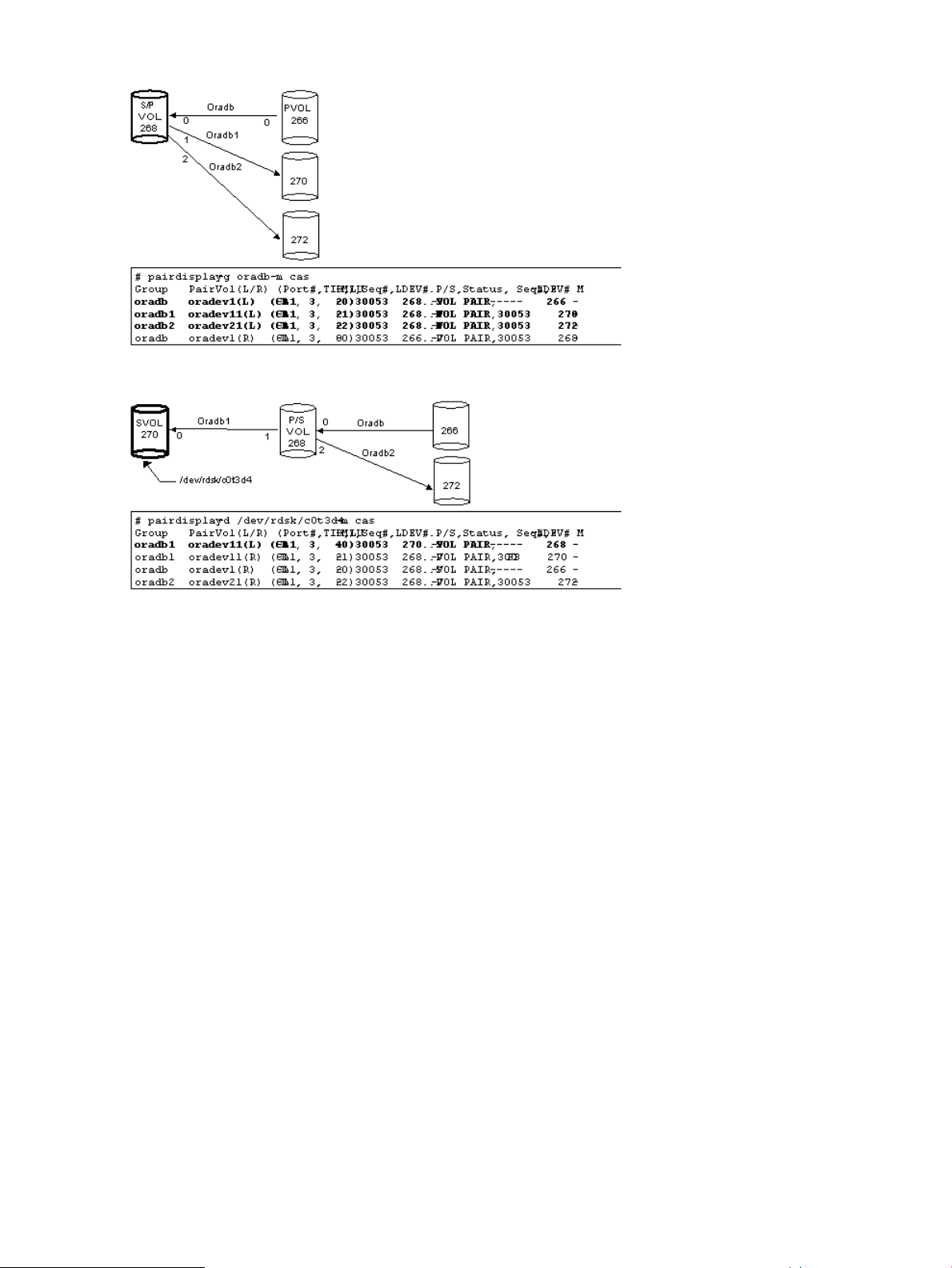

Using RAID Manager with Business Copy and Continuous Access Synchronous............................121

Business Copy operations......................................................................................................122

Business Copy duplicated mirroring....................................................................................122

Business Copy cascading pairs..........................................................................................123

Restrictions for Business Copy cascading volumes............................................................124

Restriction for Continuous Access Synchronous/Business Copy cascading volumes...............125

Continuous Access Synchronous operations..............................................................................125

Continuous Access Synchronous takeover commands............................................................125

Continuous Access Synchronous remote commands..............................................................126

Continuous Access Synchronous local commands.................................................................127

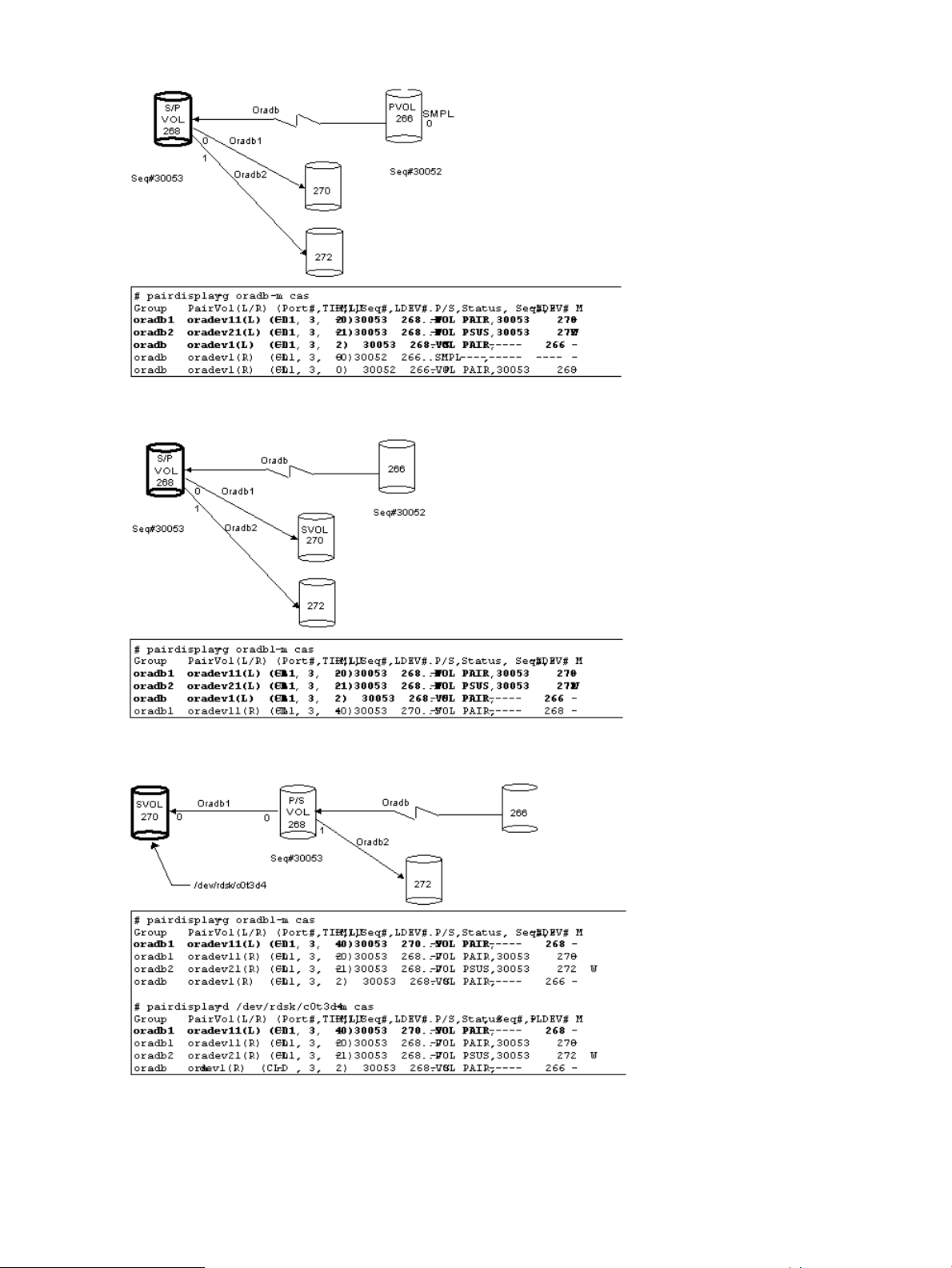

Continuous Access Synchronous, Business Copy, and Continuous Access Journal operations...........128

Continuous Access Synchronous/Business Copy volumes.......................................................128

Continuous Access Synchronous/Business Copy/Continuous Access Journal volume status.........129

Continuous Access Asynchronous, Continuous Access Synchronous, and Continuous Access Journal

volumes..........................................................................................................................133

Sidefile cache for Continuous Access Asynchronous.........................................................135

Continuous Access Asynchronous transition states and sidefile control................................136

Continuous Access Asynchronous/Continuous Access Journal error state.............................137

Continuous Access Synchronous/Continuous Access Asynchronous and Continuous Access Journal

fence level settings...........................................................................................................138

Setting the fence level..................................................................................................139

Snapshot operations.............................................................................................................139

Snapshot volumes............................................................................................................140

Creating a Snapshot pair..................................................................................................140

Snapshot pair status.........................................................................................................140

Pair status relationship to Snapshot commands.....................................................................141

Controlling Auto LUN............................................................................................................142

Specifications for Auto LUN...............................................................................................142

Contents 5

Page 6

Commands to control Auto LUN.........................................................................................143

Relations between “cc” command issues and status..............................................................146

Restrictions for Auto LUN...................................................................................................147

Continuous Access Journal MxN configuration and control.........................................................147

Overview........................................................................................................................147

Policy ............................................................................................................................148

horcm.conf......................................................................................................................148

Command specifications...................................................................................................149

pairdisplay command..................................................................................................149

pairsplit command......................................................................................................150

Notice on system operation...............................................................................................152

Configuration examples....................................................................................................153

Remote volume discovery.......................................................................................................155

Discovering a remote volume.............................................................................................156

7 Data protection operations with RAID Manager..........................................158

Data protection operations.....................................................................................................158

Data Retention.................................................................................................................158

Restrictions on Data Retention volumes...........................................................................159

Database Validator..........................................................................................................159

Restrictions on Database Validator.................................................................................160

Protection parameters and operations......................................................................................161

Data Protection facility...........................................................................................................161

Data Protection Facility specifications..................................................................................162

Examples for configuration and protected volumes...............................................................162

Target commands for protection.........................................................................................163

permission command.......................................................................................................164

New options for security...................................................................................................164

raidscan –find inst.......................................................................................................164

raidscan –find verify [MU#]..........................................................................................164

raidscan –f[d].............................................................................................................165

pairdisplay –f[d].........................................................................................................165

Permitting protected volumes..............................................................................................165

With a $HORCMPERM file...........................................................................................165

Without a $HORCMPERM file: Commands to run on different operating systems.................166

Environment variables.......................................................................................................167

$HORCMPROMOD....................................................................................................167

$HORCMPERM..........................................................................................................167

Determining the protection mode command device...............................................................167

8 Examples of using RAID Manager commands............................................168

Group version control for mixed storage system configurations....................................................168

LDM volume discovery and flushing for Windows.....................................................................168

Volume discovery function.................................................................................................169

Mountvol attached to Windows systems..............................................................................170

System buffer flushing function...........................................................................................171

Special facilities for Windows systems.....................................................................................173

Signature changing facility for Windows systems.................................................................173

GPT disk for Windows......................................................................................................175

Directory mount facility for Windows systems.......................................................................175

Host group control................................................................................................................177

Specifying a host group....................................................................................................177

Commands and options including a host group...................................................................178

Using RAID Manager SLPR security.........................................................................................178

Specifying the SLPR Protection Facility.................................................................................179

SLPR configuration examples.............................................................................................180

6 Contents

Page 7

9 Troubleshooting......................................................................................184

General troubleshooting........................................................................................................184

Operational notes and restrictions for RAID Manager operations................................................184

Error messages and error codes.............................................................................................187

System log messages........................................................................................................187

Command error messages ................................................................................................188

Generic error codes (horctakeover and pair commands).......................................................194

Generic error codes (raidscan, raidqry, raidar, horcctl).........................................................195

Specific error codes.........................................................................................................196

SSB codes......................................................................................................................197

SSB code returned by a replication command.................................................................198

SSB code returned by the configuration setting command (raidcom command)....................198

10 Support and other resources...................................................................246

Contacting HP......................................................................................................................246

Subscription service..........................................................................................................246

Documentation feedback..................................................................................................246

Related information...............................................................................................................246

HP websites....................................................................................................................247

Conventions for storage capacity values..................................................................................247

Typographic conventions.......................................................................................................247

Glossary..................................................................................................249

Index.......................................................................................................252

Contents 7

Page 8

1 Overview

Unless otherwise specified, the term P9000 in this guide refers to the following disk array:

• P9500 Disk Array

NOTE: The raidcom commands described in this guide are supported only on the P9000 disk

arrays. All other commands are supported on both the P9000 and the XP24000/XP20000,

XP12000/XP10000, SVS200, and XP1024/XP128 disk arrays.

The GUI illustrations in this guide were created using a Windows computer with the Internet Explorer

browser. Actual windows may differ depending on the operating system and browser used. GUI

contents also vary with licensed program products, storage system models, and firmware versions.

RAID Manager (RAID Manager) enables you to perform storage system configuration and data

management operations by issuing commands to the RAID storage systems.

About RAID Manager

RAID Manager enables you to perform storage system configuration and data management

operations by issuing commands to the RAID storage systems. RAID Manager operations can be

used on the following storage systems:

• P9500 Disk Array

• XP24000/XP20000 Disk Array

• XP12000 Disk Array

• XP10000 Disk Array

• XP1024/XP128 Disk Array

RAID Manager continues to provide the proven functionality that has been available for the

XP24000/XP20000 Disk Array storage systems and previous storage system models, including

in-system replication, remote replication, and data protection operations.

In addition, RAID Manager now provides command-line access to the same provisioning and

storage management operations that are available in the Remote Web Console graphical user

interface. RAID Manager commands can be used interactively or in scripts to automate and

standardize storage administration functions, thereby simplifying the job of the storage administrator

and reducing administration costs. This new version of RAID Manager also provides improved

ease of use while at the same time reducing risk of error.

RAID Manager functions available on the P9500 storage system

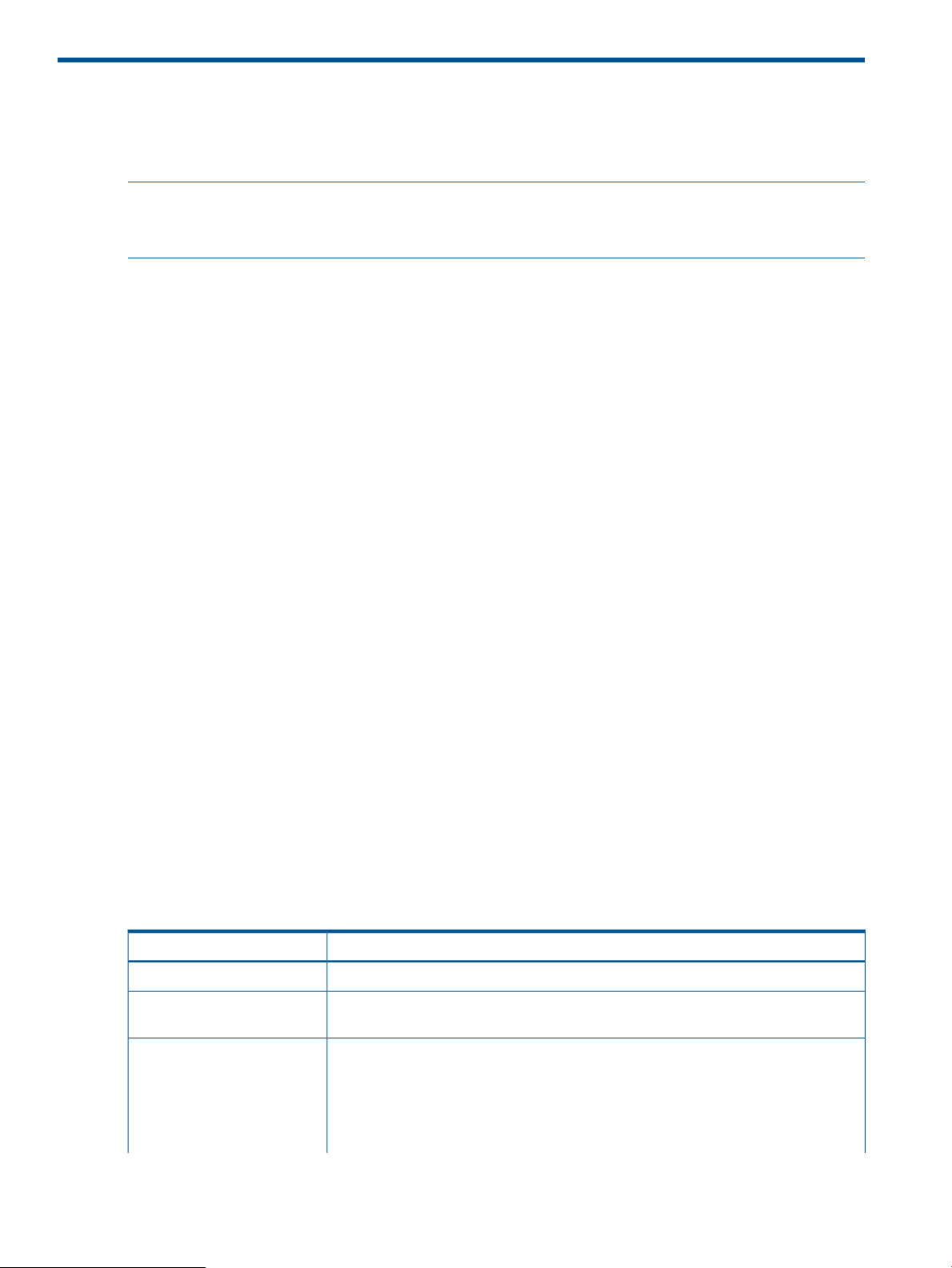

The following table lists and describes new RAID Manager functions available on the P9500.

DescriptionItem

Supports configuration setting commands in addition to replication commands.Provisioning function

Asynchronous commands

Supports a command processing method, which returns a response when receiving a

command and executes the actual processing later.

Command execution modes

8 Overview

Supports the transaction mode that executes a script file specified by the -zt option

and the line-by-line mode that executs row-by-row input from the command line.

• Context Check. Checks the consistency of the contents in the script file.

• Configuration check. Checks the contents of the script file if it is operated for the

installed resource.

Page 9

DescriptionItem

At the transaction mode, these checks are performed when these are evaluated as

normal before executing script files. Also, the progress of the check and execution are

displayed in the console.

Precheck function

CLI command in out-of-band

User authentication

LDEV grouping function

Resource group function

Resource group locking

Executes the command checking only (processing is not executed even if no problem

found on the checking result). This is available to specify in both line-by-line mode

and transaction mode (see “Command execution modes” (page 10)).

Makes both replication/provisioning CLIs executable with out-of-band method (see

“Command execution by the out-of-band method” (page 11)).

Supports the user authentication function in conjunction with the Remote Web

Console/SVP. Once user authentication is enabled, a command can be executed in

accordance with the authentication controlled by the Remote Web Console/SVP.

User authentication is required in the following cases.

• When executing replication or provisioning operation with out-of-band method.

• When executing provisioning operation with in-band method .

However, user authentication is an option when executing just a replication series

operation with in-band method.

Supports the function to provide a nickname to an LDEV.LDEV nickname function

Puts the multiple LDEVs together so that it can be defined as one device group or one

copy group. By using one defined group, the multiple LDEVs can be operated all

together.

Each user can use resources effectively by grouping resources in the storage system

(LDEV, port, host groups, and pools).

Supports the user locking of the resource (LDEV, ports, and so on) for users between

RAID Manager and SVP or between RAID Manager and another RAID Manager.

Provisioning function

By executing a configuration setting command (raidcom command) from RAID Manager, the

provisioning function such as setting commands or creating LDEVs can be done. For the information

about the configuration setting command (raidcom command), see “Overview of the configuration

setting command” (page 89).

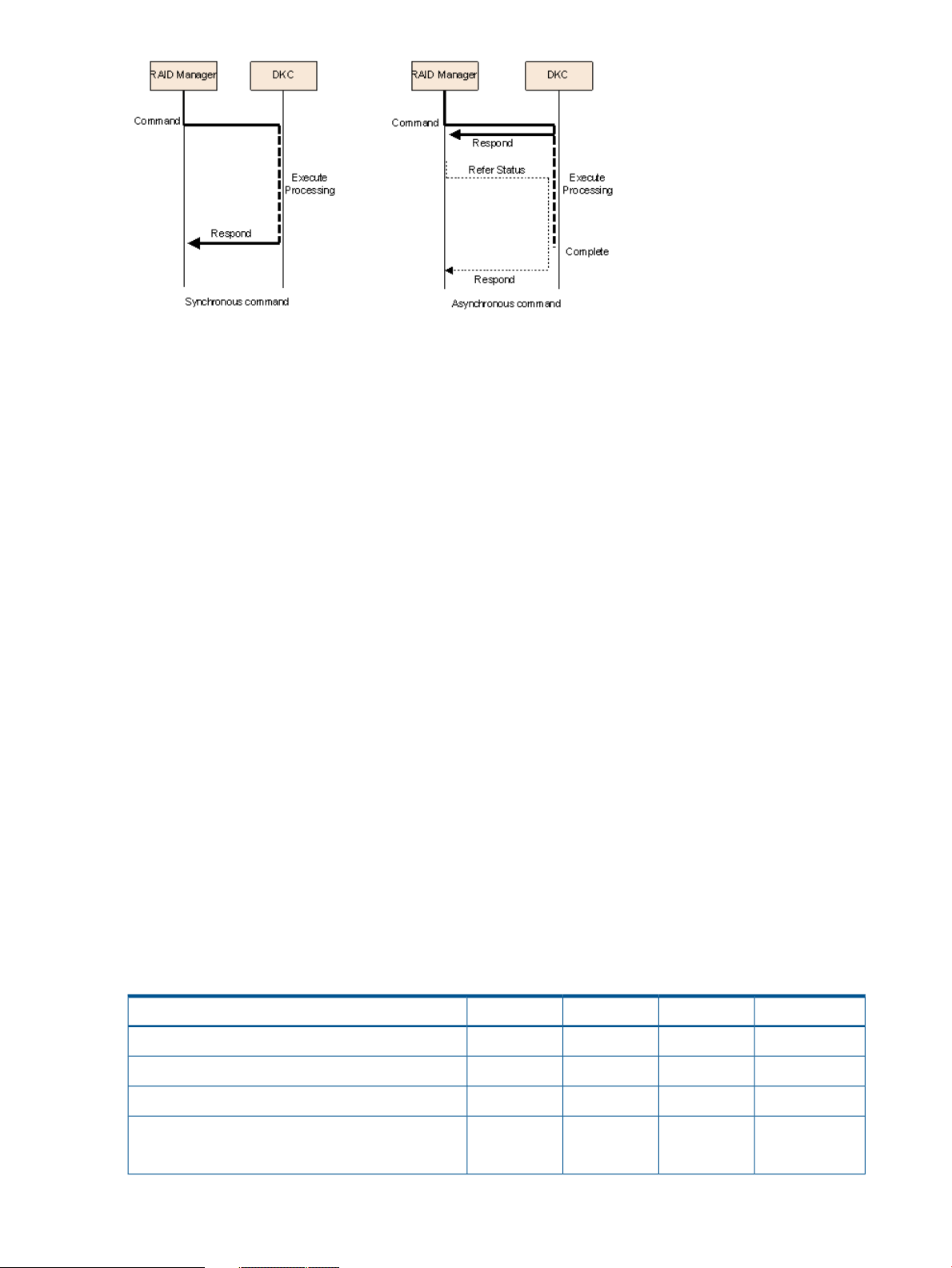

Asynchronous command processing

Within the configuration setting commands (raidcom commands), using asynchronous commands

is a method of command processing applied to a command that takes much time in processing on

the storage system. Once this processing method of command is issued, an additional command

can be executed without having to wait for the command completion that executed just before. It

is also possible to monitor the completion status by using a status reference command.

RAID Manager functions available on the P9500 storage system 9

Page 10

Command execution modes

RAID Manager provides two command execution modes: transaction mode that executes by

specifying a script file with the -zt option, and line-by-line mode that executes a command row-by-row

for the configuration setting commands (raidcom commands).

The transaction mode can execute the following checking.

• Context check: This check is executed when a script file is specified by the -zt option. It checks

the context of preceding commands and determines whether a subsequent command can be

executed.

Specifying example

> raidcom -zt <script_file>

• Configuration check: This check verifies that the actual storage system confirmation is valid

(implemented) for the resources specified in the commands (LDEVs, ports, pools, etc.).

Syntax example:

> raidcom get ldev -ldev_id -cnt 65280 -store<work_file>

> raidcom -zt <script_file> -load<work_file>

Precheck function

RAID Manager provides a precheck function that checks a configuration command before executing

the command for the configuration setting commands (raidcom commands):

In RAID Manager before supporting P9500, an error was returned when the syntax of a command

to be executed was not correct. With this precheck function, the command syntax can be checked

before the command is issued. This function can be specified using either the -checkmode

precheck option or the -zt option.

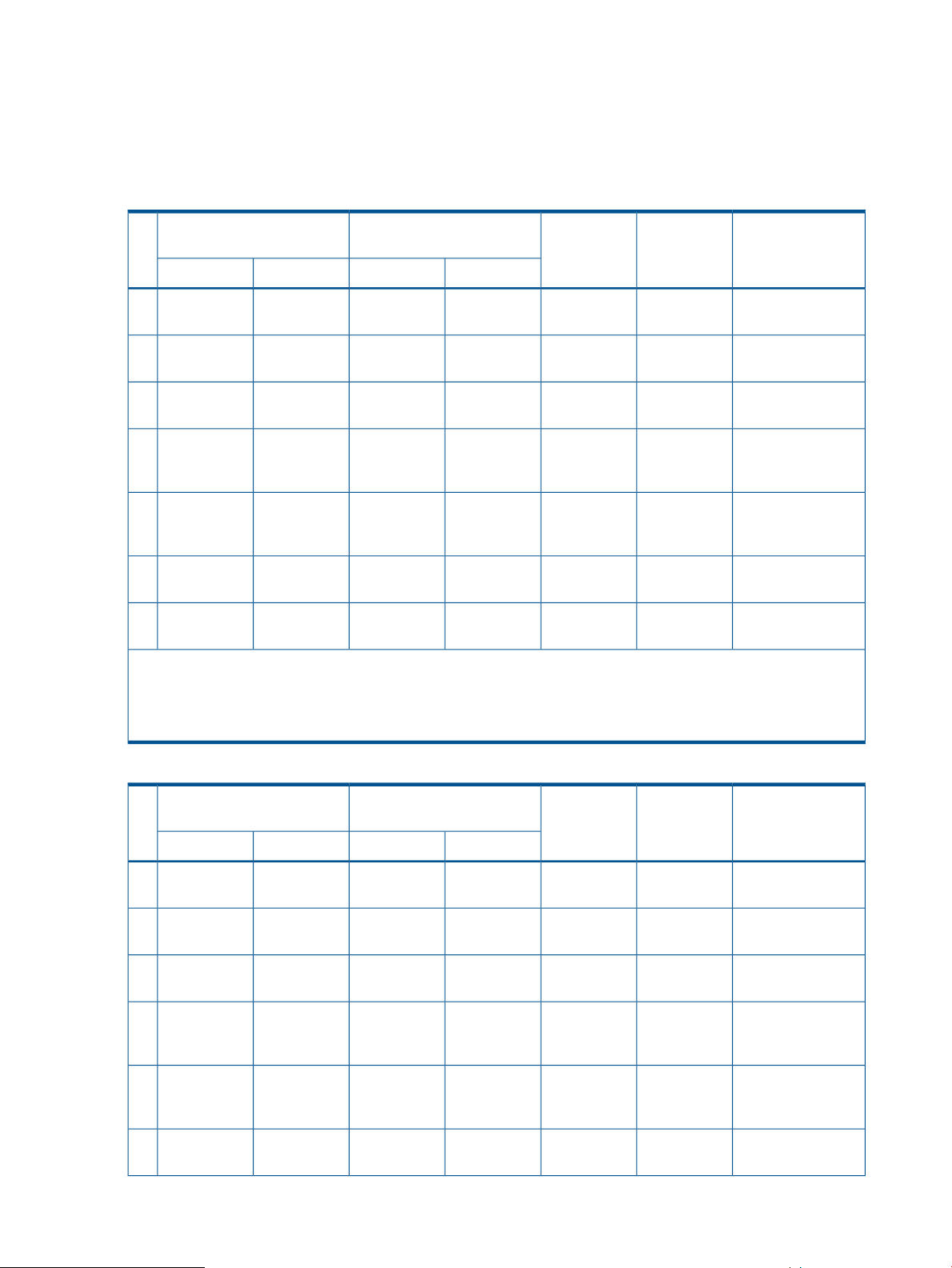

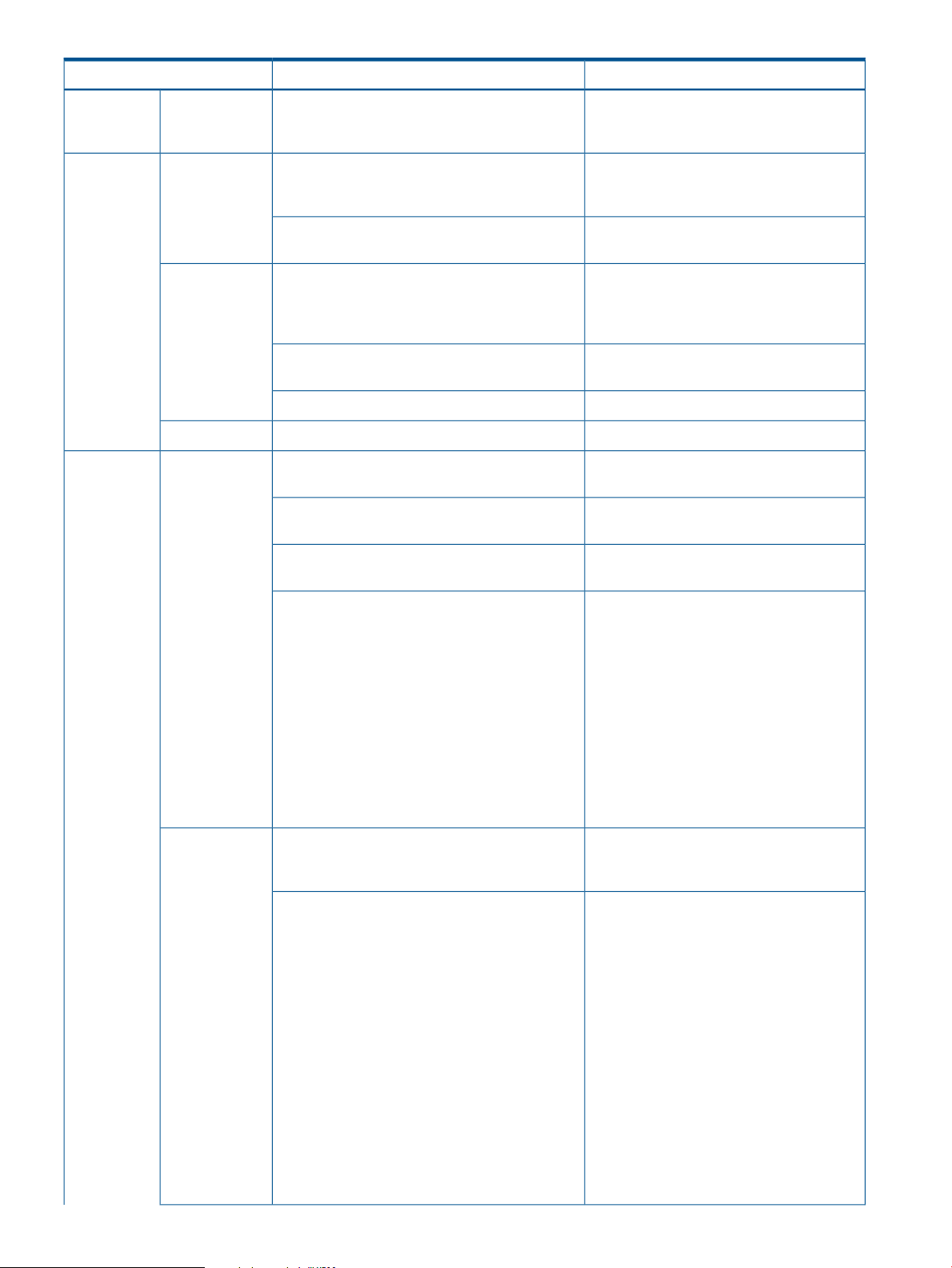

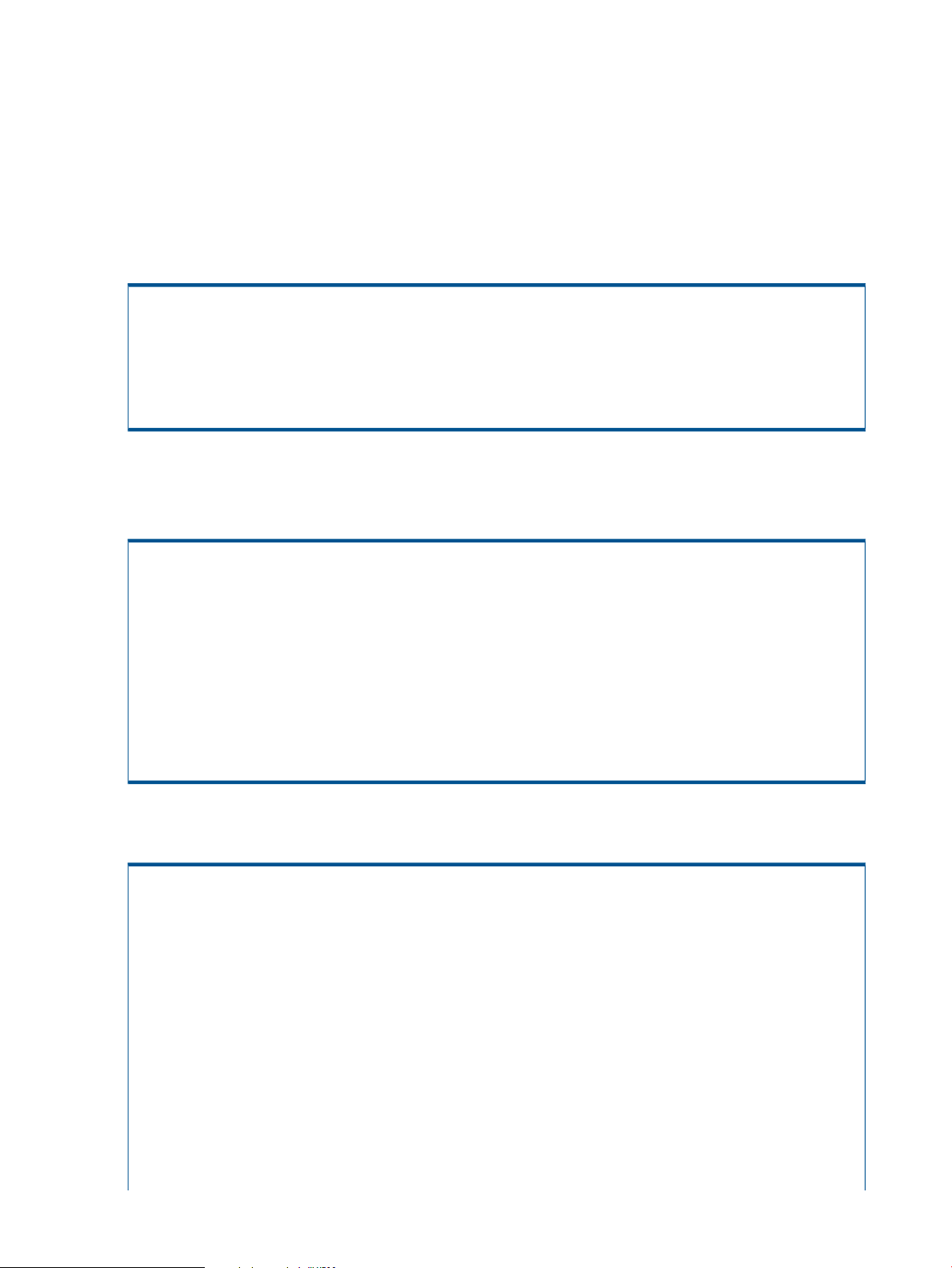

The following table shows the summary of checking function combinations between precheck

function and the transaction mode.

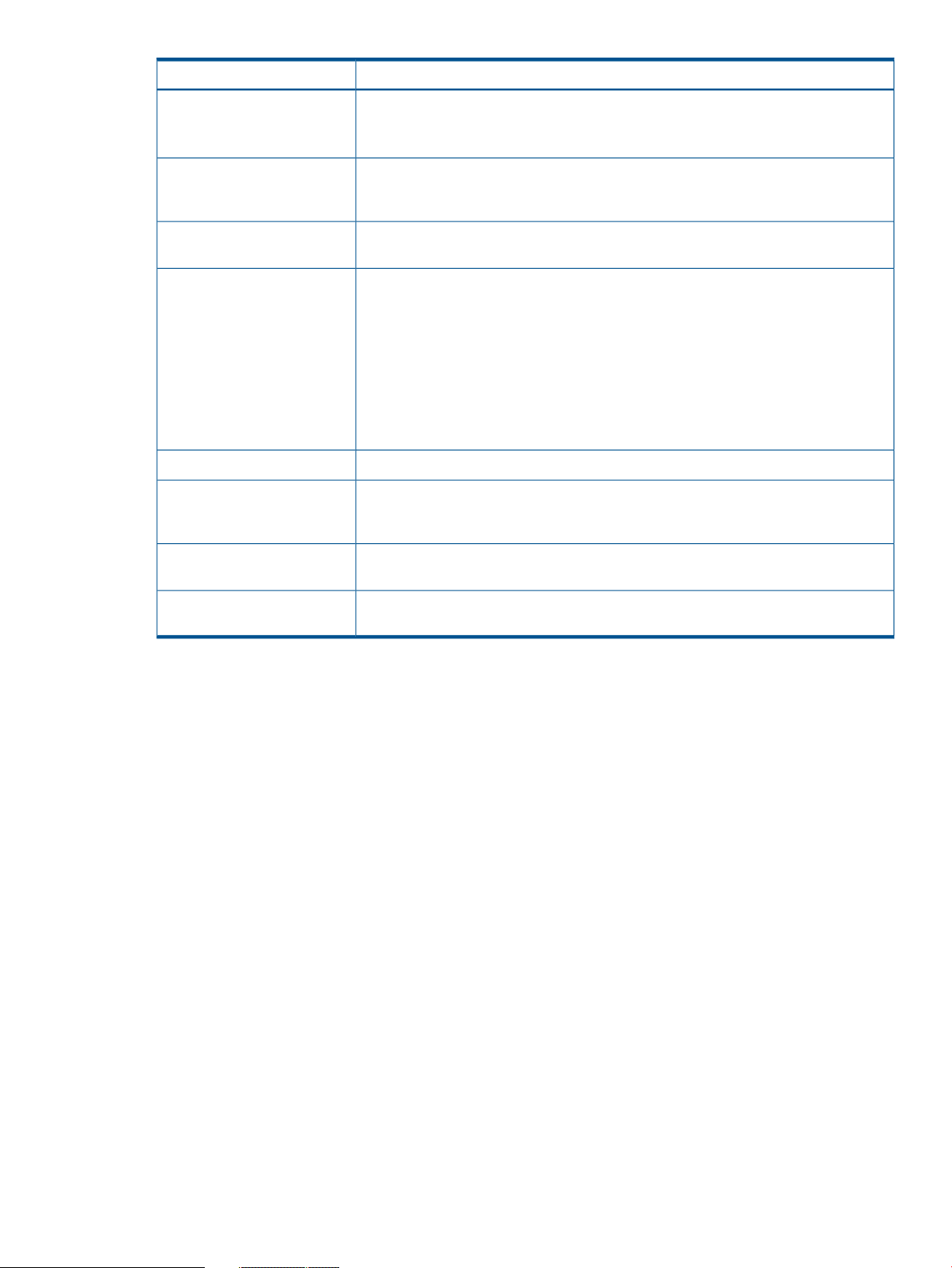

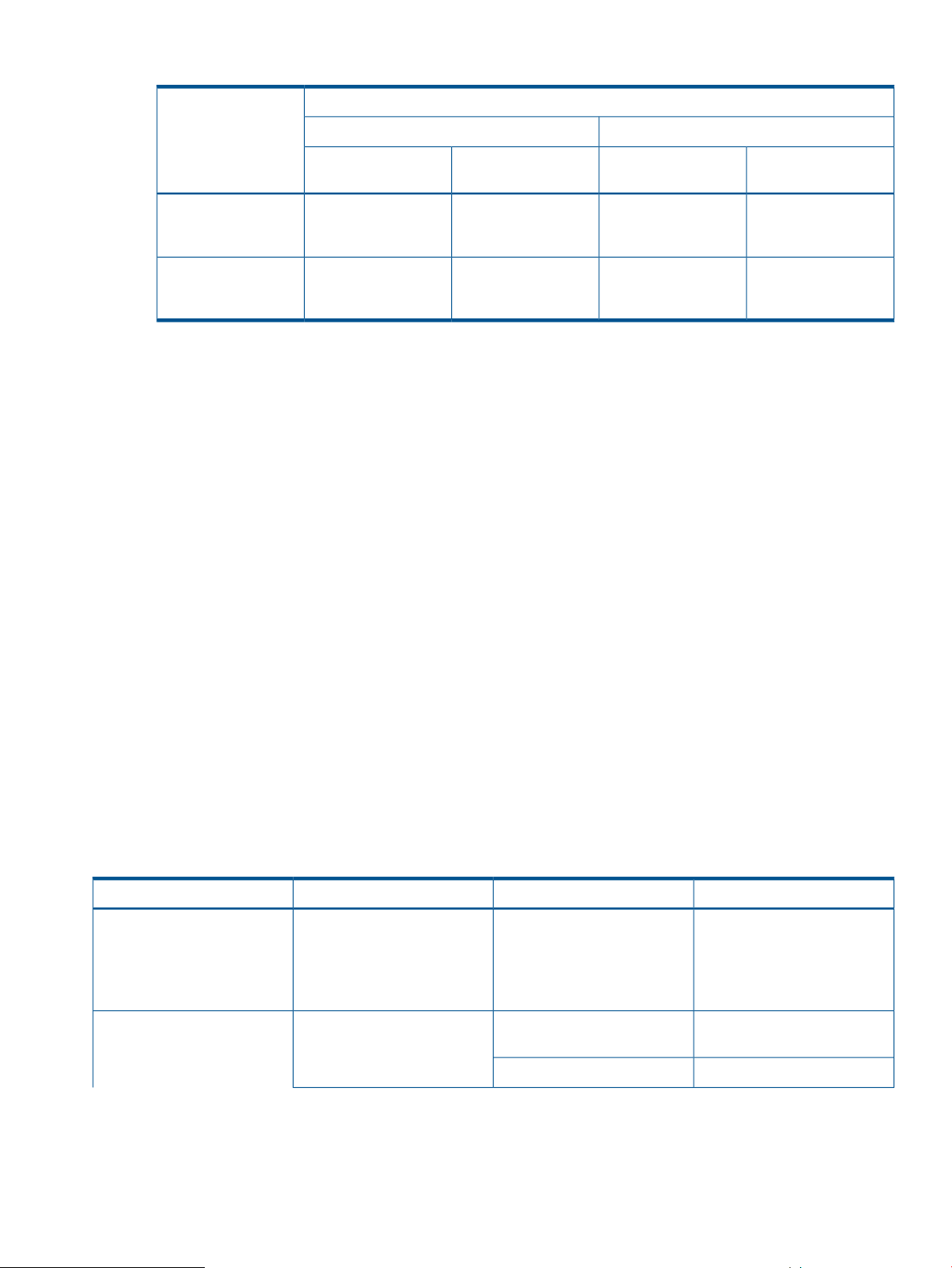

Table 1 Summary of the checking functions

raidcom -zt <script_file> -load <work_file>

10 Overview

ExecutionConfig checkContext checkSyntax checkCommand syntax

ExecutedNot executedNot executedExecutedraidcom <command>

Not executedNot executedNot executedExecutedraidcom <command> -checkmode precheck

ExecutedNot executedExecutedExecutedraidcom -zt <script file>

ExecutedExecutedExecutedExecutedraidcom get ldev -ldev -cnt 65280 -store<work_file>

Page 11

Table 1 Summary of the checking functions (continued)

raidcom -zt <script_file> -load <work_file> -checkmode

precheck

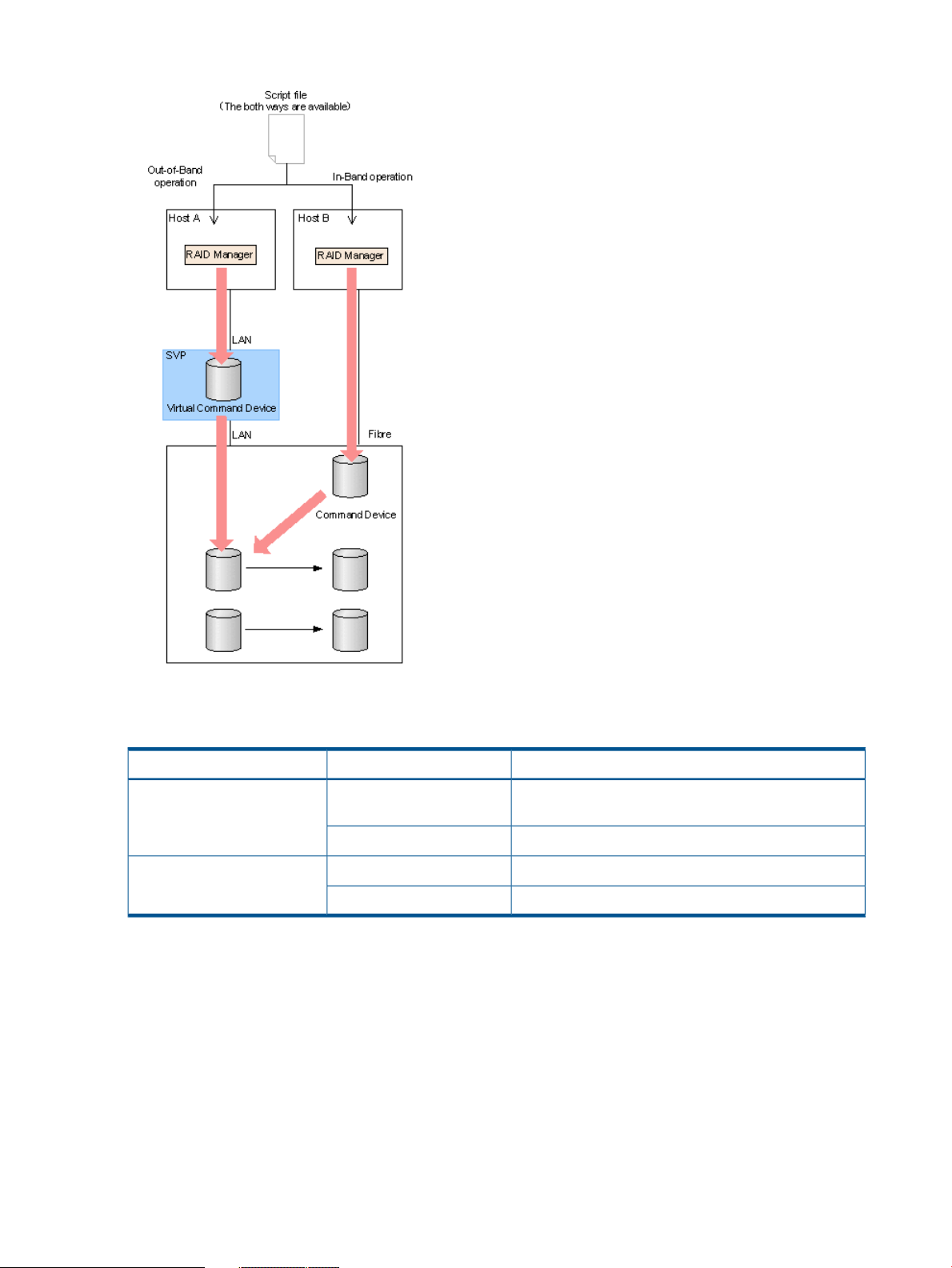

Command execution by the out-of-band method

In the RAID Manager before supporting P9500, a command can be executed only from the host

connected by the fibre channel directly. This is known as in-band operations. In the RAID Manager

supporting P9500, a command can be executed from any client PC connected to the storage

system via LAN, not just from connected hosts. This is known as out-of-band operations.

• For in-band RAID Manager operations, the command device is used, which is a user-selected

and dedicated logical volume on the storage system that functions as the interface to the

storage system on the UNIX/PC host. The command device accepts read and write commands

that are executed by the storage system.

• For out-of-band RAID Manager operations, a virtual command device is used. The virtual

command device is defined in the configuration definition file by an IP address on the SVP.

RAID Manager commands are issued from the client or the host server and transferred via

LAN to the virtual command device, and the requested operations are then performed by the

storage system.

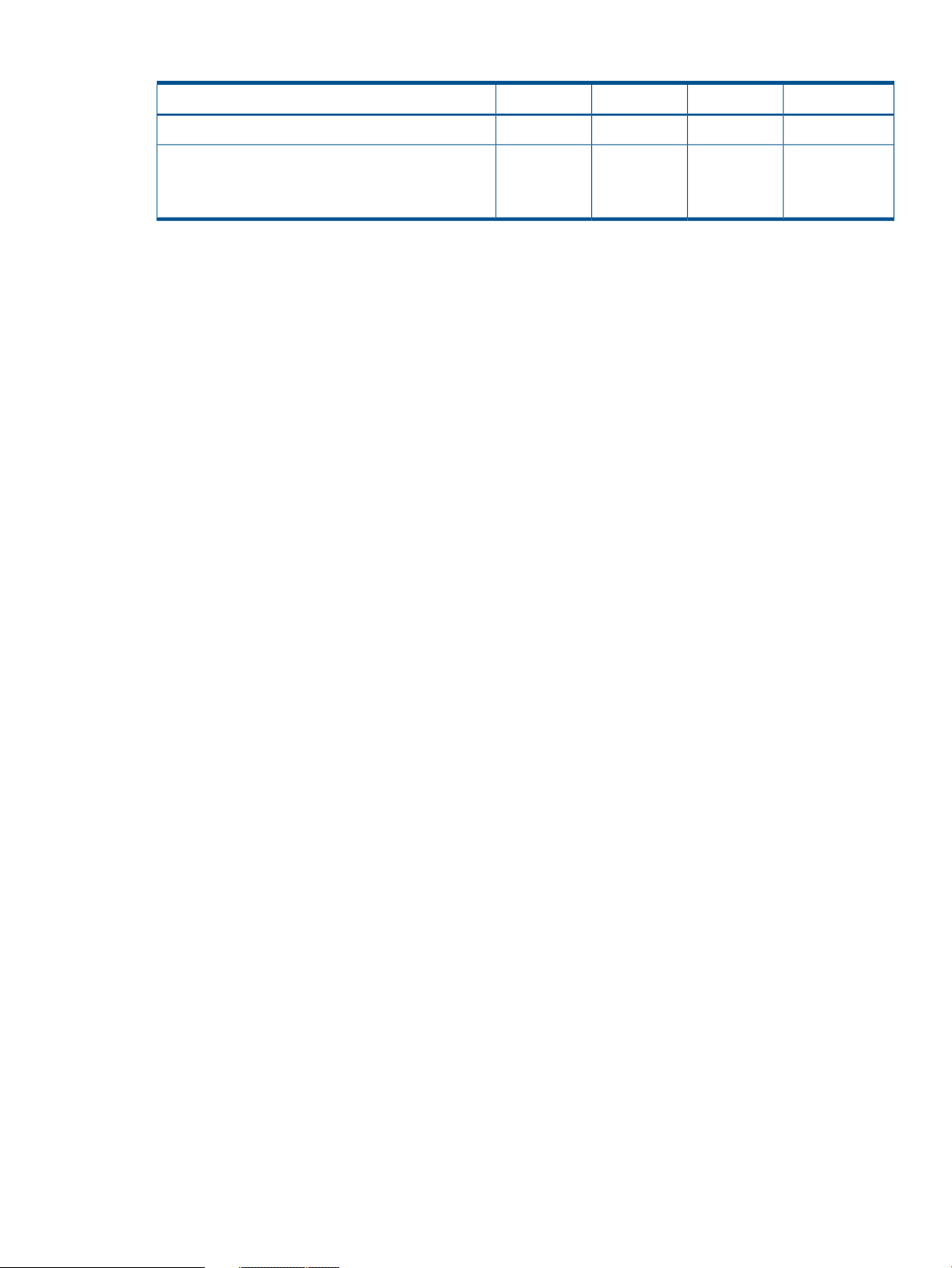

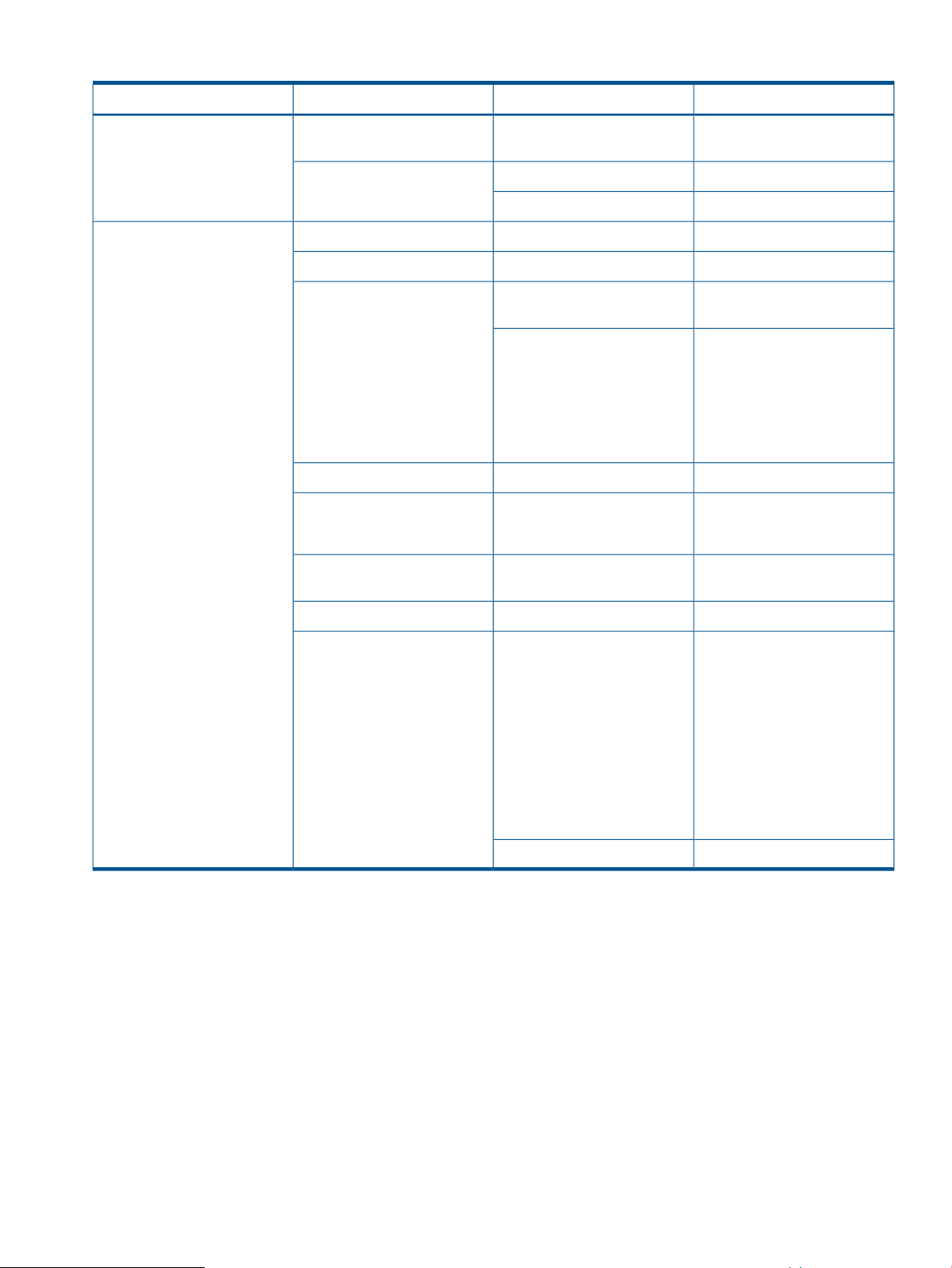

The following table illustrates in-band and out-of-band RAID Manager operations.

ExecutionConfig checkContext checkSyntax checkCommand syntax

Not executedNot executedExecutedExecutedraidcom -zt <script file> -checkmode precheck

Not executedExecutedExecutedExecutedraidcom get ldev -ldev -cnt 65280 -store<work_file>

RAID Manager functions available on the P9500 storage system 11

Page 12

Figure 1 Overview of out-of-band and in-band operations

The following table provides a comparison of in-band and out-of-band operations.

Table 2 Comparison of in-band and out-of-band operations

as if it were a command for the

command device)

directly with the SVP)

User authentication

To enable user authentication, it is required to enable user authentication mode for the command

device of RAID Manager. If the authentication is disabled, provisioning commands and out-of-band

commands cannot be executed.

The user information to be used (user ID or password) are the same with that of Remote Web

Console and SVP.

LDEV nickname function

A unique nickname with up to 32 characters can be given to an LDEV.

SpecificationCommandRoute

ReplicationIn-band (issued from the host

The required or not required of user authentication is

changed by the setting of user authentication.

User authentication is required.Provisioning

User authentication is required.ReplicationOut-of-band (communicating

User authentication is required.Provisioning

12 Overview

Page 13

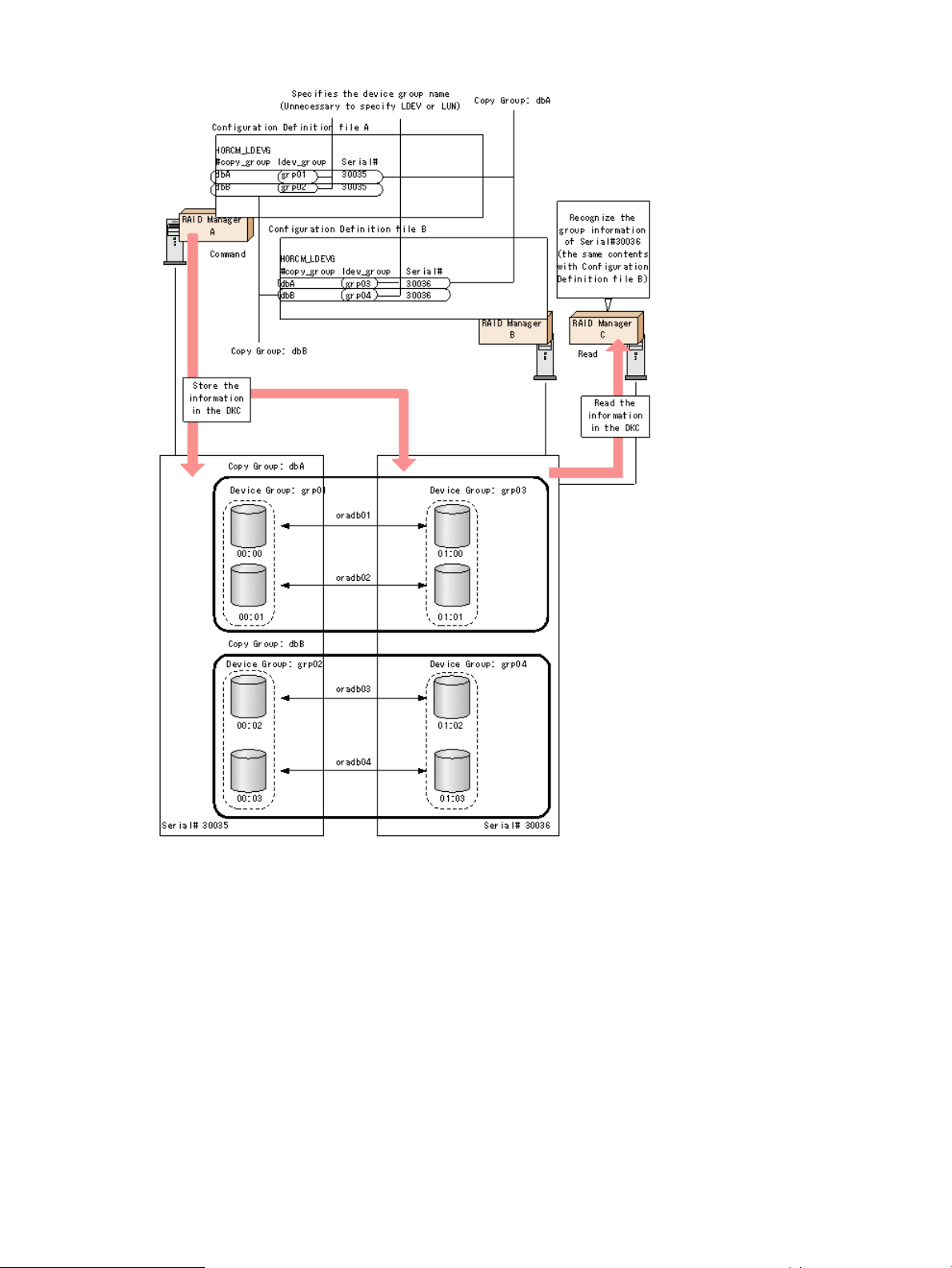

LDEV grouping function

In the RAID Manager before supporting P9500, it was required to define a copy group for the

configuration definition file on each host. When changing copy group information, editing of the

configuration definition file was required on each host. In the RAID Manager supporting P9500,

the group information can be defined at a time and stored in the storage system. When changing

group information, only one configuration file needs to be edited, saving time and effort and

eliminating the chance for error due to mismatching edits.

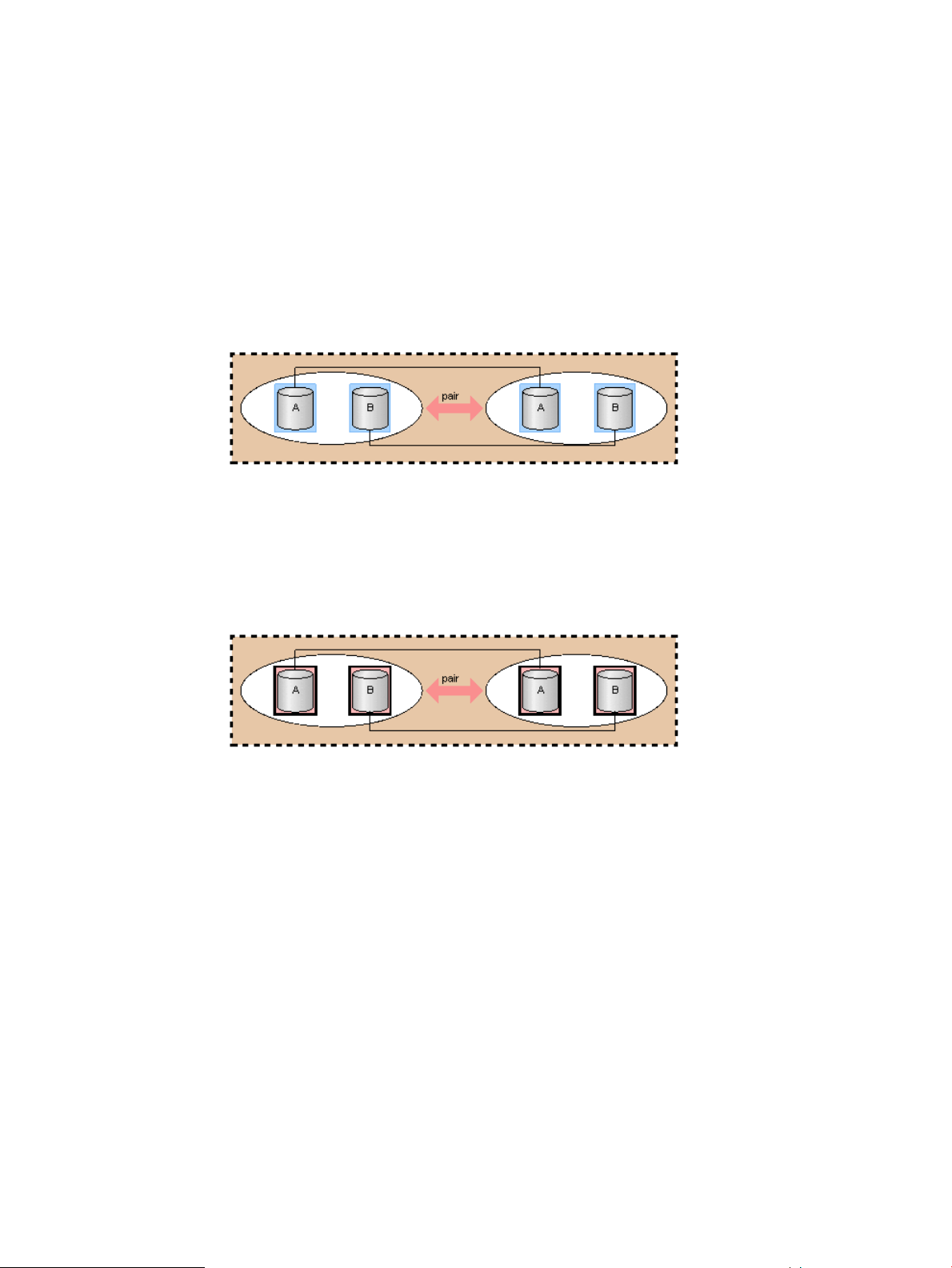

This new functionality is implemented using LDEV names, device groups, and copy groups:

• Copy group: A group that is defined by specifying two device groups: one device group from

the primary side and one device group from the secondary side.

• Device group:

A group that is configured with one or more LDEV.◦

◦ A device group can only belong to one copy group.

◦ When creating a mirrored or cascaded pair, each copy group must have unique device

groups and devices names.

• Device name:

A name that can be given to one LDEV per the device group.◦

◦ Each name is associated with a device group in which the LDEV belongs to.

◦ An LDEV nickname can be given to the LDEV as a unique name for the LDEV that is not

related with device group. Only one LDEV nickname can be given for each LDEV.

◦ Device group:

A group that is configured with one or more LDEVs.–

– A device group can belong to only one copy group

– When creating a mirrored of cascaded pair, each copy group must have unique

device groups and device names.

◦ Copy group: A group that is defined by specifying two device groups: one device group

from primary side and one device group from the secondary side.

Resource group function

Using Resource Group function, a storage administrator in each resource group can access

respective resource groups only. The storage administrator in each resource group cannot access

the other resources except the one that the administrator manages. This can prevent the risk of

destroying the data by another storage administrator in the other resource groups or of leaking

out the data.

Resource group locking function

The resource group locking function prevents conflict among multiple users:

User scripts cannot be guaranteed to work correctly when there are multiple users (Remote Web

Console and SVP). You can use the Lock command while the script is running to ensure completion.

To use the Lock command, user authentication is required.

RAID Manager functions available on the P9500 storage system 13

Page 14

RAID Manager functions available on all RAID storage systems

RAID Manager provides the following functionality on all HP RAID storage systems.

• In-system replication

• Remote replication

• Data protection

In-system replication

RAID Manager provides command-line control for in-system replication operations, including

Business Copy and Snapshot. RAID Manager displays Business Copy and Snapshot information

and allows you to perform operations by issuing commands or by executing a script file.

Remote replication

RAID Manager provides command-line control for remote replication operations, including

Continuous Access Synchronous and Continuous Access Journal. RAID Manager displays Continuous

Access Synchronous and Continuous Access Journal information and allows you to perform

operations by issuing commands or by executing a script file.

For remote copy operations, RAID Manager interfaces with the system software and high-availability

(HA) software on the host as well as the software on the RAID storage system. RAID Manager

provides failover operation commands that support mutual hot standby in conjunction with

industry-standard failover products (e.g., MC/ServiceGuard, HACMP, FirstWatch®). RAID Manager

also supports a scripting function for defining multiple operations in a script (or text) file. Using

RAID Manager scripting, you can set up and execute a large number of commands in a short

period of time while integrating host-based high-availability control over copy operations.

Data protection

RAID Manager continues to support data protection operations, including Database Validator and

Data Retention.

• Database Validator. The RAID Manager software provides commands to set and verify

parameters for volume-level validation checking of Oracle database operations. Once validation

checking is enabled, all write operations to the specified volumes must have valid Oracle

checksums. RAID Manager reports a validation check error to the syslog file each time an

error is detected. Database Validator requires the operation of RAID Manager software product

but cannot be controlled via the Remote Web Console software.

• Data Retention. The RAID Manager software enables you to set and verify the parameters for

guarding at the volume level. Once guarding is enabled, the RAID storage system conceals

the target volumes from SCSI commands such as SCSI Inquiry and SCSI Read Capacity,

prevents reading and writing to the volume, and protects the volume from being used as a

copy volume (the Continuous Access Synchronous or Business Copy paircreate operation

fails).

14 Overview

Page 15

2 RAID Manager software environment

The RAID Manager software environment involves components on the RAID storage system(s) and

RAID Manager instance components on the host server(s).

Overview of the RAID Manager software environment

The RAID Manager software environment involves components on the RAID storage systems and

RAID Manager instance components on the host server(s). The RAID Manager components on the

storage systems include the command devices and the data volumes. Each RAID Manager instance

on a host server includes:

• RAID Manager application files, referred to as HORC Manager (HORCM):

Log and trace files◦

◦ A command server

◦ Error monitoring and event reporting files

◦ A configuration management feature

• Configuration definition file (user-defined)

• User execution environments for the HP features, including the commands, a command log,

and a monitoring function.

The RAID Manager commands also have interface considerations (see “RAID Manager and the

SCSI command interface” (page 18)).

RAID Manager components on the RAID storage system

Command device

RAID Manager commands are issued by the RAID Manager software to the RAID storage system

command device. The command device is a user-selected, dedicated logical volume on the storage

system that functions as the interface to the RAID Manager software on the host. The command

device is dedicated to RAID Manager communications and cannot be used by any other

applications. The command device accepts RAID Manager read and write commands that are

issued by the storage system. The command device also returns read requests to the host. The

volume designated as the command device is used only by the storage system and is blocked from

the user. The command device uses 16 MB, and the remaining volume space is reserved for RAID

Manager and its utilities. The command device can be any OPEN-x device (e.g., OPEN-V) that is

accessible to the host. A LUN Expansion volume cannot be used as a command device. A Virtual

LVI/Virtual LUN volume as small as 36 MB (e.g., OPEN-3-CVS) can be used as a command device.

CAUTION: Make sure the volume to be selected as the command device does not contain any

user data. The command device will be inaccessible to the host.

The RAID Manager software on the host issues read and write commands to the command device.

When RAID Manager receives an error notification in reply to a read or write request to the RAID

storage system, the RAID Manager software switchs to an alternate command device, if one is

defined. If a command device is blocked (e.g., for online maintenance), you can switch to an

alternate command device manually. If no alternate command device is defined or available, all

Continuous Access Synchronous and Business Copy commands terminate abnormally, and the

host will not be able to issue commands to the storage system. Therefore, one or more alternate

command devices (see “Alternate command device function” (page 17)) must be set to avoid data

loss and storage system downtime.

Overview of the RAID Manager software environment 15

Page 16

Each command device must be set using the LUN Manager software on Remote Web Console. In

addition, for using a Provisioning command, user authentication is required. Set the security attribute

of the command device with user authentication. For information and instructions on setting a

command device, see the HP P9000 Provisioning for Open Systems User Guide.

Each command device must also be defined in the HORCM_CMD section of the configuration file

for the RAID Manager instance on the attached host. If an alternate command device is not defined

in the configuration file, the RAID Manager software may not be able to use the device.

The RAID Manager Data Protection Facility uses an enhanced command device that has an attribute

to indicate protection ON or OFF.

NOTE:

• For Solaris operations, the command device must be labeled.

• To enable dual pathing of the command device under Solaris systems, make sure to include

all paths to the command device on a single line in the HORCM_CMD section of the

configuration file. Example 1 “Example of alternate path for command device for solaris

systems” shows an example with two controller paths (c1 and c2) to the command device.

Putting the path information on separate lines may cause parsing issues, and failover may not

occur unless the HORCM startup script is restarted on the Solaris system.

Example 1 Example of alternate path for command device for solaris systems

HORCM_CMD

#dev_name dev_name dev_name

/dev/rdsk/c1t66d36s2 /dev/rdsk/c2t66d36s2

Command device guarding

In the customer environment, a command device may be attacked by the maintenance program

of the Solaris Server. After that usable instance is exhausted, the RAID Manager instance would

not start up on all servers (except attacked server). This may happen due to incorrect operation of

the maintenance personnel for the UNIX Server. In this case, the command device should be

protected against operator error, as long as it can be seen as the device file from the maintenance

personnel.

Thus, the RAID microcode (for the command device) and RAID Manager support this protection in

order to guard from similar access.

Guarding method

Currently, assignment of the instance via the command device is ONE phase. Therefore, if the

command device reads a special allocation area of the instance through the maintenance tool and

so on, then it causes a fault of full space of the instance, because the command device interprets

as assignment of the instance from RAID Manager.

RAID Manager has TWO phases that it reads to acquire usable LBA, and writes with the acquired

LBA in attaching sequence to the command device, so the command device will be able to confirm

whether it was required as the assignment for RAID Manager or not, by detecting and adding two

status bits to the instance assignment table.

16 RAID Manager software environment

Page 17

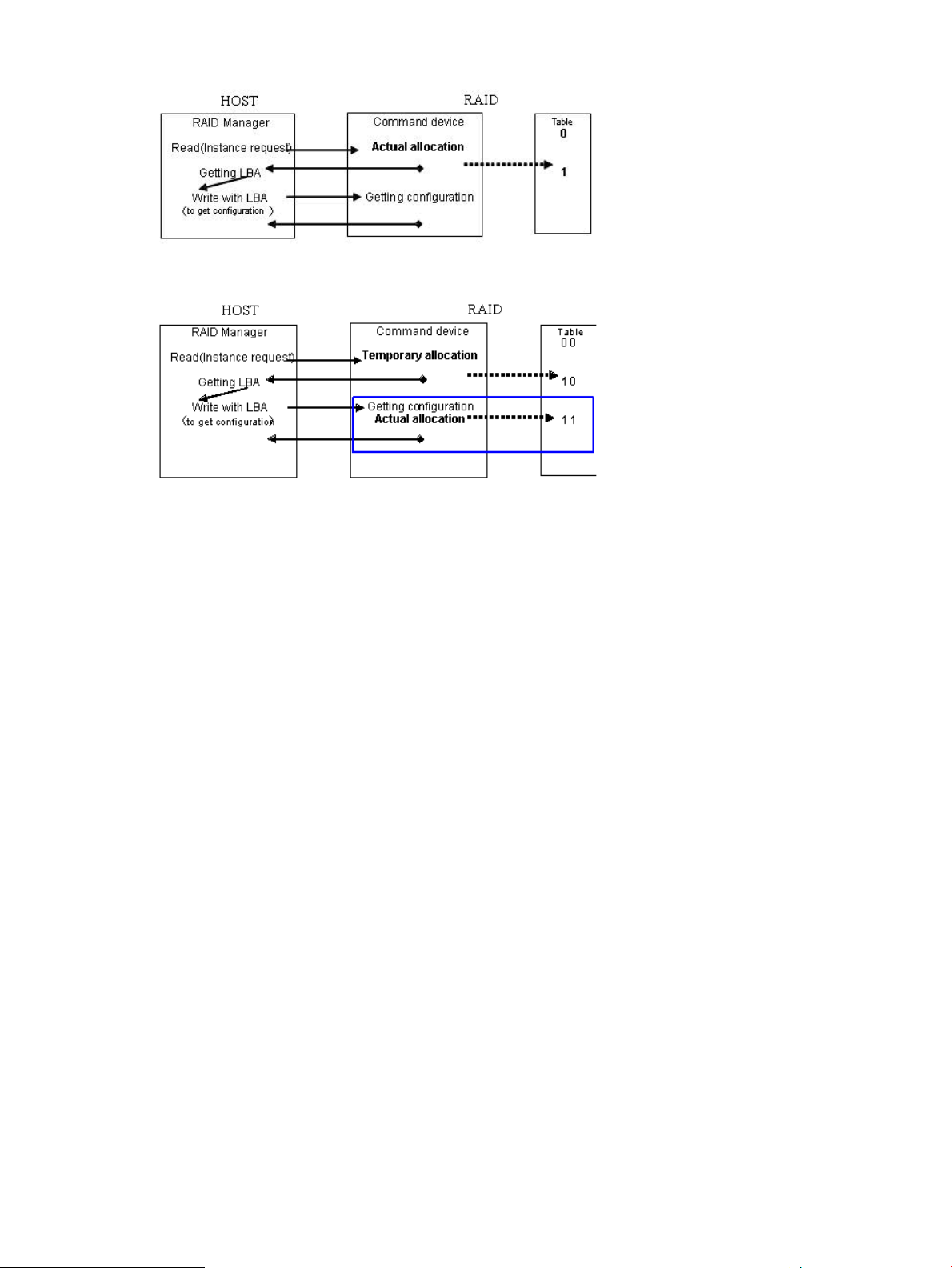

Figure 2 Current assignment sequence

Figure 3 Improved assignment sequence

The command device performs the assignment of an instance through TWO phase that has

“temporary allocation (1 0)” and “actual allocation (1 1)” to the instance assignment table.

If the command device is attacked, the instance assignment table will be filled with “temporary

allocation (1 0)” status, after that the command device will detect a fault of full space as the instance

assignment, and then will clear up all “temporary allocation (1 0)”, and re-assigns the required

instance automatically.

This does not require service personnel to do “OFF/ON” of the command device for clear up the

instance table.

Verifying the RAID Manager instance number

RAID Manager provides a way to verify the number of “temporary allocations (1 0)” and “actual

allocations (1 1)” on the instance table so that you can confirm validity of the RAID Manager

instance number in use. The horcctl -DI command shows the number of RAID Manager

instances since HORCM was started as follows.

Example without command device security:

# horcctl -DICurrent control device = /dev/rdsk/c0t0d0 AI = 14 TI = 0 CI = 1

Example with command device security:

# horcctl -DI

Current control device = /dev/rdsk/c0t0d0*AI = 14 TI = 0 CI = 1

AI : NUM of Actual instances in use

TI : NUM of temporary instances in RAID

CI : NUM of instances using current (own) instance

Alternate command device function

The RAID Manager software issues commands to the command device via the UNIX/PC raw I/O

interface. If the command device fails in any way, all RAID Manager commands are terminated

abnormally, and you cannot use any commands. Because the use of alternate I/O pathing is

platform dependent, restrictions are placed upon it. For example, on HP-UX systems, only devices

RAID Manager components on the RAID storage system 17

Page 18

subject to the LVM can use the alternate path PV-LINK. To avoid command device failure, RAID

Manager supports an alternate command device function.

• Definition of alternate command devices. To use an alternate command device, you must

define two or more command devices for the HORCM_CMD item in the configuration definition

file. When two or more devices are defined, they are recognized as alternate command

devices.

• Timing of alternate command devices. When the HORCM receives an error notification in

reply from the operating system via the raw I/O interface, the alternate command device is

used. It is possible to force a switch to use the alternate the command device by issuing the

horcctl -C switch command provided by RAID Manager.

• Operation of alternating command. If the command device is blocked due to online

maintenance, the switch command should be issued in advance. If the switch command is

issued again after completion of the online maintenance, the previous command device is

activated.

• Multiple command devices on HORCM startup. If at least one command device is available

during one or more command devices described to the configuration definition file, then

HORCM can start with a warning message to the startup log by using the available command

device. Confirm that all command devices can be changed by using the horcctl -C

command option, or HORCM has been started without the warning message to the HORCM

startup log.

Figure 4 Alternate command device function

Define the remote command device

The command device of external storage system that is mapped as a command device of the local

storage system is called as remote command device. By issuing a command to the remote command

device, the operation at the external storage system is realized.

The remote command device is defined by the Remote Web Console. For more information, see

HP StorageWorks P9000 External Storage for Open and Mainframe Systems User Guide.

RAID Manager and the SCSI command interface

When RAID Manager commands are converted into a special SCSI command format, a SCSI

through driver that can send specially formatted SCSI commands to the RAID storage system is

needed. As a result, OS support for RAID Manager depends on the OS capabilities. It is necessary

to use a read/write command that can easily be issued by many UNIX/PC server platforms. For

example, ioctl() can be used for the following platforms: HP-UX, Linux, Solaris, Windows, IRIX64,

OpenVMS and zLinux.

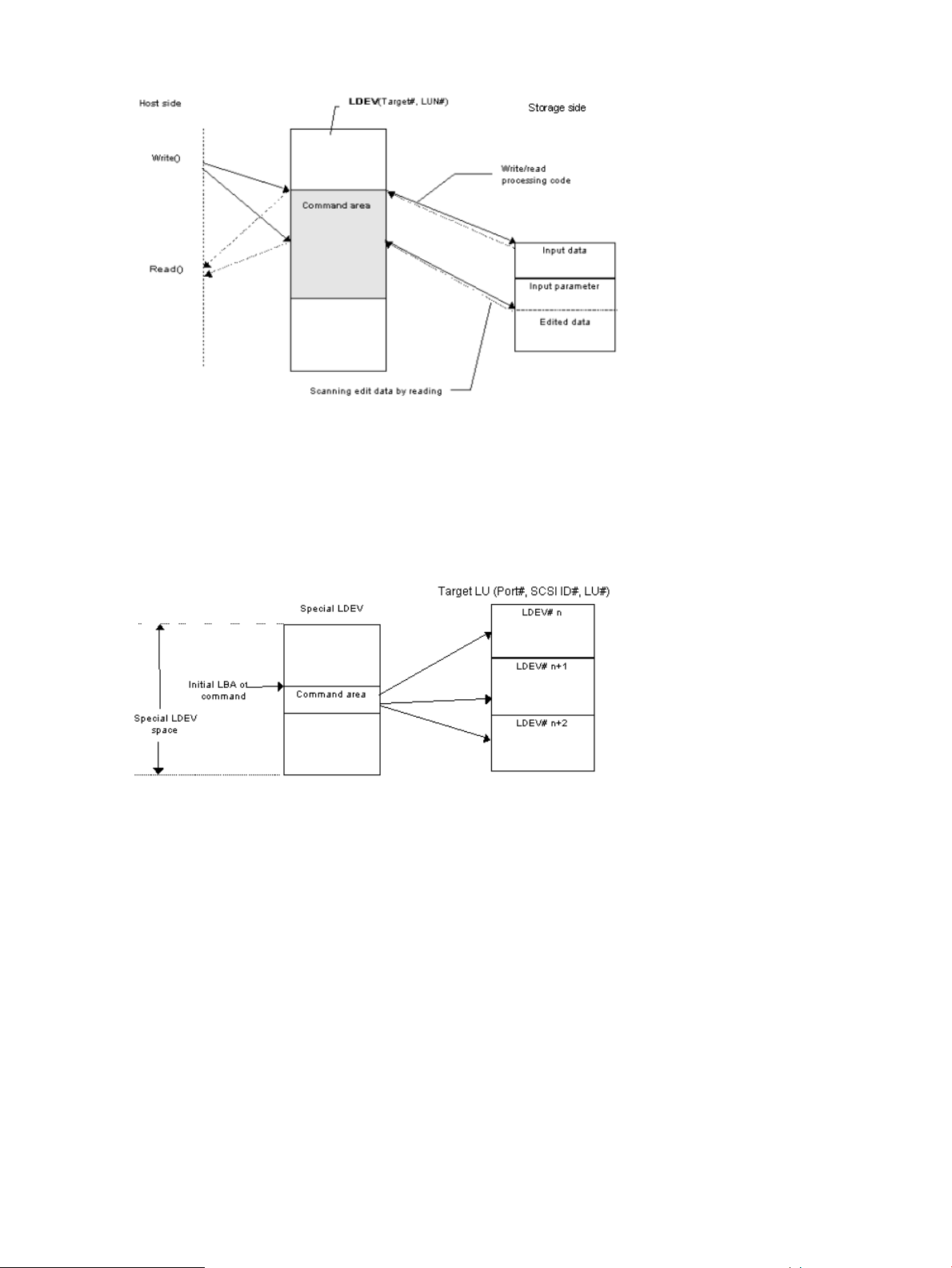

SCSI command format used. Use a RD/WR command that can be used with special LDEVs, since

they should be discriminated from the normal RD/WR command.

Recognition of the control command area (LBA#). The host issues control commands through the

raw I/O special file of a special LDEV. Since the specific LU (command device) receiving these

18 RAID Manager software environment

Page 19

commands is viewed as a normal disk by the SCSI interface, the OS can access its local control

area. The RAID storage system must distinguish such accesses from the control command accesses.

Normally, several megabytes of the OS control area are used starting at the initial LBA#. To avoid

using this area, a specific LBA# area is decided and control commands are issued within this area.

The command LBA# recognized by the storage system is shown below, provided the maximum

OS control area is 16 MB.

Figure 5 Relationship of the special file to the special LDEV

Acceptance of commands. A command is issued in the LBA area of the special LDEV explained

above. The RD/WR command meeting this requirement should be received especially as a RAID

Manager command. A command is issued in the form of WR or WR-RD. When a command is

issued in the form of RD, it is regarded as an inquiry (equivalent to a SCSI inquiry), and a RAID

Manager recognition character string is returned.

Command competition

The RAID Manager commands are asynchronous commands issued via the SCSI interface. As a

result, if several processes issue these commands to a single LDEV, the storage system cannot take

the proper action. To avoid such a problem, two or more write commands should not be issued

to a single LDEV. The command initiators should not issue two or more write commands to a single

LDEV unless the storage system can receive commands with independent initiator number * LDEV

number simultaneously.

Figure 6 HORCM and command issue process

Command flow

This figure shows the flow of read/write command control for a specified LBA#.

RAID Manager components on the RAID storage system 19

Page 20

Figure 7 Command flow

Issuing commands for LDEVs within a LUSE device

A LUSE device is a group of LDEVs regarded as a single logical unit. Because it is necessary to

know the configuration of the LDEVs when issuing a command, a new command is used to specify

a target LU and acquire LDEV configuration data (see figure).

Figure 8 LUSE device and command issue

RAID Manager instance components on the host server

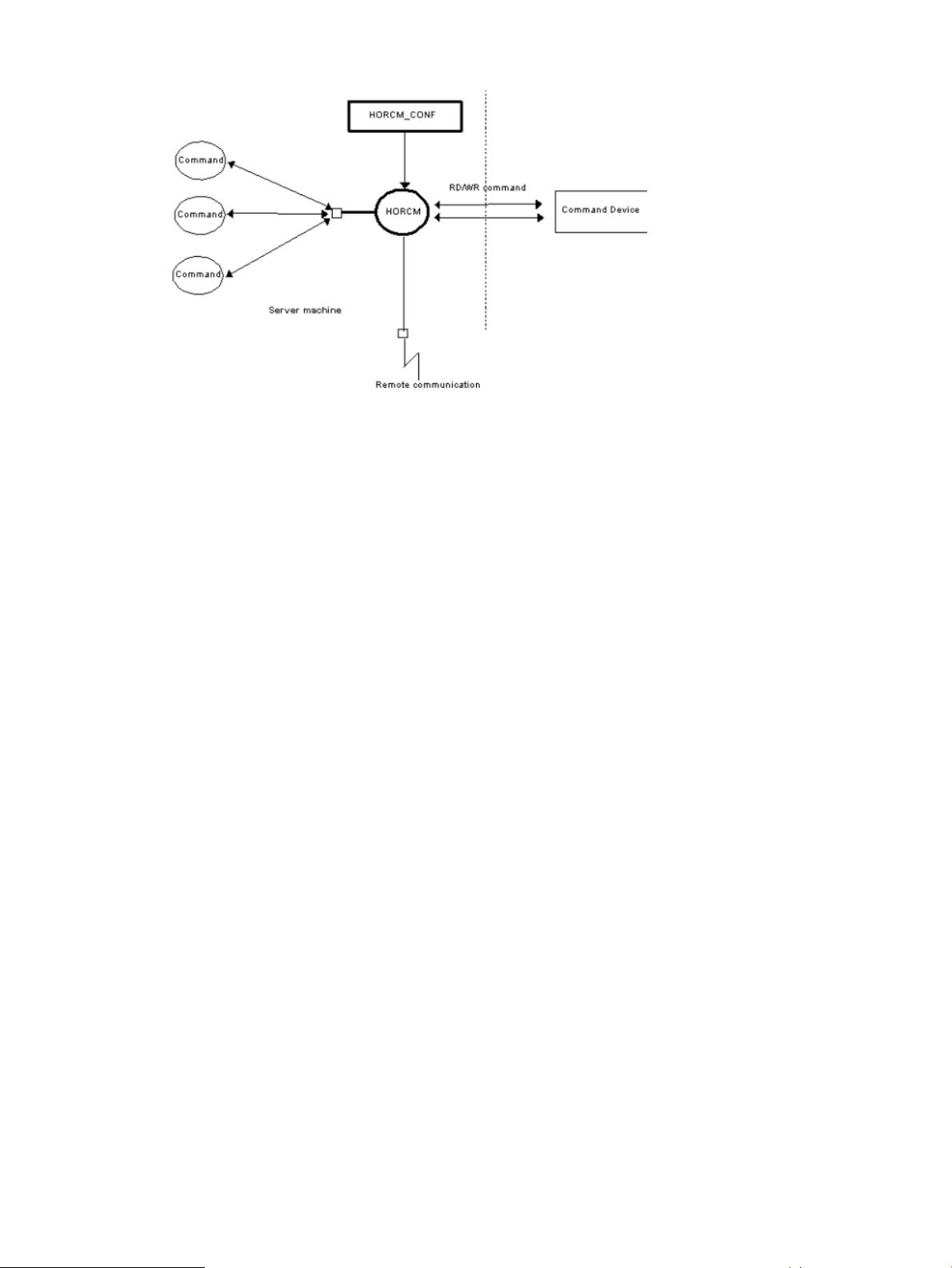

HORCM operational environment

The HORCM operates as a daemon process on the host server and is activated either automatically

when the server machine starts up or manually by the startup script. HORCM reads the definitions

specified in the configuration file upon startup. The environment variable HORCM_CONF is used

to define the location of the configuration file to be referenced.

20 RAID Manager software environment

Page 21

Figure 9 HORCM operational environment

RAID Manager instance configurations

The basic unit of the RAID Manager software structure is the RAID Manager instance. Each copy

of RAID Manager on a server is a RAID Manager instance. Each instance uses its own configuration

definition file to manage volume relationships while maintaining awareness of the other RAID

Manager instances. Each RAID Manager instance normally resides on separate servers (one node

per instance). If two or more instances are run on a single server (e.g., for test operations), it is

possible to activate two or more instances using instance numbers. The RAID Manager commands

to be used are selected by the environment variable (HORCC_MRCF). The default command

execution environment for RAID Manager is Continuous Access Synchronous.

The RAID Manager instance shown in the following figure has a remote execution link and a

connection to the RAID storage system. The remote execution link is a network connection to another

PC to allow you to execute RAID Manager functions remotely. The connection between the RAID

Manager instance and the storage system illustrates the connection between the RAID Manager

software on the host and the command device. The command device accepts RAID Manager

commands and communicates read and write I/Os between the host and the volumes on the

storage system. The host does not communicate RAID Manager commands directly to the volumes

on the storage system -- the RAID Manager commands always go through the command device.

RAID Manager instance components on the host server 21

Page 22

Figure 10 RAID Manager instance configuration & components

The four possible RAID Manager instance configurations are:

• One host connected to one storage system. Connecting one host to one storage system allows

you to maintain multiple copies of your data for testing purposes or as an offline backup. Each

RAID Manager instance has its own operation manager, server software, and scripts and

commands, and each RAID Manager instance communicates independently with the command

device. The RAID storage system contains the command device that communicates with the

RAID Manager instances as well as the primary and secondary volumes of both RAID Manager

instances.

• One host connected to two storage systems. Connecting the host to two storage systems enables

you to migrate data or implement disaster recovery by maintaining duplicate sets of data in

two different storage systems. You can implement disaster recovery solutions by placing the

storage systems in different geographic areas. Each RAID Manager instance has its own

operation manager, server software, and scripts and commands, and each RAID Manager

instance communicates independently with the command device. Each RAID storage system

has a command device that communicates with each RAID Manager instance independently.

Each storage system contains the primary volumes of its connected RAID Manager instance

and the secondary volumes of the other RAID Manager instance (located on the same host in

this case).

• Two hosts connected to one storage system. Having two attached hosts to one storage system,

one host for the primary volume and the other host for the secondary volume, allows you to

maintain and administer the primary volumes while the secondary volumes can be taken offline

for testing. The RAID Manager instances of separate hosts are connected via the LAN so that

they can maintain awareness of each other. The RAID storage system contains the command

device that communicates with both RAID Manager instances (one on each host) and the

primary and secondary volumes of both RAID Manager instances

• Two hosts connected to two storage systems. Two hosts connected to two storage systems also

allows the most flexible disaster recovery plan, because both sets of data are administered

22 RAID Manager software environment

Page 23

by different hosts. This guards against storage system failure as well as host failure. The RAID

Manager instances of separate hosts are connected via the LAN so that they can maintain

awareness of each other. Each RAID storage system has a command device that communicates

with each RAID Manager instance independently. Each storage system contains the primary

volumes of its connected RAID Manager instance and the secondary volumes of the other RAID

Manager instance (located on a different host in this case).

Host machines that can be paired

When you perform a pair operation, the version of RAID Manager should be the same on the

primary and secondary sites. As a particular application uses HORC, users sometimes use a HORC

volume as the data backup volume for the server. In this case, RAID Manager requires that the

RAID Manager instance correspond to each OS platform that is located on the secondary site for

the pair operation of data backup on the primary servers of each OS platform.

However, it is possible to prepare only one server at a secondary site by supporting RAID Manager

communications among different OSs (including the converter for little-endian vs big-endian).

However, it is possible to prepare only one server at a secondary site by supporting RAID Manager

communications among different OSs (including the converter for little-endian vs big-endian).

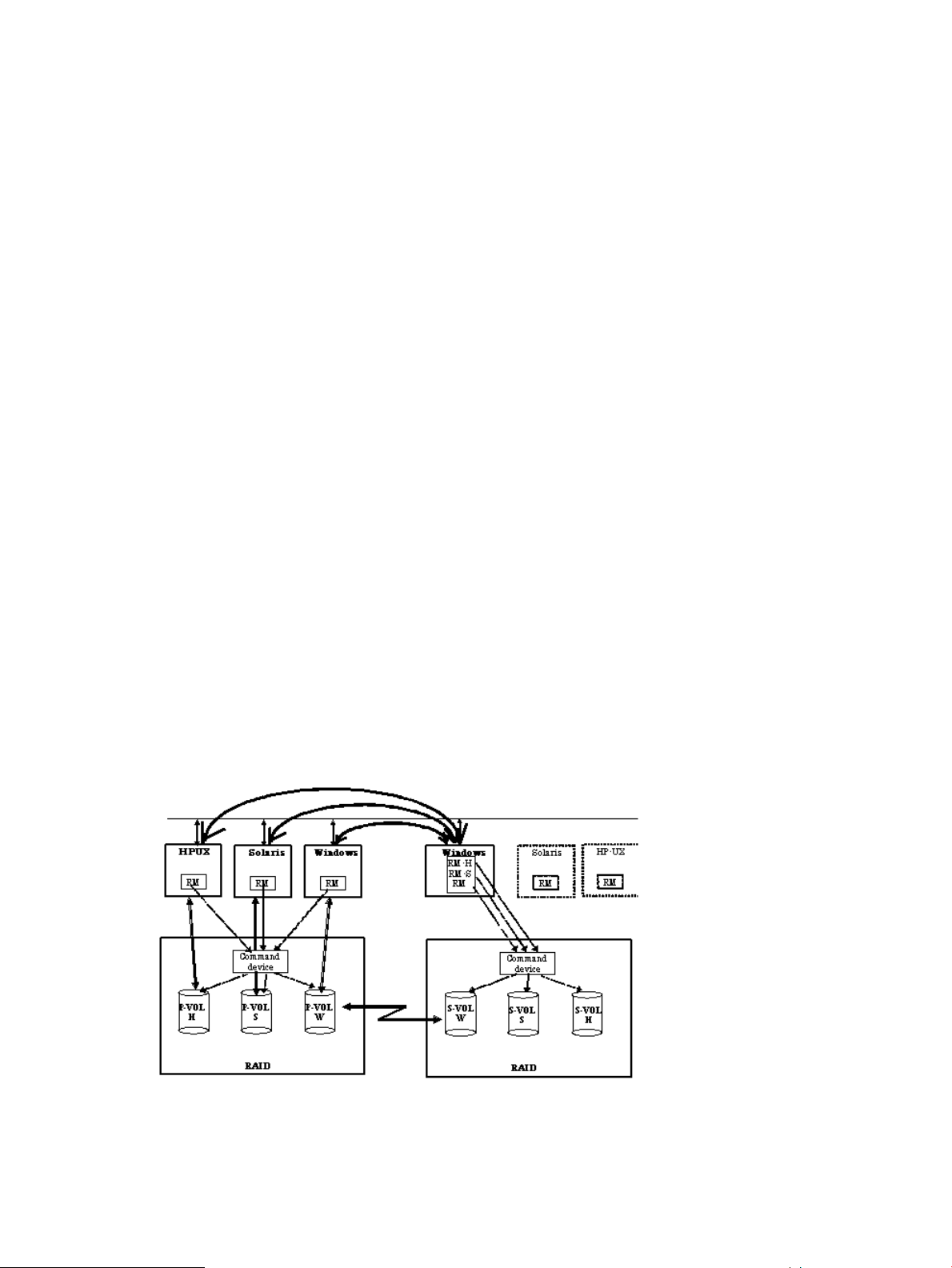

Figure 11 (page 23) represents RAID Manager’s communication among different OSs, and

Table 3 (page 24) shows the supported communication (32-bit, 64-bit, MPE/iX) among different

OSs. Please note the following terms that are used in the example:

• RM-H: Value of HORCMFCTBL environment variable for an HP-UX RAID Manager instance

on Windows

• RM-S: Value of HORCMFCTBL environment variable for a Solaris RAID Manager instance on

Windows

Restriction: RAID Manager for MPE/iX cannot communicate with 64-bit HORCM.

Restriction: RAID Manager’s communications among different operating systems is supported on

HP-UX, Solaris, AIX, Linux, and Windows (this is not supported on Tru64 UNIX/Digital UNIX).

Also, RAID Manager does not require that the HORCMFCTBL environment variable be set—except

for RM-H and RM-S instances (to ensure that the behavior of the operating system platform is

consistent across different operating systems).

Figure 11 RAID Manager communication among different operating systems

RAID Manager instance components on the host server 23

Page 24

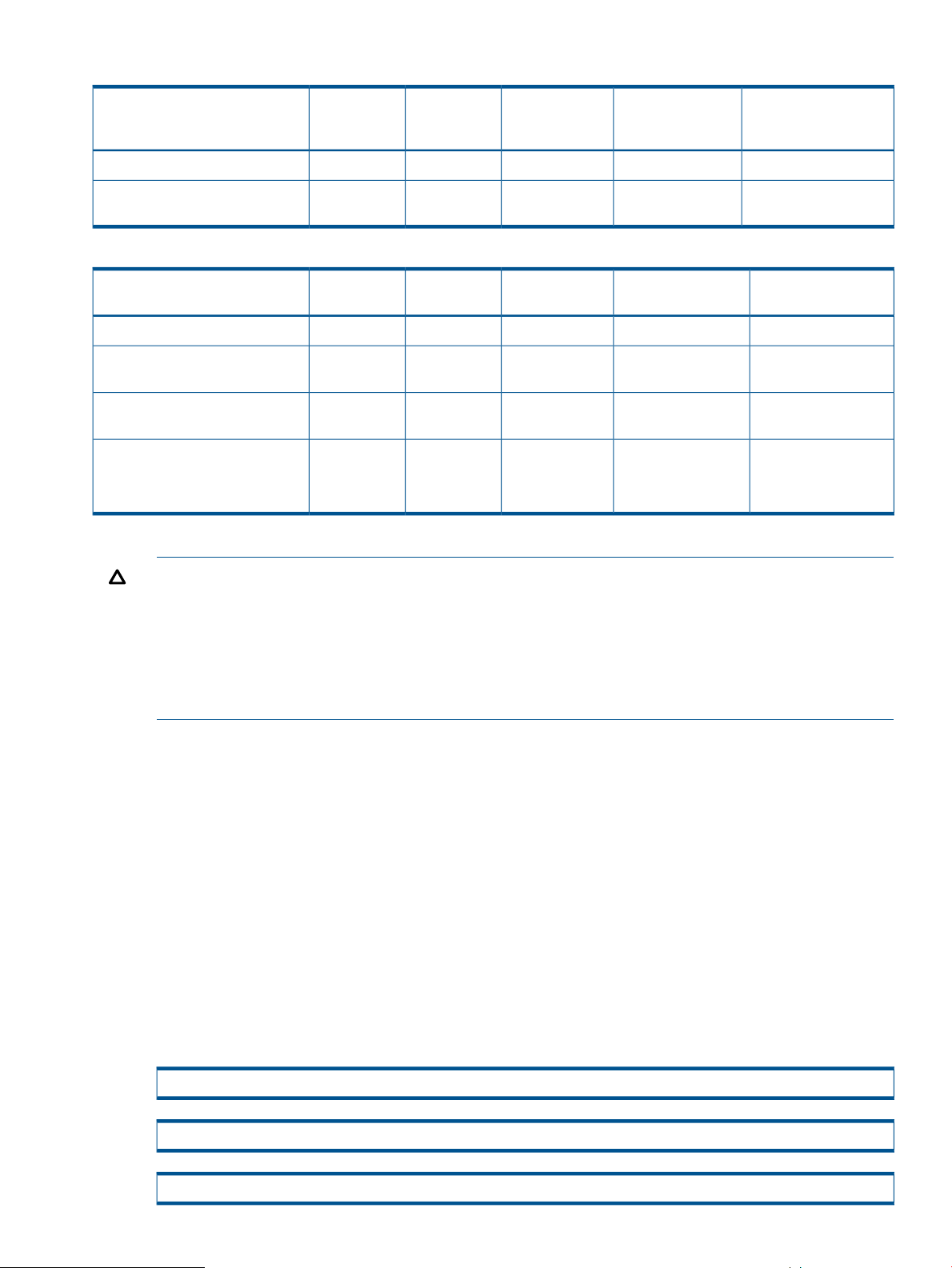

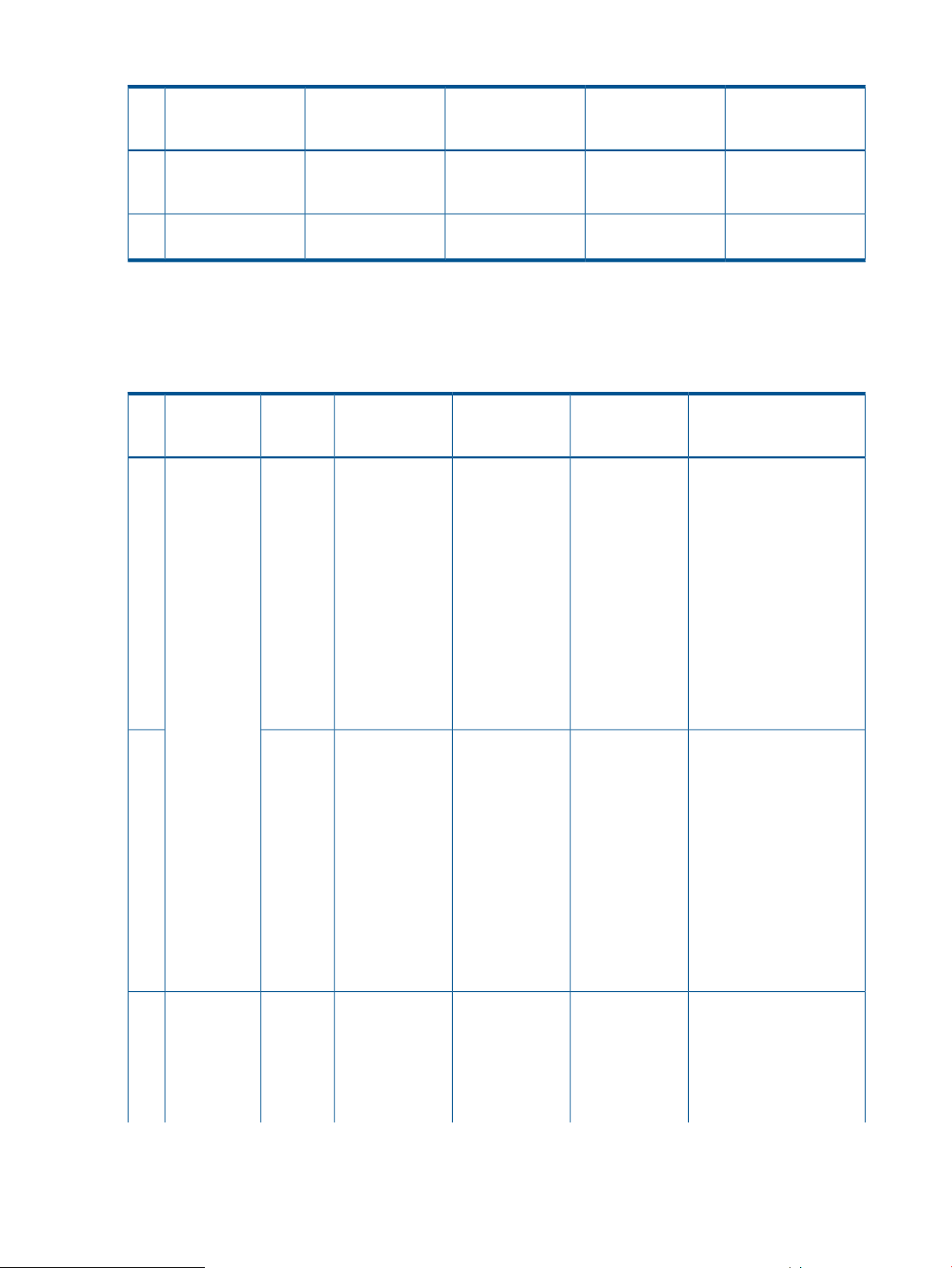

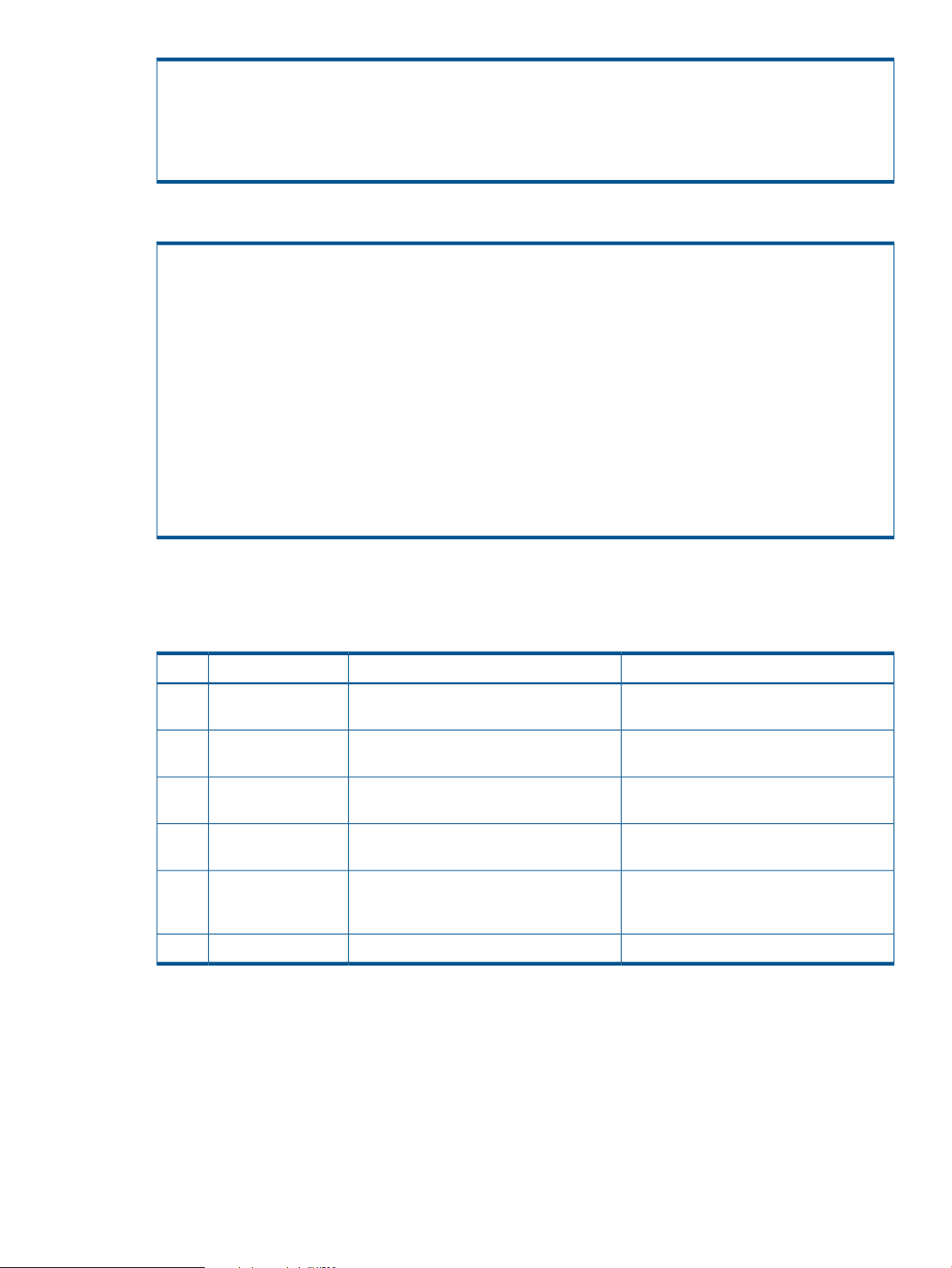

Table 3 Supported RAID Manager (HORCM) communication

Configuration definition file

Overview

The RAID Manager configuration definition file is a text file that defines a RAID Manager instance.

The connected hosts, volumes and groups known to the RAID Manager instance are defined in the

configuration definition file. Physical volumes (special files) used independently by the servers are

combined when paired logical volume names and group names are given to them. The configuration

definition file describes the correspondence between the physical volumes used by the servers and

the paired logical volumes and the names of the remote servers connected to the volumes. See the

HP StorageWorks P9000 RAID Manager Installation and Configuration User Guide for instructions

on creating the RAID Manager configuration definition file.

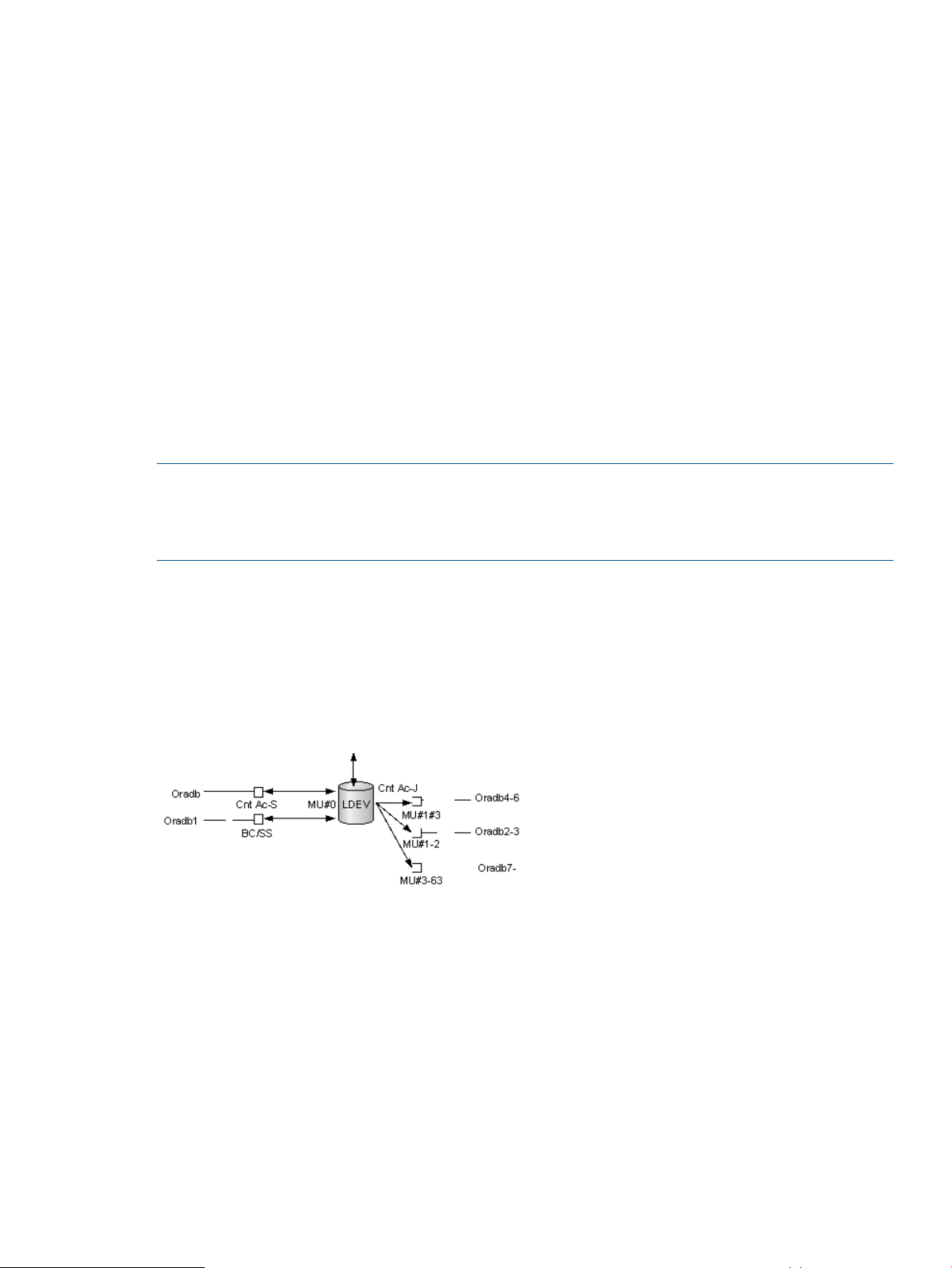

Figure 12 (page 24) illustrates the configuration definition of paired volumes.

Example 2 “Configuration file example — UNIX-based servers” shows a sample configuration file

for a UNIX-based operating system. Figure 13 (page 25) shows a sample configuration file for a

Windows operating system.

MPE/iXHORCM 64 bitHORCM 32 bitHORCM

bigbiglittlebiglittle

AV-AVAVAVlittle32 bit

AV-AVAVAVbig

NA-AVAVAVlittle64 bit

-----big

AV-NAAVAVbigMPE/iX

Figure 12 Configuration definition of paired volumes

24 RAID Manager software environment

Page 25

# at the head of each line is used to insert a comment in the configuration file.

Example 2 Configuration file example — UNIX-based servers

HORCM_MON

#ip_addressservicepoll(10ms)timeout(10ms)

HST1horcm10003000

HORCM_CMD

#unitID 0... (seq#30014)

#dev_name dev_name dev_name

dev/rdsk/c0t0d0

#unitID 1... (seq#30015)

#dev_name dev_name dev_name

/dev/rdsk/c1t0d0

HORCM_DEV

#dev_groupdev_nameport#TargetIDLU#MU#

oradboradb1CL1-A3 10

oradboradb2CL1-A3 11

oralogoralog1CL1-A5 0

oralogoralog2CL1-A15 0

oralogoralog3CL1-A15 1

oralogoralog4CL1-A15 1 h1

HORCM_INST

#dev_groupip_addressservice

oradbHST2horcm

oradbHST3horcm

oralogHST3horcm

Figure 13 Configuration file example — Windows servers

The following table lists the parameters defined in the configuration file and specifies the default

value, type, and limit for each parameter.

Table 4 Configuration (HORCM_CONF) parameters

RAID Manager instance components on the host server 25

LimitTypeDefaultParameter

64 charactersCharacter stringNoneip_address

15 charactersCharacter string or numeric valueNoneService

NoneNumeric value*1000poll (10 ms)

Page 26

Table 4 Configuration (HORCM_CONF) parameters (continued)

HORCM_DEV

HORCM_CMD

LimitTypeDefaultParameter

NoneNumeric value*3000timeout (10 ms)

31 charactersCharacter stringNonedev_name for

31 charactersCharacter stringNonedev_group

Recommended value = 8 char.

or less

31 charactersCharacter stringNoneport #

7 charactersNumeric value*Nonetarget ID

7 charactersNumeric value*NoneLU#

7 charactersNumeric value*0MU#

12 charactersNumeric valueNoneSerial#

6 charactersNumeric valueNoneCU:LDEV(LDEV#)

63 charactersCharacter stringNonedev_name for

Recommended value = 8 char.

or less

*Use decimal notation for numeric values (not hexadecimal).

Do not edit the configuration definition file while RAID Manager is running. Shut down RAID

Manager, edit the configuration file as needed, and then restart RAID Manager.

Do not mix pairs created with the "At-Time Split" option (-m grp) and pairs created without this

option in the same group defined in the RAID Manager configuration file. If you do, a pairsplit

operation might end abnormally, or S-VOLs of the P-VOLs in the same consistency group (CTG)

might not be created correctly at the time the pairsplit request is received.

Configuration definition file settings

(1) HORCM_MON

The monitor parameter (HORCM_MON) defines the following values:

• Ip_address: The IP address of the local host. When HORCM has two or more network addresses

on different subnets for communication, this must be set to NONE.

• Service: Specifies the UDP port name assigned to the HORCM communication path, which is

registered in "/etc/services" ("\WINNT\system32\drivers\etc\services" in Windows,

"SYS$SYSROOT:[000000.TCPIP$ETC]SERVICES.DAT" in OpenVMS). If a port number is

specified instead of a port name, the port number will be used.

• Poll: The interval for monitoring paired volumes. To reduce the HORCM daemon load, make

this interval longer. If set to -1, the paired volumes are not monitored. The value of -1 is specified

when two or more RAID Manager instances run on a single machine.

• Timeout: The time-out period of communication with the remote server.

(2) HORCM_CMD

When using the in-band method, this command parameter (HORCM_CMD) defines the UNIX

device path or Windows physical device number and specifies a command device that can access

the RAID Manager.

The detailed are described in the following.

In-band method

26 RAID Manager software environment

Page 27

The command device must be mapped to the SCSI/fibre using LUN Manager. You can define

more than one command device to provide failover in case the original command device becomes

unavailable (see “Alternate command device function” (page 17)). The mapped command devices

can be identified by the “-CM” of product ID field of the inqraid command.

# ls /dev/rdsk/c1t0* | /HORCM/usr/bin/inqraid -CLI -sortDEVICE_FILE PORT SERIAL LDEV CTG H/M/12 SSID R:Group

PRODUCT_ID

c1t0d0s2 CL2-E 63502 576 - - - - OPEN-V-CM

c1t0d1s2 CL2-E 63502 577 - s/s/ss 0006 1:02-01 OPEN-V -SUN

c1t0d2s2 CL2-E 63502 578 - s/s/ss 0006 1:02-01 OPEN-V -SUN

The command device of UNIX host (Solaris) on the above example is described in the following.

/dev/rdsk/c1t1d0s2

The command device of Windows host is described in the following.

\\.\PhysicalDrive2 or \\.\CMD-63502

After the process of command device mapping, set HORCM_CMD of the configuration definition

file as follows.

• \\.\CMD-<Serial Number>:<Device special file name>

<Serial Number>: Sets the serial number.

<Device special file name>: Sets the device special file name of a command device.

Example

When the serial number, 64015 and device special file name, /dev/rdsk/*is specified:

HORCM_CMD

#dev_name dev_name dev_name

\\.\CMD-64015:/dev/rdsk/*

Out-of-band method

When executing commands using the out-of-band method, create a virtual command device. To

create a virtual command device, specify as the following to the configuration definition file.

• \\.\IPCMD-<SVP IP address>-<UDP communication port number>[-Unit ID]

<SVP IP address>: Sets an IP address of SVP.

<UDP communication port number>: Sets the UDP communication port number. This value is

fixed (31001).

[-Unit ID]: Sets the unit ID of the storage system for the multiple units connection configuration.

This can be omitted.

The following expresses the case of IPv4.

HORCM_CMD#dev_name dev_name dev_name\\.\IPCMD-158.214.135.113-31001

The following expresses the case of IPv6.

HORCM_CMD#dev_name dev_name dev_name\\.\IPCMD-fe80::209:6bff:febe:3c17-31001

NOTE: To enable dual pathing of the command device under Solaris systems, make sure to

include all paths to the command device on a single line in the HORCM_CMD section of the config

file. Putting the path information on separate lines may cause parsing issues, and failover may not

occur unless the HORCM startup script is restarted on the Solaris system.

When a server is connected to two or more storage systems, the HORCM identifies each storage

system using the unit ID (see Figure 14 (page 28)). The unit ID is assigned sequentially in the order

described in this section of the configuration definition file. When the storage system is shared by

two or more servers, each server must be able to verify that the unit ID is the same Serial# (Seq#)

among servers. This can be verified using the raidqry command.

RAID Manager instance components on the host server 27

Page 28

Figure 14 Configuration and Unit IDs for Multiple Storage systems

dev_name for Windows

In Windows SAN environment, “Volume{guid}” will be changed on every re-boot under

MSCS/Windows2k3, if Windows finds the same signature on the command device connected