Page 1

HP StorageWorks

Auto LUN XP user guide

for the XP12000/XP10000

Part number: T1715-96006

Sixth edition: September 2007

Page 2

Legal and notice information

© Copyright 2005, 2007 Hewlett-Packard Development Company, L.P.

Confidential computer software. Valid license from HP required for possession, use or copying. Consistent with FAR 12.211 and 12. 212, Commercial

Computer Software, Computer Software Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government under

vendor’s standard commercial license.

The information contained herein is subject to change without notice. The only warranties for HP products and services are set forth in the express

warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. HP shall

not be liable for technical or editorial errors or omissions contained herein.

Adobe® and Acrobat are trademarks of Adobe Systems Incorporated.

ESCON, z/OS, and S/390 are registered trademarks or trademarks of International Business Machines Corporation (IBM).

HP-UX is a product name of Hewlett-Packard Company.

Java and all Java-based trademarks and logos are trademarks or registered trademarks of Sun Microsystems, Inc. in the United States and other

countries.

Netscape Navigator is a registered trademark of Netscape Communications Corporation in the United States and other countries.

Solaris is a trademark or registered trademark of Sun Microsystems, Inc. in the United States and other countries.

UNIX is a registered trademark of X/Open Company Limited in the United States and other countries and is licensed exclusively through X/Open

Company Limited.

Windows and Windows NT are registered trademarks of Microsoft Corporation.

All other brand or product names are or may be trademarks or service marks of and are used to identify products or services of their respective

owners.

Auto LUN XP user guide for the XP12000/XP10000

Page 3

Contents

About this guide. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

Supported storage platforms and firmware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

Intended audience . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

Prerequisites. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

Related documentation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

Document conventions and symbols . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

HP technical support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

Subscription service . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

HP web sites . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

Documentation feedback . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

1 Auto LUN XP for the XP12000/XP10000 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

Auto LUN XP features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

Auto LUN XP tasks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

Reserve volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

Volume migration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

Estimating usage rates. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

Automatic migration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

Storage management by maximum disk usage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

Keep reserved volumes in high HDD classes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

Auto migration sequence of events . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

Manual migration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

Requirements and restrictions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

Selection of CU group . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

Logical volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

Source volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

LUSE source volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

Target volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

Number of volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

Calculating the number of Auto LUN XP concurrent migration plans . . . . . . . . . . . . . . . . . . . . . . . . . . 19

Calculating differential tables for mainframe volume migration . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

Calculating differential tables for open-system volume migration . . . . . . . . . . . . . . . . . . . . . . . . . . 21

Auto migration planning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

Auto migration execution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

Manual migration execution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

Copy Threshold option . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

Progress value . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

Effects on other XP products . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

Maintenance. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

Auto migration parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

Powering off disk arrays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

Starting Auto LUN XP . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

Auto LUN pane . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

Tabs and selection trees. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

Monitoring term section . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

Monitoring data section. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

Table section . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

Graph section . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

WWN, Port-LUN, LDEV, and Physical tabs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

WWN tab . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

Port-LUN tab. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

LDEV tab . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

Physical tab . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

Creating and executing migration plans . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

Auto LUN XP user guide for the XP12000/XP10000 3

Page 4

Manual Migration tab . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

Pane contents . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

Tree view . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

Table view . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

Buttons . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

Migrating volumes manually . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

Deleting manual migration plans . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

Auto Migration tab . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

Pane contents . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

Auto Migration plans section . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

Auto Plan parameter section . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

Setting auto migration parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

Deleting auto migration plans. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

Remaking auto migration plans . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

Attribute tab . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

Pane contents . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

Reserving target volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

Setting (fixing) parity groups . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

Releasing (unfixing) parity groups . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

Changing maximum disk usage rates for HDD classes. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

History tab . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

Pane contents . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

Viewing migration history logs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

Troubleshooting Auto LUN XP. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

Using RAID Manager for manual Auto LUN XP migrations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

2 Auto LUN/Performance Control Base Monitor. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

Auto LUN XP statistics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

Auto LUN Monitoring Options pane. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

XP12000/XP10000 disk arrays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

Usage statistics. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

Collecting usage statistics about disk array resources. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

Viewing parity group usage statistics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

Viewing logical volume usage statistics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

Viewing channel adapter (CHA) usage statistics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

Viewing channel processor (CHP) usage statistics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

Viewing disk adapter (DKA) usage statistics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

Viewing disk processor (DKP) usage statistics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

Viewing data recovery and reconstruction processor (DRR) usage statistics . . . . . . . . . . . . . . . . . . . . . 55

Viewing write pending rates . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

Viewing access path usage statistics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

Workload and traffic statistics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

Collecting workload and traffic statistics about disk drives, ports, and LU paths. . . . . . . . . . . . . . . . . . 57

Viewing disk drive workload statistics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

Viewing disk array port traffic statistics. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

Viewing HBA/port traffic statistics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

Index . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

Figures

1 Data Flow during an Auto LUN XP Operation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

2 Data Flow after an Auto LUN XP operation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

3 Auto migration function example 1 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

4 Auto migration function example 2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

5 Auto LUN pane . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

6 WWN, Port-LUN, LDEV, and Physical tabs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

7 Manual Migration tab . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

8 Auto Migration tab . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

9 Attribute pane . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

10 Class table boxes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

4

Page 5

11 Parity group table boxes. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

12 Attribute tab tree . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

13 History tab . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

14 Pair status transitions during Auto LUN XP migrations using RAID Manager. . . . . . . . . . . . . . . . . . . . 45

15 Auto LUN Monitoring Options pane. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

16 Parity group usage statistics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

17 Logical volume usage statistics. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

18 CHA usage statistics. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

19 CHP usage statistics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

20 DKA usage statistics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

21 DKP usage statistics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

22 DRR usage statistics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

23 Write pending rates . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

24 Usage statistics about paths between adapters and the cache switch . . . . . . . . . . . . . . . . . . . . . . . . 57

25 Usage statistics about paths between adapters and shared memory. . . . . . . . . . . . . . . . . . . . . . . . . 57

26 Usage statistics about paths between cache switches and cache memory . . . . . . . . . . . . . . . . . . . . . 57

27 Workload statistics for all parity groups . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

28 Workload statistics for a specific parity group . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

29 Workload statistics for logical volumes in a parity group . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

30 Traffic statistics about ports in a disk array . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

31 Traffic statistics about host ports to which a disk array port is connected. . . . . . . . . . . . . . . . . . . . . . 59

32 Traffic statistics about host ports in a host group . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

33 Traffic statistics about LU paths . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

34 Traffic statistics for host bus adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

35 Traffic statistics for each PFC group . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

36 Traffic statistics for each port connected to a specified HBA . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

Tables

1 Document conventions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

2 Movability of volumes in pairs consisting of CV and normal values . . . . . . . . . . . . . . . . . . . . . . . . . . 16

3 Non-cascaded volumes that can be used as source volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

4 Cascaded volumes that can be used as source volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

5 Control cylinders by emulation type . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

6 Total number of differential tables per migration plan . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

7 Control cylinders by emulation type . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

8 The estimated delay in the copying speed. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .23

9 Graph contents based on list selection (Port-LUN tab) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

10 Auto LUN pane, Port-LUN tab icons . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

11 Auto LUN pane, Physical tab icons . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

12 Migration log messages . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

13 Errors during Auto LUN XP migrations or migration cancellations using RAID Manager . . . . . . . . . . . . 46

Auto LUN XP user guide for the XP12000/XP10000 5

Page 6

6

Page 7

About this guide

This guide provides information about the following:

•”Auto LUN XP for the XP12000/XP10000”on page 11

•”Auto LUN XP features” on page 11

•”Auto LUN XP tasks” on page 11

•”Reserve volumes” on page 12

•”Volume migration” on page 12

•”Estimating usage rates” on page 13

•”Automatic migration” on page 14

•”Manual migration” on page 16

•”Requirements and restrictions” on page 16

•”Starting Auto LUN XP” on page 24

•”Creating and executing migration plans” on page 30

•”Troubleshooting Auto LUN XP” on page 44

•”Using RAID Manager for manual Auto LUN XP migrations” on page 44

•”Auto LUN/Performance Control Base Monitor”on page 49

•”Auto LUN XP statistics” on page 49

•”Usage statistics” on page 51

•”Workload and traffic statistics” on page 57

Supported storage platforms and firmware

In this guide, the term array refers to the following storage platforms:

• HP StorageWorks XP12000 Disk Array

• HP StorageWorks XP10000 Disk Array

For information about required firmware versions, see the HP StorageWorks XP Remote Web Console user

guide for XP12000/XP10000.

Intended audience

This guide is intended for customers and HP-authorized service providers with knowledge of the following:

• Disk array hardware and software

• Data processing and RAID storage subsystems and their basic functions

Prerequisites

Prerequisites for using this product include:

• Installation of the HP StorageWorks disk array(s)

• Installation of the license key for this product

Related documentation

In addition to this guide, please refer to other documents for this product:

• HP StorageWorks Command View XP user guide for XP Disk Arrays

• HP StorageWorks XP Remote Web Console user guide for XP12000/XP10000

• HP StorageWorks Continuous Access XP Journal user guide

• HP StorageWorks Performance Control XP user guide

• HP StorageWorks XP Disk/Cache Partition user guide

Auto LUN XP user guide for the XP12000/XP10000 7

Page 8

•

Hitachi True Copy for z/OS® for the XP128/XP1024/XP10000/XP1200

•

HP StorageWorks RAID Manager XP user guide

You can find these documents at http://www.hp.com/support/

Document conventions and symbols

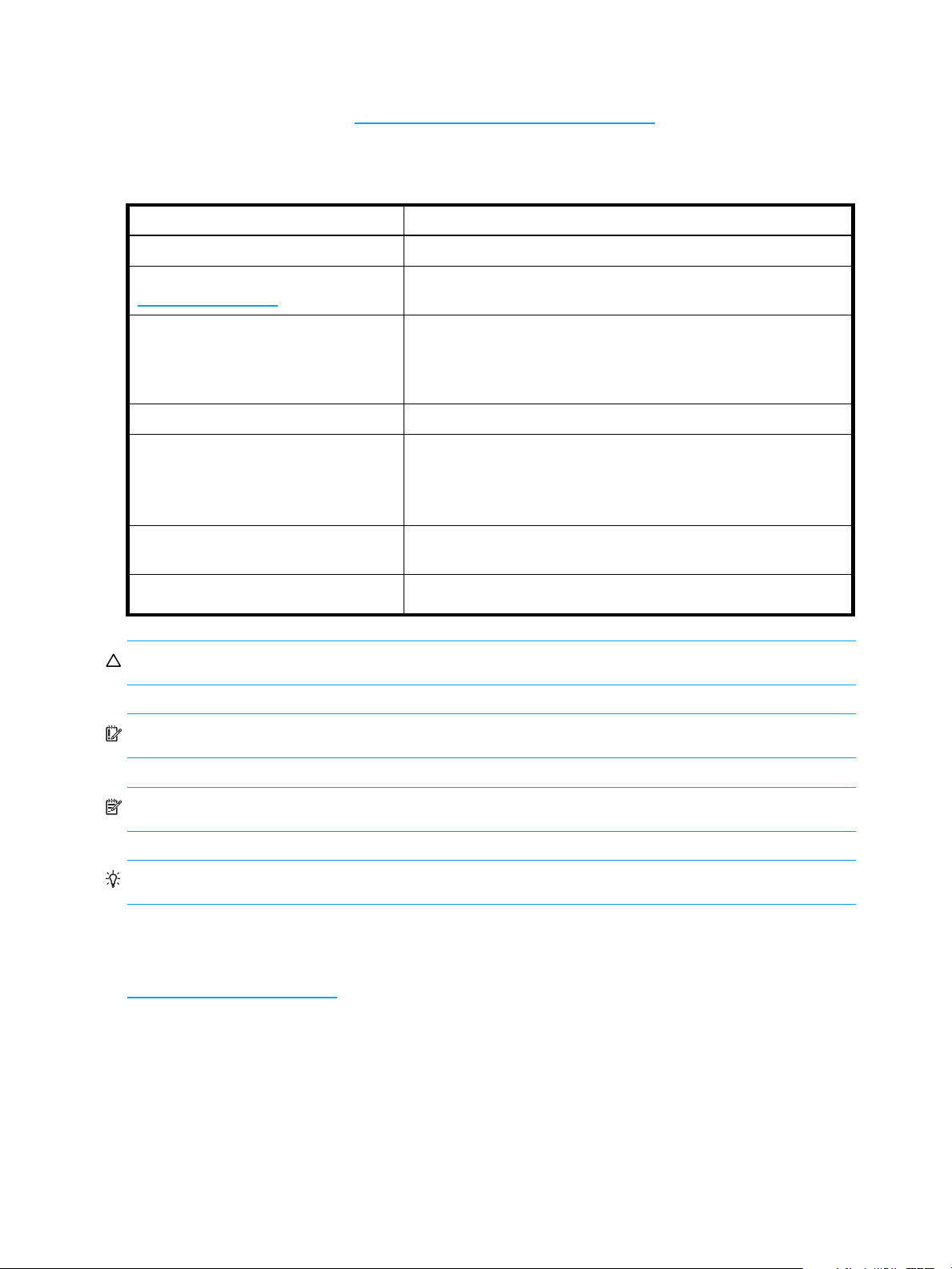

Table 1 Document conventions

Convention Element

Blue text: Table 1

Blue, underlined text:

(http://www.hp.com)

Bold text

Italic text

Monospace text

Monospace, italic text

Cross-reference links and e-mail addresses

Web site addresses

• Keys that are pressed

• Text typed into a GUI element, such as a box

• GUI elements that are clicked or selected, such as menu and list

Text emphasis

• File and directory names

• System output

• Code

• Commands, their arguments, and argument values

• Code variables

• Command variables

rwc/manuals.

items, buttons, and check boxes

Monospace, bold text

CAUTION: Indicates that failure to follow directions could result in damage to equipment or data.

IMPORTANT: Provides clarifying information or specific instructions.

NOTE: Provides additional information.

TIP: Provides helpful hints and shortcuts.

HP technical support

Telephone numbers for worldwide technical support are listed on the HP support web site:

http://www.hp.com/support/

Collect the following information before calling:

• Technical support registration number (if applicable)

• Product serial numbers

• Product model names and numbers

• Applicable error messages

• Operating system type and revision level

• Detailed, specific questions

Emphasized monospace text

.

8

Page 9

For continuous quality improvement, calls may be recorded or monitored.

Subscription service

HP strongly recommends that customers register online using the Subscriber's choice web site:

http://www.hp.com/go/e-updates

Subscribing to this service provides you with e-mail updates on the latest product enhancements, newest

driver versions, and firmware documentation updates as well as instant access to numerous other product

resources.

After subscribing, locate your products by selecting Business support and then Storage under Product

Category.

HP web sites

For additional information, see the following HP web sites:

.

• http://www.hp.com

• http://www.hp.com/go/storage

• http://www.hp.com/service_locator

• http://www.hp.com/support/manuals

• http://www.hp.com/support/downloads

• http://www.hp.com/support/rwc/manuals

Documentation feedback

HP welcomes your feedback.

To make comments and suggestions about product documentation, please send a message to

storagedocs.feedback@hp.com. All submissions become the property of HP.

Auto LUN XP user guide for the XP12000/XP10000 9

Page 10

10

Page 11

1 Auto LUN XP for the XP12000/XP10000

Auto LUN XP monitors resources in disk arrays and hosts connected to disk arrays. Auto LUN XP works with

open system and mainframe volumes. You can analyze the information Auto LUN XP provides to optimize

data storage and retrieval on disk arrays, resolve activity bottlenecks, and optimize volume allocation.

Auto LUN XP features

Auto LUN XP provides the following features:

• When a migration plan (set of detailed user-specified parameters) is in place, Auto LUN XP

automatically migrates logical volumes in disk arrays.

• Auto LUN XP operations are completely non-disruptive. Data being migrated remains online to all hosts

for read and write I/O operations throughout the volume migration process.

• Auto LUN XP supports manual volume migration operations and estimates performance improvements

prior to migration.

Auto LUN XP begins by obtaining usage statistics about physical hard disk drives, logical volumes,

processors, and other resources in disk arrays. Then, using manual or automatic migration, Auto LUN XP

balances the workload among hard disk drives, logical volumes, and processors to improve system

performance.

Use Performance Control XP to ensure that I/O operations requiring high performance receive higher

priority than I/O operations from other, lower-priority hosts. You can set the priority of disk arrays, monitor

I/O and transfer rates, and limit performance of less-critical arrays when necessary to maintain

performance of high-priority arrays.

NOTE: Partition-level users in the StorageAdmins group cannot use Auto LUN XP, but users with full array

access can use Auto LUN XP.

For users in the StorageAdmins group, the functions you can use are limited. For more information about

these limitations, see the HP StorageWorks Command View XP user guide for XP Disk Arrays or the

HP StorageWorks XP Remote Web Console user guide for XP12000/XP10000.

Auto LUN XP tasks

• Load balance disk array resources to improve performance. Balancing resource usage can significantly

improve disk array performance. Use Auto LUN XP data to optimize several areas of performance,

including front-end and back-end processor usage and allocation of logical volumes to physical disk

drives and RAID level.

• Optimize disk drive access patterns. Auto LUN XP collects and analyzes information about disk drive

access patterns and can migrate volumes to optimize host access to data. For example, RAID-1

technology might provide better performance than RAID-5 under certain conditions, and one disk drive

type might provide better performance than another for certain types of access. Auto LUN XP fine-tunes

logical volume allocation.

• Analyze disk array usage. Auto LUN XP displays performance data graphically to highlight peaks and

trends. Use the graph to identify activity bottlenecks.

• Better utilize RAID levels and HDD types. The XP12000/XP10000 supports both RAID-1 and RAID-5

technologies and a mixture of RAID-1 and RAID-5 parity groups. The XP12000/XP10000 also supports

several types of hard disk drives (HDDs) and allows a mixture of HDD types within each disk array

domain to provide maximum flexibility in configuration. Auto LUN XP takes into account RAID level and

physical HDD performance of each parity group, enabling reallocation of logical volumes and

optimization with respect to both RAID level and HDD type. The proper combination of RAID level and

HDD type for logical volumes can significantly improve disk array performance.

Auto LUN XP user guide for the XP12000/XP10000 11

Page 12

Reserve volumes

The reserve volume function reserves target volumes for automatic and manual migration operations. After

you reserve a number of target volumes, Auto LUN XP maintains this number of reserved volumes by

swapping the reserve attribute after each migration operation (following the migration operation, the

original source volume becomes a reserved volume).

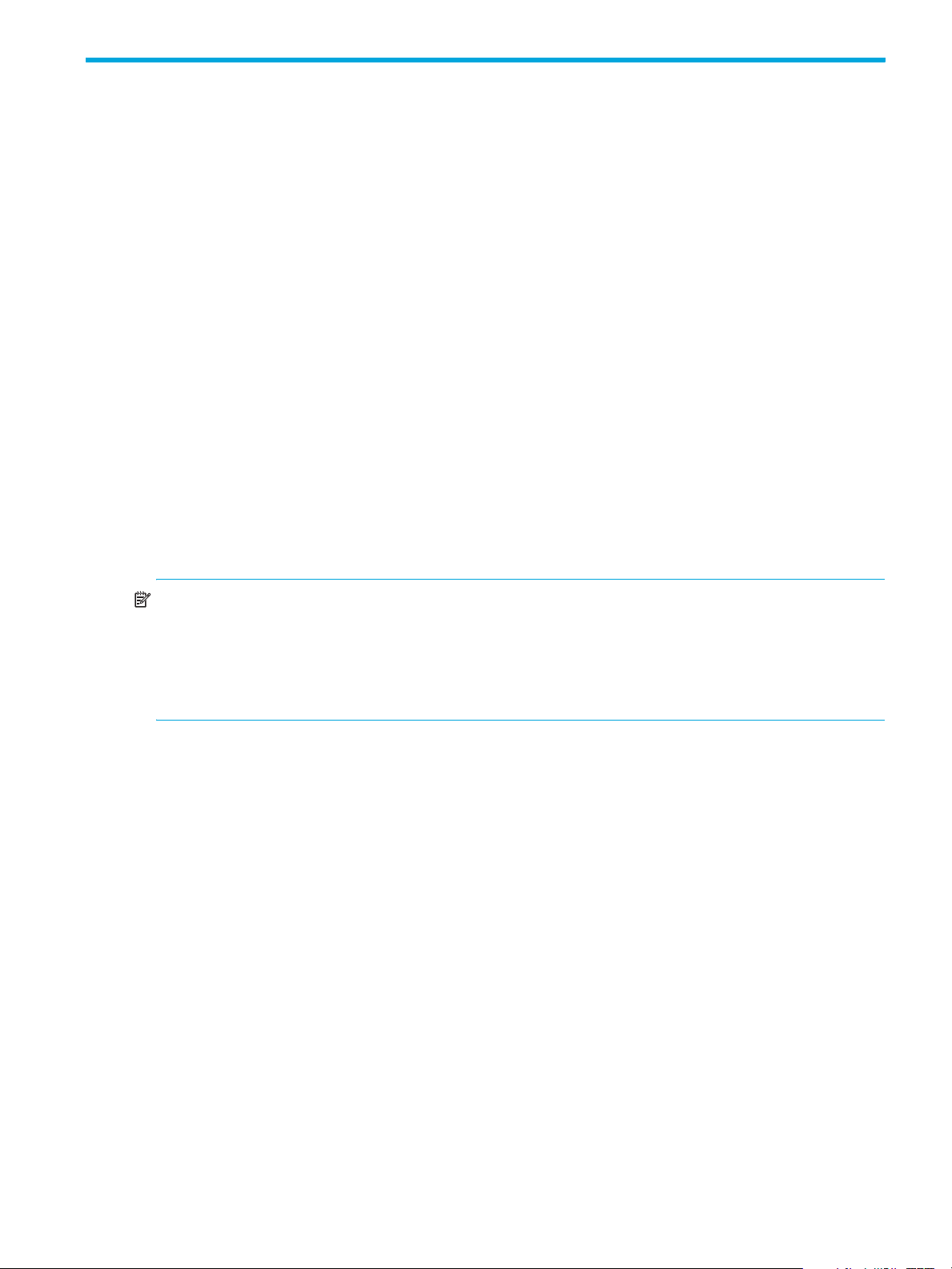

Volume migration

Auto LUN XP volume migration operations consist of two steps:

• Copy the data on the Auto LUN XP source volume to the Auto LUN XP target volume

• Transfer host access to the target volume to complete the migration

The Auto LUN XP source volume can be online to hosts during migration. The target volume is reserved

prior to migration to prevent host access during migration. The source and target volumes can be anywhere

in the disk array.

The Auto LUN XP copy operation copies the entire contents of the source volume to the target volume. If

write I/Os update the source volume during the copy operation, the XP12000/XP10000 uses a cylinder

map to track updates and performs additional copy operations after the initial copy is complete to

duplicate the updates at the target volume.

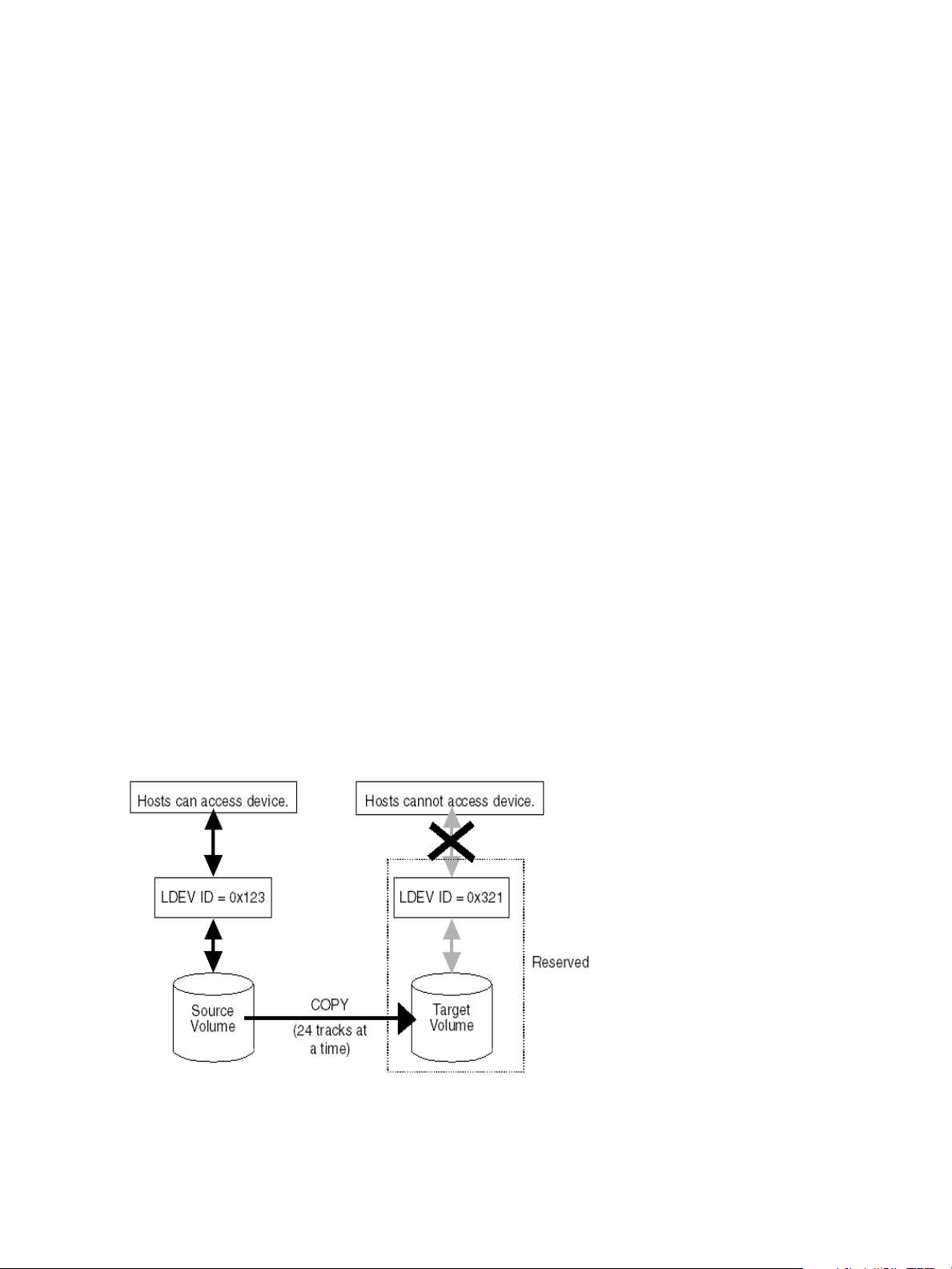

When volumes are fully synchronized (no differential data on source volume), the XP12000/XP10000

completes the migration by swapping the reserve attribute (target becomes normal and source becomes

reserved) and redirecting host access to the target volume. Auto LUN XP performs all migration operations

sequentially (one volume migration at a time).

Typical copy times for open system volumes (with no other I/O activity) are:

• OPEN-3: 1.5 minutes

• OPEN-8/9: 4.5 minutes

• OPEN-E: 9 minutes

• OPEN-V: variable

For automatic and manual migration operations, Auto LUN XP checks the current write pending rate. If the

write pending rate is higher than 60%, Auto LUN XP cancels the migration. For auto migration, Auto LUN

XP also checks current disk usage of source volumes and source and target parity groups. If current disk

usage is higher than the user-specified maximum for auto migration, Auto LUN XP cancels the migration.

Figure 1 Data Flow during an Auto LUN XP Operation

12 Auto LUN XP for the XP12000/XP10000

Page 13

Figure 2 Data Flow after an Auto LUN XP operation

NOTE: Immediately after a source volume and a target volume replace each other, RAID level before the

replacement might be displayed or an internal error might occur in the disk array. In this case, select

Refresh to update the display.

Estimating usage rates

The estimate function calculates expected usage of source and target parity groups after a proposed

volume migration. The estimate function uses subsystem usage data collected by the monitor function, HDD

performance characteristics, and RAID level to estimate expected usage rates. The automatic and manual

migration functions use these estimated values to verify proposed migrations.

For each proposed migration operation, Auto LUN XP automatically estimates expected usage rates for

source and target parity groups. The estimate function calculates expected results of a proposed volume

migration based on XP12000/XP10000 monitor data collected during the user-specified monitor data

term. The estimate function considers RAID level and HDD type when estimating expected usage rates

(RAID-1 and RAID-5 have different access characteristics).

The estimate function is a critical component of the auto migration function. The estimate function calculates

expected parity group usage for source and target parity groups, and the auto migration function uses

these estimates to verify proposed migrations. If any condition prevents Auto LUN XP from estimating an

expected usage rate (for example, invalid monitor data), the auto migration function will not schedule the

migration operation.

For manual migration operations, when you select the source volume, Auto LUN XP displays expected

usage rates for the source parity group, so you can see the predicted effect of migrating the selected

volume out of its group. When you select the target volume, Auto LUN XP displays expected usage rates for

the target parity group, so you can see the predicted effect of adding the selected source volume to the

selected parity group.

Auto LUN XP does not estimate processor or access path usage. You can use Auto LUN XP migration

operations to improve DKP and DRR usage, but cannot use them to address CHP or access path usage.

Perform Auto LUN XP migration only when you expect a large improvement in disk array performance.

Auto LUN XP migration might not provide significant improvement if parity group or volume usage varies

only slightly or if overall DKP or DRR usage is relatively high. Also keep in mind that disk array tuning

operations might improve performance in one area while decreasing performance in another. For

example, suppose parity groups A and B have average usage values of 20% and 90%, respectively. Auto

LUN XP estimates that if one logical volume is migrated from parity group B to parity group A, the usage

values will become 55% and 55%. If you perform this migration operation, I/O response time for parity

Auto LUN XP user guide for the XP12000/XP10000 13

Page 14

group B will probably decrease and I/O response time for parity group A might increase, while the overall

throughput might increase or decrease.

From an open-system host, you can also use RAID Manager command to perform manual volume

migration. To use RAID Manager to perform Auto LUN XP migration, install Auto LUN XP on the

XP12000/XP10000. For more information, see ”Using RAID Manager for manual Auto LUN XP

migrations” on page 44.

Automatic migration

Auto migration is the primary tuning method for disk arrays. Auto migration analyzes data and executes

migration plans automatically based on user-specified parameters. Use Auto LUN XP to schedule auto

migration operations, select data to be analyzed, and specify maximum time and performance impact of

auto migration operations. Auto LUN XP displays a detailed log of auto migration activities.

Auto migration operations are based on the following major parameters:

• Hierarchy of parity groups. Auto LUN XP arranges parity groups in the XP12000/XP10000 in a

hierarchy based on HDD type, and assigns each parity group to a class based on performance of its

HDD type. Classes are ordered from highest performance drive (class A) to lowest performance drive

(class B, C, or higher depending on number of HDD types available). The auto migration function uses

this hierarchy to identify target volumes for auto migration operations.

The auto migration function can also specify “fixed” parity groups that are excluded from auto

migration operations.

• Maximum disk usage. The auto migration function specifies the maximum disk usage rate for each

HDD class in the XP12000/XP10000, and uses these limits to identify source volumes for auto

migration operations.

You must identify and specify disk usage limits for your environment. When you use the same maximum

disk usage rate for all HDD classes, HDD performance is the only factor used in determining auto

migration plans. When you specify different usage limits for HDD classes, you can bias the auto

migration function to favor (or avoid) certain HDD types. Migrating high-usage volumes to higher HDD

classes should significantly improve host access to volumes, which can also have a large effect on disk

array performance.

The auto migration function also specifies the maximum disk usage rate during auto migration

operations, so you can control the impact of Auto LUN XP copy operations on disk array performance.

If source or target parity group usage exceeds the specified limit during migration, the auto migration

operation is canceled.

Do not perform manual migration operations while the auto migration function is active. Always turn off the

auto migration function and cancel any existing auto migration plan before performing manual migration

operations.

Storage management by maximum disk usage

Use the auto migration function to specify maximum usage rate for each HDD class in an

XP12000/XP10000. When a parity group exceeds this limit, the auto migration function makes a plan to

migrate one or more volumes in this parity group to a parity group in a higher HDD class or to a parity

group in the same HDD class with lower usage.

This storage tuning method addresses and can eliminate disk drive activity bottlenecks. Auto LUN XP uses

its estimated usage rates to verify each proposed auto migration, and will not perform a migration

operation that might result in a target parity group exceeding the user-specified maximum disk usage rate.

The auto migration function identifies parity groups that exceed the user-specified usage limit, and selects

high-usage volumes as source volumes to be migrated to parity groups in higher HDD classes or to other

parity groups in the same HDD class with lower usage.

Keep reserved volumes in high HDD classes

When parity groups in the highest HDD classes start to run out of reserved (empty) volumes, Auto LUN XP

maintains available reserve volumes by automatically migrating low-usage volumes from higher HDD class

groups to lower HDD class groups.

14 Auto LUN XP for the XP12000/XP10000

Page 15

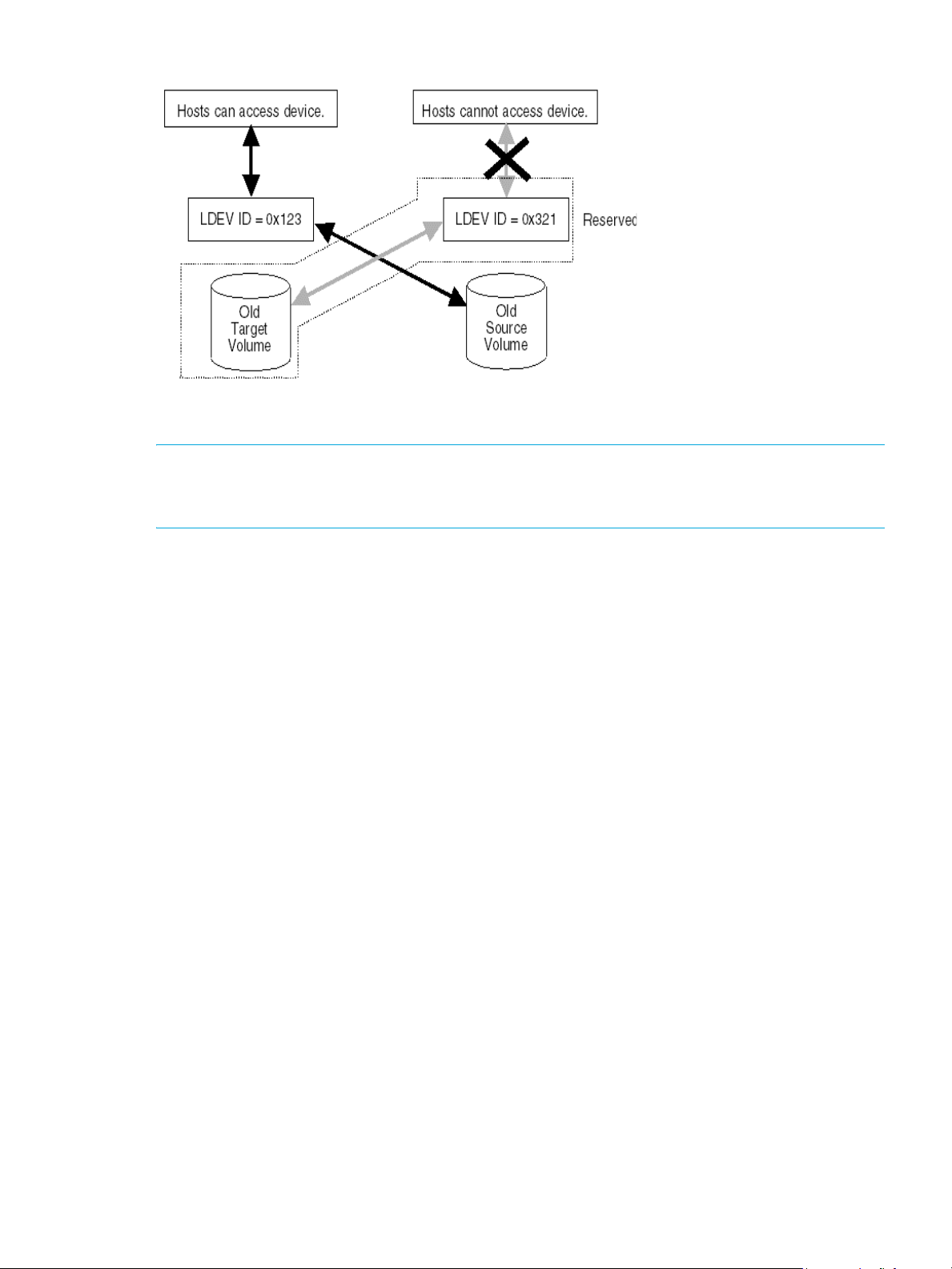

The auto migration function can move a high-usage volume to a higher HDD class group, forcing a

low-usage volume out of that HDD class group. To do so, the auto migration function requires a minimum of

5% difference in estimated disk usage between the two volumes. If the difference is less than 5%, this

migration is considered ineffective and the volume is not moved.

Figure 3 Auto migration function example 1

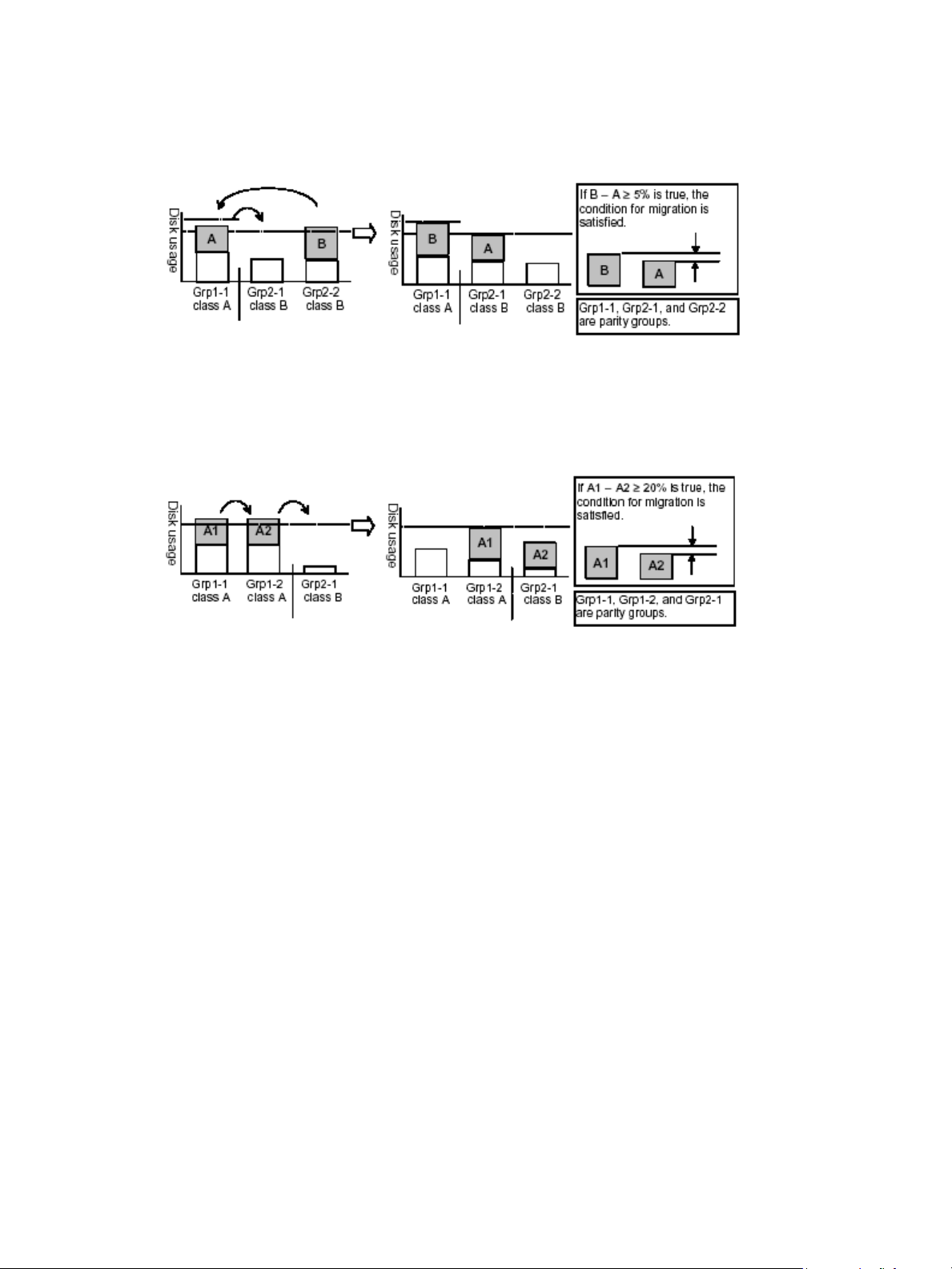

The auto migration function can also move a volume from one parity group to another group of the same

HDD class, forcing another volume out of the destination parity group. To do so, the auto migration

function requires a minimum of 20% difference in estimated disk usage between the two volumes. If the

difference is less than 20%, this migration is considered ineffective and the volume is not moved.

Figure 4 Auto migration function example 2

Auto LUN XP user guide for the XP12000/XP10000 15

Page 16

Auto migration sequence of events

The following are the typical steps to set up an auto migration plan:

1. Analyze monitor data. You specify the monitor data term to be analyzed.

2. Make auto migration plan. You specify the auto migration plan’s parameters.

3. Perform auto migration plan. You specify when the auto migration plan is executed.

4. Analyze monitor data to confirm tuning results.

Manual migration

Use manual migration to select and migrate logical volumes under direct, manual control. The manual

migration function displays estimated results of proposed migration operations, which you can use to

determine expected performance improvements prior to the actual migration.

While auto migration operations are based on disk usage and hierarchy of parity groups, you can use

manual migration operations to address back-end processor usage (DKPs and DRRs) and volume and

parity group usage. If monitoring shows high or unbalanced processor usage, use manual migration

operations to tune the processor performance of the XP12000/XP10000.

Requirements and restrictions

Selection of CU group

When you select CU group CU00-3F, the Auto LUN XP buttion is available. With all other CU group

selections, the button is deactivated (grayed out).

Logical volumes

Source and target volumes must be in the same XP12000/XP10000. Also, a combination of source and

target volumes should satisfy the following conditions:

• The source and target volumes have the same emulation type and capacity.

• If the emulation type is not OPEN-V, the source and target volumes are customized volumes (CVs), or

the volumes are normal volumes.

• If you use LUN Security XP Extension with Auto LUN XP, volume management areas (VMA) are set on

the source and target volumes, or VMAs are not set on both volumes. You cannot set VMA on a volume

that is already reserved by Auto LUN XP. To set VMA on a volume, perform the VMA setting first, and

then reserve that volume.

• The CU:LDEV numbers of volumes take the value between 00:00 and 3F:FF.

NOTE: For users in the StorageAdmins group, the functions you can use are limited. For more information

about these limitations, see the HP StorageWorks Command View XP user guide for XP Disk Arrays or the

HP StorageWorks XP Remote Web Console user guide for XP12000/XP10000.

Whether you can or cannot perform volume migration with a pair consisting of CVs and normal volumes

depends on the volumes’ emulation type. For details, see Table 2.

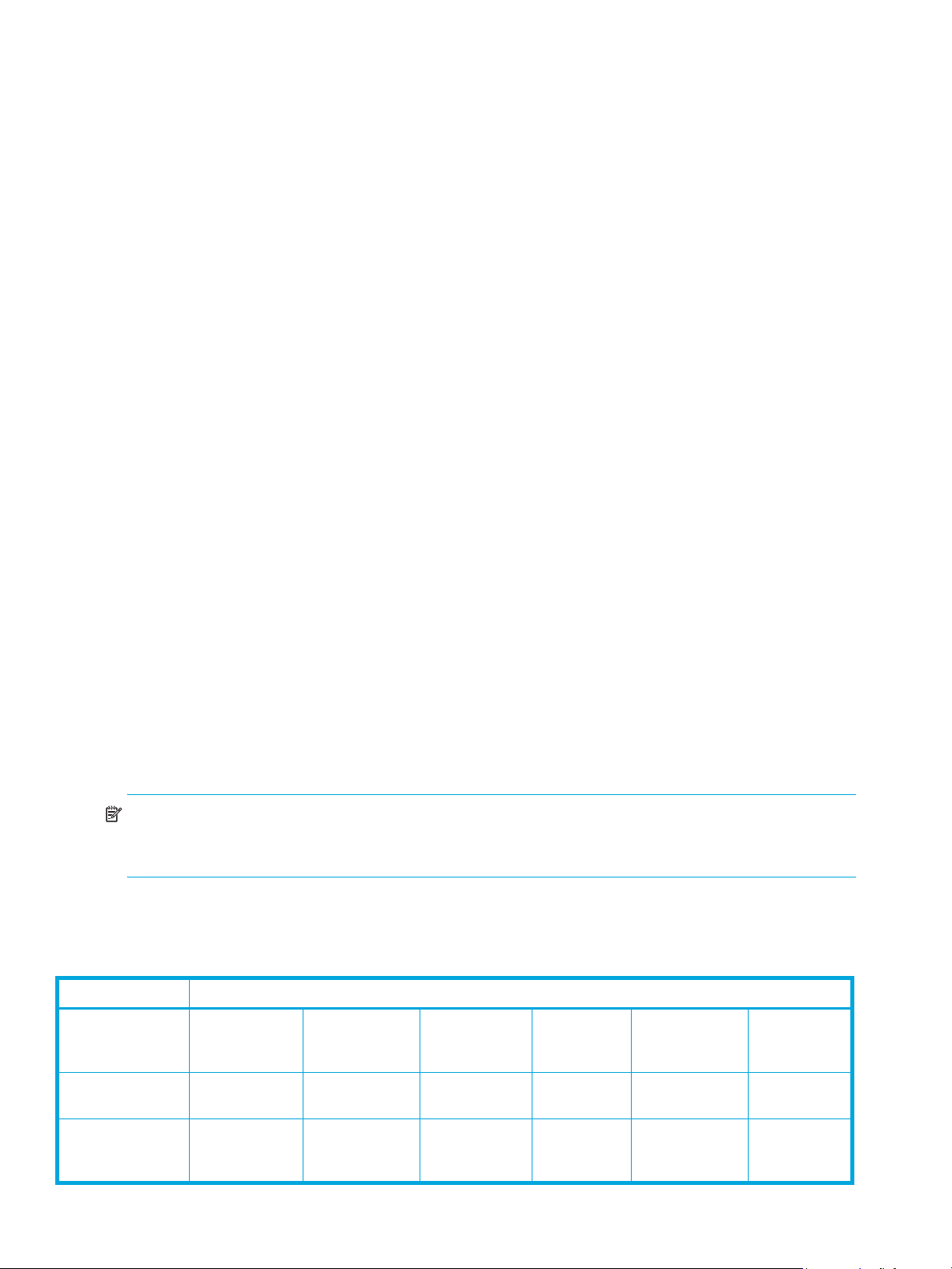

Table 2 Movability of volumes in pairs consisting of CV and normal values

Source volume Target volume

Normal volume

(not OPEN-V)

Normal volume

(not OPEN-V)

Normal volume

(OPEN-V without

VMA setting)

16 Auto LUN XP for the XP12000/XP10000

Movable Not movable Not movable Not movable Not movable Not movable

Not movable Movable Not movable Not movable Movable Not movable

Normal volume

(OPEN-V without

VMA setting)

Normal volume

(OPEN-V with

VMA setting)

CV

(not OPEN-V)CV(OPEN-V without

VMA setting)

CV

(OPEN-V with

VMA setting)

Page 17

Table 2 Movability of volumes in pairs consisting of CV and normal values (continued)

Source volume Target volume

Normal volume

(OPEN-V with

VMA setting)

CV

(not OPEN_V)

CV

(OPEN-V without

VMA setting)

CV

(OPEN-V with

VMA setting)

You cannot make pairs of the volumes.

Specify the logical volumes satisfying the conditions described in Table 2 as the source and target

volumes. The source and target volumes must be specified by LDEV ID.

Source volumes

The following describes whether volumes can be used as source volumes:

• Volumes set as command devices (reserved for use by hosts) cannot be used as source volumes.

• Volumes in an abnormal or inaccessible condition (for example, fenced) cannot be used as source

volumes.

• Volumes with Cache LUN data stored in cache cannot be used as source volumes.

• iSCSI volumes (volumes to which paths are defined from iSCSI ports) cannot be used as source

volumes.

• Volumes that HP StorageWorks Continuous Access XP Journal or Universal Replicator for z/OS® uses

as a data or journal volume cannot be used as source volumes.

• Volumes reserved by a migration program other than Auto LUN XP cannot be used as source volumes.

• Volumes on which the RAID Manager is performing volume migration operations.

• Volumes that form a Snapshot XP pair cannot be used as source volumes.

• Volumes used by Continous Access XP Journal or Universal Replicator for z/OS® as a journal volume.

• Volumes used by the IBM 3990 Concurrent Copy (CC) host software function.

• Virtual and pool volumes cannot be used as source volumes.

• Inaccessible volumes, or volumes in an abnormal state cannot be used as source volumes (for example,

pinned track or fenced volumes).

• Volumes being shredded.

• Universal Replicator for z/OS®: If the status of a Universal Replicator for z/OS® volume is Pending

duplex or Duplex, the volume cannot be used as a source volume. Note that if you specify a Universal

Replicator for z/OS® volume as a source volume, you cannot specify an external volume as the target

volume.

• Continuous Access XP Journal. If the status of a HP StorageWorks Continuous Access XP Journal volume

is PSUS, PSUE, or SMPL, the volume can be used as a source volume. If not, the volume cannot be used

as a source volume. When a Continuous Access XP Journal pair is deleted from the MCU, the status of

both volumes changes to SMPL, and both volumes can be used as source volumes. When a Continuous

Access XP pair is deleted from the RCU, the status of the P-VOL changes to PSUS, the status of the S-VOL

changes to SMPL, and both volumes can be used as source volumes.If the status of a Continuous

Access XP Journal volume is COPY or PAIR, the volume cannot be used as a source volume. Note that

if you specify a Continuous Access XP Journal volume as a source volume, you cannot specify an

external volume as the target volume.

• Business Copy. Using a BC volume as a source volume depends on the status and cascade

configuration of the volume. If the status of a BC volume is not split-pending COPY(SP) or PSUS(SP), the

Not movable Not movable Movable Not movable Not movable Not movable

Not movable Not movable Not movable Movable Not movable Not movable

Not movable Movable Not movable Not movable Movable Not movable

Not movable Not movable Movable Not movable Not movable Movable

Auto LUN XP user guide for the XP12000/XP10000 17

Page 18

volume can be used as a source volume. If the status is COPY(SP) or PSUS(SP), the volume cannot be

used as a source volume. This applies to cascaded and non-cascaded volumes.

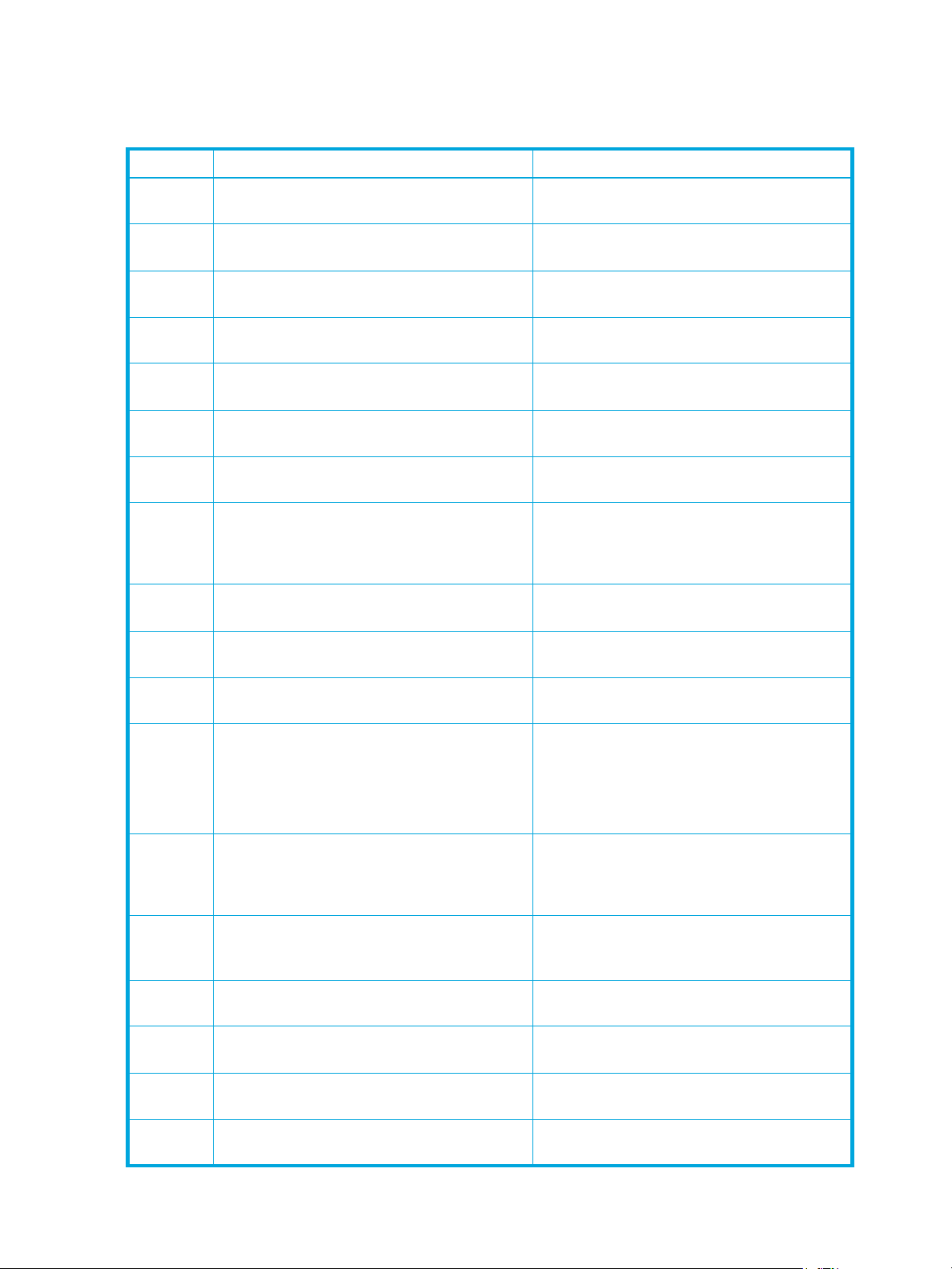

Table 3 shows which non-cascaded volumes can be used as source volumes.

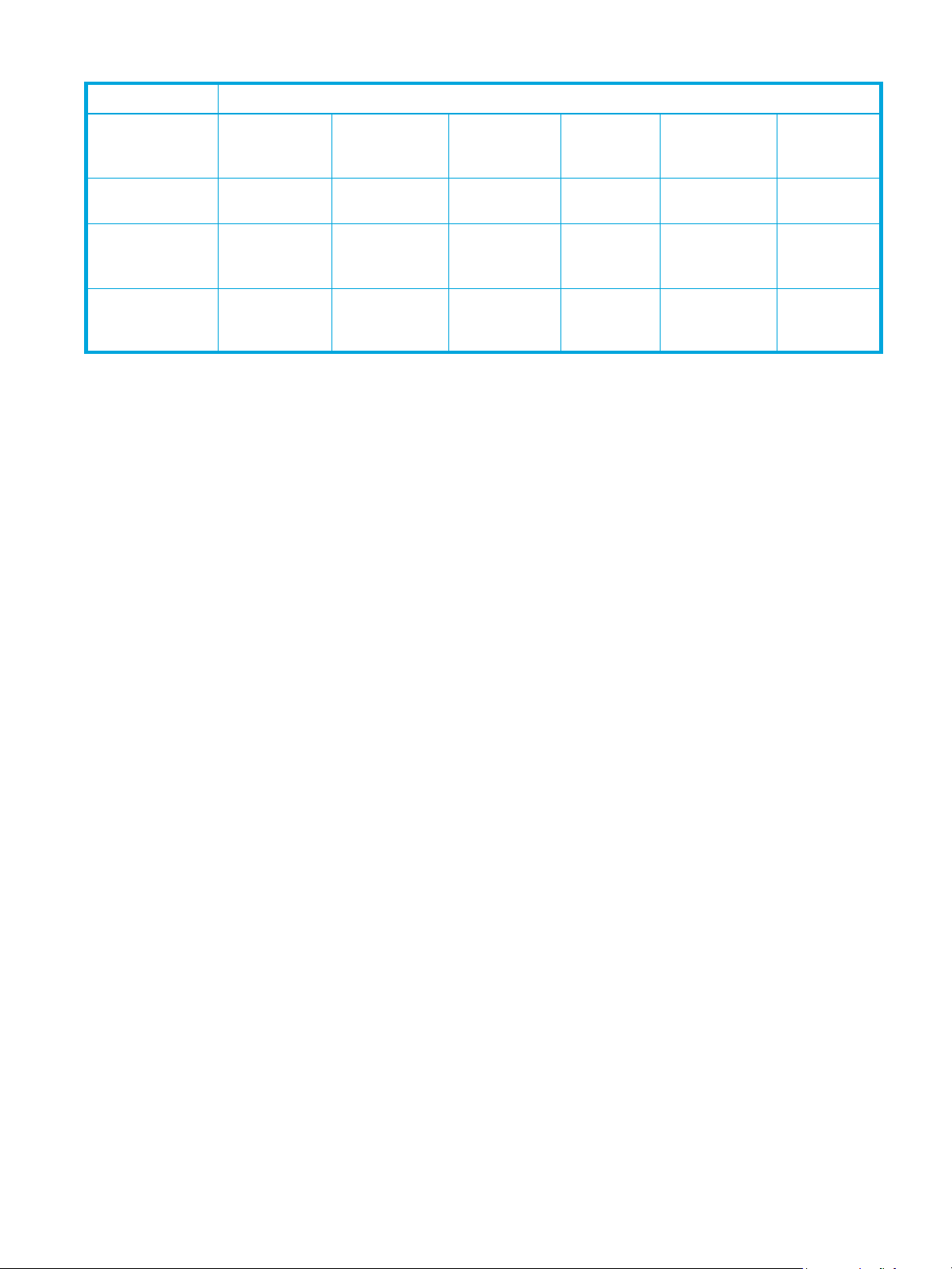

Table 3 Non-cascaded volumes that can be used as source volumes

Pair configuration Use P-VOL as source volume Use S-VOLs as source volumes

Ratio of P-VOL to S-VOLs is 1:1 Yes Yes

Ratio of P-VOL to S-VOLs is 1:2 Yes Yes

Ratio of P-VOL to S-VOLs is 1:3 No Yes

Table 4 shows which cascaded volumes can be used as source volumes.

Table 4 Cascaded volumes that can be used as source volumes

Pair Configuration Use P-VOL as source volume Use S-VOLs as source volumes

L1 pair, ratio of P-VOL to S-VOLs is 1:1 Yes Yes

L1 pair, ratio of P-VOL to S-VOLs is 1:2 Yes Yes

L1 pair, ratio of P-VOL to S-VOLs is 1:3 No Yes

L2 pair, ratio of P-VOL to S-VOLs is 1:1 Yes No

L2 pair, ratio of P-VOL to S-VOLs is 1:2 No No

If any of the following operations are performed on an Auto LUN XP source volume during migration, the

Auto LUN XP volume migration process stops:

• Continuous Access XP operations that change the volume status to something other than PSUS, PSUE, or

SMPL

• BC operations that change the volume status to COPY(SP) or PSUS(SP)

• Continuous Access XP Journal operations

• Continuous Access XP Journal or Universal Replicator for z/OS® operation that changes the volume

status to COPY.

LUSE source volumes

To specify a LUSE source volume for migration, specify individual LDEVs within the LUSE volume (for

example, LDEVs with high usage). Auto LUN XP migrates only specified LDEVs. If needed, specify all LDEVs

of the LUSE volume to relocate the entire LUSE volume. In this case, ensure that the required reserved target

LDEVs are available.

Target volumes

Target volumes must be reserved prior to migration. Hosts cannot access reserved volumes. The following

volumes cannot be reserved:

• Logical Unit Size Expansion (LUSE) volumes

• Volumes set as command devices

• Volumes assigned to BC or Continuous Access XP pairs

• Volumes reserved for BC operations

• Volumes with Cache LUN data stored in cache

• iSCSI volumes (for example, volumes to which paths are defined from iSCSI ports)

• Volumes in abnormal or inaccessible conditions (for example, fenced)

• Volumes Continuous Access XP Journal uses

• Volumes specified with the Read Only or Protect attribute

• Volumes that Volume Security disabled for use as secondary volumes

• Volumes that LUN Security XP Extension specified as Read Only or Protect or disabled for use as

secondary volumes

18 Auto LUN XP for the XP12000/XP10000

Page 19

• Volumes reserved by a migration program other than Auto LUN XP

• Volumes on which the RAID Manager is performing volume migration operations.

• Volumes that form a Snapshot XP pair.

• Virtual and pool volumes.

Number of volumes

In manual migrations, the number of migration plans that can be executed concurrently might be restricted,

depending on use of other Command View XP or XP Remote Web Console programs and emulation types

and sizes of migrated volumes. Therefore, the number of migration plans that can be executed concurrently

is not constant.

A maximum of 36 Auto LUN XP copy operations can be requested at the same time. Auto LUN XP performs

migration operations sequentially (one volume at a time).

The maximum number of Auto LUN XP copy operations plus BC pairs is 1024.

Calculating the number of Auto LUN XP concurrent migration plans

When a migration plan is created, differential tables are used. Differential tables are disk array resources

that are shared by Flex Copy XP, Business Copy XP, ShadowImage for z/OS

Mirroring, Hitachi FlashCopy Mirroring Version2, Snapshot XP, and Auto LUN XP. The maximum number of

differential tables that Auto LUN XP can use is the number of total differential tables in the disk array minus

the number used by other applications.

When using Auto LUN XP for manual volume migration, the number of migration plans that can be

executed concurrently depends on the following:

• How much shared memory is available for differential tables. The number of differential tables

available in one disk array is determined by whether additional shared memory for differential tables is

installed.

• You can use 13,652 differential tables if no additional shared memory for differential tables is

installed.

• You can use 30,718 differential tables if additional shared memory for differential tables is installed.

NOTE: Contact your HP account support representative for more information about adding additional

shared memory.

• The emulation type and capacity of each volume to be migrated:

The number of differential tables needed to migrate one volume differs depending on the emulation

type and size of the volume. For the number of differential tables needed for migrating a mainframe

volume, see page 19. For the number of differential tables needed for migrating an open-system

volume, see page 21.

®

, Hitachi FlashCopy

You can estimate the maximum number of migration plans that can be executed concurrently by applying

the previously described conditions to the following equation: , where:

• Σ(α) is the total number of differential tables needed to migrate all volumes.

• (β) is the number of available differential tables.

For example, if you want to create 20 migration plans of OPEN-3 volumes (volume size = 2,403,360 KB),

calculate the number of required differential tables (page 21) and enter this number in the equation:

120

×()20=[]13,652 or 30,718≤

Since this equation is true, you can create 20 migration plans of OPEN-3 volumes.

.

Σα() β()=

Calculating differential tables for mainframe volume migration

When you migrate mainframe volumes, use the following equation to calculate the total number of

required differential tables per migration plan:

XY+()15× Z÷ Total number of differential tables per migration plan=

Auto LUN XP user guide for the XP12000/XP10000 19

, where:

Page 20

• X: The number of cylinders in the volume to be migrated. If the volume is a CVS volume, use the number

of cylinders in the custom volume instead of the default value for the emulation type.

• Y: The number of control cylinders (See Table 5).

• Z: The number of slots that can be managed by a differential table: .

1916 32×

NOTE: Round the result of the calculation up to the nearest whole number.

For example, if a volume has the emulation type 3390-3, and 3339 cylinders, calculate the total number of

differential tables with the following equation: .

3339 6+()15 1916 32×()÷× 0.81836=

When you round 0.81836 up to the nearest whole number, it becomes 1. Therefore, the total number of

differential tables for one migration plan is 1 when emulation type is 3390-3.

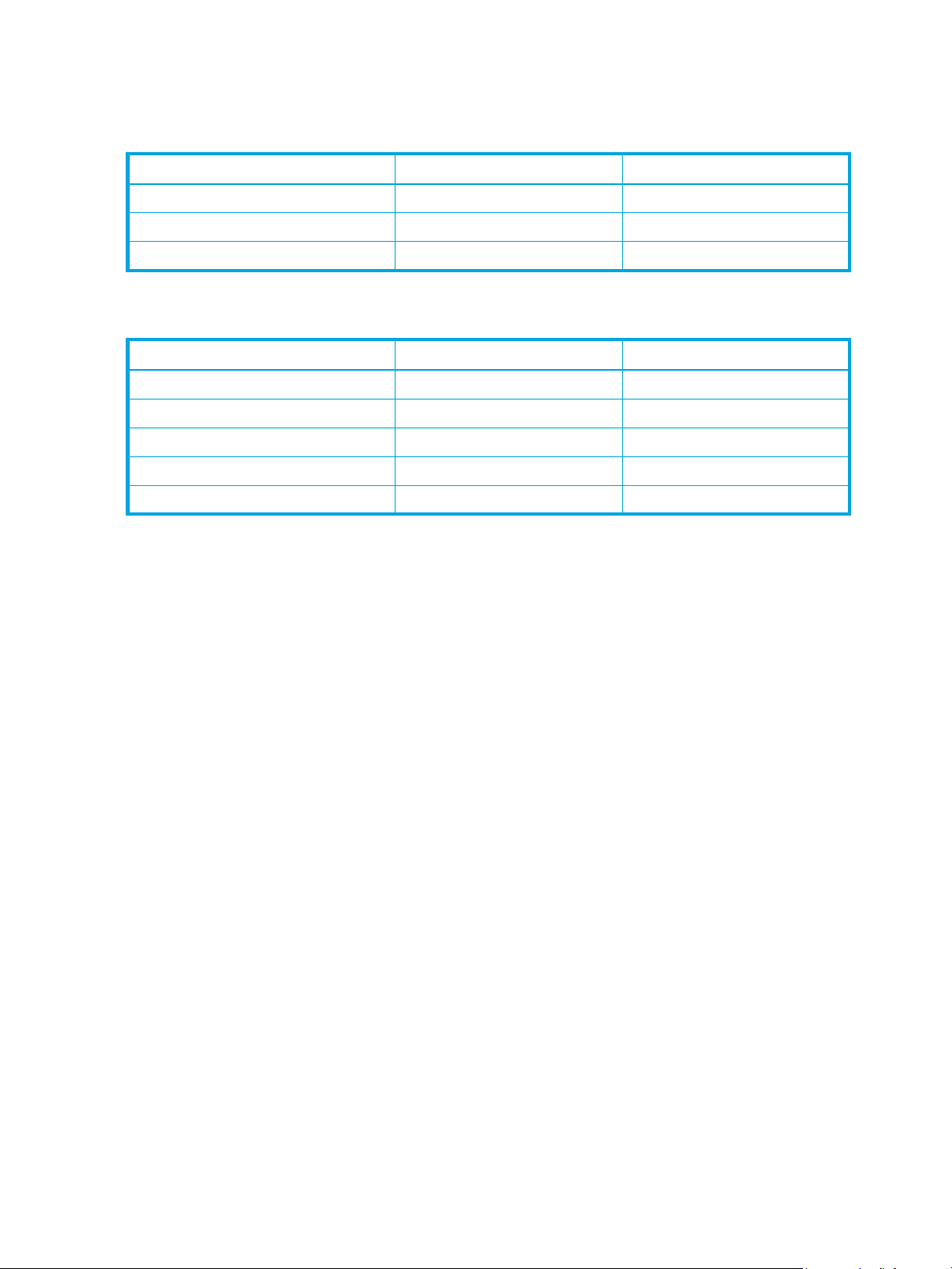

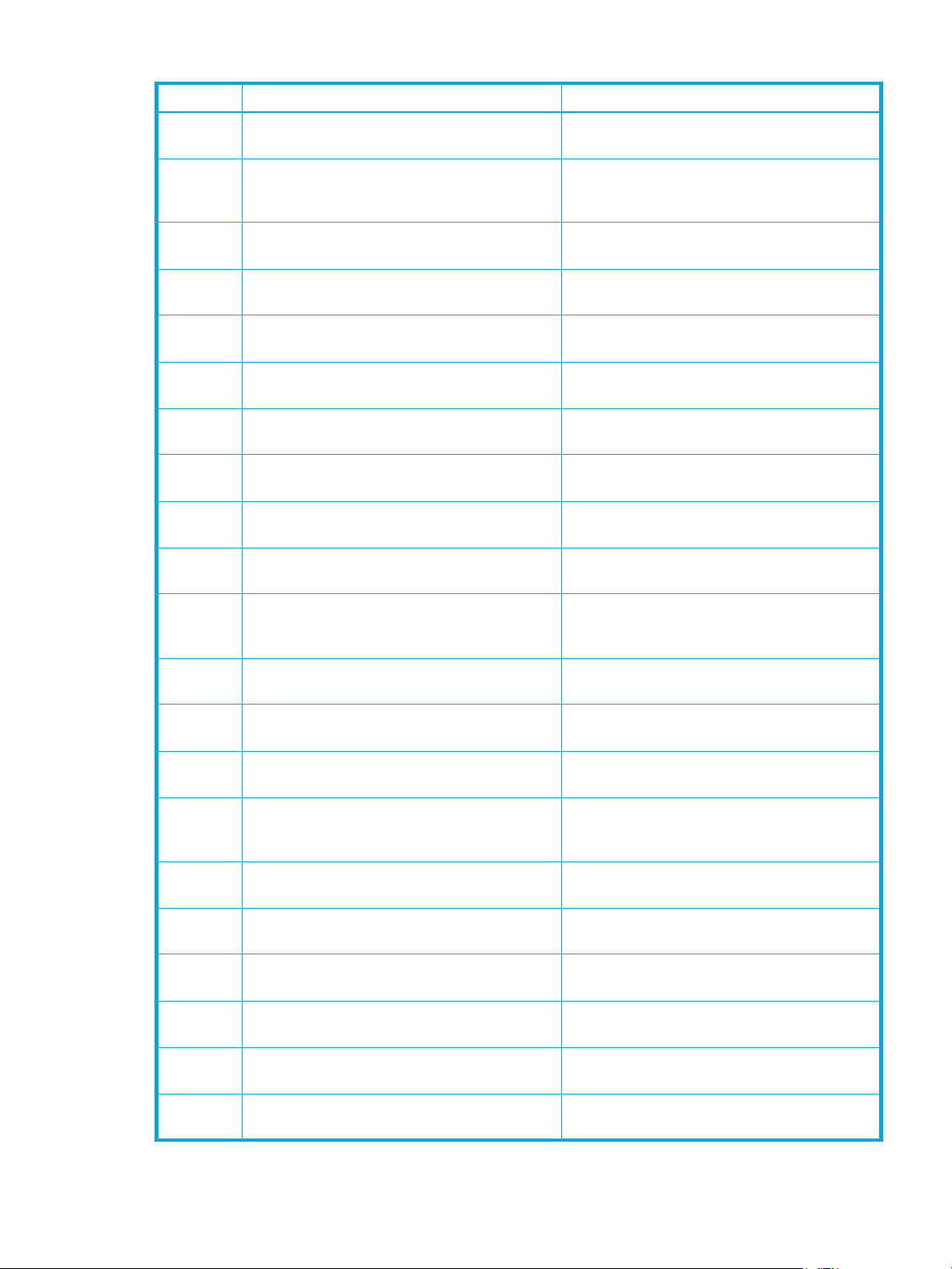

The following table shows the number of the control cylinders according to the emulation type.

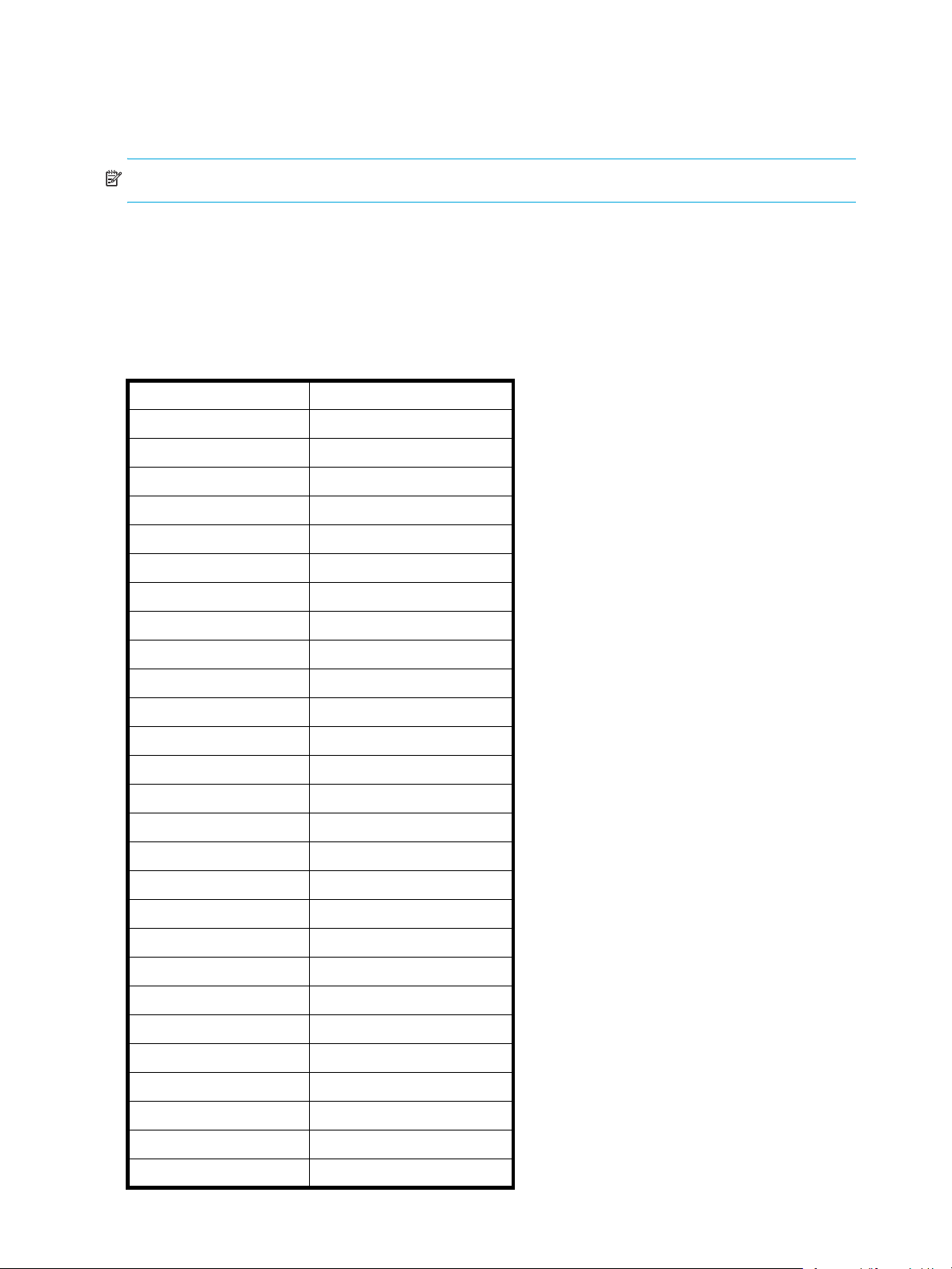

Table 5 Control cylinders by emulation type

Emulation type Number of control cylinders

3380-3 7

3380-3A 7

3380-3B 7

3380-3C 7

3380-F 22

3380-K 7

3380-KA 7

3380-KB 7

3380-KC 7

3390-3 6

3390-3A 6

3390-3B 6

3390-3C 6

3390-3R 6

3390-9 25

3390-9A 25

3390-9B 25

3390-9C 25

3390-L 23

3390-LA 23

3390-LB 23

3390-M 53

3390-MA 53

3390-MB 53

3390-MC 53

3390-LC 23

NF80-F 22

20 Auto LUN XP for the XP12000/XP10000

Page 21

Table 5 Control cylinders by emulation type (continued)

NF80-K 7

NF80-KA 7

NF80-KB 7

NF80-KC 7

Calculating differential tables for open-system volume migration

When you migrate open-system volumes, use the information in Table 6 to calculate the total number of

required differential tables per migration plan.

Table 6 Total number of differential tables per migration plan

Emulation type Equation

OPEN-3 Total number of differential tables per migration plan =

OPEN-8

OPEN-9

OPEN-E

OPEN-L

OPEN-V Total number of differential tables per migration plan =

1. If the selected volume is a CVS (custom volume size) volume, use the capacity of the custom volume instead of the default volume

capacity for the emulation type. Since the OPEN-L emulation type does not support custom volume operations, you must use the

default volume capacity of OPEN-L for X.

2. 1 KB equals 1024 bytes.

(X): Capacity of the volume to be migrated (KB)

(Y): Number of control cylinders associated with the emulation type (see Table 7)

(Z): Number of slots that can be managed by a differential table

NOTE: Round the result of the calculation up to the nearest whole number.

For example, if the volume’s emulation type is OPEN-3, and it is not a custom volume, the

volume capacity (X) is the default volume size, 2,403,360 KB, and the total number of

differential tables is calculated as follows:

2,403,360 48÷()815×()+[]1,916 32×()÷ 0.81860=

When you round to the nearest whole number, the result is 1. Therefore, the total number of

differential tables for one migration plan is 1 when the emulation type is OPEN-3.

(X): Capacity of the volume to be migrated (KB)

(Z): Number of slots that can be managed by a differential table

NOTE: Round the result of the calculation up to the nearest whole number.

For example, if the volume capacity (X) is the maximum OPEN-V volume capacity of

3,019,898,880 KB, the total number of differential tables is calculated as follows:

3,019,898,880 256÷()1,916 32×()÷ 192.40084=

When you round up to the nearest whole number, the result is 193. Therefore, the total number

of differential tables for migration plan is 193 when the emulation type is OPEN-V.

X 48÷()Y15×()+[]Z÷

1, 2

1,916 32×()

X 256÷()Z()÷

2

1,916 32×()

The following table shows the number of control cylinders according to the emulation type.

Table 7 Control cylinders by emulation type

Emulation type Number of control cylinders

OPEN-3 8 (5,760KB)

OPEN-8 27 (19,440KB)

OPEN-9

OPEN-E 19 (13,680KB)

Auto LUN XP user guide for the XP12000/XP10000 21

Page 22

Table 7 Control cylinders by emulation type (continued)

OPEN-L 7 (5,040KB)

OPEN-V 0 (0KB)

Auto migration planning

Auto LUN XP will not plan an auto migration when:

• There is no reserve volume with the same emulation type and capacity as the source volume in the

same or next higher/lower HDD class (auto migration is performed only between consecutive classes or

within the same class)

• Auto LUN XP cannot estimate the usage rate for the target parity group because of some condition (for

example, invalid monitor data)

• The estimated usage of the target parity group or volume is over the user-specified maximum disk usage

value for that HDD class

• The estimated performance improvement is not large enough

Auto migration execution

Auto LUN XP will not execute an auto migration operation when:

• The current usage (last 15 minutes) of the source or target parity group or volume is over the maximum

disk usage rate for auto migration

• The current write pending rate for the disk array is 60% or higher

Manual migration execution

Auto LUN XP will execute a manual migration operation when the current write pending rate for the disk

array is 60% or higher.

Copy Threshold option

If Auto LUN XP performs migration operations when the disk array workload is heavy, host server I/O

performance might be degraded. To minimize this performance degradation, enable Copy Threshold,

which temporarily stops Auto LUN XP operations when the disk array has a heavy workload. Copy

operations that are stopped by the Copy Threshold option resume when the disk array workload is lighter.

CAUTION: A volume migration operation might fail if hosts frequently update the volumes being

migrated. If you enable Copy Threshold, Auto LUN XP temporarily stops copy processing when the disk

array is overloaded, which increases the possibility of a failed volume migration operation. Using the

Copy Threshold operation is not recommended when hosts frequently update the volumes being migrated.

IMPORTANT: Contact your HP support account representative for more information about the Copy

Threshold option.

In addition to Auto LUN XP operations, Copy Threshold temporarily stops copy operations for the following

applications when the disk array workload is heavy:

• Business Copy XP

• ShadowImage for z/OS

• Hitachi FlashCopy Mirroring

• Hitachi FlashCopy Mirroring Version 2

• Snapshot XP

• Flex Copy XP

22 Auto LUN XP for the XP12000/XP10000

Page 23

Progress value

If a volumes with heavy and light workloads are migrated together, the migration might not complete (even

though the progress value indicates 100%). If this happens perform one of the following tasks.

• Reduce the workloads between hosts and the disk array and complete the migration.

• Stop the migration for the volume with heavy workloads, migrate the other volume, and then retry the

migration which has been stopped.

Effects on other XP products

When the workloads for update I/Os between hosts and the disk array are heavy, longer time will be

required to complete the volume migration because the copy of differential data is repeatedly executed.

Longer copying times may be required for other XP products when this copy of differential data is

executed. These XP products include: Auto LUN XP, Business Copy XP, ShadowImage for z/OS®,

Compatible Mirroring for IBM® FlashCopy®, and Flex Copy XP.

See Figure 8 for the amount of affected copying time. The copying time of other XP products depends on

the number of pairs set to the XP product, however, the copying time may be increased up to twice.

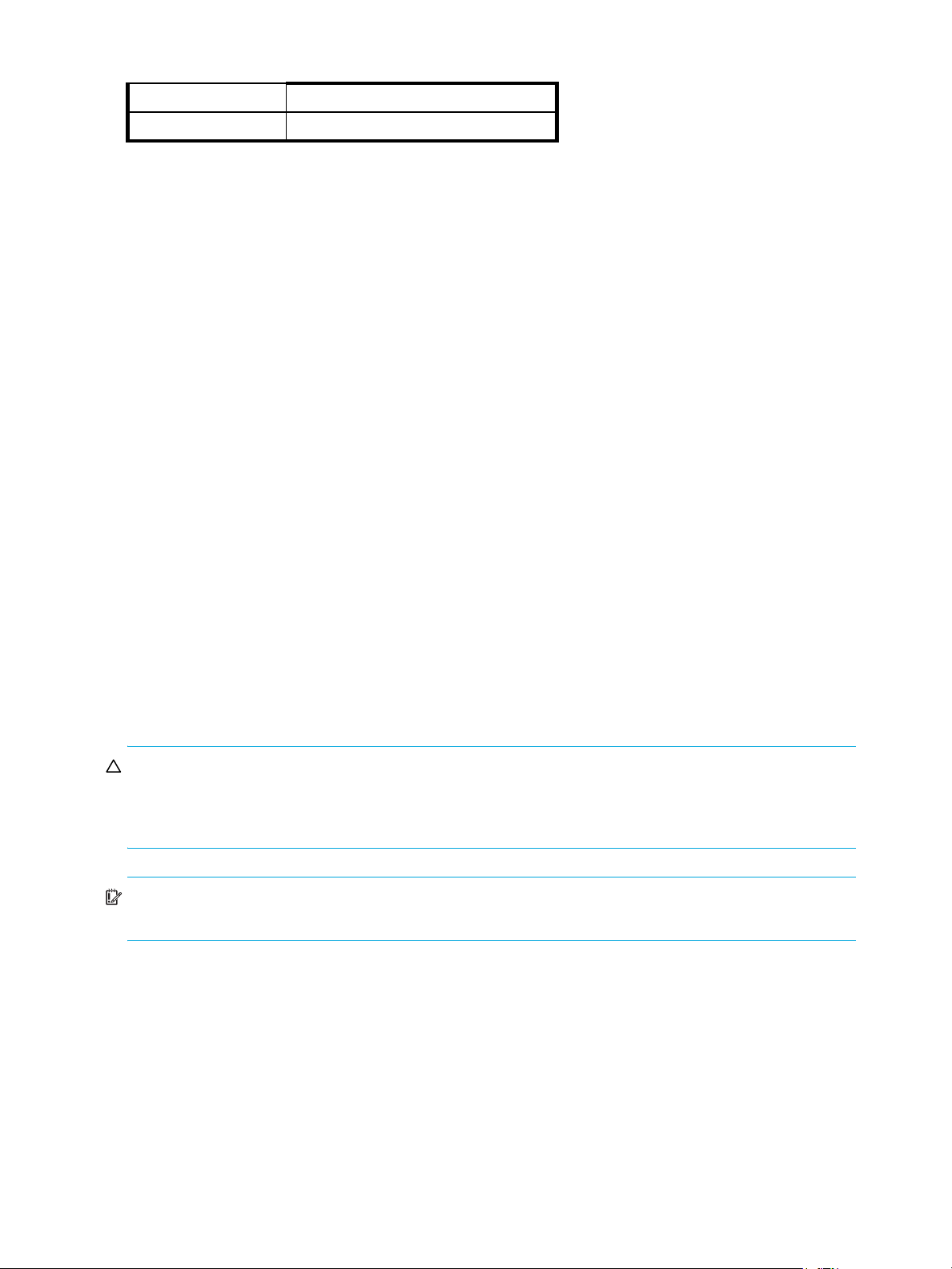

Table 8 The estimated delay in the copying speed

Capacity of Migrated Volume (MB) Estimated delay in the copying

speed (in minutes)

0 - 1,000 4

1,001 - 5,000 18

5,001 - 10,000 37

10,001 - 50,000 186

50, 001 - 100,000 372

100,001 - 500,000 1860

500,001 - 1,000,000 3720

1,000,001 - 2,150,400 9667

The above estimates are calculated based on the assumption that the

workload for update I/Os for the migrated volume is 50 IOPS for one volume.

Maintenance

Do not perform Auto LUN XP migration operations during disk array maintenance activities (for example,

cache or drive installation, replacement, de-installation, or replacement of the microprogram).

Auto migration parameters

Auto migration parameters are initialized when the disk array configuration is changed (for example, when

new drives or LDEVs are installed). Parameters include disk usage limit and migration time. After a change

is made to the subsystem configuration, you must specify auto migration parameters again.

Powering off disk arrays

To turn the power off at the disk array, ensure that volume migration is finished. If migration is not finished,

HP strongly recommends that you do not turn off the power until the migration is finished.

If you turn the power off while migration is in progress, data migration stops and some of the data are not

migrated. If you turn the power on later, Auto LUN XP resumes data migration. If data remains in shared

memory, which is volatile memory, Auto LUN XP attempts to copy only data that have not been migrated to

the migration destination. However, if data is lost from shared memory, Auto LUN XP attempts to copy all

data to the migration destination; therefore, the copy operation takes more time. Auto LUN XP attempts to

Auto LUN XP user guide for the XP12000/XP10000 23

Page 24

copy data that have not been migrated and data that have already been copied to the migration

destination.

To power off the disk array, obtain the monitoring results first. If you do not, the Physical tab will not display

some monitoring results. For example, if 4:00 a.m. and p.m. is specified in the Gathering Time option in

the Auto LUN XP Monitoring Options pane and you turn off the power to the disk array at 5:00 p.m., the

Physical tab will not display the monitoring results from 4:00 p.m. to 5:00 p.m.

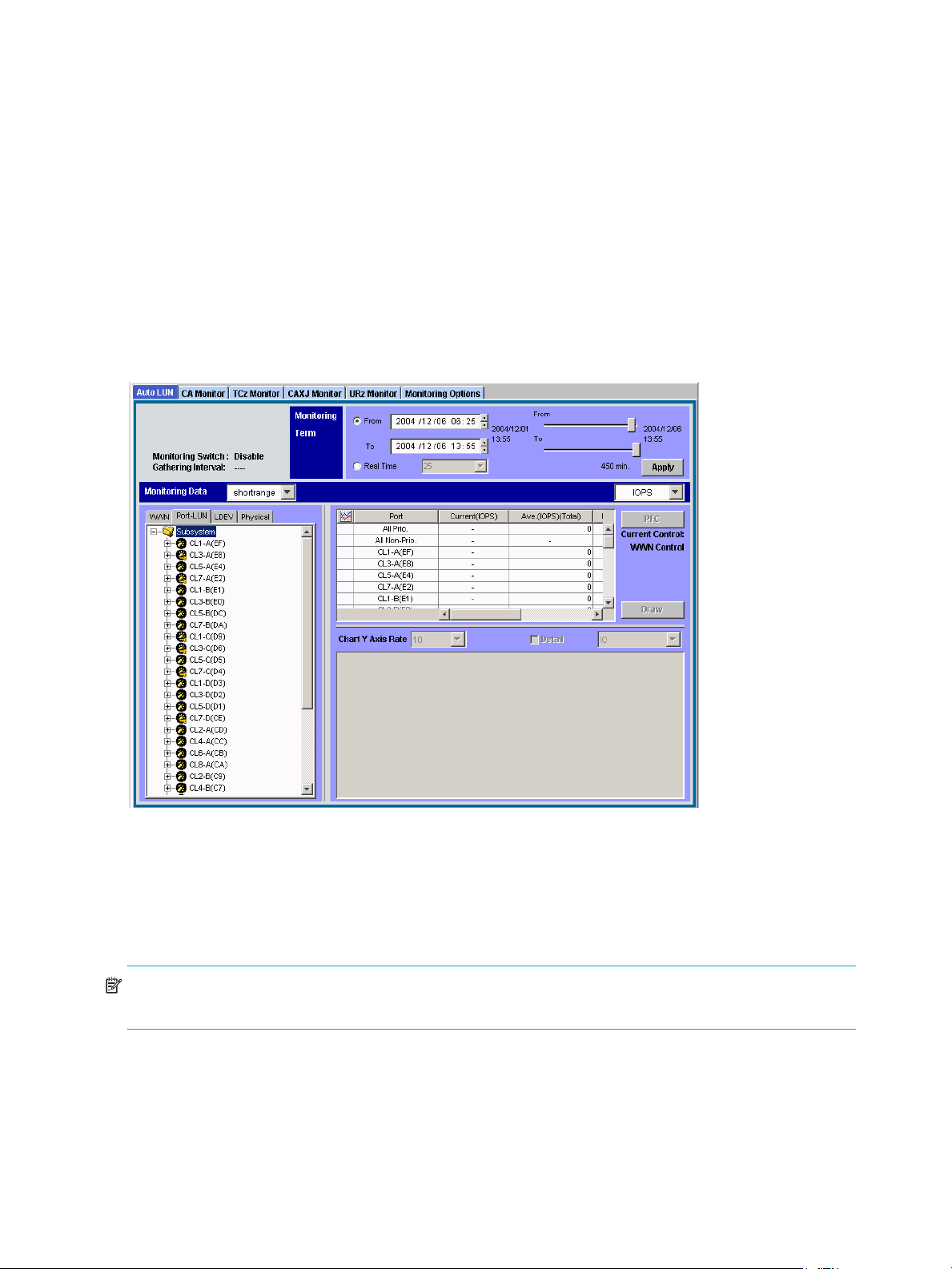

Starting Auto LUN XP

1. Log in to Command View XP or XP Remote Web Console. For more information, see the

HP StorageWorks Command View XP user guide for XP Disk Arrays or the HP StorageWorks XP Remote

Web Console user guide for XP12000/XP10000.

2. Ensure that you are in Modify mode. For more information, see the HP StorageWorks Command View

XP user guide for XP Disk Arrays or the HP StorageWorks XP Remote Web Console user guide for

XP12000/XP10000.

3. Click Auto LUN / Perf Control Base Monitor / PFC in the left pane. The Auto LUN pane appears.

Figure 5 Auto LUN pane

4. Click Monitoring Options. The Monitoring Options pane appears.

Auto LUN pane

The Auto LUN pane is the performance management pane. It displays usage rates for parity groups,

logical volumes, channel processors, disk processors, and so forth. The pane has several sections.

NOTE: If a hyphen appears in monitoring data columns on the Auto LUN pane, statistics cannot be

collected for that item.

Tabs and selection trees

When you click one of the tabs (WWN, Port-LUN, LDEV, or Physical) in the lower-left portion of the pane,

the pane displays different trees of items you can click.

The top left of the pane shows the status of the Short range Monitor and Long range Monitor. For

information about these monitors, see ”Auto LUN Monitoring Options pane” on page 50.

24 Auto LUN XP for the XP12000/XP10000

Page 25

Monitoring term section

Use this section to specify the monitoring period. This setting affects all Auto LUN XP monitoring panes.

Use the From box and slide bar to specify the starting date for the monitoring period. Use the To box and

slide bar to specify the ending date for the monitoring period.

Click Real Time to display workload statistics in real time. Use the list to specify the data range to be

displayed in the graph. This check box appears dimmed when the Physical or LDEV tab is active. If the

service processor is overloaded, extra time might be required for gathering monitoring results, and a

portion of the results will not be displayed.

The Apply button applies the monitoring period settings to the disk array.

Monitoring data section

This section includes the following:

• A list on the left to specify the units in which usage are displayed (MB/s, IOPS, and so forth).

• The CAXJ Monitor pane displays information about Continuous Access XP Journal’s continuous access

operation. This information is also displayed in the Usage Monitor pane. For more information, see the

HP StorageWorks Continuous Access XP Journal user guide.

• The URz Monitor pane displays information about the Universal Replicator for z/OS’s continuous

access operation. This information is also displayed in the Usage Monitor pane. For more information,

see the Hitachi True Copy for z/OS for the XP128/XP1024/XP10000/XP1200.

NOTE: If any continuous access function is not installed in your environment, the corresponding

tab for that function is inactive and you cannot display that pane.

• A list on the right to specify the type of monitoring required: long range or short range. Long range

Table section

This section displays the following:

• Statistics about traffic between host bus adapters (HBAs) in the hosts and ports on the disk array, if the

• Traffic statistics about WWNs and ports if the Port-LUN tab is active.

• Workload statistics about logical volumes if the LDEV tab is active.

• Statistics about parity group usage, processor usage, and so forth if the Physical tab is active.

• The Plan button is displayed when the Physical tab is active. Clicking this button displays Plan pane

• The Draw button draws a graph in the pane’s graph section. The graph charts the statistics displayed in

• The PFC button is displayed when the WWN or Port tab is active. Clicking this button displays the

• The Current Control status is displayed when the WWN or Port tab is active. It displays the status of

monitoring is available only when the Physical tab is active.

WWN tab is active.

containing the Manual Migration, Auto Migration, Attribute, and History tabs.

the table section.

Performance Control pane. For details, see HP StorageWorks Performance Control XP user guide.

Performance Control:

• Port Control: System performance is controlled by the upper limits and threshold specified in the Port

tab of the Performance Control pane

• WWN Control: System performance is controlled by the upper limits and threshold specified in the

WWN tab of the Performance Control pane

• No Control: System performance is not controlled by Performance Control

For further information, see HP StorageWorks Performance Control XP user guide.

Graph section

The graph shows statistics displayed in the table. The vertical axis shows usage values. The horizontal axis

shows date or time.

Auto LUN XP user guide for the XP12000/XP10000 25

Page 26

Use the Chart Y Axis Rate list to select the highest value of the Y-axis (the vertical axis). This list is not

displayed when the Plan button is active.

If you click the Detail check box, the graph displays detailed statistics. This check box is displayed when

the Port-LUN or LDEV tab is active. The contents of the graph depend on the item selected in the list to the

right of the table, as shown in the following table.

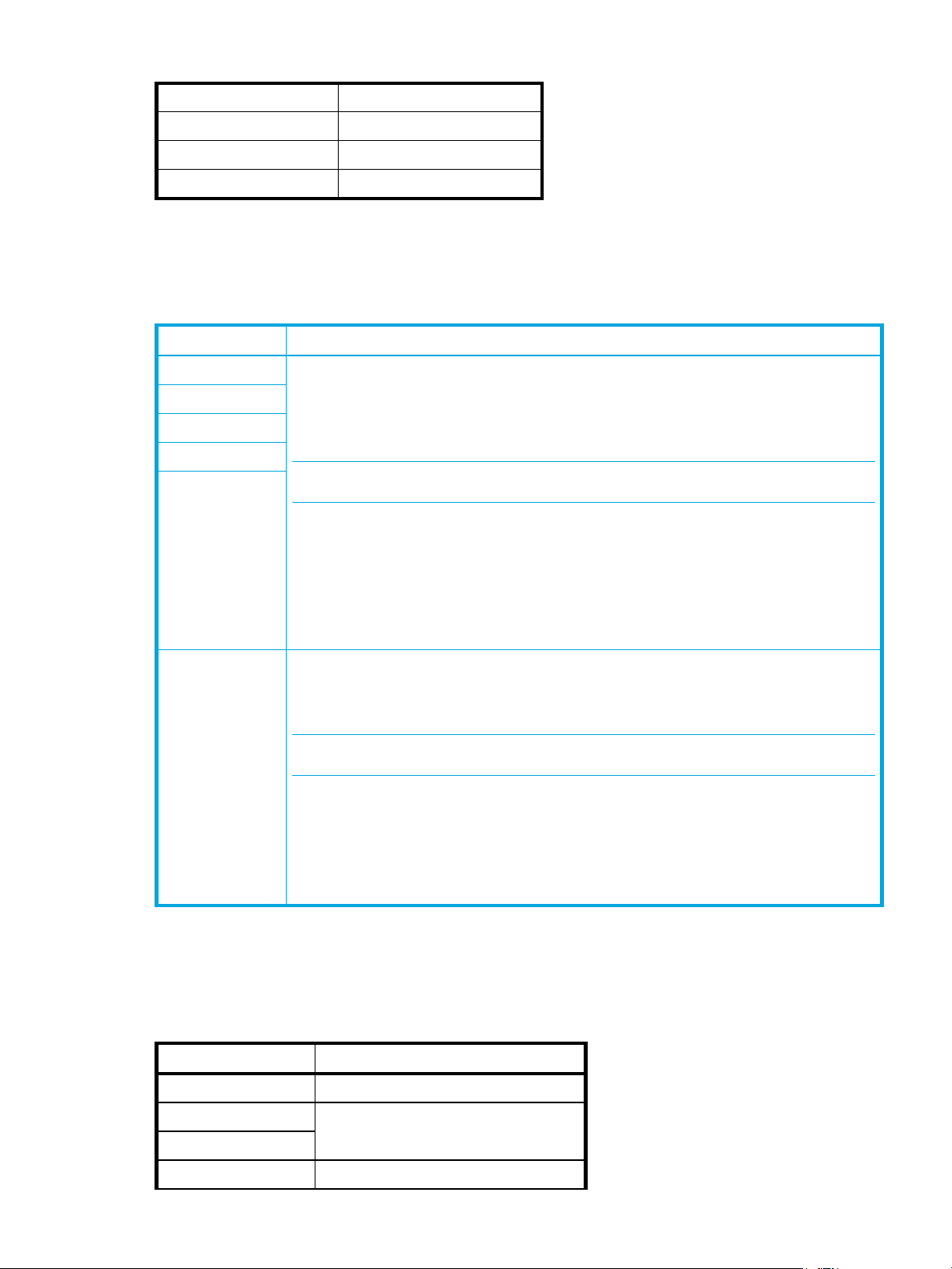

Table 9 Graph contents based on list selection (Port-LUN tab)

Selection in list Graph contents

I/O Rate (number of I/Os per second) • Statistics in sequential access mode

Read (number of read accesses per second)

Write (number of write accesses per second)

Read Hit (read hit ratio)

Write Hit (write hit ratio)

1

1

• Statistics in random access mode

• Statistics in CFW (cache fast write) mode

• If the read hit ratio or write hit ratio is high, random

access mode is used for transferring data instead of

sequential access mode. For example, random

access mode might be used for transferring data to

disk areas where Cache LUN XP is applied.

Back Transfer (backend transfer: number of I/Os

between the cache memory and disk drives)

Trans. Rate (number of data transfers) The graph does not display detailed information.

1. Available only when I/O rates are displayed.

• Number of data transfers from the cache memory to

disk drives

• Number of data transfers from disk drives to cache

memory in sequential access mode

• Number of data transfers from disk drives to cache

memory in random access mode

26 Auto LUN XP for the XP12000/XP10000

Page 27

WWN, Port-LUN, LDEV, and Physical tabs

Figure 6 WWN, Port-LUN, LDEV, and Physical tabs

Click one of the tabs (WWN, Port-LUN, LDEV, or Physical) in the lower-left portion of the pane to view data

about ports, LDEVs, or physical components.

When you click a tab, the selection tree in the lower-left portion of the pane changes. You can then click

entities to display information in the table and graph sections of the pane.

If I/O workloads between hosts and the disk array become heavy, the disk array gives higher priority to

I/O processing than monitoring processing; therefore, some monitoring data might be missing. If

monitoring data are frequently missing, use the Gathering Interval option in the Monitoring Options pane

to increase the collection interval.

WWN tab

This tab displays the Subsystem folder and PFC groups, which are groups of multiple WWNs.

Double-clicking a PFC group ( ) displays host bus adapters ( ) in the PFC group. Double-clicking Not

Grouped in the tree shows host bus adapters (WWNs) that do not belong to any PFC group.

To start monitoring traffic between host bus adapters and disk array ports, you must specify settings before

starting monitoring.

If the SVP is overloaded, more time than the gathering interval allots might be required for updating the

displayed monitoring data. In this case, some portion of monitoring data does not appear in the pane. For

example, if the gathering interval is 1 minute, and the display in the pane is updated at 9 minutes and the

next update occurs at 9:02, the pane (including the graph) does not display monitoring results for the

period of 9:00 to 9:01. This situation occurs when you use a Command View XP or XP Remote Web

Console system, and when the SVP is used to perform maintenance operations for the disk controller.

Auto LUN XP user guide for the XP12000/XP10000 27

Page 28

After replacing the micro program, monitoring data is not stored until a service technician releases the SVP

from Modify mode.

NOTE: If a host bus adapter’s WWN is displayed in red in the tree, the host bus adapter is connected to

two or more ports, but Performance Control does not control traffic between the HBA and some ports. For

information about controlling the traffic between the HBA and all connected ports, see HP StorageWorks

Performance Control XP user guide.

Port -LUN tab

This tab displays ports. The tree view displays the following icons.

Table 10 Auto LUN pane, Port-LUN tab icons

Icon Status

Short-wave Fibre Channel port in standard mode with LUN security.

If the port name is followed by its fibre address, the port is a Fibre Channel port. For example, CL1-A(EF)

indicates the CL1-A port is a Fibre Channel port.

Short-wave Fibre Channel port in standard mode without LUN security.

Short-wave Fibre Channel port in high-speed or high-speed (2 port) mode with LUN security.

LDEV tab

Short-wave Fibre Channel port in high-speed or high-speed (2 port) mode without LUN security.

Long-wave Fibre Channel port in standard mode with LUN security.

Long-wave Fibre Channel port in standard mode without LUN security.

Long-wave Fibre Channel port in high-speed or high-speed (2 port) mode with LUN security.

Long-wave Fibre Channel port in high-speed or high-speed (2 port) mode without LUN security.

NOTE: High-speed (2 port) mode is available only if the XP disk array has firmware version 21.06.22 or

later installed.

NOTE: Auto LUN/Performance Control Base Monitor obtains statistics about traffic only on ports

connected to open-system host groups. Statistics for ports connected to mainframe host groups cannot be

obtained.

This tab displays parity groups. Box folders appear below the Subsystem folder. The number at the end of

a Box folder name indicates the number at the beginning of the parity group ID. For example, if you

double-click the Box 1 folder, the tree view displays a list of parity groups that have IDs beginning with 1

(such as 1-1 and 1-2).

If you double-click a parity group, the logical volumes in the parity group appear in the table. If a parity

group ID starts with the letter E, the logical volumes in the parity group are external LUs.

The parity group icon ( ) represents a single parity group or two or more connected parity groups. If two

or more parity groups are connected, logical volumes can be striped across two or more drives. Therefore,

connected parity groups provide faster access (particularly faster sequential access) to data.

28 Auto LUN XP for the XP12000/XP10000

Page 29

If the parity group icon represents a single parity group 1-3, the text 1-3 is displayed on the right of the

CHA-1E (Fibre 16 P

)*

icon. If the parity group icon represents two or more connected parity groups, all connected parity groups

are displayed to the right of the icon. For example, if parity group 1-3 is connected with parity group 1-4,