Page 1

Veritas Storage Foundation™ 5.0

Cluster File System

Administration Guide Extracts

for the HP Serviceguard

Storage Management Suite

Second Edition

Manufacturing Part Number: T2771-90045

April 2008

Page 2

Legal Notices

© Copyright 2005-2008 Hewlett-Packard Development Company, L.P.

Confidential computer software. Valid license from HP required for possession, use, or

copying. Consistent with FAR 12.211 and 12.212, Commercial Computer Software,

Computer Software Documentation, and Technical Data for Commercial Items are

licensed to the U.S. Government under vendor’s standard commercial license.

The information contained herein is subject to change without notice. The only

warranties for HP products and services are set forth in the express warranty

statements accompanying such products and services. Nothing herein should be

construed as constituting an additional warranty. HP shall not be liable for technical or

editorial errors or omissions contained herein.

Oracle ® is a registered trademark of Oracle Corporation.

Copyright © 2008 Symantec Corporation. All rights reserved. Symantec, the Symantec

Logo, Veritas, and Veritas Storage Foundation are trademarks or registered trademarks

of Symantec Corporation or its affiliates in the U.S. and other countries. Other names

may be trademarks of their respective owners.

2

Page 3

1. Technical Overview

Overview of Cluster File System Architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

Cluster File System Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

Cluster File System Failover . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

Group Lock Manager . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

VxFS Functionality on Cluster File Systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

Supported Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

Unsupported Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

Benefits and Applications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

Advantages To Using CFS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

When To Use CFS. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

2. Cluster File System Architecture

Role of Component Products . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

Cluster Communication . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

Membership Ports. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

Veritas‘ Cluster Volume Manager Functionality . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

About CFS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

Cluster File System and The Group Lock Manager . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

Asymmetric Mounts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

Parallel I/O . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

Cluster File System Backup Strategies. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

Synchronizing Time on Cluster File Systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

Distributing Load on a Cluster . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

File System Tuneables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

Single Network Link and Reliability. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

I/O Error Handling Policy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

About Veritas Cluster Volume Manager Functionality . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

Private and Shared Disk Groups . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

Activation Modes for Shared Disk Groups . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

Connectivity Policy of Shared Disk Groups. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

Limitations of Shared Disk Groups . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

Contents

3. Cluster File System Administration

Cluster Messaging - GAB . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

Cluster Communication - LLT. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

Volume Manager Cluster Functionality Overview. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

Cluster File System Overview. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

Cluster and Shared Mounts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

Cluster File System Primary and Cluster File System Secondary . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

Asymmetric Mounts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

Cluster File System Administration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

Cluster File System Commands. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

Time Synchronization for Cluster File Systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

Growing a Cluster File System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

The fstab file . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

3

Page 4

Contents

Distributing the Load on a Cluster . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

Snapshots for Cluster File Systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

Cluster Snapshot Characteristics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

Performance Considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

Creating a Snapshot on a Cluster File System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

4. Cluster Volume Manager Administration

Overview of Cluster Volume Management. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

Private and Shared Disk Groups . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

Activation Modes for Shared Disk Groups . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

Connectivity Policy of Shared Disk Groups. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

Disk Group Failure Policy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

Limitations of Shared Disk Groups . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

Recovery in a CVM Environment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

A. Troubleshooting

Installation Issues . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

Incorrect Permissions for Root on Remote System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

Resource Temporarily Unavailable . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

Inaccessible System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

Cluster File System Problems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

Unmount Failures. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

Mount Failures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

Command Failures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

Performance Issues. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

High Availability Issues . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

4

Page 5

Preface

The Veritas Storage Foundation™ 5.0 Cluster File System Administration Guide

Extracts for the HP Serviceguard Storage Management Suite contains information

extracted from the Veritas Storage Foundation

Guide - 5.0 - HP-UX, which has been modified to support the HP Serviceguard Storage

Management Suite bundles that include the Veritas Storage Foundation™ Cluster File

System by Symantec and the Veritas Storage Foundation™ Cluster Volume Manager by

Symantec.

Printing History

The last printing date and part number indicate the current edition.

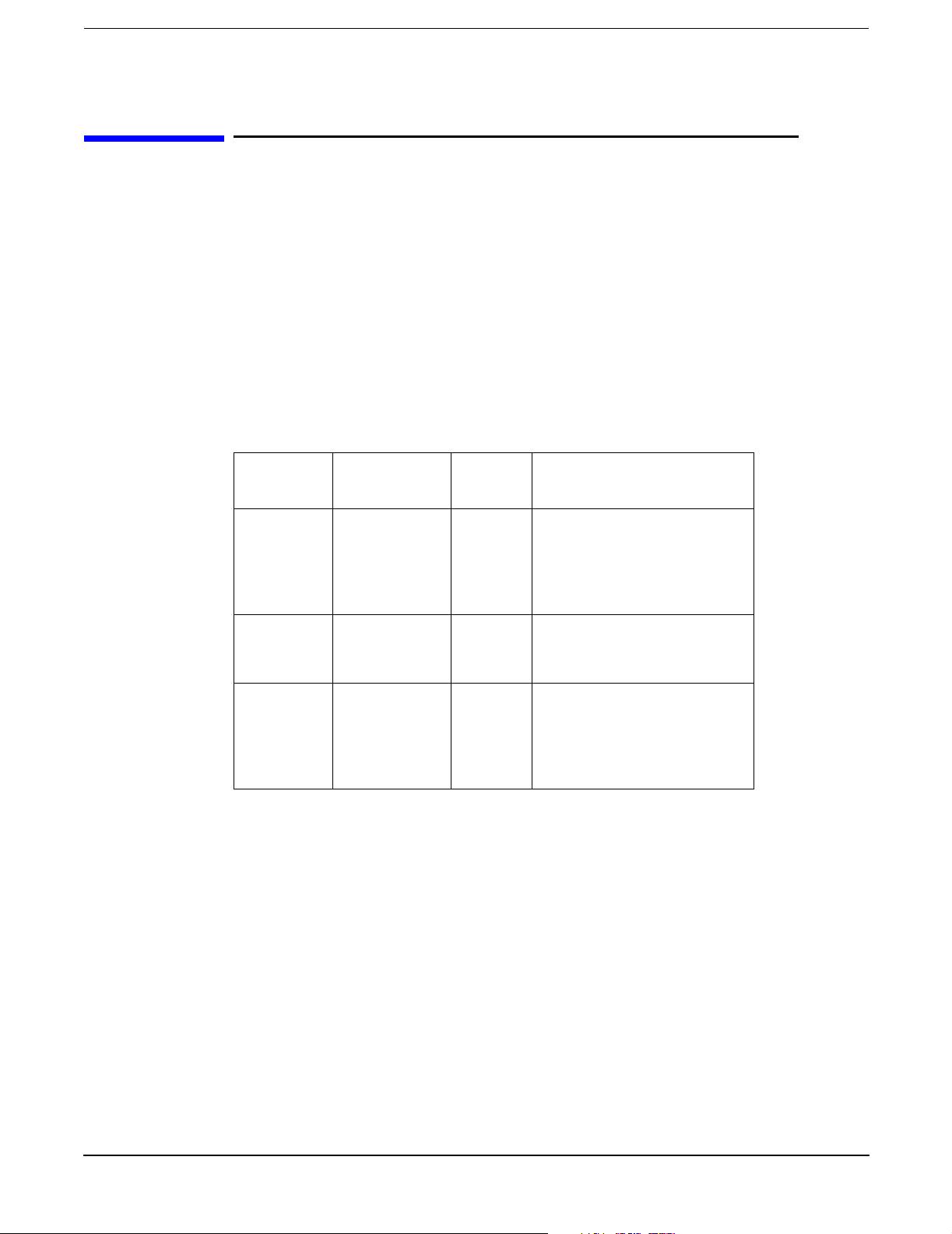

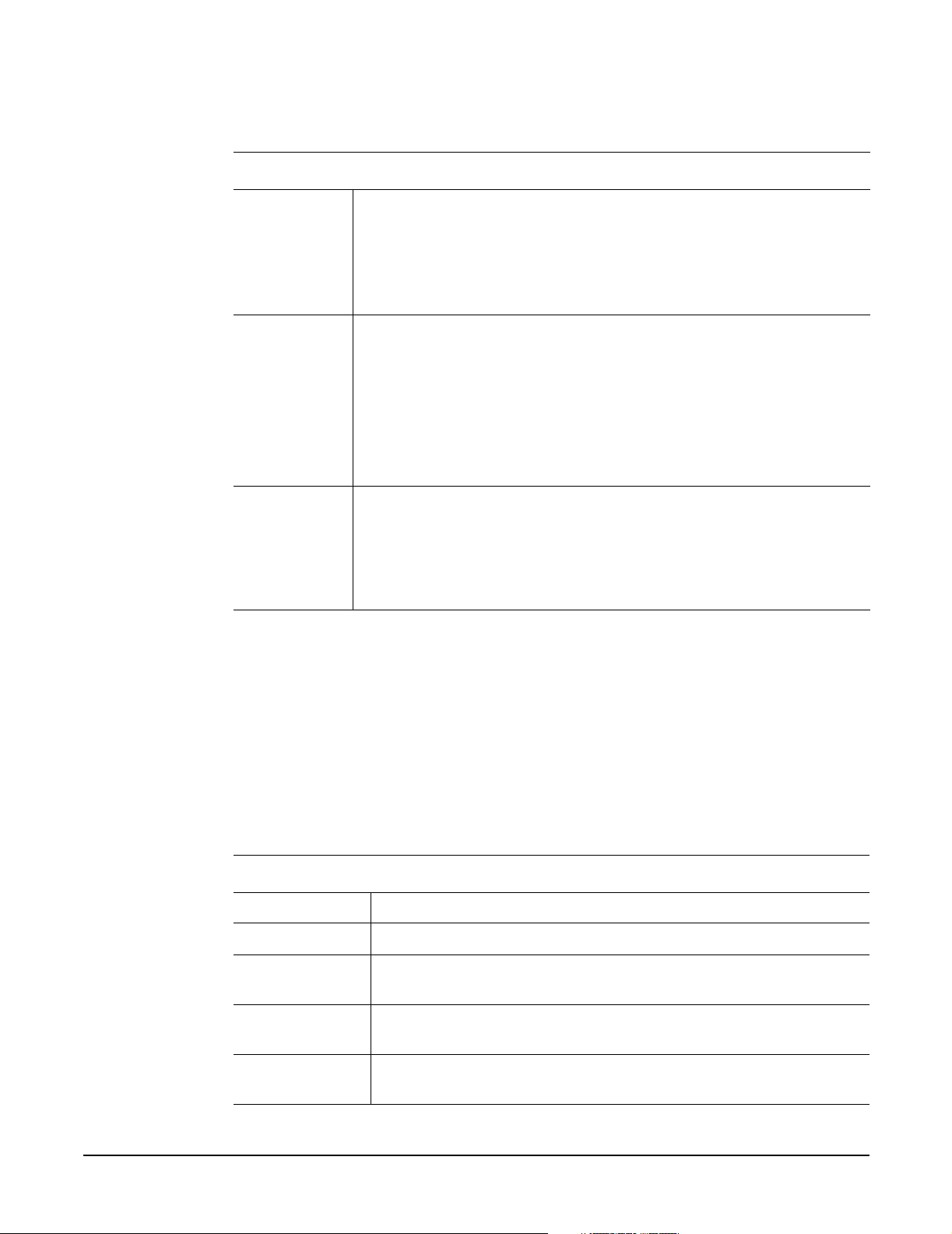

Table 1 Printing History

™

Cluster File System Administration

Printing

Date

June 2007 T2271-90034 First

January

2008

April 2008 T2271-90045 Second

Part

Number

T2271-90034 Reprint

Edition Changes

Edition

First

Edition

Edition

Original release to support

the HP Serviceguard

Storage Management Suite

A.02.00 release on HP-UX

11i v2.

CFS nested mounts are not

supported with HP

Serviceguard

Second edition to support

the HP Serviceguard

Storage Management Suite

version A.02.00 release on

HP-UX 11i v3

5

Page 6

6

Page 7

1 Technical Overview

This chapter includes the following topics:

• “Overview of Cluster File System Architecture” on page 8

• “VxFS Functionality on Cluster File Systems” on page 9

• “Benefits and Applications” on page 12

HP Serviceguard Storage Management Suite (SG SMS) bundles provide several options

for clustering and storage. The information in this document applies to the SG SMS

bundles that include the Veritas Storage Foundation™ 5.0 Cluster File System and

Cluster Volume Manager by Symantec:

• SG SMS version A.02.00 bundles T2775CA, T2776CA, and T2777CA for HP-UX 11i

v2

• SG SMS version A.02.00 Mission Critical Operating Environment (MCOE) bundles

T2795CA, T2796CA, and T2797CA for HP-UX 11i v2

• SG SMS version A.02.00 bundles T2775CB, T2776CB, and T2777CB for HP-UX 11i

v3

• SG SMS version A.02.00 High Availability Operating Environment (HAOE) bundles

T8685CB, T8686CB, and T8687CB for HP-UX 11i v3

• SG SMS version A.02.00 Data Center Operating Environment (DCOE) bundles

T8695CB, T8696CB, and T8697CB for HP-UX 11i v3

SG SMS bundles that include the Veritas Storage Foundation Cluster File System (CFS)

allow clustered servers running HP-UX 11i to mount and use the same file system

simultaneously, as if all applications using the file system are running on the same

server. SG SMS bundles that include CFS also include the Veritas Storage Foundation

Cluster Volume Manager (CVM). CVM makes logical volumes and raw device

applications accessible throughout a cluster.

As SG SMS components, CFS and CVM are integrated with HP Serviceguard to

form a highly available clustered computing environment. SG SMS bundles

that include CFS and CVM do not include the Veritas™ Cluster Server by

Symantec (VCS). VCS functions that are required in an SG SMS environment

are performed by Serviceguard. This document focuses on CFS and CVM

administration in an SG SMS environment.

For more information on bundle features, options and applications, see the Application

Use Cases for the HP Serviceguard Storage Management Suite White Paper and the HP

Serviceguard Storage Management Suite Release Notes at:

http://www.docs.hp.com - select “High Availability”, then select “HP Serviceguard

Storage Management Suite”

Chapter 1

7

Page 8

Technical Overview

Overview of Cluster File System Architecture

Overview of Cluster File System Architecture

CFS allows clustered servers to mount and use the same file system simultaneously, as if

all applications using the file system are running on the same server. CVM makes logical

volumes and raw device applications accessible throughout a cluster.

Cluster File System Design

Beginning with version 5.0, CFS uses a Symmetric architecture in which all nodes in the

cluster can simultaneously function as metadata servers. CFS 5.0 has some remnants of

the master/slave node concept from version 4.1, but this functionality has changed in

version 5.0 along with a different naming convention. The first server to mount each

cluster file system becomes the primary CFS node; all other nodes in the cluster are

considered secondary CFS nodes. Applications access user data directly from the node

they are running on. Each CFS node has its own intent log. File system operations, such

as allocating or deleting files, can originate from any node in the cluster.

NOTE The master/slave node naming convention continues to be used when referring to Veritas

Cluster Volume Manager (CVM) nodes.

Cluster File System Failover

If the server designated as the CFS primary node fails, the remaining nodes in the

cluster elect a new primary node. The new primary node reads the intent log of the old

primary node and completes any metadata updates that were in process at the time of

the failure.

Failure of a secondary node does not require metadata repair, because nodes using a

cluster file system in secondary mode do not update file system metadata directly. The

Multiple Transaction Server distributes file locking ownership and metadata updates

across all nodes in the cluster, enhancing scalability without requiring unnecessary

metadata communication throughout the cluster. CFS recovery from secondary node

failure is therefore faster than from primary node failure.

Group Lock Manager

CFS uses the Veritas Group Lock Manager (GLM) to reproduce UNIX single-host file

system semantics in clusters. This is most important in write behavior. UNIX file

systems make writes appear to be atomic. This means that when an application writes a

stream of data to a file, any subsequent application that reads from the same area of the

file retrieves the new data, even if it has been cached by the file system and not yet

written to disk. Applications can never retrieve stale data, or partial results from a

previous write.

To reproduce single-host write semantics, system caches must be kept coherent and each

must instantly reflect any updates to cached data, regardless of the cluster node from

which they originate. GLM locks a file so that no other node in the cluster can

simultaneously update it, or read it before the update is complete.

8

Chapter 1

Page 9

Technical Overview

VxFS Functionality on Cluster File Systems

VxFS Functionality on Cluster File Systems

The HP Serviceguard Storage Management Suite uses the Veritas File System (VxFS).

Most of the major features of VxFS local file systems are available on cluster file

systems, including:

• Extent-based space management that maps files up to 1 terabyte in size

• Fast recovery from system crashes using the intent log to track recent file system

metadata updates

• Online administration that allows file systems to be extended and defragmented

while they are in use

Supported Features

The following table lists the features and commands that are available and supported

with CFS. Every VxFS online manual page has a Cluster File System Issues section that

informs you if the command functions on cluster-mounted file systems, and indicates any

difference in behavior from how the command functions on local mounted file systems.

Table 1-1 CFS Supported Features

Features and Commands Supported on CFS

Quick I/O The clusterized Oracle Disk Manager (ODM) is supported with CFS

using the Quick I/O for Databases feature in the following HP

Serviceguard Storage Management Suite CFS bundles for Oracle:

For HP-UX 11i v2 - T2776CA, T2777CA, T2796CA, and T2797CA

For HP-UX 11i v3 - T2776CB, T2777CB, T8686CB, T8687CB,

T8696CB, and T8697CB

Storage

Checkpoints

Freeze and

Thaw

Snapshots Snapshots are supported with CFS.

Quotas Quotas are supported with CFS.

NFS Mounts You can mount cluster file systems to NFS.

Memory

Mapping

Concurrent

I/O

Storage Checkpoints are supported with CFS.

Synchronizing operations, which require freezing and thawing file

systems, are done on a cluster-wide basis.

Shared memory mapping established by the map() function is

supported on CFS. See the mmap(2) manual page.

This feature extends current support for concurrent I/O to cluster file

systems. Semantics for concurrent read/write access on a file in a

cluster file system matches those for a local mount.

Chapter 1

Delaylog The -o delaylog mount option is supported with cluster mounts.

This is the default state for CFS.

9

Page 10

Technical Overview

VxFS Functionality on Cluster File Systems

Table 1-1 CFS Supported Features (Continued)

Features and Commands Supported on CFS

Disk Layout

Versions

Locking Advisory file and record locking are supported on CFS. For the

Multiple

Transaction

Servers

See the HP Serviceguard Storage Management Suite Release Notes in the High

Availability section at http://www.docs.hp.com for more information on bundle features

and options.

CFS supports only disk layout Version 6 and Version 7. Cluster

mounted file systems can be upgraded. A local mounted file system

can be upgraded, unmounted, and mounted again, as part of a cluster.

Use the fstyp -v special_device command to ascertain the disk

layout version of a VxFS file system. Use the vxupgrade command to

update the disk layout version.

F_GETLK command, if there is a process holding a conflicting lock, the

l_pid field returns the process ID of the process holding the

conflicting lock. The nodeid-to-node name translation can be done by

examining the /etc/llthosts file or with the fsclustadm

command. Mandatory locking and deadlock detection supported by

traditional fcntl locks are not supported on CFS.

See the fcntl(2) manual page for more information.

With this feature, CFS moves from a primary/secondary architecture,

where only one node in the cluster processes metadata operations

(file creation, deletion, growth, etc.) to a symmetrical architecture,

where all nodes in the cluster can simultaneously process metadata

operations. This allows CFS to handle significantly higher metadata

loads.

Unsupported Features

Functionality that is documented as unsupported may not be expressly prevented from

operating on CFS, but the actual behavior is indeterminate. HP does not advise using

unsupported functionality on CFS, or to alternately mount file systems with

unsupported features as local and cluster mounts.

Table 1-2 CFS Unsupported Features

Features and Commands Not Supported on CFS

qlog Quick log is not supported on CFS.

Swap Files Swap files are not supported on CFS.

The mknod

command

Cache

Advisories

Cached Quick

I/O

You cannot use the mknod command to create devices on CFS.

Cache advisories are set with the mount command on individual

file systems, but are not propagated to other nodes of a cluster.

This Quick I/O for Databases feature that caches data in the file

system cache is not supported on CFS.

10

Chapter 1

Page 11

Table 1-2 CFS Unsupported Features (Continued)

Features and Commands Not Supported on CFS

Technical Overview

VxFS Functionality on Cluster File Systems

Commands that

Depend on File

Access Times

Nested Mounts HP Serviceguard does not support CFS nested mounts.

File access times may appear different across nodes because the

atime file attribute is not closely synchronized in a cluster file

system. Utilities that depend on checking access times may not

function reliably.

Chapter 1

11

Page 12

Technical Overview

Benefits and Applications

Benefits and Applications

The following sections describe CFS benefits and some applications.

Advantages To Using CFS

CFS simplifies or eliminates system administration tasks resulting from hardware

limitations:

• The CFS single file system image administrative model simplifies administration by

allowing all file system management operations, resizing, and reorganization

(defragmentation) to be performed from any node.

• You can create and manage terabyte-sized volumes, so partitioning file systems to fit

within disk limitations is usually not necessary - only extremely large data farms

must be partitioned to accommodate file system addressing limitations. For

maximum supported file system sizes, see Supported File and File System Sizes for

HFS and JFS available at: http://docs.hp.com/en/oshpux11iv3.html#VxFS

• Keeping data consistent across multiple servers is automatic, because all servers in

a CFS cluster have access to cluster-shareable file systems. All cluster nodes have

access to the same data, and all data is accessible by all servers using single server

file system semantics.

• Applications can be allocated to different servers to balance the load or to meet other

operational requirements, because all files can be accessed by all servers. Similarly,

failover becomes more flexible, because it is not constrained by data accessibility.

• The file system recovery portion of failover time in an n-node cluster can be reduced

by a factor of n, by distributing the file systems uniformly across cluster nodes,

because each CFS file system can be on any node in the cluster.

• Enterprise storage arrays are more effective, because all of the storage capacity can

be accessed by all nodes in the cluster, but it can be managed from one source.

• Larger volumes with wider striping improve application I/O load balancing. Not only

is the I/O load of each server spread across storage resources, but with CFS shared

file systems, the loads of all servers are balanced against each other.

• Extending clusters by adding servers is easier because each new server’s storage

configuration does not need to be set up - new servers simply adopt the cluster-wide

volume and file system configuration.

• For the following HP Serviceguard Storage Management Suite CFS for Oracle

bundles, the clusterized Oracle Disk Manager (ODM) feature is available to

applications running in a cluster, enabling file-based database performance to

approach the performance of raw partition-based databases:

— T2776CA, T2777CA, T2796CA, and T2797CA

— T2776CB, T2777CB, T8686CB, T8687CB, T8696CB, and T8697CB

12

Chapter 1

Page 13

Technical Overview

Benefits and Applications

When To Use CFS

You should use CFS for any application that requires file sharing, such as for home

directories, web pages, and for cluster-ready applications. CFS can also be used when

you want highly available standby data in predominantly read-only environments, or

when you do not want to rely on NFS for file sharing.

Almost all applications can benefit from CFS. Applications that are not “cluster-aware”

can operate and access data from anywhere in a cluster. If multiple cluster applications

running on different servers are accessing data in a cluster file system, overall system

I/O performance improves due to the load balancing effect of having one cluster file

system on a separate underlying volume. This is automatic; no tuning or other

administrative action is required.

Many applications consist of multiple concurrent threads of execution that could run on

different servers if they had a way to coordinate their data accesses. CFS provides this

coordination. These applications can be made cluster-aware allowing their instances to

co-operate to balance the client and data access load, and thereby scale beyond the

capacity of any single server. In these applications, CFS provides shared data access,

enabling application-level load balancing across cluster nodes.

• For single-host applications that must be continuously available, CFS can reduce

application failover time, because it provides an already-running file system

environment in which an application can restart after a server failure.

• For parallel applications, such as distributed database management systems and

web servers, CFS provides shared data to all application instances concurrently. CFS

also allows these applications to grow by the addition of servers, and improves their

availability by enabling them to redistribute load in the event of server failure

simply by reassigning network addresses.

• For workflow applications, such as video production, in which very large files are

passed from station to station, CFS eliminates time consuming and error prone data

copying by making files available at all stations.

• For backup, CFS can reduce the impact on operations by running on a separate

server, while accessing data in cluster-shareable file systems.

Some common applications for CFS are:

• Using CFS on file servers

Two or more servers connected in a cluster configuration (that is, connected to the

same clients and the same storage) serve separate file systems. If one of the servers

fails, the other recognizes the failure, recovers, assumes the role of primary node,

and begins responding to clients using the failed server’s IP addresses.

• Using CFS on web servers

Web servers are particularly suitable to shared clustering, because their application

is typically read-only. Moreover, with a client load balancing front end, a Web server

cluster’s capacity can be expanded by adding a server and another copy of the site. A

CFS-based cluster greatly simplifies scaling and administration for this type of

application.

Chapter 1

13

Page 14

Technical Overview

Benefits and Applications

14

Chapter 1

Page 15

2 Cluster File System Architecture

This chapter includes the following topics:

• “Role of Component Products” on page 16

• “About CFS” on page 17

• “About Veritas Cluster Volume Manager Functionality” on page 21

Chapter 2

15

Page 16

Cluster File System Architecture

Role of Component Products

Role of Component Products

The HP Serviceguard Storage Management Suite bundles that include CFS also include

the Veritas™ Volume Manager by Symantec (VxVM) and it's cluster component, the

Veritas Storage Foundation™ Cluster Volume Manager by Symantec (CVM). The

following sections introduce cluster communication, membership ports, and CVM

functionality.

Cluster Communication

Group Membership Atomic Broadcast (GAB) and Low Latency Transport (LLT) are

protocols implemented directly on an ethernet data link. They run on redundant data

links that connect the nodes in a cluster. Serviceguard and CFS are in most respects, two

separate clusters. GAB provides membership and messaging for the clusters and their

applications. GAB membership also provides orderly startup and shutdown of clusters.

LLT is the cluster communication transport. The /etc/gabtab file is used to configure

GAB and the /etc/llttab

creates these configuration files each time the CFS package is started and modifies them

whenever you apply changes to the Serviceguard cluster configuration - this keeps the

Serviceguard cluster synchronized with the CFS cluster.

file is used to configure LLT. Serviceguard cmapplyconf

Any attempt to directly modify /etc/gabtab and /etc/llttab will be overwritten by

cmapplyconf (or cmdeleteconf).

Membership Ports

Each component in a CFS registers with a membership port. The port membership

identifies nodes that have formed a cluster for the individual components. Examples of

port memberships include:

port a heartbeat membership

port f Cluster File system membership

port u Temporarily used by CVM

port v Cluster Volume Manager membership

port w Cluster Volume Manager daemons on different nodes communicate

with one another using this port.

Port memberships are configured automatically and cannot be changed. To display port

memberships, enter the gabconfig -a command.

Veritas™ Cluster Volume Manager Functionality

A VxVM cluster is comprised of nodes sharing a set of devices. The nodes are connected

across a network. CVM (the VxVM cluster component) presents a consistent logical view

of device configurations (including changes) on all nodes. CVM functionality makes

logical volumes and raw device applications accessible throughout a cluster. CVM

enables multiple hosts to concurrently access the logical volumes under its control. If one

node fails, the other nodes can still access the devices. You configure CVM shared storage

after the HP Serviceguard high availability (HA) cluster is configured and running.

16

Chapter 2

Page 17

Cluster File System Architecture

About CFS

About CFS

If the CFS primary node fails, the remaining cluster nodes elect a new primary node.

The new primary node reads the file system intent log and completes any metadata

updates that were in process at the time of the failure. Application I/O from other nodes

may block during this process and cause a delay. When the file system becomes

consistent again, application processing resumes.

Failure of a secondary node does not require metadata repair, because nodes using a

cluster file system in secondary mode do not update file system metadata directly. The

Multiple Transaction Server distributes file locking ownership and metadata updates

across all nodes in the cluster, enhancing scalability without requiring unnecessary

metadata communication throughout the cluster. CFS recovery from secondary node

failure is therefore faster than from primary node failure.

See “Distributing Load on a Cluster” on page 20.

Cluster File System and The Group Lock Manager

CFS uses the Veritas Group Lock Manager (GLM) to reproduce UNIX single-host file

system semantics in clusters. UNIX file systems make writes appear atomic. This means

when an application writes a stream of data to a file, a subsequent application reading

from the same area of the file retrieves the new data, even if it has been cached by the

file system and not yet written to disk. Applications cannot retrieve stale data or partial

results from a previous write.

To simulate single-host write semantics, system caches are kept coherent and each

node’s cache instantly reflects updates to cached data, regardless of the node from which

the update originates.

Asymmetric Mounts

A Veritas™ File System (VxFS) mounted with the mount -o cluster option is a cluster or

shared mount, as opposed to a non-shared or local mount. A file system mounted in

shared mode must be on a Veritas™ Volume Manager (VxVM) shared volume in a cluster

environment. A local mount cannot be remounted in shared mode and a shared mount

cannot be remounted in local mode. File systems in a cluster can be mounted with

different read/write options. These are called asymmetric mounts.

Asymmetric mounts allow shared file systems to be mounted with different read/write

capabilities. One node in the cluster can mount read/write, while other nodes mount

read-only.

You can specify the cluster read-write (crw) option when you first mount a file system, or

the options can be altered when doing a remount (mount -o remount). The first column

in Table 2-1 on page 18 shows the mode in which the primary node is mounted. The X

marks indicate the modes available to secondary nodes in the cluster.

See the mount_vxfs(1M) manual page for more information.

Chapter 2

17

Page 18

Cluster File System Architecture

About CFS

Table 2-1 Primary and Secondary Mount Options

Secondary:roSecondary:rwSecondary:

ro, crw

Primary:

ro

Primary:

rw

Primary:

ro, crw

Mounting the primary node with only the -o cluster,ro option prevents the secondary

nodes from mounting in the read-write mode. Note that mounting the primary node with

the rw option implies read-write capability throughout the cluster.

X

XX

XX

Parallel I/O

Some distributed applications read and write to the same file concurrently from one or

more nodes in the cluster; for example, any distributed application where one thread

appends to a file and there are one or more threads reading from various regions in the

file. Several high-performance computing (HPC) applications can also benefit from this

feature, where concurrent I/O is performed on the same file. Applications do not require

any changes to use this parallel I/O feature.

Traditionally, the entire file is locked to perform I/O to a small region. To support

parallel I/O, CFS locks ranges in a file that correspond to an I/O request. Two I/O

requests conflict, if at least one is a write request and it’s I/O range overlaps the I/O

range of the other I/O request.

18

The parallel I/O feature enables I/O to a file by multiple threads concurrently, as long as

the requests do not conflict. Threads issuing concurrent I/O requests can execute on the

same node, or on a different node in the cluster.

An I/O request that requires allocation is not executed concurrently with other I/O

requests. Note that when a writer is extending the file and readers are lagging behind,

block allocation is not necessarily done for each extending write.

If the file size can be predetermined, the file can be preallocated to avoid block

allocations during I/O. This improves the concurrency of applications performing parallel

I/O to the file. Parallel I/O also avoids unnecessary page cache flushes and invalidations

using range locking, without compromising the cache coherency across the cluster.

For applications that update the same file from multiple nodes, the -nomtime mount

option provides further concurrency. Modification and change times of the file are not

synchronized across the cluster, which eliminates the overhead of increased I/O and

locking. The timestamp seen for these files from a node may not have the time updates

that happened in the last 60 seconds.

Chapter 2

Page 19

Cluster File System Architecture

About CFS

Cluster File System Backup Strategies

The same backup strategies used for standard VxFS can be used with CFS, because the

APIs and commands for accessing the namespace are the same. File system checkpoints

provide an on-disk, point-in-time copy of the file system. HP recommends file system

checkpoints over file system snapshots (described below) for obtaining a frozen image of

the cluster file system, because the performance characteristics of a checkpointed file

system are better in certain I/O patterns.

NOTE See the Veritas File System Administrator's Guide, HP-UX, 5.0 for a detailed explanation

and comparison of checkpoints and snapshots.

A file system snapshot is another method for obtaining a file system on-disk frozen

image. The frozen image is non-persistent, in contrast to the checkpoint feature. A

snapshot can be accessed as a read-only mounted file system to perform efficient online

backups. Snapshots implement “copy-on-write” semantics that incrementally copy data

blocks when they are overwritten on the “snapped” file system. Snapshots for cluster file

systems extend the same copy-on-write mechanism for the I/O originating from any

cluster node.

Mounting a snapshot filesystem for backups increases the load on the system because of

the resources used to perform copy-on-writes and to read data blocks from the snapshot.

In this situation, cluster snapshots can be used to do off-host backups. Off-host backups

reduce the load of a backup application on the primary server. Overhead from remote

snapshots is small when compared to overall snapshot overhead. Therefore, running a

backup application by mounting a snapshot from a relatively less loaded node is

beneficial to overall cluster performance.

There are several characteristics of a cluster snapshot, including:

• A snapshot for a cluster mounted file system can be mounted on any node in a

cluster. The file system can be a primary, secondary, or secondary-only. A stable

image of the file system is provided for writes from any node.

• Multiple snapshots of a cluster file system can be mounted on the same or different

cluster nodes.

• A snapshot is accessible only on the node it is mounted on. The snapshot device

cannot be mounted on two nodes simultaneously.

• The device for mounting a snapshot can be a local disk or a shared volume. A shared

volume is used exclusively by a snapshot mount and is not usable from other nodes

as long as the snapshot is active on that device.

• On the node mounting a snapshot, the snapped file system cannot be unmounted

while the snapshot is mounted.

• A CFS snapshot ceases to exist if it is unmounted or the node mounting the snapshot

fails. However, a snapshot is not affected if a node leaves or joins the cluster.

• A snapshot of a read-only mounted file system cannot be taken. It is possible to

mount a snapshot of a cluster file system only if the snapped cluster file system is

mounted with the crw option.

In addition to file-level frozen images, there are volume-level alternatives available for

shared volumes using mirror split and rejoin. Features such as Fast Mirror Resync and

Space Optimized snapshot are also available.

Chapter 2

19

Page 20

Cluster File System Architecture

About CFS

Synchronizing Time on Cluster File Systems

CFS requires that the system clocks on all nodes are synchronized using some external

component such as the Network Time Protocol (NTP) daemon. If the nodes are not in

sync, timestamps for creation (ctime) and modification (mtime) may not be consistent

with the sequence in which operations actually happened.

Distributing Load on a Cluster

For example, if you have eight file systems and four nodes, designating two file systems

per node as the primary is beneficial. The first node that mounts a file system becomes

the primary for that file system.

You can also use the fsclustadm command to designate a CFS primary. The fsclustadm

setprimary command can be used to change the primary. This change to the primary is

not persistent across unmounts or reboots. The change is in effect as long as one or more

nodes in the cluster have the file system mounted. The primary selection policy can also

be defined by an HP Serviceguard attribute associated with the CFS mount resource.

File System Tuneables

Tuneable parameters are updated at the time of mount using the tunefstab file or

vxtunefs command. The file system tunefs parameters are set to be identical on all

nodes by propagating the parameters to each cluster node. When the file system is

mounted on the node, the tunefs parameters of the primary node are used. The

tunefstab file on the node is used if this is the first node to mount the file system. HP

recommends that this file be identical on each node.

Single Network Link and Reliability

In some environments, you may prefer using a single private link, or a pubic network, for

connecting nodes in a cluster - despite the loss of redundancy if a network failure occurs.

The benefits of this approach include simpler hardware topology and lower costs;

however, there is obviously a tradeoff with high availability.

I/O Error Handling Policy

I/O errors can occur for several reasons, including failures of FibreChannel links,

host-bus adapters, and disks. CFS disables the file system on the node that is

encountering I/O errors, but the file system remains available from other nodes. After

the I/O error is fixed, the file system can be forcibly unmounted and the mount resource

can be brought online from the disabled node to reinstate the file system.

20

Chapter 2

Page 21

About Veritas Cluster Volume Manager Functionality

About Veritas Cluster Volume Manager Functionality

CVM supports up to 8 nodes in a cluster to simultaneously access and manage a set of

disks under VxVM control (VM disks). The same logical view of the disk configuration

and any changes are available on each node. When the cluster functionality is enabled,

all cluster nodes can share VxVM objects. Features provided by the base volume

manager, such as mirroring, fast mirror resync, and dirty region logging are also

supported in the cluster environment.

NOTE RAID-5 volumes are not supported on a shared disk group.

To implement cluster functionality, VxVM works together with the cmvx daemon

provided by HP. The cmdx daemon informs VxVM of changes in cluster membership.

Each node starts up independently and has its own copies of HP-UX, Serviceguard, and

CVM. When a node joins a cluster it gains access to shared disks. When a node leaves a

cluster, it no longer has access to shared disks. A node joins a cluster when Serviceguard

is started on that node.

Figure 2-1 illustrates a simple cluster consisting of four nodes with similar or identical

hardware characteristics (CPUs, RAM and host adapters), and configured with identical

software (including the operating system). The nodes are fully connected by a private

network and they are also separately connected to shared external storage (either disk

arrays or JBODs) via Fibre Channel. Each node has two independent paths to these

disks, which are configured in one or more cluster-shareable disk groups.

Cluster File System Architecture

The private network allows the nodes to share information about system resources and

about each other’s state. Using the private network, any node can recognize which nodes

are currently active, which are joining or leaving the cluster, and which have failed. The

private network requires at least two communication channels to provide redundancy

against one of the channels failing. If only one channel is used, its failure will be

indistinguishable from node failure—a condition known as network partitioning.

Figure 2-1 Example of a Four-Node Cluster

Redundant Private Network

Node 0

Master

Node 1

Slave

Node 2

Slave

Node 3

Slave

Fibre Channel

Connectivity

Cluster-Shareable

Disks

Redundant

Chapter 2

Cluster-Shareable

Disk Groups

21

Page 22

Cluster File System Architecture

About Veritas Cluster Volume Manager Functionality

To the cmvx daemon, all nodes are the same. VxVM objects configured within shared disk

groups can potentially be accessed by all nodes that join the cluster. However, the cluster

functionality of VxVM requires one node to act as the master node; all other nodes in the

cluster are slave nodes. Any node is capable of being the master node, which is

responsible for coordinating certain VxVM activities.

NOTE You must run commands that configure or reconfigure VxVM objects on the master node.

Tasks that must be initiated from the master node include setting up shared disk groups

and creating and reconfiguring volumes.

VxVM designates the first node to join a cluster as the master node. If the master node

leaves the cluster, one of the slave nodes is chosen to be the new master node. In the

preceding example, node 0 is the master node and nodes 1, 2 and 3 are slave nodes.

Private and Shared Disk Groups

There are two types of disk groups:

• Private (non- CFS) disk groups, which belong to only one node.

A private disk group is only imported by one system. Disks in a private disk group

may be physically accessible from one or more systems, but import is restricted to

one system only. The root disk group is always a private disk group.

• Shared (CFS) disk groups, which are shared by all nodes.

A shared (or cluster-shareable) disk group is imported by all cluster nodes. Disks in a

shared disk group must be physically accessible from all systems that may join the

cluster.

Disks in a shared disk group are accessible from all nodes in a cluster, allowing

applications on multiple cluster nodes to simultaneously access the same disk. A volume

in a shared disk group can be simultaneously accessed by more than one node in the

cluster, subject to licensing and disk group activation mode restrictions.

You can use the vxdg command to designate a disk group as cluster-shareable. When a

disk group is imported as cluster-shareable for one node, each disk header is marked

with the cluster ID. As each node subsequently joins the cluster, it recognizes the disk

group as being cluster-shareable and imports it. You can also import or deport a shared

disk group at any time; the operation takes places in a distributed fashion on all nodes.

See “Cluster File System Commands” on page 34 for more information.

Each physical disk is marked with a unique disk ID. When cluster functionality for

VxVM starts on the master node, it imports all shared disk groups (except for any that

have the noautoimport attribute set). When a slave node tries to join a cluster, the

master node sends it a list of the disk IDs that it has imported, and the slave node checks

to see if it can access all of them. If the slave node cannot access one of the listed disks, it

abandons its attempt to join the cluster. If it can access all of the listed disks, it imports

the same shared disk groups as the master node and joins the cluster. When a node

leaves the cluster, it deports all of its imported shared disk groups, but they remain

imported on the surviving nodes.

22

Reconfiguring a shared disk group is performed with the co-operation of all nodes.

Configuration changes to the disk group happen simultaneously on all nodes and the

changes are identical. Such changes are atomic in nature, which means that they either

occur simultaneously on all nodes, or not at all.

Chapter 2

Page 23

Cluster File System Architecture

About Veritas Cluster Volume Manager Functionality

Whether all members of the cluster have simultaneous read and write access to a

cluster-shareable disk group depends on its activation mode setting as described in

Table 2-2, “Activation Modes for Shared Disk Groups.” The data contained in a

cluster-shareable disk group is available as long as at least one node is active in the

cluster. The failure of a cluster node does not affect access by the remaining active nodes.

Regardless of which node accesses a cluster-shareable disk group, the configuration of

the disk group looks the same.

NOTE Applications running on each node can access the data on the VM disks simultaneously.

VxVM does not protect against simultaneous writes to shared volumes by more than one

node. It is assumed that applications control consistency (by using Veritas Storage

Foundation Cluster File System or a distributed lock manager, for example).

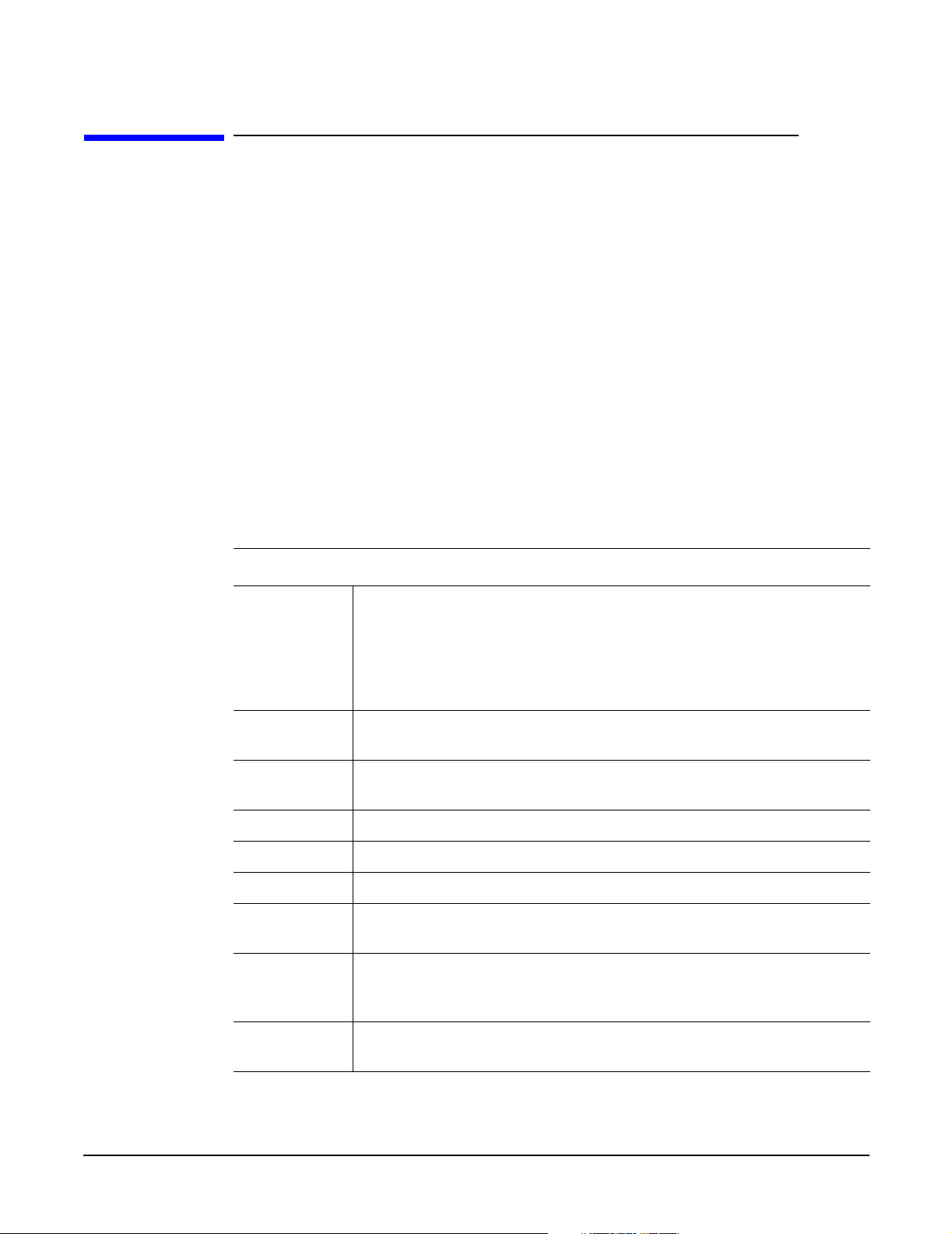

Activation Modes for Shared Disk Groups

A shared disk group must be activated on a node in order for the volumes in the disk

group to become accessible for application I/O from that node. The ability of applications

to read from, or to write to, volumes is dictated by the activation mode of a shared disk

group. Valid activation modes for a shared disk group are exclusivewrite, readonly,

sharedread, sharedwrite, and off (inactive). These activation modes are described in

Table 2-2, “Activation Modes for Shared Disk Groups.”

NOTE The default activation mode for shared disk groups is off (inactive).

Special use clusters, such as high availability (HA) applications and off-host backup, can

employ disk group activation to explicitly control volume access from different nodes in

the cluster.

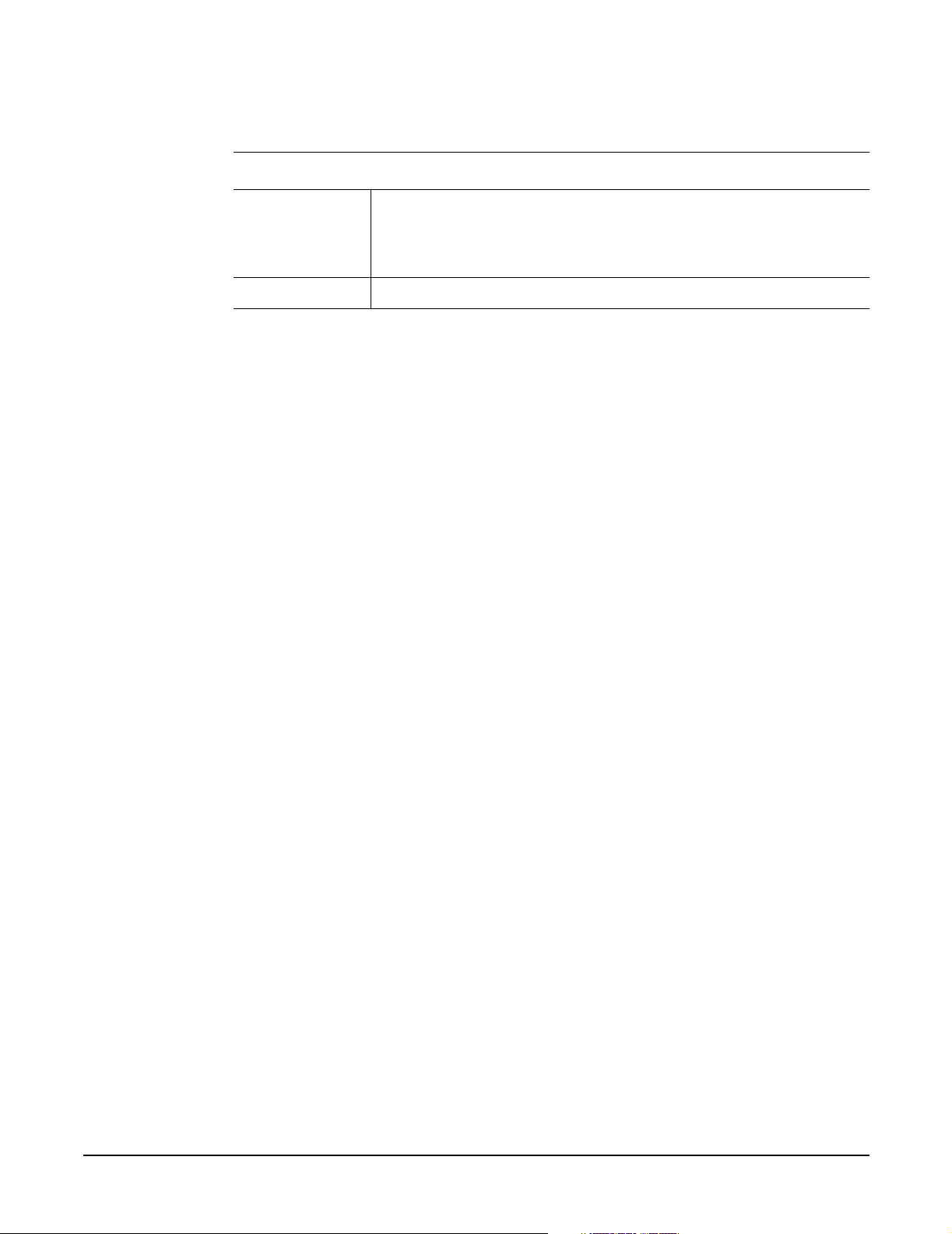

Table 2-2 Activation Modes for Shared Disk Groups

Activation Mode Description

exclusivewrite The node has exclusive write access to the disk group. No other

node can activate the disk group for write access.

readonly The node has read access to the disk group and denies write

access for all other nodes in the cluster. The node has no write

access to the disk group. Attempts to activate a disk group for

either of the write modes on other nodes fail.

sharedread The node has read access to the disk group. The node has no

write access to the disk group, however other nodes can obtain

write access.

sharedwrite The node has write access to the disk group.

off The node has neither read nor write access to the disk group.

Query operations on the disk group are permitted.

Chapter 2

23

Page 24

Cluster File System Architecture

About Veritas Cluster Volume Manager Functionality

The following table summarizes the allowed and conflicting activation modes for shared

disk groups:

Table 2-3 Allowed and conflicting activation modes

Disk group

activated in

cluster as:

exclusivewrite Fails Fails Succeeds Fails

readonly Fails Succeeds Succeeds Fails

sharedread Succeeds Succeeds Succeeds Succeeds

sharedwrite Fails Fails Succeeds Succeeds

Shared disk groups can be automatically activated in any mode during disk group

creation or during manual or auto-import. To control auto-activation of shared disk

groups, the defaults file /etc/default/vxdg must be created.

The defaults file /etc/default/vxdg must contain the following lines:

enable_activation=true

default_activation_mode=activation-mode

The activation-mode is one of exclusivewrite, readonly, sharedread, sharedwrite, or

off.

When a shared disk group is created or imported, it is activated in the specified mode.

When a node joins the cluster, all shared disk groups accessible from the node are

activated in the specified mode.

Attempt to activate disk group on another node as:

exclusive-

write

readonly sharedread sharedwrite

NOTE The activation mode of a disk group controls volume I/O from different nodes in the

cluster. It is not possible to activate a disk group on a given node if it is activated in a

conflicting mode on another node in the cluster. When enabling activation using the

defaults file, it is recommended that this file be made identical on all nodes in the

cluster. Otherwise, the results of activation are unpredictable.

If the defaults file is edited while the vxconfigd daemon is already running, the

vxconfigd process must be restarted for the changes in the defaults file to take effect.

If the default activation mode is anything other than off, an activation following a cluster

join, or a disk group creation or import can fail if another node in the cluster has

activated the disk group in a conflicting mode.

To display the activation mode for a shared disk group, use the vxdg list diskgroup

command.

You can also use the vxdg command to change the activation mode on a shared disk

group.

Connectivity Policy of Shared Disk Groups

The nodes in a cluster must always agree on the status of a disk. In particular, if one

node cannot write to a given disk, all nodes must stop accessing that disk before the

results of the write operation are returned to the caller. Therefore, if a node cannot

24

Chapter 2

Page 25

Cluster File System Architecture

About Veritas Cluster Volume Manager Functionality

contact a disk, it should contact another node to check on the disk’s status. If the disk

fails, no node can access it and the nodes can agree to detach the disk. If the disk does

not fail, but rather the access paths from some of the nodes fail, the nodes cannot agree

on the status of the disk. Either of the following policies for resolving this type of

discrepancy may be applied:

• Under the global connectivity policy, the detach occurs cluster-wide (globally) if any

node in the cluster reports a disk failure. This is the default policy.

• Under the local connectivity policy, in the event of disks failing, the failures are

confined to the particular nodes that saw the failure. However, this policy is not

highly available because it fails the node even if one of the mirrors is available. Note

that an attempt is made to communicate with all nodes in the cluster to ascertain

the disks’ usability. If all nodes report a problem with the disk, a cluster-wide detach

occurs.

Limitations of Shared Disk Groups

The cluster functionality of VxVM does not support RAID-5 volumes, or task monitoring

for cluster-shareable disk groups. These features can, however, be used in private disk

groups that are attached to specific nodes of a cluster. Online relayout is supported

provided that it does not involve RAID-5 volumes.

The root disk group cannot be made cluster-shareable. It must be private.

Only raw device access may be performed via the cluster functionality of VxVM. It does

not support shared access to file systems in shared volumes unless the appropriate

software, such as the HP Serviceguard Storage Management Suite, is installed and

configured.

If a shared disk group contains unsupported objects, deport it and then re-import the

disk group as private on one of the cluster nodes. Reorganize the volumes into layouts

that are supported for shared disk groups, and then deport and re-import the disk group

as shared.

Chapter 2

25

Page 26

Cluster File System Architecture

About Veritas Cluster Volume Manager Functionality

26

Chapter 2

Page 27

3 Cluster File System Administration

The following HP Serviceguard Storage Management Suite bundles include the Veritas

Storage Foundation™ 5.0 Cluster File System (CFS) and Cluster Volume Manager

(CVM) by Symantec:

• Bundles T2775CA, T2776CA, and T2777CA of the HP Serviceguard Storage

Management Suite version A.02.00 for HP-UX 11i v2

• Mission Critical Operating Environment (MCOE) bundles T2795CA, T2796CA, and

T2797CA of the HP Serviceguard Storage Management Suite version A.02.00 for

HP-UX 11i v2

• Bundles T2775CB, T2776CB, T2777CB of the HP Serviceguard Storage

Management Suite version A.02.00 for HP-UX 11i v3

• High Availability Operating Environment (HAOE) bundles T8680CB, T8681CB, and

T8682CB, of the HP Serviceguard Storage Management Suite version A.02.00 for

HP-UX 11i v3

• Data Center Operating Environment (DCOE) bundles T8684CB, T8685CB, and

T8686CB of the HP Serviceguard Storage Management Suite version A.02.00 for

HP-UX 11i v3

CFS enables multiple hosts to mount and perform file operations concurrently on the

same storage device. To operate in a cluster configuration, CFS requires the integrated

set of Veritas ™ products by Symantec that are included in the HP Serviceguard Storage

Management Suite.

CFS includes Low Latency Transport (LLT) and Group Membership Atomic Broadcast

(GAB) packages. The LLT package provides node-to-node communications and monitors

network communications. The GAB package provides cluster state, configuration, and

membership services. It also monitors the heartbeat links between systems to ensure

that they are active.

There are other packages provided by HP Serviceguard that provide application failover

support when you install CFS as part of a high availability solution.

CFS also requires the cluster volume manager (CVM) component of the Veritas™

Volume Manager (VxVM) to create the shared volumes necessary for mounting cluster

file systems.

NOTE To install and administer CFS, you should have a working knowledge of cluster file

systems. To install and administer application failover functionality, you should have a

working knowledge of HP Serviceguard. For more information on these products, refer to

the HP Serviceguard documentation and the Veritas™ Volume Manager (VxVM)

documentation available in the /usr/share/doc/vxvm directory after you install CFS.

You can also access this documentation at: http://www.docs.hp.com

The HP Serviceguard Storage Management Suite Release Notes contain an extensive list

of release specific HP and Veritas ™ documentation with part numbers to facilitate

search and location at: http://www.docs.hp.com

Chapter 3

27

Page 28

Cluster File System Administration

Topics in this chapter include:

• “Cluster Messaging - GAB” on page 29

• “Cluster Communication - LLT” on page 30

• “Volume Manager Cluster Functionality Overview” on page 31

• “Cluster File System Overview” on page 32

• “Cluster File System Administration” on page 34

• “Snapshots for Cluster File Systems” on page 37

28

Chapter 3

Page 29

Cluster File System Administration

Cluster Messaging - GAB

Cluster Messaging - GAB

GAB provides membership and messaging services for clusters and for groups of

applications running on a cluster. The GAB membership service provides orderly startup

and shutdown of clusters.

GAB is automatically configured initially when Serviceguard is installed, and GAB is

also automatically configured each time the CFS package is started.

For more information, see the gabconfig(1m) manual page.

Chapter 3

29

Page 30

Cluster File System Administration

Cluster Communication - LLT

Cluster Communication - LLT

LLT provides kernel-to-kernel communications and monitors network communications.

The LLT files /etc/llthosts and /etc/llttab can be configured to set system IDs

within a cluster, set cluster IDs for multiple clusters, and tune network parameters such

as heartbeat frequency. LLT is implemented so events such as state changes are

reflected quickly, which in turn enables fast responses.

LLT is automatically configured initially when Serviceguard is installed, and LLT is also

automatically configured each time the CFS package is started.

See the llttab(4) manual page.

30

Chapter 3

Page 31

Cluster File System Administration

Volume Manager Cluster Functionality Overview

Volume Manager Cluster Functionality Overview

The Veritas™ Cluster Volume Manager (CVM) component of the Veritas™ Volume

Manager by Symantec (VxVM) allows multiple hosts to concurrently access and manage

a given set of logical devices under VxVM control. A VxVM cluster is a set of hosts

sharing a set of devices; each host is a node in the cluster. The nodes are connected

across a network. If one node fails, other nodes can still access the devices. CVM

presents the same logical view of device configurations and changes on all nodes.

You configure CVM shared storage after HP Serviceguard sets up a cluster

configuration.

See “Cluster File System Administration” on page 34.

Chapter 3

31

Page 32

Cluster File System Administration

Cluster File System Overview

Cluster File System Overview

With respect to each shared file system, a cluster includes one primary node, and up to 7

secondary nodes. The primary and secondary designation of nodes is specific to each file

system, not the hardware. It is possible for the same cluster node be primary for one

shared file system, while at the same time it is secondary for another shared file system.

Distribution of file system primary node designation to balance the load on a cluster is a

recommended administrative policy.

See “Distributing Load on a Cluster” on page 20.

For CVM, a single cluster node is the master node for all shared disk groups and shared

volumes in the cluster.

Cluster and Shared Mounts

A VxFS file system that is mounted is called a cluster or shared mount, as opposed to a

non-shared or local mount. A file system mounted in shared mode must be on a VxVM

shared volume in a cluster environment. A local mount cannot be remounted in shared

mode and a shared mount cannot be remounted in local mode. File systems in a cluster

can be mounted with different read-write options. These are called asymmetric mounts.

Cluster File System Primary and Cluster File System Secondary

Both primary and secondary nodes handle metadata intent logging for a cluster file

system. The first node of a cluster file system to mount is called the primary node - the

other nodes are called secondary nodes. If a primary node fails, an internal election

process determines which of the secondaries becomes the primary file system.

Use the following command to determine which node is primary:

# fsclustadm –v showprimary mount_point

Use the following command to designate a primary node:

# fsclustadm –v setprimary mount_point

32

Chapter 3

Page 33

Cluster File System Administration

Cluster File System Overview

Asymmetric Mounts

Asymmetric mounts allow shared file systems to be mounted with different read/write

capabilities. One node in the cluster can mount read-write, while other nodes mount

read-only.

You can specify the cluster read-write (crw) option when you first mount the file system.

The first column in the following table shows the mode in which the primary is mounted.

The “X” marks indicate the modes available to secondary nodes in the cluster.

See the mount_vxfs(1M) manual page for more information.

Table 3-1 Primary and Secondary Mount Options

Secondary:roSecondary:rwSecondary:

ro, crw

Primary:

ro

Primary:

rw

Primary:

ro, crw

Mounting the primary node with only the -o cluster,ro option prevents the secondary

nodes from mounting in the read-write mode. Note that mounting the primary node with

the rw option implies read-write capability throughout the cluster.

X

XX

XX

Chapter 3

33

Page 34

Cluster File System Administration

Cluster File System Administration

Cluster File System Administration

This section describes some of the major aspects of cluster file system administration

and the ways that it differs from single-host VxFS administration.

Cluster File System Commands

The CFS commands are:

• cfscluster—cluster configuration command

• cfsmntadm—adds, deletes, modifies, and sets policy on cluster mounted file systems

• cfsdgadm—adds or deletes shared disk groups to/from a cluster configuration

• cfsmount/cfsumount—mounts/unmounts a cluster file system on a shared volume

IMPORTANT Once disk group and mount point multi-node packages are created with HP

Serviceguard, it is critical to use the CFS commands, including cfsdgadm, cfsmntadm,

cfsmount, and cfsumount. If the HP-UX mount and umount commands are used, serious

problems such as writing to the local file system, instead of the cluster file system, could

occur. You must not use the HP-UX mount command to provide or remove access

to a shared file system in a CFS environment (for example, mount -ocluster,

dbed_chkptmount, or sfrac_chkptmount). These non-CFS commands could cause

conflicts with subsequent CFS command operations on the file system or the

Serviceguard packages. Use of HP-UX mount commands will not create an appropriate

multi-node package, which means cluster packages will not be aware of file system

changes. Instead, use the CFS commands - cfsmount or cfsumount.

The fsclustadm and fsadm commands are useful for configuring cluster file systems.

• fsclustadm

The fsclustadm command reports various attributes of a cluster file system. Using

fsclustadm you can show and set the primary node in a cluster, translate node IDs

to host names and vice versa, list all nodes that currently have a cluster mount of the

specified file system mount point, and determine whether a mount is a local or

cluster mount. The fsclustadm command operates from any node in a cluster on

which the file system is mounted, and can control the location of the primary for a

specified mount point.

See the fsclustadm(1M) manual page.

• fsadm

The fsadm command is designed to perform selected administration tasks on file

systems. It can be invoked from a primary or secondary node. These tasks may differ

between file system types. A special device file contains an unmounted file system. A

special file system could be a directory, if it provides online administration

capabilities. A directory must be the root of a mounted file system.

See the fsadm(1M) manual page.

• Running commands safely in a cluster environment

34

Chapter 3

Page 35

Cluster File System Administration

Cluster File System Administration

Any HP-UX command that can write to a raw device must be used carefully in a

shared environment to prevent data from being corrupted. For shared VxVM

volumes, CFS provides protection by reserving the volumes in a cluster to prevent

VxFS commands, such as fsck and mkfs, from inadvertently damaging a mounted

file system from another node in a cluster. However, commands such as dd execute

without any reservation, and can damage a file system mounted from another node.

Before running this kind of command on a file system, be sure the file system is not

mounted on a cluster. You can run the mount command with no options to see if a file

system is a shared or local mount.

Time Synchronization for Cluster File Systems

CFS requires that the system clocks on all nodes are synchronized using some external

component such as the Network Time Protocol (NTP) daemon. If the nodes are not in

sync, timestamps for creation (ctime) and modification (mtime) may not be consistent

with the sequence in which operations actually happened.

Growing a Cluster File System

There is a CVM master node as well as a CFS primary node. When growing a file system,

you grow the volume from the CVM master node, and then grow the file system from any

CFS node. The CVM master node and the CFS primary node can be two different nodes.

To determine the primary file system in a cluster (CFS primary), enter:

# fsclustadm –v showprimary mount_point

To determine if the CFS primary is also the CVM master node, enter:

# vxdctl -c mode

To increase the size of the file system, run the following commands:

On the CVM master node, enter:

# vxassist -g shared_disk_group growto volume_name newlength

On any CFS node, enter:

# fsadm –F vxfs –b newsize –r device_name mount_point

The fstab file

In the /etc/fstab file, do not specify any cluster file systems to mount-at-boot,

because mounts initiated from fstab occur before cluster configuration begins. For

cluster mounts, use the HP Serviceguard configuration file to determine which file

systems to enable following a reboot.

Distributing the Load on a Cluster

Distributing the workload in a cluster provides performance and failover advantages.

For example, if you have eight file systems and four nodes, designating two file systems

per node as primary file systems will be beneficial. Primaryship is determined by which

node first mounts the file system. You can also use the fsclustadm setprimary

command to designate a CFS primary node. In addition, the fsclustadm setprimary

Chapter 3

35

Page 36

Cluster File System Administration

Cluster File System Administration

command can define the order in which primaryship is assumed if the current primary

node fails. After setup, the policy is in effect as long as one or more nodes in the cluster

have the file system mounted.

36

Chapter 3

Page 37

Cluster File System Administration

Snapshots for Cluster File Systems

Snapshots for Cluster File Systems

A snapshot provides a consistent point-in-time image of a VxFS file system. A snapshot

can be accessed as a read-only mounted file system to perform efficient online backups.

Snapshots implement copy-on-write semantics that incrementally copy data blocks when

they are overwritten on the “snapped” file system.

Snapshots for Serviceguard cluster file systems extend the same copy-on-write

mechanism for the I/O originating from any node in a CFS cluster.

Cluster Snapshot Characteristics

• A snapshot for a cluster mounted file system can be mounted on any node in a

cluster. The file system node can be a primary, secondary, or secondary-only node. A

stable image of the file system is provided for writes from any node.

• Multiple snapshots of a cluster file system can be mounted on the same node, or on a

different node in a cluster.

• A snapshot is accessible only on the node it is mounted on. The snapshot device

cannot be mounted on two different nodes simultaneously.

• The device for mounting a snapshot can be a local disk or a shared volume. A shared

volume is used exclusively by a snapshot mount and is not usable from other nodes

in a cluster as long as the snapshot is active on that device.

• On the node mounting a snapshot, the “snapped” file system cannot be unmounted

while the snapshot is mounted.

• A CFS snapshot ceases to exist if it is unmounted, or the node mounting the

snapshot fails. A snapshot is not affected if any other node leaves or joins the cluster.

• A snapshot of a read-only mounted file system cannot be taken. It is possible to

mount a snapshot of a cluster file system only if the “snapped” cluster file system is

mounted with the crw option.

Performance Considerations

Mounting a snapshot file system for backup increases the load on the system because of

the resources used to perform copy-on-writes and to read data blocks from the snapshot.

In this situation, cluster snapshots can be used to do off-host backups. Off-host backups

reduce the load of a backup application on the primary server. Overhead from remote

snapshots is small when compared to overall snapshot overhead. Running a backup

application by mounting a snapshot from a lightly loaded node is beneficial to overall

cluster performance.

Creating a Snapshot on a Cluster File System

Chapter 3

The following example shows how to create and mount a snapshot on a two-node cluster

using CFS administrative interface commands.

1. Create a VxFS file system on a shared VxVM volume:

# mkfs –F vxfs /dev/vx/rdsk/cfsdg/vol1

37

Page 38

Cluster File System Administration

Snapshots for Cluster File Systems

version 7 layout

104857600 sectors, 52428800 blocks of size 1024, log size 16384

blocks

unlimited inodes, largefiles not supported

52428800 data blocks, 52399152 free data blocks

1600 allocation units of 32768 blocks, 32768 data blocks

2. Mount the file system on all nodes (following previous examples, on system01 and

system02):

# cfsmntadm add cfsdg vol1 /mnt1 all=cluster

# cfsmount /mnt1

The cfsmntadm command adds an entry to the cluster manager configuration, then

the cfsmount command mounts the file system on all nodes.

3. Add the snapshot on a previously created volume (snapvol in this example) to the

cluster manager configuration:

# cfsmntadm add snapshot cfsdg snapvol /mnt1 /mnt1snap \

system01=ro

NOTE The snapshot of a cluster file system is accessible only on the node where it is

created; the snapshot file system itself cannot be cluster mounted.

4. Mount the snapshot:

# cfsmount /mnt1snap

5. A snapped file system cannot be unmounted until all of its snapshots are

unmounted. Unmount the snapshot before trying to unmount the snapped cluster

file system:

# cfsumount /mnt1snap

38

Chapter 3

Page 39

4 Cluster Volume Manager

Administration

A cluster consists of a number of hosts or nodes that share a set of disks. The main

benefits of cluster configurations are:

• Availability—If one node fails, the other nodes can still access the shared disks.

When configured with suitable software, mission-critical applications can continue

running by transferring their execution to a standby node in the cluster. This ability

to provide continuous uninterrupted service by switching to redundant hardware is

commonly termed failover.

Failover is transparent to users and high-level applications for database and

file-sharing. You must configure cluster management software, for example

Serviceguard, to monitor systems and services, and to restart applications on

another node in the event of either hardware or software failure. Serviceguard also

allows you to perform general administrative tasks such as joining or removing

nodes from a cluster.

• Off-host processing—Clusters can reduce contention for system resources by

performing activities such as backup, decision support and report generation on the