ProLiant Clusters for

SCO UnixWare 7 U/300

Quick Install Guide for the Compaq ProLiant ML370

First Edition (January 2001)

Part Number 221540-001

Compaq Computer Corporation

Notice

© 2001 Compaq Computer Corporation

Compaq, the Compaq logo, NonStop, ProLiant, SmartStart, Compaq Insight Manager, ServerNet, and

ROMPaq Registered in U.S. Patent and Trademark Office. Microsoft, MS-DOS, Windows, and

Windows NT are trademarks of Microsoft Corporation in the United States and other countries. Intel and

Pentium are trademarks of Intel Corporation in the United States and other countries. UNIX is a

trademark of The Open Group in the United States and other countries. All other product names

mentioned herein may be trademarks or registered trademarks of their respective companies.

Compaq shall not be liable for technical or editorial errors or omissions contained herein. The

information in this document is provided “as is” without warranty of any kind and is subject to change

without notice. The warranties for Compaq products are set forth in the express limited warranty

statements accompanying such products. Nothing herein should be construed as constituting an

additional warranty.

Compaq ProLiant Clusters for SCO UnixWare 7 U/300

Quick Install Guide for the Compaq ProLiant ML370

First Edition (January 2001)

Part Number 221540-001

Contents

About This Guide

Text Conventions.......................................................................................................vii

Symbols in Text....................................................................................................... viii

Symbols on Equipment............................................................................................ viii

Getting Help ................................................................................................................x

Compaq Technical Support ..................................................................................x

Compaq Support Website..................................................................................... x

Compaq Authorized Reseller...............................................................................xi

Chapter 1

Clustering Overview

Compaq ProLiant Clusters for SCO UnixWare 7 U/300......................................... 1-2

Hardware Components ..................................................................................... 1-2

Software Components....................................................................................... 1-6

Overview of Cluster Assembly and Software Installation Steps ........................... 1-10

Resources for Application Installation................................................................... 1-11

Other References ................................................................................................... 1-12

SCO UnixWare 7 NonStop Clusters Documentation ............................................ 1-12

Chapter 2

Setting Up Cluster Hardware

Assembling the Rack ............................................................................................... 2-2

Stacking Components....................................................................................... 2-3

Transporting Racks........................................................................................... 2-4

Setting Up the Cluster Nodes ........................................................................... 2-5

Installing the 64-Bit External Storage Fibre Channel HBA and GBIC-SWs ... 2-5

Installing Internal Disk Drives.......................................................................... 2-6

Installing the Public LAN NIC into a Cluster Ethernet Interconnect ............... 2-6

Installing the ServerNet I Cluster Interconnect ................................................ 2-6

iv Compaq ProLiant Clusters for SCO UnixWare 7 U/300 Quick Install Guide for the Compaq ProLiant ML370

Setting Up Cluster Hardware

continued

Setting Up the External Storage Hardware .............................................................. 2-7

Cabling the Components.......................................................................................... 2-8

Using Labeling Standards................................................................................. 2-8

Cabling the ServerNet I Interconnect................................................................ 2-9

Cabling the Public LAN Connection .............................................................. 2-12

Cabling the Ethernet Interconnect................................................................... 2-12

Cabling the CI Serial Cable ............................................................................ 2-13

Cabling the RA4100 ....................................................................................... 2-13

Cabling the Keyboard, Monitor, and Mouse................................................... 2-15

UPS Power Management Cabling................................................................... 2-15

Chapter 3

Installing Cluster Software

Understanding Preinstallation Tasks and Considerations ........................................ 3-2

Default Quick Install Settings........................................................................... 3-2

Internal Disk Drive Considerations................................................................... 3-2

Obtaining UnixWare 7 Licenses....................................................................... 3-2

Configuring the Servers with SmartStart ................................................................. 3-3

Erasing the Configuration................................................................................. 3-3

Configuring the Servers.................................................................................... 3-4

Updating Controller Firmware................................................................................. 3-7

Verifying ServerNet I Connections.......................................................................... 3-7

Verifying the Local Adapter ............................................................................. 3-8

Verifying Node-to-Node Communication ........................................................ 3-9

Installing the Cluster Using Quick Install.............................................................. 3-10

Installing Node 1............................................................................................. 3-11

Installing Node 2............................................................................................. 3-13

Verifying the Cluster Assembly............................................................................. 3-14

Additional Cluster Setup Tasks.............................................................................. 3-15

Registering the ProLiant Cluster for SCO UnixWare 7......................................... 3-15

Viewing UnixWare and NonStop Clusters Documentation................................... 3-16

Chapter 4

Managing Clusters

SCO UnixWare 7 NonStop Clusters Management Software................................... 4-2

Clusterized SCOadmin...................................................................................... 4-3

Event Processing Subsystem............................................................................. 4-4

NonStop Clusters Management Suite ............................................................... 4-4

SCO Clusterized Commands ............................................................................ 4-5

Managing Clusters

continued

Compaq ProLiant Cluster Management Software for SCO UnixWare 7

NonStop Clusters..................................................................................................... 4-7

Compaq Insight Manager Support.................................................................... 4-7

Compaq Insight Manager XE Support.............................................................. 4-8

NonStop Clusters Verification Utility .............................................................. 4-9

UPS-Initiated Shutdown ................................................................................... 4-9

Chapter 5

Troubleshooting

Installation Problems ............................................................................................... 5-2

Quick Install Error Messages............................................................................ 5-4

Node-to-Node Communication Problems......................................................... 5-5

Shared Storage Problems................................................................................ 5-10

Client-to-Cluster Connectivity Problems........................................................ 5-12

Cluster Resource Problems............................................................................. 5-14

ServerNet I Messages ............................................................................................ 5-15

ServerNet I SAN Error Messages................................................................... 5-15

ServerNet I Notice Messages.......................................................................... 5-17

ServerNet I Warning Messages ...................................................................... 5-19

ServerNet I Panic Messages ........................................................................... 5-22

ServerNet I Continuation and Informative Messages..................................... 5-27

Contents v

Appendix A

Software Versions

Appendix B

Quick Install Planning Worksheets

Glossary

Index

Use the Compaq ProLiant Clusters for the SCO UnixWare 7 U/300 Quick

Install Guide for the Compaq ProLiant ML370 as step-by-step instructions for

installation and as a reference for cluster operation and troubleshooting.

Text Conventions

The following conventions distinguish elements of text:

Keys, Buttons Keys and buttons appear in boldface. A plus sign

About This Guide

(+) between two keys indicates that they should be

pressed simultaneously.

User Input, File Names,

Directory Names,

Commands, Examples,

Screen Elements

Variables Information supplied by the user appears in italics.

Menu Options, Dialog

Box Names

Type When you are instructed to type information, type

Enter When you are instructed to enter information, type

These elements appear in a different typeface.

These elements appear in initial capital letters.

the information without pressing the Enter key.

the information and then press the Enter key.

viii Compaq ProLiant Clusters for SCO UnixWare 7 U/300 Quick Install Guide for the Compaq ProLiant ML370

Symbols in Text

These symbols may be found in the text of this guide. They have the following

meanings.

WARNING: Text set off in this manner indicates that failure to follow directions

in the warning can result in bodily harm or loss of life.

CAUTION: Text set off in this manner indicates that failure to follow directions

could result in damage to equipment or loss of information.

IMPORTANT: Text set off in this manner presents clarifying information or specific

instructions.

NOTE: Text set off in this manner presents commentary, sidelights, or interesting points

of information.

Symbols on Equipment

These symbols may be located on equipment in areas where hazardous

conditions may exist.

This symbol, in conjunction with any of the following symbols, indicates the

presence of a potential hazard. The potential for injury exists if warnings

are not observed. Consult your documentation for specific details.

This symbol indicates the presence of hazardous energy circuits or electric

shock hazards. Refer all servicing to qualified personnel.

WARNING: To reduce the risk of injury from electric shock hazards, do not

open this enclosure. Refer all maintenance, upgrades, and servicing to

qualified personnel.

This symbol indicates the presence of electric shock hazards. The area

contains no user or field serviceable parts. Do not open for any reason.

WARNING: To reduce the risk of injury from electric shock hazards, do

not open this enclosure.

About This Guide ix

This symbol, on an RJ-45 receptacle, indicates a network interface

connection.

WARNING: To reduce the risk of electric shock, fire, or damage to the

equipment, do not plug telephone or telecommunications connectors into

this receptacle.

This symbol indicates the presence of a hot surface or hot component. If

this surface is contacted, the potential for injury exists.

WARNING: To reduce the risk of injury from a hot component, allow the

surface to cool before touching.

These symbols, on power supplies or systems, indicate that

the equipment is supplied by multiple sources of power.

WARNING: To reduce the risk of injury from electric shock,

remove all power cords to completely disconnect power from

the system.

Weight in kg

Weight in lb

This symbol indicates that the component exceeds the recommended

weight for one individual to handle safely.

WARNING: To reduce the risk of personal injury or damage to the

equipment, observe local occupational health and safety requirements and

guidelines for manual material handling.

x Compaq ProLiant Clusters for SCO UnixWare 7 U/300 Quick Install Guide for the Compaq ProLiant ML370

Getting Help

If you have a problem and have exhausted the information in this guide, you

can obtain further information and other help in the following locations.

Compaq Technical Support

In North America, call the Compaq Technical Support Phone Center at

1-800-OK-COMPAQ. This service is available 24 hours a day, 7 days a week.

For continuous quality improvement, calls may be recorded or monitored.

Outside North America, call the nearest Compaq Technical Support Phone

Center. Telephone numbers for worldwide Technical Support Centers are

listed on the Compaq website. Access the Compaq website by logging on to

the Internet at

http://www.compaq.com

Be sure to have the following information available before you call Compaq:

■ Technical support registration number (if applicable)

■ Product serial number

■ Product model name and number

■ Applicable error messages

■ Add-on boards or hardware

■ Third-party hardware or software

■ Operating system type and revision level

Compaq Support Website

The Compaq Support website has information on this product and the latest

drivers and Flash ROM images. You can access the Compaq Support website

by logging on to the Internet at

http://www.compaq.com/support

Compaq Authorized Reseller

For the name of your nearest Compaq authorized reseller:

■ In the United States, call 1-800-345-1518.

■ In Canada, call 1-800-263-5868.

■ Elsewhere, see the Compaq website for locations and telephone

numbers.

About This Guide xi

Chapter 1

Clustering Overview

A Compaq ProLiant™ Cluster for UnixWare 7 is a collection of servers,

storage, and software that allows independent storage and servers to act as a

single system. The cluster presents a single-system image to clients. It also

protects against hardware, operating system, middleware, and application

failures and provides configuration options for load balancing.

Clustering is an established technology that can provide the following benefits:

■ Availability

■ Scalability

■ Manageability

■ Investment protection

■ Operational efficiency

The reliability of the SCO UnixWare 7 NonStop™ Clusters technology

ensures that your applications and data are protected from multiple error

conditions. For more details on Compaq ProLiant Clusters for SCO

UnixWare 7, see the Compaq High Availability website at

http://www.compaq.com/highavailability

1-2 Compaq ProLiant Clusters for SCO UnixWare 7 U/300 Quick Install Guide for the Compaq ProLiant ML370

Compaq ProLiant Clusters for SCO UnixWare 7 U/300

The Compaq ProLiant Clusters for SCO UnixWare 7 U/300 Quick Install

Cluster Kit (U/300 kit) for the ProLiant ML370 server supports specific

hardware components, enabling the cluster software to be installed in about an

hour. Cluster components include servers, internal disk drives, external

storage, cluster interconnect, software, and local area network (LAN)

hardware. Cluster software provides installation capabilities, the operating

system, and various Compaq cluster management utilities. To set up the

cluster, you must assemble the cluster components, initialize them, and install

the cluster software.

Hardware Components

Supported cluster hardware components for this Quick Install include ProLiant

ML370 servers, the Compaq StorageWorks RAID Array 4100 (RA4100)

storage subsystem, the hardware required for the cluster interconnect, public

network interface controller (NIC), and the Cluster Integrity (CI) serial cable.

Server Components

The U/300 kit for the ProLiant ML370 server supports the following server

hardware components:

■ Two identical ProLiant ML370 servers with an embedded NIC in each

server

■ One 9.1-GB or larger disk drive in each server

■ One 64-bit Fibre Channel Host Bus Adapter (HBA) in slot 3 of each

server

■ Two Gigabit Interface Converters Shortwave (GBIC-SW), one installed

into each HBA in slot 3 of each server

■ One CI serial cable (provided in the cluster kit)

■ For clusters using Ethernet interconnect:

G One Compaq NC3123 Fast Ethernet NIC (NC3123 NIC) PCI 10/100

Wake on LAN (WOL) installed into slot 1 of each server for public

network access

G One crossover cable for the cluster interconnect (provided in the

cluster kit)

Clustering Overview 1-3

For clusters using ServerNet™ I interconnect:

■

G One ServerNet I PCI adapter installed into slot 1 of each server

G Two ServerNet I cables

Storage Components

The U/300 kit for the ProLiant ML370 server supports the following storage

hardware components:

■ One RA4100 storage subsystem, including one Compaq StorageWorks

RAID Array 4000 (RA4000) primary array controller

■ One RA4000 redundant array controller

■ Two GBIC-SWs, one in each controller

■ Two 9.1-GB or larger disk drives, one in each slot 0

■ Two multimode Fibre Channel cables

Cluster Interconnect

ProLiant Clusters for SCO UnixWare 7 with the ProLiant ML370 server can

use either a high-speed ServerNet I network or a dedicated, private Ethernet

network to connect the cluster nodes. The cluster nodes use the interconnect

data path to support the following cluster features:

■ Cluster-wide file system

■ Cluster-wide process management, migration, and load balancing

■ Cluster-wide networking and Cluster Virtual IP (CVIP)

■ Cluster-wide system administration and management

The ServerNet I cluster interconnect uses two ServerNet I PCI adapters and

two ServerNet I cables to connect the nodes. The Ethernet cluster interconnect

uses the embedded NICs and an Ethernet crossover cable to connect the two

nodes.

1-4 Compaq ProLiant Clusters for SCO UnixWare 7 U/300 Quick Install Guide for the Compaq ProLiant ML370

Cluster Integrity Serial Cable

The Cluster Integrity (CI) serial cable listed with the server components is

required for the U/300 Quick Install cluster for the ProLiant ML370 server.

This cable prevents the condition in which more than one node in a cluster acts

as the root node and operates as the root node. Because the active root node

mounts the root file system and runs several critical cluster-wide functions,

more than one node trying to behave as the root node is undesirable.

NOTE: The CI serial cable may be referred to as the split-brain avoidance (SBA) serial

cable in UnixWare software and documentation.

Hardware Configuration

The U/300 kit for the ProLiant ML370 server supports the server and storage

hardware in specific configurations based on the type of cluster interconnect.

The CI serial cable is required for all configurations.

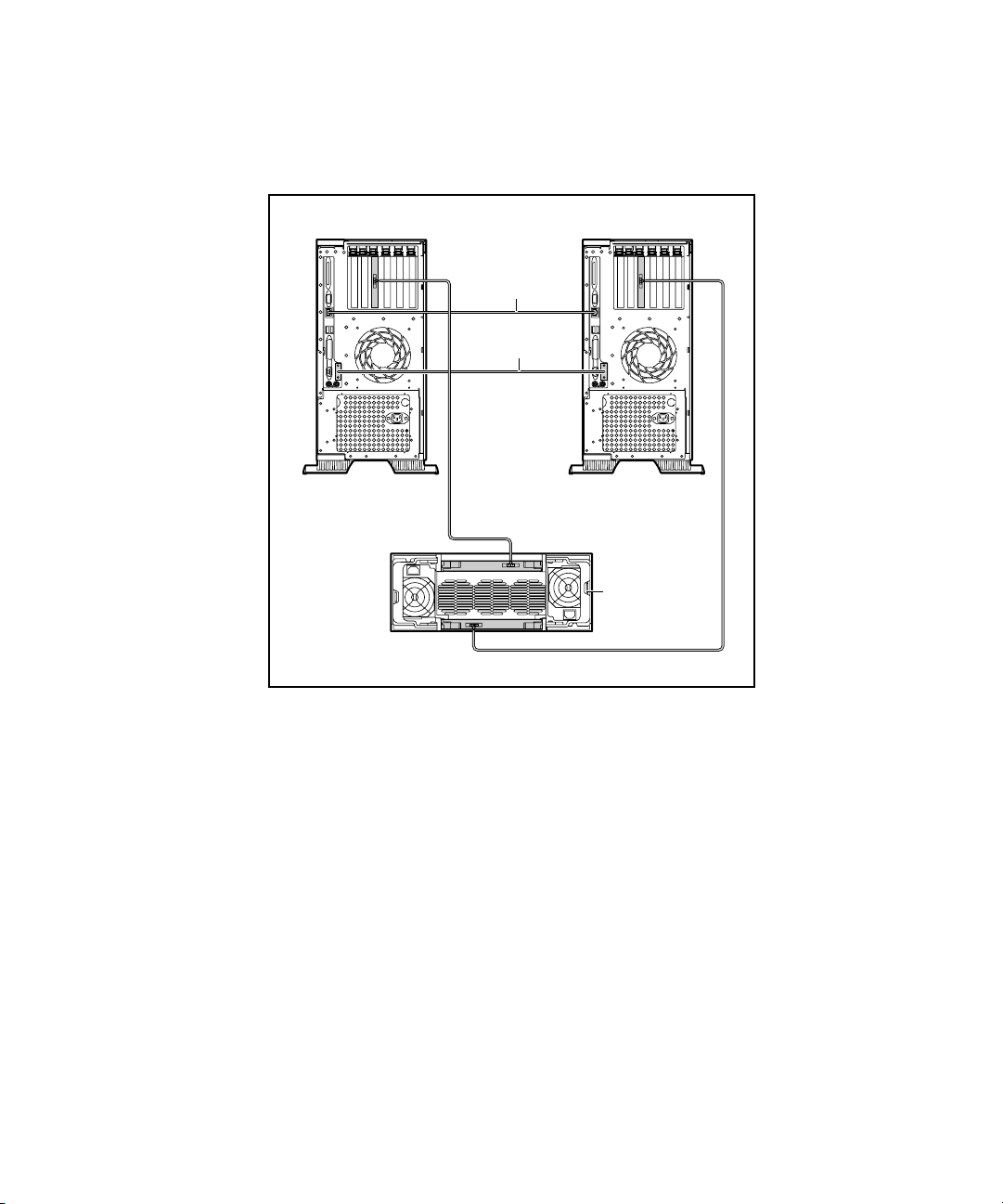

A ServerNet I cluster interconnect uses the two ServerNet I PCI adapters and

two cables as shown in Figure 1-1.

Node 1 Node 2

X

Y

Dedicated

ServerNet I Cables

Figure 1-1. Example of hardware components of the ServerNet I cluster

interconnect configuration

CI Serial

Cable

RA4100

Clustering Overview 1-5

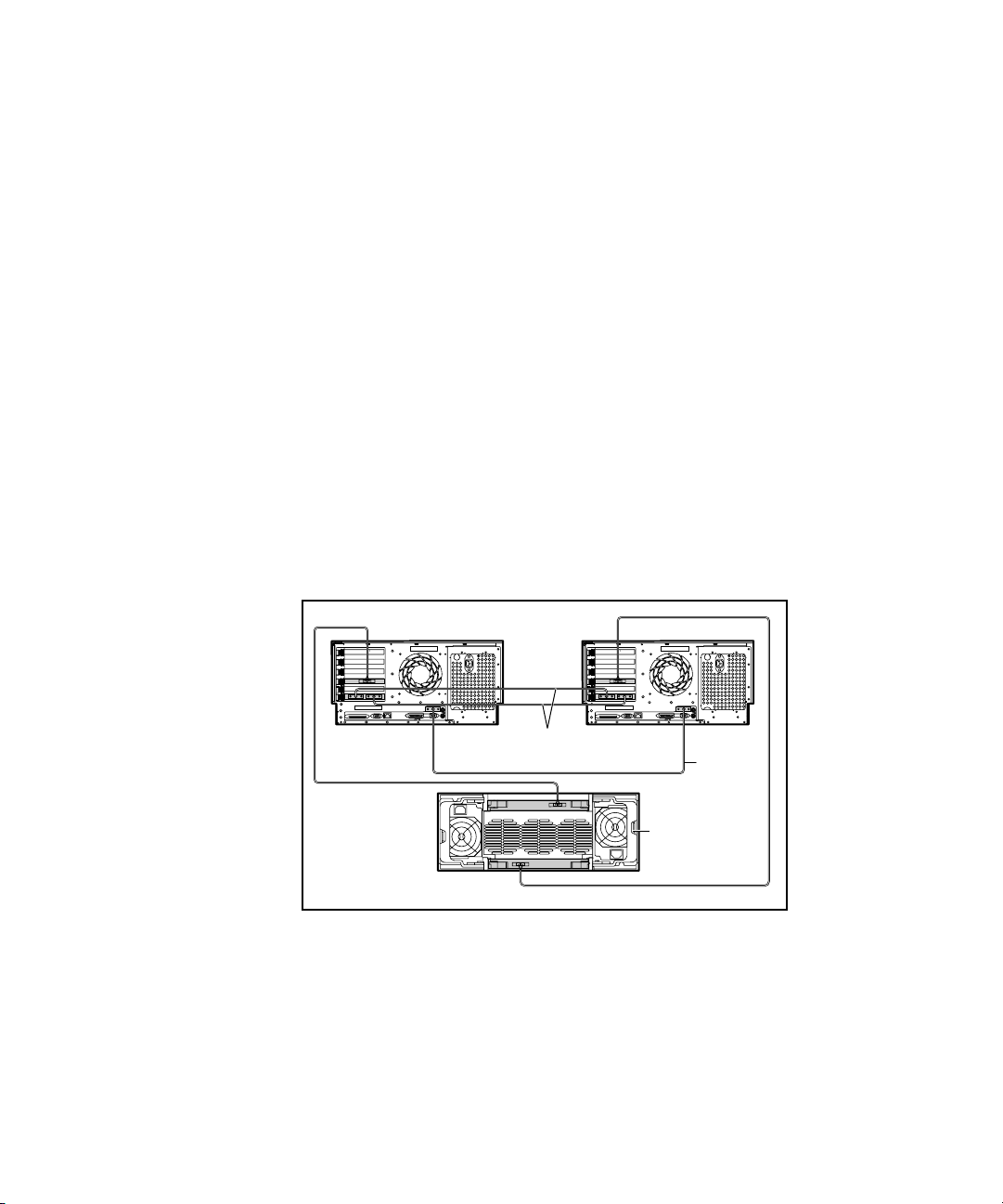

An Ethernet cluster interconnect uses the embedded NIC in each server

connected by one Ethernet crossover cable as shown in Figure 1-2.

Node 1 Node 2

Ethernet Crossover

Cable

CI Serial Cable

RA4100

Figure 1-2. Example of hardware components of the Ethernet cluster

interconnect configuration

LAN Connection

Clusters using Ethernet interconnect require an NC3123 NIC installed into

slot 1 of each node before cluster software installation so that the cluster can

access a public network. These NICs must be on a different subnet from the

embedded Ethernet cluster interconnect. Multiple public network controllers

can be installed after cluster installation is complete. For a list of certified

NICs, see the Compaq High Availability website at

http://www.compaq.com/highavailability

Clusters that use ServerNet I interconnect access the public network using the

embedded NIC. Each server must be connected to the same network.

1-6 Compaq ProLiant Clusters for SCO UnixWare 7 U/300 Quick Install Guide for the Compaq ProLiant ML370

Software Components

Software components of the U/300 kit for the ProLiant ML370 server include:

■ SCO UnixWare Release 7.1.1 Compact Media Kit

■ SCO UnixWare 7 NonStop Clusters Media Kit Version 7.1.1+IP

■ Compaq ProLiant Clusters for SCO UnixWare 7 ML370 Quick Install

CDs for the Compaq ProLiant ML370 server

Cluster-related software provided with the ProLiant ML370 server includes:

■ Compaq SmartStart™ and Support Software CD

■ Compaq Management CD

Additionally, you must obtain software licenses.

NOTE: SCO UnixWare 7 (with Mirroring Option or Online Data Manager) and UnixWare 7

NonStop Clusters software licenses must be purchased through your SCO reseller or

distributor. To locate a convenient SCO reseller or distributor to purchase licenses, see the

SCO website at

http://www.sco.com

SCO UnixWare Software

SCO UnixWare 7 and the SCO UnixWare 7 NonStop Clusters software

provide the operating environment for the ProLiant Clusters for

SCO UnixWare 7. The SCO UnixWare 7 NonStop Clusters software provides

the technology to:

■ Perform single-system image operations

■ Perform failover

■ Define and modify cluster members

■ Manually control and administer the cluster

■ View the current state of the cluster

This software is included in the U/300 kit.

NOTE: The U/300 kit includes SCO UnixWare 7.1.1 and SCO UnixWare NonStop

Clusters 7.1.1+IP. Other versions of the operating system and cluster software are not

supported by this kit.

Clustering Overview 1-7

NOTE: SCO UnixWare 7 (with Mirroring Option or Online Data Manager) and UnixWare 7

NonStop Clusters software licenses must be purchased through your SCO reseller or

distributor. To locate a convenient SCO reseller or distributor to purchase licenses, see the

SCO website at

http://www.sco.com

Quick Install CDs for the ProLiant ML370 Server

The Quick Install CDs for the ProLiant ML370 server provide rapid and

simplified cluster installation. These CDs contain all the necessary software

already configured for immediate cluster boot. An installation wizard allows

you to enter parameters and licenses specific to your configuration.

The Quick Install CDs for the ProLiant ML370 server contain a

readme.html

file, which includes descriptions of potential problems and how to avoid or

correct them.

The Quick Install CDs for the ProLiant ML370 server also include the

following utilities:

■ NonStop Clusters Verification Utility (NSCVU)

The NSCVU validates Compaq ProLiant Clusters for SCO UnixWare 7

and their components. The NSCVU is run from any node in the cluster

and tests cluster configuration in the following categories:

G ServerNet I connectivity tests verify that the nodes in the cluster can

communicate over X and Y ServerNet I paths.

G Ethernet connectivity tests verify that the nodes can communicate

over the Ethernet cluster interconnect.

G Storage tests verify the presence of, and minimum configuration

requirements of, supported HBAs, array controllers, and external

storage subsystems.

G System software tests verify that SCO UnixWare 7 and SCO

UnixWare 7 NonStop Clusters software have been properly

installed.

For further information on running the NSCVU, refer to the nscvu(1M)

manual page, which can be viewed with the man(1M) command or in the

SCOhelp online documentation set.

■ Uninterruptible Power Supply (UPS) software

This software provides management capabilities for UPSs connected to

the cluster.

1-8 Compaq ProLiant Clusters for SCO UnixWare 7 U/300 Quick Install Guide for the Compaq ProLiant ML370

Compaq Insight Manager™ Agents

■

These agents provide system information to the Compaq Insight

Manager, which is available on the Management CD that comes with

the ProLiant servers.

Compaq ServerNet Verification Utility (SVU)

The Compaq ServerNet Verification Utility (SVU) verifies proper installation

and cabling of the Compaq ServerNet I interconnect before a UnixWare

software installation. The SVU is a utility run from bootable diskettes inserted

into each cluster node. For more information on creating the diskettes and

running the SVU, refer to Chapter 3, “Installing Cluster Software,” of this

guide.

Compaq SmartStart and Support Software CD

SmartStart is located on the SmartStart and Support Software CD shipped with

ProLiant servers. This CD is required for ServerNet I configurations. You can

also use the CD to configure additional hardware. For information concerning

SmartStart, refer to the Compaq Server Setup and Management package that

comes with your server. The following utilities on the SmartStart CD are used

for your cluster:

■ Compaq Array Configuration Utility (ACU)

The Compaq ACU is an offline tool that is used to configure the array

controller, add disk drives to an existing configuration, and expand

capacity.

■ Options ROMPaq™ Utility

The SmartStart and Support Software CD contains the Options

ROMPaq Utility. Options ROMPaq updates the firmware on the disk

drives and controller.

■ Fibre Channel Fault Isolation Utility (FFIU)

The FFIU verifies the integrity of the Fibre Channel Arbitrated Loop

(FC-AL) installation. This utility provides fault detection and help in

locating a failing device on the FC-AL.

Clustering Overview 1-9

Compaq Management CD

The Compaq Management CD shipped with ProLiant servers contains

software for managing Compaq clusters. The Compaq Insight Manager is

included on the CD along with Compaq Management Agents and Tools for

Servers for SCO UnixWare 7 NonStop Cluster. The Quick Install process

automatically installs the agents and tools.

■ Compaq Insight Manager

Compaq Insight Manager is an easy-to-use Microsoft Win32 software

utility for collecting server and cluster information. Compaq Insight

Manager performs the following functions:

G Monitors fault conditions and system status

G Monitors shared storage and interconnect adapters

G Forwards server alert fault conditions

G Remotely controls servers

In Compaq servers, each hardware subsystem, such as disk drive

storage, system memory, and system processor, has a robust set of

management capabilities. Compaq Insight Manager notifies the system

administrator of impending fault conditions.

For information concerning Compaq Insight Manager, refer to the

Compaq Server Setup and Management package. See Chapter 4,

“Managing Clusters,” for more information.

■ Compaq Management Agents and Tools for Servers for SCO

UnixWare 7 NonStop Clusters.

SCO UnixWare 7 NonStop Clusters and SCO UnixWare 7 agents and

tools include the Compaq Insight Manager agents, the NSCVU

software, and the UPS management software described in “Quick Install

CDs for the ProLiant ML370 Server” earlier in this chapter and in detail

in Chapter 4.

Software Licenses

Licenses for UnixWare 7 (with Mirroring option or Online Data Manager) and

UnixWare 7 NonStop Clusters are not provided in the cluster kit. The licenses

must be purchased from an authorized SCO reseller. To locate a SCO reseller,

visit the following URL:

http://www.sco.com

1-10 Compaq ProLiant Clusters for SCO UnixWare 7 U/300 Quick Install Guide for the Compaq ProLiant ML370

Overview of Cluster Assembly and Software Installation Steps

Use the following general steps to set up your cluster hardware, initialize the

hardware, and install the software. The specific procedures are found in the

sections noted in these steps:

1. Set up the cluster hardware.

Cluster hardware assembly includes the following tasks:

G Setting up the rack that contains the server and storage components

if your cluster uses a rack. Refer to the section “Assembling the

Rack” in Chapter 2, “Setting Up Cluster Hardware.”

G Setting up the cluster nodes so that they include the hardware

required for cluster operation, including internal disk drives,

adapters, and NICs. To set up the cluster nodes, refer to “Setting up

the Cluster Nodes” in Chapter 2.

G Setting up external storage hardware components according to the

documentation that came with them. Once you have set up the

hardware, you must cable the hardware. To set up the external

storage, refer to “Setting up the External Storage Hardware” in

Chapter 2.

2. Perform preinstallation tasks.

Before beginning any software installation procedures, you must

perform a few tasks to prepare for the installation. You must obtain SCO

UnixWare 7 and SCO UnixWare 7 NonStop Clusters licenses, read

through all the installation procedures to become familiar with them, fill

out the installation worksheets in Appendix B of this guide, and ensure

that the servers each contain a single disk drive. Refer to the section

“Understanding Preinstallation Tasks and Considerations” in Chapter 3,

“Installing Cluster Hardware.”

3. Configure the servers.

Configuring the servers involves erasing any existing configuration and

using the SmartStart CD to set up the servers to use the SCO

UnixWare 7 operating system. Refer to “Configuring the Servers” in

Chapter 3.

Clustering Overview 1-11

4. Upgrade controller firmware.

Firmware provides an interface between hardware and software. It is

important to use the latest firmware for full hardware functionality.

Upgrading controller firmware is performed using a diskette created as

part of server configuration. Refer to “Updating Controller Firmware” in

Chapter 3.

5. Verify ServerNet I connections.

If your cluster uses the ServerNet I cluster interconnect, you must ensure

that the hardware for the interconnect is properly installed. This

procedure instructs you to perform tests of the adapters and the cables.

Refer to “Verifying ServerNet I Connections” in Chapter 3.

6. Install the software.

Installing the software provides your cluster with the SCO UnixWare 7

operating system and the SCO UnixWare 7 NonStop Clusters software

discussed in this chapter. You must select the Quick Install CDs for your

configuration and install the software on both nodes. Installation

prompts guide you through the installation and request the information

found in your completed worksheets. Refer to “Installing the Cluster

Using Quick Install” in Chapter 3.

The following sections offer sources of information and support for

application installation and cluster documentation.

Resources for Application Installation

Client/server software applications are among the key components of any

cluster. Compaq is working with its key software partners to ensure that

cluster-aware applications are available and that the applications work

seamlessly on Compaq ProLiant Clusters for SCO UnixWare 7.

Compaq white papers provide information about installing applications in

Compaq ProLiant Clusters for SCO UnixWare 7. Visit the Compaq High

Availability website to download cluster-related white papers and other

technical documents at

http://www.compaq.com/highavailability

IMPORTANT: Some software applications may need to be updated to take advantage of

clustering. Contact the software vendors to check whether their software supports SCO

UnixWare 7 NonStop Clusters and to ask whether any patches or updates are available for

SCO UnixWare 7 NonStop Clusters operation.

1-12 Compaq ProLiant Clusters for SCO UnixWare 7 U/300 Quick Install Guide for the Compaq ProLiant ML370

Other References

For more information about the RA4100 storage subsystem or RA4000

redundant array controller, refer to the following guides, either as included

with your hardware or as found at the Compaq Support website at

http://www.compaq.com/support/

■ Compaq StorageWorks RAID Array 4100 User Guide

■ Compaq StorageWorks RAID Array 4100 Configuration poster

■ Compaq StorageWorks RAID Array 4000 Redundant Array Controller

Configuration poster

■ Compaq Fibre Channel Storage System User Guide

■ Compaq StorageWorks Fibre Channel Host Adapter Installation Guide

■ Compaq Fibre Channel Troubleshooting Guide

■ Compaq Fibre Channel Storage System Technology

For more information about cluster use and administration, refer to the SCO

UnixWare 7 NonStop Clusters System Administrator’s Guide, located in the

SCOhelp online documentation set in the NonStop Clusters Documentation

topic.

SCO UnixWare 7 NonStop Clusters Documentation

The SCO UnixWare 7 NonStop Clusters software includes online

documentation, which you can view after the cluster is installed. The main

documentation set is called SCOhelp and contains information that can answer

many administrative questions. SCOhelp is available when you use the

UnixWare Desktop and remotely using a Web browser when your cluster is

connected to the public network. Additionally, you can access manual pages

using the

Access the online documentation in the following ways:

■ Click the book-and-question-mark icon in the toolbar on the UnixWare

■ Type scohelp at the command line of a desktop terminal (dtterm) to access

man(1M) command.

Desktop to access SCOhelp. A browser displays the main SCOhelp list

of topics.

SCOhelp. A browser displays the main SCOhelp list of topics.

Clustering Overview 1-13

Use the following URL to access SCOhelp remotely when the cluster is

■

attached to the public network:

http:// clustername:457

Substitute the name of your cluster or its CVIP address for clustername.

The browser displays the main SCOhelp list of topics.

■ Use the man command to access manual pages from any command line

by entering

man and the name of the command, file, or routine about

which you want information. For example, enter:

man cluster

The man command displays the reference page for the cluster command.

Chapter 2

Setting Up Cluster Hardware

Setting up a cluster includes setting up, cabling, and verifying hardware

components. Use the following sections to set up the Compaq ProLiant

Clusters for SCO UnixWare 7 U/300 for the Compaq ProLiant ML370 Quick

Install Cluster:

■ Assembling the Rack

■ Setting Up the Cluster Nodes

■ Setting Up the External Storage Hardware

■ Cabling the Components

For specific information about individual components, see the documentation

that comes with the component. For information on steps and procedures for

setting up cluster hardware, refer to the documentation that comes with the

hardware.

2-2 Compaq ProLiant Clusters for SCO UnixWare 7 U/300 Quick Install Guide for the Compaq ProLiant ML370

Assembling the Rack

In clusters that use racks, rack assembly requires careful attention to avoid

problems.

Evaluate the site where the cluster is to be installed by checking the path and

setup area.

■ Check the path from the receiving dock to the installation area for the

following conditions:

G Height and width of doors

G Ceiling height and overhead obstacles

G Change in slope of the floor or change in other elevation

G Floor roughness, texture, gaps, and obstacles

G Floor load capacity

■ Check the area where the hardware is to be unpacked for the following

conditions:

G Adequate proximity to installation area

G Maneuvering room

G Room to disassemble the crate

G Room for the ramp and for rolling the hardware off the crate

■ Check the installation area for the following conditions:

G Adequate power needs, including outlets, breakers, electrical quality,

and grounding

G Cooling capacity

G Cable handling capacity

G Floor load capacity

G Clearance for the equipment

Stacking Components

Keep in mind the following considerations while stacking components in a

rack:

■ Put the UPSs in the bottom of the rack.

■ Assemble other components into the rack from the bottom up.

■ Put the heaviest equipment per U of height in the bottom of the rack

whenever possible.

■ Install non-flat-panel monitors toward the top of the rack.

■ Install components that require better cooling capacity toward the top of

the rack.

■ Purchase the rack stabilizer feet option when offered.

The typical stacking order has the UPSs at the bottom and progresses upward

according to the following list:

■ UPS

■ Storage subsystems

■ Node 1 and node 2

Setting Up Cluster Hardware 2-3

■ Keyboard/mouse/monitor switch

■ Monitor and expansion nodes

CAUTION: Load the racks from the bottom up to avoid tipping the rack.

2-4 Compaq ProLiant Clusters for SCO UnixWare 7 U/300 Quick Install Guide for the Compaq ProLiant ML370

Transporting Racks

Before transporting a filled rack, read the documentation that comes with the

rack to determine the safety measures to take for successful transportation.

Never transport a rack without first reviewing the documentation.

Develop standard procedures for securing rack equipment depending on the

rack and its components. Standard procedures include:

■ Verify that the rack is secured to the pallet.

■ Remove all loose items from the rack and the pallet.

■ Disconnect the cabling, ensuring that cables are disconnected from any

expansion cabinets and that you have labeled the cables for trouble-free

reconnection. Protect, coil, and stow the cables in the cabinet base.

■ Confirm that all major cable bundles are well-secured.

■ Insert anti-static foam between components in the rack.

■ Wrap the front and rear doors of the rack in bubble wrap before securely

closing them.

■ Crate the rack according to the documentation that comes with the rack,

including protective wrapping, banding components, and any external

packaging.

■ Label the crate properly with handling information, using statements

such as This End Up, No Forklifts, Top Heavy, and Do Not Double

Stack.

■ Include tilt watch for X and Y directions and shock watch indicators.

Use these guidelines in addition to rack documentation to secure the rack for

transportation.

Setting Up the Cluster Nodes

Setting up the cluster nodes includes:

■ Installing the 64-bit Fibre Channel Host Bus Adapter (HBA) into slot 3

of each node and Gigabit Interface Converters Shortwave (GBIC-SW)

into each adapter

■ Installing one 9.1-GB or larger internal disk drive on each node

■ Installing the public LAN NIC Compaq NC3123 Fast Ethernet NIC

(NC3123 NIC) PCI 10/100 WOL into slot 1 for clusters using Ethernet

interconnect

■ Installing the ServerNet I cluster interconnect

NOTE: No installation is required for Ethernet interconnects, which use the embedded

NIC. Refer to the section, “Cabling the Ethernet Interconnect,” later in this chapter for

installing the Ethernet interconnect cable.

Additional options, such as tape drives, other NICs, and Remote Insight

Lights-Out Edition boards can be installed after the cluster Quick Install

procedure has completed.

Setting Up Cluster Hardware 2-5

Installing the 64-Bit External Storage Fibre Channel

HBA and GBIC-SWs

For a redundant fault-tolerant configuration, the storage system connects to

both ProLiant ML370 servers, so an HBA must be installed in each server.

Additionally, a GBIC-SW must be installed in each adapter.

The following steps explain how to install the external Fibre Channel HBAs,

which are required for the Compaq StorageWorks RAID Array 4100

(RA4100):

NOTE: Refer to the ProLiant ML370 server documentation for general PCI adapter

installation information.

1. Install one 64-bit HBA into slot 3 of each node.

IMPORTANT: Only one Fibre Channel HBA is supported in each node.

2. Install one GBIC-SW module into each HBA. For installation

instructions, refer to the documentation that comes with the Fibre

Channel hardware.

2-6 Compaq ProLiant Clusters for SCO UnixWare 7 U/300 Quick Install Guide for the Compaq ProLiant ML370

3. Do not install or update drivers. The Quick Install procedures install the

Fibre Channel drivers.

Installing Internal Disk Drives

One 9.1-GB disk drive is required per node. The Quick Install automatically

configures each internal drive with a 9.1-GB partition, even if the disk drive is

larger than 9.1-GB. This UnixWare partition cannot be modified, and other

UnixWare partitions cannot be added to this disk drive. After the Quick Install

is completed, non-UnixWare partitions can be added up to the maximum

capacity of the disk drive, or other disk drives can be installed for additional

partitions.

To install the internal disk drives, refer to the documentation included with the

drive and with the ProLiant ML370 server.

Installing the Public LAN NIC into a Cluster Ethernet Interconnect

To connect a cluster using Ethernet interconnect to a public network, install an

NC3123 NIC into slot 1 of each node using the documentation that comes with

the NIC. This step is not needed for clusters that use the ServerNet I

interconnect.

Installing the ServerNet I Cluster Interconnect

If your ProLiant ML370 cluster uses ServerNet I as the cluster interconnect,

you must install the ServerNet I PCI adapter into slot 1 of each server. To

install the ServerNet I PCI adapter, refer to the documentation that comes with

the adapter for general installation guidelines.

■ Install one ServerNet I PCI adapter version 1.5e into slot 1 of each node.

■ Ignore the ServerNet I driver installation instructions in the adapter

installation guide. The required UnixWare ServerNet I device driver is

included with the SCO UnixWare 7 NonStop Clusters software and is

automatically installed during software installation.

■ Ignore the ServerNet I connection testing instructions in the adapter

installation guide. The software installation procedure includes testing

the connection.

Setting Up the External Storage Hardware

IMPORTANT: The RA4100 is shipped with a single RAID controller. Each RA4100 array

used in Compaq ProLiant Clusters for SCO UnixWare 7 requires an additional, redundant

controller.

NOTE: The Quick Install automatically configures an RA4100 drive with a RAID 1 9.1-GB

UnixWare partition, even if the disk drive is larger than 9.1-GB. This partition cannot be

modified and other UnixWare disk drive partitions cannot be added to this disk drive. After

the Quick Install is completed, non-UnixWare partitions can be added up to the maximum

capacity of the disk drive, or other disk drives can be installed for additional UnixWare

partitions.

The U/300 cluster for the ProLiant ML370 server uses external storage with

the following components:

■ RA4100 storage subsystem

■ Two Compaq StorageWorks RAID Array 4000 (RA4000) controllers,

one included in the RA4100 storage

■ Two GBIC-SWs, one in each controller

■ Two 9.1-GB or larger disk drives

Setting Up Cluster Hardware 2-7

■ Fibre Channel cables

To configure the external storage for the U/300 Quick Install cluster, set up the

RA4100 storage subsystem according to the following steps:

1. Follow the set-up instructions in the documentation that comes with the

subsystem to set up the RA4100.

Review the section, “Fibre Channel Cable Precautions,” later in this

chapter for general information.

2. Refer to the user guide for the RA4100 for disk drive RAID array

options and considerations.

2-8 Compaq ProLiant Clusters for SCO UnixWare 7 U/300 Quick Install Guide for the Compaq ProLiant ML370

3. In each array, install one redundant controller into the lower slot

(rack-mount) or into the left slot (tower as viewed from the back)

according to the following steps:

a. Disconnect the power from the storage subsystem.

b. Remove the cover from the second controller slot.

c. Rotate the board 180 degrees from the position of the top controller.

d. Insert the RA4000 redundant controller.

e. Install the GBIC-SW modules.

4. Ignore the chapters on the Array Configuration Utility and the Options

ROMPaq in the documentation for the RA4100. These steps are part of

the installation procedure in Chapter 3, “Installing Cluster Software.”

5. Install a 9.1-GB or larger disk drive into each slot 0 of the array.

Cabling the Components

Proper cabling can simplify service and assembly of the cluster, so following

appropriate cabling standards is vital to a successful cluster setup.

Using Labeling Standards

Proper labeling can prevent improper connections and simplify cluster

assembly and service. Make sure to label each server with the correct node

labels that are provided.

Also, label the ends of the following cables:

■ Ethernet crossover cable (for cluster interconnects using Ethernet)

■ ServerNet I cables (for cluster interconnects using ServerNet I)

■ Cluster Integrity (CI) serial cable

■ Keyboard, monitor, and mouse cables

■ Server power cables

Each label must identify the node to which the cable connects.

Cabling the ServerNet I Interconnect

ServerNet I adapters include X and Y connections for redundancy. Figure 2-1

shows the ServerNet I adapter connections.

Port X Connector

Port Y Connector

Figure 2-1. ServerNet I PCI adapter connections

IMPORTANT: Cable X and Y to their corresponding counterparts. Do not cable

X connections to Y connections.

Setting Up Cluster Hardware 2-9

PCI Bus Connector

2-10 Compaq ProLiant Clusters for SCO UnixWare 7 U/300 Quick Install Guide for the Compaq ProLiant ML370

The ServerNet I cables directly connect the ServerNet I adapter in node 1 to

the ServerNet I adapter in node 2, as shown in Figure 2-2.

Node 1

X

Dedicated

ServerNet I Cables

Figure 2-2. Example of cabling the cluster interconnect of a cluster that uses

ServerNet I

NOTE: Cabling for the external storage is intentionally not shown.

Y

CI Serial Cable

Node 2

To Public

Network

Setting Up Cluster Hardware 2-11

Use the cabling suggestions illustrated in Figure 2-3 to label the ServerNet I

cables.

ServerNet I

Node

1

2

Switch Port

Number

0

1

Number

Figure 2-3. ServerNet I cable labeling suggestion

Cable

Tie

Color

Pink

Orange

X ServerNet I cables

are identified with

White cable ties.

X/Y Fabric

Identifier

Node

Identifier

Red ties are used only during

shipment and are to be removed

during onsite installation.

To cable the ServerNet I interconnect, follow these steps:

1. Connect a white-labeled ServerNet I X cable to the X connection on the

ServerNet I adapter in node 1.

2. Connect the other end of the cable to the corresponding ServerNet I

adapter X connection in node 2.

3. Complete the cabling by installing the ServerNet I Y cable in a similar

manner.

2-12 Compaq ProLiant Clusters for SCO UnixWare 7 U/300 Quick Install Guide for the Compaq ProLiant ML370

Cabling the Public LAN Connection

For interconnects using ServerNet I, connect the public LAN Ethernet cable to

the embedded NIC of the servers. See Figure 2-2 earlier in this chapter.

For interconnects using Ethernet, connect the public LAN Ethernet cable to the

NC3123 NIC into slot 1 of the servers. See Figure 2-4.

Node 1 Node 2

Ethernet Crossover

Cable

CI Serial Cable

To Public Network

Figure 2-4. Example of cabling the cluster interconnect of a cluster that uses

Ethernet

NOTE: Cabling for the external storage is intentionally not shown.

Cabling the Ethernet Interconnect

An Ethernet crossover cable is required for interconnects using Ethernet. To

cable the Ethernet interconnect, connect one end of the Ethernet crossover

cable to the embedded NIC in node 1. Connect the other end of the Ethernet

crossover cable to the embedded NIC in node 2. Figure 2-4 illustrates the

proper cabling.

Cabling the CI Serial Cable

IMPORTANT: The CI serial cable is required.

To cable the CI serial cable, connect one end of the CI serial cable to serial

port connector B in node 1. Connect the other end of the CI serial cable to

serial port connector B in node 2. Figure 2-2 illustrates the proper cabling for

clusters that use ServerNet I interconnect. Figure 2-4 illustrates the proper

cabling for clusters that use Ethernet interconnect.

Cabling the RA4100

To cable the RA4100 components, follow these steps:

1. Connect the Fibre Channel cabling between the arrays and nodes using

the instructions in the user guide for the RA4100 and the documentation

that comes with the Fibre Channel cables. See Figure 2-5 for a cabling

illustration.

Fibre Channel

Host Controller

Setting Up Cluster Hardware 2-13

GBIC (4 places)

Node 1 Node 2

Fibre Channel

Array Controller

SCSI Bus 2

543210

Figure 2-5. Supported cabling of the RA4100 storage subsystem for the

U/300 configuration

YX

SCSI Bus 1

Dual Fiber

543210

(viewed from rear)

Optic Cable

2. Connect the first RAID controller in the upper slot (rack-mount) or right

slot (tower as viewed from the back) of the RA4100 to node 1. Connect

the redundant, or second, controller in the lower (rack-mount) or left

(tower) slot to node 2.

3. Confirm that all cables are properly connected to the appropriate arrays

and servers.

Figure 2-5 shows the supported cabling of the RA4100 storage subsystem for

the U/300 configuration.

2-14 Compaq ProLiant Clusters for SCO UnixWare 7 U/300 Quick Install Guide for the Compaq ProLiant ML370

Fibre Channel Cable Precautions

Keep the following precautions in mind when installing, handling, moving,

connecting, and disconnecting Fibre Channel cables:

■ Affix cable labels carefully, without over-tightening, to avoid breaking

the glass fibers within the cables.

■ Do not bend the Fibre Channel cable into an arc tighter than the

minimum allowable bend radius specified by the cable manufacturer.

The minimum bend radius is usually 10 to 20 times the outer diameter

of the cable. Protect the cables from pinching, abrasion, excess tension,

and any other mechanical stress.

■ When inserting or removing connectors, handle the Fibre Channel cable

only by the connector body, not by the strain relief or by the cable body.

Even moderate amounts of tension or pressure on the Fibre Channel

cable body can destroy the connector.

■ Type SC connectors include a white stripe along each side. Verify that

the connectors mate with a positive click and that the white stripe is

invisible. If the white stripe is visible, the connectors are not properly

mated.

■ Do not subject connectors to abrasion, chemical contaminants, or rough

handling. Fibre Channel mating surfaces are cut, polished, and aligned

to extremely close tolerances, and they are much more sensitive to

mishandling than conventional electrical signal connectors.

■ Do not allow dust to enter the connectors.

■ Leave any protective dust covers on the GBIC-SW whenever it is not

connected to a Fibre Channel cable.

■ Failures caused by dust contamination or improper cable connector

handling can exhibit the same symptoms as a controller or GBIC-SW

failure, resulting in an unnecessary component replacement that does

not resolve the root cause of the problem.

Cabling the Keyboard, Monitor, and Mouse

To cable the keyboard, monitor, and mouse, refer to the documentation that

comes with these devices.

UPS Power Management Cabling

Compaq ProLiant Clusters for SCO UnixWare 7 support serial data

connections from UPS units to ProLiant server nodes in the cluster. This

feature provides the cluster with soft shutdown capability when an AC power

outage lasts until the UPS batteries approach the end of their holdup period.

To connect the UPS power management cable to the ProLiant server nodes:

1. Locate the cable. The UPS power management cable is a 3.66-m

(12.00-ft) serial cable included with most Compaq UPSs.

2. Connect one end of the cable to the COM port on the UPS chassis.

Connect the other end of the cable to any unused serial port on any

ProLiant server within the cluster. Because the power management

software is cluster-aware, you can connect the UPS power management

cable to any node in the cluster.

Setting Up Cluster Hardware 2-15

If a second UPS is used, repeat step 2. Connect the UPS serial cable to the

other server. Do not connect multiple cables to one server.

Chapter 3

Installing Cluster Software

Using the Compaq ProLiant Clusters for the SCO UnixWare 7 ML370 Quick

Install CDs for the Compaq ProLiant ML370 server to install the SCO

UnixWare 7 NonStop Clusters software on a ProLiant ML370 Cluster includes

several tasks. Use the following information to install your cluster:

■ Understanding Preinstallation Tasks and Considerations

■ Configuring the Servers with SmartStart

■ Updating Controller Firmware

■ Verifying ServerNet I Connections

■ Installing the Cluster Using Quick Install

■ Verifying the Cluster Assembly

■ Additional Cluster Setup Tasks

■ Registering the ProLiant Cluster for SCO UnixWare 7

■ Viewing UnixWare and NonStop Clusters Documentation

For specific information about individual software components, see the

documentation that comes with the component.

3-2 Compaq ProLiant Clusters for SCO UnixWare 7 U/300 Quick Install Guide for the Compaq ProLiant ML370

Understanding Preinstallation Tasks and Considerations

Before you begin the software installation, assemble the hardware for the

cluster, fill out the Quick Install planning worksheets in Appendix B of this

guide, and have four formatted diskettes on hand. Read through this chapter to

become familiar with the installation procedures as you fill out the worksheets.

Default Quick Install Settings

Table 3-1 lists the default settings used during the Quick Install installation

procedure. These parameters can be modified after the installation is complete

by running the International Settings Manager in the System folder of the

SCOadmin system administration tool.

Table 3-1

Quick Install Default Settings

Parameter Default

Locale C Standard

Keyboard United States C

Code set C

Time zone Configurable to any U.S. time zone

Internal Disk Drive Considerations

Before you begin any procedures in this chapter, do not have any disk drives

in the internal storage area other than the one 9.1-GB or larger disk drive. The

Quick Install procedures require that only one disk drive reside in each server

during the installation.

Obtaining UnixWare 7 Licenses

Before installing the SCO UnixWare 7 NonStop Cluster software, obtain a

UnixWare 7 license that includes either the Mirroring Option or an OnLine

Data Manager (ODM) license. To locate a convenient SCO reseller or

distributor to purchase licenses, see the SCO website at

http://www.sco.com

Configuring the Servers with SmartStart

Before cluster installation on each node, you must erase any existing

configuration and configure each server using the SmartStart CD that comes

with the ProLiant ML370 server. You must also set two hardware

configuration items on each server. Start with the server that you plan to use as

node 1.

Erasing the Configuration

If you are using server or storage hardware that has been previously

configured, you must erase that configuration on each node before using the

Quick Install CDs for the ProLiant ML370 server. The following steps must be

used separately on each node to erase the configuration:

CAUTION: This procedure erases any information currently stored on the node.

To prevent information loss, back up important files before attempting

installation.

NOTE: The SmartStart procedure may prompt you for the Server Profile Diskette that

comes with the server. If prompted, insert the diskette and follow the onscreen

instructions.

Installing Cluster Software 3-3

1. If you are performing this procedure on node 1, power up the Compaq

StorageWorks RAID Array 4100 (RA4100) and wait about 90 seconds

for the Compaq StorageWorks RAID Array 4000 (RA4000) controllers

to complete their Power-On Self-Tests (POSTs).

If you are performing this procedure on node 2, power down the

RA4100.

2. Power up the node, insert the SmartStart CD into the CD-ROM drive for

the node, and then wait for SmartStart to boot.

3. Select the Run System Erase Utility icon, and then click OK.

4. Click the Yes button when prompted to continue. Wait for the

configuration to be erased.

3-4 Compaq ProLiant Clusters for SCO UnixWare 7 U/300 Quick Install Guide for the Compaq ProLiant ML370

5. If you used the Server Profile Diskette, remove it. Power down the

RA4100 if you are erasing the configuration on node 1. When prompted,

power down, and then power up only the server.

IMPORTANT: Do not turn the RA4100 back on at this time.

Continue with the following procedure for server configuration. Begin with

step 2 because you have erased a previous configuration.

Configuring the Servers

To configure the nodes using SmartStart:

IMPORTANT: The RA4100 must not be powered up during this procedure. Do not

configure any RA4100 logical disks prior to software installation; an RA4100 logical disk

is automatically configured by the Quick Install procedure.

1. If you did not erase a configuration, power up the server, and then insert

the SmartStart CD into the CD-ROM drive for node 1. If you erased a

configuration according to the preceding steps, begin with step 2.

2. Select the language at the prompt. The Regional Settings screen displays.

3. Select the country and keyboard type from the Regional Settings screen.

4. Set the date, time, and daylight savings time adjustment if applicable.

5. Click Next, and then click Continue. The License Agreement displays.

6. Accept the License Agreement to continue. An Installation Path window

displays.

7. Select Manual Configuration, and then click the Begin button.

8. Click the plus sign (+) preceding the SCO entry in the menu. A list of

operating systems displays.

9. Select SCO UnixWare 7.1.1, and then click Next. A warning screen displays.

10. Click Continue. Wait while the system configuration loads.

11. Use the arrow keys to select Review or modify hardware settings, and press

Enter. The

Steps in configuring your computer window displays.

12. Use the arrow keys to select Step 3: View or edit details, and then press

Enter.

13. Page down to the Embedded - Compaq Automated Server Recovery entry.

Installing Cluster Software 3-5

14. Verify that the following items are disabled:

G Software Error Recovery

G Standby Recovery Server

G UPS Shutdown

Use the arrow keys to select the options and the Enter key to modify

them as necessary.

15. Page down to Embedded-Compaq Integrated Dual Channel Wide Ultra2 SCSI

Controller (Port2).

G Select Controller Order, and then press Enter.

G Select First and press F10. The Configuration Changes screen displays.

G Press Enter to accept the changes.

16. Press F10 to exit the Step 3: View or edit details window. The Steps in

configuring your computer

window displays.

17. Use the arrow keys to select Step 5: Save and exit, and then press Enter.

The

Step 5: Save and exit window displays.

18. Select Save the configuration and restart the computer, and then press Enter. A

Reboot window displays.

19. Press Enter. The Array Configuration Utility loads and an error message

indicates that no array controllers were detected. This message is an

expected error message.

20. Click OK to exit the Array Configuration Utility. The system reboots.

21. Wait while the system partition installation completes. The server

reboots and sets up hardware. After multiple reboots, the

SmartStart-Manual Path

window displays.

Compaq

22. Create a firmware diskette at this time if you are completing this step on

node 1. You will use this diskette later to upgrade the RA4000 controller

firmware. If performing this procedure on node 2, skip this step and

continue with the section “Updating Controller Firmware.”

To create a firmware diskette, obtain one DOS formatted diskette, and

follow these steps:

a. Click the Create Support Software button.

b. Click the plus sign (+) next to Compaq.

3-6 Compaq ProLiant Clusters for SCO UnixWare 7 U/300 Quick Install Guide for the Compaq ProLiant ML370

c. Page down to Options ROMPaq, select it, and then click Next. Although

the onscreen instructions indicate that you need 10 diskettes, this

procedure creates only a single diskette. A screen for creating the

first diskette displays.

d. Click Skip. The Firmware Upgrade diskette for the RA4000 Controller

displays.

e. Insert the formatted diskette into the disk drive, and then click OK.

f. Wait for the software to be written to the diskette.

g. Remove the diskette from the drive after the software has been

written to the diskette.

h. Click Skip on each of the remaining screens, and then click Finish

at the final screen to return to the

Compaq SmartStart-Manual Path

window.

23. Click Next. A Compaq SmartStart-Manual Path warning window displays.

24. Remove the SmartStart CD and power down the node.

25. Repeat the procedures for erasing a configuration (if necessary) and

configuring servers on node 2. Do not repeat step 22 on node 2.

If you have erased any existing configuration on both nodes of your server and

have configured both nodes with SmartStart, continue with the following

section, “Updating Controller Firmware” to upgrade controller firmware.

Updating Controller Firmware

Controller firmware must be updated on both nodes. Use the following

procedure to upgrade the controller firmware:

1. Turn on the RA4100 and wait about 90 seconds for the RA4000

controllers to complete their POSTs.

2. Insert the firmware upgrade diskette into the drive. (You made this

diskette in the preceding procedure.)

3. Boot the node from the diskette, and then follow the prompts on the

screen until the firmware is updated.

4. Remove the firmware diskette and upgrade the controller firmware on

node 2 using step 2 and step 3 of this procedure.

Continue with one of the following procedures when the firmware is

updated:

G Continue with the “Verify the ServerNet I Connections” section for

cluster interconnects using ServerNet I.

G Continue with the “Installing the Cluster Using Quick Install”

section for cluster interconnects using Ethernet.

Installing Cluster Software 3-7

Verifying ServerNet I Connections

NOTE: This section applies only to clusters connected with ServerNet I. If the cluster is

connected with Ethernet, proceed to the next section, “Installing the Cluster Using Quick

Install.”

To verify the ServerNet I connections, create a ServerNet utility diskette for

each node. To create the diskettes, download the ServerNet Verification

Utilities Softpaq from the following site:

http://www.compaq.com/support

From the welcome window of that site, take the following path:

1. Select software & drivers. A download center window displays.

2. Select servers.

3-8 Compaq ProLiant Clusters for SCO UnixWare 7 U/300 Quick Install Guide for the Compaq ProLiant ML370

3. From the options presented to you, select the following:

G Select your particular server from the list presented to you.

G Select the appropriate model or All Models.

G Select SCO UnixWare 7 from the list of operating systems.

4. Select the Softpaq for ServerNet Verification Utilities.

At the download page, follow the directions for downloading the Softpaq and

creating diskettes. Create two ServerNet Verification Utilities diskettes.

Verifying the Local Adapter

To verify that the local ServerNet I adapter is properly installed and

functional, on each node that has a ServerNet I adapter, follow these steps:

1. Have a ServerNet I Verification Utilities diskette for each server.

2. Insert the proper diskette into the server that you want to test. Reboot

the node. Wait for the DOS prompt to be displayed.

3. Type spaf at the DOS prompt, and then press Enter. A title screen

displays.

4. Press any key to start the test of the ServerNet I links. The following

messages display:

LINK X IS ALIVE

LINK Y IS ALIVE

Verify that the links are alive. If either link is reported NOT ALIVE, a

problem exists with the adapter. Press Esc to stop the ServerNet I link

test. Power down the server, disconnect all power to the server, reseat

the board, and then repeat the test.

5. Press Esc to exit the ServerNet I Utility or press any other key to start

the loopback test. The loopback test status displays. If a loopback error

occurs, the error displays, and the test fails.

6. Press any key to stop the loopback test.

Verifying Node-to-Node Communication

Node-to-node communication tests include a link test for the cables and a

loopback test for the adapters. Use the following steps to verify node-to-node

communication on a directly connected ServerNet I two-node cluster:

1. Insert a ServerNet Utility Disk into node 1 and node 2, and then reboot

the nodes. Wait for the DOS prompt on the nodes.

2. Type spaf 1 2 at the DOS prompt on node 1, and then press Enter. A title

screen displays.

3. Type spaf 2 1 at the DOS prompt on node 2, and then press Enter. A title

screen displays.

4. Press Enter on both nodes to start the link test for the cables. The

following messages display:

LINK X IS ALIVE

LINK Y IS ALIVE

You may see errors on the first node until the second node test starts. Be

sure that both nodes have started the test before checking for errors.

Persistent errors indicate a problem with the cabling between the nodes.

Installing Cluster Software 3-9

5. Exit the text by pressing Esc if errors persist. Resolve the problem

before continuing.

6. Press Enter on node 1 to begin the loopback test. Test message similar

to the following display:

spaf: path=0 Loopback=0 Option=1 11/29 14:08:20 600 pages

spaf: path=1 Loopback=0 Option=1 11/29 14:08:21 600 pages

If a loopback error occurs, the test stops and reports the error. The error

indicates a problem with the adapter on node 1. Exit the loopback test

by pressing Esc, and resolve the problem before continuing.

7. Press Enter on node 2 to begin the loopback test. Test message similar

to the following display:

spaf: path=0 Loopback=0 Option=1 11/29 14:08:25 19888 pages

spaf: path=1 Loopback=0 Option=1 11/29 14:08:26 19999 pages

If a loopback error occurs, the test stops and reports the error. The error

indicates a problem with the adapter on node 2. Exit the loopback test

by pressing Esc, and resolve the problem before continuing.

8. Press Enter on each node to exit the loopback test.

3-10 Compaq ProLiant Clusters for SCO UnixWare 7 U/300 Quick Install Guide for the Compaq ProLiant ML370

Installing the Cluster Using Quick Install

Before beginning the software installation, be sure to have the Quick Install

planning worksheets on hand and the following items available:

■ Cluster name and Cluster Virtual IP (CVIP) address

■ Node 1 hostname and IP address for the public network

■ Node 2 hostname and IP address for the public network

■ Netmask for the public network

■ For clusters using Ethernet interconnect, node 1 hostname and IP

address for the cluster interconnect

Default values of node1-ic and 10.1.0.1 are provided during the

installation.

■ For clusters using Ethernet interconnect, node 2 hostname and IP

address for the cluster interconnect

Default values of node2-ic and 10.1.0.2 are provided during installation.

IMPORTANT: The public network IP address for node 1 and node 2 and the CVIP address

must be on the same Ethernet subnet. The default router must be on the public network

subnet. The cluster interconnect IP addresses for node 1 and node 2 must be on a

different subnet from the public network.

■ For clusters using Ethernet interconnect, netmask for the cluster

interconnect interfaces

A value of 255.255.255.0 is provided during installation.

■ UnixWare and NonStop Clusters licenses and an add-on Mirroring

Option license or ODM license must be added if the UnixWare licenses

do not include mirroring

■ Passwords and system owner information

■ NonStop Clusters simple network management protocol (SNMP) agent

configuration information

■ One formatted diskette

■ The correct set of Quick Install CDs for the ProLiant ML370 server.

Choose either ServerNet I or Ethernet, according to your server and

cluster configuration

Installing Node 1

Before beginning the installation, select the set of Quick Install CDs for your

cluster configuration. Choose the CDs for either the ServerNet I cluster

interconnect or Ethernet cluster interconnect.

NOTE: To save time, you can install both nodes together. Be sure node 1 has rebooted

before rebooting node 2. Insert the CDs into the servers, power up the servers, and follow

the procedures for each node at the same time.

To install the software on node 1, follow these steps:

1. Turn on the RA4100 and wait about 90 seconds for the RA4000

controllers to complete their POSTs.

2. Power up node 1, and then insert Quick Install Image CD for node 1 into

the CD-ROM drive. Wait while node 1 boots from the CD. A warning

message indicates that data will be lost.

3. Press Enter to continue, or power down the system to abort the

installation.

The software begins to load and a progress bar indicates the installation

progress. When all software has been loaded, several screens request

necessary information.

Installing Cluster Software 3-11

4. Provide the necessary information for the following screens. Each of the

screens mentioned in step 3 displays the fields and a brief description of

each field as it is selected for entry (for detailed help, press F1).

a. Read responses from previously saved diskette?

This screen provides the opportunity to restore responses from a

previous installation.

Use the arrow key to select No to skip reading responses or if this is

the first installation. Responses can be saved later.

Use the arrow key to select Yes to read the answers from a diskette

and present them as defaults in the remaining screens.

Use the arrow key to select Force to read the answers from a diskette,

and then apply them without modification. The date is assumed to be

correct on the system and is not modified.

3-12 Compaq ProLiant Clusters for SCO UnixWare 7 U/300 Quick Install Guide for the Compaq ProLiant ML370

b. Date, Time, and Time Zone

Modify the current date, time, and time zone as necessary. Only U.S.

time zones are available during Quick Install. Time zone information

can be changed after Quick Install by using the UnixWare

SCOadmin system administration tools. For more information, see

the “Understanding Preinstallation Tasks and Considerations”

section in this chapter.

c. Cluster name

Enter the name of the cluster.

d. System owner identity and passwords

Enter the full name of the system owner, the system owner login ID,

and the system owner and root passwords. Passwords are not

displayed and must be repeated to verify that they are entered

correctly.

e. IP interconnect

NOTE: The IP interconnect screen is not displayed during the installation of ServerNet I

cluster interconnects.

Accept the default values, or enter the node 1 hostname and IP

address for the cluster interconnect, the node 2 hostname and IP

address for the cluster interconnect, and the netmask. The Ethernet

cluster interconnect addresses must be on the same network.

f. Network configuration

Enter the external network configuration: domain name, CVIP

address, netmask, node 1 hostname and IP address for the public

network, node 2 hostname and IP address for the public network,

and default route. The public network addresses must be on the same

network.

g. NSC SNMP agent configuration

Enter the name of the person responsible for the cluster and the

location of the cluster. Default values are supplied for the other

fields. Each field is described in the lower region of the screen as

you tab to the field. Press F1 for information that can help you to fill

out the fields.

h. Save responses

Responses can be saved, except for the date, to a formatted diskette

for future installations. Unencrypted passwords are not saved.

A final screen indicates that the installation is complete.

5. Remove the CD, and then press Enter to reboot. The SCOadmin license