Page 1

Installing and Administering HyperFabric

HP-UX 11i v3

Edition 13

Manufacturing Part Number: B6257-90055

February 2007

Printed in U.S.A.

© Copyright 2007 Hewlett-Packard Company.

Page 2

Legal Notices

Copyright 2007 Hewlett-Packard Development Company, L.P.

Confidential computer software. Valid license required from HP for possession, use or

copying. Consistent with FAR 12.211 and 12.212, Commercial Computer Software,

Computer Software Documentation, and Technical Data for Commercial Items are

licensed to the U.S. Government under vendor’s standard commercial license.

The information contained herein is subject to change without notice. The only

warranties for HP products and services are set forth in the express warranty

statements accompanying such products and services. Nothing herein should be

construed as constituting additional warranty. HP shall not be liable for technical or

editorial errors or omissions contained herein.

Oracle is a registered US trademark of Oracle Corporation, Redwood City, California.

UNIX is a registered trademark of The Open Group.

2

Page 3

1. Overview

Overview. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

Notice of non support for Oracle 10g RAC. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

HyperFabric Products . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

HyperFabric Adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

Switches and Switch Modules . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

Other Product Elements. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

HyperFabric Concepts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

2. Planning the Fabric

Preliminary Considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

HyperFabric Functionality for TCP/UDP/IP and HMP Applications . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

TCP / UDP / IP . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

Application Availability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

Features. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

Configuration Parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

TCP/UDP/IP Supported Configurations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

Point-to-Point Configurations. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

Switched. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

High Availability Switched. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

Hyper Messaging Protocol (HMP). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

Application Availability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

Features. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

Configuration Parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

HMP Supported Configurations. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

Point to Point. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

Enterprise (Database). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

Technical Computing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

Contents

3. Installing HyperFabric

Checking HyperFabric Installation Prerequisites . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

Installing HyperFabric Adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

Online Addition and Replacement. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

Planning and Preparation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

Critical Resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

Card Compatibility . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

Installing the Software . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

File Structure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

Loading the Software . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

Installing HyperFabric Switches. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

Before Installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

Installing the HF2 Switch . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

With the Rail Kit. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

Without the Rail Kit . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

4. Configuring Hyperfabric

3

Page 4

Contents

Configuration Overview. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

Information You Need . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

Configuration Information Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

Performing the Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

Using the clic_init Command. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

Examples of clic_init . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

Using SMH . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72

Deconfiguring a HyperFabric Adapter with SMH . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

Configuring the HyperFabric EMS Monitor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

Configuring HyperFabric with ServiceGuard. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

How HyperFabric Handles Adapter Failures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .77

Configuring HyperFabric with the ServiceGuard Resource Monitor. . . . . . . . . . . . . . . . . . . . . . . . 80

Configuring ServiceGuard with HyperFabric Using the ASCII File . . . . . . . . . . . . . . . . . . . . . . . . 80

Configuring ServiceGuard with HyperFabric Using SMH . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

Configuring ServiceGuard for HyperFabric Relocatable IP Addresses . . . . . . . . . . . . . . . . . . . . . . 81

Configuring HMP for Transparent Local Failover Support. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 82

How Transparent Local Failover Works . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

Configuring HMP for Transparent Local Failover Support - Using SMH. . . . . . . . . . . . . . . . . . . . . . 88

Deconfiguring HMP for Local Failover support - Using SMH . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

Configuring HMP for Transparent Local Failover Support - Using the clic_init command. . . . . . . . 90

5. Managing HyperFabric

Starting HyperFabric. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 95

Using the clic_start Command. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 95

Using SMH . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 96

Verifying Communications within the Fabric. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

The clic_probe Command . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

Examples of clic_probe . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98

Displaying Status and Statistics. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

The clic_stat Command . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

Examples of clic_stat. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

Viewing man Pages . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

Stopping HyperFabric . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

Using the clic_shutdown Command. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

Using SMH . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

6. Troubleshooting HyperFabric

Running Diagnostics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

The clic_diag Command . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

Example of clic_diag . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

Using Support Tools Manager. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

Useful Files. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

LED Colors and Their Meanings. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

Adapter LEDs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

HF2 Switch LEDs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 126

Determining Whether an Adapter or a Cable is Faulty. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

4

Page 5

Contents

Determining Whether a Switch is Faulty. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 130

Replacing a HyperFabric Adapter. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 132

Replacing a HyperFabric Switch. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 133

Safety Symbols . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 137

Regulatory Statements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 138

Adapters and Switches . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 138

FCC Statement (USA only) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 138

DOC Statement (Canada only) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 138

Europe RFI Statement . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 139

Australia and New Zealand EMI Statement. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 139

Radio Frequency Interference (Japan Only) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 139

Declarations of Conformity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 140

Physical Attributes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 144

Environmental . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 145

5

Page 6

Contents

6

Page 7

Figures

Figure 2-1. TCP/UDP/IP Point-To-Point Configurations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

Figure 2-2. TCP/UDP/IP Basic Switched Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

Figure 2-3. TCP/UDP/IP High Availability Switched Configuration . . . . . . . . . . . . . . . . . . . . . . . . 29

Figure 2-4. HMP Point-To-Point Configurations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

Figure 2-5. HMP Enterprise (Database) Configuration, Single Connection Between Nodes . . . . . 35

Figure 2-6. Local Failover Supported Enterprise (Database) Configuration, Multiple Connections

Between Nodes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

Figure 3-1. HyperFabric File Structure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

Figure 3-2. Front of HF2 Switch (A6388A Switch Module Installed) . . . . . . . . . . . . . . . . . . . . . . . . 53

Figure 3-3. Front of HF2 Switch (A6389A Switch Module Installed) . . . . . . . . . . . . . . . . . . . . . . . . 54

Figure 3-4. Parts of the Rail Kit . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

Figure 3-5. The Ends of the Rail Kit . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

Figure 4-1. Map for Configuration Information Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

Figure 4-2. An ServiceGuard Configuration (with Two HyperFabric Switches) . . . . . . . . . . . . . . . 77

Figure 4-3. Node with Two Active HyperFabric Adapters. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

Figure 4-4. Node with One Failed HyperFabric Adapter. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

Figure 4-5. When All HyperFabric Adapters Fail. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

Figure 4-6. A Configuration supporting Local Failover . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

Figure 4-7. Adapter, Link or Switch Port Failover . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

Figure 4-8. Switch Failover . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

Figure 4-9. Cable Failover Between Two Switches . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

Figure 4-10. Configuring the Transparent Local Failover feature . . . . . . . . . . . . . . . . . . . . . . . . . . 90

7

Page 8

Figures

8

Page 9

Tables

Table 2-1. HF2 Speed and Latency w/ TCP/UDP/IP Applications. . . . . . . . . . . . . . . . . . . . . . . . . . . 26

Table 2-2. HF2 Speed and Latency w/ HMP Applications. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

Table 3-1. Important OLAR Terms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

Table 6-1. LED Names (by Adapter) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

Table 6-2. HyperFabric Adapter LED Colors and Meanings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 125

Table 6-3. HF2 Switch LED Colors and Meanings. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

9

Page 10

Tables

10

Page 11

Printing History

The manual printing date and part number indicate its current edition. The printing

date will change when a new edition is printed. Minor changes may be made at reprint

without changing the printing date. The manual part number will change when

extensive changes are made.

Manual updates may be issued between editions to correct errors or document product

changes. To ensure that you receive the updated or new editions, you should subscribe to

the appropriate product support service. See your HP sales representative for details.

First Edition: March 1998

Second Edition: June 1998

Third Edition: August 1998

Fourth Edition: October 1998

Fifth Edition: December 1998

Sixth Edition: February 1999

Seventh Edition: April 1999

Eighth Edition: March 2000

Ninth Edition: June 2000

Tenth Edition: December 2000

Eleventh Edition: June 2001

Twelfth Edition: September 2002

Thirteenth Edition: March 2006

Fourteenth Edition: November 2006

Fifteenth Edition: February 2007

11

Page 12

12

Page 13

1 Overview

This chapter contains the following sections that give general information about

HyperFabric:

• “Overview” on page 15

• “HyperFabric Products” on page 16

Chapter 1

13

Page 14

Overview

• “HyperFabric Concepts” on page 18

14

Chapter 1

Page 15

Overview

Overview

Overview

HyperFabric is a HP high-speed, packet-based interconnect for node-to-node

communications. HyperFabric provides higher speed, lower network latency and less

CPU usage than other industry standard protocols (e.g. Fibre Channel and Gigabit

Ethernet). Instead of using a traditional bus based technology, HyperFabric is built

around switched fabric architecture, providing the bandwidth necessary for high speed

data transfer. This clustering solution delivers the performance, scalability and high

availability required by:

• Parallel Database Clusters:

Oracle 9i Real Application Clusters (RAC)

Oracle 8i Parallel Servers (OPS)

• Parallel Computing Clusters

• Client/Server Architecture Interconnects (for example, SAP)

• Multi-Server Batch Applications (for example, SAS Systems)

• Enterprise Resource Planning (ERP)

• Technical Computing Clusters

• Open View Data Protector (earlier known as Omniback)

• Network Backup

• Data Center Network Consolidation

• E-services

Notice of non support for Oracle 10g RAC

HyperFabric product suite was designed to optimize performance of Oracle 9i RAC

database running on HP-UX clusters. With the industry moving to standards-based

networking technologies for database clustering solutions, HP and Oracle have worked

together to optimize features and performance of Oracle 10g RAC database with

standards-based interconnect technologies including Gigabit Ethernet, 10Gigabit

Ethernet and Infiniband.

To align with the market trend for standards-based interconnects, Oracle 10g RAC

database is not currently supported on configurations consisting of HyperFabric product

suite and it will not be supported in the future either. As a result, customers must switch

to Gigabit Ethernet, 10Gigabit Ethernet or Infiniband technology if they plan to use

Oracle 10g RAC.

Please note that configurations comprising HyperFabric and Oracle 9i continue to be

supported.

Chapter 1

15

Page 16

Overview

HyperFabric Products

HyperFabric Products

HyperFabric hardware consists of host-based interface adapter cards, interconnect

cables and optional switches. HyperFabric software resides in Application Specific

Integrated Circuits (ASICs) and firmware on the adapter cards and includes user space

components and HP-UX drivers.

Currently, fibre based HyperFabric hardware is available. In addition, a hybrid switch

that has 8 fibre ports is available to support HF2 clusters.

The various HyperFabric products are described below. See the HP HyperFabric Release

Note for information about the HP 9000 systems these products are supported on.

NOTE This document uses the term HyperFabric (HF) is used in general to refer to the

hardware and software that form the HyperFabric cluster interconnect product.

The term HyperFabric2 (HF2) refers to the fibre based hardware components:

• The A6386A adapter.

• The A6384A switch chassis.

• The A6388A and A6389A switch modules. (Although the A6389A switch module has

4 copper ports it is still considered a HF2 component because it can only be used with

the A6384A HF2 switch chassis).

• The C7524A, C7525A, C7526A, and C7527A cables.

HyperFabric Adapters

The HyperFabric adapters include the following:

• A6386A HF2 PCI (4X) adapter with a fibre interface.

Switches and Switch Modules

The HyperFabric2 switches are as follows:

• A6384A HF2 fibre switch chassis with one integrated Ethernet management LAN

adapter card, one integrated 8-port fibre card, and one expansion slot. For the

chassis to be a functional switch, one of these two switch modules must be installed

in the expansion slot:

— The A6388A HF2 8-port fibre switch module. This gives the switch 16 fibre ports

(8 from the integrated fibre card and 8 from the A6388A).

16

— The A6389A HF2 4-port copper switch module. This gives the switch 12 ports—a

mixture of 8 fibre ports (from the integrated fibre card) and 4 copper ports (from

the A6389A module).

The A6384A HF2 switch chassis with either module installed is supported beginning

with the following HyperFabric software versions:

• HP-UX 11i v3: HyperFabric software version B.11.31.01

Chapter 1

Page 17

Overview

HyperFabric Products

NOTE In this manual, the terms HyperFabric2 switch or HF2 switch refer to the functional

switch (the A6384A switch chassis with one of the switch modules installed).

IMPORTANT HF2 adapters and switches are not supported by software versions earlier than those

listed in “HyperFabric Adapters” on page 16 and “Switches and Switch Modules” on

page 16.

To determine the version of HyperFabric you have, issue this command:

swlist | grep -i hyperfabric

Other Product Elements

The other elements of the HyperFabric product family are the following:

• HF2 fibre cables:

— C7524A (2m length)

— C7525A (16m length)

— C7526A (50m length)

— C7527A (200m length)

• The HyperFabric software: The software resides in ASICs and firmware on the

adapter cards and includes user space components and HP-UX drivers.

HyperFabric supports the IP network protocol stack, specifically TCP/IP and

UDP/IP.

HyperFabric software includes HyperMessaging Protocol (HMP). HMP provides

higher bandwidth, lower CPU overhead, and lower latency (the time it takes a

message to get from one point to another). However, these HMP benefits are only

available when applications that were developed on top of HMP are running.

Chapter 1

17

Page 18

Overview

HyperFabric Concepts

HyperFabric Concepts

Some basic HyperFabric concepts and terms are briefly described below.

The fabric is the physical configuration that consists of all of the HyperFabric adapters,

the HyperFabric switches (if any are used) and the HyperFabric cables connecting them.

The network software controls data transfer over the fabric.

A HyperFabric configuration contains two or more HP 9000 systems and optional

HyperFabric switches. Each HP 9000 acts as a node in the configuration. Each node has

a minimum of one and a maximum of eight HyperFabric adapters installed in it. (See

Chapter 2, “Planning the Fabric,” on page 19for information about the maximum

number of adapters that can be installed in each system). HyperFabric supports a

maximum of 4 HyperFabric switches. HyperFabric switches can be meshed, and

configurations with up to four levels of meshed switches are supported.

A HyperFabric cluster can be planned as a High Availability (HA) configuration, when

it is necessary to ensure that each node can always participate in the fabric. This is done

by using MC/ServiceGuard, MC/LockManager, and the Event Monitoring Service (EMS).

Configurations of up to eight nodes are supported under MC/ServiceGuard.

Relocatable IP addresses can be used as part of an HA configuration. Relocatable IP

addresses permit a client application to reroute through an adapter on a remote node,

allowing that application to continue processing without interruption. The rerouting is

transparent. This function is associated with MC/ServiceGuard (see “Configuring

ServiceGuard for HyperFabric Relocatable IP Addresses” on page 81). When the monitor

for HyperFabric detects a failure and the backup adapter takes over, the relocatable IP

address is transparently migrated to the backup adapter. Throughout this migration

process, the client application continues to execute normally.

When you start HyperFabric (with the clic_start command, through SMH or by

booting the HP 9000 system), you start the management process. This process must

be active for HyperFabric to run. If the HyperFabric management process on a node

stops running for some reason (for example, if it is killed), all HyperFabric-related

communications on that node are stopped immediately. This makes the node

unreachable by other components in the fabric.

When you start HyperFabric, the fabric is, in effect, verified automatically. This is

because each node performs a self diagnosis and verification over each adapter installed

in the node. Also, the management process performs automatic routing and configuring

for each switch (if switches are part of the fabric). You can, if needed, run the clic_stat

command to get a textual map of the fabric, which can be used as another quick

verification.

Notice that the commands you use to administer HyperFabric all have a prefix of clic_ ,

and some of the other components have CLIC as part of their name (for example, the

CLIC firmware and the CLIC software). CLIC stands for CLuster InterConnect, and it is

used to differentiate those HyperFabric commands/components from other

commands/components. For example, the HyperFabric command clic_init is different

from the HP-UX init command.

18

Chapter 1

Page 19

2 Planning the Fabric

This chapter contains the following sections offering general guidelines and protocol

specific considerations for planning HyperFabric clusters that will run TCP/UDP/IP or

HMP applications.

• “Preliminary Considerations” on page 21

Chapter 2

19

Page 20

Planning the Fabric

• “HyperFabric Functionality for TCP/UDP/IP and HMP Applications” on page 22

• “TCP / UDP / IP” on page 23

• “Hyper Messaging Protocol (HMP)” on page 30

20

Chapter 2

Page 21

Planning the Fabric

Preliminary Considerations

Preliminary Considerations

Before beginning to physically assemble a fabric, follow the steps below to be sure all

appropriate issues have been considered:

Step 1. Read Chapter 1, “Overview,” on page 13 to get a basic understanding of HyperFabric and

its components.

Step 2. Read this chapter, Planning the Fabric, to gain an understanding of protocol specific

configuration guidelines for TCP/UDP/IP and HMP applications.

Step 3. Read “Configuration Overview” on page 63, “Information You Need” on page 64, and

“Configuration Information Example” on page 66, to gain an understanding of the

information that must be specified when the fabric is configured.

Step 4. Decide the number of nodes that will be interconnected in the fabric.

Step 5. Decide the type of HP 9000 system that each node will be (for a list of supported HP 9000

systems, see the HP HyperFabric Release Note).

Step 6. Determine the network bandwidth requirements for each node.

Step 7. Determine the number of adapters needed for each node.

Step 8. Determine if a High Availability (ServiceGuard) configuration will be needed.

Remember, If MC/ServiceGuard is used there must be at least two adapters in each

node.

Step 9. Decide what the topology of the fabric will be.

Step 10. Determine how many switches will be used based on the number of nodes in the fabric.

Remember, the only configuration that can be supported without a switch is the

node-to-node configuration (HA or non-HA). HyperFabric supports meshed switches up

to a depth of four switches.

Step 11. Draw the cable connections from each node to the switches (if the fabric will contain

switches). If you use an HA configuration with switches, note that for full redundancy

and to avoid a single point of failure, your configuration will require more than one

switch. For example, each adapter can be connected to its own switch, or two switches

can be connected to four adapters.

Chapter 2

21

Page 22

Planning the Fabric

HyperFabric Functionality for TCP/UDP/IP and HMP Applications

HyperFabric Functionality for TCP/UDP/IP and HMP

Applications

The following sections in this chapter define HyperFabric features, parameters, and

supported configurations for TCP/UDP/IP applications and Hyper Messaging Protocol

(HMP) applications. There are distinct differences in supported hardware, available

features and performance, depending on which protocol is used by applications running

on the HyperFabric.

22

Chapter 2

Page 23

Planning the Fabric

TCP / UDP / IP

TCP / UDP / IP

TCP/UDP/IP applications are supported on all HF2 (fibre) hardware. Although all

HyperFabric adapter cards support HMP applications as well, our focus in this section

will be on TCP/UDP/IP HyperFabric applications.

Application Availability

All applications that use the TCP/UDP/IP stack are supported such as Oracle 9i.

Features

• OnLine Addition and Replacement (OLAR): Supported

The OLAR feature allows the replacement or addition of HyperFabric adapter cards

while the system (node) is running. For a list of systems that support OLAR, see the

HyperFabric Release Notes (B6257-90056).

For more detailed information on OLAR, including instructions for implementing

this feature, see “Online Addition and Replacement” on page 42 in this manual, as

well as Interface Card OL* Support Guide (B2355-90698).

• Event Monitoring Service (EMS): Supported

In the HyperFabric version B.11.31.01, the HyperFabric EMS monitor allows the

system administrator to separately monitor each HyperFabric adapter on every node

in the fabric, in addition to monitoring the entire HyperFabric subsystem. The

monitor can inform the user if the resource being monitored is UP or DOWN. The

administrator defines the condition to trigger a notification (usually a change in

interface status). Notification can be accomplished with a SNMP trap or by logging

into the syslog file with a choice of severity, or by email to a user defined email

address.

For more detailed information on EMS, including instructions for implementing this

feature, see “Configuring the HyperFabric EMS Monitor” on page 75 in this manual,

as well as the EMS Hardware Monitors User’s Guide (B6191-90028).

• ServiceGuard: Supported

Within a cluster, ServiceGuard groups application services (individual HP-UX

processes) into packages. In the event of a single service failure (node, network, or

other resource), EMS provides notification and ServiceGuard transfers control of the

package to another node in the cluster, allowing services to remain available with

minimal interruption.

ServiceGuard via EMS, directly monitors cluster nodes, LAN interfaces, and services

(the individual processes within an application). ServiceGuard uses a heartbeat LAN

to monitor the nodes in a cluster. It is not possible to use HyperFabric as a heartbeat

LAN. Instead a separate LAN must be used for the heartbeat.

Chapter 2

For more detailed information on configuring ServiceGuard, see “Configuring

HyperFabric with ServiceGuard” on page 76 in this manual, as well as Managing

MC/ServiceGuard Part Number B3936-90065 March 2002 Edition.

• High Availability (HA): Supported

23

Page 24

Planning the Fabric

TCP / UDP / IP

To create a highly available HyperFabric cluster, there cannot be any single point of

failure. Once the HP 9000 nodes and the HyperFabric hardware have been

configured with no single point of failure, ServiceGuard and EMS can be configured

to monitor and fail-over nodes and services using ServiceGuard packages.

If any HyperFabric resource in a cluster fails (adapter card, cable or switch port), the

HyperFabric driver transparently routes traffic over other available HyperFabric

resources with no disruption of service.

The ability of the HyperFabric driver to transparently fail-over traffic reduces the

complexity of configuring highly available clusters with ServiceGuard, because

ServiceGuard only has to take care of node and service failover.

A “heartbeat” is used by MC/ServiceGuard to monitor the cluster. The HyperFabric

links cannot be used for the heartbeat. Instead an alternate LAN connection

(Ethernet, Gigabit Ethernet, 10Gigabit Ethernet) must be made between the nodes

for use as a heartbeat link.

End To End HA: HyperFabric provides End to End HA on the entire cluster fabric

at the link level. If any of the available routes in the fabric fails, HyperFabric will

transparently redirect all the traffic to a functional route and, if configured, notify

ServiceGuard or other enterprise management tools.

Active-Active HA: In configurations where there are multiple routes between

nodes, the HyperFabric software will use a hashing function to determine which

particular adapter/route to send messages through. This is done on a

message-by-message basis. All of the available HyperFabric resources in the fabric

are used for communication.

In contrast to Active-Passive HA, where one set of resources is not utilized until

another set fails, Active-Active HA provides the best return on investment because

all of the resources are utilized simultaneously. MC/ServiceGuard is not required for

Active-Active HA operation.

For more information on setting up HA HyperFabric clusters, see figure 2-3

“TCP/UDP/IP High Availability Switched Configuration”.

• Dynamic Resource Utilization (DRU): Supported

When a new resource (node, adapter, cable or switch) is added to a cluster, a

HyperFabric subsystem will dynamically identify the added resource and start using

it. The same process takes place when a resource is removed from a cluster. The

difference between DRU and OLAR is that OLAR only applies to the addition or

replacement of adapter cards from nodes.

• Load Balancing: Supported

When a HP 9000 HyperFabric cluster is running TCP/UDP/IP applications, the

HyperFabric driver balances the load across all available resources in the cluster

including nodes, adapter cards, links, and multiple links between switches.

24

• Switch Management: Not Supported

Switch Management is not supported. Switch management will not operate properly

if it is enabled on a HyperFabric cluster.

• Diagnostics: Supported

Diagnostics can be run to obtain information on many of the HyperFabric

components via the clic_diag, clic_probe and clic_stat commands, as well as

the Support Tools Manager (STM).

Chapter 2

Page 25

Planning the Fabric

TCP / UDP / IP

For more detailed information on HyperFabric diagnostics see “Running

Diagnostics” on page 115 on page 149.

Configuration Parameters

This section details, in general, the maximum limits for TCP/UDP/IP HyperFabric

configurations. There are numerous variables that can impact the performance of any

particular HyperFabric configuration. See the “TCP/UDP/IP Supported Configurations”

section for guidance on specific HyperFabric configurations for TCP/UDP/IP

applications.

• HyperFabric is only supported on the HP 9000 series unix servers.

• TCP/UDP/IP is supported for all HyperFabric hardware and software.

• Maximum Supported Nodes and Adapter Cards:

In point to point configurations the complexity and performance limitations of

having a large number of nodes in a cluster make it necessary to include switching in

the fabric. Typically, point to point configurations consist of only 2 or 3 nodes.

In switched configurations, HyperFabric supports a maximum of 64 interconnected

adapter cards.

A maximum of 8 HyperFabric adapter cards are supported per instance of the

HP-UX operating system. The actual number of adapter cards a particular node is

able to accommodate also depends on slot availability and system resources. See

node specific documentation for details.

A maximum of 8 configured IP addresses are supported by the HyperFabric

subsystem per instance of the HP-UX operating system.

• Maximum Number of Switches:

You can interconnect (mesh) up to 4 switches (16 port fibre or Mixed 8 fibre ports) in

a single HyperFabric cluster.

• Trunking Between Switches (multiple connections)

Trunking between switches can be used to increase bandwidth and cluster

throughput. Trunking is also a way to eliminate a possible single point of failure.

The number of trunked cables between nodes is only limited by port availability. To

assess the effects of trunking on the performance of any particular HyperFabric

configuration, consult with your HP representative.

• Maximum Cable Lengths:

HF2 (fibre): The maximum distance is 200m (4 standard cable lengths are sold and

supported: 2m, 16m, 50m and 200m).

TCP/UDP/IP supports up to four HF2 switches connected in series with a maximum

cable length of 200m between the switches and 200m between switches and nodes.

Chapter 2

TCP/UDP/IP supports up to 4 hybrid HF2 switches connected in series with a

maximum cable length of 200m between fibre ports.

25

Page 26

Planning the Fabric

TCP / UDP / IP

• Speed and Latency:

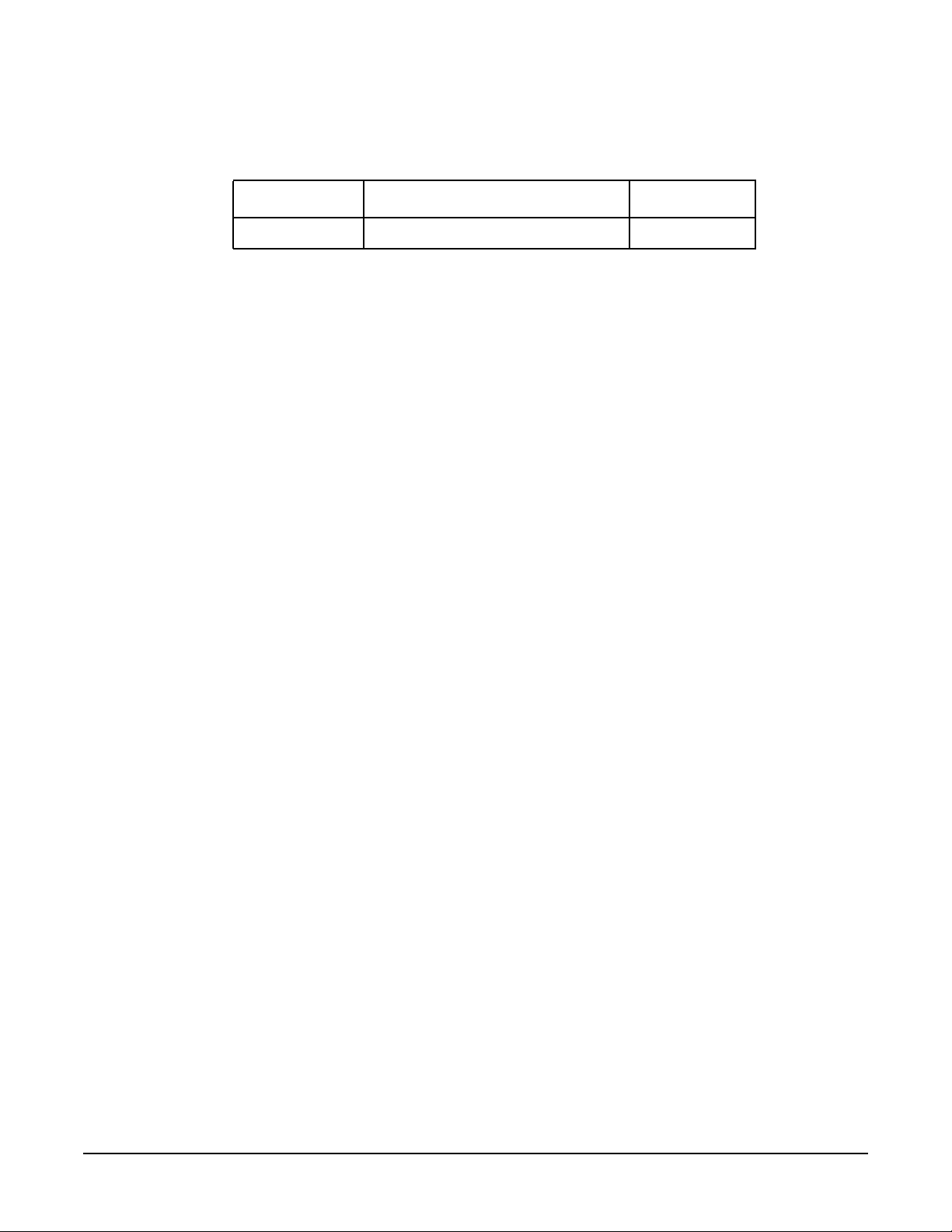

Table 2-1 HF2 Speed and Latency w/ TCP/UDP/IP Applications

Server Class Maximum Speed Latency

rp7420 2 + 2 Gbps full duplex per link < 42 microsec

For a list of HF2 hardware that supports TCP/UDP/IP applications (HP-UXN 11i v3), see

HyperFabric Release Notes (B6257-90056).

TCP/UDP/IP Supported Configurations

Multiple TCP/UDP/IP HyperFabric configurations are supported to match the cost,

scaling and performance requirements of each installation.

In the previous “Configuration Guidelines” section the maximum limits for TCP/UDP/IP

enabled HyperFabric hardware configurations were outlined. In this section the

TCP/UDP/IP enabled HyperFabric configurations that HP supports will be detailed.

These recommended configurations offer an optimal mix of performance, availability and

practicality for a variety of operating environments.

There are many variables that can impact HyperFabric performance. If you are

considering a configuration that is beyond the scope of the following HP supported

configurations, contact your HP representative.

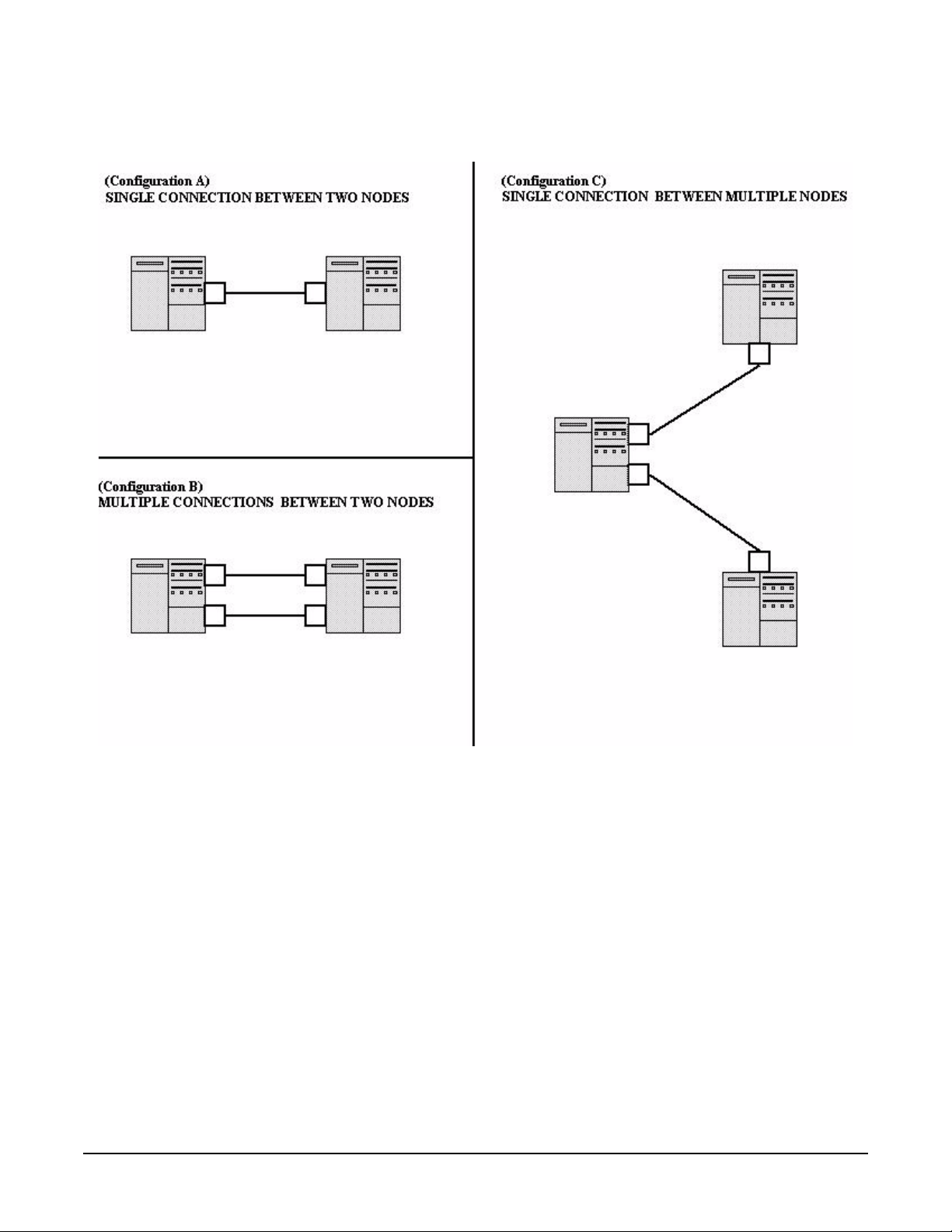

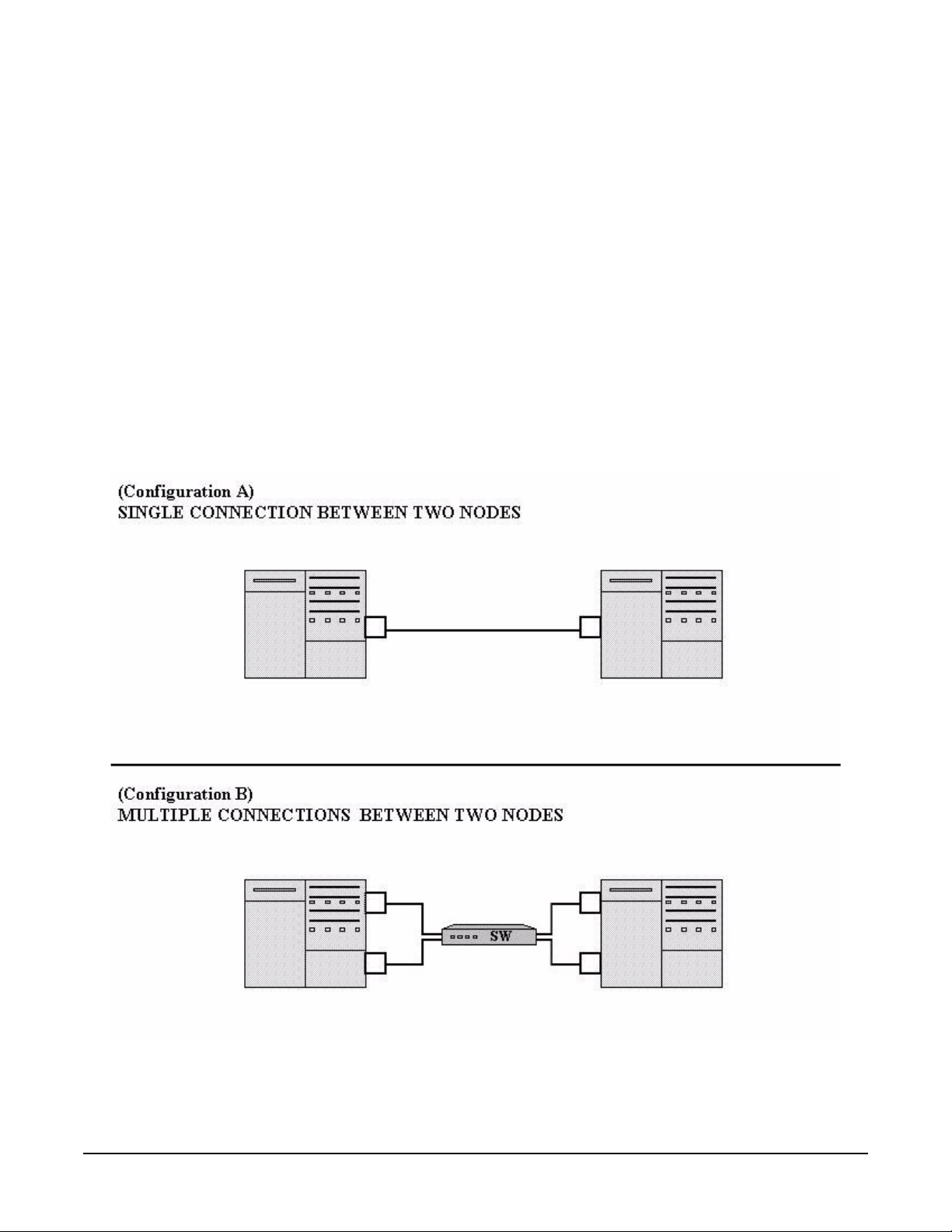

Point-to-Point Configurations

Large servers like HP’s Superdome can be interconnected to run Oracle RAC 9i and

enterprise resource planning applications. These applications are typically consolidated

on large servers.

Point to point connections between servers support the performance benefits of HMP

without investing in HyperFabric switches. This is a good solution in small

configurations where the benefits of a switched HyperFabric cluster might not be

required (see configuration A and configuration C in Figure 2-1).

If there are multiple point to point connections between two nodes, the traffic load will

be balanced over those links. If one link fails, the load will fail-over to the remaining

links (see configuration B in Figure 2-1).

Running applications using TCP/UDP/IP on a HyperFabric cluster provides major

performance benefits compared to other technologies (such as ethernet). If a

HyperFabric cluster is originally set up to run enterprise applications using

TCP/UDP/IP and the computing environment stabilizes with a requirement for higher

performance, migration to HMP is always an option.

26

Chapter 2

Page 27

Figure 2-1 TCP/UDP/IP Point-To-Point Configurations

Planning the Fabric

TCP / UDP / IP

Chapter 2

27

Page 28

Planning the Fabric

TCP / UDP / IP

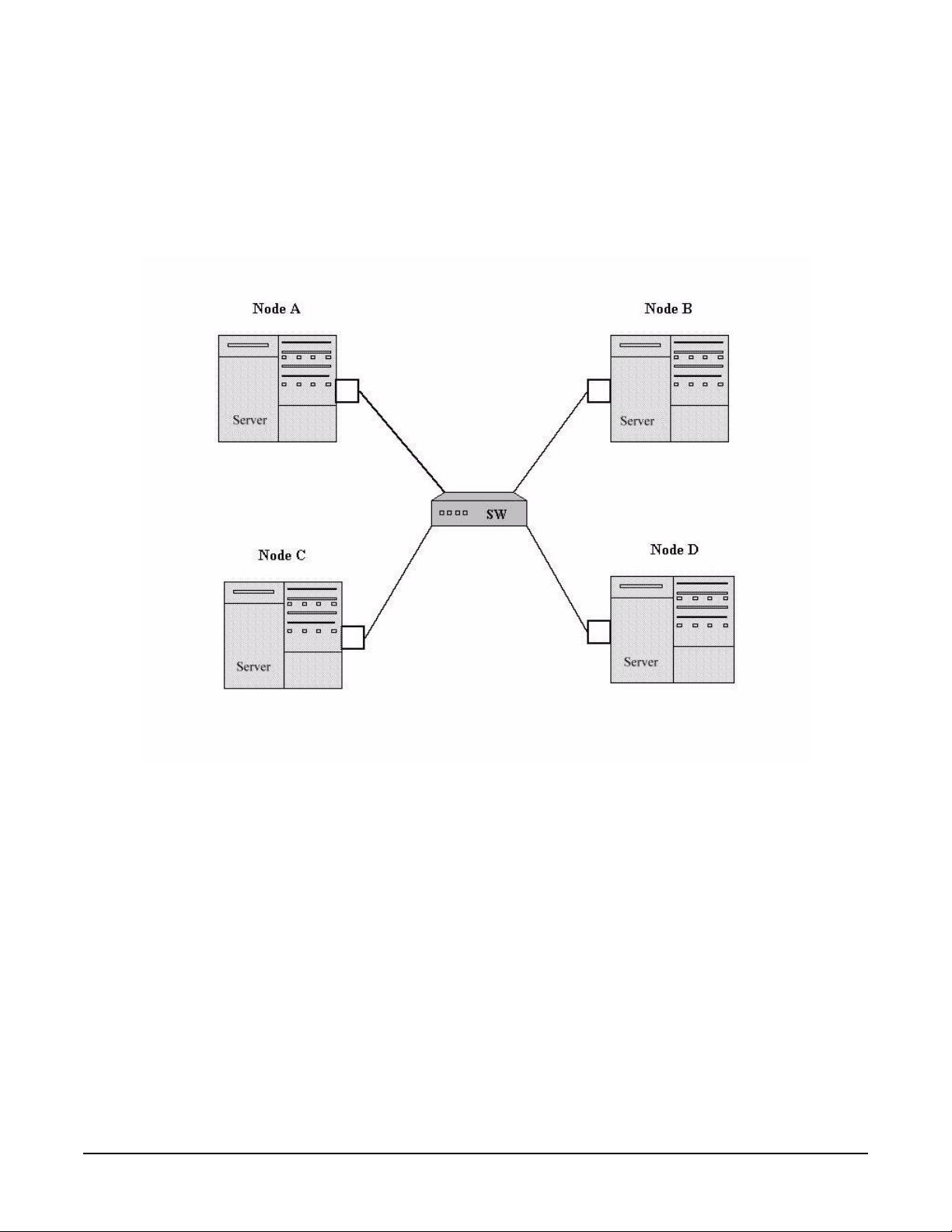

Switched

This configuration offers the same benefits as the point to point configurations

illustrated in figure 1, but it has the added advantage of greater connectivity (see

Figure 2-2).

Figure 2-2 TCP/UDP/IP Basic Switched Configuration

28

Chapter 2

Page 29

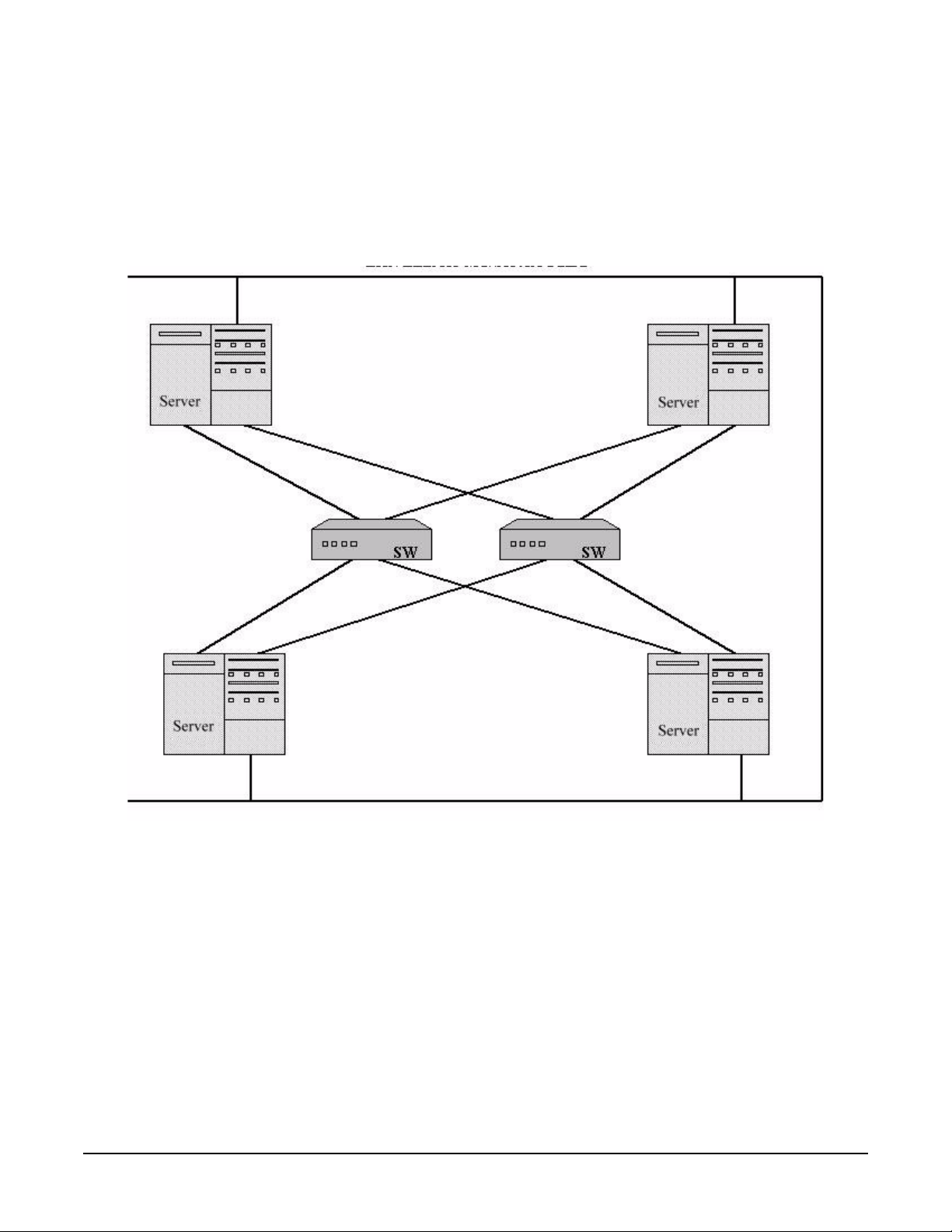

High Availability Switched

This configuration has no single point of failure. The HyperFabric driver provides end to

end HA. If any HyperFabric resource in the cluster fails, traffic will be transparently

rerouted through other available resources. This configuration provides high

performance and high availability (see Figure 2-3).

Figure 2-3 TCP/UDP/IP High Availability Switched Configuration

Planning the Fabric

TCP / UDP / IP

Chapter 2

29

Page 30

Planning the Fabric

Hyper Messaging Protocol (HMP)

Hyper Messaging Protocol (HMP)

Hyper Messaging protocol (HMP) is HP patented, high performance cluster interconnect

protocol. HMP provides reliable, high speed, low latency, low CPU overhead, datagram

service to applications running on HP-UX platforms.

HMP was jointly developed with Oracle Corp. The resulting feature set was tuned to

enhance the scalability of the Oracle Cache Fusion clustering technology. It is

implemented using Remote DMA (RDMA) paradigms.

HMP is integral to the HP-UX HyperFabric driver. It is a functionality that can be

enabled or disabled at HyperFabric initialization using clic_init or SMH. The HMP

functionality is used by the applications listed in the Application Availability section

below.

HMP significantly enhances the performance of parallel and technical computing

applications.

HMP firmware on HyperFabric adapter cards provides a “shortcut” that bypasses

several layers in the protocol stack, boosting link performance and lowering latency. By

avoiding interruptions and buffer copying in the protocol stack, communication task

processing is optimized.

Application Availability

Currently there are two families of applications that can use HMP over the HyperFabric

interface:

• Oracle 9i Database, Release 1 (9.0.1) and Release 2 (9.2.0.1.0).

HMP has been certified on Oracle 9i Database Release 1 with 11i v3.

HMP has been certified on Oracle 9i Database Release 2 with 11i v3.

• Technical Computing Applications

Features

• OnLine Addition and Replacement (OLAR)

The OLAR feature, which allows the replacement or addition of HyperFabric adapter

cards while the system (node) is running, is supported when applications use HMP

to communicate.

30

Chapter 2

Page 31

Planning the Fabric

Hyper Messaging Protocol (HMP)

• Event Monitoring Service (EMS): Supported

The HyperFabric EMS monitor allows the system administrator to separately

monitor each HyperFabric adapter on every node in the fabric, in addition to

monitoring the entire HyperFabric subsystem. The monitor can inform the user if

the resource being monitored is UP or DOWN. The administrator defines the

condition to trigger a notification (usually a change in interface status). Notification

can be accomplished with a SNMP trap or by logging into the syslog file with a choice

of severity, or by email to a user defined email address.

For more detailed information on EMS, including instructions for implementing this

feature, see “Configuring the HyperFabric EMS Monitor” on page 75 in this manual,

as well as the EMS Hardware Monitors User’s Guide Part Number B6191-90028

September 2001 Edition.

• ServiceGuard: Supported

Within a cluster, ServiceGuard groups application services (individual HP-UX

processes) into packages. In the event of a single service failure (node, network, or

other resource), EMS provides notification and ServiceGuard transfers control of the

package to another node in the cluster, allowing services to remain available with

minimal interruption. ServiceGuard via EMS, directly monitors cluster nodes, LAN

interfaces, and services (the individual processes within an application).

ServiceGuard uses a heartbeat LAN to monitor the nodes in a cluster. ServiceGuard

cannot use the HyperFabric interconnect as a heartbeat link. Instead, a separate

LAN must be used for the heartbeat.

For more detailed information on configuring ServiceGuard, see “Configuring

HyperFabric with ServiceGuard” on page 76 in this manual, as well as Managing

MC/ServiceGuard Part Number B3936-90065 March 2002 Edition.

• High Availability (HA): Supported

When applications use HMP to communicate between HP 9000 nodes in a

HyperFabric cluster, MC/ServiceGuard and the EMS monitor can be configured to

identify node failure and automatically fail-over to a functioning HP 9000 node.

For more detailed information on HA when running HMP applications, consult with

your HP representative.

• Transparent Local Failover: Supported

When a HyperFabric resource (adapter, cable, switch or switch port) fails in a cluster,

HMP transparently fails over traffic using other available resources. This is

accomplished using card pairs, each of which is a logical entity that comprises a pair

of HF2 adapters on a HP 9000 node. Only Oracle applications can make use of the

Local Failover feature. HMP traffic can only fail over between adapters that belong

to the same card pair. Traffic does not fail over if both the adapters in a card pair fail.

However, administrators do not need to configure HF2 adapters as card pairs if

TCP/UDP/IP is run over HF2.

When HMP is configured in the local failover mode, all the resources in the cluster

are utilized. If a resource fails in the cluster and is restored, HMP does not utilize

that resource until another resource fails.

Chapter 2

For more information on Transparent Local Failover while running HMP

applications, see “Configuring HMP for Transparent Local Failover Support” on page

96.

31

Page 32

Planning the Fabric

Hyper Messaging Protocol (HMP)

• Dynamic Resource Utilization (DRU): Partially Supported

When a new HyperFabric resource (node, cable or switch) is added to a cluster

running an HMP application, the HyperFabric subsystem will dynamically identify

the added resource and start using it. The same process takes place when a resource

is removed from a cluster. The distinction for HMP is that DRU is supported when a

node with adapters installed in it is added or removed from a cluster running an

HMP application, but DRU is not supported when an adapter is added or removed

from a node that is running an HMP application. This is consistent with the fact that

OLAR is not supported when an HMP application is running on HyperFabric.

• Load Balancing: Supported

When an HP 9000 node that has multiple HyperFabric adapter cards is running

HMP applications, the HyperFabric driver only balances the load across the nodes,

available adapter cards on that node, links and multiple links between switches.

• Switch Management: Not Supported

Switch Management is not supported. Switch management will not operate properly

if it is enabled on a HyperFabric cluster.

• Diagnostics: Supported

Diagnostics can be run to obtain information on many of the HyperFabric

components via the clic_diag, clic_probe and clic_stat commands, as well as

the Support Tools Manager (STM).

For more detailed information on HyperFabric diagnostics, see “Running

Diagnostics” on page 115 on page 149.

Configuration Parameters

This section details, in general, the maximum limits for HMP HyperFabric

configurations. There are numerous variables that can impact the performance of any

particular HyperFabric configuration. See the “HMP Supported Configurations” section

for guidance on specific HyperFabric configurations for HMP applications.

• HyperFabric is only supported on the HP 9000 series unix servers.

• The performance advantages HMP offers will not be fully realized unless it is used

with A6386A HF2 (fibre) adapters and related fibre hardware. The local failover

configuration of HMP is supported only on the A6386AA HF2 adapters.

• Maximum Supported Nodes and Adapter Cards:

HyperFabric clusters running HMP applications are limited to supporting a

maximum of 64 adapter cards. However, in local failover configurations, a maximum

of only 52 adapters are supported.

In point to point configurations running HMP applications, the complexity and

performance limitations of having a large number of nodes in a cluster make it

necessary to include switching in the fabric. Typically, point to point configurations

consist of only 2 or 3 nodes.

32

In switched configurations running HMP applications, HyperFabric supports a

maximum of 64 interconnected adapter cards.

Chapter 2

Page 33

Planning the Fabric

Hyper Messaging Protocol (HMP)

A maximum of 8 HyperFabric adapter cards are supported per instance of the

HP-UX operating system. The actual number of adapter cards a particular node is

able to accommodate also depends on slot availability and system resources. See

node specific documentation for details.

A maximum of 8 configured IP addresses are supported by the HyperFabric

subsystem per instance of the HP-UX operating system.

• Maximum Number of Switches:

You can interconnect (mesh) up to 4 switches (16 port fibre or Mixed 8 fibre ports) in

a single HyperFabric cluster.

• Trunking Between Switches (multiple connections).

HMP is supported in configurations where switches are interconnected through

multiple cables. However, with the current release of HMP software, this

configuration will not eliminate a single point of failure or increase performance.

Instead, all of the traffic will be sent over a single connection with no failover

capability and without the performance increase that would come from balancing the

load over multiple connections.

• Maximum Cable Lengths:

HF2 (fibre): The maximum distance is 200m (4 standard cable lengths are sold and

supported: 2m, 16m, 50m and 200m).

HMP supports up to four HF2 switches connected in series with a maximum cable

length of 200m between the switches and 200m between switches and nodes.

HMP supports up to 4 hybrid HF2 switches connected in series with a maximum

cable length of 200m between fibre ports.

• HMP is supported on the PCI 4X adapters, A6386A.

• For a list of HF2 hardware that supports HMP on HP-UX 11i v3, see HyperFabric

Release Notes (B6257-90056).

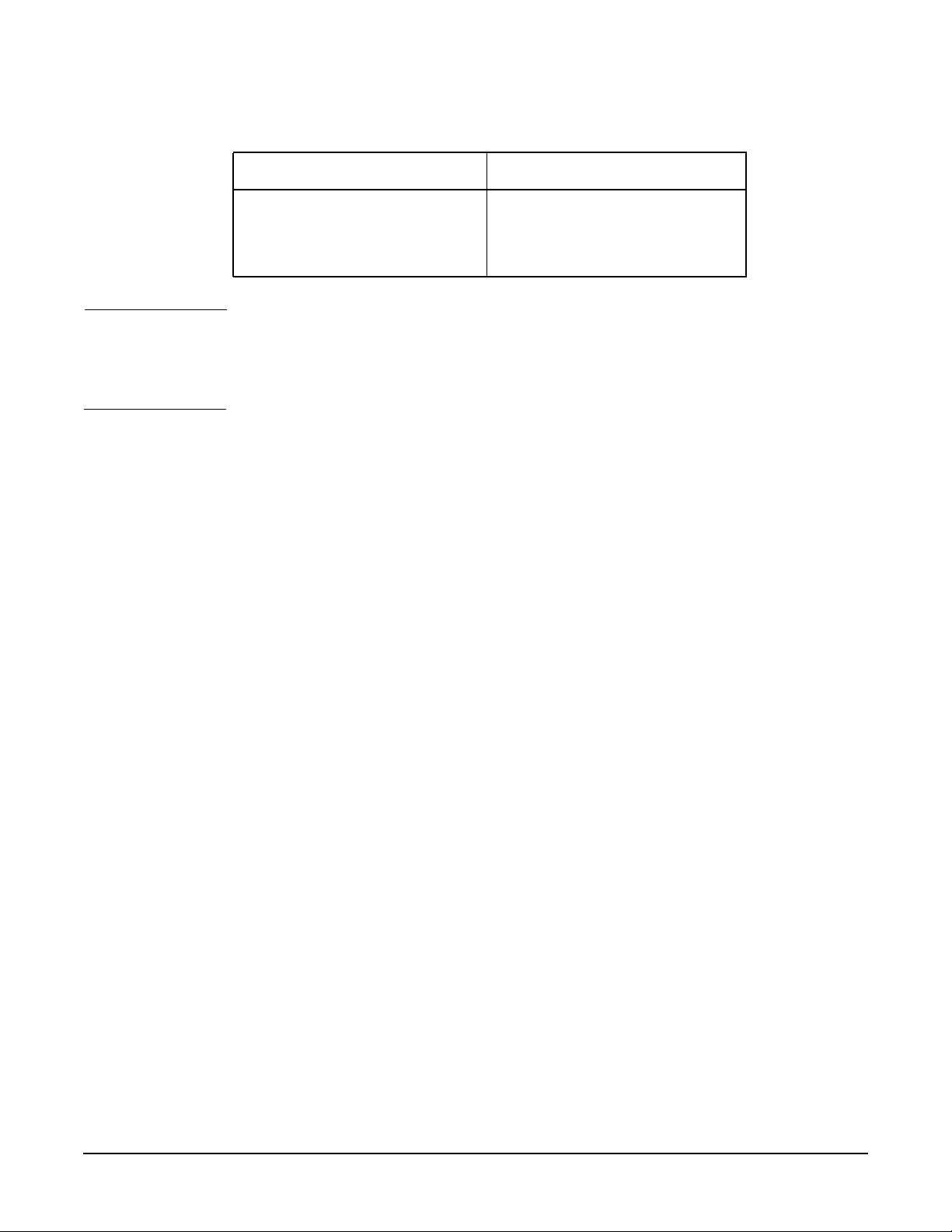

• Speed and Latency

Table 2-2 HF2 Speed and Latency w/ HMP Applications

Server Class Maximum Speed Latency

rp7420 2 + 2 Gbps full duplex per link < 22 microsec

For a list of HF2 hardware that supports HMP on HP-UX 11i v3, see HyperFabric

Release Notes (B6257-90056).

HMP Supported Configurations

Chapter 2

Multiple HMP HyperFabric configurations are supported to match the performance, cost

and scaling requirements of each installation.

In the previous “Configuration Guidelines” section, the maximum limits for HMP

enabled HyperFabric hardware configurations were outlined. In this section, the HMP

enabled HyperFabric configurations that HP supports will be detailed. These

recommended configurations offer an optimal mix of performance, availability and

practicality for a variety of operating environments.

33

Page 34

Planning the Fabric

Hyper Messaging Protocol (HMP)

There are many variables that can impact HyperFabric performance. If you are

considering a configuration that is beyond the scope of the following HP supported

configurations, contact your HP representative.

Point to Point

Large servers like HP’s Superdome can be interconnected to run Oracle RAC 9i and

enterprise resource planning applications. These applications are typically consolidated

on large servers.

Point to point connections between servers support the performance benefits of HMP

without investing in HyperFabric switches. This is a good solution in small

configurations where the benefits of a switched HyperFabric cluster might not be

required (see configurations A and B in Figure 2-4).

If an HMP application is running over the HyperFabric and another node is added to

either of the point to point configurations illustrated in Figure 2-4, it will be necessary to

also add a HyperFabric switch to the cluster.

Figure 2-4 HMP Point-To-Point Configurations

34

Chapter 2

Page 35

Planning the Fabric

Hyper Messaging Protocol (HMP)

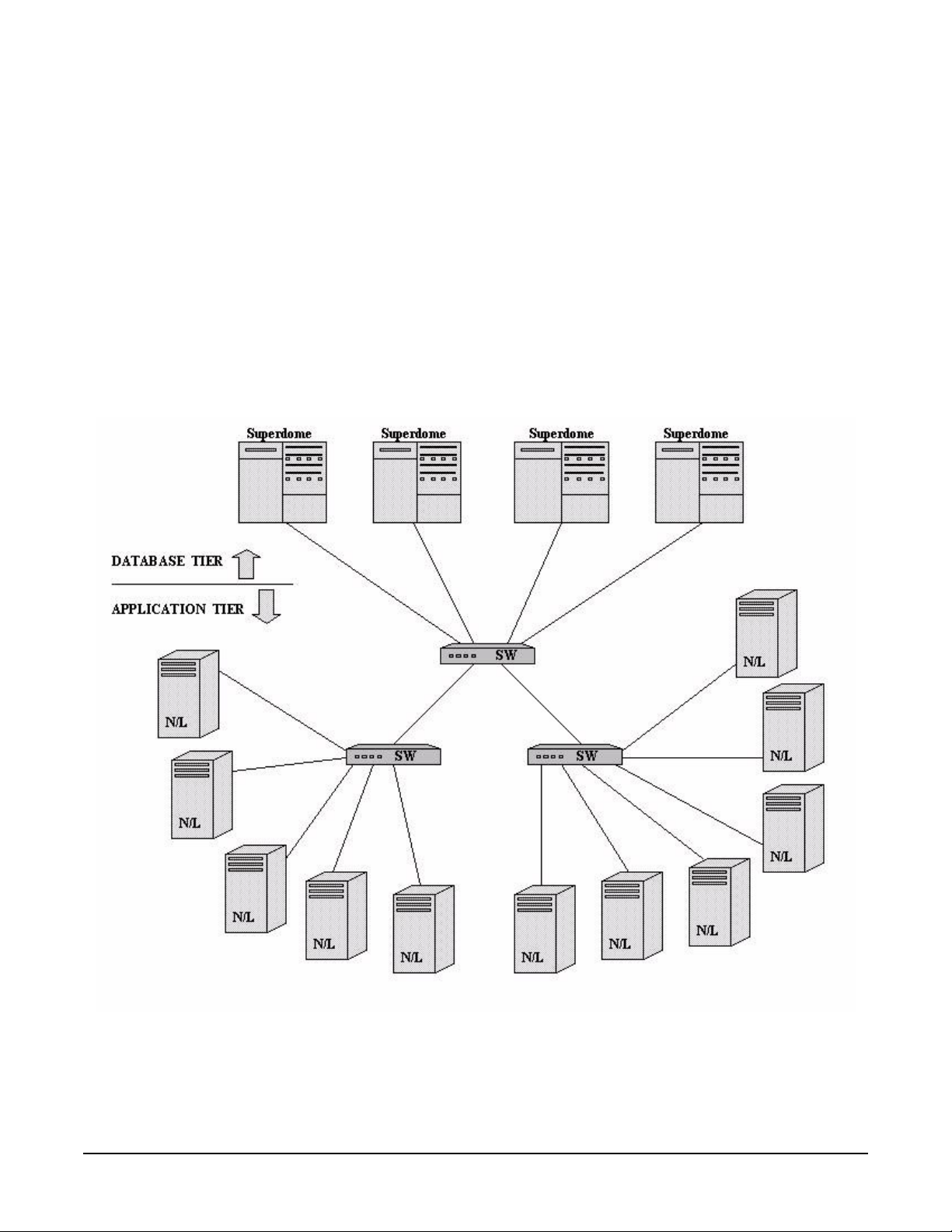

Enterprise (Database)

The HMP enterprise configuration illustrated in Figure 2-5 is very popular for running

Oracle RAC 9i.

Superdomes or other large servers make up the Database Tier.

Database Tier nodes communicate with each other using HMP.

Application Tier nodes communicate with each other and to the Database Tier using

TCP/UDP/IP.

Although each of the servers in the Application Tier could also have multiple adapter

cards and multiple connections to switches, link and adapter card failover capabilities

are not currently available for HMP.

Figure 2-5 HMP Enterprise (Database) Configuration, Single Connection Between

Nodes

Chapter 2

35

Page 36

Planning the Fabric

Hyper Messaging Protocol (HMP)

Enterprise (Database) - Local Failover Supported Configuration

The HMP enterprise configuration is a scalable solution. If higher performance is

required, or if eliminating single points of failure is necessary, scaling up to the HMP

enterprise configuration with multiple connections between nodes is easily accomplished

(see Figure 2-6).

Figure 2-6 Local Failover Supported Enterprise (Database) Configuration,

Multiple Connections Between Nodes

36

Chapter 2

Page 37

Planning the Fabric

Hyper Messaging Protocol (HMP)

Technical Computing

This configuration is typically used to run technical computing applications. A large

number of small nodes are interconnected to achieve high throughput. High availability

is not usually a requirement in technical computing environments.

HMP provides the high performance, low latency path necessary for these technical

computing applications. As many as 56 nodes can be interconnected using HP’s 16 port

switches. Not more than four 16 port switches can be linked in a single cluster.

Chapter 2

37

Page 38

Planning the Fabric

Hyper Messaging Protocol (HMP)

38

Chapter 2

Page 39

3 Installing HyperFabric

This chapter contains the following sections that describe installing HyperFabric:

• “Checking HyperFabric Installation Prerequisites” on page 41

• “Installing HyperFabric Adapters” on page 42

• “Installing the Software” on page 47

Chapter 3

39

Page 40

Installing HyperFabric

• “Installing HyperFabric Switches” on page 52

40

Chapter 3

Page 41

Installing HyperFabric

Checking HyperFabric Installation Prerequisites

Checking HyperFabric Installation Prerequisites

Before installing HyperFabric, check to make sure the following hardware and software

prerequisites have been met:

✓ Check the HP HyperFabric Release Note for any known problems, required patches,

or other information needed for installation.

✓ Confirm the /usr/bin, /usr/sbin, and /sbin directories are in your PATH by

logging in as root and using the echo $PATH command.

✓ Confirm the HP-UX operating system is the correct version. Use the uname -a

command to determine the HP-UX version.

See the HP HyperFabric Release Note for information about the required operating

system versions.

✓ If you are installing an HF2 switch, confirm that you have four screws with

over-sized heads.

✓ Confirm there are cables of the proper length and type (fibre) to make each of the

connections in the fabric (adapter to adapter, adapter to switch, or switch to switch).

✓ Confirm there is at least one loopback plug for testing the adapters and switches (a

fibre loopback plug [HP part number A6384-67004] is shipped with each HF2

switch).

✓ Confirm the necessary tools are available to install the HyperFabric switch

mounting hardware. Also check the HP 9000 system documentation to determine if

any additional tools may be required for component installation.

✓ Confirm software media is correct.

✓ Create a map of the fabric (optional).

✓ Confirm HP-UX super-user privileges are available, they will be necessary to

complete the HyperFabric installation.

The first HyperFabric installation step is installing HyperFabric adapter cards in the

nodes. Proceed to the next section “Installing HyperFabric Adapters”.

Chapter 3

41

Page 42

Installing HyperFabric

Installing HyperFabric Adapters

Installing HyperFabric Adapters

This section contains information about installing HyperFabric adapters in HP 9000

systems. Online Addition and Replacement (OLAR) information is provided in the

“Online Addition and Replacement” section on page 62.

CAUTION HyperFabric adapters contain electronic components that can easily be damaged by

small amounts of electricity. To avoid damage, follow these guidelines:

• Store adapters in their antistatic plastic bags until installation.

• Work in a static-free area, if possible.

• Handle adapters by the edges only. Do not touch electronic components or electrical

traces.

• Use the disposable grounding wrist strap provided with each adapter. Follow the

instructions included with the grounding strap.

•Use a suitable ground—any exposed metal surface on the computer chassis.

For specific instructions see system specific documentation on “installing networking

adapters” for each type of HP 9000 system that HyperFabric adapters will be installed

into.

When the HyperFabric adapters have been installed, go to “Installing the Software” on

page 47.

Online Addition and Replacement

Online Addition and Replacement (OLAR) allows PCI I/O cards, adapters or

controllers to be replaced or added to HP 9000 systems, without the need for completely

shutting down and rebooting the system, or adversely affecting other system

components. This feature is only available on HP 9000 systems that are designed to

support OLAR. The system hardware uses the per-slot power control combined with OS

support to enable this feature.

NOTE OLAR is supported only on TCP/UDP/IP over HF2 adapters.

Not all add-in cards have this capability, but over time many cards will be gaining this

capability.

The latest HyperFabric Release Notes contains information about which HP 9000

systems and HyperFabric adapters OLAR is supported for.

42

Chapter 3

Page 43

Installing HyperFabric

Installing HyperFabric Adapters

IMPORTANT At this time, Superdome systems are not intended for access by users. HP recommends

that these systems only be opened by a qualified HP engineer. Failure to observe this

requirement can invalidate any support agreement or warranty to which the owner

might otherwise be entitled.

There are two methods to add or replace OLAR-compatible cards:

• Using the SAM or SMH1 utility.

NOTE System Administration Manager (SAM) is deprecated in the 11i v3 release of

HP-UX. HP recommends that you use HP System Management Homepage (HP

SMH) in an HP-UX 11i v3 system. For OLAR operations, use the new pdweb utility,

integrated in SMH.

• Issuing command-line commands, through rad, that refer to the HyperFabric OLAR

script (/usr/sbin/olard.d/clicd).

HP recommends that pdweb be used for OLAR procedures, instead of the rad command.

This is primarily because pdweb prevents the user from doing things that might have

adverse effects. This is not true when the rad command is used.

For detailed information about using either of these two procedures, see Interface Card

OL* Support Guide.

Table 3-1 below explains some important OLAR-related terms.

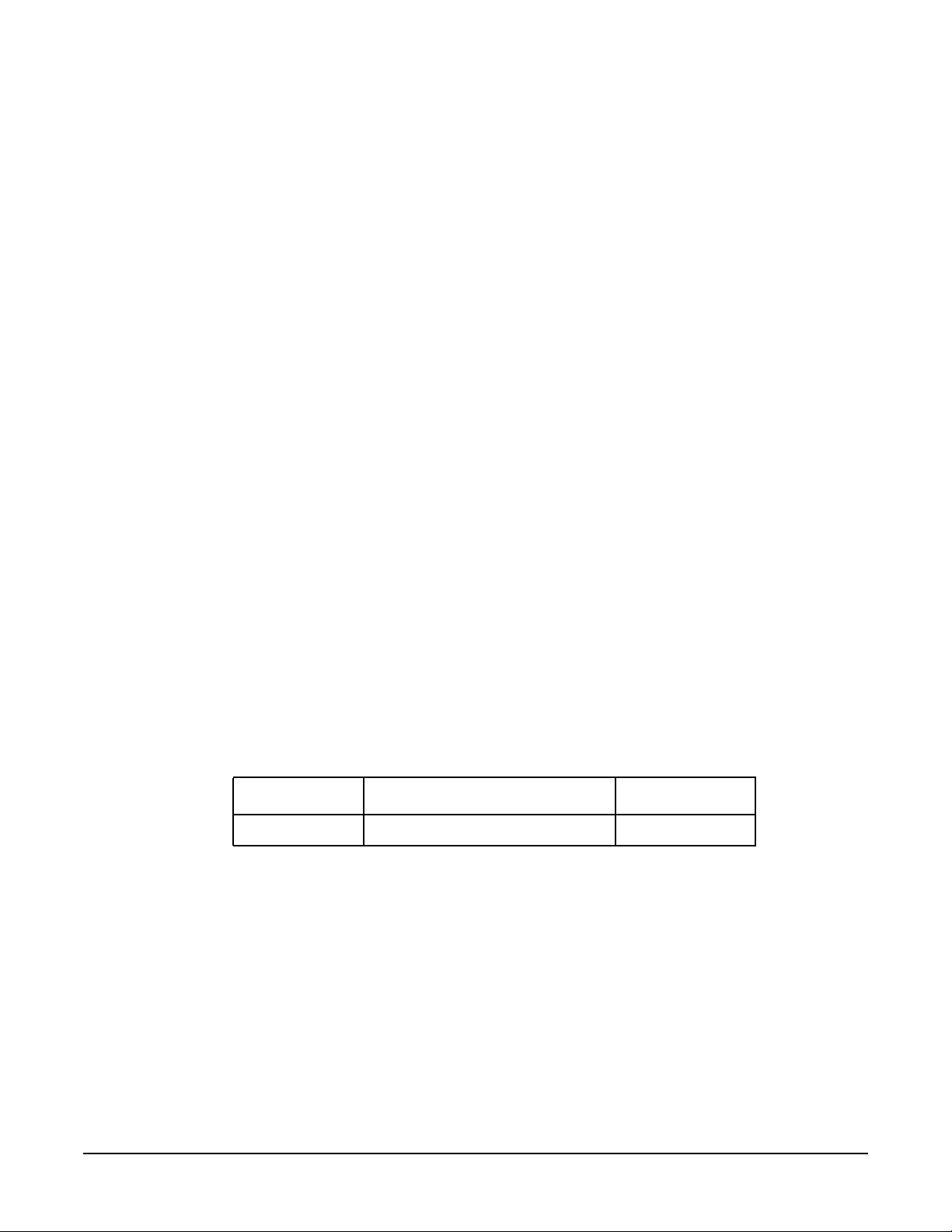

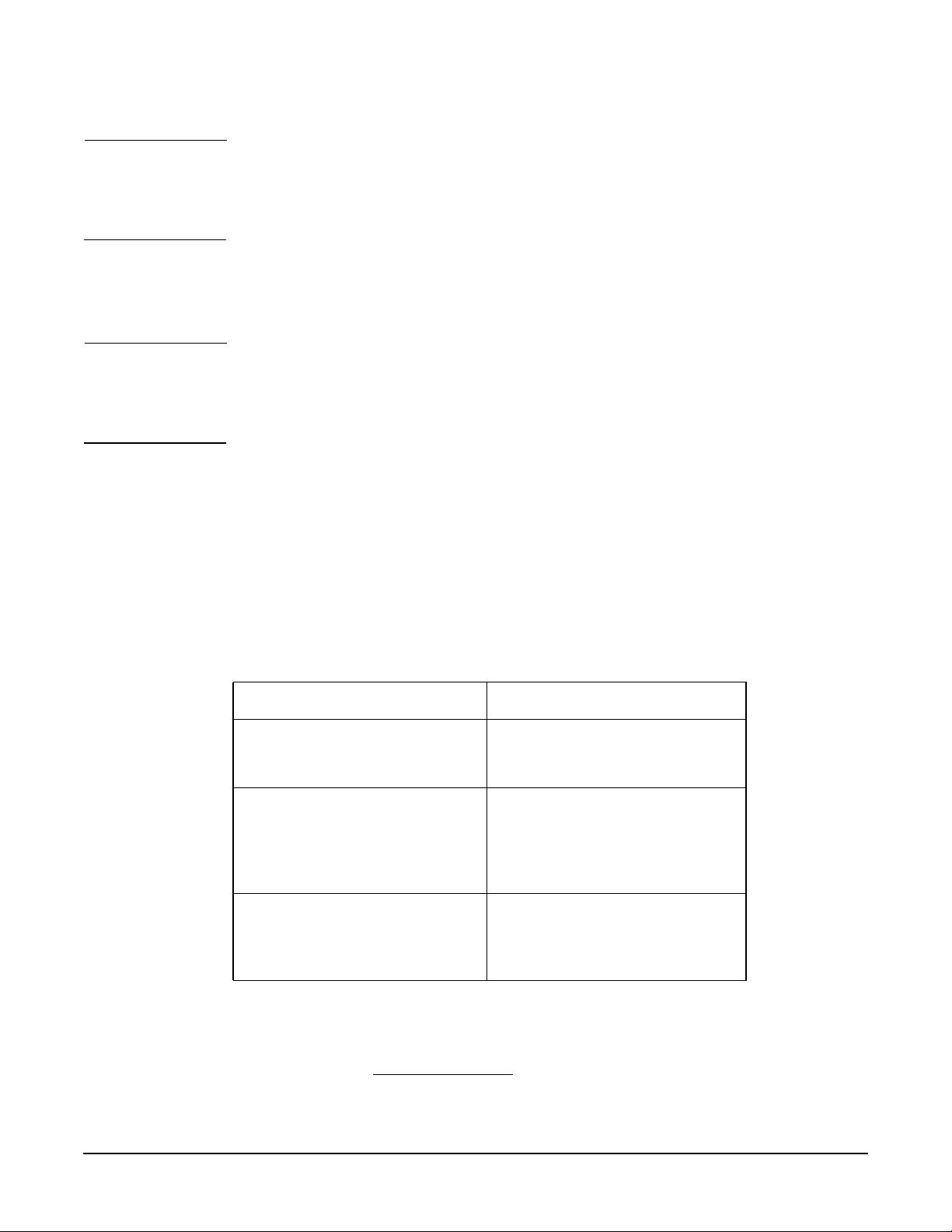

Table 3-1 Important OLAR Terms

Term Meaning

OLAR All aspects of the OLAR feature

Power Domain A grouping of 1 or more interface

target card / target card slot The interface card which will be

including Online Addition (OLA)

and Online Replacement (OLR).

card slots that are powered on or

off as a unit. (Note: Multi-slot

power domains are not currently

supported.)

added or replaced using OLAR,

and the card slot in which it

resides.

Chapter 3

1. The HP System Management Homepage is an enhanced version of System

Administration Manager (SAM) and is introduced for managing HP-UX.

43

Page 44

Installing HyperFabric

Installing HyperFabric Adapters

Table 3-1 Important OLAR Terms (Continued)

Term Meaning

affected card / affected card slot Interface cards and the card slots

they reside in, which are in the

same power domain as the target

slot.

IMPORTANT In many cases, other interface cards and slots within the system are dependent on the

target card. For example, if the target card is a multiple-port card, suspending or

deleting drivers for the target card slot also suspends individual drivers for the multiple

hardware paths on that card.

During a card replacement operation, pdweb performs a Critical Resource Analysis

(CRA), which checks all ports on the target card for critical resources that would be

temporarily unavailable while the card is shut down.

Planning and Preparation

As mentioned previously, for the most part, pdweb prevents the user from performing

OLAR procedures that would adversely affect other areas of the HP 9000 system. See

Interface Card OL* Support Guide for detailed information.

Critical Resources

The effects of shutting down a card’s functions must be considered. Replacing a card that

is still operating can have extensive consequences. Power to a slot must be turned off

when a card is removed and a new card is inserted.

This is particularly important if there is no online failover or backup card to pick up

those functions. For example:

• Which mass storage devices will be temporarily disconnected when a card is shut

down?

• Will a critical networking connection be lost?

A critical resource is one that would cause a system crash or prevent an operation from

successfully completing if the resource were temporarily suspended or disconnected. For

example, if the SCSI controller is connected to the unmirrored root disk or swap space,

the system will crash when the SCSI controller is shut down.

During an OLAR procedure, it is essential to check the targeted card for critical

resources, as well as the effects of existing disk mirrors and other situations where a

card’s functions can be taken over by another card that will not be affected.

44

Fortunately, as mentioned earlier, OL* performs a thorough CRA automatically, and

presents options based on its findings. If it is determined that critical resources will be

affected by the OLAR procedure, the card could be replaced when the system is offline. If

action must be taken immediately, an online addition of a backup card and deletion of

the target card could be attempted using rad.

Chapter 3

Page 45

Installing HyperFabric

Installing HyperFabric Adapters

Card Compatibility

This section explains card compatibility considerations for doing OLAR.

Online Addition (OLA) Multiple cards can be added at the same time. When adding a

card online, the first issue to resolve is whether the new card is compatible with the

system. Each OLAR-capable PCI slot provides a set amount of power. The replacement

card cannot require more power than there is available.

The card must also operate at the slot’s bus frequency. A PCI card must run at any

frequency lower than its maximum capability, but a card that could operate at only 33

MHz would not work on a bus running at 66 MHz. rad provides information about the

bus frequency and power available at a slot, as well as other slot-related data.

If an HP 9000 system has one or more slots that support OLAR and OLA will be used to

install a HyperFabric adapter in one of those slots. Refer to the Interface Card OL*

Support Guide for information on how to install the adapter in the HP 9000 or Integrity

server.

After adding a new HyperFabric adapter, SMH tries to locate the HyperFabric software.

If SMH cannot locate the HyperFabric software, the new adapter cannot be used until

the software is installed (remember that software installation requires a system reboot).

If SMH locates the HyperFabric software, it determines whether the new adapter is

functional. If it is not functional, an error message is displayed.

If the new adapter is functional, SMH displays a message telling the user to configure

the adapter and start HyperFabric. If only one adapter is being added, issue the

clic_init -c command or use SMH to configure the adapter, and then issue the

clic_start command or use SMH to start HyperFabric. If multiple adapters are being

added, add all of the adapters first, and then run clic_init -c and clic_start or use

SMH. See “Performing the Configuration” on page 69 and “Starting HyperFabric” on

page 95 for more information about configuring and starting HyperFabric.

CAUTION Do not change any configuration information for an existing HyperFabric adapter or

switch while you are using clic_init -c to configure a new adapter.

When you have completed the adapter installation, go to “Installing the Software” on

page 47.

Online Replacement (OLR) When replacing an interface card online, the

replacement card must be identical to the card being replaced (or at least be able to

operate using the same driver as the replaced card). This is referred to as like-for-like

replacement and should be adhered to, because using a similar but not identical card can

cause unpredictable results. For example, a newer version of the target card that is

identical to the older card in terms of hardware might contain an updated firmware

version that could potentially conflict with the current driver. For example, an A6386A

adapter must be replaced with another A6386A adapter. In addition, the old adapter and

new adapter must have the same revision levels.

Chapter 3

When a replacement card is added to an HP 9000 system, the appropriate driver for that

card must be configured in the kernel before beginning the replacement operation. SMH

ensures the correct driver is present. (In most cases, the replacement card will be the

same type as a card already in the system, and this requirement will be automatically

met.) Keep the following things in mind:

45

Page 46

Installing HyperFabric

Installing HyperFabric Adapters

• If the necessary driver is not present and the driver is a dynamically loadable kernel

module (DLKM), it can be loaded manually. See the “Dynamically Loadable Kernel

Modules” section in “Interface Card OL* Support Guide” for more information.

• If the driver is static and not configured in the kernel, then the card cannot be added

online. The card could be physically inserted online, but no driver would claim it.

If there is any question about the driver’s presence, or if it is uncertain that the

replacement card is identical to the existing card, ioscan can be used together with rad

to investigate.

If more than one operational HyperFabric adapter is present when SMH requests the

suspend operation for all ports on the target adapter, HyperFabric will redirect the

target adapter’s traffic to a local backup adapter using local failover. Client applications

using the replaced adapter will not be interrupted in any way.

If the adapter being replacing is active and it is the only operational HyperFabric

adapter on the HP 9000 system, SMH displays the following warning message:

WARNING: You have 1 operational HyperFabric card. If you go ahead with this

operation you will lose network access via HyperFabric until the on-line

replaced HyperFabric card becomes operational.

You are asked if you want to continue. If you reply Yes, client applications are

suspended. Replace the adapter according to the procedure described in the Interface

Card OL* Support Guide.

When an adapter has been replaced, client application activity resumes unless the TCP

timers or the application timers have popped.

CAUTION Do not use the clic_start command or the clic_shutdown command, while an

installed adapter is suspended. Do not use SMH to start or stop HyperFabric while an

installed adapter is suspended. The operation will fail and an error message will be

displayed.

After a HyperFabric adapter has been replaced, SMH checks the replacement adapter to

make sure it is permitted according to the like-for-like rules. If the adapter is permitted,

SMH automatically activates it. If it is not permitted, SMH displays an error message.

46

Chapter 3

Page 47

Installing the Software

This section describes the HyperFabric file structure and the steps necessary to load the

software. The software must be installed on each instance of the HP-UX operating

system in the fabric.

File Structure

The HyperFabric file structure is shown in Figure 3-1 below. Note that the structure is

shown for informational purposes only. The user cannot modify any of the files or move

them to a different directory.

Figure 3-1 HyperFabric File Structure

Installing HyperFabric

Installing the Software

/

/resmon

/dictionary

/clic_01

/usr

/conf

/lib

/libclic_dlpi_drv.a

/libha_drv.a

/opt

/master.d

/etc

/clic

/libclic_mgmt.a

/rc.config.d

/clic_global_conf

/lib

/clic_diag

/clic_dump

/clic_init

/clic_mgmtd

/clic_mond

/clic_ping

/clic_probe

/clic_shutdown

/clic_start

/clic_stat

/opt

/clic

/bin

/sbin

/init.d

/clic

/firmware

/clic_fw

/clic_fw_1x32c

/clic_fw_4x8c

/clic_fw_4x32c

/clic_fw_hf28c

/clic_fw_hf232c

/clic_fw_db

/var/adm

/clic_ip_drv.trc

/clic_ip_drv.trc0

/clic_ip_drv.trc1

/clic_log

/clic_log.old

/OLDclic_log

/share

/man

/man1m.Z

Chapter 3

The commands and files used to administer HyperFabric typically have a prefix of

clic_. CLIC stands for CLuster InterConnect, and it is used to differentiate those

HyperFabric commands/files from other commands/files. For example, the HyperFabric

command clic_init is different from the HP-UX init command.

Each of the files shown in Figure 3-1 above is briefly described below:

• /etc/opt/resmon/dictionary/clic_01

The HyperFabric dictionary file for the Event Monitoring Service (EMS).

• /etc/rc.config.d/clic_global_conf

47

Page 48

Installing HyperFabric

Installing the Software

• /sbin/init.d/clic

• /var/adm/clic_ip_drv.trc

• /var/adm/clic_ip_drv.trc0

• /var/adm/clic_ip_drv.trc1

• /var/adm/clic_log

The global configuration file, which contains the IP addresses for each adapter and

each HyperFabric switch (if any) in the fabric.

The system boot startup script for the HyperFabric management process.

One of the software’s trace files. This file is created when the clic_diag -D TCP_IP

command is run.

One of the HyperFabric software’s trace files. This is the primary file that is created

when the clic_diag -C TCP_IP command is run.

One of the HyperFabric software’s trace files. This file is created when the

clic_diag -C TCP_IP command is run, and the primary trace file

(clic_ip_drv.trc0) becomes full.

The global log file that is updated by the HyperFabric management process.

• /var/adm/clic_log.old

The backup copy of the log file that is created when the log file grows larger than 100

Kbytes.

• /var/adm/OLDclic_log

The log file from the previous time the clic_start command was executed.

• /usr/conf/lib/libclic_dlpi_drv.a