Page 1

S

Hit achi Universal Storage Platform V

Hit achi Universal Storage Platform VM

User and Reference Guide

F

ASTFIND

Document Organization

Product Version

Getting Help

Contents

L

INK

MK-96RD635-04

Page 2

Copyright © 2008 Hitachi Data Systems

Corporation, ALL RIGHTS RESERVED

Notice: No part of this publication may be

reproduced or transmitted in any form or by

any means, electronic or mechanical, including

photocopying and recording, or stored in a

database or retrieval system for any purpose

without the express written permission of

Hitachi Data Systems Corporation (hereinafter

referred to as “Hitachi Data Systems”).

Hitachi Data Systems reserves the right to

make changes to this document at any time

without notice and assumes no responsibility

for its use. Hitachi Data Systems products and

services can only be ordered under the terms

and conditions of Hitachi Data Systems’

applicable agreements. All of the features

described in this document may not be

currently available. Refer to the most recent

product announcement or contact your local

Hitachi Data Systems sales office for

information on feature and product availability.

This document contains the most current

information available at the time of publication.

When new and/or revised information becomes

available, this entire document will be updated

and distributed to all registered users.

Hitachi, the Hitachi logo, and Hitachi Data

Systems are registered trademarks and service

marks of Hitachi, Ltd. The Hitachi Data

Systems logo is a trademark of Hitachi, Ltd.

Dynamic Provisioning, Hi-Track, ShadowImage,

TrueCopy, and Universal Star Network are

registered trademarks or trademarks of Hitachi

Data Systems.

All other brand or product names are or may

be trademarks or service marks of and are

used to identify products or services of their

respective owners.

ii

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 3

Contents

Preface..................................................................................................vii

Safety and Environmental Notices....................................................................... viii

Intended Audience..............................................................................................ix

Product Version...................................................................................................ix

Document Revision Level.....................................................................................ix

Source Document(s) for this Revision ...................................................................ix

Changes in this Revision.......................................................................................x

Document Organization ........................................................................................x

Referenced Documents........................................................................................xi

Document Conventions.......................................................................................xii

Convention for Storage Capacity Values............................................................... xii

Getting Help ..................................................................................................... xiii

Comments........................................................................................................ xiii

Product Overview................................................................................. 1-1

Universal Storage Platform V Family ...................................................................1-2

New and Improved Capabilities..........................................................................1-3

Specifications at a Glance ..................................................................................1-4

Specifications for the Universal Storage Platform V........................................1-4

Specifications for the Universal Storage Platform VM .....................................1-6

Software Products.............................................................................................1-8

Architecture and Components................................................................ 2-1

Hardware Architecture.......................................................................................2-2

Multiple Data and Control Paths...................................................................2-3

Storage Clusters.........................................................................................2-4

Hardware Components......................................................................................2-5

Shared Memory..........................................................................................2-6

Cache Memory ...........................................................................................2-6

Front-End Directors and Host Channels ........................................................2-7

Contents iii

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 4

Back-End Directors and Array Domains........................................................ 2-9

Hard Disk Drives...................................................................................... 2-11

Service Processor..................................................................................... 2-11

Power Supplies........................................................................................ 2-12

Batteries ................................................................................................. 2-12

Control Panel and Emergency Power-Off Switch................................................ 2-13

Control Panel........................................................................................... 2-13

Emergency Power-Off Switch.................................................................... 2-15

Intermix Configurations................................................................................... 2-16

RAID-Level Intermix................................................................................. 2-16

Hard Disk Drive Intermix.......................................................................... 2-17

Device Emulation Intermix........................................................................ 2-17

Functional and Operational Characteristics ............................................. 3-1

RAID Implementation....................................................................................... 3-2

Array Groups and RAID Levels....................................................................3-2

Sequential Data Striping............................................................................. 3-4

LDEV Striping Across Array Groups.............................................................. 3-5

CU Images, LVIs, and LUs.................................................................................3-7

CU Images................................................................................................3-7

Logical Volume Images...............................................................................3-7

Logical Units.............................................................................................. 3-8

Storage Navigator.............................................................................................3-9

System Option Modes, Host Modes, and Host Mode Options.............................. 3-10

System Option Modes............................................................................... 3-10

Host Modes and Host Mode Options.......................................................... 3-21

Mainframe Operations..................................................................................... 3-22

Mainframe Compatibility and Functionality ................................................. 3-22

Mainframe Operating System Support........................................................ 3-22

Mainframe Configuration .......................................................................... 3-23

Open-Systems Operations............................................................................... 3-24

Open-Systems Compatibility and Functionality............................................ 3-24

Open-Systems Host Platform Support........................................................ 3-25

Open-Systems Configuration..................................................................... 3-26

Battery Backup Operations.............................................................................. 3-27

Troubleshooting................................................................................... 4-1

General Troubleshooting................................................................................... 4-2

Service Information Messages ........................................................................... 4-3

Calling the Hitachi Data Systems Support Center................................................. 4-4

iv Contents

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 5

Units and Unit Conversions....................................................................A-1

Acronyms and Abbreviations

Index

Contents v

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 6

vi Contents

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 7

Preface

This document describes the physical, functional, and operational

characteristics of the Hitachi Universal Storage Platform V (USP V) and Hitachi

Universal Storage Platform VM (USP VM) storage systems and provides

general instructions for operating the USP V and USP VM.

Please read this document carefully to understand how to use this product,

and maintain a copy for reference purposes.

This preface includes the following information:

Safety and Environmental Notices

Intended Audience

Product Version

Document Revision Level

Source Document(s) for this Revision

Changes in this Revision

Document Organization

Referenced Documents

Document Conventions

Convention for Storage Capacity Values

Getting Help

Comments

Notice: The use of the Hitachi Universal Storage Platform V and VM storage

systems and all other Hitachi Data Systems products is governed by the terms

of your agreement(s) with Hitachi Data Systems.

Preface vii

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 8

Safety and Environmental Notices

Federal Communications Commission (FCC) Statement

This equipment has been tested and found to comply with the limits for a Class

A digital device, pursuant to part 15 of the FCC Rules. These limits are

designed to provide reasonable protection against harmful interference when

the equipment is operated in a commercial environment. This equipment

generates, uses, and can radiate radio frequency energy and, if not installed

and used in accordance with the instruction manual, may cause harmful

interference to radio communications. Operation of this equipment in a

residential area is likely to cause harmful interference in which case the user

will be required to correct the interference at his own expense.

“EINE LEICHT ZUGÄNGLICHE TRENN-VORRICHTUNG, MIT EINER KONTAKTÖFFNUNGSWEITE VON MINDESTENS 3mm IST IN DER UNMITTELBAREN NÄHE

DER VERBRAUCHERANLAGE ANZUORDNEN (4-POLIGE ABSCHALTUNG).”

Maschinenlärminformationsverordnung 3. GSGV, 18.01.1991: Der

höchste Schalldruckpegel beträgt 70 db(A) oder weniger gemäß ISO 7779.

CLASS 1 LASER PRODUCT

CLASS 1 LASER PRODUCT

LASER KLASSE 1

WARNING: This is a Class A product. In a domestic environment this product

may cause radio interference in which case the user may be required to take

adequate measures.

WARNUNG: Dies ist ein Produkt der Klasse A. In nichtgewerblichen

Umgebungen können von dem Gerät Funkstörungen ausgehen, zu deren

Beseitigung vom Benutzer geeignete Maßnahmen zu ergreifen sind.

viii Preface

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 9

Intended Audience

This document is intended for system administrators, Hitachi Data Systems

representatives, and authorized service providers who are involved in

installing, configuring, and operating the Hitachi Universal Storage Platform V

and/or Hitachi Universal Storage Platform VM storage systems.

This document assumes the following:

• The user has a background in data processing and understands RAID

storage systems and their basic functions.

• The user is familiar with the host systems supported by the Hitachi

Universal Storage Platform V/VM.

• The user is familiar with the equipment used to connect RAID storage

systems to the supported host systems.

Product Version

This document revision applies to USP V/VM microcode 60-02-4x and higher.

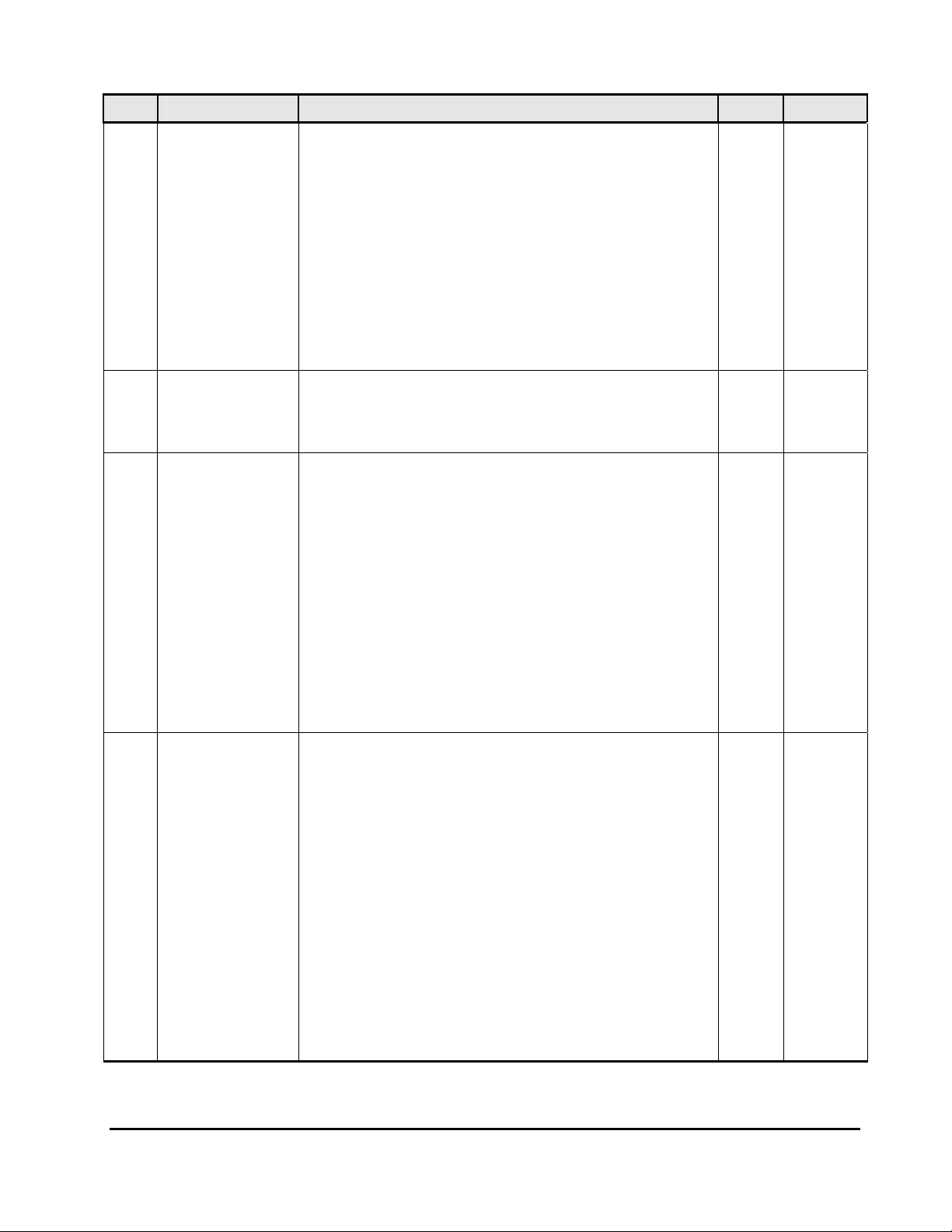

Document Revision Level

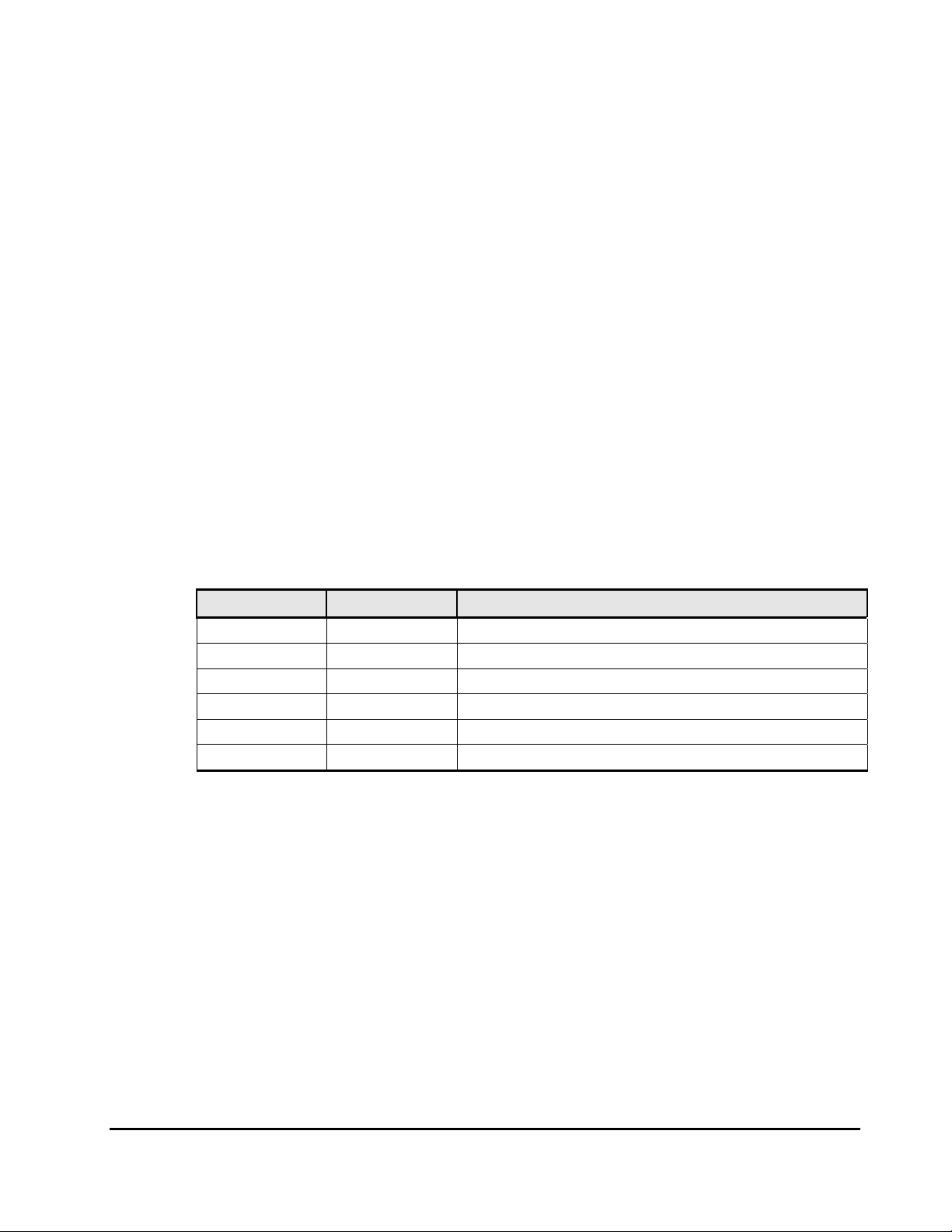

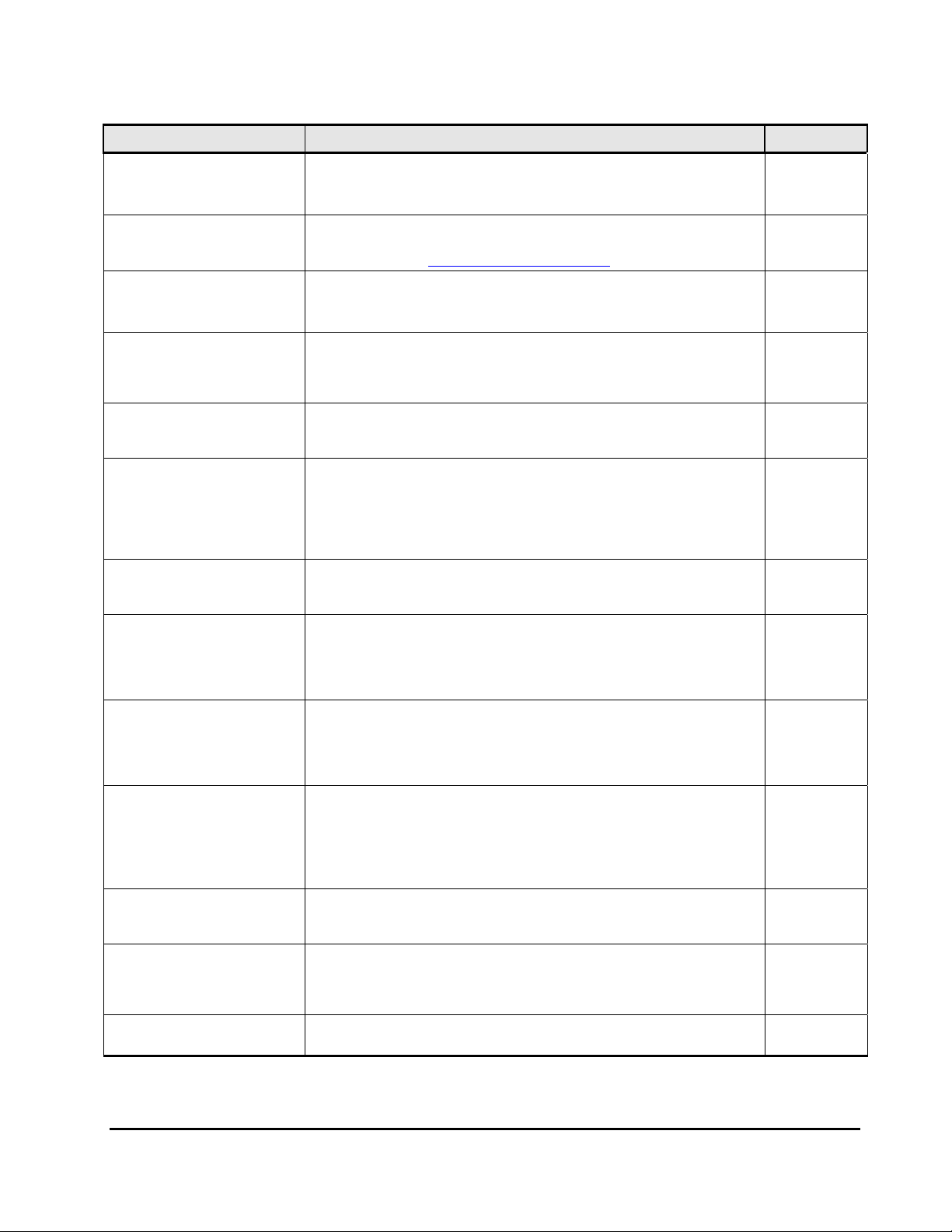

Revision Date Description

MK-96RD635-P February 2007 Preliminary Release

MK-96RD635-00 May 2007 Initial Release, supersedes and replaces MK-96RD635-P

MK-96RD635-01 June 2007 Revision 1, supersedes and replaces MK-96RD635-00

MK-96RD635-02 September 2007 Revision 2, supersedes and replaces MK-96RD635-01

MK-96RD635-03 November 2007 Revision 3, supersedes and replaces MK-96RD635-02

MK-96RD635-04 April 2008 Revision 4, supersedes and replaces MK-96RD635-03

Source Document(s) for this Revision

• Exhibit M1, DKC610I Disk Subsystem, Hardware Specifications, revision 13

• Exhibit M1, DKC615I Disk Subsystem, Hardware Specifications, revision 5

• Public Mode for RAID600, R600_Public_Mode_2008_0314.xls

Preface ix

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 10

Changes in this Revision

• Added the 400-GB disk drive (Specifications at a Glance, Hard Disk Drives).

• Updated the maximum usable capacity values (Specifications at a Glance).

• Added a table of specifications for the disk drives (new Table 2-3).

• Updated the list of public system option modes (Table 3-1).

– Added the following new modes: 545, 685, 689, 690, 697, 701, 704.

– Modified the description of mode 467 as follows:

• Changed the default from OFF to ON.

• Added Universal Volume Manager to the list of affected functions.

• Added a caution about setting mode 467 ON when using external

volumes as secondary copy volumes.

• Added a note about copy processing time and the prioritization of

host I/O performance.

– Removed mode 198.

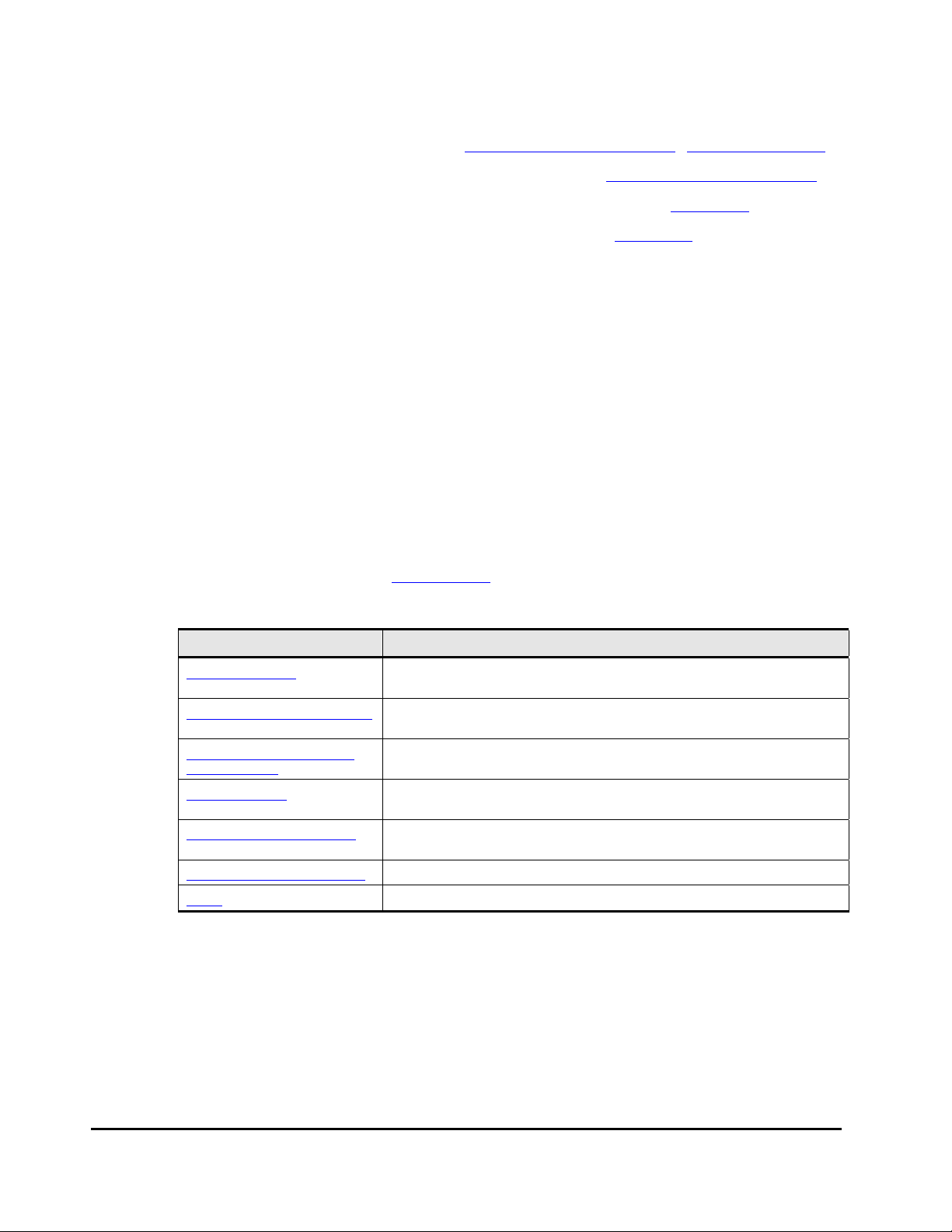

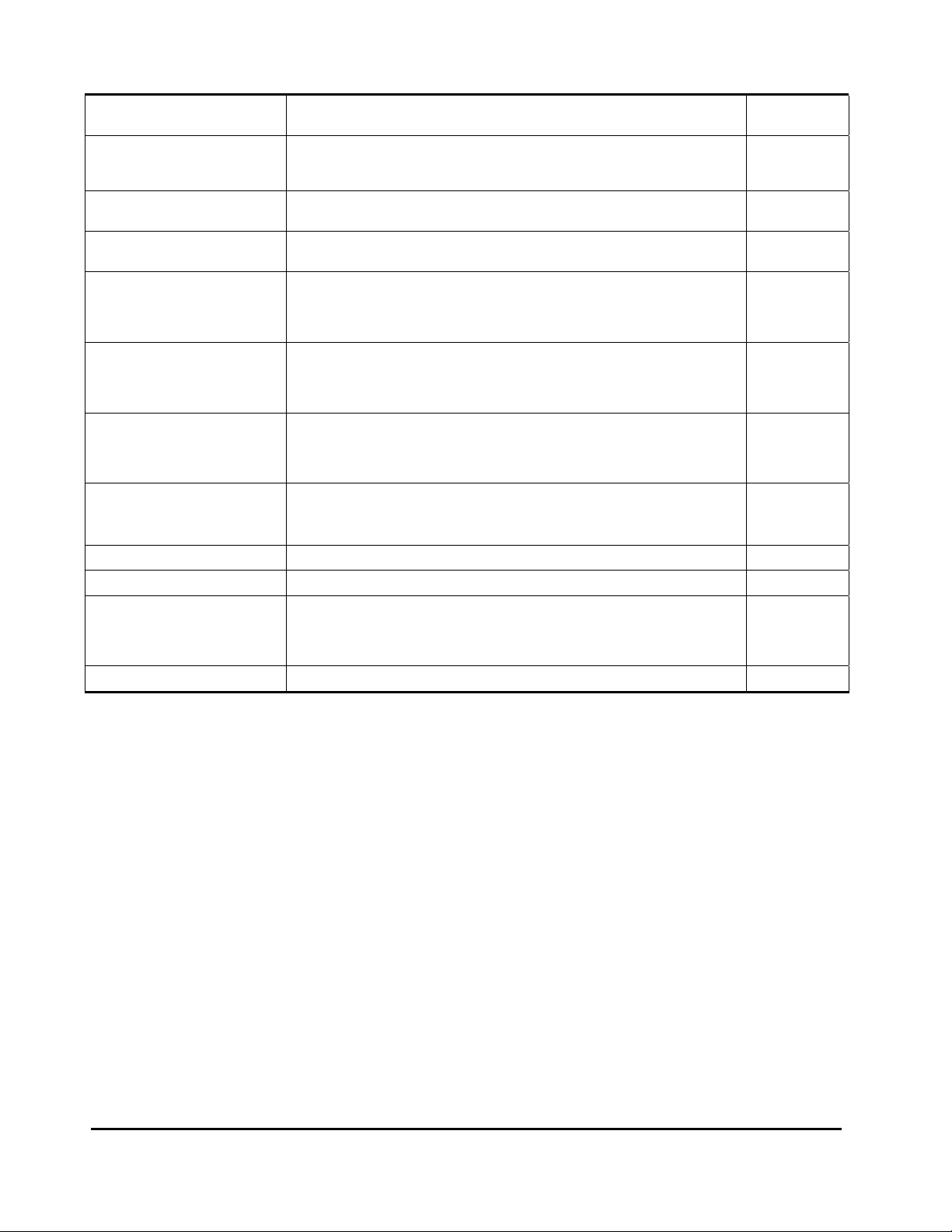

Document Organization

The following table provides an overview of the contents and organization of

this document. Click the chapter title

The first page of each chapter provides links to the sections in that chapter.

in the left column to go to that chapter.

Chapter Description

Product Overview

Architecture and Components

Functional and Operational

Characteristics

Troubleshooting

Units and Unit Conversions

Acronyms and Abbreviations Defines the acronyms and abbreviations used in this document.

Index Lists the topics in this document in alphabetical order.

Provides an overview of the Universal Storage Platform V/VM, including

features, benefits, general function, and connectivity descriptions.

Describes the Universal Storage Platform V/VM architecture and

components.

Discusses the functional and operational capabilities of the Universal

Storage Platform V/VM.

Provides troubleshooting guidelines and customer support contact

information for the Universal Storage Platform V/VM.

Provides conversions for standard (U.S.) and metric units of meas ure

associated with the Universal Storage Platform V/VM.

x Preface

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 11

Referenced Documents

Hitachi Universal Storage Platform V/VM documentation:

• Table 1-3 lists the user documents for Storage Navigator-based software.

• Table 1-4 lists the user documents for host- and server-based software.

• Table 3-5 lists the configuration guides for host attachment.

• Other referenced USP V/VM documents:

– USP V Installation Planning Guide, MK-97RD6668

– USP VM Installation Planning Guide, MK-97RD6679

IBM® documentation:

• Planning for IBM Remote Copy, SG24-2595

• DFSMSdfp Storage Administrator Reference, SC28-4920

• DFSMS MVS V1 Remote Copy Guide and Reference, SC35-0169

• OS/390 Advanced Copy Services, SC35-0395

• Storage Subsystem Library, 3990 Transaction Processing Facility Support

RPQs, GA32-0134

• 3990 Operations and Recovery Guide, GA32-0253

• Storage Subsystem Library, 3990 Storage Control Reference for Model 6,

GA32-0274

Preface xi

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 12

Document Conventions

The terms “Universal Storage Platform V” and “Universal Storage Platform VM”

refer to all models of the Hitachi Universal Storage Platform V and VM storage

systems, unless otherwise noted.

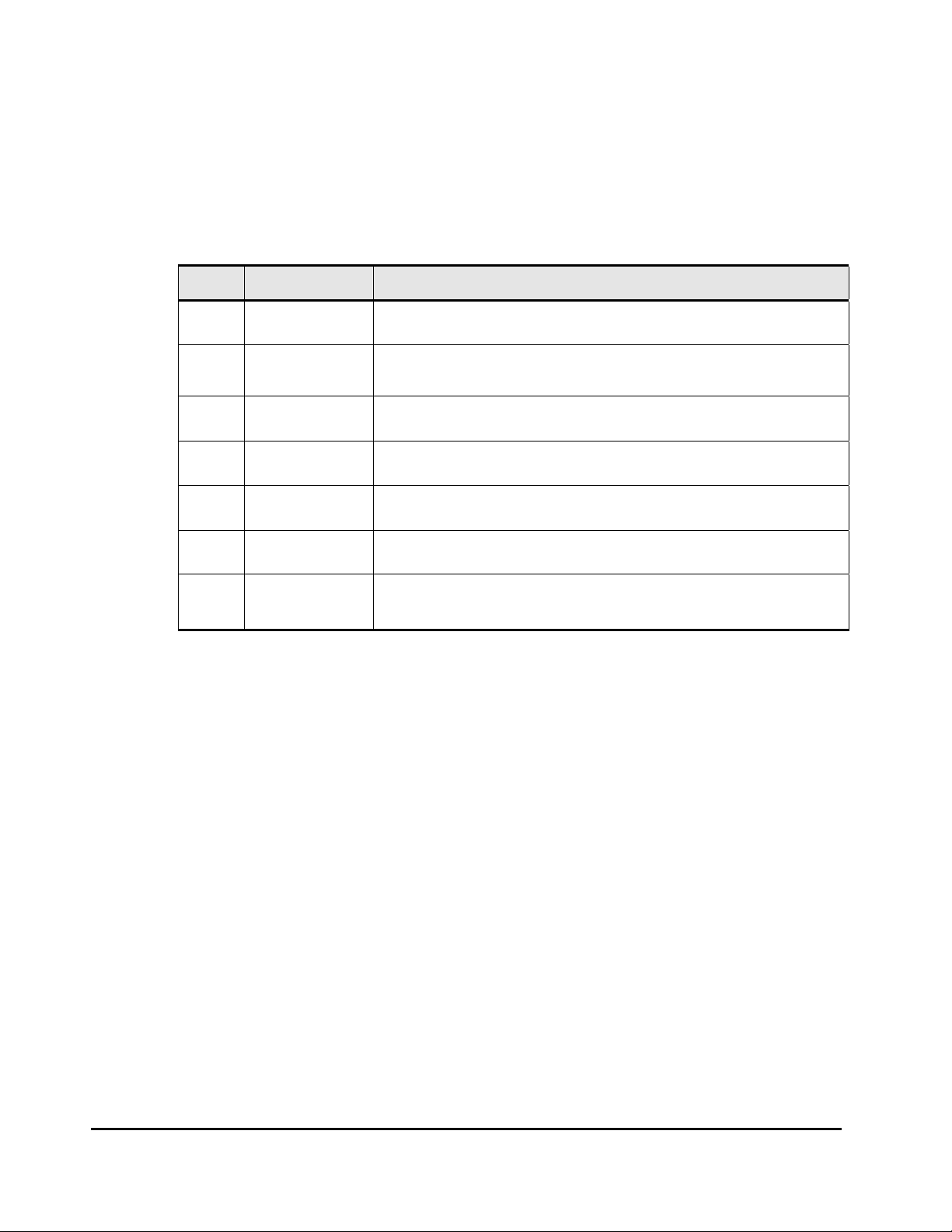

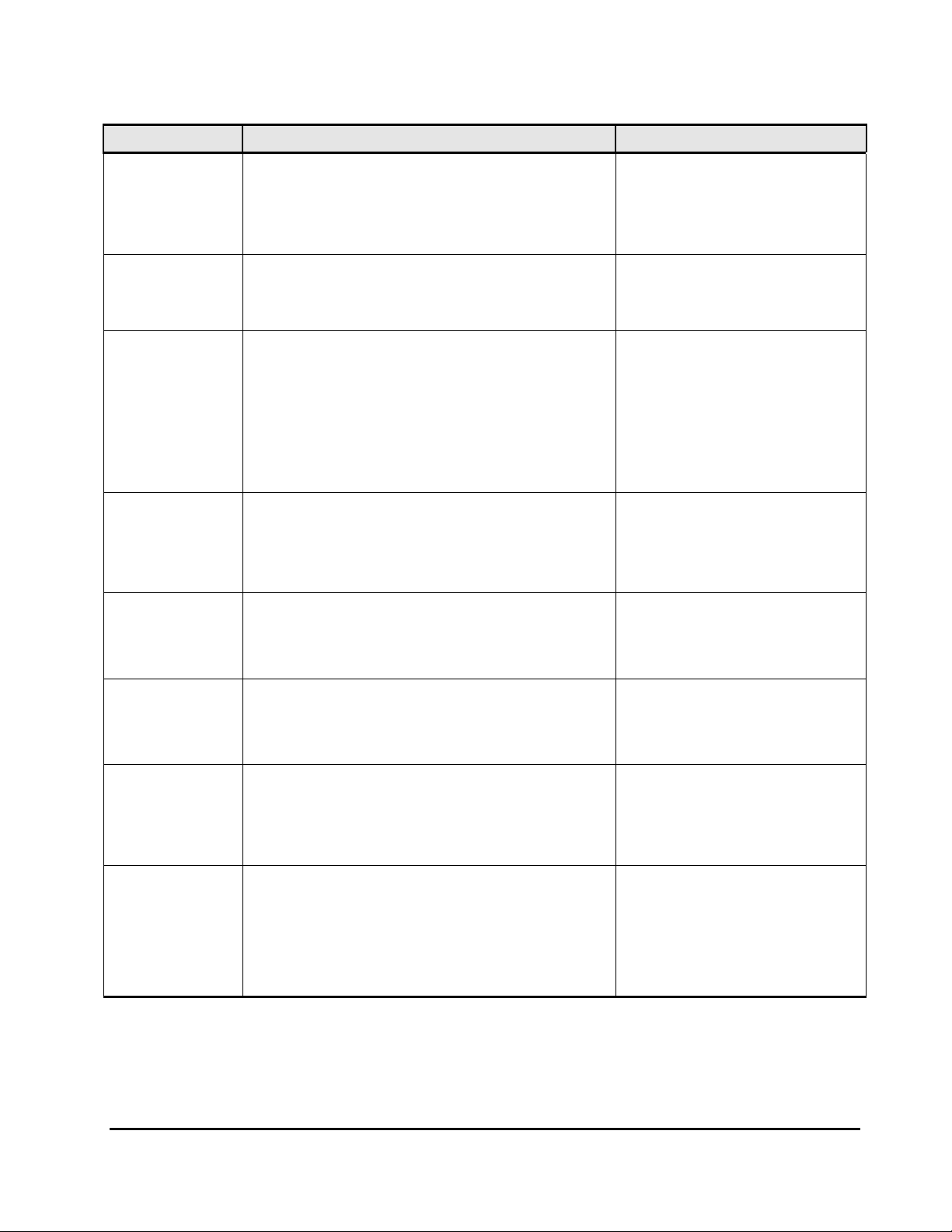

This document uses the following icons to draw attention to information:

Icon Meaning Description

Note Calls attention to important and/or additional information.

Tip

Caution

WARNING

DANGER

ELECTRIC SHOCK

HAZARD!

ESD Sensitive

Provides helpful information, guidelines, or suggestions for performing

tasks more effectively.

Warns the user of adverse conditions and/or consequences (e.g.,

disruptive operations).

Warns the user of severe conditions and/or consequences (e.g.,

destructive operations).

Dangers provide information about how to avoid physical injury to

yourself and others.

Warns the user of electric shock hazard. Failure to take appropriate

precautions (e.g., do not touch) could result in serious injury.

Warns the user that the hardware is sensiti ve to electrostatic discharge

(ESD). Failure to take appropriate precautions (e.g., grounded wrist

strap) could result in damage to the hardware.

Convention for Storage Capacity Values

Physical storage capacity values (e.g., disk drive capacity) are calculated

based on the following values:

1 KB = 1,000 bytes

1 MB = 1,000

1 GB = 1,000

1 TB = 1,000

1 PB = 1,000

Logical storage capacity values (e.g., logical device capacity) are calculated

based on the following values:

1 KB = 1,024 bytes

1 MB = 1,024

1 GB = 1,024

1 TB = 1,024

1 PB = 1,024

1 block = 512 bytes

xii Preface

2

bytes

3

bytes

4

bytes

5

bytes

2

bytes

3

bytes

4

bytes

5

bytes

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 13

Getting Help

If you need to call the Hitachi Data Systems Support Center, make sure to

provide as much information about the problem as possible, including:

• The circumstances surrounding the error or failure.

• The exact content of any message(s) displayed on the host system(s).

• The exact content of any message(s) displayed by Storage Navigator.

• The service information messages (SIMs), including reference codes and

severity levels, displayed by Storage Navigator and/or logged at the host.

The Hitachi Data Systems customer support staff is available 24 hours/day,

seven days a week. If you need technical support, please call:

• United States: (800) 446-0744

• Outside the United States: (858) 547-4526

Comments

Please send us your comments on this document. Make sure to include the

document title, number, and revision. Please refer to specific section(s) and

paragraph(s) whenever possible.

• E-mail: doc.comments@hds.com

• Fax: 858-695-1186

• Mail:

Technical Writing, M/S 35-10

Hitachi Data Systems

10277 Scripps Ranch Blvd.

San Diego, CA 92131

Thank you! (All comments become the property of Hitachi Data Systems

Corporation.)

Preface xiii

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 14

xiv Preface

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 15

1

Product Overview

This chapter provides an overview of the Universal Storage Platform V and VM

storage systems.

Universal Storage Platform V Family

New and Improved Capabilities

Specifications at a Glance

Software Products

Product Overview 1-1

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 16

Universal Storage Platform V Family

The Hitachi Universal Storage Platform™ V family, the industry’s highest

performing and most scalable storage solution, represents the first

implementation of a large-scale, enterprise-class virtualization layer combined

with thin provisioning software, delivering virtualization of internal and

external storage into one pool. Users realize the consolidation benefits of

external storage virtualization with the efficiencies, power, and cooling

advantages of thin provisioning in one integrated solution.

The Universal Storage Platform V family, which includes the USP V floor

models and the rack-mounted USP VM, offer a wide range of storage and data

services, including thin provisioning with Hitachi Dynamic Provisioning™

software, application-centric storage management and logical partitioning, and

simplified and unified data replication across heterogeneous storage systems.

The Universal Storage Platform V family enables users to deploy applications

within a new framework, leverage and add value to current investments, and

more closely align IT with business objectives.

The Universal Storage Platform V family is an integral part of the Services

Oriented Storage Solutions architecture from Hitachi Data Systems. These

storage systems provide the foundation for matching application requirements

to different classes of storage and deliver critical services such as:

• Business continuity services

• Content management services (search, indexing)

• Non-disruptive data migration

• Volume management across heterogeneous storage arrays

• Thin provisioning

• Security services (immutability, logging, auditing, data shredding)

• Data de-duplication

• I/O load balancing

• Data classification

• File management services

For further information on storage solutions and the Universal Storage

Platform V and VM storage systems, please contact your Hitachi Data Systems

account team.

1-2 Product Overview

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 17

New and Improved Capabilities

The Hitachi Universal Storage Platform V and VM storage systems offer the

following new and improved capabilities as compared with the TagmaStore

Universal Storage Platform and Network Storage Controller:

• NEW! Hitachi Dynamic Provisioning™

Hitachi Dynamic Provisioning is a new and advanced thin-provisioning

software product that provides “virtual storage capacity” to simplify

administration and addition of storage, eliminate application service

interruptions, and reduce costs.

• Cache capacity

The USP V supports up to 256 GB (128 GB for TagmaStore USP).

• Shared memory capacity

The USP V supports up to 32 GB (12 GB for TagmaStore USP).

The USP VM supports up to 16 GB (6 GB for TagmaStore NSC).

• Total storage capacity (internal and external storage)

The USP V supports up to 247 PB (32 PB for TagmaStore USP).

The USP VM supports up to 96 PB (16 PB for TagmaStore NSC).

• Aggregate bandwidth

The USP V provides an aggregate bandwidth of up to 106 GB/sec

(81 GB/sec for TagmaStore USP).

• Fibre-channel ports

The USP V supports up to 224 FC ports (192 for TagmaStore USP).

• FICON

The USP V supports up to 112 FICON ports (96 for TagmaStore USP).

The USP VM supports up to 24 FICON ports (16 for TagmaStore NSC).

• ESCON

The USP V supports up to 112 ESCON ports (96 for TagmaStore USP).

®

ports

®

ports

• Open-system logical devices

The USP VM supports up to 65,536 LDEVs (16,384 for TagmaStore NSC).

Product Overview 1-3

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 18

Specifications at a Glance

Specifications for the Universal Storage Platform V

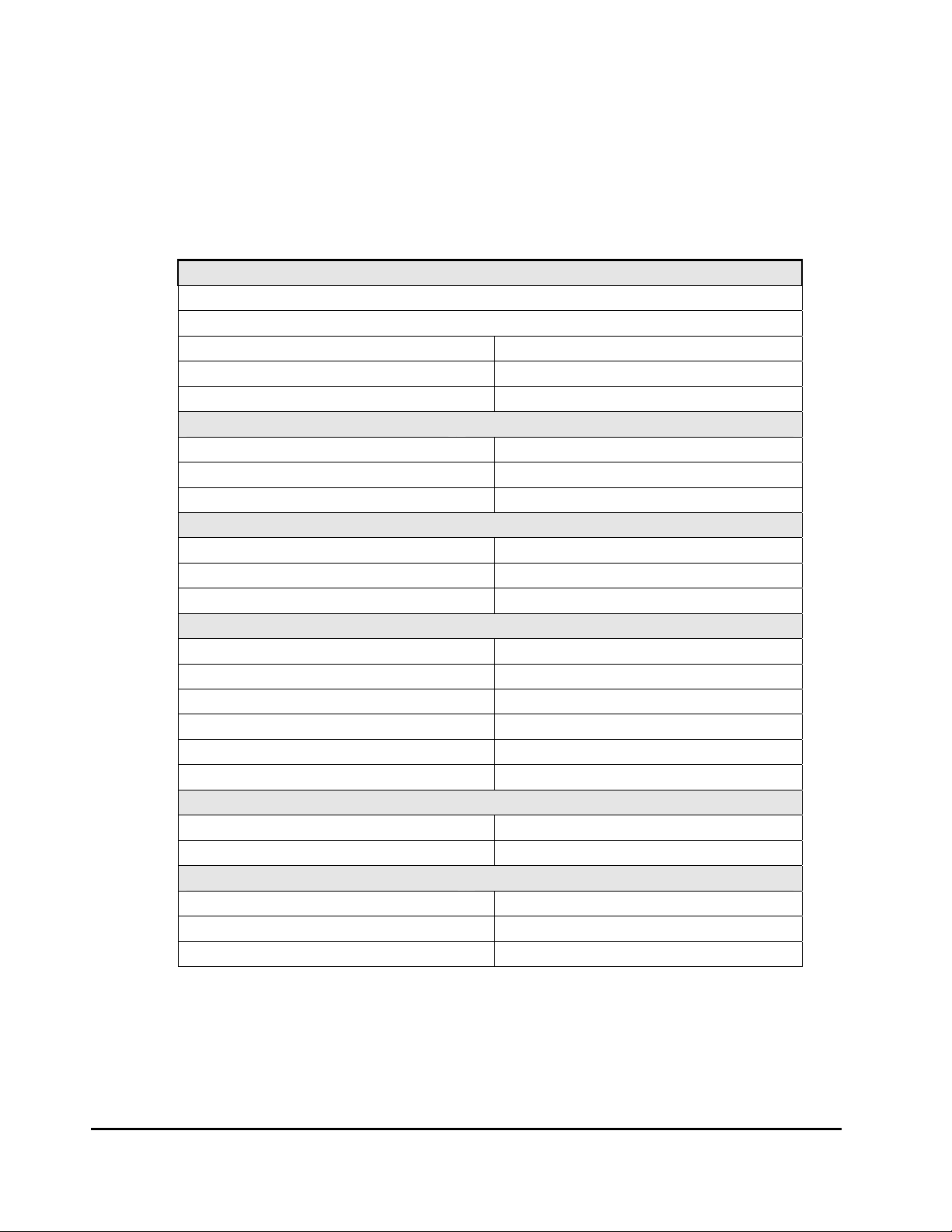

Table 1-1 provides a brief overview of the USP V specifications.

Table 1-1 Specifications – Universal Storage Platform V

Controller

Basic platform packaging unit: Integrated control/array frame and 1 to 4 optional array frames

Universal Star Network Crossbar Switch

Number of switches 8

Aggregate bandwidth 106 GB/sec

Aggregate IOPS 4.5 million

Cache Memory

Boards 32

Board capacity 4 GB or 8 GB

Maximum 256 GB

Shared Memory

Boards 8

Board capacity 4 GB

Maximum 32 GB

Front-End Directors (Connectivity)

Boards 14

Fibre-channel host ports per board 8 or 16

Maximum fibre-channel host ports 224

Virtual host ports 1,024 per physical port

Maximum FICON host ports 112

Maximum ESCON host ports 112

Logical Devices (LDEVs)—Maximum Supported

Open systems 65,536

Mainframe 65,536

Hard Disk Drives

Type (fibre channel) 73 GB, 146 GB, 300 GB, 400 GB, 750 GB

Number of drives (minimum–maximum) 4–1152

Spare drives per system (minimum–maximum) 1–16

1-4 Product Overview

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 19

Internal Raw Capacity

Minimum (73-GB disks) 82 TB

Maximum (750-GB disks) 850.8 TB

Maximum Usable Capacity—RAID-5

Open systems (750-GB disks) 739.3 TB

Mainframe (400-GB disks) 374.3 TB

Maximum Usable Capacity—RAID-6

Open systems (750-GB disks) 633.7 TB

Mainframe (400-GB disks) 318.5 TB

Maximum Usable Capacity—RAID-1+

Open systems (750-GB disks) 423.9 TB

Mainframe (400-GB disks) 207.7 TB

External Storage Support

Maximum internal and external capacity 247 PB

Virtual Storage Machines 32

Standard Back-End Directors 1-8

Operating System Support

Mainframe: IBM OS/390®, MVS/ESA™, MVS/XA™, VM/ESA®, VSE/ESA™, z/OS, z/OS.e, z/VM®,

zVSE™; Fujitsu MSP; Red Hat Linux for IBM S/390

®

and zSeries®

Open Systems: Sun Solaris, HP-UX, IBM AIX®, Microsoft® Windows, Novell NetWare, Red Hat

and SuSE Linux, VMWare ESX, HP Tru64, SGI IRIX, HP OpenVMS

Product Overview 1-5

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 20

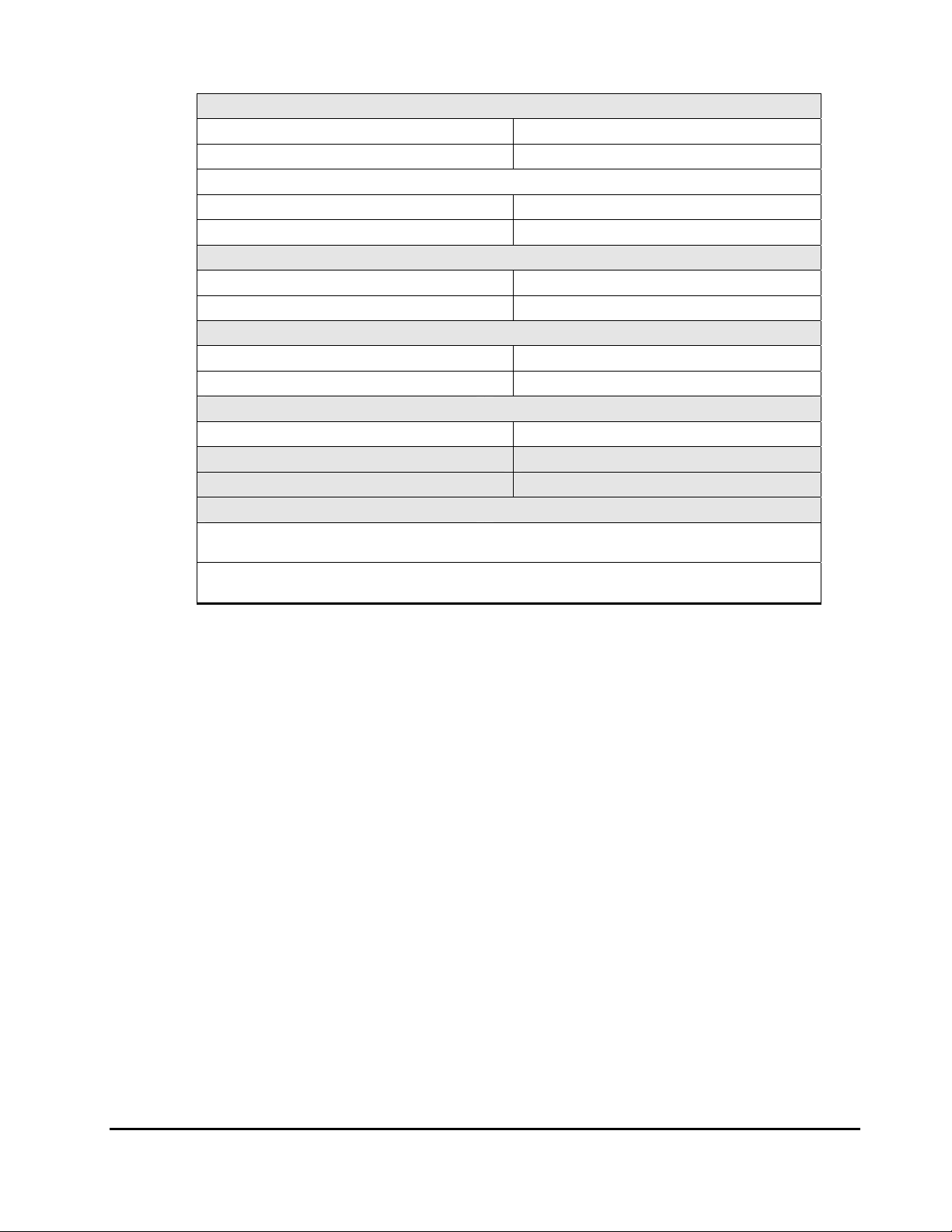

Specifications for the Universal Storage Platform VM

Table 1-2 provides a brief overview of the USP VM specifications.

Table 1-2 Specifications – Universal Storage Platform VM

Controller

Single-rack configuration: controller and up to two disk chassis

Optional second rack: up to two disk chassis

Universal Star Network Crossbar Switch

Number of switches 2

Aggregate bandwidth 13.3 GB/sec

Aggregate IOPS 1.2 million

Cache Memory

Boards 8

Board capacity 4 or 8 GB

Maximum 64 GB

Shared Memory

Boards 4

Board capacity 4 GB

Maximum 16 GB

Front-End Directors (Connectivity)

Boards 3

Fibre-channel host ports per feature 8 or 1 6

Fibre-channel port performance 4 Gb/sec

Maximum number of fibre-channel host ports 48

Virtual host ports 1,024 per physical port

Maximum FICON host ports 24

Maximum ESCON host ports 24

Logical Devices (LDEVs)—Maximum Supported

Open systems 65,536

Mainframe 65,536

Hard Disk Drives

Capacity and (fibre channel) 73 GB, 146 GB, 300 GB, 400 GB, 750 GB

Number (minimum–maximum) 0–240

Spare drives per system (minimum–maximum) 1–16

Internal Raw Capacity

Minimum (73-GB disks) 0 GB (146 GB)

Maximum (750-GB disks) 177 TB

1-6 Product Overview

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 21

Controller

Maximum Usable Capacity—RAID-5

Open systems (750-GB disks) 144.7 TB

Mainframe (400-GB disks) 73.3 TB

Maximum Usable Capacity—RAID-6

Open systems (750-GB disks) 124 TB

Mainframe (400-GB disks) 62.4 TB

Maximum Usable Capacity—RAID-1+

Open systems (750-GB disks) 87.1 TB

Mainframe (400-GB disks) 42.7 TB

External Storage Support

Maximum internal and external capacity 96 PB

Virtual Storage Machines 8

Standard Back-End Director 1

Operating System Support

Mainframe: IBM OS/390®, MVS/ESA™, MVS/XA™, VM/ESA®, VSE/ESA™, z/OS, z/OS.e, z/VM®,

zVSE™; Fujitsu MSP; Red Hat Linux for IBM S/390

®

and zSeries®

Open Systems: Sun Solaris, HP-UX, IBM AIX®, Microsoft® Windows, Novell NetWare, Red Hat

and SuSE Linux, VMWare ESX, HP Tru64, HP OpenVMS

Product Overview 1-7

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 22

Software Products

The Universal Storage Platform V and VM provide many advanced features and

functions that increase data accessibility and deliver enterprise-wide coverage

of online data copy/relocation, data access/protection, and storage resource

management. Hitachi Data Systems’ software products and solutions provide a

full set of industry-leading copy, availability, resource management, and

exchange software to support business continuity, database backup and

restore, application testing, and data mining.

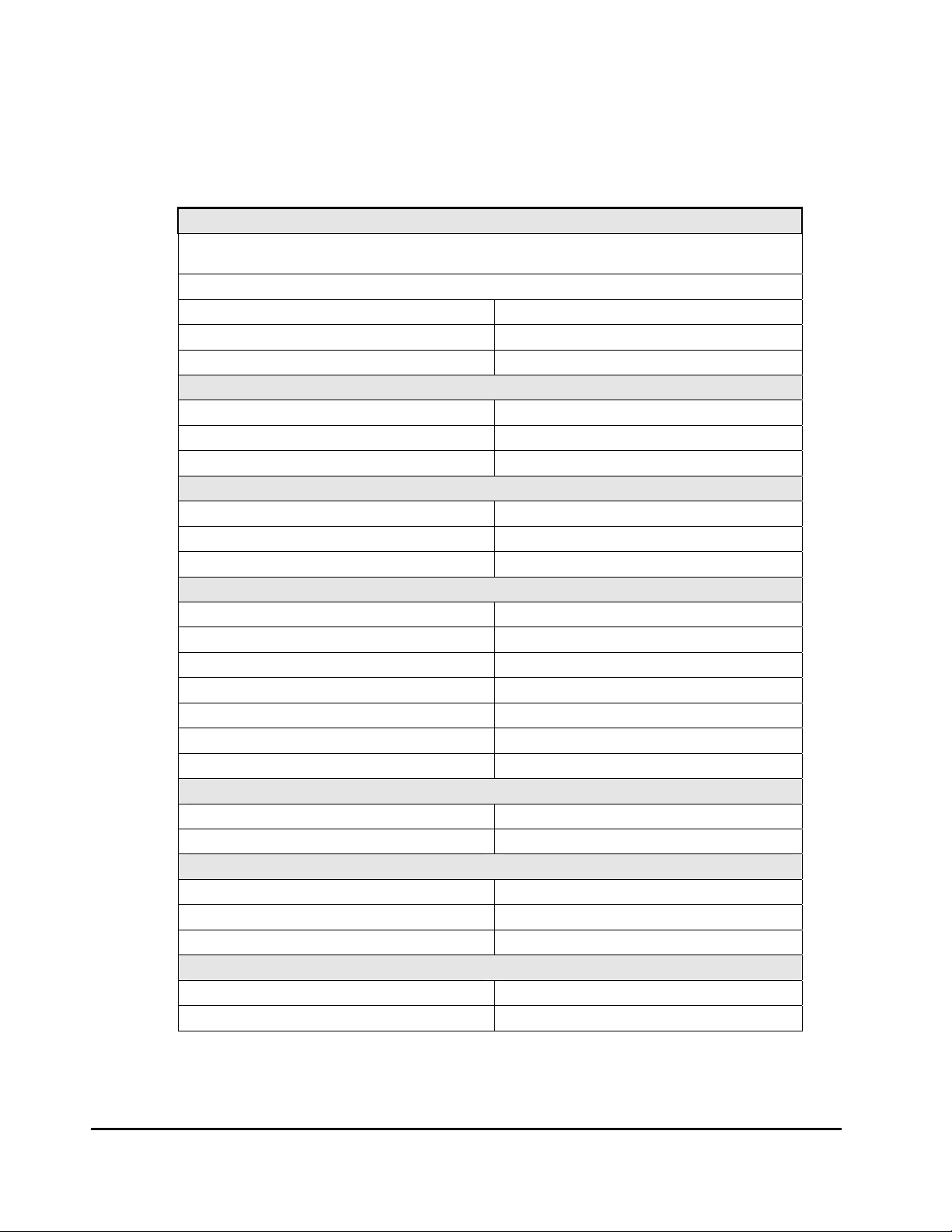

Table 1-3 lists and describes the Storage Navigator-based software for the

Universal Storage Platform V and VM.

host/server-based software for the Universal Storage Platform V and VM.

NEW – Hitachi Dynamic Provisioning

Hitachi Dynamic Provisioning is a new and advanced thin-provisioning software

product for the Universal Storage Platform V/VM that provides “virtual storage

capacity” to simplify administration and addition of storage, eliminate

application service interruptions, and reduce costs.

Dynamic Provisioning allows storage to be allocated to an application without

being physically mapped until it is used. This “just-in-time” provisioning decouples the provisioning of storage to an application from the physical addition

of storage capacity to the storage system to achieve overall higher rates of

storage utilization. Dynamic Provisioning also transparently spreads many

individual I/O workloads across multiple physical disks. This I/O workload

balancing feature directly reduces performance and capacity management

expenses by eliminating I/O bottlenecks across multiple applications.

Table 1-4 lists and describes the

For further information on Hitachi Dynamic Provisioning, please contact your

Hitachi Data Systems account team, or visit Hitachi Data Systems online at

www.hds.com.

1-8 Product Overview

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 23

Table 1-3 Storage Navigator-Based Software

Name Description Documents

Hitachi Storage Navigator

Hitachi Storage Navigator

Messages

NEW: Hitachi Dynamic

Provisioning

Hitachi TrueCopy

Hitachi TrueCopy for

IBM z/OS

Hitachi ShadowImage

Hitachi ShadowImage for

IBM z/OS

Hitachi Compatible Mirroring

for IBM FlashCopy

Hitachi Universal Replicator

Hitachi Universal Replicator

for IBM z/OS

Hitachi Compatible

Replication for IBM XRC*

Hitachi Copy-on-Write

Snapshot

Hitachi Universal Volume

Manager

Hitachi Virtual Partition

Manager

Obtains system configuration and status information and sends userrequested commands to the storage systems. Serves as the

integrated user interface for all Resource Manager components.

Provides “virtual storage capacity” to simpli fy administration and

addition of storage, eliminate application service interruptions, and

reduce costs. See

Enables the user to perform remote copy operations between storage

systems in different locations. TrueCopy provides synchronous and

asynchronous copy modes for open-system and mainframe data.

Allows the user to create internal copies of volumes for purposes such

as application testing and offline backup. Can be used in conjunction

with TrueCopy to maintain multiple copies of data at primary and

secondary sites.

Provides compatibility with th e IBM FlashCopy mainframe host

software function, which performs server-based data replication for

mainframe data.

Provides a RAID storage-based hardware solution for disaster

recovery which enables fast and accurate system recovery,

particularly for large amounts of data which span multiple volumes.

Using UR, you can configure and manage highly reliable data

replication systems using journal volumes to reduce chances of

suspension of copy operations.

Provides compatibility with the IBM Extended Remote Copy (XRC)

mainframe host software function, which performs server-based

asynchronous remote copy operations for mainframe LVIs.

Provides ShadowImage functionality using less capacity of the storage

system and less time for processing than ShadowImage by using

“virtual” secondary volumes. COW Snapshot is useful for copying and

managing data in a short time with reduced cost. ShadowImage

provides higher data integrity.

Realizes the virtualization of the storage system. Users can connect

other storage systems to the USP V/VM and access the data on the

external storage system over virtual devices on the USP V/VM.

Functions such as TrueCopy and Cache Residency can be performed

on the external data.

Provides storage logical partition and cache logical partition:

Storage logical partition allows you to divide the availab le storage

among various users to reduce conflicts over usage.

Cache logical partition allows you to divide the cache into multiple

virtual cache memories to reduce I/O contention.

Hitachi Dynamic Provisioning.

MK-96RD621

MK-96RD613

MK-96RD641

MK-96RD622

MK-96RD623

MK-96RD618

MK-96RD619

MK-96RD614

MK-96RD624

MK-96RD625

MK-96RD610

MK-96RD607

MK-96RD626

MK-96RD629

Hitachi LUN Manager

Hitachi SNMP Agent

Audit Log

Enables users to configure the fibre-channel ports and devices (LUs)

for operational environments (for example, arbitrated-loop and fabric

topologies, host failover support).

Provides support for SNMP monitoring and management. Includes

Hitachi specific MIBs and enable s SNMP-based reporting on status and

alerts. SNMP agent on the SVP gathers usage and error information

and transfers the information to the SNMP manager on the host.

Provides detailed records of all operations performed using Storage

Navigator (and the SVP).

MK-96RD615

MK-96RD620

MK-96RD606

Product Overview 1-9

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 24

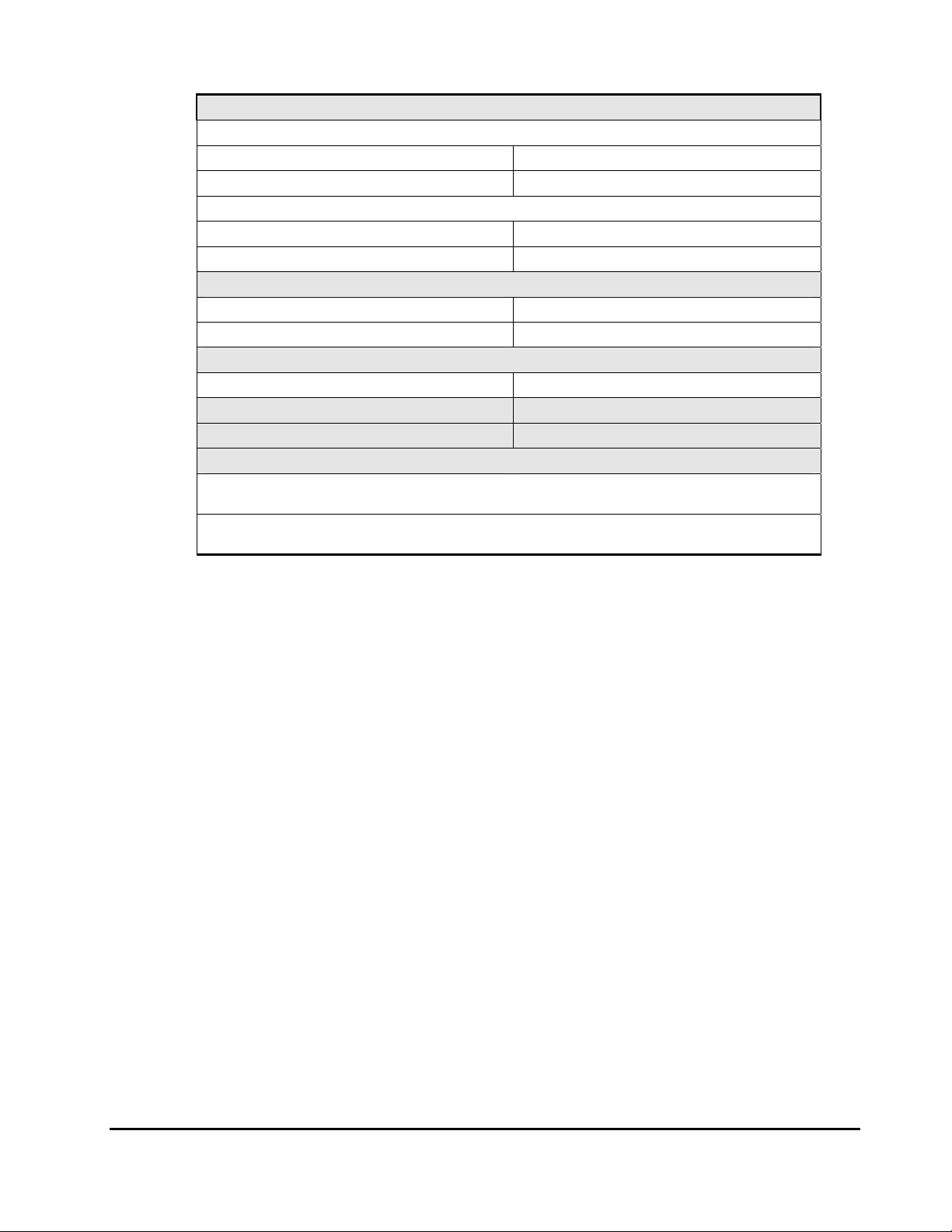

Encrypted Communications

Hitachi LUN Expansion

Hitachi Virtual LVI/LUN

Hitachi Cache Residency

Manager

Hitachi Compatible PAV

Hitachi LUN Security

Hitachi Volume Security

Hitachi Database Validator*

Hitachi Data Retention Utility

Hitachi Volume Retention

Manager

Hitachi Performance Monitor Performs detailed monitoring of storage system and volume activity. MK-96RD617

Hitachi Volume Migration Performs automatic relocation of volumes to optimize performance. MK-96RD617

Hitachi Server Priority

Manager*

Volume Shredder Enables users to overwrite data on logical volumes with dummy data. MK-96RD630

Allows users to employ SSL-encrypted communications with the

Hitachi Universal Storage Platfo r m V/VM.

Allows open-system users to concatenate multiple LUs into single LUs

to enable open-system hosts to acc ess the data on the entire

Universal Storage Platform V/VM using fewer logical units.

Enables users to convert single volumes (LVIs or LUs ) into multiple

smaller volumes to improve data access performance.

Allows users to “lock” and “unlock” data into cache in real time to

optimize access to your most frequently accessed data.

Enables the mainframe host to issue multiple I/O requests in parallel

to single LDEVs in the USP V/VM. Compatible PAV provides

compatibility with the IBM Workload Manager (WLM) host software

function and supports both static and dynamic PAV functionality.

Allows users to restrict host access to data on the USP V/VM. Opensystem users can restrict host access to LUs based on the host’s world

wide name (WWN). Mainframe users can restrict host access to LVIs

based on node IDs and logical partition (LPAR) number s.

Prevents corrupted data environments by identifying and rejecting

corrupted data blocks before they are written onto the storage disk,

thus minimizing risk and potential costs in backup, restore, and

recovery operations.

Allows users to protect data from I/O operations performed by hosts.

Users can assign an access attribute to each logi cal volume to restrict

read and/or write operations, preventing unauthorized access to data.

Allows open-system users to designate prioritized ports (for example,

for production servers) and non-prioritized ports (for example, for

development servers) and set thresholds and upper limits for the I/O

activity of these ports.

MK-96RD631

MK-96RD616

MK-96RD630

MK-96RD609

MK-96RD608

MK-96RD615

MK-96RD628

MK-96RD611

MK-96RD612

MK-96RD627

MK-96RD617

* Please contact your Hitachi Data Systems account team for the latest

information on the availability of these features.

1-10 Product Overview

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 25

Table 1-4 Host/Server-Based Software

Name Description Documents

Hitachi Command

Control Interface

Hitachi Cross-OS

File Exchange

Hitachi Code

Converter

HiCommand Global

Link Availability

Manager

Hitachi Dynamic

Link Manager

HiCommand

Device Manager

HiCommand

Provisioning

Manager

Hitachi Business

Continuity Manager

HiCommand

Replication Monitor

Enables open-system users to perform data replication

and data protection operations by issuing commands

from the host to the Hitachi storage systems. The CCI

software supports scripting and provides failover and

mutual hot standby functionality in cooperation with

host failover products.

Enables users to transfer data between mainframe and

open-system platforms using the FICON and/or ESCON

channels, for high-speed data transfer without requiring

network communication links or tape.

Provides simple, integrated, single-point, multipath

storage connection management and reporting.

Improves system reliability and reduces downtime by

automated path health checks, reporting alerts and

error information from hosts, and assisting with rapid

troubleshooting. Administrators can optimize application

performance by controlling path bandwidth (per host

LUN load balancing), and keep applications online while

performing tasks that require taking a path down by

easily switching to and from alternate paths

Provides automatic load balancing, path failover, and

recovery capabilities in the event of a path failure.

Enables users to manage the Hitachi storage systems

and perform functions (e.g., LUN Manager,

ShadowImage) from virtually any location via the

Device Manager Web Client, command line interface

(CLI), and/or third-party application.

Designed to handle a variety of storage systems to

simplify storage management operations and reduce

costs. Works together with HiCommand Device Manager

to provide the functionality to integrate, manipulate,

and manage storage using provisioning plans.

Enables mainframe users to make Po int-in-Time (PiT)

copies of production data, without quiescing the

application or causing any disruption to end-user

operations, for such uses as application testing,

business intelligence, and disaster recovery for business

continuance.

Supports management of storage replication (copy pair)

operations, enabling users to view (report) the

configuration, change the status, and troubleshoot copy

pair issues. Replication Monitor is particularly effective

in environments that include multiple storage systems

or multiple physical locations, and in environments in

which various types of volume replication functionality

(such as both ShadowImage and TrueCopy) are used.

User and Reference Guide:

MK-90RD011

User’s Guide: MK-96RD647

Code Converter: MK-94RD253

User’s Guide: MK-95HC106

Installation & Admin: MK-95HC107

Messages: MK-95HC108

Concepts & Planning: MK-96HC144

For AIX: MK-92DLM111

For HP-UX: MK-92DLM112

For Linux: MK-92DLM113

For Solaris: MK-92DLM114

For Windows: MK-92DLM129

Web Client: MK-91HC001

Server Inst & Config: MK-91HC002

CLI: MK-91HC007

Messages: MK-92HC016

Agent: MK-92HC019

User’s Guide: MK-93HC035

Server: MK-93HC038

Messages: MK-95HC117

Installation: MK-95HC104

Reference Guide: MK-95HC105

User’s Guide: MK-94RD247

Messages: MK-94RD262

Install & Config: MK-96HC131

Messages: MK-96HC132

User’s Guide: MK-94HC093

Product Overview 1-11

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 26

HiCommand

Tuning Manager

HiCommand

Protection Manager

HiCommand Tiered

Storage Manager

Hitachi Copy

Manager for TPF

Hitachi Cache

Manager

Hitachi Dataset

Replication for

z/OS

Provides intelligent and proactive performance and

capacity monitoring as well as reporting and forecasting

capabilities of storage resources.

Systematically controls storage systems,

backup/recovery products, databases, and other system

components to provide efficient and reliable data

protection using simple operations without complex

procedures or expertise.

Enables users to relocate data non-disruptively from one

volume to another for purposes of Data Lifecycle

Management (DLM). Helps improve the efficiency of the

entire data storage system by enabling quick and easy

data migration according to the user’s environment and

requirements.

Enables TPF users to control DASD copy functions on

Hitachi RAID storage systems from TPF through an

interface that is simple to install and use.

Enables users to perform Cache Residency Manager

operations from the mainframe host system. Cache

Residency Manager allows you to place specific data in

cache memory to enable virtually immediate access to

this data.

Operates together with the ShadowImage feature.

Rewrites the OS management information (VTOC,

VVDS, and VTOCIX) and dataset name and creates a

user catalog for a ShadowImage target volume after a

split operation. Provides the prepare, volume divide,

volume unify, and volume backup functions to enable

use of a ShadowImage target volume.

Server Installation: MK-95HC10 9

Getting Started: MK-96HC120

Server Administration: MK-92HC021

User’s Guide: MK-92HC022

CLI: MK-96HC119

Performance Reporter: MK-93HC033

Agent Admin Guide: MK-92HC013

Agent Installation: MK-96HC110

Hardware Agent: MK-96HC111

OS Agent: MK-96HC112

Database Agent: MK-96HC113

Messages: MK-96HC114

User’s Guide: MK-94HC070

Console: MK-94HC071

Command Reference: MK-94HC072

Messages: MK-94HC073

Server: MK-94HC089

User’s Guide: MK-94HC090

CLI: MK-94HC091

Messages: MK-94HC092

Administrator’s Guide: MK-92RD129

Messages: MK-92RD130

Operations Guide: MK-92RD131

User’s Guide: MK-96RD646

User’s Guide: MK-96RD648

1-12 Product Overview

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 27

2

Architecture and Components

This chapter describes the architecture and components of the Hitachi

Universal Storage Platform V and VM storage systems:

Hardware Architecture

Hardware Components

Control Panel and Emergency Power-Off Switch

Intermix Configurations

Architecture and Components 2-1

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 28

Hardware Architecture

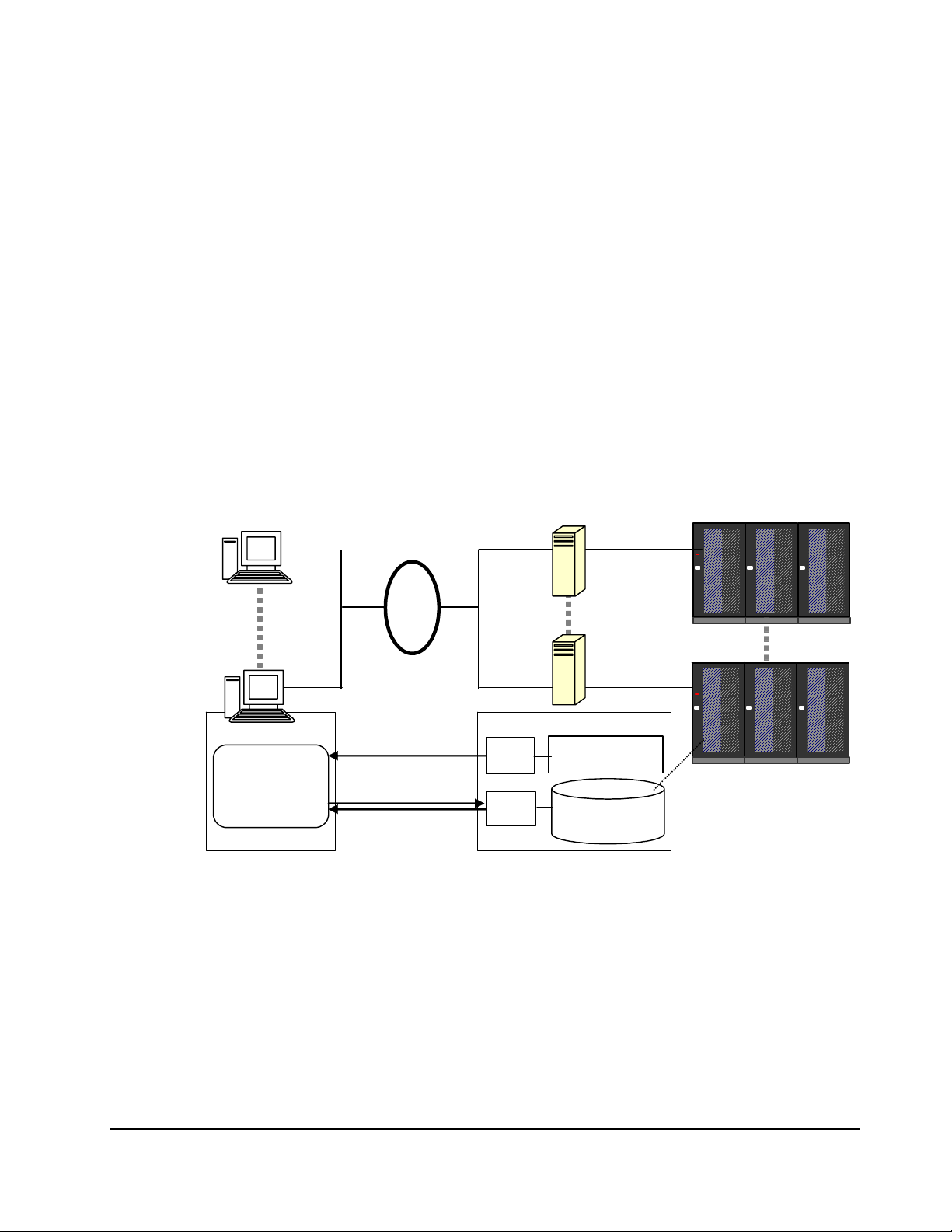

Figure 2-1 illustrates the hardware architecture of the Universal Storage

Platform V storage system.

the Universal Storage Platform VM storage system. As shown, the USP V and

USP VM share the same hardware architecture, differing only in number of

features (FEDs, BEDs, etc.), number of hard disk drives (HDDs), and power

supply.

Figure 2-2 illustrates the hardware architecture of

Input

Power

Input

Power

Power Supply

AC-Box

AC-Box

Power Supply

AC-Box

AC-Box

AC-DC

AC-DC

Power

Power

Supply

Supply

AC-DC

Power

AC-DC

Supply

Power

Supply

Battery

Battery

Box

Box

Controller

SM Path

DKA

Cache Path

DKA

DKA

BED

Channel Interface

SMA SMA

DKA

DKA

DKA

FED

CSW

CSW

CMA

CACHE

Disk Path (max. 64 paths)

Max. 48

HDDs

DKA

DKA

DKA

FED

CSW

CSW

CMA

CACHE

FC-AL (4 Gbps/port)

DKA

DKA

BED

DKA

Battery

Battery

Box

Box

Disk Unit

Max. 1,152 HDDs per storage system

Figure 2-1 Universal Storage Platform V Hardware Architecture

2-2 Architecture and Components

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 29

Channel Interface

Input

Power

PDB-Box

PDU

Input

Power

PDB-Box

PDU

Power Supply

AC-DC

AC-DC

Power

Power

Supply

Supply

Battery

Battery

Box

Box

Power Supply

AC-DC

Power

AC-DC

Supply

Power

Supply

Controller

SM Path

Cache Path

DKA

SMA SMA

CHA

CHA

CHA

Disk Path (max. 8 paths)

Max. 60

HDDs

CHA

CHA

CHA

CSW CSW

CMA CMA

FC-AL (4 Gbps/port)

DKA

Disk Unit

Figure 2-2 Universal Storage Platform VM Hardware Architecture

Multiple Data and Control Paths

The Universal Storage Platform V/VM employs the proven Hi-Star™ crossbar

switch architecture, which uses multiple point-to-point data and command

paths to provide redundancy and improve performance. Each data and

command path is independent. The individual paths between the front-end or

back-end directors and cache are steered by high-speed cache switch cards

(CSWs). The USP V/VM does not have any common buses, thus eliminating

the performance degradation and contention that can occur in bus

architecture. All data stored on the USP V/VM is moved into and out of cache

over the redundant high-speed paths.

Max. 240 HDDs per storage system

Architecture and Components 2-3

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 30

Storage Clusters

Each controller consists of two redundant controller halves called storage

clusters. Each storage cluster contains all physical and logical elements (for

example, power supplies, channel adapters, disk adapters, cache, control

storage) needed to sustain processing within the storage system. Both storage

clusters should be connected to each host using an alternate path scheme, so

that if one storage cluster fails, the other storage cluster can continue

processing for the entire storage system.

The front-end and back-end directors are split between clusters to provide full

backup. Each storage cluster also contains a separate, duplicate copy of cache

and shared memory contents. In addition to the high-level redundancy that

this type of storage clustering provides, many of the individual components

within each storage cluster contain redundant circuits, paths, and/or

processors to allow the storage cluster to remain operational even with

multiple component failures. Each storage cluster is powered by its own set of

power supplies, which can provide power for the entire storage system in the

event of power supply failure. Because of this redundancy, the USP V/VM can

sustain the loss of multiple power supplies and still continue operation.

The redundancy and backup features of the USP V/VM eliminate all active

single points of failure, no matter how unlikely, to provide an additional level

of reliability and data availability.

2-4 Architecture and Components

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 31

Hardware Components

The USP V/VM hardware includes the controller, disk unit, and power supply

components. Each component is connected over the cache paths, shared

memory paths, and/or disk paths. The USP V/VM controller is fully redundant

and has no active single point of failure. All components can be repaired or

replaced without interrupting access to user data.

The main hardware components of the USP V and VM storage systems are:

• Shared Memory

• Cache Memory

• Host Channels and Front-End Directors

• Back-End Directors and Array Domains

• Hard Disk Drives

• Service Processor

• Power Supplies

• Batteries

Architecture and Components 2-5

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 32

Shared Memory

The nonvolatile shared memory contains the cache directory and configuration

information for the USP V/VM storage system. The path group arrays (for

example, for dynamic path selection) also reside in the shared memory. The

shared memory is duplexed, and each side of the duplex resides on the first

two shared memory cards, which are in clusters 1 and 2. In the event of a

power failure, shared memory is protected for at least 36 hours by battery

backup.

The Universal Storage Platform V can be configured with up to 32 GB of shared

memory, and the Universal Storage Platform VM can be configured with up to

16 GB of shared memory. The size of the shared memory is determined by

several factors, including total cache size, number of logical devices (LDEVs),

and replication function(s) in use. Any required increase beyond the base size

is automatically shipped and configured during the installation or upgrade

process.

Cache Memory

The Universal Storage Platform V can be configured with up to 256 GB of

cache, and the Universal Storage Platform VM can be configured with up to

64 GB of cache memory. All cache memory in the USP V/VM is nonvolatile and

is protected for at least 36 hours by battery backup.

The Universal Storage Platform V and VM storage systems place all read and

write data in cache. The amount of fast-write data in cache is dynamically

managed by the cache control algorithms to provide the optimum amount of

read and write cache, depending on the workload read and write I/O

characteristics.

The cache is divided into two equal areas (called cache A and cache B) on

separate cards. Cache A is in cluster 1, and cache B is in cluster 2. The

Universal Storage Platform V/VM places all read and write data in cache. Write

data is normally written to both cache A and B with one channel write

operation, so that the data is always duplicated (duplexed) across logic and

power boundaries. If one copy of write data is defective or lost, the other copy

is immediately destaged to disk. This “duplex cache” design ensures full data

integrity in the unlikely event of a cache memory or power-related failure.

Note: Mainframe hosts can specify special attributes (for example, cache fast

write (CFW) command) to write data (typically sort work data) without write

duplexing. This data is not duplexed and is usually given a discard command

at the end of the sort, so that the data will not be destaged to the disk drives.

2-6 Architecture and Components

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 33

Front-End Directors and Host Channels

The Universal Storage Platform V and VM support all-mainframe, all-opensystem, and multiplatform configurations. The front-end directors (FEDs)

process the channel commands from the hosts and manage host access to

cache. In the mainframe environment, the front-end directors perform CKDto-FBA and FBA-to-CKD conversion for the data in cache.

Each front-end director feature (pair of boards) is composed of one type of

host channel interface: fibre-channel, FICON, or Extended Serial Adapter

(ExSA) (compatible with ESCON protocol). The channel interfaces on each

board can transfer data simultaneously and independently.

The FICON and fibre-channel FED features are available in shortwave

(multimode) and longwave (single mode) versions. When configured with

shortwave features, the USP V/VM can be located up to 500 meters (2750

feet) from the host. When configured with longwave features, the USP V/VM

can be located up to ten kilometers from the host(s).

• FICON. The FICON features provide data transfer speeds of up to 4 Gbps

and have 8 ports per feature (pair of boards).

Note: FICON data transmission rates vary according to configuration:

S/390 Parallel Enterprise Servers - Generation 5 (G5) and Generation 6

(G6) only support FICON at 1 Gbps.

z800 and z900 series hosts have the following possible configurations:

– FICON channel will operate at 1 Gbps ONLY.

– FICON EXPRESS channel transmission rates will vary according to

microcode release. If microcode is 3G or later, the channel will autonegotiate to set a 1-Gbps or 2-Gbps transmission rate. If microcode is

previous to 3G, the channel will operate at 1 Gbps ONLY.

For further information on FICON connectivity, refer to the Mainframe Host

Attachment and Operations Guide (MK-96RD645), or contact your Hitachi

Data Systems representative.

• ESCON. The ExSA features provide data transfer speeds of up to 17

MB/sec and have 8 ports per feature (pair of boards). Each ExSA channel

can be directly connected to a CHPID or a serial channel director. Shared

serial channels can be used for dynamic path switching. The USP V/VM also

supports the ESCON Extended Distance Feature (XDF).

• Fibre-Channel. The fibre-channel features provide data transfer speeds of

up to 4 Gbps and can have either 8 or 16 ports per feature (pair of

boards). The USP V/VM supports shortwave (multimode) and longwave

(single-mode) versions of fibre-channel ports on the same adapter board.

Note: Fiber-channel connectivity is also supported for IBM mainframe

attachment when host FICON channel paths are defined to operate in fiberchannel protocol (FCP) mode.

Architecture and Components 2-7

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 34

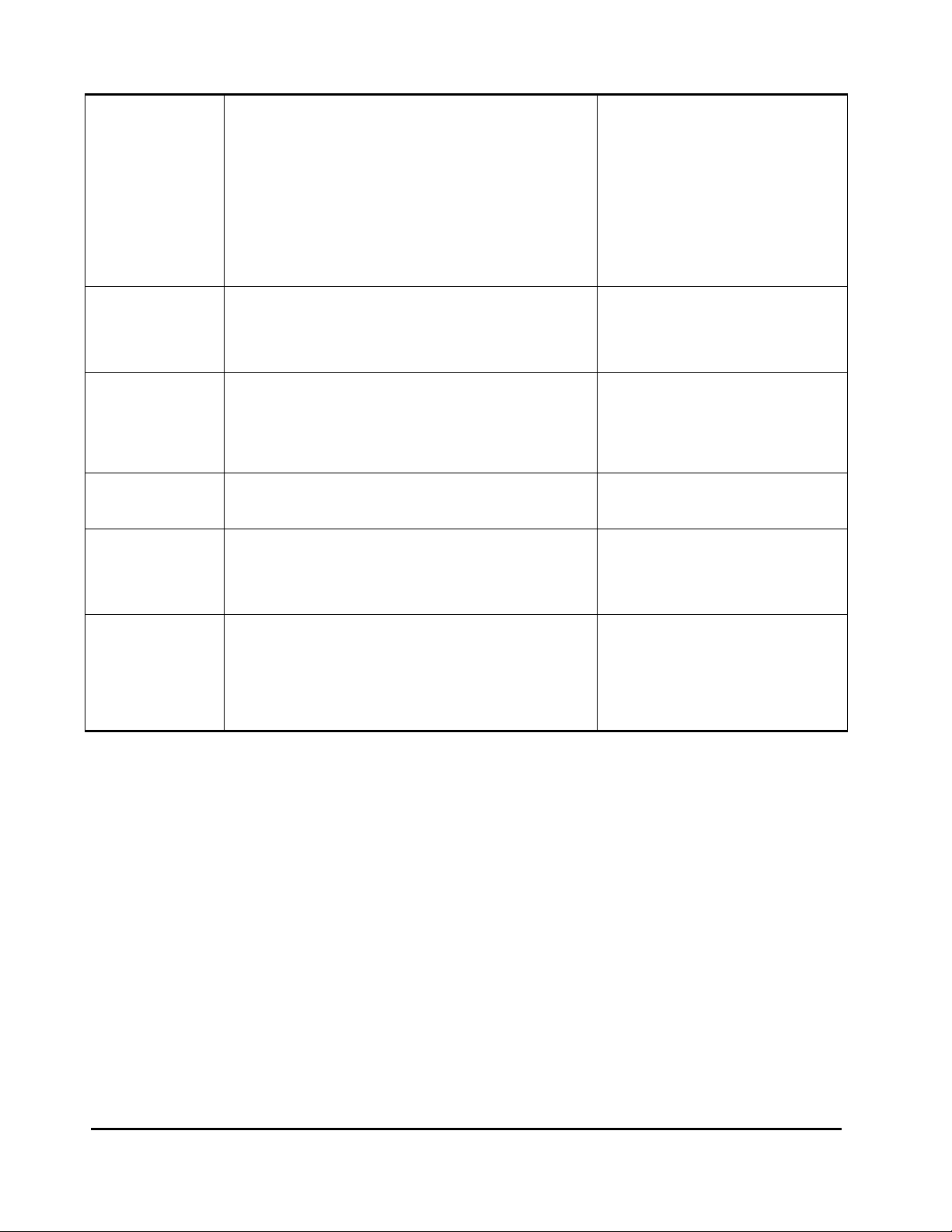

Table 2-1 lists the specifications and configurations for the front-end directors

and specifies the number of channel connections for each configuration.

Table 2-1 Front-End Director and Channel Specifications

Parameter Specifications

Number of front-end director features USP V: 1 – 8, 14 when FEDs are installed in BED slots

USP VM: 1 – 3

Simultaneous data transfers per FED pair:

FICON

ExSA (ESCON)

Fibre-channel

Maximum data transfer rate:

FICON

ExSA (ESCON)

Fibre-channel

Physical interfaces per FED pair:

FICON

ExSA (ESCON)

Fibre-channel

Max. physical FICON interfaces per system USP V: 112

Max. physical ExSA interfaces per system USP V: 112

Max. physical FC interfaces per system USP V: 224

Logical paths per FICON port 2105 emulation: 65,536 (1024 host paths × 64 CUs)

Logical paths per ExSA (ESCON) port 512 (32 host paths × 16 CUs) *

Max. FICON logical paths per system 2105 emulation: 131,072

Max. ExSA (ESCON) logical paths per system 8,192

Maximum LUs per fibre-channel port 2048

Maximum LDEVs per storage system USP V: 130,560 (256 LDEVs x 510 CUs)

8

8

8, 16

400 MB/sec (4 Gbps)

17 MB/sec

400 MB/sec (4 Gbps)

8

8

8, 16

USP VM: 24

USP VM: 24

USP VM: 48

2107 emulation: 261,120 (1024 host paths x 255 CUs)

2107 emulation: 522,240

USP VM: 65,280

*Note: When the number of devices per CHL image is limited to a maximum

of 1024, 16 CU images can be assigned per CHL image. If one CU includes 256

devices, the maximum number of CUs per CHL image is limited to 4.

2-8 Architecture and Components

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 35

Back-End Directors and Array Domains

The back-end director (BED) features control the transfer of data between the

disk drives and cache. The BEDs are installed in pairs for redundancy and

performance. The USP V can be configured with up to eight BED pairs,

providing up to 64 concurrent data transfers to and from the disk drives. The

USP VM is configured with one BED pair, which provides eight concurrent data

transfers to and from the disk drives.

The disk drives are connected to the BED pairs by fibre cables using an

arbitrated-loop (FC-AL) topology. Each BED pair has eight independent fibre

back-end paths controlled by eight back-end microprocessors. Each dualported fibre-channel disk drive is connected through its two ports to each

board in a BED pair over separate physical paths for improved performance as

well as redundancy.

Table 2-2 lists the BED specifications. Each BED pair contains eight buffers

(one per fibre path) that support data transfer to and from cache. Each dualported disk drive can transfer data over either port. Each of the two paths

shared by the disk drive is connected to a separate board in the BED pair to

provide alternate path capability. Each BED pair is capable of eight

simultaneous data transfers to or from the HDDs.

Table 2-2 BED Specifications

Parameter Specifications

Number of back-end director features USP V: 1 – 8

USP VM: 1

Back-end paths per BED feature 8

Back-end paths per storage system USP V: 8 – 64

USP VM: 8

Back-end array interface type Fibre-channel arbitrated loop (FC-AL)

Back-end interface transfer rate (burst rate) 400 MB/sec (4 Gbps)

Maximum concurrent back-end operations per BED feature 8

Maximum concurrent back-end operations per storage system USP V: 64

USP VM: 8

Back-end (data) bandwidth USP V: 68 GB/sec

USP VM: 8.5 GB/sec

Architecture and Components 2-9

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 36

Figure 2-3 illustrates a conceptual array domain. All functions, paths, and disk

*1

drives controlled by one BED pair are called an “array domain.” An array

domain can contain a variety of LVI and/or LU configurations. RAID-level

intermix (all RAID types) is allowed within an array domain (under a BED pair)

but not within an array group.

BED Pair

BED

(CL1)

BED

(CL2)

RAID group (7D+1P / 4D+4D)

Fibre Port Number

Fibre Port

0

1

2

3

4

5

6

7

0

1

2

3

4

5

6

7

Fibre Loop

62 63 00 01

62 63 00 01

62 63 00 01

62 63 00 01

62 63 00 01

62 63 00 01

62 63 00 01

62 63 00 01

RAID group (3D+1P / 2D+2D)

*1: A RAID group (3D+1P/2D+2D) consists of fibre port number 0, 2, 4, and 6, or 1, 3, 5 and 7.

Figure 2-3 Conceptual Array Domain

2-10 Architecture and Components

Hitachi Universal Storage Platform V/VM User and Reference Guide

Max. 64 HDDs per FCAL

Page 37

Hard Disk Drives

The Universal Storage Platform V/VM uses disk drives with fixed-blockarchitecture (FBA) format.

hard disk drives: 72 GB, 146 GB, 300 GB, 400 GB, and 750 GB.

Table 2-3 Disk Drive Specifications

Table 2-3 lists and describes the currently available

Disk Drive

Size

72 GB 71.50 GB 15,000 rpm FC 400 MB/s

146 GB 143.76 GB 15,000 rpm FC 400 MB/s

300 GB 288.20 GB 10,000 rpm FC 200 MB/s

300 GB 288.20 GB 15,000 rpm FC 400 MB/s

400 GB 393.85 GB 10,000 rpm FC 400 MB/s

750 GB 738.62 GB 7,200 rpm SATA 300 MB/s

* The storage capacity values for the disk drives (raw capacity) are calculated based on the following

values: 1 KB = 1,000 bytes, 1 MB = 1,000

Formatted

Capacity*

Revolution

Speed

Interface Interface Data Transfer Rate

2

bytes, 1 GB = 1,0003 bytes, 1 TB = 1,0004 bytes.

(maximum)

Each disk drive can be replaced non-disruptively on site. The USP V/VM utilizes

diagnostic techniques and background dynamic scrubbing that detect and

correct disk errors. Dynamic sparing is invoked automatically if needed. For an

array group of any RAID level, any spare disk drive can back up any other disk

drive of the same rotation speed and the same or lower capacity anywhere in

the storage system, even if the failed disk and the spare disk are in different

array domains (attached to different BED pairs). The USP V/VM can be

configured with up to 16 spare disk drives. The standard configuration

provides one spare drive for each type of drive installed in the storage system.

The Hi-Track monitoring and reporting tool detects disk failures and notifies

the Hitachi Data Systems Support Center automatically, and a service

representative is sent to replace the disk drive.

Note: The spare disk drives are used only as replacements and are not

included in the storage capacity ratings of the storage system.

Service Processor

The Universal Storage Platform V/VM includes a built-in custom PC called the

service processor (SVP). The SVP is integrated into the controller and can only

be used by authorized Hitachi Data Systems personnel. The SVP enables the

Hitachi Data Systems representative to configure, maintain, service, and

upgrade the storage system. The SVP also provides the Storage Navigator

functionality, and it collects performance data for the key components of the

USP V/VM to enable diagnostic testing and analysis. The SVP is connected with

a service center for remote maintenance of the storage system.

Note: The SVP does not have access to any user data stored on the Universal

Storage Platform V/VM.

Architecture and Components 2-11

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 38

Power Supplies

Each storage cluster is powered by its own set of redundant power supplies,

and each power supply is able to provide power for the entire system, if

necessary. Because of this redundancy, the Universal Storage Platform V/VM

can sustain the loss of multiple power supplies and still continue to operate. To

make use of this capability, the USP V/VM should be connected either to dual

power sources or to different power panels, so if there is a failure on one of

the power sources, the USP V/VM can continue full operations using power

from the alternate source.

The AC power supplied to the USP V/VM is converted by the AC-DC power

supply to supply 56V/12V DC power to all storage system components. Each

component has its own DC-DC converter to generate the necessary voltage

from the 56V/12V DC power that is supplied.

Batteries

The Universal Storage Platform V/VM uses nickel-hydrogen batteries to provide

backup power for the control and operational components (cache memory,

shared memory, FEDs, BEDs) as well as the hard disk drives. The

configuration of the storage system and the operational conditions determine

the number and type of batteries that are required.

2-12 Architecture and Components

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 39

Control Panel and Emergency Power-Off Switch

Control Panel

Figure 2-4 shows the location of the control panel on the USP V, and Figure

2-5 shows the location of the control panel on the USP VM.

the items on the USP V/VM control panel. To open the control panel cover,

push and release on the point marked PUSH.

Table 2-4 describes

FRONT VIEW

Control Panel

SUB-SYSTEM

PS

ENABLE

DISABLE

ON

OFF

READY

ALARM

MESSAGE

EMERGENCY

RESTART

ON

BS-

PS-ON

REMOTE

MAINTENANCE

PROCESSING

ENABLE

Figure 2-4 Location of Control Panel on the USP V

FRONT VIEW

Control Panel

SUB-SYSTEM

PS

ENABLE

DISABLE

ON

OFF

READY

ALARM

MESSAGE

EMERGENCY

RESTART

BS-ON

PS-ON

REMOTE

MAINTENANC

PROCESSING

ENABLE

Figure 2-5 Location of Control Panel on the USP VM

Architecture and Components 2-13

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 40

Table 2-4 Control Panel (USP V and USP VM)

Name Type Description

SUBSYSTEM

READY

SUBSYSTEM

ALARM

SUBSYSTEM

MESSAGE

SUBSYSTEM

RESTART

REMOTE

MAINTENANCE

PROCESSING

REMOTE

MAINTENANCE

ENABLE/DISABLE

BS-ON

PS-ON

PS SW ENABLE Switch

PS ON / PS OFF Switch

EMERGENCY

LED

(Green)

LED

(Red)

LED

(Amber)

Switch

LED

(Amber)

Switch

LED

(Amber)

LED

(Green)

LED

(Red)

When lit, indicates that input/output o peration on the channel

interface is possible. Applies to both storage clusters.

When lit, indicates that low DC voltage, high DC current, abnormally

high temperature, or a failure has occurred. Applies to both storage

clusters.

On: Indicates that a SIM (Message) was generated from eith er of the

clusters. Applied to both storage clusters.

Blinking: Indicates that the SVP failure has occurred.

Used to un-fence a fenced drive path and to release the Write Inhibit

command. Applies to both storage clusters.

When lit, indicates that remote maintenance activity is in process. If

remote maintenance is not in use, this LED is not lit. Applies to both

storage clusters.

Used for remote maintenance. While executing remote maintenance

(the REMOTE MAINTENANCE PROCESSING LED in item 5 is blinking),

when switching from ENABLE to DISABLE, remote maintenance is

interrupted. If the remote maintenance function is not used, this

switch is ineffective. Applies t o both storage clusters.

Indicates input power is available.

Indicates that storage system is powered on.

Applies to both storage clusters.

Used to enable the PS ON/ PS OFF switch. To be enabling the PS ON/

PS OFF switch, turn the PS SW ENABLE switch to the ENABLE

position.

Used to power storage system on/off. This switch is valid when the

PS REMOTE/LOCAL switch is set to LOCAL. Applies to both storage

clusters.

This LED shows status of EPO switch on the rear door.

OFF: Indicates that the EPO switch is off.

ON: Indicates that the EPO switch is on.

2-14 Architecture and Components

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 41

Emergency Power-Off Switch

Figure 2-6 shows the location of the emergency power-off (EPO) switch on the

USP V (top right corner of the back side of controller frame).

the location of the EPO switch on the USP VM (next to the control panel on the

primary rack). Use the EPO switch only in case of an emergency.

To power off the USP V/VM storage system in case of an emergency, pull the

EPO switch up and then out towards you, as illustrated on the switch. The EPO

switch must be reset by service personnel before the storage system can be

powered on again.

REAR VIEW

OF

CONTROLLER

FRAME

EMERGENCY

UNIT

EMERGENCY

POWER OFF

Figure 2-7 shows

Figure 2-6 Location of EPO Switch on the USP V

FRONT VIEW OF

PRIMARY RACK

EMERGENCY

UNIT

EMERGENCY

POWER OFF

Figure 2-7 Location of EPO Switch on the USP VM

Architecture and Components 2-15

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 42

Intermix Configurations

RAID-Level Intermix

RAID technology provides full fault-tolerance capability for the disk drives of

the Universal Storage Platform V/VM. The cache management algorithms

enable the USP V to stage up to one full RAID stripe of data into cache ahead

of the current access to allow subsequent access to be satisfied from cache at

host channel transfer speeds.

The Universal Storage Platform V supports RAID-1, RAID-5, RAID-6, and

intermixed RAID-level configurations, including intermixed array groups within

an array domain.

array groups (RAID-5 3D+1P, 7D+1P; RAID-1 2D+2D, 4D+4D; RAID-6

6D+2P) can be intermixed under one BED pair.

Figure 2-8 illustrates an intermix of RAID levels. All types of

1st, 3rd

5th or 7th

BED Pair

2nd, 4th

6th or 8th

BED Pair

BED

(CL1)

BED

(CL2)

BED

(CL2)

BED

(CL1)

0

1

2

3

0

1

2

3

0

1

2

3

0

1

2

3

RAID group

(2D+2D)

RAID group

00

00 01

00 01

00 01

00 01

00 01

01

0100

(3D+1P)

0100

02

02

03

03

03 46 47 02

RAID group

(7D+1P/6D+2P)

46 47 02 03

46 47 02 03

46 47 02 03

46 47 02 03

46 47 02 03

46 47

46 47

RAID group

(3D+1P)

RAID group

(4D+4D)

Figure 2-8 Sample RAID Level Intermix

2-16 Architecture and Components

Hitachi Universal Storage Platform V/VM User and Reference Guide

RAID group

(4D+4D)

Page 43

Hard Disk Drive Intermix

A

A

A

A

All hard disk drives (HDDs) in one array group (parity group) must be of the

same capacity and type. Different HDD types can be attached to the same BED

pair. All HDDs under a single BED pair must operate at the same data transfer

rate (200 or 400 MB/sec), so certain restrictions apply. For example, when an

array group consisting of HDDs with 200 MB/sec transfer rate is intermingled

with an array group consisting of HDDs with 400 MB/sec transfer rate, both

array groups operate at 200 MB/sec.

Device Emulation Intermix

Figure 2-9 illustrates an intermix of device emulation types. The Universal

Storage Platform V supports an intermix of all device emulations on the same

BED pair, with the restriction that the devices in each array group have the

same type of track geometry or format.

The Virtual LVI/LUN function enables different logical volume types to coexist.

When Virtual LVI/LUN is not being used, an array group can be configured with

only one device type (for example, 3390-3 or 3390-9, not 3390-3 and 3390-

9). When Virtual LVI/LUN is being used, you can intermix 3390 device types,

and you can intermix OPEN-x device types, but you cannot intermix 3390 and

OPEN device types.

Note: For the latest information on supported LU types and intermix

requirements, please contact your Hitachi Data Systems account team.

rray Frame

rray Frame

3390-9

3390-3

OPEN-V

USP V

Controller Frame

4th

Pair

3rd

Pair

2nd

BED

Pair

1st

BED

Pair

BED

BED

rray Frame

OPEN-3

3390-9

OPEN-V

rray Frame

Figure 2-9 Sample Device Emulation Intermix

Architecture and Components 2-17

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 44

2-18 Architecture and Components

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 45

3

Functional and Operational

Characteristics

This chapter discusses the functional and operational capabilities of the USP V.

RAID Implementation

CU Images, LVIs, and LUs

Storage Navigator

System Option Modes, Host Modes, and Host Mode Options

Mainframe Operations

Open-Systems Operations

Battery Backup Operations

Functional and Operational Characteristics 3-1

Hitachi Universal Storage Platform V/VM User and Reference Guide

Page 46

RAID Implementation

This section provides an overview of the implementation of RAID technology

on the Universal Storage Platform V:

• Array Groups and RAID Levels

• Sequential Data Striping

• LDEV Striping Across Array Groups

Array Groups and RAID Levels

The array group (also called parity group) is the basic unit of storage capacity

for the USP V. Each array group is attached to both boards of a BED pair over

16 fibre paths, which enables all disk drives in the array group to be accessed

simultaneously by the BED pair. Each array frame has two canister mounts,

and each canister mount can have up to 128 physical disk drives.

The USP V supports the following RAID levels: RAID-1, RAID-5, RAID-6, and

RAID1+0 (also known as RAIDA). RAID-0 is not supported on the USP V.

When configured in four-drive RAID-5 parity groups (3D+1P), ¾ of the raw

capacity is available to store user data, and ¼ of the raw capacity is used for

parity data.

RAID-1.

(2D+2D) array group consists of two pair of disk drives in a mirrored

configuration, regardless of disk drive capacity. A RAID-1 (4D+4D) group*

combines two RAID-1 (2D+2D) groups. Data is striped to two drives and

mirrored to the other two drives. The stripe consists of two data chunks. The

primary and secondary stripes are toggled back and forth across the physical

disk drives for high performance. Each data chunk consists of either eight

logical tracks (mainframe) or 768 logical blocks (open systems). A failure in a

drive causes the corresponding mirrored drive to take over for the failed drive.

Although the RAID-5 implementation is appropriate for many applications, the

RAID-1 option on the USP V is ideal for workloads with low cache-hit ratios.

*Note for RAID-1(4D+4D): It is recommended that both RAID-1 groups

within a RAID-1 (4D+4D) group be configured under the same BED pair.