Page 1

HPE Synergy 660 Gen10 Compute Module

User Guide

Abstract

This document is for the person who installs, administers, and troubleshoots server blades.

Hewlett Packard Enterprise assumes you are qualified in the servicing of computer equipment and

trained in recognizing hazards in products with hazardous energy levels.

Part Number: 876828-005

Published: April 2019

Edition: 5

Page 2

©

Copyright 2017, 2019 Hewlett Packard Enterprise Development LP

Notices

The information contained herein is subject to change without notice. The only warranties for Hewlett Packard

Enterprise products and services are set forth in the express warranty statements accompanying such

products and services. Nothing herein should be construed as constituting an additional warranty. Hewlett

Packard Enterprise shall not be liable for technical or editorial errors or omissions contained herein.

Confidential computer software. Valid license from Hewlett Packard Enterprise required for possession, use,

or copying. Consistent with FAR 12.211 and 12.212, Commercial Computer Software, Computer Software

Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government under

vendor's standard commercial license.

Links to third-party websites take you outside the Hewlett Packard Enterprise website. Hewlett Packard

Enterprise has no control over and is not responsible for information outside the Hewlett Packard Enterprise

website.

Acknowledgments

Intel®, Itanium®, Pentium®, Xeon®, Intel Inside®, and the Intel Inside logo are trademarks of Intel Corporation

in the U.S. and other countries.

Microsoft® and Windows® are either registered trademarks or trademarks of Microsoft Corporation in the

United States and/or other countries.

Adobe® and Acrobat® are trademarks of Adobe Systems Incorporated.

Java® and Oracle® are registered trademarks of Oracle and/or its affiliates.

UNIX® is a registered trademark of The Open Group.

Page 3

Component identification

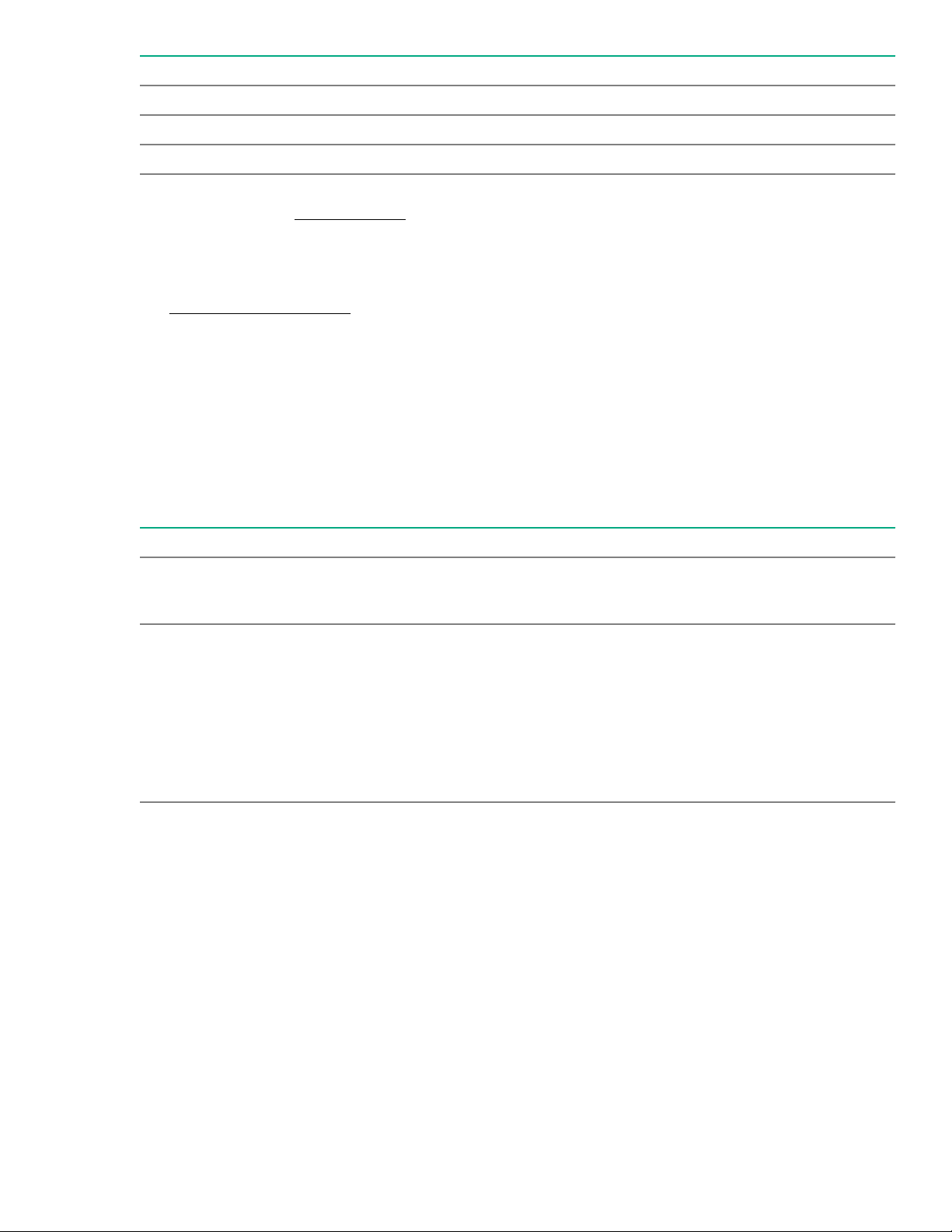

Front panel LEDs and buttons

Item Description Status

1 UID LED

2 Health status LED

Solid blue = Activated

Flashing blue (1 Hz/cycle per sec)

= Remote management or

firmware upgrade in progress

Off = Deactivated

Solid green = Normal

Flashing green (1 Hz/cycle per

sec) = iLO is rebooting

Flashing amber = System

degraded

Flashing red (1 Hz/cycle per sec) =

System critical

Table Continued

Component identification 3

Page 4

Item Description Status

1

2

3

4

7

8

7

5

6

6

5

3 Mezzanine NIC status LED

4 Power On/Standby button and

system power LED

1

If all other LEDs are off, then no power is present to the compute module. (For example, facility power is not present,

power cord is not attached, no power supplies are installed, power supply failure has occurred, or the compute module is

not properly seated.) If the health LED is flashing green while the system power LED is off, the Power On/Standby button

service is initializing or that an iLO reboot is in progress.

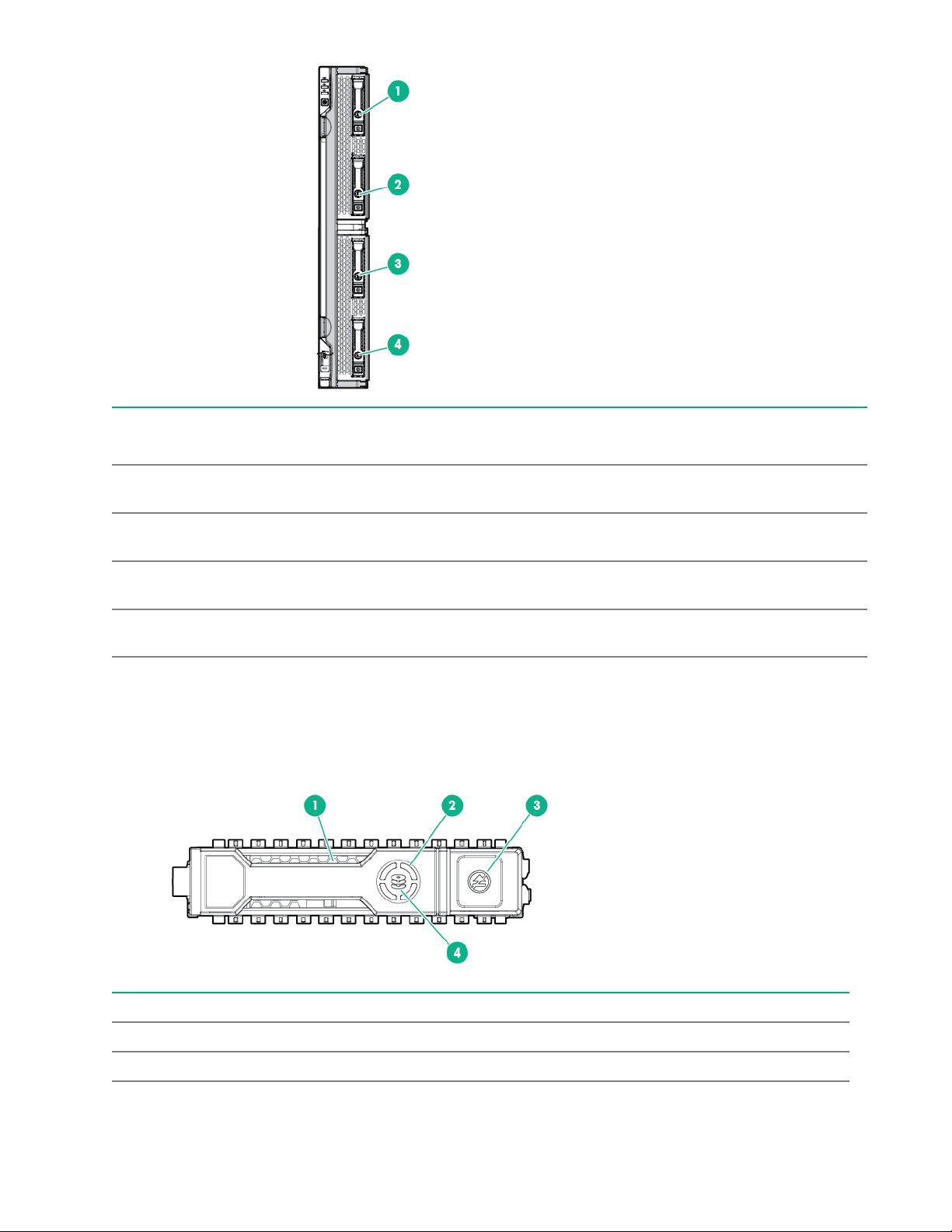

Front panel components

Solid green= Link on any

Mezzanine NIC

Flashing green= Activity on any

Mezzanine NIC

Off = No link or activity on any

Mezzanine NIC

Solid green = System on

Flashing green (1 Hz/cycle per

sec) = Performing power on

sequence

Solid amber = System in standby

Off = No power present

1

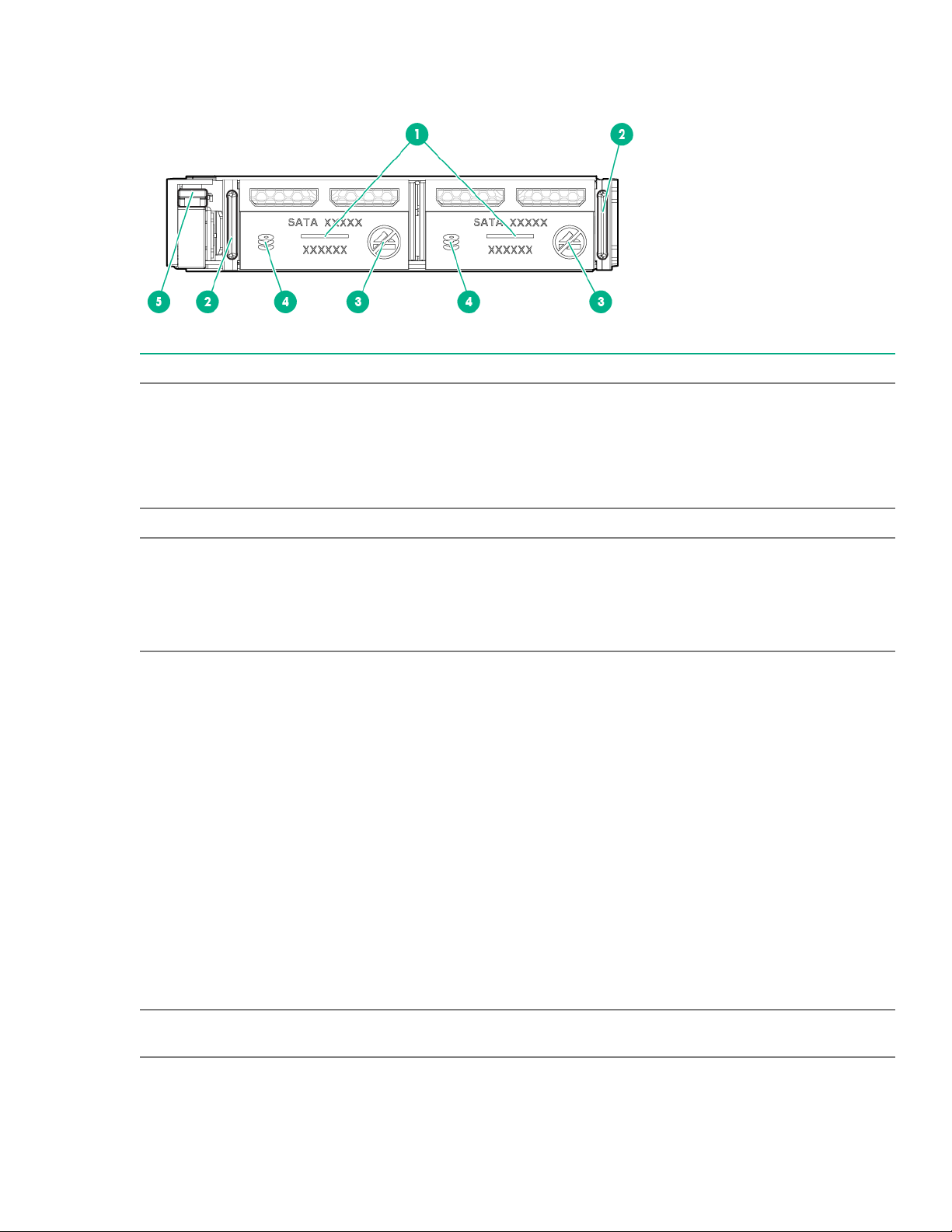

Item Description

1 Drive Box 1, Drive bay 1

2 Drive Box 1, Drive bay 2

3 Drive Box 2, Drive bay 1

4 Drive Box 2, Drive bay 2

5 iLO Service Port (169.254.1.2) (located behind the Serial label pull tab)

4 Component identification

1

1

1

1

Table Continued

Page 5

Item Description

6 External USB (located behind the Serial label pull tab)

7 Compute module handle release latch

8 Compute module handle

1

If uFF drives (the SFF Flash Storage Adapter) are installed in the drive bays, the drive bay numbering is different. For

more information, see Drive numbering.

Serial label pull tab information

The serial label pull tab is located on the front panel of the compute module. To locate the serial label pull tab,

see Front panel components. The serial label pull tab provides the following information:

• Product serial number

• HPE iLO information

• QR code that points to mobile-friendly documentation

Drive numbering

Depending on the configuration, the drive bay numbering on this compute module will vary. Supported

configurations on this compute module are shown in the following table.

Compute module model Configuration Drive support

SAS model Standard backplane with

embedded controller or supported

controller option

SATA model Chipset SATA with embedded

controller or supported controller

option

Four SFF hot-plug SAS drive bays

with support for up to 4 SFF HDDs

or SSDs

Four SFF hot-plug SATA drive

bays with support for the following:

• Up to 4 SFF SATA HDDs or

SSDs

• Up to 8 uFF SATA HDDs (with

the SFF flash adapter for each

2 uFF drives)

Component identification 5

Page 6

Item SAS configuration SATA configuration* Expansion storage

configuration

1 1 Drive box 1, drive bay 1 and

101

2 2 Drive box 1, drive bay 2 and

102

3 1 Drive box 2, drive bay 1 and

101

4 2 Drive box 2, drive bay 2 and

102

The driveless model is not shown, as it does not have drive bays and does support for any drives.

*SATA drives are supported only with the HPE Synergy 480 Gen9 Compute Module backplane.

Hot-plug drive LED definitions

Drive box 1, drive bay 1

Drive box 1, drive bay 2

Drive box 2, drive bay 1

Drive box 2, drive bay 2

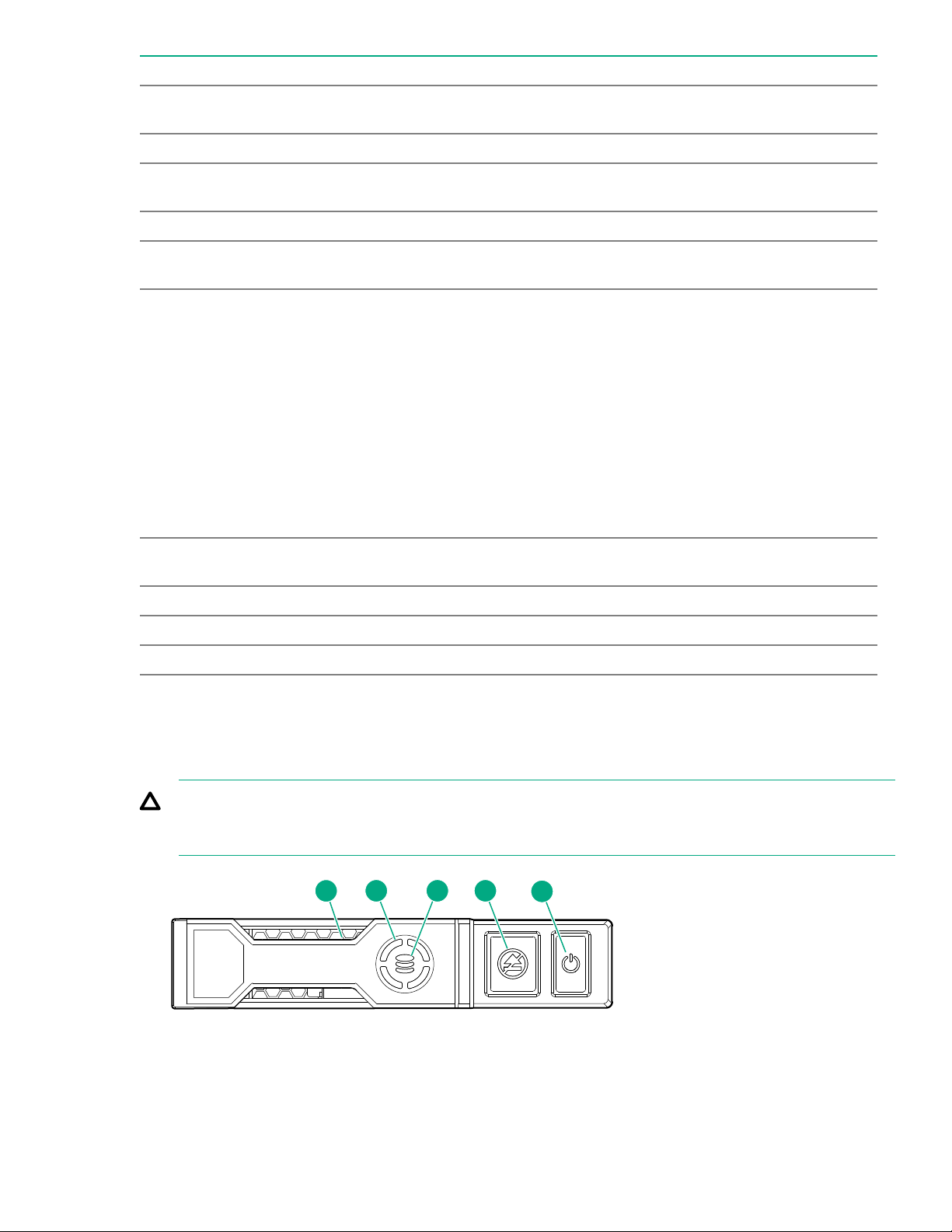

Item LED Status Definition

1 Locate Solid blue The drive is being identified by a host application.

Flashing blue The drive carrier firmware is being updated or requires an update.

6 Component identification

Table Continued

Page 7

Item LED Status Definition

2 3 4

5

1

2 Activity

ring

Off No drive activity

3 Do not

remove

Off Removing the drive does not cause a logical drive to fail.

4 Drive

status

Flashing green

Rotating green Drive activity

Solid white Do not remove the drive. Removing the drive causes one or more of

the logical drives to fail.

Solid green The drive is a member of one or more logical drives.

The drive is doing one of the following:

• Rebuilding

• Performing a RAID migration

• Performing a strip size migration

• Performing a capacity expansion

• Performing a logical drive extension

• Erasing

• Spare part activation

Flashing amber/

green

Flashing amber The drive is not configured and predicts the drive will fail.

Solid amber The drive has failed.

Off The drive is not configured by a RAID controller or a spare drive.

The drive is a member of one or more logical drives and predicts the

drive will fail.

NVMe SSD LED definitions

The NVMe SSD is a PCIe bus device. A device attached to a PCIe bus cannot be removed without allowing

the device and bus to complete and cease the signal/traffic flow.

CAUTION: Do not remove an NVMe SSD from the drive bay while the Do not remove LED is flashing.

The Do not remove LED flashes to indicate that the device is still in use. Removing the NVMe SSD

before the device has completed and ceased signal/traffic flow can cause loss of data.

Component identification 7

Page 8

Item LED Status Definition

1 Locate Solid blue The drive is being identified by a host application.

Flashing blue The drive carrier firmware is being updated or requires an update.

2 Activity

ring

Off No drive activity

3 Drive

status

Flashing green

Flashing amber/

Flashing amber The drive is not configured and predicts the drive will fail.

Solid amber The drive has failed.

Rotating green Drive activity

Solid green The drive is a member of one or more logical drives.

The drive is doing one of the following:

• Rebuilding

• Performing a RAID migration

• Performing a stripe size migration

• Performing a capacity expansion

• Performing a logical drive extension

• Erasing

The drive is a member of one or more logical drives and predicts the

green

drive will fail.

Off The drive is not configured by a RAID controller.

4 Do not

remove

Flashing white The drive ejection request is pending.

5 Power Solid green Do not remove the drive. The drive must be ejected from the PCIe bus

Solid white Do not remove the drive. The drive must be ejected from the PCIe bus

prior to removal.

Off The drive has been ejected.

prior to removal.

Flashing green The drive ejection request is pending.

Off The drive has been ejected.

8 Component identification

Page 9

SFF flash adapter components and LED definitions

Item Component Description

1 Locate • Off—Normal

• Solid blue—The drive is being identified by a host application.

• Flashing blue—The drive firmware is being updated or requires

an update.

2 uFF drive ejection latch Removes the uFF drive when released.

3 Do not remove LED • Off—OK to remove the drive. Removing the drive does not

cause a logical drive to fail.

• Solid white—Do not remove the drive. Removing the drive

causes one or more of the logical drives to fail.

4 Drive status LED • Off—The drive is not configured by a RAID controller or a spare

drive.

• Solid green—The drive is a member of one or more logical

drives.

• Flashing green (4 Hz)—The drive is operating normally and has

activity.

• Flashing green (1 Hz)—The drive is rebuilding, erasing, or

performing a RAID migration, stripe size migration, capacity

expansion, logical drive extension, or spare activation.

• Flashing amber/green (1 Hz)—The drive is a member of one or

more logical drives that predicts the drive will fail.

• Solid amber—The drive has failed.

• Flashing amber (1 Hz)—The drive is not configured and predicts

the drive will fail.

5 Adapter ejection release latch

and handle

Removes the SFF flash adapter when released.

Component identification 9

Page 10

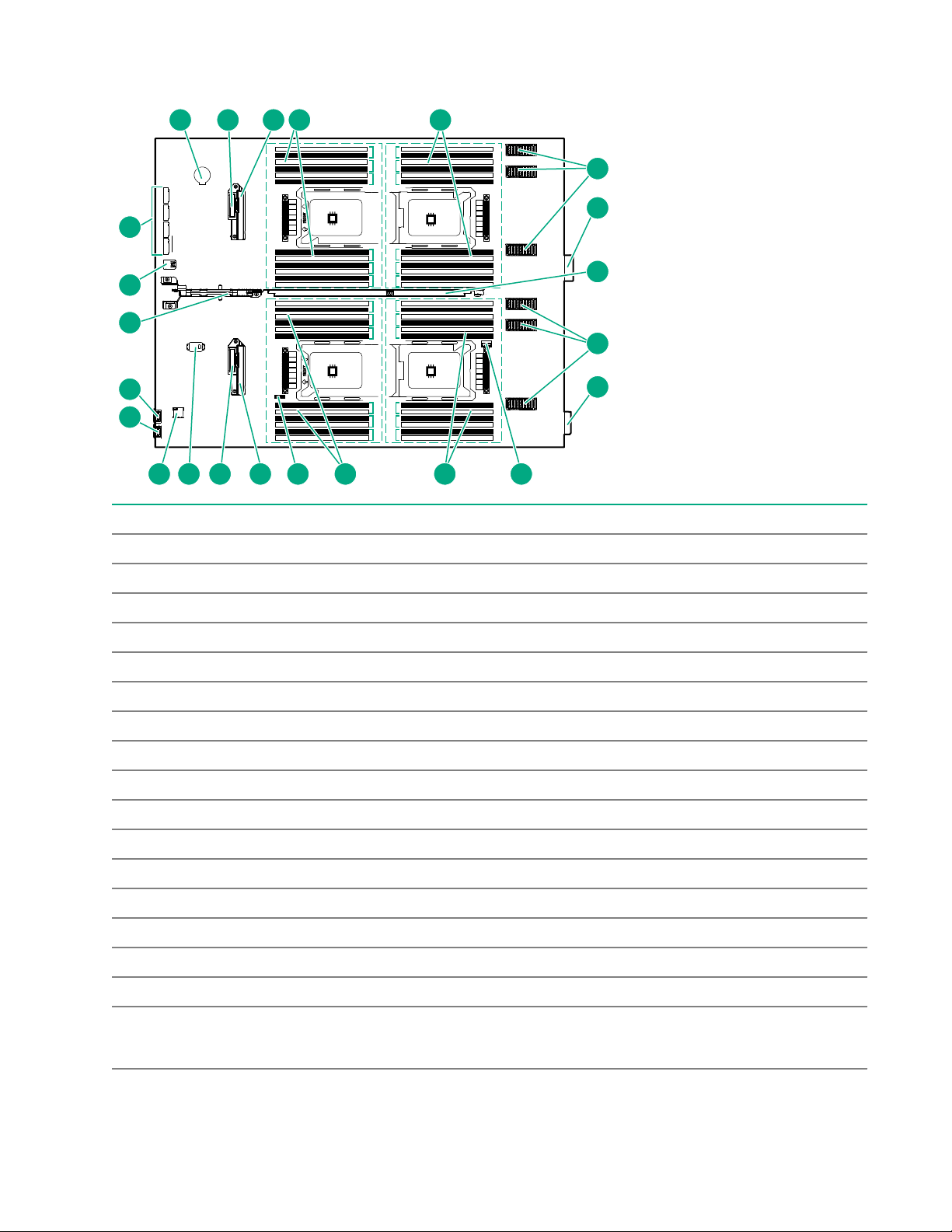

System board components

M1

M2

M3

M4

M5

M6

1

2

3

4

5

6

1

2

3

4

5

6

7

8

9

10

11

12

7

8

9

10

11

12

1

2

3

4

5

6

1

2

3

4

5

6

7

8

9

10

11

12

7

8

9

10

11

12

Ch 6 Ch 5 Ch 4

Ch 1 Ch 2 Ch 3Ch 6 Ch 5 Ch 4

Ch 1 Ch 2 Ch 3

Ch 6 Ch 5 Ch 4

Ch 1 Ch 2 Ch 3Ch 6 Ch 5 Ch 4

Ch 1 Ch 2 Ch 3

P3

P1

P4

P2

1 2 3 4 5

6

6

7

7

910111213141516

17

18

19

20

8

8

Item Description

1 System battery

2 SATA interconnect 1

3 Drive backplane connector 1

4 P1 DIMM slots (12 each)

5 P3 DIMM slots (12 each)

6 Mezzanine connectors

7 Management/power connector (2)

8 Handle

9 Energy pack option connector

10 P4 DIMM slots (12 each)

11 P2 DIMM slots (12 each)

10 Component identification

12 System maintenance switch

13 Drive backplane connector 2

14 SATA interconnect 2

15 TPM connector

16 MicroSD connector

17 iLO Service Port (169.254.1.2)

(located behind the Serial label pull tab)

Table Continued

Page 11

Item Description

18 External USB (located behind the Serial label pull tab)

19 Internal USB 3.0 connector

20 M.2 connectors

System maintenance switch

Position Defa

Function

ult

1

S1

Off

Off = HPE iLO security is enabled.

On = HPE iLO security is disabled.

S2 Off

Off = System configuration can be changed.

On = System configuration is locked.

S3 Off Reserved

S4 Off Reserved

1

S5

Off

Off = Power-on password is enabled.

On = Power-on password is disabled.

2

S61,

Off

Off = No function

On = Restore default manufacturing settings

S7 Off

Off = Set default boot mode to UEFI.

On = Set default boot mode to legacy.

S8 — Reserved

S9 — Reserved

S10 — Reserved

S11 — Reserved

S12 — Reserved

1

You can access the redundant ROM by setting S1, S5, and S6 to On.

2

When the system maintenance switch position 6 is set to the On position, the system is prepared to restore all

configuration settings to their manufacturing defaults.

When the system maintenance switch position 6 is set to the On position and Secure Boot is enabled, some

configurations cannot be restored. For more information, see Secure Boot configuration.

IMPORTANT: Before using the S7 switch to change to Legacy BIOS Boot Mode, be sure the HPE

Dynamic Smart Array B140i Controller is disabled. Do not use the B140i controller when the compute

module is in Legacy BIOS Boot Mode.

Component identification 11

Page 12

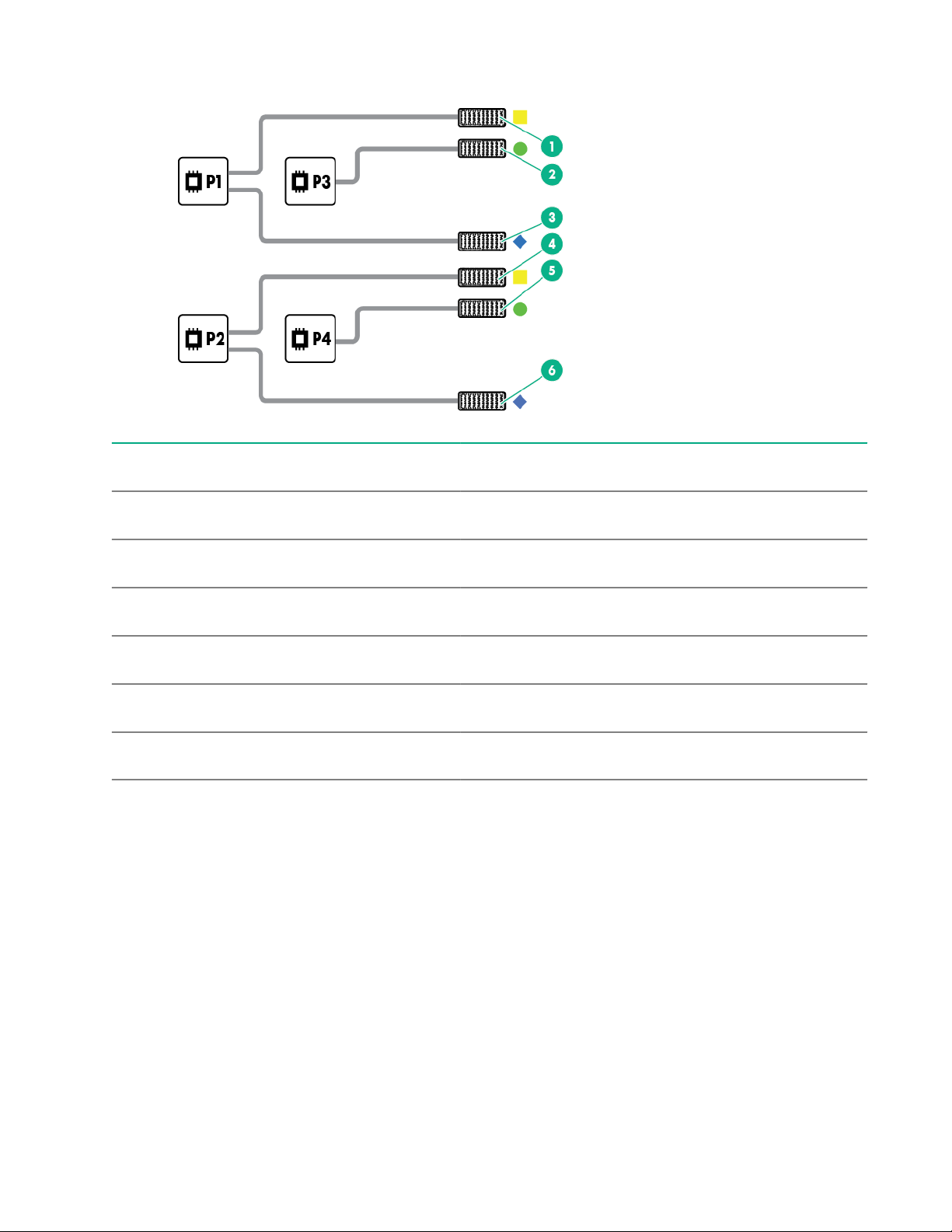

Mezzanine connector definitions

Item Connector

identification

1 Mezzanine

connector 1 (M1)

2 Mezzanine

connector 2 (M2)

3 Mezzanine

connector 3 (M3)

4 Mezzanine

connector 4 (M4)

5 Mezzanine

connector 5 (M5)

6 Mezzanine

connector 6 (M6)

1

When installing a mezzanine option on mezzanine connector 2, processor 3 must be installed.

2

When installing a mezzanine option on mezzanine connector 5, processor 4 must be installed.

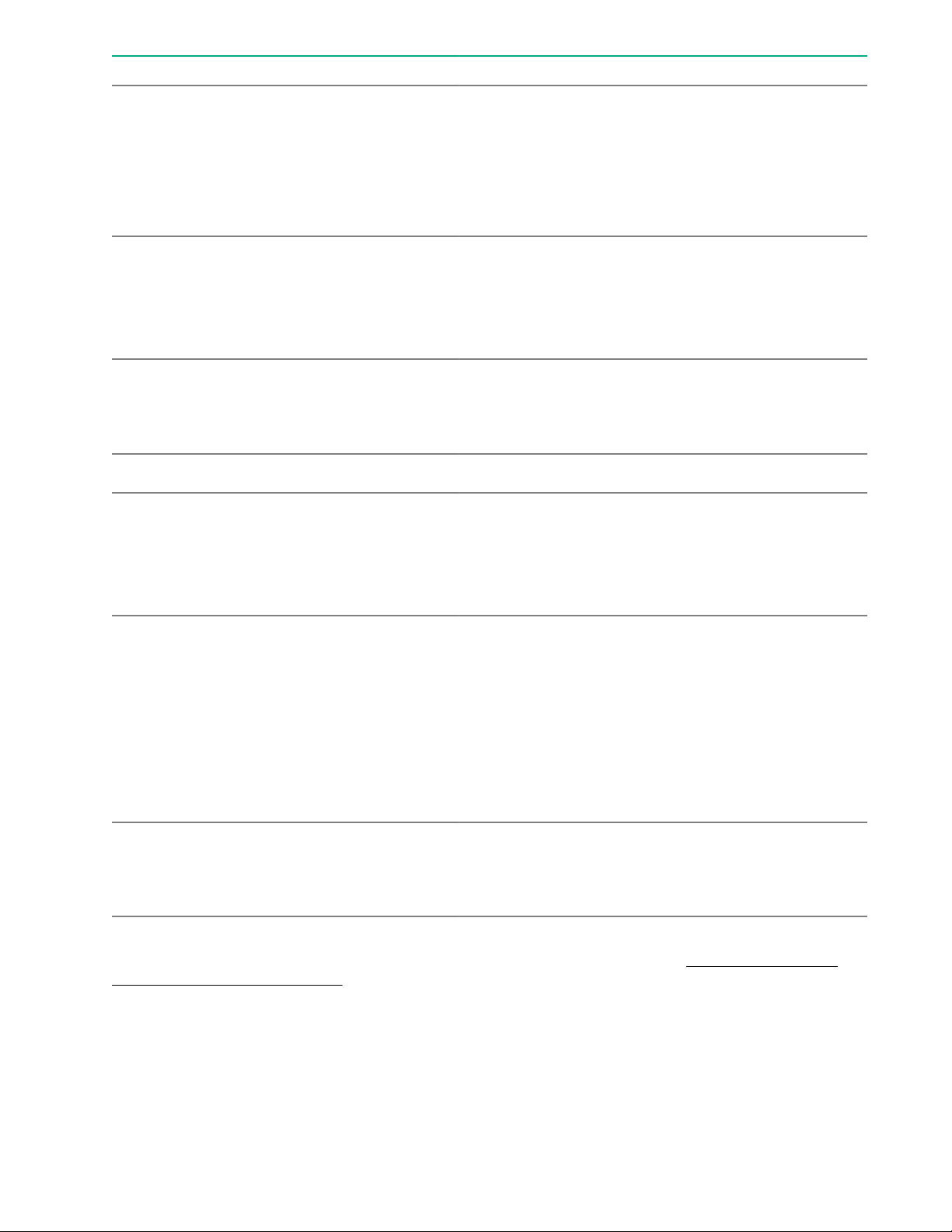

DIMM slot locations

DIMM slots are numbered sequentially (1 through 12) for each processor. The supported AMP modes use the

alpha assignments for population order, and the slot numbers designate the DIMM slot ID for spare

replacement.

Supported

FabricSupported ICM bays

card types

Type C and

1 ICM 1 and 4

Type D

Type C and

1

Type D

2 ICM 2 and 5

Type C only 3 ICM 3 and 6

Type C and

1 ICM 1 and 4

Type D

Type C and

2

Type D

2 ICM 2 and 5

Type C 3 ICM 3 and 6

The colored slots indicate the slot order within each channel:

• White — First slot of a channel

• Black — Second slot of a channel

The arrow points to the front of the compute module.

12 Component identification

Page 13

1

2

3

4

5

6

1

2

3

4

5

6

7

8

9

10

11

12

7

8

9

10

11

12

1

2

3

4

5

6

1

2

3

4

5

6

7

8

9

10

11

12

7

8

9

10

11

12

Ch 6 Ch 5 Ch 4

Ch 1 Ch 2 Ch 3

Ch 6 Ch 5 Ch 4

Ch 1 Ch 2 Ch 3

Ch 6 Ch 5 Ch 4

Ch 1 Ch 2 Ch 3

Ch 6 Ch 5 Ch 4

P1

P2

Ch 1 Ch 2 Ch 3

P3

P4

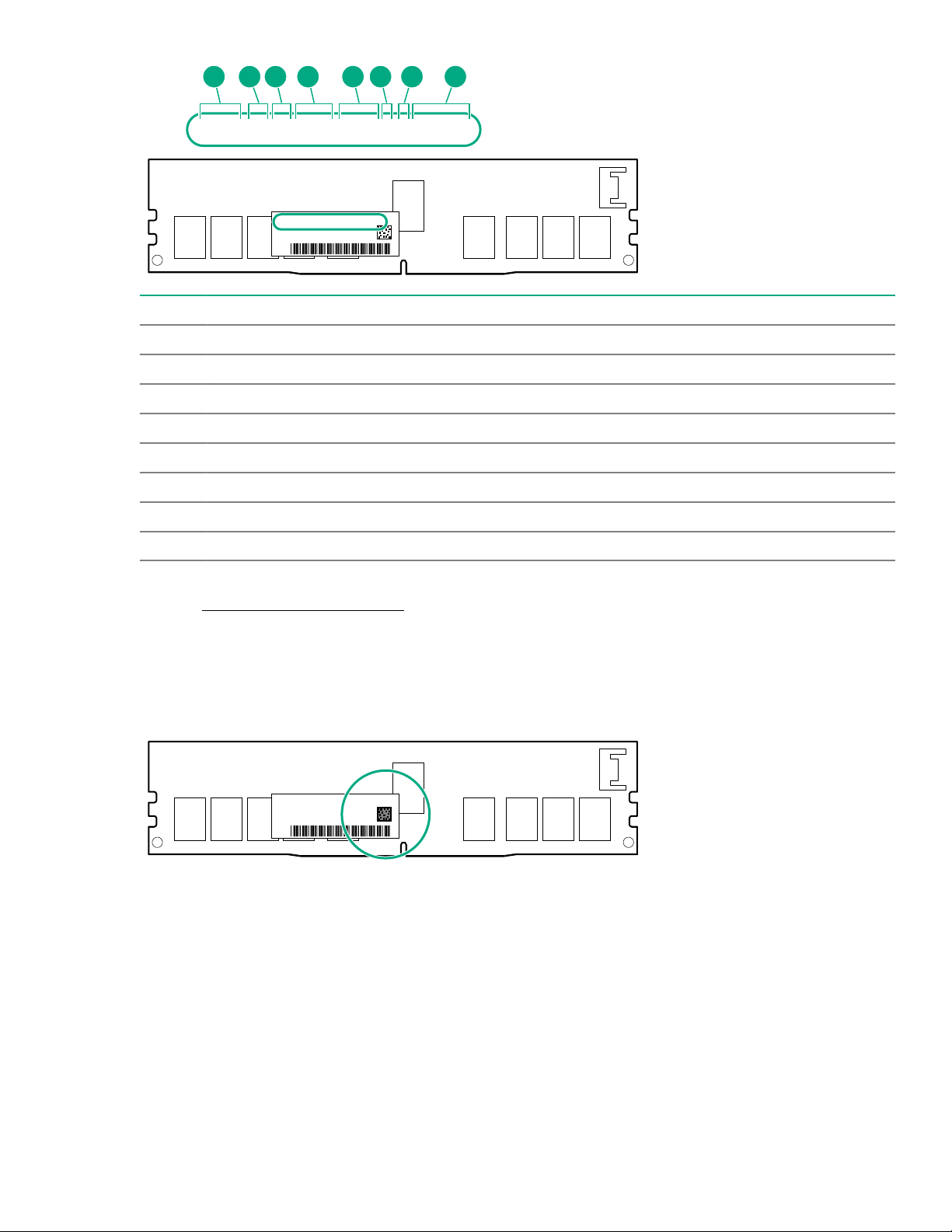

DIMM label identification

8GB 1Rx4 DDR4-2666P-R

8GB 1 Rx4 DDR 4-2666P -R

1 2 3 4 5 6 7

To determine DIMM characteristics, see the label attached to the DIMM. The information in this section helps

you to use the label to locate specific information about the DIMM.

Component identification 13

Page 14

Item Description Example

1 Capacity

2 Rank

3 Data width on DRAM

4 Memory generation

5 Maximum memory speed

8 GB

16 GB

32 GB

64 GB

128 GB

1R = Single rank

2R = Dual rank

4R = Quad rank

8R = Octal rank

x4 = 4-bit

x8 = 8-bit

x16 = 16-bit

PC4 = DDR4

2133 MT/s

2400 MT/s

2666 MT/s

2933 MT/s

6 CAS latency

7 DIMM type

For more information about product features, specifications, options, configurations, and compatibility, see the

HPE DDR4 SmartMemory QuickSpecs on the Hewlett Packard Enterprise website (http://www.hpe.com/

support/DDR4SmartMemoryQS).

P = CAS 15-15-15

T = CAS 17-17-17

U = CAS 20-18-18

V = CAS 19-19-19 (for RDIMM, LRDIMM)

V = CAS 22-19-19 (for 3DS TSV LRDIMM)

Y = CAS 21-21-21 (for RDIMM, LRDIMM)

Y = CAS 24-21-21 (for 3DS TSV LRDIMM)

R = RDIMM (registered)

L = LRDIMM (load reduced)

E = Unbuffered ECC (UDIMM)

NVDIMM identification

NVDIMM boards are blue instead of green. This change to the color makes it easier to distinguish NVDIMMs

from DIMMs.

To determine NVDIMM characteristics, see the full product description as shown in the following example:

14 Component identification

Page 15

16GB 1Rx4 NN4-2666V-RZZZ-10

16GB 1Rx4 N N4-2666 V-RZZZ-1 0

1 2 3 74 5 6 8

Item Description Definition

1 Capacity 16 GiB

2 Rank 1R (Single rank)

3 Data width per DRAM chip x4 (4 bit)

4 Memory type NN4=DDR4 NVDIMM-N

5 Maximum memory speed 2667 MT/s

6 Speed grade V (latency 19-19-19)

7 DIMM type RDIMM (registered)

8 Other —

For more information about NVDIMMs, see the product QuickSpecs on the Hewlett Packard Enterprise

website (http://www.hpe.com/info/qs).

NVDIMM 2D Data Matrix barcode

The 2D Data Matrix barcode is on the right side of the NVDIMM label and can be scanned by a cell phone or

other device.

When scanned, the following information from the label can be copied to your cell phone or device:

• (P) is the module part number.

• (L) is the technical details shown on the label.

• (S) is the module serial number.

Example: (P)HMN82GR7AFR4N-VK (L)16GB 1Rx4 NN4-2666V-RZZZ-10(S)80AD-01-1742-11AED5C2

Component identification 15

Page 16

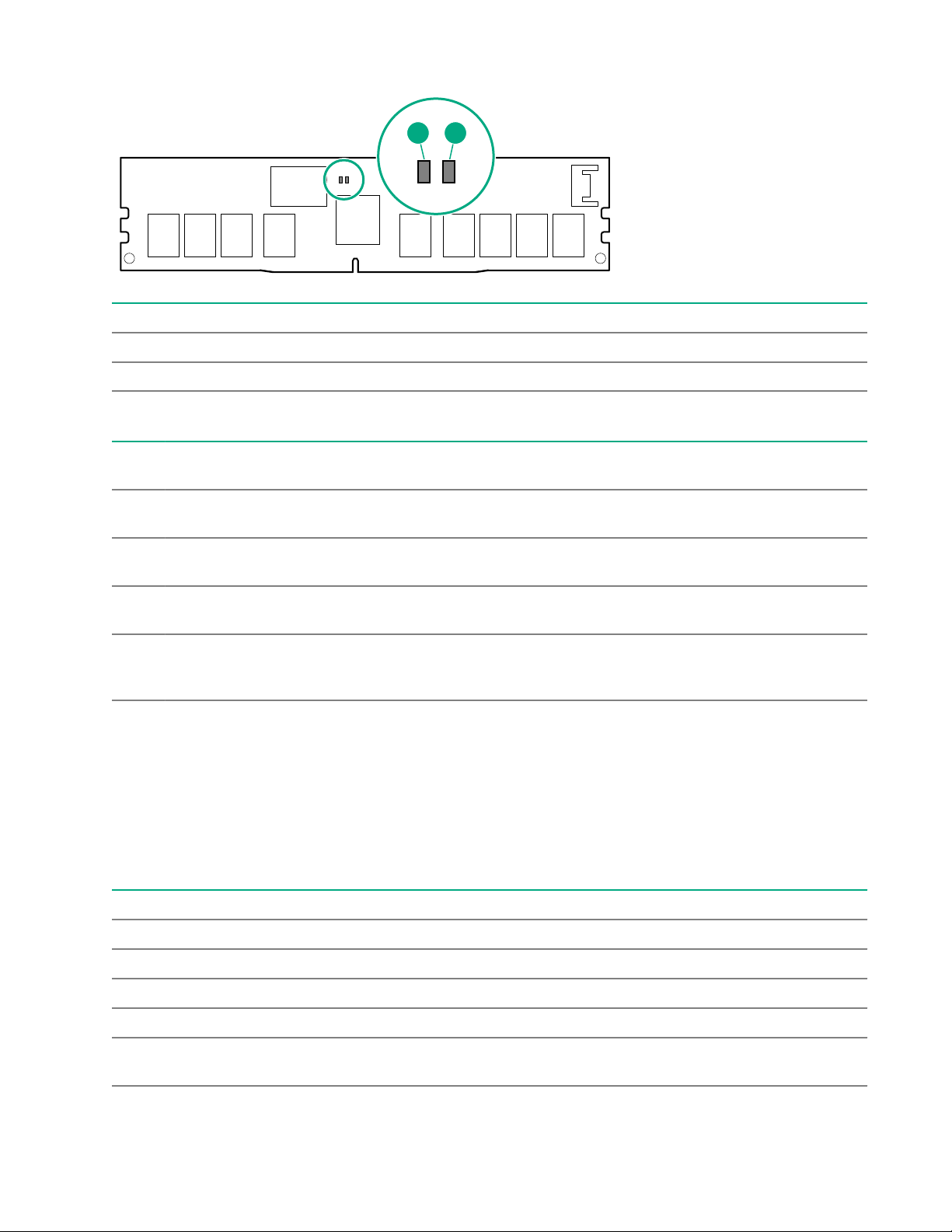

NVDIMM LED identification

1 2

Item LED description LED color

1 Power LED Green

2 Function LED Blue

NVDIMM-N LED combinations

State Definition NVDIMM-N Power LED

0 AC power is on (12V rail) but the NVM

controller is not working or not ready.

1 AC power is on (12V rail) and the NVM

controller is ready.

2 AC power is off or the battery is off (12V rail

off).

3 AC power is on (12V rail) or the battery is

on (12V rail) and the NVDIMM-N is active

(backup and restore).

NVDIMM Function LED patterns

For the purpose of this table, the NVDIMM-N LED operates as follows:

• Solid indicates that the LED remains in the on state.

• Flashing indicates that the LED is on for 2 seconds and off for 1 second.

• Fast-flashing indicates that the LED is on for 300 ms and off for 300 ms.

State Definition NVDIMM-N Function LED

NVDIMM-N Function LED

(green)

On Off

On On

Off Off

On Flashing

(blue)

0 The restore operation is in progress. Flashing

1 The restore operation is successful. Solid or On

2 Erase is in progress. Flashing

3 The erase operation is successful. Solid or On

4 The NVDIMM-N is armed, and the NVDIMM-N is in

normal operation.

16 Component identification

Solid or On

Table Continued

Page 17

State Definition NVDIMM-N Function LED

5 The save operation is in progress. Flashing

6 The NVDIMM-N finished saving and battery is still turned

on (12 V still powered).

7 The NVDIMM-N has an internal error or a firmware

update is in progress. For more information about an

NVDIMM-N internal error, see the IML.

Solid or On

Fast-flashing

Component and LED identification for HPE Synergy

hardware

For more information about component and LED identification for HPE Synergy components, see the productspecific maintenance and service guide or the HPE Synergy 12000 Frame Setup and Installation Guide in the

Hewlett Packard Enterprise Information Library .

Component identification 17

Page 18

Operations

Powering up the compute module

To power up the compute module, press the Power On/Standby button after the power button LED has turned

amber.

Powering down the compute module

Before powering down the compute module for any upgrade or maintenance procedures, perform a backup of

the system and all data. Then, shut down, as appropriate, applications and operating systems. A successful

shutdown is indicated by the system power LED displaying amber.

IMPORTANT: Always attempt a graceful shutdown before forcing a nongraceful shutdown. Application

data can be lost when performing a nongraceful shutdown of applications and the OS.

Before proceeding, verify the following:

• The compute module is in standby mode by observing that the system power LED is amber.

• The UID LED is not flashing blue.

NOTE:

◦ When the compute module is in standby mode, auxiliary power is still being provided to the system.

◦ If the UID LED is flashing blue, a remote session is in progress.

To power down the compute module, use one of the following methods:

• To perform a graceful shutdown of applications and the OS when powering down the compute module to

standby mode, do one of the following:

◦ Press and release the Power On/Standby button.

◦ Select the Momentary press power off selection in HPE OneView.

◦ Select the Momentary press virtual power button selection in HPE iLO.

• If a graceful shutdown fails to power down the compute module to standby mode when an application or

OS stops responding, force a nongraceful shutdown of applications and the OS. Do one of the following:

◦ Press and hold the Power On/Standby button for more than four seconds.

◦ Select the Press and hold power off selection in HPE OneView.

◦ Select the Press and hold virtual power button selection in HPE iLO.

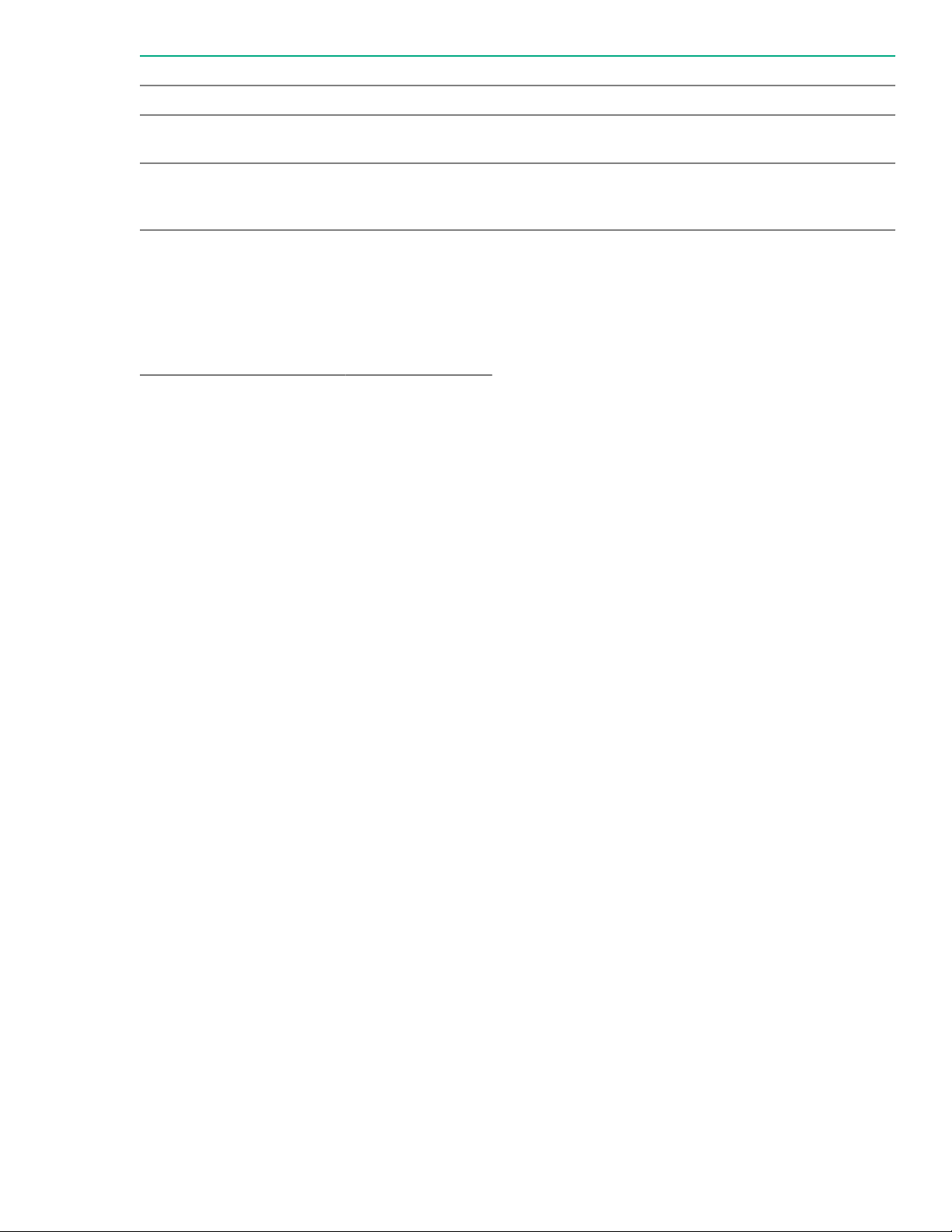

Removing the compute module

Procedure

1. Identify the proper compute module.

2. Power down the compute module.

18 Operations

Page 19

3. Remove the compute module.

1

2

4. Place the compute module on a flat, level work surface.

WARNING: To reduce the risk of personal injury from hot surfaces, allow the drives and the internal

system components to cool before touching them.

CAUTION: To prevent damage to electrical components, properly ground the compute module

before beginning any installation procedure. Improper grounding can cause ESD.

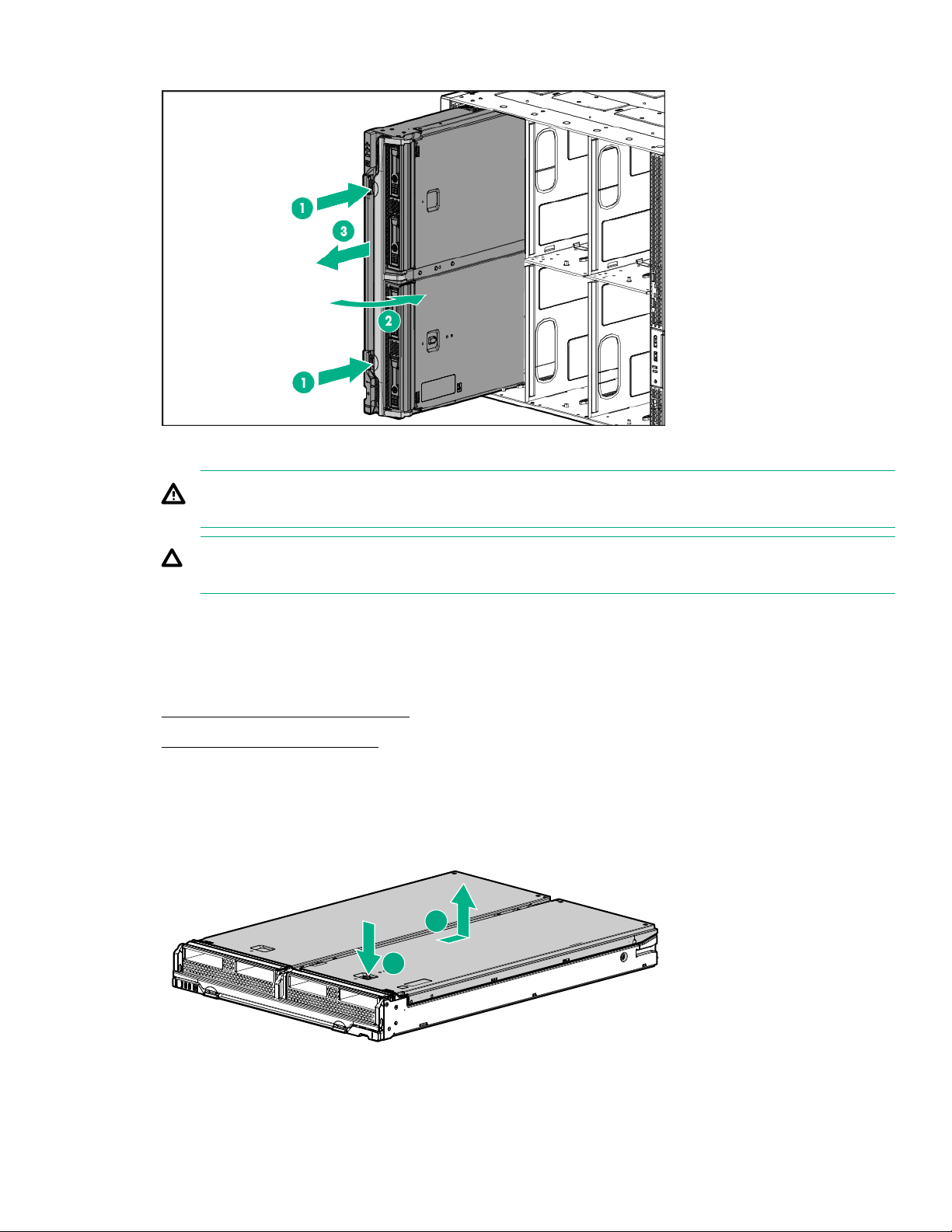

Removing the access panel

Procedure

1. Power down the compute module.

2. Remove the compute module.

3. Place the compute module on a flat, level work surface.

4. Press the access panel release button.

5. Slide the access panel towards the rear of the compute module, and then lift the panel to remove it.

Operations 19

Page 20

Installing the access panel

1

2

Procedure

1. Place the access panel on top of the compute module.

2. Slide the access panel forward until it clicks into place.

Removing and replacing a drive blank

Procedure

1. Press the drive release button.

2. Remove the drive.

CAUTION: To prevent improper cooling and thermal damage, do not operate the compute module

unless all bays are populated with either a component or a blank.

To replace the component, reverse the removal procedure.

Removing the DIMM baffle

Procedure

1. Power down the compute module.

2. Remove the compute module.

20 Operations

Page 21

3. Place the compute module on a flat, level work surface.

4. Remove the access panel.

IMPORTANT: When removing the right DIMM baffle, leave the energy pack option installed on the

baffle.

5. Remove one or more DIMM baffles.

Installing the DIMM baffles

Procedure

1. Install the DIMM baffles.

Operations 21

Page 22

2. Install the access panel.

3. Install the compute module .

Removing the front panel/drive cage assembly

Procedure

1. Power down the compute module.

2. Remove the compute module.

3. Place the compute module on a flat, level work surface.

4. Remove the access panel.

5. Remove all drives.

6. Remove all drive blanks.

7. Remove the front panel/drive cage assembly.

22 Operations

Page 23

Installing the front panel/drive cage assembly

Procedure

1. Install the front panel/drive cage assembly.

2. Install all drives.

3. Install drive blanks in unpopulated drive bays.

4. Install the access panel.

5. Install the compute module.

Operations 23

Page 24

Setup

Installation overview

This section provides instructions for installing an HPE Synergy 660 Gen10 Compute Module in a compute

module for the first time.

Installation of a compute module requires the following steps:

Procedure

1. Installing supported hardware options for the compute module.

2. Installing the compute module.

3. Completing compute module configuration.

Installing the compute module

Procedure

1. Verify that the device bay is configured for a full-height compute module. For more information, see the

setup and installation guide for the compute module on the Hewlett Packard Enterprise website.

2. Remove the compute module end cap.

3. Prepare the compute module for installation by opening the compute module handle.

24 Setup

Page 25

4. Install the compute module. Press the compute module handle near each release button to completely

1

2

3

3

3

close the handle.

5. Review the compute module front panel LEDs to determine the compute module status. For more

information on the compute module LEDs, see "Component identification."

Installing the compute module options

Before installing and initializing the compute module, install any compute module options, such as an

additional processor, drive, or a mezzanine card.

Mezzanine options are classified as Type C and Type D mezzanine cards. The type of mezzanine card

determines where the card can be installed in the compute module. Some mezzanine cards require that an

interconnect module is installed in the associated ICM bay in the rear of the frame. Be sure to review the

mezzanine card mapping information in the HPE Synergy Configuration and Compatibility Guide on the

Hewlett Packard Enterprise website (http://www.hpe.com/info/synergy-docs).

For a list of supported options, see the product QuickSpecs on the Hewlett Packard Enterprise website

(http://www.hpe.com/info/qs).

Setup 25

Page 26

For compute module options installation information, see Hardware options installation.

Completing the configuration

When a compute module is added to an existing configuration, HPE OneView automatically detects the new

hardware. HPE OneView is hosted on the HPE Synergy Composer appliance installed in the HPE Synergy

12000 Frame. You can use HPE OneView to comprehensively manage an HPE Synergy system throughout

the hardware life cycle.

To configure the compute module for the first time, log in to HPE OneView from the frame using your

assigned user name and password. For more information, see the HPE Synergy 12000 Frame Setup and

Installation Guide on the Hewlett Packard Enterprise website (http://www.hpe.com/info/synergy-docs). For

more information about HPE OneView, see the HPE OneView User Guide on the Hewlett Packard Enterprise

website (http://www.hpe.com/info/synergy-docs).

26 Setup

Page 27

Hardware options installation

Introduction

If more than one option is being installed, read the installation instructions for all the hardware options and

identify similar steps to streamline the installation process.

WARNING: To reduce the risk of personal injury from hot surfaces, allow the drives and the internal

system components to cool before touching them.

CAUTION: To prevent damage to electrical components, properly ground the compute module before

beginning any installation procedure. Improper grounding can cause electrostatic discharge.

Installing the drive option

The compute module supports up to two SFF SAS, NVMe, solid state, or SATA (supported only with the HPE

Synergy 660 Gen10 compute module backplane) drives.

CAUTION: To prevent improper cooling and thermal damage, do not operate the compute module or

the frame unless all drive and device bays are populated with either a component or a blank.

Procedure

1. Remove the drive blank.

2. Prepare the drive:

• SAS, SATA, or solid state

• NVMe—Press Do Not Remove to open the release handle.

Hardware options installation 27

Page 28

3. Install the drive:

1

2

2

1

• SAS, SATA, or solid state

• NVMe

4. Determine the status of the drive from the drive LED definitions.

28 Hardware options installation

Page 29

Installing the SFF flash adapter option

CAUTION: To prevent improper cooling and thermal damage, do not operate the compute module or

the enclosure unless all drive and device bays are populated with either a component or a blank.

Prequisites

The SFF flash adapter option is supported when any of the following components are installed:

• HPE Dynamic Smart Array S100i Controller

• HPE Smart Array E208i-c/P204i-c/P416ie-m Controller

• HPE Synergy 3830C 16G FC HBA

• HPE Synergy 3530C 16G FC HBA

Procedure

1. Remove the drive blank.

2. Install the uFF drives in the SFF flash adapter.

3. Install the SFF flash adapter by pushing firmly near the left-side adapter ejection handle until the latching

spring engages in the drive bay.

Hardware options installation 29

Page 30

Installing the M.2 SSD flash drive

1

2

Procedure

1. Power down the compute module.

2. Remove the compute module.

3. Lay the compute module on a flat and level surface.

4. Remove the access panel.

5. Remove the front panel/drive cage assembly.

6. Locate the SSD slot, and install SSD flash drive to the appropriate slot.

IMPORTANT: You must install the SSD flash drive to the connector at an angle first, and then lower

it down onto the mounting standoffs to ensure proper installation.

7. Secure the SSD flash drive to the board standoff.

8. Repeat the SSD flash drive installation on the second SSD flash drive, as applicable.

Installing the controller option

For more information about supported options, see the product QuickSpecs on the Hewlett Packard

Enterprise website.

CAUTION: Hewlett Packard Enterprise recommends performing a complete backup of all compute

module data before performing a controller or adapter installation or removal.

CAUTION: In systems that use external data storage, be sure that the compute module is the first unit

to be powered down and the last to be powered back up. Taking this precaution ensures that the system

does not erroneously mark the drives as failed when the compute module is powered up.

To install the component:

30 Hardware options installation

Page 31

Procedure

1. Power down the compute module.

2. Remove the compute module.

3. Remove the access panel.

4. Remove the front panel/drive cage assembly.

5. Install the controller.

6. Install the front panel/drive cage assembly.

7. Install the access panel.

8. Install the compute module.

9. Power up the compute module.

HPE Smart Storage Battery

The HPE Smart Storage Battery supports the following devices:

• HPE Smart Storage SR controllers

• HPE Smart Storage MR controllers

A single 96W battery can support up to 24 devices.

After the battery is installed, it might take up to two hours to charge. Controller features requiring backup

power are not re-enabled until the battery is capable of supporting the backup power.

This server supports the 96W HPE Smart Storage Battery with the 260mm cable.

HPE Smart Storage Hybrid Capacitor

The HPE Smart Storage Hybrid Capacitor supports the following devices:

Hardware options installation 31

Page 32

• HPE Smart Storage SR controllers

• HPE Smart Storage MR controllers

The capacitor pack can support up to three devices or 600 joules.

This server supports the HPE Smart Storage Hybrid Capacitor with the 260mm cable.

Before installing the HPE Smart Storage Hybrid Capacitor, verify that the system BIOS meets the minimum

firmware requirements to support the capacitor pack.

IMPORTANT: If the system BIOS or controller firmware is older than the minimum recommended

firmware versions, the capacitor pack will only support one device.

The capacitor pack is fully charged after the system boots.

Minimum firmware versions

Product Minimum firmware version

HPE Synergy 660 Gen10 Compute Module system

ROM

HPE Smart Array SR controllers 1.90

HPE Smart Array MR controllers 24.23.0-0041

Installing the energy pack option

Hewlett Packard Enterprise now offers two options as a centralized backup power source for backing up write

cache content on Smart Array controllers in case of an unplanned server power loss.

• HPE Smart Storage Battery

• HPE Smart Storage Hybrid Capacitor

IMPORTANT: The HPE Smart Storage Hybrid Capacitor is only supported on Gen10 and later

servers that support the 96W HPE Smart Storage Battery.

Only one energy pack is required per server, as it can support multiple devices.

To install the component:

Procedure

1. Power down the compute module.

2.00

2. Remove the compute module.

3. Place the compute module on a flat, level work surface.

4. Remove the access panel.

5. Remove the DIMM baffle.

6. Install the energy pack.

32 Hardware options installation

Page 33

1

2

7. Install the DIMM baffle.

8. Connect the energy pack to the system board. To locate the energy pack connector, see "System board

components."

9. Install the access panel.

10. Install the compute module.

11. Power up the compute module.

Installing the mezzanine card options

IMPORTANT: For more information about the association between the mezzanine bay and the

interconnect bays, see the HPE Synergy 12000 Frame Setup and Installation Guide in the Hewlett

Packard Enterprise Information Library. Where you install the mezzanine card determines where you

need to install the interconnect modules.

To install the component:

Hardware options installation 33

Page 34

Procedure

1. Power down the compute module.

2. Remove the compute module.

3. Place the compute module on a flat, level work surface.

4. Remove the access panel.

5. Locate the appropriate mezzanine connector. To locate the connector, see the "System board

components."

6. Remove the mezzanine connector cover, if installed.

7. Install the mezzanine card. Press firmly on the PRESS HERE label above the mezzanine connector to

seat the card.

8. If you are installing an HPE Smart Array Controller with an NVMe-enabled backplane and SAS hard

drives, you must connect a cable between the mezzanine card and the NVMe-enabled backplane.

9. Install the access panel.

10. Install the compute module.

11. Power up the compute module.

Memory options

IMPORTANT: This compute module does not support mixing LRDIMMs and RDIMMs. Attempting to mix

any combination of these DIMMs can cause the server to halt during BIOS initialization. All memory

installed in the compute module must be of the same type.

DIMM and NVDIMM population information

For specific DIMM and NVDIMM population information, see the DIMM population guidelines on the Hewlett

Packard Enterprise website (http://www.hpe.com/docs/memory-population-rules).

34 Hardware options installation

Page 35

DIMM-processor compatibility

The installed processor determines the type of DIMM that is supported in the compute module:

• First Generation Intel Xeon Scalable Processors support DDR4-2666 DIMMs.

• Second Generation Intel Xeon Scalable Processors support DDR4-2933 DIMMs.

Mixing DIMM types is not supported. Install only the supported DDR4-2666 or DDR4-2933 DIMMs in the

compute module.

HPE SmartMemory speed information

For more information about memory speed information, see the Hewlett Packard Enterprise website (https://

www.hpe.com/docs/memory-speed-table).

Installing a DIMM

The server supports up to 24 DIMMs.

Prerequisites

Before installing this option, be sure you have the following:

The components included with the hardware option kit

For more information on specific options, see the compute module QuickSpecs on the Hewlett Packard

Enterprise website.

Procedure

1. Power down the compute module.

2. Remove the compute module.

3. Place the compute module on a flat, level work surface.

4. Remove the access panel.

5. Open the DIMM slot latches.

6. Install the DIMM .

Hardware options installation 35

Page 36

7. Install the access panel.

8. Install the compute module in the rack.

9. Install the compute module.

10. Power up the compute module.

Use the BIOS/Platform Configuration (RBSU) in the UEFI System Utilities to configure the memory mode.

HPE 16GB NVDIMM option

HPE NVDIMMs are flash-backed NVDIMMs used as fast storage and are designed to eliminate smaller

storage bottlenecks. The HPE 16GB NVDIMM for HPE ProLiant Gen10 servers is ideal for smaller database

storage bottlenecks, write caching tiers, and any workload constrained by storage bottlenecks.

The HPE 16GB NVDIMM is supported on select HPE ProLiant Gen10 servers with first generation Intel Xeon

Scalable processors. The compute module can support up to 12 NVDIMMs in 2 socket servers (up to 192GB)

and up to 24 NVDIMMs in 4 socket servers (up to 384GB). The HPE Smart Storage Battery provides backup

power to the memory slots allowing data to be moved from the DRAM portion of the NVDIMM to the Flash

portion for persistence during a power down event.

For more information on HPE NVDIMMs, see the Hewlett Packard Enterprise website (http://www.hpe.com/

info/persistentmemory).

NVDIMM-processor compatibility

HPE 16GB NVDIMMs are only supported in servers with first generation Intel Xeon Scalable processors

installed.

Server requirements for NVDIMM support

Before installing an HPE 16GB NVDIMM in a compute module, make sure that the following components and

software are available:

• A supported HPE server using Intel Xeon Scalable Processors: For more information, see the NVDIMM

QuickSpecs on the Hewlett Packard Enterprise website (http://www.hpe.com/info/qs).

• An HPE Smart Storage Battery

• A minimum of one regular DIMM: The system cannot have only NVDIMM-Ns installed.

• A supported operating system with persistent memory/NVDIMM drivers. For the latest software

information, see the Hewlett Packard Enterprise website (http://persistentmemory.hpe.com).

• For minimum firmware versions, see the HPE 16GB NVDIMM User Guide on the Hewlett Packard

Enterprise website (http://www.hpe.com/info/nvdimm-docs).

To determine NVDIMM support for your compute module, see the compute module QuickSpecs on the

Hewlett Packard Enterprise website (http://www.hpe.com/info/qs).

Installing an NVDIMM

CAUTION: To avoid damage to the hard drives, memory, and other system components, the air baffle,

drive blanks, and access panel must be installed when the server is powered up.

CAUTION: To avoid damage to the hard drives, memory, and other system components, be sure to

install the correct DIMM baffles for your server model.

36 Hardware options installation

Page 37

CAUTION: DIMMs are keyed for proper alignment. Align notches in the DIMM with the corresponding

notches in the DIMM slot before inserting the DIMM. Do not force the DIMM into the slot. When installed

properly, not all DIMMs will face in the same direction.

CAUTION: Electrostatic discharge can damage electronic components. Be sure you are properly

grounded before beginning this procedure.

CAUTION: Failure to properly handle DIMMs can damage the DIMM components and the system board

connector. For more information, see the DIMM handling guidelines in the troubleshooting guide for your

product on the Hewlett Packard Enterprise website:

• HPE ProLiant Gen10 (http://www.hpe.com/info/gen10-troubleshooting)

• HPE Synergy (http://www.hpe.com/info/synergy-troubleshooting)

CAUTION: Unlike traditional storage devices, NVDIMMs are fully integrated in with the ProLiant

compute module. Data loss can occur when system components, such as the processor or HPE Smart

Storage Battery, fails. HPE Smart Storage battery is a critical component required to perform the backup

functionality of NVDIMMs. It is important to act when HPE Smart Storage Battery related failures occur.

Always follow best practices for ensuring data protection.

Prerequisites

Before installing an NVDIMM, be sure the compute module meets the Server requirements for NVDIMM

support on page 36.

Procedure

1. Power down the compute module.

2. Remove the compute module.

3. Place the compute module on a flat, level work surface.

4. Remove the access panel.

5. Locate any NVDIMMs already installed in the compute module.

6. Verify that all LEDs on any installed NVDIMMs are off.

7. Install the NVDIMM.

Hardware options installation 37

Page 38

1

2

2

8. Install and connect the energy pack option, if it is not already installed.

9. Install any components removed to access the DIMM slots and the energy pack option.

10. Install the access panel.

11. Install the compute module.

12. Power up the compute module.

13. If required, sanitize the NVDIMM-Ns. For more information, see NVDIMM sanitization on page 38.

Configuring the compute module for NVDIMMs

After installing NVDIMMs, configure the compute module for NVDIMMs. For information on configuring

settings for NVDIMMs, see the HPE 16GB NVDIMM User Guide on the Hewlett Packard Enterprise website

(http://www.hpe.com/info/nvdimm-docs).

The compute module can be configured for NVDIMMs using either of the following:

• UEFI System Utilities—Use System Utilities through the Remote Console to configure the compute

module for NVDIMM memory options by pressing the F9 key during POST. For more information about

UEFI System Utilities, see the Hewlett Packard Enterprise website (http://www.hpe.com/info/uefi/docs).

• iLO RESTful API for HPE iLO 5—For more information about configuring the system for NVDIMMs, see

https://hewlettpackard.github.io/ilo-rest-api-docs/ilo5/.

NVDIMM sanitization

Media sanitization is defined by NIST SP800-88 Guidelines for Media Sanitization (Rev 1, Dec 2014) as "a

general term referring to the actions taken to render data written on media unrecoverable by both ordinary

and extraordinary means."

The specification defines the following levels:

38 Hardware options installation

Page 39

• Clear: Overwrite user-addressable storage space using standard write commands; might not sanitize data

in areas not currently user-addressable (such as bad blocks and overprovisioned areas)

• Purge: Overwrite or erase all storage space that might have been used to store data using dedicated

device sanitize commands, such that data retrieval is "infeasible using state-of-the-art laboratory

techniques"

• Destroy: Ensure that data retrieval is "infeasible using state-of-the-art laboratory techniques" and render

the media unable to store data (such as disintegrate, pulverize, melt, incinerate, or shred)

The NVDIMM-N Sanitize options are intended to meet the Purge level.

For more information on sanitization for NVDIMMs, see the following sections in the HPE 16GB NVDIMM

User Guide on the Hewlett Packard Enterprise website (http://www.hpe.com/info/nvdimm-docs):

• NVDIMM sanitization policies

• NVDIMM sanitization guidelines

• Setting the NVDIMM-N Sanitize/Erase on the Next Reboot Policy

NIST SP800-88 Guidelines for Media Sanitization (Rev 1, Dec 2014) is available for download from the NIST

website (http://nvlpubs.nist.gov/nistpubs/SpecialPublications/NIST.SP.800-88r1.pdf).

NVDIMM relocation guidelines

Requirements for relocating NVDIMMs or a set of NVDIMMs when the data must be preserved

• The destination compute module hardware must match the original compute module hardware

configuration.

• All System Utilities settings in the destination compute module must match the original System Utilities

settings in the original compute module.

• If NVDIMM-Ns are used with NVDIMM Interleaving ON mode in the original compute module, do the

following:

◦ Install the NVDIMMs in the same DIMM slots in the destination compute module.

◦ Install the entire NVDIMM set (all the NVDIMM-Ns on the processor) on the destination compute

module.

This guideline would apply when replacing a system board due to system failure.

If any of the requirements cannot be met during NVDIMM relocation, do the following:

◦ Manually back up the NVDIMM-N data before relocating NVDIMM-Ns to another compute module.

◦ Relocate the NVDIMM-Ns to another compute module.

◦ Sanitize all NVDIMM-Ns on the new compute module before using them.

Requirements for relocating NVDIMMs or a set of NVDIMMs when the data does not have to be

preserved

If data on the NVDIMM-N or set of NVDIMM-Ns does not have to be preserved, then

Hardware options installation 39

Page 40

• Move the NVDIMM-Ns to the new location and sanitize all NVDIMM-Ns after installing them to the new

location. For more information, see NVDIMM sanitization on page 38.

• Observe all DIMM and NVDIMM population guidelines. For more information, see DIMM and NVDIMM

population information on page 34.

• Observe the process for removing an NVDIMM.

• Observe the process for installing an NVDIMM.

• Review and configure the system settings for NVDIMMs. For more information, see Configuring the

compute module for NVDIMMs on page 38.

Installing the processor heatsink option

The server supports installation of 1–4 processors.

IMPORTANT: Existing HPE ProLiant and HPE Synergy Gen10 server products containing First

Generation Intel Xeon Scalable Processors may not be upgraded to Second Generation Intel Xeon

Scalable Processors at this time. For more information, see the product QuickSpecs on the Hewlett

Packard Enterprise website (http://www.hpe.com/info/qs).

Prerequisites

To complete this procedure, you need a T-30 Torx screwdriver.

Procedure

1. Observe the following cautions and warnings:

WARNING: To reduce the risk of personal injury from hot surfaces, allow the drives and the internal

system components to cool before touching them.

CAUTION: To prevent possible compute module malfunction and damage to the equipment,

multiprocessor configurations must contain processors with the same part number.

CAUTION: The heatsink thermal interface media is not reusable and must be replaced if the

heatsink is removed from the processor after it has been installed.

CAUTION: To prevent possible compute module overheating, always populate processor socket 2

with a processor and a heatsink or a processor socket cover and a heatsink blank.

CAUTION: To prevent damage to electrical components, properly ground the compute module

before beginning any installation procedure. Improper grounding can cause ESD.

IMPORTANT: Processor socket 1 must be populated at all times or the compute module does not

function.

2. Update the system ROM.

Locate and download the latest ROM version from the Hewlett Packard Enterprise website (http://

www.hpe.com/support). Follow the instructions on the website to update the system ROM.

3. Power down the compute module.

40 Hardware options installation

Page 41

4. Remove the compute module.

5. Remove the access panel.

6. Remove all DIMM baffles.

7. Remove the heatsink blank. Retain the heatsink blank for future use.

8. Align the processor heatsink assembly with the alignment pins and gently lower it down until it sits evenly

on the socket.

The heatsink alignment pins are keyed. The processor will only install one way.

A standard heatsink is shown. Your heatsink might look different.

9. Secure the heatsink using a T-30 screwdriver.

Hardware options installation 41

Page 42

Front of Server

T30, 12 in-lbs

1

3

24

1 12 2

3 34 4

Must Install Must Remove

Rear

Fully tighten

until screws

will not turn

Start

here

1 3

4 2

10. Install all DIMM baffles.

11. Install the access panel.

12. Installing supported hardware options for the compute module.

HPE Trusted Platform Module 2.0 Gen10 option

Overview

Use these instructions to install and enable an HPE TPM 2.0 Gen10 Kit in a supported compute module. This

option is not supported on Gen9 and earlier compute modules.

This procedure includes three sections:

1. Installing the Trusted Platform Module board.

2. Enabling the Trusted Platform Module.

3. Retaining the recovery key/password.

HPE TPM 2.0 installation is supported with specific operating system support such as Microsoft® Windows

Server® 2012 R2 and later. For more information about operating system support, see the product

QuickSpecs on the Hewlett Packard Enterprise website (http://www.hpe.com/info/qs). For more information

about Microsoft® Windows® BitLocker Drive Encryption feature, see the Microsoft website (http://

www.microsoft.com).

CAUTION: If the TPM is removed from the original compute module and powered up on a different

compute module, data stored in the TPM including keys will be erased.

IMPORTANT: In UEFI Boot Mode, the HPE TPM 2.0 Gen10 Kit can be configured to operate as TPM

2.0 (default) or TPM 1.2 on a supported compute module. In Legacy Boot Mode, the configuration can

be changed between TPM 1.2 and TPM 2.0, but only TPM 1.2 operation is supported.

42 Hardware options installation

Page 43

HPE Trusted Platform Module 2.0 Guidelines

CAUTION: Always observe the guidelines in this document. Failure to follow these guidelines can cause

hardware damage or halt data access.

Hewlett Packard Enterprise SPECIAL REMINDER: Before enabling TPM functionality on this system, you

must ensure that your intended use of TPM complies with relevant local laws, regulations and policies, and

approvals or licenses must be obtained if applicable.

For any compliance issues arising from your operation/usage of TPM which violates the above mentioned

requirement, you shall bear all the liabilities wholly and solely. Hewlett Packard Enterprise will not be

responsible for any related liabilities.

When installing or replacing a TPM, observe the following guidelines:

• Do not remove an installed TPM. Once installed, the TPM becomes a permanent part of the system board.

• When installing or replacing hardware, Hewlett Packard Enterprise service providers cannot enable the

TPM or the encryption technology. For security reasons, only the customer can enable these features.

• When returning a system board for service replacement, do not remove the TPM from the system board.

When requested, Hewlett Packard Enterprise Service provides a TPM with the spare system board.

• Any attempt to remove the cover of an installed TPM from the system board can damage the TPM cover,

the TPM, and the system board.

• If the TPM is removed from the original server and powered up on a different server, data stored in the

TPM including keys will be erased.

• When using BitLocker, always retain the recovery key/password. The recovery key/password is required to

complete Recovery Mode after BitLocker detects a possible compromise of system integrity.

• Hewlett Packard Enterprise is not liable for blocked data access caused by improper TPM use. For

operating instructions, see the TPM documentation or the encryption technology feature documentation

provided by the operating system.

Installing and enabling the HPE TPM 2.0 Gen10 Kit

Installing the Trusted Platform Module board

Preparing the compute module for installation

Procedure

1. Observe the following warnings:

Hardware options installation 43

Page 44

WARNING: The front panel Power On/Standby button does not shut off system power. Portions of

the power supply and some internal circuitry remain active until AC power is removed.

To reduce the risk of personal injury, electric shock, or damage to the equipment, remove power from

the compute module:

For rack and tower servers, remove the power cord.

For server blades and compute modules, remove the server blade or compute module from the

enclosure.

WARNING: To reduce the risk of personal injury from hot surfaces, allow the drives and the internal

system components to cool before touching them.

2. Update the system ROM.

Locate and download the latest ROM version from the Hewlett Packard Enterprise Support Center

website. Follow the instructions on the website to update the system ROM.

3. Update the system ROM.

Locate and download the latest ROM version from the Hewlett Packard Enterprise Support Center website

(http://www.hpe.com/support/hpesc). To update the system ROM, follow the instructions on the website.

4. Power down the compute module.

a. Shut down the OS as directed by the OS documentation.

b. To place the compute module in standby mode, press the Power On/Standby button. When the

compute module enters standby power mode, the system power LED changes to amber.

c. Disconnect the power cords (rack and tower servers).

5. Do one of the following:

• Remove the compute module from the rack, if necessary.

• Remove the compute module or compute module blade from the compute module.

6. Place the compute module on a flat, level work surface.

7. Remove the access panel.

8. Remove any options or cables that may prevent access to the TPM connector.

9. Proceed to Installing the TPM board and cover on page 44.

Installing the TPM board and cover

Procedure

1. Observe the following alerts:

CAUTION: If the TPM is removed from the original compute module and powered up on a different

compute module, data stored in the TPM including keys will be erased.

44 Hardware options installation

Page 45

CAUTION: The TPM is keyed to install only in the orientation shown. Any attempt to install the TPM

1

2

in a different orientation might result in damage to the TPM or system board.

2. Align the TPM board with the key on the connector, and then install the TPM board. To seat the board,

press the TPM board firmly into the connector. To locate the TPM connector on the system board, see the

compute module label on the access panel.

3. Install the TPM cover:

a. Line up the tabs on the cover with the openings on either side of the TPM connector.

b. To snap the cover into place, firmly press straight down on the middle of the cover.

4. Proceed to Preparing the compute module for operation on page 46.

Hardware options installation 45

Page 46

Preparing the compute module for operation

Procedure

1. Install any options or cables previously removed to access the TPM connector.

2. Install the access panel.

3. Do one of the following:

a. Install the compute module in the rack, if necessary.

b. Install the compute module in the enclosure.

4. Power up the compute module.

a. Connect the power cords (rack and tower compute modules).

b. Press the Power On/Standby button.

Enabling the Trusted Platform Module

When enabling the Trusted Platform module, observe the following guidelines:

• By default, the Trusted Platform Module is enabled as TPM 2.0 when the compute module is powered on

after installing it.

• In UEFI Boot Mode, the Trusted Platform Module can be configured to operate as TPM 2.0 or TPM 1.2.

• In Legacy Boot Mode, the Trusted Platform Module configuration can be changed between TPM 1.2 and

TPM 2.0, but only TPM 1.2 operation is supported.

Enabling the Trusted Platform Module as TPM 2.0

Procedure

1. During the compute module startup sequence, press the F9 key to access System Utilities.

2. From the System Utilities screen, select System Configuration > BIOS/Platform Configuration (RBSU)

> Server Security > Trusted Platform Module options.

3. Verify the following:

• "Current TPM Type" is set to TPM 2.0.

• "Current TPM State" is set to Present and Enabled.

• "TPM Visibility" is set to Visible.

4. If changes were made in the previous step, press the F10 key to save your selection.

5. If F10 was pressed in the previous step, do one of the following:

• If in graphical mode, click Yes.

• If in text mode, press the Y key.

6. Press the ESC key to exit System Utilities.

46 Hardware options installation

Page 47

7. If changes were made and saved, the compute module prompts for reboot request. Press the Enter key to

confirm reboot.

If the following actions were performed, the compute module reboots a second time without user input.

During this reboot, the TPM setting becomes effective.

• Changing from TPM 1.2 and TPM 2.0

• Changing TPM bus from FIFO to CRB

• Enabling or disabling TPM

• Clearing the TPM

8. Enable TPM functionality in the OS, such as Microsoft Windows BitLocker or measured boot.

For more information, see the Microsoft website.

Enabling the Trusted Platform Module as TPM 1.2

Procedure

1. During the compute module startup sequence, press the F9 key to access System Utilities.

2. From the System Utilities screen select System Configuration > BIOS/Platform Configuration (RBSU)

> Server Security > Trusted Platform Module options.

3. Change the "TPM Mode Switch Operation" to TPM 1.2.

4. Verify "TPM Visibility" is Visible.

5. Press the F10 key to save your selection.

6. When prompted to save the change in System Utilities, do one of the following:

• If in graphical mode, click Yes.

• If in text mode, press the Y key.

7. Press the ESC key to exit System Utilities.

The compute module reboots a second time without user input. During this reboot, the TPM setting

becomes effective.

8. Enable TPM functionality in the OS, such as Microsoft Windows BitLocker or measured boot.

For more information, see the Microsoft website.

Retaining the recovery key/password

The recovery key/password is generated during BitLocker setup, and can be saved and printed after

BitLocker is enabled. When using BitLocker, always retain the recovery key/password. The recovery key/

password is required to enter Recovery Mode after BitLocker detects a possible compromise of system

integrity.

To help ensure maximum security, observe the following guidelines when retaining the recovery key/

password:

Hardware options installation 47

Page 48

• Always store the recovery key/password in multiple locations.

• Always store copies of the recovery key/password away from the compute module.

• Do not save the recovery key/password on the encrypted hard drive.

48 Hardware options installation

Page 49

Cabling

Cabling resources

Cabling configurations and requirements vary depending on the product and installed options. For more

information about product features, specifications, options, configurations, and compatibility, see the product

QuickSpecs on the Hewlett Packard Enterprise website (

Energy pack option cabling

http://www.hpe.com/info/qs).

Standard drive backplane cabling

Cabling 49

Page 50

P416ie-m Smart Array Controller cabling

1

3

2

Item Description

1 Port3i - SAS/SATA cable connector

2 Port4i - SAS/SATA cable connector

3 Mezzanine option card

50 Cabling

Page 51

Removing and replacing the system battery

If the compute module no longer automatically displays the correct date and time, then replace the battery

that provides power to the real-time clock. Under normal use, battery life is 5 to 10 years.

WARNING: The computer contains an internal lithium manganese dioxide, a vanadium pentoxide, or an

alkaline battery pack. A risk of fire and burns exists if the battery pack is not properly handled. To reduce

the risk of personal injury:

• Do not attempt to recharge the battery.

• Do not expose the battery to temperatures higher than 60°C (140°F).

• Do not disassemble, crush, puncture, short external contacts, or dispose of in fire or water.

• Replace only with the spare designated for this product.

To remove the component:

Procedure

1. Power down the compute module .

2. Remove the compute module .

3. Place the compute module on a flat, level work surface.

on a flat, level work surface.Place the

4. Remove the access panel.

5. Remove front panel/drive cage assembly.

6. Locate the battery on the system board.

7. Remove the battery.

IMPORTANT: Replacing the system board battery resets the system ROM to its default

configuration. After replacing the battery, use BIOS/Platform Configuration (RBSU) in the UEFI

System Utilities to reconfigure the system.

Removing and replacing the system battery 51

Page 52

To replace the component, reverse the removal procedure.

For more information about battery replacement or proper disposal, contact an authorized reseller or an

authorized service provider.

52 Removing and replacing the system battery

Page 53

Electrostatic discharge

Preventing electrostatic discharge

To prevent damaging the system, be aware of the precautions you must follow when setting up the system or

handling parts. A discharge of static electricity from a finger or other conductor may damage system boards or

other static-sensitive devices. This type of damage may reduce the life expectancy of the device.

Procedure

• Avoid hand contact by transporting and storing products in static-safe containers.

• Keep electrostatic-sensitive parts in their containers until they arrive at static-free workstations.

• Place parts on a grounded surface before removing them from their containers.

• Avoid touching pins, leads, or circuitry.

• Always be properly grounded when touching a static-sensitive component or assembly.

Grounding methods to prevent electrostatic discharge

Several methods are used for grounding. Use one or more of the following methods when handling or

installing electrostatic-sensitive parts:

• Use a wrist strap connected by a ground cord to a grounded workstation or computer chassis. Wrist straps

are flexible straps with a minimum of 1 megohm ±10 percent resistance in the ground cords. To provide

proper ground, wear the strap snug against the skin.

• Use heel straps, toe straps, or boot straps at standing workstations. Wear the straps on both feet when

standing on conductive floors or dissipating floor mats.

• Use conductive field service tools.

• Use a portable field service kit with a folding static-dissipating work mat.

If you do not have any of the suggested equipment for proper grounding, have an authorized reseller install

the part.

For more information on static electricity or assistance with product installation, contact the Hewlett Packard

Enterprise Support Center.

Electrostatic discharge 53

Page 54

Specifications

Environmental specifications

Specification Value

Temperature range

Operating 10°C to 35°C (50°F to 95°F)

Nonoperating -30°C to 60°C (-22°F to 140°F)

Relative humidity (noncondensing)

Operating 10% to 90% @ 28°C (82.4°F)

Nonoperating 5% to 95% @ 38.7°C (101.7°F)

Altitude

Operating 3,050 m (10,000 ft)

Nonoperating 9,144 m (30,000 ft)

1

The following temperature conditions and limitations apply:

• All temperature ratings shown are for sea level.

3

1

2

—

—

—

• An altitude derating of 1°C per 304.8 m (1.8°F per 1,000 ft) up to 3,048 m (10,000 ft) applies.

• No direct sunlight is allowed.

• The maximum permissible rate of change is 10°C/hr (18°F/hr).

• The type and number of options installed might reduce the upper temperature and humidity limits.

• Operating with a fan fault or above 30°C (86°F) might reduce system performance.

2

Storage maximum humidity of 95% is based on a maximum temperature of 45°C (113°F).

3

Maximum storage altitude corresponds to a minimum pressure of 70 kPa (10.1 psia).

Physical specifications

Specification Value

Height 430.30 mm (16.94 in)

Depth 606.6 mm (23.88 in)

Width 63.50 mm (2.50 in)

Weight (maximum) 17.41 kg (38.38 lb)

Weight (minimum) 13.75 kg (30.31 lb)

54 Specifications

Page 55

Documentation and troubleshooting resources

for HPE Synergy

HPE Synergy documentation

The Hewlett Packard Enterprise Information Library (

repository. It includes installation instructions, user guides, maintenance and service guides, best practices,

and links to additional resources. Use this website to obtain the latest documentation, including:

• Learning about HPE Synergy technology

• Installing and cabling HPE Synergy

• Updating the HPE Synergy components

• Using and managing HPE Synergy

• Troubleshooting HPE Synergy

www.hpe.com/info/synergy-docs) is a task-based

HPE Synergy Configuration and Compatibility Guide

The HPE Synergy Configuration and Compatibility Guide is in the Hewlett Packard Enterprise Information

Library (www.hpe.com/info/synergy-docs). It provides an overview of HPE Synergy management and fabric

architecture, detailed hardware component identification and configuration, and cabling examples.

HPE Synergy Frame Link Module User Guide

The HPE Synergy Frame Link Module User Guide is in the Hewlett Packard Enterprise Information Library

(www.hpe.com/info/synergy-docs). It outlines frame link module management, configuration, and security.

HPE OneView User Guide for HPE Synergy

The HPE OneView User Guide for HPE Synergy is in the Hewlett Packard Enterprise Information Library

(www.hpe.com/info/synergy-docs). It describes resource features, planning tasks, configuration quick start

tasks, navigational tools for the graphical user interface, and more support and reference information for HPE

OneView.

HPE OneView Global Dashboard

The HPE OneView Global Dashboard provides a unified view of health, alerting, and key resources managed

by HPE OneView across multiple platforms and data center sites. The HPE OneView Global Dashboard User

Guide is in the Hewlett Packard Enterprise Information Library (www.hpe.com/info/synergy-docs). It

provides instructions for installing, configuring, navigating, and troubleshooting the HPE OneView Global

Dashboard.

HPE Synergy Image Streamer User Guide

The HPE Synergy Image Streamer User Guide is in the Hewlett Packard Enterprise Information Library

(www.hpe.com/info/synergy-docs). It describes the OS deployment process using Image Streamer,

features of Image Streamer, and purpose and life cycle of Image Streamer artifacts. It also includes

authentication, authorization, and troubleshooting information for Image Streamer.

Documentation and troubleshooting resources for HPE Synergy 55

Page 56

HPE Synergy Image Streamer GitHub

The HPE Synergy Image Streamer GitHub repository (github.com/HewlettPackard) contains sample

artifacts and documentation on how to use the sample artifacts. It also contains technical white papers

explaining deployment steps that can be performed using Image Streamer.

HPE Synergy Software Overview Guide

The HPE Synergy Software Overview Guide is in the Hewlett Packard Enterprise Information Library

(www.hpe.com/info/synergy-docs). It provides detailed references and overviews of the various software

and configuration utilities to support HPE Synergy. The guide is task-based and covers the documentation

and resources for all supported software and configuration utilities available for:

• HPE Synergy setup and configuration

• OS deployment

• Firmware updates

• Troubleshooting

• Remote support

Best Practices for HPE Synergy Firmware and Driver Updates

The Best Practices for HPE Synergy Firmware and Driver Updates is in the Hewlett Packard Enterprise

Information Library (www.hpe.com/info/synergy-docs). It provides information on how to update the

firmware and recommended best practices to update firmware and drivers through HPE Synergy Composer,

which is powered by HPE OneView.

HPE OneView Support Matrix for HPE Synergy

The HPE OneView Support Matrix for HPE Synergy is in the Hewlett Packard Enterprise Information Library

(www.hpe.com/info/synergy-docs). It maintains the latest software and firmware requirements, supported

hardware, and configuration maximums for HPE OneView.

HPE Synergy Image Streamer Support Matrix

The HPE Synergy Image Streamer Support Matrix is in the Hewlett Packard Enterprise Information Library

(www.hpe.com/info/synergy-docs). It maintains the latest software and firmware requirements, supported

hardware, and configuration maximums for HPE Synergy Image Streamer.

HPE Synergy Firmware Comparison Tool

The HPE Synergy Firmware Comparison Tool is on the Hewlett Packard Enterprise website (http://

www.hpe.com/info/synergy-fw-comparison-tool). HPE Synergy Software Releases are made up of a

management combination and an HPE Synergy Custom SPP. This tool provides a list of Management

Combinations and lets you compare HPE Synergy SPPs supported by the selected management

combination.

HPE Synergy Upgrade Paths

The HPE Synergy Upgrade Paths is a table on the Hewlett Packard Enterprise website (http://

www.hpe.com/info/synergy-fw-upgrade-table). The table provides information on HPE Synergy Composer

and HPE Synergy Image Streamer upgrade paths and management combinations.

56 Documentation and troubleshooting resources for HPE Synergy

Page 57

HPE Synergy Glossary

The HPE Synergy Glossary, in the Hewlett Packard Enterprise Information Library (www.hpe.com/info/

synergy-docs), defines common terminology associated with HPE Synergy.

HPE Synergy troubleshooting resources

HPE Synergy troubleshooting resources are available within HPE OneView and in the Hewlett Packard

Enterprise Information Library (www.hpe.com/info/synergy-docs).

Troubleshooting within HPE OneView

HPE OneView graphical user interface includes alert notifications and options for troubleshooting within HPE

OneView. The UI provides multiple views of HPE Synergy components, including colored icons to indicate

resource status and potential problem resolution in messages.

You can also use the Enclosure view and Map view to quickly see the status of all discovered HPE Synergy

hardware.

HPE Synergy Troubleshooting Guide

The HPE Synergy Troubleshooting Guide is in the Hewlett Packard Enterprise Information Library

(www.hpe.com/info/synergy-docs). It provides information for resolving common problems and courses of

action for fault isolation and identification, issue resolution, and maintenance for both HPE Synergy hardware

and software components.

Error Message Guide for HPE ProLiant Gen10 servers and HPE Synergy

The Error Message Guide for HPE ProLiant Gen10 servers and HPE Synergy is in the Hewlett Packard

Enterprise Information Library (www.hpe.com/info/synergy-docs). It provides information for resolving

common problems associated with specific error messages received for both HPE Synergy hardware and

software components.

HPE OneView Help and HPE OneView API Reference

The HPE OneView Help and the HPE OneView API Reference are readily accessible, embedded online help

available within the HPE OneView user interface. These help files include “Learn more” links to common

issues, as well as procedures and examples to troubleshoot issues within HPE Synergy.

The help files are also available in the Hewlett Packard Enterprise Information Library (www.hpe.com/info/

synergy-docs).

HPE Synergy QuickSpecs

HPE Synergy has system specifications as well as individual product and component specifications. For

complete specification information, see the HPE Synergy and individual HPE Synergy product QuickSpecs on

the Hewlett Packard Enterprise website (www.hpe.com/info/qs).

Documentation and troubleshooting resources for HPE Synergy 57

Page 58

HPE Synergy document overview

(documentation map)

www.hpe.com/info/synergy-docs

58 HPE Synergy document overview (documentation map)

Page 59

Planning

Managing

• HPE Synergy 12000 Frame Site Planning

Guide

• HPE Synergy Configuration and

Compatibility Guide

• HPE OneView Support Matrix for HPE

Synergy

• HPE Synergy Image Streamer Support

Matrix

• Setup Overview for HPE Synergy

• HPE Synergy Software Overview Guide

Installing hardware

• HPE Synergy Start Here Poster (included

with frame)

• HPE Synergy 12000 Frame Setup and

Installation Guide

• Rack Rails Installation Instructions for the

HPE Synergy 12000 Frame (included with

frame)

• HPE OneView User Guide for HPE Synergy

• HPE Synergy Image Streamer Help

• HPE Synergy Image Streamer User Guide

• HPE Synergy Image Streamer API Reference

• HPE Synergy Image Streamer deployment workflow

• HPE Synergy Frame Link Module User Guide

Monitoring

• HPE OneView User Guide for HPE Synergy

• HPE OneView Global Dashboard User Guide

Maintaining

• Product maintenance and service guides

• Best Practices for HPE Synergy Firmware and Driver

Updates

• HPE OneView Help for HPE Synergy

• HPE OneView User Guide for HPE Synergy

• HPE Synergy 12000 Frame Rack Template

(included with frame)

• Hood labels

• User guides

• HPE Synergy Cabling Interactive Guide

• HPE OneView Help for HPE Synergy —

Hardware setup

Configuring for managing and monitoring

• HPE OneView Help for HPE Synergy

• HPE OneView User Guide for HPE Synergy

• HPE OneView API Reference for HPE

Synergy

• User Guides

• HPE Synergy Firmware Comparison Tool

• HPE Synergy Upgrade Paths (website)

• HPE Synergy Appliances Maintenance and Service

Guide for HPE Synergy Composer and HPE Synergy

Image Streamer

Troubleshooting

• HPE OneView alert details

• HPE Synergy Troubleshooting Guide

• Error Message Guide for HPE ProLiant Gen10 servers

and HPE Synergy

• Integrated Management Log Messages and

Troubleshooting Guide for HPE ProLiant Gen10 and

HPE Synergy