Page 1

A

HPE FlexFabric 5940 Switch Series

CL and QoS Configuration Guide

Part number: 5200-1002b

Software version: Release 25xx

Document version: 6W102-20170830

Page 2

© Copyright 2017 Hewlett Packard Enterprise Development LP

The information contained herein is subject to change without notice. The only warranties for Hewlett Packard

Enterprise products and services are set forth in the express warranty statements acco mpanying such

products and services. Nothing herein should be construe d as constituting an additional warranty. Hewlett

Packard Enterprise shall not be liable for technical or editorial errors or omissions co ntained herein.

Confidential computer software. V alid license from Hewlett Packard Enterprise required for possession, use, or

copying. Consistent with FAR 12.211 and 12.212, Commercial Computer Software, Computer Software

Documentation, and T e chnical Data for Commercial Items are licensed to the U.S. Government under vendor’s

standard commercial license.

Links to third-party websites take you outside the Hewlett Packard Enterprise website. Hewlett Packard

Enterprise has no control over and is not responsible for information outside the Hewlett Packard Enterprise

website.

Acknowledgments

Intel®, Itanium®, Pentium®, Intel Inside®, and the Intel Inside logo are trademarks of Intel Corporation in the

United States and other countries.

Microsoft® and Windows® are either registered trademarks or trademarks of Microsoft Corporation in the

United States and/or other countries.

Adobe® and Acrobat® are trademarks of Adobe Systems In corporated.

Java and Oracle are registered trademarks of Oracle and/or its affiliates.

UNIX® is a registered trademark of The Open Group.

Page 3

Contents

Configuring ACLs ············································································· 1

Overview ·································································································································· 1

ACL types ·························································································································· 1

Numbering and naming ACLs ································································································ 1

Match order ························································································································ 1

Rule numbering ·················································································································· 2

Fragment filtering with ACLs ·································································································· 3

Configuration restrictions and guidelines ························································································· 3

Configuration task list·················································································································· 4

Configuring a basic ACL ·············································································································· 4

Configuring an IPv4 basic ACL ······························································································· 4

Configuring an IPv6 basic ACL ······························································································· 5

Configuring an advanced ACL ······································································································ 6

Configuring an IPv4 advanced ACL ························································································· 6

Configuring an IPv6 advanced ACL ························································································· 7

Configuring a Layer 2 ACL ··········································································································· 8

Configuring a user-defined ACL ···································································································· 9

Copying an ACL ······················································································································ 10

Configuring packet filtering with ACLs ·························································································· 10

Applying an ACL to an interface for packet filtering ··································································· 10

Configuring the applicable scope of packet filtering on a VLAN interface ······································· 11

Configuring logging and SNMP notifications for packet filtering ··················································· 11

Setting the packet filtering default action················································································· 12

Displaying and maintaining ACLs ································································································ 12

ACL configuration examples ······································································································· 13

Interface-based packet filter configuration example ··································································· 13

QoS overview ················································································ 15

QoS service models ················································································································· 15

Best-effort service model ···································································································· 15

IntServ model ··················································································································· 15

DiffServ model ·················································································································· 15

QoS techniques overview ·········································································································· 15

Deploying QoS in a network ································································································ 16

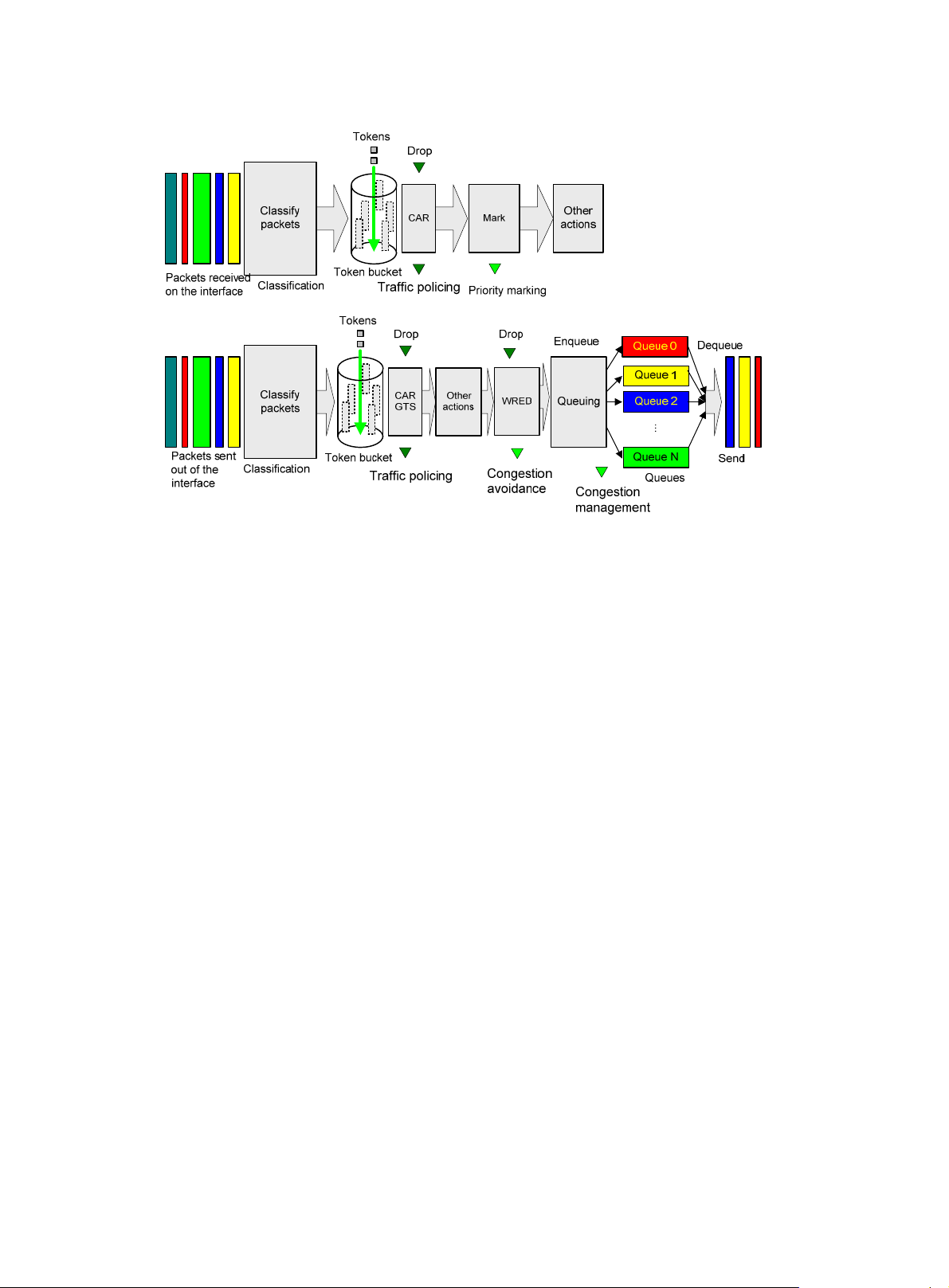

QoS processing flow in a device ··························································································· 16

Configuring a QoS policy ································································· 18

Non-MQC approach ················································································································· 18

MQC approach ························································································································ 18

Configuration procedure diagram ································································································ 18

Defining a traffic class ··············································································································· 19

Defining a traffic behavior ·········································································································· 19

Defining a QoS policy ··············································································································· 19

Applying the QoS policy ············································································································ 20

Applying the QoS policy to an interface ·················································································· 20

Applying the QoS policy to VLANs ························································································ 21

Applying the QoS policy globally ··························································································· 21

Applying the QoS policy to a control plane ·············································································· 21

Applying the QoS policy to a user profile ················································································ 22

Displaying and maintaining QoS policies ······················································································· 23

Configuring priority mapping ····························································· 24

Overview ································································································································ 24

Introduction to priorities ······································································································ 24

Priority maps ···················································································································· 24

Priority mapping configuration tasks ····························································································· 25

Configuring a priority map ·········································································································· 25

i

Page 4

Configuring an interface to trust packet priority for priority mapping ····················································· 26

Changing the port priority of an interface ······················································································· 26

Displaying and maintaining priority mapping ·················································································· 27

Priority mapping configuration examples ······················································································· 27

Port priority configuration example ························································································ 27

Priority mapping table and priority marking configuration example ··············································· 28

Configuring traffic policing, GTS, and rate limit ····································· 32

Overview ································································································································ 32

Traffic evaluation and token buckets ······················································································ 32

Traffic policing ·················································································································· 33

GTS ······························································································································· 34

Rate limit ························································································································· 35

Configuration restrictions and guidelines ······················································································· 36

Configuring traffic policing by using the MQC approach ···································································· 36

Configuring GTS by using the non-MQC approach ·········································································· 37

Configuring the rate limit for an interface ······················································································· 38

Displaying and maintaining traffic policing, GTS, and rate limit ··························································· 38

Traffic policing, GTS, and rate limit configuration example ································································ 38

Network requirements ········································································································ 38

Configuration procedure ····································································································· 39

Configuring congestion management ················································· 42

Overview ································································································································ 42

SP queuing ······················································································································ 42

WRR queuing ··················································································································· 43

WFQ queuing ··················································································································· 44

Configuration approaches and task list ························································································· 44

Configuring per-queue congestion management ············································································· 45

Configuring SP queuing ······································································································ 45

Configuring WRR queuing ··································································································· 45

Configuring WFQ queuing ··································································································· 46

Configuring SP+WRR queuing ····························································································· 46

Configuring SP+WFQ queuing ····························································································· 47

Configuring a queue scheduling profile ························································································· 48

Configuration restrictions and guidelines ················································································ 49

Configuration procedure ····································································································· 49

Queue scheduling profile configuration example ······································································ 50

Displaying and maintaining congestion management ······································································· 51

Configuring congestion avoidance ····················································· 52

Overview ································································································································ 52

Tail drop ·························································································································· 52

RED and WRED ··············································································································· 52

Relationship between WRED and queuing mechanisms ···························································· 53

ECN ······························································································································· 53

Configuring and applying a queue-based WRED table ····································································· 54

Configuration procedure ····································································································· 55

Configuration example ········································································································ 55

Displaying and maintaining WRED ······························································································ 56

Configuring traffic filtering ································································ 57

Configuration procedure ············································································································ 57

Configuration example ·············································································································· 57

Network requirements ········································································································ 57

Configuration procedure ····································································································· 58

Configuring priority marking ······························································ 59

Configuration procedure ············································································································ 59

Configuration example ·············································································································· 60

Network requirements ········································································································ 60

Configuration procedure ····································································································· 61

ii

Page 5

Configuring nesting ········································································· 63

Configuration procedure ············································································································ 63

Configuration example ·············································································································· 63

Network requirements ········································································································ 63

Configuration procedure ····································································································· 64

Configuring traffic redirecting ···························································· 66

Configuration procedure ············································································································ 66

Configuration example ·············································································································· 67

Network requirements ········································································································ 67

Configuration procedure ····································································································· 68

Configuring global CAR ··································································· 69

Overview ································································································································ 69

Aggregate CAR ················································································································· 69

Hierarchical CAR ··············································································································· 69

Configuring aggregate CAR by using the MQC approach ································································· 70

Displaying and maintaining global CAR ························································································ 70

Configuring class-based accounting ··················································· 71

Configuration procedure ············································································································ 71

Configuration example ·············································································································· 72

Network requirements ········································································································ 72

Configuration procedure ····································································································· 72

Appendixes ··················································································· 74

Appendix A Acronym ················································································································ 74

Appendix B Default priority maps ································································································· 74

Appendix C Introduction to packet precedence ··············································································· 75

IP precedence and DSCP values ·························································································· 75

802.1p priority ··················································································································· 77

EXP values ······················································································································ 77

Configuring time ranges ··································································· 79

Configuration procedure ············································································································ 79

Displaying and maintaining time ranges ························································································ 79

Time range configuration example ······························································································· 79

Configuring data buffers ·································································· 81

Configuration task list················································································································ 82

Enabling the Burst feature ·········································································································· 82

Configuring data buffers manually ······························································································· 83

Setting the total shared-area ratio ························································································· 83

Setting the maximum shared-area ratio for a queue ·································································· 83

Setting the fixed-area ratio for a queue··················································································· 83

Applying data buffer configuration ························································································· 84

Displaying and maintaining data buffers ························································································ 84

Burst configuration example ······································································································· 84

Configuring QCN ············································································ 86

Basic concepts ························································································································ 86

QCN message format ··············································································································· 86

Data flow format ················································································································ 86

CNM format ····················································································································· 87

How QCN works ······················································································································ 88

QCN algorithm ························································································································ 89

CP algorithm ···················································································································· 89

RP algorithm ···················································································································· 89

CND ······································································································································ 90

CND defense mode ··········································································································· 90

Priority mapping ················································································································ 90

iii

Page 6

Protocols and standards ············································································································ 90

QCN configuration task list ········································································································· 90

Enabling QCN ························································································································· 91

Configuration prerequisites ·································································································· 91

Configuration procedure ····································································································· 91

Configuring CND settings ·········································································································· 91

Configuring global CND settings ··························································································· 91

Configuring CND settings for an interface ··············································································· 92

Configuring congestion detection parameters ················································································· 92

Displaying and maintaining QCN ································································································· 93

QCN configuration examples ······································································································ 93

Basic QCN configuration example ························································································· 93

MultiCND QCN configuration example ··················································································· 96

Document conventions and icons ···················································· 102

Conventions ························································································································· 102

Network topology icons ··········································································································· 103

Support and other resources ·························································· 104

Accessing Hewlett Packard Enterprise Support ············································································ 104

Accessing updates ················································································································· 104

Websites ······················································································································· 105

Customer self repair ········································································································· 105

Remote support ·············································································································· 105

Documentation feedback ·································································································· 105

Index ························································································· 107

iv

Page 7

Configuring ACLs

Overview

An access control list (ACL) is a set of rules for identifying traffic based on criteria such as source IP

address, destination IP address, and port number. The rules are also called permit or deny

statements.

ACLs are primarily used for packet filtering. "Configuring packet filtering with ACLs" p

example. You can use ACLs in QoS, security, routing, and other modules for identifying traffic. The

packet drop or forwarding decisions depend on the modules that use ACLs.

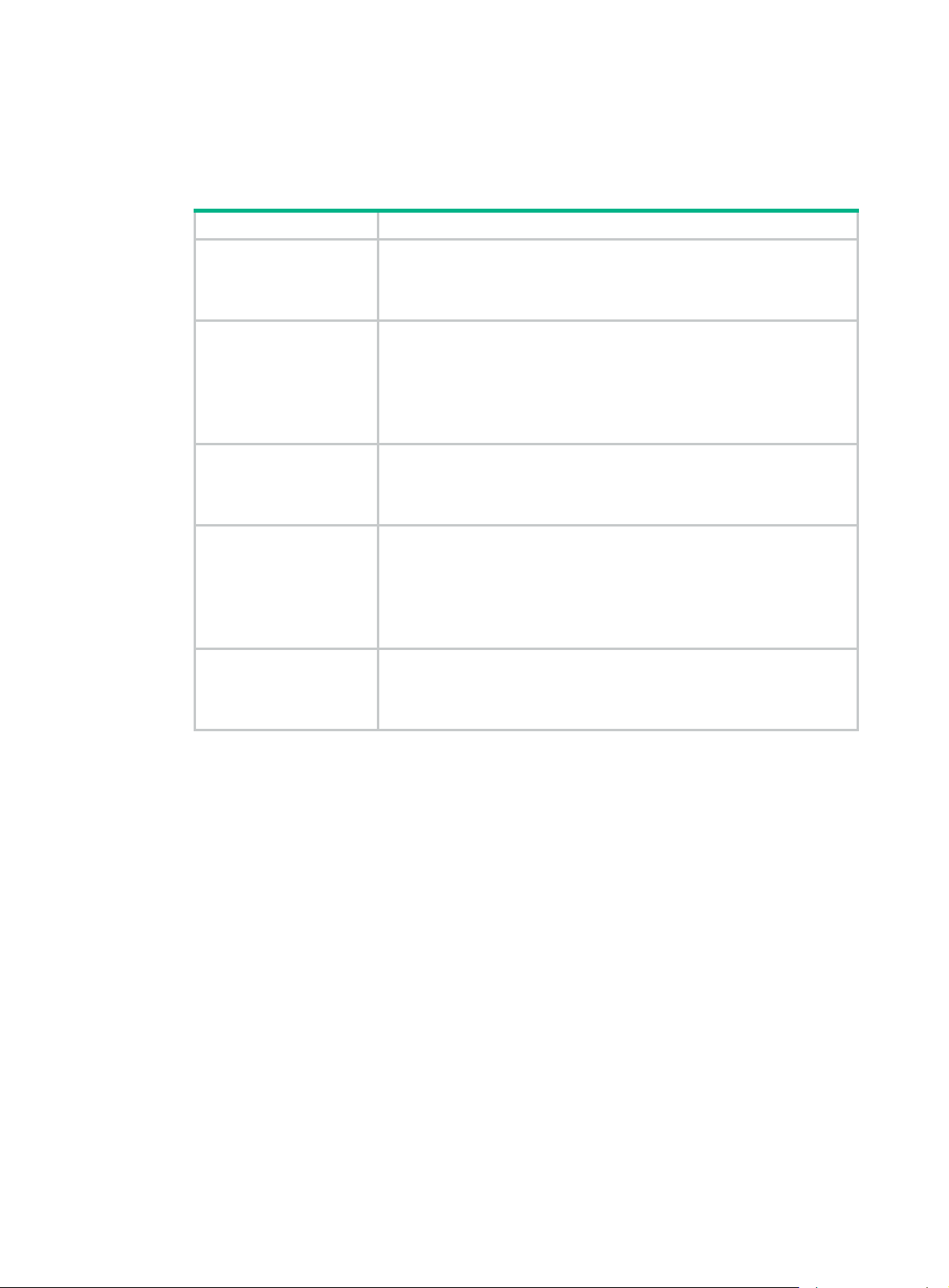

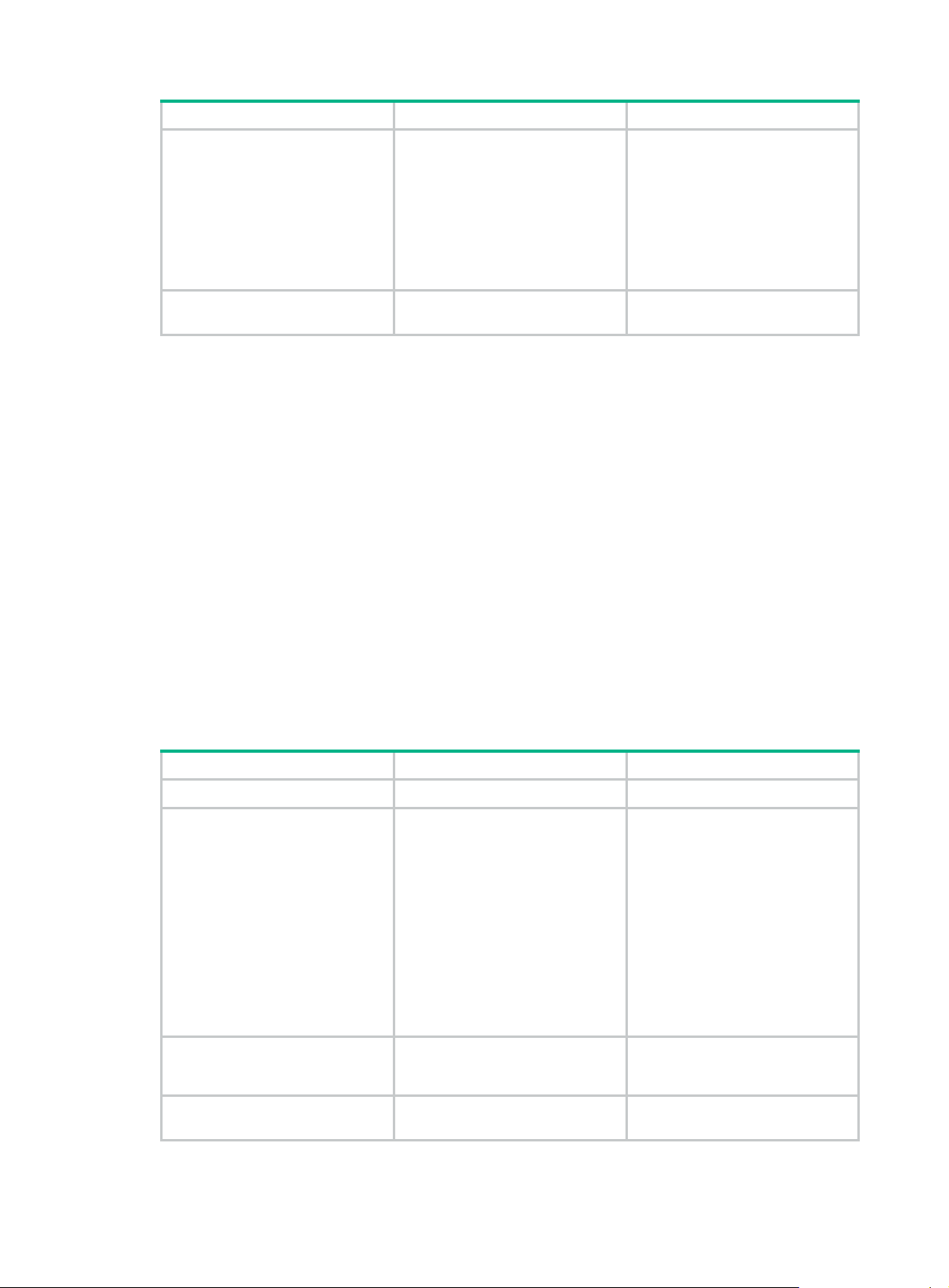

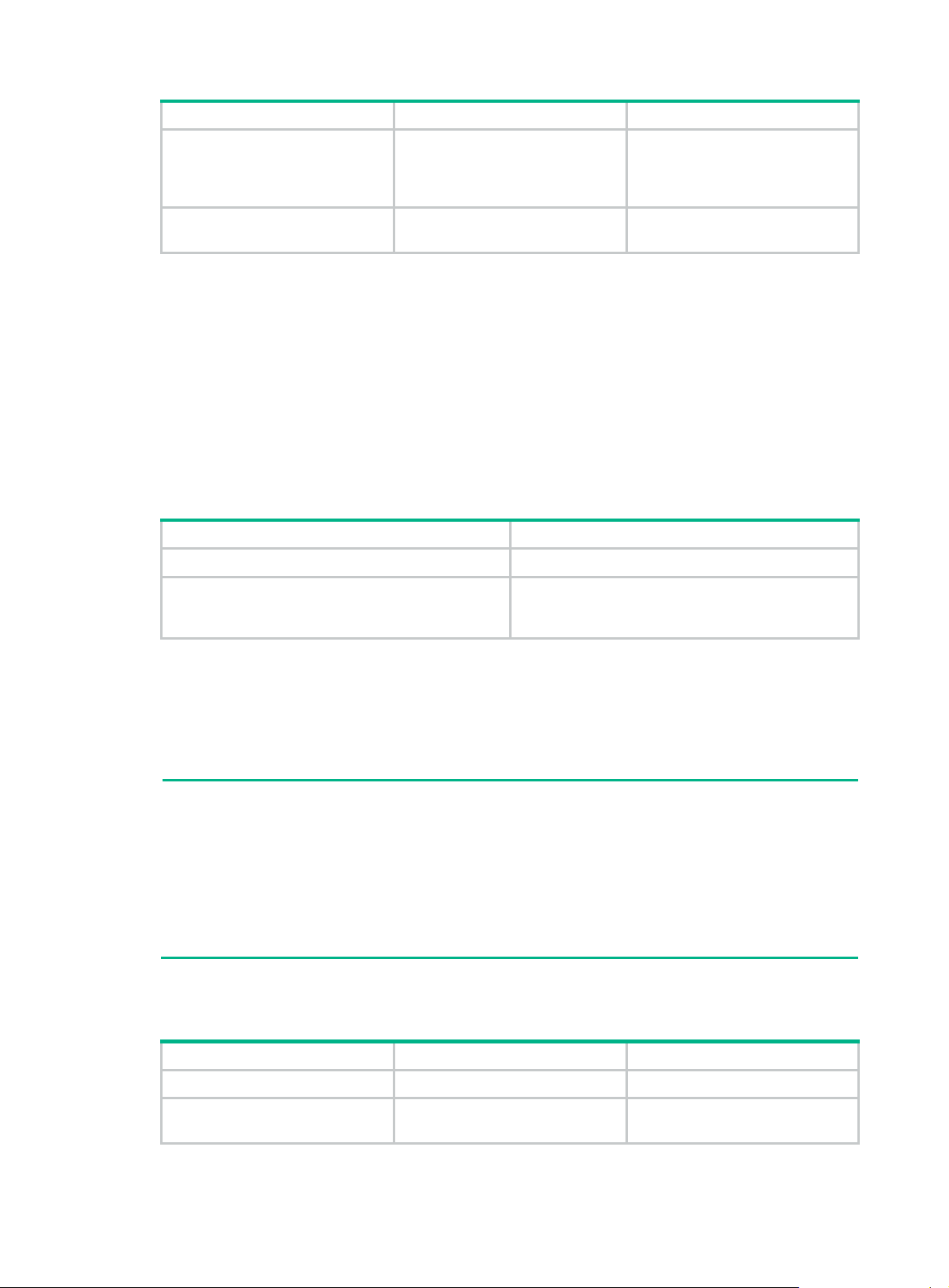

ACL types

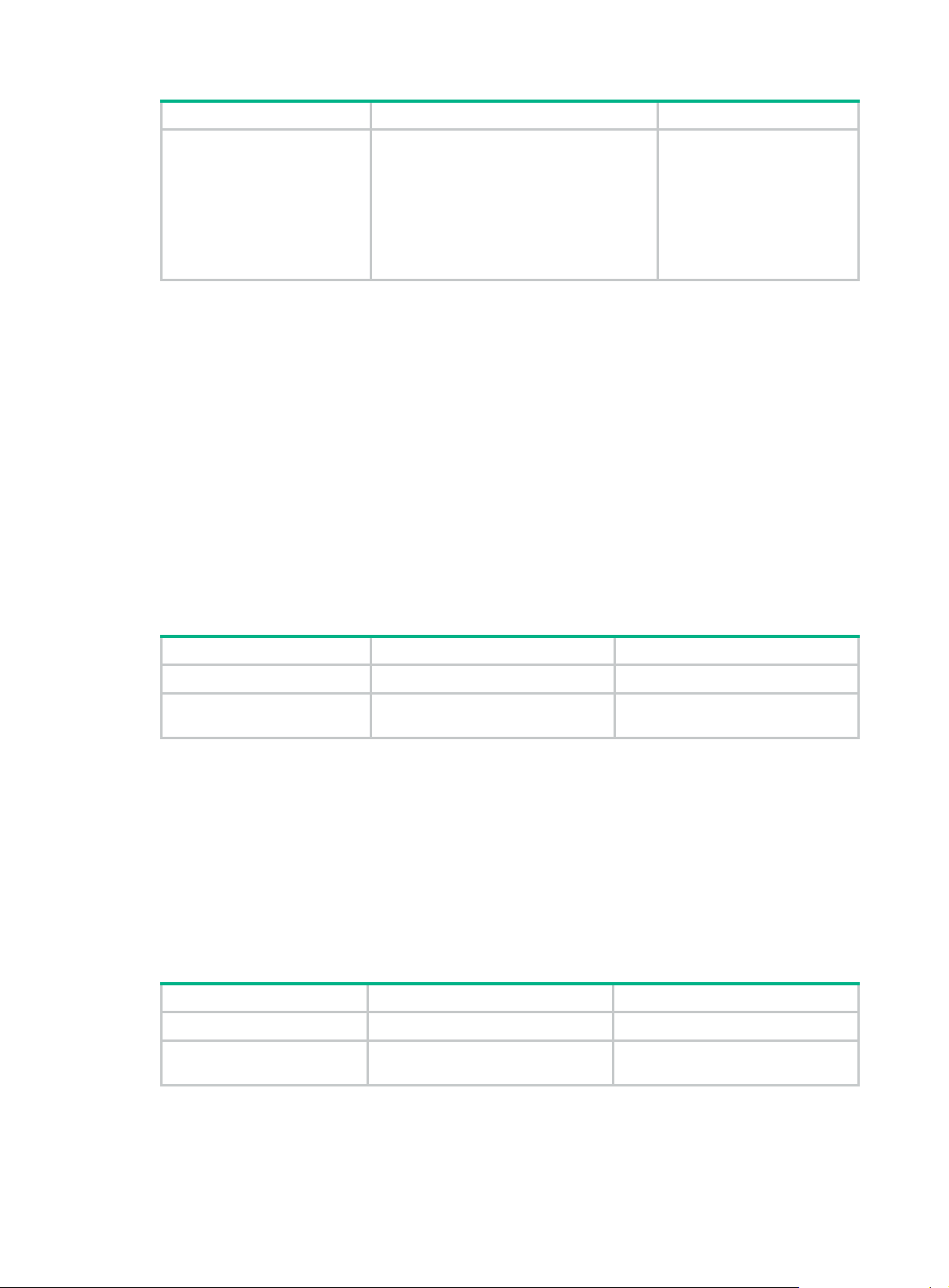

Type ACL number IP version Match criteria

Basic ACLs 2000 to 2999

Advanced ACLs 3000 to 3999

Layer 2 ACLs 4000 to 4999 IPv4 and IPv6

User-defined ACLs 5000 to 5999 IPv4 and IPv6

IPv4

IPv6 Source IPv6 address.

IPv4

IPv6

Source IPv4 address.

Source IPv4 address, destination IPv4

address, packet priority, protocol number, and

other Layer 3 and Layer 4 header fields.

Source IPv6 address, destination IPv6

address, packet priority, protocol number, and

other Layer 3 and Layer 4 header fields.

Layer 2 header fields, such as source and

destination MAC addresses, 802.1p priority,

and link layer protocol type.

User specified matching patterns in protocol

headers.

rovides an

Numbering and naming ACLs

When creating an ACL, you must assign it a number or name for identification. You can specify an

existing ACL by its number or name. Each ACL type has a unique range of ACL numbers.

For an IPv4 basic or advanced ACL, its ACL number or name must be unique in IPv4. For an IPv6

basic or advanced ACL, its ACL number and name must be unique in IPv6. For an ACL of some

other type, its number or name must be globally unique.

Match order

The rules in an ACL are sorted in a specific order. When a packet matches a rule, the device stops

the match process and performs the action defined in the rule. If an ACL contains overlapping or

conflicting rules, the matching result and action to take depend on the rule order.

The following ACL match orders are available:

• config—Sorts ACL rules in ascending order of rule ID. A rule with a lower ID is matched before

a rule with a higher ID. If you use this method, check the rules and their order carefully.

NOTE:

The match order of user-defined ACLs can only be config.

1

Page 8

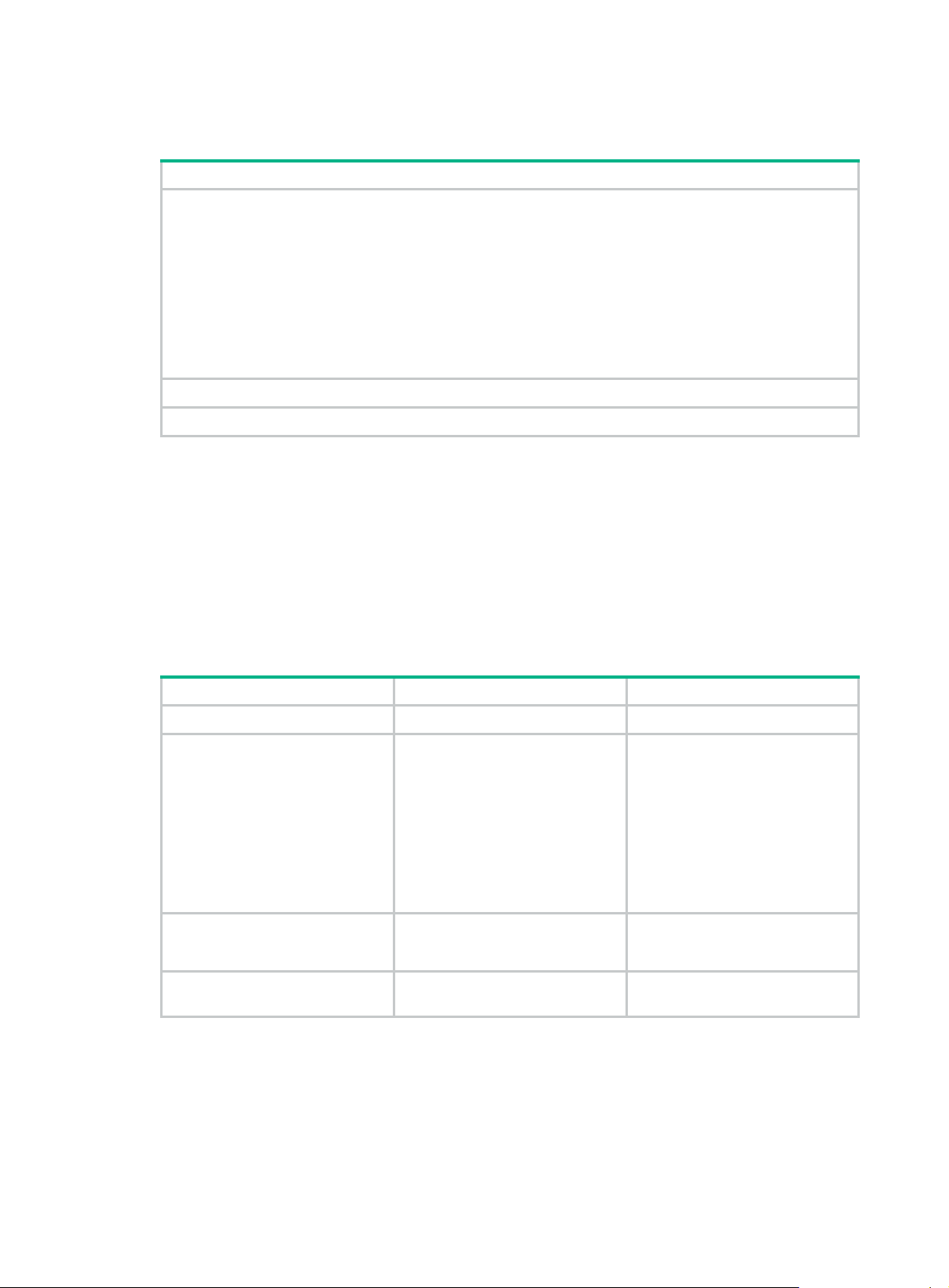

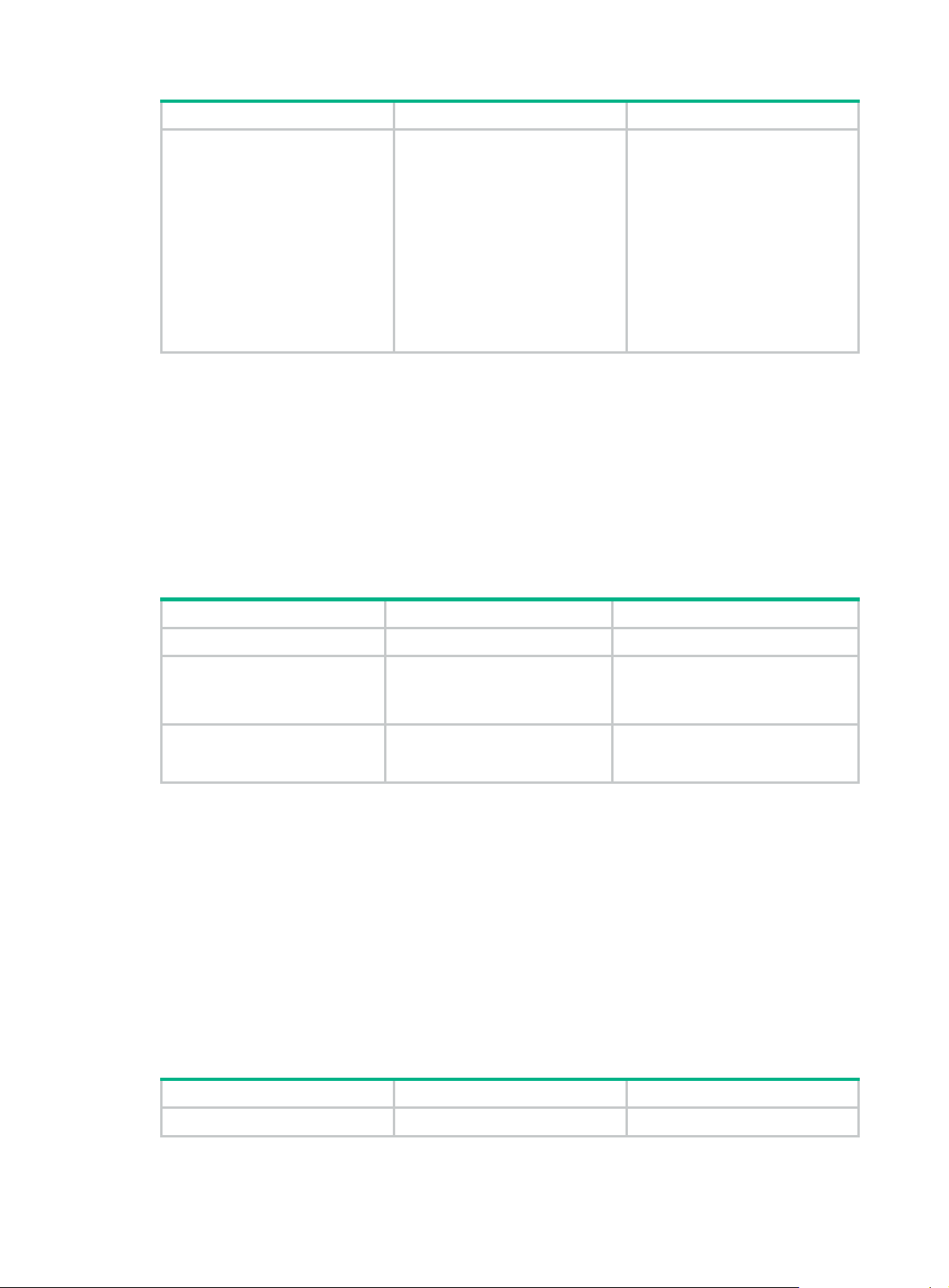

• auto—Sorts ACL rules in depth-first order. Depth-first ordering makes sure any subset of a rule

is always matched before the rule. Table 1 lists the se

quence of tie breakers that depth-first

ordering uses to sort rules for each type of ACL.

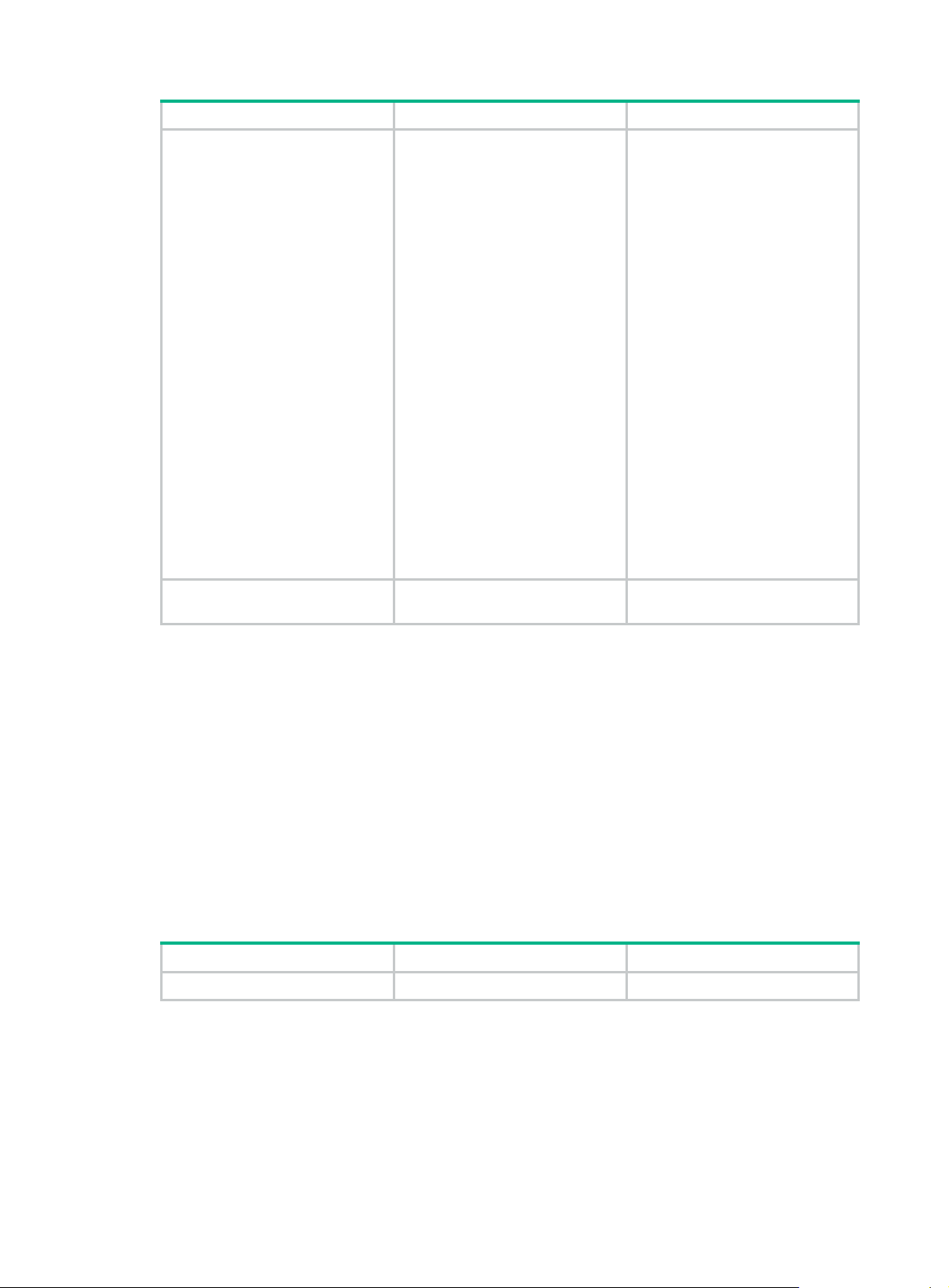

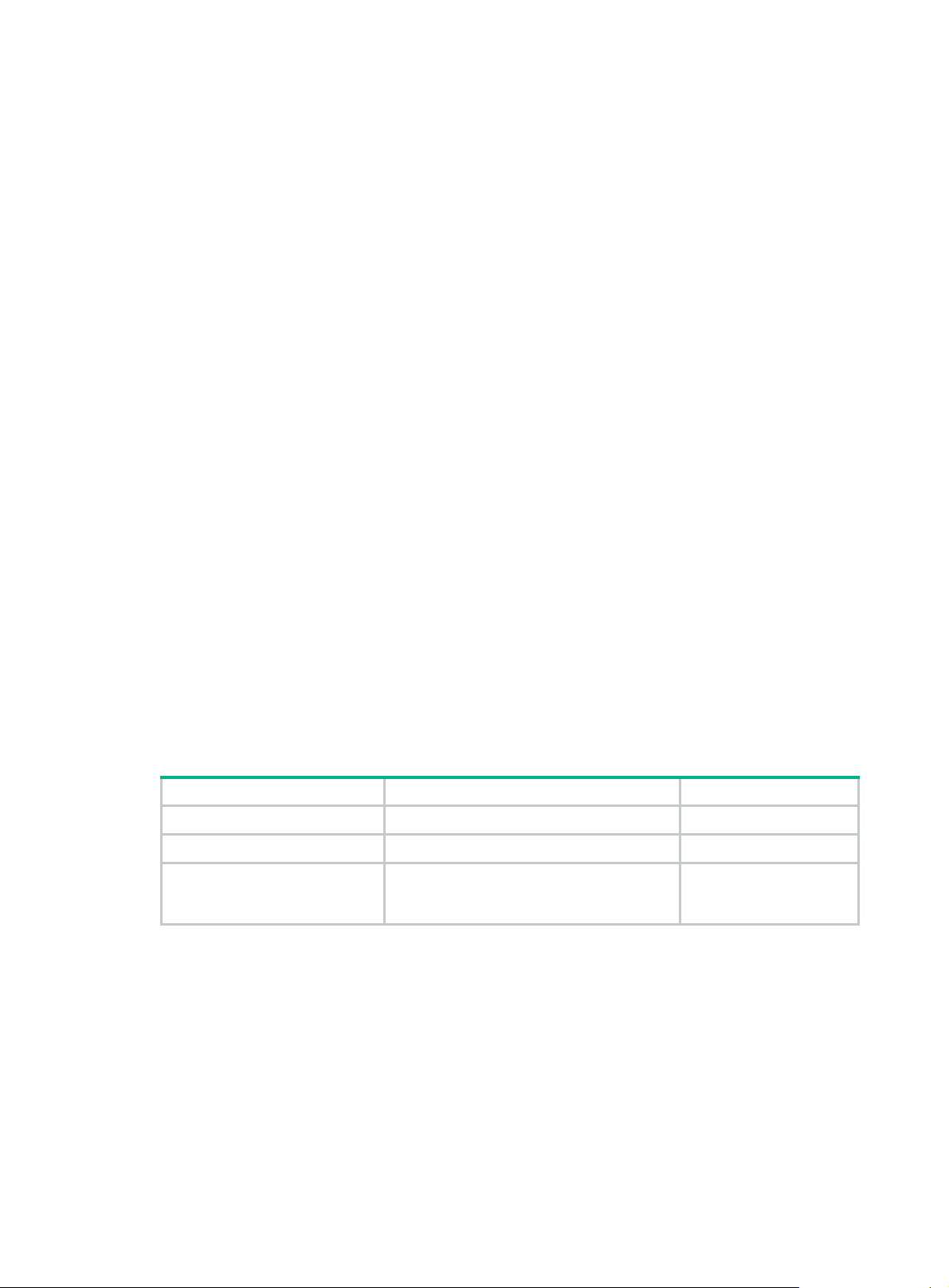

Table 1 Sort ACL rules in depth-first order

ACL type Sequence of tie breakers

1. VPN instance.

IPv4 basic ACL

IPv4 advanced ACL

IPv6 basic ACL

IPv6 advanced ACL

Layer 2 ACL

2. More 0s in the source IPv4 address wildcard (more 0s means a

narrower IPv4 address range).

3. Rule configured earlier.

1. VPN instance.

2. Specific protocol number.

3. More 0s in the source IPv4 address wildcard mask.

4. More 0s in the destination IPv4 address wildcard.

5. Narrower TCP/UDP service port number range.

6. Rule configured earlier.

1. VPN instance.

2. Longer prefix for the source IPv6 address (a longer prefix means a

narrower IPv6 address range).

3. Rule configured earlier.

1. VPN instance.

2. Specific protocol number.

3. Longer prefix for the source IPv6 address.

4. Longer prefix for the destination IPv6 address.

5. Narrower TCP/UDP service port number range.

6. Rule configured earlier.

1. More 1s in the source MAC address mask (more 1s means a smaller

MAC address).

2. More 1s in the destination MAC address mask.

3. Rule configured earlier.

A wildcard mask, also called an inverse mask, is a 32-bit binary number represented in dotted

decimal notation. In contrast to a network mask, the 0 bits in a wildcard mask represent "do care" bits,

and the 1 bits represent "don't care" bits. If the "do care" bits in an IP address are identical to the "do

care" bits in an IP address criterion, the IP address matches the criterion. All "don't care" bits are

ignored. The 0s and 1s in a wildcard mask can be noncontiguous. For example, 0.255.0.255 is a

valid wildcard mask.

Rule numbering

ACL rules can be manually numbered or automatically numbered. This section describes how

automatic ACL rule numbering works.

Rule numbering step

If you do not assign an ID to the rule you are creating, the system automatically assigns it a rule ID.

The rule numbering step sets the increment by which the system automatically numbers rules. For

example, the default ACL rule numbering step is 5. If you do not assign IDs to rules you are creating,

they are automatically numbered 0, 5, 10, 15, and so on. The wider the numbering step, the more

rules you can insert between two rules.

By introducing a gap between rules rather than contiguously numbering rules, you have the flexibility

of inserting rules in an ACL. This feature is important for a config-order ACL, where ACL rules are

matched in ascending order of rule ID.

2

Page 9

Automatic rule numbering and renumbering

The ID automatically assigned to an ACL rule takes the nearest higher multiple of the numbering step

to the current highest rule ID, starting with 0.

For example, if the step is 5, and there are five rules numbered 0, 5, 9, 10, and 12, the newly defined

rule is numbered 15. If the ACL does not contain a rule, the first rule is numbered 0.

Whenever the step changes, the rules are renumbered, starting from 0. For example, changing the

step from 5 to 2 renumbers rules 5, 10, 13, and 15 as rules 0, 2, 4, and 6.

Fragment filtering with ACLs

Traditional packet filtering matches only first fragments of packets, and allows all subsequent

non-first fragments to pass through. Attackers can fabricate non-first fragments to attack networks.

To avoid risks, the ACL feature is designed as follows:

• Filters all fragments by default, including non-first fragments.

• Allows for matching criteria modification for efficiency. For example, you can configure the ACL

to filter only non-first fragments.

Configuration restrictions and guidelines

When you configure ACLs, follow these restrictions and guidelines:

• Matching packets are forwarded through slow forwarding if an ACL rule contains match criteria

or has functions enabled in addition to the following match criteria and functions:

{ Source and destination IP addresses.

{ Source and destination ports.

{ Transport layer protocol.

{ ICMP or ICMPv6 message type, message code, and message name.

{ VPN instance.

{ Logging.

{ Time range.

Slow forwarding requires packets to be sent to the control plane for forwarding entry calculation,

which affects the device forwarding performance.

• On a border gateway in a VXLAN or EVPN network, an ACL applied to a Layer 3 Ethernet

interface or Layer 3 aggregate interface matches the packets on both the interface and its

subinterfaces. For information about VXLAN and EVPN, see VXLAN Configuration Guide and

EVPN Configuration Guide.

3

Page 10

Configuration task list

Tasks at a glance

(Required.) Configure ACLs according to the characteristics of the packets to be matched:

• Configuring a basic ACL

{ Configuring an IPv4 basic ACL

{ Configuring an IPv6 basic ACL

• Configuring an advanced ACL

{ Configuring an IPv4 advanced ACL

{ Configuring an IPv6 advanced ACL

• Configuring a Layer 2 ACL

• Configuring a user-defined ACL

(Optional.) Copying an ACL

(Optional.) Configuring packet filtering with ACLs

Configuring a basic ACL

This section describes procedures for configuring IPv4 and IPv6 basic ACLs.

Configuring an IPv4 basic ACL

IPv4 basic ACLs match packets based only on source IP addresses.

To configure an IPv4 basic ACL:

Step Command Remarks

1. Enter system view.

2. Create an IPv4 basic ACL

and enter its view.

3. (Optional.) Configure a

description for the IPv4 basic

ACL.

4. (Optional.) Set the rule

numbering step.

system-view

acl basic

acl-name } [

config

description

step

start-value ]

{ acl-number |

} ]

step-value [

match-order

text

start

name

auto

{

N/A

By default, no ACLs exist.

The value range for a numbered

IPv4 basic ACL is 2000 to 2999.

Use the

|

command to enter the view of a

numbered IPv4 basic ACL.

Use the

command to enter the view of a

named IPv4 basic ACL.

By default, an IPv4 basic ACL

does not have a description.

By default, the rule numbering

step is 5 and the start rule ID is 0.

acl basic

acl basic name

acl-number

acl-name

4

Page 11

Step Command Remarks

By default, no IPv4 basic ACL

rules exist.

logging

5. Create or edit a rule.

6. (Optional.) Add or edit a rule

comment.

rule

[ rule-id ] {

counting

[

source

{ source-address

source-wildcard |

time-range

vpn-instance

vpn-instance-name ] *

rule

rule-id

deny

fragment

|

any

time-range-name |

comment

permit

|

|

} |

text

}

logging

The

only when the module (for

example, packet filtering) that

uses the ACL supports logging.

If an IPv4 basic ACL is used for

QoS traffic classification or packet

filtering in a VXLAN network, the

ACL matches packets as follows:

|

• The ACL matches outgoing

VXLAN packets by outer

IPv4 header information on a

VTEP.

• The ACL matches incoming

VXLAN packets by outer

IPv4 header information on

an intermediate transport

device.

• The ACL matches

de-encapsulated incoming

VXLAN packets by IPv4

header information on a

VTEP.

By default, no rule comment is

configured.

keyword takes effect

Configuring an IPv6 basic ACL

IPv6 basic ACLs match packets based only on source IP addresses.

To configure an IPv6 basic ACL:

Step Command Remarks

1. Enter system view.

2. Create an IPv6 basic ACL

view and enter its view.

3. (Optional.) Configure a

description for the IPv6 basic

ACL.

4. (Optional.) Set the rule

numbering step.

system-view

acl ipv6 basic

name

acl-name } [

auto

{

description

step

start-value ]

config

|

step-value [

{ acl-number |

match-order

} ]

text

start

N/A

By default, no ACLs exist.

The value range for a numbered

IPv6 basic ACL is 2000 to 2999.

Use the

acl-number command to enter the

view of a numbered IPv6 basic

ACL.

Use the

acl-name command to enter the

view of a named IPv6 basic ACL.

By default, an IPv6 basic ACL

does not have a description.

By default, the rule numbering

step is 5 and the start rule ID is 0.

acl ipv6 basic

acl ipv6 basic name

5

Page 12

Step Command Remarks

5. Create or edit a rule.

6. (Optional.) Add or edit a rule

comment.

rule

[ rule-id ] {

counting

[

routing

source

source-prefix |

source-address/source-prefix |

any

time-range-name |

vpn-instance-name ] *

rule

[

{ source-address

time-range

} |

rule-id

deny

fragment

|

type

routing-type ] |

comment

permit

|

|

vpn-instance

text

Configuring an advanced ACL

This section describes procedures for configuring IPv4 and IPv6 advanced ACLs.

Configuring an IPv4 advanced ACL

IPv4 advanced ACLs match packets based on the following criteria:

• Source IP addresses.

• Destination IP addresses.

• Packet priorities.

• Protocol numbers.

• Other protocol header information, such as TCP/UDP source and destination port numbers,

TCP flags, ICMP message types, and ICMP message codes.

}

logging

|

By default, no IPv6 basic ACL

rules exist.

logging

The

only when the module (for

example, packet filtering) that

uses the ACL supports logging.

By default, no rule comment is

configured.

keyword takes effect

Compared to IPv4 basic ACLs, IPv4 advanced ACLs allow more flexible and accurate filtering.

To configure an IPv4 advanced ACL:

Step Command Remarks

1. Enter system view.

2. Create an IPv4 advanced

ACL and enter its view.

3. (Optional.) Configure a

description for the IPv4

advanced ACL.

4. (Optional.) Set the rule

numbering step.

system-view

acl advanced

name

acl-name } [

auto

{

description

step

start-value ]

config

|

step-value [

{ acl-number |

match-order

} ]

text

start

N/A

By default, no ACLs exist.

The value range for a numbered

IPv4 advanced ACL is 3000 to

3999.

Use the

acl-number command to enter the

view of a numbered IPv4

advanced ACL.

Use the

acl-name command to enter the

view of a named IPv4 advanced

ACL.

By default, an IPv4 advanced ACL

does not have a description.

By default, the rule numbering

step is 5 and the start rule ID is 0.

acl advanced

acl advanced name

6

Page 13

Step Command Remarks

By default, no IPv4 advanced ACL

rules exist.

logging

5. Create or edit a rule.

6. (Optional.) Add or edit a rule

comment.

rule

[ rule-id ] {

protocol [ { {

fin-value |

rst-value |

urg-value } * |

counting

{ dest-address dest-wildcard |

any

destination-port

} |

port1 [ port2 ] | {

precedence

{

tos } * } |

{ icmp-type [ icmp-code ] |

icmp-message } |

source

{ source-address

source-wildcard |

source-port

[ port2 ] |

ange-name |

time-r

vpn-instance-name ] *

rule

rule-id

deny

ack

ack-value |

psh

psh-value |

syn

syn-value |

established

destination

|

dscp

precedence |

fragment

time-range

|

logging

any

operator port1

comment

permit

|

rst

urg

} |

operator

dscp |

tos

icmp-type

|

} |

vpn-instance

text

fin

The

only when the module (for

}

example, packet filtering) that

uses the ACL supports logging.

If an IPv4 advanced ACL is used

for QoS traffic classification or

packet filtering in a VXLAN

network, the ACL matches

packets as follows:

• The ACL matches outgoing

VXLAN packets by outer

IPv4 header information on a

VTEP.

• The ACL matches incoming

VXLAN packets by outer

IPv4 header information on

an intermediate transport

device.

• The ACL matches

de-encapsulated incoming

VXLAN packets by IPv4

header information on a

VTEP.

By default, no rule comment is

configured.

keyword takes effect

Configuring an IPv6 advanced ACL

IPv6 advanced ACLs match packets based on the following criteria:

• Source IPv6 addresses.

• Destination IPv6 addresses.

• Packet priorities.

• Protocol numbers.

• Other protocol header fields such as the TCP/UDP source port number, TCP/UDP destination

port number, ICMPv6 message type, and ICMPv6 message code.

Compared to IPv6 basic ACLs, IPv6 advanced ACLs allow more flexible and accurate filtering.

To configure an IPv6 advanced ACL:

Step Command Remarks

1. Enter system view.

system-view

N/A

7

Page 14

Step Command Remarks

By default, no ACLs exist.

The value range for a numbered

IPv6 advanced ACL is 3000 to

3999.

2. Create an IPv6 advanced

ACL and enter its view.

3. (Optional.) Configure a

description for the IPv6

advanced ACL.

4. (Optional.) Set the rule

numbering step.

acl ipv6 advanced

name

acl-name } [

auto

{

description

step

start-value ]

config

|

step-value [

} ]

text

{ acl-number |

match-order

start

Use the

acl-number command to enter the

view of a numbered IPv6

advanced ACL.

Use the

acl-name command to enter the

view of a named IPv6 advanced

ACL.

By default, an IPv6 advanced ACL

does not have a description.

By default, the rule numbering

step is 5 and the start rule ID is 0.

acl ipv6 advanced

acl ipv6 advanced name

rule

[ rule-id ] {

ack

psh

psh-value |

syn

syn-value |

established

destination

|

dscp

dscp |

{ icmp6-type

routing

|

hop-by-hop

source

source-port

comment

5. Create or edit a rule.

6. (Optional.) Add or edit a rule

comment.

protocol [ { {

fin-value |

rst-value |

urg-value } * |

counting

{ dest-address dest-prefix |

dest-address/dest-prefix |

destination-port

[ port2 ] |

flow-label-value |

icmp6-type

icmp6-code | icmp6-message } |

logging

routing-type ] |

hop-type ] |

{ source-address source-prefix |

source-address/source-prefix |

y

an

} |

time-range-name |

vpn-instance-name ] *

rule

rule-id

Configuring a Layer 2 ACL

deny

permit

|

ack-value |

rst

urg

} |

any

operator port1

flow-label

fragment

type

[

|

vpn-instance

|

time-range

text

[

}

fin

} |

type

By default, no IPv6 advanced ACL

rules exist.

logging

The

only when the module (for

example, packet filtering) that

uses the ACL supports logging.

If an IPv6 advanced ACL is used

for outbound QoS traffic

classification or packet filtering,

do not specify the

parameter.

If an IPv6 advanced ACL is used

for packet filtering, do not specify

fragment

the

By default, no rule comment is

configured.

keyword takes effect

flow-label

keyword.

Layer 2 ACLs, also called "Ethernet frame header ACLs," match packets based on Layer 2 Ethernet

header fields, such as:

• Source MAC address.

• Destination MAC address.

• 802.1p priority (VLAN priority).

• Link layer protocol type.

To configure a Layer 2 ACL:

8

Page 15

Step Command Remarks

1. Enter system view.

2. Create a Layer 2 ACL and

enter its view.

3. (Optional.) Configure a

description for the Layer 2

ACL.

4. (Optional.) Set the rule

numbering step.

system-view N/A

By default, no ACLs exist.

The value range for a numbered

Layer 2 ACL is 4000 to 4999.

acl mac

acl-name } [

config

description

step

start-value ]

{ acl-number |

} ]

step-value [

match-order

text

start

name

{

auto

Use the

|

command to enter the view of a

numbered Layer 2 ACL.

Use the

command to enter the view of a

named Layer 2 ACL.

By default, a Layer 2 ACL does

not have a description.

By default, the rule numbering

step is 5 and the start rule ID is 0.

acl mac

acl mac name

acl-number

acl-name

5. Create or edit a rule.

6. (Optional.) Add or edit a rule

comment.

rule

[ rule-id ] {

cos

[

dot1p |

dest-address dest-mask | {

lsap-type lsap-type-mask |

protocol-type

protocol-type-mask } |

source-mac

source-mask |

time-range-name ] *

rule

rule-id

deny

permit

|

counting

source-address

time-range

comment

dest-mac

|

text

lsap

type

Configuring a user-defined ACL

User-defined ACLs allow you to customize rules based on information in protocol headers. You can

define a user-defined ACL to match packets. A specific number of bytes after an offset (relative to the

specified header) are compared against a match pattern after being ANDed with a match pattern

mask.

To configure a user-defined ACL:

Step Command Remarks

1. Enter system view.

system-view

}

By default

exist.

By default, no rule comment is

configured.

N/A

,

no Layer 2 ACL rules

2. Create a user-defined ACL

and enter its view.

3. (Optional.) Configure a

description for the

user-defined ACL.

acl user-defined

name

acl-name }

description

text

9

{ acl-number |

By default, no ACLs exist.

The value range for a numbered

user-defined ACL is 5000 to 5999.

Use the

acl-number command to enter the

view of a numbered user-defined

ACL.

Use the

acl-name command to enter the

view of a named user-defined

ACL.

By default, a user-defined ACL

does not have a description.

acl user-defined

acl user-defined name

Page 16

Step Command Remarks

4. Create or edit a rule.

5. (Optional.) Add or edit a rule

comment.

Copying an ACL

You can create an ACL by copying an existing ACL (source ACL). The new ACL (destination ACL)

has the same properties and content as the source ACL, but uses a different number or name than

the source ACL.

To successfully copy an ACL, make sure:

• The destination ACL number is from the same type as the source ACL number.

• The source ACL already exists, but the destination ACL does not.

To copy an ACL:

Step Command

1. Enter system view.

rule

[ rule-id ] {

l2

[ {

rule-string rule-mask

offset }&<1-8> ] [

time-range

rule

time-range-name ] *

comment

rule-id

deny

permit

|

counting

text

system-view

}

By default, no user-defined ACL

|

rules exist.

By default, no rule comment is

configured.

acl

ipv6

2. Copy an existing ACL to create a new ACL.

[

{ source-acl-number |

{ dest-acl-number |

mac | user-defined

|

name

name

dest-acl-name }

]

source-acl-name }

Configuring packet filtering with ACLs

This section describes procedures for using an ACL to filter packets. For example, you can apply an

ACL to an interface to filter incoming or outgoing packets.

NOTE:

• The packet filtering feature is available on Layer 2 Ethernet interfaces, Layer 2 aggregate

interfaces, Layer 3 Ethernet interfaces, Layer 3 Ethernet subinterfaces, Layer 3 aggregate

interfaces, VLAN interfaces, and VSI interfaces.

• For VSI interfaces, the packet filtering feature is available in Release 2510P01 and later.

• The term "interface" in this section collectively refers to these types of interfaces. You can use the

port link-mode command to configure an Ethernet port as a Layer 2 or Layer 3 interface (see

Layer 2—LAN Switching Configuration Guide).

Applying an ACL to an interface for packet filtering

copy

to

Step Command Remarks

1. Enter system view.

2. Enter interface view.

system-view

interface

interface-number

interface-type

10

N/A

N/A

Page 17

Step Command Remarks

By default, an interface does not

filter packets.

To the same direction of an

interface, you can apply a

3. Apply an ACL to the interface

to filter packets.

packet-filter

user-defined

name

acl-name } {

outbound

ipv6

[

} [

mac

|

] { acl-number |

inbound

hardware-count ]

|

|

maximum of four ACLs: one IPv4

ACL, one IPv6 ACL, one Layer 2

ACL, and one user-defined ACL.

You cannot apply an ACL to the

outbound direction of a Layer 2

aggregate interface, Layer 3

aggregate interface, or VSI

interface.

Configuring the applicable scope of packet filtering on a VLAN interface

You can configure the packet filtering on a VLAN interface to filter the following packets:

• Packets forwarded at Layer 3 by the VLAN interface.

• All packets, including packets forwarded at Layer 3 by the VLAN interface and packets

forwarded at Layer 2 by the physical ports associated with the VLAN interface.

To configure the applicable scope of packet filtering on a VLAN interface:

Step Command Remarks

1. Enter system view.

2. Create a VLAN interface

and enter its view.

3. Specify the applicable

scope of packet filtering on

the VLAN interface.

system-view

interface vlan-interface

vlan-interface-id

packet-filter filter

route

[

N/A

all ]

|

If the VLAN interface already exists,

you directly enter its view.

By default, no VLAN interface exists.

By default, the packet filtering filters

all packets.

Configuring logging and SNMP notifications for packet filtering

You can configure the ACL module to generate log entries or SNMP notifications for packet filtering

and output them to the information center or SNMP module at the output interval. The log entry or

notification records the number of matching packets and the matched ACL rules. If an ACL is

matched for the first time, the device immediately outputs a log entry or notification to record the

matching packet.

For more information about the information center and SNMP, see Network Management and

Monitoring Configuration Guide.

To configure logging and SNMP notifications for packet filtering:

Step Command Remarks

1. Enter system view.

system-view

11

N/A

Page 18

Step Command Remarks

2. Set the interval for outputting

packet filtering logs or

notifications.

acl

logging

{

interval

trap

|

interval

}

Setting the packet filtering default action

Step Command Remarks

1. Enter system view.

system-view

The default setting is 0 minutes.

By default, the device does not

generate log entries or SNMP

notifications for packet filtering.

N/A

2. Set the packet filtering

default action to deny.

packet-filter default deny

Displaying and maintaining ACLs

Execute display commands in any view and reset commands in user view.

Task Command

Display ACL configuration and match

statistics.

Display ACL application information for

packet filtering.

Display match statistics for packet filtering

ACLs.

Display the accumulated statistics for

packet filtering ACLs.

Display detailed ACL packet filtering

information.

display acl

name

display packet-filter

interface-number ] [

vlan-interface

slot

[

slot-number ] }

display packet-filter statistics interface

interface-number {

user-defined

display packet-filter statistics sum

ipv6

[

|

brief ]

[

display packet-filter verbose interface

interface-number {

user-defined

slot-number ]

ipv6

[

acl-name }

mac | user-defined

mac | user-defined

|

{

inbound

vlan-interface-number [

inbound

] { acl-number |

inbound

] { acl-number |

By default, the packet filter

permits packets that do not match

any ACL rule to pass.

] { acl-number |

interface

[ interface-type

outbound

|

outbound

|

name

] { acl-number |

outbound

|

name

] |

inbound

interface-type

} [ [

acl-name } ] [

inbound

{

interface-type

} [ [

acl-name } ]

interface

outbound

|

ipv6

mac

|

brief

outbound

|

name

acl-name }

ipv6 | mac

[ slot

all

|

]

|

]

}

|

Display QoS and ACL resource usage.

Clear ACL statistics.

Clear match statistics and accumulated

match statistics for packet filtering ACLs.

display qos-acl resource

reset acl

all

|

reset packet-filter statistics interface

interface-number ] {

user-defined

12

name

ipv6

[

mac | user-defined

|

acl-name }

] { acl-number |

inbound

slot

[

slot-number ]

]

outbound

|

name

acl-name } ]

counter

[ interface-type

{ acl-number |

ipv6 | mac

} [ [

|

Page 19

ACL configuration examples

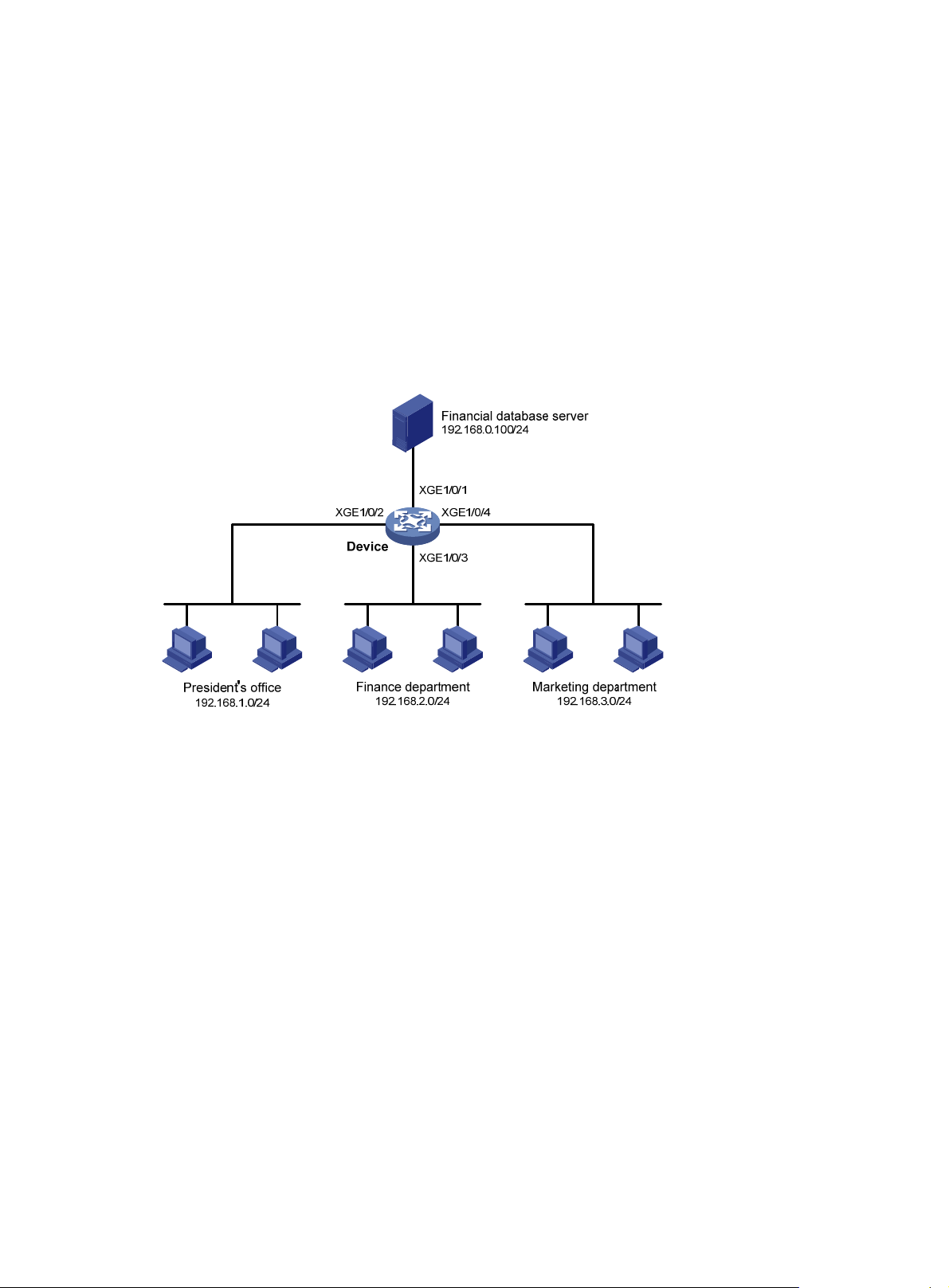

Interface-based packet filter configuration example

Network requirements

A company interconnects its departments through the device. Configure a packet filter to:

• Permit access from the President's office at any time to the financial database server.

• Permit access from the Finance department to the database server only during working hours

(from 8:00 to 18:00) on working days.

• Deny access from any other department to the database server.

Figure 1 Network diagram

Configuration procedure

# Create a periodic time range from 8:00 to 18:00 on working days.

<Device> system-view

[Device] time-range work 08:0 to 18:00 working-day

# Create an IPv4 advanced ACL numbered 3000.

[Device] acl advanced 3000

# Configure a rule to permit access from the President's office to the financial database server.

[Device-acl-ipv4-adv-3000] rule permit ip source 192.168.1.0 0.0.0.255 destination

192.168.0.100 0

# Configure a rule to permit access from the Finance department to the database server during

working hours.

[Device-acl-ipv4-adv-3000] rule permit ip source 192.168.2.0 0.0.0.255 destination

192.168.0.100 0 time-range work

# Configure a rule to deny access to the financial database server.

[Device-acl-ipv4-adv-3000] rule deny ip source any destination 192.168.0.100 0

[Device-acl-ipv4-adv-3000] quit

# Apply IPv4 advanced ACL 3000 to filter outgoing packets on interface Ten-GigabitEthernet 1/0/1.

[Device] interface ten-gigabitethernet 1/0/1

[Device-Ten-GigabitEthernet1/0/1] packet-filter 3000 outbound

13

Page 20

[Device-Ten-GigabitEthernet1/0/1] quit

Verifying the configuration

# Verify that a PC in the Finance department can ping the database server during working hours. (All

PCs in this example use Windows XP).

C:\> ping 192.168.0.100

Pinging 192.168.0.100 with 32 bytes of data:

Reply from 192.168.0.100: bytes=32 time=1ms TTL=255

Reply from 192.168.0.100: bytes=32 time<1ms TTL=255

Reply from 192.168.0.100: bytes=32 time<1ms TTL=255

Reply from 192.168.0.100: bytes=32 time<1ms TTL=255

Ping statistics for 192.168.0.100:

Packets: Sent = 4, Received = 4, Lost = 0 (0% loss),

Approximate round trip times in milli-seconds:

Minimum = 0ms, Maximum = 1ms, Average = 0ms

# Verify that a PC in the Marketing department cannot ping the database server during working

hours.

C:\> ping 192.168.0.100

Pinging 192.168.0.100 with 32 bytes of data:

Request timed out.

Request timed out.

Request timed out.

Request timed out.

Ping statistics for 192.168.0.100:

Packets: Sent = 4, Received = 0, Lost = 4 (100% loss),

# Display configuration and match statistics for IPv4 advanced ACL 3000 on the device during

working hours.

[Device] display acl 3000

Advanced IPv4 ACL 3000, 3 rules,

ACL's step is 5

rule 0 permit ip source 192.168.1.0 0.0.0.255 destination 192.168.0.100 0

rule 5 permit ip source 192.168.2.0 0.0.0.255 destination 192.168.0.100 0 time-range work

(Active)

rule 10 deny ip destination 192.168.0.100 0

The output shows that rule 5 is active.

14

Page 21

QoS overview

In data communications, Quality of Service (QoS) provides differentiated service guarantees for

diversified traffic in terms of bandwidth, delay, jitter, and drop rate, all of which can affect QoS.

QoS manages network resources and prioritizes traffic to balance system resources.

The following section describes typical QoS service models and widely used QoS techniques.

QoS service models

This section describes several typical QoS service models.

Best-effort service model

The best-effort model is a single-service model. The best-effort model is not as reliable as other

models and does not guarantee delay-free delivery.

The best-effort service model is the default model for the Internet and applies to most network

applications. It uses the First In First Out (FIFO) queuing mechanism.

IntServ model

The integrated service (IntServ) model is a multiple-service model that can accommodate diverse

QoS requirements. This service model provides the most granularly differentiated QoS by identifying

and guaranteeing definite QoS for each data flow.

In the IntServ model, an application must request service from the network before it sends data.

IntServ signals the service request with the RSVP. All nodes receiving the request reserve resources

as requested and maintain state information for the application flow.

The IntServ model demands high storage and processing capabilities because it requires all nodes

along the transmission path to maintain resource state information for each flow. This model is

suitable for small-sized or edge networks. However, it is not suitable for large-sized networks, for

example, the core layer of the Internet, where billions of flows are present.

DiffServ model

The differentiated service (DiffServ) model is a multiple-service model that can meet diverse QoS

requirements. It is easy to implement and extend. DiffServ does not signal the network to reserve

resources before sending data, as IntServ does.

QoS techniques overview

The QoS techniques include the following features:

• Traffic classification.

• Traffic policing.

• Traffic shaping.

• Rate limit.

• Congestion management.

• Congestion avoidance.

15

Page 22

The following section briefly introduces these QoS techniques.

All QoS techniques in this document are based on the DiffServ model.

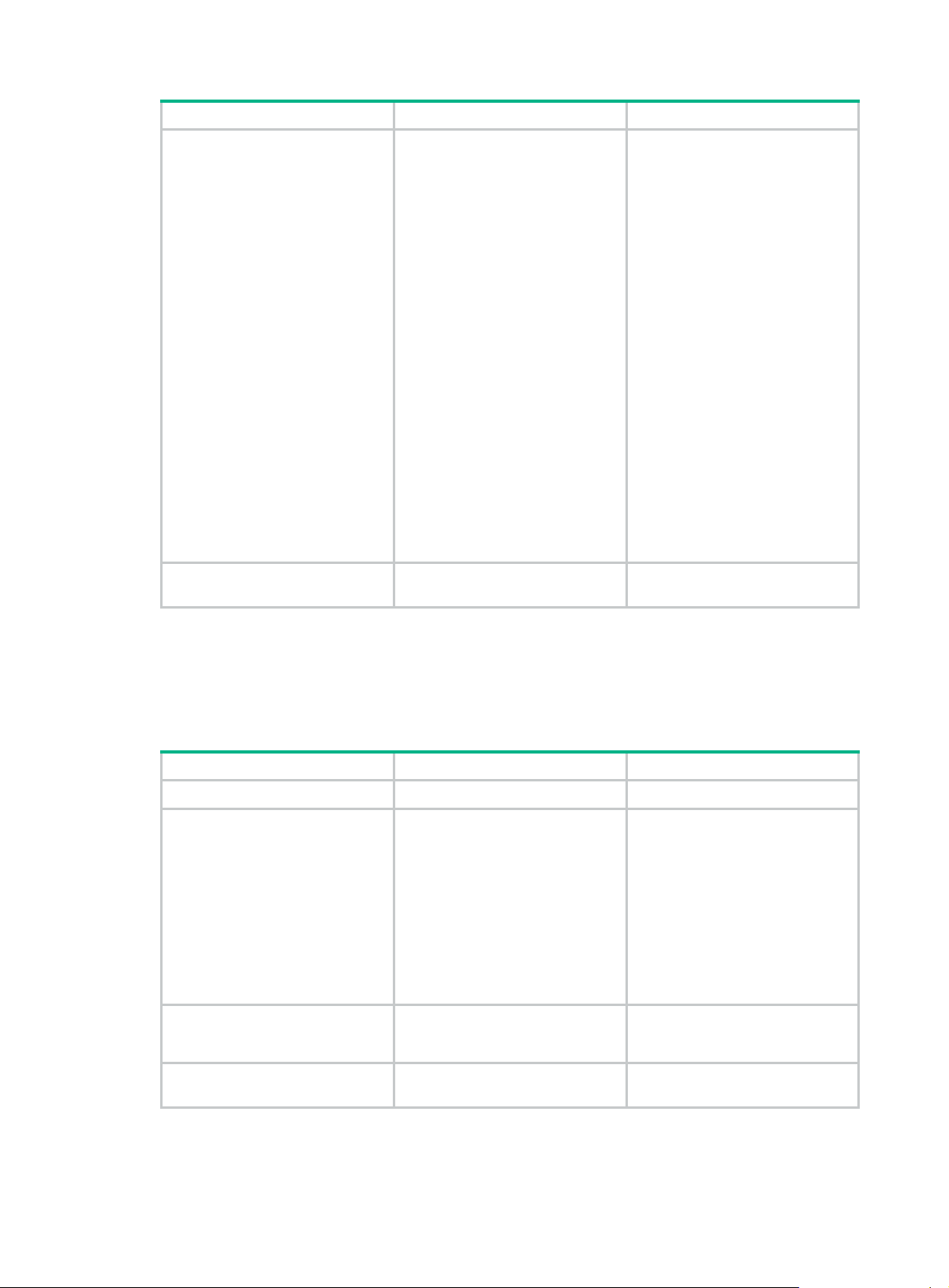

Deploying QoS in a network

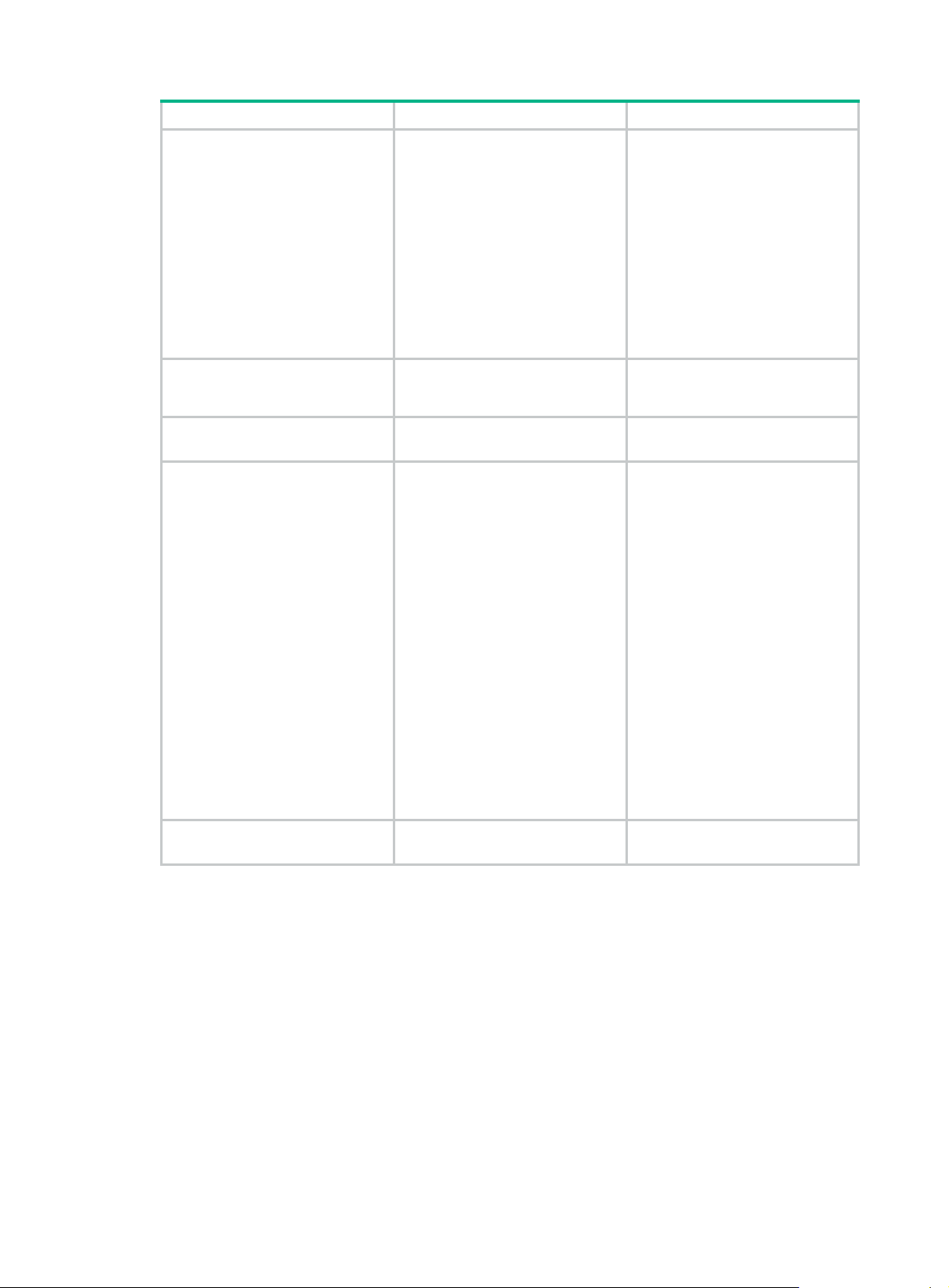

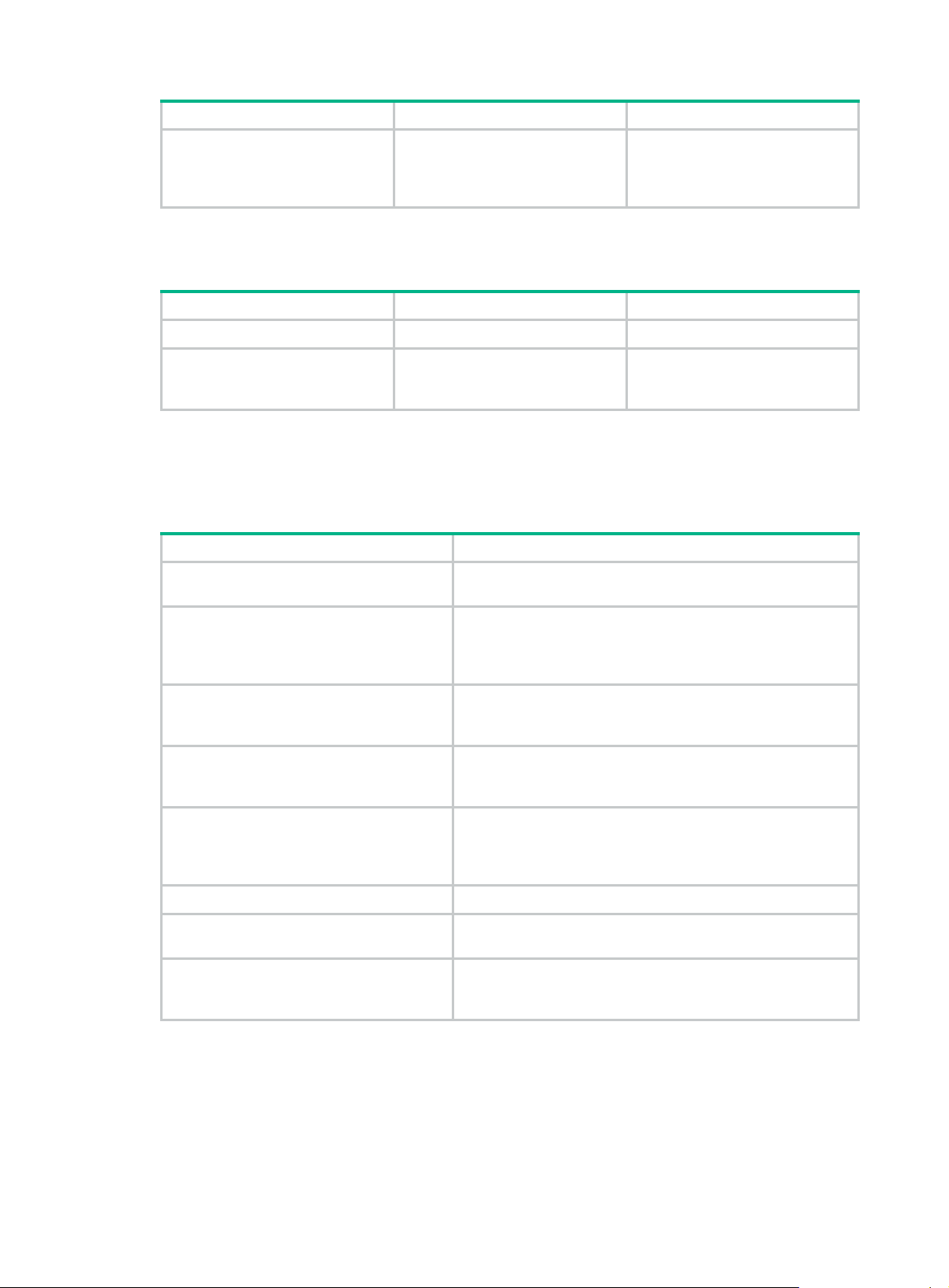

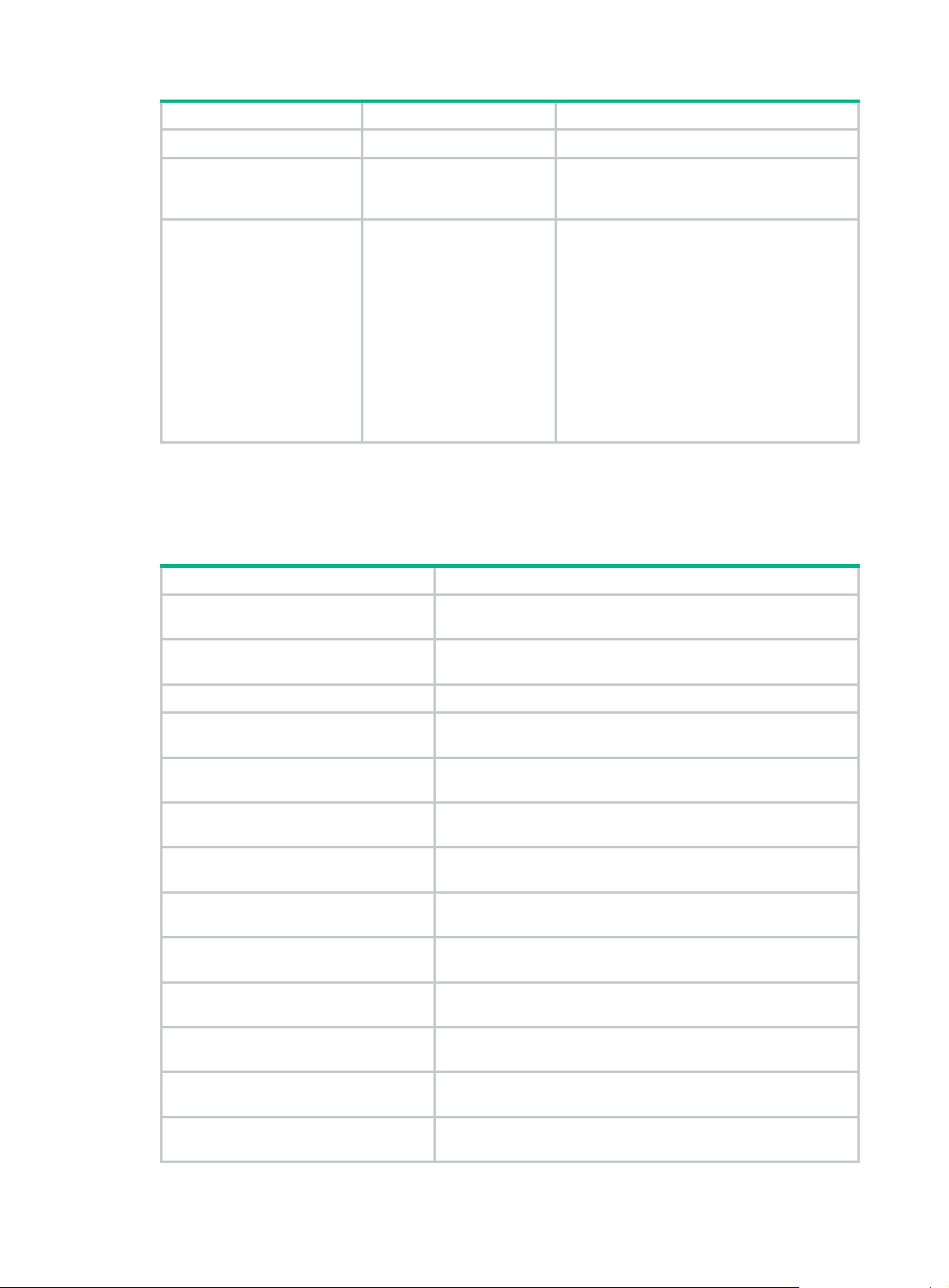

Figure 2 Position of the QoS techniques in a network

As shown in Figure 2, traffic classification, traffic shaping, traffic policing, congestion management,

and congestion avoidance mainly implement the following functions:

• Traffic classification—Uses match criteria to assign packets with the same characteristics to

a traffic class. Based on traffic classes, you can provide differentiated services.

• Traffic policing—Polices flows and imposes penalties to prevent aggressive use of network

resources. You can apply traffic policing to both incoming and outgoing traffic of a port.

• Traffic shaping—Adapts the output rate of traffic to the network resources available on the

downstream device to eliminate packet drops. Traffic shaping usually applies to the outgoing

traffic of a port.

• Congestion management—Provides a resource scheduling policy to determine the packet

forwarding sequence when congestion occurs. Congestion management usually applies to the

outgoing traffic of a port.

• Congestion avoidance—Monitors the network resource usage. It is usually applied to the

outgoing traffic of a port. When congestion worsens, congestion avoidance reduces the queue

length by dropping packets.

QoS processing flow in a device

Figure 3 briefly describes how the QoS module processes traffic.

1. Traffic classifier identifies and classifies traffic for subsequent QoS actions.

2. The QoS module takes various QoS actions on classified traffic as configured, depending on

the traffic processing phase and network status. For example, you can configure the QoS

module to perform the following operations:

{ Traffic policing for incoming traffic.

{ Traffic shaping for outgoing traffic.

{ Congestion avoidance before congestion occurs.

{ Congestion management when congestion occurs.

16

Page 23

Figure 3 QoS processing flow

17

Page 24

Configuring a QoS policy

You can configure QoS by using the MQC approach or non-MQC approach.

Non-MQC approach

In the non-MQC approach, you configure QoS service parameters without using a QoS policy. For

example, you can use the rate limit feature to set a rate limit on an interface without using a QoS

policy.

MQC approach

In the modular QoS configuration (MQC) approach, you configure QoS service parameters by using

QoS policies. A QoS policy defines the policing or other QoS actions to take on different classes of

traffic. It is a set of class-behavior associations.

A traffic class is a set of match criteria for identifying traffic, and it uses the AND or OR operator.

• If the operator is AND, a packet must match all the criteria to match the traffic class.

• If the operator is OR, a packet matches the traffic class if it matches any of the criteria in the

traffic class.

A traffic behavior defines a set of QoS actions to take on packets, such as priority marking and

redirect.

By associating a traffic behavior with a traffic class in a QoS policy, you apply QoS actions in the

traffic behavior to the traffic class.

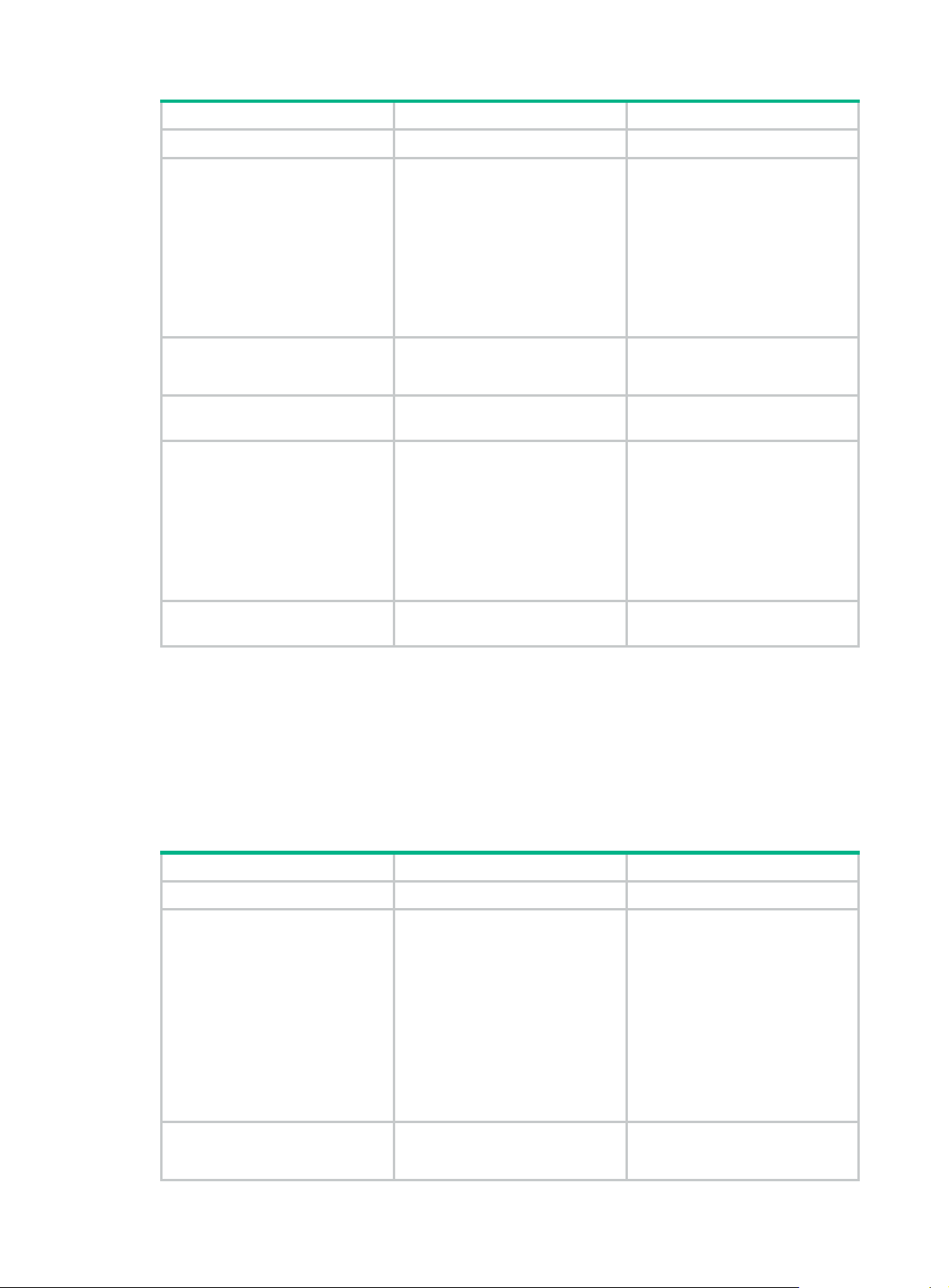

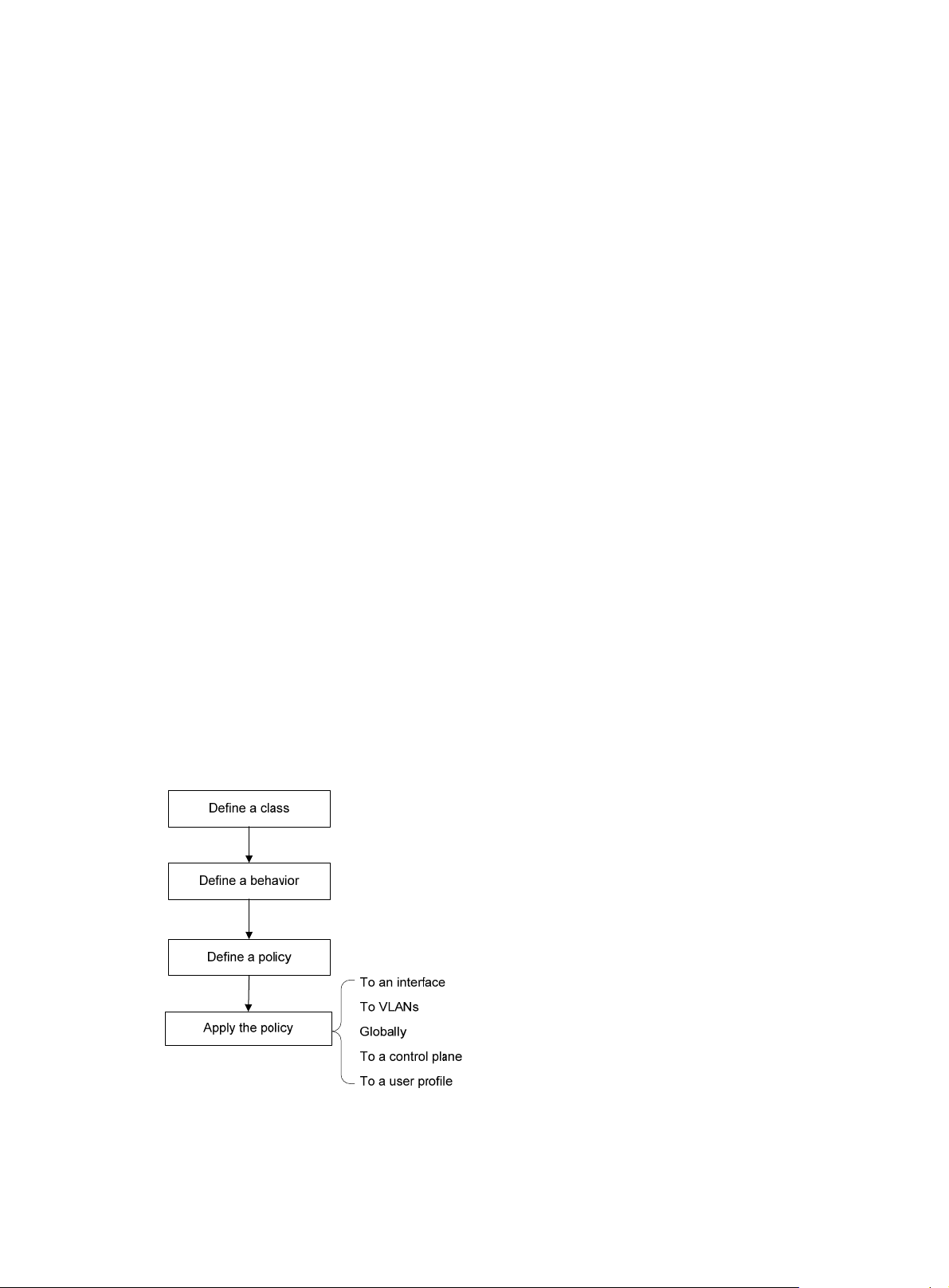

Configuration procedure diagram

Figure 4 shows how to configure a QoS policy.

Figure 4 QoS policy configuration procedure

18

Page 25

Defining a traffic class

Step Command Remarks

1. Enter system view.

2. Create a traffic class and

enter traffic class view.

3. Configure a match criterion.

system-view

traffic classifier

operator { and | or

[

if-match

match-criteria

Defining a traffic behavior

A traffic behavior is a set of QoS actions (such as traffic filtering, shaping, policing, and priority

marking) to take on a traffic class.

To define a traffic behavior:

classifier-name

} ]

N/A

By default, no traffic classes exist.

By default, no match criterion is

configured.

For more information, see the

if-match

QoS Command Reference.

command in ACL and

Step Command Remarks

1. Enter system view.

2. Create a traffic behavior and

enter traffic behavior view.

3. Configure an action in the

traffic behavior.

system-view

traffic behavior

See the subsequent chapters,

depending on the purpose of the

traffic behavior: traffic policing,

traffic filtering, priority marking,

traffic accounting, and so on.

Defining a QoS policy

To perform actions defined in a behavior for a class of packets, associate the behavior with the class

in a QoS policy.

To associate a traffic class with a traffic behavior in a QoS policy:

Step Command Remarks

1. Enter system view.

2. Create a QoS policy and

enter QoS policy view.

system-view

qos policy

N/A

behavior-name

policy-name By default, no QoS policies exist.

By default, no traffic behaviors

exist.

By default, no action is configured

for a traffic behavior.

N/A

3. Associate a traffic class with

a traffic behavior to create a

class-behavior association

in the QoS policy.

classifier

behavior

insert-before

[

before-classifier-name ]

classifier-name

behavior-name

19

By default, a traffic class is not

associated with a traffic behavior.

Repeat this step to create more

class-behavior associations.

Page 26

Applying the QoS policy

You can apply a QoS policy to the following destinations:

• Interface—The QoS policy takes effect on the traffic sent or received on the interface.

• VLAN—The QoS policy takes effect on the traffic sent or received on all ports in the VLAN.

• Globally—The QoS policy takes effect on the traffic sent or received on all ports.

• Control plane—The QoS policy takes effect on the traffic received on the control plane.

• User profile—The QoS policy takes effect on the traffic sent or received by the online users of

the user profile.

You can modify traffic classes, traffic behaviors, and class-behavior associations in a QoS policy

even after it is applied (except that it is applied to a user profile). If a traffic class uses an ACL for

traffic classification, you can delete or modify the ACL.

Applying the QoS policy to an interface

A QoS policy can be applied to multiple interfaces. However, only one QoS policy can be applied to

one direction (inbound or outbound) of an interface.

The QoS policy applied to the outgoing traffic on an interface does not regulate local packets. Local

packets refer to critical protocol packets sent by the local system for operation maintenance. The

most common local packets include link maintenance, routing, LDP, RSVP, and SSH packets.

QoS policies can be applied to Layer 2/Layer 3 Ethernet interfaces, Layer 3 Ethernet subinterfaces,

Layer 2/Layer 3 aggregate interfaces, and VSI interfaces.

For VSI interfaces, the QoS policy application feature is available in Release 2510P01 and later.

The term "interface" in this section collectively refers to these types of interfaces. You can use the

port link-mode command to configure an Ethernet port as a Layer 2 or Layer 3 interface (see Layer

2—LAN Switching Configuration Guide).

On a border gateway in a VXLAN or EVPN network:

• If a QoS policy without VLAN ID match criteria is applied to a Layer 3 Ethernet interface, the

QoS policy also takes effect on its subinterfaces.

• If a QoS policy is applied to any other interface, the match criteria for untagged packets

forwarded at Layer 3 do not take effect if the following conditions exist:

{ A class contains an inner or outer VLAN ID match criterion.

{ The class also contains match criteria configured to match untagged packets forwarded at

Layer 3.

For information about VXLAN and EVPN, see VXLAN Configuration Guide and EVPN Configuration

Guide.

To apply a QoS policy to an interface:

Step Command Remarks

1. Enter system view.

2. Enter interface view.

system-view

interface

interface-type interface-number

N/A

N/A

20

Page 27

Step Command Remarks

3. Apply the QoS policy to

the interface.

qos apply policy

outbound

}

policy-name {

Applying the QoS policy to VLANs

You can apply a QoS policy to VLANs to regulate traffic of the VLANs.

Configuration restrictions and guidelines

When you apply a QoS policy to VLANs, follow these restrictions and guidelines:

• QoS policies cannot be applied to dynamic VLANs, including VLANs created by GVRP.

• If the hardware resources of an IRF member device are insufficient, applying a QoS policy to

VLANs might fail on the IRF member device. The system does not automatically roll back the

QoS policy configuration already applied to other IRF member devices. To ensure consistency,

use the undo qos vlan-policy vlan command to manually remove the QoS policy

configuration applied to them.

inbound

By default, no QoS policy is

applied to an interface.

You cannot apply a QoS

|

policy to the outbound

direction of a Layer 2

aggregate interface, Layer 3

aggregate interface, or VSI

interface.

Configuration procedure

To apply the QoS policy to VLANs:

Step Command Remarks

1. Enter system view.

2. Apply the QoS policy to

VLANs.

system-view

qos vlan-policy

vlan-id-list {

inbound | outbound

policy-name

Applying the QoS policy globally

You can apply a QoS policy globally to the inbound or outbound direction of all ports.

If the hardware resources of an IRF member device are insufficient, applying a QoS policy globally

might fail on the IRF member device. The system does not automatically roll back the QoS policy

configuration already applied to other IRF member devices. To ensure consistency, use the undo

qos apply policy global command to manually remove the QoS policy configuration applied to

them.

To apply the QoS policy globally:

Step Command Remarks

1. Enter system view.

2. Apply the QoS policy

globally.

system-view

qos apply policy

global { inbound | outbound }

policy-name

vlan

N/A

By default, no QoS policy is applied

}

to a VLAN.

N/A

By default, no QoS policy is applied

globally.

Applying the QoS policy to a control plane

A device provides the data plane and the control plane.

21

Page 28

• Data plane—The units at the data plane are responsible for receiving, transmitting, and

switching (forwarding) packets, such as various dedicated forwarding chips. They deliver super

processing speeds and throughput.

• Control plane—The units at the control plane are processing units running most routing and

switching protocols. They are responsible for protocol packet resolution and calculation, such

as CPUs. Compared with data plane units, the control plane units allow for great packet

processing flexibility but have lower throughput.

When the data plane receives packets that it cannot recognize or process, it transmits them to the

control plane. If the transmission rate exceeds the processing capability of the control plane, the

control plane will be busy handling undesired packets. As a result, the control plane will fail to handle

legitimate packets correctly or timely. As a result, protocol performance is affected.

To address this problem, apply a QoS policy to the control plane to take QoS actions, such as traffic

filtering or rate limiting, on inbound traffic. This ensures that the control plane can correctly receive,

transmit, and process packets.

A predefined control plane QoS policy uses the protocol type or protocol group type to identify the

type of packets sent to the control plane. You can use protocol types or protocol group types in

if-match commands in traffic class view for traffic classification. Then you can reconfigure traffic

behaviors for these traffic classes as required. You can use the display qos policy control-plane

pre-defined command to display predefined control plane QoS policies.

Configuration restrictions and guidelines

When you apply a QoS policy to a control plane, follow these restrictions and guidelines:

• If the hardware resources of IRF member device are insufficient, applying a QoS policy globally

might fail on the IRF member device. The system does not automatically roll back the QoS

policy configuration already applied to other IRF member devices. To ensure consistency, use

the undo qos apply policy command to manually remove the QoS policy configuration applied

to them.

• If a class uses control plane protocols or control plane protocol groups as match criteria, the

action in the associated traffic behavior can only be car or the combination of car and

accounting packet. Only the cir keyword in the car action can be applied correctly.

Configuration procedure

To apply the QoS policy to a control plane:

Step Command Remarks

1. Enter system view.

2. Enter control plane view.

3. Apply the QoS policy to the

control plane.

system-view

control-plane slot

qos apply policy

slot-number

policy-name

inbound

Applying the QoS policy to a user profile

You can apply a QoS policy to multiple user profiles. In one direction of each user profile, only one

policy can be applied. To modify a QoS policy already applied to a direction, first remove the applied

QoS policy.

A user profile supports 802.1X authentication and MAC authentication.

To apply a QoS policy to a user profile:

N/A

N/A

By default, no QoS policy

is applied to a control

plane.

22

Page 29

Step Command Remarks

1. Enter system view.

system-view

N/A

2. Enter user profile view.

3. Apply the QoS policy to

the user profile.

user-profile

qos apply policy

policy-name {

outbound

profile-name

inbound

}

The configuration made in user profile view

takes effect only after it is successfully issued

to the driver.

By default, no QoS policy is applied to a user

profile.

Use the

policy to the incoming traffic of the device

(traffic sent by the online users). Use the

outbound

|

the outgoing traffic of the device (traffic

received by the online users).

A QoS policy that contains the action of

redirecting traffic to an interface cannot be

applied to the outbound direction for a user