Page 1

Functional MRI User's Guide

Michael A. Yassa

● The Division of Psychiatric Neuroimaging ●

● Department of Psychiatry and Behavioral Sciences ●

●

The Johns Hopkins School of Medicine ●

● Baltimore, MD ●

1

Page 2

Document written in OpenOffice.org Writer 2.0 by Sun Microsystems

Publication date: June 2005 (1st edition)

Online versions available at http://pni.med.jhu.edu/intranet /fmriguide/

Acknowledgments:

This document relies heavily on expertise and advice from the following individuals

and/or groups: John Ashburner, Karl Friston, and Will Penny (FIL-UCL: London), Kalina

Christoff (UBC: Canada), Matthew Brett (MRC-CBU: Cambridge), and Tom Nichols

(SPH-UMichigan, Ann Arbor). Some portions of this document are adapted or copied

verbatim from other sources, and are referenced as such.

Supplemental Reading:

Frackowiak RS, Friston K, Frith C, Dolan RJ, Price CJ, Zeki S, Ashburner J, & Perchey G

(2004). Human Brain Function, 2

nd

edition, Elsevier Academic Press, San Diego, CA.

Huettel SA, Song AW, McCarthy, G. (2004) Functional Magnetic Resonance Imaging.

Sinaur Associates, Sunderland, MA.

2

Page 3

Table of Contents

Magnetic Resonance Physics..............................................................................6

How the MR Signal is Generated.............................................................................6

The BOLD Contrast Mechanism..............................................................................8

Hemodynamic Modeling.........................................................................................10

Signal and Noise in fMRI........................................................................................12

Thermal Noise.......................................................................................................12

Cardiac and respiratory artifacts............................................................................12

N/2 Ghost..............................................................................................................12

Subject motion.......................................................................................................12

Draining veins........................................................................................................13

Scanner drift..........................................................................................................13

Susceptibility artifacts............................................................................................13

Experimental Design...........................................................................................14

Cognitive subtractions ...........................................................................................14

Cognitive Conjunctions...........................................................................................14

Parametric Designs................................................................................................14

Multi-factorial Designs............................................................................................15

Optimizing fMRI Studies.........................................................................................15

Signal Processing..................................................................................................15

Confounding Factors.............................................................................................15

Control task............................................................................................................16

Latent (hidden) factor.............................................................................................16

Randomization and Counterbalancing...................................................................16

Nonlinear Hemodynamic Effects............................................................................16

Epoch (Blocked) and Event-Related Designs .......................................................17

Spatial and Temporal Pre-Processing...............................................................18

Overview................................................................................................................18

Raw Data ...............................................................................................................18

Getting Started.......................................................................................................18

Requirements.........................................................................................................19

Hardware Requirements........................................................................................19

Software Requirements.........................................................................................19

Software Set-up......................................................................................................19

The SPM Environment...........................................................................................20

Data Transfer from Godzilla...................................................................................20

Volume Separation and Analyze headers .............................................................21

Buffer Removal.......................................................................................................24

Slice Timing Correction (For event-related data)...................................................24

To Correct or Not to Correct..................................................................................24

Philips Slice Acquisition Order...............................................................................25

Which Slice to Use as a Reference Slice...............................................................25

Timing Parameters.................................................................................................26

Rigid-Body Registration (Correction for Head Motion)...........................................26

3

Page 4

Creating a Mean Image.........................................................................................26

Realignment...........................................................................................................27

Anatomical Co-registration (Optional)....................................................................29

Co-registering Whole Brain Volumes.....................................................................30

Co-registering Partial Brain Volumes.....................................................................30

Spatial Normalization to Standard Space..............................................................30

Correcting Scan Orientation..................................................................................31

Normalization Defaults...........................................................................................31

Normalization to a Standard EPI Template............................................................32

Gaussian Smoothing.............................................................................................33

Summary of Pre-processing Steps........................................................................34

Statistical Analysis using the General Linear Model.......................................35

Modeling and Inference in SPM.............................................................................35

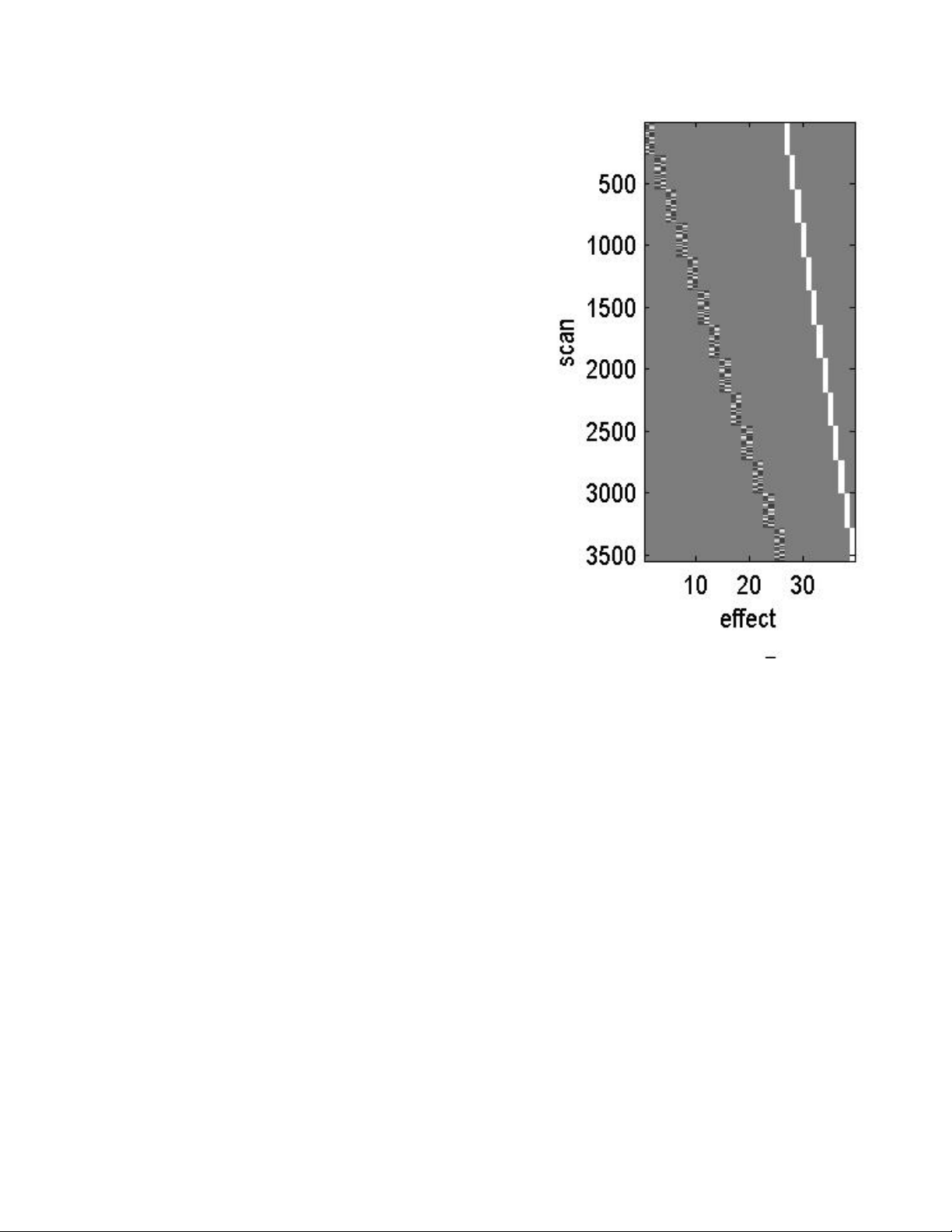

Model Specification and the SPM Design Matrix ..................................................35

Setting Up fMRI Defaults.......................................................................................36

Model Specification................................................................................................36

Estimating a Specified Model................................................................................39

Global Intensity Normalization................................................................................40

Temporal Filtering...................................................................................................40

Results and Statistical Inference............................................................................42

Contrast Specification............................................................................................42

Thresholding and Inference ..................................................................................43

Rejecting the Null Hypothesis.................................................................................43

Type I Error (Multiple Comparison Correction).......................................................44

Spatial Extent Threshold (Cluster analysis) ...........................................................46

Viewing Results using Maximum Intensity Projection ...........................................46

Small Volume Correction and Regional Hypotheses.............................................48

Extracting Results and Talairach Labeling.............................................................48

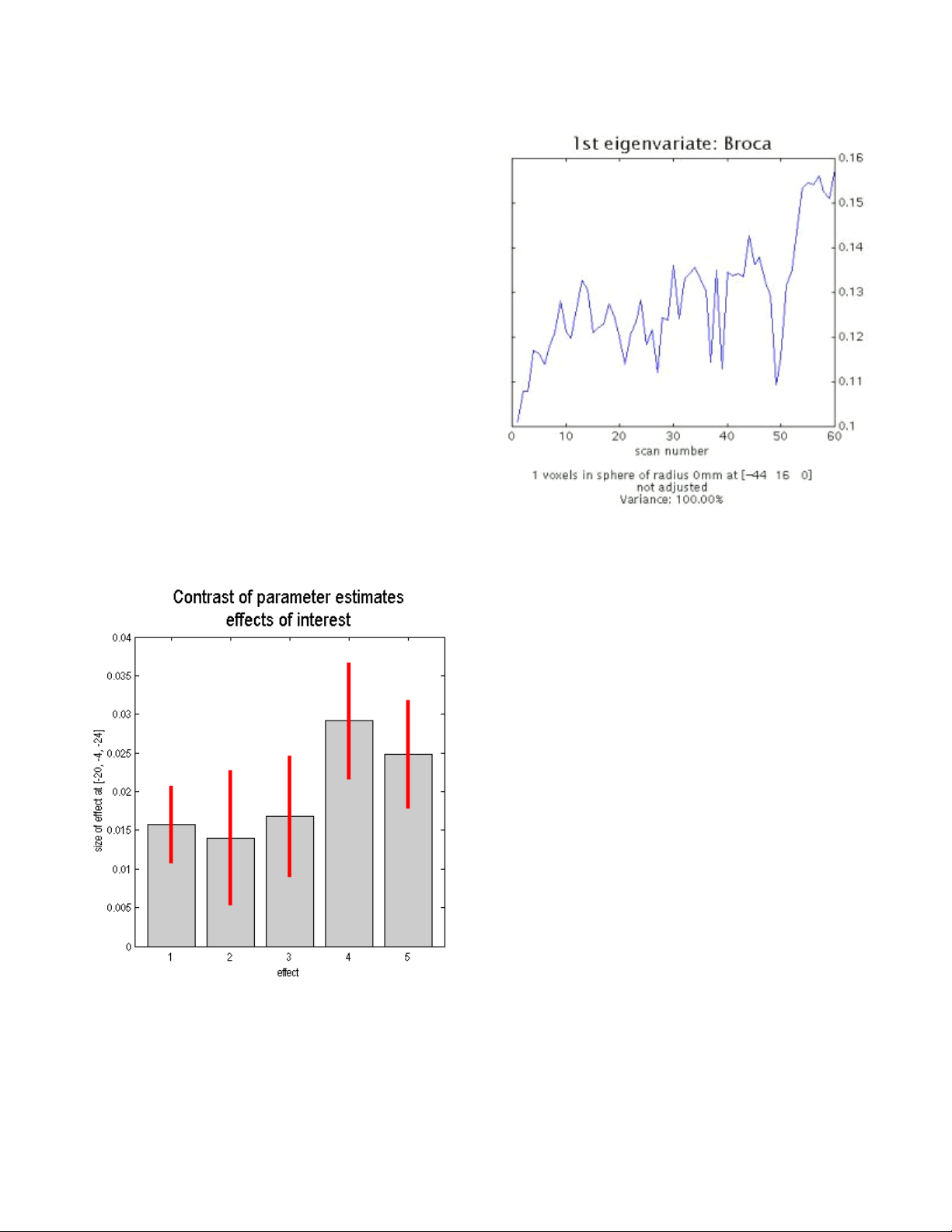

Time-Series Extraction and Local Eigenimage Analysis .......................................49

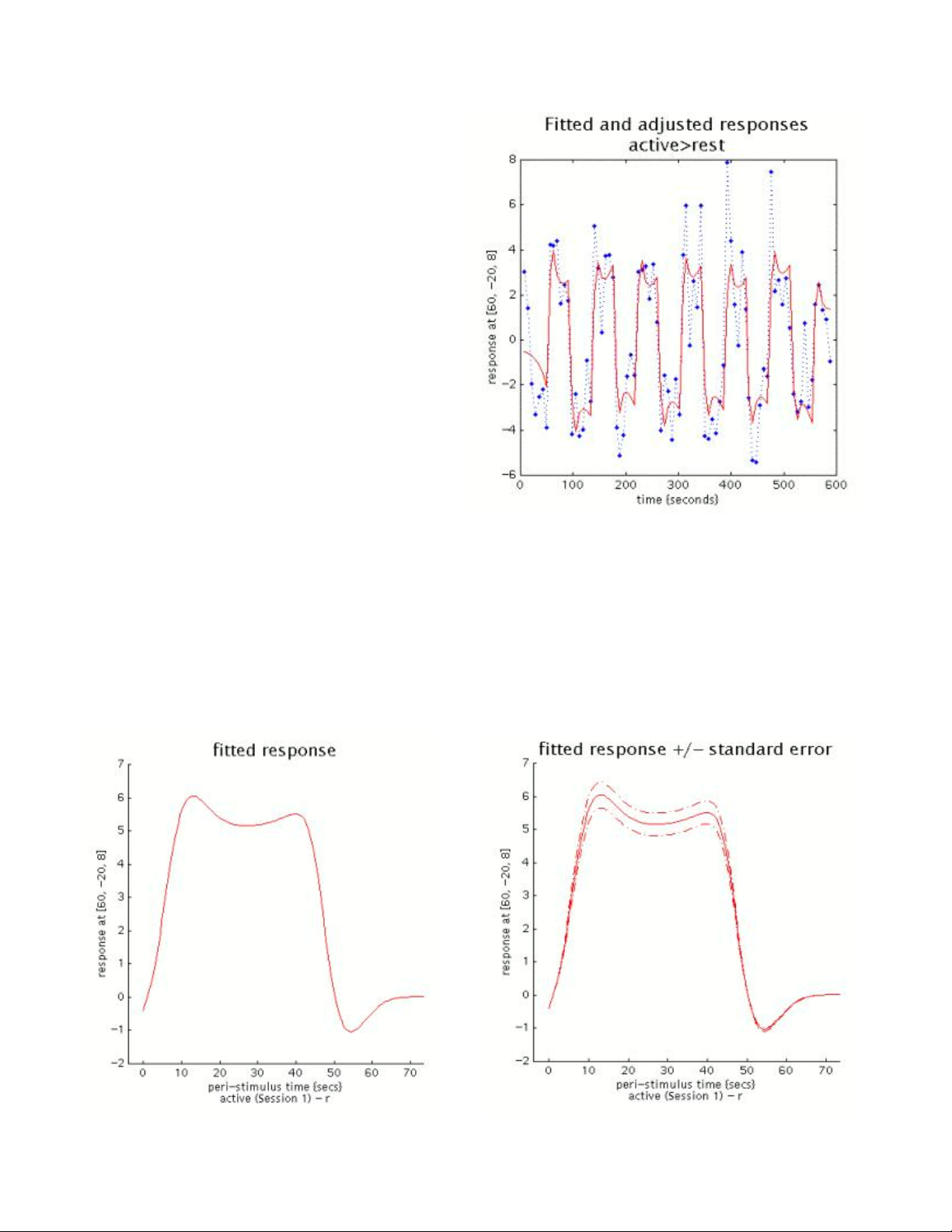

Plotting Responses and Parameter Estimates......................................................50

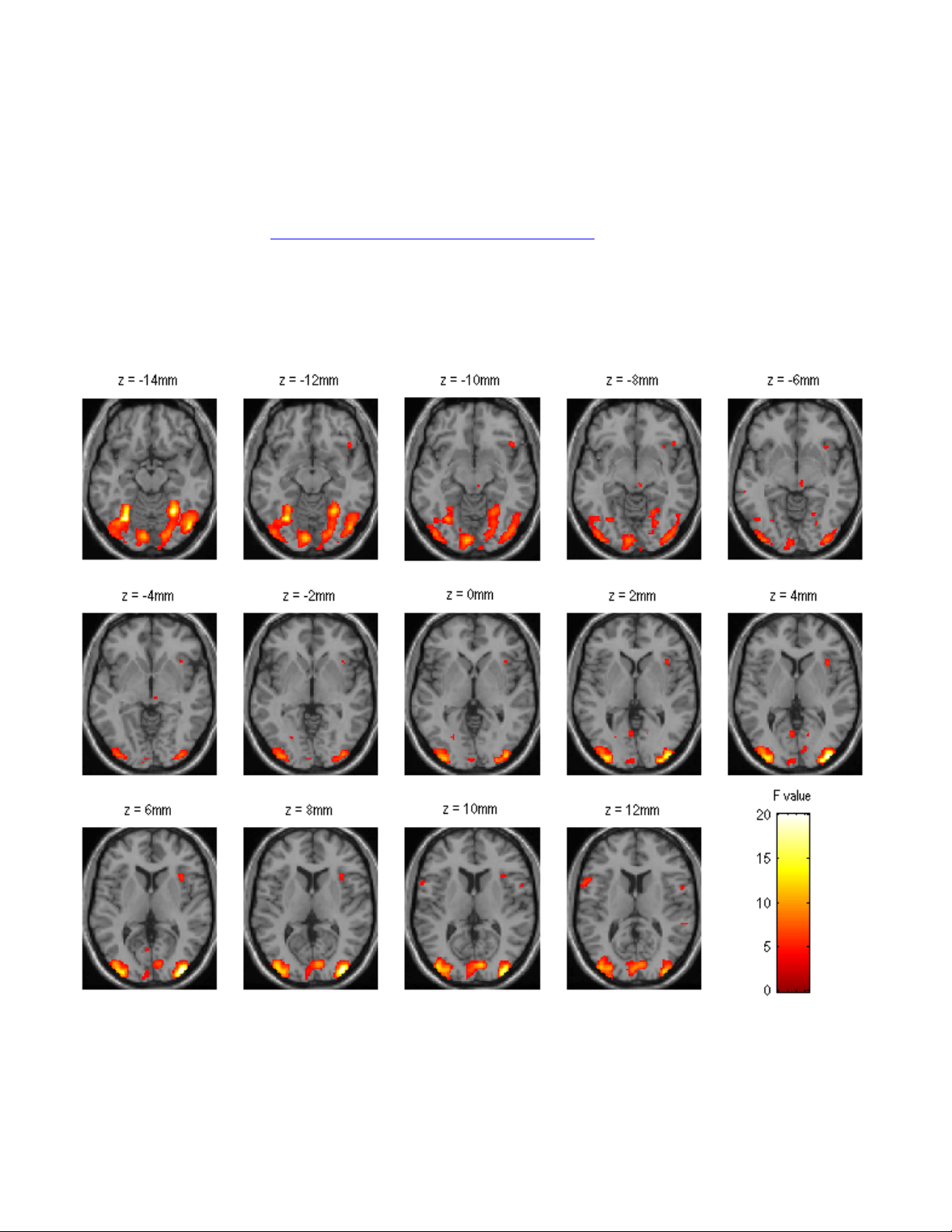

Anatomical Overlays..............................................................................................53

Editing, Printing and Exporting SPM output...........................................................55

Region of Interest (ROI) Analyses......................................................................56

Anatomical vs. Functional ROIs ............................................................................56

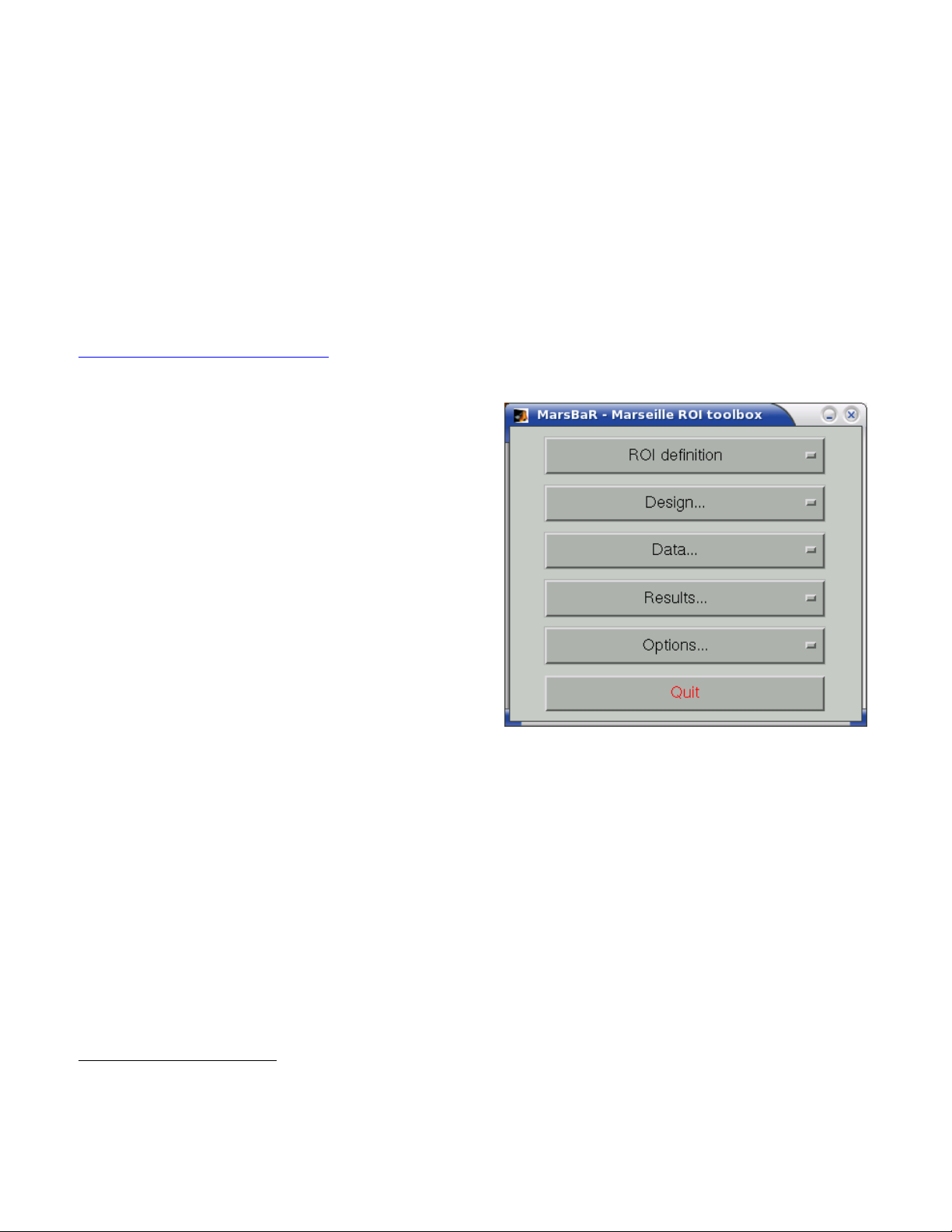

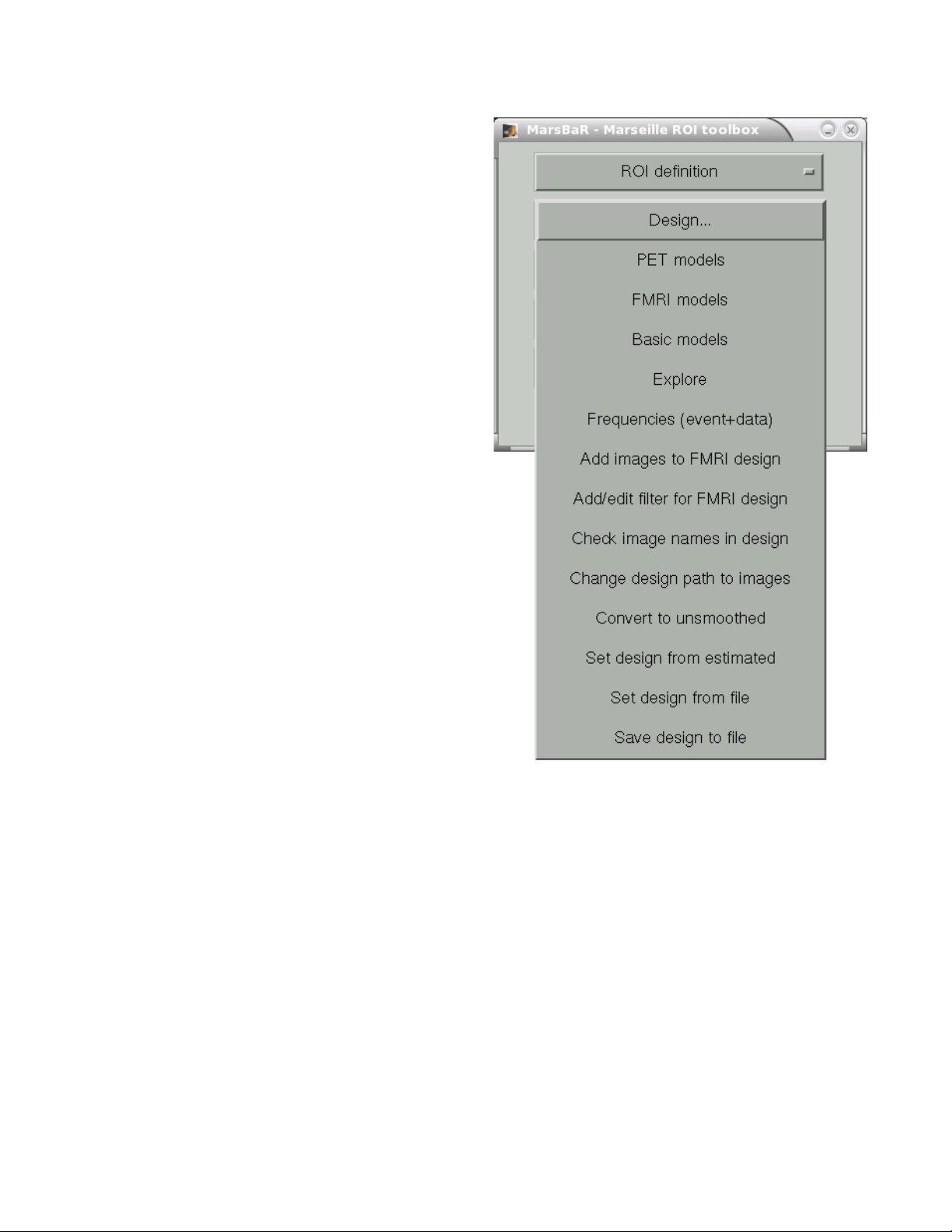

MarsBaR (MARSeille Boîte À Région d'Intérêt) ....................................................57

Overview of the Toolbox........................................................................................57

ROI Definition........................................................................................................57

Running an ROI Analysis ......................................................................................59

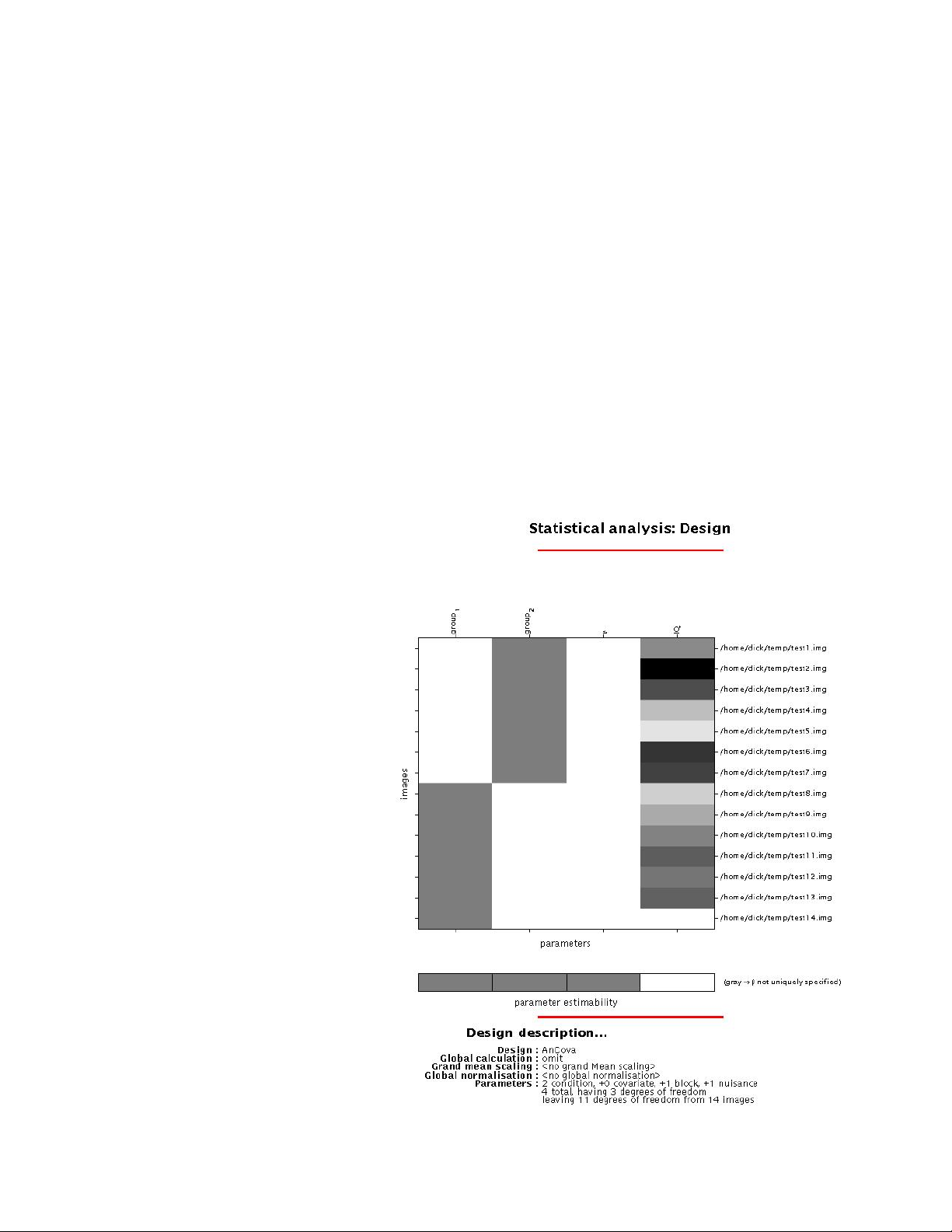

Group-Level Analysis and Population-level Inferences...................................63

Inter-subject Analyses............................................................................................63

Fixed-Effects Analysis............................................................................................63

Random-Effects Analysis.......................................................................................64

Conjunction Analysis .............................................................................................66

Nonparametric Approaches....................................................................................67

False Discovery Rate.............................................................................................68

4

Page 5

Special Topics.....................................................................................................68

Cost Function Masking for Lesion fMRI.................................................................68

Advanced Spatial Normalization Methods.............................................................69

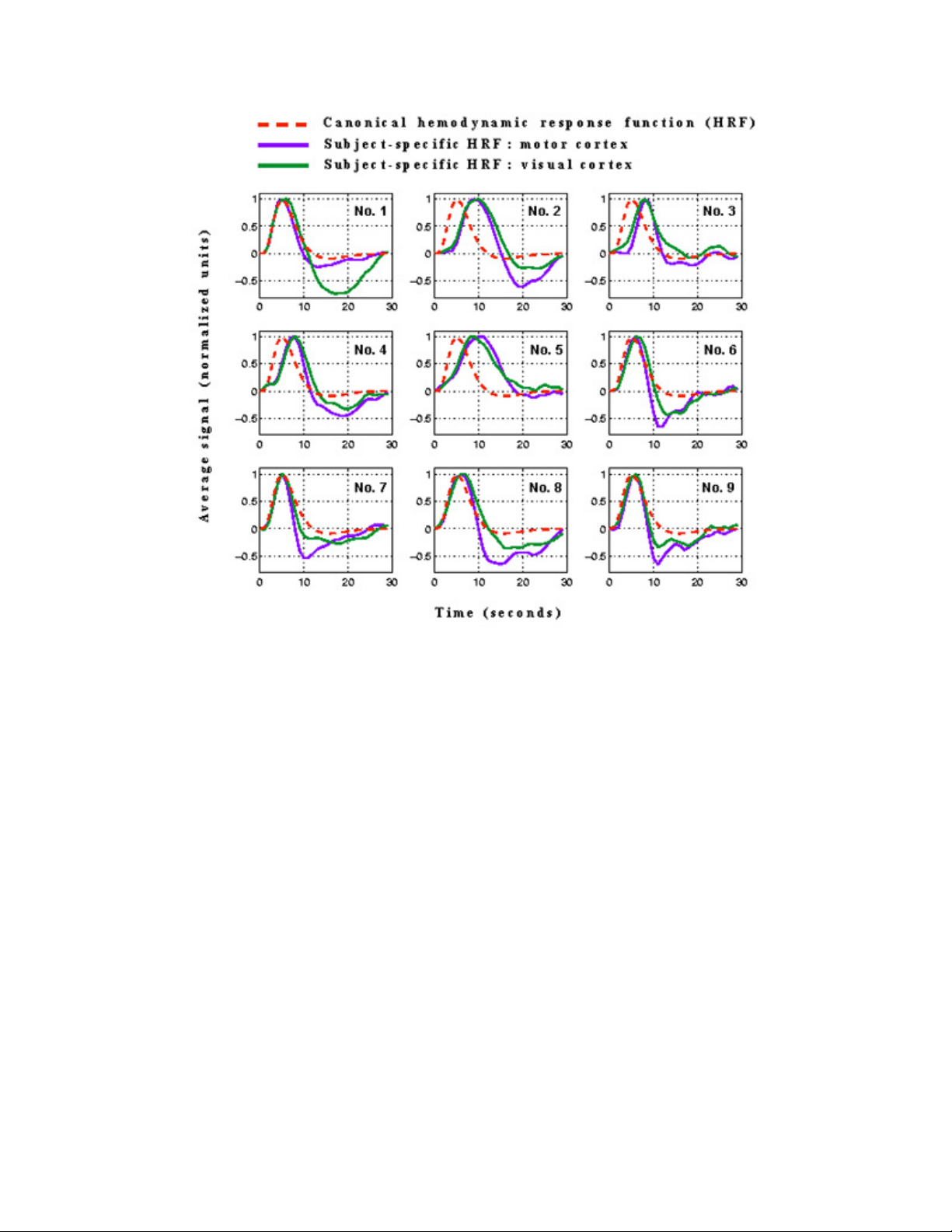

Using a Subject-Specific HRF in analysis .............................................................70

Guidelines for Presenting fMRI Data......................................................................73

5

Page 6

Magnetic Resonance Physics

How the MR Signal is Generated

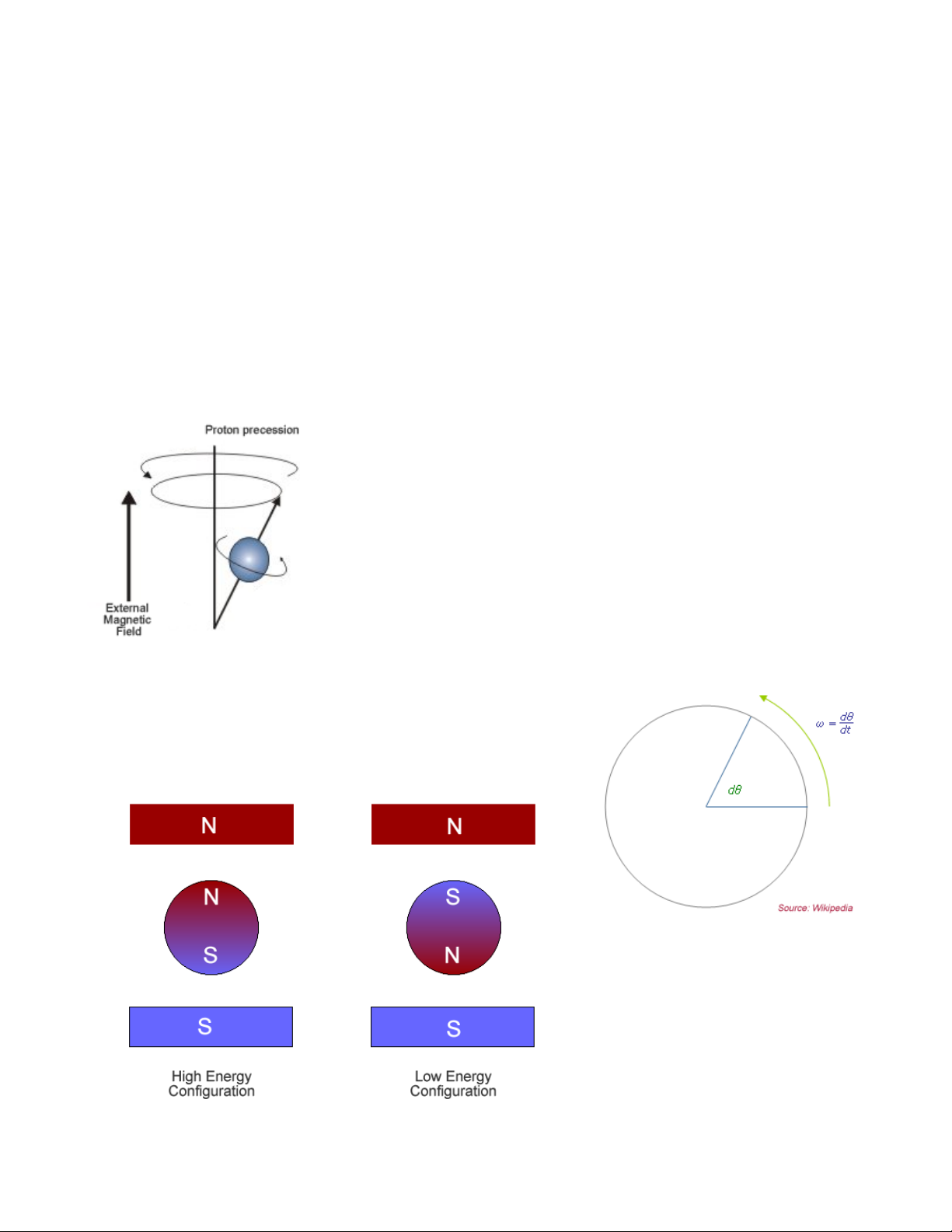

The magnetic resonance (MR) signal arises from hydrogen nuclei, which are the only

dipoles abundant enough to be measured with reasonably high spatial resolution. The human

body is made up mostly of water (mainly hydrogen atoms). Hydrogen atoms possess a

magnetic property called spin which can be thought of as a small magnetic field. Spin is a

fundamental property of some nuclei (not all nuclei possess spin) and has two important

parameters: (1) size; spin comes in multiples of ½ and (2) charge; spin can be positive or

negative. Paired opposite-charged particles, e.g. protons and electrons can eliminate each

other's spin effects. An unpaired proton (e.g. in the case of hydrogen) has a spin of +½.

In an external magnetic field, a particle with non-zero spin will experience a torque which

aligns the particle with the field, by precessing (wobbling) around

the magnetic field axis (see figure on the left). The particle develops

an angular momentum, which is empirically related to its

gyromagnetic ratio (γ) (the ratio of the magnetic dipole moment to

the angular momentum of the particle). This value is unique to the

nucleus of each element (For Hydrogen, γ = 42.58 MHz/T). The

value's derivation is too complex to explain here. Instead we will

describe its relationship to the precession angular frequency (ω)

of a proton. Angular frequency is a scalar measure of how fast a

particle is rotating around an axis (see figure on the right)

ω

Larmor

The above is known as the Larmor Equation named

after Joseph Larmor, an Irish physicist (1857-1942). It

describes the relationship between the angular frequency (ω)

of precession and the strength of the magnetic field B. There

= γ Β

are two possible configurations for

proton alignment; one configuration

possesses higher energy than the

other (see figure on the left). A

proton can undergo a transition

between the two energy states by

absorbing a photon that has

enough energy to match the energy

6

Page 7

difference between the two states. This energy E is related to the photon's frequency ν by

Planck's constant h (6.626 x 10

-34

J-sec)

E = h ν

This frequency is associated with a spin flip and is often used to describe the Larmor frequency

as well.

Larmor

= ν

ω

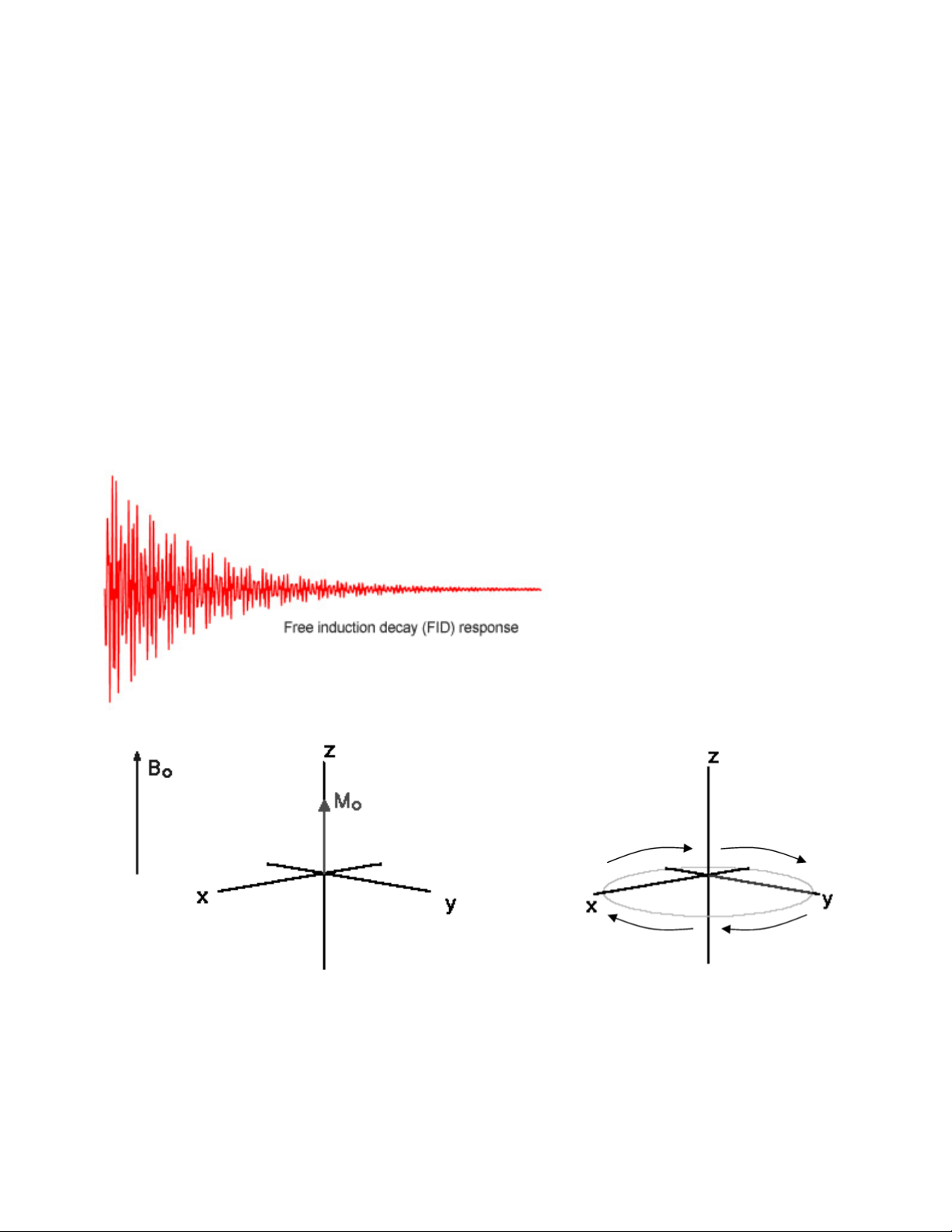

In the context of MRI, a radio-frequency (RF) pulse is applied perpendicular to the static

magnetic field (B

). This pulse, which has a frequency equal to the Larmor frequency, shifts

0

protons into a higher energy state. When the RF pulse (BRF) stops, the protons return to

equilibrium such that their magnetic moment is parallel again to B0. During this process of

nuclear relaxation, the nuclei lose energy by emitting their own RF signal. This is referred to as

a free-induction decay (FID) response signal. The FID response signal is measured by a field

RF coil, and has the characteristic shape shown in the figure below.

The Rf coil measure the relaxation

of the dipoles in two dimensions. The

Time-1 (T1) constant measures the

time for the longitudinal relaxation in

the direction of the B0 field (shown

below on the left). It is referred to as

spin-lattice relaxation.

The Time-2 (T2) constant

measures the time it takes for the

transverse relaxation of the dipole in

the plane perpendicular to the B0 field

(shown below on the right). It is

referred to as spin-spin relaxation.

The T

combined time constant (in physiological tissue) is called T

relaxation process is affected by molecular interactions and variations in B0. The

2

* (T2 star). In the case of MRI, we

2

take advantage of the fact that physiological tissue does not contain not a homogeneous

magnetic field, and thus the transverse relaxation is much faster. The size of these

inhomogeneities depends on physiological processes, such as the composition of the local

blood supply.

7

Page 8

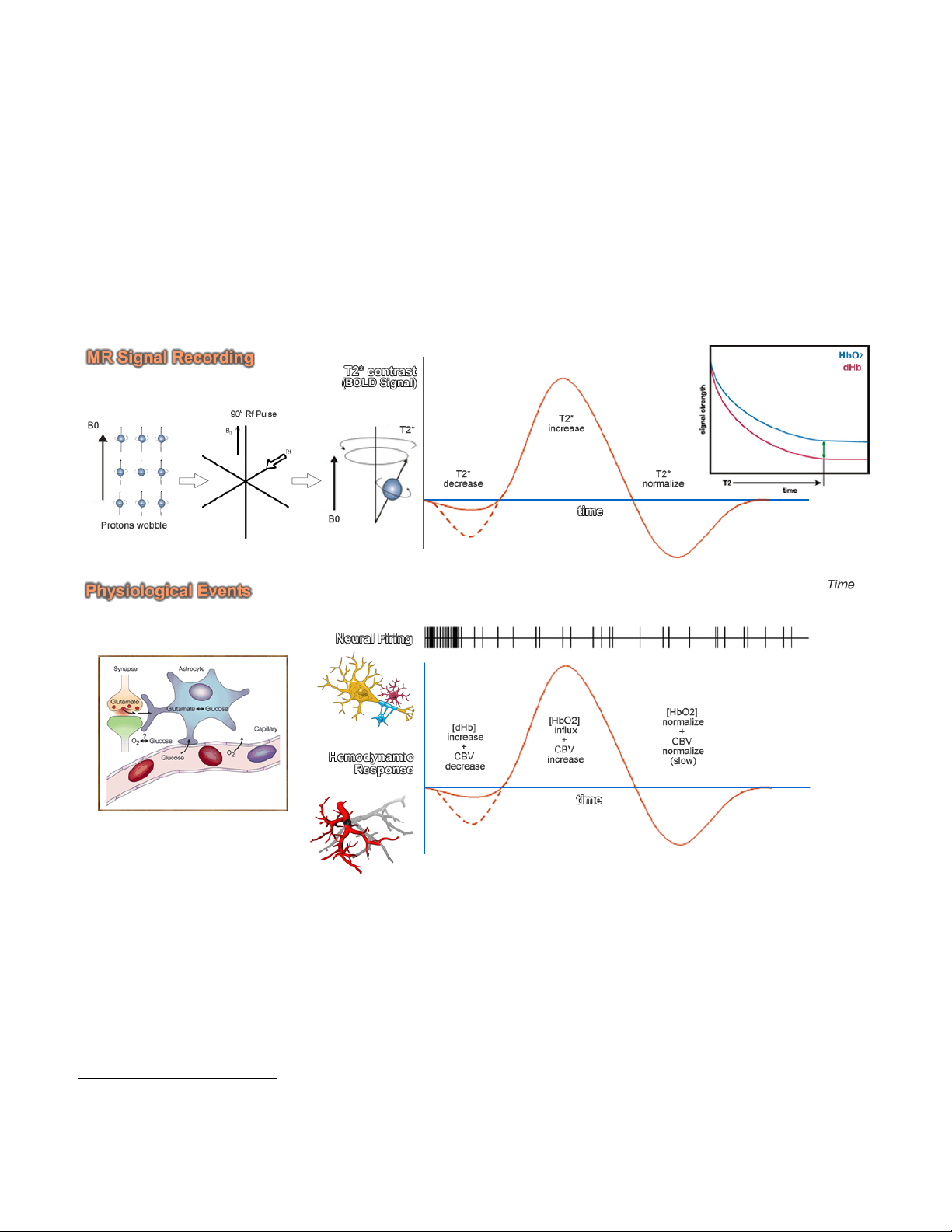

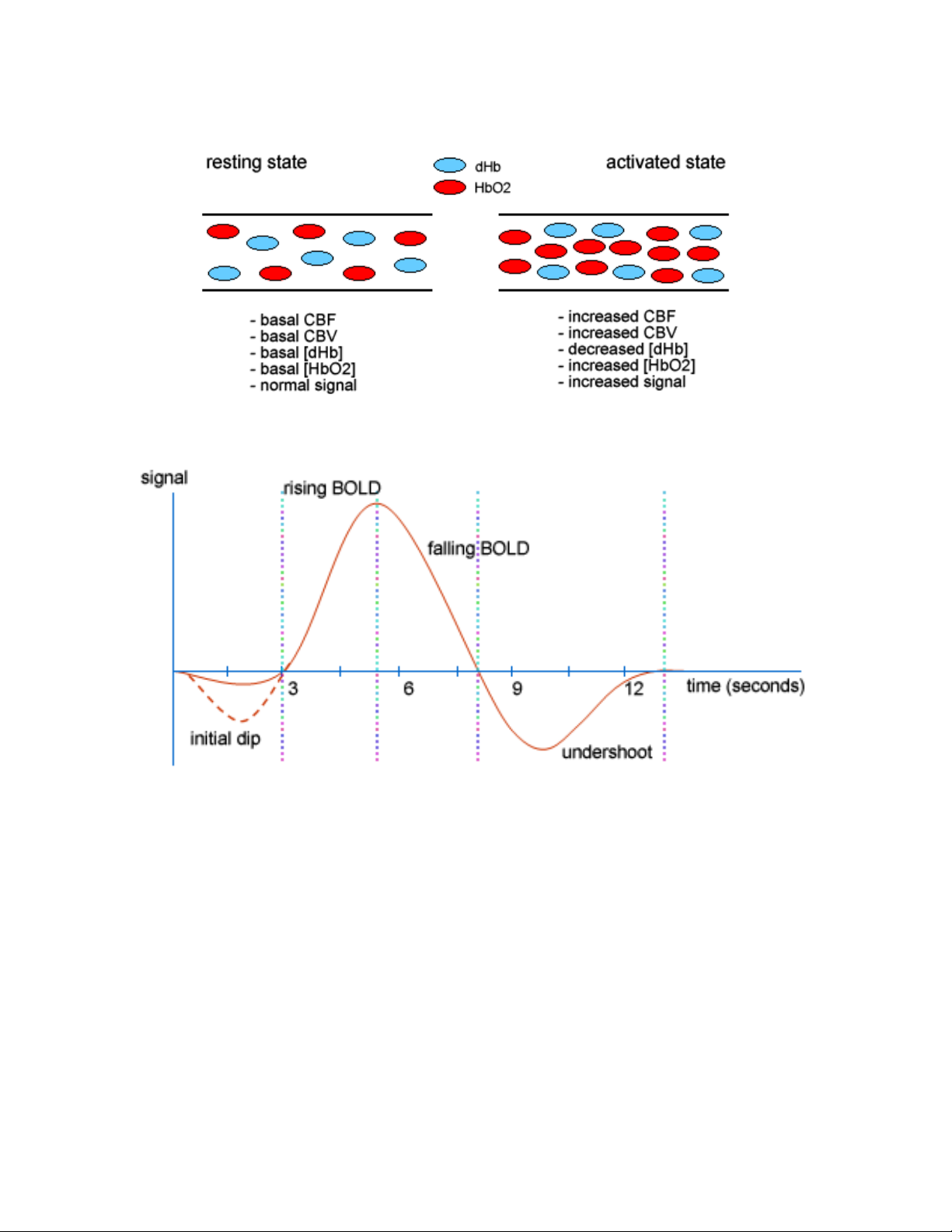

The BOLD Contrast Mechanism

This mechanism is employed in most fMRI studies. The idea is that neural activity

changes the relative concentration of oxygenated and deoxygenated hemoglobin in the local

blood supply. Deoxyhemoglobin (dHb) is paramagnetic (changes the MR signal), while

oxyhemoglobin is diamagnetic (does not change the MRI signal). An increase in dHb causes the

T2* constant to decrease. This was first noticed by Ogawa et al. In 1990 1 in the rodent brain,

and over the following few years became the mainstay of functional MRI. The BOLD Contrast

refers to the difference in T2* signal between oxygenated (HbO2) and dexoygenated (dHB)

hemoglobin.

The above figure illustrates the physiological events that underlie our recording of the MR

signal. Upon stimulation, neural activation occurs, which pulls oxygen from the local blood

supply. Theoretically, as the paramagnetic dHb increases, the field inhomogeneities are

enhanced and the BOLD signal is reduced. However, the dHb increase is tightly coupled with a

surge in cerebral blood flow (CBF) which compensates for the decrease in oxygen, delivering a

larger supply of oxygenated blood. The result is a net increase in cerebral blood volume (CBV)

and in Hb oxygenation, which decreases the susceptibility-related dephasing, increasing T2*

signal and in turn enhancing the BOLD contrast.

1 Ogawa S., Lee T.M., Nayak A.S., Glynn P. (1990). Oxygenation-sensitive contrast in magnetic resonance image

of rodent brain at high magnetic fields. Magn Reson Med 14:68-78.

8

Page 9

The BOLD response can be thought of as the combination of four processes:

(1) An initial decrease (dip) in signal caused by a combination of a negative metabolic and

non-metabolic BOLD effect. The local flow change as a result of the immediate oxygen

extraction leads to a negative metabolic BOLD effect, while the vasodilation leads to a

non-metabolic (or volumetric) negative BOLD effect.

(2) A sustained signal increase or positive BOLD effect due to the significantly increased

blood flow and the corresponding shift in the deoxy/oxy hemoglobin ratio. As the blood

oxygenation level increases, the signal continues to increase.

(3) A sustained signal decrease which is induced by the return to normal flow and normal

deoxy/oxy hemoglobin ratios.

(4) A post-stimulus undershoot caused by the slow recovery in cerebral blood volume.

9

Page 10

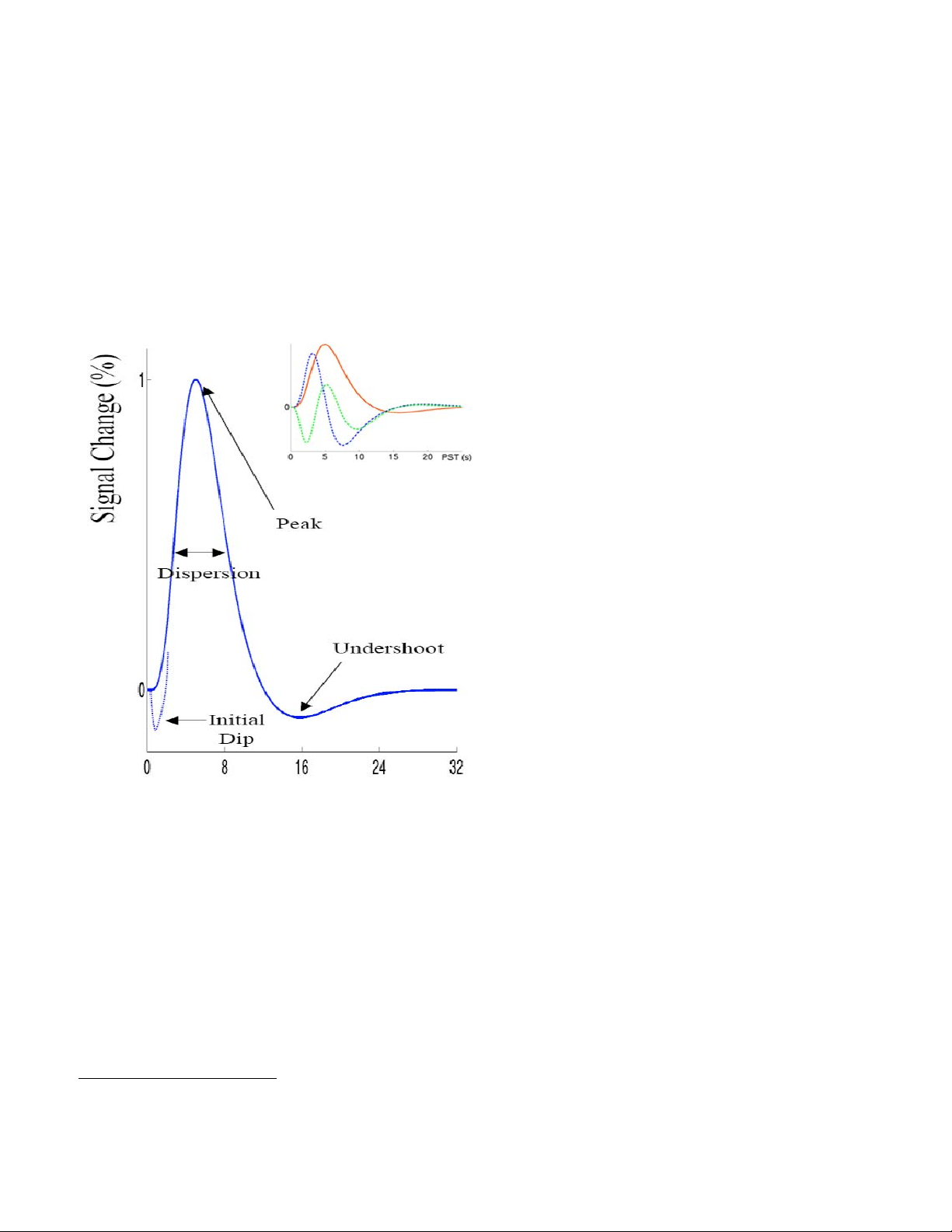

Hemodynamic Modeling

The BOLD response is very complex. The signal depends on the total of dHb, which

means that the total blood volume is also a factor. Another factor is the amount of oxygen

leaving the blood to enter the tissue (metabolic changes), which also changes the blood

oxygenation level. Finally, due to the elasticity of vascular tissue, increasing blood flow, changes

blood volume. All these factors have to be modeled adequately in order for us to estimate the

neural signal. The model currently employed in research and literature uses a canonical

hemodynamic response function that linearly transforms neural activity to the observed MR

signal. However, being able to get the true neural signal based on the hemodynamic counterpart

is a bigger problem.

Ideally, we would like to evaluate how well our linear transform model allows us to

estimate the actual neural signal. This can be done using simultaneous measurements of the

neural and BOLD signals.

Source: Logothetis and Wandell 2004

2

The above figure shows these simultaneous measurements in a monkey brain, using

extracellular field potential recording, together with fMRI. (a) the black trace is the mean

extracellular field potential (mEFP) signal; the red trace is the BOLD response. (b) spike activity

2 Logothetic NK, Wandell BA. (2004). Interpreting the BOLD signal. Ann Rev Physiol 66:735-69

10

Page 11

derived from the mEFP. (c) frequency band separation of the mEFP (d) estimated temporal

pulse response function relating the neurophysiological and BOLD measurements in monkeys.

Even though these recordings are problematic due to their invasive nature (cannot be done in

humans) and due to sampling bias, they provided useful evidence for the coupling of the neural

signal and the hemodynamic response.

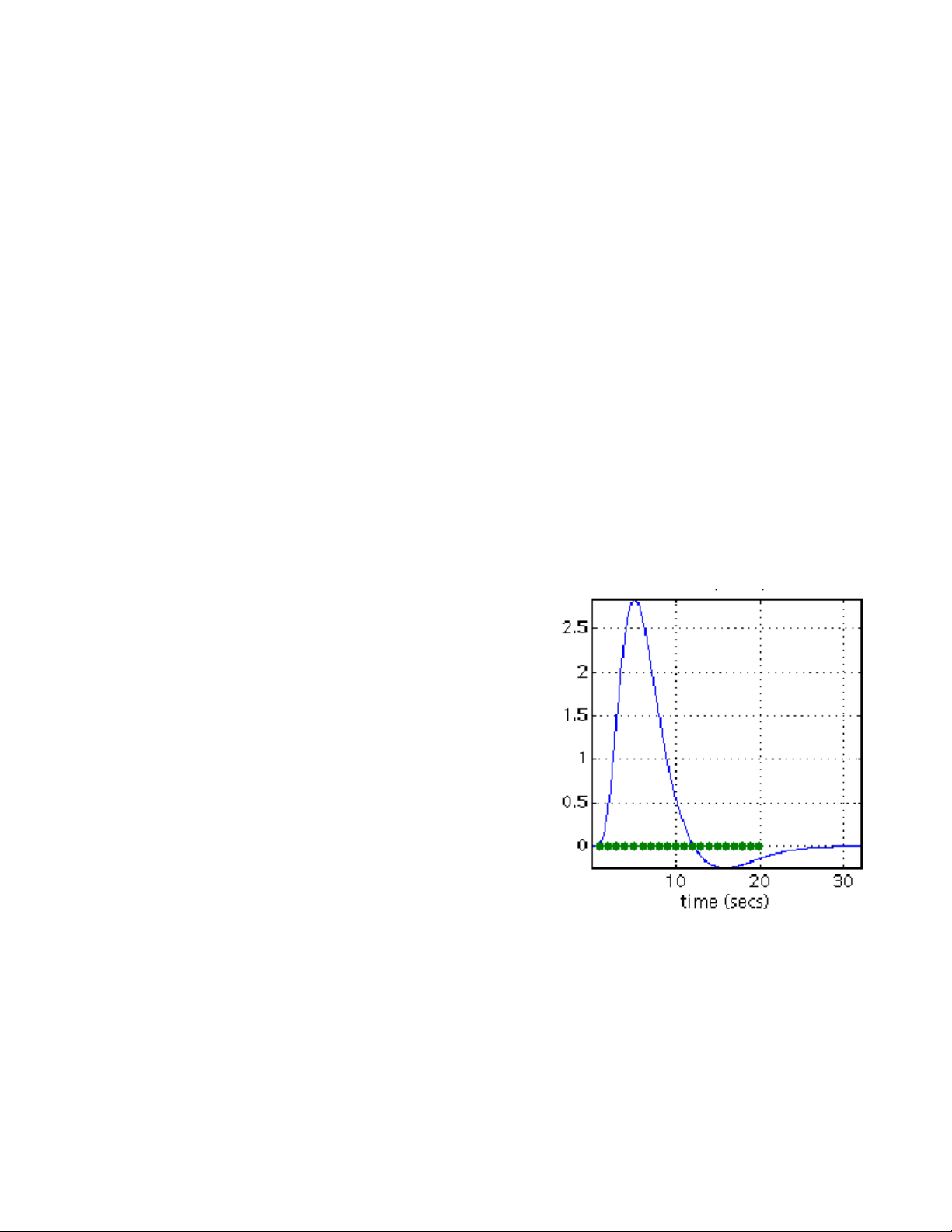

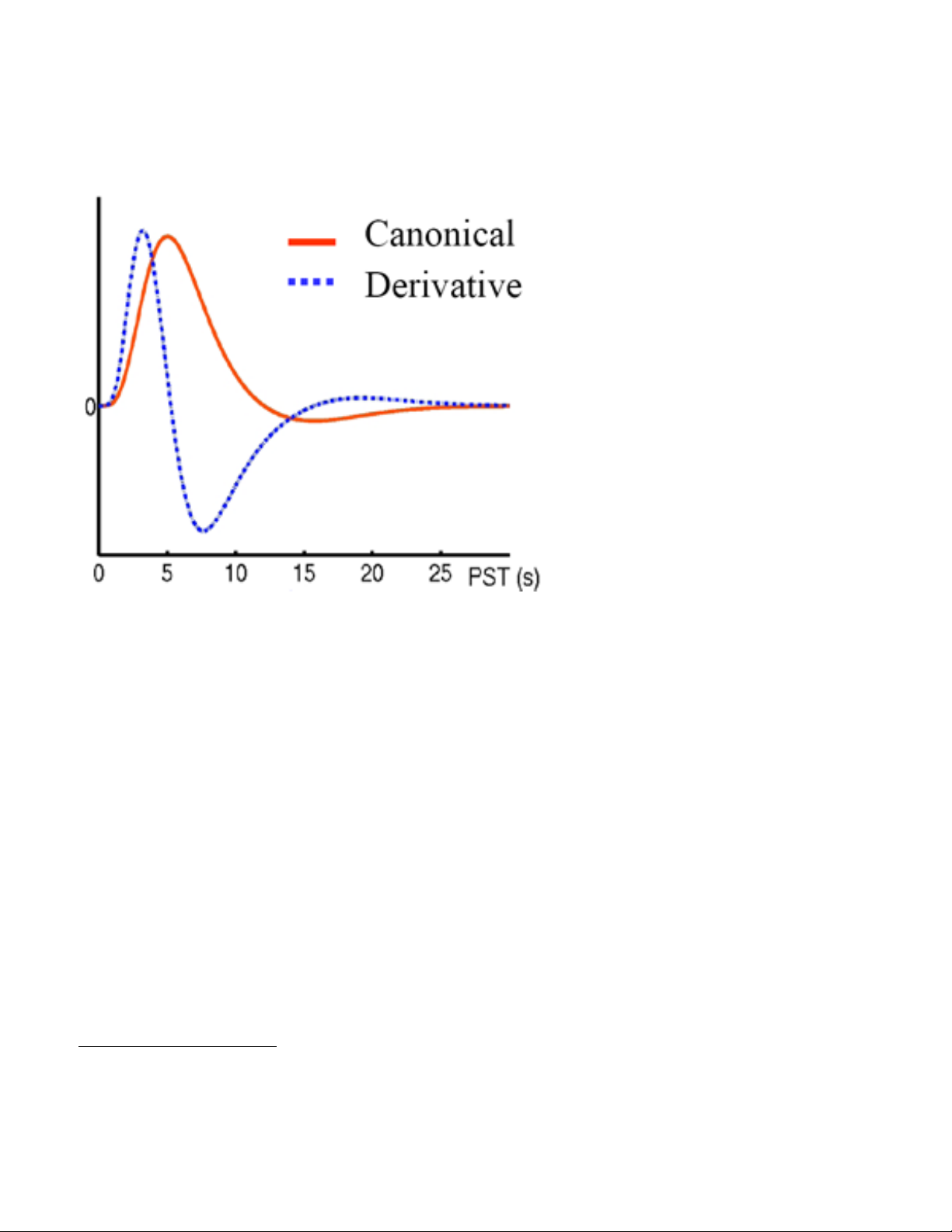

In human fMRI, we can estimate the hemodynamic response function, using known tasks

with known and expected specific neural activation, e.g. visual, motor, etc... Results that are

consistent with what we already know about specific structures' involvement in cognitive

processes may provide some insight (even though it is at best speculative) into the neural

activation and the related hemodynamic

response. Over recent years, a more

descriptive canonical hemodynamic

response function has been developed that

accounts for the timing delay (temporal

derivative) as well as the duration (dispersion

derivative) of the response. This set of

functions is what SPM uses to estimate the

neural signal. The mathematics behind the

hemodynamic model are too complicated to

explain here, but more details are given in

the fMRI analysis section.

It is important to understand however that

this model is a 'best fit' model, which means

it does a good job of explaining variance in

the hemodynamic response after neural

stimulation. However, it does not explain all

the parameters. The metabolic and neural

processes that couple action potentials to

blood flow are still not well understood, and

are the subject of much of today's fMRI

research.

Animal research is attempting to carry out

more multi-modal experiments to produce empirical data to support or reject this model, and

human research is getting better at the deconvolution of the neural impulse using higher order

mathematical modeling.

From the above we can see the entire cycle takes about 30 seconds to complete. Early

event-related studies were limited by this, and thus had to use very long inter-stimulus intervals

to allow the response to return to baseline before another one started. If the hemodynamic

responses were perfectly linear, then they should not have been hindered by this, as the linear

summation of HRFs can be deconvolved easily. However, BOLD response non-linearities exist,

and pose a problem. This non-linearity can be thought of as a “saturation” effect where the

response to a series of events is smaller than would be predicted by the sum of the BOLD

3

responses from the individual events. Empirically, it has been found that for SOA

of below ~8

seconds, the degree of saturation increases as the SOA decreases. However, for SOA of 2-4

3 SOA: Stimulus Onset Asynchrony – This is the amount of delay between the presentation of one experimental

stimulus to another.

11

Page 12

seconds, the magnitude of saturation is small. This is important to think about in designing an

fMRI experiment, and is particularly of importance in discussing rapid event-related fMRI.

To summarize, the general shape of the hemodynamic response is the same across

individuals and cortical areas. However, the precise shape varies from individual to individual

and from area to area. Canonical modeling however offers us a powerful tool to be able to

reasonably estimate the neural signal, based on the observed changes in regional cerebral

blood flow.

Signal and Noise in fMRI

The magnitude of the BOLD response signal we are trying to measure in fMRI is very

small compared to the overall MR signal. We can improve our signal detection ability by

increasing the amplitude of the signal or reducing the amplitude of the noise. The type of control

is referred to as signal-to-noise ratio or SNR. There are many different sources of noise that

produce artifacts in the scanner. Here is a brief description of some of the most common

problems:

Thermal Noise

Thermal noise is produced due to the thermal motion of electrons

inside the subject's body and in the large electronic circuits of the MRI

scanner. This type of intrinsic scanner noise is uncorrelated to the task

and the hemodynamic signal, and therefore can be described as “white”

noise. This type of noise increases with increased resolution (smaller

voxels). Therefore controlling it is a trade-off with the resolution of the

images.

Cardiac and respiratory artifacts

The pulsation of the blood and changes connected to breathing can change blood flow

and oxygenation. These factors create high frequency signal artifacts, for example, the cardiac

cycle is too fast (500 ms) to be sampled with a relatively average TR (2000 ms). However, when

this is the case, the variabilities become attributed to a lower frequency (aliasing), creating an

even larger problem.

N/2 Ghost

EPI scans in general suffer from ghosting artifacts in the phase

encoding direction. During acquisition, k-space data are sampled by an

alternating positive/negative read gradient. This results in a single ghost

shifted by half a FOV, known as the “Nyquist” or N/2 ghost. Using

readout gradient with the same polarity eliminates this problem at the

expense of lengthened data acquisitions.

Subject motion

Subject motion is the single most common source of series artifacts. Even relatively small

motion (of the range much smaller than a voxel size e.g 1.6-3.2 mm) can create serious artifacts

12

Page 13

due to the partial volume effects. Typically motion of about half a voxel in size will render the

data useless. Subjects should be instructed not to move, with their heads restrained securely.

The task design should also minimize the possibility of task related movements.

Draining veins

Large vessels draining in the brain could induce a hemodynamic signal, that may not be

easily differentiated from the hemodynamic responses related to the neural signal. This is hard

to control, thus caution should be taken in considering activation occurring close to visible large

vessels.

Scanner drift

Drift is created most probably by the small instability of scanner gradients. It can create

slow changes in voxel intensity over time. Even though the magnet contains huge

superconducting coils to maintain its magnetic field, the stability of this magnetic field is

occasionally drifts. This type of spatial distortion can also be caused by non-system factors, e.g.

the subject's head slowly moving downwards due to a possible leak in the vacuum pack holding

the head in place.

Susceptibility artifacts

The EPI images are very sensitive to the changes of the

magnetic susceptibility. In effect the signal from regions close to sinuses

and bottom of the brain may disappear. This can also be caused by the

presence of magnetic material in proximity of the gradients, e.g.

Implants, braces, buttons, or even another human body moving in the

room.

13

Page 14

Experimental Design

This section deals with the different designs that can be employed in neuroimaging

studies. Designs in general can be subdivided into categorical (or parametric) designs and multifactorial designs, with the latter being more complicated than the former.

Cognitive subtractions

These are one type of categorical design, which rely on the premise that the difference

between two tasks can be qualified as a separate cognitive components that is distinct in space

and therefore can be separated as an individual component of the hemodynamic response. An

example is a study in which visual and motor stimulation are combined in the experimental task

or condition, while the control task or condition consist of only the visual or only the motor

stimulation. Subtracting the activation in one condition from the other is expected to show only

the activation relevant to the specific type of stimulation. The problem with these designs is the

underlying assumption that the neural processes underlying behavior are additive in nature. Due

to the complexity of neural responses and the significant functional integration between various

brain structures, this assumption may not always hold true.

Cognitive Conjunctions

These designs can be thought of as a series of subtractions. Instead of testing a single

hypothesis pertaining to the activation in one task over the other, conjunctions test several

hypotheses at a time, asking whether all activations are jointly significant. For example, if we are

interested in verbal working memory, then we can use a series of tasks that have that cognitive

component in common, but nothing else in common. The conjunction of these tasks should

show only the structures that are involved in verbal working memory. Conjunction analyses

allow us to demonstrate neural responses independent of context.

Note: Testing joint significance using conjunctions is a notion that we will return to when we

discuss group fMRI analysis.

Parametric Designs

The underlying premise in these designs is that regional activation will vary systematically

with the degree of cognitive processing. For example, an fMRI study of hemodynamic

responses and performance on a cognitive task illustrates the utility of this design. Correlations

or neurometric functions may or may not be linear. Clinical neuroscience can use parametric

designs by looking for neuronal correlates of clinical ratings over subjects (e.g. symptom

severity, IQ, performance on QNE, etc..). The statistical design then can be viewed as a multiple

linear regression model. However, if one needed to investigate several clinical scores that are

correlated, we have a problem with running the regression model, since variables are not

orthogonal. In this case, factor analysis, or principal components analysis (PCA) is used to

reduce the number of possible explanatory variables, and render them orthogonal to each other.

14

Page 15

Multi-factorial Designs

These designs are more prevalent than single factor designs, because they offer more

information and allow us to investigate interesting interactions between variables, e.g. time by

condition interactions. For example pharmacological activation studies assess evoked

responses before and after the administration of a drug. Interaction terms would reflect the

pharmacological modulation of task-dependent activation. Interaction effects can be interpreted

as (a) the integration of cognitive processes or (b) the modulation of one cognitive process by

another.

Optimizing fMRI Studies

Signal Processing

An fMRI time series can be thought of as a mixture of signal and noise. Signal

corresponds to neurally mediated hemodynamic changes, while noise can be the result of many

contributions that include scanner artifacts, subject drift, motion, physiological changes (e.g.

breathing), in addition to neuronal noise (or signal mediated by neural activity that is not

modeled by explanatory variables). Noise in general can be classified as either white

(completely random), or colored (e.g. the pulsatile motion of the brain caused by cardiac cycles

and modulation of the static magnetic field by respiratory movement.

These effects are typically low-frequency or wideband. Thus in order to optimize an fMRI study, one

should place stimuli and the expected neural stimulation

in a narrow-band or higher frequency than the

physiological noise that is expected. This makes the

process of filtering and hemodynamic deconvolution

easier. For example, the dominant frequency of the

canonical HRF bandpass filter in SPM is ~0.03 Hz. In

order to maximize the signal passed by this filter, the

most efficient design would then be a sinusoidal

modulation of neural response with period ~32 s. In terms

of design, this means a blocked design using a box-car

function with 16s ON and 16 OFF epochs would be

optimal. The objective here is to comply with the natural

constraints of the hemodynamic response and ensure

that the experimental variance is detected in the

appropriate frequencies.

Confounding Factors

Any variable that co-varies with the independent variable is a confounding factor. These

can be due to variety of sources. For the most part, exerting experimental control on the task

can help resolve these issues. Optimized fMRI designs are generally more successful at

minimizing these factors.

15

Page 16

Control task

The control task is very important in a subtraction design. The idea is to make the control

condition very similar to the experimental condition, except for the variable we are trying to

assess. For example, in a study of face perception, one can use the control condition of simple

fixation. However, the two conditions would differ in more than one aspect, e.g. brightness,

edges, etc... If we use this design, we may not be able to make inferences about the activation

of interest, since it could have been solely due to the perception of a picture in general, and not

a face in particular. We can optimize this design by making the control task stimuli out of the

same faces, but transformed somehow, so that are no longer perceptible as faces, but rather as

images of noise (with a similar intensity histogram).

Latent (hidden) factor

This is one of the most dangerous confounding factors, and is due to the fact that

correlation does not imply causation. For example, you can give a group of Parkinson's disease

patients as well as a group of controls a motor activity task (repeated finger tapping) to

investigate activation in the motor cortex. You find that motor cortex activity is diminished in PD

patients compared to controls. This may lead one to conclude that PD patients under-activate

their motor cortex during motor movement. However, other explanations should also be

considered. In this case, it is possible that PD patients pressed the buttons less often, and

performed poorly on the task, which would explain the diminished activation. Here the latent

factor is performance, while our mis-interpretation of the data makes it seem like the diseased

state was really the causal factor.

Randomization and Counterbalancing

Trials and subjects should be sufficiently randomized, not to induce any confounding

effects. For example, if you test both patients and controls by day and night. You should

randomize whether night subjects are patients or controls. If you have two versions of the task

(or two conditions), you might want to randomize subjects to conditions, so that your subject-bycondition interaction is not a confounding factor. In the case where certain variables cannot be

adequately randomized, the investigator may choose to use a counterbalanced design. For

example if gender is randomly assigned to groups, it is possible that one group will have twice

as many men as the other. Counterbalancing ensures that this is not case, by balancing the

number of men and women in each group. Whether you randomize or counterbalance may

depend on your sample size (for example, in a small sample, randomization may not yield a

perfectly balanced design).

Nonlinear Hemodynamic Effects

This is manifested as a hemodynamic refractoriness or saturation effect at high stimulus

presentation rates. This means that the simple addition of hemodynamic responses is not

enough to deconvolve the individual events. This effect has an important implication for eventrelated fMRI, in which trials are usually presented in quick succession. This issue will be

addressed in detail in the following section.

16

Page 17

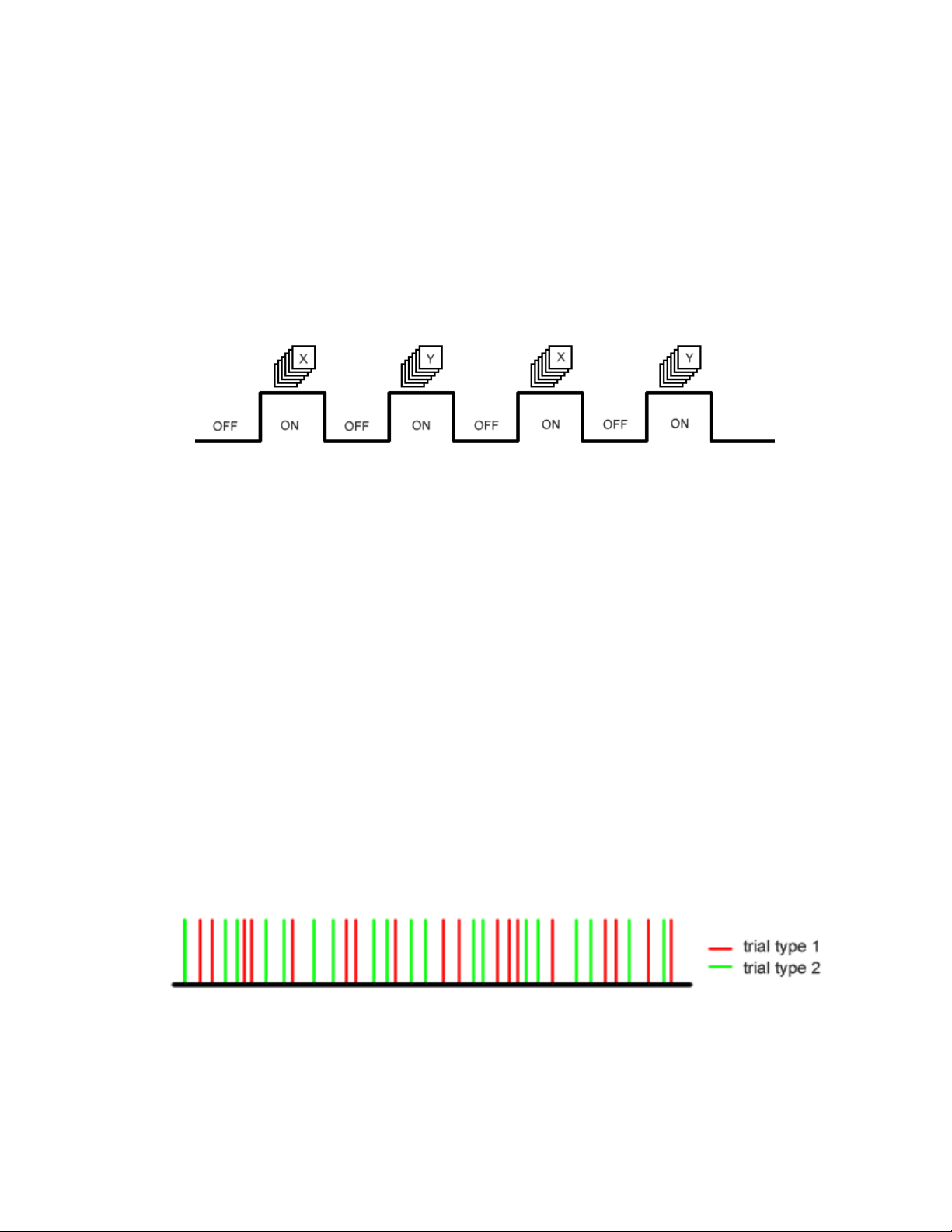

Epoch (Blocked) and Event-Related Designs

Typically, fMRI experimental design can be classified into two types: a blocked design

(epoch-related) and a single event design (event-related). Blocked designs are the more

traditional type and involve the presentation of stimuli as blocks containing many stimuli of the

same type. For example, one may use a blocked design for a sustained attention task, where

the subject is instructed to press the button every time he or she sees an X on the screen.

Typically blocks of stimulation are separated from each other by equivalent blocks of rest (where

the subject may be instructed to passively attend to a fixation cross on the screen. This type of

design is depicted below.

Blocked designs are simple to design and implement. They also have the added

advantage that we can present a large number of stimuli, and thus increase our signal to noise

ratio. It has excellent detection power, but is insensitive to the shape of the hemodynamic

response. We also have to assume a single mode of activity at a constant level during

stimulation. In other words, we cannot infer any information regarding the individual events. This

precludes us from being able to investigate interesting questions, such as the relationship of

activation to accuracy and performance or reaction time. We use blocked designs if we plan to

use a cognitive subtraction or conjunction to analyze our data.

The alternative to epoch designs is a more powerful estimation method. Event-related

fMRI has emerged as a much more informative method that allows for a number of other

analyses to be conducted. Rapid, randomized, event-related fMRI is the newest improvement

on this concept. The idea is to present individual stimuli of various condition types in randomized

order, with variable stimulus onset asynchrony (SOA). This provides us with enough information

for time-series deconvolution using a canonical or individual-derived HRF, and allows us to

conduct post-hoc analyses with trial sorting (accuracy, performance, etc...). This design is more

efficient, because the built-in randomization (jittering) ensures that preparatory or anticipatory

effects (which are common in blocks designs) do not confound event-related responses. A

typical event-related design is depicted below.

Mixed designs are also possible (combining aspects of blocked and event-related

designs, however they are much more complicated to design and analyze. They usually contain

blocks of control and experimental stimuli, however within each block are multiple types of

stimuli. It allows us to simultaneously examine state-related processes (best evaluated using a

block design) and item-related processes (best evaluated using an event-related design).

17

Page 18

Spatial and Temporal Pre-Processing

Overview

Functional MRI (fMRI) pre-processing is designed to accomplish several purposes. It

corrects for head motion artifacts during the scan (realignment), adjusts the data to a standard

anatomical template (normalization) and convolves the data with a smooth function suitable for

analysis (smoothing). The pre-processing is done within the Statistical Parametric Mapping

(SPM) environment which is a MATLAB package with a graphical user interface (GUI).

Additional MATLAB functions will be used and will be described in detail. Depending on the

computer speed and dataset size, pre-processing can take several hours or days.

Pre-processing also requires a lot of hard drive space, for example if a single subject’s

dataset is 1000 MB (1GB) in size, you will need 5000 MB (5GB) of space to pre-process the

subject’s data. Of course once the pre-processing is done, a lot of the data generated in the

intermediate steps can be deleted, and this can be used to save hard drive space. The preprocessing directory should be either (1) an internal drive at 7200 RPM or more (RAID-0 SATA

or 10-15K SCSI preferred) or (2) an external drive at high throughput rates. FireWire is the

recommended medium, due to its reliability and high throughput rates (800 Mbps on machines

that support 1394b). Pre-processing, in general should not be done over the network (i.e. writing

images to a mapped network drive), as it takes longer, and makes the process more prone to

crashing (this is severely affected by network traffic). However, you may run pre-processing on

another computer on the network, using remote desktop (and the pre-processing computer's

native Matlab/SPM). For instructions on how to set up the remote desktop, please see

http://www.microsoft.com/windowsxp/using/mobility/default.mspx

Raw Data

fMRI datasets are saved at the point of origin (Philips scanner) as combinations of

.par/rec files. This data is saved on Godzilla (large capacity UNIX-based server, maintained by

the F.M. Kirby Research Center: for questions about Godzilla or to set up a user account,

please contact its administrator, Joe Gillen (jgillen@jhu.edu

combination of the subject’s last name and the reverse date of the scan, followed by the scan

number (scans are numbered in the same order in which they were acquired), e.g.

“yassa050103_3.rec”. You may let the technicians know to save the files using a different name

(HIPAA regulations somewhat preclude saving these files with the subject last name).

). Data is usually saved as a

Getting Started

To start a new analysis on your computer, first you must create a new working directory

for storing all of the data files in your dataset. You have to make sure the drive on which you

save the data has enough space to contain all the images. Then you should create a directory

(without spaces in the directory name), e.g. “C:\fmri\subjID\” to contain all of the subject’s fMRI

data. It is a good idea to keep your imaging data organized by project and by subject. fMRI data

involves potentially thousands of files and thousands of data points, so it is essential to keep

everything organized and document this organizational structure somewhere safe.

18

Page 19

Requirements

Hardware Requirements

You must have the following hardware requirements before you begin:

- Windows XP Professional or Windows 2000 or Redhat Linux 9.0 and above.

- At least 20 GB of free space (60 recommended)

- At least 1 GB of RAM (2 – 4 GB recommended)

- 4 GB of swap space (also known as paging file on Windows)

- Dual processors recommended.

Software Requirements

You must have the following software on your computer, before you begin:

- Matlab 6.0 or higher with SPM99 and its latest updates (download)

- Secure Shell SSH Software

If you do not have any of these requirements, you should contact Arnold Bakker or Mike Yassa

to make sure you have the correct setup.

Software Set-up

Install Matlab 6.1 (or above) in its default directory. If you’re using a network installation

of Matlab, you may need to be on an enabled Matlab client (we have a limited number of client

licenses). We also have a personal licensed version of Matlab which is more convenient and

can be installed without the need for network setup.

Download SPM99 from http://www.fil.ion.ucl.ac.uk/spm/ and extract it in a suitable

directory, e.g. “C:\spm99” or “C:\Matlab6p1\spm99”. Find the file “r2a.m” under

\\Soma\Matlab_functions . If you do not have access to Soma, contact Mike Yassa or Arnold

Bakker to get a copy of r2a. Copy and paste the file in your SPM99 directory.

Open Matlab 6.1 and add SPM99’s directory to the Matlab path, by going to File> Set

Path, and adding the SPM99 folder. Save the appended path, and close the “Set path“ window.

To check that everything has been installed correctly, type “spm fmri” in the Matlab console and

wait for the SPM windows to pop up. If you get error messages at this point, then your

installation was unsuccessful or your options are not set correctly.

Note regarding SPM use: SPM is a very resource-hungry program that can be very

temperamental. Make sure you close other open windows and other “memory hogging”

programs, before you start pre-processing or analyzing using SPM. At times it may also

spontaneously suffer from an internal error and indicate this by printing a verbose and cryptic

output to the Matlab command window. It may also crash or lock up your Windows system

entirely. If this happens, then shut down SPM and restart Matlab (restarting Matlab clears its

cache memory, and is necessary before you start the same process again).

19

Page 20

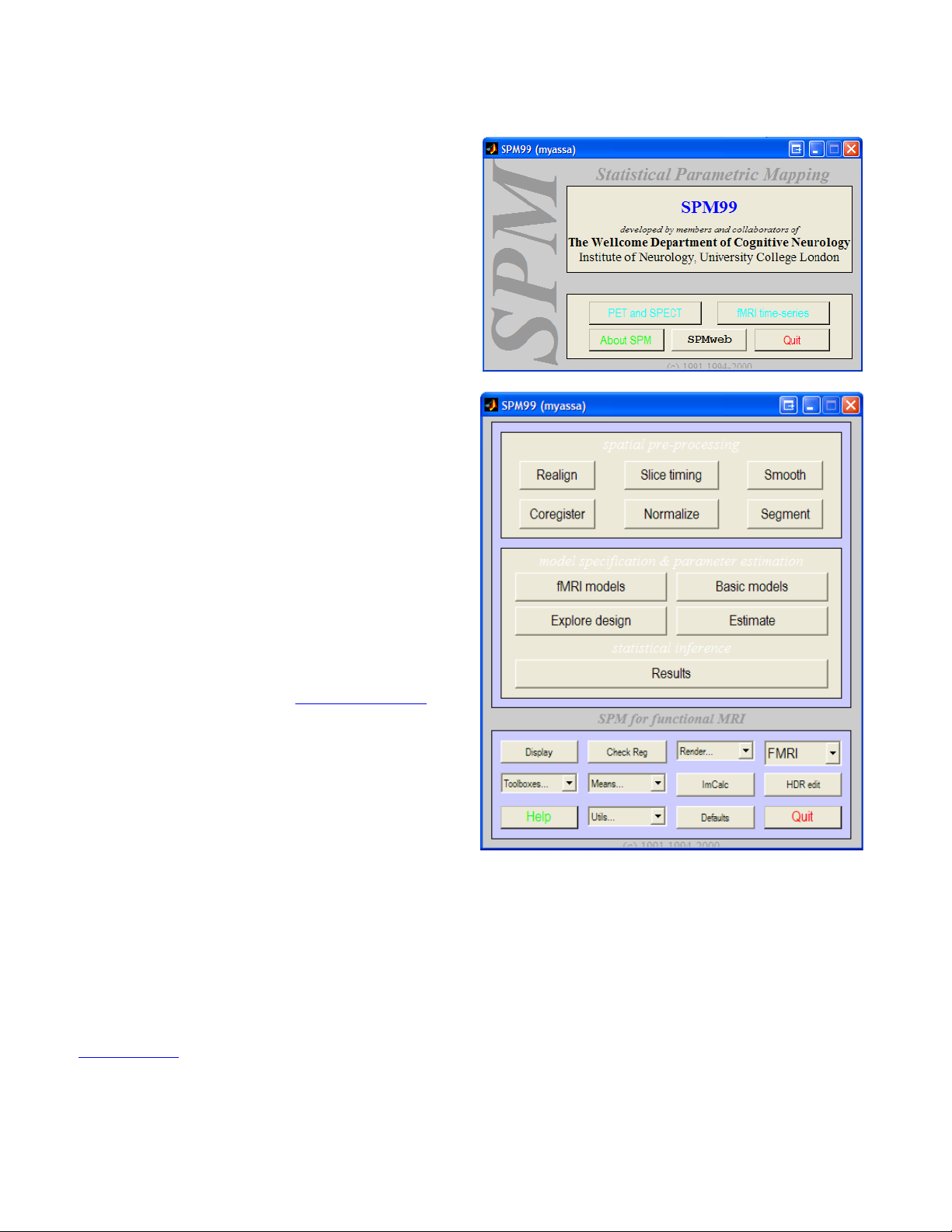

The SPM Environment

Statistical Parametric Mapping (SPM)

main panel allows you to select between two

interfaces, one for fMRI and one for

PET/SPECT modeling. In order to bring up this

screen, type >spm at the Matlab console. Click

on <fMRI Time-series> to bring up the fMRI

interface. If you are running spm2 as well, make

sure that the spm99 directory is prepended to

the top of the Matlab path. Matlab will run

whichever instance of spm it finds in its path

first.

Three SPM windows should appear. The

Upper window will be referred to as the fMRI

switchboard. The lower left window is the SPM

input window, and the right window is the SPM

graphics output window. The switchboard

consists of a spatial preprocessing panel with

option for processing fMRI data. The statistical

analysis panel containing the different linear

models that can be applied to the data. And

finally, the bottom panel contains useful tools for

displaying images, changing directories,

creating means, changing defaults, writing

headers, and running different toolbox options.

Toolboxes are installed in \\spm99\toolbox. The

<Defaults> button changes the defaults only for

the current session. If you close and restart

SPM or Matlab, those changes will be lost. You

can make permanent changes to fMRI defaults

by editing the spm_defaults.m file (or creating

an alternate version for your lab, and placing it

in the Matlab path before the spm directory.

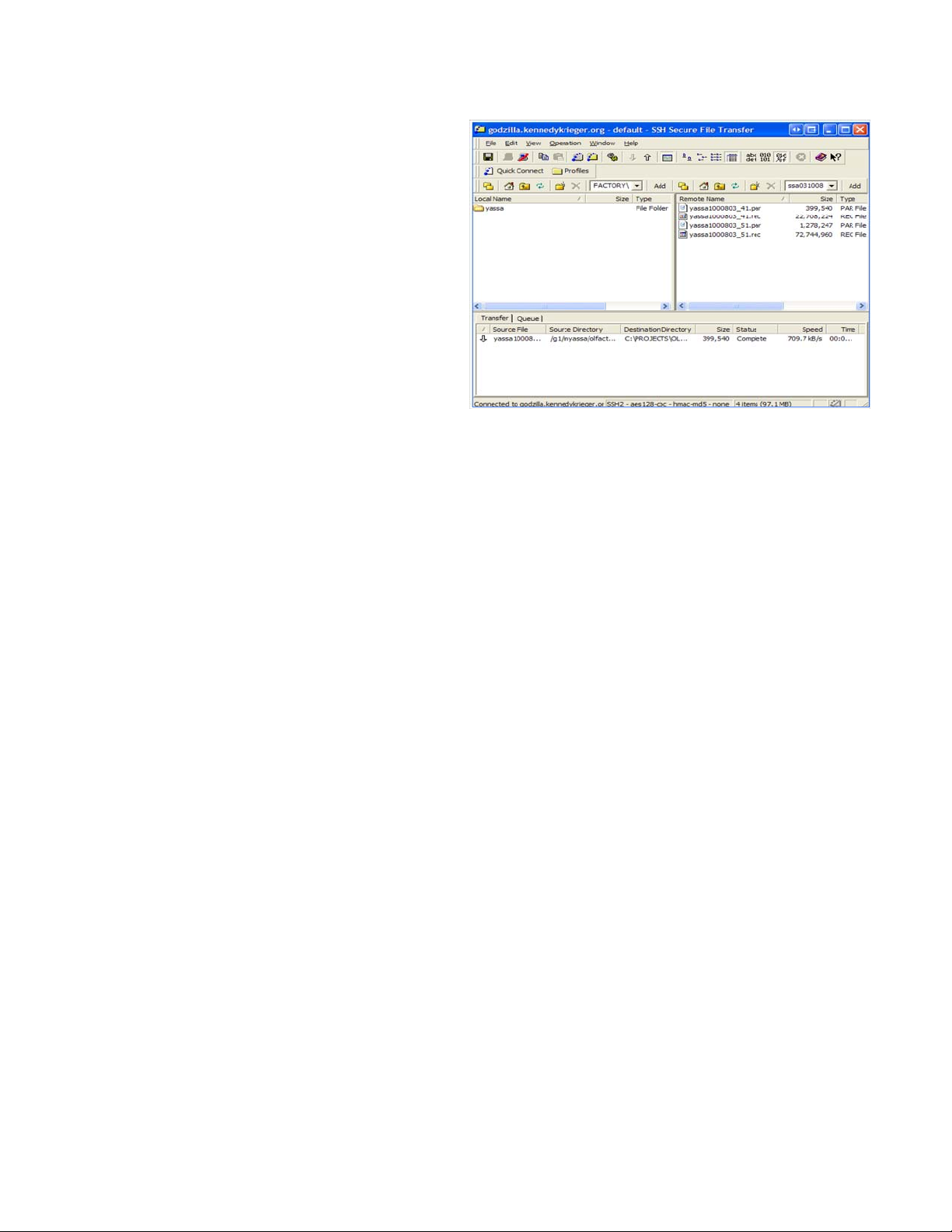

Data Transfer from Godzilla

Godzilla is a large RAID array, acting as a storage server at the F.M. Kirby Research

Center at Kennedy Krieger Institute. It is the default image repository. We use this server to

transfer subject data from the scanner to our laboratory. Once a subject's data is acquired, it is

exported from the scanner database to a specific directory on Godzilla. Usually this is under one

of the two main disks (g1 or g2). Each investigator has a directory for storage and transfer, e.g.

\\g1\myassa

(godzilla.kennedykrieger.org) using your username and password. Once connected, in the top

menu bar go to <Operation> and Select <Go to Folder>. In the folder window enter the folder

. Open Secure Shell (SSH) File Transfer Window, and connect to Godzilla

20

Page 21

name e.g. “/g1/studyPI” and press <Enter>.

This is shown on the left.

In the left window, change the

local folder to the data folder you set up for the

study/ subject. In the right window, navigate

through the remote directories and find the

subject whose data you would like to preprocess. Click and drag the directory with the

correct subject name/date to your local folder.

The individual files will be queued for transfer

sequentially. This process takes quite a bit of

time, and depends on network speed and

traffic. Wait for the transfer to be completed

before you close Secure Shell SSH.

Volume Separation and Analyze headers

This step involves the conversion of the Philips REC/PAR file format to the conventional

3D Analyze format (SPM can only handle Analyze images). The REC file contains all of the time

series images, and the PAR file is the text file containing all the parameters necessary to

separate the REC file into Analyze volumes. Rename the directories and par/rec combinations

to names that identify the subject ID and the session number, e.g. replace

“lastname051112_10_1.par” with “50100_4.par” where “50100” is the subject ID and “4” is the

session number. One way to separate the volumes uses the executable file “separate.exe”

which can be copied from \\Soma\Software\. If you do not have access to Soma, contact Mike

Yassa or Arnold Bakker to get a copy of the file. Separate uses a command line (DOS-like)

interface and requires you to know and/or calculate some of the parameters of your scan

acquisition. First you need to open your .par file. Right click the .par file and select “Open

With…”. Select Wordpad from the list of programs. The header file should look like this:

. Patient name : Yassa,Michael

. Examination name : #-#/g1/myassa/yassa050131

. Protocol name : Bold396 SENSE

. Examination date/time : 2005.01.31 / 10:12:59

. Scan Duration [sec] : 798

. Max. number of slices/locations : 39

. Max. number of dynamics : 396

. Image pixel size [8 or 16 bits] : 16

. Scan resolution (x, y) : 80 80

. Scan percentage : 100

. Recon resolution (x, y) : 128 128

. Number of averages : 1

. Repetition time [msec] : 2000.00

. FOV (ap,fh,rl) [mm] : 230.00 117.00 230.00

. Slice thickness [mm] : 3.00

. Slice gap [mm] : 0.00

21

Page 22

The header file above has been truncated to only show the parameters of interest. The

Recon resolution is the reconstructed image matrix, and is what defines the image space. In the

case above, the matrix is 128 x 128 voxels (in the “x” and “y” planes). The plane of acquisition is

plane “z” and is determined by the Number of Slices

parameter, which in this case is 39. Thus

the image matrix is 128 x 128 x 39.

The Number of dynamics

parameter determines the number of functional scans or time

points in your series, for example 396 dynamics, means your rec file will be separated into 396

Analyze volumes.

The FOV (ap, fh, rl) parameter describes the field of view in three dimensions (“ap” is

anterior-posterior, “fh” is foot-head, and “rl” is right-left). Since the direction of acquisition of this

scan is axial (foot-head) that means the “fh” parameter (in this case, it is 117.00) is in the z

orientation.

The voxel dimensions

can be calculated from the image matrix and the field of view using

the following formula:

Voxel size = FOV (mm)

e.g. 230 x 230 x 117 = 1.8 x 1.8 x 3.0 mm

Matrix (voxels) 128 x 128 x 39 voxel

Once you locate the file “separate.exe” copy it to your “C:\Windows” or “C:\WINNT”

directory. Now click on Start>Run and type “cmd” to display the command prompt. Test that the

file is in the right location and works by typing “separate” at the console, then hitting enter. You

should get the following usage notification with a list of the arguments needed to separate

volumes.

Splits a set of volumes into individual files

Usage: separate <input_file_name> <output_filename> <head_bytes>

<volsize> <numvols> <bufsize> <avg value> <swap bytes? 0 or 1>

Here is an explanation of each of these arguments:

✗ <input_file_name> - this is the name of the .rec file you would like to separate. You have

to type the full location of the file e.g. “C:\my_fmri\scan1.rec”. Separate also does not like

spaces in folder or filenames.

✗ <output_file_name> - this is the root filename for the separated scans, for example

“scan1_sess1_”. Output files would be appended with the dynamic number, e.g.

scan1_sess1_0001.img etc…

✗ <head_bytes> - this is the number of bytes preceding the actual scan. Unless you have

specified this for your scan before acquisition, this parameter should be set to zero.

✗ <volsize> - this is the size of each volume in voxels, which is calculated from the

information retrieved from the header. This is the equivalent of the image matrix, e.g. 128

x 128 x 39. The product of those three numbers is the <volsize> parameter, which in this

case is 638976 voxels.

✗ <numvols> - this is the number of volumes in the dataset, which is also the number of

dynamics, e.g. 396. This is the number of volumes your dataset will be split into.

✗ <bufsize> - this is the number of blank “buffer” voxels you may add to the beginning and

end of each dynamic. We mostly do not use this parameter, but if you wanted to buffer

each dynamic with a two-dimensional slice you would enter a number equivalent to the

22

Page 23

product of your XY matrix, e.g. 128 x 128 which is 4096.

✗ <avg_value> - we do not use this parameter. Enter the number 0

✗ <swap_bytes> - each voxel is represented by two bytes of data and the swap parameter

specific which order in which those bytes are read in order to form a readable image.

Different operating systems read the bytes in different order. The scanner can be thought

of as a UNIX-based machine. Since we are operating on a Windows PC, we have to

swap the bytes to read the image. Enter the number 1.

Thus in order to separate the session 1 rec file in the example above, you would enter:

separate C:\fmri\50001_1.rec C:\fmri\50001_1_ 0 638976 396 0 0 1

There is no interactive output written to the screen. You will know when the process is

finished because the console will return to input mode with the flashing cursor.

You may want to browse through the directory where all the files have been made to

make sure that things went well. Is there the right number of files, (the "numvols" parameter)?

Are they all the same size? Are they all the correct size? If any of these things seems wrong,

check the original commands that you entered, check for inconsistencies, check for math errors

on your part and then try again.

In our example above, there should be 396 files of size 1.21 MB each in the directory

C:\fmri\50001 and they should be numbered sequentially from 50001_1_0000.img to

50001_1_0396.img. Note that you cannot double-click any of these files to view them, without

first writing Analyze headers for them (the next step). You may now close the command prompt

screen. The next steps will all be handled by SPM99.

Assuming Matlab and SPM99 are already installed and SPM99’s directory was appended

to the Matlab path, you may now create header (.hdr) files using SPM’s HDREdit facility.

Open Matlab and type “spm fmri” at the console. This should bring up the SPM windows.

At the fMRI switchboard window, click on <HDREdit>. The lower left box will contain a

series of options on a pull-down menu that asks you to set various values that describe the

images. Click on the drop down menu and select the first parameter:

Set Image Dimensions

: This is the same as the image matrix which you retrieved from

the .par file. Enter the matrix parameters separated by space, e.g. 128 128 39

Set Voxel Dimensions: This was also calculated using the formula, which in our example

yields 1.8 1.8 3.0 (Entered with spaces as separators again)

Set Scalefactor: Scalefactor is 1 unless otherwise specified.

Set Datatype: Datatype from the Philips scanner is 16-bit integer data. Byte swapping is

optional and depends on the dataset. Try selecting Int16 first. If after header specification and

displaying the images they look incorrect, then you probably need to select byte-swapped Int16.

Set Offset into file

: This is only specified if there is a buffer, otherwise it should be zero. (If

you do not set this option, it is set by default to zero).

Set Origin (x y z)

: This is the mathematical origin of the scan, and it by default set to 0 0

0. (If you do not set this option, it set by default to 0 0 0).

Set Image Description: Here you can type a text description of the images in the series,

e.g. subject ID, or a standard statement like “property of PNI”, etc… You may also leave this

field blank or choose not to set it.

Now select APPLY to images. The “SPMget” file selector window will be invoked. This is

23

Page 24

the standard way of selecting files in SPM. You can change the present directory from

C:\Matlab\work to the directory where your images are kept, and select all the *.img which you

wrote using the separate function. You will notice that SPM does not list all of the files, but

instead it abbreviates the files with similar names and uses only the common root while the

number of files sharing this root are marked with subscript numbers to the left of the name, e.g.

50001_1_*.img. In this case click on the filename root, and you should see that files 1-396

396

were selected (turns blue). You can select more than one file and more than one series to write

headers to. Once you have selected all the files for which you would like to write Analyze

headers, click Done. SPM will create header files for each image file you selected, using the

same filename as the image file, but using the extension .hdr instead. You will see the progress

in the bottom left window.

You can check that the headers were written correctly by double-clicking an image file,

and displaying it in MRIcro. If the images do not display correctly, it is possible that your

datatype should have been byte-swapped or that one or more of your parameters during

separation and/or header creation was incorrect.

Buffer Removal

In most fMRI acquisitions, the first few volumes acquired can be removed from the series

to be excluded from the analysis. This is done for two reasons. We have to make sure that the

net magnetization has reached steady state condition, and we also have to account for possible

hemodynamic effects that may be related to the start of the experiment, e.g. Scanner noise,

shifting stimulus, etc... If these scans are included in the analysis there will be a large change in

signal that is not related to experimental conditions per se, which should be avoided.

Before you remove any volumes, you have to make sure that these volumes were

acquired during rest (or fixation) and be sure that your model or design accounts for the lag that

will result in the timing parameters. If you would rather not use the first few scans as a buffer,

you can also use dummy scans to get magnetization to reach steady state before you start the

actual experiment. This can be specified in your MRI protocol on the Philips scanner. Check

with the MRI technician to make sure that enough dummy scans are included before the trigger.

Slice Timing Correction (For event-related data)

To Correct or Not to Correct

Functional MRI data from the Philips scanner are acquired slice-wise so that a small

amount of time elapses between the acquisition of consecutive (or in the Philips case interleaving) slices. Given a TR of 2000 ms, for example, in a 20-slice acquisition, each slice would

roughly take 100 ms to be acquired. This becomes an issue only in event-related designs where

one typically uses stimulus durations that elicit BOLD responses lasting only a couple of

seconds. For these designs it is critical that an appropriate temporal model is used, as any

difference between the expected and actual onset times may decrease the sensitivity of the

analysis. For short TR's (i.e. less than 3 seconds), slice timing correction can be used to remedy

this problem. Essentially this pre-processing step will determine the midpoint slice in the

acquisition and temporally interpolate all the other slices to this point.

Note: If slice timing correction is used, then one can use a naïve HRF model in the

analysis. If slice timing correction is not possible or is not performed, one can still model event-

24

Page 25

related data using HRF derivatives (more information on this in the analysis section).

Philips Slice Acquisition Order

In order to perform slice timing correction, click on the <Slice timing correction> button in

the SPM fMRI switchboard. Select all images in the series you would like to correct. Under

<Sequence type> select <user specified>. The Philips scanner acquires slices in an odd-evens

interleaved pattern (i.e. 1, 3, 5, 7, … 2, 4, 6, 8, …). In the empty box enter the correct slice order

from your acquisition. For example, if you acquire 20 slices, enter: 1 3 5 7 9 11 13 15 17 19 2 4

6 8 10 12 14 16 18 20 . Numbers should be separated by a single space, and all slices in the

acquisition should be included. Once you’re done click enter.

Note regarding slice acquisition order: At the point of scanning, you can specify and

let the MR technician know that you would like to acquire the scans in a sequential order (this is

the Kirby center default). If you do not change it, then they will be acquired according to the

Philips default (interleaved, odds then evens).

Which Slice to Use as a Reference Slice

The next prompt will be for the <Reference slice>. Enter the slice you want to consider as

a reference point. All other slices will be corrected to what they would have been if they were

acquired when the reference slice was acquired. The default is the middle slice (although,

please make sure the default value given is indeed the middle slice for the number of slices you

have).

The logic behind selecting the middle slice as a reference point for slice timing correction

is that this way there will be a minimum total shifting in time required, and therefore any

interpolation introduced by the correction procedure would be minimized. Some may argue that

in a perfect slice timing correction, the interpolation to any slice in the temporal sequence is the

same, and thus it doesn’t make any difference which slice you choose (even if it is in the space

outside the brain). However, SPM’s algorithm is not perfect and is worse for longer TR’s (more

10 as the reference slice.

Note: When the first slice in time is NOT used as a reference during correction, the default

sampled bin must be adjusted prior to analysis. More details in the analysis section.

25

Page 26

Timing Parameters

Once you’ve specified the reference slice, SPM will prompt you for <Repetition Time

(TR)>. This parameter is in your .par file, and is quite simply the amount of time it takes the

scanner to acquire a full volume. SPM will suggest a suitable TR by default, but this may not be

the correct TR. You must specify the correct TR for slice timing correction to work properly;

otherwise temporal artifacts may be induced.

Next, SPM will ask you to input <Acquisition time (TA)>, which is the time between the

beginning of acquisition of the first slice and the beginning of acquisition of the last slice of one

scan. Typically, this is calculated using the formula TA = (TR/#slices)*(#slices – 1). For

example, if TR = 2, #slices = 39, TA = 1.949. This default value is calculated by SPM for you,

and is displayed in the input box. You may accept this default value, but you may want to

confirm that it is indeed correct. This step will produce a* files, which are acquisition corrected. It

typically takes 20 minutes or so to correct a typical session (300 scans).

Rigid-Body Registration (Correction for Head Motion)

Image registration is very important in fMRI, since signal changes due to hemodynamic

responses can be masked by signal changes resulting from subject movement. Although, the

subject’s head is restrained as much as possible in the scanner, head motion cannot be

completely eliminated, thus retrospective motion correction (i.e. Realignment in SPM-speak) is

an essential pre-processing step. Image registration involves estimating a transformation matrix

that maps image A (the source image) onto image B (reference image (or target), which is

assumed to be stationary). A rigid-body transformation is defined by six parameters: 3

translations (x, y, z) and 3 rotations (x, y, z). This type of transformation is a subset of the more

general affine (linear) transformations.

Creating a Mean Image

Motion correction involves registering a source image to a target image. The target image

can be the first image in the series or it could be a mean image based on the entire series.

Since the subject could undergo some motion at the beginning of the scan session which

subsides as the scan goes on, it is better to calculate a mean image for the series and use this

image as the realignment target.

The output of the function spm_mean_ui.m is written to the current working directory, so

you should change this to your fmri directory before you create a mean. In the fMRI switchboard

click on the <Utils> drop down menu and select <CD> to change the current working directory.

Using the SPM folder selector window, navigate to the correct folder and select it. SPM should

display an alert with the new working directory name. Once this is done, you can click on the

<Means> drop down menu and select <Mean>. You will be prompted to select the images to be

averaged. Select all of your (slice timing corrected if event related) functional images. If you

have several sessions, you may want to select all images or a representative subset of images

from each session (MATLAB may crash if you try to average more than a few hundred images

at the same time). This process has no progress bar, but the output is printed to the MATLAB

screen. The mean image is written to the working directory. You can display the mean using

<Display> to see if it came out OK.

26

Page 27

Realignment

Click on <Realign> in the spatial pre-processing tab in the fMRI switchboard. Under

<number of subjects> type 1 (you can also realign more than one subject at once). Under

<number of sessions> type the correct number of sessions. You will be asked to select the

appropriate files for each session. Here you should first select the mean image followed by the

rest of the series. This will instruct SPM to realign all images to the first image select (mean).

Under <Which option?> Select <Coregister Only>. This will cause all files to be realigned by

creating transformation .mat files that contain the realignment parameters that need to be

applied to the corresponding images. Since reslicing causes the images to lose some resolution,

it is recommended only after normalization in the next step. Of course, it is still OK to select

<Coregister and reslice> if you wanted to output motion corrected volumes to be saved or for

other pre-processing. The logic here is that normalization will take into account the motion

correction parameters (written to .mat files), so that reslicing has to be performed only once.

Note that if you select <Coregister and Reslice> you will be given an option of the reslice

interpolation method. Here’s a brief description of these methods:

1. Trilinear Interpolation : this is the process of linearly interpolating points within a 3

dimensional box given the values at the vertices of the box. For example given the

intensities at the vertices of the three dimensional grid of voxels, one can interpolate the

intensity at a point inside the grid.

2. Sinc Interpolation : This involves convolving the image with a sinc function centered on

the point to be resampled. A true sinc interpolation would use every voxel in the image to

interpolate a single point, but due to time and speed considerations, an approximation

using a limited number of nearest neighbors, 'window' is used instead.

3. Fourier space interpolation : This is an implementation of rigid-body rotations executed as

a series of shears, which are performed in Fourier space. This method can only be

applied to images with cubic voxels. For more information on this see Eddy et al.4

The best quality interpolation is given by the 'windowed' sinc interpolation (SPM selects

this option as the default). You may also use trilinear interpolation; however, the quality will be

degraded. Once you select an interpolation mode you will be asked for which images you would

like to create. Here you can select <All Images> or <Images 2..n> (remember that image 1 was

the mean you already created). If you choose not to output resliced files, you can create just the

mean image, and leave the other files without reslicing to prevent degradation of image quality.

Next, SPM will ask whether or not you want to <Adjust sampling errors>. This is a dated

function that works well with simulated data, but unfortunately not with real data. It is an

additional adjustment that is made to the data that removes a tiny amount of movement-related

confounds. It is based on the assumption that most of the realignment errors are from

interpolation artifacts, which does not appear to be the case. For this option, it is best to select

<No>.

During realignment, SPM 99 eliminates unnecessary voxels (voxels offering the least

information about intensity differences between images), before performing the realignment

using the best voxels to resample, i.e. the ones that provide the most information about the

registration, e.g. edge information. Realignment is SPM’s most time-consuming step. Depending

on the amount of data being realigned, this can take anywhere from an hour to several hours. It

also has a tendency to crash MATLAB and occasionally run out of memory. Be sure to shut

4 Eddy, W. F., Fitzgerald, M., & Noll, D. C. (1996) Improved image registration by using Fourier interpolation, Magn

Reson Med. 36(6):923-931.

27

Page 28

down all major programs while realignment is in progress.

Realignment works in two stages. First, the first image from each session is realigned to

the first file of the first session that you selected (mean.img). Second, within each session, the

rest of the images (2..n) images are realigned to the first image. As a consequence, after

realignment, all files are realigned to the first file select (mean.img).

Realignment produces .mat files that correspond to the realigned volumes. If you asked

SPM to reslice at this stage, it will also produce r*.img files that are the resliced realigned

volumes. Realignment produces text files with the estimated realignment (or motion) parameters

for each session. These are the realignment_params_*mean.txt files stored in each session's

directory. They contain 6 columns and each row corresponds to an image. The columns are the

estimated translations in millimeters ("right", "forward", "up") and the estimated rotations in

radians ("pitch", "roll", "yaw") that are needed to shift each file. These text files can be used later

at the statistics stages, to enter the estimated motion parameters as user-specified regressors in

the design matrix (see section on motion parameters as confounds in analysis).

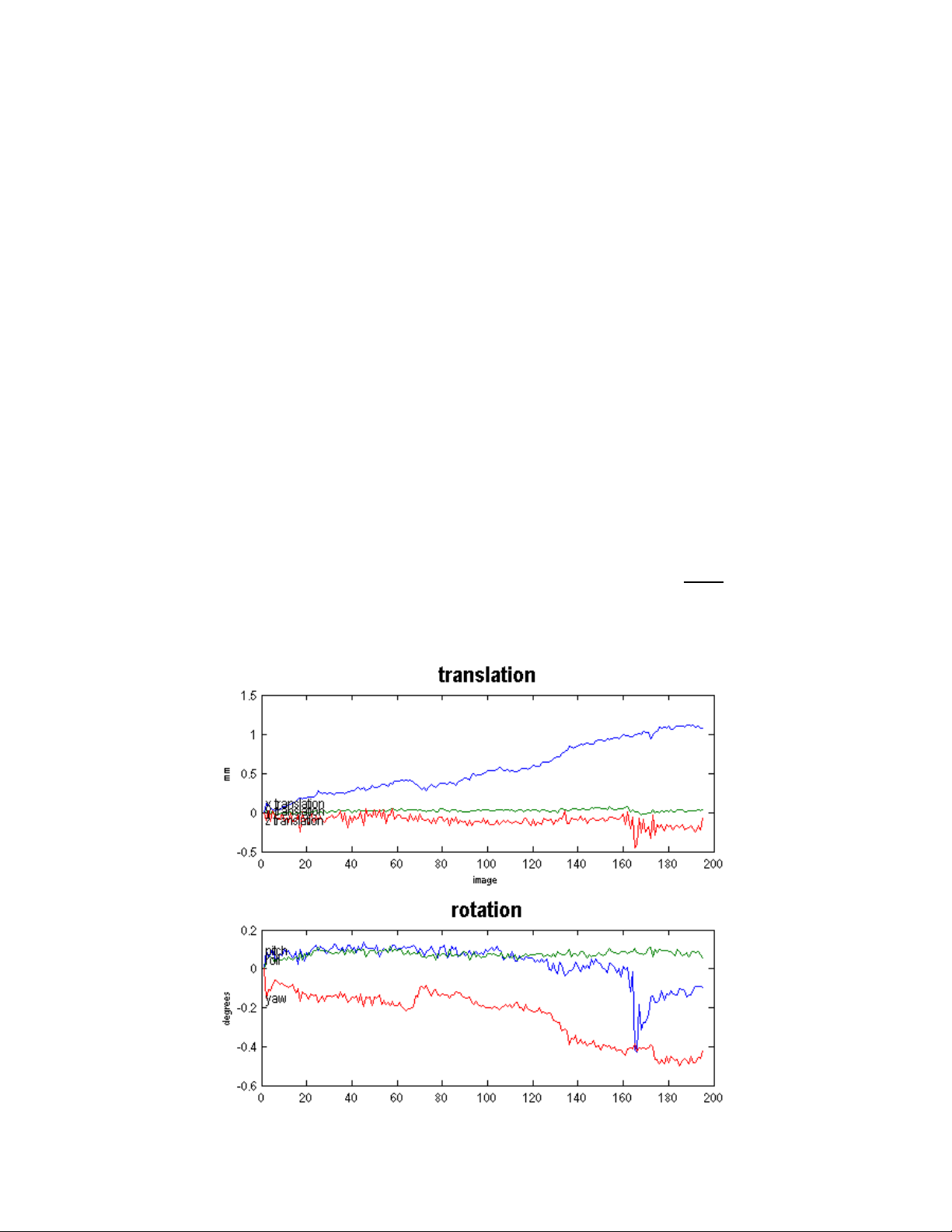

This stage also produces a spm99.ps postscript file, which contains two plots of the

transformations. This file can be viewed using a postscript viewer or can be converted to a PDF

using Adobe Acrobat Distiller. The top plot shows x, y, and z translations, and the bottom plot

shows x, y, and z rotations. Normally translations should be within 2 mm and rotations should be

within a few radians. If there are translations or rotations of more than 10 mm or radians, then

you should seriously consider using your motion correction parameters as confounds in the

statistical analysis. Otherwise, the large motion artifacts could cause signal changes that affect

your model. See example plot below for what to expect. Also, you should NOT see large sets of

consistent values. If a set of continuous scans appear to stay the same in translation or rotation

(straight line on the plot), that means something has gone terribly wrong. This could indicate a

calculation error that resulted in a meaningless loop in SPM computations, or it is possible that

28

Page 29

all those files are merely copies of the same file. If this happens, you need to diagnose the

problem:

One potential reason for this problem, is an error during the volume separation (if you are

using the old “separate and create headers” routine). You can check if this is the problem, by

running separate again, or by using the r2a (rec2analyze) function to separate the volumes. If

this doesn’t work, run a short realignment on a smaller subset of volumes to determine if the

problem is consistent. It is also possible that data was corrupted either in the export process at

the scanner, or in the transfer from Godzilla. Check the data at all stages to make sure this is

not the case. If this is not due to a data handling error, it could be due to a scanner error, and

the data may be irrecoverable. Of course, you should exhaust all options first.

Your realigned volumes at this point (if you elected to reslice) will be saved in the same

directory as your raw (or slice-timing corrected) volumes, using the same filenames, except the

names will be pre-pended with the letter “r” to indicate that these volumes have been realigned.

You can check the quality of the realignment by display a few of the realigned scans (a

few from the beginning, middle and end of the series) using the <Check Reg> button in SPM.

The images will be printed to the SPM graphical output window and you can click around using

the left mouse button to check the quality of the registration, you may want to re-run the

registration using a higher quality interpolation (e.g. if you used Trilinear interpolation, you

should use Sinc interpolation). You can also change the default options in SPM. Under

<Defaults> select Realignment. Change the option for registration quality from 0.5 to 1.0

(slowest, but most accurate). You may also choose to adjust for interpolation <Adjust sampling

errors> to see if it improves the quality of the registration.

At this point, you may compress and save a copy of your motion-corrected volumes

(since this step is the most error-prone and time-consuming) to have a backup in the case of

data loss.

Anatomical Co-registration (Optional)

This step is recommended for single subject studies, as it offers better anatomical

localization of signal differences. It is also recommended for partial brain acquisitions. The idea

is to use the subject’s anatomical scan as a template to overlay functional activation and to

localize signal differences, instead of using a standard template such as the MNI (Montreal

Neurological Institute) or the Talairach

During scanning, you should collect three types of scans:

1. EPI functional scans

2. An in-plane T1-weighted scans with the same parameters as the EPI. You can use a 2D

sequence like a Spin Echo.

3. A high resolution whole brain T1-weighted scan. Typically this scan has an isotropic (or

almost isotropic ~1mm

popular MP-RAGE (M

3

) resolution and good gray/white contrast. An example is the

agnetization Prepared Rapid Acquisition Gradient Echo) 6. A good

MP-RAGE sequence can be used for structural morphometry and gray/white matter

segmentation, but it can also be used as a reference scan for EPI/in-plane T1 coregistration.

5 Talairach, J. & Tournoux, P. (1988) Co-planar Stereotaxic Atlas of the Human Brain: 3-Dimensional Proportional

System: An Approach to Cerebral Imaging. Thieme, New York.

6 Mugler, J. P., III & Brookeman, J. R. (1990) Three-dimensional magnetization-prepared rapid gradient-echo imaging

(3D MP RAGE), Magn Reson Med 15(1):152-157.

5

29

Page 30

Co-registering Whole Brain Volumes

In this step, you co-register the in-plane T1 to the high resolution 3 dimensional T1 scan.

Click on the <Coregister> button in the fMRI switchboard. Select <1> for <number of subjects>.

Select <Coregister only> under <Which option?>. Select <target – T1 MRI> for <modality of first

target> and <object – T1 MRI> for <modality of first object image>. In the SPM selector window,

select the high resolution 3-D T1 scan as your target scan. Select the 2-D in-plane T1 scan as

your object scan. You will be prompted to select other images for your subject. Here you can

select the entire volume of motion-corrected EPI scans (or alternatively you can select you’re

the mean EPI image (other images can be registered at a later point if you desire). Once SPM is

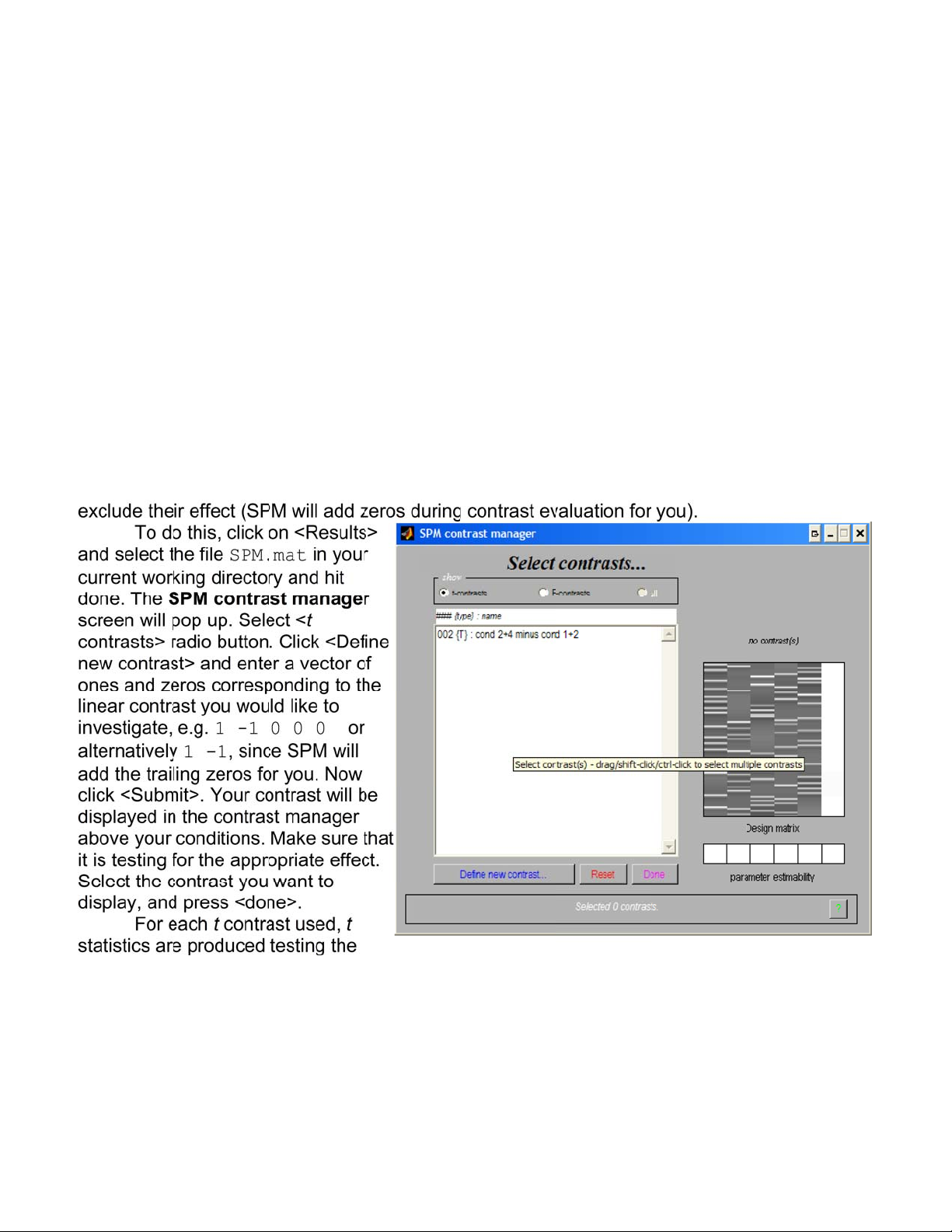

done, you will see the results of the registration in the graphics window. You can also use the